Network Sharing The story thus far Sharing Omega

![Rate Allocation – Adaptive Algorithm � Use � Seawall [NSDI’ 11] as rate-allocation algorithm Rate Allocation – Adaptive Algorithm � Use � Seawall [NSDI’ 11] as rate-allocation algorithm](https://slidetodoc.com/presentation_image_h2/37a300addc1c433ea87e7e34137d50a0/image-41.jpg)

![Rate Allocation – Adaptive Algorithm � Use � Seawall [NSDI’ 11] as rate-allocation algorithm Rate Allocation – Adaptive Algorithm � Use � Seawall [NSDI’ 11] as rate-allocation algorithm](https://slidetodoc.com/presentation_image_h2/37a300addc1c433ea87e7e34137d50a0/image-42.jpg)

- Slides: 56

Network Sharing

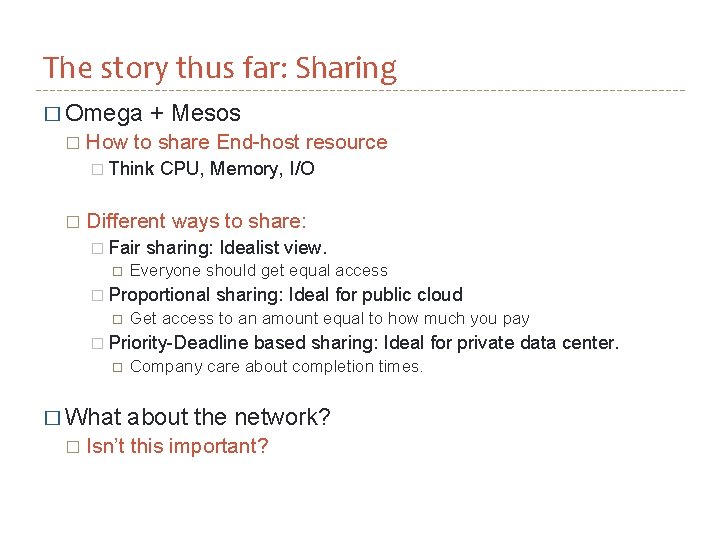

The story thus far: Sharing � Omega � + Mesos How to share End-host resource � Think � CPU, Memory, I/O Different ways to share: � Fair sharing: Idealist view. Everyone should get equal access � Proportional sharing: Ideal for public cloud Get access to an amount equal to how much you pay � Priority-Deadline � What � based sharing: Ideal for private data center. Company care about completion times. about the network? Isn’t this important?

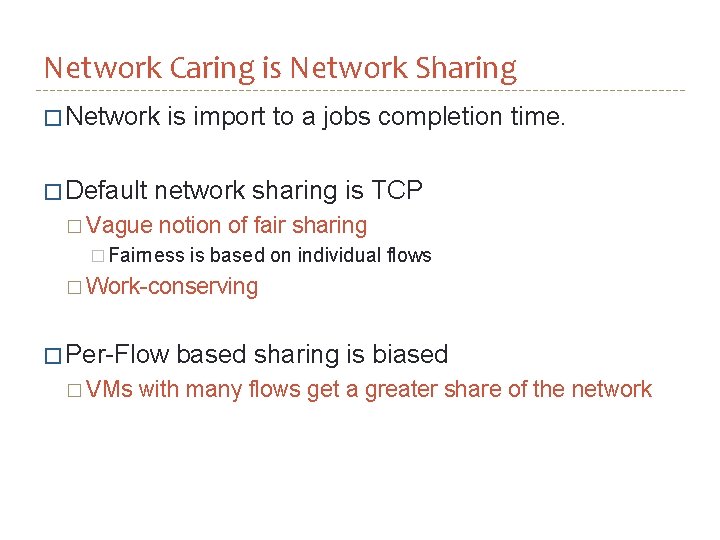

Network Caring is Network Sharing � Network � Default � Vague is import to a jobs completion time. network sharing is TCP notion of fair sharing � Fairness is based on individual flows � Work-conserving � Per-Flow � VMs based sharing is biased with many flows get a greater share of the network

What is the best form of Network Sharing � Fair sharing: � Per-Source � Reducers based fairness? cheats– many flows to one destination. � Per-Destination � Map based fairness? can cheat � Fairness === Bad: � No one can predict anything. � And we like things to be prediction: we like short and predictable latency � Min-Bandwidth � Perfect!! Guarantees But: � Implementation can lead to inefficiency � How do you predict bandwidth demands

What is the best form of Network Sharing � Fair sharing: � Per-Source � Reducers based fairness? cheats– many flows to one destination. � Per-Destination � Map based fairness? can cheat � Fairness === Bad: � No one can predict anything. � And we like things to be prediction: we like short and predictable latency � Min-Bandwidth � Perfect!! Guarantees But: � Implementation can lead to inefficiency � How do you predict bandwidth demands

What is the best form of Network Sharing � Fair sharing: � Per-Source � Reducers based fairness? cheats– many flows to one destination. � Per-Destination � Map based fairness? can cheat � Fairness === Bad: � No one can predict anything. � And we like things to be prediction: we like short and predictable latency � Min-Bandwidth � Perfect!! Guarantees But: � Implementation can lead to inefficiency � How do you predict bandwidth demands

How can you share the network? � Endhost sharing schemes � Use default TCP? Never! � Change the hypervisor! � Requires � Change the endhost’s TCP stack � Invasive and undesirably � In-Network � Use virtualization sharing schemes queues and rate-limiters � Limited � Utilize enforcing to 7 -8 different guarantees ECN � Requires � Other switches that support ECN mechanism switch modifications � Expensive and highly unlikely except maybe Open. Flow.

Elastic. Switch: Practical Work-Conserving Bandwidth Guarantees for Cloud Computing Lucian Popa Praveen Yalagandula* Sujata Banerjee Jeffrey C. Mogul+ Yoshio Turner Jose Renato Santos HP Labs * Avi Networks + Google

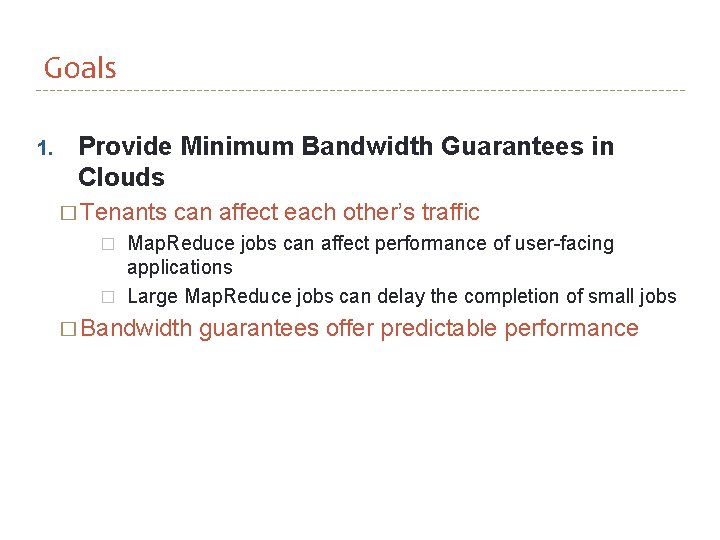

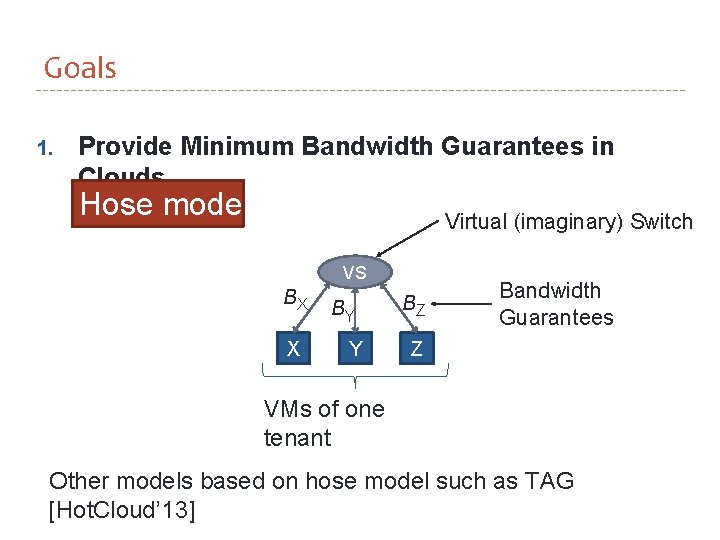

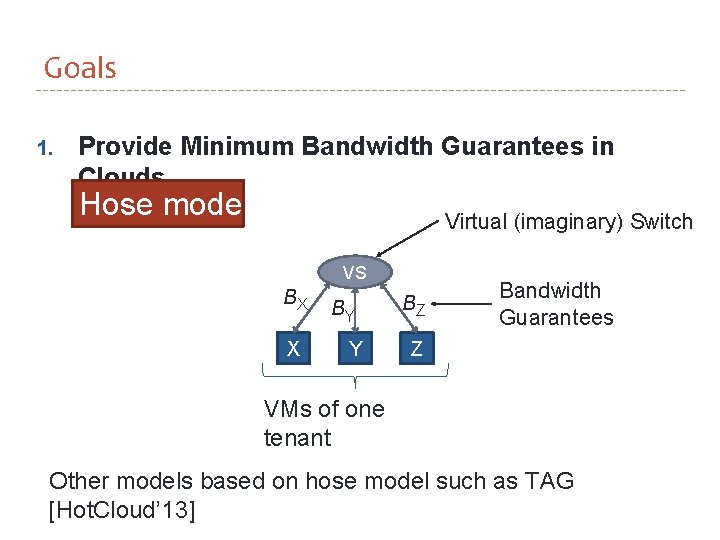

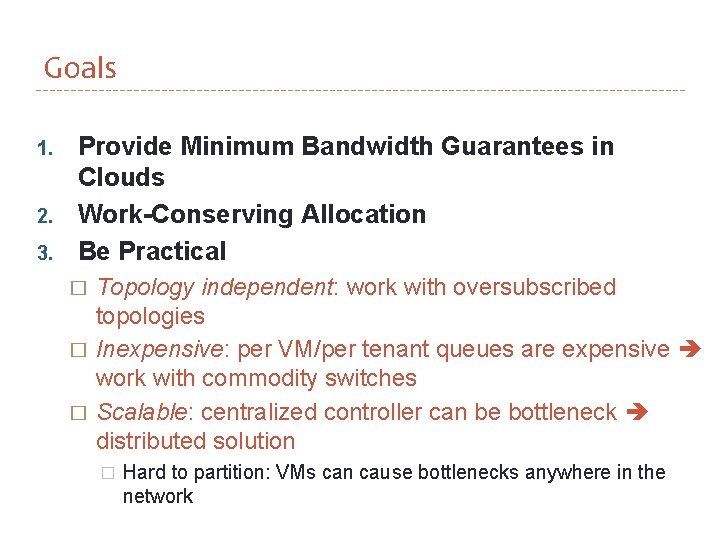

Goals 1. Provide Minimum Bandwidth Guarantees in Clouds � Tenants � � can affect each other’s traffic Map. Reduce jobs can affect performance of user-facing applications Large Map. Reduce jobs can delay the completion of small jobs � Bandwidth guarantees offer predictable performance

Goals 1. Provide Minimum Bandwidth Guarantees in Clouds Hose model Virtual (imaginary) Switch VS BX X BY Y BZ Bandwidth Guarantees Z VMs of one tenant Other models based on hose model such as TAG [Hot. Cloud’ 13]

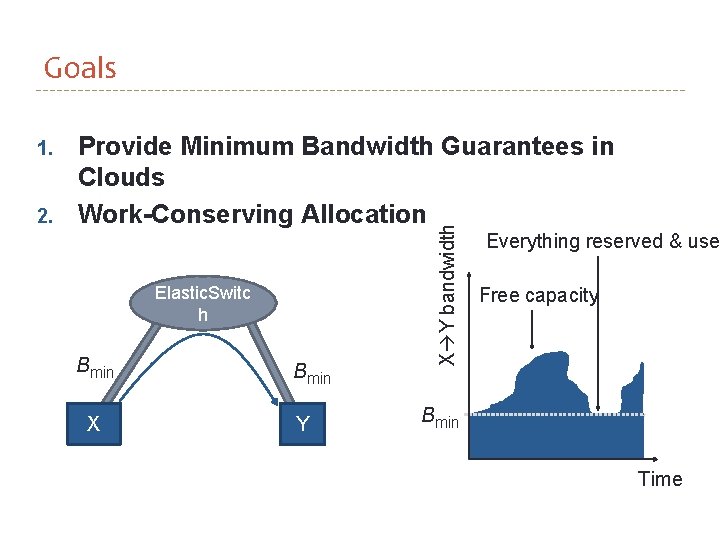

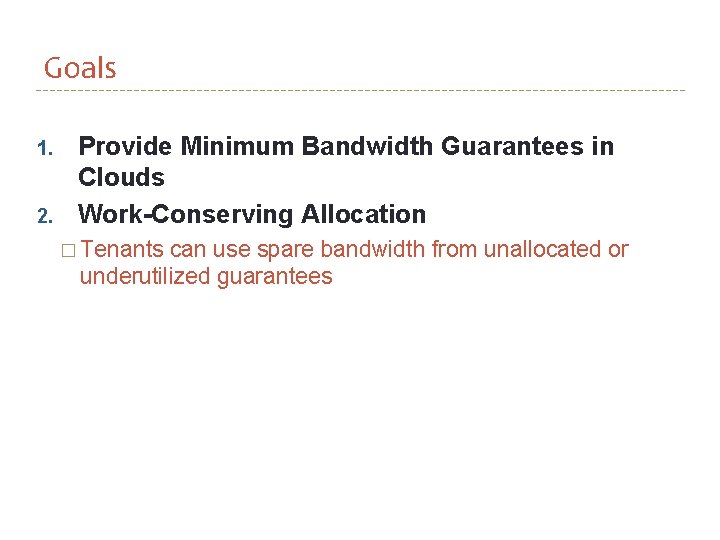

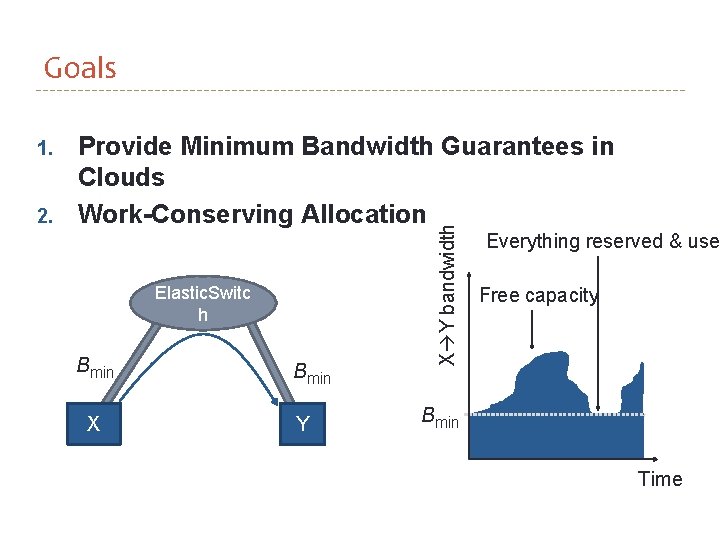

Goals 1. 2. Provide Minimum Bandwidth Guarantees in Clouds Work-Conserving Allocation � Tenants can use spare bandwidth from unallocated or underutilized guarantees

Goals 1. 2. Provide Minimum Bandwidth Guarantees in Clouds Work-Conserving Allocation � Tenants can use spare bandwidth from unallocated or underutilized guarantees � Significantly increases performance � Average traffic is low [IMC 09, IMC 10] � Traffic is bursty

Goals 2. Provide Minimum Bandwidth Guarantees in Clouds Work-Conserving Allocation Elastic. Switc h Bmin X Bmin Y X Y bandwidth 1. Everything reserved & use Free capacity Bmin Time

Goals 1. 2. 3. Provide Minimum Bandwidth Guarantees in Clouds Work-Conserving Allocation Be Practical � � � Topology independent: work with oversubscribed topologies Inexpensive: per VM/per tenant queues are expensive work with commodity switches Scalable: centralized controller can be bottleneck distributed solution � Hard to partition: VMs can cause bottlenecks anywhere in the network

Goals 1. 2. 3. Provide Minimum Bandwidth Guarantees in Clouds Work-Conserving Allocation Be Practical

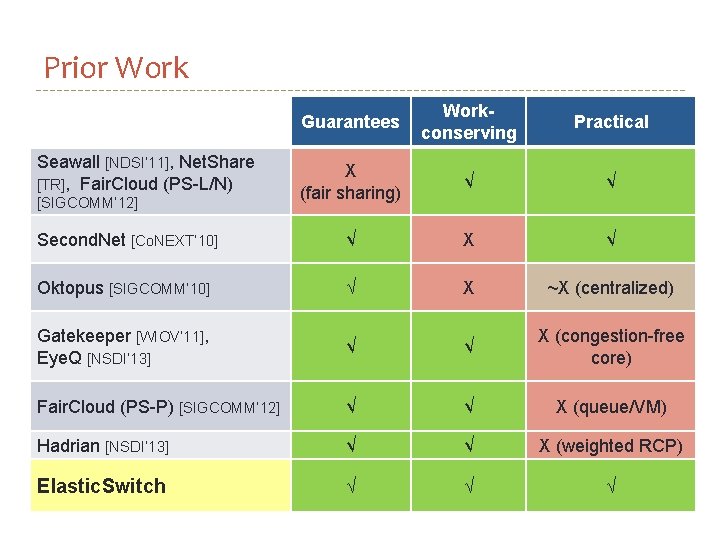

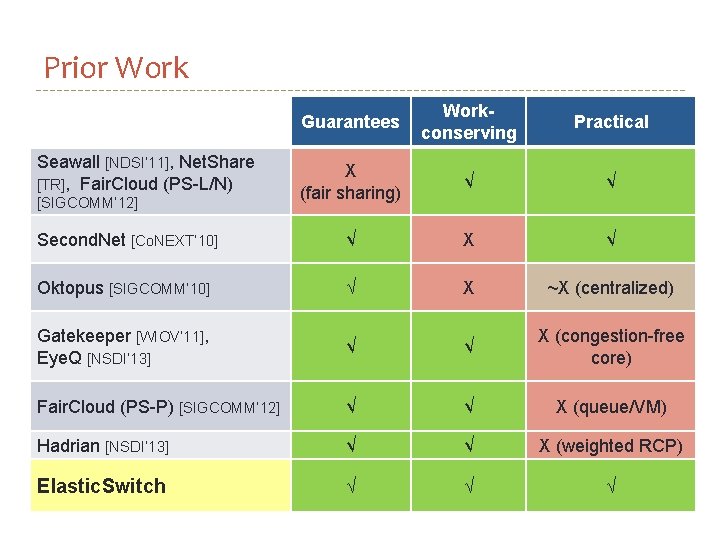

Prior Work Guarantees Workconserving Practical X (fair sharing) √ √ Second. Net [Co. NEXT’ 10] √ X √ Oktopus [SIGCOMM’ 10] √ X ~X (centralized) Gatekeeper [WIOV’ 11], Eye. Q [NSDI’ 13] √ √ X (congestion-free core) Fair. Cloud (PS-P) [SIGCOMM’ 12] √ √ X (queue/VM) Hadrian [NSDI’ 13] √ √ X (weighted RCP) Elastic. Switch √ √ √ Seawall [NDSI’ 11], Net. Share [TR], Fair. Cloud (PS-L/N) [SIGCOMM’ 12]

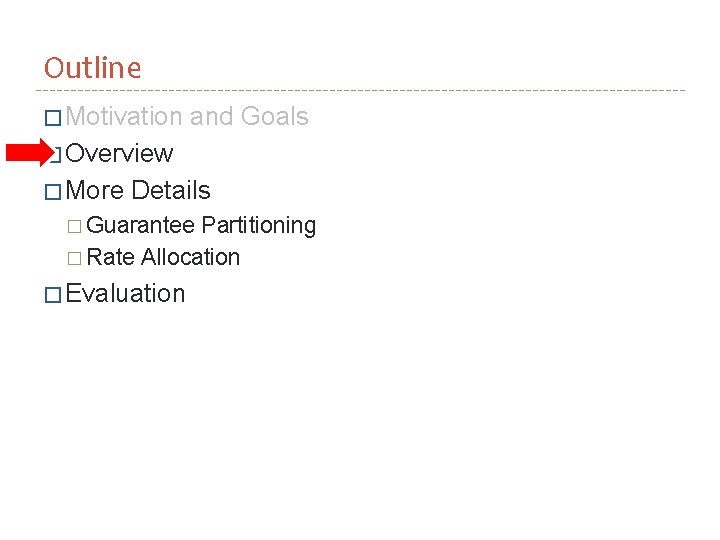

Outline � Motivation and Goals � Overview � More Details � Guarantee Partitioning � Rate Allocation � Evaluation

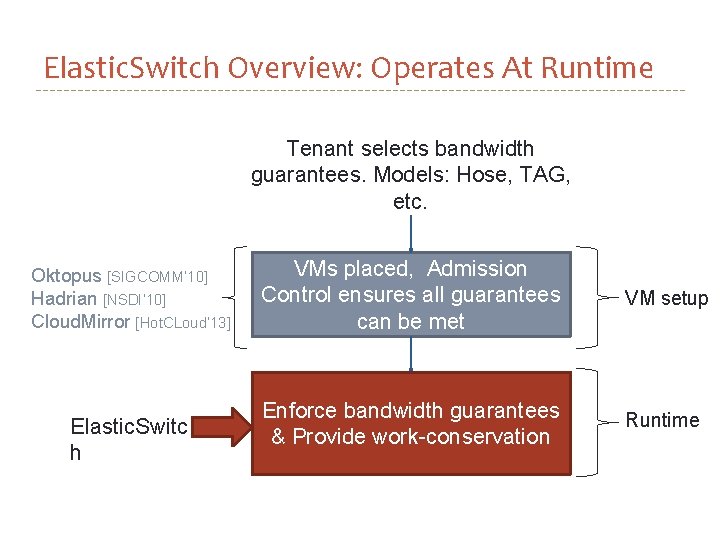

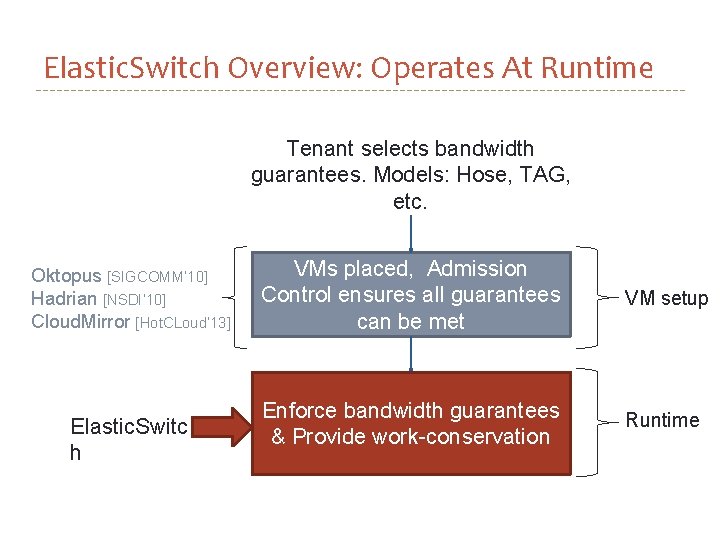

Elastic. Switch Overview: Operates At Runtime Tenant selects bandwidth guarantees. Models: Hose, TAG, etc. Oktopus [SIGCOMM’ 10] Hadrian [NSDI’ 10] Cloud. Mirror [Hot. CLoud’ 13] Elastic. Switc h VMs placed, Admission Control ensures all guarantees can be met VM setup Enforce bandwidth guarantees & Provide work-conservation Runtime

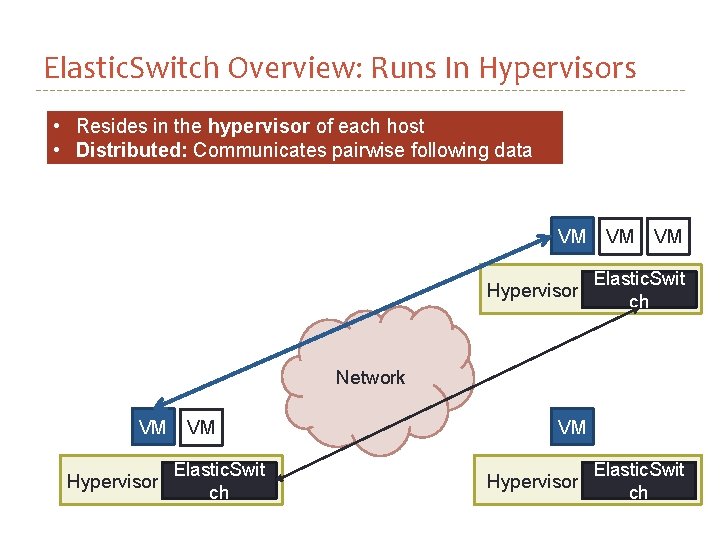

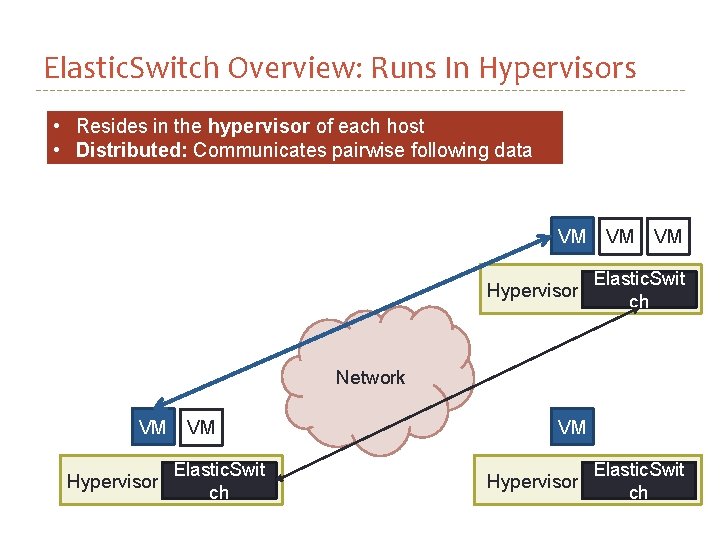

Elastic. Switch Overview: Runs In Hypervisors • Resides in the hypervisor of each host • Distributed: Communicates pairwise following data flows VM Hypervisor VM VM Elastic. Swit ch Network VM Hypervisor VM Elastic. Swit ch VM Hypervisor Elastic. Swit ch

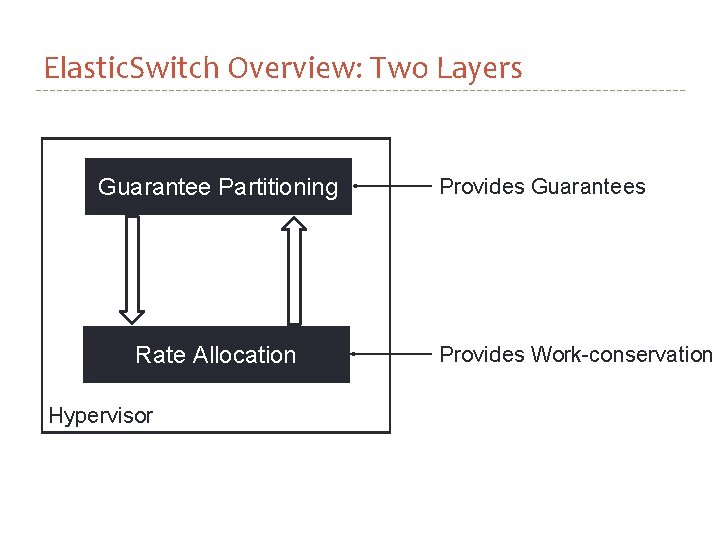

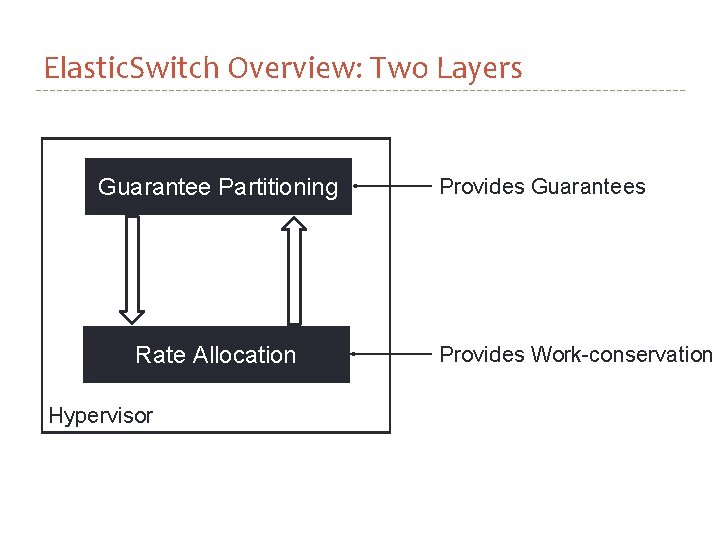

Elastic. Switch Overview: Two Layers Guarantee Partitioning Rate Allocation Hypervisor Provides Guarantees Provides Work-conservation

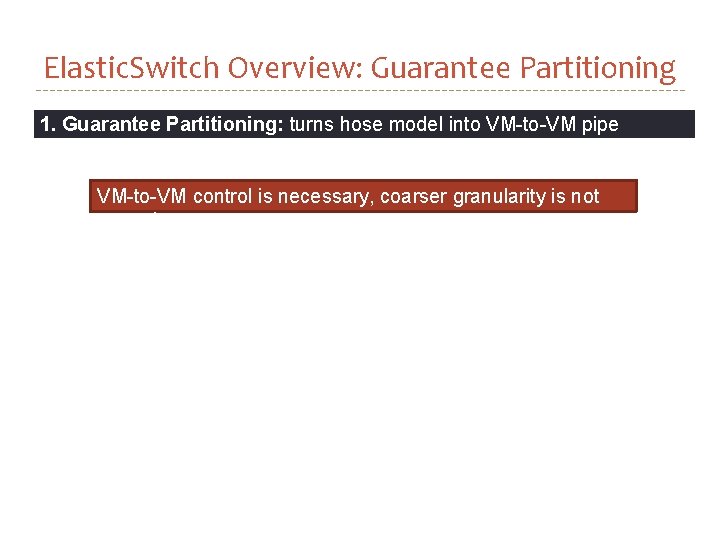

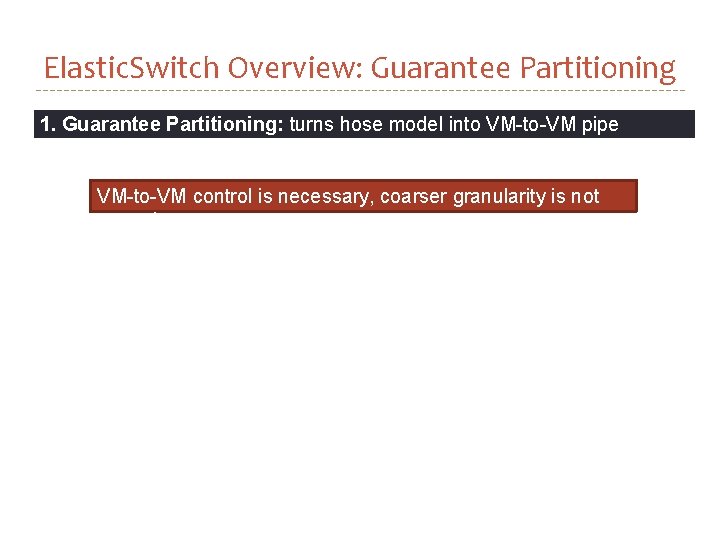

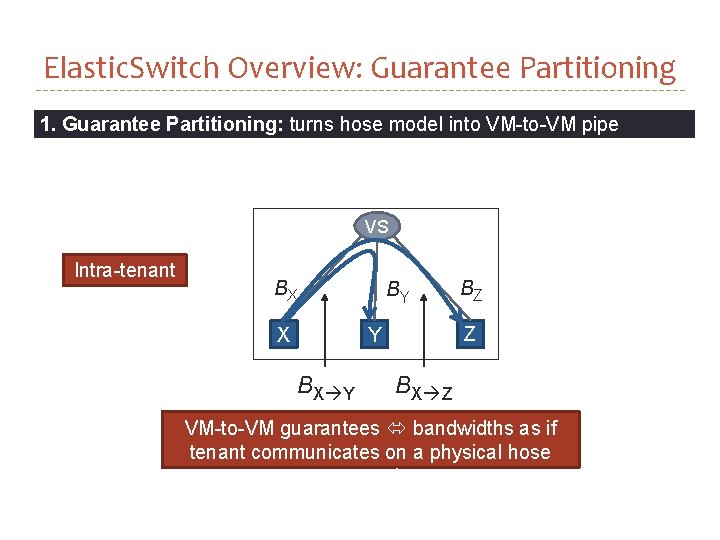

Elastic. Switch Overview: Guarantee Partitioning 1. Guarantee Partitioning: turns hose model into VM-to-VM pipe guarantees VM-to-VM control is necessary, coarser granularity is not enough

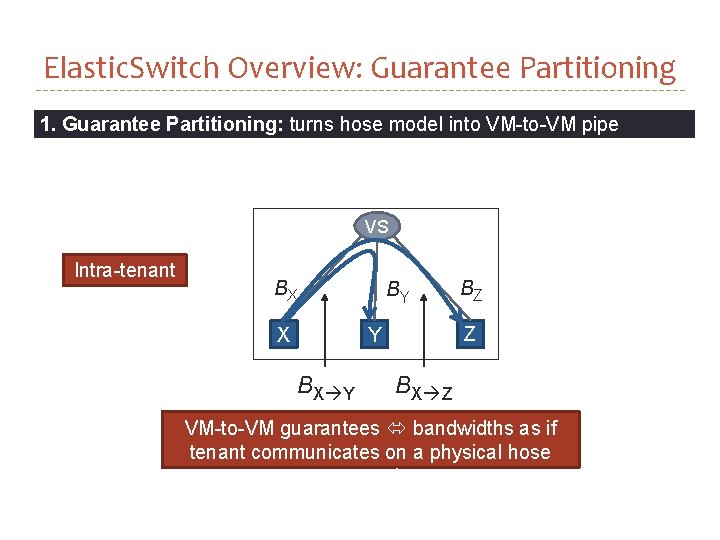

Elastic. Switch Overview: Guarantee Partitioning 1. Guarantee Partitioning: turns hose model into VM-to-VM pipe guarantees VS Intra-tenant BX X BY Z Y BX Y BZ BX Z VM-to-VM guarantees bandwidths as if tenant communicates on a physical hose network

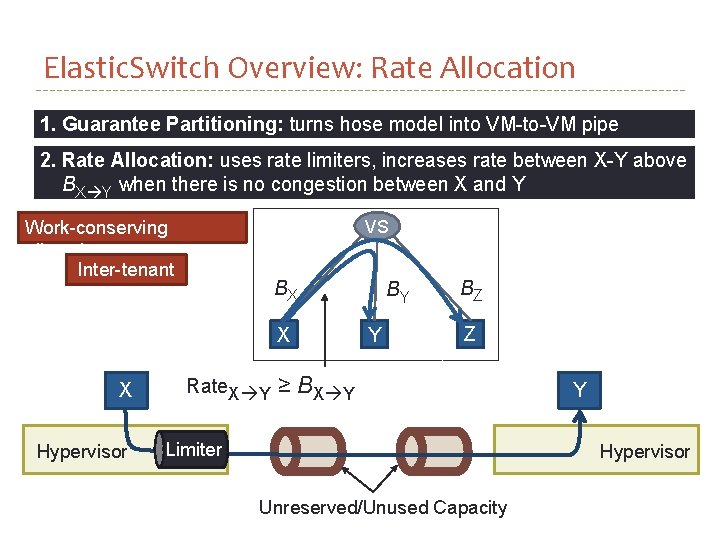

Elastic. Switch Overview: Rate Allocation 1. Guarantee Partitioning: turns hose model into VM-to-VM pipe guarantees 2. Rate Allocation: uses rate limiters, increases rate between X-Y above BX Y when there is no congestion between X and Y VS Work-conserving allocation Inter-tenant BX X X Hypervisor Rate. X Y BY Y BZ Z ≥ BX Y Limiter Y Hypervisor Unreserved/Unused Capacity

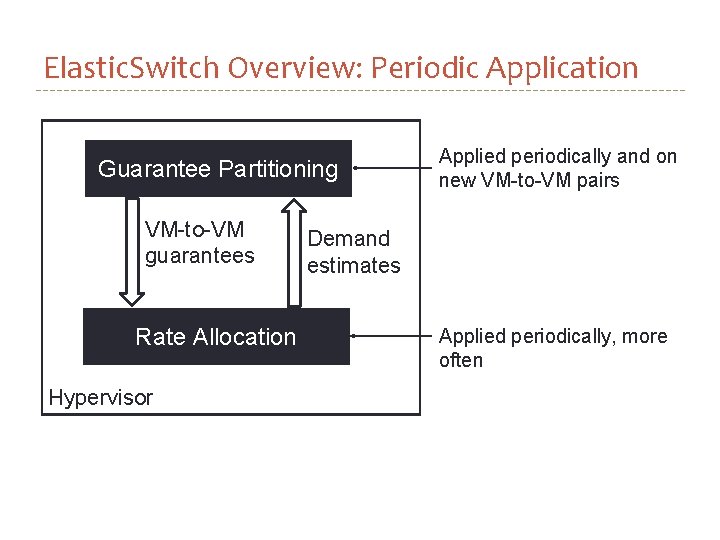

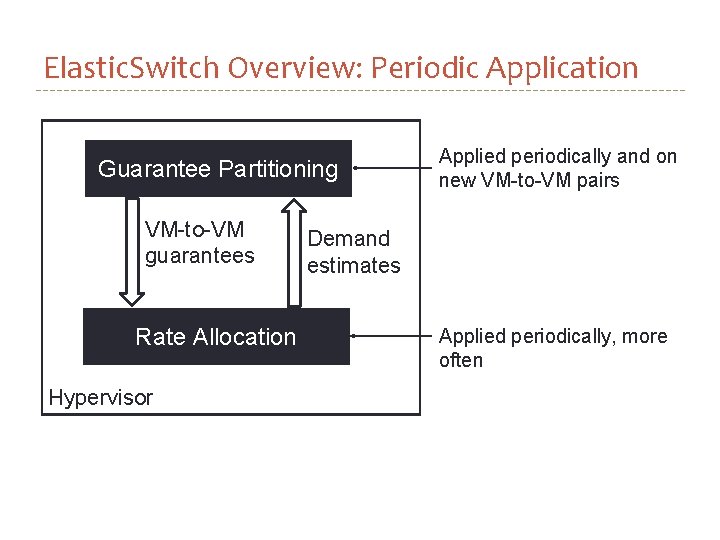

Elastic. Switch Overview: Periodic Application Guarantee Partitioning VM-to-VM guarantees Rate Allocation Hypervisor Applied periodically and on new VM-to-VM pairs Demand estimates Applied periodically, more often

Outline � Motivation and Goals � Overview � More Details � Guarantee Partitioning � Rate Allocation � Evaluation

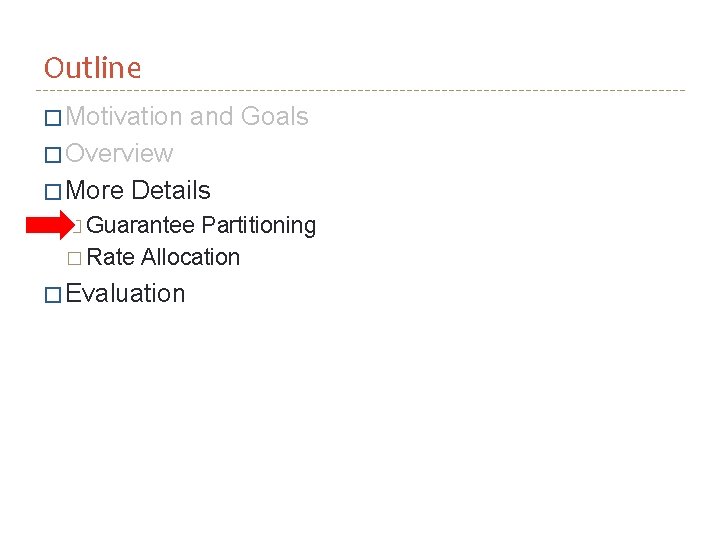

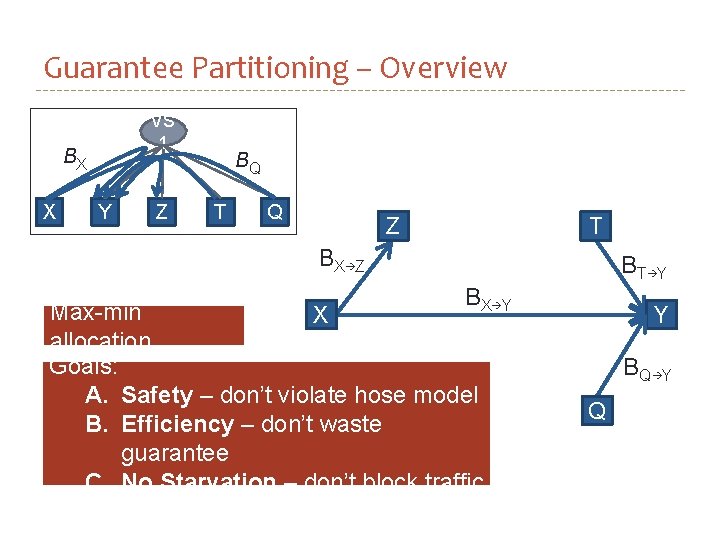

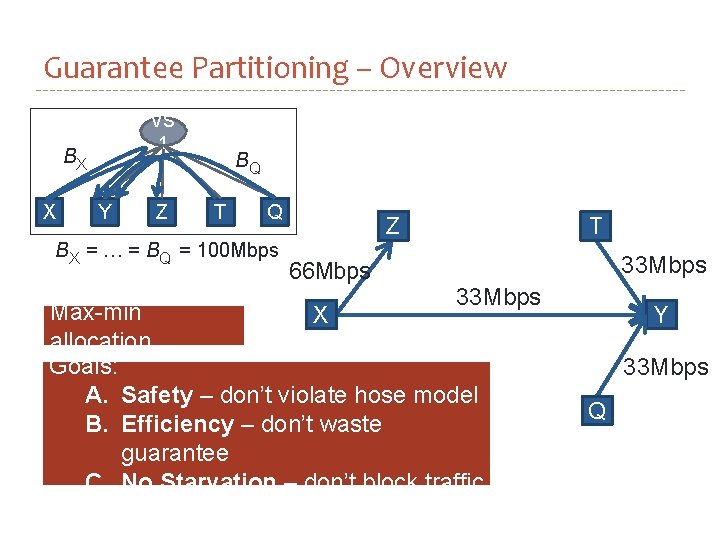

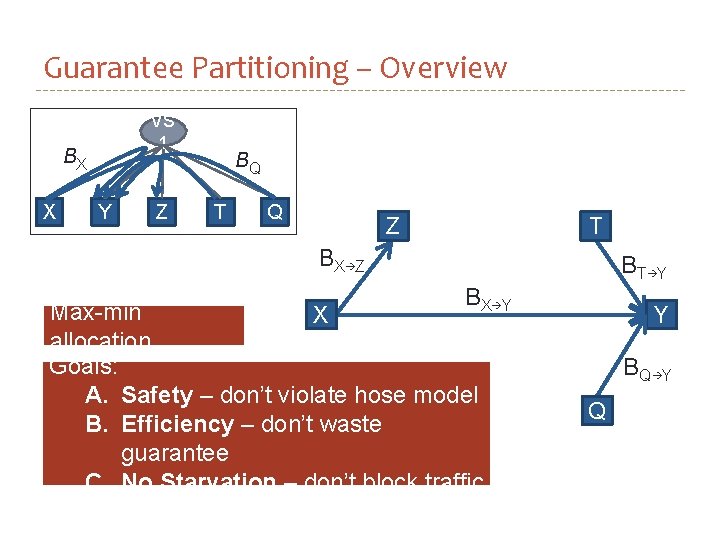

Guarantee Partitioning – Overview VS 1 BX X Y Z BQ T Q Z T B X Z B X Y Max-min X allocation Goals: A. Safety – don’t violate hose model B. Efficiency – don’t waste guarantee C. No Starvation – don’t block traffic B T Y Y B Q Y Q

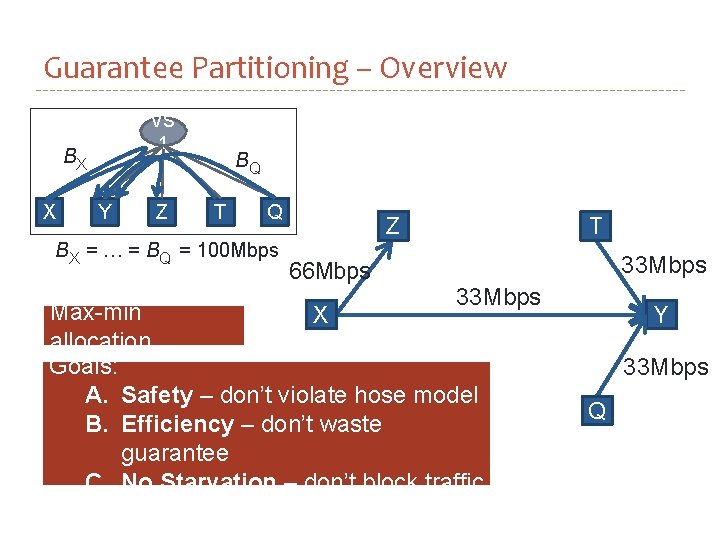

Guarantee Partitioning – Overview VS 1 BX X Y Z BQ T Q BX = … = BQ = 100 Mbps Z T 33 Mbps 66 Mbps 33 Mbps Max-min X allocation Goals: A. Safety – don’t violate hose model B. Efficiency – don’t waste guarantee C. No Starvation – don’t block traffic Y 33 Mbps Q

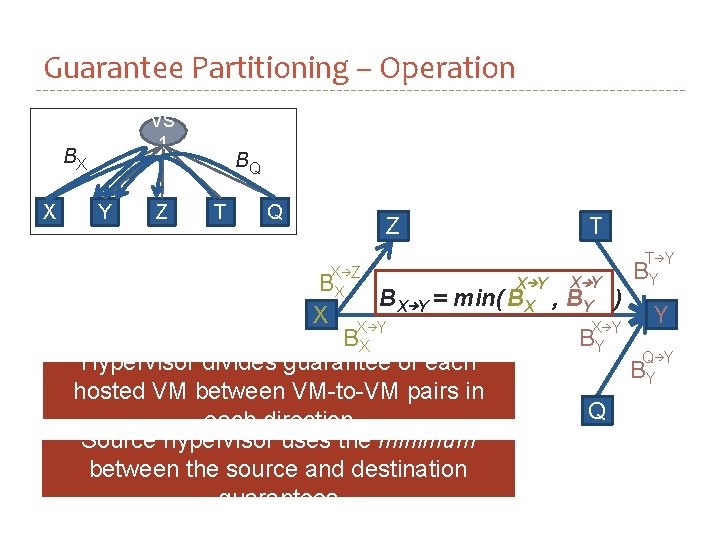

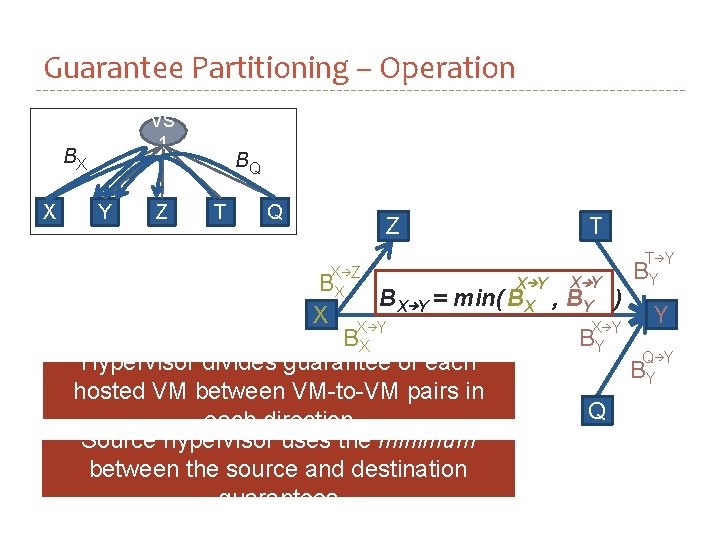

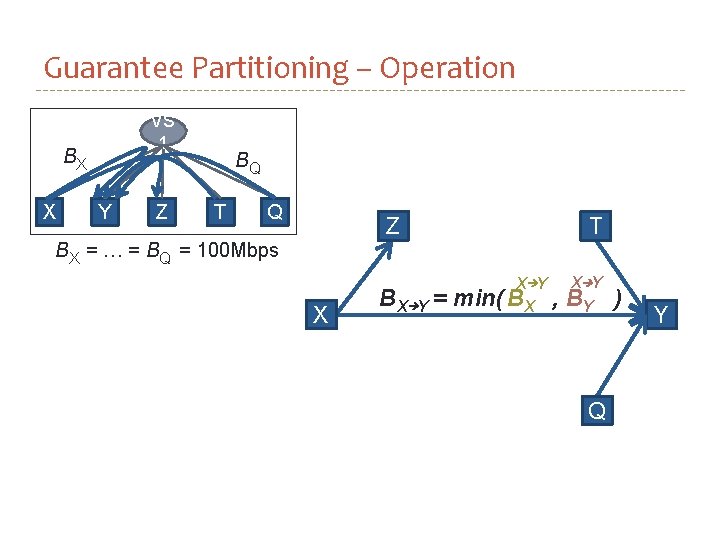

Guarantee Partitioning – Operation VS 1 BX X Y Z BQ T Q Z X Z T T Y BY X Y BX BX Y = min( BX , BY ) X X Y Y X Y BX BY Q Y Hypervisor divides guarantee of each BY hosted VM between VM-to-VM pairs in Q each direction Source hypervisor uses the minimum between the source and destination guarantees

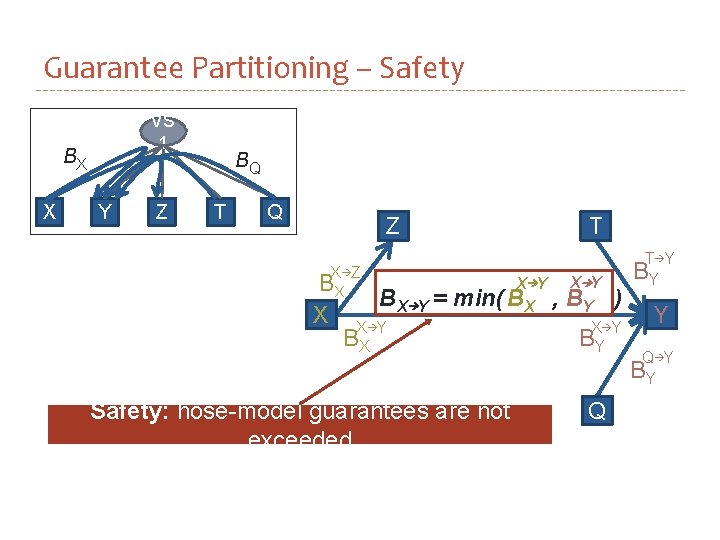

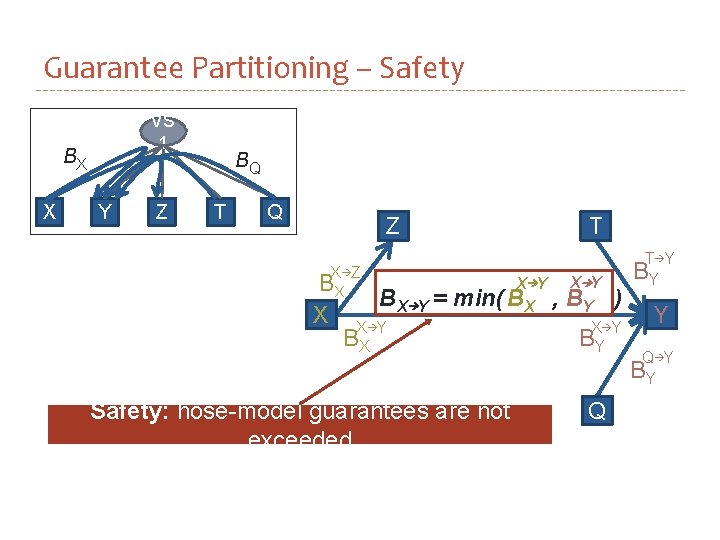

Guarantee Partitioning – Safety VS 1 BX X Y Z BQ T Q Z T X Z X Y BX BX Y = min( BX , BY ) X X Y BX BY Safety: hose-model guarantees are not exceeded Q T Y BY Y Q Y BY

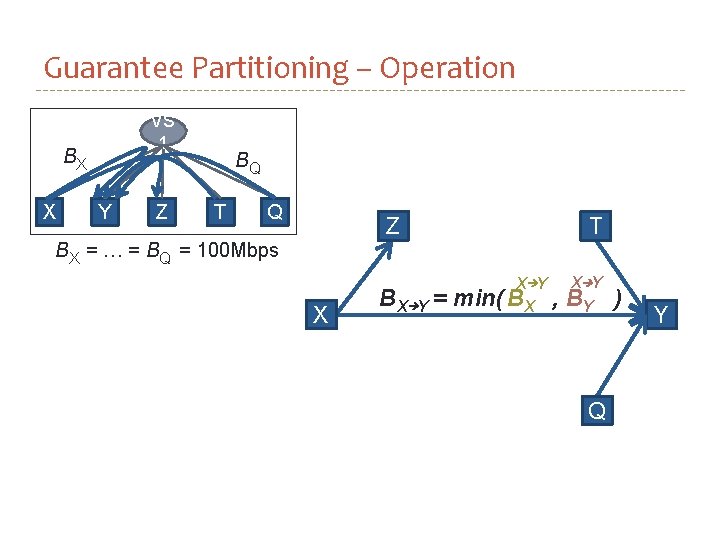

Guarantee Partitioning – Operation VS 1 BX X Y Z BQ T Q Z BX = … = BQ = 100 Mbps X BX Y = T X Y min( BX , X Y BY Q ) Y

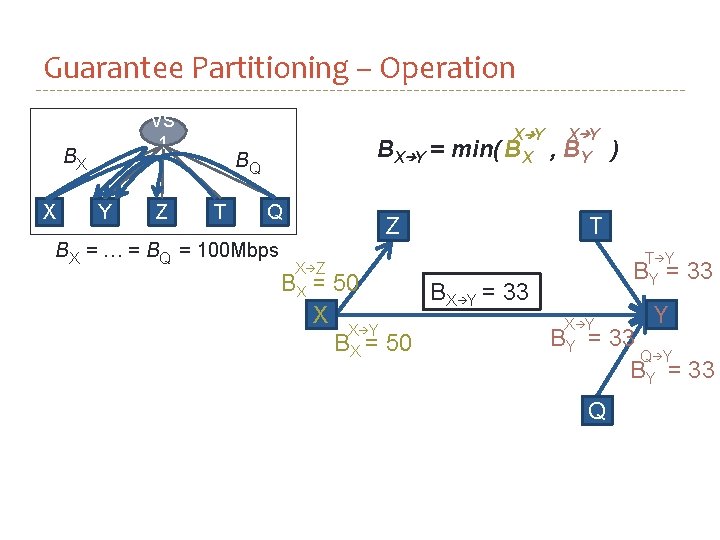

Guarantee Partitioning – Operation VS 1 BX X Y Z BX Y = BQ T Q BX = … = BQ = 100 Mbps X Y min( BX Z BX = X 50 X Y BX = 50 , X Y BY ) T T Y BY = BX Y = 33 X Y BY = Q Y 33 33 Q Y BY = 33

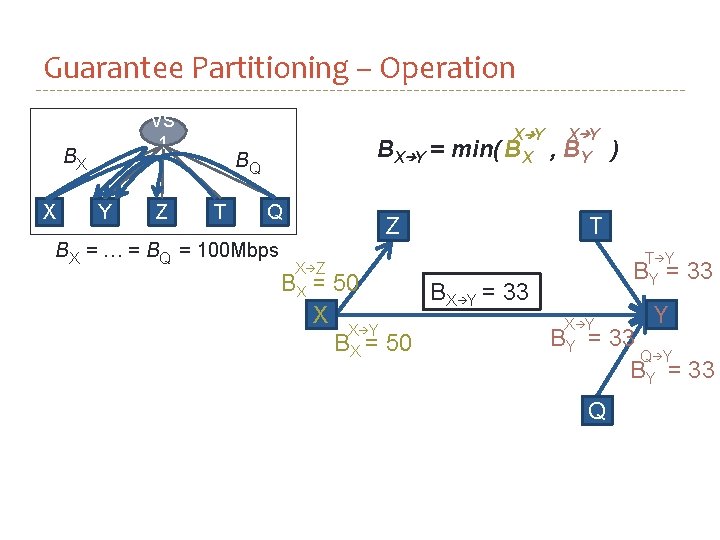

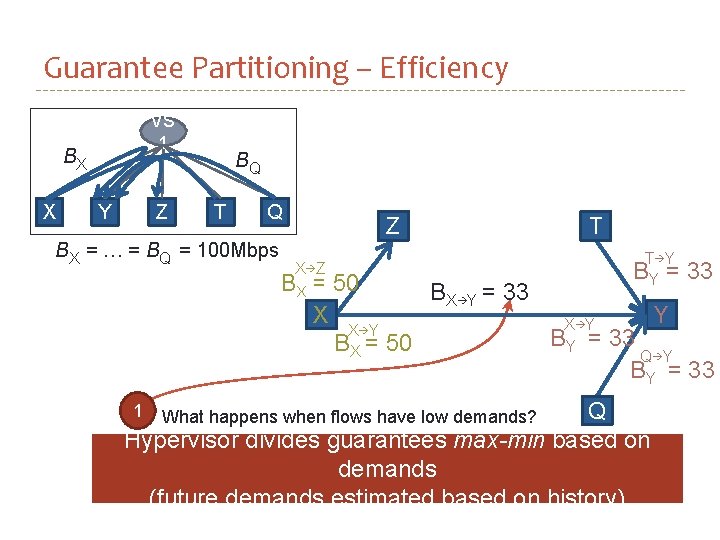

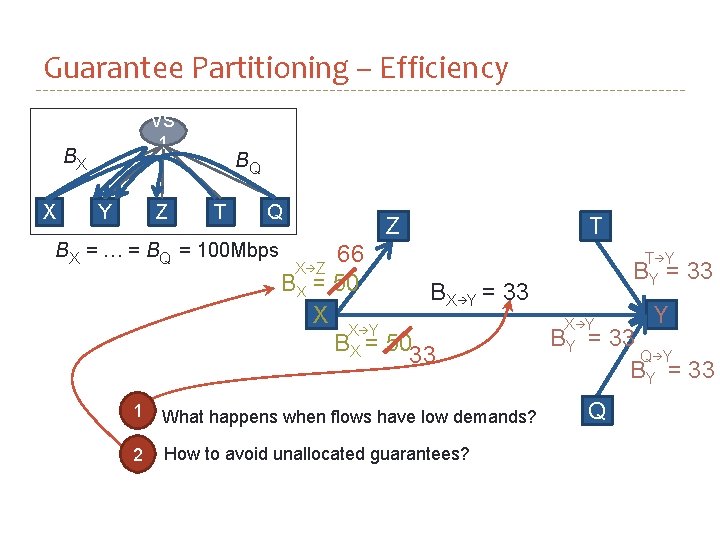

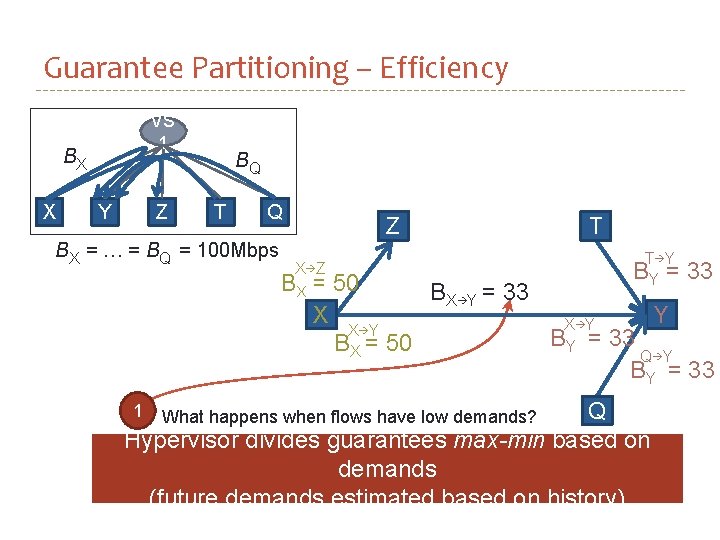

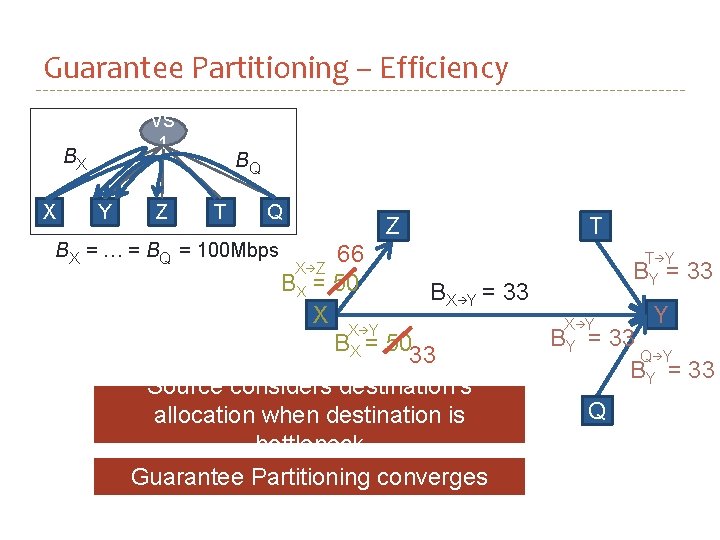

Guarantee Partitioning – Efficiency VS 1 BX X Z Y BQ T Q BX = … = BQ = 100 Mbps Z X Z BX = X 50 T T Y BY = BX Y = 33 X Y BX = 50 X Y BY = 33 Q Hypervisor divides guarantees max-min based on demands (future demands estimated based on history) 1 What happens when flows have low demands? Y 33

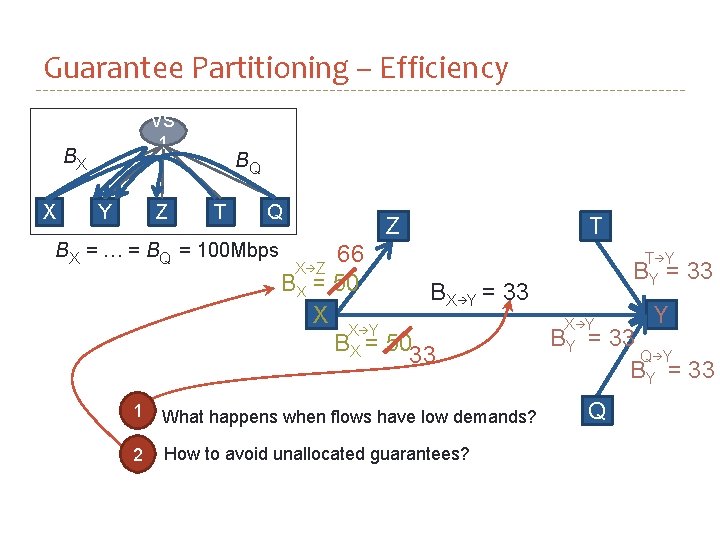

Guarantee Partitioning – Efficiency VS 1 BX X Z Y BQ T Q BX = … = BQ = 100 Mbps Z X Z BX = X 66 50 T T Y BY = BX Y = 33 X Y BX = 5033 1 What happens when flows have low demands? 2 How to avoid unallocated guarantees? X Y BY = Q Y 33 33 Q Y BY = 33

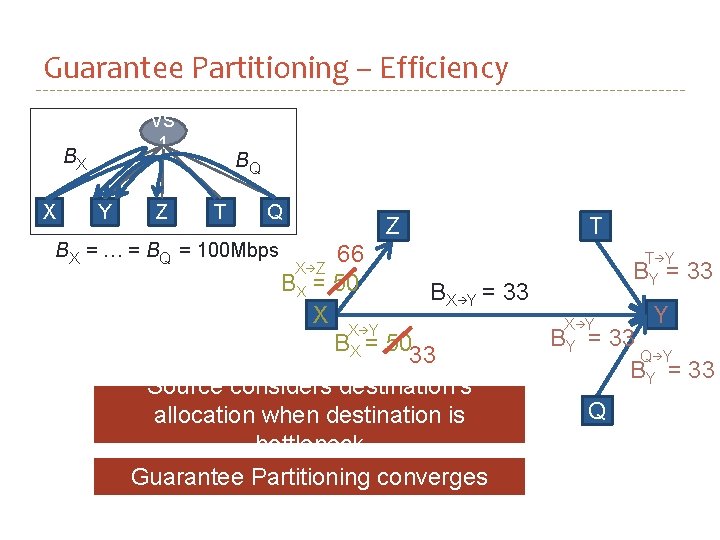

Guarantee Partitioning – Efficiency VS 1 BX X Y Z BQ T Q BX = … = BQ = 100 Mbps Z X Z BX = X 66 50 T T Y BY = BX Y = 33 X Y BX = 5033 Source considers destination’s allocation when destination is bottleneck Guarantee Partitioning converges X Y BY = Q Y 33 33 Q Y BY = 33

Outline � Motivation and Goals � Overview � More Details � Guarantee Partitioning � Rate Allocation � Evaluation

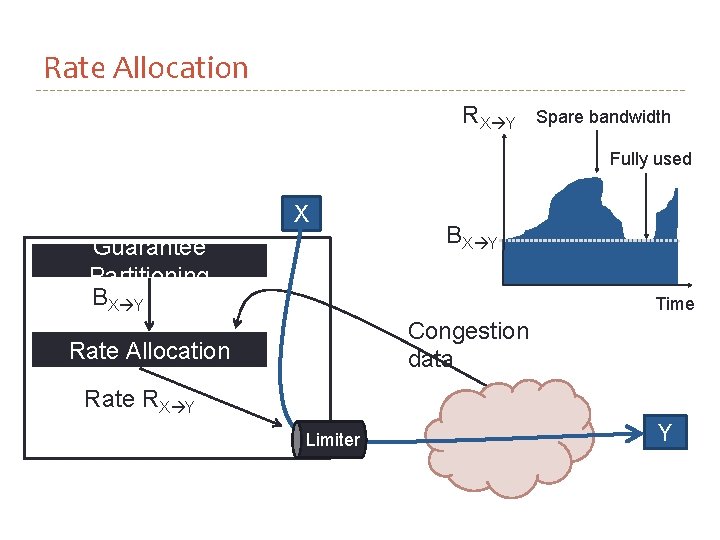

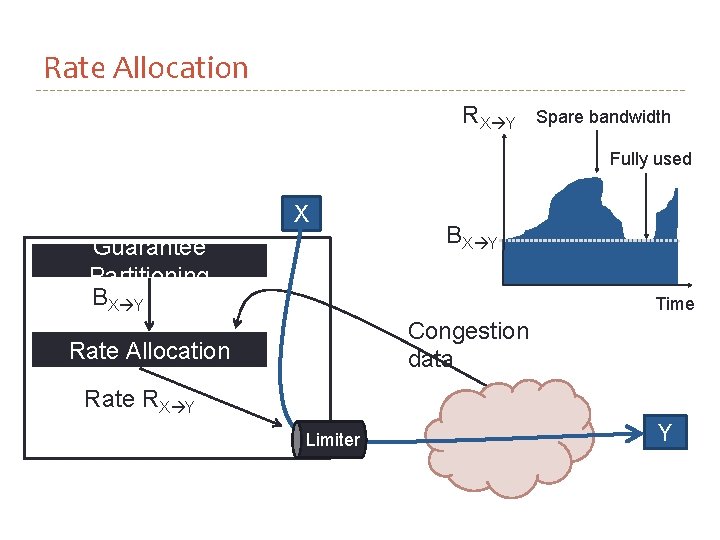

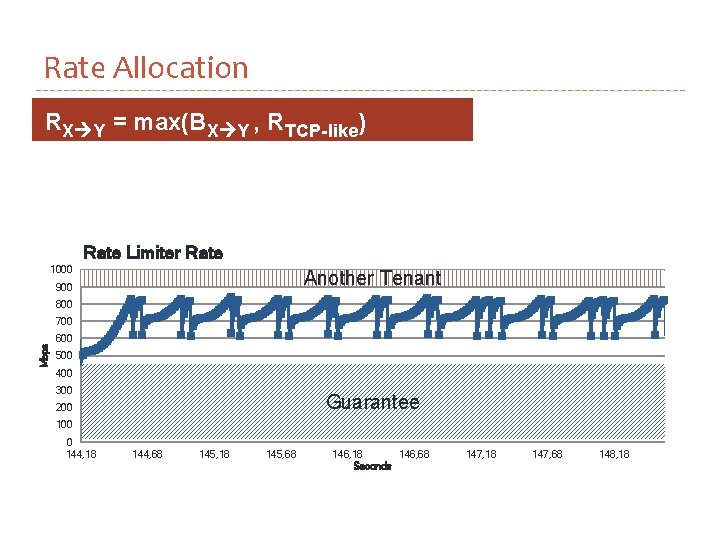

Rate Allocation RX Y Spare bandwidth Fully used X Guarantee Partitioning BX Y Time Congestion data Rate Allocation Rate RX Y Limiter Y

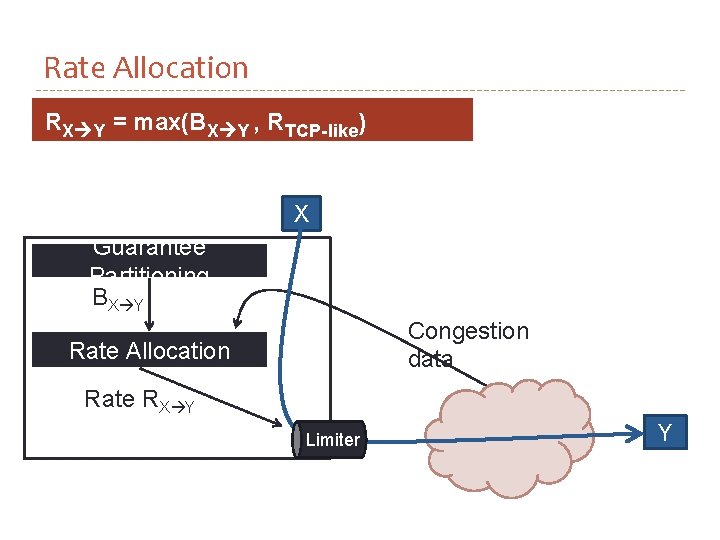

Rate Allocation RX Y = max(BX Y , RTCP-like) X Guarantee Partitioning BX Y Congestion data Rate Allocation Rate RX Y Limiter Y

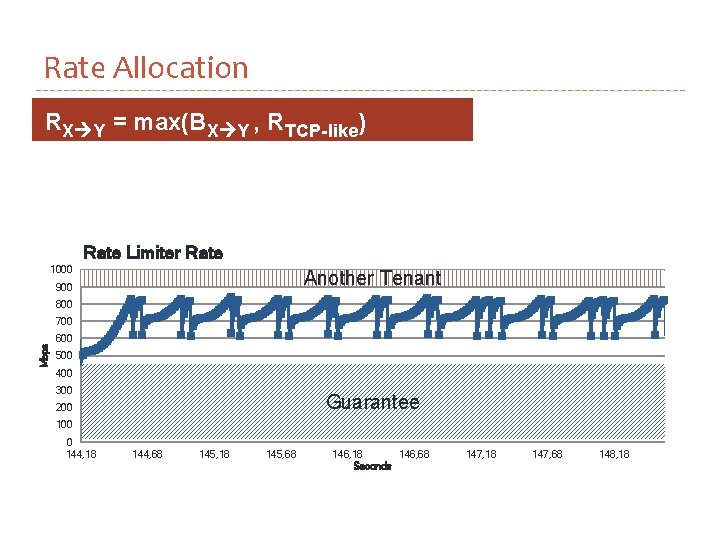

Rate Allocation RX Y = max(BX Y , RTCP-like) Rate Limiter Rate 1000 Another Tenant 900 800 Mbps 700 600 500 400 300 Guarantee 200 100 0 144, 18 144, 68 145, 18 145, 68 146, 18 146, 68 Seconds 147, 18 147, 68 148, 18

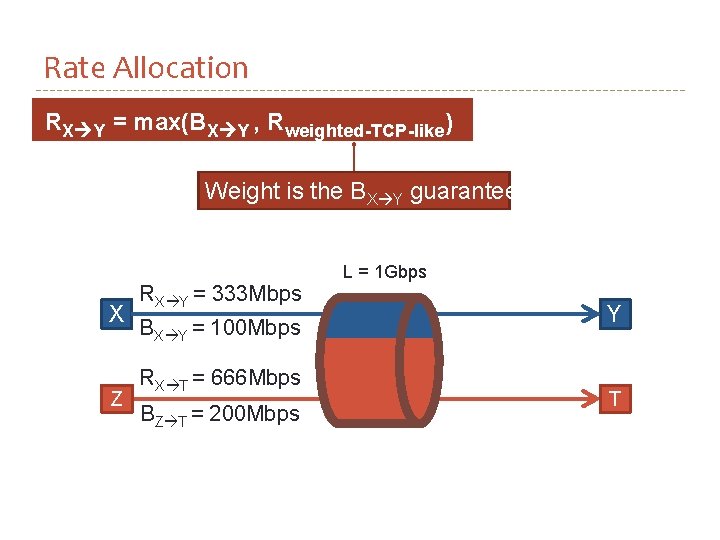

Rate Allocation RX Y = max(BX Y , Rweighted-TCP-like) Weight is the BX Y guarantee X Z RX Y = 333 Mbps BX Y = 100 Mbps RX T = 666 Mbps BZ T = 200 Mbps L = 1 Gbps Y T

Rate Allocation – Congestion Detection � Detect congestion through dropped packets � Hypervisors add/monitor sequence numbers in packet headers � Use ECN, if available

![Rate Allocation Adaptive Algorithm Use Seawall NSDI 11 as rateallocation algorithm Rate Allocation – Adaptive Algorithm � Use � Seawall [NSDI’ 11] as rate-allocation algorithm](https://slidetodoc.com/presentation_image_h2/37a300addc1c433ea87e7e34137d50a0/image-41.jpg)

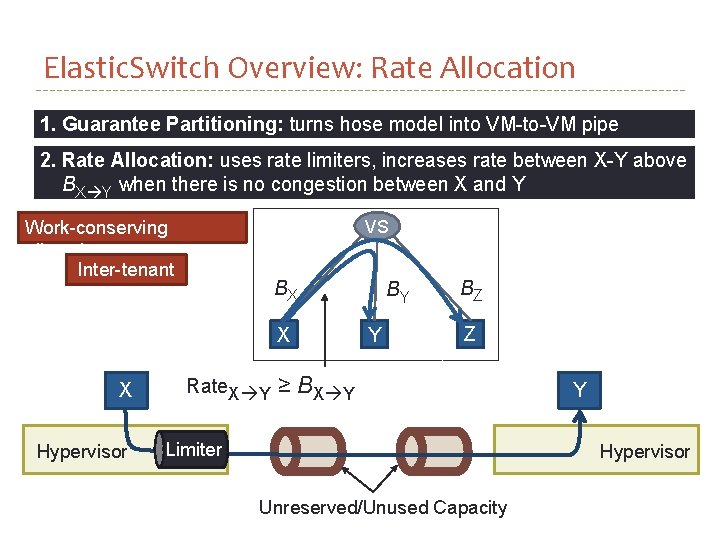

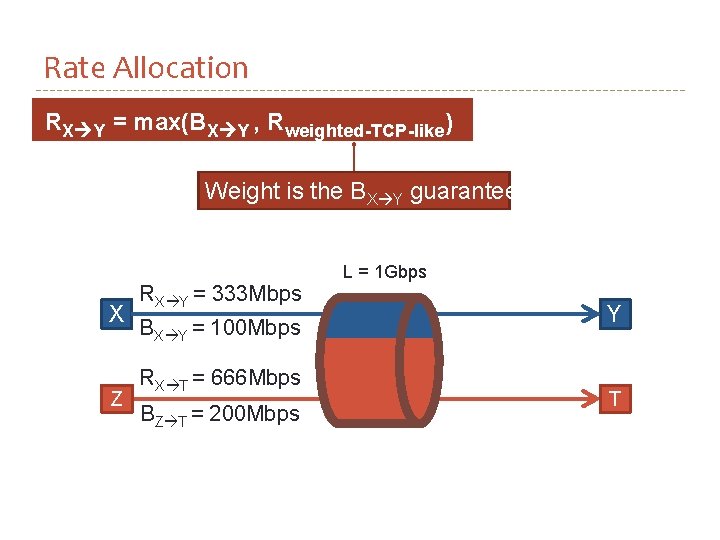

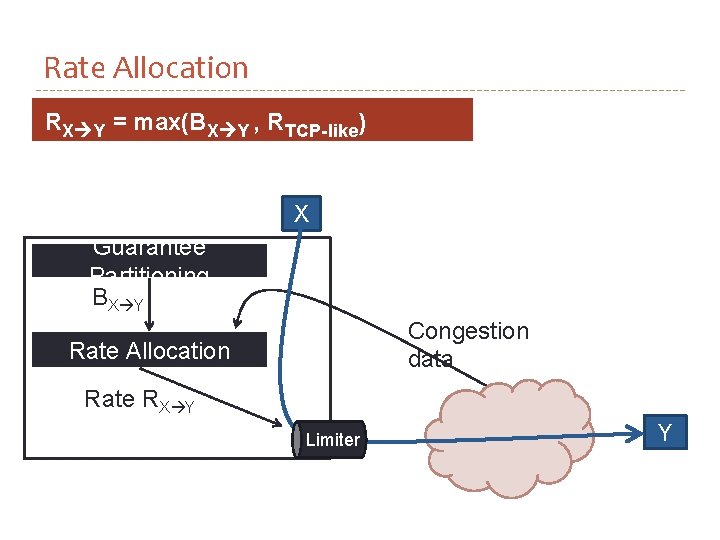

Rate Allocation – Adaptive Algorithm � Use � Seawall [NSDI’ 11] as rate-allocation algorithm TCP-Cubic like � Essential improvements (for when using dropped packets) Many flows probing for spare bandwidth affect guarantees of others

![Rate Allocation Adaptive Algorithm Use Seawall NSDI 11 as rateallocation algorithm Rate Allocation – Adaptive Algorithm � Use � Seawall [NSDI’ 11] as rate-allocation algorithm](https://slidetodoc.com/presentation_image_h2/37a300addc1c433ea87e7e34137d50a0/image-42.jpg)

Rate Allocation – Adaptive Algorithm � Use � Seawall [NSDI’ 11] as rate-allocation algorithm TCP-Cubic like � Essential improvements (for when using dropped packets) � Hold-increase: hold probing for free bandwidth after a congestion event. Holding time is inversely proportional to guarantee. Rate increasing Guarantee Holding time

Outline � Motivation and Goals � Overview � More Details � Guarantee Partitioning � Rate Allocation � Evaluation

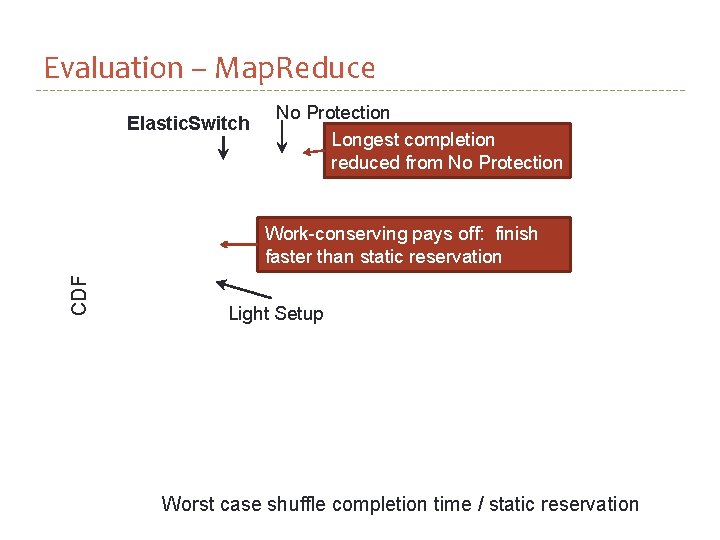

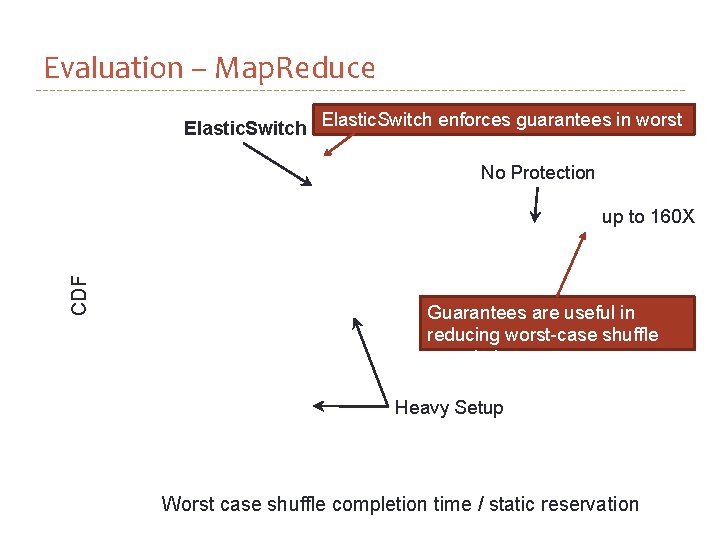

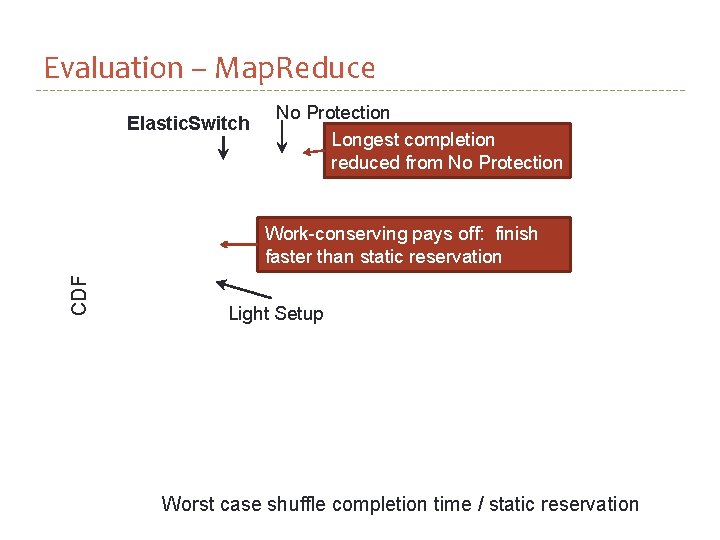

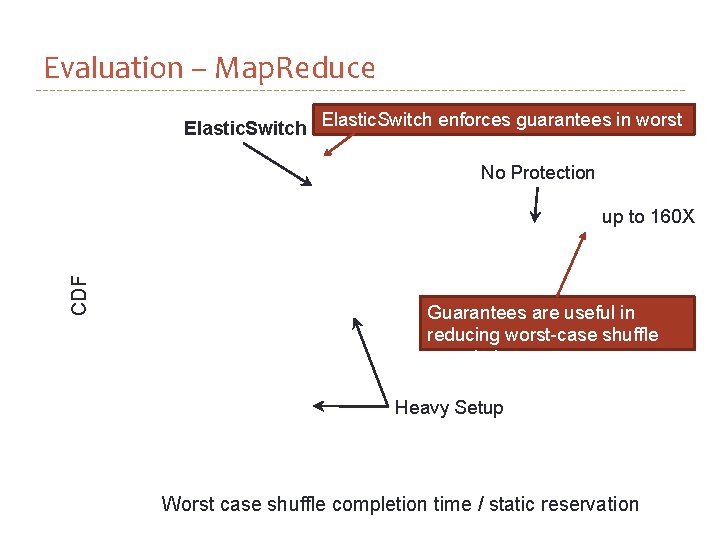

Evaluation – Map. Reduce � Setup � 44 servers, 4 x oversubscribed topology, 4 VMs/server � Each tenant runs one job, all VMs of all tenants same guarantee � Two scenarios: � Light � 10% of VM slots are either a mapper or a reducer � Randomly placed � Heavy � 100% of VM slots are either a mapper or a reducer � Mappers are placed in one half of the datacenter

CDF Evaluation – Map. Reduce Worst case shuffle completion time / static reservation

Evaluation – Map. Reduce Elastic. Switch No Protection Longest completion reduced from No Protection CDF Work-conserving pays off: finish faster than static reservation Light Setup Worst case shuffle completion time / static reservation

Evaluation – Map. Reduce Elastic. Switch enforces guarantees in worst case No Protection CDF up to 160 X Guarantees are useful in reducing worst-case shuffle completion Heavy Setup Worst case shuffle completion time / static reservation

Elastic. Switch Summary � Properties 1. 2. 3. Bandwidth Guarantees: hose model or derivatives Work-conserving Practical: oversubscribed topologies, commodity switches, decentralized � Design: two layers � Guarantee Partitioning: provides guarantees by transforming hose-model guarantees into VM-to-VM guarantees � Rate Allocation: enables work conservation by increasing rate limits above guarantees when no congestion HP Labs is hiring!

Future Work � Reduce Overhead � Elastic. Switch: average 1 core / 15 VMs , worst case 1 core /VM � Multi-path solution � Single-path reservations are inefficient � No existing solution works on multi-path networks � VM placement � Placing VMs in different locations impacts the gaurantees that can be made.

Open Questions � How do you integrate network sharing with endhost sharing. � What are the implications of different sharing mechanisms with each other? � How does the network architecture affect network sharing? � How do you do admission control? � How do you detect demand? � How does payment fit into this question? And if it does, when VMs from different people communicate, who dictates price, who gets charged?

Elastic Switch – Detecting Demand � Optimize for bimodal distribution flows � Most flows short, a few flows carry most bytes � Short flows care about latency, long flows care about throughput � Start � If with a small guarantee for a new VM-to-VM flow demand not satisfied, increase guarantee exponentially

Perfect Network Architecture � What happens in the perfect network architecture? � Implications: � No loss in network � Only at the edge of the network: � Edge uplinks between Server and To. R � Or hypervisor to VM – virtual links � These � VL 2 losses core networks are real: at Azure � Clos at Google

Open Questions � How do you integrate network sharing with endhost sharing. � What are the implications of different sharing mechanisms with each other? � How does the network architecture affect network sharing? � How do you do admission control? does payment fit into this question? And if it does, when VMs from different people communicate, who dictates price, who gets charged?

Open Questions � How do you integrate network sharing with endhost sharing. � What are the implications of different sharing mechanisms with each other? � How does the network architecture affect network sharing? � How do you do admission control? does payment fit into this question? And if it does, when VMs from different people communicate, who dictates price, who gets charged?

Open Questions � How do you integrate network sharing with endhost sharing. � What are the implications of different sharing mechanisms with each other? � How does the network architecture affect network sharing? � How do you do admission control? does payment fit into this question? And if it does, when VMs from different people communicate, who dictates price, who gets charged?