Automated negotiations Agents interacting with other automated agents

![Aut. ONA [BY 03] Buyers and sellers Using data from previous experiments Belief function Aut. ONA [BY 03] Buyers and sellers Using data from previous experiments Belief function](https://slidetodoc.com/presentation_image_h2/3b9f1cf96b8bf601bd5e44831df86a79/image-97.jpg)

![QOAgent [LIN 08] Played at least as well as people Multi-issue, multi-attribute, with incomplete QOAgent [LIN 08] Played at least as well as people Multi-issue, multi-attribute, with incomplete](https://slidetodoc.com/presentation_image_h2/3b9f1cf96b8bf601bd5e44831df86a79/image-103.jpg)

- Slides: 162

Automated negotiations: Agents interacting with other automated agents and with humans Sarit Kraus Department of Computer Science Bar-Ilan University of Maryland sarit@cs. biu. ac. il http: //www. cs. biu. ac. il/~sarit/ 1

Negotiations “A discussion in which interested parties exchange information and come to an agreement. ” — Davis and Smith, 1977 2

Negotiations NEGOTIATION is an interpersonal decisionmaking process necessary whenever we cannot achieve our objectives single-handedly. 3

Agent environments Teams of agents that need to coordinate joint activities; problems: distributed information, distributed decision solving, local conflicts. Open agent environments acting in the same environment; problems: need motivation to cooperate, conflict resolution, trust, distributed and hidden information. 4

Open Agent Environments Consist of: ◦ Automated agents developed by or serving different people or organizations. ◦ People with a variety of interests and institutional affiliations. The computer agents are “self-interested”; they may cooperate to further their interests. The set of agents is not fixed. 5

Open Agent Environments (examples) Agents support people ◦ Collaborative interfaces ◦ CSCW: Computer Supported Cooperative Work systems ◦ Cooperative learning systems ◦ Military-support systems n Coordinating schedules n Agents act as proxies for people n Patient care-delivery systems n Groups of agents act autonomously alongside n Online auctions people n Simulation systems for education and training n Computer games and other forms of entertainment n Robots in rescue operations n Software personal assistants 6

Examples Monitoring electricity networks (Jennings) Distributed design and engineering (Petrie et al. ) Distributed meeting scheduling (Sen & Durfee) Teams of robotic systems acting in hostile environments (Balch & Arkin, Tambe) Collaborative Internet-agents (Etzioni & Weld, Weiss) Collaborative interfaces (Grosz & Ortiz, Andre) Information agent on the Internet (Klusch) Cooperative transportation scheduling (Fischer) Supporting hospital patient scheduling (Decker & Jin) Intelligent Agents for Command Control (Sycara) 8

Types of agents Fully rational agents Bounded rational agents 9

Using other disciplines’ results No need to start from scratch! Required modification and adjustment; AI gives insights and complimentary methods. Is it worth it to use formal methods for multi-agent systems? 10

Negotiating with rational agents Quantitative decision making ◦ Maximizing expected utility ◦ Nash equilibrium, Bayesian Nash equilibrium Automated Negotiator ◦ Model the scenario as a game ◦ The agent computes (if complexity allows) the equilibrium strategy, and acts accordingly. (Kraus, Strategic Negotiation in Multiagent Environments, MIT Press 2001). 11

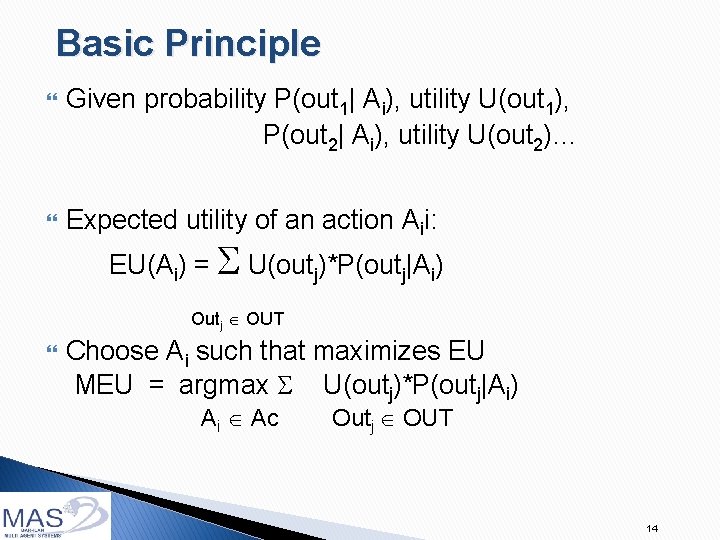

Short introduction to game theory Game Theory studies situations of strategic interaction in which each decision maker's plan of action depends on the plans of the other decision makers. 12

Decision Theory (reminder) (How to make decisions) Decision Theory = Probability theory + Utility Theory (deals with chance) (deals with outcomes) Fundamental idea ◦ The MEU (Maximum expected utility) principle ◦ Weigh the utility of each outcome by the probability that it occurs 13

Basic Principle Given probability P(out 1| Ai), utility U(out 1), P(out 2| Ai), utility U(out 2)… Expected utility of an action Aii: EU(Ai) = S U(outj)*P(outj|Ai) Outj OUT Choose Ai such that maximizes EU MEU = argmax S U(outj)*P(outj|Ai) Ai Ac Outj OUT 14

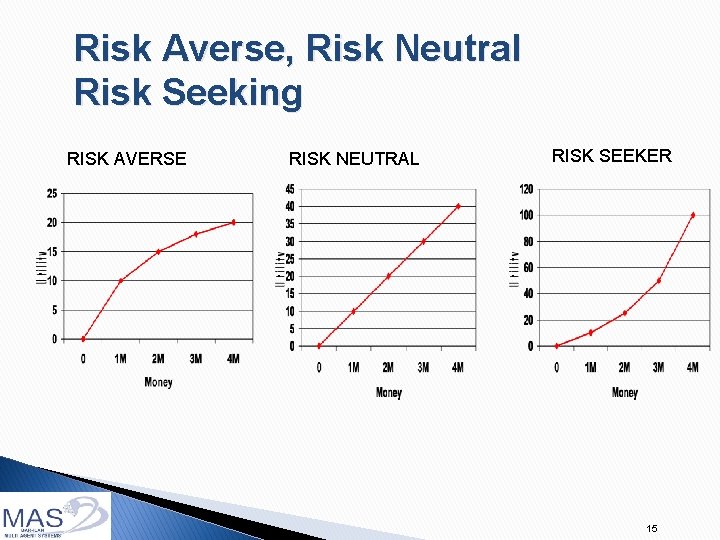

Risk Averse, Risk Neutral Risk Seeking RISK AVERSE RISK NEUTRAL RISK SEEKER 15

Game Description Players ◦ Who participates in the game? Actions / Strategies ◦ What can each player do? ◦ In what order do the players act? Outcomes / Payoffs ◦ What is the outcome of the game? ◦ What are the players' preferences over the possible outcomes? 16

Game Description (cont) Information ◦ What do the players know about the parameters of the environment or about one another? ◦ Can they observe the actions of the other players? Beliefs ◦ What do the players believe about the unknown parameters of the environment or about one another? ◦ What can they infer from observing the actions of the other players? 17

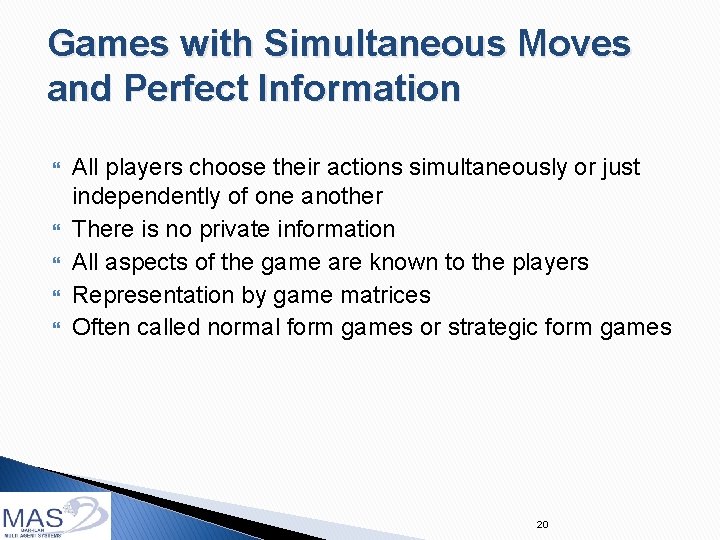

Strategies and Equilibrium Strategy ◦ Complete plan, describing an action for every contingency Nash Equilibrium ◦ Each player's strategy is a best response to the strategies of the other players ◦ Equivalently: No player can improve his payoffs by changing his strategy alone ◦ Self-enforcing agreement. No need formal contracting Other equilibrium concepts also exist 18

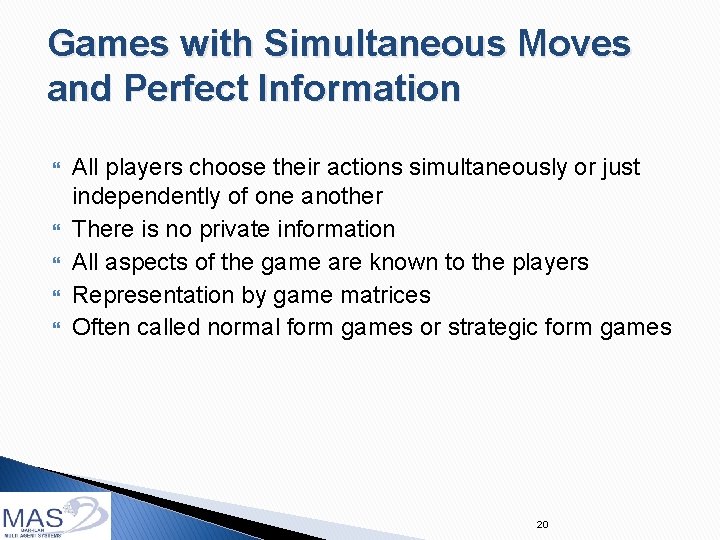

Classification of Games Depending on the timing of move ◦ Games with simultaneous moves ◦ Games with sequential moves Depending on the information available to the players ◦ Games with perfect information ◦ Games with imperfect (or incomplete) information We concentrate on non-cooperative games ◦ Groups of players cannot deviate jointly ◦ Players cannot make binding agreements 19

Games with Simultaneous Moves and Perfect Information All players choose their actions simultaneously or just independently of one another There is no private information All aspects of the game are known to the players Representation by game matrices Often called normal form games or strategic form games 20

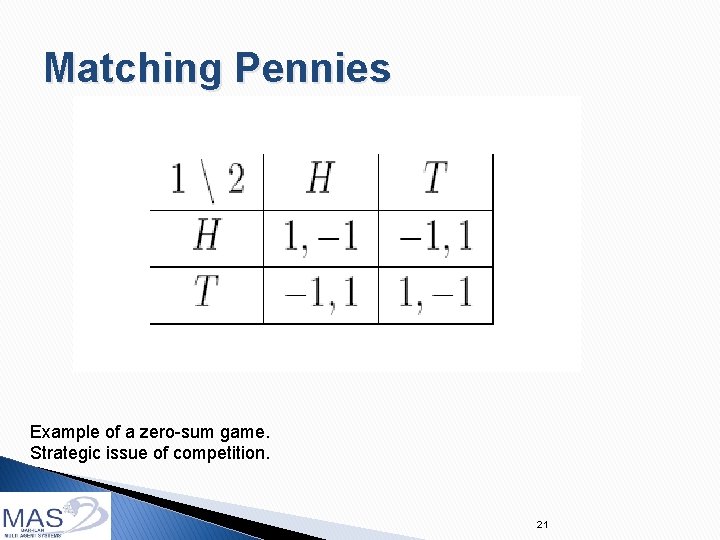

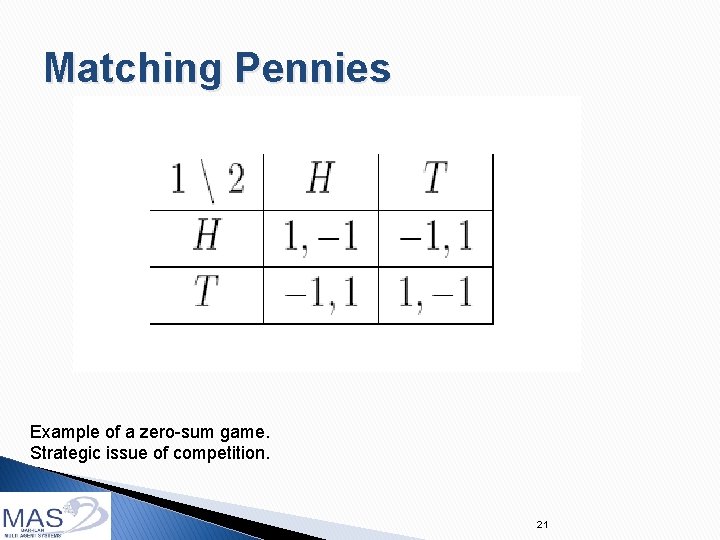

Matching Pennies Example of a zero-sum game. Strategic issue of competition. 21

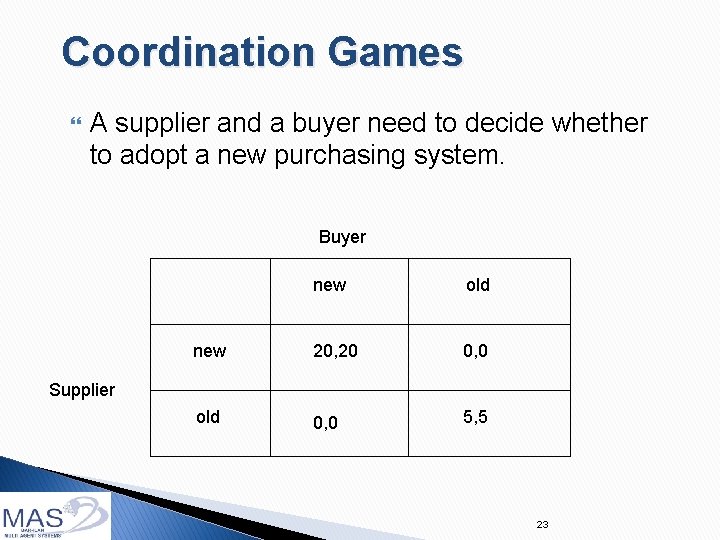

Prisoner’s Dilemma Each player can cooperate or defect Column cooperate defect -1, -1 -10, 0 0, -10 -8, -8 Row defect Main issue: Tension between social optimality and individual incentives. 22

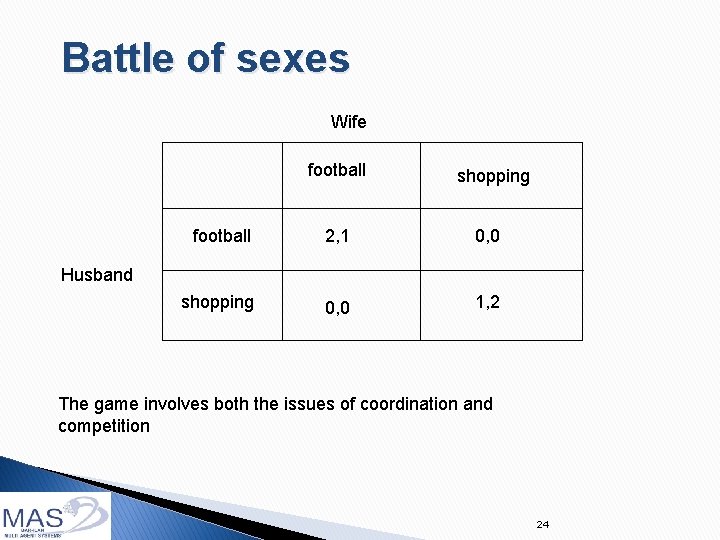

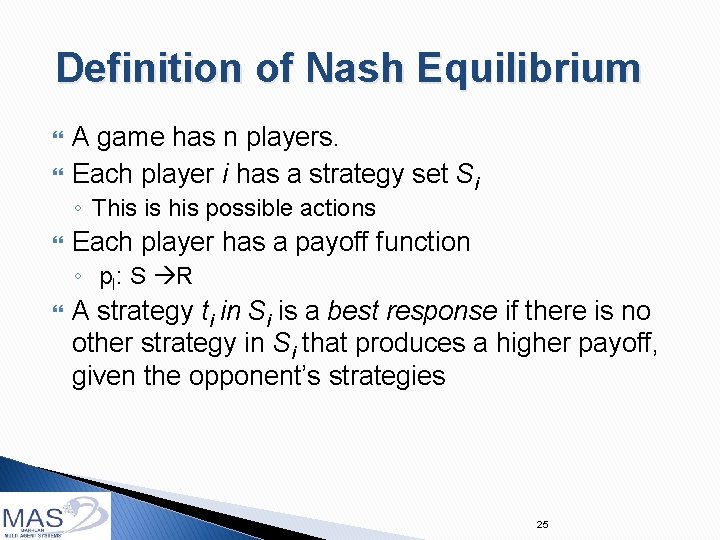

Coordination Games A supplier and a buyer need to decide whether to adopt a new purchasing system. Buyer new old new 20, 20 0, 0 old 0, 0 5, 5 Supplier 23

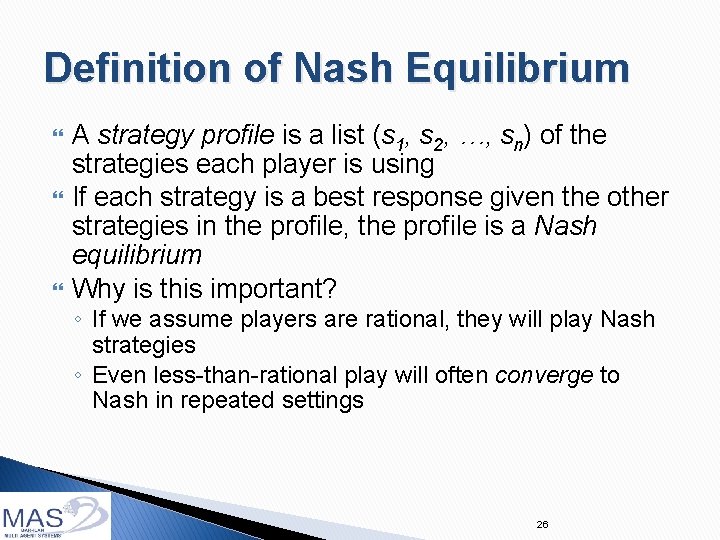

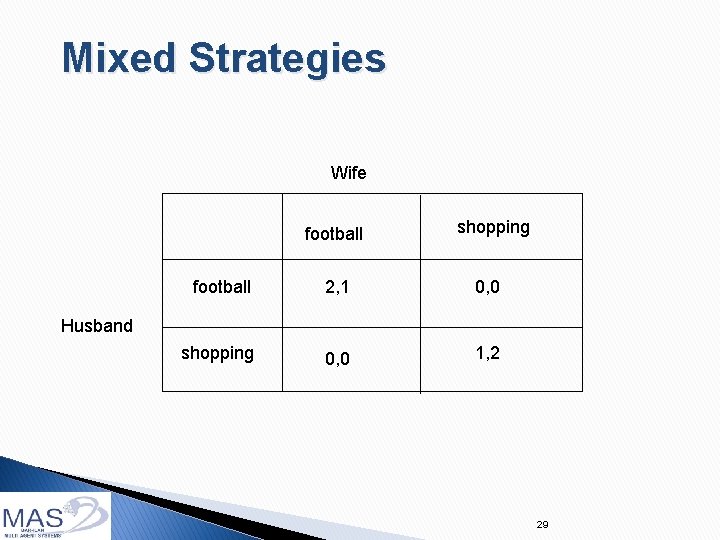

Battle of sexes Wife football shopping football 2, 1 0, 0 shopping 0, 0 1, 2 Husband The game involves both the issues of coordination and competition 24

Definition of Nash Equilibrium A game has n players. Each player i has a strategy set Si ◦ This is his possible actions Each player has a payoff function ◦ p. I: S R A strategy ti in Si is a best response if there is no other strategy in Si that produces a higher payoff, given the opponent’s strategies 25

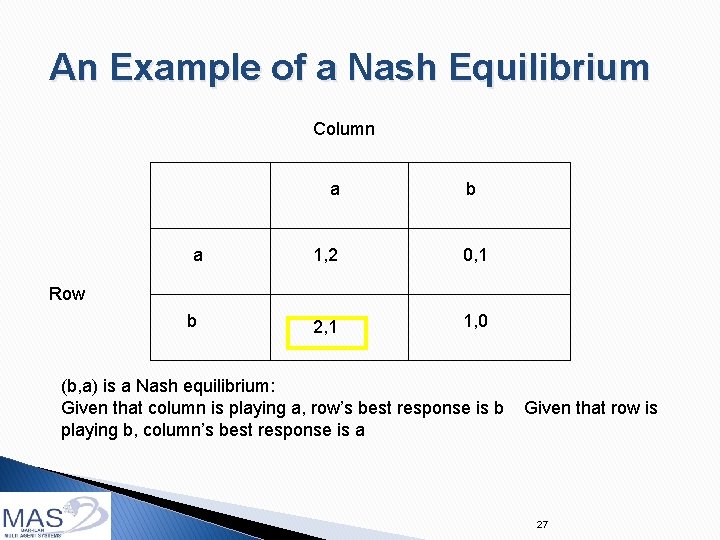

Definition of Nash Equilibrium A strategy profile is a list (s 1, s 2, …, sn) of the strategies each player is using If each strategy is a best response given the other strategies in the profile, the profile is a Nash equilibrium Why is this important? ◦ If we assume players are rational, they will play Nash strategies ◦ Even less-than-rational play will often converge to Nash in repeated settings 26

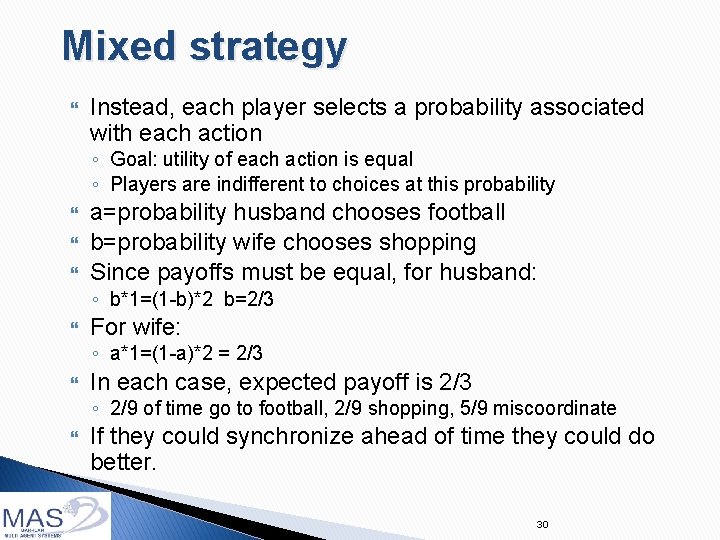

An Example of a Nash Equilibrium Column a a b 1, 2 0, 1 2, 1 1, 0 Row b (b, a) is a Nash equilibrium: Given that column is playing a, row’s best response is b playing b, column’s best response is a Given that row is 27

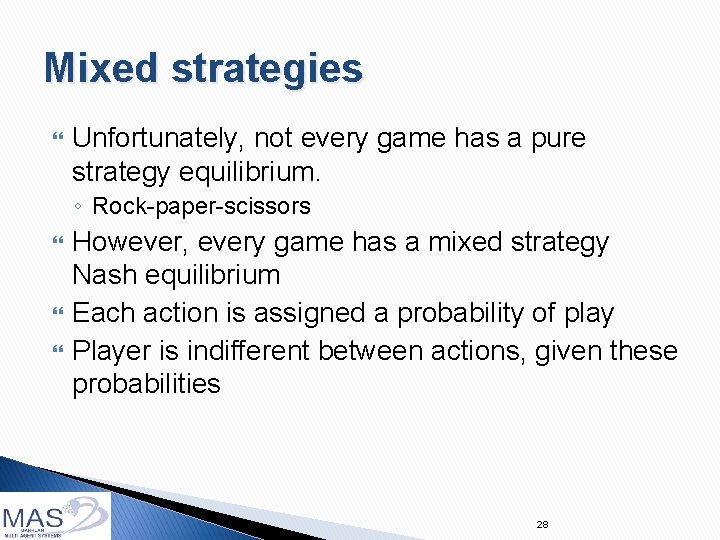

Mixed strategies Unfortunately, not every game has a pure strategy equilibrium. ◦ Rock-paper-scissors However, every game has a mixed strategy Nash equilibrium Each action is assigned a probability of play Player is indifferent between actions, given these probabilities 28

Mixed Strategies Wife football shopping football 2, 1 0, 0 shopping 0, 0 1, 2 Husband 29

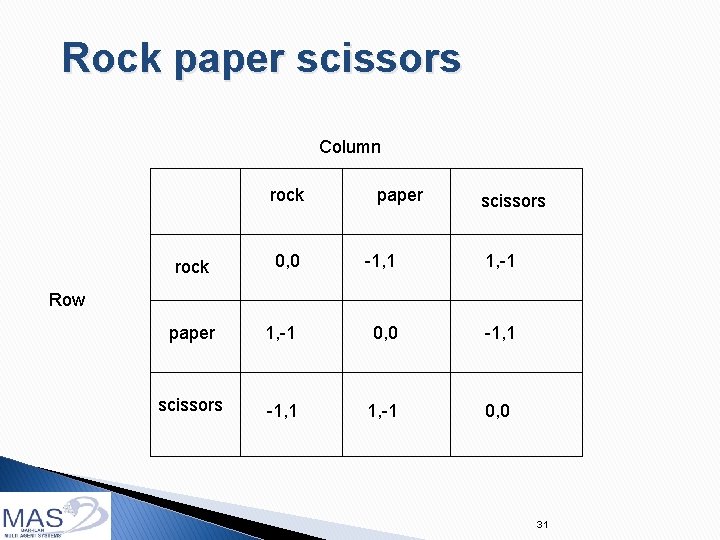

Mixed strategy Instead, each player selects a probability associated with each action ◦ Goal: utility of each action is equal ◦ Players are indifferent to choices at this probability a=probability husband chooses football b=probability wife chooses shopping Since payoffs must be equal, for husband: ◦ b*1=(1 -b)*2 b=2/3 For wife: ◦ a*1=(1 -a)*2 = 2/3 In each case, expected payoff is 2/3 ◦ 2/9 of time go to football, 2/9 shopping, 5/9 miscoordinate If they could synchronize ahead of time they could do better. 30

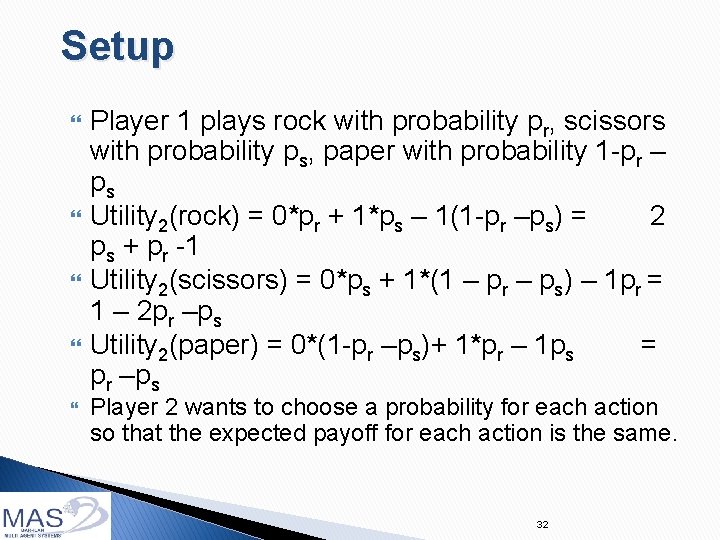

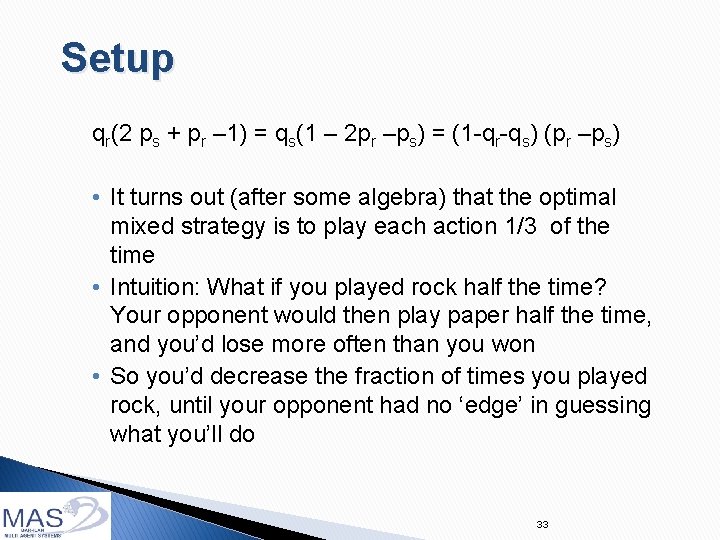

Rock paper scissors Column rock paper scissors 0, 0 -1, 1 1, -1 paper 1, -1 0, 0 -1, 1 scissors -1, 1 1, -1 0, 0 rock Row 31

Setup Player 1 plays rock with probability pr, scissors with probability ps, paper with probability 1 -pr – ps Utility 2(rock) = 0*pr + 1*ps – 1(1 -pr –ps) = 2 ps + pr -1 Utility 2(scissors) = 0*ps + 1*(1 – pr – ps) – 1 pr = 1 – 2 pr –ps Utility 2(paper) = 0*(1 -pr –ps)+ 1*pr – 1 ps = pr –ps Player 2 wants to choose a probability for each action so that the expected payoff for each action is the same. 32

Setup qr(2 ps + pr – 1) = qs(1 – 2 pr –ps) = (1 -qr-qs) (pr –ps) • It turns out (after some algebra) that the optimal mixed strategy is to play each action 1/3 of the time • Intuition: What if you played rock half the time? Your opponent would then play paper half the time, and you’d lose more often than you won • So you’d decrease the fraction of times you played rock, until your opponent had no ‘edge’ in guessing what you’ll do 33

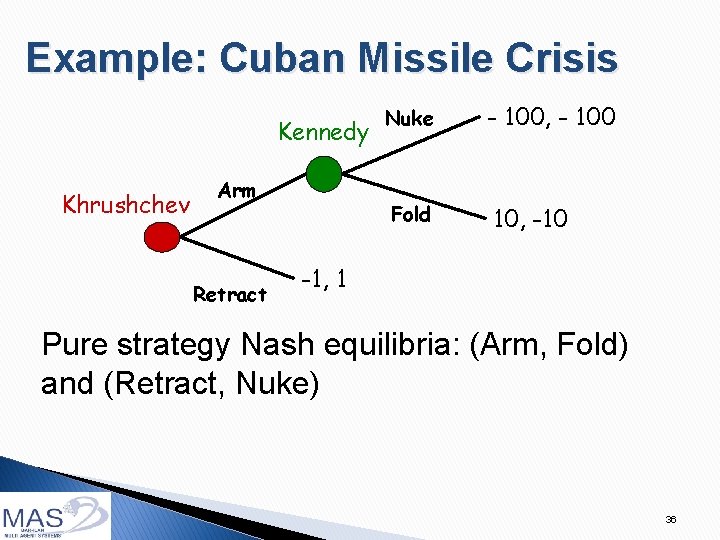

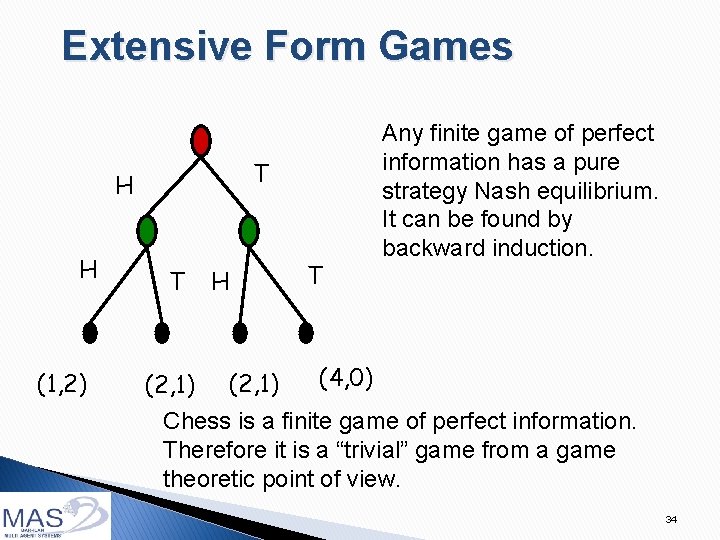

Extensive Form Games T H H (1, 2) T (2, 1) H (2, 1) T Any finite game of perfect information has a pure strategy Nash equilibrium. It can be found by backward induction. (4, 0) Chess is a finite game of perfect information. Therefore it is a “trivial” game from a game theoretic point of view. 34

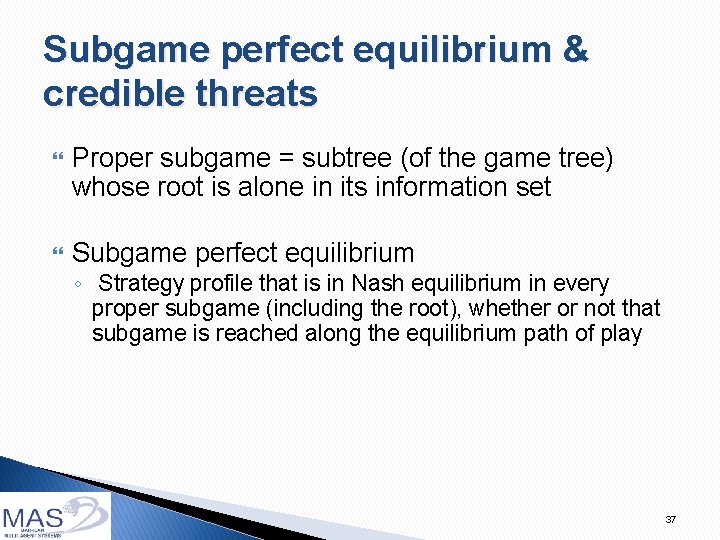

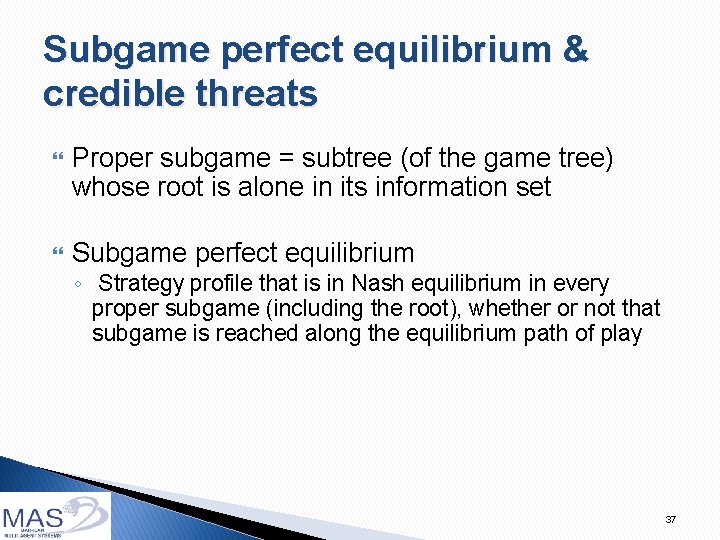

Extensive Form Games - Intro A game can have complex temporal structure Information ◦ ◦ ◦ set of players who moves when and under what circumstances what actions are available when called upon to move what is known when called upon to move what payoffs each player receives Foundation is a game tree 35

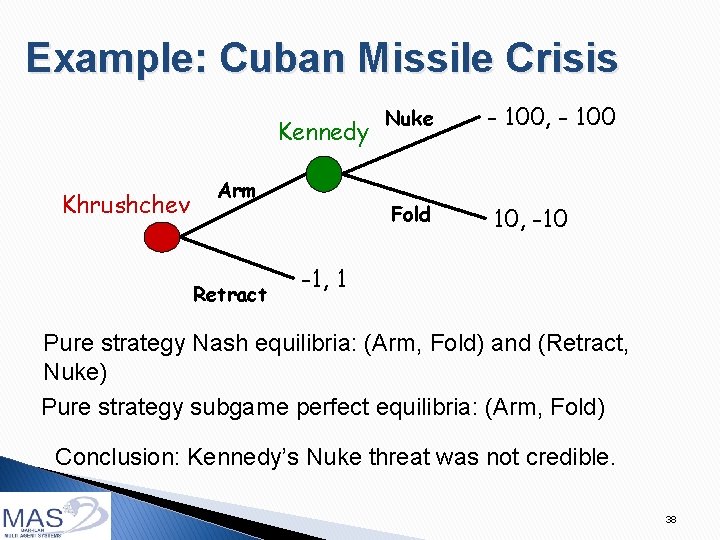

Example: Cuban Missile Crisis Kennedy Khrushchev Arm Retract Nuke - 100, - 100 Fold 10, -10 -1, 1 Pure strategy Nash equilibria: (Arm, Fold) and (Retract, Nuke) 36

Subgame perfect equilibrium & credible threats Proper subgame = subtree (of the game tree) whose root is alone in its information set Subgame perfect equilibrium ◦ Strategy profile that is in Nash equilibrium in every proper subgame (including the root), whether or not that subgame is reached along the equilibrium path of play 37

Example: Cuban Missile Crisis Kennedy Khrushchev Arm Retract Nuke - 100, - 100 Fold 10, -10 -1, 1 Pure strategy Nash equilibria: (Arm, Fold) and (Retract, Nuke) Pure strategy subgame perfect equilibria: (Arm, Fold) Conclusion: Kennedy’s Nuke threat was not credible. 38

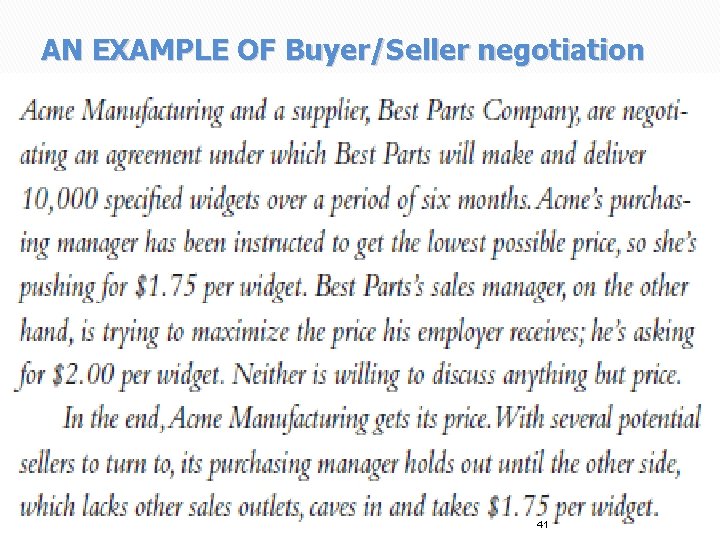

Type of games Diplomacy 39

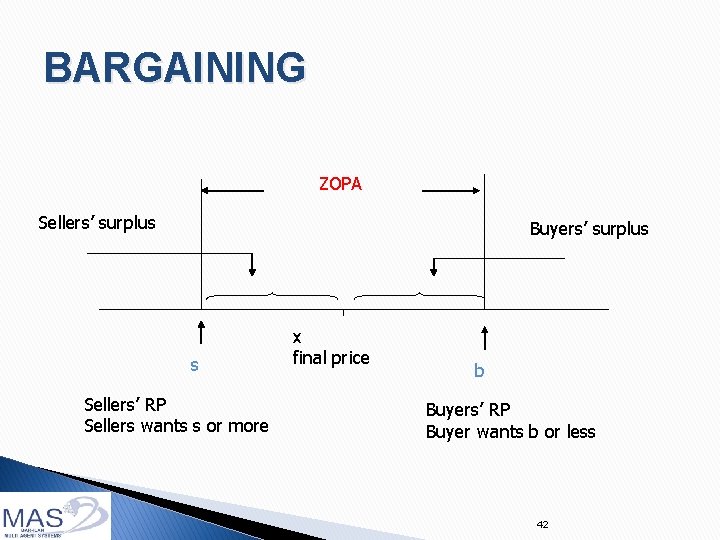

AN EXAMPLE OF Buyer/Seller negotiation 41

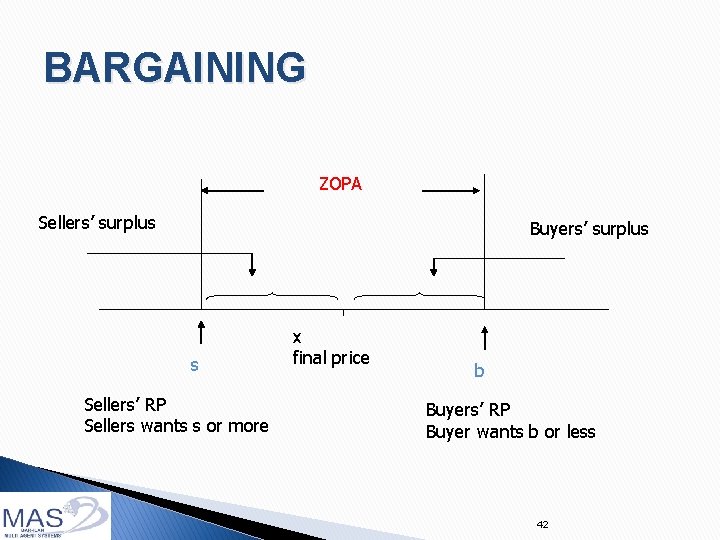

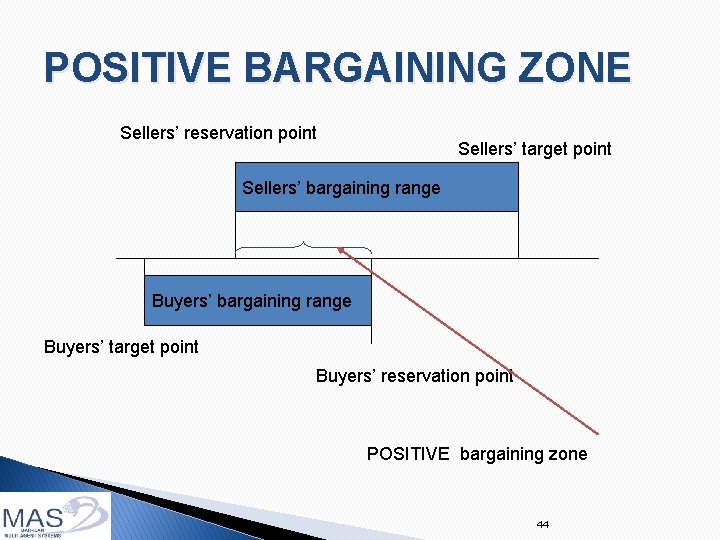

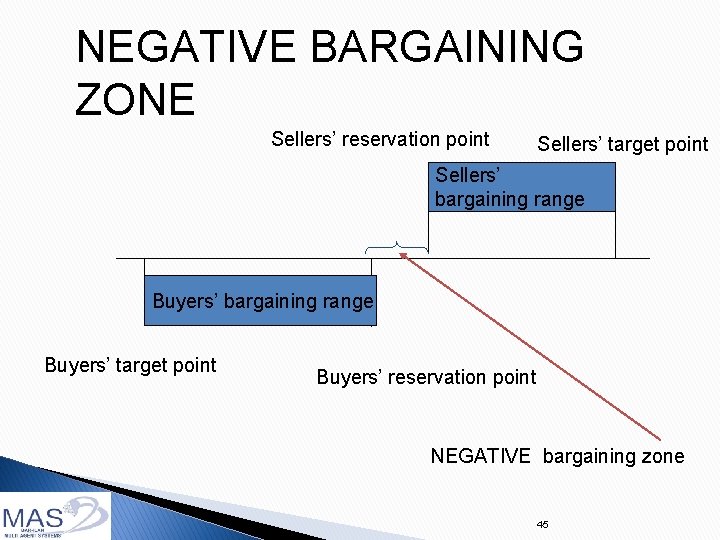

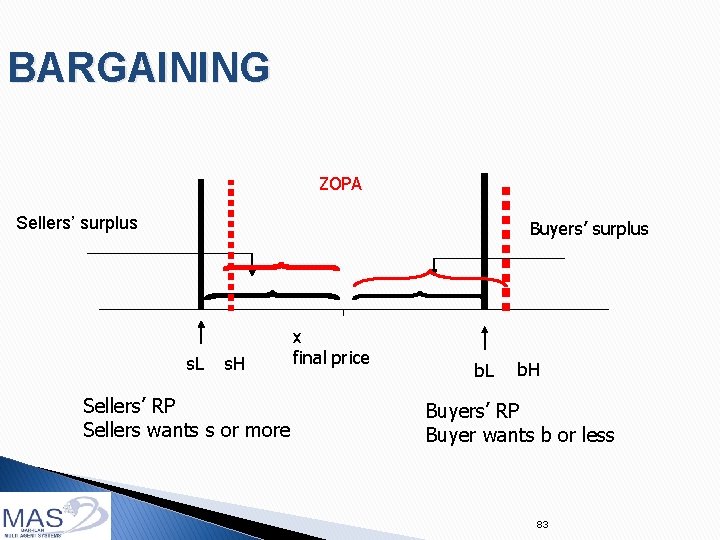

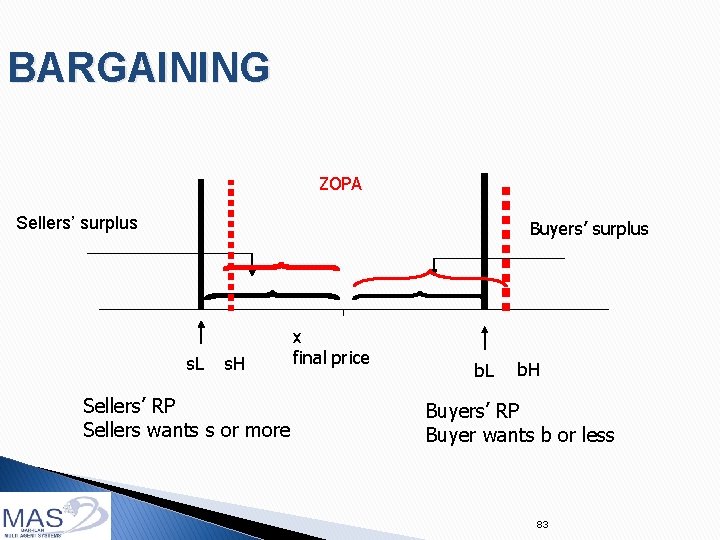

BARGAINING ZOPA Sellers’ surplus Buyers’ surplus s Sellers’ RP Sellers wants s or more x final price b Buyers’ RP Buyer wants b or less 42

BARGAINING • • • If b < s If b > s (x-s) (b-x) negative bargaining zone, zone no possible agreements positive bargaining zone, agreement possible sellers’ surplus; buyers’ surplus; The surplus to divide independent on ‘x’ – constant-sum game! 43

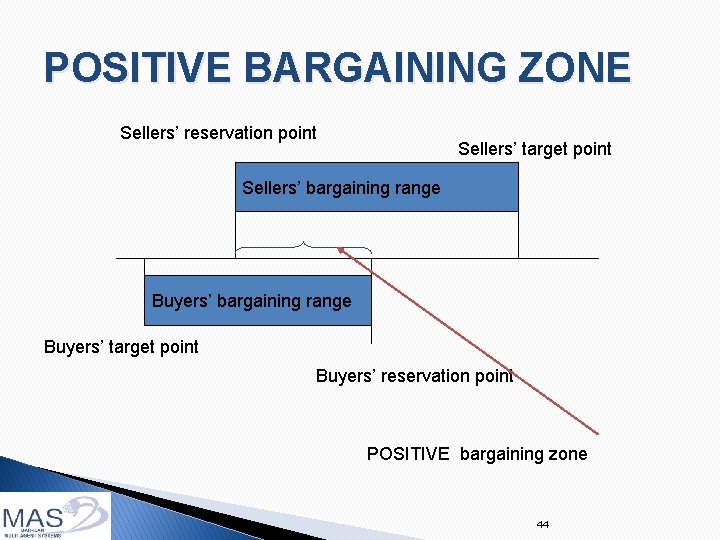

POSITIVE BARGAINING ZONE Sellers’ reservation point Sellers’ target point Sellers’ bargaining range Buyers’ target point Buyers’ reservation point POSITIVE bargaining zone 44

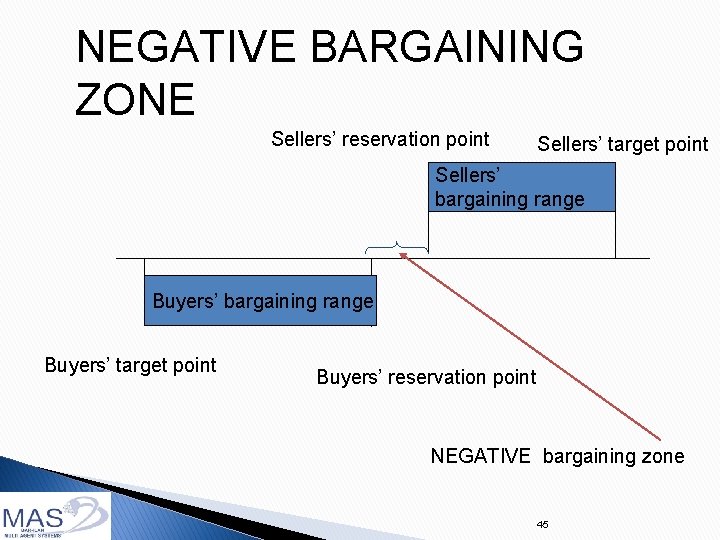

NEGATIVE BARGAINING ZONE Sellers’ reservation point Sellers’ target point Sellers’ bargaining range Buyers’ target point Buyers’ reservation point NEGATIVE bargaining zone 45

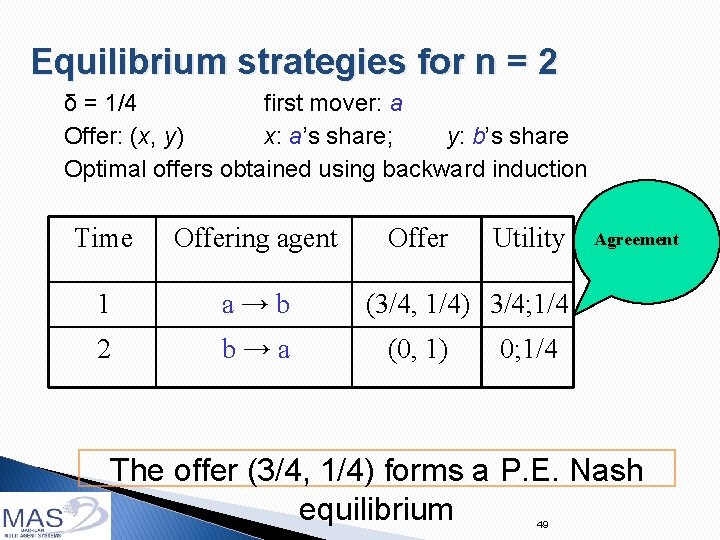

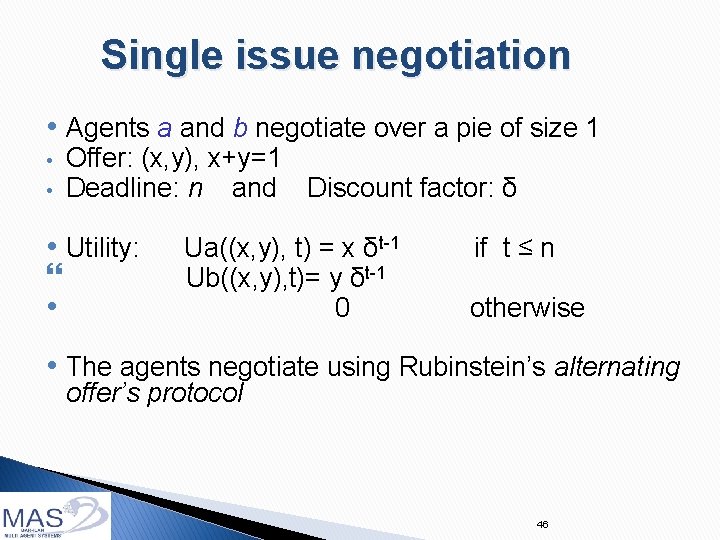

Single issue negotiation • Agents a and b negotiate over a pie of size 1 • • Offer: (x, y), x+y=1 Deadline: n and Discount factor: δ • Utility: • Ua((x, y), t) = x δt-1 Ub((x, y), t)= y δt-1 0 if t ≤ n otherwise • The agents negotiate using Rubinstein’s alternating offer’s protocol 46

Alternating offers protocol Time 1 2 Offer Respond a (x 1, y 1) b (accept/reject) b (x 2, y 2) a (accept/reject) - n 47

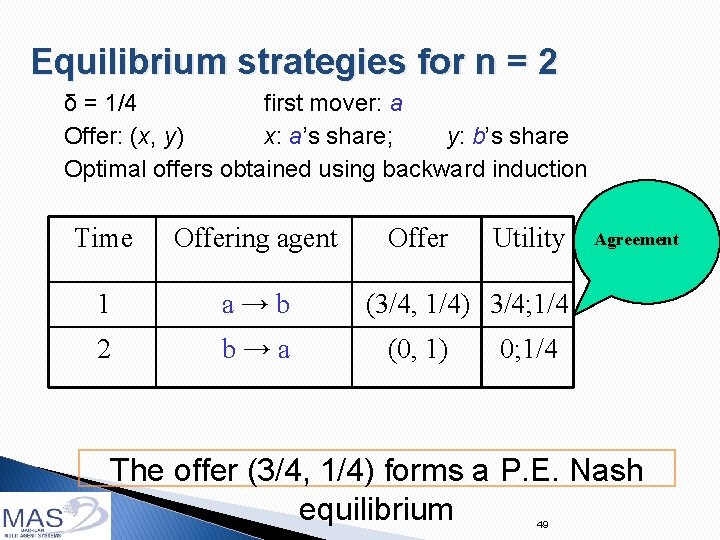

Equilibrium strategies How much should an agent offer if there is only one time period? Let n=1 and a be the first mover Agent a’s offer: Propose to keep the whole pie (1, 0); agent b will accept this 48

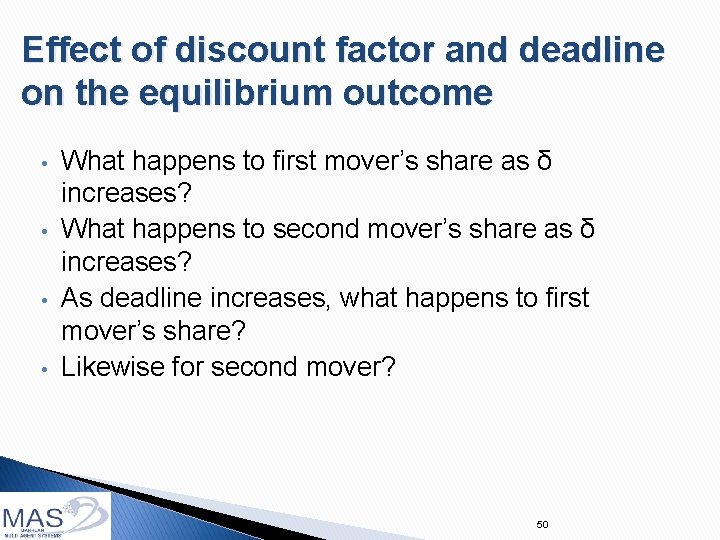

Equilibrium strategies for n = 2 δ = 1/4 first mover: a Offer: (x, y) x: a’s share; y: b’s share Optimal offers obtained using backward induction Time Offering agent 1 a→b 2 b→a Offer Utility Agreement (3/4, 1/4) 3/4; 1/4 (0, 1) 0; 1/4 The offer (3/4, 1/4) forms a P. E. Nash equilibrium 49

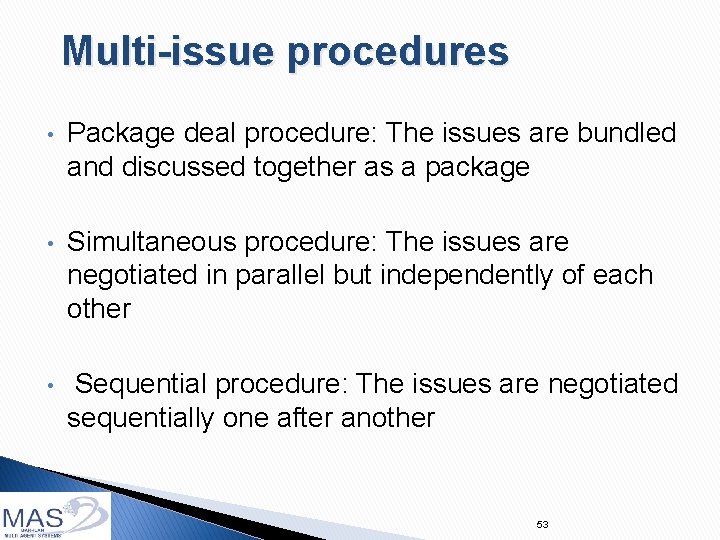

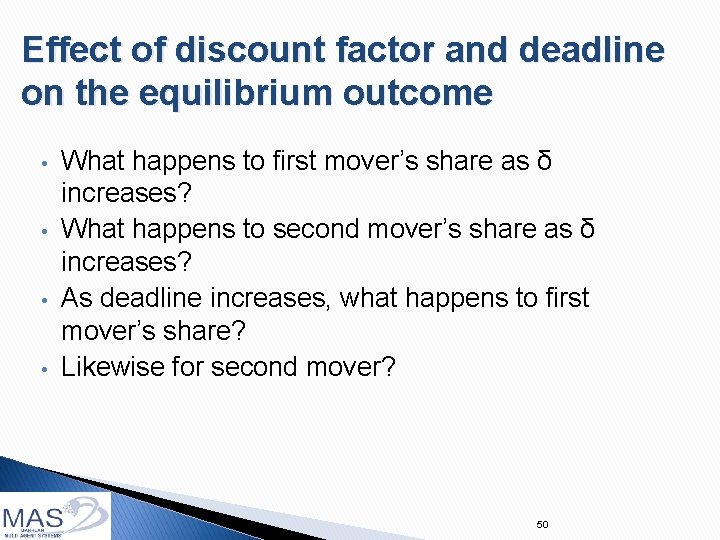

Effect of discount factor and deadline on the equilibrium outcome • • What happens to first mover’s share as δ increases? What happens to second mover’s share as δ increases? As deadline increases, what happens to first mover’s share? Likewise for second mover? 50

Effect of δ and deadline on the agents’ shares 51

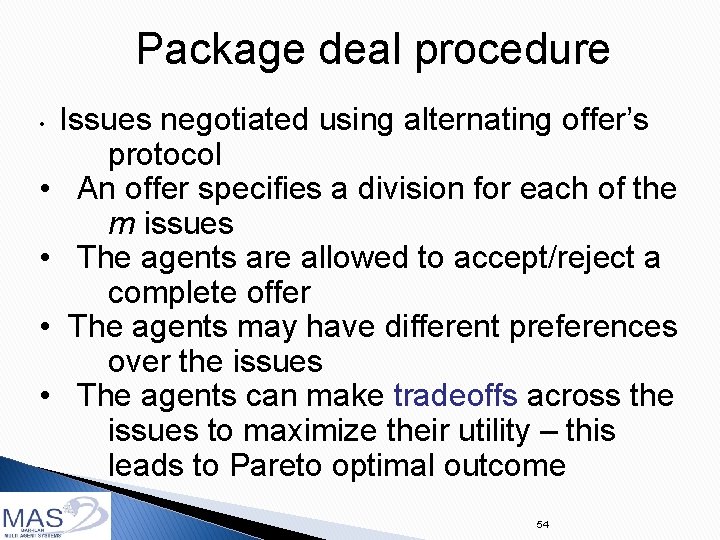

Multiple issues • • • Set of issues: S = {1, 2, …, m}. Each issue is a pie of size 1 The issues are divisible Deadline: n (for all the issues) Discount factor: δc for issue c Utility: U(x, t) = ∑c U(xc, t) 52

Multi-issue procedures • Package deal procedure: The issues are bundled and discussed together as a package • Simultaneous procedure: The issues are negotiated in parallel but independently of each other • Sequential procedure: The issues are negotiated sequentially one after another 53

Package deal procedure Issues negotiated using alternating offer’s protocol • An offer specifies a division for each of the m issues • The agents are allowed to accept/reject a complete offer • The agents may have different preferences over the issues • The agents can make tradeoffs across the issues to maximize their utility – this leads to Pareto optimal outcome • 54

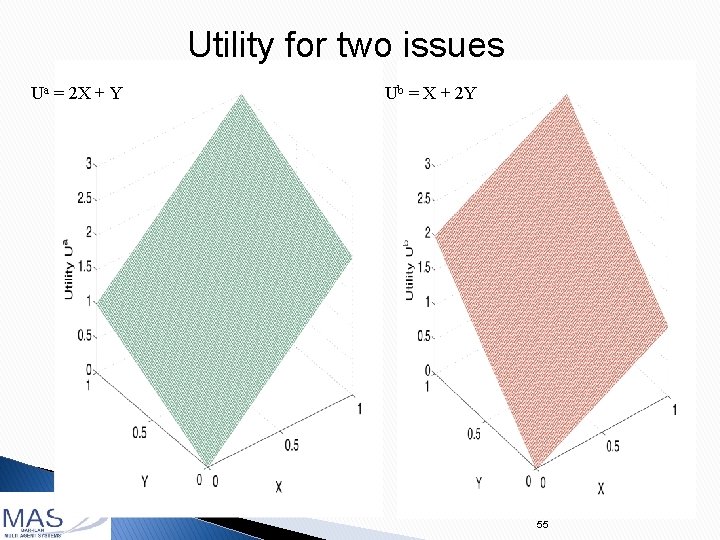

Utility for two issues Ua = 2 X + Y Ub = X + 2 Y 55

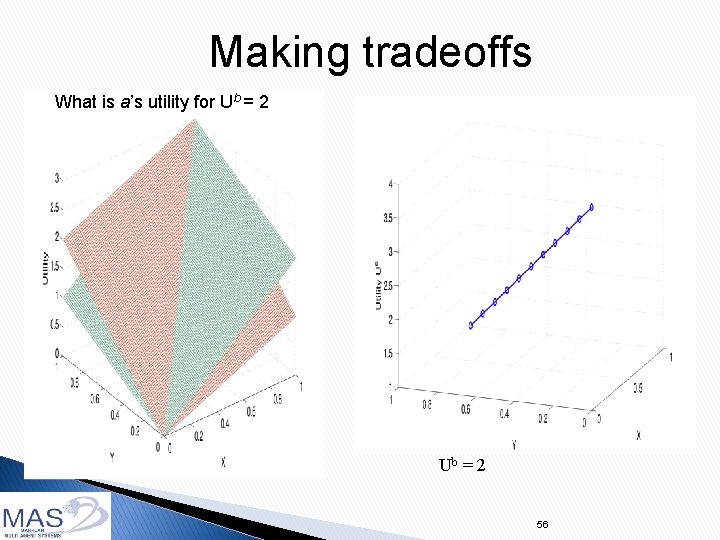

Making tradeoffs What is a’s utility for Ub = 2 56

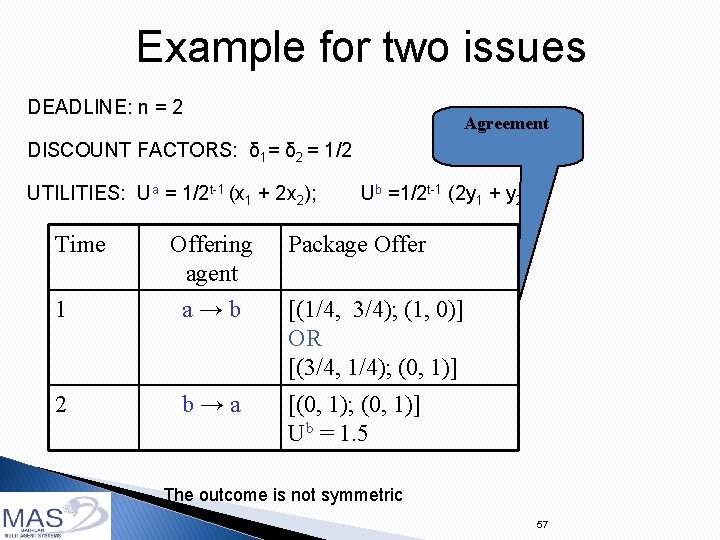

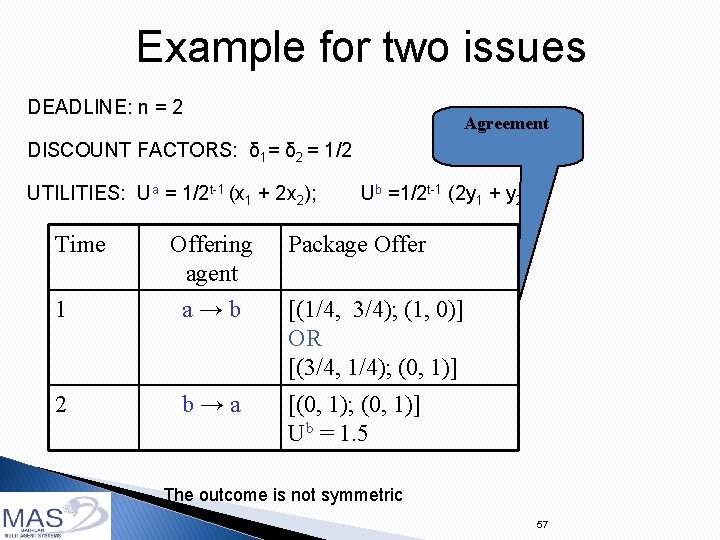

Example for two issues DEADLINE: n = 2 Agreement DISCOUNT FACTORS: δ 1= δ 2 = 1/2 UTILITIES: Ua = 1/2 t-1 (x 1 + 2 x 2); Time Offering agent Ub =1/2 t-1 (2 y 1 + y 2) Package Offer 1 a→b [(1/4, 3/4); (1, 0)] OR [(3/4, 1/4); (0, 1)] 2 b→a [(0, 1); (0, 1)] Ub = 1. 5 The outcome is not symmetric 57

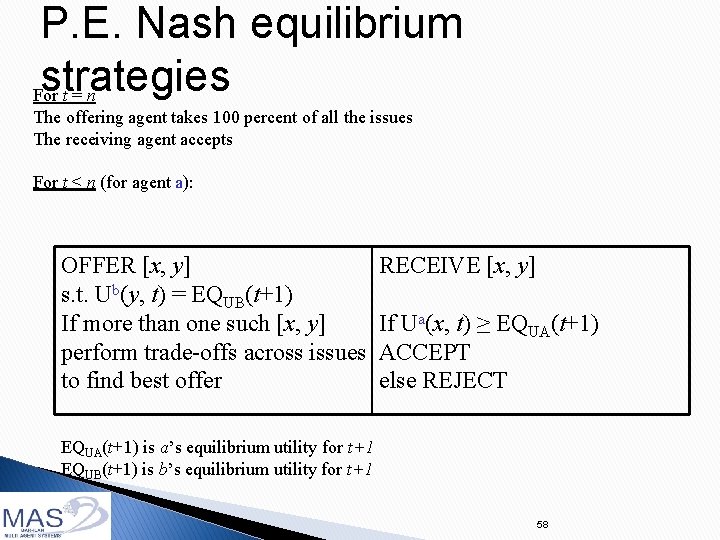

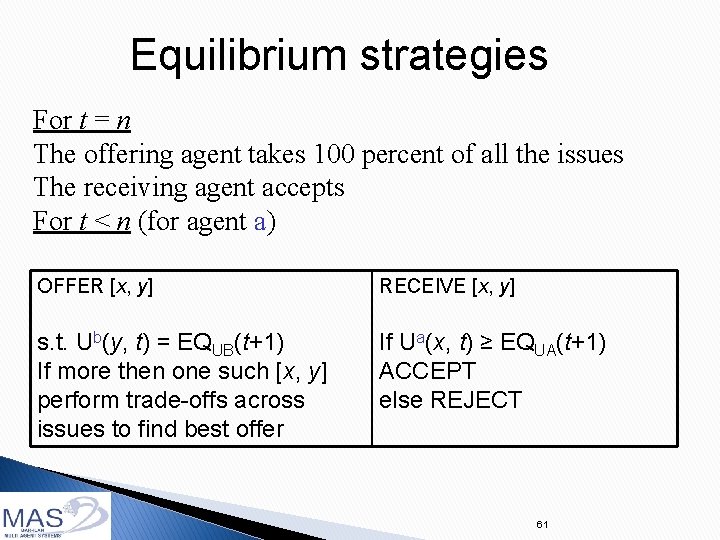

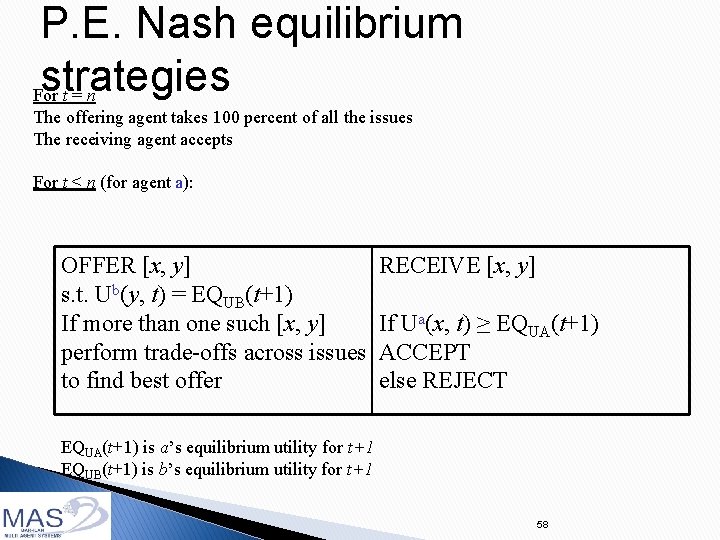

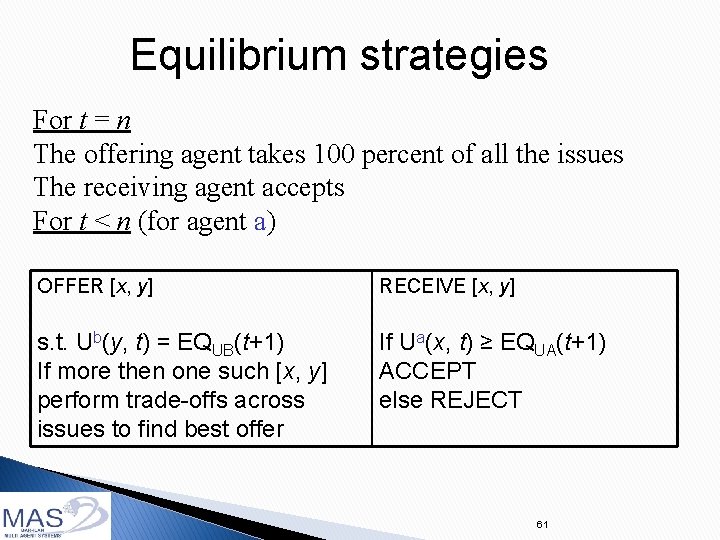

P. E. Nash equilibrium strategies For t = n The offering agent takes 100 percent of all the issues The receiving agent accepts For t < n (for agent a): OFFER [x, y] s. t. Ub(y, t) = EQUB(t+1) If more than one such [x, y] perform trade-offs across issues to find best offer RECEIVE [x, y] If Ua(x, t) ≥ EQUA(t+1) ACCEPT else REJECT EQUA(t+1) is a’s equilibrium utility for t+1 EQUB(t+1) is b’s equilibrium utility for t+1 58

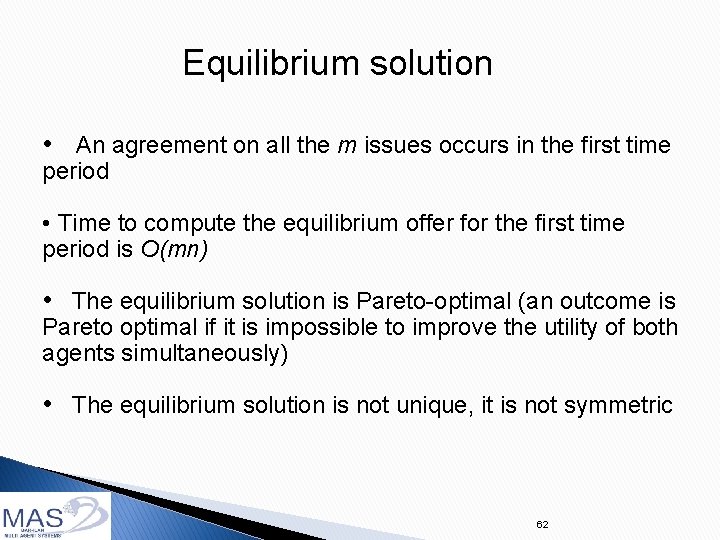

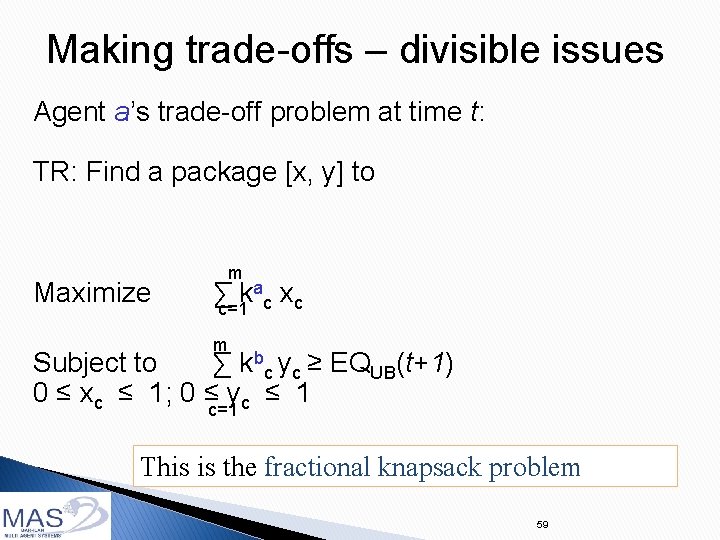

Making trade-offs – divisible issues Agent a’s trade-off problem at time t: TR: Find a package [x, y] to Maximize m a x ∑ k c=1 c c m Subject to ∑ kbc yc ≥ EQUB(t+1) 0 ≤ xc ≤ 1; 0 ≤c=1 yc ≤ 1 This is the fractional knapsack problem 59

Making trade-offs – divisible issues Agent a’s perspective (time t) Agent a considers the m issues in the increasing order of ka/kb and assigns to b the maximum possible share for each of them until b’s cumulative utility equals EQUB(t+1) 60

Equilibrium strategies For t = n The offering agent takes 100 percent of all the issues The receiving agent accepts For t < n (for agent a) OFFER [x, y] RECEIVE [x, y] s. t. Ub(y, t) = EQUB(t+1) If more then one such [x, y] perform trade-offs across issues to find best offer If Ua(x, t) ≥ EQUA(t+1) ACCEPT else REJECT 61

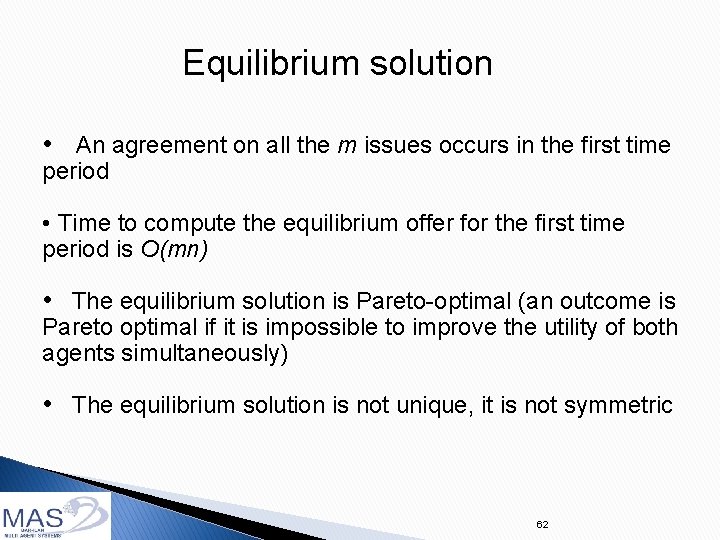

Equilibrium solution • An agreement on all the m issues occurs in the first time period • Time to compute the equilibrium offer for the first time period is O(mn) • The equilibrium solution is Pareto-optimal (an outcome is Pareto optimal if it is impossible to improve the utility of both agents simultaneously) • The equilibrium solution is not unique, it is not symmetric 62

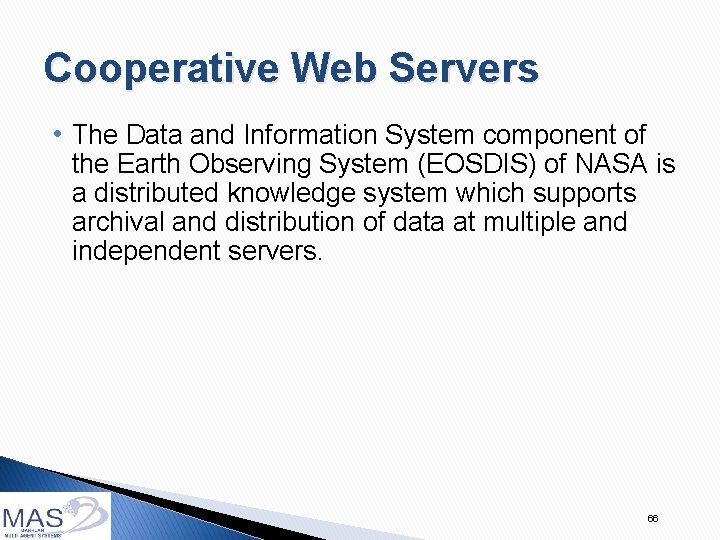

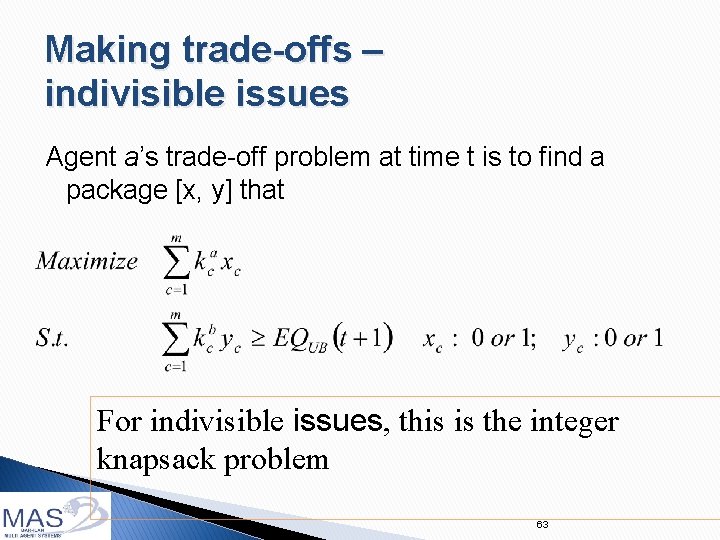

Making trade-offs – indivisible issues Agent a’s trade-off problem at time t is to find a package [x, y] that For indivisible issues, this is the integer knapsack problem 63

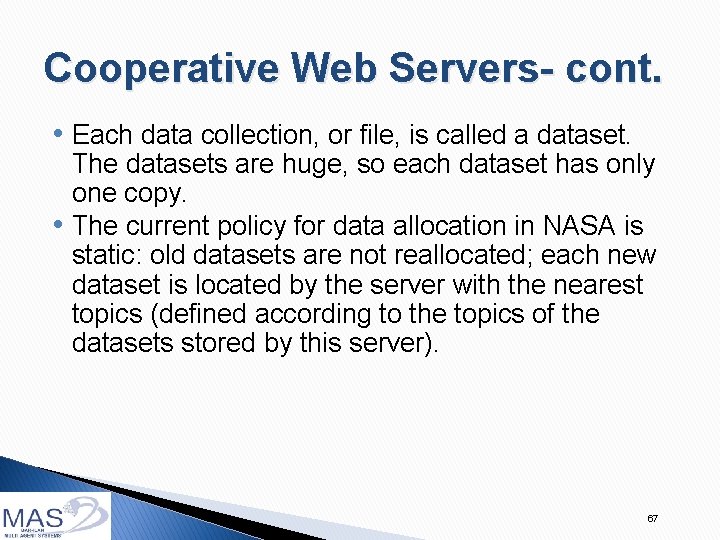

Key points • Single issue: • Time to compute equilibrium is O(n) • The equilibrium is not unique, it is not symmetric • Multiple divisible issues: (exact solution) • Time to compute equilibrium for t=1 is O(mn) • The equilibrium is Pareto optimal, it is not unique, it is not symmetric • Multiple indivisible issues: (approx. solution) • There is an FPTAS to compute approximate equilibrium • The equilibrium is Pareto optimal, it is not unique, it is not symmetric 64

Negotiation on data allocation in multi-server environment R. Azulay-Schwartz and S. Kraus. Negotiation On Data Allocation in Multi-Agent Environments. Autonomous Agents and Multi-Agent Systems journal 5(2): 123 -172, 2002. 65

Cooperative Web Servers • The Data and Information System component of the Earth Observing System (EOSDIS) of NASA is a distributed knowledge system which supports archival and distribution of data at multiple and independent servers. 66

Cooperative Web Servers- cont. • Each data collection, or file, is called a dataset. The datasets are huge, so each dataset has only one copy. • The current policy for data allocation in NASA is static: old datasets are not reallocated; each new dataset is located by the server with the nearest topics (defined according to the topics of the datasets stored by this server). 67

Related Work File Allocation Problem The original problem: How to distribute files among computers, in order to optimize the system performance. Our problem: How can self-motivated servers decide about distribution of files, when each server has its own objectives. 68

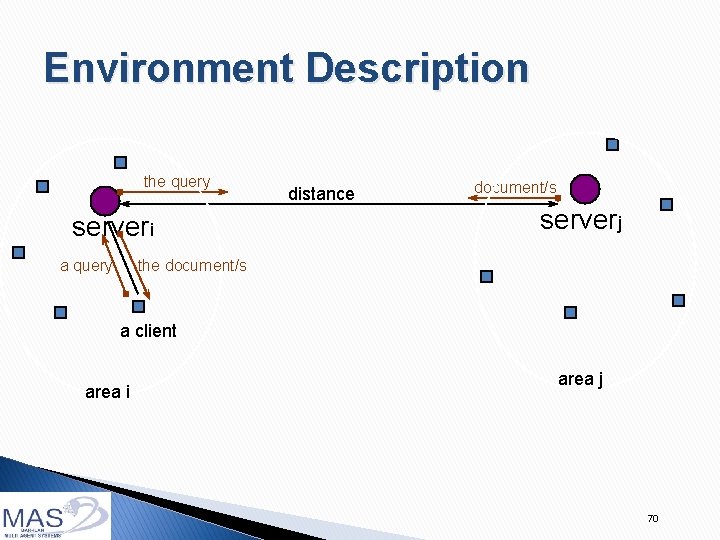

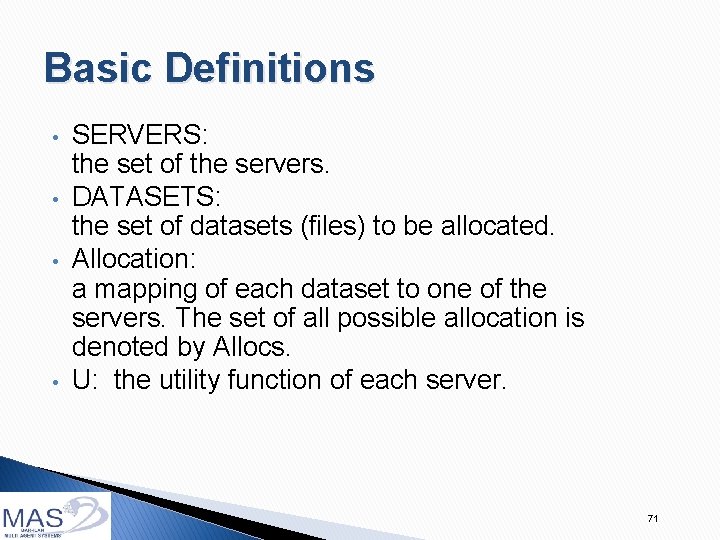

Environment Description • • There are several information servers. Each server is located at a different geographical area. Each server receives queries from the clients in its area, and sends documents as responses to queries. These documents can be stored locally, or in another server. 69

Environment Description the query serveri a query distance document/s serverj the document/s a client area i area j 70

Basic Definitions • • SERVERS: the set of the servers. DATASETS: the set of datasets (files) to be allocated. Allocation: a mapping of each dataset to one of the servers. The set of all possible allocation is denoted by Allocs. U: the utility function of each server. 71

The Conflict Allocation at least one server opts out. M of the negotiation, then the conflict allocation conflict_alloc is implemented. L We consider the conflict allocation to be the static allocation. (each dataset is stored in the server with closest topics). L If 72

Utility Function • Userver(alloc, t) specifies the utility of server from alloc Allocs at time t. • It consists of • The utility from the assignment of each dataset. • The cost of negotiation delay. Userver(alloc, 0)= Vserver(x, alloc(x)). x ־ DATASETS 73

Parameters of utility • • • query price: payment for retrieved docoments. usage(ds, s): the expected number of documents of dataset ds from clients in the area of server s. storage costs, retrieve costs, answer costs. 74

Cost over time • • Cost of communication and computation time of the negotiation. Loss of unused information: new documents can not be used until the negotiation ends. Datasets usage and storage cost are assumed to decrease over time, with the same discount ratio (p-1). Thus, there is a constant discount ratio of the utility from an allocation: Userver(alloc, t)=d t*Userver(alloc, 0) - t*C. 75

Assumptions • Each server prefers any agreement over continuation of the negotiation indefinitely. • The utility of each server from the conflict allocation is always greater or equal to 0. • OFFERS - the set of allocations that are preferred by all the agents over opting out. 76

Negotiation Analysis Simultaneous Responses • Simultaneous responses: • A server, when responding, is not informed of the other responses. Theorem: For each offer x OFFERS, there is a subgameperfect equilibrium of the bargaining game, with the outcome x offered and unanimously accepted in period 0. 77

Choosing the Allocation • The designers of the servers can agree in advance on a joint technique for choosing x • giving each server its conflict utility • maximizing a social welfare criterion • the sum of the servers’ utilities. • or the generalized Nash product of the servers’ utilities: P (Us(x)-Us(conflict)) 78

Experimental Evaluation • How do the parameters influence the results of the • • negotiation? vcost(alloc): the variable costs due to an allocation (excludes storage_cost and the gains due to queries). vcost_ratio: the ratio of vcosts when using negotiation, and vcosts of the static allocation. 79

Effect of Parameters on The Results • • • As the number of servers grows, vcost_ratio increases (more complex computations) L. As the number of datasets grows, vcost_ratio decreases (negotiation is more beneficial) J. Changing the mean usage did not influence vcost_ratio significantly. K, but vcost_ratio decreases as the standard deviation of the usage increases. J. 80

Influence of Parameters - cont. • • • When the standard deviation of the distances between servers increases, vcost_ratio decreases. J. When the distance between servers increases, vcost_ratio decreases. J. In the domains tested, • • answer_cost vcost_ratio L. storage_cost vcost_ratio L. retrieve_cost vcost_ratio J. query_price vcost_ratio J. 81

Incomplete Information • Each server knows: • The usage frequency of all datasets, by clients from its area • The usage frequency of datasets stored in it, by all clients 82

BARGAINING ZOPA Sellers’ surplus Buyers’ surplus s. L s. H Sellers’ RP Sellers wants s or more x final price b. L b. H Buyers’ RP Buyer wants b or less 83

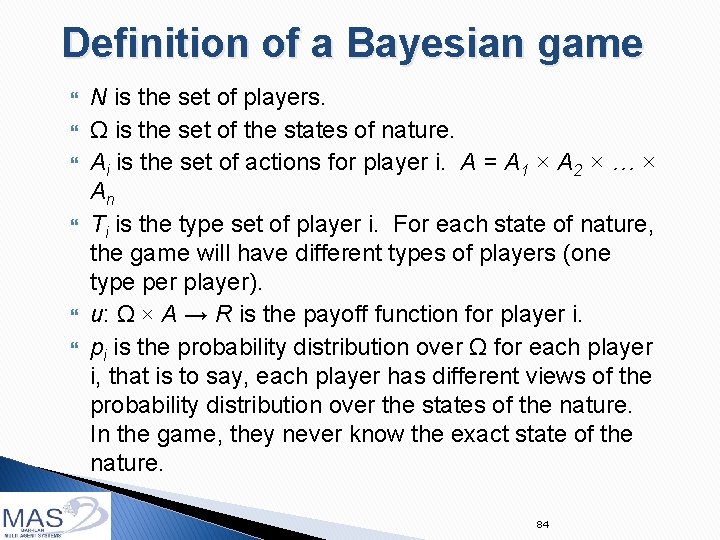

Definition of a Bayesian game N is the set of players. Ω is the set of the states of nature. Ai is the set of actions for player i. A = A 1 × A 2 × … × An Ti is the type set of player i. For each state of nature, the game will have different types of players (one type per player). u: Ω × A → R is the payoff function for player i. pi is the probability distribution over Ω for each player i, that is to say, each player has different views of the probability distribution over the states of the nature. In the game, they never know the exact state of the nature. 84

Solution concepts for Bayesian games A (Bayesian) Nash equilibrium is a strategy profile and beliefs specified for each player about the types of the other players that maximizes the expected utility for each player given their beliefs about the other players' types and given the strategies played by the other players. 85

Incomplete Information - cont. • A revelation mechanism: • First, all the servers report simultaneously all their private information: • for each dataset, the past usage of the dataset by this server. • for each server, the past usage of each local dataset by this server. • Then, the negotiation proceeds as in the complete information case. 86

Incomplete Information - cont. • Lemma: There is a Nash equilibrium where each server tells the truth about its past usage of remote datasets, and the other servers usage of its local datasets. • Lies concerning details about local usage of local datasets are intractable. 87

Summary: negotiation on data allocation • • We have considered the data allocation problem in a distributed environment. We have presented the utility function of the servers, which expresses their preferences. We have proposed using a negotiation protocol for solving the problem. For incomplete information situations, a revelation process was added to the protocol. 88

Agent-Human Negotiation 89

Computers interacting with people Computer has the control Human has the control 90 Computer persuades human

91 91

Culture sensitive agents The development of standardized agent to be used in the collection Buyer/Seller agents of data negotiate for studies on culture and well across negotiation cultures 92 PURB agent

Semi-autonomous cars 93

Medical applications Gertner Institute for Epidemiology and Health Policy Research 94 94

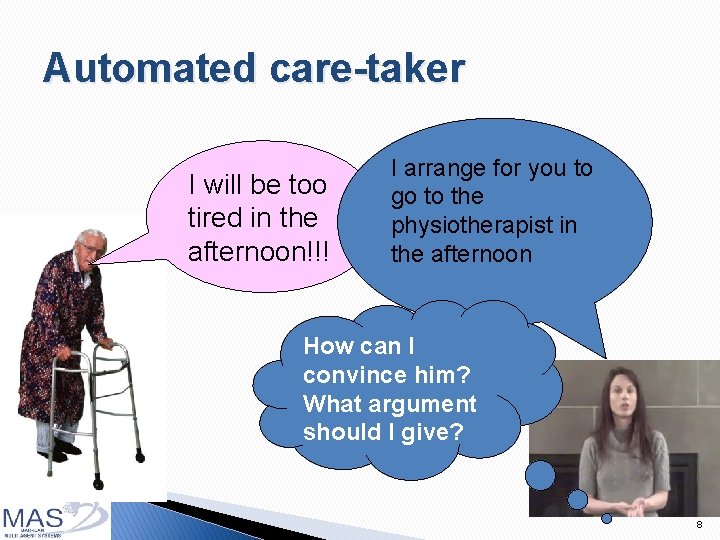

Automated care-taker I will be too tired in the afternoon!!! The physiotherapist has no other available I scheduled an appointments this week. appointment for How about resting before you at the appointment? physiotherapist this afternoon Try to reschedule and fail 95

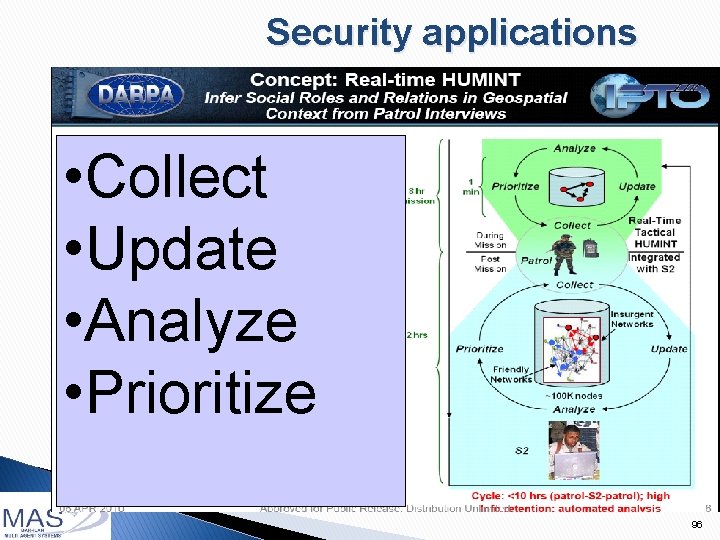

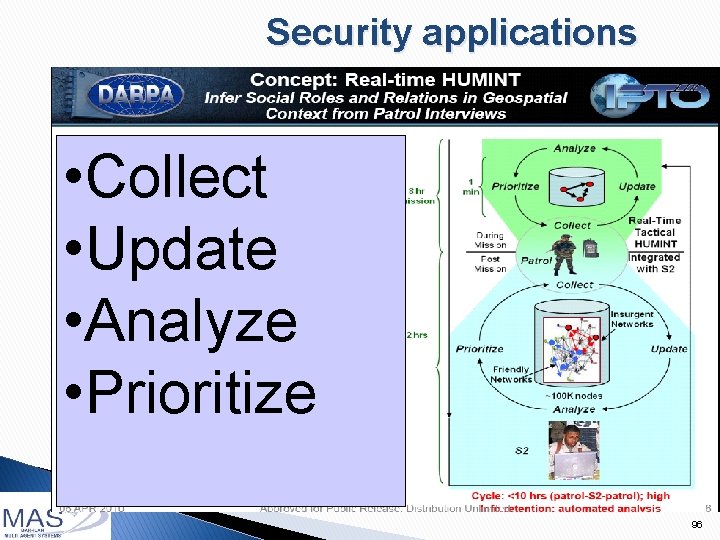

Security applications • Collect • Update • Analyze • Prioritize 96

People often follow suboptimal decision strategies Irrationalities attributed to ◦ ◦ ◦ 97 sensitivity to context lack of knowledge of own preferences the effects of complexity the interplay between emotion and cognition the problem of self control bounded rationality in the bullet 97

Agents that play repeatedly with the same person 98

![Aut ONA BY 03 Buyers and sellers Using data from previous experiments Belief function Aut. ONA [BY 03] Buyers and sellers Using data from previous experiments Belief function](https://slidetodoc.com/presentation_image_h2/3b9f1cf96b8bf601bd5e44831df86a79/image-97.jpg)

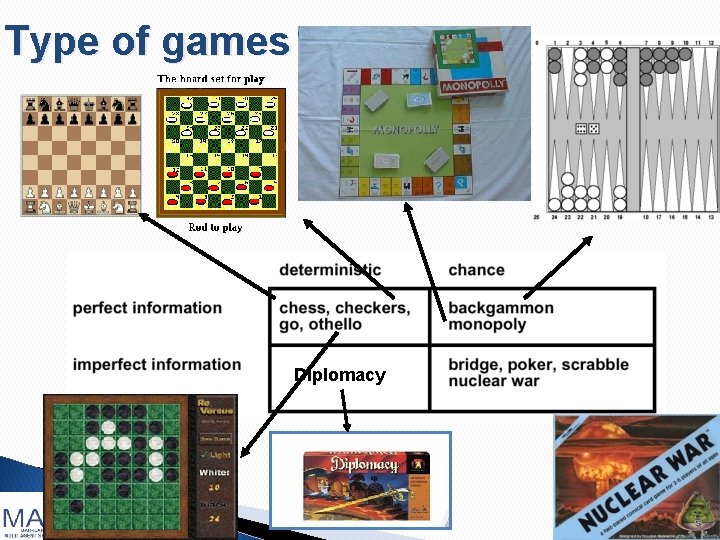

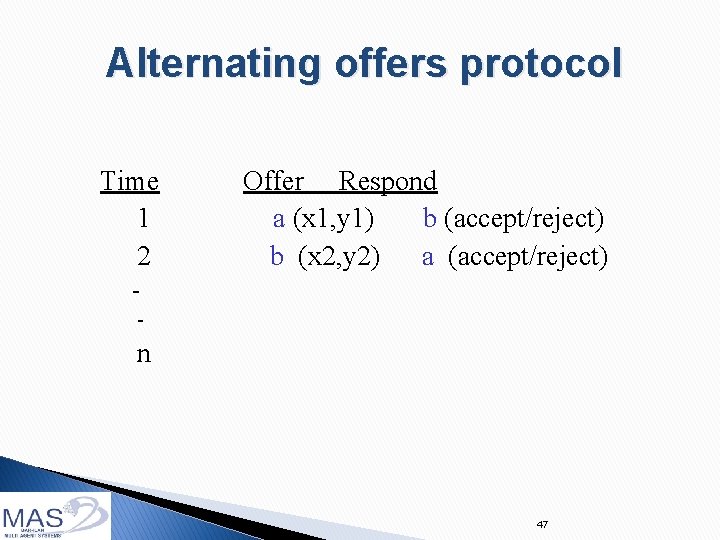

Aut. ONA [BY 03] Buyers and sellers Using data from previous experiments Belief function to model opponent Implemented several tactics and heuristics ◦ including, concession mechanism A. Byde, M. Yearworth, K. -Y. Chen, and C. Bartolini. Aut. ONA: A system for automated multiple 1 -1 negotiation. In CEC, pages 59– 67, 2003

Cliff-Edge • • • Virtual learning and reinforcement learning Using data from previous interactions Implemented several tactics and heuristics qualitative in nature Non-deterministic behavior, via means of randomization • • R. Katz and S. Kraus. Efficient agents for cliff edge environments with a large set of decision options. In AAMAS, pages 697– 704, 2006

General opponent* modeling Agents that play with the same person only once 101

Challenges of human opponent* modeling Small number of examples ◦ difficult to collect data on people Noisy data ◦ people are inconsistent (the same person may act differently) ◦ people are diverse 102

Guessing Heuristic Multi-issue, multi-attribute, with incomplete information Domain independent Implemented several tactics and heuristics ◦ including, concession mechanism C. M. Jonker, V. Robu, and J. Treur. An agent architecture for multi-attribute negotiation using incomplete preference information. JAAMAS, 15(2): 221– 252, 2007

PURB Agent Building blocks: Personality model, Utility function, Rules for guiding choice. Key idea: Models Personality traits of its negotiation partners over time. Uses decision theory to decide how to negotiate, with utility function that depends on models and other environmental features. Playscomputation. as well as Pre-defined rules facilitate people; adapts to culture

![QOAgent LIN 08 Played at least as well as people Multiissue multiattribute with incomplete QOAgent [LIN 08] Played at least as well as people Multi-issue, multi-attribute, with incomplete](https://slidetodoc.com/presentation_image_h2/3b9f1cf96b8bf601bd5e44831df86a79/image-103.jpg)

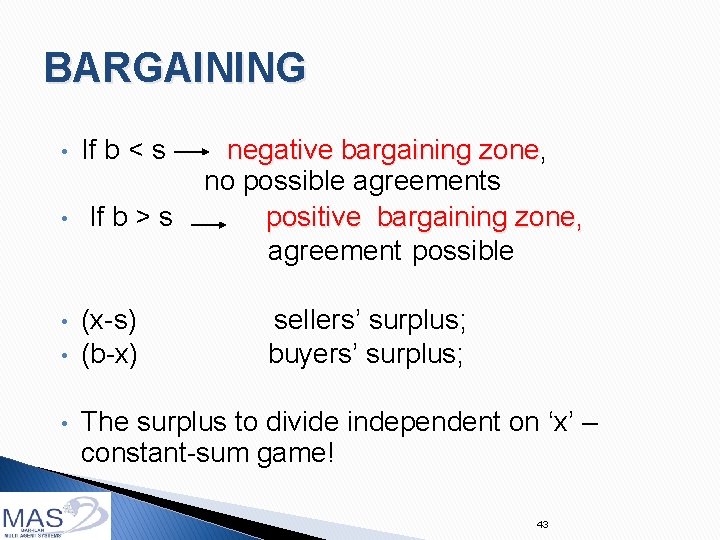

QOAgent [LIN 08] Played at least as well as people Multi-issue, multi-attribute, with incomplete information Domain independent Implemented several tactics and heuristics Is it possible toof Non-deterministic behavior, also via means Yes, if you have data improve randomization the QOAgent? ◦ qualitative in nature R. Lin, S. Kraus, J. Wilkenfeld, and J. Barry. Negotiating with bounde rational agents in environments with incomplete information using an automated agent. Artificial Intelligence, 172(6 -7): 823– 851, 2008 105

KBAgent Multi-issue, multi-attribute, with incomplete information Domain independent Implemented several tactics and heuristics ◦ qualitative in nature Non-deterministic behavior, also via means of randomization Using data from previous interactions Y. Oshrat, R. Lin, and S. Kraus. Facing the challenge of human-agent negotiations via effective general opponent modeling. In AAMAS, 2009 106

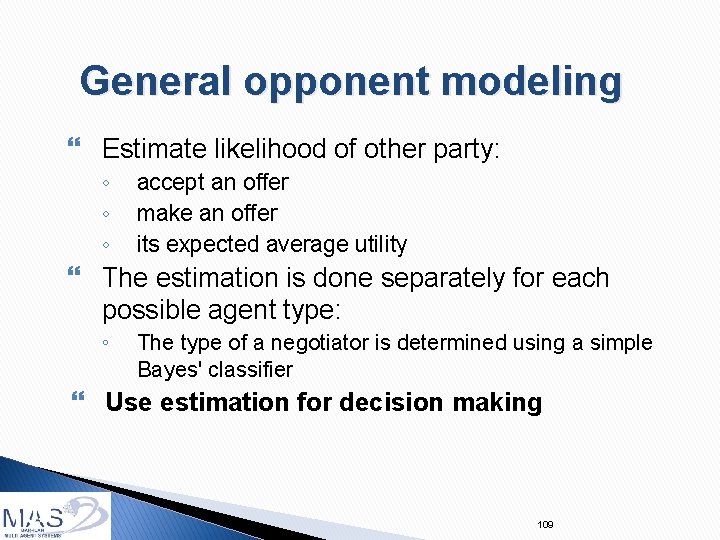

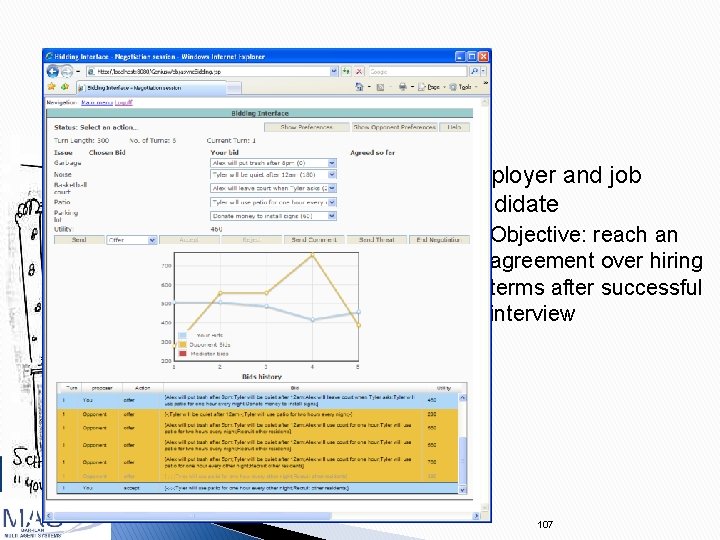

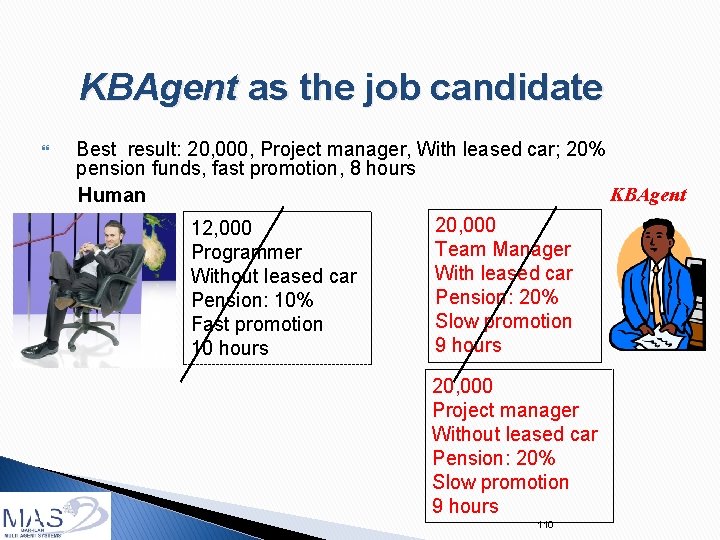

Example scenario Employer and job candidate ◦ 107 Objective: reach an agreement over hiring terms after successful interview 107

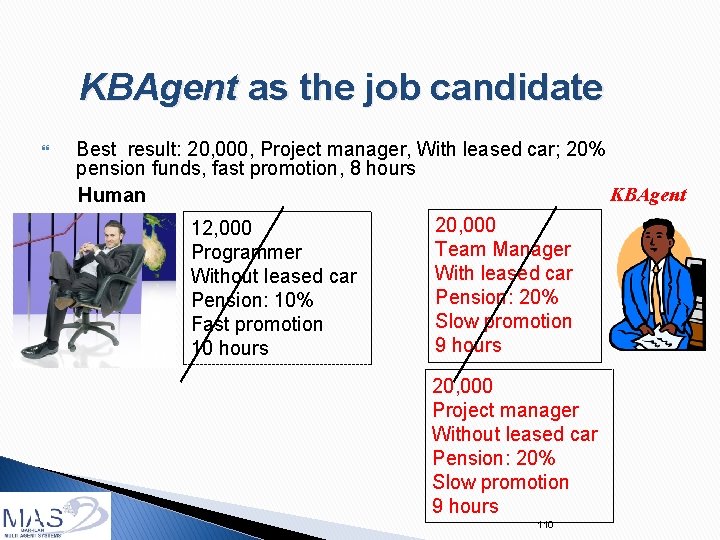

General opponent modeling • • 108 Challenge: sparse data of past negotiation sessions of people negotiation Technique: Kernel Density Estimation 108

General opponent modeling Estimate likelihood of other party: ◦ ◦ ◦ accept an offer make an offer its expected average utility The estimation is done separately for each possible agent type: ◦ The type of a negotiator is determined using a simple Bayes' classifier Use estimation for decision making 109

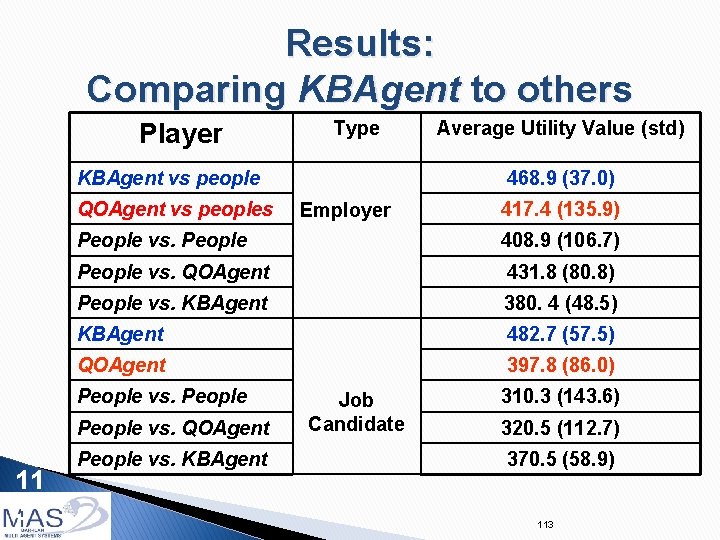

KBAgent as the job candidate Best result: 20, 000, Project manager, With leased car; 20% pension funds, fast promotion, 8 hours KBAgent Human 12, 000 Programmer Without leased car Pension: 10% Fast promotion 10 hours 110 20, 000 Team Manager With leased car Pension: 20% Slow promotion 9 hours 20, 000 Project manager Without leased car Pension: 20% Slow promotion 9 hours 110

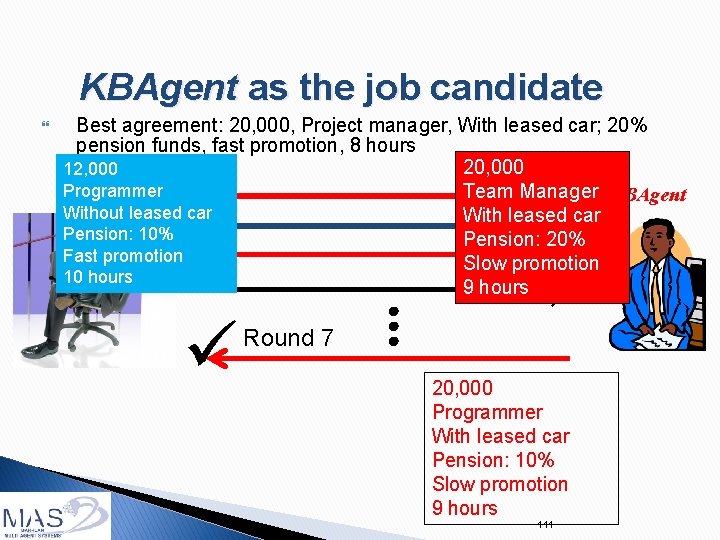

KBAgent as the job candidate Best agreement: 20, 000, Project manager, With leased car; 20% pension funds, fast promotion, 8 hours 20, 000 12, 000 Programmer Team Manager KBAgent Human Without leased car With leased car Pension: 10% Pension: 20% Fast promotion Slow promotion 10 hours 9 hours 111 Round 7 20, 000 Programmer With leased car Pension: 10% Slow promotion 9 hours 111

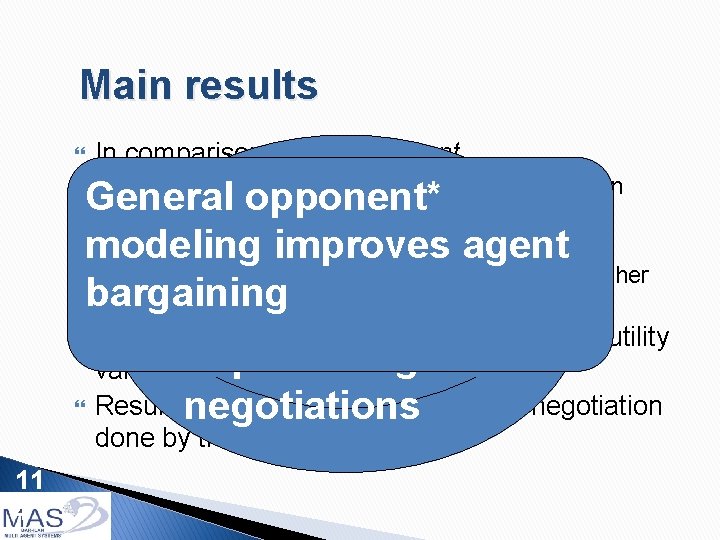

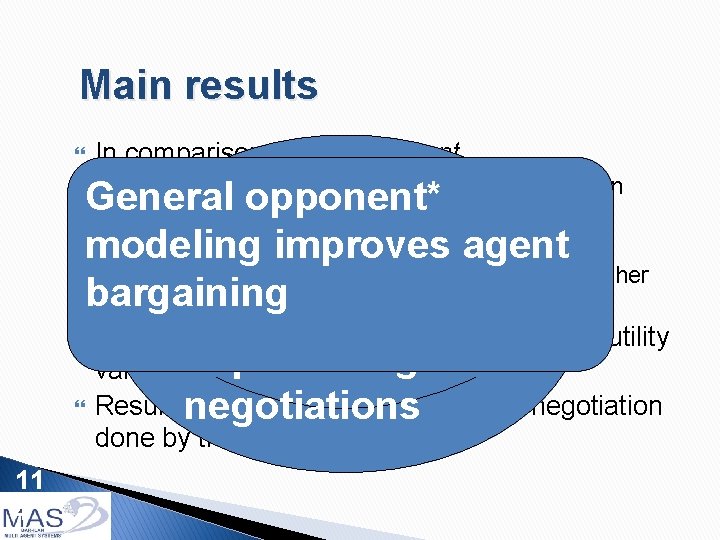

Experiments Learned from 20 games of human-human 11 2 172 grad and undergrad students in Computer Science People were told they may be playing a computer agent or a person. Scenarios: ◦ Employer-Employee ◦ Tobacco Convention: England vs. Zimbabwe 112

Results: Comparing KBAgent to others Player Type KBAgent vs people QOAgent vs peoples 468. 9 (37. 0) Employer 417. 4 (135. 9) People vs. People 408. 9 (106. 7) People vs. QOAgent 431. 8 (80. 8) People vs. KBAgent 380. 4 (48. 5) KBAgent 482. 7 (57. 5) QOAgent 397. 8 (86. 0) People vs. QOAgent 11 3 Average Utility Value (std) People vs. KBAgent Job Candidate 310. 3 (143. 6) 320. 5 (112. 7) 370. 5 (58. 9) 113

Main results In comparison to the QOAgent ◦ The KBAgent achieved higher utility values than QOAgent ◦ More agreements were accepted by people ◦ The sum of utility values (social welfare) were higher when the KBAgent was involved 11 4 General opponent* General modeling improves agent opponent bargaining modeling The KBAgent achieved significantly higher utility improves agent values than people Resultsnegotiations demonstrate the proficiency negotiation done by the KBAgent

Automated care-taker I arrange for you to I will be too How cango. I to the tired in the physiotherapist in convince him? afternoon!!! the afternoon What argument should I give? 11 5

Security applications How should I convince him to provide me with information? 11 6

Should I tell him that we Argumentation Should I tell her that are running Which information to reveal? my leg hurts? out of Should I tell him antibiotics? that. I tell I willhim lose Should I a project if I my don’t hire Build awas game that fired from today? last job? combines information revelation and bargaining 117

Automated care-taker I will be too tired in the afternoon!!! I arrange for you to go to the physiotherapist in the afternoon How can I convince him? What argument should I give? 11 8

Security applications How should I convince him to provide me with information? 119

Color Trails (CT) An infrastructure for agent design, implementation and evaluation for open environments Designed with Barbara Grosz (AAMAS 2004) Implemented by Harvard team and BIU team 120

An experimental test-ted Interesting for people to play ◦ analogous to task settings; ◦ vivid representation of strategy space (not just a list of outcomes). Possible for computers to play Can vary in complexity ◦ repeated vs. one-shot setting; ◦ availability of information; ◦ communication protocol. 121

Social Preference Agent Learns the extent to which people are affected by social preferences such as social welfare and competitiveness. Designed for one-shot take-it-or-leave-it scenarios. Does not reason about the future ramifications of its actions. Y. Gal and A. Pfeffer: Predicting people's bidding behavior in negotiation. AAMAS 2006: 370 -376

Agents for Revelation Games Peled Noam, Gal Kobi, Kraus Sarit 123

Introduction - Revelation games • Combine two types of interaction • Signaling games (Spence 1974) • Players choose whether to convey private information to each other • Bargaining games (Osborne and Rubinstein 1999) • Players engage in multiple negotiation rounds • Example: Job interview 124 -

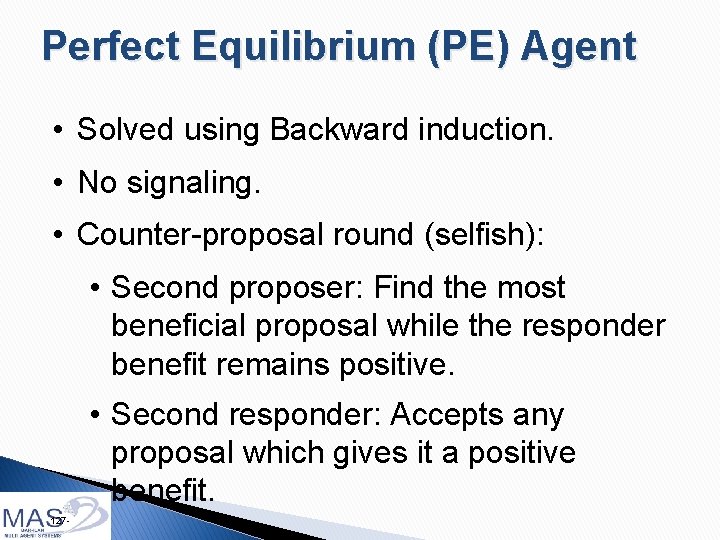

Colored Trails (CT) Asymmetric 125 - Symmetric

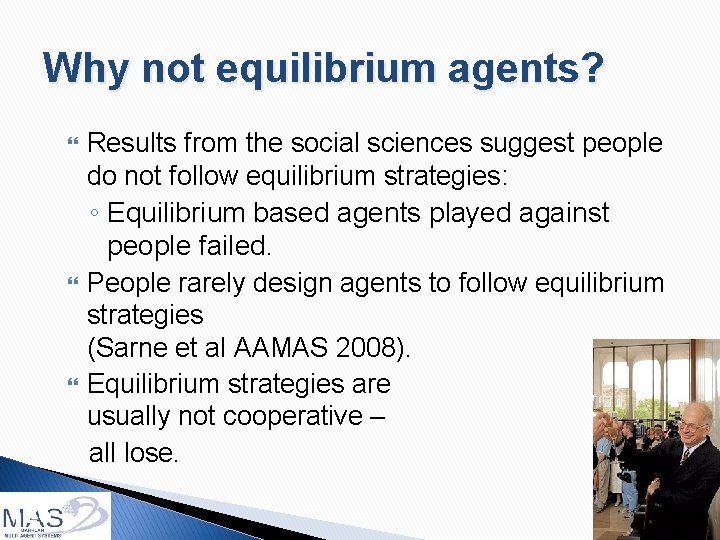

Why not equilibrium agents? 126 Results from the social sciences suggest people do not follow equilibrium strategies: ◦ Equilibrium based agents played against people failed. People rarely design agents to follow equilibrium strategies (Sarne et al AAMAS 2008). Equilibrium strategies are usually not cooperative – all lose. 126

Perfect Equilibrium (PE) Agent • Solved using Backward induction. • No signaling. • Counter-proposal round (selfish): • Second proposer: Find the most beneficial proposal while the responder benefit remains positive. • Second responder: Accepts any proposal which gives it a positive benefit. 127 -

PE agent – Phase one • First proposal round (generous): • First proposer: propose the opponent’s counter-proposal. • First responder: Accepts any proposals which gives it the same or higher benefit from its counter-proposal. • Revelation phase - revelation vs non revelation: 128 - • In both boards, the PE with goal revelation yields lower or equal expected utility than non-revelation PE

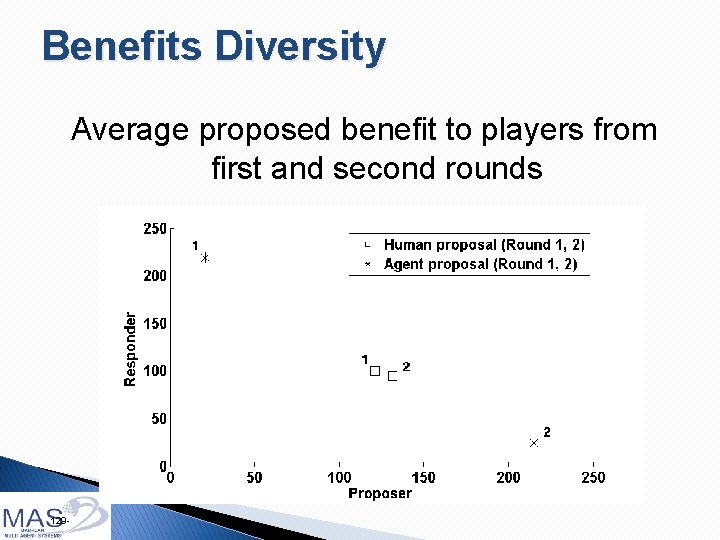

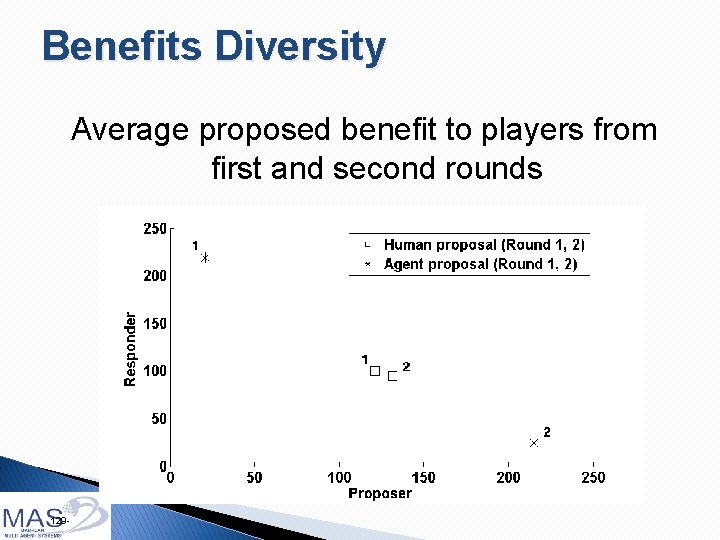

Benefits Diversity Average proposed benefit to players from first and second rounds 129 -

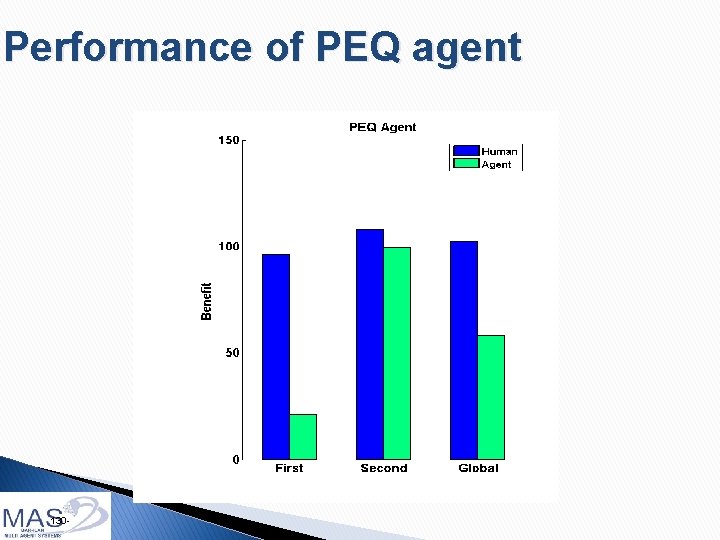

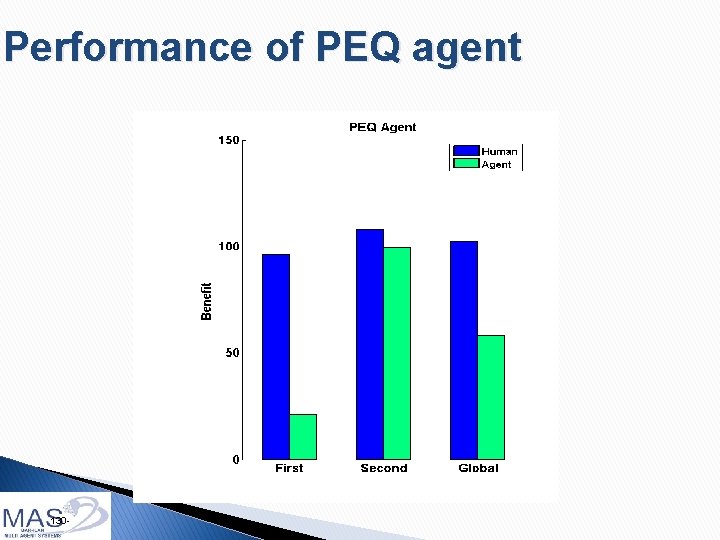

Performance of PEQ agent 130 -

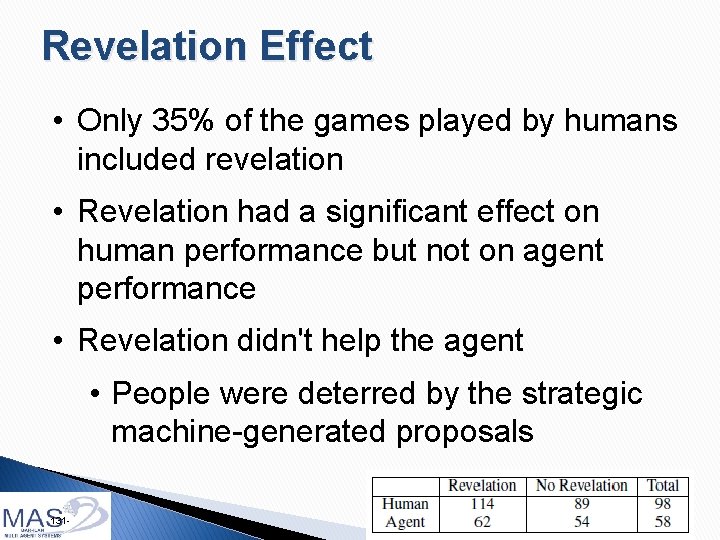

Revelation Effect • Only 35% of the games played by humans included revelation • Revelation had a significant effect on human performance but not on agent performance • Revelation didn't help the agent • People were deterred by the strategic machine-generated proposals 131 -

SIGAL agent Agent based on general opponent modeling: Genetic algorithm Logistic Regression 132

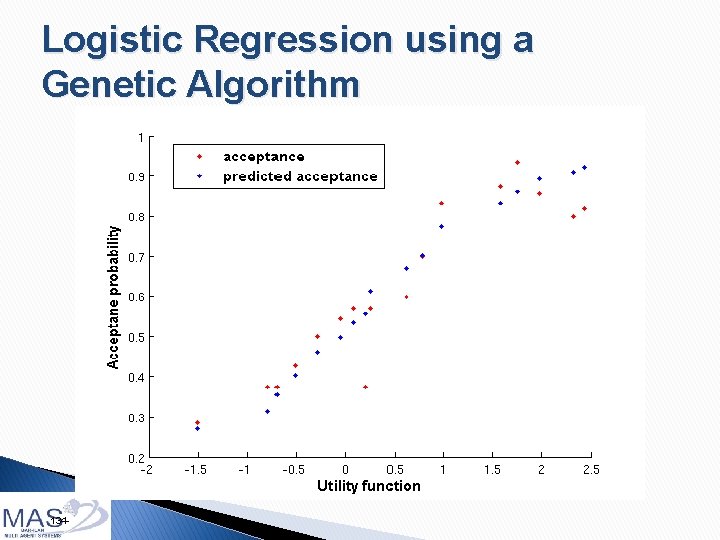

SIGAL Agent • Learns from previous games. • Predict the acceptance probability for each proposal using Logistic Regression. • Models human as using a weighted utility function of: • Humans benefit • Benefits difference • Revelation decision • Benefits in previous round 133 -

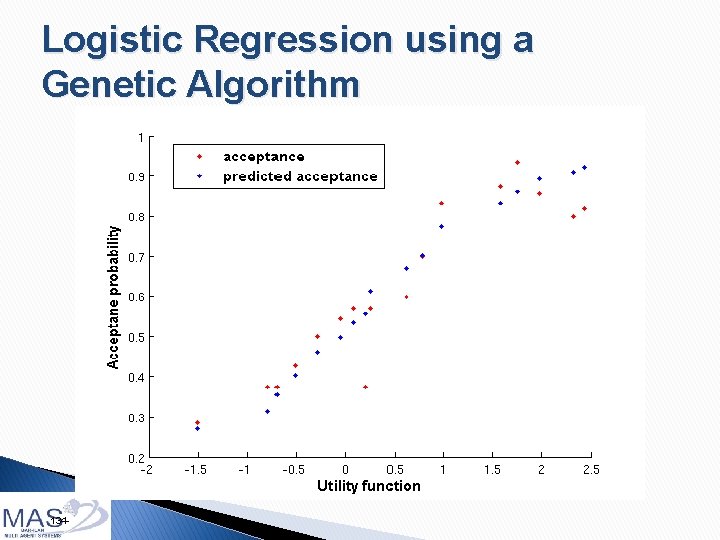

Logistic Regression using a Genetic Algorithm 134 -

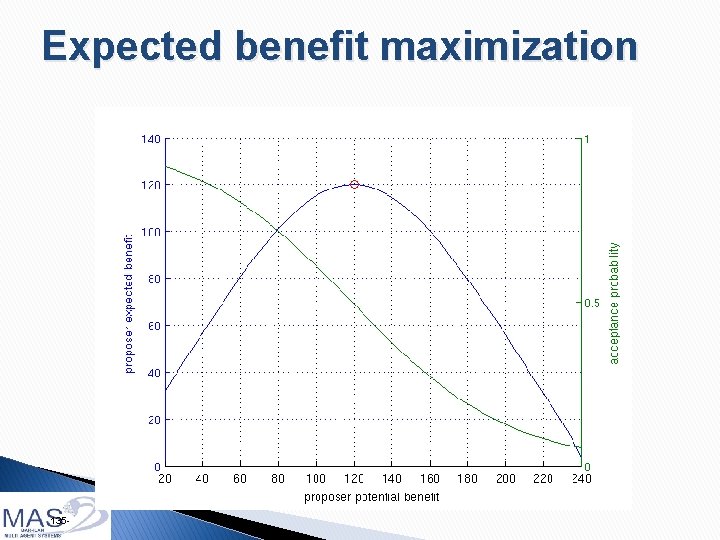

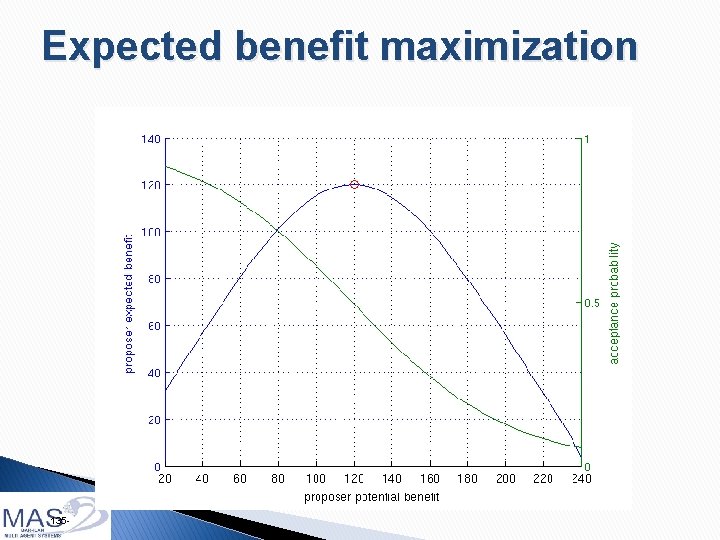

Expected benefit maximization 135 -

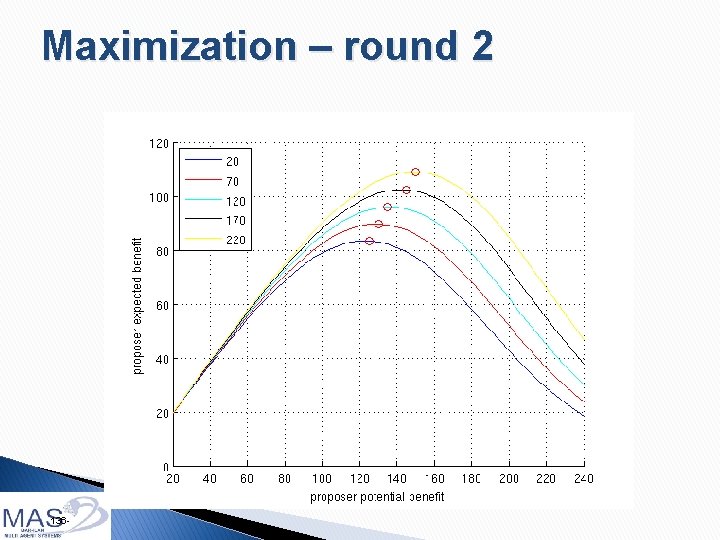

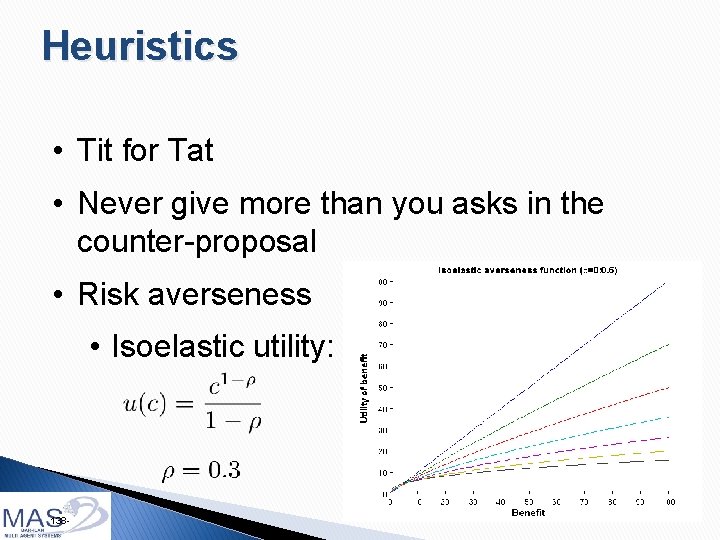

Maximization – round 2 136 -

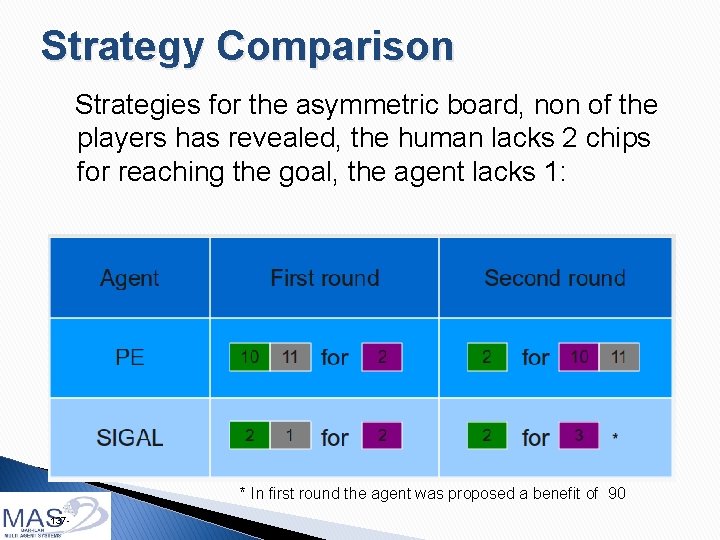

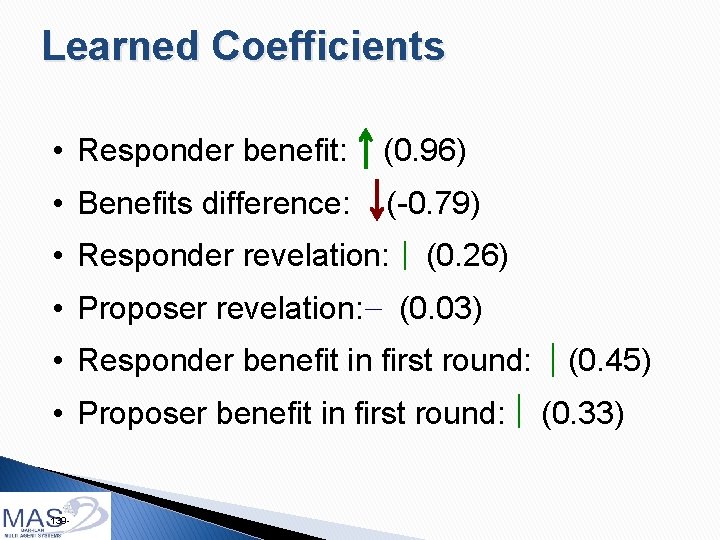

Strategy Comparison Strategies for the asymmetric board, non of the players has revealed, the human lacks 2 chips for reaching the goal, the agent lacks 1: * In first round the agent was proposed a benefit of 90 137 -

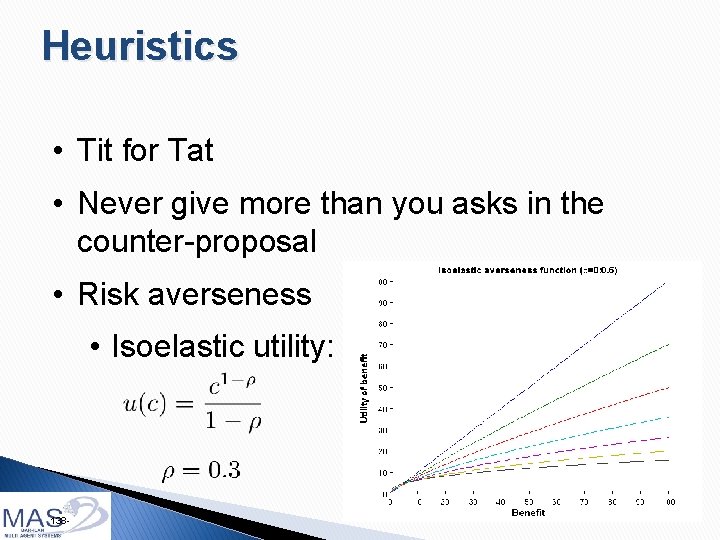

Heuristics • Tit for Tat • Never give more than you asks in the counter-proposal • Risk averseness • Isoelastic utility: 138 -

Learned Coefficients • Responder benefit: (0. 96) • Benefits difference: (-0. 79) • Responder revelation: • Proposer revelation: (0. 26) (0. 03) • Responder benefit in first round: • Proposer benefit in first round: 139 - (0. 45) (0. 33)

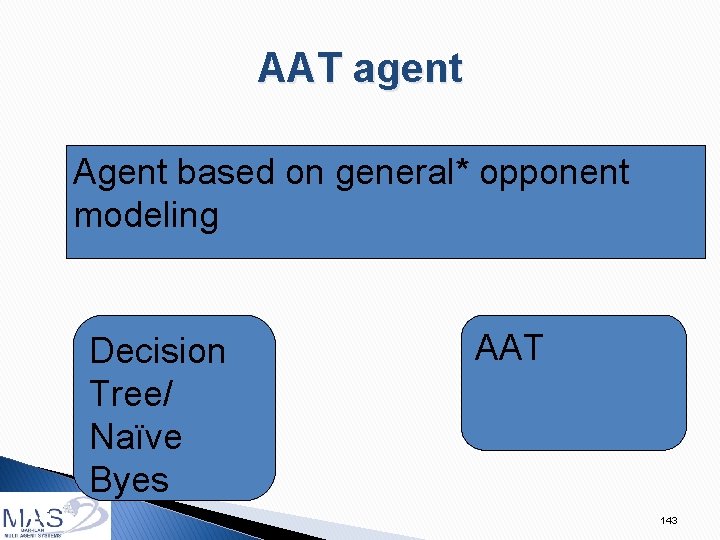

Methodology • Cross validation. • 10 -fold • Over-fitting removal. • Stop learning in the minimum of the generalization error • Error calculation on held out test set. • Using new human-human games • Performance prediction criteria. 140 -

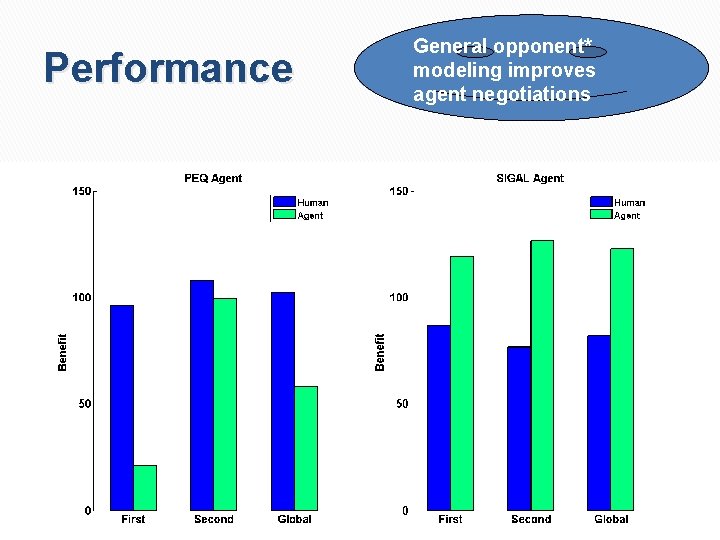

Performance 141 - General opponent* modeling improves agent negotiations

General opponent* modeling in Maximization problems 142

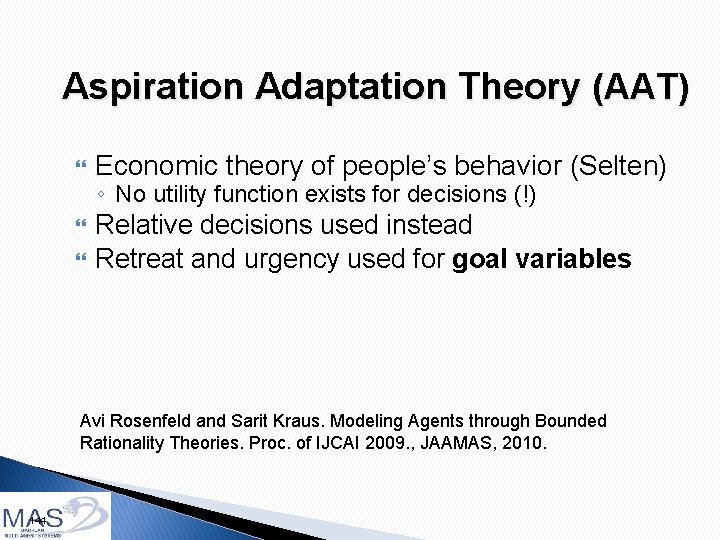

AAT agent Agent based on general* opponent modeling 143 Decision Tree/ Naïve Byes AAT 143

Aspiration Adaptation Theory (AAT) Economic theory of people’s behavior (Selten) Relative decisions used instead Retreat and urgency used for goal variables ◦ No utility function exists for decisions (!) Avi Rosenfeld and Sarit Kraus. Modeling Agents through Bounded Rationality Theories. Proc. of IJCAI 2009. , JAAMAS, 2010. 144

Commodity search 1000 145

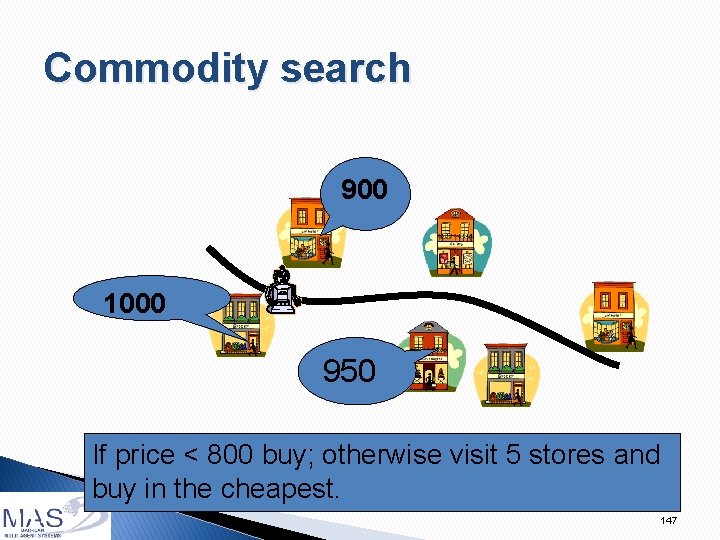

Commodity search 900 1000 146

Commodity search 900 1000 950 147 If price < 800 buy; otherwise visit 5 stores and buy in the cheapest. 147

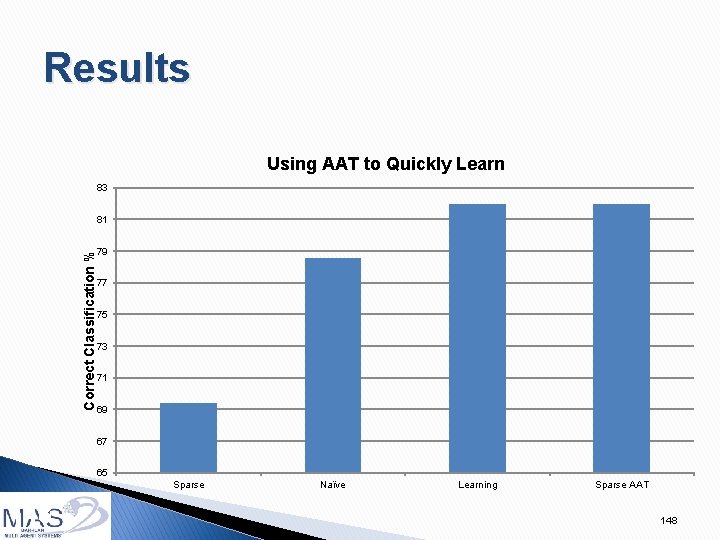

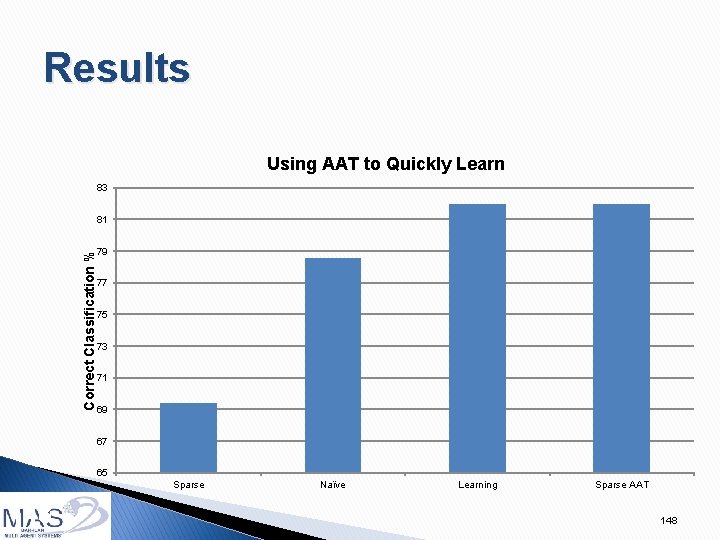

Results Using AAT to Quickly Learn 83 Correct Classification % 81 79 77 75 73 71 69 67 65 Sparse 148 Naïve Learning Sparse AAT 148

General opponent* modeling in cooperative environments 149

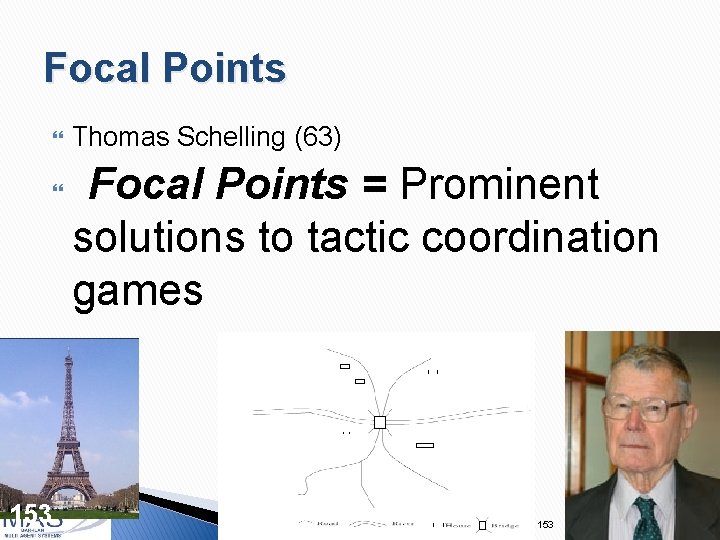

Coordination with limited communication Communication is not always possible: ◦ High communication costs ◦ Need to act undetected ◦ Damaged communication devices ◦ Language incompatibilities ◦ Goal: Limited interruption of human activities Zuckerman, S. Kraus and J. S. Rosenschein. Using Focal Points Learning to Improve Human-Machine Tactic Coordination, JAAMAS, 2010. 150

Focal Points (Examples) Divide £ 100 into two piles, if your piles are identical to your coordination partner, you get the £ 100. Otherwise, you get nothing. 101 equilibria 151

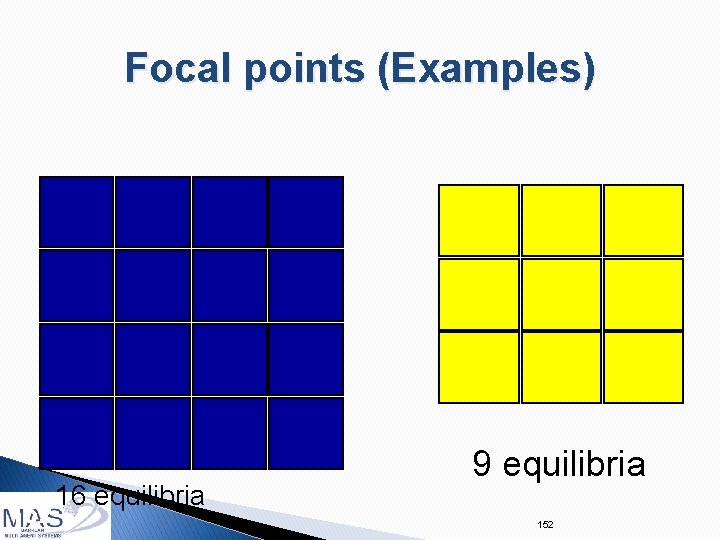

Focal points (Examples) 152 16 equilibria 9 equilibria 152

Focal Points 153 Thomas Schelling (63) Focal Points = Prominent solutions to tactic coordination games 153

Prior work: Focal Points Based Coordination for closed environments Domain-independent rules that could be used by automated agents to identify focal points: Properties: Centrality, Firstness, Extremeness, Singularity. ◦ Logic based model ◦ Decision theory based model Algorithms for agents coordination Kraus and Rosenchein MAAMA 1992 Fenster et al ICMAS 1995 Annals of Mathematics and Artificial Intelligence 2000 154

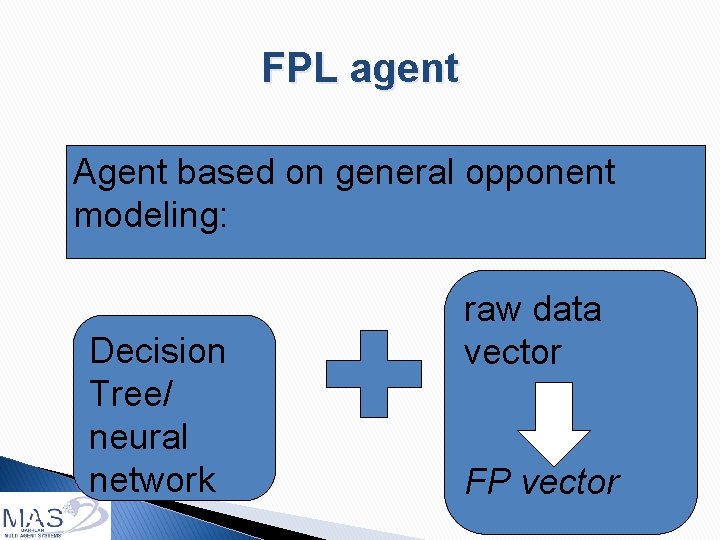

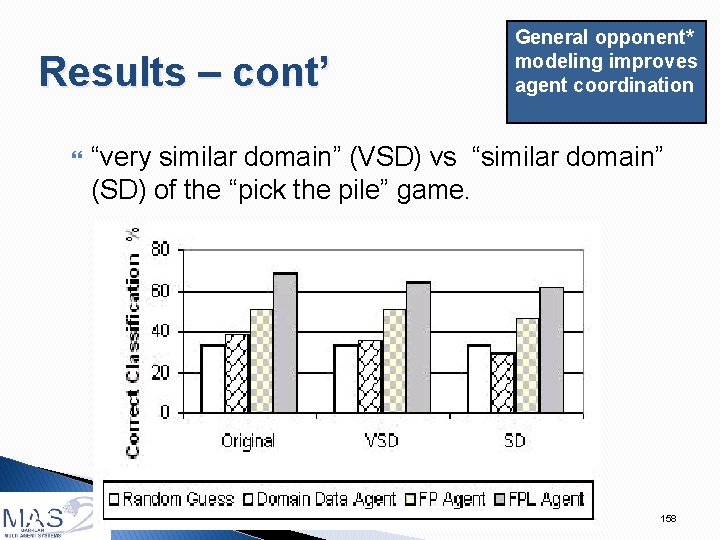

FPL agent Agent based on general* opponent modeling 155 Decision Tree/ neural network Focal Point 155

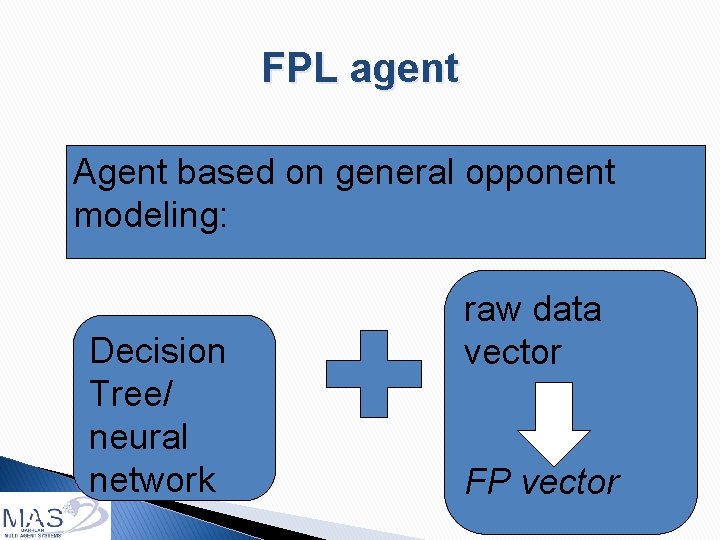

FPL agent Agent based on general opponent modeling: 156 Decision Tree/ neural network raw data vector FP vector 156

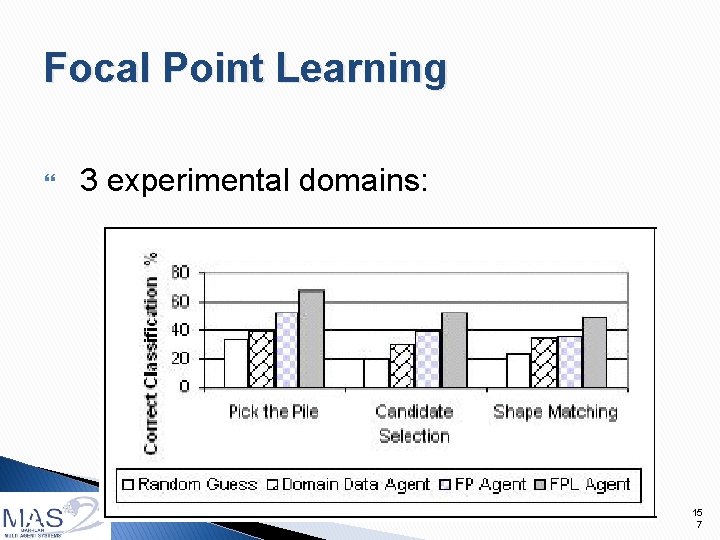

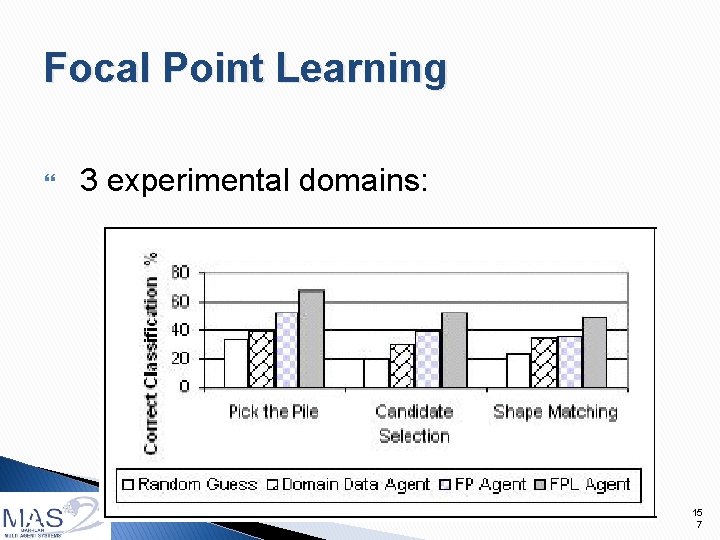

Focal Point Learning 157 3 experimental domains: 15 7

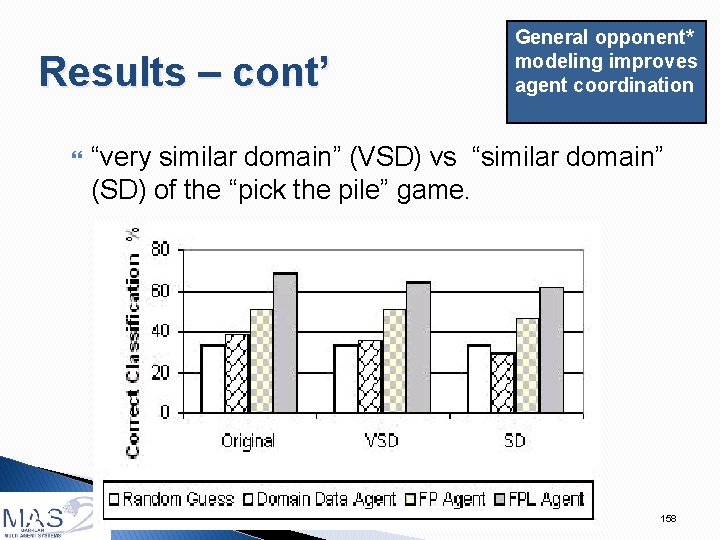

Results – cont’ 158 General opponent* modeling improves agent coordination “very similar domain” (VSD) vs “similar domain” (SD) of the “pick the pile” game. 158

Experiments with people is a costly process 159

Evaluation of agents (EDA) Peer Designed Agents (PDA): computer agents developed by humans Experiment: 300 human subjects, 50 PDAs, 3 EDA Results: ◦ EDA outperformed PDAs in the same situations in which they outperformed people, ◦ on average, EDA exhibited the same measure of generosity R. Lin, S. Kraus, Y. Oshrat and Y. Gal. Facilitating the Evaluation of Automated Negotiators using Peer Designed Agents, in AAAI 2010. 160

Conclusions Negotiation and argumentation with people is required for many applications General* opponent modeling is beneficial ◦ Machine learning ◦ Behavioral model ◦ Challenge: how to integrate machine learning and behavioral model 161

References 1. S. S. Fatima, M. Wooldridge, and N. R. Jennings, Multi-issue negotiation with deadlines, Jnl of AI Research, 21: 381 -471, 2006. 2. R. Keeney and H. Raiffa, Decisions with multiple objectives: Preferences and value trade-offs, John Wiley, 1976. 3. S. Kraus, Strategic negotiation in multiagent environments, The MIT press, 2001. 4. S. Kraus and D. Lehmann. Designing and Building a Negotiating Automated Agent, Computational Intelligence, 11(1): 132 -171, 1995 5. S. Kraus, K. Sycara and A. Evenchik. Reaching agreements through argumentation: a logical model and implementation. Artificial Intelligence journal, 104(1 -2): 1 -69, 1998. 6. R. Lin and Sarit Kraus. Can Automated Agents Proficiently Negotiate With Humans? Communications of the ACM Vol. 53 No. 1, Pages 78 -88, January, 2010. 7. R. Lin, S. Kraus, Y. Oshrat and Y. Gal. Facilitating the Evaluation of Automated Negotiators using Peer Designed Agents, in AAAI 2010. 162

References contd. 8. R. Lin, S. Kraus, J. Wilkenfeld, and J. Barry. Negotiating with bounded rational agents in environments with incomplete information using an automated agent. Artificial Intelligence, 172(6 -7): 823– 851, 2008 9. A. Lomuscio, M. Wooldridge, and N. R. Jennings, A classification scheme for negotiation in electronic commerce , Int. Jnl. of Group Deciion and Negotiation, 12(1), 31 -56, 2003. 10. M. J. Osborne and A. Rubinstein, A course in game theory, The MIT press, 1994. 11. M. J. Osborne and A. Rubinstein, Bargaining and Markets, Academic Press, 1990. 12. Y. Oshrat, R. Lin, and S. Kraus. Facing the challenge of human-agent negotiations via effective general opponent modeling. In AAMAS, 2009 13. H. Raiffa, The Art and Science of Negotiation, Harvard University Press, 1982. 14. J. S. Rosenschein and G. Zlotkin, Rules of encounter, The MIT press, 1994. 15. I. Stahl, Bargaining Theory, Economics Research Institute, Stockholm School of Economics, 1972. 16. I. Zuckerman, S. Kraus and J. S. Rosenschein. Using Focal Points Learning to Improve Human-Machine Tactic Coordination, JAAMAS, 2010. 163

Tournament 2 nd annual competition of state-of-the-art negotiating agents to be held in AAMAS’ 11 Do you want to participate? At least $2, 000 for the winner! Contact us! sarit@cs. biu. ac. il