The theory of concurrent programming for a seasoned

![The model with shared objects [Shared] Memory Thread 1 [Shared] Object 1 Thread 2 The model with shared objects [Shared] Memory Thread 1 [Shared] Object 1 Thread 2](https://slidetodoc.com/presentation_image/56c1dcf8c43e2988600888d8b578e98e/image-6.jpg)

![[Shared] Registers • Don’t confuse with CPU registers (eax, ebx, etc in x 86) [Shared] Registers • Don’t confuse with CPU registers (eax, ebx, etc in x 86)](https://slidetodoc.com/presentation_image/56c1dcf8c43e2988600888d8b578e98e/image-9.jpg)

![Parallel Concurrent [shared memory] Distributed [message passing] * NOTE: There is no general consensus Parallel Concurrent [shared memory] Distributed [message passing] * NOTE: There is no general consensus](https://slidetodoc.com/presentation_image/56c1dcf8c43e2988600888d8b578e98e/image-11.jpg)

![Mutex attempt #1 threadlocal int id // 0 or 1 shared boolean want[2] def Mutex attempt #1 threadlocal int id // 0 or 1 shared boolean want[2] def](https://slidetodoc.com/presentation_image/56c1dcf8c43e2988600888d8b578e98e/image-24.jpg)

![Peterson’s mutual exclusion algorithm threadlocal int id // 0 or 1 shared boolean want[2] Peterson’s mutual exclusion algorithm threadlocal int id // 0 or 1 shared boolean want[2]](https://slidetodoc.com/presentation_image/56c1dcf8c43e2988600888d8b578e98e/image-26.jpg)

![Lamport’s [bakery] mutual exclusion algorithm threadlocal int id // 0 to N-1 shared boolean Lamport’s [bakery] mutual exclusion algorithm threadlocal int id // 0 to N-1 shared boolean](https://slidetodoc.com/presentation_image/56c1dcf8c43e2988600888d8b578e98e/image-27.jpg)

- Slides: 55

The theory of concurrent programming for a seasoned programmer © Roman Elizarov, Devexperts, 2012

What? For whom? • The practical experience in writing concurrent programs is assumed - Here, concurrent == using shared memory - Assuming audience knows and used in practice locks, synchronized sections, compare and set, etc - Knowledge of “Java Concurrency in Practice” is a plus! • The theory behind the practical constructs will be explained - Formal models - Key definitions - Important facts and theorems (without proofs) - Practical corollaries • But some concepts are simplified

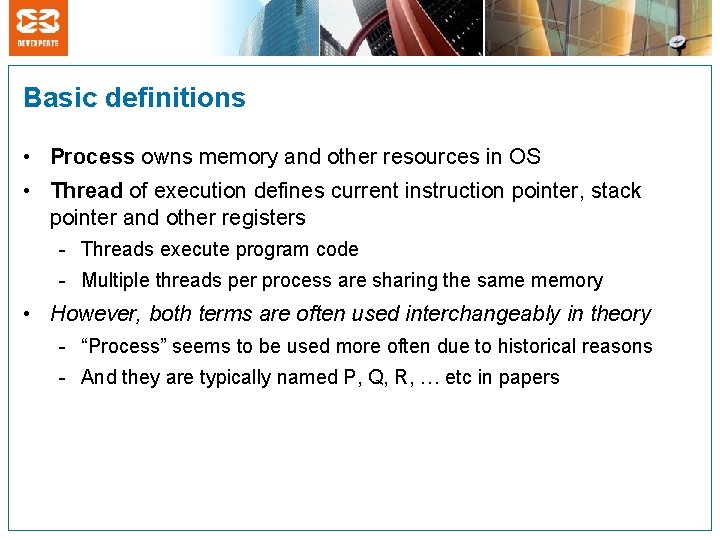

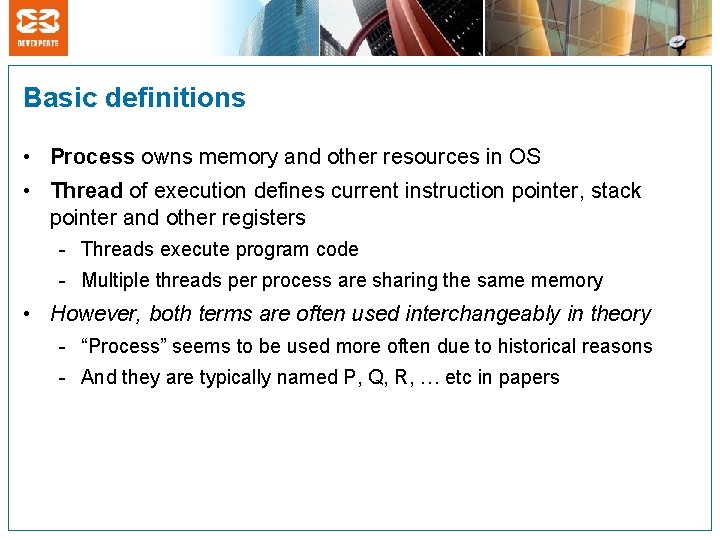

Just a reminder: the free lunch is over http: //www. gotw. ca/publications/concurrency-ddj. htm

Basic definitions • Process owns memory and other resources in OS • Thread of execution defines current instruction pointer, stack pointer and other registers - Threads execute program code - Multiple threads per process are sharing the same memory • However, both terms are often used interchangeably in theory - “Process” seems to be used more often due to historical reasons - And they are typically named P, Q, R, … etc in papers

Why model? • Formal models of computation let you define and prove certain desired properties of you programs • The models let you prove impossibility of achieving certain results under specific constraints - Saving your time trying to find a working solution

![The model with shared objects Shared Memory Thread 1 Shared Object 1 Thread 2 The model with shared objects [Shared] Memory Thread 1 [Shared] Object 1 Thread 2](https://slidetodoc.com/presentation_image/56c1dcf8c43e2988600888d8b578e98e/image-6.jpg)

The model with shared objects [Shared] Memory Thread 1 [Shared] Object 1 Thread 2 [Shared] Object 2 Thread N [Shared] Object M

Concurrency http: //www. nassaulibrary. org/nclacler_files/LILC 7. JPG

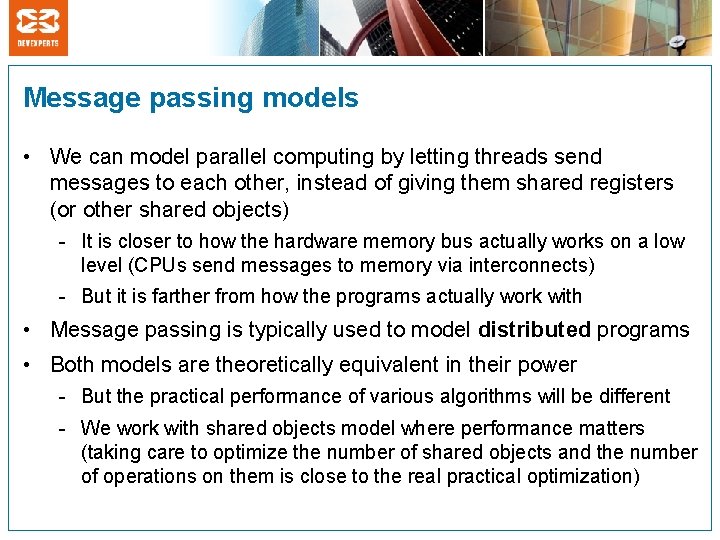

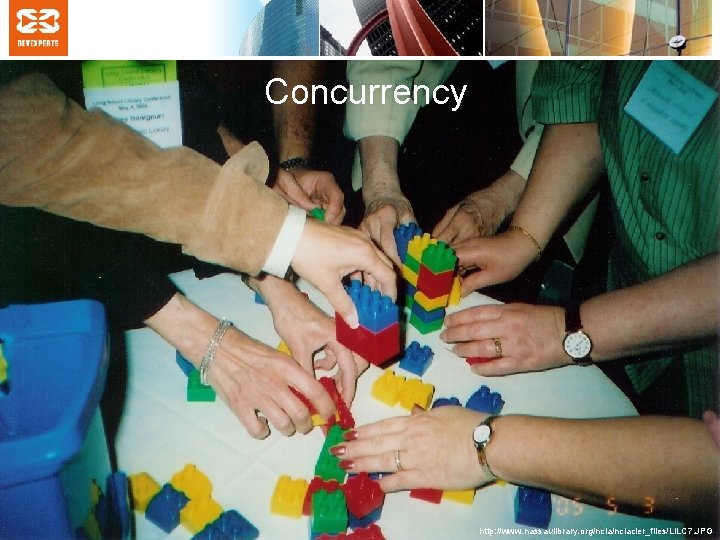

Shared objects • Threads (or processes) perform operations on shared memory objects • This model doesn’t care about operations that are internal to threads: - Computations performed by threads - Updates to threads’ CPU registers - Updates to threads’ stacks - Updates to any “thread local” memory regions • Only inter-thread communication matters • The only type of inter-thread communication in this model is via shared objects

![Shared Registers Dont confuse with CPU registers eax ebx etc in x 86 [Shared] Registers • Don’t confuse with CPU registers (eax, ebx, etc in x 86)](https://slidetodoc.com/presentation_image/56c1dcf8c43e2988600888d8b578e98e/image-9.jpg)

[Shared] Registers • Don’t confuse with CPU registers (eax, ebx, etc in x 86) - They are just part of “thread state” in concurrent programming theory • In concurrent programming [shared] register is the simplest kind of shared object: - It has some value type (typically boolean or integer) - With read and write operations • Registers are basic building blocks for many practical concurrent algorithms • The model of threads + shared registers is a decent abstraction for modern multicore hardware systems - It abstracts away enough actual complexity to make theoretical reasoning possible

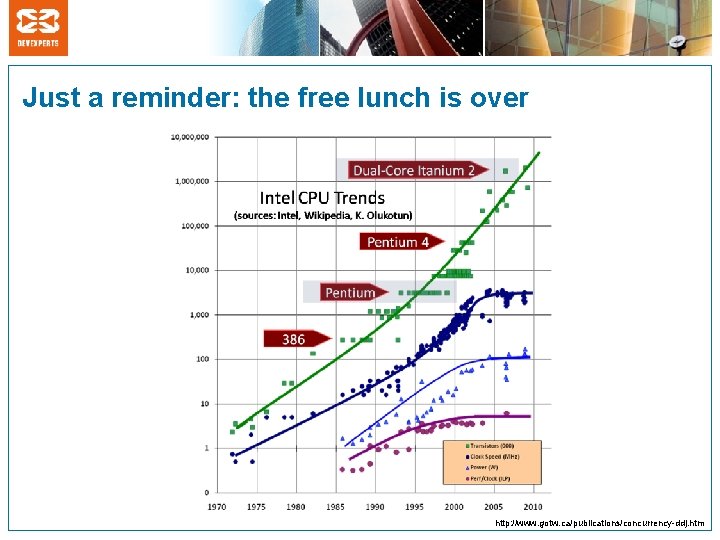

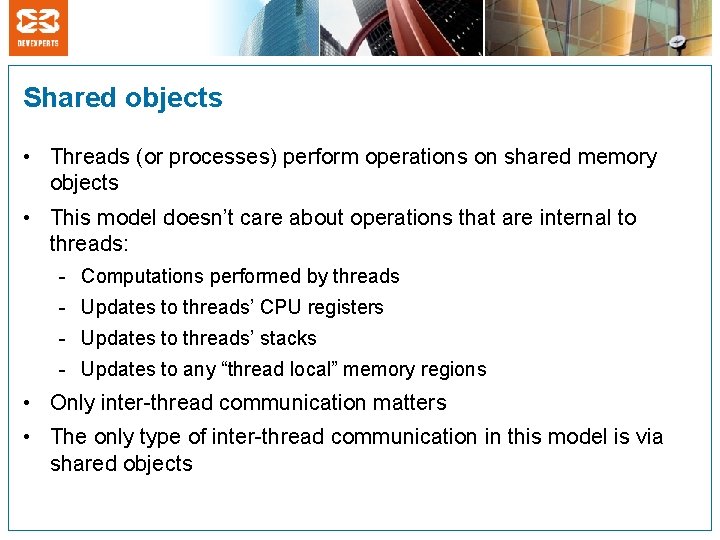

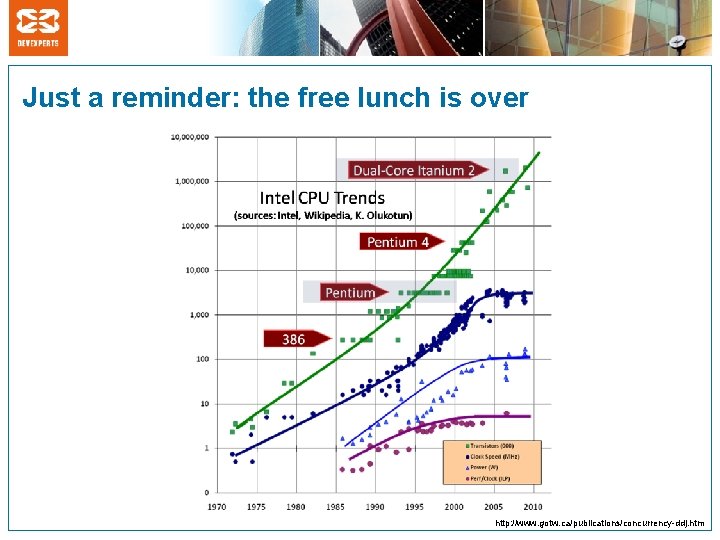

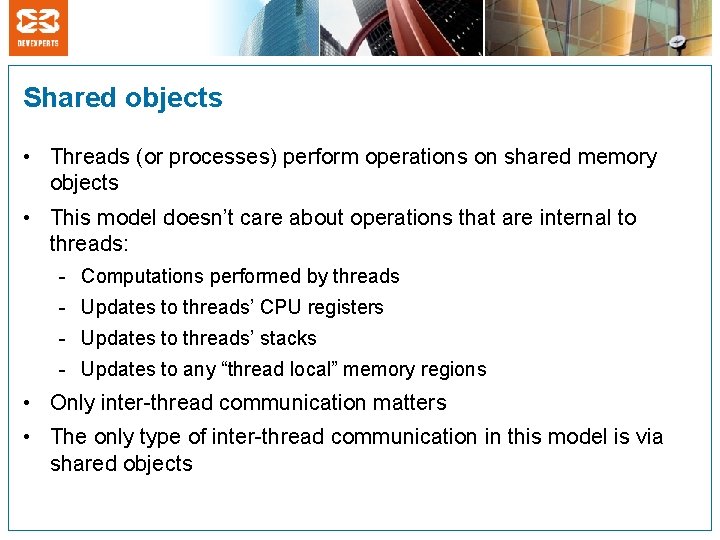

Message passing models • We can model parallel computing by letting threads send messages to each other, instead of giving them shared registers (or other shared objects) - It is closer to how the hardware memory bus actually works on a low level (CPUs send messages to memory via interconnects) - But it is farther from how the programs actually work with • Message passing is typically used to model distributed programs • Both models are theoretically equivalent in their power - But the practical performance of various algorithms will be different - We work with shared objects model where performance matters (taking care to optimize the number of shared objects and the number of operations on them is close to the real practical optimization)

![Parallel Concurrent shared memory Distributed message passing NOTE There is no general consensus Parallel Concurrent [shared memory] Distributed [message passing] * NOTE: There is no general consensus](https://slidetodoc.com/presentation_image/56c1dcf8c43e2988600888d8b578e98e/image-11.jpg)

Parallel Concurrent [shared memory] Distributed [message passing] * NOTE: There is no general consensus on this terminology

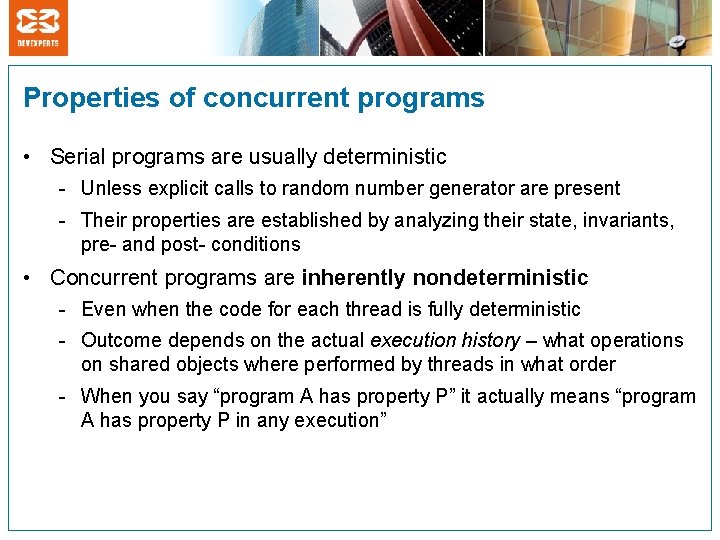

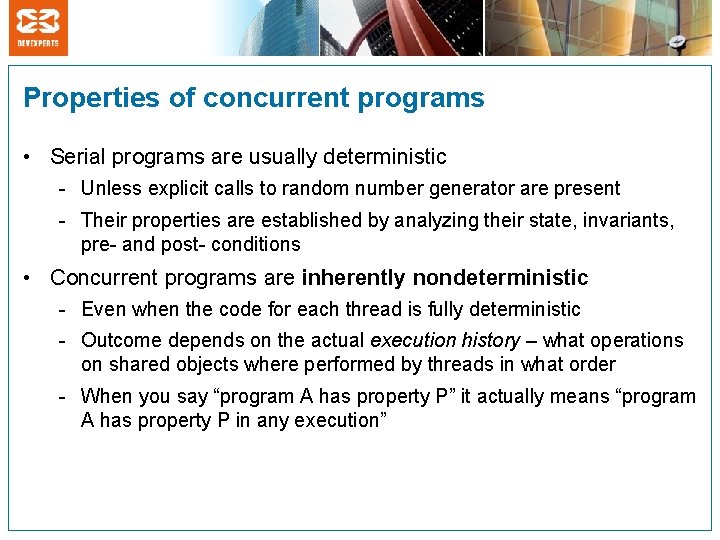

Properties of concurrent programs • Serial programs are usually deterministic - Unless explicit calls to random number generator are present - Their properties are established by analyzing their state, invariants, pre- and post- conditions • Concurrent programs are inherently nondeterministic - Even when the code for each thread is fully deterministic - Outcome depends on the actual execution history – what operations on shared objects where performed by threads in what order - When you say “program A has property P” it actually means “program A has property P in any execution”

Modeling executions • S is a global state, which includes: - State of all threads S f f(S) - State of all shared objects or all “in flight” messages (in distributed system) g • f and g are operations on shared objects g(S) - for registers it can be either ri. read(value) or ri. write(value) - There as many possible operations in each state as there active threads • not as simple for distributed case • f(S) is a new state after operation f was performed in state S

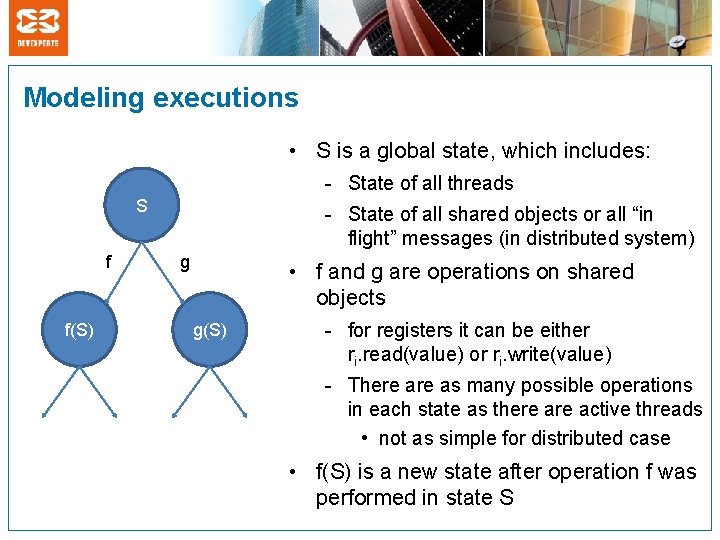

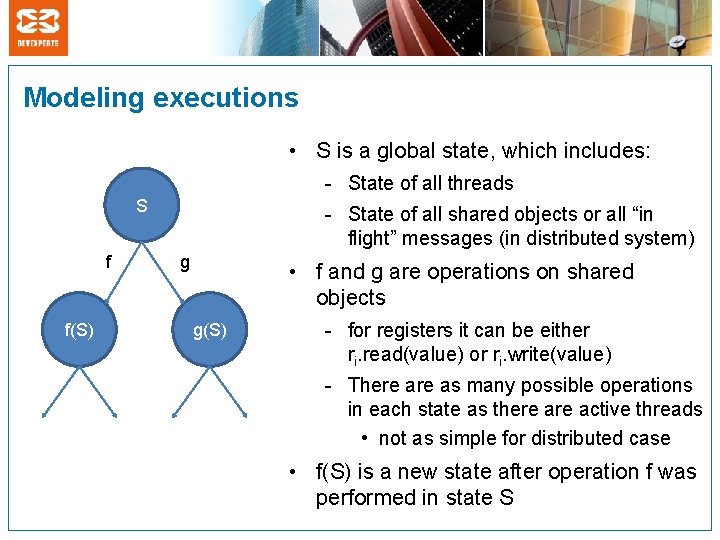

Example P 0, Q 0 x=0 (-, -) shared int x thread P: 0: x = 1 1: print x 2: stop P 1, Q 0 x=1 (-, -) P 2, Q 0 x=1 (1, -) +1 state not shown P 2, Q 2 x=2 (1, 2) P 0, Q 1 x=2 (-, -) A total of 17 states P 1, Q 1 x=2 (-, -) +2 states not shown P 2, Q 2 x=2 (2, 2) P 1, Q 1 x=1 (-, -) +2 states not shown P 2, Q 2 x=1 (1, 1) thread Q: 0: x = 2 1: print x 2: stop P 0, Q 2 x=2 (-, 2) +1 state not shown P 2, Q 2 x=1 (2, 1)

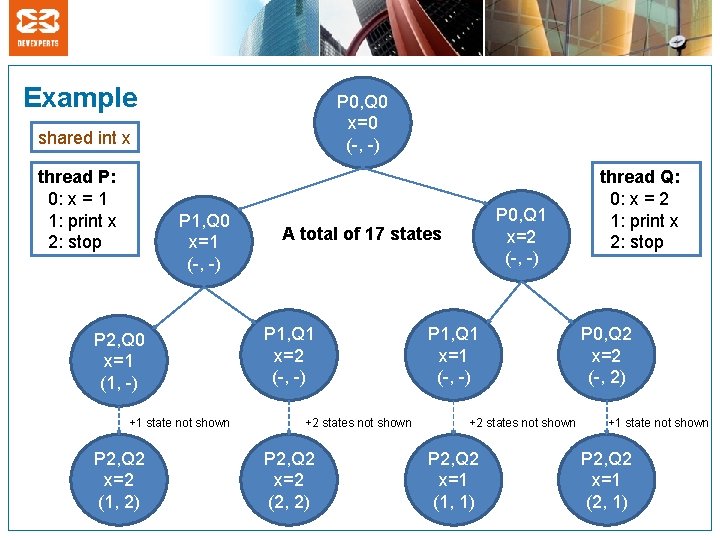

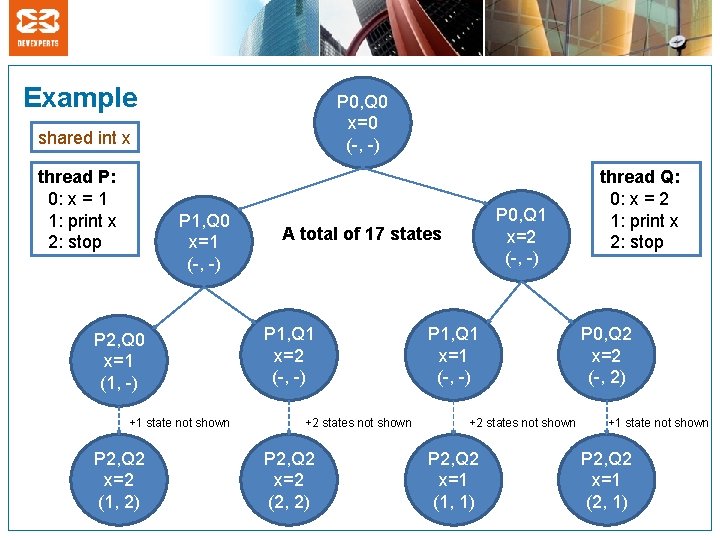

Discussion of the execution model with states • This model is not truly “parallel” - All operations happen serially (albeit in undefined order) • In reality (on a modern CPU) - A read or write operation is not instantaneous. It takes time - There are multiple memory banks that work in parallel. You have multiple read or write operation happening at the same time. • However, you can safely use this model for atomic registers - Atomic (linearizable) registers work as if each write or read is instantaneous and as if there is no parallelism - Will define what this means precisely later • A more general model of execution is needed to analyze a wider class of primitives

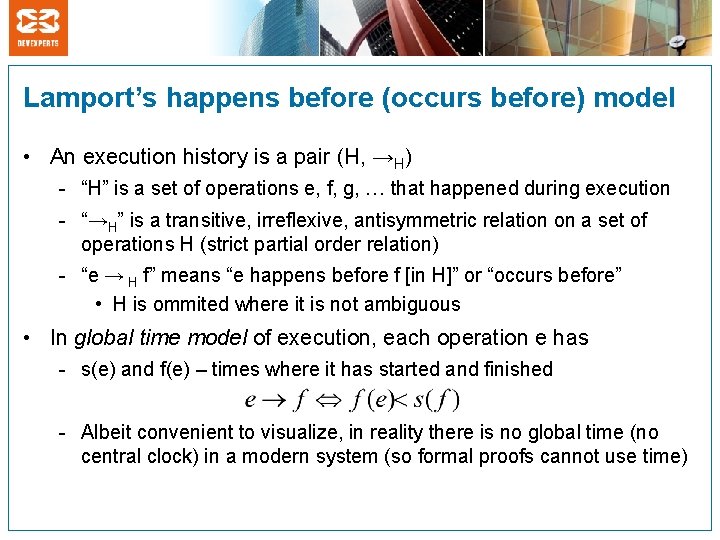

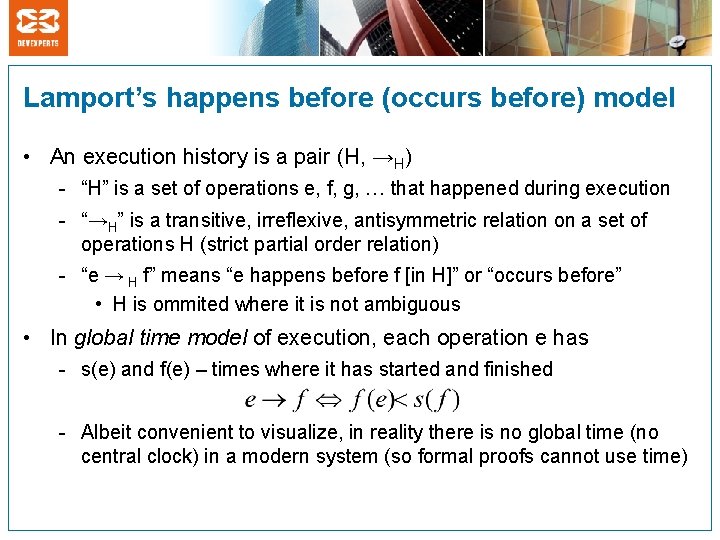

Lamport’s happens before (occurs before) model • An execution history is a pair (H, →H) - “H” is a set of operations e, f, g, … that happened during execution - “→H” is a transitive, irreflexive, antisymmetric relation on a set of operations H (strict partial order relation) - “e → H f” means “e happens before f [in H]” or “occurs before” • H is ommited where it is not ambiguous • In global time model of execution, each operation e has - s(e) and f(e) – times where it has started and finished - Albeit convenient to visualize, in reality there is no global time (no central clock) in a modern system (so formal proofs cannot use time)

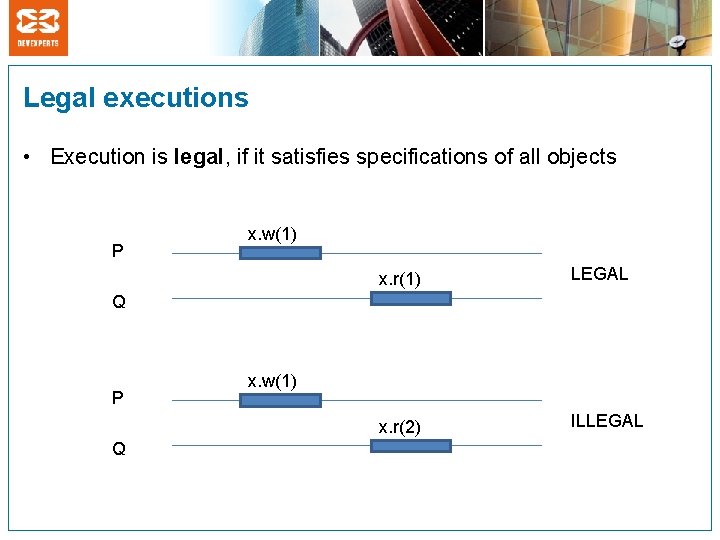

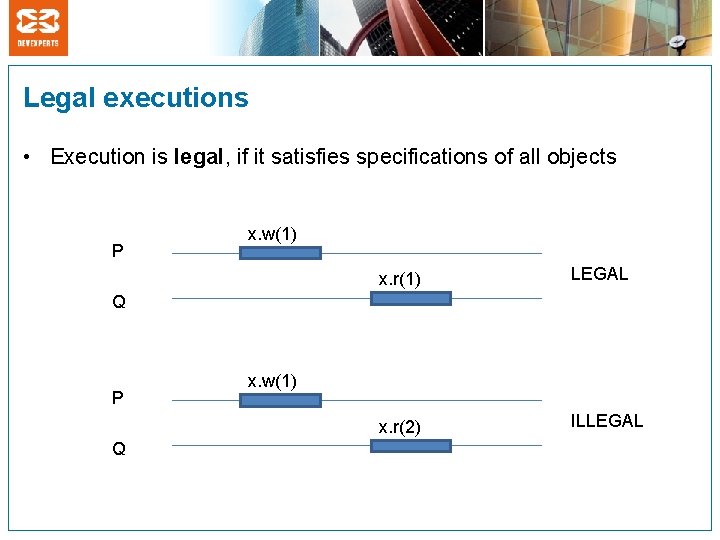

Legal executions • Execution is legal, if it satisfies specifications of all objects P x. w(1) x. r(1) LEGAL x. r(2) ILLEGAL Q P Q x. w(1)

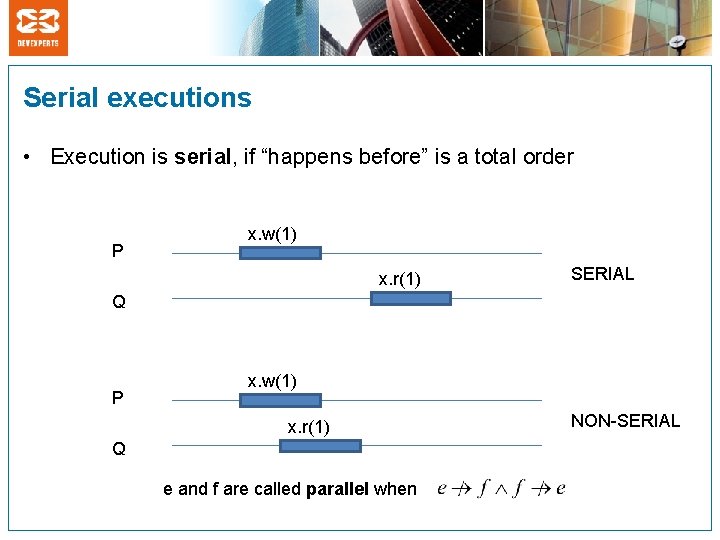

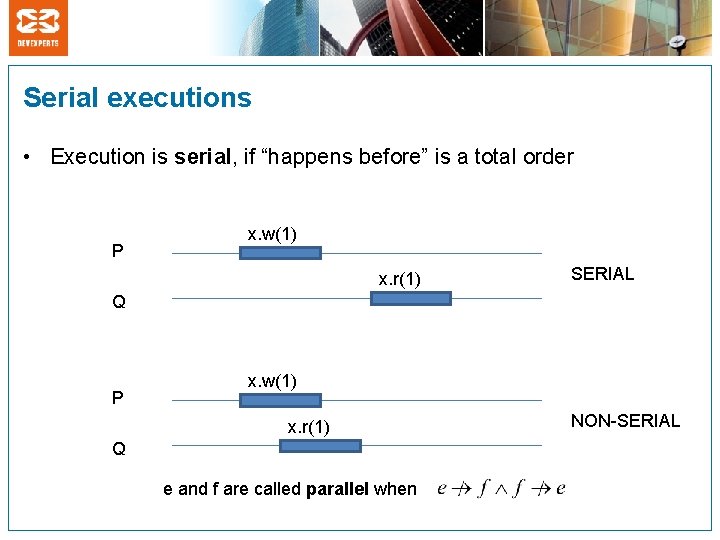

Serial executions • Execution is serial, if “happens before” is a total order P x. w(1) x. r(1) SERIAL Q P x. w(1) x. r(1) Q e and f are called parallel when NON-SERIAL

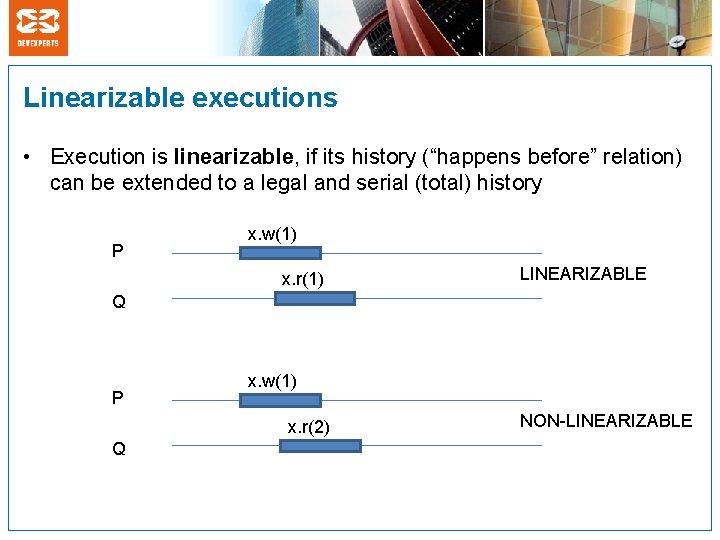

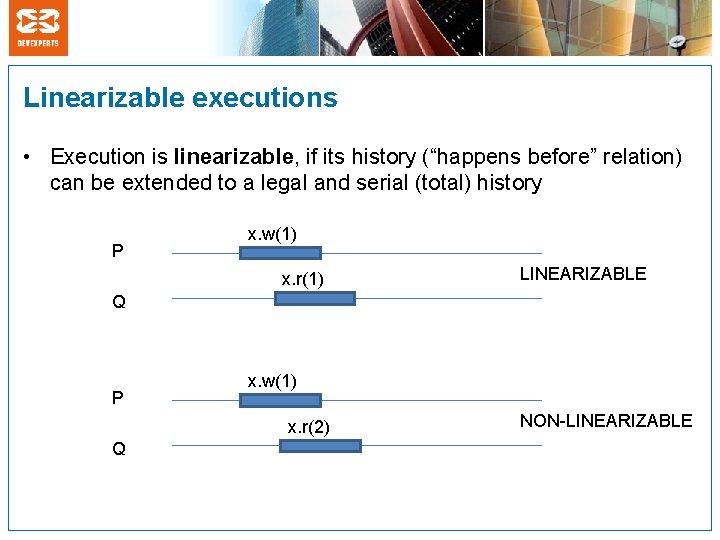

Linearizable executions • Execution is linearizable, if its history (“happens before” relation) can be extended to a legal and serial (total) history P x. w(1) x. r(1) LINEARIZABLE Q P x. w(1) x. r(2) Q NON-LINEARIZABLE

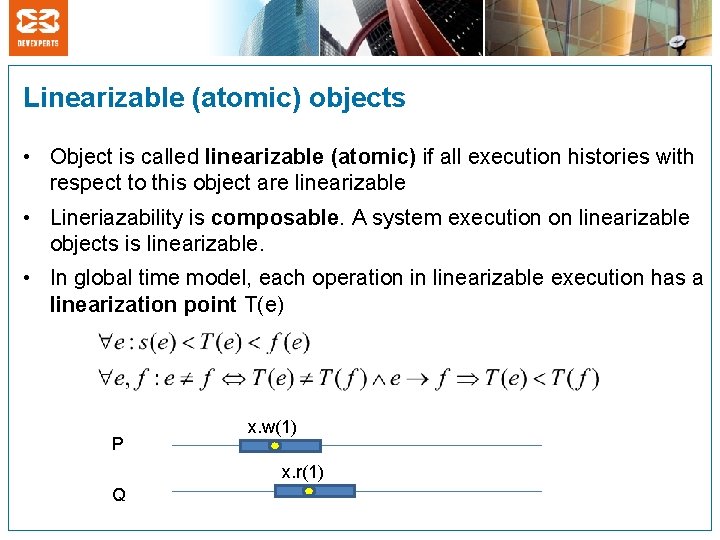

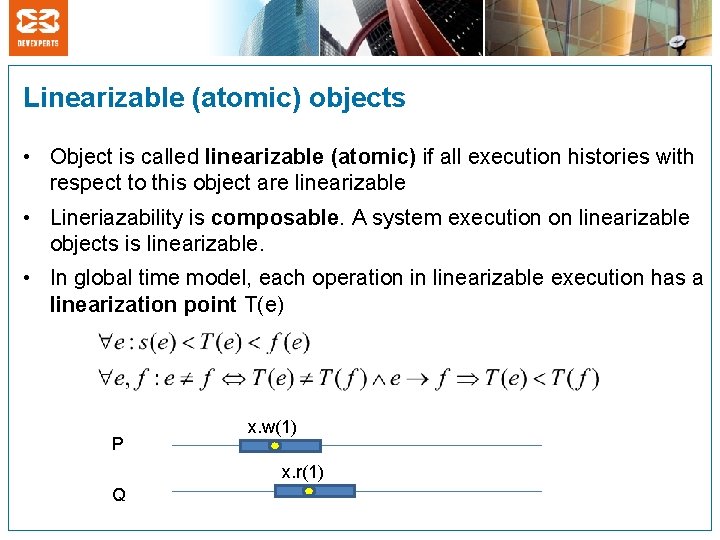

Linearizable (atomic) objects • Object is called linearizable (atomic) if all execution histories with respect to this object are linearizable • Lineriazability is composable. A system execution on linearizable objects is linearizable. • In global time model, each operation in linearizable execution has a linearization point T(e) P x. w(1) x. r(1) Q

Atomic registers and other objects • Atomic register == linearizable register - They work as if read/write operations happen instantaneously at linearization point and in some specific serial order - Thus we can use “global state” model of execution to analyze behavior of a program whose threads are working with shared atomic registers (or with other atomic objects) • volatile fields in Java work like atomic registers - Atomic. XXX classes are atomic registers, too (with additional ops) • Thread-safe classes (synchronized, Concurrent. XXX) are atomic (linearizable) unless explicitly specified otherwise - “thread-safe” in practice means “linearizable”, e. g. designed to work as if all operations happen in some serial order without an outside synchronization even if accessed concurrently

Locks http: //www. flickr. com/photos/xserve/368758286/

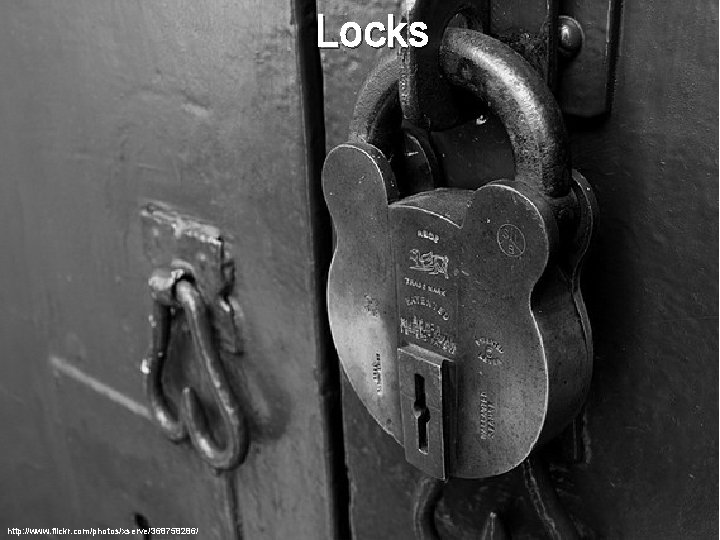

Mutual exclusion (lock) The mutex protocol thread Pid: loop forever: non. Critical. Section mutex. lock critical. Section mutex. unlock • The main desired property of protocol is mutual exclusion. Two executions of critical section cannot be parallel: • It is also known as correctness requirement for mutual exclusion protocol

![Mutex attempt 1 threadlocal int id 0 or 1 shared boolean want2 def Mutex attempt #1 threadlocal int id // 0 or 1 shared boolean want[2] def](https://slidetodoc.com/presentation_image/56c1dcf8c43e2988600888d8b578e98e/image-24.jpg)

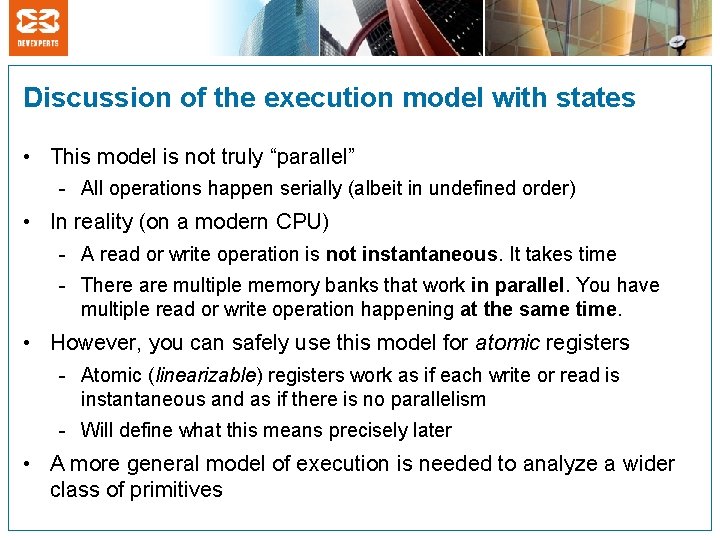

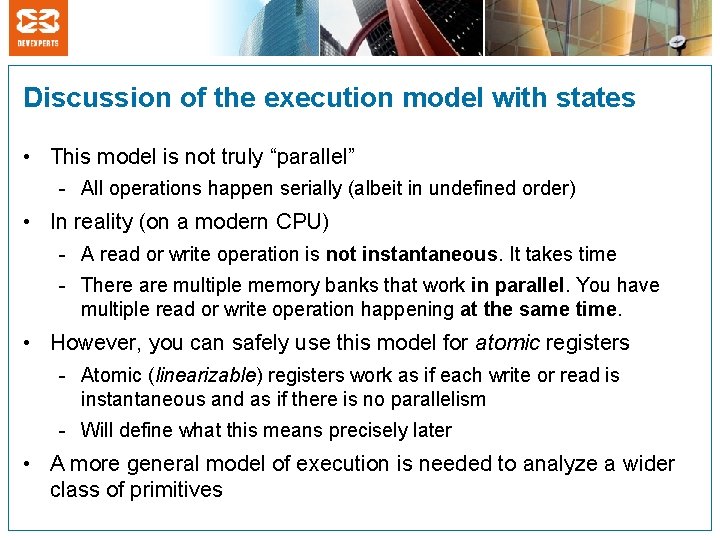

Mutex attempt #1 threadlocal int id // 0 or 1 shared boolean want[2] def lock: want[id] = true while want[1 - id]: pass def unlock: want[id] = false • This protocol does guarantee mutual exclusion • But there is no guarantee of progress. It can get into live-lock (both threads spinning forever in lock) • So, the other desired property is progress: critical section should get entered infinitely often

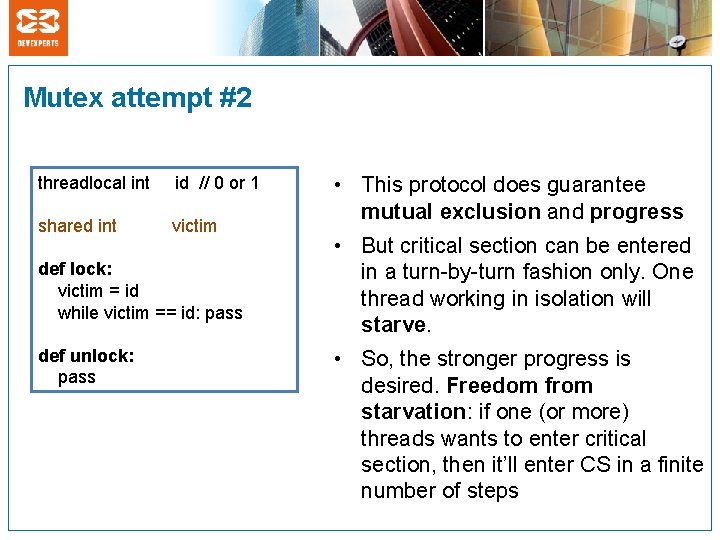

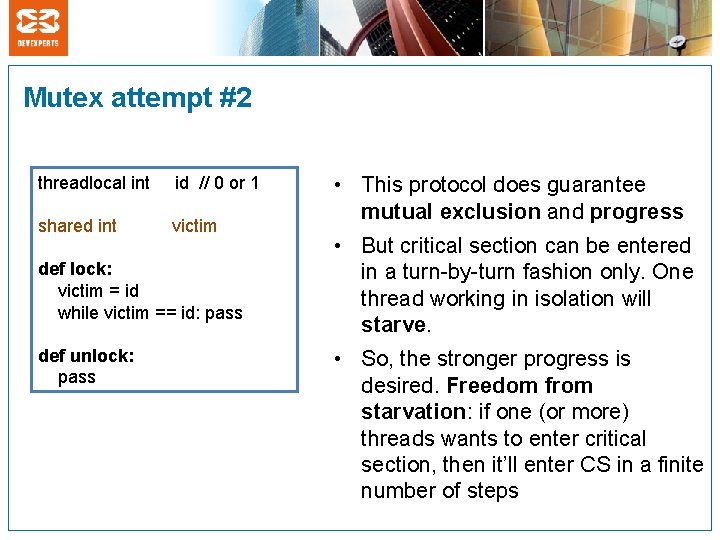

Mutex attempt #2 threadlocal int id // 0 or 1 shared int victim def lock: victim = id while victim == id: pass def unlock: pass • This protocol does guarantee mutual exclusion and progress • But critical section can be entered in a turn-by-turn fashion only. One thread working in isolation will starve. • So, the stronger progress is desired. Freedom from starvation: if one (or more) threads wants to enter critical section, then it’ll enter CS in a finite number of steps

![Petersons mutual exclusion algorithm threadlocal int id 0 or 1 shared boolean want2 Peterson’s mutual exclusion algorithm threadlocal int id // 0 or 1 shared boolean want[2]](https://slidetodoc.com/presentation_image/56c1dcf8c43e2988600888d8b578e98e/image-26.jpg)

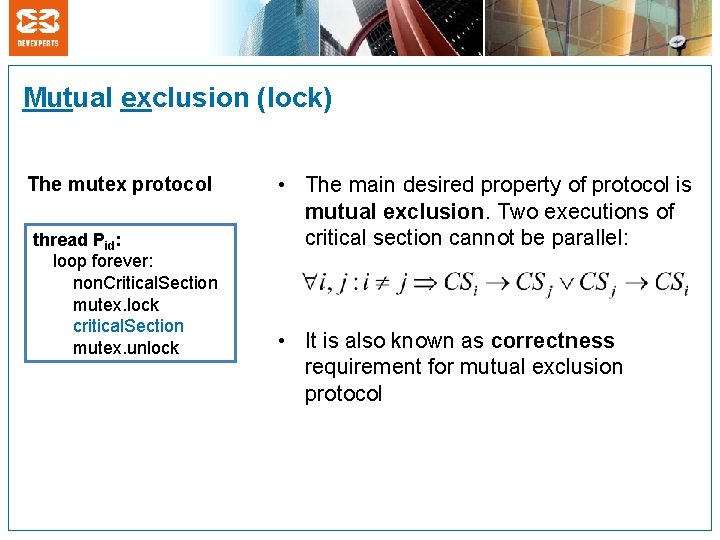

Peterson’s mutual exclusion algorithm threadlocal int id // 0 or 1 shared boolean want[2] shared int victim def lock: want[id] = true victim = id while want[1 -id] and victim == id: pass def unlock: want[id] = false • This protocol does guarantee mutual exclusion, progress and freedom from starvation • The order of operations in this pseudo-code is important • Not the first one invented (1981), but the simplest 2 -thread one • Hard to generalize to N threads (can be, but the result is complex)

![Lamports bakery mutual exclusion algorithm threadlocal int id 0 to N1 shared boolean Lamport’s [bakery] mutual exclusion algorithm threadlocal int id // 0 to N-1 shared boolean](https://slidetodoc.com/presentation_image/56c1dcf8c43e2988600888d8b578e98e/image-27.jpg)

Lamport’s [bakery] mutual exclusion algorithm threadlocal int id // 0 to N-1 shared boolean want[N] shared int label[N] def lock: want[id] = true doorway label[id] = max(label) + 1 while exists k: k != i and want[k] and (label[k], k) < (label[id], id) : pass def unlock: want[id] = false • This protocol does guarantee mutual exclusion, progress and freedom from starvation for N threads • This protocol has an additional first-come, first-served (FCFS) property. First thread finishing doorway gets lock first • But relies on infinite labels. They can be replaced with “concurrent bounded timestamps”

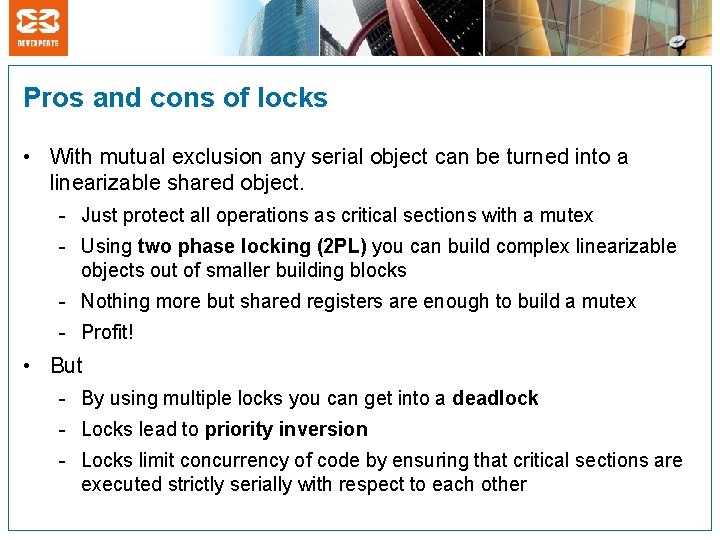

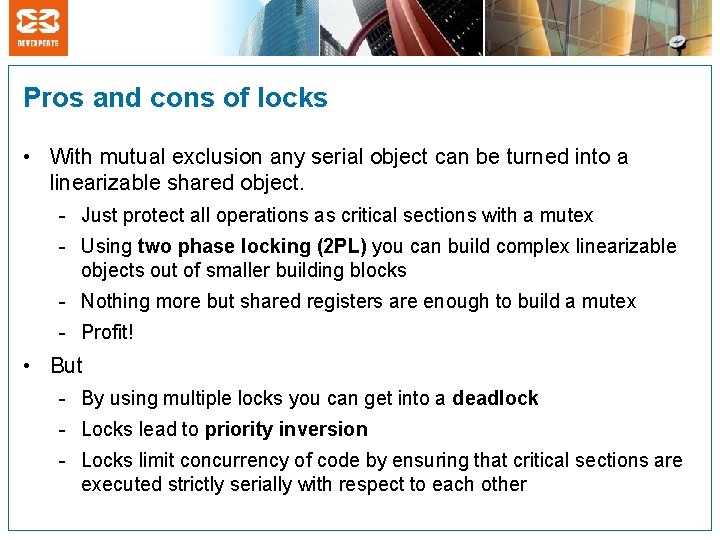

Pros and cons of locks • With mutual exclusion any serial object can be turned into a linearizable shared object. - Just protect all operations as critical sections with a mutex - Using two phase locking (2 PL) you can build complex linearizable objects out of smaller building blocks - Nothing more but shared registers are enough to build a mutex - Profit! • But - By using multiple locks you can get into a deadlock - Locks lead to priority inversion - Locks limit concurrency of code by ensuring that critical sections are executed strictly serially with respect to each other

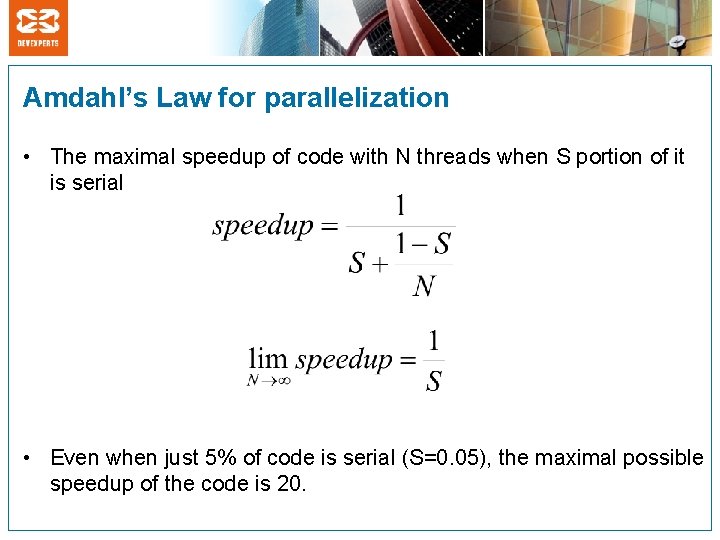

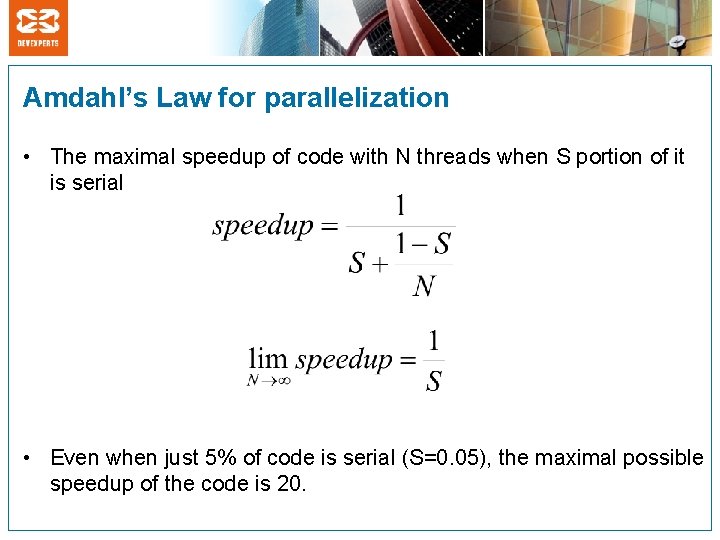

Amdahl’s Law for parallelization • The maximal speedup of code with N threads when S portion of it is serial • Even when just 5% of code is serial (S=0. 05), the maximal possible speedup of the code is 20.

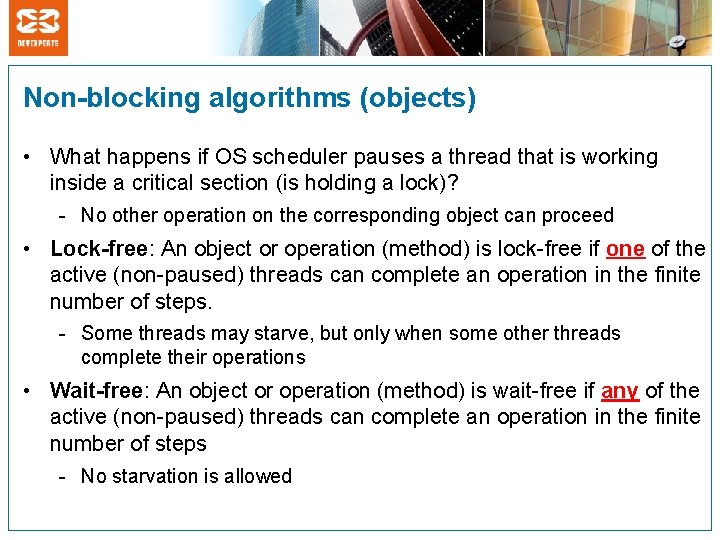

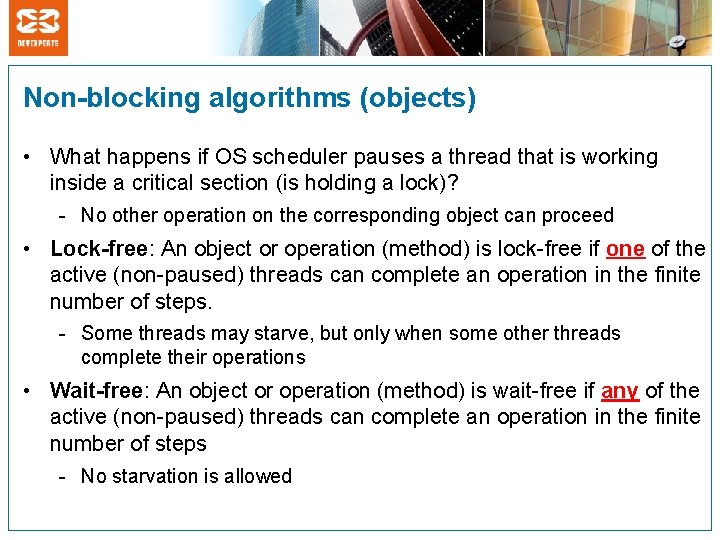

Non-blocking algorithms (objects) • What happens if OS scheduler pauses a thread that is working inside a critical section (is holding a lock)? - No other operation on the corresponding object can proceed • Lock-free: An object or operation (method) is lock-free if one of the active (non-paused) threads can complete an operation in the finite number of steps. - Some threads may starve, but only when some other threads complete their operations • Wait-free: An object or operation (method) is wait-free if any of the active (non-paused) threads can complete an operation in the finite number of steps - No starvation is allowed

What can we do without locks?

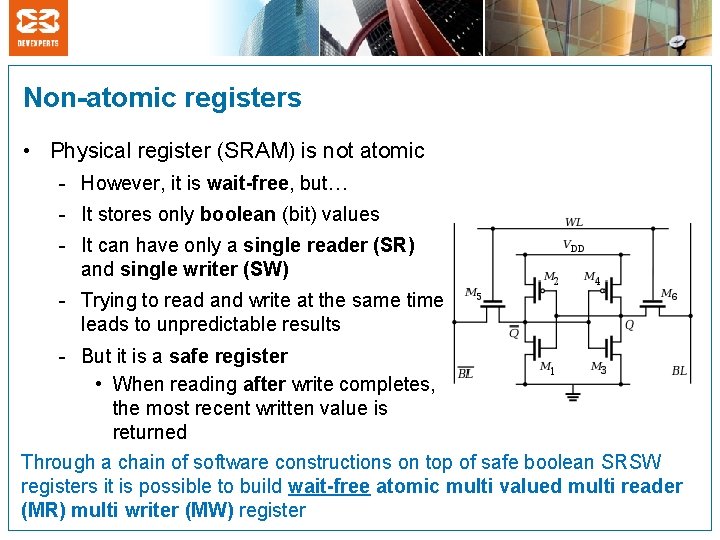

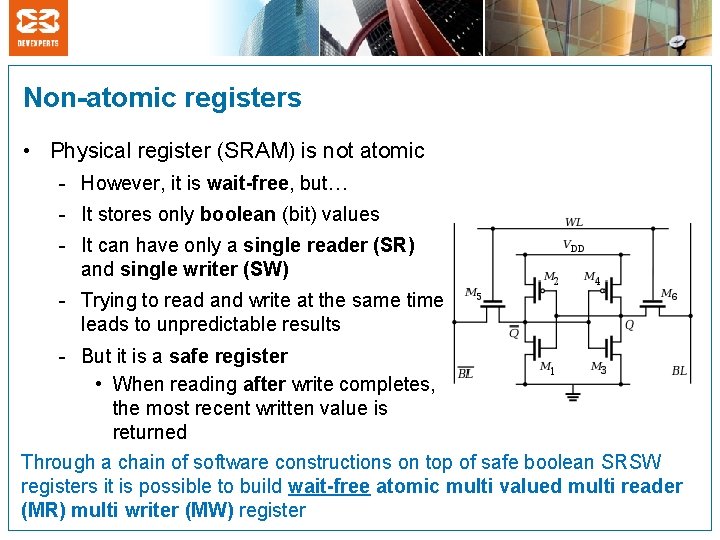

Non-atomic registers • Physical register (SRAM) is not atomic - However, it is wait-free, but… - It stores only boolean (bit) values - It can have only a single reader (SR) and single writer (SW) - Trying to read and write at the same time leads to unpredictable results - But it is a safe register • When reading after write completes, the most recent written value is returned Through a chain of software constructions on top of safe boolean SRSW registers it is possible to build wait-free atomic multi valued multi reader (MR) multi writer (MW) register

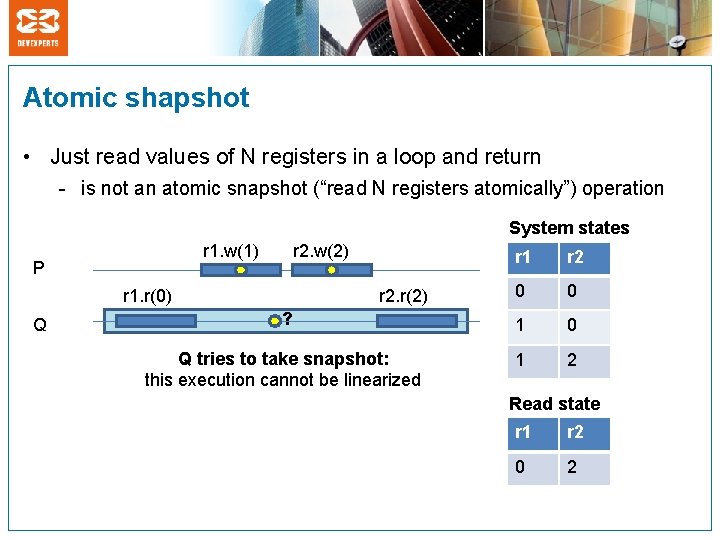

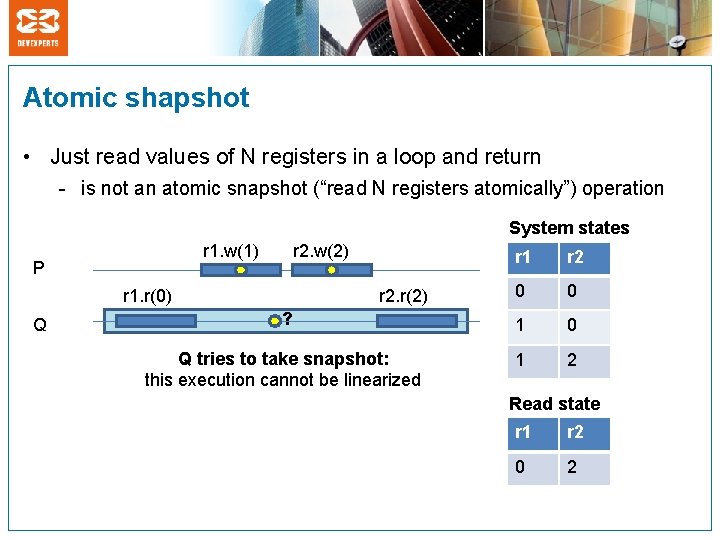

Atomic shapshot • Just read values of N registers in a loop and return - is not an atomic snapshot (“read N registers atomically”) operation System states r 1. w(1) r 2. w(2) r 1 r 2 0 0 ? 1 0 Q tries to take snapshot: this execution cannot be linearized 1 2 P r 1. r(0) Q r 2. r(2) Read state r 1 r 2 0 2

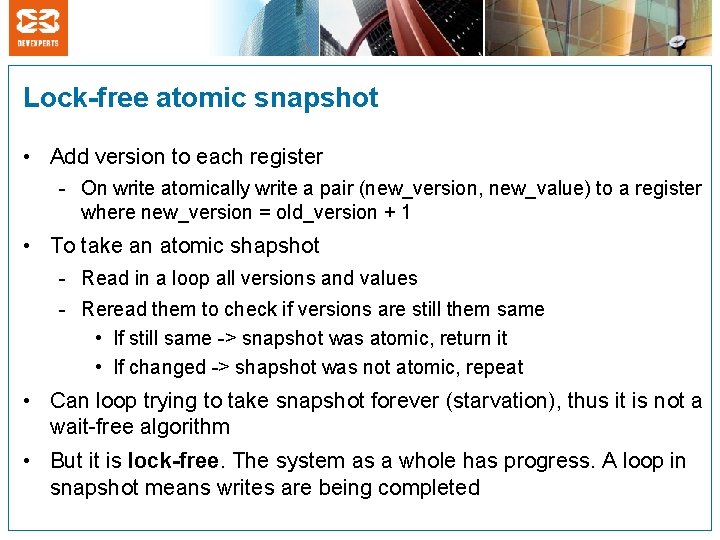

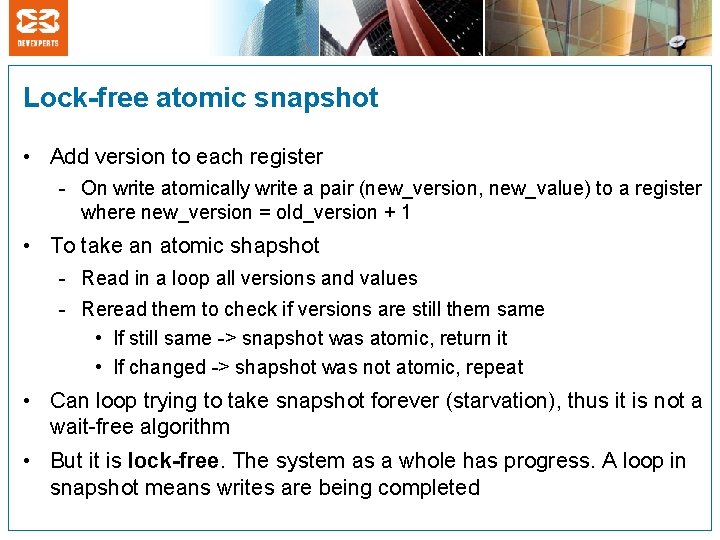

Lock-free atomic snapshot • Add version to each register - On write atomically write a pair (new_version, new_value) to a register where new_version = old_version + 1 • To take an atomic shapshot - Read in a loop all versions and values - Reread them to check if versions are still them same • If still same -> snapshot was atomic, return it • If changed -> shapshot was not atomic, repeat • Can loop trying to take snapshot forever (starvation), thus it is not a wait-free algorithm • But it is lock-free. The system as a whole has progress. A loop in snapshot means writes are being completed

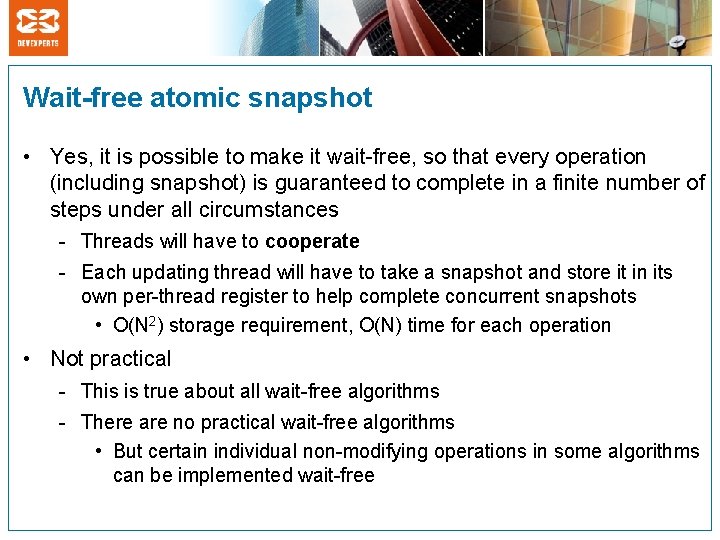

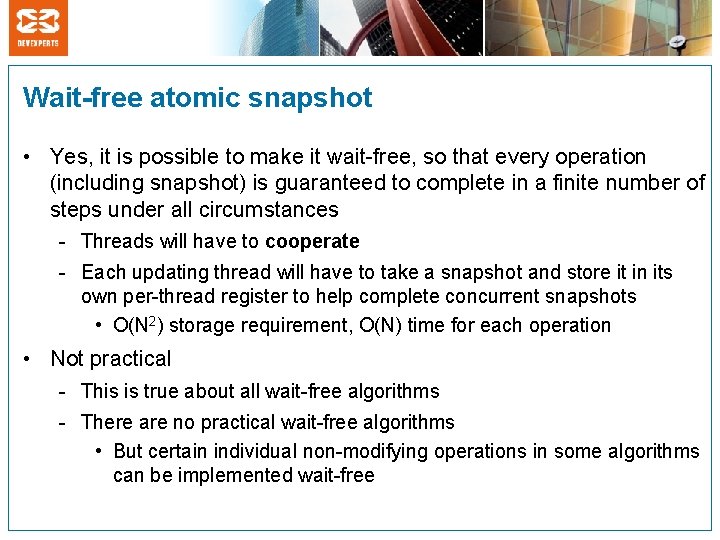

Wait-free atomic snapshot • Yes, it is possible to make it wait-free, so that every operation (including snapshot) is guaranteed to complete in a finite number of steps under all circumstances - Threads will have to cooperate - Each updating thread will have to take a snapshot and store it in its own per-thread register to help complete concurrent snapshots • O(N 2) storage requirement, O(N) time for each operation • Not practical - This is true about all wait-free algorithms - There are no practical wait-free algorithms • But certain individual non-modifying operations in some algorithms can be implemented wait-free

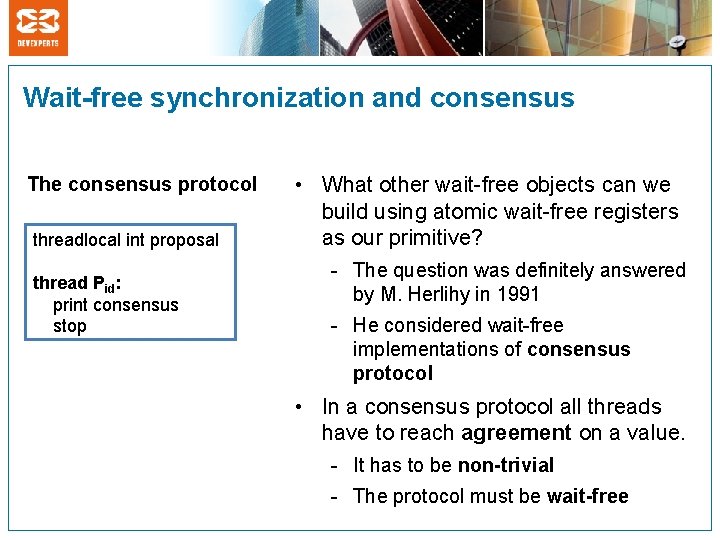

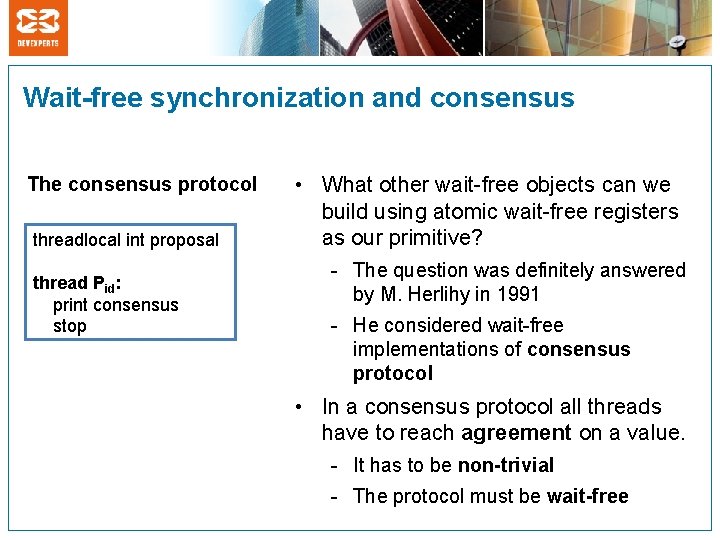

Wait-free synchronization and consensus The consensus protocol threadlocal int proposal thread Pid: print consensus stop • What other wait-free objects can we build using atomic wait-free registers as our primitive? - The question was definitely answered by M. Herlihy in 1991 - He considered wait-free implementations of consensus protocol • In a consensus protocol all threads have to reach agreement on a value. - It has to be non-trivial - The protocol must be wait-free

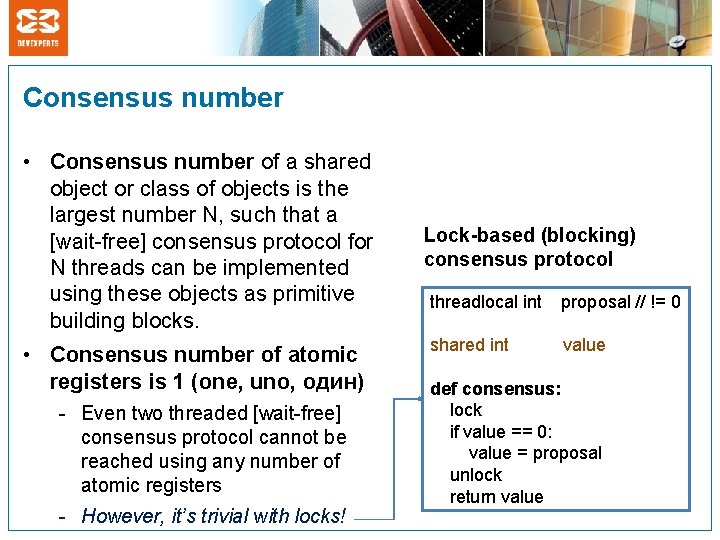

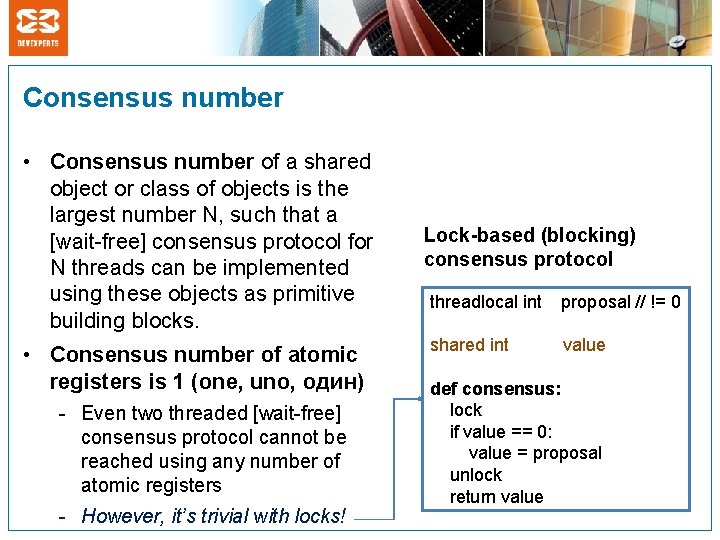

Consensus number • Consensus number of a shared object or class of objects is the largest number N, such that a [wait-free] consensus protocol for N threads can be implemented using these objects as primitive building blocks. • Consensus number of atomic registers is 1 (one, uno, один) - Even two threaded [wait-free] consensus protocol cannot be reached using any number of atomic registers - However, it’s trivial with locks! Lock-based (blocking) consensus protocol threadlocal int proposal // != 0 shared int value def consensus: lock if value == 0: value = proposal unlock return value

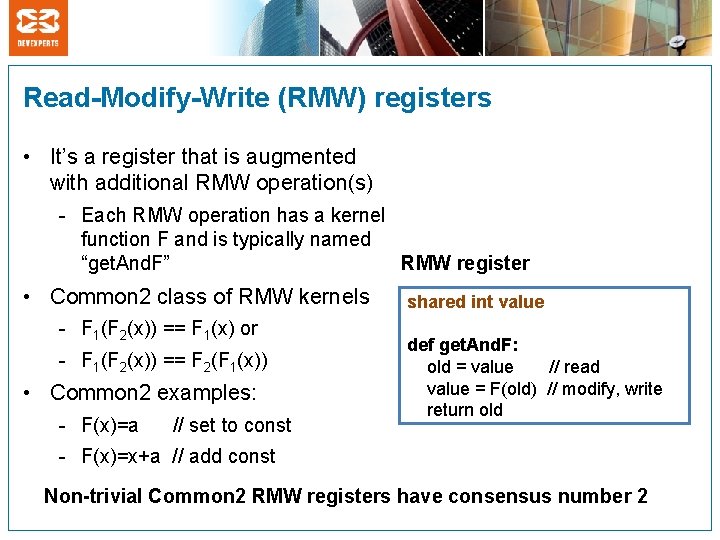

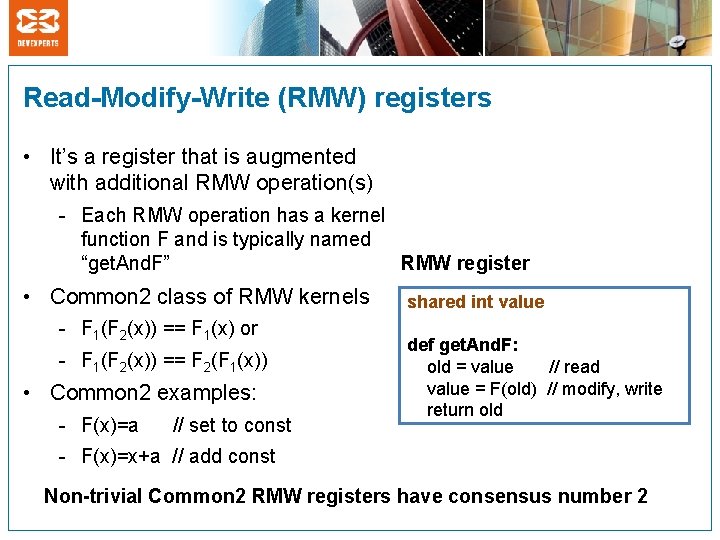

Read-Modify-Write (RMW) registers • It’s a register that is augmented with additional RMW operation(s) - Each RMW operation has a kernel function F and is typically named “get. And. F” RMW register • Common 2 class of RMW kernels - F 1(F 2(x)) == F 1(x) or - F 1(F 2(x)) == F 2(F 1(x)) • Common 2 examples: - F(x)=a // set to const shared int value def get. And. F: old = value // read value = F(old) // modify, write return old - F(x)=x+a // add const Non-trivial Common 2 RMW registers have consensus number 2

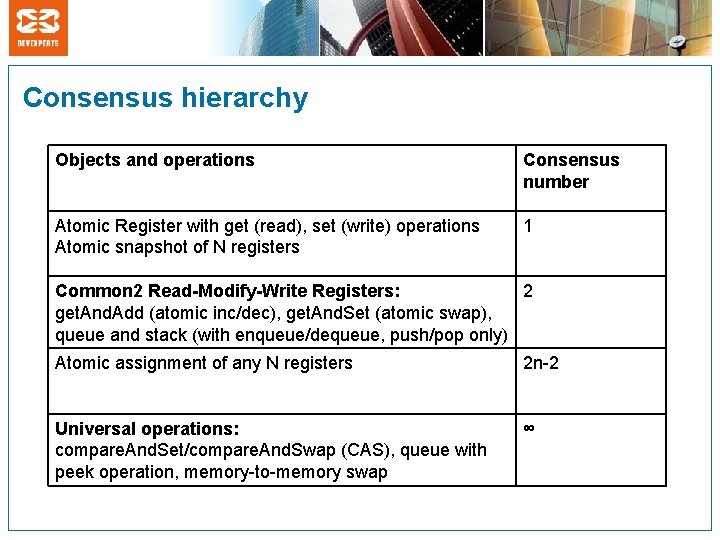

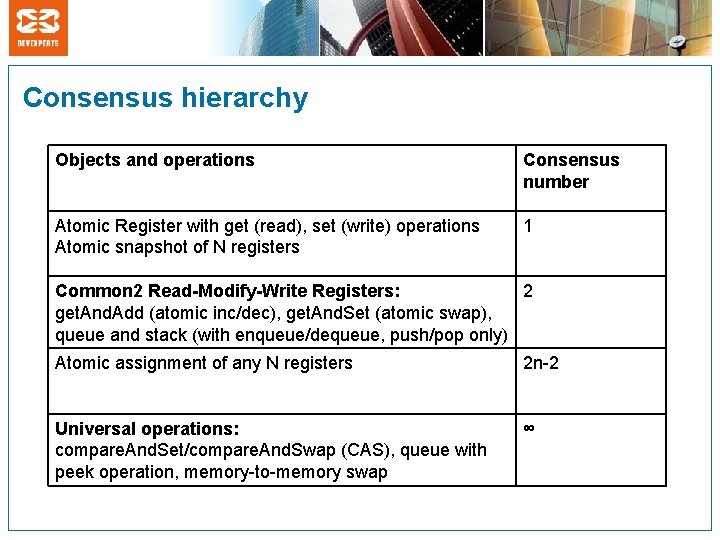

Consensus hierarchy Objects and operations Consensus number Atomic Register with get (read), set (write) operations Atomic snapshot of N registers 1 Common 2 Read-Modify-Write Registers: 2 get. And. Add (atomic inc/dec), get. And. Set (atomic swap), queue and stack (with enqueue/dequeue, push/pop only) Atomic assignment of any N registers 2 n-2 Universal operations: compare. And. Set/compare. And. Swap (CAS), queue with peek operation, memory-to-memory swap ∞

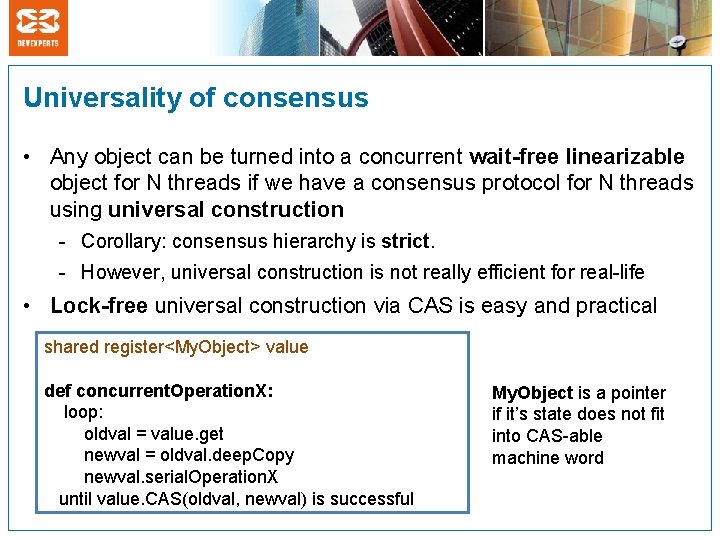

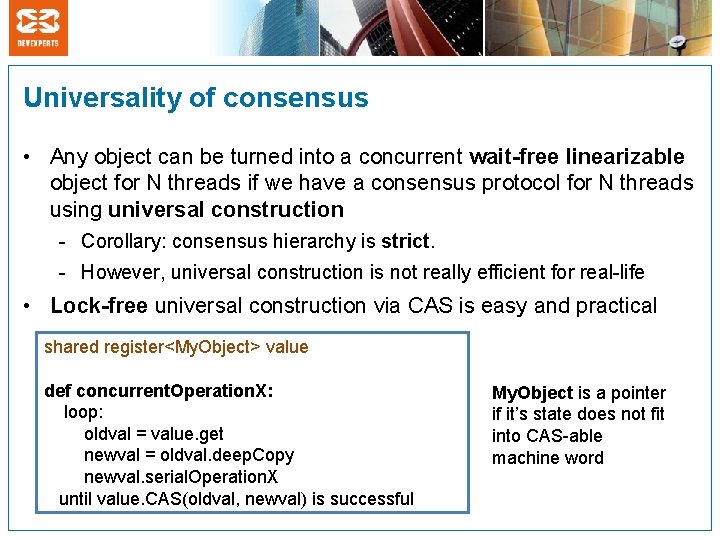

Universality of consensus • Any object can be turned into a concurrent wait-free linearizable object for N threads if we have a consensus protocol for N threads using universal construction - Corollary: consensus hierarchy is strict. - However, universal construction is not really efficient for real-life • Lock-free universal construction via CAS is easy and practical shared register<My. Object> value def concurrent. Operation. X: loop: oldval = value. get newval = oldval. deep. Copy newval. serial. Operation. X until value. CAS(oldval, newval) is successful My. Object is a pointer if it’s state does not fit into CAS-able machine word

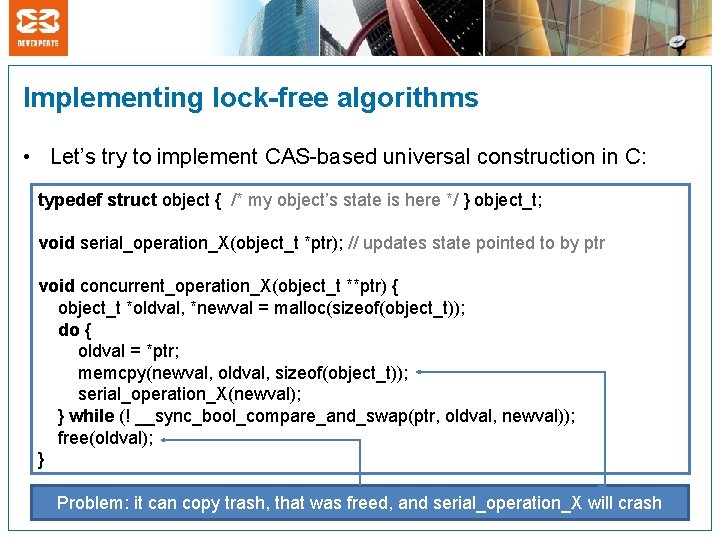

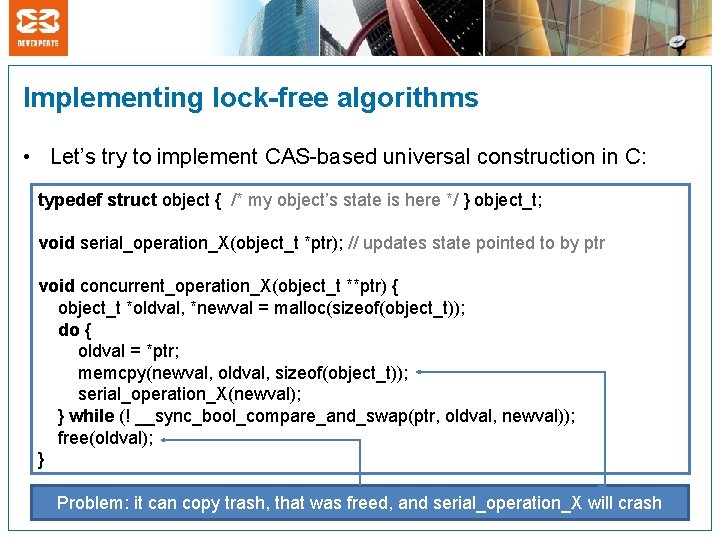

Implementing lock-free algorithms • Let’s try to implement CAS-based universal construction in C: typedef struct object { /* my object’s state is here */ } object_t; void serial_operation_X(object_t *ptr); // updates state pointed to by ptr void concurrent_operation_X(object_t **ptr) { object_t *oldval, *newval = malloc(sizeof(object_t)); do { oldval = *ptr; memcpy(newval, oldval, sizeof(object_t)); serial_operation_X(newval); } while (! __sync_bool_compare_and_swap(ptr, oldval, newval)); free(oldval); } Problem: it can copy trash, that was freed, and serial_operation_X will crash

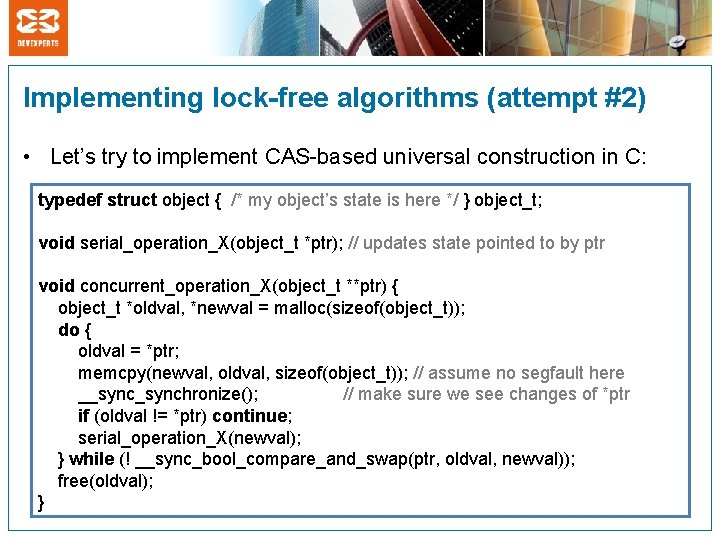

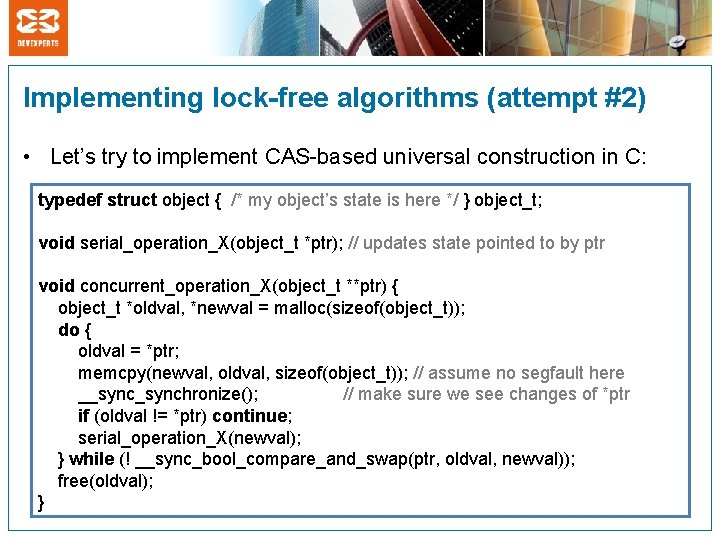

Implementing lock-free algorithms (attempt #2) • Let’s try to implement CAS-based universal construction in C: typedef struct object { /* my object’s state is here */ } object_t; void serial_operation_X(object_t *ptr); // updates state pointed to by ptr void concurrent_operation_X(object_t **ptr) { object_t *oldval, *newval = malloc(sizeof(object_t)); do { oldval = *ptr; memcpy(newval, oldval, sizeof(object_t)); // assume no segfault here __synchronize(); // make sure we see changes of *ptr if (oldval != *ptr) continue; serial_operation_X(newval); } while (! __sync_bool_compare_and_swap(ptr, oldval, newval)); free(oldval); }

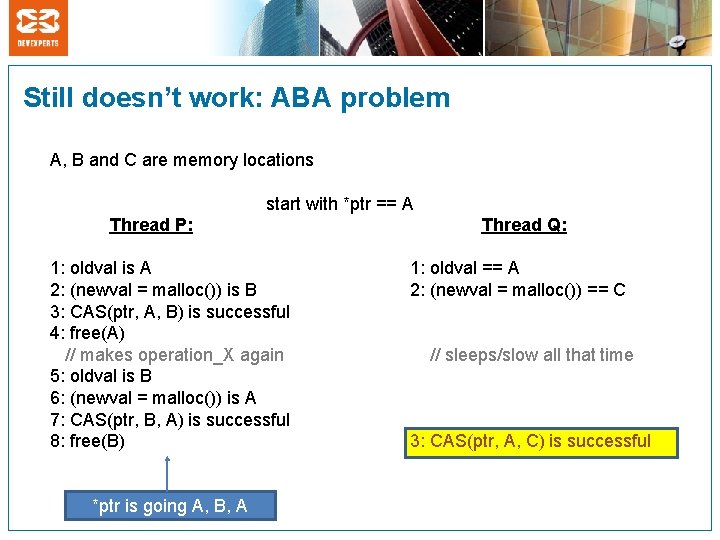

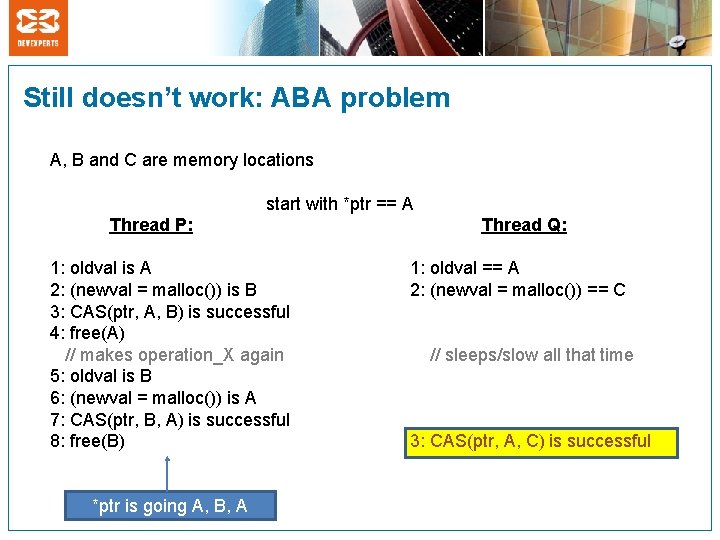

Still doesn’t work: ABA problem A, B and C are memory locations start with *ptr == A Thread P: Thread Q: 1: oldval is A 1: oldval == A 2: (newval = malloc()) is B 2: (newval = malloc()) == C 3: CAS(ptr, A, B) is successful 4: free(A) // makes operation_X again // sleeps/slow all that time 5: oldval is B 6: (newval = malloc()) is A 7: CAS(ptr, B, A) is successful 8: free(B) 3: CAS(ptr, A, C) is successful *ptr is going A, B, A

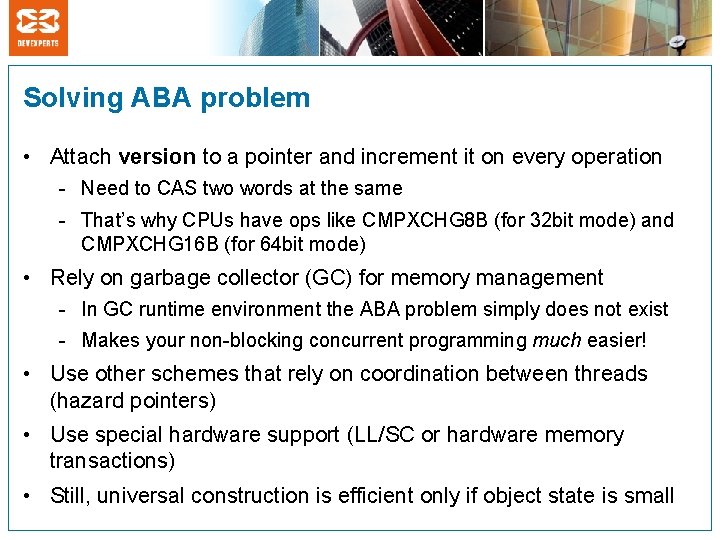

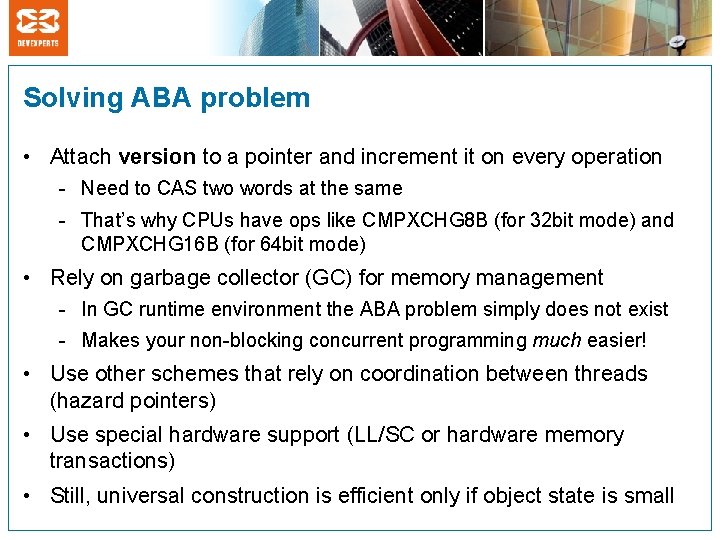

Solving ABA problem • Attach version to a pointer and increment it on every operation - Need to CAS two words at the same - That’s why CPUs have ops like CMPXCHG 8 B (for 32 bit mode) and CMPXCHG 16 B (for 64 bit mode) • Rely on garbage collector (GC) for memory management - In GC runtime environment the ABA problem simply does not exist - Makes your non-blocking concurrent programming much easier! • Use other schemes that rely on coordination between threads (hazard pointers) • Use special hardware support (LL/SC or hardware memory transactions) • Still, universal construction is efficient only if object state is small

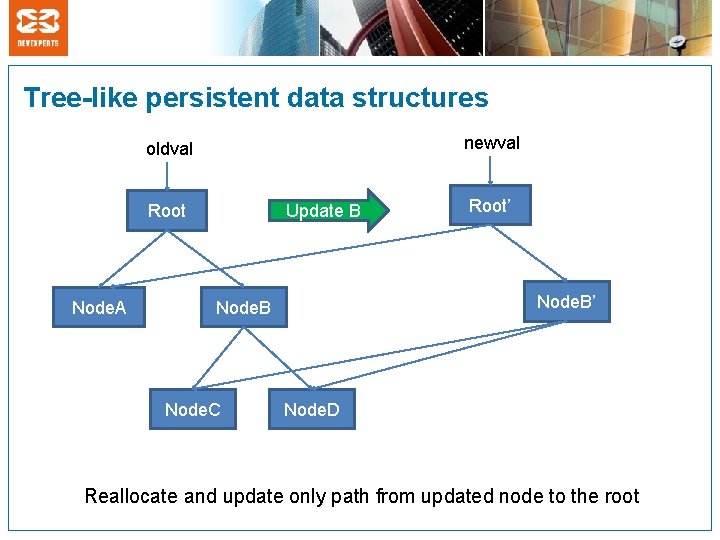

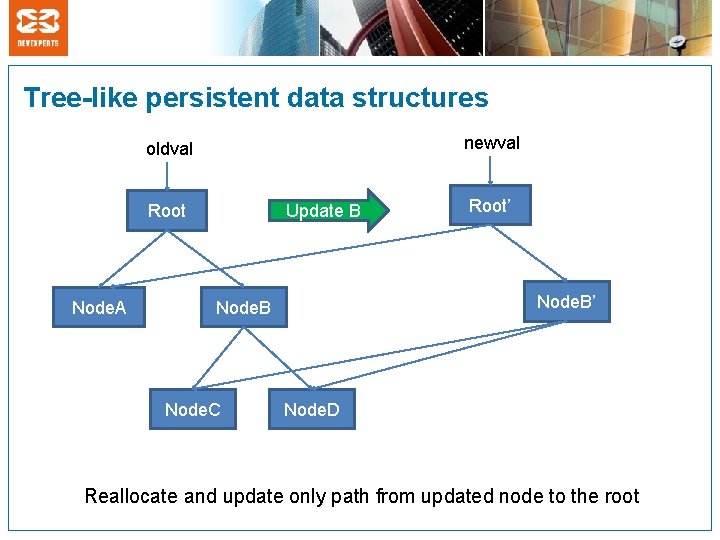

Tree-like persistent data structures newval oldval Root Node. A Update B Node. B’ Node. B Node. C Root’ Node. D Reallocate and update only path from updated node to the root

http: //liveearth. org/en/liveearthblog/run-for-water? page=7 Quick overview of other topics

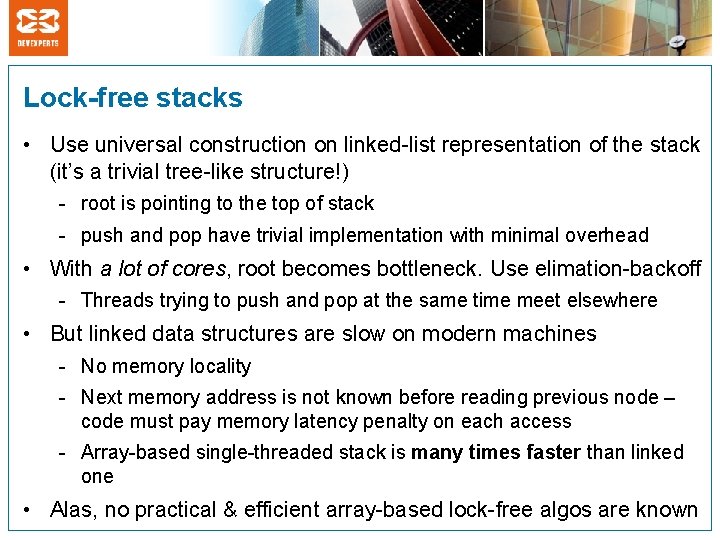

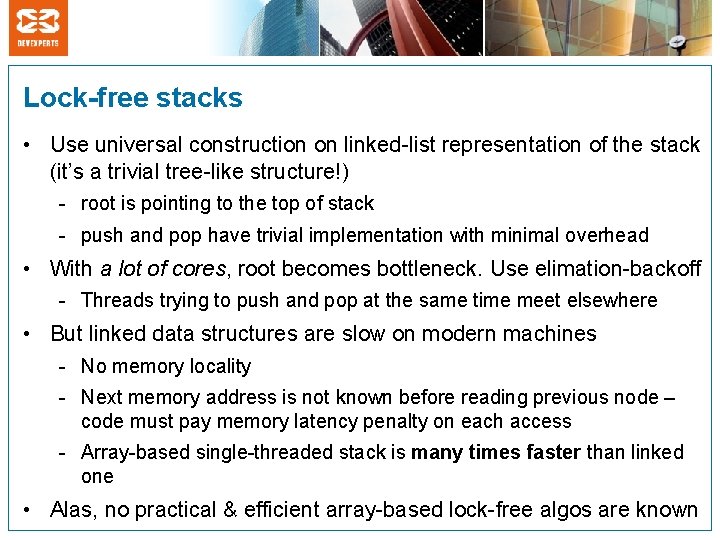

Lock-free stacks • Use universal construction on linked-list representation of the stack (it’s a trivial tree-like structure!) - root is pointing to the top of stack - push and pop have trivial implementation with minimal overhead • With a lot of cores, root becomes bottleneck. Use elimation-backoff - Threads trying to push and pop at the same time meet elsewhere • But linked data structures are slow on modern machines - No memory locality - Next memory address is not known before reading previous node – code must pay memory latency penalty on each access - Array-based single-threaded stack is many times faster than linked one • Alas, no practical & efficient array-based lock-free algos are known

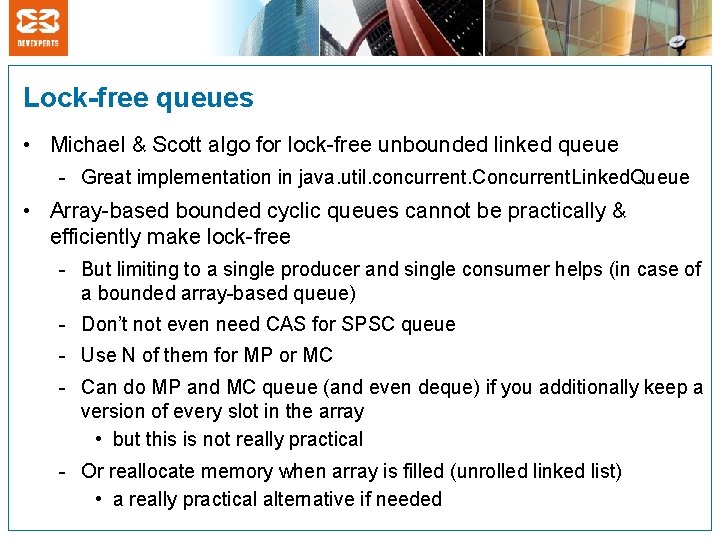

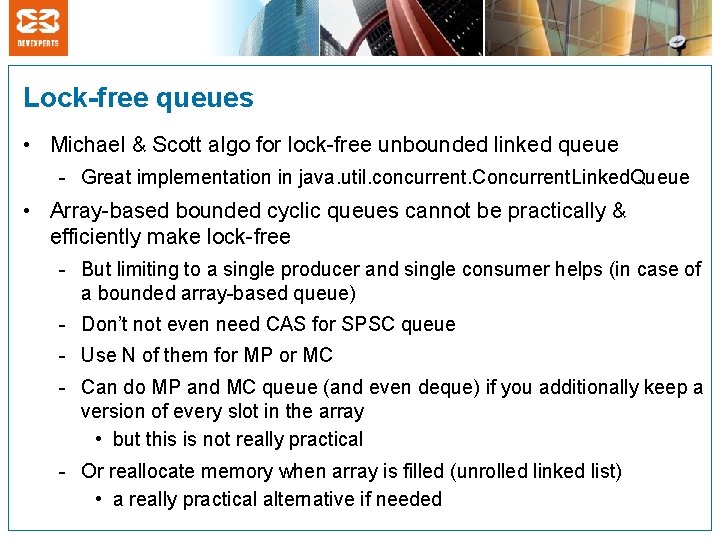

Lock-free queues • Michael & Scott algo for lock-free unbounded linked queue - Great implementation in java. util. concurrent. Concurrent. Linked. Queue • Array-based bounded cyclic queues cannot be practically & efficiently make lock-free - But limiting to a single producer and single consumer helps (in case of a bounded array-based queue) - Don’t not even need CAS for SPSC queue - Use N of them for MP or MC - Can do MP and MC queue (and even deque) if you additionally keep a version of every slot in the array • but this is not really practical - Or reallocate memory when array is filled (unrolled linked list) • a really practical alternative if needed

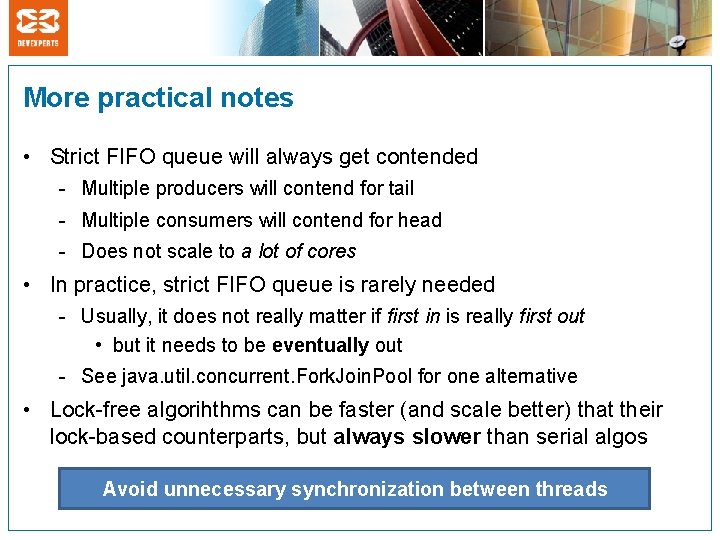

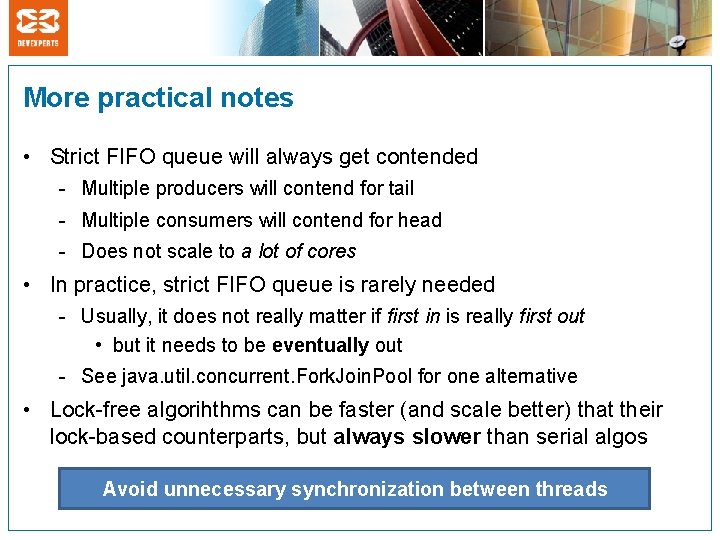

More practical notes • Strict FIFO queue will always get contended - Multiple producers will contend for tail - Multiple consumers will contend for head - Does not scale to a lot of cores • In practice, strict FIFO queue is rarely needed - Usually, it does not really matter if first in is really first out • but it needs to be eventually out - See java. util. concurrent. Fork. Join. Pool for one alternative • Lock-free algorihthms can be faster (and scale better) that their lock-based counterparts, but always slower than serial algos Avoid unnecessary synchronization between threads

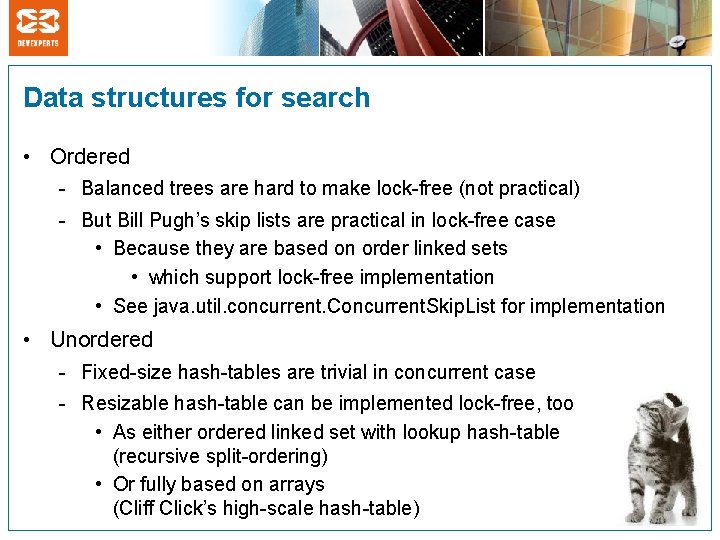

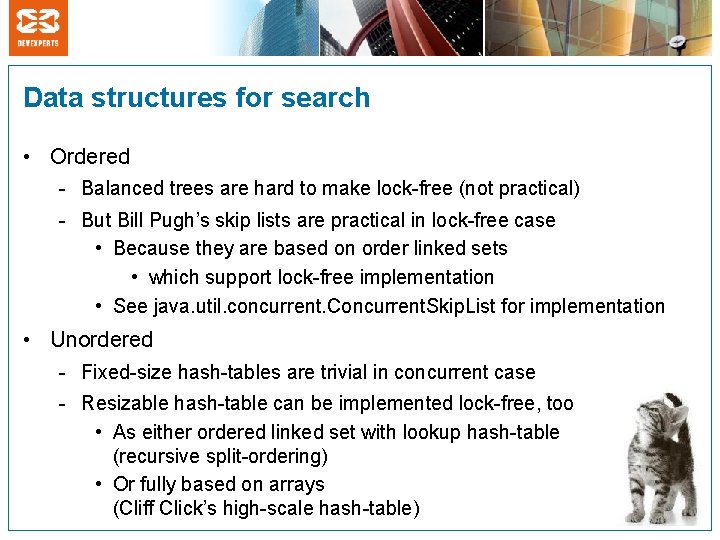

Data structures for search • Ordered - Balanced trees are hard to make lock-free (not practical) - But Bill Pugh’s skip lists are practical in lock-free case • Because they are based on order linked sets • which support lock-free implementation • See java. util. concurrent. Concurrent. Skip. List for implementation • Unordered - Fixed-size hash-tables are trivial in concurrent case - Resizable hash-table can be implemented lock-free, too • As either ordered linked set with lookup hash-table (recursive split-ordering) • Or fully based on arrays (Cliff Click’s high-scale hash-table)

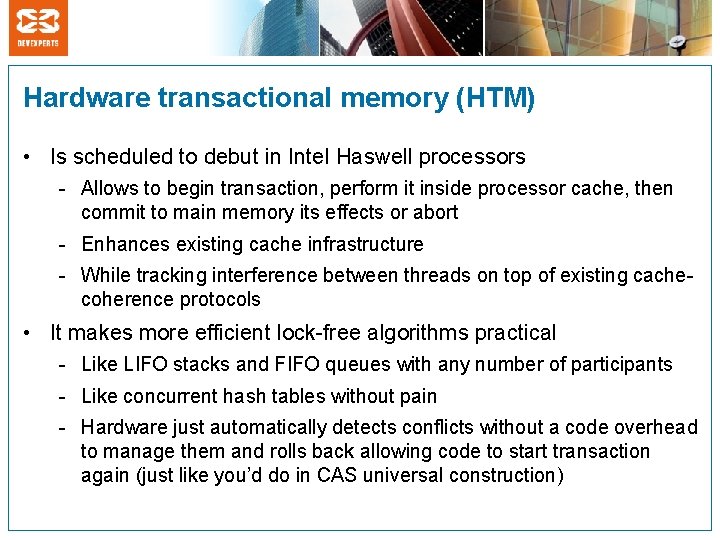

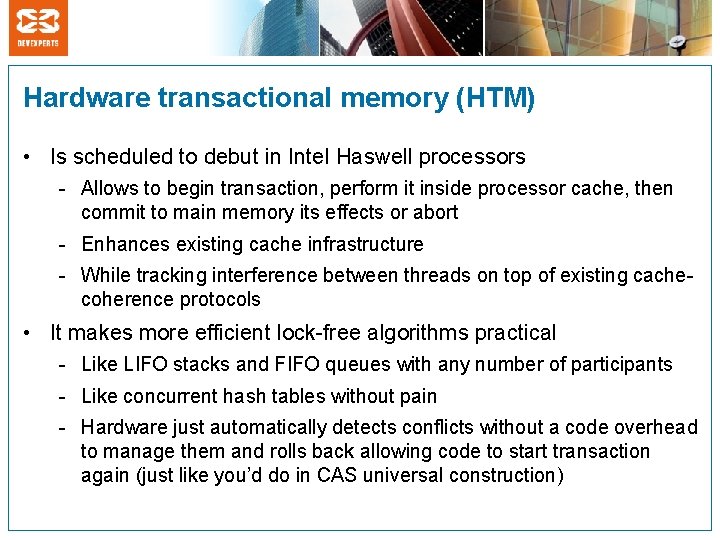

Hardware transactional memory (HTM) • Is scheduled to debut in Intel Haswell processors - Allows to begin transaction, perform it inside processor cache, then commit to main memory its effects or abort - Enhances existing cache infrastructure - While tracking interference between threads on top of existing cachecoherence protocols • It makes more efficient lock-free algorithms practical - Like LIFO stacks and FIFO queues with any number of participants - Like concurrent hash tables without pain - Hardware just automatically detects conflicts without a code overhead to manage them and rolls back allowing code to start transaction again (just like you’d do in CAS universal construction)

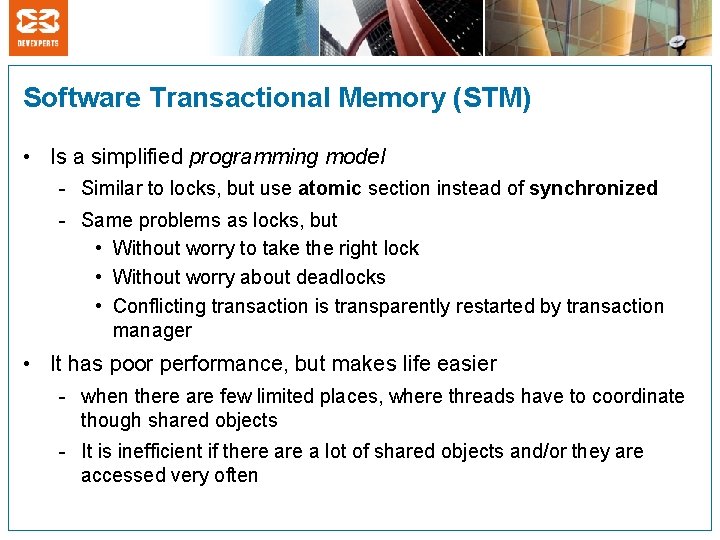

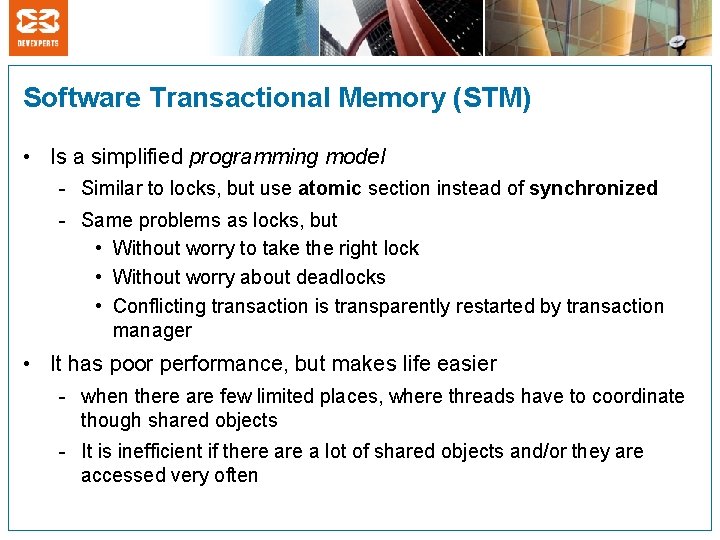

Software Transactional Memory (STM) • Is a simplified programming model - Similar to locks, but use atomic section instead of synchronized - Same problems as locks, but • Without worry to take the right lock • Without worry about deadlocks • Conflicting transaction is transparently restarted by transaction manager • It has poor performance, but makes life easier - when there are few limited places, where threads have to coordinate though shared objects - It is inefficient if there a lot of shared objects and/or they are accessed very often

There’s much more to it. It is an active area of research

Further reading

Thank you for your attention! Any questions? Slides will be posted to elizarov. livejournal. com