COSC 1306COMPUTER SCIENCE AND COSC 1306 PROGRAMMING COMPUTER

- Slides: 129

COSC 1306—COMPUTER SCIENCE AND COSC 1306 PROGRAMMING COMPUTER LITERACY FOR COMPUTER SCIENCE MAJORS ORGANIZATION Jehan-François Pâris jfparis@uh. edu

Module Overview • We will focus on the main challenges of computer architecture – Managing the I/O hierarchy • Caching, multiprogramming, virtual memory – Speeding up the CPU • Pipelined and multicore architectures – Protecting user computations and data • Memory protection, privileged

THE MEMORY HIERARCHY

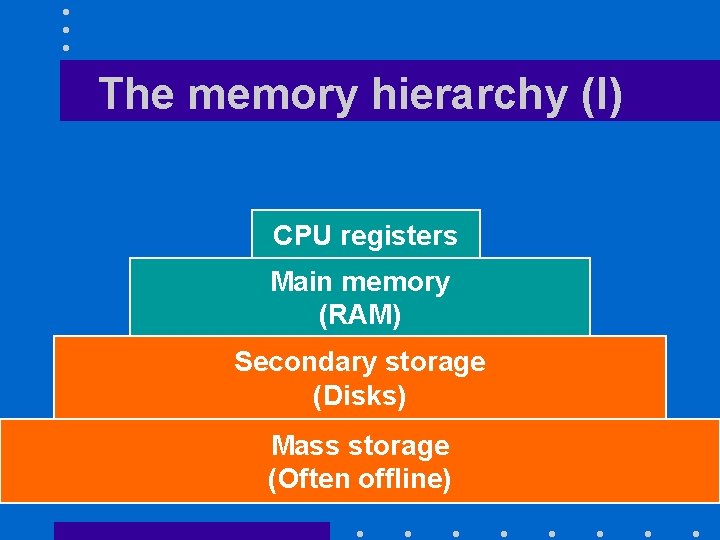

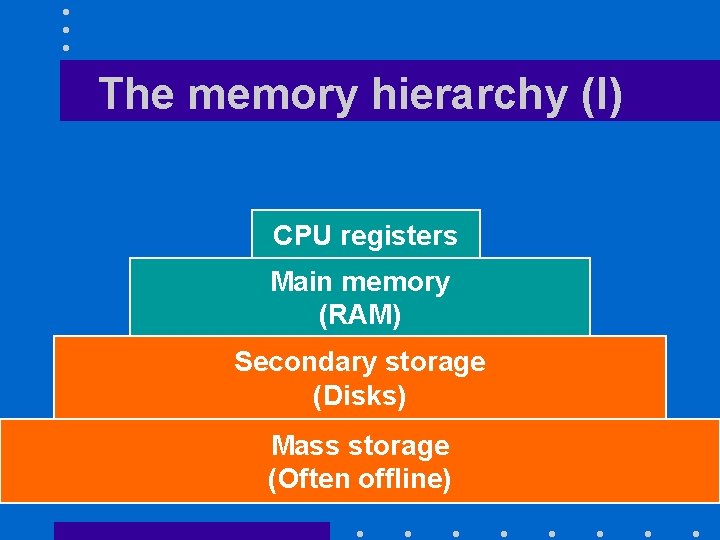

The memory hierarchy (I) CPU registers Main memory (RAM) Secondary storage (Disks) Mass storage (Often offline)

CPU registers • Inside the processor itself • Some can be accessed by our programs – Others no • Can be read/written to in one processor cycle – If processor speed is 2 GHz • 2, 000, 000 cycles per second

Main memory (I) • Byte accessible – Each group of 8 bits has an address • Dynamic random access memory (DRAM) – Slower but much cheaper than static RAM – Contents must be refreshed every 64 ms • Otherwise its contents are lost:

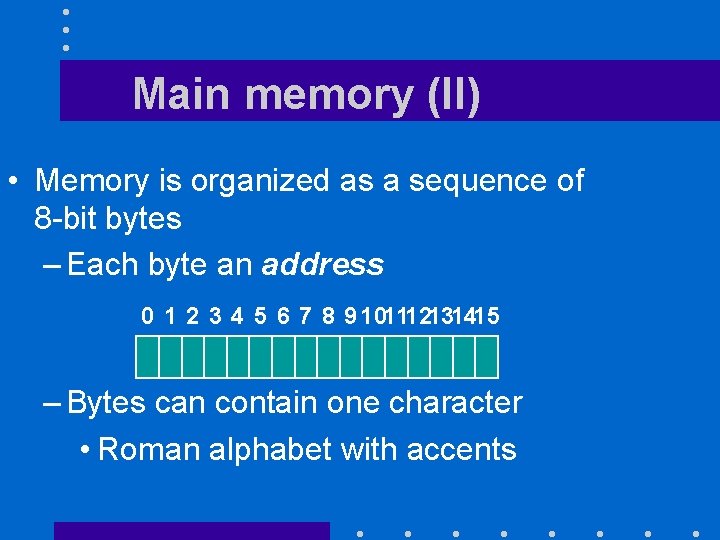

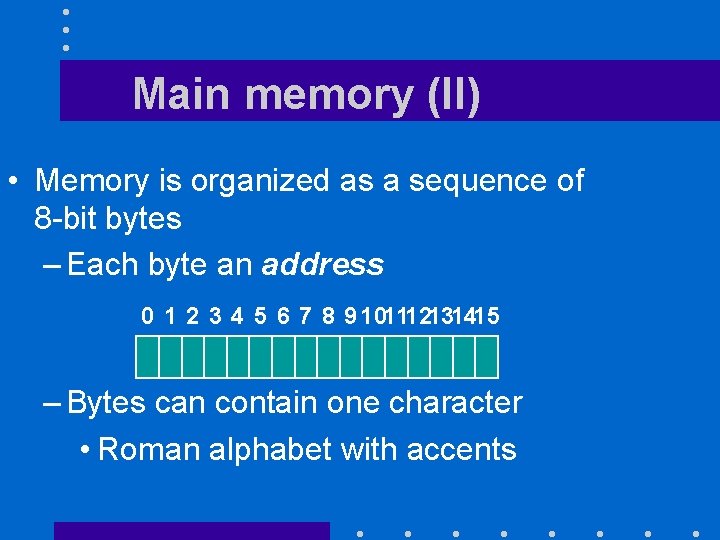

Main memory (II) • Memory is organized as a sequence of 8 -bit bytes – Each byte an address 0 1 2 3 4 5 6 7 8 9 101112131415 – Bytes can contain one character • Roman alphabet with accents

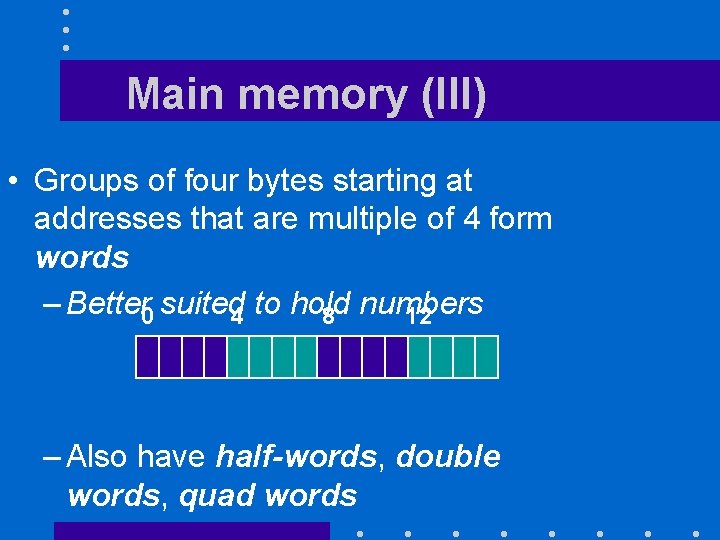

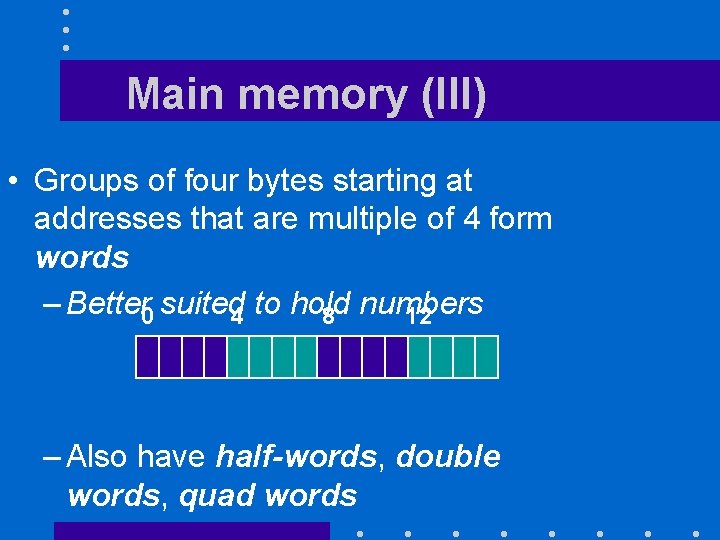

Main memory (III) • Groups of four bytes starting at addresses that are multiple of 4 form words – Better 0 suited 4 to hold 8 numbers 12 – Also have half-words, double words, quad words

Accessing main memory contents (I) • When look for some item, our search criteria can include the location of the item – The book on the table – The student behind you, … • More often our main search criterion is some attribute of the item – The color of a folder – The title or the authors of a book

Accessing main memory contents (II) • Computers always access their memory by location – The byte at address 4095 – The word at location 512 • States the address 51 51 of 51 the 51 first byte in 2 3 4 5 the word • Why?

An analogy (I) • Some research libraries have a closedstack policy – Only library employees can access the stacks – Patrons wanting to get an item fill a form containing a call number specifying the location of the item • Could be Library of Congress classification if the stacks are

An analogy (II) • The procedure followed by the employee fetching the book is fairly simple – Go at location specified by the book call number – Check it the book is there – Bring it to the patron

An analogy (III) • The memory operates in an even simpler manner – Always fetch the contents of the addressed bytes • Junk or not

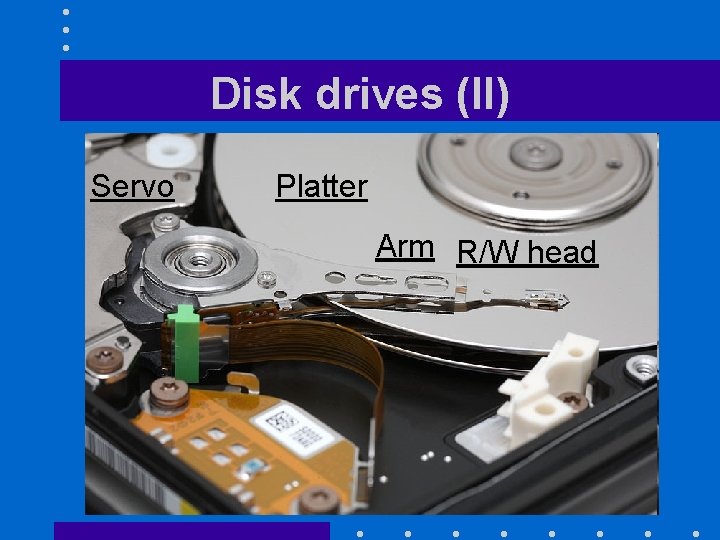

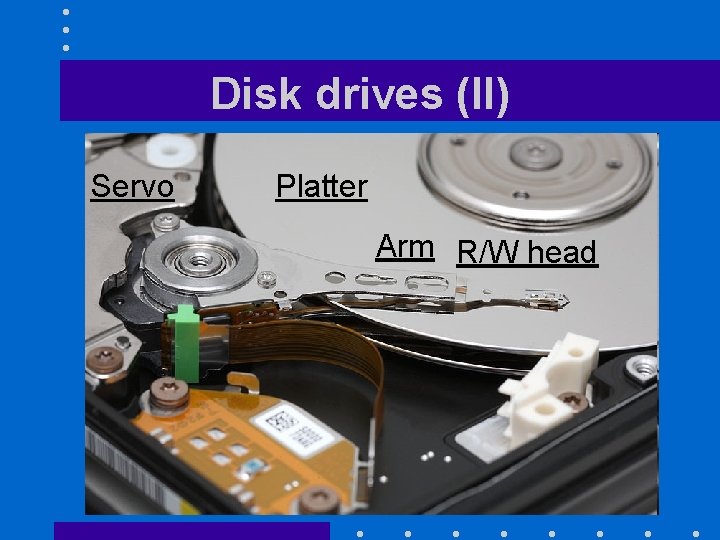

Disk drives (I) • Sole part of computer architecture with moving parts: • Data stored on circular tracks of a disk – Spinning speed between 5, 400 and 15, 000 rotations per minute – Accessed through a read/write head

Disk drives (II) Servo Platter Arm R/W head

Disk drives (III) • Data can be accessed by blocks of 4 KB, 8 KB, … – Depends on disk partition parameters • User selectable • To access a disk block – Read/write head must be over the right track • Seek time – Data to be accessed must pass under

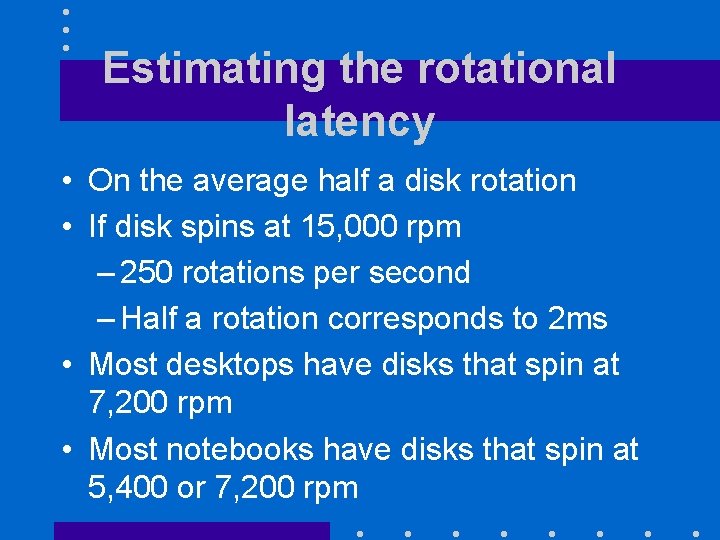

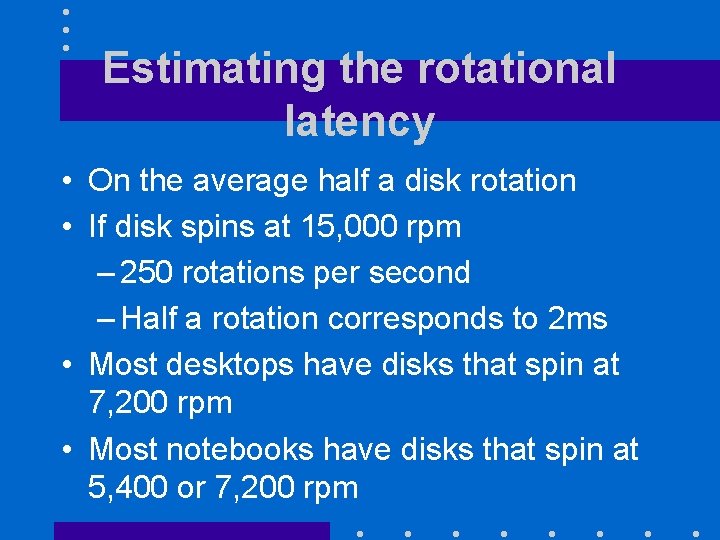

Estimating the rotational latency • On the average half a disk rotation • If disk spins at 15, 000 rpm – 250 rotations per second – Half a rotation corresponds to 2 ms • Most desktops have disks that spin at 7, 200 rpm • Most notebooks have disks that spin at 5, 400 or 7, 200 rpm

Accessing disk contents • Each block on a disk has a unique address – Normally a single number • Logical block addressing (LBA) – Older PCs used a different scheme

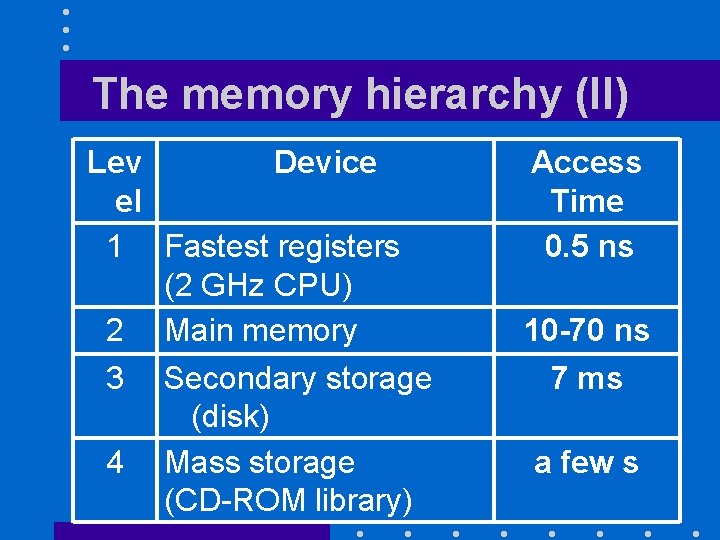

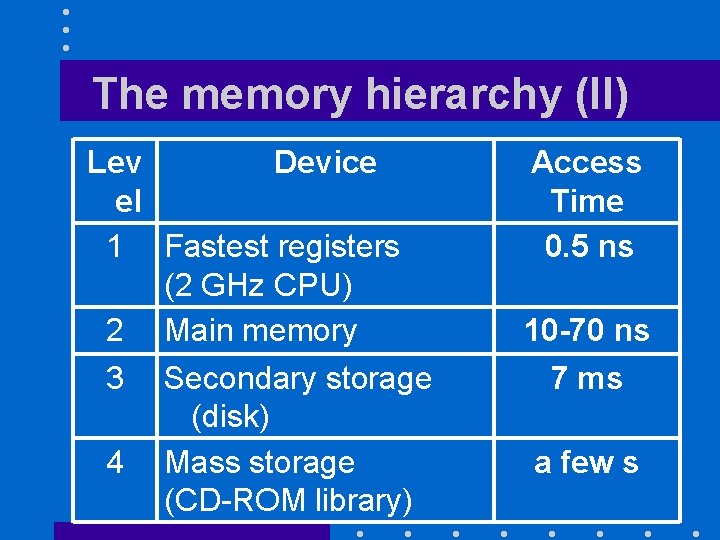

The memory hierarchy (II) Lev Device el 1 Fastest registers (2 GHz CPU) 2 Main memory 3 Secondary storage (disk) 4 Mass storage (CD-ROM library) Access Time 0. 5 ns 10 -70 ns 7 ms a few s

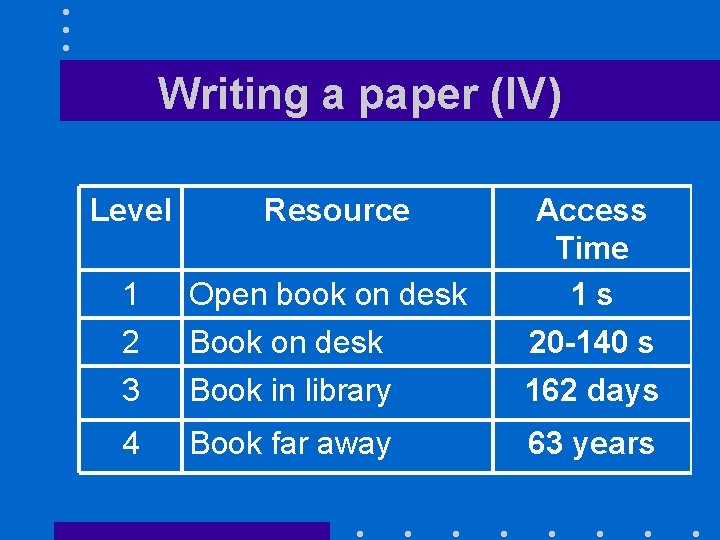

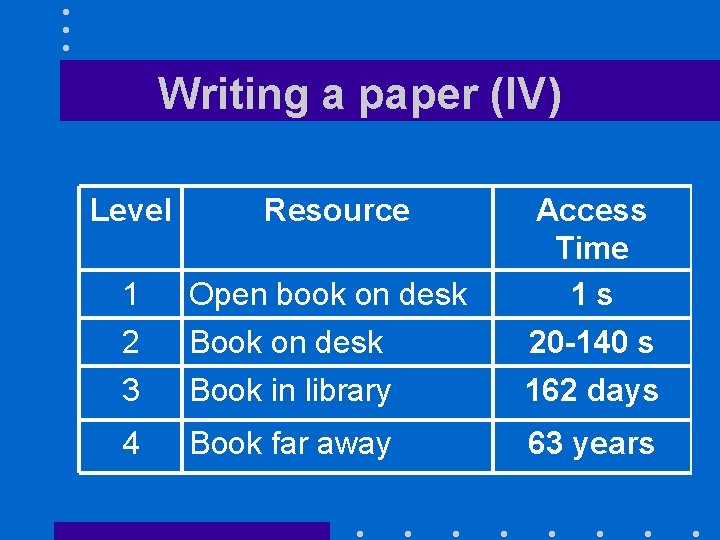

The memory hierarchy (III) • To make sense of these numbers, let us consider an analogy

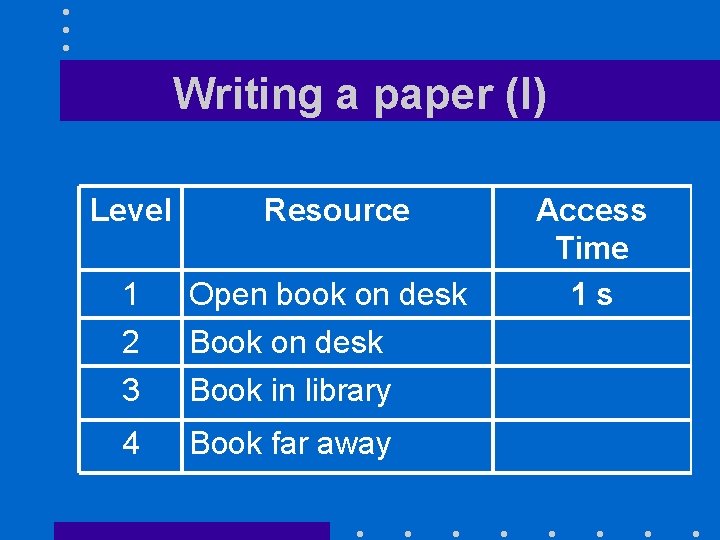

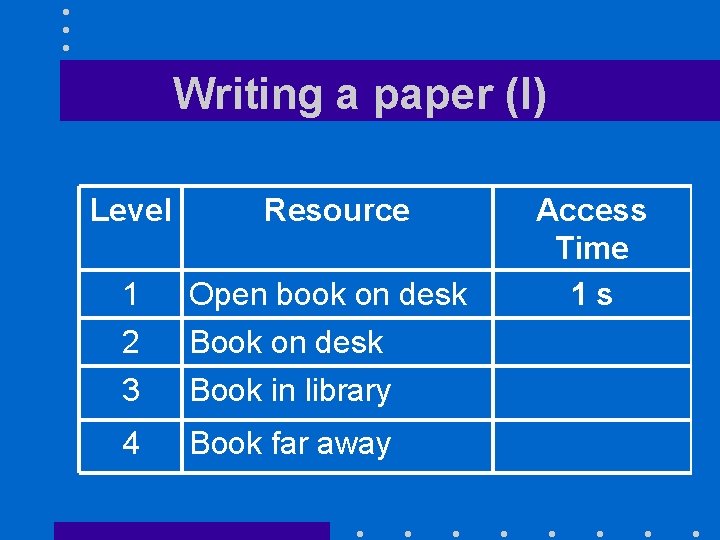

Writing a paper (I) Level Resource 1 2 3 Open book on desk Book in library 4 Book far away Access Time 1 s

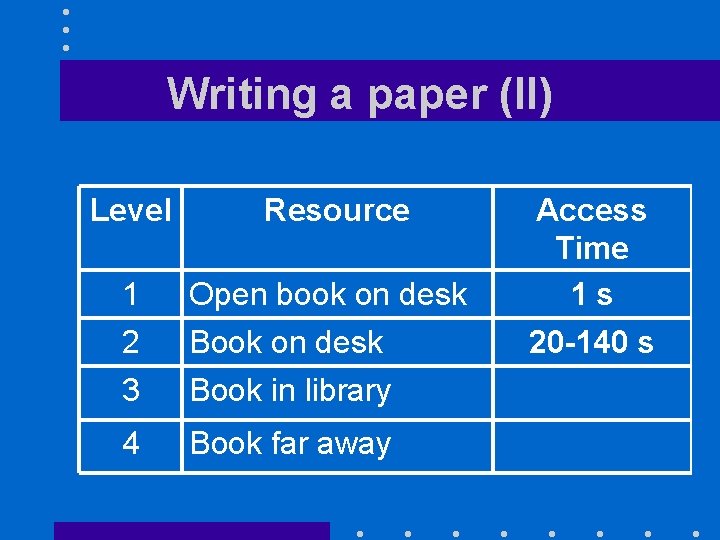

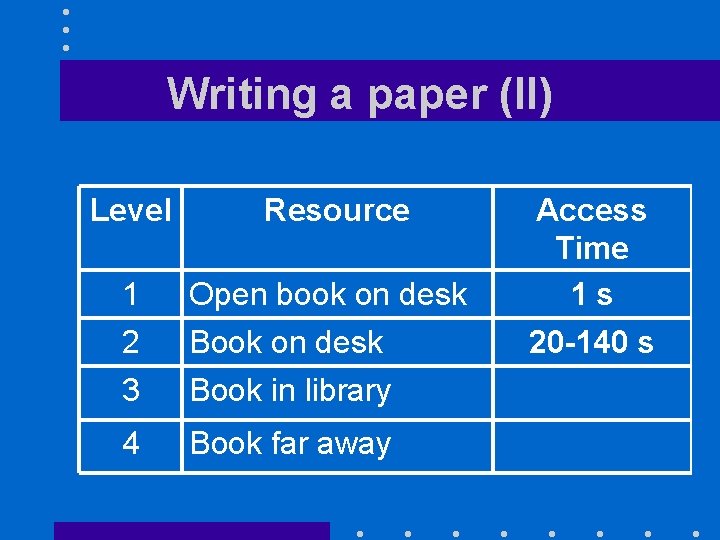

Writing a paper (II) Level Resource 1 2 3 Open book on desk Book in library 4 Book far away Access Time 1 s 20 -140 s

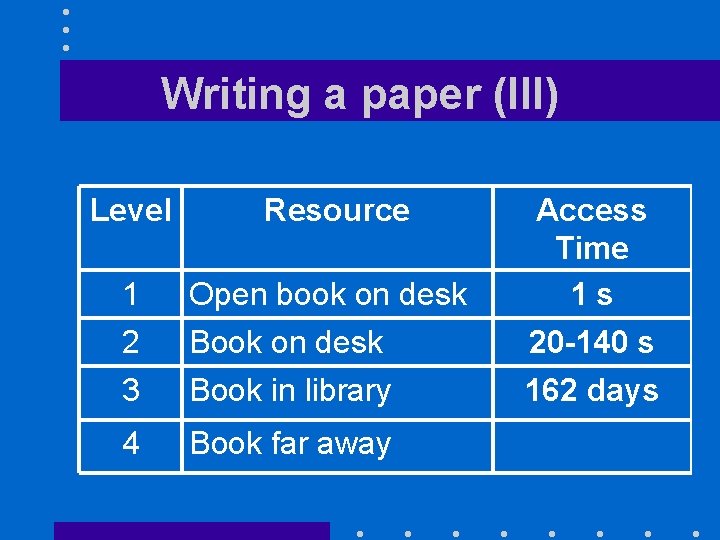

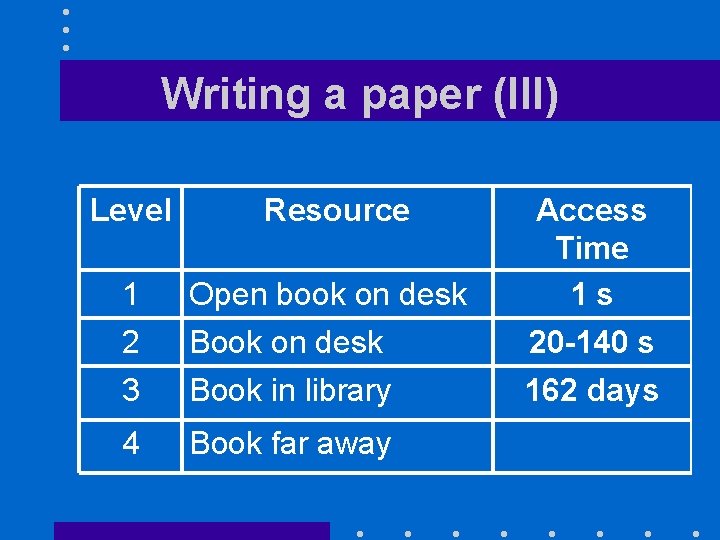

Writing a paper (III) Level Resource 1 2 3 Open book on desk Book in library 4 Book far away Access Time 1 s 20 -140 s 162 days

Writing a paper (IV) Level Resource 1 2 3 Open book on desk Book in library Access Time 1 s 20 -140 s 162 days 4 Book far away 63 years

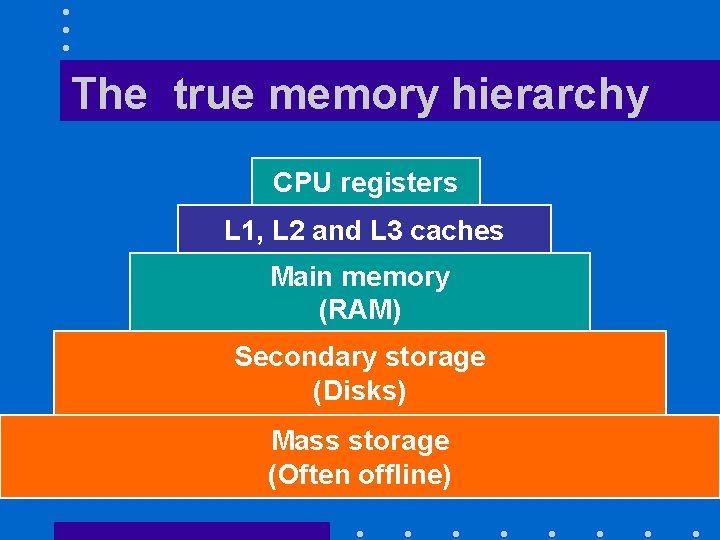

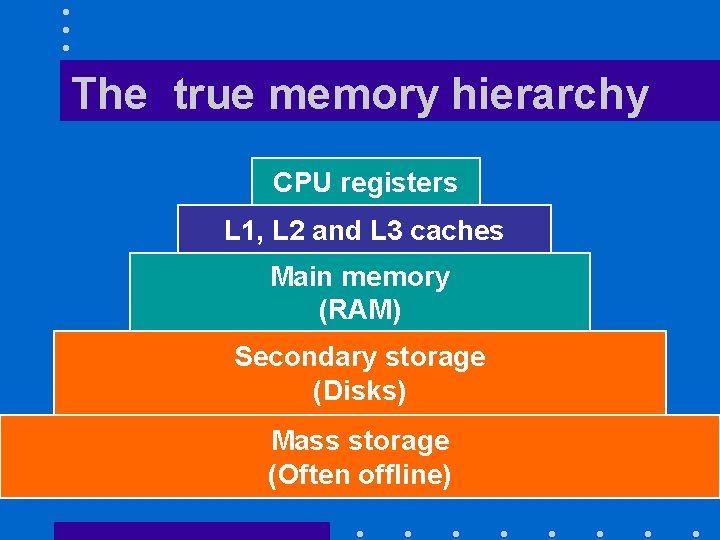

The two gaps (I) • Gap between CPU and main memory speeds: – Will add intermediary levels • L 1, L 2, and L 3 caches – Will store contents of most recently accessed memory addresses • Most likely to be needed in the future – Purely hardware solution

Major issues • Huge gaps between – CPU speeds and SDRAM access times – SDRAM access times and disk access times • Both problems have very different solutions – Gap between CPU speeds and SDRAM access times handled by hardware

Why? • Having hardware handle an issue – Complicates hardware design – Offers a very fast solution – Standard approach for very frequent actions • Letting software handle an issue – Cheaper – Has a much higher overhead

Will the problem go away? • It will become worse – RAM access times are not improving as fast as CPU power – Disk access times are limited by rotational speed of disk drive

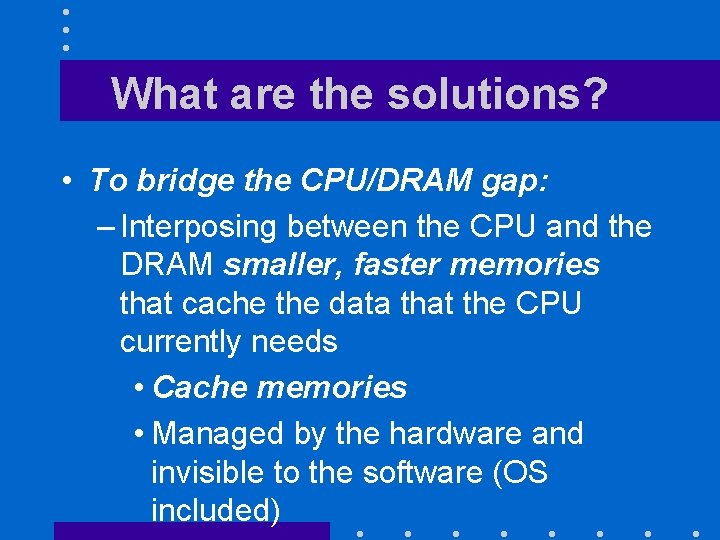

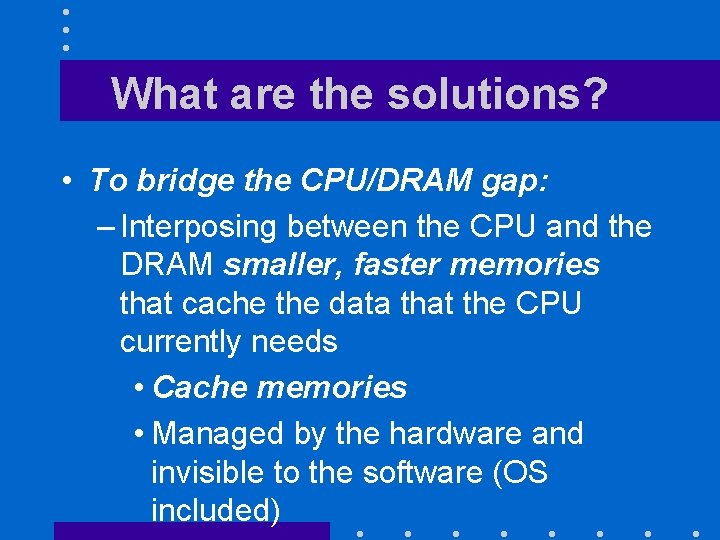

What are the solutions? • To bridge the CPU/DRAM gap: – Interposing between the CPU and the DRAM smaller, faster memories that cache the data that the CPU currently needs • Cache memories • Managed by the hardware and invisible to the software (OS included)

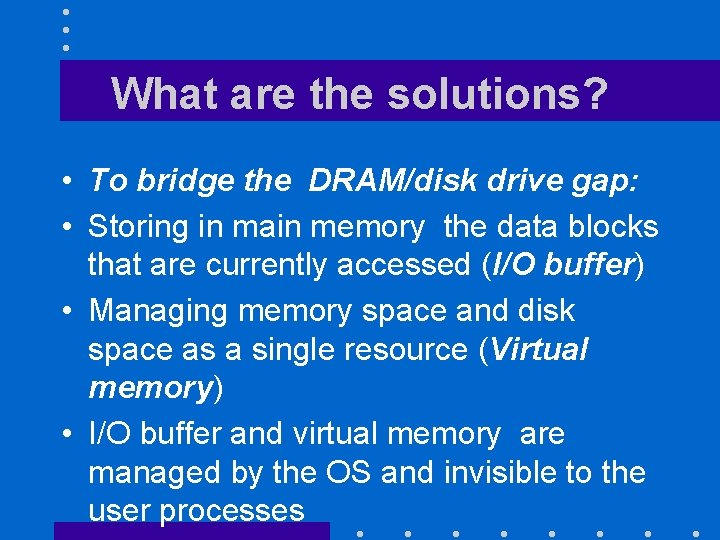

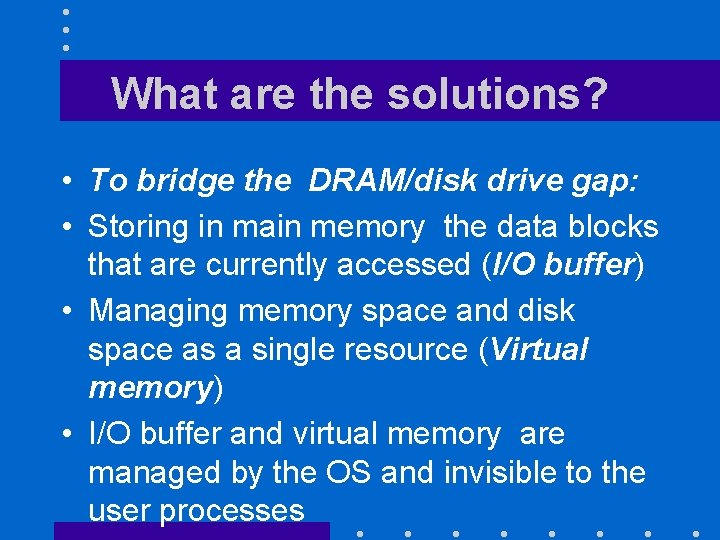

What are the solutions? • To bridge the DRAM/disk drive gap: • Storing in main memory the data blocks that are currently accessed (I/O buffer) • Managing memory space and disk space as a single resource (Virtual memory) • I/O buffer and virtual memory are managed by the OS and invisible to the user processes

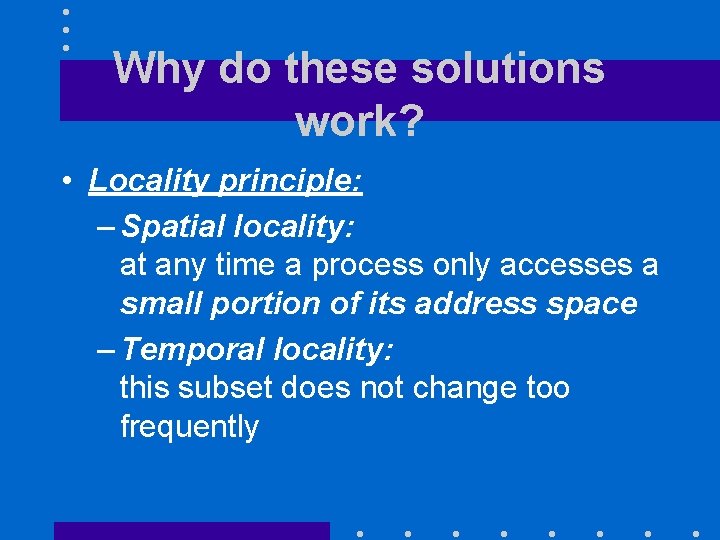

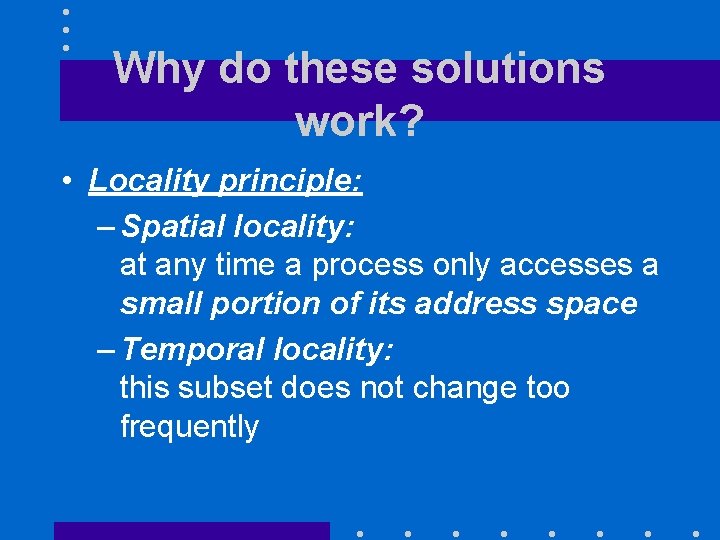

Why do these solutions work? • Locality principle: – Spatial locality: at any time a process only accesses a small portion of its address space – Temporal locality: this subset does not change too frequently

The true memory hierarchy CPU registers L 1, L 2 and L 3 caches Main memory (RAM) Secondary storage (Disks) Mass storage (Often offline)

Handling the CPU/DRAM speed gap

The technology • Caches use faster static RAM (SRAM) – (D flipflops) • Can have – Separate caches for instructions and data • Great for pipelining – A unified cache

Basic principles • Assume we want to store in a faster memory 2 n words that are currently accessed by the CPU – Can be instructions or data or even both • When the CPU will need to fetch an instruction or load a word into a register – It will look first into the cache – Can have a hit or a miss

Cache hits • Occur when the requested word is found in the cache – Cache avoided a memory access – CPU can proceed

Cache misses • Occur when the requested word is not found in the cache – Will need to access the main memory – Will bring the new word into the cache • Must make space for it by expelling one of the cache entries –Need to decide which one

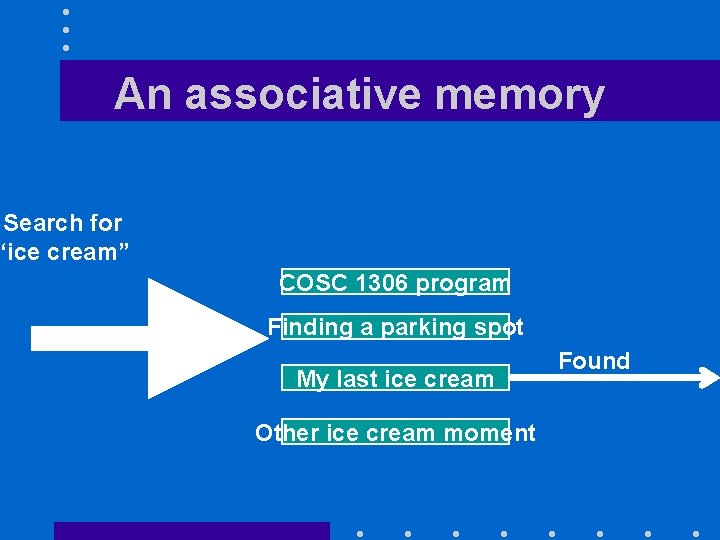

Cache design challenges • Cache contains a small subset of memory addresses • Must find a very fast access mechanism – No linear search, no binary search – Would like to have an associative memory • Can search by content all memory entries in parallel –Like human brains do

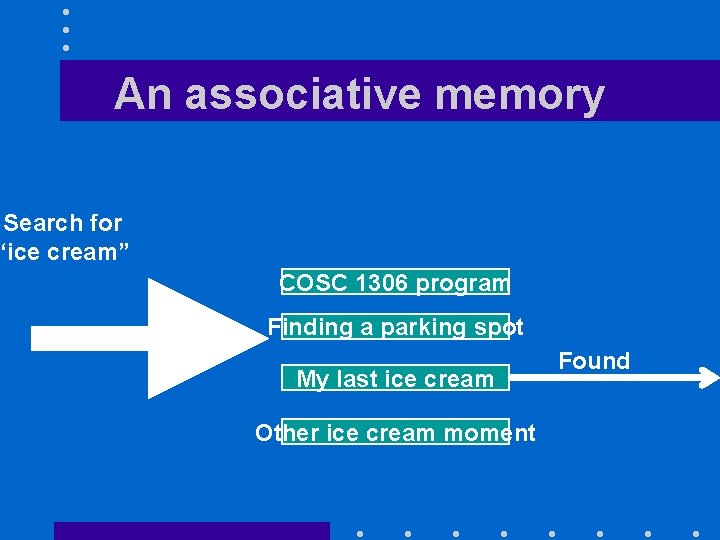

An associative memory Search for “ice cream” COSC 1306 program Finding a parking spot My last ice cream Other ice cream moment Found

An analogy (I) • Let go back to our closed-stack library example – Librarians have noted that some books get asked again and again • Want to put them closer to the circulation desk –Would result in much faster service – The problem is how to locate these books

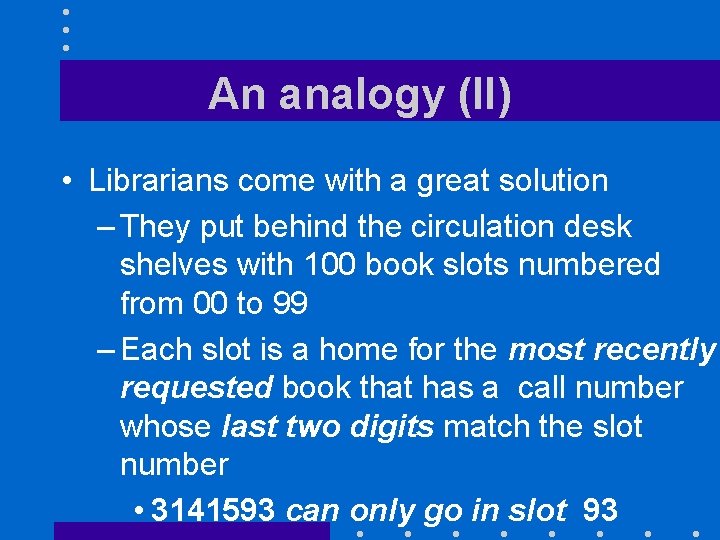

An analogy (II) • Librarians come with a great solution – They put behind the circulation desk shelves with 100 book slots numbered from 00 to 99 – Each slot is a home for the most recently requested book that has a call number whose last two digits match the slot number • 3141593 can only go in slot 93

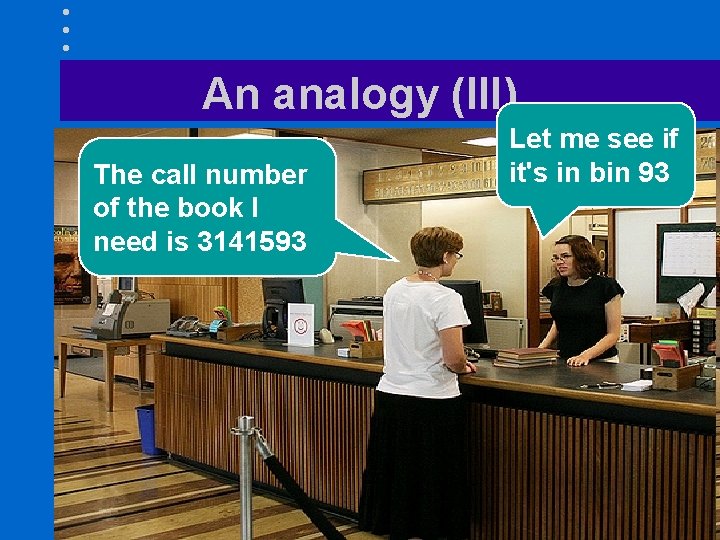

An analogy (III) The call number of the book I need is 3141593 Let me see if it's in bin 93

An analogy (IV) • To let the librarian do her job each slot much contain either – Nothing or – A book and its reference number • There are many books whose reference number ends in 93 or 67 or any two given digits

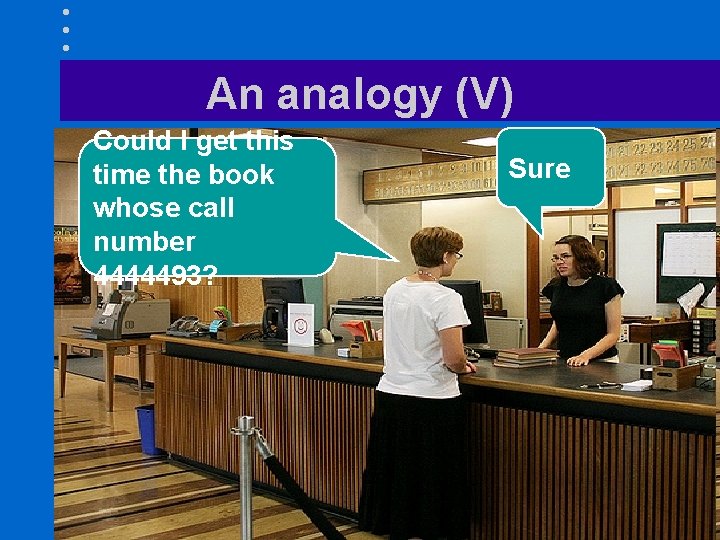

An analogy (V) Could I get this time the book whose call number 4444493? Sure

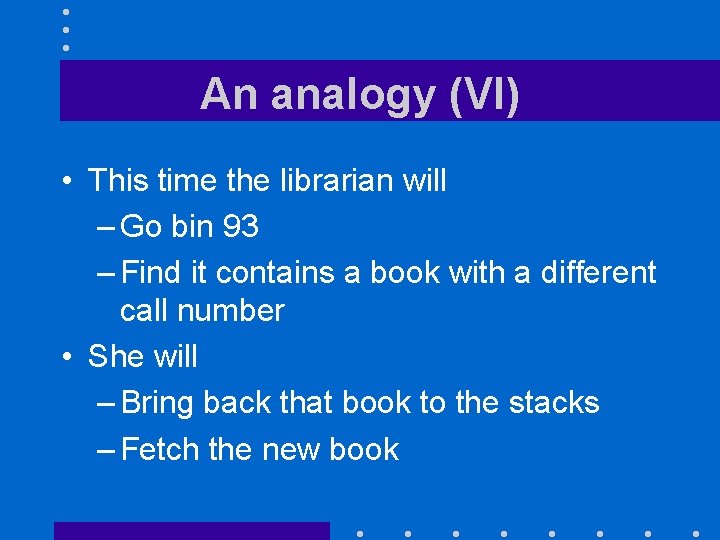

An analogy (VI) • This time the librarian will – Go bin 93 – Find it contains a book with a different call number • She will – Bring back that book to the stacks – Fetch the new book

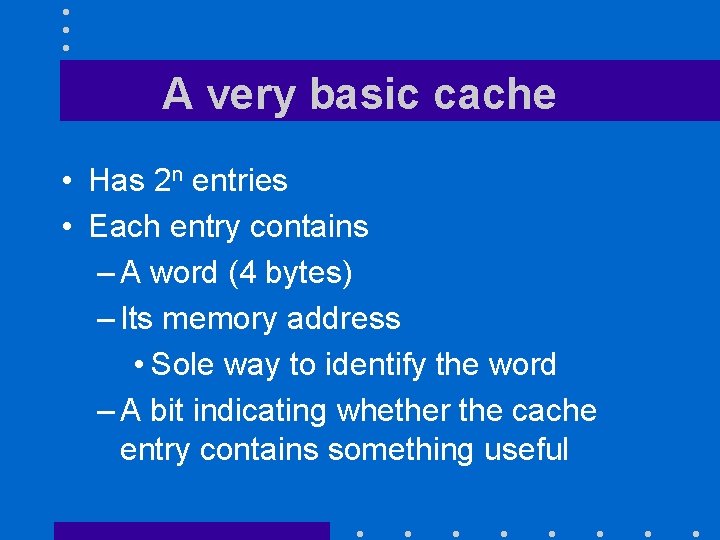

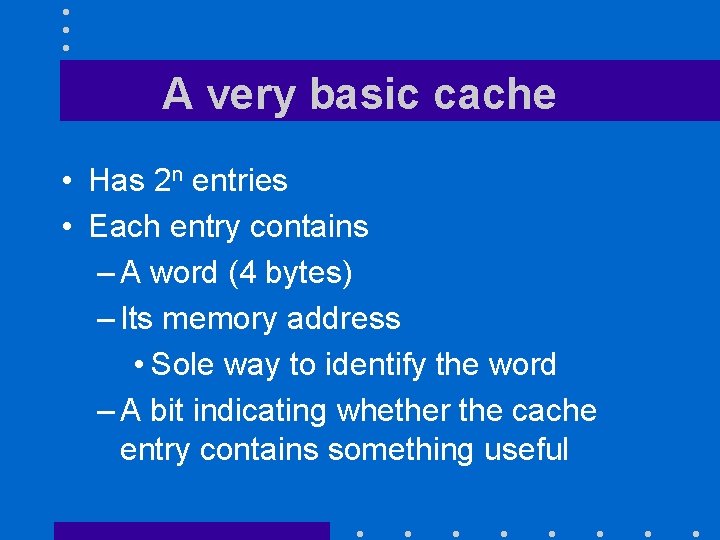

A very basic cache • Has 2 n entries • Each entry contains – A word (4 bytes) – Its memory address • Sole way to identify the word – A bit indicating whether the cache entry contains something useful

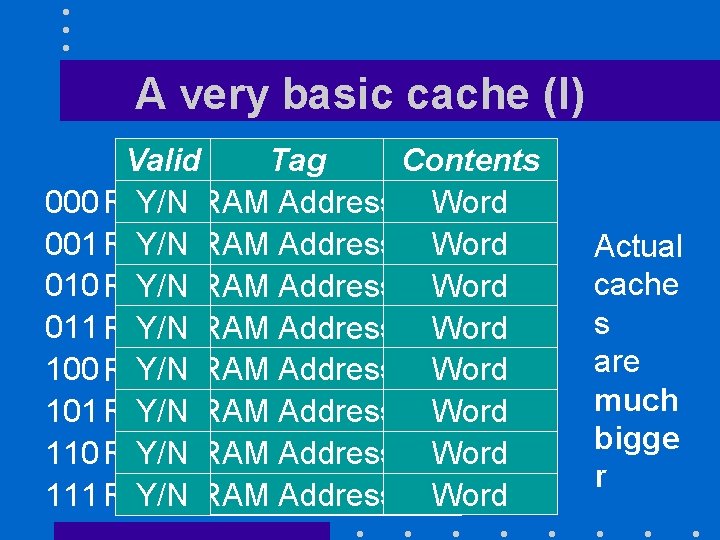

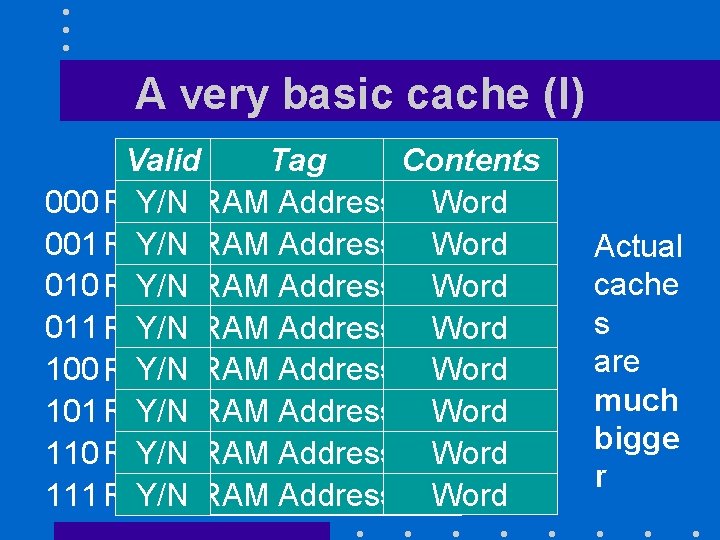

A very basic cache (I) Valid Tag Contents 000 RAM Y/NAddress RAM Address Word 001 RAM Address Word Y/NAddress 010 RAM Y/NAddress RAM Address Word 011 RAM Address Word Y/NAddress 100 RAM Y/NAddress RAM Address Word 101 RAM Address Y/NAddress Word 110 RAM Y/NAddress RAM Address Word RAM Address 111 RAM Word Y/NAddress Actual cache s are much bigge r

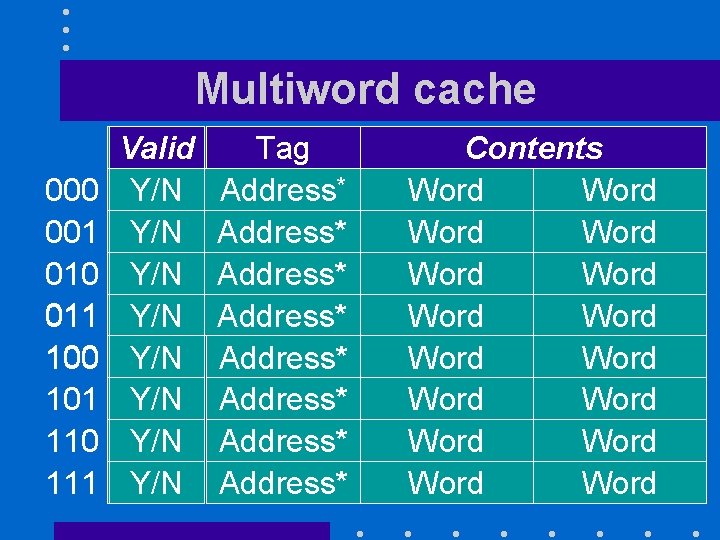

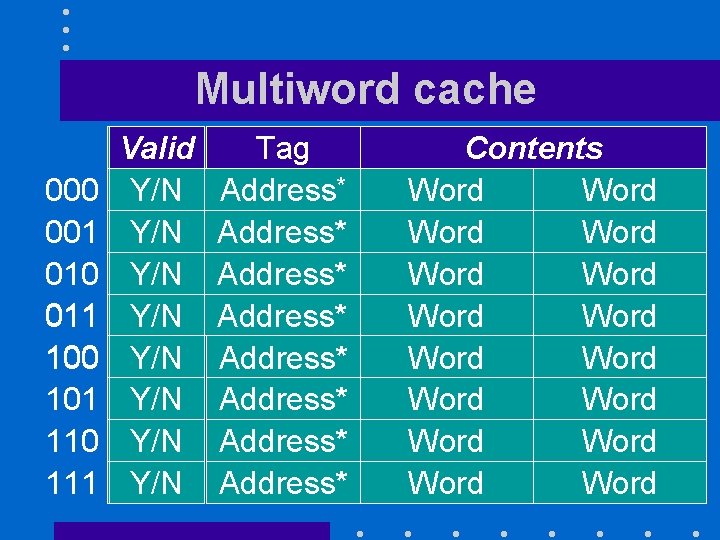

Multiword cache 000 001 010 011 100 101 110 111 Valid Y/N Y/N Tag Address* Address* Contents Word Word Word Word

Set-associative caches (I) • Can be seen as 2, 4, 8 caches attached together • Reduces collisions

Back to our library example • What if two books whose call number have the same last two digits are often asked on the same day: – Say, 3141593 and 4444493 • Best solution is – Keep the number of book slots equal to 100 – Store more than one book with same last two digits in the same slot

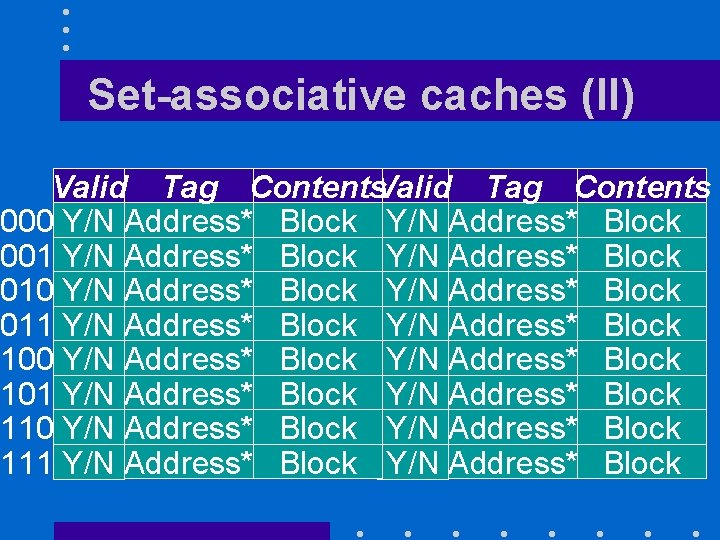

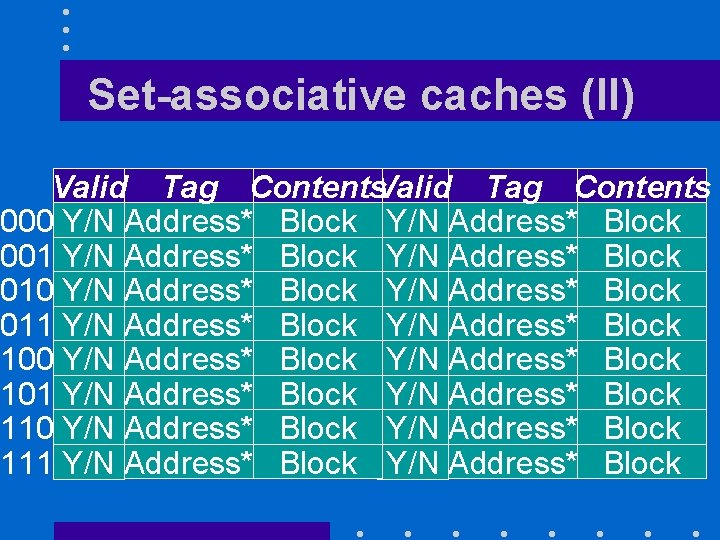

Set-associative caches (II) Valid Tag Contents 000 Y/N Address* Block 001 Y/N Address* Block 010 Y/N Address* Block 011 Y/N Address* Block 100 Y/N Address* Block 101 Y/N Address* Block 110 Y/N Address* Block 111 Y/N Address* Block

Set-associative caches (III) • Advantage: – We take care of more collisions • Like a hash table with a fixed bucket size – Results in lower miss rates than direct -mapped caches • Disadvantage: – Slower access – Best solution if miss penalty is very

Fully associative caches • The dream! • A block can occupy any index position in the cache • Requires an associative memory – Content-addressable – Like our brain! • Remains a dream

A cache hierarchy • Two or three levels (L 1, L 2, L 3) • L 1 cache: – Highest level – Optimized for speed: • Direct mapping and no associativity • L 2 and L 3 caches: – Optimized for hit ratio • Higher associativity

Handling the DRAM/disk speed gap

What can be done? • Two main techniques – Making disk accesses more efficient – Doing something else while waiting for an I/O operation • Not very different from what we are doing in our every day's lives

Optimizing read accesses (I) • When we shop in a market that’s far away from our home, we plan ahead and buy food for several days • The OS will read as many bytes as it can during each disk access – In practice, whole pages (4 KB or more) – Pages are stored in the I/O buffer

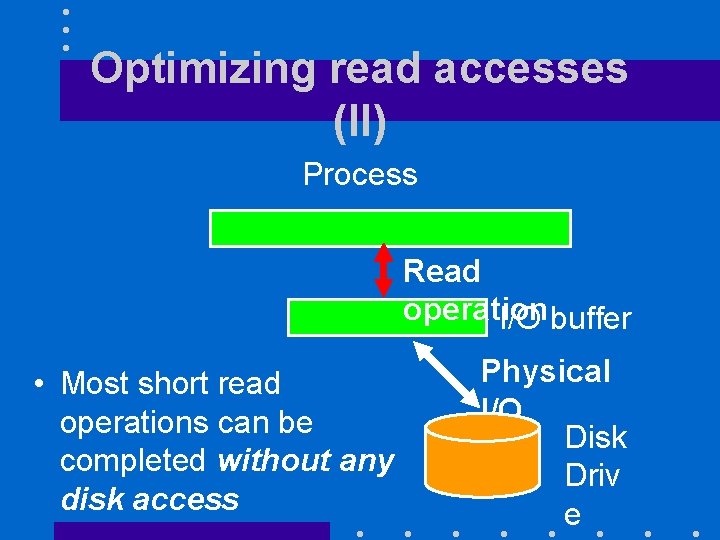

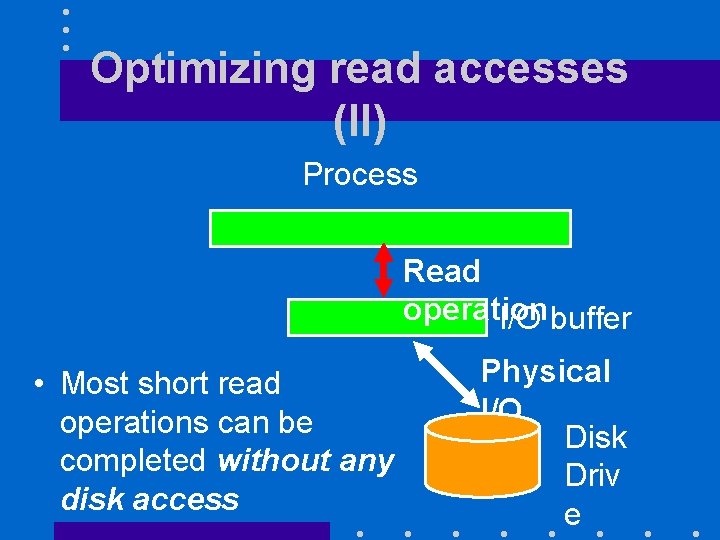

Optimizing read accesses (II) Process Read operation I/O buffer • Most short read operations can be completed without any disk access Physical I/O Disk Driv e

Optimizing read accesses (III) • Buffered writes work quite well – Most systems use it • They have a major limitation – If we try to read too much ahead of the program, we risk to bring into main memory data that will never be used

Optimizing read accesses (IV) • Can also keep in a buffer recently accessed blocks hoping they will be accessed again – Caching • Works very well because we keep accessing again and again the data we are working with • Caching is a fundamental technique of OS and database design

Optimizing write accesses (II) • If we live far away from a library, we wait until we have several books to return before making the trip • The OS will delay writes for a few seconds then write an entire block – Since most writes are sequential, most short writes will not require any disk access

Optimizing write accesses (II) • Delayed writes work quite well – Most systems use it • It has a major drawback – We will lose data if the system or the program crashes • After the program issued a write but • Before the data were saved to disk

Doing something else • When we order something on the web, we do not remain idle until the goods are delivered • The OS can implement multiprogramming and let the CPU run another program while a program waits for an I/O

Advantages (I) • Multiprogramming is very important in business applications – Many of these applications use the peripherals much more than the CPU – For a long time the CPU was the most expensive component of a computer – Multiprogramming was invented to keep the CPU busy

Advantages (II) • Multiprogramming made time-sharing possible • Multiprogramming lets your PC run several applications at the same time – MS Word and MS Outlook

Requirements • Two basic requirements – Must have a mechanism that handles I/O without CPU intervention • I/O controller – Must have a way to notfy the CPU when an I/O operation is completed • Interrupts

Analogy • When we buy a book in brick and mortar store, – We wait until we get the book • May have to waste time waiting in line • When we order a book over the Internet, – We do other things while waiting for the book • UPS takes care of it

Interrupts • Normally the kernel is inactive – Users' programs are in control • When OS intervention is required – Must interrupt the flow of execution of the CPU – Give CPU to the OS

Interrupts • Detected by the CPU hardware – After it has executed the current instruction – Before it starts the next instruction

A very schematic view (I) • A very basic CPU would execute the following loop: forever { fetch_instruction(); decode_instruction(); execute_instruction(); } • Pipelining makes things more complicated

A very schematic view (II) • We add an extra step: forever { check_for_interrupts(); fetch_instruction(); decode_instruction(); execute_instruction(); }

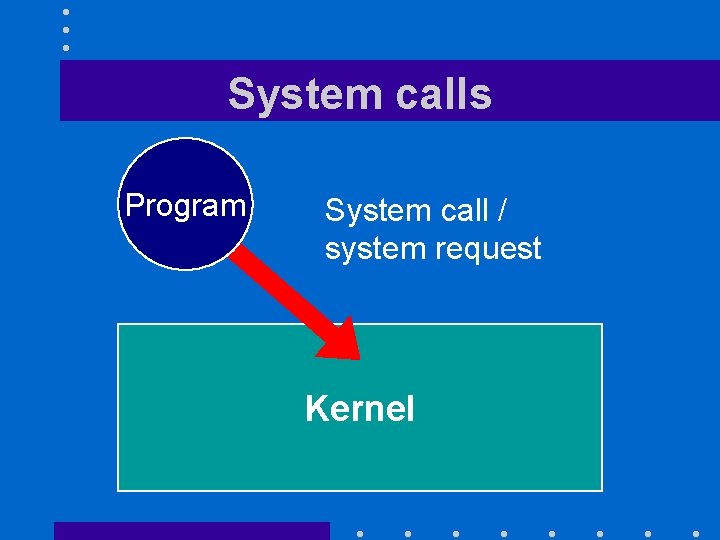

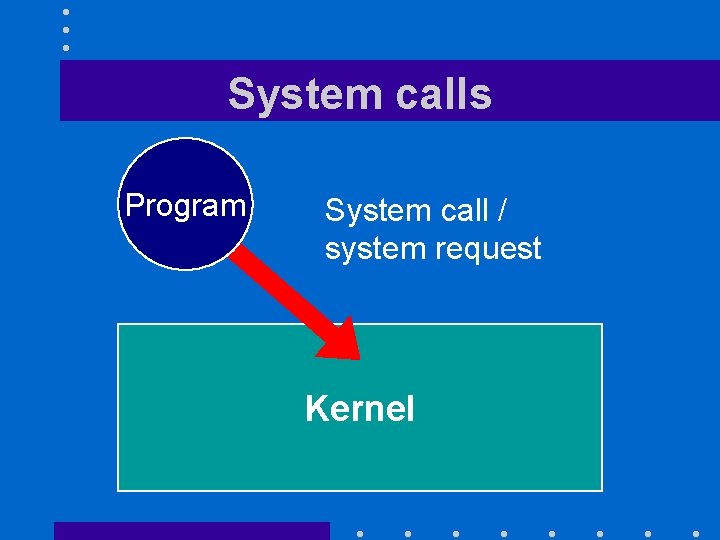

Types of interrupts • I/O completion interrupts – Requested data are now in memory • Timer interrupts – A process has been using the CPU for more than x ms • System calls – Running process needs something from the OS • …

System calls Program System call / system request Kernel

Managing the main memory

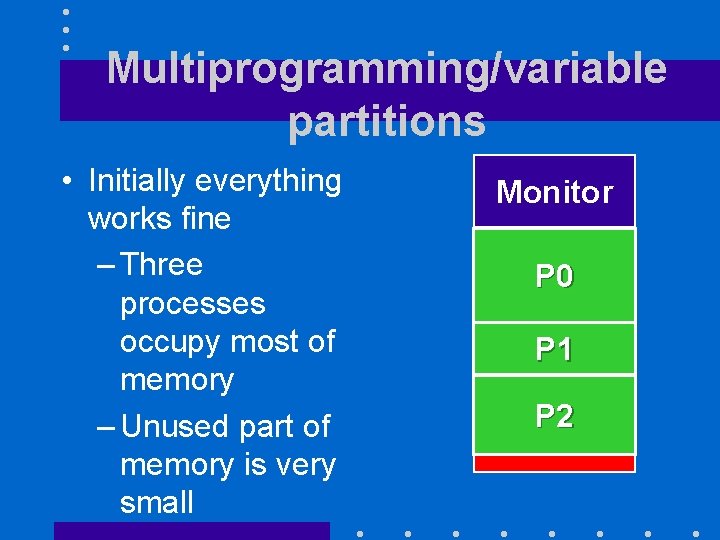

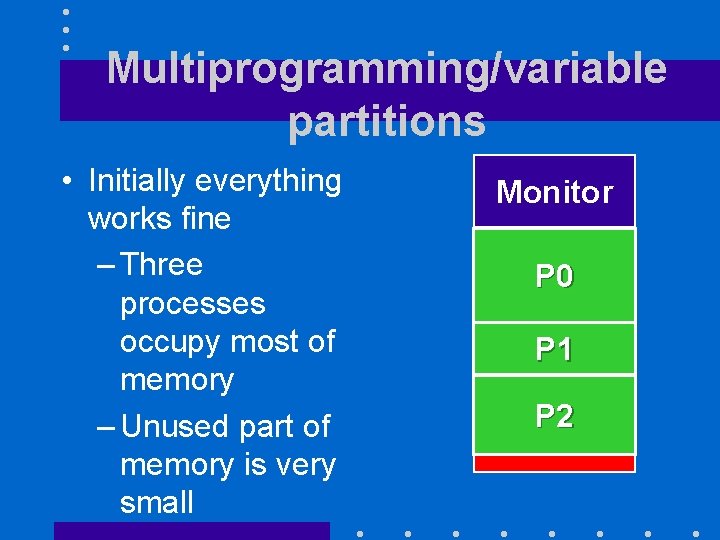

Multiprogramming/variable partitions • Initially everything works fine – Three processes occupy most of memory – Unused part of memory is very small Monitor P 0 P 1 P 2

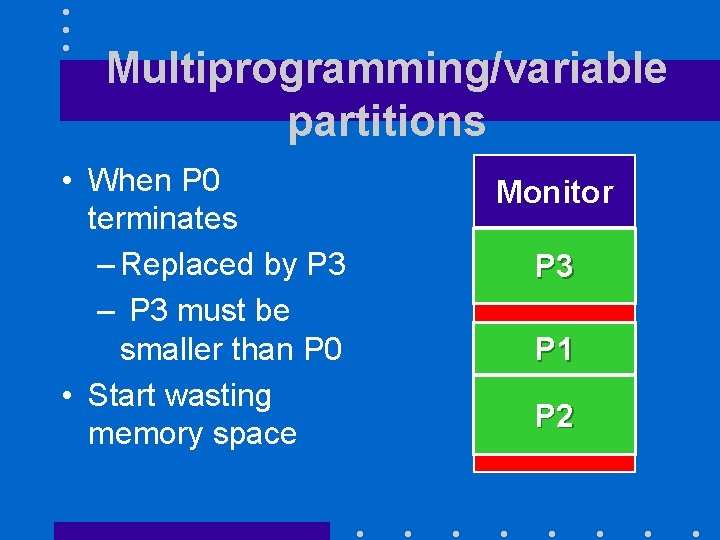

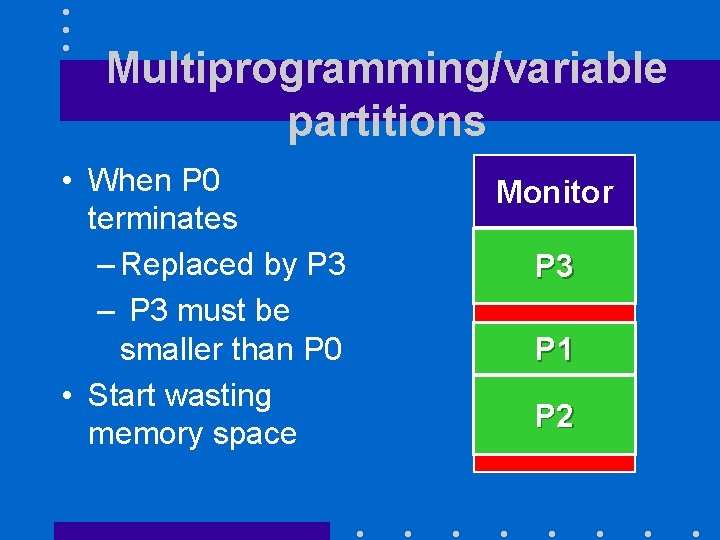

Multiprogramming/variable partitions • When P 0 terminates – Replaced by P 3 – P 3 must be smaller than P 0 • Start wasting memory space Monitor P 3 P 1 P 2

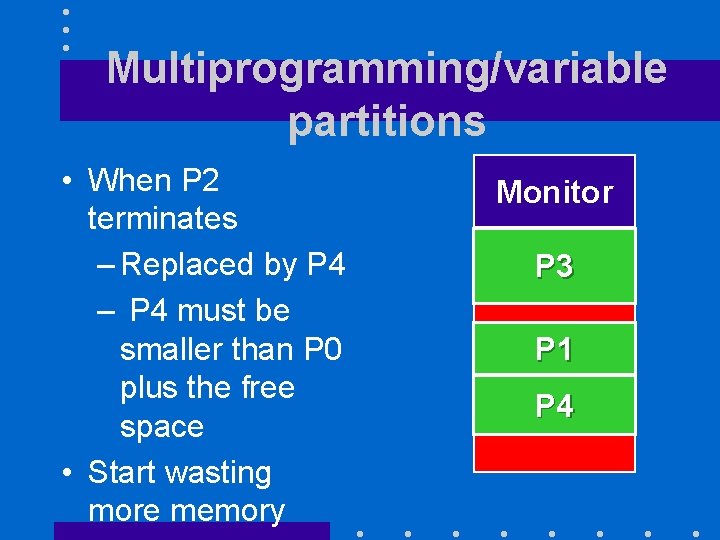

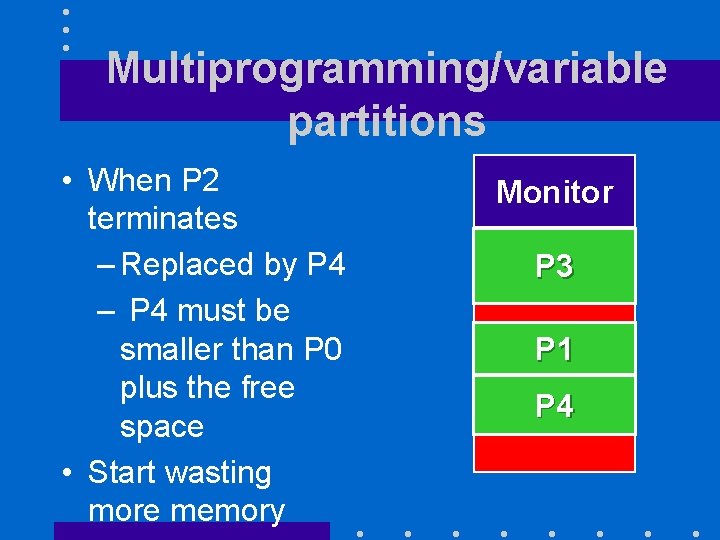

Multiprogramming/variable partitions • When P 2 terminates – Replaced by P 4 – P 4 must be smaller than P 0 plus the free space • Start wasting more memory Monitor P 3 P 1 P 4

External fragmentation • Happens in all systems using multiprogramming with variable partitions • Occurs because new process must fit in the hole left by terminating process – Very low probability that both process will have exactly the same size – Typically the new process will be a bit smaller than the terminating process

An Analogy • Replacing an old book by a new book on a bookshelf • New book must fit in the hole left by old book – Very low probability that both books have exactly the same width – We will end with empty shelf space between books • Solution it to push books left and right

Virtual memory • Combines two big ideas – Non-contiguous memory allocation: processes are allocated page frames scattered all over the main memory – On-demand fetch: Process pages are brought in main memory when they are accessed for the first time

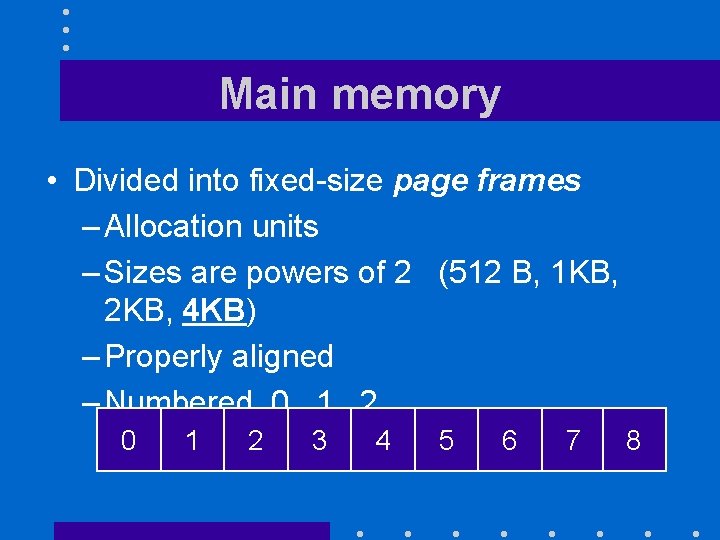

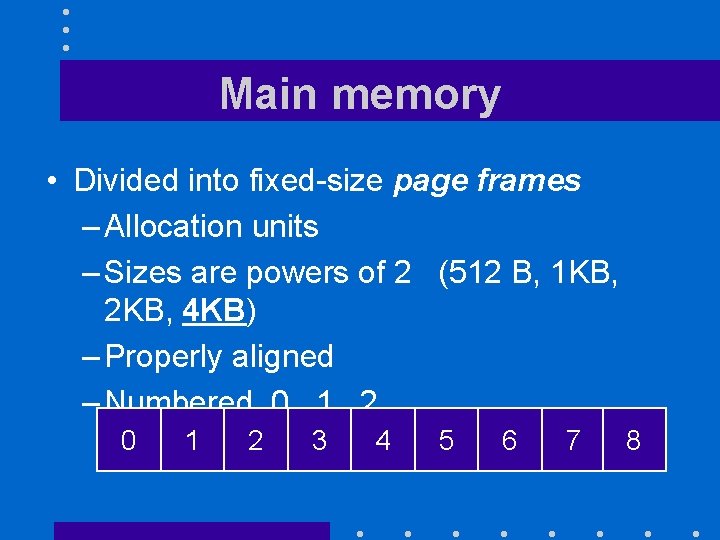

Main memory • Divided into fixed-size page frames – Allocation units – Sizes are powers of 2 (512 B, 1 KB, 2 KB, 4 KB) – Properly aligned – Numbered 0 , 1, 2, . . . 0 1 2 3 4 5 6 7 8

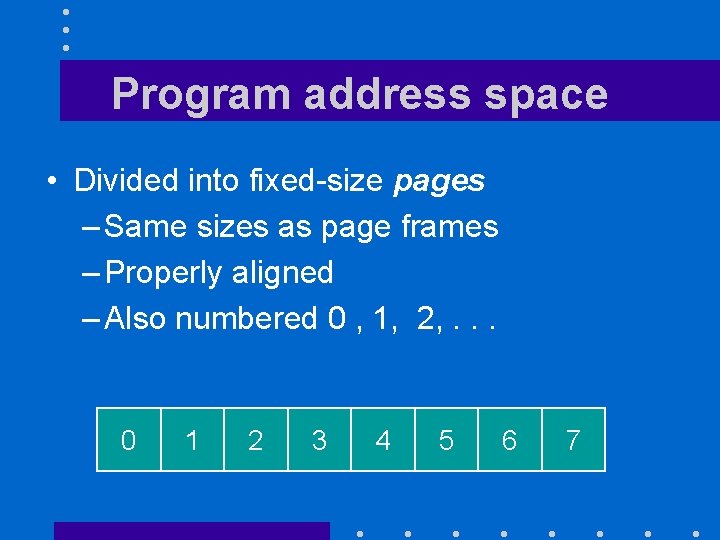

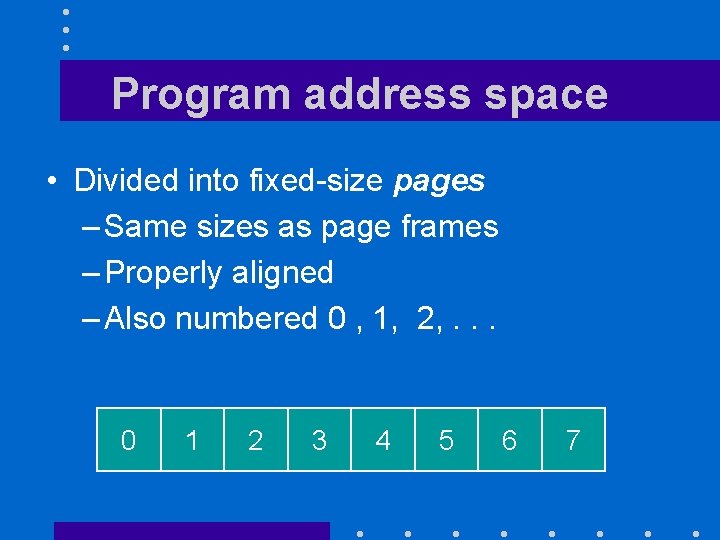

Program address space • Divided into fixed-size pages – Same sizes as page frames – Properly aligned – Also numbered 0 , 1, 2, . . . 0 1 2 3 4 5 6 7

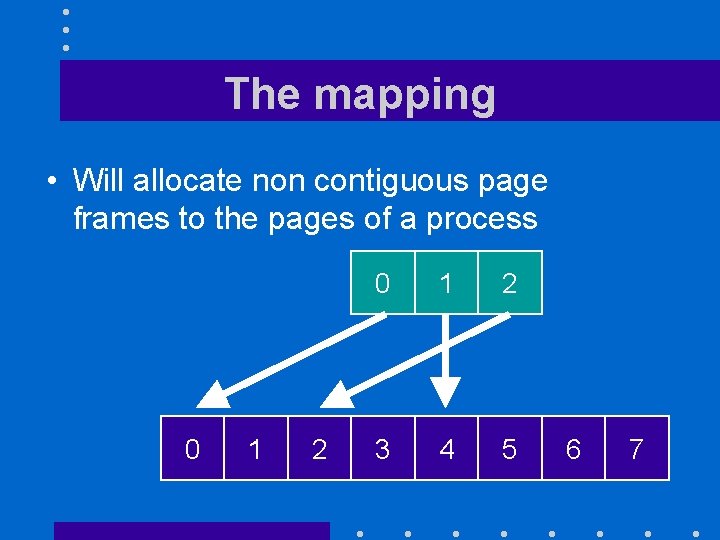

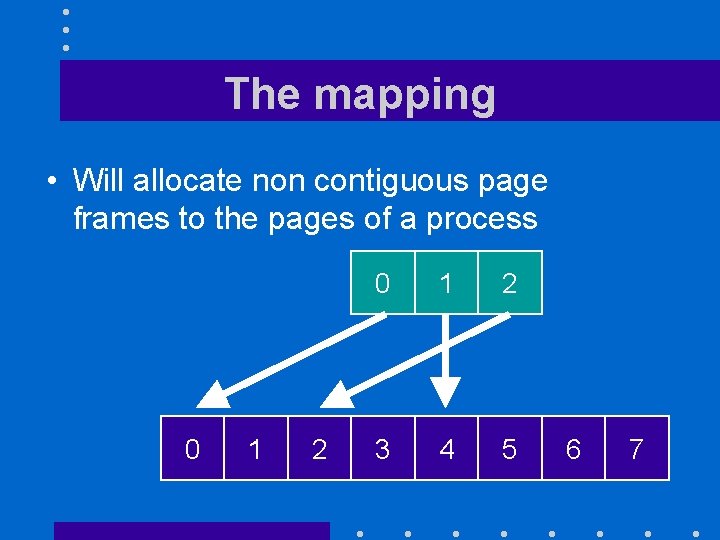

The mapping • Will allocate non contiguous page frames to the pages of a process 0 1 2 3 4 5 6 7

Is it virtual or real? • MMU translates – Virtual addresses used by the process into – Real addresses in main memory

Realization

On-demand fetch (I) • Most processes terminate without having accessed their whole address space – Code handling rare error conditions, . . . • Other processes go to multiple phases during which they access different parts of their address space – Compilers

On-demand fetch (II) • VM systems do not fetch whole address space of a process when it is brought into memory • They fetch individual pages on demand when they get accessed the first time – Page miss or page fault • When memory is full, they expel from memory pages that are not currently in use

Advantages • System does not waste time loading pages that will be never accessed. • Can have very large virtual address spaces • Could run programs that are too big to fit in main memory – Important during the 70's and early

Disadvantages • Slows down memory accesses – Address translation overhead – Page faults • Page faults introduce unpredictable delays – Very bad for real-time system • No substitute for enough physical memory

Implementation • To speed up address translation – A few hundred recently accessed page table entries are cached in the Translation Lookaside Buffer (TLB) – Remainder of page table is divided between • Main memory (active page table entries) • Secondary storage (the other

SPEEDING UP THE CPU

Main techniques • Speeding up the CPU clock – A 2 GHz CPU goes through to computing cycle every nanosecond • Letting the CPU "pipeline" instructions – Let the CPU work as an assembly line • Have a multicore architecture

Limitations • Speeding up the CPU clock – CPU with clock rates over 2 to 3 GHz become increasingly hard to cool • Letting the CPU "pipeline" instructions – Cannot have perfect pipelining • Have a multicore architecture – Harder to write software

Pipelining

The big idea • Making different parts of the CPU work at the same time at different steps of a different instructions – Transforming the CPU into an assembly line

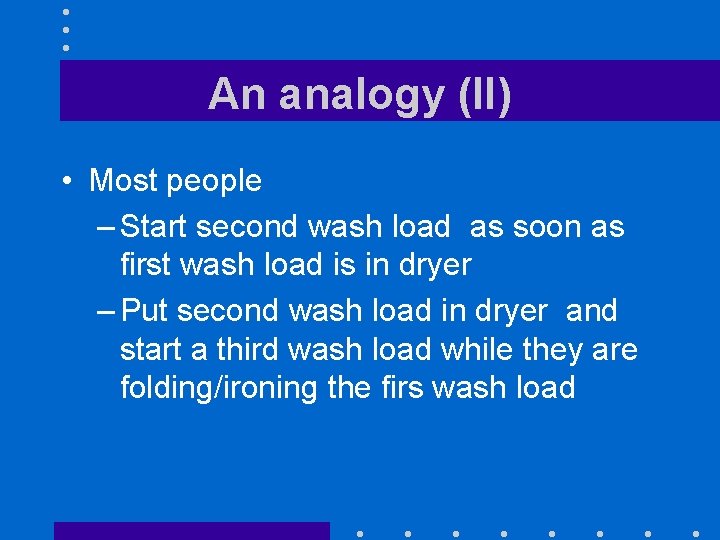

An analogy (I) • Washing your clothes – Four steps: 1. Putting in the washer 2. Putting in the dryer 3. Folding/ironing 4. Putting them away

An analogy (II) • Most people – Start second wash load as soon as first wash load is in dryer – Put second wash load in dryer and start a third wash load while they are folding/ironing the firs wash load

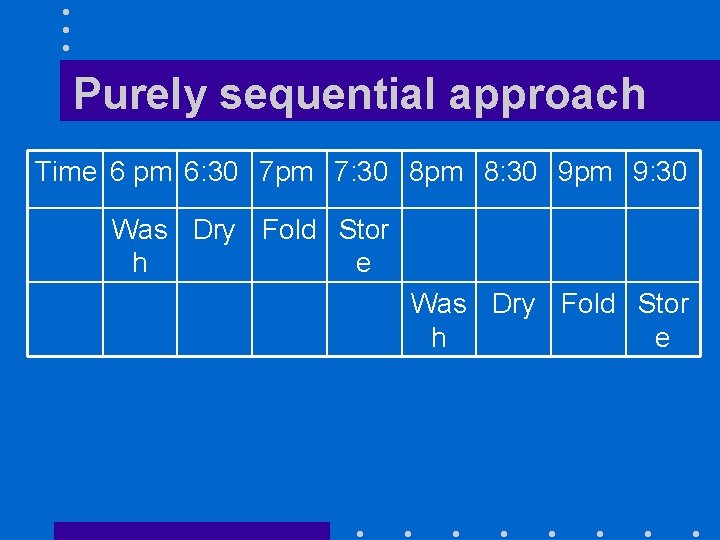

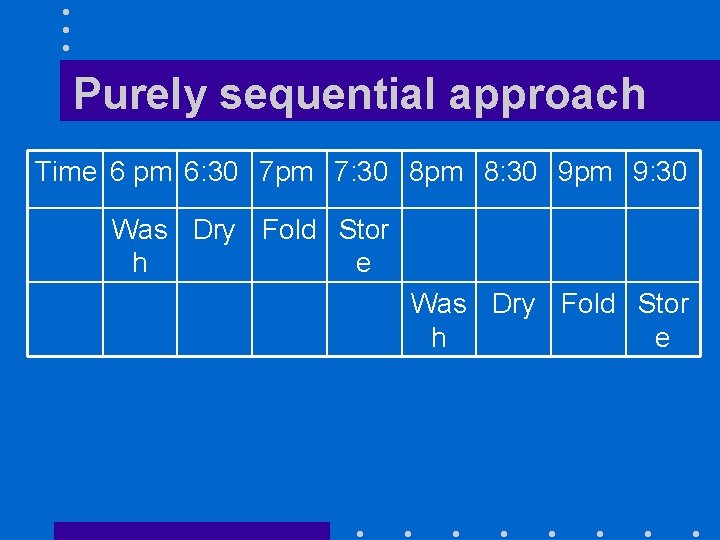

Purely sequential approach Time 6 pm 6: 30 7 pm 7: 30 8 pm 8: 30 9 pm 9: 30 Was Dry Fold Stor h e

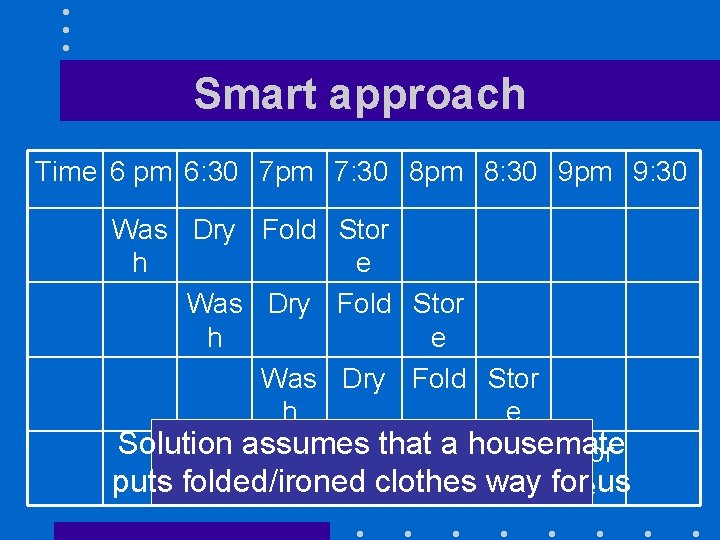

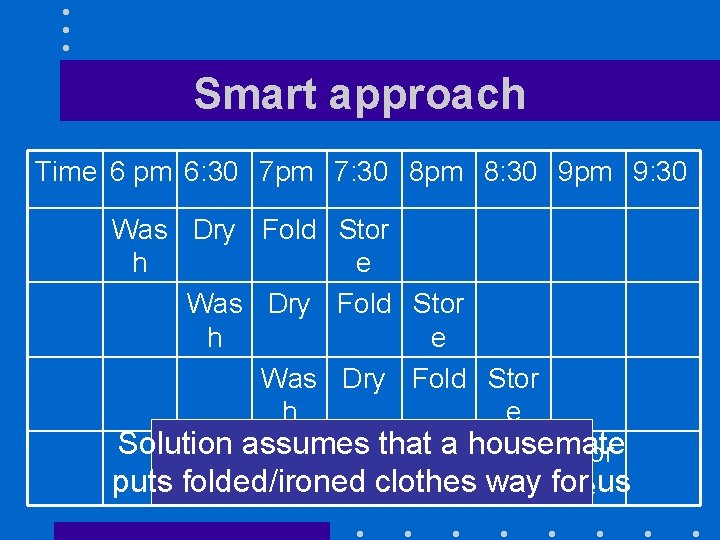

Smart approach Time 6 pm 6: 30 7 pm 7: 30 8 pm 8: 30 9 pm 9: 30 Was Dry Fold Stor h e Solution assumes a housemate Wasthat Dry Fold Stor puts folded/ironedh clothes way fore us

Main advantage • Can do much more in much less time

An example • Pipelining in the MIPS architecture • Why? – Architecture developed by a team lead by John Hennessy from Stanford University – Pipelining is well described in architecture textbook by Hennessy WARNING: It is just an example and Patterson

Multiprocessor architectures

The solutions • Many parallel processing solutions – Multiprocessor architectures • Two or more microprocessor chips • Multiple architectures – Multicore architectures • Several processors on a single chip – Can have both

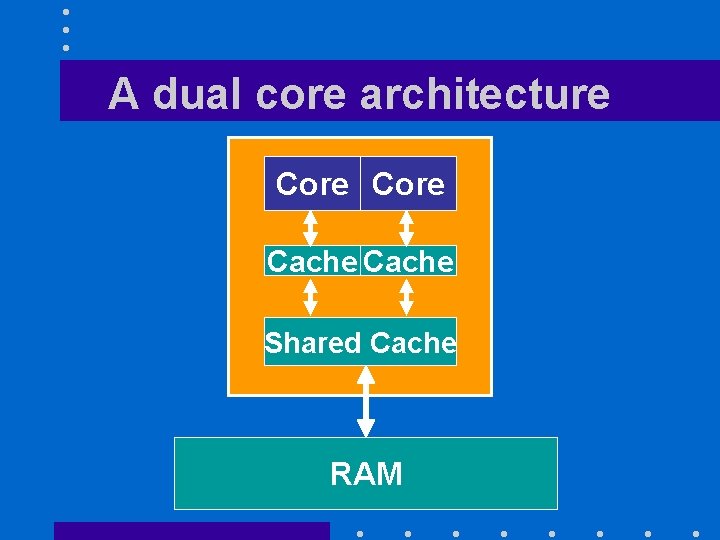

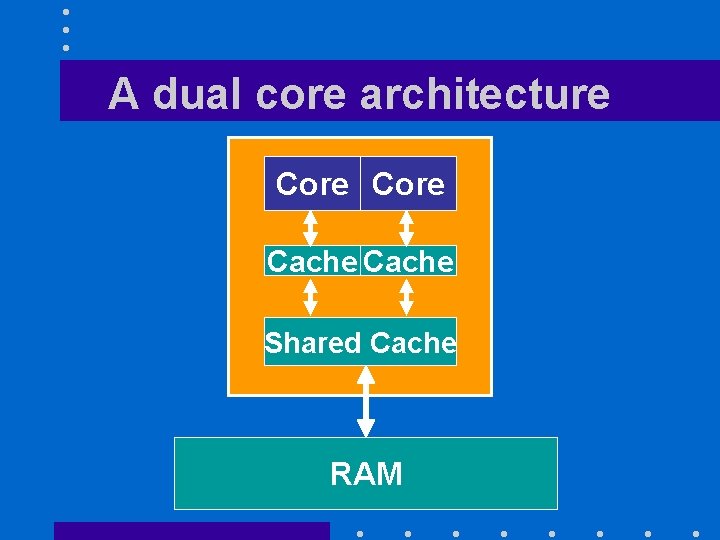

A dual core architecture Core Cache Shared Cache RAM

Even our your cell phone • Taiwanese chip maker Media. Tek has introduced what it’s calling the first “true” octa-core chip. • The MT 6592 can use up to 8 processor cores at once – It’s not clear what that will actually mean in terms of day-to-day performance.

Rene Descartes • Seventeenth-century French philosopher • Invented – Cartesian coordinates – Methodical doubt • [To] never to accept anything for true which I did not clearly know to be such • Proposed a scientific method based on

A major challenge • Keeping the caches consistent – Contents of same memory location can be cached by different processing units – What if one of the processing units modifies these contents • All other caches will have the old values – Must update the memory location and

The software side • Two ways for software to exploit parallel processing capabilities of hardware – Job-level parallelism • Several sequential processes run in parallel • Easy to implement (OS does the job!) – Process-level parallelism • A single program runs on several processors at the same time

Main considerations • Some problems are embarrassingly parallel – Many computer graphics tasks – Brute force searches in cryptography or password guessing • Much more difficult for other applications – Communication overhead among subtasks – Balancing the load

A last issue • Humans likes to address issues one after the order – We have meeting agendas – We do not like to be interrupted – We write sequential programs

Rene Descartes • Seventeenth-century French philosopher • Invented – Cartesian coordinates – Methodical doubt • [To] never to accept anything for true which I did not clearly know to be such • Proposed a scientific method based on

Method's third rule • The third, to conduct my thoughts in such order that, by commencing with objects the simplest and easiest to know, I might ascend by little and little, and, as it were, step by step, to the knowledge of the more complex; assigning in thought a certain order even to those objects which in their own nature do not stand in a relation of antecedence and sequence.

My take • Things will have to change

PROTECTION

Protecting users’ data (I) • Unless we have an isolated single-user system, we must prevent users from – Accessing – Deleting – Modifying without authorization other people's programs and data

Protecting users’ data (III) • Two aspects – Protecting user's files on disk – Preventing programs from interfering with each other

Historical Considerations • Earlier operating systems for personal computers did not have any protection – They were single-user machines – They typically ran one program at a time • Windows 2000, Windows XP, Vista, Windows 7 and Mac. OS X are protected

Protecting users’ files • Key idea is to prevent users’ programs from directly accessing the disk • Will require I/O operations to be performed by the kernel • Make them privileged instructions that only the kernel can execute

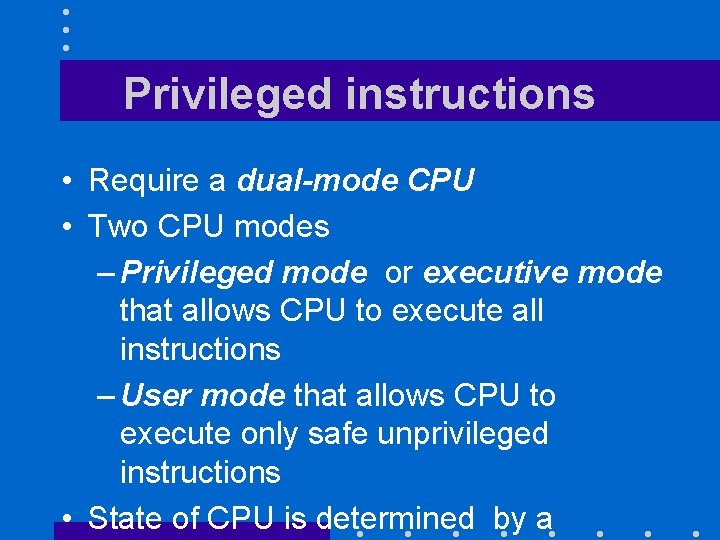

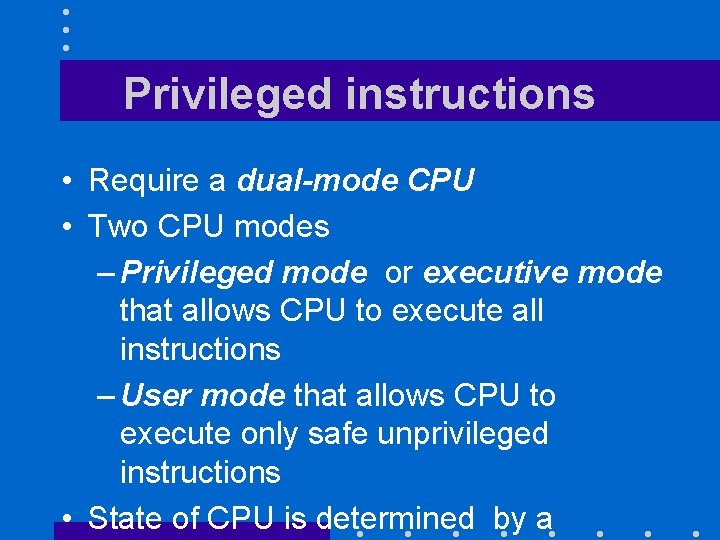

Privileged instructions • Require a dual-mode CPU • Two CPU modes – Privileged mode or executive mode that allows CPU to execute all instructions – User mode that allows CPU to execute only safe unprivileged instructions • State of CPU is determined by a

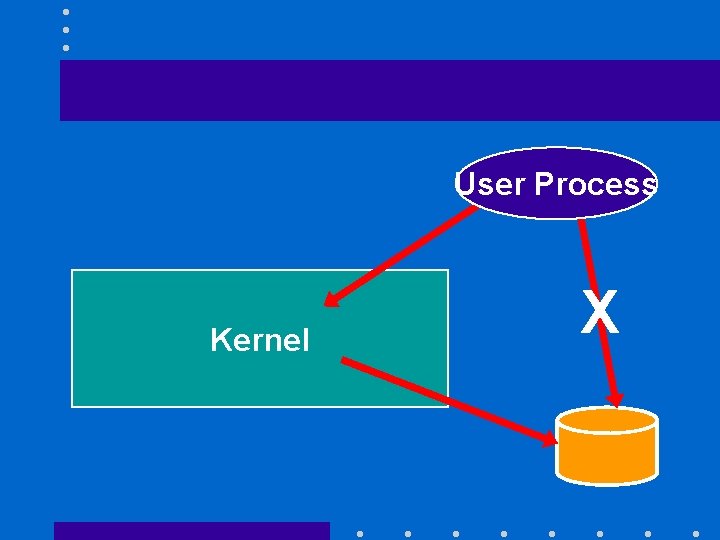

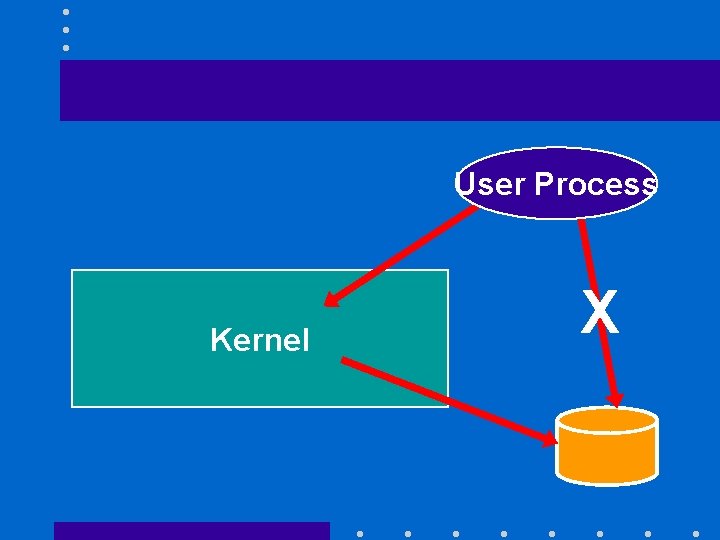

User Process Kernel X

Switching between states • User mode will be the default mode for all programs – Only the kernel can run in supervisor mode • Switching from user mode to supervisor mode is done through an interrupt – Safe because the jump address is at a well-defined location in main memory

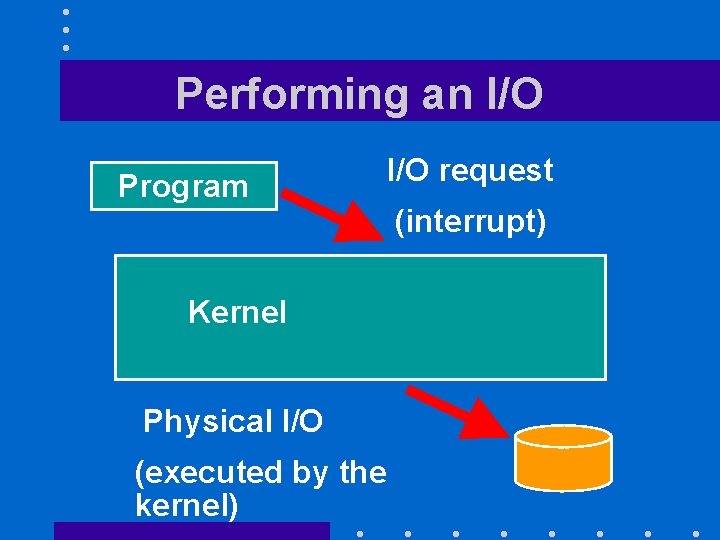

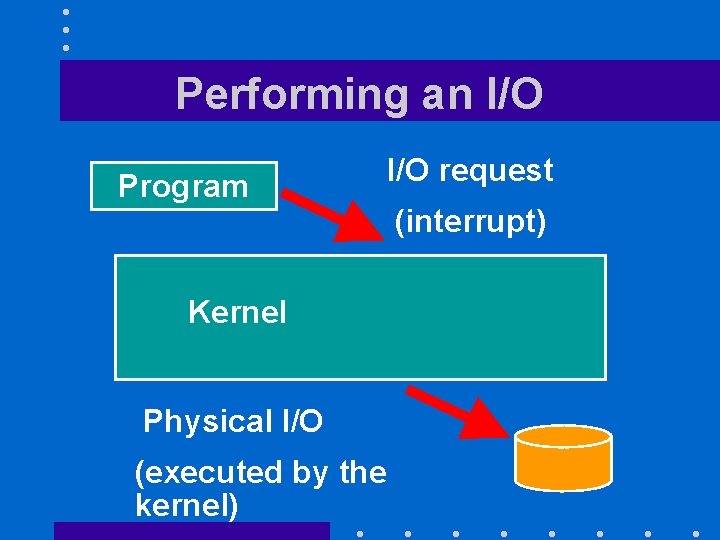

Performing an I/O Program Kernel Physical I/O (executed by the kernel) I/O request (interrupt)

An analogy (I) • Most UH libraries are open stacks – Anyone can consult books in the stacks and bring them to checkout • National libraries and the Library of Congress have close stack collections – Users fill a request for a specific document – A librarian will bring the document to the circulation desk

An analogy (II) • Open stack collections – Let users browse the collections – Users can misplace or vandalize books • Close stack collections – Much slower and less flexible – Much safer

More trouble • Having a dual-mode CPU is not enough to protect user’s files • Must also prevent rogue users from tampering with the kernel – Same as a rogue customer bribing a librarian in order to steal books • Done through memory protection

Memory protection (I) • Prevents programs from accessing any memory location outside their own address space • Requires special memory protection hardware • Memory protection hardware – Checks every reference issued by program – Generates an interrupt when it detects

Memory protection (II) • Has additional advantages: – Prevents programs from corrupting address spaces of other programs – Prevents programs from crashing the kernel • Not true for device drivers which are inside the kernel • Required part of any multiprogramming system

Even more trouble • Having both a dual-mode CPU and memory protection is not enough to protect user’s files • Must also prevent rogue users from booting the system with a doctored kernel – Example: • Can run Linux from a “live” CD Linux

Conclusion • As computer architecture becomes more complex – Some old problems continue to bother us: • Wide access time gaps between –CPU and main memory –Main memory and disk (or even flash) – Some solutions bring new challenges: