102519 Interest Points Galatea of the Spheres Salvador

![Many Existing Detectors Available Hessian & Harris [Beaudet ‘ 78], [Harris ‘ 88] Laplacian, Many Existing Detectors Available Hessian & Harris [Beaudet ‘ 78], [Harris ‘ 88] Laplacian,](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-26.jpg)

![Harris Detector [Harris 88] Second moment matrix Intuition: Search for local neighborhoods where the Harris Detector [Harris 88] Second moment matrix Intuition: Search for local neighborhoods where the](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-27.jpg)

![Harris Detector [Harris 88] Second moment matrix Ix Iy Ix 2 Iy 2 Ix Harris Detector [Harris 88] Second moment matrix Ix Iy Ix 2 Iy 2 Ix](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-28.jpg)

![Harris Detector – Responses [Harris 88] Effect: A very precise corner detector. Harris Detector – Responses [Harris 88] Effect: A very precise corner detector.](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-30.jpg)

![Harris Detector – Responses [Harris 88] Harris Detector – Responses [Harris 88]](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-31.jpg)

![Orientation Normalization • Compute orientation histogram [Lowe, SIFT, 1999] • Select dominant orientation • Orientation Normalization • Compute orientation histogram [Lowe, SIFT, 1999] • Select dominant orientation •](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-44.jpg)

![Local Descriptors: SIFT Descriptor [Lowe, ICCV 1999] Histogram of oriented gradients • Captures important Local Descriptors: SIFT Descriptor [Lowe, ICCV 1999] Histogram of oriented gradients • Captures important](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-49.jpg)

- Slides: 55

10/25/19 Interest Points Galatea of the Spheres Salvador Dali Computational Photography Derek Hoiem, University of Illinois (edited/presented by Wilfredo Torres Calderon)

Today’s class • Review of “Modeling the Physical World” • Interest points

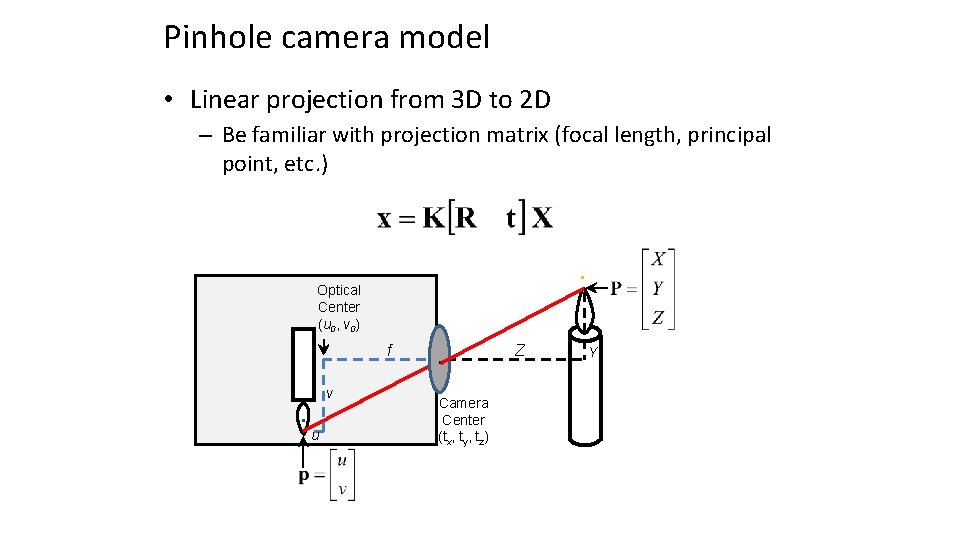

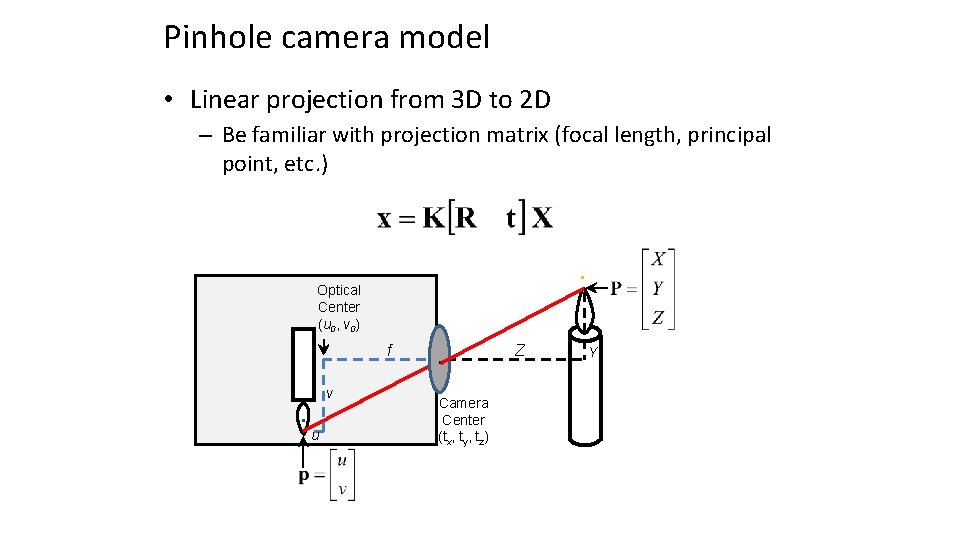

Pinhole camera model • Linear projection from 3 D to 2 D – Be familiar with projection matrix (focal length, principal point, etc. ) . Optical Center (u 0, v 0) . . v u f . Camera Center (tx, ty, tz) Z Y

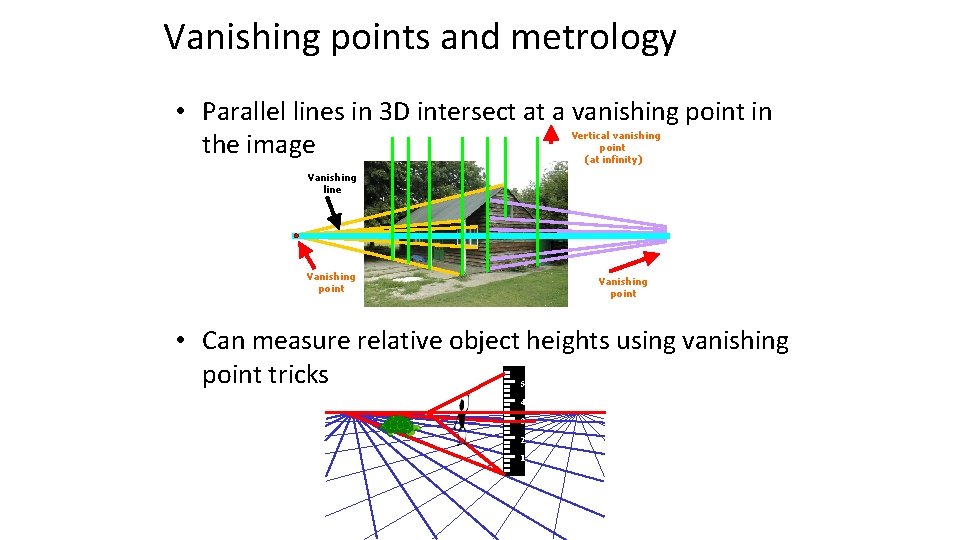

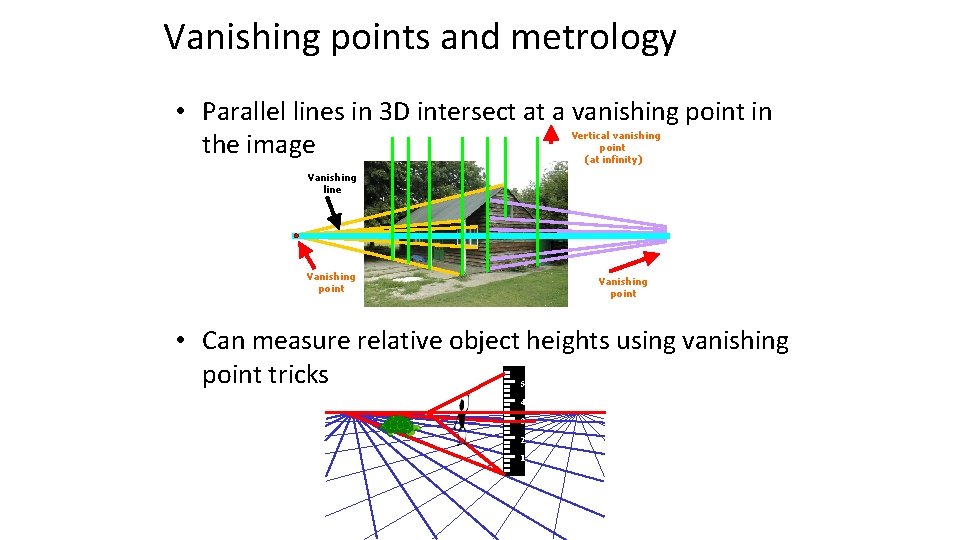

Vanishing points and metrology • Parallel lines in 3 D intersect at a vanishing point in the image Vertical vanishing point (at infinity) Vanishing line Vanishing point • Can measure relative object heights using vanishing point tricks 5 4 3 2 1

Single-view 3 D Reconstruction • Technically impossible to go from 2 D to 3 D, but we can do it with simplifying models – Need some interaction or recognition algorithms – Uses basic VP tricks and projective geometry

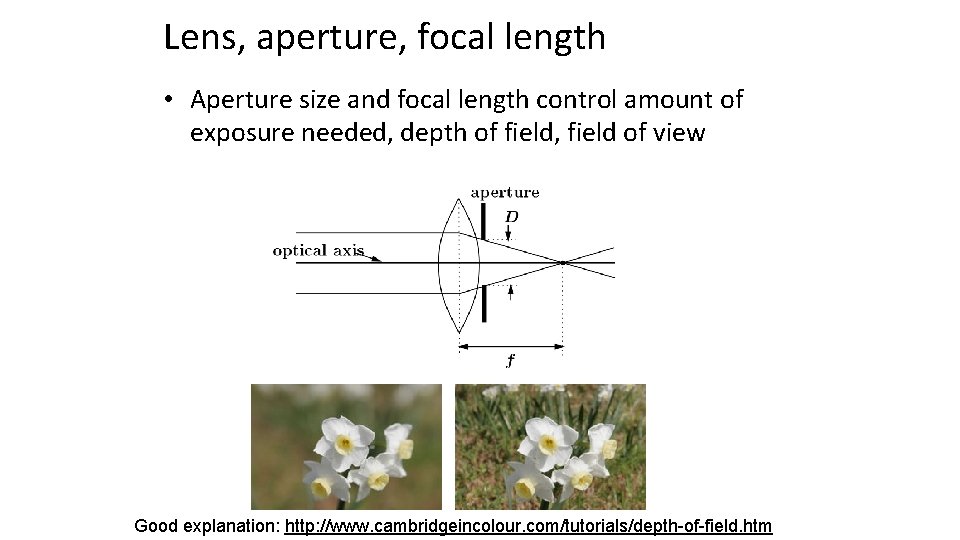

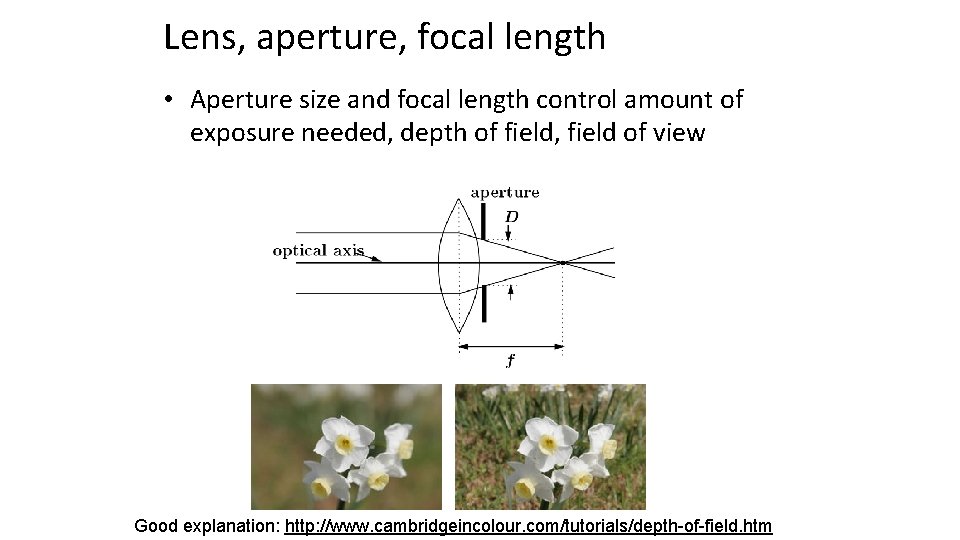

Lens, aperture, focal length • Aperture size and focal length control amount of exposure needed, depth of field, field of view Good explanation: http: //www. cambridgeincolour. com/tutorials/depth-of-field. htm

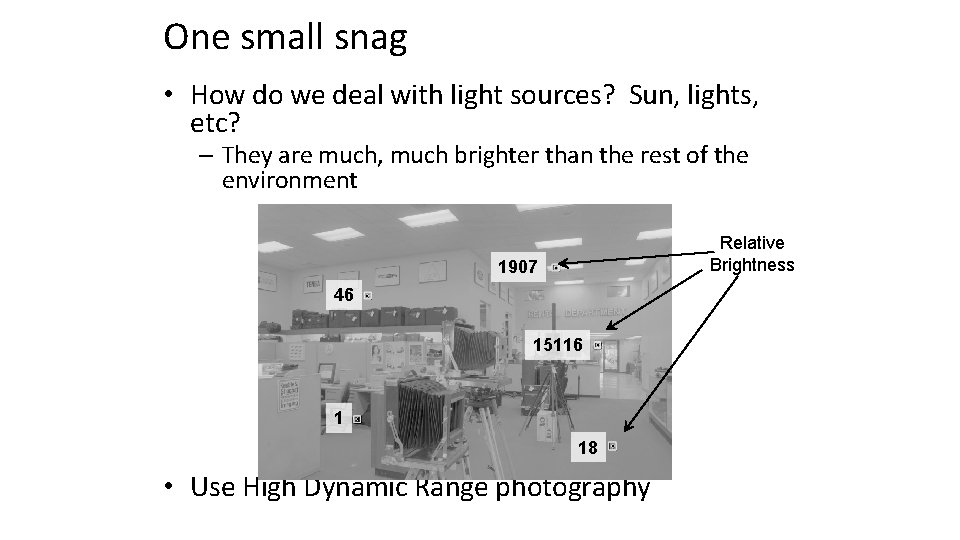

Capturing light with a mirrored sphere

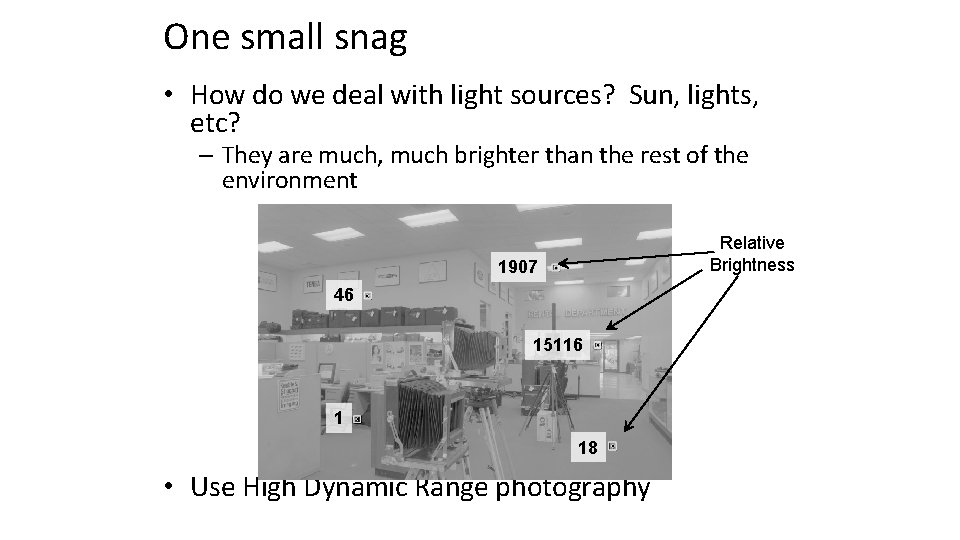

One small snag • How do we deal with light sources? Sun, lights, etc? – They are much, much brighter than the rest of the environment . 46 1907 Relative Brightness . 15116 1 . . 18 . • Use High Dynamic Range photography

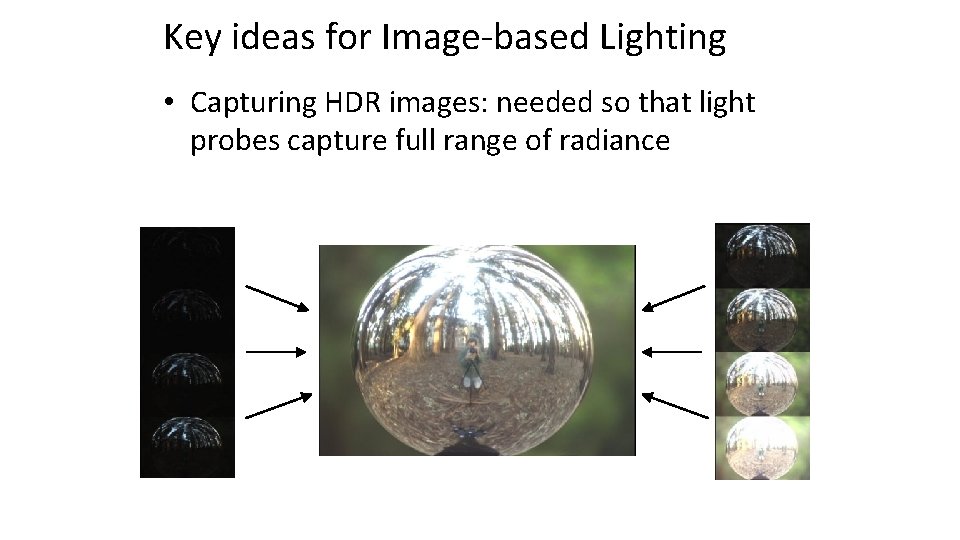

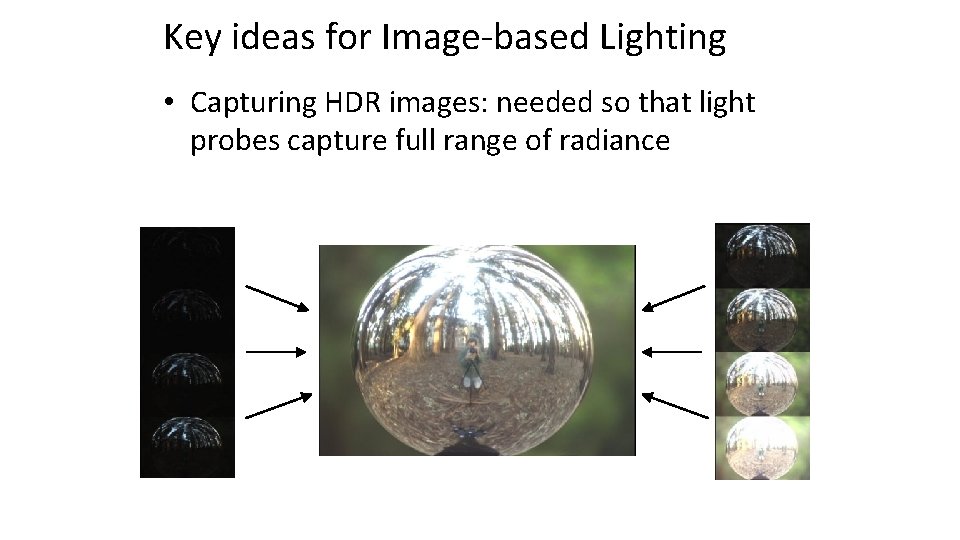

Key ideas for Image-based Lighting • Capturing HDR images: needed so that light probes capture full range of radiance

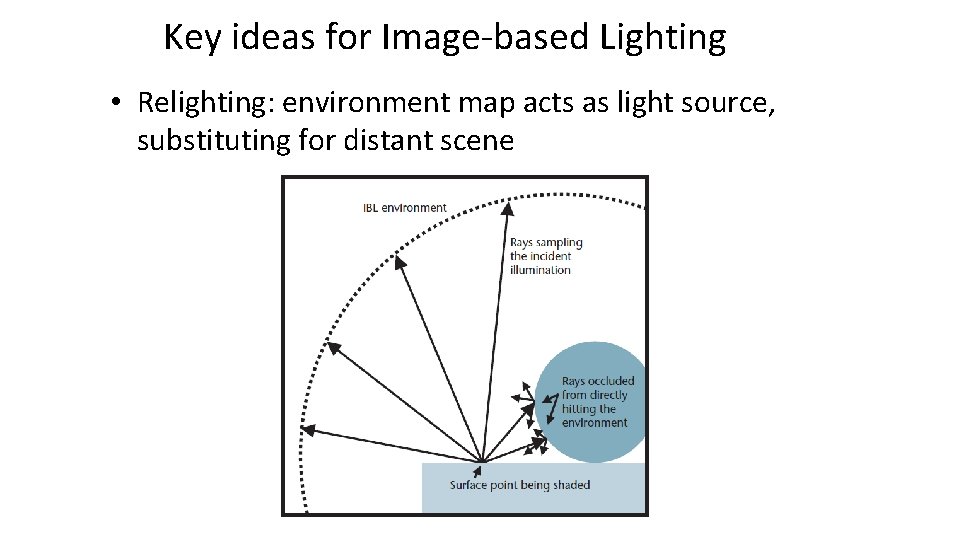

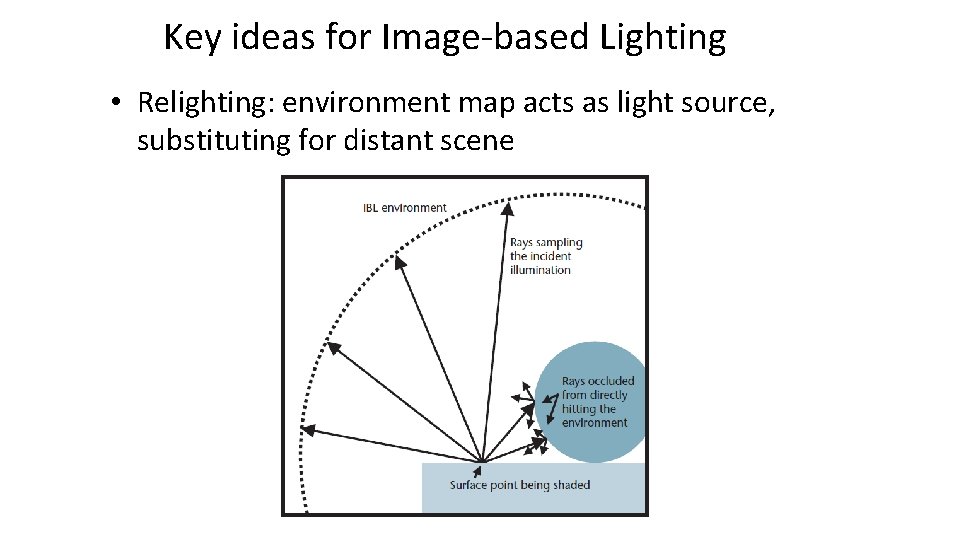

Key ideas for Image-based Lighting • Relighting: environment map acts as light source, substituting for distant scene

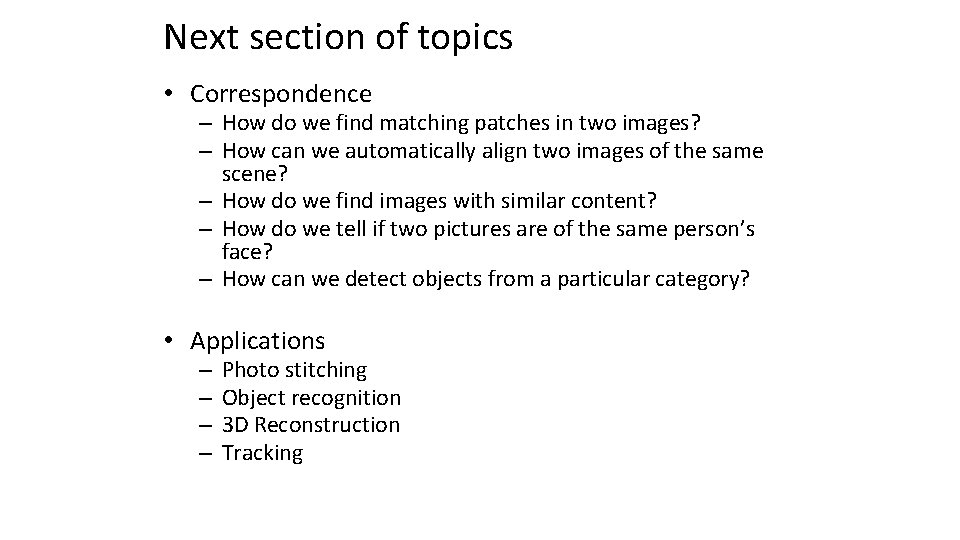

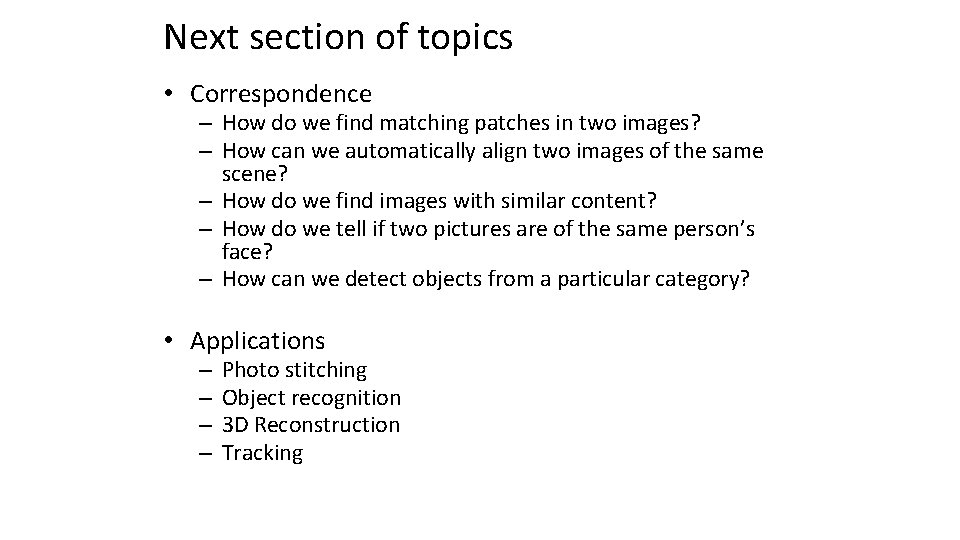

Next section of topics • Correspondence – How do we find matching patches in two images? – How can we automatically align two images of the same scene? – How do we find images with similar content? – How do we tell if two pictures are of the same person’s face? – How can we detect objects from a particular category? • Applications – – Photo stitching Object recognition 3 D Reconstruction Tracking

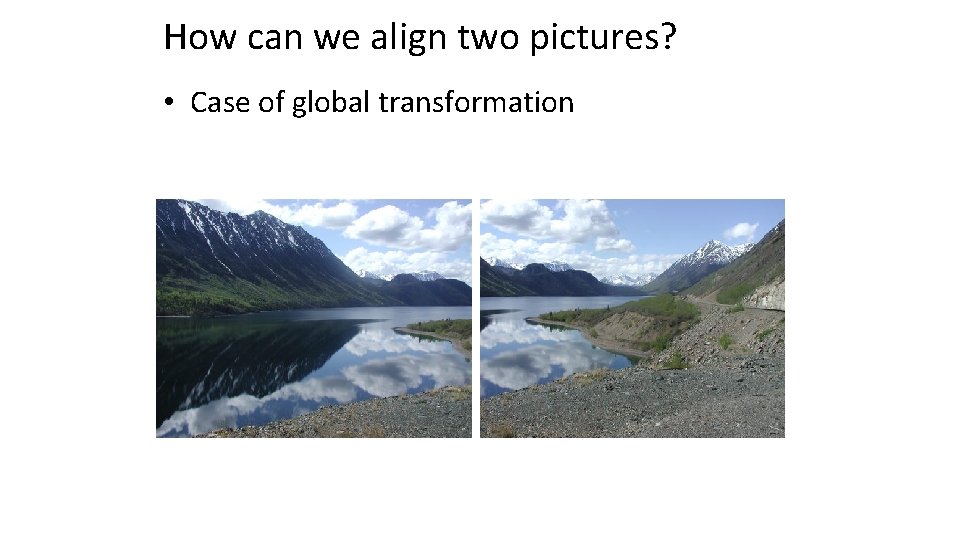

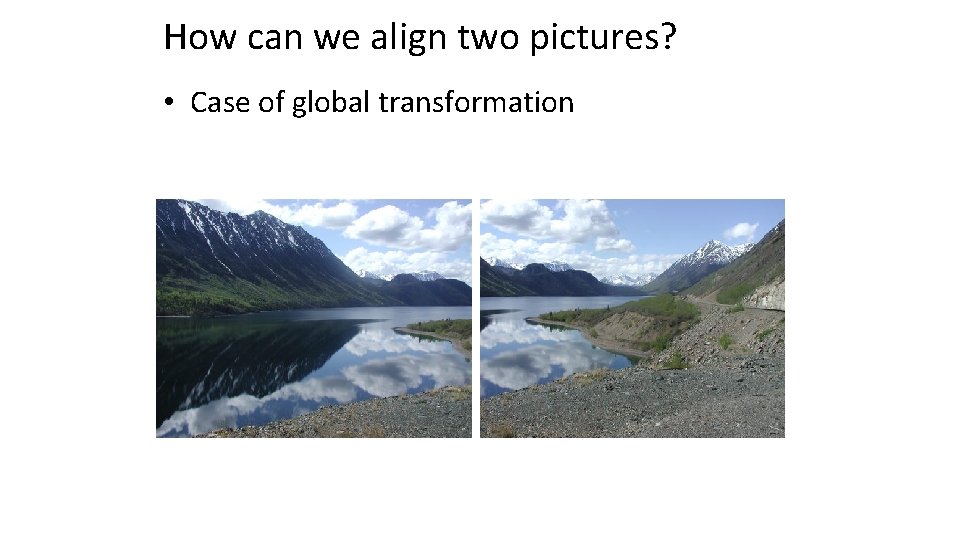

How can we align two pictures? • Case of global transformation

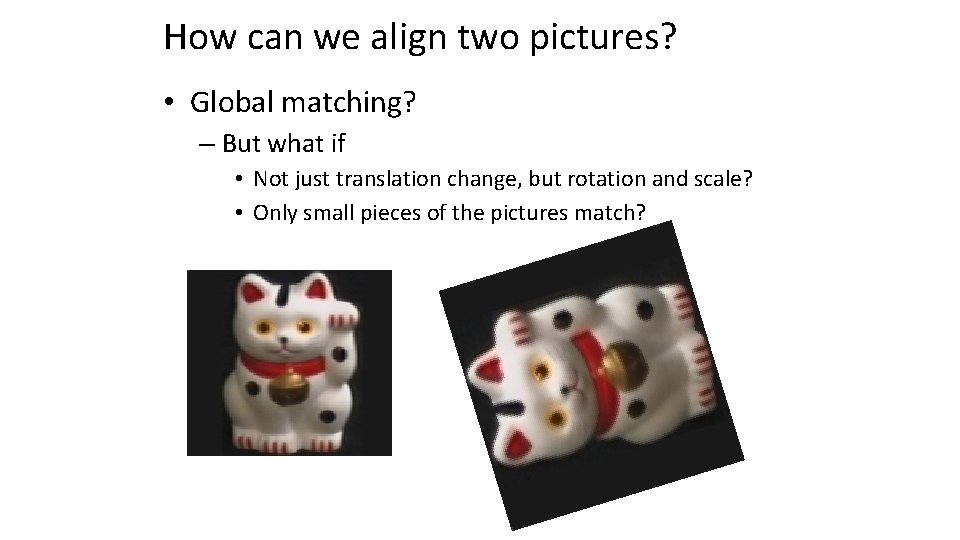

How can we align two pictures? • Global matching? – But what if • Not just translation change, but rotation and scale? • Only small pieces of the pictures match?

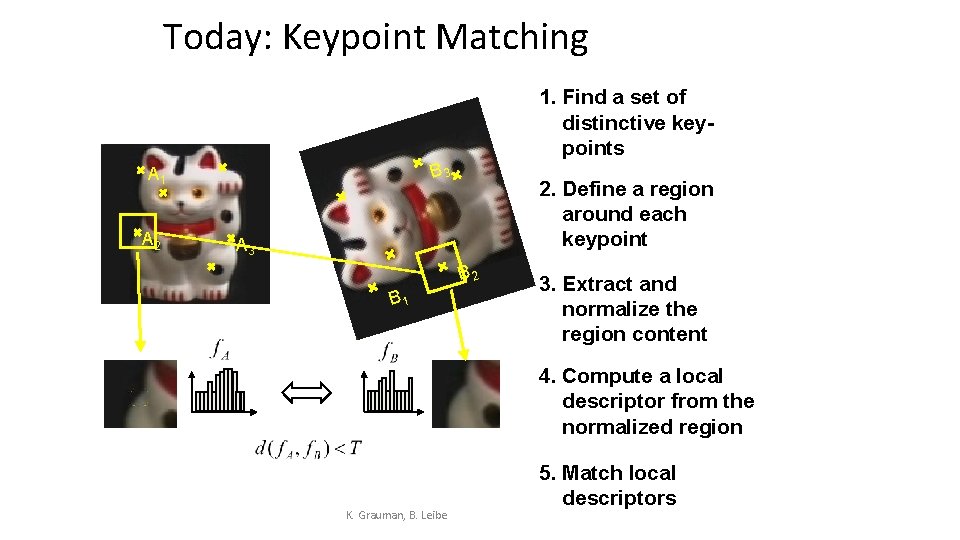

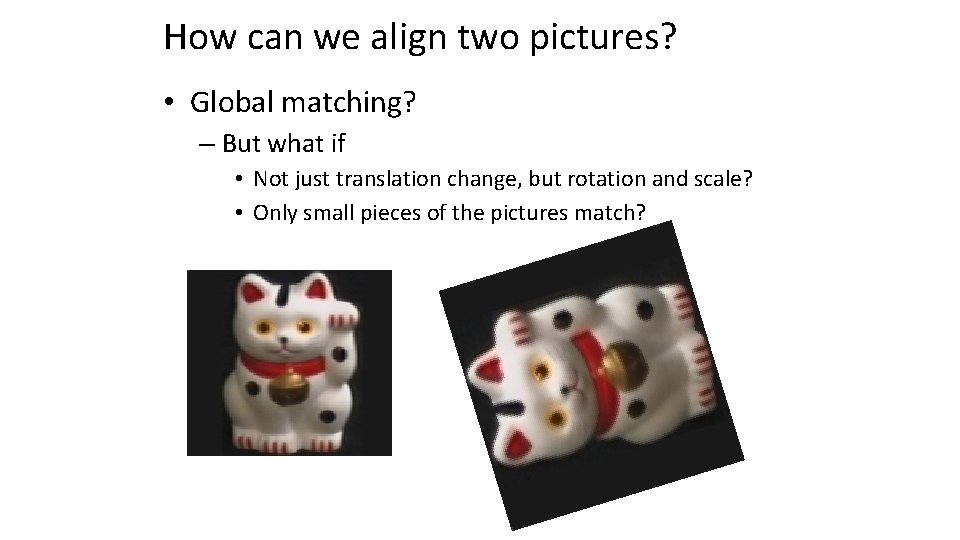

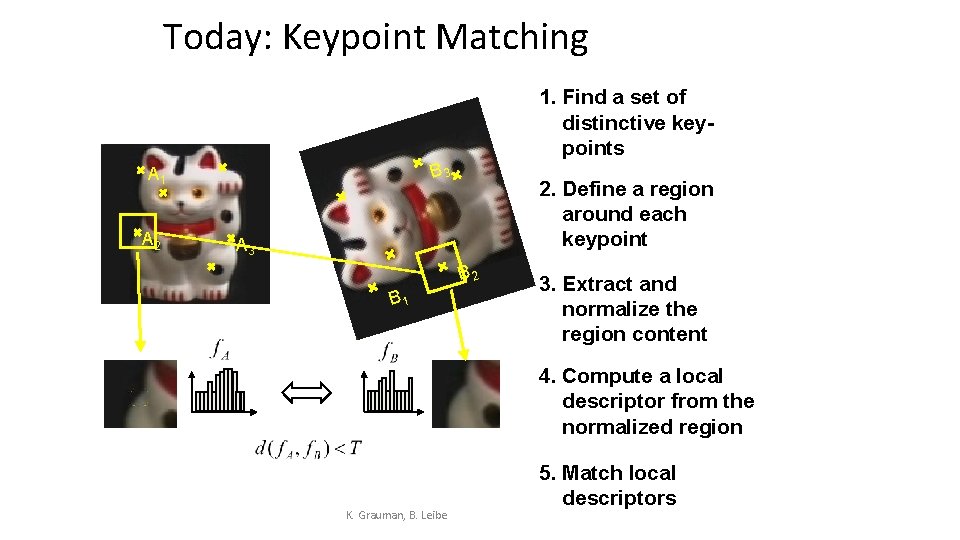

Today: Keypoint Matching 1. Find a set of distinctive keypoints B 3 A 1 A 2 2. Define a region around each keypoint A 3 B 2 B 1 3. Extract and normalize the region content 4. Compute a local descriptor from the normalized region K. Grauman, B. Leibe 5. Match local descriptors

Main challenges • Change in position, scale, and rotation • Change in viewpoint • Occlusion • Articulation, change in appearance

Question • Why not just take every patch in the original image and find best match in second image?

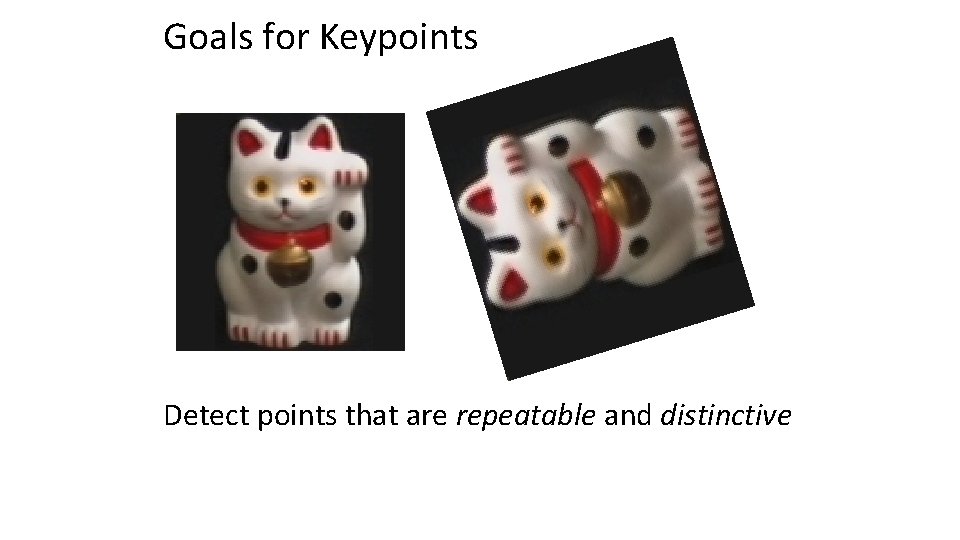

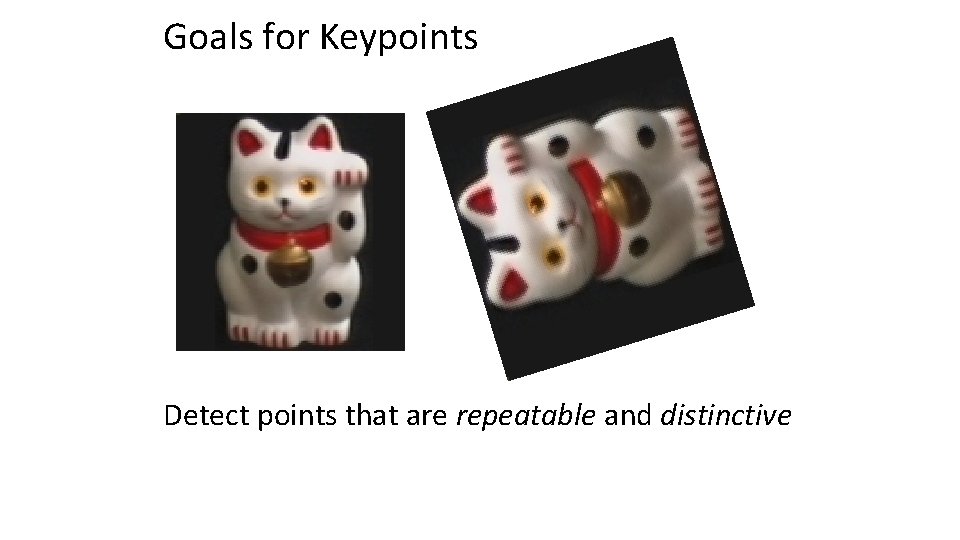

Goals for Keypoints Detect points that are repeatable and distinctive

Key trade-offs A B 1 3 A A 2 3 B Localization B 2 1 More Points More Repeatable Robust to occlusion Works with less texture Robust detection Precise localization Description More Robust More Selective Deal with expected variations Maximize correct matches Minimize wrong matches

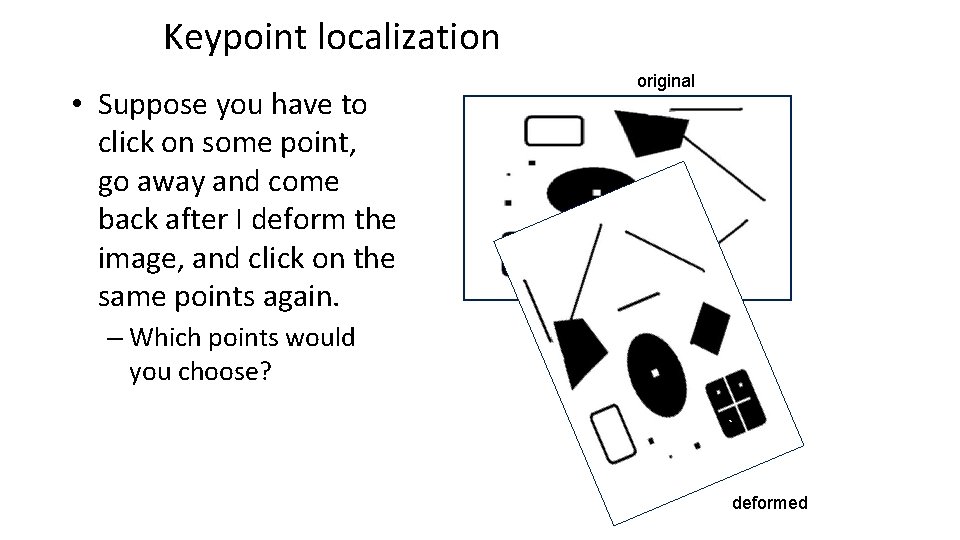

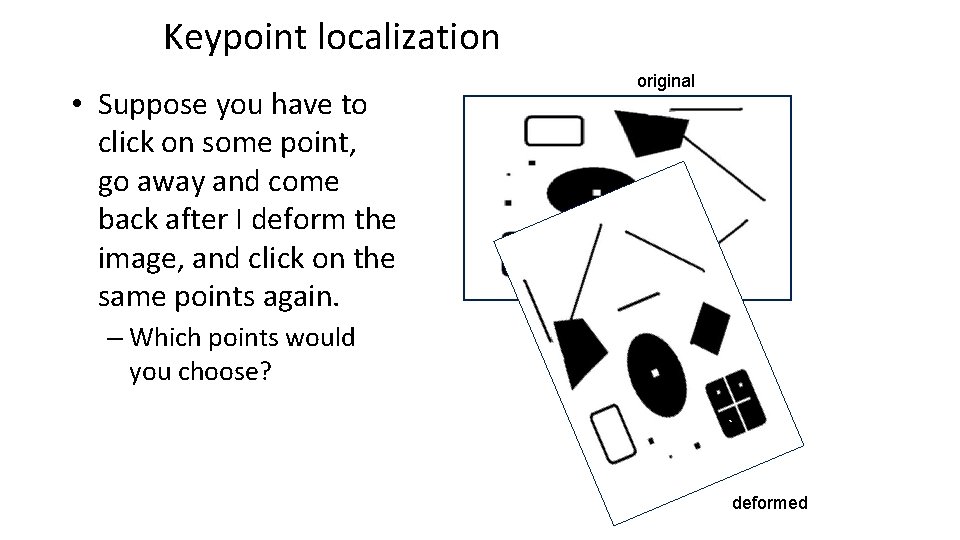

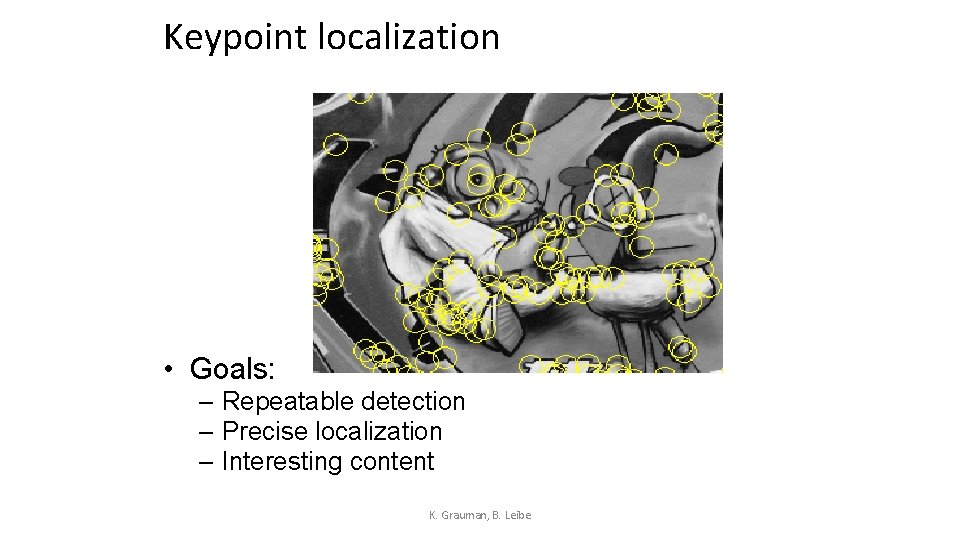

Keypoint localization • Suppose you have to click on some point, go away and come back after I deform the image, and click on the same points again. original – Which points would you choose? deformed

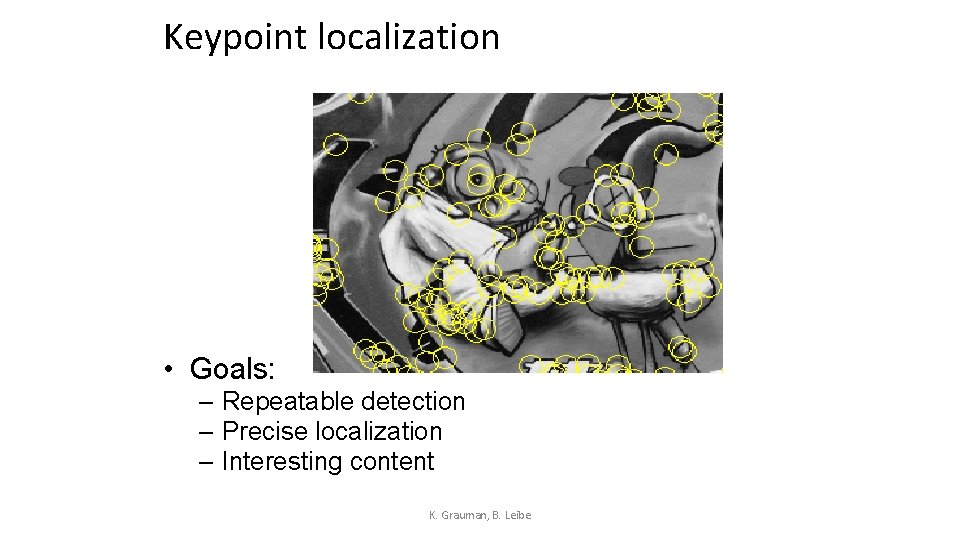

Keypoint localization • Goals: – Repeatable detection – Precise localization – Interesting content K. Grauman, B. Leibe

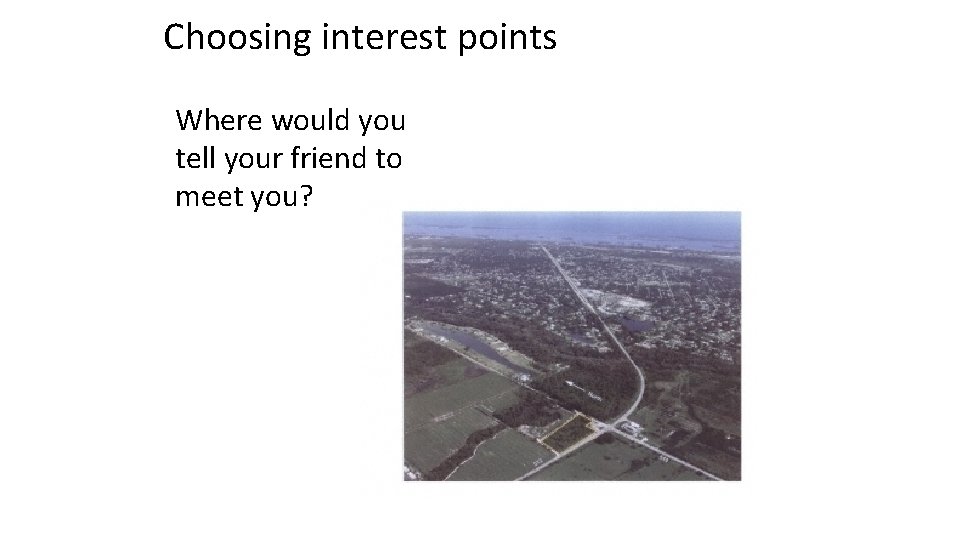

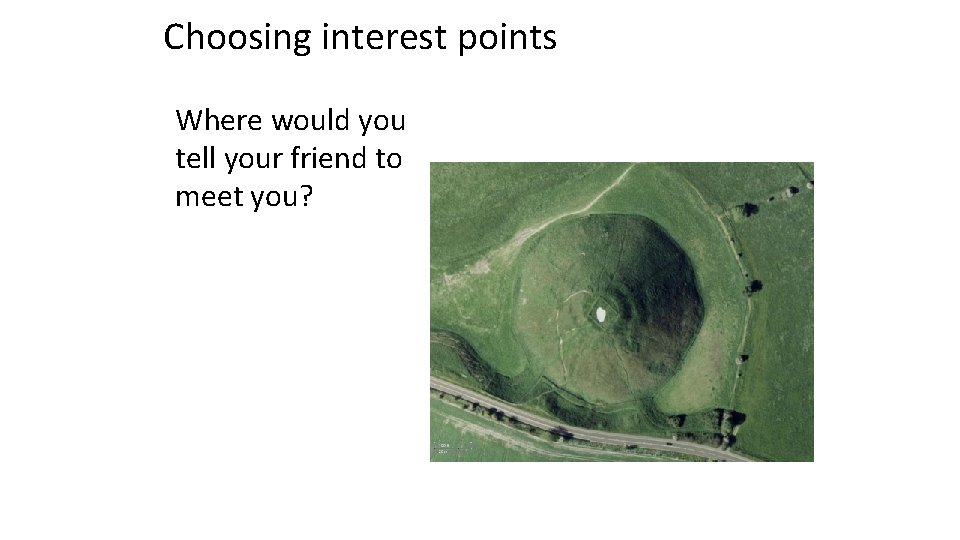

Choosing interest points Where would you tell your friend to meet you?

Choosing interest points Where would you tell your friend to meet you?

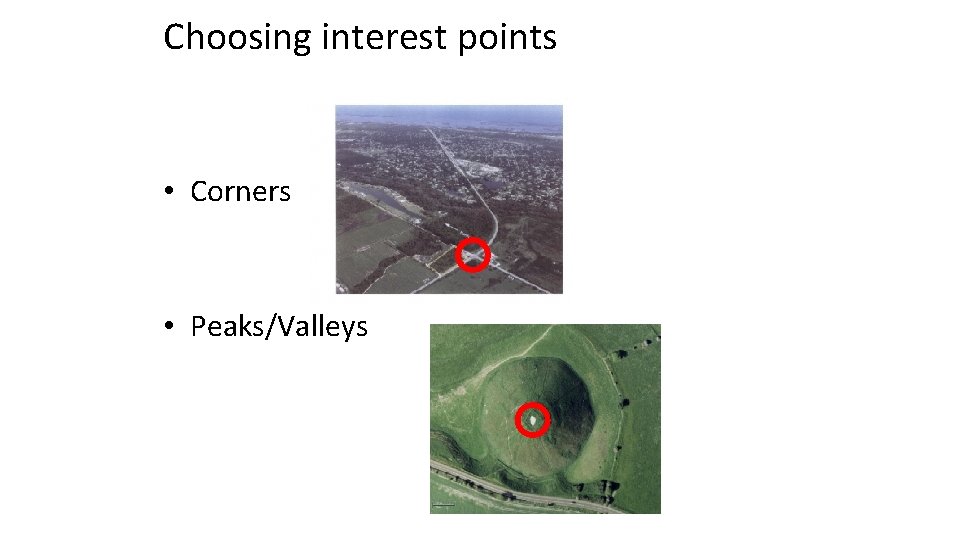

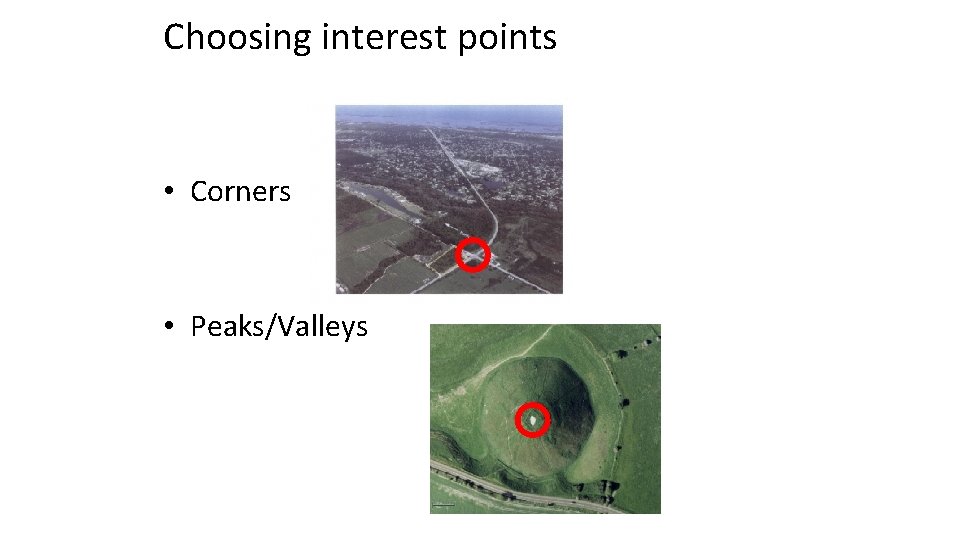

Choosing interest points • Corners • Peaks/Valleys

Choosing interest points • If you wanted to meet a friend would you say a) “Let’s meet on campus. ” b) “Let’s meet on Green street. ” c) “Let’s meet at Green and Wright. ” • Or if you were in a secluded area: a) “Let’s meet in the Plains of Akbar. ” b) “Let’s meet on the side of Mt. Doom. ” c) “Let’s meet on top of Mt. Doom. ”

Which patches are easier to match? ?

![Many Existing Detectors Available Hessian Harris Beaudet 78 Harris 88 Laplacian Many Existing Detectors Available Hessian & Harris [Beaudet ‘ 78], [Harris ‘ 88] Laplacian,](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-26.jpg)

Many Existing Detectors Available Hessian & Harris [Beaudet ‘ 78], [Harris ‘ 88] Laplacian, Do. G [Lindeberg ‘ 98], [Lowe 1999] Harris-/Hessian-Laplace [Mikolajczyk & Schmid ‘ 01] Harris-/Hessian-Affine[Mikolajczyk & Schmid ‘ 04] EBR and IBR [Tuytelaars & Van Gool ‘ 04] MSER [Matas ‘ 02] Salient Regions [Kadir & Brady ‘ 01] Others… K. Grauman, B. Leibe

![Harris Detector Harris 88 Second moment matrix Intuition Search for local neighborhoods where the Harris Detector [Harris 88] Second moment matrix Intuition: Search for local neighborhoods where the](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-27.jpg)

Harris Detector [Harris 88] Second moment matrix Intuition: Search for local neighborhoods where the image gradient has two main directions (eigenvectors). K. Grauman, B. Leibe

![Harris Detector Harris 88 Second moment matrix Ix Iy Ix 2 Iy 2 Ix Harris Detector [Harris 88] Second moment matrix Ix Iy Ix 2 Iy 2 Ix](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-28.jpg)

Harris Detector [Harris 88] Second moment matrix Ix Iy Ix 2 Iy 2 Ix Iy g(Ix 2) g(Iy 2) g(Ix. Iy) 1. Image derivatives 2. Square of derivatives 3. Gaussian filter g(σI) 4. Cornerness function – both eigenvalues are strong 5. Non-maxima suppression g(Ix. Iy) 28 har

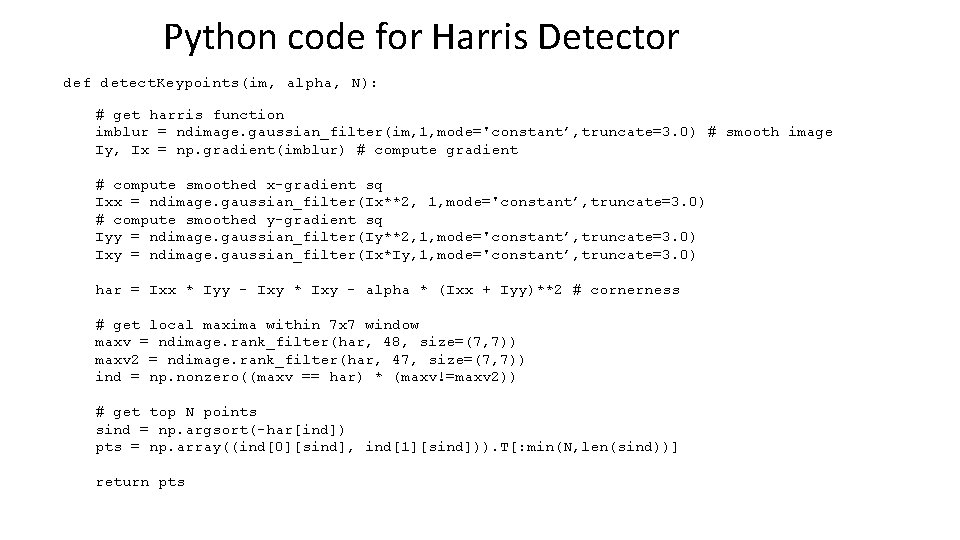

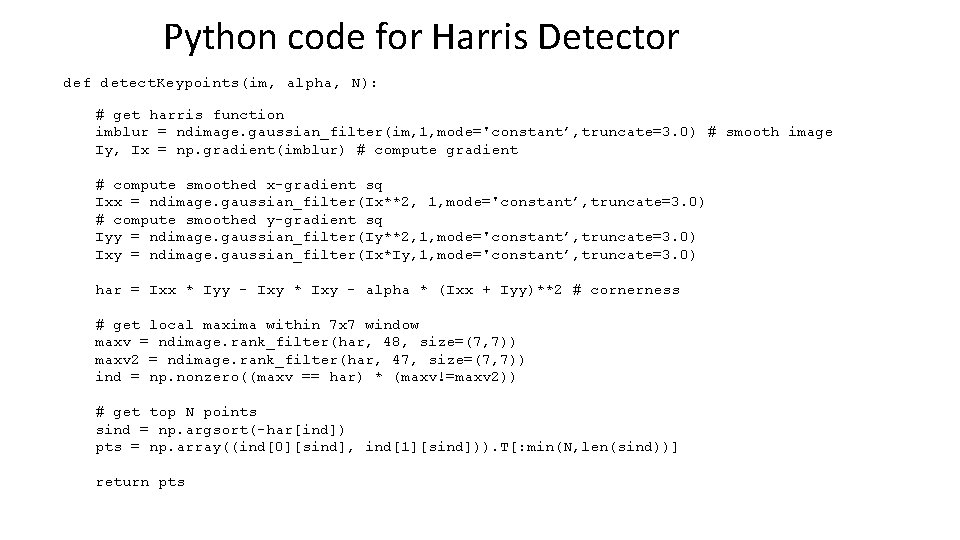

Python code for Harris Detector def detect. Keypoints(im, alpha, N): # get harris function imblur = ndimage. gaussian_filter(im, 1, mode='constant’, truncate=3. 0) # smooth image Iy, Ix = np. gradient(imblur) # compute gradient # compute smoothed x-gradient sq Ixx = ndimage. gaussian_filter(Ix**2, 1, mode='constant’, truncate=3. 0) # compute smoothed y-gradient sq Iyy = ndimage. gaussian_filter(Iy**2, 1, mode='constant’, truncate=3. 0) Ixy = ndimage. gaussian_filter(Ix*Iy, 1, mode='constant’, truncate=3. 0) har = Ixx * Iyy - Ixy * Ixy - alpha * (Ixx + Iyy)**2 # cornerness # get local maxima within 7 x 7 window maxv = ndimage. rank_filter(har, 48, size=(7, 7)) maxv 2 = ndimage. rank_filter(har, 47, size=(7, 7)) ind = np. nonzero((maxv == har) * (maxv!=maxv 2)) # get top N points sind = np. argsort(-har[ind]) pts = np. array((ind[0][sind], ind[1][sind])). T[: min(N, len(sind))] return pts

![Harris Detector Responses Harris 88 Effect A very precise corner detector Harris Detector – Responses [Harris 88] Effect: A very precise corner detector.](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-30.jpg)

Harris Detector – Responses [Harris 88] Effect: A very precise corner detector.

![Harris Detector Responses Harris 88 Harris Detector – Responses [Harris 88]](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-31.jpg)

Harris Detector – Responses [Harris 88]

So far: can localize in x-y, but not scale

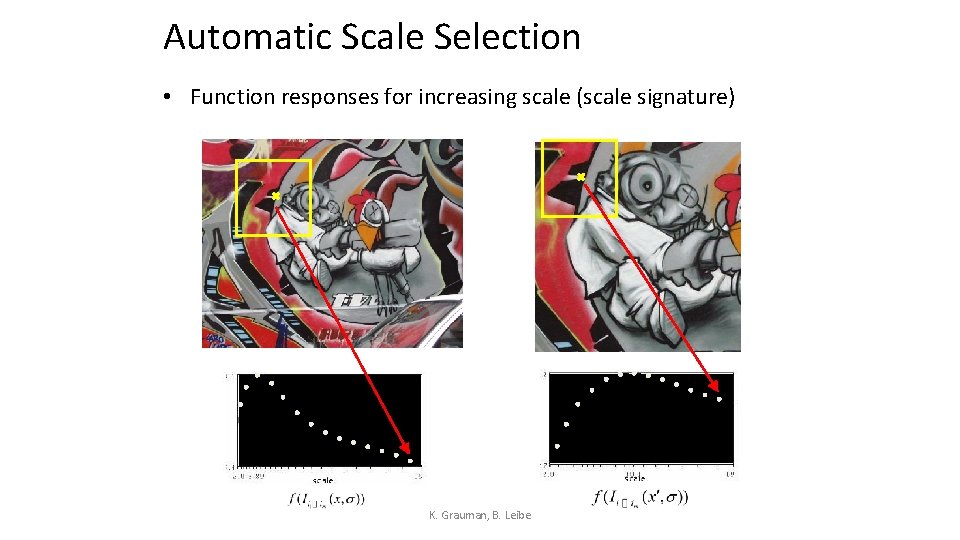

Automatic Scale Selection How to find corresponding patch sizes? K. Grauman, B. Leibe

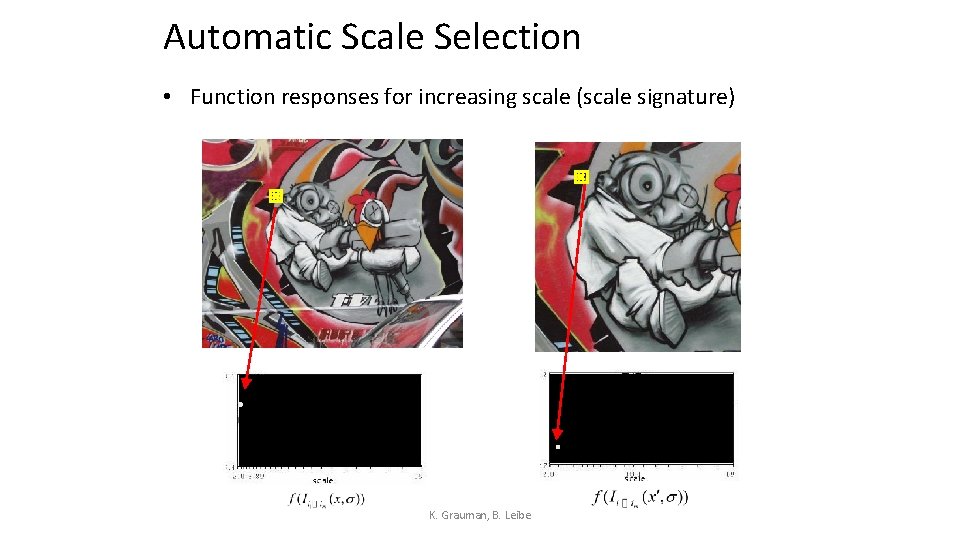

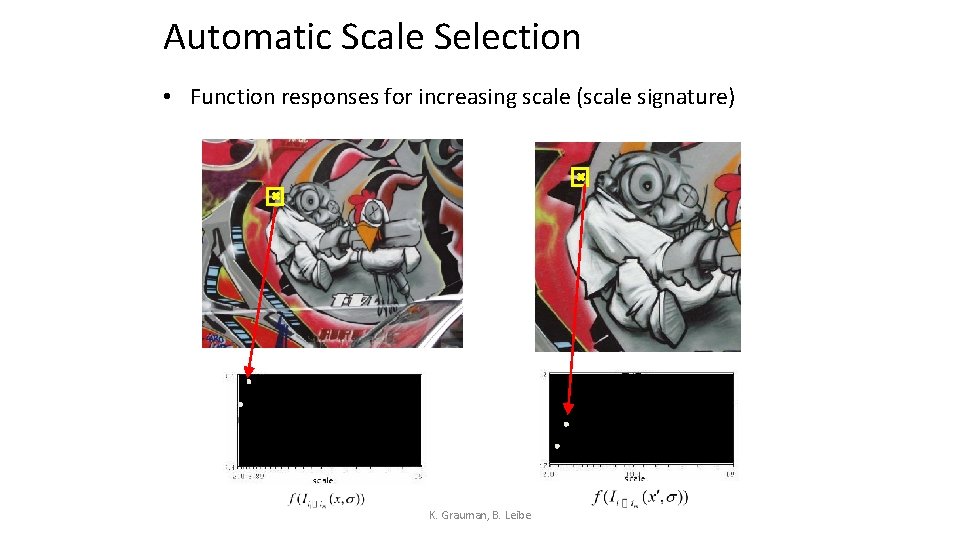

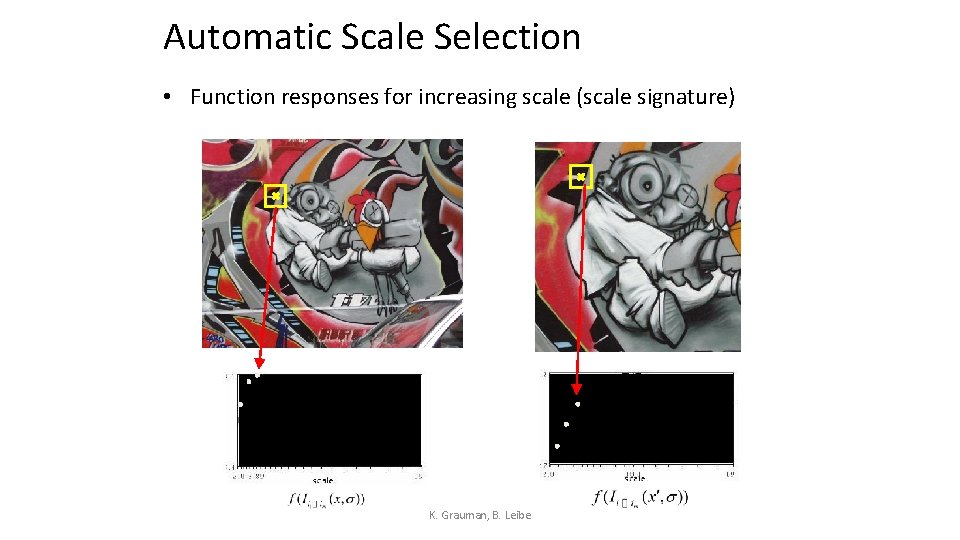

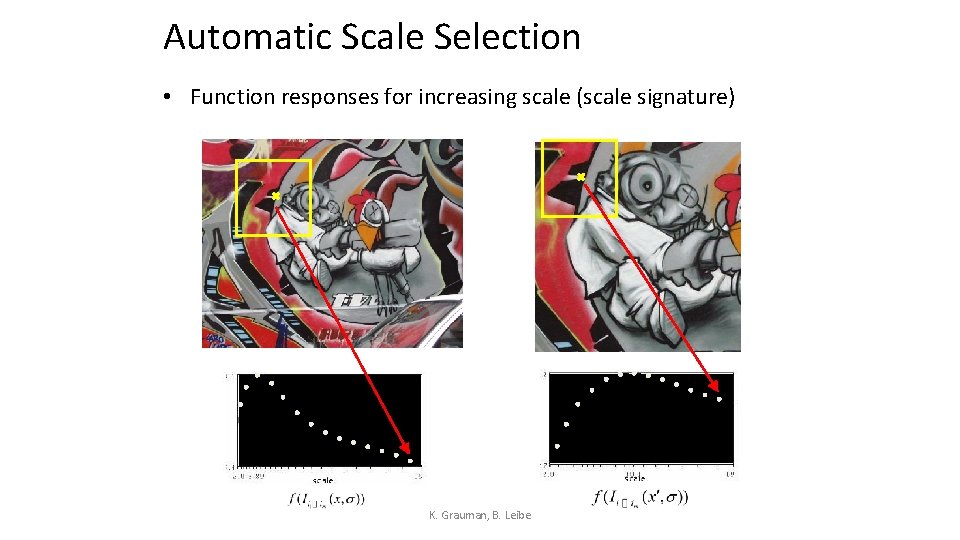

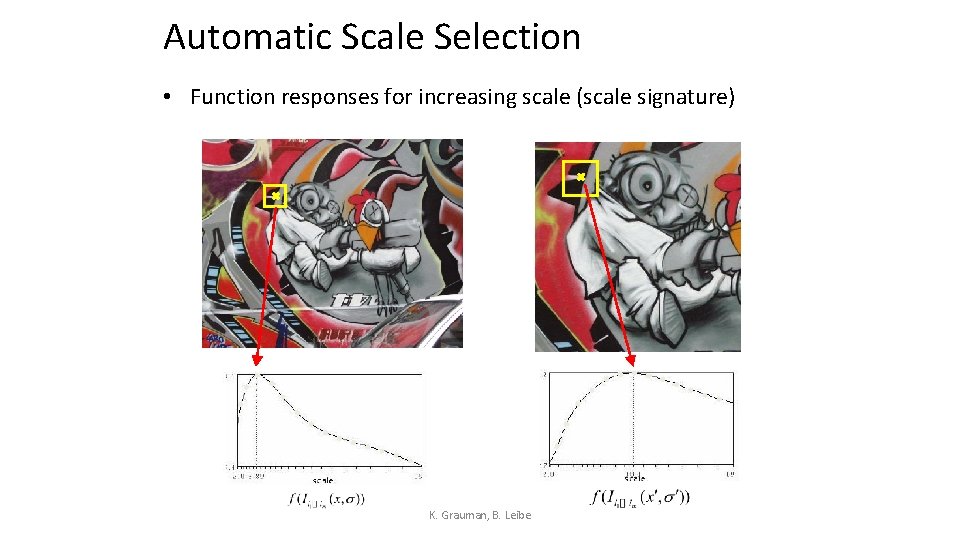

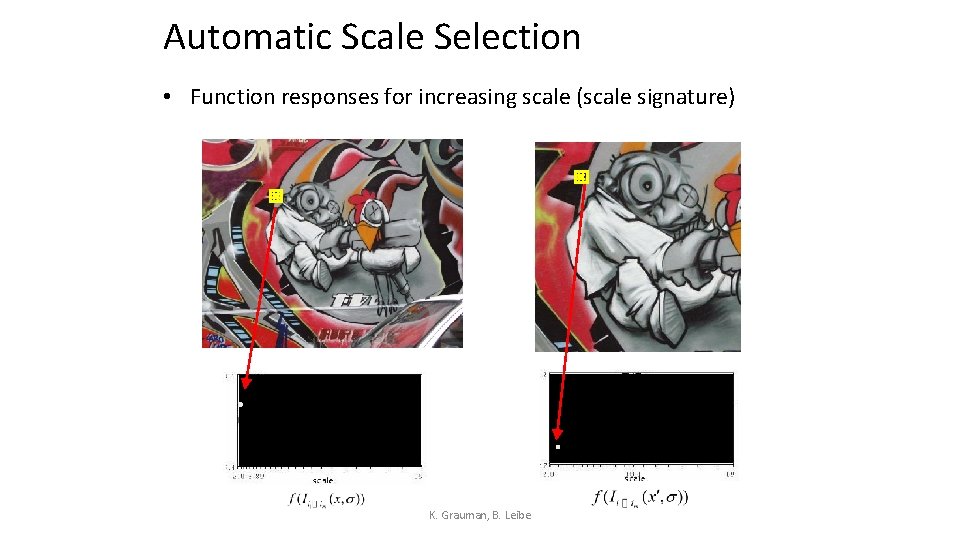

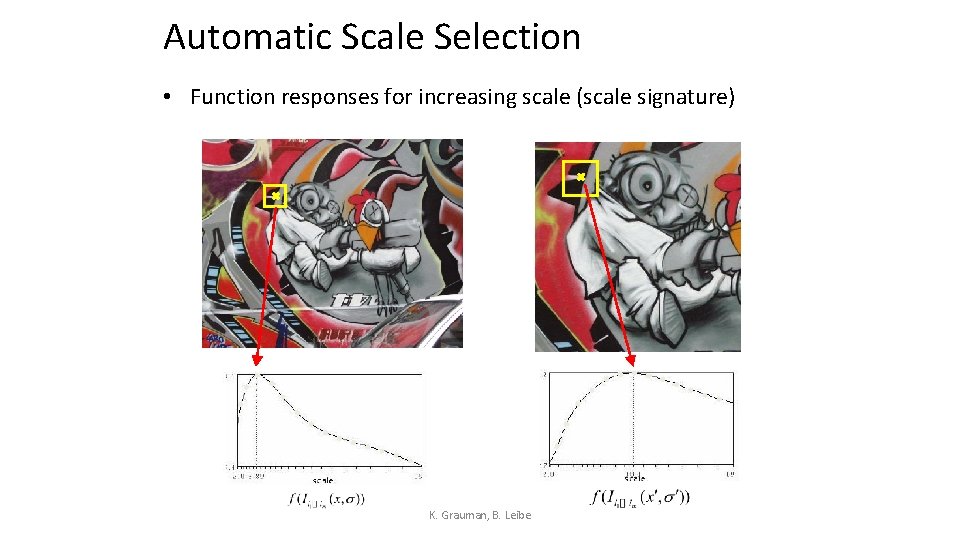

Automatic Scale Selection • Function responses for increasing scale (scale signature) K. Grauman, B. Leibe

Automatic Scale Selection • Function responses for increasing scale (scale signature) K. Grauman, B. Leibe

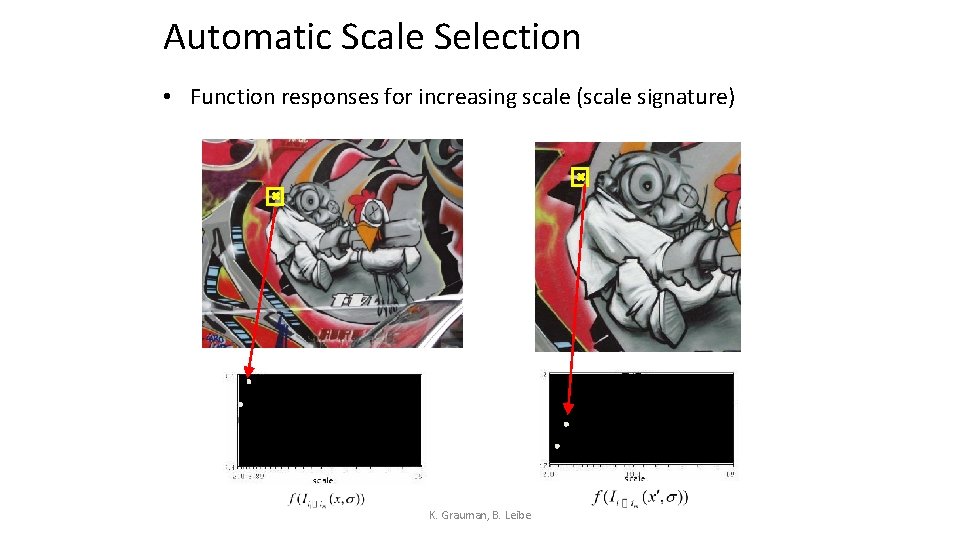

Automatic Scale Selection • Function responses for increasing scale (scale signature) K. Grauman, B. Leibe

Automatic Scale Selection • Function responses for increasing scale (scale signature) K. Grauman, B. Leibe

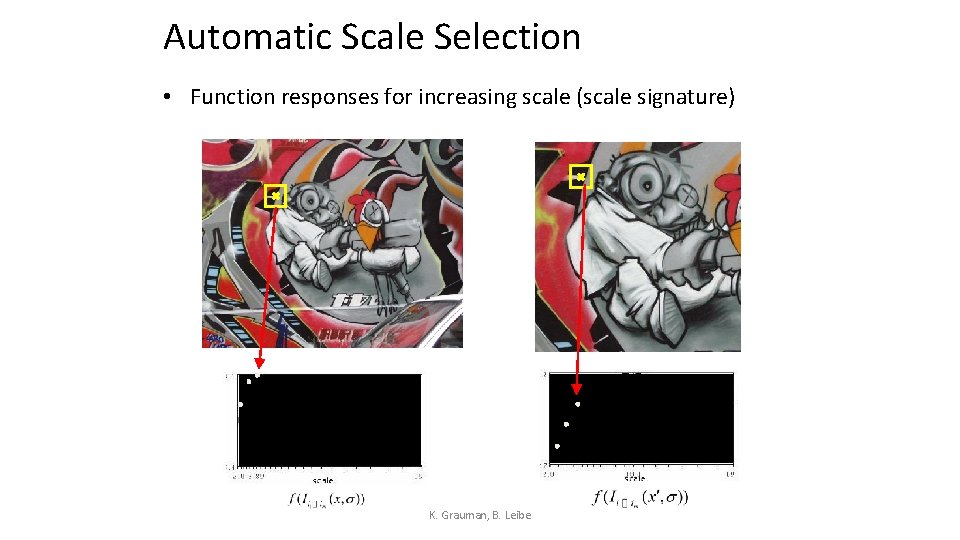

Automatic Scale Selection • Function responses for increasing scale (scale signature) K. Grauman, B. Leibe

Automatic Scale Selection • Function responses for increasing scale (scale signature) K. Grauman, B. Leibe

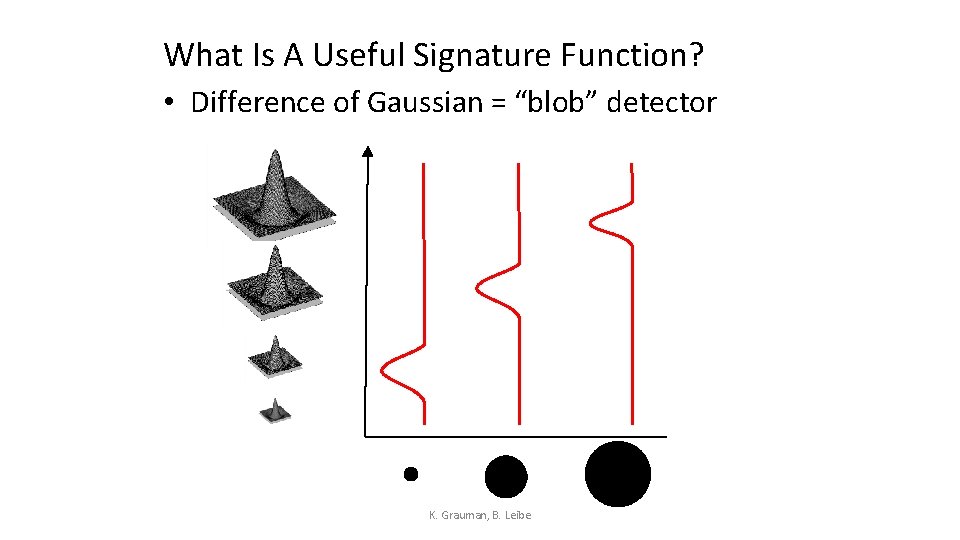

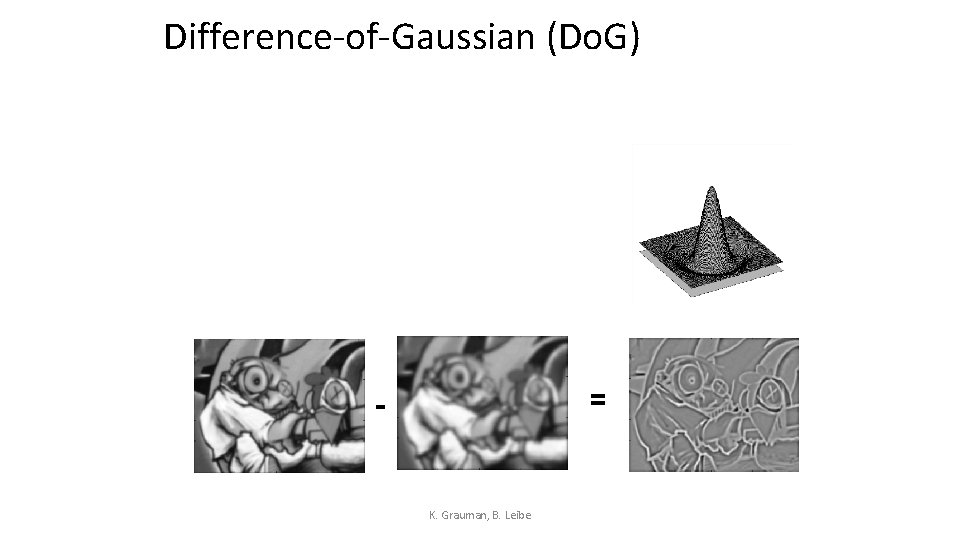

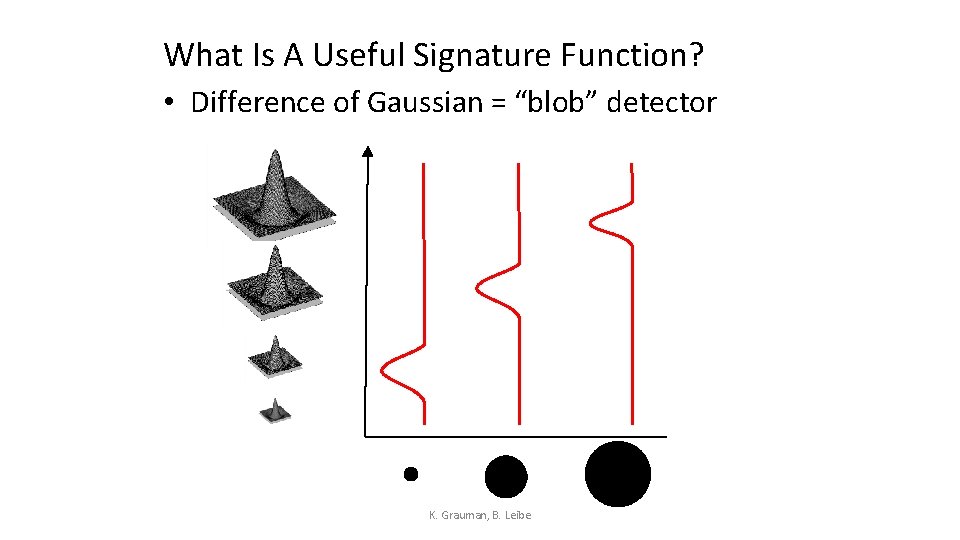

What Is A Useful Signature Function? • Difference of Gaussian = “blob” detector K. Grauman, B. Leibe

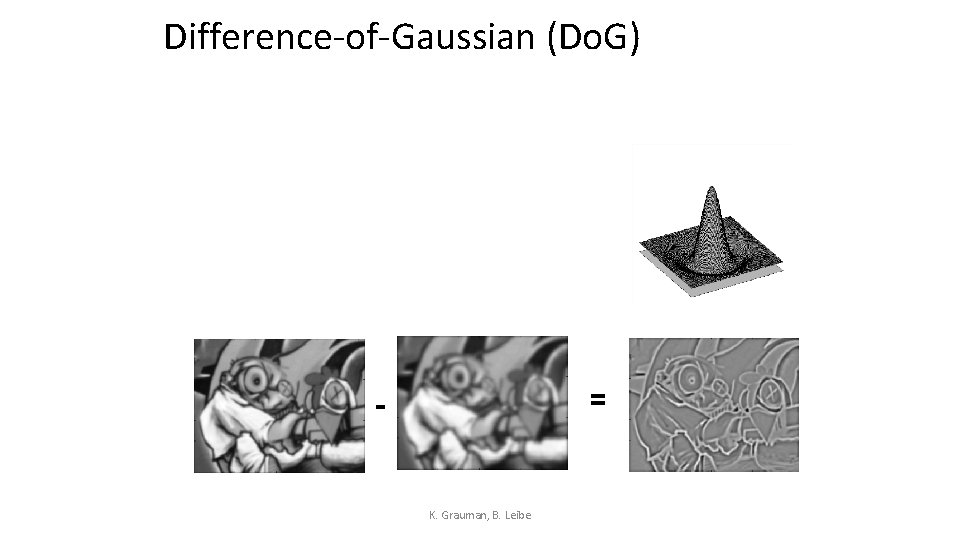

Difference-of-Gaussian (Do. G) = - K. Grauman, B. Leibe

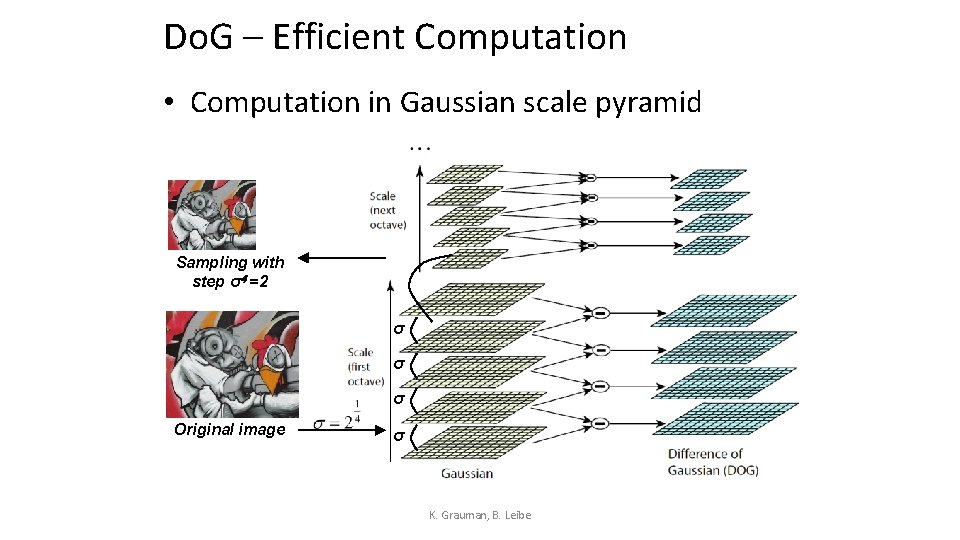

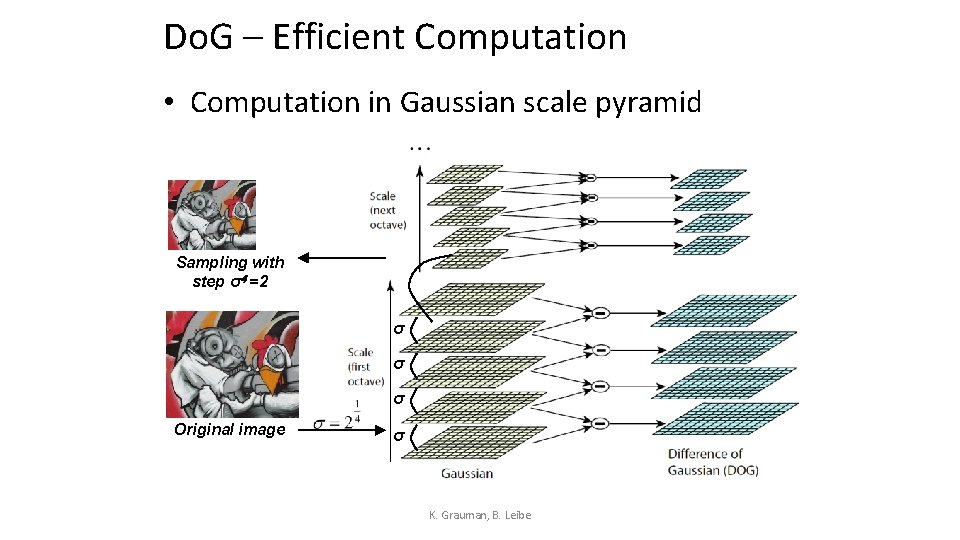

Do. G – Efficient Computation • Computation in Gaussian scale pyramid Sampling with step σ4 =2 σ σ σ Original image σ K. Grauman, B. Leibe

Results: Lowe’s Do. G K. Grauman, B. Leibe

![Orientation Normalization Compute orientation histogram Lowe SIFT 1999 Select dominant orientation Orientation Normalization • Compute orientation histogram [Lowe, SIFT, 1999] • Select dominant orientation •](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-44.jpg)

Orientation Normalization • Compute orientation histogram [Lowe, SIFT, 1999] • Select dominant orientation • Normalize: rotate to fixed orientation 0 T. Tuytelaars, B. Leibe 2π

Available at a web site near you… • For most local feature detectors, executables are available online: – http: //robots. ox. ac. uk/~vgg/research/affine – http: //www. cs. ubc. ca/~lowe/keypoints/ – http: //www. vision. ee. ethz. ch/~surf K. Grauman, B. Leibe

How do we describe the keypoint?

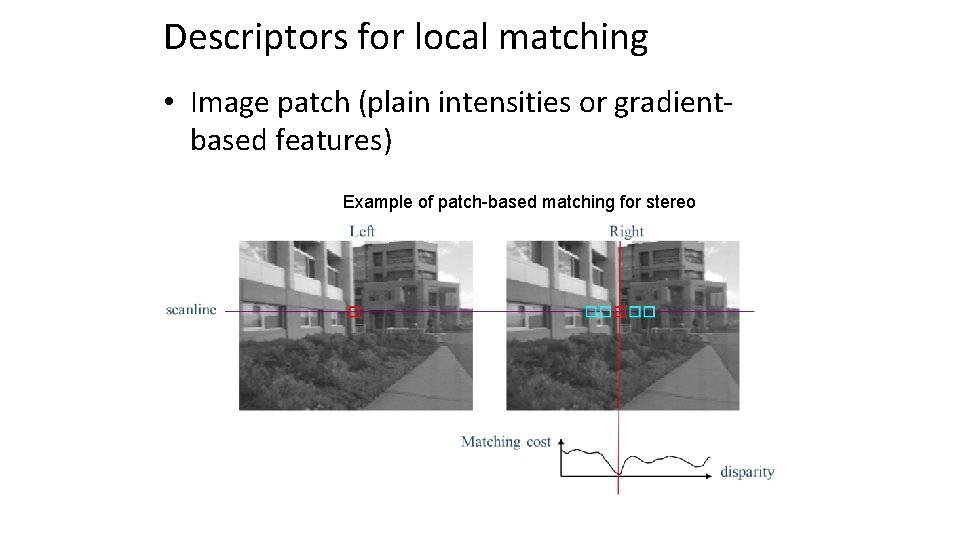

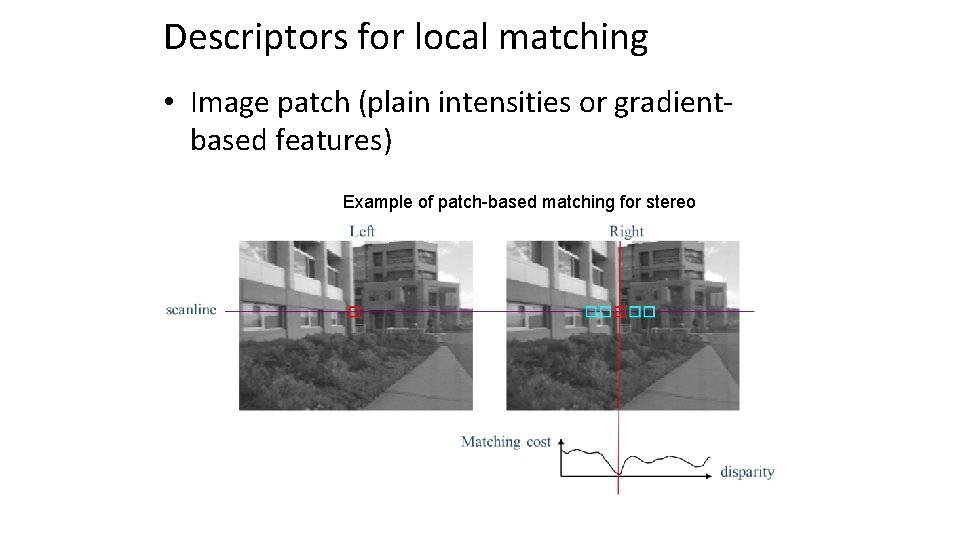

Descriptors for local matching • Image patch (plain intensities or gradientbased features) Example of patch-based matching for stereo

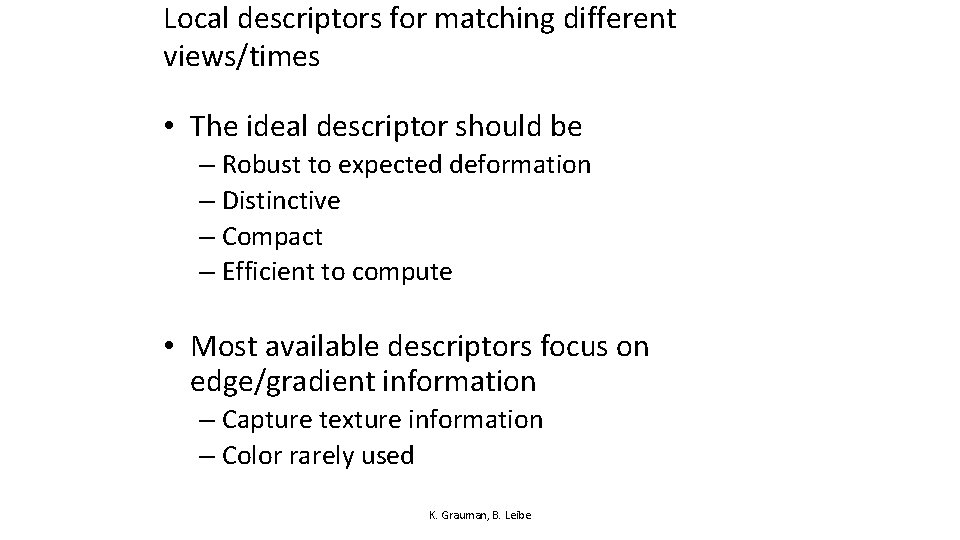

Local descriptors for matching different views/times • The ideal descriptor should be – Robust to expected deformation – Distinctive – Compact – Efficient to compute • Most available descriptors focus on edge/gradient information – Capture texture information – Color rarely used K. Grauman, B. Leibe

![Local Descriptors SIFT Descriptor Lowe ICCV 1999 Histogram of oriented gradients Captures important Local Descriptors: SIFT Descriptor [Lowe, ICCV 1999] Histogram of oriented gradients • Captures important](https://slidetodoc.com/presentation_image/b8c72a6371d954938df6fb7e87b32152/image-49.jpg)

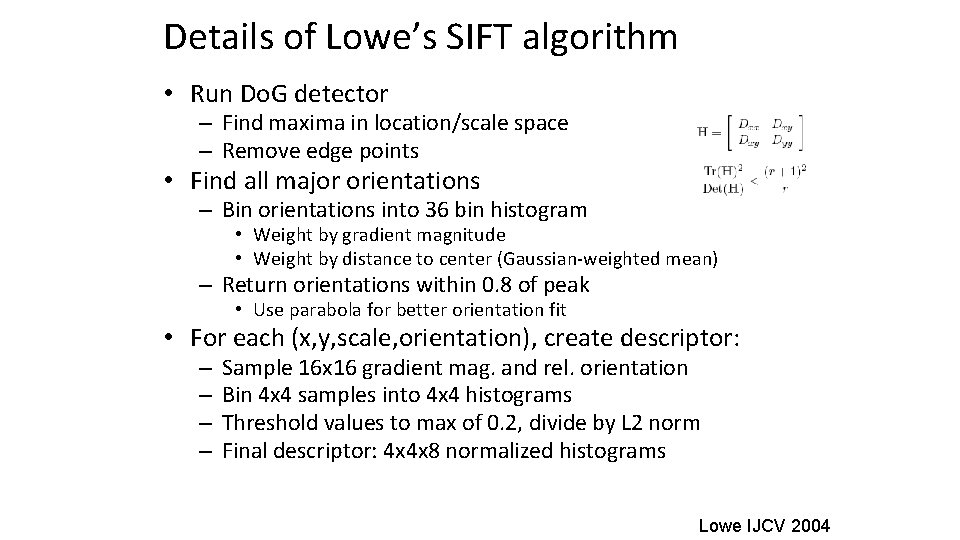

Local Descriptors: SIFT Descriptor [Lowe, ICCV 1999] Histogram of oriented gradients • Captures important texture information • Robust to small translations / affine deformations K. Grauman, B. Leibe

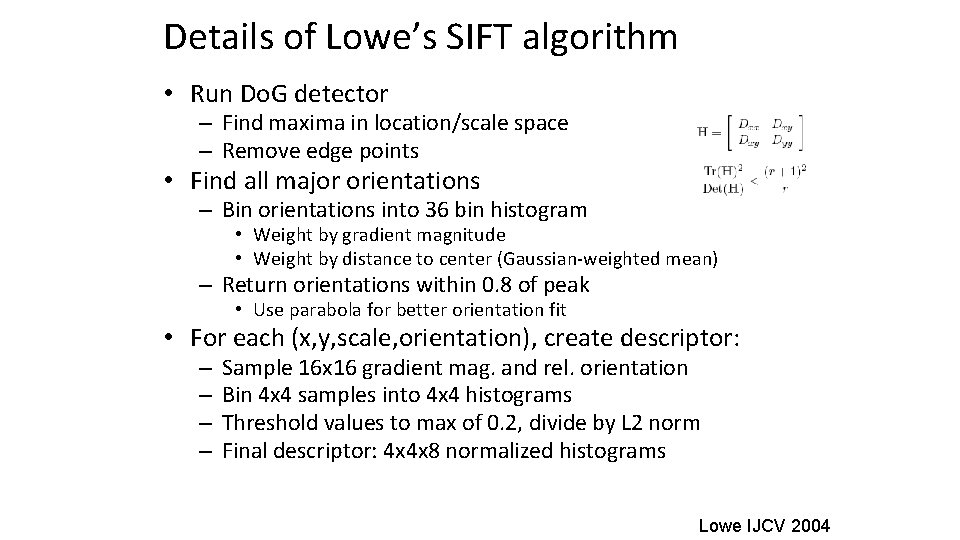

Details of Lowe’s SIFT algorithm • Run Do. G detector – Find maxima in location/scale space – Remove edge points • Find all major orientations – Bin orientations into 36 bin histogram • Weight by gradient magnitude • Weight by distance to center (Gaussian-weighted mean) – Return orientations within 0. 8 of peak • Use parabola for better orientation fit • For each (x, y, scale, orientation), create descriptor: – – Sample 16 x 16 gradient mag. and rel. orientation Bin 4 x 4 samples into 4 x 4 histograms Threshold values to max of 0. 2, divide by L 2 norm Final descriptor: 4 x 4 x 8 normalized histograms Lowe IJCV 2004

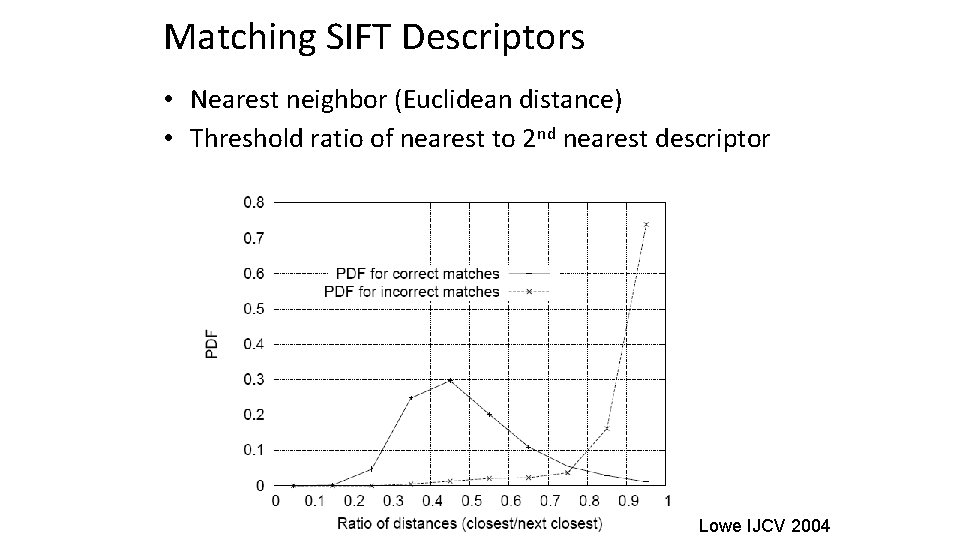

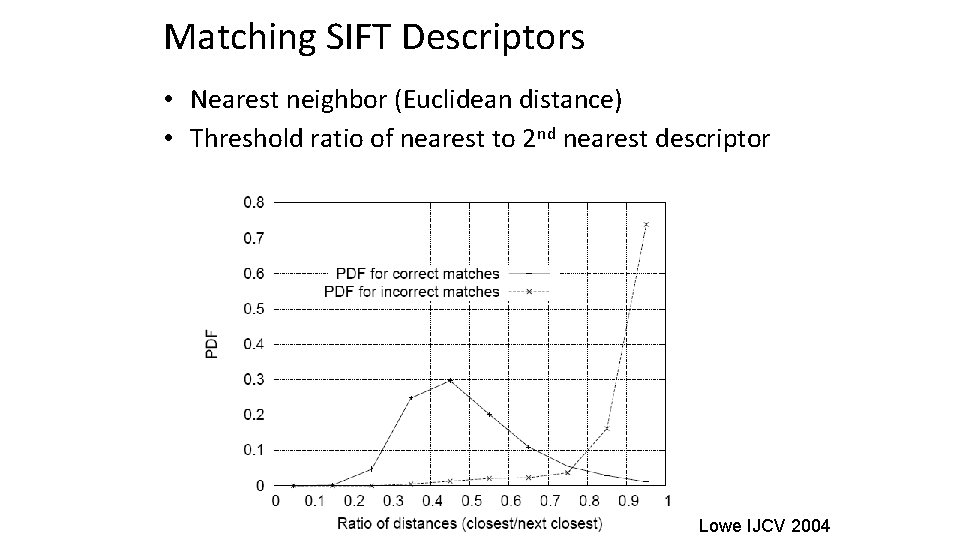

Matching SIFT Descriptors • Nearest neighbor (Euclidean distance) • Threshold ratio of nearest to 2 nd nearest descriptor Lowe IJCV 2004

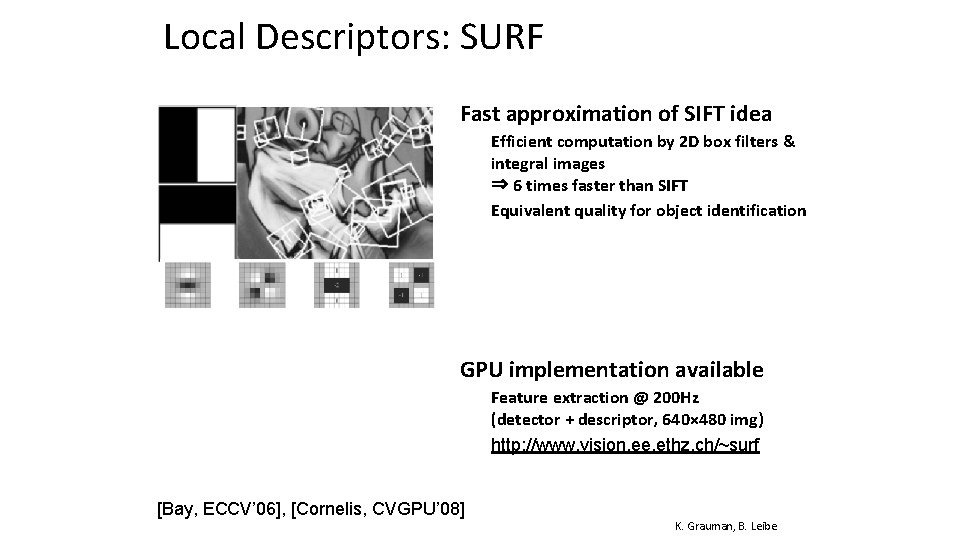

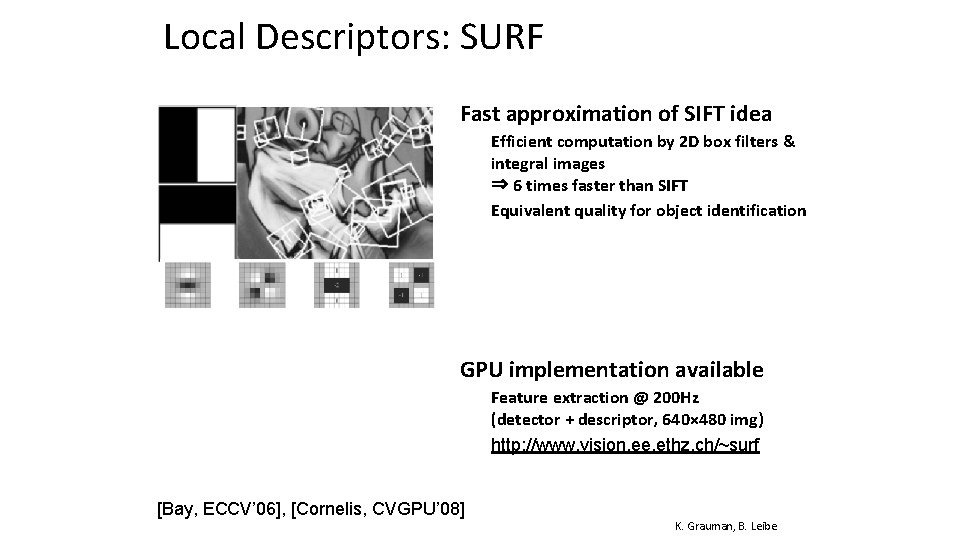

Local Descriptors: SURF • Fast approximation of SIFT idea ➢ ➢ Efficient computation by 2 D box filters & integral images ⇒ 6 times faster than SIFT Equivalent quality for object identification • GPU implementation available ➢ ➢ [Bay, ECCV’ 06], [Cornelis, CVGPU’ 08] Feature extraction @ 200 Hz (detector + descriptor, 640× 480 img) http: //www. vision. ee. ethz. ch/~surf K. Grauman, B. Leibe

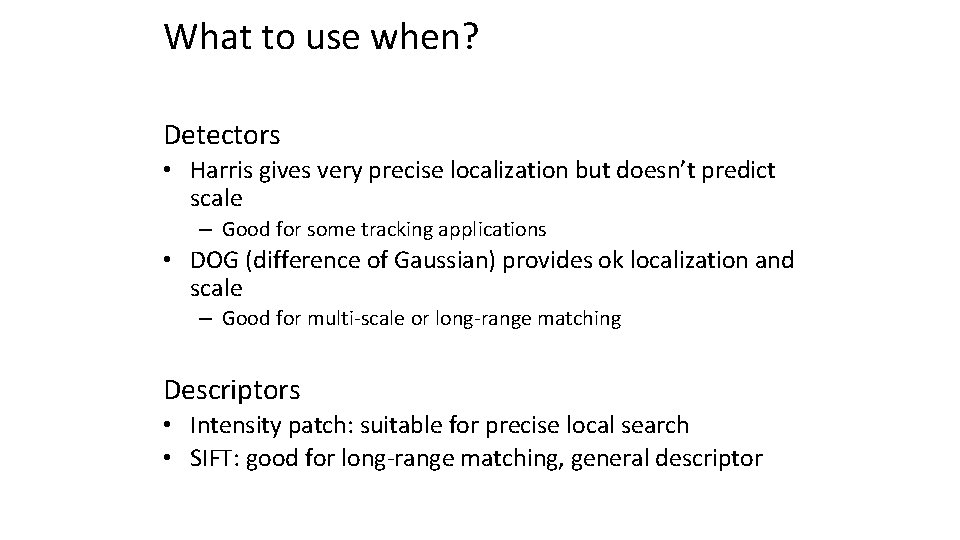

What to use when? Detectors • Harris gives very precise localization but doesn’t predict scale – Good for some tracking applications • DOG (difference of Gaussian) provides ok localization and scale – Good for multi-scale or long-range matching Descriptors • Intensity patch: suitable for precise local search • SIFT: good for long-range matching, general descriptor

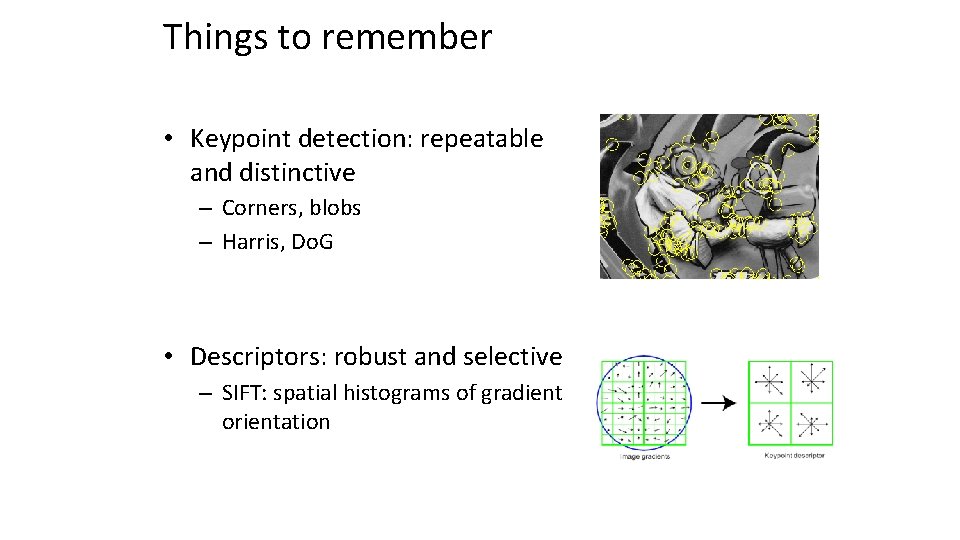

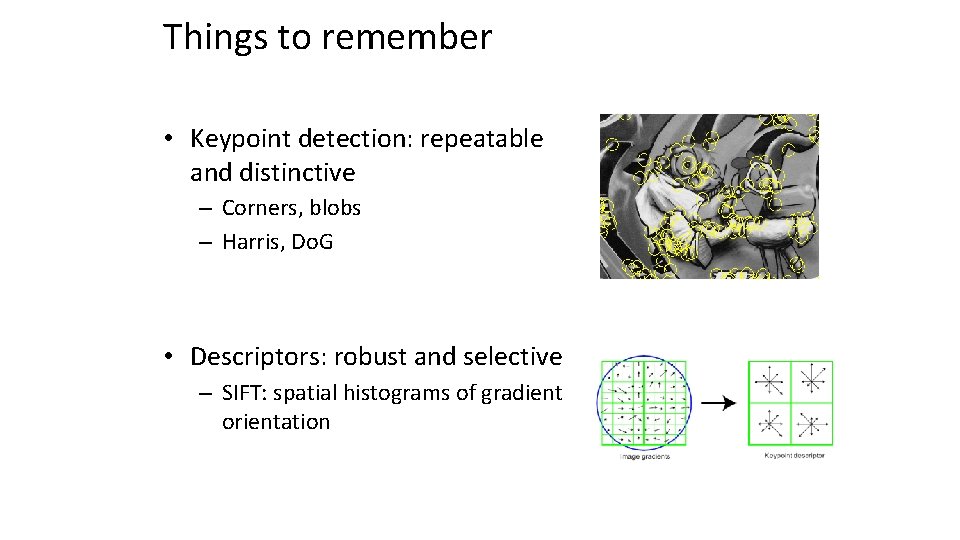

Things to remember • Keypoint detection: repeatable and distinctive – Corners, blobs – Harris, Do. G • Descriptors: robust and selective – SIFT: spatial histograms of gradient orientation

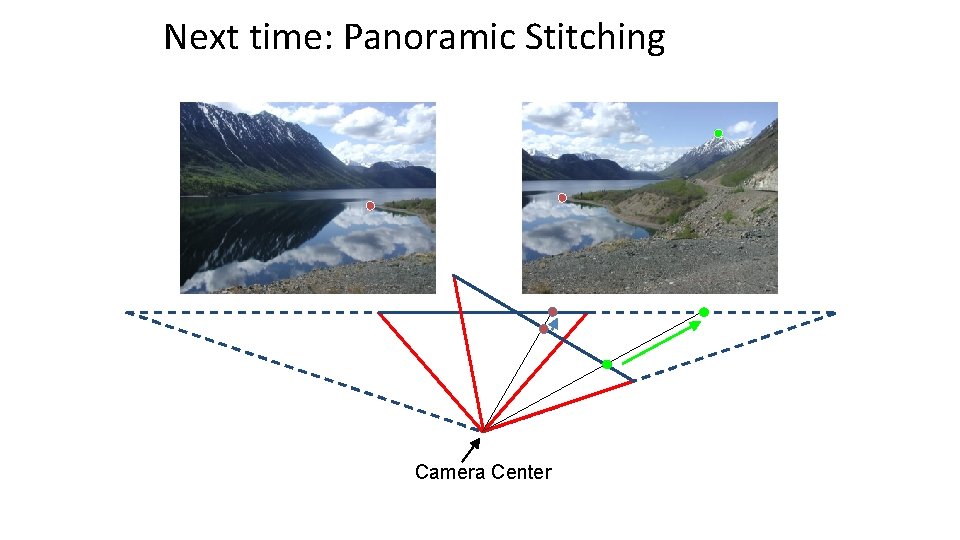

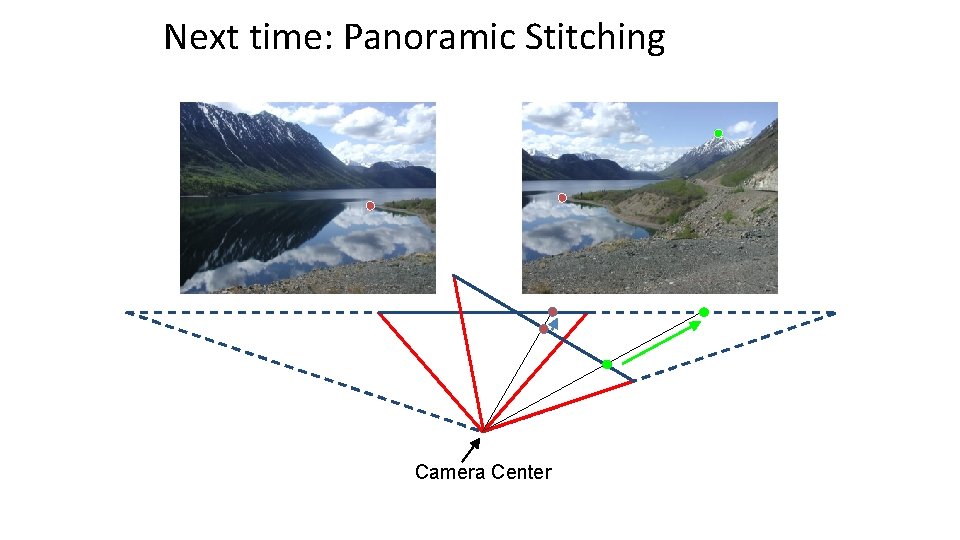

Next time: Panoramic Stitching Camera Center

Galatea of the spheres

Galatea of the spheres Galatea of the spheres meaning

Galatea of the spheres meaning La galatea de que trata

La galatea de que trata Purpúreas rosas sobre galatea

Purpúreas rosas sobre galatea Nominal v. real interest rates

Nominal v. real interest rates Nominal.interest rate

Nominal.interest rate 0 965

0 965 Straddle positioning

Straddle positioning Point of difference and point of parity

Point of difference and point of parity Từ ngữ thể hiện lòng nhân hậu

Từ ngữ thể hiện lòng nhân hậu Diễn thế sinh thái là

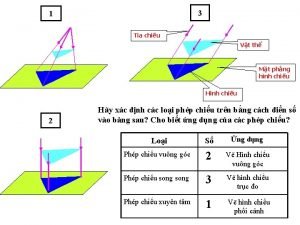

Diễn thế sinh thái là Vẽ hình chiếu vuông góc của vật thể sau

Vẽ hình chiếu vuông góc của vật thể sau Lp html

Lp html V cc cc

V cc cc Làm thế nào để 102-1=99

Làm thế nào để 102-1=99 Alleluia hat len nguoi oi

Alleluia hat len nguoi oi Lời thề hippocrates

Lời thề hippocrates Khi nào hổ con có thể sống độc lập

Khi nào hổ con có thể sống độc lập Tư thế worms-breton

Tư thế worms-breton đại từ thay thế

đại từ thay thế Quá trình desamine hóa có thể tạo ra

Quá trình desamine hóa có thể tạo ra Công thức tiính động năng

Công thức tiính động năng Thế nào là mạng điện lắp đặt kiểu nổi

Thế nào là mạng điện lắp đặt kiểu nổi Dạng đột biến một nhiễm là

Dạng đột biến một nhiễm là Bổ thể

Bổ thể Vẽ hình chiếu đứng bằng cạnh của vật thể

Vẽ hình chiếu đứng bằng cạnh của vật thể độ dài liên kết

độ dài liên kết Các môn thể thao bắt đầu bằng tiếng chạy

Các môn thể thao bắt đầu bằng tiếng chạy Thiếu nhi thế giới liên hoan

Thiếu nhi thế giới liên hoan Sự nuôi và dạy con của hươu

Sự nuôi và dạy con của hươu điện thế nghỉ

điện thế nghỉ Thế nào là sự mỏi cơ

Thế nào là sự mỏi cơ Một số thể thơ truyền thống

Một số thể thơ truyền thống Trời xanh đây là của chúng ta thể thơ

Trời xanh đây là của chúng ta thể thơ Số nguyên là gì

Số nguyên là gì Tỉ lệ cơ thể trẻ em

Tỉ lệ cơ thể trẻ em Phối cảnh

Phối cảnh Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Thế nào là hệ số cao nhất

Thế nào là hệ số cao nhất Sơ đồ cơ thể người

Sơ đồ cơ thể người Tư thế ngồi viết

Tư thế ngồi viết Cái miệng nó xinh thế

Cái miệng nó xinh thế Hình ảnh bộ gõ cơ thể búng tay

Hình ảnh bộ gõ cơ thể búng tay đặc điểm cơ thể của người tối cổ

đặc điểm cơ thể của người tối cổ Mật thư anh em như thể tay chân

Mật thư anh em như thể tay chân ưu thế lai là gì

ưu thế lai là gì Tư thế ngồi viết

Tư thế ngồi viết Thẻ vin

Thẻ vin Chó sói

Chó sói Thể thơ truyền thống

Thể thơ truyền thống Các châu lục và đại dương trên thế giới

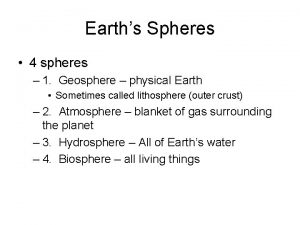

Các châu lục và đại dương trên thế giới Earths 4 spheres

Earths 4 spheres How to find volume of prisms and cylinders

How to find volume of prisms and cylinders What is biosphere and geosphere

What is biosphere and geosphere What are the three spheres of quality?

What are the three spheres of quality? Argument spheres

Argument spheres