High Performance Switches and Routers Theory and Practice

- Slides: 126

High Performance Switches and Routers: Theory and Practice Hot Interconnects 7 August 20, 1999 Stanford University Nick Mc. Keown Assistant Professor of Electrical Engineering CTO and Founder and Computer Science Abrizio Inc. nickm@stanford. edu nickm@abrizio. com http: //www. stanford. edu/~nickm http: //www. abrizio. com Copyright 1999. All Rights Reserved

Tutorial Outline • Introduction: What is a Packet Switch? • Packet Lookup and Classification: Where does a packet go next? • Switching Fabrics: How does the packet get there? Copyright 1999. All Rights Reserved 2

Introduction What is a Packet Switch? • Basic Architectural Components • Some Example Packet Switches • The Evolution of IP Routers Copyright 1999. All Rights Reserved 3

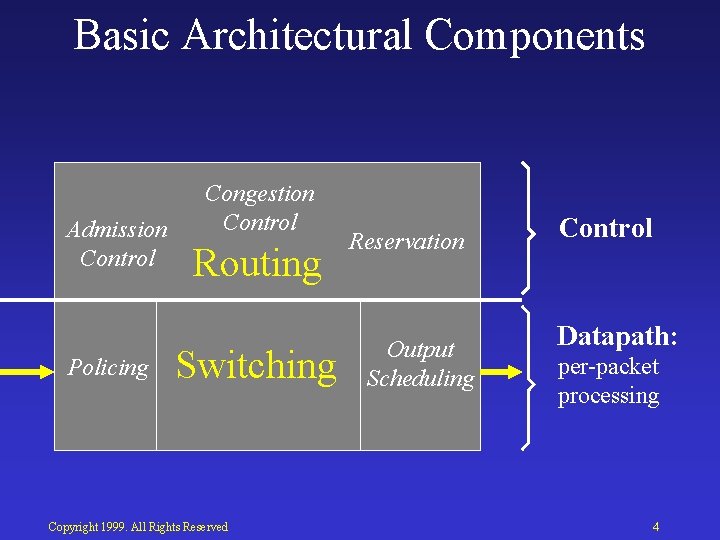

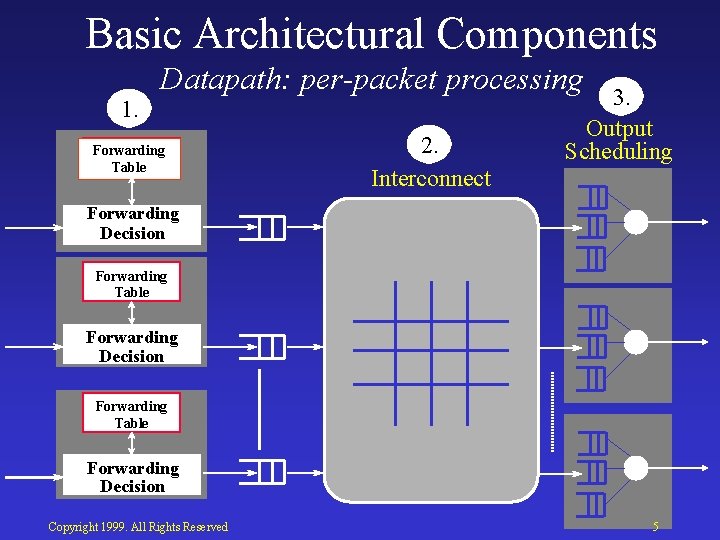

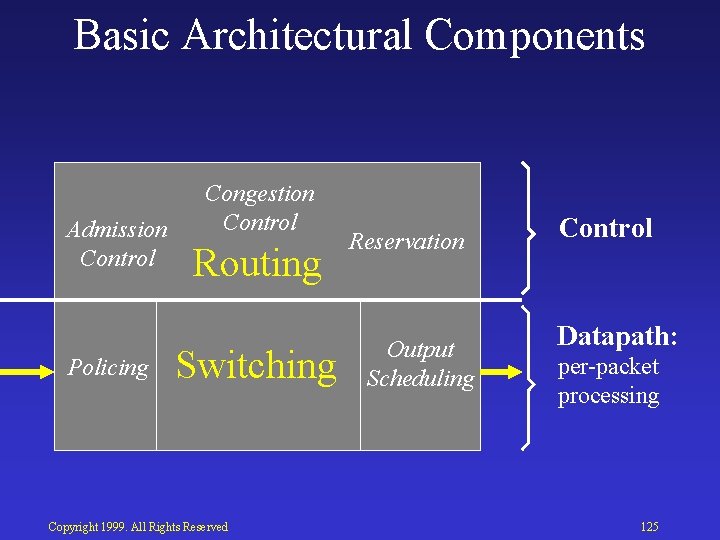

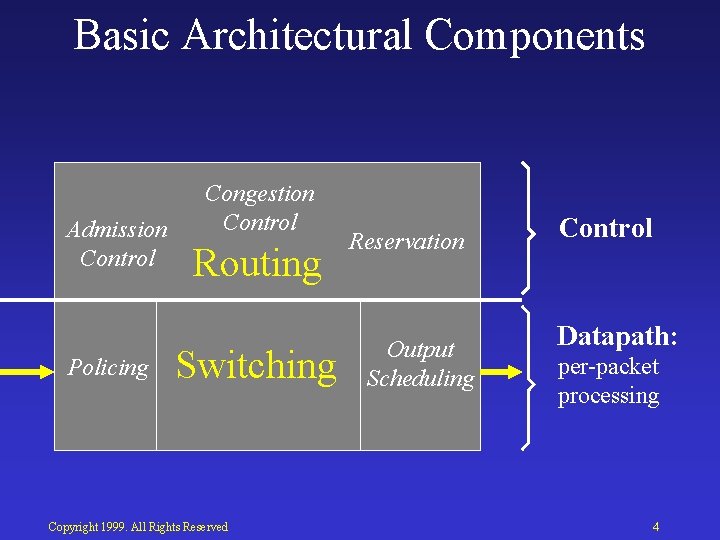

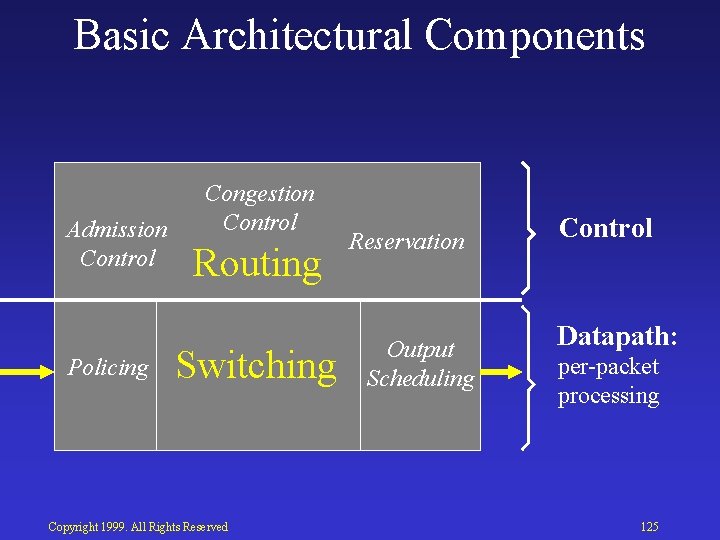

Basic Architectural Components Admission Control Policing Congestion Control Routing Switching Copyright 1999. All Rights Reserved Reservation Output Scheduling Control Datapath: per packet processing 4

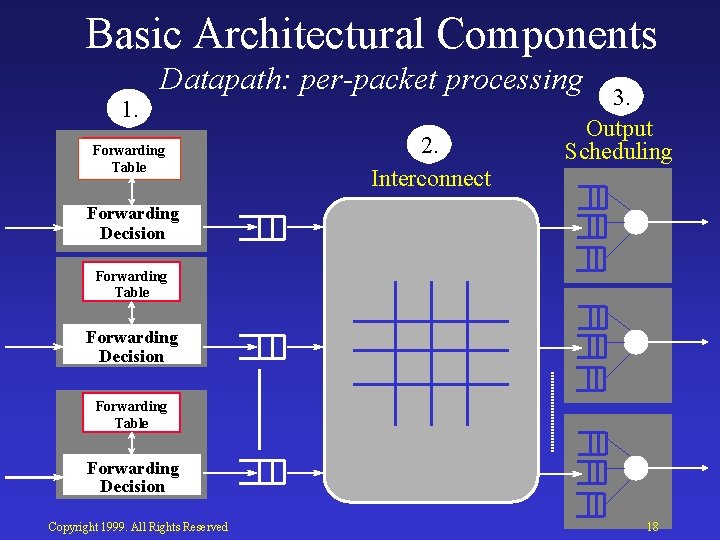

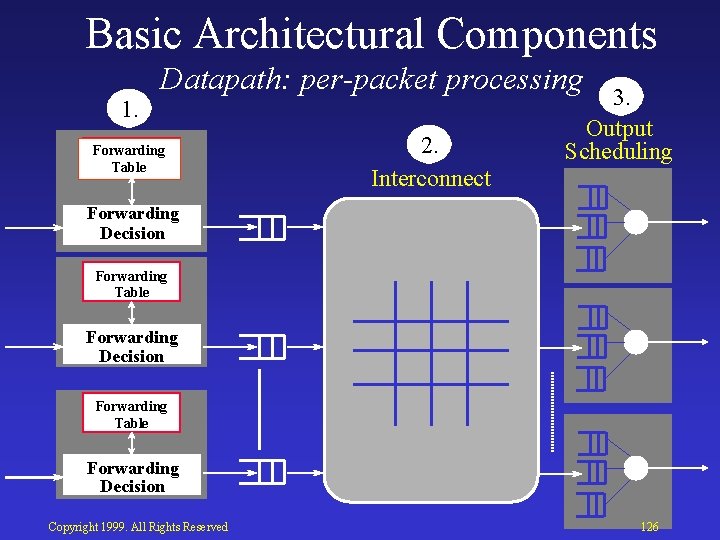

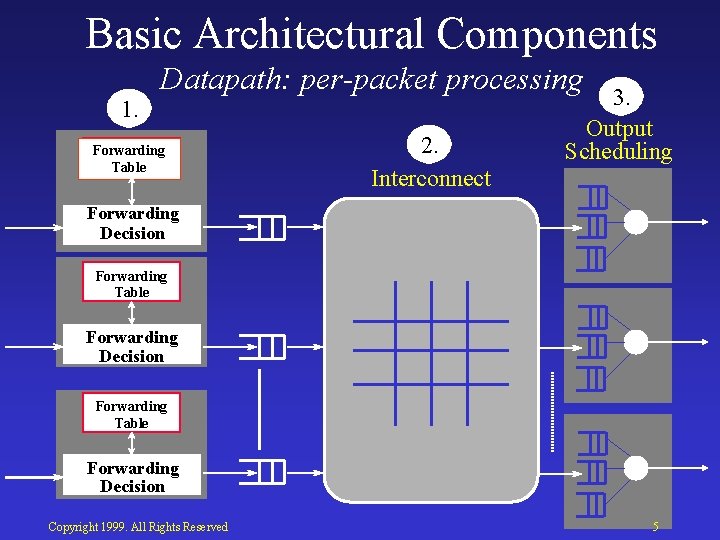

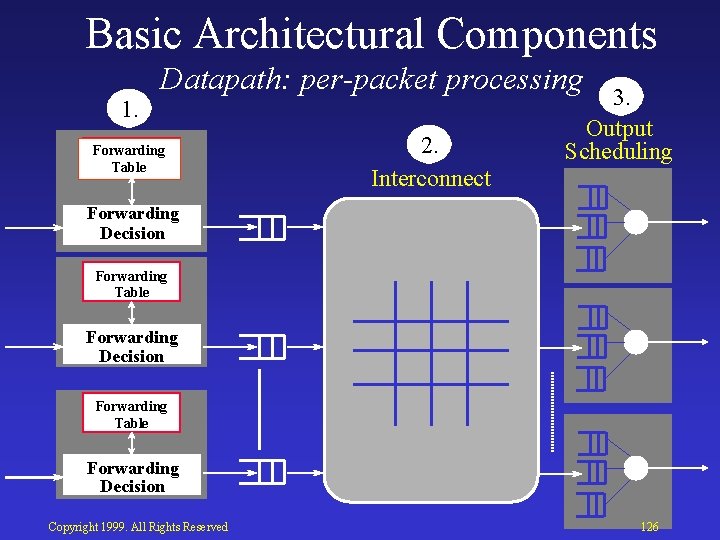

Basic Architectural Components 1. Datapath: per-packet processing Forwarding Table 2. Interconnect 3. Output Scheduling Forwarding Decision Forwarding Table Forwarding Decision Copyright 1999. All Rights Reserved 5

Where high performance packet switches are used Carrier Class Core Router ATM Switch Frame Relay Switch The Internet Core Edge Router Copyright 1999. All Rights Reserved Enterprise WAN access & Enterprise Campus Switch 6

Introduction What is a Packet Switch? • Basic Architectural Components • Some Example Packet Switches • The Evolution of IP Routers Copyright 1999. All Rights Reserved 7

ATM Switch • • Lookup cell VCI/VPI in VC table. Replace old VCI/VPI with new. Forward cell to outgoing interface. Transmit cell onto link. Copyright 1999. All Rights Reserved 8

Ethernet Switch • Lookup frame DA in forwarding table. – If known, forward to correct port. – If unknown, broadcast to all ports. • Learn SA of incoming frame. • Forward frame to outgoing interface. • Transmit frame onto link. Copyright 1999. All Rights Reserved 9

IP Router • Lookup packet DA in forwarding table. – If known, forward to correct port. – If unknown, drop packet. • Decrement TTL, update header Cksum. • Forward packet to outgoing interface. • Transmit packet onto link. Copyright 1999. All Rights Reserved 10

Introduction What is a Packet Switch? • Basic Architectural Components • Some Example Packet Switches • The Evolution of IP Routers Copyright 1999. All Rights Reserved 11

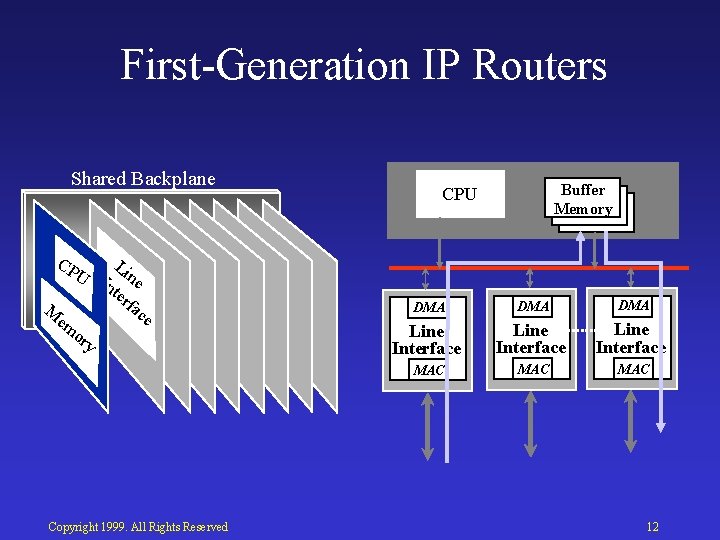

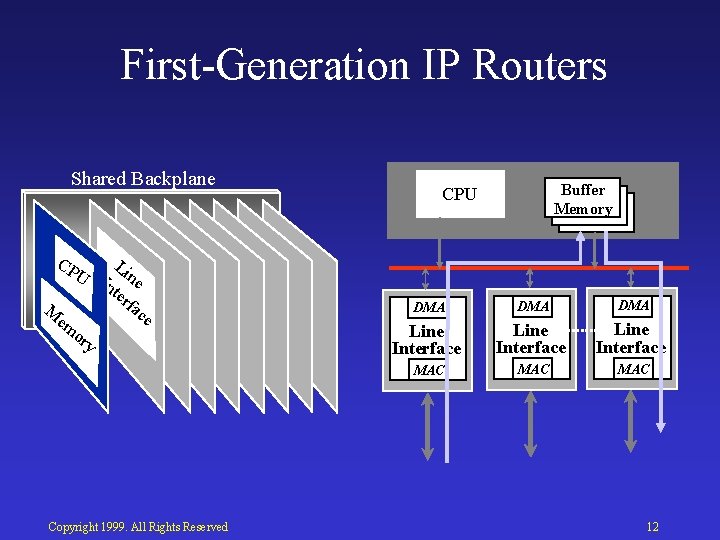

First Generation IP Routers Shared Backplane Buffer Memory CPU CP L U I ine nt er fa M ce em or y Copyright 1999. All Rights Reserved DMA DMA Line Interface MAC MAC 12

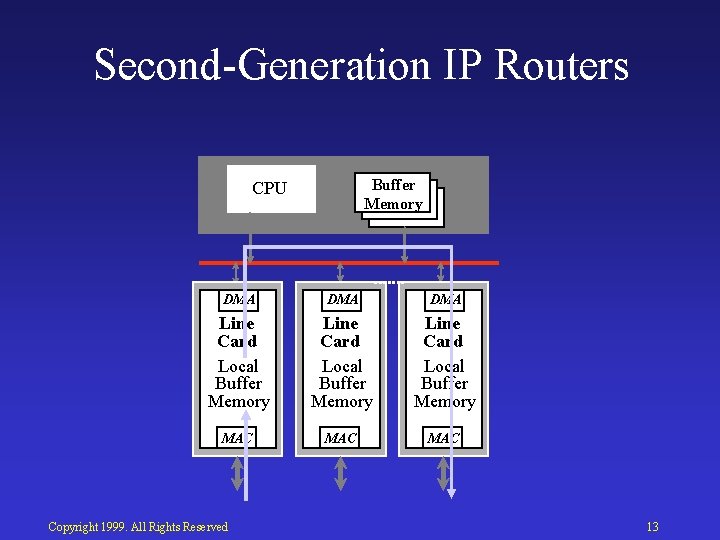

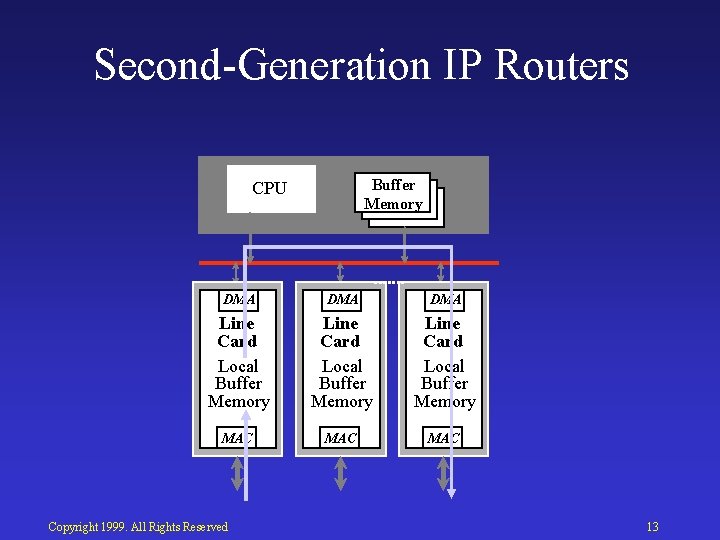

Second Generation IP Routers Buffer Memory CPU DMA DMA Line Card Local Buffer Memory MAC MAC Copyright 1999. All Rights Reserved 13

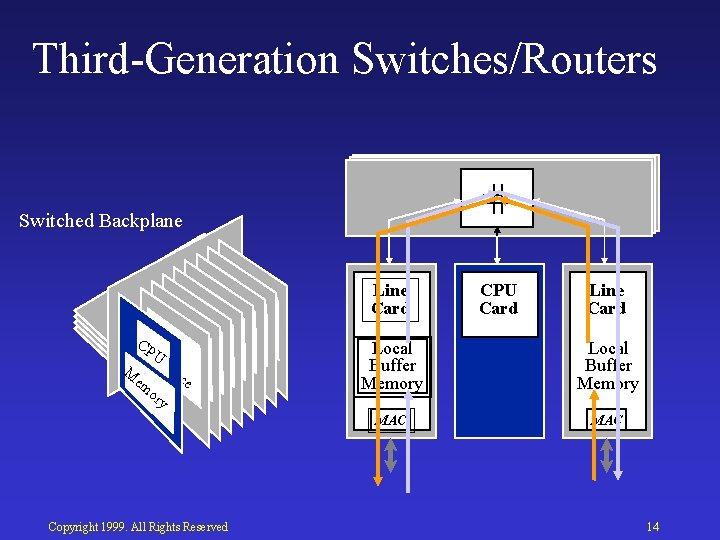

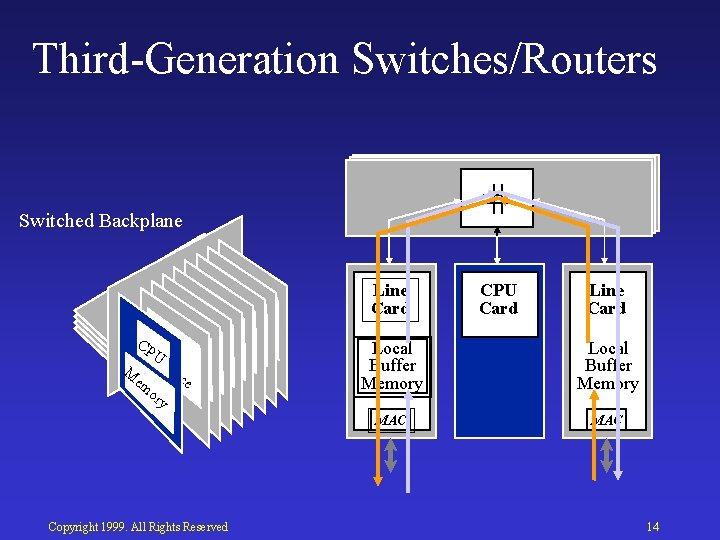

Third Generation Switches/Routers Switched Backplane Li L i Li. In nene L I Li. Ininnetneeterf rfa ace L I CPI Initnnetneeterf rfacacece n. Ut er rfa ac e er fa ce e fa ce M ce em or y Copyright 1999. All Rights Reserved Line Card CPU Card Line Card Local Buffer Memory MAC 14

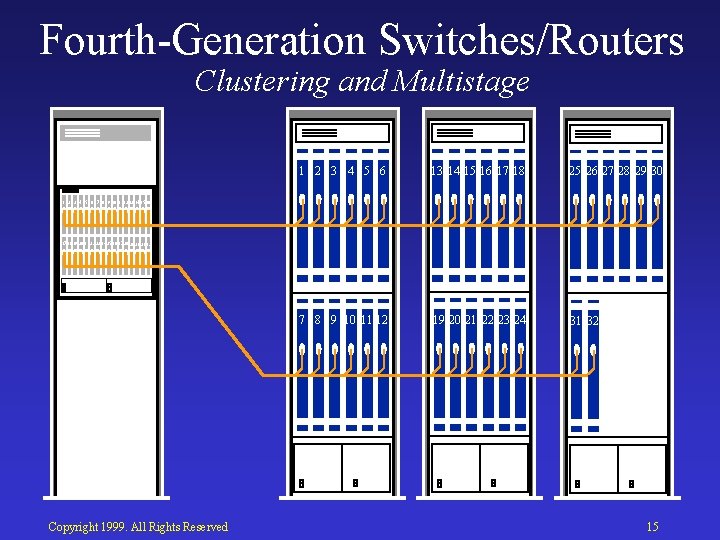

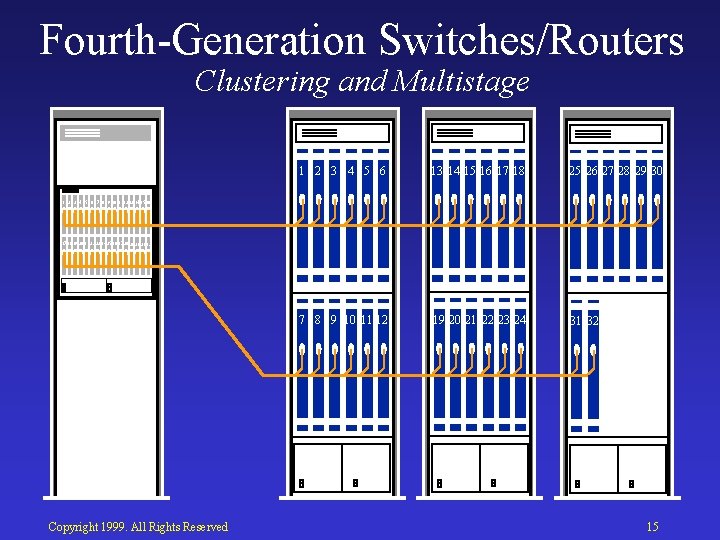

Fourth Generation Switches/Routers Clustering and Multistage 1 2 3 4 5 6 13 14 15 16 17 18 25 26 27 28 29 30 7 8 9 10 11 12 19 20 21 22 23 24 31 32 21 1 2 3 4 5 6 7 8 9 10 1112 13 14 15 16 17 1819 20 21 22 23 2425 26 27 28 29 30 31 32 Copyright 1999. All Rights Reserved 15

Packet Switches References • J. Giacopelli, M. Littlewood, W. D. Sincoskie “Sunshine: A high performance self routing broadband packet switch architecture”, ISS ‘ 90. • J. S. Turner “Design of a Broadcast packet switching network”, IEEE Trans Comm, June 1988, pp. 734 743. • C. Partridge et al. “A Fifty Gigabit per second IP Router”, IEEE Trans Networking, 1998. • N. Mc. Keown, M. Izzard, A. Mekkittikul, W. Ellersick, M. Horowitz, “The Tiny Tera: A Packet Switch Core”, IEEE Micro Magazine, Jan Feb 1997. Copyright 1999. All Rights Reserved 16

Tutorial Outline • Introduction: What is a Packet Switch? • Packet Lookup and Classification: Where does a packet go next? • Switching Fabrics: How does the packet get there? Copyright 1999. All Rights Reserved 17

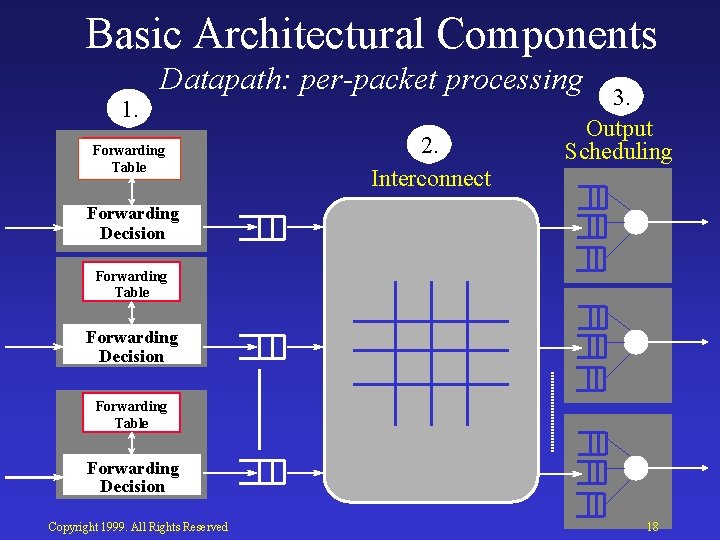

Basic Architectural Components 1. Datapath: per-packet processing Forwarding Table 2. Interconnect 3. Output Scheduling Forwarding Decision Forwarding Table Forwarding Decision Copyright 1999. All Rights Reserved 18

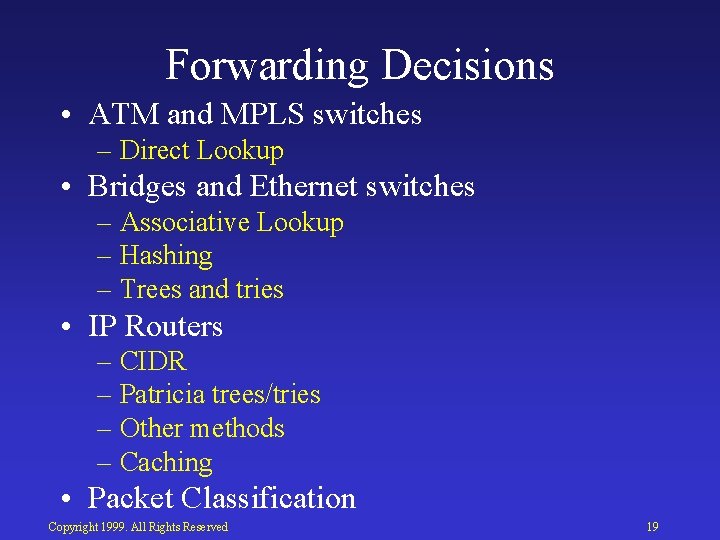

Forwarding Decisions • ATM and MPLS switches – Direct Lookup • Bridges and Ethernet switches – Associative Lookup – Hashing – Trees and tries • IP Routers – CIDR – Patricia trees/tries – Other methods – Caching • Packet Classification Copyright 1999. All Rights Reserved 19

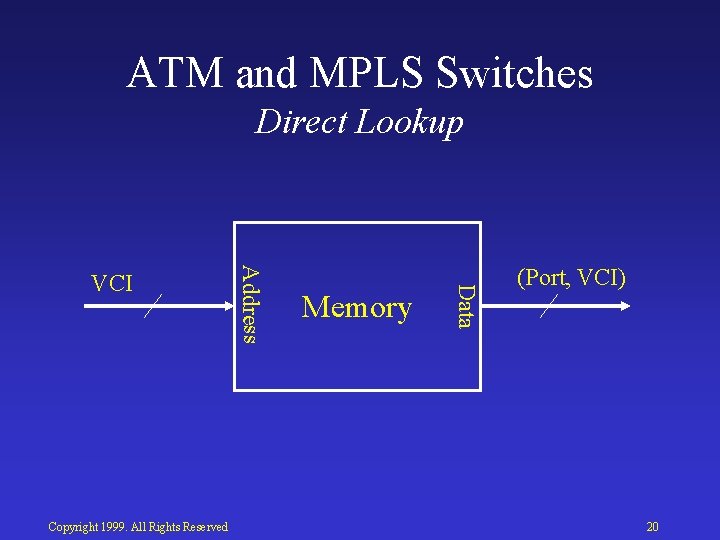

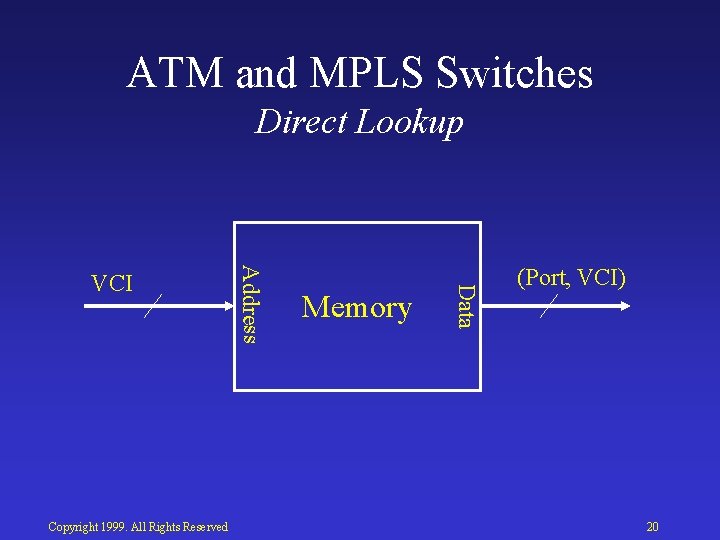

ATM and MPLS Switches Direct Lookup Memory Data Copyright 1999. All Rights Reserved Address VCI (Port, VCI) 20

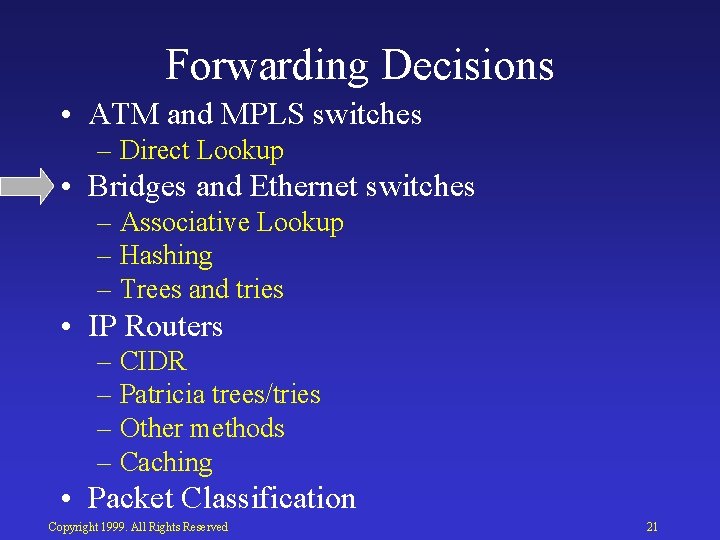

Forwarding Decisions • ATM and MPLS switches – Direct Lookup • Bridges and Ethernet switches – Associative Lookup – Hashing – Trees and tries • IP Routers – CIDR – Patricia trees/tries – Other methods – Caching • Packet Classification Copyright 1999. All Rights Reserved 21

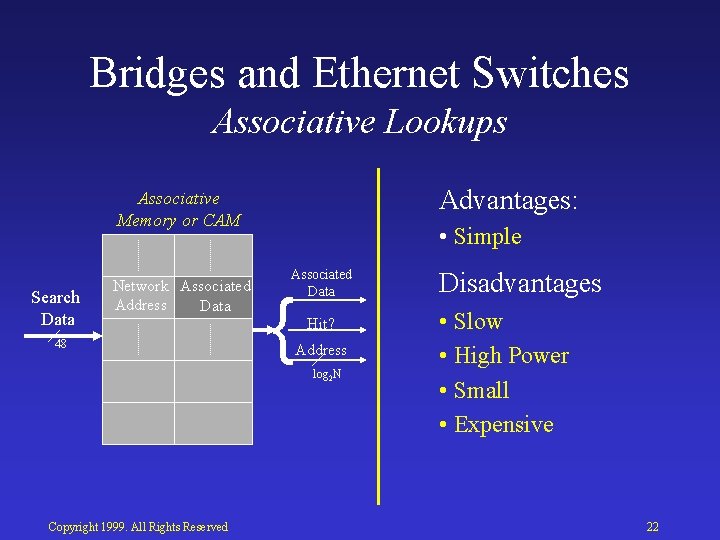

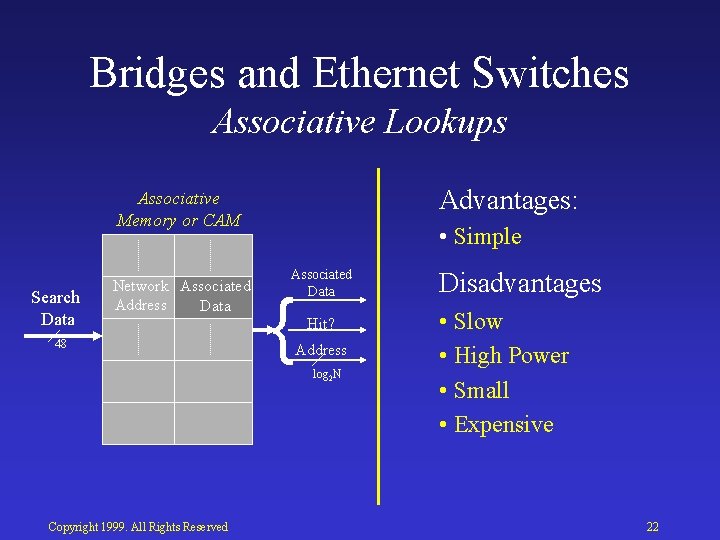

Bridges and Ethernet Switches Associative Lookups Advantages: Associative Memory or CAM Search Data Network Associated Address Data 48 • Simple Associated Data { Hit? Address log 2 N Copyright 1999. All Rights Reserved Disadvantages • Slow • High Power • Small • Expensive 22

Bridges and Ethernet Switches Hashing 16 Memory Data 48 Hashing Function Address Search Data Associated Data { Hit? Address log 2 N Copyright 1999. All Rights Reserved 23

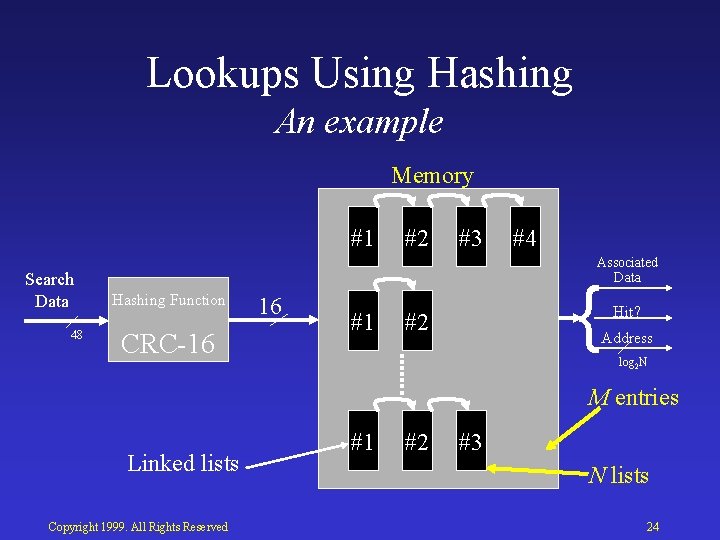

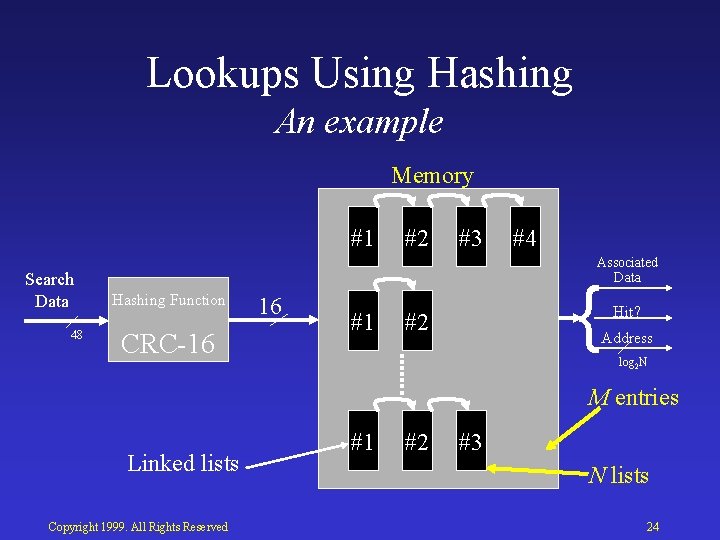

Lookups Using Hashing An example Memory #1 Search Data 48 #2 #3 #4 Associated Data Hashing Function CRC 16 16 #1 { #2 Hit? Address log 2 N M entries Linked lists Copyright 1999. All Rights Reserved #1 #2 #3 N lists 24

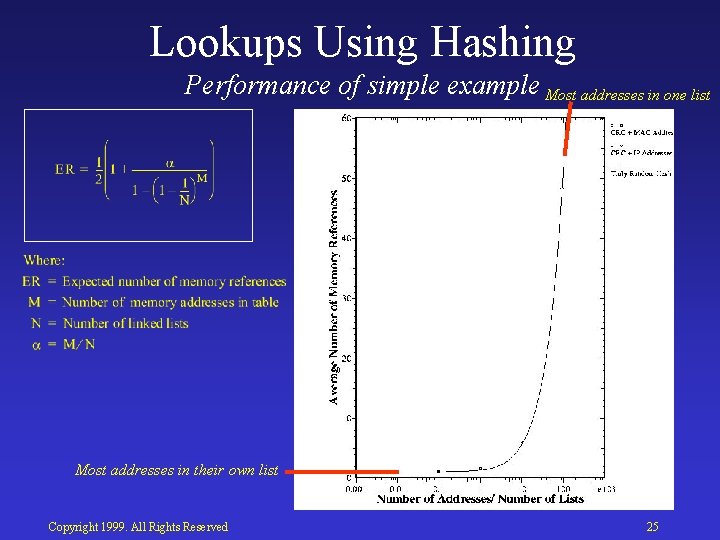

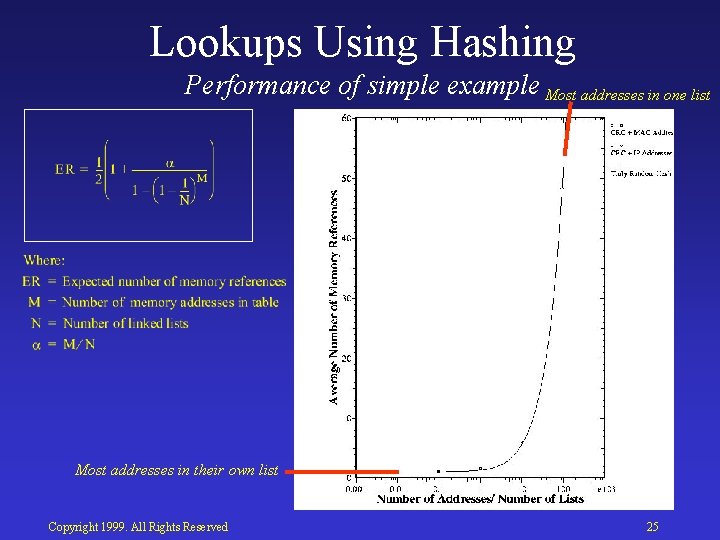

Lookups Using Hashing Performance of simple example Most addresses in one list Most addresses in their own list Copyright 1999. All Rights Reserved 25

Lookups Using Hashing Advantages: • Simple • Expected lookup time can be small Disadvantages • Non deterministic lookup time • Inefficient use of memory Copyright 1999. All Rights Reserved 26

Trees and Tries Binary Search Tree < > > < N entries Copyright 1999. All Rights Reserved > log 2 N < Binary Search Trie 0 0 1 1 010 0 1 111 27

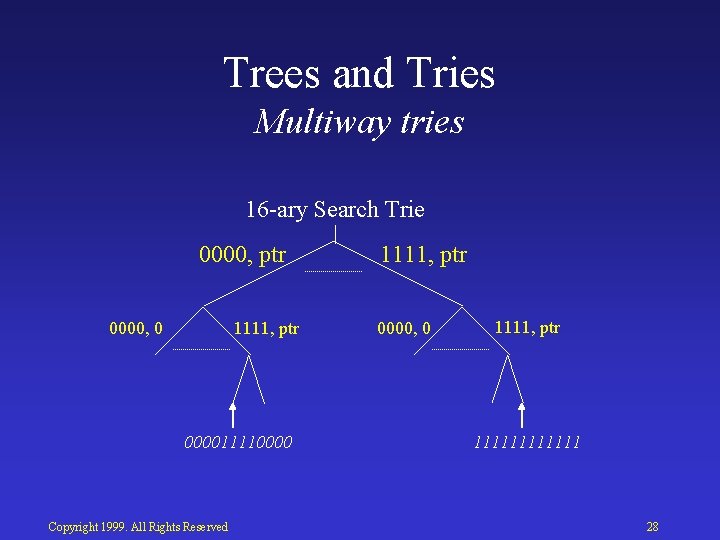

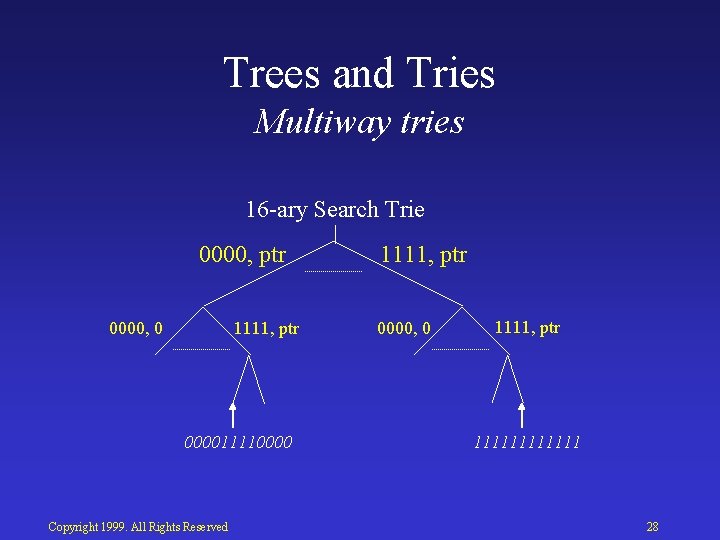

Trees and Tries Multiway tries 16 ary Search Trie 0000, ptr 0000, 0 1111, ptr 000011110000 Copyright 1999. All Rights Reserved 1111, ptr 0000, 0 1111, ptr 111111 28

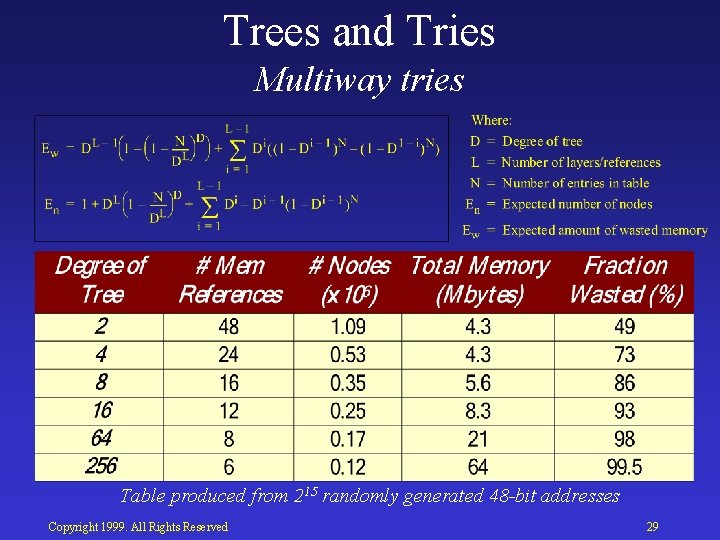

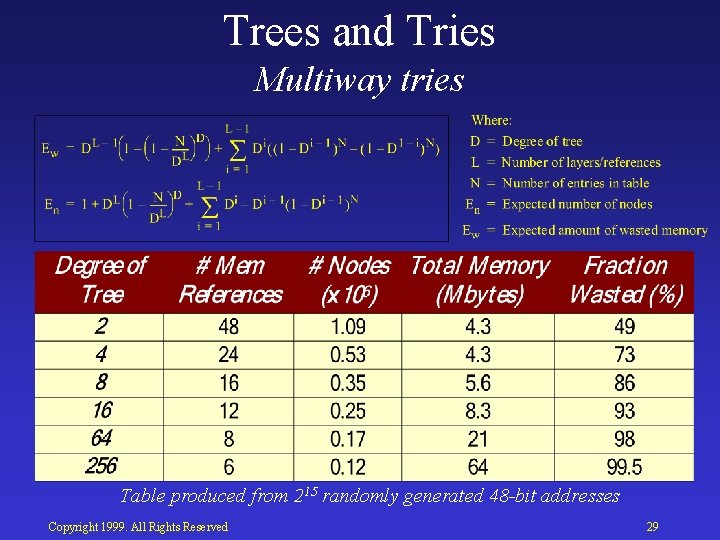

Trees and Tries Multiway tries Table produced from 215 randomly generated 48 -bit addresses Copyright 1999. All Rights Reserved 29

Forwarding Decisions • ATM and MPLS switches – Direct Lookup • Bridges and Ethernet switches – Associative Lookup – Hashing – Trees and tries • IP Routers – CIDR – Patricia trees/tries – Other methods – Caching • Packet Classification Copyright 1999. All Rights Reserved 30

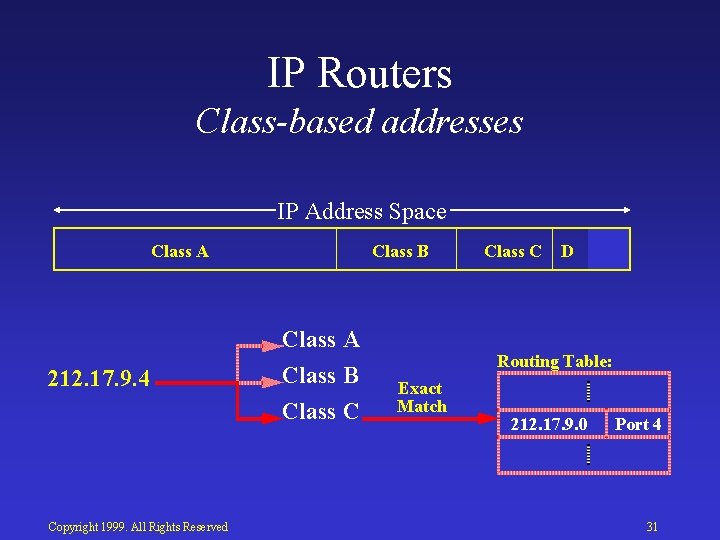

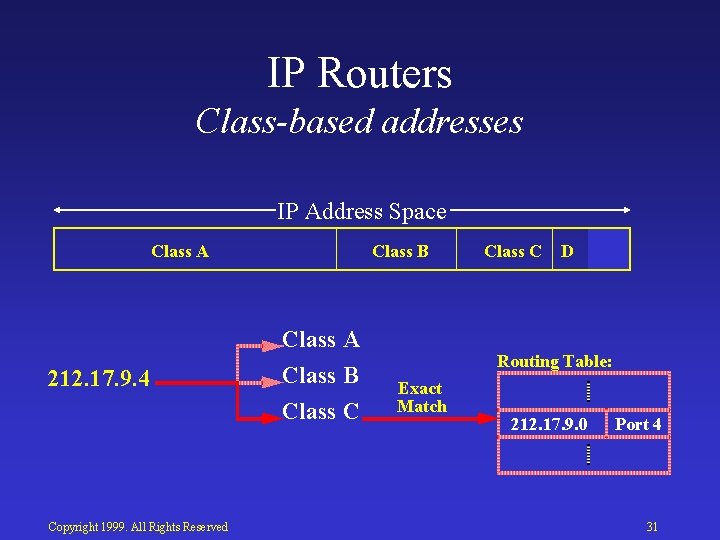

IP Routers Class-based addresses IP Address Space Class A Class B Class A 212. 17. 9. 4 Class B Class C Copyright 1999. All Rights Reserved Class C D Routing Table: Exact Match 212. 17. 9. 0 Port 4 31

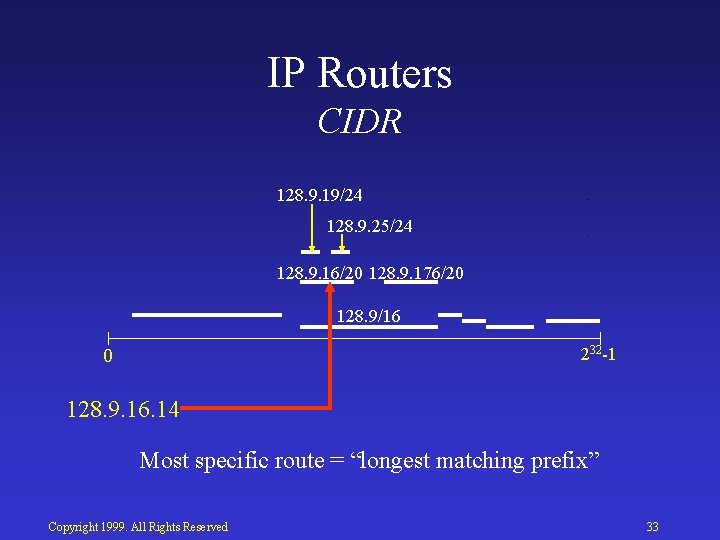

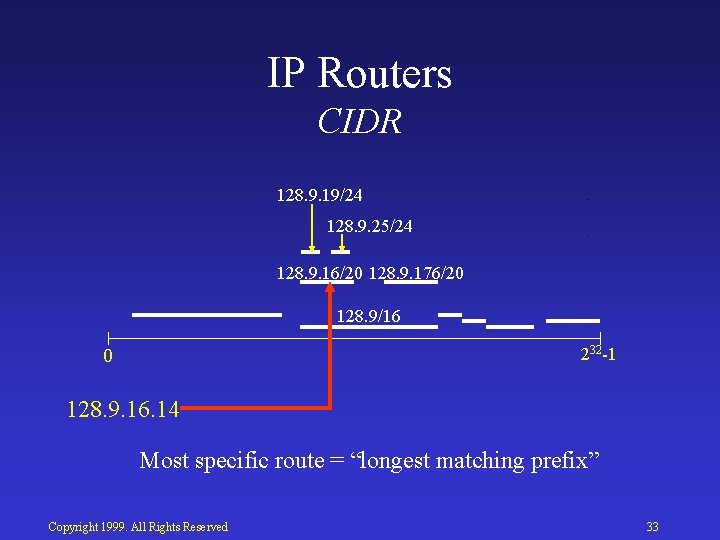

IP Routers CIDR Class based: A B C D 232 1 0 Classless: 128. 9. 0. 0 65/24 0 142. 12/19 128. 9/16 232 1 128. 9. 16. 14 Copyright 1999. All Rights Reserved 32

IP Routers CIDR 128. 9. 19/24 128. 9. 25/24 128. 9. 16/20 128. 9. 176/20 128. 9/16 232 1 0 128. 9. 16. 14 Most specific route = “longest matching prefix” Copyright 1999. All Rights Reserved 33

IP Routers Metrics for Lookups 128. 9. 16. 14 Prefix Port 65/24 128. 9/16 128. 9. 16/20 128. 9. 19/24 128. 9. 25/24 128. 9. 176/20 142. 12/19 3 5 2 7 10 1 3 Copyright 1999. All Rights Reserved • Lookup time • Storage space • Update time • Preprocessing time 34

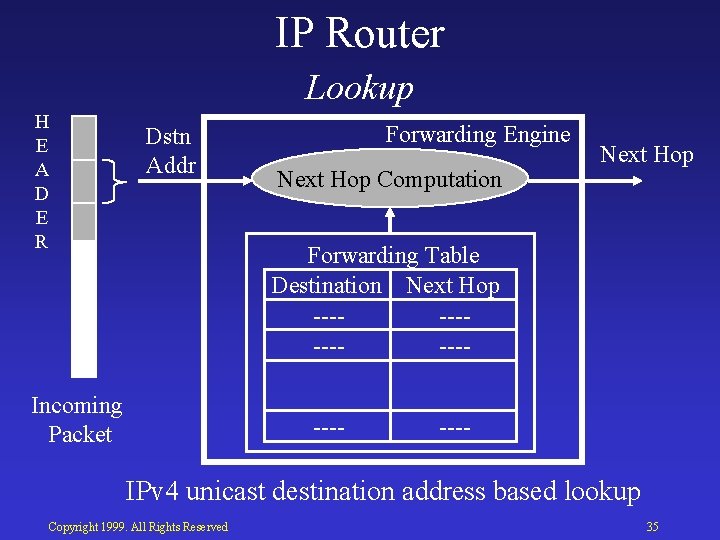

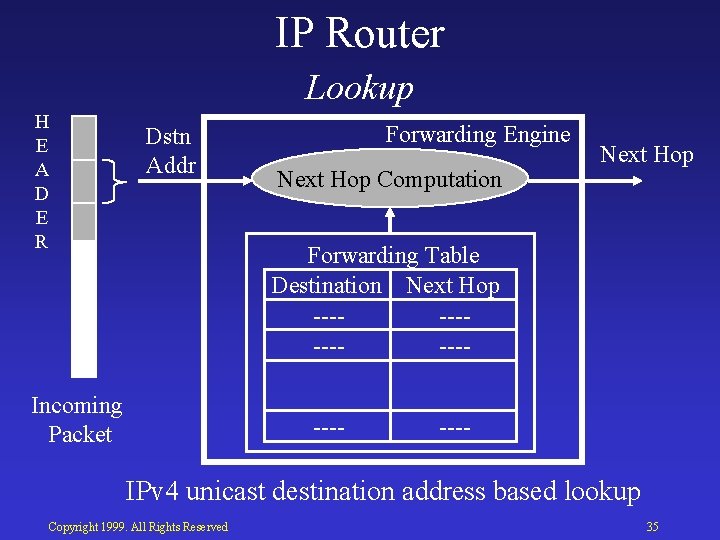

IP Router Lookup H E A D E R Dstn Addr Forwarding Engine Next Hop Computation Next Hop Forwarding Table Destination Next Hop Incoming Packet IPv 4 unicast destination address based lookup Copyright 1999. All Rights Reserved 35

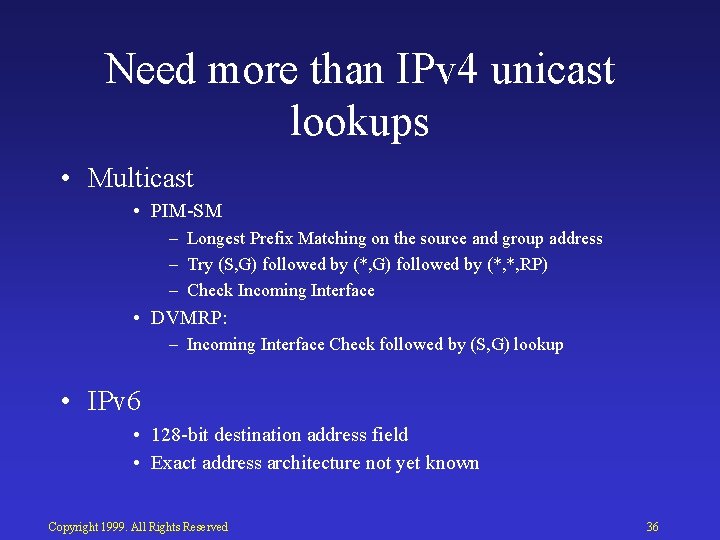

Need more than IPv 4 unicast lookups • Multicast • PIM SM – Longest Prefix Matching on the source and group address – Try (S, G) followed by (*, *, RP) – Check Incoming Interface • DVMRP: – Incoming Interface Check followed by (S, G) lookup • IPv 6 • 128 bit destination address field • Exact address architecture not yet known Copyright 1999. All Rights Reserved 36

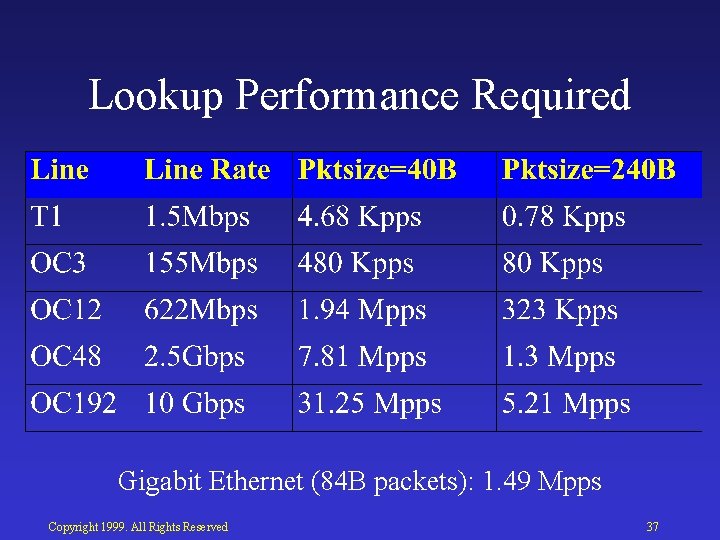

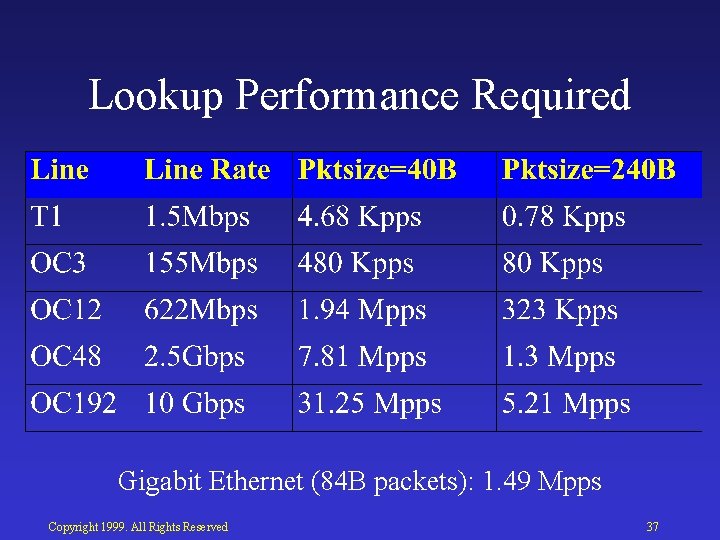

Lookup Performance Required Gigabit Ethernet (84 B packets): 1. 49 Mpps Copyright 1999. All Rights Reserved 37

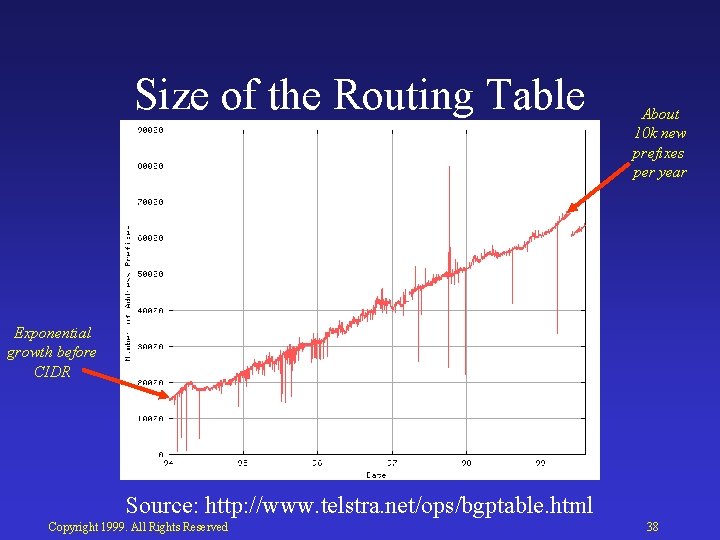

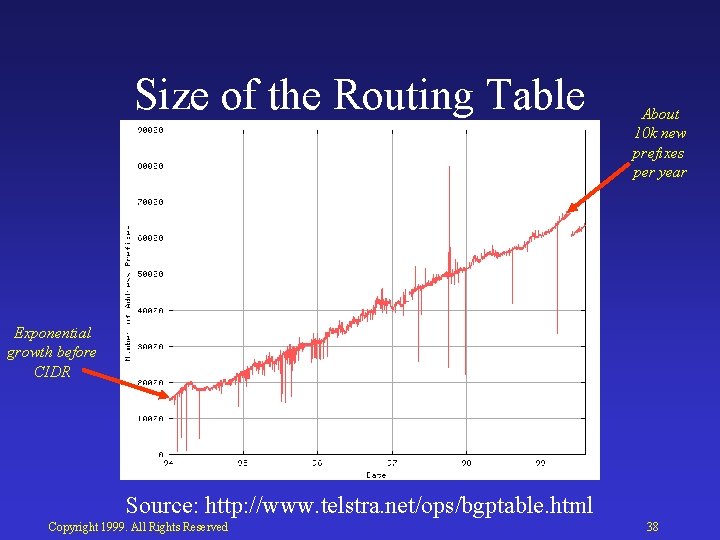

Size of the Routing Table About 10 k new prefixes per year Exponential growth before CIDR Source: http: //www. telstra. net/ops/bgptable. html Copyright 1999. All Rights Reserved 38

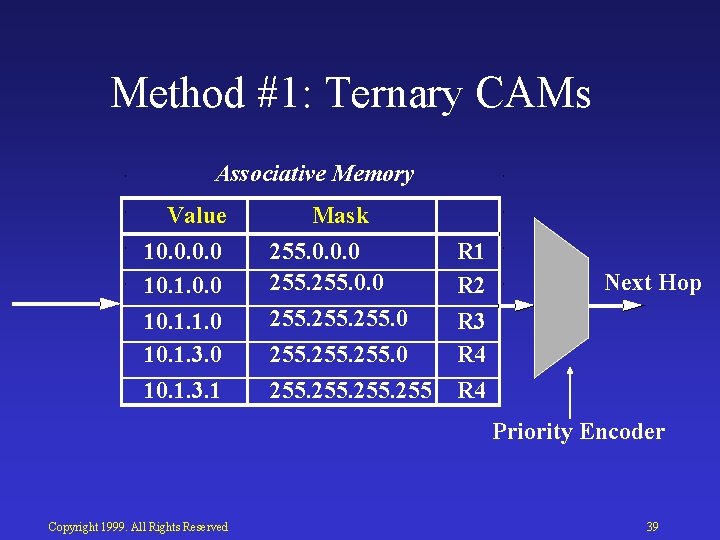

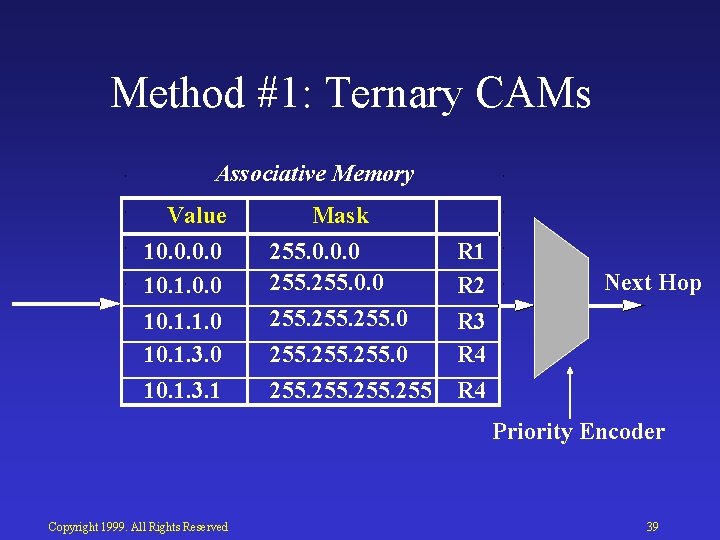

Method #1: Ternary CAMs Associative Memory Value 10. 0 10. 1. 1. 0 10. 1. 3. 1 Mask 255. 0. 0. 0 255 R 1 R 2 R 3 R 4 Next Hop Priority Encoder Copyright 1999. All Rights Reserved 39

Method #2: Binary Tries 0 d 1 f e a b g i h c Copyright 1999. All Rights Reserved j Example Prefixes a) 00001 b) 00010 c) 00011 d) 001 e) 0101 f) 011 g) 100 h) 1010 i) 1100 j) 11110000 40

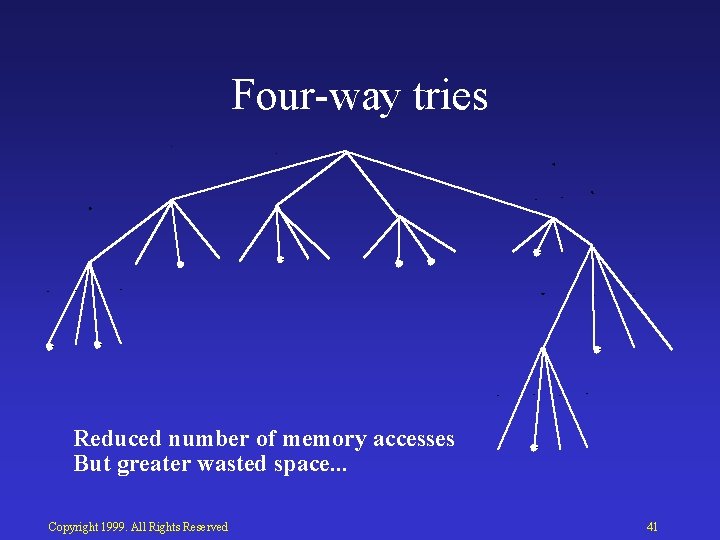

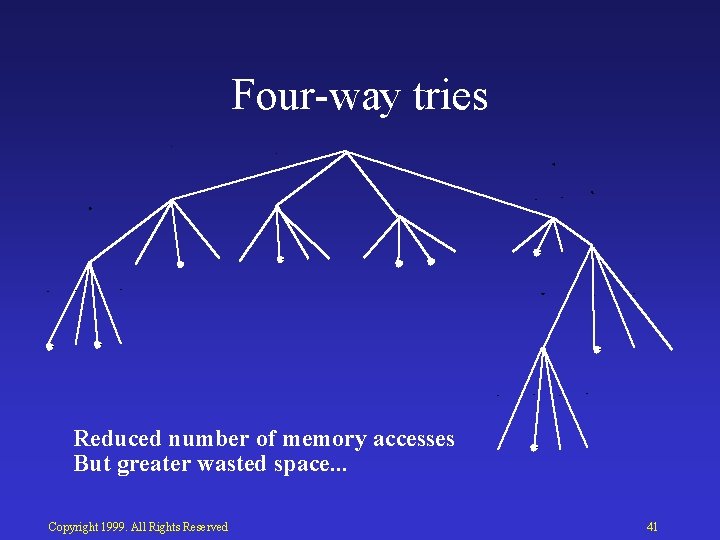

Four way tries Reduced number of memory accesses But greater wasted space. . . Copyright 1999. All Rights Reserved 41

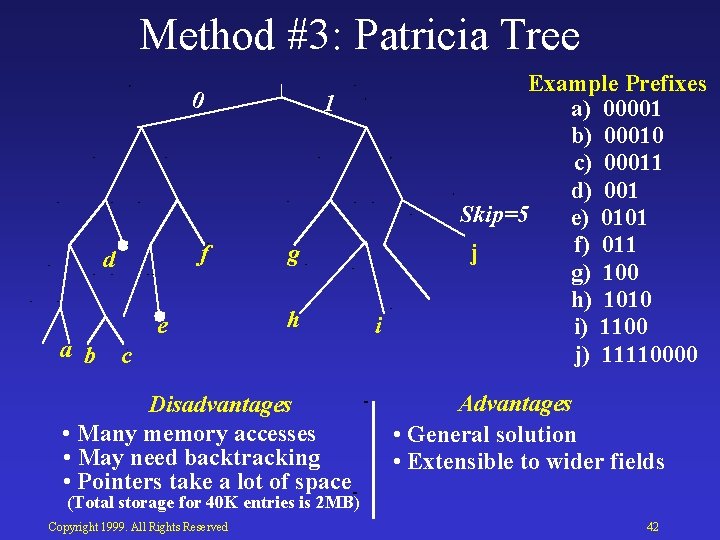

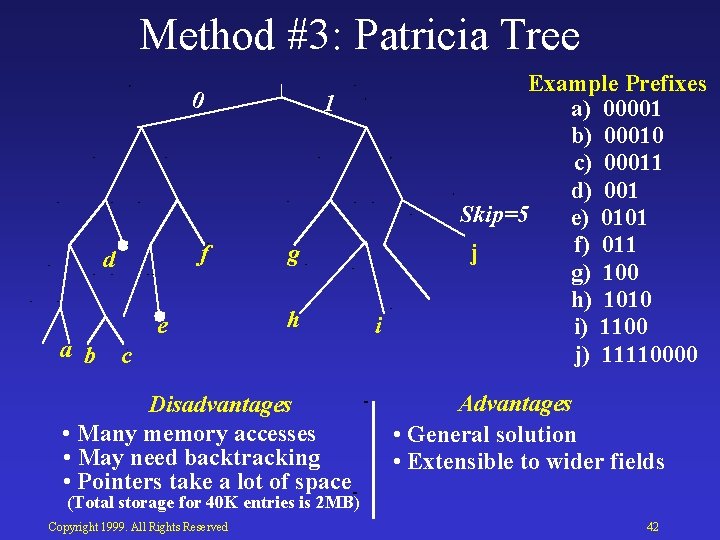

Method #3: Patricia Tree 0 f d a b e 1 g h c Disadvantages • Many memory accesses • May need backtracking • Pointers take a lot of space i Example Prefixes a) 00001 b) 00010 c) 00011 d) 001 Skip=5 e) 0101 f) 011 j g) 100 h) 1010 i) 1100 j) 11110000 Advantages • General solution • Extensible to wider fields (Total storage for 40 K entries is 2 MB) Copyright 1999. All Rights Reserved 42

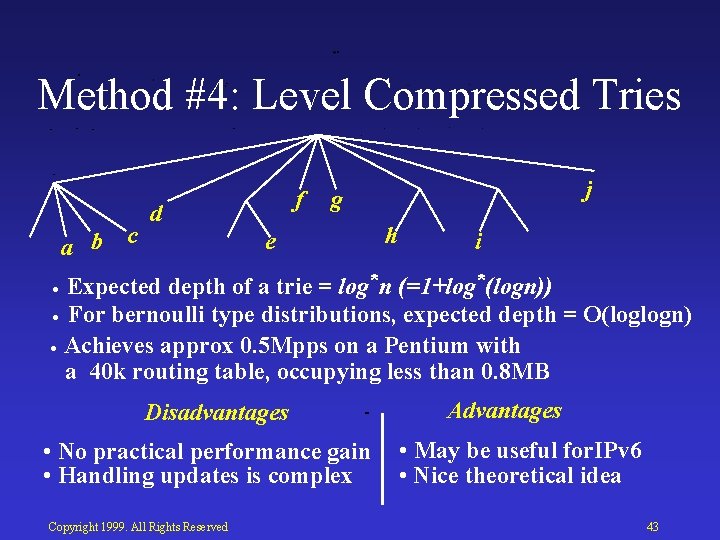

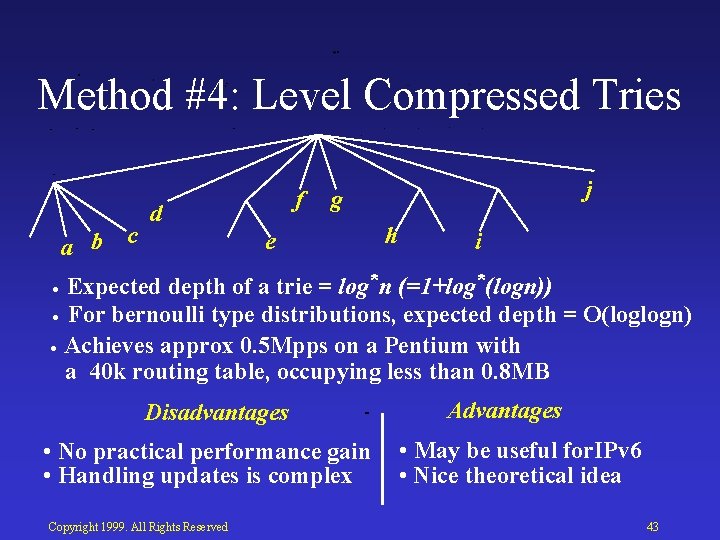

Method #4: Level Compressed Tries a b c f d j g e h i . Expected depth of a trie = log* n (=1+log* (logn)). For bernoulli type distributions, expected depth = O(loglogn). Achieves approx 0. 5 Mpps on a Pentium with a 40 k routing table, occupying less than 0. 8 MB Disadvantages • No practical performance gain • Handling updates is complex Copyright 1999. All Rights Reserved Advantages • May be useful for. IPv 6 • Nice theoretical idea 43

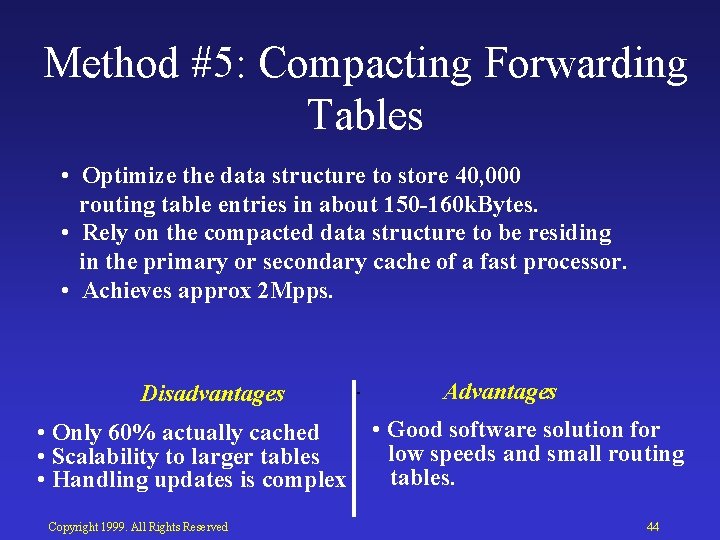

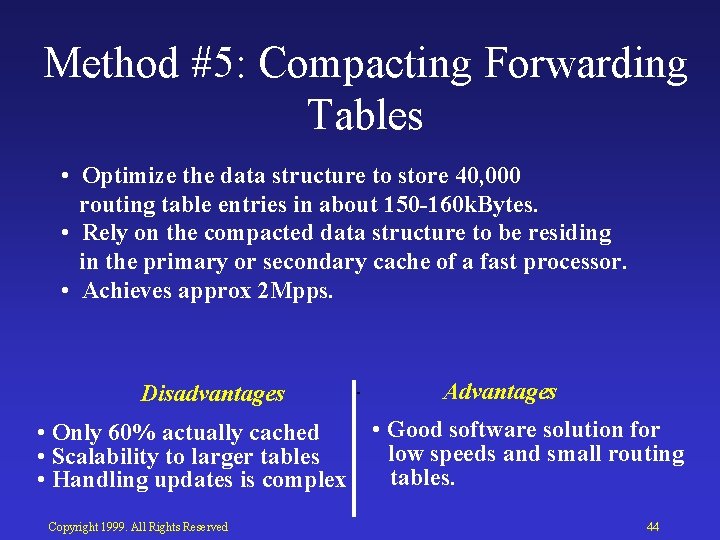

Method #5: Compacting Forwarding Tables • Optimize the data structure to store 40, 000 routing table entries in about 150 -160 k. Bytes. • Rely on the compacted data structure to be residing in the primary or secondary cache of a fast processor. • Achieves approx 2 Mpps. Disadvantages • Only 60% actually cached • Scalability to larger tables • Handling updates is complex Copyright 1999. All Rights Reserved Advantages • Good software solution for low speeds and small routing tables. 44

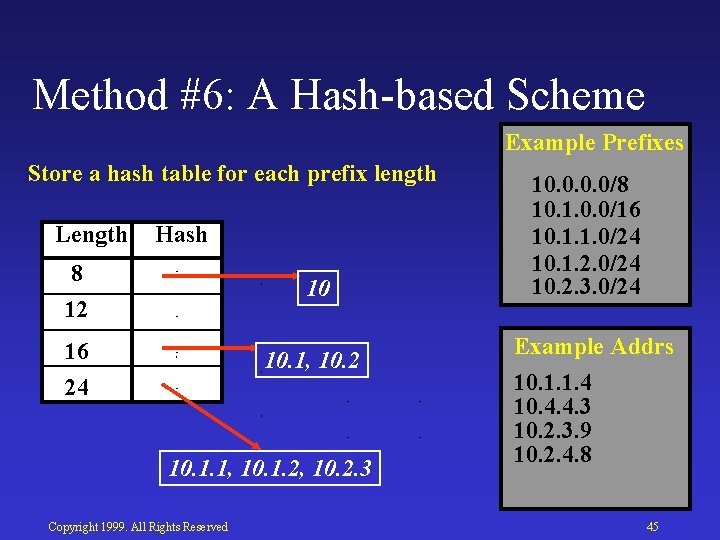

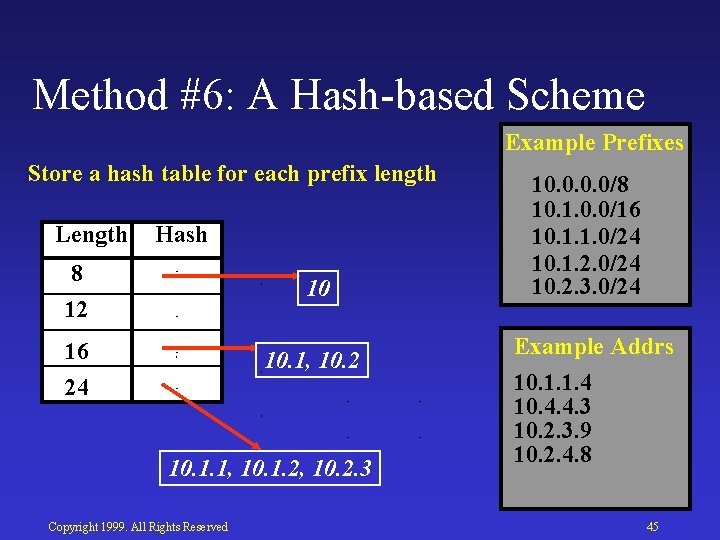

Method #6: A Hash based Scheme Example Prefixes Store a hash table for each prefix length Length Hash 8 12 10 16 24 10. 1, 10. 2 10. 1. 1, 10. 1. 2, 10. 2. 3 Copyright 1999. All Rights Reserved 10. 0/8 10. 1. 0. 0/16 10. 1. 1. 0/24 10. 1. 2. 0/24 10. 2. 3. 0/24 Example Addrs 10. 1. 1. 4 10. 4. 4. 3 10. 2. 3. 9 10. 2. 4. 8 45

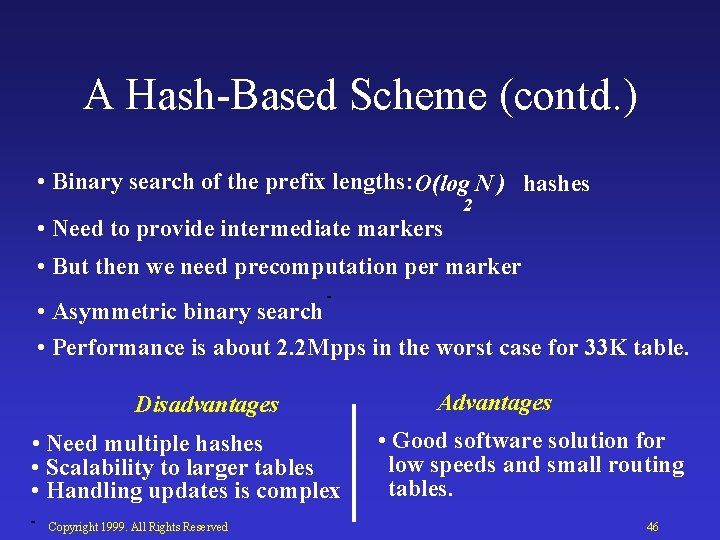

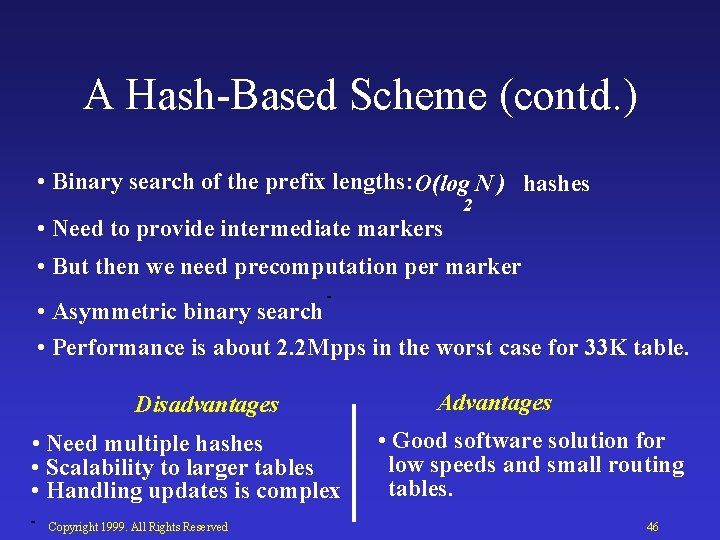

A Hash Based Scheme (contd. ) • Binary search of the prefix lengths: O(log N ) hashes • Need to provide intermediate markers 2 • But then we need precomputation per marker • Asymmetric binary search • Performance is about 2. 2 Mpps in the worst case for 33 K table. Disadvantages • Need multiple hashes • Scalability to larger tables • Handling updates is complex Copyright 1999. All Rights Reserved Advantages • Good software solution for low speeds and small routing tables. 46

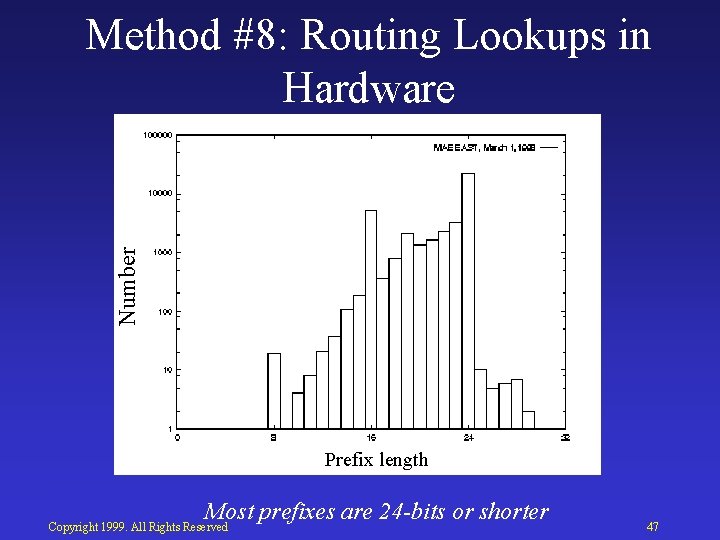

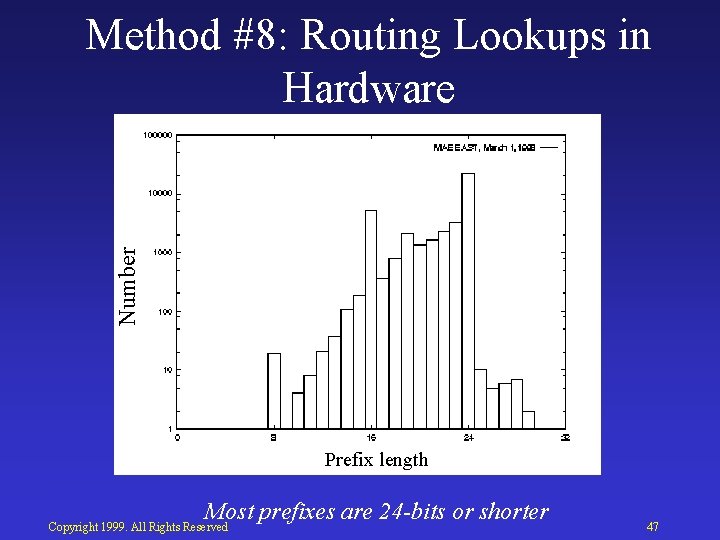

Number Method #8: Routing Lookups in Hardware Prefix length Most prefixes are 24 -bits or shorter Copyright 1999. All Rights Reserved 47

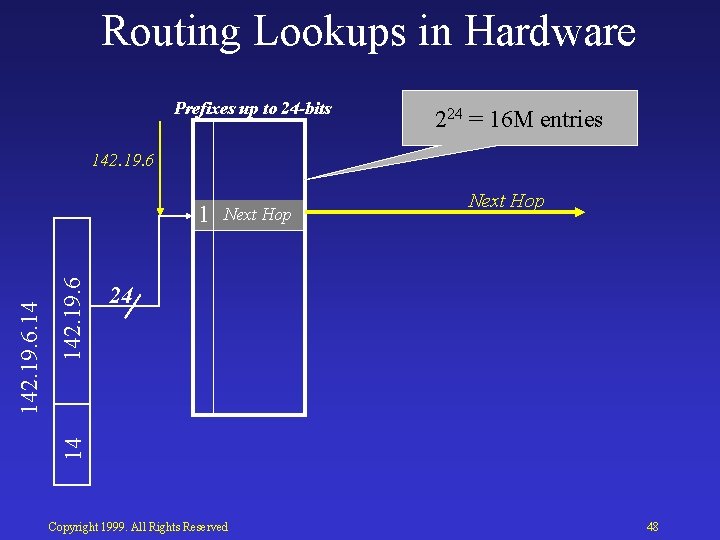

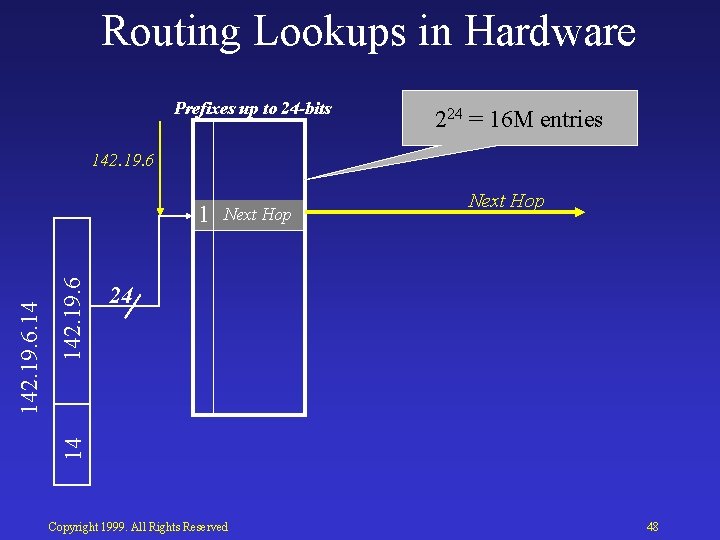

Routing Lookups in Hardware Prefixes up to 24 -bits 224 = 16 M entries 142. 19. 6 Next Hop 24 14 142. 19. 6. 14 1 Next Hop Copyright 1999. All Rights Reserved 48

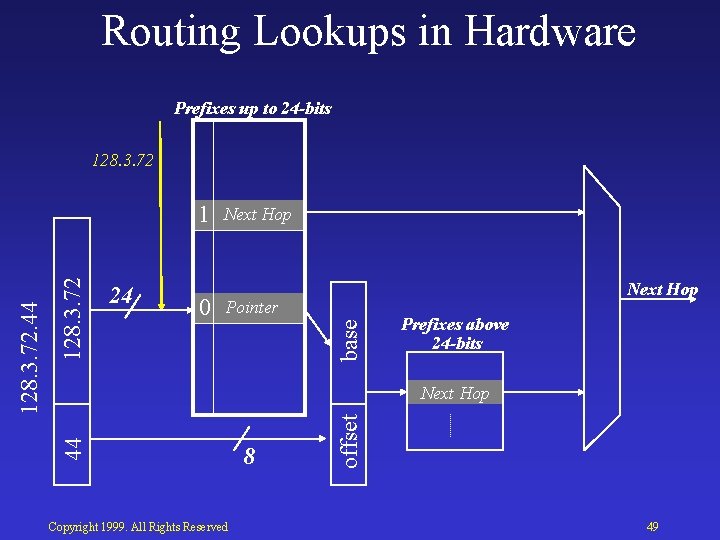

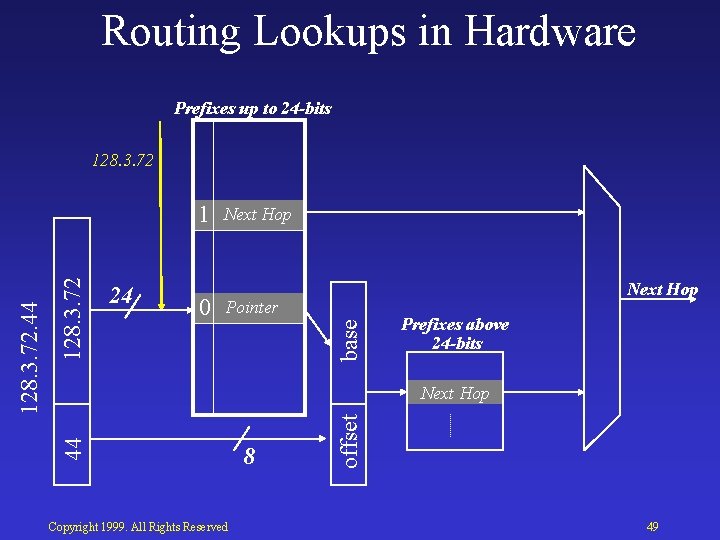

Routing Lookups in Hardware Prefixes up to 24 -bits 128. 3. 72 0 Next Hop Pointer base 128. 3. 72 24 Next Hop Prefixes above 24 -bits Copyright 1999. All Rights Reserved 8 offset Next Hop Next 44 128. 3. 72. 44 1 49

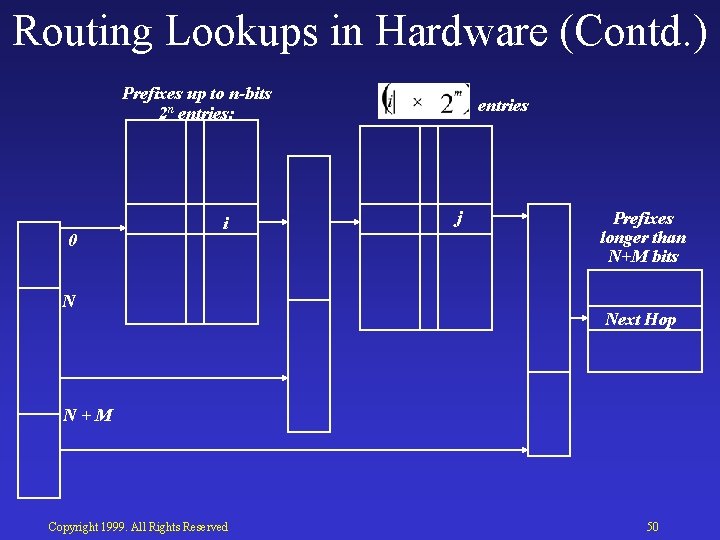

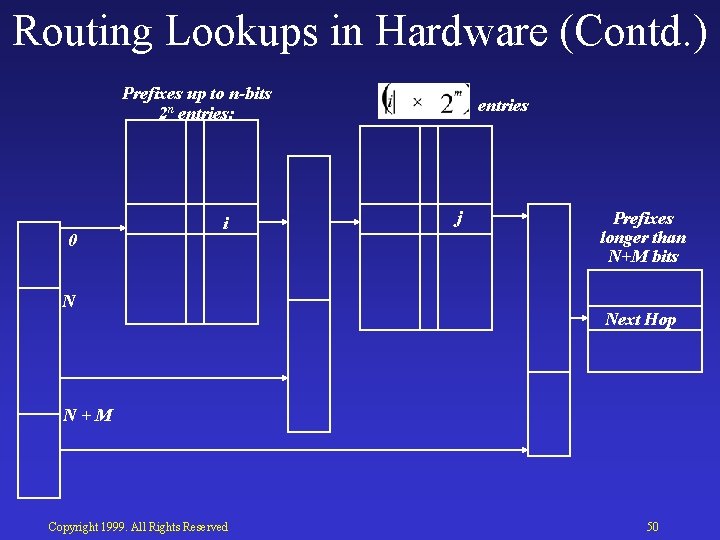

Routing Lookups in Hardware (Contd. ) Prefixes up to n-bits 2 n entries: 0 i N entries j Prefixes longer than N+M bits Next Hop N+M Copyright 1999. All Rights Reserved 50

Routing Updates 10. 4. 24. 0 Depth 3 10. 4. 0. 0 Depth 2 Depth 1 10. 0 Disadvantages • Large memory required • Depends on prefix length distribution Copyright 1999. All Rights Reserved 10. 0 Advantages • 20 Mpps with 50 ns DRAM • Easy to implement in hardware 51

IP Router Lookups References • A. Brodnik, S. Carlsson, M. Degermark, S. Pink. “Small Forwarding Tables for Fast Routing Lookups”, Sigcomm 1997, pp 3 14. • B. Lampson, V. Srinivasan, G. Varghese. “ IP lookups using multiway and multicolumn search”, Infocom 1998, pp 1248 56, vol. 3. • M. Waldvogel, G. Varghese, J. Turner, B. Plattner. “Scalable high speed IP routing lookups”, Sigcomm 1997, pp 25 36. • P. Gupta, S. Lin, N. Mc. Keown. “Routing lookups in hardware at memory access speeds”, Infocom 1998, pp 1241 1248, vol. 3. • S. Nilsson, G. Karlsson. “Fast address lookup for Internet routers”, IFIP Intl Conf on Broadband Communications, Stuttgart, Germany, April 1 3, 1998. • V. Srinivasan, G. Varghese. “Fast IP lookups using controlled prefix expansion”, Sigmetrics, June 1998. Copyright 1999. All Rights Reserved 52

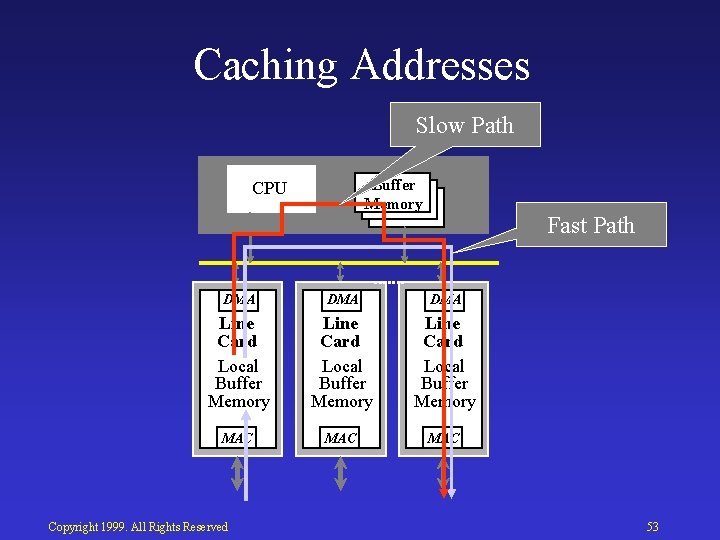

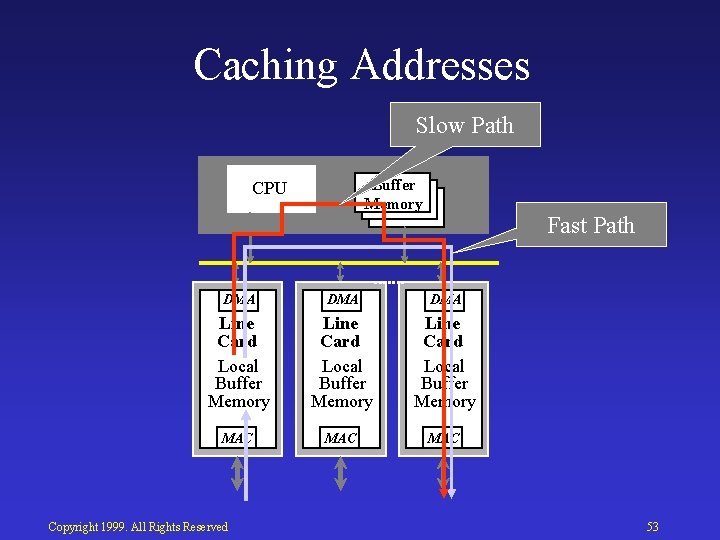

Caching Addresses Slow Path Buffer Memory CPU Fast Path DMA DMA Line Card Local Buffer Memory MAC MAC Copyright 1999. All Rights Reserved 53

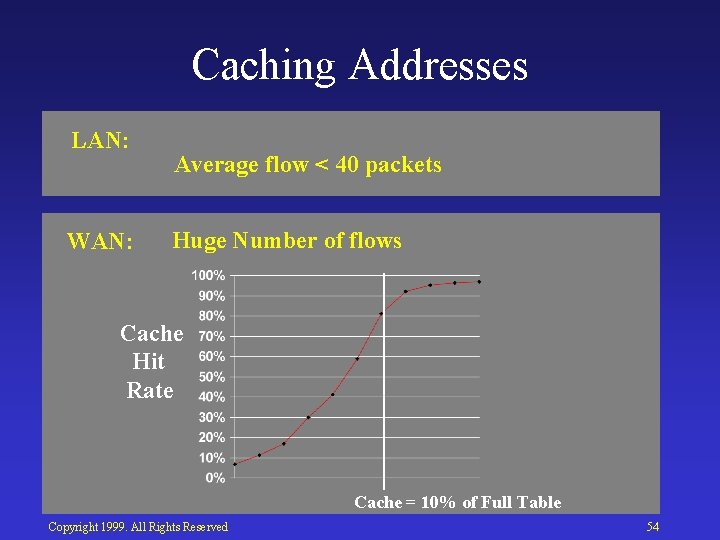

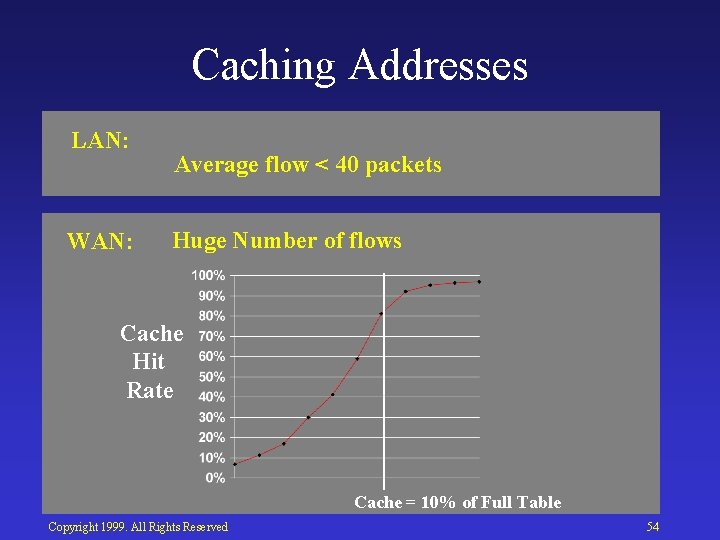

Caching Addresses LAN: WAN: Average flow < 40 packets Huge Number of flows Cache Hit Rate Cache = 10% of Full Table Copyright 1999. All Rights Reserved 54

Forwarding Decisions • ATM and MPLS switches – Direct Lookup • Bridges and Ethernet switches – Associative Lookup – Hashing – Trees and tries • IP Routers – CIDR – Patricia trees/tries – Other methods – Caching • Packet Classification Copyright 1999. All Rights Reserved 55

Providing Value Added Services Some examples • Differentiated services – Regard traffic from AS#33 as `platinum grade’ • Access Control Lists – Deny udp host 194. 72. 33 194. 72. 6. 64 0. 0. 0. 15 eq snmp • Committed Access Rate – Rate limit WWW traffic from sub interface#739 to 10 Mbps • Policy based Routing – Route all voice traffic through the ATM network • Peering Arrangements – Restrict the total amount of traffic of precedence 7 from – MAC address N to 20 Mbps between 10 am and 5 pm • Accounting and Billing – Generate hourly reports of traffic from MAC address M Copyright 1999. All Rights Reserved 56

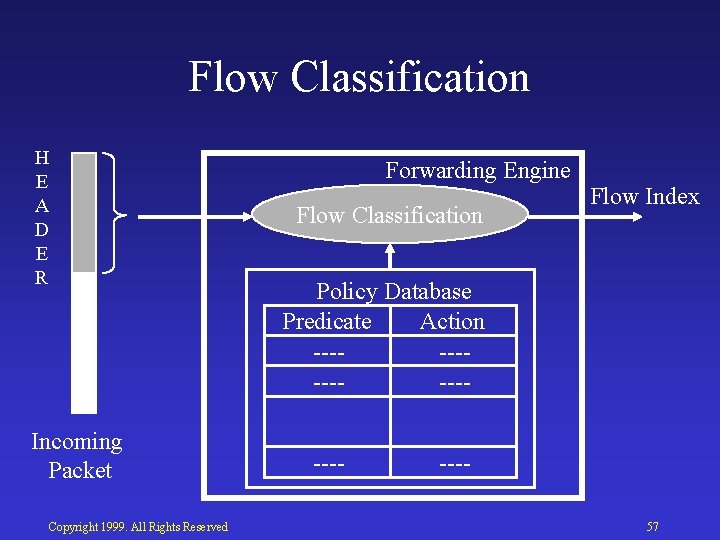

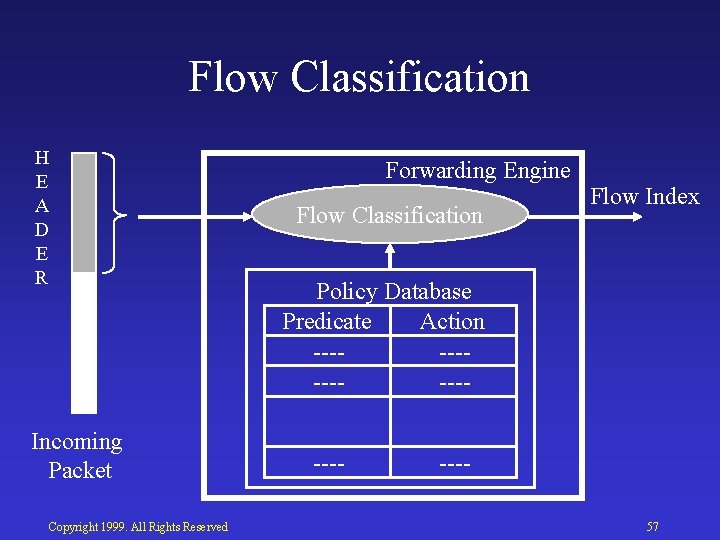

Flow Classification H E A D E R Incoming Packet Copyright 1999. All Rights Reserved Forwarding Engine Flow Classification Flow Index Policy Database Predicate Action 57

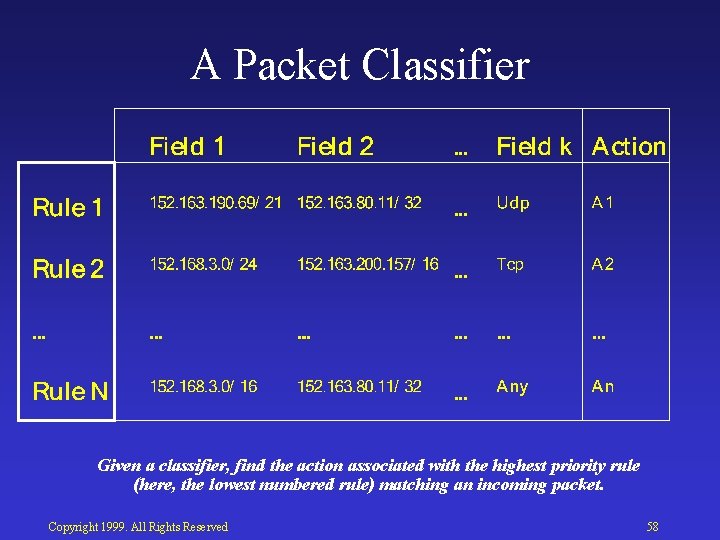

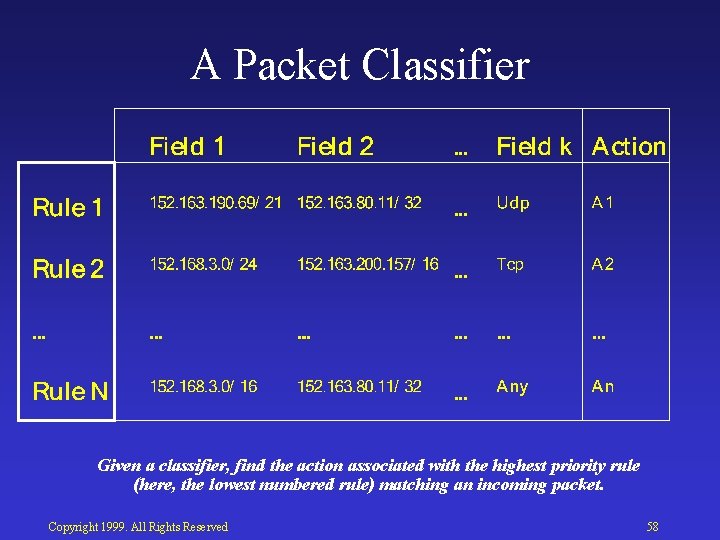

A Packet Classifier Given a classifier, find the action associated with the highest priority rule (here, the lowest numbered rule) matching an incoming packet. Copyright 1999. All Rights Reserved 58

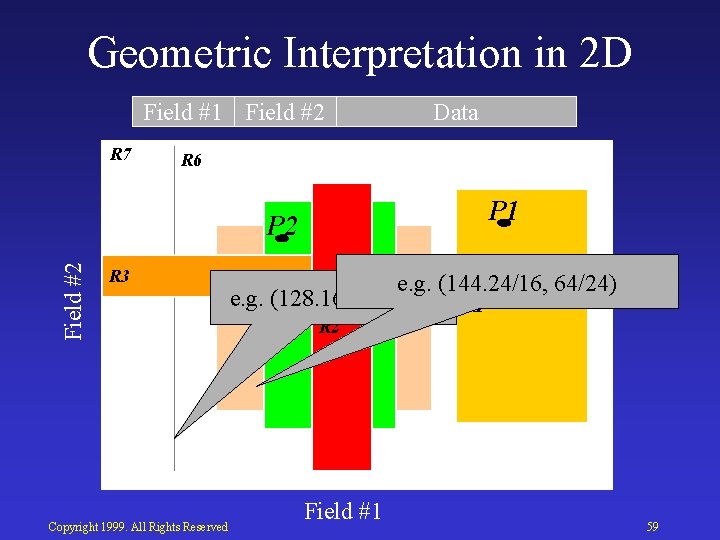

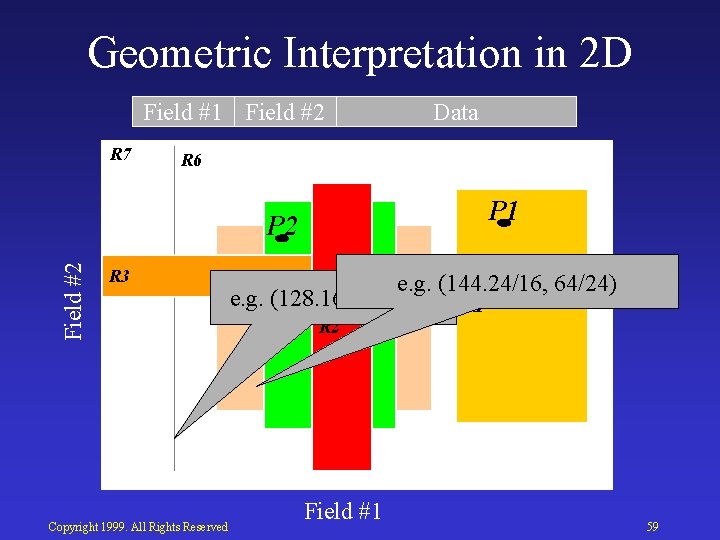

Geometric Interpretation in 2 D Field #1 Field #2 R 7 R 6 P 1 P 2 Field #2 Data R 3 e. g. (144. 24/16, 64/24) e. g. (128. 16. 46. 23, *) R 1 R 5 Copyright 1999. All Rights Reserved R 4 R 2 Field #1 59

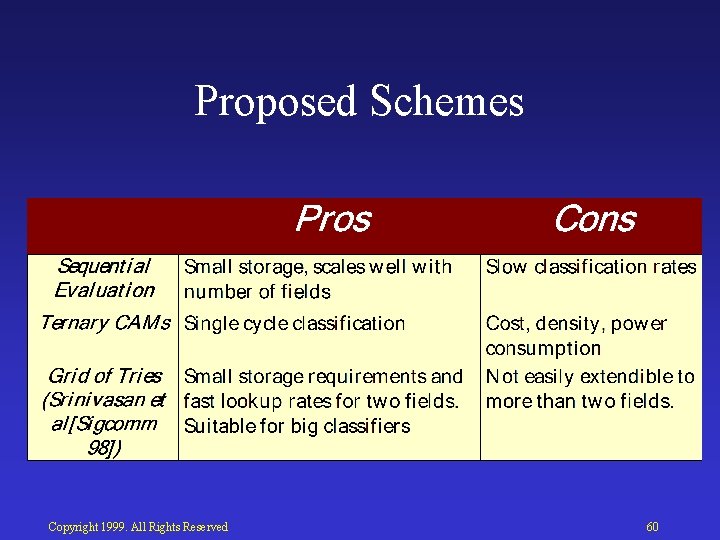

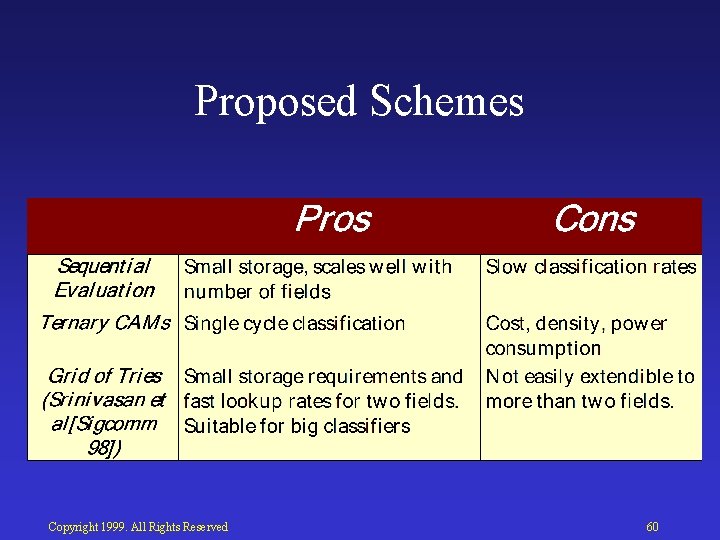

Proposed Schemes Copyright 1999. All Rights Reserved 60

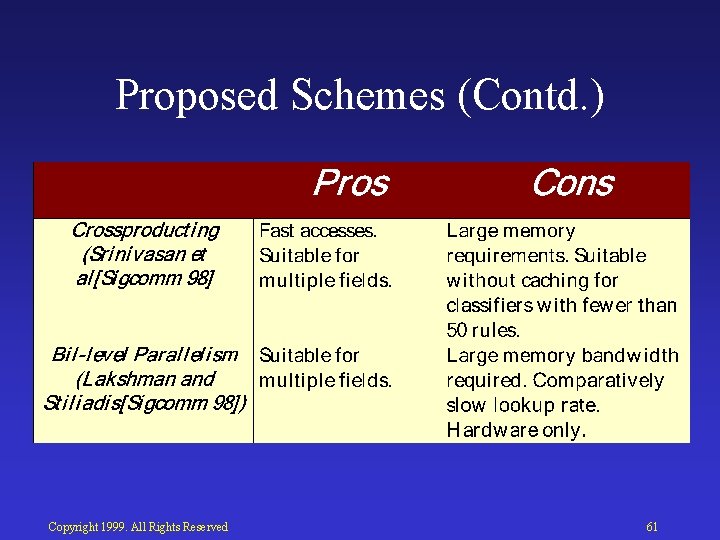

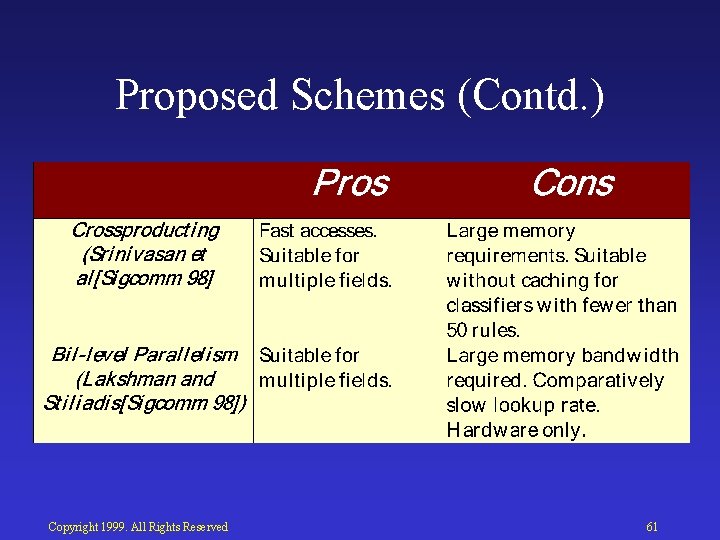

Proposed Schemes (Contd. ) Copyright 1999. All Rights Reserved 61

Proposed Schemes (Contd. ) Copyright 1999. All Rights Reserved 62

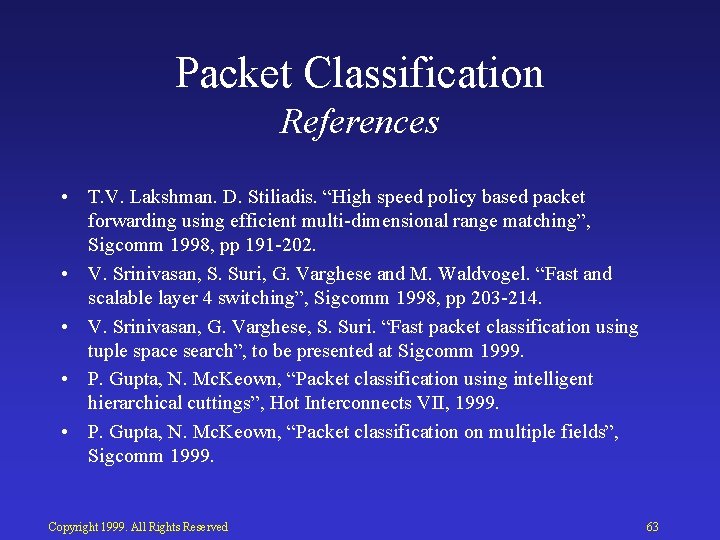

Packet Classification References • T. V. Lakshman. D. Stiliadis. “High speed policy based packet forwarding using efficient multi dimensional range matching”, Sigcomm 1998, pp 191 202. • V. Srinivasan, S. Suri, G. Varghese and M. Waldvogel. “Fast and scalable layer 4 switching”, Sigcomm 1998, pp 203 214. • V. Srinivasan, G. Varghese, S. Suri. “Fast packet classification using tuple space search”, to be presented at Sigcomm 1999. • P. Gupta, N. Mc. Keown, “Packet classification using intelligent hierarchical cuttings”, Hot Interconnects VII, 1999. • P. Gupta, N. Mc. Keown, “Packet classification on multiple fields”, Sigcomm 1999. Copyright 1999. All Rights Reserved 63

Tutorial Outline • Introduction: What is a Packet Switch? • Packet Lookup and Classification: Where does a packet go next? • Switching Fabrics: How does the packet get there? Copyright 1999. All Rights Reserved 64

Switching Fabrics • Output and Input Queueing • Output Queueing • Input Queueing – Scheduling algorithms – Combining input and output queues – Multicast traffic – Other non blocking fabrics • Multistage Switches Copyright 1999. All Rights Reserved 65

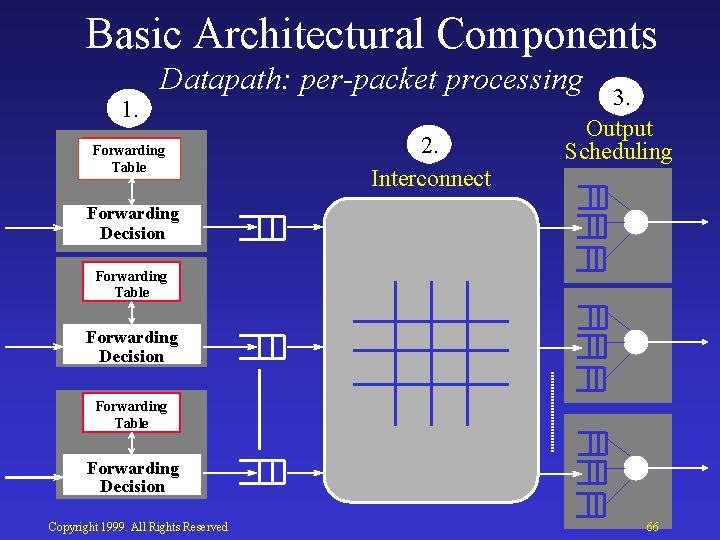

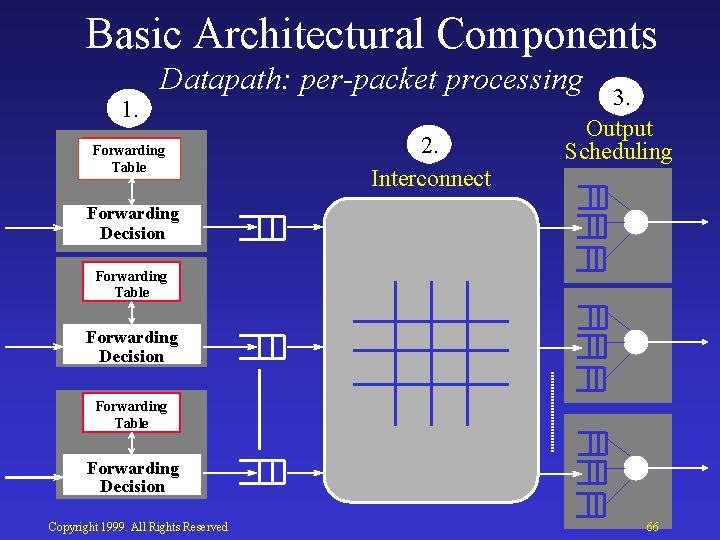

Basic Architectural Components 1. Datapath: per-packet processing Forwarding Table 2. Interconnect 3. Output Scheduling Forwarding Decision Forwarding Table Forwarding Decision Copyright 1999. All Rights Reserved 66

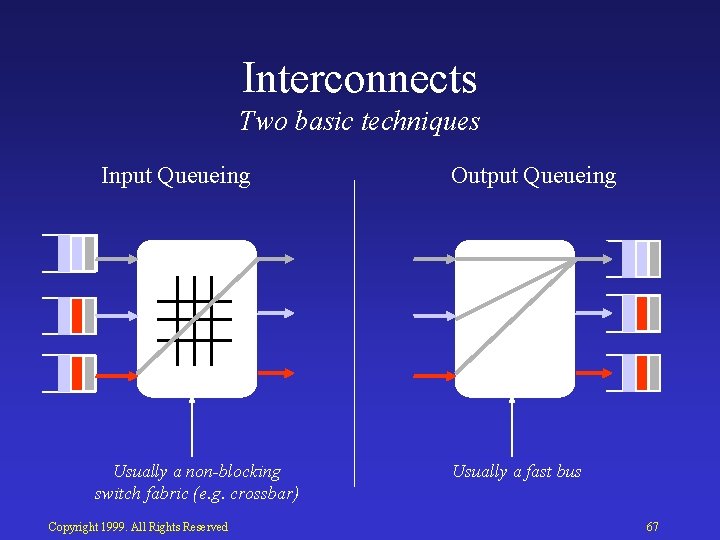

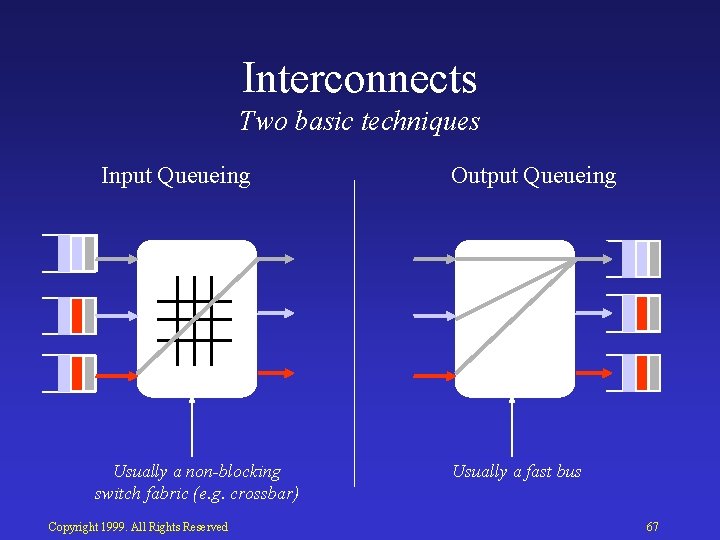

Interconnects Two basic techniques Input Queueing Usually a non-blocking switch fabric (e. g. crossbar) Copyright 1999. All Rights Reserved Output Queueing Usually a fast bus 67

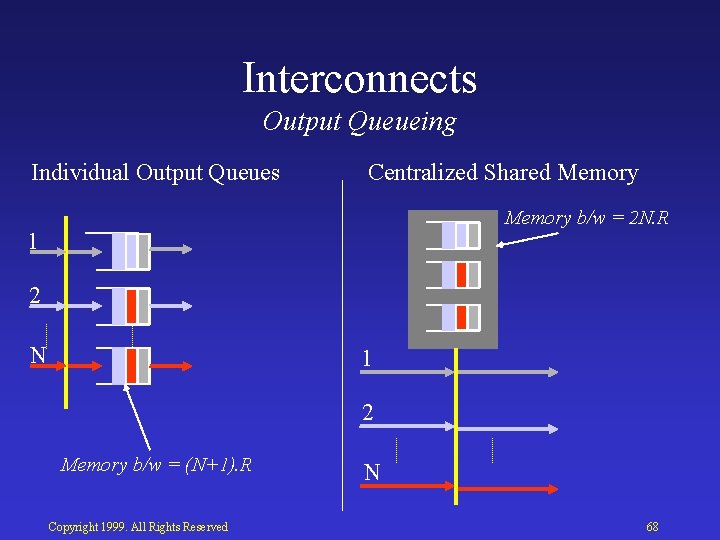

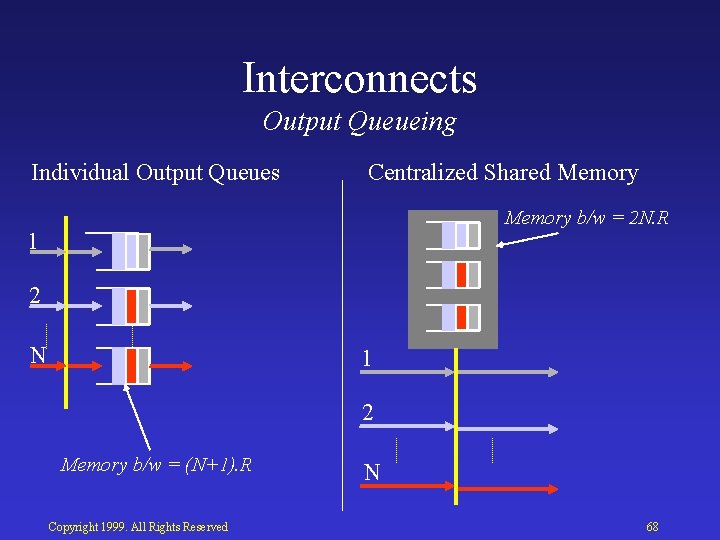

Interconnects Output Queueing Individual Output Queues Centralized Shared Memory b/w = 2 N. R 1 2 N 1 2 Memory b/w = (N+1). R Copyright 1999. All Rights Reserved N 68

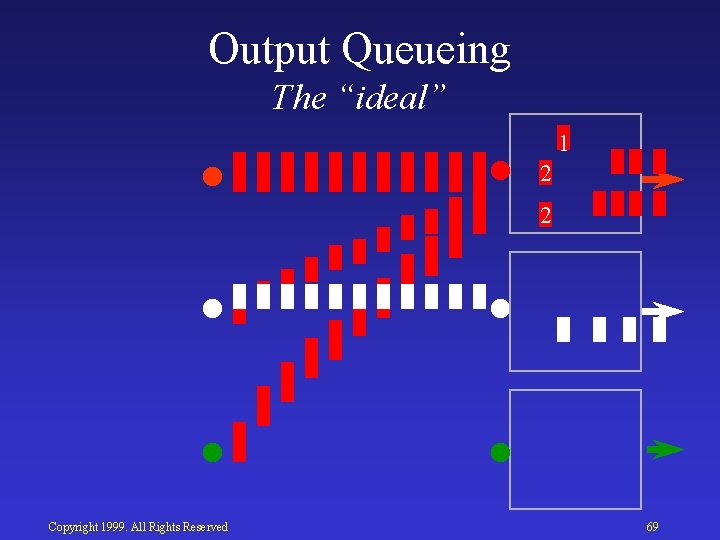

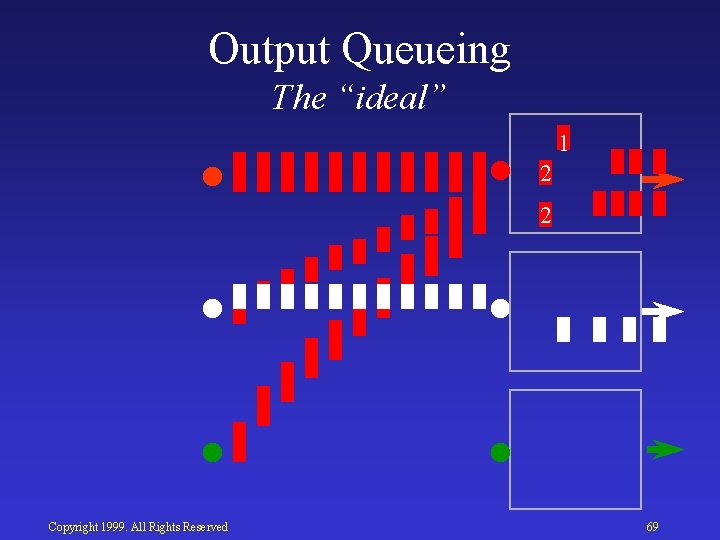

Output Queueing The “ideal” 2 1 1 2 1 2 11 2 2 1 Copyright 1999. All Rights Reserved 69

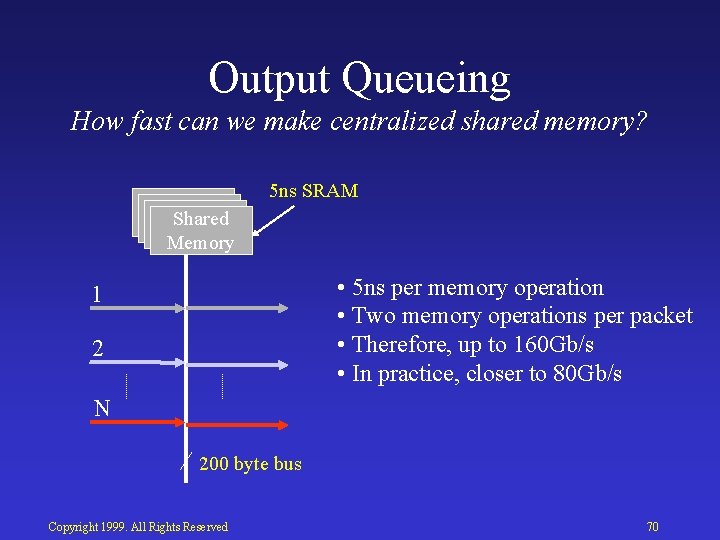

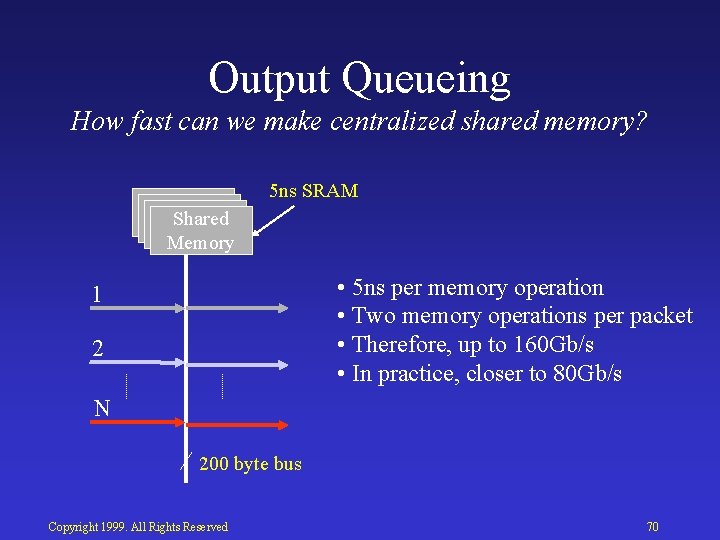

Output Queueing How fast can we make centralized shared memory? 5 ns SRAM Shared Memory • 5 ns per memory operation • Two memory operations per packet • Therefore, up to 160 Gb/s • In practice, closer to 80 Gb/s 1 2 N 200 byte bus Copyright 1999. All Rights Reserved 70

Switching Fabrics • Output and Input Queueing • Output Queueing • Input Queueing – Scheduling algorithms – Combining input and output queues – Multicast traffic – Other non blocking fabrics • Multistage Switches Copyright 1999. All Rights Reserved 71

Interconnects Input Queueing with Crossbar Memory b/w = 2 R Data In Scheduler configuration Data Out Copyright 1999. All Rights Reserved 72

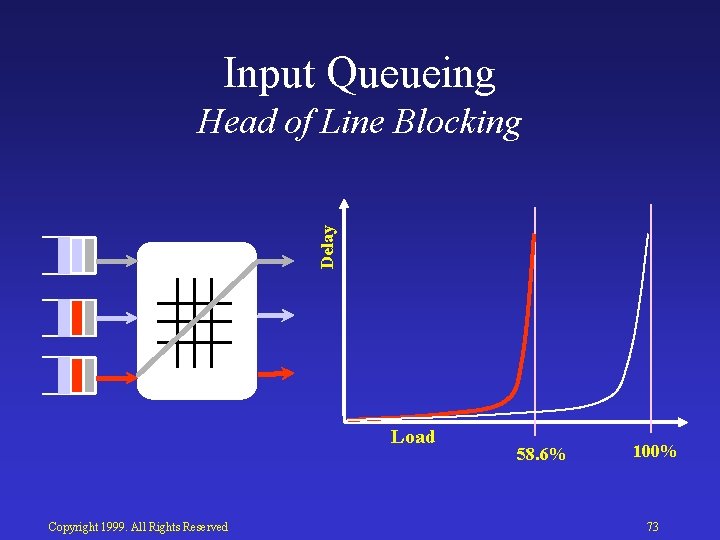

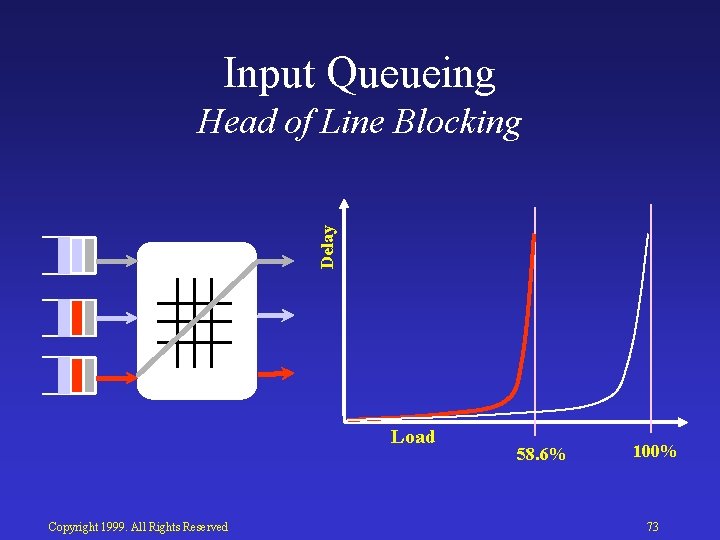

Input Queueing Delay Head of Line Blocking Load Copyright 1999. All Rights Reserved 58. 6% 100% 73

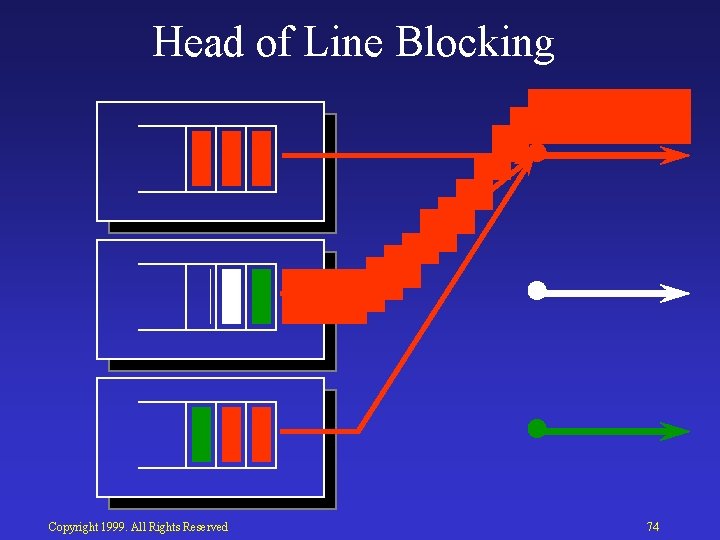

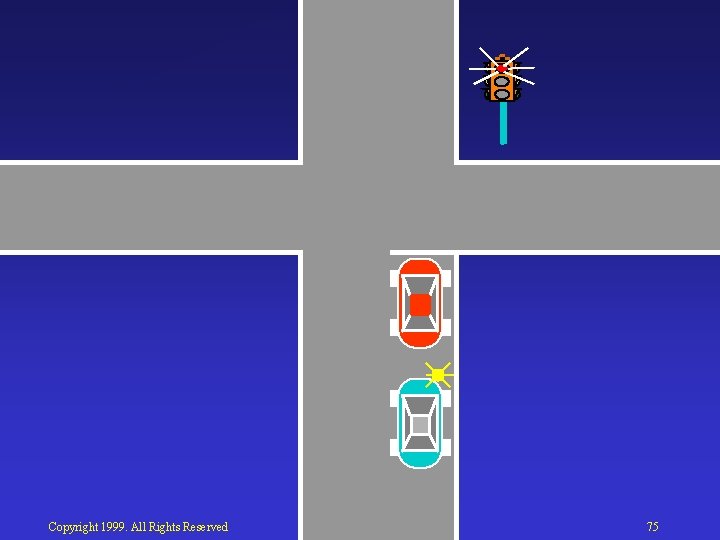

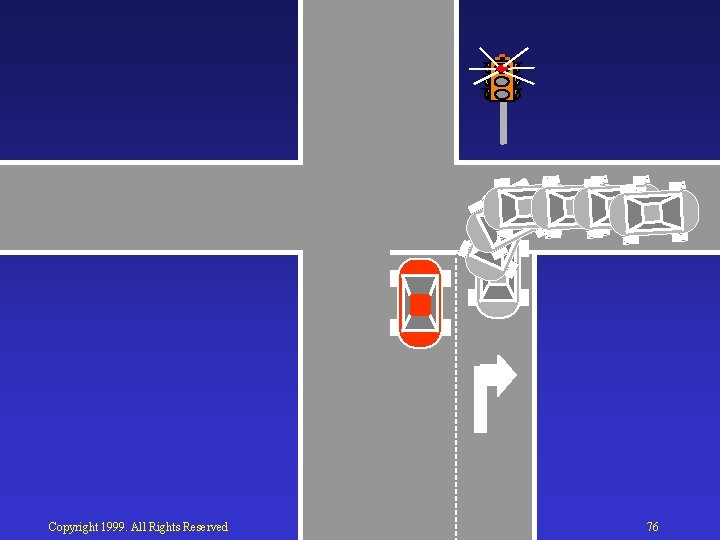

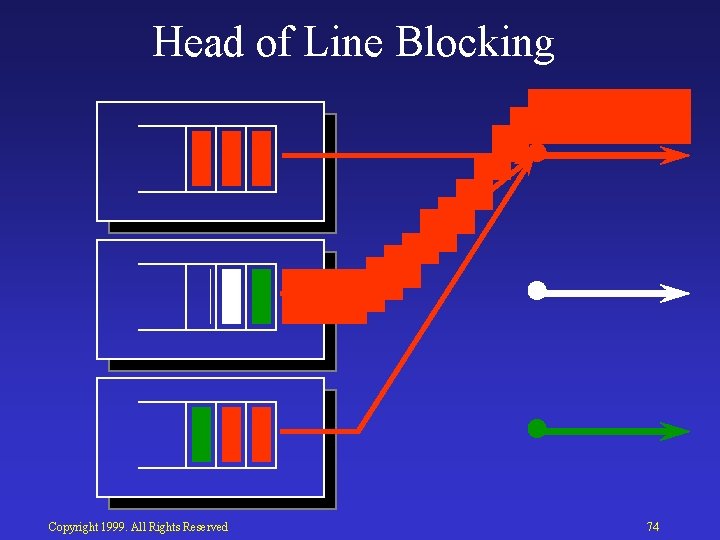

Head of Line Blocking Copyright 1999. All Rights Reserved 74

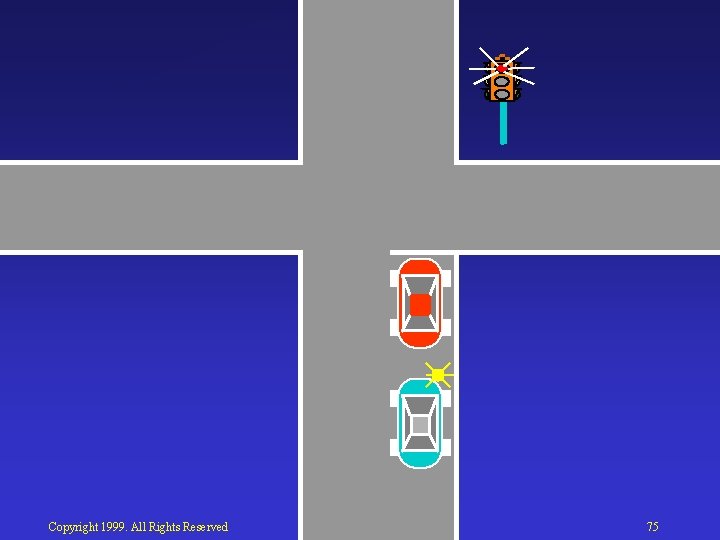

Copyright 1999. All Rights Reserved 75

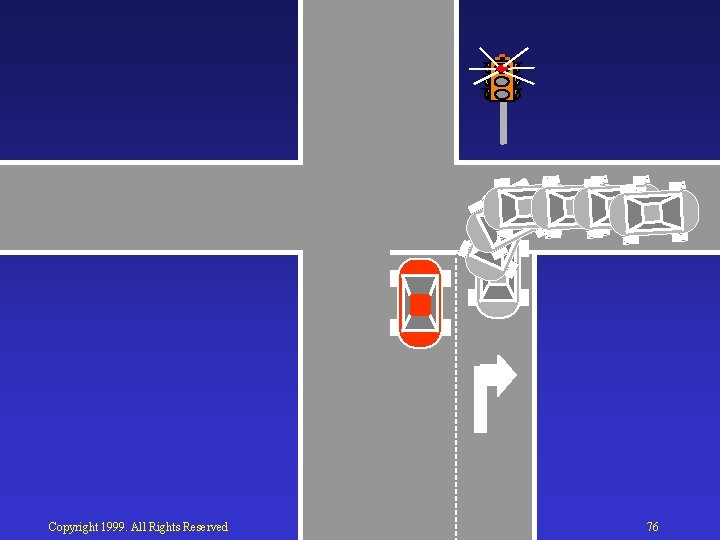

Copyright 1999. All Rights Reserved 76

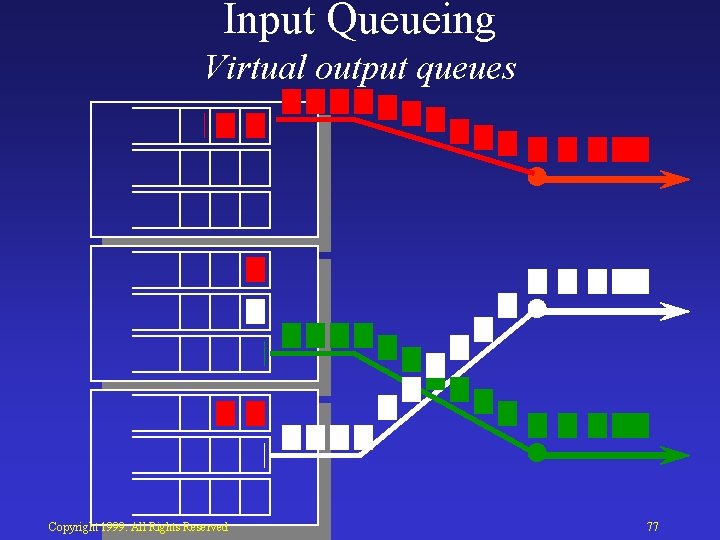

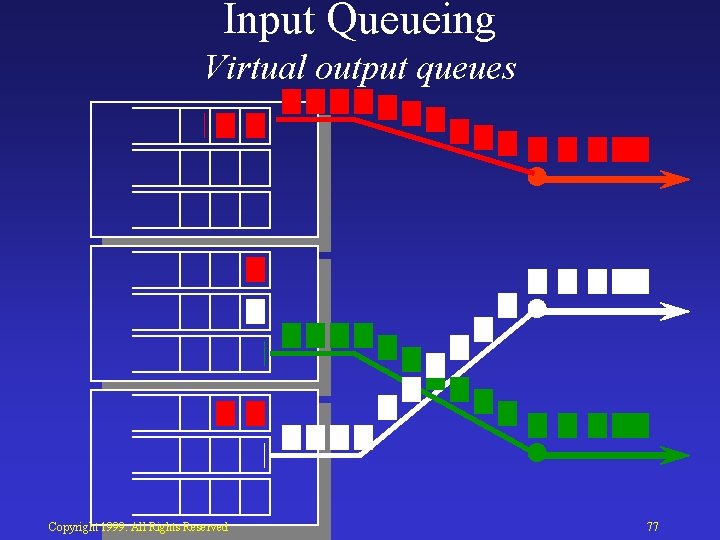

Input Queueing Virtual output queues Copyright 1999. All Rights Reserved 77

Input Queues Delay Virtual Output Queues Load Copyright 1999. All Rights Reserved 100% 78

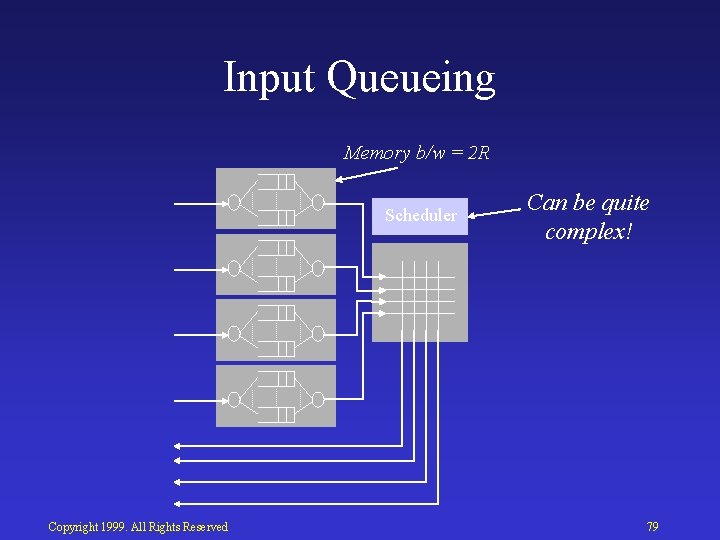

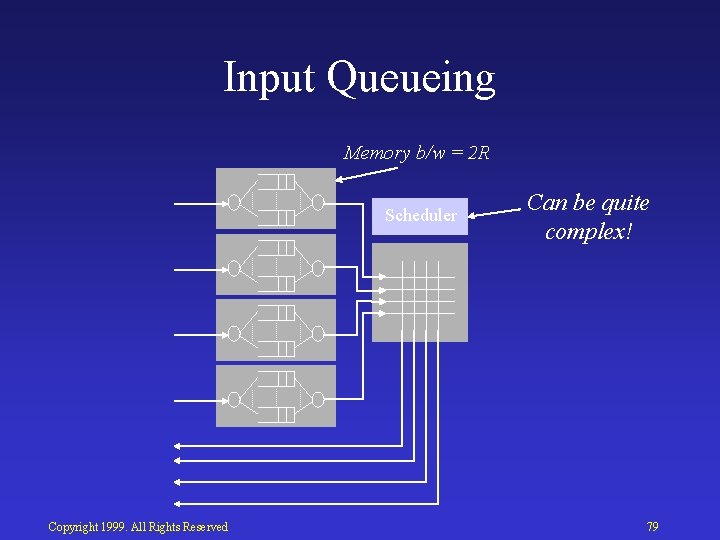

Input Queueing Memory b/w = 2 R Scheduler Copyright 1999. All Rights Reserved Can be quite complex! 79

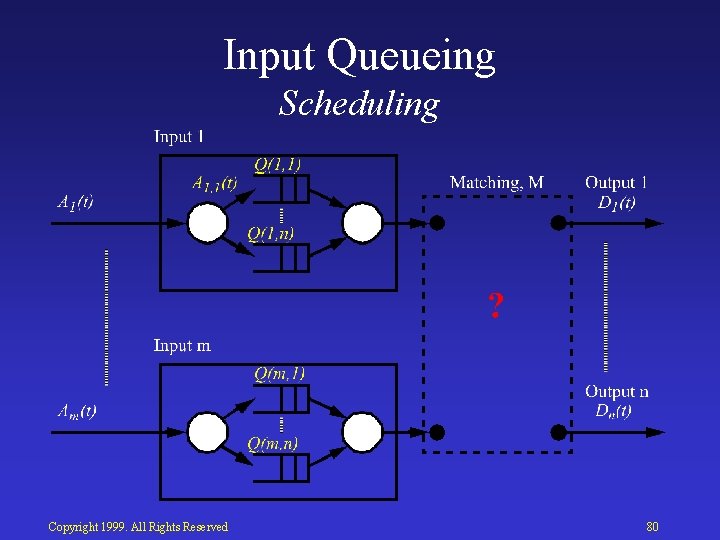

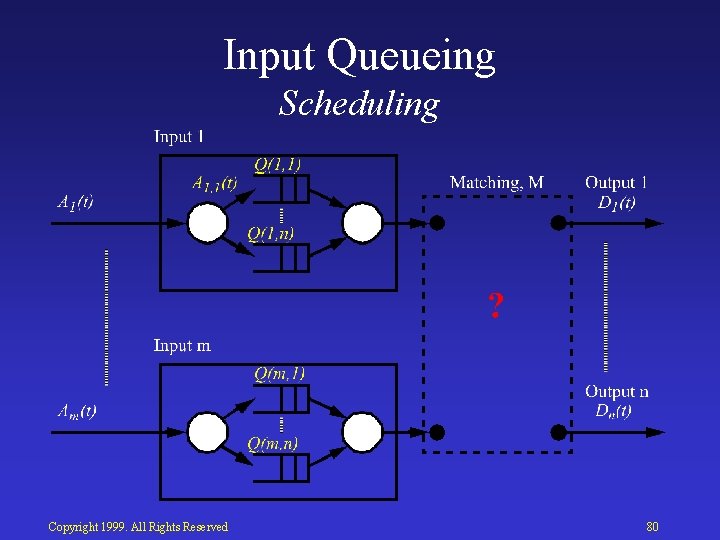

Input Queueing Scheduling Copyright 1999. All Rights Reserved 80

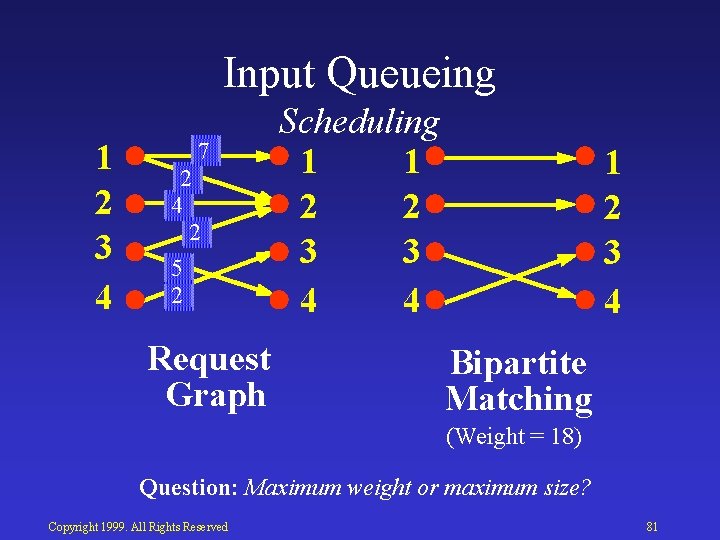

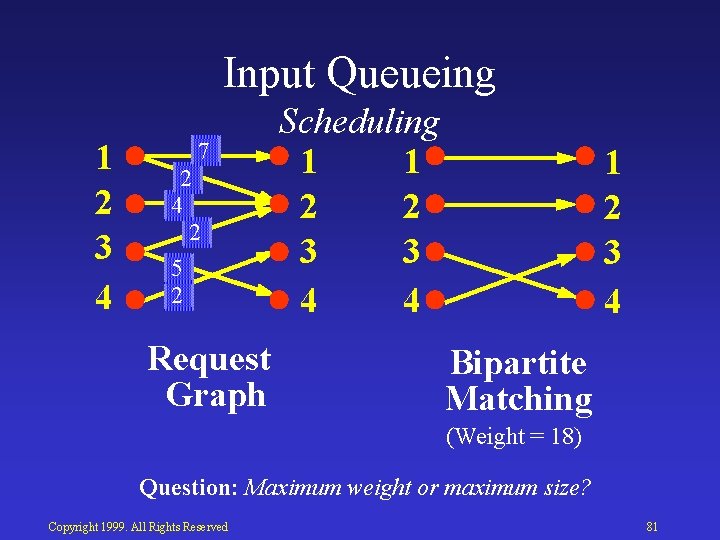

Input Queueing 1 2 3 4 7 2 4 2 5 2 Request Graph Scheduling 1 1 2 2 3 3 4 4 1 2 3 4 Bipartite Matching (Weight = 18) Question: Maximum weight or maximum size? Copyright 1999. All Rights Reserved 81

Input Queueing Scheduling • Maximum Size – Maximizes instantaneous throughput – Does it maximize long term throughput? • Maximum Weight – Can clear most backlogged queues – But does it sacrifice long term throughput? Copyright 1999. All Rights Reserved 82

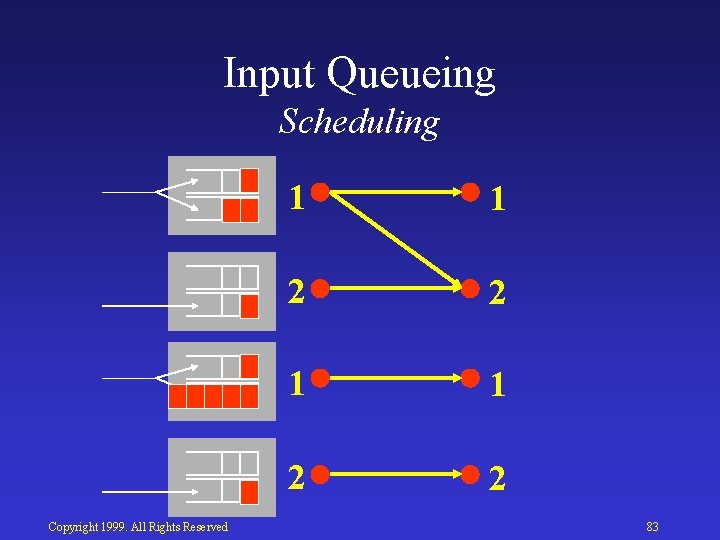

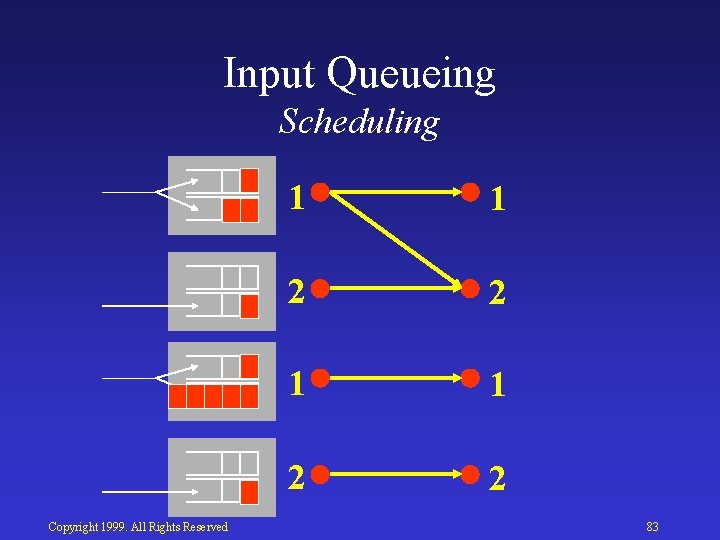

Input Queueing Scheduling Copyright 1999. All Rights Reserved 1 1 2 2 83

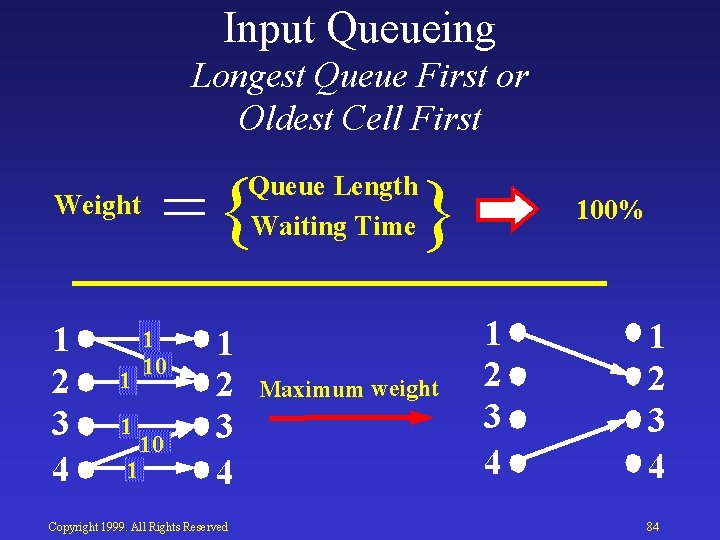

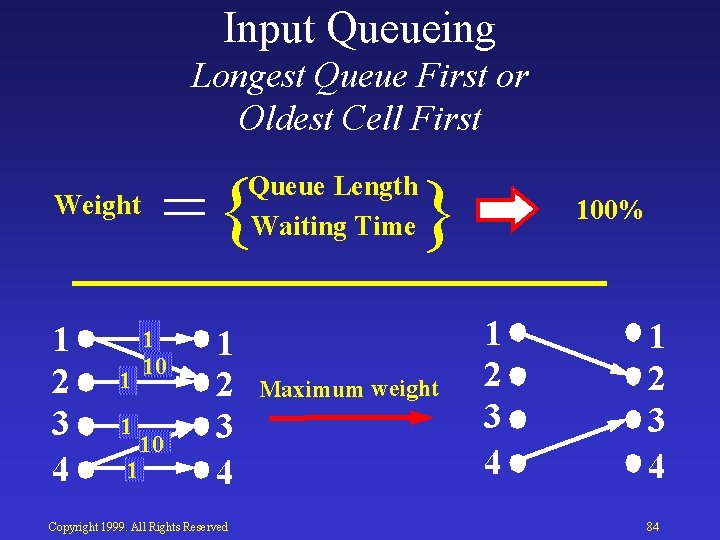

Input Queueing Longest Queue First or Oldest Cell First Weight 1 2 3 4 1 1 1 ={ Queue Length Waiting Time 1 10 10 1 2 3 4 Copyright 1999. All Rights Reserved } Maximum weight 100% 1 2 3 4 84

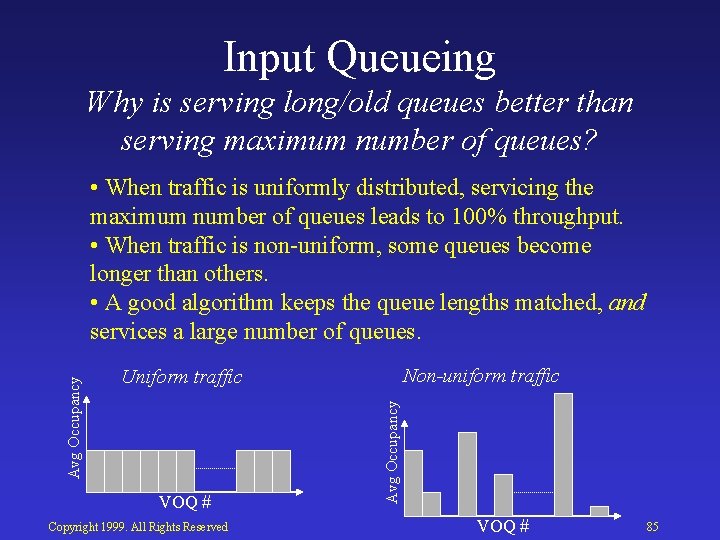

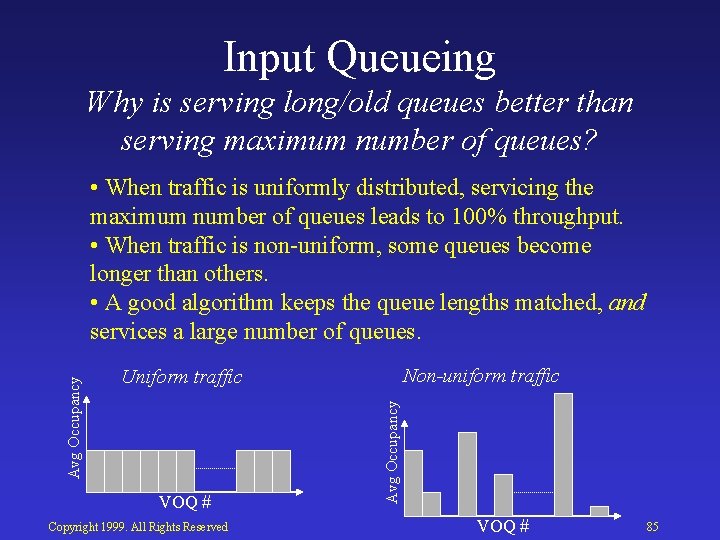

Input Queueing Why is serving long/old queues better than serving maximum number of queues? Non-uniform traffic Uniform traffic VOQ # Copyright 1999. All Rights Reserved Avg Occupancy • When traffic is uniformly distributed, servicing the maximum number of queues leads to 100% throughput. • When traffic is non uniform, some queues become longer than others. • A good algorithm keeps the queue lengths matched, and services a large number of queues. VOQ # 85

Input Queueing Practical Algorithms • Maximal Size Algorithms – Wave Front Arbiter (WFA) – Parallel Iterative Matching (PIM) – i. SLIP • Maximal Weight Algorithms – Fair Access Round Robin (FARR) – Longest Port First (LPF) Copyright 1999. All Rights Reserved 86

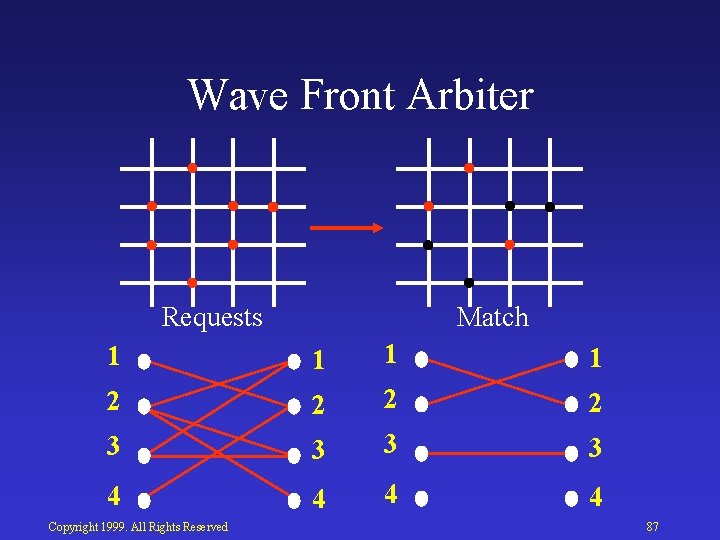

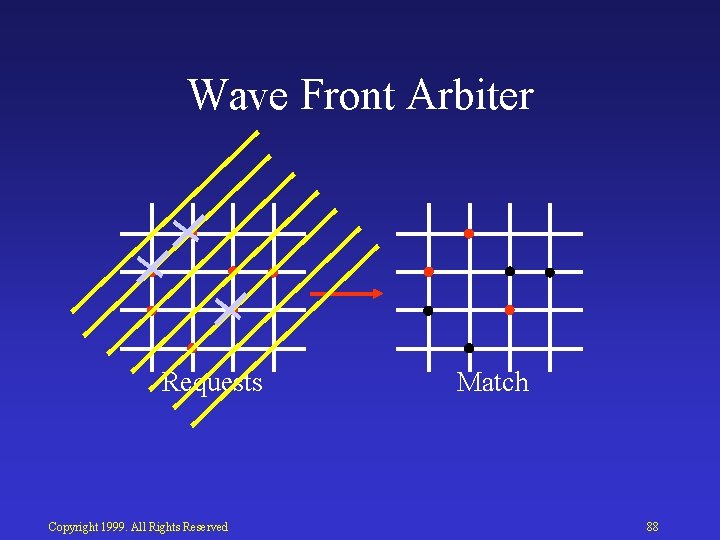

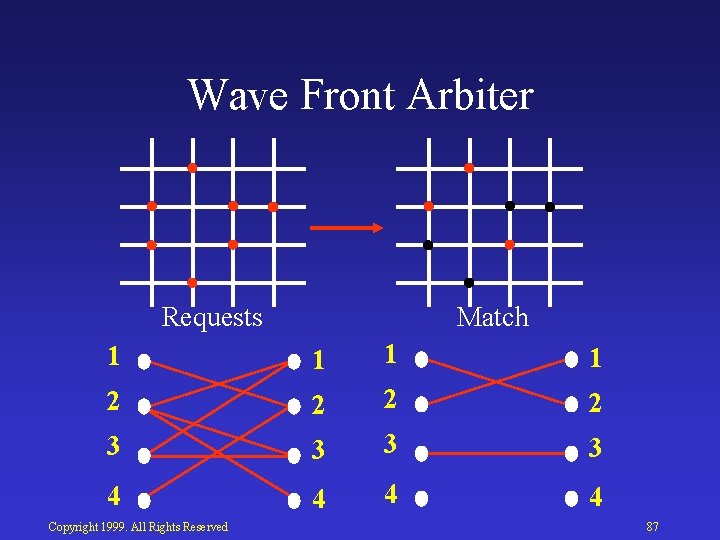

Wave Front Arbiter Requests Match 1 1 2 2 3 3 4 4 Copyright 1999. All Rights Reserved 87

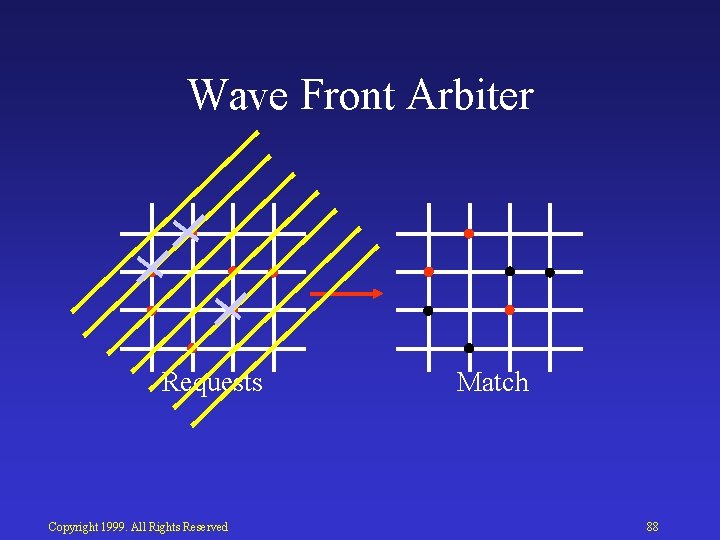

Wave Front Arbiter Requests Copyright 1999. All Rights Reserved Match 88

Wave Front Arbiter Implementation Copyright 1999. All Rights Reserved 1, 1 1, 2 1, 3 1, 4 2, 1 2, 2 2, 3 2, 4 3, 1 3, 2 3, 3 3, 4 4, 1 4, 2 4, 3 4, 4 Combinational Logic Blocks 89

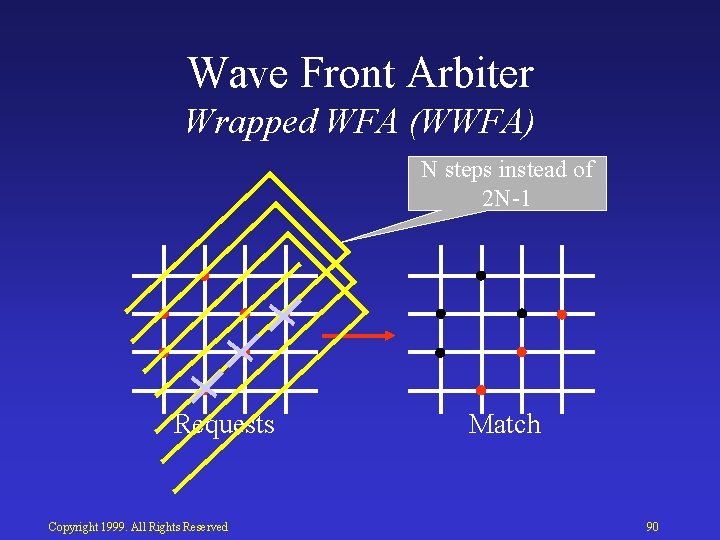

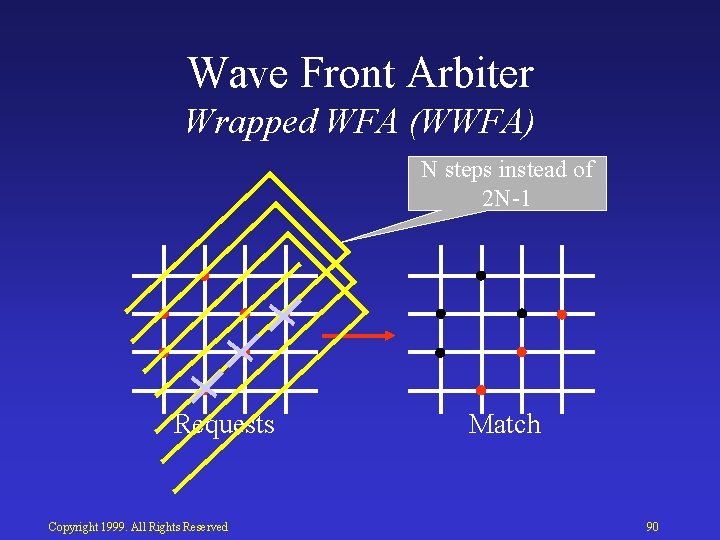

Wave Front Arbiter Wrapped WFA (WWFA) N steps instead of 2 N 1 Requests Copyright 1999. All Rights Reserved Match 90

Input Queueing Practical Algorithms • Maximal Size Algorithms – Wave Front Arbiter (WFA) – Parallel Iterative Matching (PIM) – i. SLIP • Maximal Weight Algorithms – Fair Access Round Robin (FARR) – Longest Port First (LPF) Copyright 1999. All Rights Reserved 91

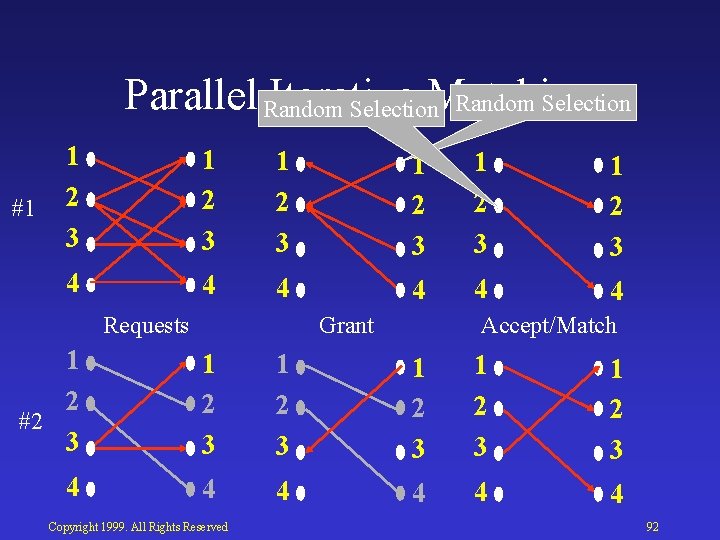

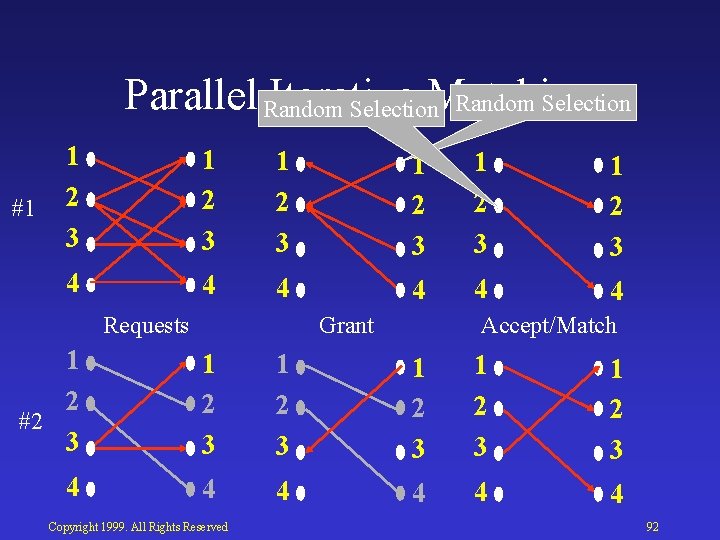

Parallel Random Iterative Matching Random Selection #1 1 2 3 1 2 3 4 4 4 Requests Grant Accept/Match 1 2 #2 3 1 2 3 1 2 3 4 4 4 Copyright 1999. All Rights Reserved 92

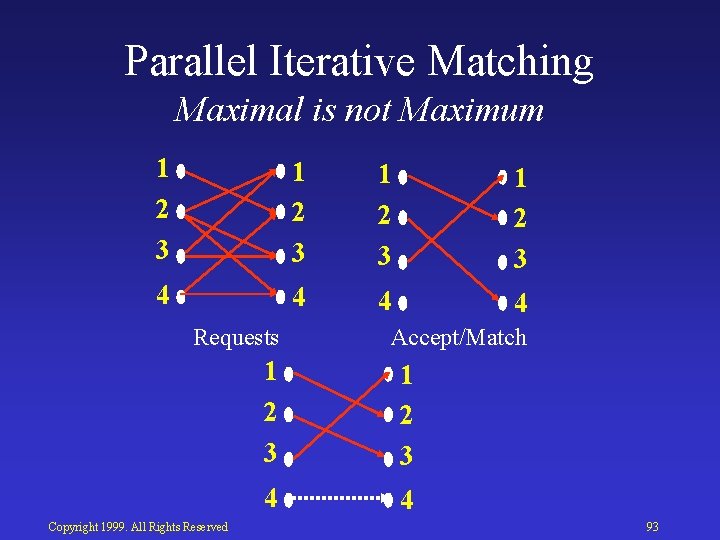

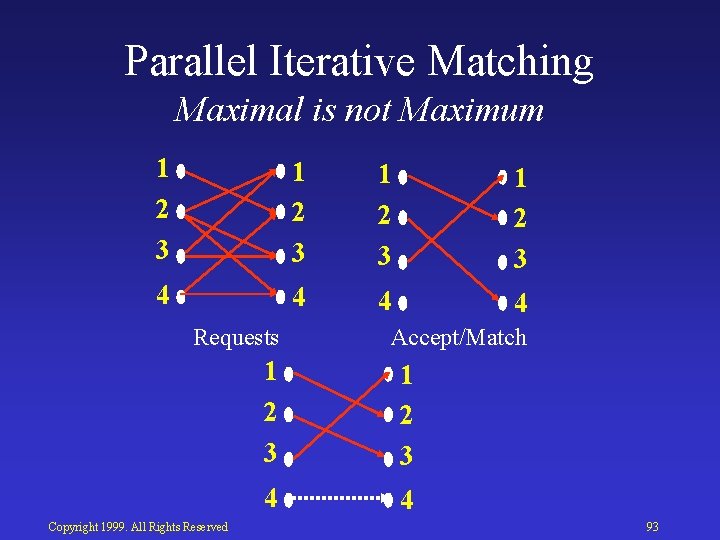

Parallel Iterative Matching Maximal is not Maximum 1 2 3 4 4 Requests Copyright 1999. All Rights Reserved Accept/Match 1 2 3 4 4 93

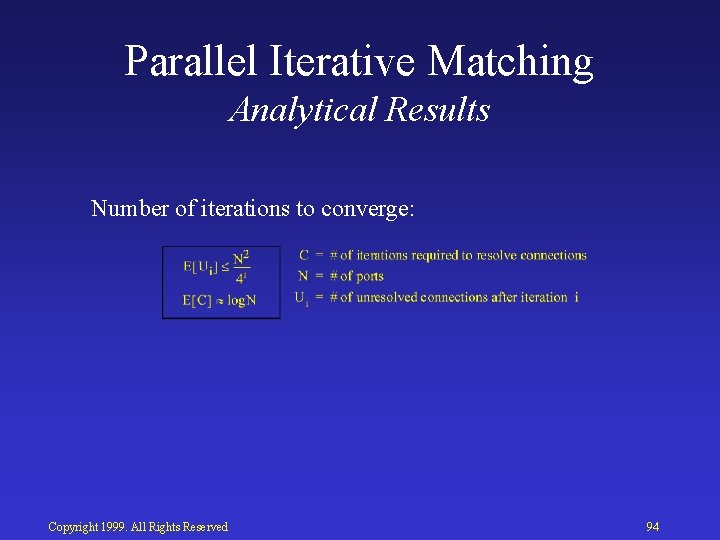

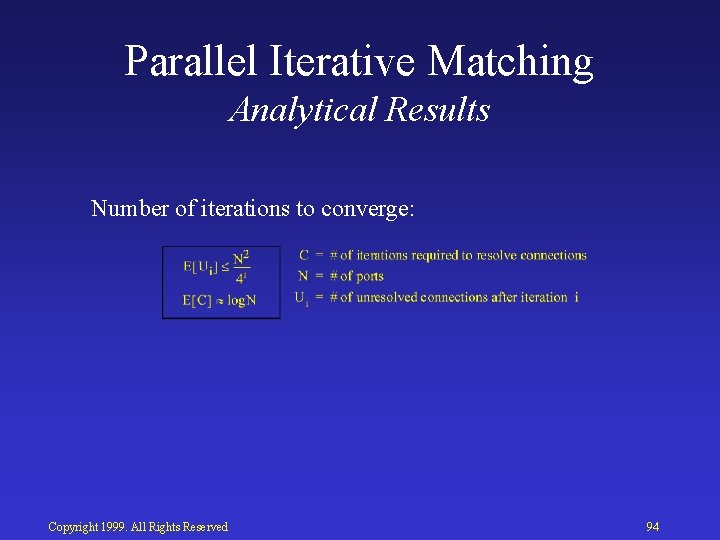

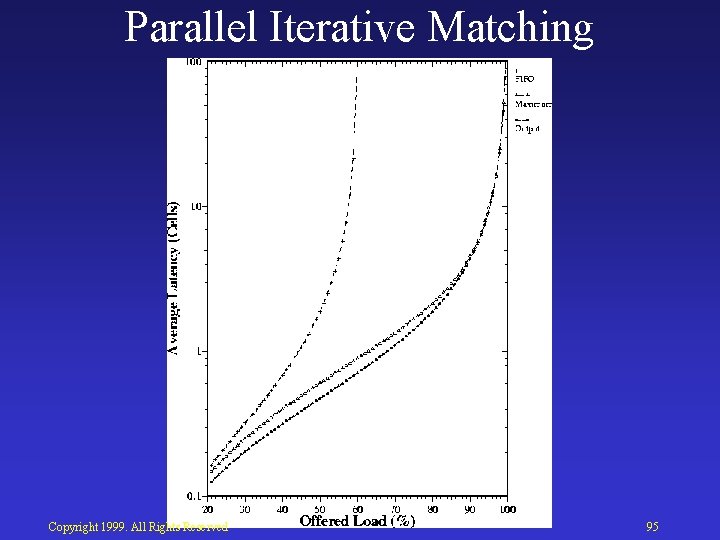

Parallel Iterative Matching Analytical Results Number of iterations to converge: Copyright 1999. All Rights Reserved 94

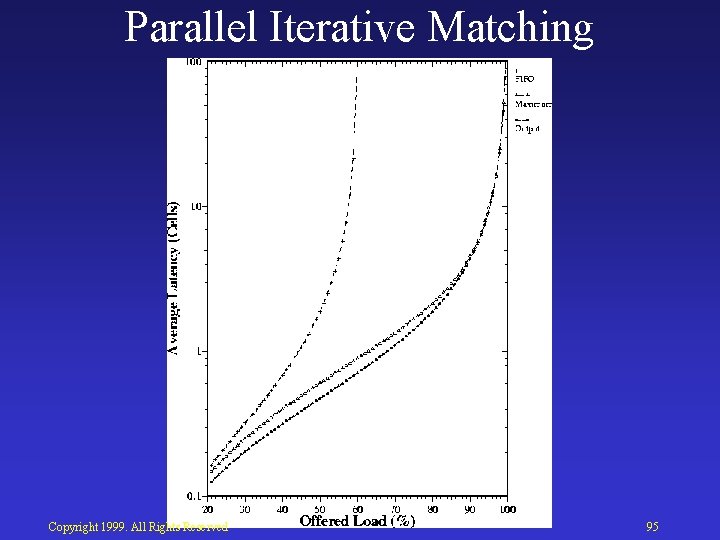

Parallel Iterative Matching Copyright 1999. All Rights Reserved 95

Parallel Iterative Matching Copyright 1999. All Rights Reserved 96

Parallel Iterative Matching Copyright 1999. All Rights Reserved 97

Input Queueing Practical Algorithms • Maximal Size Algorithms – Wave Front Arbiter (WFA) – Parallel Iterative Matching (PIM) – i. SLIP • Maximal Weight Algorithms – Fair Access Round Robin (FARR) – Longest Port First (LPF) Copyright 1999. All Rights Reserved 98

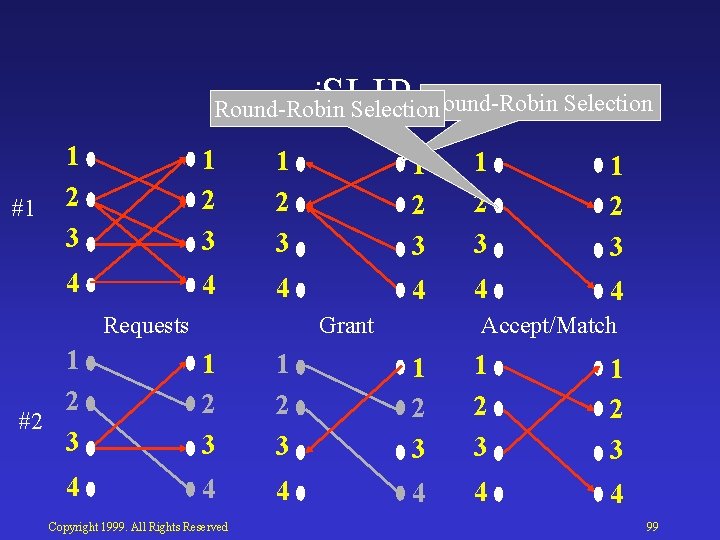

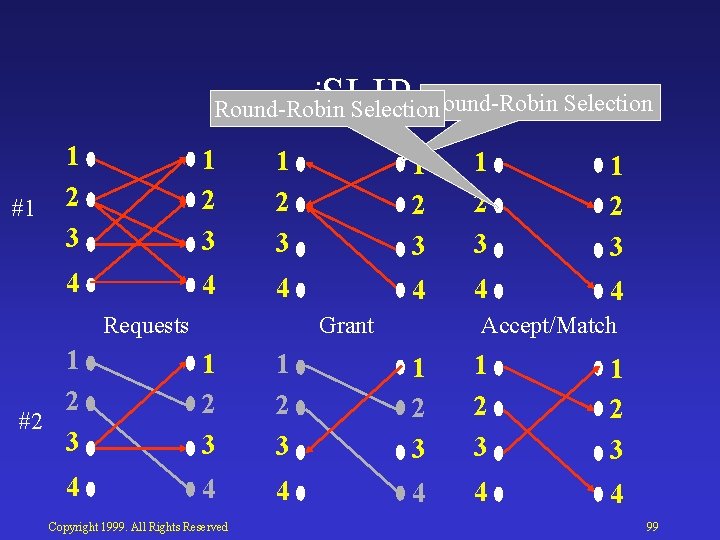

i. SLIP Round Robin Selection #1 1 2 3 1 2 3 4 4 4 Requests Grant Accept/Match 1 2 #2 3 1 2 3 1 2 3 4 4 4 Copyright 1999. All Rights Reserved 99

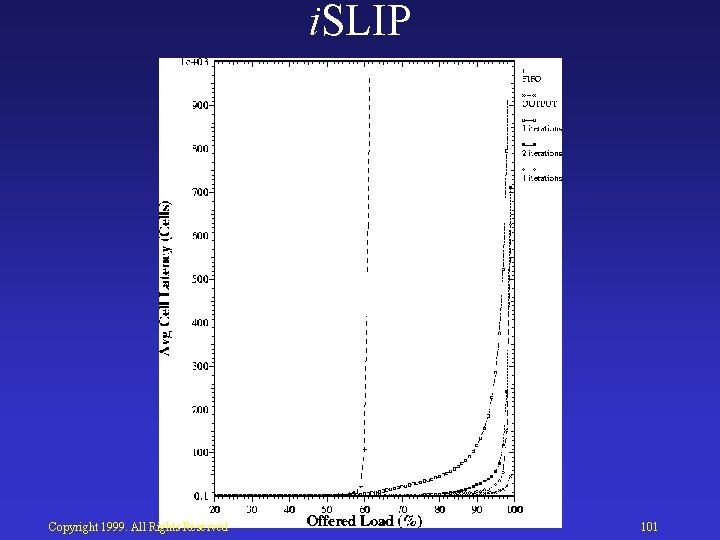

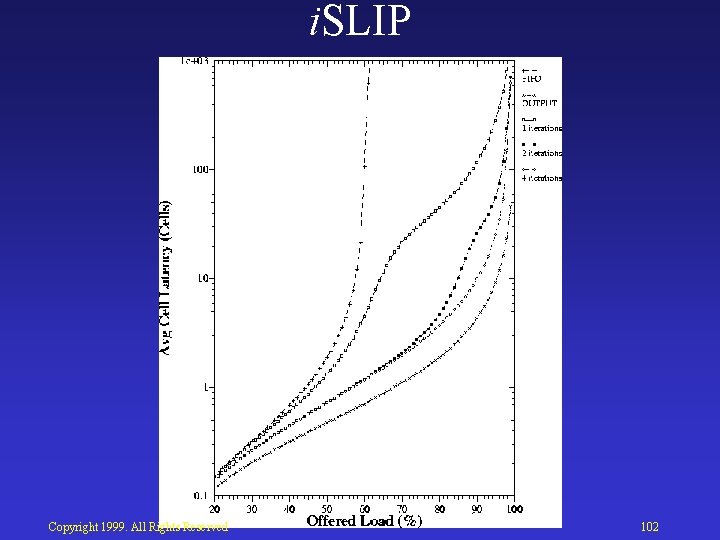

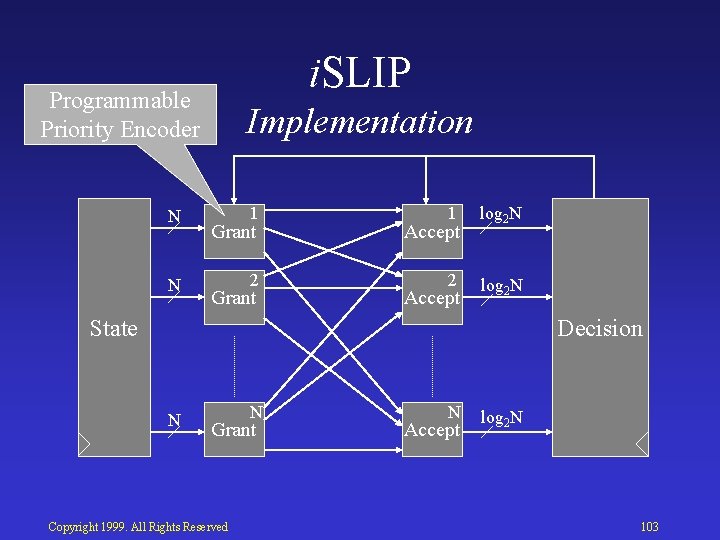

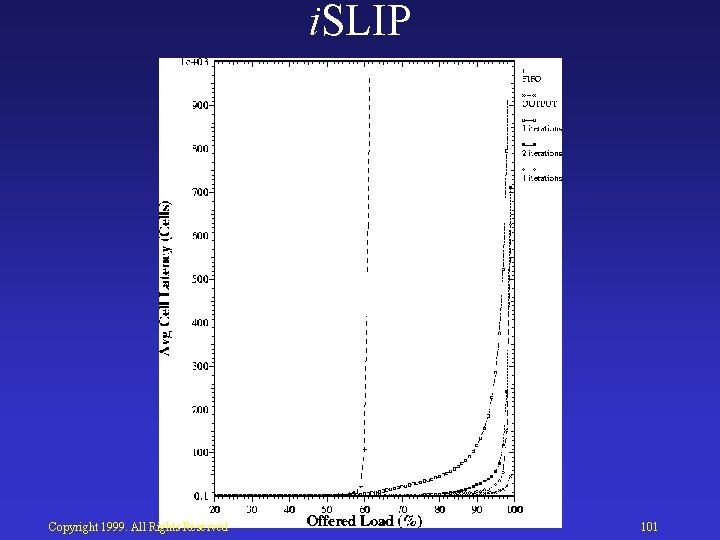

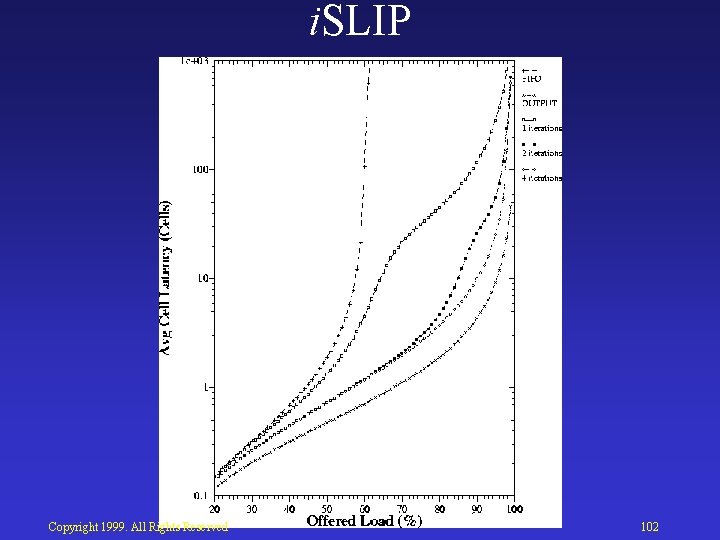

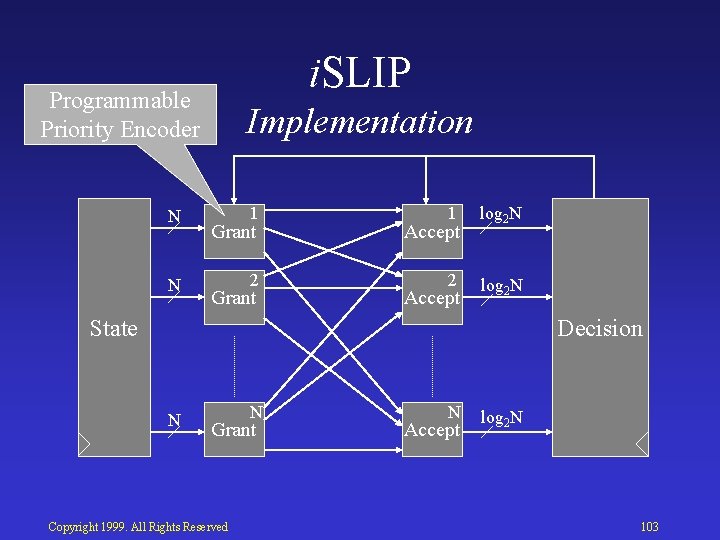

i. SLIP Properties • • • Random under low load TDM under high load Lowest priority to MRU 1 iteration: fair to outputs Converges in at most N iterations. On average <= log 2 N • Implementation: N priority encoders • Up to 100% throughput for uniform traffic Copyright 1999. All Rights Reserved 100

i. SLIP Copyright 1999. All Rights Reserved 101

i. SLIP Copyright 1999. All Rights Reserved 102

i. SLIP Programmable Priority Encoder N N Implementation 1 Grant 1 Accept log 2 N 2 2 log 2 N Grant Accept State Decision N N Grant Copyright 1999. All Rights Reserved N Accept log 2 N 103

Input Queueing References • M. Karol et al. “Input vs Output Queueing on a Space Division Packet Switch”, IEEE Trans Comm. , Dec 1987, pp. 1347 1356. • Y. Tamir, “Symmetric Crossbar arbiters for VLSI communication switches”, IEEE Trans Parallel and Dist Sys. , Jan 1993, pp. 13 27. • T. Anderson et al. “High Speed Switch Scheduling for Local Area Networks”, ACM Trans Comp Sys. , Nov 1993, pp. 319 352. • N. Mc. Keown, “The i. SLIP scheduling algorithm for Input Queued Switches”, IEEE Trans Networking, April 1999, pp. 188 201. • C. Lund et al. “Fair prioritized scheduling in an input buffered switch”, Proc. of IFIP IEEE Conf. , April 1996, pp. 358 69. • A. Mekkitikul et al. “A Practical Scheduling Algorithm to Achieve 100% Throughput in Input Queued Switches”, IEEE Infocom 98, April 1998. Copyright 1999. All Rights Reserved 104

Switching Fabrics • Output and Input Queueing • Output Queueing • Input Queueing – Scheduling algorithms – Combining input and output queues – Multicast traffic – Other non blocking fabrics • Multistage Switches Copyright 1999. All Rights Reserved 105

Input Queueing Speedup • Input queued switches can not easily control delay • But output queued switches can. • How can we emulate the behavior of an output queued switch? Copyright 1999. All Rights Reserved 106

Output Queueing The “ideal” 2 1 1 2 1 2 11 2 2 1 Copyright 1999. All Rights Reserved 107

Using Speedup 1 2 1 Copyright 1999. All Rights Reserved 108

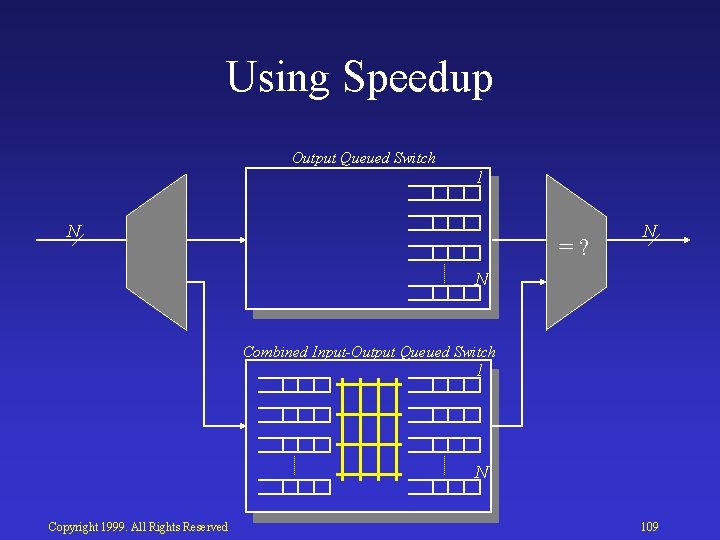

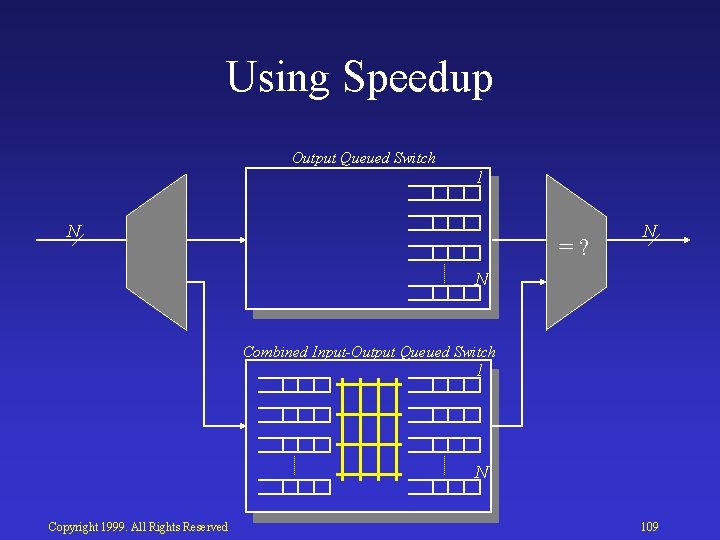

Using Speedup Output Queued Switch 1 N =? N N Combined Input-Output Queued Switch 1 N Copyright 1999. All Rights Reserved 109

Using Speedup Theorem: For a switch with combined input and output queueing to exactly mimic an output queued switch, for all types of traffic, a speedup of 2 -1/N is necessary and sufficient. Copyright 1999. All Rights Reserved 110

Switching Fabrics • Output and Input Queueing • Output Queueing • Input Queueing – Scheduling algorithms – Combining input and output queues – Multicast traffic – Other non blocking fabrics • Multistage Switches Copyright 1999. All Rights Reserved 111

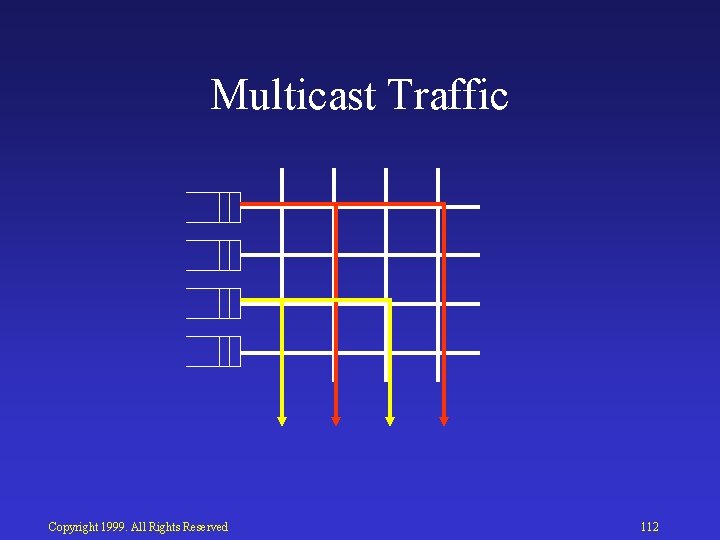

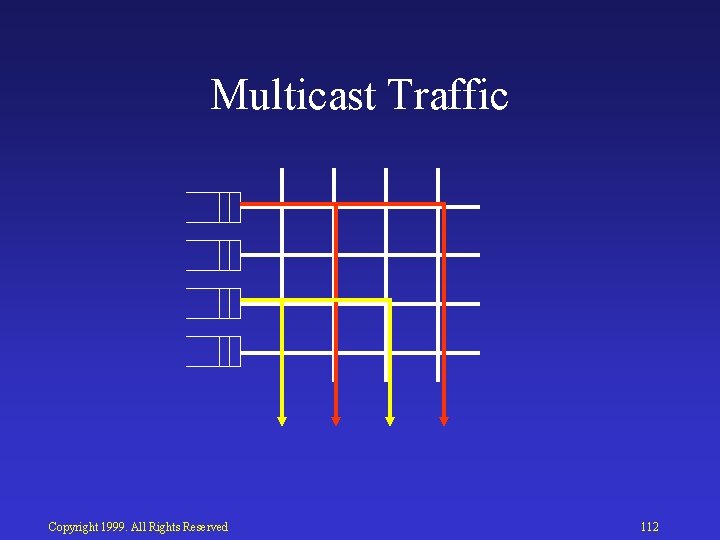

Multicast Traffic Copyright 1999. All Rights Reserved 112

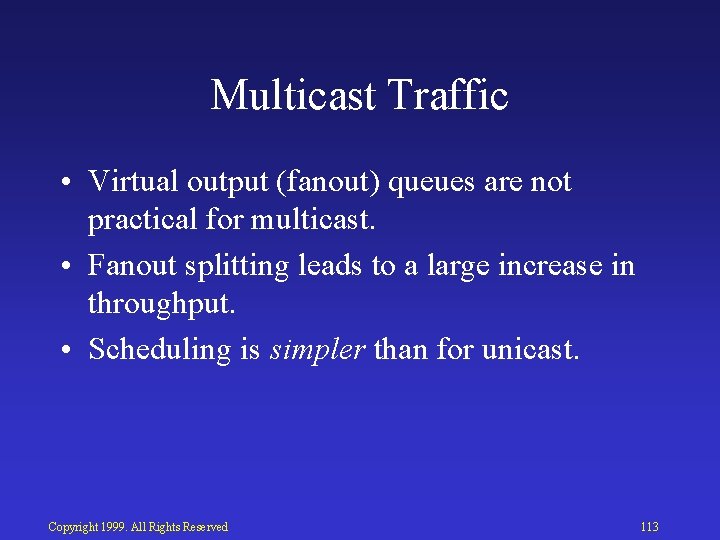

Multicast Traffic • Virtual output (fanout) queues are not practical for multicast. • Fanout splitting leads to a large increase in throughput. • Scheduling is simpler than for unicast. Copyright 1999. All Rights Reserved 113

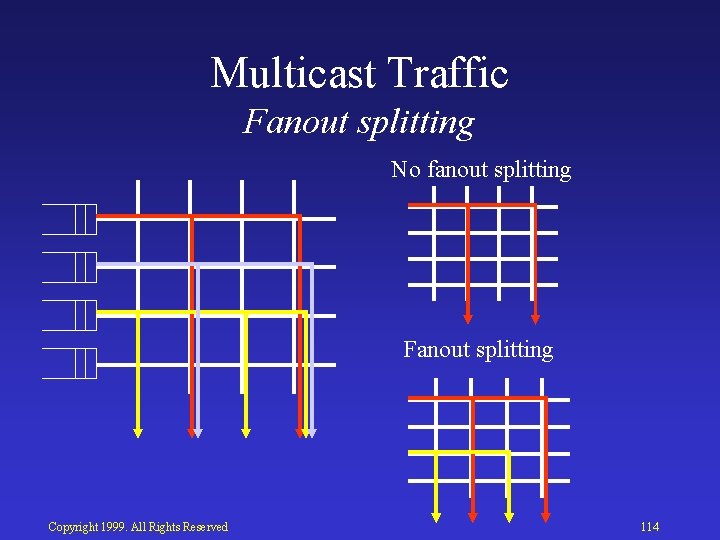

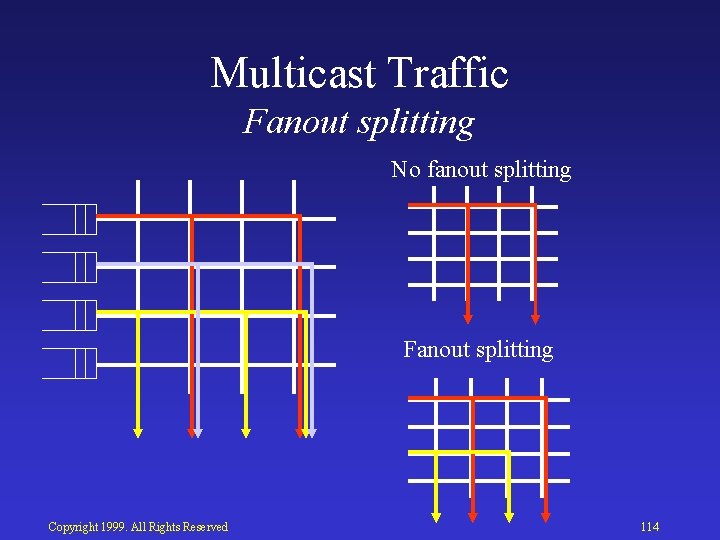

Multicast Traffic Fanout splitting No fanout splitting Fanout splitting Copyright 1999. All Rights Reserved 114

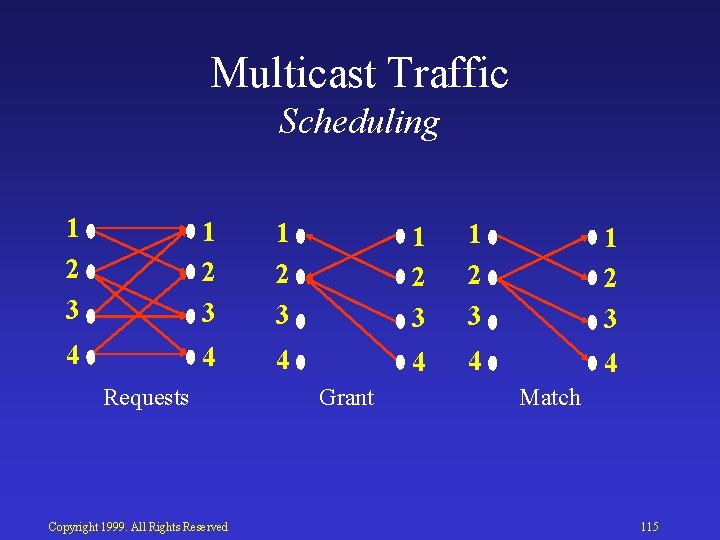

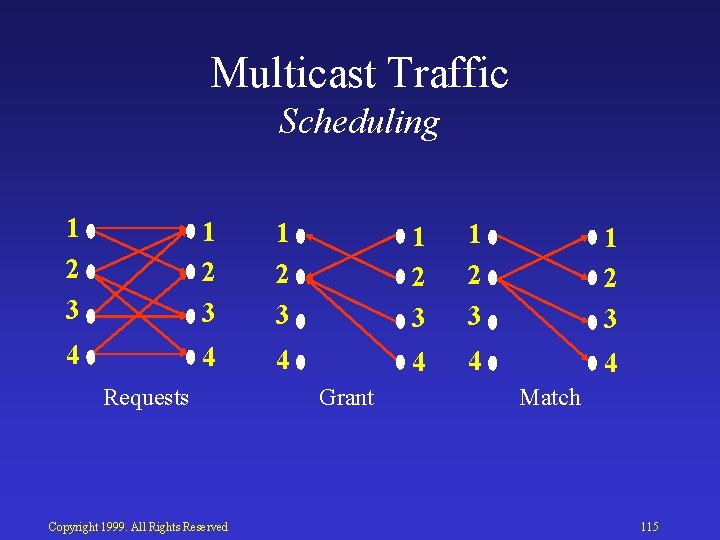

Multicast Traffic Scheduling 1 2 3 1 2 3 4 4 4 Requests Copyright 1999. All Rights Reserved Grant Match 115

Switching Fabrics • Output and Input Queueing • Output Queueing • Input Queueing – Scheduling algorithms – Combining input and output queues – Multicast traffic – Other non blocking fabrics • Multistage Switches Copyright 1999. All Rights Reserved 116

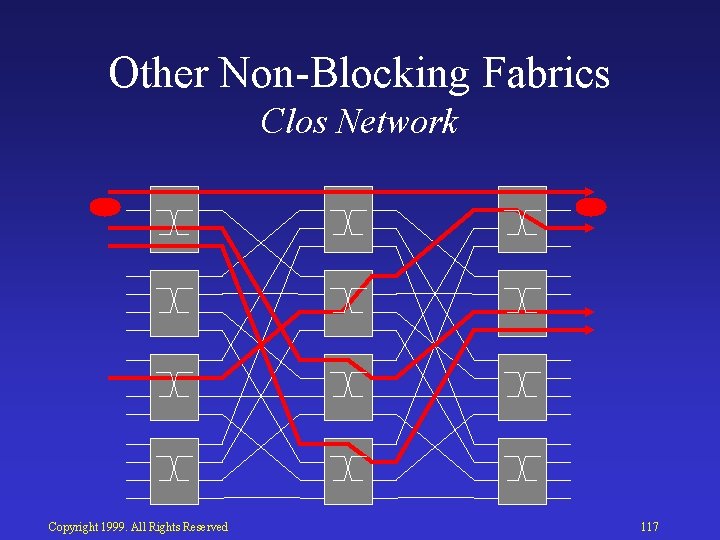

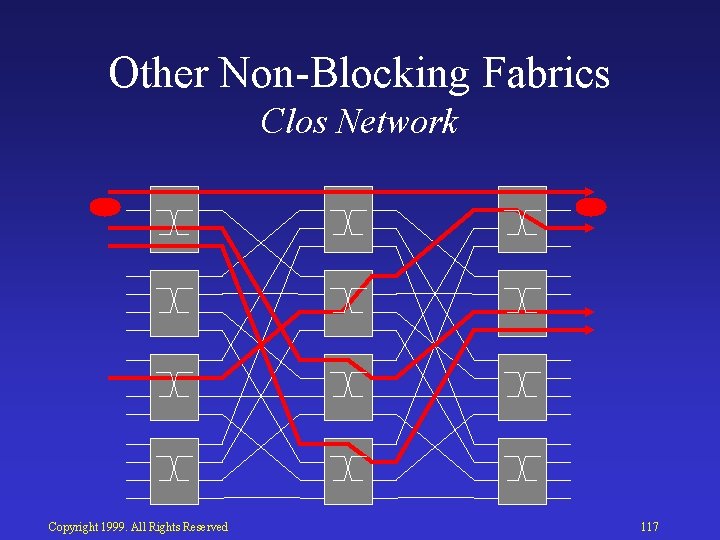

Other Non Blocking Fabrics Clos Network Copyright 1999. All Rights Reserved 117

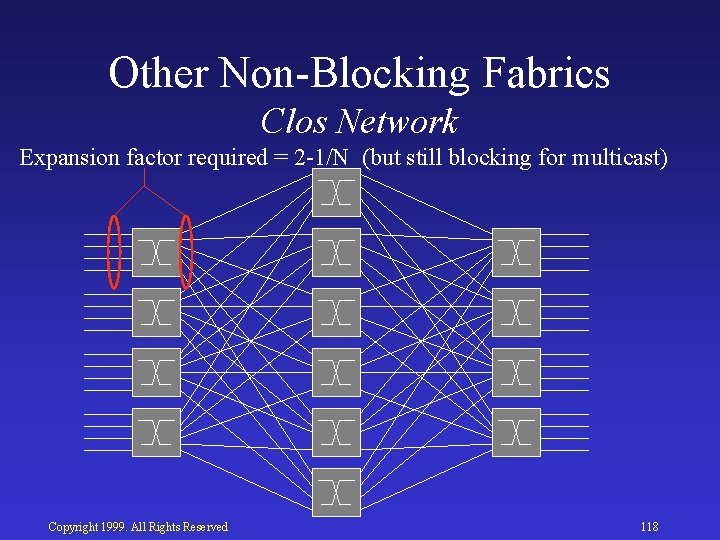

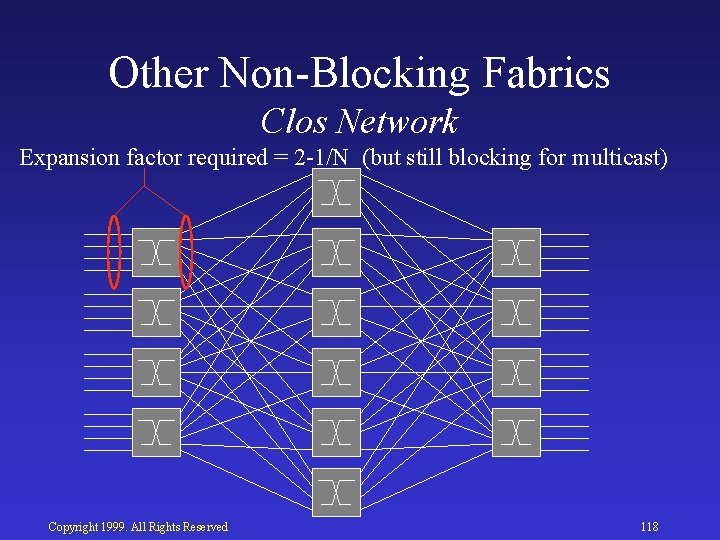

Other Non Blocking Fabrics Clos Network Expansion factor required = 2 1/N (but still blocking for multicast) Copyright 1999. All Rights Reserved 118

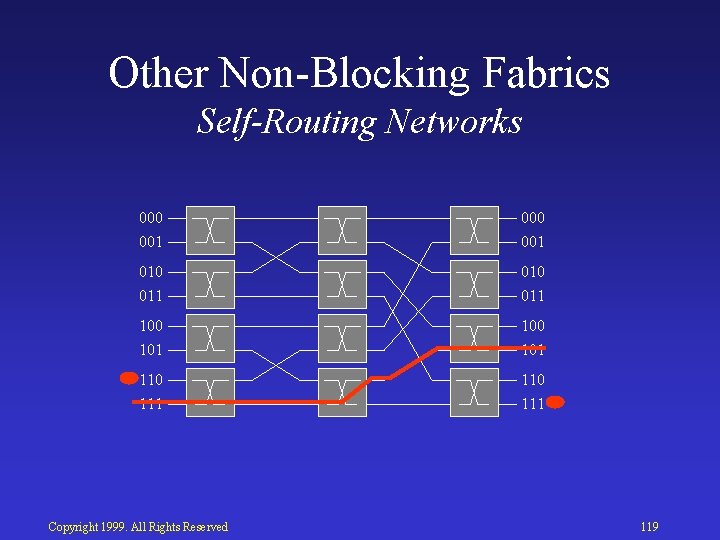

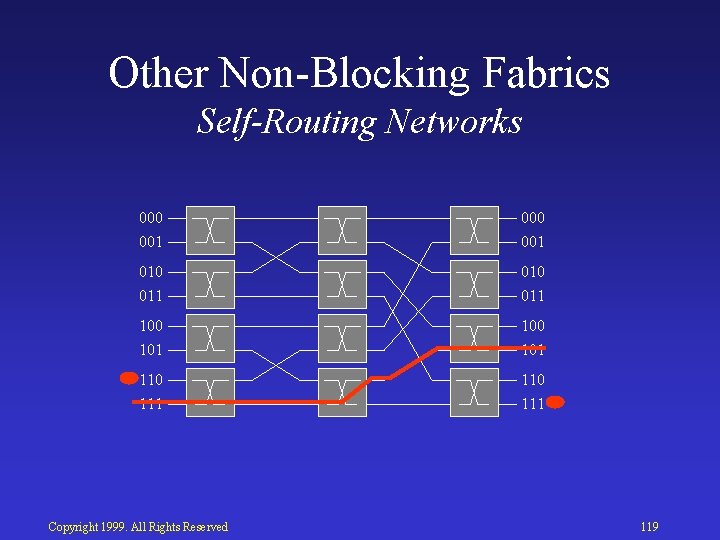

Other Non Blocking Fabrics Self-Routing Networks 000 001 010 011 100 101 110 111 Copyright 1999. All Rights Reserved 119

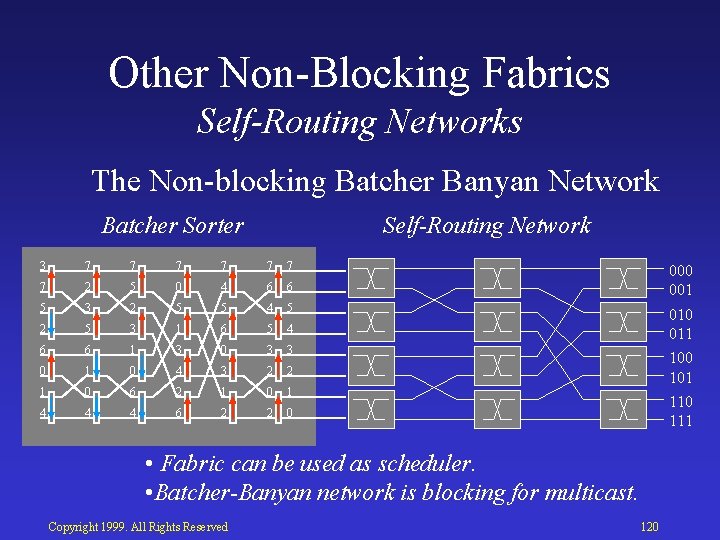

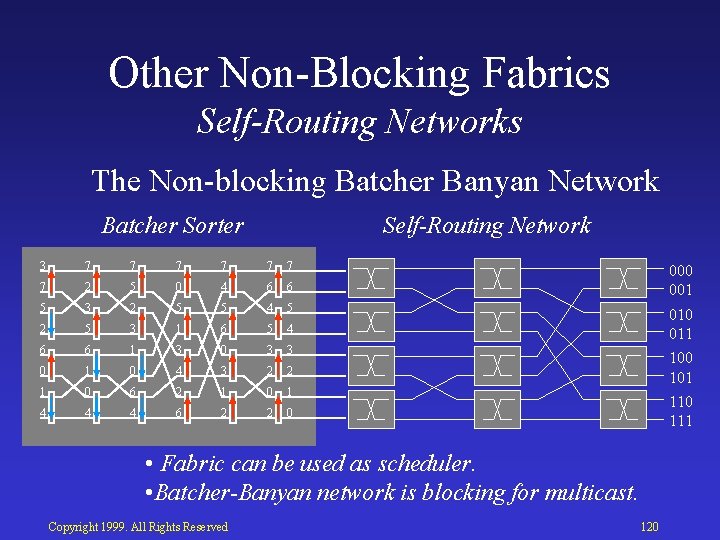

Other Non Blocking Fabrics Self-Routing Networks The Non blocking Batcher Banyan Network Batcher Sorter Self-Routing Network 3 7 7 7 7 2 5 0 4 6 6 5 3 2 5 5 4 5 2 5 3 1 6 5 4 6 6 1 3 0 3 3 0 1 0 4 3 2 2 1 0 6 2 1 0 1 4 4 4 6 2 2 0 001 010 011 100 101 110 111 • Fabric can be used as scheduler. • Batcher-Banyan network is blocking for multicast. Copyright 1999. All Rights Reserved 120

Switching Fabrics • Output and Input Queueing • Output Queueing • Input Queueing – Scheduling algorithms – Combining input and output queues – Multicast traffic – Other non blocking fabrics • Multistage Switches Copyright 1999. All Rights Reserved 121

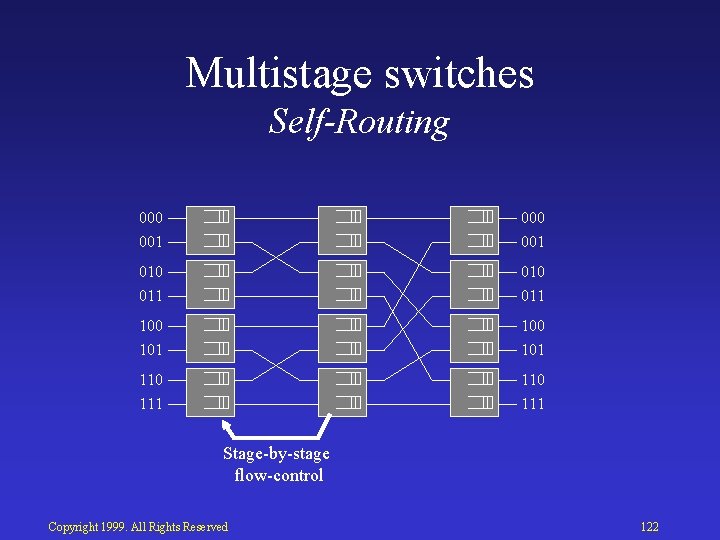

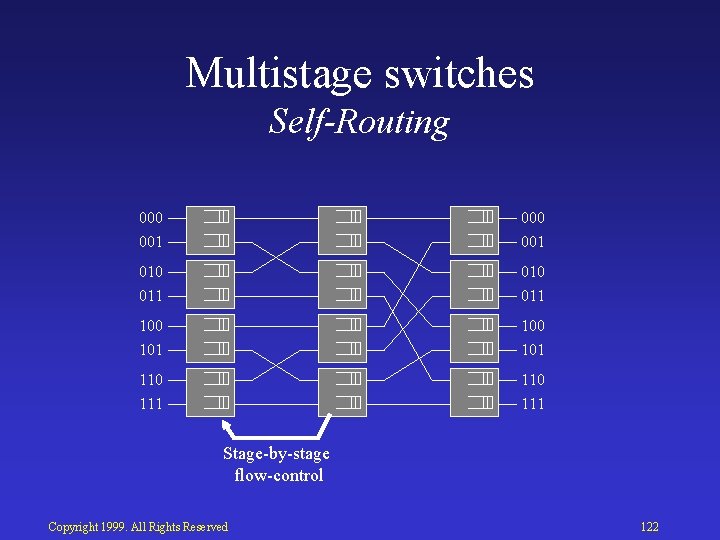

Multistage switches Self-Routing 000 001 010 011 100 101 110 111 Stage by stage flow control Copyright 1999. All Rights Reserved 122

Multistage switches Self-Routing Buffered multistage switch Multicast copy network 000 001 010 011 100 101 110 111 Stage by stage flow control Copyright 1999. All Rights Reserved 123

Tutorial Outline • Introduction: What is a Packet Switch? • Packet Lookup and Classification: Where does a packet go next? • Switching Fabrics: How does the packet get there? Copyright 1999. All Rights Reserved 124

Basic Architectural Components Admission Control Policing Congestion Control Routing Switching Copyright 1999. All Rights Reserved Reservation Output Scheduling Control Datapath: per packet processing 125

Basic Architectural Components 1. Datapath: per-packet processing Forwarding Table 2. Interconnect 3. Output Scheduling Forwarding Decision Forwarding Table Forwarding Decision Copyright 1999. All Rights Reserved 126