Accelerating High Performance Cluster Computing Through the Reduction

- Slides: 15

Accelerating High Performance Cluster Computing Through the Reduction of File System Latency David Fellinger Chief Scientist, DDN Storage © 2015 Dartadirect Networks, Inc. All rights reserved.

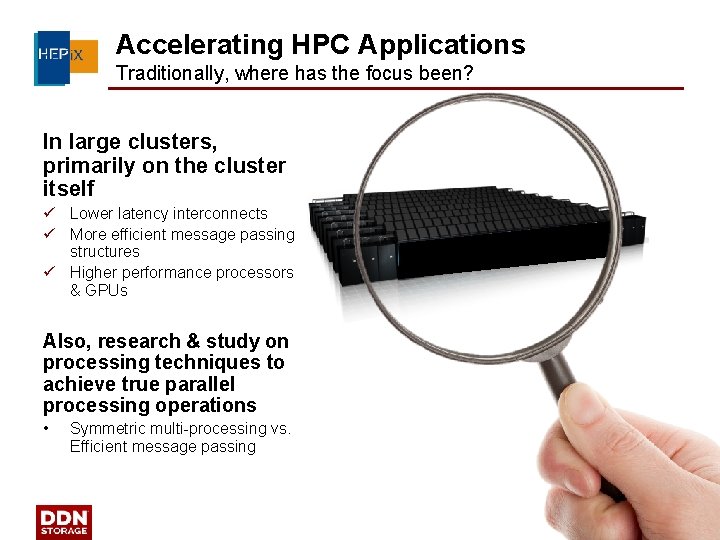

Accelerating HPC Applications Traditionally, where has the focus been? In large clusters, primarily on the cluster itself ü Lower latency interconnects ü More efficient message passing structures ü Higher performance processors & GPUs Also, research & study on processing techniques to achieve true parallel processing operations • Symmetric multi-processing vs. Efficient message passing

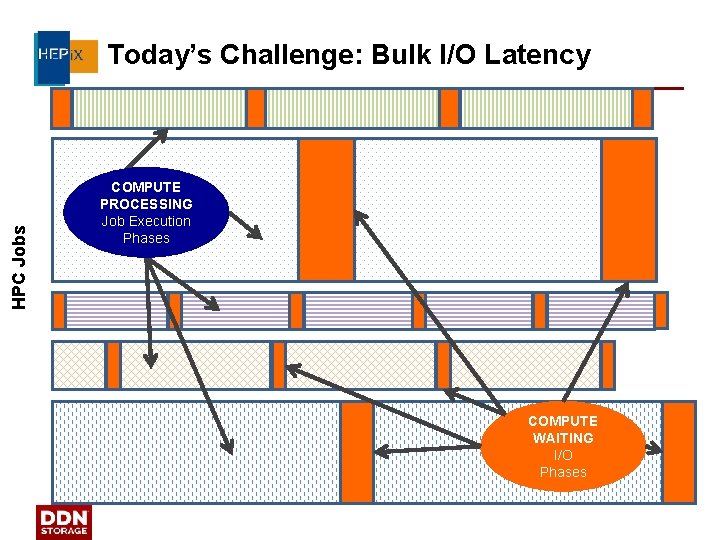

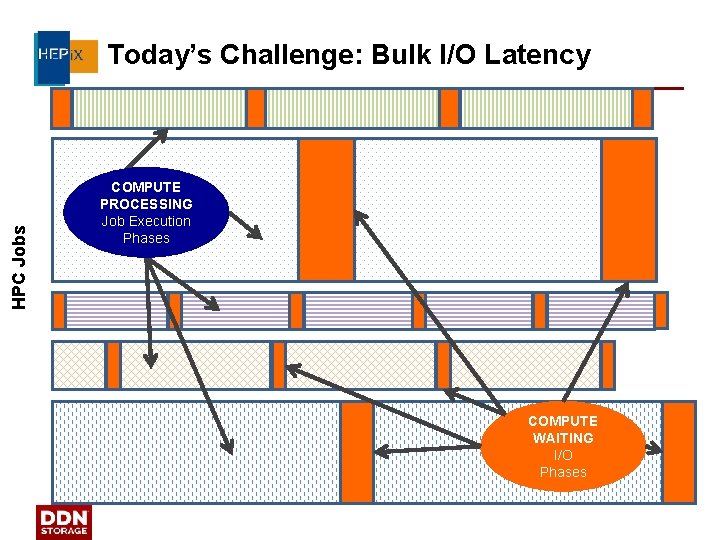

HPC Jobs Today’s Challenge: Bulk I/O Latency COMPUTE PROCESSING Job Execution Phases COMPUTE WAITING I/O Phases

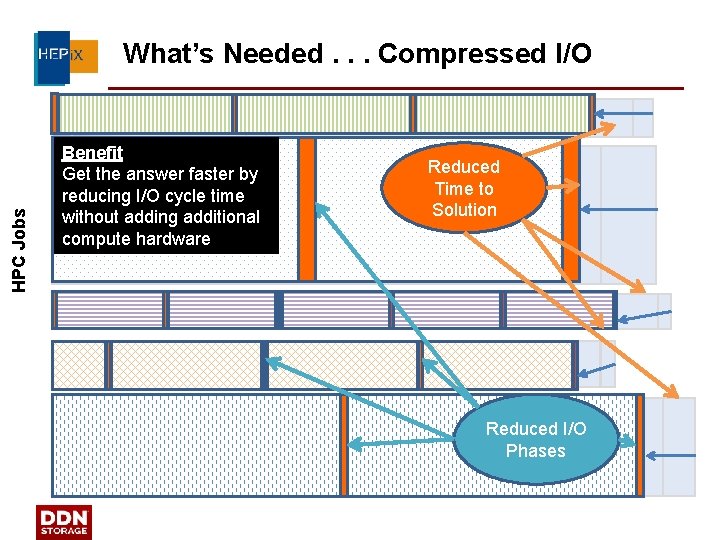

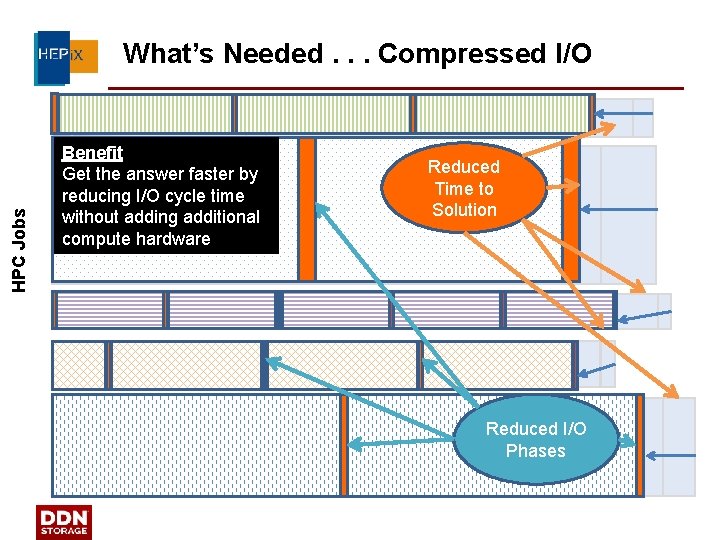

HPC Jobs What’s Needed. . . Compressed I/O Benefit Get the answer faster by reducing I/O cycle time without adding additional compute hardware Reduced Time to Solution Reduced I/O Phases

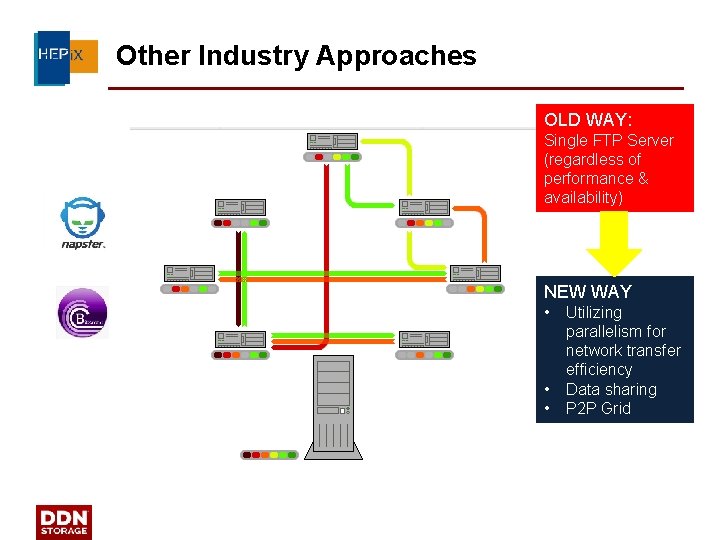

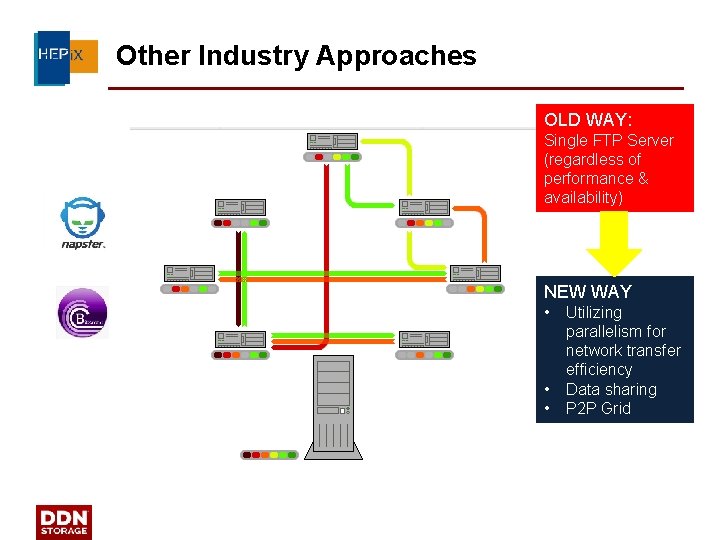

Other Industry Approaches OLD WAY: Single FTP Server (regardless of performance & availability) NEW WAY • • • Utilizing parallelism for network transfer efficiency Data sharing P 2 P Grid

Why is HPC living in the “Internet 80’s”? This phone is way too heavy to have only 1 conversation at a time

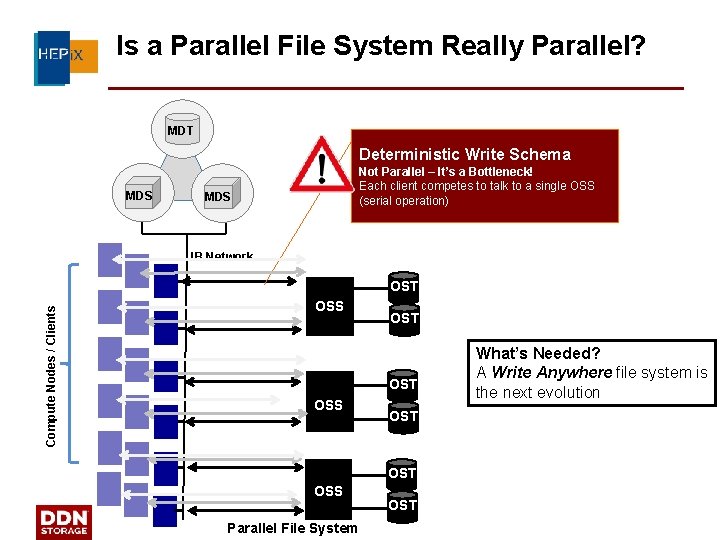

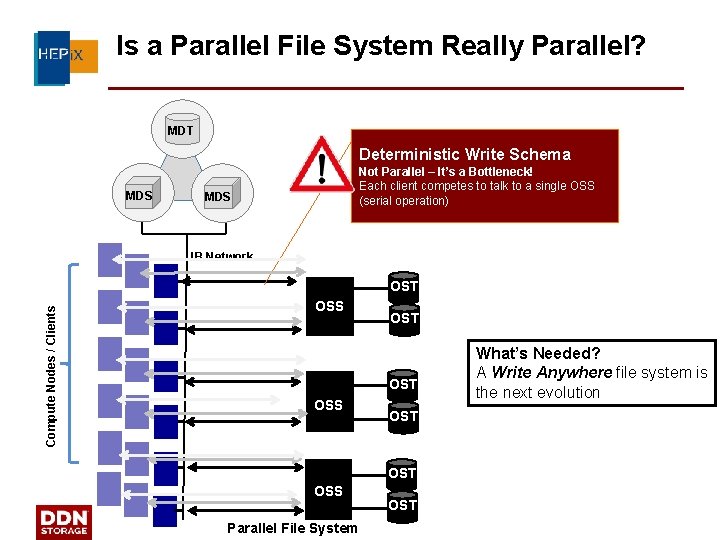

Is a Parallel File System Really Parallel? MDT Deterministic Write Schema MDS Not Parallel – It’s a Bottleneck! Each client competes to talk to a single OSS (serial operation) MDS IB Network Compute Nodes / Clients OST OSS OST OST OSS Parallel File System OST 7 What’s Needed? A Write Anywhere file system is the next evolution

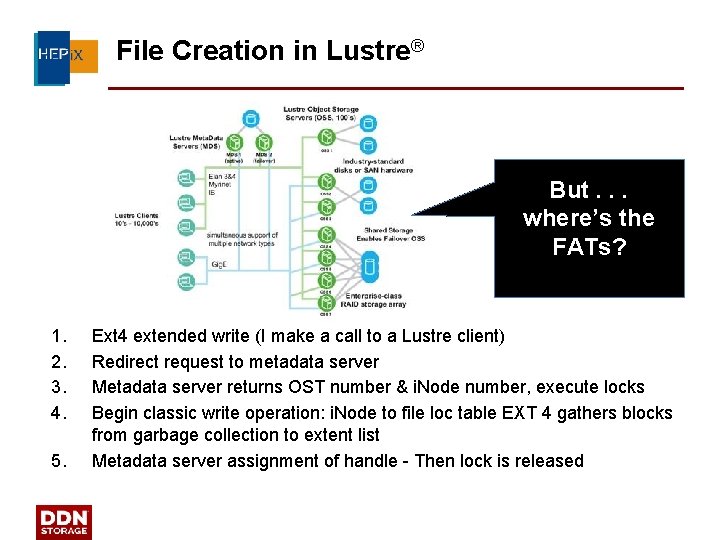

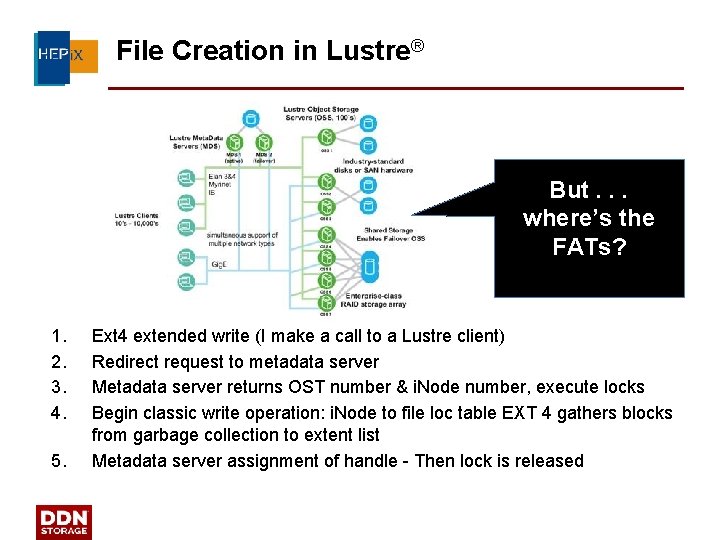

File Creation in Lustre® But. . . where’s the FATs? 1. 2. 3. 4. 5. Ext 4 extended write (I make a call to a Lustre client) Redirect request to metadata server Metadata server returns OST number & i. Node number, execute locks Begin classic write operation: i. Node to file loc table EXT 4 gathers blocks from garbage collection to extent list Metadata server assignment of handle - Then lock is released

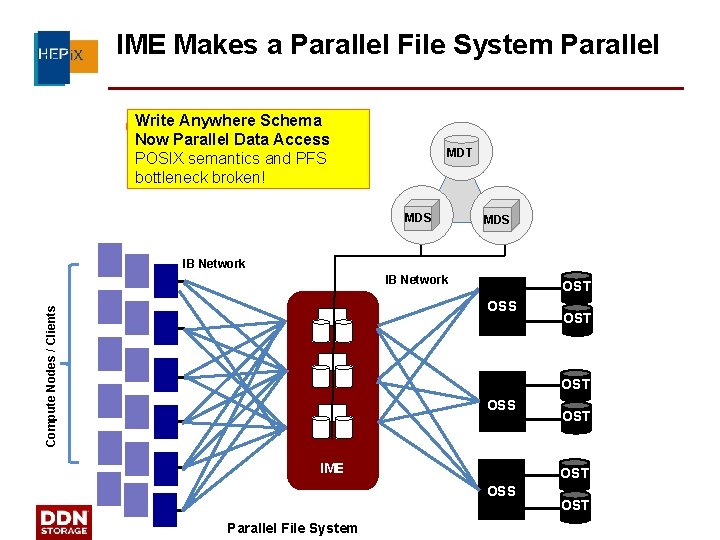

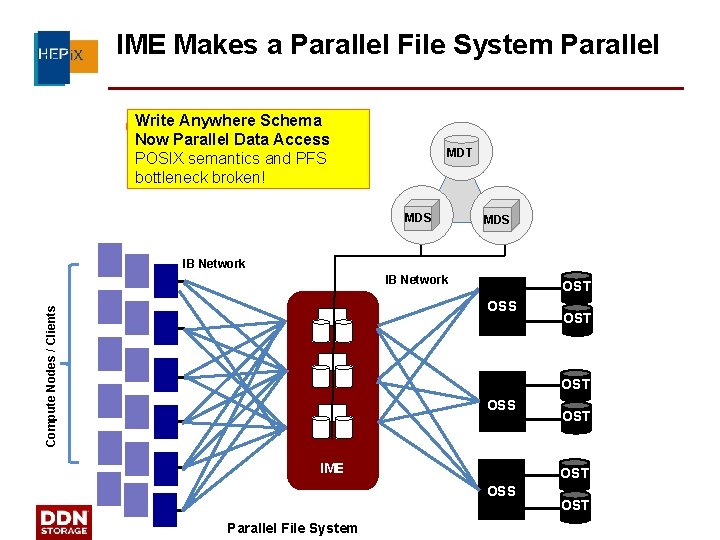

IME Makes a Parallel File System Parallel Write Anywhere Schema Now Parallel Data Access POSIX semantics and PFS bottleneck broken! MDT MDS IB Network OST Compute Nodes / Clients OSS OST OSS IME OST OSS Parallel File System OST 9 OST

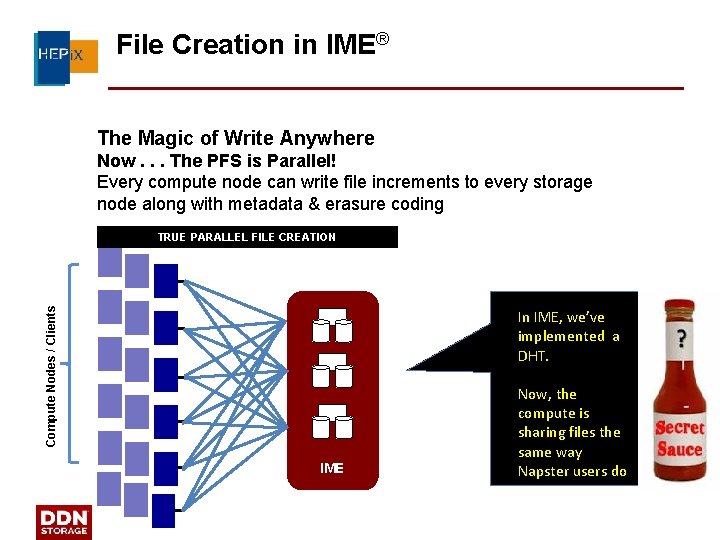

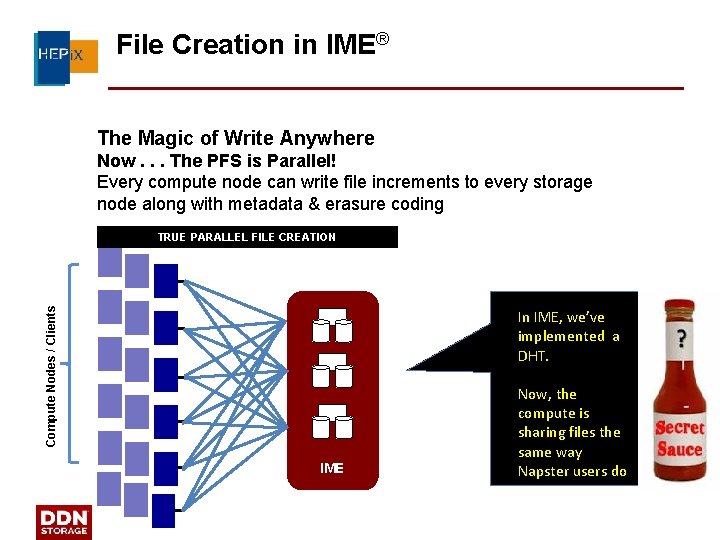

File Creation in IME® The Magic of Write Anywhere Now. . . The PFS is Parallel! Every compute node can write file increments to every storage node along with metadata & erasure coding Compute Nodes / Clients TRUE PARALLEL FILE CREATION In IME, we’ve implemented a DHT. IME Now, the compute is sharing files the same way Napster users do

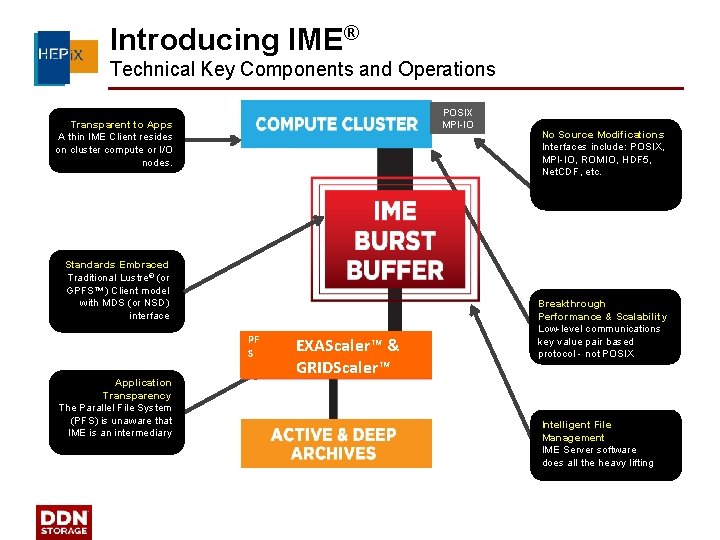

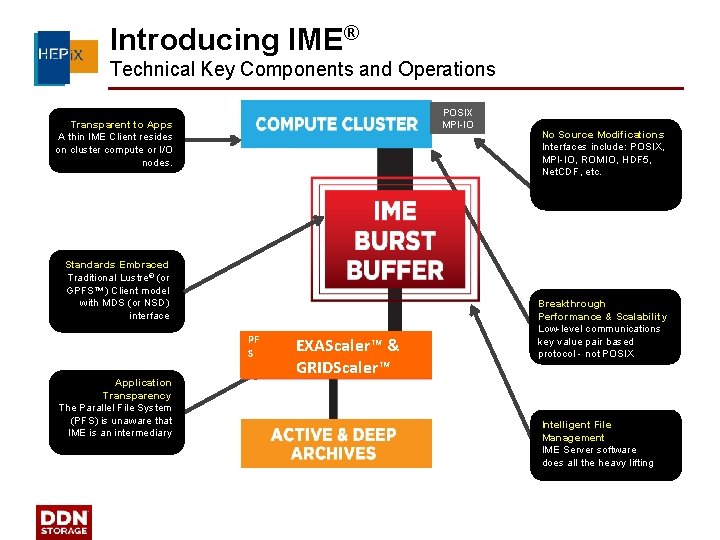

Introducing IME® Technical Key Components and Operations POSIX MPI-IO Transparent to Apps A thin IME Client resides on cluster compute or I/O nodes. Standards Embraced Traditional Lustre® (or GPFS™) Client model with MDS (or NSD) interface PF S Application Transparency The Parallel File System (PFS) is unaware that IME is an intermediary EXAScaler™ & GRIDScaler™ No Source Modifications Interfaces include: POSIX, MPI-IO, ROMIO, HDF 5, Net. CDF, etc. Breakthrough Performance & Scalability Low-level communications key value pair based protocol - not POSIX Intelligent File Management IME Server software does all the heavy lifting 11

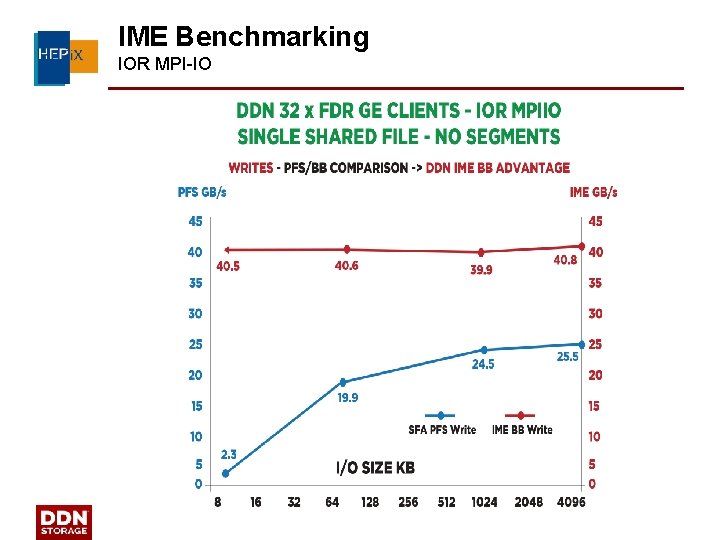

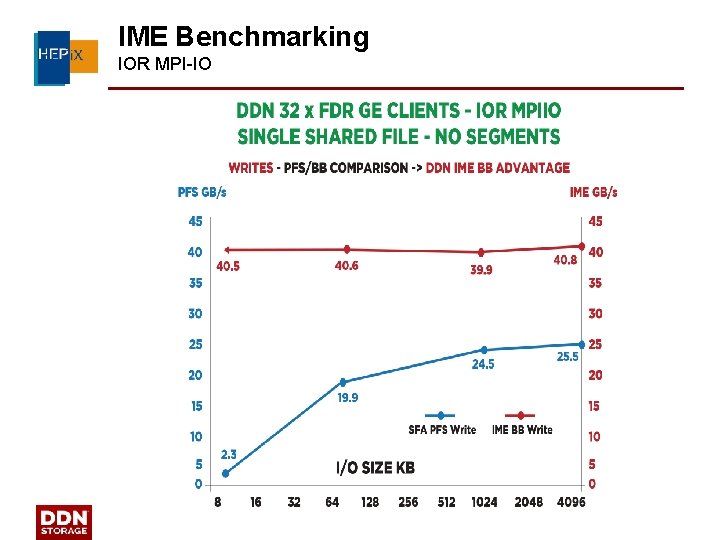

IME Benchmarking IOR MPI-IO 12

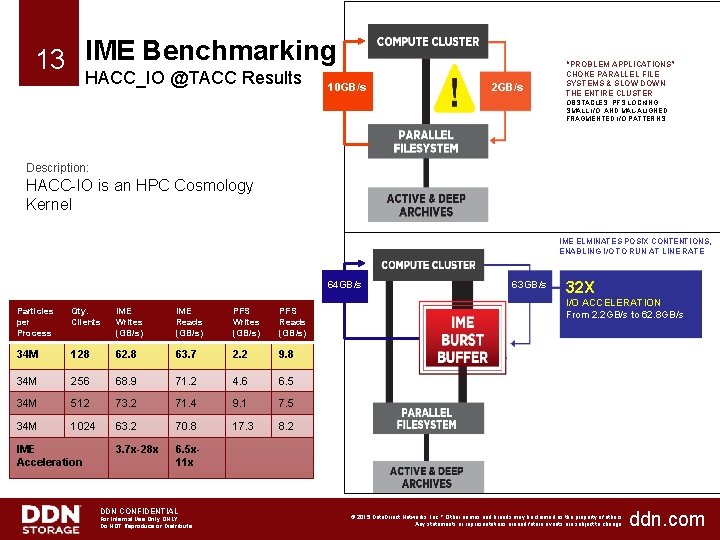

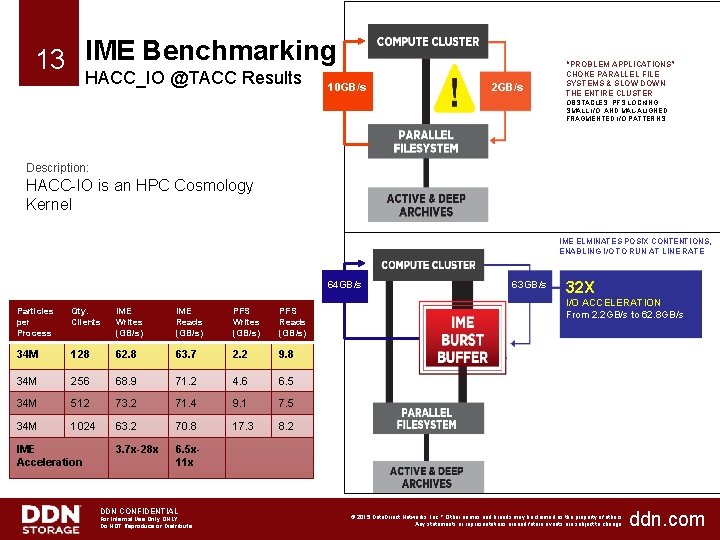

13 IME Benchmarking HACC_IO @TACC Results 10 GB/s 2 GB/s “PROBLEM APPLICATIONS” CHOKE PARALLEL FILE SYSTEMS & SLOW DOWN THE ENTIRE CLUSTER OBSTACLES: PFS LOCKING, SMALL I/O, AND MAL-ALIGNED, FRAGMENTED I/O PATTERNS Description: HACC-IO is an HPC Cosmology Kernel IME ELMINATES POSIX CONTENTIONS, ENABLING I/O TO RUN AT LINE RATE 64 GB/s Particles per Process Qty. Clients IME Writes (GB/s) IME Reads (GB/s) PFS Writes (GB/s) PFS Reads (GB/s) 34 M 128 62. 8 63. 7 2. 2 9. 8 34 M 256 68. 9 71. 2 4. 6 6. 5 34 M 512 73. 2 71. 4 9. 1 7. 5 34 M 1024 63. 2 70. 8 17. 3 8. 2 3. 7 x-28 x 6. 5 x 11 x IME Acceleration DDN CONFIDENTIAL For Internal Use Only ONLY Do NOT Reproduce or Distribute 63 GB/s 32 X I/O ACCELERATION From 2. 2 GB/s to 62. 8 GB/s © 2015 Data. Direct Networks, Inc. * Other names and brands may be claimed as the property of others. Any statements or representations around future events are subject to change. ddn. com

Moral of the story HPC Cluster = Large Group of Data Users ü Why haven’t we learned. . . What the internet p 2 p guys have known for a long time? ü Learn to share! The precious resources of network bandwidth & storage

Thank You! Keep in touch with us David Fellinger Chief Scientist, DDN Storage dfellinger@ddn. com sales@ddn. com 2929 Patrick Henry Drive Santa Clara, CA 95054 @ddn_limitless 1. 800. 837. 2298 1. 818. 700. 4000 company/datadirect-networks © 2015 Data. Direct Networks, Inc. * Other names and brands may be claimed as the property of others. Any statements or representations around future events are subject to change. ddn. com