Maui High Performance Computing Center Open System Support

- Slides: 13

Maui High Performance Computing Center Open System Support An AFRL, MHPCC and UH Collaboration December 18, 2007 Mike Mc. Craney MHPCC Operations Director 1

Agenda Ø Ø Ø MHPCC Background and History Open System Description Scheduled and Unscheduled Maintenance Application Process Additional Information Required Summary and Q/A 2

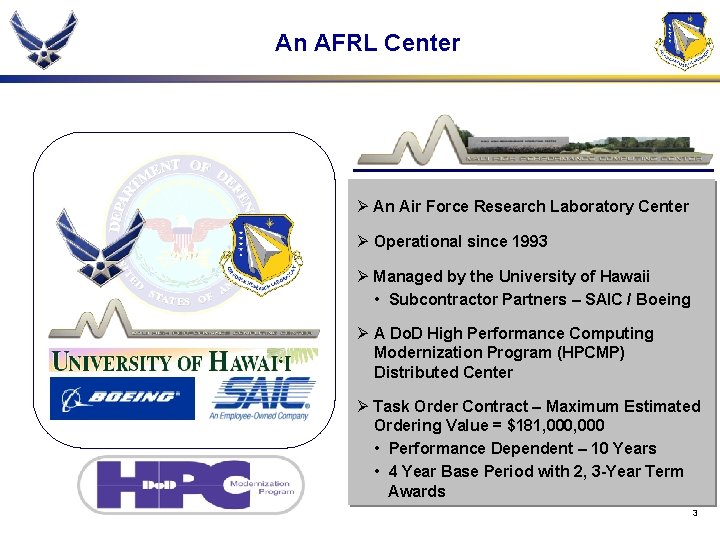

An AFRL Center Ø An Air Force Research Laboratory Center Ø Operational since 1993 Ø Managed by the University of Hawaii • Subcontractor Partners – SAIC / Boeing Ø A Do. D High Performance Computing Modernization Program (HPCMP) Distributed Center Ø Task Order Contract – Maximum Estimated Ordering Value = $181, 000 • Performance Dependent – 10 Years • 4 Year Base Period with 2, 3 -Year Term Awards 3

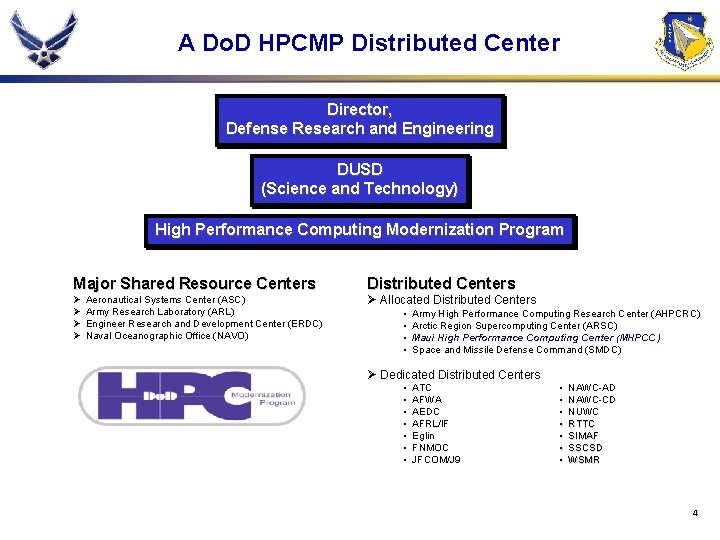

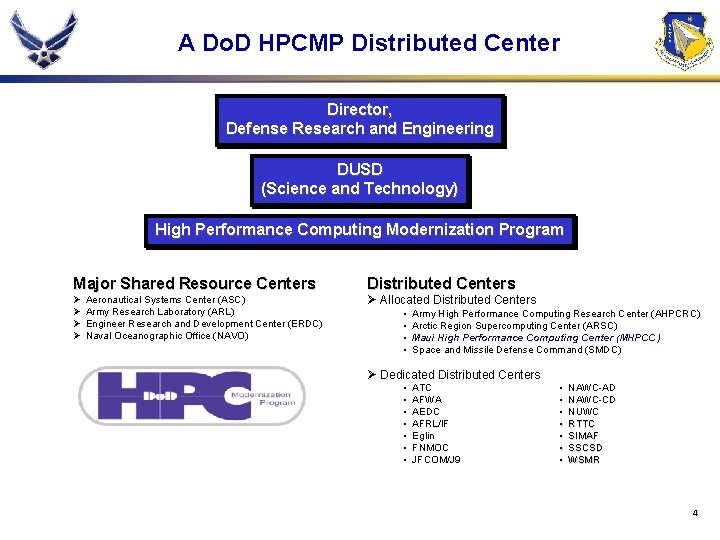

A Do. D HPCMP Distributed Center Director, Defense Research and Engineering DUSD (Science and Technology) High Performance Computing Modernization Program Major Shared Resource Centers Ø Ø Aeronautical Systems Center (ASC) Army Research Laboratory (ARL) Engineer Research and Development Center (ERDC) Naval Oceanographic Office (NAVO) Distributed Centers Ø Allocated Distributed Centers • • Army High Performance Computing Research Center (AHPCRC) Arctic Region Supercomputing Center (ARSC) Maui High Performance Computing Center (MHPCC) Space and Missile Defense Command (SMDC) Ø Dedicated Distributed Centers • • ATC AFWA AEDC AFRL/IF Eglin FNMOC JFCOM/J 9 • • NAWC-AD NAWC-CD NUWC RTTC SIMAF SSCSD WSMR 4

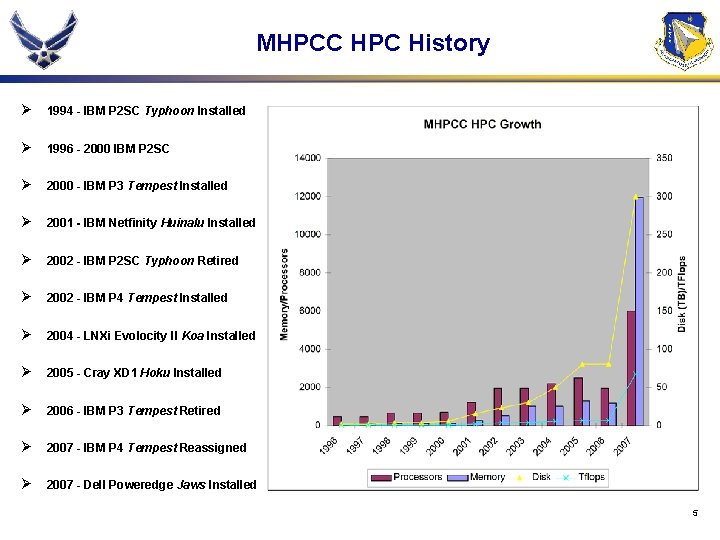

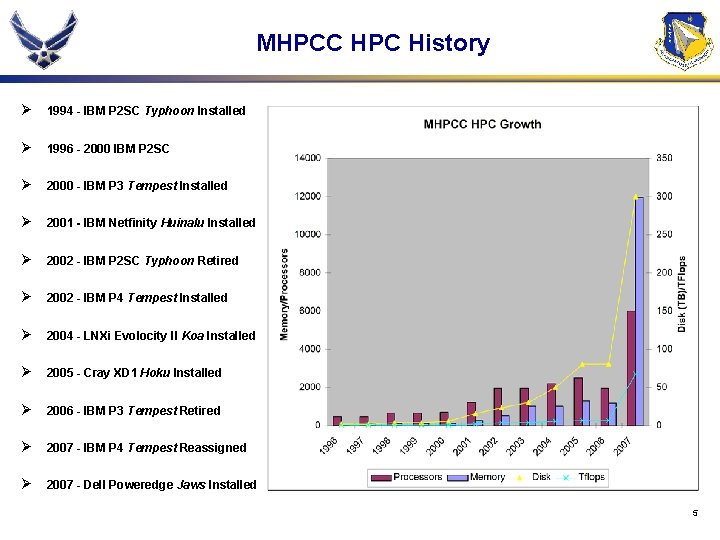

MHPCC HPC History Ø 1994 - IBM P 2 SC Typhoon Installed Ø 1996 - 2000 IBM P 2 SC Ø 2000 - IBM P 3 Tempest Installed Ø 2001 - IBM Netfinity Huinalu Installed Ø 2002 - IBM P 2 SC Typhoon Retired Ø 2002 - IBM P 4 Tempest Installed Ø 2004 - LNXi Evolocity II Koa Installed Ø 2005 - Cray XD 1 Hoku Installed Ø 2006 - IBM P 3 Tempest Retired Ø 2007 - IBM P 4 Tempest Reassigned Ø 2007 - Dell Poweredge Jaws Installed 5

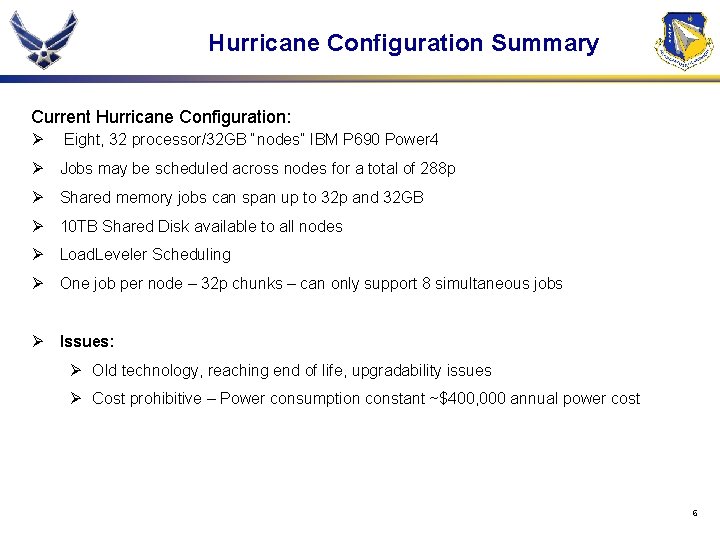

Hurricane Configuration Summary Current Hurricane Configuration: Ø Eight, 32 processor/32 GB “nodes” IBM P 690 Power 4 Ø Jobs may be scheduled across nodes for a total of 288 p Ø Shared memory jobs can span up to 32 p and 32 GB Ø 10 TB Shared Disk available to all nodes Ø Load. Leveler Scheduling Ø One job per node – 32 p chunks – can only support 8 simultaneous jobs Ø Issues: Ø Old technology, reaching end of life, upgradability issues Ø Cost prohibitive – Power consumption constant ~$400, 000 annual power cost 6

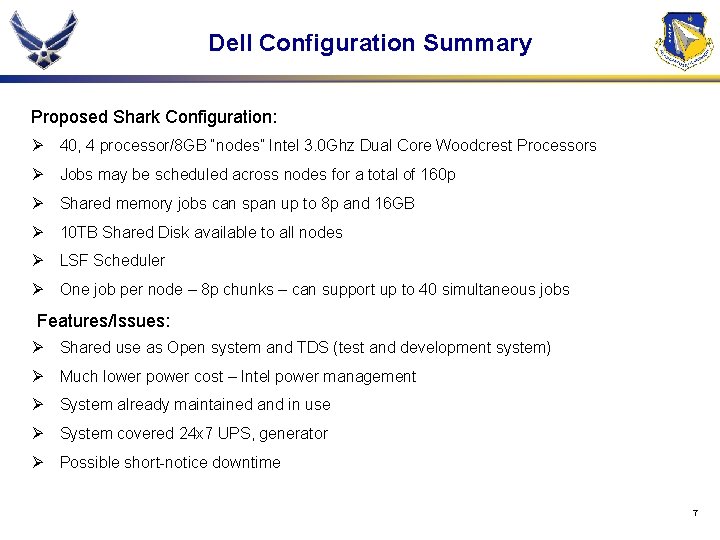

Dell Configuration Summary Proposed Shark Configuration: Ø 40, 4 processor/8 GB “nodes” Intel 3. 0 Ghz Dual Core Woodcrest Processors Ø Jobs may be scheduled across nodes for a total of 160 p Ø Shared memory jobs can span up to 8 p and 16 GB Ø 10 TB Shared Disk available to all nodes Ø LSF Scheduler Ø One job per node – 8 p chunks – can support up to 40 simultaneous jobs Features/Issues: Ø Shared use as Open system and TDS (test and development system) Ø Much lower power cost – Intel power management Ø System already maintained and in use Ø System covered 24 x 7 UPS, generator Ø Possible short-notice downtime 7

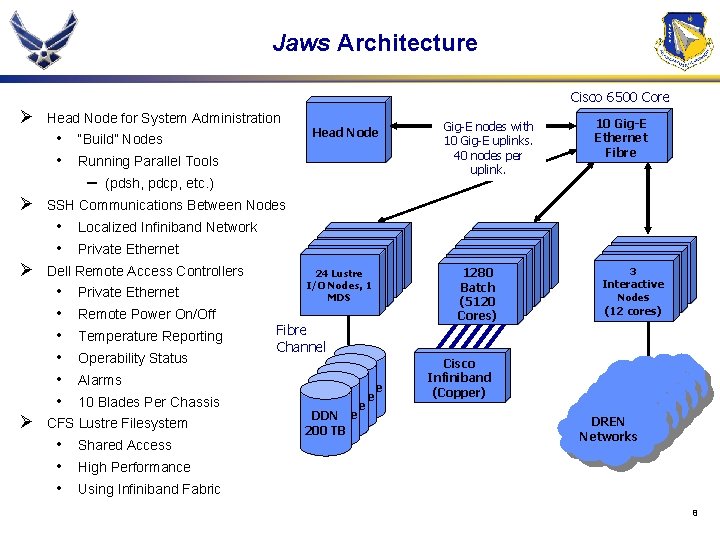

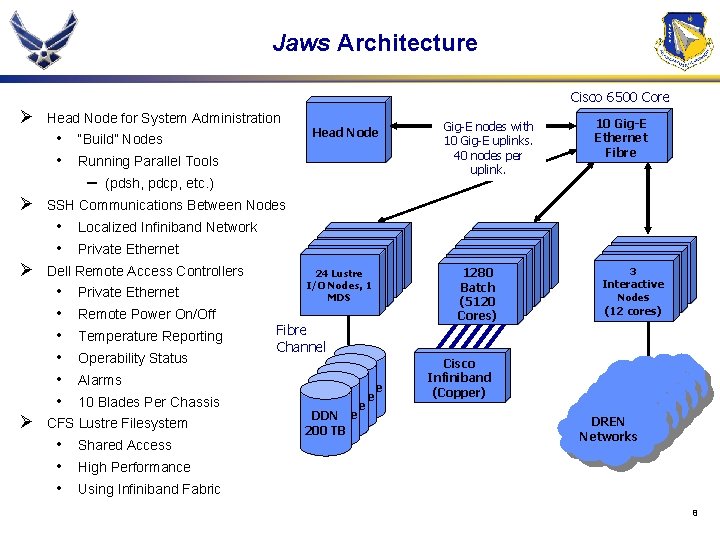

Jaws Architecture Cisco 6500 Core Ø Head Node for System Administration • • “Build” Nodes Running Parallel Tools – Ø 10 Gig-E Ethernet Fibre Localized Infiniband Network Private Ethernet Dell Remote Access Controllers • • • Ø (pdsh, pdcp, etc. ) Gig-E nodes with 10 Gig-E uplinks. 40 nodes per uplink. SSH Communications Between Nodes • • Ø Head Node Private Ethernet Remote Power On/Off Temperature Reporting Operability Status Alarms 10 Blades Per Chassis CFS Lustre Filesystem • • • Shared Access User Webtop User 24 Lustre Webtop I/OWebtop Nodes, 1 MDS Fibre Channel Storage DDN 200 TB Simulation Engine Simulation 1280 Engine Simulation Engine Batch Engine (5120 Cores) Cisco Infiniband (Copper) 3 User Webtop Interactive Webtop Nodes (12 cores) Networks DREN Networks High Performance Using Infiniband Fabric 8

Shark Software Ø Systems Software • Red Hat Enterprise Linux v 4 – • 2. 6. 9 Kernel Infiniband ü Cisco Software stack • MVAPICH – • • MPICH 1. 2. 7 over IB Library Gnu 3. 4. 6 C/C++/Fortran Intel 9. 1 C/C++/Fortran Platform LSF HPC 6. 2 Platform Rocks 9

Maintenance Schedule Ø Current • 2: 00 pm – 4: 00 pm • 2 nd and 4 th Thursday (as necessary) • Check website (mhpcc. hpc. mil) for maintenance notices Ø New Proposed Schedule • 8: 00 am – 5: 00 pm • 2 nd and 4 th Wednesdays (as necessary) • Check website for maintenance notices Ø Only take maintenance on scheduled systems Ø Check on Mondays before submitting jobs 10

Account Applications and Documentation Ø Contact Helpdesk or website for application information Ø Documentation Needed: • Account names, systems, special requirements • Project title, nature of work, accessibility of code • Nationality of applicant • Collaborative relevance with AFRL Ø New Requirements • “Case File” information • For use in AFRL research collaboration • Future AFRL applicability • Intellectual property shared with AFRL Ø Annual Account Renewals • September 30 is final day of the fiscal year 11

Summary Ø Anticipated migration to Shark Ø Should be more productive and able to support wide range of jobs Ø Cutting edge technology Ø Cost savings from Hurricane (~$400, 000 annual) Ø Stay tuned for timeline – likely end of January, early February 12

Mahalo 13