Adaptive Insertion Policies for HighPerformance Caching Moinuddin K

![Circular Reference Model [Smith & Goodman ISCA’ 84] Reference stream has T blocks and Circular Reference Model [Smith & Goodman ISCA’ 84] Reference stream has T blocks and](https://slidetodoc.com/presentation_image/29f6fe5af9d8c0009d43d26d325096a4/image-11.jpg)

- Slides: 32

Adaptive Insertion Policies for High-Performance Caching Moinuddin K. Qureshi Yale N. Patt Aamer Jaleel Simon C. Steely Jr. Joel Emer International Symposium on Computer Architecture (ISCA) 2007 1

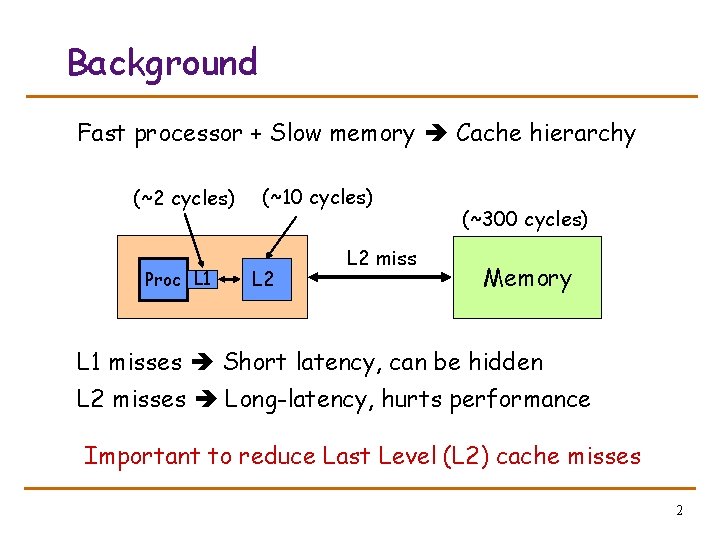

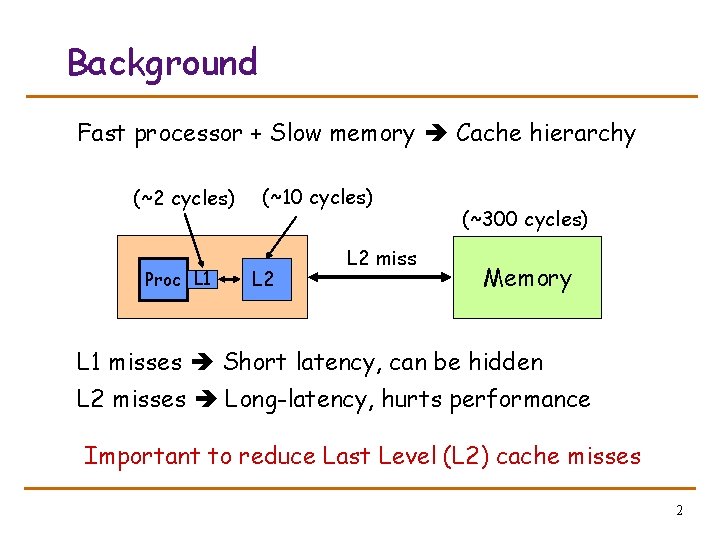

Background Fast processor + Slow memory Cache hierarchy (~2 cycles) Proc L 1 (~10 cycles) L 2 miss (~300 cycles) Memory L 1 misses Short latency, can be hidden L 2 misses Long-latency, hurts performance Important to reduce Last Level (L 2) cache misses 2

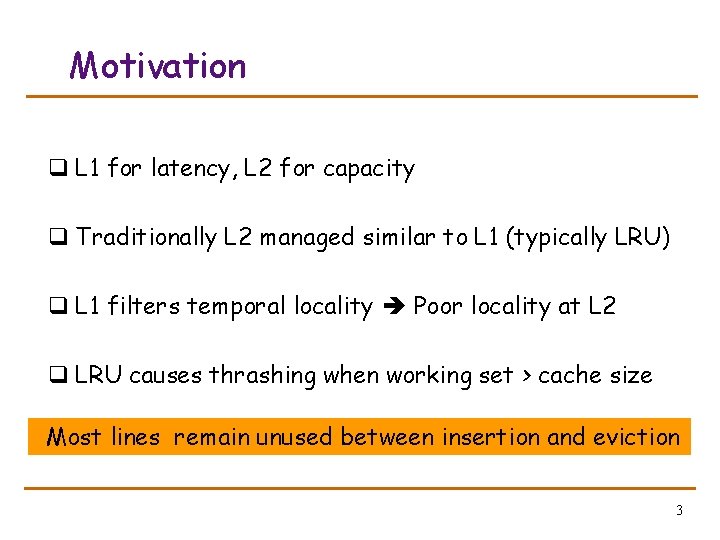

Motivation q L 1 for latency, L 2 for capacity q Traditionally L 2 managed similar to L 1 (typically LRU) q L 1 filters temporal locality Poor locality at L 2 q LRU causes thrashing when working set > cache size Most lines remain unused between insertion and eviction 3

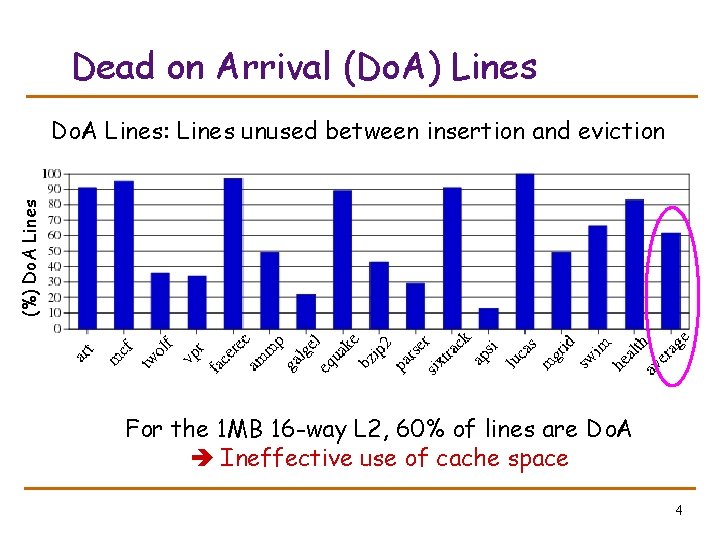

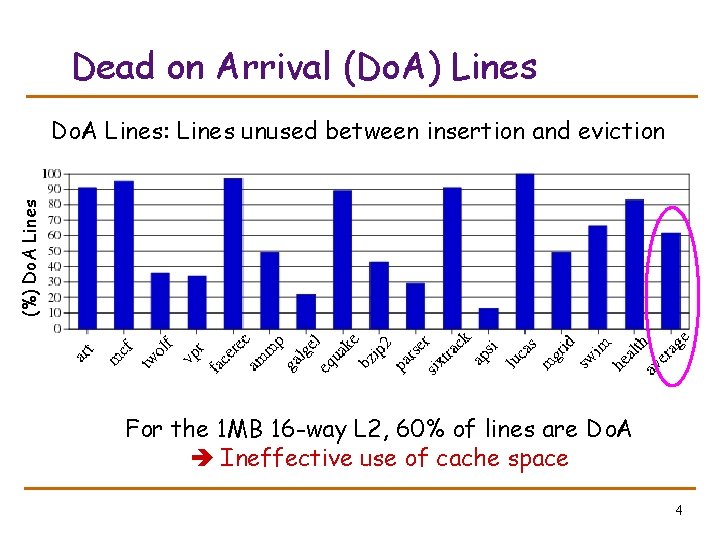

Dead on Arrival (Do. A) Lines (%) Do. A Lines: Lines unused between insertion and eviction For the 1 MB 16 -way L 2, 60% of lines are Do. A Ineffective use of cache space 4

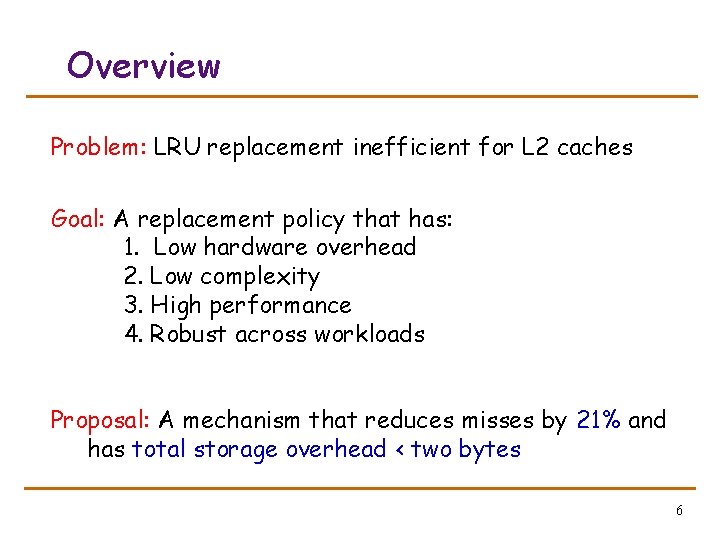

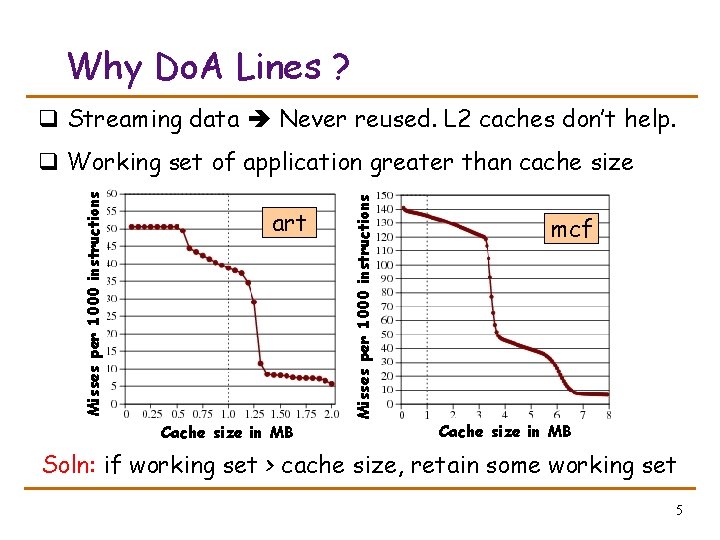

Why Do. A Lines ? q Streaming data Never reused. L 2 caches don’t help. art Cache size in MB Misses per 1000 instructions q Working set of application greater than cache size mcf Cache size in MB Soln: if working set > cache size, retain some working set 5

Overview Problem: LRU replacement inefficient for L 2 caches Goal: A replacement policy that has: 1. Low hardware overhead 2. Low complexity 3. High performance 4. Robust across workloads Proposal: A mechanism that reduces misses by 21% and has total storage overhead < two bytes 6

Outline q Introduction q Static Insertion Policies q Dynamic Insertion Policies q Summary 7

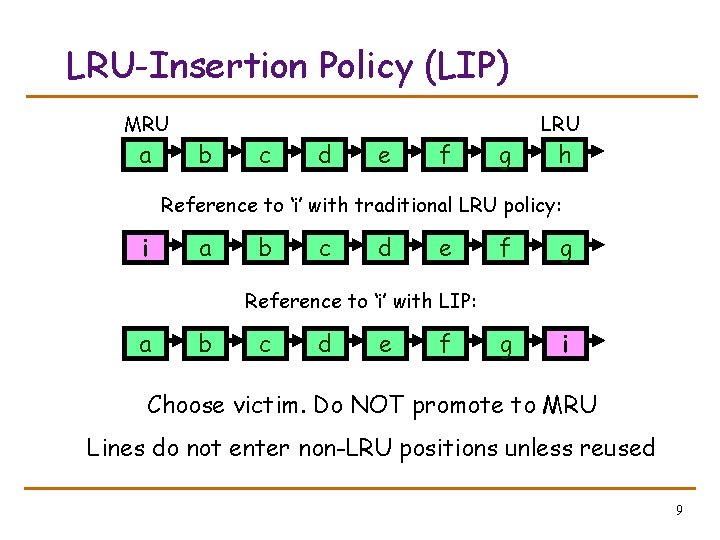

Cache Insertion Policy Two components of cache replacement: 1. Victim Selection: Which line to replace for incoming line? (E. g. LRU, Random, FIFO, LFU) 2. Insertion Policy: Where is incoming line placed in replacement list? (E. g. insert incoming line at MRU position) Simple changes to insertion policy can greatly improve cache performance for memory-intensive workloads 8

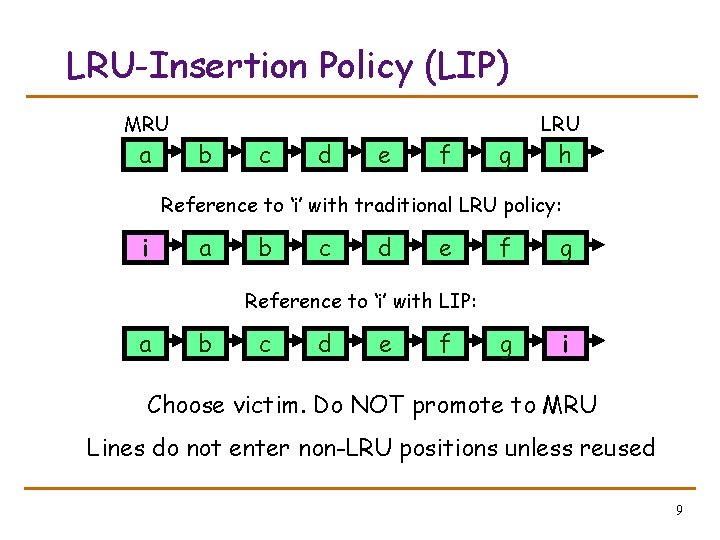

LRU-Insertion Policy (LIP) MRU a b c d e f g LRU h Reference to ‘i’ with traditional LRU policy: i a b c d e f g g i Reference to ‘i’ with LIP: a b c d e f Choose victim. Do NOT promote to MRU Lines do not enter non-LRU positions unless reused 9

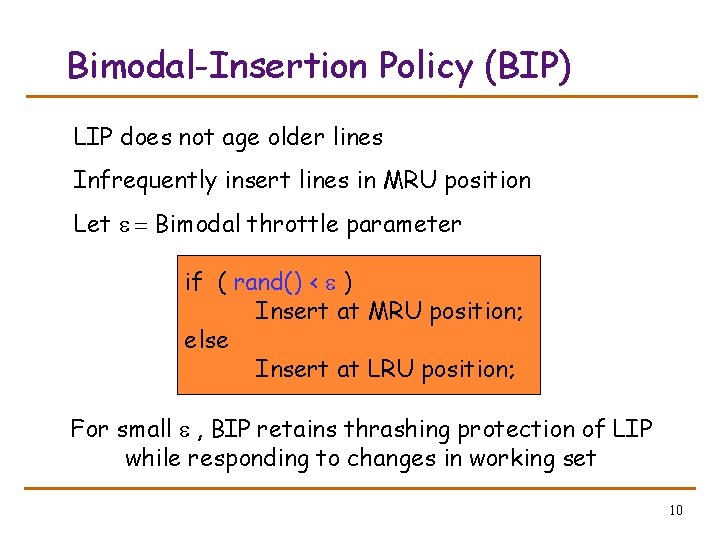

Bimodal-Insertion Policy (BIP) LIP does not age older lines Infrequently insert lines in MRU position Let e = Bimodal throttle parameter if ( rand() < e ) Insert at MRU position; else Insert at LRU position; For small e , BIP retains thrashing protection of LIP while responding to changes in working set 10

![Circular Reference Model Smith Goodman ISCA 84 Reference stream has T blocks and Circular Reference Model [Smith & Goodman ISCA’ 84] Reference stream has T blocks and](https://slidetodoc.com/presentation_image/29f6fe5af9d8c0009d43d26d325096a4/image-11.jpg)

Circular Reference Model [Smith & Goodman ISCA’ 84] Reference stream has T blocks and repeats N times. Cache has K blocks (K<T and N>>T) Policy LRU (a 1 a 2 a 3 … a. T)N (b 1 b 2 b 3 … b. T)N 0 0 OPT (K-1)/(T-1) LIP (K-1)/T 0 BIP (small e) ≈ (K-1)/T For small e , BIP retains thrashing protection of LIP while adapting to changes in working set 11

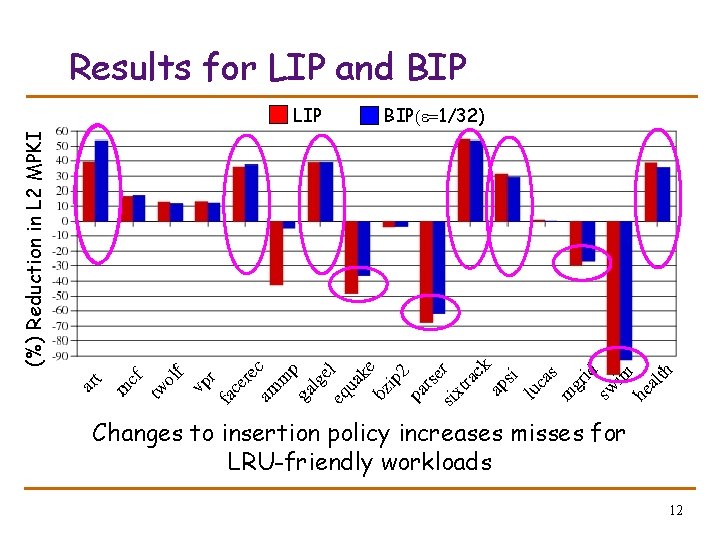

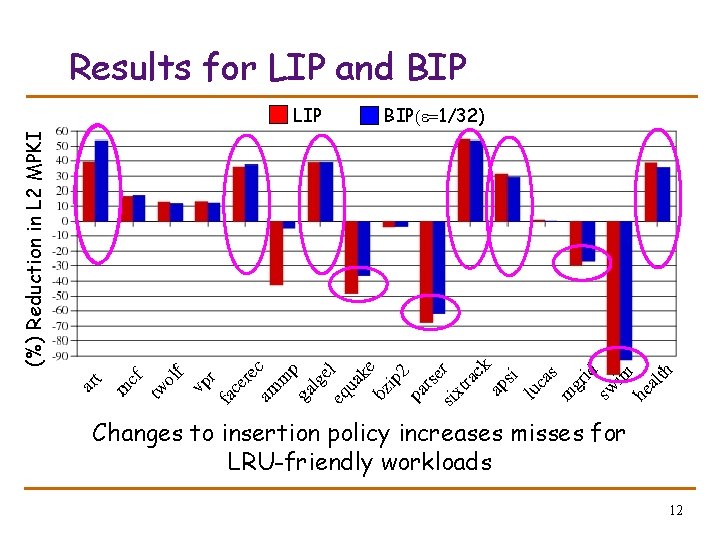

Results for LIP and BIP(e=1/32) (%) Reduction in L 2 MPKI LIP Changes to insertion policy increases misses for LRU-friendly workloads 12

Outline q Introduction q Static Insertion Policies q Dynamic Insertion Policies q Summary 13

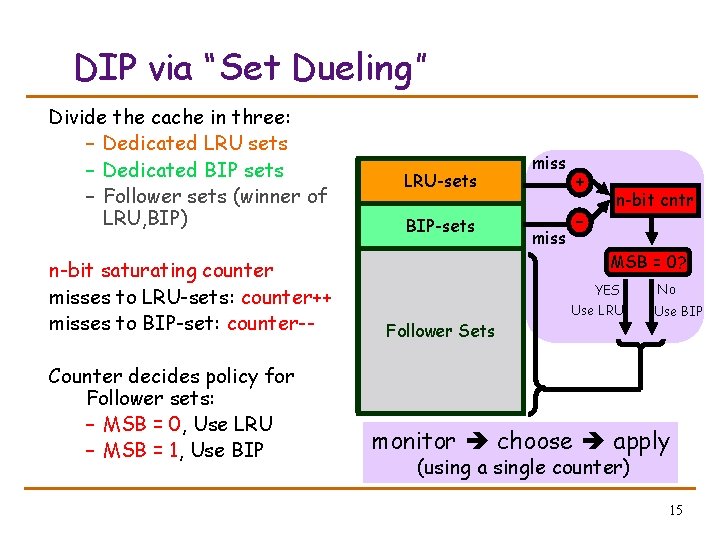

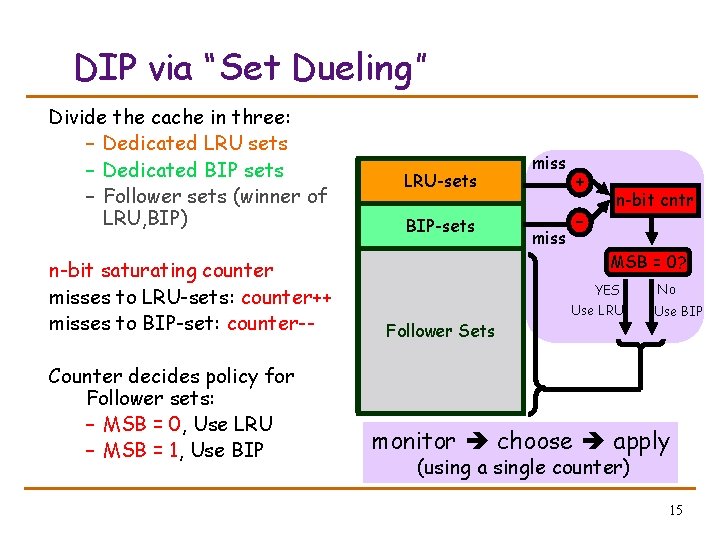

Dynamic-Insertion Policy (DIP) Two types of workloads: LRU-friendly or BIP-friendly DIP can be implemented by: 1. Monitor both policies (LRU and BIP) 2. Choose the best-performing policy 3. Apply the best policy to the cache Need a cost-effective implementation “Set Dueling” 14

DIP via “Set Dueling” Divide the cache in three: – Dedicated LRU sets – Dedicated BIP sets – Follower sets (winner of LRU, BIP) n-bit saturating counter misses to LRU-sets: counter++ misses to BIP-set: counter-Counter decides policy for Follower sets: – MSB = 0, Use LRU – MSB = 1, Use BIP LRU-sets BIP-sets miss + – n-bit cntr MSB = 0? YES Follower Sets Use LRU No Use BIP monitor choose apply (using a single counter) 15

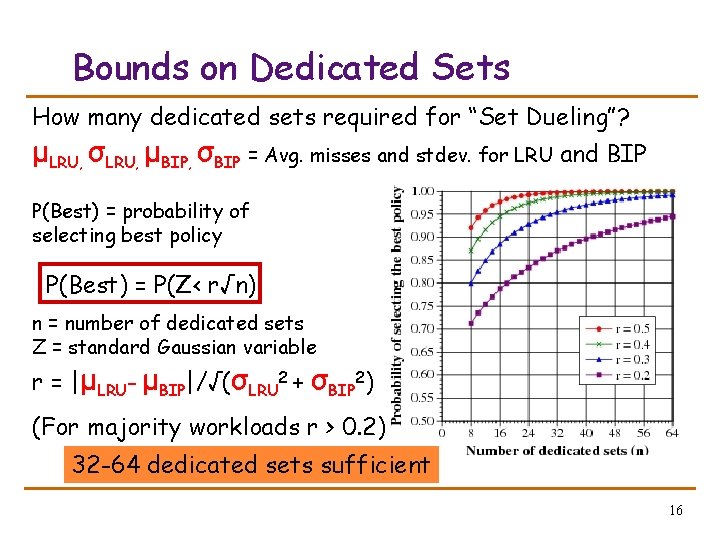

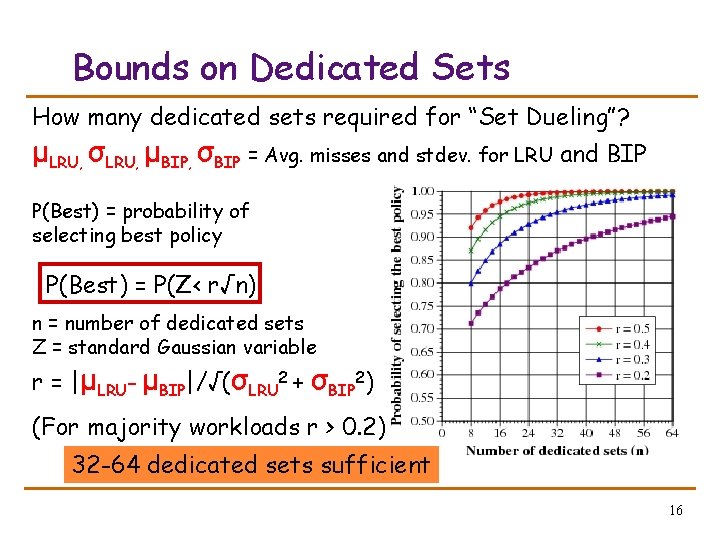

Bounds on Dedicated Sets How many dedicated sets required for “Set Dueling”? μLRU, σLRU, μBIP, σBIP = Avg. misses and stdev. for LRU and BIP P(Best) = probability of selecting best policy P(Best) = P(Z< r√n) n = number of dedicated sets Z = standard Gaussian variable r = |μLRU- μBIP|/√(σLRU 2 + σBIP 2) (For majority workloads r > 0. 2) 32 -64 dedicated sets sufficient 16

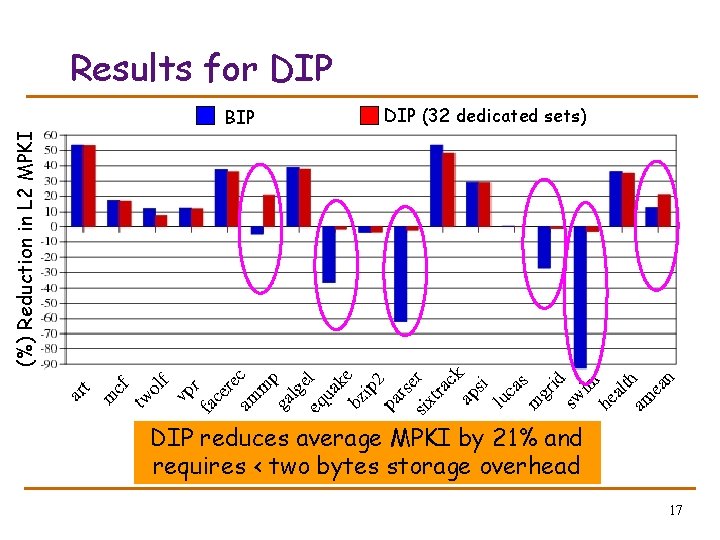

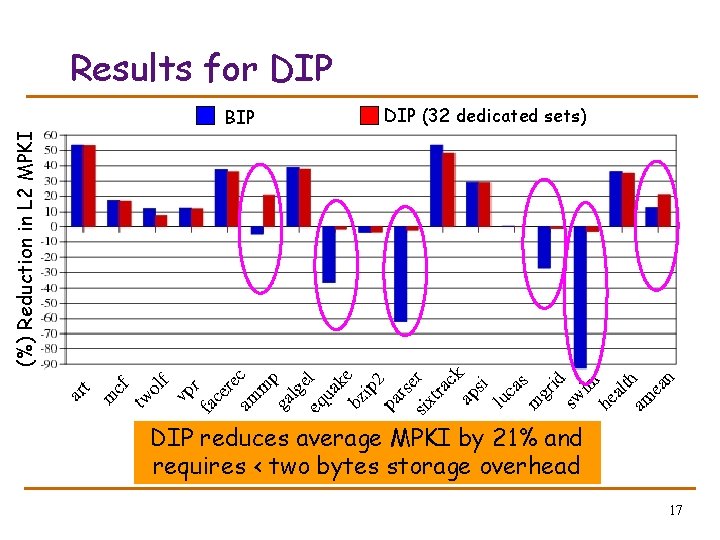

Results for DIP (32 dedicated sets) (%) Reduction in L 2 MPKI BIP DIP reduces average MPKI by 21% and requires < two bytes storage overhead 17

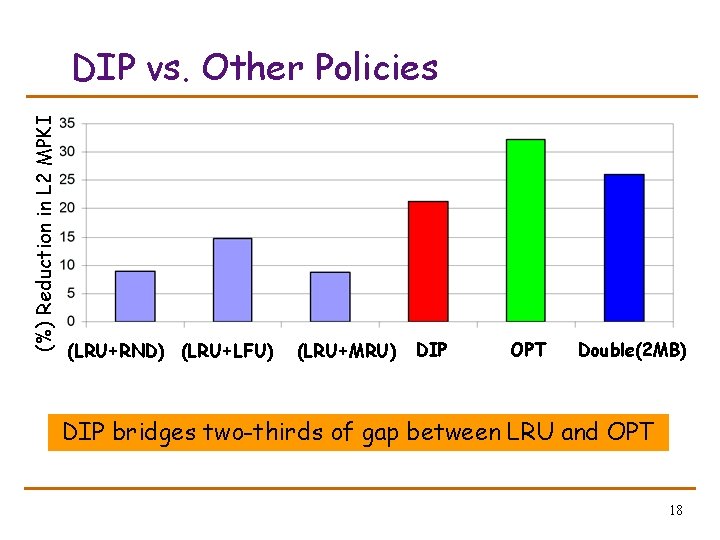

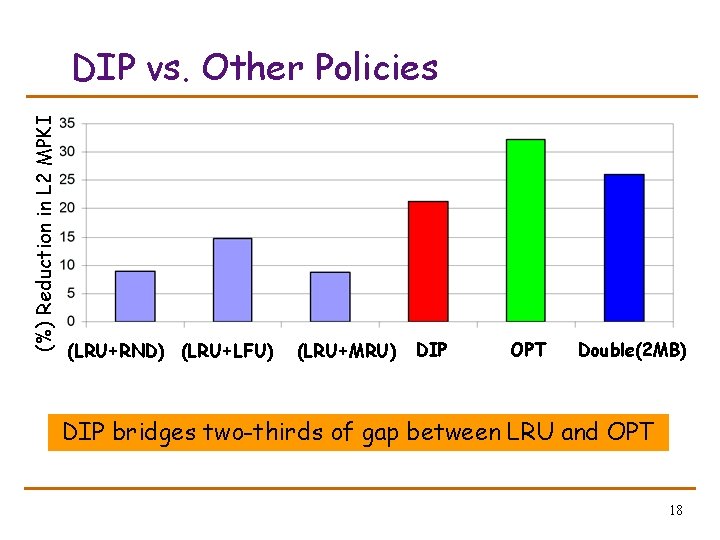

(%) Reduction in L 2 MPKI DIP vs. Other Policies (LRU+RND) (LRU+LFU) (LRU+MRU) DIP OPT Double(2 MB) DIP bridges two-thirds of gap between LRU and OPT 18

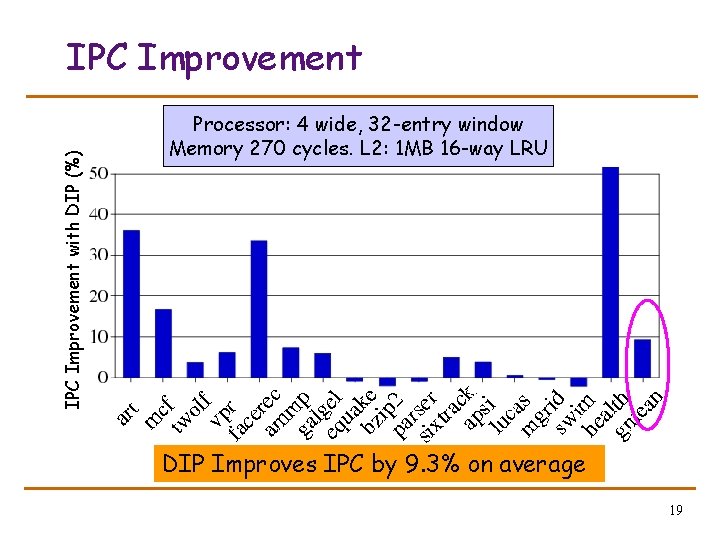

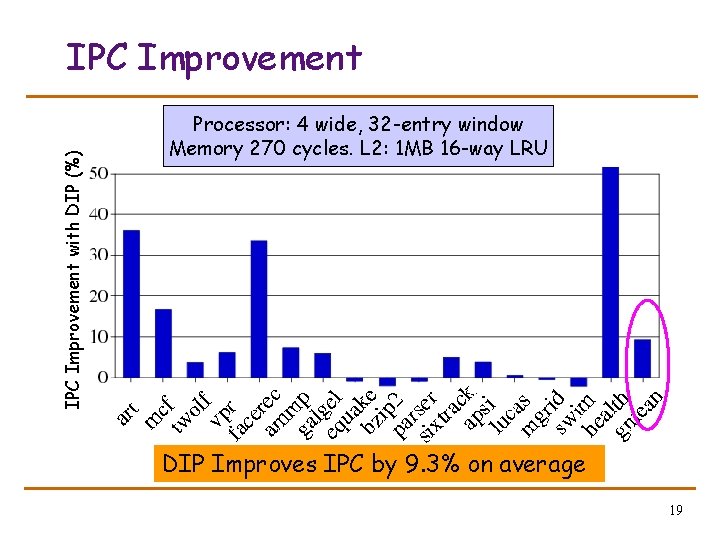

IPC Improvement with DIP (%) IPC Improvement Processor: 4 wide, 32 -entry window Memory 270 cycles. L 2: 1 MB 16 -way LRU DIP Improves IPC by 9. 3% on average 19

Outline q Introduction q Static Insertion Policies q Dynamic Insertion Policies q Summary 20

Summary LRU inefficient for L 2 caches. Most lines remain unused between insertion and eviction Proposed changes to cache insertion policy (DIP) has: overhead 1. Low hardware Requires < two bytes storage overhead 2. Low complexity Trivial to implement. No changes to cache structure performance 3. High Reduces misses by 21%. Two-thirds as good as OPT across workloads 4. Robust. Almost as good as LRU for LRU-friendly workloads 21

source code: www. ece. utexas. edu/~qk/dip Questions 22

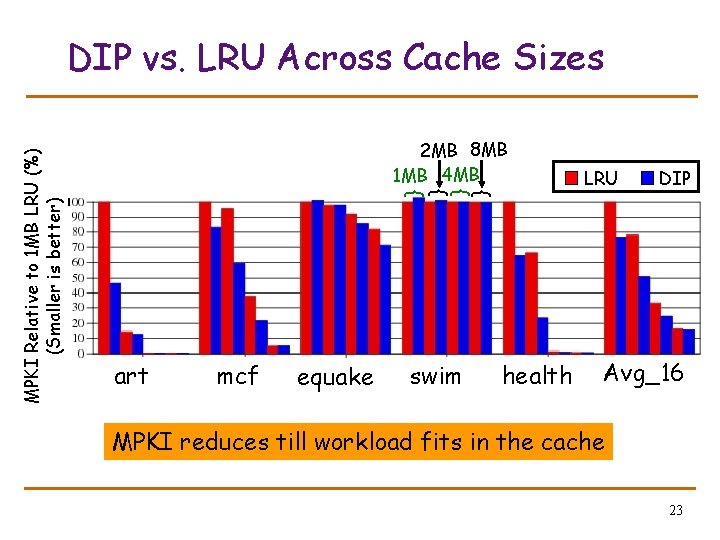

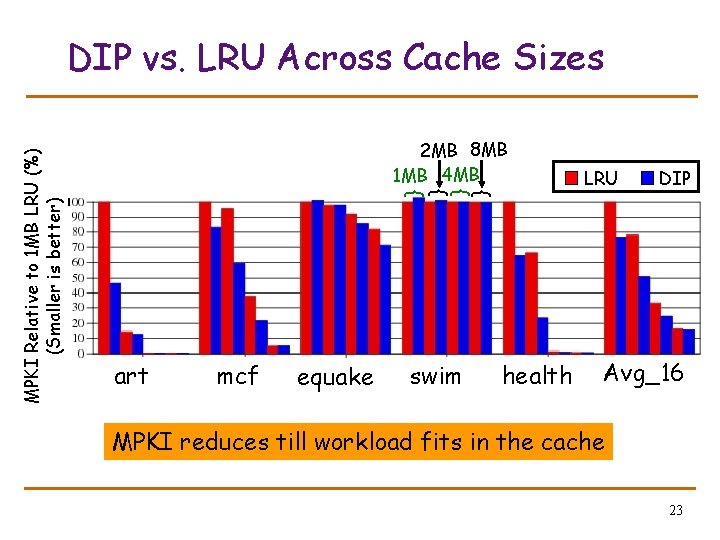

2 MB 8 MB 1 MB 4 MB } } MPKI Relative to 1 MB LRU (%) (Smaller is better) DIP vs. LRU Across Cache Sizes art mcf equake swim health LRU DIP Avg_16 MPKI reduces till workload fits in the cache 23

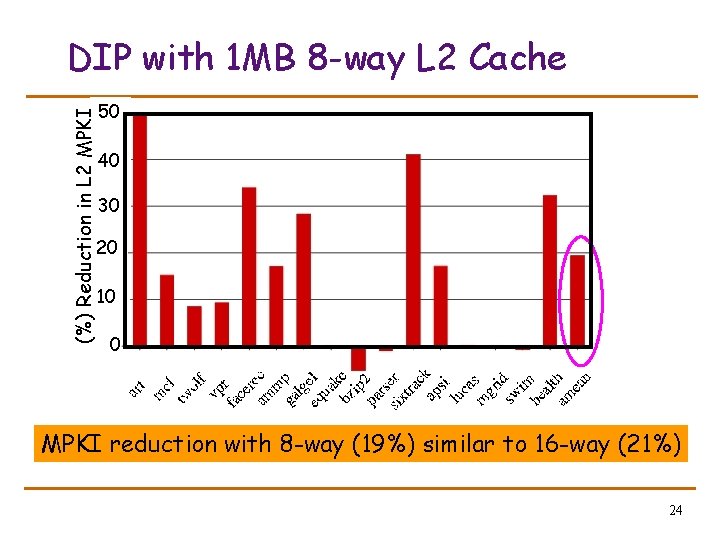

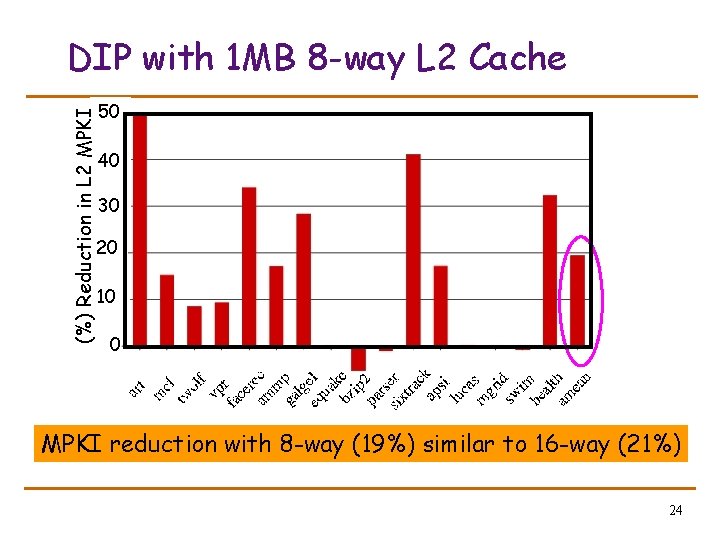

(%) Reduction in L 2 MPKI DIP with 1 MB 8 -way L 2 Cache 50 40 30 20 10 0 MPKI reduction with 8 -way (19%) similar to 16 -way (21%) 24

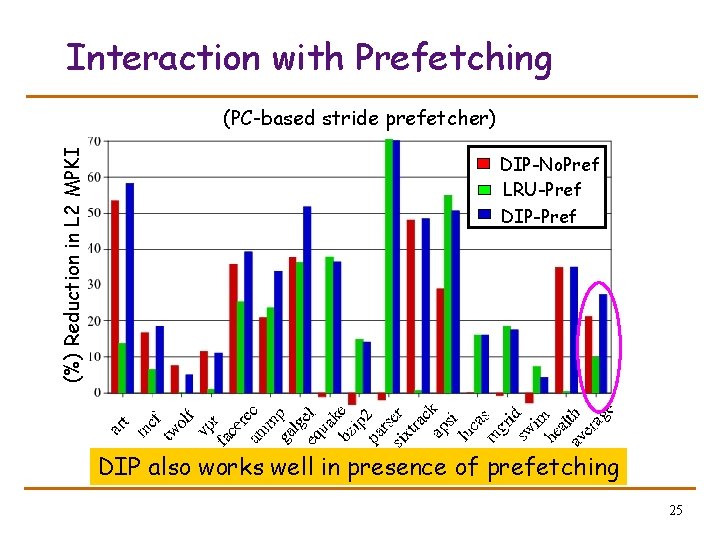

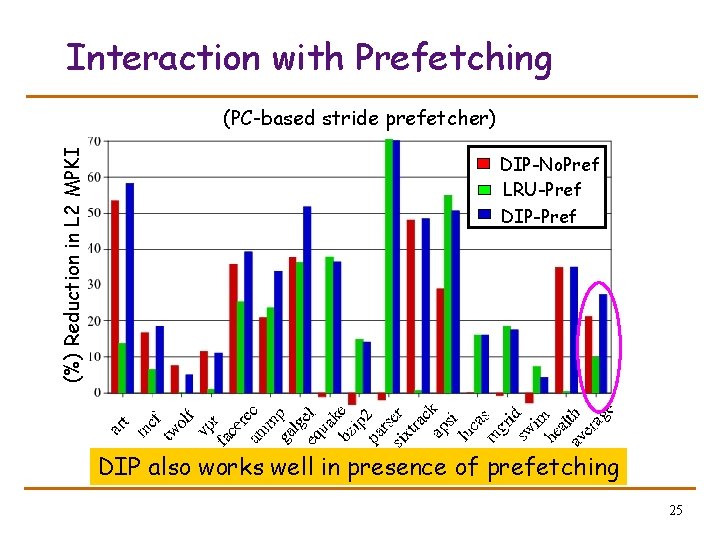

Interaction with Prefetching (%) Reduction in L 2 MPKI (PC-based stride prefetcher) DIP-No. Pref LRU-Pref DIP also works well in presence of prefetching 25

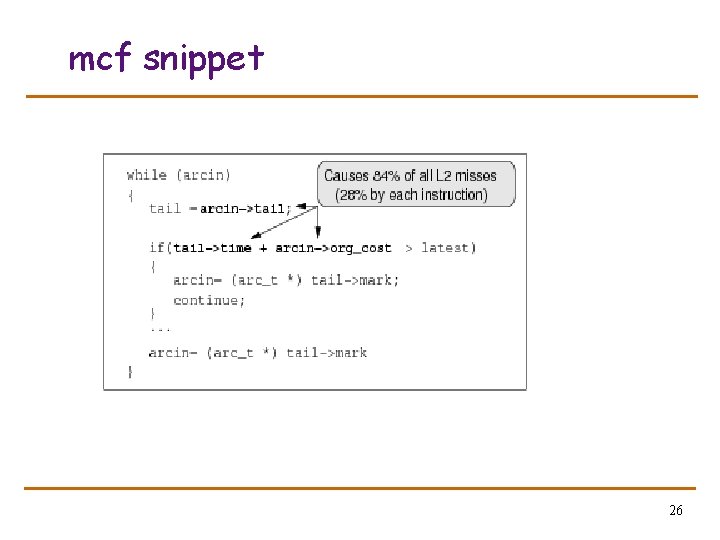

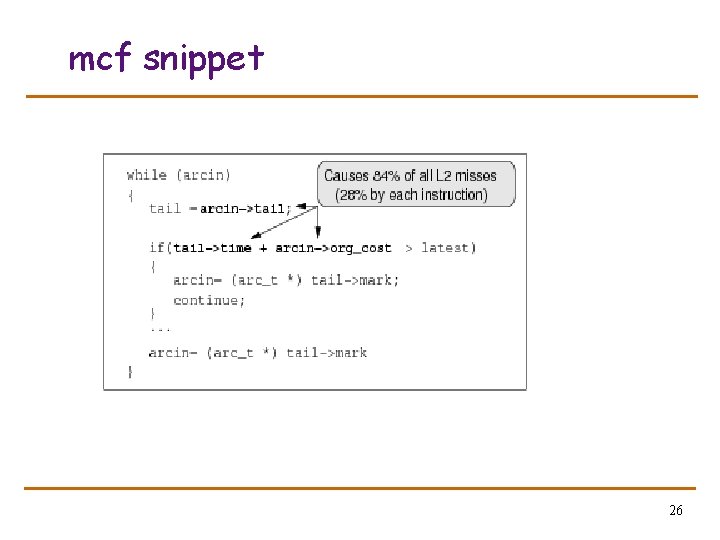

mcf snippet 26

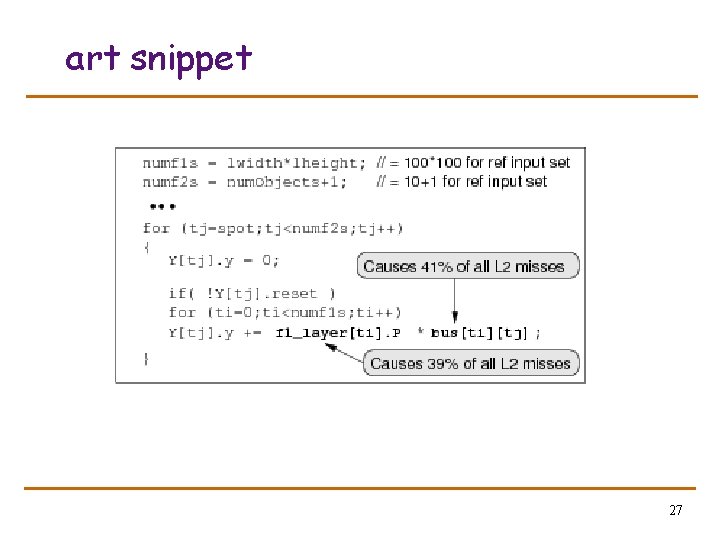

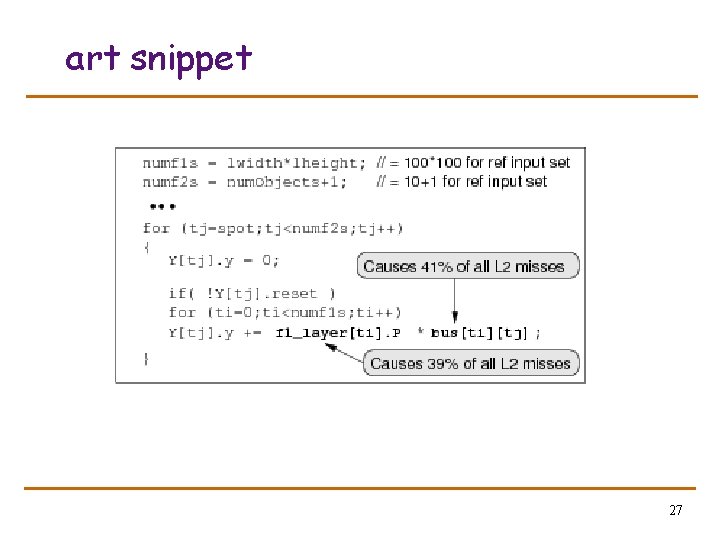

art snippet 27

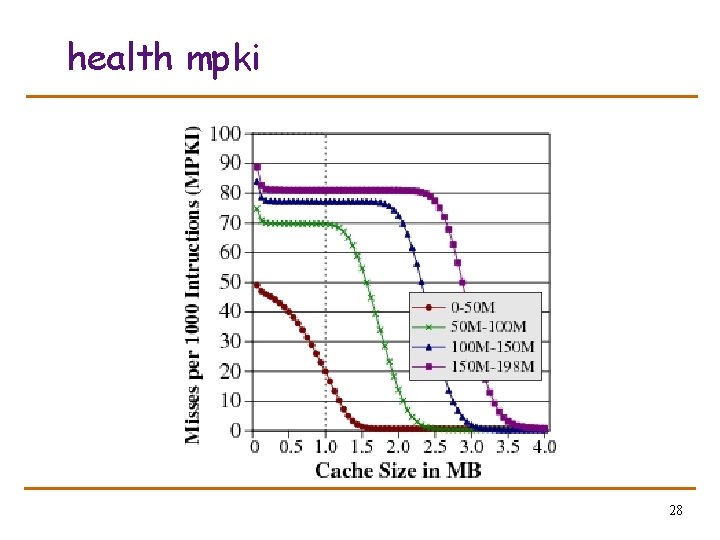

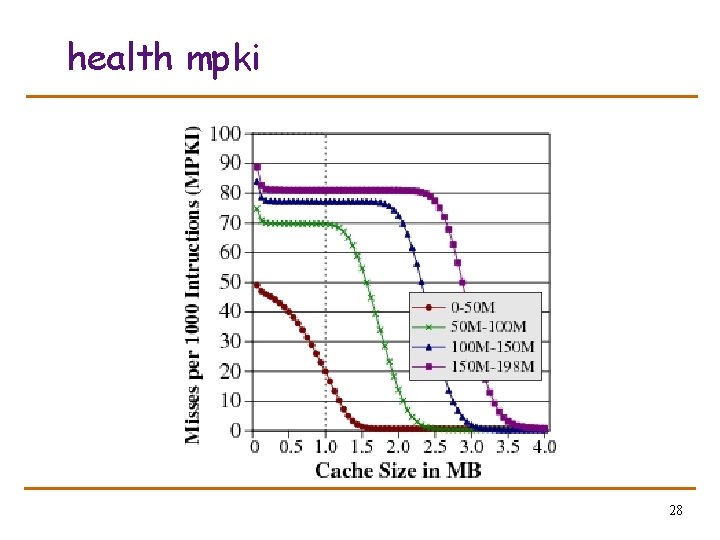

health mpki 28

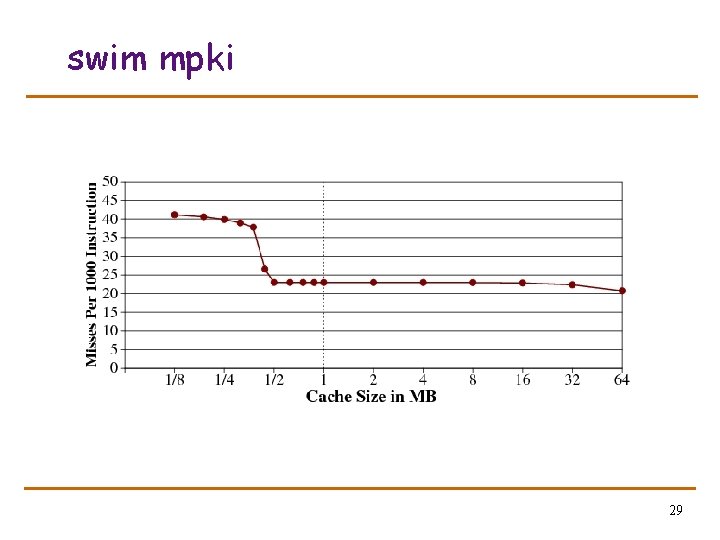

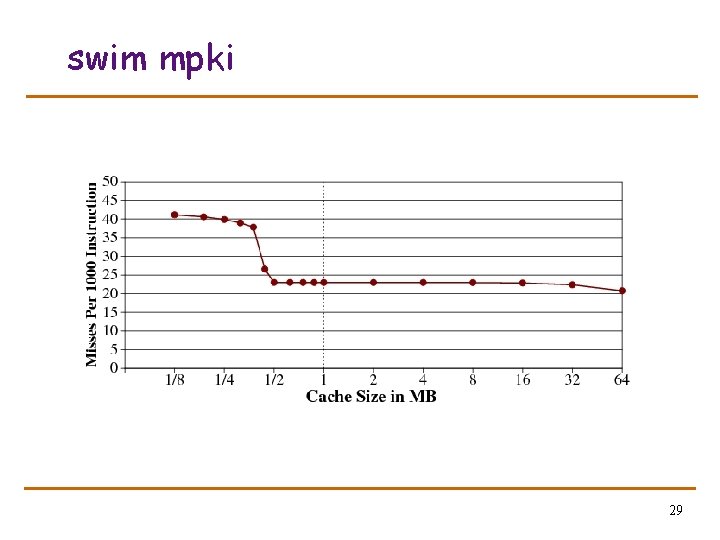

swim mpki 29

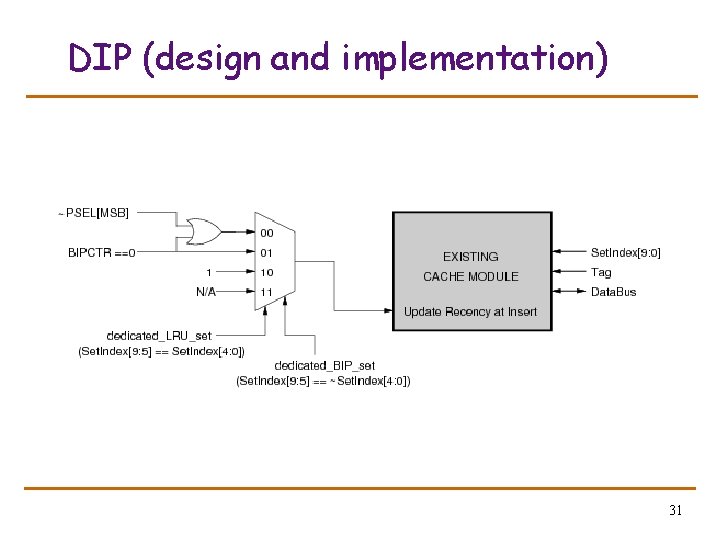

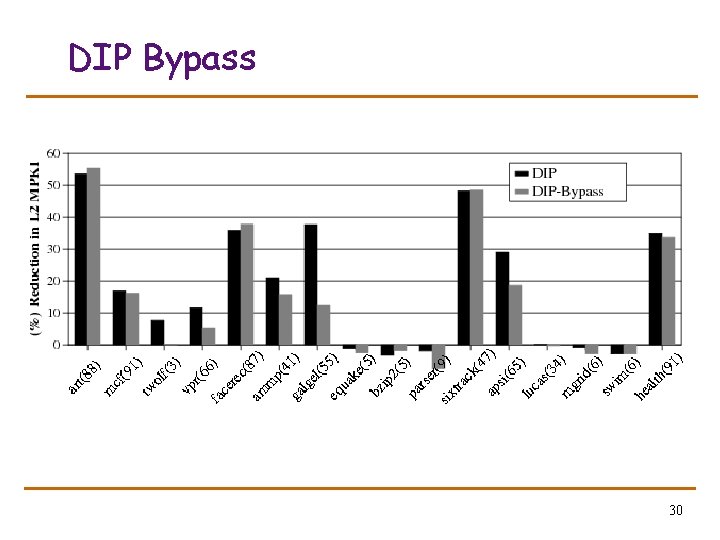

DIP Bypass 30

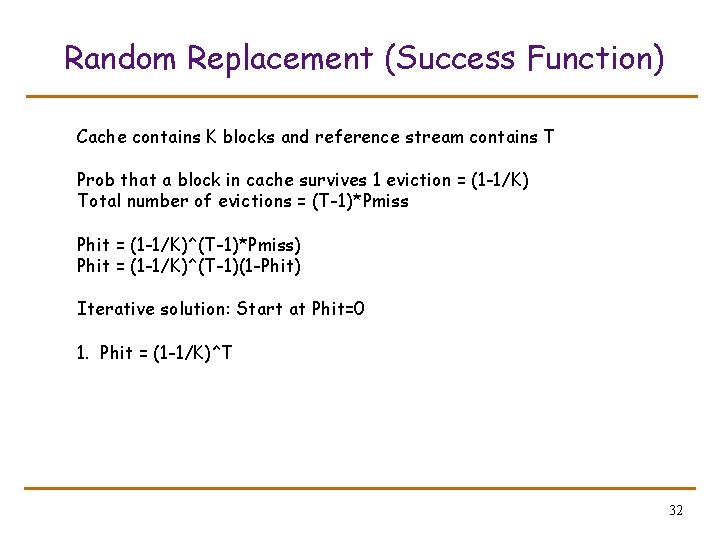

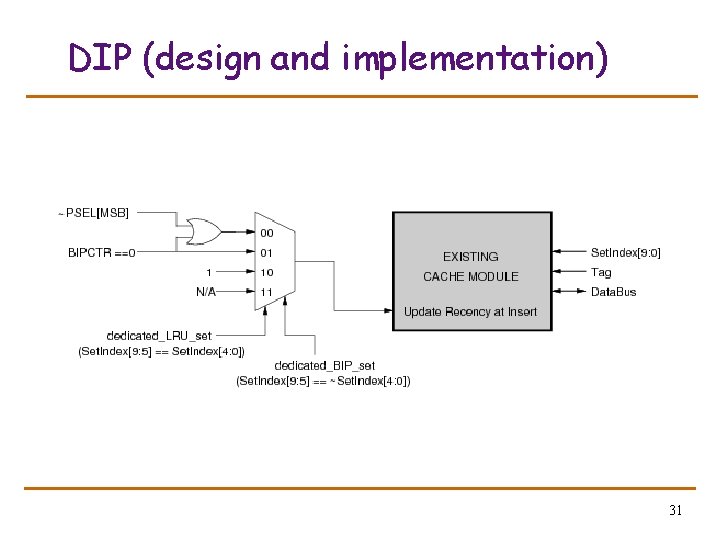

DIP (design and implementation) 31

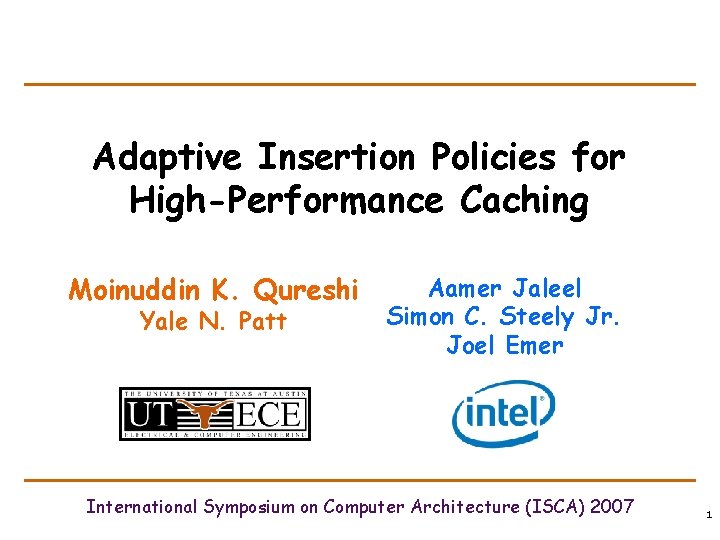

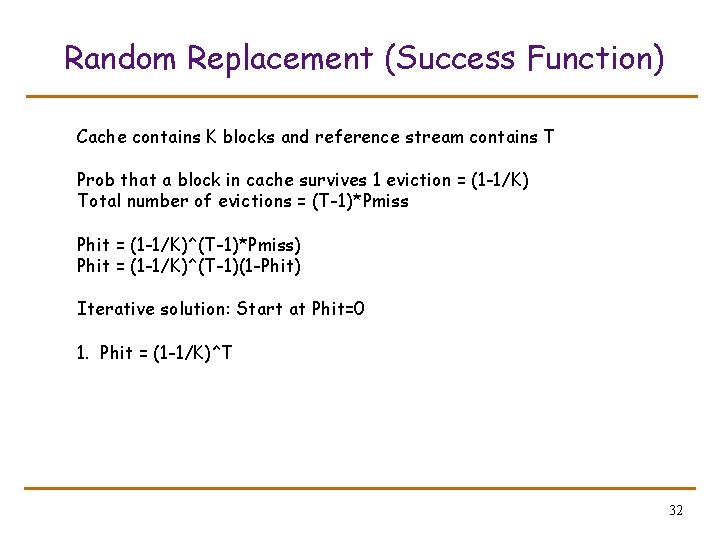

Random Replacement (Success Function) Cache contains K blocks and reference stream contains T Prob that a block in cache survives 1 eviction = (1 -1/K) Total number of evictions = (T-1)*Pmiss Phit = (1 -1/K)^(T-1)*Pmiss) Phit = (1 -1/K)^(T-1)(1 -Phit) Iterative solution: Start at Phit=0 1. Phit = (1 -1/K)^T 32