Caching for File Systems Caching for File Systems

- Slides: 29

Caching for File Systems

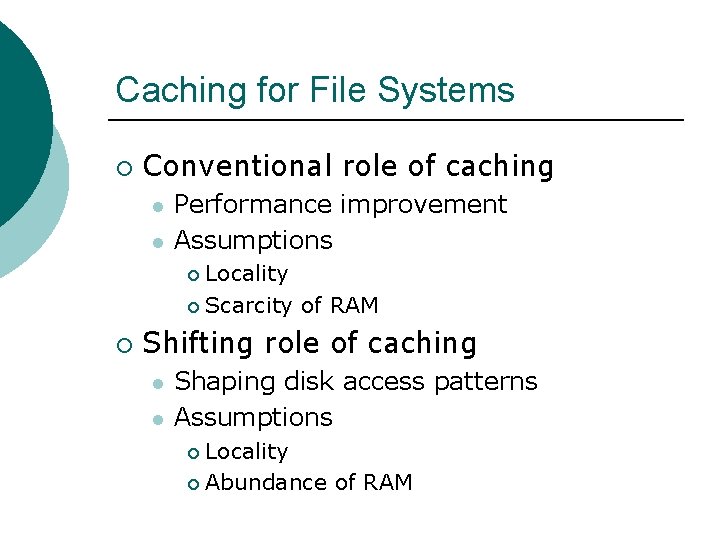

Caching for File Systems ¡ Conventional role of caching l l Performance improvement Assumptions Locality ¡ Scarcity of RAM ¡ ¡ Shifting role of caching l l Shaping disk access patterns Assumptions Locality ¡ Abundance of RAM ¡

Performance Improvement Essentially all file systems rely on caching to achieve acceptable performance ¡ Goal is to make FS run at the memory speeds ¡ l Even though most of the data is on disk

Issues in I/O Buffer Caching Cache size ¡ Cache replacement policy ¡ Cache write handling ¡ Cache-to-process data handling ¡

Cache size The bigger, the fewer the cache misses ¡ More data to keep in sync with disk ¡

What if…. RAM size = disk size? ¡ What are some implications in terms of disk layouts? ¡ l l Memory dump? LFS layout?

What if…. RAM is big enough to cache all hot files ¡ What are some implications in terms of disk layouts? ¡ l Optimized for the remaining files

Cache Replacement Policy ¡ LRU works fairly well l l ¡ Can use “stack of pointers” to keep track of LRU info cheaply Need to watch out for cache pollutions LFU doesn’t work well because a block may get lots of hits, then not be used l So, it takes a long time to get it out

What is the optimal policy? ¡ MIN: Replacing a page that will not be used for the longest time… Hmm…

What if your goal is to save power? ¡ Option 1: MIN replacement l l RAM will cache the hottest data items Disks will achieve maximum idleness… Hmm…

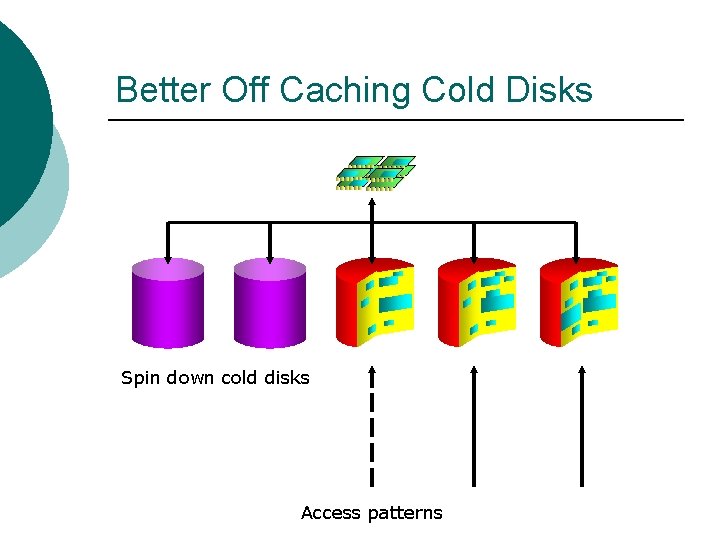

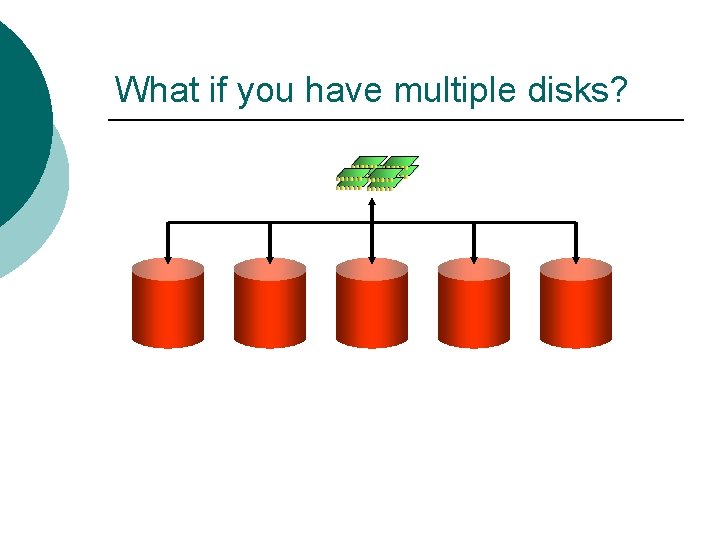

What if you have multiple disks?

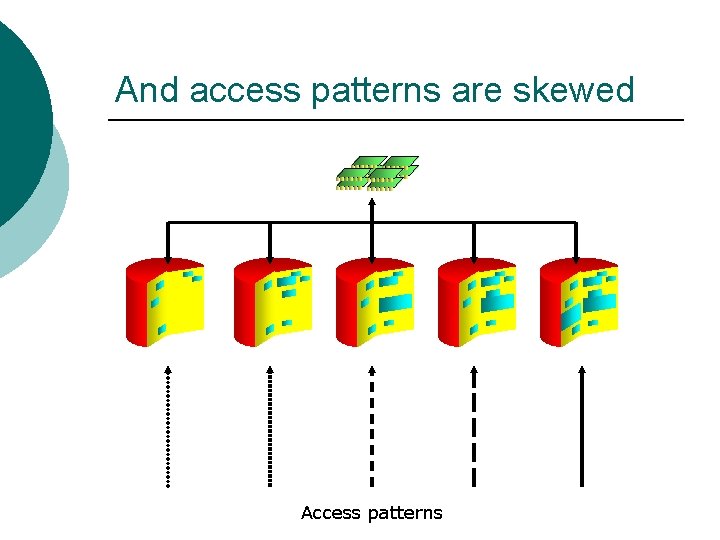

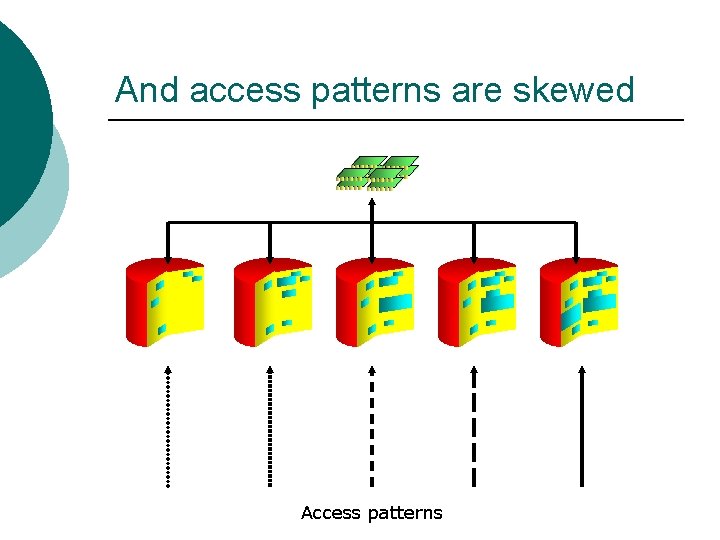

And access patterns are skewed Access patterns

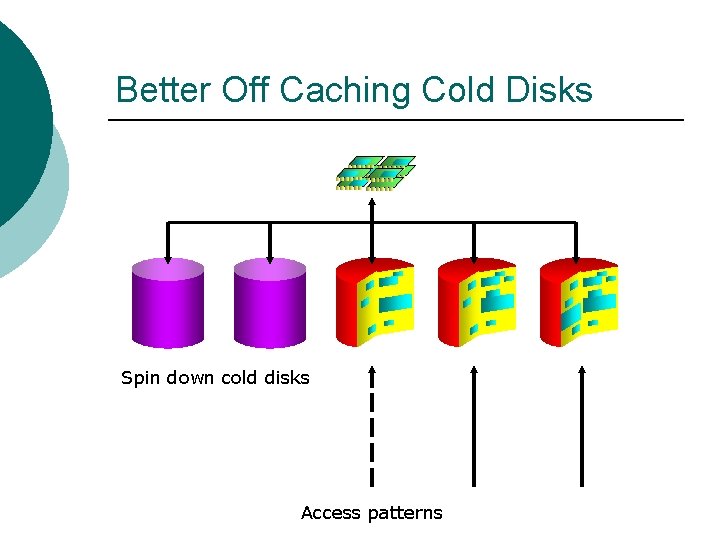

Better Off Caching Cold Disks Spin down cold disks Access patterns

Handling Writes to Cached Blocks Write-through cache: update propagate through various levels of caches immediately ¡ Write-back cache: delayed updates to amortize the cost of propagation ¡

What if…. Multiple levels of caching with different speeds and sizes? ¡ What are some tricky performance behaviors? ¡

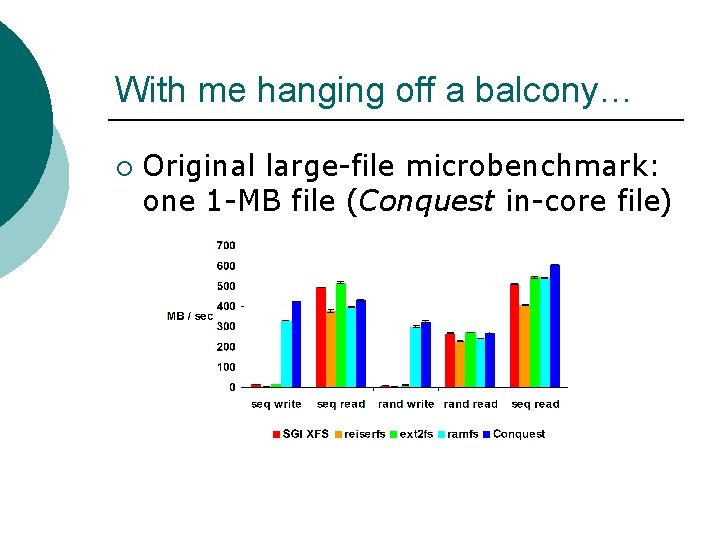

istory’s Mystery Puzzling Conquest Microbenchmark Numbers… Geoff Kuenning: “If Conquest is slower than ext 2 fs, I will toss you off of the balcony…”

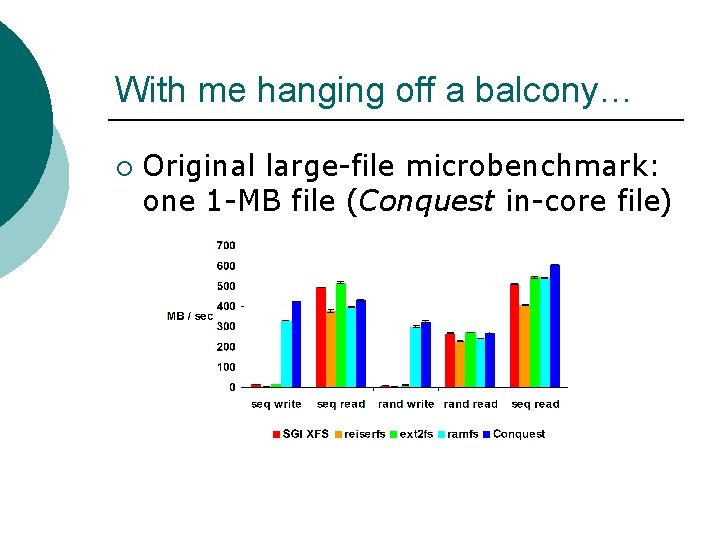

With me hanging off a balcony… ¡ Original large-file microbenchmark: one 1 -MB file (Conquest in-core file)

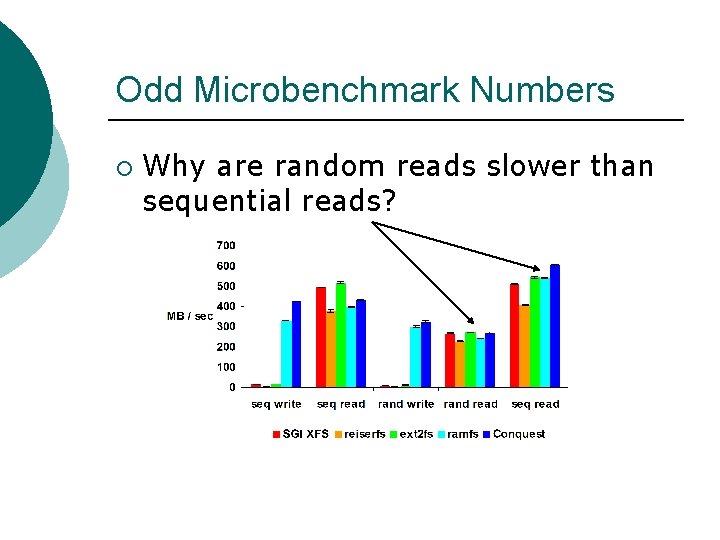

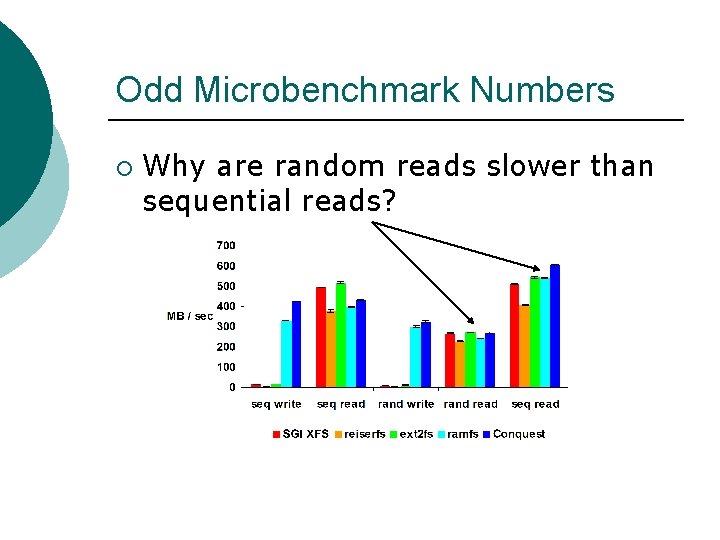

Odd Microbenchmark Numbers ¡ Why are random reads slower than sequential reads?

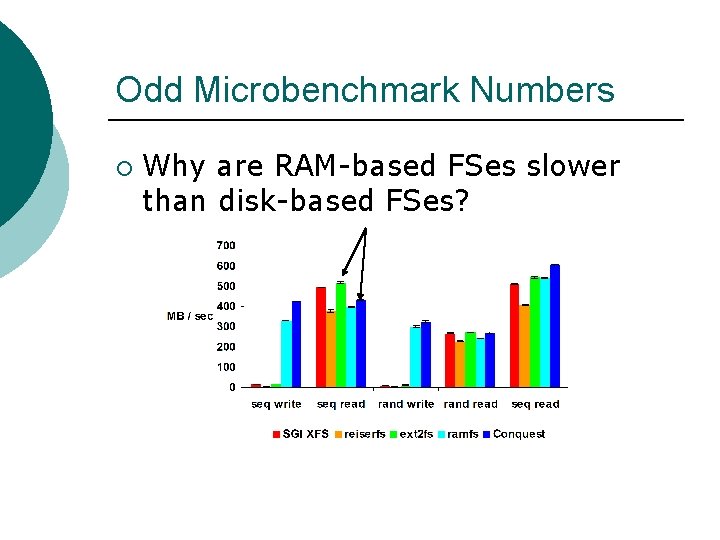

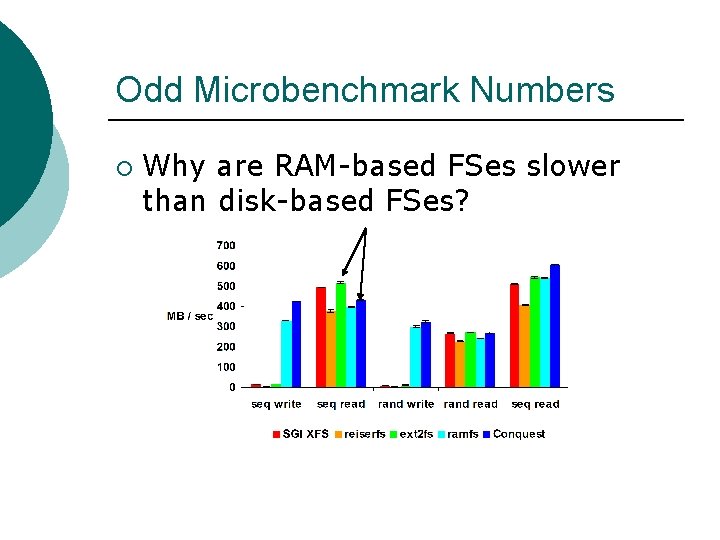

Odd Microbenchmark Numbers ¡ Why are RAM-based FSes slower than disk-based FSes?

A Series of Hypotheses ¡ Warm-up effect? l l ¡ Bad initial states? l ¡ Maybe Why do RAM-based systems warm up slower? No Pentium III streaming I/O option? l No

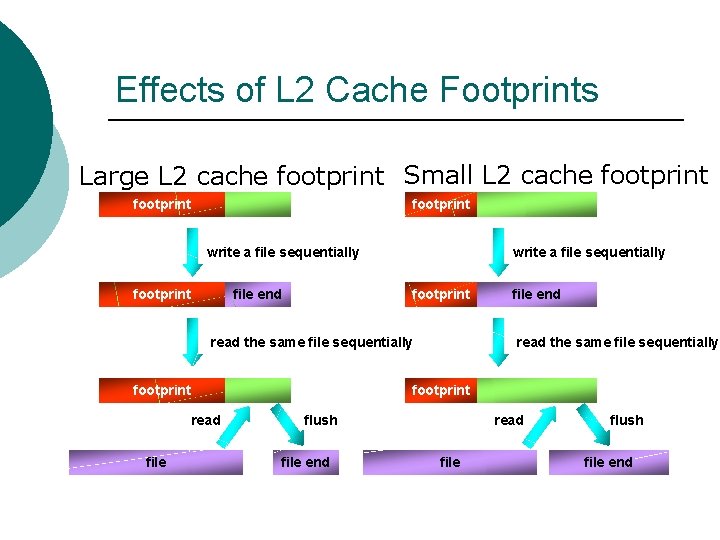

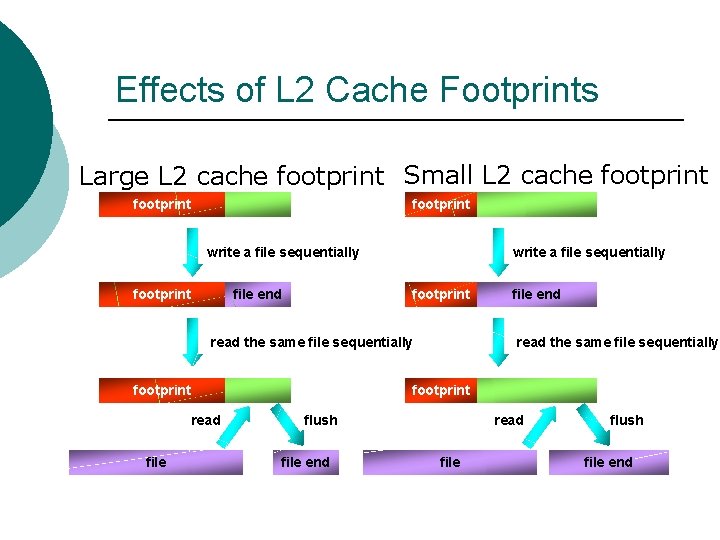

Effects of L 2 Cache Footprints Large L 2 cache footprint Small L 2 cache footprint write a file sequentially footprint file end write a file sequentially footprint read the same file sequentially footprint read file end read the same file sequentially footprint flush file end read file flush file end

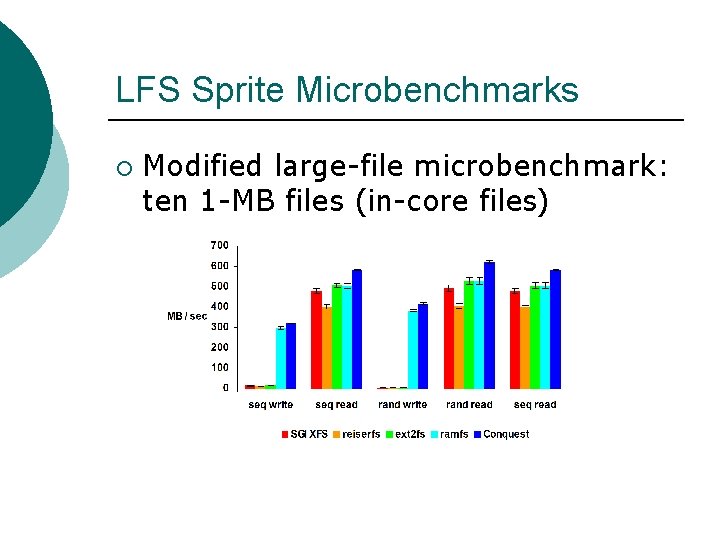

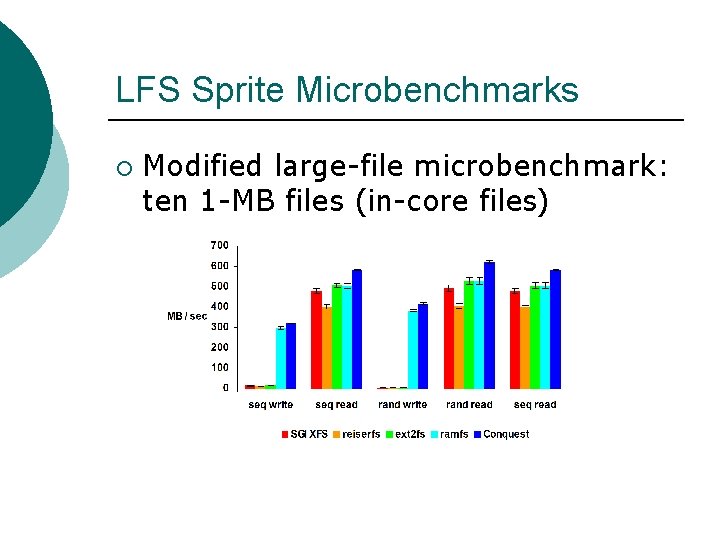

LFS Sprite Microbenchmarks ¡ Modified large-file microbenchmark: ten 1 -MB files (in-core files)

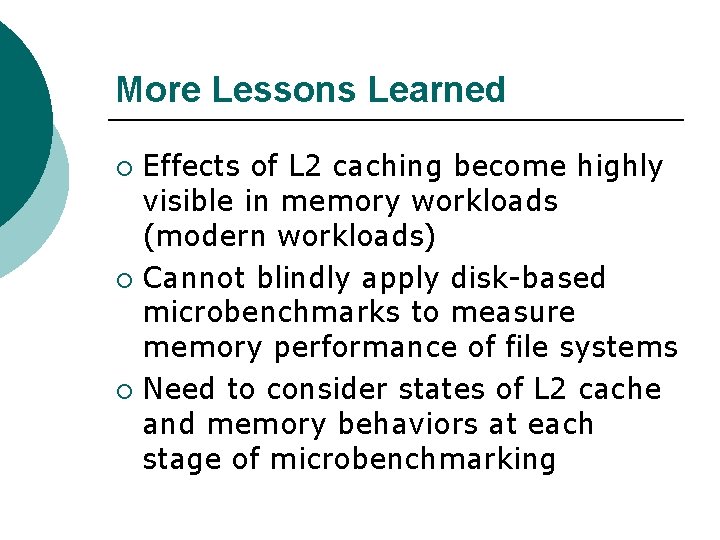

More Lessons Learned Effects of L 2 caching become highly visible in memory workloads (modern workloads) ¡ Cannot blindly apply disk-based microbenchmarks to measure memory performance of file systems ¡ Need to consider states of L 2 cache and memory behaviors at each stage of microbenchmarking ¡

Additional Lessons Learned ¡ Don’t discuss your performance numbers next to a balcony…unless…

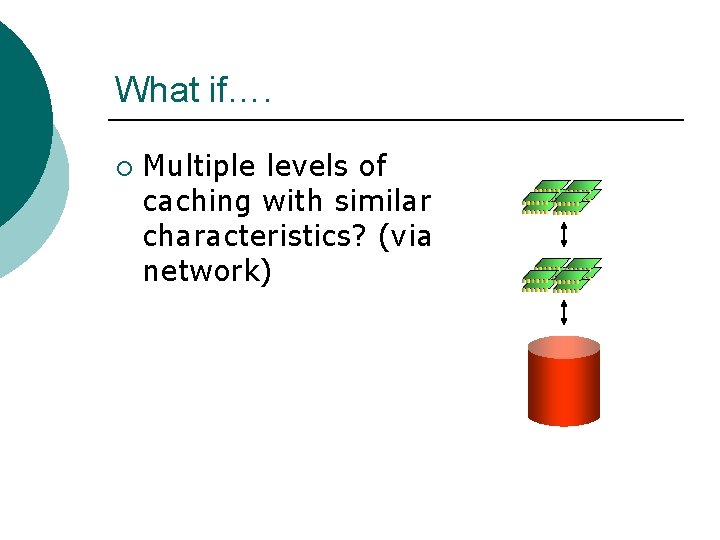

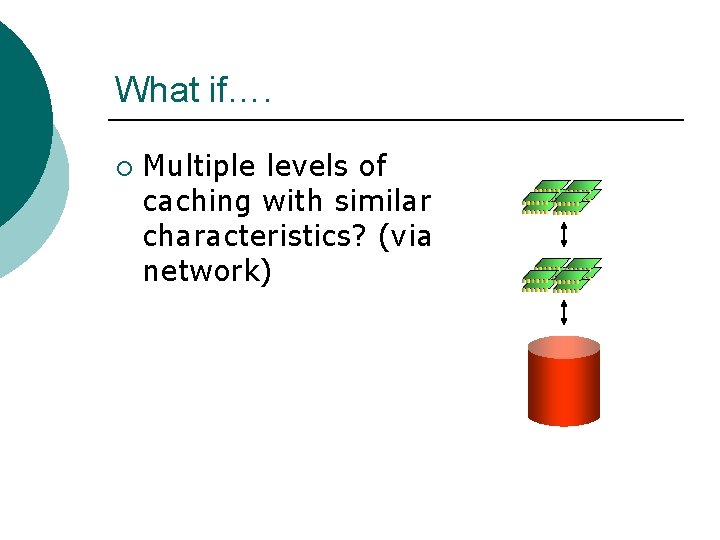

What if…. ¡ Multiple levels of caching with similar characteristics? (via network)

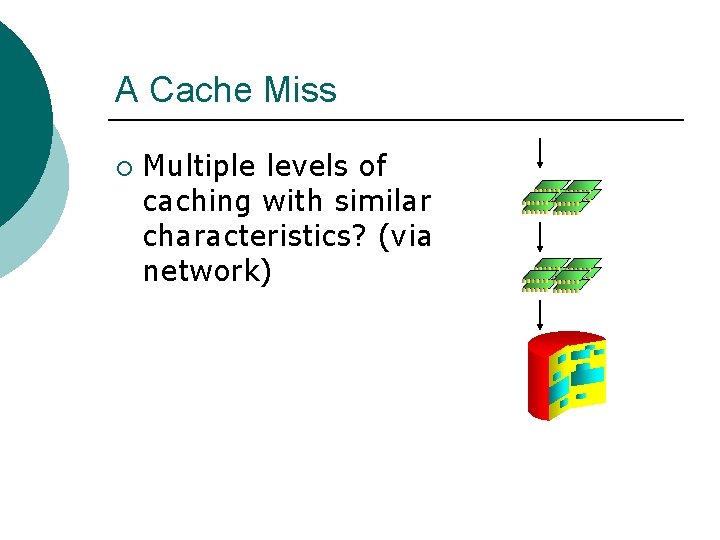

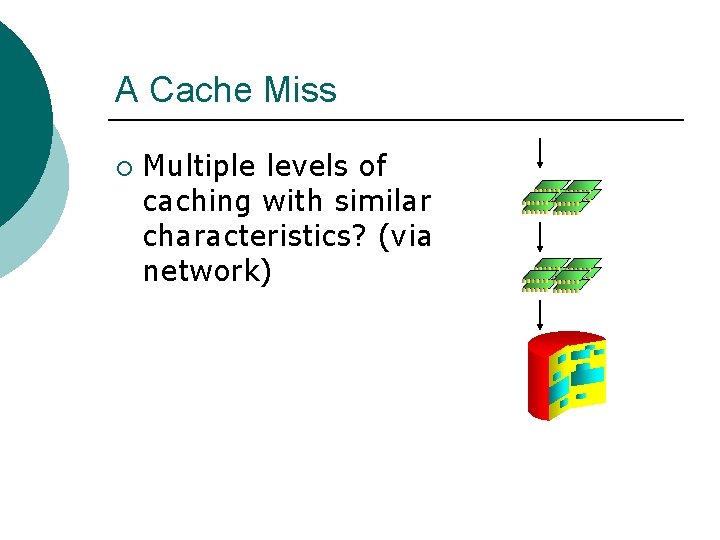

A Cache Miss ¡ Multiple levels of caching with similar characteristics? (via network)

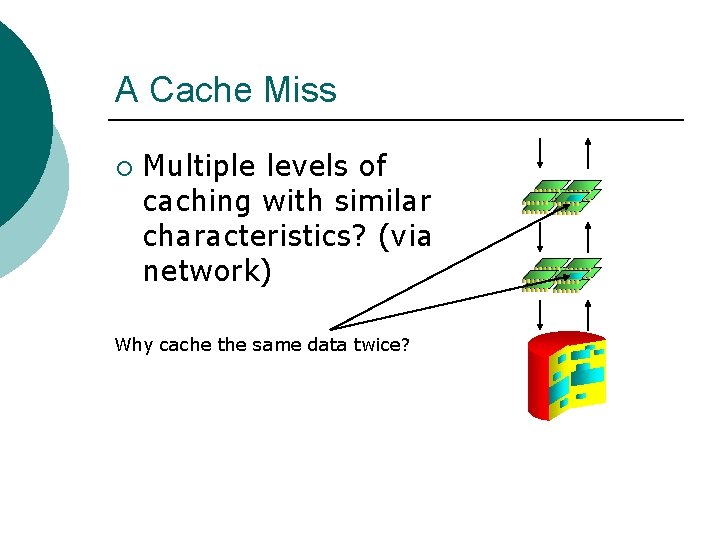

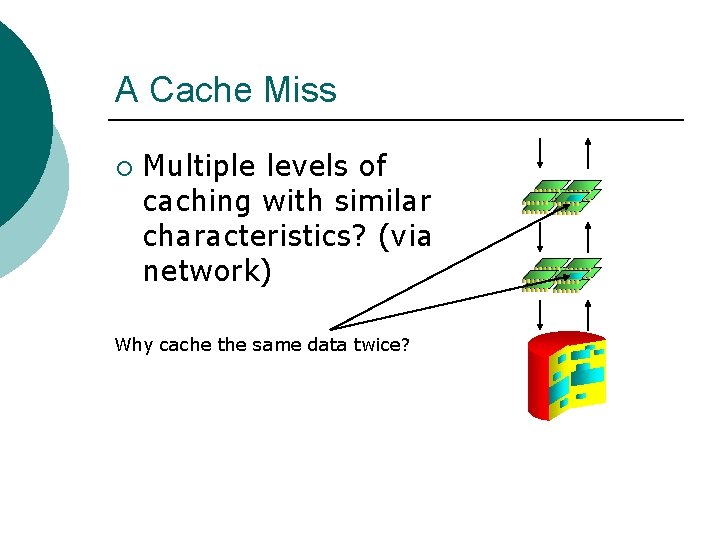

A Cache Miss ¡ Multiple levels of caching with similar characteristics? (via network) Why cache the same data twice?

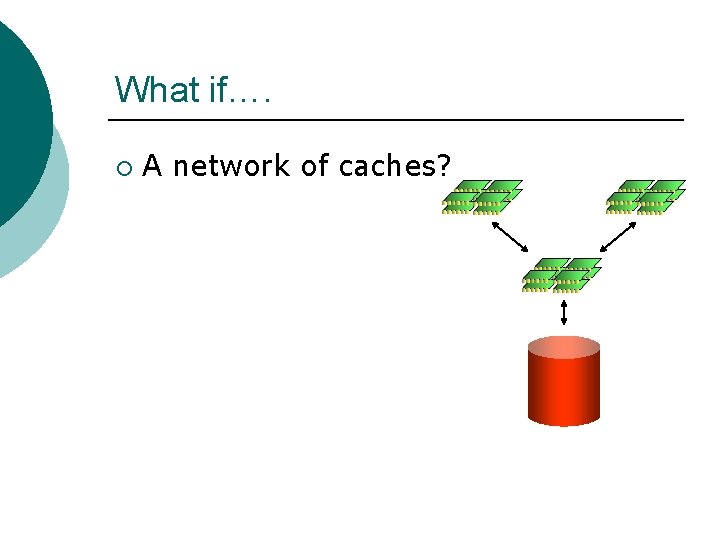

What if…. ¡ A network of caches?

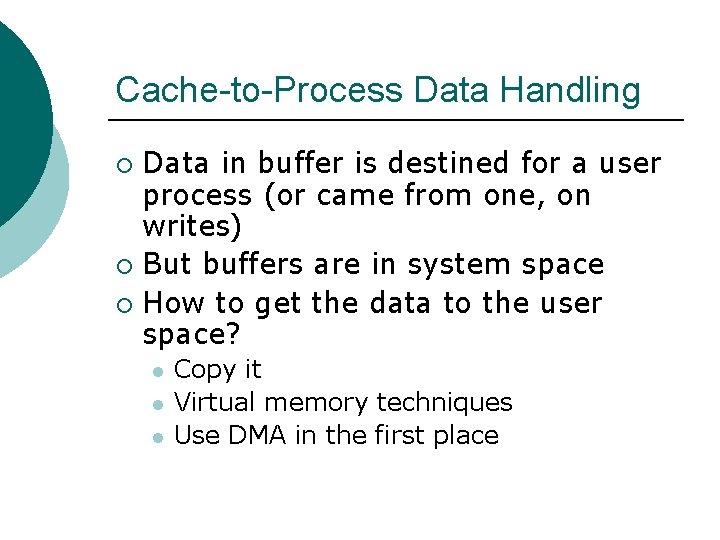

Cache-to-Process Data Handling Data in buffer is destined for a user process (or came from one, on writes) ¡ But buffers are in system space ¡ How to get the data to the user space? ¡ l l l Copy it Virtual memory techniques Use DMA in the first place