CERN Trigger data acquisition and control ICFA school

- Slides: 125

CERN Trigger, data acquisition and control ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 1

Foreword l l l CERN The subject of this course is not an exact science! The challenges are often similar but the aim and the requirements of a particular experiment may be unique. Thus the solutions vary enormously. While I will try to give a general view of the strategies adopted, I will use the LHC experiments as examples. This course presents the work of many people that have been developing trigger, data acquisition and control system for high energy experiments over many years! To prepare this course I have received input and comments from many sources. In particular thanks to: Ø Ø Clara Gaspar, CERN Sergio Cittolin, CERN Jean-Pierre Dufey, CERN Irakli Mandjavidze, Saclay ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 2

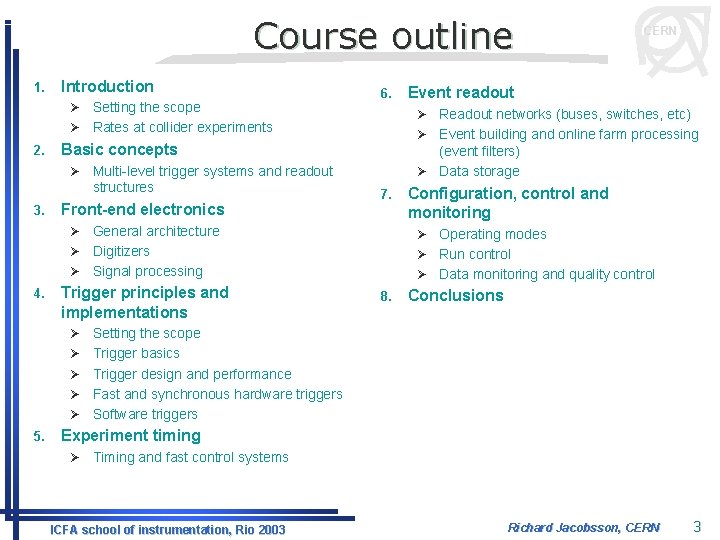

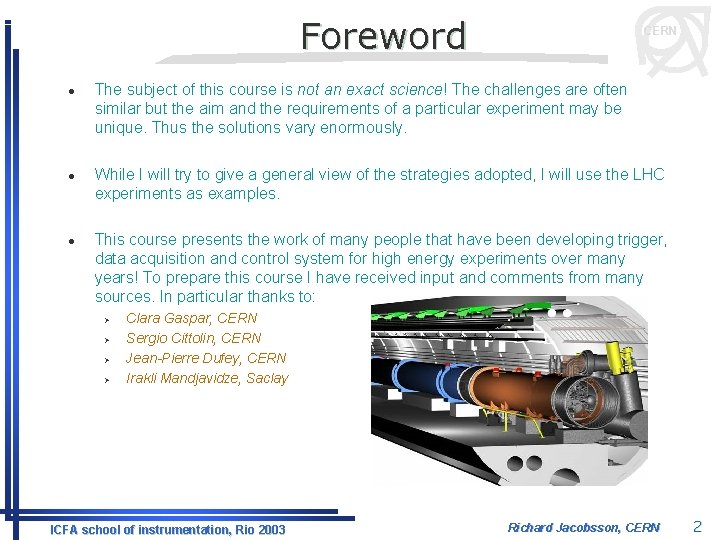

Course outline 1. Introduction Setting the scope Ø Rates at collider experiments 6. Ø 2. 3. Multi-level trigger systems and readout structures Front-end electronics Readout networks (buses, switches, etc) Ø Event building and online farm processing (event filters) Ø Data storage 7. General architecture Ø Digitizers Ø Signal processing Ø 4. Trigger principles and implementations Ø Ø Ø 5. Event readout Ø Basic concepts Ø CERN Configuration, control and monitoring Operating modes Ø Run control Ø Data monitoring and quality control Ø 8. Conclusions Setting the scope Trigger basics Trigger design and performance Fast and synchronous hardware triggers Software triggers Experiment timing Ø Timing and fast control systems ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 3

CERN 1. Introduction ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 4

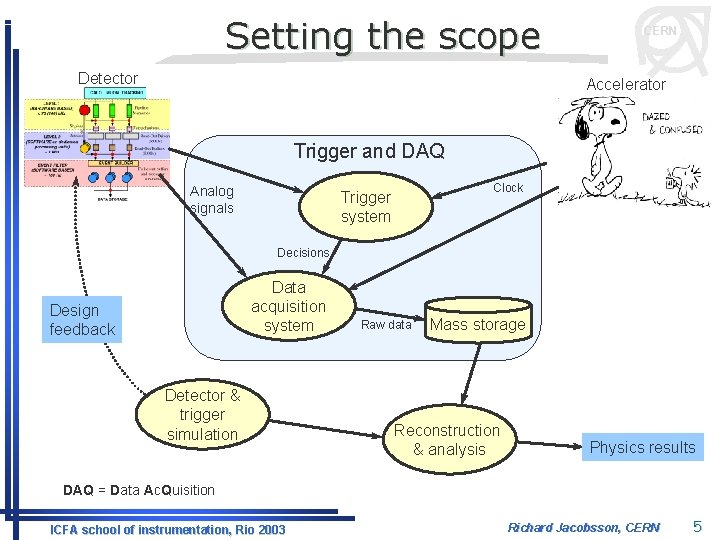

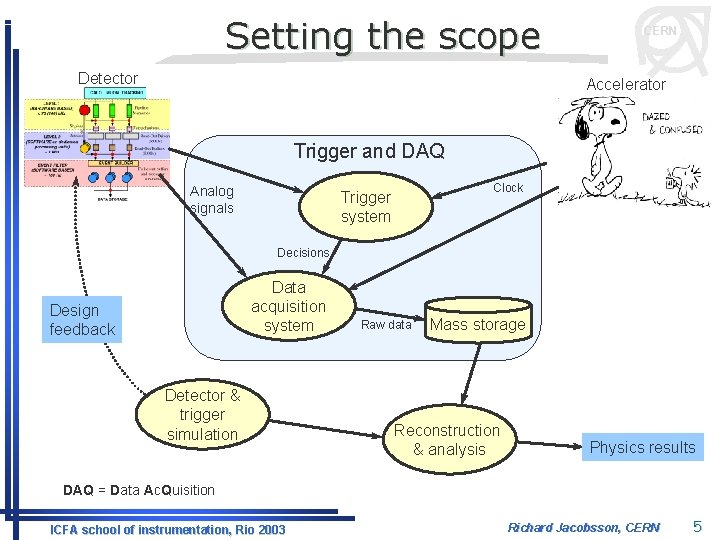

Setting the scope Detector CERN Accelerator Trigger and DAQ Analog signals Clock Trigger system Decisions Data acquisition system Design feedback Detector & trigger simulation Raw data Mass storage Reconstruction & analysis Physics results DAQ = Data Ac. Quisition ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 5

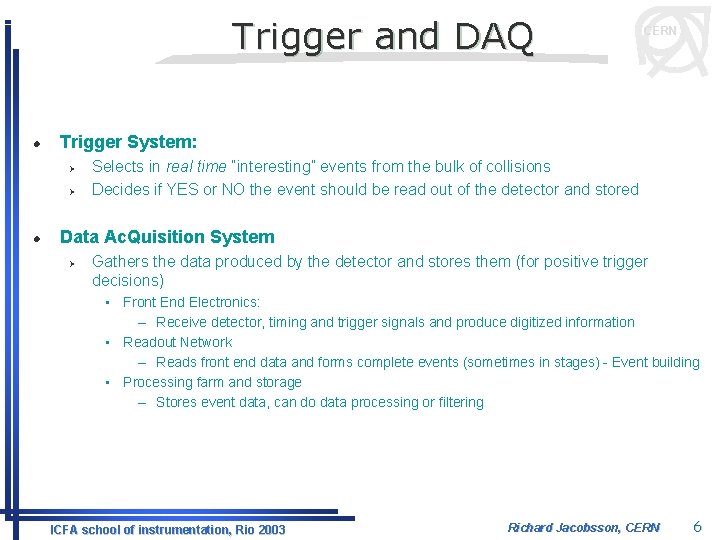

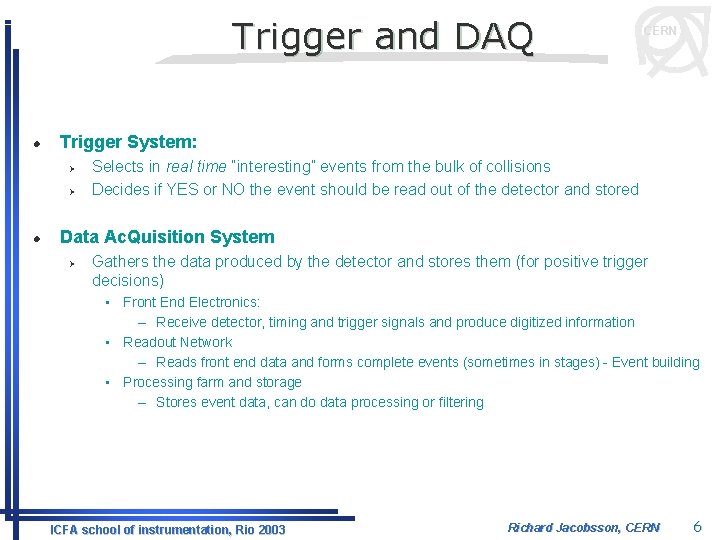

Trigger and DAQ l Trigger System: Ø Ø l CERN Selects in real time “interesting” events from the bulk of collisions Decides if YES or NO the event should be read out of the detector and stored Data Ac. Quisition System Ø Gathers the data produced by the detector and stores them (for positive trigger decisions) • Front End Electronics: – Receive detector, timing and trigger signals and produce digitized information • Readout Network – Reads front end data and forms complete events (sometimes in stages) - Event building • Processing farm and storage – Stores event data, can do data processing or filtering ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 6

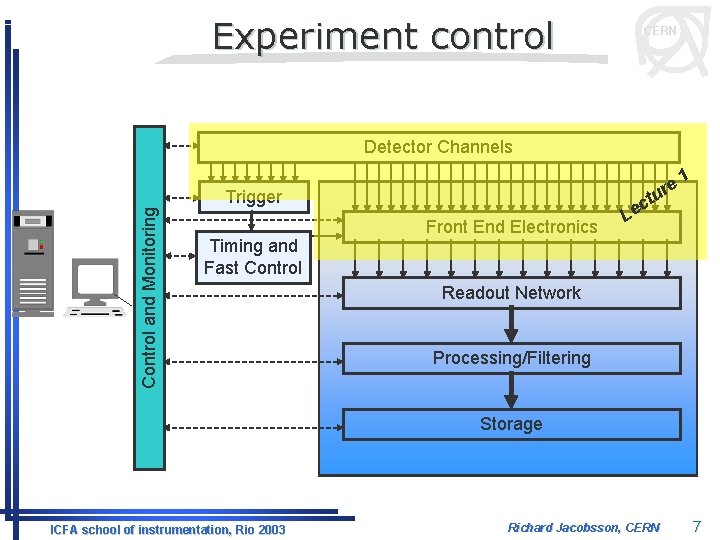

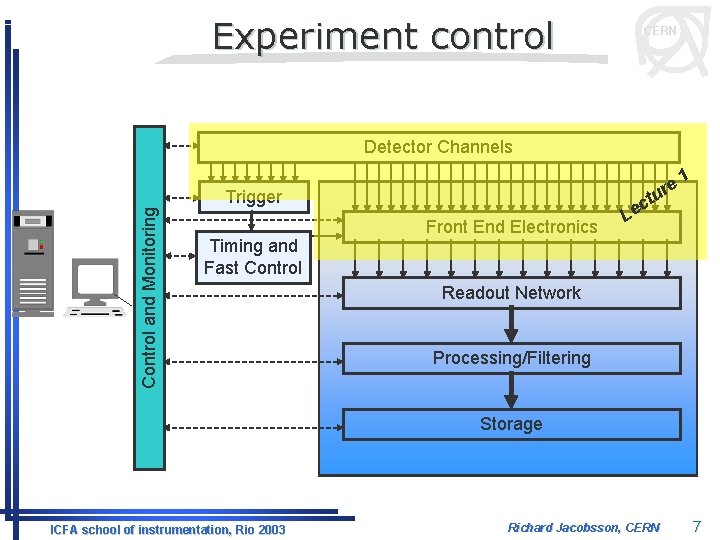

Experiment control CERN Detector Channels Control and Monitoring Trigger Timing and Fast Control e ur 1 t Front End Electronics c Le Readout Network Processing/Filtering Storage ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 7

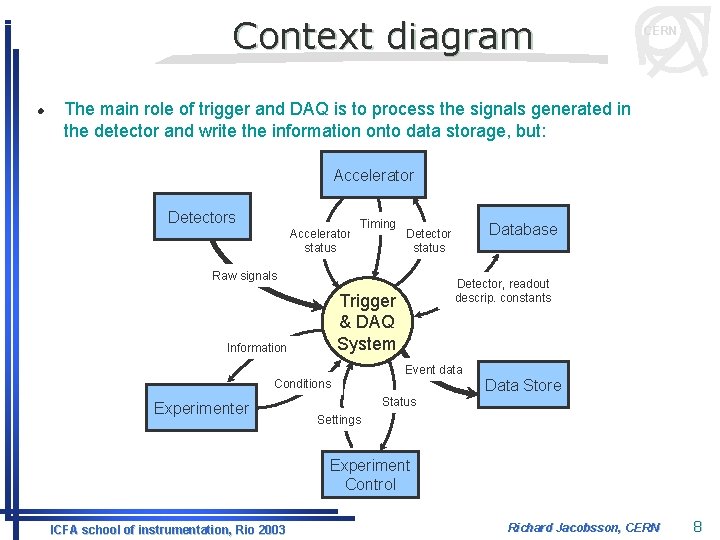

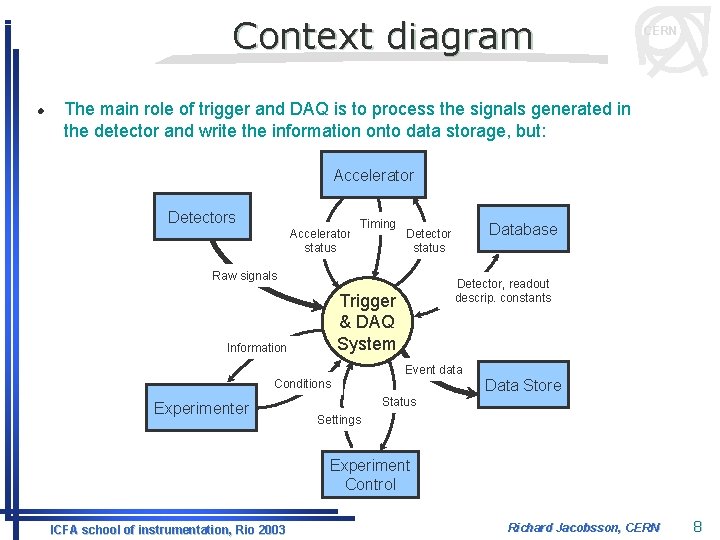

Context diagram l CERN The main role of trigger and DAQ is to process the signals generated in the detector and write the information onto data storage, but: Accelerator Detectors Accelerator status Timing Raw signals Detector, readout descrip. constants Trigger & DAQ System Information Database Detector status Event data Conditions Experimenter Data Store Status Settings Experiment Control ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 8

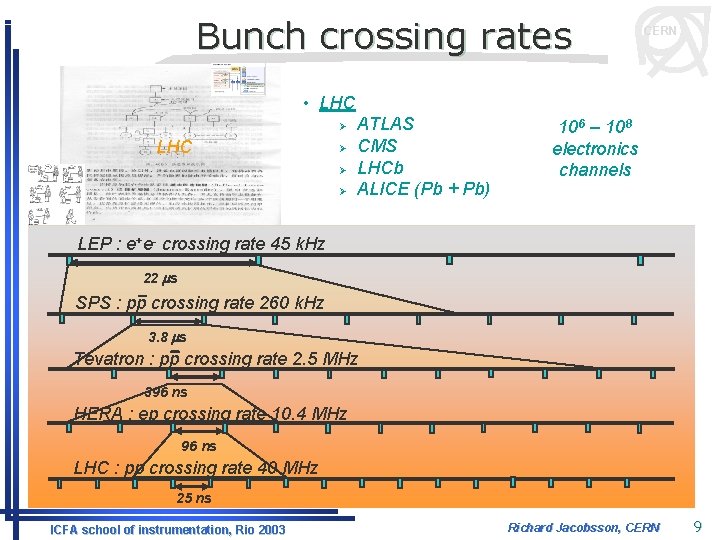

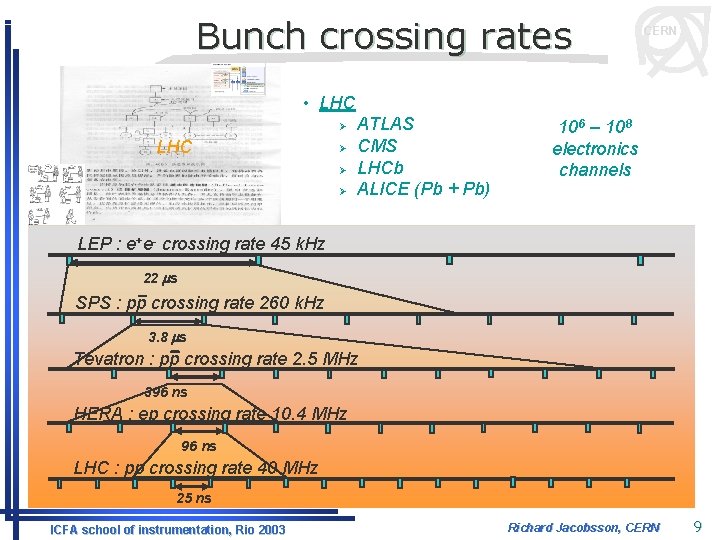

Bunch crossing rates CERN • LHC Ø Ø Ø ATLAS CMS LHCb ALICE (Pb + Pb) 106 – 108 electronics channels LEP : e+e- crossing rate 45 k. Hz 22 ms SPS : pp crossing rate 260 k. Hz 3. 8 ms Tevatron : pp crossing rate 2. 5 MHz 396 ns HERA : ep crossing rate 10. 4 MHz 96 ns LHC : pp crossing rate 40 MHz 25 ns ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 9

CERN 2. Basic concepts ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 10

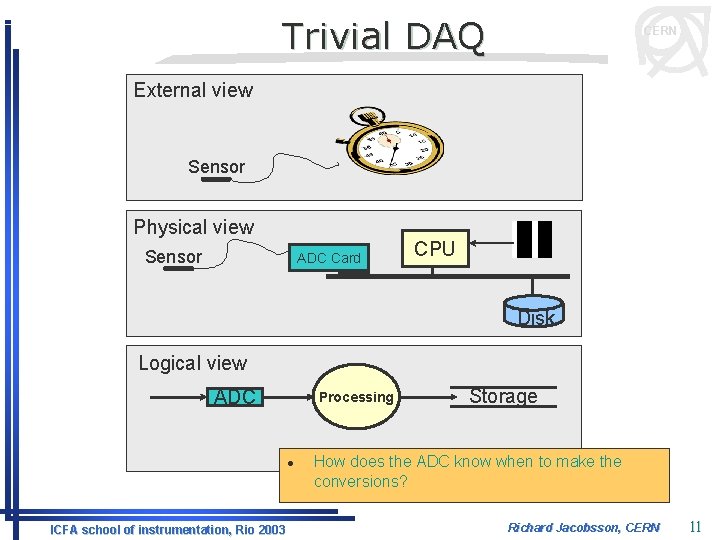

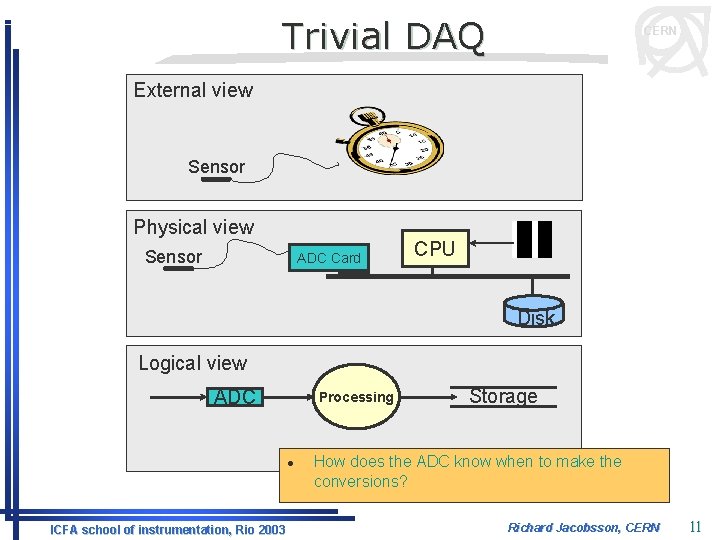

Trivial DAQ CERN External view Sensor Physical view Sensor ADC Card CPU Disk Logical view ADC Processing l ICFA school of instrumentation, Rio 2003 Storage How does the ADC know when to make the conversions? Richard Jacobsson, CERN 11

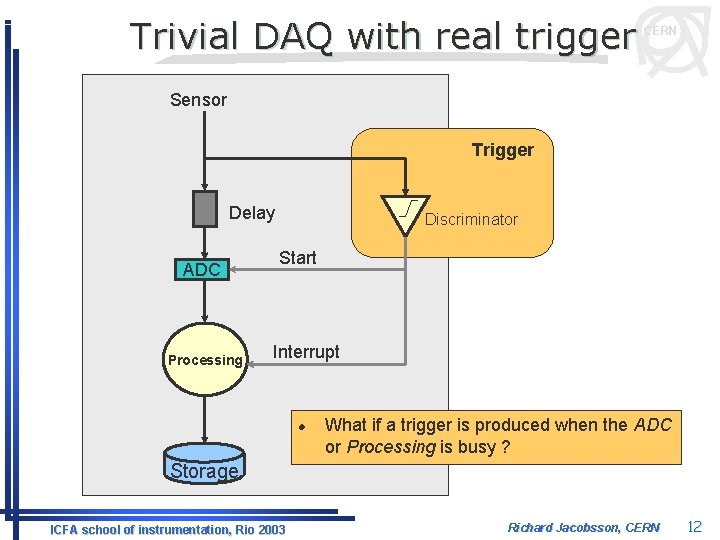

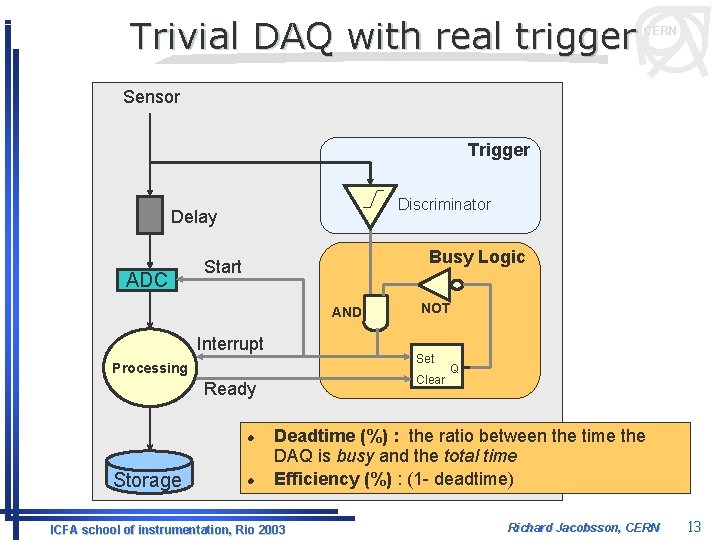

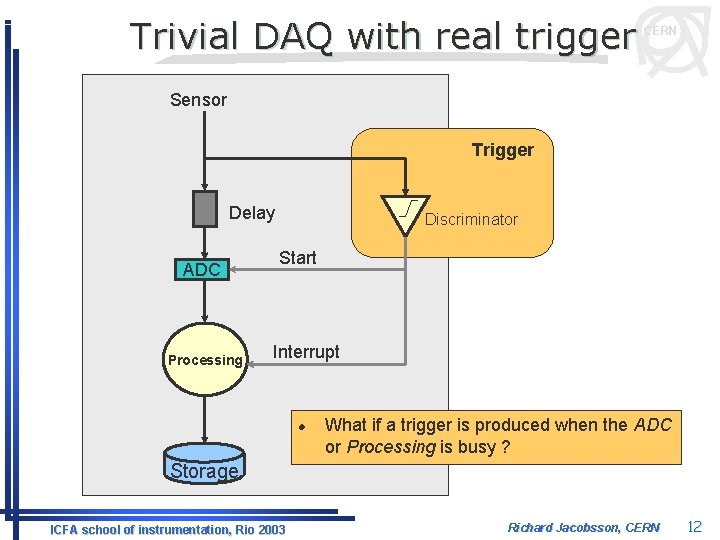

Trivial DAQ with real trigger CERN Sensor Trigger Delay ADC Processing Discriminator Start Interrupt l What if a trigger is produced when the ADC or Processing is busy ? Storage ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 12

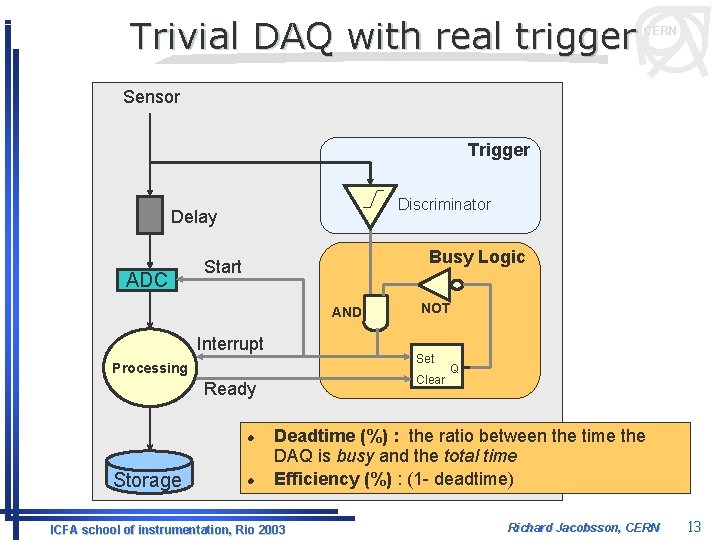

Trivial DAQ with real trigger CERN Sensor Trigger Discriminator Delay ADC Busy Logic Start AND Interrupt Set Processing Clear Ready l Storage l NOT Q Deadtime (%) : the ratio between the time the DAQ is busy and the total time Efficiency (%) : (1 - deadtime) ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 13

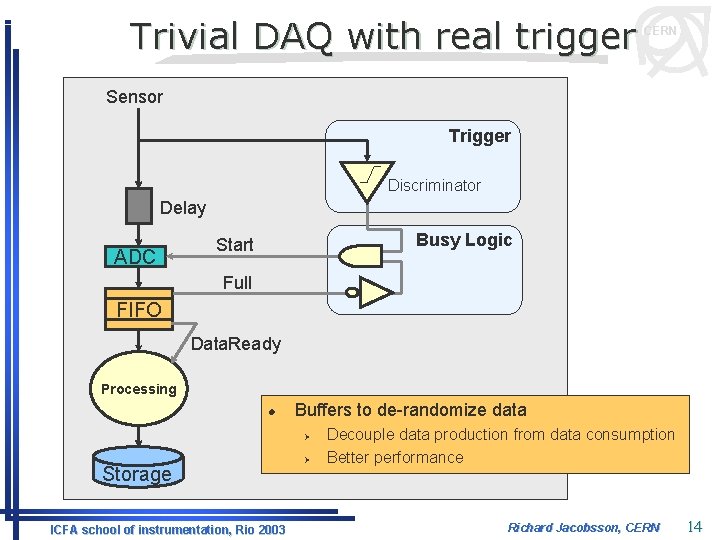

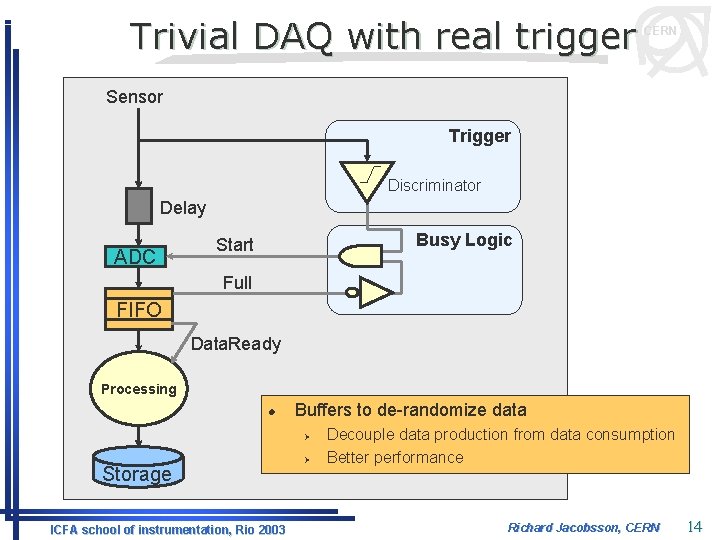

Trivial DAQ with real trigger CERN Sensor Trigger Discriminator Delay ADC Busy Logic Start Full FIFO Data. Ready Processing l Buffers to de-randomize data Ø Storage ICFA school of instrumentation, Rio 2003 Ø Decouple data production from data consumption Better performance Richard Jacobsson, CERN 14

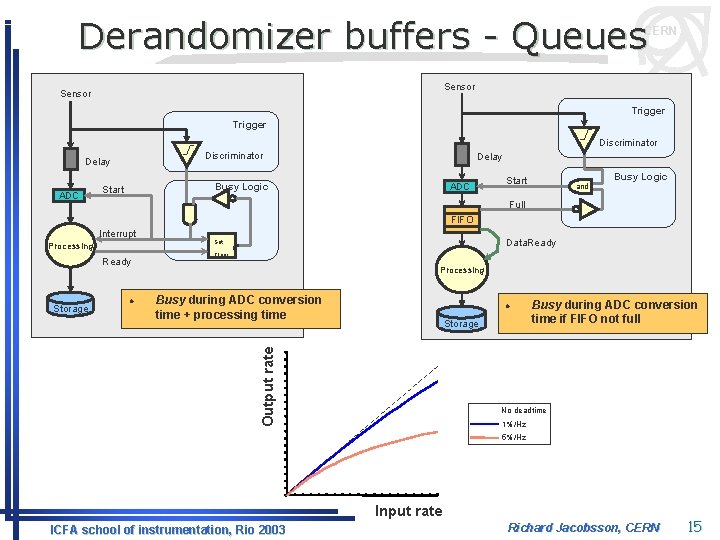

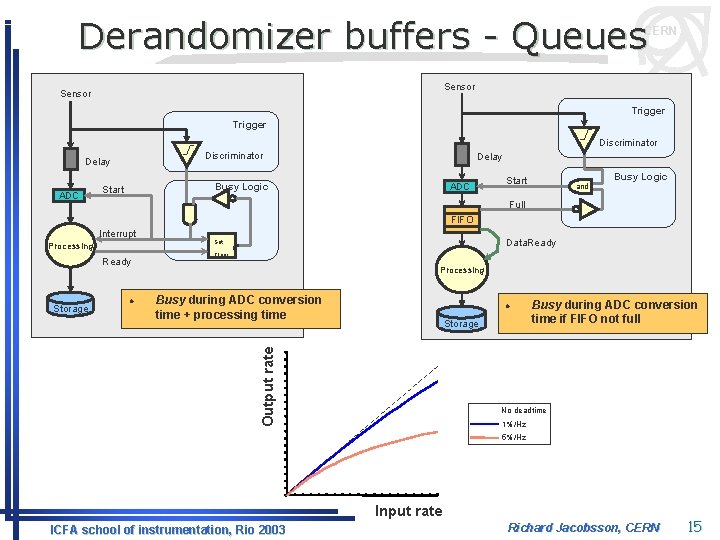

Derandomizer buffers - Queues CERN Sensor Trigger Discriminator Delay ADC Delay Busy Logic Start ADC Busy Logic Start and Full FIFO Interrupt Processing Ready l Clear Q Processing Busy during ADC conversion time + processing time l Storage Output rate Storage Data. Ready Set Busy during ADC conversion time if FIFO not full No deadtime 1%/Hz 5%/Hz Input rate ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 15

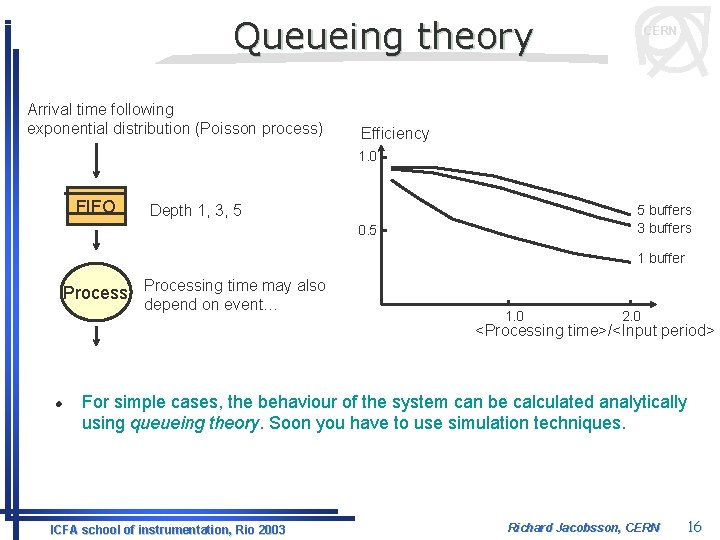

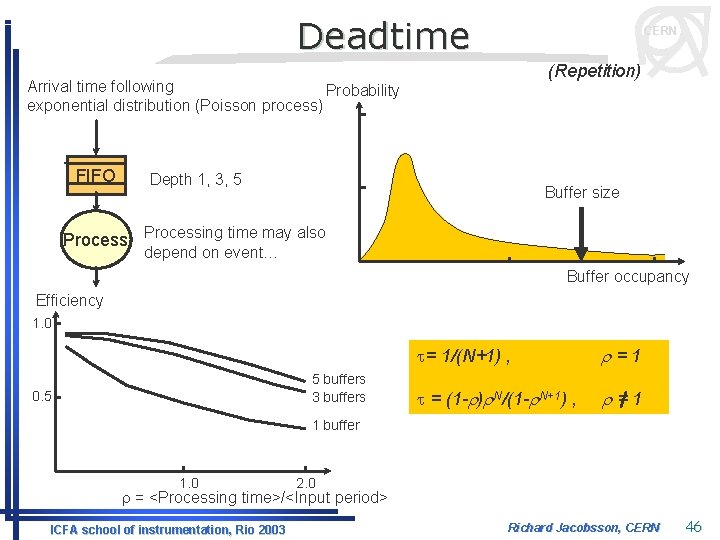

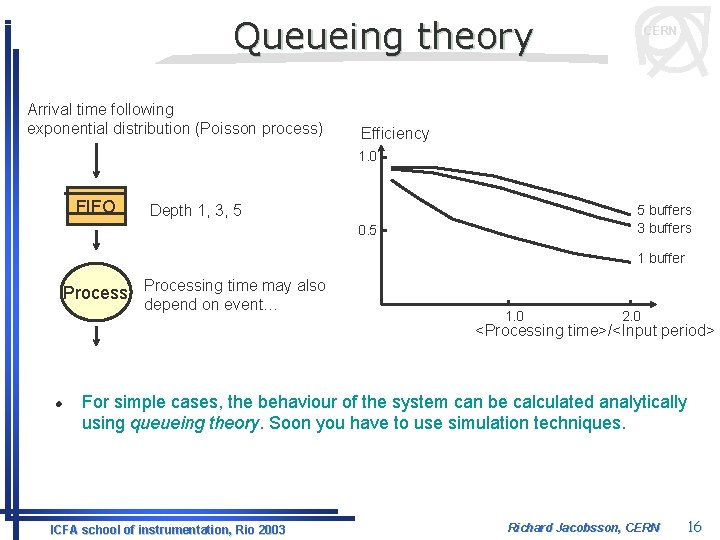

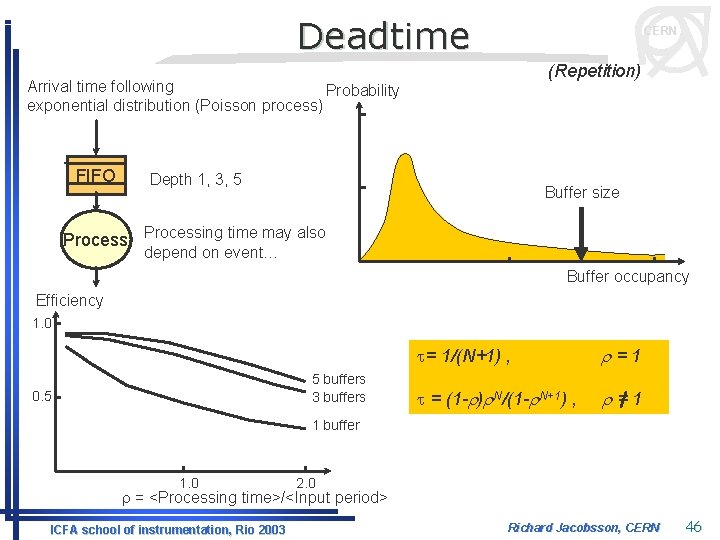

Queueing theory Arrival time following exponential distribution (Poisson process) CERN Efficiency 1. 0 FIFO Depth 1, 3, 5 5 buffers 3 buffers 0. 5 1 buffer Processing time may also depend on event… 1. 0 2. 0 <Processing time>/<Input period> l For simple cases, the behaviour of the system can be calculated analytically using queueing theory. Soon you have to use simulation techniques. ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 16

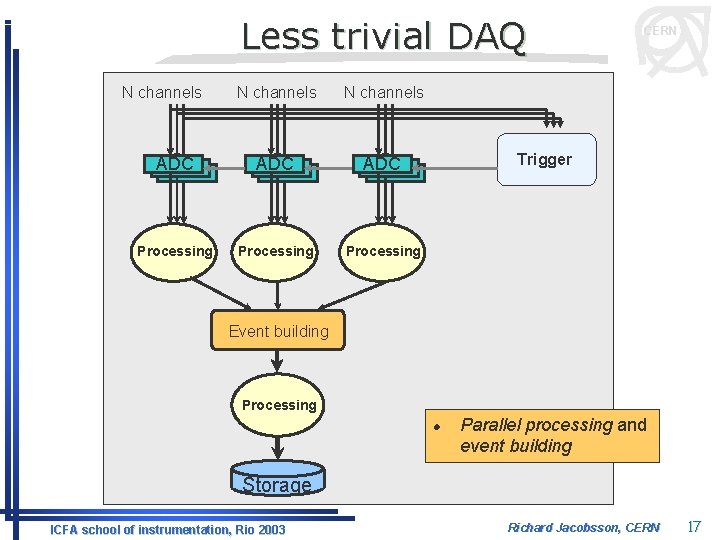

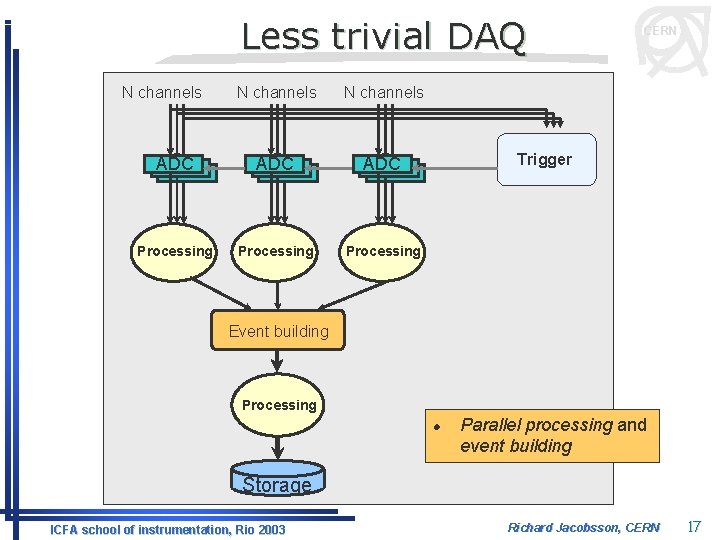

Less trivial DAQ N channels ADC ADC Processing CERN Trigger Event building Processing l Parallel processing and event building Storage ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 17

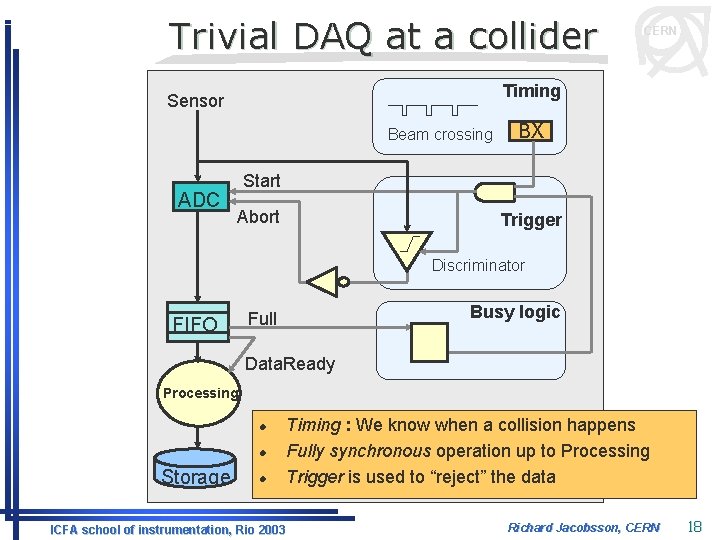

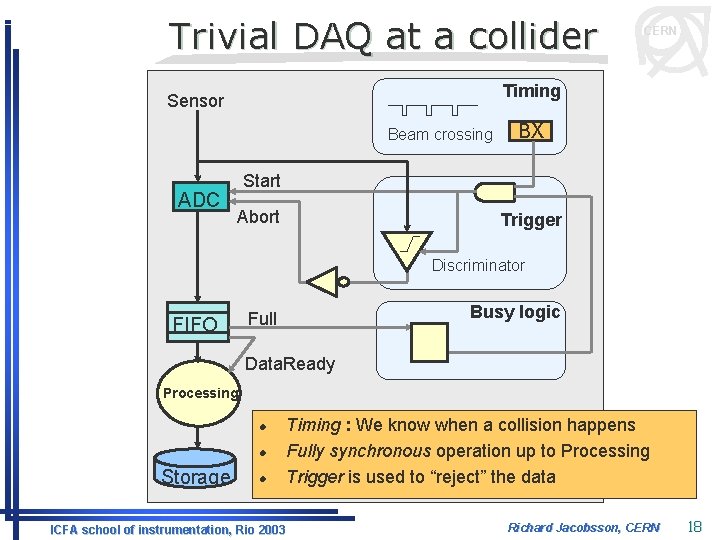

Trivial DAQ at a collider Timing Sensor Beam crossing ADC CERN BX Start Abort Trigger Discriminator FIFO Busy logic Full Data. Ready Processing l l Storage l ICFA school of instrumentation, Rio 2003 Timing : We know when a collision happens Fully synchronous operation up to Processing Trigger is used to “reject” the data Richard Jacobsson, CERN 18

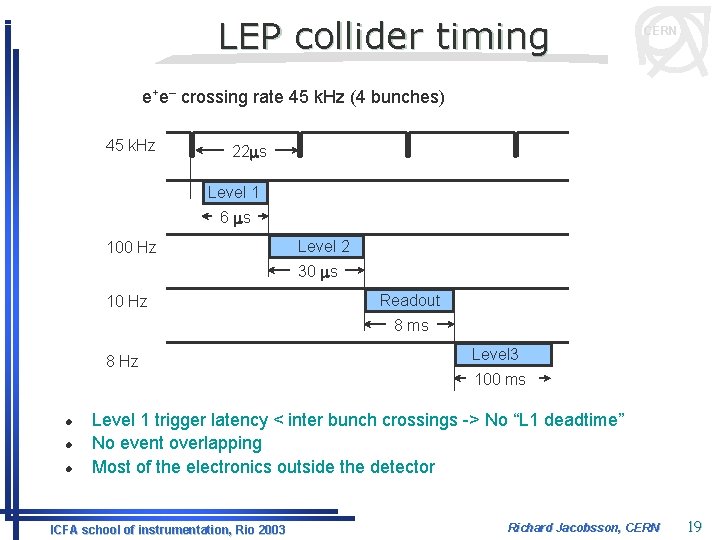

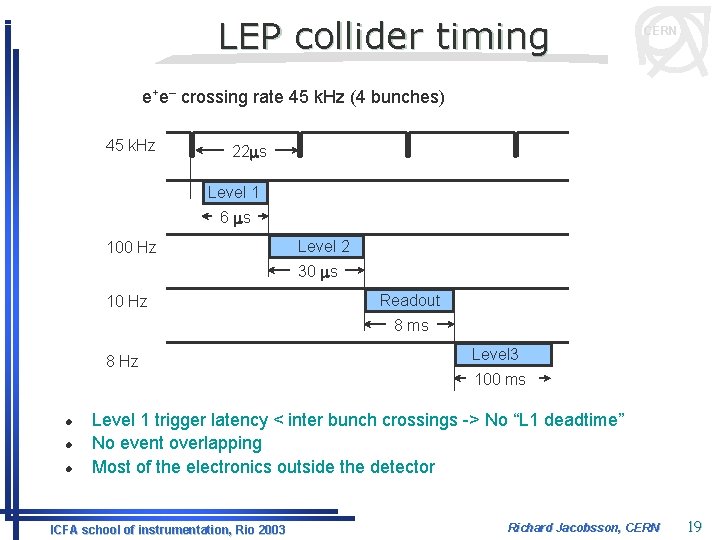

LEP collider timing CERN e+e– crossing rate 45 k. Hz (4 bunches) 45 k. Hz 22 ms Level 1 6 ms 100 Hz Level 2 30 ms 10 Hz Readout 8 ms 8 Hz Level 3 100 ms l l l Level 1 trigger latency < inter bunch crossings -> No “L 1 deadtime” No event overlapping Most of the electronics outside the detector ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 19

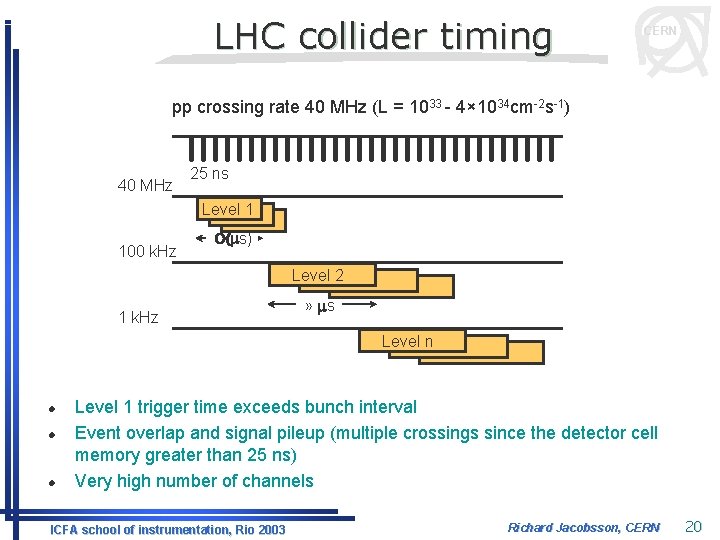

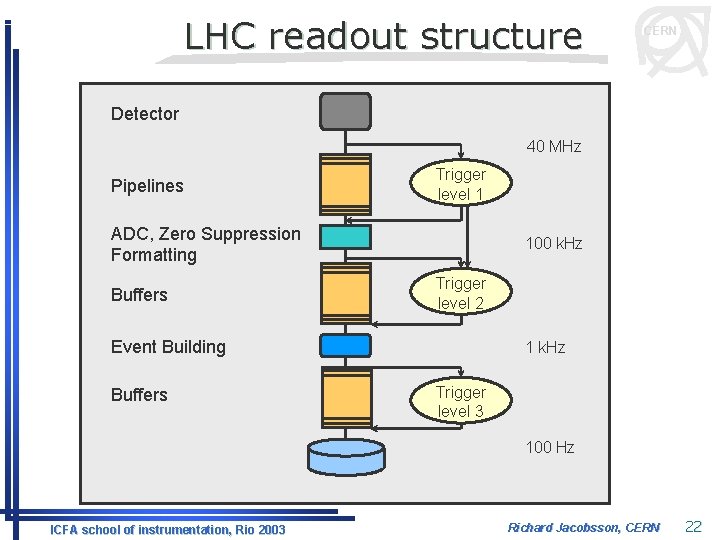

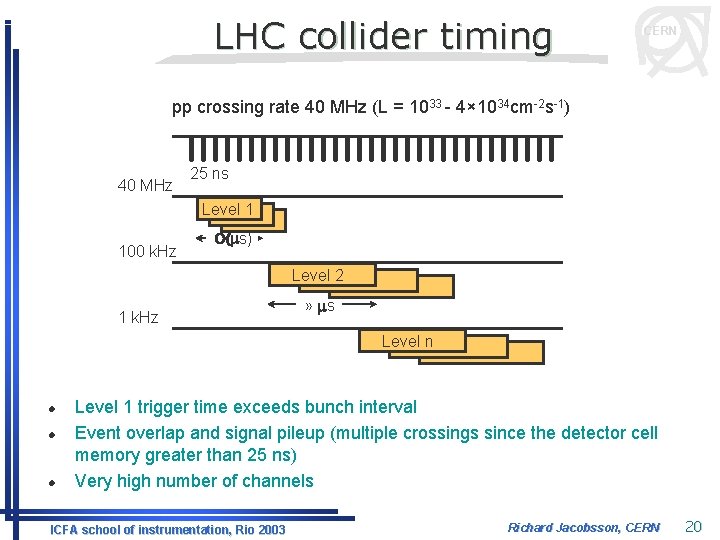

LHC collider timing CERN pp crossing rate 40 MHz (L = 1033 - 4× 1034 cm-2 s-1) 40 MHz 25 ns Level 1 100 k. Hz O(ms) Level 2 1 k. Hz » ms Level n l l l Level 1 trigger time exceeds bunch interval Event overlap and signal pileup (multiple crossings since the detector cell memory greater than 25 ns) Very high number of channels ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 20

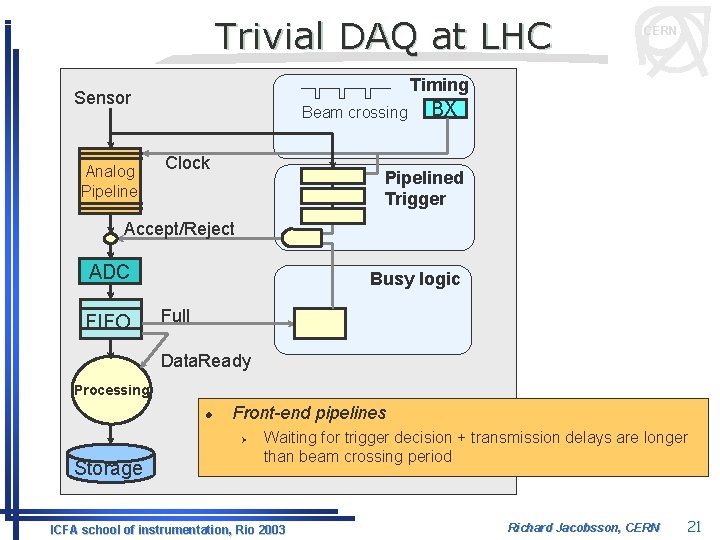

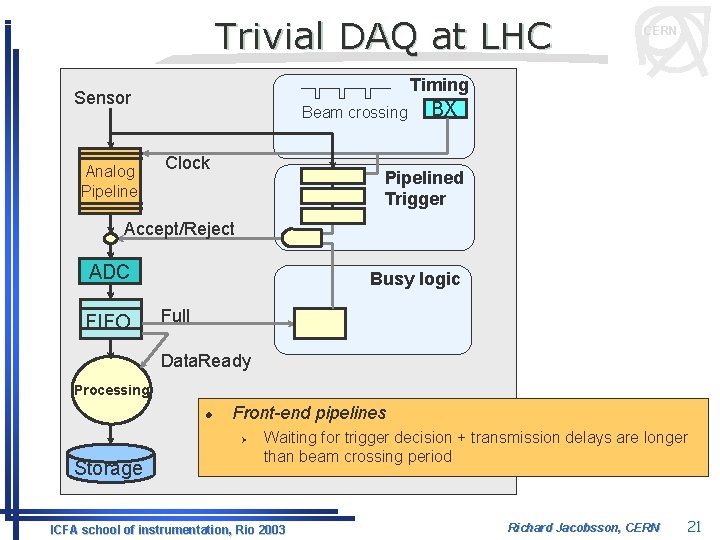

Trivial DAQ at LHC Timing Sensor Analog Pipeline CERN Beam crossing Clock BX Pipelined Trigger Accept/Reject ADC FIFO Busy logic Full Data. Ready Processing l Front-end pipelines Ø Storage Waiting for trigger decision + transmission delays are longer than beam crossing period ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 21

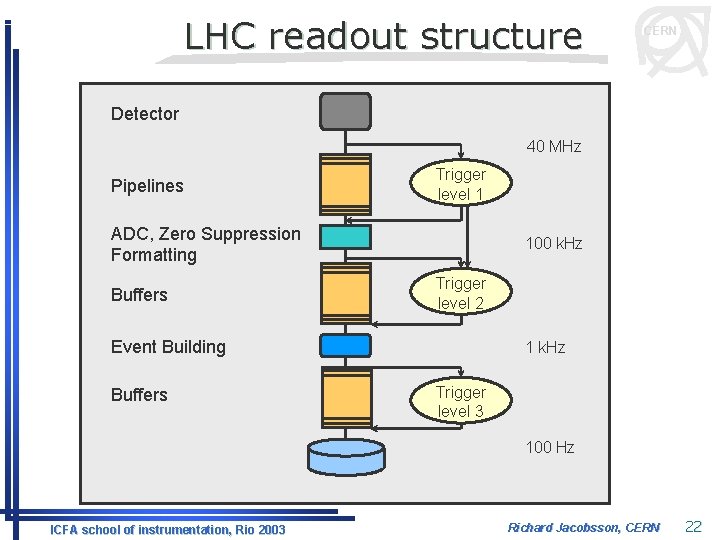

LHC readout structure CERN Detector 40 MHz Pipelines Trigger level 1 ADC, Zero Suppression Formatting Buffers 100 k. Hz Trigger level 2 Event Building Buffers 1 k. Hz Trigger level 3 100 Hz ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 22

CERN 3. Front-end electronics ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 23

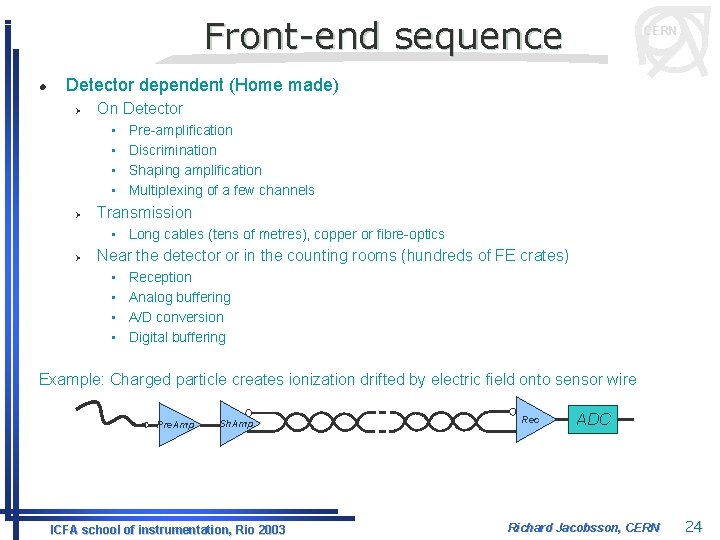

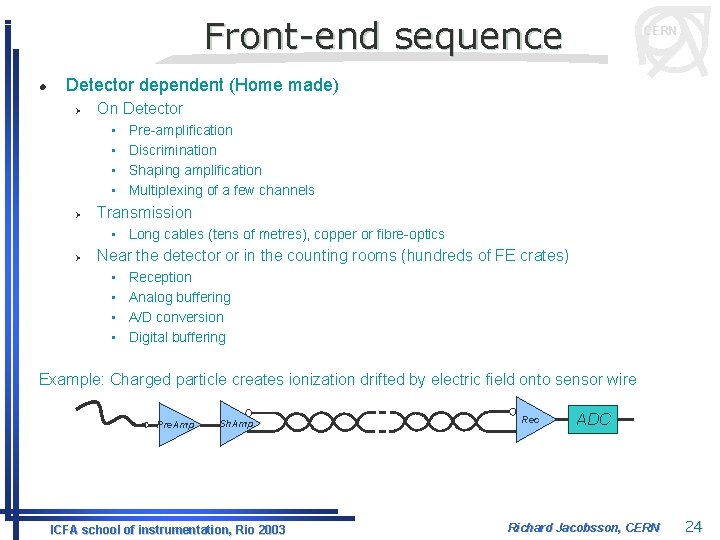

Front-end sequence l CERN Detector dependent (Home made) Ø On Detector • • Ø Pre-amplification Discrimination Shaping amplification Multiplexing of a few channels Transmission • Long cables (tens of metres), copper or fibre-optics Ø Near the detector or in the counting rooms (hundreds of FE crates) • • Reception Analog buffering A/D conversion Digital buffering Example: Charged particle creates ionization drifted by electric field onto sensor wire Pre. Amp Sh. Amp ICFA school of instrumentation, Rio 2003 Rec ADC Richard Jacobsson, CERN 24

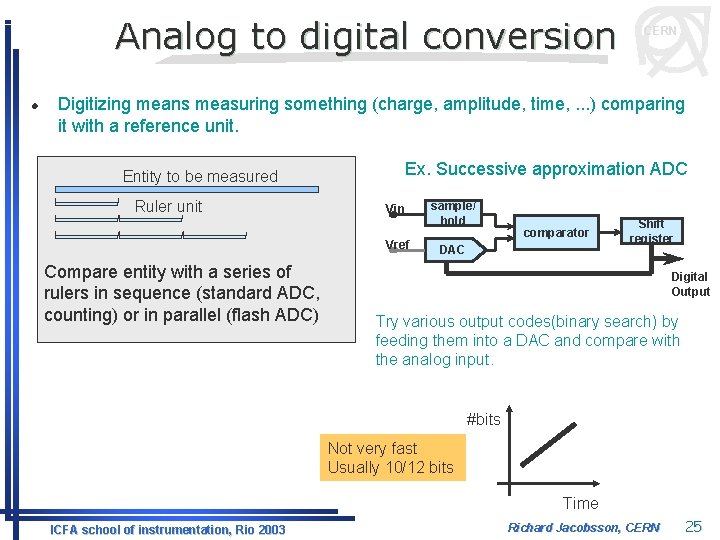

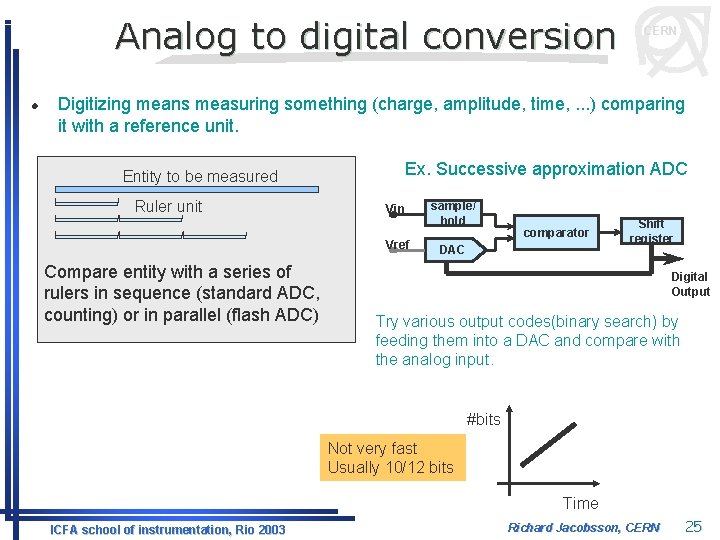

Analog to digital conversion l CERN Digitizing means measuring something (charge, amplitude, time, . . . ) comparing it with a reference unit. Ex. Successive approximation ADC Entity to be measured Ruler unit Vin Vref Compare entity with a series of rulers in sequence (standard ADC, counting) or in parallel (flash ADC) sample/ hold comparator DAC Shift register Digital Output Try various output codes(binary search) by feeding them into a DAC and compare with the analog input. #bits Not very fast Usually 10/12 bits Time ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 25

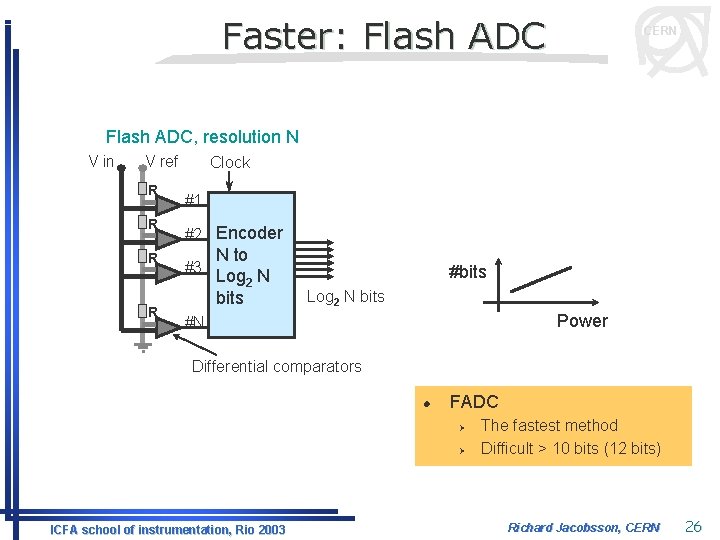

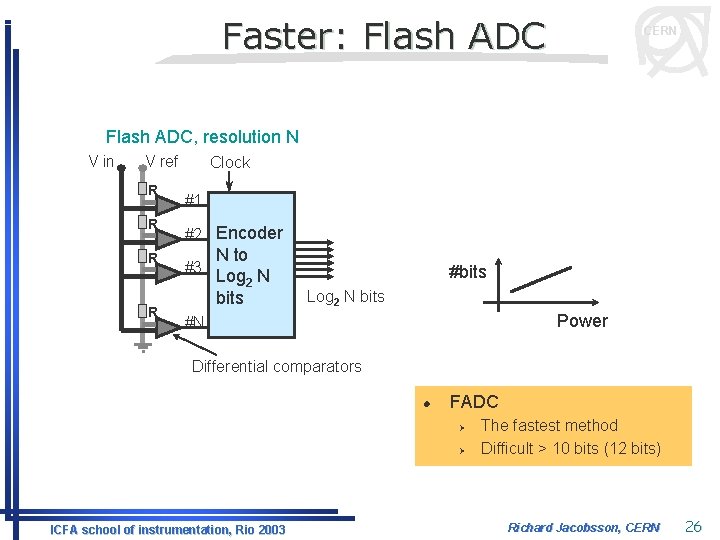

Faster: Flash ADC CERN Flash ADC, resolution N V in V ref R R Clock #1 #2 Encoder #3 N to Log 2 N bits #bits Log 2 N bits Power #N Differential comparators l FADC Ø Ø ICFA school of instrumentation, Rio 2003 The fastest method Difficult > 10 bits (12 bits) Richard Jacobsson, CERN 26

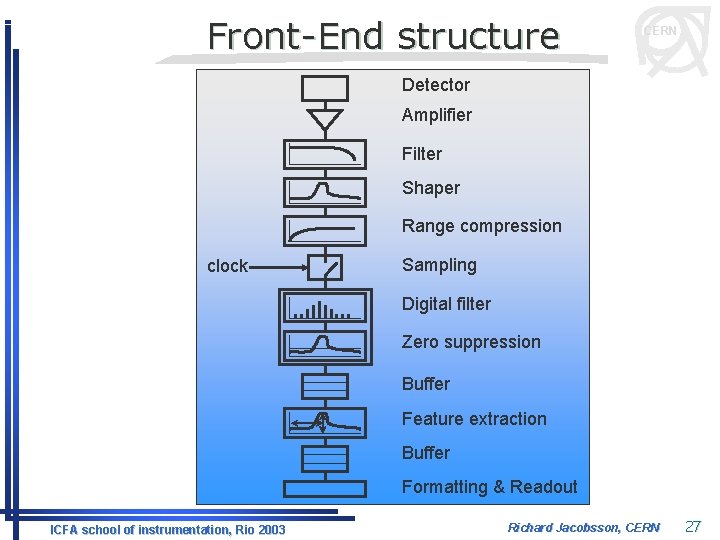

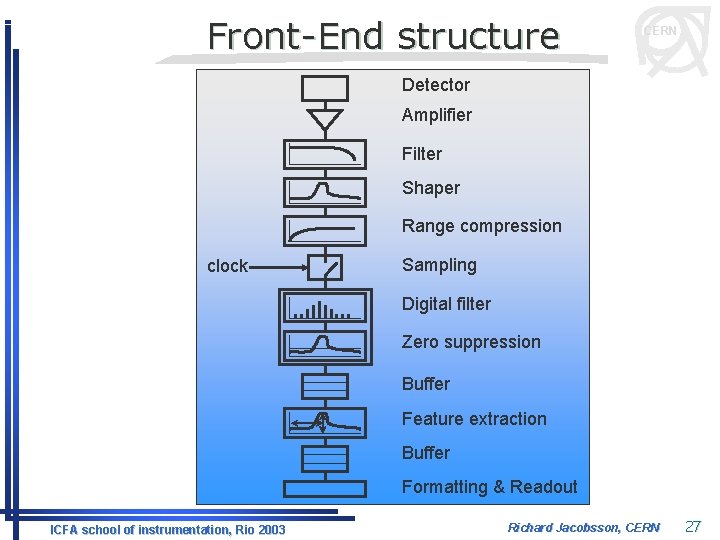

Front-End structure CERN Detector Amplifier Filter Shaper Range compression clock Sampling Digital filter Zero suppression Buffer Feature extraction Buffer Formatting & Readout ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 27

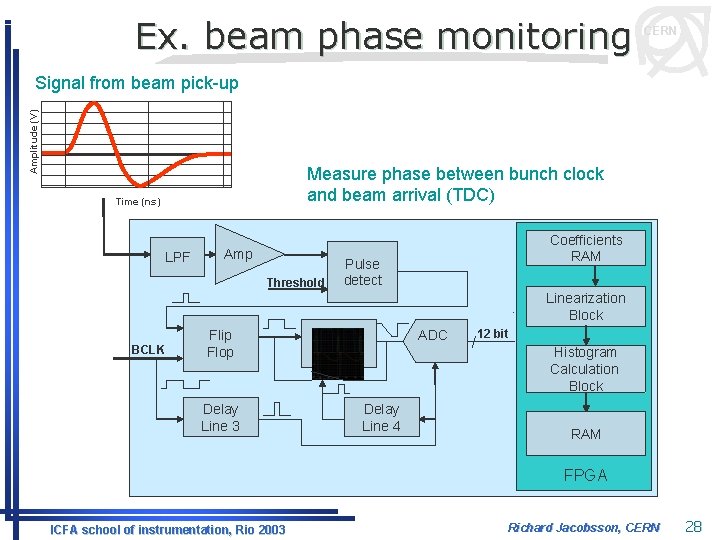

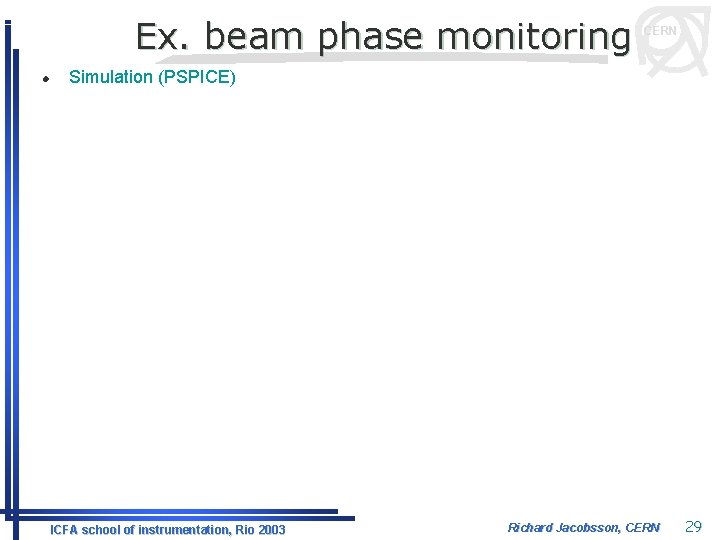

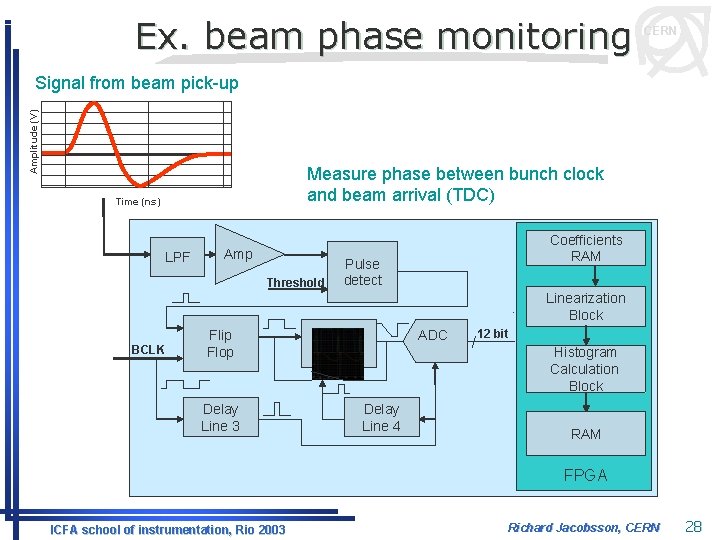

Ex. beam phase monitoring CERN Amplitude (V) Signal from beam pick-up Measure phase between bunch clock and beam arrival (TDC) Time (ns) LPF Amp Threshold Coefficients RAM Pulse detect Linearization Block BCLK ADC Flip Flop Delay Line 3 12 bit Histogram Calculation Block Delay Line 4 RAM FPGA ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 28

Ex. beam phase monitoring l CERN Simulation (PSPICE) Input Flip-flop Integrator BCLK ADC sampling ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 29

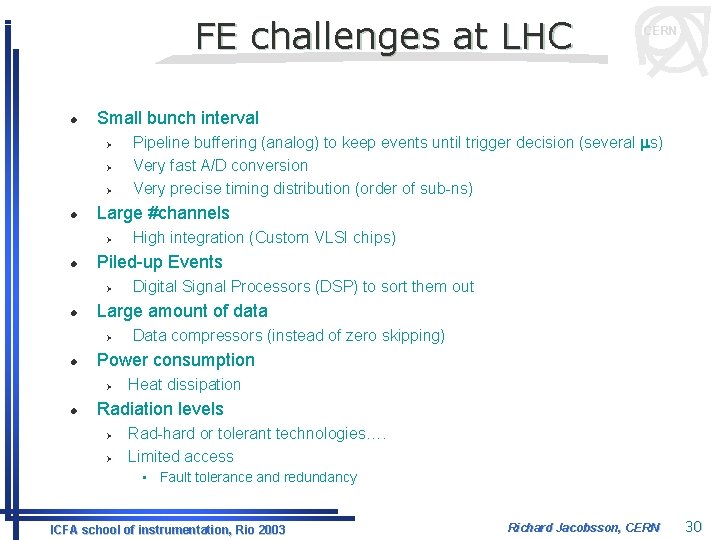

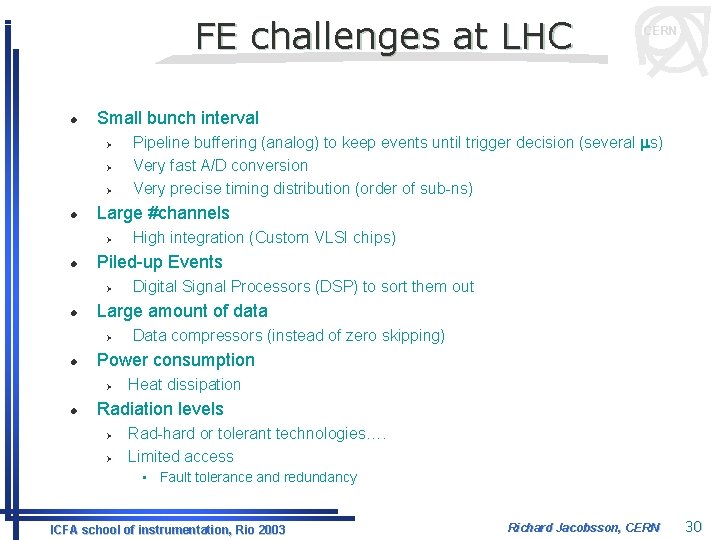

FE challenges at LHC l Small bunch interval Ø Ø Ø l Data compressors (instead of zero skipping) Power consumption Ø l Digital Signal Processors (DSP) to sort them out Large amount of data Ø l High integration (Custom VLSI chips) Piled-up Events Ø l Pipeline buffering (analog) to keep events until trigger decision (several ms) Very fast A/D conversion Very precise timing distribution (order of sub-ns) Large #channels Ø l CERN Heat dissipation Radiation levels Ø Ø Rad-hard or tolerant technologies…. Limited access • Fault tolerance and redundancy ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 30

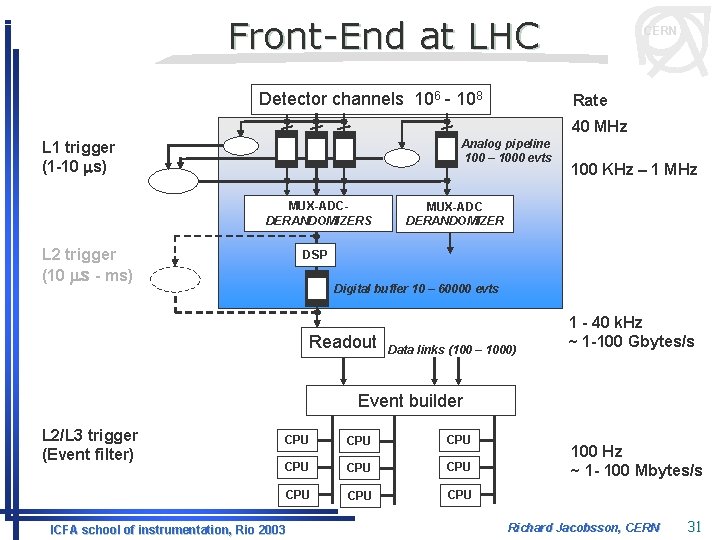

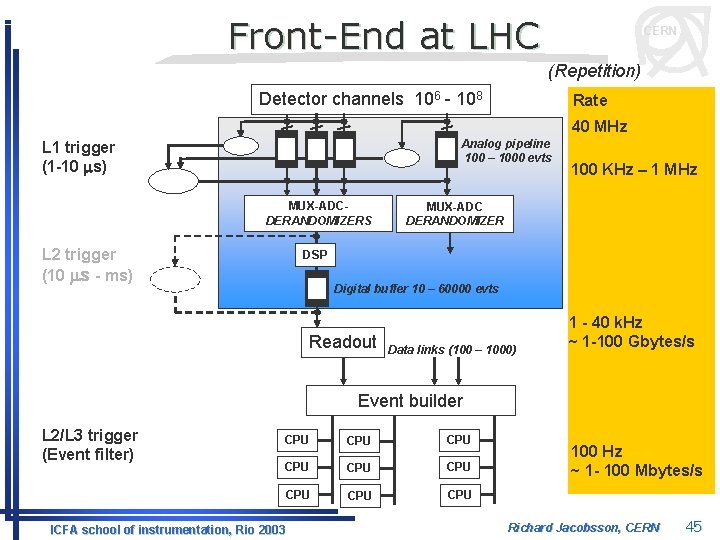

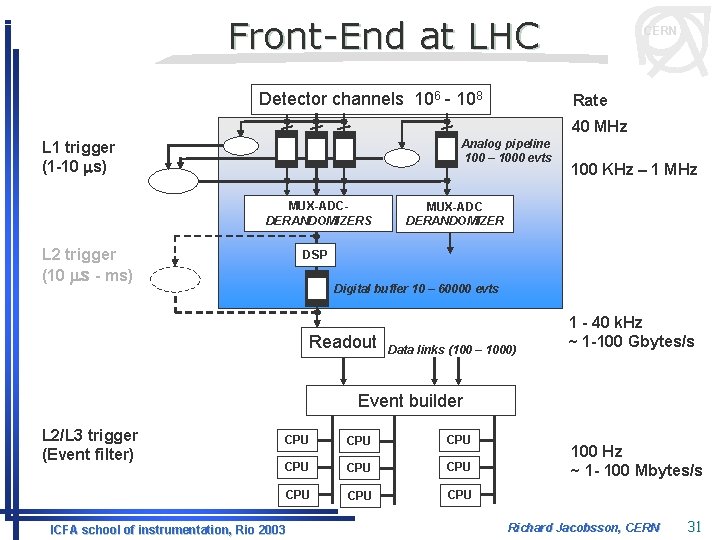

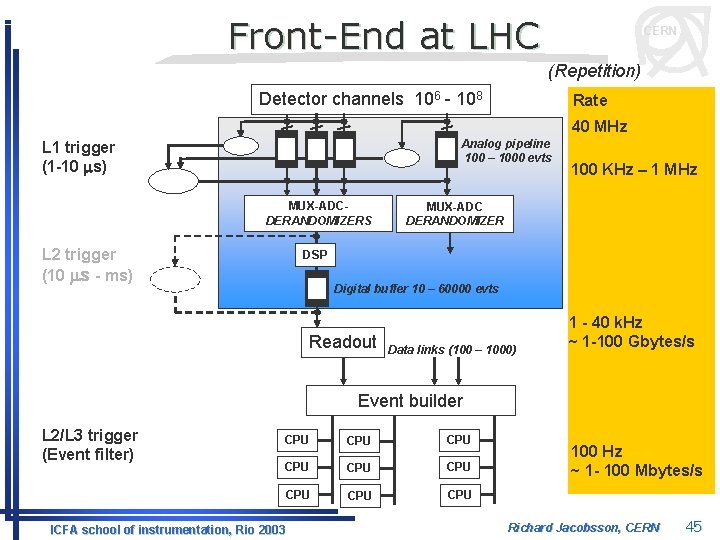

Front-End at LHC Detector channels 106 - 108 CERN Rate 40 MHz Analog pipeline 100 – 1000 evts L 1 trigger (1 -10 ms) MUX-ADCDERANDOMIZERS L 2 trigger (10 ms - ms) 100 KHz – 1 MHz MUX-ADC DERANDOMIZER DSP Digital buffer 10 – 60000 evts Readout Data links (100 – 1000) 1 - 40 k. Hz ~ 1 -100 Gbytes/s Event builder L 2/L 3 trigger (Event filter) CPU CPU CPU ICFA school of instrumentation, Rio 2003 100 Hz ~ 1 - 100 Mbytes/s Richard Jacobsson, CERN 31

CERN 4. Trigger ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 32

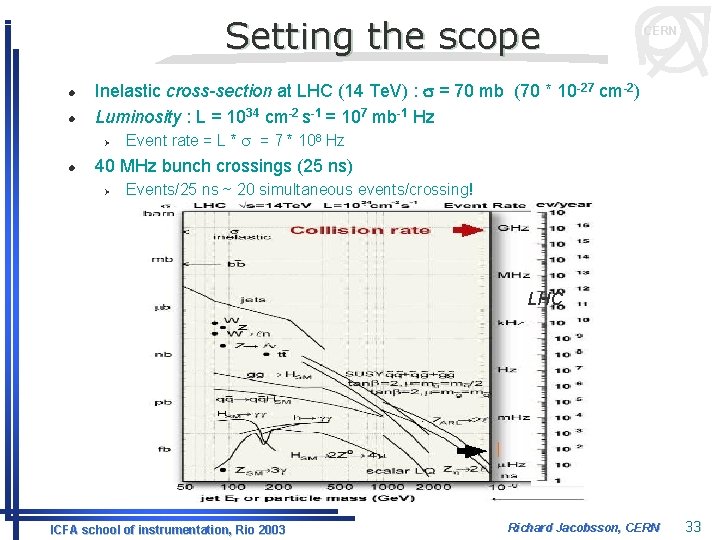

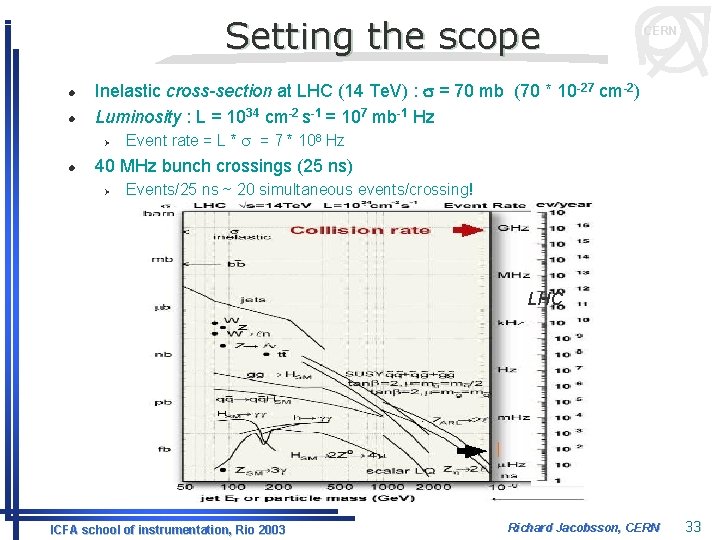

Setting the scope l l Inelastic cross-section at LHC (14 Te. V) : s = 70 mb (70 * 10 -27 cm-2) Luminosity : L = 1034 cm-2 s-1 = 107 mb-1 Hz Ø l CERN Event rate = L * s = 7 * 108 Hz 40 MHz bunch crossings (25 ns) Ø Events/25 ns ~ 20 simultaneous events/crossing! LHC ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 33

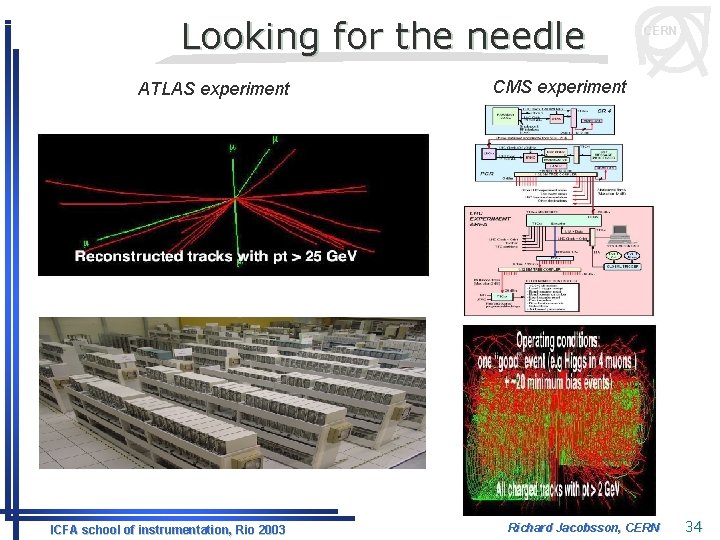

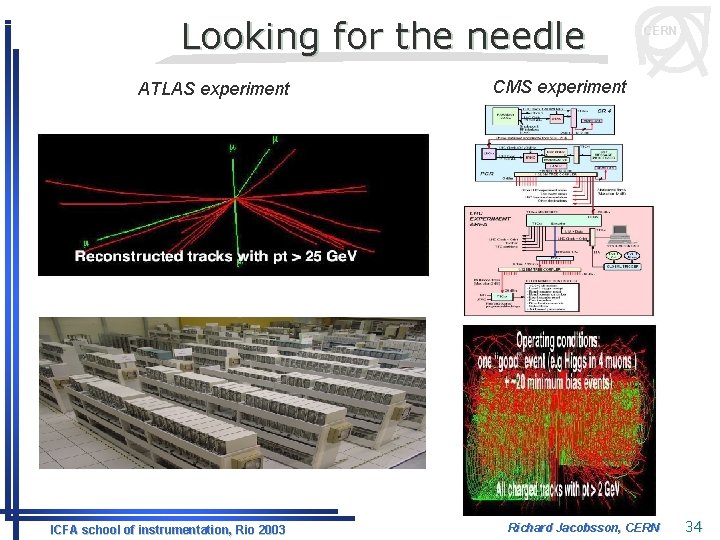

Looking for the needle ATLAS experiment ICFA school of instrumentation, Rio 2003 CERN CMS experiment Richard Jacobsson, CERN 34

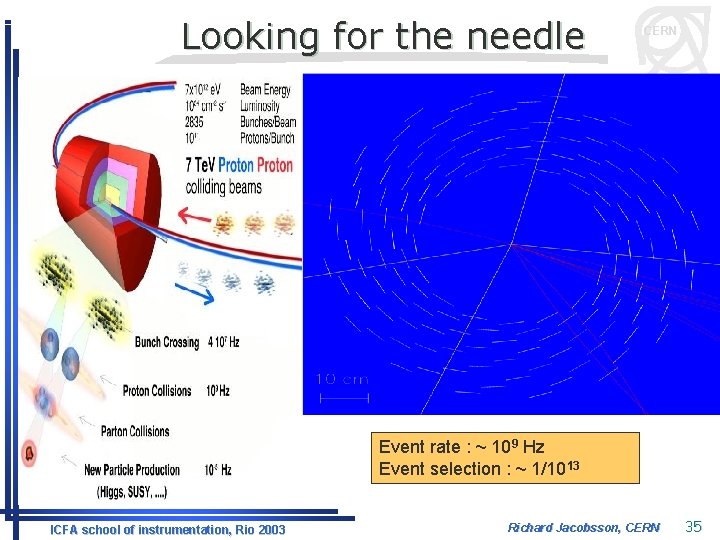

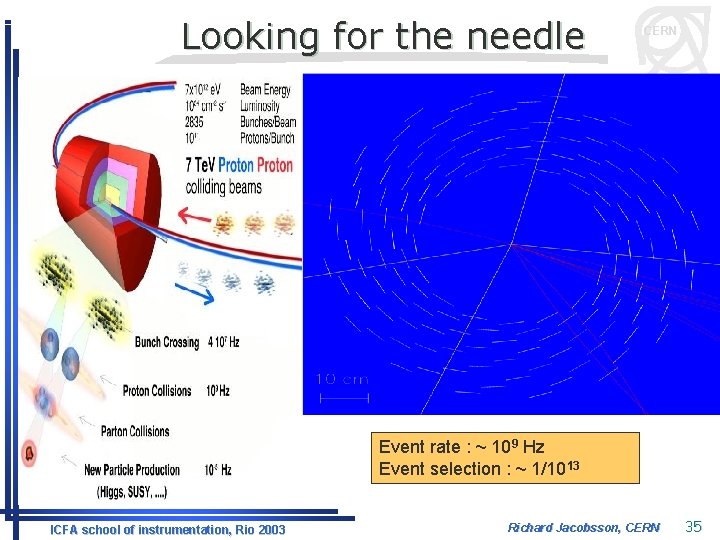

Looking for the needle CERN Event rate : ~ 109 Hz Event selection : ~ 1/1013 ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 35

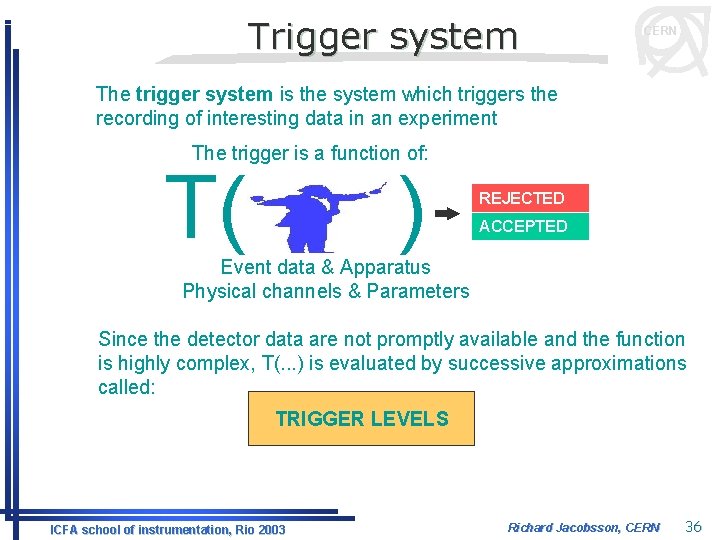

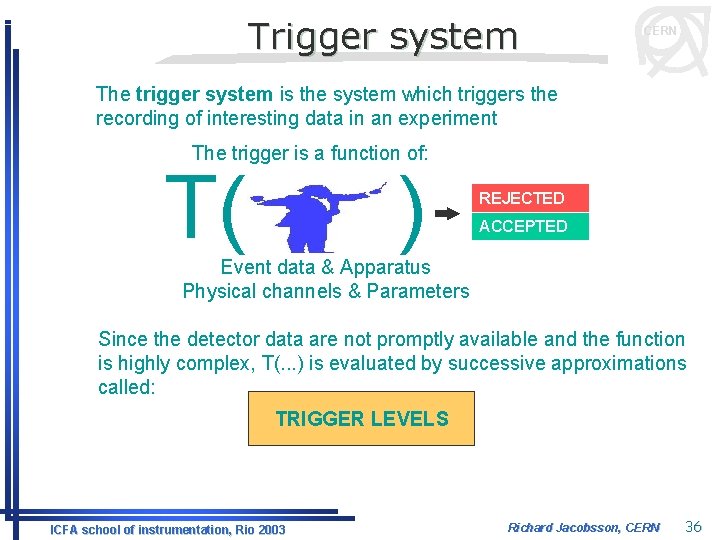

Trigger system CERN The trigger system is the system which triggers the recording of interesting data in an experiment The trigger is a function of: T( ) REJECTED ACCEPTED Event data & Apparatus Physical channels & Parameters Since the detector data are not promptly available and the function is highly complex, T(. . . ) is evaluated by successive approximations called: TRIGGER LEVELS ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 36

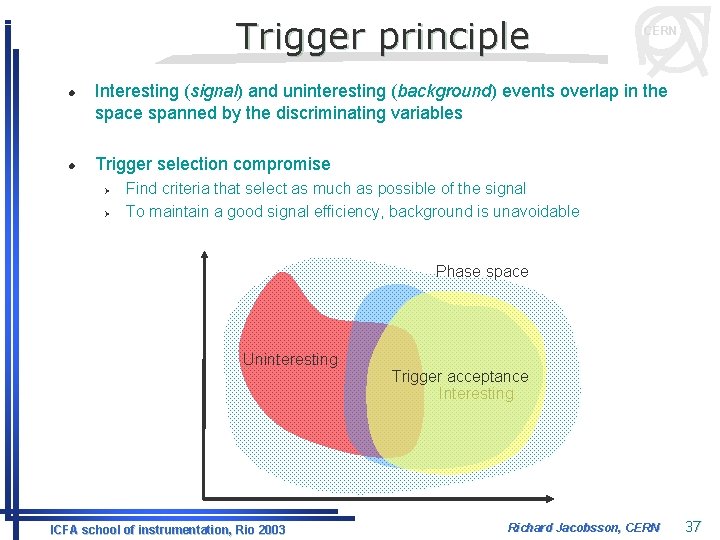

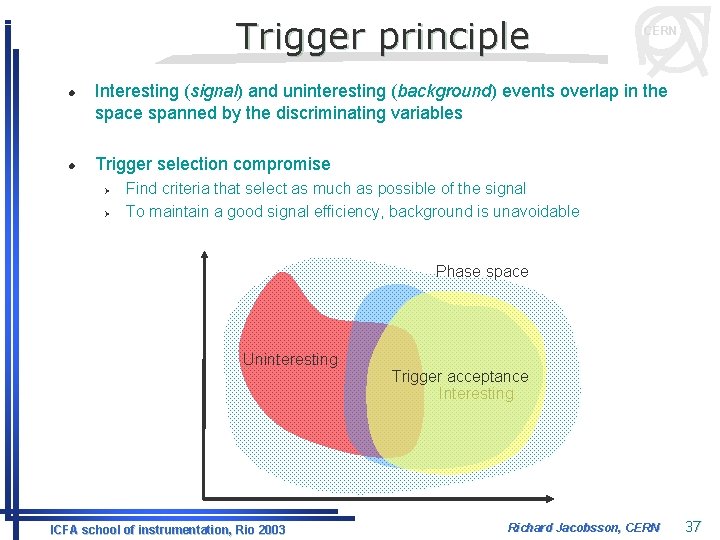

Trigger principle l l CERN Interesting (signal) and uninteresting (background) events overlap in the space spanned by the discriminating variables Trigger selection compromise Ø Ø Find criteria that select as much as possible of the signal To maintain a good signal efficiency, background is unavoidable Phase space Uninteresting ICFA school of instrumentation, Rio 2003 Trigger acceptance Interesting Richard Jacobsson, CERN 37

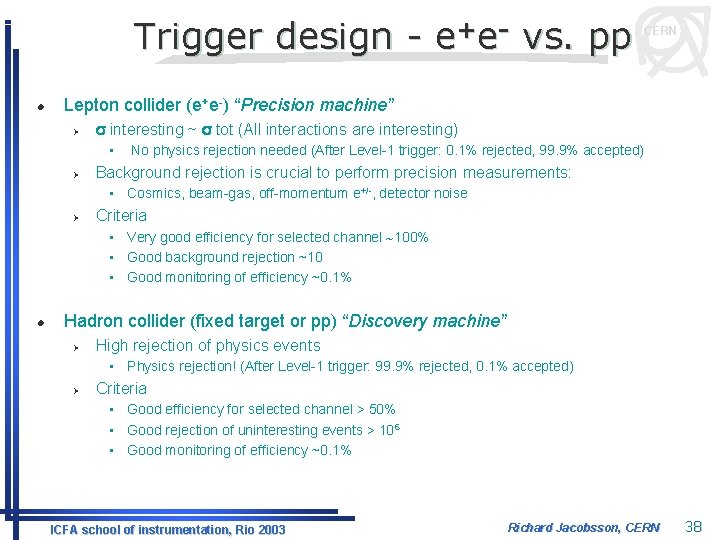

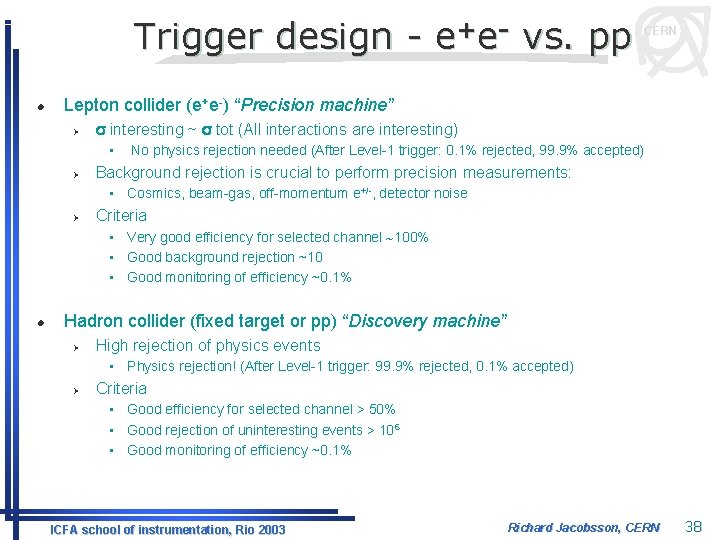

Trigger design l e +e - vs. pp CERN Lepton collider (e+e-) “Precision machine” Ø s interesting ~ s tot (All interactions are interesting) • Ø No physics rejection needed (After Level-1 trigger: 0. 1% rejected, 99. 9% accepted) Background rejection is crucial to perform precision measurements: • Cosmics, beam-gas, off-momentum e+/-, detector noise Ø Criteria • Very good efficiency for selected channel ~100% • Good background rejection ~10 • Good monitoring of efficiency ~0. 1% l Hadron collider (fixed target or pp) “Discovery machine” Ø High rejection of physics events • Physics rejection! (After Level-1 trigger: 99. 9% rejected, 0. 1% accepted) Ø Criteria • Good efficiency for selected channel > 50% • Good rejection of uninteresting events > 106 • Good monitoring of efficiency ~0. 1% ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 38

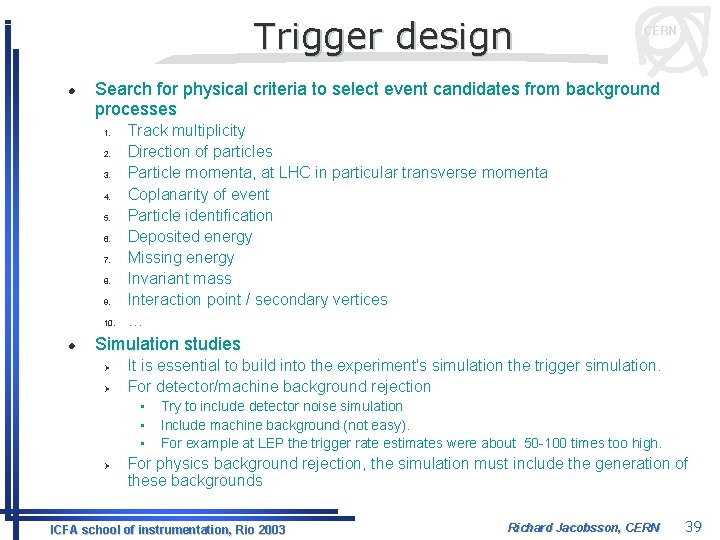

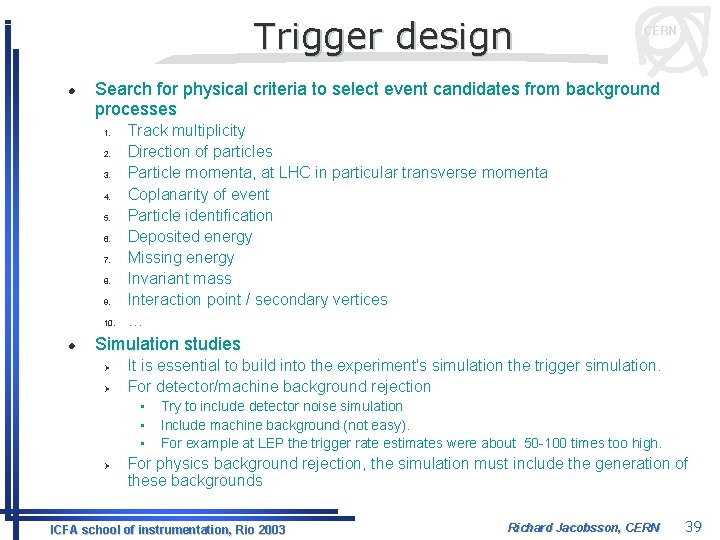

Trigger design l Search for physical criteria to select event candidates from background processes 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. l CERN Track multiplicity Direction of particles Particle momenta, at LHC in particular transverse momenta Coplanarity of event Particle identification Deposited energy Missing energy Invariant mass Interaction point / secondary vertices … Simulation studies Ø Ø It is essential to build into the experiment's simulation the trigger simulation. For detector/machine background rejection • • • Ø Try to include detector noise simulation Include machine background (not easy). For example at LEP the trigger rate estimates were about 50 -100 times too high. For physics background rejection, the simulation must include the generation of these backgrounds ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 39

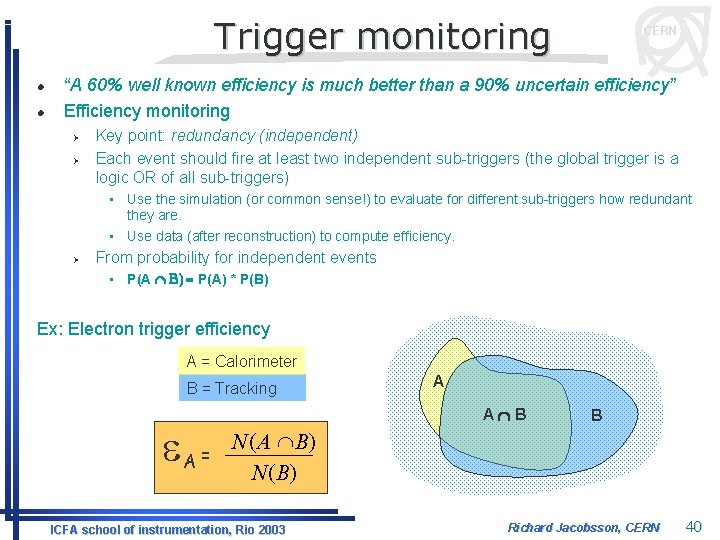

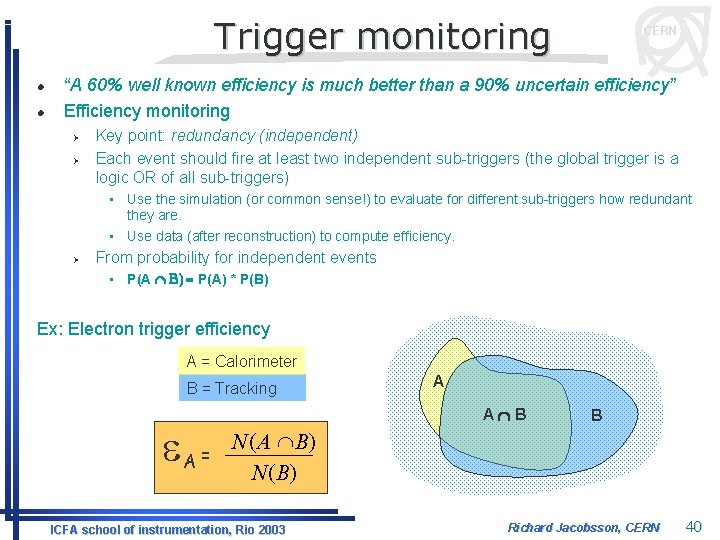

Trigger monitoring l l CERN “A 60% well known efficiency is much better than a 90% uncertain efficiency” Efficiency monitoring Ø Ø Key point: redundancy (independent) Each event should fire at least two independent sub-triggers (the global trigger is a logic OR of all sub-triggers) • Use the simulation (or common sense!) to evaluate for different sub-triggers how redundant they are. • Use data (after reconstruction) to compute efficiency. Ø From probability for independent events • P(A Ç B) = P(A) * P(B) Ex: Electron trigger efficiency A = Calorimeter B = Tracking e A AÇB N ( A Ç B) A= N ( B) ICFA school of instrumentation, Rio 2003 B Richard Jacobsson, CERN 40

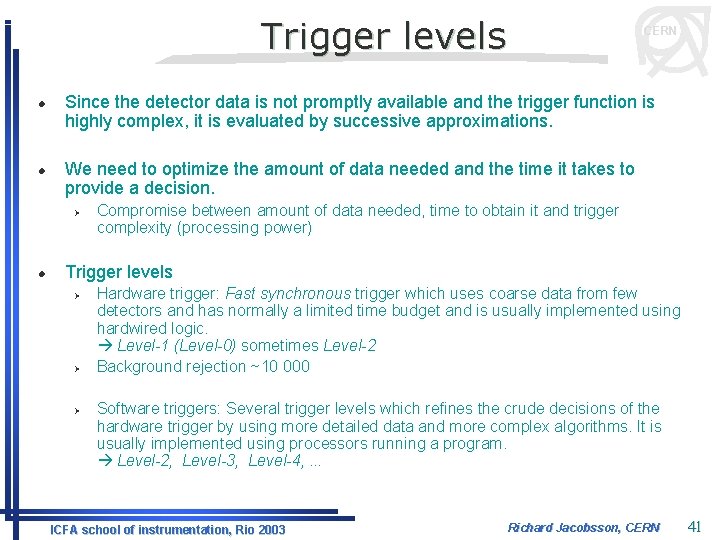

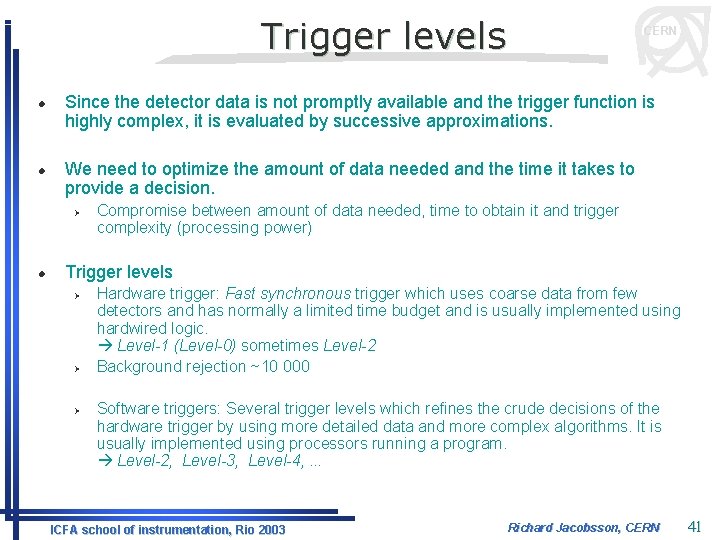

Trigger levels l l Since the detector data is not promptly available and the trigger function is highly complex, it is evaluated by successive approximations. We need to optimize the amount of data needed and the time it takes to provide a decision. Ø l CERN Compromise between amount of data needed, time to obtain it and trigger complexity (processing power) Trigger levels Ø Ø Ø Hardware trigger: Fast synchronous trigger which uses coarse data from few detectors and has normally a limited time budget and is usually implemented using hardwired logic. Level-1 (Level-0) sometimes Level-2 Background rejection ~10 000 Software triggers: Several trigger levels which refines the crude decisions of the hardware trigger by using more detailed data and more complex algorithms. It is usually implemented using processors running a program. Level-2, Level-3, Level-4, . . . ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 41

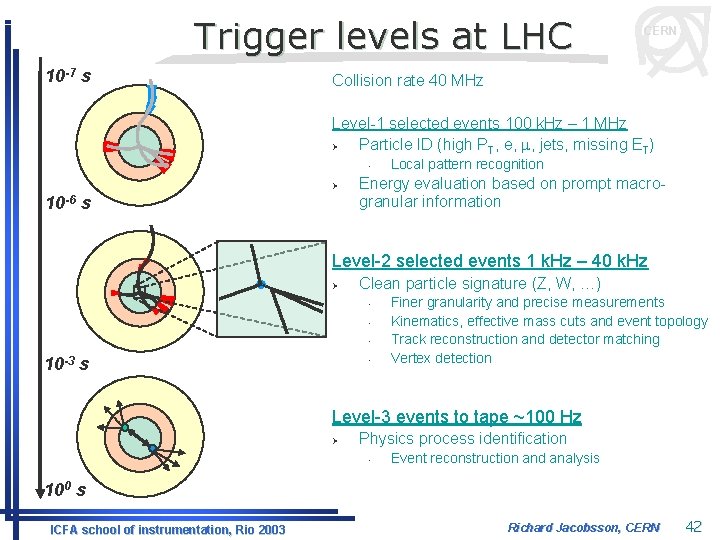

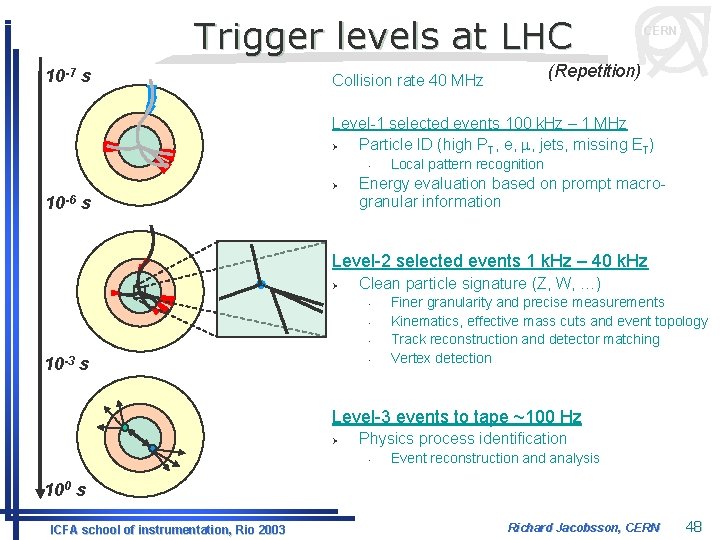

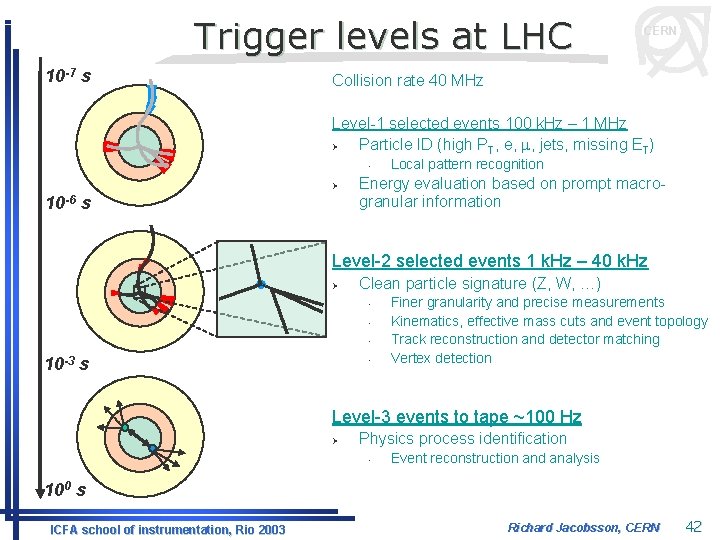

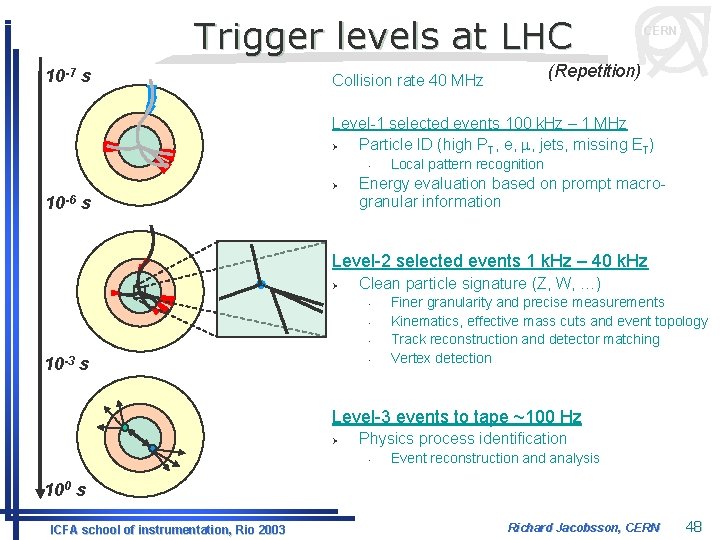

Trigger levels at LHC 10 -7 s CERN Collision rate 40 MHz Level-1 selected events 100 k. Hz – 1 MHz Ø Particle ID (high PT, e, m, jets, missing ET) • Ø 10 -6 s Local pattern recognition Energy evaluation based on prompt macrogranular information Level-2 selected events 1 k. Hz – 40 k. Hz Ø Clean particle signature (Z, W, …) • • • 10 -3 s • Finer granularity and precise measurements Kinematics, effective mass cuts and event topology Track reconstruction and detector matching Vertex detection Level-3 events to tape ~100 Hz Ø Physics process identification • Event reconstruction and analysis 100 s ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 42

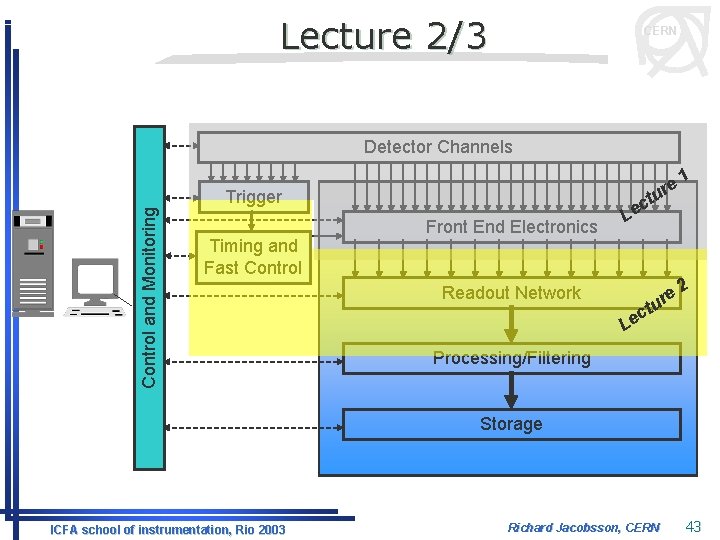

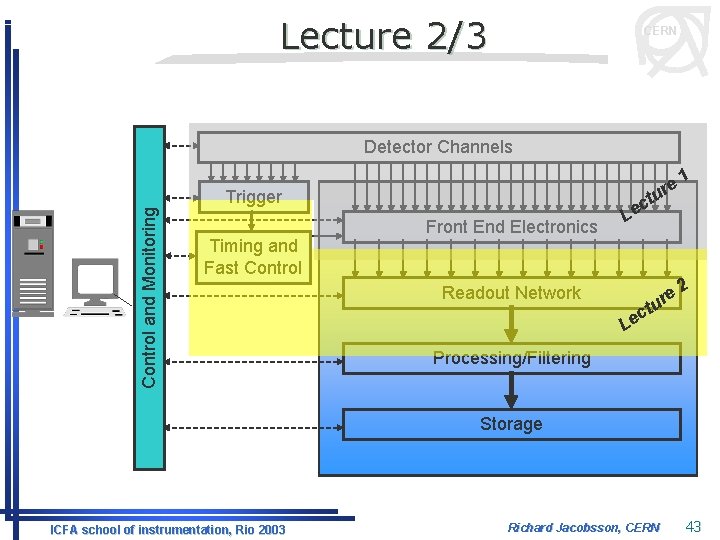

Lecture 2/3 CERN Detector Channels Control and Monitoring Trigger Timing and Fast Control e ur 1 e ur 2 t Front End Electronics c Le Readout Network t c Le Processing/Filtering Storage ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 43

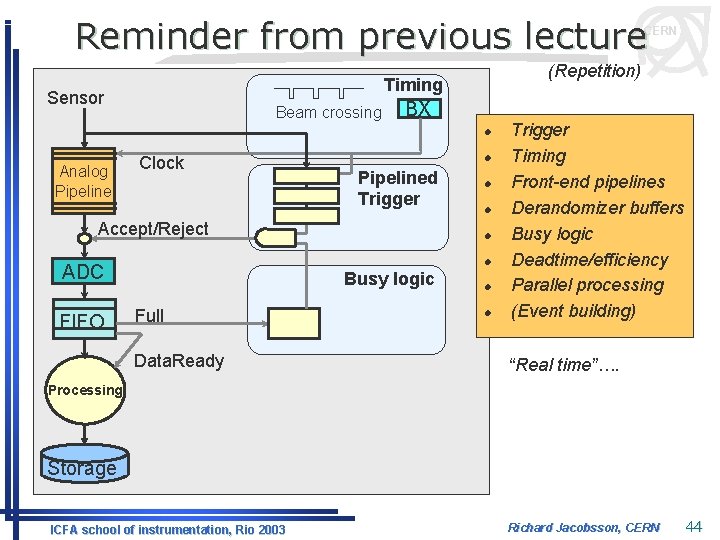

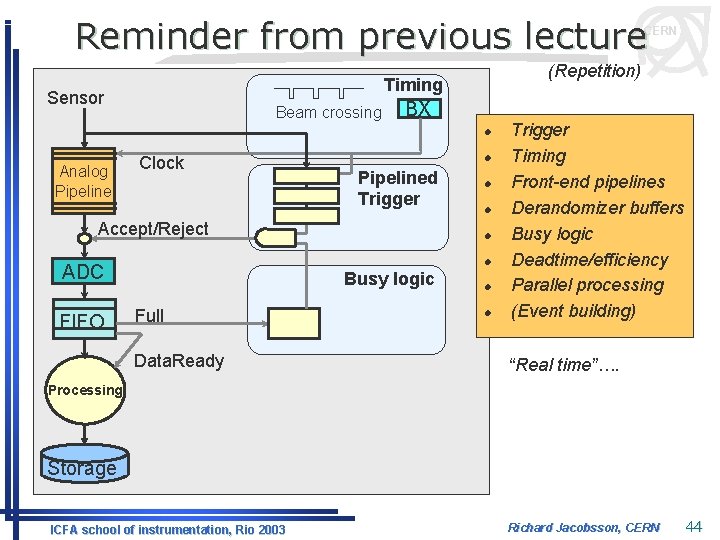

Reminder from previous lecture CERN (Repetition) Timing Sensor Beam crossing BX l Analog Pipeline Clock l Pipelined Trigger Accept/Reject l l l ADC FIFO l Busy logic Full Data. Ready l l Trigger Timing Front-end pipelines Derandomizer buffers Busy logic Deadtime/efficiency Parallel processing (Event building) “Real time”…. Processing Storage ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 44

Front-End at LHC CERN (Repetition) Detector channels 106 - 108 Rate 40 MHz Analog pipeline 100 – 1000 evts L 1 trigger (1 -10 ms) MUX-ADCDERANDOMIZERS L 2 trigger (10 ms - ms) 100 KHz – 1 MHz MUX-ADC DERANDOMIZER DSP Digital buffer 10 – 60000 evts Readout Data links (100 – 1000) 1 - 40 k. Hz ~ 1 -100 Gbytes/s Event builder L 2/L 3 trigger (Event filter) CPU CPU CPU ICFA school of instrumentation, Rio 2003 100 Hz ~ 1 - 100 Mbytes/s Richard Jacobsson, CERN 45

Deadtime CERN (Repetition) Arrival time following Probability exponential distribution (Poisson process) FIFO Depth 1, 3, 5 Buffer size Processing time may also depend on event… Buffer occupancy Efficiency 1. 0 5 buffers 3 buffers 0. 5 t= 1/(N+1) , r=1 t = (1 -r)r. N/(1 -r. N+1) , r=1 1 buffer 1. 0 2. 0 r = <Processing time>/<Input period> ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 46

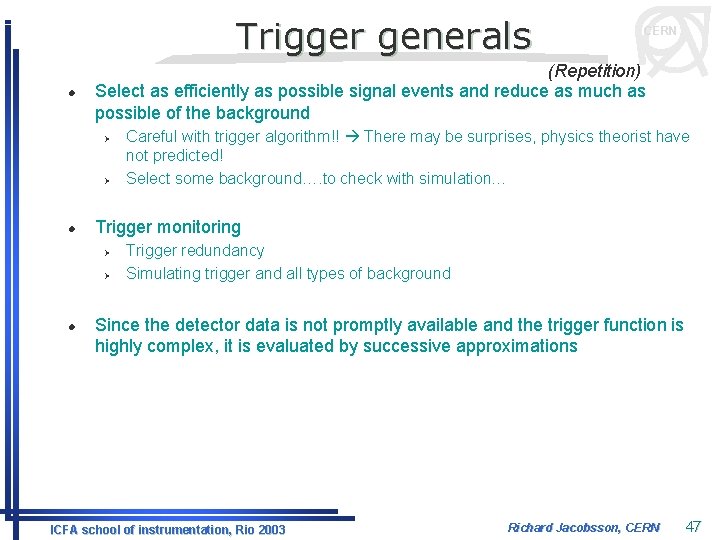

Trigger generals l (Repetition) Select as efficiently as possible signal events and reduce as much as possible of the background Ø Ø l Careful with trigger algorithm!! There may be surprises, physics theorist have not predicted! Select some background…. to check with simulation… Trigger monitoring Ø Ø l CERN Trigger redundancy Simulating trigger and all types of background Since the detector data is not promptly available and the trigger function is highly complex, it is evaluated by successive approximations ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 47

Trigger levels at LHC 10 -7 s CERN (Repetition) Collision rate 40 MHz Level-1 selected events 100 k. Hz – 1 MHz Ø Particle ID (high PT, e, m, jets, missing ET) • Ø 10 -6 s Local pattern recognition Energy evaluation based on prompt macrogranular information Level-2 selected events 1 k. Hz – 40 k. Hz Ø Clean particle signature (Z, W, …) • • • 10 -3 s • Finer granularity and precise measurements Kinematics, effective mass cuts and event topology Track reconstruction and detector matching Vertex detection Level-3 events to tape ~100 Hz Ø Physics process identification • Event reconstruction and analysis 100 s ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 48

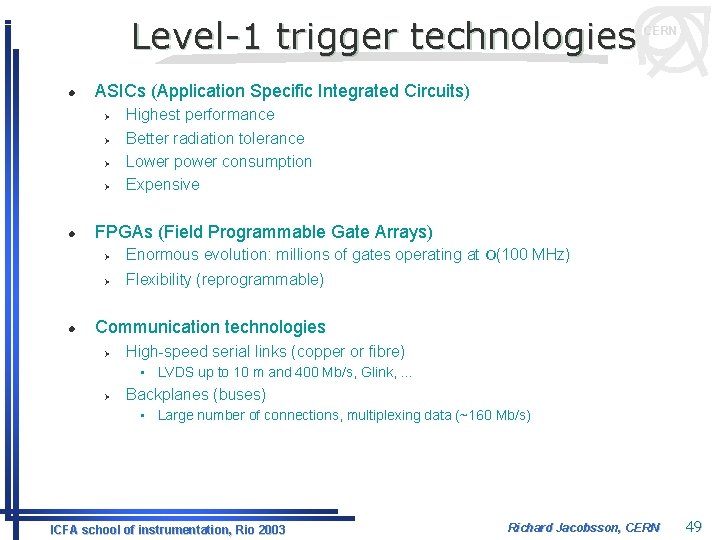

Level-1 trigger technologies l ASICs (Application Specific Integrated Circuits) Ø Ø l l CERN Highest performance Better radiation tolerance Lower power consumption Expensive FPGAs (Field Programmable Gate Arrays) Ø Enormous evolution: millions of gates operating at O(100 MHz) Ø Flexibility (reprogrammable) Communication technologies Ø High-speed serial links (copper or fibre) • LVDS up to 10 m and 400 Mb/s, Glink, … Ø Backplanes (buses) • Large number of connections, multiplexing data (~160 Mb/s) ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 49

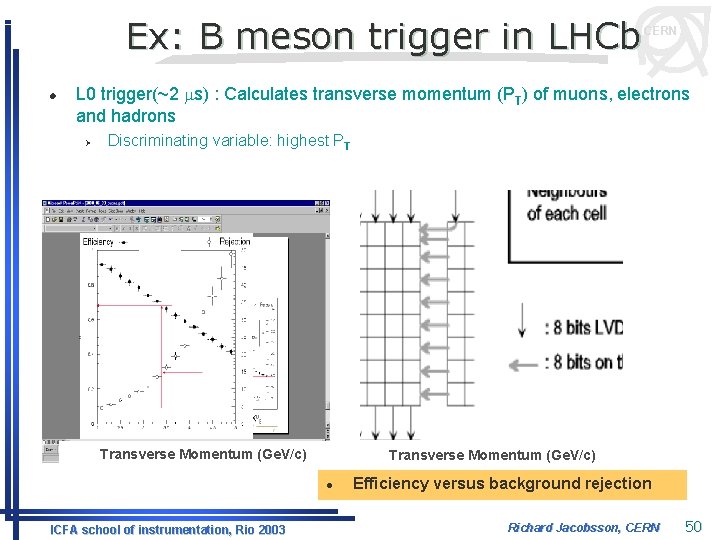

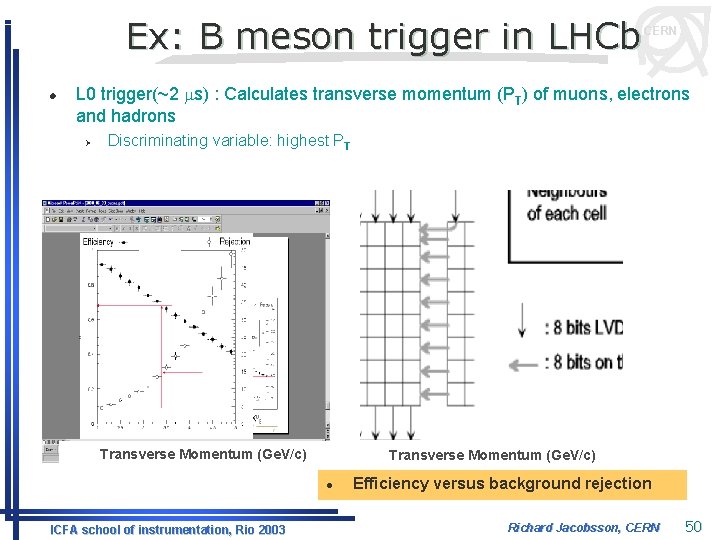

Ex: B meson trigger in LHCb l CERN L 0 trigger(~2 ms) : Calculates transverse momentum (PT) of muons, electrons and hadrons Ø Discriminating variable: highest PT Transverse Momentum (Ge. V/c) l ICFA school of instrumentation, Rio 2003 Efficiency versus background rejection Richard Jacobsson, CERN 50

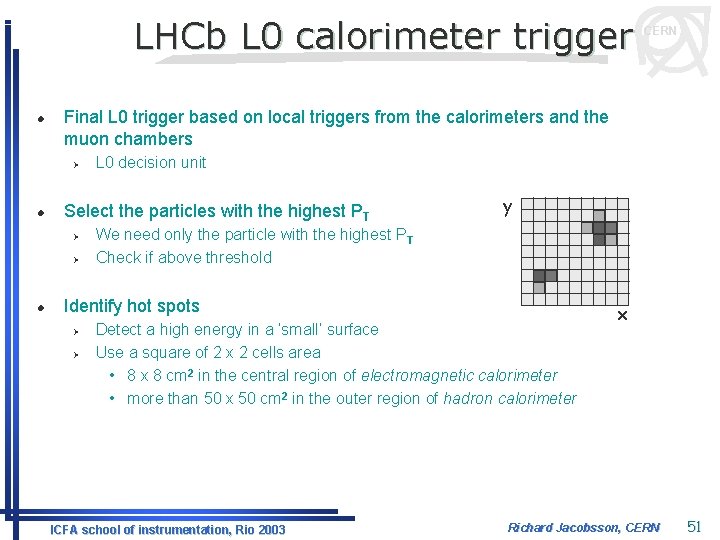

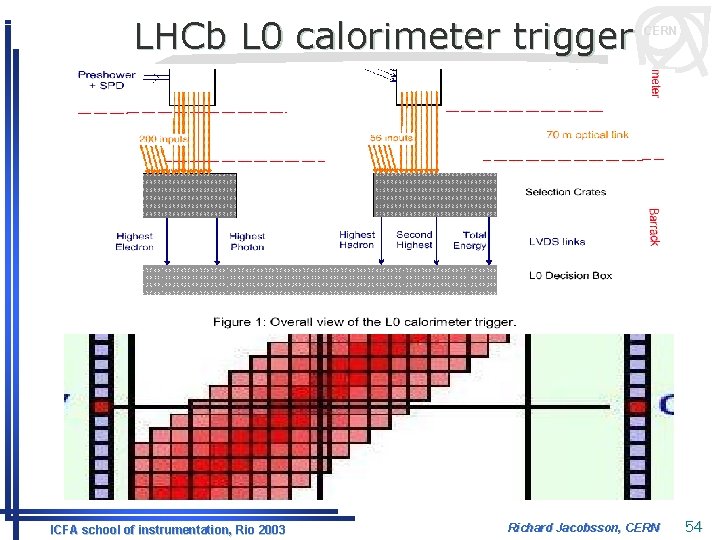

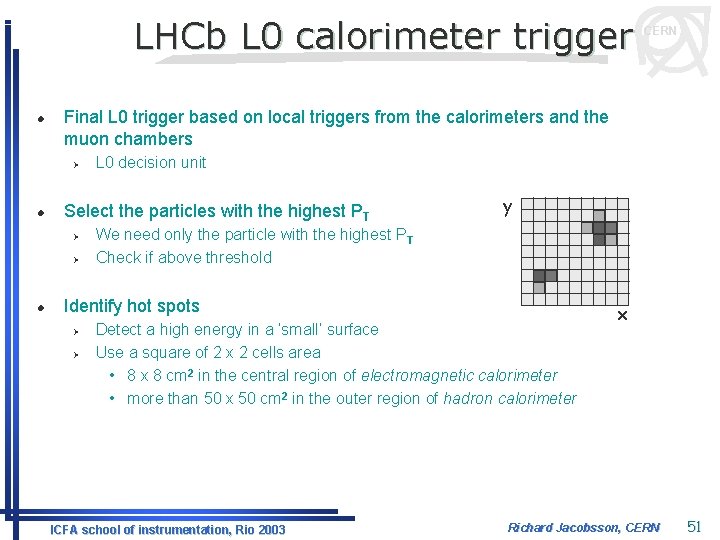

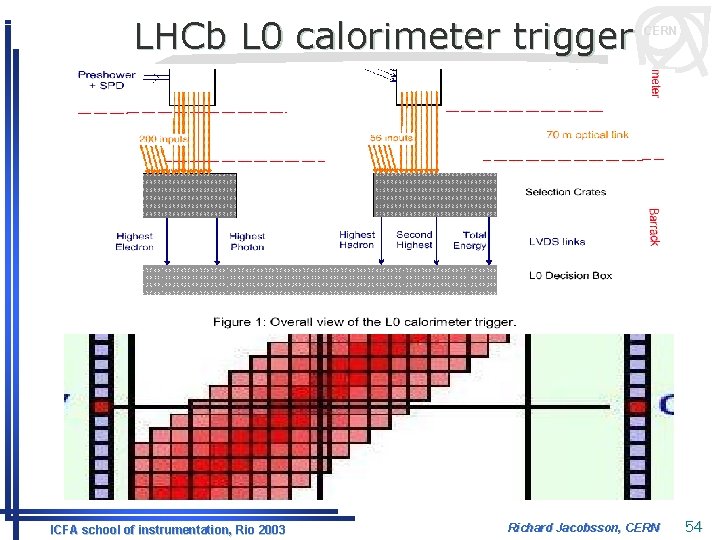

LHCb L 0 calorimeter trigger l Final L 0 trigger based on local triggers from the calorimeters and the muon chambers Ø l L 0 decision unit Select the particles with the highest PT Ø Ø l CERN y We need only the particle with the highest PT Check if above threshold Identify hot spots Ø Ø Detect a high energy in a ‘small’ surface Use a square of 2 x 2 cells area • 8 x 8 cm 2 in the central region of electromagnetic calorimeter • more than 50 x 50 cm 2 in the outer region of hadron calorimeter ICFA school of instrumentation, Rio 2003 x Richard Jacobsson, CERN 51

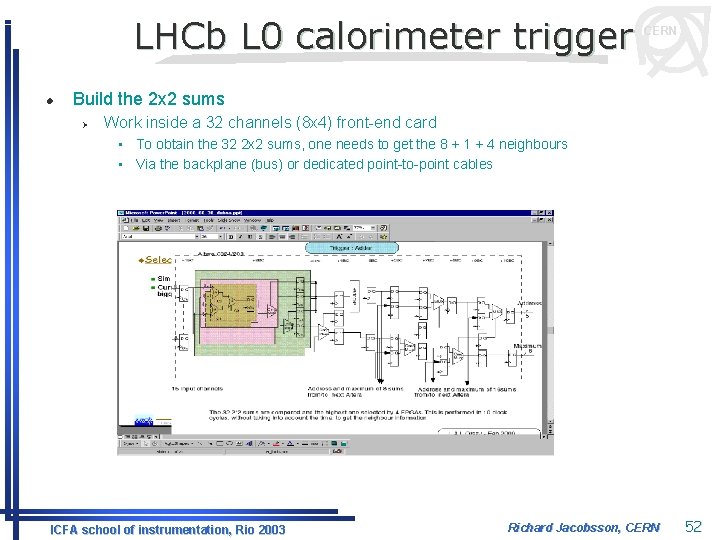

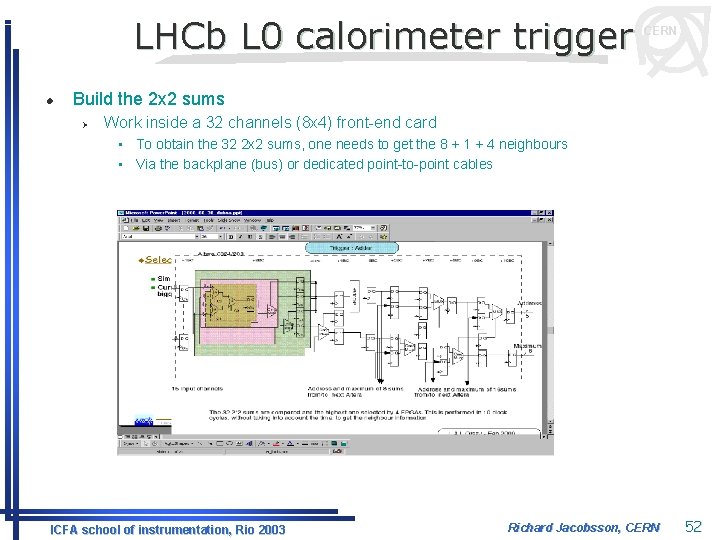

LHCb L 0 calorimeter trigger l CERN Build the 2 x 2 sums Ø Work inside a 32 channels (8 x 4) front-end card • To obtain the 32 2 x 2 sums, one needs to get the 8 + 1 + 4 neighbours • Via the backplane (bus) or dedicated point-to-point cables ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 52

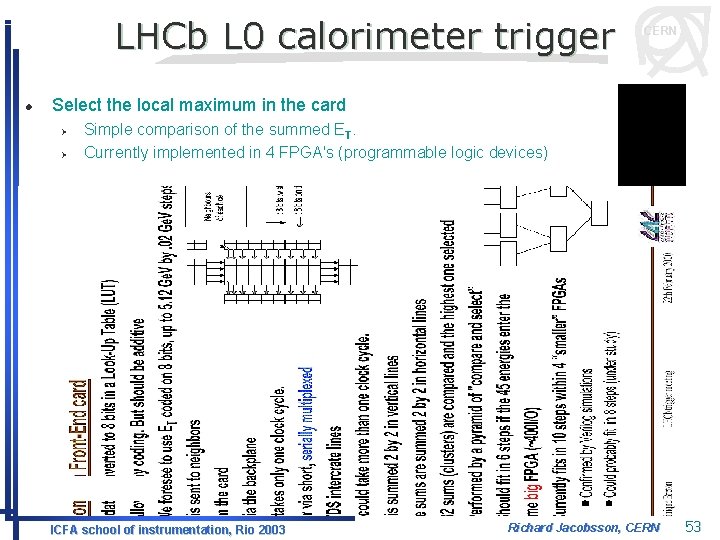

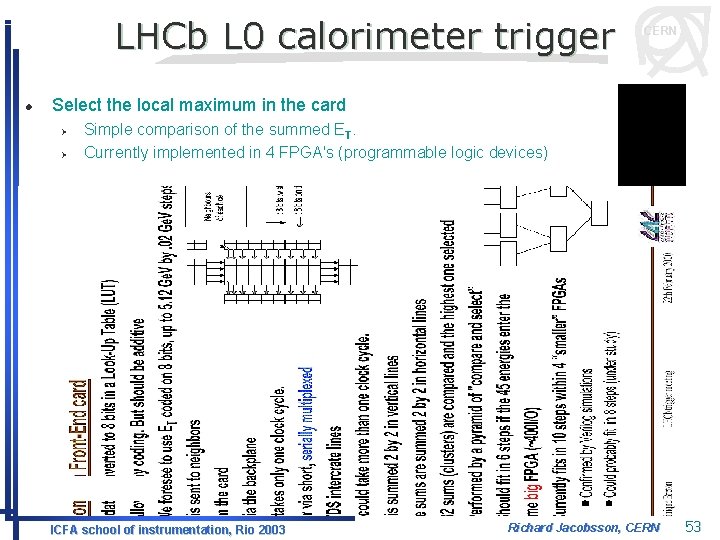

LHCb L 0 calorimeter trigger l CERN Select the local maximum in the card Ø Ø Simple comparison of the summed ET. Currently implemented in 4 FPGA's (programmable logic devices) ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 53

LHCb L 0 calorimeter trigger ICFA school of instrumentation, Rio 2003 CERN Richard Jacobsson, CERN 54

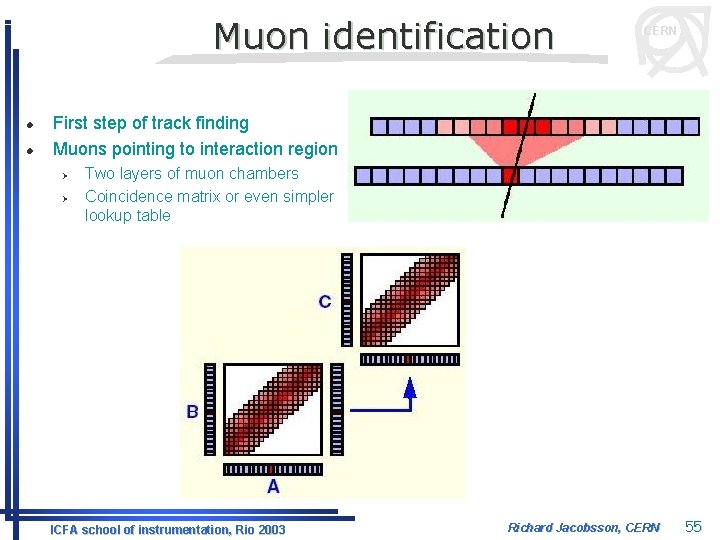

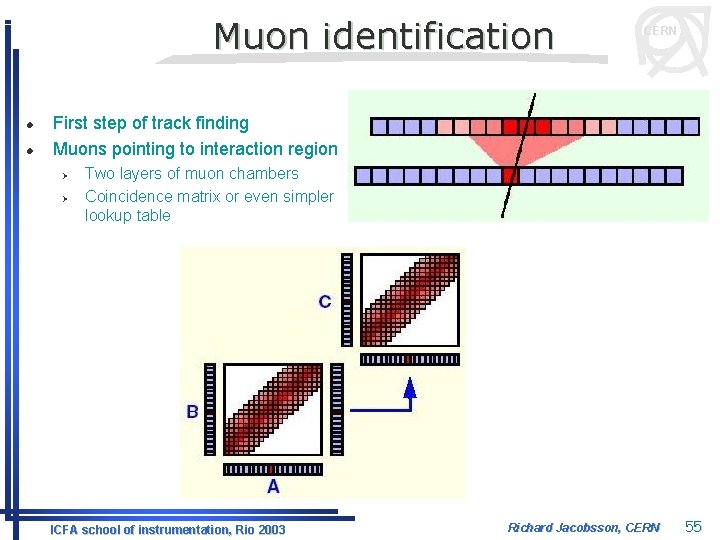

Muon identification l l CERN First step of track finding Muons pointing to interaction region Ø Ø Two layers of muon chambers Coincidence matrix or even simpler lookup table ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 55

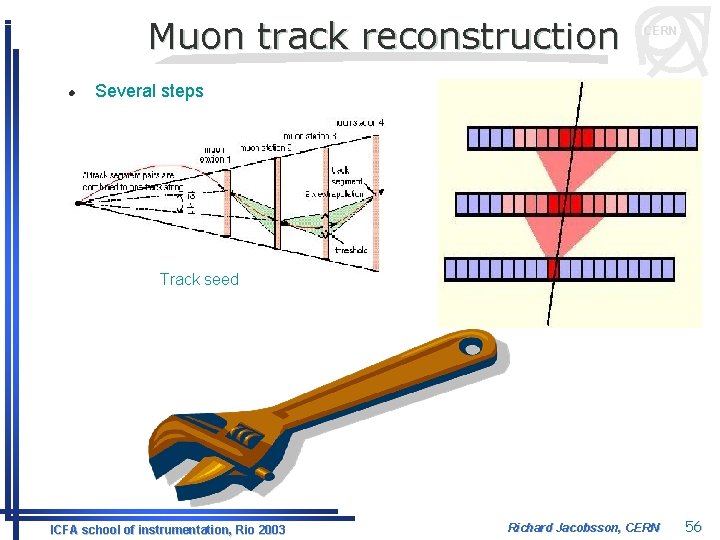

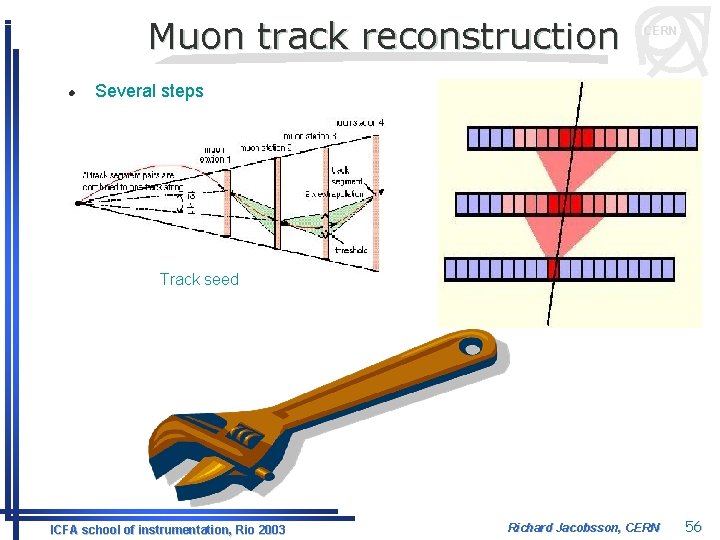

Muon track reconstruction l CERN Several steps Track seed ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 56

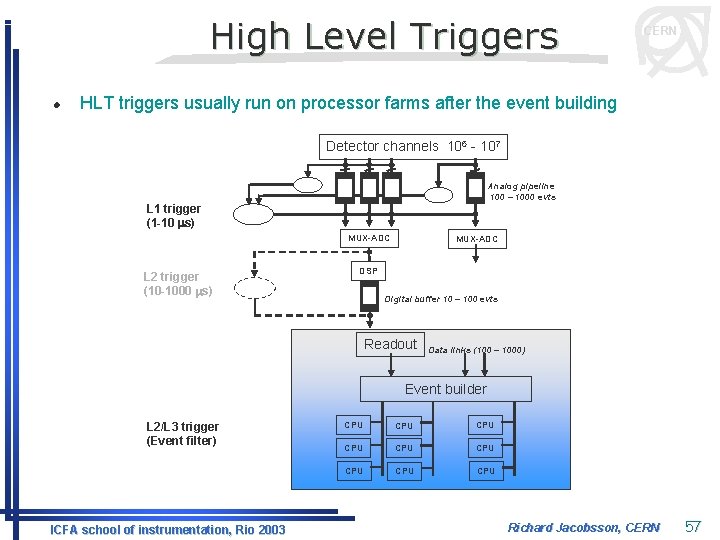

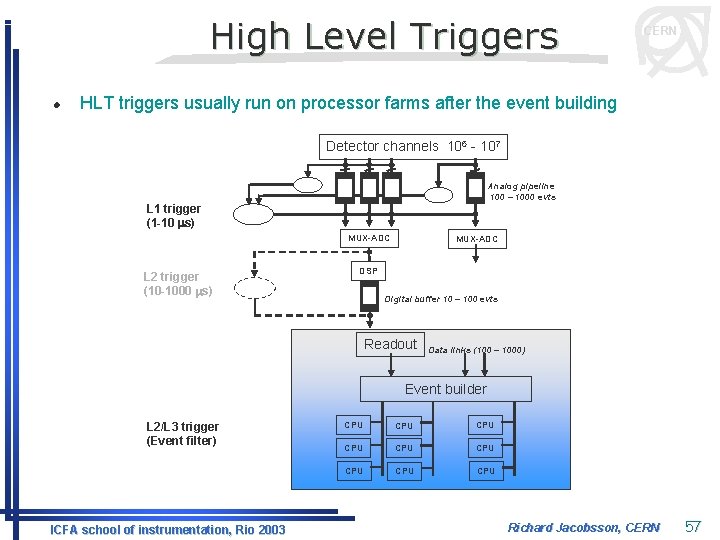

High Level Triggers l CERN HLT triggers usually run on processor farms after the event building Detector channels 106 - 107 Analog pipeline 100 – 1000 evts L 1 trigger (1 -10 ms) MUX-ADC L 2 trigger (10 -1000 ms) MUX-ADC DSP Digital buffer 10 – 100 evts Readout Data links (100 – 1000) Event builder L 2/L 3 trigger (Event filter) ICFA school of instrumentation, Rio 2003 CPU CPU CPU Richard Jacobsson, CERN 57

CERN 5. Experiment timing ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 58

Accelerator timing ICFA school of instrumentation, Rio 2003 CERN Richard Jacobsson, CERN 59

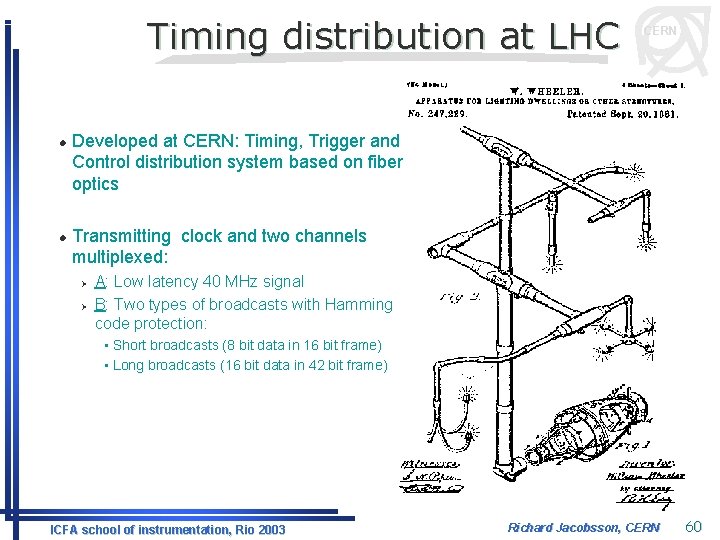

Timing distribution at LHC l l CERN Developed at CERN: Timing, Trigger and Control distribution system based on fiber optics Transmitting clock and two channels multiplexed: Ø Ø A: Low latency 40 MHz signal B: Two types of broadcasts with Hamming code protection: • Short broadcasts (8 bit data in 16 bit frame) • Long broadcasts (16 bit data in 42 bit frame) ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 60

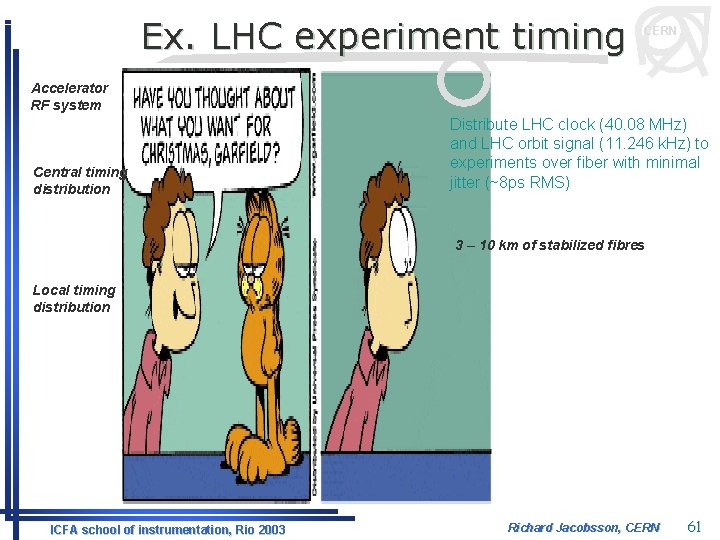

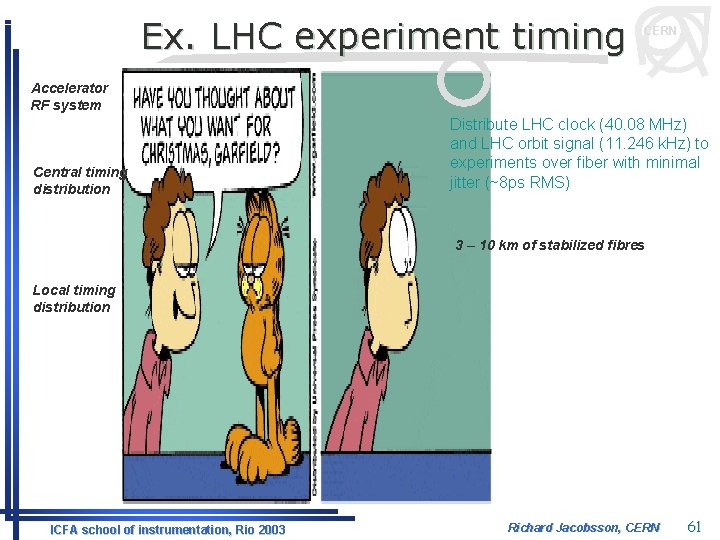

Ex. LHC experiment timing CERN Accelerator RF system Central timing distribution Distribute LHC clock (40. 08 MHz) and LHC orbit signal (11. 246 k. Hz) to experiments over fiber with minimal jitter (~8 ps RMS) 3 – 10 km of stabilized fibres Local timing distribution ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 61

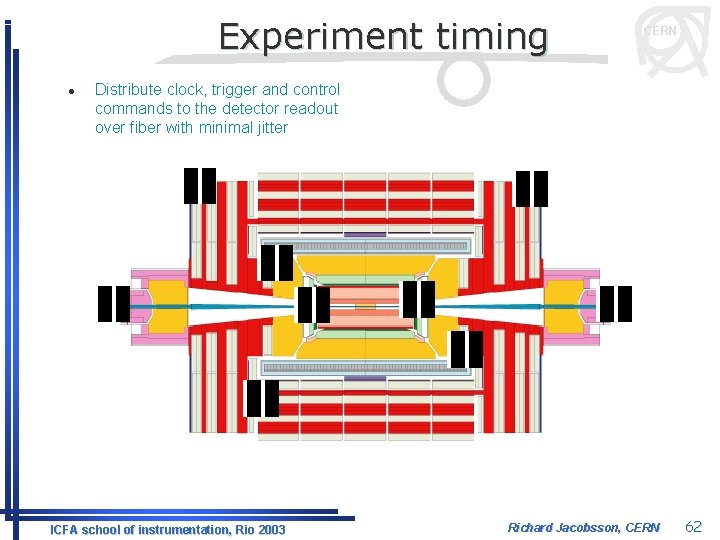

Experiment timing l CERN Distribute clock, trigger and control commands to the detector readout over fiber with minimal jitter ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 62

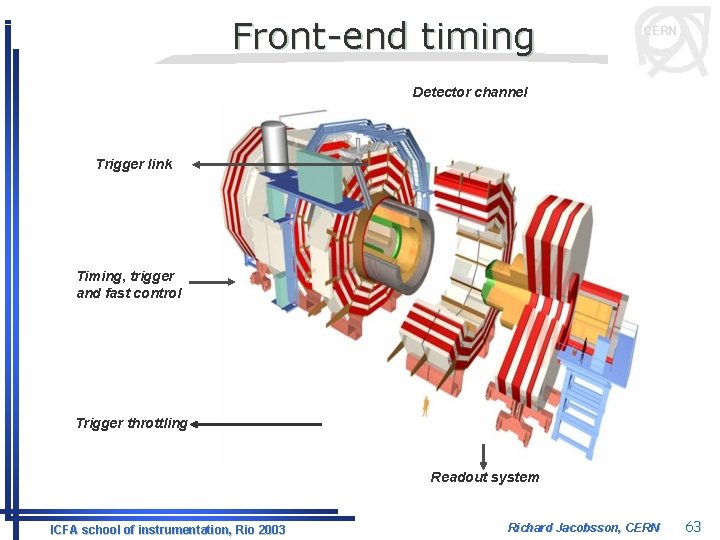

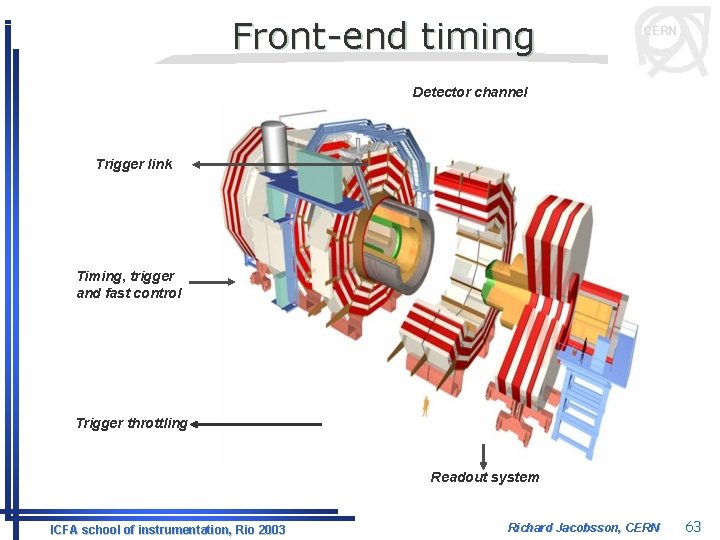

Front-end timing CERN Detector channel Trigger link Timing, trigger and fast control Trigger throttling Readout system ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 63

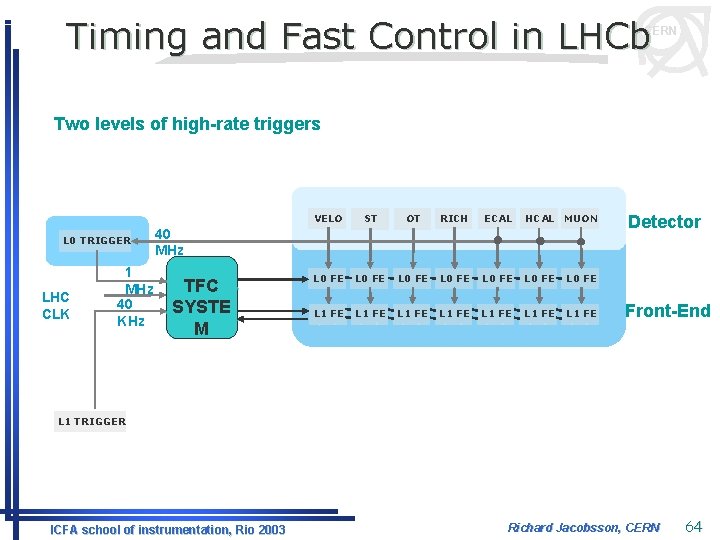

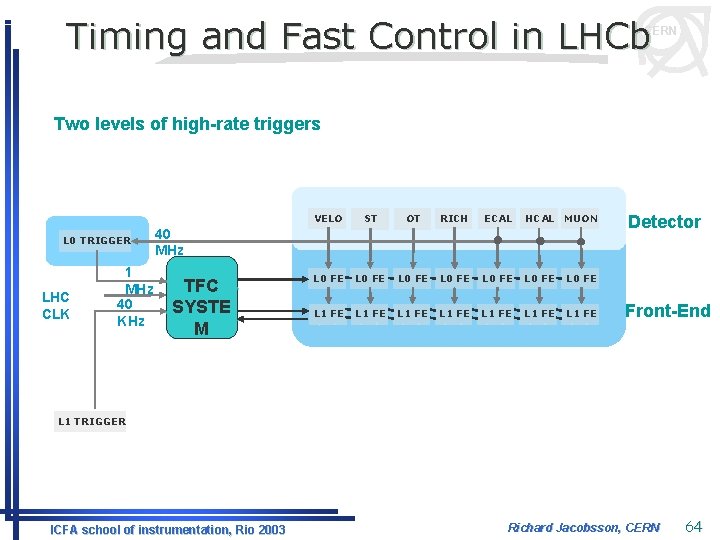

Timing and Fast Control in LHCb CERN Two levels of high-rate triggers L 0 TRIGGER LHC CLK 1 MHz 40 KHz VELO ST OT RICH ECAL HCAL MUON L 0 FE L 0 FE L 1 FE L 1 FE 40 MHz TFC SYSTE M Detector Front-End L 1 TRIGGER ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 64

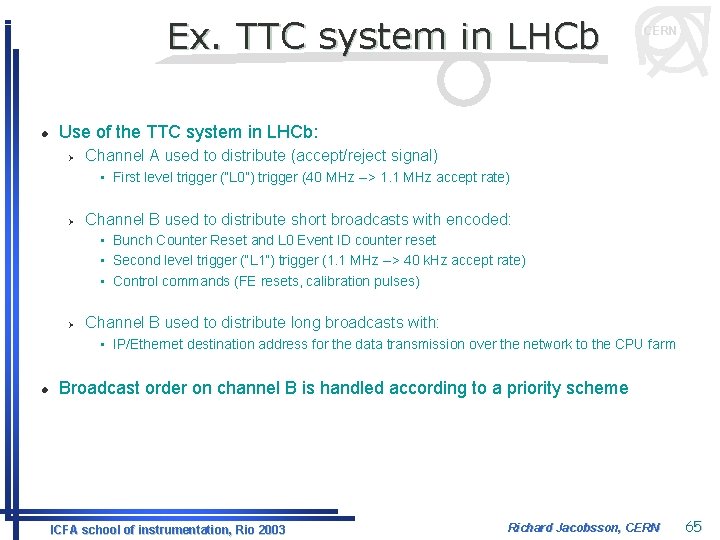

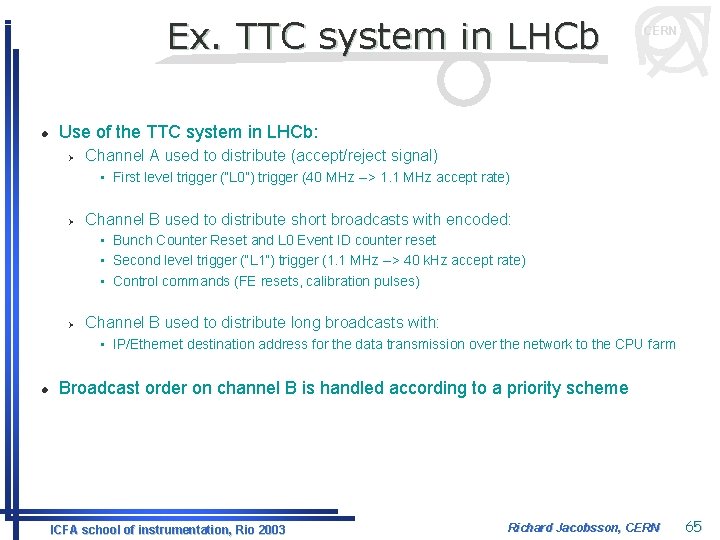

Ex. TTC system in LHCb l CERN Use of the TTC system in LHCb: Ø Channel A used to distribute (accept/reject signal) • First level trigger (“L 0”) trigger (40 MHz --> 1. 1 MHz accept rate) Ø Channel B used to distribute short broadcasts with encoded: • Bunch Counter Reset and L 0 Event ID counter reset • Second level trigger (“L 1”) trigger (1. 1 MHz --> 40 k. Hz accept rate) • Control commands (FE resets, calibration pulses) Ø Channel B used to distribute long broadcasts with: • IP/Ethernet destination address for the data transmission over the network to the CPU farm l Broadcast order on channel B is handled according to a priority scheme ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 65

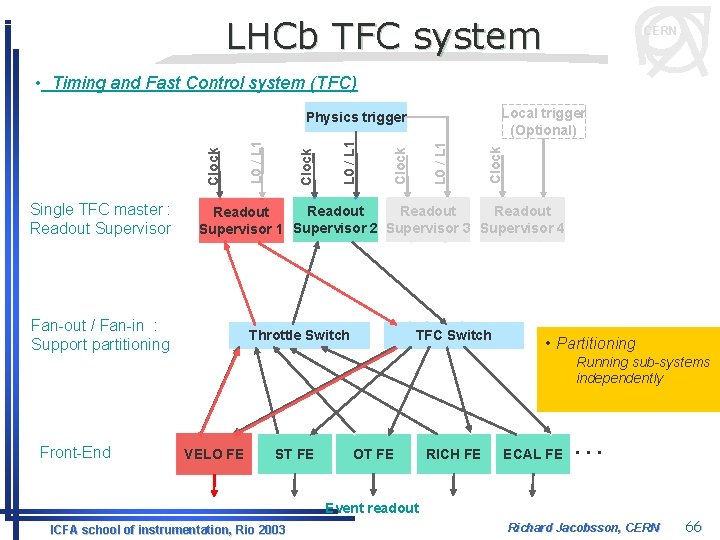

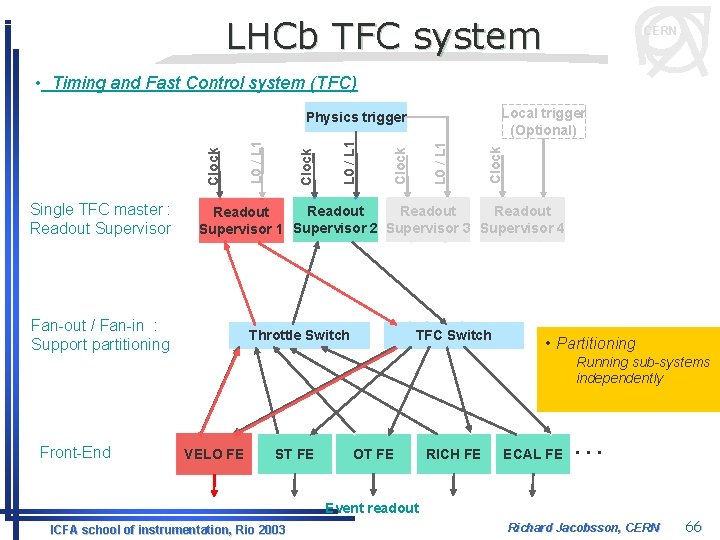

LHCb TFC system CERN • Timing and Fast Control system (TFC) Local trigger (Optional) Single TFC master : Readout Supervisor Clock L 0 / L 1 Clock Physics trigger Readout Supervisor 1 Supervisor 2 Supervisor 3 Supervisor 4 Fan-out / Fan-in : Support partitioning Throttle Switch TFC Switch • Partitioning Running sub-systems independently Front-End VELO FE ST FE OT FE RICH FE ECAL FE . . . Event readout ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 66

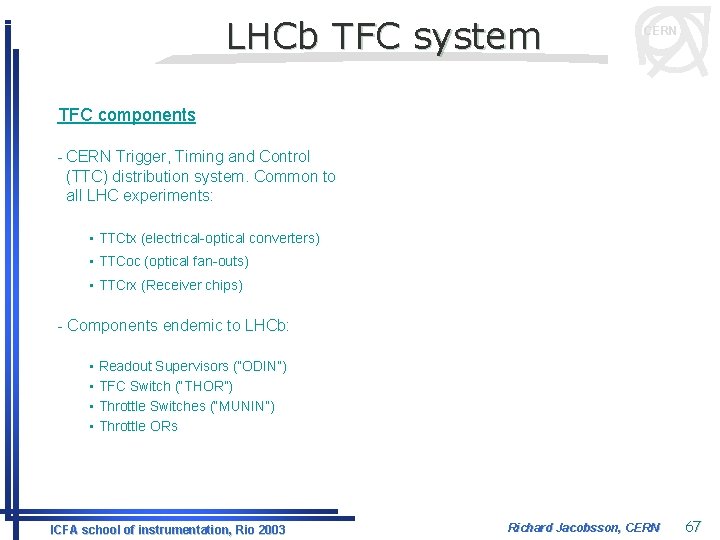

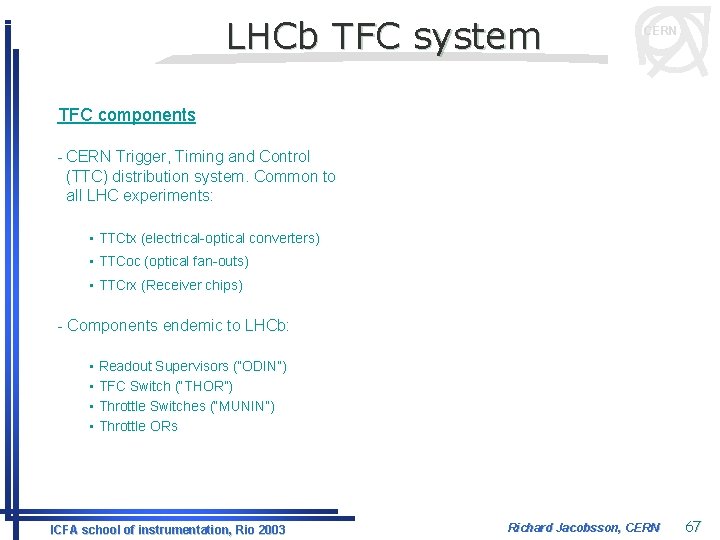

LHCb TFC system CERN TFC components - CERN Trigger, Timing and Control (TTC) distribution system. Common to all LHC experiments: • TTCtx (electrical-optical converters) • TTCoc (optical fan-outs) • TTCrx (Receiver chips) - Components endemic to LHCb: • • Readout Supervisors (“ODIN”) TFC Switch (“THOR”) Throttle Switches (“MUNIN”) Throttle ORs ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 67

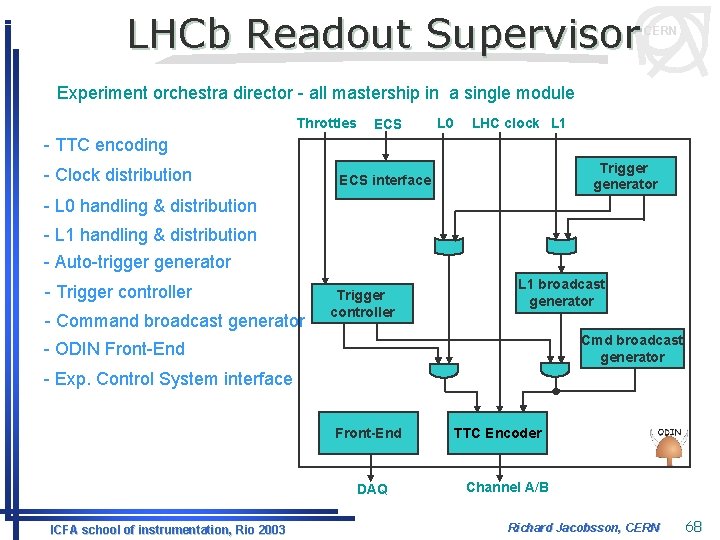

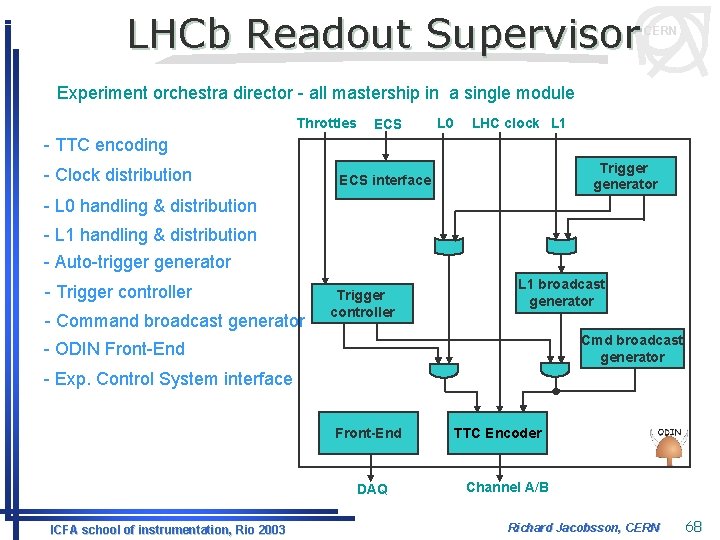

LHCb Readout Supervisor CERN Experiment orchestra director - all mastership in a single module Throttles ECS L 0 LHC clock L 1 - TTC encoding - Clock distribution Trigger generator ECS interface - L 0 handling & distribution - L 1 handling & distribution - Auto-trigger generator - Trigger controller - Command broadcast generator Trigger controller L 1 broadcast generator Cmd broadcast generator - ODIN Front-End - Exp. Control System interface Front-End DAQ ICFA school of instrumentation, Rio 2003 TTC Encoder Channel A/B Richard Jacobsson, CERN 68

CERN 6. Event readout ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 69

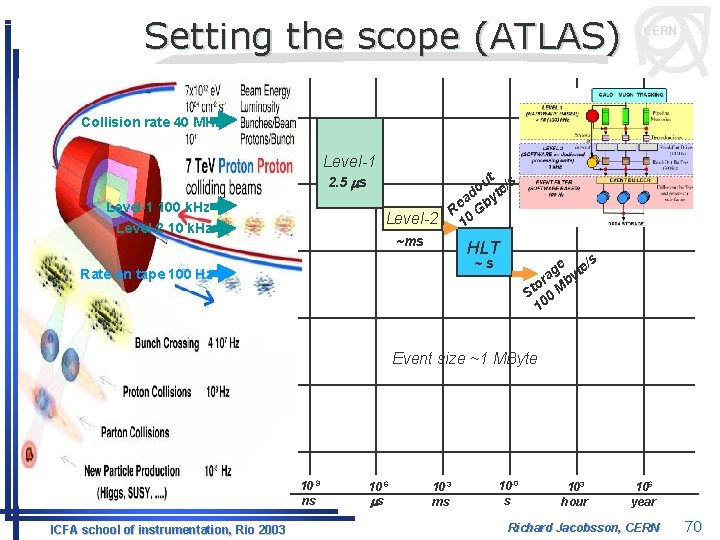

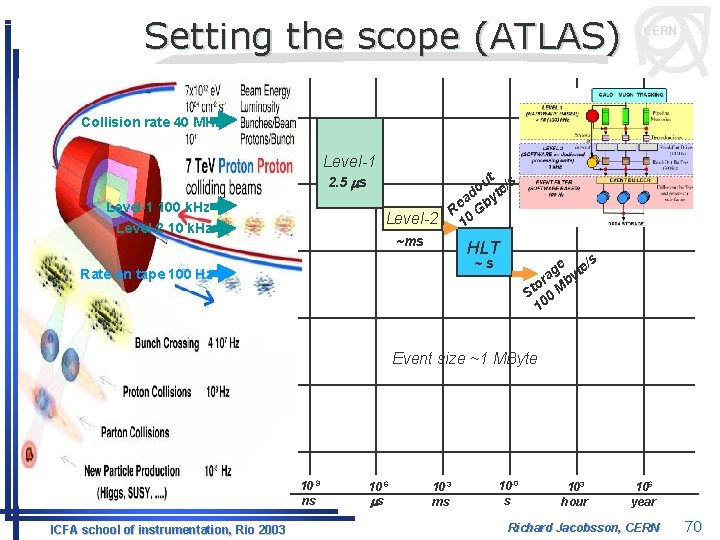

Setting the scope (ATLAS) CERN Collision rate 40 MHz Level-1 2. 5 ms Level-1 100 k. Hz Level-2 10 k. Hz ~ms ut /s o d yte a b Re G 0 1 HLT /s ~s Rate on tape 100 Hz ge yte a or b St 0 M 10 Event size ~1 MByte 10 -9 ns ICFA school of instrumentation, Rio 2003 10 -6 ms 10 -3 ms 10 -0 s 103 hour 106 year Richard Jacobsson, CERN 70

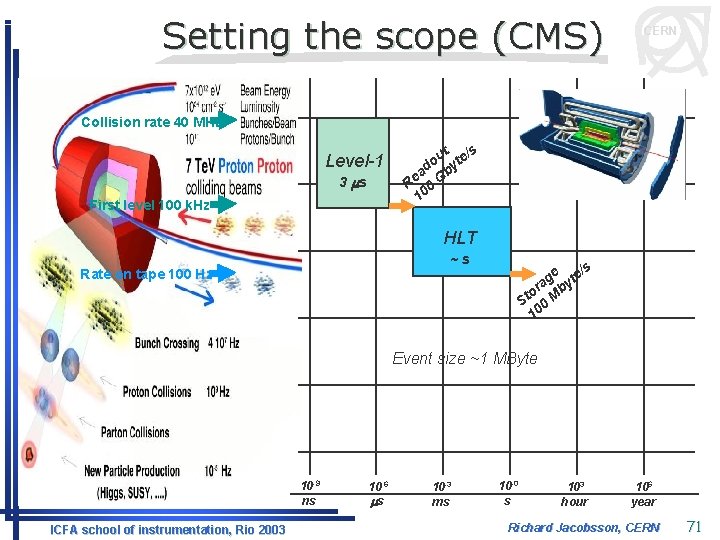

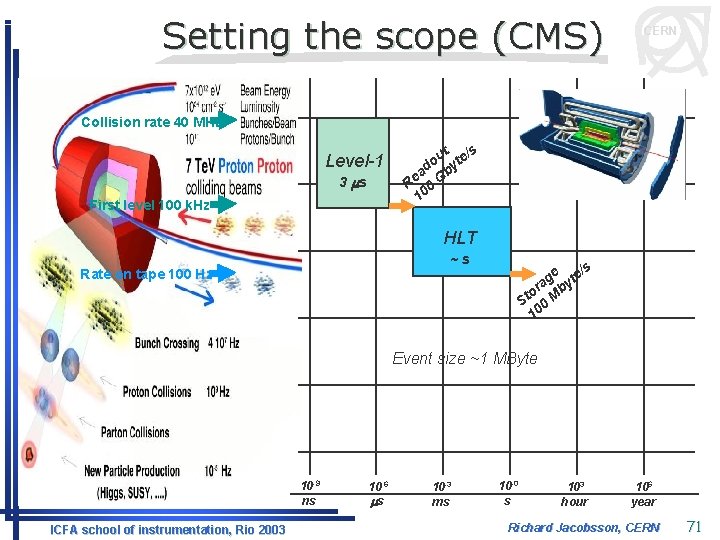

Setting the scope (CMS) CERN Collision rate 40 MHz Level-1 3 ms First level 100 k. Hz ut te/s o ad Gby e R 0 10 HLT ~s Rate on tape 100 Hz /s ge yte a or b St 0 M 10 Event size ~1 MByte 10 -9 ns ICFA school of instrumentation, Rio 2003 10 -6 ms 10 -3 ms 10 -0 s 103 hour 106 year Richard Jacobsson, CERN 71

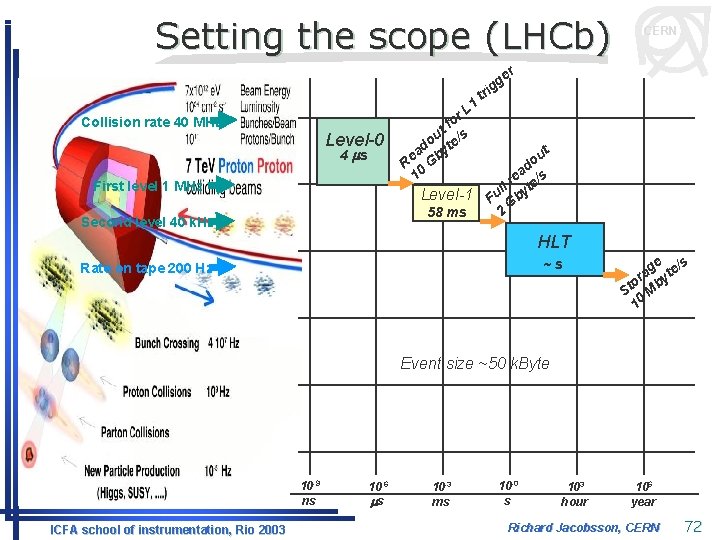

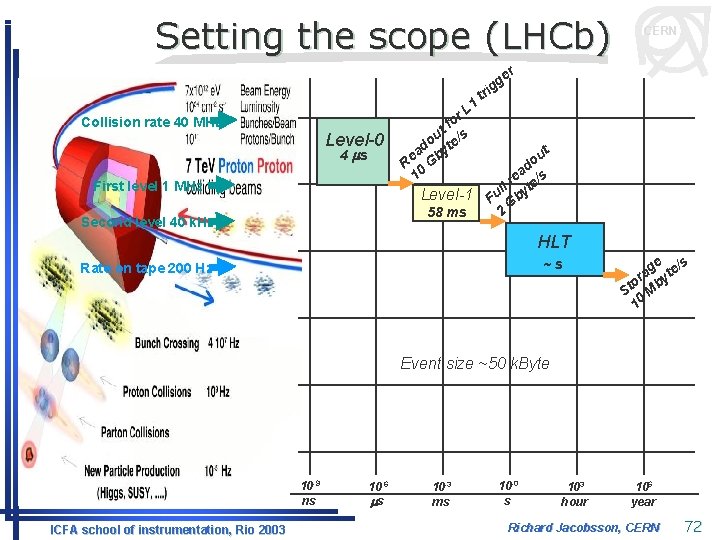

Setting the scope (LHCb) CERN er g g 1 Collision rate 40 MHz Level-0 4 ms First level 1 MHz r. L o tf s u o te/ d a by e R G 10 Level-1 58 ms Second level 40 k. Hz tri ut o ad /s e r ll yte u F Gb 2 HLT ~s Rate on tape 200 Hz ge te/s a or by t S M 10 Event size ~50 k. Byte 10 -9 ns ICFA school of instrumentation, Rio 2003 10 -6 ms 10 -3 ms 10 -0 s 103 hour 106 year Richard Jacobsson, CERN 72

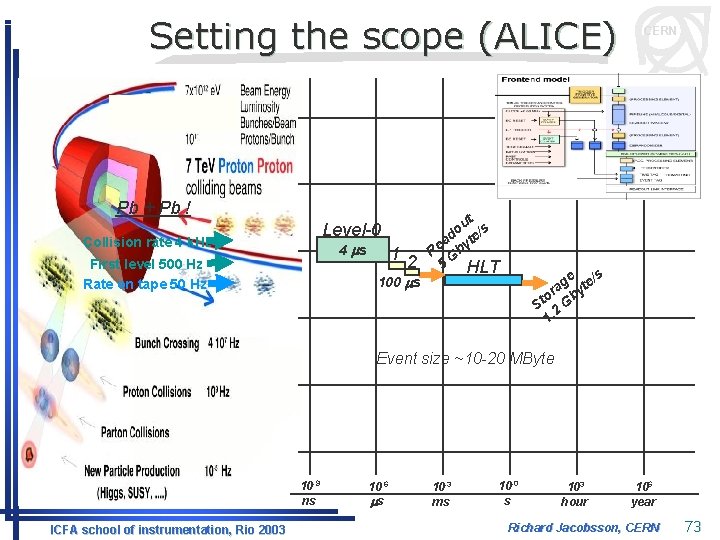

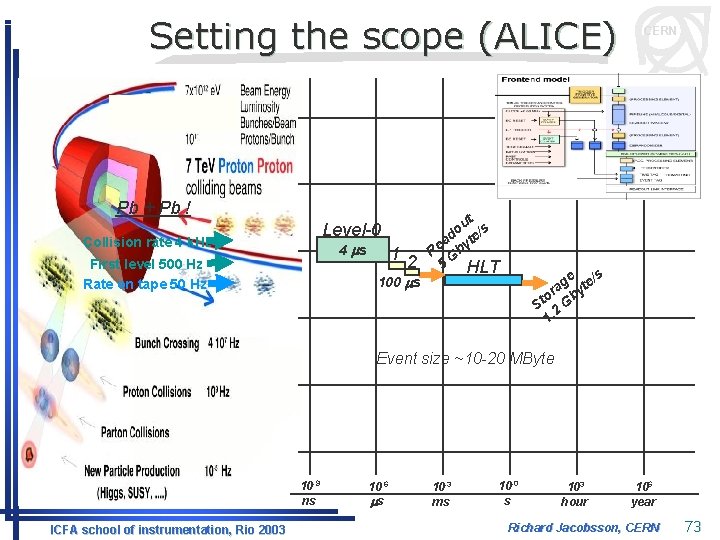

Setting the scope (ALICE) Pb + Pb ! Level-0 Collision rate 4 k. Hz 4 ms First level 500 Hz Rate on tape 50 Hz 12 100 ms ut s o / ad yte e R Gb 5 HLT CERN s ge te/ a or Gby t S 2 1. Event size ~10 -20 MByte 10 -9 ns ICFA school of instrumentation, Rio 2003 10 -6 ms 10 -3 ms 10 -0 s 103 hour 106 year Richard Jacobsson, CERN 73

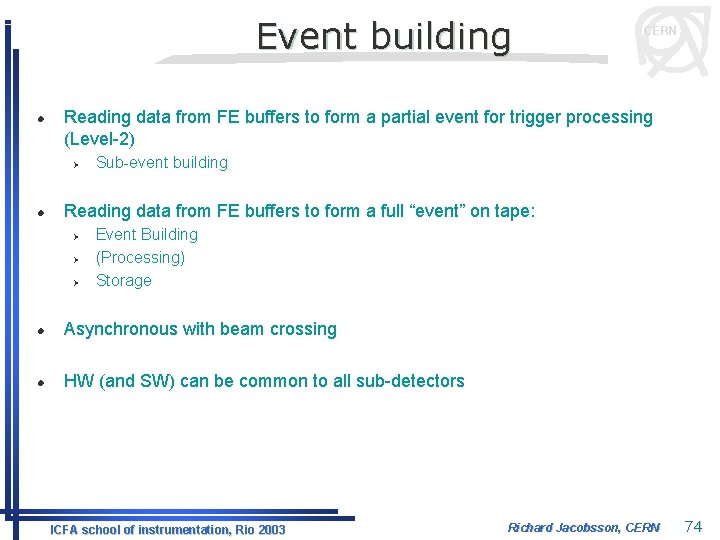

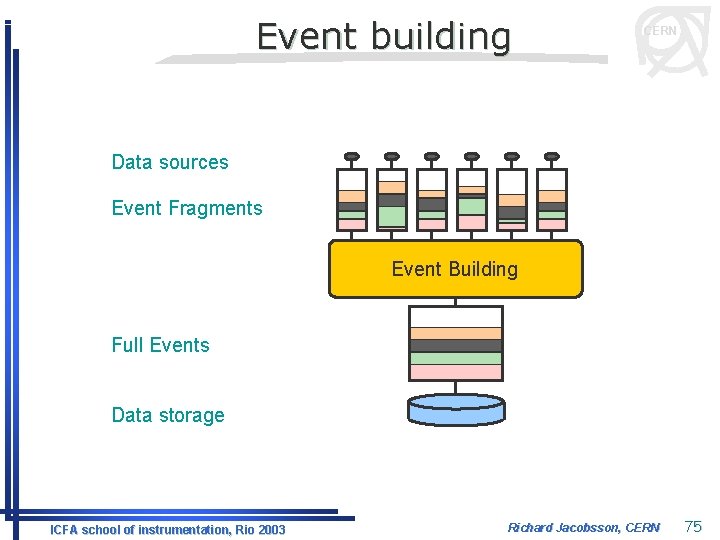

Event building l Reading data from FE buffers to form a partial event for trigger processing (Level-2) Ø l CERN Sub-event building Reading data from FE buffers to form a full “event” on tape: Ø Ø Ø Event Building (Processing) Storage l Asynchronous with beam crossing l HW (and SW) can be common to all sub-detectors ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 74

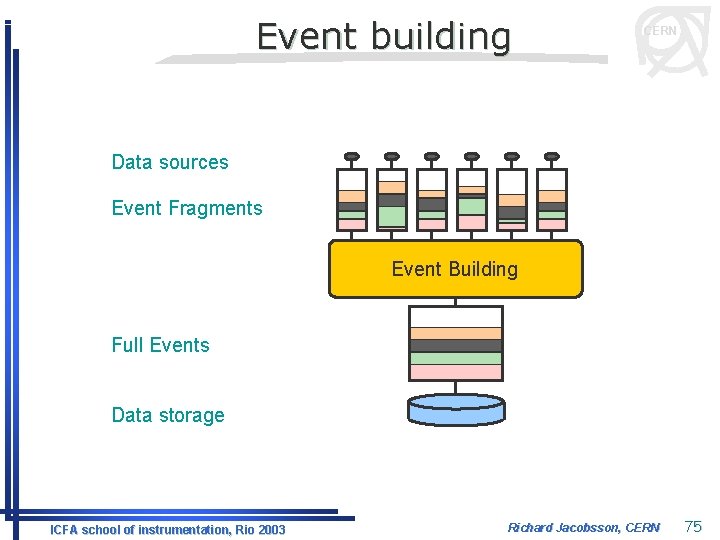

Event building CERN Data sources Event Fragments Event Building Full Events Data storage ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 75

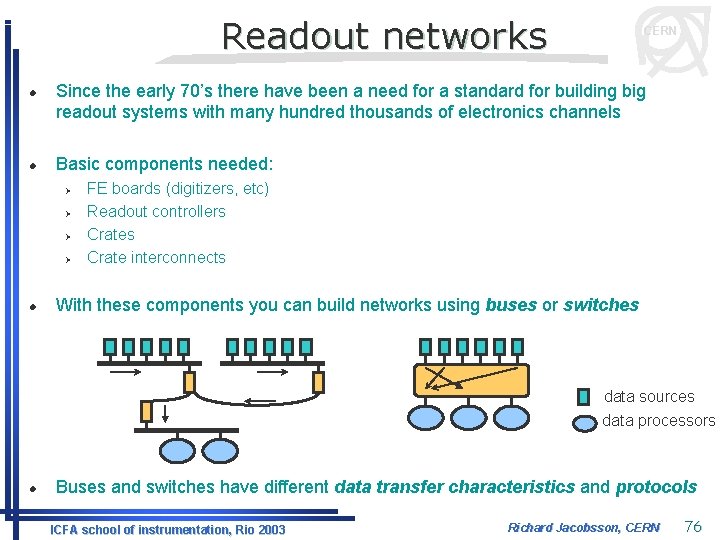

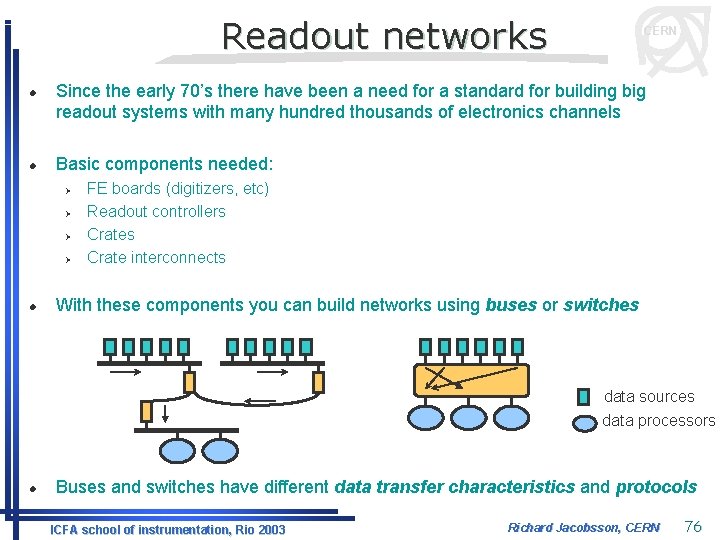

Readout networks l l Since the early 70’s there have been a need for a standard for building big readout systems with many hundred thousands of electronics channels Basic components needed: Ø Ø l CERN FE boards (digitizers, etc) Readout controllers Crate interconnects With these components you can build networks using buses or switches data sources data processors l Buses and switches have different data transfer characteristics and protocols ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 76

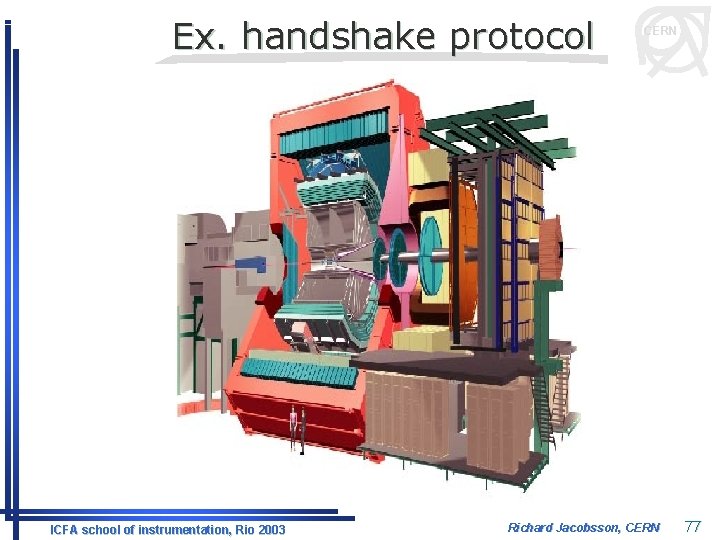

Ex. handshake protocol ICFA school of instrumentation, Rio 2003 CERN Richard Jacobsson, CERN 77

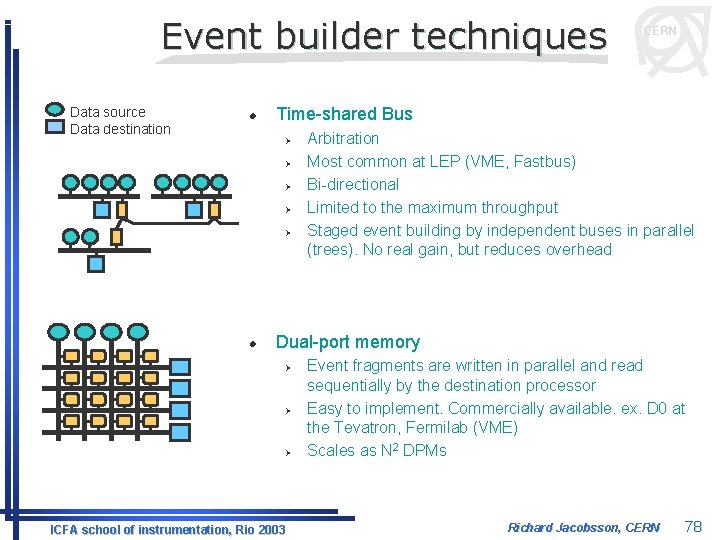

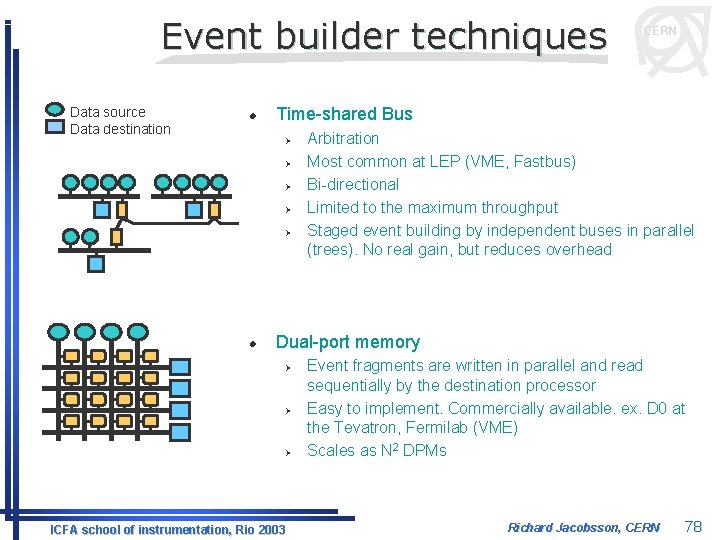

Event builder techniques Data source Data destination l Time-shared Bus Ø Ø Ø l CERN Arbitration Most common at LEP (VME, Fastbus) Bi-directional Limited to the maximum throughput Staged event building by independent buses in parallel (trees). No real gain, but reduces overhead Dual-port memory Ø Ø Ø ICFA school of instrumentation, Rio 2003 Event fragments are written in parallel and read sequentially by the destination processor Easy to implement. Commercially available. ex. D 0 at the Tevatron, Fermilab (VME) Scales as N 2 DPMs Richard Jacobsson, CERN 78

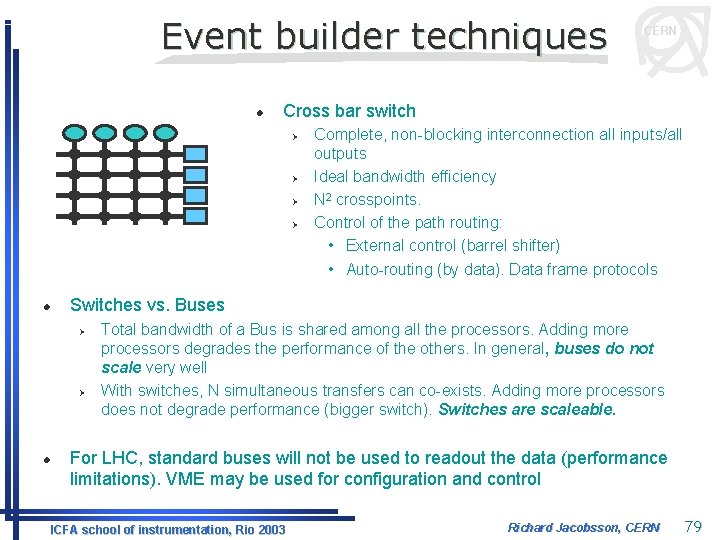

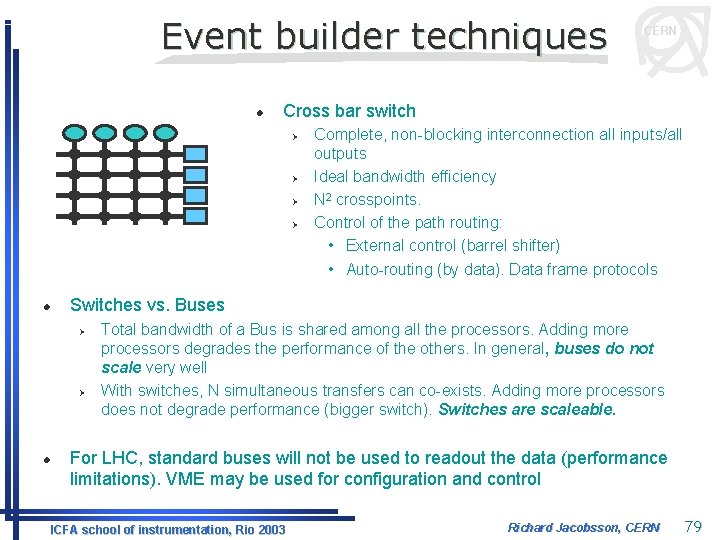

Event builder techniques l Cross bar switch Ø Ø l Complete, non-blocking interconnection all inputs/all outputs Ideal bandwidth efficiency N 2 crosspoints. Control of the path routing: • External control (barrel shifter) • Auto-routing (by data). Data frame protocols Switches vs. Buses Ø Ø l CERN Total bandwidth of a Bus is shared among all the processors. Adding more processors degrades the performance of the others. In general, buses do not scale very well With switches, N simultaneous transfers can co-exists. Adding more processors does not degrade performance (bigger switch). Switches are scaleable. For LHC, standard buses will not be used to readout the data (performance limitations). VME may be used for configuration and control ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 79

Computing and network trends CERN ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 80

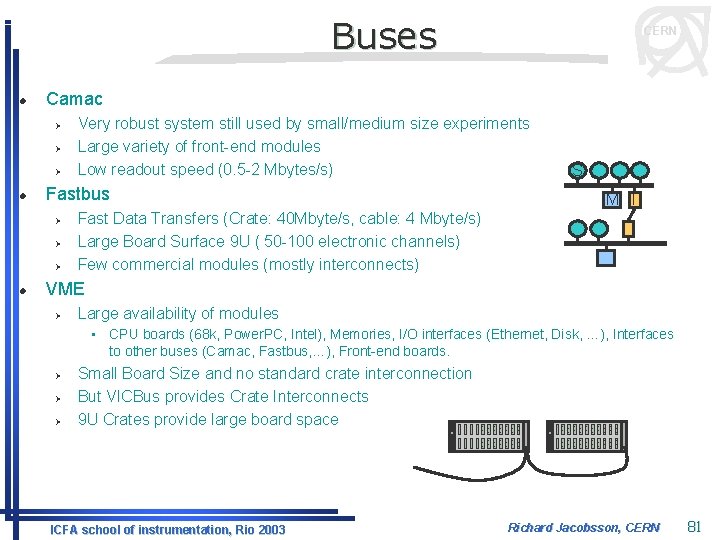

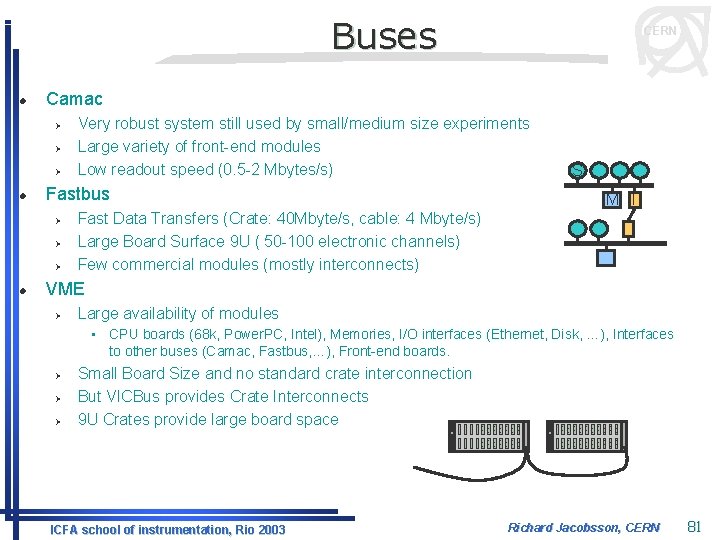

Buses l Camac Ø Ø Ø l Very robust system still used by small/medium size experiments Large variety of front-end modules Low readout speed (0. 5 -2 Mbytes/s) Fastbus Ø Ø Ø l CERN S M I Fast Data Transfers (Crate: 40 Mbyte/s, cable: 4 Mbyte/s) Large Board Surface 9 U ( 50 -100 electronic channels) Few commercial modules (mostly interconnects) VME Ø Large availability of modules • CPU boards (68 k, Power. PC, Intel), Memories, I/O interfaces (Ethernet, Disk, …), Interfaces to other buses (Camac, Fastbus, …), Front-end boards. Ø Ø Ø Small Board Size and no standard crate interconnection But VICBus provides Crate Interconnects 9 U Crates provide large board space ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 81

Event readout with buses(LEP) l FE data l Full Event ICFA school of instrumentation, Rio 2003 CERN Richard Jacobsson, CERN 82

Not always so easy… ICFA school of instrumentation, Rio 2003 CERN Richard Jacobsson, CERN 83

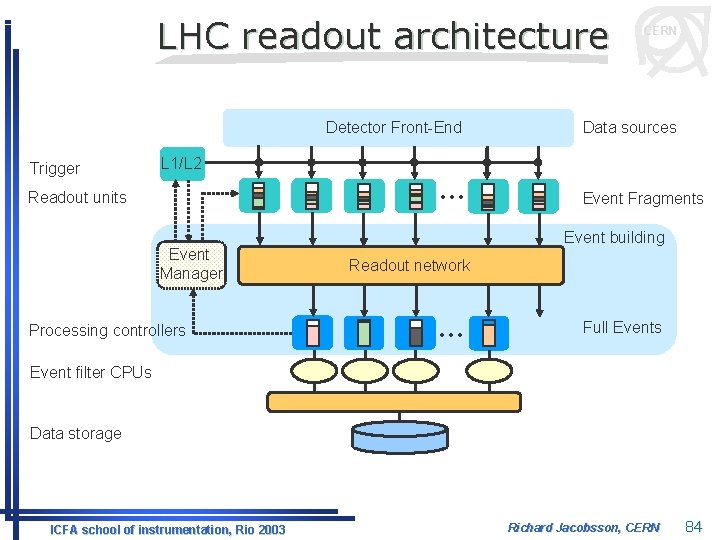

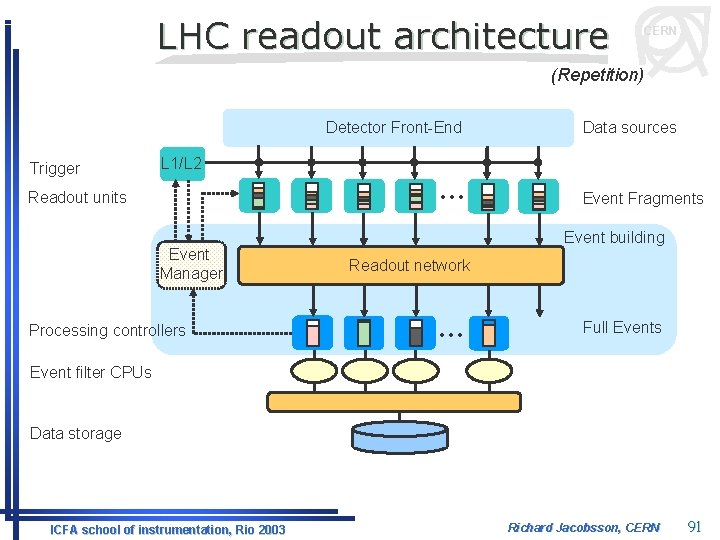

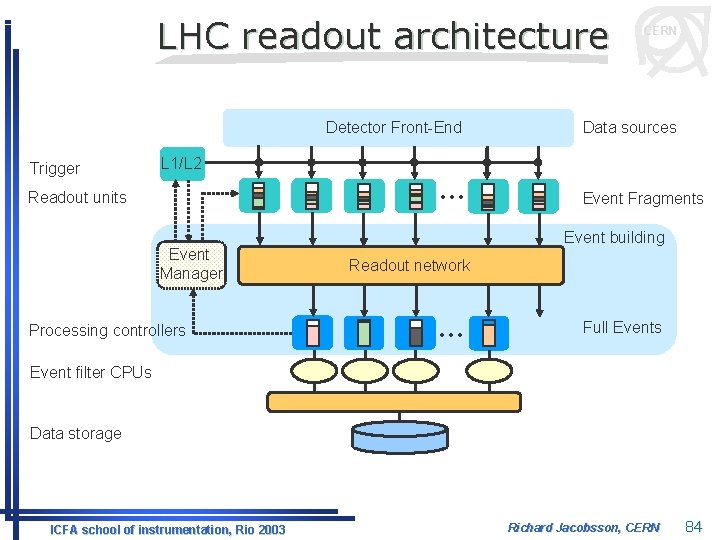

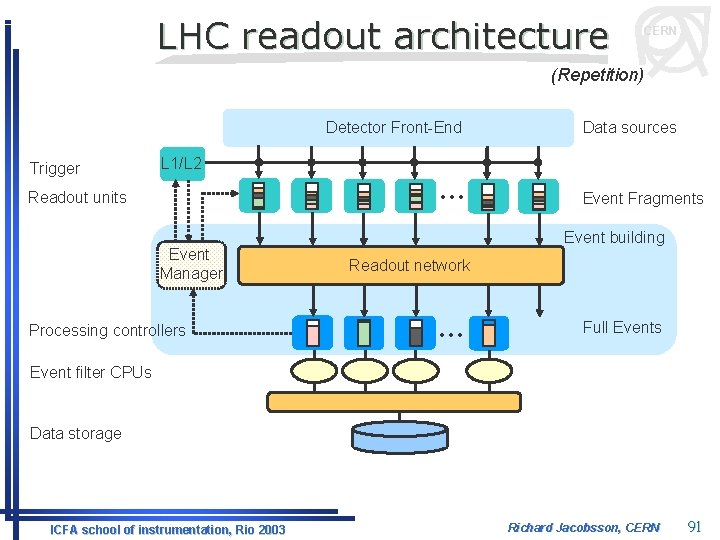

LHC readout architecture Detector Front-End Trigger L 1/L 2 Readout units Event Manager Processing controllers . . . CERN Data sources Event Fragments Event building Readout network . . . Full Events Event filter CPUs Data storage ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 84

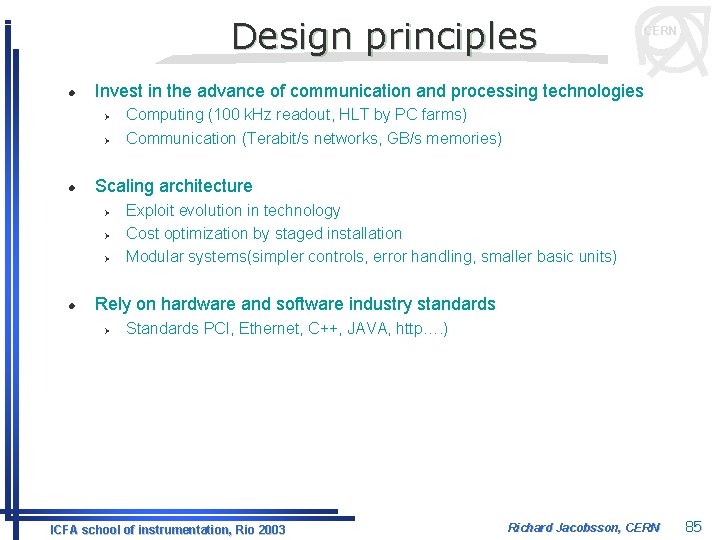

Design principles l Invest in the advance of communication and processing technologies Ø Ø l Computing (100 k. Hz readout, HLT by PC farms) Communication (Terabit/s networks, GB/s memories) Scaling architecture Ø Ø Ø l CERN Exploit evolution in technology Cost optimization by staged installation Modular systems(simpler controls, error handling, smaller basic units) Rely on hardware and software industry standards Ø Standards PCI, Ethernet, C++, JAVA, http…. ) ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 85

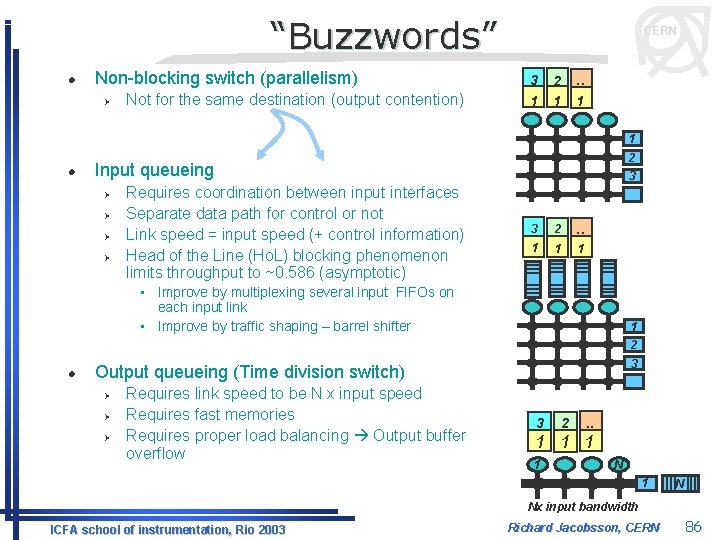

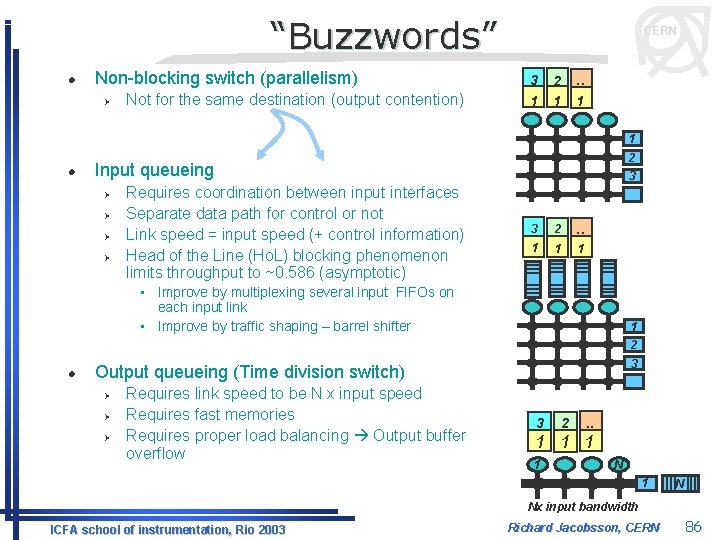

“Buzzwords” l Non-blocking switch (parallelism) Ø Not for the same destination (output contention) CERN 3 1 2 1 . . 1 1 l 2 Input queueing Ø Ø Requires coordination between input interfaces Separate data path for control or not Link speed = input speed (+ control information) Head of the Line (Ho. L) blocking phenomenon limits throughput to ~0. 586 (asymptotic) 3 3 2 . . 1 1 1 • Improve by multiplexing several input FIFOs on each input link • Improve by traffic shaping – barrel shifter 1 2 l 3 Output queueing (Time division switch) Ø Ø Ø Requires link speed to be N x input speed Requires fast memories Requires proper load balancing Output buffer overflow 3 2 . . 1 1 N 1 N Nx input bandwidth ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 86

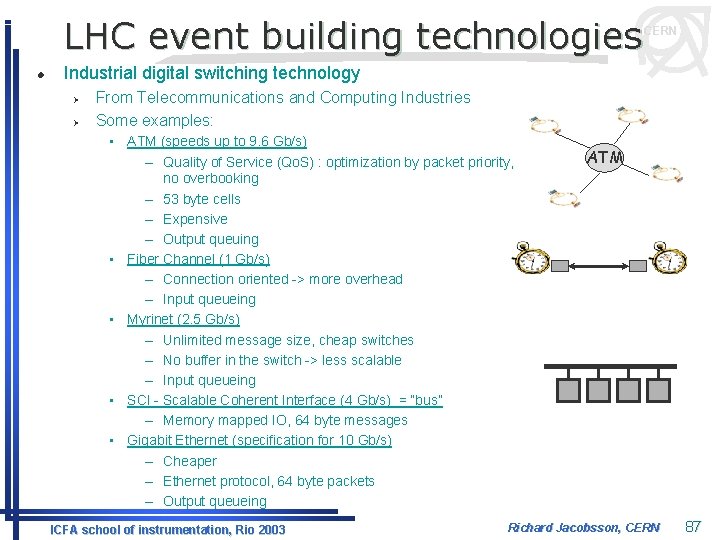

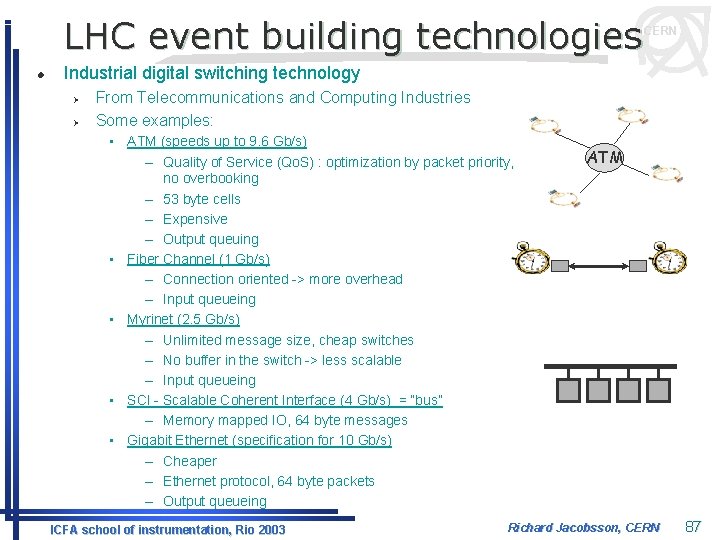

LHC event building technologies l CERN Industrial digital switching technology Ø Ø From Telecommunications and Computing Industries Some examples: • ATM (speeds up to 9. 6 Gb/s) – Quality of Service (Qo. S) : optimization by packet priority, no overbooking – 53 byte cells – Expensive – Output queuing • Fiber Channel (1 Gb/s) – Connection oriented -> more overhead – Input queueing • Myrinet (2. 5 Gb/s) – Unlimited message size, cheap switches – No buffer in the switch -> less scalable – Input queueing • SCI - Scalable Coherent Interface (4 Gb/s) = “bus” – Memory mapped IO, 64 byte messages • Gigabit Ethernet (specification for 10 Gb/s) – Cheaper – Ethernet protocol, 64 byte packets – Output queueing ICFA school of instrumentation, Rio 2003 ATM Richard Jacobsson, CERN 87

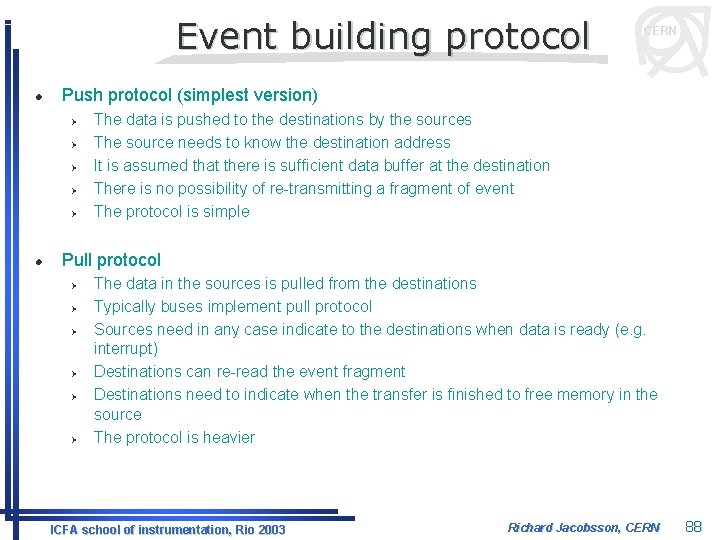

Event building protocol l Push protocol (simplest version) Ø Ø Ø l CERN The data is pushed to the destinations by the sources The source needs to know the destination address It is assumed that there is sufficient data buffer at the destination There is no possibility of re-transmitting a fragment of event The protocol is simple Pull protocol Ø Ø Ø The data in the sources is pulled from the destinations Typically buses implement pull protocol Sources need in any case indicate to the destinations when data is ready (e. g. interrupt) Destinations can re-read the event fragment Destinations need to indicate when the transfer is finished to free memory in the source The protocol is heavier ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 88

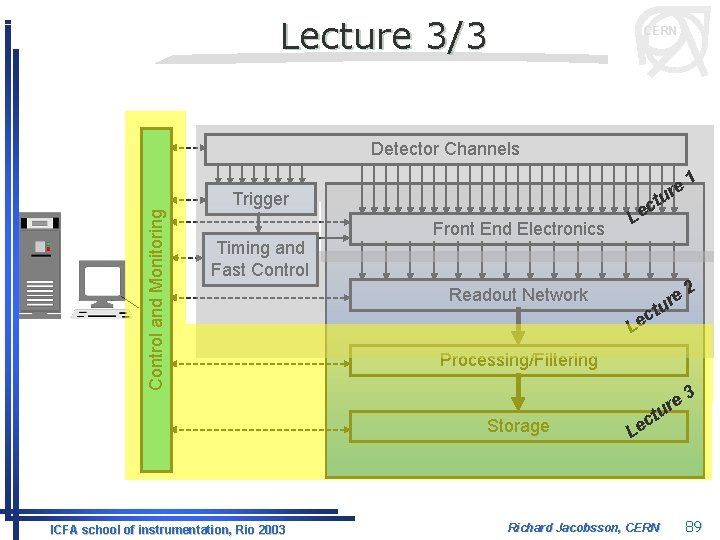

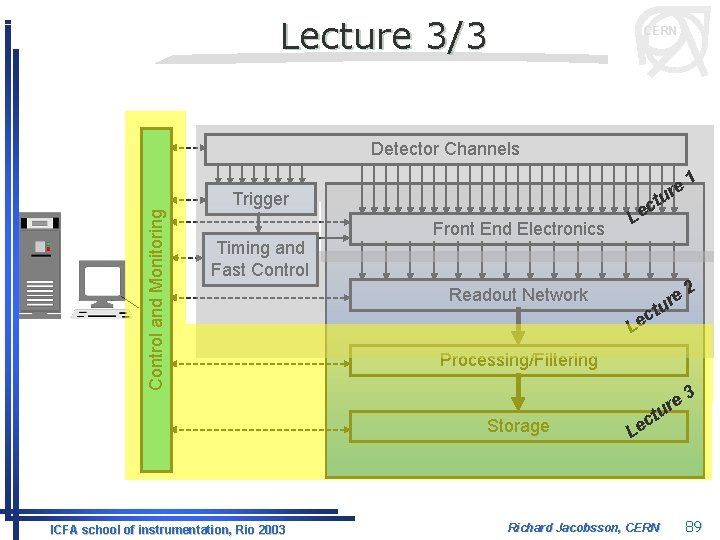

Lecture 3/3 CERN Detector Channels Control and Monitoring Trigger Timing and Fast Control 1 e ur 2 t Front End Electronics c Le Readout Network t c Le Processing/Filtering Storage ICFA school of instrumentation, Rio 2003 e ur 3 e r tu c Le Richard Jacobsson, CERN 89

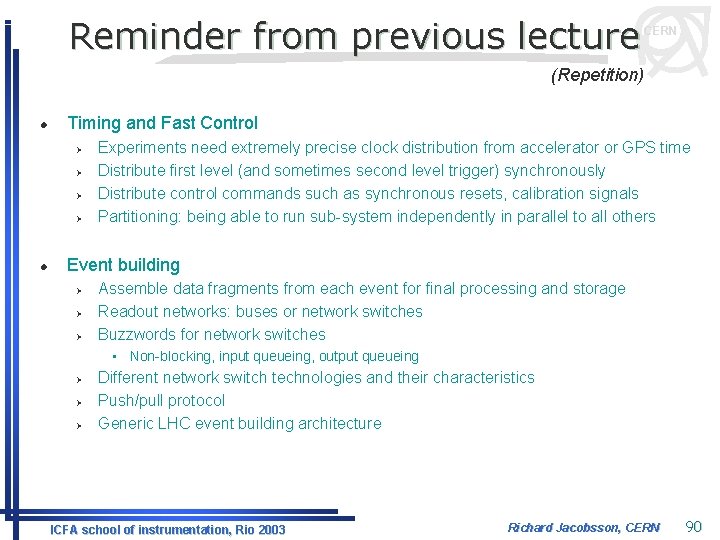

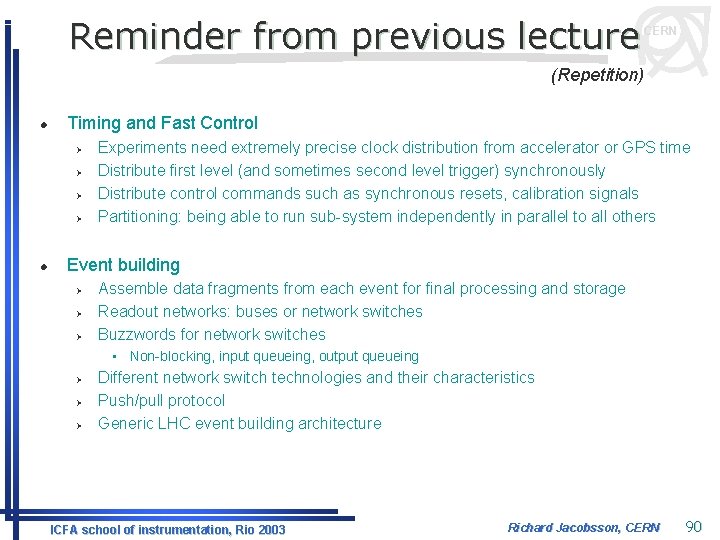

Reminder from previous lecture CERN (Repetition) l Timing and Fast Control Ø Ø l Experiments need extremely precise clock distribution from accelerator or GPS time Distribute first level (and sometimes second level trigger) synchronously Distribute control commands such as synchronous resets, calibration signals Partitioning: being able to run sub-system independently in parallel to all others Event building Ø Ø Ø Assemble data fragments from each event for final processing and storage Readout networks: buses or network switches Buzzwords for network switches • Non-blocking, input queueing, output queueing Ø Ø Ø Different network switch technologies and their characteristics Push/pull protocol Generic LHC event building architecture ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 90

LHC readout architecture CERN (Repetition) Detector Front-End Trigger L 1/L 2 Readout units Event Manager Processing controllers . . . Data sources Event Fragments Event building Readout network . . . Full Events Event filter CPUs Data storage ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 91

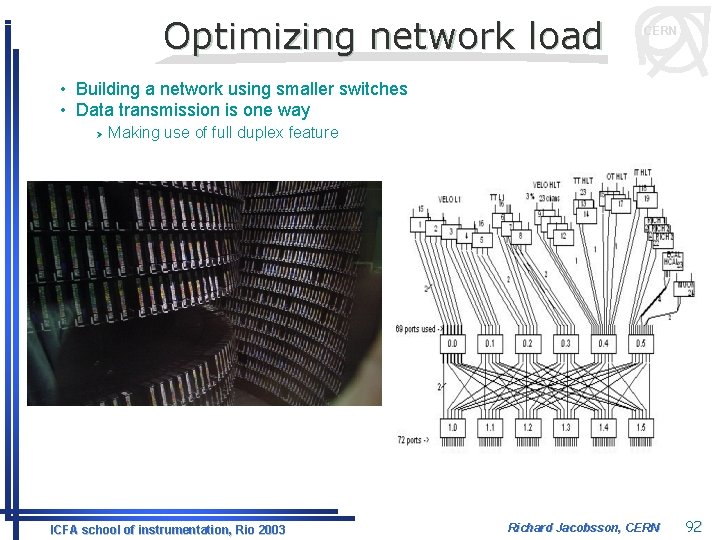

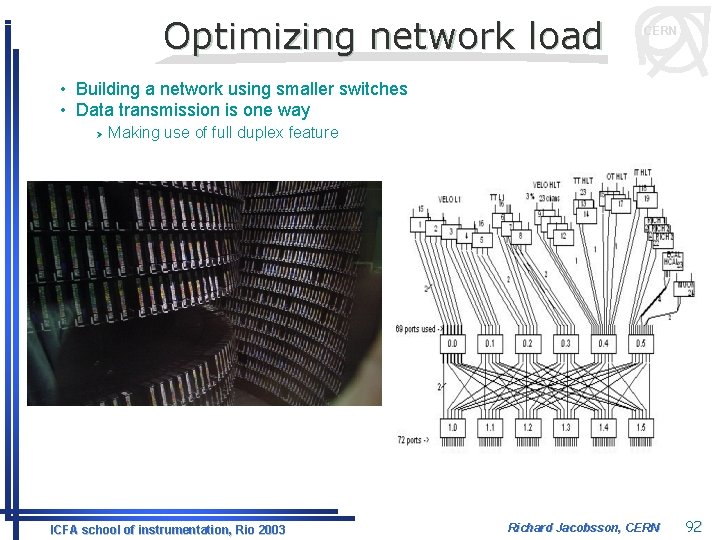

Optimizing network load CERN • Building a network using smaller switches • Data transmission is one way Ø Making use of full duplex feature ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 92

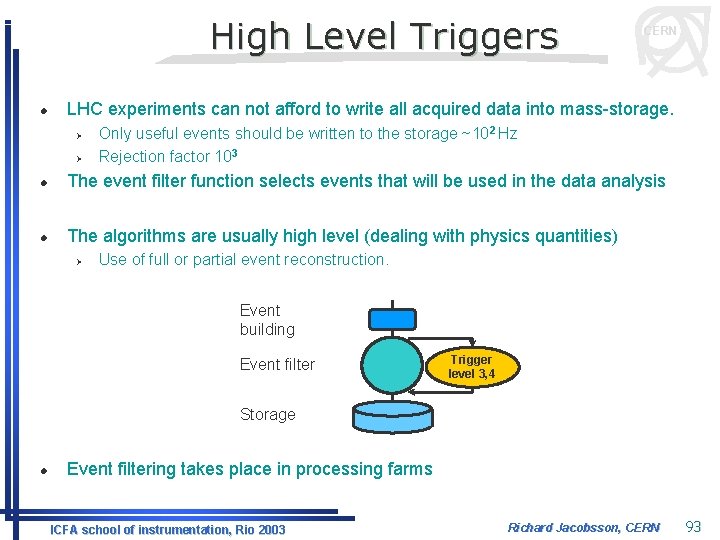

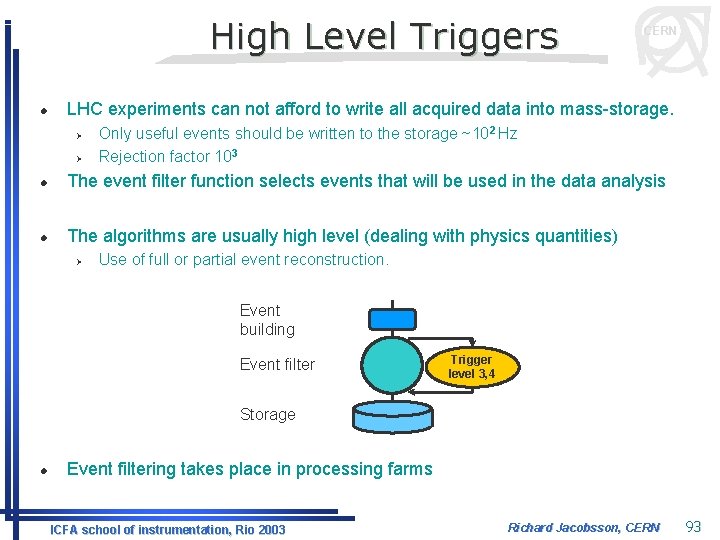

High Level Triggers l CERN LHC experiments can not afford to write all acquired data into mass-storage. Ø Ø Only useful events should be written to the storage ~102 Hz Rejection factor 103 l The event filter function selects events that will be used in the data analysis l The algorithms are usually high level (dealing with physics quantities) Ø Use of full or partial event reconstruction. Event building Event filter Trigger level 3, 4 Storage l Event filtering takes place in processing farms ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 93

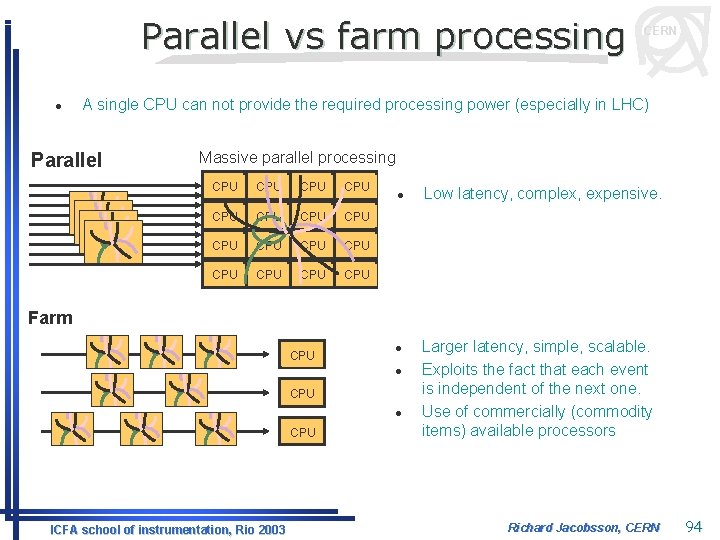

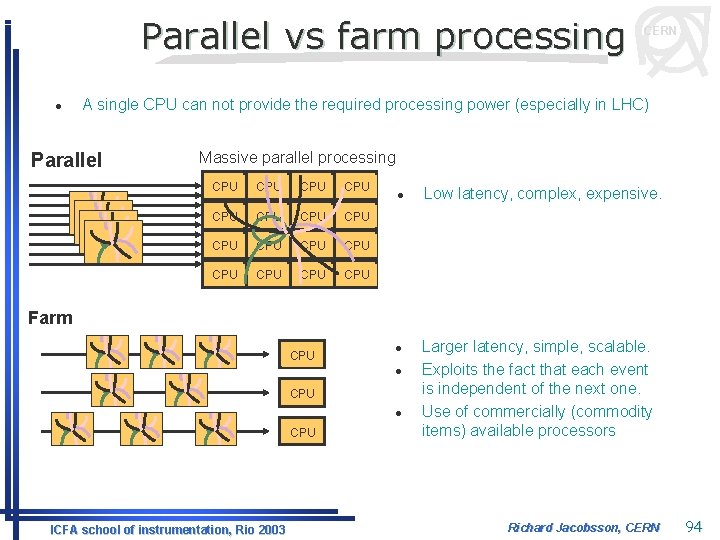

Parallel vs farm processing l CERN A single CPU can not provide the required processing power (especially in LHC) Parallel Massive parallel processing CPU CPU CPU CPU l Low latency, complex, expensive. Farm CPU l l CPU ICFA school of instrumentation, Rio 2003 Larger latency, simple, scalable. Exploits the fact that each event is independent of the next one. Use of commercially (commodity items) available processors Richard Jacobsson, CERN 94

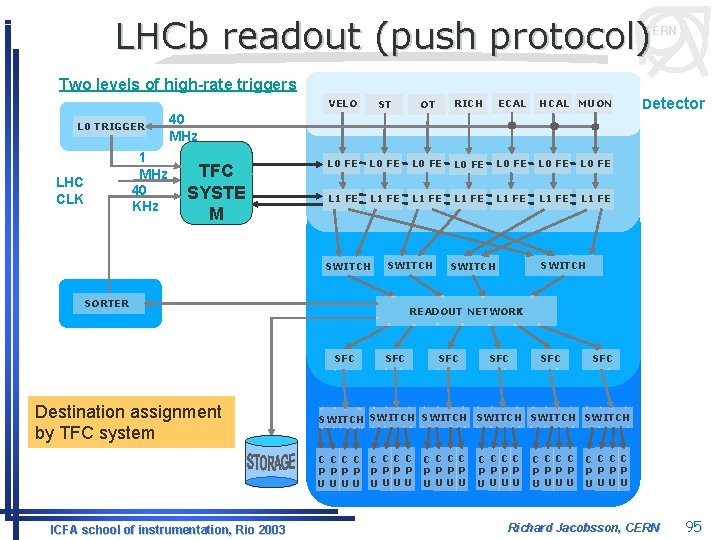

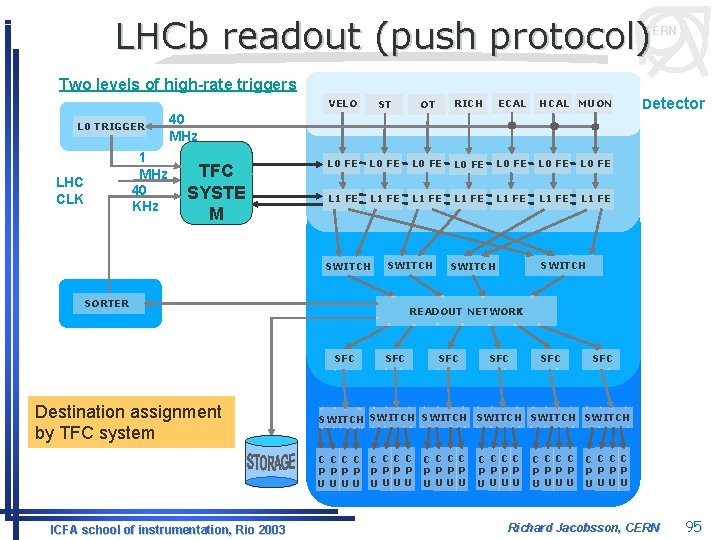

LHCb readout (push protocol) CERN Two levels of high-rate triggers L 0 TRIGGER LHC CLK 1 MHz 40 KHz VELO ST OT RICH ECAL HCAL MUON L 0 FE L 0 FE L 1 FE L 1 FE 40 MHz TFC SYSTE M SWITCH SORTER SFC SFC SFC SWITCH SWITCH C C C C P P P P UUUU ICFA school of instrumentation, Rio 2003 SWITCH READOUT NETWORK SFC Destination assignment by TFC system Detector C C C C P P P P UUUU Richard Jacobsson, CERN 95

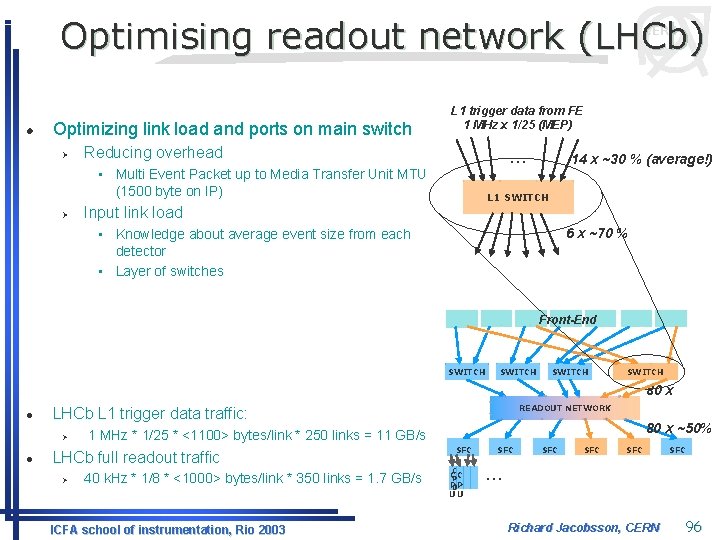

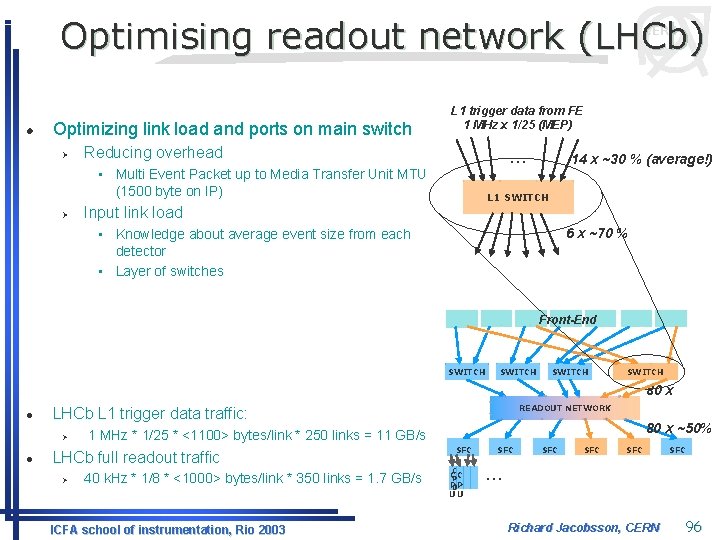

Optimising readout network (LHCb) CERN l Optimizing link load and ports on main switch Ø L 1 trigger data from FE 1 MHz x 1/25 (MEP) Reducing overhead … • Multi Event Packet up to Media Transfer Unit MTU (1500 byte on IP) Ø 14 x ~30 % (average!) L 1 SWITCH Input link load 6 x ~70 % • Knowledge about average event size from each detector • Layer of switches Front-End SWITCH 80 x l Ø l READOUT NETWORK LHCb L 1 trigger data traffic: LHCb full readout traffic Ø 80 x ~50% 1 MHz * 1/25 * <1100> bytes/link * 250 links = 11 GB/s 40 k. Hz * 1/8 * <1000> bytes/link * 350 links = 1. 7 GB/s ICFA school of instrumentation, Rio 2003 SFC C CPC PUP UU SFC SFC SFC … Richard Jacobsson, CERN 96

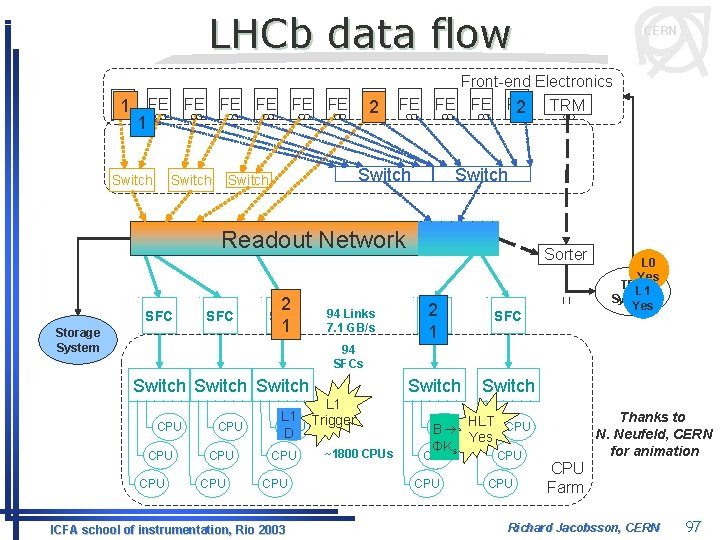

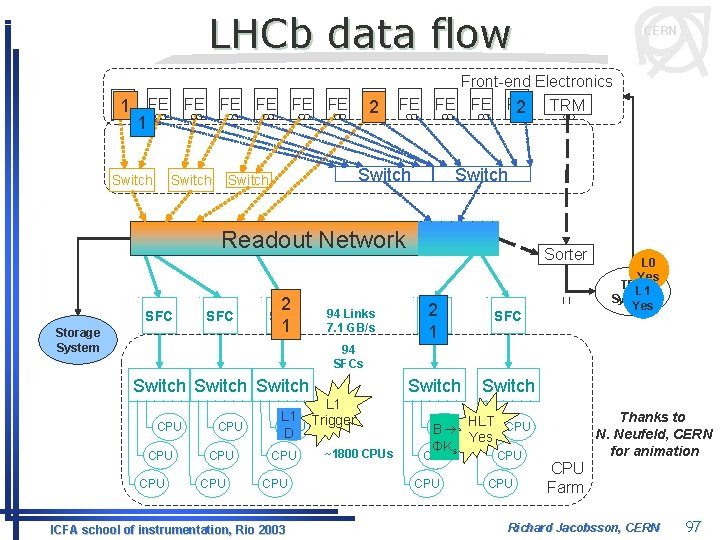

LHCb data flow CERN Front-end Electronics FE 1 FE FE 2 FE FE 2 TRM 1 Switch Switch Readout Network SFC Storage System 2 1 SFC 94 Links 7. 1 GB/s Sorter 2 SFC Switch 1 L 0 Yes TFC L 1 System Yes 94 SFCs Switch CPU CPU CPU L 1 Trigger CPU D CPU ICFA school of instrumentation, Rio 2003 ~1800 CPUs Thanks to N. Neufeld, CERN for animation HLT CPU B ΦΚs CPU Yes CPU CPU Farm Richard Jacobsson, CERN 97

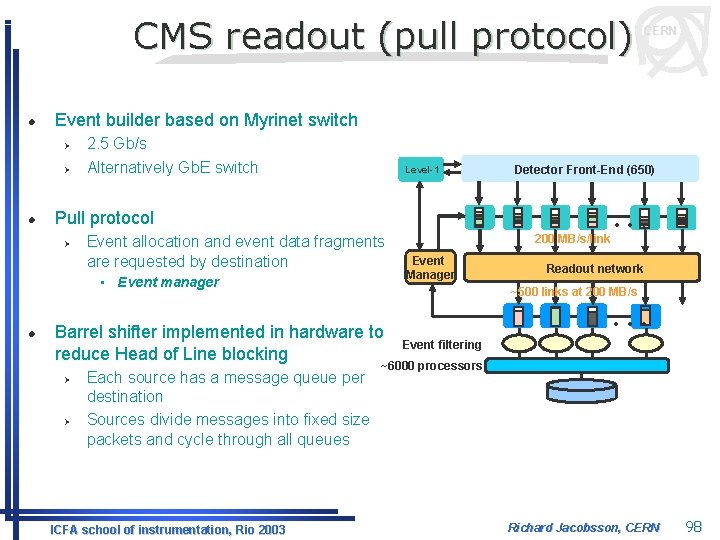

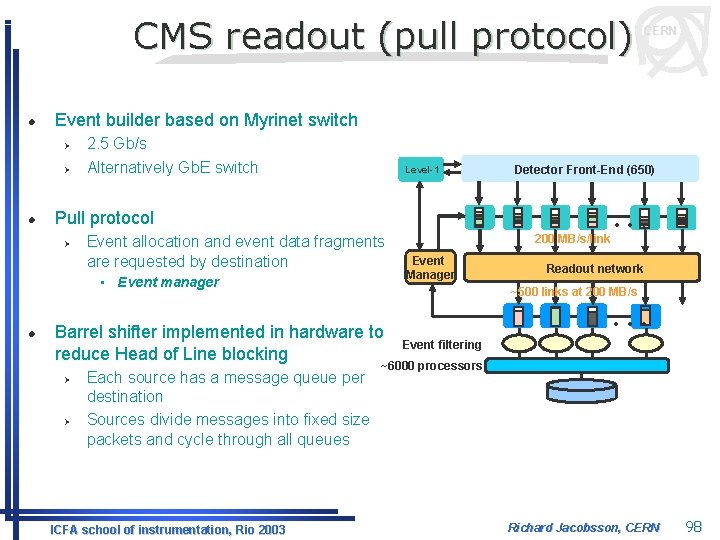

CMS readout (pull protocol) l Event builder based on Myrinet switch Ø Ø l 2. 5 Gb/s Alternatively Gb. E switch Level-1 Event allocation and event data fragments are requested by destination • Event manager Ø Each source has a message queue per destination Sources divide messages into fixed size packets and cycle through all queues ICFA school of instrumentation, Rio 2003 200 MB/s/link Event Manager Readout network ~500 links at 200 MB/s Barrel shifter implemented in hardware to reduce Head of Line blocking Ø Detector Front-End (650) . . . Pull protocol Ø l CERN . . . Event filtering ~6000 processors Richard Jacobsson, CERN 98

CMS event builder CERN • Staged installation ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 99

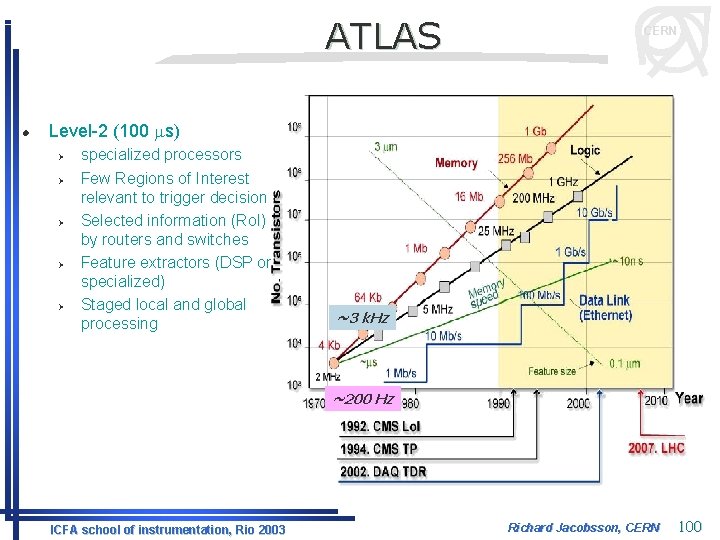

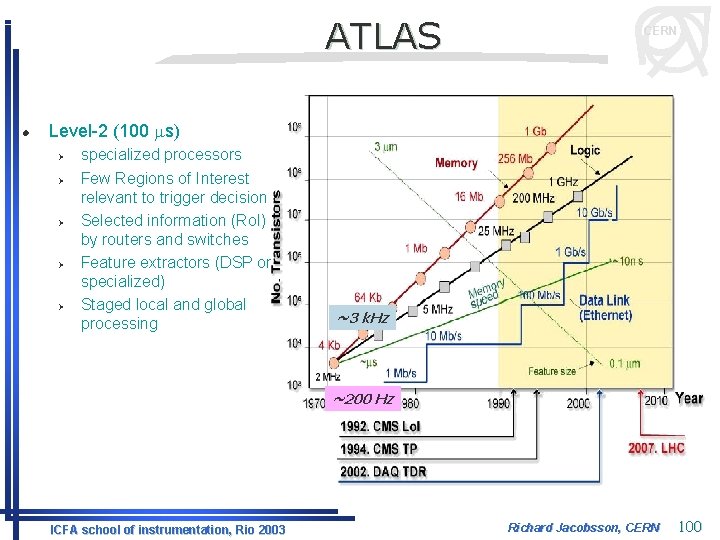

ATLAS l CERN Level-2 (100 ms) Ø Ø Ø specialized processors Few Regions of Interest relevant to trigger decision Selected information (Ro. I) by routers and switches Feature extractors (DSP or specialized) Staged local and global processing ~3 k. Hz ~200 Hz ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 100

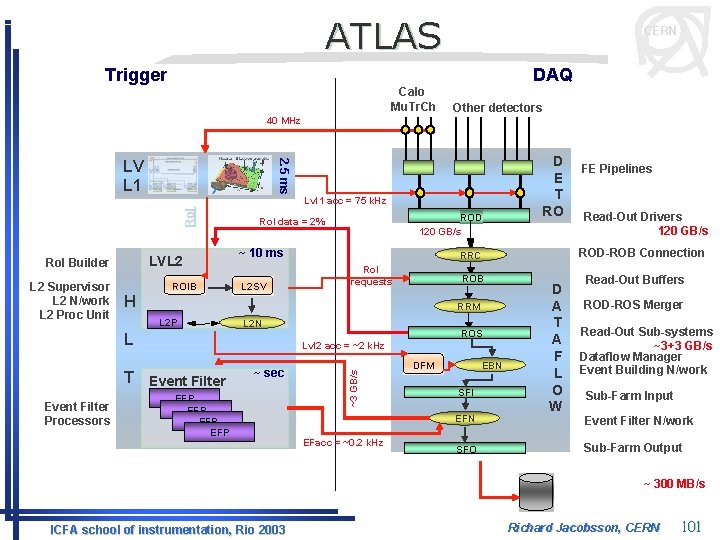

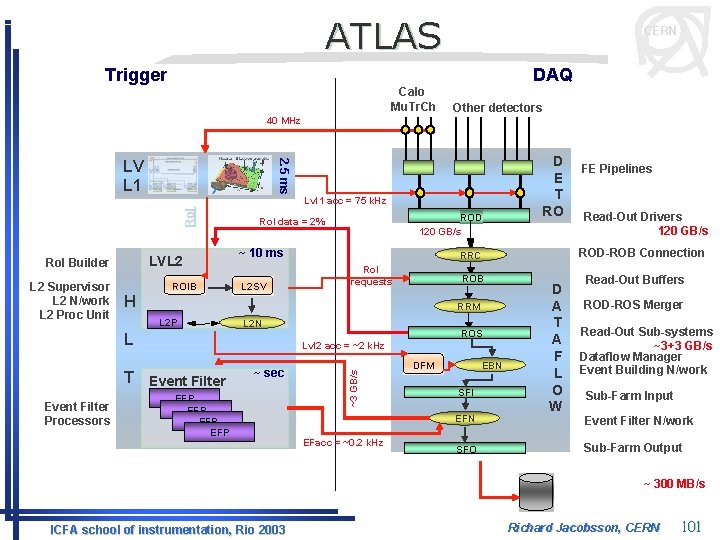

ATLAS CERN DAQ Trigger Calo Mu. Tr. Ch 40 MHz Ro. I 2. 5 ms LV L 1 LVL 2 Ro. I Builder ROIB ROD 120 GB/s Ro. I data = 2% ~ 10 ms L 2 SV Ro. I requests ROB RRM L 2 P L 2 N ROS Lvl 2 acc = ~2 k. Hz T Event Filter ~ sec EFP EFP D E T RO DFM EBN SFI EFN EFacc = ~0. 2 k. Hz SFO FE Pipelines Read-Out Drivers 120 GB/s ROD-ROB Connection RRC H L Event Filter Processors Lvl 1 acc = 75 k. Hz ~3 GB/s L 2 Supervisor L 2 N/work L 2 Proc Unit Other detectors D A T A F L O W Read-Out Buffers ROD-ROS Merger Read-Out Sub-systems ~3+3 GB/s Dataflow Manager Event Building N/work Sub-Farm Input Event Filter N/work Sub-Farm Output ~ 300 MB/s ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 101

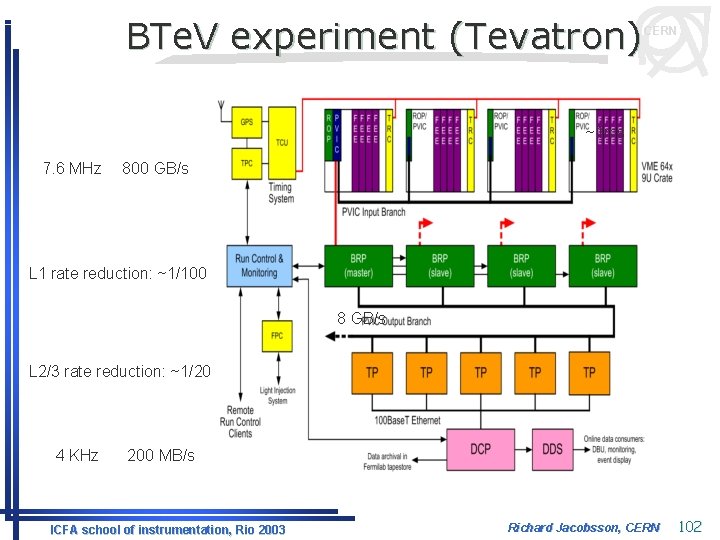

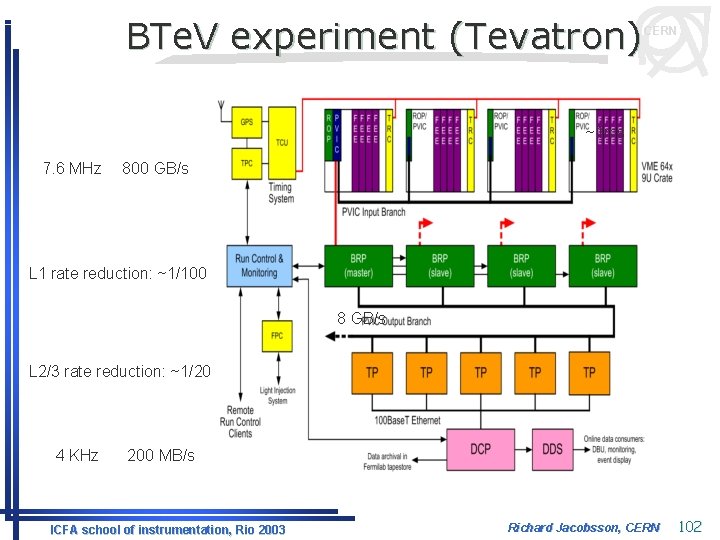

BTe. V experiment (Tevatron) CERN ~1 ms 7. 6 MHz 800 GB/s L 1 rate reduction: ~1/100 8 GB/s L 2/3 rate reduction: ~1/20 4 KHz 200 MB/s ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 102

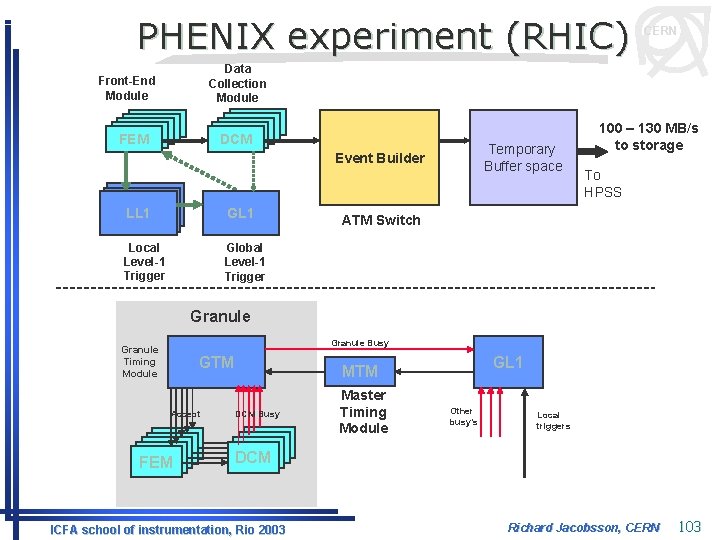

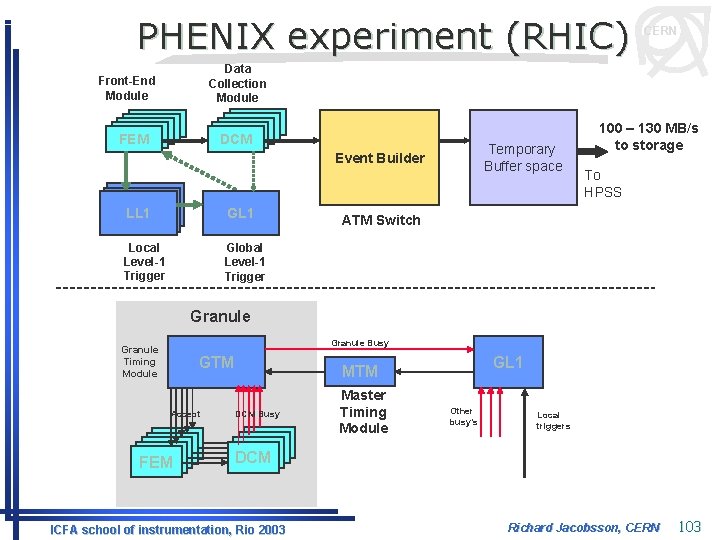

PHENIX experiment (RHIC) CERN Data Collection Module Front-End Module DCM DCM DCM FEM FEM FEM Temporary Buffer space Event Builder LL 1 GL 1 Local Level-1 Trigger Global Level-1 Trigger 100 – 130 MB/s to storage To HPSS ATM Switch Granule Busy Granule Timing Module GTM Accept FEM FEM FEM GL 1 MTM DCM Busy DCM DCM ICFA school of instrumentation, Rio 2003 Master Timing Module Other busy’s Local triggers Richard Jacobsson, CERN 103

MINOS experiment CERN • MINOS – Main Injector Neutrino Oscillation Search • A long baseline neutrino experiment – 730 km • Neutrino beam produced by new Nu. MI beamline at Fermilab 8 Hz ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 104

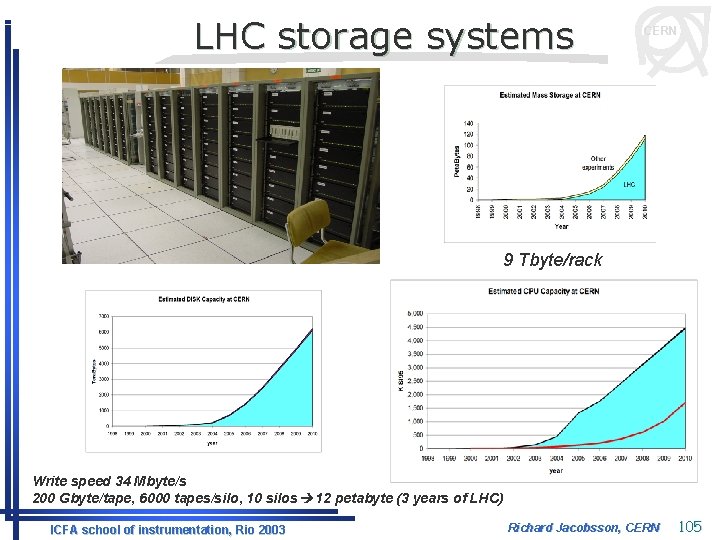

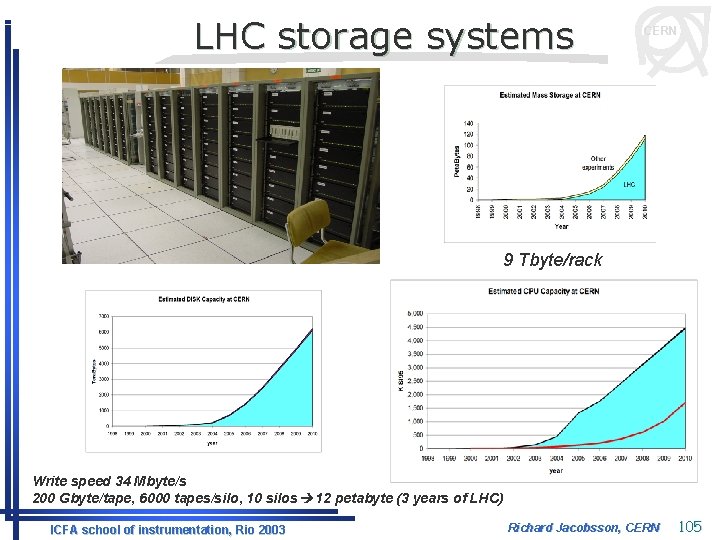

LHC storage systems CERN 9 Tbyte/rack Write speed 34 Mbyte/s 200 Gbyte/tape, 6000 tapes/silo, 10 silos 12 petabyte (3 years of LHC) ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 105

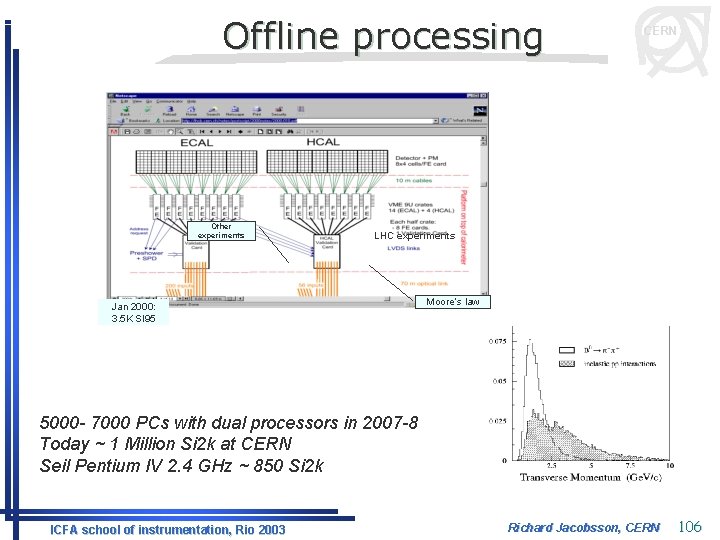

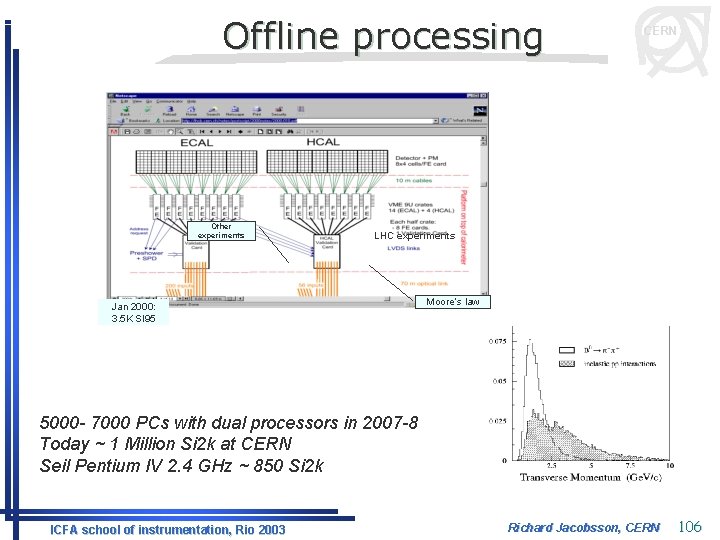

Offline processing Other experiments CERN LHC experiments Jan 2000: 3. 5 K SI 95 Moore’s law 5000 - 7000 PCs with dual processors in 2007 -8 Today ~ 1 Million Si 2 k at CERN Seil Pentium IV 2. 4 GHz ~ 850 Si 2 k ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 106

CERN 7. Configuring, control and monitoring ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 107

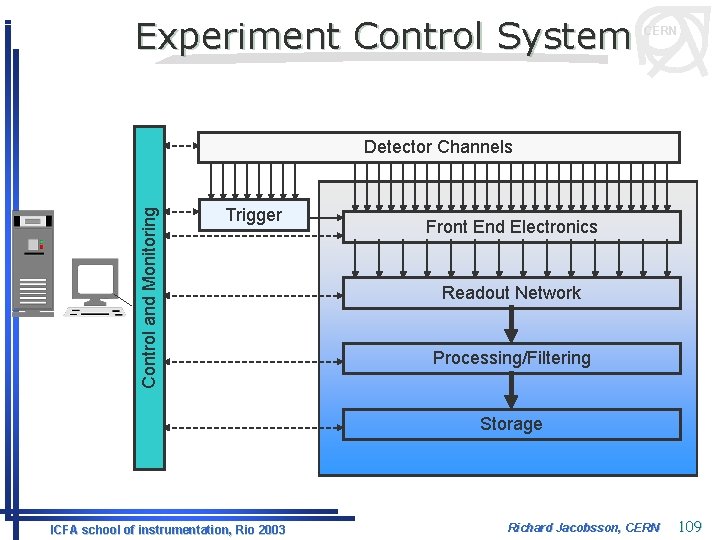

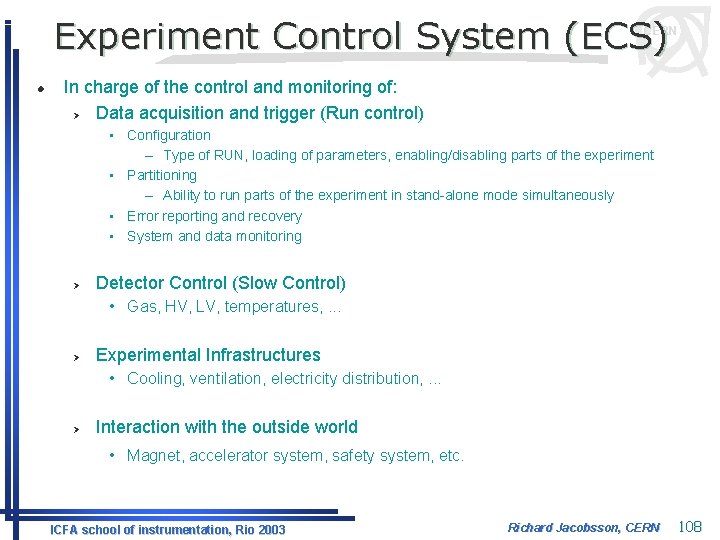

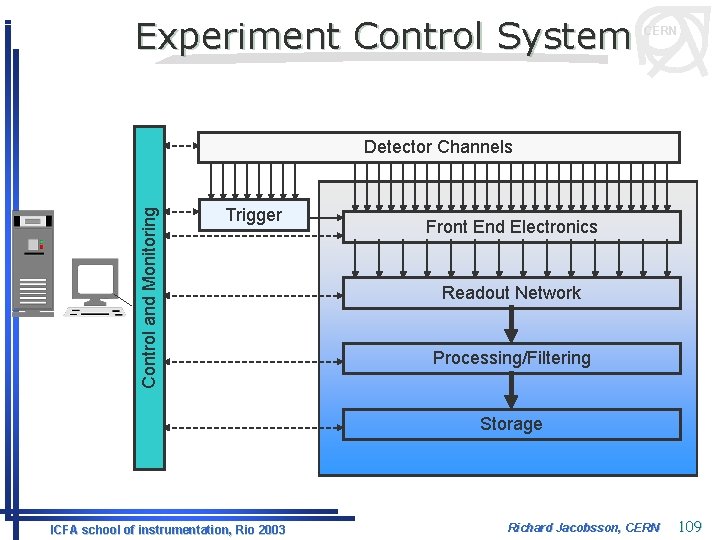

Experiment Control System (ECS) CERN l In charge of the control and monitoring of: Ø Data acquisition and trigger (Run control) • Configuration – Type of RUN, loading of parameters, enabling/disabling parts of the experiment • Partitioning – Ability to run parts of the experiment in stand-alone mode simultaneously • Error reporting and recovery • System and data monitoring Ø Detector Control (Slow Control) • Gas, HV, LV, temperatures, . . . Ø Experimental Infrastructures • Cooling, ventilation, electricity distribution, . . . Ø Interaction with the outside world • Magnet, accelerator system, safety system, etc. ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 108

Experiment Control System CERN Control and Monitoring Detector Channels Trigger Front End Electronics Readout Network Processing/Filtering Storage ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 109

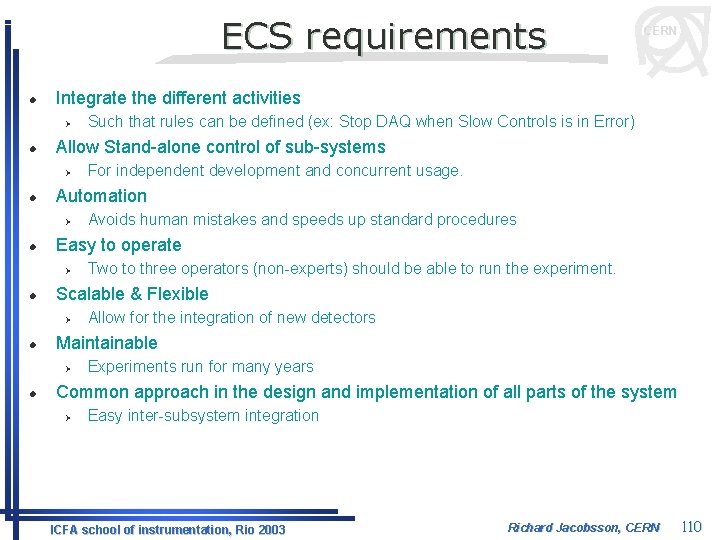

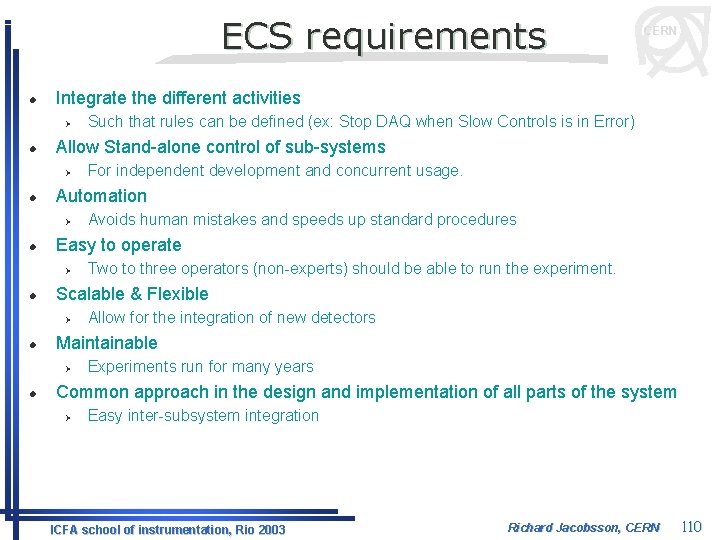

ECS requirements l Integrate the different activities Ø l Allow for the integration of new detectors Maintainable Ø l Two to three operators (non-experts) should be able to run the experiment. Scalable & Flexible Ø l Avoids human mistakes and speeds up standard procedures Easy to operate Ø l For independent development and concurrent usage. Automation Ø l Such that rules can be defined (ex: Stop DAQ when Slow Controls is in Error) Allow Stand-alone control of sub-systems Ø l CERN Experiments run for many years Common approach in the design and implementation of all parts of the system Ø Easy inter-subsystem integration ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 110

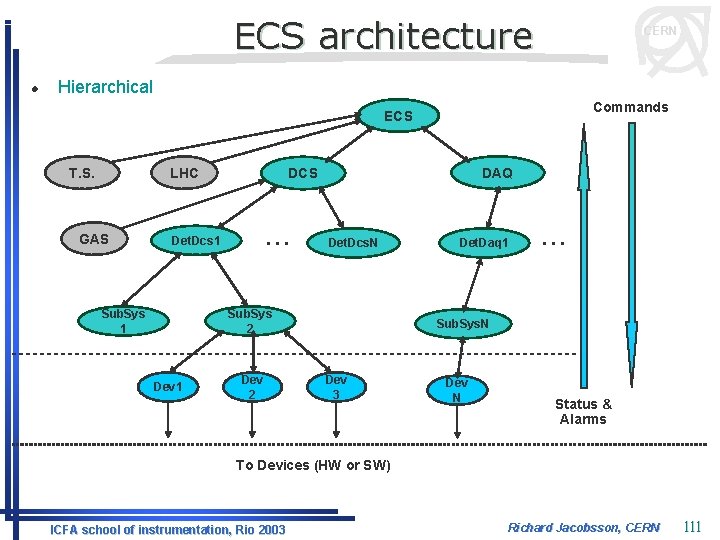

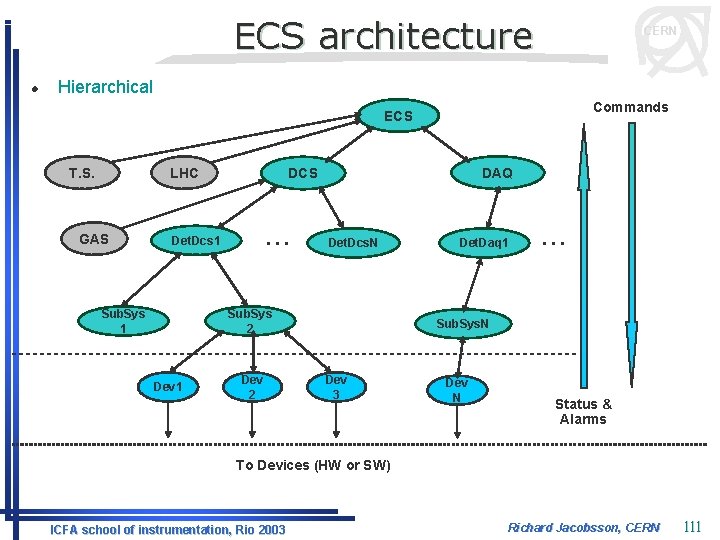

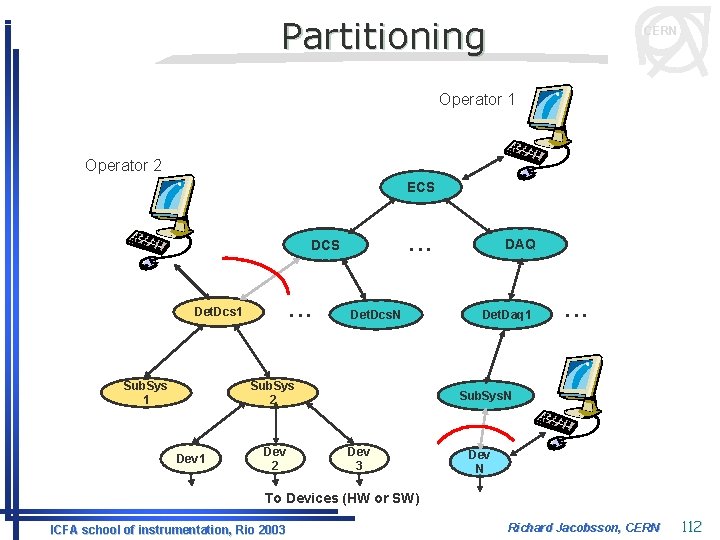

ECS architecture l CERN Hierarchical Commands ECS T. S. LHC GAS . . . Det. Dcs 1 Sub. Sys 1 DAQ DCS Det. Dcs. N Sub. Sys 2 Dev 1 Dev 2 Det. Daq 1 . . . Sub. Sys. N Dev 3 Dev N Status & Alarms To Devices (HW or SW) ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 111

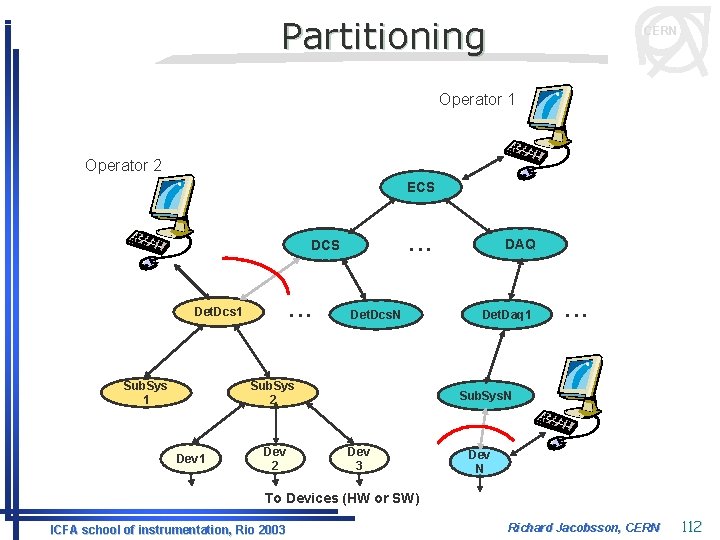

Partitioning CERN Operator 1 Operator 2 ECS . . . Det. Dcs 1 Sub. Sys 1 Det. Dcs. N Sub. Sys 2 Dev 1 Dev 2 DAQ Det. Daq 1 . . . Sub. Sys. N Dev 3 Dev N To Devices (HW or SW) ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 112

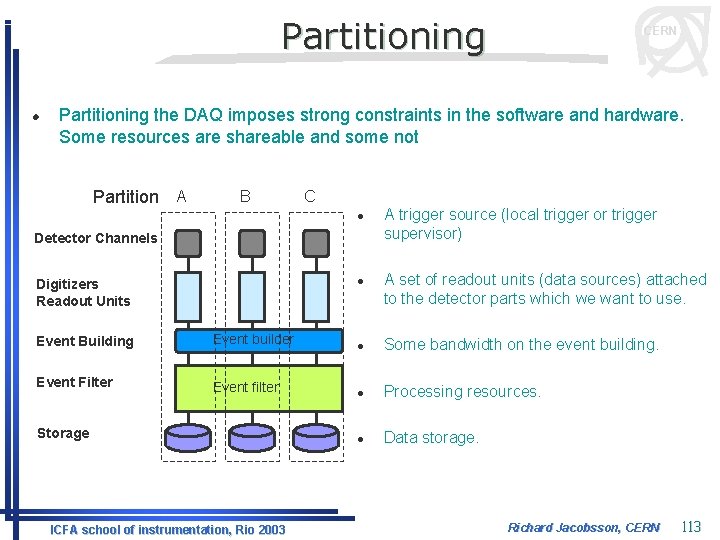

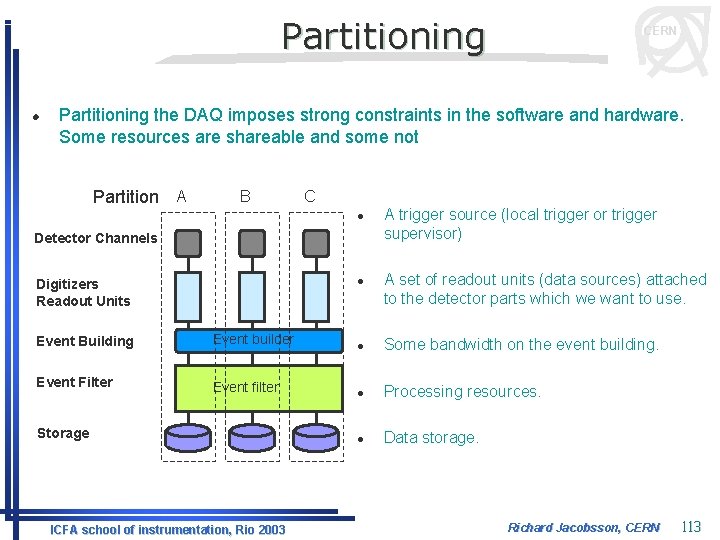

Partitioning l CERN Partitioning the DAQ imposes strong constraints in the software and hardware. Some resources are shareable and some not Partition A B C l Detector Channels Digitizers Readout Units l Event Building Event builder Event Filter Event filter Storage ICFA school of instrumentation, Rio 2003 A trigger source (local trigger or trigger supervisor) A set of readout units (data sources) attached to the detector parts which we want to use. l Some bandwidth on the event building. l Processing resources. l Data storage. Richard Jacobsson, CERN 113

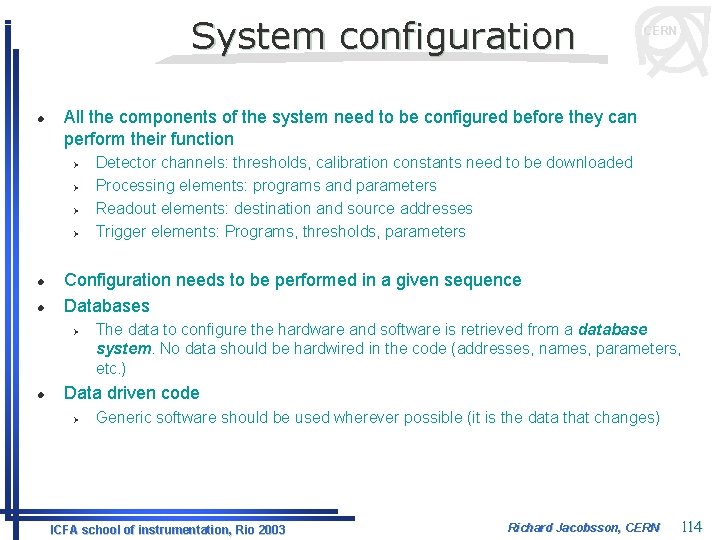

System configuration l All the components of the system need to be configured before they can perform their function Ø Ø l l Detector channels: thresholds, calibration constants need to be downloaded Processing elements: programs and parameters Readout elements: destination and source addresses Trigger elements: Programs, thresholds, parameters Configuration needs to be performed in a given sequence Databases Ø l CERN The data to configure the hardware and software is retrieved from a database system. No data should be hardwired in the code (addresses, names, parameters, etc. ) Data driven code Ø Generic software should be used wherever possible (it is the data that changes) ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 114

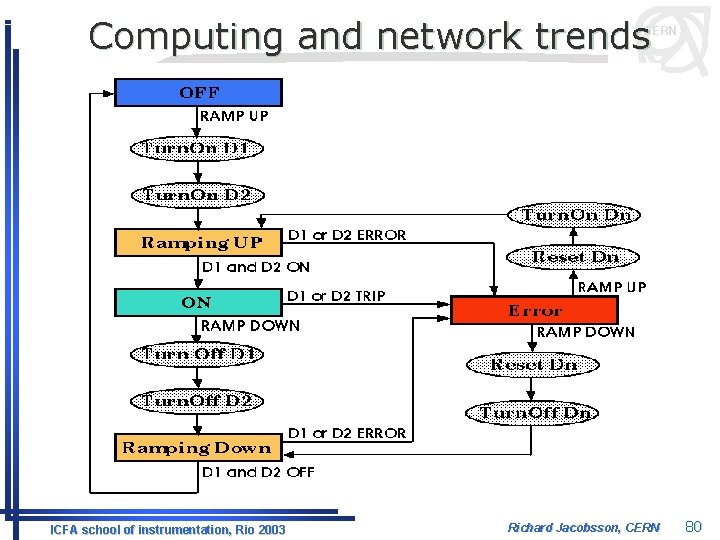

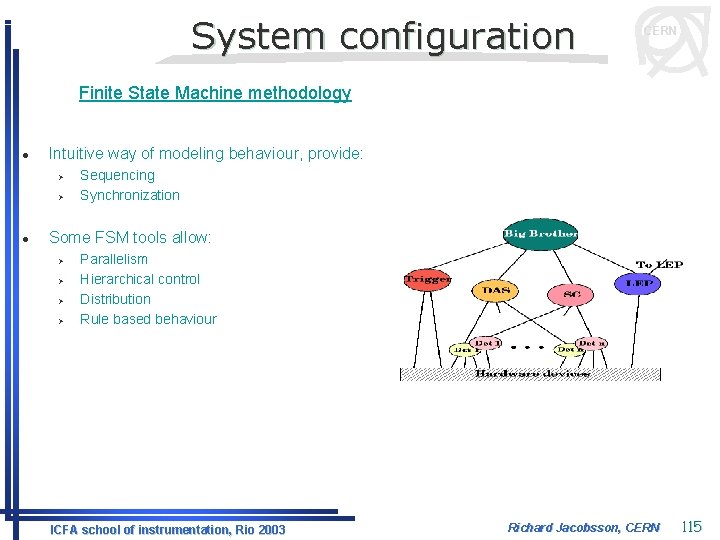

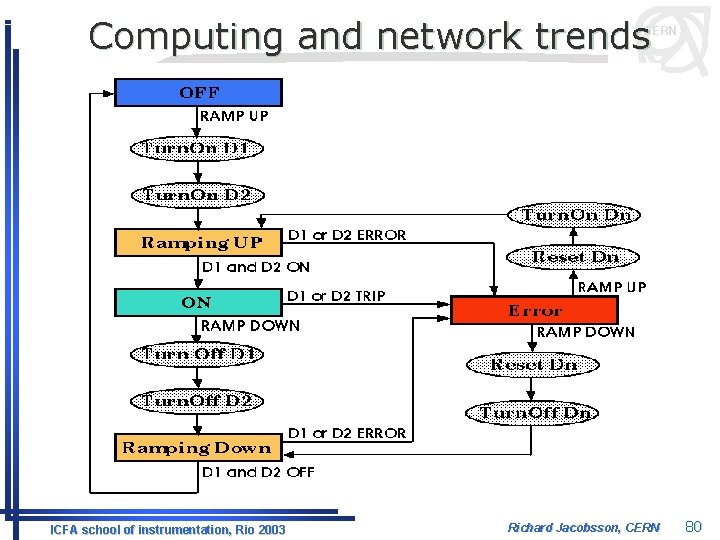

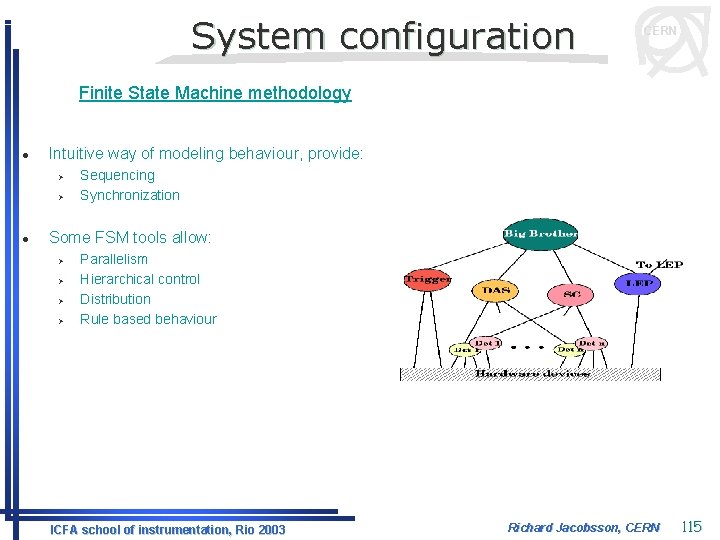

System configuration CERN Finite State Machine methodology l Intuitive way of modeling behaviour, provide: Ø Ø l Sequencing Synchronization Some FSM tools allow: Ø Ø Parallelism Hierarchical control Distribution Rule based behaviour ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 115

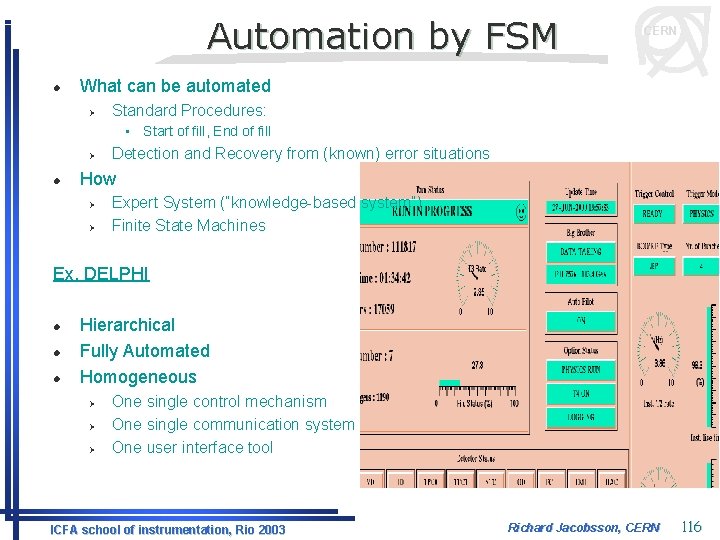

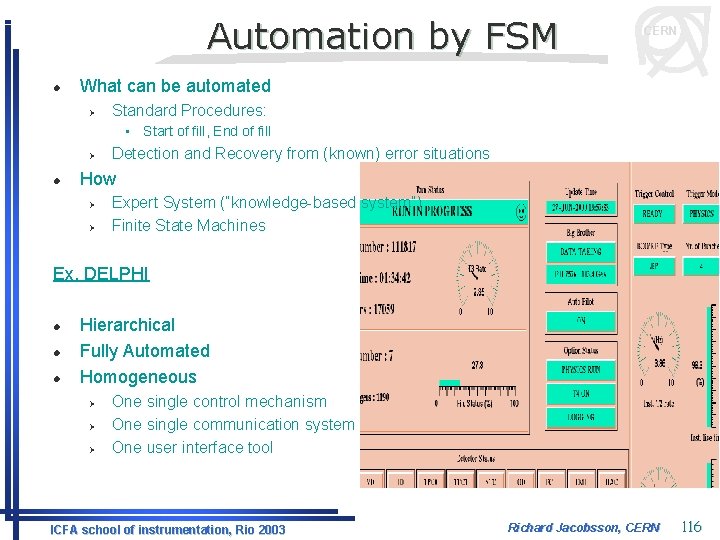

Automation by FSM l CERN What can be automated Ø Standard Procedures: • Start of fill, End of fill Ø l Detection and Recovery from (known) error situations How Ø Ø Expert System (“knowledge-based system”) Finite State Machines Ex. DELPHI l l l Hierarchical Fully Automated Homogeneous Ø Ø Ø One single control mechanism One single communication system One user interface tool ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 116

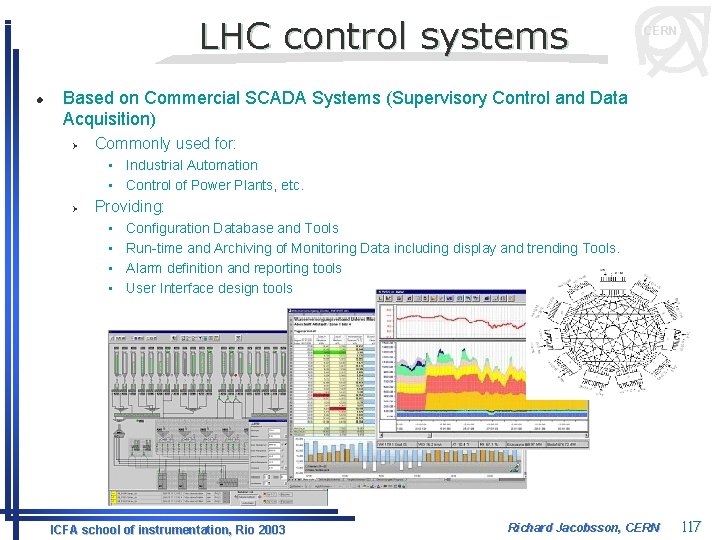

LHC control systems l CERN Based on Commercial SCADA Systems (Supervisory Control and Data Acquisition) Ø Commonly used for: • Industrial Automation • Control of Power Plants, etc. Ø Providing: • • Configuration Database and Tools Run-time and Archiving of Monitoring Data including display and trending Tools. Alarm definition and reporting tools User Interface design tools ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 117

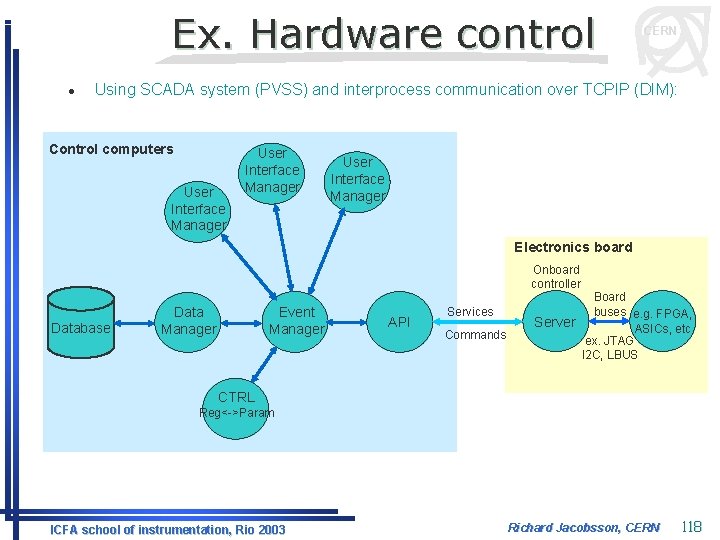

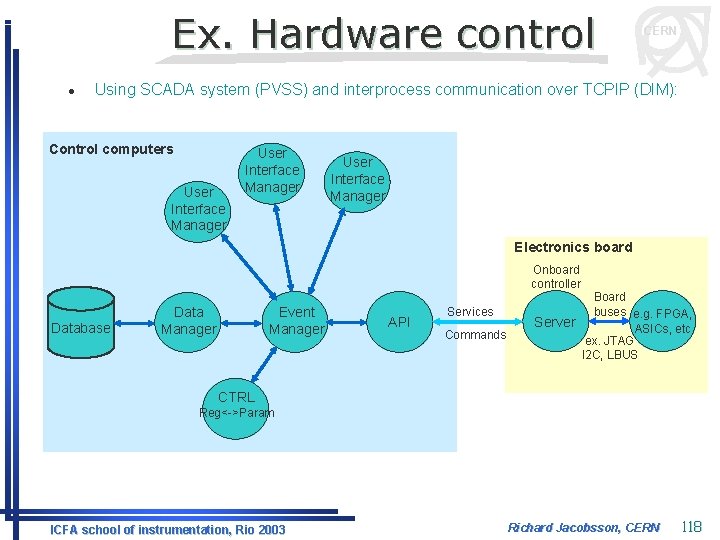

Ex. Hardware control l CERN Using SCADA system (PVSS) and interprocess communication over TCPIP (DIM): Control computers User Interface Manager Electronics board Onboard controller Database Event Manager Data Manager API Services Commands Board buses e. g. FPGA, Server ASICs, etc ex. JTAG I 2 C, LBUS CTRL Reg<->Param ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 118

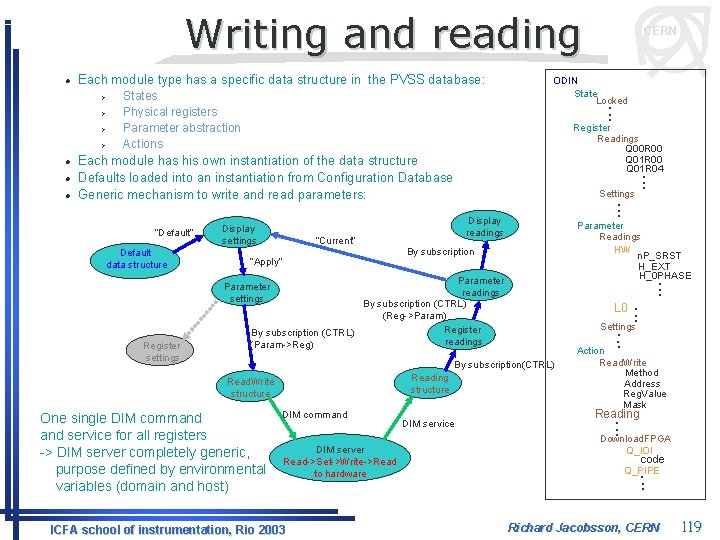

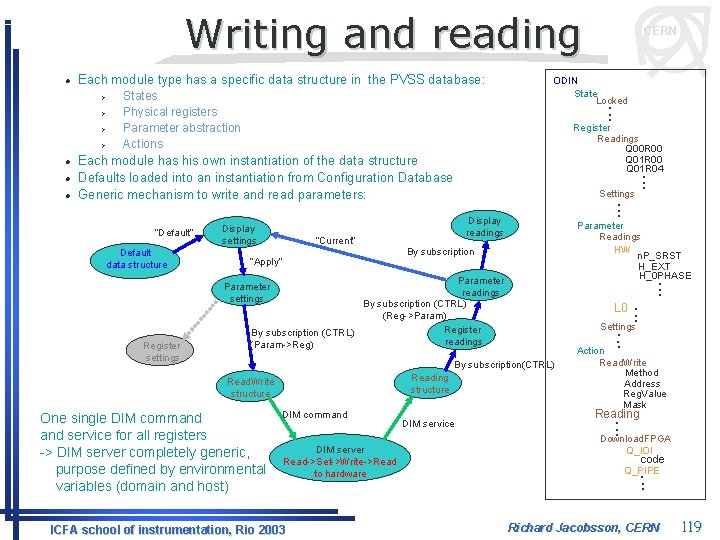

Writing and reading l Each module type has a specific data structure in the PVSS database: Ø Ø l l l ODIN State Locked . . . Register States Physical registers Parameter abstraction Actions Readings Q 00 R 00 Q 01 R 04 Each module has his own instantiation of the data structure Defaults loaded into an instantiation from Configuration Database Generic mechanism to write and read parameters: “Default” Default data structure Display settings . . . Settings. . . Parameter Display readings “Current” Readings HW n. P_SRST H_EXT H_0 PHASE By subscription “Apply” Parameter readings By subscription (CTRL) (Reg->Param) Register By subscription (CTRL) readings (Param->Reg) Parameter settings Register settings CERN . . . Settings. . . Action L 0 By subscription(CTRL) Reading structure Read. Write structure One single DIM command service for all registers -> DIM server completely generic, purpose defined by environmental variables (domain and host) DIM command DIM server Read->Set->Write->Read to hardware ICFA school of instrumentation, Rio 2003 DIM service . . . Read. Write Method Address Reg. Value Mask . . . Download. FPGA Reading Q_IOI code . . . Q_PIPE Richard Jacobsson, CERN 119

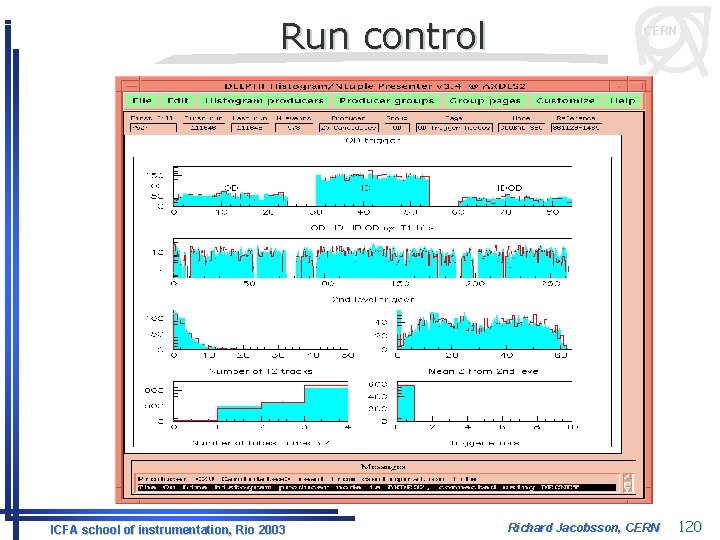

Run control ICFA school of instrumentation, Rio 2003 CERN Richard Jacobsson, CERN 120

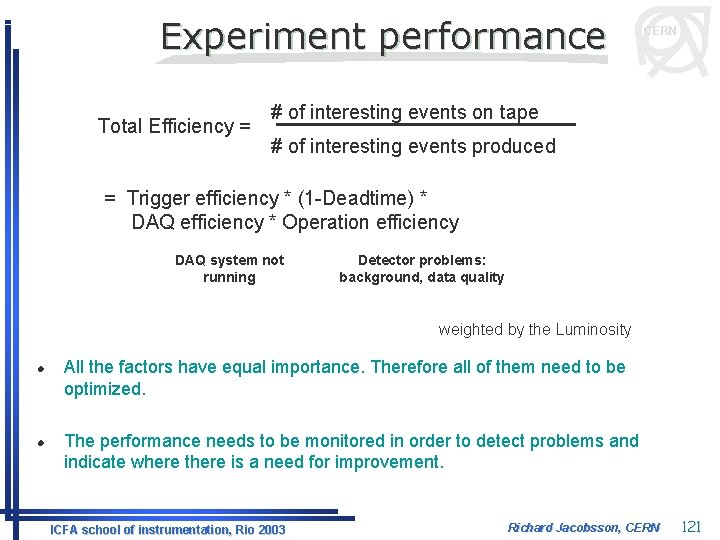

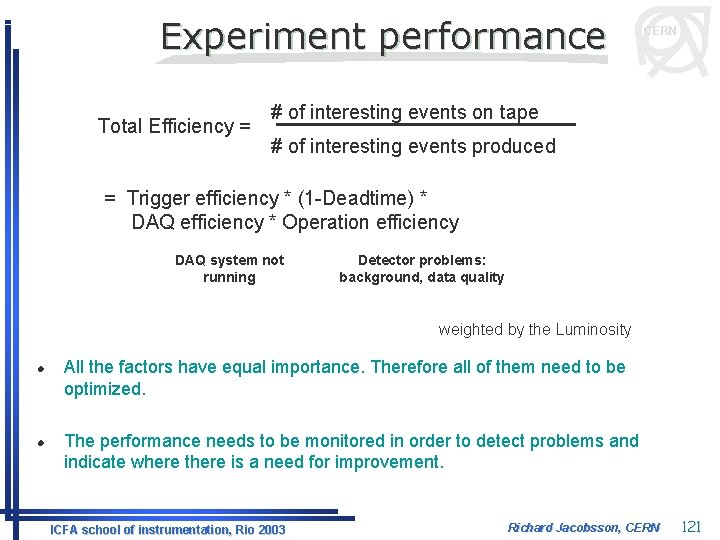

Experiment performance Total Efficiency = CERN # of interesting events on tape # of interesting events produced = Trigger efficiency * (1 -Deadtime) * DAQ efficiency * Operation efficiency DAQ system not running Detector problems: background, data quality weighted by the Luminosity l l All the factors have equal importance. Therefore all of them need to be optimized. The performance needs to be monitored in order to detect problems and indicate where there is a need for improvement. ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 121

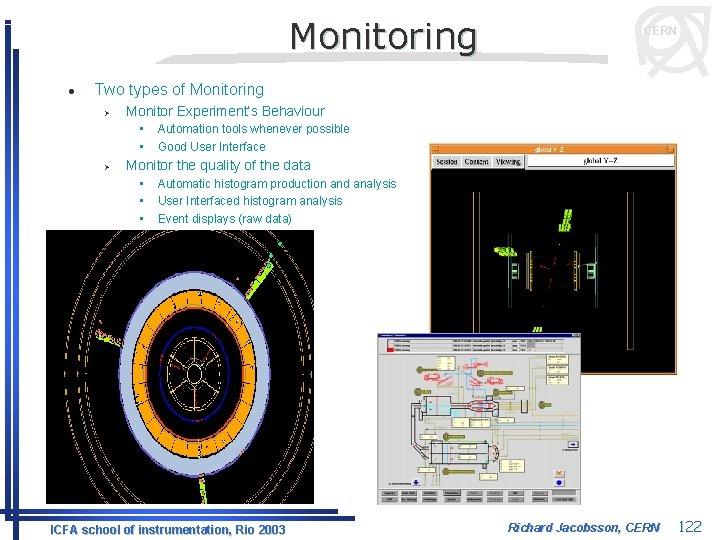

Monitoring l CERN Two types of Monitoring Ø Monitor Experiment’s Behaviour • • Ø Automation tools whenever possible Good User Interface Monitor the quality of the data • • • Automatic histogram production and analysis User Interfaced histogram analysis Event displays (raw data) ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 122

Well, we are at the end of the road…. . ICFA school of instrumentation, Rio 2003 CERN Richard Jacobsson, CERN 123

Conclusions CERN l Trigger, data acquisition and control systems are becoming increasingly complex l They are not static Ø Ø l Luckily the requirements of telecommunications and computing in general have strongly contributed to the development of standard technologies: Ø Ø l It is a system that is expected to change with time, accelerator and experiment conditions Provide maximum flexibility in functionality and for upgrades Hardware: FPGAs, Flash ADCs, analog memories, PCs, networks, helical scan recording, data compression, image processing, . . . Software: distributed computing, software development environments, supervisory systems, . . . We can now build a large fraction of our systems using commercial components (customization will still be needed in the front-end) l It is essential that we keep up-to-date with the progress being made by industry l But it is also essential that we go beyond Ø Basic research is what we need to build a long-term potential for technical progress ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 124

Merry Christmas! CERN Next year a lot of central systems in the LHC project should reach production readiness…. ICFA school of instrumentation, Rio 2003 Richard Jacobsson, CERN 125