18 742 Spring 2011 Parallel Computer Architecture Lecture

![STFM Scheduling Algorithm [MICRO’ 07] n n For each thread, the DRAM controller q STFM Scheduling Algorithm [MICRO’ 07] n n For each thread, the DRAM controller q](https://slidetodoc.com/presentation_image_h2/95951a814fa4830ec4f991a13ff97291/image-20.jpg)

![Comparison to Previous Scheduling Algorithms § FCFS, FR-FCFS [Rixner+, ISCA 00] § Oldest-first, row-hit Comparison to Previous Scheduling Algorithms § FCFS, FR-FCFS [Rixner+, ISCA 00] § Oldest-first, row-hit](https://slidetodoc.com/presentation_image_h2/95951a814fa4830ec4f991a13ff97291/image-58.jpg)

![Previous Work FRFCFS [Rixner et al. , ISCA 00]: Prioritizes row-buffer hits – Thread-oblivious Previous Work FRFCFS [Rixner et al. , ISCA 00]: Prioritizes row-buffer hits – Thread-oblivious](https://slidetodoc.com/presentation_image_h2/95951a814fa4830ec4f991a13ff97291/image-93.jpg)

- Slides: 128

18 -742 Spring 2011 Parallel Computer Architecture Lecture 27: Shared Memory Management Prof. Onur Mutlu Carnegie Mellon University

Announcements n Last year’s written exam posted online n April 27 (Wednesday): Oral Exam q q n May 6: Project poster session q n 30 minutes person; in my office; closed book/notes All content covered could be part of the exam HH 1112, 2 -6 pm May 10: Project report due 2

Readings: Shared Main Memory n Required q q q n Mutlu and Moscibroda, “Stall-Time Fair Memory Access Scheduling for Chip Multiprocessors, ” MICRO 2007. Mutlu and Moscibroda, “Parallelism-Aware Batch Scheduling: Enabling High. Performance and Fair Memory Controllers, ” ISCA 2008. Kim et al. , “ATLAS: A Scalable and High-Performance Scheduling Algorithm for Multiple Memory Controllers, ” HPCA 2010. Kim et al. , “Thread Cluster Memory Scheduling, ” MICRO 2010. Ebrahimi et al. , “Fairness via Source Throttling: A Configurable and High. Performance Fairness Substrate for Multi-Core Memory Systems, ” ASPLOS 2010. Recommended q q Rixner et al. , “Memory Access Scheduling, ” ISCA 2000. Moscibroda and Mutlu, “Memory Performance Attacks, ” USENIX Security 2007. Lee et al. , “Prefetch-Aware DRAM Controllers, ” MICRO 2008. Zheng et al. , “Mini-Rank: Adaptive DRAM Architecture for Improving Memory Power Efficiency, ” MICRO 2008. 3

Readings in Other Topics (Not Covered) n Shared memory consistency q q q Lamport, “How to Make a Multiprocessor Computer That Correctly Executes Multiprocess Programs, ” IEEE To. C 1979. Gharachorloo et al. , “Memory Consistency and Event Ordering in Scalable Shared Memory Multiprocessors, ” ISCA 1990. Gharachorloo et al. , “Two Techniques to Enhance the Performance of Memory Consistency Models, ” ICPP 1991. Adve and Gharachorloo, “Shared memory consistency models: a tutorial, ” IEEE Computer 1996. Ceze et al. , “Bulk. SC: bulk enforcement of sequential consistency, ” ISCA 2007. 4

Readings in Other Topics (Not Covered) n Rethinking main memory organization q q n Hsu and Smith, “Performance of Cached DRAM Organizations in Vector Supercomputers, ISCA 1993. Lee et al. , “Architecting Phase Change Memory as a Scalable DRAM Alternative, ” ISCA 2009. Qureshi et al. , “Scalable High-Performance Main Memory System Using Phase-Change Memory Technology, ” ISCA 2009. Udipi et al. , “Rethinking DRAM design and organization for energy -constrained multi-cores, ” ISCA 2010. SIMD processing q q Kuck and Stokes, “The Burroughs Scientific Processor (BSP), ” IEEE To. C 1982. Lindholm et al. , “NVIDIA Tesla: A Unified Graphics and Computing Architecture, ” IEEE Micro 2008. 5

Shared Main Memory Systems

Sharing in Main Memory n Bandwidth sharing q q q n Capacity sharing q q n Which thread/core to prioritize? How to schedule requests? How much bandwidth to allocate to each thread? How much memory capacity to allocate to which thread? Where to map that memory? (row, bank, rank, channel) Metrics for optimization q q q System performance Fairness, Qo. S Energy/power consumption 7

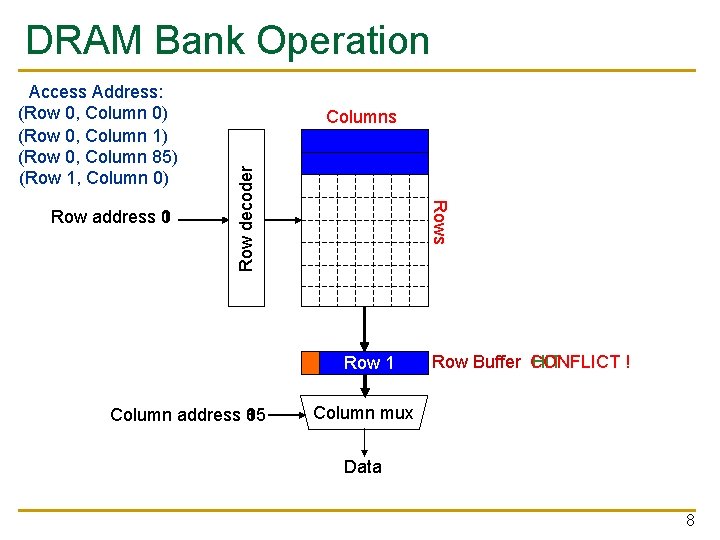

DRAM Bank Operation Rows Row address 0 1 Columns Row decoder Access Address: (Row 0, Column 0) (Row 0, Column 1) (Row 0, Column 85) (Row 1, Column 0) Row 01 Row Empty Column address 0 1 85 Row Buffer CONFLICT HIT ! Column mux Data 8

Generalized Memory Structure 9

Memory Controller 10

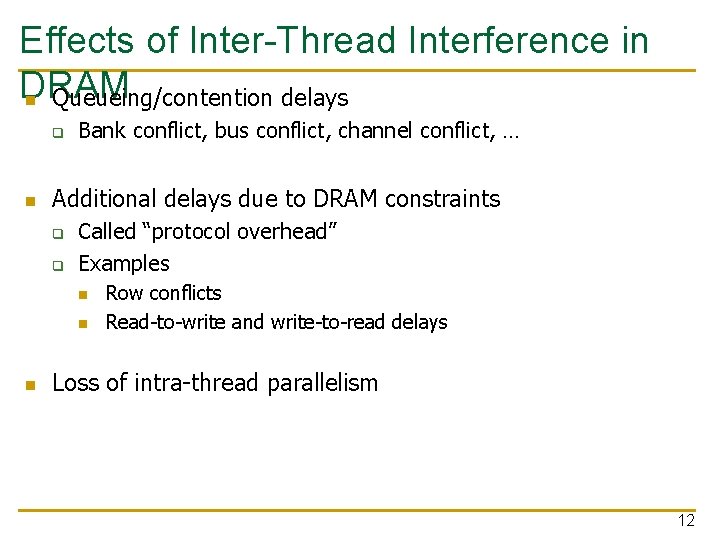

Inter-Thread Interference in DRAM n Memory controllers, pins, and memory banks are shared n Pin bandwidth is not increasing as fast as number of cores q n n Different threads executing on different cores interfere with each other in the main memory system Threads delay each other by causing resource contention: q n Bandwidth per core reducing Bank, bus, row-buffer conflicts reduced DRAM throughput Threads can also destroy each other’s DRAM bank parallelism q Otherwise parallel requests can become serialized 11

Effects of Inter-Thread Interference in DRAM n Queueing/contention delays q n Bank conflict, bus conflict, channel conflict, … Additional delays due to DRAM constraints q q Called “protocol overhead” Examples n n n Row conflicts Read-to-write and write-to-read delays Loss of intra-thread parallelism 12

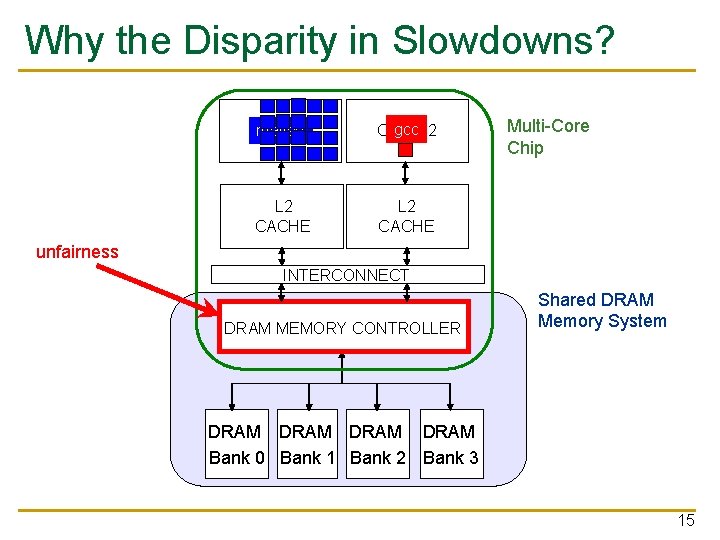

Inter-Thread Interference in DRAM n n Existing DRAM controllers are unaware of inter-thread interference in DRAM system They simply aim to maximize DRAM throughput q q q Thread-unaware and thread-unfair No intent to service each thread’s requests in parallel FR-FCFS policy: 1) row-hit first, 2) oldest first n Unfairly prioritizes threads with high row-buffer locality 13

Consequences of Inter-Thread Interference in DRAM is the only shared resource High priority Memory Low performance priority hog Cores make very slow progress n n Unfair slowdown of different threads System performance loss Vulnerability to denial of service Inability to enforce system-level thread priorities 14

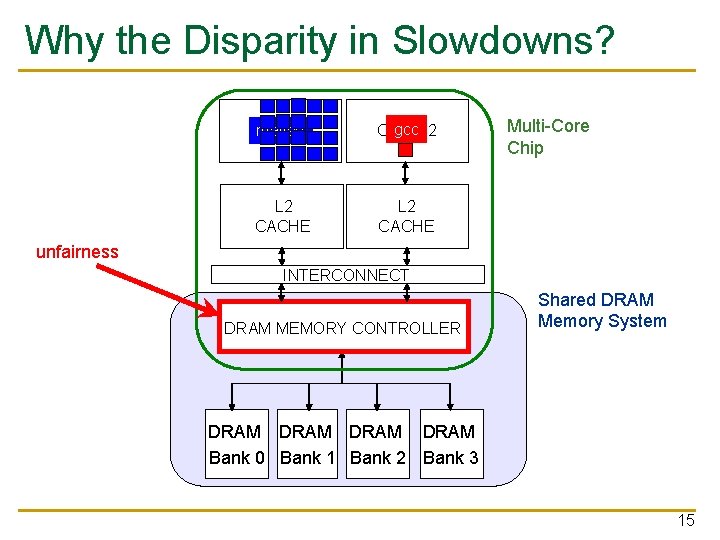

Why the Disparity in Slowdowns? CORE matlab 1 gcc 2 CORE L 2 CACHE Multi-Core Chip unfairness INTERCONNECT DRAM MEMORY CONTROLLER Shared DRAM Memory System DRAM Bank 0 Bank 1 Bank 2 Bank 3 15

Qo. S-Aware Memory Systems: Challenges n How do we reduce inter-thread interference? q q n How do we control inter-thread interference? q q n Improve system performance and utilization Preserve the benefits of single-thread performance techniques Provide scalable mechanisms to enable system software to enforce a variety of Qo. S policies While providing high system performance How do we make the memory system configurable/flexible? q Enable flexible mechanisms that can achieve many goals n n Provide fairness or throughput when needed Satisfy performance guarantees when needed 16

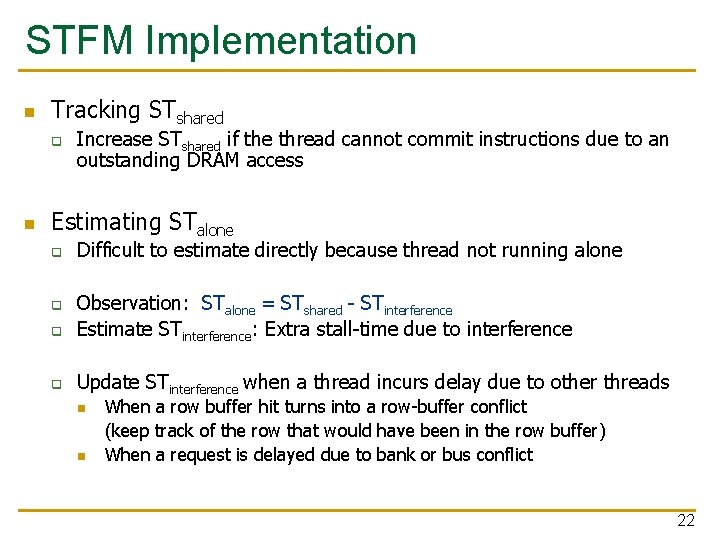

Designing Qo. S-Aware Memory Systems: Approaches n Smart resources: Design each shared resource to have a configurable interference control/reduction mechanism q Fair/Qo. S-aware memory schedulers, interconnects, caches, arbiters n Fair memory schedulers [Mutlu+ MICRO 2007], parallelism-aware memory schedulers [Mutlu+ ISCA 2008, Top Picks 2009], ATLAS memory scheduler [Kim+ HPCA 2010], thread cluster memory scheduler [Kim+ MICRO’ 10, Top Picks’ 11] n Application-aware on-chip networks [Das+ MICRO 2009, ISCA 2010, Top Picks 2011, Grot+ MICRO 2009, ISCA 2011] n Dumb resources: Keep each resource free-for-all, but control access to memory system at the cores/sources q Estimate interference/slowdown in the entire system and throttle cores that slow down others n n Fairness via Source Throttling [Ebrahimi+, ASPLOS 2010, ISCA 2011] Coordinated Prefetcher Throttling [Ebrahimi+, MICRO 2009] 17

Stall-Time Fair Memory Access Scheduling Mutlu and Moscibroda, “Stall-Time Fair Memory Access Scheduling for Chip Multiprocessors, ” MICRO 2007.

Stall-Time Fairness in Shared DRAM Systems n n n A DRAM system is fair if it equalizes the slowdown of equal-priority threads relative to when each thread is run alone on the same system DRAM-related stall-time: The time a thread spends waiting for DRAM memory STshared: DRAM-related stall-time when the thread runs with other threads STalone: DRAM-related stall-time when the thread runs alone Memory-slowdown = STshared/STalone q n Relative increase in stall-time Stall-Time Fair Memory scheduler (STFM) aims to equalize -slowdown for interfering threads, without sacrificing performance q q Memory Considers inherent DRAM performance of each thread Aims to allow proportional progress of threads 19

![STFM Scheduling Algorithm MICRO 07 n n For each thread the DRAM controller q STFM Scheduling Algorithm [MICRO’ 07] n n For each thread, the DRAM controller q](https://slidetodoc.com/presentation_image_h2/95951a814fa4830ec4f991a13ff97291/image-20.jpg)

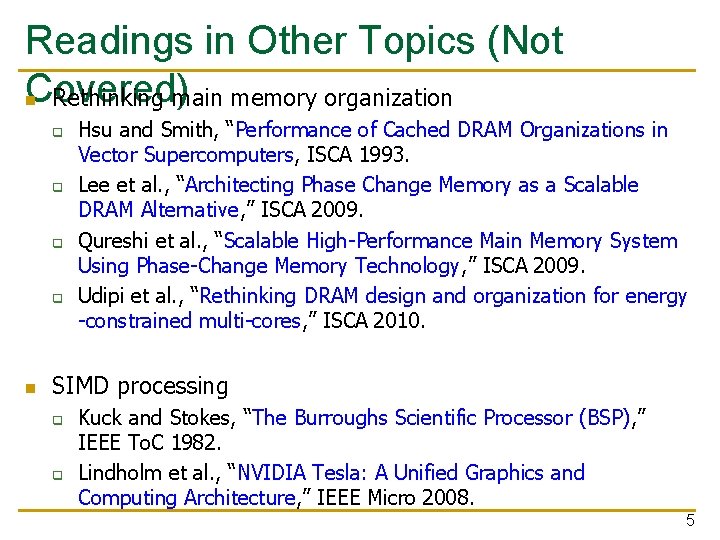

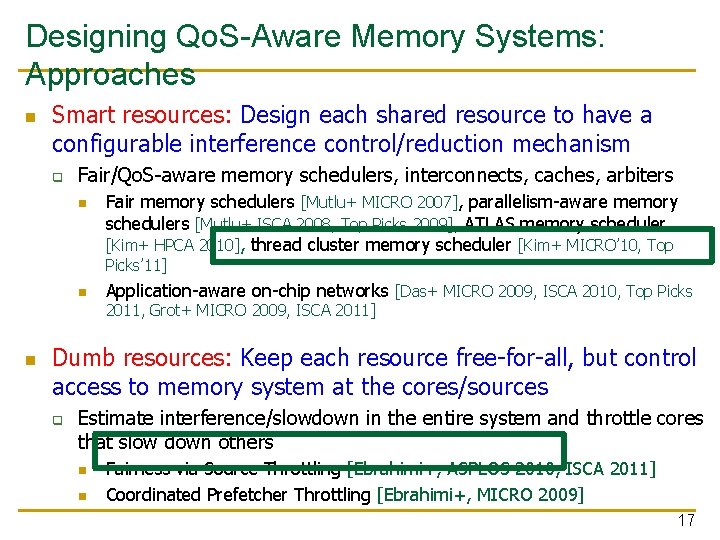

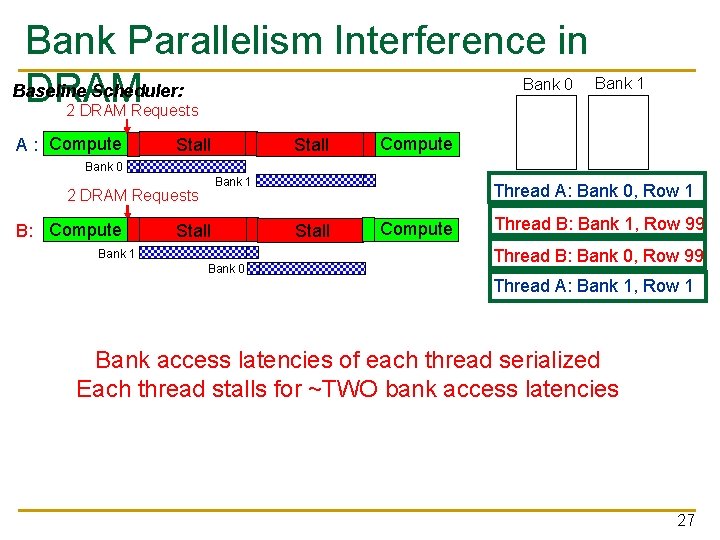

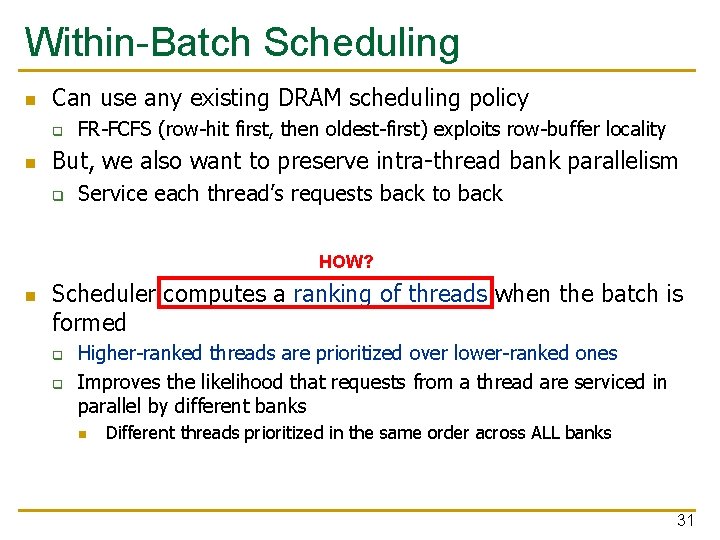

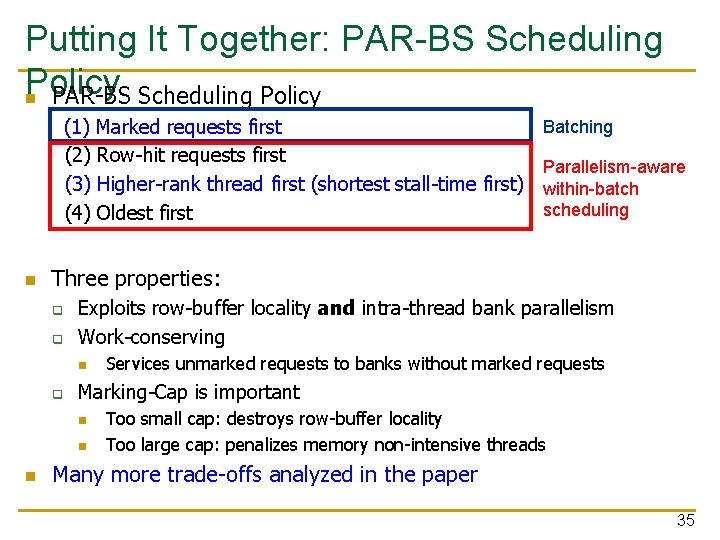

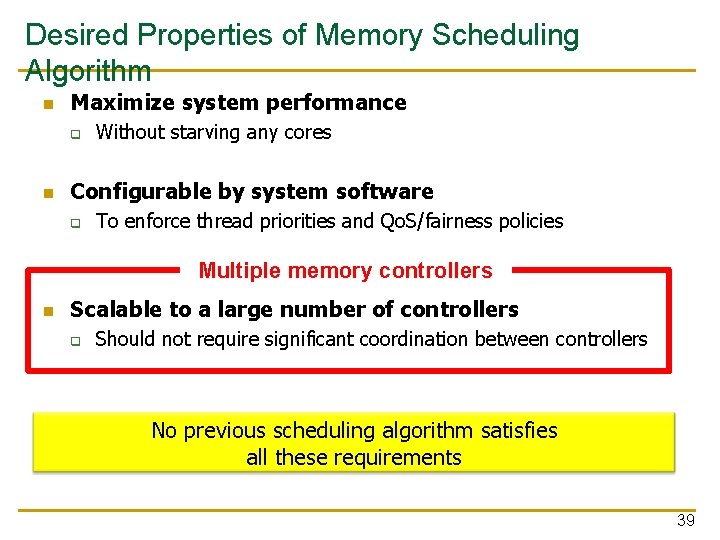

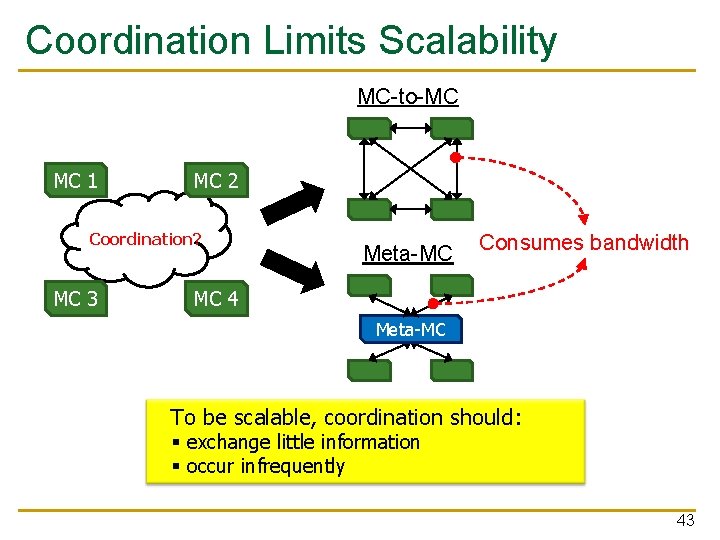

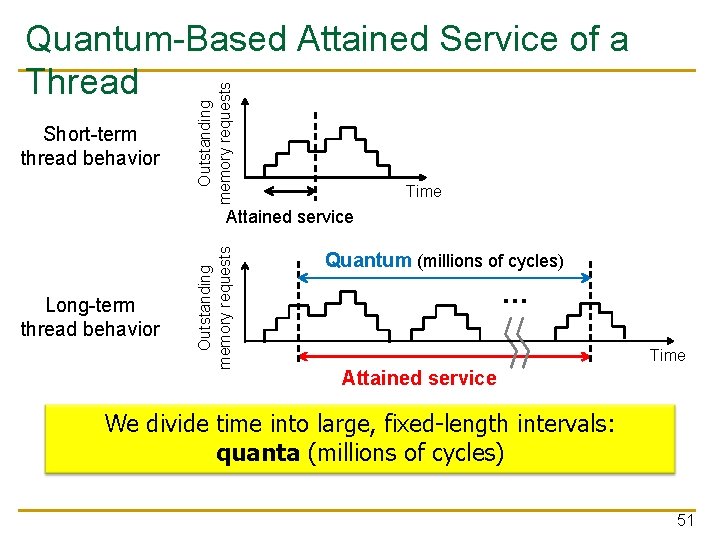

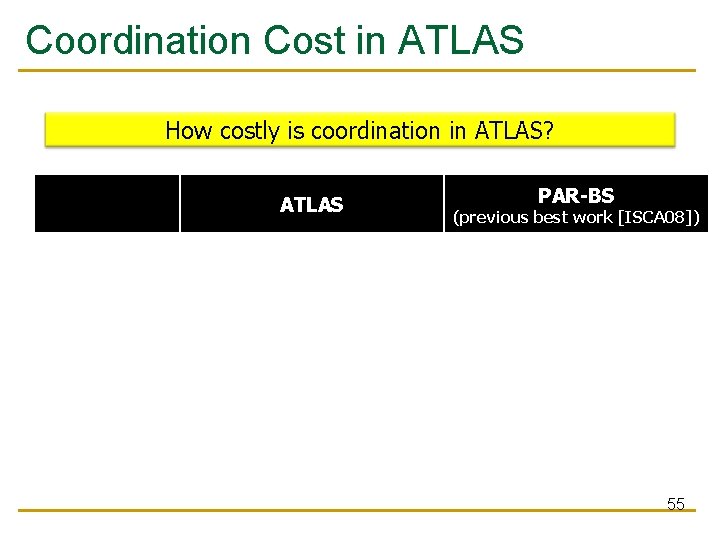

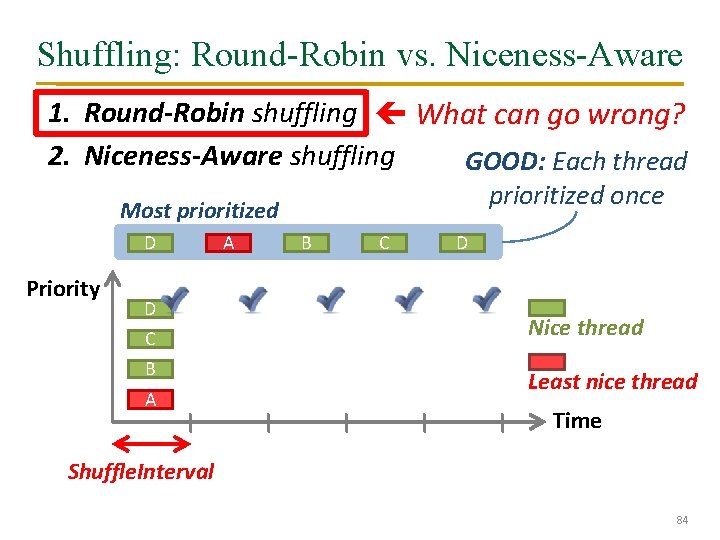

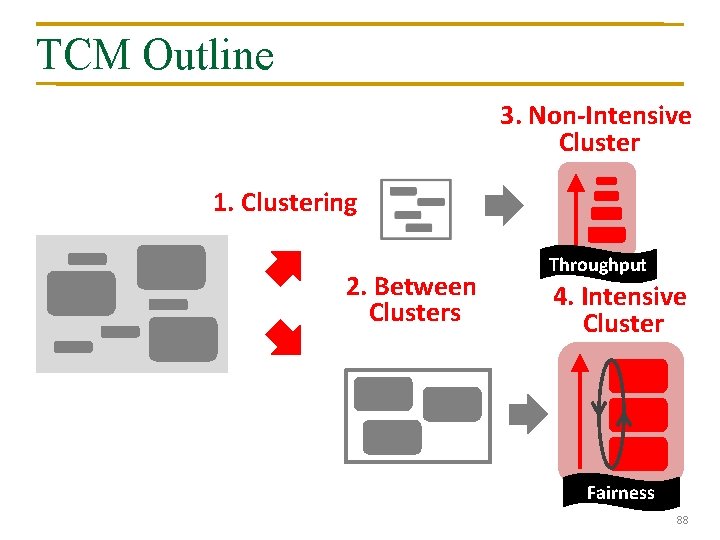

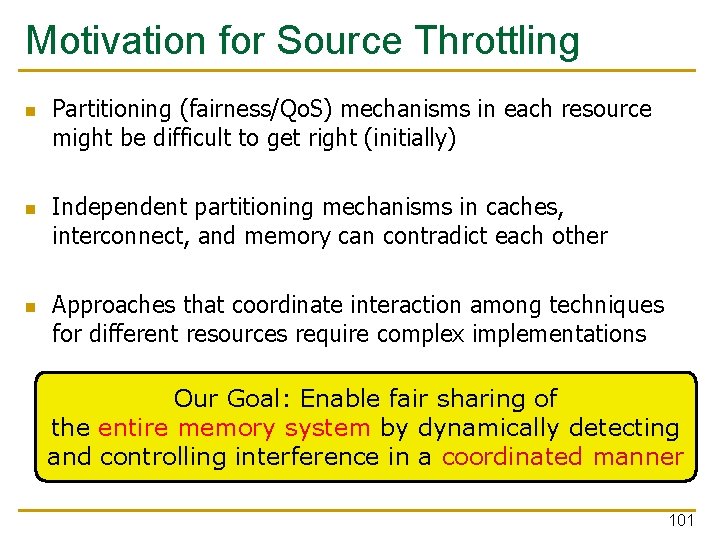

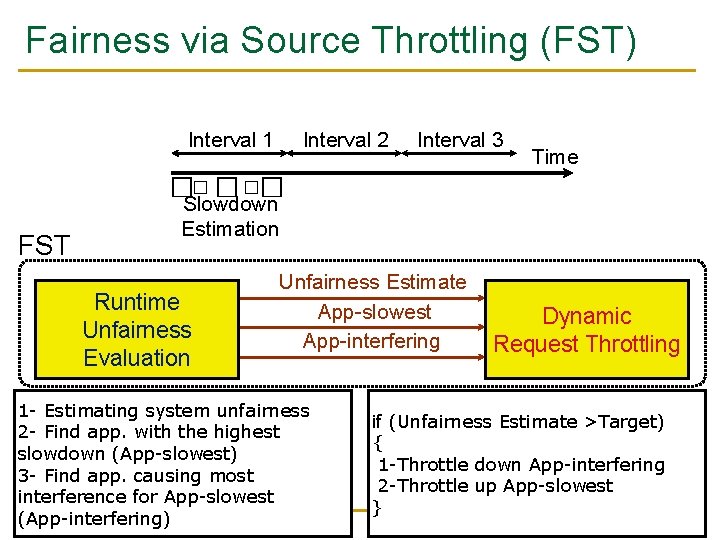

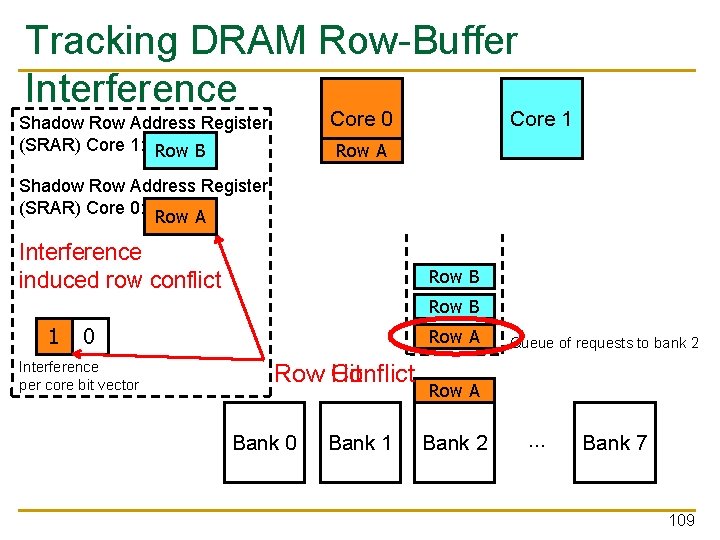

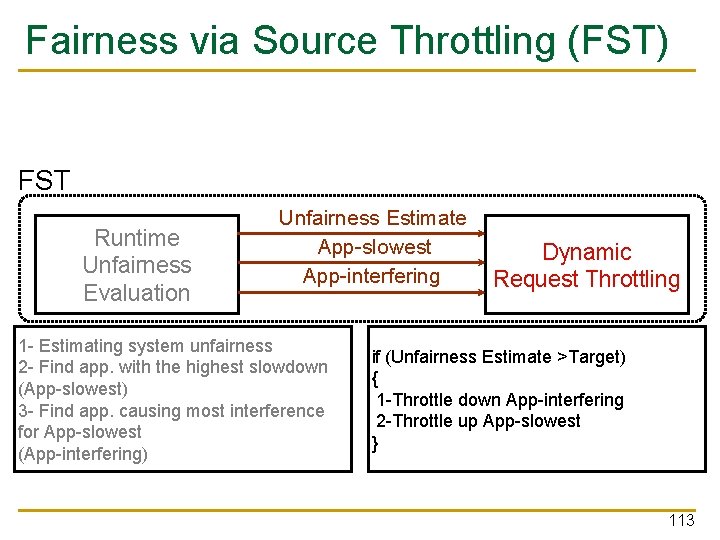

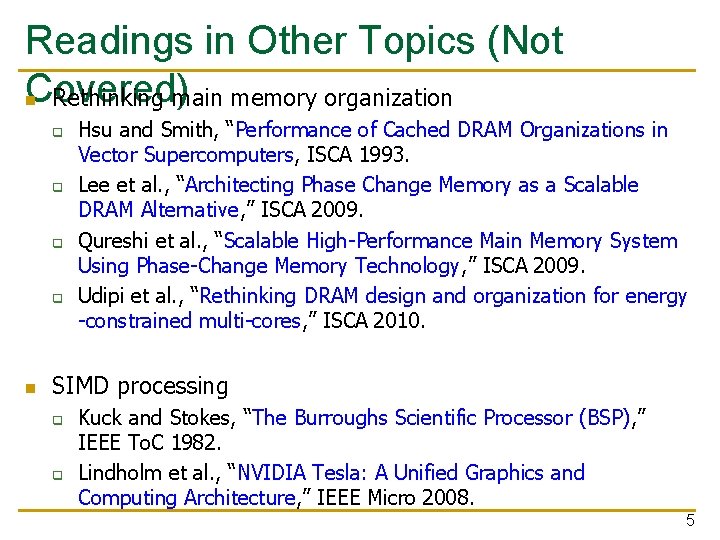

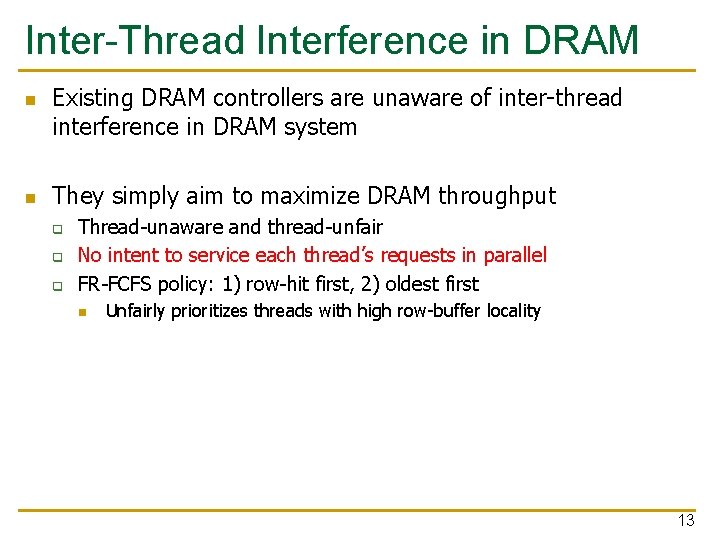

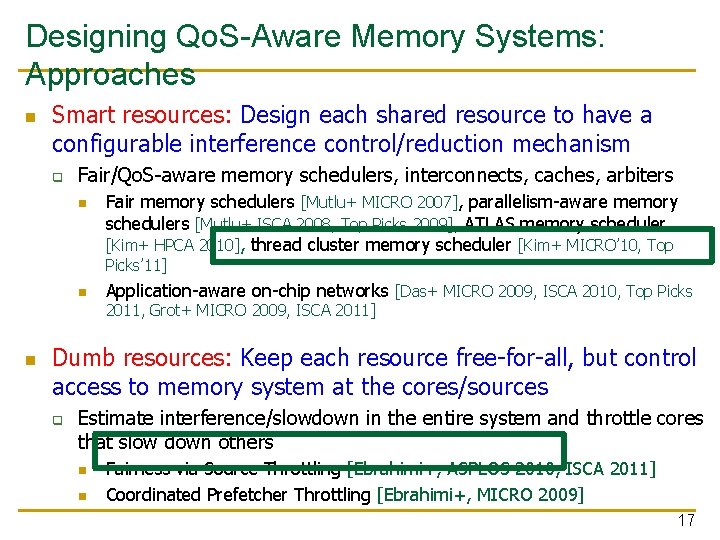

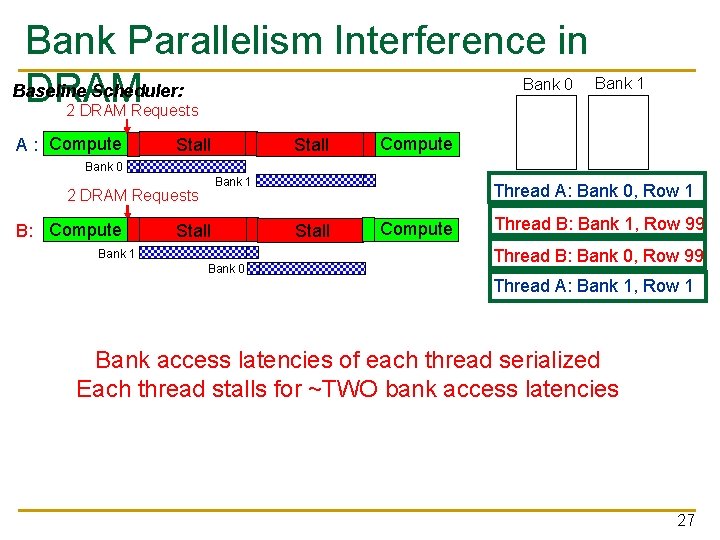

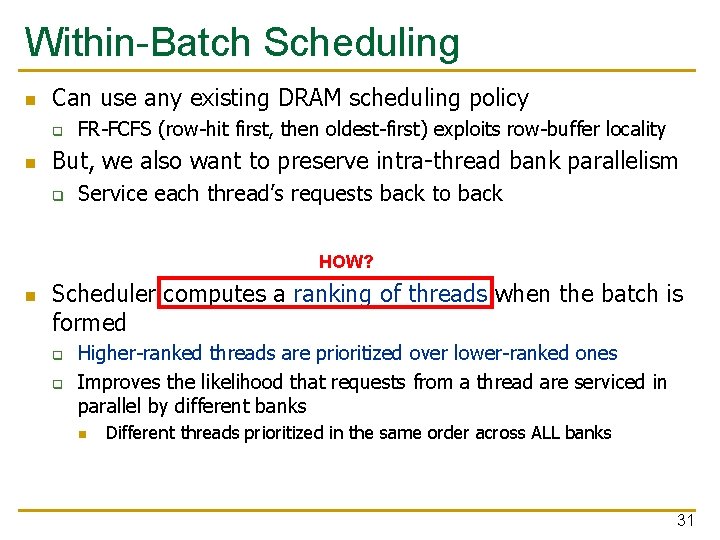

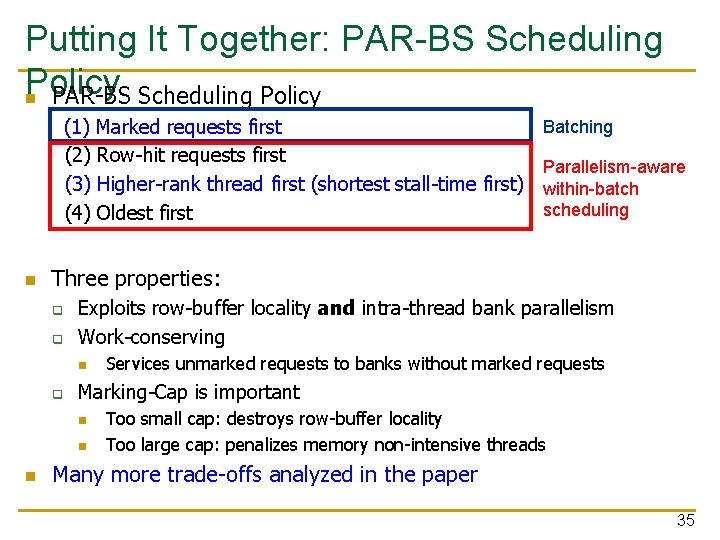

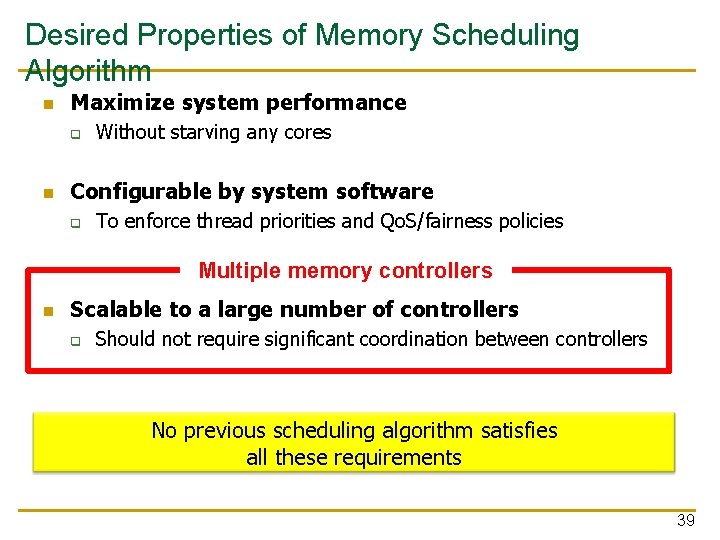

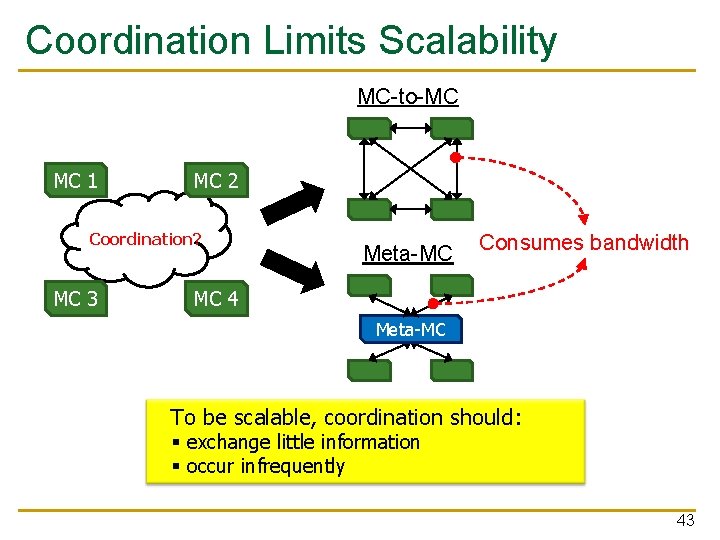

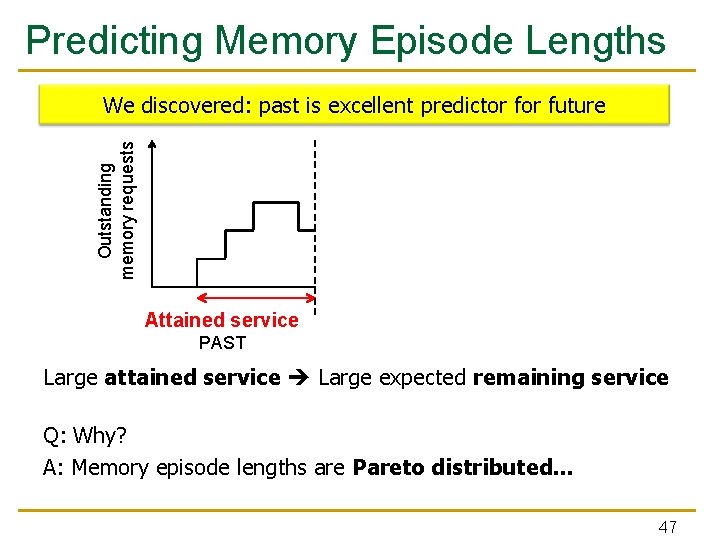

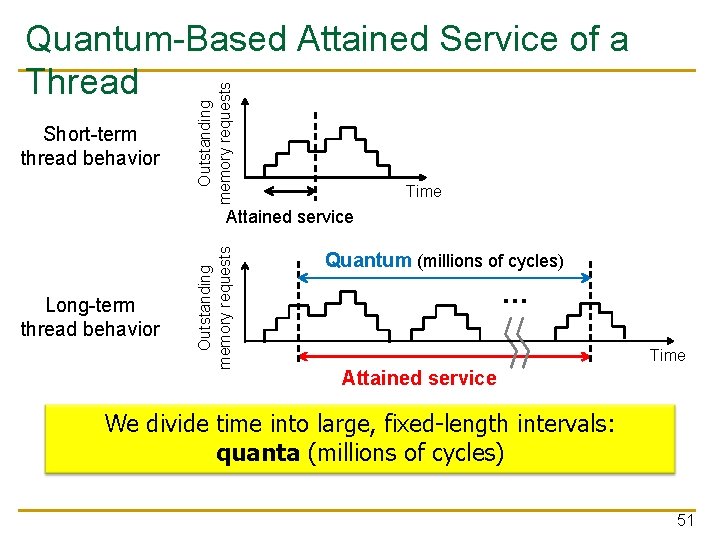

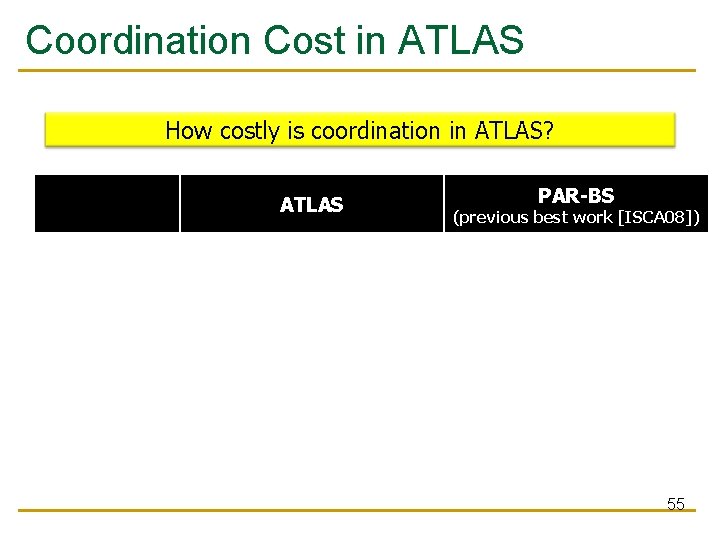

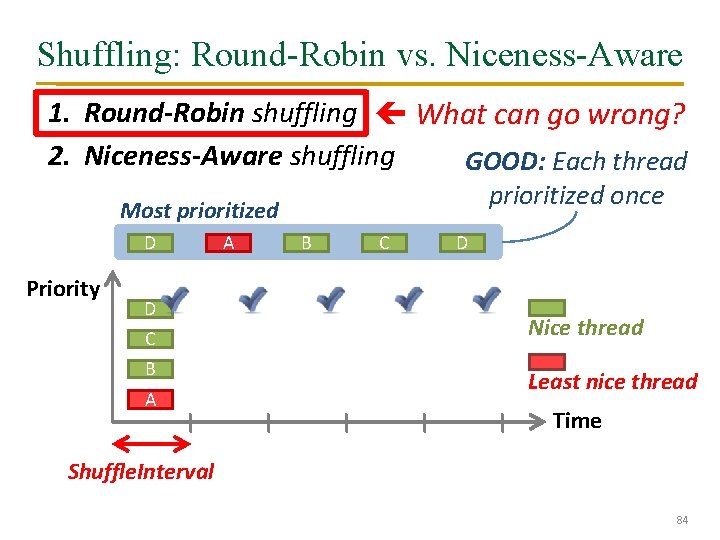

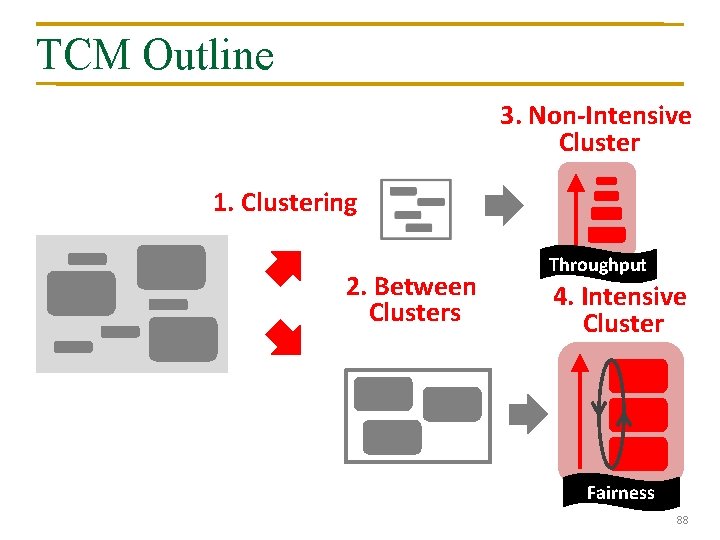

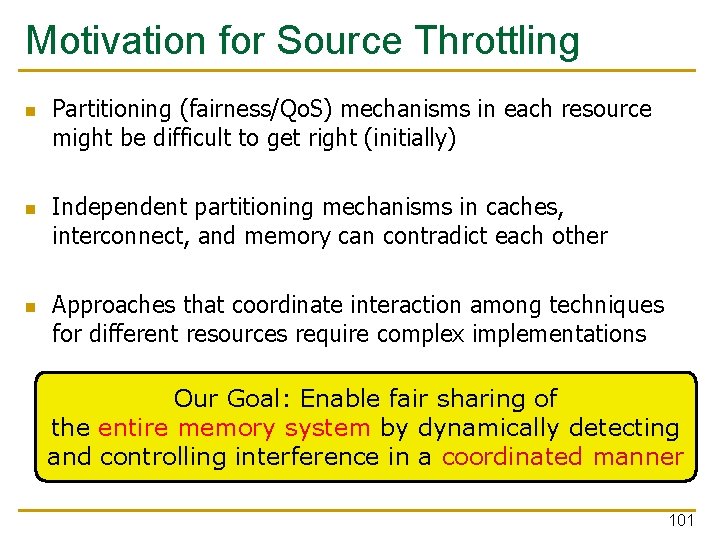

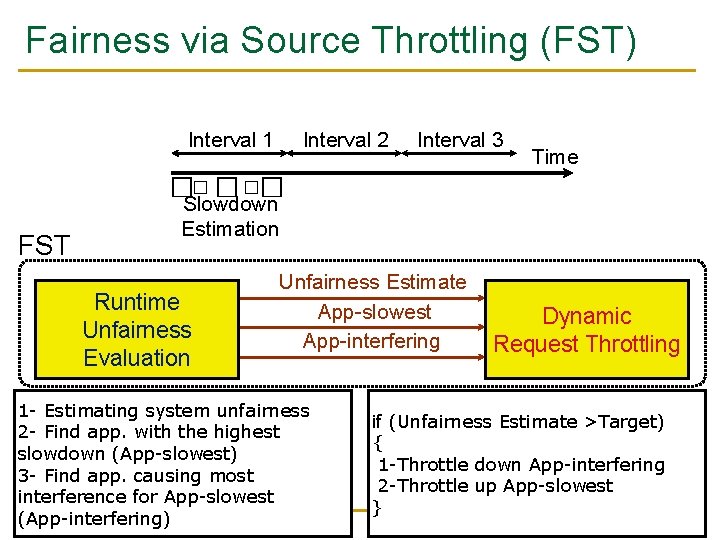

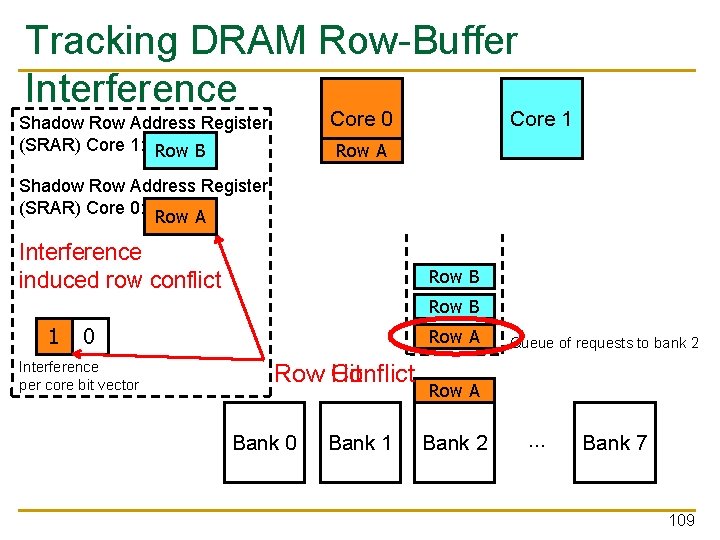

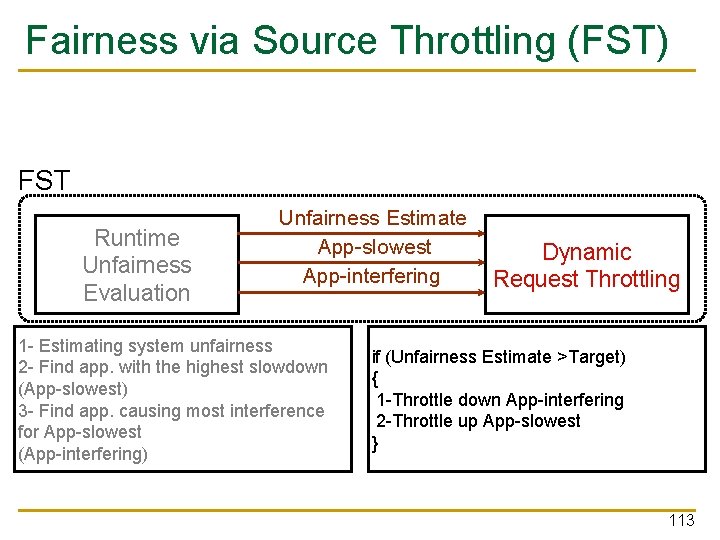

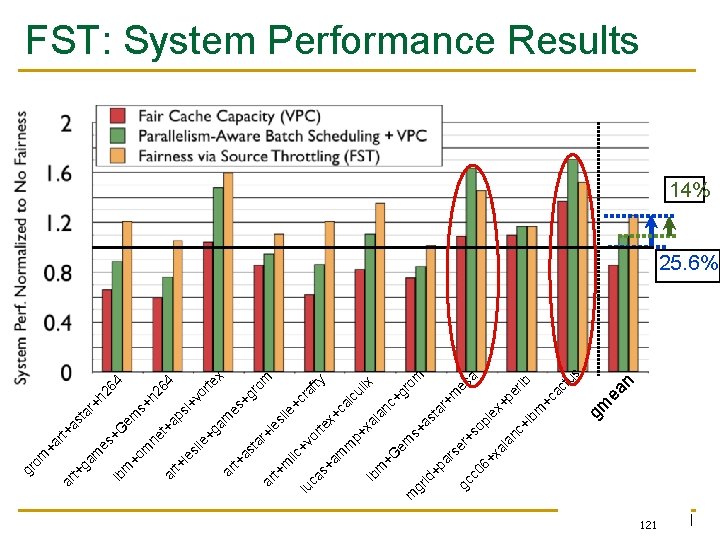

STFM Scheduling Algorithm [MICRO’ 07] n n For each thread, the DRAM controller q Tracks STshared q Estimates STalone Each cycle, the DRAM controller q Computes Slowdown = STshared/STalone for threads with legal requests q Computes unfairness = MAX Slowdown / MIN Slowdown If unfairness < q Use DRAM throughput oriented scheduling policy If unfairness ≥ q Use fairness-oriented scheduling policy n n (1) requests from thread with MAX Slowdown first (2) row-hit first , (3) oldest-first 20

How Does STFM Prevent Unfairness? T 0: Row 0 T 1: Row 5 T 0: Row 0 T 1: Row 111 T 0: Row 0 T 0: T 1: Row 0 16 T 0 Slowdown 1. 10 1. 04 1. 07 1. 03 Row 16 Row 00 Row 111 Row Buffer T 1 Slowdown 1. 14 1. 03 1. 06 1. 08 1. 11 1. 00 Unfairness 1. 06 1. 04 1. 03 1. 00 1. 05 Data 21

STFM Implementation n Tracking STshared q n Increase STshared if the thread cannot commit instructions due to an outstanding DRAM access Estimating STalone q Difficult to estimate directly because thread not running alone q Observation: STalone = STshared - STinterference Estimate STinterference: Extra stall-time due to interference q Update STinterference when a thread incurs delay due to other threads q n n When a row buffer hit turns into a row-buffer conflict (keep track of the row that would have been in the row buffer) When a request is delayed due to bank or bus conflict 22

Support for System Software n System-level thread weights (priorities) q q q OS can choose thread weights to satisfy Qo. S requirements Larger-weight threads should be slowed down less OS communicates thread weights to the memory controller Controller scales each thread’s slowdown by its weight Controller uses weighted slowdown used for scheduling n n Favors threads with larger weights : Maximum tolerable unfairness set by system software q q q Don’t need fairness? Set large. Need strict fairness? Set close to 1. Other values of : trade off fairness and throughput 23

Another Problem due to Interference n Processors try to tolerate the latency of DRAM requests by generating multiple outstanding requests q q n n n Memory-Level Parallelism (MLP) Out-of-order execution, non-blocking caches, runahead execution Effective only if the DRAM controller actually services the multiple requests in parallel in DRAM banks Multiple threads share the DRAM controllers are not aware of a thread’s MLP q Can service each thread’s outstanding requests serially, not in parallel 24

Parallelism-Aware Batch Scheduling Mutlu and Moscibroda, “Parallelism-Aware Batch Scheduling: …, ” ISCA 2008, IEEE Micro Top Picks 2009.

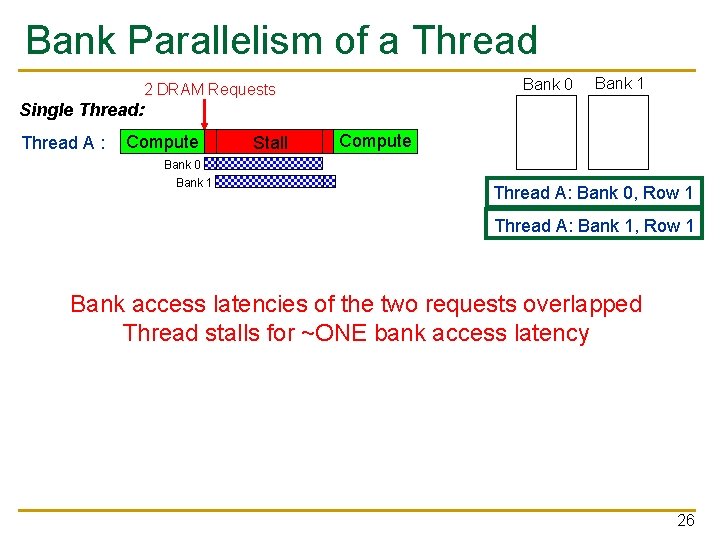

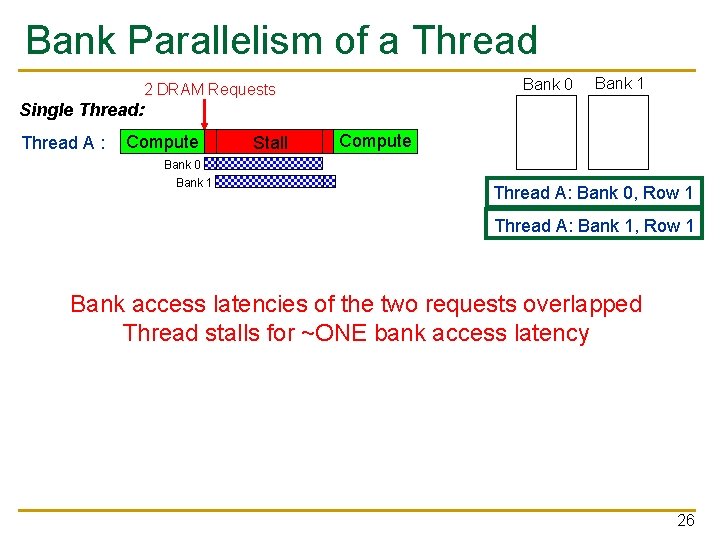

Bank Parallelism of a Thread Bank 0 2 DRAM Requests Bank 1 Single Thread: Thread A : Compute Stall Compute Bank 0 Bank 1 Thread A: Bank 0, Row 1 Thread A: Bank 1, Row 1 Bank access latencies of the two requests overlapped Thread stalls for ~ONE bank access latency 26

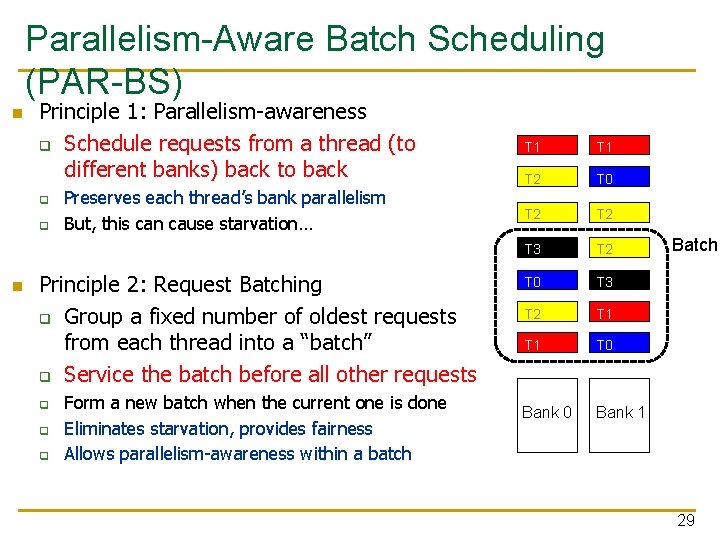

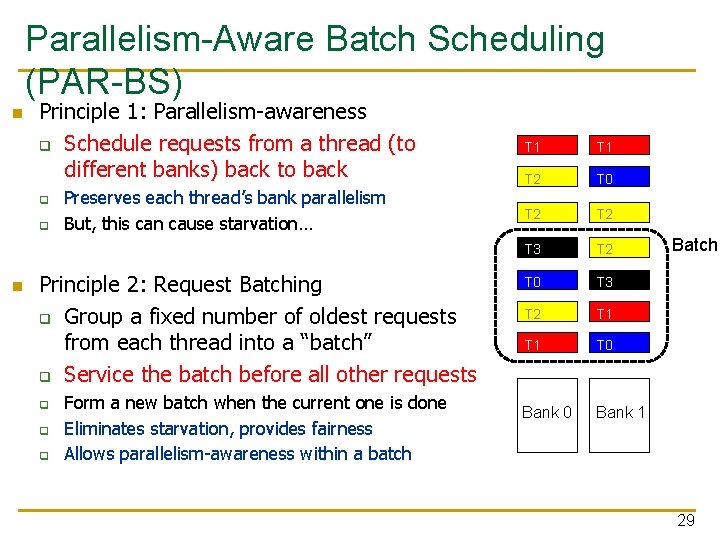

Bank Parallelism Interference in Baseline Scheduler: DRAM Bank 0 Bank 1 2 DRAM Requests A : Compute Stall Compute Bank 0 Bank 1 2 DRAM Requests B: Compute Stall Bank 1 Bank 0 Thread A: Bank 0, Row 1 Stall Compute Thread B: Bank 1, Row 99 Thread B: Bank 0, Row 99 Thread A: Bank 1, Row 1 Bank access latencies of each thread serialized Each thread stalls for ~TWO bank access latencies 27

Parallelism-Aware Scheduler Baseline Scheduler: Bank 0 2 DRAM Requests A : Compute Bank 0 Compute Bank 1 2 DRAM Requests B: Compute Stall Bank 1 Thread A: Bank 0, Row 1 Stall Compute Bank 1 Thread B: Bank 1, Row 99 Thread B: Bank 0, Row 99 Bank 0 Thread A: Bank 1, Row 1 Parallelism-aware Scheduler: 2 DRAM Requests A : Compute Stall Compute Bank 0 Bank 1 Saved Cycles 2 DRAM Requests B: Compute Stall Compute Average stall-time: ~1. 5 bank access latencies Bank 0 Bank 1 28

Parallelism-Aware Batch Scheduling (PAR-BS) n Principle 1: Parallelism-awareness q Schedule requests from a thread (to different banks) back to back q q n Preserves each thread’s bank parallelism But, this can cause starvation… Principle 2: Request Batching q Group a fixed number of oldest requests from each thread into a “batch” q Service the batch before all other requests q q q Form a new batch when the current one is done Eliminates starvation, provides fairness Allows parallelism-awareness within a batch T 1 T 2 T 0 T 2 T 3 T 2 T 0 T 3 T 2 T 1 T 0 Bank 1 Batch 29

Request Batching n Each memory request has a bit (marked) associated with it n Batch formation: q q q n Marked requests are prioritized over unmarked ones q n Mark up to Marking-Cap oldest requests per bank for each thread Marked requests constitute the batch Form a new batch when no marked requests are left No reordering of requests across batches: no starvation, high fairness How to prioritize requests within a batch? 30

Within-Batch Scheduling n Can use any existing DRAM scheduling policy q n FR-FCFS (row-hit first, then oldest-first) exploits row-buffer locality But, we also want to preserve intra-thread bank parallelism q Service each thread’s requests back to back HOW? n Scheduler computes a ranking of threads when the batch is formed q q Higher-ranked threads are prioritized over lower-ranked ones Improves the likelihood that requests from a thread are serviced in parallel by different banks n Different threads prioritized in the same order across ALL banks 31

How to Rank Threads within a Batch n Ranking scheme affects system throughput and fairness n Maximize system throughput q n Minimize unfairness (Equalize the slowdown of threads) q q n Minimize average stall-time of threads within the batch Service threads with inherently low stall-time early in the batch Insight: delaying memory non-intensive threads results in high slowdown Shortest stall-time first (shortest job first) ranking q q q Provides optimal system throughput [Smith, 1956]* Controller estimates each thread’s stall-time within the batch Ranks threads with shorter stall-time higher * W. E. Smith, “Various optimizers for single stage production, ” Naval Research Logistics Quarterly, 1956. 32

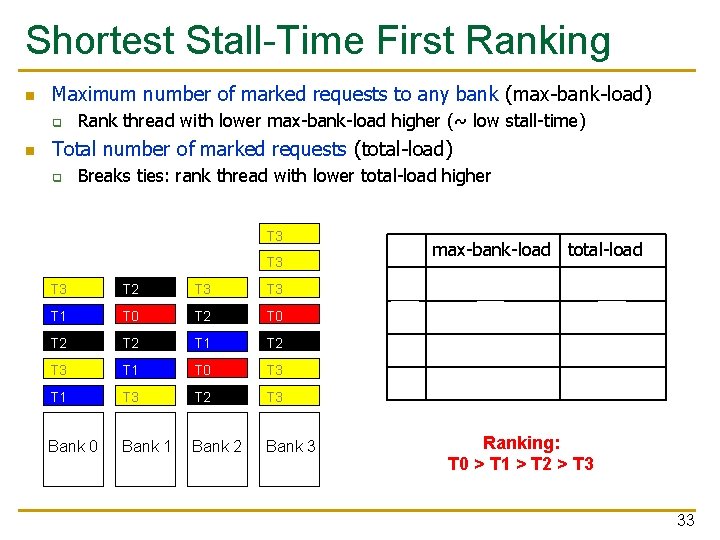

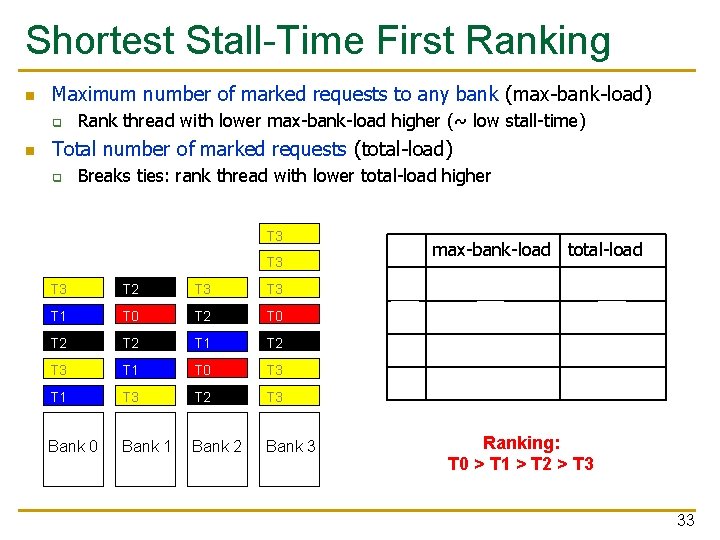

Shortest Stall-Time First Ranking n Maximum number of marked requests to any bank (max-bank-load) q n Rank thread with lower max-bank-load higher (~ low stall-time) Total number of marked requests (total-load) q Breaks ties: rank thread with lower total-load higher T 3 max-bank-load total-load T 3 T 2 T 3 T 0 1 3 T 1 T 0 T 2 T 0 T 1 2 4 T 2 T 1 T 2 2 6 T 3 T 1 T 0 T 3 T 1 T 3 T 2 T 3 5 9 Bank 0 Bank 1 Bank 2 Bank 3 Ranking: T 0 > T 1 > T 2 > T 3 33

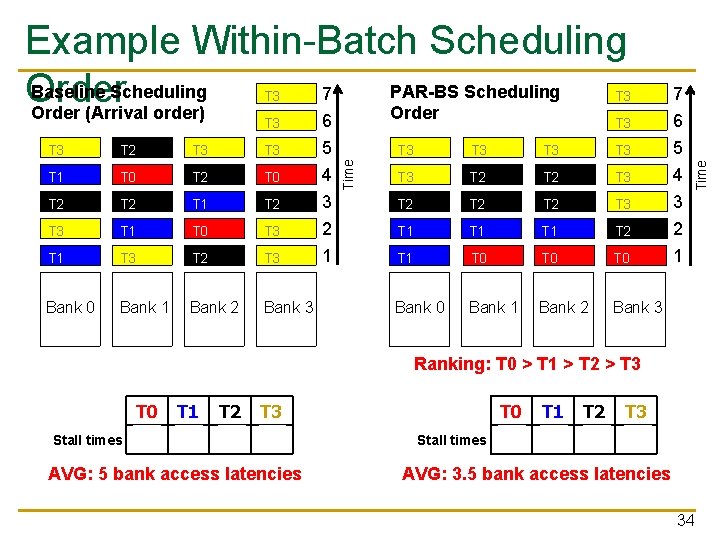

T 3 6 7 6 5 4 3 2 1 T 3 T 2 T 3 T 1 T 0 T 2 T 2 T 1 T 2 T 3 T 1 T 0 T 3 T 1 T 3 T 2 T 3 Bank 0 Bank 1 Bank 2 Bank 3 Time T 3 T 3 T 2 T 2 T 2 T 3 T 1 T 1 T 2 T 1 T 0 T 0 Bank 1 Bank 2 Bank 3 Time Example Within-Batch Scheduling Baseline Scheduling PAR-BS Scheduling 7 Order (Arrival order) Order Ranking: T 0 > T 1 > T 2 > T 3 Stall times T 0 T 1 T 2 T 3 4 4 5 7 AVG: 5 bank access latencies Stall times T 0 T 1 T 2 T 3 1 2 4 7 AVG: 3. 5 bank access latencies 34

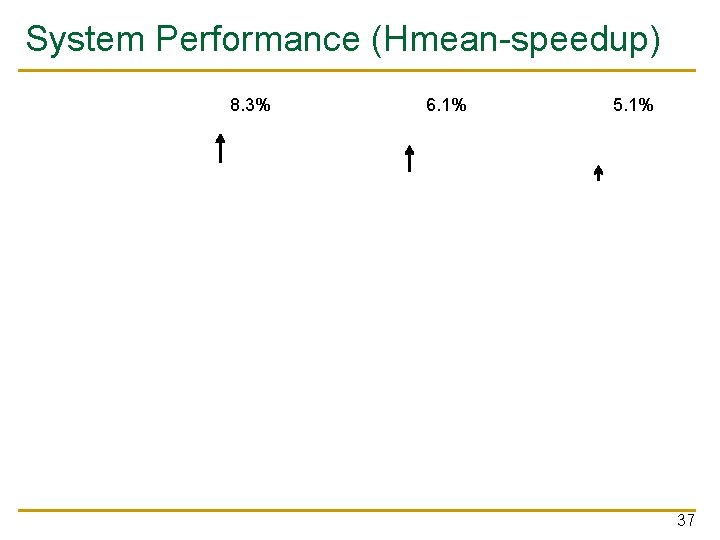

Putting It Together: PAR-BS Scheduling Policy n PAR-BS Scheduling Policy Batching (1) Marked requests first (2) Row-hit requests first Parallelism-aware (3) Higher-rank thread first (shortest stall-time first) within-batch scheduling (4) Oldest first n Three properties: q q Exploits row-buffer locality and intra-thread bank parallelism Work-conserving n q Marking-Cap is important n n n Services unmarked requests to banks without marked requests Too small cap: destroys row-buffer locality Too large cap: penalizes memory non-intensive threads Many more trade-offs analyzed in the paper 35

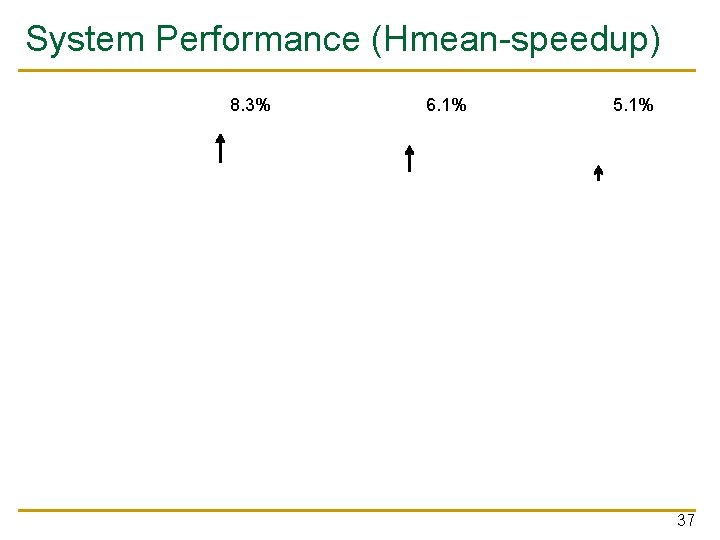

Unfairness on 4 -, 8 -, 16 -core Systems Unfairness = MAX Memory Slowdown / MIN Memory Slowdown [MICRO 2007] 1. 11 X 1. 08 X 36

System Performance (Hmean-speedup) 8. 3% 6. 1% 5. 1% 37

ATLAS Memory Scheduler Yoongu Kim et al. , “ATLAS: A Scalable and High-Performance Scheduling Algorithm for Multiple Memory Controllers, ” HPCA 2010, Best Paper Session. 38

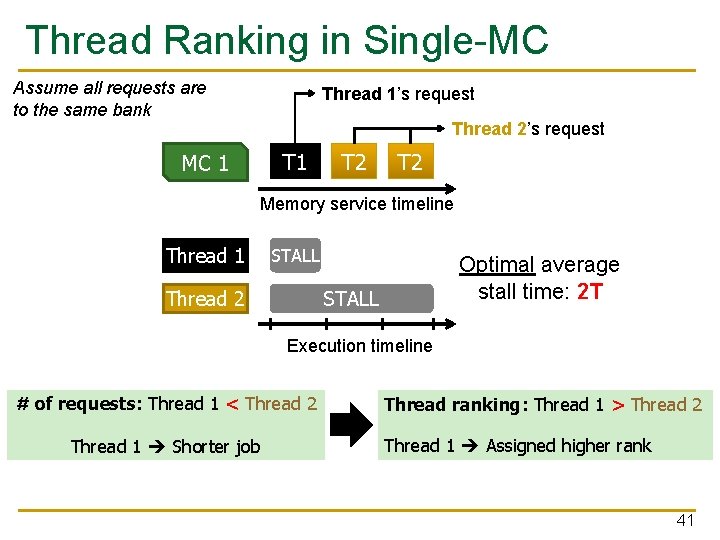

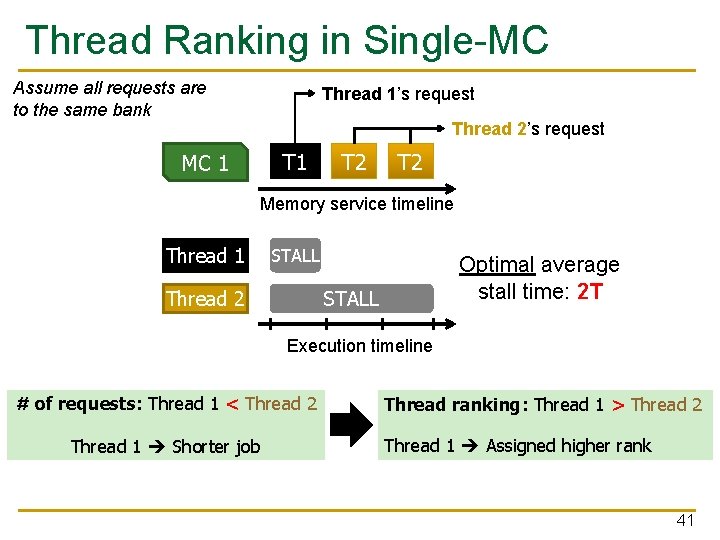

Desired Properties of Memory Scheduling Algorithm n Maximize system performance q n Without starving any cores Configurable by system software q To enforce thread priorities and Qo. S/fairness policies Multiple memory controllers n Scalable to a large number of controllers q Should not require significant coordination between controllers No previous scheduling algorithm satisfies all these requirements 39

Multiple Memory Controllers Single-MC system Core MC Multiple-MC system Memory Core MC Memory Difference? The need for coordination 40

Thread Ranking in Single-MC Assume all requests are to the same bank Thread 1’s request Thread 2’s request T 1 MC 1 T 2 Memory service timeline Thread 1 STALL Optimal average stall time: 2 T STALL Thread 2 Execution timeline # of requests: Thread 1 < Thread 2 Thread 1 Shorter job Thread ranking: Thread 1 > Thread 2 Thread 1 Assigned higher rank 41

Thread Ranking in Multiple-MC Uncoordinated MC 1 T 2 T 2 MC 2 T 1 T 1 Coordinated Coordination MC 1 T 2 T 1 MC 2 T 1 T 1 Avg. stall time: 3 T Avg. stall time: 2. 5 T Thread 1 STALL Thread 1 Thread 2 STALL SAVED CYCLES ! MC 1’s shorter job: Thread 1 Global shorter job: Thread 2 MC 1 incorrectly assigns higher rank to Thread 1 MC 1 correctly assigns higher rank to Thread 2 Coordination Better scheduling decisions 42

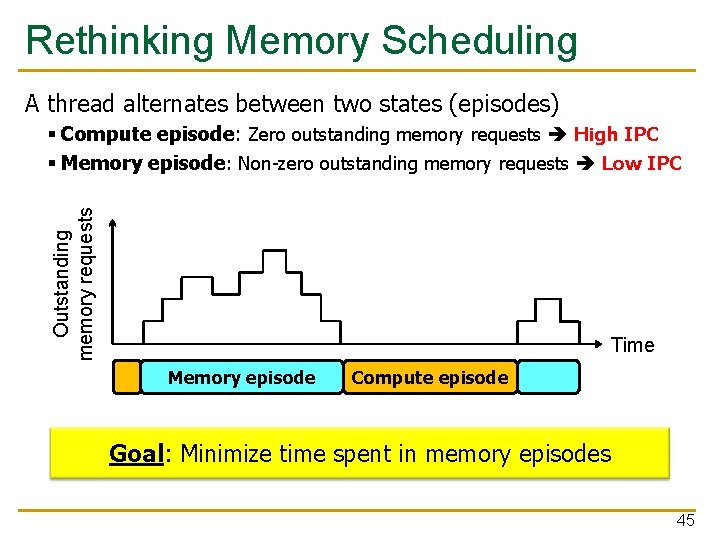

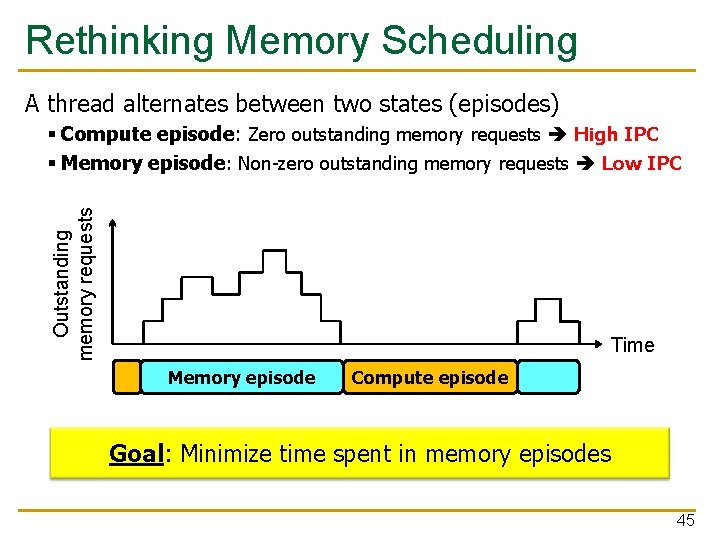

Coordination Limits Scalability MC-to-MC MC 1 MC 2 Coordination? MC 3 Meta-MC Consumes bandwidth MC 4 Meta-MC To be scalable, coordination should: § exchange little information § occur infrequently 43

The Problem and Our Goal Problem: § Previous best memory scheduling algorithms are not scalable to many controllers § Not designed for multiple MCs § Low performance or require significant coordination Our Goal: § Fundamentally redesign the memory scheduling algorithm such that it § Provides high system throughput § Requires little or no coordination among MCs 44

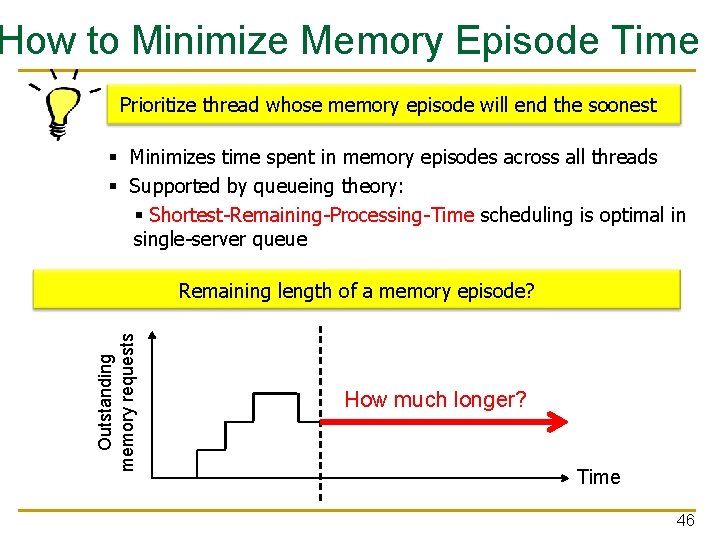

Rethinking Memory Scheduling A thread alternates between two states (episodes) Outstanding memory requests § Compute episode: Zero outstanding memory requests High IPC § Memory episode: Non-zero outstanding memory requests Low IPC Time Memory episode Compute episode Goal: Minimize time spent in memory episodes 45

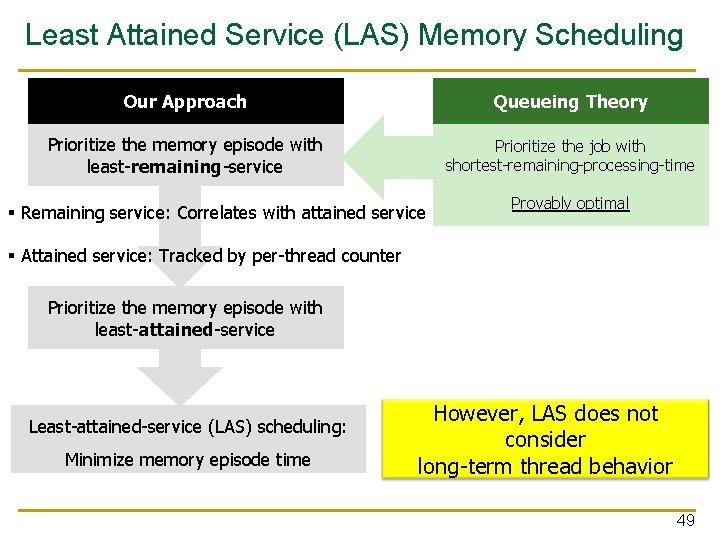

How to Minimize Memory Episode Time Prioritize thread whose memory episode will end the soonest § Minimizes time spent in memory episodes across all threads § Supported by queueing theory: § Shortest-Remaining-Processing-Time scheduling is optimal in single-server queue Outstanding memory requests Remaining length of a memory episode? How much longer? Time 46

Predicting Memory Episode Lengths Outstanding memory requests We discovered: past is excellent predictor future Time Attained service Remaining service PAST FUTURE Large attained service Large expected remaining service Q: Why? A: Memory episode lengths are Pareto distributed… 47

Pareto Distribution of Memory Episode Lengths Pr{Mem. episode > x} 401. bzip 2 Memory episode lengths of SPEC benchmarks Pareto distribution The longer an episode has lasted The longer it will last further x (cycles) Attained service correlates with remaining service Favoring least-attained-service memory episode = Favoring memory episode which will end the soonest 48

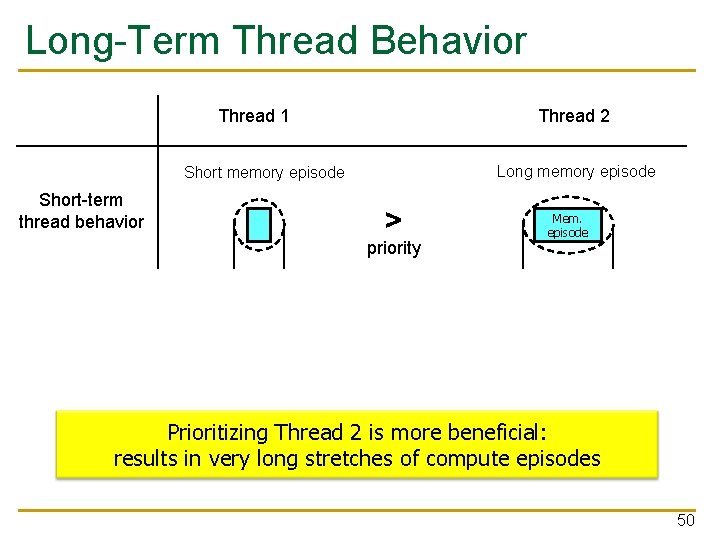

Least Attained Service (LAS) Memory Scheduling Our Approach Queueing Theory Prioritize the memory episode with least-remaining-service Prioritize the job with shortest-remaining-processing-time § Remaining service: Correlates with attained service Provably optimal § Attained service: Tracked by per-thread counter Prioritize the memory episode with least-attained-service Least-attained-service (LAS) scheduling: Minimize memory episode time However, LAS does not consider long-term thread behavior 49

Long-Term Thread Behavior Thread 1 Thread 2 Long memory episode Short-term thread behavior > Mem. episode priority Long-term thread behavior < priority Compute episode Mem. episode Compute episode Prioritizing Thread 2 is more beneficial: results in very long stretches of compute episodes 50

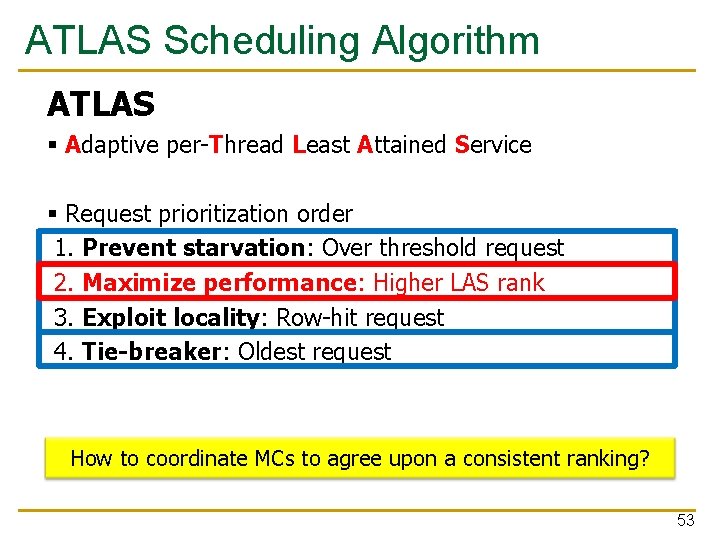

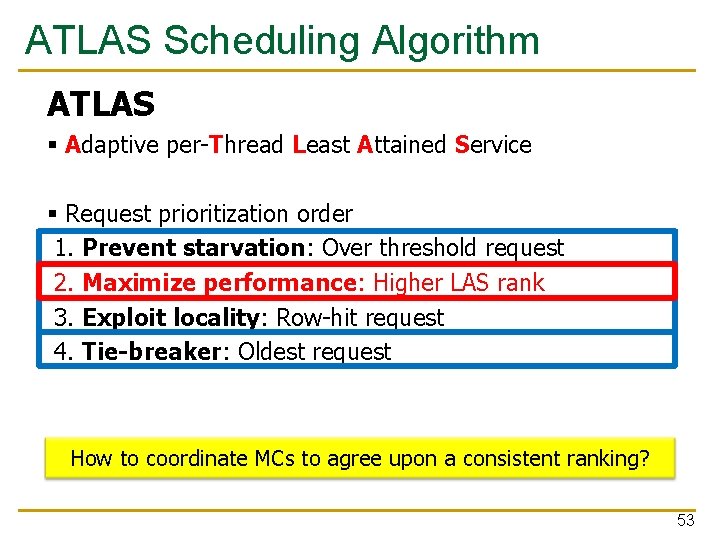

Short-term thread behavior Outstanding memory requests Quantum-Based Attained Service of a Thread Time Long-term thread behavior Outstanding memory requests Attained service Quantum (millions of cycles) … Time Attained service We divide time into large, fixed-length intervals: quanta (millions of cycles) 51

Quantum-Based LAS Thread Ranking During a quantum Each thread’s attained service (AS) is tracked by MCs ASi = A thread’s AS during only the i-th quantum End of a quantum Each thread’s Total. AS computed as: Total. ASi = α · Total. ASi-1 + (1 - α) · ASi High α More bias towards history Threads are ranked, favoring threads with lower Total. AS Next quantum Threads are serviced according to their ranking 52

ATLAS Scheduling Algorithm ATLAS § Adaptive per-Thread Least Attained Service § Request prioritization order 1. Prevent starvation: Over threshold request 2. Maximize performance: Higher LAS rank 3. Exploit locality: Row-hit request 4. Tie-breaker: Oldest request How to coordinate MCs to agree upon a consistent ranking? 53

ATLAS Coordination Mechanism During a quantum: § Each MC increments the local AS of each thread End of a quantum: § Each MC sends local AS of each thread to centralized meta-MC § Meta-MC accumulates local AS and calculates ranking § Meta-MC broadcasts ranking to all MCs Consistent thread ranking 54

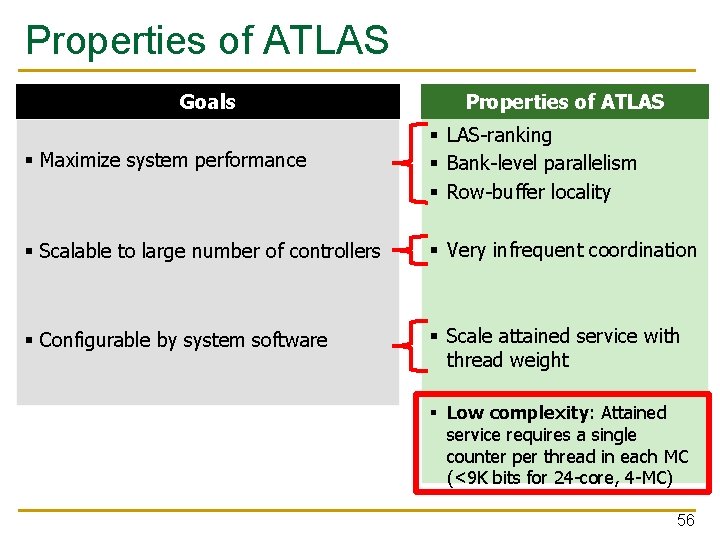

Coordination Cost in ATLAS How costly is coordination in ATLAS? ATLAS How often? Sensitive to coordination latency? PAR-BS (previous best work [ISCA 08]) Very infrequently Frequently Every quantum boundary (10 M cycles) Every batch boundary (thousands of cycles) Insensitive Sensitive Coordination latency << Quantum length Coordination latency ~ Batch length 55

Properties of ATLAS Goals Properties of ATLAS § Maximize system performance § LAS-ranking § Bank-level parallelism § Row-buffer locality § Scalable to large number of controllers § Very infrequent coordination § Configurable by system software § Scale attained service with thread weight § Low complexity: Attained service requires a single counter per thread in each MC (<9 K bits for 24 -core, 4 -MC) 56

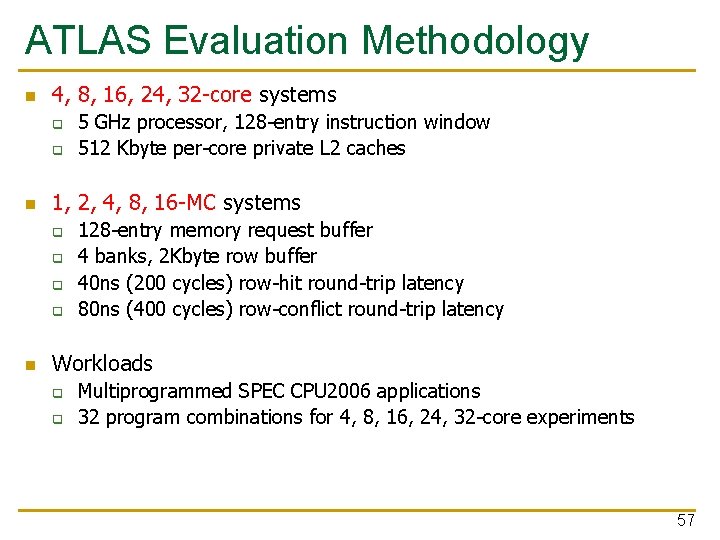

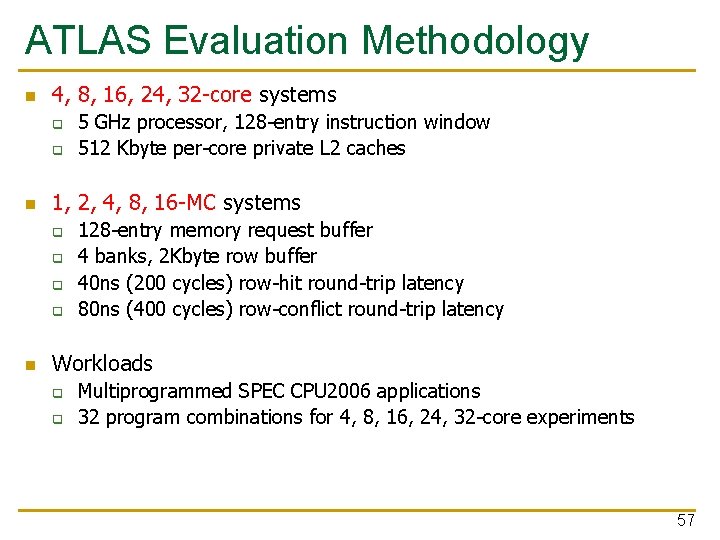

ATLAS Evaluation Methodology n 4, 8, 16, 24, 32 -core systems q q n 1, 2, 4, 8, 16 -MC systems q q n 5 GHz processor, 128 -entry instruction window 512 Kbyte per-core private L 2 caches 128 -entry memory request buffer 4 banks, 2 Kbyte row buffer 40 ns (200 cycles) row-hit round-trip latency 80 ns (400 cycles) row-conflict round-trip latency Workloads q q Multiprogrammed SPEC CPU 2006 applications 32 program combinations for 4, 8, 16, 24, 32 -core experiments 57

![Comparison to Previous Scheduling Algorithms FCFS FRFCFS Rixner ISCA 00 Oldestfirst rowhit Comparison to Previous Scheduling Algorithms § FCFS, FR-FCFS [Rixner+, ISCA 00] § Oldest-first, row-hit](https://slidetodoc.com/presentation_image_h2/95951a814fa4830ec4f991a13ff97291/image-58.jpg)

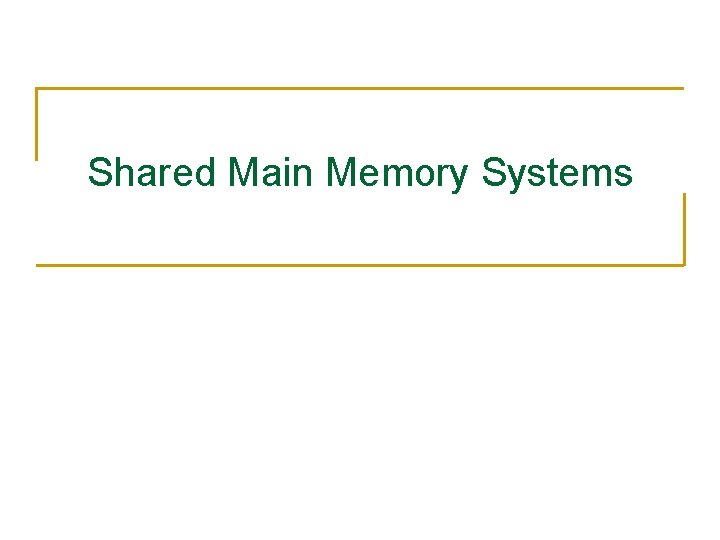

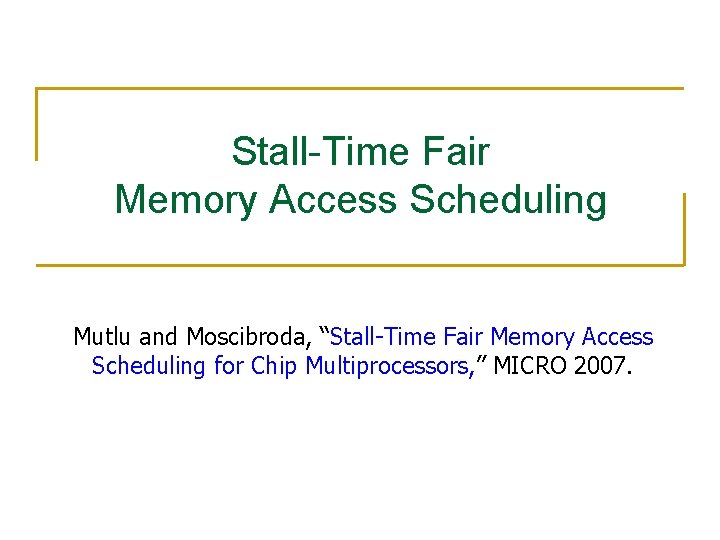

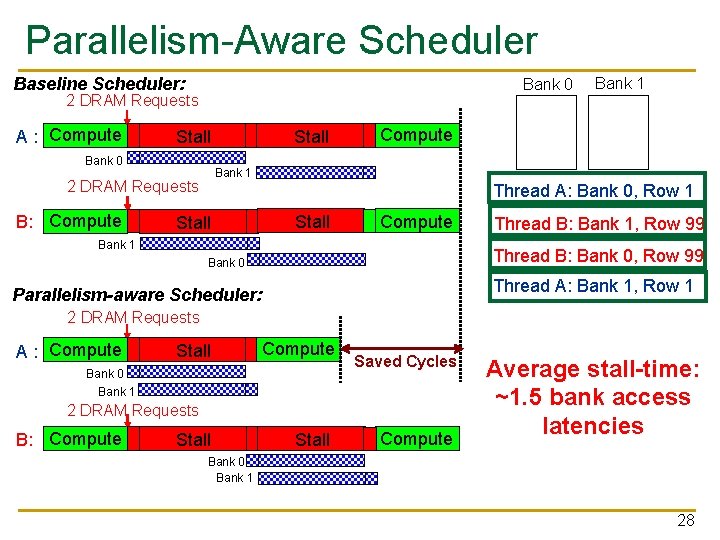

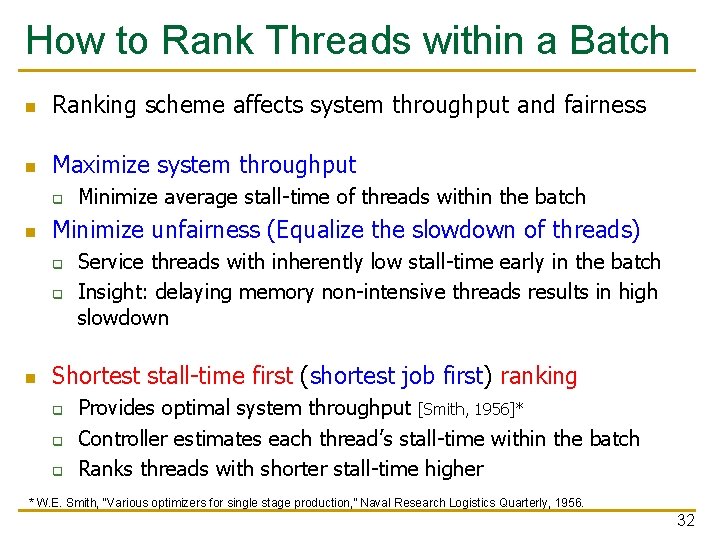

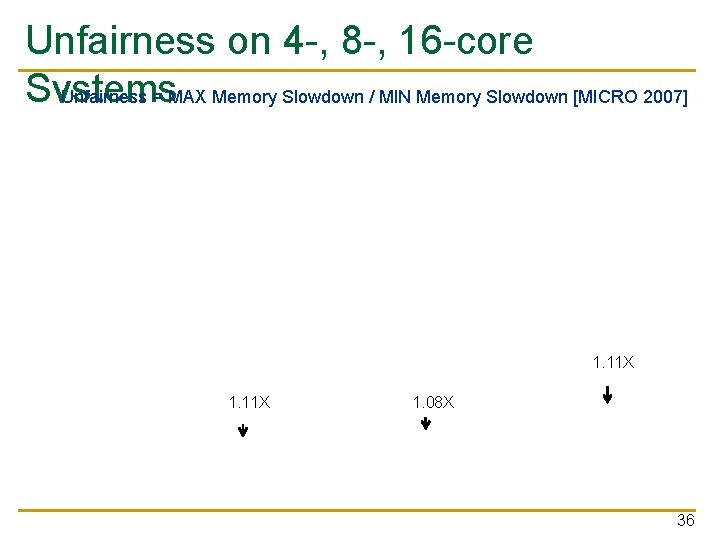

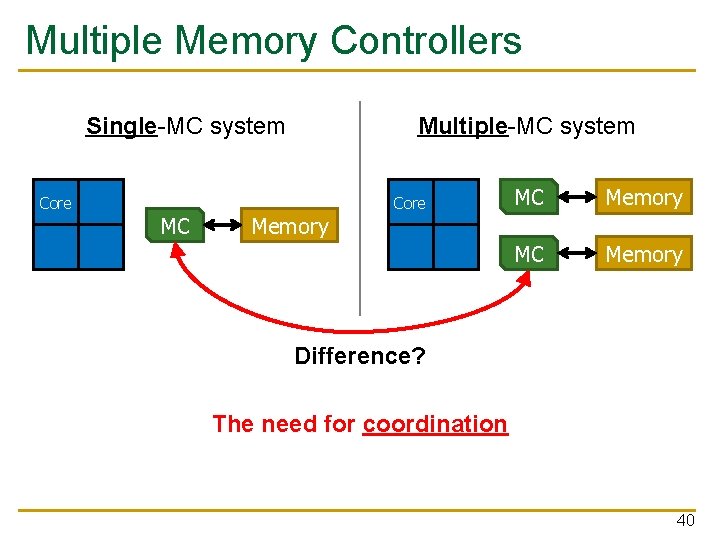

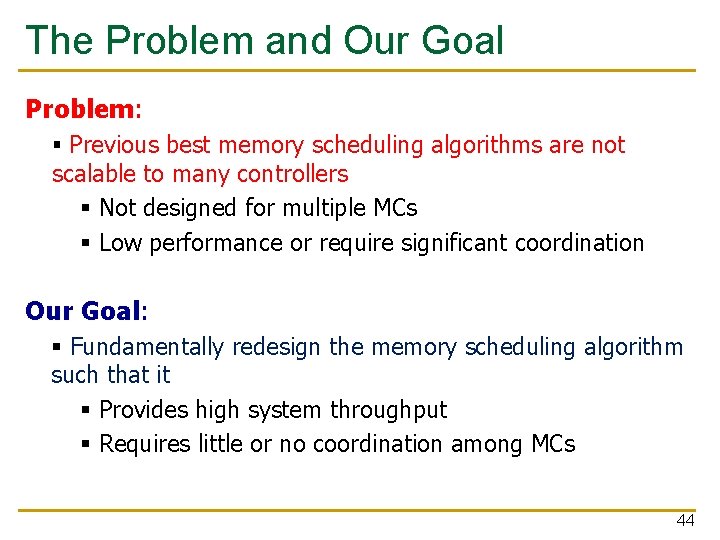

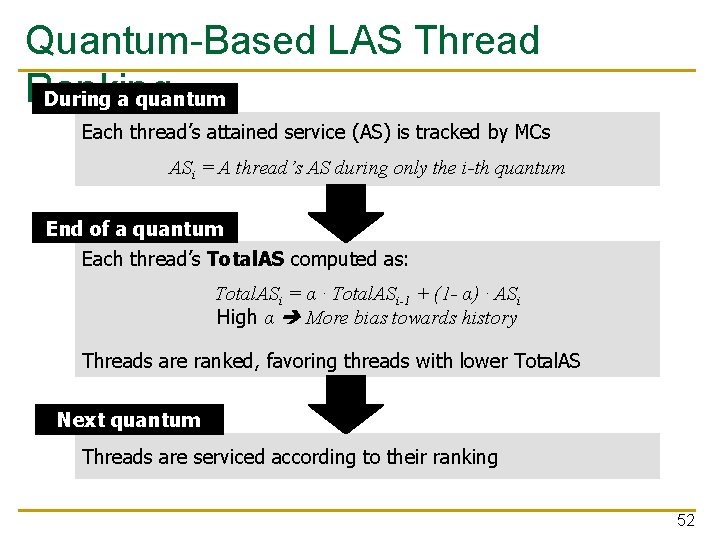

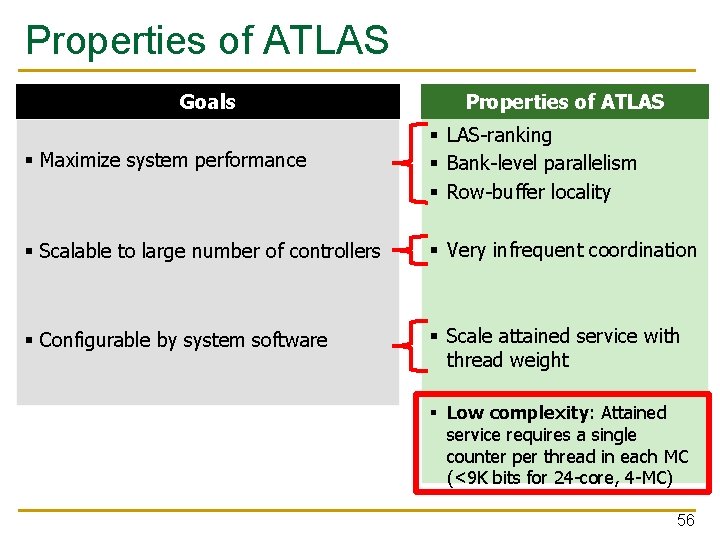

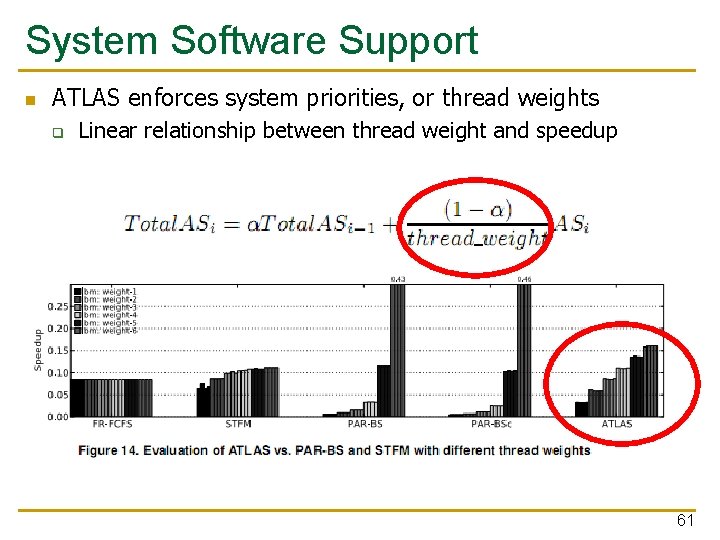

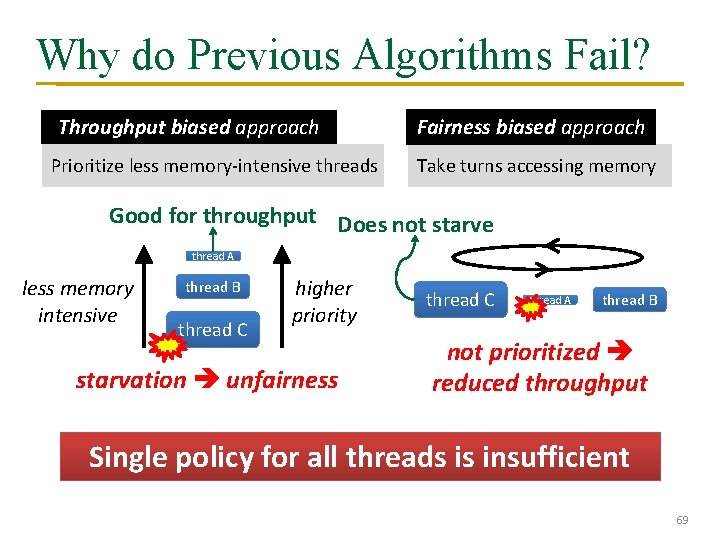

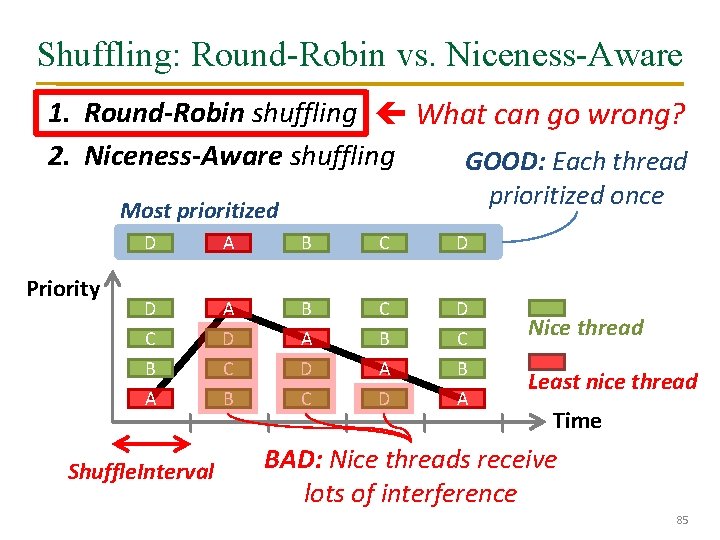

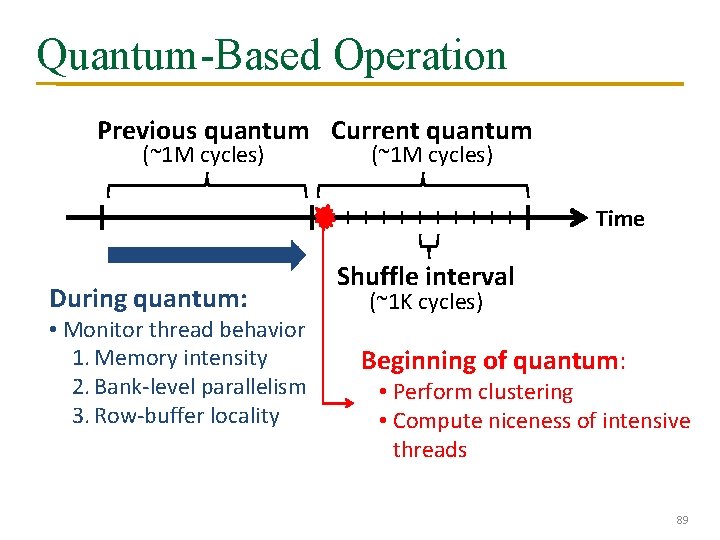

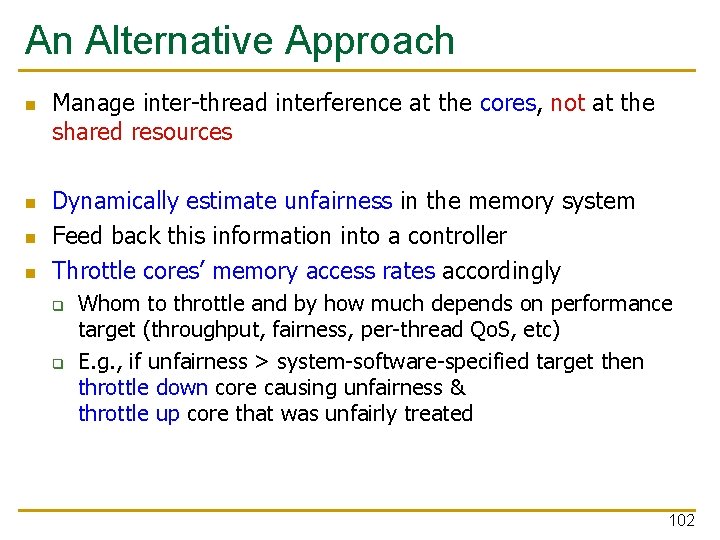

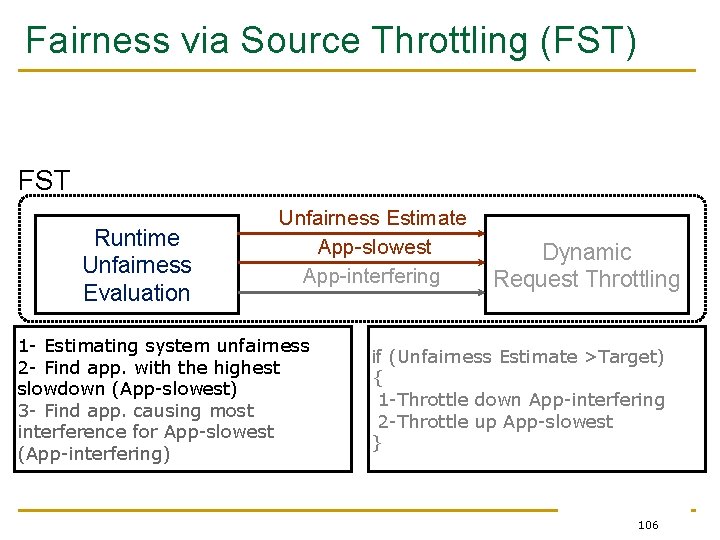

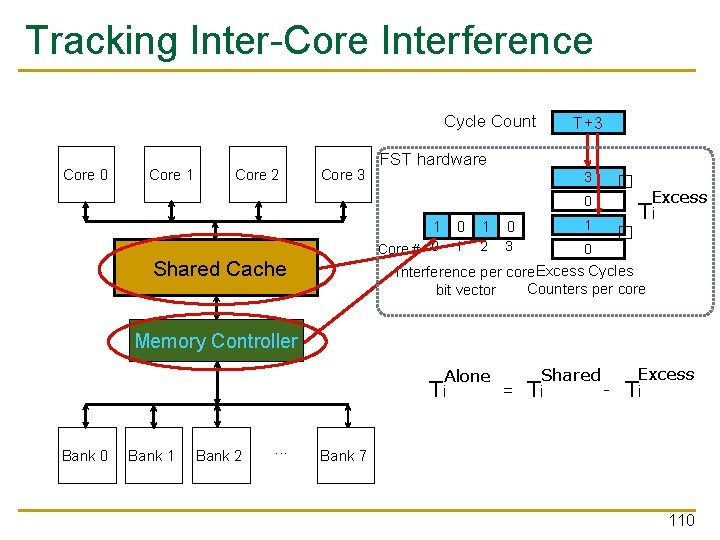

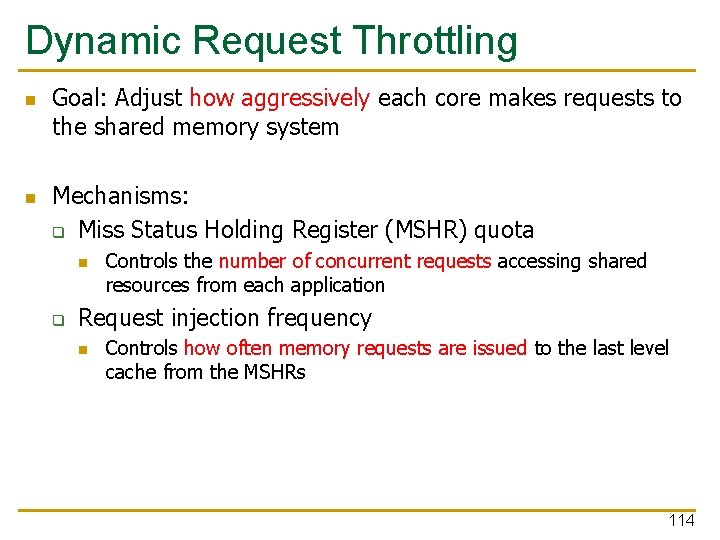

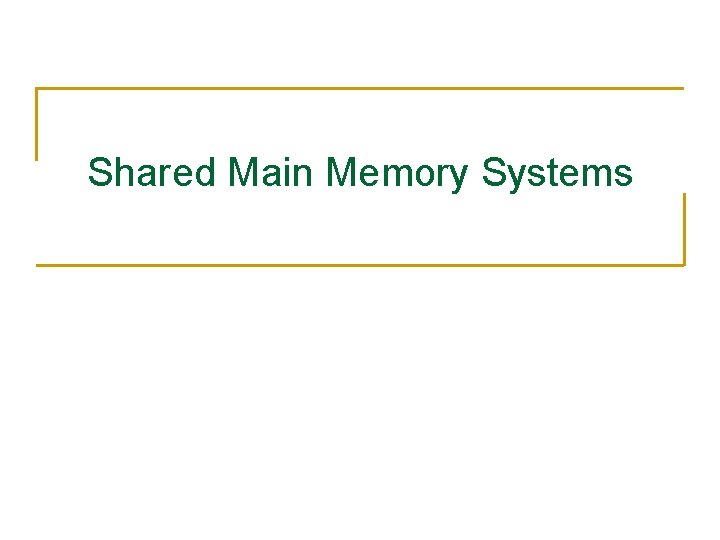

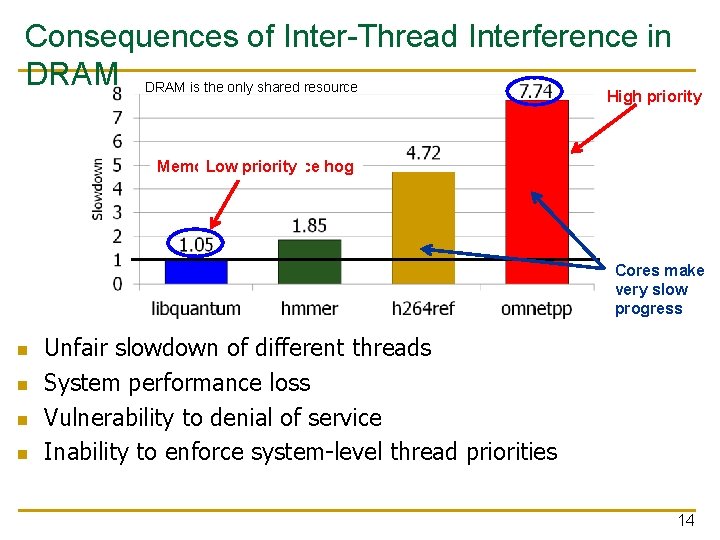

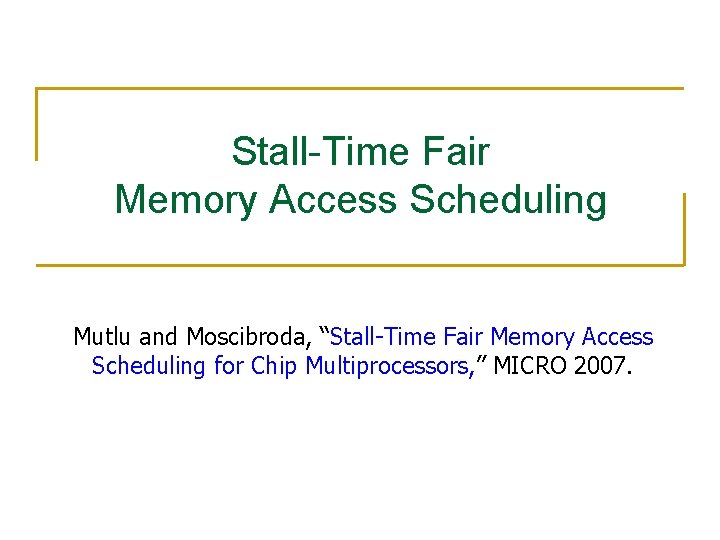

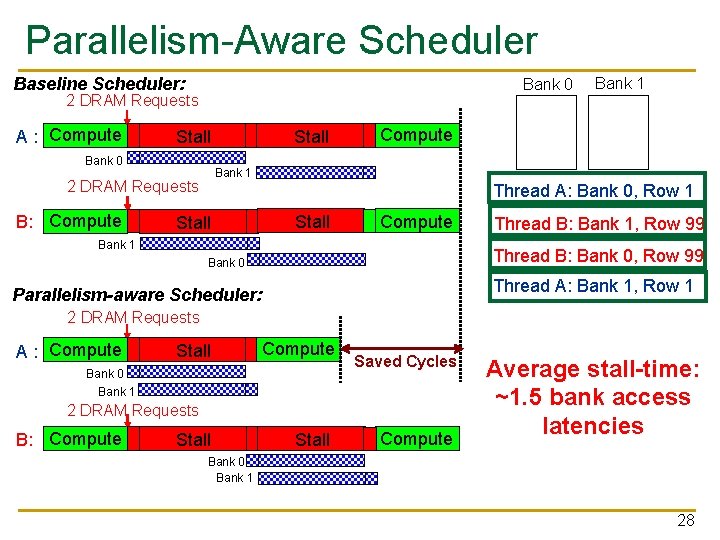

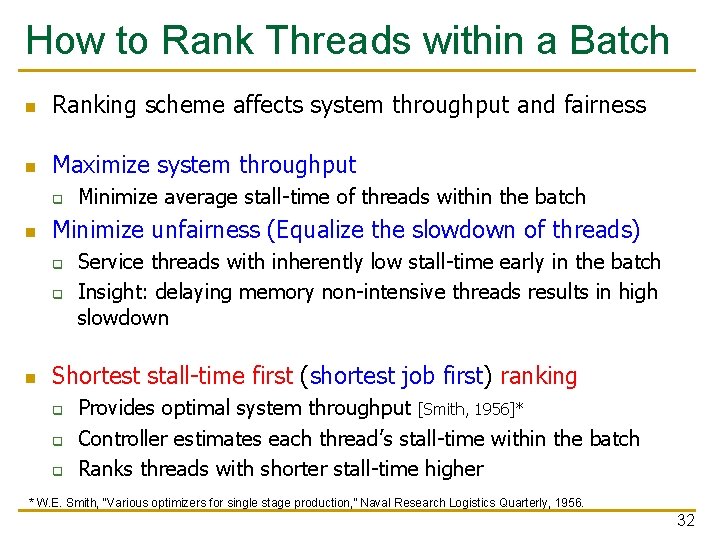

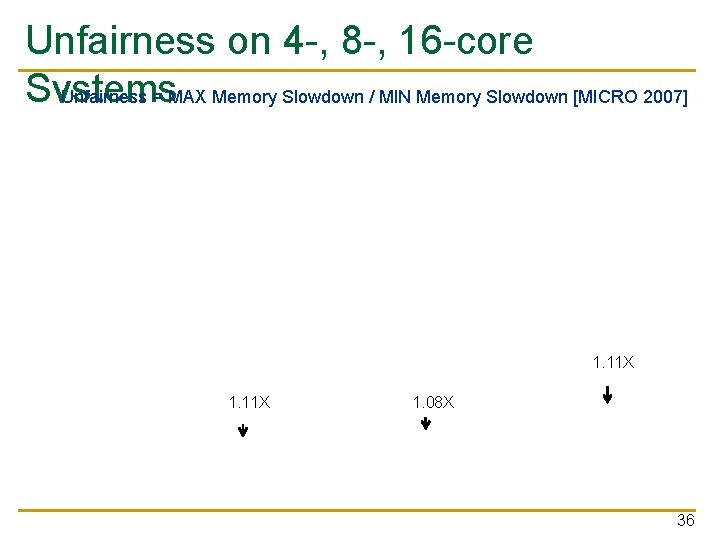

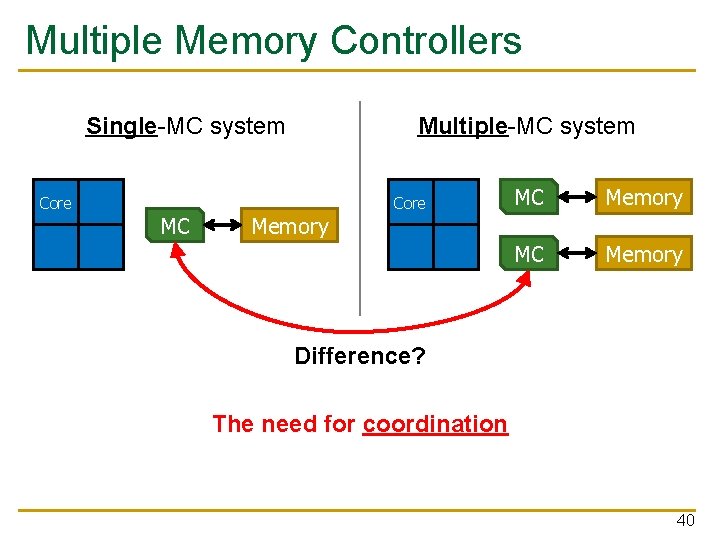

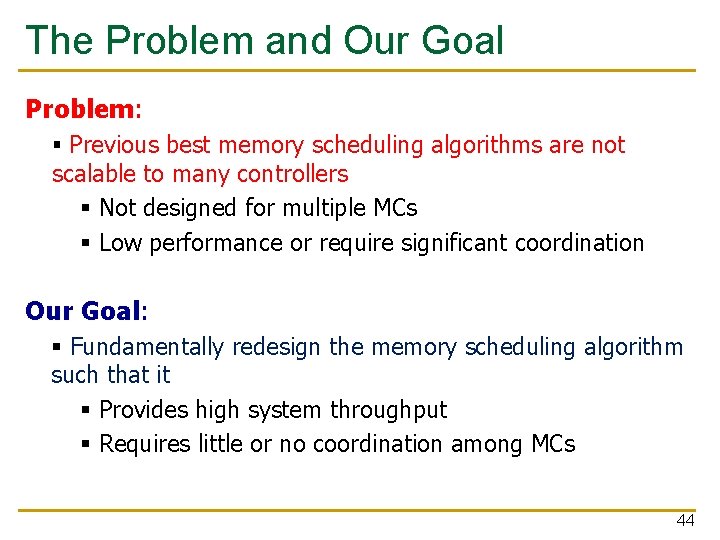

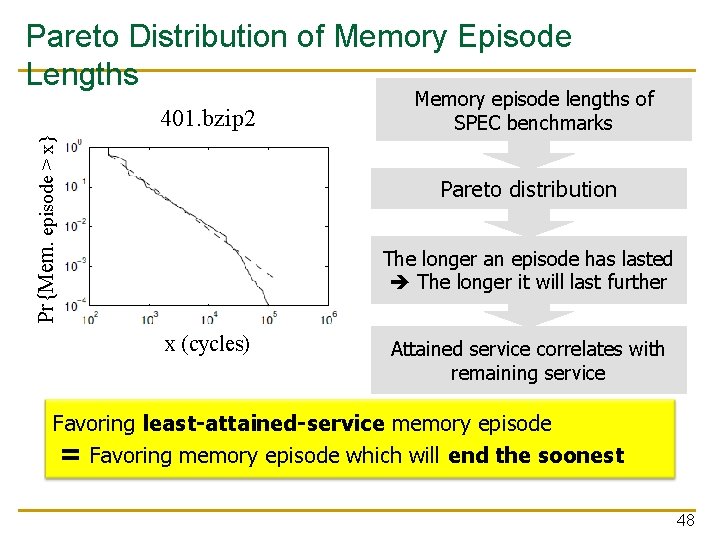

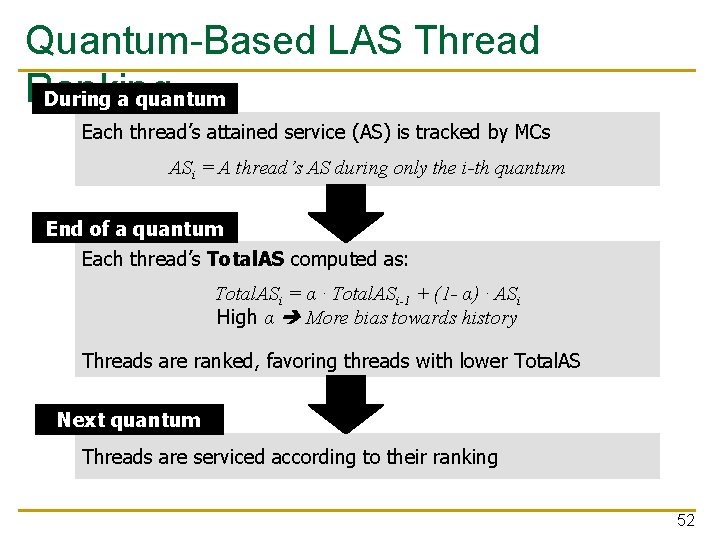

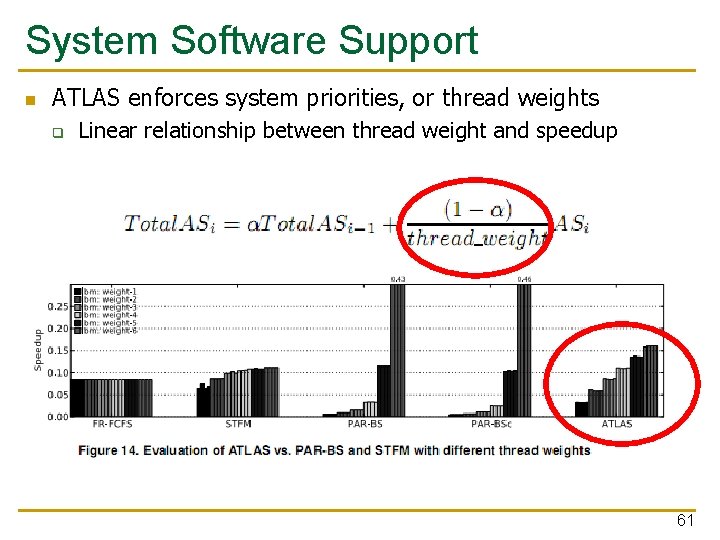

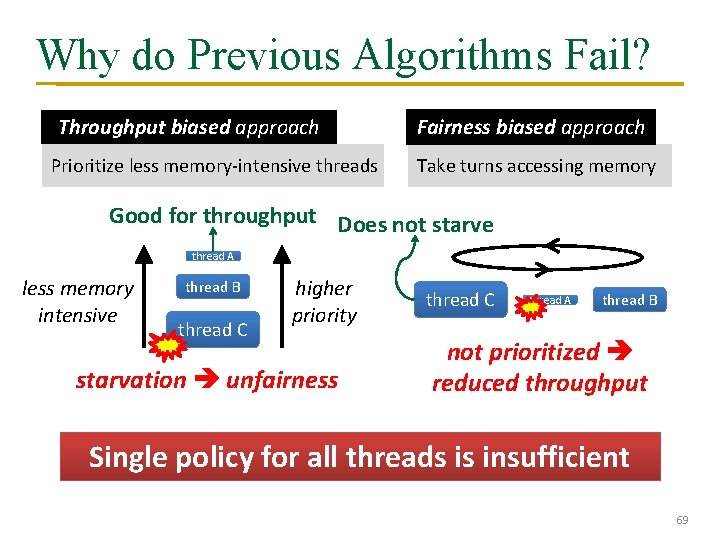

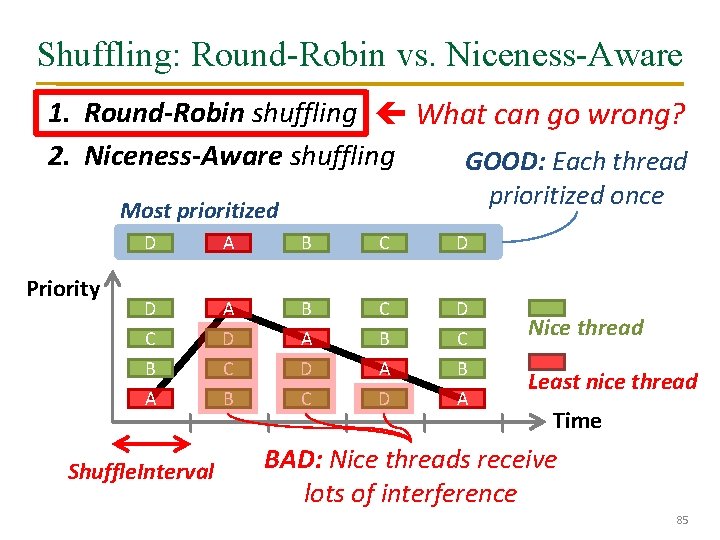

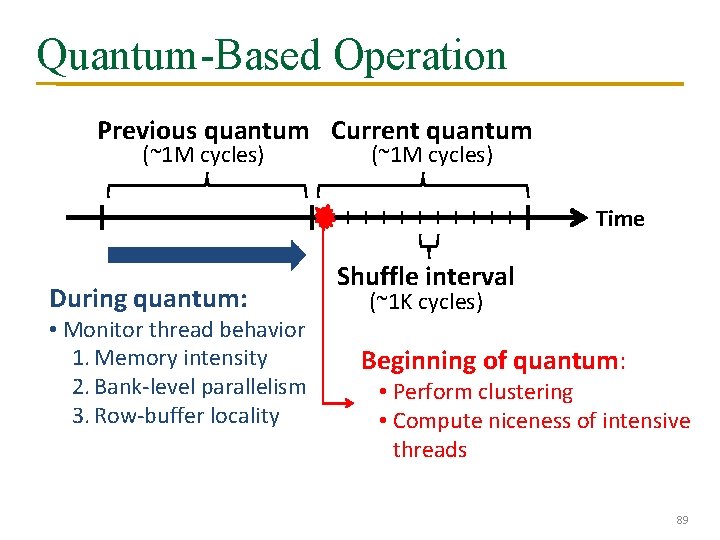

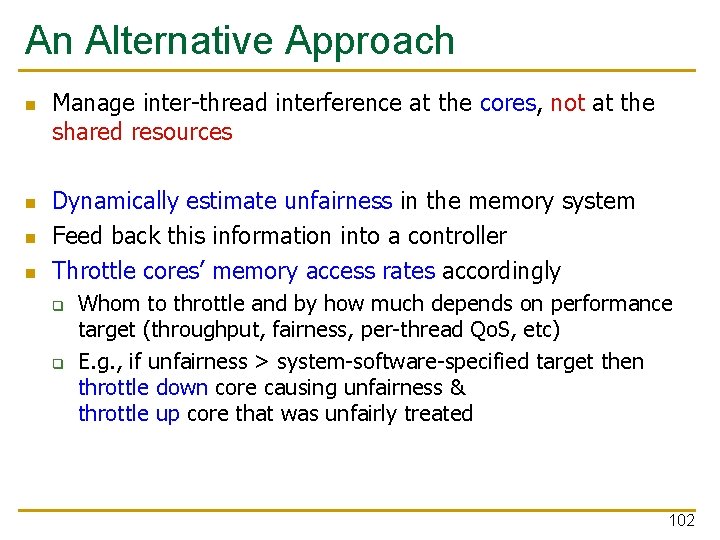

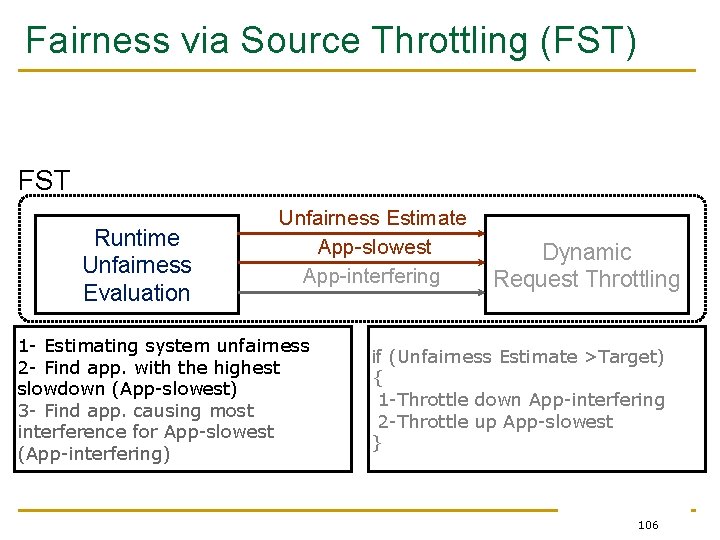

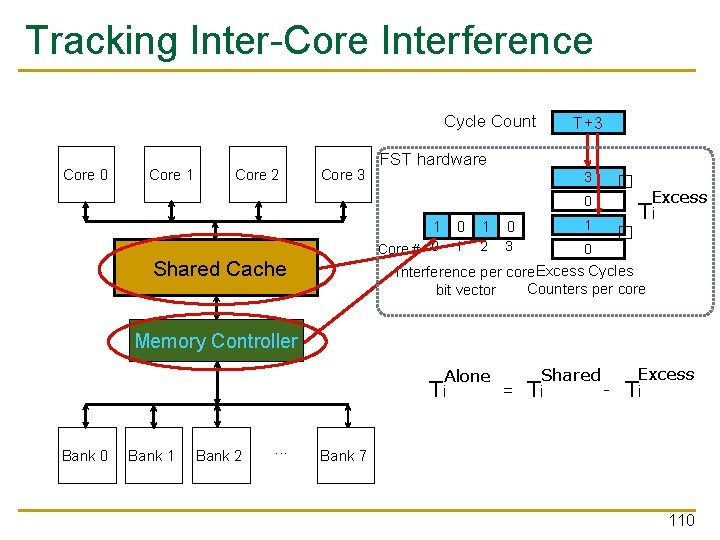

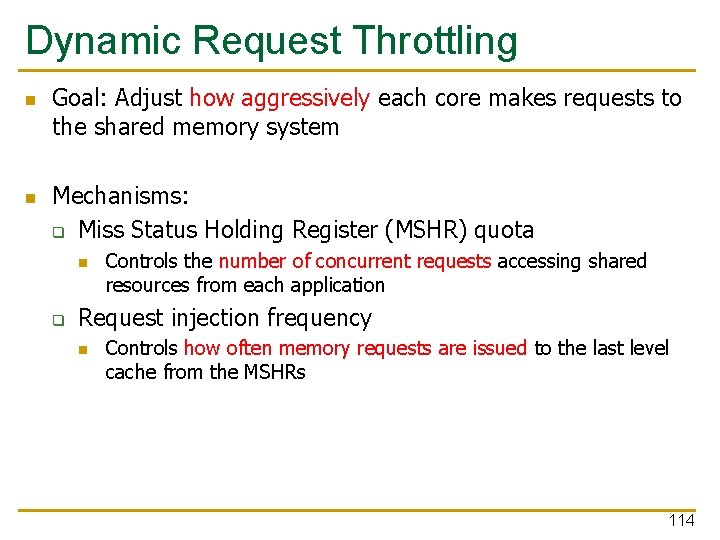

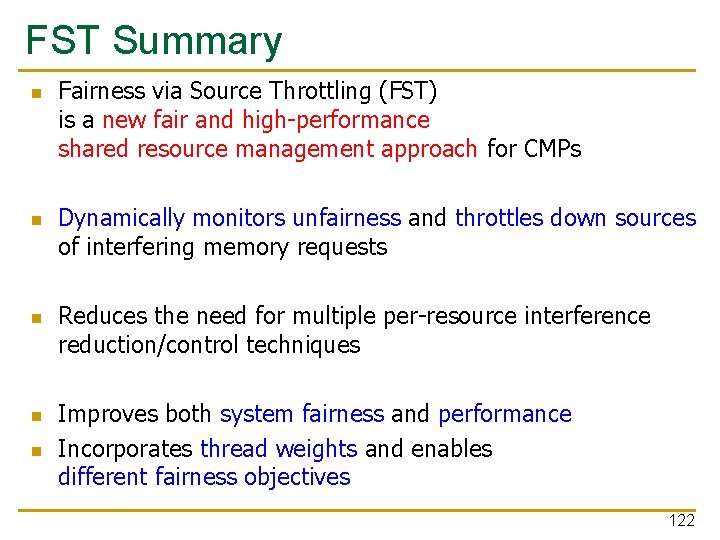

Comparison to Previous Scheduling Algorithms § FCFS, FR-FCFS [Rixner+, ISCA 00] § Oldest-first, row-hit first § Low multi-core performance Do not distinguish between threads § Network Fair Queueing [Nesbit+, MICRO 06] § Partitions memory bandwidth equally among threads § Low system performance Bank-level parallelism, locality not exploited § Stall-time Fair Memory Scheduler [Mutlu+, MICRO 07] § Balances thread slowdowns relative to when run alone § High coordination costs Requires heavy cycle-by-cycle coordination § Parallelism-Aware Batch Scheduler § [Mutlu+, ISCA 08] Batches requests and performs thread ranking to preserve bank-level parallelism § High coordination costs Batch duration is very short 58

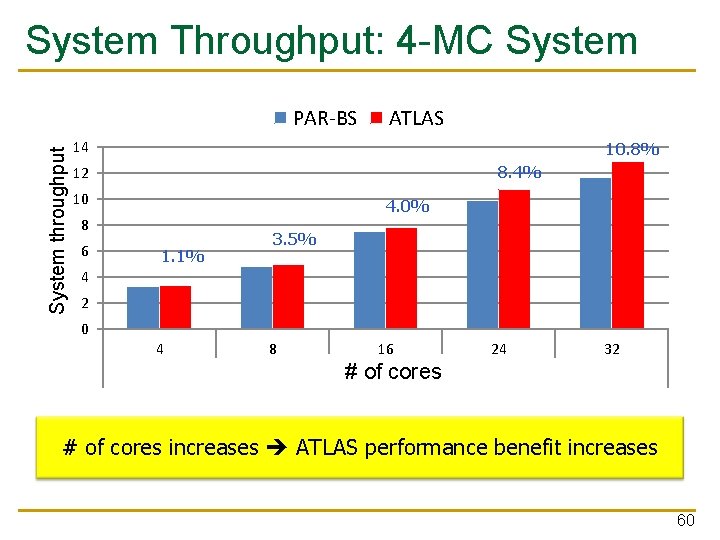

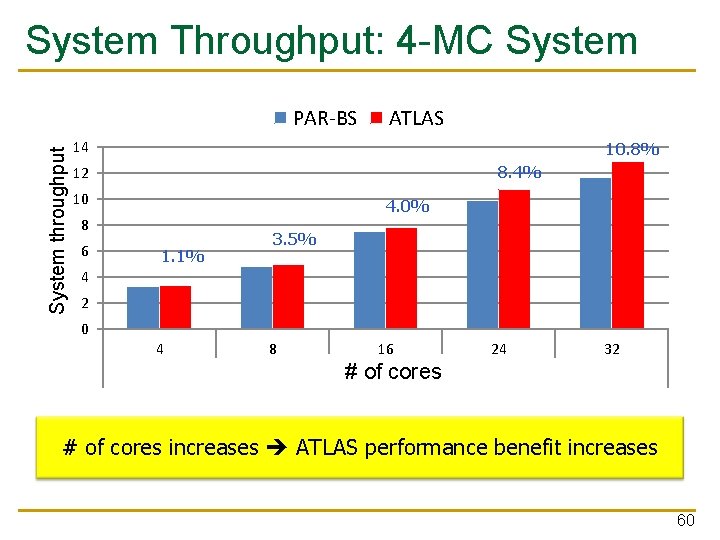

System Throughput: 24 -Core System throughput = ∑ Speedup System throughput FCFS FR_FCFS STFM PAR-BS ATLAS 3. 5% 16 5. 9% 14 8. 4% 12 9. 8% 10 17. 0% 8 6 4 1 2 4 8 # of memory controllers Memory controllers 16 ATLAS consistently provides higher system throughput than all previous scheduling algorithms 59

System Throughput: 4 -MC System throughput PAR-BS ATLAS 14 10. 8% 8. 4% 12 10 4. 0% 8 6 1. 1% 3. 5% 4 2 0 4 8 16 24 32 # of cores Cores # of cores increases ATLAS performance benefit increases 60

System Software Support n ATLAS enforces system priorities, or thread weights q Linear relationship between thread weight and speedup 61

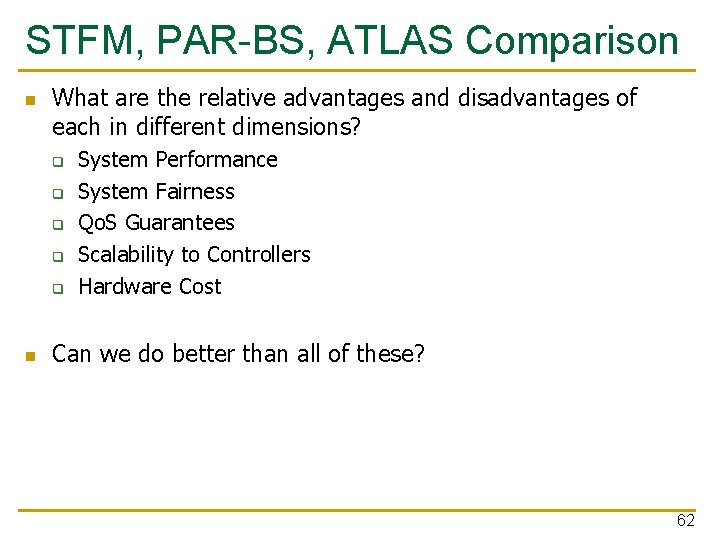

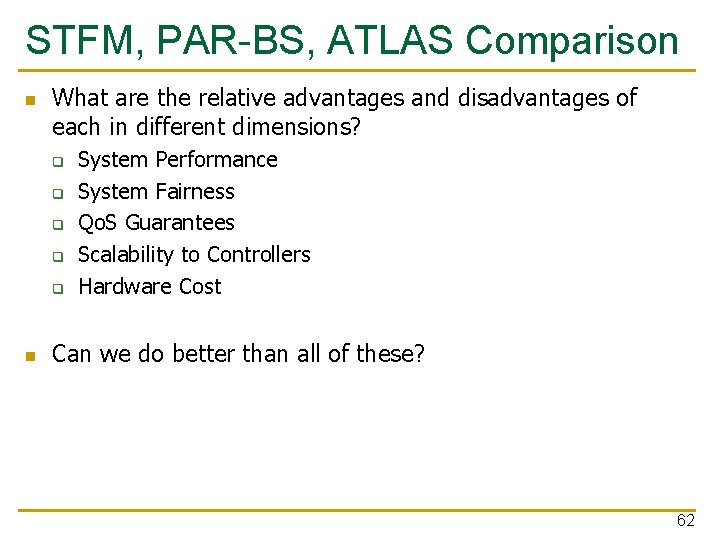

STFM, PAR-BS, ATLAS Comparison n What are the relative advantages and disadvantages of each in different dimensions? q q q n System Performance System Fairness Qo. S Guarantees Scalability to Controllers Hardware Cost Can we do better than all of these? 62

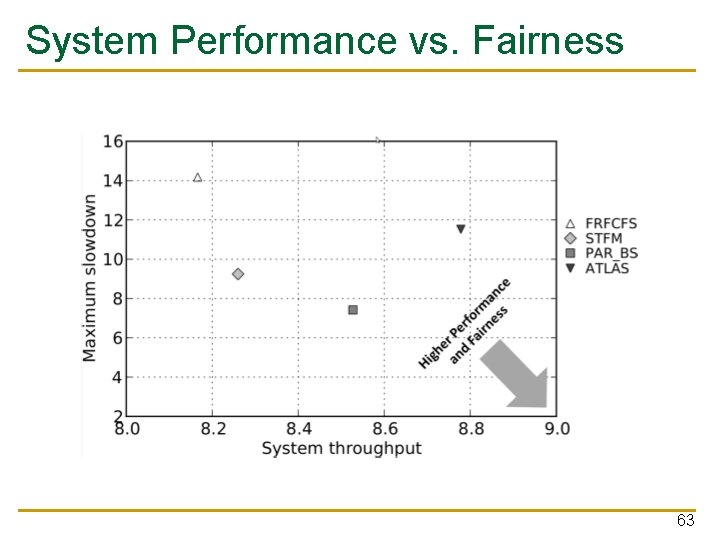

System Performance vs. Fairness 63

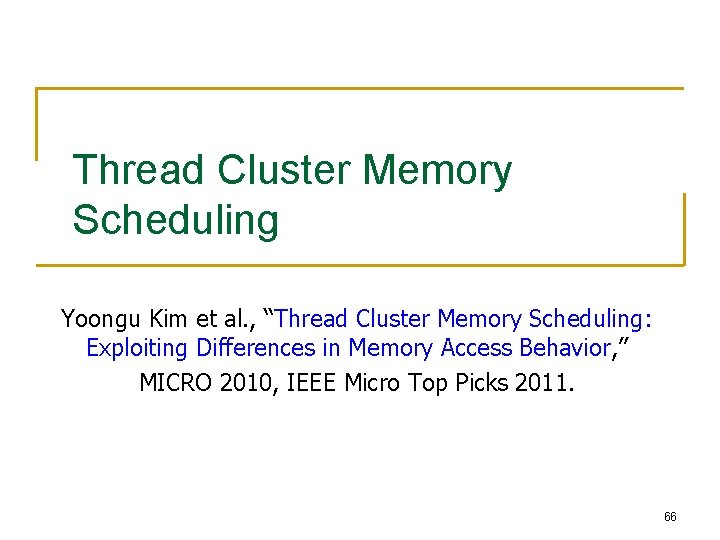

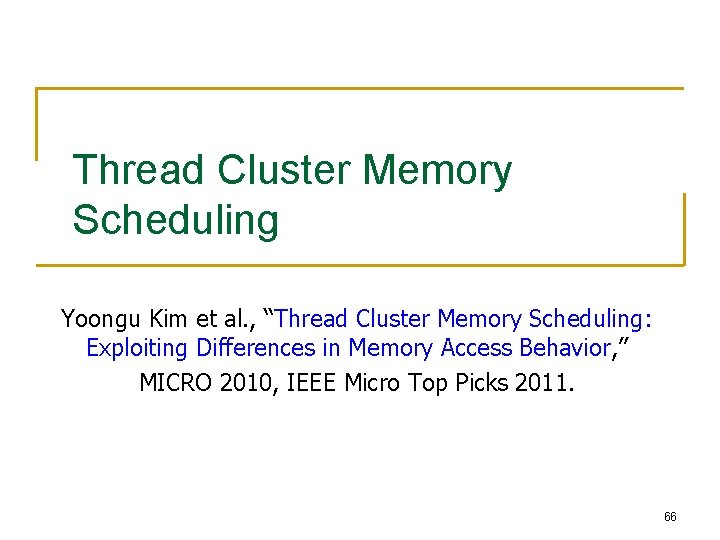

Achieving the Best of Both Worlds n n Yoongu Kim et al. , “Thread Cluster Memory Scheduling: Exploiting Di�erences in Memory Access Behavior, ” MICRO 2010, IEEE Micro Top Picks 2011. Idea: Dynamically cluster threads based on their memory intensity and employ a different scheduling algorithm in each cluster q q Low intensity cluster: Prioritize over high intensity threads maximize system throughput High intensity cluster: Shuffle the priority order of threads periodically maximize system fairness 64

Thread Cluster Memory Scheduling 65

Thread Cluster Memory Scheduling Yoongu Kim et al. , “Thread Cluster Memory Scheduling: Exploiting Differences in Memory Access Behavior, ” MICRO 2010, IEEE Micro Top Picks 2011. 66

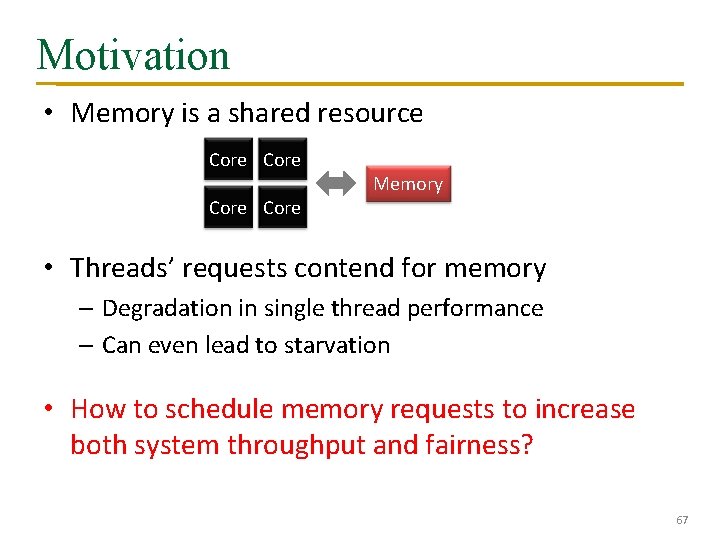

Motivation • Memory is a shared resource Core Memory • Threads’ requests contend for memory – Degradation in single thread performance – Can even lead to starvation • How to schedule memory requests to increase both system throughput and fairness? 67

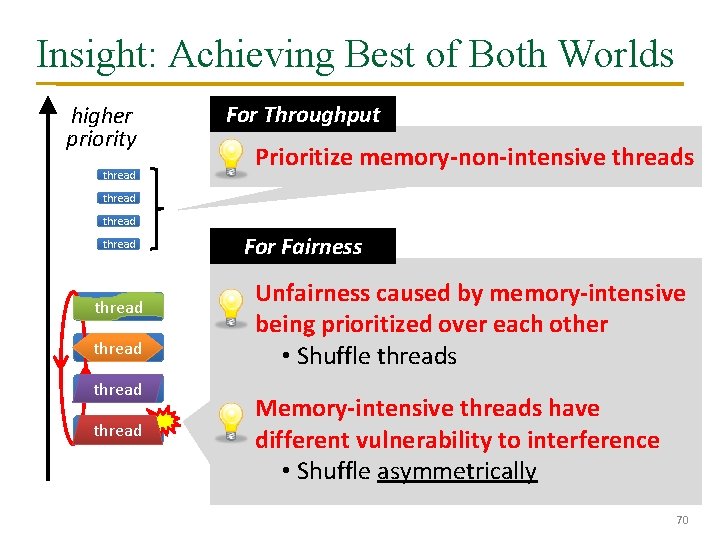

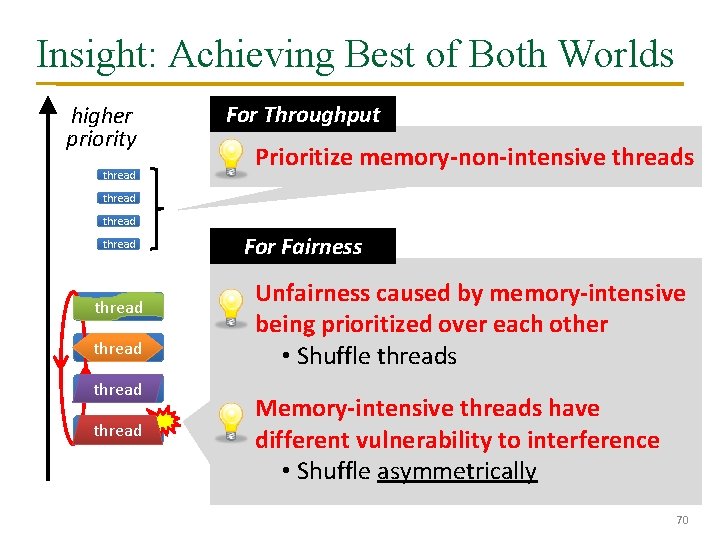

System throughput bias l ea Fairness bias Id Better fairness Previous Scheduling Algorithms are Biased Better system throughput No previous memory scheduling algorithm provides both the best fairness and system throughput 68

Why do Previous Algorithms Fail? Throughput biased approach Prioritize less memory-intensive threads Fairness biased approach Take turns accessing memory Good for throughput Does not starve thread A less memory intensive thread B thread C higher priority starvation unfairness thread C thread A thread B not prioritized reduced throughput Single policy for all threads is insufficient 69

Insight: Achieving Best of Both Worlds higher priority thread For Throughput Prioritize memory-non-intensive threads thread thread For Fairness Unfairness caused by memory-intensive being prioritized over each other • Shuffle threads Memory-intensive threads have different vulnerability to interference • Shuffle asymmetrically 70

Overview: Thread Cluster Memory Scheduling 1. Group threads into two clusters 2. Prioritize non-intensive cluster 3. Different policies for each cluster Memory-non-intensive thread Non-intensive cluster Throughput thread higher priority Prioritized thread higher priority Threads in the system Memory-intensive Intensive cluster Fairness 71

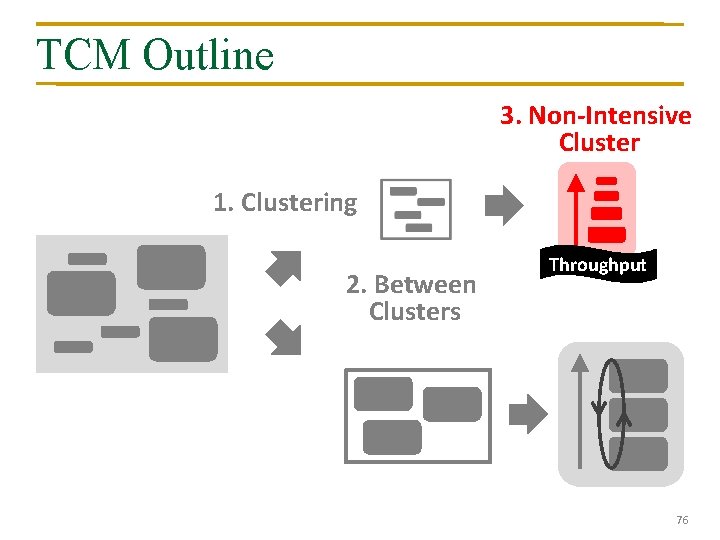

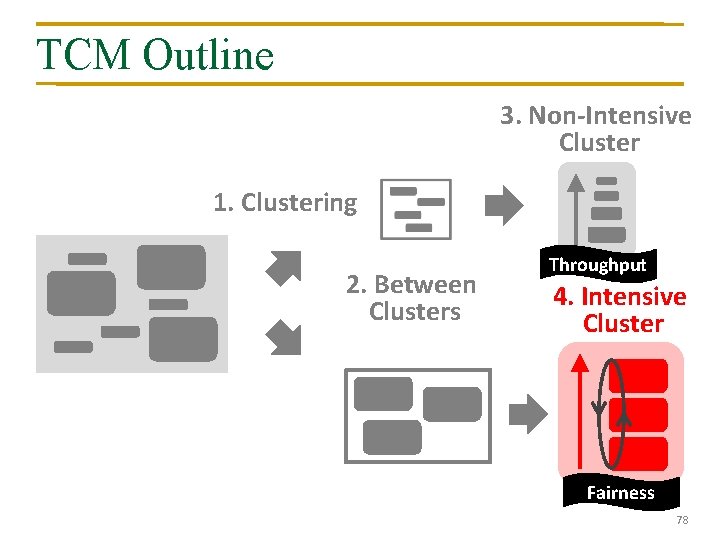

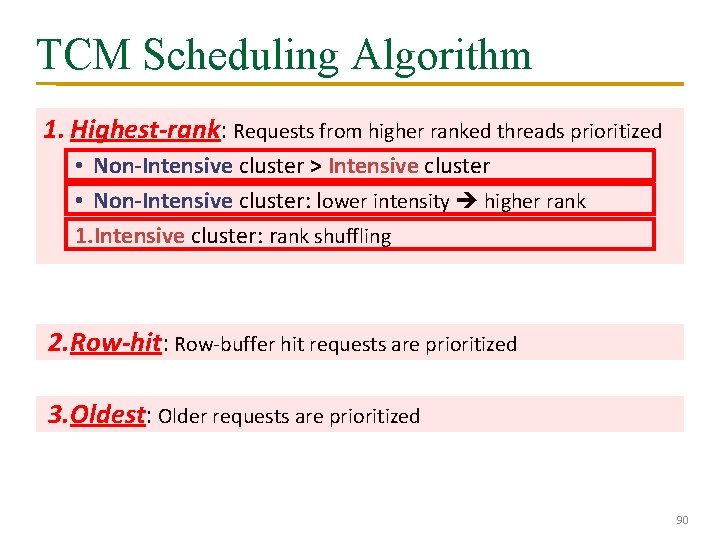

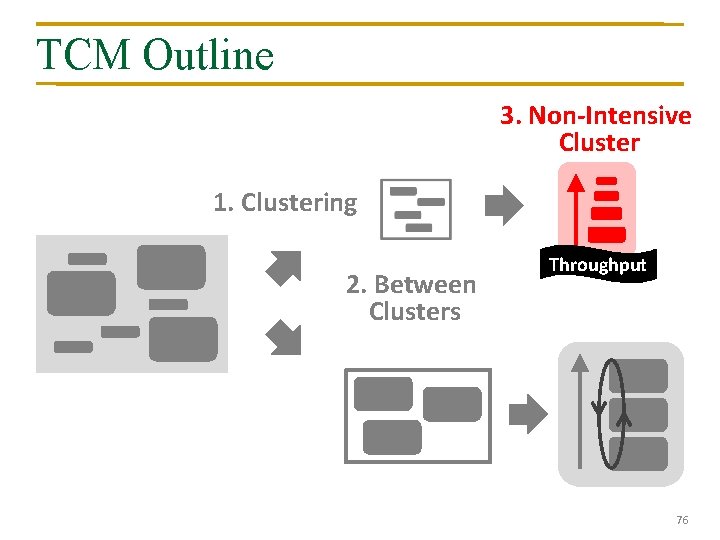

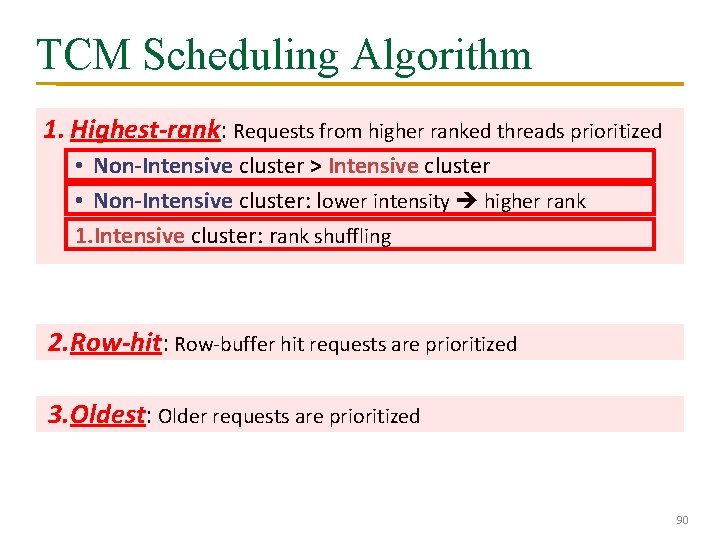

TCM Outline 1. Clustering 72

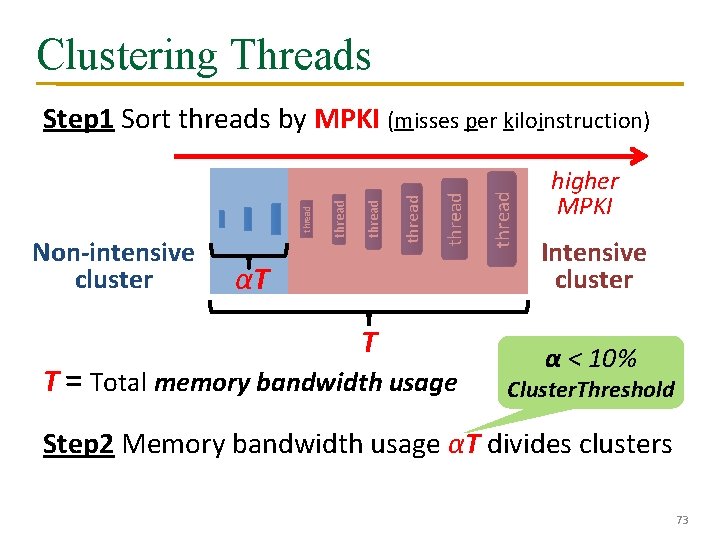

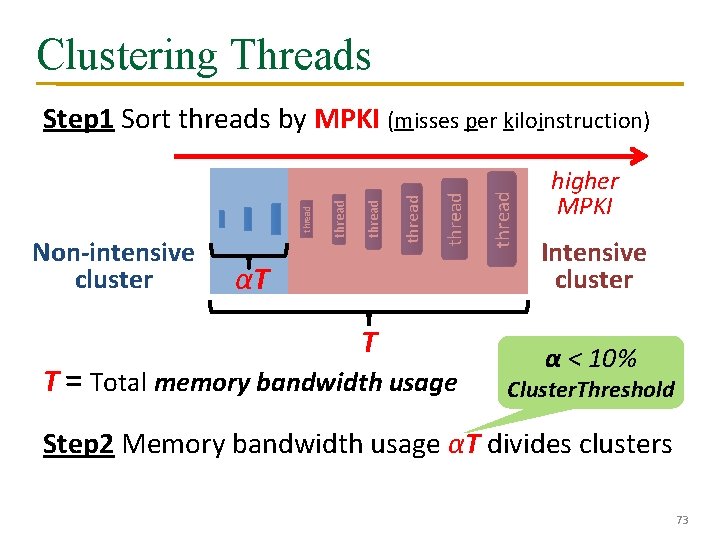

Clustering Threads αT T T = Total memory bandwidth usage thread Non-intensive cluster thread Step 1 Sort threads by MPKI (misses per kiloinstruction) higher MPKI Intensive cluster α < 10% Cluster. Threshold Step 2 Memory bandwidth usage αT divides clusters 73

TCM Outline 1. Clustering 2. Between Clusters 74

Prioritization Between Clusters Prioritize non-intensive cluster > priority • Increases system throughput – Non-intensive threads have greater potential for making progress • Does not degrade fairness – Non-intensive threads are “light” – Rarely interfere with intensive threads 75

TCM Outline 3. Non-Intensive Cluster 1. Clustering 2. Between Clusters Throughput 76

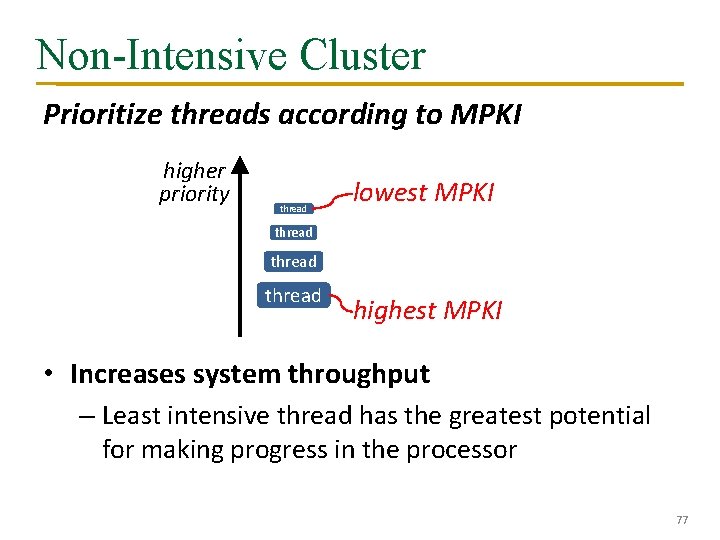

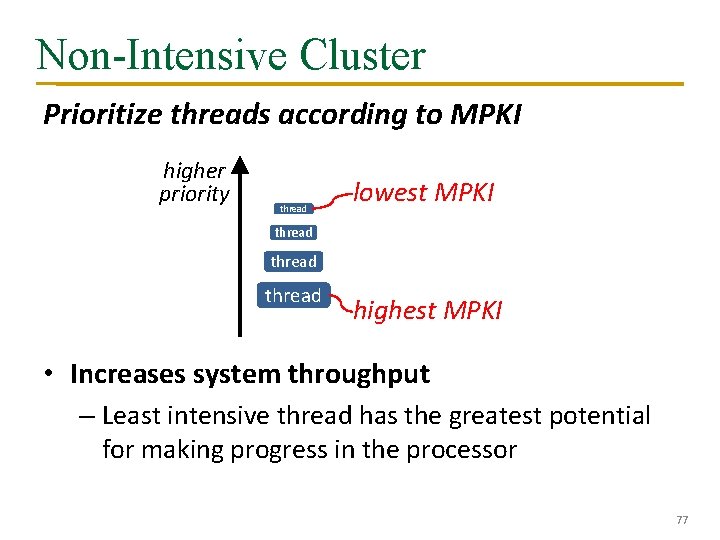

Non-Intensive Cluster Prioritize threads according to MPKI higher priority thread lowest MPKI thread highest MPKI • Increases system throughput – Least intensive thread has the greatest potential for making progress in the processor 77

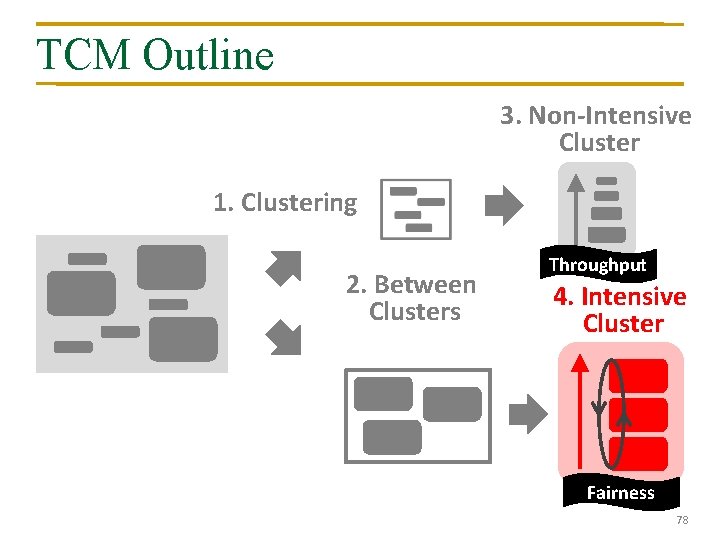

TCM Outline 3. Non-Intensive Cluster 1. Clustering 2. Between Clusters Throughput 4. Intensive Cluster Fairness 78

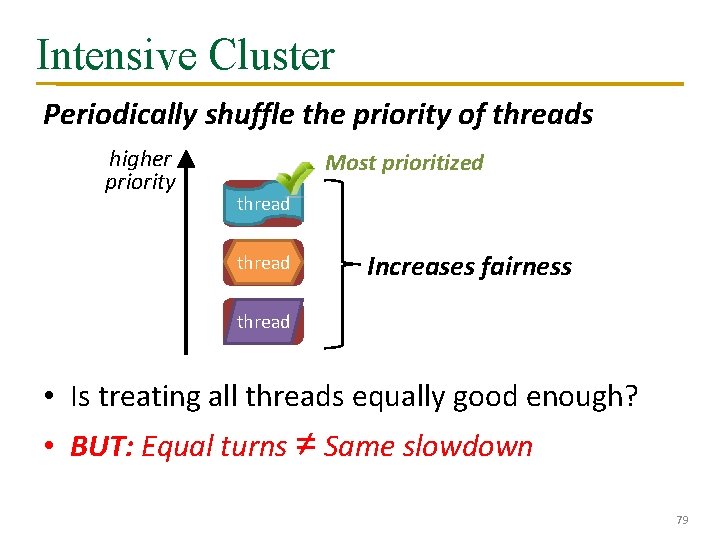

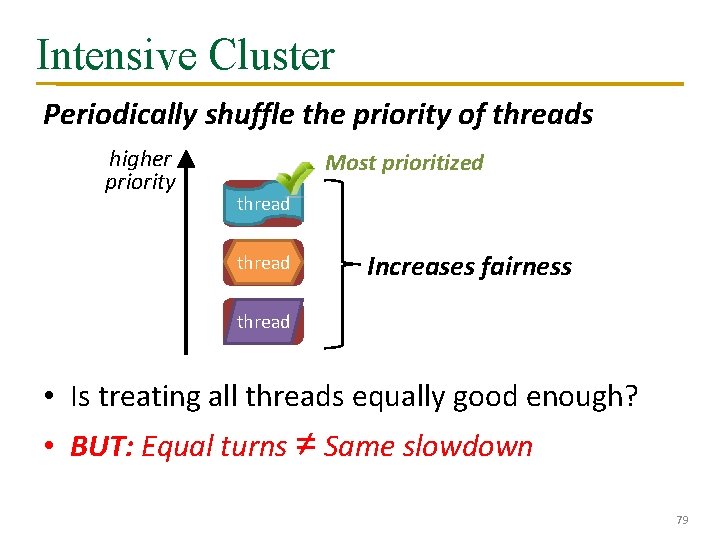

Intensive Cluster Periodically shuffle the priority of threads higher priority Most prioritized thread Increases fairness thread • Is treating all threads equally good enough? • BUT: Equal turns ≠ Same slowdown 79

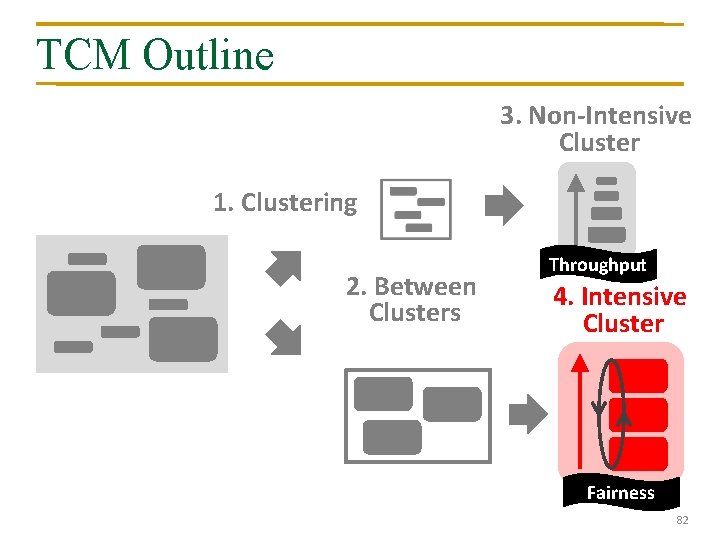

Case Study: A Tale of Two Threads Case Study: Two intensive threads contending 1. random-access 2. streaming Which is slowed down more easily? Prioritize random-access 7 x prioritized 1 x Prioritize streaming 11 x prioritized 1 x random-access thread is more easily slowed down 80

Why are Threads Different? random-access streaming req stuck activated rows Bank 1 Bank 2 Bank 3 • All requests parallel • High bank-level parallelism Bank 4 Memory • All requests Same row • High row-buffer locality Vulnerable to interference 81

TCM Outline 3. Non-Intensive Cluster 1. Clustering 2. Between Clusters Throughput 4. Intensive Cluster Fairness 82

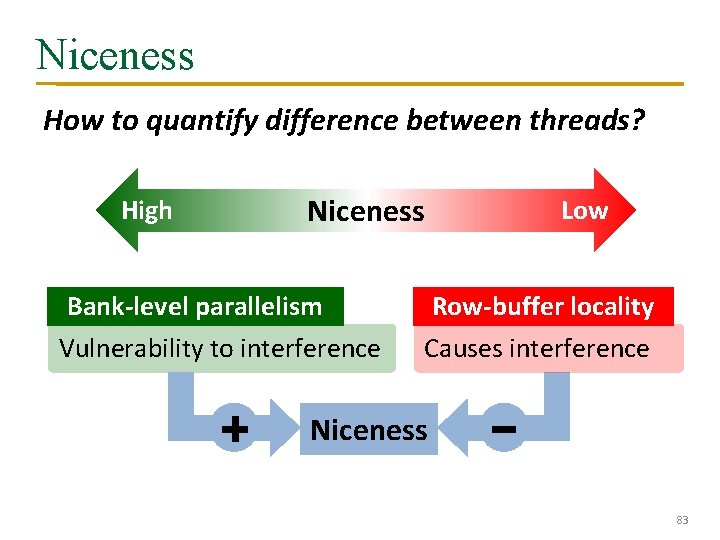

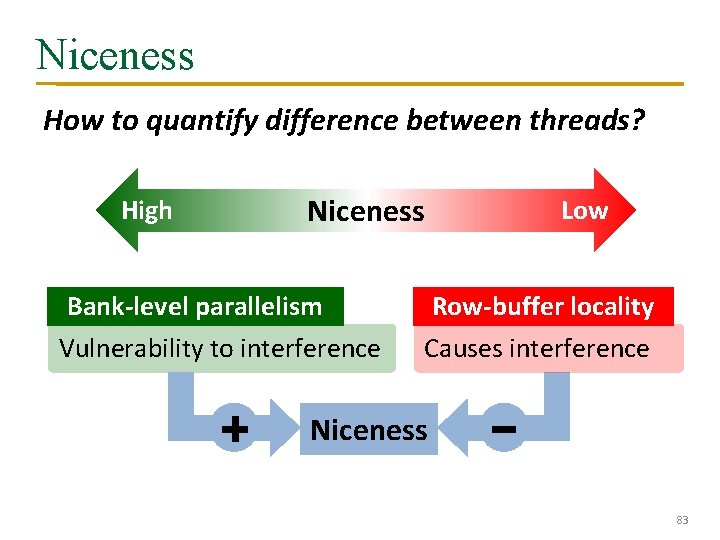

Niceness How to quantify difference between threads? Niceness High Low Bank-level parallelism Row-buffer locality Vulnerability to interference Causes interference + Niceness 83

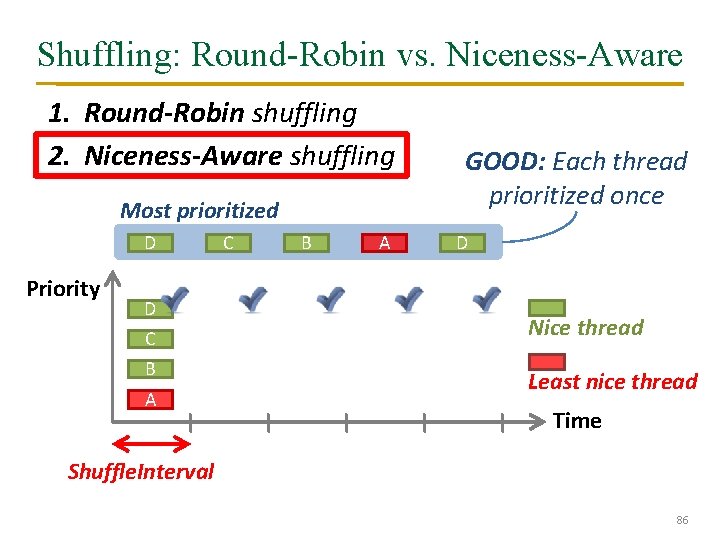

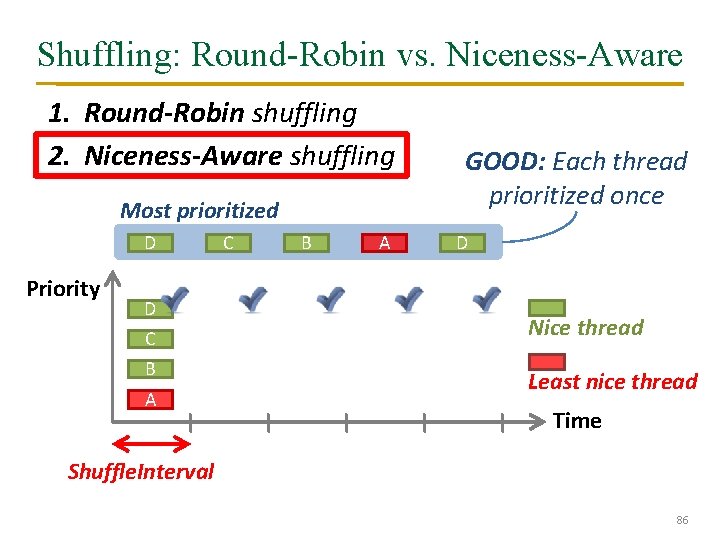

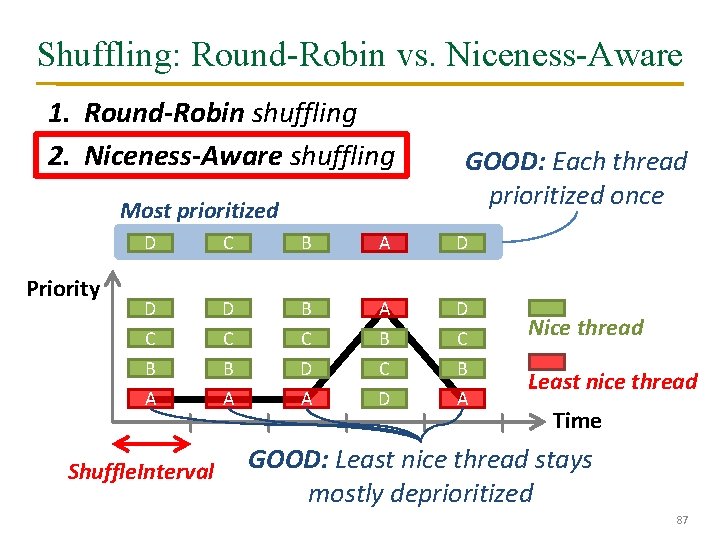

Shuffling: Round-Robin vs. Niceness-Aware 1. Round-Robin shuffling What can go wrong? 2. Niceness-Aware shuffling GOOD: Each thread prioritized once Most prioritized D Priority D C B A A B C D Nice thread Least nice thread Time Shuffle. Interval 84

Shuffling: Round-Robin vs. Niceness-Aware 1. Round-Robin shuffling What can go wrong? 2. Niceness-Aware shuffling GOOD: Each thread prioritized once Most prioritized Priority D A B C D D C B A B C D D A B C D A C B A B C D A Shuffle. Interval Nice thread Least nice thread Time BAD: Nice threads receive lots of interference 85

Shuffling: Round-Robin vs. Niceness-Aware 1. Round-Robin shuffling 2. Niceness-Aware shuffling Most prioritized D Priority D C B A GOOD: Each thread prioritized once D Nice thread Least nice thread Time Shuffle. Interval 86

Shuffling: Round-Robin vs. Niceness-Aware 1. Round-Robin shuffling 2. Niceness-Aware shuffling Most prioritized Priority GOOD: Each thread prioritized once D C B A D D C B D B A D C C B B D C C B A A A D A Shuffle. Interval Nice thread Least nice thread Time GOOD: Least nice thread stays mostly deprioritized 87

TCM Outline 3. Non-Intensive Cluster 1. Clustering 2. Between Clusters Throughput 4. Intensive Cluster Fairness 88

Quantum-Based Operation Previous quantum Current quantum (~1 M cycles) Time During quantum: • Monitor thread behavior 1. Memory intensity 2. Bank-level parallelism 3. Row-buffer locality Shuffle interval (~1 K cycles) Beginning of quantum: • Perform clustering • Compute niceness of intensive threads 89

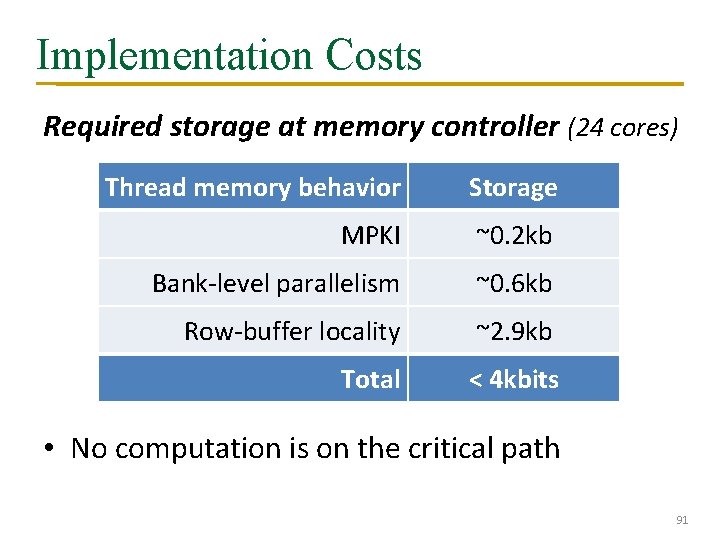

TCM Scheduling Algorithm 1. Highest-rank: Requests from higher ranked threads prioritized • Non-Intensive cluster > Intensive cluster • Non-Intensive cluster: lower intensity higher rank 1. Intensive cluster: rank shuffling 2. Row-hit: Row-buffer hit requests are prioritized 3. Oldest: Older requests are prioritized 90

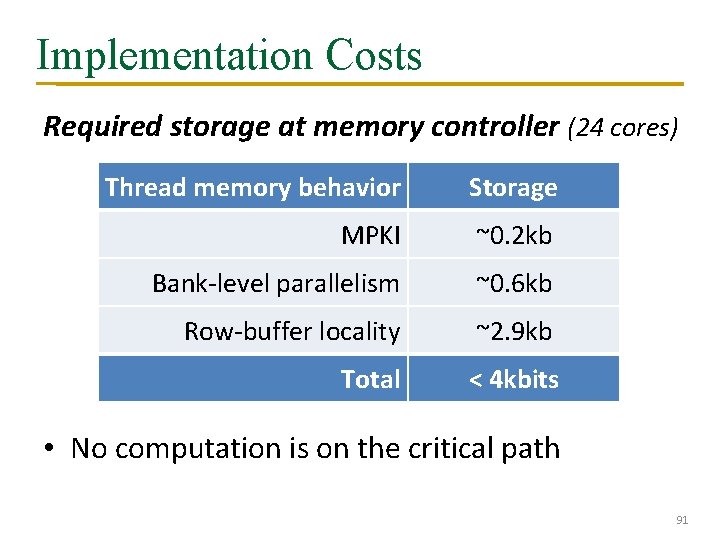

Implementation Costs Required storage at memory controller (24 cores) Thread memory behavior Storage MPKI ~0. 2 kb Bank-level parallelism ~0. 6 kb Row-buffer locality ~2. 9 kb Total < 4 kbits • No computation is on the critical path 91

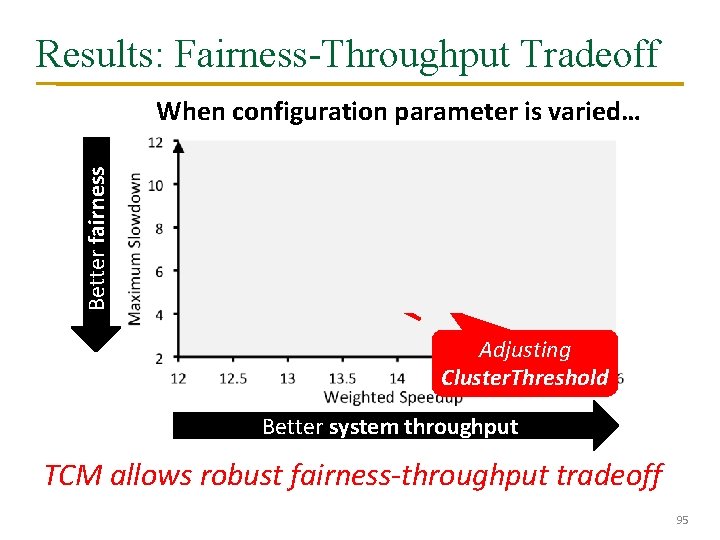

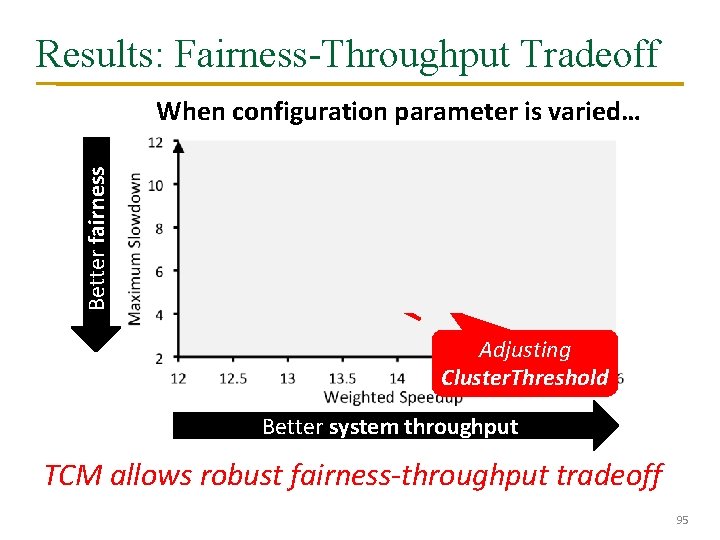

Metrics & Methodology • Metrics System throughput Unfairness • Methodology – Core model • 4 GHz processor, 128 -entry instruction window • 512 KB/core L 2 cache – Memory model: DDR 2 – 96 multiprogrammed SPEC CPU 2006 workloads 92

![Previous Work FRFCFS Rixner et al ISCA 00 Prioritizes rowbuffer hits Threadoblivious Previous Work FRFCFS [Rixner et al. , ISCA 00]: Prioritizes row-buffer hits – Thread-oblivious](https://slidetodoc.com/presentation_image_h2/95951a814fa4830ec4f991a13ff97291/image-93.jpg)

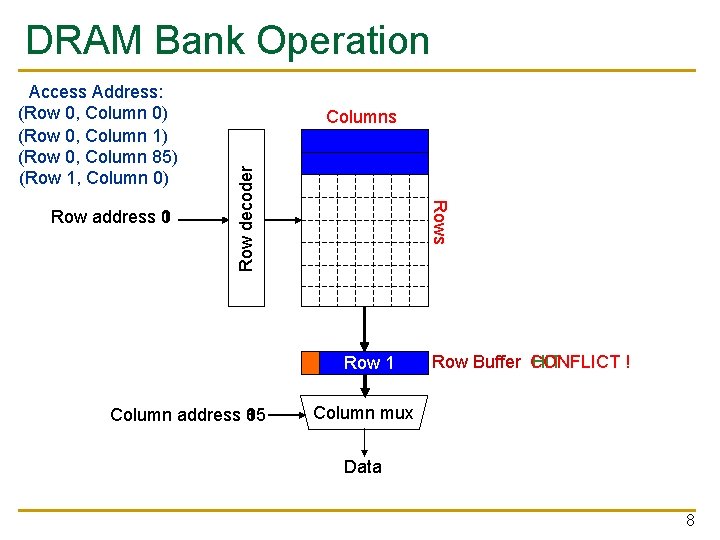

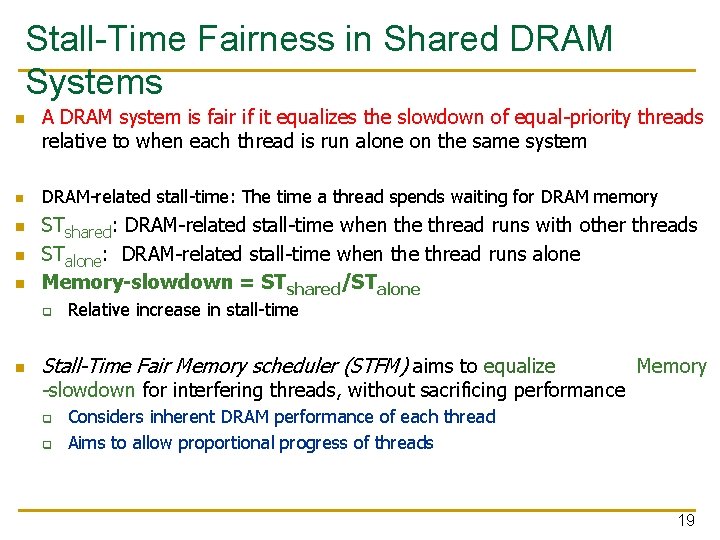

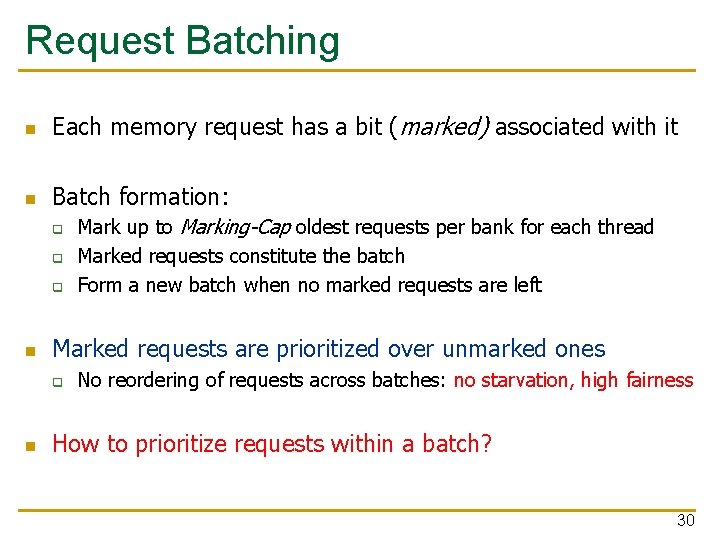

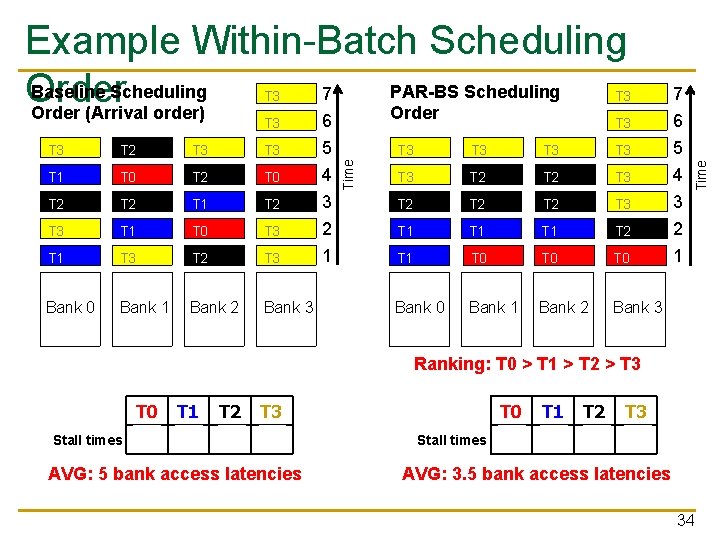

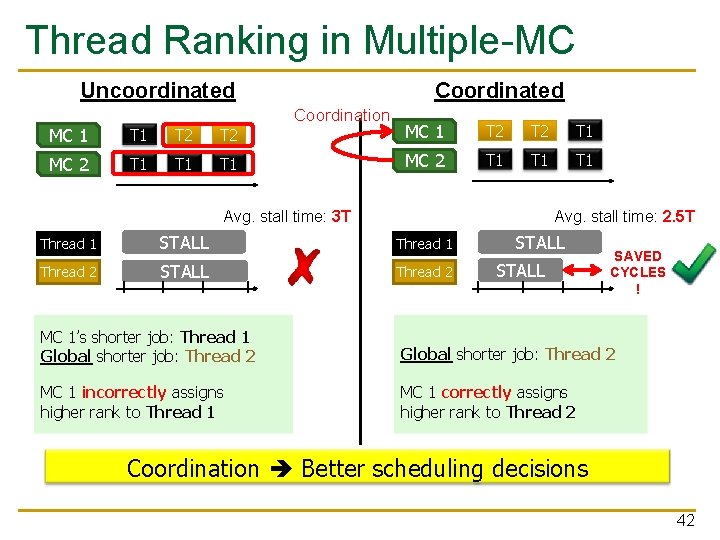

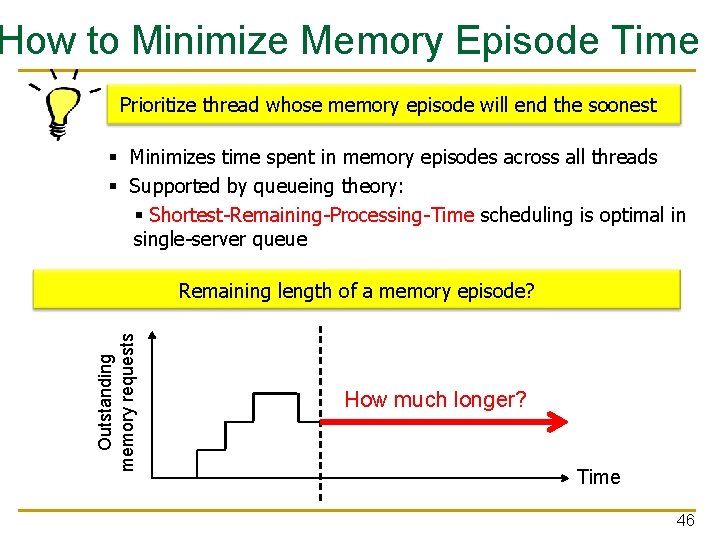

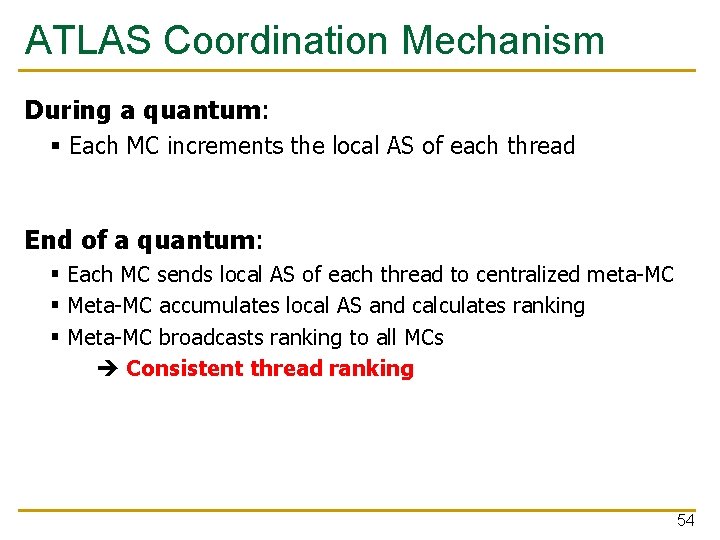

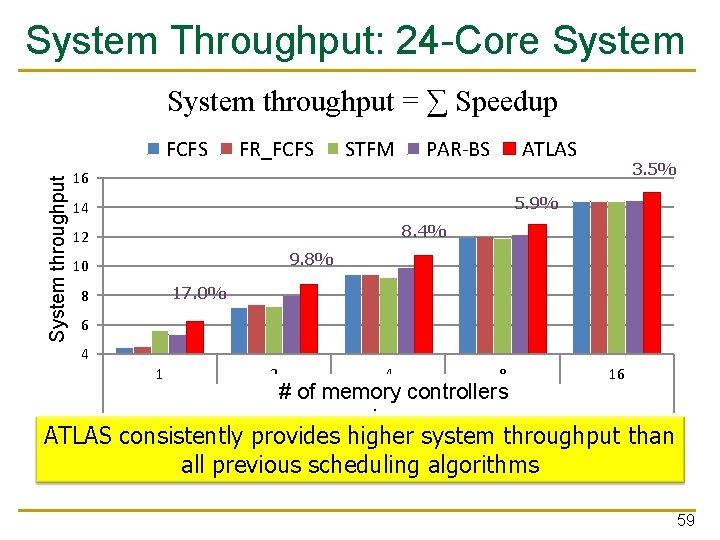

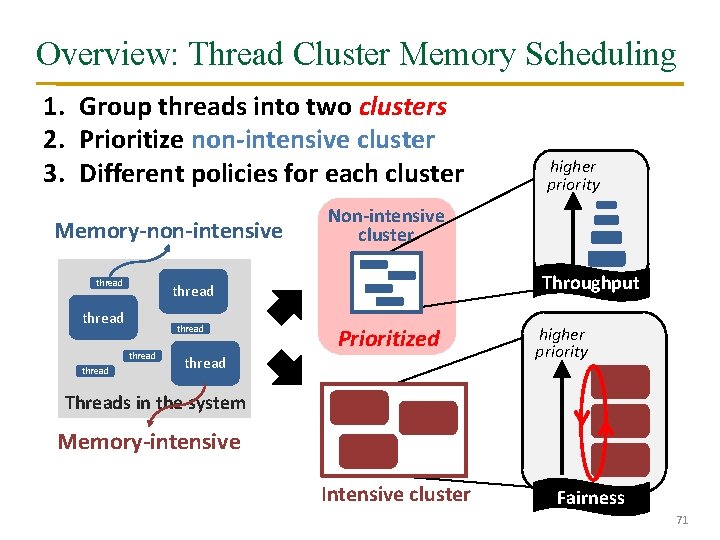

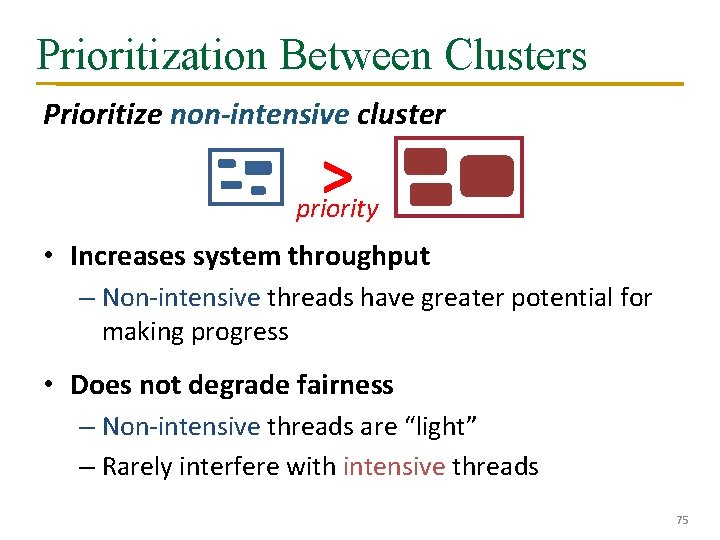

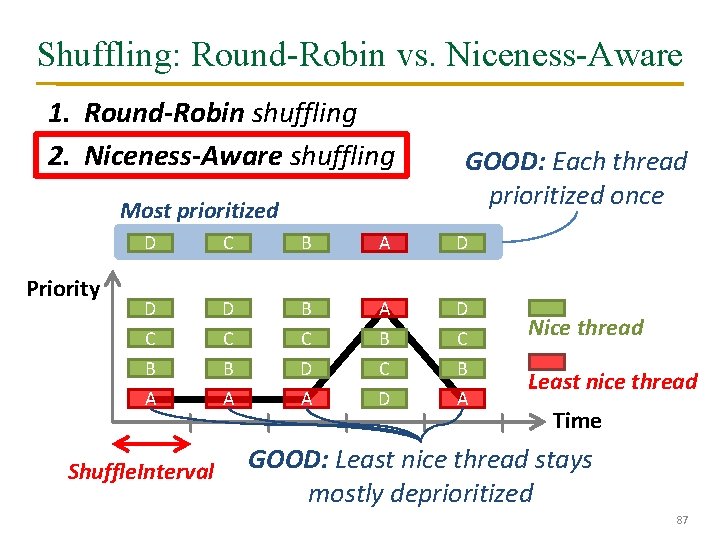

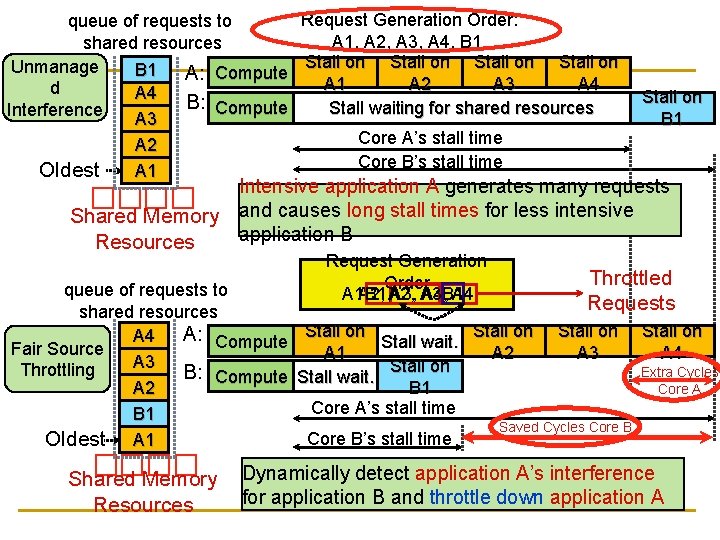

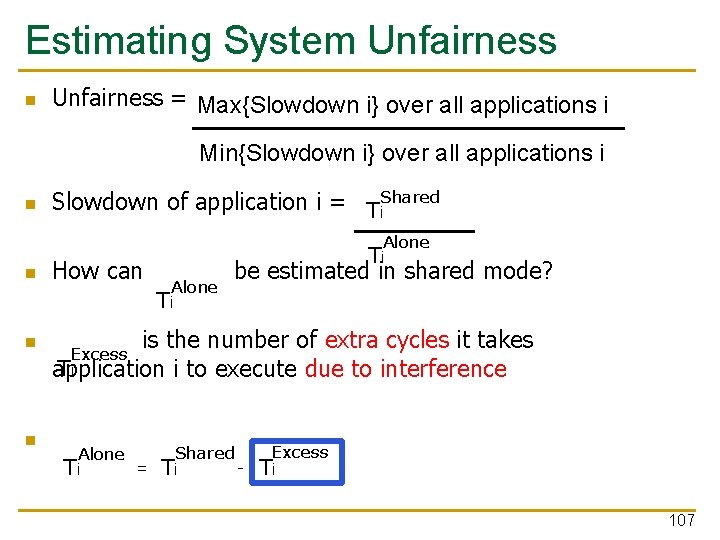

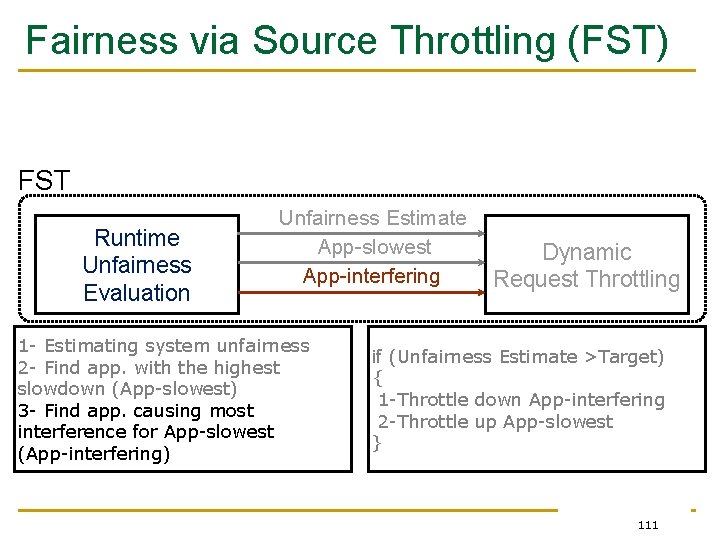

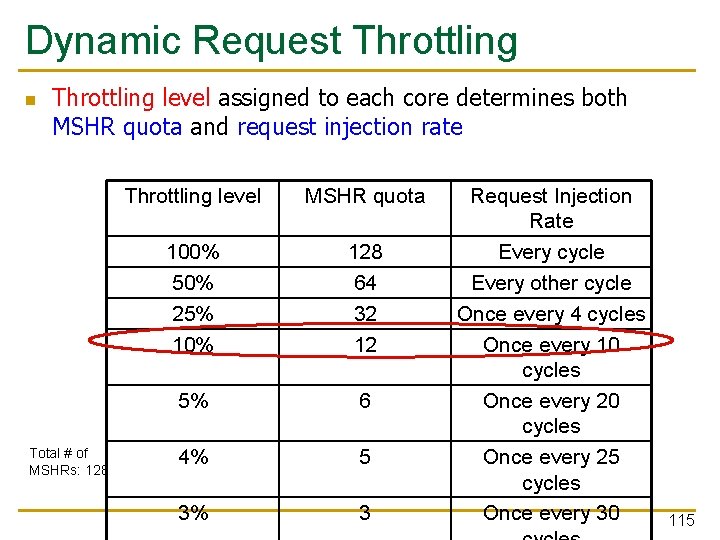

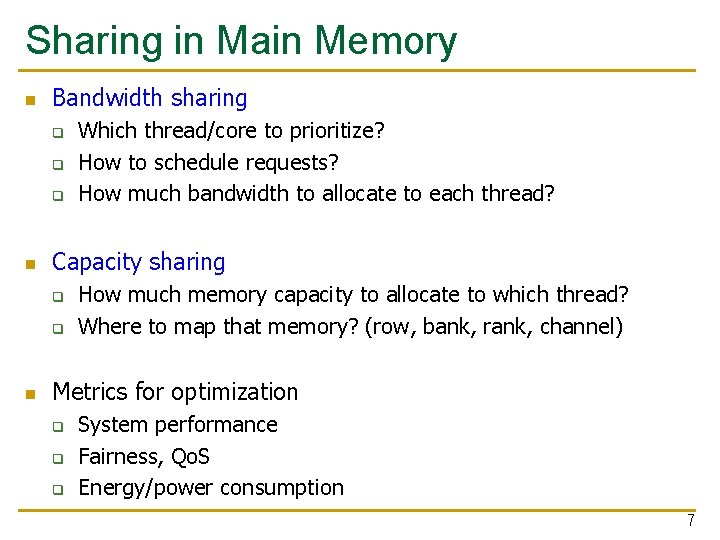

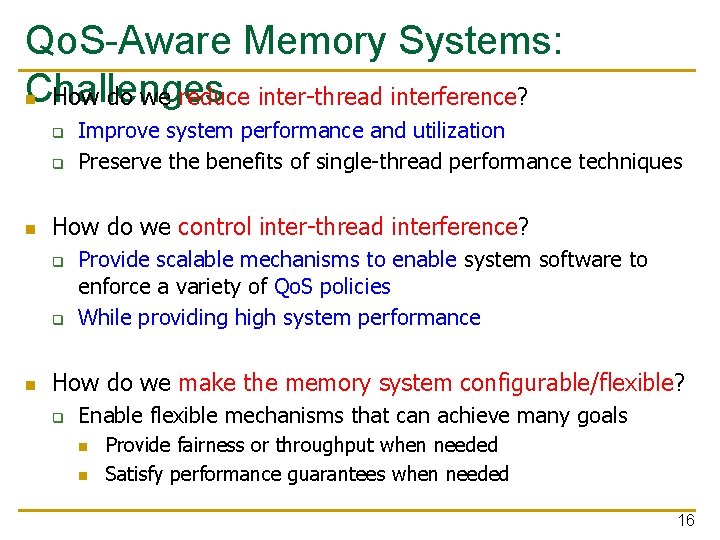

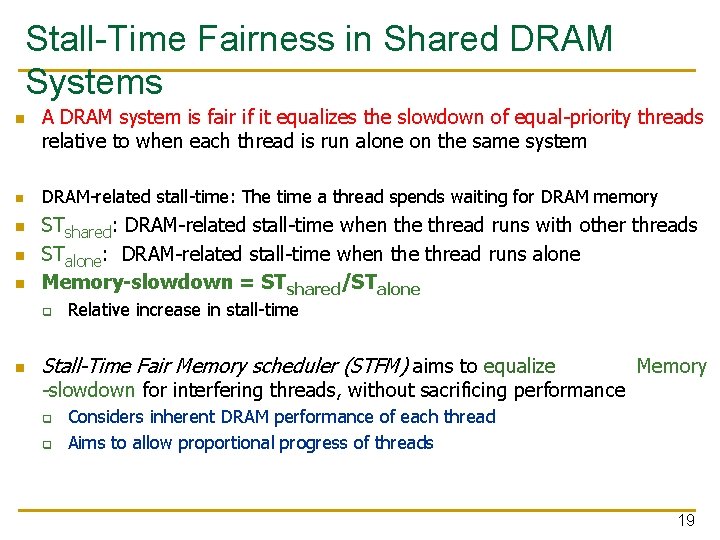

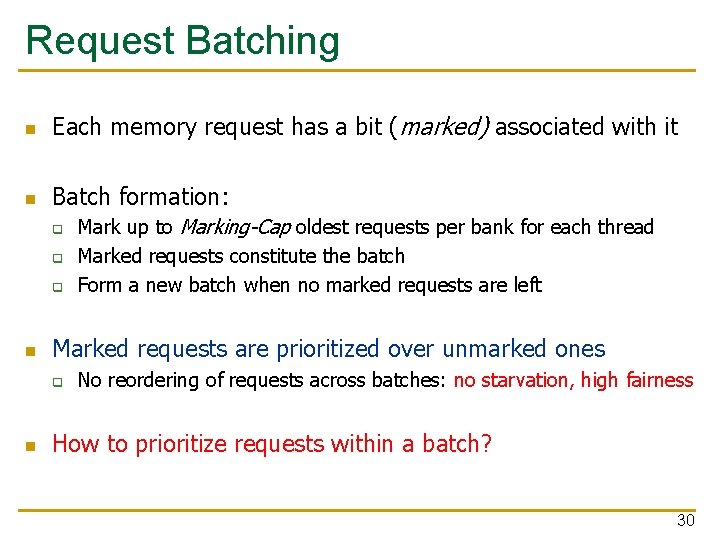

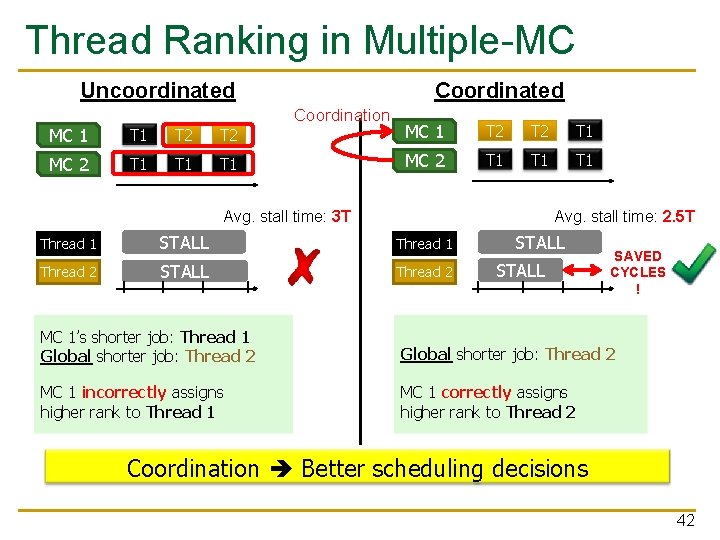

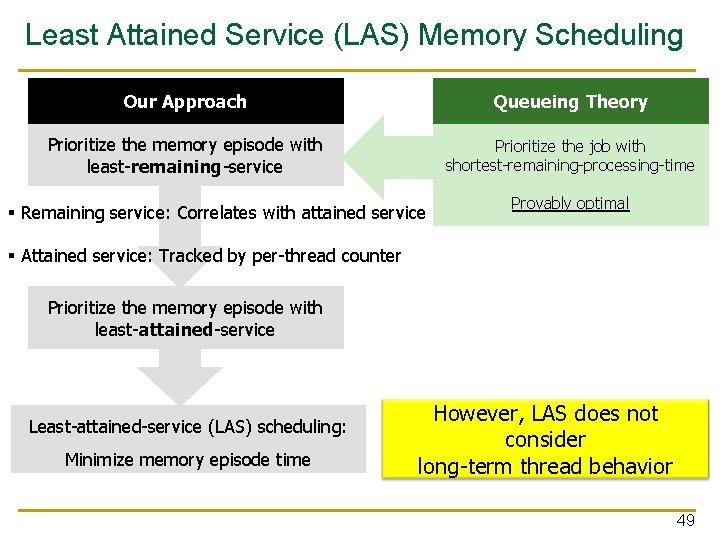

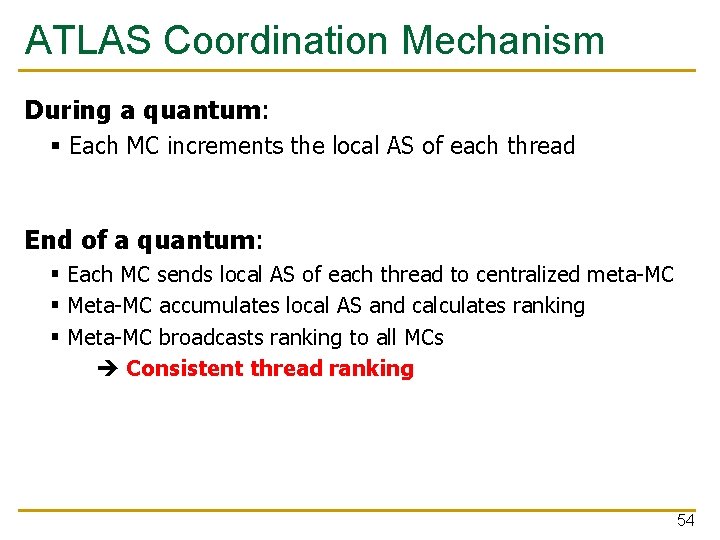

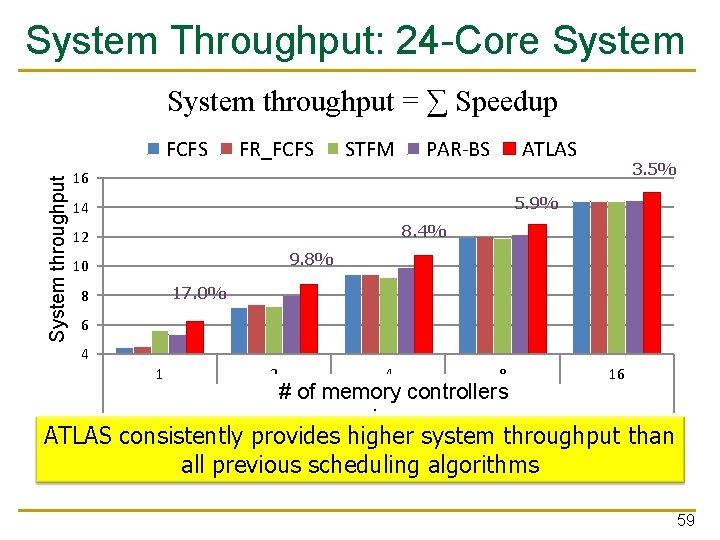

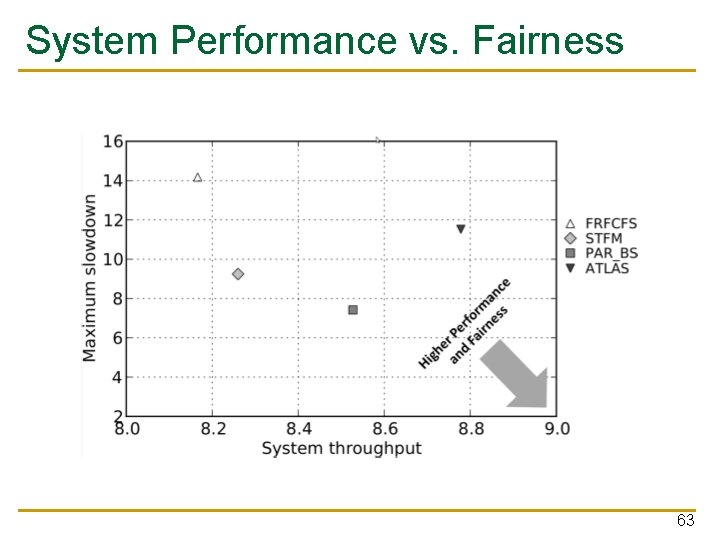

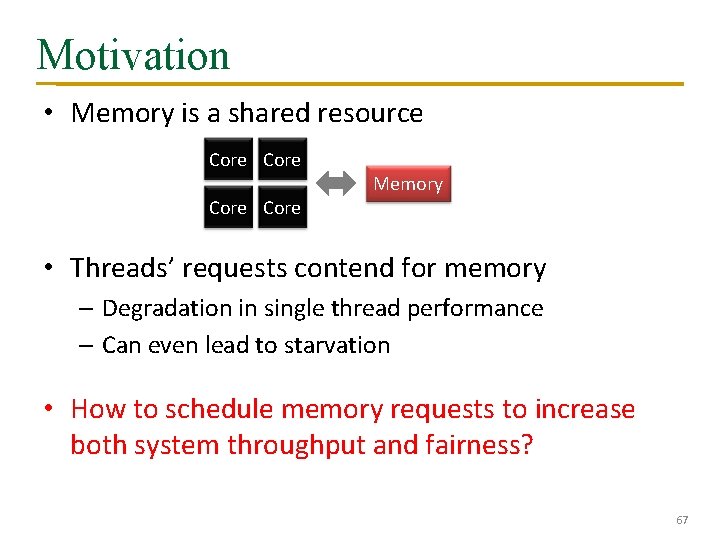

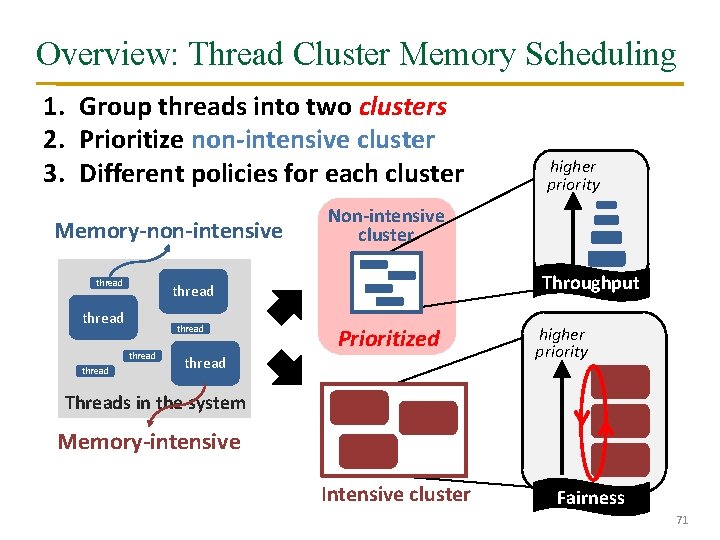

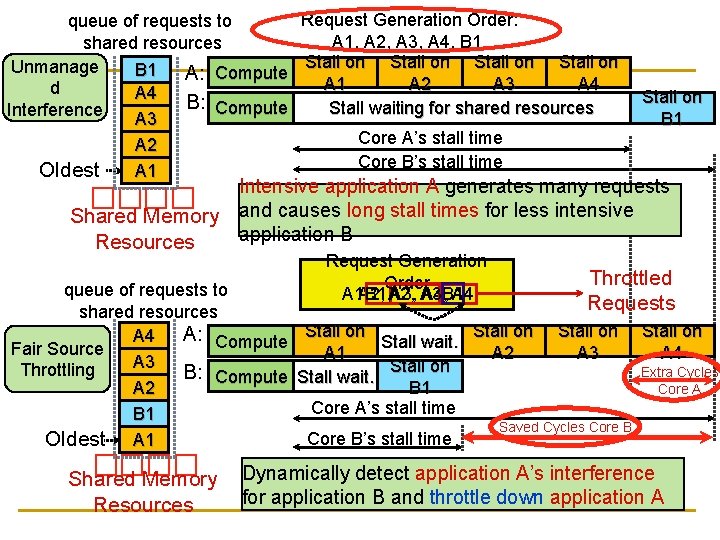

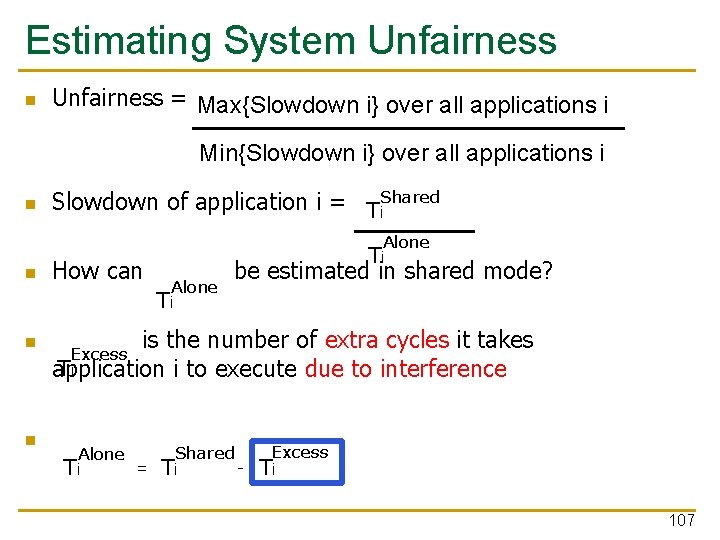

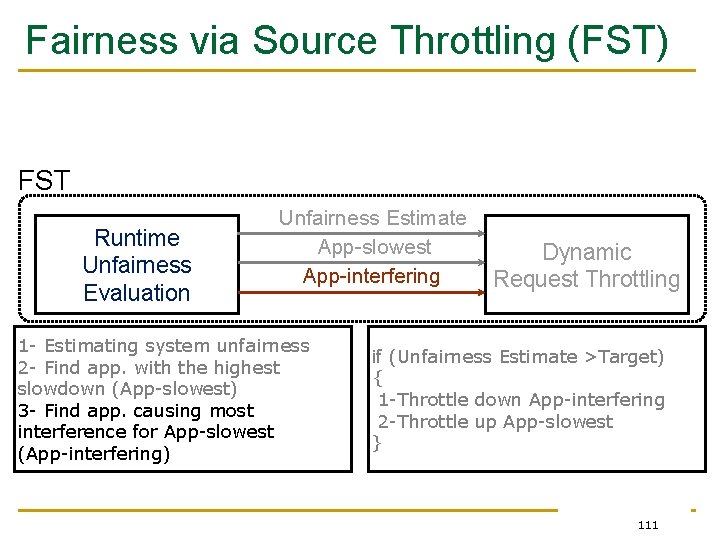

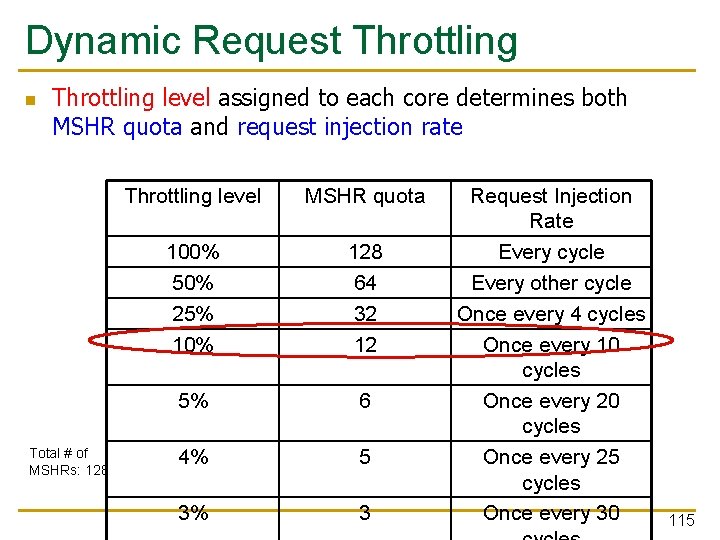

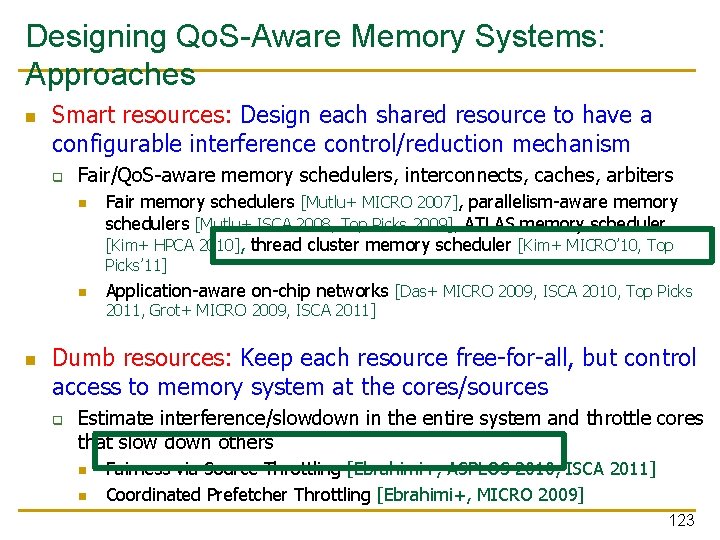

Previous Work FRFCFS [Rixner et al. , ISCA 00]: Prioritizes row-buffer hits – Thread-oblivious Low throughput & Low fairness STFM [Mutlu et al. , MICRO 07]: Equalizes thread slowdowns – Non-intensive threads not prioritized Low throughput PAR-BS [Mutlu et al. , ISCA 08]: Prioritizes oldest batch of requests while preserving bank-level parallelism – Non-intensive threads not always prioritized Low throughput ATLAS [Kim et al. , HPCA 10]: Prioritizes threads with less memory service – Most intensive thread starves Low fairness 93

Results: Fairness vs. Throughput Better fairness Averaged over 96 workloads 5% 39% 5% 8% Better system throughput TCM provides best fairness and system throughput 94

Results: Fairness-Throughput Tradeoff Better fairness When configuration parameter is varied… ATLAS STFM PAR-BS TCM Adjusting Cluster. Threshold Better system throughput TCM allows robust fairness-throughput tradeoff 95

Operating System Support • Cluster. Threshold is a tunable knob – OS can trade off between fairness and throughput • Enforcing thread weights – OS assigns weights to threads – TCM enforces thread weights within each cluster 96

TCM Summary • No previous memory scheduling algorithm provides both high system throughput and fairness – Problem: They use a single policy for all threads • TCM groups threads into two clusters 1. Prioritize non-intensive cluster throughput 2. Shuffle priorities in intensive cluster fairness 3. Shuffling should favor nice threads fairness • TCM provides the best system throughput and fairness 97

Designing Qo. S-Aware Memory Systems: Approaches n Smart resources: Design each shared resource to have a configurable interference control/reduction mechanism q Fair/Qo. S-aware memory schedulers, interconnects, caches, arbiters n Fair memory schedulers [Mutlu+ MICRO 2007], parallelism-aware memory schedulers [Mutlu+ ISCA 2008, Top Picks 2009], ATLAS memory scheduler [Kim+ HPCA 2010], thread cluster memory scheduler [Kim+ MICRO’ 10, Top Picks’ 11] n Application-aware on-chip networks [Das+ MICRO 2009, ISCA 2010, Top Picks 2011, Grot+ MICRO 2009, ISCA 2011] n Dumb resources: Keep each resource free-for-all, but control access to memory system at the cores/sources q Estimate interference/slowdown in the entire system and throttle cores that slow down others n n Fairness via Source Throttling [Ebrahimi+, ASPLOS 2010, ISCA 2011] Coordinated Prefetcher Throttling [Ebrahimi+, MICRO 2009] 98

Fairness via Source Throttling Eiman Ebrahimi et al. , “Fairness via Source Throttling: A Configurable and High-Performance Fairness Substrate for Multi-Core Memory Systems, ” ASPLOS 2010, Best Paper Award. 99

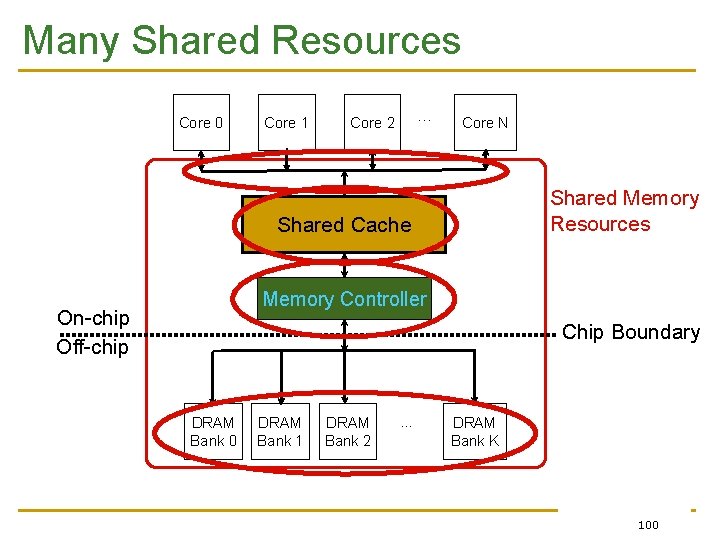

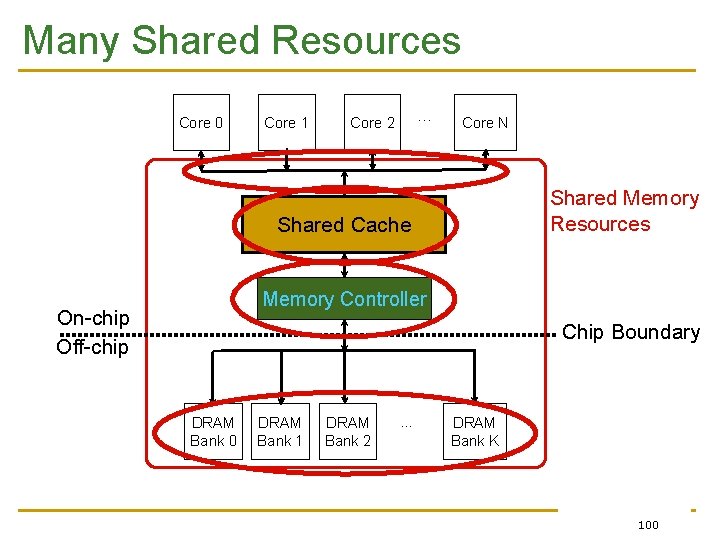

Many Shared Resources Core 0 Core 1 . . . Core 2 Core N Shared Memory Resources Shared Cache Memory Controller On-chip Off-chip Chip Boundary DRAM Bank 0 DRAM Bank 1 DRAM Bank 2 . . . DRAM Bank K 100

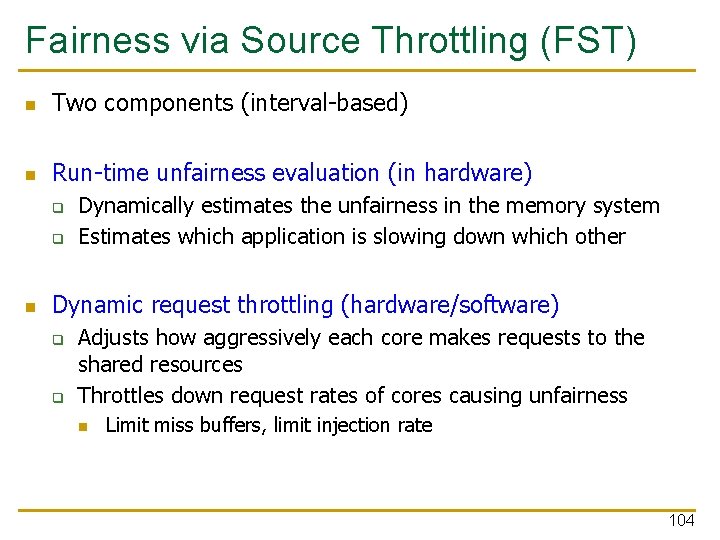

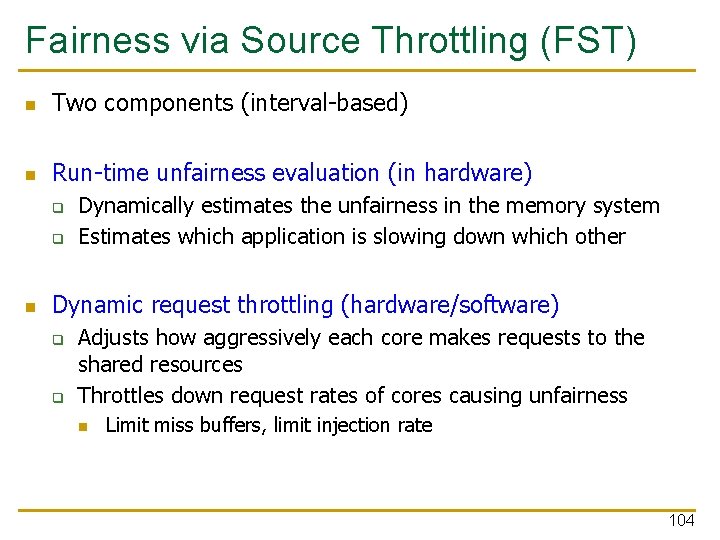

Motivation for Source Throttling n n n Partitioning (fairness/Qo. S) mechanisms in each resource might be difficult to get right (initially) Independent partitioning mechanisms in caches, interconnect, and memory can contradict each other Approaches that coordinate interaction among techniques for different resources require complex implementations Our Goal: Enable fair sharing of the entire memory system by dynamically detecting and controlling interference in a coordinated manner 101

An Alternative Approach n n Manage inter-thread interference at the cores, not at the shared resources Dynamically estimate unfairness in the memory system Feed back this information into a controller Throttle cores’ memory access rates accordingly q q Whom to throttle and by how much depends on performance target (throughput, fairness, per-thread Qo. S, etc) E. g. , if unfairness > system-software-specified target then throttle down core causing unfairness & throttle up core that was unfairly treated 102

Request Generation Order: queue of requests to A 1, A 2, A 3, A 4, B 1 shared resources Stall on Unmanage B 1 A: Compute A 1 A 2 A 3 A 4 d A 4 B: Compute Stall waiting for shared resources Interference A 3 Core A’s stall time A 2 Core B’s stall time Oldest A 1 Stall on B 1 � � Intensive application A generates many requests Shared Memory and causes long stall times for less intensive application B Resources Request Generation Order A 1 A 2, , B 1, A 2, A 3, A 4, B 1 A 4 Throttled � � queue of requests to Requests shared resources A: Compute Stall on Stall wait. Stall on A 4 Fair Source A 1 A 2 A 3 A 4 A 3 Throttling Extra Cycles B: Compute Stall wait. Stall on Core A B 1 A 2 Core A’s stall time B 1 Saved Cycles Core B’s stall time Oldest A 1 Shared Memory Resources Dynamically detect application A’s interference for application B and throttle down application A

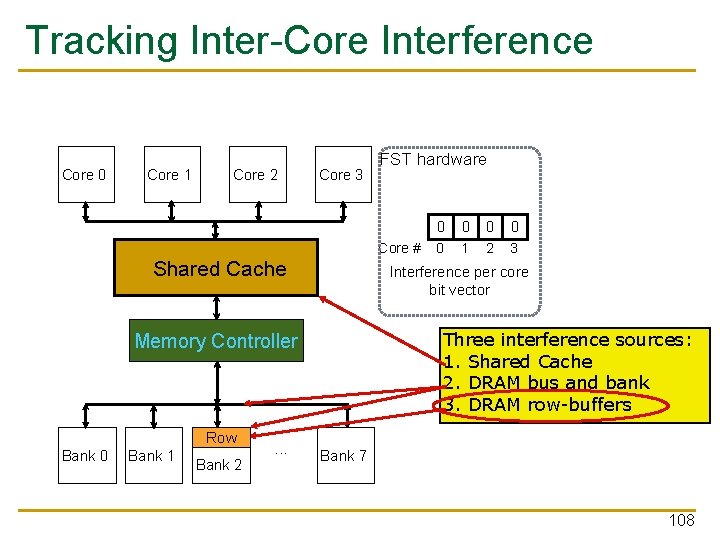

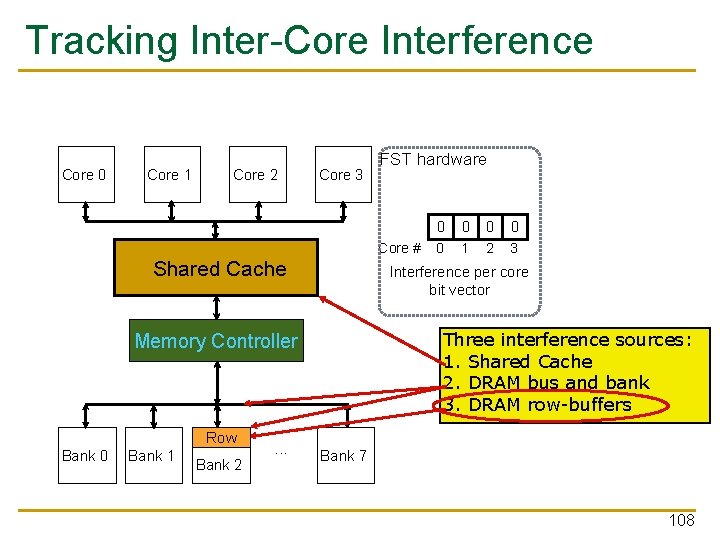

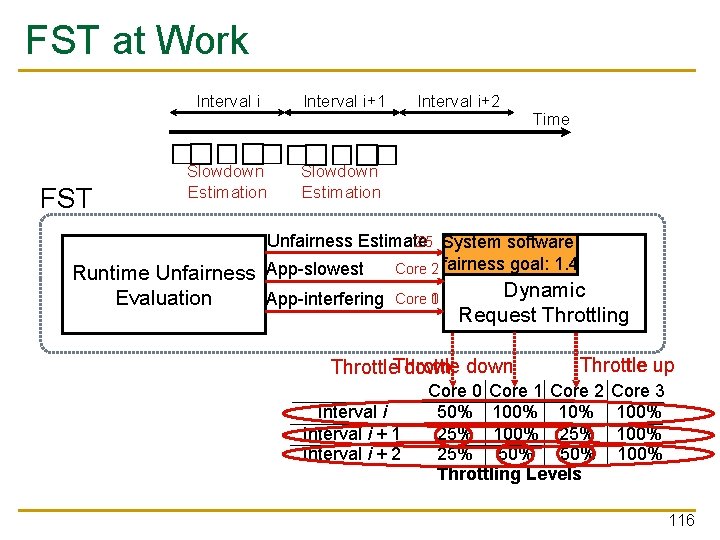

Fairness via Source Throttling (FST) n Two components (interval-based) n Run-time unfairness evaluation (in hardware) q q n Dynamically estimates the unfairness in the memory system Estimates which application is slowing down which other Dynamic request throttling (hardware/software) q q Adjusts how aggressively each core makes requests to the shared resources Throttles down request rates of cores causing unfairness n Limit miss buffers, limit injection rate 104

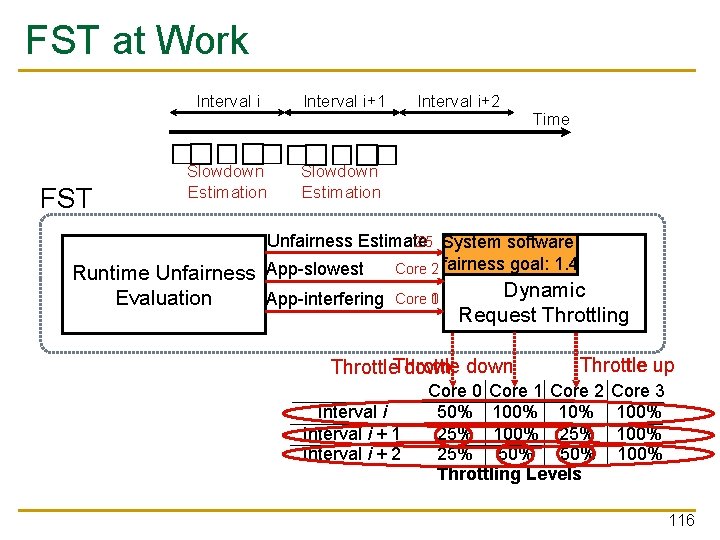

Fairness via Source Throttling (FST) Interval 1 Interval 3 Time � � � FST Interval 2 Slowdown Estimation Runtime Unfairness Evaluation Unfairness Estimate App-slowest App-interfering 1 - Estimating system unfairness 2 - Find app. with the highest slowdown (App-slowest) 3 - Find app. causing most interference for App-slowest (App-interfering) Dynamic Request Throttling if (Unfairness Estimate >Target) { 1 -Throttle down App-interfering 2 -Throttle up App-slowest } 105

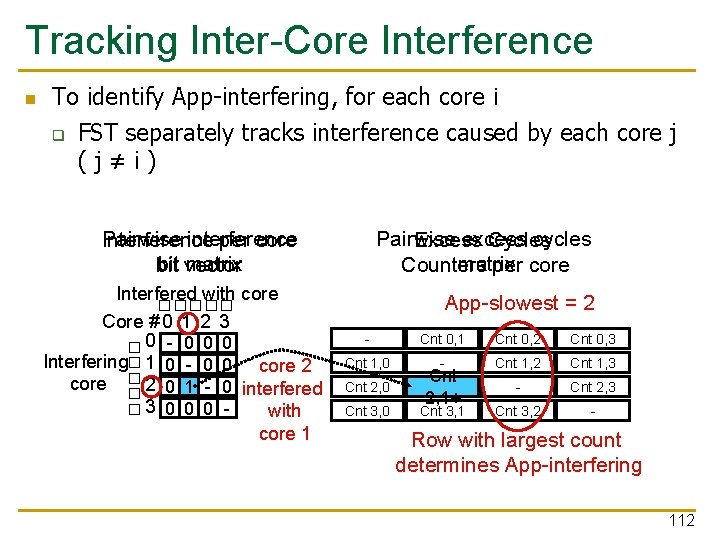

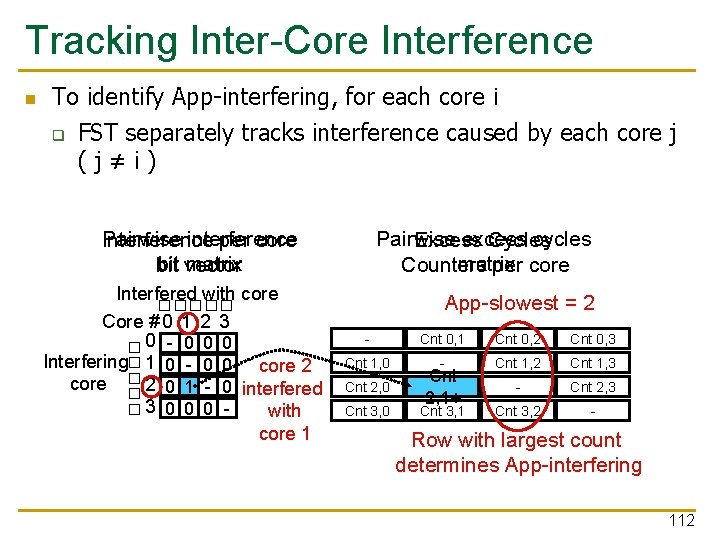

Fairness via Source Throttling (FST) FST Runtime Unfairness Evaluation Unfairness Estimate App-slowest App-interfering 1 - Estimating system unfairness 2 - Find app. with the highest slowdown (App-slowest) 3 - Find app. causing most interference for App-slowest (App-interfering) Dynamic Request Throttling if (Unfairness Estimate >Target) { 1 -Throttle down App-interfering 2 -Throttle up App-slowest } 106

Estimating System Unfairness n Unfairness = Max{Slowdown i} over all applications i Min{Slowdown i} over all applications i n n Slowdown of application i = Ti. Shared How can Alone Ti be estimated in shared mode? is the number of extra cycles it takes Excess Ti application i to execute due to interference Alone = Ti Shared Ti Excess Ti 107

Tracking Inter-Core Interference Core 0 Core 1 Core 2 Core 3 FST hardware Core # Shared Cache Bank 0 Bank 1 . . . 0 0 1 2 3 Interference per core bit vector Three interference sources: 1. Shared Cache 2. DRAM bus and bank 3. DRAM row-buffers Memory Controller Row Bank 2 0 Bank 7 108

Tracking DRAM Row-Buffer Interference Core 0 Shadow Row Address Register (SRAR) Core 1: Row B Core 1 Row A Shadow Row Address Register (SRAR) Core 0: Row A Interference induced row conflict Row B 0 1 0 Interference per core bit vector Row A Row Hit Conflict Bank 0 Bank 1 Queue of requests to bank 2 Row A Bank 2 … Bank 7 109

Tracking Inter-Core Interference Cycle Count Core 0 Core 1 Core 2 Core 3 T+1 T+2 T+3 T FST hardware 0 1 2 3 � 0 Shared Cache 0 1 0 0 1 Core # 0 1 2 3 0 � Excess Ti Interference per core. Excess Cycles Counters per core bit vector Memory Controller Alone = Ti Bank 0 Bank 1 Bank 2 . . . Shared Ti Excess Ti Bank 7 110

Fairness via Source Throttling (FST) FST Runtime Unfairness Evaluation Unfairness Estimate App-slowest App-interfering 1 - Estimating system unfairness 2 - Find app. with the highest slowdown (App-slowest) 3 - Find app. causing most interference for App-slowest (App-interfering) Dynamic Request Throttling if (Unfairness Estimate >Target) { 1 -Throttle down App-interfering 2 -Throttle up App-slowest } 111

Tracking Inter-Core Interference To identify App-interfering, for each core i q FST separately tracks interference caused by each core j (j≠i) Pairwise interference Interference per core matrix bit vector Pairwise excess cycles Excess Cycles matrix Counters per core App-slowest = 2 � � � Interfered with core Core # 0 1 2 3 0 0 - 0 0 0 Interfering 1 0 - 0 0 core 2 0 1 0 - 0 interfered 3 0 0 0 with core 1 � � � n Cnt - 0 Cnt 0, 1 1 Cnt 0, 2 2 Cnt 0, 3 3 Cnt 1, 0 - Cnt 1, 2 Cnt 1, 3 Cnt 2, 0 Cnt 2, 1 - Cnt 2, 3 Cnt 3, 0 Cnt 3, 1 Cnt 3, 2 - Cnt 2, 1+ Row with largest count determines App-interfering 112

Fairness via Source Throttling (FST) FST Runtime Unfairness Evaluation Unfairness Estimate App-slowest App-interfering 1 - Estimating system unfairness 2 - Find app. with the highest slowdown (App-slowest) 3 - Find app. causing most interference for App-slowest (App-interfering) Dynamic Request Throttling if (Unfairness Estimate >Target) { 1 -Throttle down App-interfering 2 -Throttle up App-slowest } 113

Dynamic Request Throttling n n Goal: Adjust how aggressively each core makes requests to the shared memory system Mechanisms: q Miss Status Holding Register (MSHR) quota n q Controls the number of concurrent requests accessing shared resources from each application Request injection frequency n Controls how often memory requests are issued to the last level cache from the MSHRs 114

Dynamic Request Throttling n Throttling level assigned to each core determines both MSHR quota and request injection rate Total # of MSHRs: 128 Throttling level MSHR quota 100% 50% 25% 10% 128 64 32 12 5% 6 4% 5 3% 3 Request Injection Rate Every cycle Every other cycle Once every 4 cycles Once every 10 cycles Once every 25 cycles Once every 30 115

FST at Work Interval i+2 Time � � Slowdown Estimation � � � � FST Interval i+1 Slowdown Estimation 2. 5 3 System software Unfairness Estimate Runtime Unfairness App-slowest Core 2 fairness goal: 1. 4 Dynamic Evaluation App-interfering Core 10 Request Throttling Throttle down Interval i + 1 Interval i + 2 Throttle up Core 0 Core 1 Core 2 50% 10% 25% 100% 25% 50% Throttling Levels Core 3 100% 116

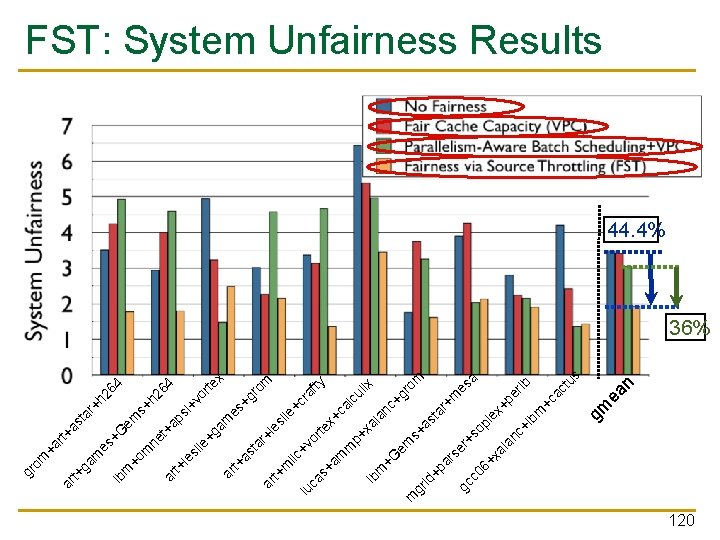

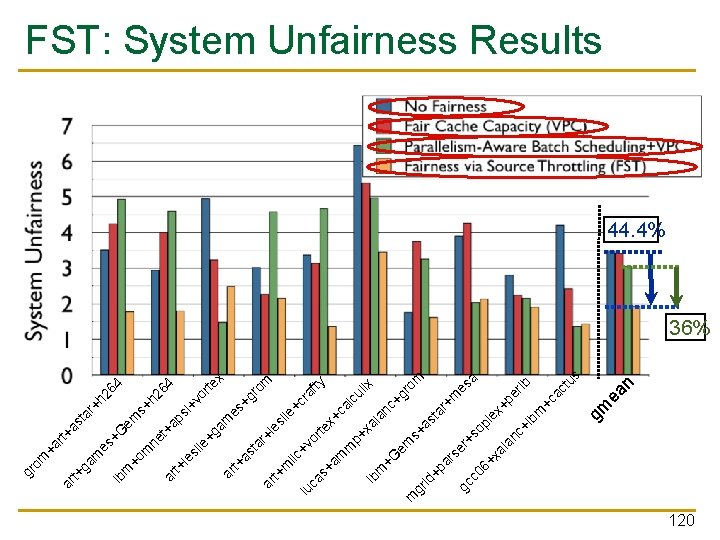

System Software Support n Different fairness objectives can be configured by system software q q q n Estimated Unfairness > Target Unfairness Estimated Max Slowdown > Target Max Slowdown Estimated Slowdown(i) > Target Slowdown(i) Support for thread priorities q Weighted Slowdown(i) = Estimated Slowdown(i) x Weight(i) 117

FST Hardware Cost n n Total storage cost required for 4 cores is ~12 KB FST does not require any structures or logic that are on the processor’s critical path 118

FST Evaluation Methodology n n x 86 cycle accurate simulator Baseline processor configuration q Per-core n q Shared (4 -core system) n n q 4 -wide issue, out-of-order, 256 entry ROB 128 MSHRs 2 MB, 16 -way L 2 cache Main Memory n n n DDR 3 1333 MHz Latency of 15 ns per command (t. RP, t. RCD, CL) 8 B wide core to memory bus 119

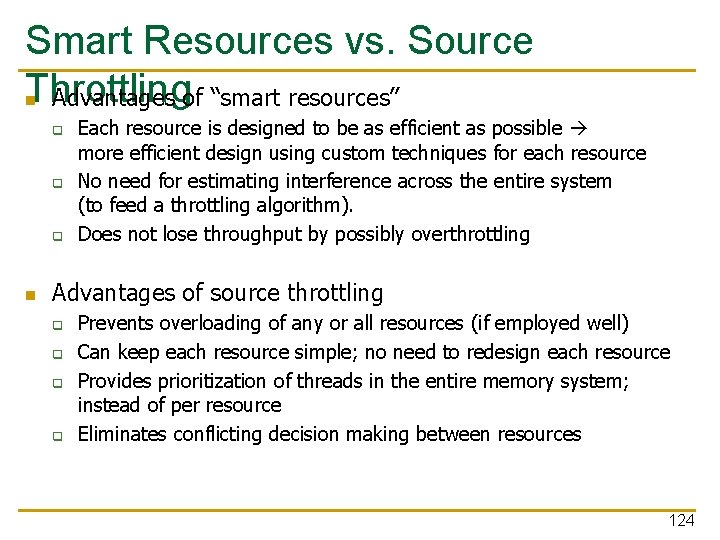

gm ea n s+ h 2 ne 64 t + ar a t+ ps le i+ sl vo ie rte +g x am ar es t+ +g as ta ro r+ m ar le t+ sl m ie ilc +c + lu ra vo ca fty r te s+ x+ am ca m lc p+ ul ix x lb a l m an +G c+ em gr m om s gr +a id st +p ar ar +m se es gc r+ a c 0 so 6+ pl ex xa +p la nc er lb +l bm +c ac tu s em G +h 26 4 ar as t rt+ es + m +o lb m ga m ar t+ +a om gr FST: System Unfairness Results 44. 4% 36% 120

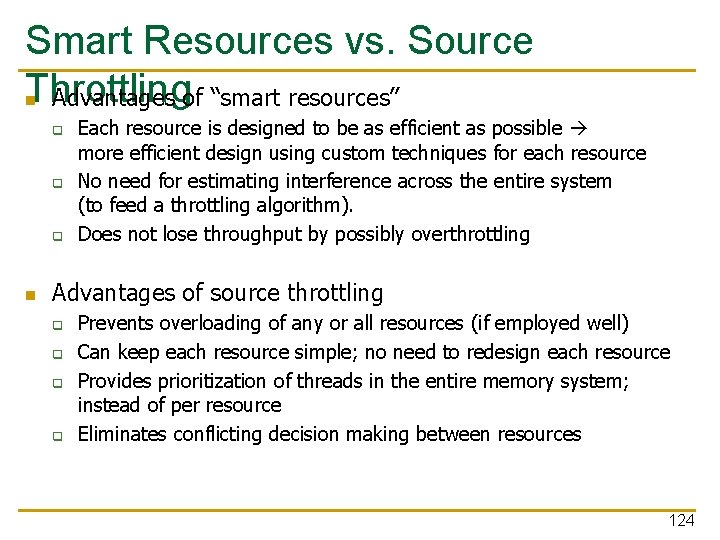

64 n ea gm s+ h 2 ne 64 t + ar a t+ ps le i+ sl vo ie rte +g x am ar es t+ +g as ta ro r+ m ar le t+ sl m ie ilc +c + lu ra vo ca fty r te s+ x+ am ca m lc p+ ul ix x lb a l m an +G c+ em gr m om s gr +a id st +p ar ar +m se es gc r+ a c 0 so 6+ pl ex xa +p la nc er lb +l bm +c ac tu s em G +h 2 ar as t rt+ es + m +o lb m ga m ar t+ +a om gr FST: System Performance Results 14% 25. 6% 121

FST Summary n n n Fairness via Source Throttling (FST) is a new fair and high-performance shared resource management approach for CMPs Dynamically monitors unfairness and throttles down sources of interfering memory requests Reduces the need for multiple per-resource interference reduction/control techniques Improves both system fairness and performance Incorporates thread weights and enables different fairness objectives 122

Designing Qo. S-Aware Memory Systems: Approaches n Smart resources: Design each shared resource to have a configurable interference control/reduction mechanism q Fair/Qo. S-aware memory schedulers, interconnects, caches, arbiters n Fair memory schedulers [Mutlu+ MICRO 2007], parallelism-aware memory schedulers [Mutlu+ ISCA 2008, Top Picks 2009], ATLAS memory scheduler [Kim+ HPCA 2010], thread cluster memory scheduler [Kim+ MICRO’ 10, Top Picks’ 11] n Application-aware on-chip networks [Das+ MICRO 2009, ISCA 2010, Top Picks 2011, Grot+ MICRO 2009, ISCA 2011] n Dumb resources: Keep each resource free-for-all, but control access to memory system at the cores/sources q Estimate interference/slowdown in the entire system and throttle cores that slow down others n n Fairness via Source Throttling [Ebrahimi+, ASPLOS 2010, ISCA 2011] Coordinated Prefetcher Throttling [Ebrahimi+, MICRO 2009] 123

Smart Resources vs. Source Throttling n Advantages of “smart resources” q q q n Each resource is designed to be as efficient as possible more efficient design using custom techniques for each resource No need for estimating interference across the entire system (to feed a throttling algorithm). Does not lose throughput by possibly overthrottling Advantages of source throttling q q Prevents overloading of any or all resources (if employed well) Can keep each resource simple; no need to redesign each resource Provides prioritization of threads in the entire memory system; instead of per resource Eliminates conflicting decision making between resources 124

Other Ways of Reducing (DRAM) Interference n DRAM bank/channel partitioning among threads n Interference-aware address mapping/remapping Core/request throttling: How? Interference-aware thread scheduling: How? Better/Interference-aware caching Interference-aware scheduling in the interconnect Randomized address mapping DRAM architecture/microarchitecture changes? n These are general techniques that can be used to improve n n n q q q System throughput Qo. S/fairness Power/energy consumption? 125

DRAM Partitioning Among Threads n n Idea: Map competing threads’ physical pages to different channels (or banks/ranks) Essentially, physically partition DRAM channels/banks/ranks among threads q Can be static or dynamic n A research topic (no known publications) n Advantages + Reduces interference + No/little need for hardware changes n Disadvantages - Causes fragmentation in physical memory possibly more page faults - Can reduce each thread’s bank parallelism/locality - Scalability? 126

Core/Request Throttling n Idea: Estimate the slowdown due to (DRAM) interference and throttle down threads that slow down others q n Ebrahimi et al. , “Fairness via Source Throttling: A Configurable and High-Performance Fairness Substrate for Multi-Core Memory Systems, ” ASPLOS 2010. Advantages + Core/request throttling is easy to implement: no need to change scheduling algorithm + Can be a general way of handling shared resource contention n Disadvantages - Requires interference/slowdown estimations - Thresholds can become difficult to optimize 127

Research Topics in Main Memory Management n Abundant n n n Interference reduction via different techniques Distributed memory controller management Co-design with on-chip interconnects and caches Reducing waste, minimizing energy, minimizing cost Enabling new memory technologies q q q n Die stacking Non-volatile memory Latency tolerance You can come up with great solutions that will significantly impact computing industry 128