18 742 Fall 2012 Parallel Computer Architecture Lecture

![Qo. S-Aware Memory Scheduling: Evolution n Stall-time fair memory scheduling [Mutlu+ MICRO’ 07] q Qo. S-Aware Memory Scheduling: Evolution n Stall-time fair memory scheduling [Mutlu+ MICRO’ 07] q](https://slidetodoc.com/presentation_image_h2/94aa5777ed5606dc7c116c1bf0fe384e/image-9.jpg)

![Qo. S-Aware Memory Scheduling: Evolution n Thread cluster memory scheduling [Kim+ MICRO’ 10] q Qo. S-Aware Memory Scheduling: Evolution n Thread cluster memory scheduling [Kim+ MICRO’ 10] q](https://slidetodoc.com/presentation_image_h2/94aa5777ed5606dc7c116c1bf0fe384e/image-10.jpg)

![Qo. S-Aware Memory Scheduling: Evolution n Parallel application memory scheduling [Ebrahimi+ MICRO’ 11] q Qo. S-Aware Memory Scheduling: Evolution n Parallel application memory scheduling [Ebrahimi+ MICRO’ 11] q](https://slidetodoc.com/presentation_image_h2/94aa5777ed5606dc7c116c1bf0fe384e/image-11.jpg)

![Qo. S-Aware Memory Scheduling: Evolution n Prefetch-aware shared resource management [Ebrahimi+ ISCA’ 12] [Ebrahimi+ Qo. S-Aware Memory Scheduling: Evolution n Prefetch-aware shared resource management [Ebrahimi+ ISCA’ 12] [Ebrahimi+](https://slidetodoc.com/presentation_image_h2/94aa5777ed5606dc7c116c1bf0fe384e/image-12.jpg)

![Thread Cluster Memory Scheduling [Kim+ MICRO’ 10] 1. Group threads into two clusters 2. Thread Cluster Memory Scheduling [Kim+ MICRO’ 10] 1. Group threads into two clusters 2.](https://slidetodoc.com/presentation_image_h2/94aa5777ed5606dc7c116c1bf0fe384e/image-19.jpg)

- Slides: 101

18 -742 Fall 2012 Parallel Computer Architecture Lecture 25: Main Memory Management II Prof. Onur Mutlu Carnegie Mellon University 11/12/2012

Reminder: New Review Assignments n Due: Tuesday, November 13, 11: 59 pm. q q n Mutlu and Moscibroda, “Parallelism-Aware Batch Scheduling: Enhancing both Performance and Fairness of Shared DRAM Systems, ” ISCA 2008. Kim et al. , “Thread Cluster Memory Scheduling: Exploiting Differences in Memory Access Behavior, ” MICRO 2010. Due: Thursday, November 15, 11: 59 pm. q q Ebrahimi et al. , “Fairness via Source Throttling: A Configurable and High-Performance Fairness Substrate for Multi-Core Memory Systems, ” ASPLOS 2010. Muralidhara et al. , “Reducing Memory Interference in Multicore Systems via Application-Aware Memory Channel Partitioning, ” MICRO 2011. 2

Reminder: Literature Survey Process n n Done in groups: your research project group is likely ideal Step 1: Pick 3 or more research papers q Broadly related to your research project Step 2: Send me the list of papers with links to pdf copies (by Sunday, November 11) n q q n n I need to approve the 3 papers We will iterate to ensure convergence on the list Step 3: Prepare a 2 -page writeup on the 3 papers Step 3: Prepare a 15 -minute presentation on the 3 papers q q Total time: 15 -minute talk + 5 -minute Q&A Talk should focus on insights and tradeoffs Step 4: Deliver the presentation in front of class (dates: November 26 -28 or December 3 -7) and turn in your writeup (due date: December 1) n 3

Last Lecture n Begin shared resource management n Main memory as a shared resource q q Qo. S-aware memory systems Memory request scheduling n n Memory performance attacks STFM PAR-BS ATLAS 4

Today n End Qo. S-aware Memory Request Scheduling 5

More on Qo. S-Aware Memory Request Scheduling

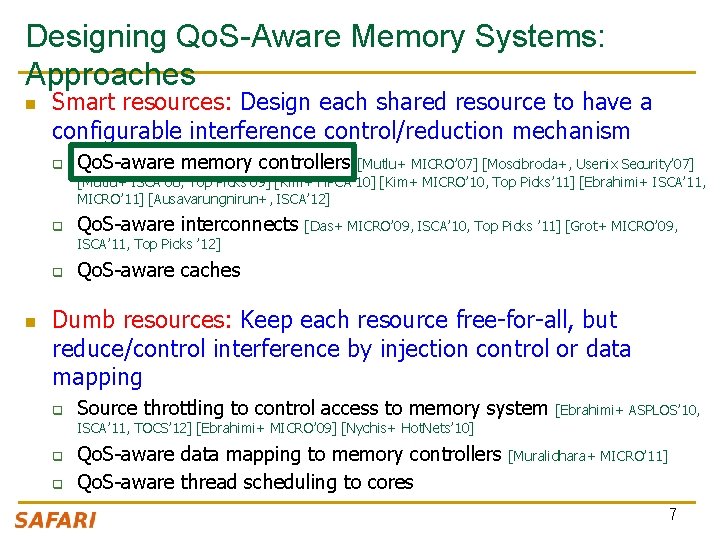

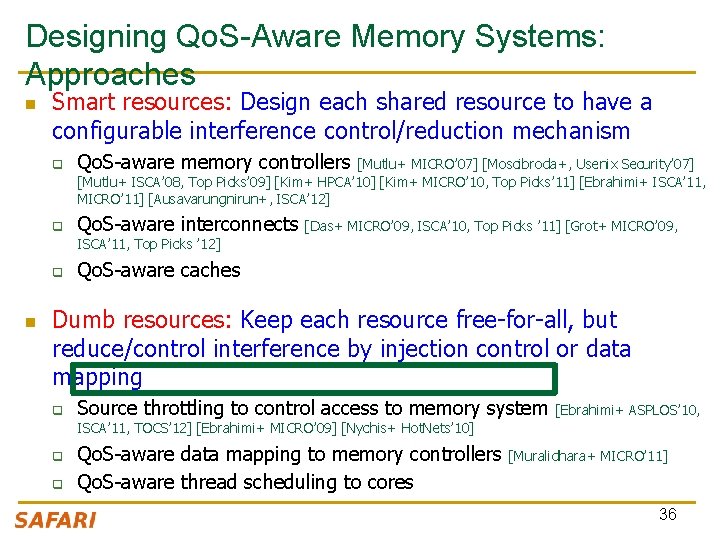

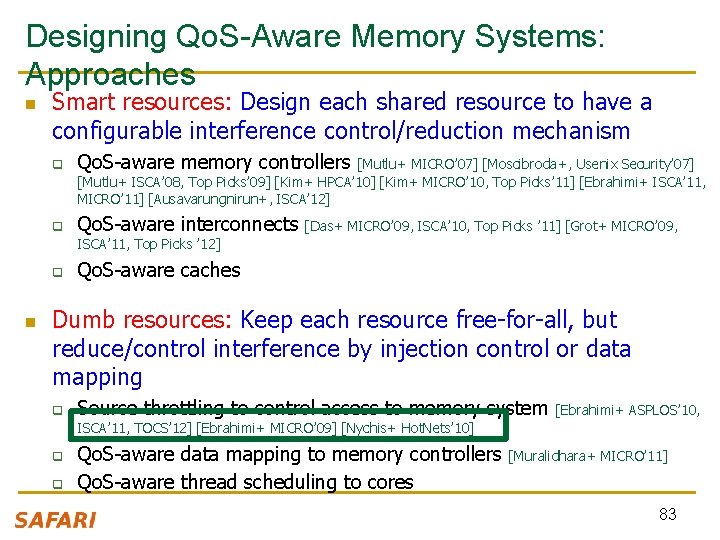

Designing Qo. S-Aware Memory Systems: Approaches n Smart resources: Design each shared resource to have a configurable interference control/reduction mechanism q Qo. S-aware memory controllers q Qo. S-aware interconnects [Mutlu+ MICRO’ 07] [Moscibroda+, Usenix Security’ 07] [Mutlu+ ISCA’ 08, Top Picks’ 09] [Kim+ HPCA’ 10] [Kim+ MICRO’ 10, Top Picks’ 11] [Ebrahimi+ ISCA’ 11, MICRO’ 11] [Ausavarungnirun+, ISCA’ 12] [Das+ MICRO’ 09, ISCA’ 10, Top Picks ’ 11] [Grot+ MICRO’ 09, ISCA’ 11, Top Picks ’ 12] q n Qo. S-aware caches Dumb resources: Keep each resource free-for-all, but reduce/control interference by injection control or data mapping q Source throttling to control access to memory system [Ebrahimi+ ASPLOS’ 10, ISCA’ 11, TOCS’ 12] [Ebrahimi+ MICRO’ 09] [Nychis+ Hot. Nets’ 10] q q Qo. S-aware data mapping to memory controllers Qo. S-aware thread scheduling to cores [Muralidhara+ MICRO’ 11] 7

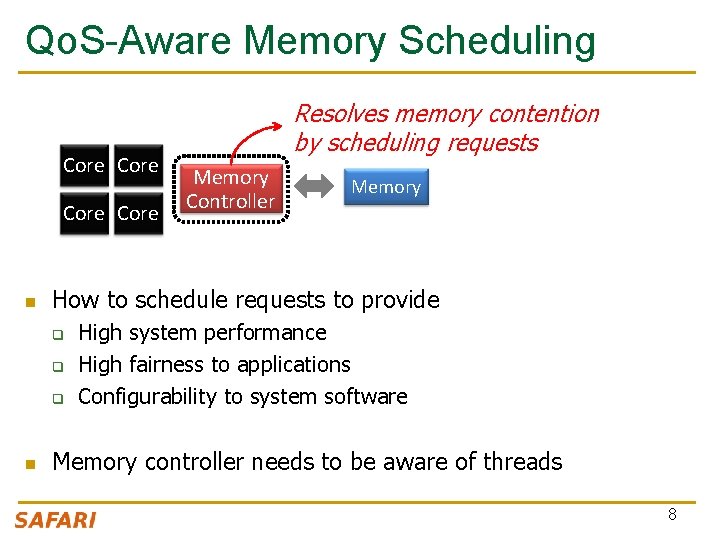

Qo. S-Aware Memory Scheduling Core n Memory Controller Memory How to schedule requests to provide q q q n Resolves memory contention by scheduling requests High system performance High fairness to applications Configurability to system software Memory controller needs to be aware of threads 8

![Qo SAware Memory Scheduling Evolution n Stalltime fair memory scheduling Mutlu MICRO 07 q Qo. S-Aware Memory Scheduling: Evolution n Stall-time fair memory scheduling [Mutlu+ MICRO’ 07] q](https://slidetodoc.com/presentation_image_h2/94aa5777ed5606dc7c116c1bf0fe384e/image-9.jpg)

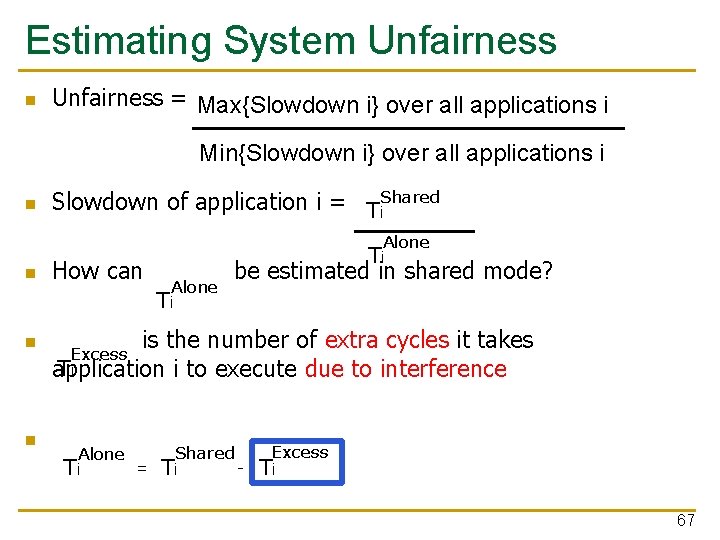

Qo. S-Aware Memory Scheduling: Evolution n Stall-time fair memory scheduling [Mutlu+ MICRO’ 07] q q n Takeaway: Proportional thread progress improves performance, especially when threads are “heavy” (memory intensive) Parallelism-aware batch scheduling q q n Idea: Estimate and balance thread slowdowns Idea: Rank threads and service in rank order (to preserve bank parallelism); batch requests to prevent starvation Takeaway: Preserving within-thread bank-parallelism improves performance; request batching improves fairness ATLAS memory scheduler q q [Mutlu+ ISCA’ 08, Top Picks’ 09] [Kim+ HPCA’ 10] Idea: Prioritize threads that have attained the least service from the memory scheduler Takeaway: Prioritizing “light” threads improves performance 9

![Qo SAware Memory Scheduling Evolution n Thread cluster memory scheduling Kim MICRO 10 q Qo. S-Aware Memory Scheduling: Evolution n Thread cluster memory scheduling [Kim+ MICRO’ 10] q](https://slidetodoc.com/presentation_image_h2/94aa5777ed5606dc7c116c1bf0fe384e/image-10.jpg)

Qo. S-Aware Memory Scheduling: Evolution n Thread cluster memory scheduling [Kim+ MICRO’ 10] q q n Idea: Cluster threads into two groups (latency vs. bandwidth sensitive); prioritize the latency-sensitive ones; employ a fairness policy in the bandwidth sensitive group Takeaway: Heterogeneous scheduling policy that is different based on thread behavior maximizes both performance and fairness Staged memory scheduling n n [Ausavarungnirun+ ISCA’ 12] Idea: Divide the functional tasks of an application-aware memory scheduler into multiple distinct stages, where each stage is significantly simpler than a monolithic scheduler Takeaway: Staging enables the design of a scalable and relatively simpler application-aware memory scheduler that works on very large request buffers 10

![Qo SAware Memory Scheduling Evolution n Parallel application memory scheduling Ebrahimi MICRO 11 q Qo. S-Aware Memory Scheduling: Evolution n Parallel application memory scheduling [Ebrahimi+ MICRO’ 11] q](https://slidetodoc.com/presentation_image_h2/94aa5777ed5606dc7c116c1bf0fe384e/image-11.jpg)

Qo. S-Aware Memory Scheduling: Evolution n Parallel application memory scheduling [Ebrahimi+ MICRO’ 11] q q n Idea: Identify and prioritize limiter threads of a multithreaded application in the memory scheduler; provide fast and fair progress to non-limiter threads Takeaway: Carefully prioritizing between limiter and non-limiter threads of a parallel application improves performance Integrated Memory Channel Partitioning and Scheduling [Muralidhara+ MICRO’ 11] n n Idea: Only prioritize very latency-sensitive threads in the scheduler; mitigate all other applications’ interference via channel partitioning Takeaway: Intelligently ombining application-aware channel partitioning and memory scheduling provides better performance than either 11

![Qo SAware Memory Scheduling Evolution n Prefetchaware shared resource management Ebrahimi ISCA 12 Ebrahimi Qo. S-Aware Memory Scheduling: Evolution n Prefetch-aware shared resource management [Ebrahimi+ ISCA’ 12] [Ebrahimi+](https://slidetodoc.com/presentation_image_h2/94aa5777ed5606dc7c116c1bf0fe384e/image-12.jpg)

Qo. S-Aware Memory Scheduling: Evolution n Prefetch-aware shared resource management [Ebrahimi+ ISCA’ 12] [Ebrahimi+ MICRO’ 09] [Lee+ MICRO’ 08] q q Idea: Prioritize prefetches depending on how they affect system performance; even accurate prefetches can degrade performance of the system Takeaway: Carefully controlling and prioritizing prefetch requests improves performance and fairness 12

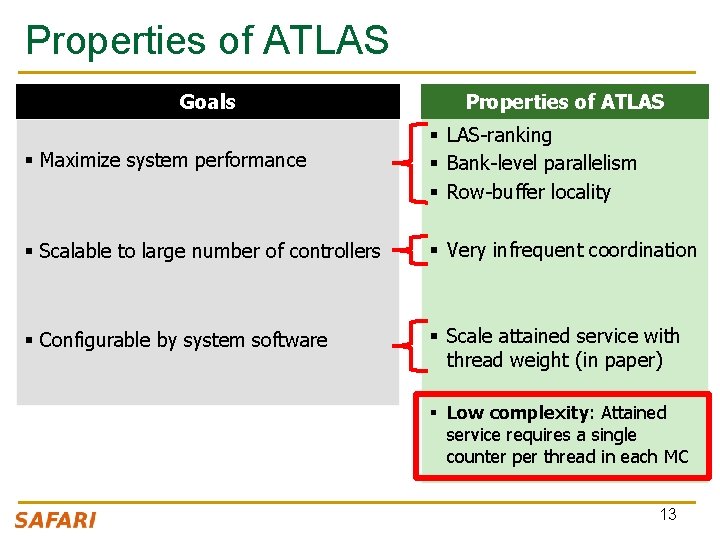

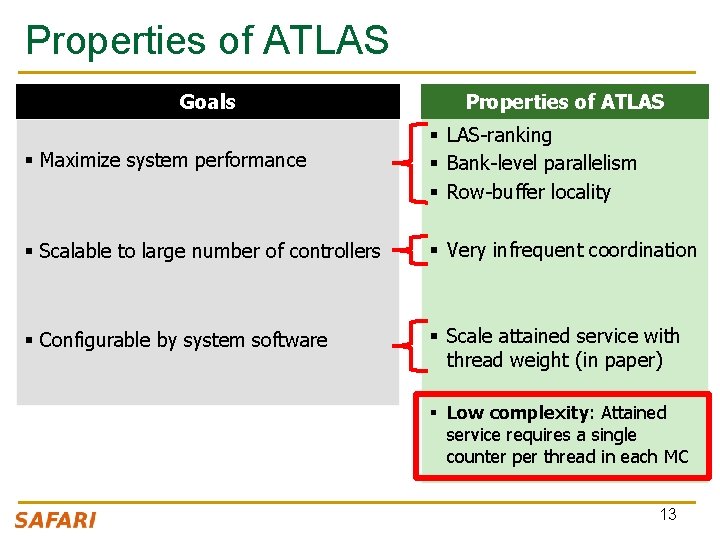

Properties of ATLAS Goals Properties of ATLAS § Maximize system performance § LAS-ranking § Bank-level parallelism § Row-buffer locality § Scalable to large number of controllers § Very infrequent coordination § Configurable by system software § Scale attained service with thread weight (in paper) § Low complexity: Attained service requires a single counter per thread in each MC 13

ATLAS Pros and Cons n Upsides: q q q n Good at improving performance Low complexity Coordination among controllers happens infrequently Downsides: q Lowest ranked threads get delayed significantly high unfairness 14

TCM: Thread Cluster Memory Scheduling Yoongu Kim, Michael Papamichael, Onur Mutlu, and Mor Harchol-Balter, "Thread Cluster Memory Scheduling: Exploiting Differences in Memory Access Behavior" 43 rd International Symposium on Microarchitecture (MICRO), pages 65 -76, Atlanta, GA, December 2010. Slides (pptx) (pdf) TCM Micro 2010 Talk

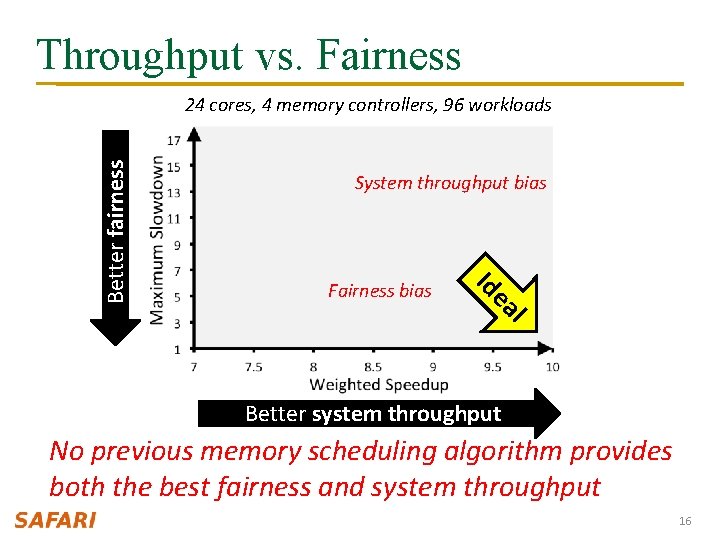

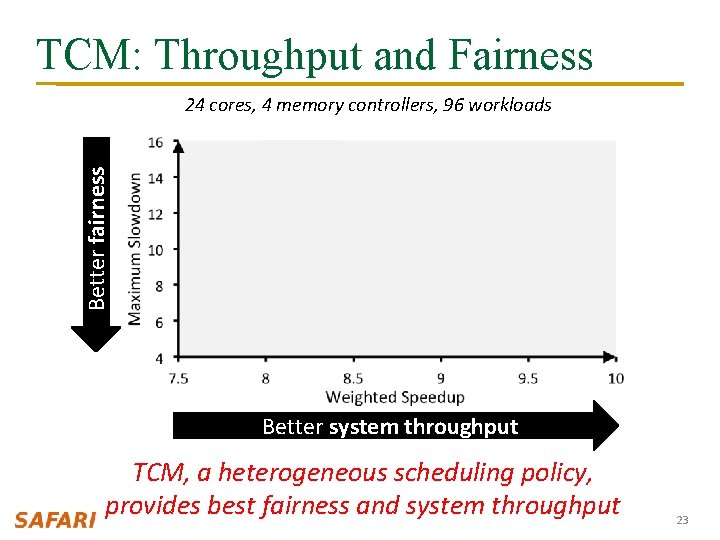

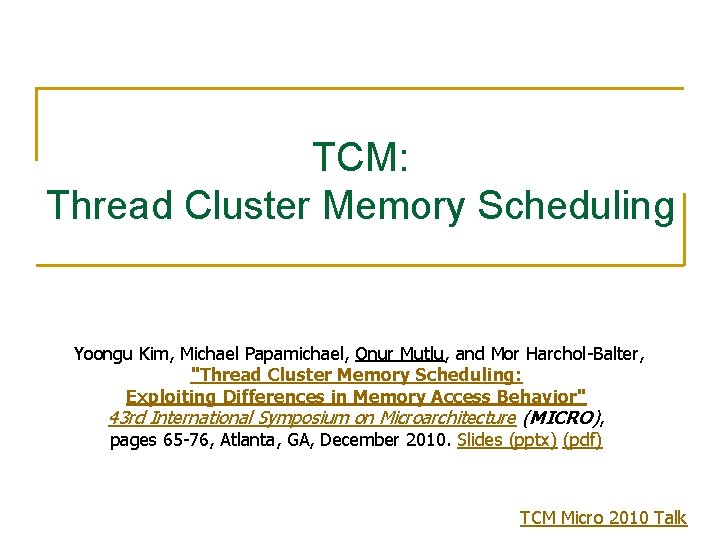

Throughput vs. Fairness System throughput bias l ea Fairness bias Id Better fairness 24 cores, 4 memory controllers, 96 workloads Better system throughput No previous memory scheduling algorithm provides both the best fairness and system throughput 16

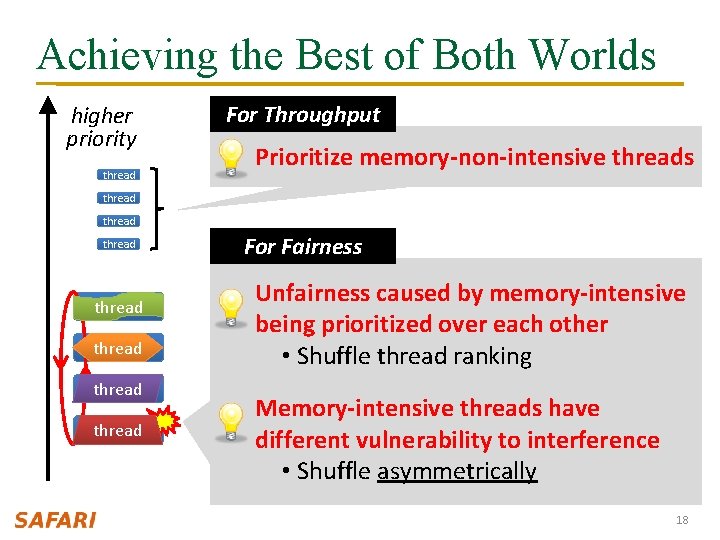

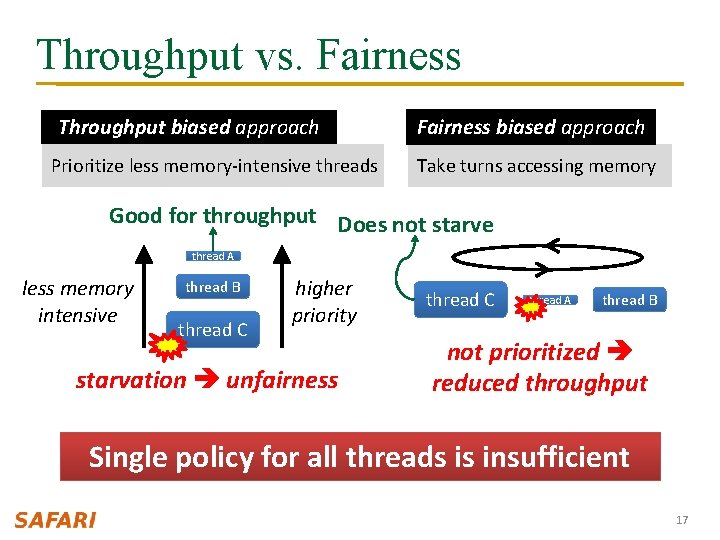

Throughput vs. Fairness Throughput biased approach Prioritize less memory-intensive threads Fairness biased approach Take turns accessing memory Good for throughput Does not starve thread A less memory intensive thread B thread C higher priority starvation unfairness thread C thread A thread B not prioritized reduced throughput Single policy for all threads is insufficient 17

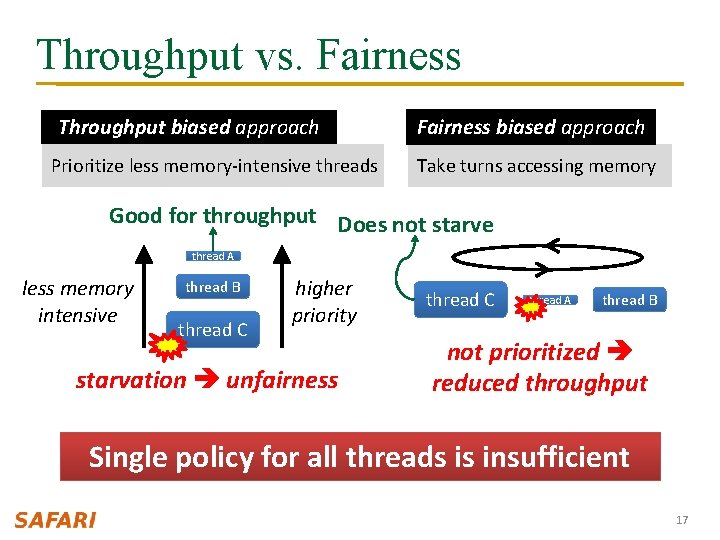

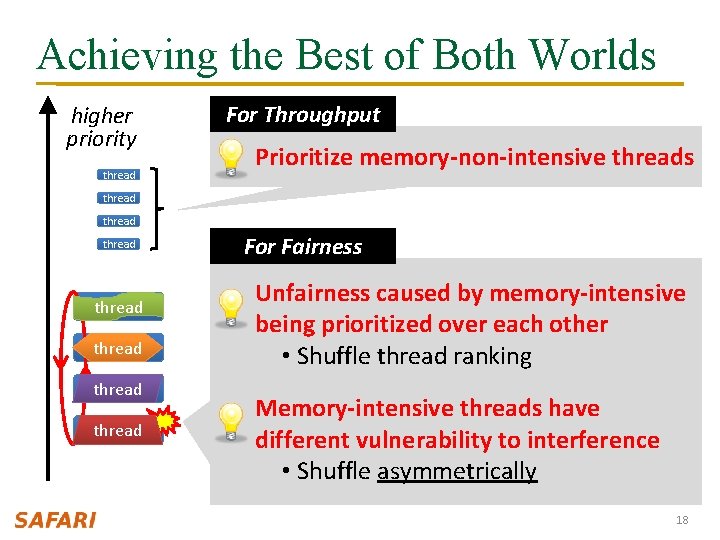

Achieving the Best of Both Worlds higher priority thread For Throughput Prioritize memory-non-intensive threads thread thread For Fairness Unfairness caused by memory-intensive being prioritized over each other • Shuffle thread ranking Memory-intensive threads have different vulnerability to interference • Shuffle asymmetrically 18

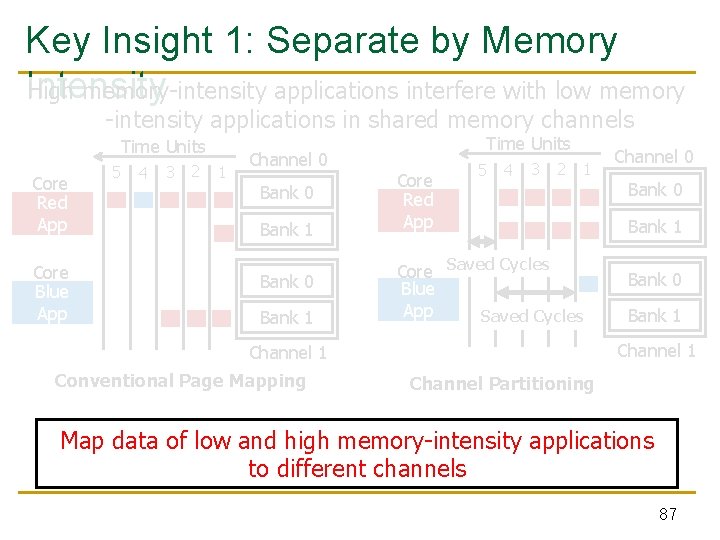

![Thread Cluster Memory Scheduling Kim MICRO 10 1 Group threads into two clusters 2 Thread Cluster Memory Scheduling [Kim+ MICRO’ 10] 1. Group threads into two clusters 2.](https://slidetodoc.com/presentation_image_h2/94aa5777ed5606dc7c116c1bf0fe384e/image-19.jpg)

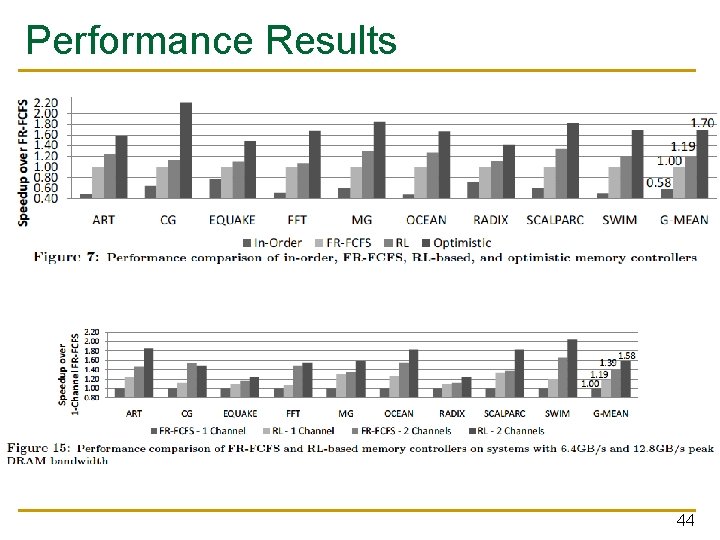

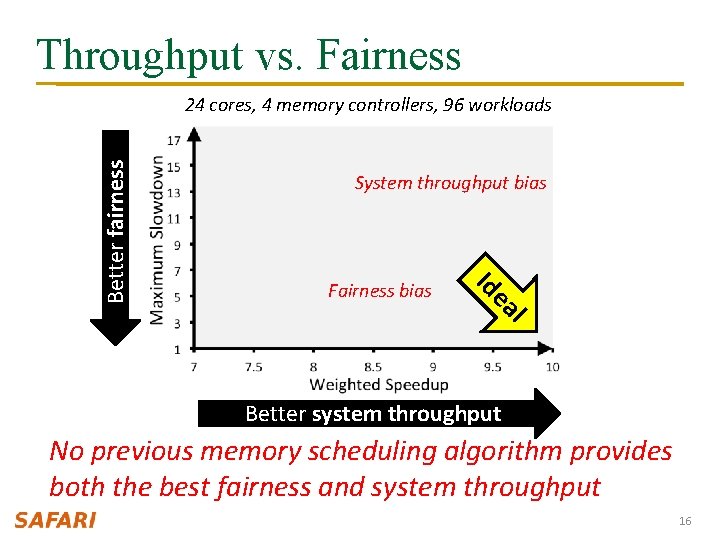

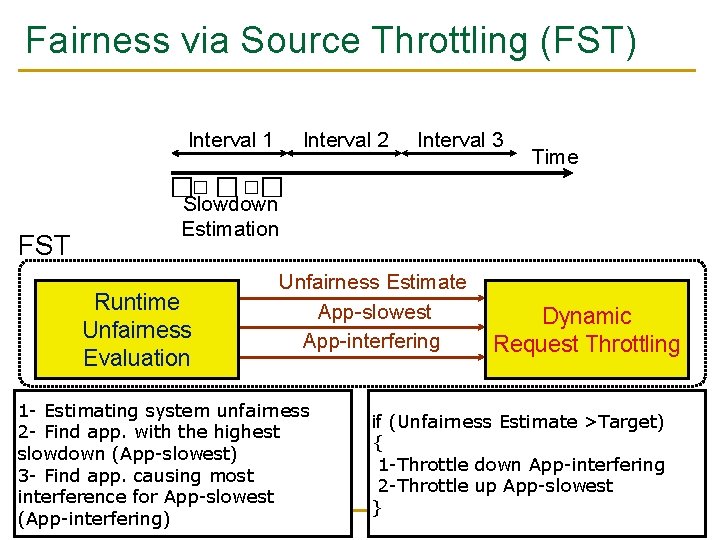

Thread Cluster Memory Scheduling [Kim+ MICRO’ 10] 1. Group threads into two clusters 2. Prioritize non-intensive cluster 3. Different policies for each cluster Memory-non-intensive thread Non-intensive cluster Throughput thread higher priority Prioritized thread higher priority Threads in the system Memory-intensive Intensive cluster Fairness 19

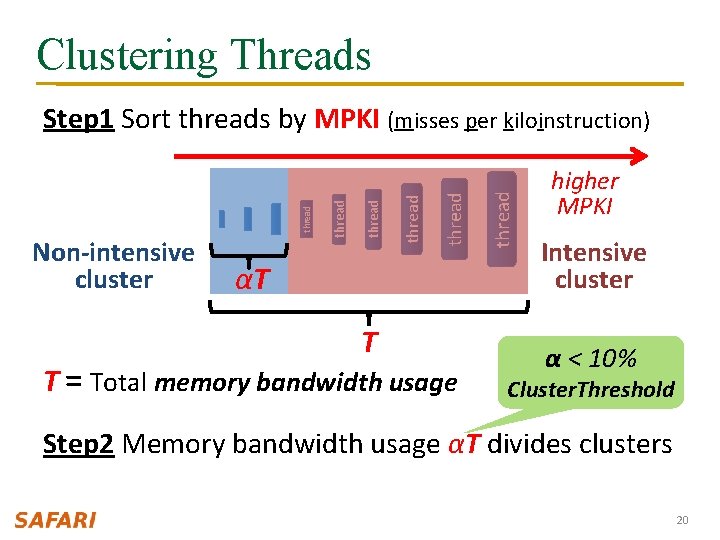

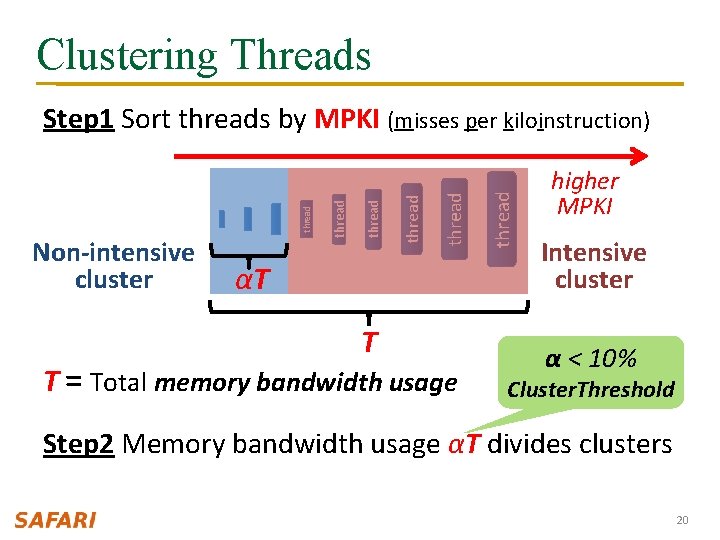

Clustering Threads αT T T = Total memory bandwidth usage thread Non-intensive cluster thread Step 1 Sort threads by MPKI (misses per kiloinstruction) higher MPKI Intensive cluster α < 10% Cluster. Threshold Step 2 Memory bandwidth usage αT divides clusters 20

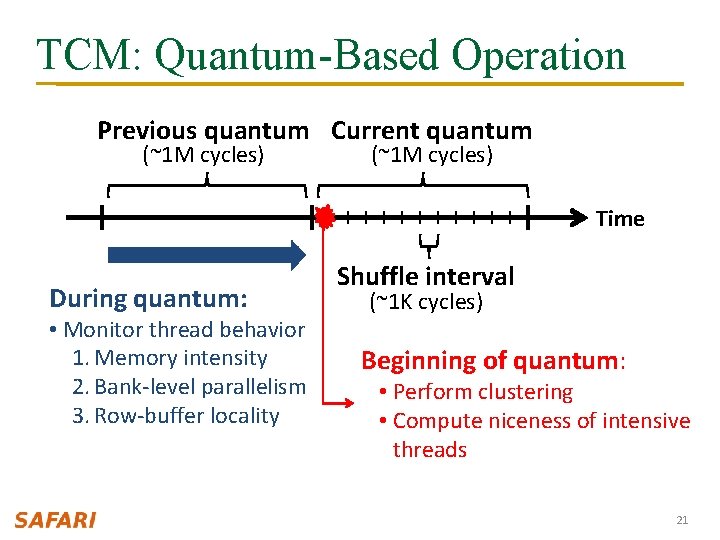

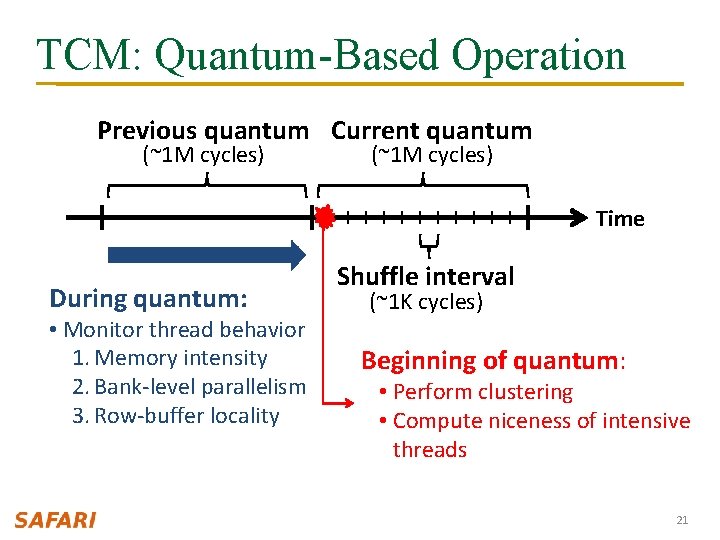

TCM: Quantum-Based Operation Previous quantum Current quantum (~1 M cycles) Time During quantum: • Monitor thread behavior 1. Memory intensity 2. Bank-level parallelism 3. Row-buffer locality Shuffle interval (~1 K cycles) Beginning of quantum: • Perform clustering • Compute niceness of intensive threads 21

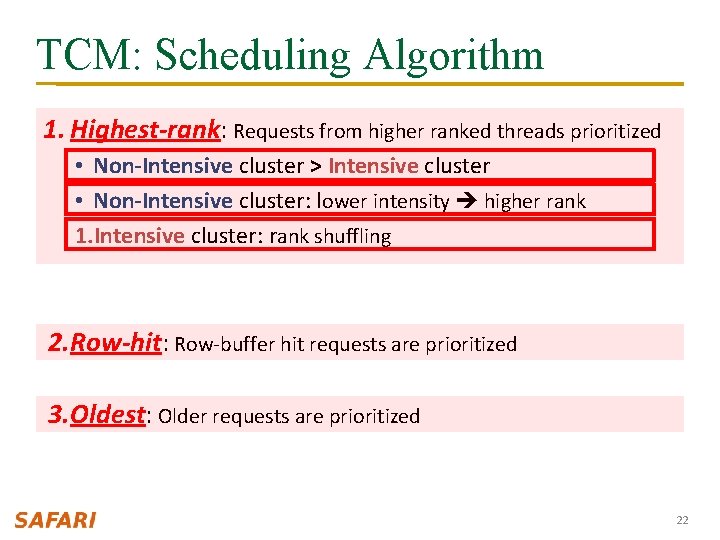

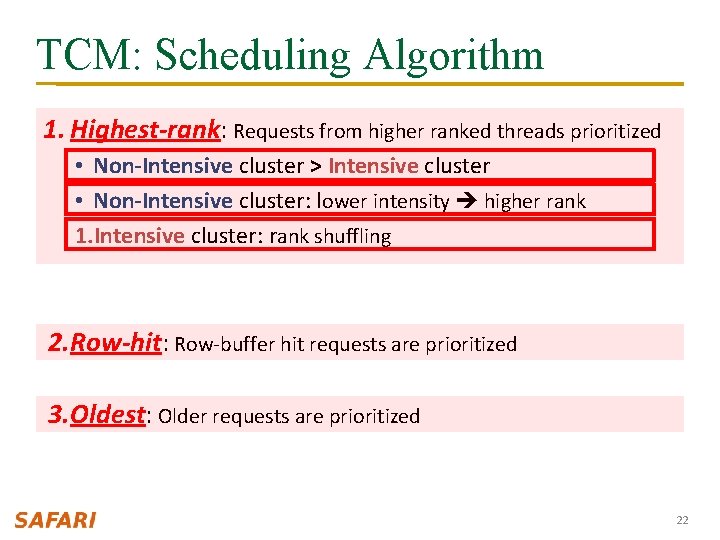

TCM: Scheduling Algorithm 1. Highest-rank: Requests from higher ranked threads prioritized • Non-Intensive cluster > Intensive cluster • Non-Intensive cluster: lower intensity higher rank 1. Intensive cluster: rank shuffling 2. Row-hit: Row-buffer hit requests are prioritized 3. Oldest: Older requests are prioritized 22

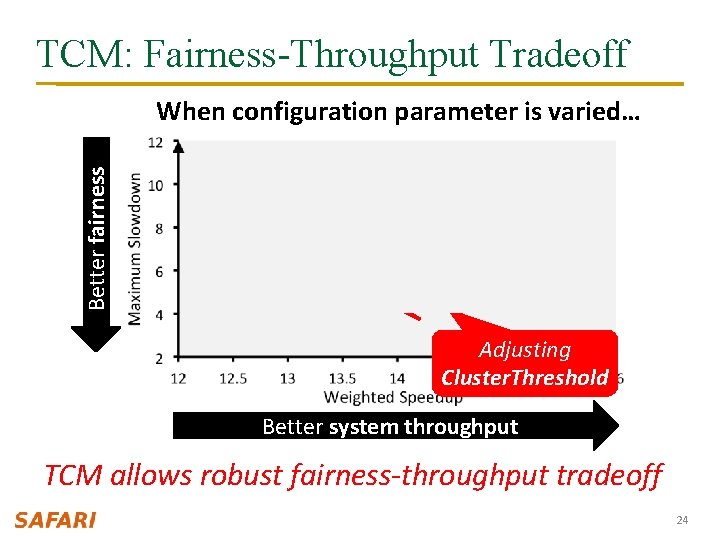

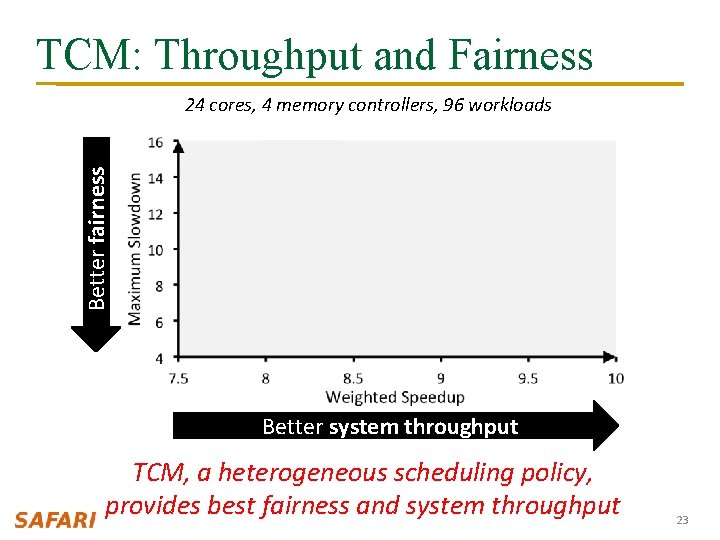

TCM: Throughput and Fairness Better fairness 24 cores, 4 memory controllers, 96 workloads Better system throughput TCM, a heterogeneous scheduling policy, provides best fairness and system throughput 23

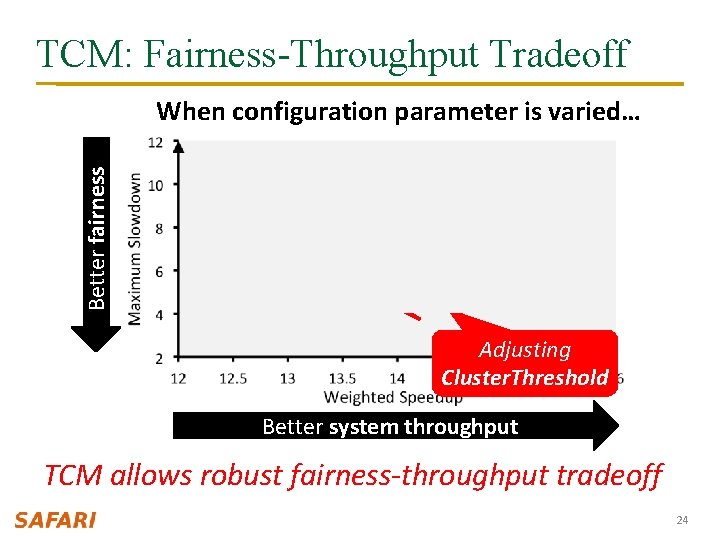

TCM: Fairness-Throughput Tradeoff Better fairness When configuration parameter is varied… FRFCFS STFM ATLAS PAR-BS TCM Adjusting Cluster. Threshold Better system throughput TCM allows robust fairness-throughput tradeoff 24

TCM Pros and Cons n Upsides: q n Provides both high fairness and high performance Downsides: q q Scalability to large buffer sizes? Effectiveness in a heterogeneous system? 25

Staged Memory Scheduling Rachata Ausavarungnirun, Kevin Chang, Lavanya Subramanian, Gabriel Loh, and Onur Mutlu, "Staged Memory Scheduling: Achieving High Performance and Scalability in Heterogeneous Systems” 39 th International Symposium on Computer Architecture (ISCA), Portland, OR, June 2012. SMS ISCA 2012 Talk

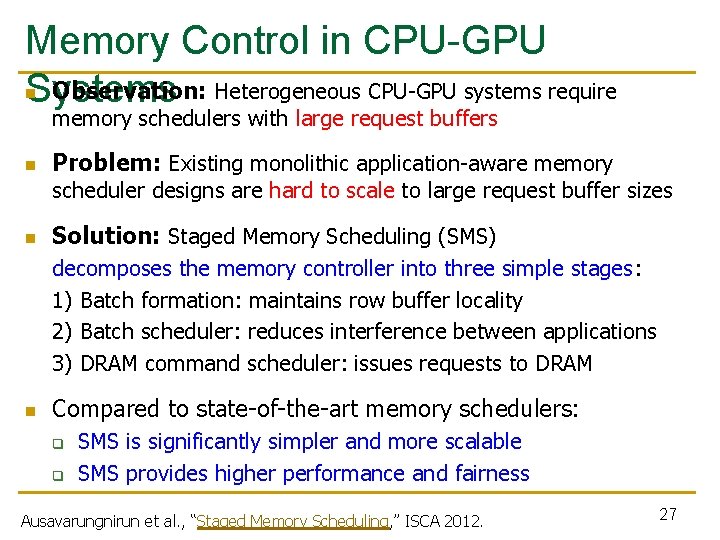

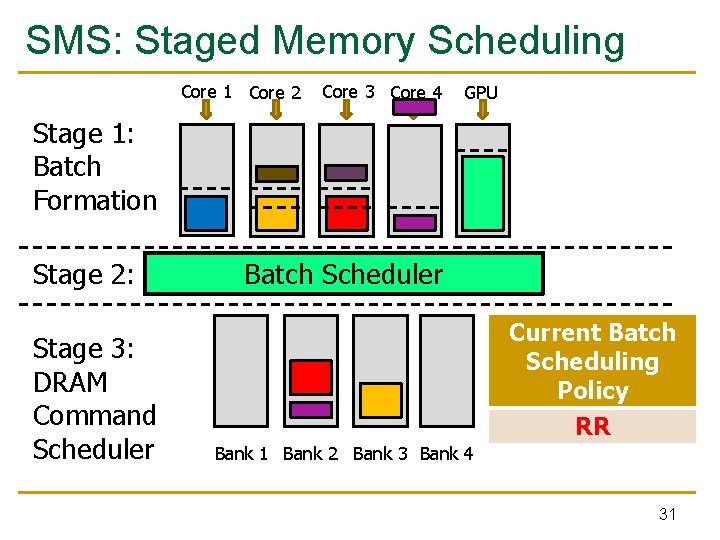

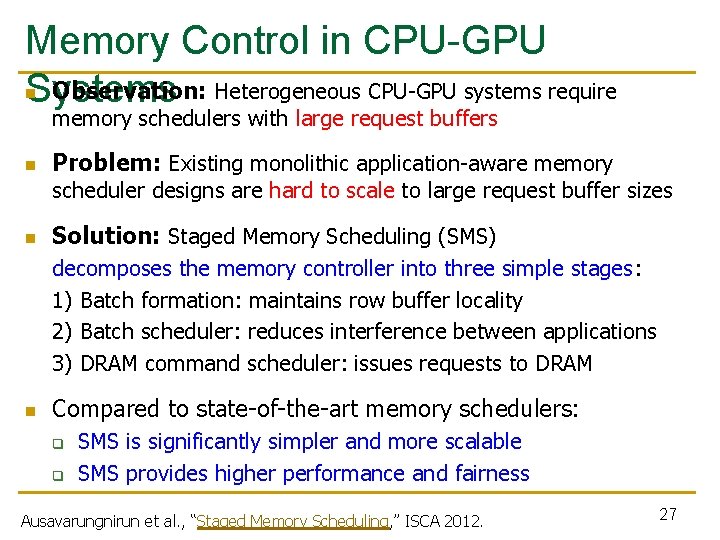

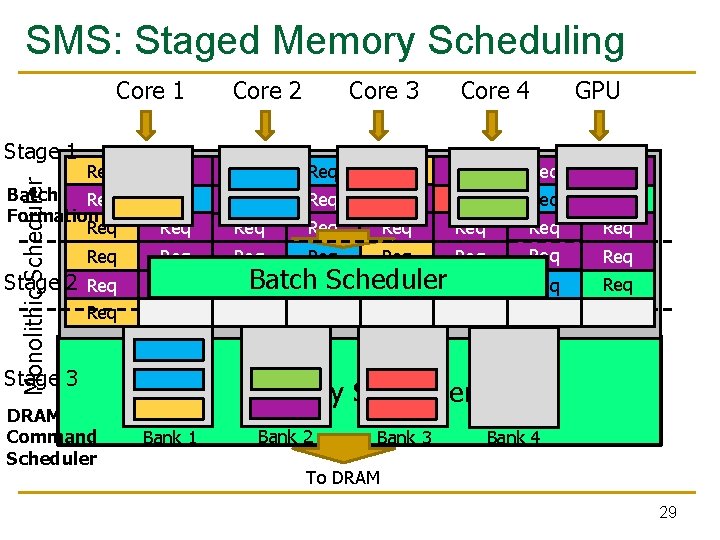

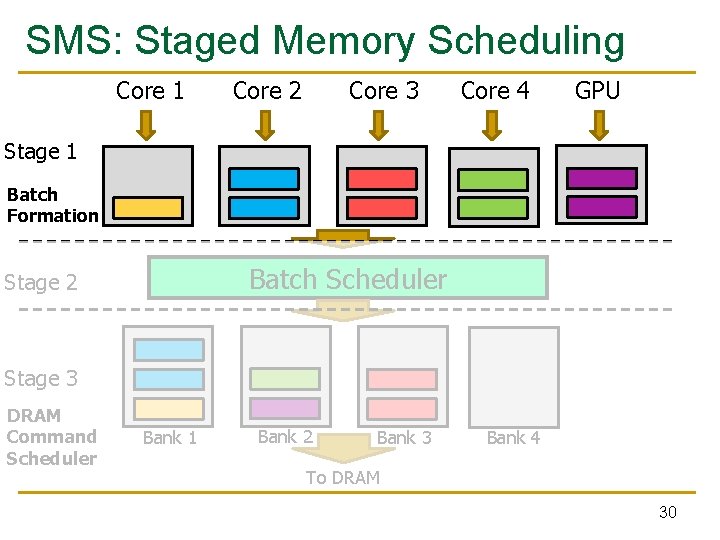

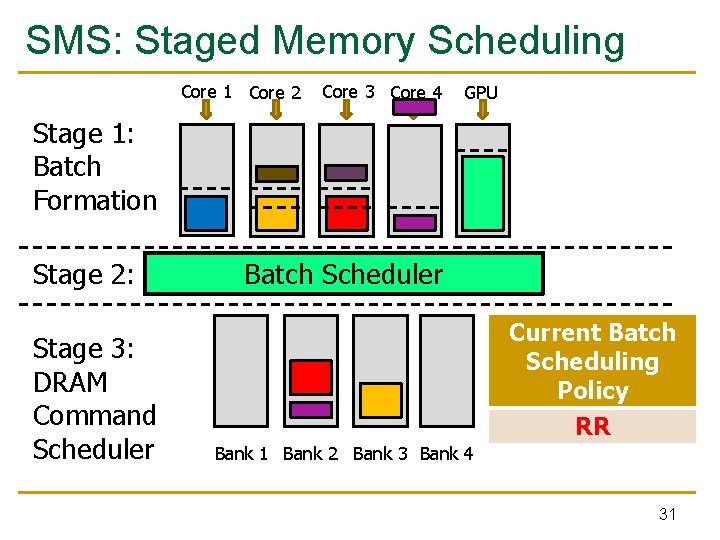

Memory Control in CPU-GPU n Observation: Heterogeneous CPU-GPU systems require Systems memory schedulers with large request buffers n Problem: Existing monolithic application-aware memory scheduler designs are hard to scale to large request buffer sizes n Solution: Staged Memory Scheduling (SMS) decomposes the memory controller into three simple stages: 1) Batch formation: maintains row buffer locality 2) Batch scheduler: reduces interference between applications 3) DRAM command scheduler: issues requests to DRAM n Compared to state-of-the-art memory schedulers: q q SMS is significantly simpler and more scalable SMS provides higher performance and fairness Ausavarungnirun et al. , “Staged Memory Scheduling, ” ISCA 2012. 27

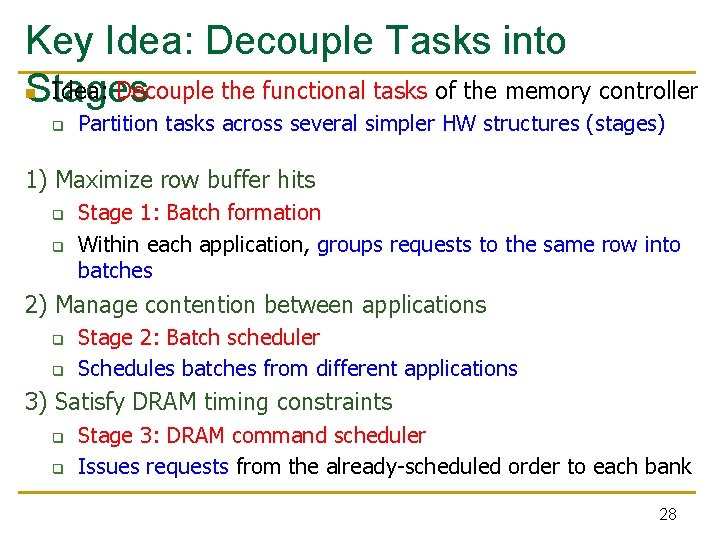

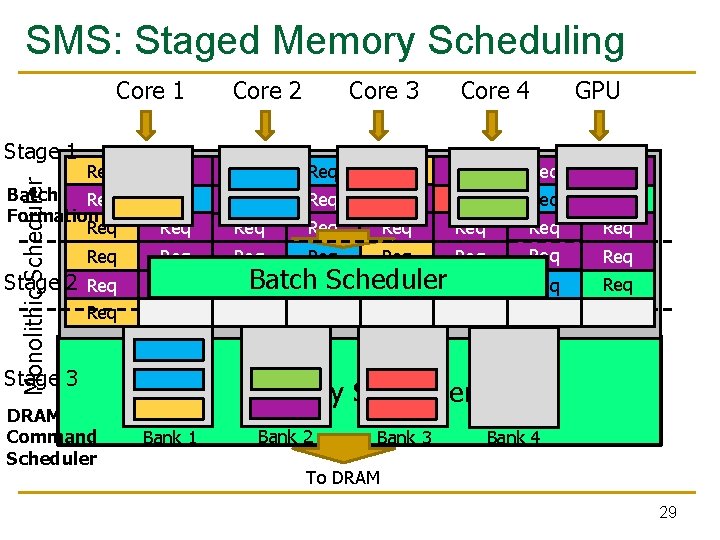

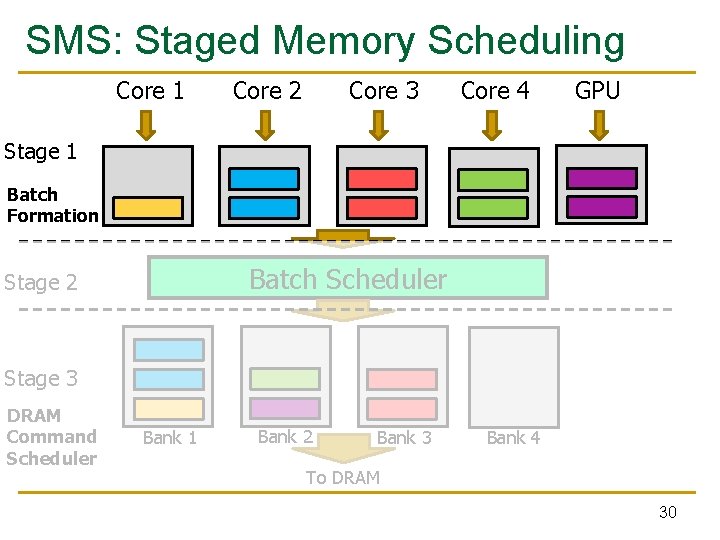

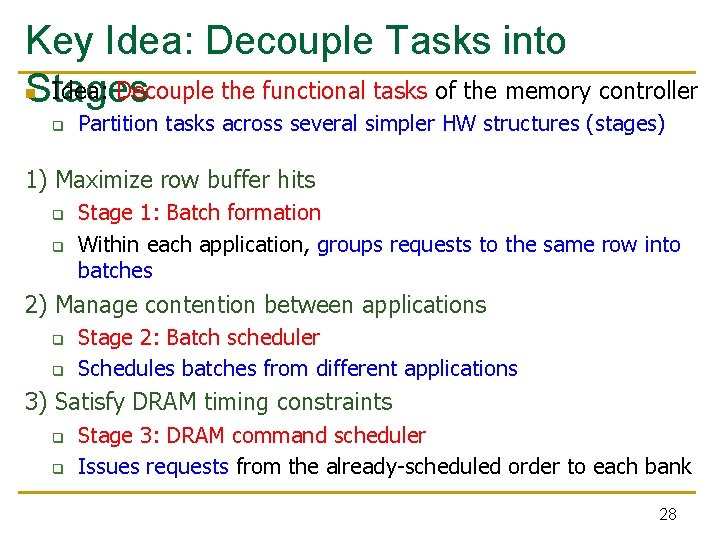

Key Idea: Decouple Tasks into n Idea: Decouple the functional tasks of the memory controller Stages q Partition tasks across several simpler HW structures (stages) 1) Maximize row buffer hits q q Stage 1: Batch formation Within each application, groups requests to the same row into batches 2) Manage contention between applications q q Stage 2: Batch scheduler Schedules batches from different applications 3) Satisfy DRAM timing constraints q q Stage 3: DRAM command scheduler Issues requests from the already-scheduled order to each bank 28

SMS: Staged Memory Scheduling Core 1 Stage 1 Core 2 Core 3 Core 4 GPU Req Req Batch Req Formation Req Req Req Req Req Req Stage 2 Req Req Monolithic Scheduler Req Batch. Req. Scheduler Req Req Stage 3 DRAM Command Scheduler Memory Scheduler Bank 1 Bank 2 Bank 3 Bank 4 To DRAM 29

SMS: Staged Memory Scheduling Core 1 Core 2 Core 3 Core 4 GPU Stage 1 Batch Formation Batch. Req. Scheduler Req Stage 2 Stage 3 DRAM Command Scheduler Bank 1 Bank 2 Bank 3 Bank 4 To DRAM 30

SMS: Staged Memory Scheduling Core 1 Core 2 Core 3 Core 4 GPU Stage 1: Batch Formation Stage 2: Stage 3: DRAM Command Scheduler Batch Scheduler Current Batch Scheduling Policy RR SJF Bank 1 Bank 2 Bank 3 Bank 4 31

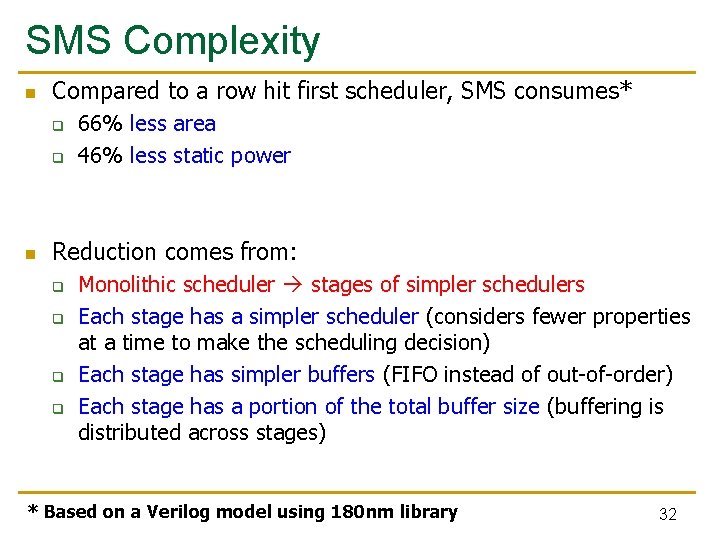

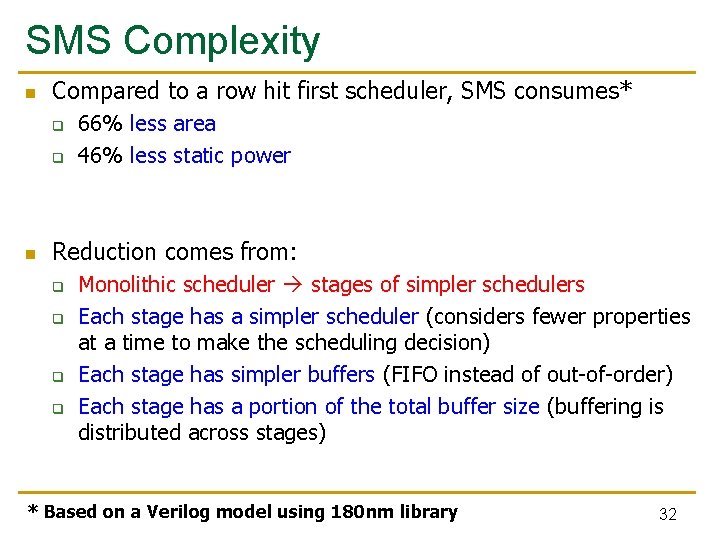

SMS Complexity n Compared to a row hit first scheduler, SMS consumes* q q n 66% less area 46% less static power Reduction comes from: q q Monolithic scheduler stages of simpler schedulers Each stage has a simpler scheduler (considers fewer properties at a time to make the scheduling decision) Each stage has simpler buffers (FIFO instead of out-of-order) Each stage has a portion of the total buffer size (buffering is distributed across stages) * Based on a Verilog model using 180 nm library 32

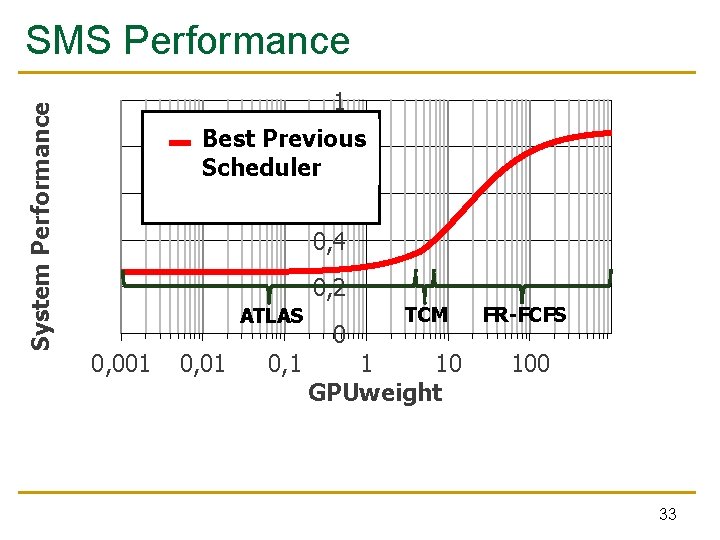

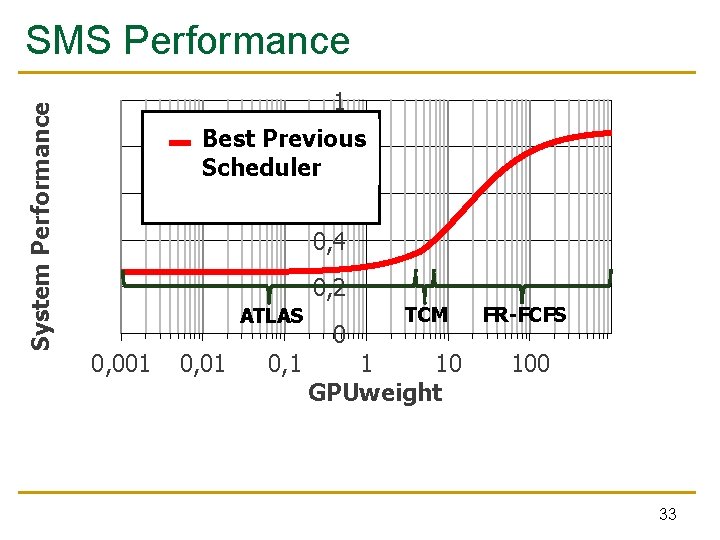

System Performance SMS Performance 1 Best Previous 0, 8 Scheduler Previous Best 0, 6 0, 4 0, 2 ATLAS 0, 001 0, 1 0 TCM 1 10 GPUweight FR-FCFS 100 33

System Performance SMS Performance n 1 Best Previous Best 0, 8 Scheduler 0, 6 SMS 0, 4 0, 2 0, 001 0, 1 0 1 10 GPUweight 100 At every GPU weight, SMS outperforms the best previous scheduling algorithm for that weight 34

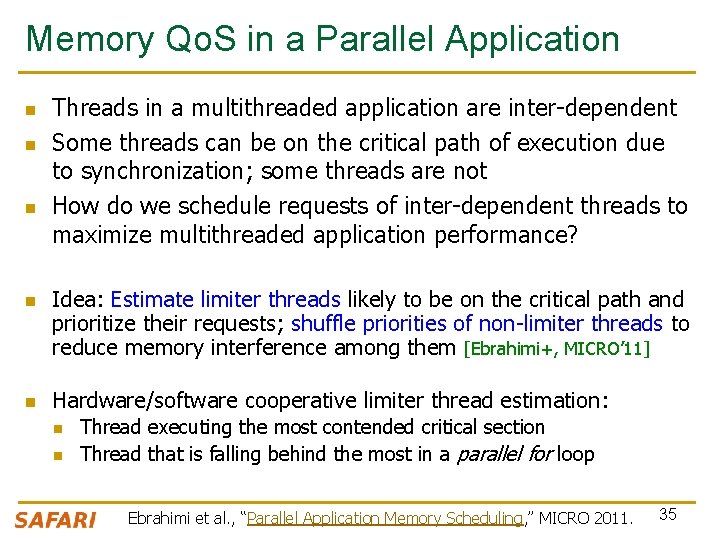

Memory Qo. S in a Parallel Application n n Threads in a multithreaded application are inter-dependent Some threads can be on the critical path of execution due to synchronization; some threads are not How do we schedule requests of inter-dependent threads to maximize multithreaded application performance? Idea: Estimate limiter threads likely to be on the critical path and prioritize their requests; shuffle priorities of non-limiter threads to reduce memory interference among them [Ebrahimi+, MICRO’ 11] Hardware/software cooperative limiter thread estimation: n n Thread executing the most contended critical section Thread that is falling behind the most in a parallel for loop Ebrahimi et al. , “Parallel Application Memory Scheduling, ” MICRO 2011. 35

Designing Qo. S-Aware Memory Systems: Approaches n Smart resources: Design each shared resource to have a configurable interference control/reduction mechanism q Qo. S-aware memory controllers q Qo. S-aware interconnects [Mutlu+ MICRO’ 07] [Moscibroda+, Usenix Security’ 07] [Mutlu+ ISCA’ 08, Top Picks’ 09] [Kim+ HPCA’ 10] [Kim+ MICRO’ 10, Top Picks’ 11] [Ebrahimi+ ISCA’ 11, MICRO’ 11] [Ausavarungnirun+, ISCA’ 12] [Das+ MICRO’ 09, ISCA’ 10, Top Picks ’ 11] [Grot+ MICRO’ 09, ISCA’ 11, Top Picks ’ 12] q n Qo. S-aware caches Dumb resources: Keep each resource free-for-all, but reduce/control interference by injection control or data mapping q Source throttling to control access to memory system [Ebrahimi+ ASPLOS’ 10, ISCA’ 11, TOCS’ 12] [Ebrahimi+ MICRO’ 09] [Nychis+ Hot. Nets’ 10] q q Qo. S-aware data mapping to memory controllers Qo. S-aware thread scheduling to cores [Muralidhara+ MICRO’ 11] 36

We did not cover the following slides in lecture. These are for your preparation for the next lecture.

Self-Optimizing Memory Controllers Engin Ipek, Onur Mutlu, José F. Martínez, and Rich Caruana, "Self Optimizing Memory Controllers: A Reinforcement Learning Approach" Proceedings of the 35 th International Symposium on Computer Architecture (ISCA), Beijing, China, June 2008.

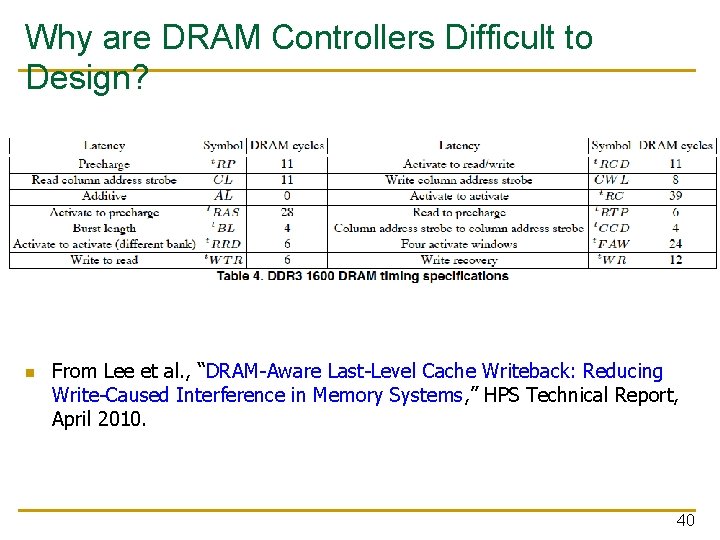

Why are DRAM Controllers Difficult to Design? n Need to obey DRAM timing constraints for correctness q q n Need to keep track of many resources to prevent conflicts q n n There are many (50+) timing constraints in DRAM t. WTR: Minimum number of cycles to wait before issuing a read command after a write command is issued t. RC: Minimum number of cycles between the issuing of two consecutive activate commands to the same bank … Channels, banks, ranks, data bus, address bus, row buffers Need to handle DRAM refresh Need to optimize for performance q q (in the presence of constraints) Reordering is not simple Predicting the future? 39

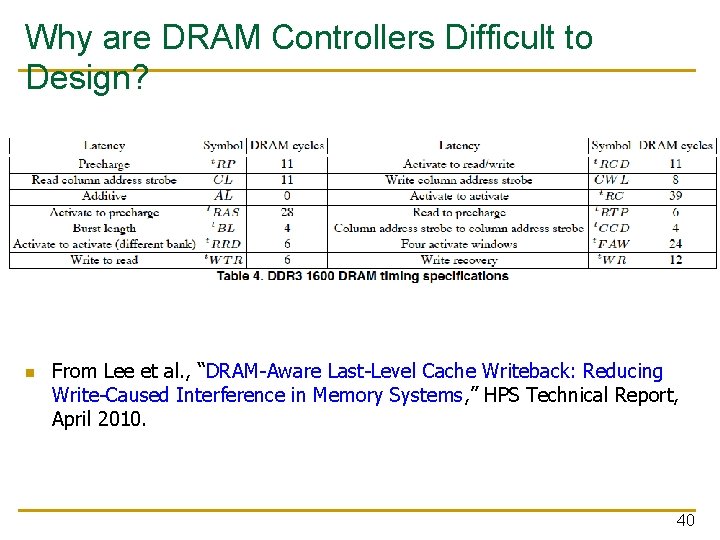

Why are DRAM Controllers Difficult to Design? n From Lee et al. , “DRAM-Aware Last-Level Cache Writeback: Reducing Write-Caused Interference in Memory Systems, ” HPS Technical Report, April 2010. 40

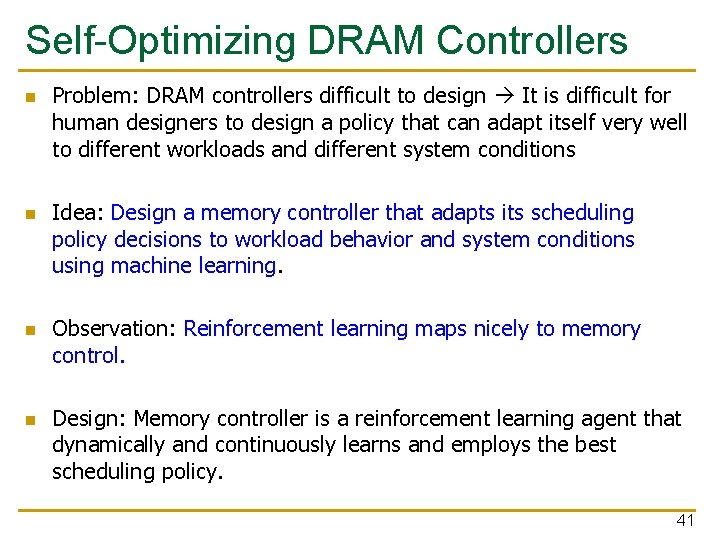

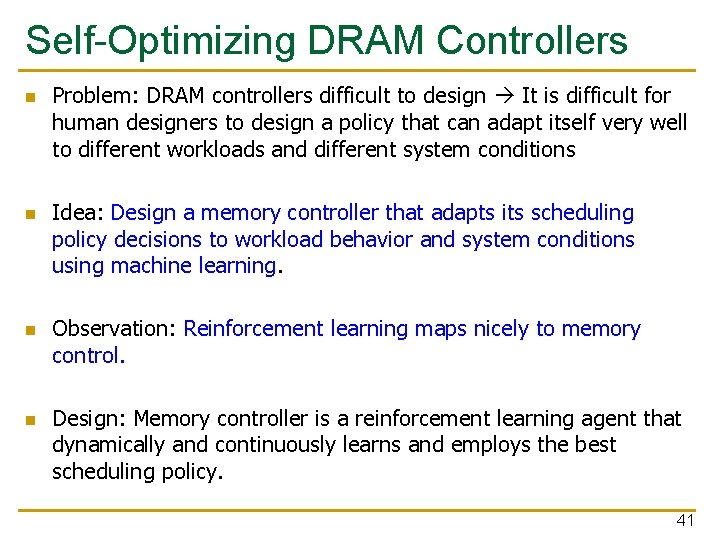

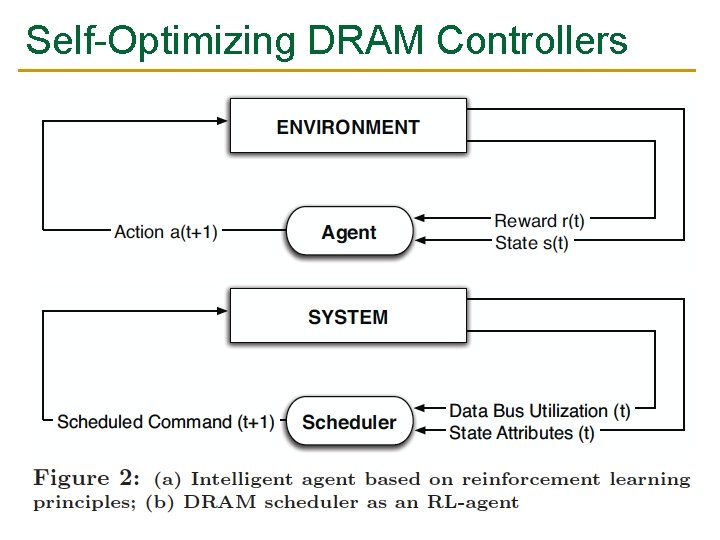

Self-Optimizing DRAM Controllers n n Problem: DRAM controllers difficult to design It is difficult for human designers to design a policy that can adapt itself very well to different workloads and different system conditions Idea: Design a memory controller that adapts its scheduling policy decisions to workload behavior and system conditions using machine learning. Observation: Reinforcement learning maps nicely to memory control. Design: Memory controller is a reinforcement learning agent that dynamically and continuously learns and employs the best scheduling policy. 41

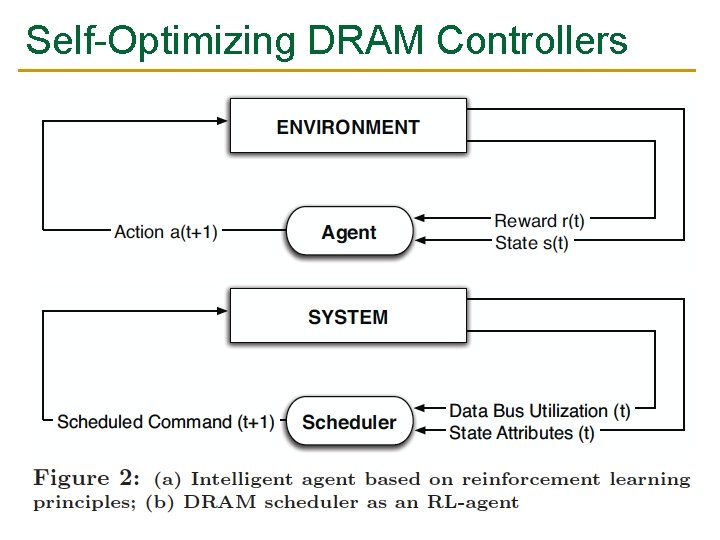

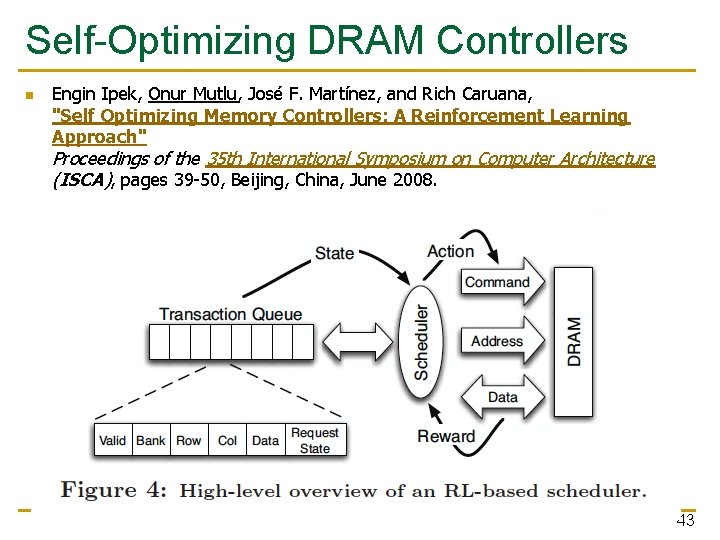

Self-Optimizing DRAM Controllers n Engin Ipek, Onur Mutlu, José F. Martínez, and Rich Caruana, "Self Optimizing Memory Controllers: A Reinforcement Learning Approach" Proceedings of the 35 th International Symposium on Computer Architecture (ISCA), pages 39 -50, Beijing, China, June 2008. 42

Self-Optimizing DRAM Controllers n Engin Ipek, Onur Mutlu, José F. Martínez, and Rich Caruana, "Self Optimizing Memory Controllers: A Reinforcement Learning Approach" Proceedings of the 35 th International Symposium on Computer Architecture (ISCA), pages 39 -50, Beijing, China, June 2008. 43

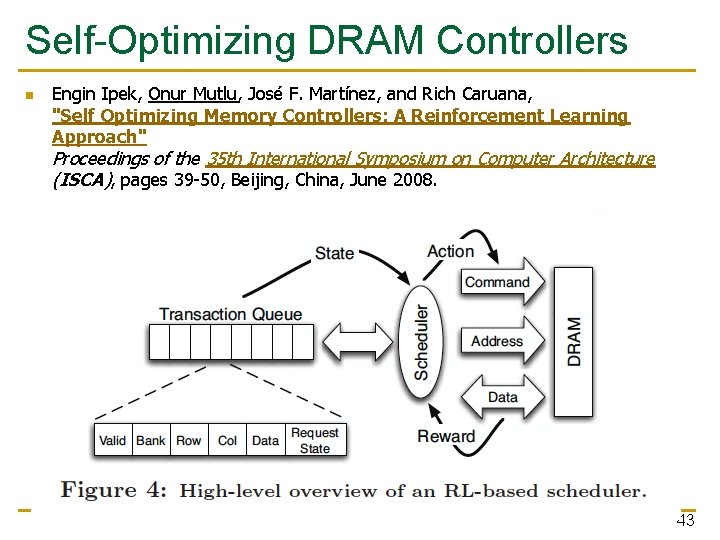

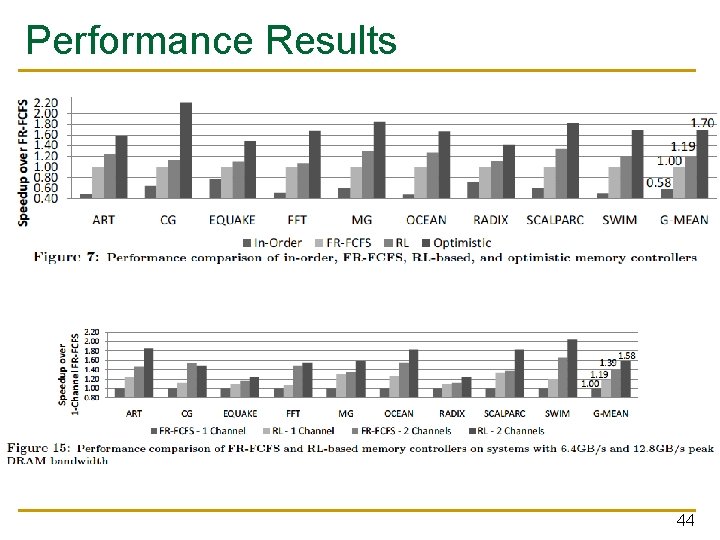

Performance Results 44

DRAM-Aware Cache Design: An Example of Resource Coordination Chang Joo Lee, Veynu Narasiman, Eiman Ebrahimi, Onur Mutlu, and Yale N. Patt, "DRAM-Aware Last-Level Cache Writeback: Reducing Write-Caused Interference in Memory Systems" HPS Technical Report, TR-HPS-2010 -002, April 2010.

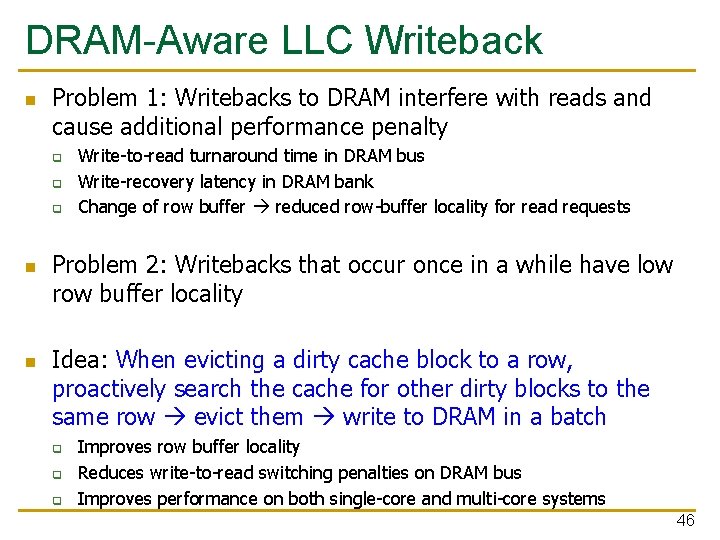

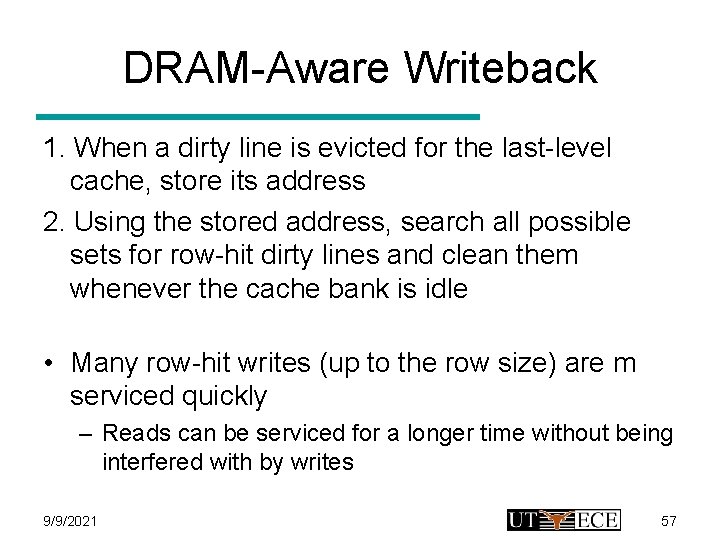

DRAM-Aware LLC Writeback n Problem 1: Writebacks to DRAM interfere with reads and cause additional performance penalty q q q n n Write-to-read turnaround time in DRAM bus Write-recovery latency in DRAM bank Change of row buffer reduced row-buffer locality for read requests Problem 2: Writebacks that occur once in a while have low row buffer locality Idea: When evicting a dirty cache block to a row, proactively search the cache for other dirty blocks to the same row evict them write to DRAM in a batch q q q Improves row buffer locality Reduces write-to-read switching penalties on DRAM bus Improves performance on both single-core and multi-core systems 46

More Information n Chang Joo Lee, Veynu Narasiman, Eiman Ebrahimi, Onur Mutlu, and Yale N. Patt, "DRAM-Aware Last-Level Cache Writeback: Reducing Write-Caused Interference in Memory Systems" HPS Technical Report, TR-HPS-2010 -002, April 2010. 47

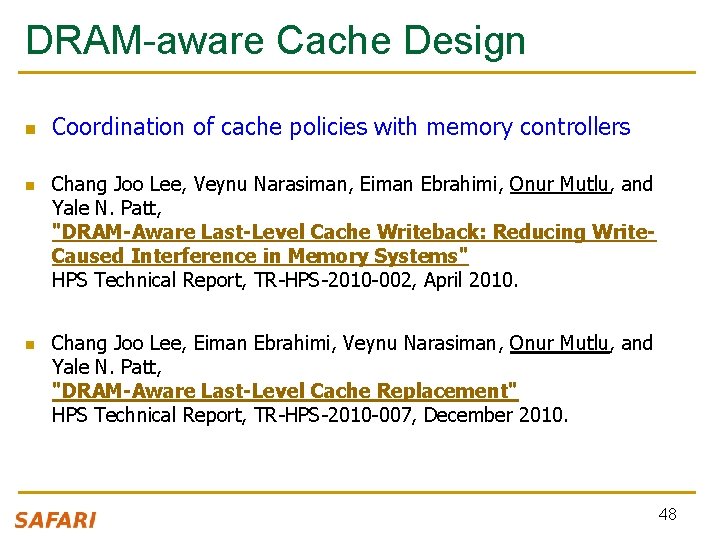

DRAM-aware Cache Design n Coordination of cache policies with memory controllers Chang Joo Lee, Veynu Narasiman, Eiman Ebrahimi, Onur Mutlu, and Yale N. Patt, "DRAM-Aware Last-Level Cache Writeback: Reducing Write. Caused Interference in Memory Systems" HPS Technical Report, TR-HPS-2010 -002, April 2010. Chang Joo Lee, Eiman Ebrahimi, Veynu Narasiman, Onur Mutlu, and Yale N. Patt, "DRAM-Aware Last-Level Cache Replacement" HPS Technical Report, TR-HPS-2010 -007, December 2010. 48

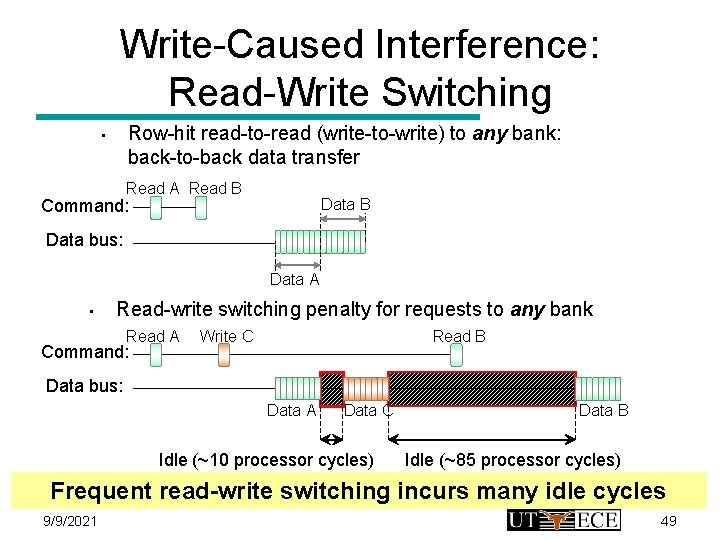

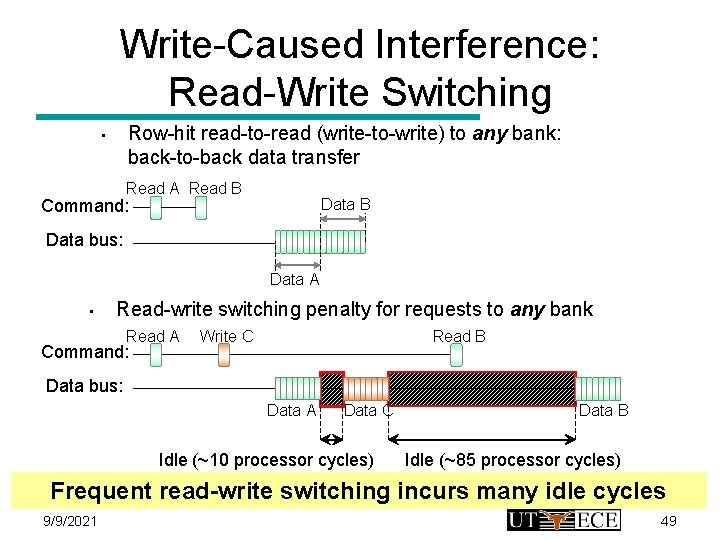

Write-Caused Interference: Read-Write Switching Row-hit read-to-read (write-to-write) to any bank: back-to-back data transfer • Read A Read B Data B Command: Data bus: Data A • Read-write switching penalty for requests to any bank Read A Command: Write C Read B Data bus: Data A Data C Idle (~10 processor cycles) Data B Idle (~85 processor cycles) Frequent read-write switching incurs many idle cycles 9/9/2021 49

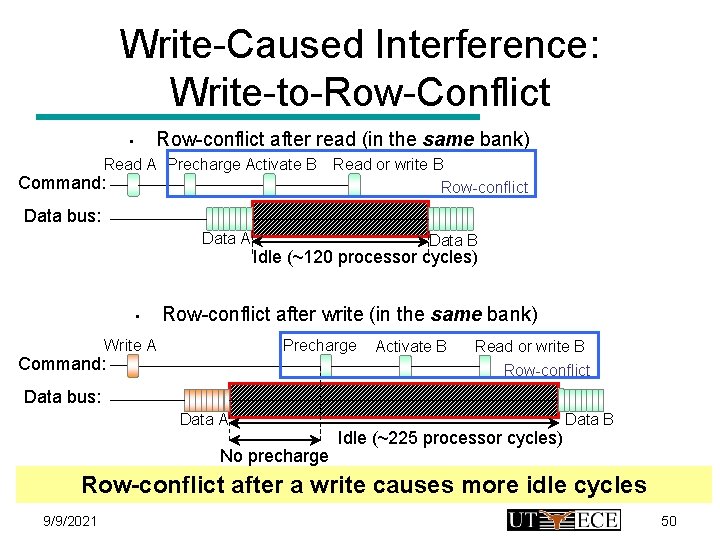

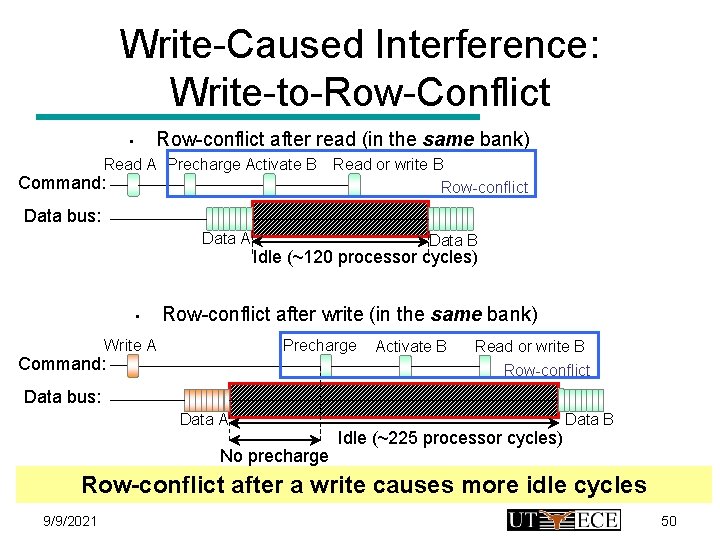

Write-Caused Interference: Write-to-Row-Conflict Row-conflict after read (in the same bank) • Read A Precharge Activate B Command: Read or write B Row-conflict Data bus: Data A • Data B Idle (~120 processor cycles) Row-conflict after write (in the same bank) Write A Precharge Command: Activate B Read or write B Row-conflict Data bus: Data A Idle (~225 processor cycles) Data B No precharge (~60 processor cycles) Row-conflict after a write causes more idle cycles 9/9/2021 50

Write-Caused Interference • Read-Write Switching – Frequent read-write switching incurs many idle cycles • Write-to-Row-Conflict – A row-conflict after a write causes more idle cycles Generating many row-hit writes rather than row-conflict writes is preferred 9/9/2021 51

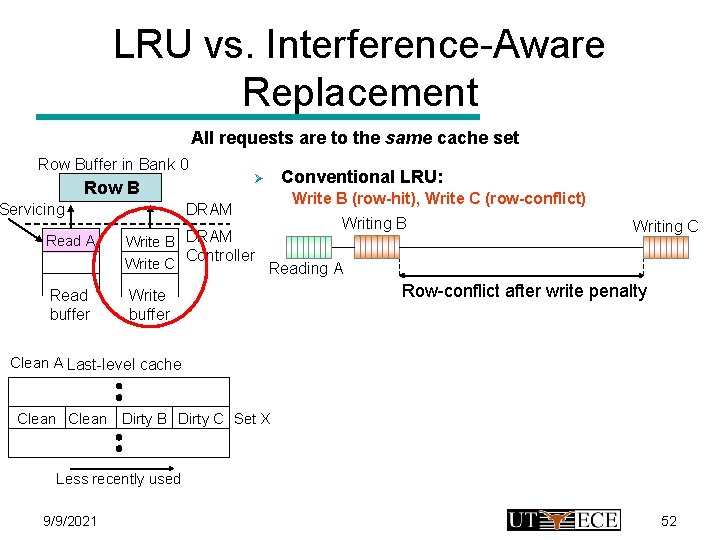

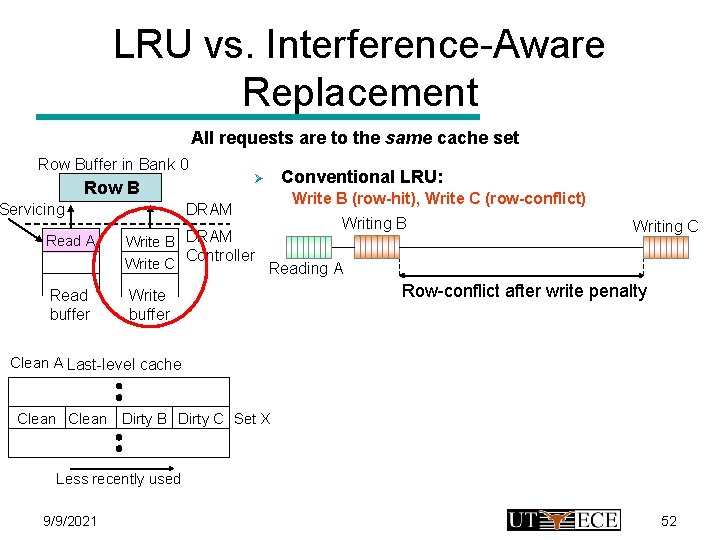

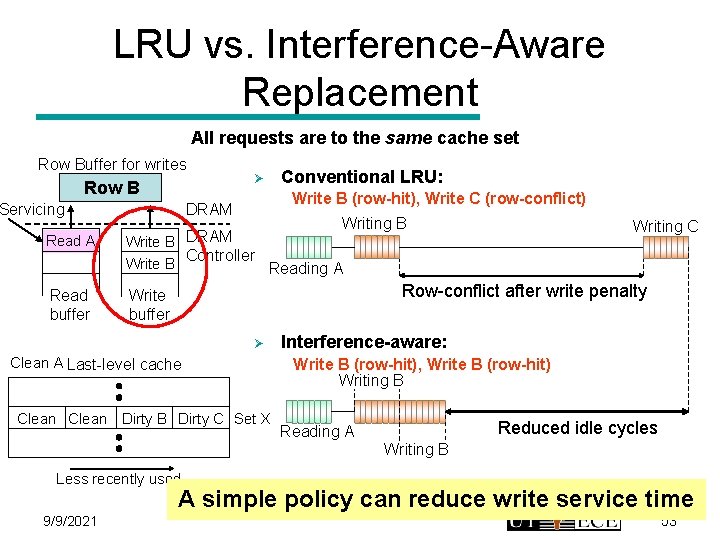

LRU vs. Interference-Aware Replacement All requests are to the same cache set Row Buffer in Bank 0 Row B Servicing Ø DRAM Conventional LRU: Write B (row-hit), Write C (row-conflict) Read A Writing B DRAM Write B Write C Controller Reading A Read buffer Write buffer Writing C Row-conflict after write penalty Clean A Last-level cache Clean Dirty B Dirty C Set X Less recently used 9/9/2021 52

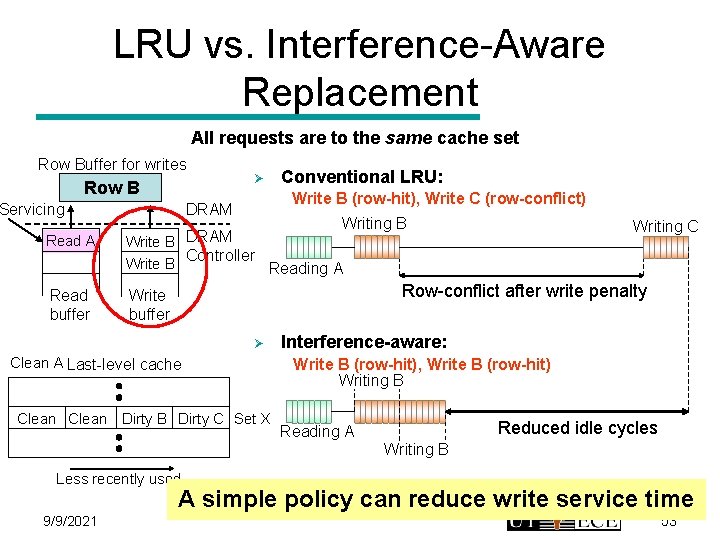

LRU vs. Interference-Aware Replacement All requests are to the same cache set Row Buffer for writes Row B Servicing Ø Conventional LRU: Write B (row-hit), Write C (row-conflict) DRAM Read A Writing B DRAM Write B Controller Reading A Read buffer Write buffer Writing C Row-conflict after write penalty Ø Clean A Last-level cache Clean Dirty B Dirty C Set X Interference-aware: Write B (row-hit), Write B (row-hit) Writing B Reduced idle cycles Reading A Writing B Less recently used A simple policy can reduce write service time 9/9/2021 53

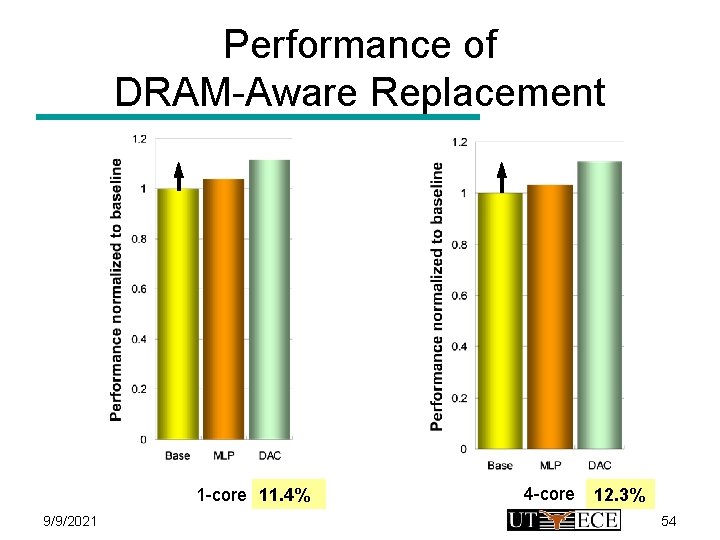

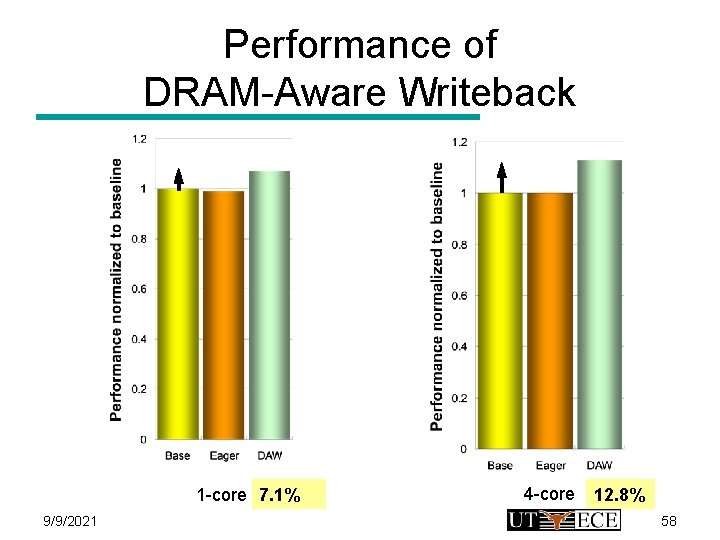

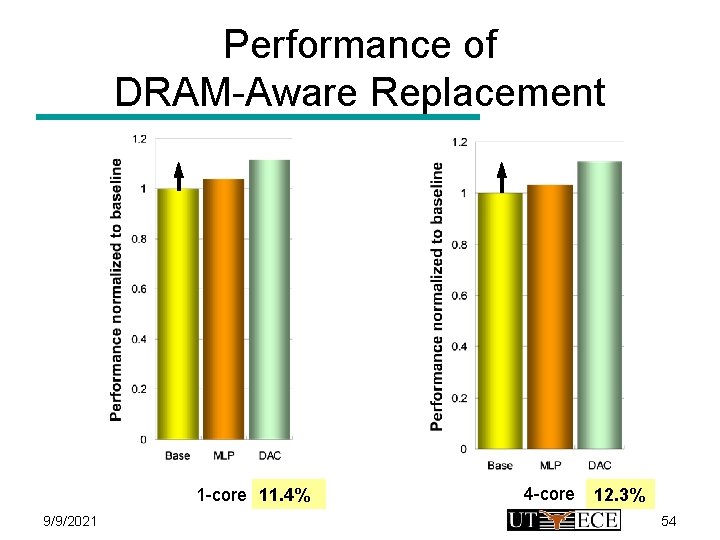

Performance of DRAM-Aware Replacement 1 -core 11. 4% 9/9/2021 4 -core 12. 3% 54

Outline • Problem • Solutions – – Prefetch-Aware DRAM Controller BLP-Aware Request Issue Policies DRAM-Aware Cache Replacement DRAM-Aware Writeback • Combination of Solutions • Related Work • Conclusion 9/9/2021 55

DRAM-Aware Writeback • Write-caused interference-aware replacement is not enough – Row-hit writebacks are sent only when a replacement occurs • Lose opportunities to service more writes quickly • To minimize write-caused interference, proactively clean row-hit dirty lines → Reads are serviced without write-caused interference for a longer period 9/9/2021 56

DRAM-Aware Writeback 1. When a dirty line is evicted for the last-level cache, store its address 2. Using the stored address, search all possible sets for row-hit dirty lines and clean them whenever the cache bank is idle • Many row-hit writes (up to the row size) are m serviced quickly – Reads can be serviced for a longer time without being interfered with by writes 9/9/2021 57

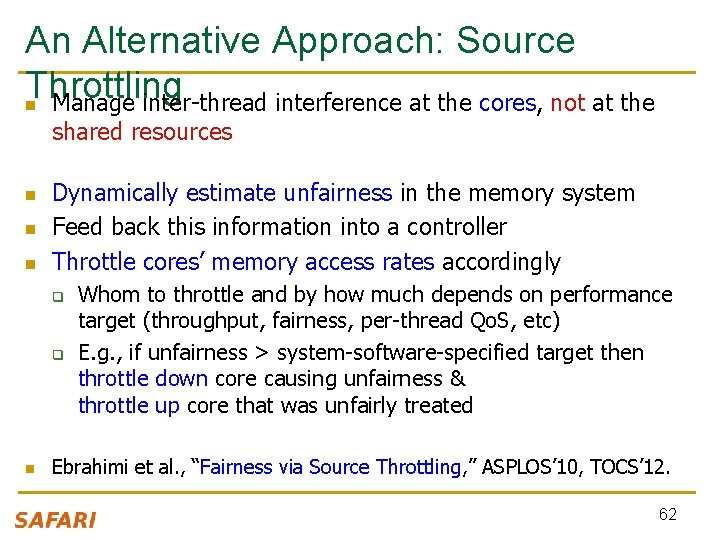

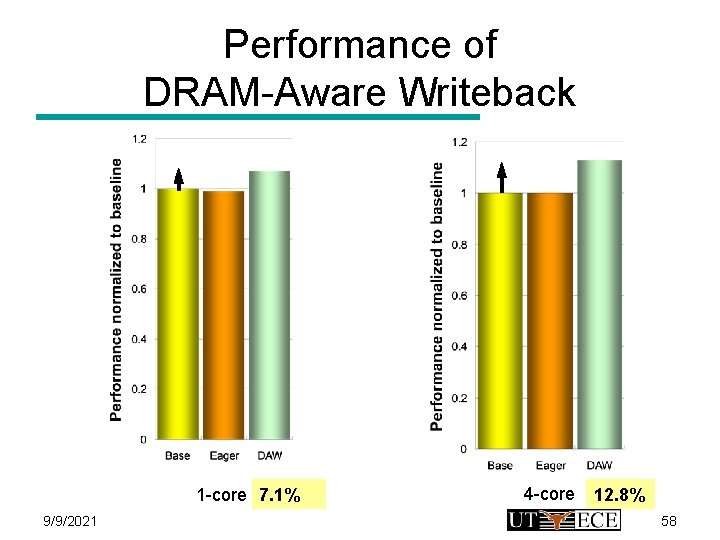

Performance of DRAM-Aware Writeback 1 -core 7. 1% 9/9/2021 4 -core 12. 8% 58

Fairness via Source Throttling Eiman Ebrahimi, Chang Joo Lee, Onur Mutlu, and Yale N. Patt, "Fairness via Source Throttling: A Configurable and High-Performance Fairness Substrate for Multi-Core Memory Systems" 15 th Intl. Conf. on Architectural Support for Programming Languages and Operating Systems (ASPLOS), pages 335 -346, Pittsburgh, PA, March 2010. Slides (pdf) FST ASPLOS 2010 Talk

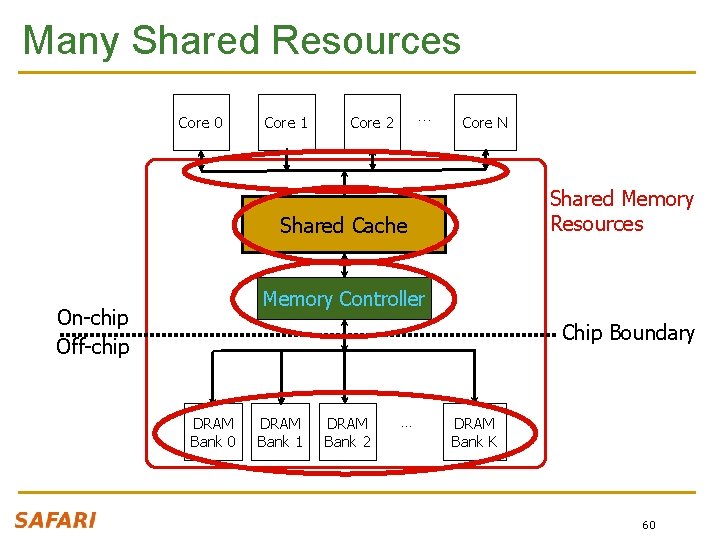

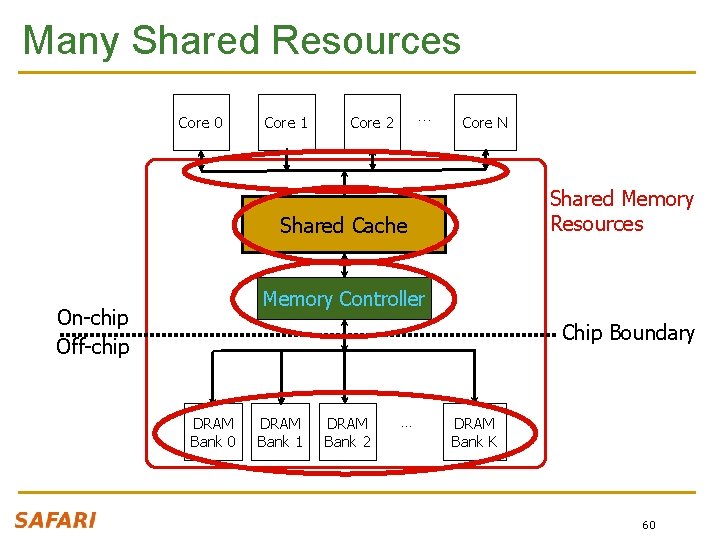

Many Shared Resources Core 0 Core 1 . . . Core 2 Core N Shared Memory Resources Shared Cache Memory Controller On-chip Off-chip Chip Boundary DRAM Bank 0 DRAM Bank 1 DRAM Bank 2 . . . DRAM Bank K 60

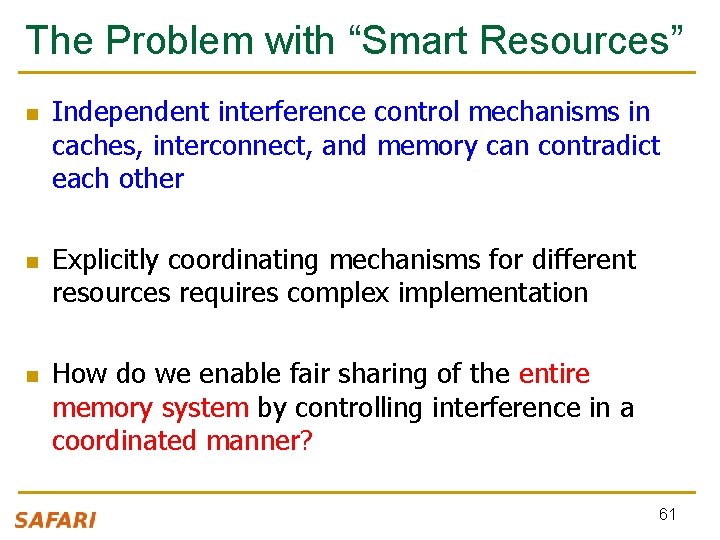

The Problem with “Smart Resources” n n n Independent interference control mechanisms in caches, interconnect, and memory can contradict each other Explicitly coordinating mechanisms for different resources requires complex implementation How do we enable fair sharing of the entire memory system by controlling interference in a coordinated manner? 61

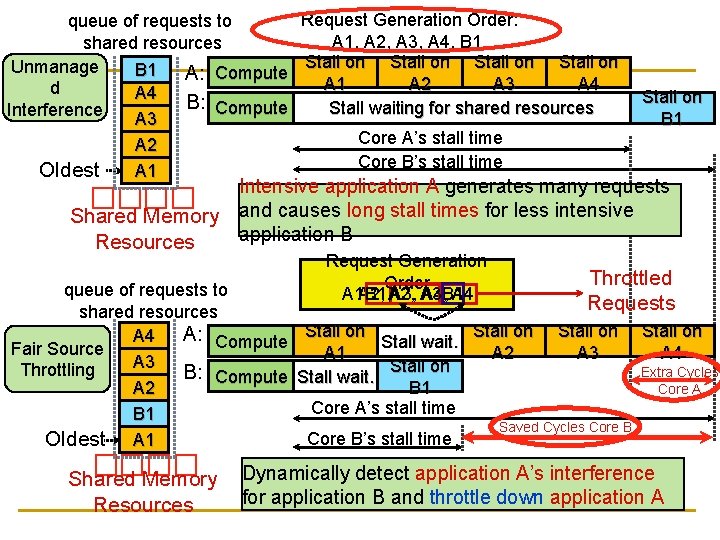

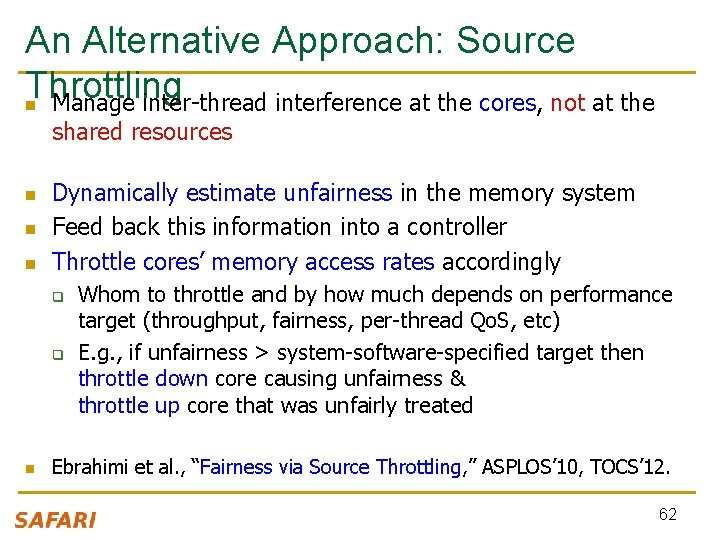

An Alternative Approach: Source Throttling n Manage inter-thread interference at the cores, not at the shared resources n n n Dynamically estimate unfairness in the memory system Feed back this information into a controller Throttle cores’ memory access rates accordingly q q n Whom to throttle and by how much depends on performance target (throughput, fairness, per-thread Qo. S, etc) E. g. , if unfairness > system-software-specified target then throttle down core causing unfairness & throttle up core that was unfairly treated Ebrahimi et al. , “Fairness via Source Throttling, ” ASPLOS’ 10, TOCS’ 12. 62

Request Generation Order: queue of requests to A 1, A 2, A 3, A 4, B 1 shared resources Stall on Unmanage B 1 A: Compute A 1 A 2 A 3 A 4 d A 4 B: Compute Stall waiting for shared resources Interference A 3 Core A’s stall time A 2 Core B’s stall time Oldest A 1 Stall on B 1 � � Intensive application A generates many requests Shared Memory and causes long stall times for less intensive application B Resources Request Generation Order A 1 A 2, , B 1, A 2, A 3, A 4, B 1 A 4 Throttled � � queue of requests to Requests shared resources A: Compute Stall on Stall wait. Stall on A 4 Fair Source A 1 A 2 A 3 A 4 A 3 Throttling Extra Cycles B: Compute Stall wait. Stall on Core A B 1 A 2 Core A’s stall time B 1 Saved Cycles Core B’s stall time Oldest A 1 Shared Memory Resources Dynamically detect application A’s interference for application B and throttle down application A

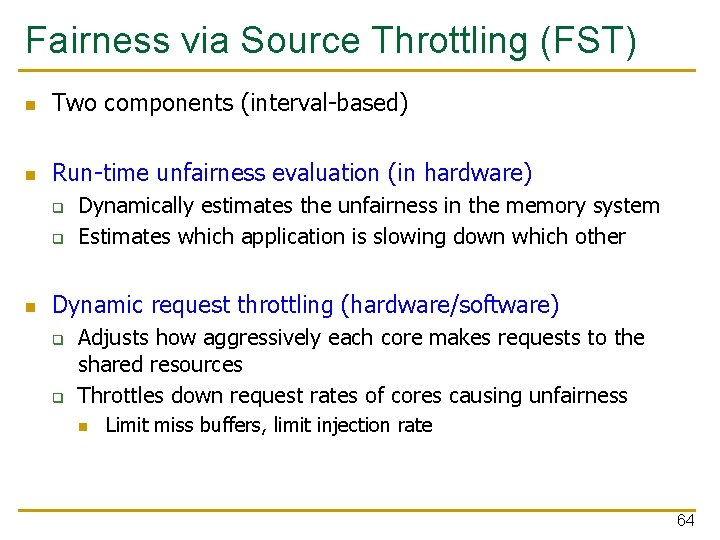

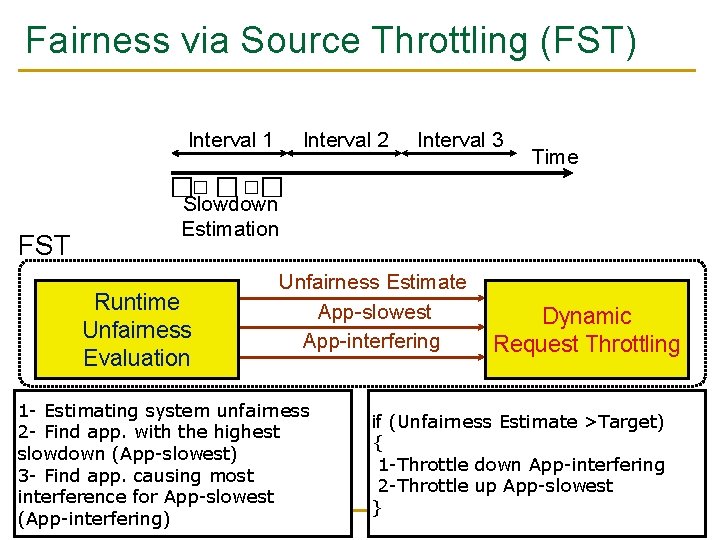

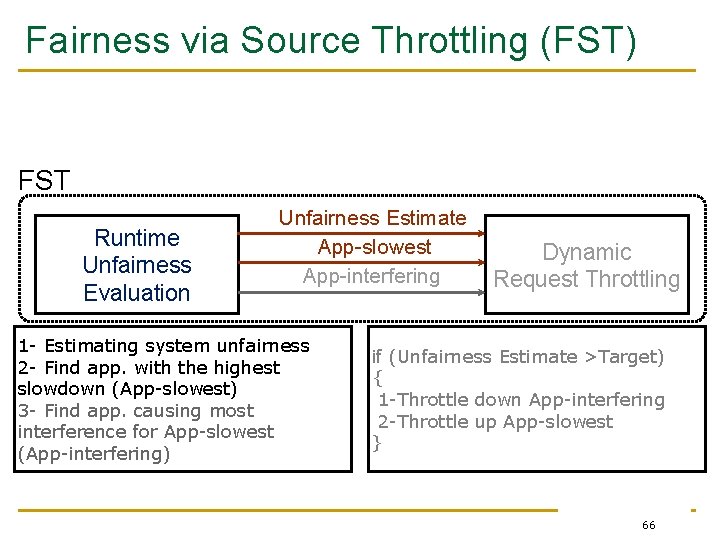

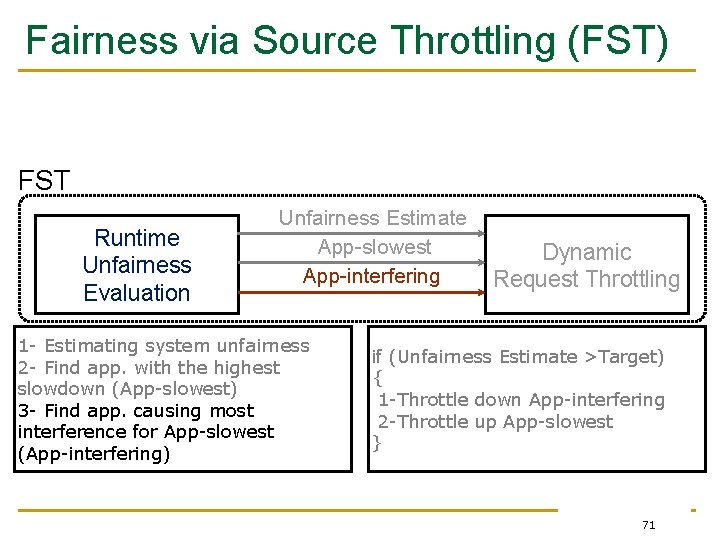

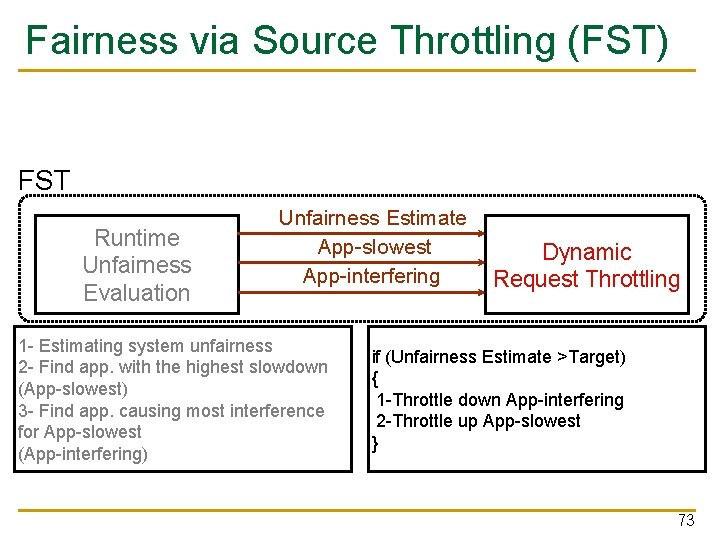

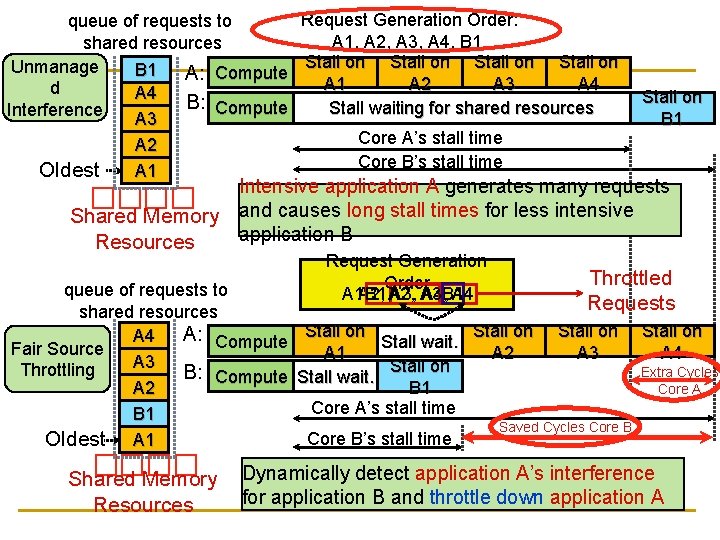

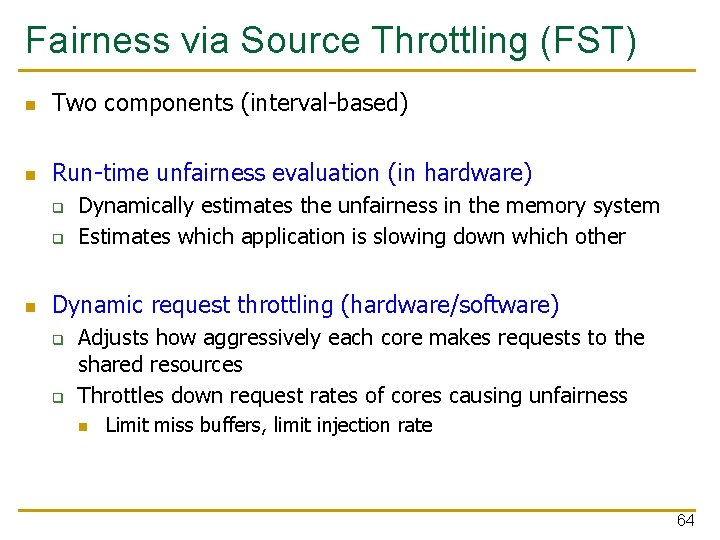

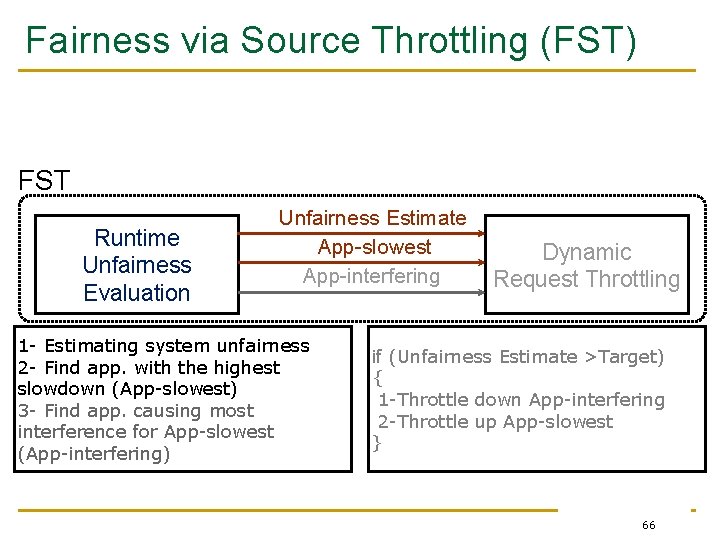

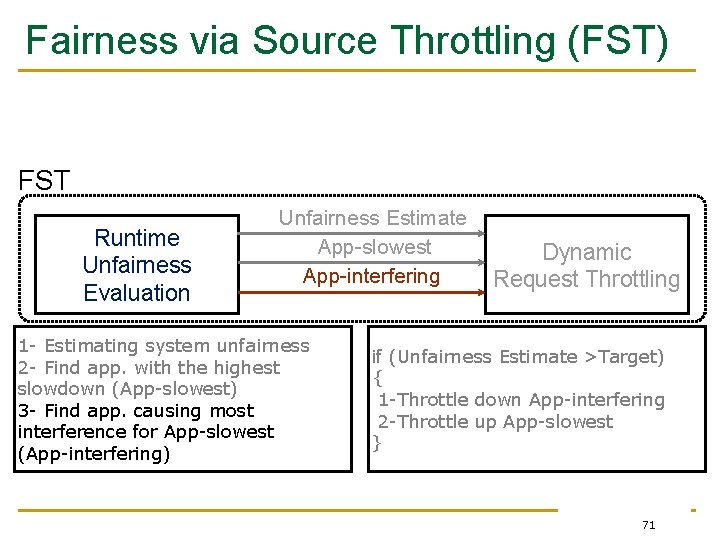

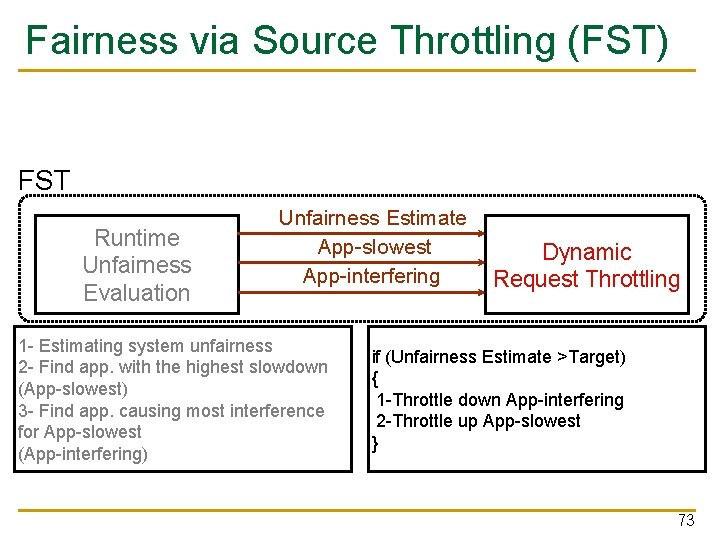

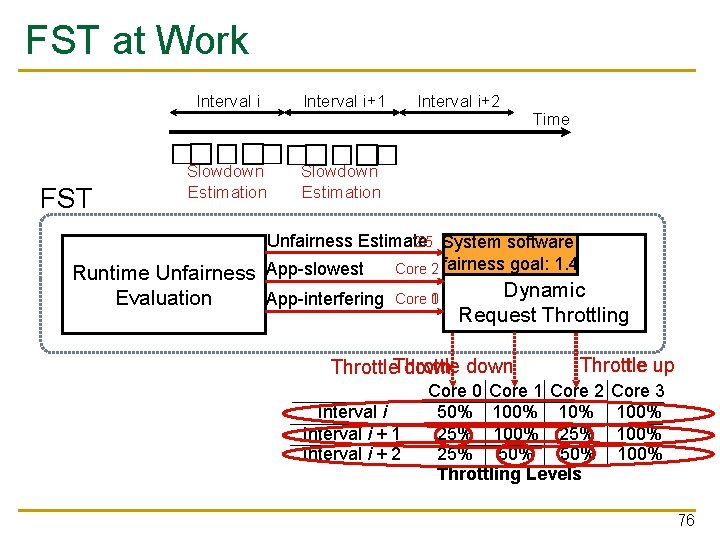

Fairness via Source Throttling (FST) n Two components (interval-based) n Run-time unfairness evaluation (in hardware) q q n Dynamically estimates the unfairness in the memory system Estimates which application is slowing down which other Dynamic request throttling (hardware/software) q q Adjusts how aggressively each core makes requests to the shared resources Throttles down request rates of cores causing unfairness n Limit miss buffers, limit injection rate 64

Fairness via Source Throttling (FST) Interval 1 Interval 3 Time � � � FST Interval 2 Slowdown Estimation Runtime Unfairness Evaluation Unfairness Estimate App-slowest App-interfering 1 - Estimating system unfairness 2 - Find app. with the highest slowdown (App-slowest) 3 - Find app. causing most interference for App-slowest (App-interfering) Dynamic Request Throttling if (Unfairness Estimate >Target) { 1 -Throttle down App-interfering 2 -Throttle up App-slowest } 65

Fairness via Source Throttling (FST) FST Runtime Unfairness Evaluation Unfairness Estimate App-slowest App-interfering 1 - Estimating system unfairness 2 - Find app. with the highest slowdown (App-slowest) 3 - Find app. causing most interference for App-slowest (App-interfering) Dynamic Request Throttling if (Unfairness Estimate >Target) { 1 -Throttle down App-interfering 2 -Throttle up App-slowest } 66

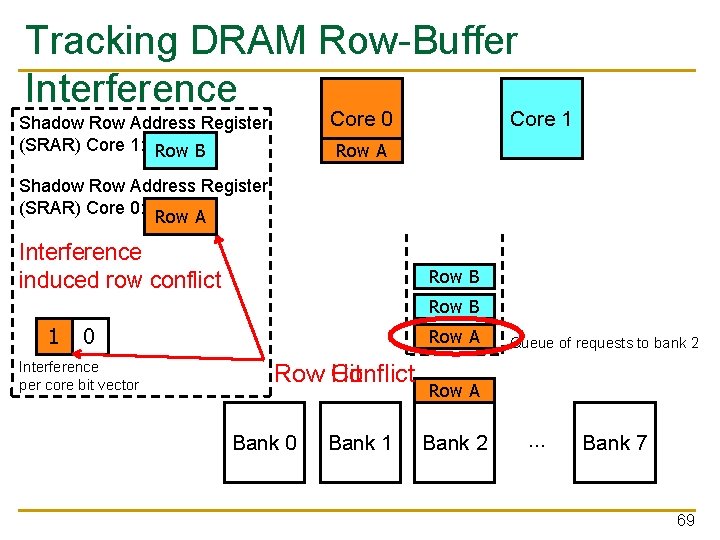

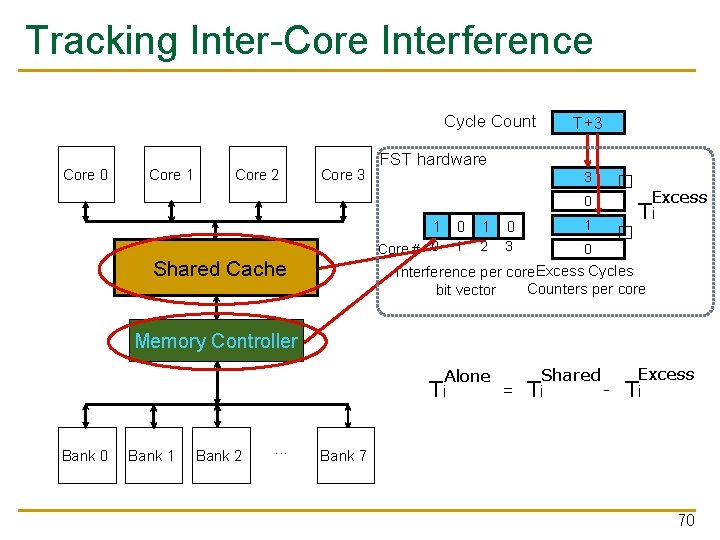

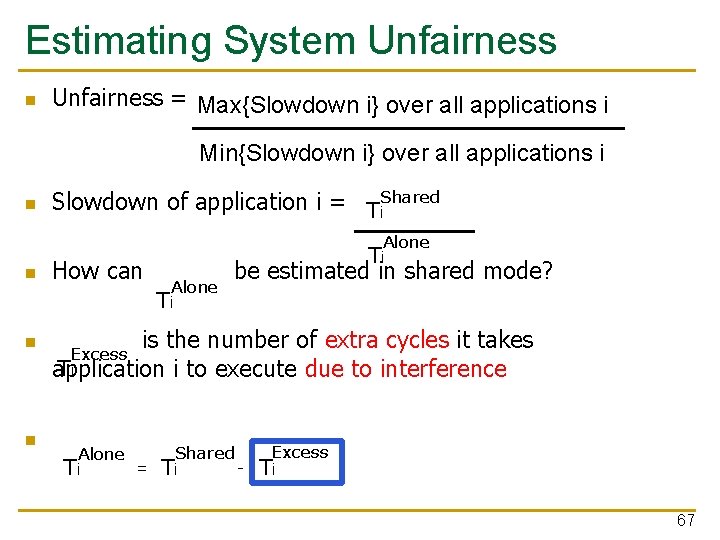

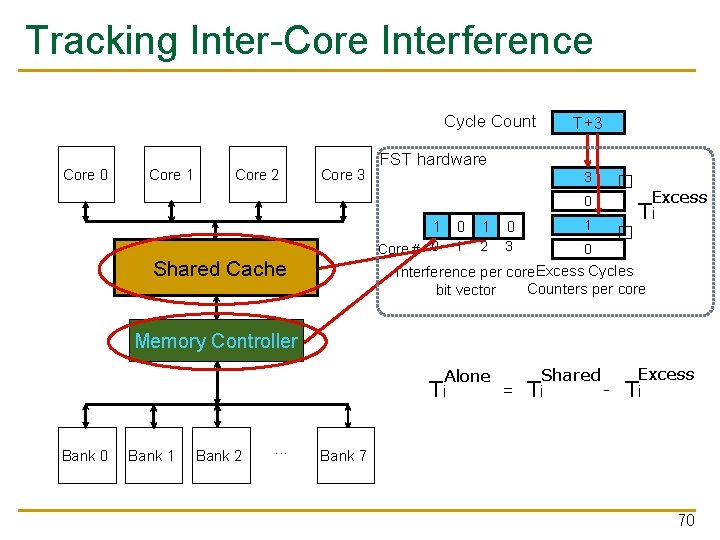

Estimating System Unfairness n Unfairness = Max{Slowdown i} over all applications i Min{Slowdown i} over all applications i n n Slowdown of application i = Ti. Shared How can Alone Ti be estimated in shared mode? is the number of extra cycles it takes Excess Ti application i to execute due to interference Alone = Ti Shared Ti Excess Ti 67

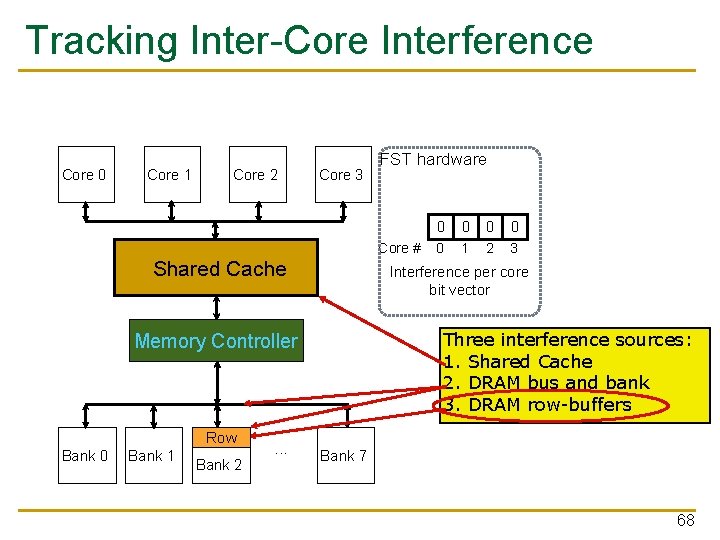

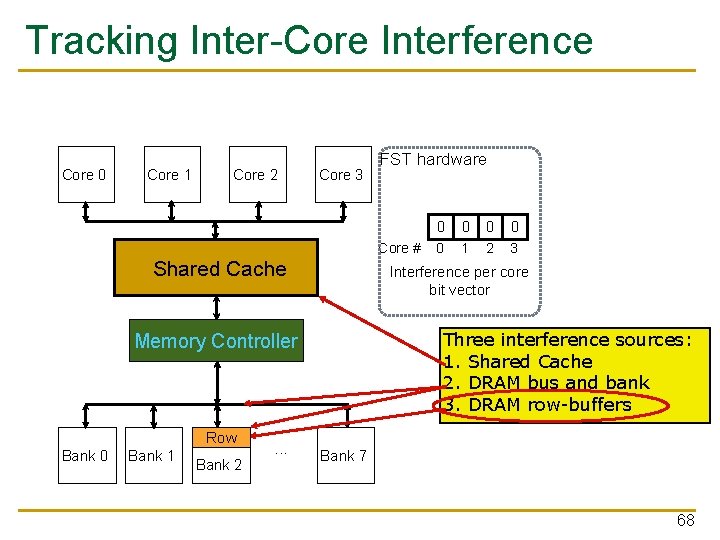

Tracking Inter-Core Interference Core 0 Core 1 Core 2 Core 3 FST hardware Core # Shared Cache Bank 0 Bank 1 . . . 0 0 1 2 3 Interference per core bit vector Three interference sources: 1. Shared Cache 2. DRAM bus and bank 3. DRAM row-buffers Memory Controller Row Bank 2 0 Bank 7 68

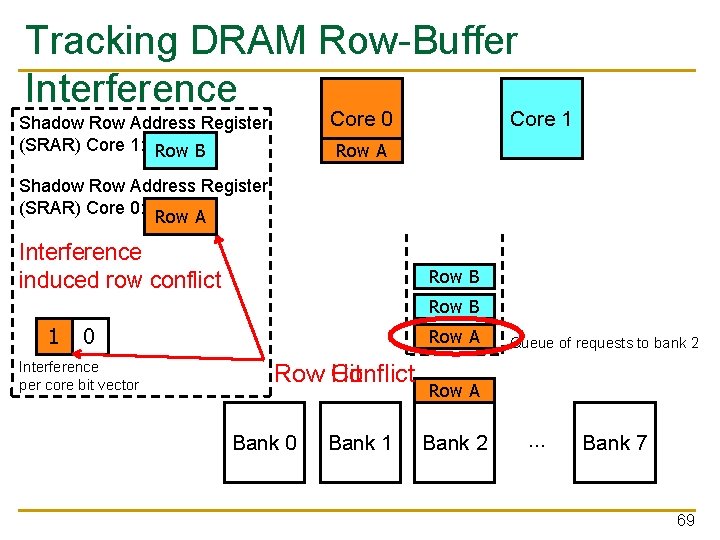

Tracking DRAM Row-Buffer Interference Core 0 Shadow Row Address Register (SRAR) Core 1: Row B Core 1 Row A Shadow Row Address Register (SRAR) Core 0: Row A Interference induced row conflict Row B 0 1 0 Interference per core bit vector Row A Row Hit Conflict Bank 0 Bank 1 Queue of requests to bank 2 Row A Bank 2 … Bank 7 69

Tracking Inter-Core Interference Cycle Count Core 0 Core 1 Core 2 Core 3 T+1 T+2 T+3 T FST hardware 0 1 2 3 � 0 Shared Cache 0 1 0 0 1 Core # 0 1 2 3 0 � Excess Ti Interference per core. Excess Cycles Counters per core bit vector Memory Controller Alone = Ti Bank 0 Bank 1 Bank 2 . . . Shared Ti Excess Ti Bank 7 70

Fairness via Source Throttling (FST) FST Runtime Unfairness Evaluation Unfairness Estimate App-slowest App-interfering 1 - Estimating system unfairness 2 - Find app. with the highest slowdown (App-slowest) 3 - Find app. causing most interference for App-slowest (App-interfering) Dynamic Request Throttling if (Unfairness Estimate >Target) { 1 -Throttle down App-interfering 2 -Throttle up App-slowest } 71

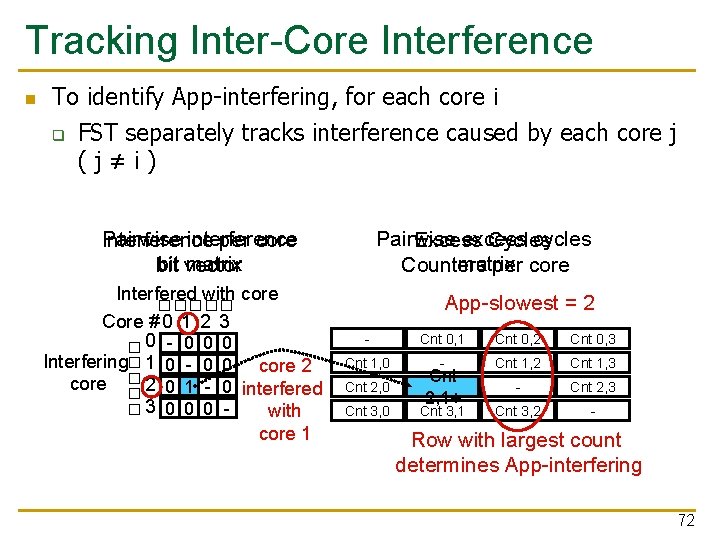

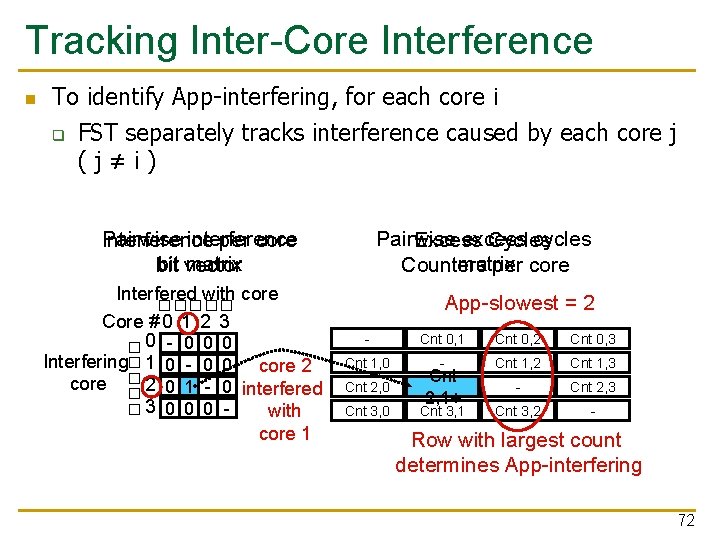

Tracking Inter-Core Interference To identify App-interfering, for each core i q FST separately tracks interference caused by each core j (j≠i) Pairwise interference Interference per core matrix bit vector Pairwise excess cycles Excess Cycles matrix Counters per core App-slowest = 2 � � � Interfered with core Core # 0 1 2 3 0 0 - 0 0 0 Interfering 1 0 - 0 0 core 2 0 1 0 - 0 interfered 3 0 0 0 with core 1 � � � n Cnt - 0 Cnt 0, 1 1 Cnt 0, 2 2 Cnt 0, 3 3 Cnt 1, 0 - Cnt 1, 2 Cnt 1, 3 Cnt 2, 0 Cnt 2, 1 - Cnt 2, 3 Cnt 3, 0 Cnt 3, 1 Cnt 3, 2 - Cnt 2, 1+ Row with largest count determines App-interfering 72

Fairness via Source Throttling (FST) FST Runtime Unfairness Evaluation Unfairness Estimate App-slowest App-interfering 1 - Estimating system unfairness 2 - Find app. with the highest slowdown (App-slowest) 3 - Find app. causing most interference for App-slowest (App-interfering) Dynamic Request Throttling if (Unfairness Estimate >Target) { 1 -Throttle down App-interfering 2 -Throttle up App-slowest } 73

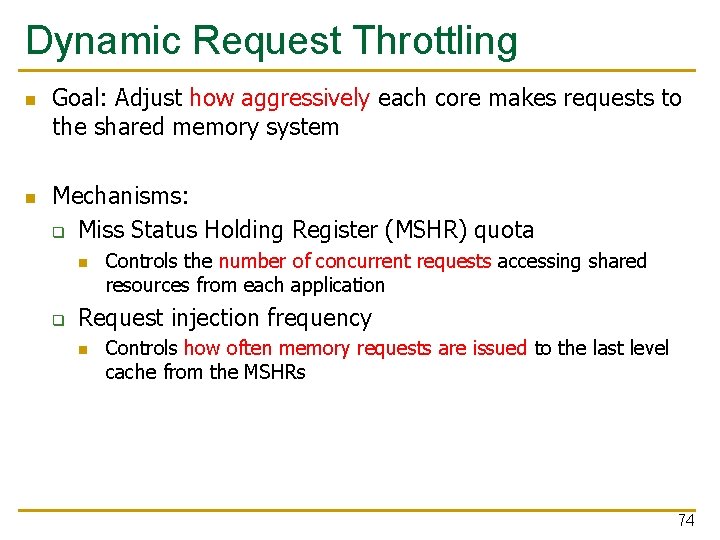

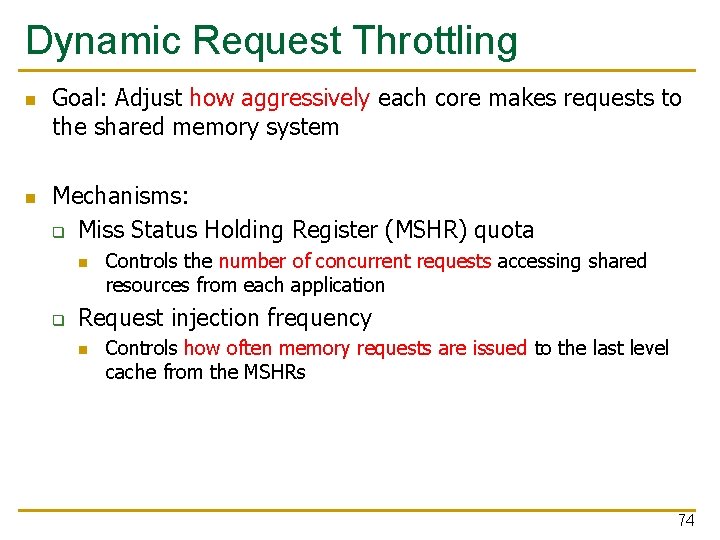

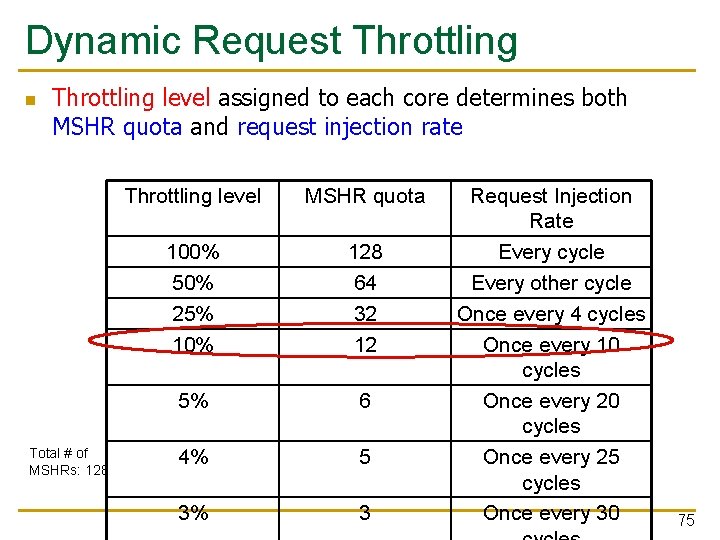

Dynamic Request Throttling n n Goal: Adjust how aggressively each core makes requests to the shared memory system Mechanisms: q Miss Status Holding Register (MSHR) quota n q Controls the number of concurrent requests accessing shared resources from each application Request injection frequency n Controls how often memory requests are issued to the last level cache from the MSHRs 74

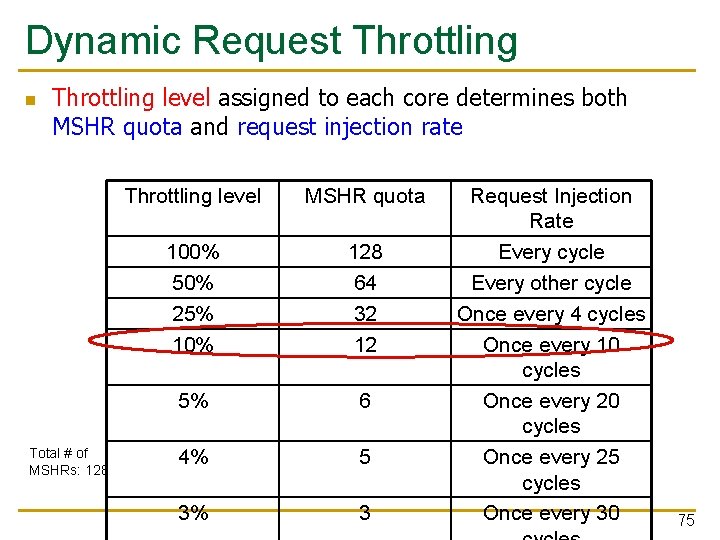

Dynamic Request Throttling n Throttling level assigned to each core determines both MSHR quota and request injection rate Total # of MSHRs: 128 Throttling level MSHR quota 100% 50% 25% 10% 128 64 32 12 5% 6 4% 5 3% 3 Request Injection Rate Every cycle Every other cycle Once every 4 cycles Once every 10 cycles Once every 25 cycles Once every 30 75

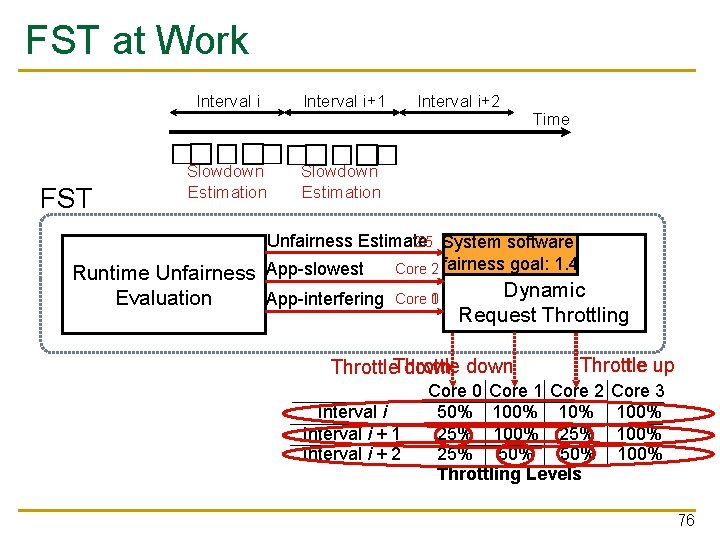

FST at Work Interval i+2 Time � � Slowdown Estimation � � � � FST Interval i+1 Slowdown Estimation 2. 5 3 System software Unfairness Estimate Runtime Unfairness App-slowest Core 2 fairness goal: 1. 4 Dynamic Evaluation App-interfering Core 10 Request Throttling Throttle down Interval i + 1 Interval i + 2 Throttle up Core 0 Core 1 Core 2 50% 10% 25% 100% 25% 50% Throttling Levels Core 3 100% 76

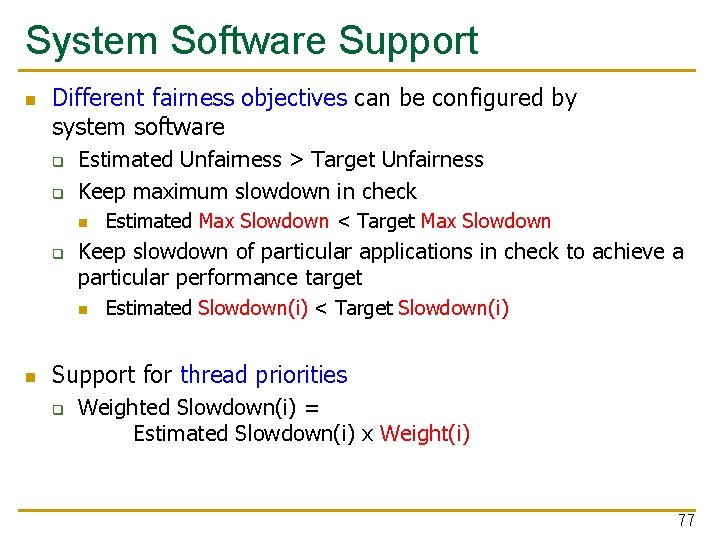

System Software Support n Different fairness objectives can be configured by system software q q Estimated Unfairness > Target Unfairness Keep maximum slowdown in check n q Keep slowdown of particular applications in check to achieve a particular performance target n n Estimated Max Slowdown < Target Max Slowdown Estimated Slowdown(i) < Target Slowdown(i) Support for thread priorities q Weighted Slowdown(i) = Estimated Slowdown(i) x Weight(i) 77

FST Hardware Cost n n Total storage cost required for 4 cores is ~12 KB FST does not require any structures or logic that are on the processor’s critical path 78

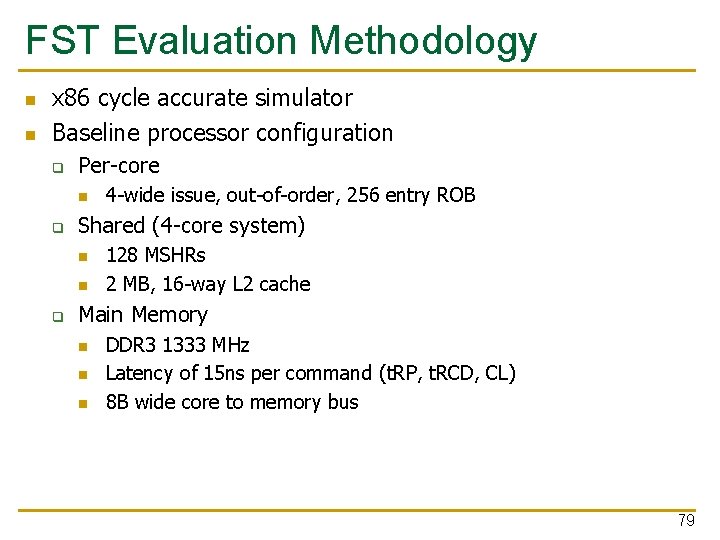

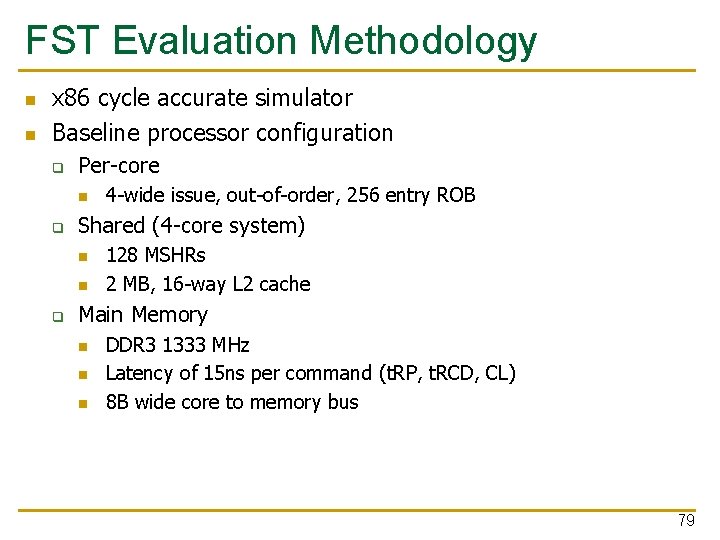

FST Evaluation Methodology n n x 86 cycle accurate simulator Baseline processor configuration q Per-core n q Shared (4 -core system) n n q 4 -wide issue, out-of-order, 256 entry ROB 128 MSHRs 2 MB, 16 -way L 2 cache Main Memory n n n DDR 3 1333 MHz Latency of 15 ns per command (t. RP, t. RCD, CL) 8 B wide core to memory bus 79

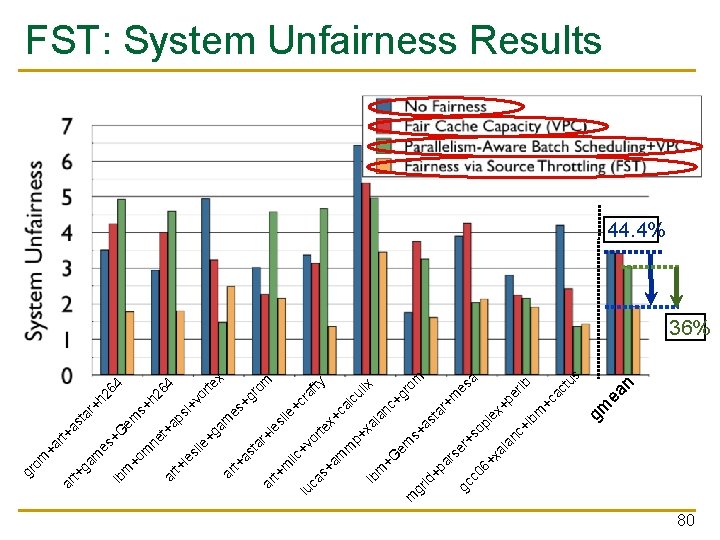

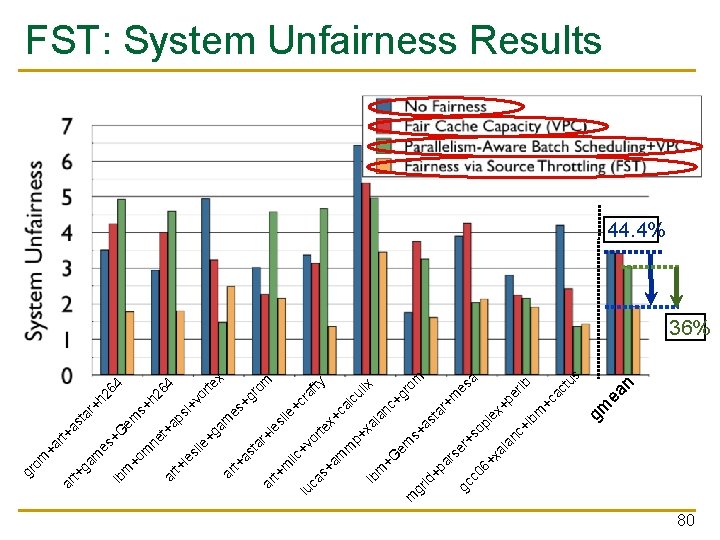

gm ea n s+ h 2 ne 64 t + ar a t+ ps le i+ sl vo ie rte +g x am ar es t+ +g as ta ro r+ m ar le t+ sl m ie ilc +c + lu ra vo ca fty r te s+ x+ am ca m lc p+ ul ix x lb a l m an +G c+ em gr m om s gr +a id st +p ar ar +m se es gc r+ a c 0 so 6+ pl ex xa +p la nc er lb +l bm +c ac tu s em G +h 26 4 ar as t rt+ es + m +o lb m ga m ar t+ +a om gr FST: System Unfairness Results 44. 4% 36% 80

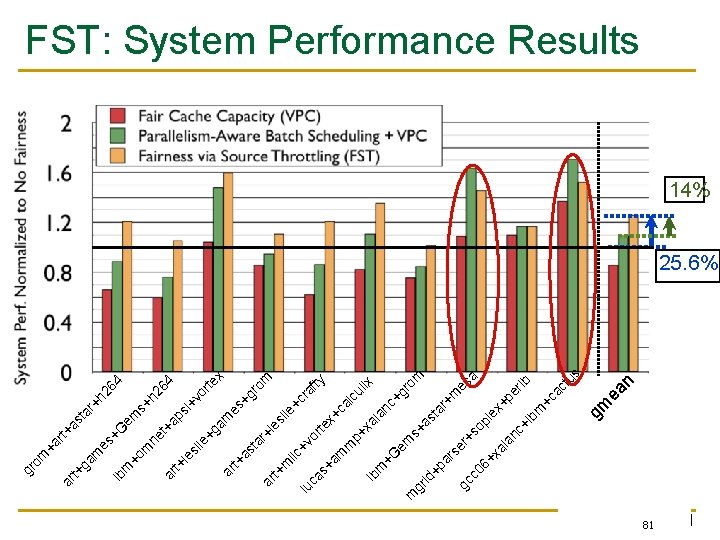

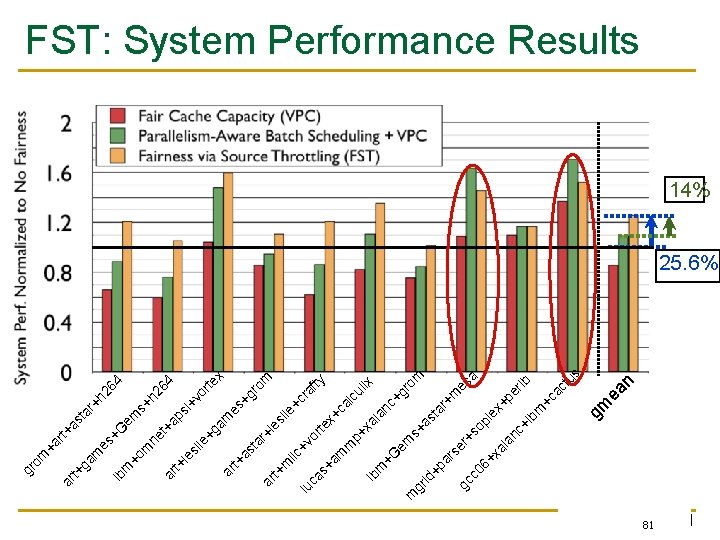

64 n ea gm s+ h 2 ne 64 t + ar a t+ ps le i+ sl vo ie rte +g x am ar es t+ +g as ta ro r+ m ar le t+ sl m ie ilc +c + lu ra vo ca fty r te s+ x+ am ca m lc p+ ul ix x lb a l m an +G c+ em gr m om s gr +a id st +p ar ar +m se es gc r+ a c 0 so 6+ pl ex xa +p la nc er lb +l bm +c ac tu s em G +h 2 ar as t rt+ es + m +o lb m ga m ar t+ +a om gr FST: System Performance Results 14% 25. 6% 81 81

Source Throttling Results: Takeaways n Source throttling alone provides better performance than a combination of “smart” memory scheduling and fair caching q n n Decisions made at the memory scheduler and the cache sometimes contradict each other Neither source throttling alone nor “smart resources” alone provides the best performance Combined approaches are even more powerful q Source throttling and resource-based interference control FST ASPLOS 2010 Talk 82

Designing Qo. S-Aware Memory Systems: Approaches n Smart resources: Design each shared resource to have a configurable interference control/reduction mechanism q Qo. S-aware memory controllers q Qo. S-aware interconnects [Mutlu+ MICRO’ 07] [Moscibroda+, Usenix Security’ 07] [Mutlu+ ISCA’ 08, Top Picks’ 09] [Kim+ HPCA’ 10] [Kim+ MICRO’ 10, Top Picks’ 11] [Ebrahimi+ ISCA’ 11, MICRO’ 11] [Ausavarungnirun+, ISCA’ 12] [Das+ MICRO’ 09, ISCA’ 10, Top Picks ’ 11] [Grot+ MICRO’ 09, ISCA’ 11, Top Picks ’ 12] q n Qo. S-aware caches Dumb resources: Keep each resource free-for-all, but reduce/control interference by injection control or data mapping q Source throttling to control access to memory system [Ebrahimi+ ASPLOS’ 10, ISCA’ 11, TOCS’ 12] [Ebrahimi+ MICRO’ 09] [Nychis+ Hot. Nets’ 10] q q Qo. S-aware data mapping to memory controllers Qo. S-aware thread scheduling to cores [Muralidhara+ MICRO’ 11] 83

Memory Channel Partitioning Sai Prashanth Muralidhara, Lavanya Subramanian, Onur Mutlu, Mahmut Kandemir, and Thomas Moscibroda, "Reducing Memory Interference in Multicore Systems via Application-Aware Memory Channel Partitioning” 44 th International Symposium on Microarchitecture (MICRO), Porto Alegre, Brazil, December 2011. Slides (pptx) MCP Micro 2011 Talk

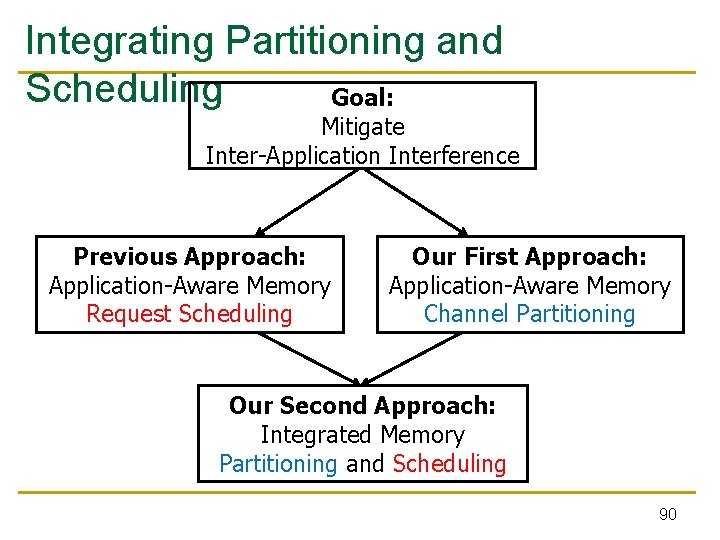

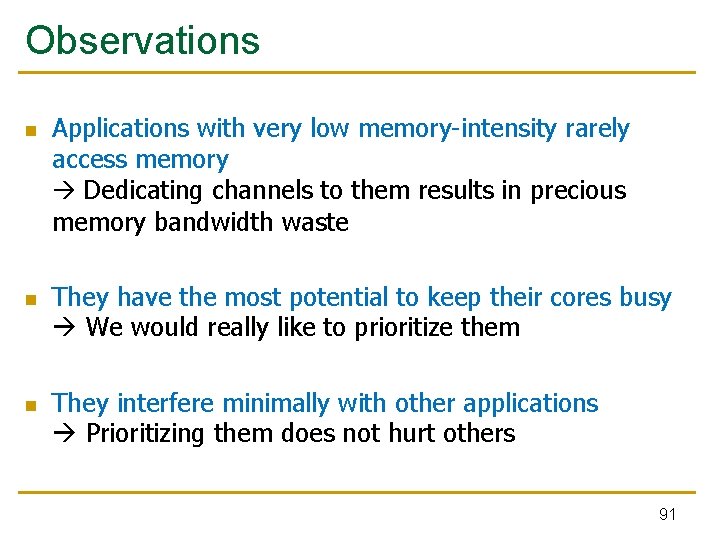

Outline Goal: Mitigate Inter-Application Interference Previous Approach: Application-Aware Memory Request Scheduling Our First Approach: Application-Aware Memory Channel Partitioning Our Second Approach: Integrated Memory Partitioning and Scheduling 85

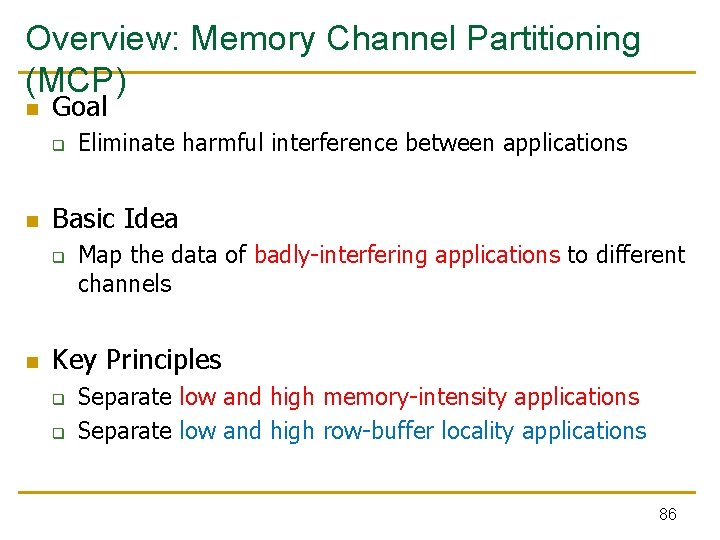

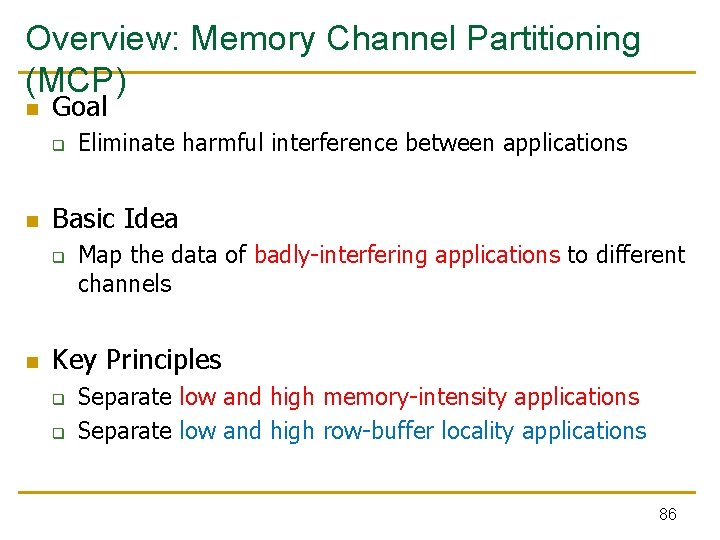

Overview: Memory Channel Partitioning (MCP) n Goal q n Basic Idea q n Eliminate harmful interference between applications Map the data of badly-interfering applications to different channels Key Principles q q Separate low and high memory-intensity applications Separate low and high row-buffer locality applications 86

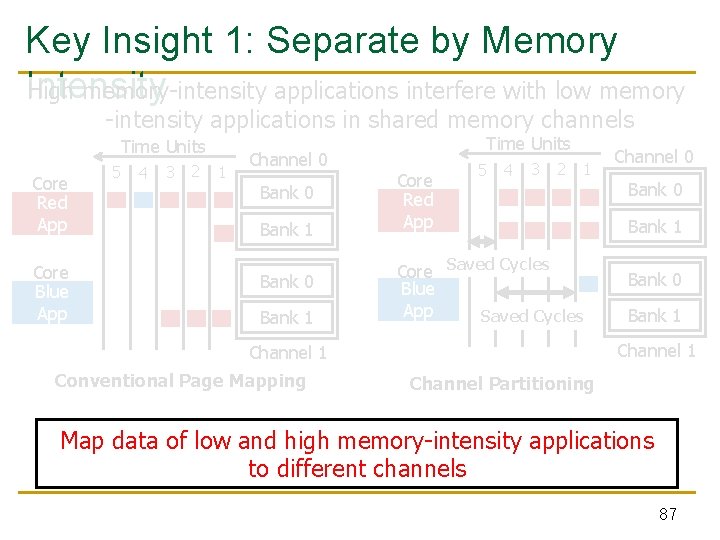

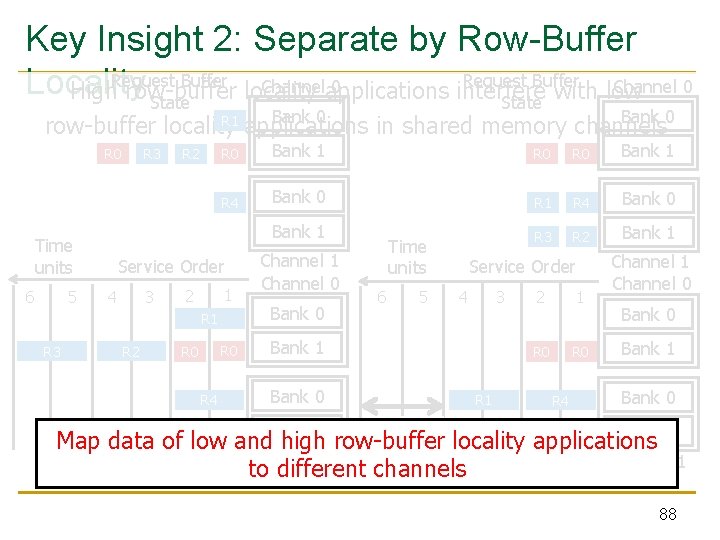

Key Insight 1: Separate by Memory Intensity High memory-intensity applications interfere with low memory -intensity applications in shared memory channels Time Units Core Red App Core Blue App 5 4 3 2 1 Channel 0 Bank 1 Bank 0 Bank 1 Time Units Core Red App 5 4 3 2 1 Core Saved Cycles Blue App Saved Cycles Bank 0 Bank 1 Channel 1 Conventional Page Mapping Channel 0 Channel Partitioning Map data of low and high memory-intensity applications to different channels 87

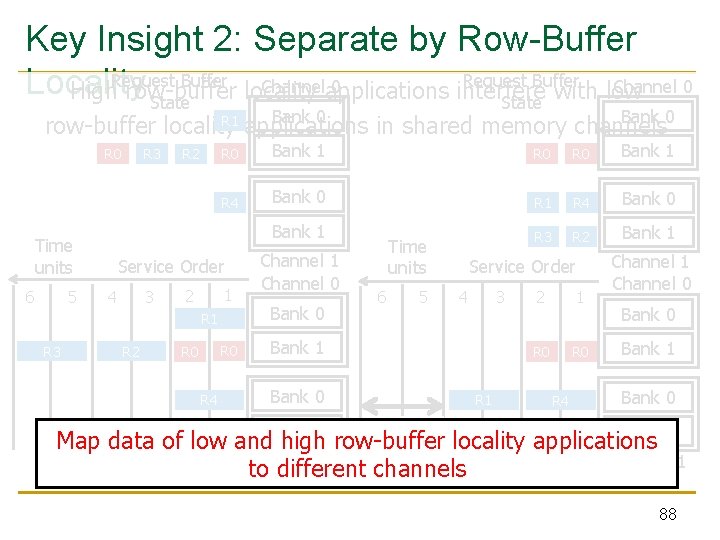

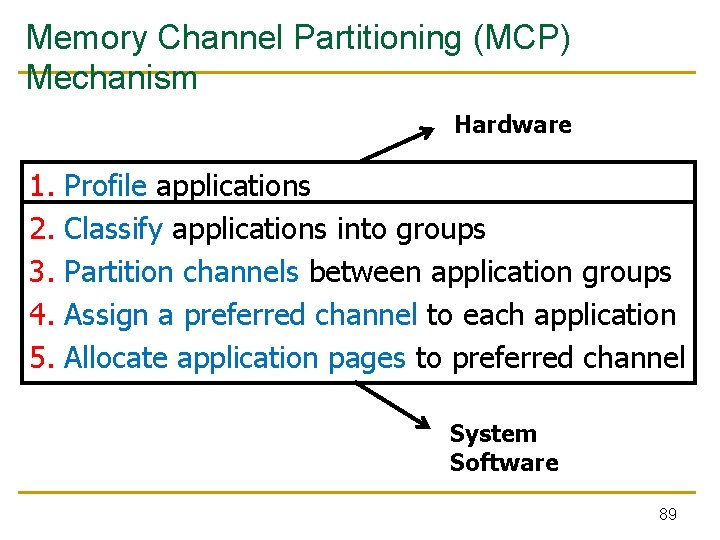

Key Insight 2: Separate by Row-Buffer Request Buffer Channel 0 Channelapplications 0 Locality High. Request row-buffer locality interfere with low State Bank 0 R 1 row-buffer locality applications in shared memory channels R 0 Time units 6 5 R 3 R 2 R 0 Bank 1 R 4 Bank 0 R 1 R 4 Bank 0 Bank 1 R 3 R 2 Bank 1 Service Order 3 4 1 2 R 1 R 3 R 2 R 0 R 4 Channel 1 Channel 0 Bank 0 Time units 6 5 Service Order 3 4 Bank 1 Bank 0 Bank 1 R 1 2 1 R 0 R 4 Channel 1 Channel 0 Bank 1 Bank 0 Saved row-buffer Cycles Bank 1 R 3 R 2 Map data of low and high locality applications Channel 1 to different channels Conventional Page Mapping Channel Partitioning 88

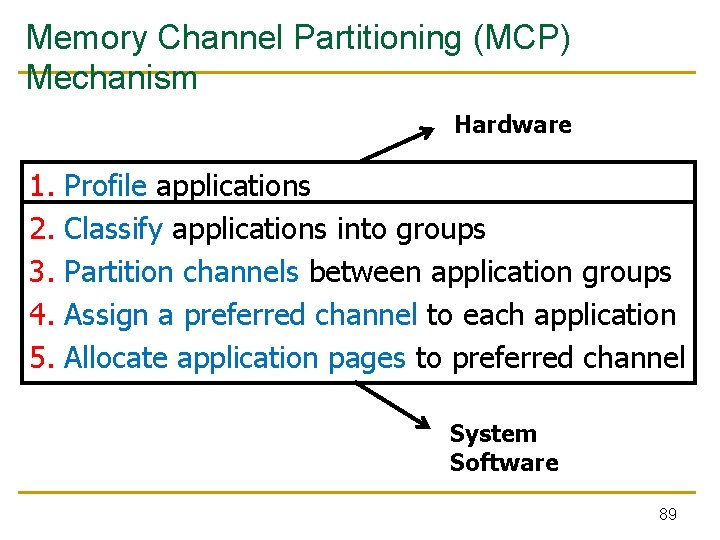

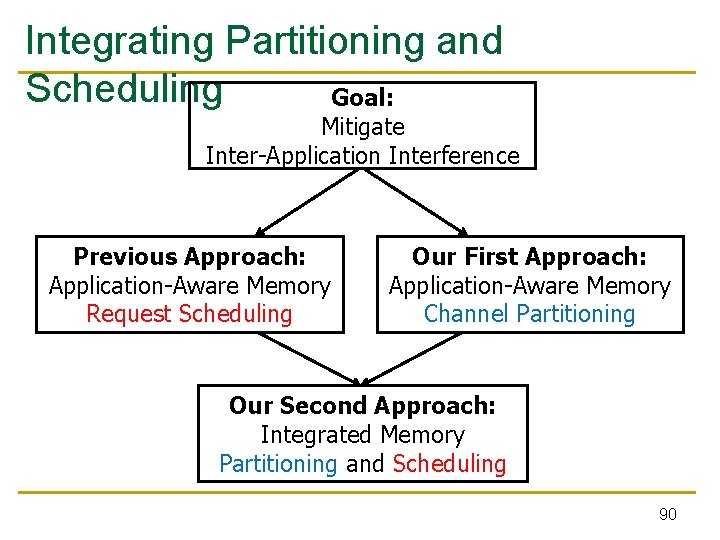

Memory Channel Partitioning (MCP) Mechanism Hardware 1. 2. 3. 4. 5. Profile applications Classify applications into groups Partition channels between application groups Assign a preferred channel to each application Allocate application pages to preferred channel System Software 89

Integrating Partitioning and Scheduling Goal: Mitigate Inter-Application Interference Previous Approach: Application-Aware Memory Request Scheduling Our First Approach: Application-Aware Memory Channel Partitioning Our Second Approach: Integrated Memory Partitioning and Scheduling 90

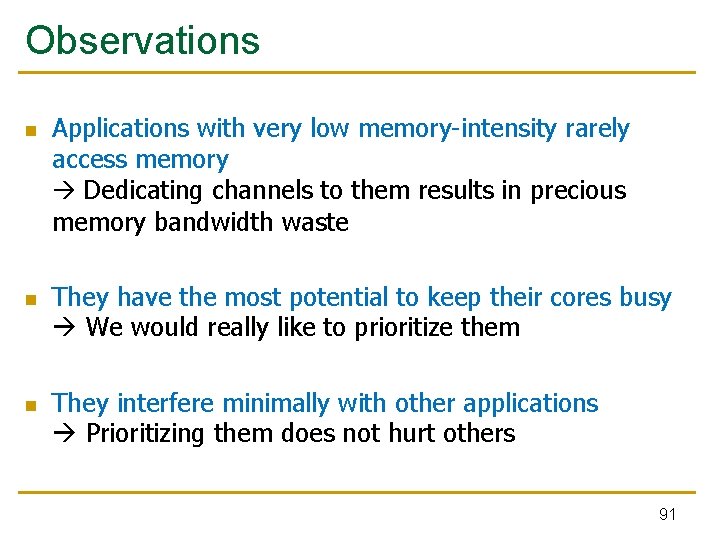

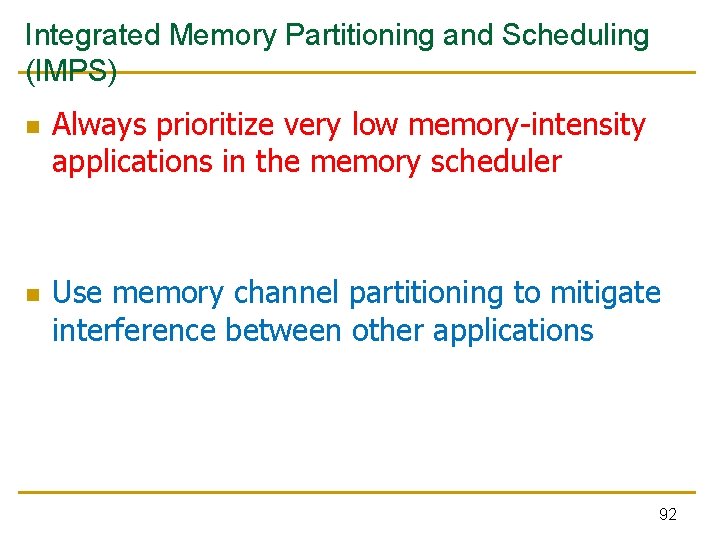

Observations n n n Applications with very low memory-intensity rarely access memory Dedicating channels to them results in precious memory bandwidth waste They have the most potential to keep their cores busy We would really like to prioritize them They interfere minimally with other applications Prioritizing them does not hurt others 91

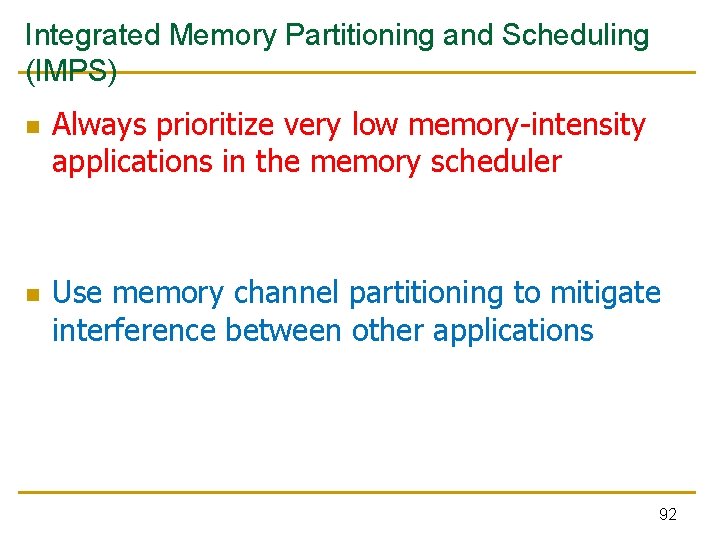

Integrated Memory Partitioning and Scheduling (IMPS) n n Always prioritize very low memory-intensity applications in the memory scheduler Use memory channel partitioning to mitigate interference between other applications 92

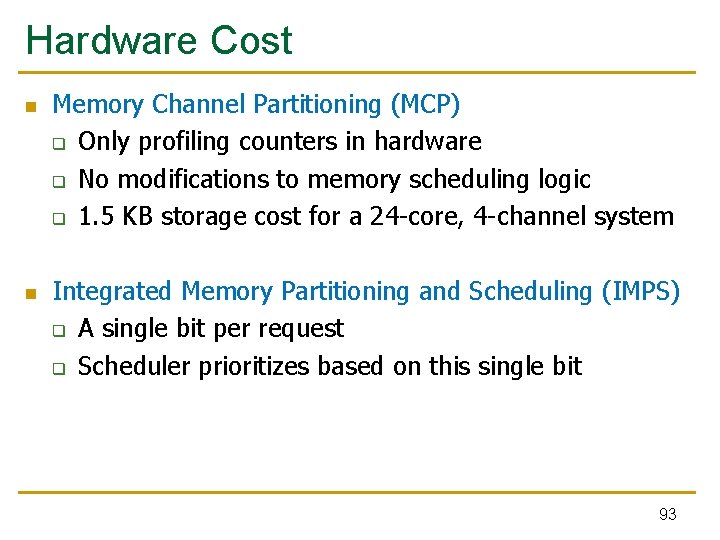

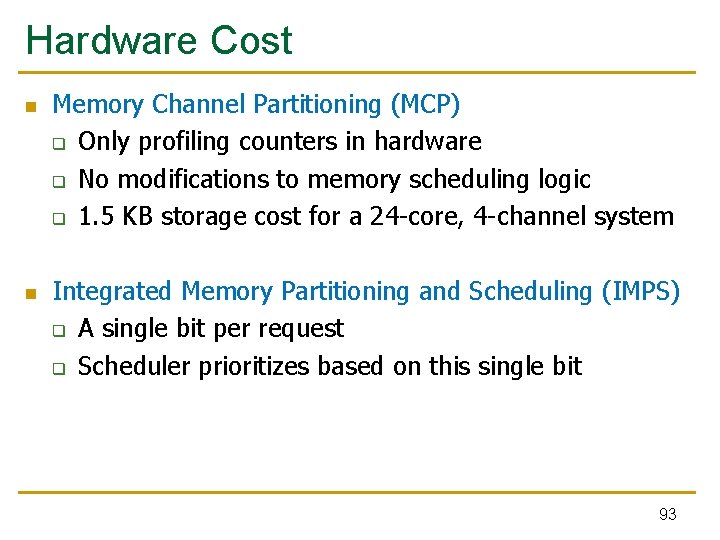

Hardware Cost n n Memory Channel Partitioning (MCP) q Only profiling counters in hardware q No modifications to memory scheduling logic q 1. 5 KB storage cost for a 24 -core, 4 -channel system Integrated Memory Partitioning and Scheduling (IMPS) q A single bit per request q Scheduler prioritizes based on this single bit 93

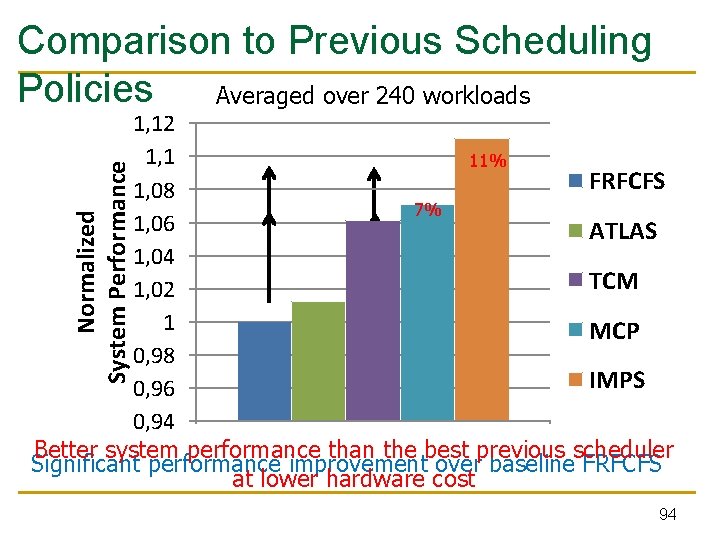

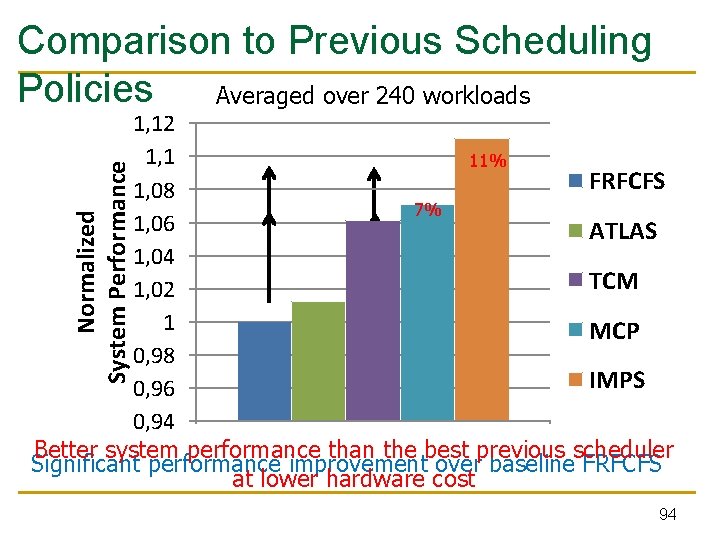

Comparison to Previous Scheduling Policies Averaged over 240 workloads Normalized System Performance 1, 12 1, 1 11% 5% FRFCFS 1, 08 7% 1% 1, 06 ATLAS 1, 04 TCM 1, 02 1 MCP 0, 98 IMPS 0, 96 0, 94 Better system performance than the best previous scheduler Significant performance improvement over baseline FRFCFS at lower hardware cost 94

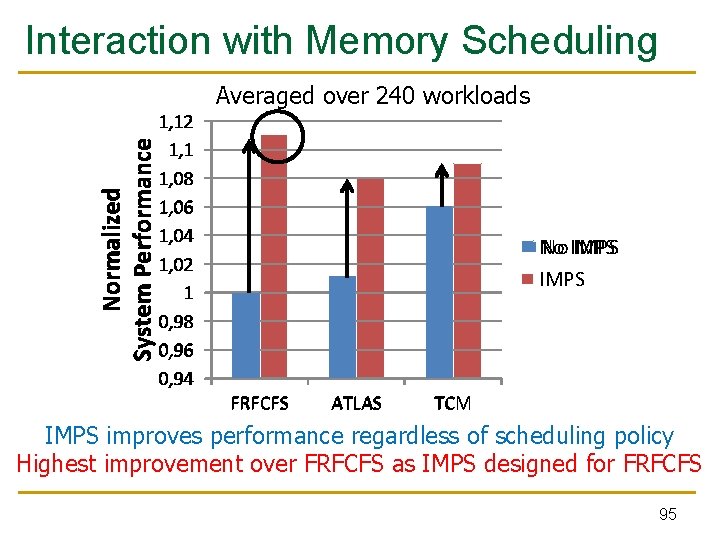

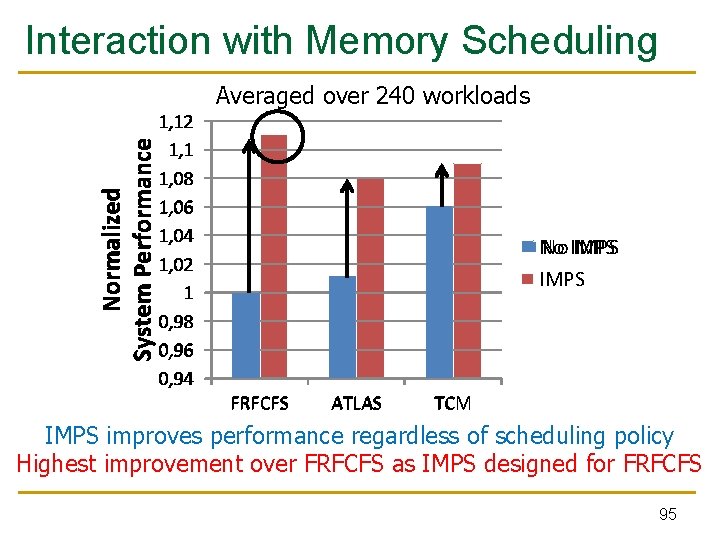

Interaction with Memory Scheduling Averaged over 240 workloads Normalized System Performance 1, 12 1, 1 1, 08 1, 06 1, 04 1, 02 1 0, 98 0, 96 0, 94 No No IMPS FRFCFS ATLAS TCM IMPS improves performance regardless of scheduling policy Highest improvement over FRFCFS as IMPS designed for FRFCFS 95

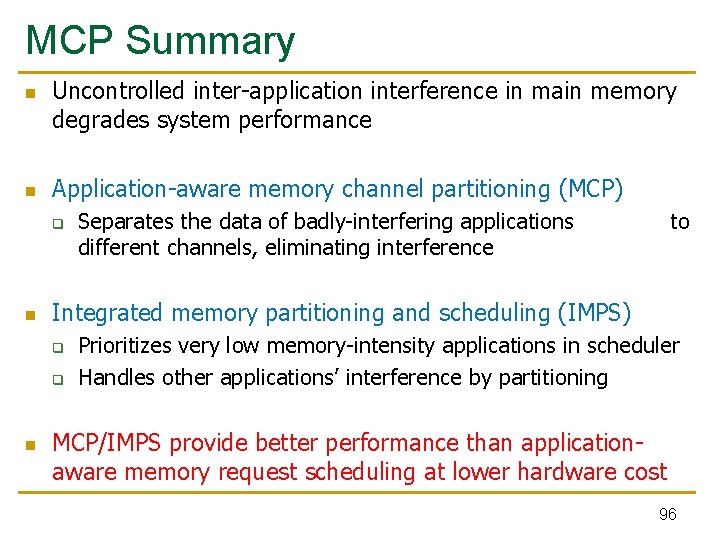

MCP Summary n n Uncontrolled inter-application interference in main memory degrades system performance Application-aware memory channel partitioning (MCP) q n to Integrated memory partitioning and scheduling (IMPS) q q n Separates the data of badly-interfering applications different channels, eliminating interference Prioritizes very low memory-intensity applications in scheduler Handles other applications’ interference by partitioning MCP/IMPS provide better performance than applicationaware memory request scheduling at lower hardware cost 96

Summary: Memory Qo. S Approaches and Techniques n Approaches: Smart vs. dumb resources q q n Techniques: Request scheduling, source throttling, memory partitioning q q q n Smart resources: Qo. S-aware memory scheduling Dumb resources: Source throttling; channel partitioning Both approaches are effective in reducing interference No single best approach for all workloads All approaches are effective in reducing interference Can be applied at different levels: hardware vs. software No single best technique for all workloads Combined approaches and techniques are the most powerful q Integrated Memory Channel Partitioning and Scheduling [MICRO’ 11] 97

Smart Resources vs. Source Throttling n Advantages of “smart resources” q q q n Each resource is designed to be as efficient as possible more efficient design using custom techniques for each resource No need for estimating interference across the entire system (to feed a throttling algorithm). Does not lose throughput by possibly overthrottling Advantages of source throttling q q Prevents overloading of any or all resources (if employed well) Can keep each resource simple; no need to redesign each resource Provides prioritization of threads in the entire memory system; instead of per resource Eliminates conflicting decision making between resources 98

Handling Interference in Parallel Applications n n n Threads in a multithreaded application are inter-dependent Some threads can be on the critical path of execution due to synchronization; some threads are not How do we schedule requests of inter-dependent threads to maximize multithreaded application performance? Idea: Estimate limiter threads likely to be on the critical path and prioritize their requests; shuffle priorities of non-limiter threads to reduce memory interference among them [Ebrahimi+, MICRO’ 11] Hardware/software cooperative limiter thread estimation: n n Thread executing the most contended critical section Thread that is falling behind the most in a parallel for loop PAMS Micro 2011 Talk 99

Other Ways of Reducing (DRAM) Interference n DRAM bank/subarray partitioning among threads n Interference-aware address mapping/remapping Core/request throttling: How? Interference-aware thread scheduling: How? Better/Interference-aware caching Interference-aware scheduling in the interconnect Randomized address mapping DRAM architecture/microarchitecture changes? n These are general techniques that can be used to improve n n n q q q System throughput Qo. S/fairness Power/energy consumption? 100

Research Topics in Main Memory Management n Abundant n n n Interference reduction via different techniques Distributed memory controller management Co-design with on-chip interconnects and caches Reducing waste, minimizing energy, minimizing cost Enabling new memory technologies q q q n Die stacking Non-volatile memory Latency tolerance You can come up with great solutions that will significantly impact computing industry 101