18 742 Parallel Computer Architecture Lecture 5 Cache

![Memory Consistency (Briefly) n n We consider only sequential consistency [Lamport 79] here Sequential Memory Consistency (Briefly) n n We consider only sequential consistency [Lamport 79] here Sequential](https://slidetodoc.com/presentation_image/9a4f5a1ee8b5abfb27182ae98343bbf9/image-37.jpg)

- Slides: 38

18 -742 Parallel Computer Architecture Lecture 5: Cache Coherence Chris Craik (TA) Carnegie Mellon University

Readings: Coherence n Required for Review q q n Required q q q n Papamarcos and Patel, “A low-overhead coherence solution for multiprocessors with private cache memories, ” ISCA 1984. Kelm et al, “Cohesion: A Hybrid Memory Model for Accelerators”, ISCA 2010. Censier and Feautrier, “A new solution to coherence problems in multicache systems, ” IEEE Trans. Comput. , 1978. Goodman, “Using cache memory to reduce processor-memory traffic, ” ISCA 1983. Laudon and Lenoski, “The SGI Origin: a cc. NUMA highly scalable server, ” ISCA 1997. Lenoski et al, “The Stanford DASH Multiprocessor, ” IEEE Computer, 25(3): 63 -79, 1992. Martin et al, “Token coherence: decoupling performance and correctness, ” ISCA 2003. Recommended q q q Baer and Wang, “On the inclusion properties for multi-level cache hierarchies, ” ISCA 1988. Lamport, “How to Make a Multiprocessor Computer that Correctly Executes Multiprocess Programs”, IEEE Trans. Comput. , Sept 1979, pp 690 -691. Culler and Singh, Parallel Computer Architecture, Chapters 5 and 8. 2

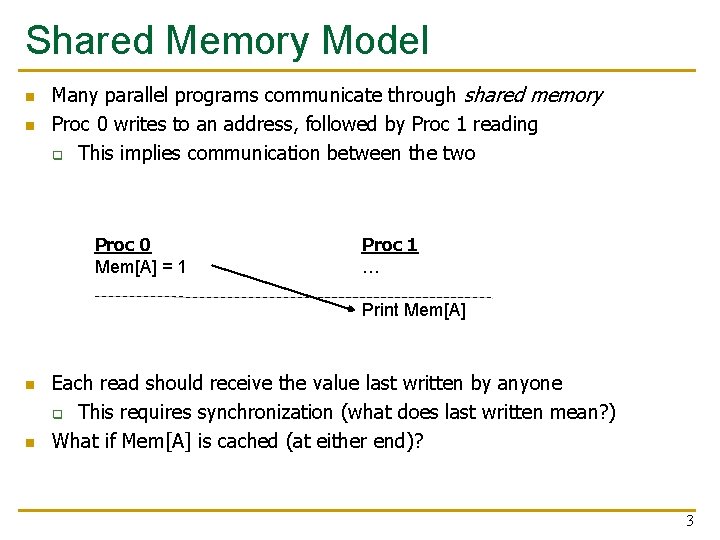

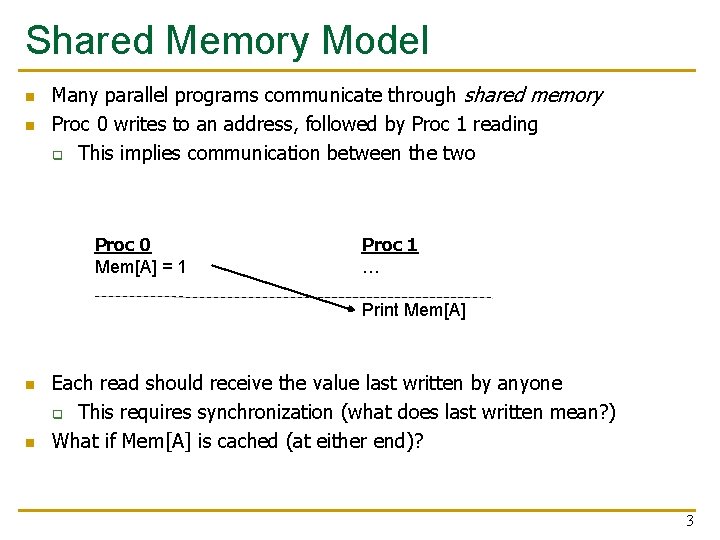

Shared Memory Model n n Many parallel programs communicate through shared memory Proc 0 writes to an address, followed by Proc 1 reading q This implies communication between the two Proc 0 Mem[A] = 1 Proc 1 … Print Mem[A] n n Each read should receive the value last written by anyone q This requires synchronization (what does last written mean? ) What if Mem[A] is cached (at either end)? 3

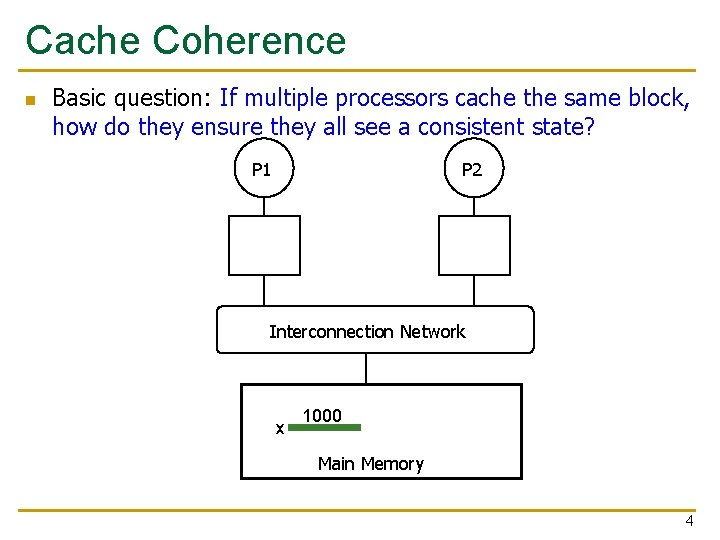

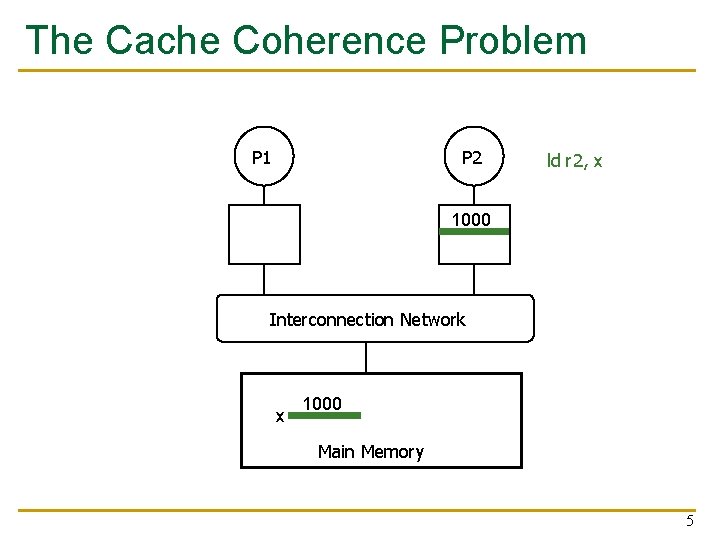

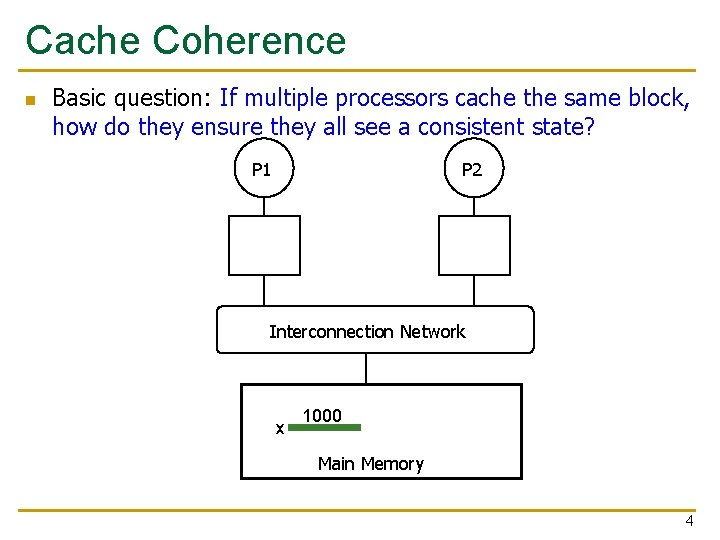

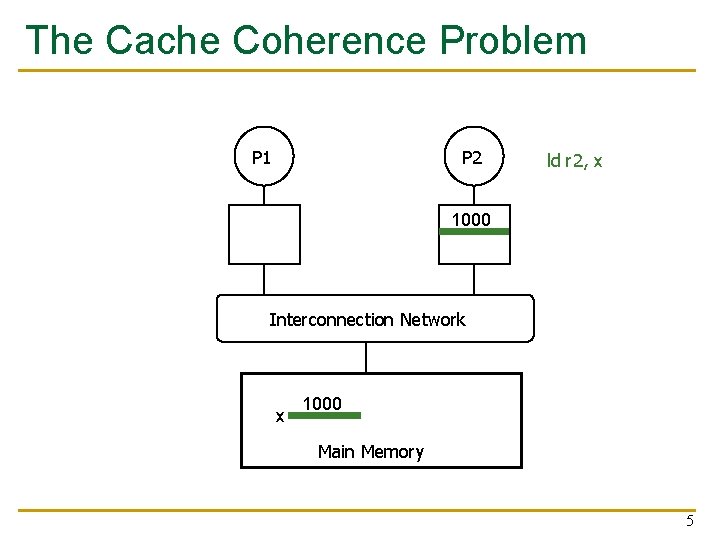

Cache Coherence n Basic question: If multiple processors cache the same block, how do they ensure they all see a consistent state? P 2 P 1 Interconnection Network x 1000 Main Memory 4

The Cache Coherence Problem P 2 P 1 ld r 2, x 1000 Interconnection Network x 1000 Main Memory 5

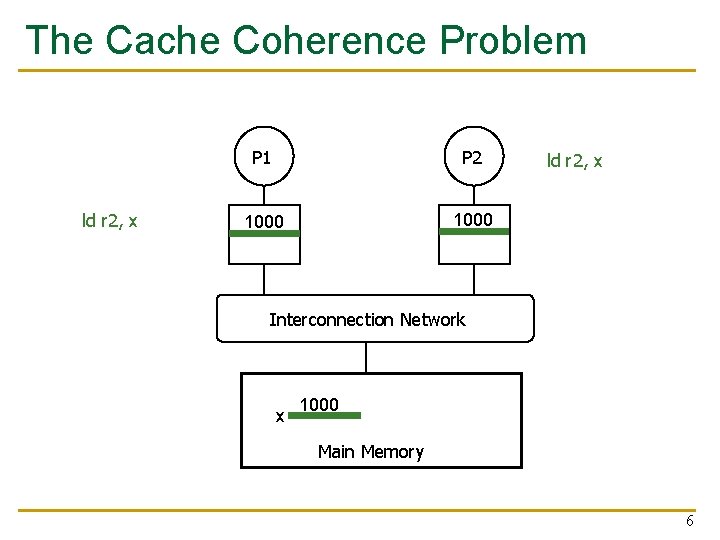

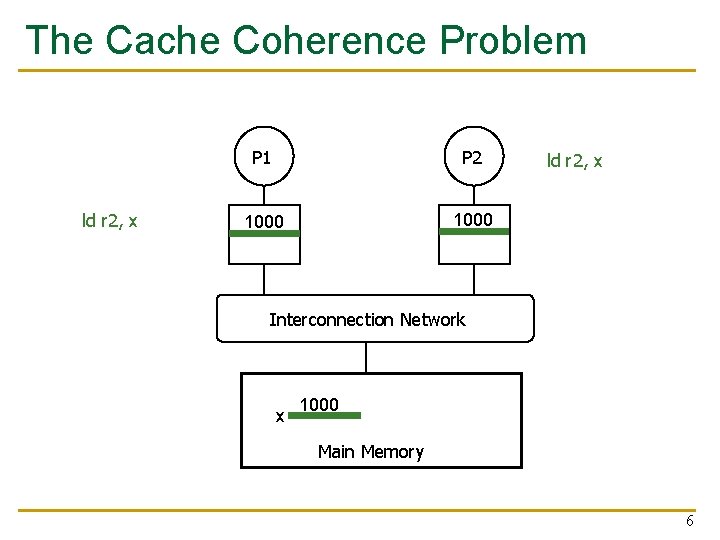

The Cache Coherence Problem ld r 2, x P 1 P 2 1000 ld r 2, x Interconnection Network x 1000 Main Memory 6

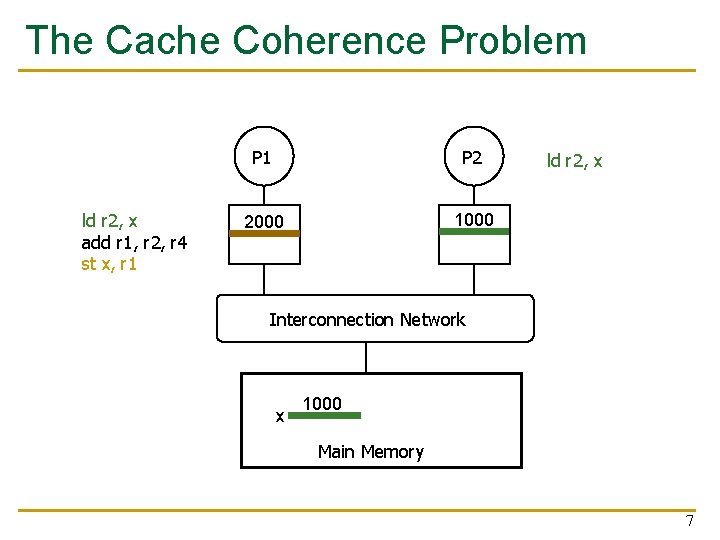

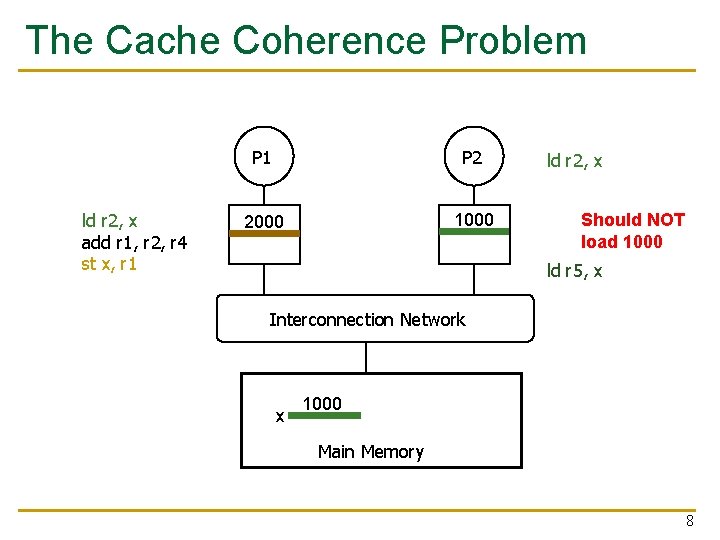

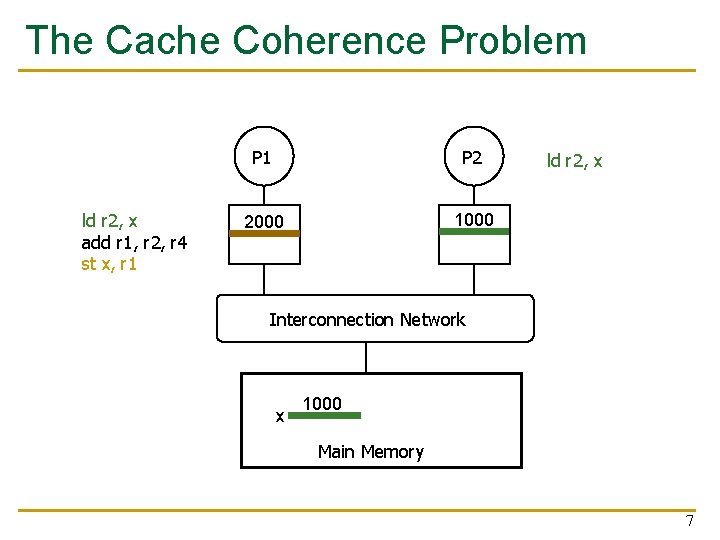

The Cache Coherence Problem ld r 2, x add r 1, r 2, r 4 st x, r 1 P 2 2000 1000 ld r 2, x Interconnection Network x 1000 Main Memory 7

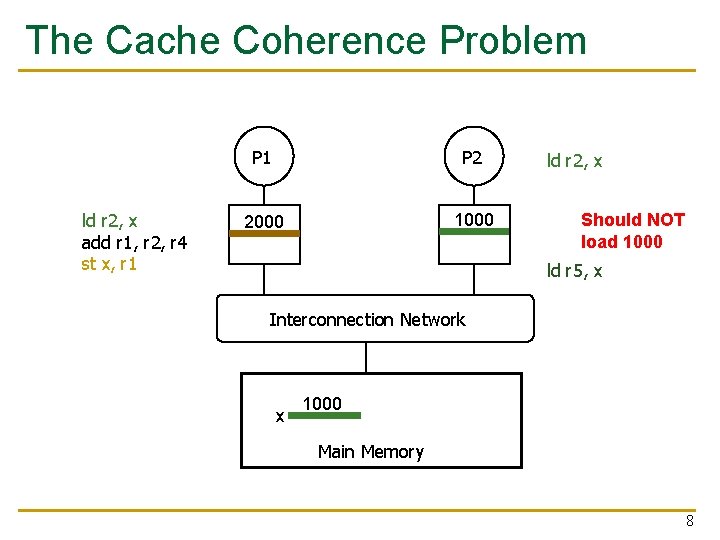

The Cache Coherence Problem ld r 2, x add r 1, r 2, r 4 st x, r 1 P 2 2000 1000 ld r 2, x Should NOT load 1000 ld r 5, x Interconnection Network x 1000 Main Memory 8

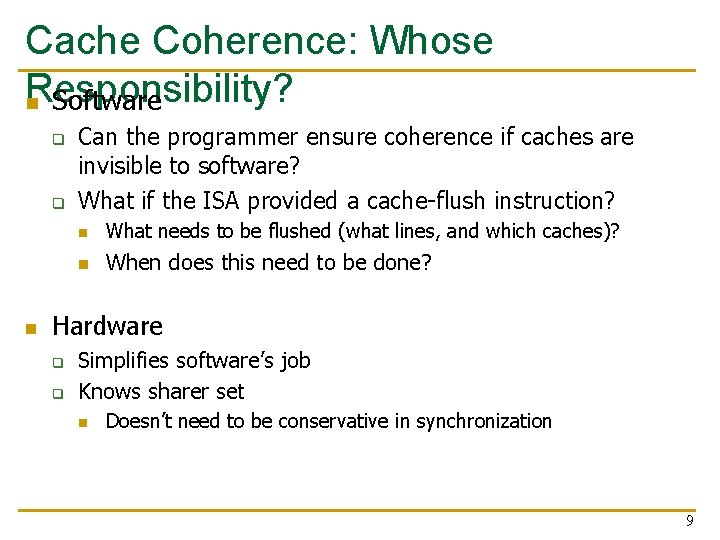

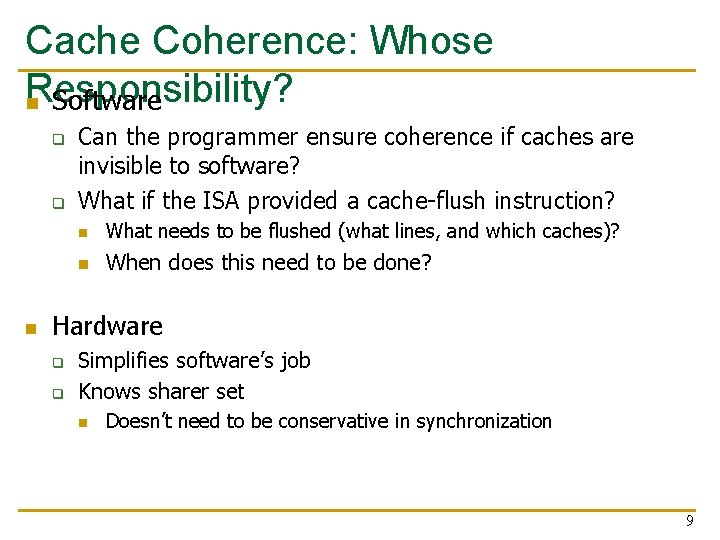

Cache Coherence: Whose Responsibility? n Software q q n Can the programmer ensure coherence if caches are invisible to software? What if the ISA provided a cache-flush instruction? n What needs to be flushed (what lines, and which caches)? n When does this need to be done? Hardware q q Simplifies software’s job Knows sharer set n Doesn’t need to be conservative in synchronization 9

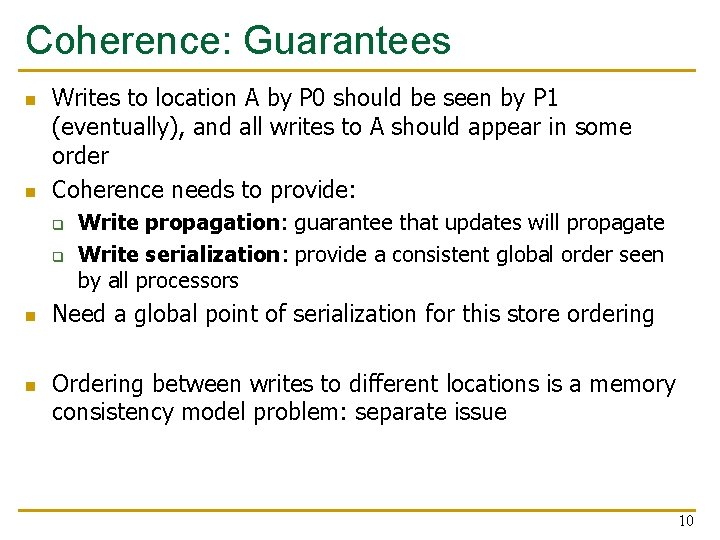

Coherence: Guarantees n n Writes to location A by P 0 should be seen by P 1 (eventually), and all writes to A should appear in some order Coherence needs to provide: q q n n Write propagation: guarantee that updates will propagate Write serialization: provide a consistent global order seen by all processors Need a global point of serialization for this store ordering Ordering between writes to different locations is a memory consistency model problem: separate issue 10

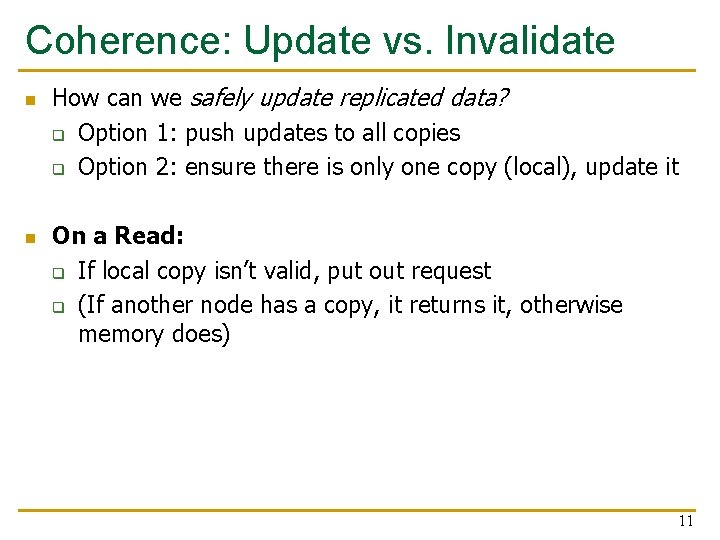

Coherence: Update vs. Invalidate n n How can we safely update replicated data? q Option 1: push updates to all copies q Option 2: ensure there is only one copy (local), update it On a Read: q If local copy isn’t valid, put out request q (If another node has a copy, it returns it, otherwise memory does) 11

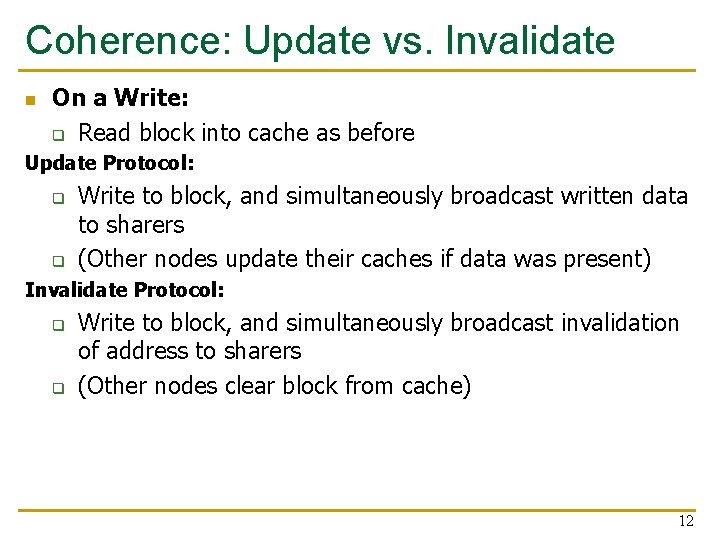

Coherence: Update vs. Invalidate n On a Write: q Read block into cache as before Update Protocol: q q Write to block, and simultaneously broadcast written data to sharers (Other nodes update their caches if data was present) Invalidate Protocol: q q Write to block, and simultaneously broadcast invalidation of address to sharers (Other nodes clear block from cache) 12

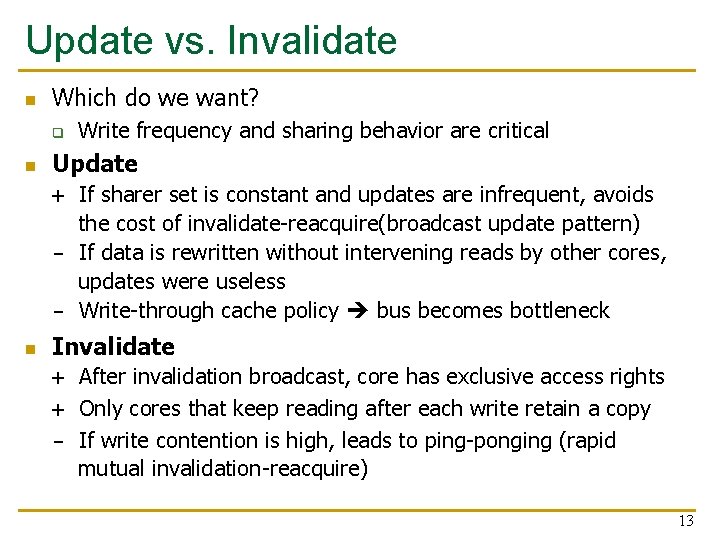

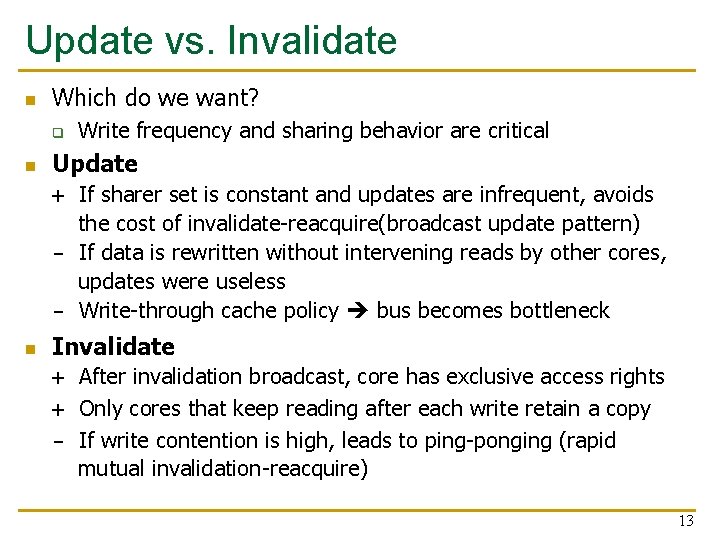

Update vs. Invalidate n Which do we want? q n Write frequency and sharing behavior are critical Update + If sharer set is constant and updates are infrequent, avoids the cost of invalidate-reacquire(broadcast update pattern) - If data is rewritten without intervening reads by other cores, updates were useless - Write-through cache policy bus becomes bottleneck n Invalidate + After invalidation broadcast, core has exclusive access rights + Only cores that keep reading after each write retain a copy - If write contention is high, leads to ping-ponging (rapid mutual invalidation-reacquire) 13

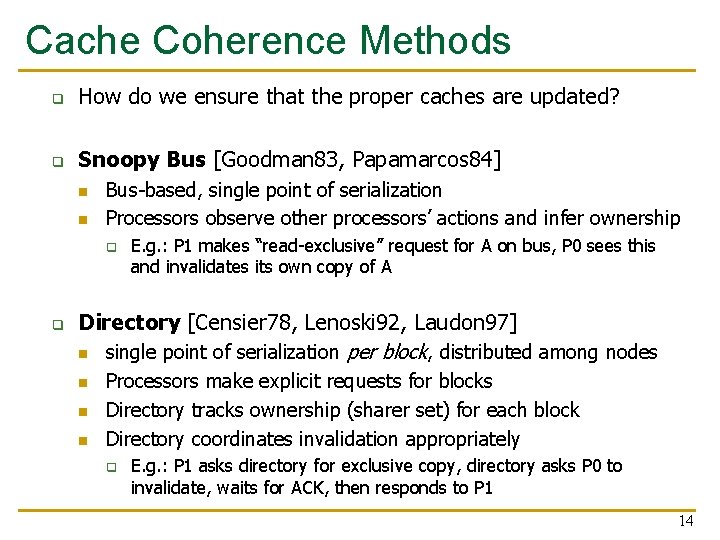

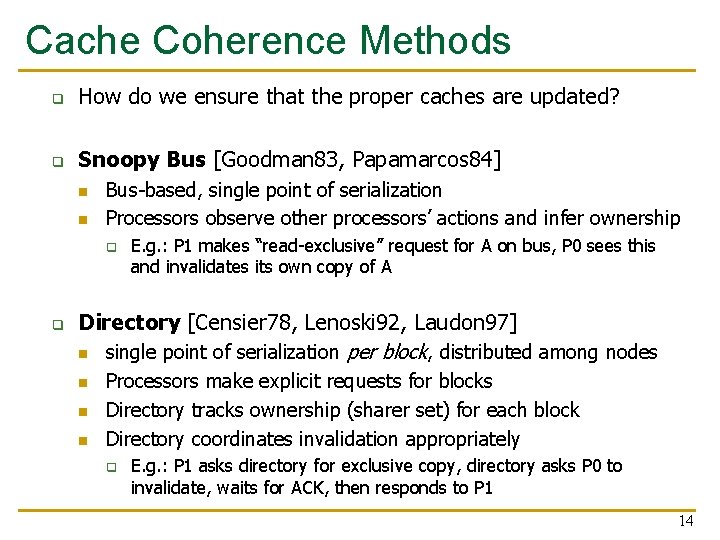

Cache Coherence Methods q How do we ensure that the proper caches are updated? q Snoopy Bus [Goodman 83, Papamarcos 84] n n Bus-based, single point of serialization Processors observe other processors’ actions and infer ownership q q E. g. : P 1 makes “read-exclusive” request for A on bus, P 0 sees this and invalidates its own copy of A Directory [Censier 78, Lenoski 92, Laudon 97] n single point of serialization per block, distributed among nodes n n n Processors make explicit requests for blocks Directory tracks ownership (sharer set) for each block Directory coordinates invalidation appropriately q E. g. : P 1 asks directory for exclusive copy, directory asks P 0 to invalidate, waits for ACK, then responds to P 1 14

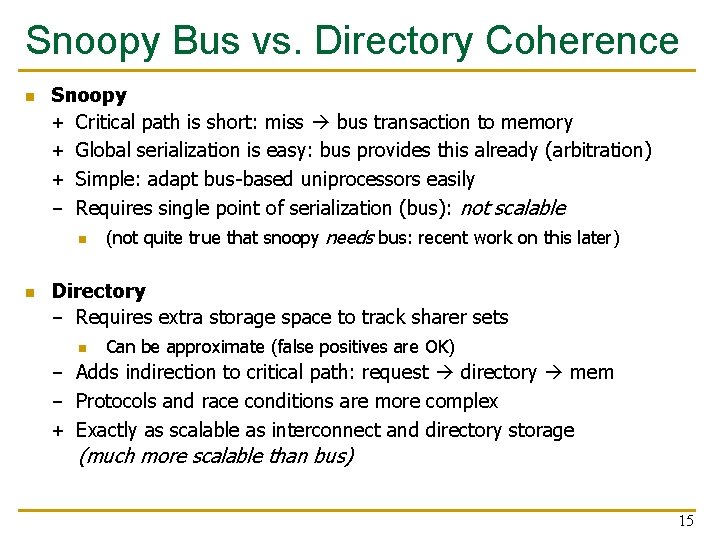

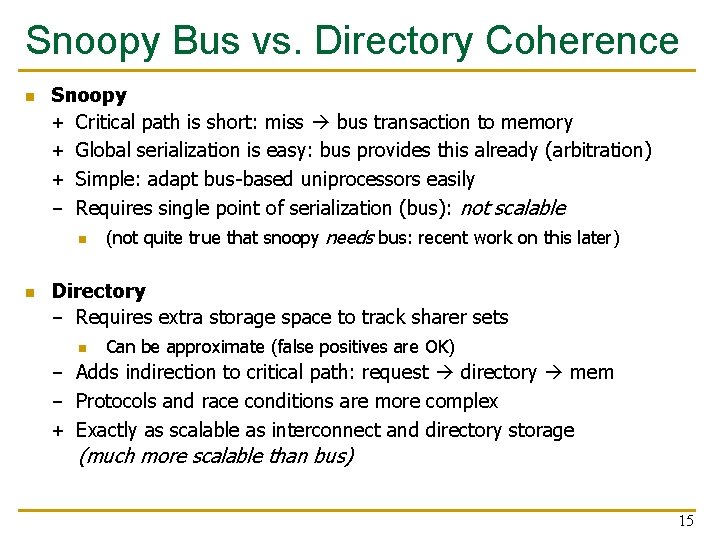

Snoopy Bus vs. Directory Coherence n n Snoopy + Critical path is short: miss bus transaction to memory + Global serialization is easy: bus provides this already (arbitration) + Simple: adapt bus-based uniprocessors easily - Requires single point of serialization (bus): not scalable n (not quite true that snoopy needs bus: recent work on this later) Directory - Requires extra storage space to track sharer sets n Can be approximate (false positives are OK) - Adds indirection to critical path: request directory mem - Protocols and race conditions are more complex + Exactly as scalable as interconnect and directory storage (much more scalable than bus) 15

Snoopy-Bus Coherence 16

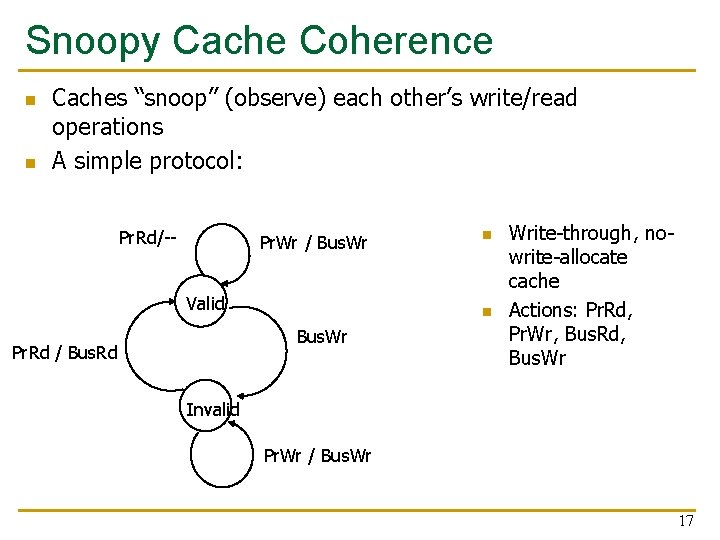

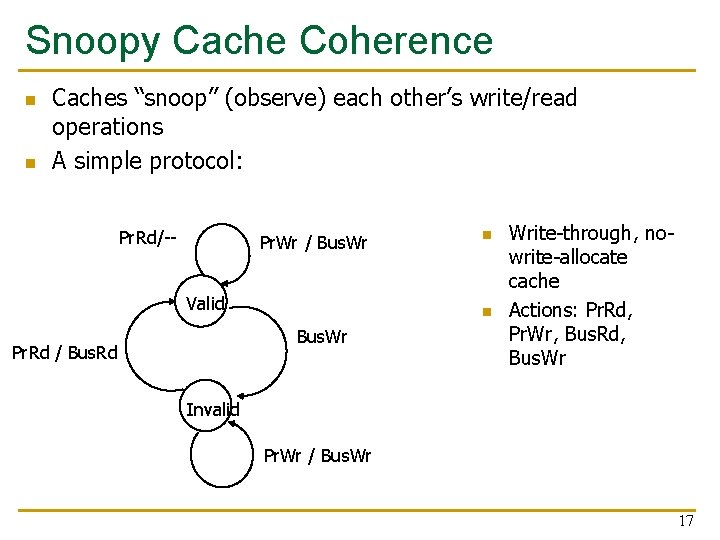

Snoopy Cache Coherence n n Caches “snoop” (observe) each other’s write/read operations A simple protocol: Pr. Rd/-- Pr. Wr / Bus. Wr Valid n Bus. Wr Pr. Rd / Bus. Rd n Write-through, nowrite-allocate cache Actions: Pr. Rd, Pr. Wr, Bus. Rd, Bus. Wr Invalid Pr. Wr / Bus. Wr 17

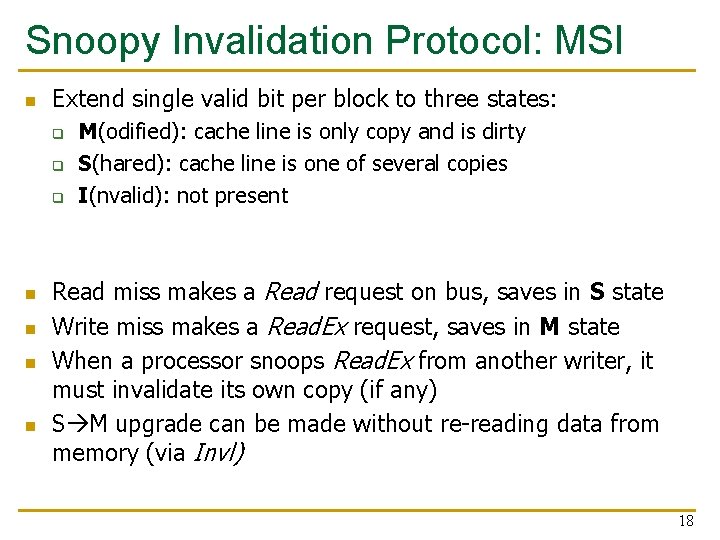

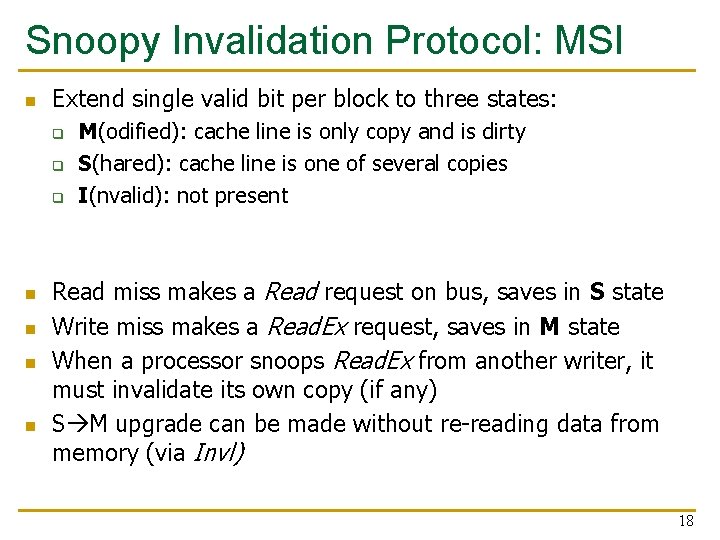

Snoopy Invalidation Protocol: MSI n Extend single valid bit per block to three states: q q q n n M(odified): cache line is only copy and is dirty S(hared): cache line is one of several copies I(nvalid): not present Read miss makes a Read request on bus, saves in S state Write miss makes a Read. Ex request, saves in M state When a processor snoops Read. Ex from another writer, it must invalidate its own copy (if any) S M upgrade can be made without re-reading data from memory (via Invl) 18

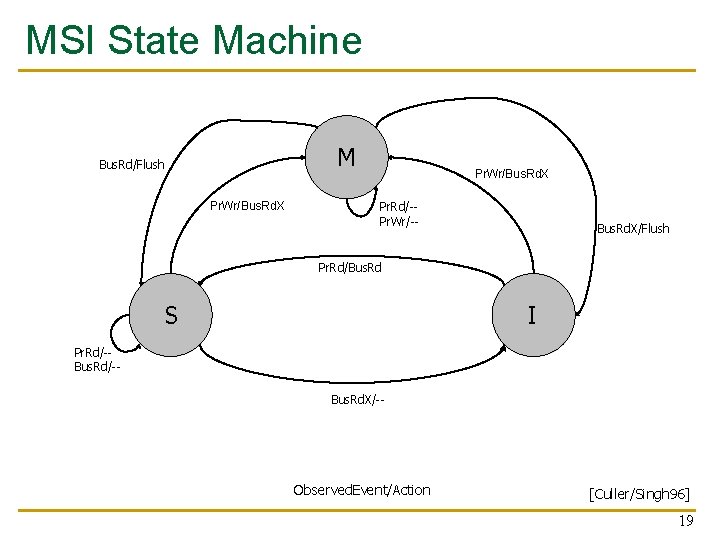

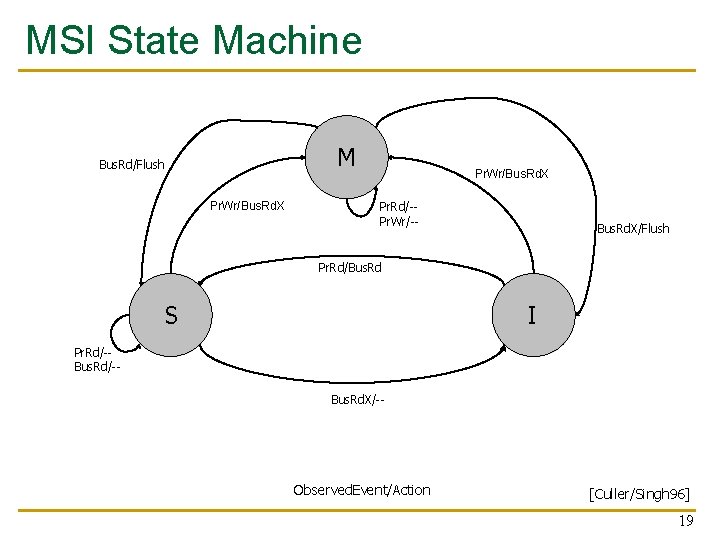

MSI State Machine M Bus. Rd/Flush Pr. Wr/Bus. Rd. X Pr. Rd/-Pr. Wr/-- Bus. Rd. X/Flush Pr. Rd/Bus. Rd S I Pr. Rd/-Bus. Rd. X/-- Observed. Event/Action [Culler/Singh 96] 19

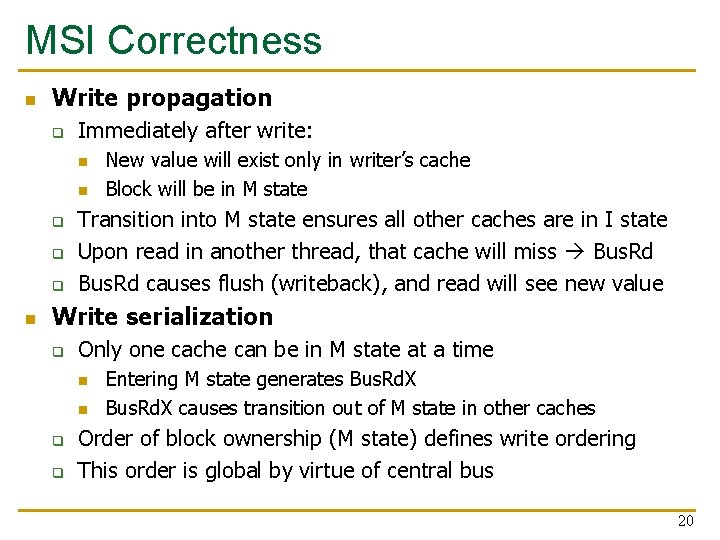

MSI Correctness n Write propagation q Immediately after write: n n q q q n New value will exist only in writer’s cache Block will be in M state Transition into M state ensures all other caches are in I state Upon read in another thread, that cache will miss Bus. Rd causes flush (writeback), and read will see new value Write serialization q Only one cache can be in M state at a time n n q q Entering M state generates Bus. Rd. X causes transition out of M state in other caches Order of block ownership (M state) defines write ordering This order is global by virtue of central bus 20

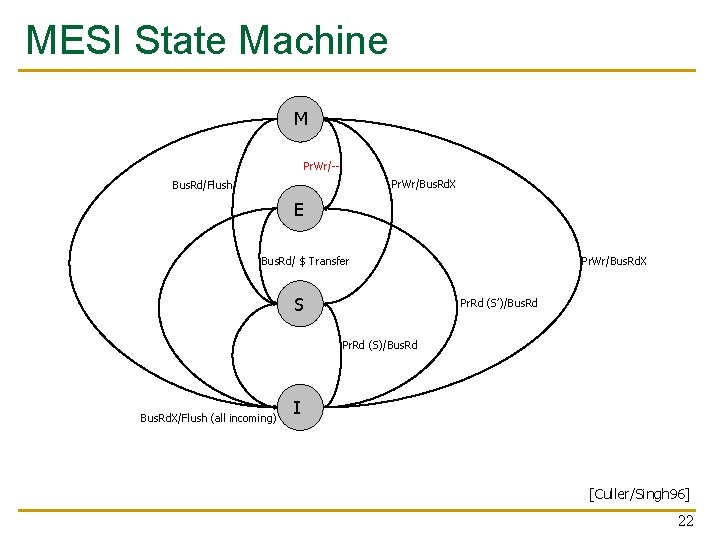

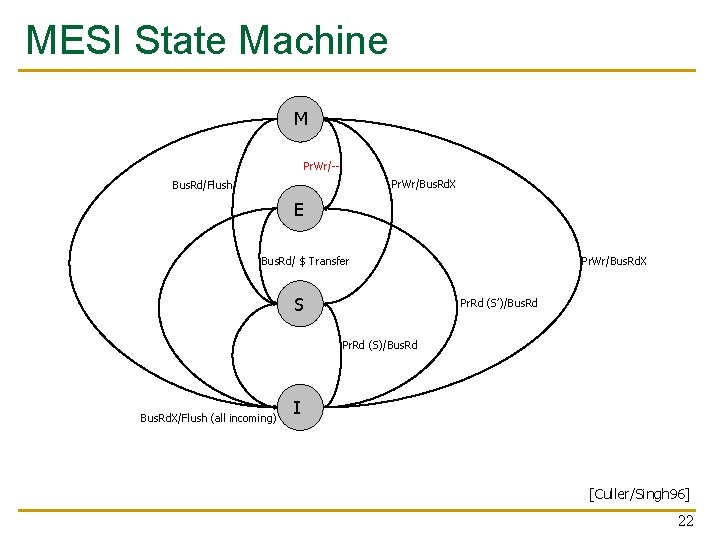

More States: MESI n n Invalid Shared Modified sequence takes two bus ops What if data is not shared? Unnecessary broadcasts Exclusive state: this is the only copy, and it is clean Block is exclusive if, during Bus. Rd, no other cache had it q Wired-OR “shared” signal on bus can determine this: snooping caches assert the signal if they also have a copy n Another Bus. Rd also causes transition into Shared n Silent transition Exclusive Modified is possible on write! n MESI is also called the Illinois protocol [Papamarcos 84] 21

MESI State Machine M Pr. Wr/-Pr. Wr/Bus. Rd. X Bus. Rd/Flush E Bus. Rd/ $ Transfer S Pr. Wr/Bus. Rd. X Pr. Rd (S’)/Bus. Rd Pr. Rd (S)/Bus. Rd. X/Flush (all incoming) I [Culler/Singh 96] 22

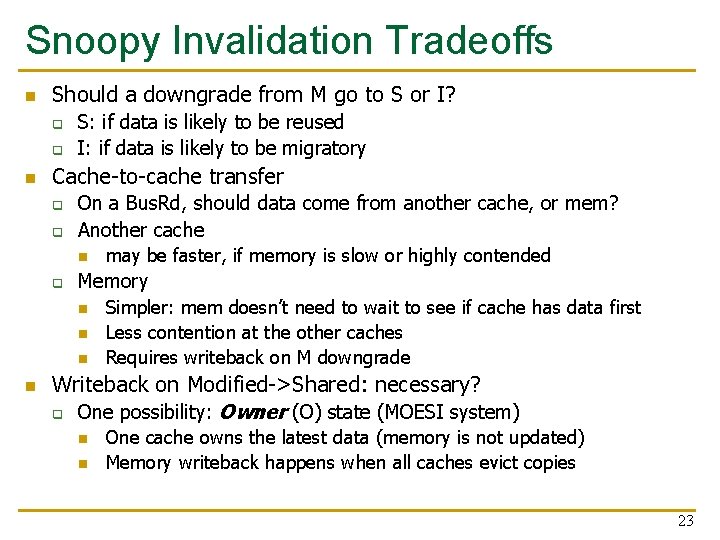

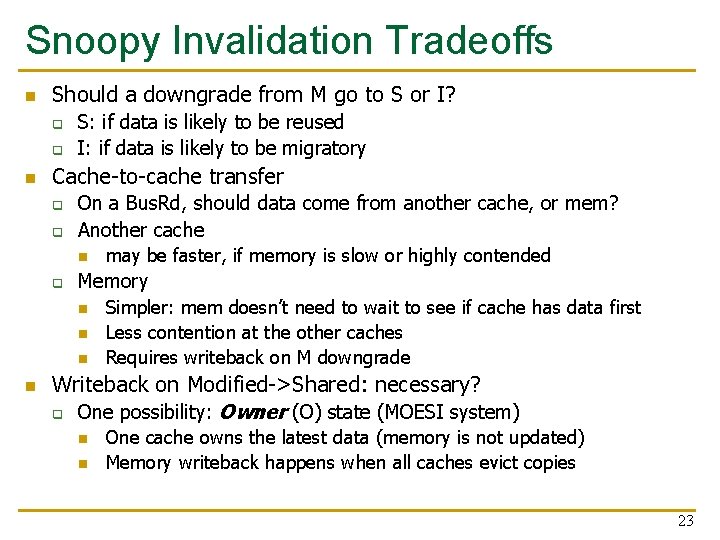

Snoopy Invalidation Tradeoffs n Should a downgrade from M go to S or I? q q n S: if data is likely to be reused I: if data is likely to be migratory Cache-to-cache transfer q q On a Bus. Rd, should data come from another cache, or mem? Another cache n q Memory n n may be faster, if memory is slow or highly contended Simpler: mem doesn’t need to wait to see if cache has data first Less contention at the other caches Requires writeback on M downgrade Writeback on Modified->Shared: necessary? q One possibility: Owner (O) state (MOESI system) n n One cache owns the latest data (memory is not updated) Memory writeback happens when all caches evict copies 23

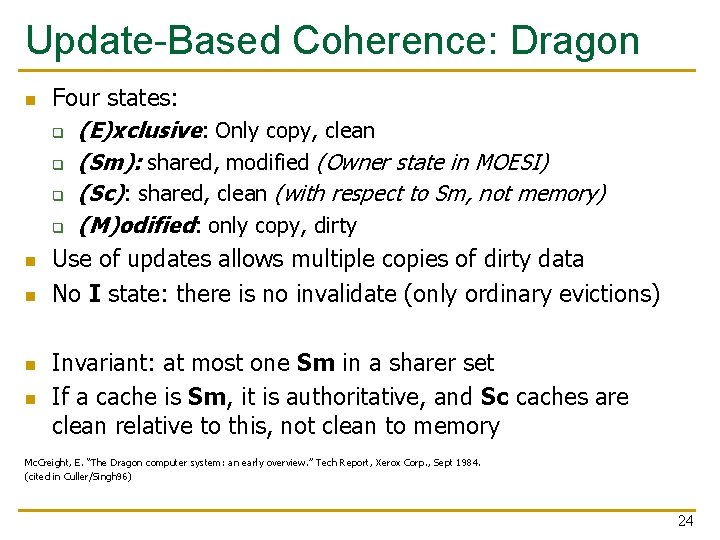

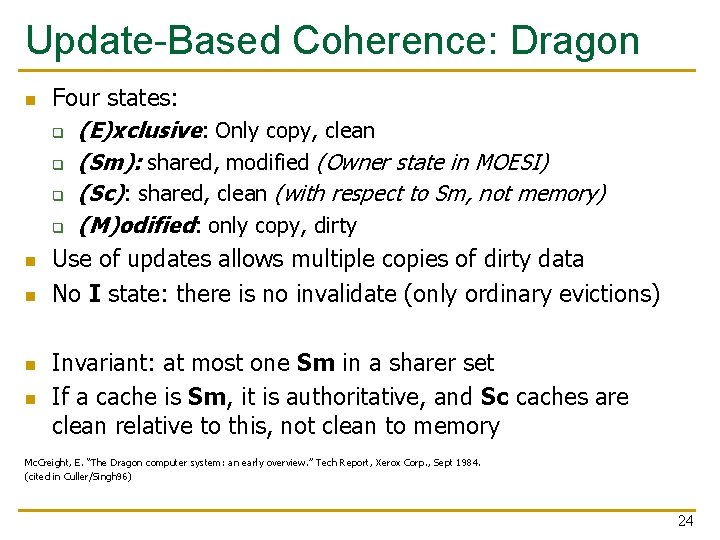

Update-Based Coherence: Dragon n n Four states: q (E)xclusive: Only copy, clean q (Sm): shared, modified (Owner state in MOESI) q (Sc): shared, clean (with respect to Sm, not memory) q (M)odified: only copy, dirty Use of updates allows multiple copies of dirty data No I state: there is no invalidate (only ordinary evictions) Invariant: at most one Sm in a sharer set If a cache is Sm, it is authoritative, and Sc caches are clean relative to this, not clean to memory Mc. Creight, E. “The Dragon computer system: an early overview. ” Tech Report, Xerox Corp. , Sept 1984. (cited in Culler/Singh 96) 24

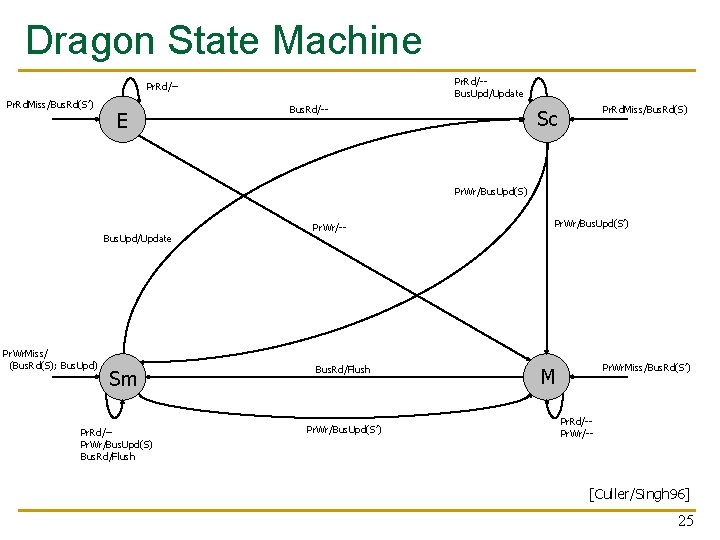

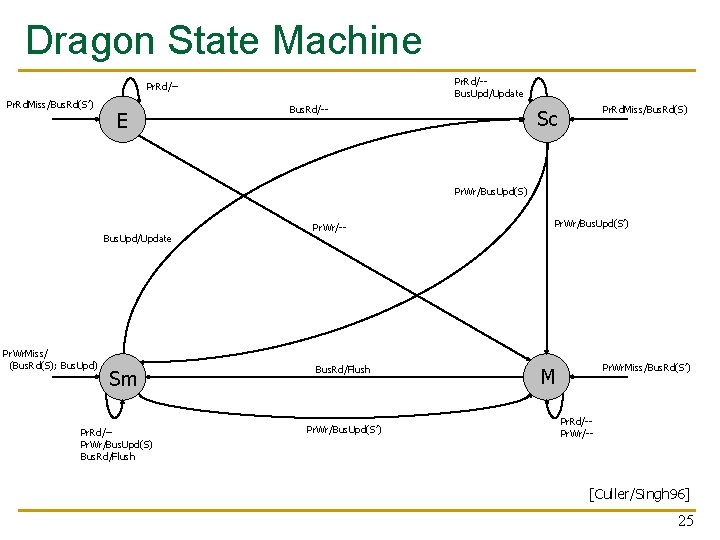

Dragon State Machine Pr. Rd/-Bus. Upd/Update Pr. Rd/-Pr. Rd. Miss/Bus. Rd(S’) E Bus. Rd/-- Pr. Rd. Miss/Bus. Rd(S) Sc Pr. Wr/Bus. Upd(S) Bus. Upd/Update Pr. Wr. Miss/ (Bus. Rd(S); Bus. Upd) Sm Pr. Rd/-Pr. Wr/Bus. Upd(S) Bus. Rd/Flush Pr. Wr/-- Bus. Rd/Flush Pr. Wr/Bus. Upd(S’) Pr. Wr. Miss/Bus. Rd(S’) M Pr. Rd/-Pr. Wr/-- [Culler/Singh 96] 25

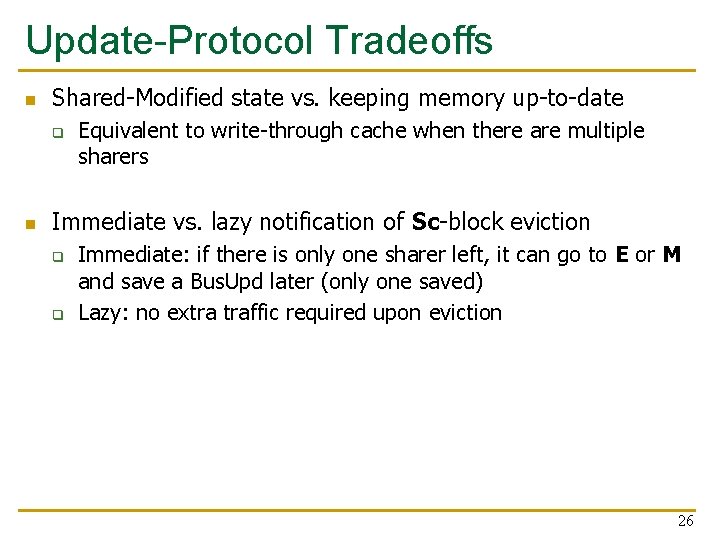

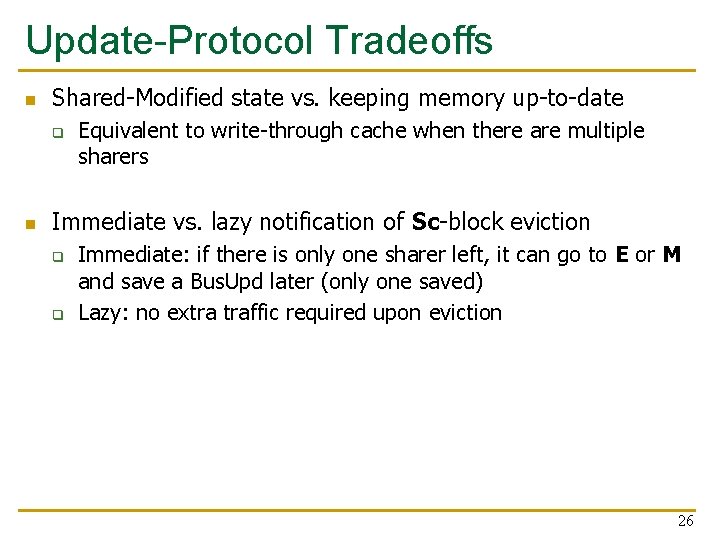

Update-Protocol Tradeoffs n Shared-Modified state vs. keeping memory up-to-date q n Equivalent to write-through cache when there are multiple sharers Immediate vs. lazy notification of Sc-block eviction q q Immediate: if there is only one sharer left, it can go to E or M and save a Bus. Upd later (only one saved) Lazy: no extra traffic required upon eviction 26

Directory-Based Coherence 27

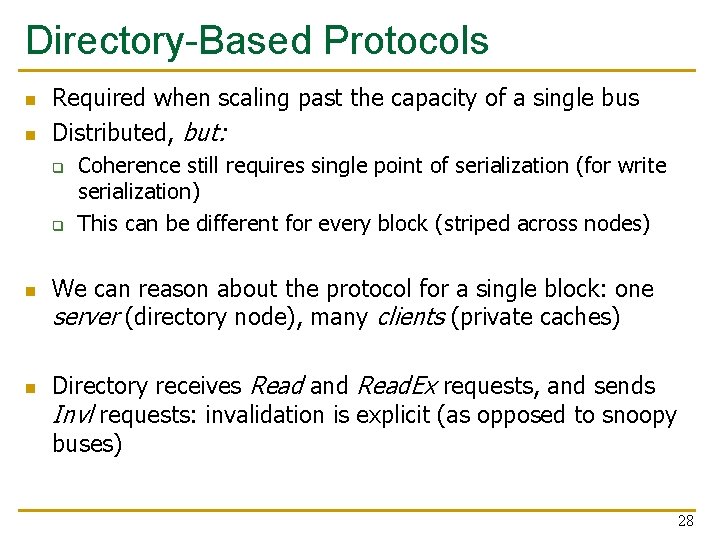

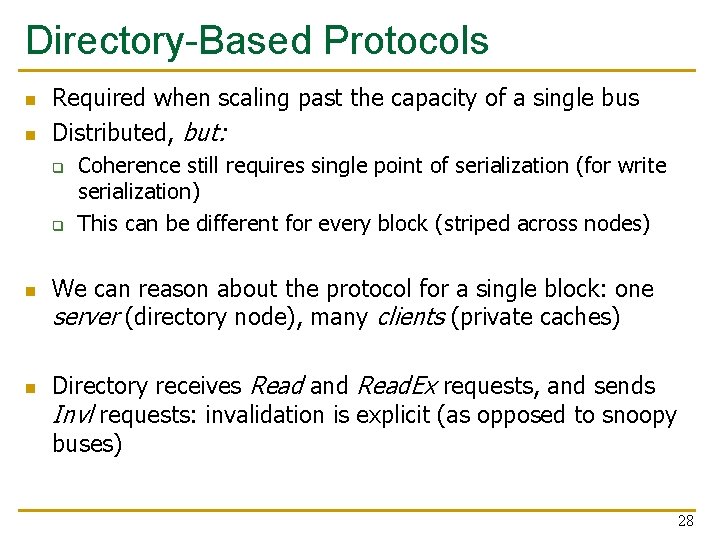

Directory-Based Protocols n n Required when scaling past the capacity of a single bus Distributed, but: q q n n Coherence still requires single point of serialization (for write serialization) This can be different for every block (striped across nodes) We can reason about the protocol for a single block: one server (directory node), many clients (private caches) Directory receives Read and Read. Ex requests, and sends Invl requests: invalidation is explicit (as opposed to snoopy buses) 28

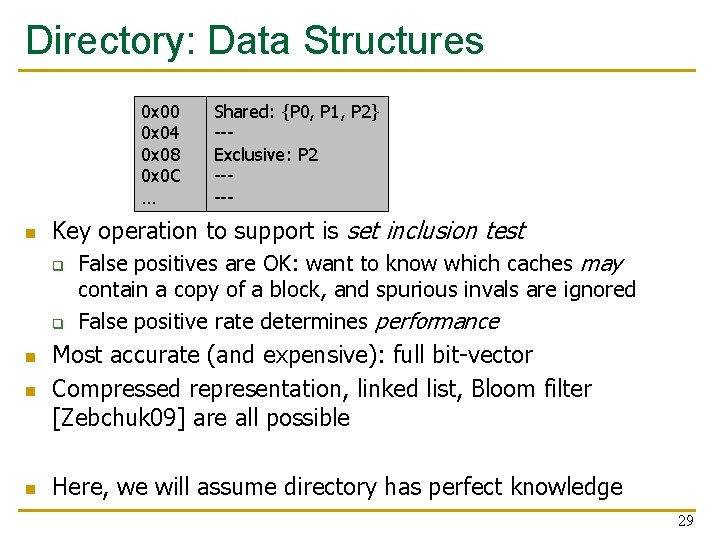

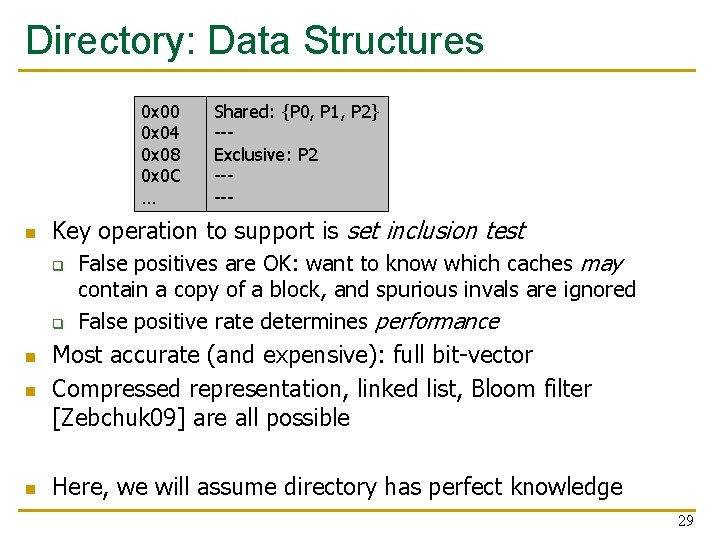

Directory: Data Structures 0 x 00 0 x 04 0 x 08 0 x 0 C … n Shared: {P 0, P 1, P 2} --Exclusive: P 2 ----- Key operation to support is set inclusion test q q False positives are OK: want to know which caches may contain a copy of a block, and spurious invals are ignored False positive rate determines performance n Most accurate (and expensive): full bit-vector Compressed representation, linked list, Bloom filter [Zebchuk 09] are all possible n Here, we will assume directory has perfect knowledge n 29

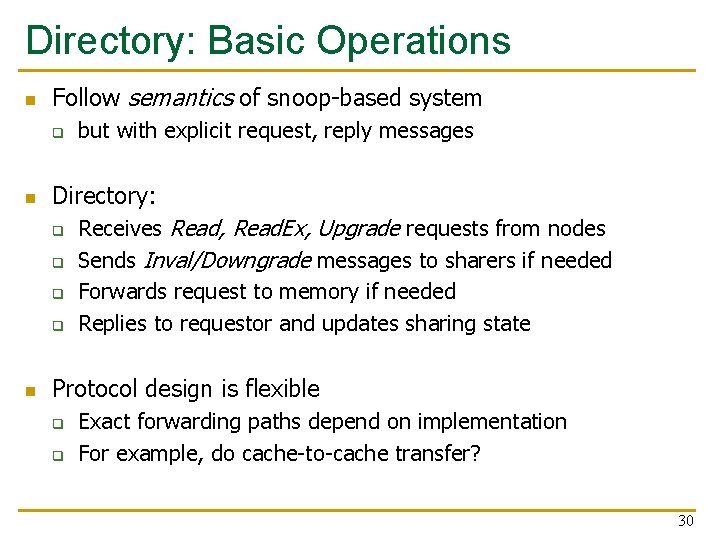

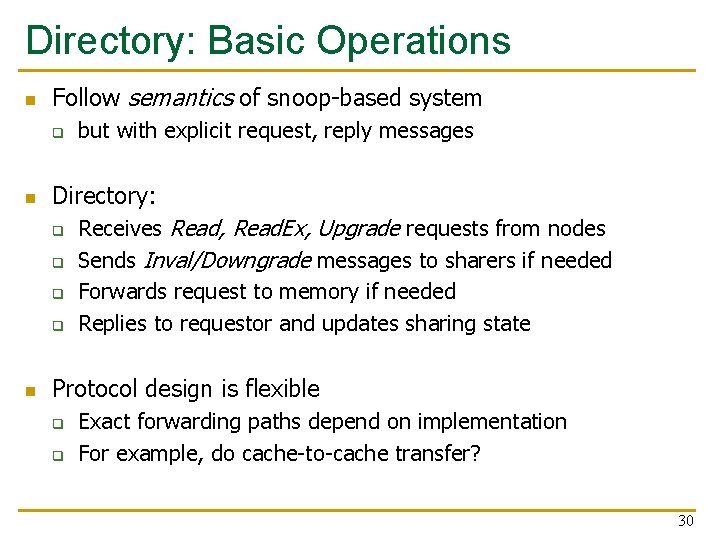

Directory: Basic Operations n Follow semantics of snoop-based system q n Directory: q q n but with explicit request, reply messages Receives Read, Read. Ex, Upgrade requests from nodes Sends Inval/Downgrade messages to sharers if needed Forwards request to memory if needed Replies to requestor and updates sharing state Protocol design is flexible q q Exact forwarding paths depend on implementation For example, do cache-to-cache transfer? 30

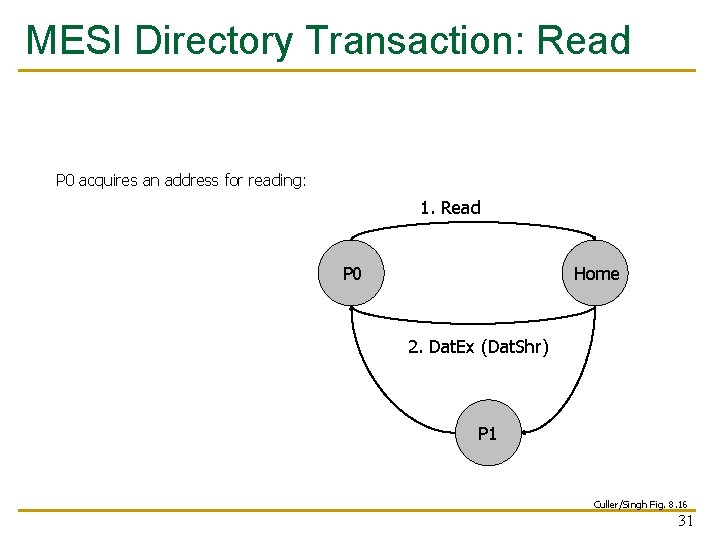

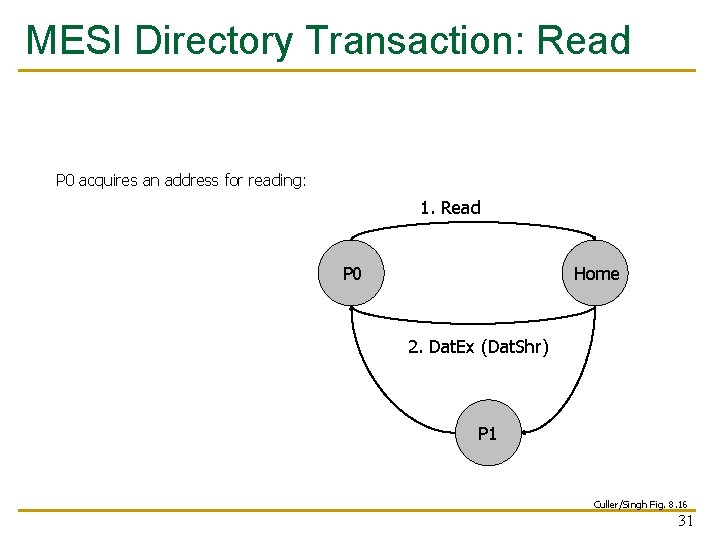

MESI Directory Transaction: Read P 0 acquires an address for reading: 1. Read P 0 Home 2. Dat. Ex (Dat. Shr) P 1 Culler/Singh Fig. 8. 16 31

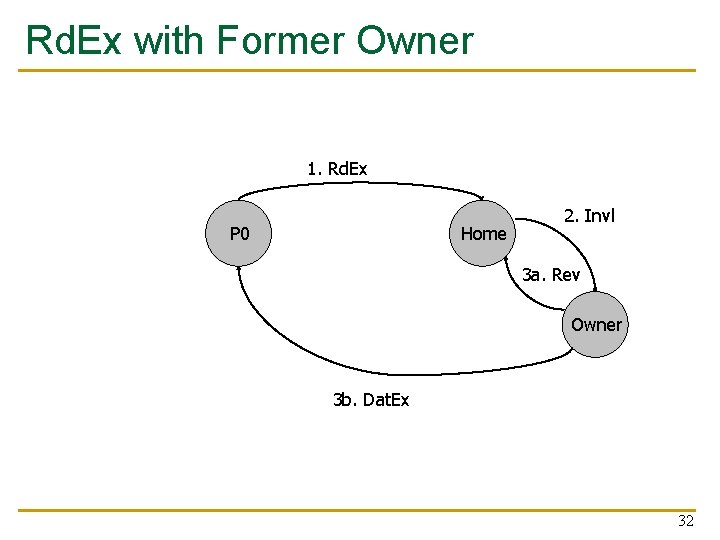

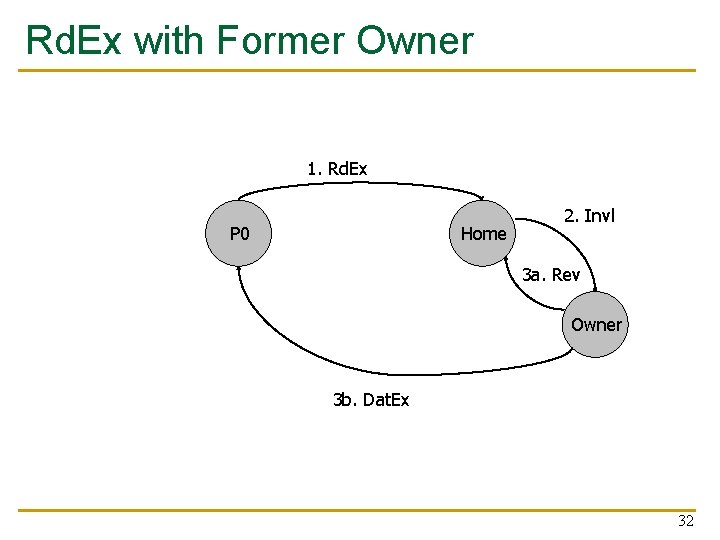

Rd. Ex with Former Owner 1. Rd. Ex P 0 Home 2. Invl 3 a. Rev Owner 3 b. Dat. Ex 32

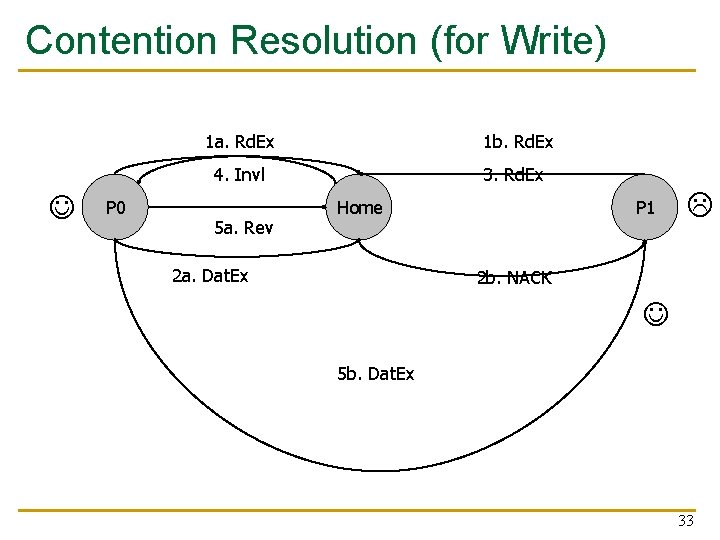

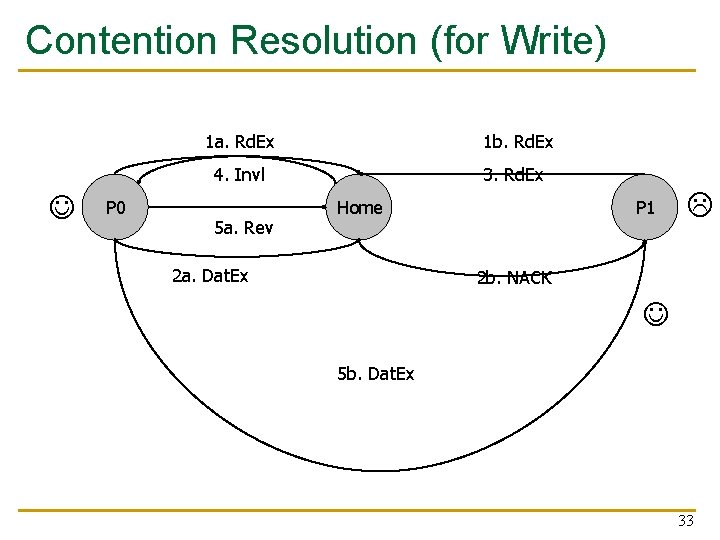

Contention Resolution (for Write) P 0 1 a. Rd. Ex 1 b. Rd. Ex 4. Invl 3. Rd. Ex 5 a. Rev Home 2 a. Dat. Ex P 1 2 b. NACK 5 b. Dat. Ex 33

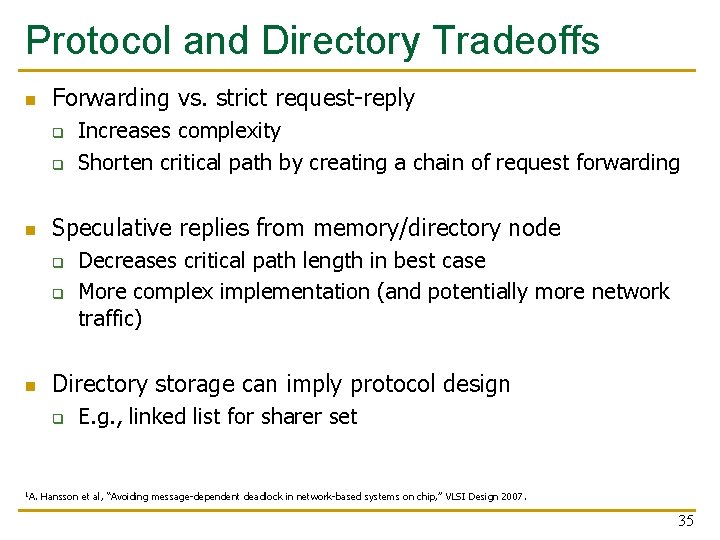

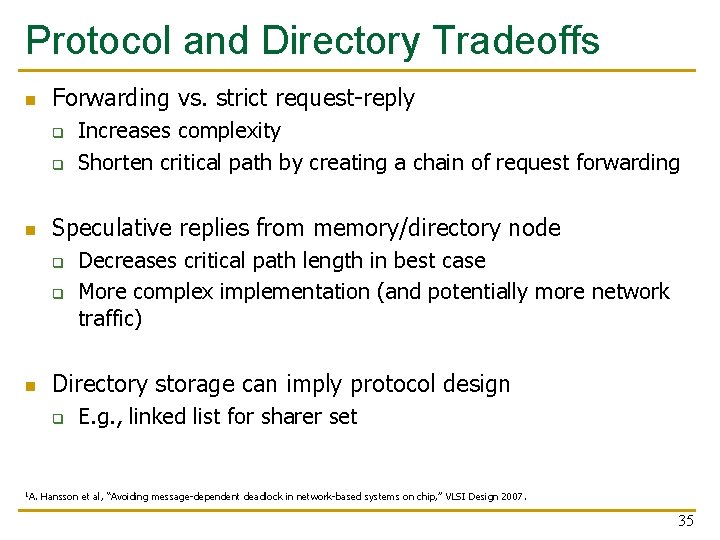

Issues with Contention Resolution n Need to escape race conditions by: q NACKing requests to busy (pending invalidate) entries n q q n OR, queuing requests and granting in sequence (Or some combination thereof) Fairness q n Original requestor retries Which requestor should be preferred in a conflict? Interconnect delivery order, and distance, both matter We guarantee that some node will make forward progress Ping-ponging is a higher-level issue q With solutions like combining trees (for locks/barriers) and better shared-data-structure design 34

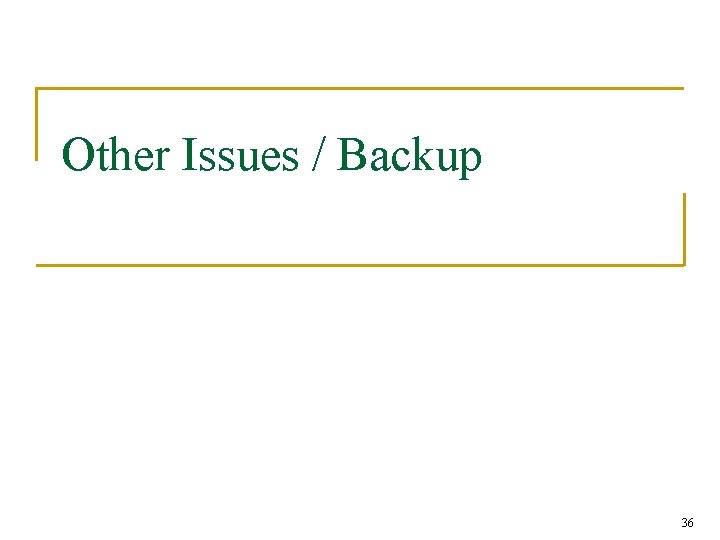

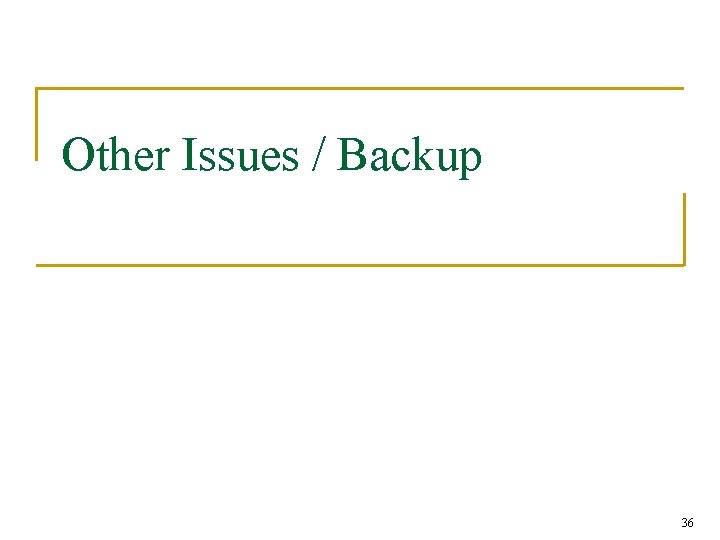

Protocol and Directory Tradeoffs n Forwarding vs. strict request-reply q q n Speculative replies from memory/directory node q q n Decreases critical path length in best case More complex implementation (and potentially more network traffic) Directory storage can imply protocol design q 1 A. Increases complexity Shorten critical path by creating a chain of request forwarding E. g. , linked list for sharer set Hansson et al, “Avoiding message-dependent deadlock in network-based systems on chip, ” VLSI Design 2007. 35

Other Issues / Backup 36

![Memory Consistency Briefly n n We consider only sequential consistency Lamport 79 here Sequential Memory Consistency (Briefly) n n We consider only sequential consistency [Lamport 79] here Sequential](https://slidetodoc.com/presentation_image/9a4f5a1ee8b5abfb27182ae98343bbf9/image-37.jpg)

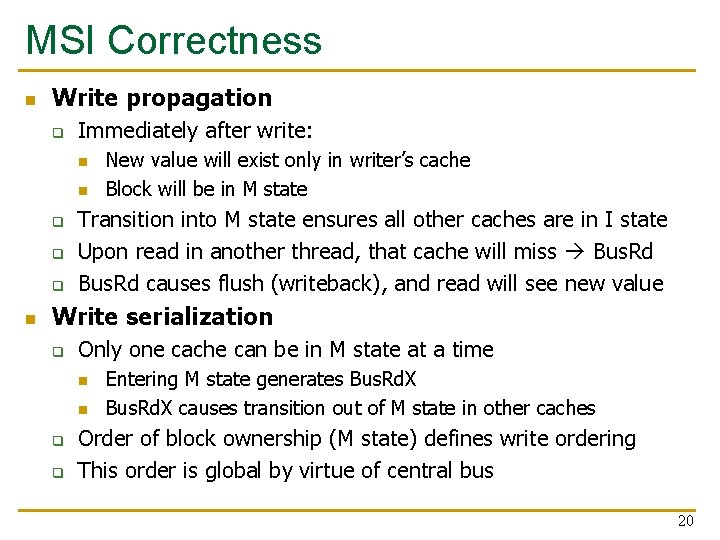

Memory Consistency (Briefly) n n We consider only sequential consistency [Lamport 79] here Sequential Consistency gives the appearance that: q q All operations (R and W) happen atomically in a global order Operations from a single thread occur in order in this stream Proc 0 A = 1; Proc 1 while (A == 0) ; B = 1; Proc 2 while (B == 0) ; print A A=1? n n Thus, ordering between different mem locations exists More relaxed models exist; usually require memory barriers when synchronizing 37

Correctness Issue: Inclusion n n What happens with multilevel caches? Snooping level (say, L 2) must know about all data in private hierarchy above it (L 1) q n Inclusive cache is one solution [Baer 88] q q n What about directories? L 2 must contain all data that L 1 contains Must propagate invalidates upward to L 1 Other options q Non-inclusive: inclusion property is optional n n q Why would L 2 evict if it has more sets and more ways than L 1? Prefetching! Exclusive: line is in L 1 xor L 2 (AMD K 7) 38