18 742 Spring 2011 Parallel Computer Architecture Lecture

- Slides: 43

18 -742 Spring 2011 Parallel Computer Architecture Lecture 15: Multithreading Prof. Onur Mutlu Carnegie Mellon University

Announcements n Project Milestone Meetings – Wednesday, March 2 q q q n Meeting format: q q q n 2: 30 -4: 30 pm, HH-B 206 20 minutes per group at most Sign up on the 742 website 10 minute presentation 10 minute Q&A and feedback Anyone can attend the meeting No class Friday (March 4) – Spring Break 2

Reviews n Due Yesterday (Feb 27) q n Mukherjee et al. , “Detailed Design and Evaluation of Redundant Multithreading Alternative, ” ISCA 2002. Due March 13 q Snavely and Tullsen, “Symbiotic Jobscheduling for a Simultaneous Multithreading Processor, ” ASPLOS 2000. 3

Last Lectures n Interconnection Networks q q q q n Introduction & Terminology Topology Buffering and Flow control Routing Router design Network performance metrics On-chip vs. off-chip differences Livelock, deadlock, the turn model Research on No. Cs and packet scheduling q q The problem with packet scheduling Application-aware packet scheduling Aergia: Latency slack based packet scheduling Bufferless routing and livelock issues 4

Reminder: Some Questions n n n What are the possible ways of handling contention in a router? What is head-of-line blocking? What is a non-minimal routing algorithm? What is the difference between deterministic, oblivious, and adaptive routing algorithms? What routing algorithms need to worry about deadlock? What routing algorithms need to worry about livelock? How to handle deadlock How to handle livelock? What is zero-load latency? What is saturation throughput? What is an application-aware packet scheduling algorithm? 5

Today n Multithreading, finally… 6

Multithreading

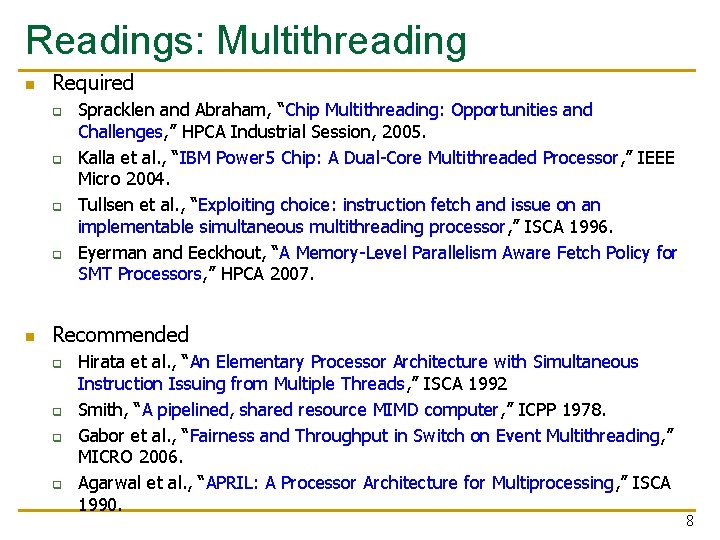

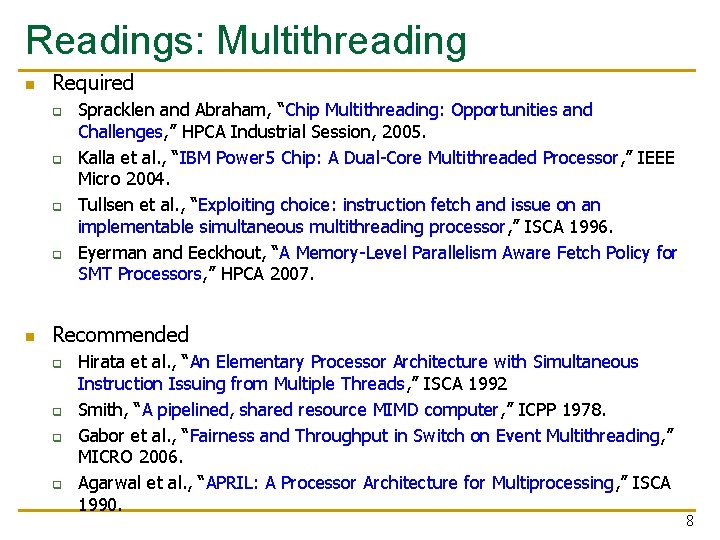

Readings: Multithreading n Required q q n Spracklen and Abraham, “Chip Multithreading: Opportunities and Challenges, ” HPCA Industrial Session, 2005. Kalla et al. , “IBM Power 5 Chip: A Dual-Core Multithreaded Processor, ” IEEE Micro 2004. Tullsen et al. , “Exploiting choice: instruction fetch and issue on an implementable simultaneous multithreading processor, ” ISCA 1996. Eyerman and Eeckhout, “A Memory-Level Parallelism Aware Fetch Policy for SMT Processors, ” HPCA 2007. Recommended q q Hirata et al. , “An Elementary Processor Architecture with Simultaneous Instruction Issuing from Multiple Threads, ” ISCA 1992 Smith, “A pipelined, shared resource MIMD computer, ” ICPP 1978. Gabor et al. , “Fairness and Throughput in Switch on Event Multithreading, ” MICRO 2006. Agarwal et al. , “APRIL: A Processor Architecture for Multiprocessing, ” ISCA 1990. 8

Multithreading (Outline) n n n Multiple hardware contexts Purpose Initial incarnations q q q n CDC 6600 HEP Tera Levels of multithreading q q Fine-grained (cycle-by-cycle) Coarse grained (multitasking) n q n Switch-on-event Simultaneous Uses: traditional + creative (now that we have multiple contexts, why do we not do …) 9

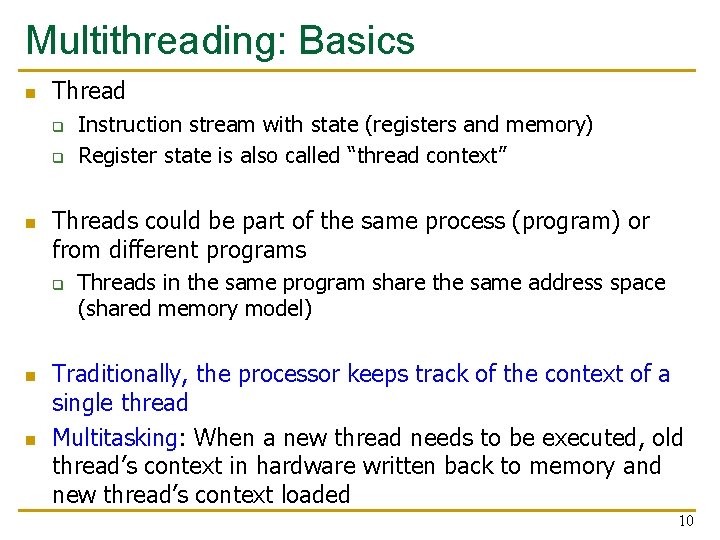

Multithreading: Basics n Thread q q n Threads could be part of the same process (program) or from different programs q n n Instruction stream with state (registers and memory) Register state is also called “thread context” Threads in the same program share the same address space (shared memory model) Traditionally, the processor keeps track of the context of a single thread Multitasking: When a new thread needs to be executed, old thread’s context in hardware written back to memory and new thread’s context loaded 10

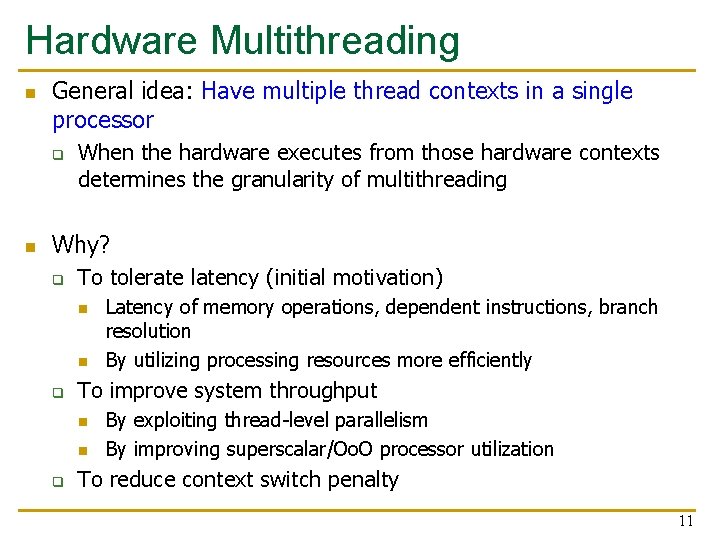

Hardware Multithreading n General idea: Have multiple thread contexts in a single processor q n When the hardware executes from those hardware contexts determines the granularity of multithreading Why? q To tolerate latency (initial motivation) n n q To improve system throughput n n q Latency of memory operations, dependent instructions, branch resolution By utilizing processing resources more efficiently By exploiting thread-level parallelism By improving superscalar/Oo. O processor utilization To reduce context switch penalty 11

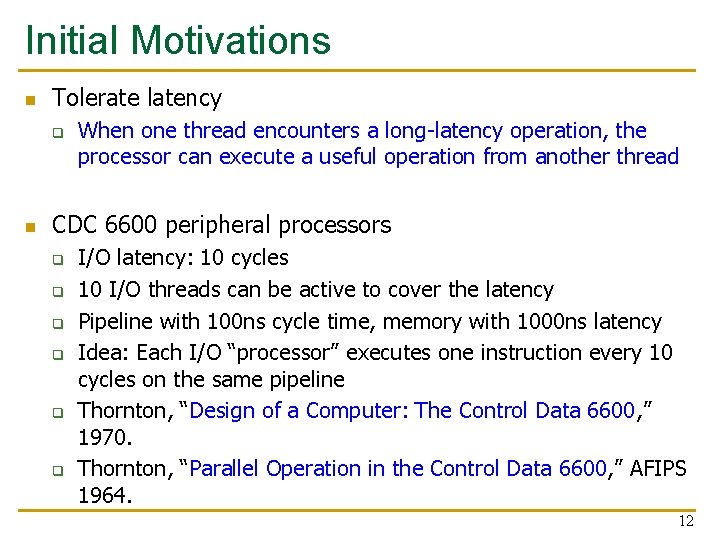

Initial Motivations n Tolerate latency q n When one thread encounters a long-latency operation, the processor can execute a useful operation from another thread CDC 6600 peripheral processors q q q I/O latency: 10 cycles 10 I/O threads can be active to cover the latency Pipeline with 100 ns cycle time, memory with 1000 ns latency Idea: Each I/O “processor” executes one instruction every 10 cycles on the same pipeline Thornton, “Design of a Computer: The Control Data 6600, ” 1970. Thornton, “Parallel Operation in the Control Data 6600, ” AFIPS 1964. 12

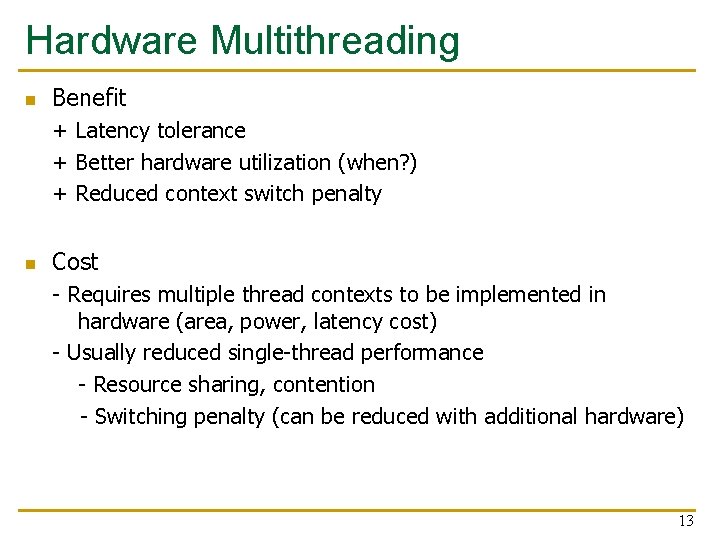

Hardware Multithreading n Benefit + Latency tolerance + Better hardware utilization (when? ) + Reduced context switch penalty n Cost - Requires multiple thread contexts to be implemented in hardware (area, power, latency cost) - Usually reduced single-thread performance - Resource sharing, contention - Switching penalty (can be reduced with additional hardware) 13

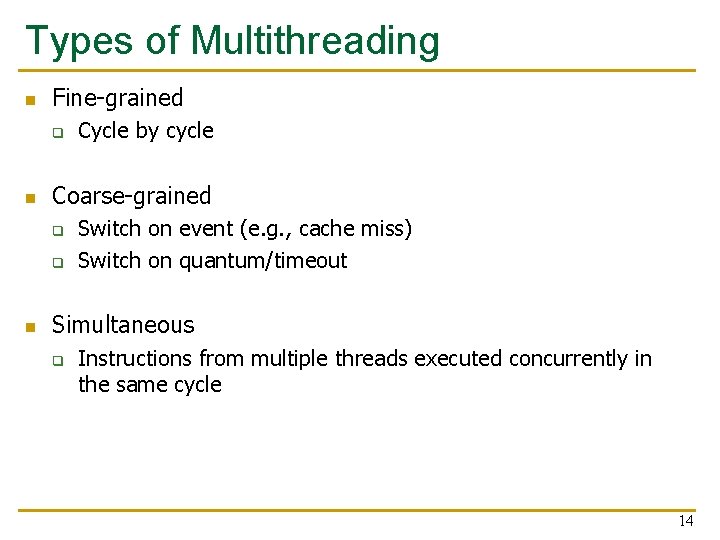

Types of Multithreading n Fine-grained q n Coarse-grained q q n Cycle by cycle Switch on event (e. g. , cache miss) Switch on quantum/timeout Simultaneous q Instructions from multiple threads executed concurrently in the same cycle 14

Fine-grained Multithreading n n Idea: Switch to another thread every cycle such that no two instructions from the thread are in the pipeline concurrently Improves pipeline utilization by taking advantage of multiple threads Alternative way of looking at it: Tolerates the control and data dependency latencies by overlapping the latency with useful work from other threads Thornton, “Parallel Operation in the Control Data 6600, ” AFIPS 1964. 15

Fine-grained Multithreading n CDC 6600’s peripheral processing unit is fine-grained multithreaded q q n Processor executes a different I/O thread every cycle An operation from the same thread is executed every 10 cycles Denelcor HEP q q q Smith, “A pipelined, shared resource MIMD computer, ” ICPP 1978. 120 threads/processor n 50 user, 70 OS functions available queue vs. unavailable (waiting) queue each thread can only have 1 instruction in the processor pipeline; each thread independent to each thread, processor looks like a sequential machine throughput vs. single thread speed 16

Fine-grained Multithreading in HEP n n Cycle time: 100 ns 8 stages 800 ns to complete an instruction q assuming no memory access 17

Fine-grained Multithreading n Advantages + No need for dependency checking between instructions (only one instruction in pipeline from a single thread) + No need for branch prediction logic + Otherwise-bubble cycles used for executing useful instructions from different threads + Improved system throughput, latency tolerance, utilization n Disadvantages - Extra hardware complexity: multiple hardware contexts, thread selection logic - Reduced single thread performance (one instruction fetched every N cycles) - Resource contention between threads in caches and memory - Dependency checking logic between threads remains (load/store) 18

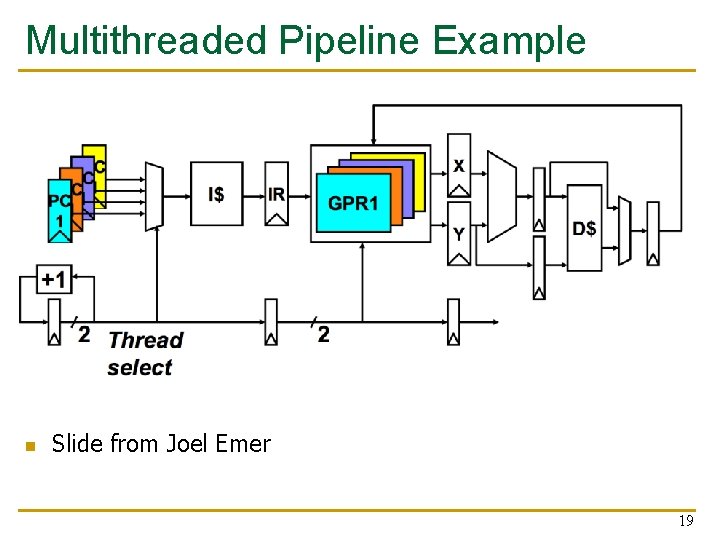

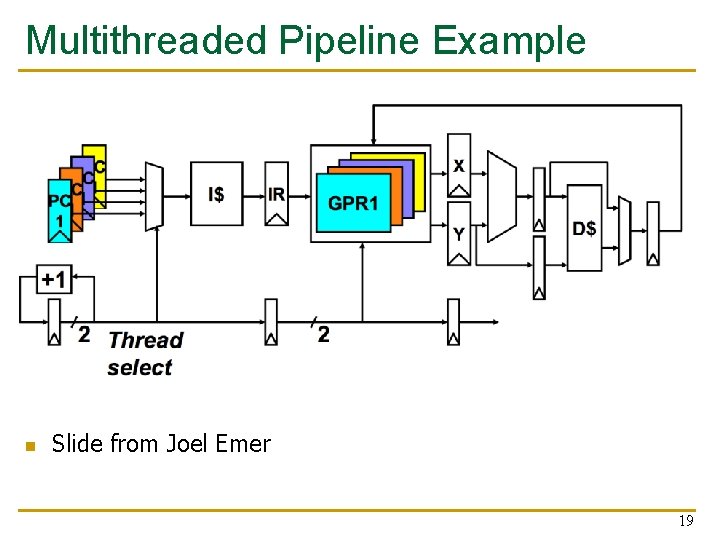

Multithreaded Pipeline Example n Slide from Joel Emer 19

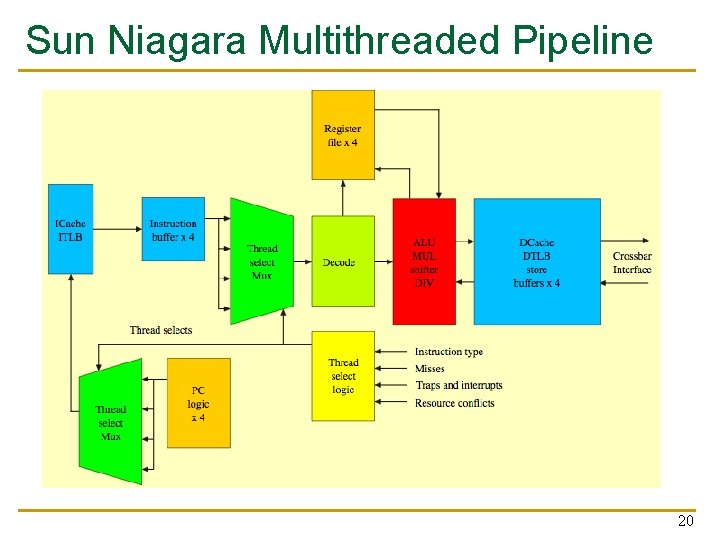

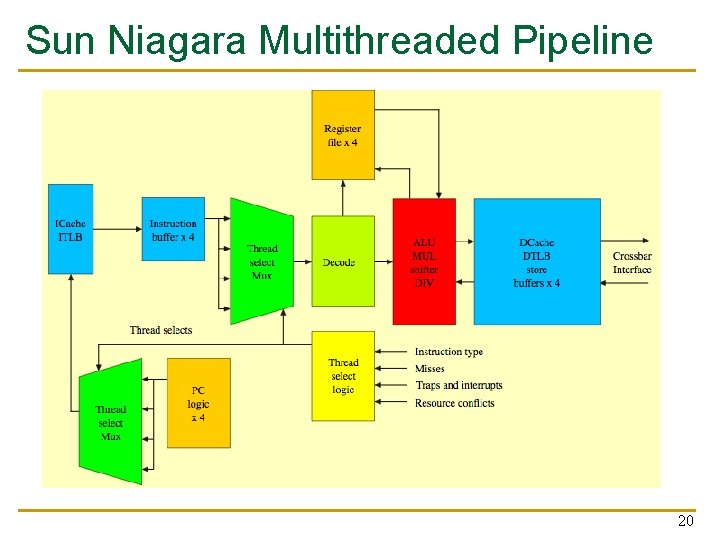

Sun Niagara Multithreaded Pipeline 20

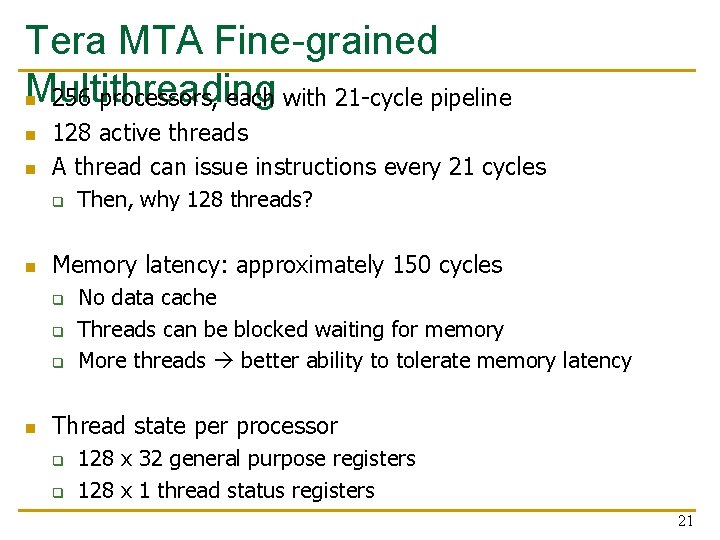

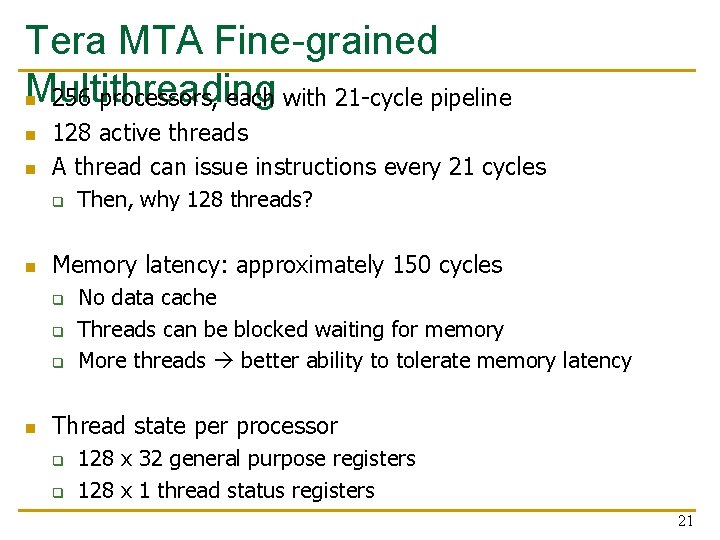

Tera MTA Fine-grained Multithreading n 256 processors, each with 21 -cycle pipeline n n 128 active threads A thread can issue instructions every 21 cycles q n Memory latency: approximately 150 cycles q q q n Then, why 128 threads? No data cache Threads can be blocked waiting for memory More threads better ability to tolerate memory latency Thread state per processor q q 128 x 32 general purpose registers 128 x 1 thread status registers 21

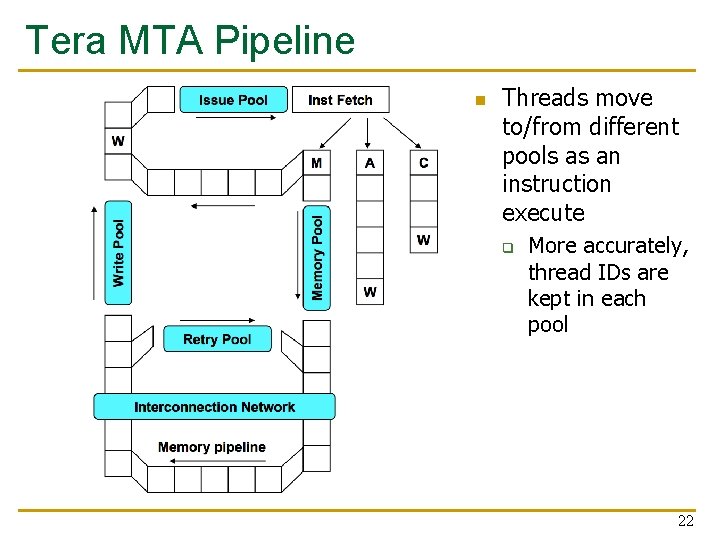

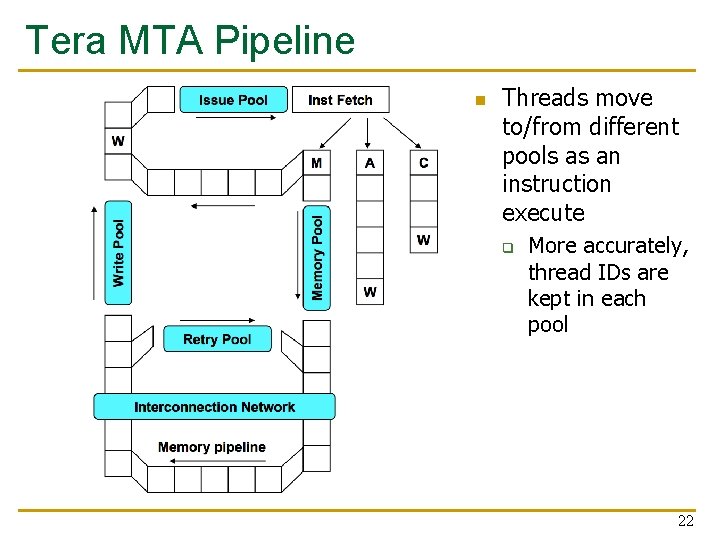

Tera MTA Pipeline n Threads move to/from different pools as an instruction execute q More accurately, thread IDs are kept in each pool 22

Coarse-grained Multithreading n Idea: When a thread is stalled due to some event, switch to a different hardware context q n Possible stall events q q q n n Switch-on-event multithreading Cache misses Synchronization events (e. g. , load an empty location) FP operations HEP, Tera combine fine-grained MT and coarse-grained MT q Thread waiting for memory becomes blocked (un-selectable) Agarwal et al. , “APRIL: A Processor Architecture for Multiprocessing, ” ISCA 1990. n Explicit switch on event 23

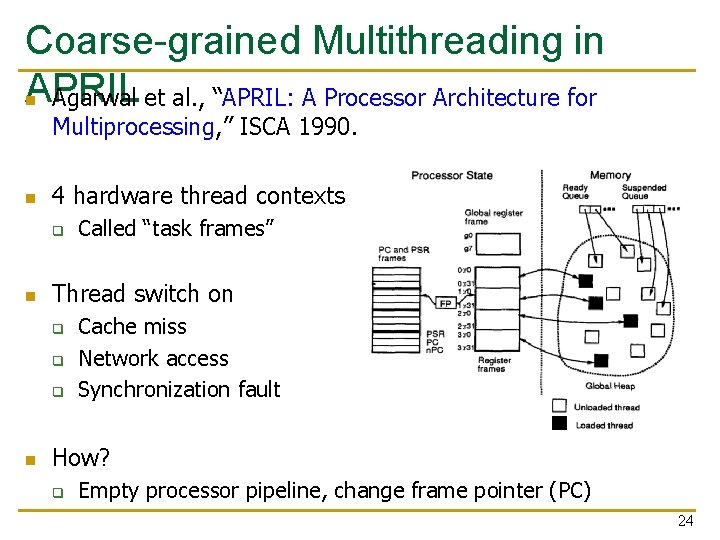

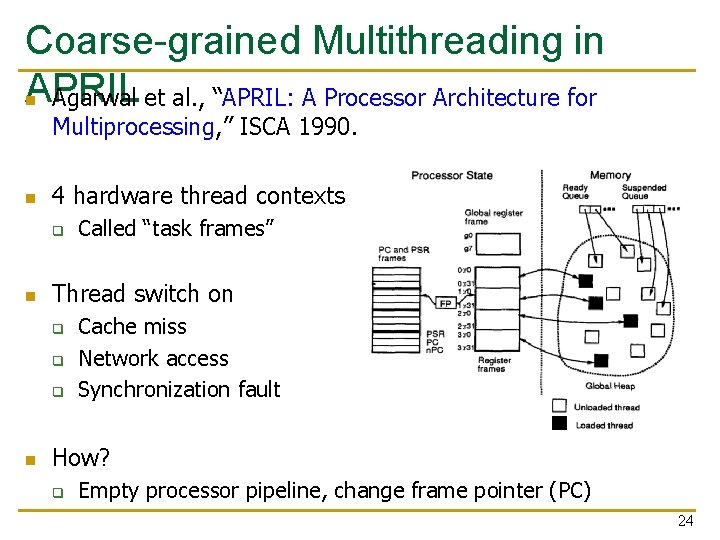

Coarse-grained Multithreading in APRIL n Agarwal et al. , “APRIL: A Processor Architecture for Multiprocessing, ” ISCA 1990. n 4 hardware thread contexts q n Thread switch on q q q n Called “task frames” Cache miss Network access Synchronization fault How? q Empty processor pipeline, change frame pointer (PC) 24

Fine-grained vs. Coarse-grained MT n Fine-grained advantages + Simpler to implement, can eliminate dependency checking, branch prediction logic completely + Switching need not have any performance overhead (i. e. dead cycles) + Coarse-grained requires a pipeline flush or a lot of hardware to save pipeline state Higher performance overhead with deep pipelines and large windows n Disadvantages - Low single thread performance: each thread gets 1/Nth of the bandwidth of the pipeline 25

IBM RS 64 -IV n n n 4 -way superscalar, in-order, 5 -stage pipeline Two hardware contexts On an L 2 cache miss q q n Flush pipeline Switch to the other thread Considerations q q Memory latency vs. thread switch overhead Short pipeline reduces the overhead of switching 26

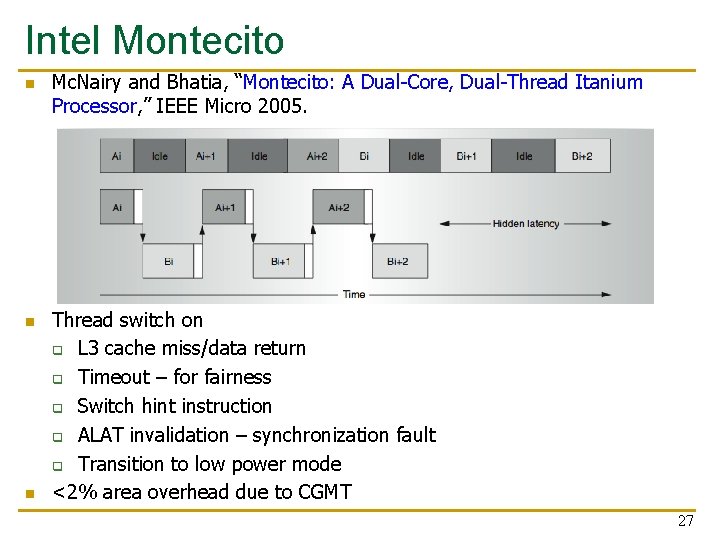

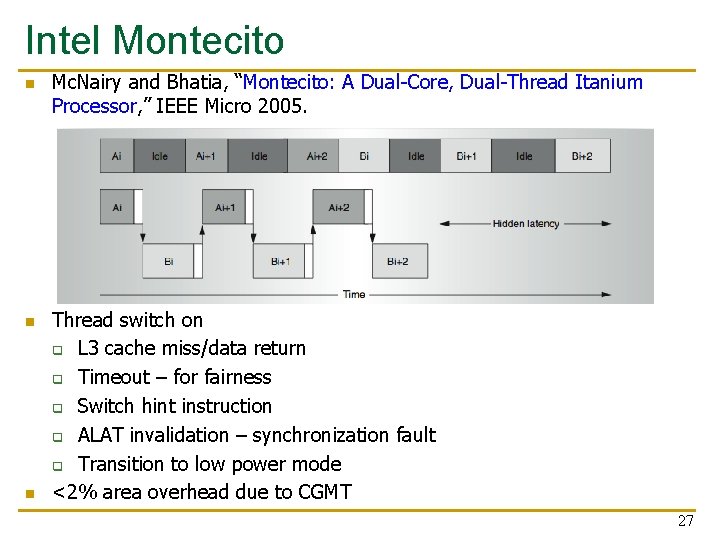

Intel Montecito n n n Mc. Nairy and Bhatia, “Montecito: A Dual-Core, Dual-Thread Itanium Processor, ” IEEE Micro 2005. Thread switch on q L 3 cache miss/data return q Timeout – for fairness q Switch hint instruction q ALAT invalidation – synchronization fault q Transition to low power mode <2% area overhead due to CGMT 27

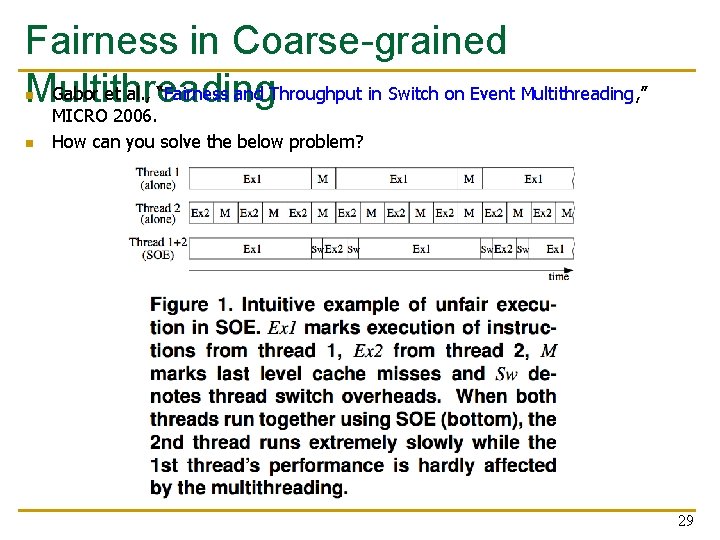

Fairness in Coarse-grained Multithreading n Resource sharing in space and time always causes fairness considerations q n Fairness: how much progress each thread makes In CGMT, the time allocated to each thread affects both fairness and system throughput q q When do we switch? For how long do we switch? When do we switch back? How does the hardware scheduler interact with the software scheduler for fairness? 28

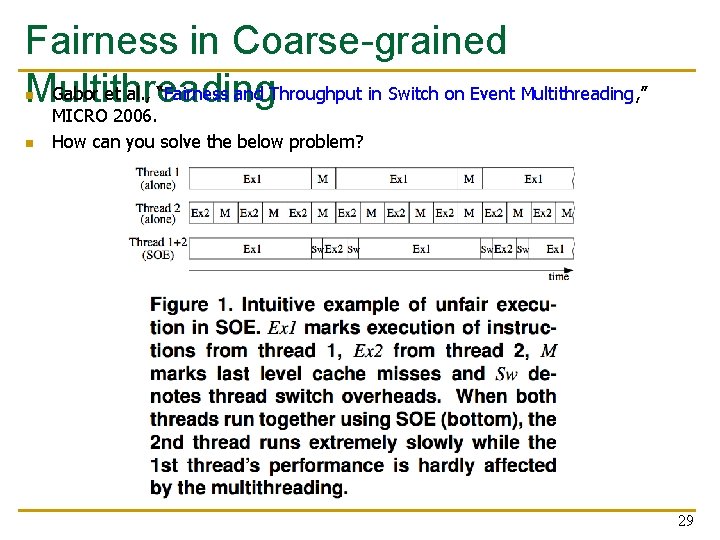

Fairness in Coarse-grained Gabor et al. , “Fairness and Throughput in Switch on Event Multithreading, ” Multithreading MICRO 2006. n n How can you solve the below problem? 29

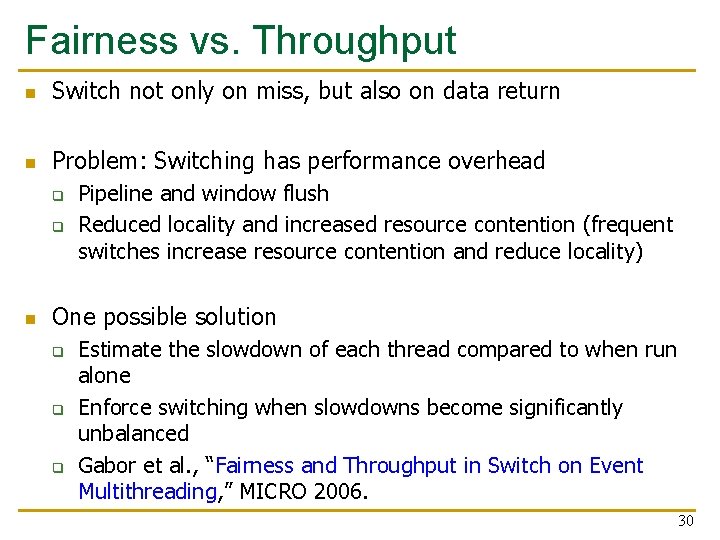

Fairness vs. Throughput n Switch not only on miss, but also on data return n Problem: Switching has performance overhead q q n Pipeline and window flush Reduced locality and increased resource contention (frequent switches increase resource contention and reduce locality) One possible solution q q q Estimate the slowdown of each thread compared to when run alone Enforce switching when slowdowns become significantly unbalanced Gabor et al. , “Fairness and Throughput in Switch on Event Multithreading, ” MICRO 2006. 30

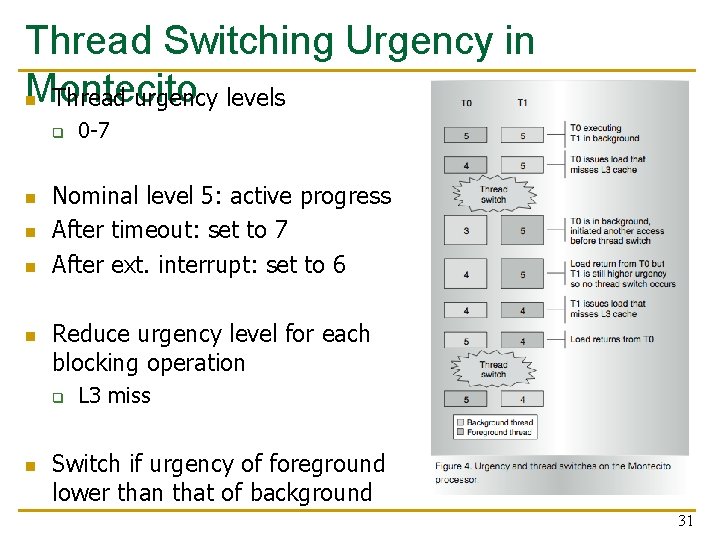

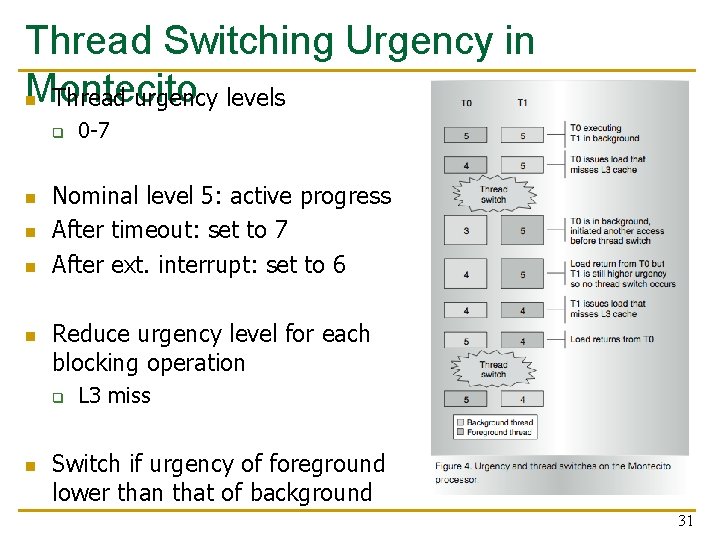

Thread Switching Urgency in Montecito n Thread urgency levels q n n Nominal level 5: active progress After timeout: set to 7 After ext. interrupt: set to 6 Reduce urgency level for each blocking operation q n 0 -7 L 3 miss Switch if urgency of foreground lower than that of background 31

Simultaneous Multithreading n n Fine-grained and coarse-grained multithreading can start execution of instructions from a single thread at a given cycle Execution unit (or pipeline stage) utilization can be low if there are not enough instructions from a thread to “dispatch” in one cycle q n In a machine with multiple execution units (i. e. , superscalar) Idea: Dispatch instructions from multiple threads in the same cycle (to keep multiple execution units utilized) q q q Hirata et al. , “An Elementary Processor Architecture with Simultaneous Instruction Issuing from Multiple Threads, ” ISCA 1992. Yamamoto et al. , “Performance Estimation of Multistreamed, Supersealar Processors, ” HICSS 1994. Tullsen et al. , “Simultaneous Multithreading: Maximizing On-Chip Parallelism, ” ISCA 1995. 32

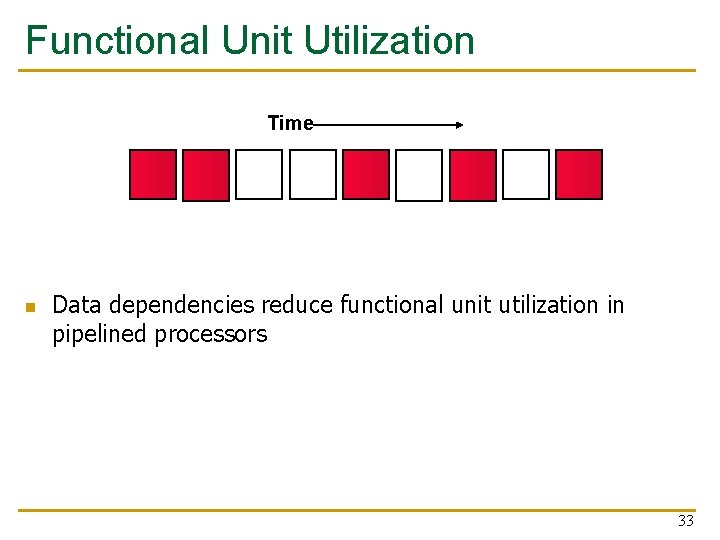

Functional Unit Utilization Time n Data dependencies reduce functional unit utilization in pipelined processors 33

Functional Unit Utilization in Superscalar Time n Functional unit utilization becomes lower in superscalar, Oo. O machines. Finding 4 instructions in parallel is not always possible 34

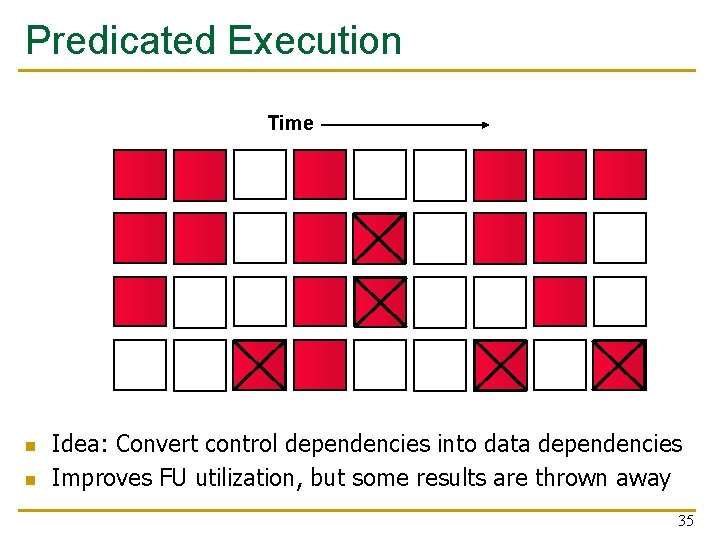

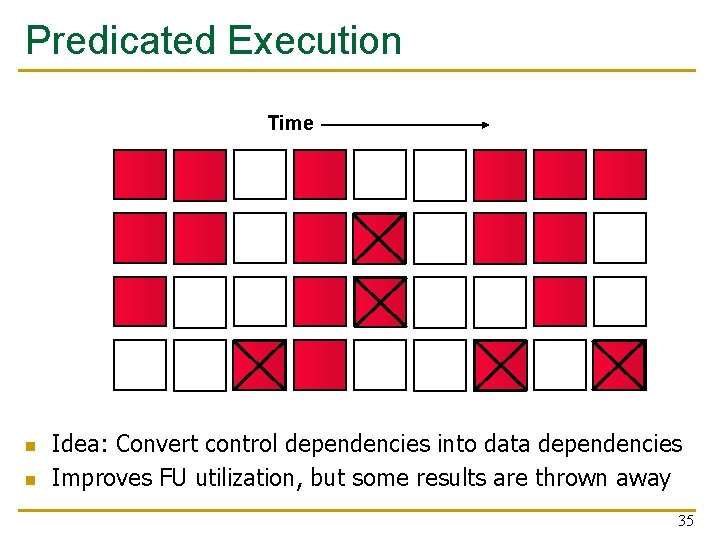

Predicated Execution Time n n Idea: Convert control dependencies into data dependencies Improves FU utilization, but some results are thrown away 35

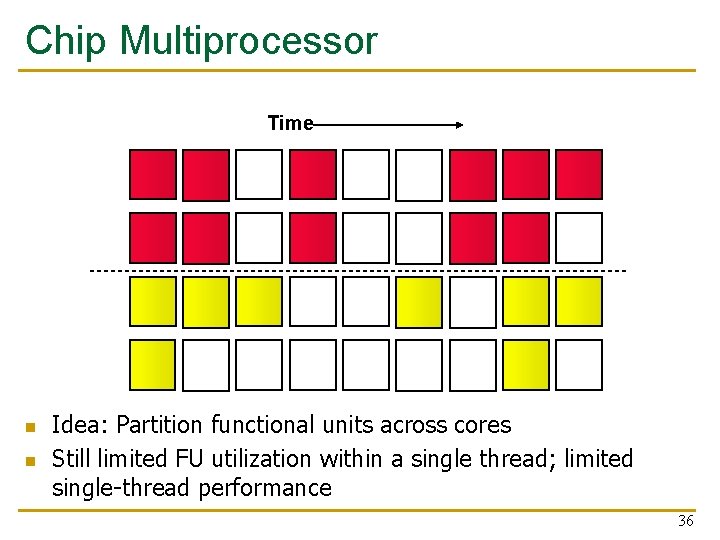

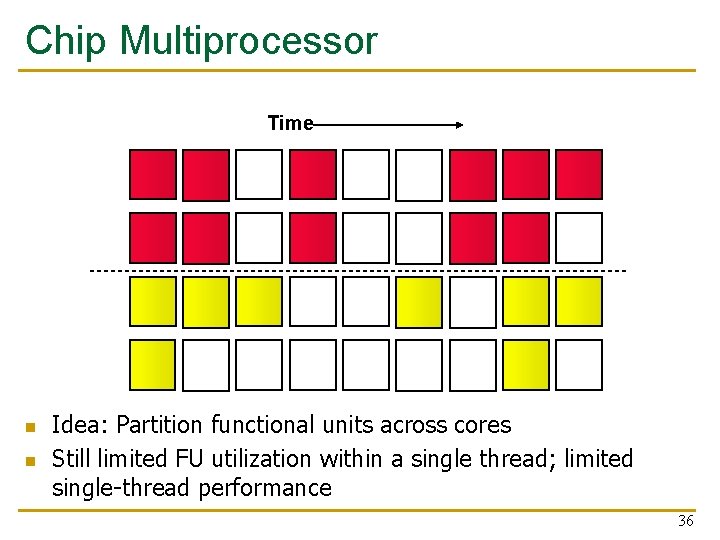

Chip Multiprocessor Time n n Idea: Partition functional units across cores Still limited FU utilization within a single thread; limited single-thread performance 36

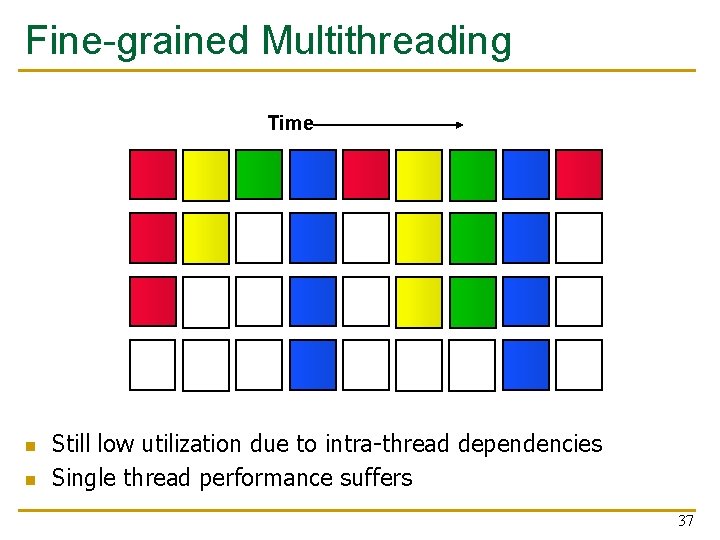

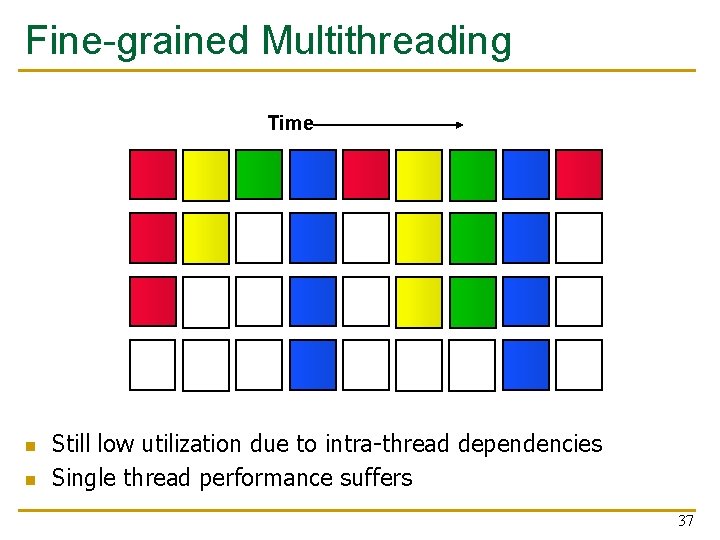

Fine-grained Multithreading Time n n Still low utilization due to intra-thread dependencies Single thread performance suffers 37

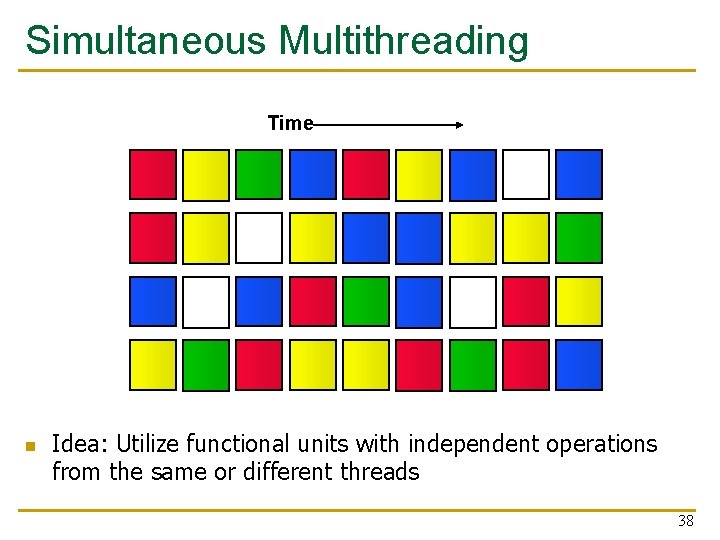

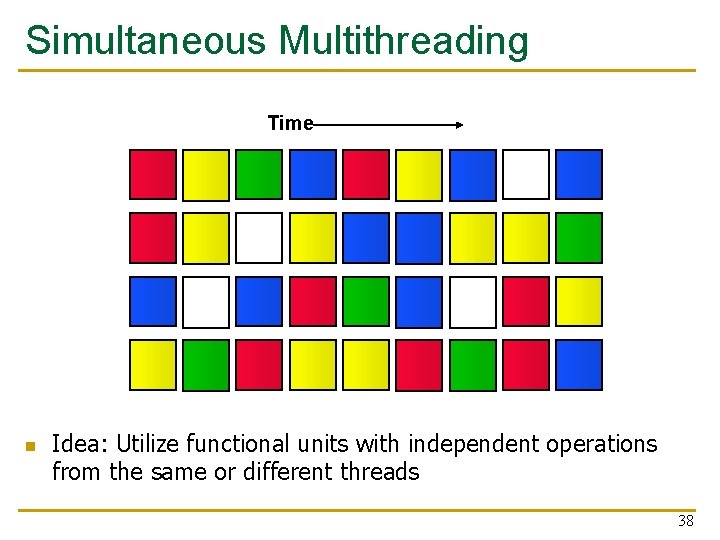

Simultaneous Multithreading Time n Idea: Utilize functional units with independent operations from the same or different threads 38

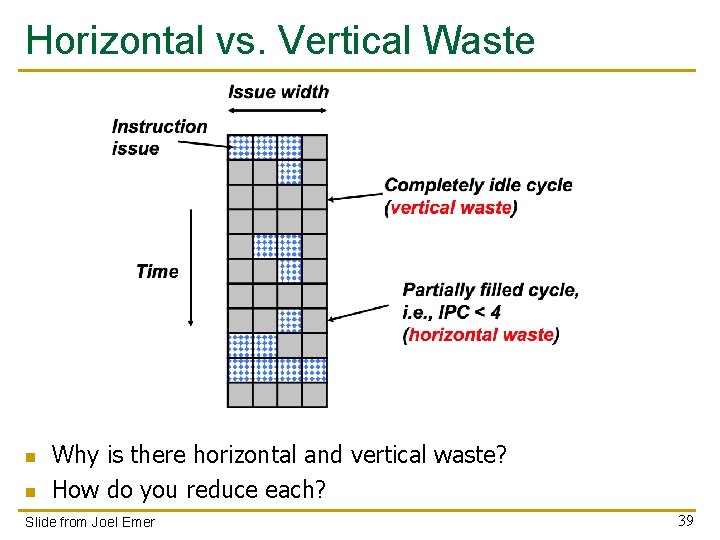

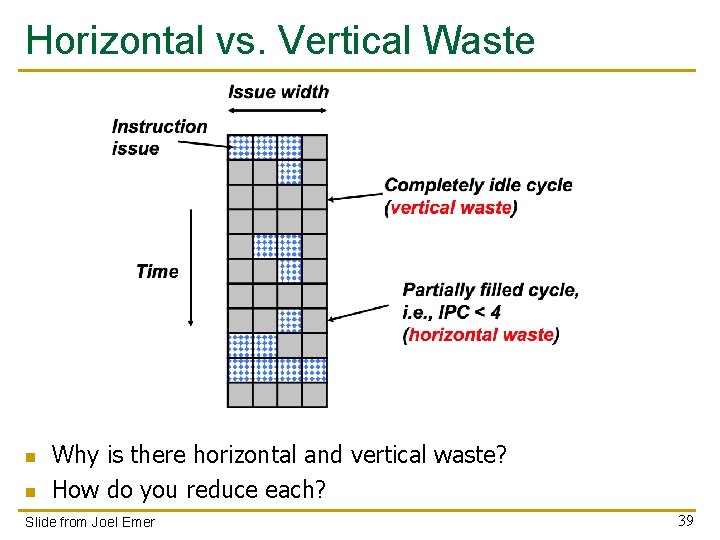

Horizontal vs. Vertical Waste n n Why is there horizontal and vertical waste? How do you reduce each? Slide from Joel Emer 39

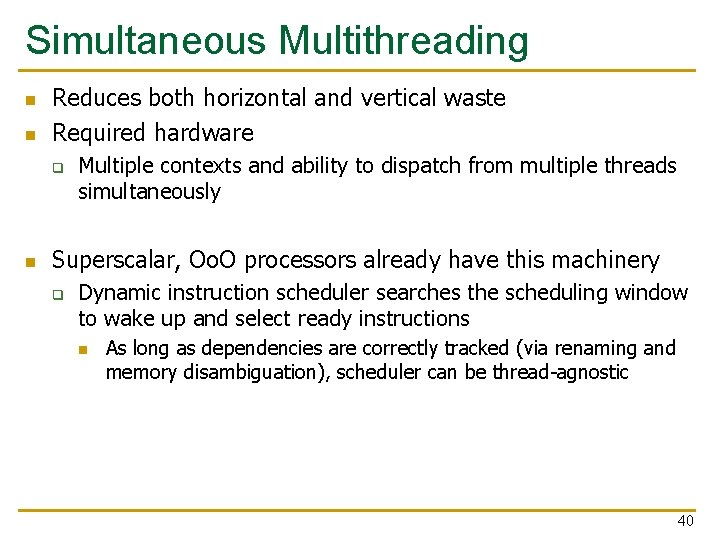

Simultaneous Multithreading n n Reduces both horizontal and vertical waste Required hardware q n Multiple contexts and ability to dispatch from multiple threads simultaneously Superscalar, Oo. O processors already have this machinery q Dynamic instruction scheduler searches the scheduling window to wake up and select ready instructions n As long as dependencies are correctly tracked (via renaming and memory disambiguation), scheduler can be thread-agnostic 40

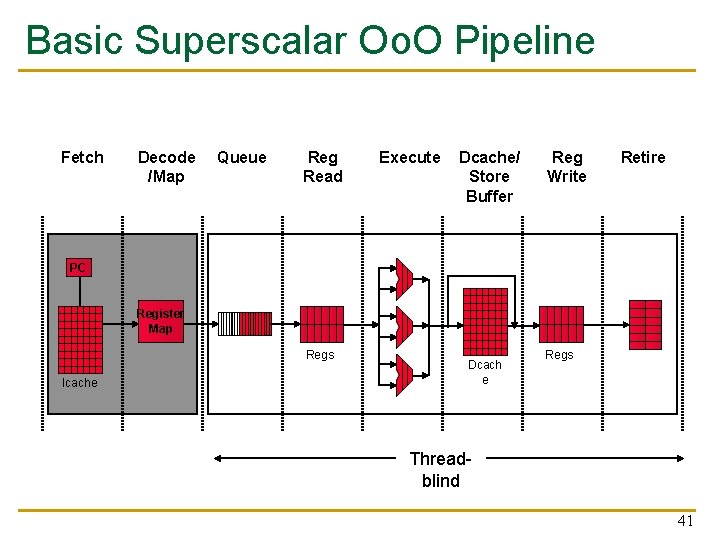

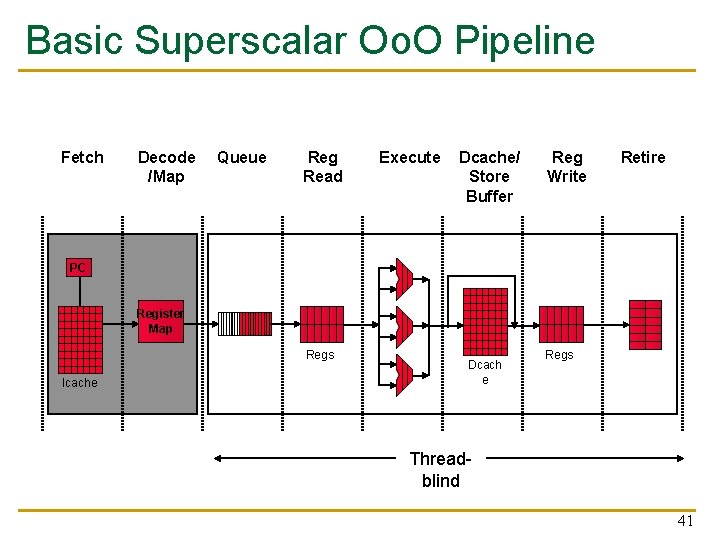

Basic Superscalar Oo. O Pipeline Fetch Decode /Map Queue Reg Read Execute Dcache/ Store Buffer Reg Write Retire PC Register Map Regs Icache Dcach e Regs Threadblind 41

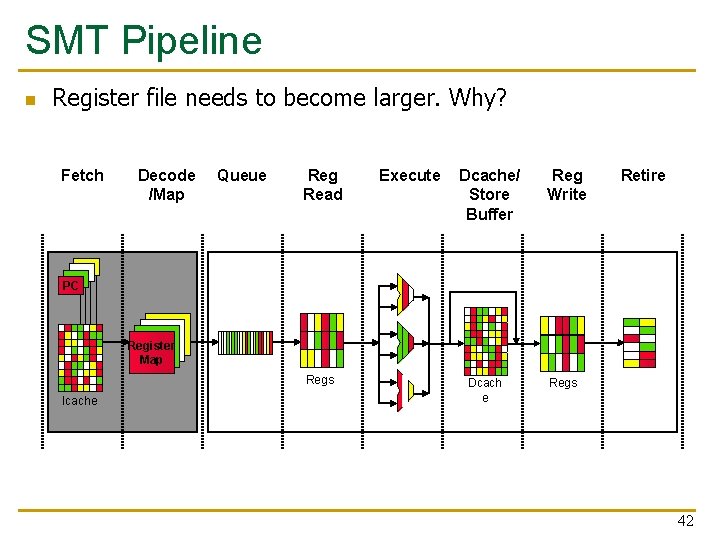

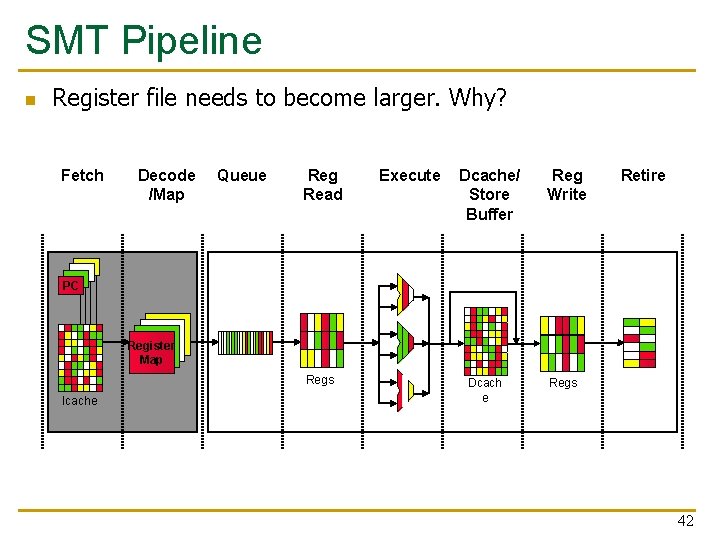

SMT Pipeline n Register file needs to become larger. Why? Fetch Decode /Map Queue Reg Read Execute Dcache/ Store Buffer Reg Write Retire PC Register Map Regs Icache Dcach e Regs 42

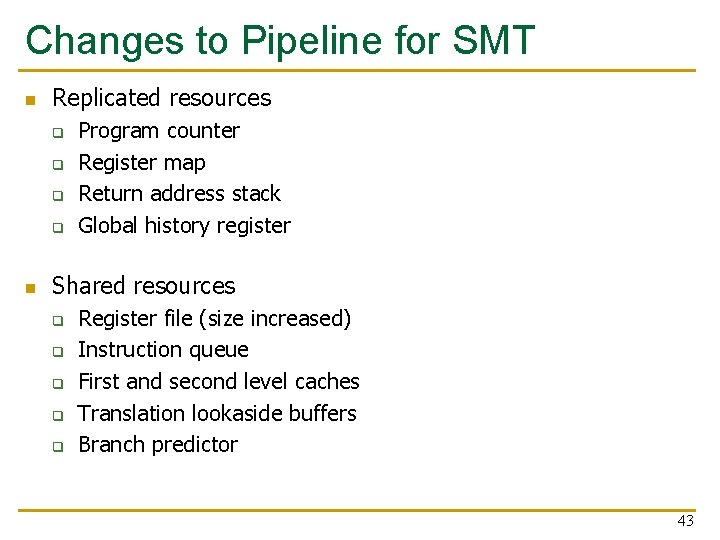

Changes to Pipeline for SMT n Replicated resources q q n Program counter Register map Return address stack Global history register Shared resources q q q Register file (size increased) Instruction queue First and second level caches Translation lookaside buffers Branch predictor 43