EECECS 6304 Computer Architecture Lecture 2 82317 Yiorgos

![Instruction Set Architecture. . . the attributes of a [computing] system as seen by Instruction Set Architecture. . . the attributes of a [computing] system as seen by](https://slidetodoc.com/presentation_image/23130af9f84479cee0c1dbad0acaf396/image-3.jpg)

- Slides: 25

EE/CE/CS 6304 Computer Architecture Lecture #2 (8/23/17) Yiorgos Makris Professor Department of Electrical Engineering University of Texas at Dallas Course Web-site: http: //www. utdallas. edu/~gxm 112130/EE 6304 FA 17

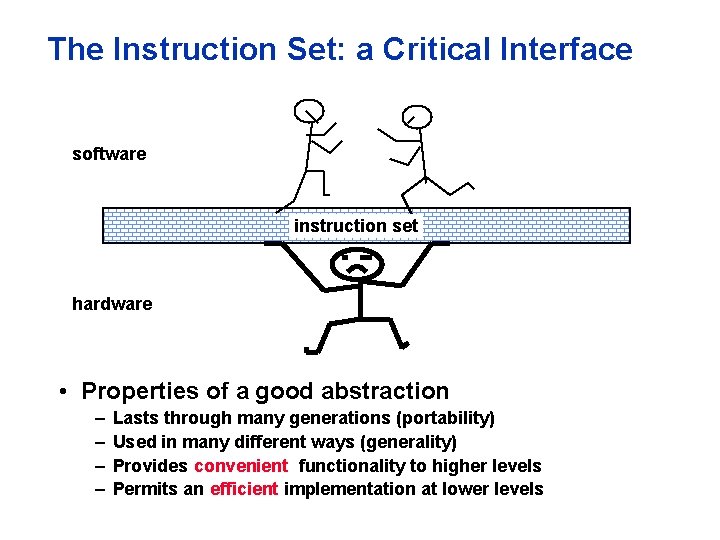

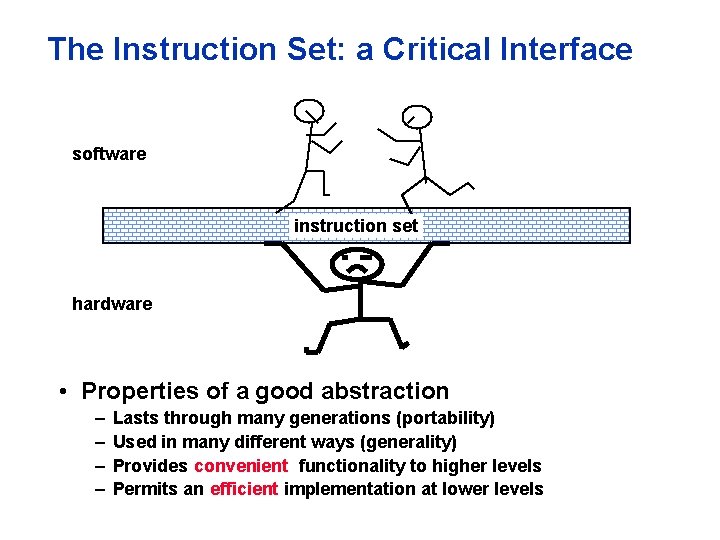

The Instruction Set: a Critical Interface software instruction set hardware • Properties of a good abstraction – – Lasts through many generations (portability) Used in many different ways (generality) Provides convenient functionality to higher levels Permits an efficient implementation at lower levels

![Instruction Set Architecture the attributes of a computing system as seen by Instruction Set Architecture. . . the attributes of a [computing] system as seen by](https://slidetodoc.com/presentation_image/23130af9f84479cee0c1dbad0acaf396/image-3.jpg)

Instruction Set Architecture. . . the attributes of a [computing] system as seen by the programmer, i. e. the conceptual structure and functional behavior, as distinct from the organization of the data flows and controls the logic design, and the physical implementation. – Amdahl, Blaaw, and Brooks, 1964 SOFTWARE -- Organization of Programmable Storage -- Data Types & Data Structures: Encodings & Representations -- Instruction Formats -- Instruction (or Operation Code) Set -- Modes of Addressing and Accessing Data Items and Instructions -- Exceptional Conditions

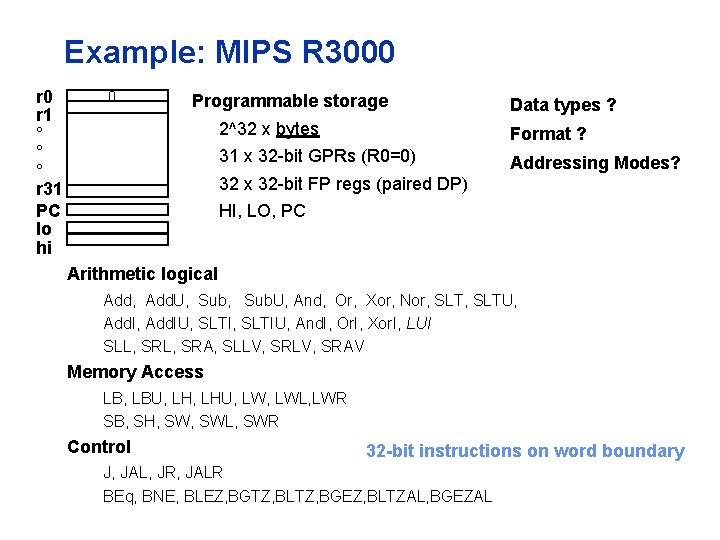

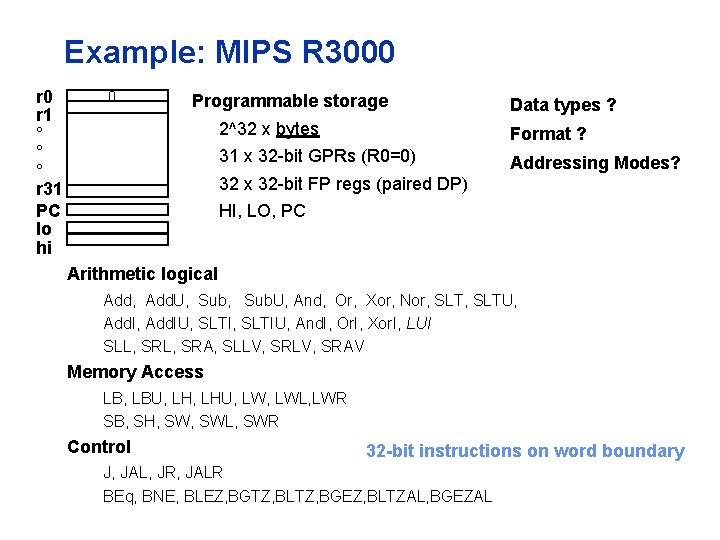

Example: MIPS R 3000 r 1 ° ° ° r 31 PC lo hi 0 Programmable storage Data types ? 2^32 x bytes Format ? 31 x 32 -bit GPRs (R 0=0) Addressing Modes? 32 x 32 -bit FP regs (paired DP) HI, LO, PC Arithmetic logical Add, Add. U, Sub. U, And, Or, Xor, Nor, SLTU, Add. IU, SLTIU, And. I, Or. I, Xor. I, LUI SLL, SRA, SLLV, SRAV Memory Access LB, LBU, LHU, LWL, LWR SB, SH, SWL, SWR Control 32 -bit instructions on word boundary J, JAL, JR, JALR BEq, BNE, BLEZ, BGTZ, BLTZ, BGEZ, BLTZAL, BGEZAL

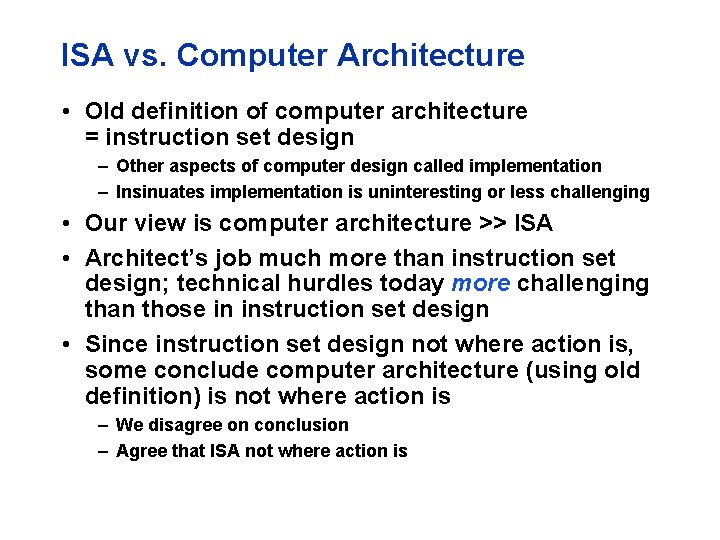

ISA vs. Computer Architecture • Old definition of computer architecture = instruction set design – Other aspects of computer design called implementation – Insinuates implementation is uninteresting or less challenging • Our view is computer architecture >> ISA • Architect’s job much more than instruction set design; technical hurdles today more challenging than those in instruction set design • Since instruction set design not where action is, some conclude computer architecture (using old definition) is not where action is – We disagree on conclusion – Agree that ISA not where action is

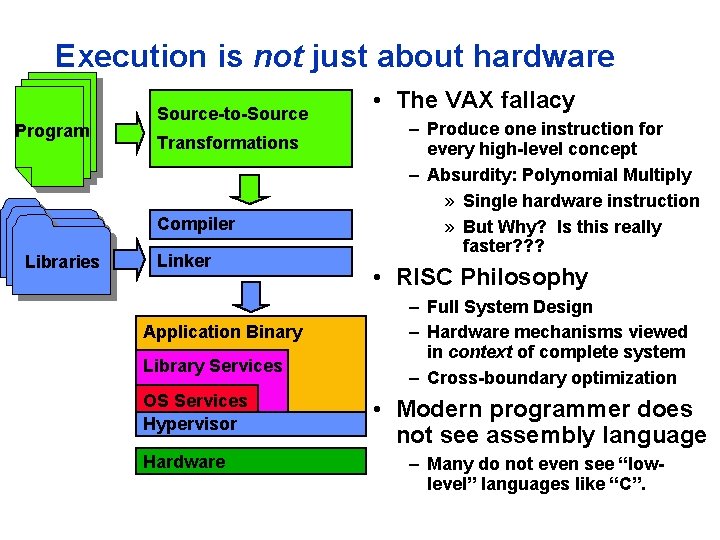

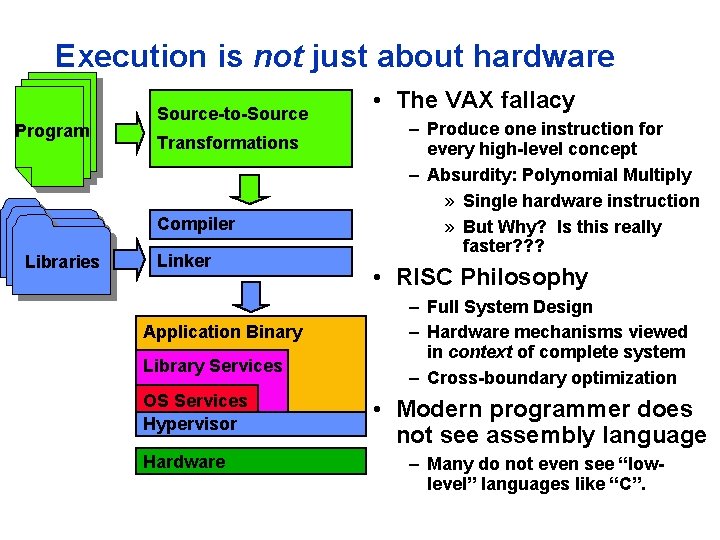

Execution is not just about hardware Program Source-to-Source Transformations Compiler Libraries Linker Application Binary Library Services OS Services Hypervisor Hardware • The VAX fallacy – Produce one instruction for every high-level concept – Absurdity: Polynomial Multiply » Single hardware instruction » But Why? Is this really faster? ? ? • RISC Philosophy – Full System Design – Hardware mechanisms viewed in context of complete system – Cross-boundary optimization • Modern programmer does not see assembly language – Many do not even see “lowlevel” languages like “C”.

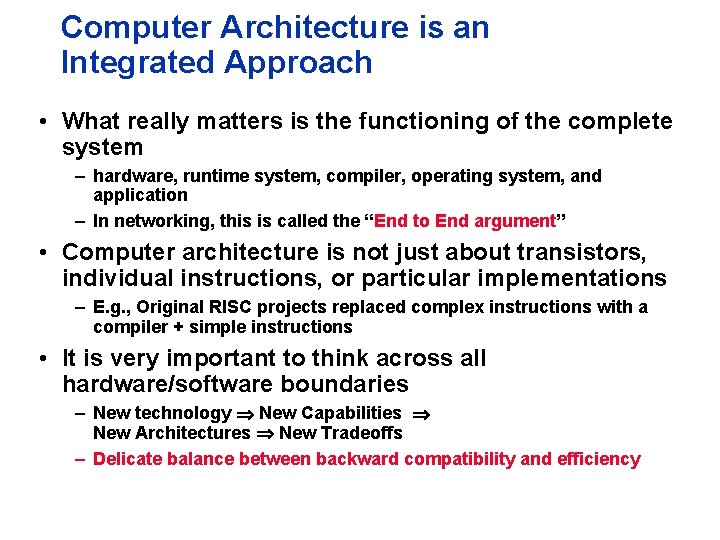

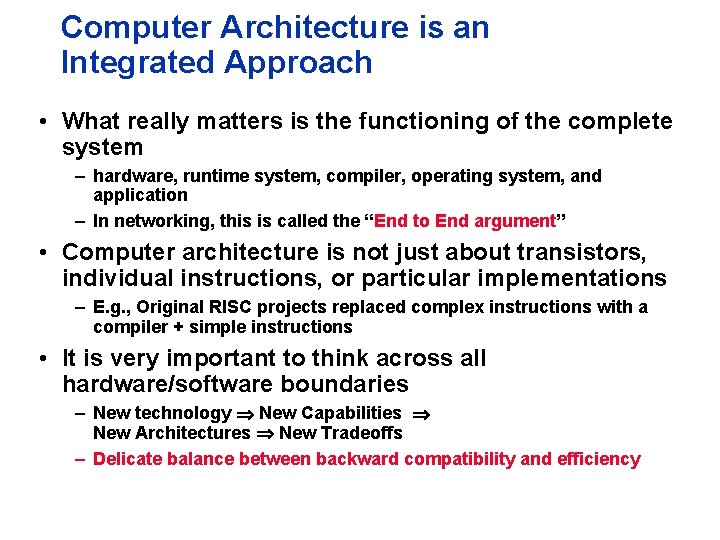

Computer Architecture is an Integrated Approach • What really matters is the functioning of the complete system – hardware, runtime system, compiler, operating system, and application – In networking, this is called the “End to End argument” • Computer architecture is not just about transistors, individual instructions, or particular implementations – E. g. , Original RISC projects replaced complex instructions with a compiler + simple instructions • It is very important to think across all hardware/software boundaries – New technology New Capabilities New Architectures New Tradeoffs – Delicate balance between backward compatibility and efficiency

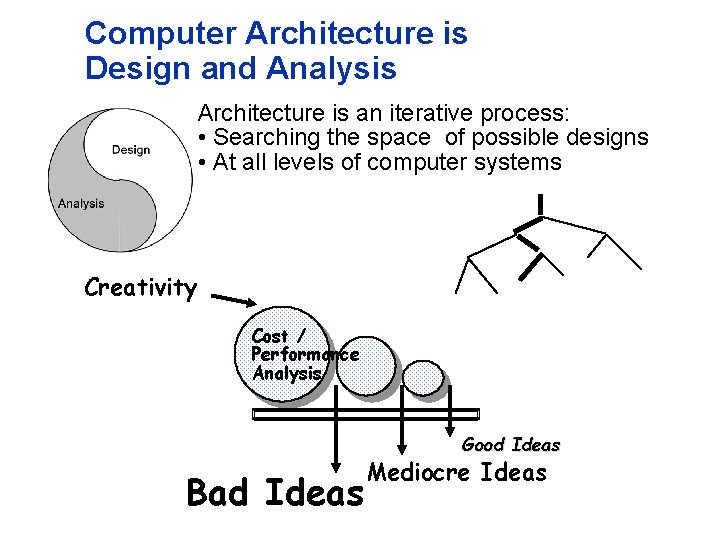

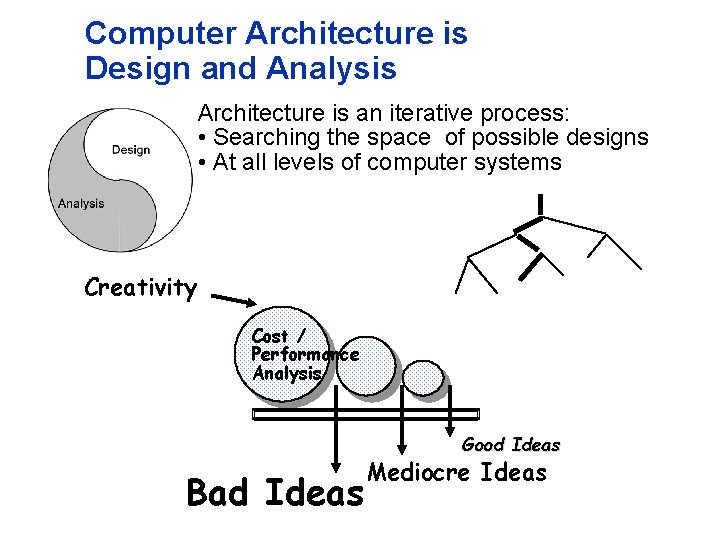

Computer Architecture is Design and Analysis Architecture is an iterative process: • Searching the space of possible designs • At all levels of computer systems Creativity Cost / Performance Analysis Good Ideas Bad Ideas Mediocre Ideas

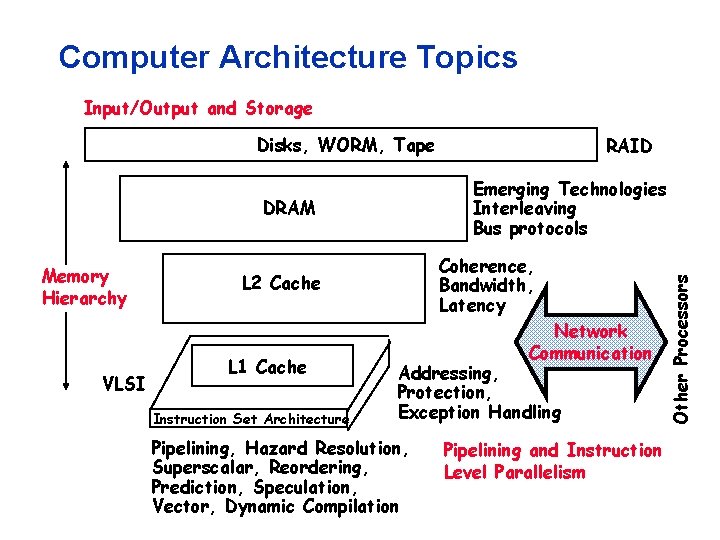

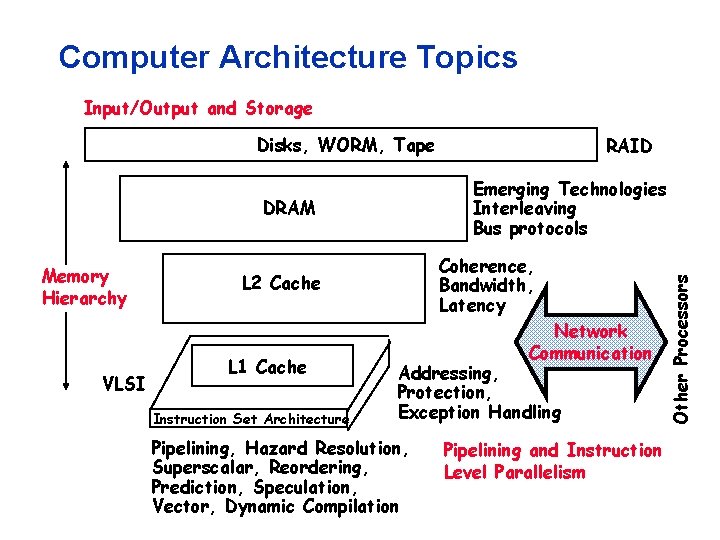

Computer Architecture Topics Input/Output and Storage Disks, WORM, Tape VLSI Coherence, Bandwidth, Latency L 2 Cache L 1 Cache Instruction Set Architecture Network Communication Addressing, Protection, Exception Handling Pipelining, Hazard Resolution, Superscalar, Reordering, Prediction, Speculation, Vector, Dynamic Compilation Pipelining and Instruction Level Parallelism Other Processors Emerging Technologies Interleaving Bus protocols DRAM Memory Hierarchy RAID

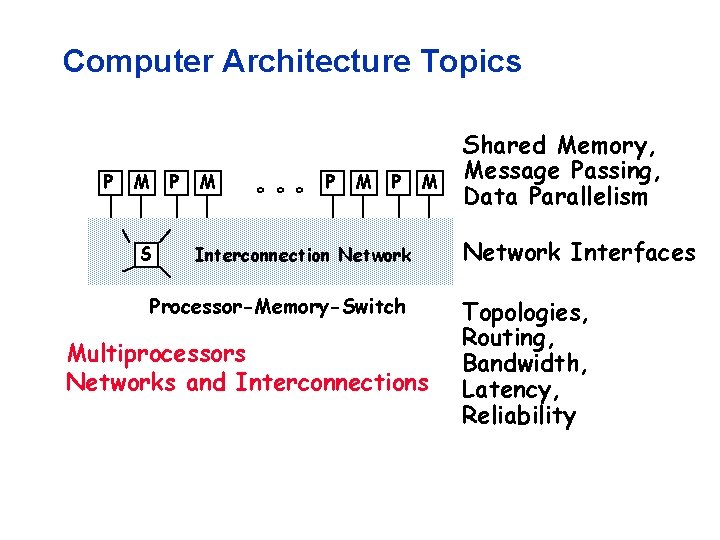

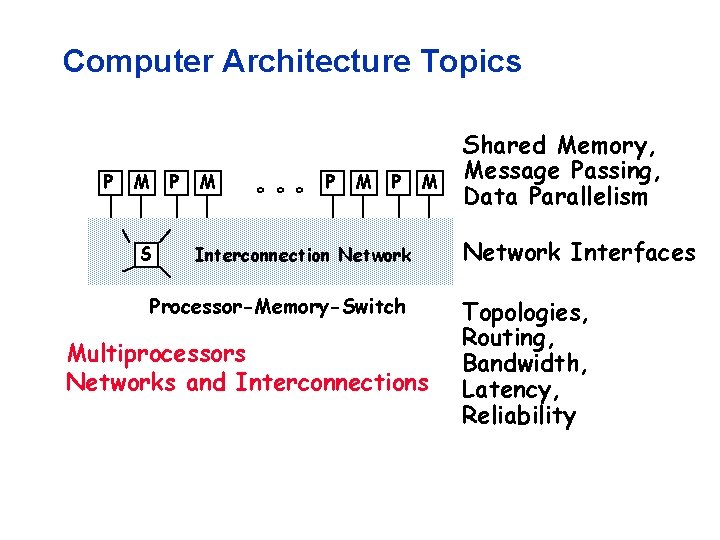

Computer Architecture Topics P M P S M ° ° ° P M Interconnection Network Processor-Memory-Switch Multiprocessors Networks and Interconnections Shared Memory, Message Passing, Data Parallelism Network Interfaces Topologies, Routing, Bandwidth, Latency, Reliability

Building Hardware that Computes

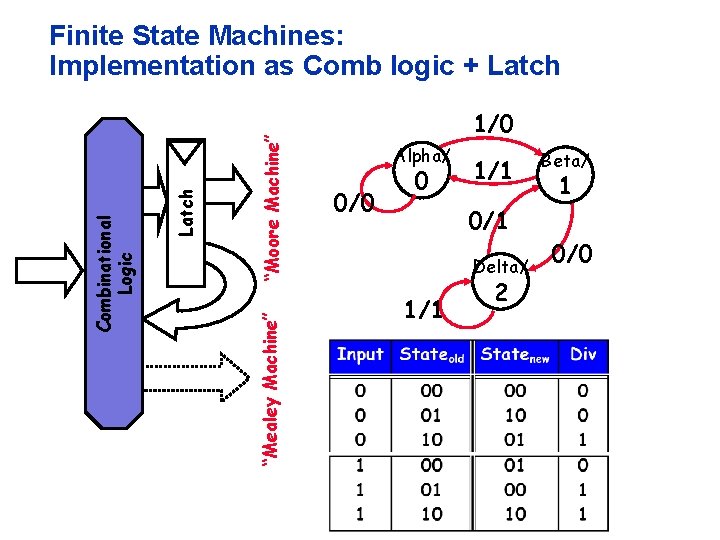

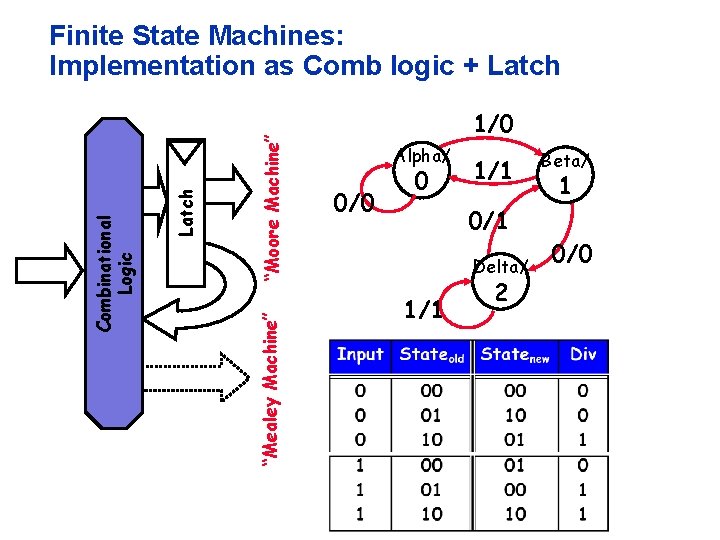

“Moore Machine” “Mealey Machine” Latch Combinational Logic Finite State Machines: Implementation as Comb logic + Latch 1/0 Alpha/ 0/0 0 1/1 0/1 Delta/ 1/1 2 Beta/ 1 0/0

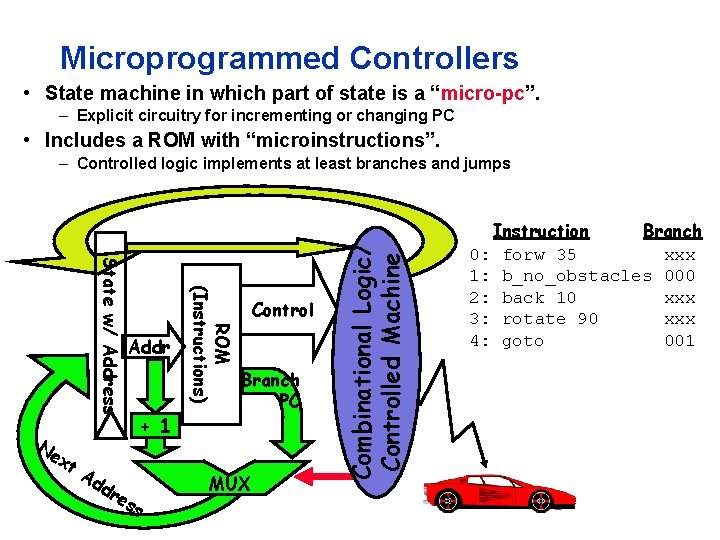

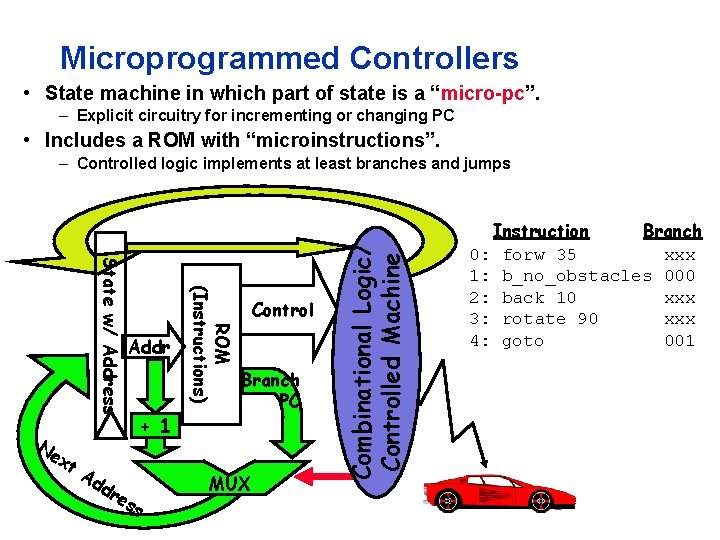

Microprogrammed Controllers • State machine in which part of state is a “micro-pc”. – Explicit circuitry for incrementing or changing PC • Includes a ROM with “microinstructions”. xt Ad d re + 1 ss ROM (Instructions) State w/ Address Ne Addr Control Branch PC MUX Combinational Logic/ Controlled Machine – Controlled logic implements at least branches and jumps Instruction Branch 0: forw 35 xxx 1: b_no_obstacles 000 2: back 10 xxx 3: rotate 90 xxx 4: goto 001

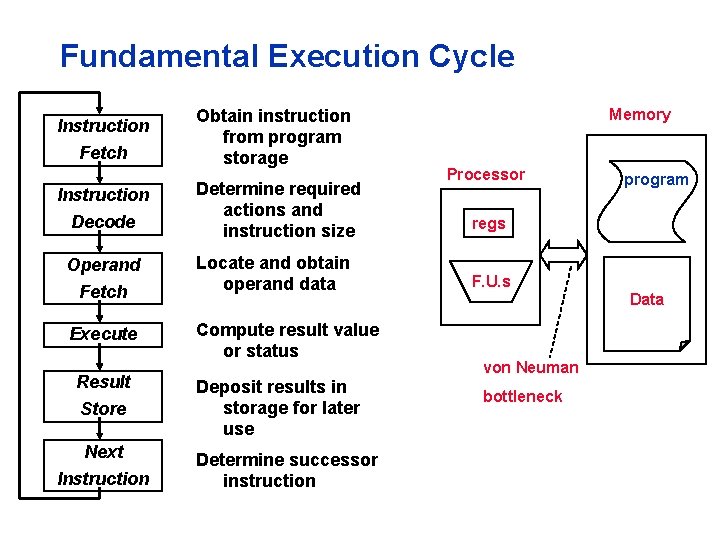

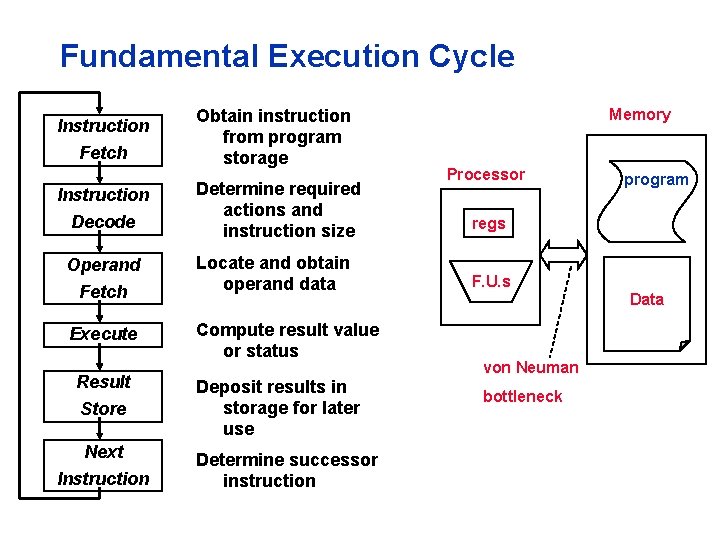

Fundamental Execution Cycle Instruction Fetch Instruction Decode Operand Fetch Execute Result Store Next Instruction Obtain instruction from program storage Determine required actions and instruction size Locate and obtain operand data Compute result value or status Deposit results in storage for later use Determine successor instruction Memory Processor program regs F. U. s von Neuman bottleneck Data

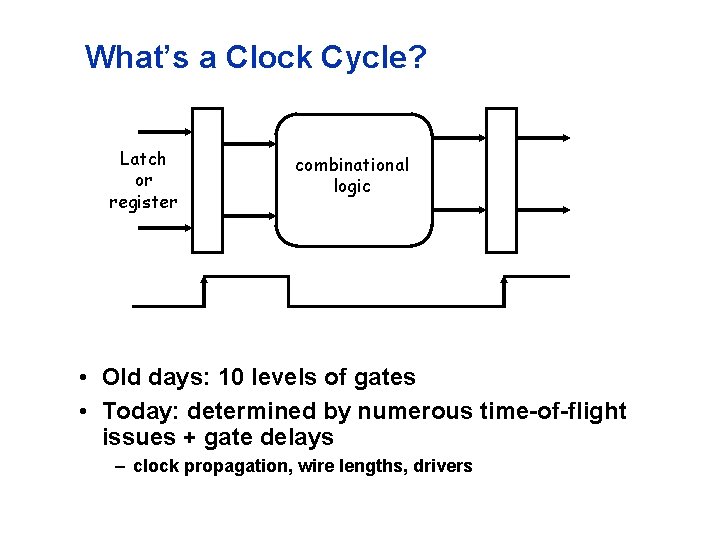

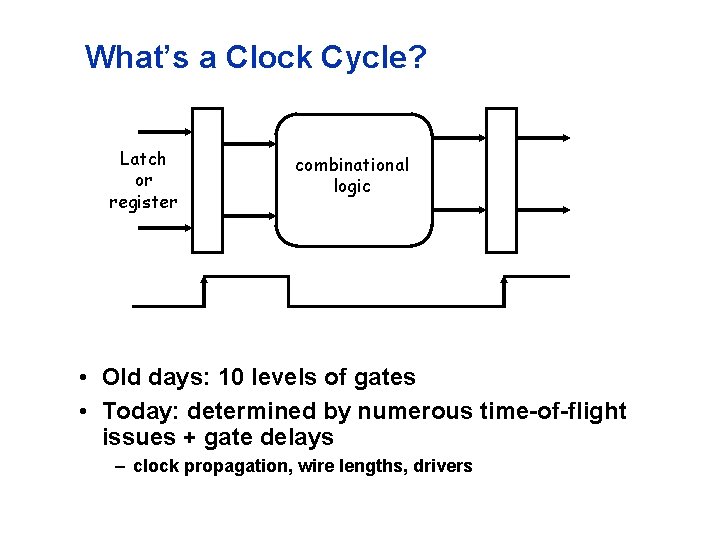

What’s a Clock Cycle? Latch or register combinational logic • Old days: 10 levels of gates • Today: determined by numerous time-of-flight issues + gate delays – clock propagation, wire lengths, drivers

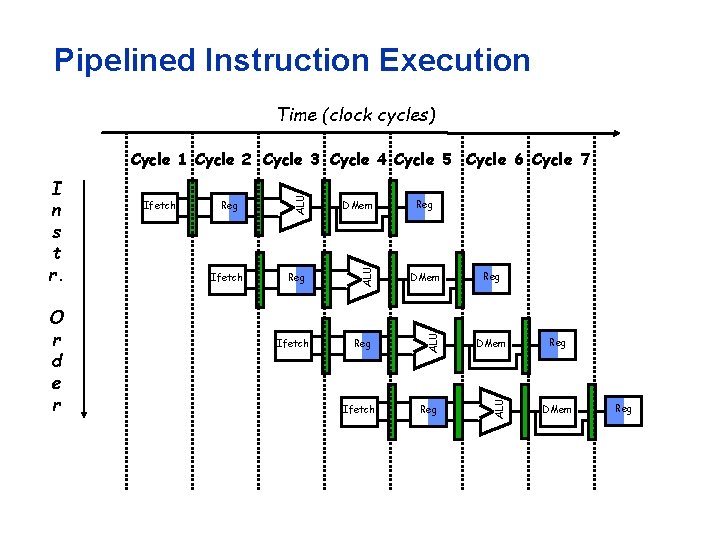

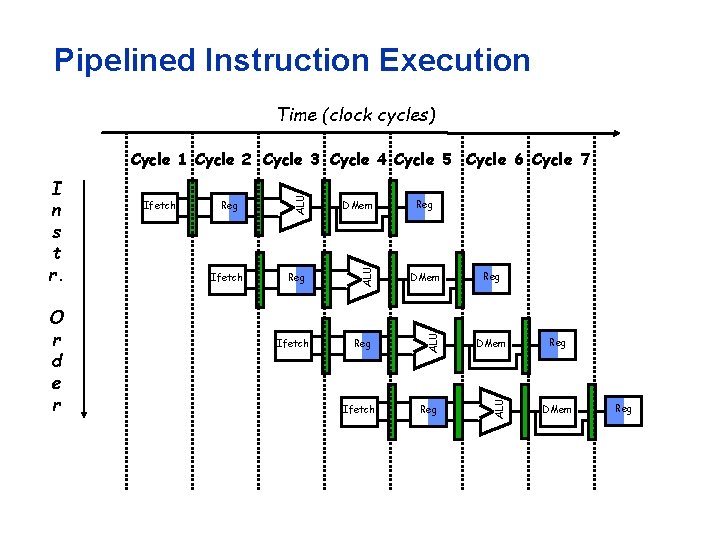

Pipelined Instruction Execution Time (clock cycles) Ifetch DMem Reg ALU O r d e r Ifetch ALU I n s t r. ALU Cycle 1 Cycle 2 Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Ifetch Reg Reg DMem Reg

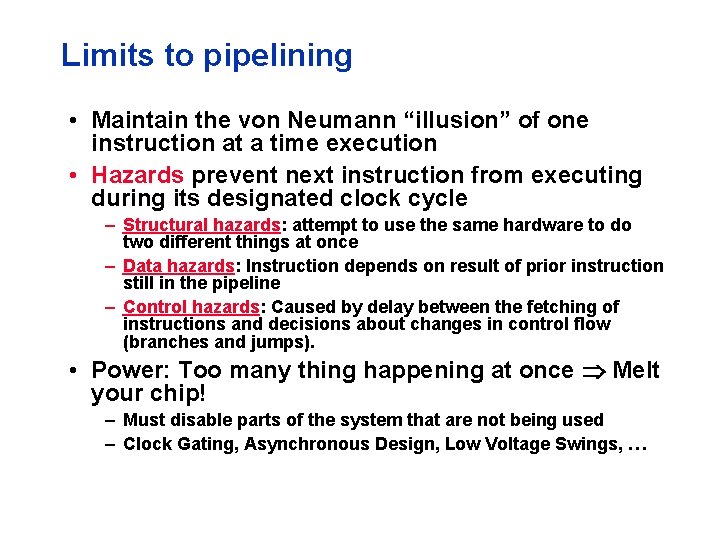

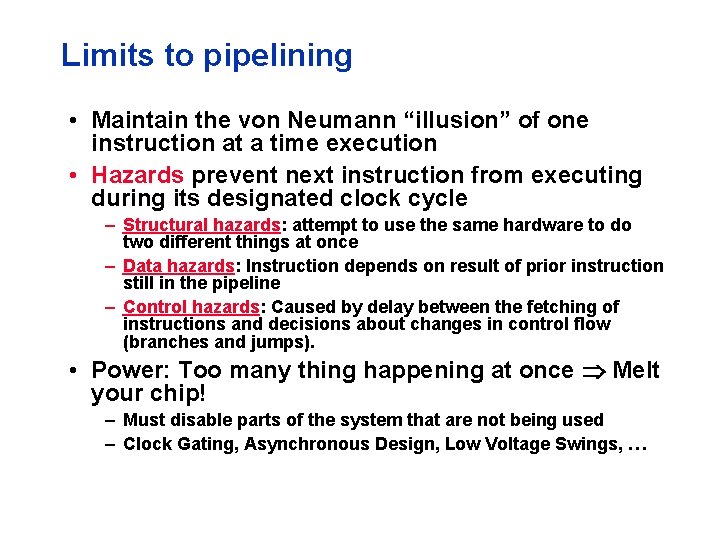

Limits to pipelining • Maintain the von Neumann “illusion” of one instruction at a time execution • Hazards prevent next instruction from executing during its designated clock cycle – Structural hazards: attempt to use the same hardware to do two different things at once – Data hazards: Instruction depends on result of prior instruction still in the pipeline – Control hazards: Caused by delay between the fetching of instructions and decisions about changes in control flow (branches and jumps). • Power: Too many thing happening at once Melt your chip! – Must disable parts of the system that are not being used – Clock Gating, Asynchronous Design, Low Voltage Swings, …

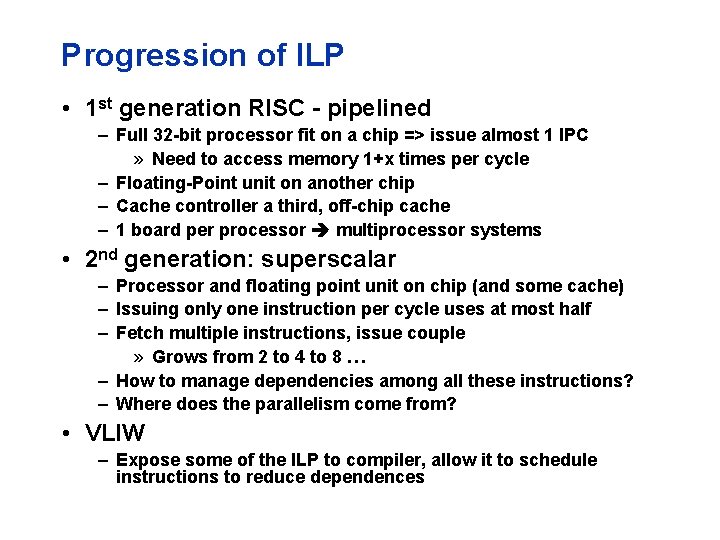

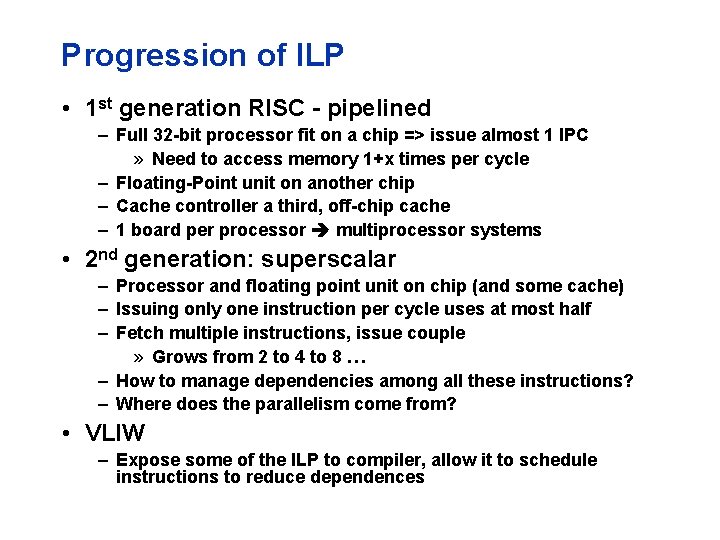

Progression of ILP • 1 st generation RISC - pipelined – Full 32 -bit processor fit on a chip => issue almost 1 IPC » Need to access memory 1+x times per cycle – Floating-Point unit on another chip – Cache controller a third, off-chip cache – 1 board per processor multiprocessor systems • 2 nd generation: superscalar – Processor and floating point unit on chip (and some cache) – Issuing only one instruction per cycle uses at most half – Fetch multiple instructions, issue couple » Grows from 2 to 4 to 8 … – How to manage dependencies among all these instructions? – Where does the parallelism come from? • VLIW – Expose some of the ILP to compiler, allow it to schedule instructions to reduce dependences

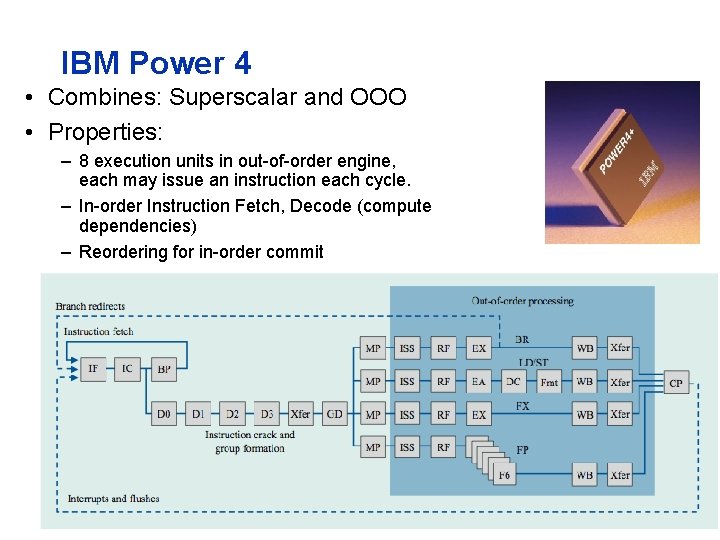

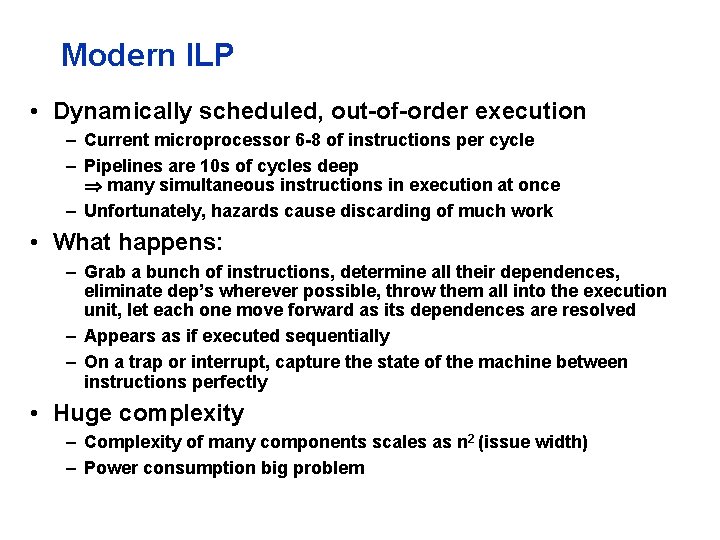

Modern ILP • Dynamically scheduled, out-of-order execution – Current microprocessor 6 -8 of instructions per cycle – Pipelines are 10 s of cycles deep many simultaneous instructions in execution at once – Unfortunately, hazards cause discarding of much work • What happens: – Grab a bunch of instructions, determine all their dependences, eliminate dep’s wherever possible, throw them all into the execution unit, let each one move forward as its dependences are resolved – Appears as if executed sequentially – On a trap or interrupt, capture the state of the machine between instructions perfectly • Huge complexity – Complexity of many components scales as n 2 (issue width) – Power consumption big problem

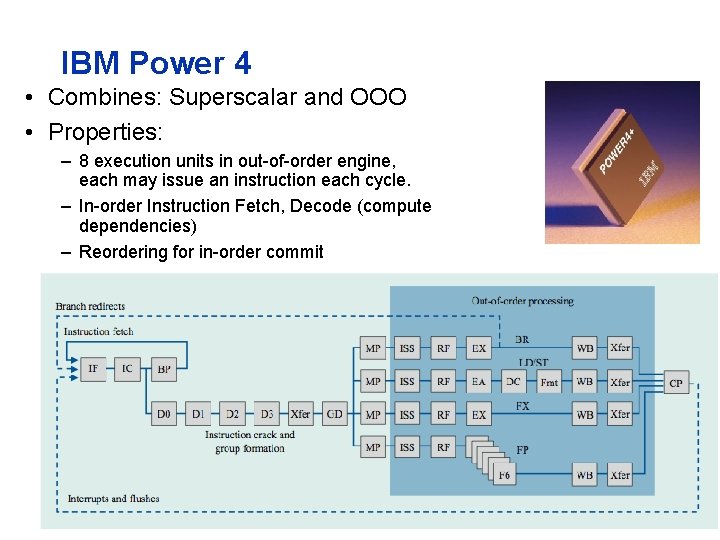

IBM Power 4 • Combines: Superscalar and OOO • Properties: – 8 execution units in out-of-order engine, each may issue an instruction each cycle. – In-order Instruction Fetch, Decode (compute dependencies) – Reordering for in-order commit

When all else fails - guess • Programs make decisions as they go – Conditionals, loops, calls – Translate into branches and jumps (1 of 5 instructions) • How do you determine what instructions for fetch when the ones before it haven’t executed? – Branch prediction – Lot’s of clever machine structures to predict future based on history – Machinery to back out of mis-predictions • Execute all the possible branches – Likely to hit additional branches, perform stores Þspeculative threads ÞWhat can hardware do to make programming (with performance) easier?

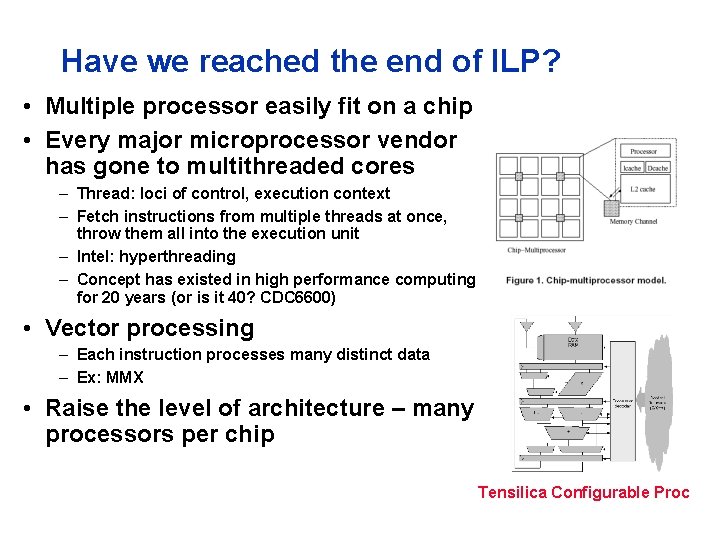

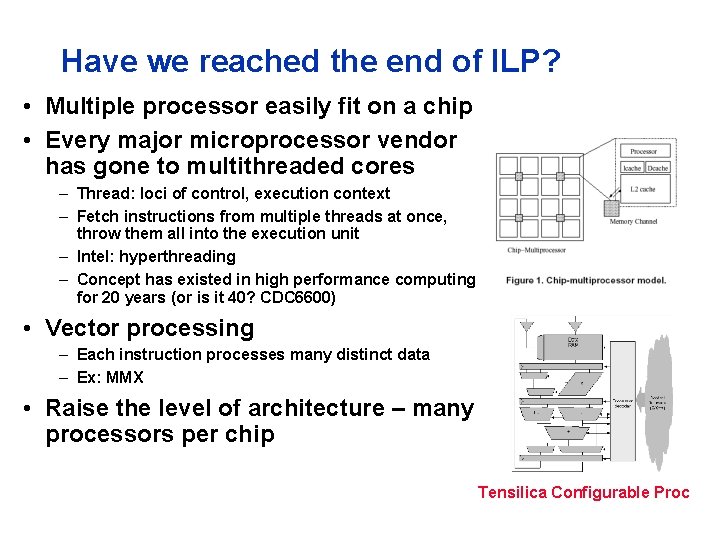

Have we reached the end of ILP? • Multiple processor easily fit on a chip • Every major microprocessor vendor has gone to multithreaded cores – Thread: loci of control, execution context – Fetch instructions from multiple threads at once, throw them all into the execution unit – Intel: hyperthreading – Concept has existed in high performance computing for 20 years (or is it 40? CDC 6600) • Vector processing – Each instruction processes many distinct data – Ex: MMX • Raise the level of architecture – many processors per chip Tensilica Configurable Proc

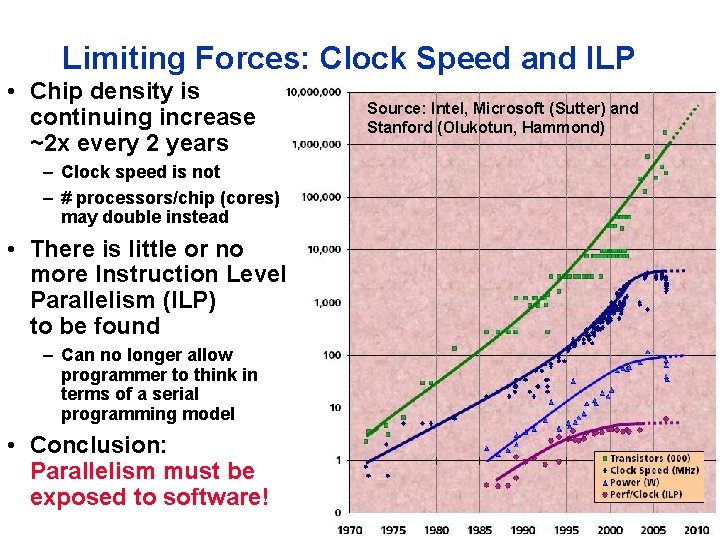

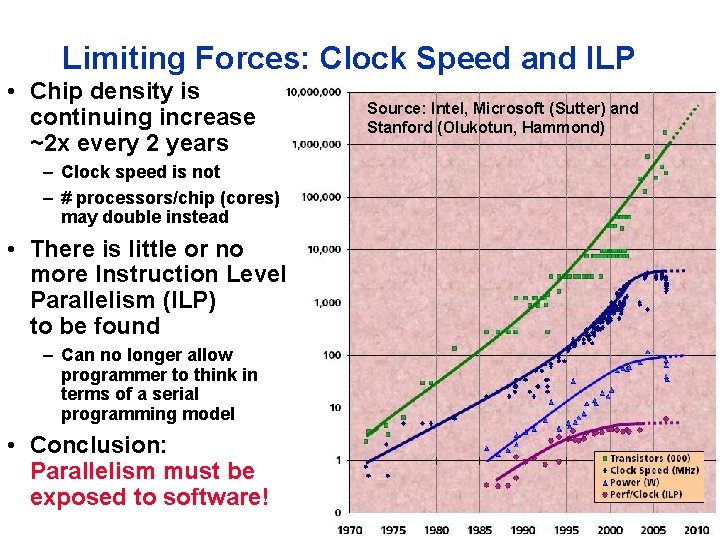

Limiting Forces: Clock Speed and ILP • Chip density is continuing increase ~2 x every 2 years – Clock speed is not – # processors/chip (cores) may double instead • There is little or no more Instruction Level Parallelism (ILP) to be found – Can no longer allow programmer to think in terms of a serial programming model • Conclusion: Parallelism must be exposed to software! Source: Intel, Microsoft (Sutter) and Stanford (Olukotun, Hammond)

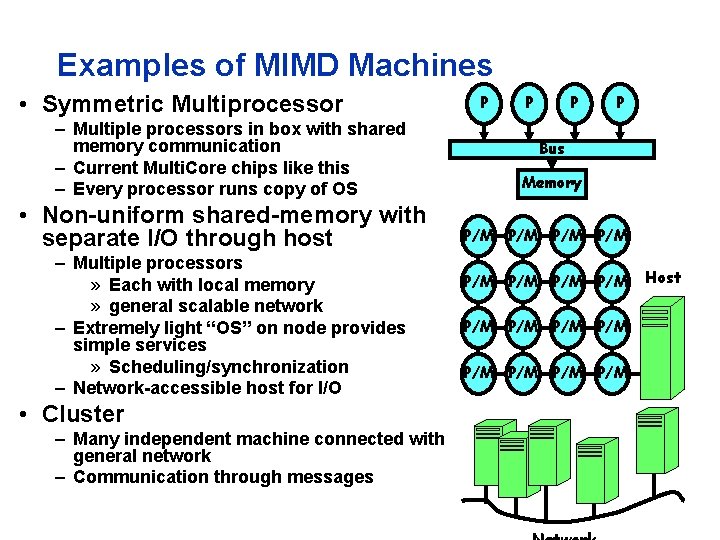

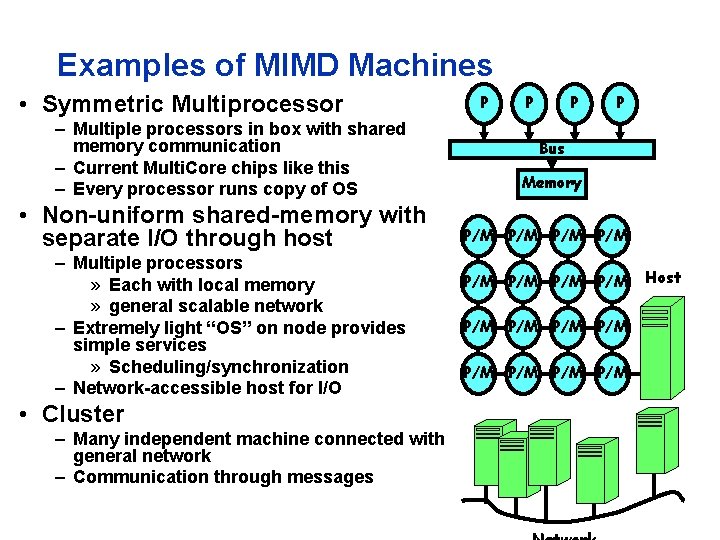

Examples of MIMD Machines • Symmetric Multiprocessor – Multiple processors in box with shared memory communication – Current Multi. Core chips like this – Every processor runs copy of OS • Non-uniform shared-memory with separate I/O through host – Multiple processors » Each with local memory » general scalable network – Extremely light “OS” on node provides simple services » Scheduling/synchronization – Network-accessible host for I/O • Cluster – Many independent machine connected with general network – Communication through messages P P Bus Memory P/M P/M P/M P/M Host

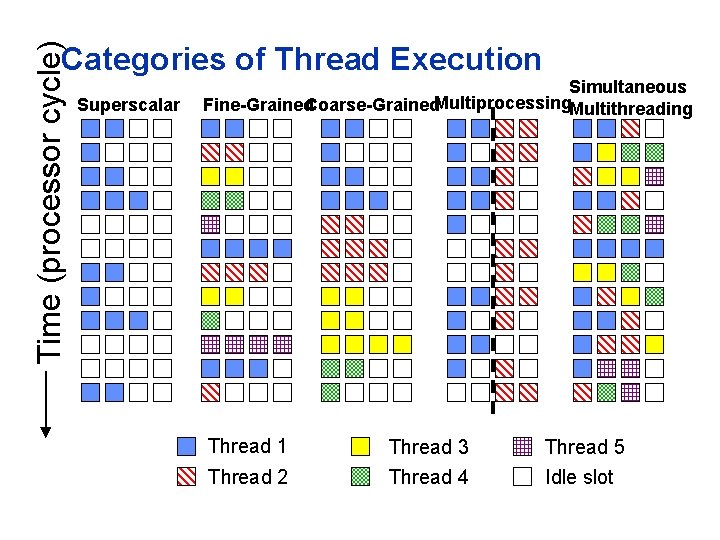

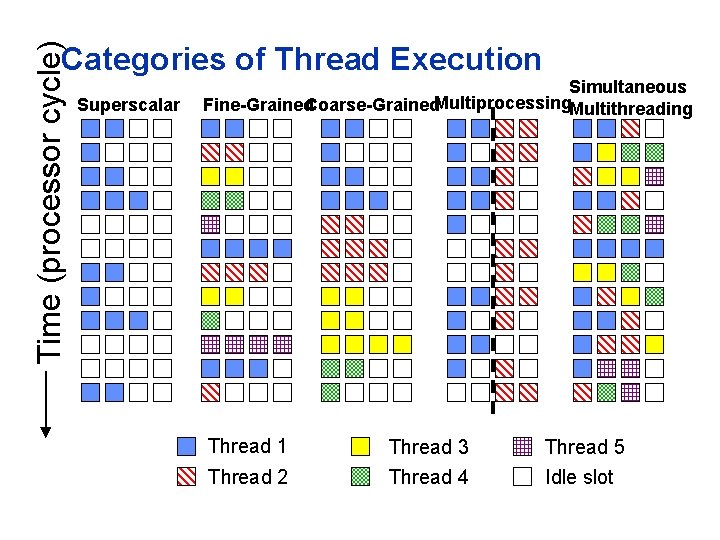

Time (processor cycle) Categories of Thread Execution Superscalar Simultaneous Fine-Grained. Coarse-Grained. Multiprocessing. Multithreading Thread 1 Thread 2 Thread 3 Thread 4 Thread 5 Idle slot