MODELING M EASURING ANDLIMITING ADVERSARY KNOWLEDGE Piotr Peter

![MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-34.jpg)

![MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-35.jpg)

![MODELING KNOWLEDGE • Prior knowledge: a probability distribution – Pr[bday = x] = 1/365 MODELING KNOWLEDGE • Prior knowledge: a probability distribution – Pr[bday = x] = 1/365](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-46.jpg)

![MODELING KNOWLEDGE • Prior knowledge: – Pr[bday=x] = 1/365 for 1 ≤ x ≤ MODELING KNOWLEDGE • Prior knowledge: – Pr[bday=x] = 1/365 for 1 ≤ x ≤](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-48.jpg)

![MEASURE RISK • Prior knowledge (given Q 1) – Pr[bday=x | true] = 1/7 MEASURE RISK • Prior knowledge (given Q 1) – Pr[bday=x | true] = 1/7](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-56.jpg)

![IMPLEMENTATION • Probabilistic programming: – Given Pr[bday], program Q 1 – Compute Pr[bday | IMPLEMENTATION • Probabilistic programming: – Given Pr[bday], program Q 1 – Compute Pr[bday |](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-67.jpg)

![IMPLEMENTATION • Probabilistic programming: – Given Pr[bday], program Q 1 – Compute Pr[bday | IMPLEMENTATION • Probabilistic programming: – Given Pr[bday], program Q 1 – Compute Pr[bday |](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-68.jpg)

![PROBABILITY DISTRIBUTIONS • Prior knowledge: – Pr[bday=1] = 1/365 – Pr[bday=2] = 1/365 – PROBABILITY DISTRIBUTIONS • Prior knowledge: – Pr[bday=1] = 1/365 – Pr[bday=2] = 1/365 –](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-69.jpg)

![PROBABILITY ABSTRACTION • Prior knowledge: – Pr[bday=x] = 1/365 for 1 ≤ x ≤ PROBABILITY ABSTRACTION • Prior knowledge: – Pr[bday=x] = 1/365 for 1 ≤ x ≤](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-70.jpg)

![PROBABILITY ABSTRACTION • Posterior given Q 1(bday) = false: – Pr[bday=x | …] = PROBABILITY ABSTRACTION • Posterior given Q 1(bday) = false: – Pr[bday=x | …] =](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-71.jpg)

![MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-75.jpg)

![MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-76.jpg)

![MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-77.jpg)

![MODELING KNOWLEDGE • Bob’s prior knowledge about Alice’s bday. A: – Pr[bday. A=x] = MODELING KNOWLEDGE • Bob’s prior knowledge about Alice’s bday. A: – Pr[bday. A=x] =](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-90.jpg)

![MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [PLAS 12] – Limit risk for collaborative MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [PLAS 12] – Limit risk for collaborative](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-103.jpg)

![MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-104.jpg)

![MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-105.jpg)

![MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [S&P 14, FCS 14] – Measure risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [S&P 14, FCS 14] – Measure risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-115.jpg)

![MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-116.jpg)

- Slides: 118

MODELING M , EASURING , ANDLIMITING ADVERSARY KNOWLEDGE Piotr (Peter) Mardziel

2

3

WE OWN YOU 4

WE OWN YOUR DATA 5

6

“IF YOU’RE NOT PAYING, YOU’RE THE PRODUCT. ” 7

8

9

DATA MISUSE (intentional or unintentional) 10

ALTERNATIVE ? 11

“USEFUL ”SOCIALINTERACTIONS ALTERNATIVE ? ECONOMICINCENTIVES FORUSEFULSERVICES 12

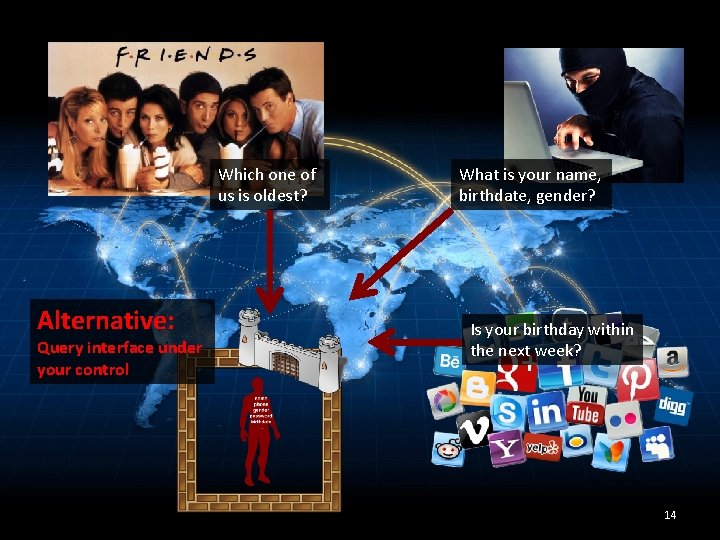

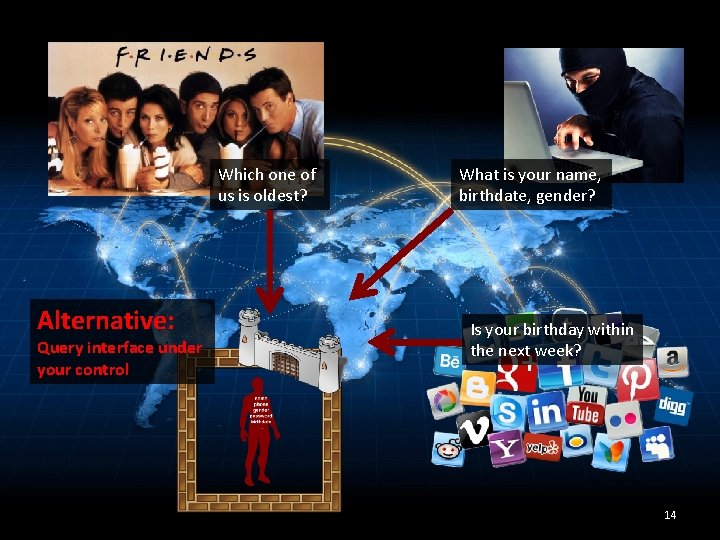

Alternative: Query interface under your control 13

Which one of us is oldest? Alternative: Query interface under your control What is your name, birthdate, gender? Is your birthday within the next week? 14

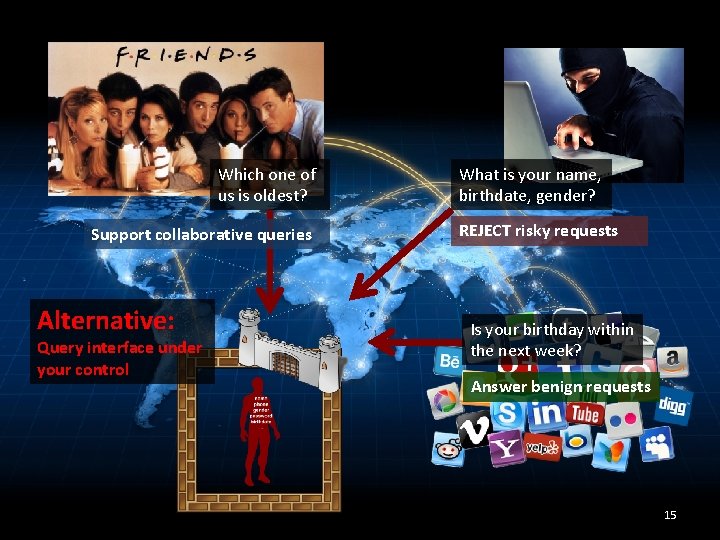

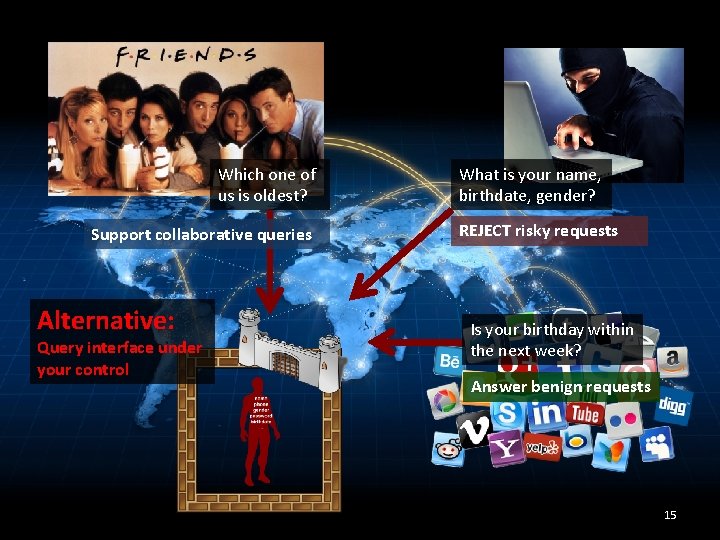

Which one of us is oldest? Support collaborative queries Alternative: Query interface under your control What is your name, birthdate, gender? REJECT risky requests Is your birthday within the next week? Answer benign requests 15

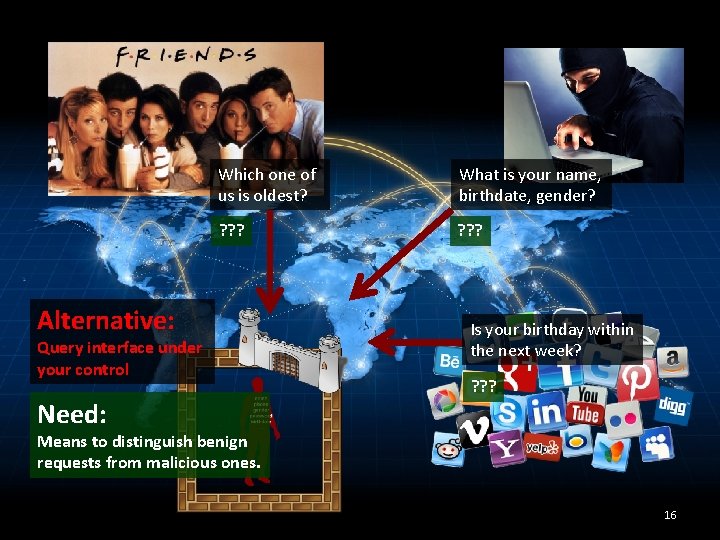

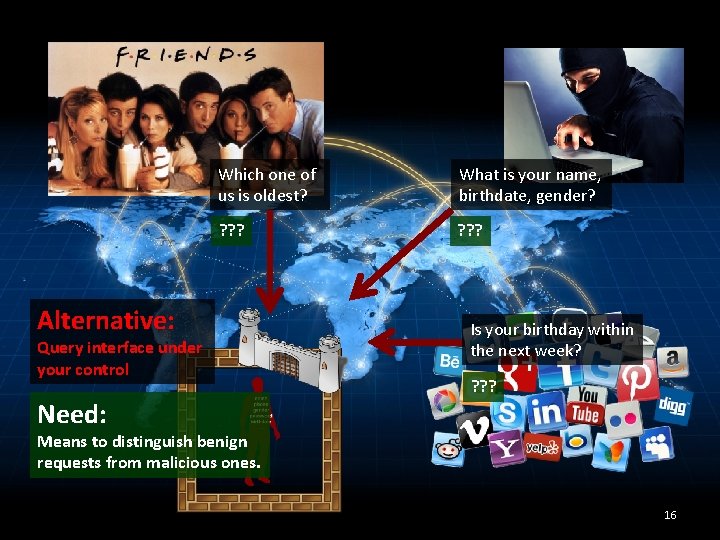

Which one of us is oldest? What is your name, birthdate, gender? ? ? ? Alternative: Query interface under your control Need: Is your birthday within the next week? ? Means to distinguish benign requests from malicious ones. 16

SAFE (ONLINE )SHARING PERVASIVE TOPIC 17

sensor network, resource allocation, … 18

INITIAL OBSERVATIONS • Benign vs. Malicious • Prior Release • Automation 21

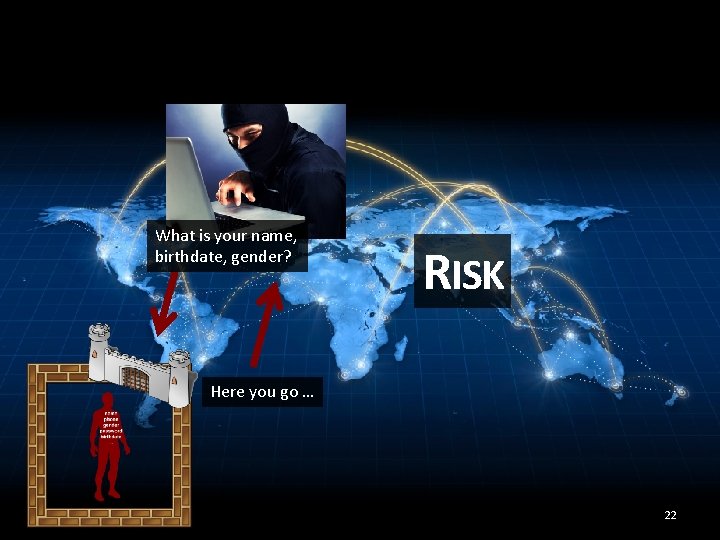

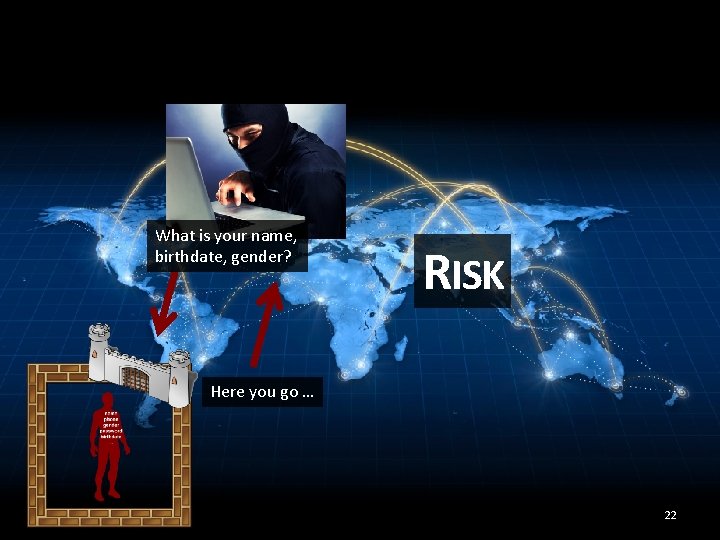

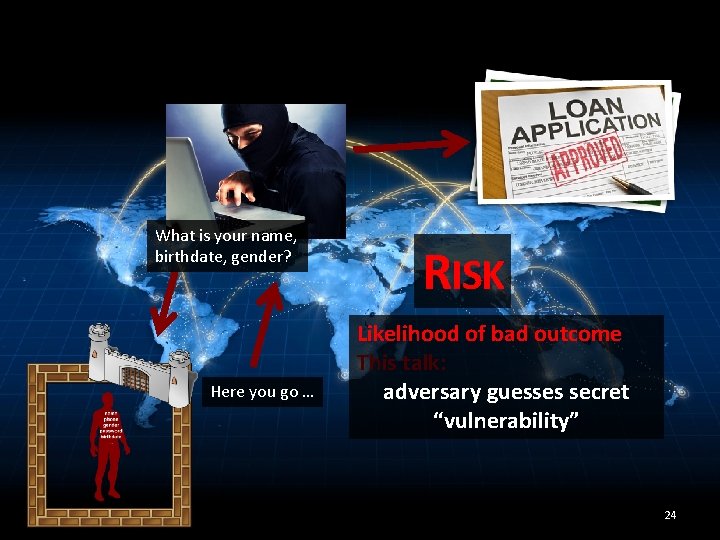

What is your name, birthdate, gender? RISK Here you go … 22

What is your name, birthdate, gender? RISK Likelihood of bad outcome Here you go … 23

What is your name, birthdate, gender? Here you go … RISK Likelihood of bad outcome This talk: adversary guesses secret “vulnerability” 24

INITIAL OBSERVATIONS • Benign vs. Malicious • Prior Release • Automation 25

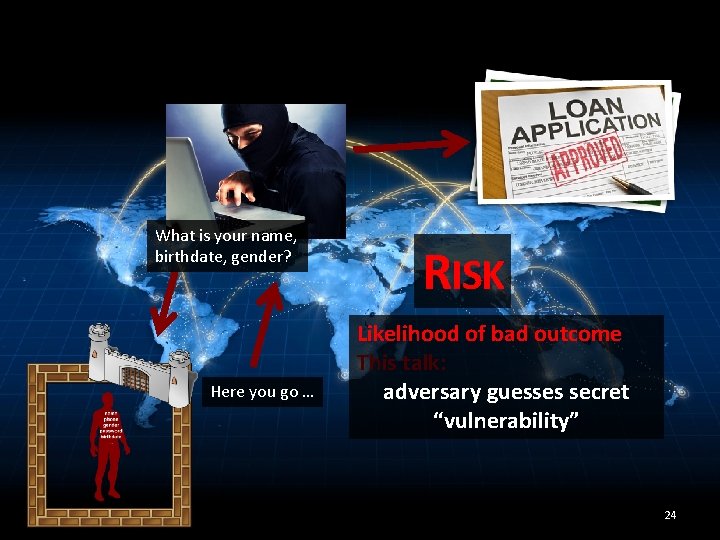

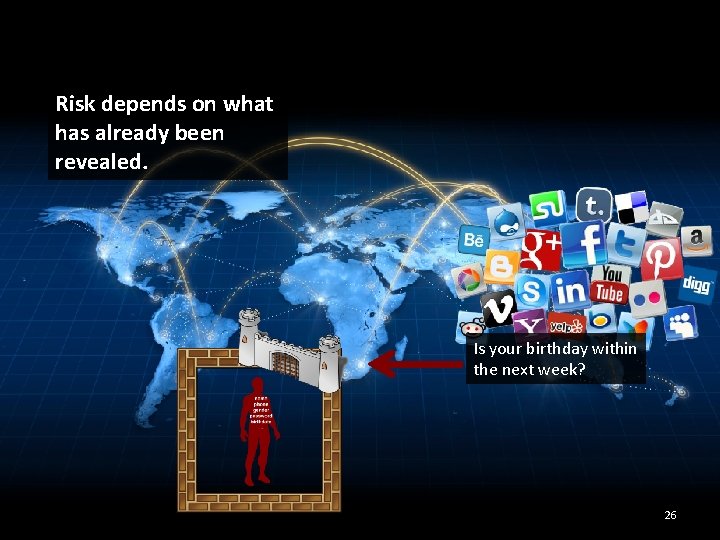

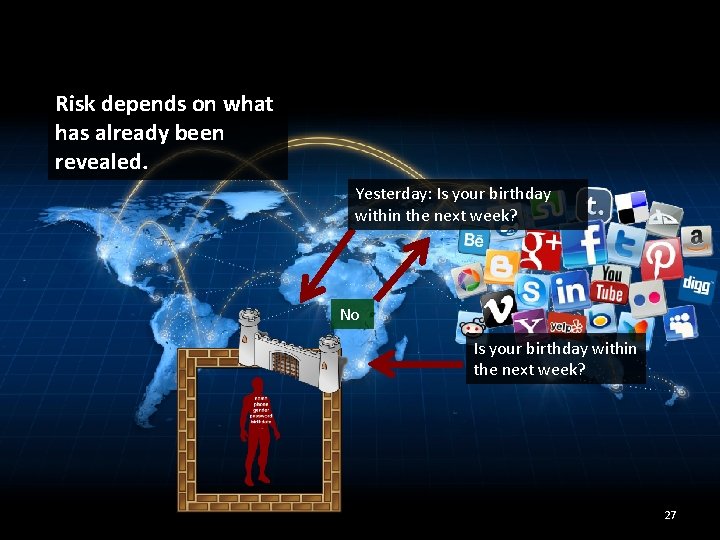

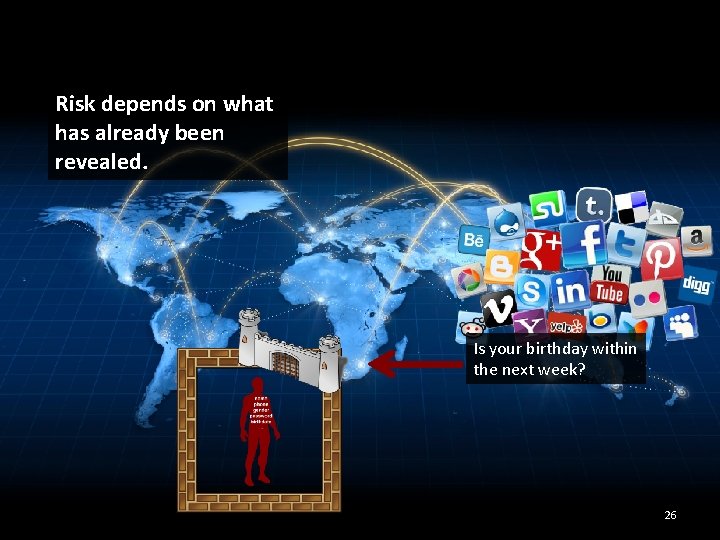

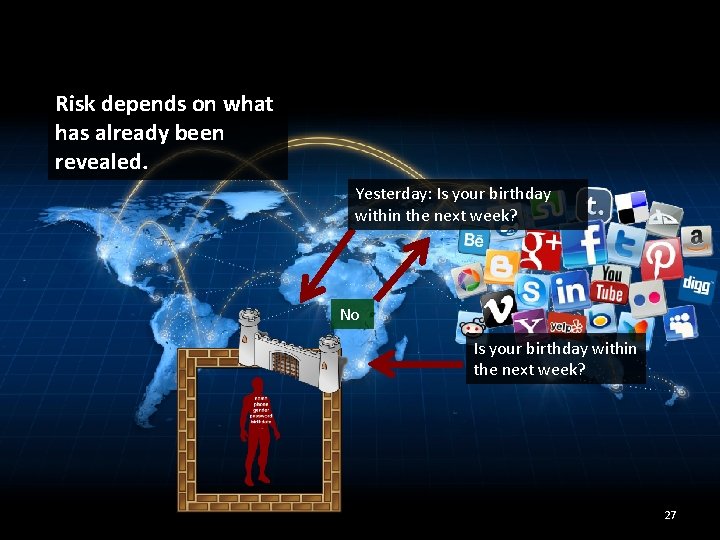

Risk depends on what has already been revealed. Is your birthday within the next week? 26

Risk depends on what has already been revealed. Yesterday: Is your birthday within the next week? No Is your birthday within the next week? 27

INITIAL OBSERVATIONS • Benign vs. Malicious • Prior Release • Automation 28

Mechanical solution necessary. … Is your birthday within the next week? … 29

Mechanical solution necessary. …… Is your birthday within the next week? …… 30

Mechanical solution necessary. ……… Is your birthday within the next week? …… … 31

5 MONTHS LATER Mechanical solution necessary. Is your birthday within the next week? …… … yes 32

5 MONTHS LATER Mechanical solution necessary. Is your birthday within the next week? …… … yes 33

INITIAL OBSERVATIONS • Benign vs. Malicious • Prior Release • Automation 34

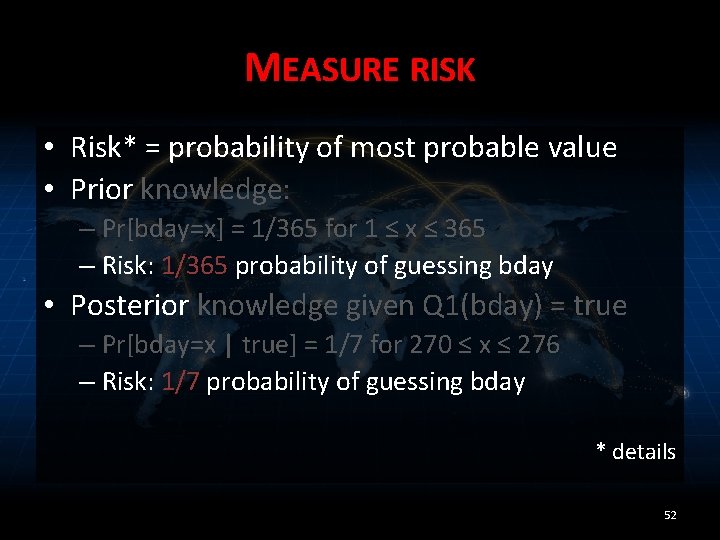

MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • Model adversary knowledge – Integrate prior answers • Measure risk of bad outcome – Vulnerability: probability of guessing • Limit risk – Reject questions with high resulting risk 35

![MODELING MEASURING AND LIMITING ADVERSARY KNOWLEDGE CSF 11 JCS 13 Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-34.jpg)

MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk and computational aspects of approach • [PLAS 12] – Limit risk for collaborative queries • [S&P 14, FCS 14] – Measure risk when secrets change over time 37

![MODELING MEASURING AND LIMITING ADVERSARY KNOWLEDGE CSF 11 JCS 13 Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-35.jpg)

MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk and computational aspects of approach • [PLAS 12] – Limit risk for collaborative queries • [S&P 14, FCS 14] – Measure risk when secrets change over time Stephen Magill Galois Michael Hicks UMD Mudhakar Srivatsa IBM TJ Watson Research Center 38

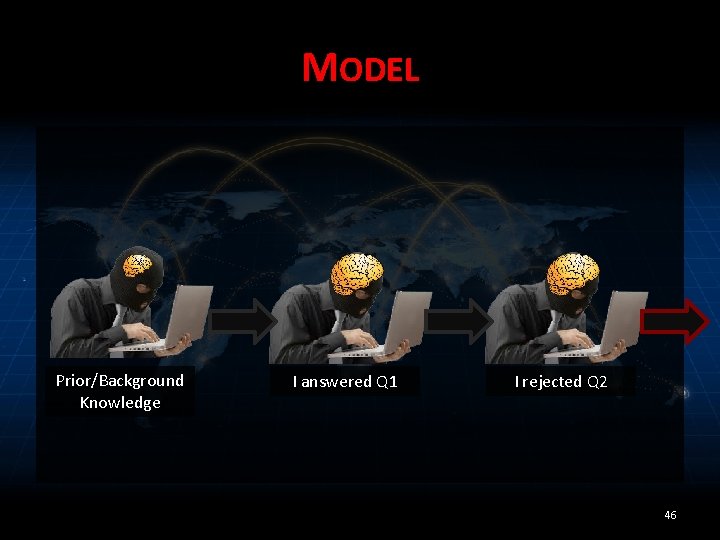

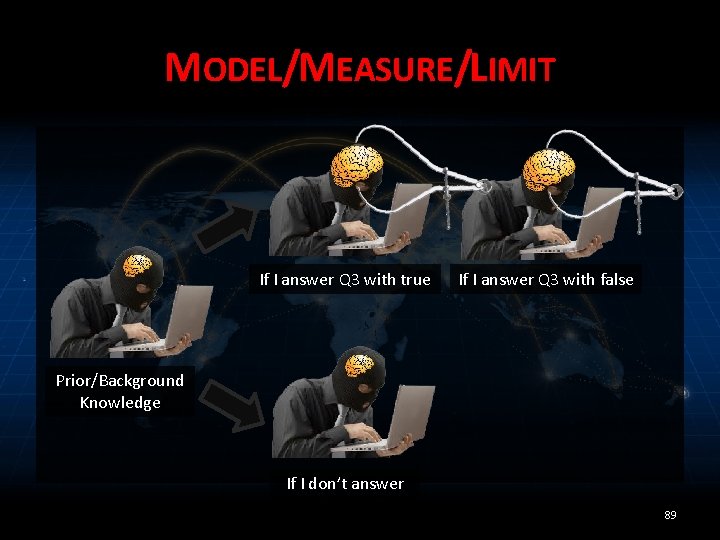

MODEL/MEASURE/LIMIT 39

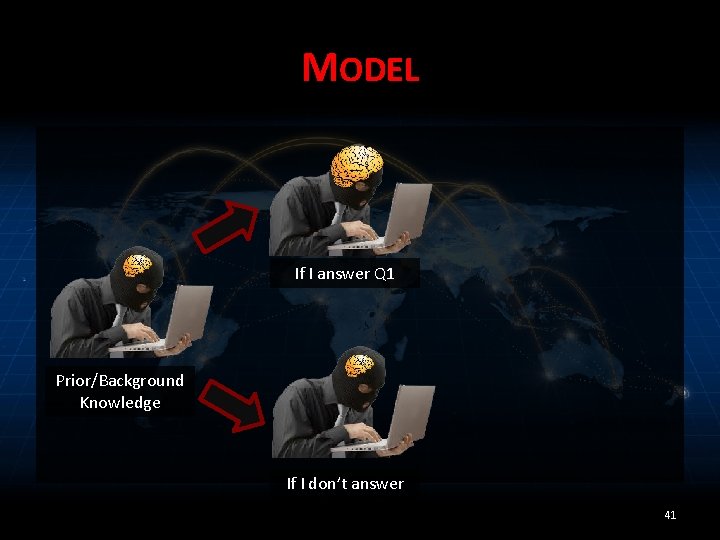

MODEL Prior/Background Knowledge 40

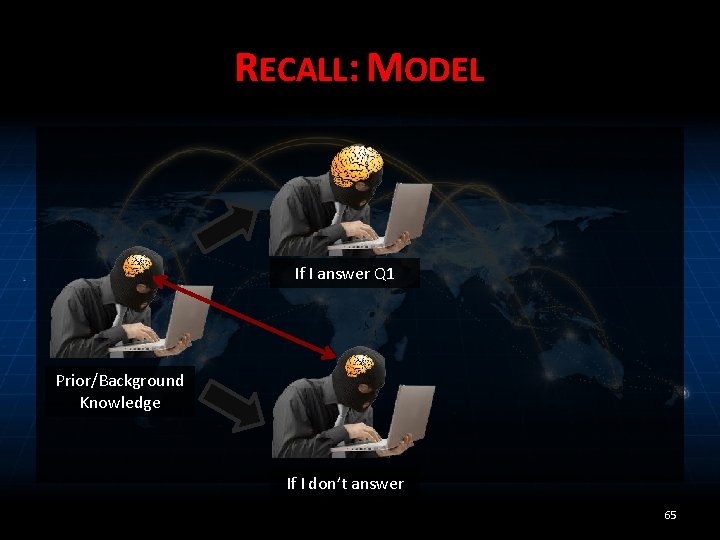

MODEL If I answer Q 1 Prior/Background Knowledge If I don’t answer 41

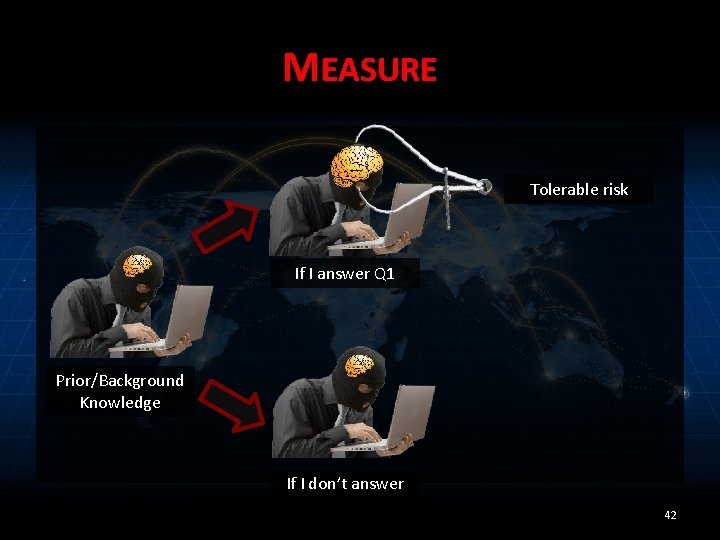

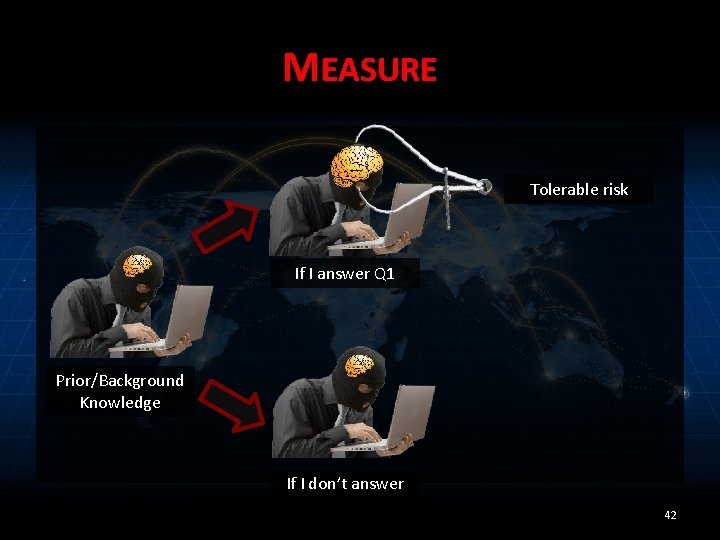

MEASURE Tolerable risk If I answer Q 1 Prior/Background Knowledge If I don’t answer 42

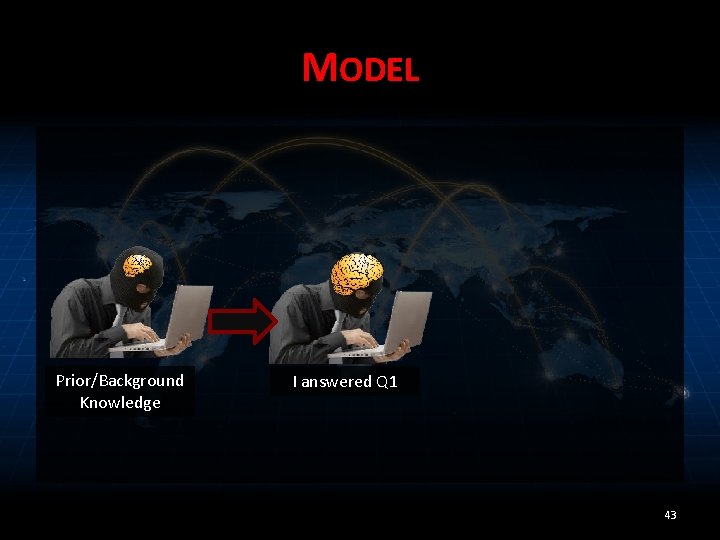

MODEL Prior/Background Knowledge I answered Q 1 43

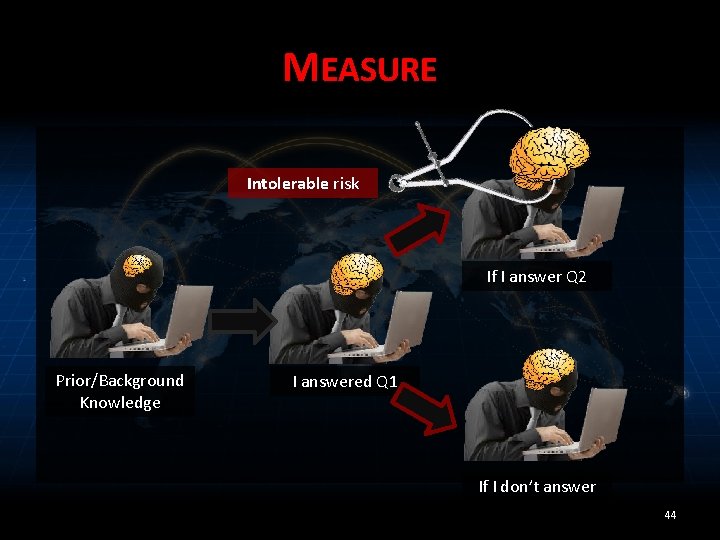

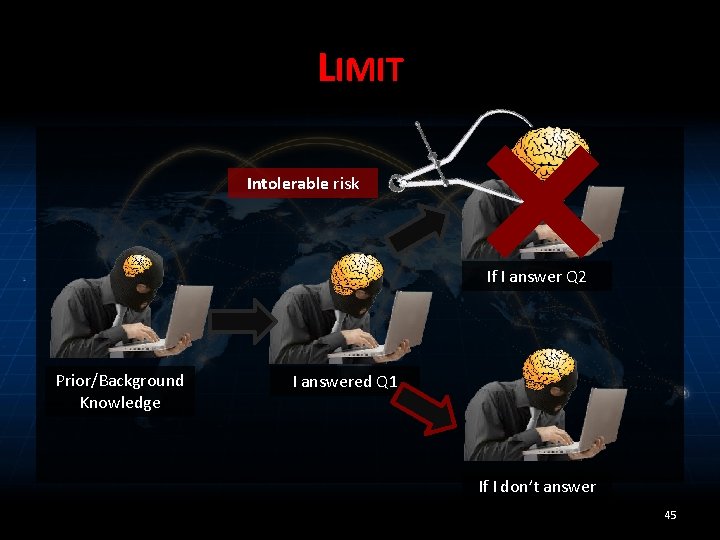

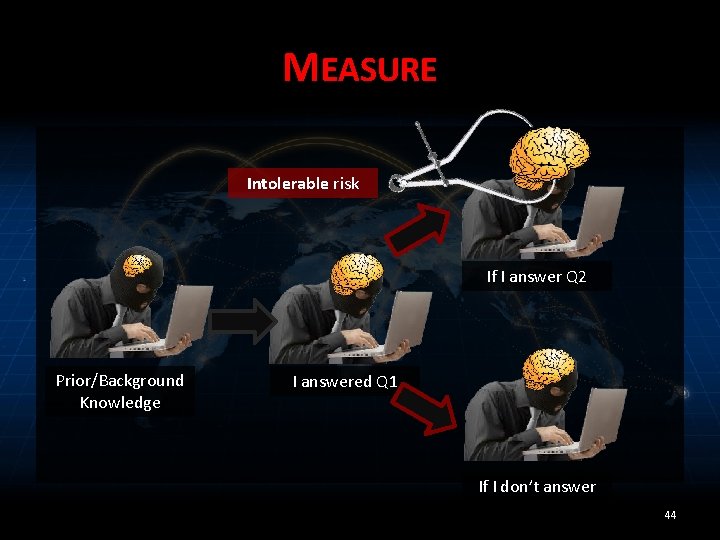

MEASURE Intolerable risk If I answer Q 2 Prior/Background Knowledge I answered Q 1 If I don’t answer 44

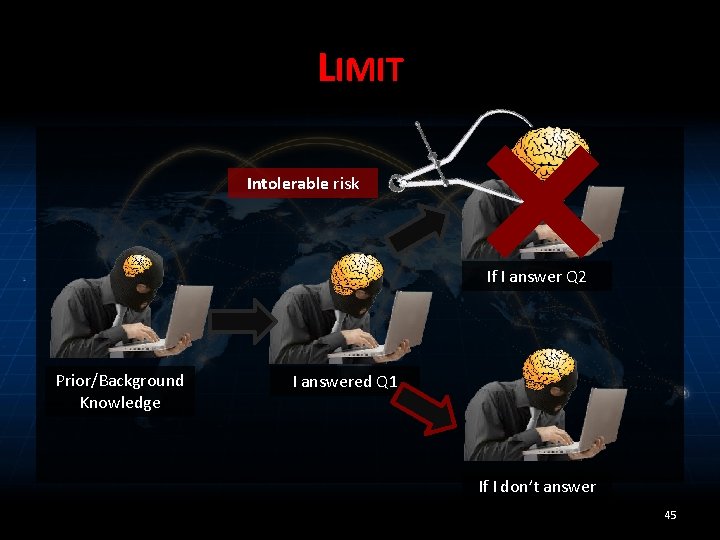

LIMIT Intolerable risk If I answer Q 2 Prior/Background Knowledge I answered Q 1 If I don’t answer 45

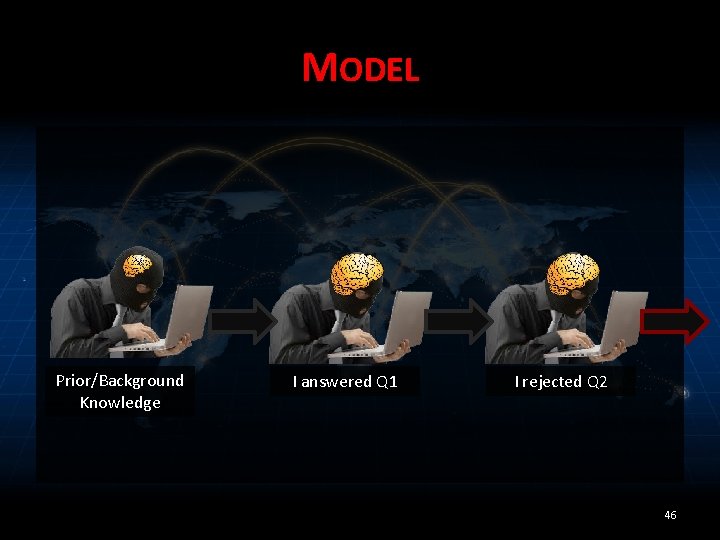

MODEL Prior/Background Knowledge I answered Q 1 I rejected Q 2 46

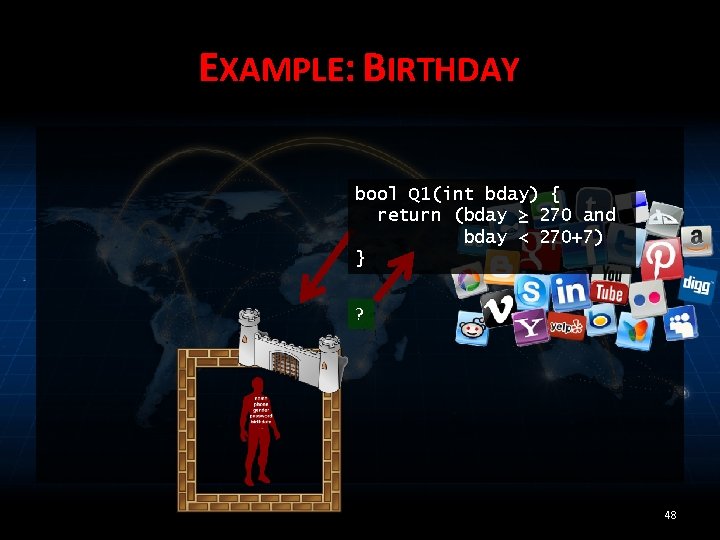

EXAMPLE: BIRTHDAY Is your birthday within the next week? ? 47

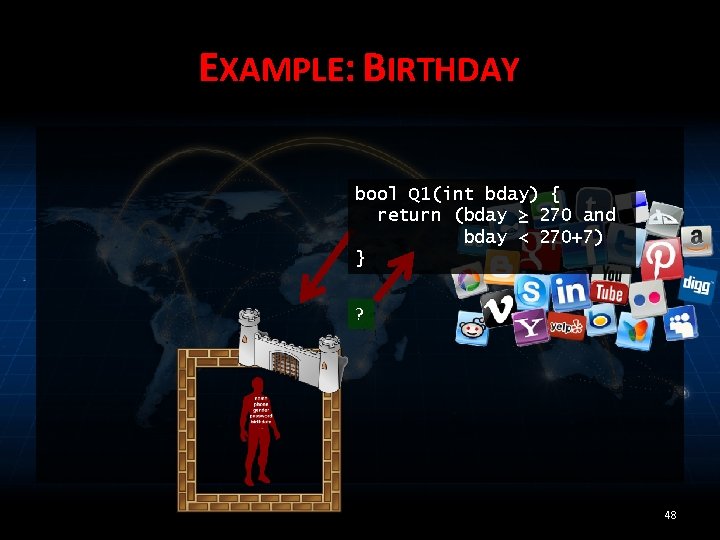

EXAMPLE: BIRTHDAY bool Q 1(int bday) { return (bday ≥ 270 and bday < 270+7) } ? 48

![MODELING KNOWLEDGE Prior knowledge a probability distribution Prbday x 1365 MODELING KNOWLEDGE • Prior knowledge: a probability distribution – Pr[bday = x] = 1/365](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-46.jpg)

MODELING KNOWLEDGE • Prior knowledge: a probability distribution – Pr[bday = x] = 1/365 for 1 ≤ x ≤ 365 49

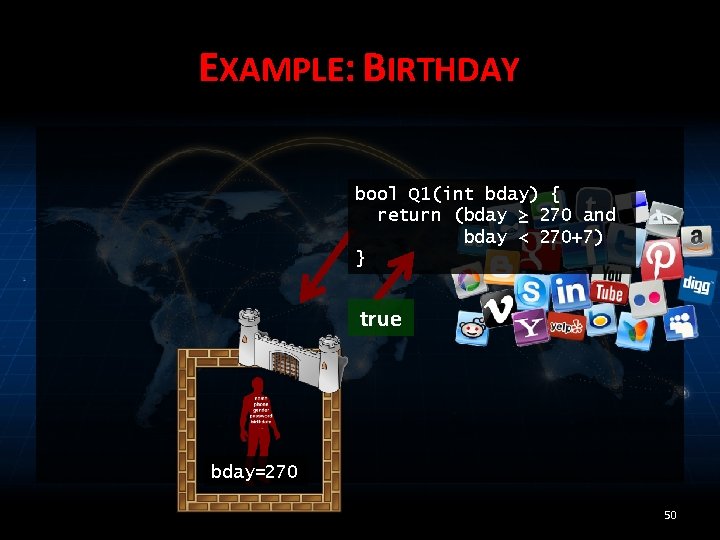

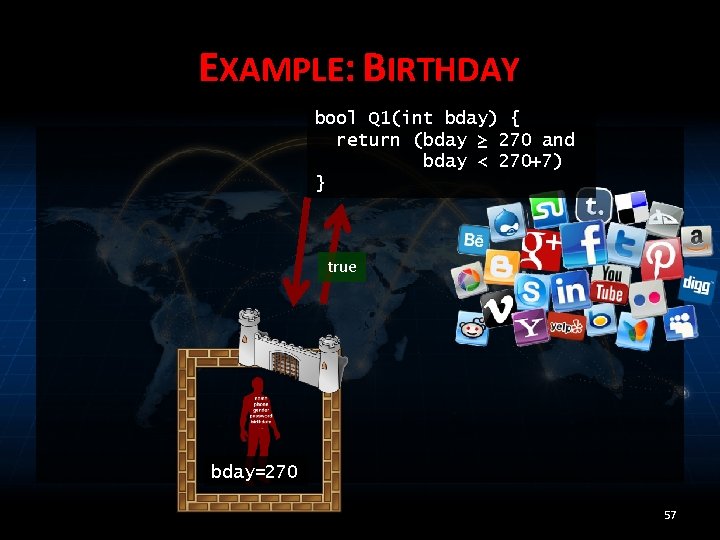

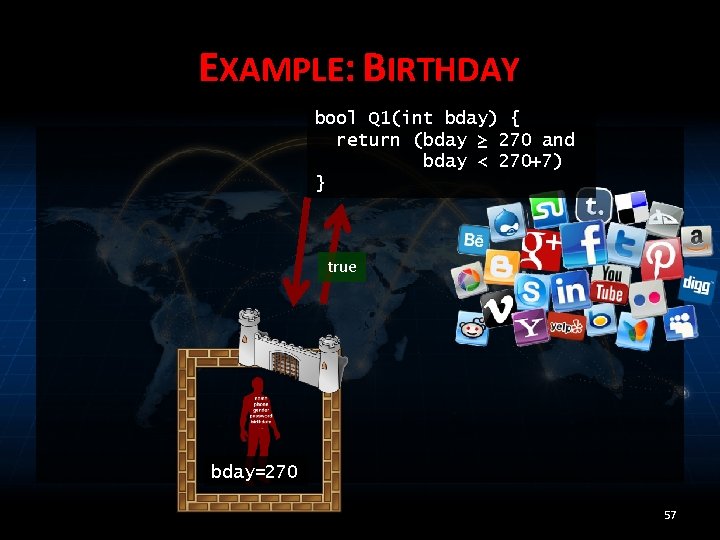

EXAMPLE: BIRTHDAY bool Q 1(int bday) { return (bday ≥ 270 and bday < 270+7) } true bday=270 50

![MODELING KNOWLEDGE Prior knowledge Prbdayx 1365 for 1 x MODELING KNOWLEDGE • Prior knowledge: – Pr[bday=x] = 1/365 for 1 ≤ x ≤](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-48.jpg)

MODELING KNOWLEDGE • Prior knowledge: – Pr[bday=x] = 1/365 for 1 ≤ x ≤ 365 • Posterior knowledge given Q 1(bday) = true – Pr[bday=x | true] = 1/7 for 270 ≤ x ≤ 276 – Elementary* probability *If you have Pr[Q 1(X) | X] and Pr[Q 1(bday)] 51

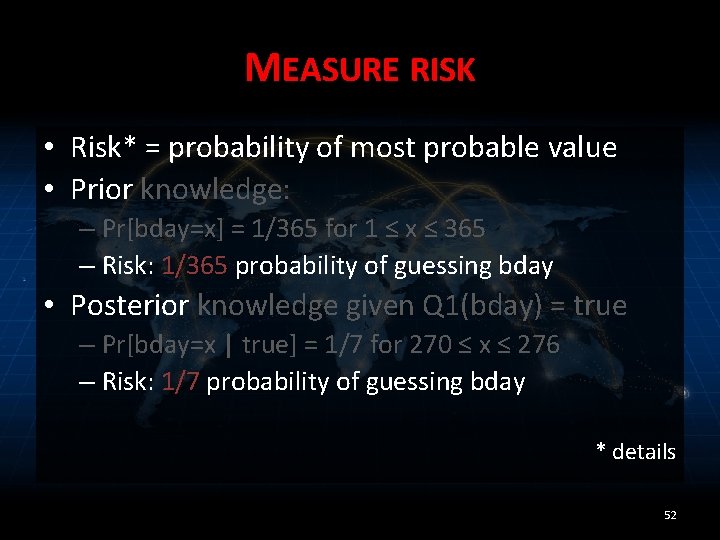

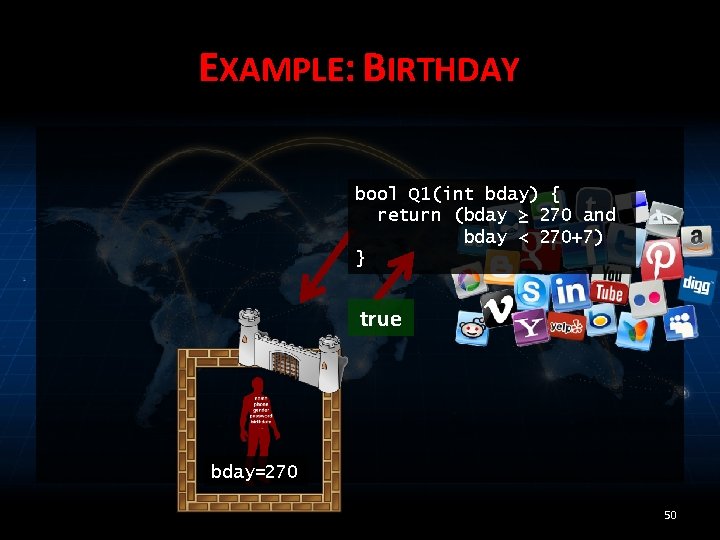

MEASURE RISK • Risk* = probability of most probable value • Prior knowledge: – Pr[bday=x] = 1/365 for 1 ≤ x ≤ 365 – Risk: 1/365 probability of guessing bday • Posterior knowledge given Q 1(bday) = true – Pr[bday=x | true] = 1/7 for 270 ≤ x ≤ 276 – Risk: 1/7 probability of guessing bday * details 52

LIMIT VIA RISK THRESHOLD • Answer if risk ≤ t 53

LIMIT VIA RISK THRESHOLD • Answer if risk ≤ t – How to pick threshold t? What is tolerable? 54

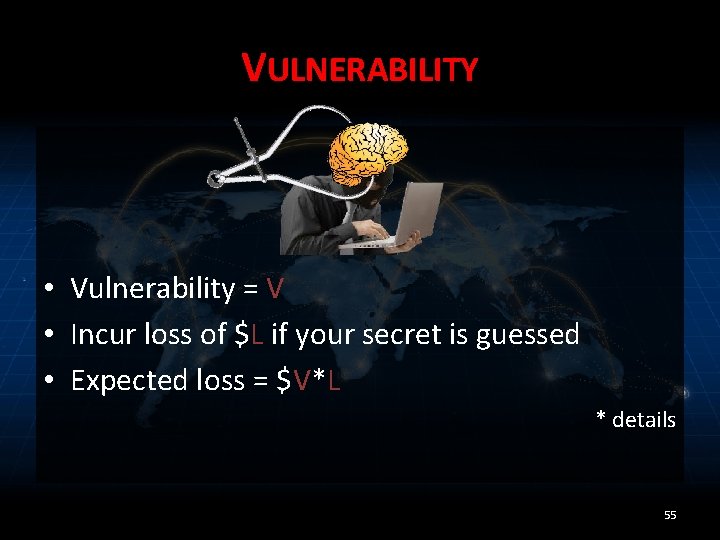

VULNERABILITY • Vulnerability = V • Incur loss of $L if your secret is guessed • Expected loss = $V*L * details 55

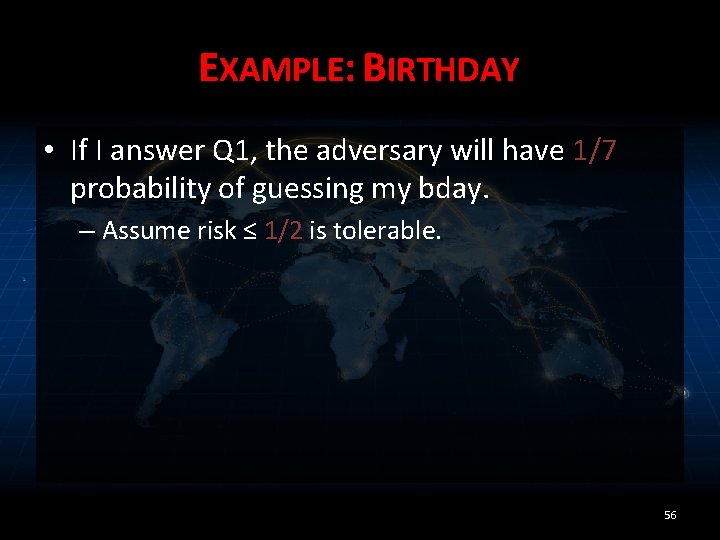

EXAMPLE: BIRTHDAY • If I answer Q 1, the adversary will have 1/7 probability of guessing my bday. – Assume risk ≤ 1/2 is tolerable. 56

EXAMPLE: BIRTHDAY bool Q 1(int bday) { return (bday ≥ 270 and bday < 270+7) } true bday=270 57

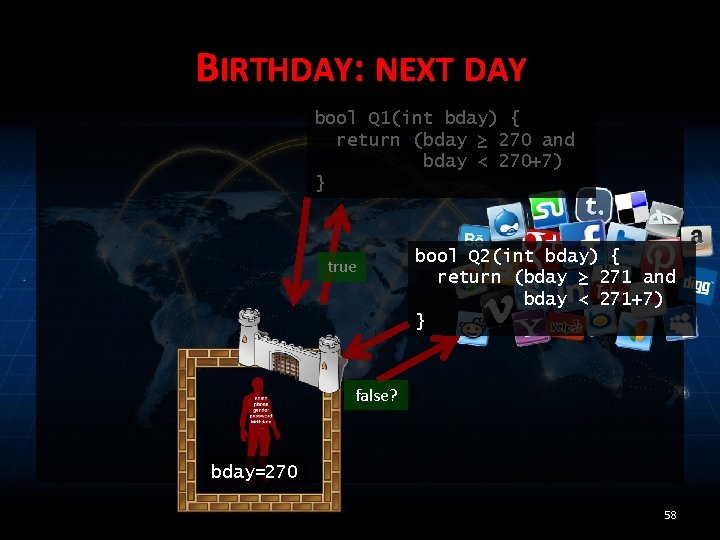

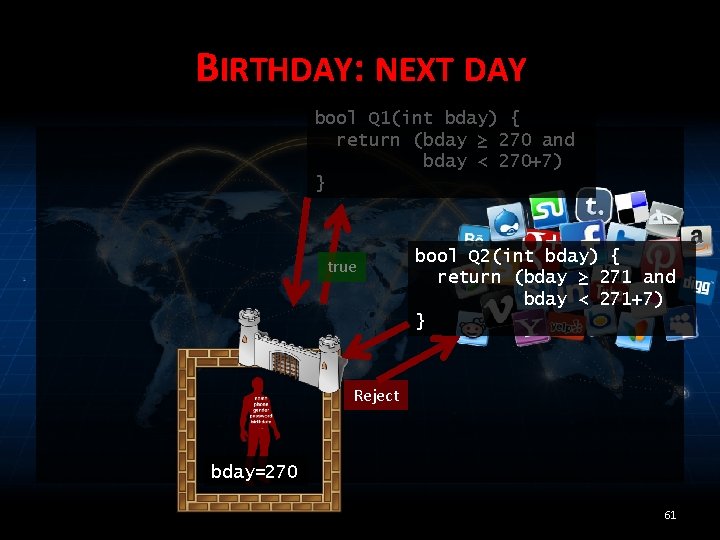

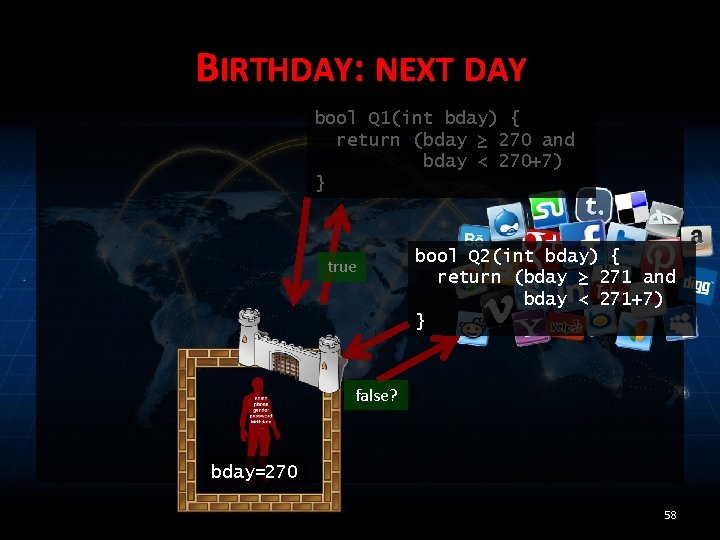

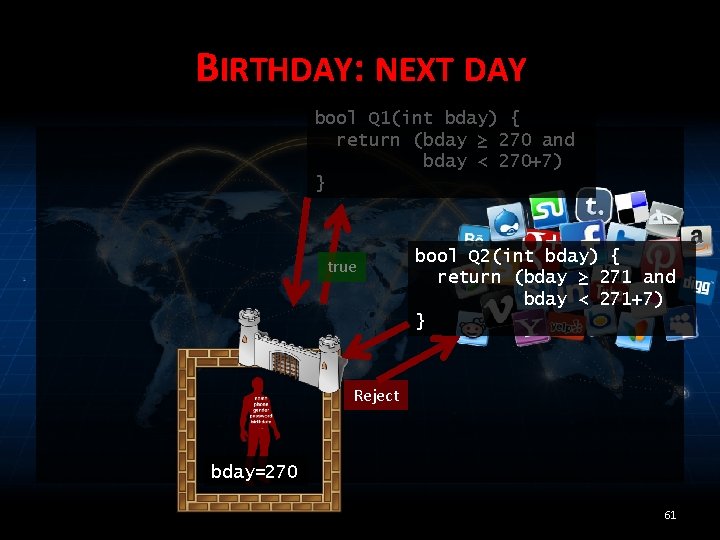

BIRTHDAY: NEXT DAY bool Q 1(int bday) { return (bday ≥ 270 and bday < 270+7) } true bool Q 2(int bday) { return (bday ≥ 271 and bday < 271+7) } false? bday=270 58

![MEASURE RISK Prior knowledge given Q 1 Prbdayx true 17 MEASURE RISK • Prior knowledge (given Q 1) – Pr[bday=x | true] = 1/7](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-56.jpg)

MEASURE RISK • Prior knowledge (given Q 1) – Pr[bday=x | true] = 1/7 for 270 ≤ x ≤ 276 – Risk: 1/7 probability of guessing bday • Posterior knowledge (given Q 1, Q 2) – Pr[bday=x | true, false] = 1 when x = 270 – Risk: 100% chance of guessing bday 59

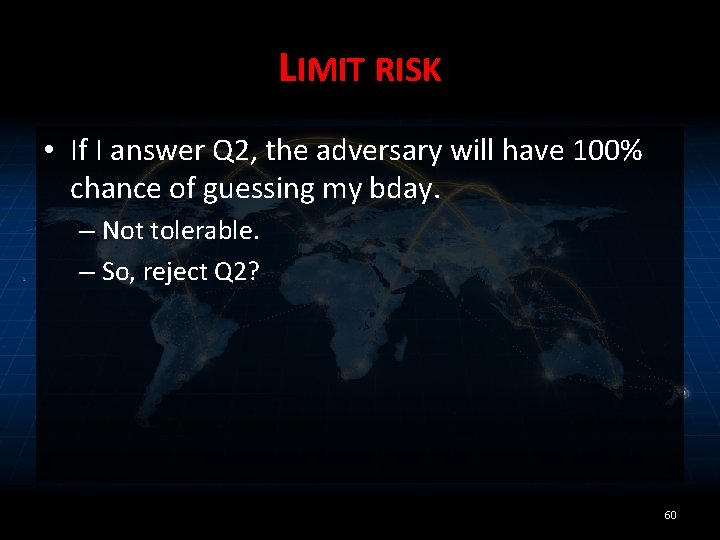

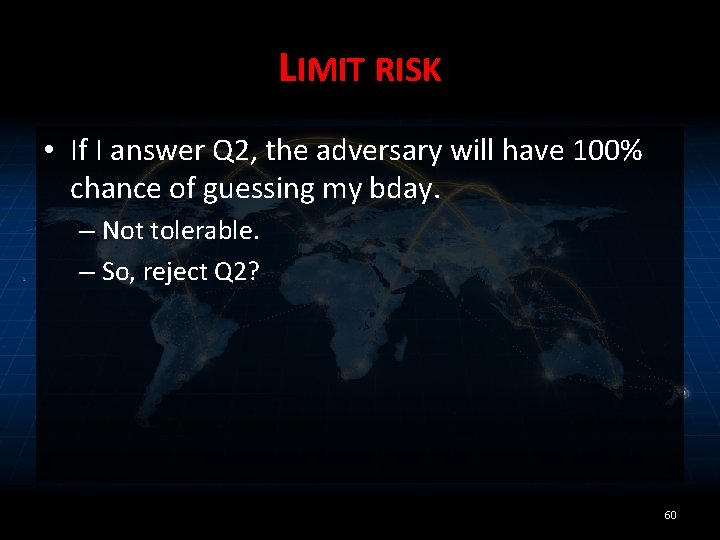

LIMIT RISK • If I answer Q 2, the adversary will have 100% chance of guessing my bday. – Not tolerable. – So, reject Q 2? 60

BIRTHDAY: NEXT DAY bool Q 1(int bday) { return (bday ≥ 270 and bday < 270+7) } true bool Q 2(int bday) { return (bday ≥ 271 and bday < 271+7) } Reject bday=270 61

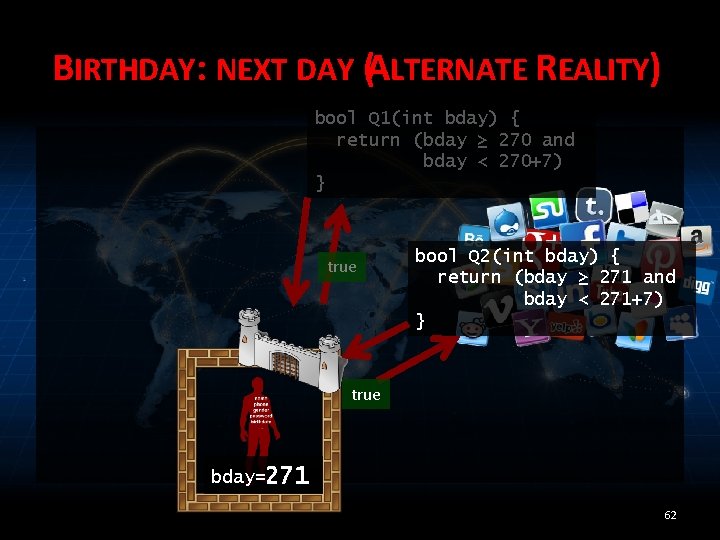

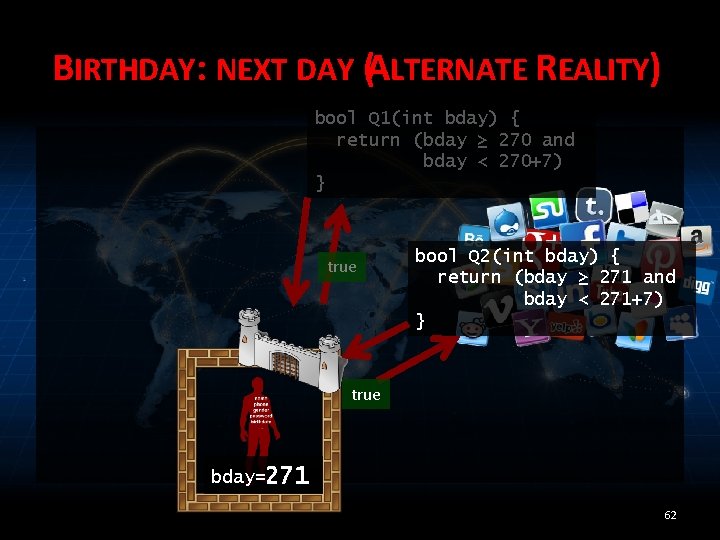

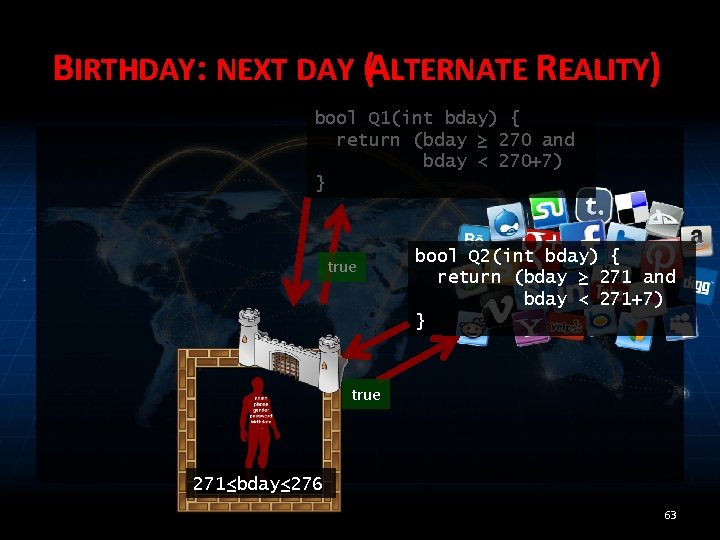

BIRTHDAY: NEXT DAY (ALTERNATE REALITY) bool Q 1(int bday) { return (bday ≥ 270 and bday < 270+7) } true bool Q 2(int bday) { return (bday ≥ 271 and bday < 271+7) } true bday=271 62

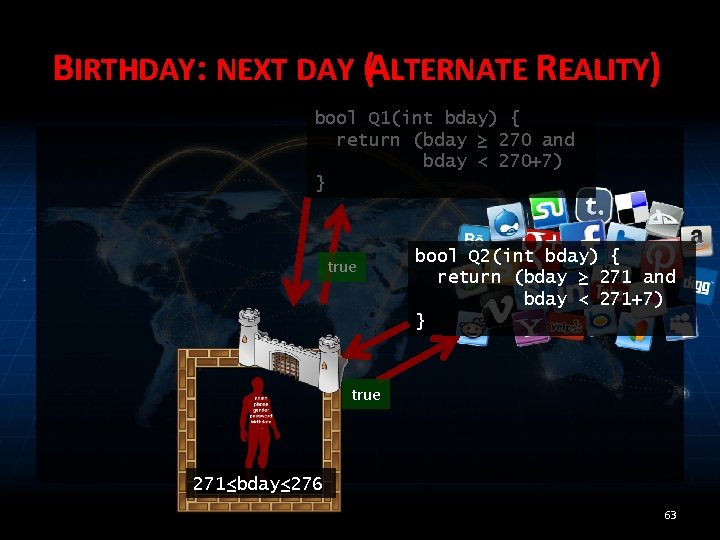

BIRTHDAY: NEXT DAY (ALTERNATE REALITY) bool Q 1(int bday) { return (bday ≥ 270 and bday < 270+7) } true bool Q 2(int bday) { return (bday ≥ 271 and bday < 271+7) } true 271≤bday≤ 276 63

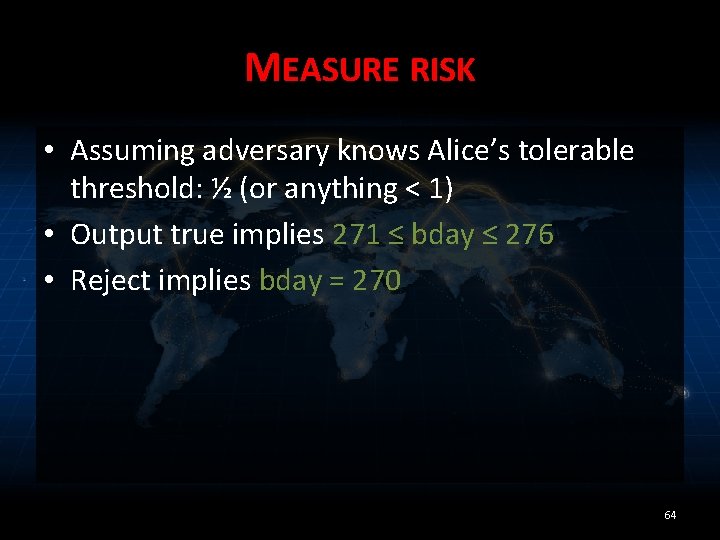

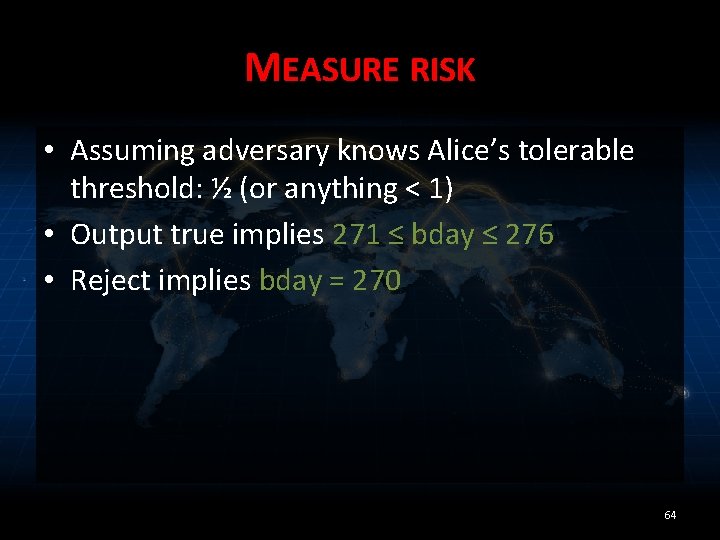

MEASURE RISK • Assuming adversary knows Alice’s tolerable threshold: ½ (or anything < 1) • Output true implies 271 ≤ bday ≤ 276 • Reject implies bday = 270 64

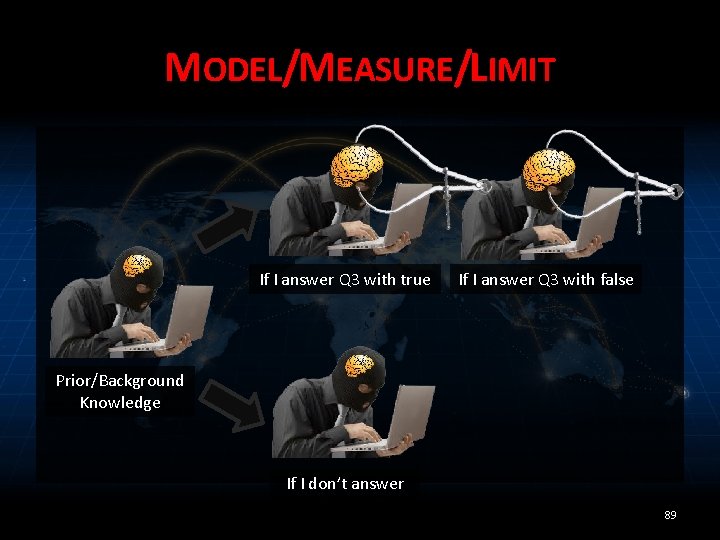

RECALL: MODEL If I answer Q 1 Prior/Background Knowledge If I don’t answer 65

MEASURE RISK • Problem: risk measurement depends on the value of the secret 66

RECALL: MODEL If I answer Q 1 with Q 1(bday) Prior/Background Knowledge If I don’t answer 67

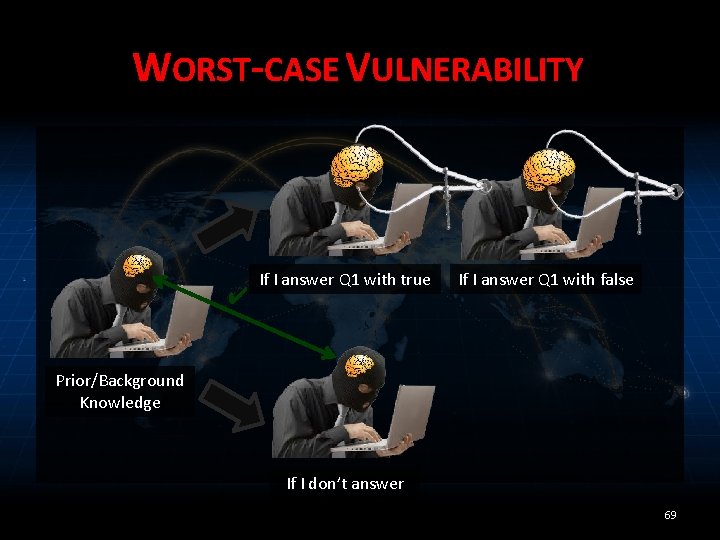

MEASURE RISK • Problem: risk measurement depends on the value of the secret • Solution: limit risk over all possible outputs (that are consistent with adversary’s knowledge) – “worst-case vulnerability” 68

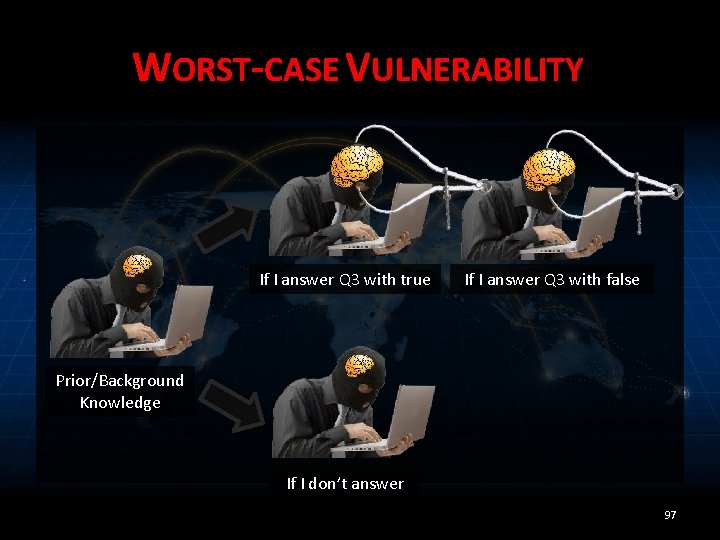

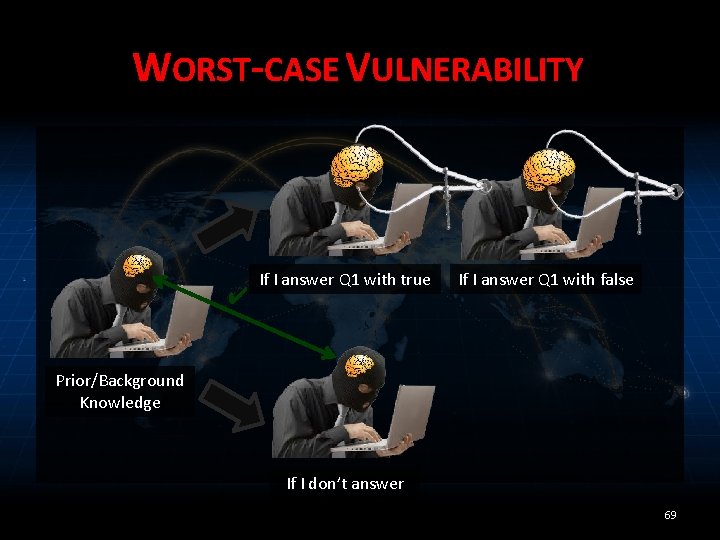

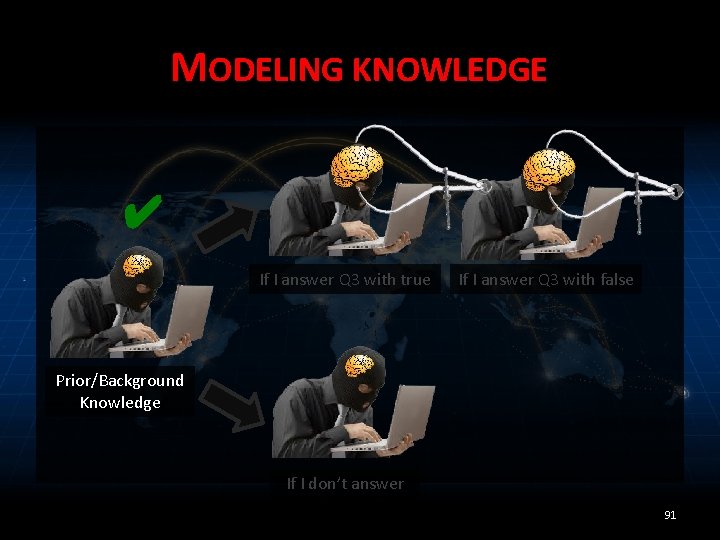

WORST-CASE VULNERABILITY ✔ If I answer Q 1 with true If I answer Q 1 with false Prior/Background Knowledge If I don’t answer 69

![IMPLEMENTATION Probabilistic programming Given Prbday program Q 1 Compute Prbday IMPLEMENTATION • Probabilistic programming: – Given Pr[bday], program Q 1 – Compute Pr[bday |](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-67.jpg)

IMPLEMENTATION • Probabilistic programming: – Given Pr[bday], program Q 1 – Compute Pr[bday | Q 1(bday) = yes] – Problem: undecidable/intractable when possible 70

![IMPLEMENTATION Probabilistic programming Given Prbday program Q 1 Compute Prbday IMPLEMENTATION • Probabilistic programming: – Given Pr[bday], program Q 1 – Compute Pr[bday |](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-68.jpg)

IMPLEMENTATION • Probabilistic programming: – Given Pr[bday], program Q 1 – Compute Pr[bday | Q 1(bday) = yes] – Problem: undecidable/intractable when possible • Solution: sound approximation 71

![PROBABILITY DISTRIBUTIONS Prior knowledge Prbday1 1365 Prbday2 1365 PROBABILITY DISTRIBUTIONS • Prior knowledge: – Pr[bday=1] = 1/365 – Pr[bday=2] = 1/365 –](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-69.jpg)

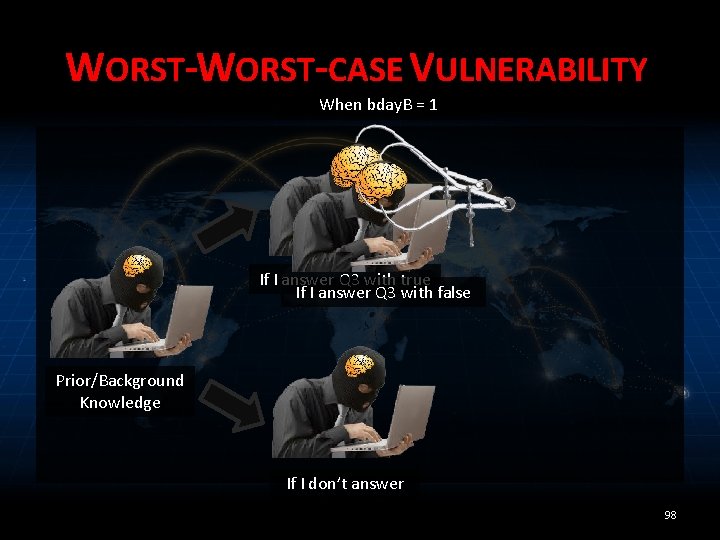

PROBABILITY DISTRIBUTIONS • Prior knowledge: – Pr[bday=1] = 1/365 – Pr[bday=2] = 1/365 – Pr[bday=3] = 1/365 – Pr[bday=4] = 1/365 – Pr[bday=5] = 1/365 –… 72

![PROBABILITY ABSTRACTION Prior knowledge Prbdayx 1365 for 1 x PROBABILITY ABSTRACTION • Prior knowledge: – Pr[bday=x] = 1/365 for 1 ≤ x ≤](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-70.jpg)

PROBABILITY ABSTRACTION • Prior knowledge: – Pr[bday=x] = 1/365 for 1 ≤ x ≤ 365 Pr[bday=x] 1/365 [ 1 x ] 365 73

![PROBABILITY ABSTRACTION Posterior given Q 1bday false Prbdayx PROBABILITY ABSTRACTION • Posterior given Q 1(bday) = false: – Pr[bday=x | …] =](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-71.jpg)

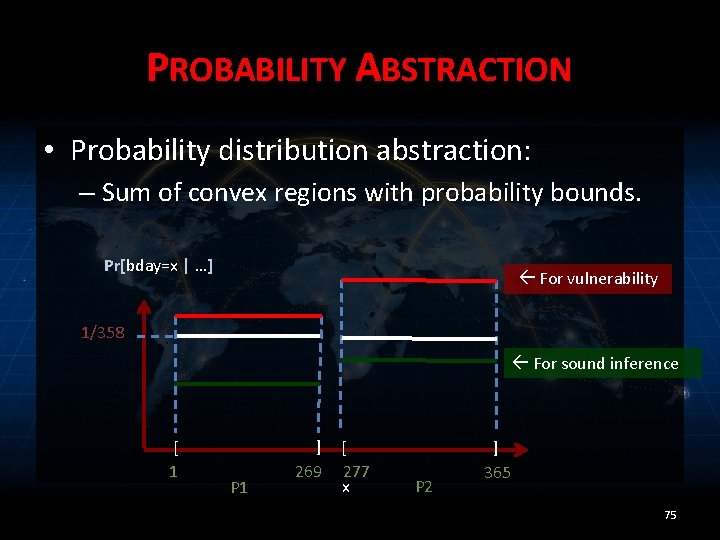

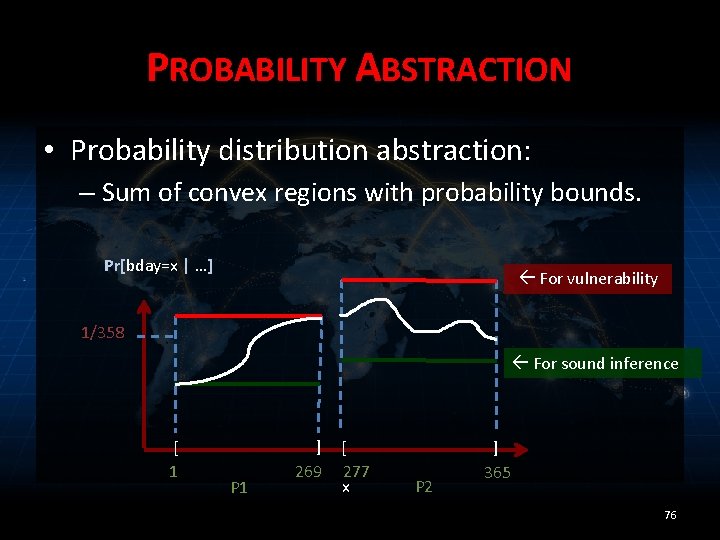

PROBABILITY ABSTRACTION • Posterior given Q 1(bday) = false: – Pr[bday=x | …] = 1/358 • for 1 ≤ x ≤ 269, 277 ≤ x ≤ 365 Pr[bday=x | …] 1/358 [ 1 ] 269 [ 277 x ] 365 74

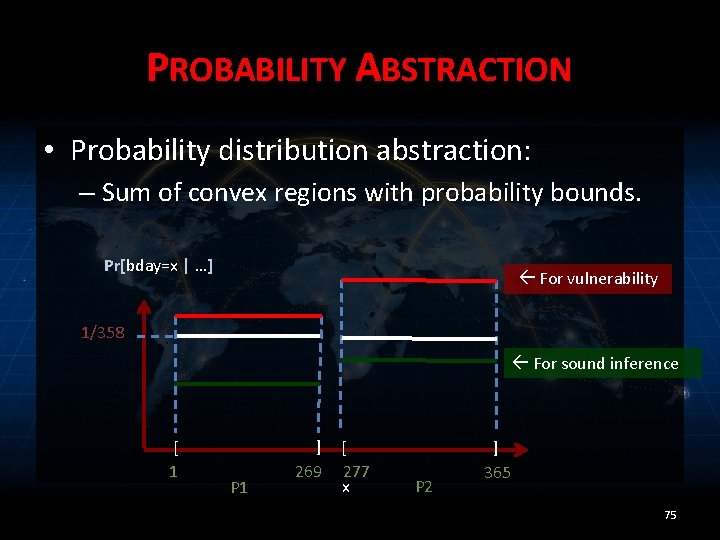

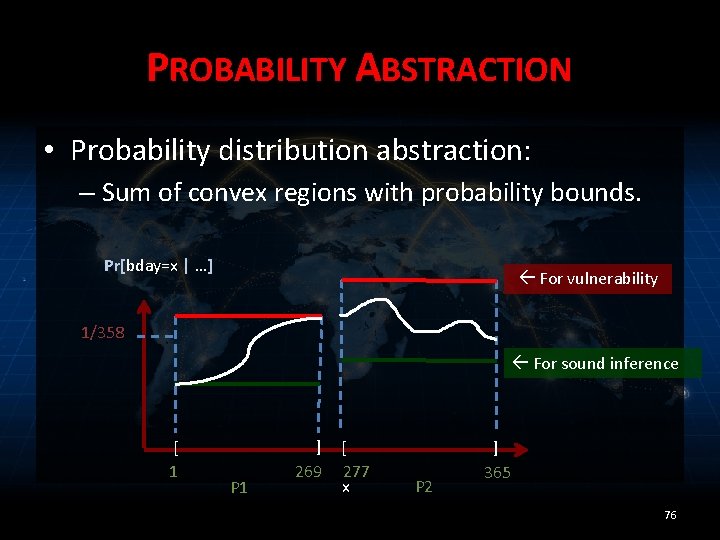

PROBABILITY ABSTRACTION • Probability distribution abstraction: – Sum of convex regions with probability bounds. Pr[bday=x | …] For vulnerability 1/358 For sound inference [ 1 P 1 ] 269 [ 277 x P 2 ] 365 75

PROBABILITY ABSTRACTION • Probability distribution abstraction: – Sum of convex regions with probability bounds. Pr[bday=x | …] For vulnerability 1/358 For sound inference [ 1 P 1 ] 269 [ 277 x P 2 ] 365 76

PROBABILITY ABSTRACTION • Probability distribution abstraction: – Can represent distributions over large state spaces efficiently – Do not need to know exact prior 77

![MODELING MEASURING AND LIMITING ADVERSARY KNOWLEDGE CSF 11 JCS 13 Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-75.jpg)

MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk and computational aspects of approach • Simulatable enforcement of risk limit • Probabilistic abstract interpreter based on, intervals, octagons, and polyhedra • Approximation: tradeoff precision and performance • Inference sound relative to vulnerability 78

![MODELING MEASURING AND LIMITING ADVERSARY KNOWLEDGE CSF 11 JCS 13 Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-76.jpg)

MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk and computational aspects of approach • [PLAS 12] – Limit risk for collaborative queries • [S&P 14, FCS 14] – Measure risk when secrets change over time 79

![MODELING MEASURING AND LIMITING ADVERSARY KNOWLEDGE CSF 11 JCS 13 Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-77.jpg)

MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk and computational aspects of approach • [PLAS 12] – Limit risk for collaborative queries • [S&P 14, FCS 14] – Measure risk when secrets change over time Michael Hicks UMD Jonathan Katz UMD Mudhakar Srivatsa IBM TJ Watson Research Center 80

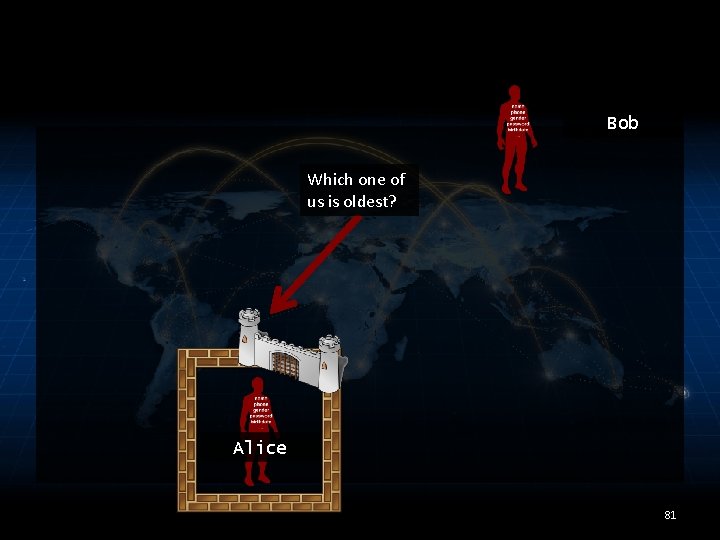

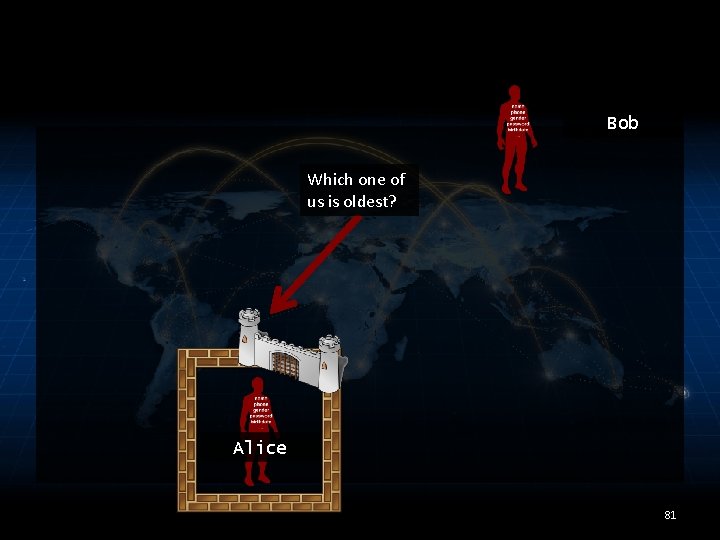

Bob Which one of us is oldest? Alice 81

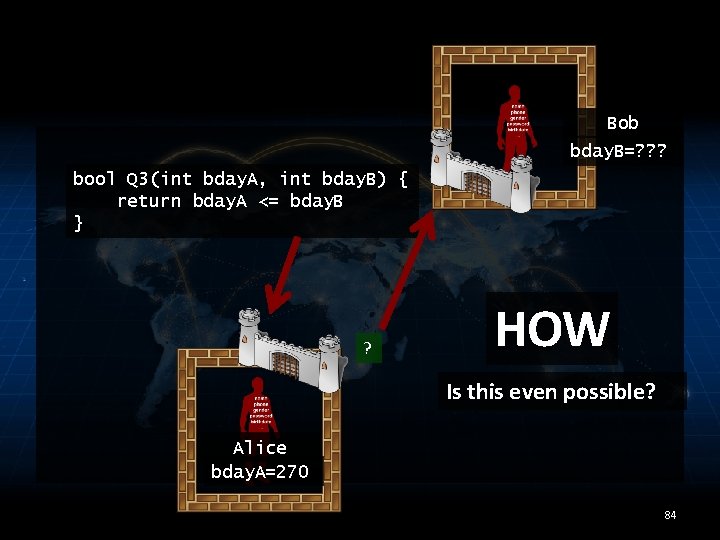

Bob bday. B=300 bool Q 3(int bday. A, int bday. B) { return bday. A <= bday. B } ? Alice bday. A=270 82

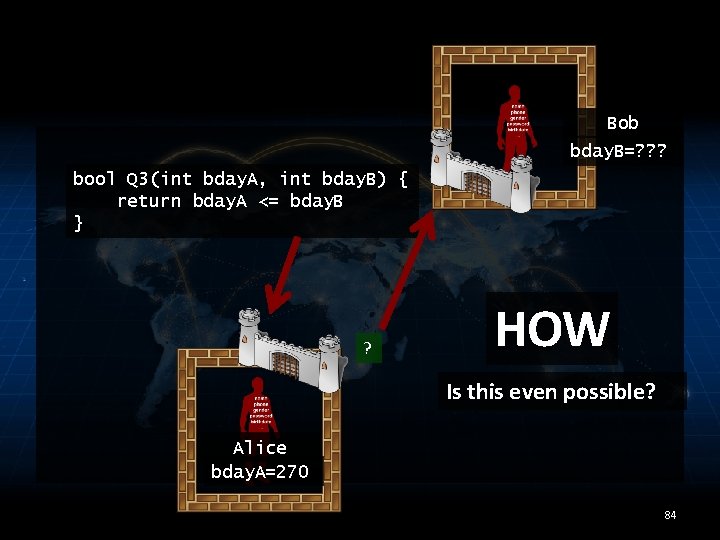

Bob bday. B=? ? ? bool Q 3(int bday. A, int bday. B) { return bday. A <= bday. B } ? Alice bday. A=270 83

Bob bday. B=? ? ? bool Q 3(int bday. A, int bday. B) { return bday. A <= bday. B } ? HOW Is this even possible? Alice bday. A=270 84

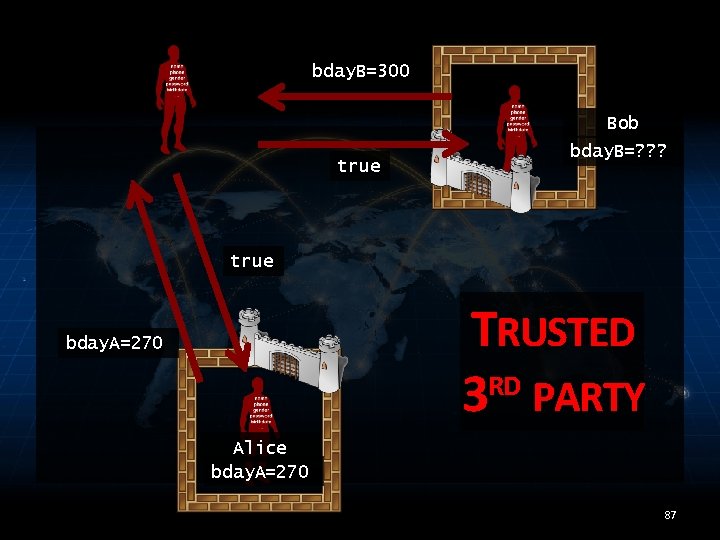

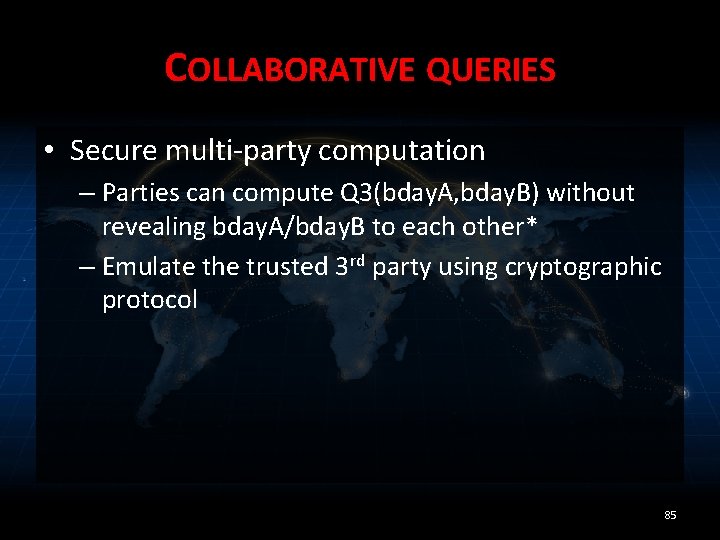

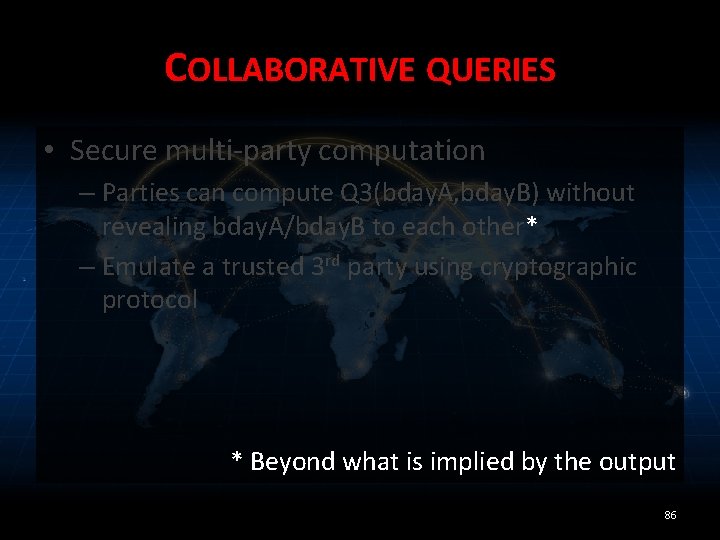

COLLABORATIVE QUERIES • Secure multi-party computation – Parties can compute Q 3(bday. A, bday. B) without revealing bday. A/bday. B to each other* – Emulate the trusted 3 rd party using cryptographic protocol 85

COLLABORATIVE QUERIES • Secure multi-party computation – Parties can compute Q 3(bday. A, bday. B) without revealing bday. A/bday. B to each other* – Emulate a trusted 3 rd party using cryptographic protocol * Beyond what is implied by the output 86

bday. B=300 Bob true bday. B=? ? ? true TRUSTED RD 3 PARTY bday. A=270 Alice bday. A=270 87

bday. B=300 Bob true bday. B=? ? ? true TRUSTED RD 3 PARTY bday. A=270 Alice bday. A=270 88

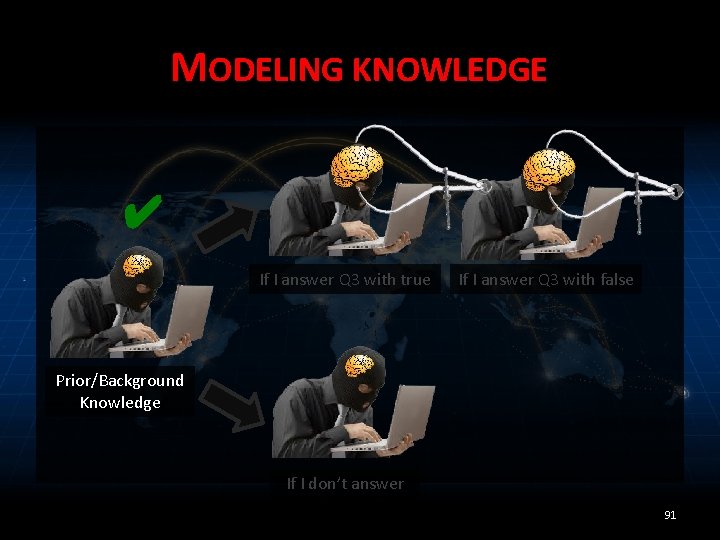

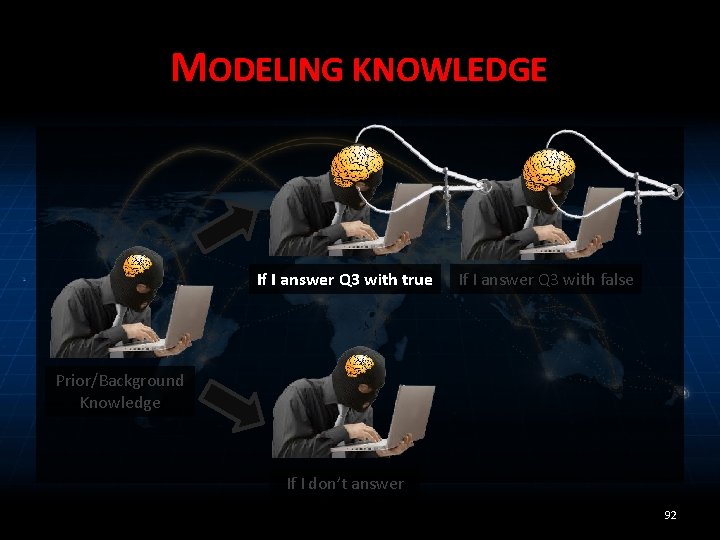

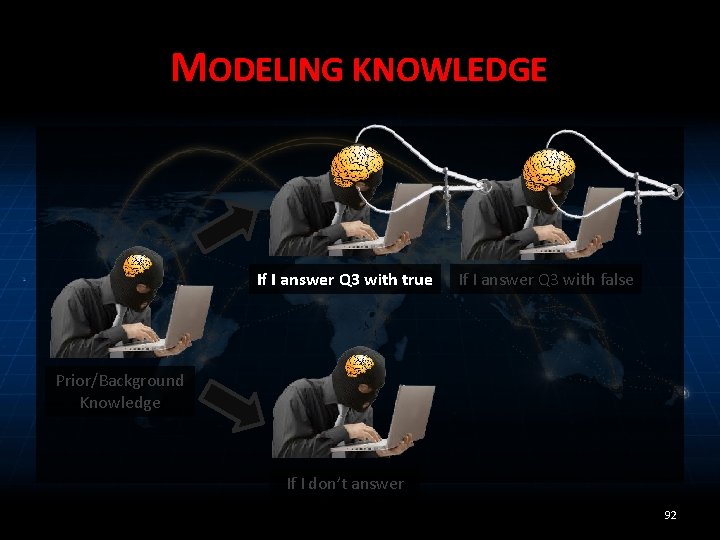

MODEL/MEASURE/LIMIT If I answer Q 3 with true If I answer Q 3 with false Prior/Background Knowledge If I don’t answer 89

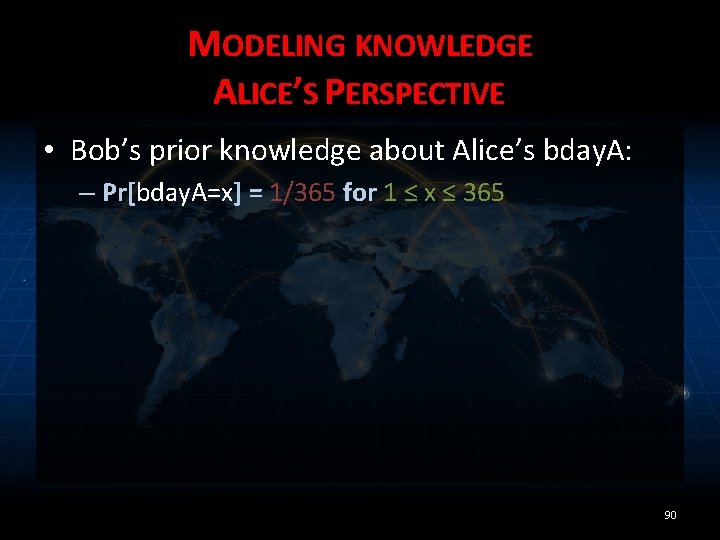

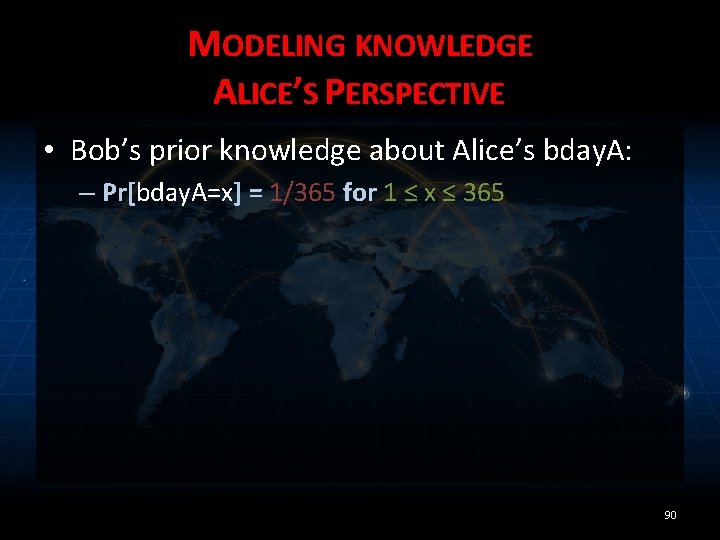

MODELING KNOWLEDGE ALICE’S PERSPECTIVE • Bob’s prior knowledge about Alice’s bday. A: – Pr[bday. A=x] = 1/365 for 1 ≤ x ≤ 365 90

MODELING KNOWLEDGE ✔ If I answer Q 3 with true If I answer Q 3 with false Prior/Background Knowledge If I don’t answer 91

MODELING KNOWLEDGE If I answer Q 3 with true If I answer Q 3 with false Prior/Background Knowledge If I don’t answer 92

![MODELING KNOWLEDGE Bobs prior knowledge about Alices bday A Prbday Ax MODELING KNOWLEDGE • Bob’s prior knowledge about Alice’s bday. A: – Pr[bday. A=x] =](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-90.jpg)

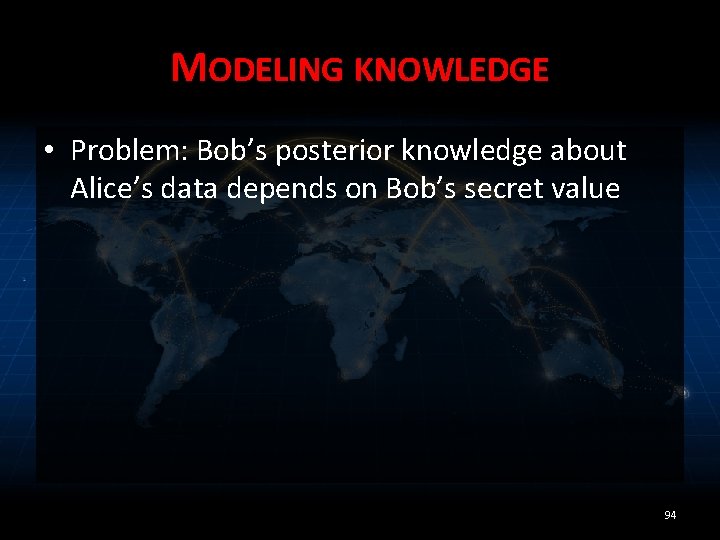

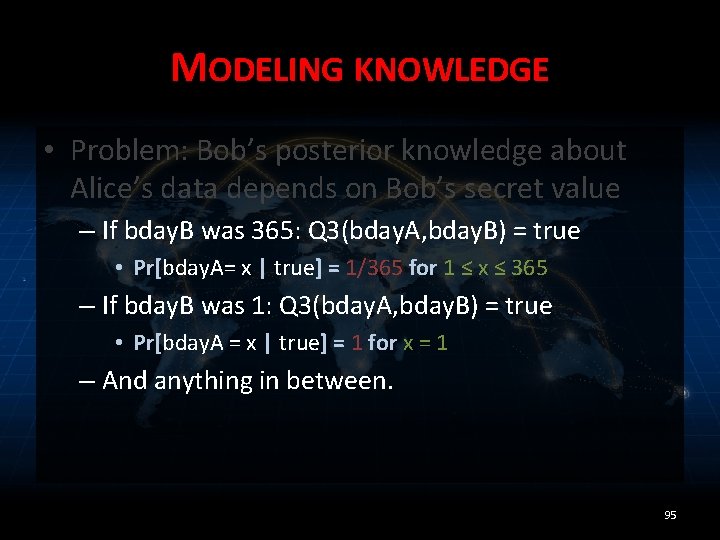

MODELING KNOWLEDGE • Bob’s prior knowledge about Alice’s bday. A: – Pr[bday. A=x] = 1/365 for 1 ≤ x ≤ 365 • Posterior knowledge given Q 3(bday. A, bday. B) = true (so bday. A ≤ bday. B) – Pr[bday. A=x | true] = ? ? ? for ? ? ? 93

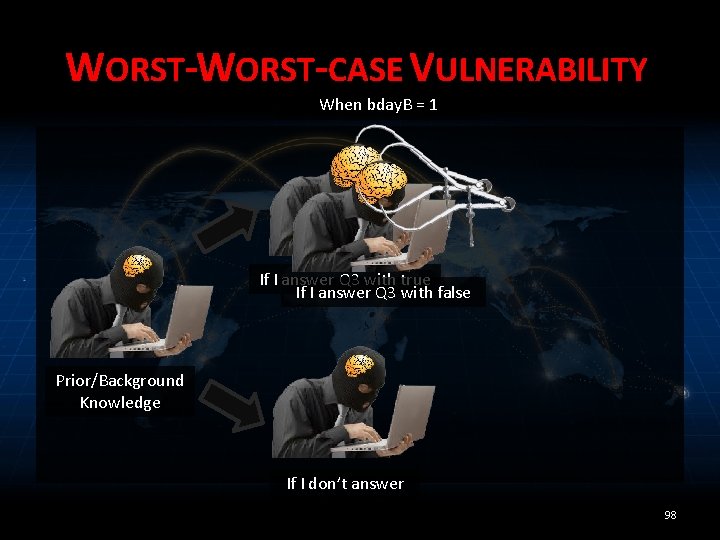

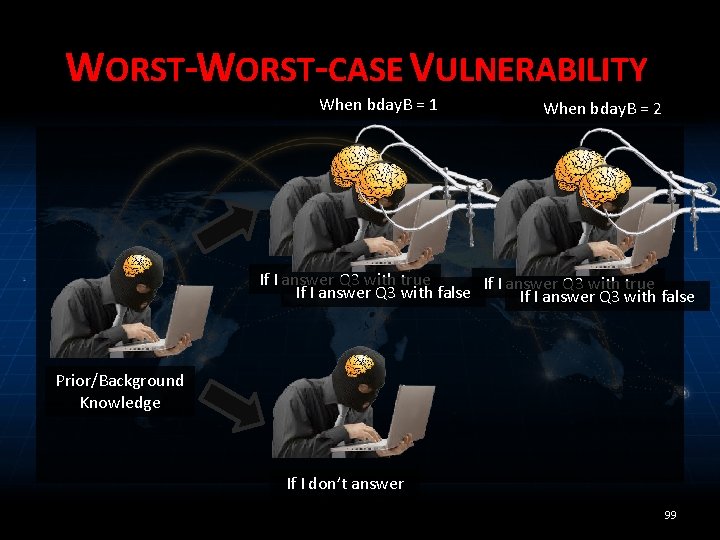

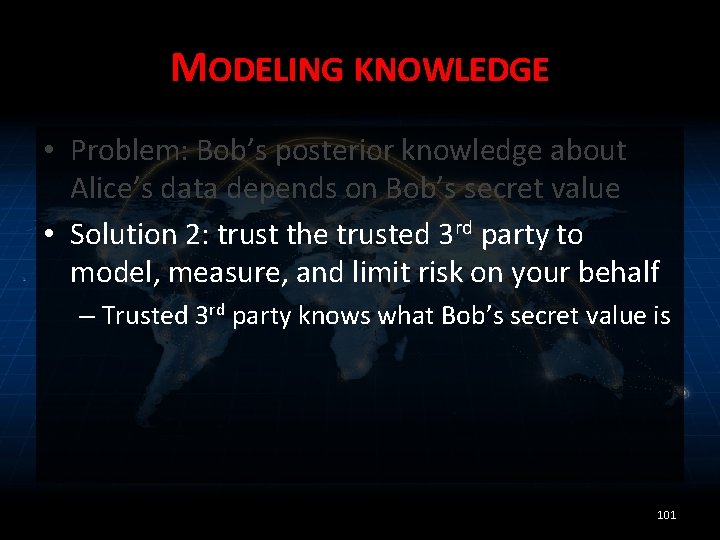

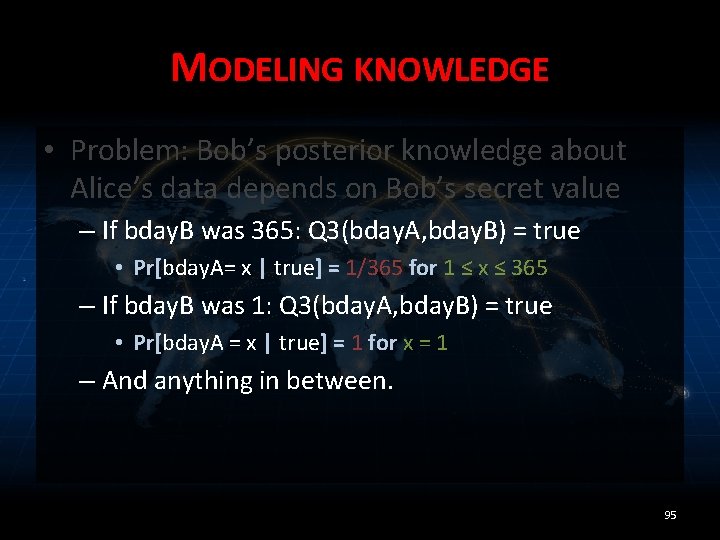

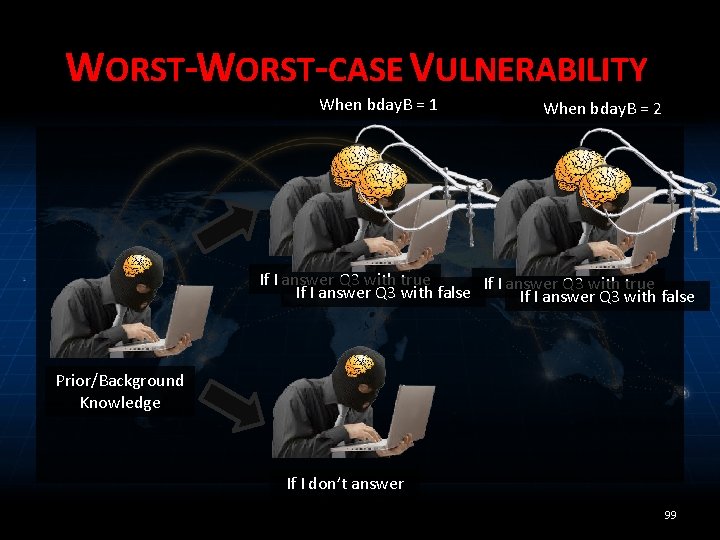

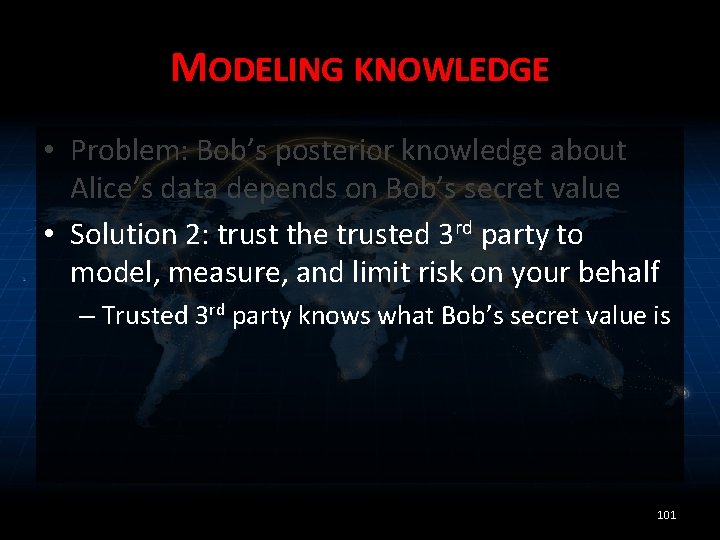

MODELING KNOWLEDGE • Problem: Bob’s posterior knowledge about Alice’s data depends on Bob’s secret value 94

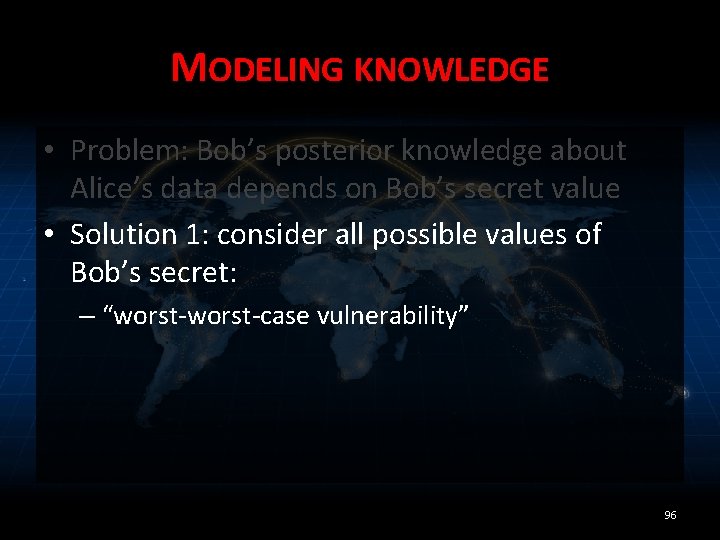

MODELING KNOWLEDGE • Problem: Bob’s posterior knowledge about Alice’s data depends on Bob’s secret value – If bday. B was 365: Q 3(bday. A, bday. B) = true • Pr[bday. A= x | true] = 1/365 for 1 ≤ x ≤ 365 – If bday. B was 1: Q 3(bday. A, bday. B) = true • Pr[bday. A = x | true] = 1 for x = 1 – And anything in between. 95

MODELING KNOWLEDGE • Problem: Bob’s posterior knowledge about Alice’s data depends on Bob’s secret value • Solution 1: consider all possible values of Bob’s secret: – “worst-case vulnerability” 96

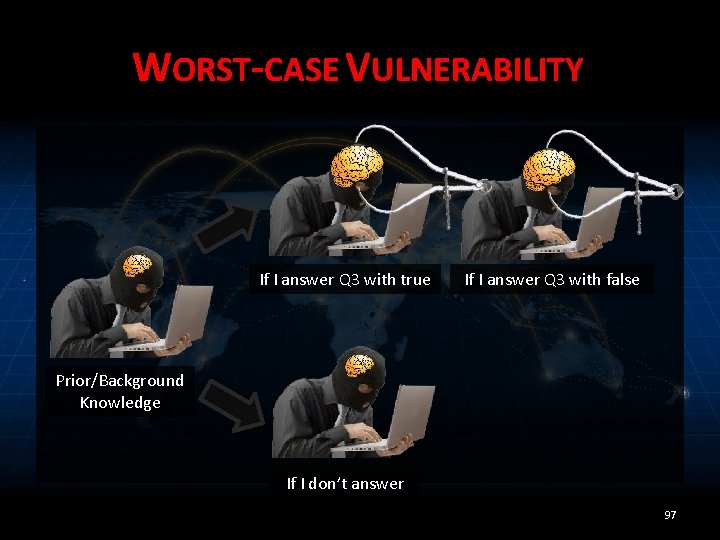

WORST-CASE VULNERABILITY If I answer Q 3 with true If I answer Q 3 with false Prior/Background Knowledge If I don’t answer 97

WORST-CASE VULNERABILITY When bday. B = 1 If I answer Q 3 with true If I answer Q 3 with false Prior/Background Knowledge If I don’t answer 98

WORST-CASE VULNERABILITY When bday. B = 1 When bday. B = 2 If I answer Q 3 with true If I answer Q 3 with false Prior/Background Knowledge If I don’t answer 99

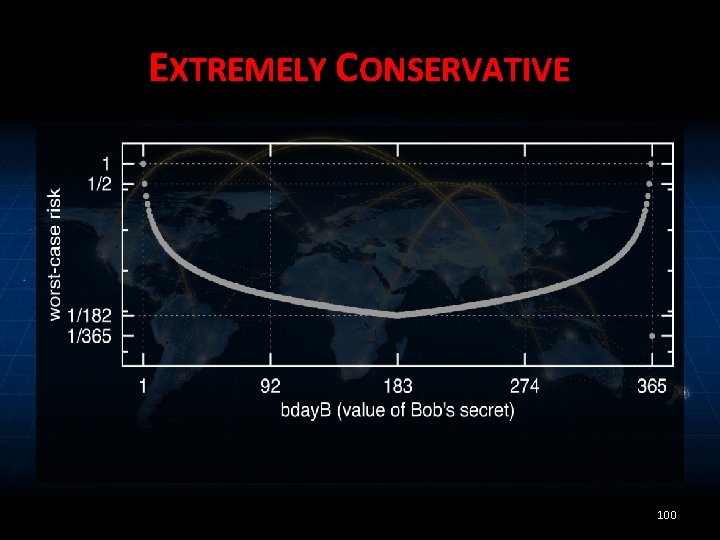

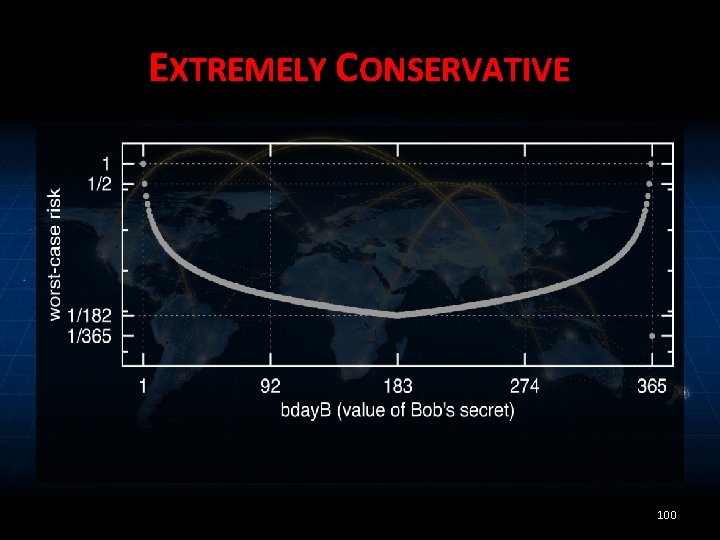

EXTREMELY CONSERVATIVE 100

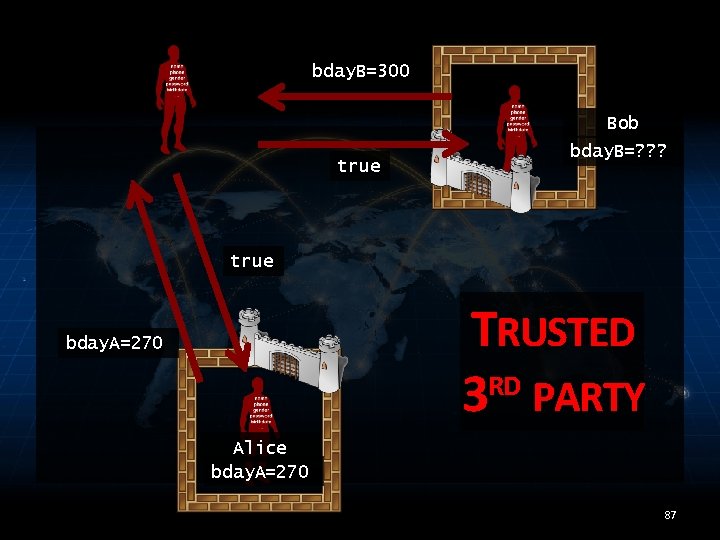

MODELING KNOWLEDGE • Problem: Bob’s posterior knowledge about Alice’s data depends on Bob’s secret value • Solution 2: trust the trusted 3 rd party to model, measure, and limit risk on your behalf – Trusted 3 rd party knows what Bob’s secret value is 101

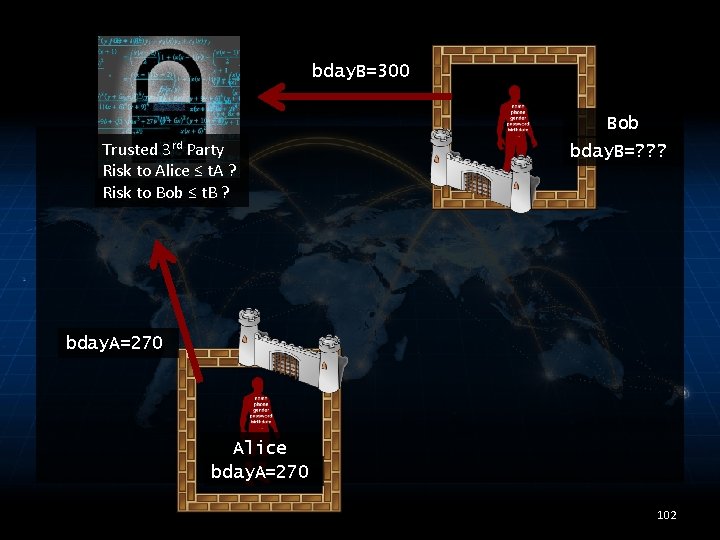

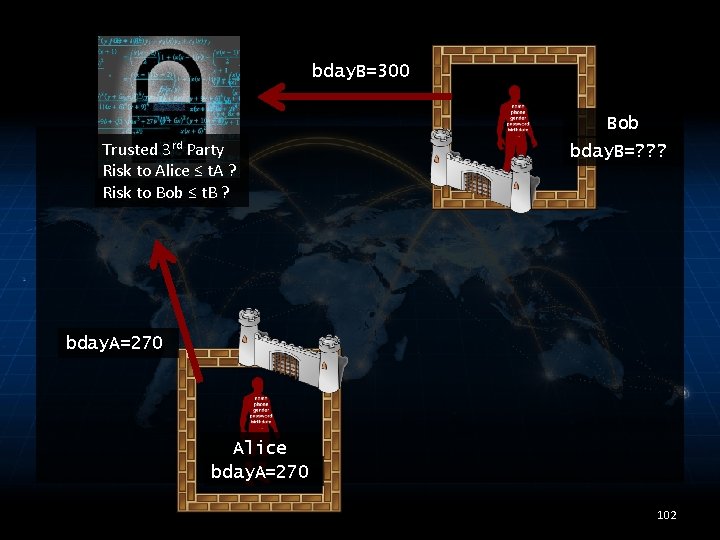

bday. B=300 Bob Trusted 3 rd Party Risk to Alice ≤ t. A ? Risk to Bob ≤ t. B ? bday. B=? ? ? bday. A=270 Alice bday. A=270 102

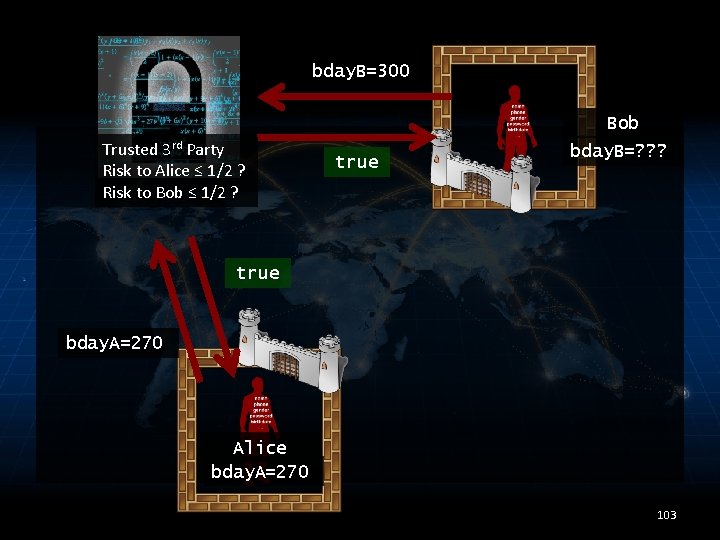

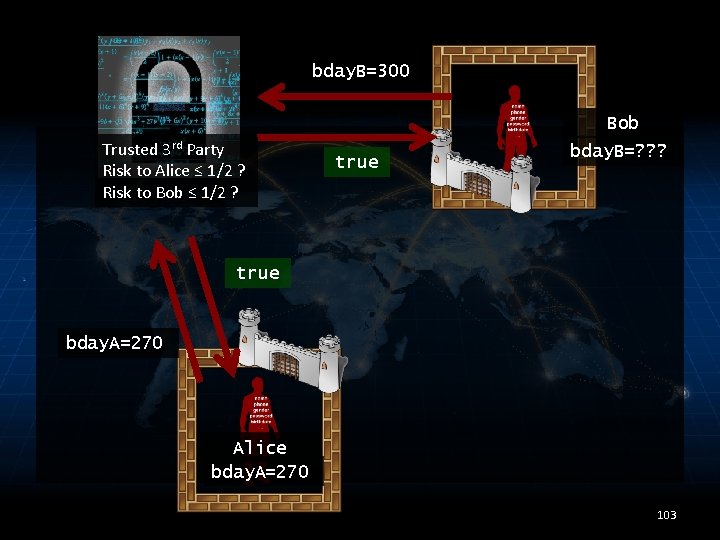

bday. B=300 Bob Trusted 3 rd Party Risk to Alice ≤ 1/2 ? Risk to Bob ≤ 1/2 ? true bday. B=? ? ? true bday. A=270 Alice bday. A=270 103

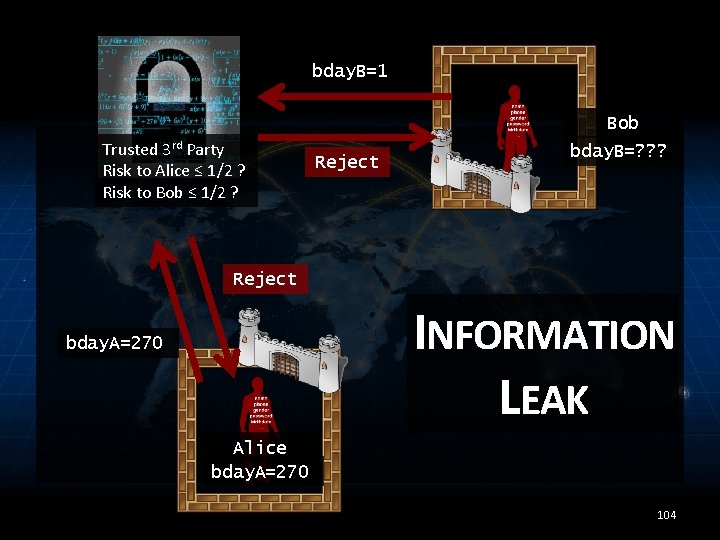

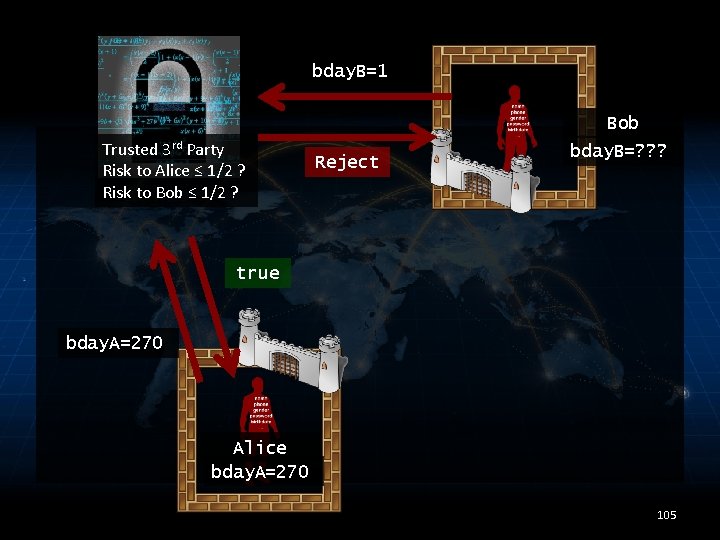

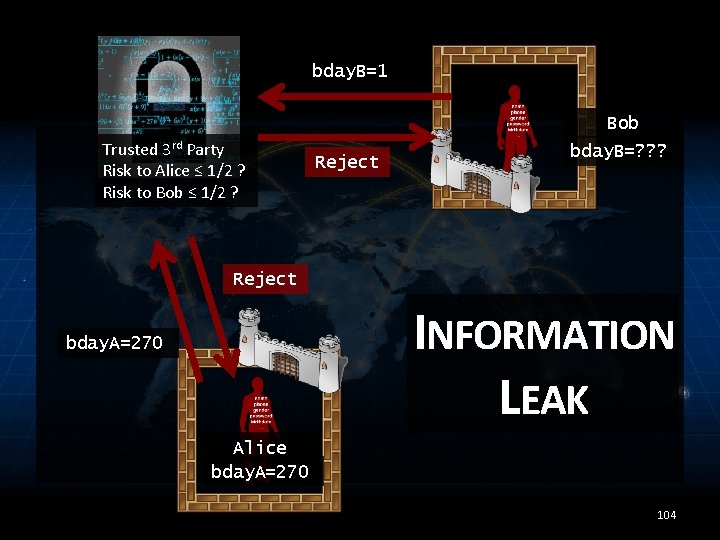

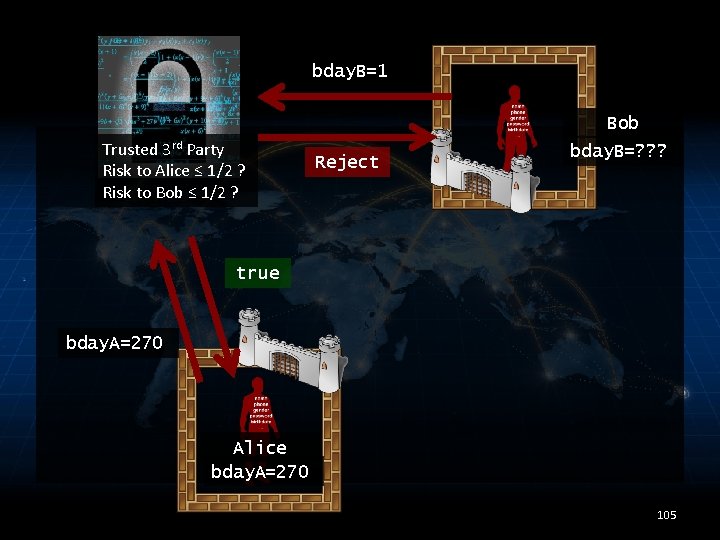

bday. B=1 Bob Trusted 3 rd Party Risk to Alice ≤ 1/2 ? Risk to Bob ≤ 1/2 ? Reject bday. B=? ? ? Reject INFORMATION LEAK bday. A=270 Alice bday. A=270 104

bday. B=1 Bob Trusted 3 rd Party Risk to Alice ≤ 1/2 ? Risk to Bob ≤ 1/2 ? Reject bday. B=? ? ? true bday. A=270 Alice bday. A=270 105

![MODELING MEASURING AND LIMITING ADVERSARY KNOWLEDGE PLAS 12 Limit risk for collaborative MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [PLAS 12] – Limit risk for collaborative](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-103.jpg)

MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [PLAS 12] – Limit risk for collaborative queries • Approach 1: Belief sets: tracking possible states of knowledge • Approach 2: Knowledge tracking as secure computation • Simulatable enforcement of risk limit • Experimental comparison of risk measurement for the two approaches. 106

![MODELING MEASURING AND LIMITING ADVERSARY KNOWLEDGE CSF 11 JCS 13 Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-104.jpg)

MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk and computational aspects of approach • [PLAS 12] – Limit risk for collaborative queries • [S&P 14, FCS 14] – Measure risk when secrets change over time 107

![MODELING MEASURING AND LIMITING ADVERSARY KNOWLEDGE CSF 11 JCS 13 Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-105.jpg)

MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk and computational aspects of approach • [PLAS 12] – Limit risk for collaborative queries Mário Alvim Universidade Federal de Minas Gerais Michael Hicks UMD Michael Clarkson Cornell • [S&P 14, FCS 14] – Measure risk when secrets change over time 108

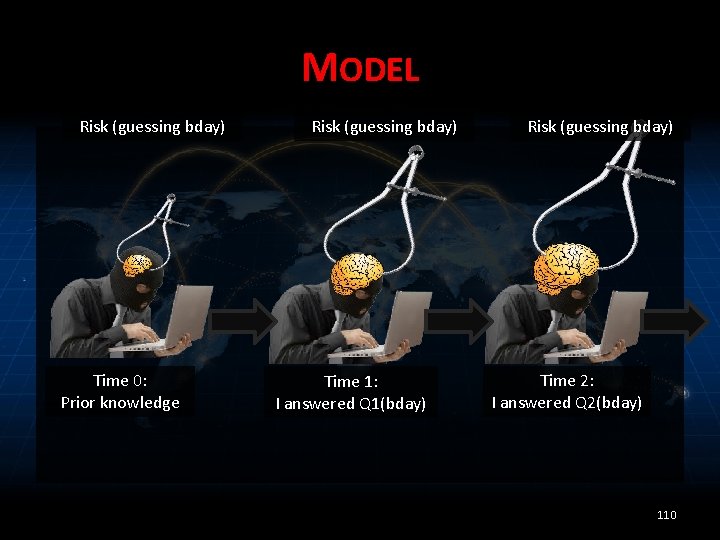

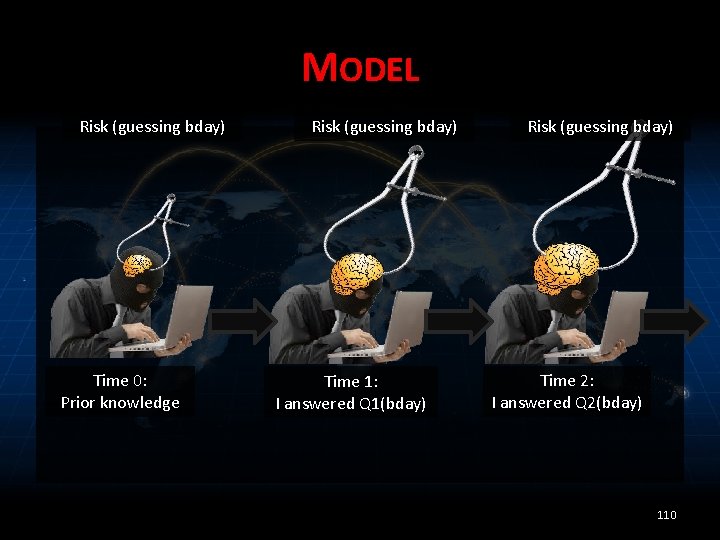

MODEL Risk (guessing bday) Time 0: Prior knowledge Risk (guessing bday) Time 1: I answered Q 1(bday) Risk (guessing bday) Time 2: I answered Q 2(bday) 110

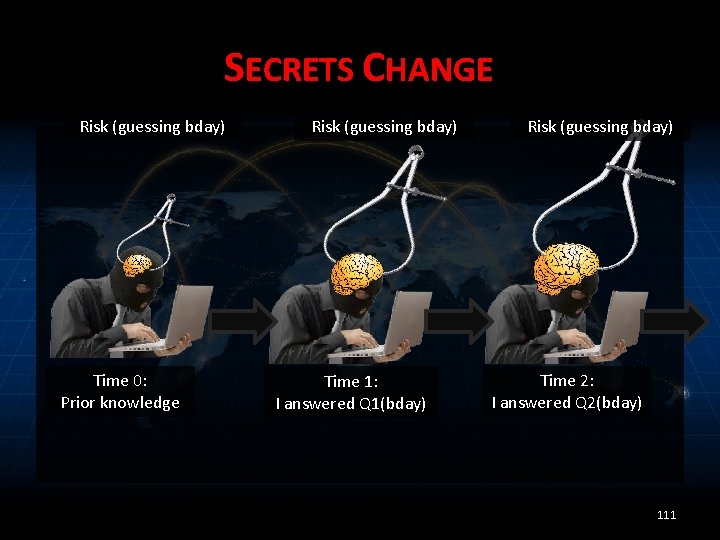

SECRETS CHANGE Risk (guessing bday) Time 0: Prior knowledge Risk (guessing bday) Time 1: I answered Q 1(bday) Risk (guessing bday) Time 2: I answered Q 2(bday) 111

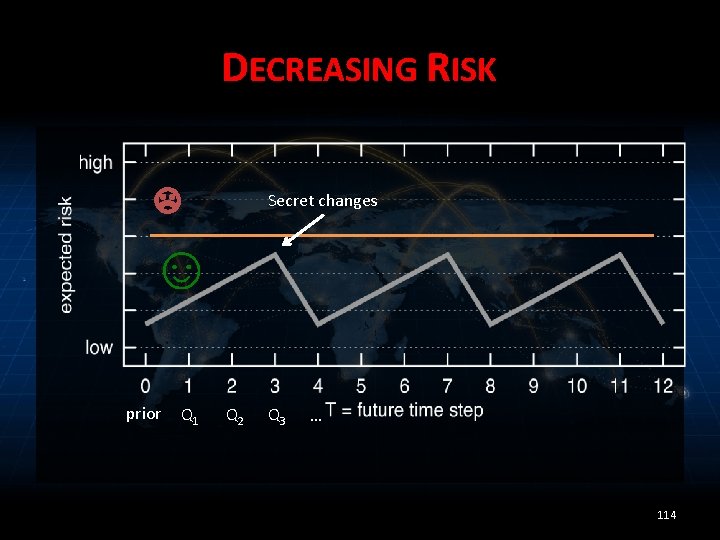

SECRETS CHANGE: MOVING TARGET Risk (guessing loc 0) Time 0: Prior knowledge Risk (guessing loc 1) Time 1: I answered Q 1(loc 1) Risk (guessing loc 2) Time 2: I answered Q 2(loc 2) 113

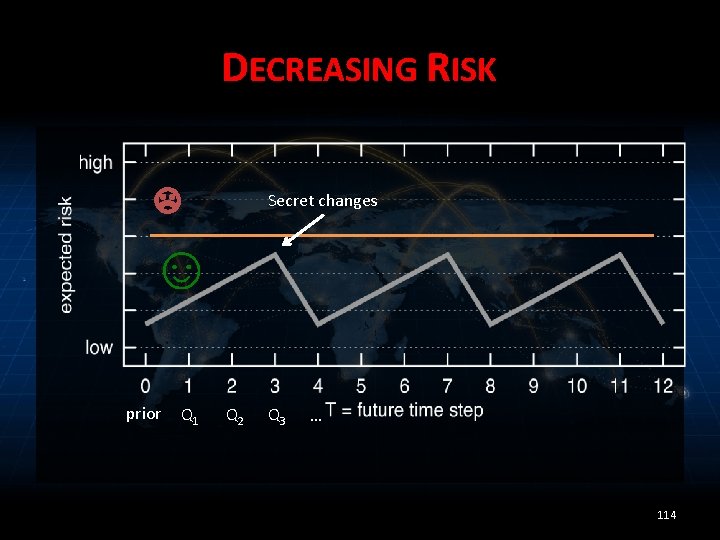

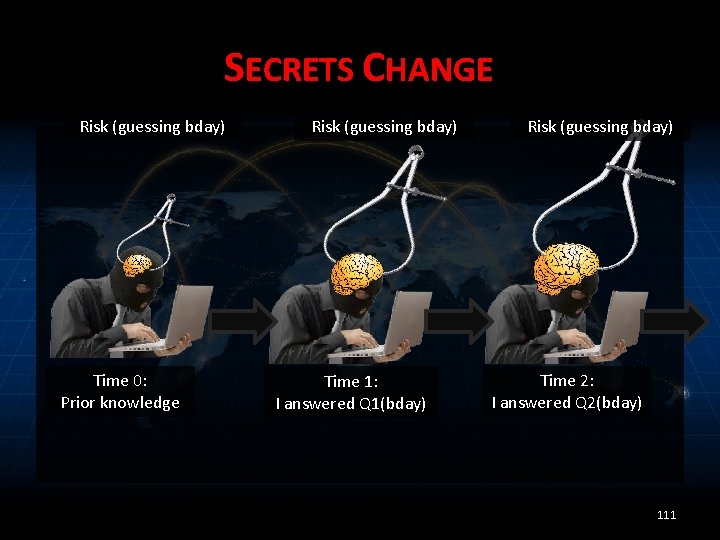

DECREASING RISK ☹ Secret changes ☺ prior Q 1 Q 2 Q 3 … 114

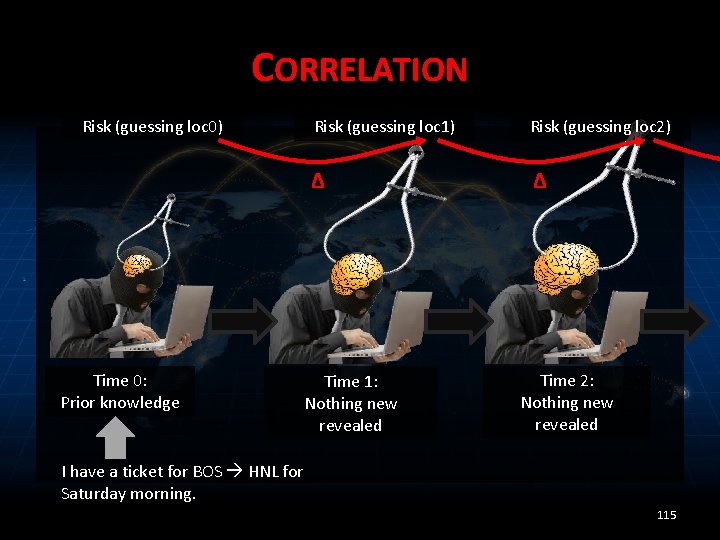

CORRELATION Risk (guessing loc 0) Time 0: Prior knowledge Risk (guessing loc 1) Risk (guessing loc 2) Δ Δ Time 1: Nothing new revealed Time 2: Nothing new revealed I have a ticket for BOS HNL for Saturday morning. 115

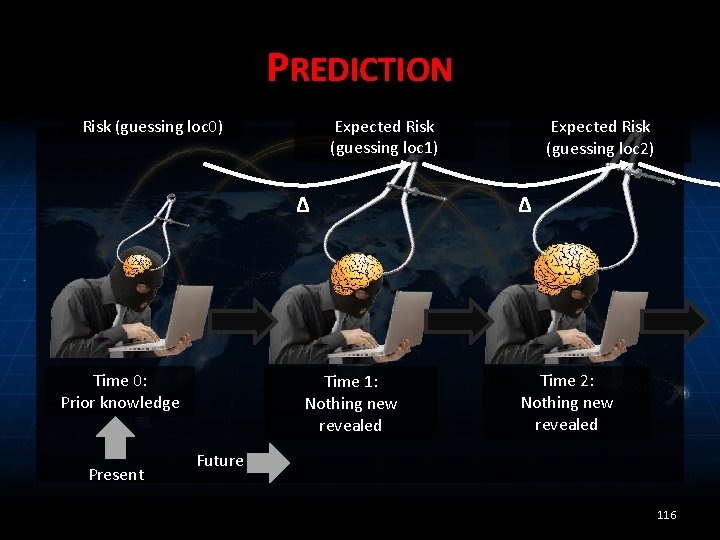

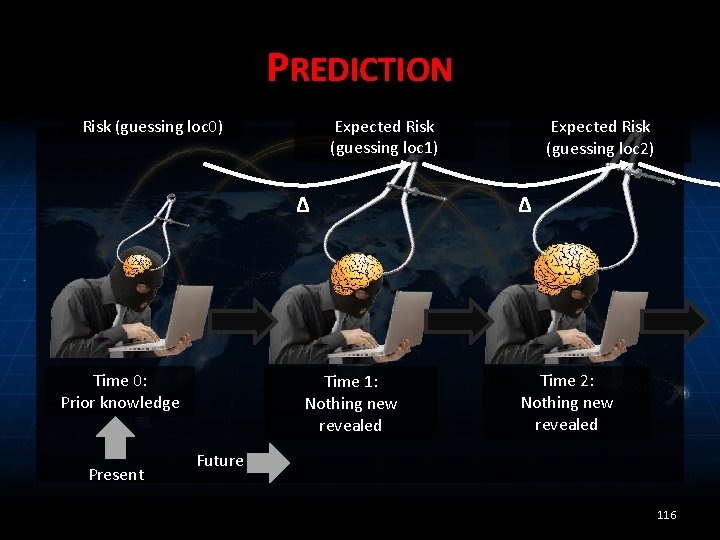

PREDICTION Expected Risk (guessing loc 1) Risk (guessing loc 0) Δ Time 0: Prior knowledge Present Time 1: Nothing new revealed Expected Risk (guessing loc 2) Δ Time 2: Nothing new revealed Future 116

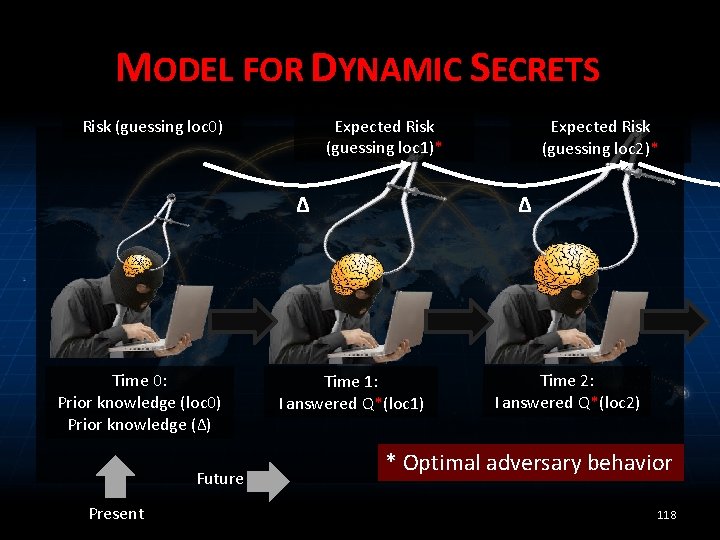

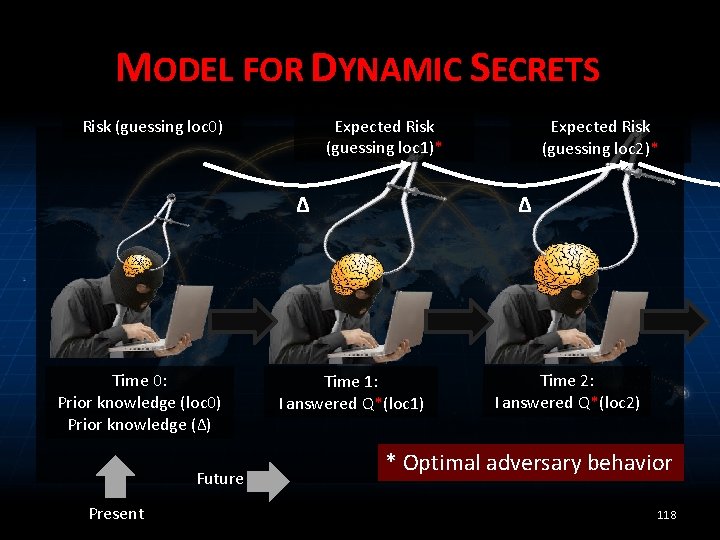

MODEL FOR DYNAMIC SECRETS Expected Risk (guessing loc 1)* Risk (guessing loc 0) Δ Time 0: Prior knowledge (loc 0) Prior knowledge (Δ) Future Present Expected Risk (guessing loc 2)* Δ Time 1: I answered Q*(loc 1) Time 2: I answered Q*(loc 2) * Optimal adversary behavior 118

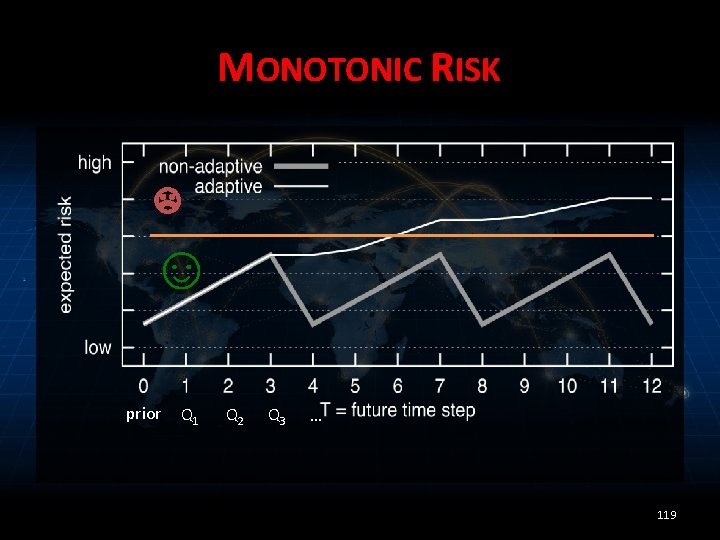

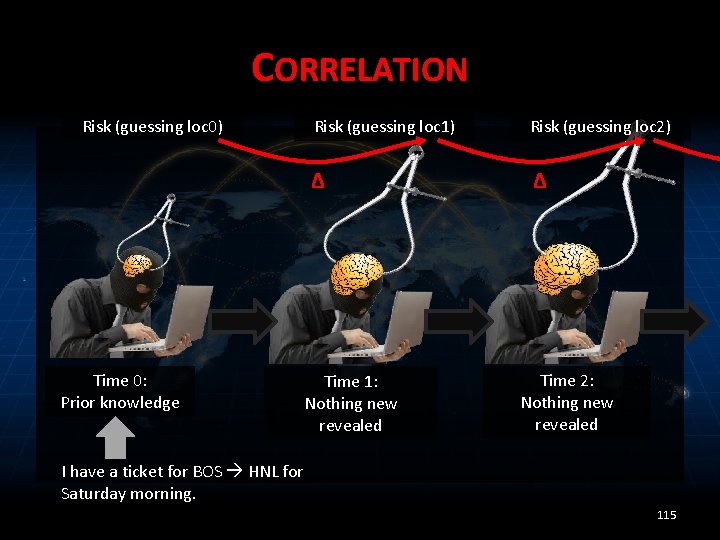

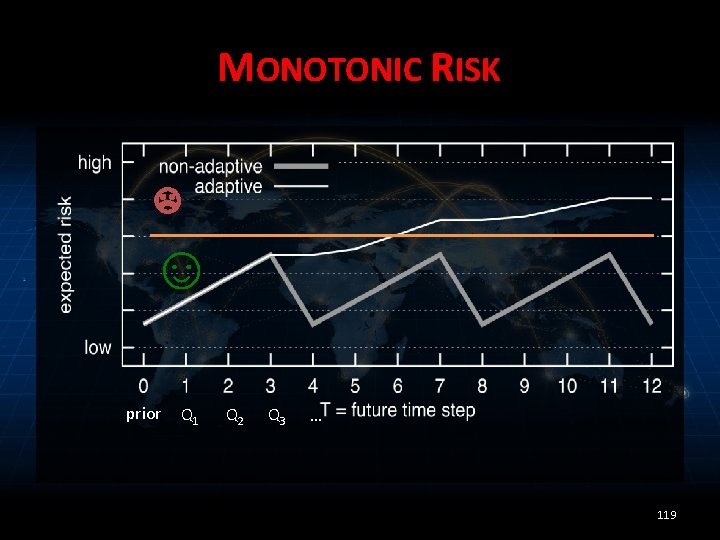

MONOTONIC RISK ☹ ☺ prior Q 1 Q 2 Q 3 … 119

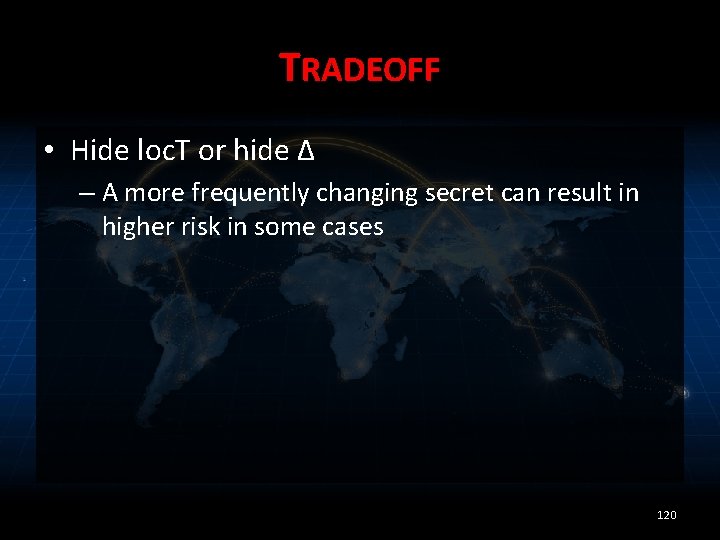

TRADEOFF • Hide loc. T or hide Δ – A more frequently changing secret can result in higher risk in some cases 120

![MODELING MEASURING AND LIMITING ADVERSARY KNOWLEDGE SP 14 FCS 14 Measure risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [S&P 14, FCS 14] – Measure risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-115.jpg)

MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [S&P 14, FCS 14] – Measure risk when secrets change over time • Model of risk with dynamic secrets and adaptive adversaries • Model separating risk to user from gain of adversary • Implementation and experimentation with small scenarios 121

![MODELING MEASURING AND LIMITING ADVERSARY KNOWLEDGE CSF 11 JCS 13 Limit risk MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk](https://slidetodoc.com/presentation_image/3d2a0319729a3177e6a07708075688e1/image-116.jpg)

MODELING, MEASURING, AND LIMITING ADVERSARY KNOWLEDGE • [CSF 11, JCS 13] – Limit risk and computational aspects of approach • [PLAS 12] – Limit risk for collaborative queries • [S&P 14, FCS 14] – Measure risk when secrets change over time 122

PRESENT/FUTURE • Probabilistic computation, SMC (not mentioned) – Make go fast(er) • Changing secrets – Characterize/avoid pathological cases • Active defense – Game theory 123

SHARING ONYOUR OWN TERMS Piotr (Peter) Mardziel piotrm@gmail. com 124