KNOWLEDGEORIENTED MULTIPARTY COMPUTATION Piotr Peter Mardziel Michael Hicks

- Slides: 41

KNOWLEDGE-ORIENTED MULTIPARTY COMPUTATION Piotr (Peter) Mardziel, Michael Hicks, Jonathan Katz, Mudhakar Srivatsa (IBM TJ Watson)

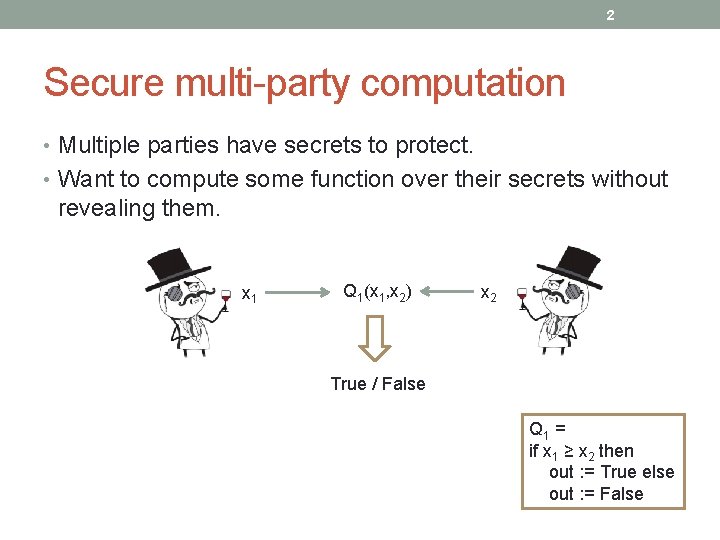

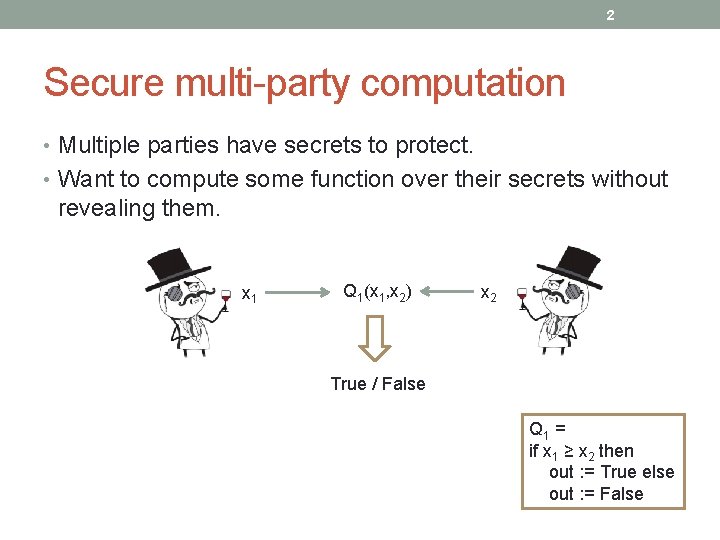

2 Secure multi-party computation • Multiple parties have secrets to protect. • Want to compute some function over their secrets without revealing them. x 1 Q 1(x 1, x 2) x 2 True / False Q 1 = if x 1 ≥ x 2 then out : = True else out : = False

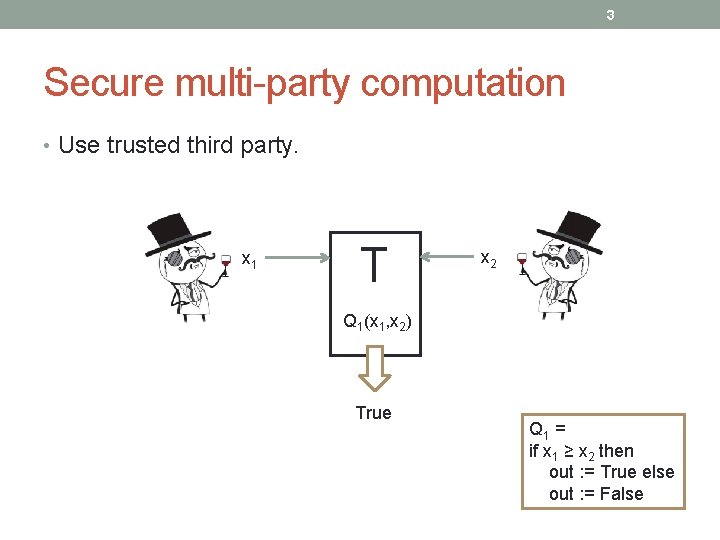

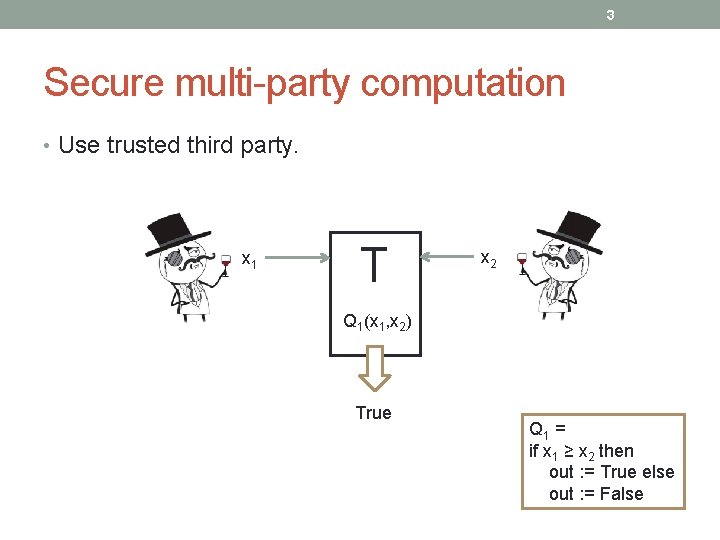

3 Secure multi-party computation • Use trusted third party. x 1 T x 2 Q 1(x 1, x 2) True Q 1 = if x 1 ≥ x 2 then out : = True else out : = False

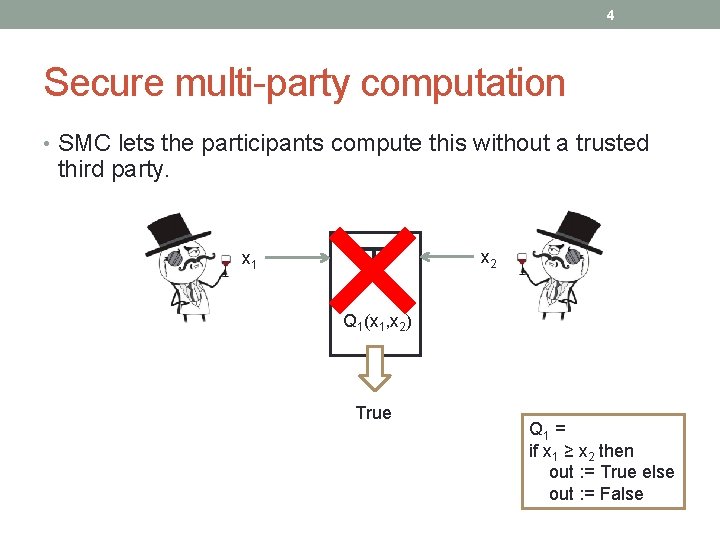

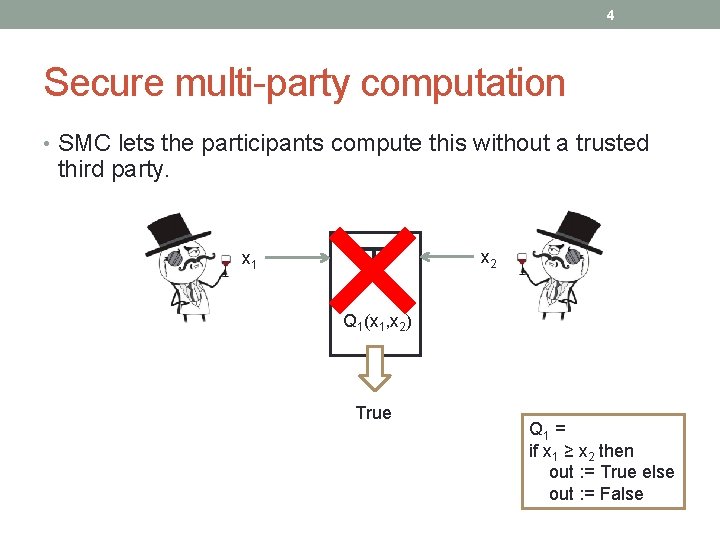

4 Secure multi-party computation • SMC lets the participants compute this without a trusted third party. x 1 T x 2 Q 1(x 1, x 2) True Q 1 = if x 1 ≥ x 2 then out : = True else out : = False

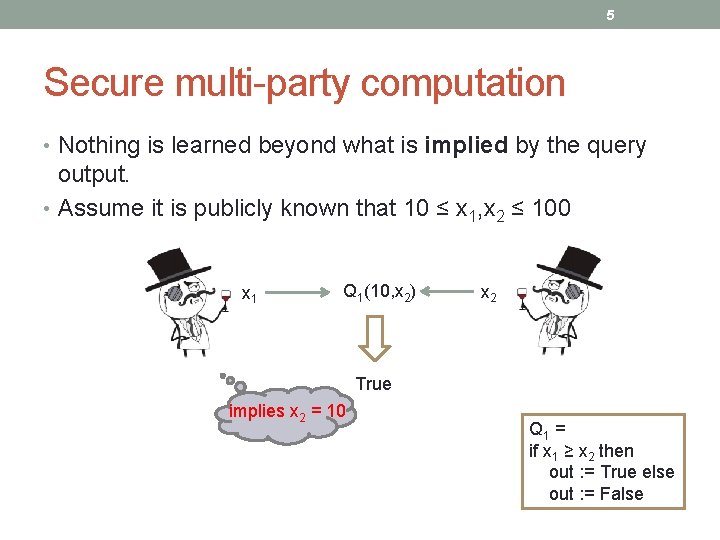

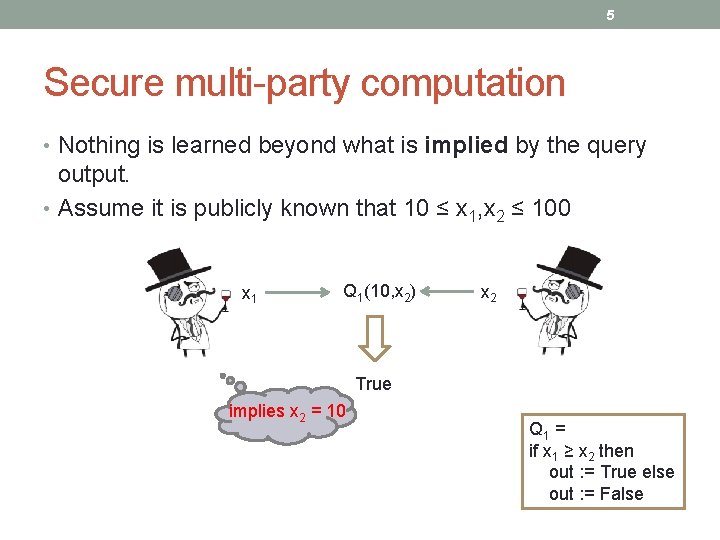

5 Secure multi-party computation • Nothing is learned beyond what is implied by the query output. • Assume it is publicly known that 10 ≤ x 1, x 2 ≤ 100 x 1 Q 1(10, x 2) x 2 True implies x 2 = 10 Q 1 = if x 1 ≥ x 2 then out : = True else out : = False

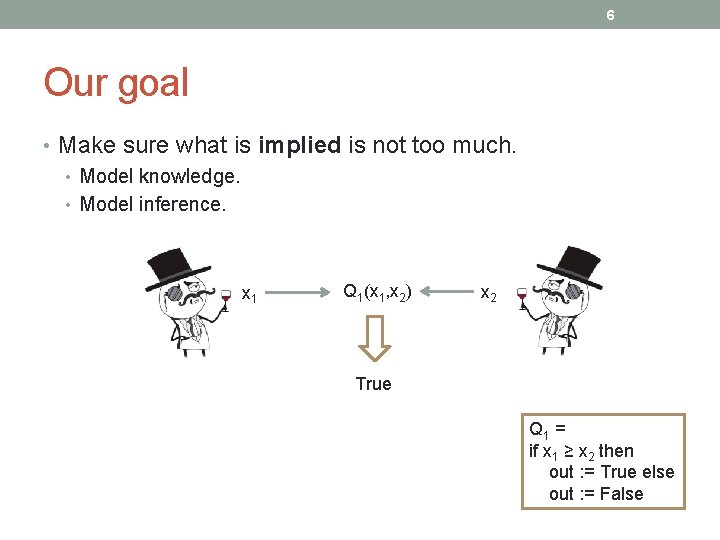

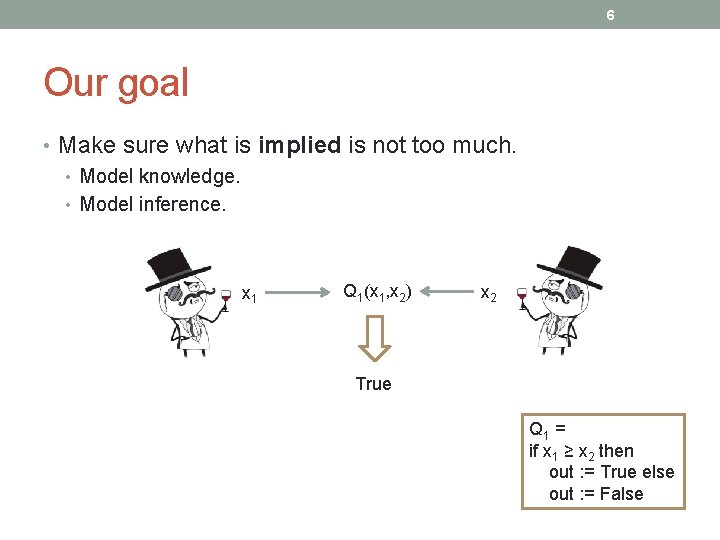

6 Our goal • Make sure what is implied is not too much. • Model knowledge. • Model inference. x 1 Q 1(x 1, x 2) x 2 True Q 1 = if x 1 ≥ x 2 then out : = True else out : = False

7 This talk • Secure multiparty computation. • Knowledge-based security • For a simpler setting • For SMC • Evaluation

8 Knowledge in a simpler setting

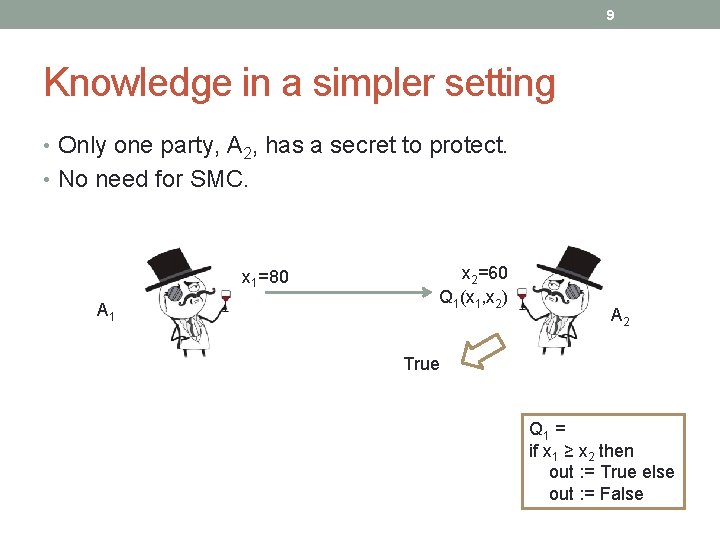

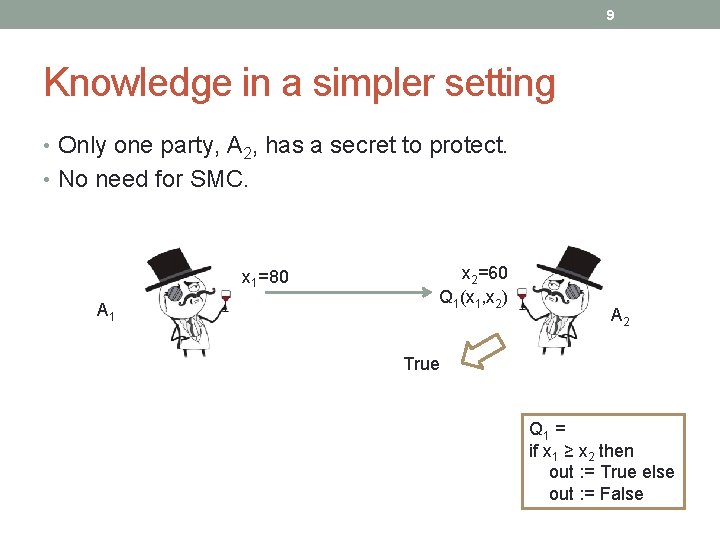

9 Knowledge in a simpler setting • Only one party, A 2, has a secret to protect. • No need for SMC. x 1=80 A 1 x 2=60 Q 1(x 1, x 2) A 2 True Q 1 = if x 1 ≥ x 2 then out : = True else out : = False

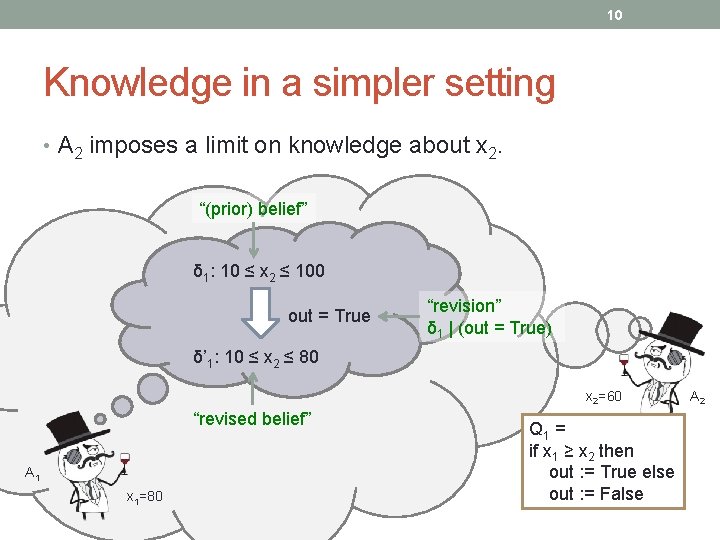

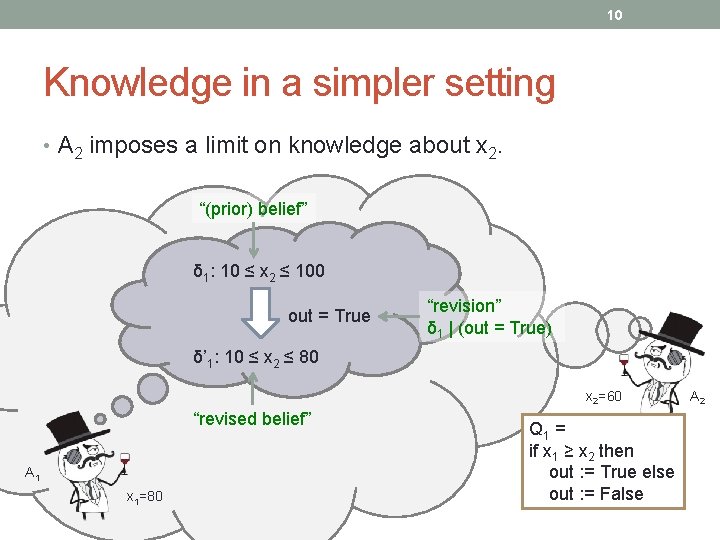

10 Knowledge in a simpler setting • A 2 imposes a limit on knowledge about x 2. “(prior) belief” δ 1: 10 ≤ x 2 ≤ 100 out = True “revision” δ 1 | (out = True) δ’ 1: 10 ≤ x 2 ≤ 80 x 2=60 “revised belief” A 1 x 1=80 Q 1 = if x 1 ≥ x 2 then out : = True else out : = False A 2

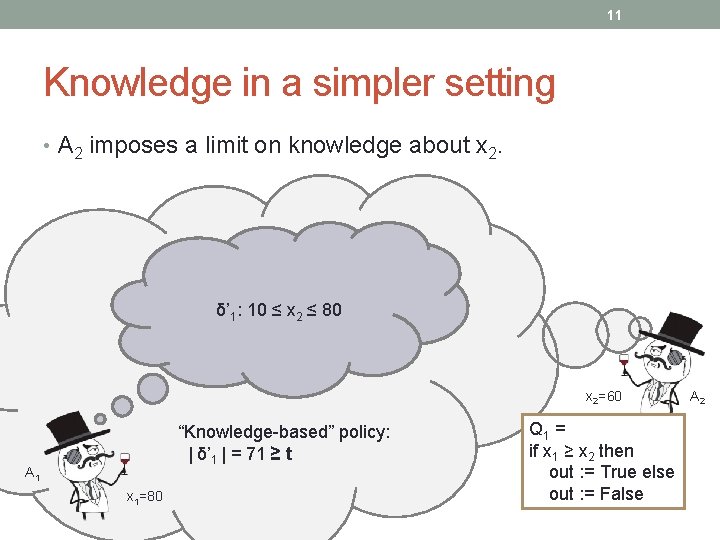

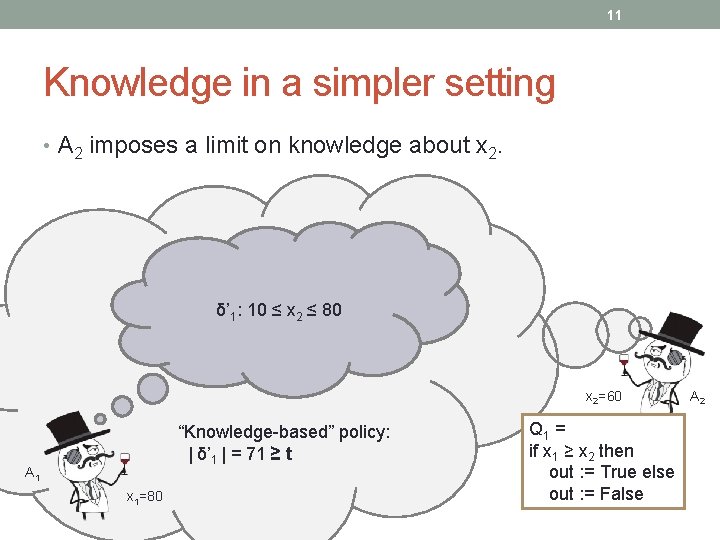

11 Knowledge in a simpler setting • A 2 imposes a limit on knowledge about x 2. δ’ 1: 10 ≤ x 2 ≤ 80 x 2=60 “Knowledge-based” policy: | δ’ 1 | = 71 ≥ t A 1 x 1=80 Q 1 = if x 1 ≥ x 2 then out : = True else out : = False A 2

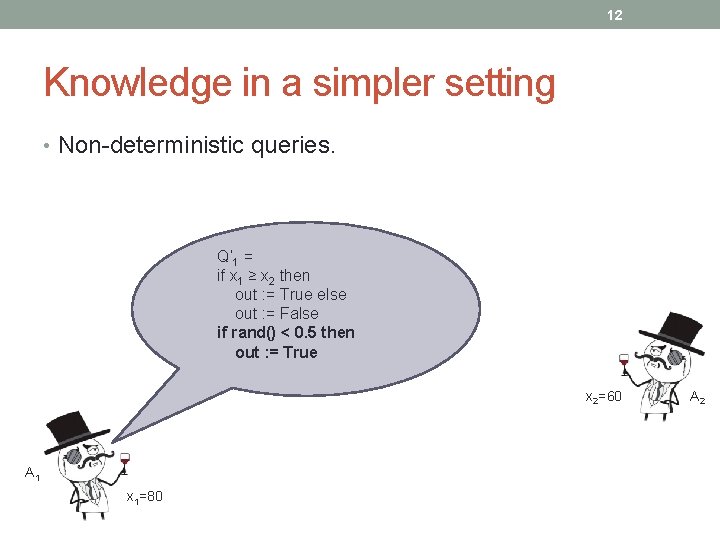

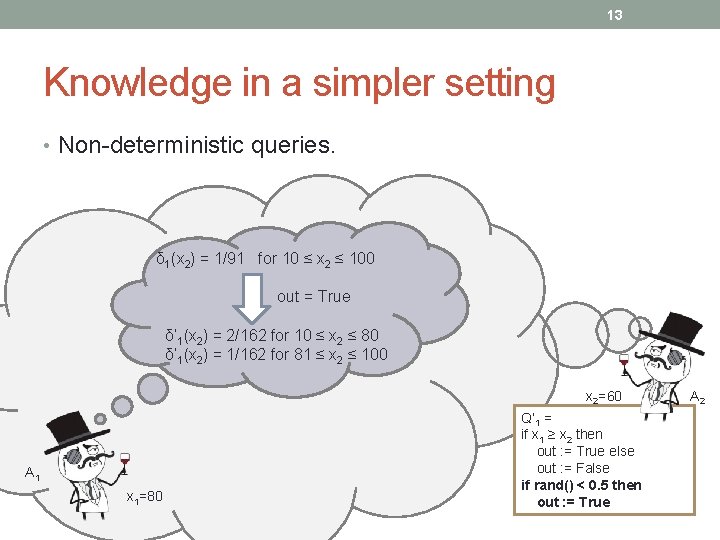

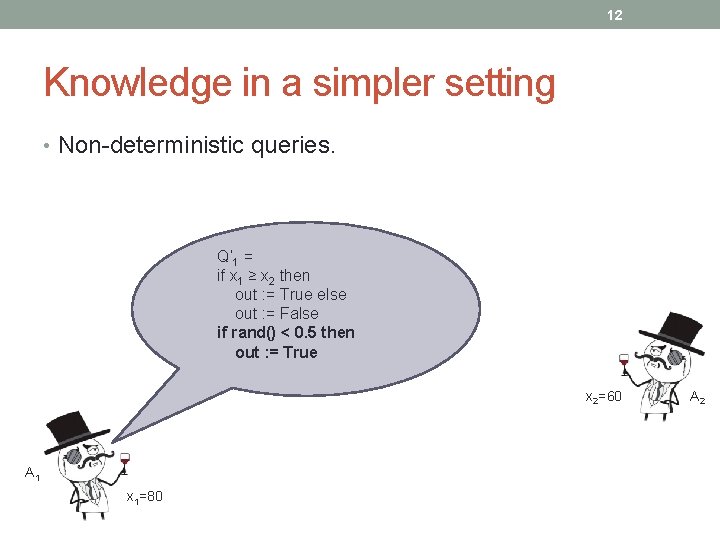

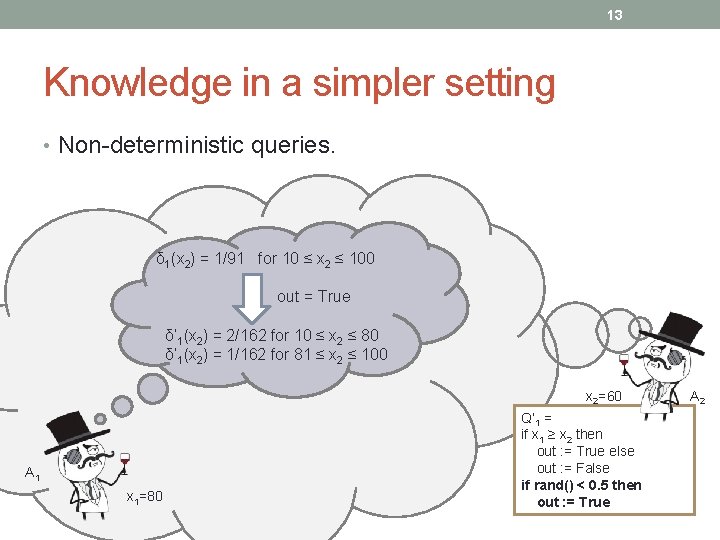

12 Knowledge in a simpler setting • Non-deterministic queries. Q’ 1 = if x 1 ≥ x 2 then out : = True else out : = False if rand() < 0. 5 then out : = True x 2=60 A 1 x 1=80 A 2

13 Knowledge in a simpler setting • Non-deterministic queries. δ 1(x 2) = 1/91 for 10 ≤ x 2 ≤ 100 out = True δ’ 1(x 2) = 2/162 for 10 ≤ x 2 ≤ 80 δ’ 1(x 2) = 1/162 for 81 ≤ x 2 ≤ 100 x 2=60 A 1 x 1=80 Q’ 1 = if x 1 ≥ x 2 then out : = True else out : = False if rand() < 0. 5 then out : = True A 2

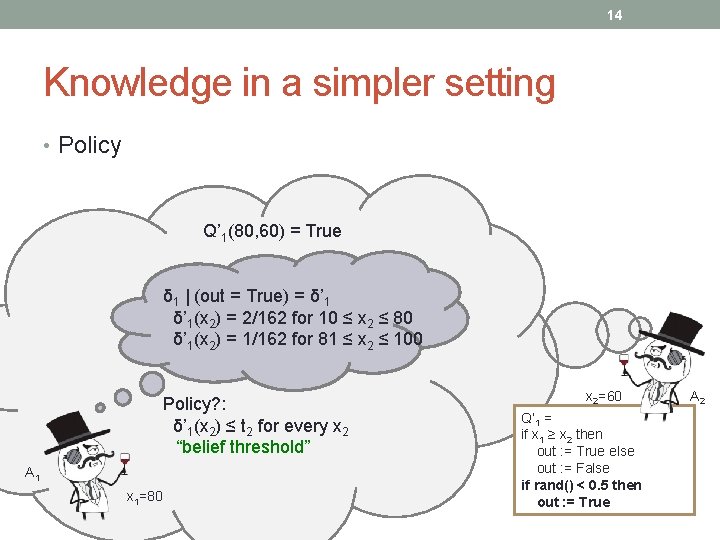

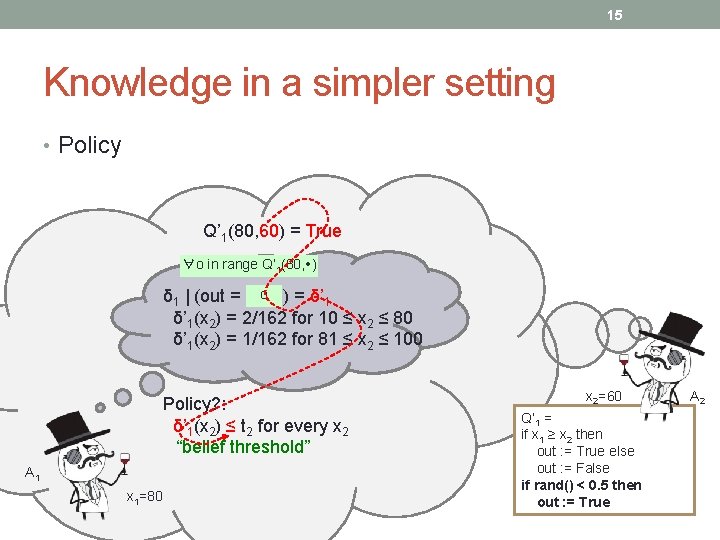

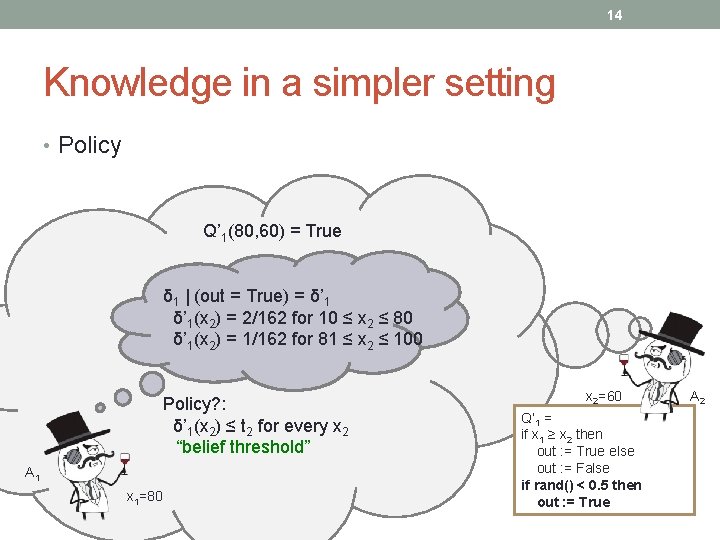

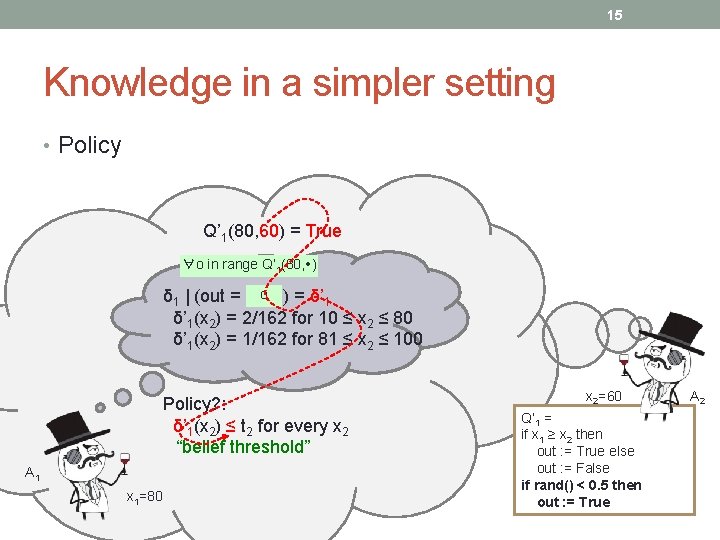

14 Knowledge in a simpler setting • Policy Q’ 1(80, 60) = True δ 1 | (out = True) = δ’ 1(x 2) = 2/162 for 10 ≤ x 2 ≤ 80 δ’ 1(x 2) = 1/162 for 81 ≤ x 2 ≤ 100 Policy? : δ’ 1(x 2) ≤ t 2 for every x 2 “belief threshold” A 1 x 1=80 x 2=60 Q’ 1 = if x 1 ≥ x 2 then out : = True else out : = False if rand() < 0. 5 then out : = True A 2

15 Knowledge in a simpler setting • Policy Q’ 1(80, 60) = True ∀o in range Q’ 1(80, ) o δ 1 | (out = True) = δ’ 1(x 2) = 2/162 for 10 ≤ x 2 ≤ 80 δ’ 1(x 2) = 1/162 for 81 ≤ x 2 ≤ 100 Policy? : δ’ 1(x 2) ≤ t 2 for every x 2 “belief threshold” A 1 x 1=80 x 2=60 Q’ 1 = if x 1 ≥ x 2 then out : = True else out : = False if rand() < 0. 5 then out : = True A 2

16 Knowledge in a simpler setting • Policy. “max belief” = maxδ’, x{ δ’(x) } where δ’ = δ 1 | (out = o) for some o Policy: P(Q’ 1, x 1=80, δ 1, t) = max belief ≤ t “(max) belief threshold” If successful Q’ 1(80, 60) = True Track δ 1 | ( out = True ) δ 1 | ( out = False ) A 1 x 1=80 x 2=60 Q’ 1 = if x 1 ≥ x 2 then out : = True else out : = False if rand() < 0. 5 then out : = True A 2

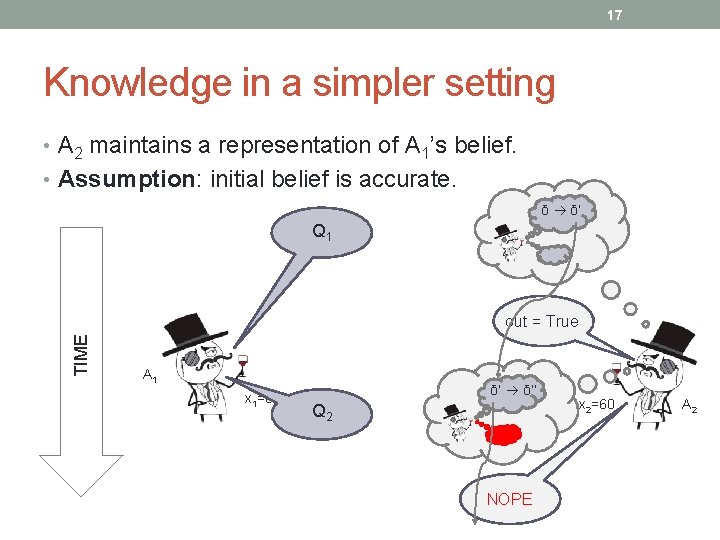

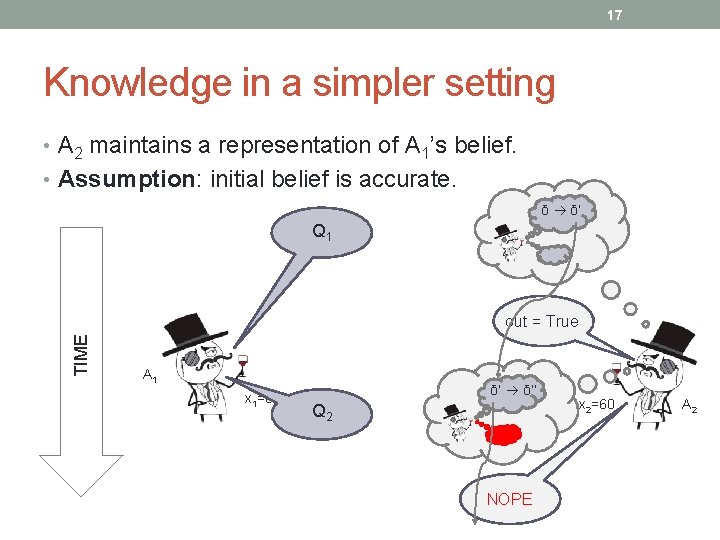

17 Knowledge in a simpler setting • A 2 maintains a representation of A 1’s belief. • Assumption: initial belief is accurate. δ δ’ Q 1 TIME out = True A 1 x 1=80 δ’ δ’’ Q 2 NOPE x 2=60 A 2

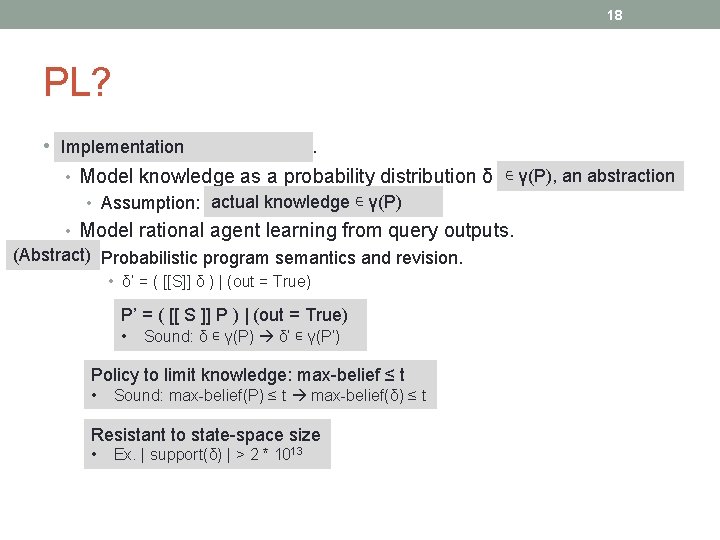

18 PL? • Theory of Clarkson et al. Implementation • Model knowledge as a probability distribution δ ∊ γ(P), an abstraction knowledge ∊ γ(P) • Assumption: δactual is agent’s actual knowledge • Model rational agent learning from query outputs. (Abstract) • Probabilistic program semantics and revision. • δ’ = ( [[S]] δ ) | (out = True) P’ = ( [[ S ]] P ) | (out = True) • Sound: δ ∊ γ(P) δ’ ∊ γ(P’) Policy to limit knowledge: max-belief ≤ t • Sound: max-belief(P) ≤ t max-belief(δ) ≤ t Resistant to state-space size • Ex. | support(δ) | > 2 * 1013

19 Knowledge in the SMC setting

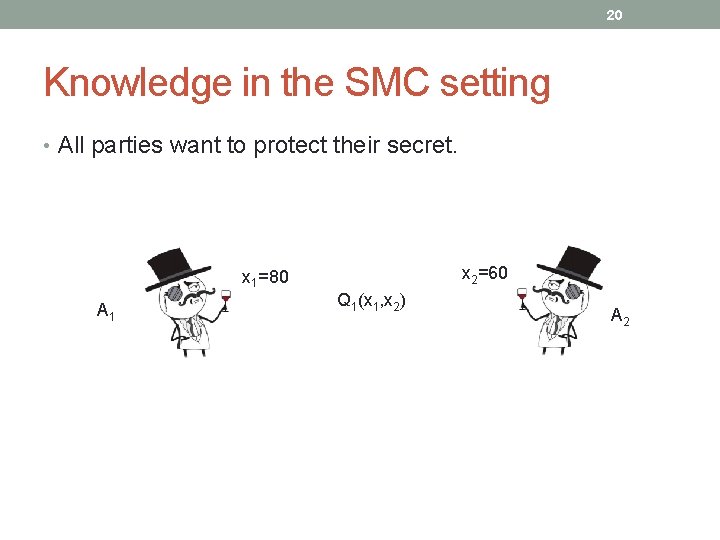

20 Knowledge in the SMC setting • All parties want to protect their secret. x 2=60 x 1=80 A 1 Q 1(x 1, x 2) A 2

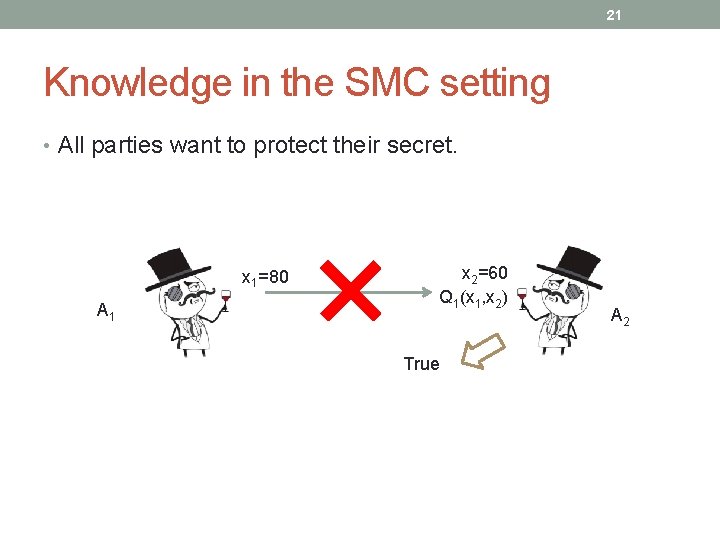

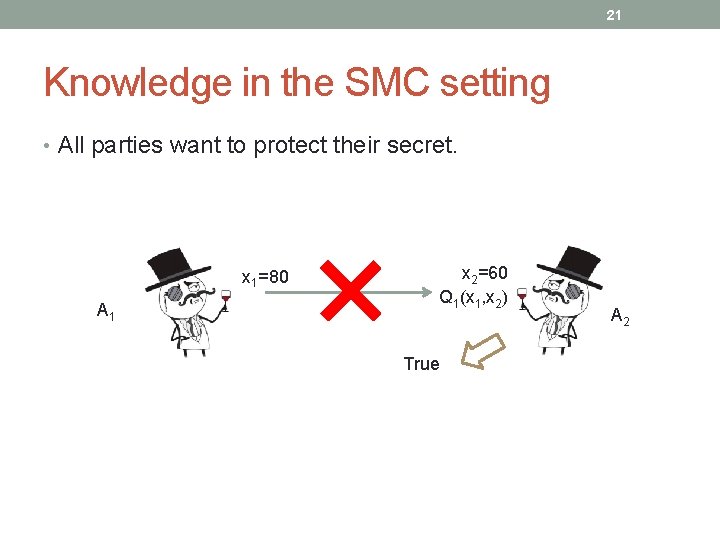

21 Knowledge in the SMC setting • All parties want to protect their secret. x 1=80 A 1 x 2=60 Q 1(x 1, x 2) True A 2

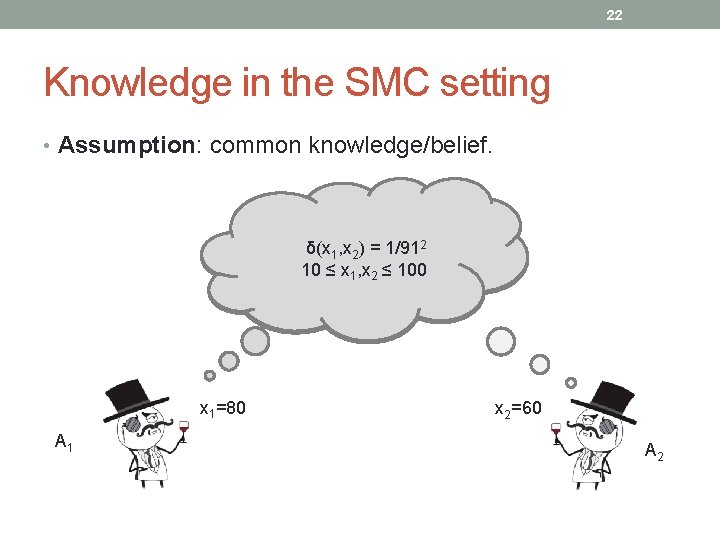

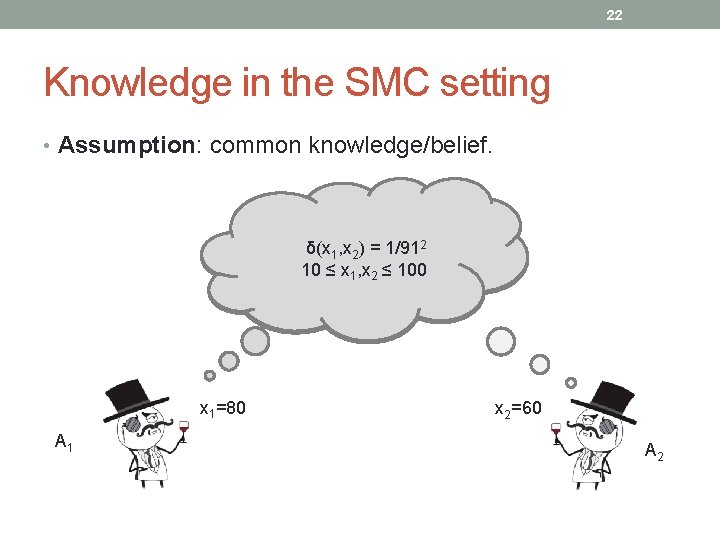

22 Knowledge in the SMC setting • Assumption: common knowledge/belief. δ(x 1, x 2) = 1/912 10 ≤ x 1, x 2 ≤ 100 x 1=80 A 1 x 2=60 A 2

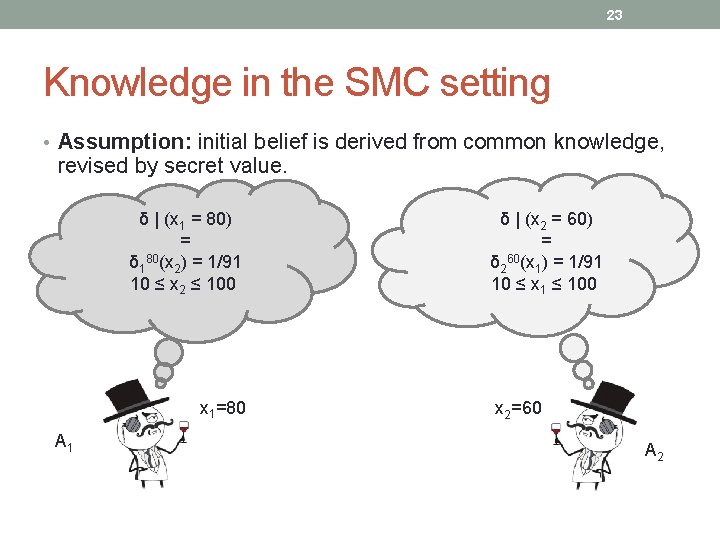

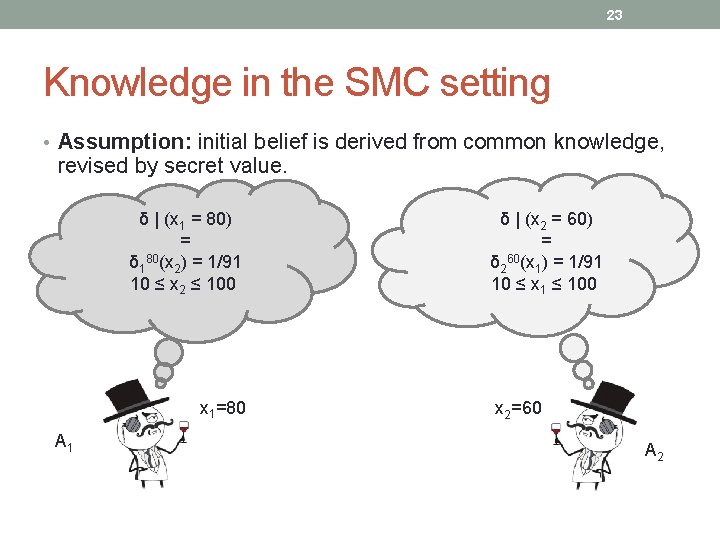

23 Knowledge in the SMC setting • Assumption: initial belief is derived from common knowledge, revised by secret value. δ | (x 1 = 80) = δ 180(x 2) = 1/91 10 ≤ x 2 ≤ 100 x 1=80 A 1 δ | (x 2 = 60) = δ 260(x 1) = 1/91 10 ≤ x 1 ≤ 100 x 2=60 A 2

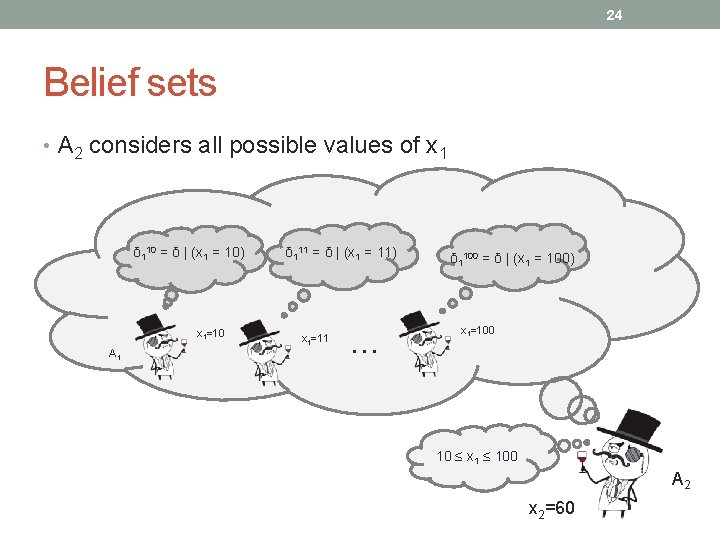

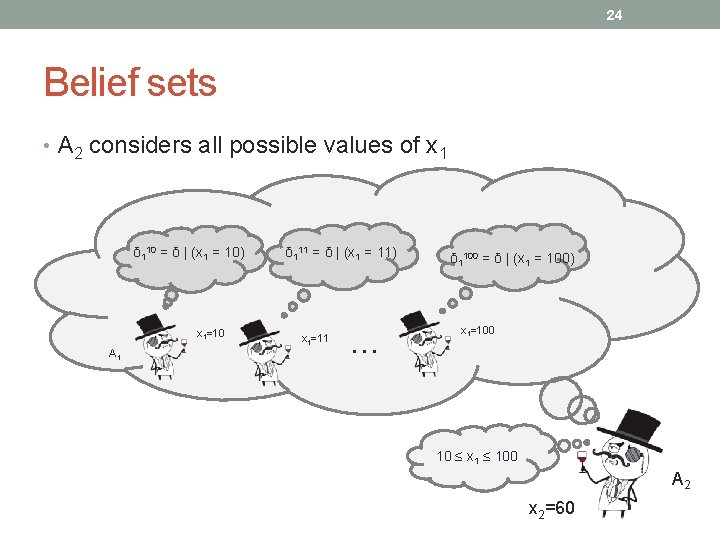

24 Belief sets • A 2 considers all possible values of x 1 δ 110 = δ | (x 1 = 10) x 1=10 A 1 δ 111 = δ | (x 1 = 11) x 1=11 … δ 1100 = δ | (x 1 = 100) x 1=100 10 ≤ x 1 ≤ 100 A 2 x 2=60

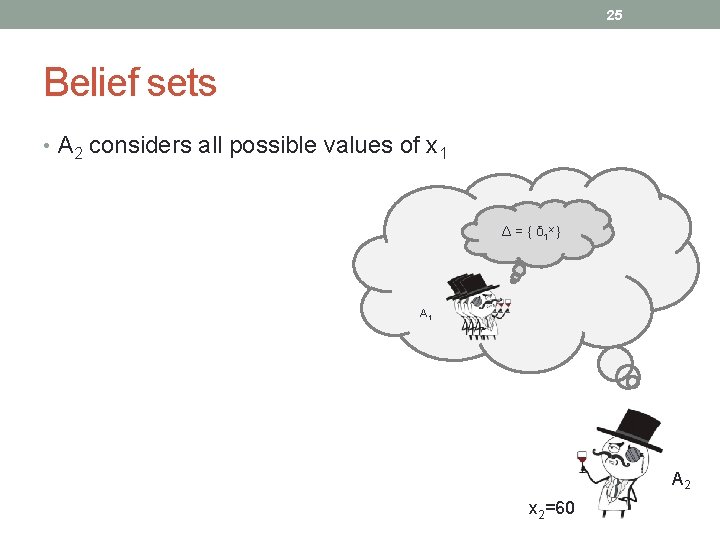

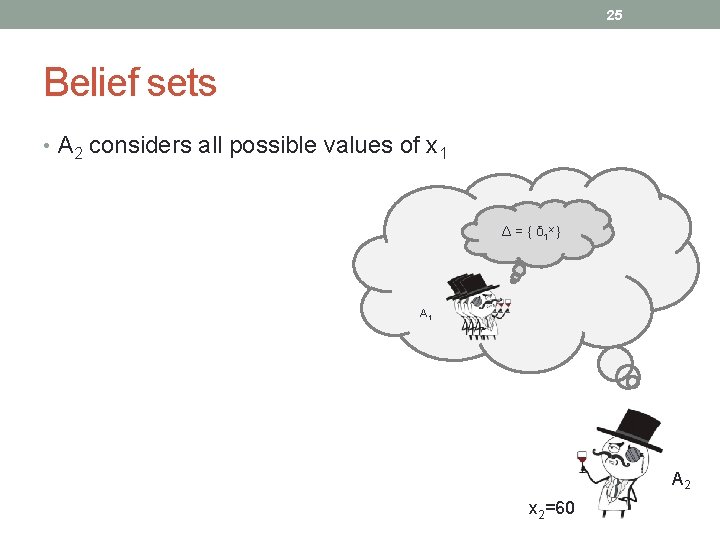

25 Belief sets • A 2 considers all possible values of x 1 Δ = { δ 1 x } A 1 A 2 x 2=60

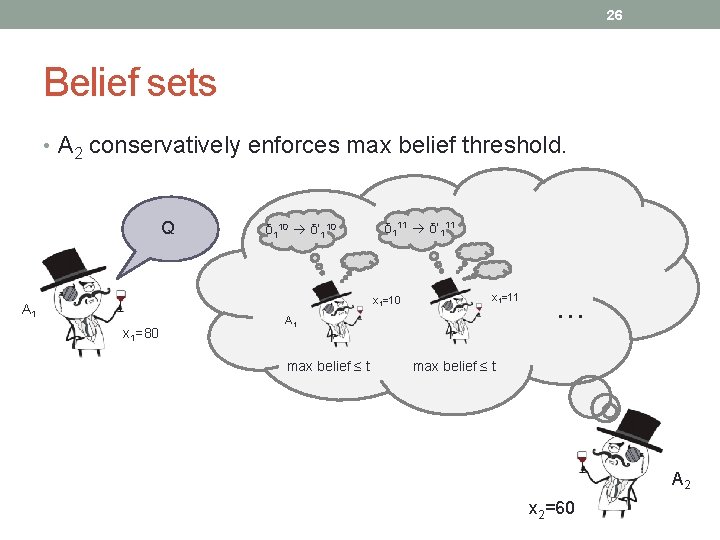

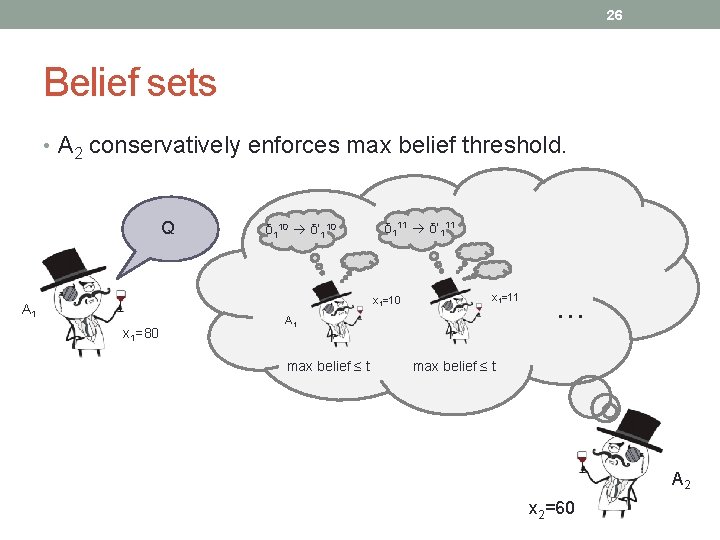

26 Belief sets • A 2 conservatively enforces max belief threshold. Q δ 110 δ’ 110 δ 111 δ’ 111 x 1=10 A 1 x 1=80 x 1=11 A 1 max belief ≤ t … max belief ≤ t A 2 x 2=60

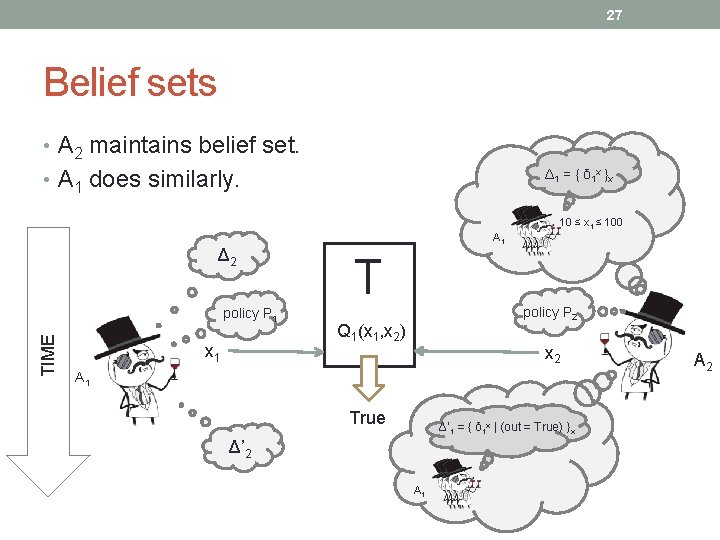

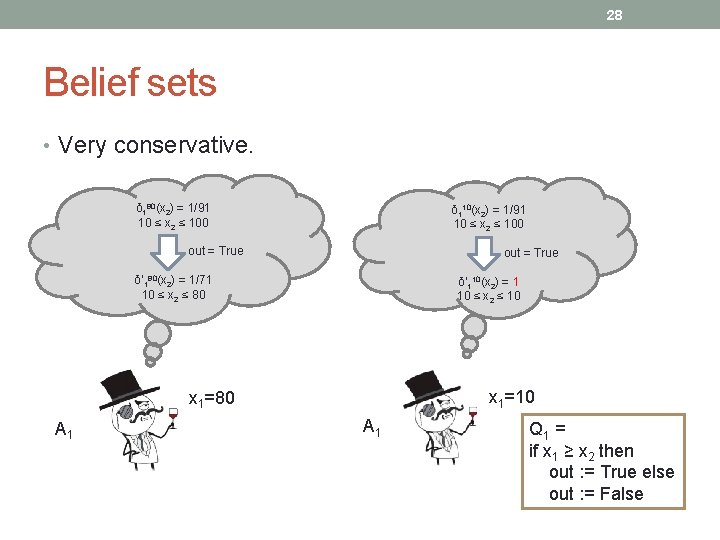

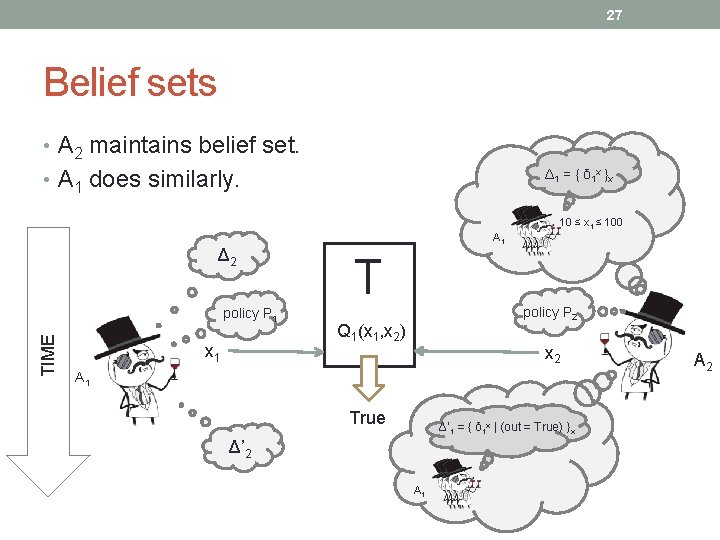

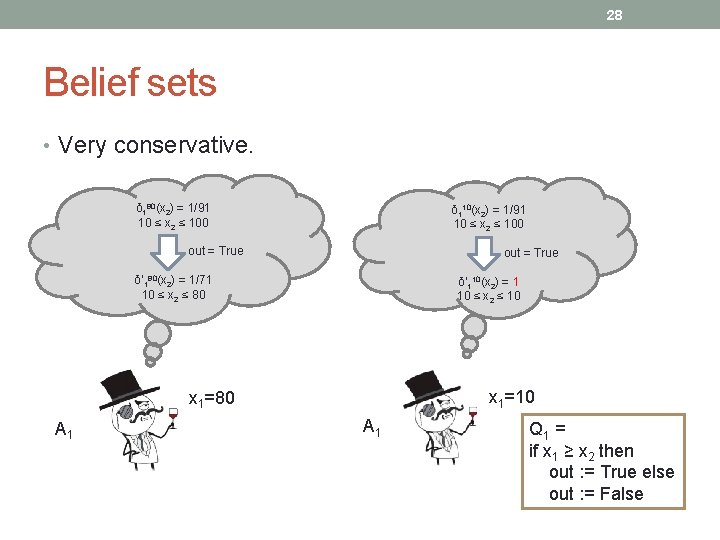

27 Belief sets • A 2 maintains belief set. • A 1 does similarly. Δ 1 = { δ 1 x }x 10 ≤ x 1 ≤ 100 Δ 2 TIME policy P 1 x 1 A 1 T policy P 2 Q 1(x 1, x 2) x 2 A 1 x 2=60 True Δ’ 1 = { δ 1 x | (out = True) }x Δ’ 2 A 1 A 2

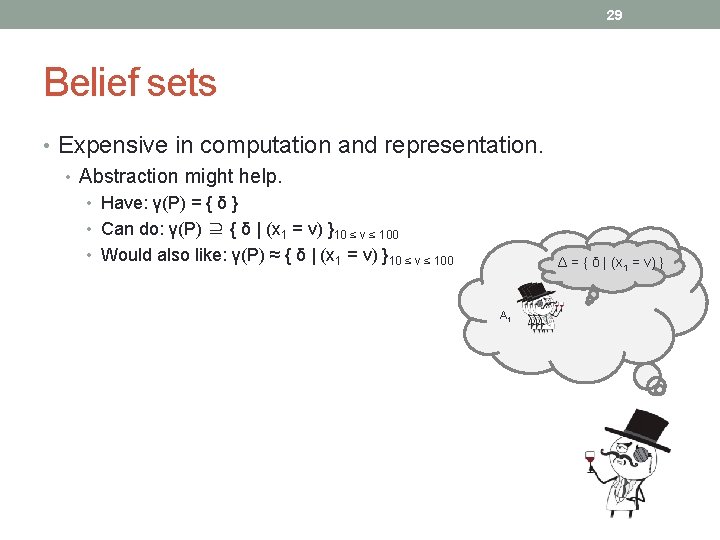

28 Belief sets • Very conservative. δ 180(x 2) = 1/91 10 ≤ x 2 ≤ 100 δ 110(x 2) = 1/91 10 ≤ x 2 ≤ 100 out = True δ’ 180(x 2) = 1/71 10 ≤ x 2 ≤ 80 δ’ 110(x 2) = 1 10 ≤ x 2 ≤ 10 x 1=80 A 1 Q 1 = if x 1 ≥ x 2 then out : = True else out : = False

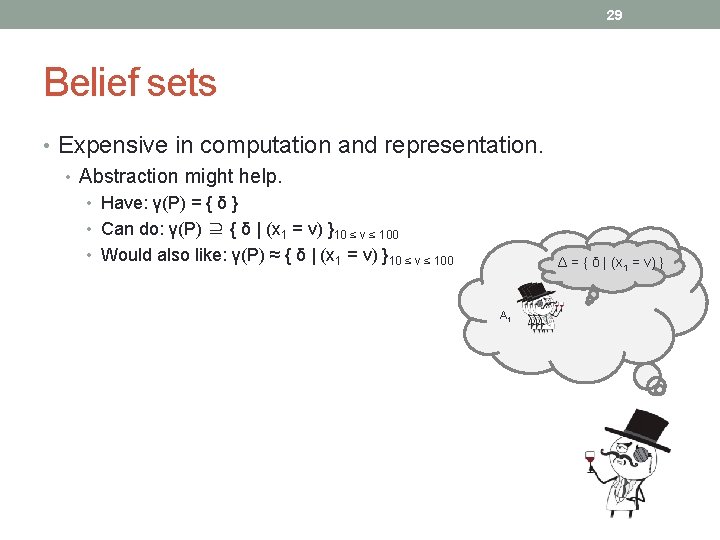

29 Belief sets • Expensive in computation and representation. • Abstraction might help. • Have: γ(P) = { δ } • Can do: γ(P) ⊇ { δ | (x 1 = v) }10 ≤ v ≤ 100 • Would also like: γ(P) ≈ { δ | (x 1 = v) }10 ≤ v ≤ 100 Δ = { δ | (x 1 = v) } A 1

30 Different approach: Knowledge tracking via SMC

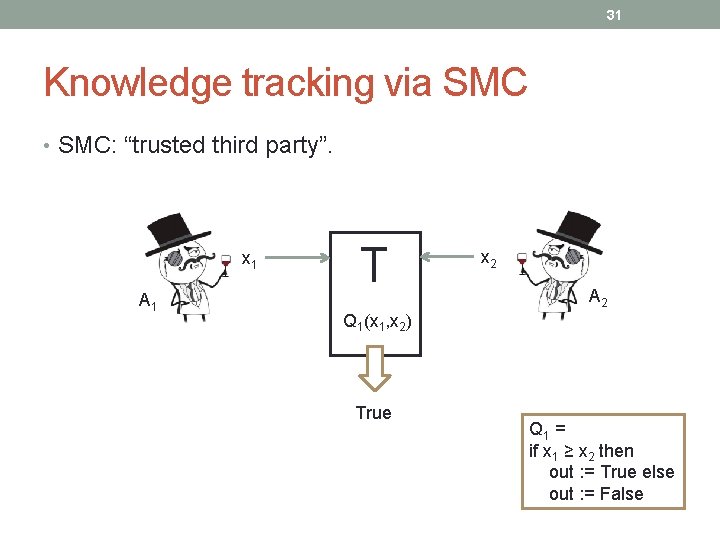

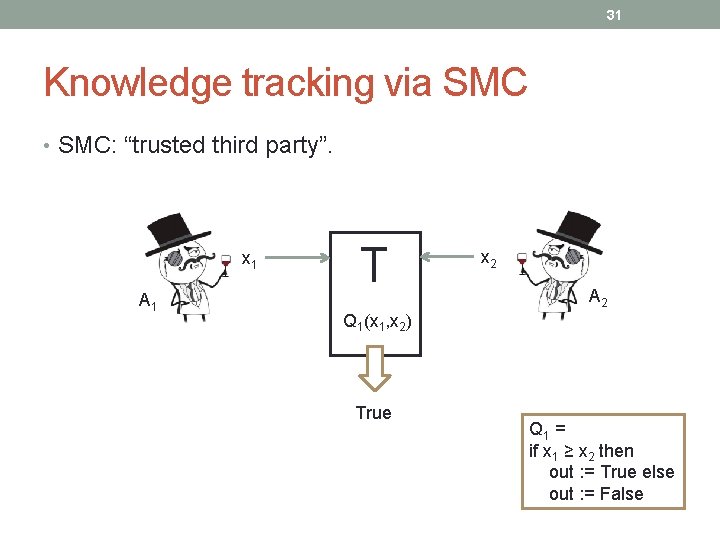

31 Knowledge tracking via SMC • SMC: “trusted third party”. x 1 A 1 T x 2 A 2 Q 1(x 1, x 2) True Q 1 = if x 1 ≥ x 2 then out : = True else out : = False

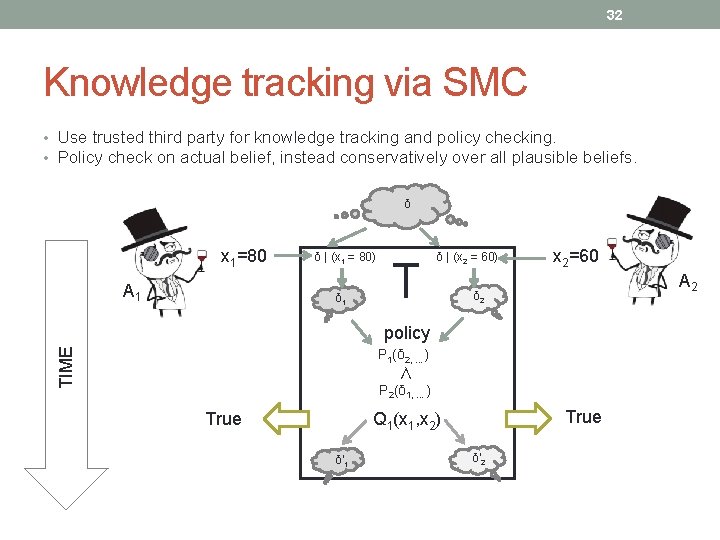

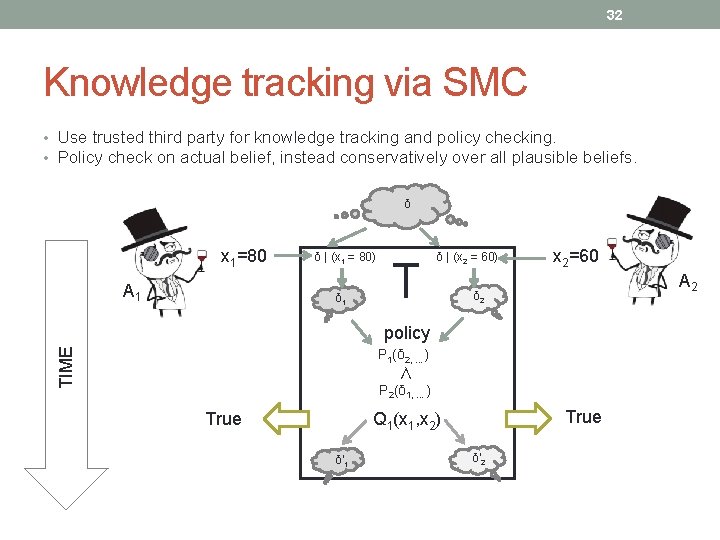

32 Knowledge tracking via SMC • Use trusted third party for knowledge tracking and policy checking. • Policy check on actual belief, instead conservatively over all plausible beliefs. δ x 1=80 A 1 δ | (x 1 = 80) δ 1 T δ | (x 2 = 60) x 2=60 A 2 δ 2 TIME policy P 1(δ 2, …) ∧ P 2(δ 1, …) True Q 1(x 1, x 2) δ’ 1 δ’ 2

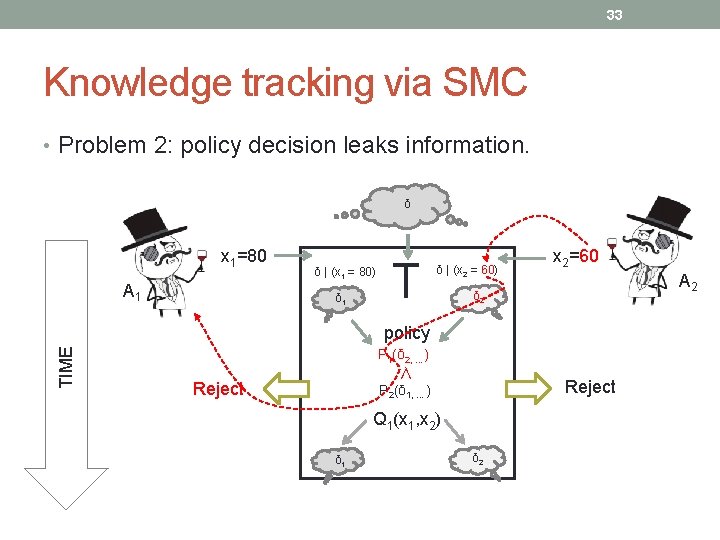

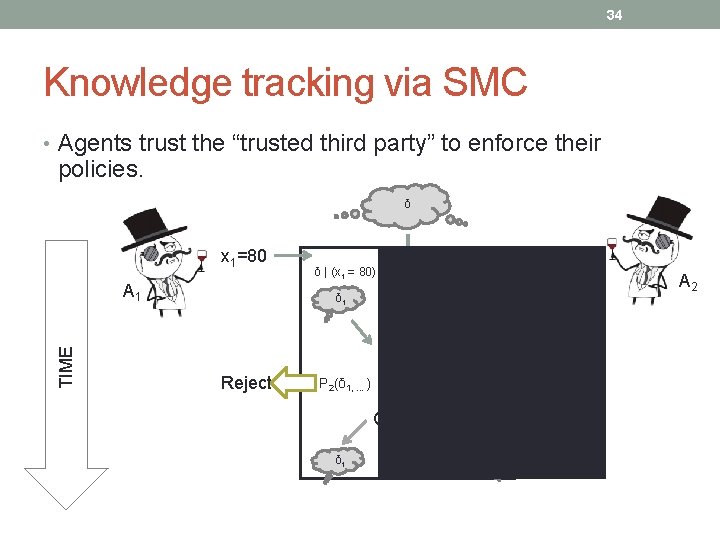

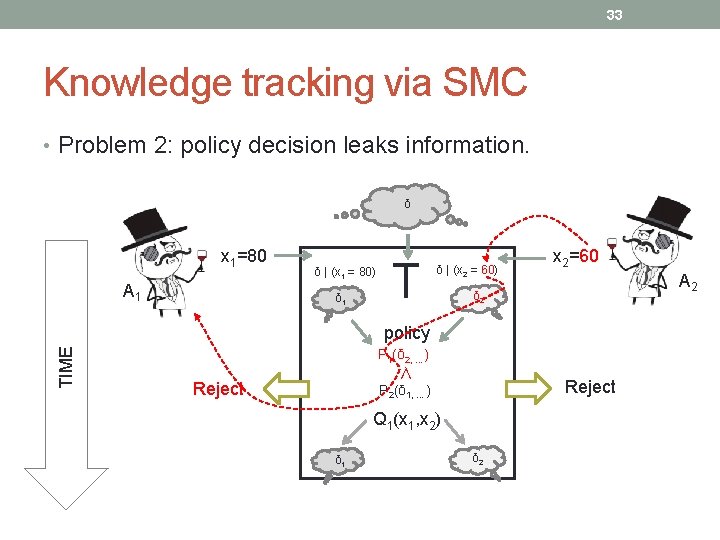

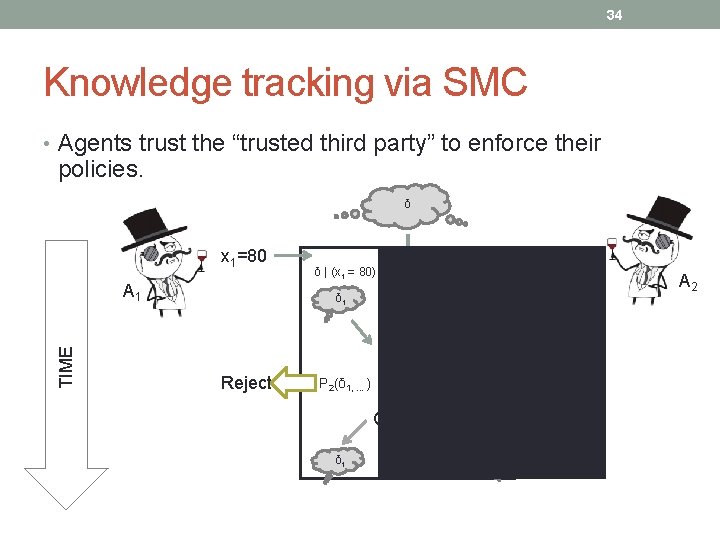

33 Knowledge tracking via SMC • Problem 2: policy decision leaks information. δ x 1=80 A 1 δ | (x 1 = 80) δ 1 T δ | (x 2 = 60) x 2=60 A 2 δ 2 TIME policy P 1(δ 2, …) ∧ P 2(δ 1, …) Reject Q 1(x 1, x 2) δ 1 δ 2

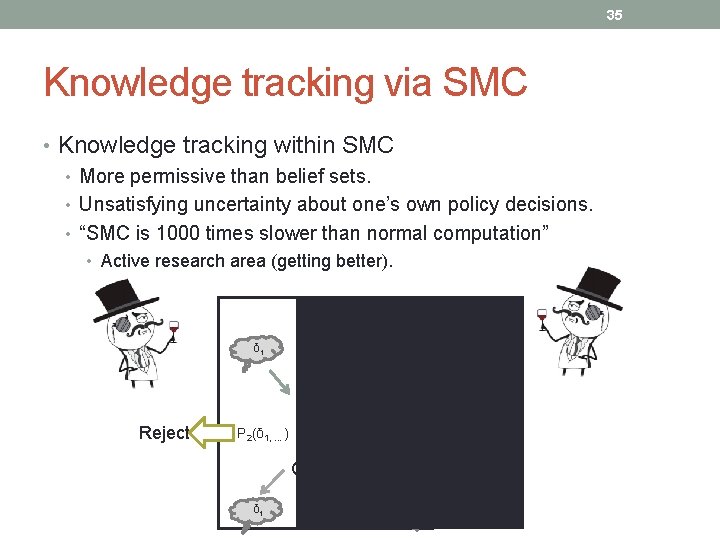

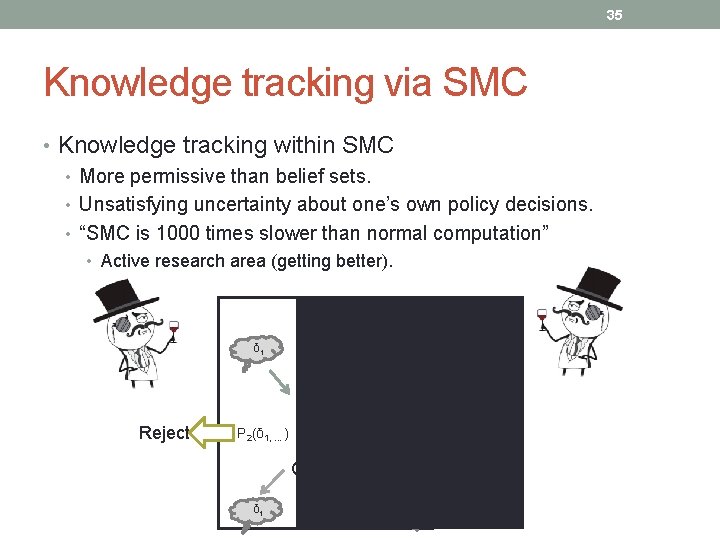

34 Knowledge tracking via SMC • Agents trust the “trusted third party” to enforce their policies. δ x 1=80 A 1 δ | (x 1 = 80) δ 1 T δ | (x 2 = 60) x 2=60 A 2 δ 2 TIME policy Reject P 2(δ 1, …) P 1(δ 2, …) Q 1(x 1, x 2) δ 1 Accept True δ’ 2

35 Knowledge tracking via SMC • Knowledge tracking within SMC • More permissive than belief sets. • Unsatisfying uncertainty about one’s own policy decisions. • “SMC is 1000 times slower than normal computation” • Active research area (getting better). δ 1 T δ | (x 2 = 60) x 2=60 δ 2 policy Reject P 2(δ 1, …) P 1(δ 2, …) Q 1(x 1, x 2) δ 1 Accept True δ’ 2

36 Comparison and Examples

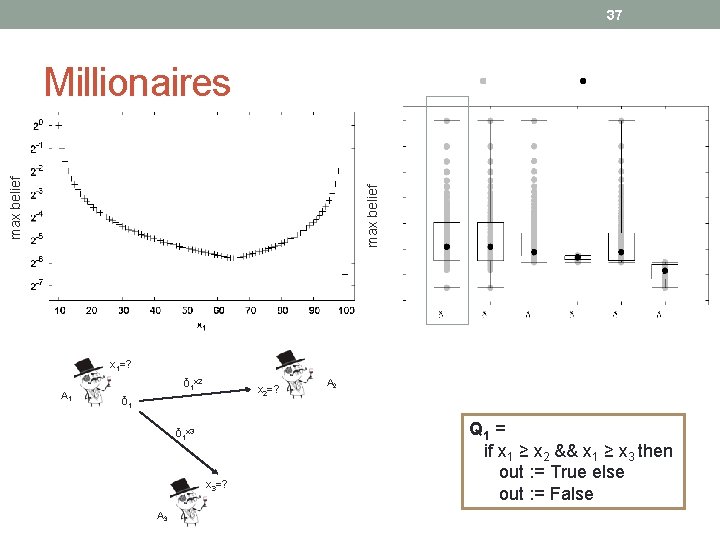

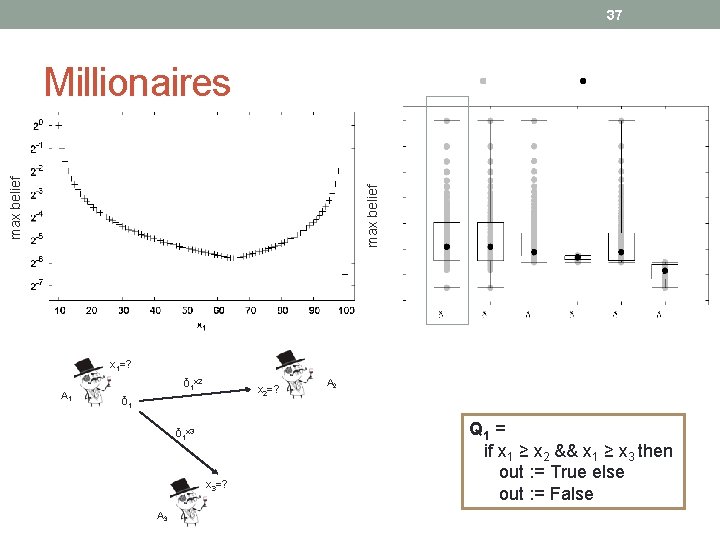

37 max belief Millionaires x 1=? A 1 δ 1 x 2 x 2=? δ 1 x 3 x 3=? A 3 A 2 Q 1 = if x 1 ≥ x 2 && x 1 ≥ x 3 then out : = True else out : = False

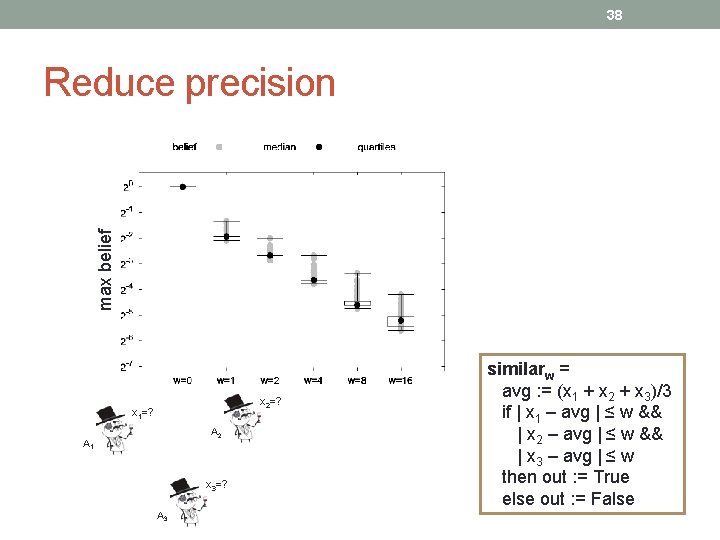

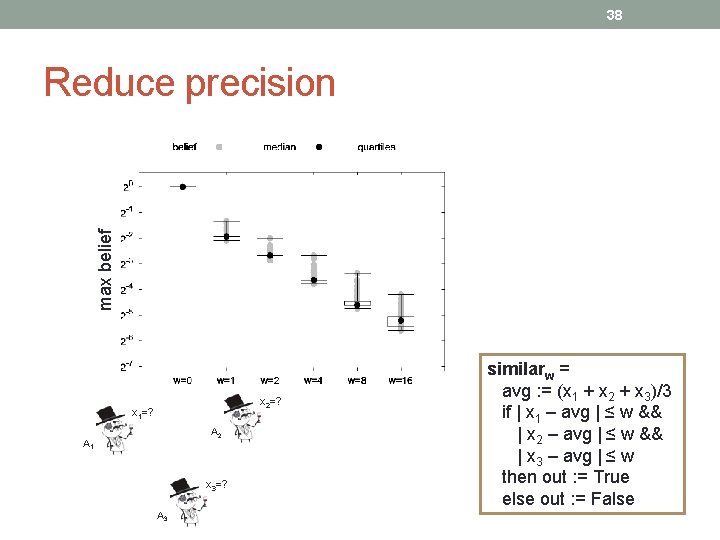

38 max belief Reduce precision x 2=? x 1=? A 2 A 1 x 3=? A 3 similarw = avg : = (x 1 + x 2 + x 3)/3 if | x 1 – avg | ≤ w && | x 2 – avg | ≤ w && | x 3 – avg | ≤ w then out : = True else out : = False

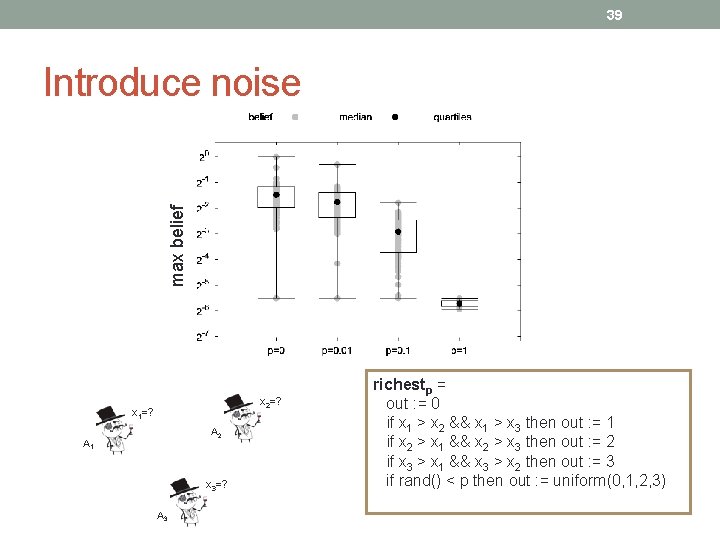

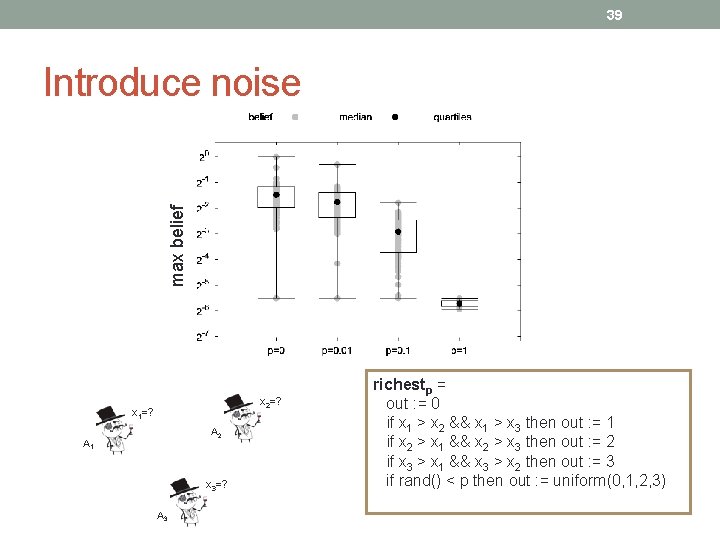

39 max belief Introduce noise x 2=? x 1=? A 2 A 1 x 3=? A 3 richestp = out : = 0 if x 1 > x 2 && x 1 > x 3 then out : = 1 if x 2 > x 1 && x 2 > x 3 then out : = 2 if x 3 > x 1 && x 3 > x 2 then out : = 3 if rand() < p then out : = uniform(0, 1, 2, 3)

40 Summary+conclusions

41 Knowledge-Oriented Multiparty computation • SMC: agents do not learn beyond what is implied by query. • Our work: agents limit what can be inferred. x 1 Q 1(x 1, x 2) True x 2 • Two approaches with differing (dis)advantages. • Ongoing work in PL and crypto for tractability.