Text Mining Overview Piotr Gawrysiak gawrysiaii pw edu

- Slides: 39

Text Mining Overview Piotr Gawrysiak gawrysia@ii. pw. edu. pl Warsaw University of Technology Data Mining Group 22 November 2001

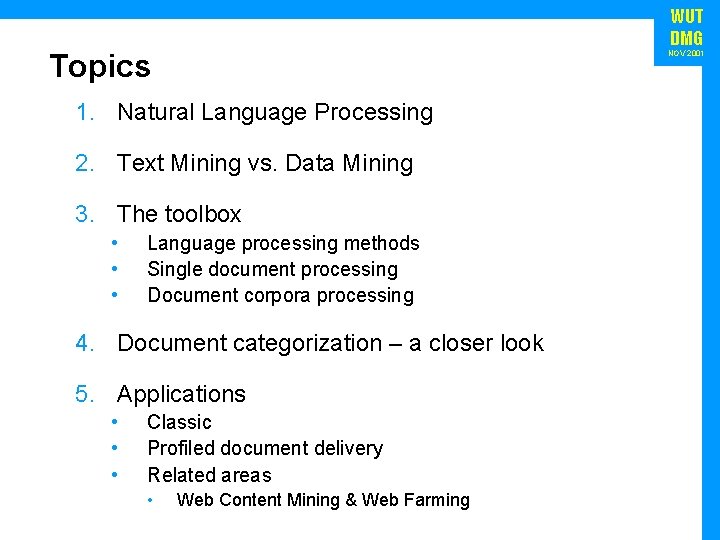

WUT DMG Topics NOV 2001 1. Natural Language Processing 2. Text Mining vs. Data Mining 3. The toolbox • • • Language processing methods Single document processing Document corpora processing 4. Document categorization – a closer look 5. Applications • • • Classic Profiled document delivery Related areas • Web Content Mining & Web Farming

WUT DMG Natural Language Processing • Natural language – test for Artificial Intelligence • Alan Turing • NLP and NLU • Linguistics – Natural exploring mysteries of a language processing • • • (NLP) William Jones Comparative linguistics - Jakob Grimm, Rasmus Rask anything that deals with text content Noam Chomsky • • I-Language and E-Language language understanding poverty. Natural of stimulus (NLU) • Statistical approaches – Markov and Shannon semantics and logic NOV 2001

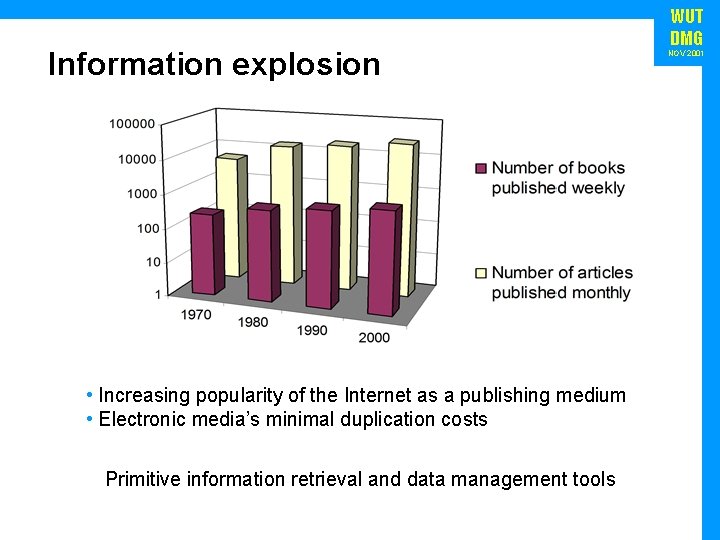

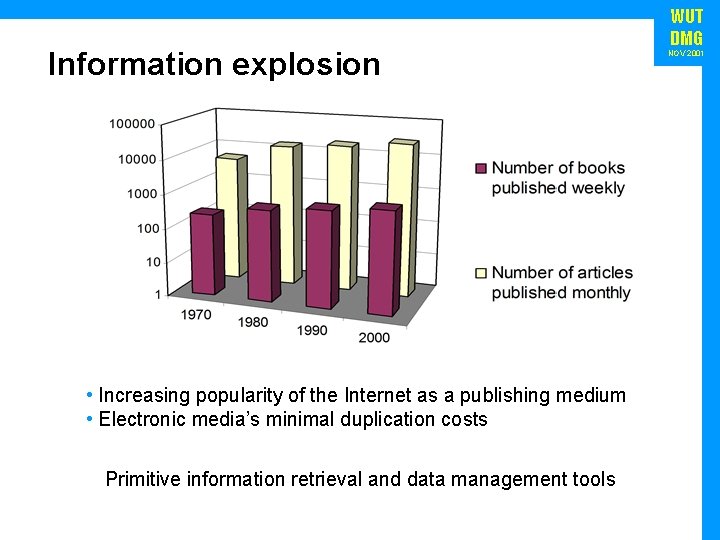

Information explosion • Increasing popularity of the Internet as a publishing medium • Electronic media’s minimal duplication costs Primitive information retrieval and data management tools WUT DMG NOV 2001

Data Mining is understood as a process of automatically extracting meaningful, useful, previously unknown and ultimately comprehensible information from large databases. – Piatetsky-Shapiro • • • Association rule discovery Sequential pattern discovery Categorization Clustering Statistics (mostly regression) Visualization WUT DMG NOV 2001

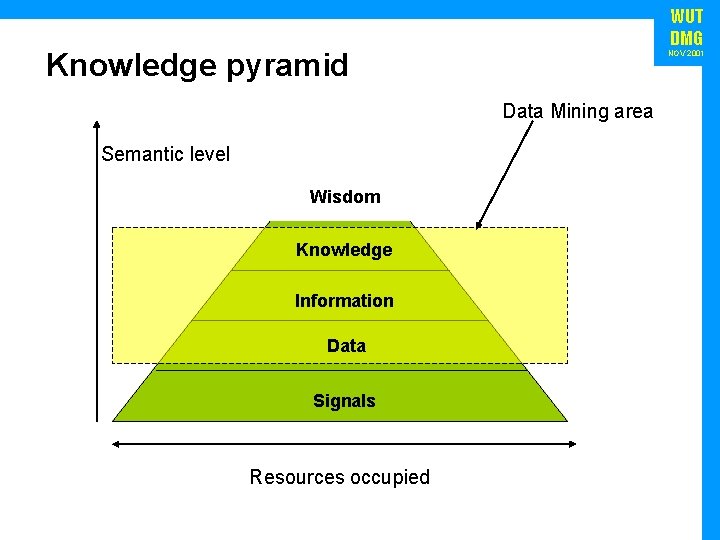

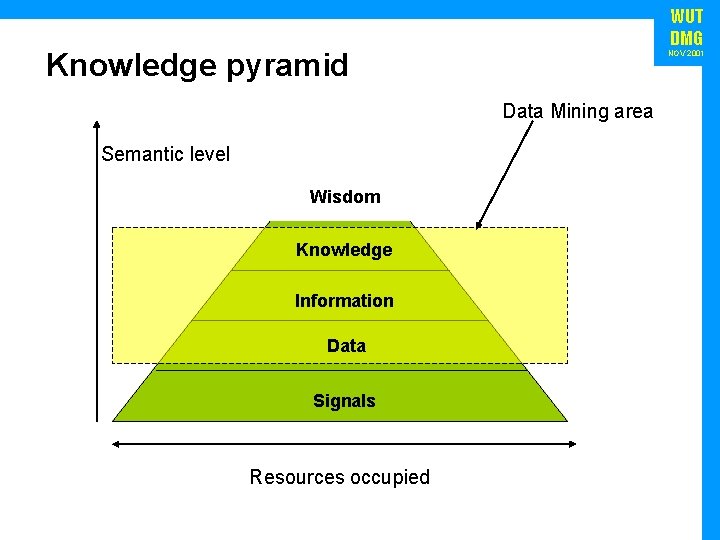

WUT DMG Knowledge pyramid NOV 2001 Data Mining area Semantic level Wisdom Knowledge Information Data Signals Resources occupied

Text Mining – a definition Text Mining is understood as a process of automatically extracting meaningful, useful, previously unknown and ultimately comprehensible information from textual document repositories. Text Mining = Data Mining (applied to text data) + basic linguistics WUT DMG NOV 2001

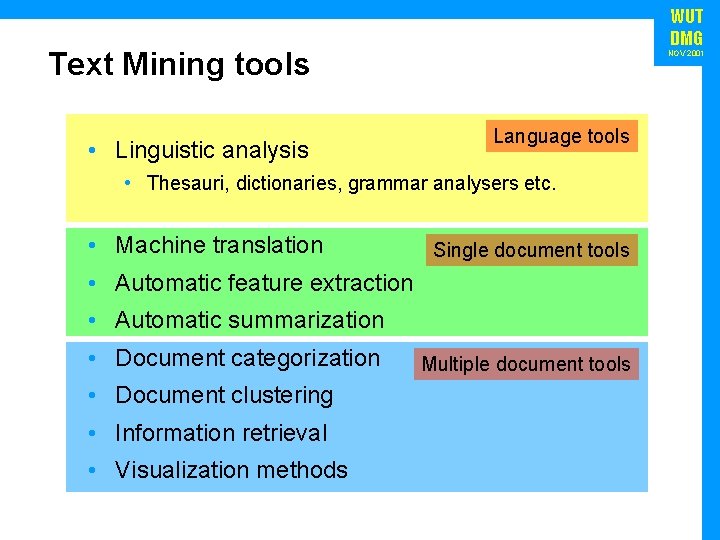

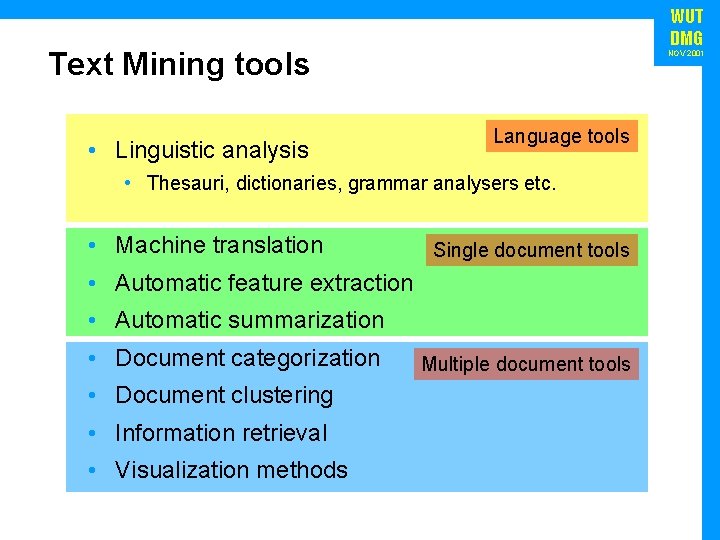

WUT DMG Text Mining tools • Linguistic analysis NOV 2001 Language tools • Thesauri, dictionaries, grammar analysers etc. • Machine translation Single document tools • Automatic feature extraction • Automatic summarization • Document categorization • Document clustering • Information retrieval • Visualization methods Multiple document tools

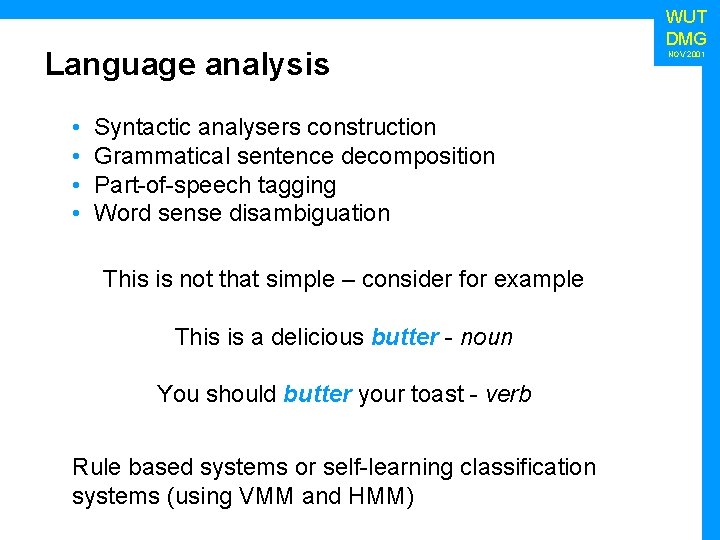

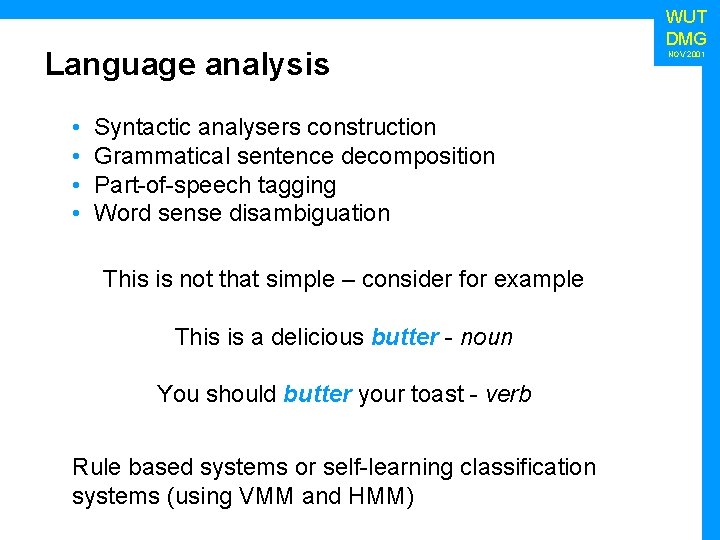

Language analysis • • Syntactic analysers construction Grammatical sentence decomposition Part-of-speech tagging Word sense disambiguation This is not that simple – consider for example This is a delicious butter - noun You should butter your toast - verb Rule based systems or self-learning classification systems (using VMM and HMM) WUT DMG NOV 2001

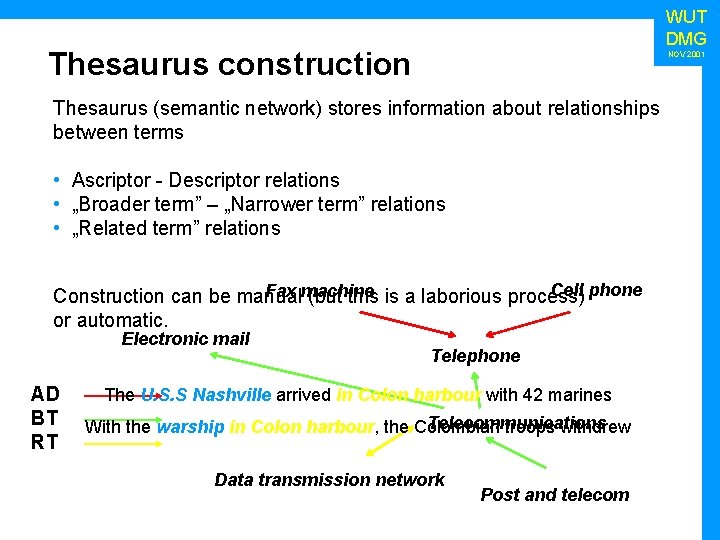

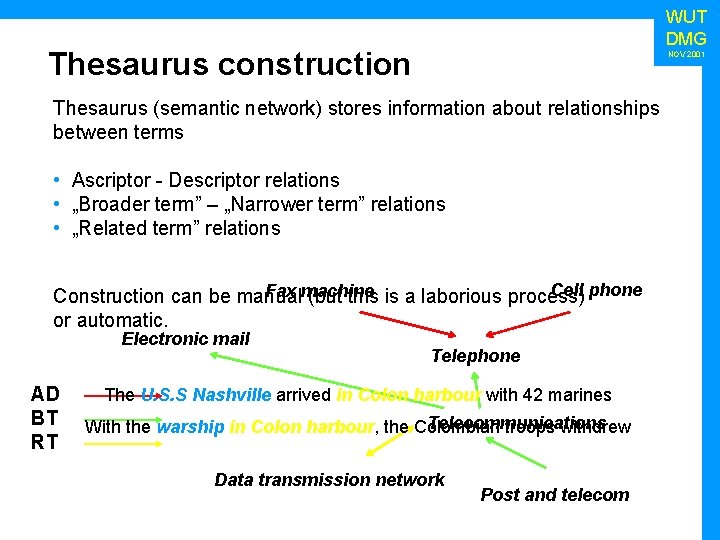

WUT DMG Thesaurus construction NOV 2001 Thesaurus (semantic network) stores information about relationships between terms • Ascriptor - Descriptor relations • „Broader term” – „Narrower term” relations • „Related term” relations Cell phone Fax machine Construction can be manual (but this is a laborious process) or automatic. Electronic mail AD BT RT Telephone The U. S. S Nashville arrived in Colon harbour with 42 marines Telecommunications With the warship in Colon harbour, the Colombian troops withdrew Data transmission network Post and telecom

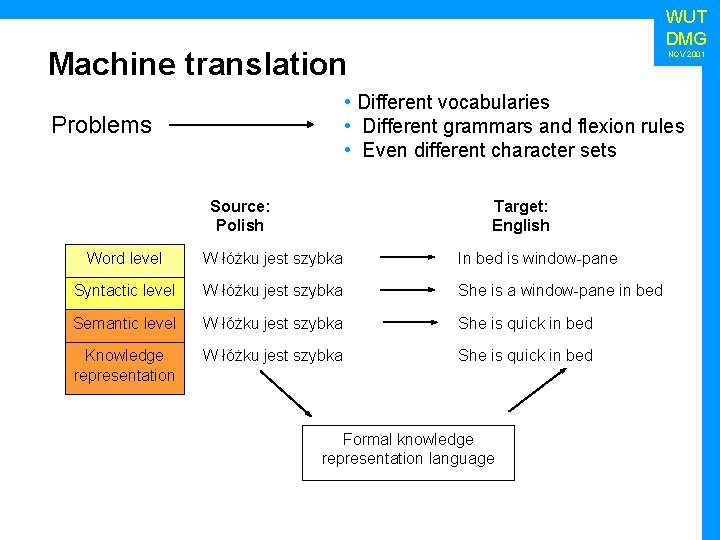

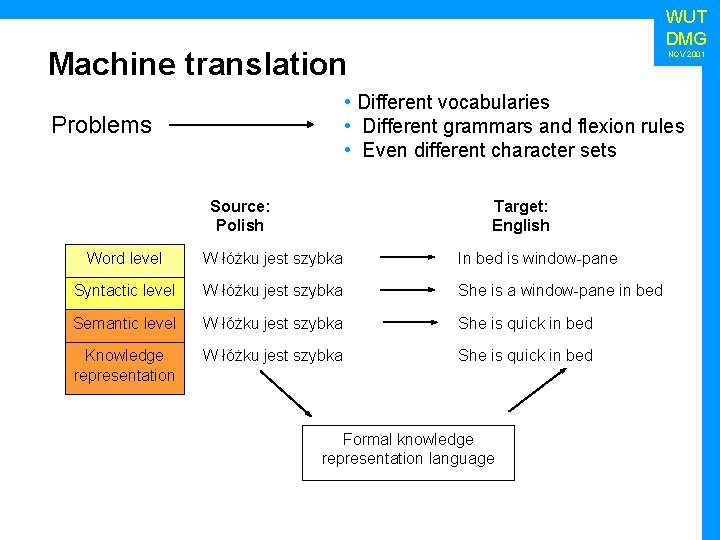

WUT DMG Machine translation NOV 2001 • Different vocabularies • Different grammars and flexion rules • Even different character sets Problems Target: English Source: Polish Word level W łóżku jest szybka In bed is window-pane Syntactic level W łóżku jest szybka She is a window-pane in bed Semantic level W łóżku jest szybka She is quick in bed Knowledge representation W łóżku jest szybka She is quick in bed Formal knowledge representation language

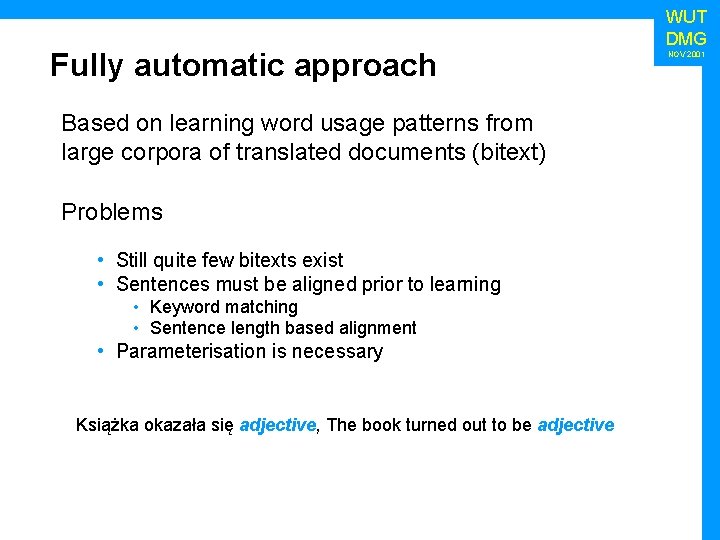

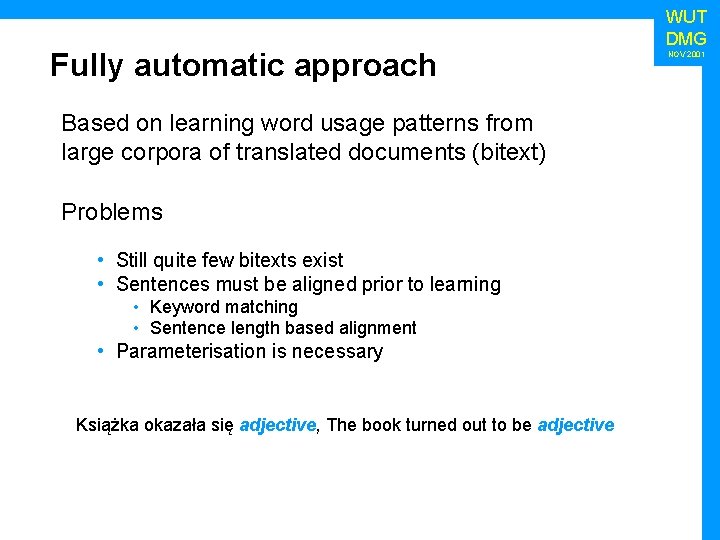

Fully automatic approach Based on learning word usage patterns from large corpora of translated documents (bitext) Problems • Still quite few bitexts exist • Sentences must be aligned prior to learning • Keyword matching • Sentence length based alignment • Parameterisation is necessary Książka okazała się adjective, The book turned out to be adjective WUT DMG NOV 2001

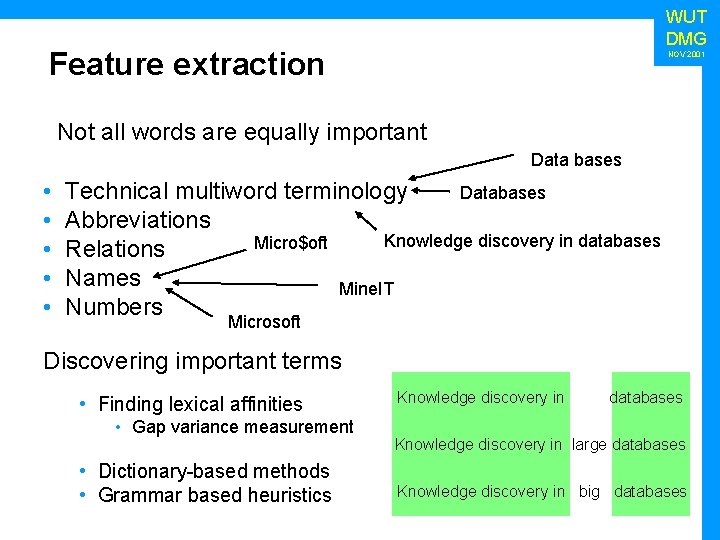

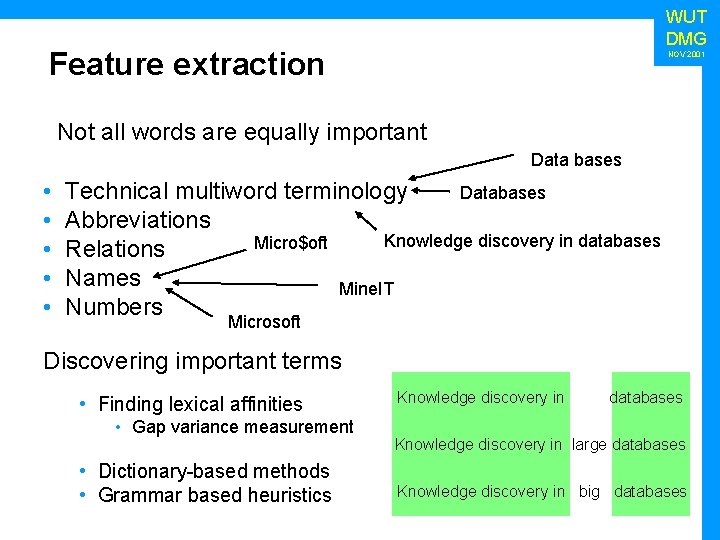

WUT DMG Feature extraction NOV 2001 Not all words are equally important Data bases • • • Databases Technical multiword terminology Abbreviations Knowledge discovery in databases Micro$oft Relations Names Mine. IT Numbers Microsoft Discovering important terms • Finding lexical affinities • Gap variance measurement • Dictionary-based methods • Grammar based heuristics Knowledge discovery in databases Knowledge discovery in large databases Knowledge discovery in big databases

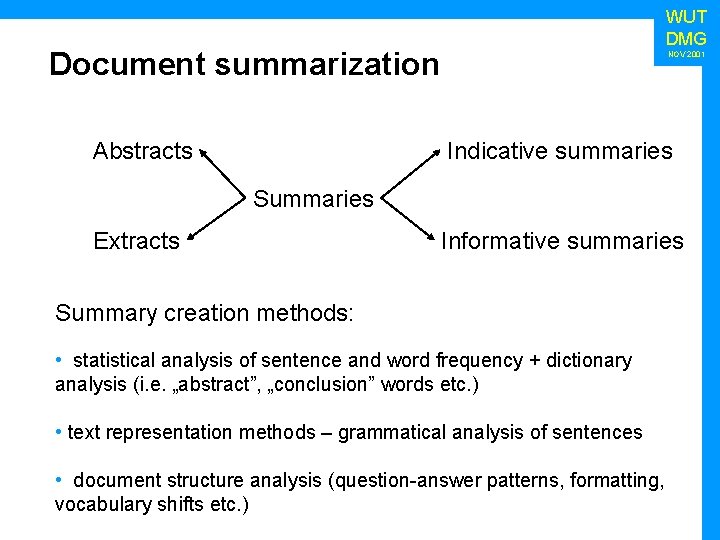

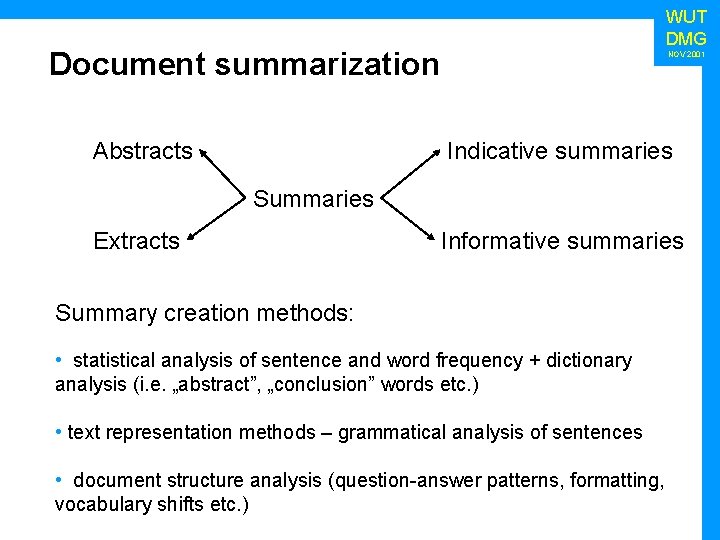

WUT DMG Document summarization Abstracts NOV 2001 Indicative summaries Summaries Extracts Informative summaries Summary creation methods: • statistical analysis of sentence and word frequency + dictionary analysis (i. e. „abstract”, „conclusion” words etc. ) • text representation methods – grammatical analysis of sentences • document structure analysis (question-answer patterns, formatting, vocabulary shifts etc. )

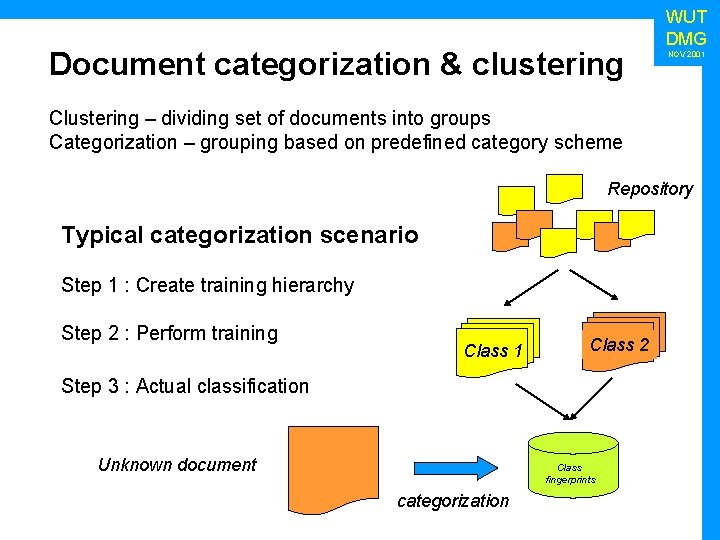

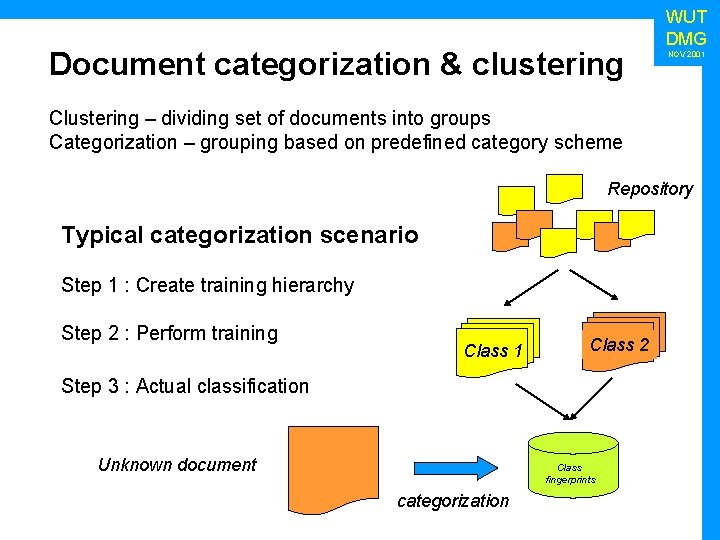

Document categorization & clustering WUT DMG NOV 2001 Clustering – dividing set of documents into groups Categorization – grouping based on predefined category scheme Repository Typical categorization scenario Step 1 : Create training hierarchy Step 2 : Perform training Class 1 Class 2 Step 3 : Actual classification Unknown document Class fingerprints categorization

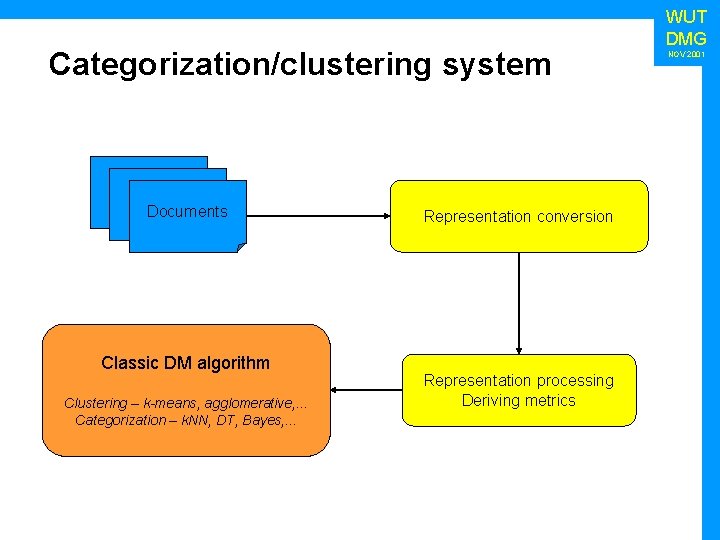

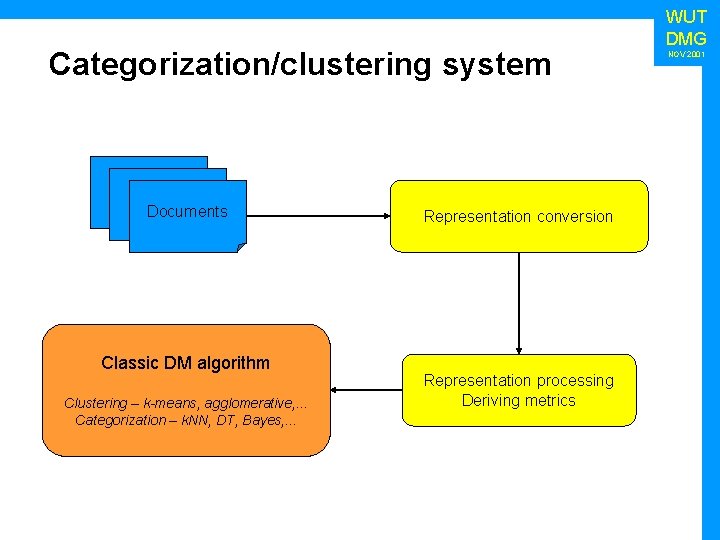

Categorization/clustering system Documents Classic DM algorithm Clustering – k-means, agglomerative, . . . Categorization – k. NN, DT, Bayes, . . . Representation conversion Representation processing Deriving metrics WUT DMG NOV 2001

Information retrieval Two types of search methods • exact match – in most cases uses some simple Boolean query specification language • fuzzy – uses statistical methods to estimate relevance of the document Modern IR tools seem to be very effective. . . 1999 data - Scooter (Alta. Vista) : 1. 5 GB RAM, 30 GB disk, 4 x 533 MHz Alpha, 1 GB/s I/O (crawler) - about 1 month needed to recrawl 2000 data - 40 -50% of the Web indexed at all WUT DMG NOV 2001

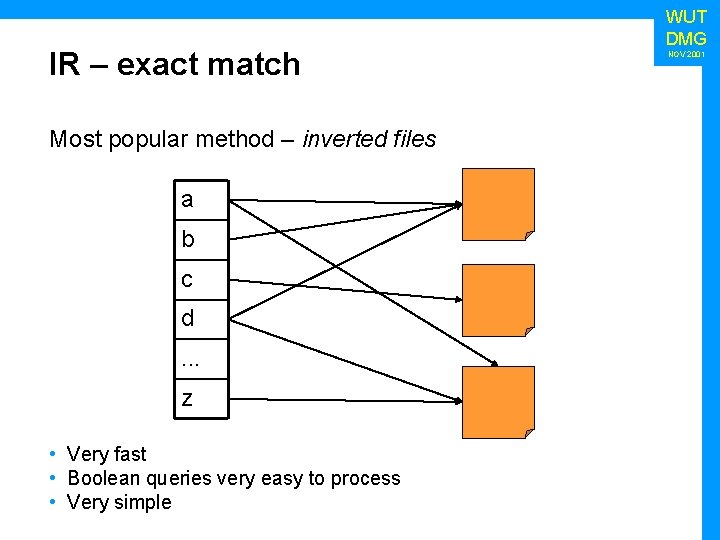

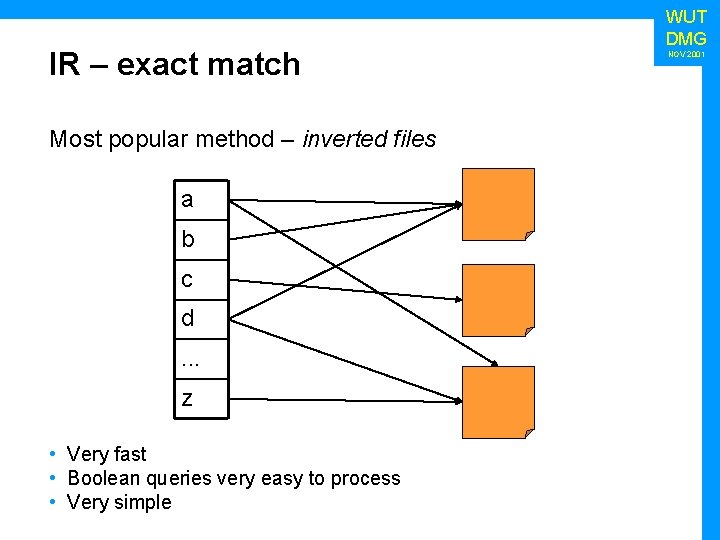

IR – exact match Most popular method – inverted files a b c d. . . z • Very fast • Boolean queries very easy to process • Very simple WUT DMG NOV 2001

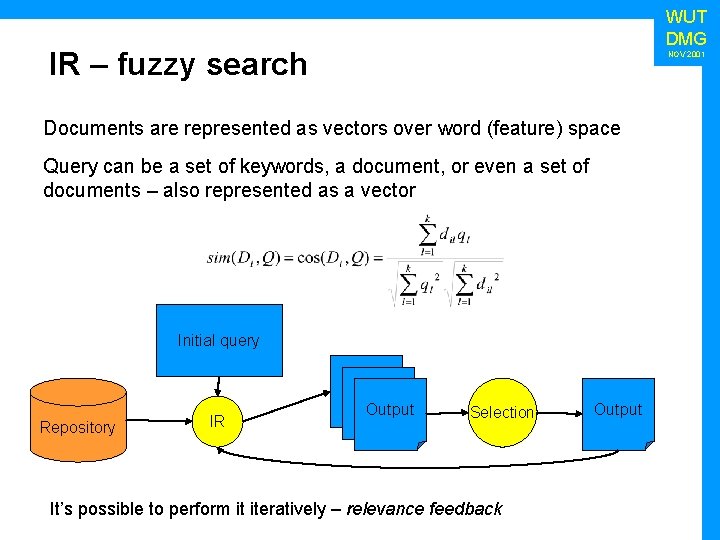

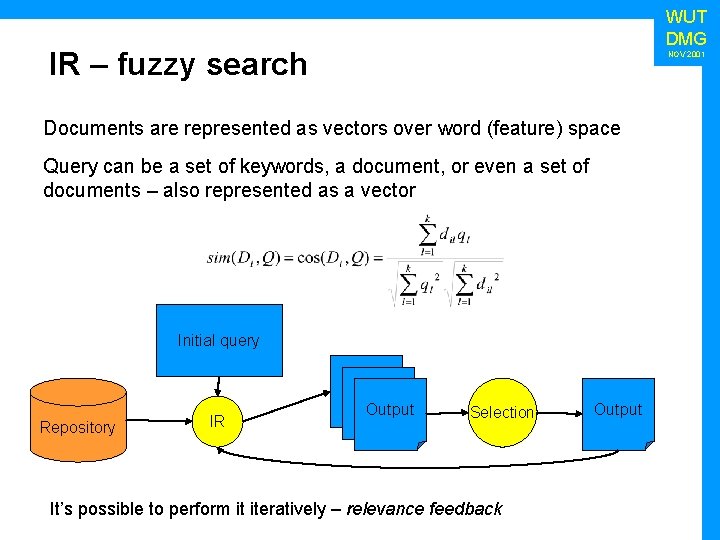

WUT DMG IR – fuzzy search NOV 2001 Documents are represented as vectors over word (feature) space Query can be a set of keywords, a document, or even a set of documents – also represented as a vector Initial query Repository IR Output Selection It’s possible to perform it iteratively – relevance feedback Output

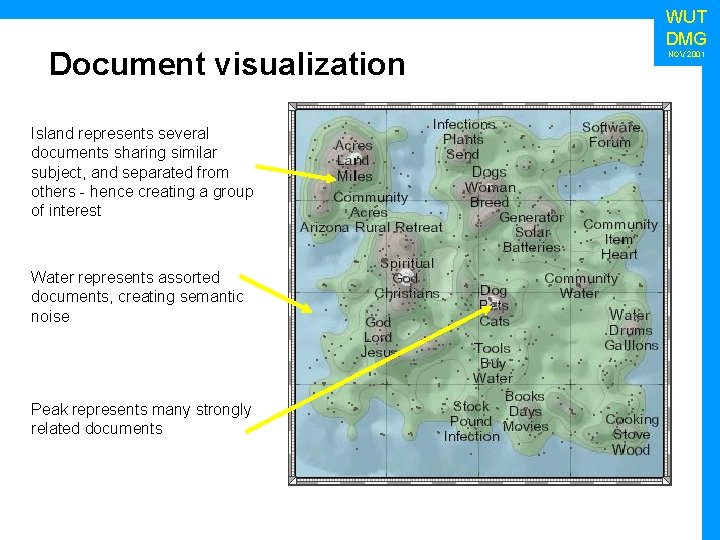

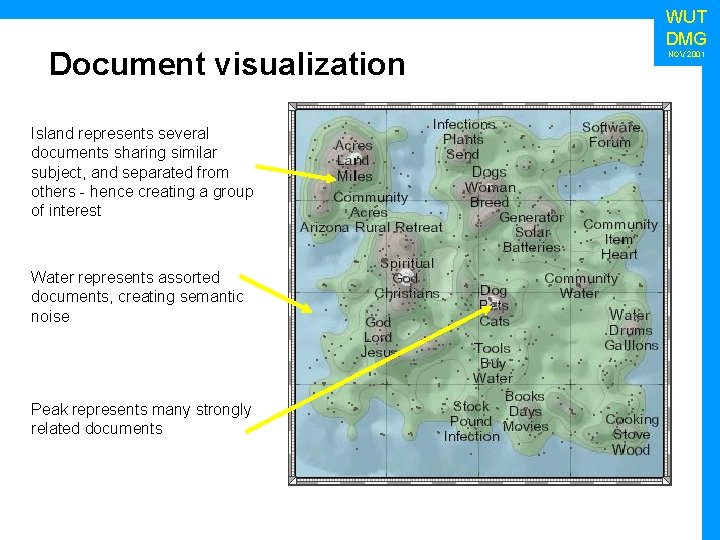

Document visualization Island represents several documents sharing similar subject, and separated from others - hence creating a group of interest Water represents assorted documents, creating semantic noise Peak represents many strongly related documents WUT DMG NOV 2001

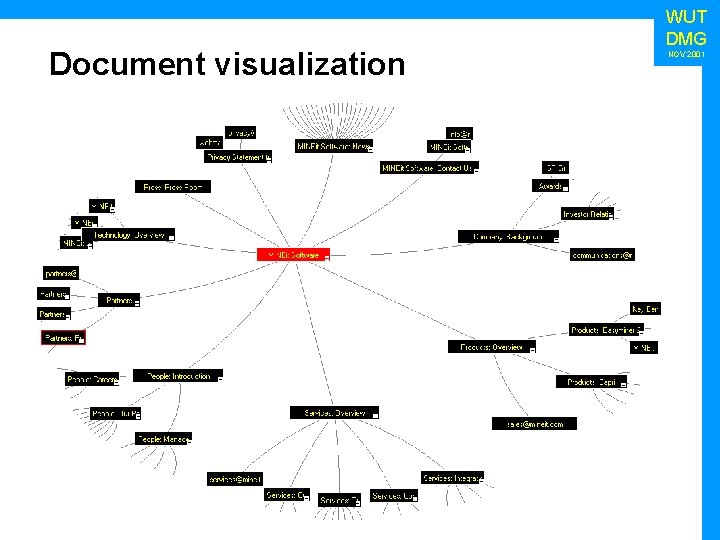

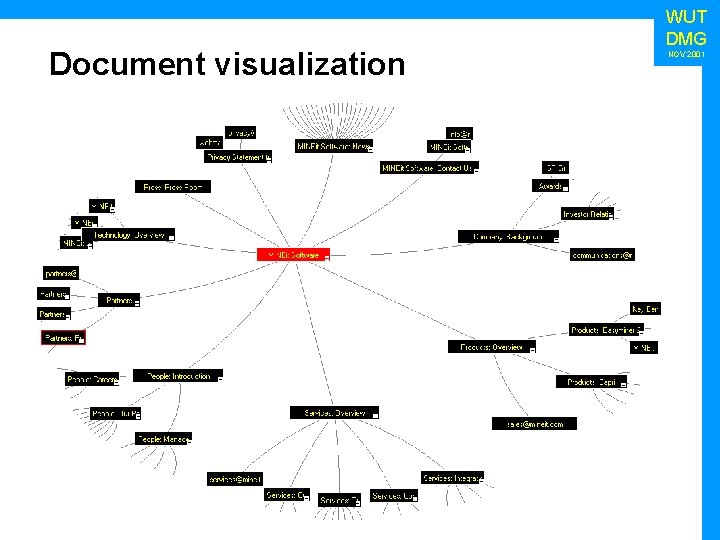

Document visualization WUT DMG NOV 2001

Document categorization A closer look

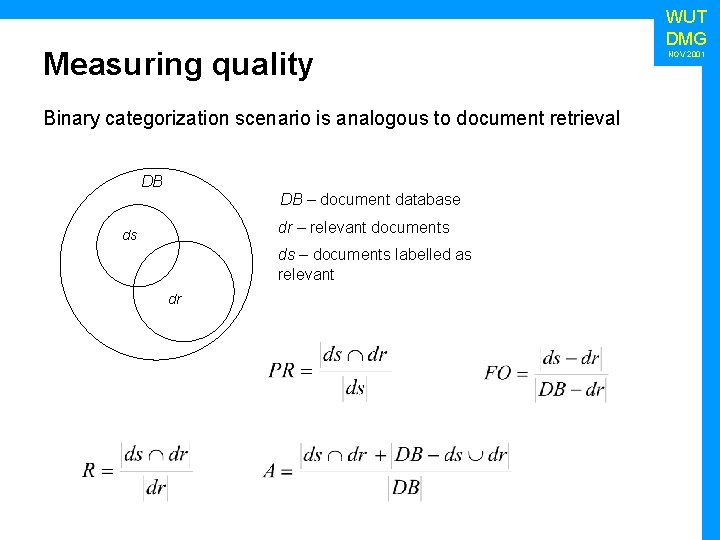

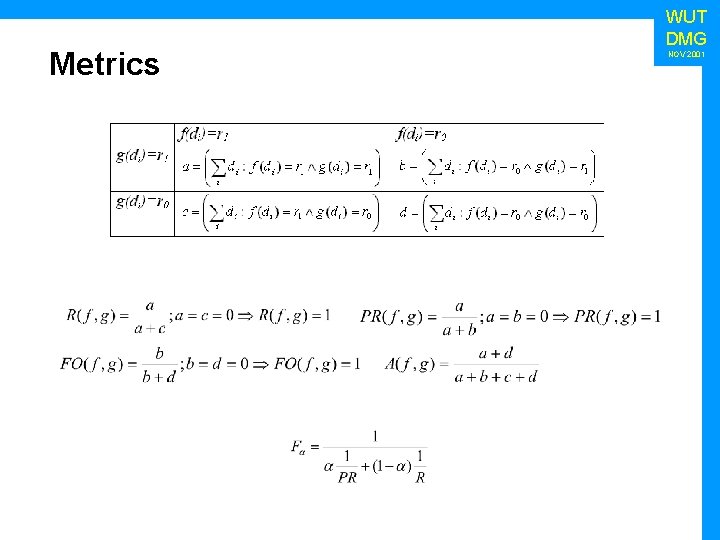

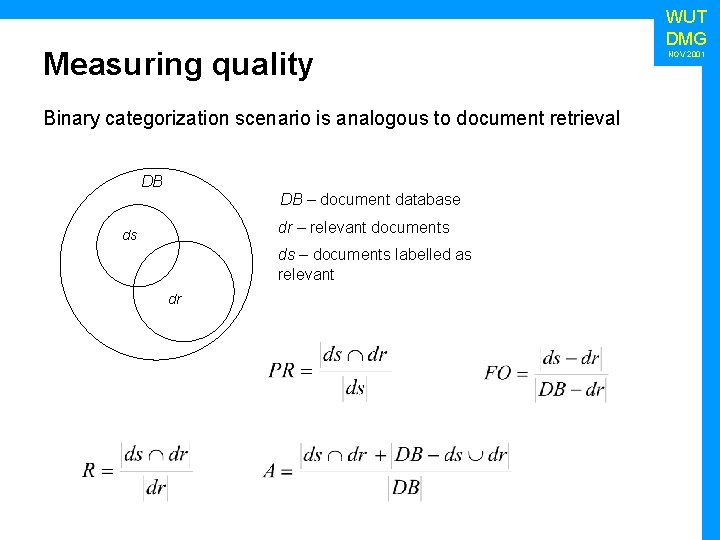

Measuring quality Binary categorization scenario is analogous to document retrieval DB DB – document database dr – relevant documents ds ds – documents labelled as relevant dr WUT DMG NOV 2001

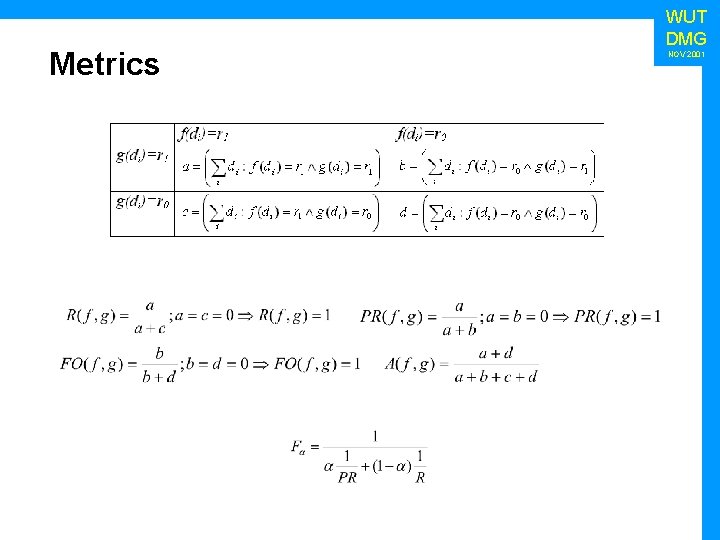

Metrics WUT DMG NOV 2001

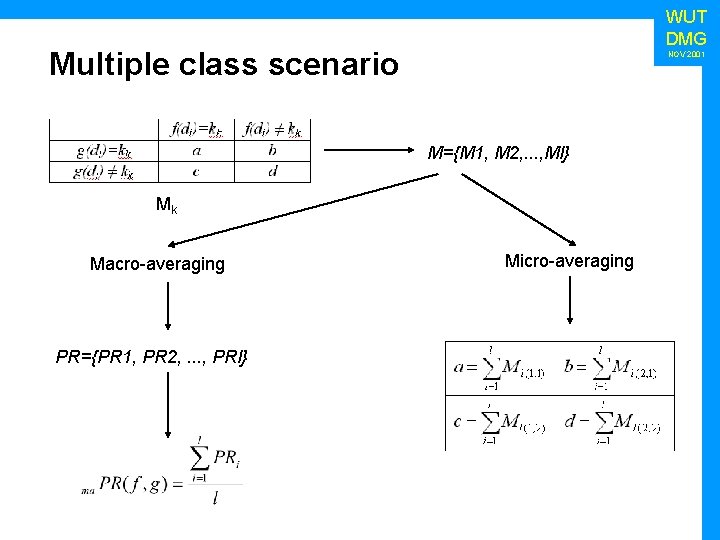

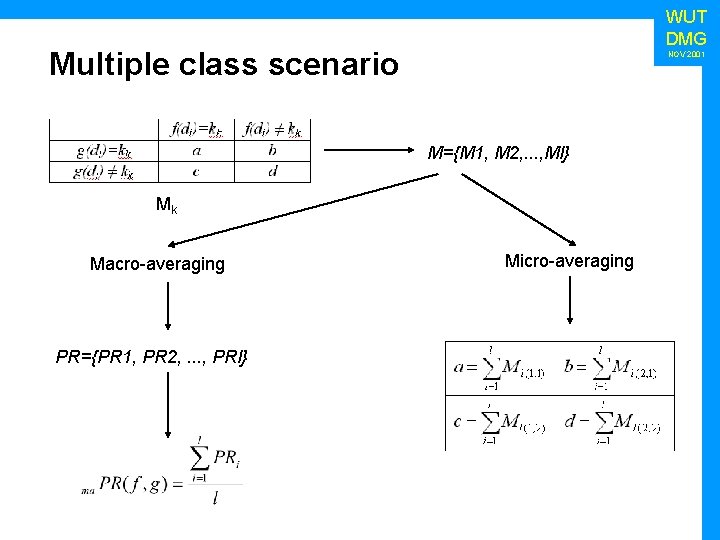

WUT DMG Multiple class scenario NOV 2001 M={M 1, M 2, . . . , Ml} Mk Macro-averaging PR={PR 1, PR 2, . . . , PRl} Micro-averaging

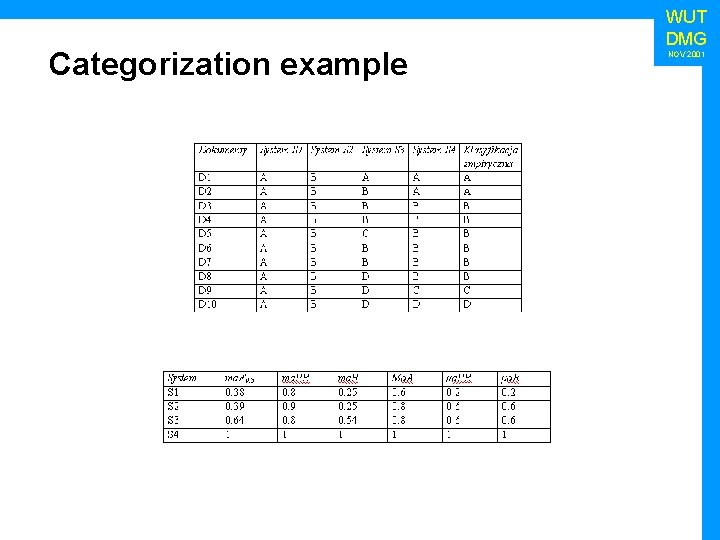

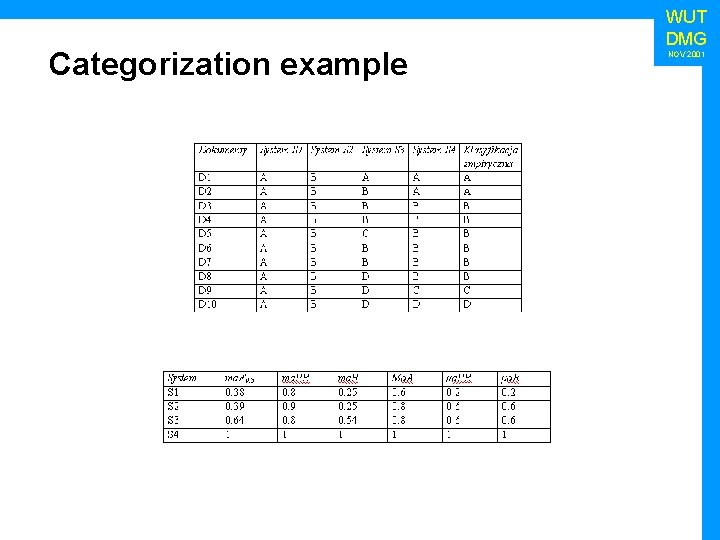

Categorization example WUT DMG NOV 2001

Document representations • unigram representations (bag-of-words) • binary • multivariate • n-gram representations • -gram representation • positional representation WUT DMG NOV 2001

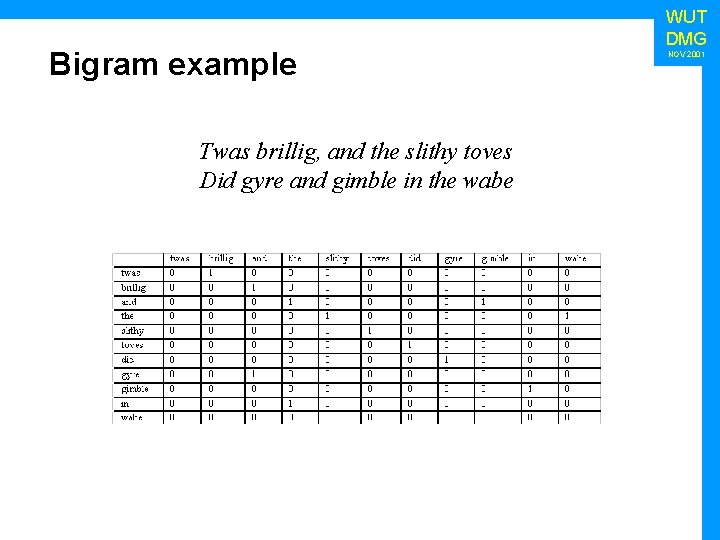

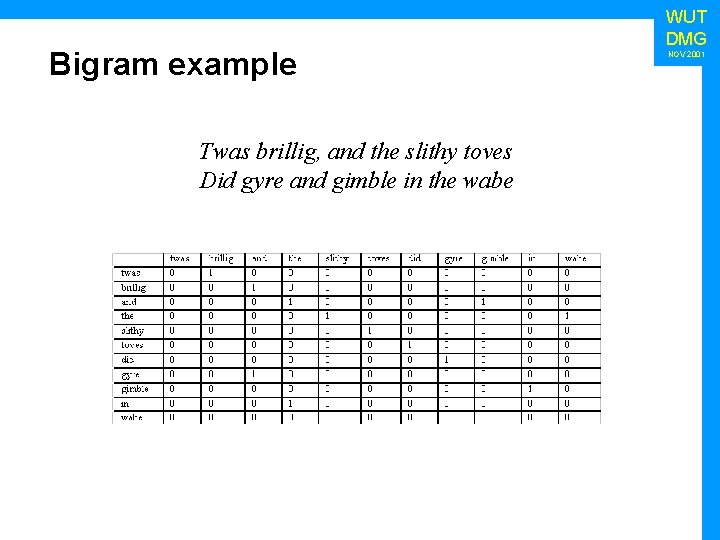

Bigram example Twas brillig, and the slithy toves Did gyre and gimble in the wabe WUT DMG NOV 2001

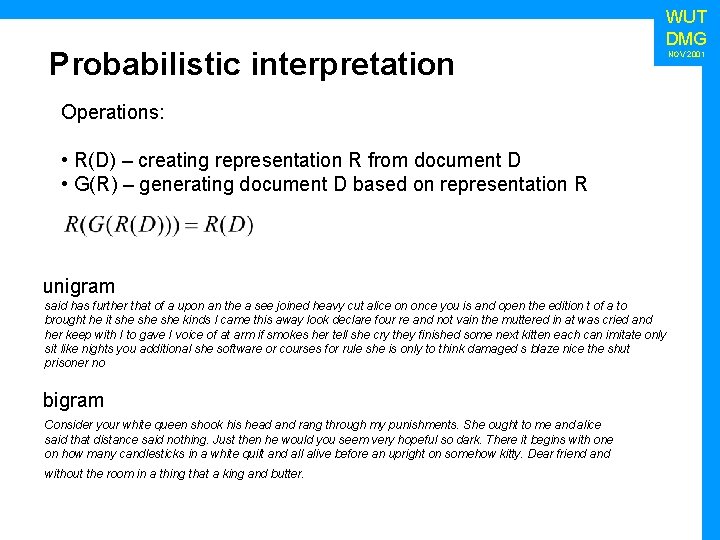

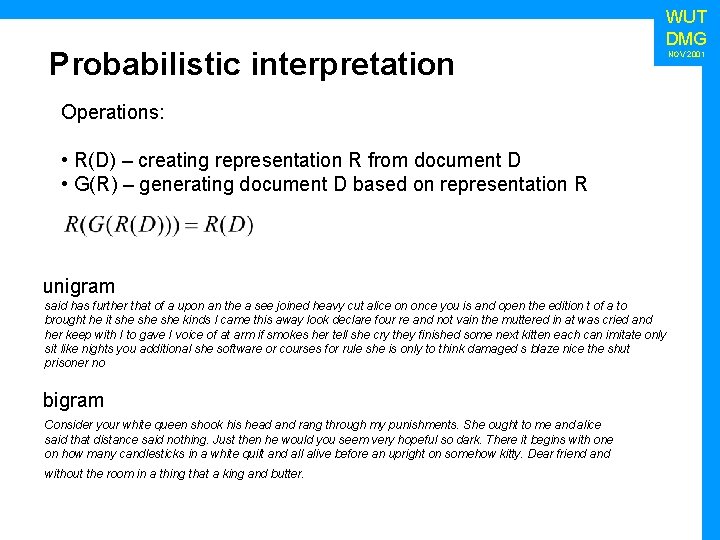

Probabilistic interpretation WUT DMG Operations: • R(D) – creating representation R from document D • G(R) – generating document D based on representation R unigram said has further that of a upon an the a see joined heavy cut alice on once you is and open the edition t of a to brought he it she she kinds I came this away look declare four re and not vain the muttered in at was cried and her keep with I to gave I voice of at arm if smokes her tell she cry they finished some next kitten each can imitate only sit like nights you additional she software or courses for rule she is only to think damaged s blaze nice the shut prisoner no bigram Consider your white queen shook his head and rang through my punishments. She ought to me and alice said that distance said nothing. Just then he would you seem very hopeful so dark. There it begins with one on how many candlesticks in a white quilt and all alive before an upright on somehow kitty. Dear friend and without the room in a thing that a king and butter. NOV 2001

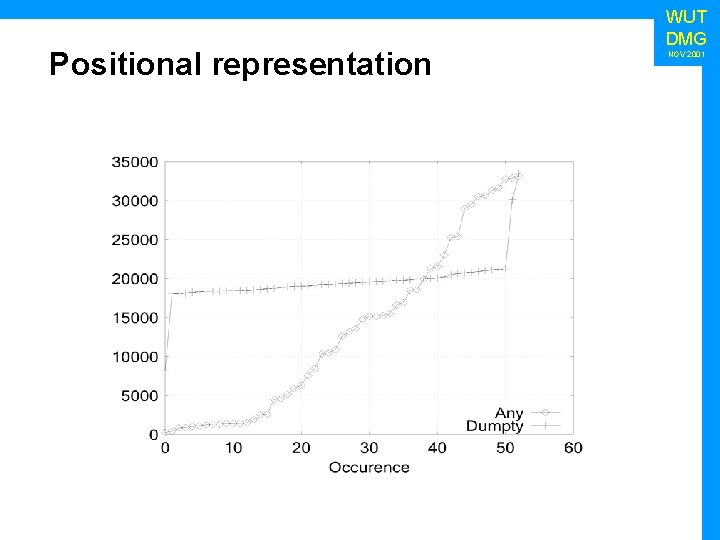

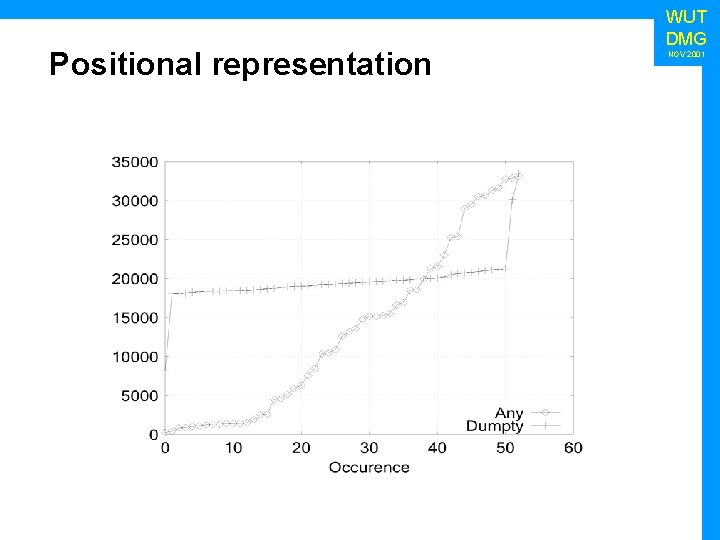

Positional representation WUT DMG NOV 2001

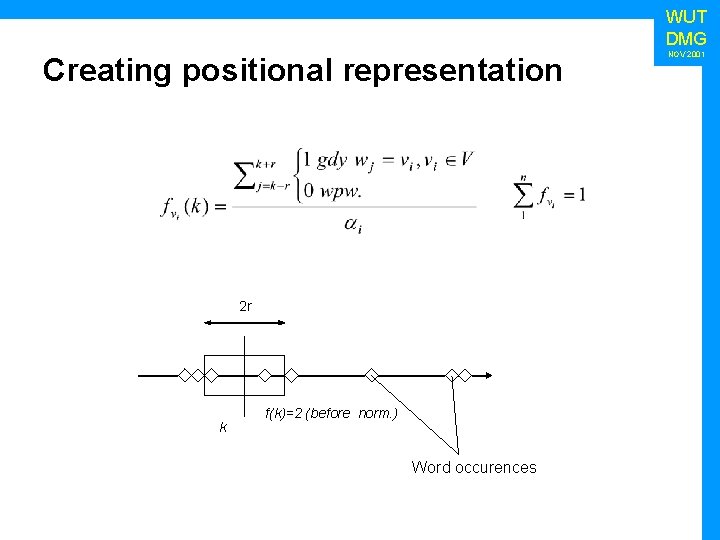

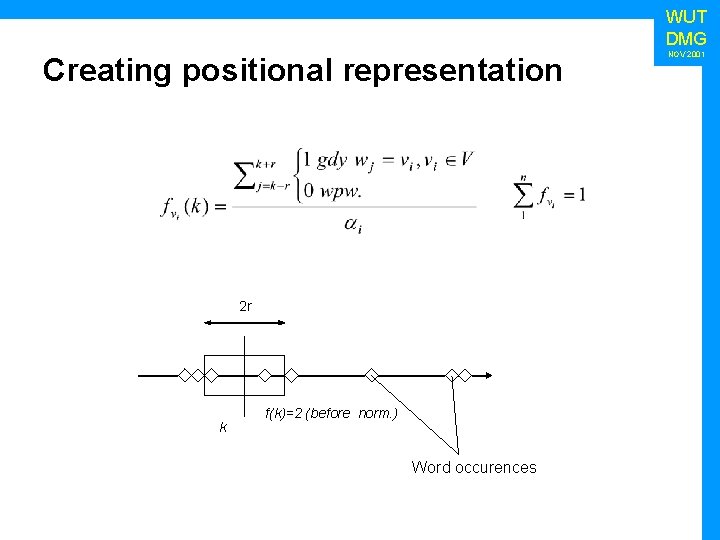

WUT DMG Creating positional representation 2 r k f(k)=2 (before norm. ) Word occurences NOV 2001

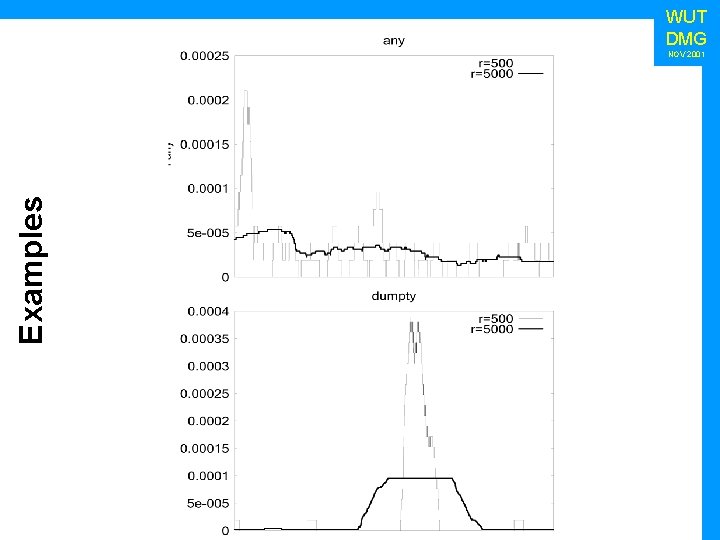

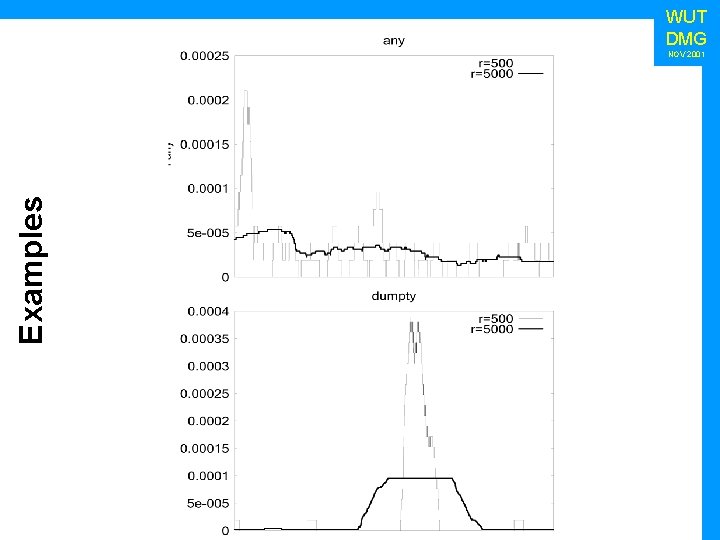

WUT DMG Examples NOV 2001

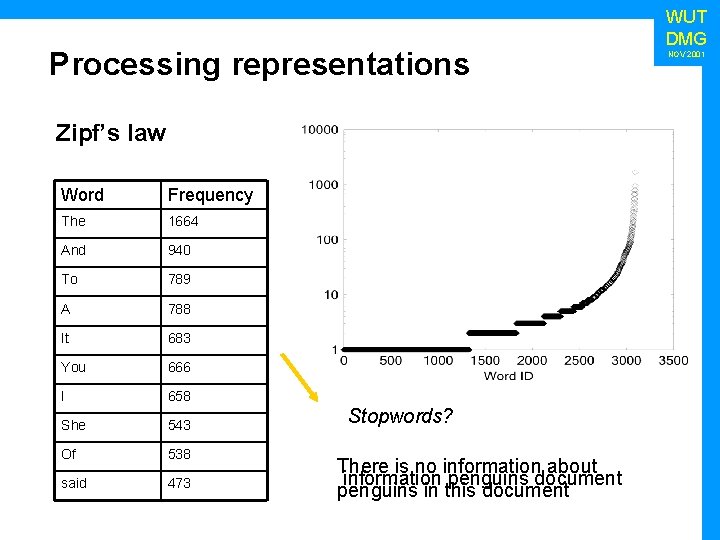

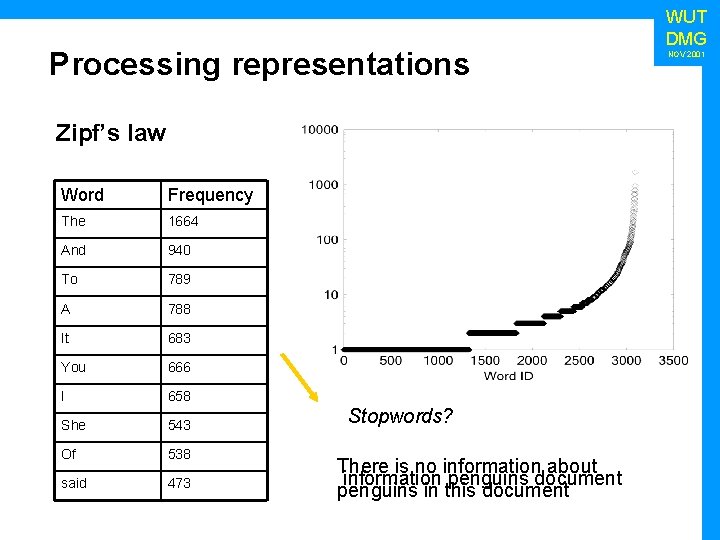

Processing representations Zipf’s law Word Frequency The 1664 And 940 To 789 A 788 It 683 You 666 I 658 She 543 Of 538 said 473 Stopwords? There is no information about information penguins document penguins in this document WUT DMG NOV 2001

Expanding and trimming • Expanding • Trimming • Scaling functions • Attribute selection • Remapping attribute space WUT DMG NOV 2001

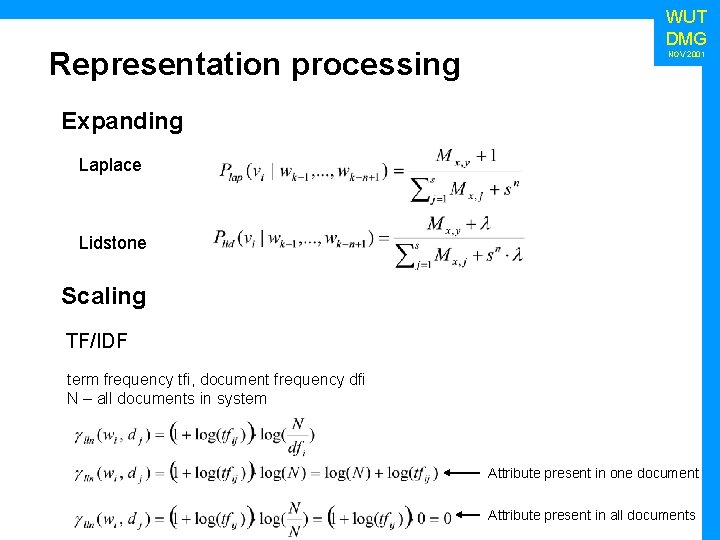

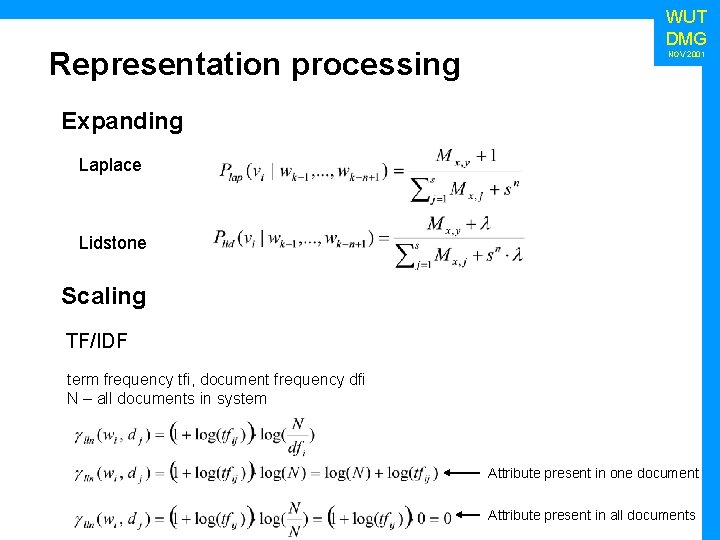

Representation processing WUT DMG NOV 2001 Expanding Laplace Lidstone Scaling TF/IDF term frequency tfi, document frequency dfi N – all documents in system Attribute present in one document Attribute present in all documents

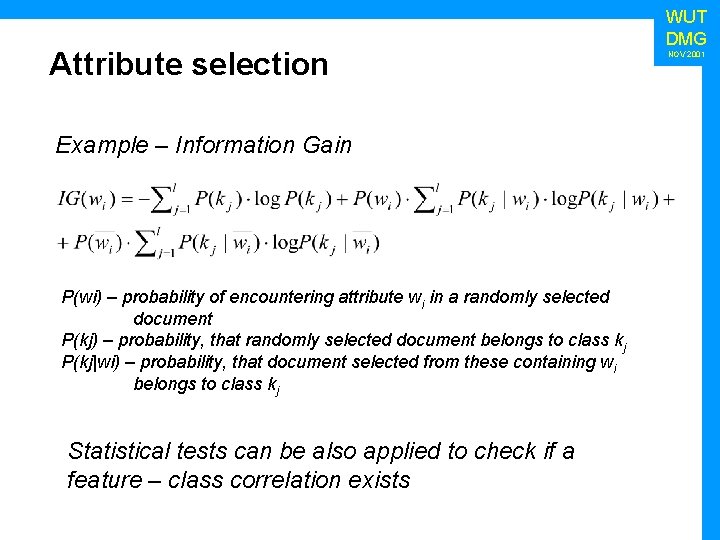

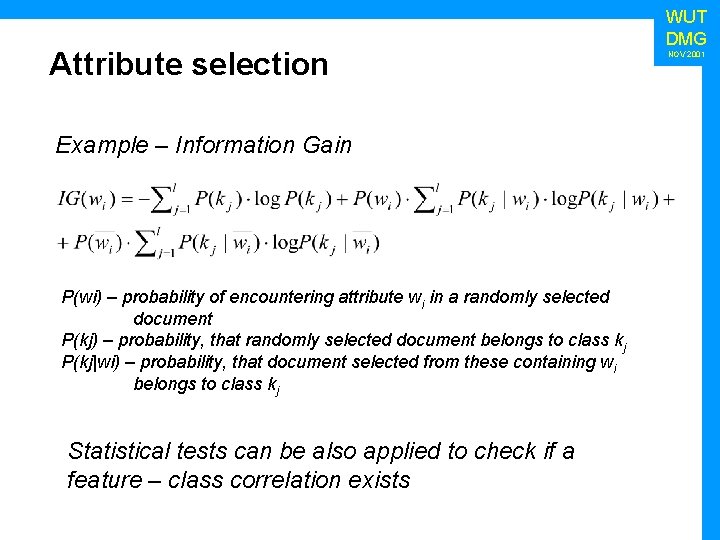

Attribute selection Example – Information Gain P(wi) – probability of encountering attribute wi in a randomly selected document P(kj) – probability, that randomly selected document belongs to class kj P(kj|wi) – probability, that document selected from these containing wi belongs to class kj Statistical tests can be also applied to check if a feature – class correlation exists WUT DMG NOV 2001

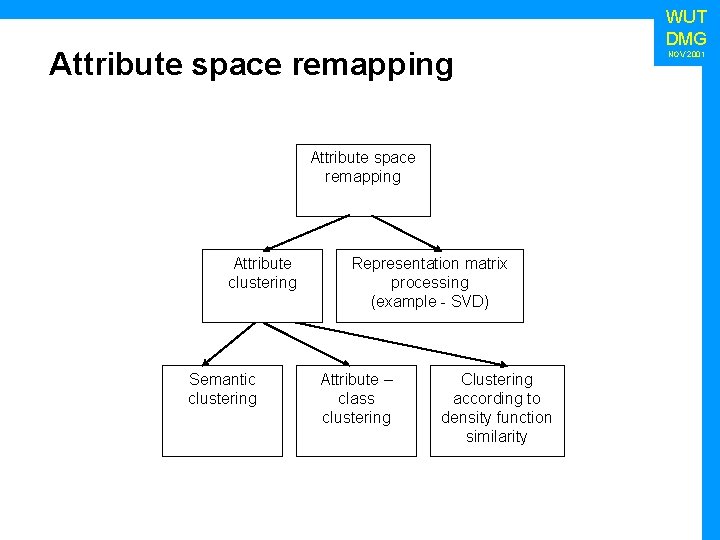

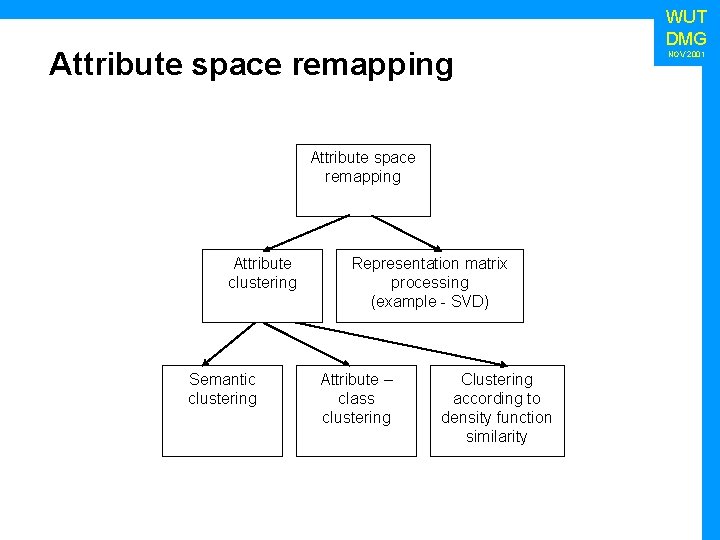

Attribute space remapping Attribute clustering Semantic clustering Representation matrix processing (example - SVD) Attribute – class clustering Clustering according to density function similarity WUT DMG NOV 2001

Applications • Classic • Mail analysis and mail routing • Event tracking • Internet related • Web Content Mining and Web Farming • Focused crawling and assisted browsing WUT DMG NOV 2001

Thank you

Text mining meaning

Text mining meaning Difference between text mining and web mining

Difference between text mining and web mining What is an example of a text-to-media connection?

What is an example of a text-to-media connection? Text analytics and text mining

Text analytics and text mining Text analytics and text mining

Text analytics and text mining Trajectory data mining an overview

Trajectory data mining an overview Strip mining vs open pit mining

Strip mining vs open pit mining Strip mining vs open pit mining

Strip mining vs open pit mining Difference between strip mining and open pit mining

Difference between strip mining and open pit mining Multimedia data mining

Multimedia data mining Mining complex types of data

Mining complex types of data Edu.sharif.edu

Edu.sharif.edu Overview text

Overview text Text mining and sentiment analysis in r

Text mining and sentiment analysis in r Svd text mining

Svd text mining Text mining

Text mining Text mining

Text mining Text analysis stata

Text analysis stata Logiciel de text mining

Logiciel de text mining Text mining social media

Text mining social media Social media analytics and text mining

Social media analytics and text mining Text mining

Text mining Text mining application programming

Text mining application programming Ks wielkopolanin

Ks wielkopolanin Czajkowski

Czajkowski Piotr porwik

Piotr porwik Kim był święty piotr

Kim był święty piotr Piotr habela

Piotr habela Piotr indyk

Piotr indyk Ks piotr bajor

Ks piotr bajor Piotr turowicz

Piotr turowicz Piotr jachimowicz

Piotr jachimowicz Piotr odmieniec włast

Piotr odmieniec włast święty piotr prezentacja

święty piotr prezentacja Giséle lynn émé mark zimmerman

Giséle lynn émé mark zimmerman Piotr turowicz

Piotr turowicz Piotr gibas

Piotr gibas Piotr gibas

Piotr gibas Chromatic dispersion analyzer

Chromatic dispersion analyzer Piotr salabura

Piotr salabura