Era of Customization and Specialization for Era of

![Core spilling – [Cong et al Trans. on Parallel and Distributed Systems 2007] u Core spilling – [Cong et al Trans. on Parallel and Distributed Systems 2007] u](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-13.jpg)

![Core spilling – [Cong et al Trans. on Parallel and Distributed Systems 2007] u Core spilling – [Cong et al Trans. on Parallel and Distributed Systems 2007] u](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-14.jpg)

![Customizable Hybrid L 1 Cache [ISLPED’ 2011] § Cache in conjunction with Scratchpad Memory Customizable Hybrid L 1 Cache [ISLPED’ 2011] § Cache in conjunction with Scratchpad Memory](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-16.jpg)

![Mesh Overlaid with RF-I [HPCA’ 08] u u u 10 x 10 mesh of Mesh Overlaid with RF-I [HPCA’ 08] u u u 10 x 10 mesh of](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-24.jpg)

![Power Savings [MICRO’ 08] 8 bytes 16 4 bytes Requires high bw to communicate Power Savings [MICRO’ 08] 8 bytes 16 4 bytes Requires high bw to communicate](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-26.jpg)

![Impact of Using RF-Interconnects [MICRO’ 08] • Adaptive RF-I enabled No. C - Cost Impact of Using RF-Interconnects [MICRO’ 08] • Adaptive RF-I enabled No. C - Cost](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-27.jpg)

![Our Proposal: Extensive Use of Accelerators [SAW’ 2011] u Proposed solution: extensive use of Our Proposal: Extensive Use of Accelerators [SAW’ 2011] u Proposed solution: extensive use of](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-29.jpg)

![x. Pilot: Behavioral-to-RTL Synthesis Flow [SOCC’ 2006] Behavioral spec. in C/C++/System. C Platform description x. Pilot: Behavioral-to-RTL Synthesis Flow [SOCC’ 2006] Behavioral spec. in C/C++/System. C Platform description](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-45.jpg)

![Another Example: Efficient Pattern Mining [FPGA’ 08 and DATE’ 2010] u Programers may contain Another Example: Efficient Pattern Mining [FPGA’ 08 and DATE’ 2010] u Programers may contain](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-49.jpg)

![Beyond C-to-RTL Synthesis Compilation Support for Hybrid Cache [DAC’ 2011] Manually coding …… RASP Beyond C-to-RTL Synthesis Compilation Support for Hybrid Cache [DAC’ 2011] Manually coding …… RASP](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-50.jpg)

![Automatic Memory Partitioning [ICCAD 09] u Memory system is critical for high performance and Automatic Memory Partitioning [ICCAD 09] u Memory system is critical for high performance and](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-62.jpg)

- Slides: 66

Era of Customization and Specialization for Era of Customization and Specialization Energy-Efficient Computing Jason Cong Director, Center for Domain-Specific Computing www. cdsc. ucla. edu Chancellor’s Professor, UCLA Computer Science Department cong@cs. ucla. edu 1

Is Semiconductor a Sunset Industry ? • Frequency scaling has stopped a decade ago due to power barrier • CMOS device scaling is coming to the end soon, with no sure replacement in sight Source : Shekhar Borkar, Intel 2

Current Solution to Power Barrier • 10’s to 100’s cores in a processor • 1000’s to 10, 000’s servers in a data center Parallelization 3

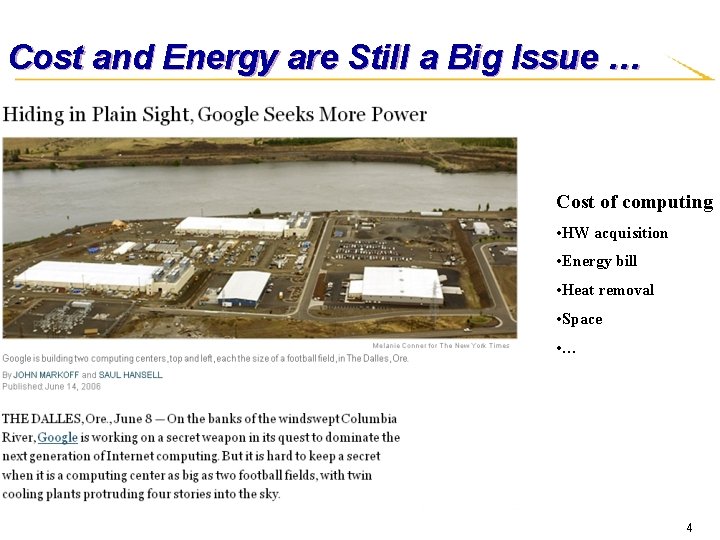

Cost and Energy are Still a Big Issue … Cost of computing • HW acquisition • Energy bill • Heat removal • Space • … 4

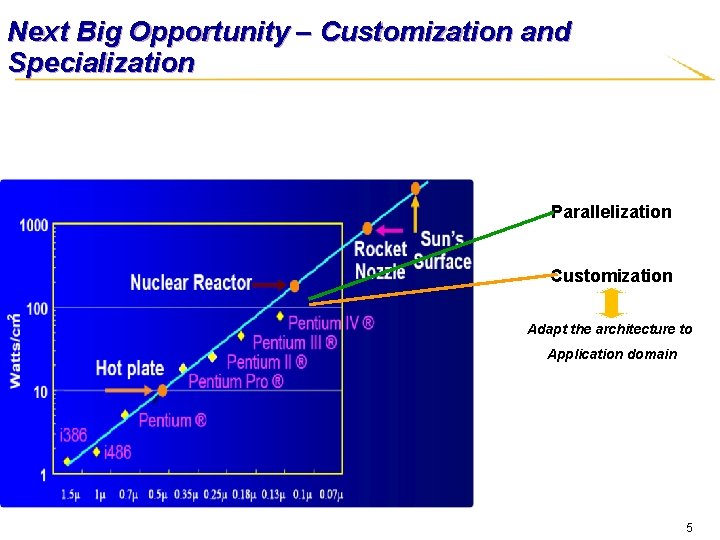

Next Big Opportunity – Customization and Specialization Parallelization Customization Adapt the architecture to Application domain 5

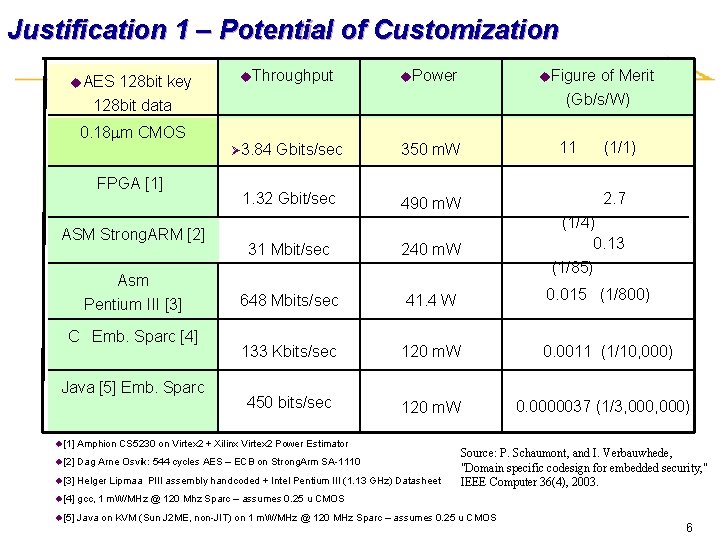

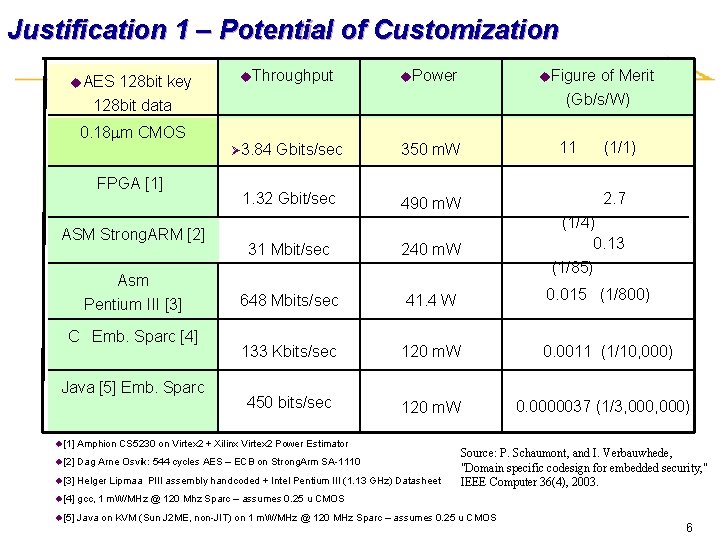

Justification 1 – Potential of Customization u. AES 128 bit key 128 bit data 0. 18 mm CMOS FPGA [1] ASM Strong. ARM [2] Asm Pentium III [3] C Emb. Sparc [4] Java [5] Emb. Sparc u. Throughput Ø 3. 84 Gbits/sec u. Power u. Figure of Merit (Gb/s/W) 350 m. W 11 (1/1) 490 m. W 2. 7 31 Mbit/sec 240 m. W (1/4) 0. 13 (1/85) 648 Mbits/sec 41. 4 W 0. 015 (1/800) 133 Kbits/sec 120 m. W 0. 0011 (1/10, 000) 450 bits/sec 120 m. W 1. 32 Gbit/sec u[1] Amphion CS 5230 on Virtex 2 + Xilinx Virtex 2 Power Estimator u[2] Dag Arne Osvik: 544 cycles AES – ECB on Strong. Arm SA-1110 u[3] Helger Lipmaa PIII assembly handcoded + Intel Pentium III (1. 13 GHz) Datasheet u[4] gcc, 1 m. W/MHz @ 120 Mhz Sparc – assumes 0. 25 u CMOS u[5] Java on KVM (Sun J 2 ME, non-JIT) on 1 m. W/MHz @ 120 MHz Sparc – assumes 0. 25 u CMOS 0. 0000037 (1/3, 000) Source: P. Schaumont, and I. Verbauwhede, "Domain specific codesign for embedded security, " IEEE Computer 36(4), 2003. 6

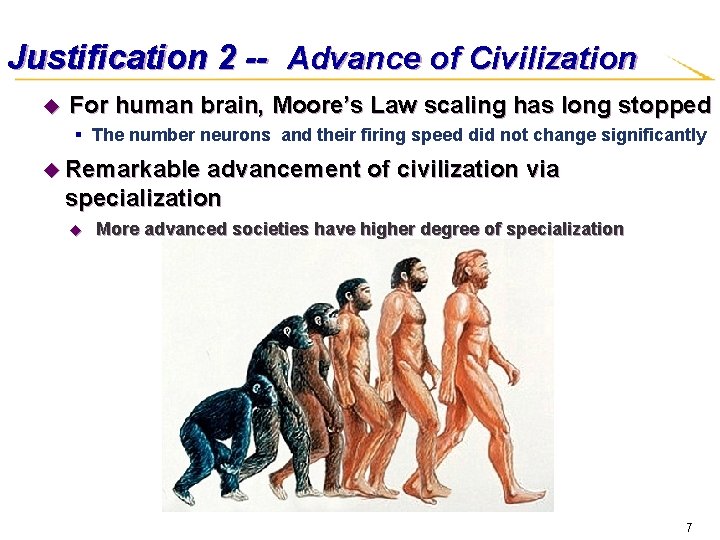

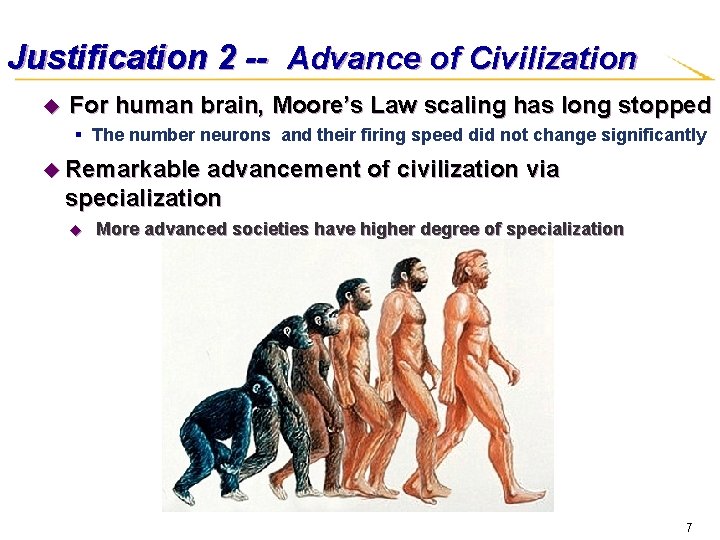

Justification 2 -- Advance of Civilization u For human brain, Moore’s Law scaling has long stopped § The number neurons and their firing speed did not change significantly u Remarkable advancement of civilization via specialization u More advanced societies have higher degree of specialization 7

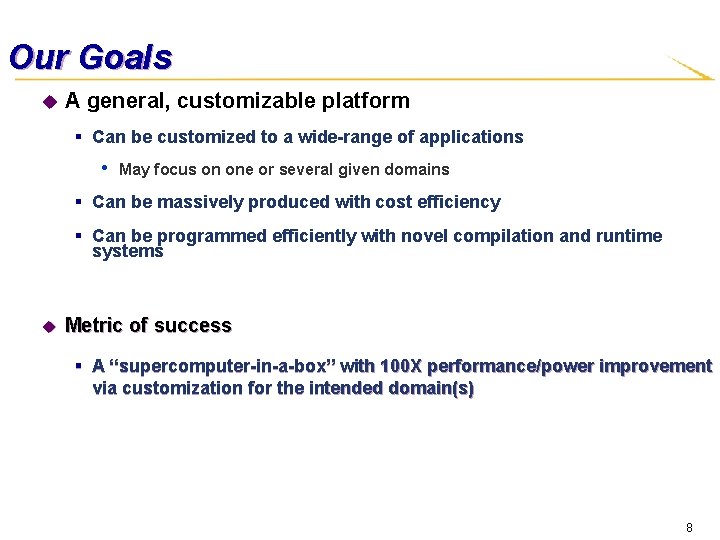

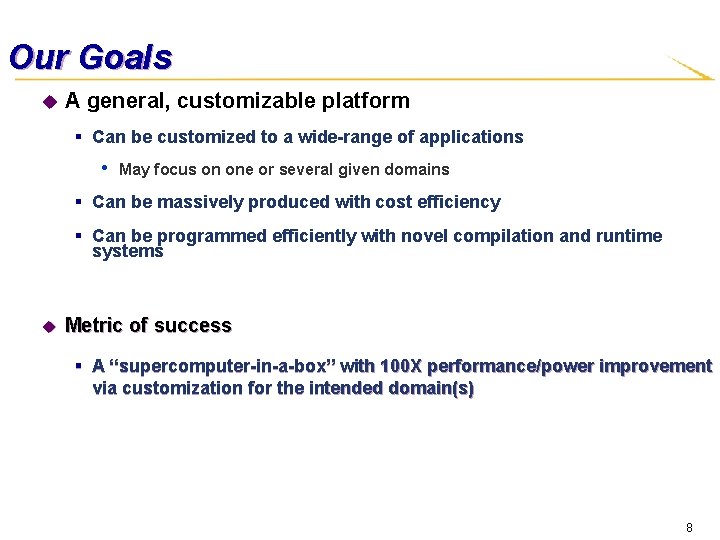

Our Goals u A general, customizable platform § Can be customized to a wide-range of applications • May focus on one or several given domains § Can be massively produced with cost efficiency § Can be programmed efficiently with novel compilation and runtime systems u Metric of success § A “supercomputer-in-a-box” with 100 X performance/power improvement via customization for the intended domain(s) 8

Example of Customizable Platforms: FPGAs u Configurable logic blocks u Island-style configurable mesh routing u Dedicated components § Specialization allows optimization § Memory/Multiplier § I/O, Processor § Anything that the FPGA architect wants to put in! Source: I. Kuon, R. Tessier, J. Rose. FPGA Architecture: Survey and Challenges. 2008. 9

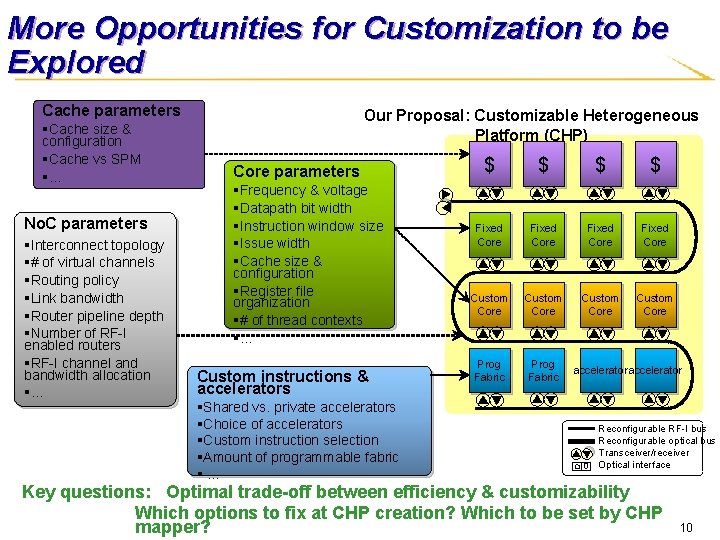

More Opportunities for Customization to be Explored Cache parameters §Cache size & configuration §Cache vs SPM §… No. C parameters §Interconnect topology §# of virtual channels §Routing policy §Link bandwidth §Router pipeline depth §Number of RF-I enabled routers §RF-I channel and bandwidth allocation §… Our Proposal: Customizable Heterogeneous Platform (CHP) Core parameters §Frequency & voltage §Datapath bit width §Instruction window size §Issue width §Cache size & configuration §Register file organization §# of thread contexts §… Custom instructions & accelerators §Shared vs. private accelerators §Choice of accelerators §Custom instruction selection §Amount of programmable fabric §… $ $ Fixed Core Custom Core Prog Fabric accelerator Reconfigurable RF-I bus Reconfigurable optical bus Transceiver/receiver Optical interface Key questions: Optimal trade-off between efficiency & customizability Which options to fix at CHP creation? Which to be set by CHP mapper? 10

Examples of Customization u Customization of processor cores u Customization of on-chip memory u Customization of on-chip interconnects 11

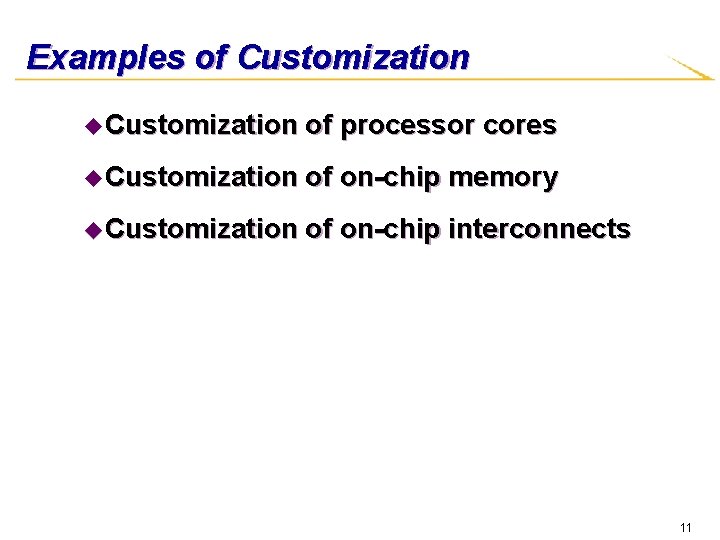

Example 1 – Customization of Cores u Large u How cores or small cores? many each type? 12

![Core spilling Cong et al Trans on Parallel and Distributed Systems 2007 u Core spilling – [Cong et al Trans. on Parallel and Distributed Systems 2007] u](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-13.jpg)

Core spilling – [Cong et al Trans. on Parallel and Distributed Systems 2007] u CMP systems focus on improving overall throughput § Sequential or legacy applications might not see benefits u Key idea – allow execution to be spilt from one core to next at run-time § Simulate increase in register file, instruction queue, ROB and LSQ size § Allocate cores intelligently to spilling core 13

![Core spilling Cong et al Trans on Parallel and Distributed Systems 2007 u Core spilling – [Cong et al Trans. on Parallel and Distributed Systems 2007] u](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-14.jpg)

Core spilling – [Cong et al Trans. on Parallel and Distributed Systems 2007] u Results § Core spilling achieves more than 50% of the performance of ‘ideal’ 32 -issue core by using 4 -issue cores for single applications § 39% improvement for multiple application workload § Up to 40% reduction in latency for changing workloads 14

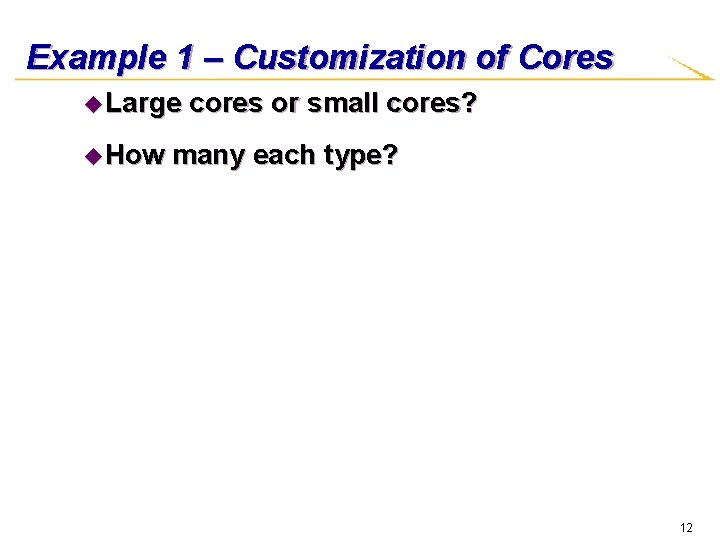

Example 2: Customization of On-Chip Memory u HW controlled cache or SW controlled cache (SPM)? u How much to allocate for each type? 15

![Customizable Hybrid L 1 Cache ISLPED 2011 Cache in conjunction with Scratchpad Memory Customizable Hybrid L 1 Cache [ISLPED’ 2011] § Cache in conjunction with Scratchpad Memory](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-16.jpg)

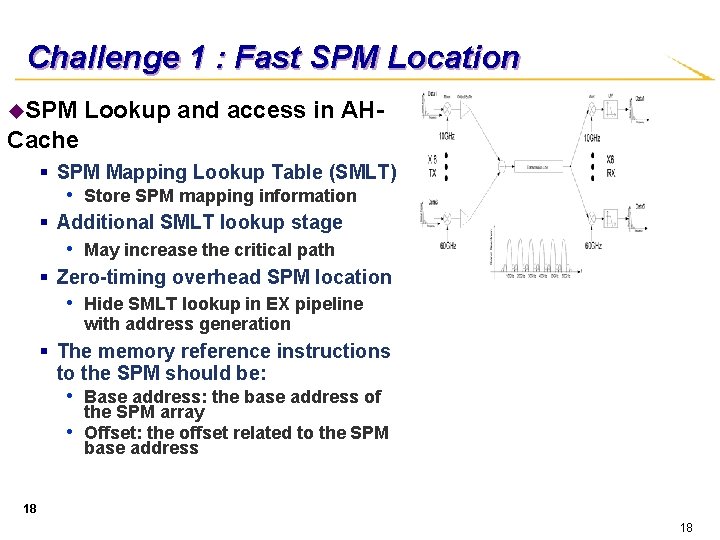

Customizable Hybrid L 1 Cache [ISLPED’ 2011] § Cache in conjunction with Scratchpad Memory (SPM) in L 1 • Cache: Hardware-controlled u Transparent to software: a fast local copy of the global memory address space • SPM: Software-controlled u Not transparent to software: a separate address space from the global address space § Customizable • Flexibly size the cache and SPM based on the application requirements u u Tag Array 16 Cache: dynamic/random access SPM: regular data access pattern Decoder Unit Data Array Column Circuitry (a) Cache memory organization Decoder Unit Data Array Column Circuitry (b) SPM organization Cache SPM acces s time hit : 1 cycle miss : L cycles 1 cycle energ y (c) 4. 57 n. J 1. 53 Comparison (2 KB) n. J 16

How to Customize? u. Way-wise reconfigurable cache rician-denoise 0 Cache sets cache SPM § Configure several ways of cache as SPM § Column cache [Chiou et. al. DAC’ 00] u. Block-wise Run time 0 Cache sets reconfigurable cache § Virtual local store [Cook et. al. UCB TR’ 09] § Unified mapping of SPM blocks onto cache blocks u. Adaptive hybrid cache Run time 0 Cache sets (AH-Cache) § Dynamically remap SPM blocks from highdemand cache sets to 17 low-demand cache sets. Run time 17

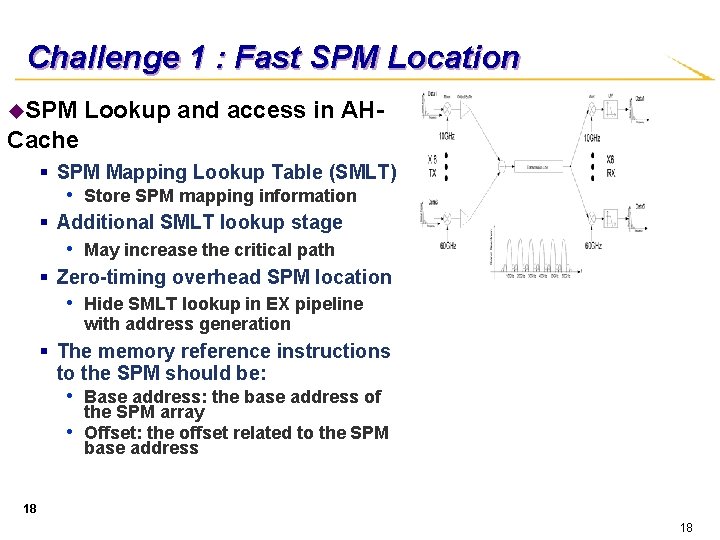

Challenge 1 : Fast SPM Location u. SPM Lookup and access in AH- Cache § SPM Mapping Lookup Table (SMLT) • Store SPM mapping information § Additional SMLT lookup stage • May increase the critical path § Zero-timing overhead SPM location • Hide SMLT lookup in EX pipeline with address generation § The memory reference instructions to the SPM should be: • Base address: the base address of • the SPM array Offset: the offset related to the SPM base address 18 18

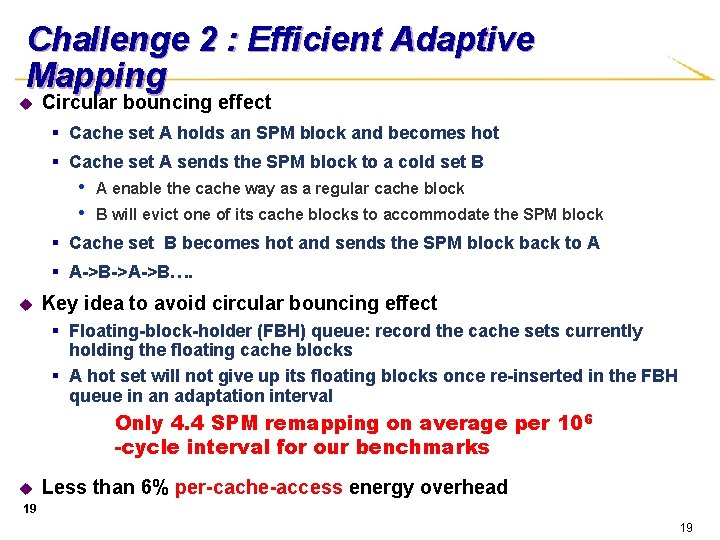

Challenge 2 : Efficient Adaptive Mapping u Circular bouncing effect § Cache set A holds an SPM block and becomes hot § Cache set A sends the SPM block to a cold set B • A enable the cache way as a regular cache block • B will evict one of its cache blocks to accommodate the SPM block § Cache set B becomes hot and sends the SPM block back to A § A->B->A->B…. u Key idea to avoid circular bouncing effect § Floating-block-holder (FBH) queue: record the cache sets currently holding the floating cache blocks § A hot set will not give up its floating blocks once re-inserted in the FBH queue in an adaptation interval Only 4. 4 SPM remapping on average per 106 -cycle interval for our benchmarks u Less than 6% per-cache-access energy overhead 19 19

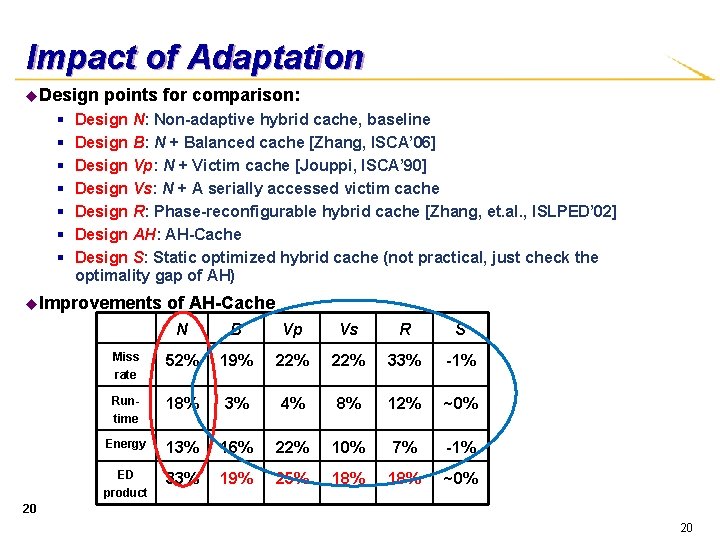

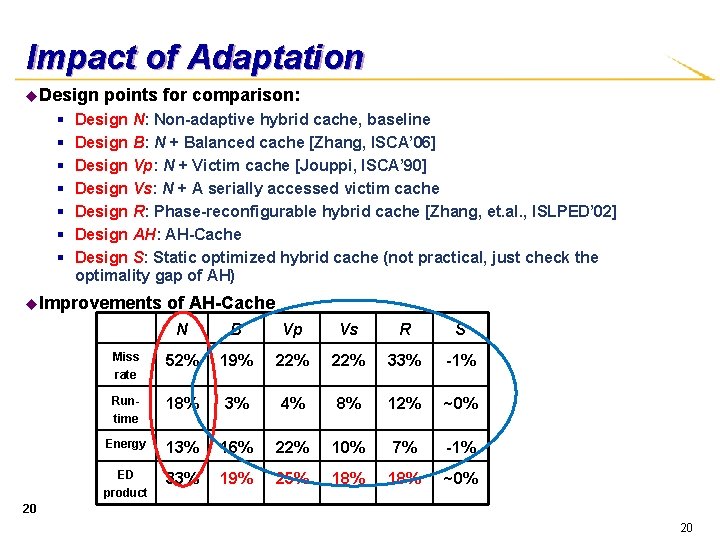

Impact of Adaptation u. Design § § § § points for comparison: Design N: Non-adaptive hybrid cache, baseline Design B: N + Balanced cache [Zhang, ISCA’ 06] Design Vp: N + Victim cache [Jouppi, ISCA’ 90] Design Vs: N + A serially accessed victim cache Design R: Phase-reconfigurable hybrid cache [Zhang, et. al. , ISLPED’ 02] Design AH: AH-Cache Design S: Static optimized hybrid cache (not practical, just check the optimality gap of AH) u. Improvements of AH-Cache N B Vp Vs R S Miss rate 52% 19% 22% 33% -1% Runtime 18% 3% 4% 8% 12% ~0% Energy 13% 16% 22% 10% 7% -1% ED product 33% 19% 25% 18% ~0% 20 20

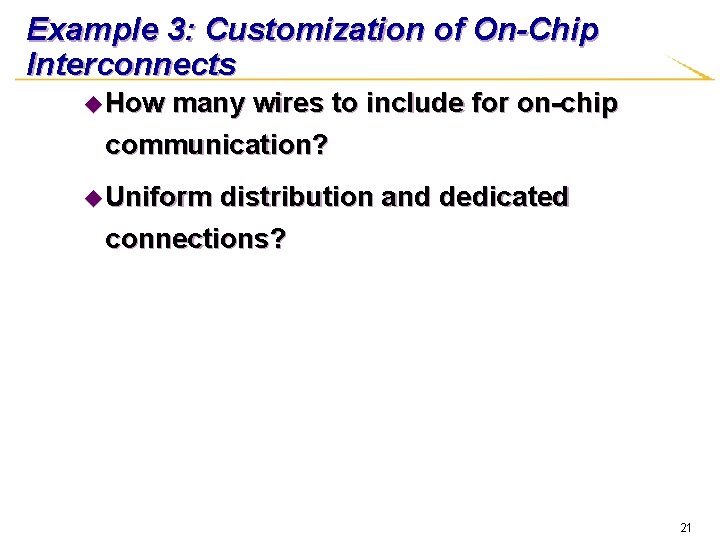

Example 3: Customization of On-Chip Interconnects u How many wires to include for on-chip communication? u Uniform distribution and dedicated connections? 21

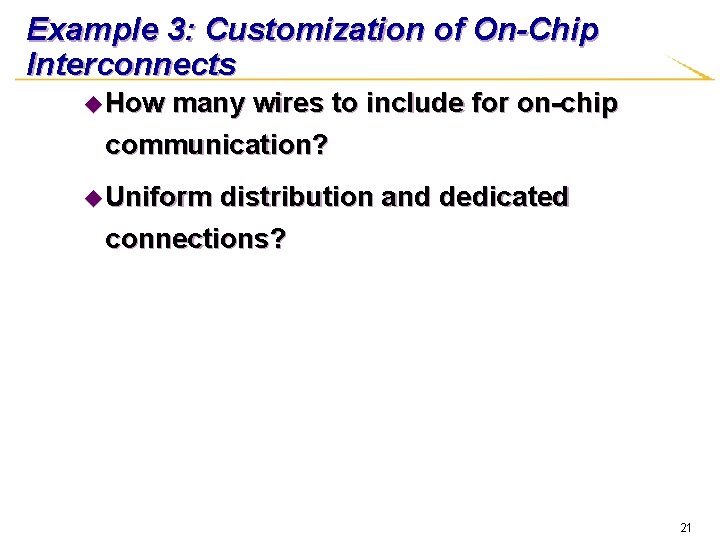

Our Answer : Use of Multiband RF-Interconnect for Customization • • • In TX, each mixer up-converts individual baseband streams into specific frequency band (or channel) N different data streams (N=6 in exemplary figure above) may transmit simultaneously on the shared transmission medium to achieve higher aggregate data rates In RX, individual signals are down-converted by mixer, and recovered after low-pass filter 22 22

Terahertz VCO in 65 nm CMOS Measured signal spectrum with uncalibrated power u Demonstrated an ultra high frequency and low power oscillator structure in CMOS by adding a negative resistance parallel tank, with the fundamental frequency at 217 GHz and 16. 8 m. W DC power consumption. u higher harmonics (4 th and 6 th harmonics) may be substantially underestimated due to excessive water and oxygen absorption and setup losses at these frequencies. The measured 4 th and 6 th harmonics are about 870 GHz and 1. 3 THz, respectively. “Generating Terahertz Signals in 65 nm CMOS with Negative-Resistance Resonator Boosting and Selective Harmonic Suppression” Symposium on VLSI Technology and Circuits, June 2010 23

![Mesh Overlaid with RFI HPCA 08 u u u 10 x 10 mesh of Mesh Overlaid with RF-I [HPCA’ 08] u u u 10 x 10 mesh of](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-24.jpg)

Mesh Overlaid with RF-I [HPCA’ 08] u u u 10 x 10 mesh of pipelined routers § No. C runs at 2 GHz § XY routing 64 4 GHz 3 -wide processor cores § Labeled aqua § 8 KB L 1 Data Cache § 8 KB L 1 Instruction Cache 32 L 2 Cache Banks § Labeled pink § 256 KB each § Organized as shared NUCA cache 4 Main Memory Interfaces § Labeled green RF-I transmission line bundle § Black thick line spanning mesh 24 24

RF-I Logical Organization • Logically: - RF-I behaves as set of N express channels - Each channel assigned to src, dest router pair (s, d) • Reconfigured by: - remapping shortcuts to match needs of different applications LOGICAL BA 25 25

![Power Savings MICRO 08 8 bytes 16 4 bytes Requires high bw to communicate Power Savings [MICRO’ 08] 8 bytes 16 4 bytes Requires high bw to communicate](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-26.jpg)

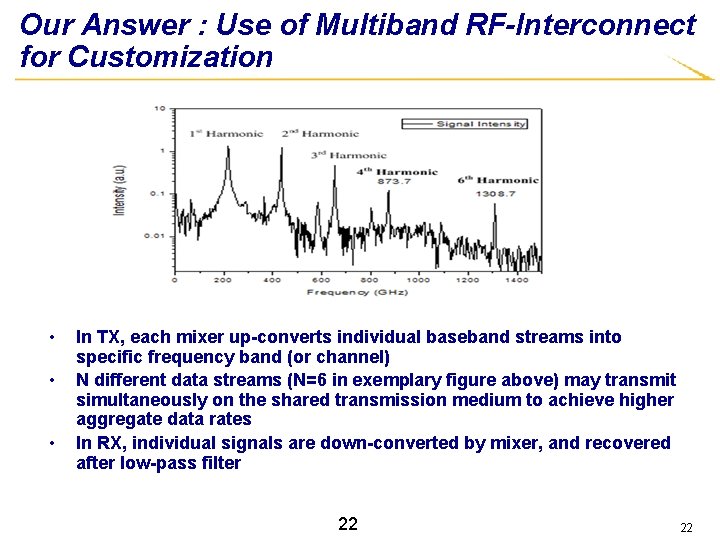

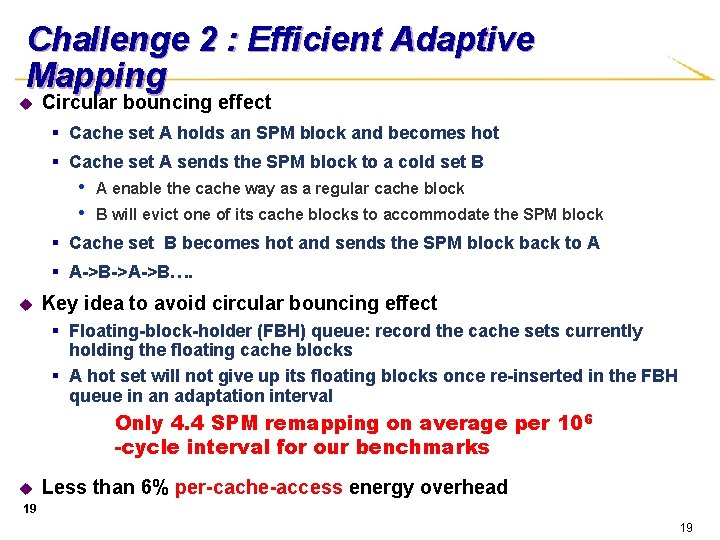

Power Savings [MICRO’ 08] 8 bytes 16 4 bytes Requires high bw to communicate w/ B u We can thin the baseline mesh links § From 16 B… § …to 8 B § …to 4 B u RF-I makes up the difference in performance while saving overall power! § RF-I provides bandwidth where most necessary § Baseline RC wires supply the rest A B 26 26

![Impact of Using RFInterconnects MICRO 08 Adaptive RFI enabled No C Cost Impact of Using RF-Interconnects [MICRO’ 08] • Adaptive RF-I enabled No. C - Cost](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-27.jpg)

Impact of Using RF-Interconnects [MICRO’ 08] • Adaptive RF-I enabled No. C - Cost Effective in terms of both power and performance 27 27

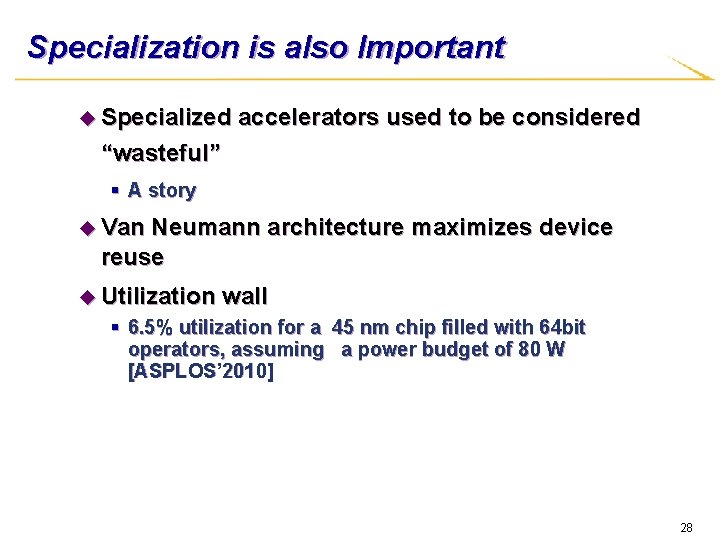

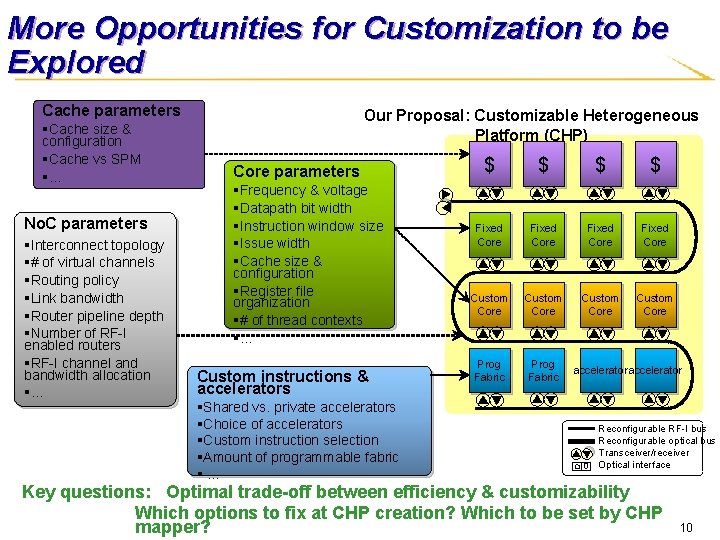

Specialization is also Important u Specialized accelerators used to be considered “wasteful” § A story u Van Neumann architecture maximizes device reuse u Utilization wall § 6. 5% utilization for a 45 nm chip filled with 64 bit operators, assuming a power budget of 80 W [ASPLOS’ 2010] 28

![Our Proposal Extensive Use of Accelerators SAW 2011 u Proposed solution extensive use of Our Proposal: Extensive Use of Accelerators [SAW’ 2011] u Proposed solution: extensive use of](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-29.jpg)

Our Proposal: Extensive Use of Accelerators [SAW’ 2011] u Proposed solution: extensive use of accelerators (customized or implemented using programmable fabric) § Sea of accelerators u Type of accelerators: § Tightly vs. loosely coupled u Benefits § Better performance § Higher power-efficiency § It’s ok to be “wasteful” u Critical needs: § Efficient accelerator management • Scheduling • Sharing 29

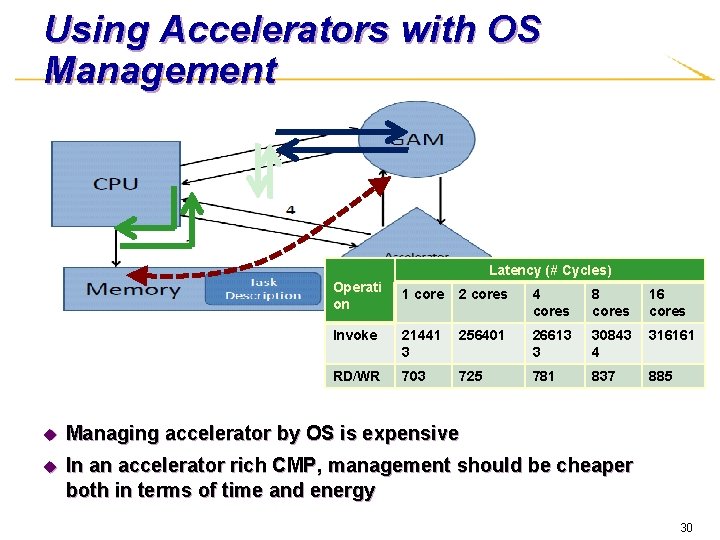

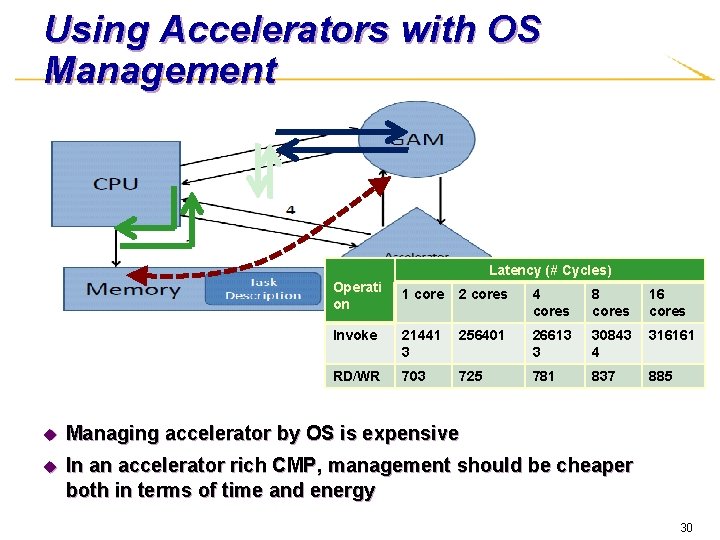

Using Accelerators with OS Management Latency (# Cycles) Operati on 1 core 2 cores 4 cores 8 cores 16 cores Invoke 21441 3 256401 26613 3 30843 4 316161 RD/WR 703 725 781 837 885 u Managing accelerator by OS is expensive u In an accelerator rich CMP, management should be cheaper both in terms of time and energy 30

Overall Architecture of AXR-CMP u Architecture of AXR- CMP: § Multiple cores and accelerators § Global Accelerator Manager (GAM) § Shared L 2 cache banks and No. C routers between multiple accelerators GAM Shared Core L 2 $ Accelerator + DMA Shared Memory Router controller 31

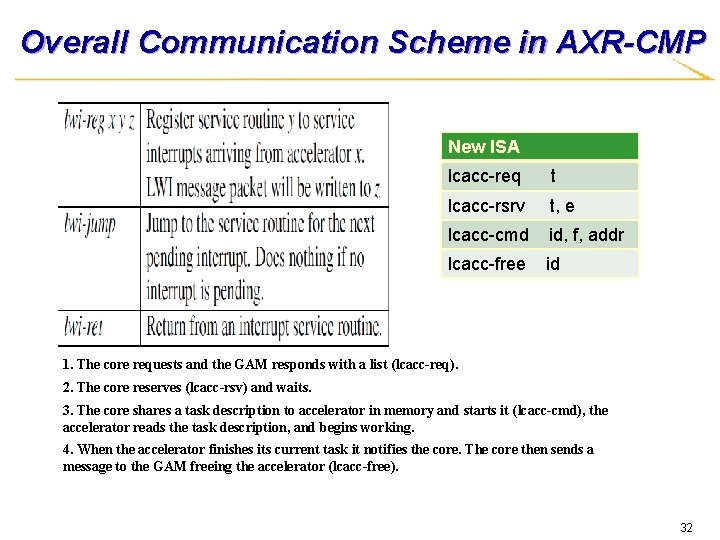

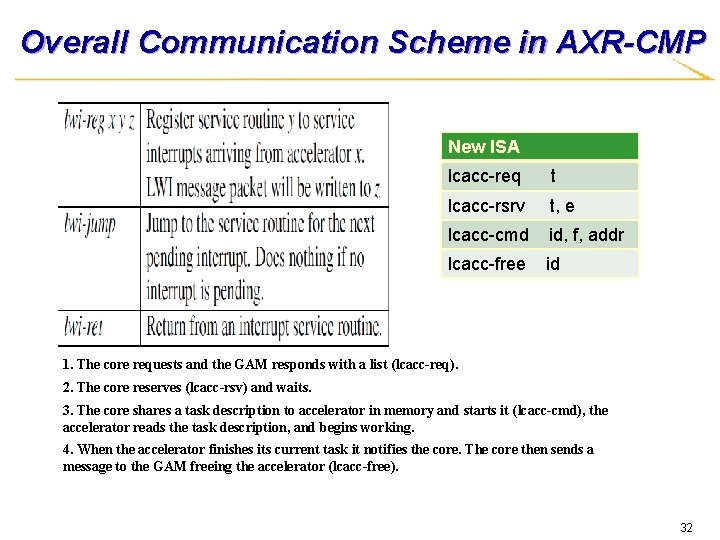

Overall Communication Scheme in AXR-CMP New ISA lcacc-req t lcacc-rsrv t, e lcacc-cmd id, f, addr lcacc-free id 1. The core requests and the GAM responds with a list (lcacc-req). 2. The core reserves (lcacc-rsv) and waits. 3. The core shares a task description to accelerator in memory and starts it (lcacc-cmd), the accelerator reads the task description, and begins working. 4. When the accelerator finishes its current task it notifies the core. The core then sends a message to the GAM freeing the accelerator (lcacc-free). 32

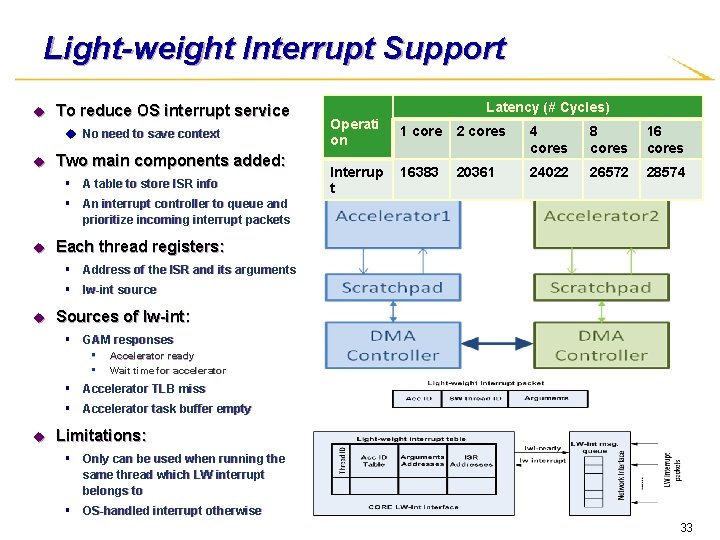

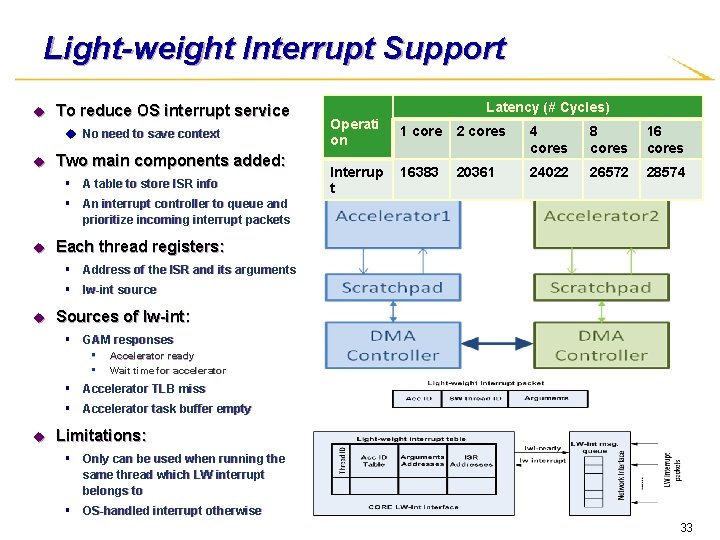

Light-weight Interrupt Support u To reduce OS interrupt service u No need to save context u Two main components added: § A table to store ISR info Latency (# Cycles) Operati on Interrup t 1 core 2 cores 4 cores 8 cores 16383 20361 24022 26572 28574 § An interrupt controller to queue and prioritize incoming interrupt packets u Each thread registers: § Address of the ISR and its arguments § lw-int source u Sources of lw-int: § GAM responses • Accelerator ready • Wait time for accelerator § Accelerator TLB miss § Accelerator task buffer empty u Limitations: § Only can be used when running the same thread which LW interrupt belongs to § OS-handled interrupt otherwise 33

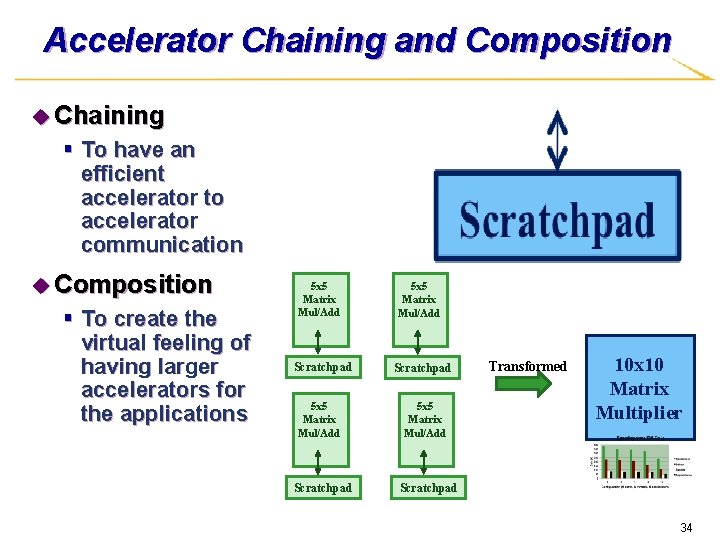

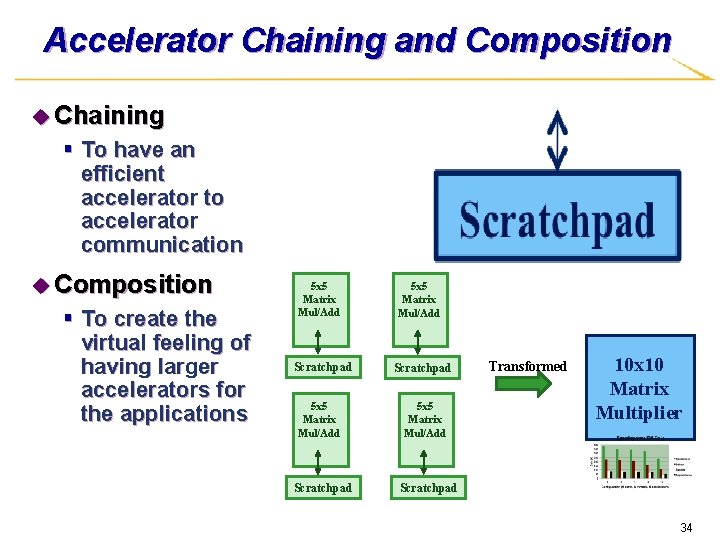

Accelerator Chaining and Composition u Chaining § To have an efficient accelerator to accelerator communication u Composition § To create the virtual feeling of having larger accelerators for the applications 5 x 5 Matrix Mul/Add Scratchpad 5 x 5 Matrix Mul/Add Transformed 10 x 10 Matrix Multiplier Scratchpad 34

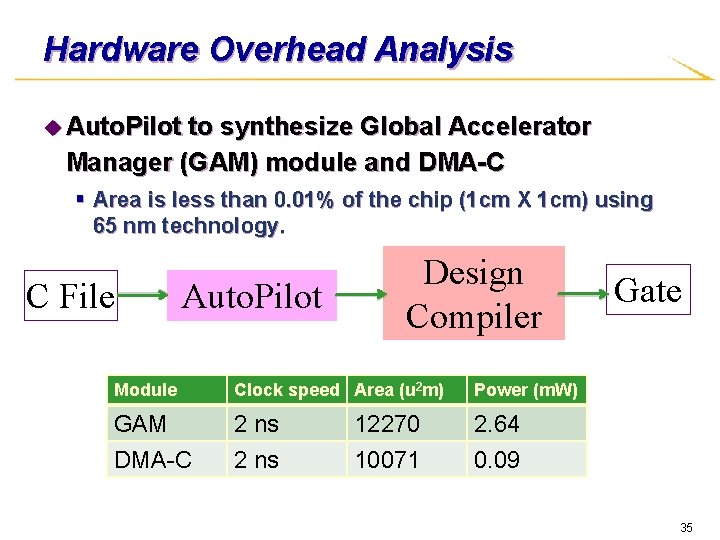

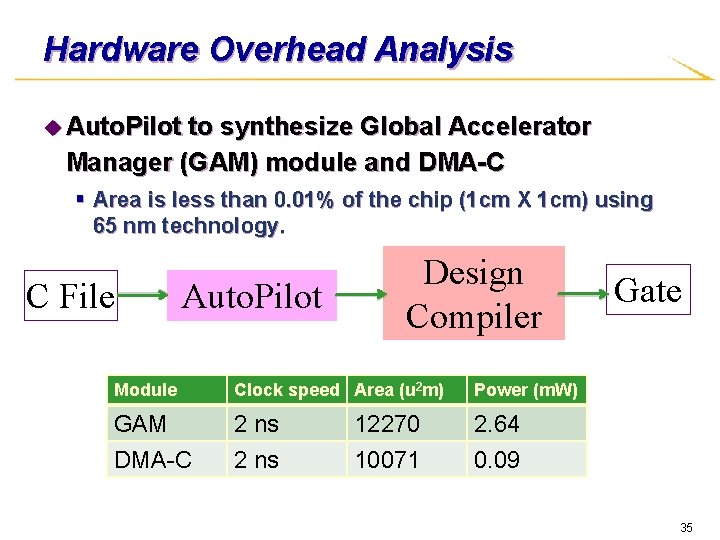

Hardware Overhead Analysis u Auto. Pilot to synthesize Global Accelerator Manager (GAM) module and DMA-C § Area is less than 0. 01% of the chip (1 cm X 1 cm) using 65 nm technology. C File Auto. Pilot Design Compiler Module Clock speed Area (u 2 m) Power (m. W) GAM 2 ns 12270 2. 64 DMA-C 2 ns 10071 0. 09 Gate 35

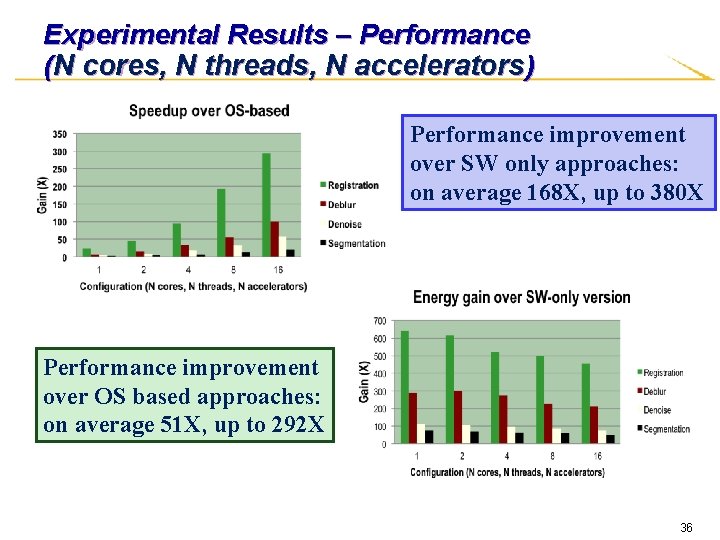

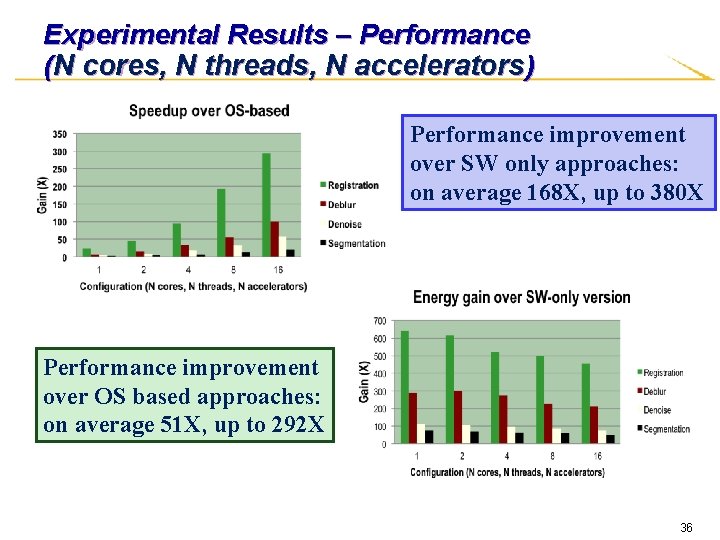

Experimental Results – Performance (N cores, N threads, N accelerators) Performance improvement over SW only approaches: on average 168 X, up to 380 X Performance improvement over OS based approaches: on average 51 X, up to 292 X 36

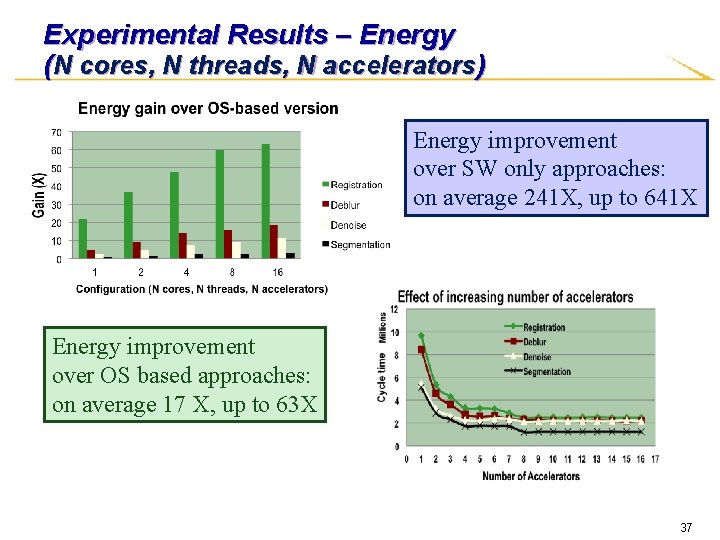

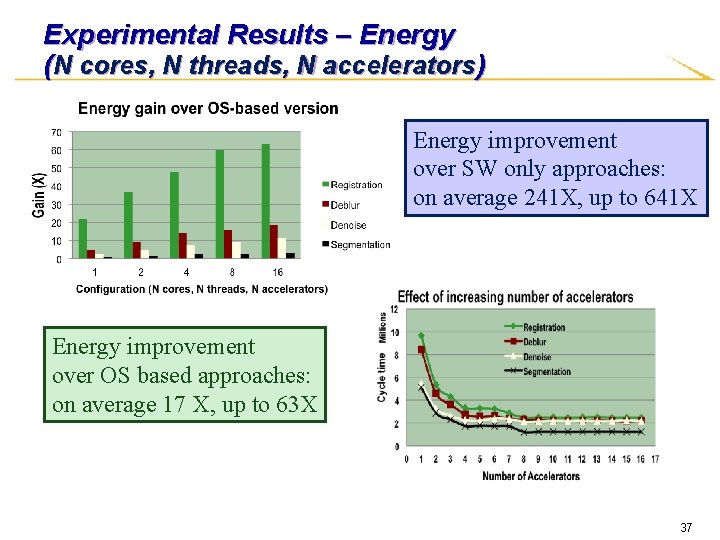

Experimental Results – Energy (N cores, N threads, N accelerators) Energy improvement over SW only approaches: on average 241 X, up to 641 X Energy improvement over OS based approaches: on average 17 X, up to 63 X 37

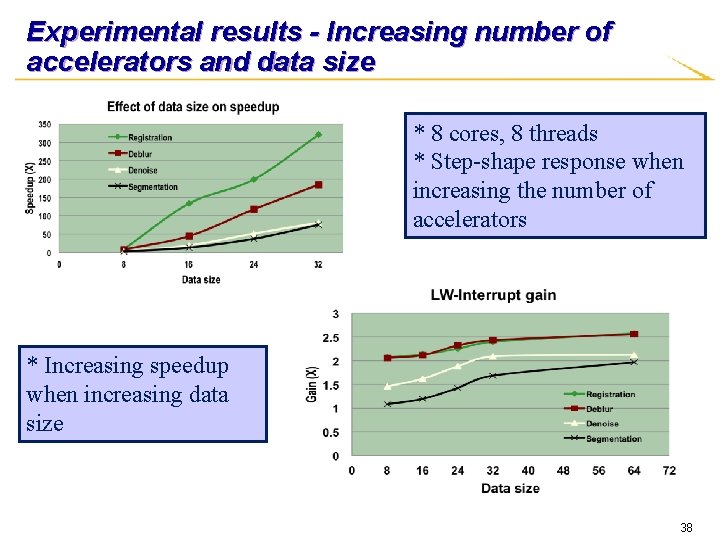

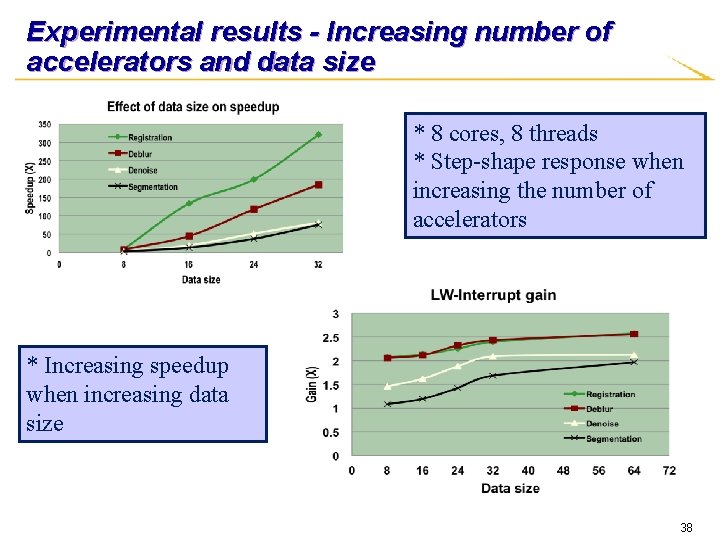

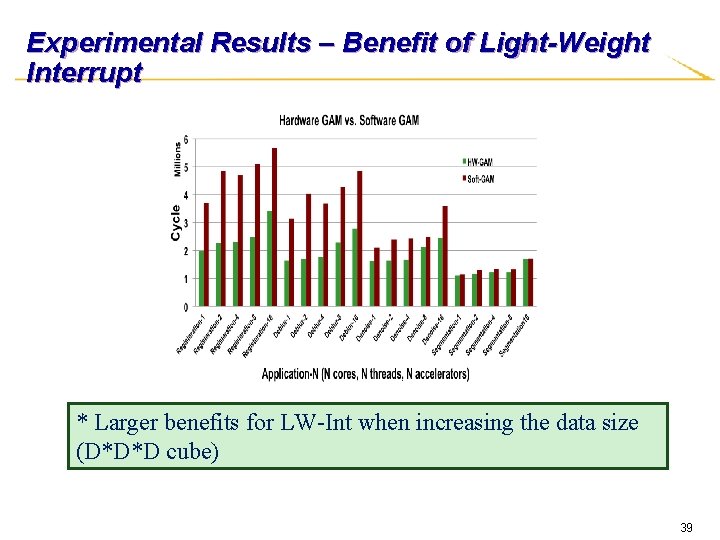

Experimental results - Increasing number of accelerators and data size * 8 cores, 8 threads * Step-shape response when increasing the number of accelerators * Increasing speedup when increasing data size 38

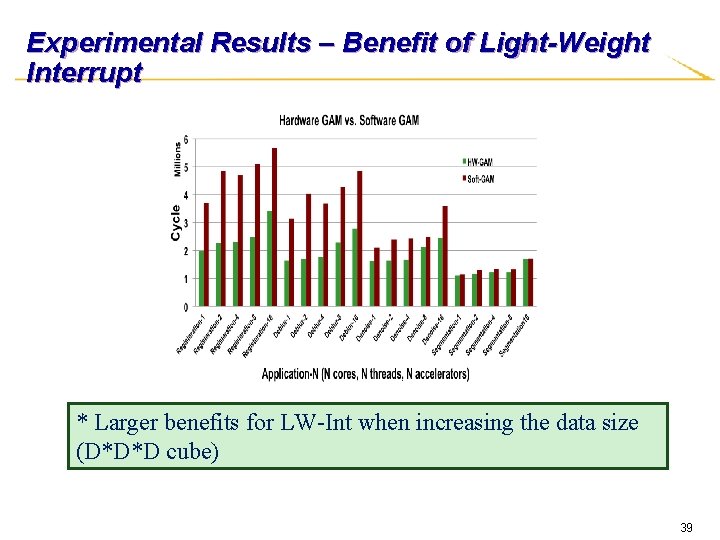

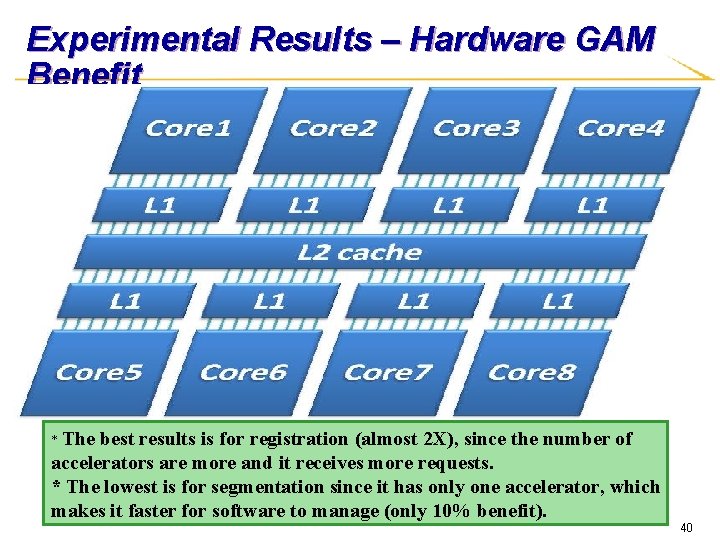

Experimental Results – Benefit of Light-Weight Interrupt * Larger benefits for LW-Int when increasing the data size (D*D*D cube) 39

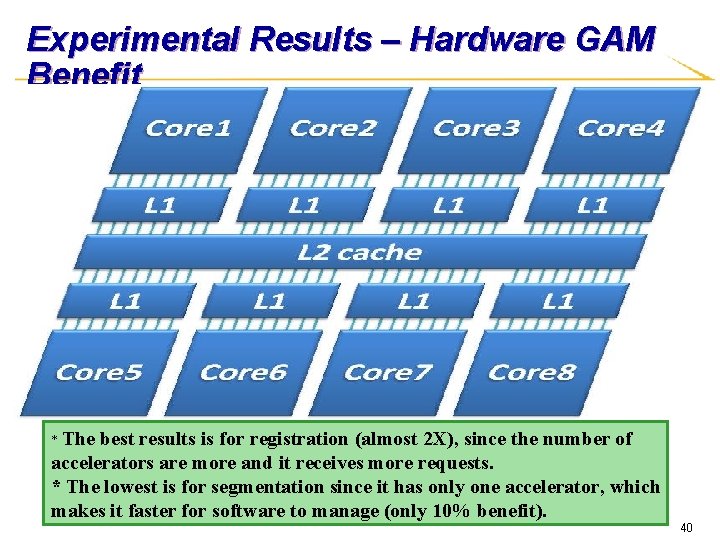

Experimental Results – Hardware GAM Benefit * The best results is for registration (almost 2 X), since the number of accelerators are more and it receives more requests. * The lowest is for segmentation since it has only one accelerator, which makes it faster for software to manage (only 10% benefit). 40

3 D Integration for Customization or Specialization u Vertical integration: § CMP layer + customization/acceleration layer u Accelerators can directly access caches § L 1 or L 2 u Low latency § 1 cycle traversal across the TSV bundle § 2 -3 cycles to get from the TSV bundle to accelerator/cache controller u Higher bandwidth FPGA layer TSVs § Almost equal to the bandwidth of the L 1/L 2 cache u No single bottleneck CMP layer § Each cache can have its own TSV bundle • Sharing TSV bundles possible u Early results: medical imaging benchmarks [ASAP’ 2011] § > 7 x performance gain 41

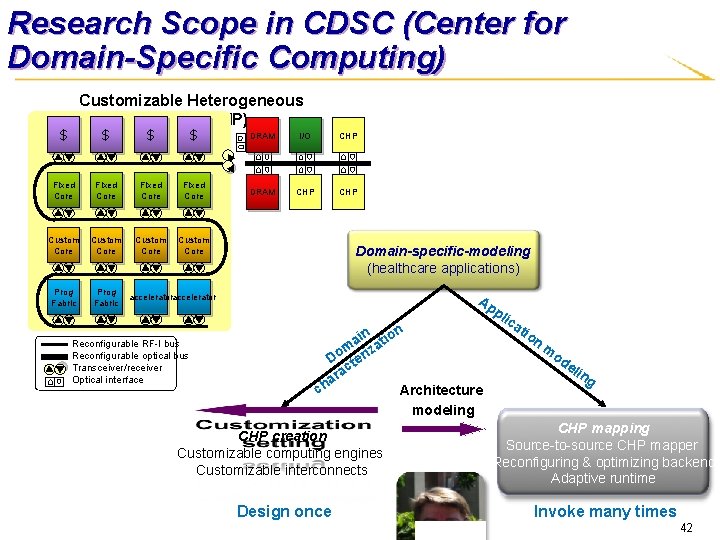

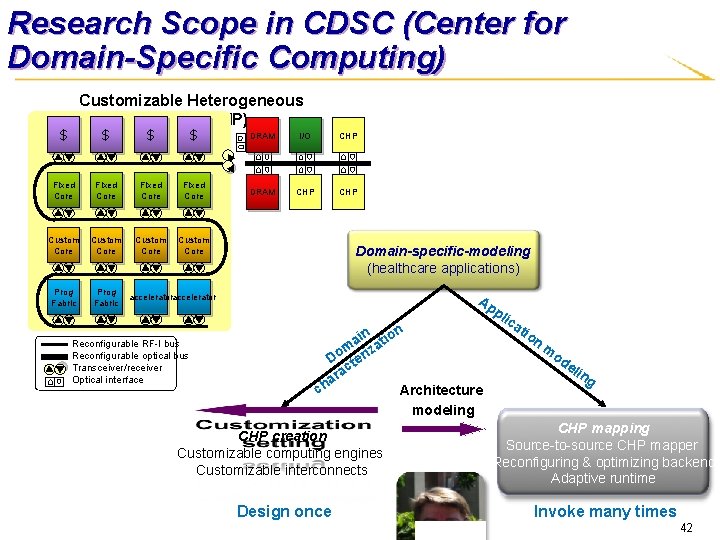

Research Scope in CDSC (Center for Domain-Specific Computing) Customizable Heterogeneous Platform (CHP) $ $ DRAM I/O CHP Fixed Core DRAM CHP Custom Core Prog Fabric Domain-specific-modeling (healthcare applications) Ap accelerator pl Reconfigurable RF-I bus Reconfigurable optical bus Transceiver/receiver Optical interface on c ain ati m iz Do ter c ra a h ica tio n m od eli n Architecture modeling g CHP creation Customizable computing engines Customizable interconnects CHP mapping Source-to-source CHP mapper Reconfiguring & optimizing backend Adaptive runtime Design once Invoke many times 42

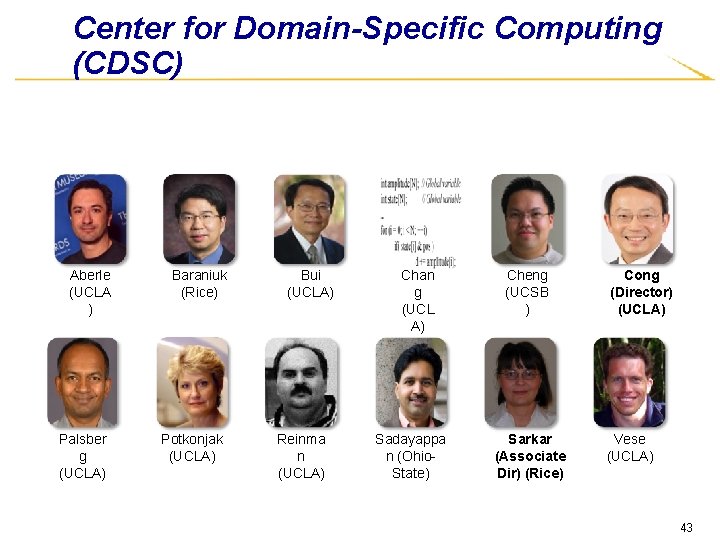

Center for Domain-Specific Computing (CDSC) Aberle (UCLA ) Palsber g (UCLA) Baraniuk (Rice) Potkonjak (UCLA) Bui (UCLA) Reinma n (UCLA) Chan g (UCL A) Sadayappa n (Ohio. State) Cheng (UCSB ) Sarkar (Associate Dir) (Rice) Cong (Director) (UCLA) Vese (UCLA) 43

CHP Mapping – Compilation and Runtime Software Systems for Customization Goals: Efficient mapping of domain-specification to customizable hardware – Adapt the CHP to a given application for drastic performance/power efficiency improvement Domain-specific applications Abstract execution Application characteristics CHP architecture models Programmer Domain-specific programming model (Domain-specific coordination graph and domain-specific language extensions) Source-to source CHP Mapper C/C++ code Analysis annotation s C/C++ front-end RTL Synthesize r (x. Pilot) Reconfiguring and optimizing back-end Binary code for fixed & customized cores C/System. C behavioral spec Customized target code Performan ce feedback RTL for programmable fabric Adaptive runtime Lightweight threads and adaptive configuration CHP architectural prototypes (CHP hardware testbeds, CHP simulation testbed, full CHP) 44

![x Pilot BehavioraltoRTL Synthesis Flow SOCC 2006 Behavioral spec in CCSystem C Platform description x. Pilot: Behavioral-to-RTL Synthesis Flow [SOCC’ 2006] Behavioral spec. in C/C++/System. C Platform description](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-45.jpg)

x. Pilot: Behavioral-to-RTL Synthesis Flow [SOCC’ 2006] Behavioral spec. in C/C++/System. C Platform description Frontend compiler u Advanced transformtion/optimizations § Loop unrolling/shifting/pipelining § Strength reduction / Tree height reduction § Bitwidth analysis § Memory analysis … u Core behvior synthesis optimizations § Scheduling § Resource binding, e. g. , functional unit binding register/port binding SSDM u RTL + constraints FPGAs/ASICs Arch-generation & RTL/constraints generation § Verilog/VHDL/System. C § FPGAs: Altera, Xilinx § ASICs: Magma, Synopsys, … 45

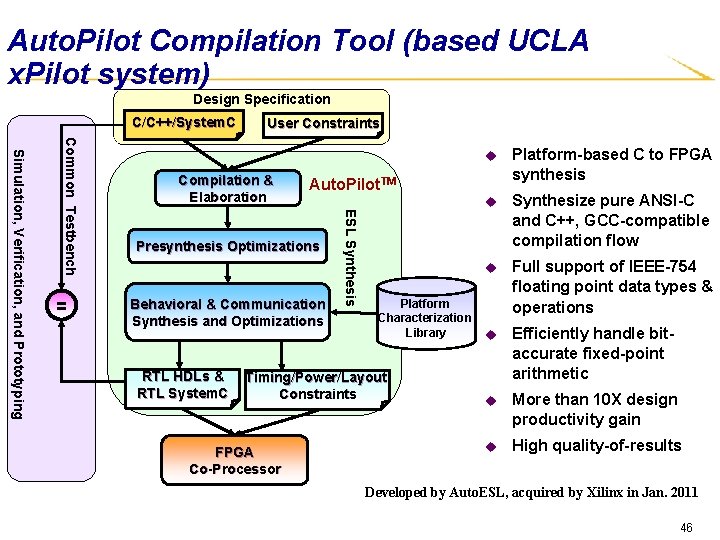

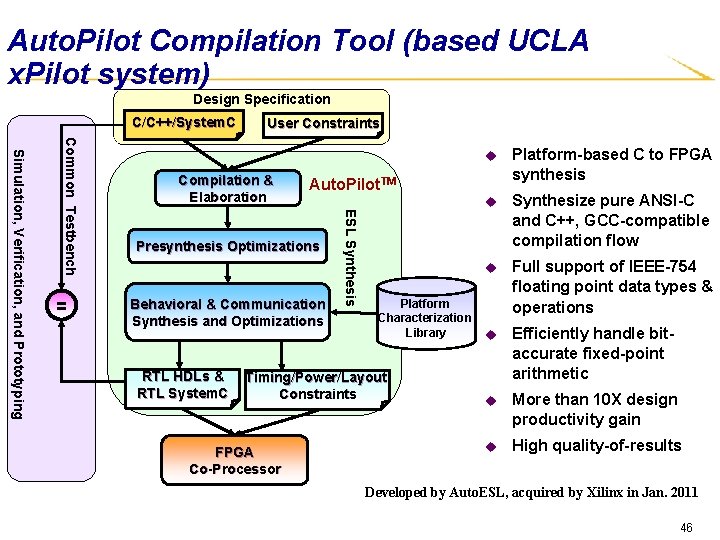

Auto. Pilot Compilation Tool (based UCLA x. Pilot system) Design Specification C/C++/System. C Compilation & Elaboration Presynthesis Optimizations Behavioral & Communication Synthesis and Optimizations RTL HDLs & RTL System. C Platform Characterization Library Timing/Power/Layout Constraints FPGA Co-Processor u Platform-based C to FPGA synthesis u Synthesize pure ANSI-C and C++, GCC-compatible compilation flow u Full support of IEEE-754 floating point data types & operations u Efficiently handle bitaccurate fixed-point arithmetic u More than 10 X design productivity gain u High quality-of-results Auto. Pilot. TM ESL Synthesis Common Testbench Simulation, Verification, and Prototyping = User Constraints Developed by Auto. ESL, acquired by Xilinx in Jan. 2011 46

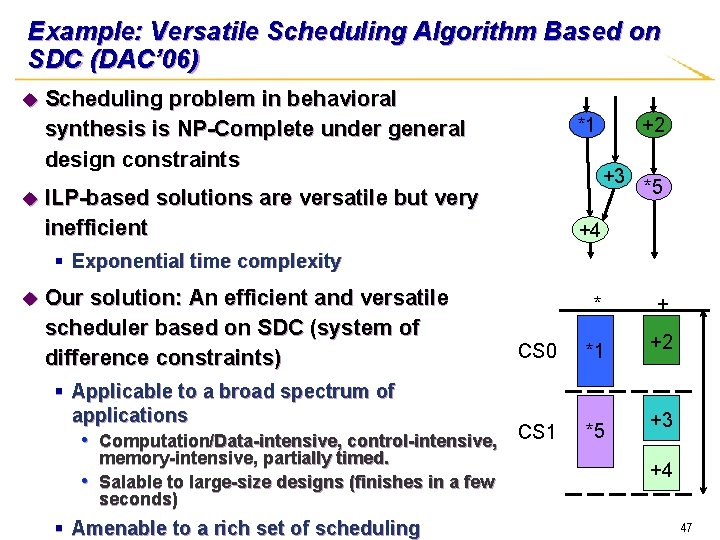

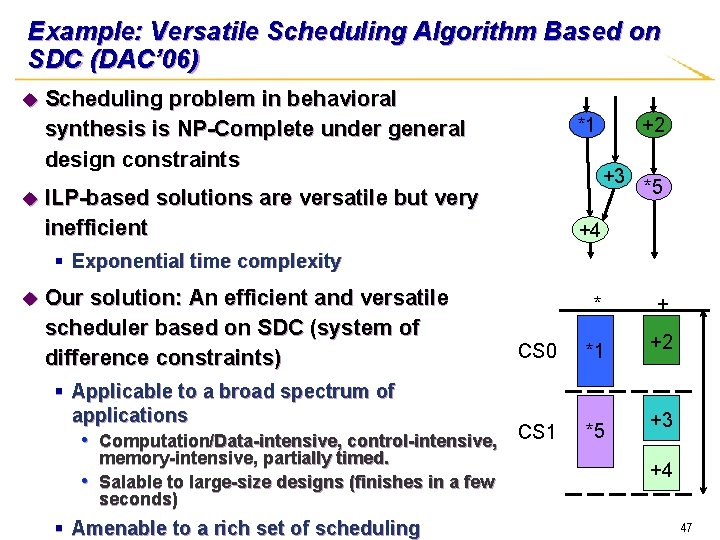

Example: Versatile Scheduling Algorithm Based on SDC (DAC’ 06) u Scheduling problem in behavioral synthesis is NP-Complete under general design constraints u ILP-based *1 +2 +3 solutions are versatile but very inefficient *5 +4 § Exponential time complexity u Our solution: An efficient and versatile scheduler based on SDC (system of difference constraints) * + CS 0 *1 +2 • Computation/Data-intensive, control-intensive, CS 1 *5 § Applicable to a broad spectrum of applications • memory-intensive, partially timed. Salable to large-size designs (finishes in a few seconds) § Amenable to a rich set of scheduling +3 +4 47

Scheduling Our Approach (DAC’ 06) u Overall § Dependency constraint approach • • § Current objective: high-performance § Use a system of integer difference constraints to express all kinds of scheduling constraints § Represent the design objective in a linear + v 4 +v * v 2 function 1 * v 3 § Platform characterization: • adder (+/–) 2 ns • multipiler (*): 5 ns § Target cycle time: 10 ns § Resource constraint: Only ONE multiplier is available v 5 1 0 0 0 1 -1 -1 1 0 0 A 0 0 -1 1 0 v 1 v 3 : x 3 – x 1 0 v 2 v 3 : x 3 – x 2 0 v 3 v 5 : x 4 – x 3 0 v 4 v 5 : x 5 – x 4 0 § Frequency constraint • <v 2 , v 5> : x 5 – x 2 1 § Resource constraint • <v 2 , v 3>: x 3 – x 2 1 0 0 0 -1 -1 X 2 X 3 X x 4 X 0 -1 0 0 -1 b Totally unimodular matrix: 5 guarantees integral solu 48

![Another Example Efficient Pattern Mining FPGA 08 and DATE 2010 u Programers may contain Another Example: Efficient Pattern Mining [FPGA’ 08 and DATE’ 2010] u Programers may contain](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-49.jpg)

Another Example: Efficient Pattern Mining [FPGA’ 08 and DATE’ 2010] u Programers may contain many patterns u Prior work can only identify exact patterns u We can efficiently identify “approximate” patterns in large programs + + < § Efficient subgraph enumeration and pruning u Highly scalable – can handle programs with 100, 000+ lines of code u Applications: § Behavioral synthesis: + - Structure Variation + 16 16 + * 16 § Based on the concept of editing distance § Use data-mining techniques + + + 32 + * 32 32 + * Bitwidth Variation + + * Ports Variation + + * 49

![Beyond CtoRTL Synthesis Compilation Support for Hybrid Cache DAC 2011 Manually coding RASP Beyond C-to-RTL Synthesis Compilation Support for Hybrid Cache [DAC’ 2011] Manually coding …… RASP](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-50.jpg)

Beyond C-to-RTL Synthesis Compilation Support for Hybrid Cache [DAC’ 2011] Manually coding …… RASP ! SPM base address SPM size an SPM access 50 50

Compiler Support for Customizable Hybrid Cache u RASP (Reuse-Aware SPM Prefetching) flow n n Prefetch-enabled: hide memory access latency Reuse-enabled: reduce amount of data transfers Hybrid Cache Configurati on maximal SPM size C/C++ Program Architectur e Parameters prefetch latency LLVM-2. 7 RASPimplementation initiate SPM buffer size caculate SPM utilization ratio r for inactive reuse dependency Reuse Analysis Reuse Candidate Graphs Reuse & Prefetching Co-Optimization 51 Optimized Code for Hybrid Cache update local/reuse regions of downstream vertices of v exceed no SPM size? yes 51

Reuse Candidate Graph u Reuse candidate graph construction n Each vertex represents one array reference n Each edge represents reuse dependency (1, 1) (0, 2) (1, 0) u[i+2][j+1] (1, -1) u[i+1][j+2] (0, 1) u[i+1][j+1] (0, 1) u[i+1][j] (1, -1) u[i][j+1] (1, 0) (1, 1) 52 (2, 0) 52

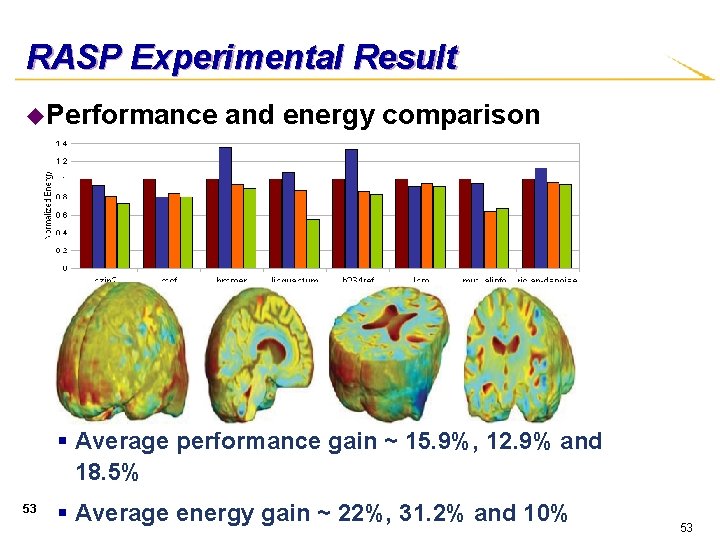

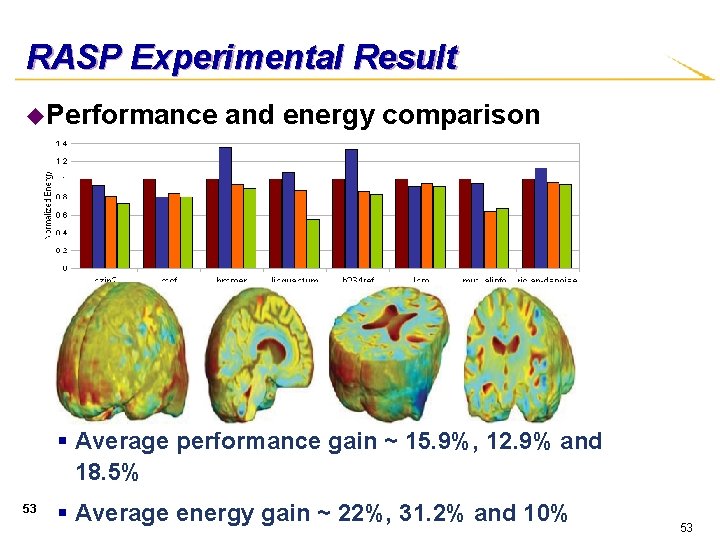

RASP Experimental Result u. Performance and energy comparison § Average performance gain ~ 15. 9%, 12. 9% and 18. 5% 53 § Average energy gain ~ 22%, 31. 2% and 10% 53

analysis segmentation registration denoising reconstruction Application Domain: Medical Image Processing compressive sensing total variational algorithm fluid registratio n level set methods Navier-Stokes equations 54

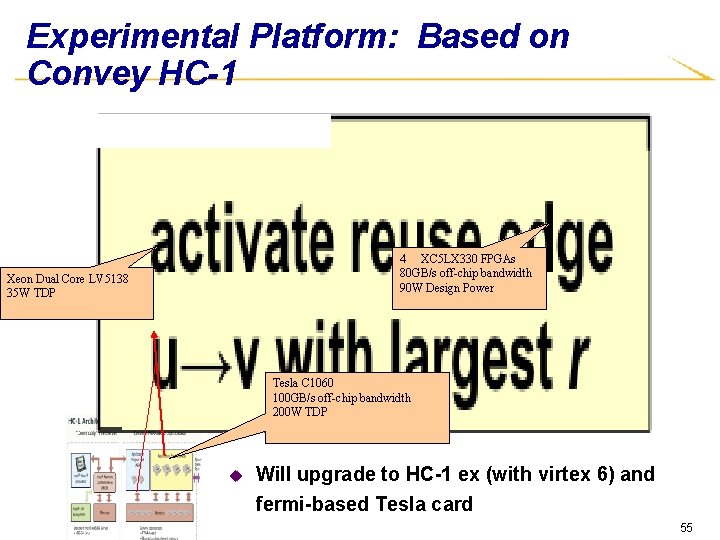

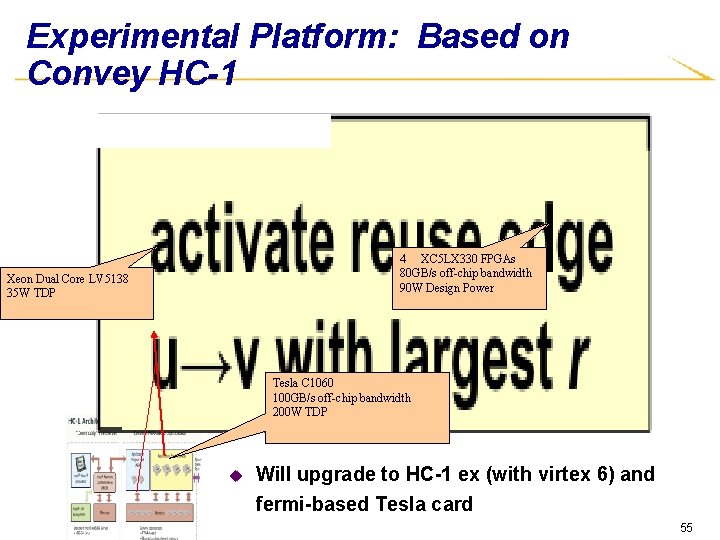

Experimental Platform: Based on Convey HC-1 4 XC 5 LX 330 FPGAs 80 GB/s off-chip bandwidth 90 W Design Power Xeon Dual Core LV 5138 35 W TDP Tesla C 1060 100 GB/s off-chip bandwidth 200 W TDP u Will upgrade to HC-1 ex (with virtex 6) and fermi-based Tesla card 55

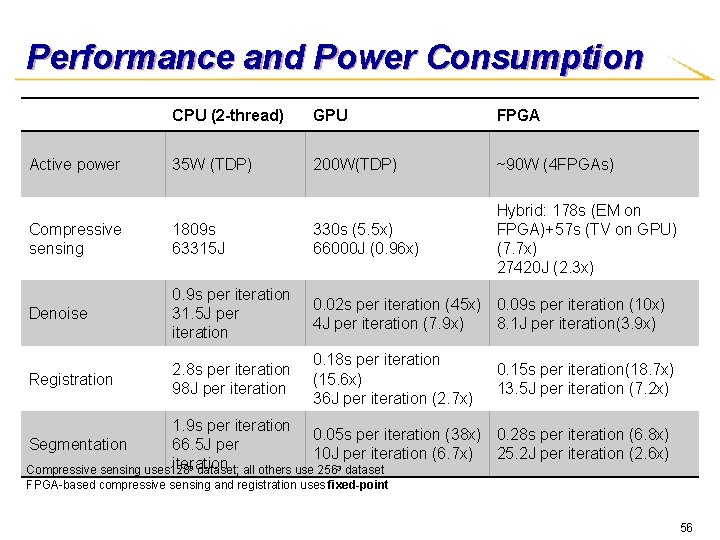

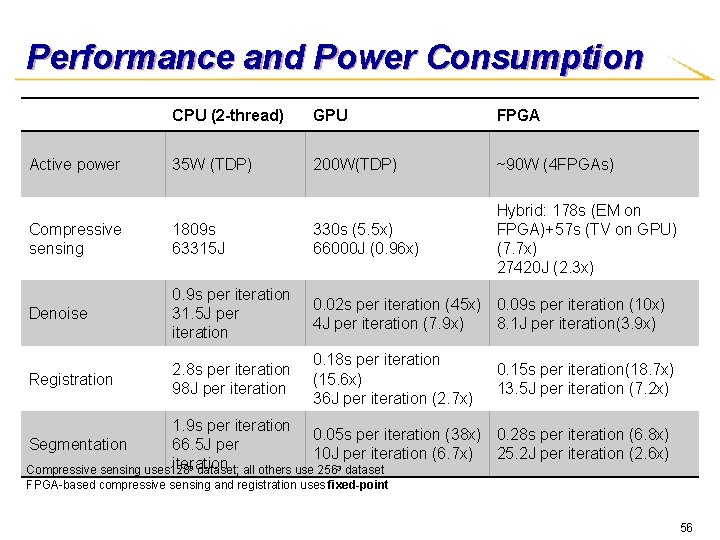

Performance and Power Consumption Active power CPU (2 -thread) GPU FPGA 35 W (TDP) 200 W(TDP) ~90 W (4 FPGAs) Hybrid: 178 s (EM on FPGA)+57 s (TV on GPU) (7. 7 x) 27420 J (2. 3 x) Compressive sensing 1809 s 63315 J 330 s (5. 5 x) 66000 J (0. 96 x) Denoise 0. 9 s per iteration 31. 5 J per iteration 0. 02 s per iteration (45 x) 0. 09 s per iteration (10 x) 4 J per iteration (7. 9 x) 8. 1 J per iteration(3. 9 x) Registration 2. 8 s per iteration 98 J per iteration 0. 18 s per iteration (15. 6 x) 36 J per iteration (2. 7 x) 0. 15 s per iteration(18. 7 x) 13. 5 J per iteration (7. 2 x) 1. 9 s per iteration 0. 05 s per iteration (38 x) 0. 28 s per iteration (6. 8 x) Segmentation 66. 5 J per 10 J per iteration (6. 7 x) 25. 2 J per iteration (2. 6 x) iteration 3 Compressive sensing uses 128 dataset; all others use 2563 dataset FPGA-based compressive sensing and registration uses fixed-point 56

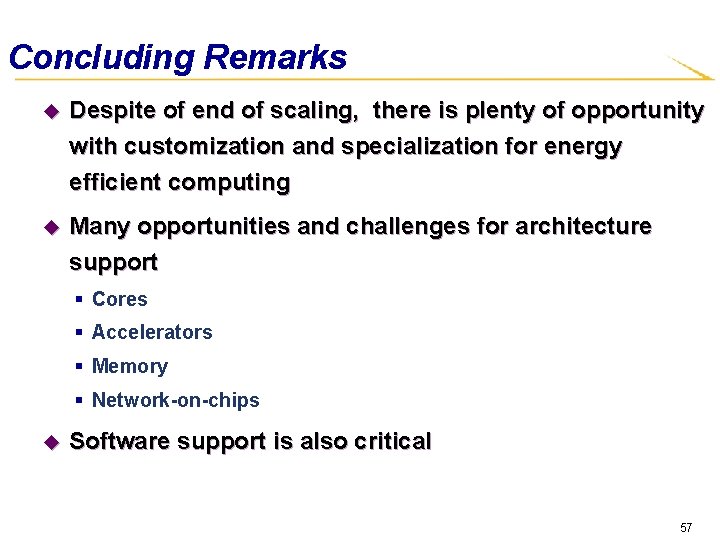

Concluding Remarks u Despite of end of scaling, there is plenty of opportunity with customization and specialization for energy efficient computing u Many opportunities and challenges for architecture support § Cores § Accelerators § Memory § Network-on-chips u Software support is also critical 57

Acknowledgements • A highly collaborative effort • thanks to all my co-PIs in four universities – UCLA, Rice, Ohio-State, and UC Santa Barbara • Thanks the support from the National Science Foundation, GSRC, Intel, and Xilinx Aberle (UCLA ) Palsber g (UCLA) Baraniuk (Rice) Potkonjak (UCLA) Bui (UCLA) Reinma n (UCLA) Chan g (UCL A) Sadayappa n (Ohio. State) Cheng (UCSB ) Sarkar (Associate Dir) (Rice) Cong (Director) (UCLA) Vese (UCLA) 58

Backup Slides 59

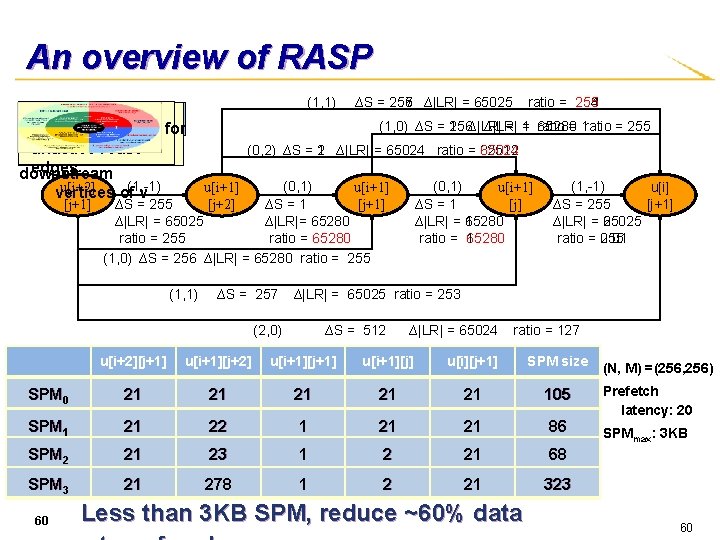

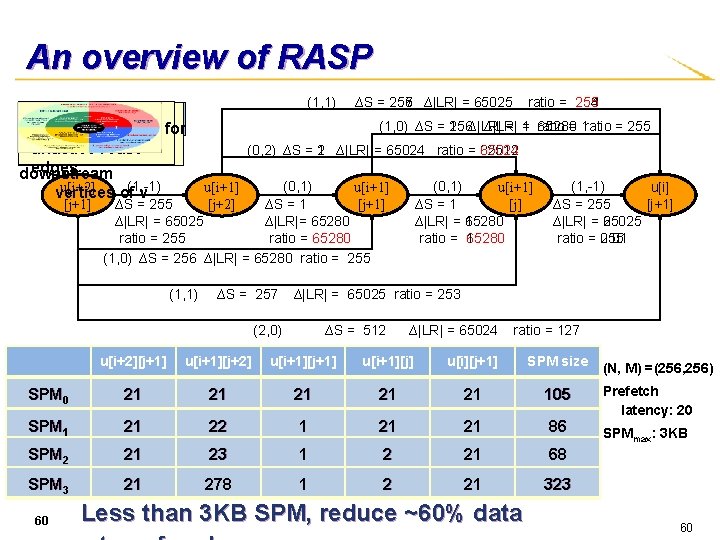

An overview of RASP caculate SPM update SPM initiate SPM buffer utilization ratio r for utilization size unactive ratio for reuse edges downstream u[i+2] vertices of(1, -1) v (1, 1) ∆S = 255 257 ∆|LR| = 65025 256∆|LR| = = 1 ratio 65280 = 1 ratio = 255 (1, 0) ∆S = 1 (0, 2) ∆S = 1 2 ∆|LR| = 65024 ratio = 32512 65024 (0, 1) u[i+1] [j+1] ∆S = 255 ∆S = 1 ∆|LR| = 65025 ∆|LR|= 65280 ratio = 255 ratio = 65280 (1, 0) ∆S = 256 ∆|LR| = 65280 ratio = 255 (1, 1) ratio = 255 253 254 (0, 1) u[i+1] [j] ∆S = 1 ∆|LR| = 1 65280 ratio = 1 65280 u[i+1] [j+2] ∆S = 257 (1, -1) u[i] [j+1] ∆S = 255 ∆|LR| = 2 65025 ratio = 0. 01 255 ∆|LR| = 65025 ratio = 253 ∆S = 512 (2, 0) ∆|LR| = 65024 ratio = 127 u[i+2][j+1] u[i+1][j+2] u[i+1][j+1] u[i+1][j] u[i][j+1] SPM size SPM 0 21 21 21 105 SPM 1 21 22 1 21 21 86 SPM 2 21 23 1 2 21 68 SPM 3 21 278 1 2 21 323 60 Less than 3 KB SPM, reduce ~60% data (N, M) =(256, 256) Prefetch latency: 20 SPMmax: 3 KB 60

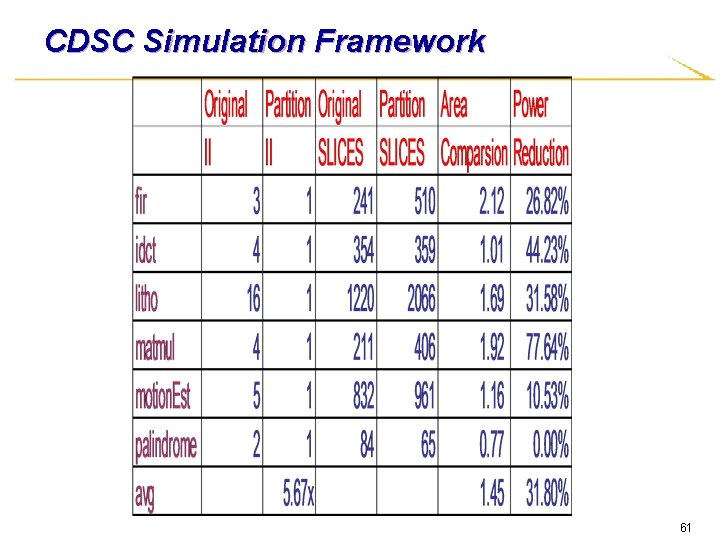

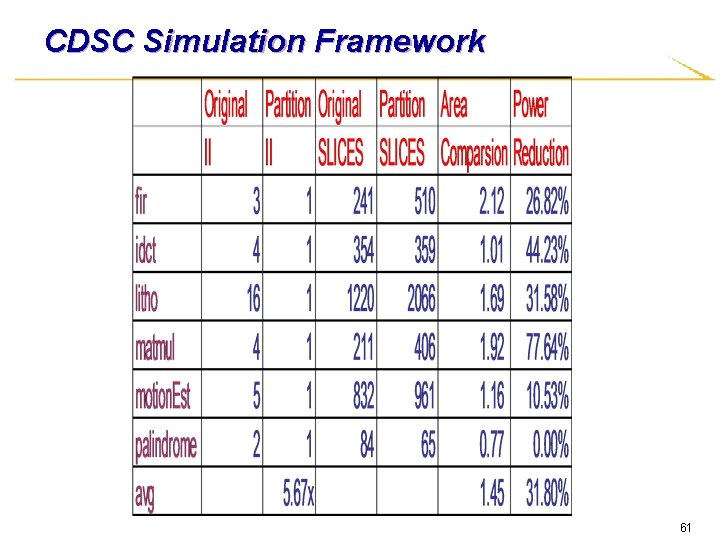

CDSC Simulation Framework 61

![Automatic Memory Partitioning ICCAD 09 u Memory system is critical for high performance and Automatic Memory Partitioning [ICCAD 09] u Memory system is critical for high performance and](https://slidetodoc.com/presentation_image/45cc2bb41aab1e797607df6f798973f3/image-62.jpg)

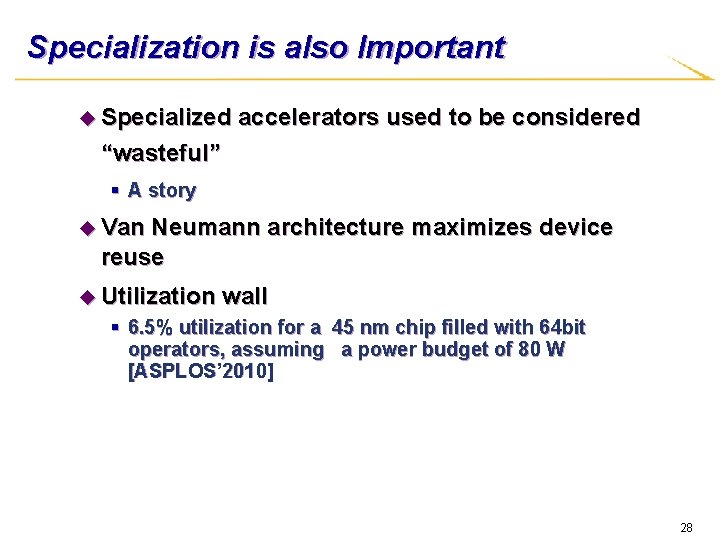

Automatic Memory Partitioning [ICCAD 09] u Memory system is critical for high performance and low power design for (int i =0; i < n; i++) … = A[i]+A[i+1] § Memory bottleneck limits maximum parallelism (a) C code § Memory system accounts for a significant portion of total power consumption u A[i] Goal § Given platform information (memory port, power, etc. ), behavioral specification, and throughput constraints • Partition memories automatically • Meet throughput constraints • Minimize power consumption A[i+1] (b) Scheduling R 1 R 2 Decoder A[0, 2, 4, …] A[1, 3, 5…] (c) Memory architecture after parti 62

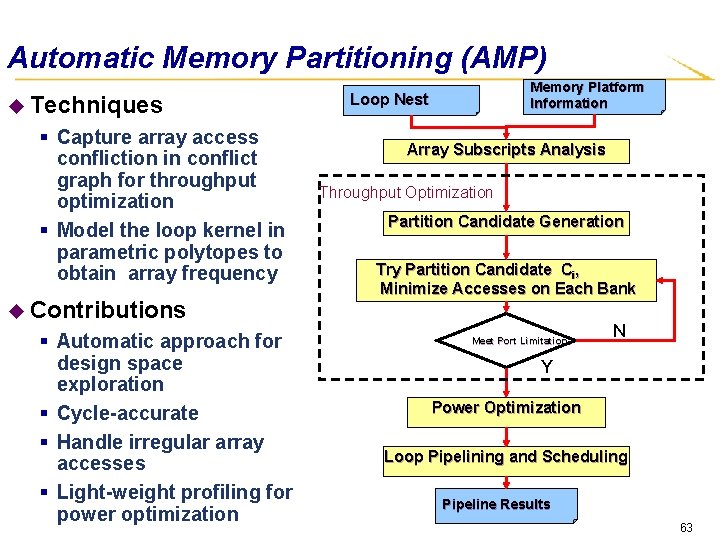

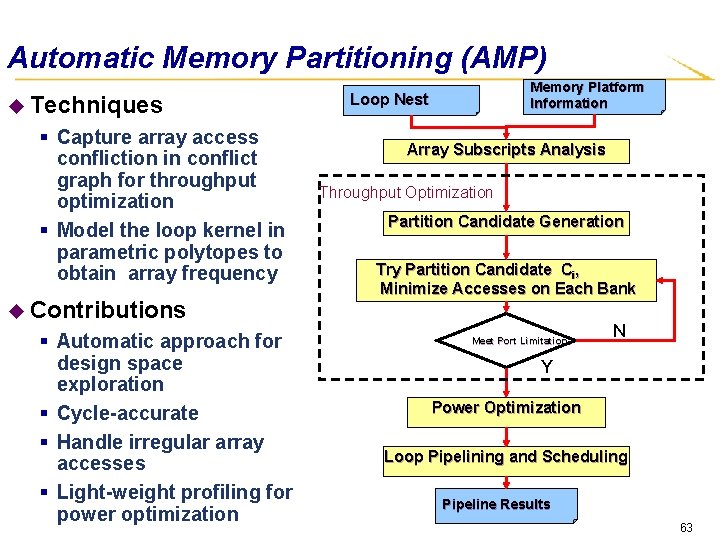

Automatic Memory Partitioning (AMP) u Techniques § Capture array access confliction in conflict graph for throughput optimization § Model the loop kernel in parametric polytopes to obtain array frequency u Contributions § Automatic approach for design space exploration § Cycle-accurate § Handle irregular array accesses § Light-weight profiling for power optimization Memory Platform Information Loop Nest Array Subscripts Analysis Throughput Optimization Partition Candidate Generation Try Partition Candidate Ci, Minimize Accesses on Each Bank Meet Port Limitation? N Y Power Optimization Loop Pipelining and Scheduling Pipeline Results 63

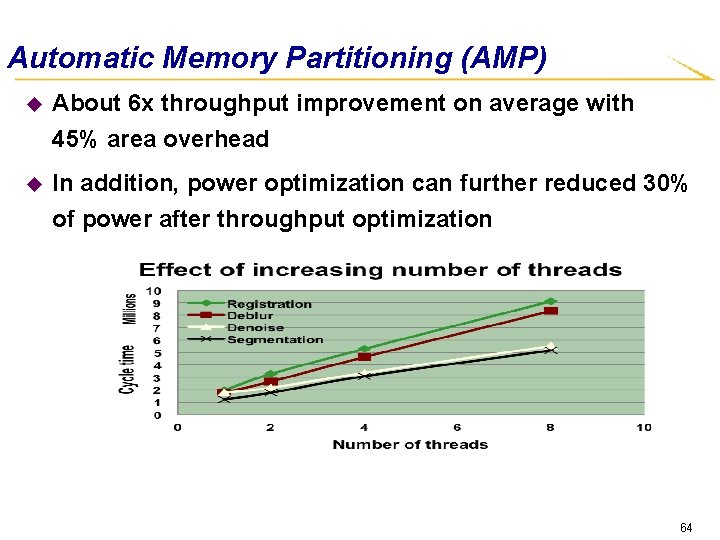

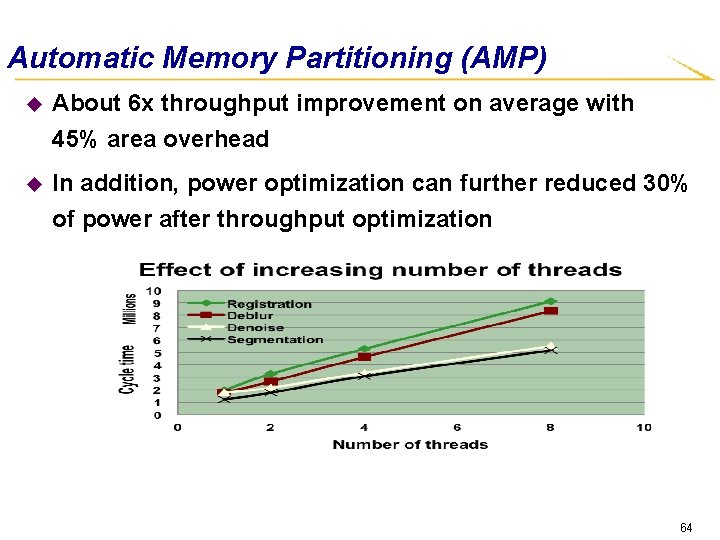

Automatic Memory Partitioning (AMP) u About 6 x throughput improvement on average with 45% area overhead u In addition, power optimization can further reduced 30% of power after throughput optimization 64

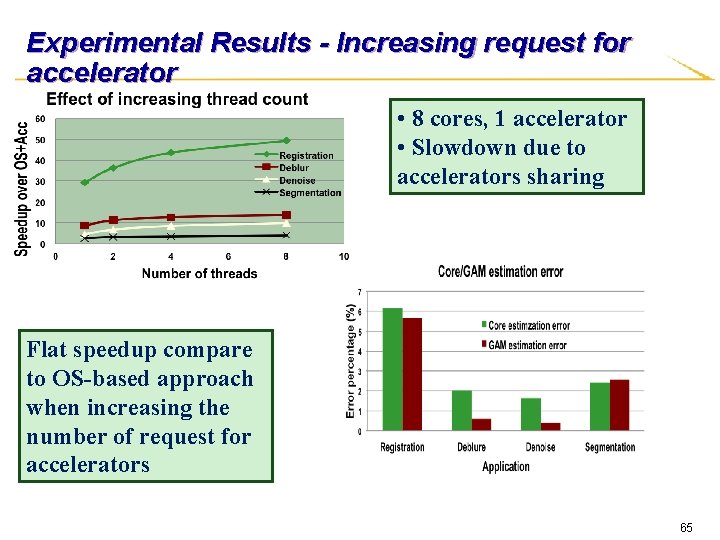

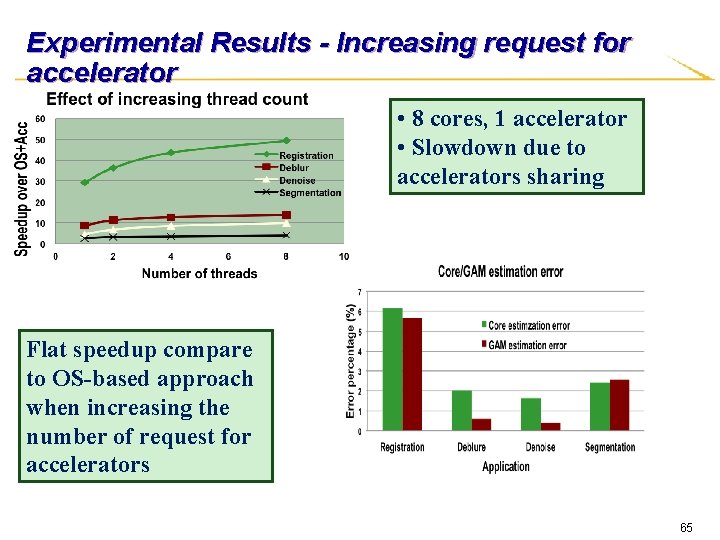

Experimental Results - Increasing request for accelerator • 8 cores, 1 accelerator • Slowdown due to accelerators sharing Flat speedup compare to OS-based approach when increasing the number of request for accelerators 65

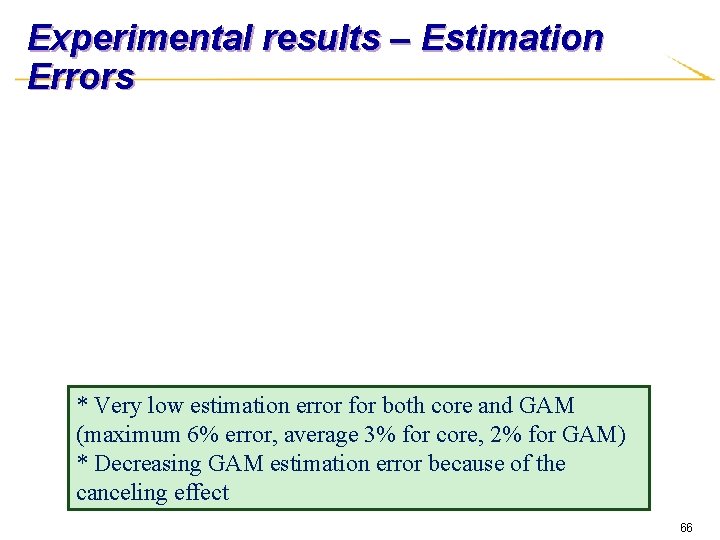

Experimental results – Estimation Errors * Very low estimation error for both core and GAM (maximum 6% error, average 3% for core, 2% for GAM) * Decreasing GAM estimation error because of the canceling effect 66

Mass customization and rapid product development

Mass customization and rapid product development Pfms salary payment process

Pfms salary payment process Activant prophet 21

Activant prophet 21 Adidas mass customization

Adidas mass customization Layout decisions

Layout decisions Breadcrumb

Breadcrumb Yale workday

Yale workday Umlchina

Umlchina Toolbox customization ansys workbench

Toolbox customization ansys workbench Unit 8

Unit 8 Activity 26.5 specialization and trade answers

Activity 26.5 specialization and trade answers Specialization and interdependence

Specialization and interdependence Southern colonies specialization

Southern colonies specialization Specialization and interdependence

Specialization and interdependence Specialization and exchange

Specialization and exchange Disadvantage of division of labour

Disadvantage of division of labour Specialization and comparative advantage

Specialization and comparative advantage Economies and diseconomies of work specialization

Economies and diseconomies of work specialization Generalization and specialization in java

Generalization and specialization in java Kontinuitetshantering i praktiken

Kontinuitetshantering i praktiken Typiska drag för en novell

Typiska drag för en novell Nationell inriktning för artificiell intelligens

Nationell inriktning för artificiell intelligens Returpilarna

Returpilarna Shingelfrisyren

Shingelfrisyren En lathund för arbete med kontinuitetshantering

En lathund för arbete med kontinuitetshantering Underlag för särskild löneskatt på pensionskostnader

Underlag för särskild löneskatt på pensionskostnader Tidböcker

Tidböcker A gastrica

A gastrica Vad är densitet

Vad är densitet Datorkunskap för nybörjare

Datorkunskap för nybörjare Tack för att ni lyssnade bild

Tack för att ni lyssnade bild Att skriva debattartikel

Att skriva debattartikel Autokratiskt ledarskap

Autokratiskt ledarskap Nyckelkompetenser för livslångt lärande

Nyckelkompetenser för livslångt lärande Påbyggnader för flakfordon

Påbyggnader för flakfordon Tryck formel

Tryck formel Offentlig förvaltning

Offentlig förvaltning Kyssande vind analys

Kyssande vind analys Presentera för publik crossboss

Presentera för publik crossboss Argument för teckenspråk som minoritetsspråk

Argument för teckenspråk som minoritetsspråk Kanaans land

Kanaans land Treserva lathund

Treserva lathund Epiteltyper

Epiteltyper Claes martinsson

Claes martinsson Cks

Cks Lågenergihus nyproduktion

Lågenergihus nyproduktion Mat för idrottare

Mat för idrottare Verktyg för automatisering av utbetalningar

Verktyg för automatisering av utbetalningar Rutin för avvikelsehantering

Rutin för avvikelsehantering Smärtskolan kunskap för livet

Smärtskolan kunskap för livet Ministerstyre för och nackdelar

Ministerstyre för och nackdelar Tack för att ni har lyssnat

Tack för att ni har lyssnat Vad är referatmarkeringar

Vad är referatmarkeringar Redogör för vad psykologi är

Redogör för vad psykologi är Borstål, egenskaper

Borstål, egenskaper Atmosfr

Atmosfr Borra hål för knoppar

Borra hål för knoppar Vilken grundregel finns det för tronföljden i sverige?

Vilken grundregel finns det för tronföljden i sverige? Standardavvikelse formel

Standardavvikelse formel Tack för att ni har lyssnat

Tack för att ni har lyssnat Rita perspektiv

Rita perspektiv Verksamhetsanalys exempel

Verksamhetsanalys exempel Tobinskatten för och nackdelar

Tobinskatten för och nackdelar Blomman för dagen drog

Blomman för dagen drog Modell för handledningsprocess

Modell för handledningsprocess Egg för emanuel

Egg för emanuel Elektronik för barn

Elektronik för barn