ecs 236 Winter 2006 Intrusion Detection 3 Anomaly

![Input Events For each sample of the statistic measure, X (0, 1] (1, 3] Input Events For each sample of the statistic measure, X (0, 1] (1, 3]](https://slidetodoc.com/presentation_image_h2/3979034093228da6320f5d79e6de3510/image-11.jpg)

![Long-term Profile: C-Training For each sample of the statistic measure, X (0, 50] (50, Long-term Profile: C-Training For each sample of the statistic measure, X (0, 50] (50,](https://slidetodoc.com/presentation_image_h2/3979034093228da6320f5d79e6de3510/image-21.jpg)

- Slides: 69

ecs 236 Winter 2006: Intrusion Detection #3: Anomaly Detection Dr. S. Felix Wu Computer Science Department University of California, Davis http: //www. cs. ucdavis. edu/~wu/ sfelixwu@gmail. com 01/04/2006 ecs 236 winter 2006 1

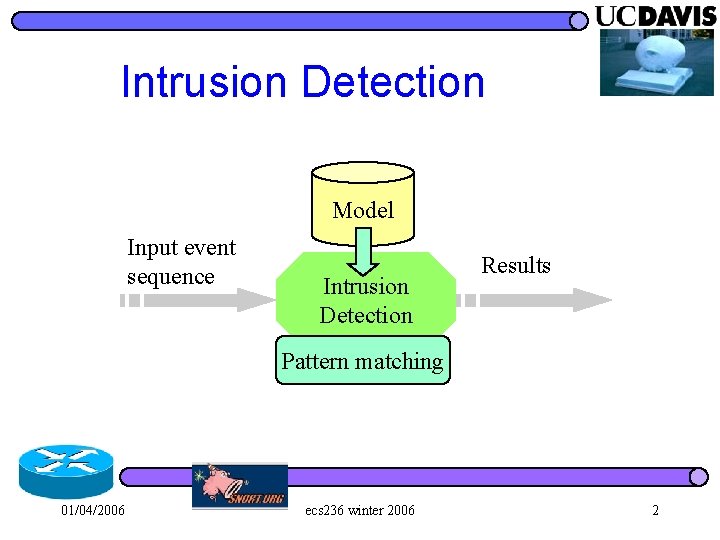

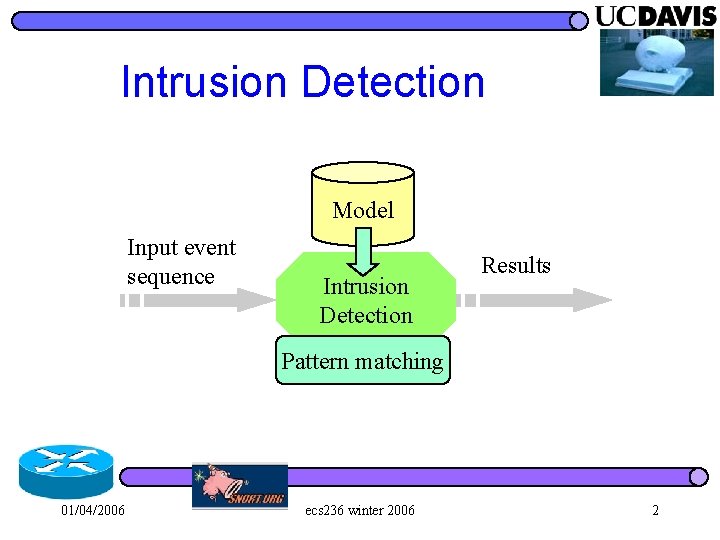

Intrusion Detection Model Input event sequence Intrusion Detection Results Pattern matching 01/04/2006 ecs 236 winter 2006 2

Scalability of Detection Number of signatures, amount of analysis l Unknown exploits/vulnerabilities l 01/04/2006 ecs 236 winter 2006 3

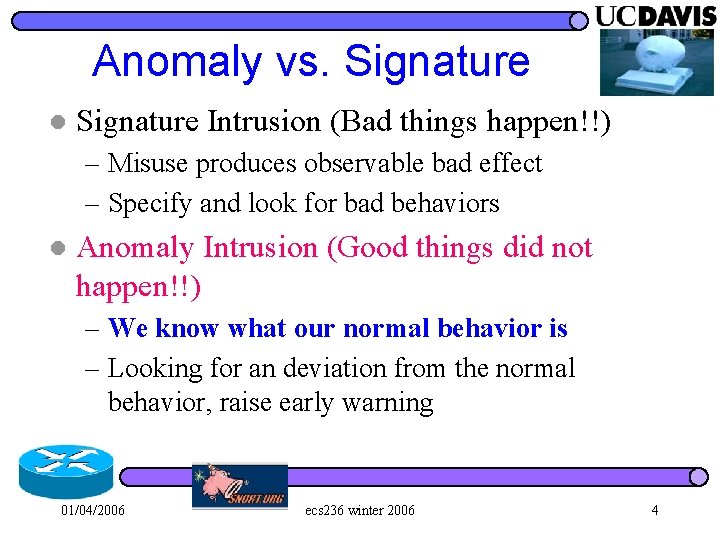

Anomaly vs. Signature l Signature Intrusion (Bad things happen!!) – Misuse produces observable bad effect – Specify and look for bad behaviors l Anomaly Intrusion (Good things did not happen!!) – We know what our normal behavior is – Looking for an deviation from the normal behavior, raise early warning 01/04/2006 ecs 236 winter 2006 4

Reasons for “AND” Unknown attacks (insider threat) l Better scalability l – AND target/vulnerabilities – SD exploits 01/04/2006 ecs 236 winter 2006 5

Another definition… l Signature-based detection Convert ourthe limited/partial – Predefine signatures of anomalies understanding/modeling about the target system – Pattern matching or protocol into detection heuristics (i. e. , BUTTERCUP signatures) l Statistics-based detection – Buildon statistics profile for expected Based our experience, select behaviors a set of – Compare testing behaviors expected behaviors “features” that will likelywith to distinguish – Significant deviation expected from unexpected behavior. 01/04/2006 ecs 236 winter 2006 6

What is “vulnerability”? 01/04/2006 ecs 236 winter 2006 7

What is “vulnerability”? Signature Detection create “effective/strong/scaleable” signatures Anomaly Detection detect/discover “unknown vulnerabilities” 01/04/2006 ecs 236 winter 2006 8

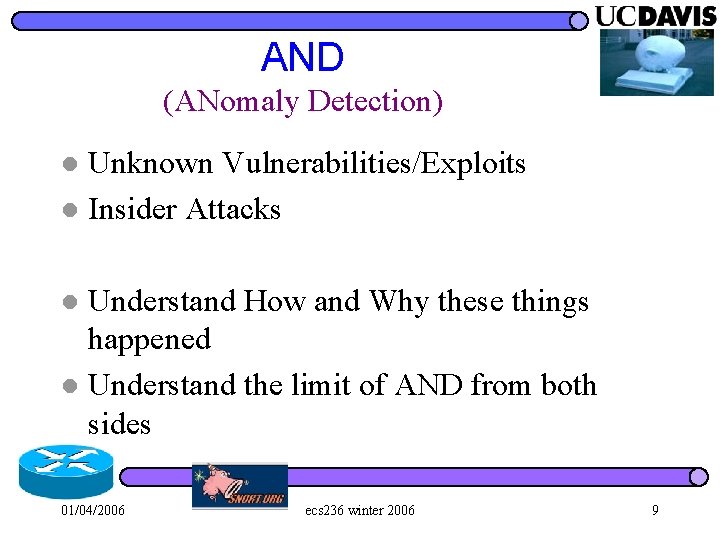

AND (ANomaly Detection) Unknown Vulnerabilities/Exploits l Insider Attacks l Understand How and Why these things happened l Understand the limit of AND from both sides l 01/04/2006 ecs 236 winter 2006 9

What is an anomaly? 01/04/2006 ecs 236 winter 2006 10

![Input Events For each sample of the statistic measure X 0 1 1 3 Input Events For each sample of the statistic measure, X (0, 1] (1, 3]](https://slidetodoc.com/presentation_image_h2/3979034093228da6320f5d79e6de3510/image-11.jpg)

Input Events For each sample of the statistic measure, X (0, 1] (1, 3] (3, 15] (15, + ) 40% 30% 20% 10% SAND 01/04/2006 ecs 236 winter 2006 11

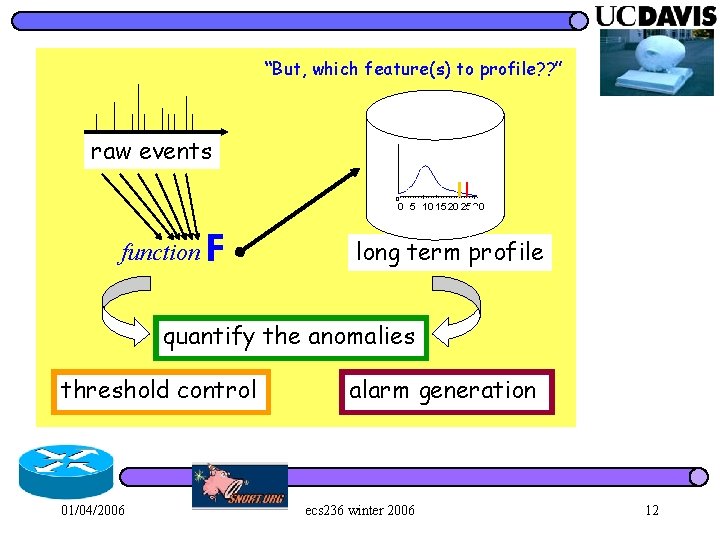

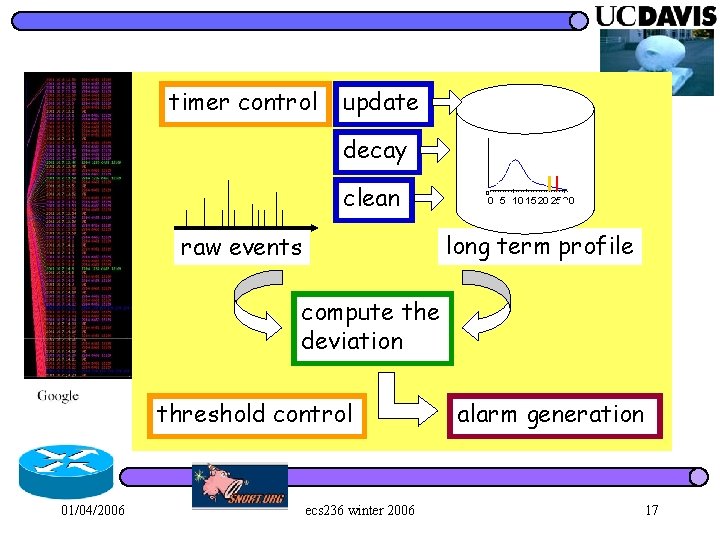

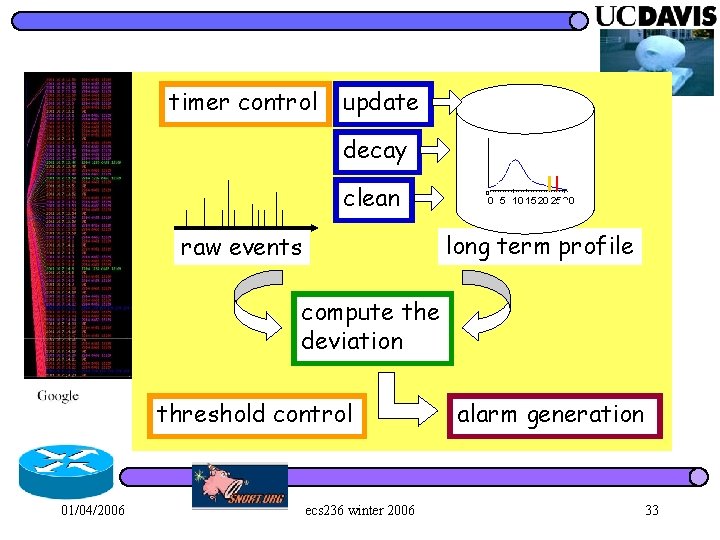

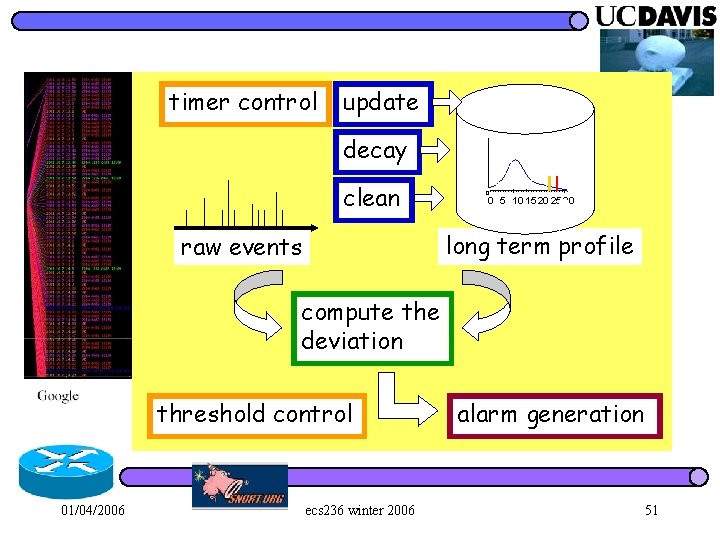

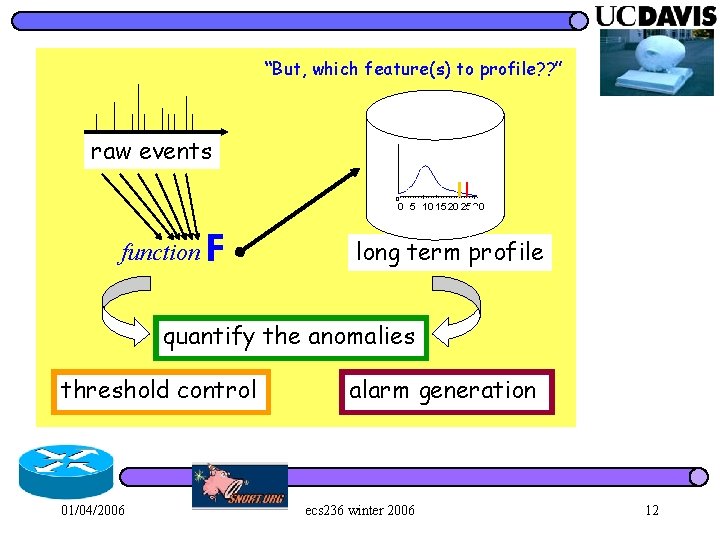

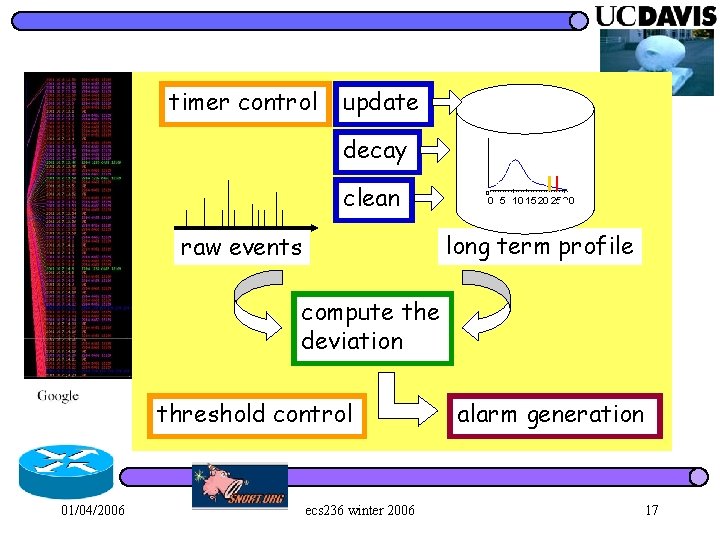

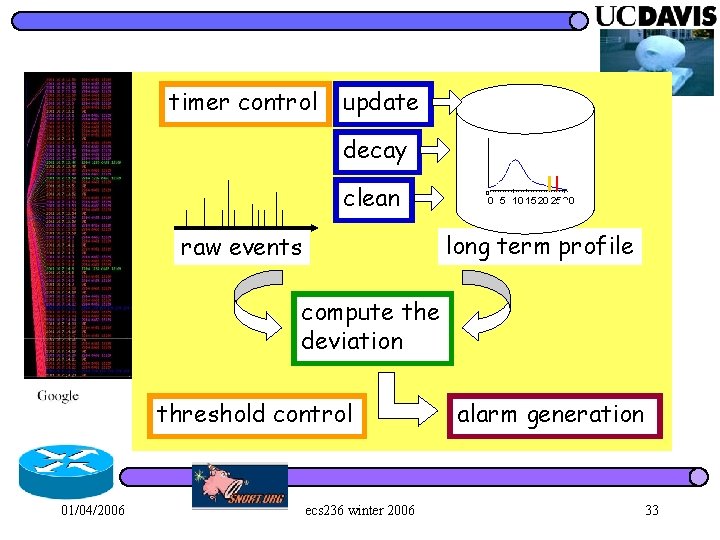

“But, which feature(s) to profile? ? ” raw events 0 0 5 10 15 20 25 30 function F long term profile quantify the anomalies threshold control 01/04/2006 alarm generation ecs 236 winter 2006 12

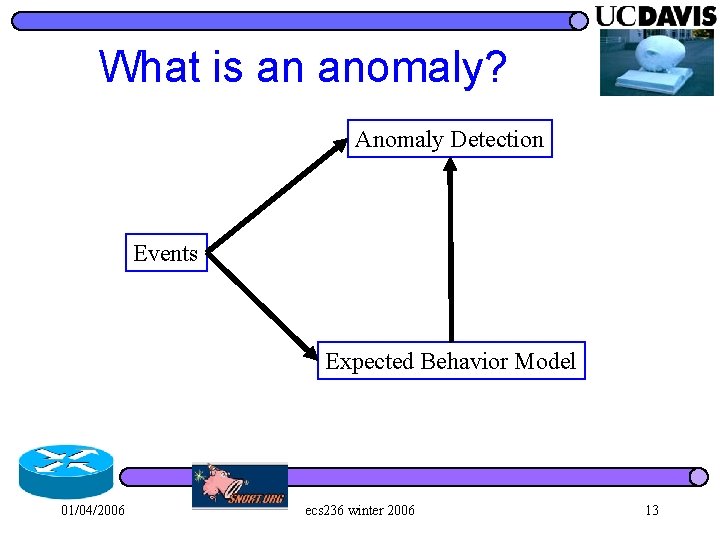

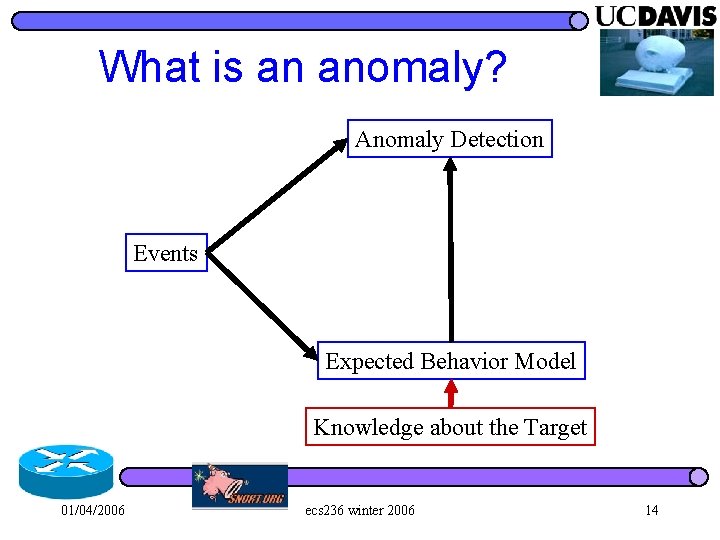

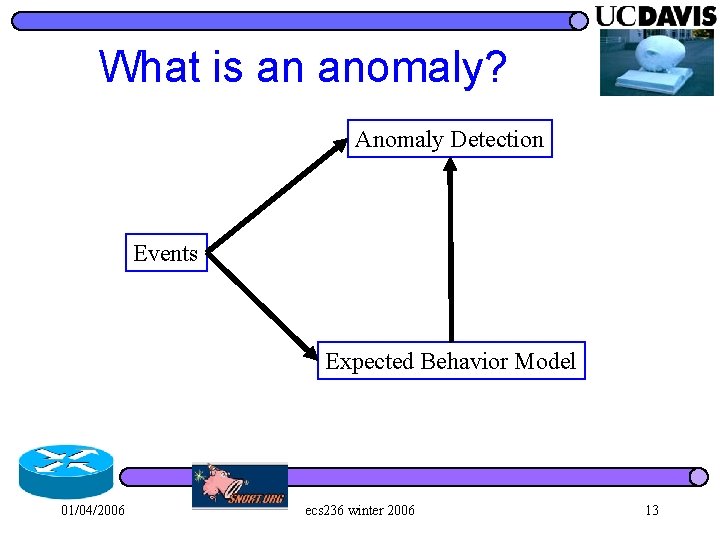

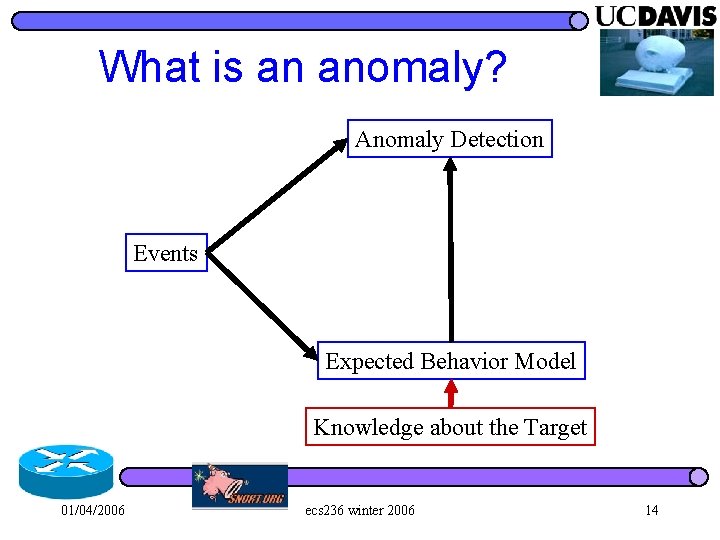

What is an anomaly? Anomaly Detection Events Expected Behavior Model 01/04/2006 ecs 236 winter 2006 13

What is an anomaly? Anomaly Detection Events Expected Behavior Model Knowledge about the Target 01/04/2006 ecs 236 winter 2006 14

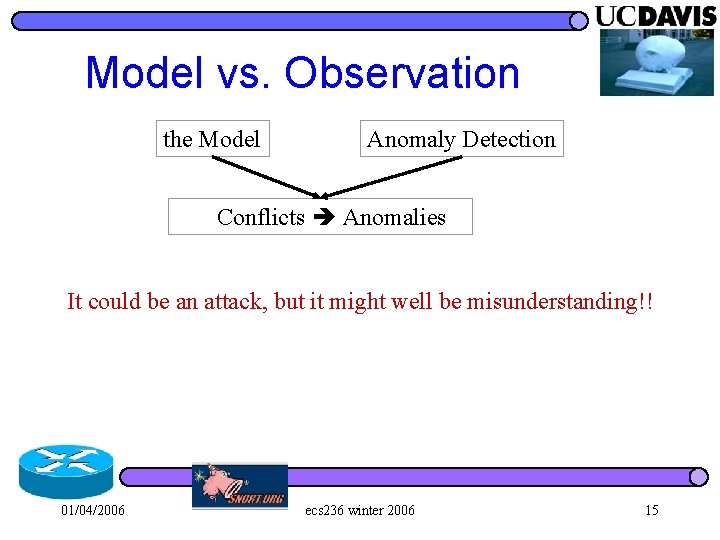

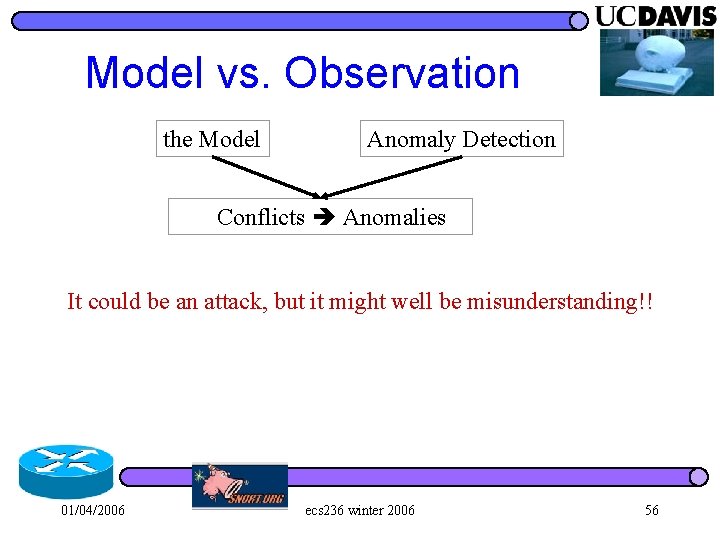

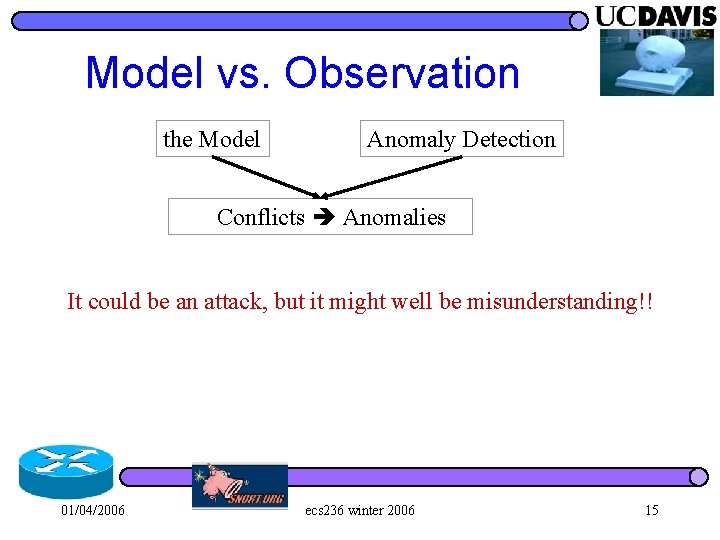

Model vs. Observation the Model Anomaly Detection Conflicts Anomalies It could be an attack, but it might well be misunderstanding!! 01/04/2006 ecs 236 winter 2006 15

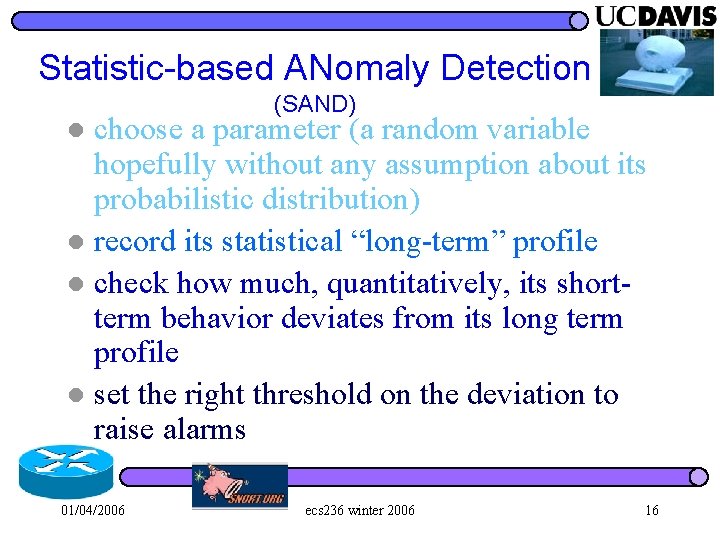

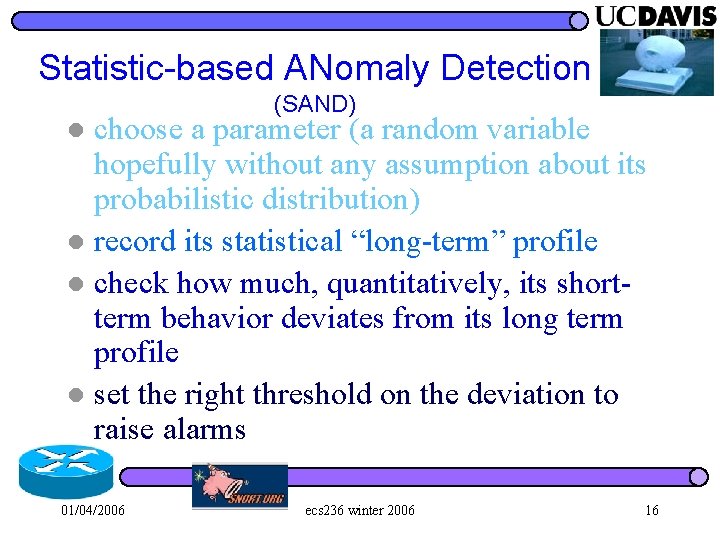

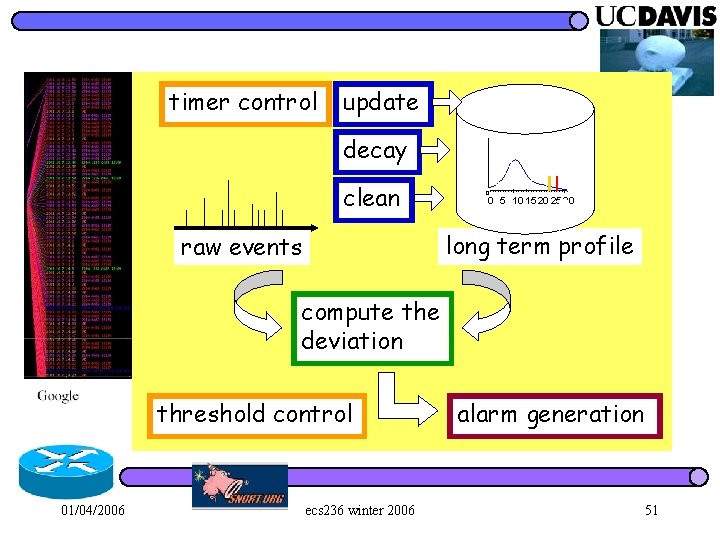

Statistic-based ANomaly Detection (SAND) choose a parameter (a random variable hopefully without any assumption about its probabilistic distribution) l record its statistical “long-term” profile l check how much, quantitatively, its shortterm behavior deviates from its long term profile l set the right threshold on the deviation to raise alarms l 01/04/2006 ecs 236 winter 2006 16

timer control update decay clean 0 0 5 10 15 20 25 30 long term profile raw events compute the deviation threshold control 01/04/2006 ecs 236 winter 2006 alarm generation 17

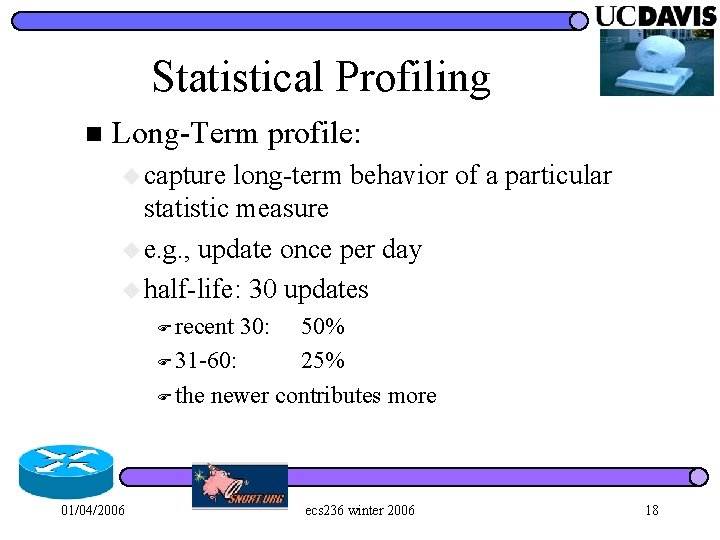

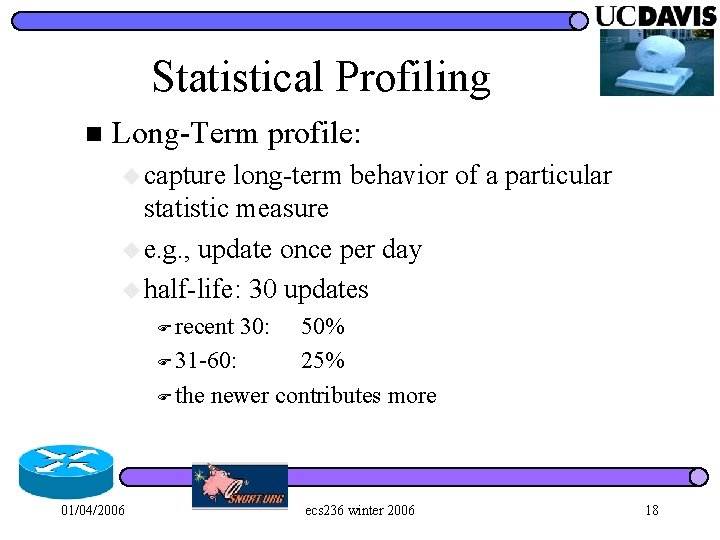

Statistical Profiling n Long-Term profile: u capture long-term behavior of a particular statistic measure u e. g. , update once per day u half-life: 30 updates F recent 50% F 31 -60: 25% F the newer contributes more 01/04/2006 30: ecs 236 winter 2006 18

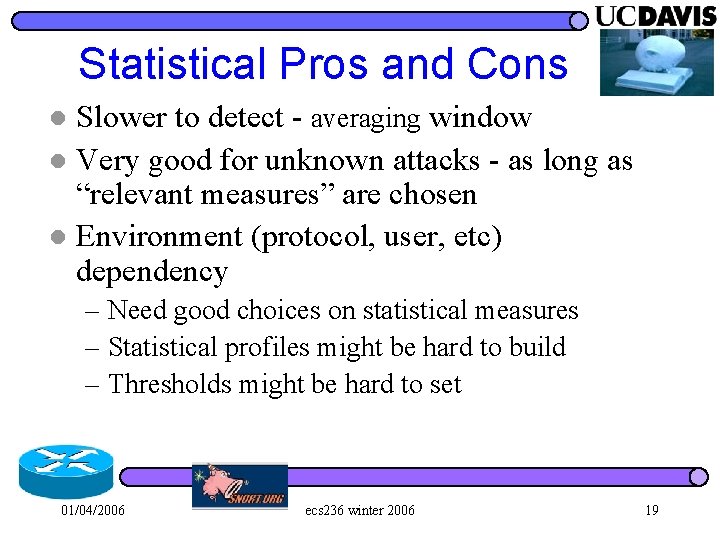

Statistical Pros and Cons Slower to detect - averaging window l Very good for unknown attacks - as long as “relevant measures” are chosen l Environment (protocol, user, etc) dependency l – Need good choices on statistical measures – Statistical profiles might be hard to build – Thresholds might be hard to set 01/04/2006 ecs 236 winter 2006 19

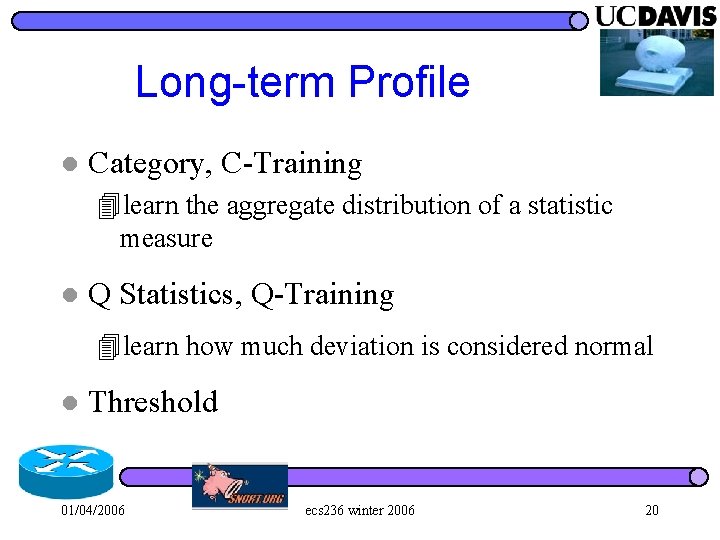

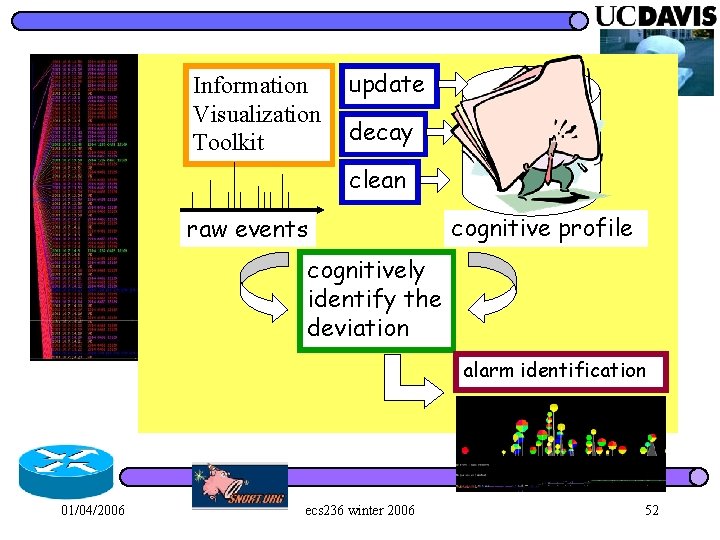

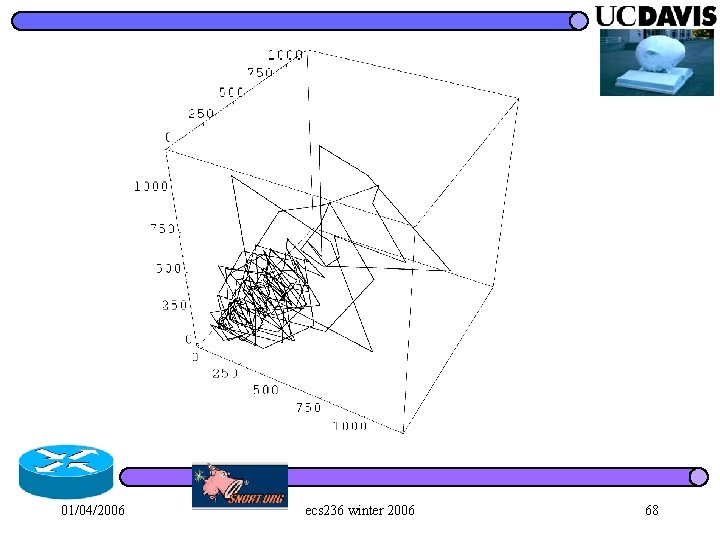

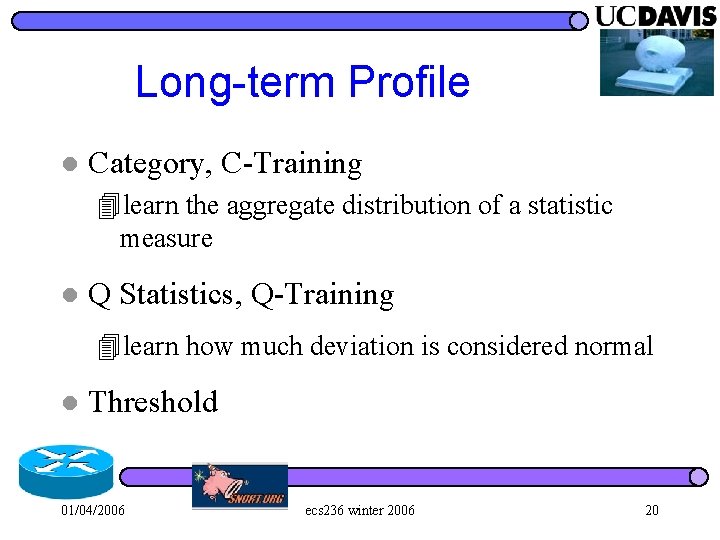

Long-term Profile l Category, C-Training 4 learn the aggregate distribution of a statistic measure l Q Statistics, Q-Training 4 learn how much deviation is considered normal l Threshold 01/04/2006 ecs 236 winter 2006 20

![Longterm Profile CTraining For each sample of the statistic measure X 0 50 50 Long-term Profile: C-Training For each sample of the statistic measure, X (0, 50] (50,](https://slidetodoc.com/presentation_image_h2/3979034093228da6320f5d79e6de3510/image-21.jpg)

Long-term Profile: C-Training For each sample of the statistic measure, X (0, 50] (50, 75] (75, 90] (90, + ) 20% 30% 40% 10% l l l k bins Expected Distribution, P 1 P 2. . . Pk , where Training time: months 01/04/2006 ecs 236 winter 2006 21

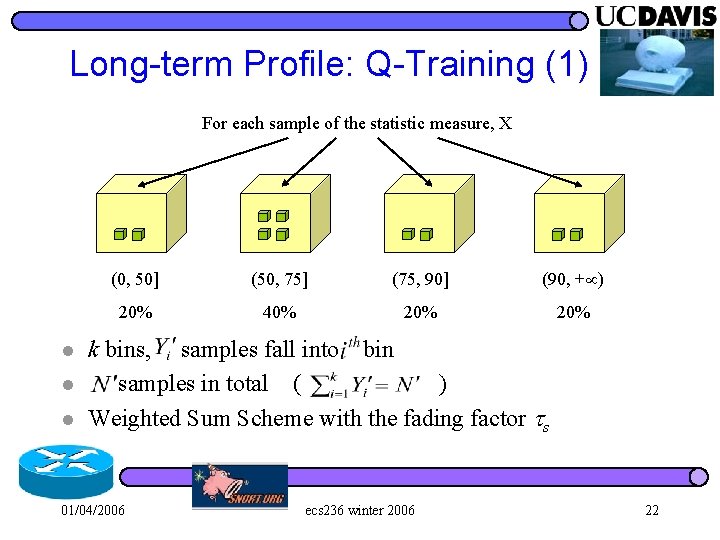

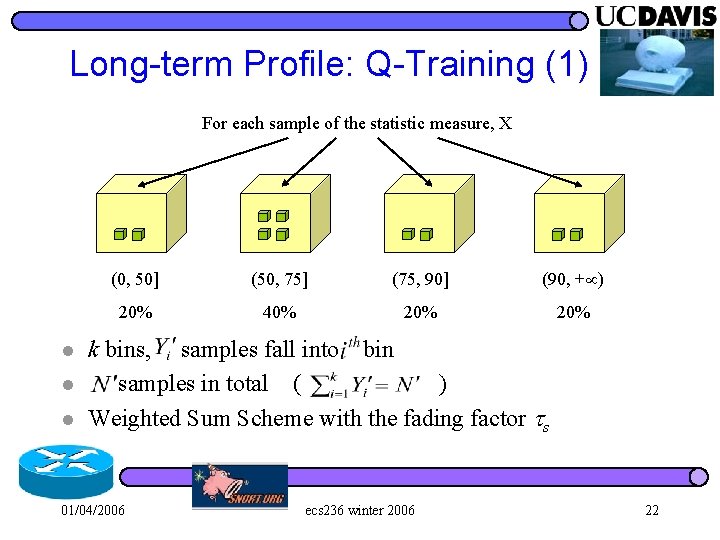

Long-term Profile: Q-Training (1) For each sample of the statistic measure, X l l l (0, 50] (50, 75] (75, 90] (90, + ) 20% 40% 20% k bins, samples fall into bin samples in total ( ) Weighted Sum Scheme with the fading factor s 01/04/2006 ecs 236 winter 2006 22

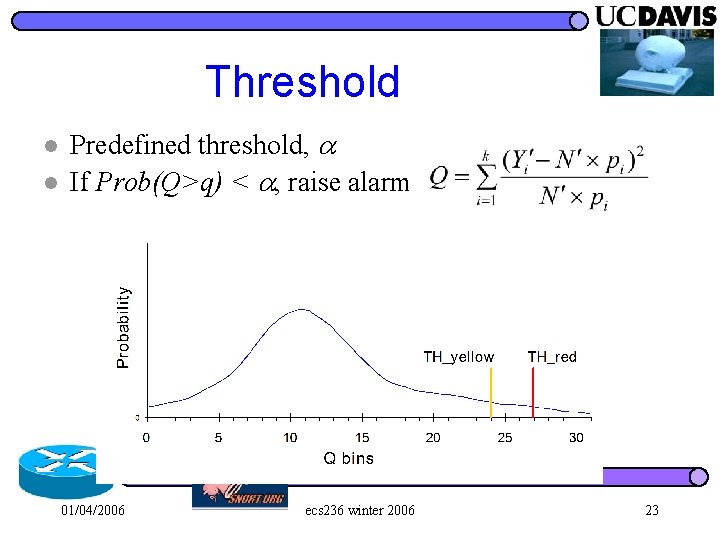

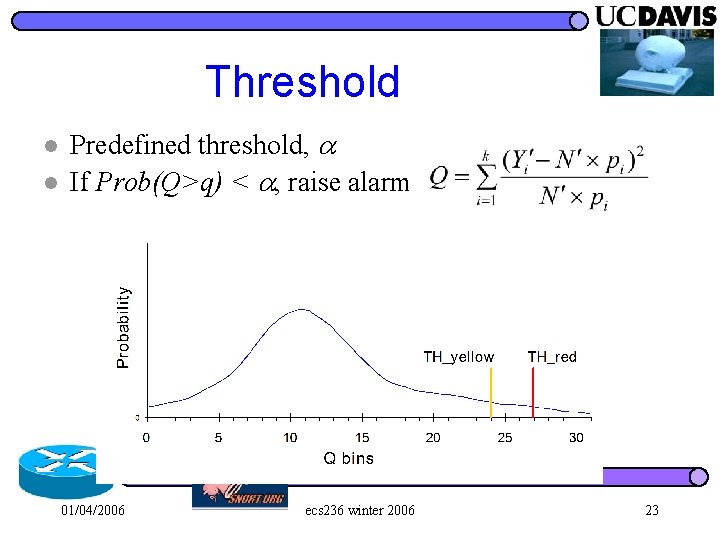

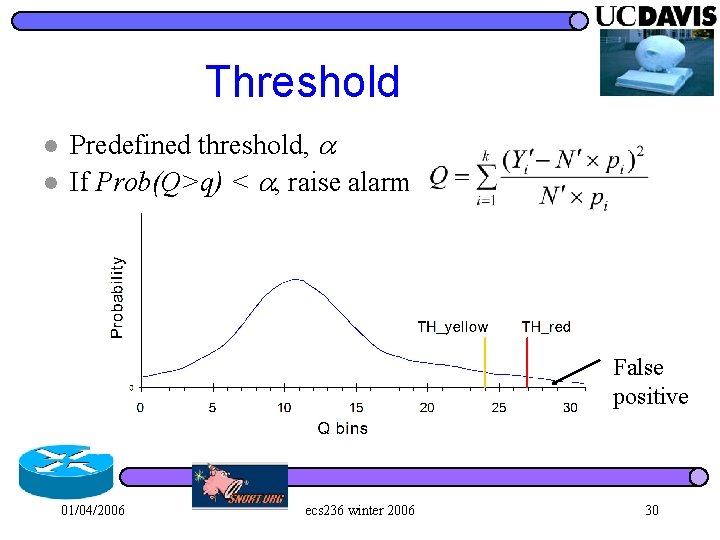

Threshold l l Predefined threshold, If Prob(Q>q) < , raise alarm 01/04/2006 ecs 236 winter 2006 23

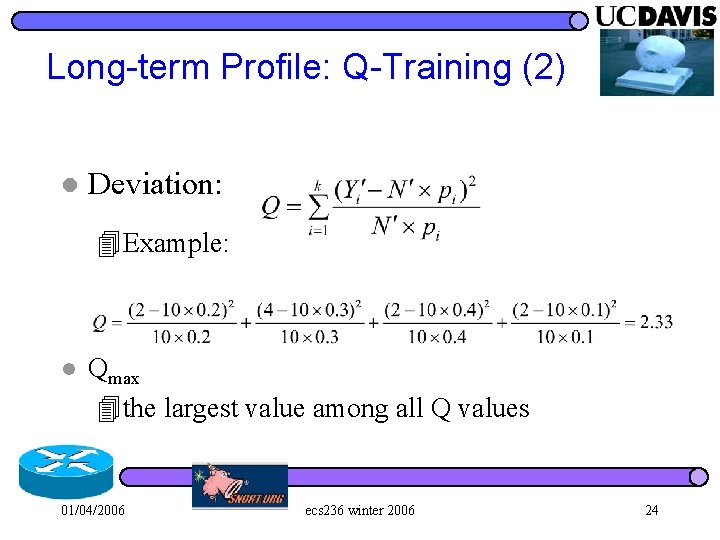

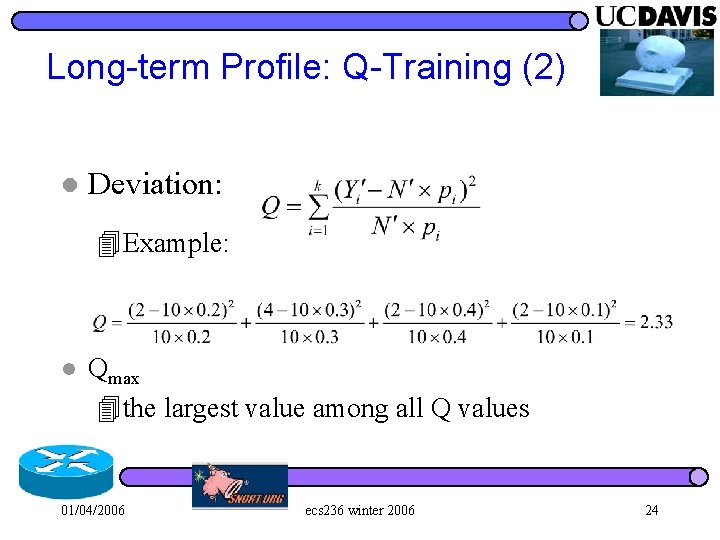

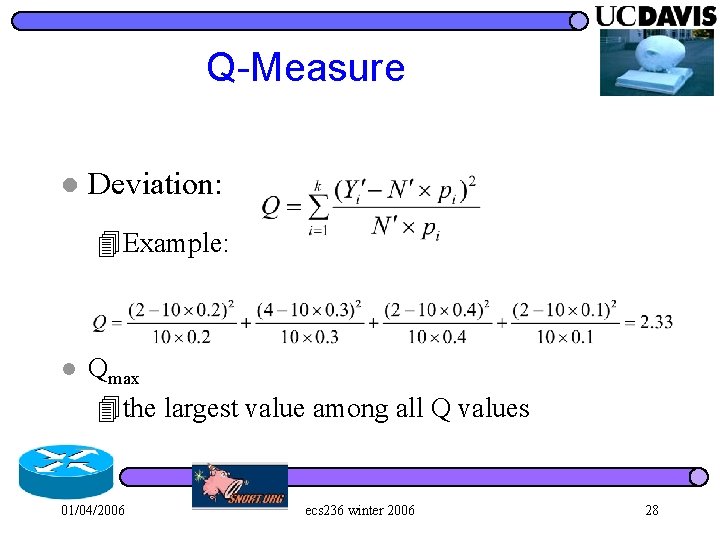

Long-term Profile: Q-Training (2) l Deviation: 4 Example: l Qmax 4 the largest value among all Q values 01/04/2006 ecs 236 winter 2006 24

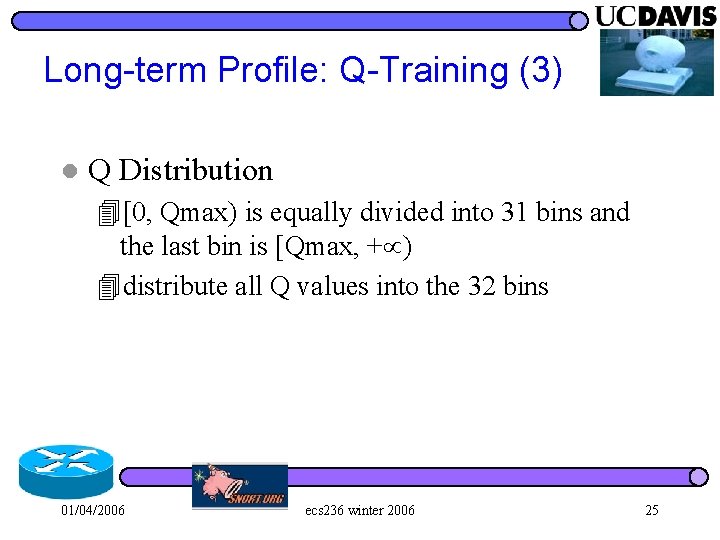

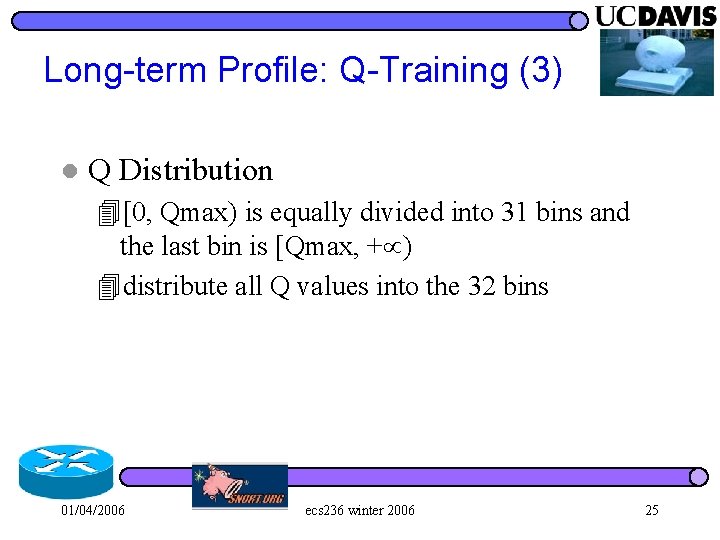

Long-term Profile: Q-Training (3) l Q Distribution 4[0, Qmax) is equally divided into 31 bins and the last bin is [Qmax, + ) 4 distribute all Q values into the 32 bins 01/04/2006 ecs 236 winter 2006 25

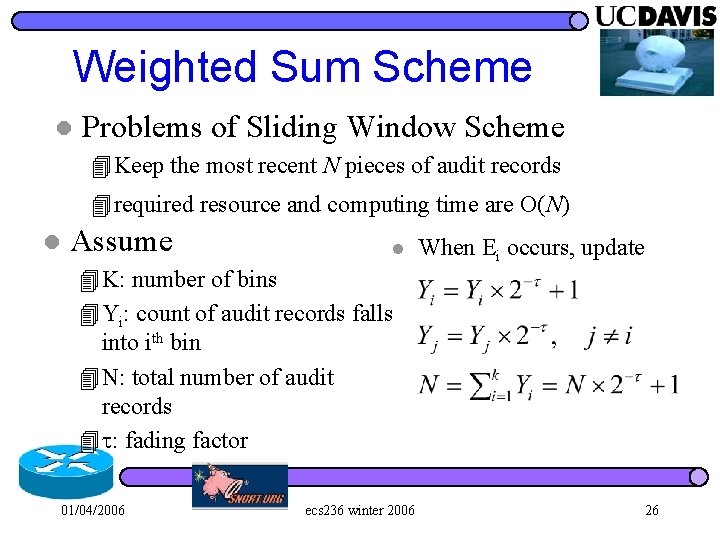

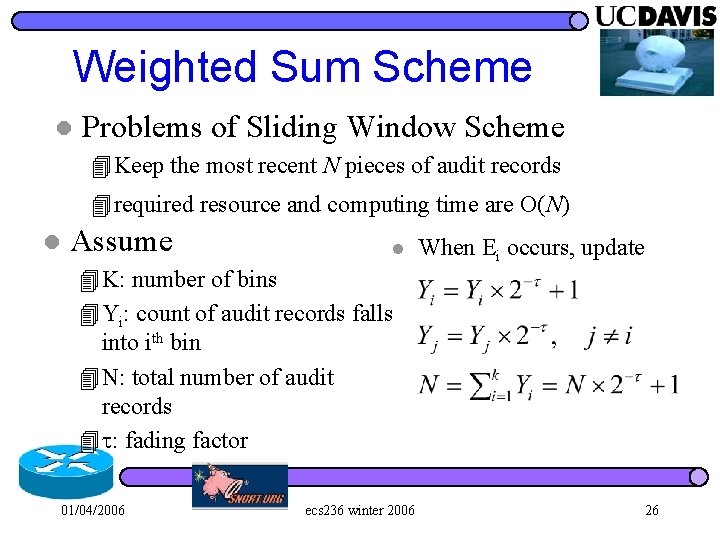

Weighted Sum Scheme l Problems of Sliding Window Scheme 4 Keep the most recent N pieces of audit records 4 required resource and computing time are O(N) l Assume l When Ei occurs, update 4 K: number of bins 4 Yi: count of audit records falls into ith bin 4 N: total number of audit records 4 : fading factor 01/04/2006 ecs 236 winter 2006 26

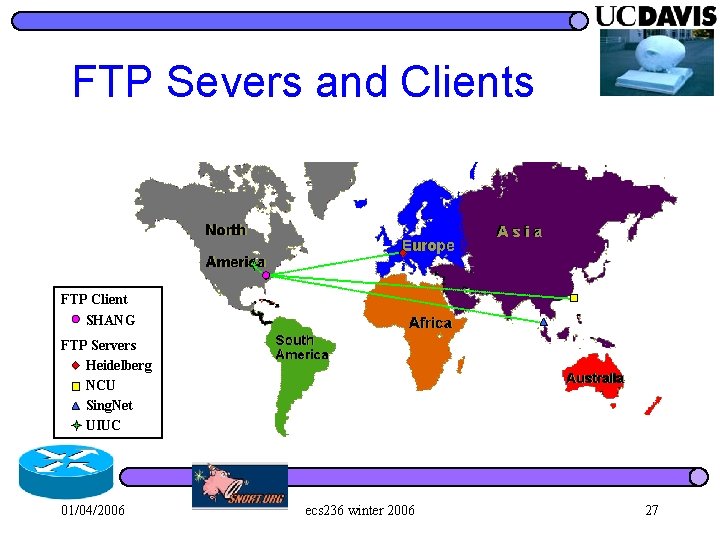

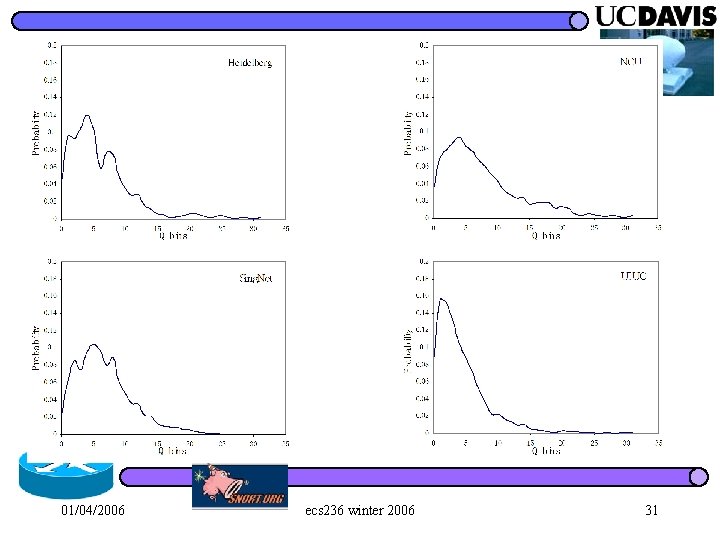

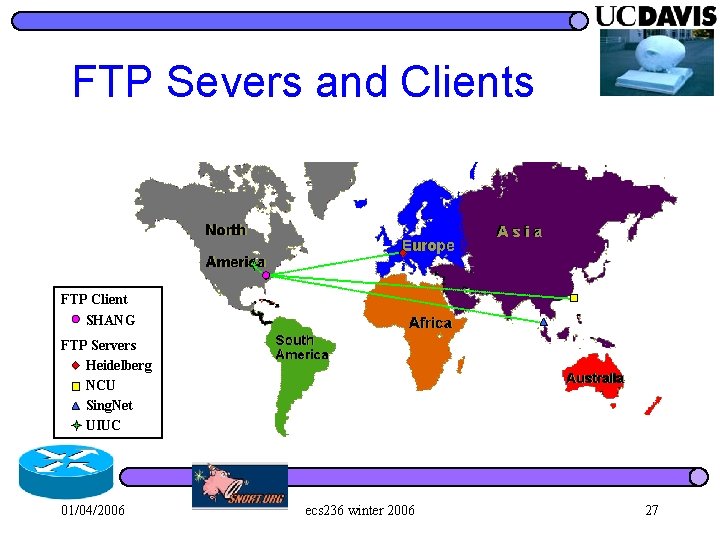

FTP Severs and Clients FTP Client SHANG FTP Servers Heidelberg NCU Sing. Net UIUC 01/04/2006 ecs 236 winter 2006 27

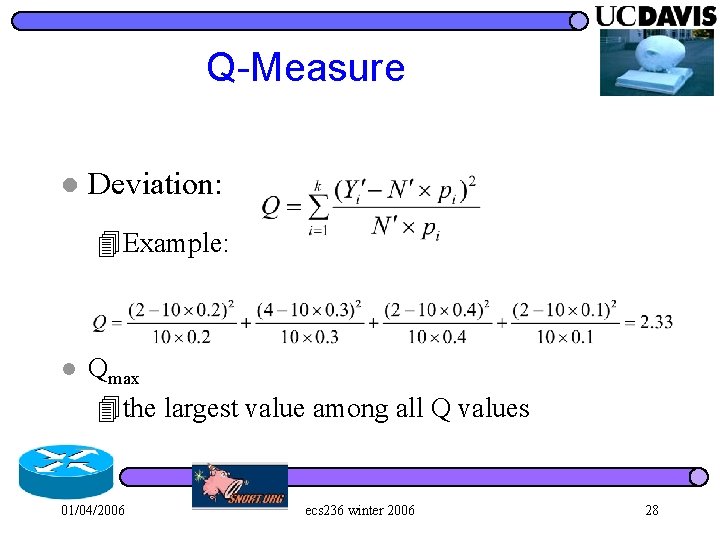

Q-Measure l Deviation: 4 Example: l Qmax 4 the largest value among all Q values 01/04/2006 ecs 236 winter 2006 28

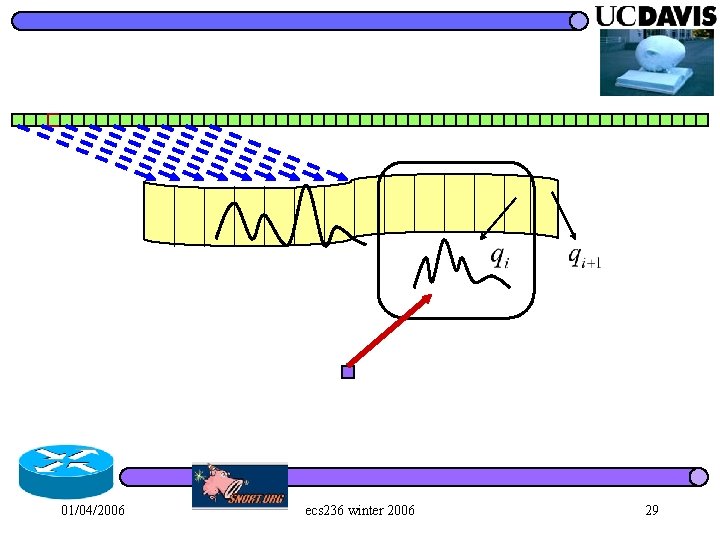

01/04/2006 ecs 236 winter 2006 29

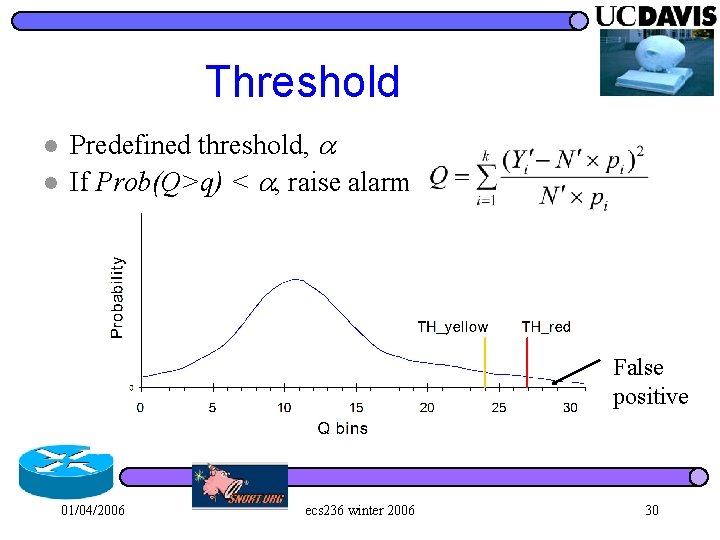

Threshold l l Predefined threshold, If Prob(Q>q) < , raise alarm False positive 01/04/2006 ecs 236 winter 2006 30

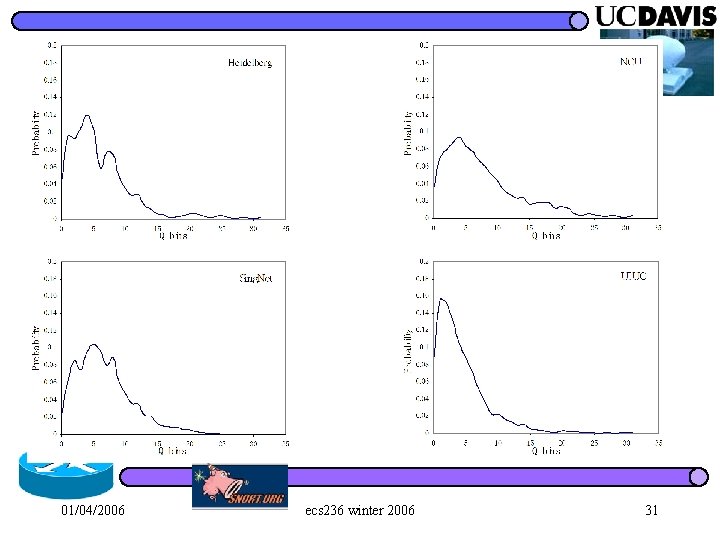

01/04/2006 ecs 236 winter 2006 31

Mathematics l Many other techniques: – Training/learning – detection 01/04/2006 ecs 236 winter 2006 32

timer control update decay clean 0 0 5 10 15 20 25 30 long term profile raw events compute the deviation threshold control 01/04/2006 ecs 236 winter 2006 alarm generation 33

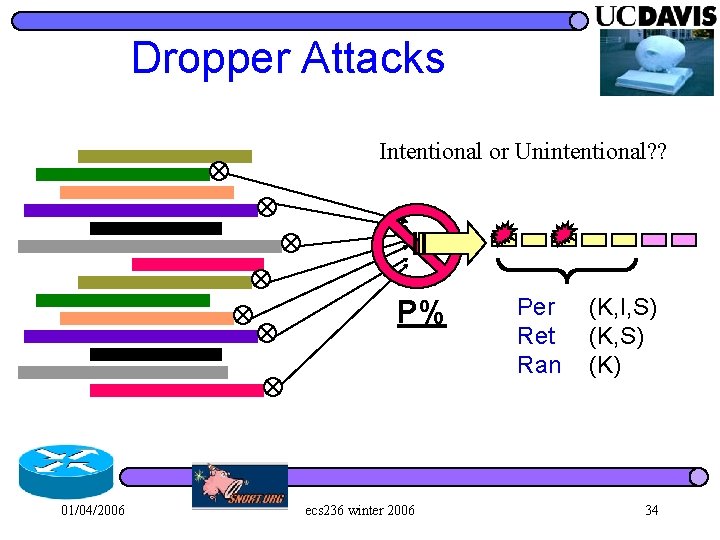

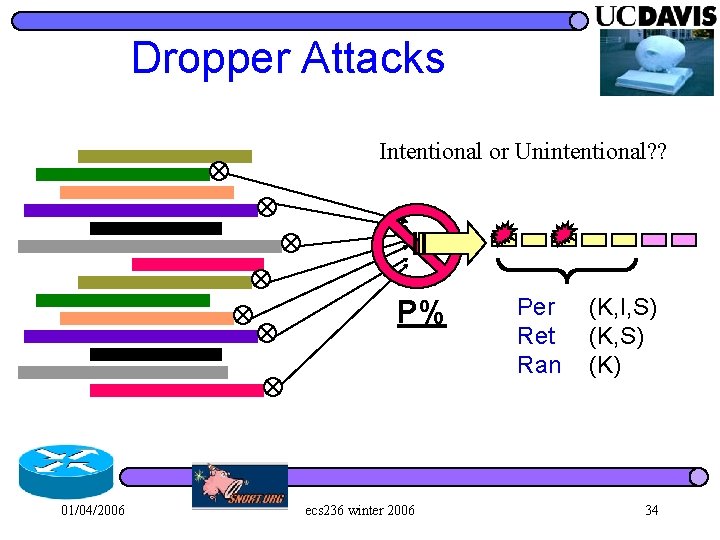

Dropper Attacks Intentional or Unintentional? ? P% 01/04/2006 ecs 236 winter 2006 Per Ret Ran (K, I, S) (K) 34

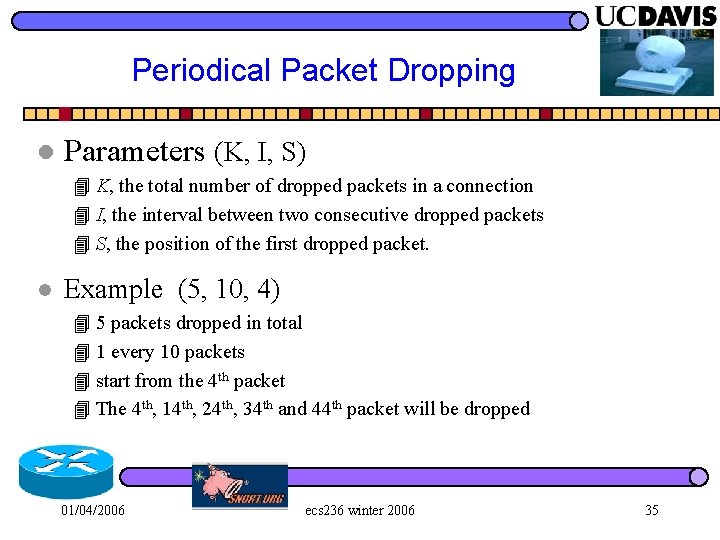

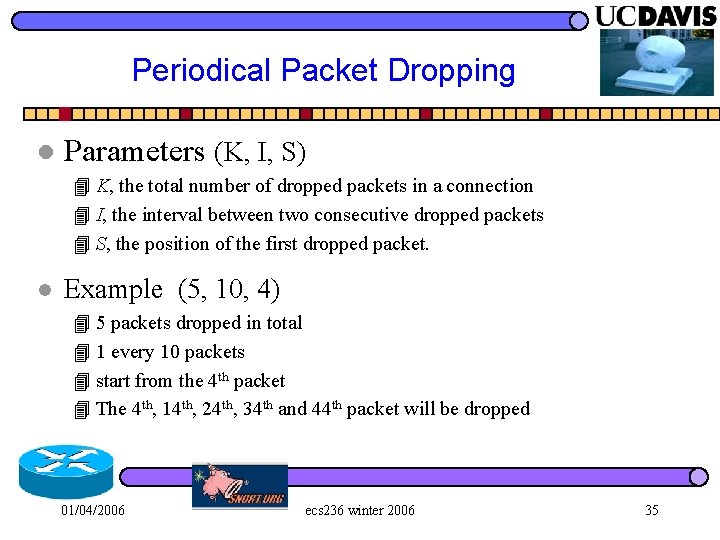

Periodical Packet Dropping l Parameters (K, I, S) 4 K, the total number of dropped packets in a connection 4 I, the interval between two consecutive dropped packets 4 S, the position of the first dropped packet. l Example (5, 10, 4) 4 5 packets dropped in total 4 1 every 10 packets 4 start from the 4 th packet 4 The 4 th, 14 th, 24 th, 34 th and 44 th packet will be dropped 01/04/2006 ecs 236 winter 2006 35

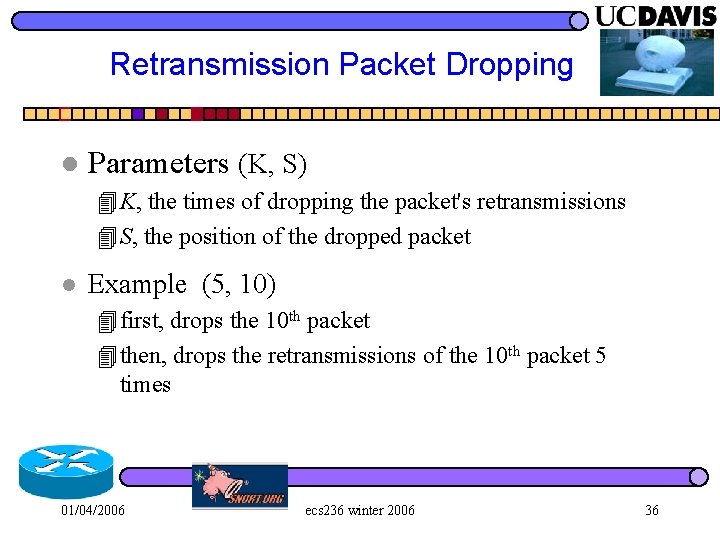

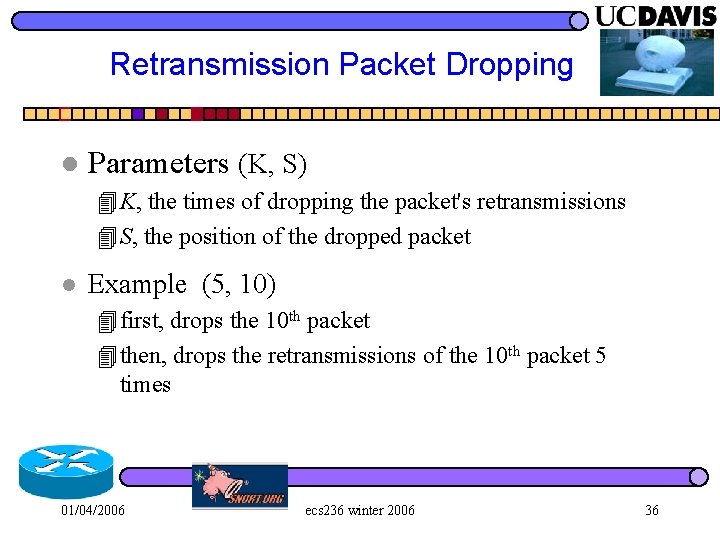

Retransmission Packet Dropping l Parameters (K, S) 4 K, the times of dropping the packet's retransmissions 4 S, the position of the dropped packet l Example (5, 10) 4 first, drops the 10 th packet 4 then, drops the retransmissions of the 10 th packet 5 times 01/04/2006 ecs 236 winter 2006 36

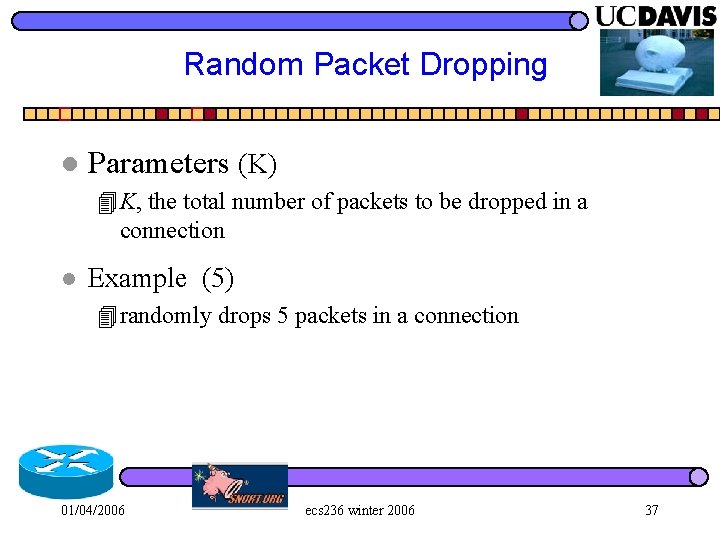

Random Packet Dropping l Parameters (K) 4 K, the total number of packets to be dropped in a connection l Example (5) 4 randomly drops 5 packets in a connection 01/04/2006 ecs 236 winter 2006 37

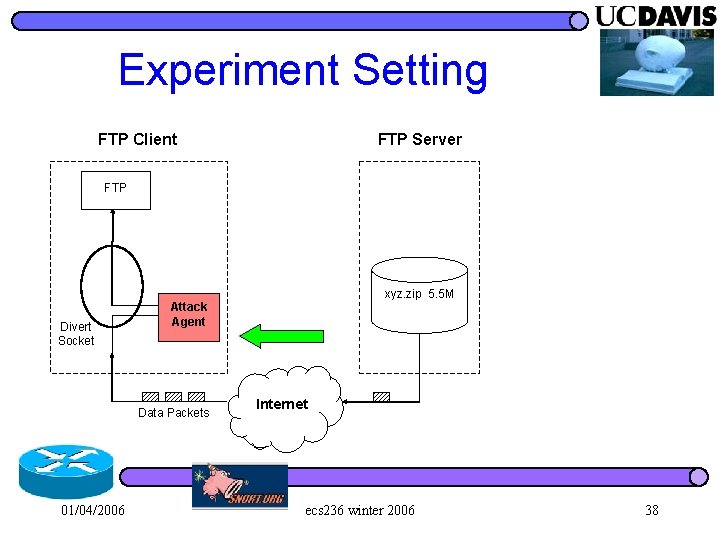

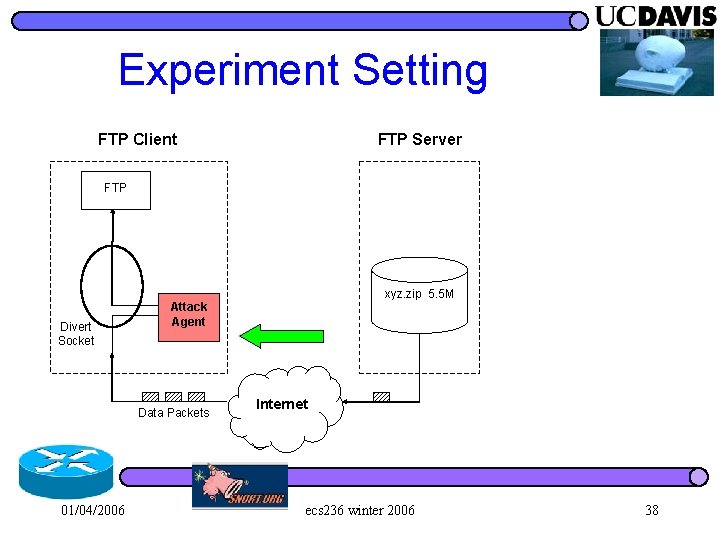

Experiment Setting FTP Client FTP Server FTP xyz. zip 5. 5 M Divert Socket Attack Agent Data Packets 01/04/2006 Internet ecs 236 winter 2006 38

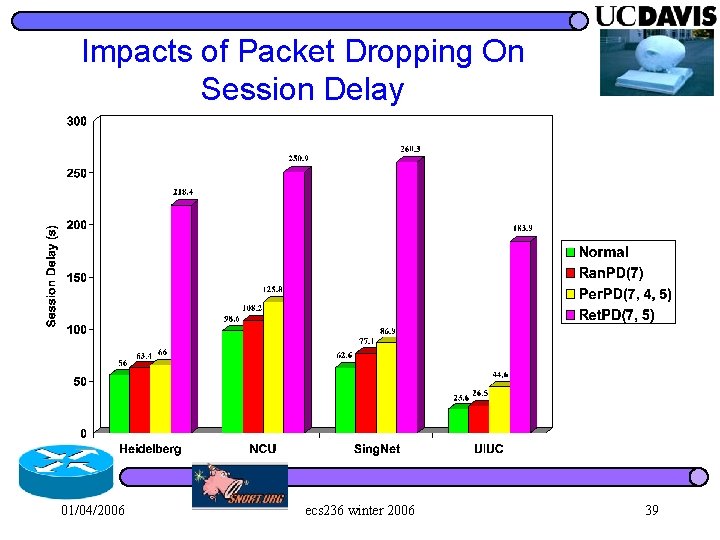

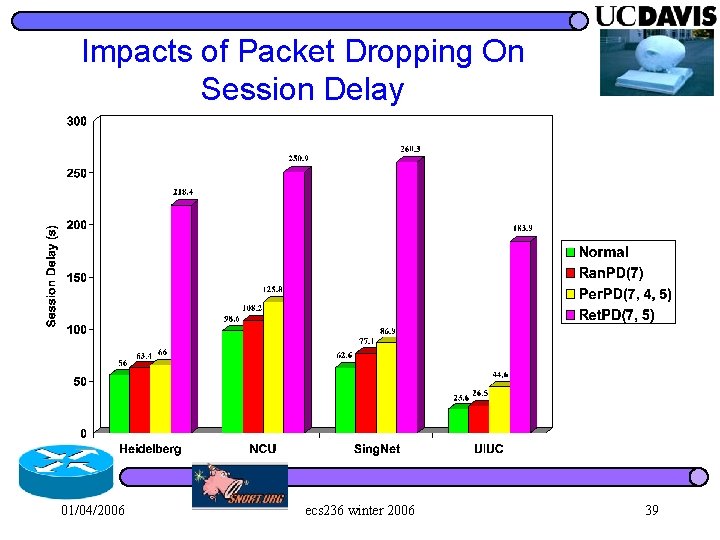

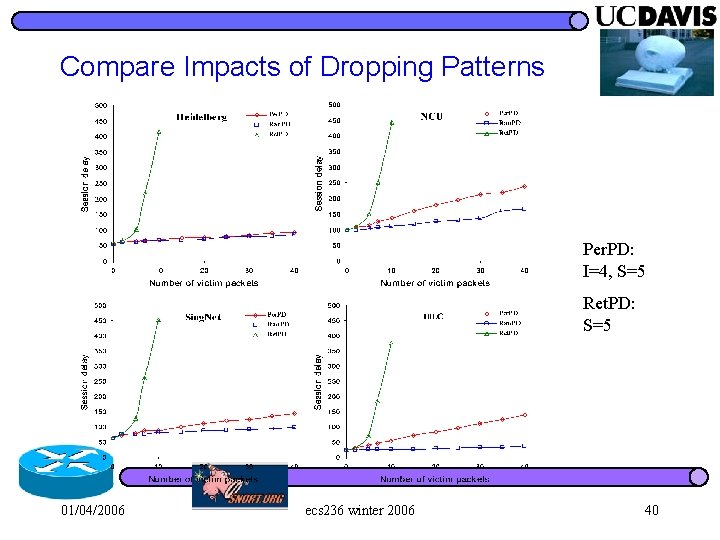

Impacts of Packet Dropping On Session Delay 01/04/2006 ecs 236 winter 2006 39

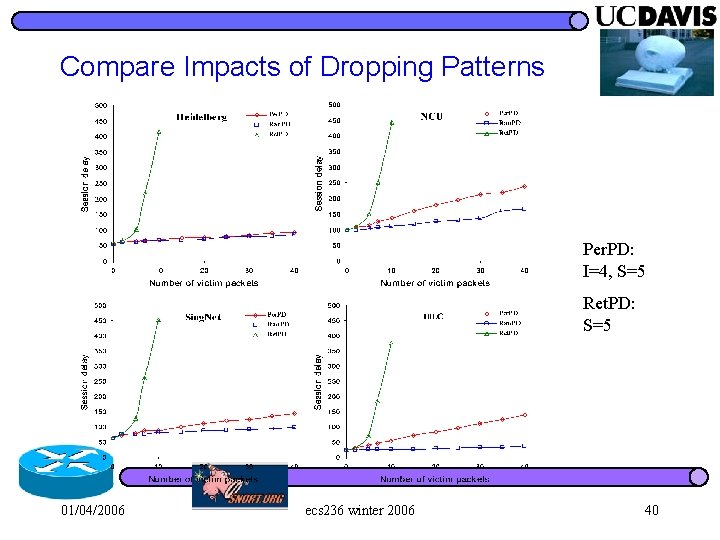

Compare Impacts of Dropping Patterns Per. PD: I=4, S=5 Ret. PD: S=5 01/04/2006 ecs 236 winter 2006 40

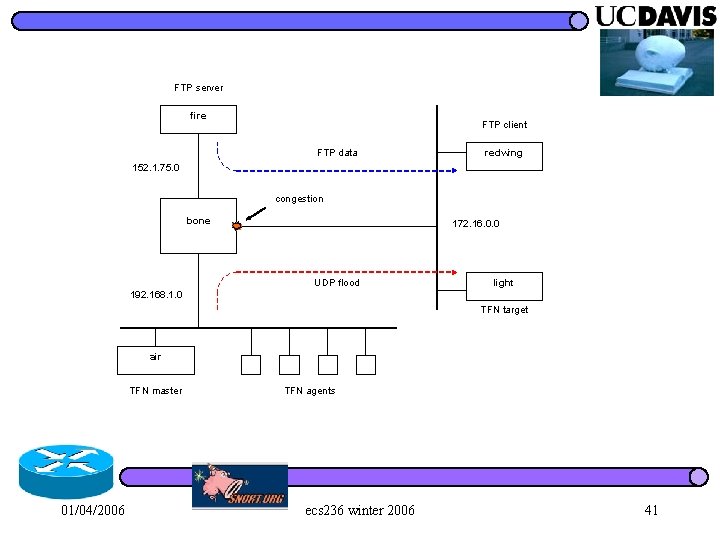

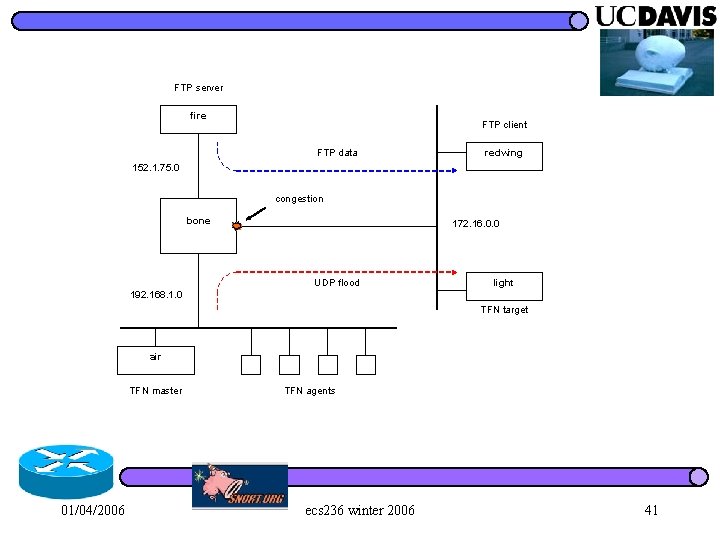

FTP server fire FTP client FTP data redwing 152. 1. 75. 0 congestion bone 172. 16. 0. 0 UDP flood light 192. 168. 1. 0 TFN target air TFN master 01/04/2006 TFN agents ecs 236 winter 2006 41

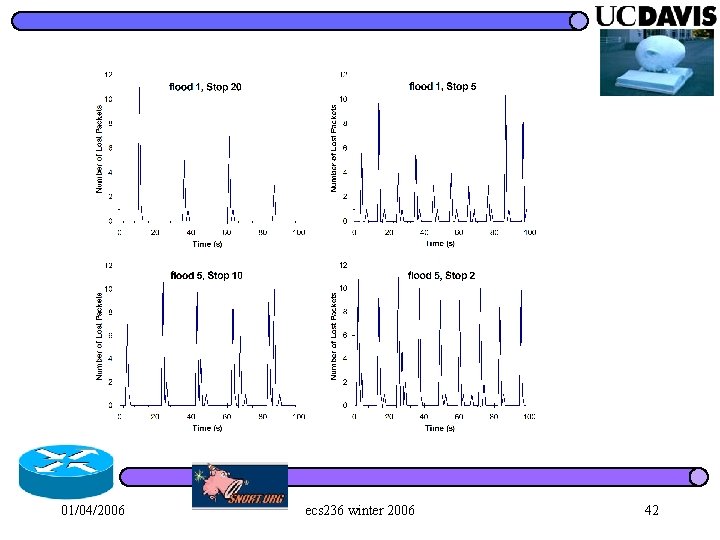

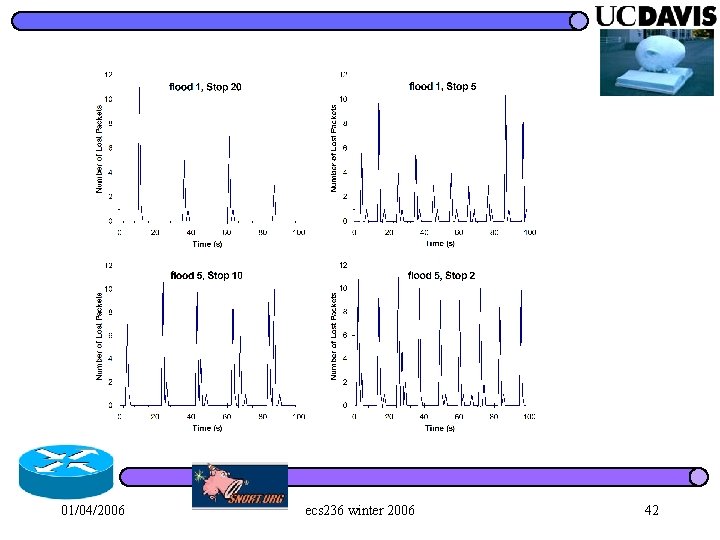

01/04/2006 ecs 236 winter 2006 42

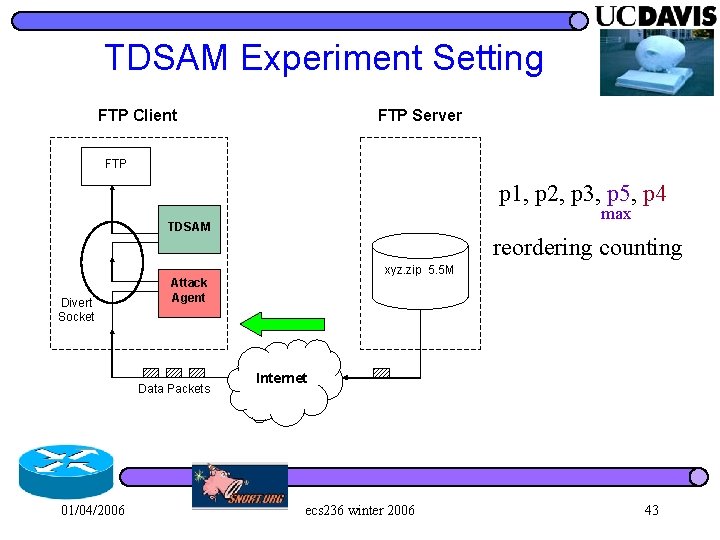

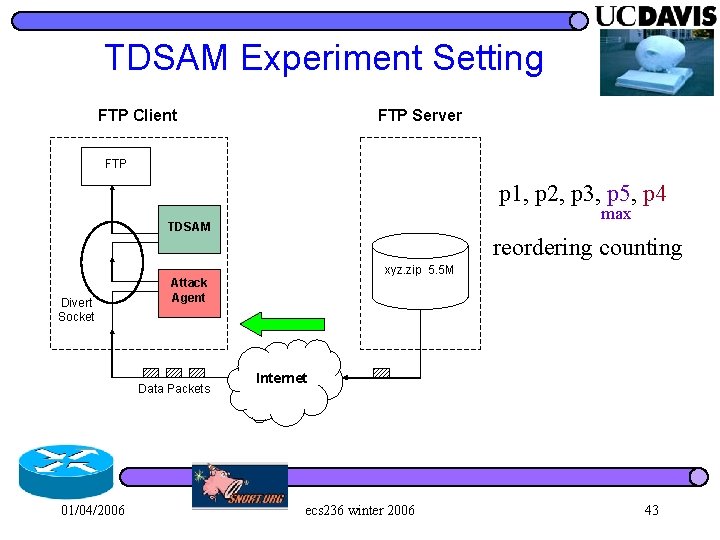

TDSAM Experiment Setting FTP Client FTP Server FTP p 1, p 2, p 3, p 5, p 4 max TDSAM reordering counting xyz. zip 5. 5 M Divert Socket Attack Agent Data Packets 01/04/2006 Internet ecs 236 winter 2006 43

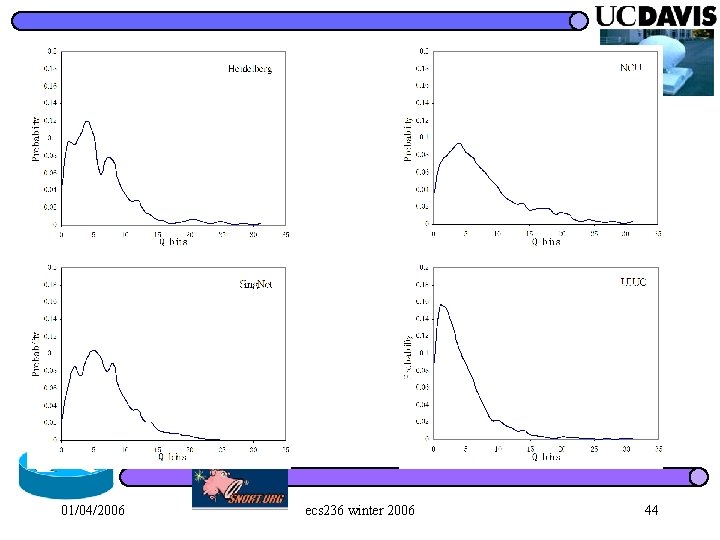

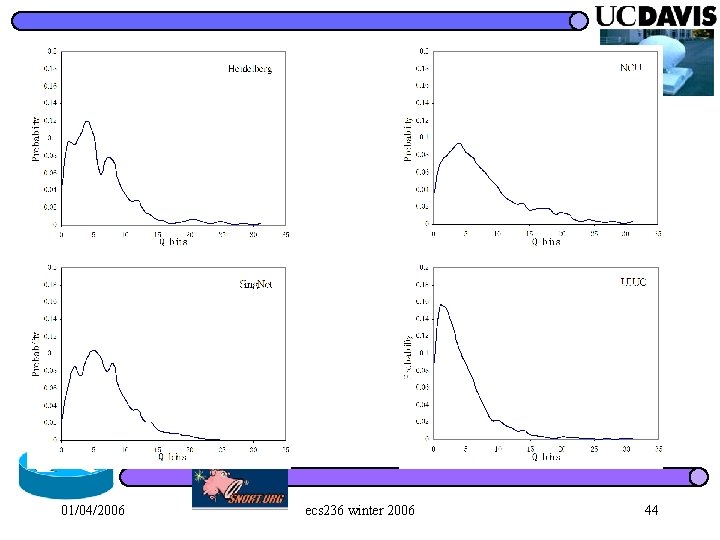

01/04/2006 ecs 236 winter 2006 44

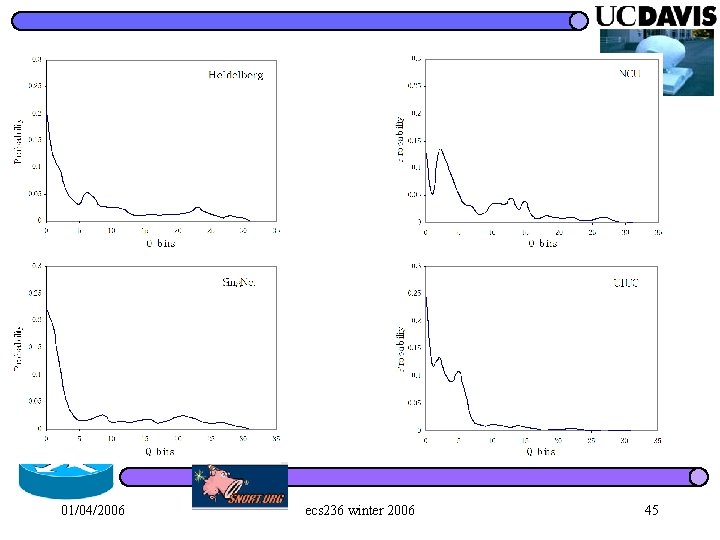

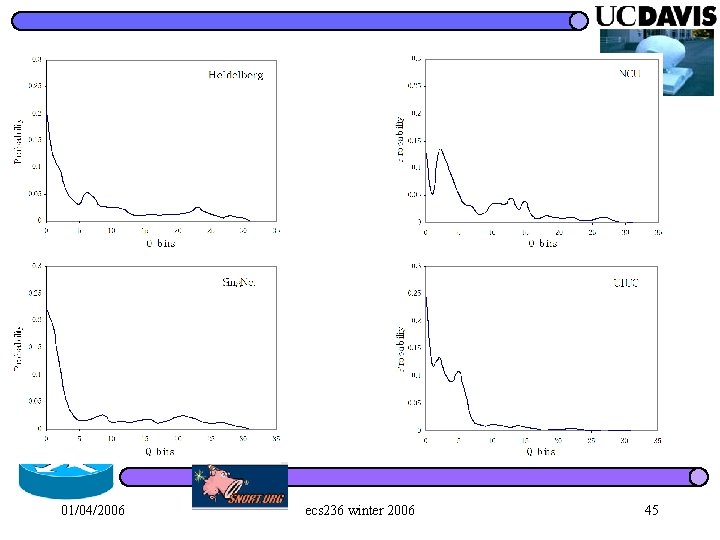

01/04/2006 ecs 236 winter 2006 45

Results: Position Measure 01/04/2006 ecs 236 winter 2006 46

Results: Delay Measure 01/04/2006 ecs 236 winter 2006 47

Results: NPR Measure 01/04/2006 ecs 236 winter 2006 48

Results (good and bad) l False Alarm Rate 4 less than 10% in most cases, the highest is 17. 4% l Detection Rate 4 Position: good on Ret. PD and most of Per. PD > at NCU, 98. 7% for Per. PD(20, 4, 5), but 0% for Per. PD(100, 40, 5) in which dropped packets are evenly distributed 4 Delay: good on those significantly change session delay, e. g. , Ret. PD, Per. PD with a large value of K > at Sing. Net, 100% for Ret. PD(5, 5), but 67. 9% for Ran. PD(10) 4 NPR: good on those dropping many packets > 01/04/2006 at Heidelberg, 0% for Ran. PD(10), but 100% for Ran. PD(40) ecs 236 winter 2006 49

Performance Analysis l Good sites correspond to a high detection rate. 4 stable and small session delay or packet reordering 4 e. g. , using Delay Measure for Ran. PD(10): UIUC (99. 5%) > Heidelberg(74. 5%) > Sing. Net (67. 9%) > NCU (26. 8%) l How to choose the value of nbin is site-specific 4 e. g. , using Position Measure, lowest false alarm rate occurs when nbin= 5 at Heidelberg(4. 0%) and NCU(5. 4%), 10 at UIUC(4. 5%) and 20 at Sing. Net(1. 6%) 01/04/2006 ecs 236 winter 2006 50

timer control update decay clean 0 0 5 10 15 20 25 30 long term profile raw events compute the deviation threshold control 01/04/2006 ecs 236 winter 2006 alarm generation 51

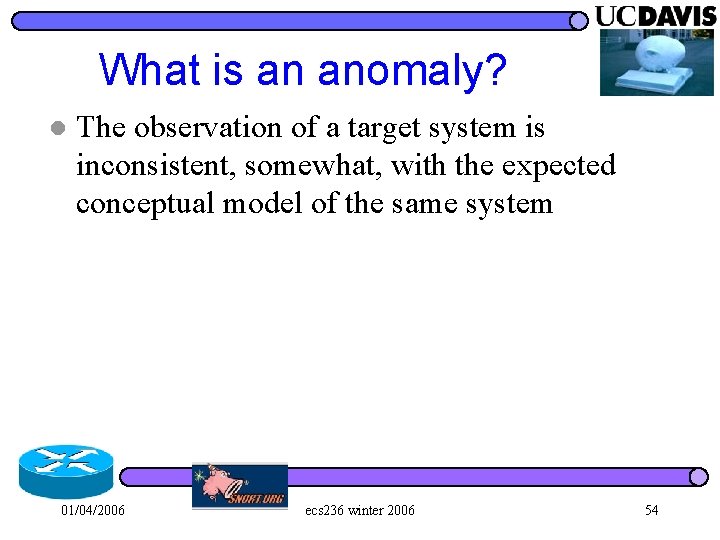

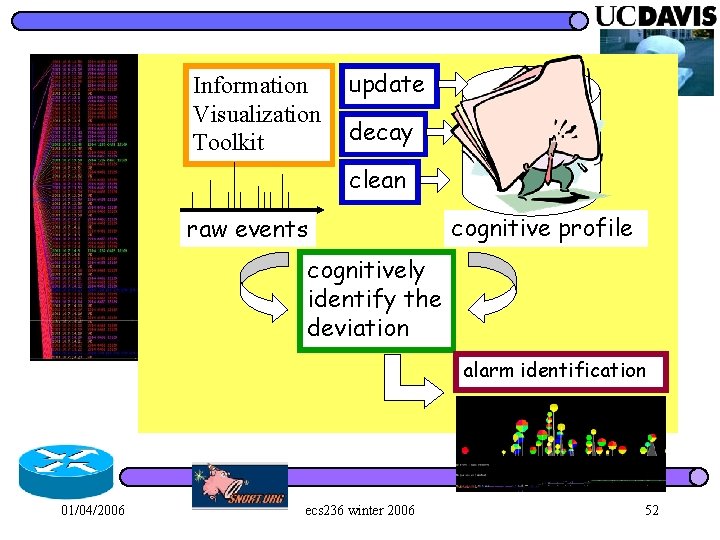

Information Visualization Toolkit update decay clean raw events cognitive profile cognitively identify the deviation alarm identification 01/04/2006 ecs 236 winter 2006 52

What is an anomaly? 01/04/2006 ecs 236 winter 2006 53

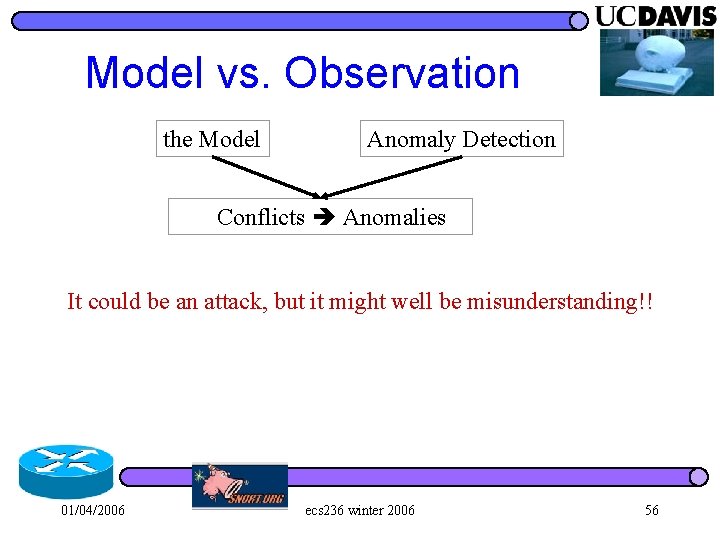

What is an anomaly? l The observation of a target system is inconsistent, somewhat, with the expected conceptual model of the same system 01/04/2006 ecs 236 winter 2006 54

What is an anomaly? l The observation of a target system is inconsistent, somewhat, with the expected conceptual model of the same system l And, this conceptual model can be ANYTHING. – Statistical, logical, or something else 01/04/2006 ecs 236 winter 2006 55

Model vs. Observation the Model Anomaly Detection Conflicts Anomalies It could be an attack, but it might well be misunderstanding!! 01/04/2006 ecs 236 winter 2006 56

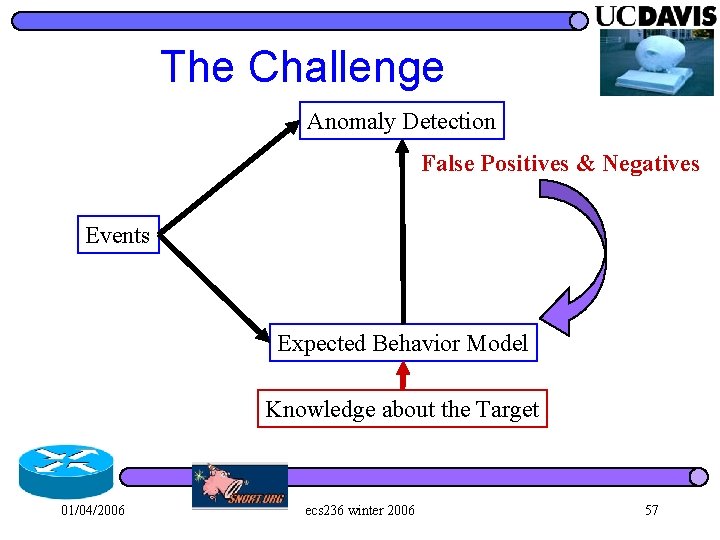

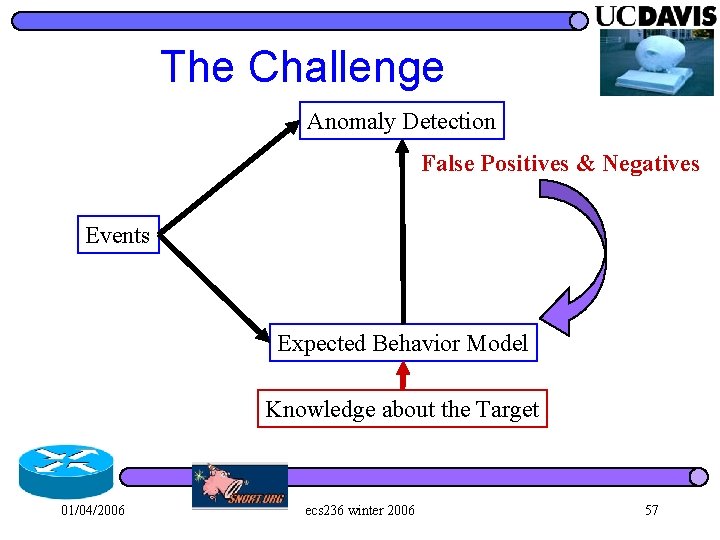

The Challenge Anomaly Detection False Positives & Negatives Events Expected Behavior Model Knowledge about the Target 01/04/2006 ecs 236 winter 2006 57

Challenge We know that the detected anomalies can be either true-positive or false-positive. l We try all our best to resolve the puzzle by examining all information available to us. l But, the “ground truth” of these anomalies is very hard to obtain l – even with human intelligence 01/04/2006 ecs 236 winter 2006 58

Problems with AND We are not sure about whatever we want to detect… l We are not sure either when something is caught… l We are still in the dark… at least in many cases… l 01/04/2006 ecs 236 winter 2006 59

Anomaly Explanation l How will a human resolve the conflict? l The Power of Reasoning and Explanation – We detected something we really want to detect reducing false negative – Our model can be improved reduce false positive 01/04/2006 ecs 236 winter 2006 60

Without Explanation AND is not as useful? ? l Knowledge is the power to utilize information! l – Unknown vulnerabilities – Root cause analysis – Event correlation 01/04/2006 ecs 236 winter 2006 61

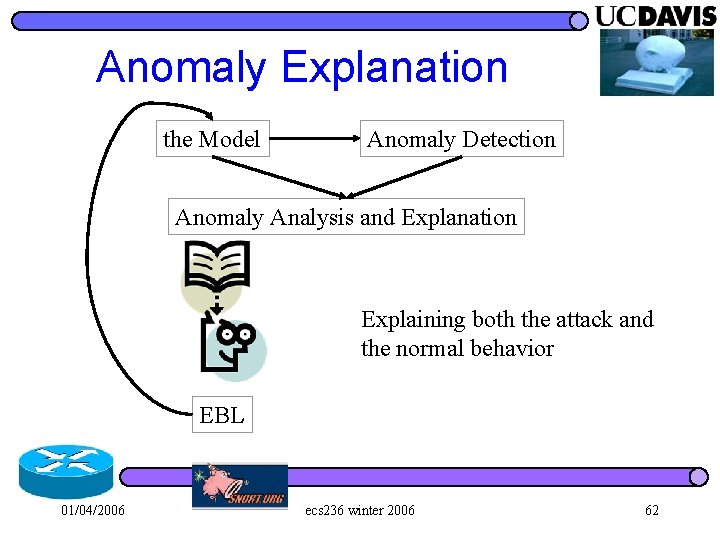

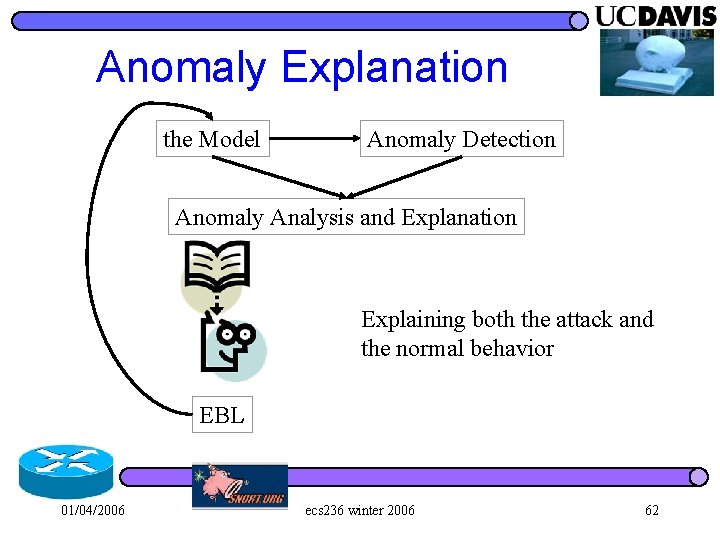

Anomaly Explanation the Model Anomaly Detection Anomaly Analysis and Explanation Explaining both the attack and the normal behavior EBL 01/04/2006 ecs 236 winter 2006 62

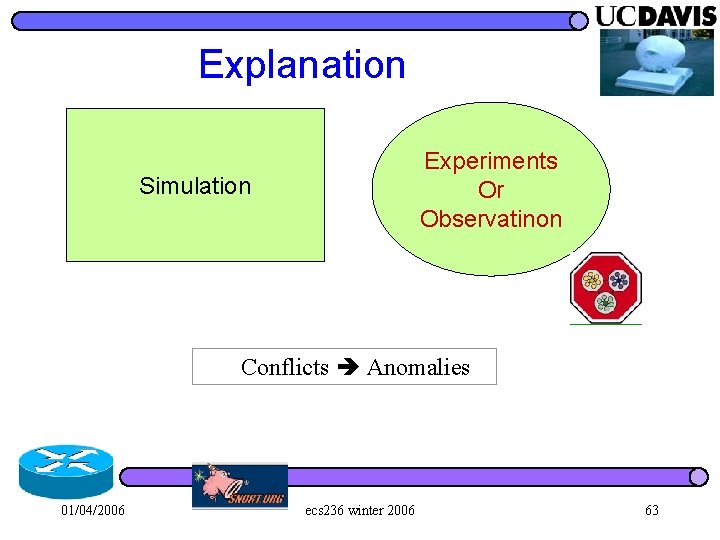

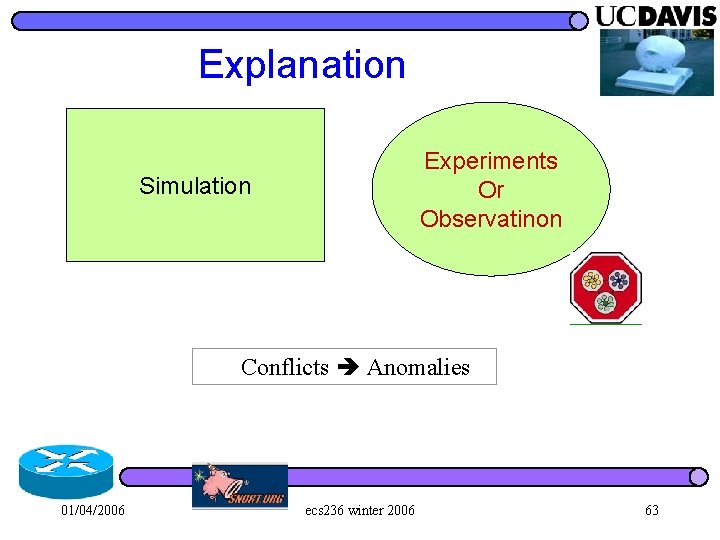

Explanation Experiments Or Observatinon Simulation Conflicts Anomalies 01/04/2006 ecs 236 winter 2006 63

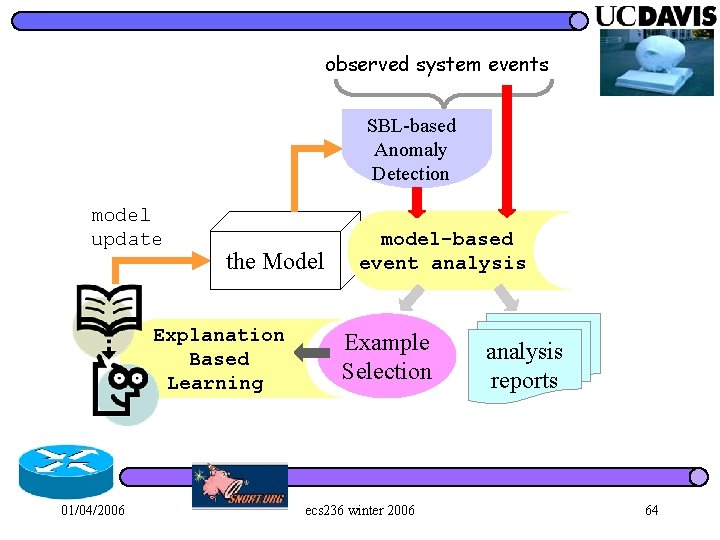

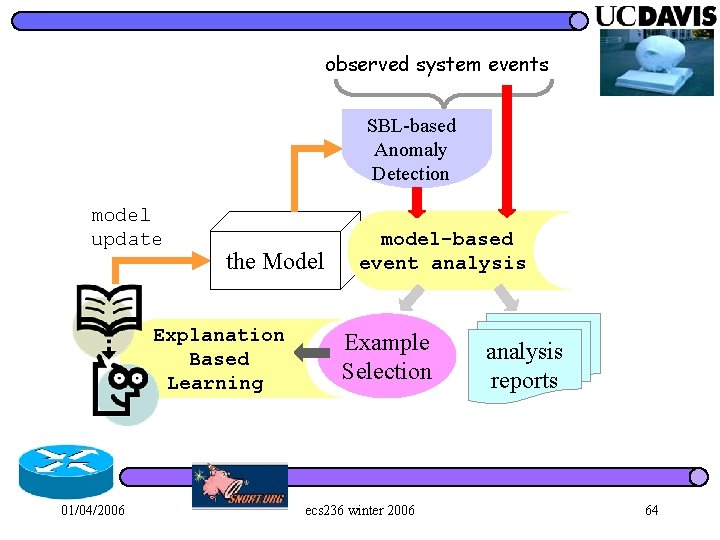

observed system events SBL-based Anomaly Detection model update the Model Explanation Based Learning 01/04/2006 model-based event analysis Example Selection ecs 236 winter 2006 analysis reports 64

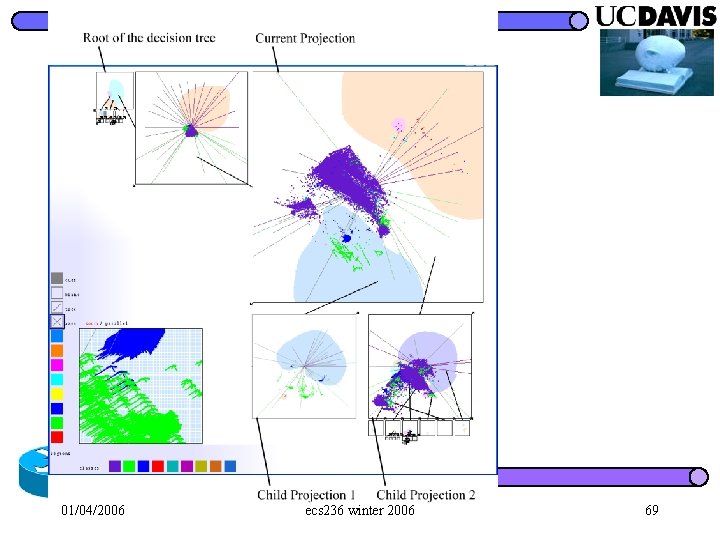

AND EXPAND l Anomaly Detection – Detect – Analysis and Explanation – Application 01/04/2006 ecs 236 winter 2006 65

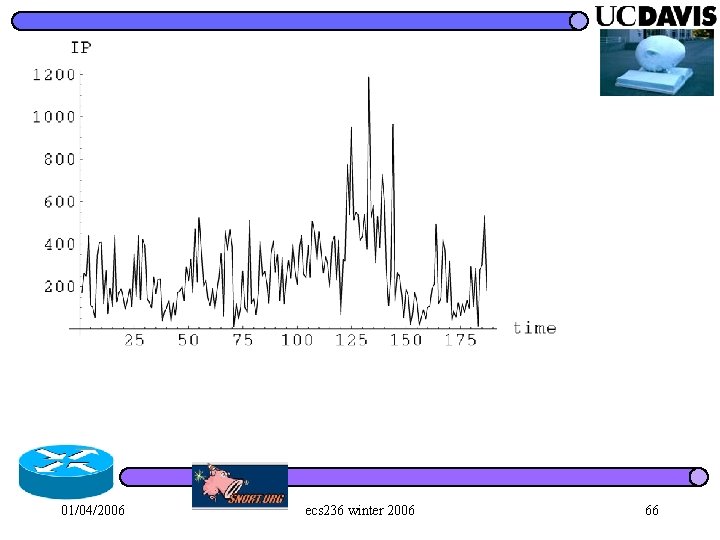

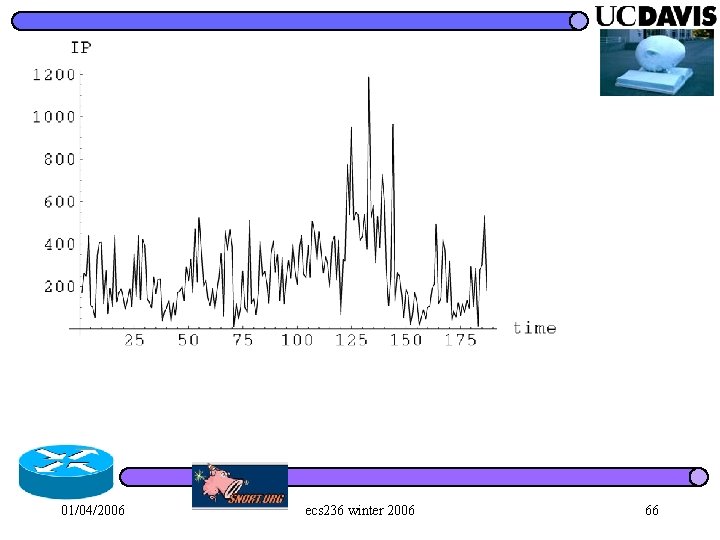

01/04/2006 ecs 236 winter 2006 66

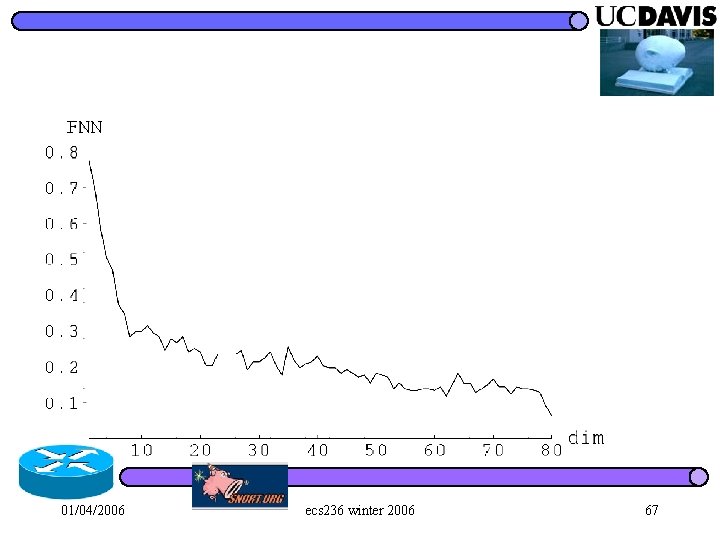

01/04/2006 ecs 236 winter 2006 67

01/04/2006 ecs 236 winter 2006 68

01/04/2006 ecs 236 winter 2006 69