Lecture 6 Memory Management Part II Lecture Highlights

![Paging Specifics of a sample implementation The implementation specifics of Zhao’s study [6] are Paging Specifics of a sample implementation The implementation specifics of Zhao’s study [6] are](https://slidetodoc.com/presentation_image_h/afcf5b3401f115ca5ba15f58bf3af0dc/image-70.jpg)

- Slides: 75

Lecture 6 Memory Management Part II

Lecture Highlights Ø Introduction to Paging Ø Ø Ø Ø Ø Paging Hardware & Page Tables Paging model of memory Page Size Paging versus Continuous Allocation Scheme Multilevel Paging Page Replacement & Page Anticipation Algorithms Parameters Involved Parameter-Performance Relationships Sample Results

Introduction to Paging n n n In the last lecture, we saw an introduction to memory management and learnt about its related problems of synchronization, redundancy and fragmentation. We, then, looked at one scheme of continuous memory allocation. In this lecture, we’ll look at another memory management scheme: paging.

Introduction to Paging n n n Paging entails division of physical memory into many small equal-sized frames. Logical memory is also broken into blocks of the same size called pages. When a process is to be executed, some of its pages are loaded into any available memory frames. The next slide includes a discussion of paging in relation to synchronization, redundancy and fragmentation. Then we’ll draw a comparison with the continuous memory allocation followed by a set of slides that illustrate the hardware support for paging.

Introduction to Paging Synchronization, Redundancy & Fragmentation n Synchronization Since a page/frame has a fixed size, swapping back and forth from RAM to disc and vice-versa takes a fixed amount of time. Thus, it is easier to synchronize the spooling time of a page to a time slot. n Redundancy Paging does not require entire processes to be swapped in and out rather only some pages of a process are present in the RAM at any given time. Swapping of single pages are undertaken when required. As compared to continuous memory allocation scheme, paging suffers with much less redundancy.

Introduction to Paging Synchronization, Redundancy & Fragmentation n Fragmentation In a paging scheme, external fragmentation is totally eliminated. However, internal fragmentation still exists and we’ll take a closer look at it in the next slide. However, as we’ll see in later slides, paging brings its own set of complications which include: n n Page table – size and maintenance/update issue Page anticipation and replacement Page location in memory Number of accesses

Introduction to Paging and Fragmentation n n In a paging scheme, we have no external fragmentation. However, we may have internal fragmentation as illustrated below: n n n If page size is 2 KB and we have a process with size 72, 766 bytes, then the process will need 35 pages plus 1086 bytes. It would be allocated 36 pages with internal fragmentation of 2048 – 1086 = 962 bytes In worst case, a process would need n pages plus 1 byte. It would be allocated n + 1 pages, resulting in an internal fragmentation of almost an entire frame. If process size is independent of page size, we expect internal fragmentation to average one-half page per process

Paging versus Continuous Allocation Scheme n n There is no external fragmentation in a paging scheme while the same is present in continuous memory allocation scheme thus eliminating the need of the expensive compaction scheme in paging. However, paging suffers from internal fragmentation. Moreover, page tables need storage themselves and in realistic systems this is a pretty large storage size (the examples we’ll discuss will make this clear). Addressing a memory space in a paging scheme needs two accesses (first to access the page table and second to access the mapped physical memory). However, time involved is not twice rather a factor of 1. 1.

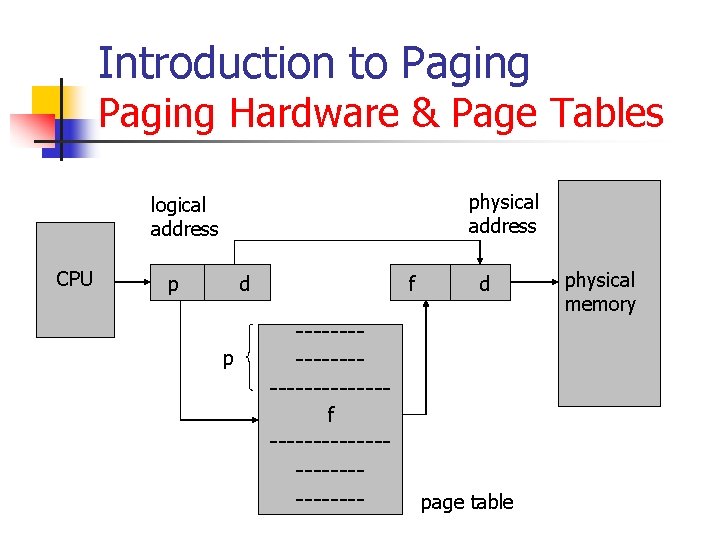

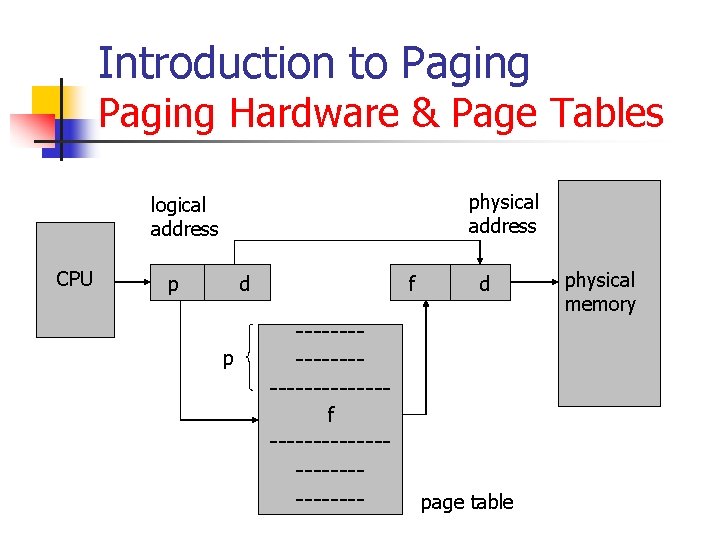

Introduction to Paging Hardware & Page Tables physical address logical address CPU p d p f d --------------f -------------- page table physical memory

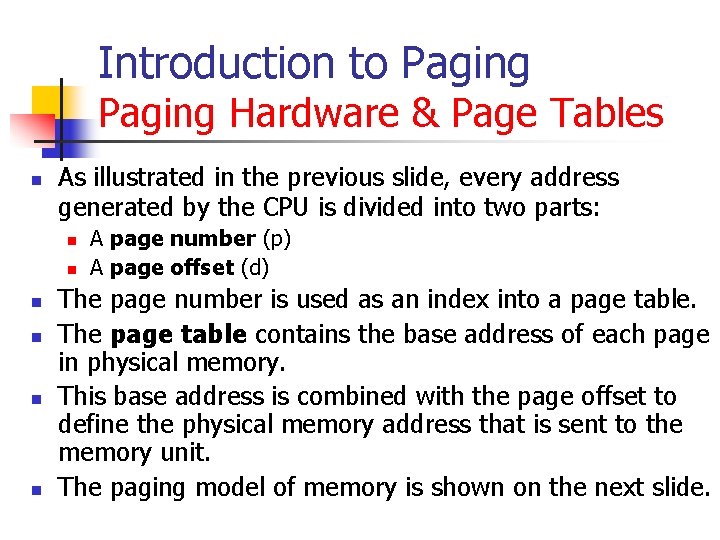

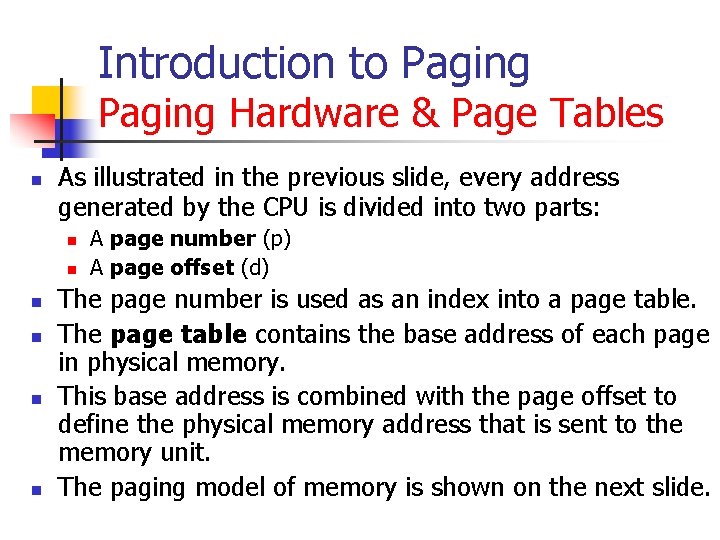

Introduction to Paging Hardware & Page Tables n As illustrated in the previous slide, every address generated by the CPU is divided into two parts: n n n A page number (p) A page offset (d) The page number is used as an index into a page table. The page table contains the base address of each page in physical memory. This base address is combined with the page offset to define the physical memory address that is sent to the memory unit. The paging model of memory is shown on the next slide.

Introduction to Paging Model of Memory frame number 0 Page 0 0 1 Page 1 1 4 Page 2 2 3 Page 3 3 7 logical page table memory 1 Page 0 2 3 Page 2 4 Page 1 5 6 7 Page 3 physical memory

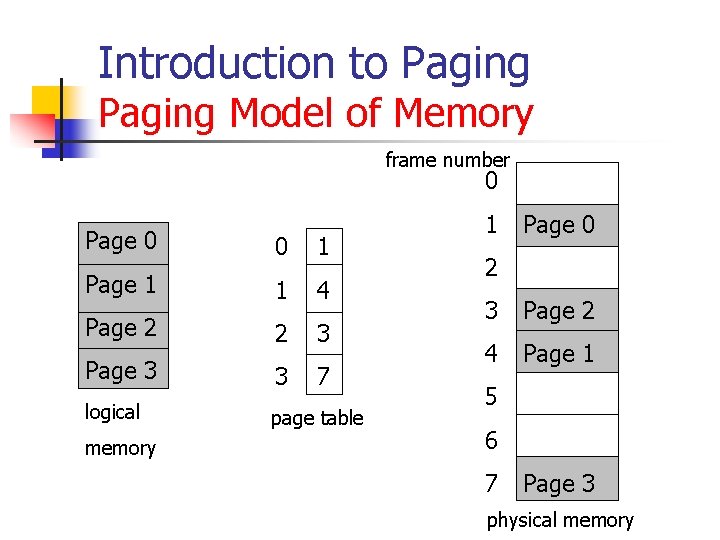

Introduction to Paging Page Size and Paging Model n n n The page size (like the frame size) is defined by the hardware. The size of a page is typically a power of 2 (values between 512 to 8192 bytes per page). The selection of a power of 2 as a page size makes the translation of a logical address into a page number and page offset particularly easy. If the size of logical address space is 2 m, and a page size is 2 n addressing units, then the high-order m-n bits of a logical address designate the page number, and the n low-order bits designate the page offset. Thus the logical address is as follows: page number page offset p d m-n n

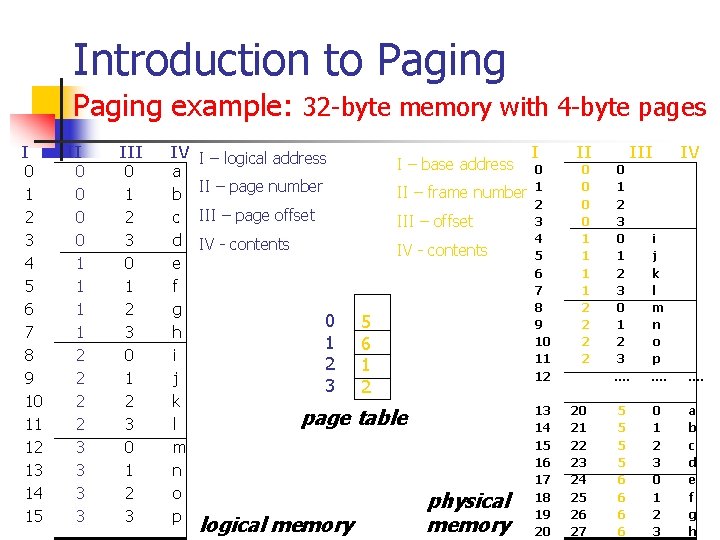

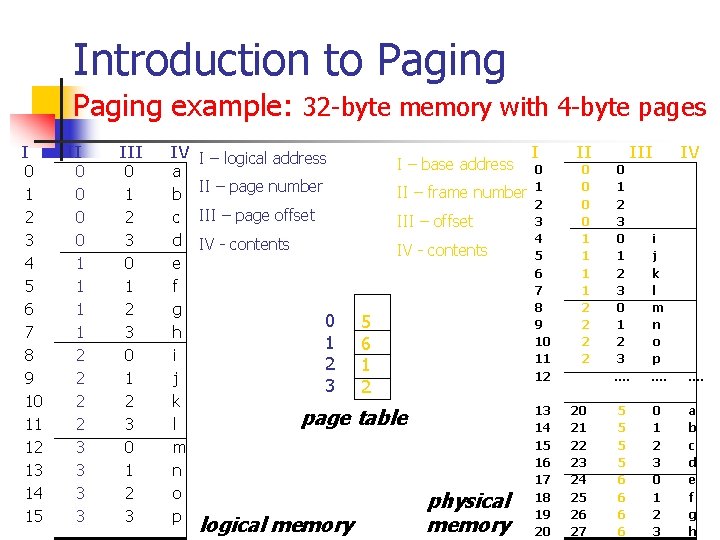

Introduction to Paging example: 32 -byte memory with 4 -byte pages I 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 II 0 0 1 1 2 2 3 3 III 0 1 2 3 IV a b c d e f g h i j k l m n o p I – logical address I – base address II – page number II – frame number III – page offset III – offset IV - contents 0 1 2 3 5 6 1 2 page table logical memory physical memory I II 0 1 2 3 4 5 6 7 8 9 10 11 12 0 0 1 1 2 2 13 14 15 16 17 18 19 20 20 21 22 23 24 25 26 27 III IV 0 1 2 3 …. i j k l m n o p …. 5 5 6 6 0 1 2 3 a b c d e f g h

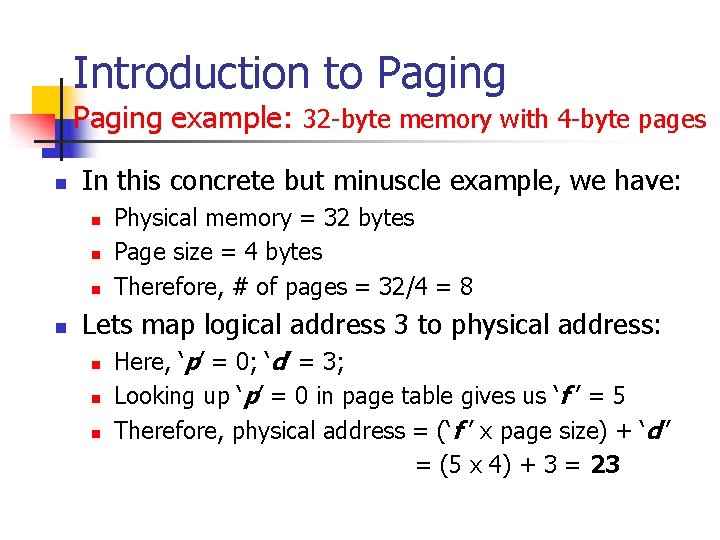

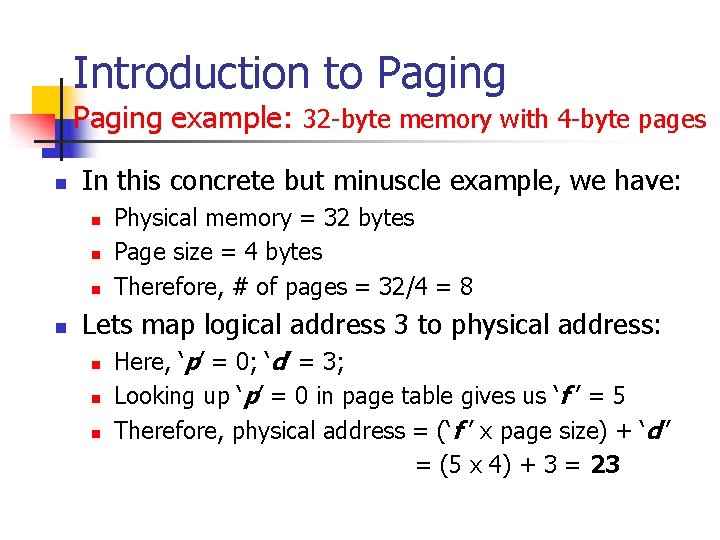

Introduction to Paging example: 32 -byte memory with 4 -byte pages n In this concrete but minuscle example, we have: n n Physical memory = 32 bytes Page size = 4 bytes Therefore, # of pages = 32/4 = 8 Lets map logical address 3 to physical address: n n n Here, ‘p’ = 0; ‘d’ = 3; Looking up ‘p’ = 0 in page table gives us ‘f ’ = 5 Therefore, physical address = (‘f ’ x page size) + ‘d’’ = (5 x 4) + 3 = 23

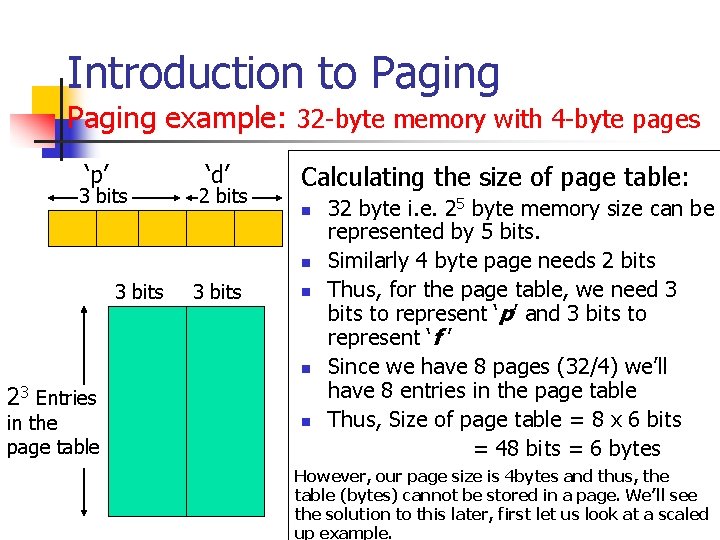

Introduction to Paging example: 32 -byte memory with 4 -byte pages ‘p’ 3 bits ‘d’ 2 bits Calculating the size of page table: n n 3 bits n n 23 Entries in the page table n 32 byte i. e. 25 byte memory size can be represented by 5 bits. Similarly 4 byte page needs 2 bits Thus, for the page table, we need 3 bits to represent ‘p’ and 3 bits to represent ‘f ’ Since we have 8 pages (32/4) we’ll have 8 entries in the page table Thus, Size of page table = 8 x 6 bits = 48 bits = 6 bytes However, our page size is 4 bytes and thus, the table (bytes) cannot be stored in a page. We’ll see the solution to this later, first let us look at a scaled up example.

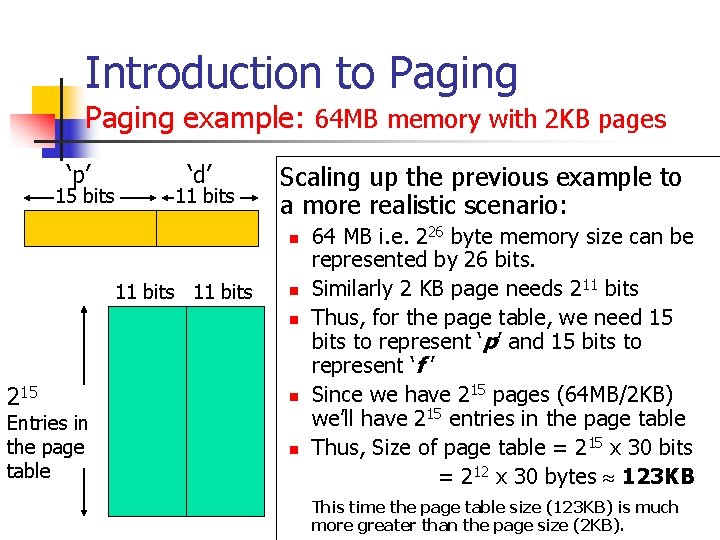

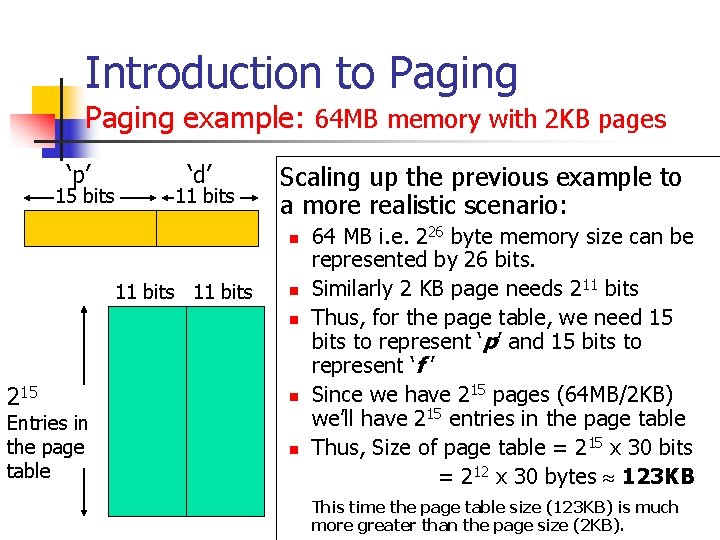

Introduction to Paging example: 64 MB memory with 2 KB pages ‘p’ 15 bits ‘d’ 11 bits Scaling up the previous example to a more realistic scenario: n 11 bits n n 215 Entries in the page table n n 64 MB i. e. 226 byte memory size can be represented by 26 bits. Similarly 2 KB page needs 211 bits Thus, for the page table, we need 15 bits to represent ‘p’ and 15 bits to represent ‘f ’ Since we have 215 pages (64 MB/2 KB) we’ll have 215 entries in the page table Thus, Size of page table = 215 x 30 bits = 212 x 30 bytes 123 KB This time the page table size (123 KB) is much more greater than the page size (2 KB).

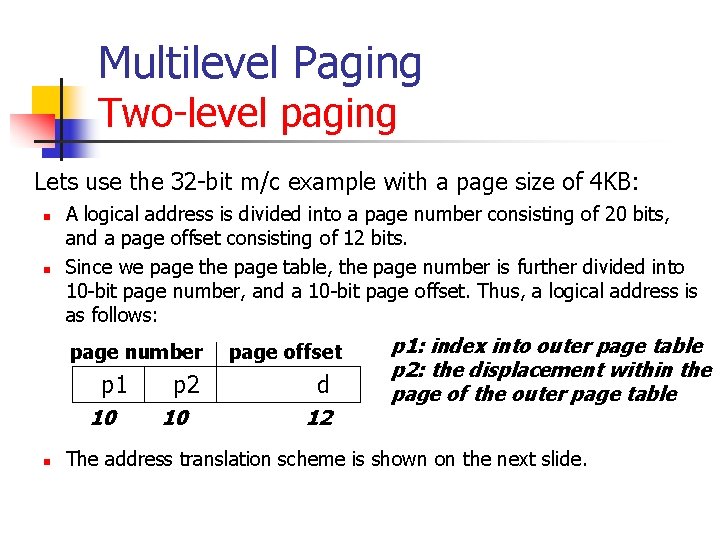

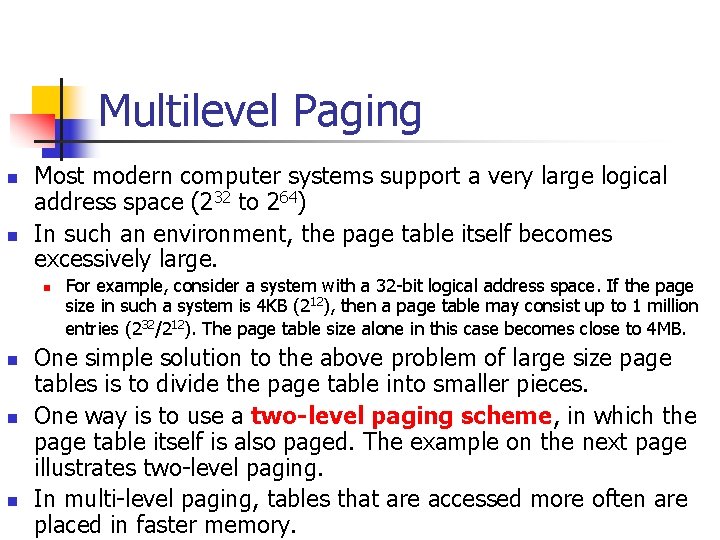

Multilevel Paging n n Most modern computer systems support a very large logical address space (232 to 264) In such an environment, the page table itself becomes excessively large. n n For example, consider a system with a 32 -bit logical address space. If the page size in such a system is 4 KB (212), then a page table may consist up to 1 million entries (232/212). The page table size alone in this case becomes close to 4 MB. One simple solution to the above problem of large size page tables is to divide the page table into smaller pieces. One way is to use a two-level paging scheme, in which the page table itself is also paged. The example on the next page illustrates two-level paging. In multi-level paging, tables that are accessed more often are placed in faster memory.

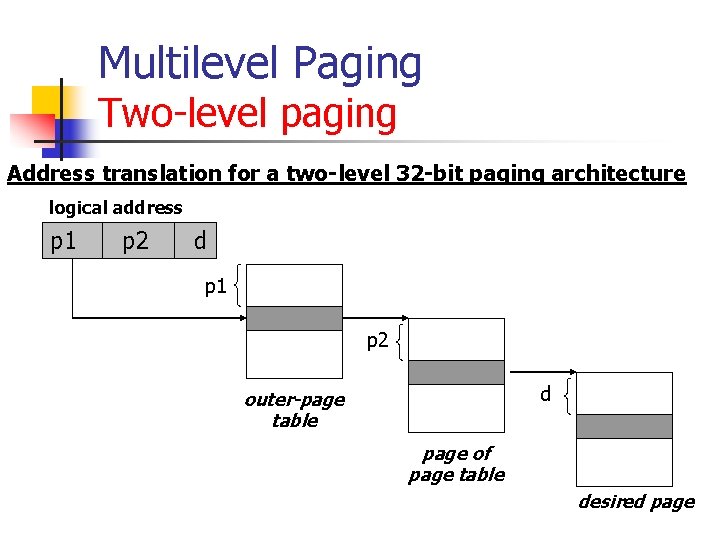

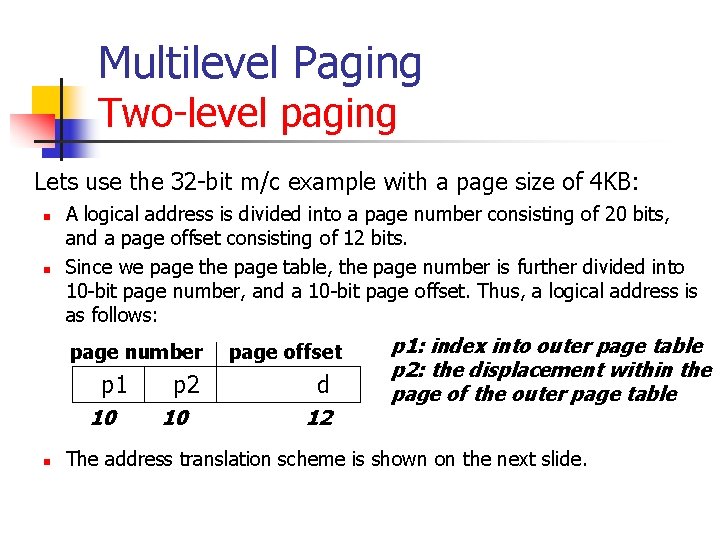

Multilevel Paging Two-level paging Lets use the 32 -bit m/c example with a page size of 4 KB: n n A logical address is divided into a page number consisting of 20 bits, and a page offset consisting of 12 bits. Since we page the page table, the page number is further divided into 10 -bit page number, and a 10 -bit page offset. Thus, a logical address is as follows: page number p 1 10 n p 2 10 page offset d 12 p 1: index into outer page table p 2: the displacement within the page of the outer page table The address translation scheme is shown on the next slide.

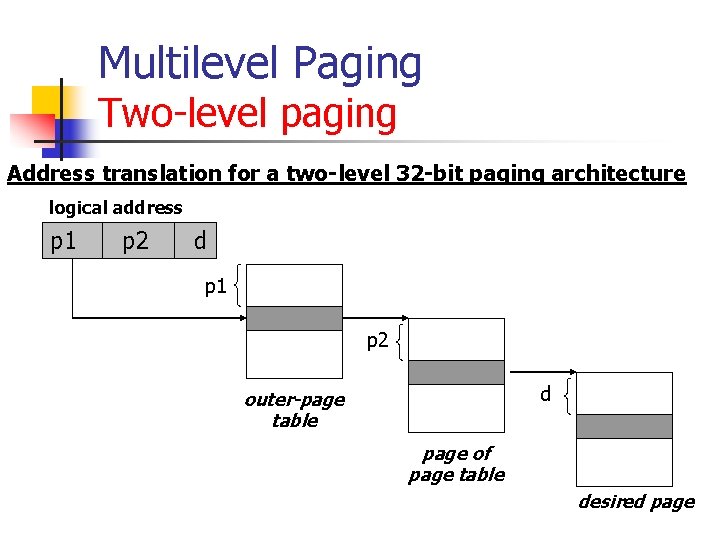

Multilevel Paging Two-level paging Address translation for a two-level 32 -bit paging architecture logical address p 1 p 2 d outer-page table page of page table desired page

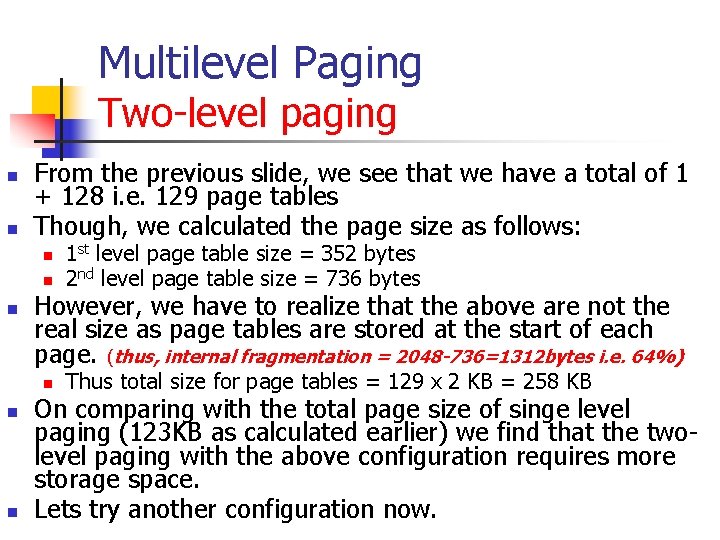

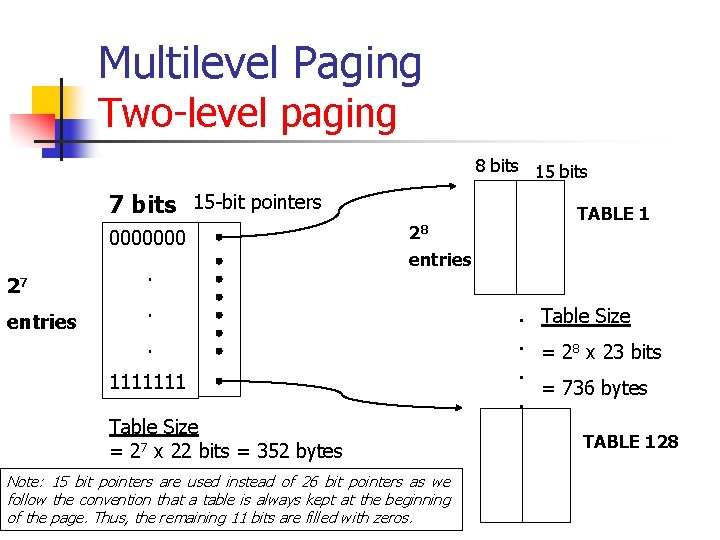

Multilevel Paging Two-level paging Let’s now see the real system example of 64 MB RAM with 2 KB page size. n n This system had 215 possible entries in the single level page table i. e. ‘p’ = 15, ‘d’ = 11, ‘f’ = 15 Scaling it to a two-level paging system entails breaking up the ‘p’ into ‘p 1’ and ‘p 2’ Let ‘p 1’ = 7 and ‘p 2’ = 8 The figure on the following page illustrates the two levels.

Multilevel Paging Two-level paging 8 bits 15 bits 7 bits 15 -bit pointers 0000000 27 entries . 28 TABLE 1 entries . . 1111111 Table Size = 27 x 22 bits = 352 bytes Note: 15 bit pointers are used instead of 26 bit pointers as we follow the convention that a table is always kept at the beginning of the page. Thus, the remaining 11 bits are filled with zeros. . Table Size. = 28 x 23 bits. = 736 bytes. TABLE 128

Multilevel Paging Two-level paging n n From the previous slide, we see that we have a total of 1 + 128 i. e. 129 page tables Though, we calculated the page size as follows: n n n However, we have to realize that the above are not the real size as page tables are stored at the start of each page. (thus, internal fragmentation = 2048 -736=1312 bytes i. e. 64%) n n n 1 st level page table size = 352 bytes 2 nd level page table size = 736 bytes Thus total size for page tables = 129 x 2 KB = 258 KB On comparing with the total page size of singe level paging (123 KB as calculated earlier) we find that the twolevel paging with the above configuration requires more storage space. Lets try another configuration now.

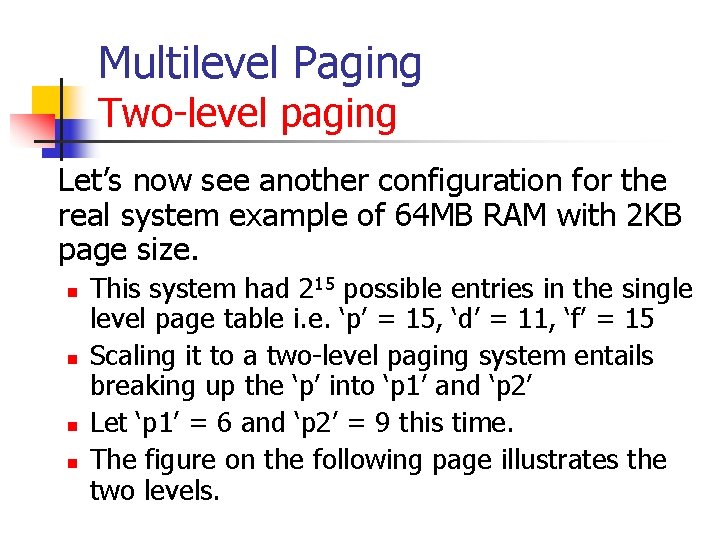

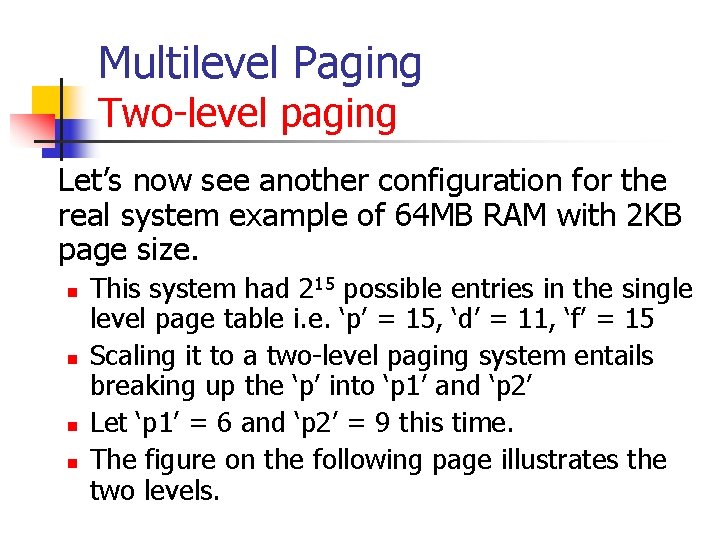

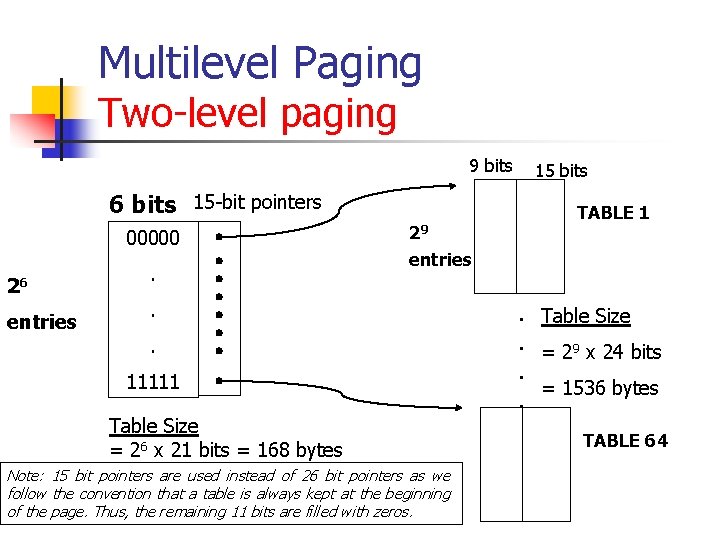

Multilevel Paging Two-level paging Let’s now see another configuration for the real system example of 64 MB RAM with 2 KB page size. n n This system had 215 possible entries in the single level page table i. e. ‘p’ = 15, ‘d’ = 11, ‘f’ = 15 Scaling it to a two-level paging system entails breaking up the ‘p’ into ‘p 1’ and ‘p 2’ Let ‘p 1’ = 6 and ‘p 2’ = 9 this time. The figure on the following page illustrates the two levels.

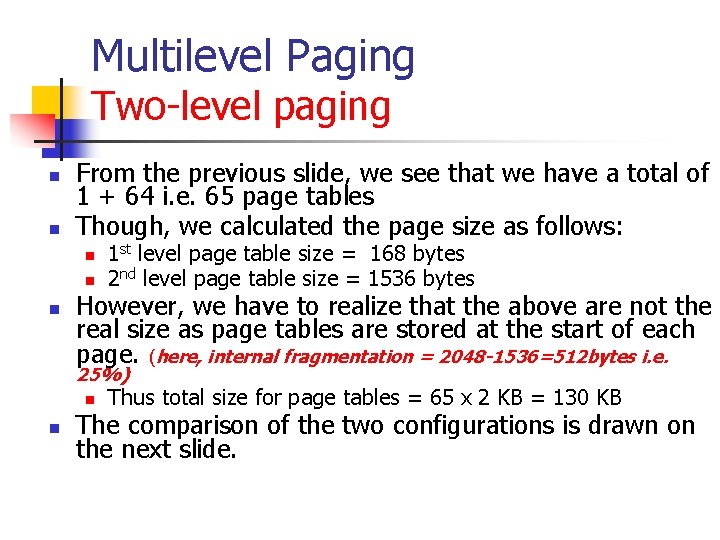

Multilevel Paging Two-level paging 9 bits 6 bits 15 -bit pointers 00000 26 entries . 29 15 bits TABLE 1 entries . . 11111 Table Size = 26 x 21 bits = 168 bytes Note: 15 bit pointers are used instead of 26 bit pointers as we follow the convention that a table is always kept at the beginning of the page. Thus, the remaining 11 bits are filled with zeros. . Table Size. = 29 x 24 bits. = 1536 bytes. TABLE 64

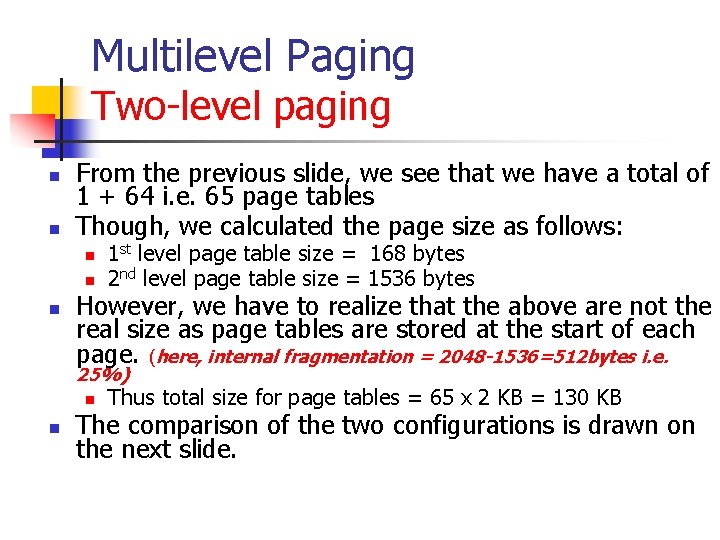

Multilevel Paging Two-level paging n n From the previous slide, we see that we have a total of 1 + 64 i. e. 65 page tables Though, we calculated the page size as follows: n n n 1 st level page table size = 168 bytes 2 nd level page table size = 1536 bytes However, we have to realize that the above are not the real size as page tables are stored at the start of each page. (here, internal fragmentation = 2048 -1536=512 bytes i. e. 25%) n n Thus total size for page tables = 65 x 2 KB = 130 KB The comparison of the two configurations is drawn on the next slide.

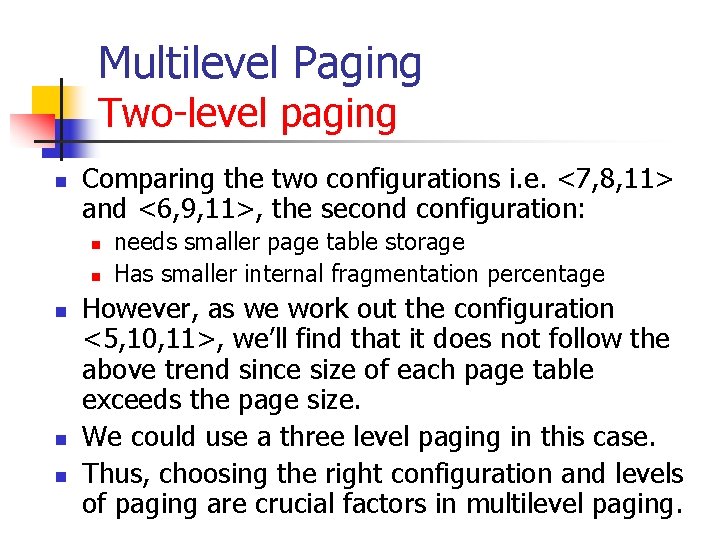

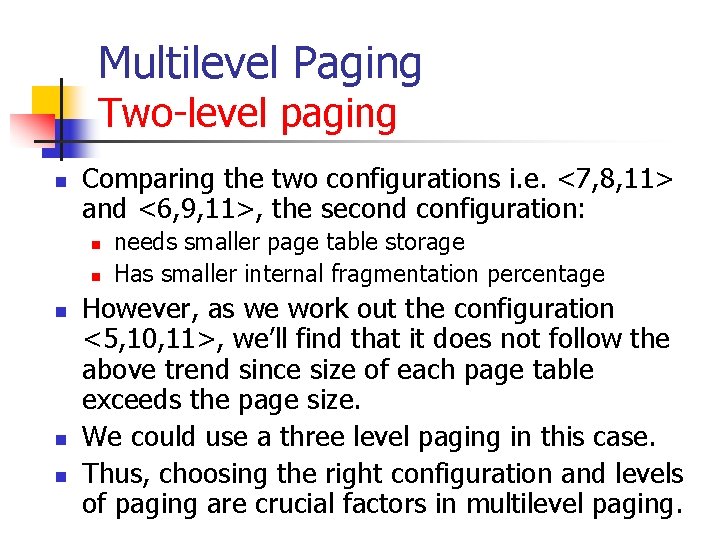

Multilevel Paging Two-level paging n Comparing the two configurations i. e. <7, 8, 11> and <6, 9, 11>, the second configuration: n n needs smaller page table storage Has smaller internal fragmentation percentage However, as we work out the configuration <5, 10, 11>, we’ll find that it does not follow the above trend since size of each page table exceeds the page size. We could use a three level paging in this case. Thus, choosing the right configuration and levels of paging are crucial factors in multilevel paging.

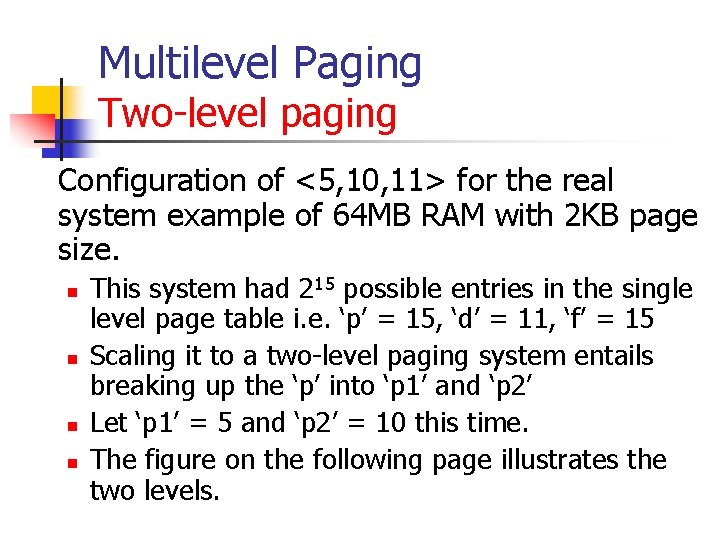

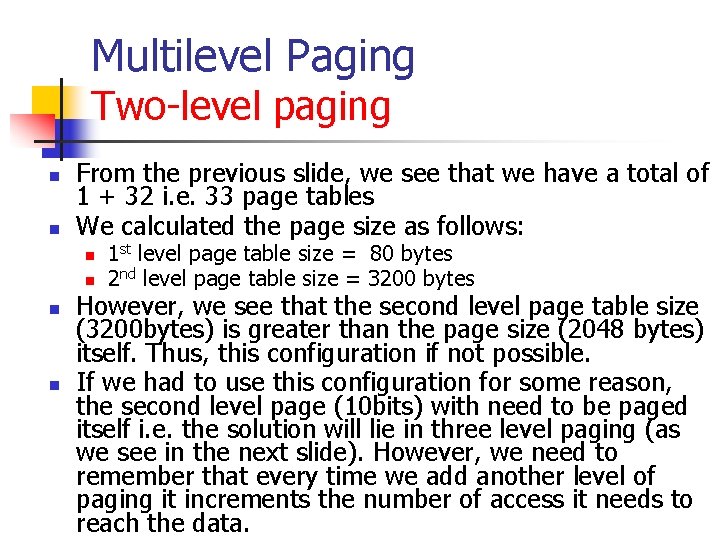

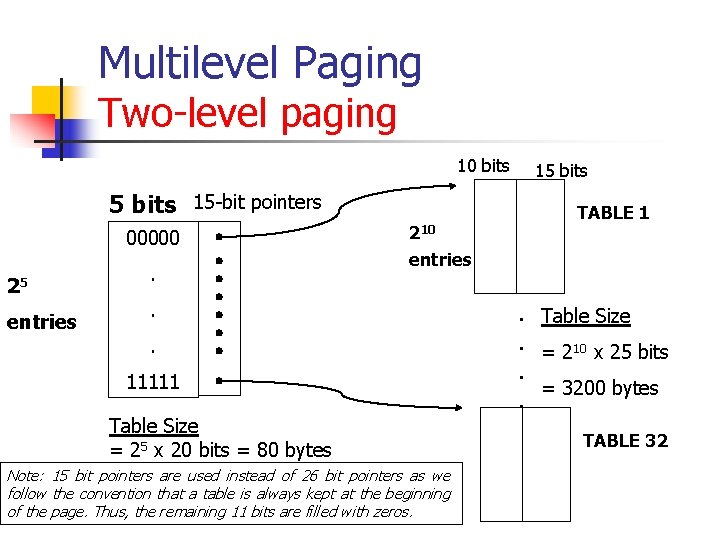

Multilevel Paging Two-level paging Configuration of <5, 10, 11> for the real system example of 64 MB RAM with 2 KB page size. n n This system had 215 possible entries in the single level page table i. e. ‘p’ = 15, ‘d’ = 11, ‘f’ = 15 Scaling it to a two-level paging system entails breaking up the ‘p’ into ‘p 1’ and ‘p 2’ Let ‘p 1’ = 5 and ‘p 2’ = 10 this time. The figure on the following page illustrates the two levels.

Multilevel Paging Two-level paging 10 bits 5 bits 15 -bit pointers 00000 25 entries . 210 15 bits TABLE 1 entries . . 11111 Table Size = 25 x 20 bits = 80 bytes Note: 15 bit pointers are used instead of 26 bit pointers as we follow the convention that a table is always kept at the beginning of the page. Thus, the remaining 11 bits are filled with zeros. . Table Size. = 210 x 25 bits. = 3200 bytes. TABLE 32

Multilevel Paging Two-level paging n n From the previous slide, we see that we have a total of 1 + 32 i. e. 33 page tables We calculated the page size as follows: n n 1 st level page table size = 80 bytes 2 nd level page table size = 3200 bytes However, we see that the second level page table size (3200 bytes) is greater than the page size (2048 bytes) itself. Thus, this configuration if not possible. If we had to use this configuration for some reason, the second level page (10 bits) with need to be paged itself i. e. the solution will lie in three level paging (as we see in the next slide). However, we need to remember that every time we add another level of paging it increments the number of access it needs to reach the data.

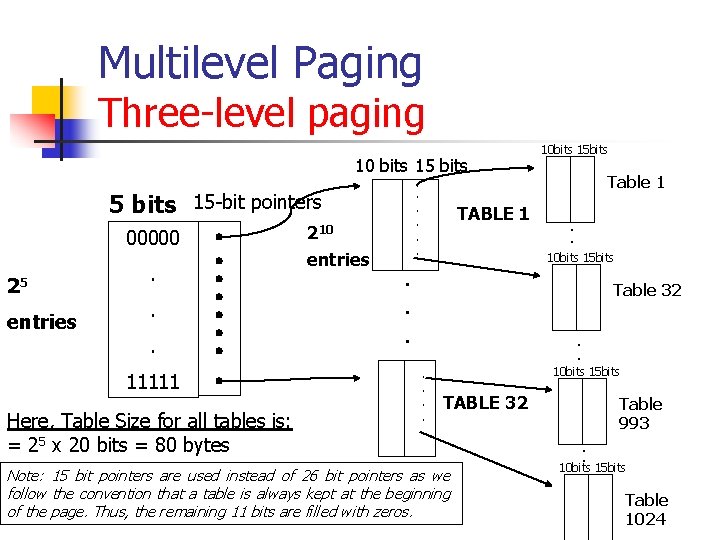

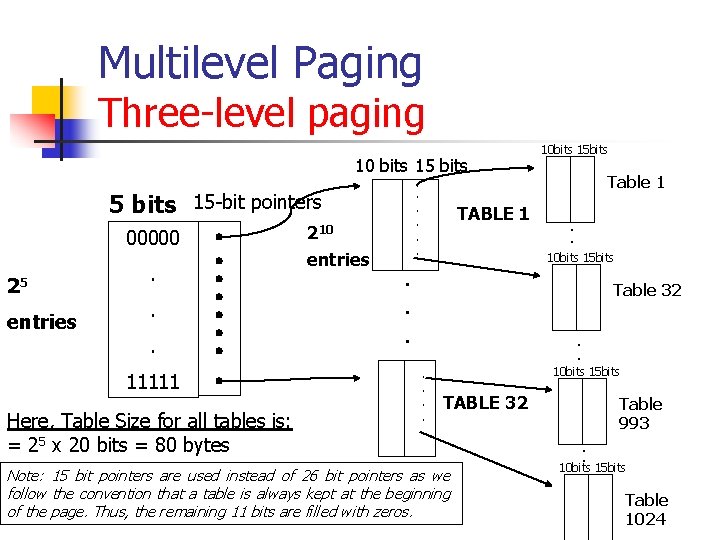

Multilevel Paging Three-level paging Configuration of <5, 5, 5, 11> for the real system example of 64 MB RAM with 2 KB page size. n n This system had 215 possible entries in the single level page table i. e. ‘p’ = 15, ‘d’ = 11, ‘f’ = 15 Scaling it to a three-level paging scheme entails breaking up the ‘p’ into ‘p 1’, ‘p 2’ and ‘p 3’ Let ‘p 1’ = 5, ‘p 2’ = 5 and ‘p 3’ = 5 The figure on the following page illustrates the three levels.

Multilevel Paging Three-level paging 10 bits 15 -bit pointers 00000 25 entries . . . 11111 Here, Table Size for all tables is: = 25 x 20 bits = 80 bytes 10 bits 15 bits Table 1 . TABLE 1 . 210 . entries . . . . 10 bits 15 bits Table 32. . 10 bits 15 bits . . . TABLE 32 Table 993 . . Note: 15 bit pointers are used instead of 26 bit pointers as we follow the convention that a table is always kept at the beginning of the page. Thus, the remaining 11 bits are filled with zeros. . . 10 bits 15 bits Table 1024

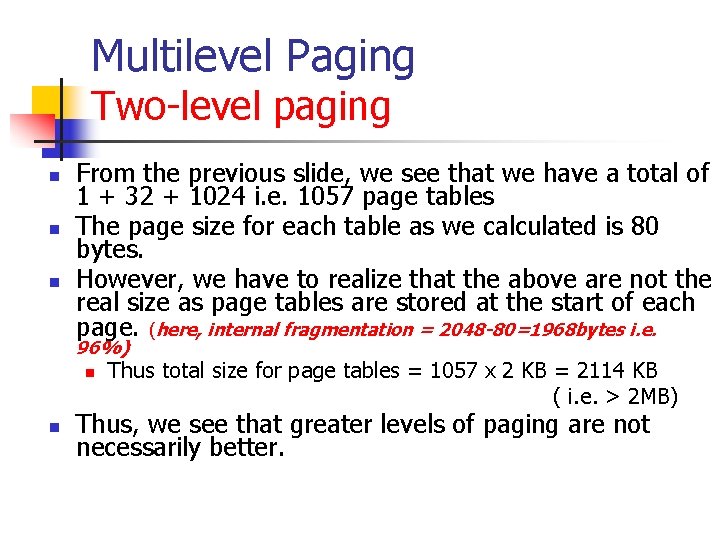

Multilevel Paging Two-level paging n n n From the previous slide, we see that we have a total of 1 + 32 + 1024 i. e. 1057 page tables The page size for each table as we calculated is 80 bytes. However, we have to realize that the above are not the real size as page tables are stored at the start of each page. (here, internal fragmentation = 2048 -80=1968 bytes i. e. 96%) n n Thus total size for page tables = 1057 x 2 KB = 2114 KB ( i. e. > 2 MB) Thus, we see that greater levels of paging are not necessarily better.

Multilevel Paging n n Till now, we have explored the different configurations of paging and different levels of paging and their effects on internal fragmentation, memory requirements, etc. Let us now turn our attention to page size.

Multilevel Paging Page Size n n n A large page size causes a lot of internal fragmentation. This means that, with a large page size, the paging scheme tends to degenerate to a continuous memory allocation scheme. On the other hand, a small page size requires large amounts of memory space to be allocated for page tables and more memory accesses potentially. Finding an optimal page size for a system is not easy as it is very subjective dependent on the process mix and the pattern of access.

Multilevel Paging n In the following examples, we’ll try to do the following: n n n Given a page size, find the minimum number of paging levels Given a fixed number of paging levels, find the page size Note that here our page size shall be chosen such that it provides minimum internal fragmentation if terms of storing page tables.

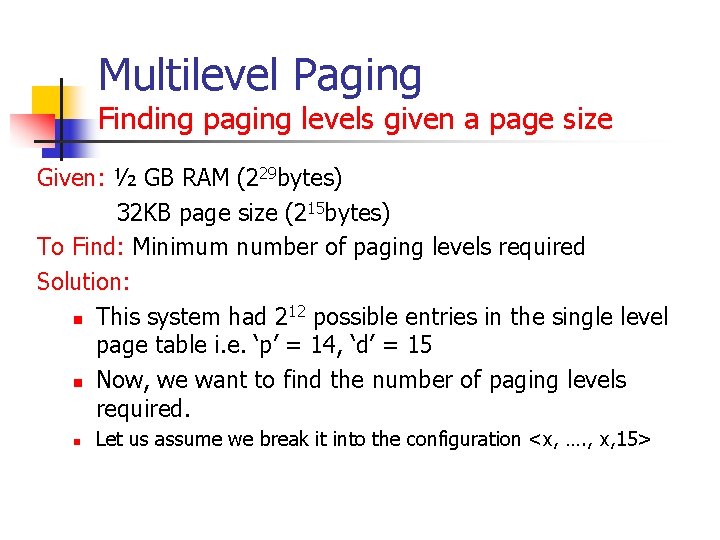

Multilevel Paging Finding paging levels given a page size Given: ½ GB RAM (229 bytes) 32 KB page size (215 bytes) To Find: Minimum number of paging levels required Solution: 12 possible entries in the single level n This system had 2 page table i. e. ‘p’ = 14, ‘d’ = 15 n Now, we want to find the number of paging levels required. n Let us assume we break it into the configuration <x, …. , x, 15>

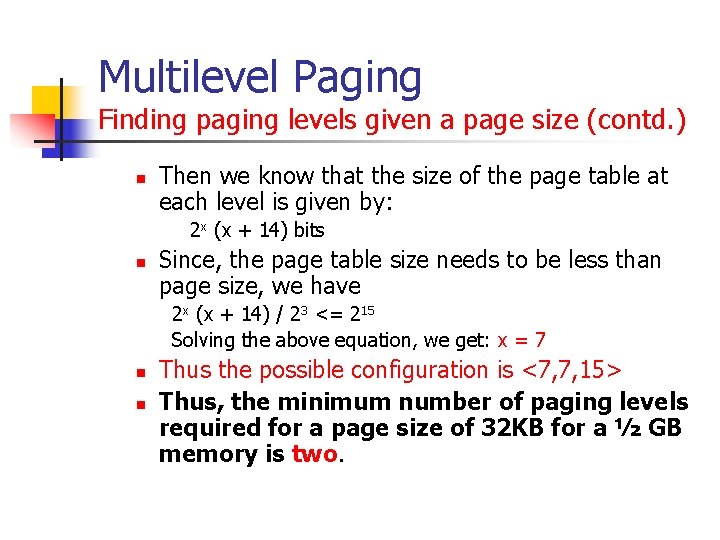

Multilevel Paging Finding paging levels given a page size (contd. ) n Then we know that the size of the page table at each level is given by: 2 x (x + 14) bits n Since, the page table size needs to be less than page size, we have 2 x (x + 14) / 23 <= 215 Solving the above equation, we get: x = 7 n n Thus the possible configuration is <7, 7, 15> Thus, the minimum number of paging levels required for a page size of 32 KB for a ½ GB memory is two.

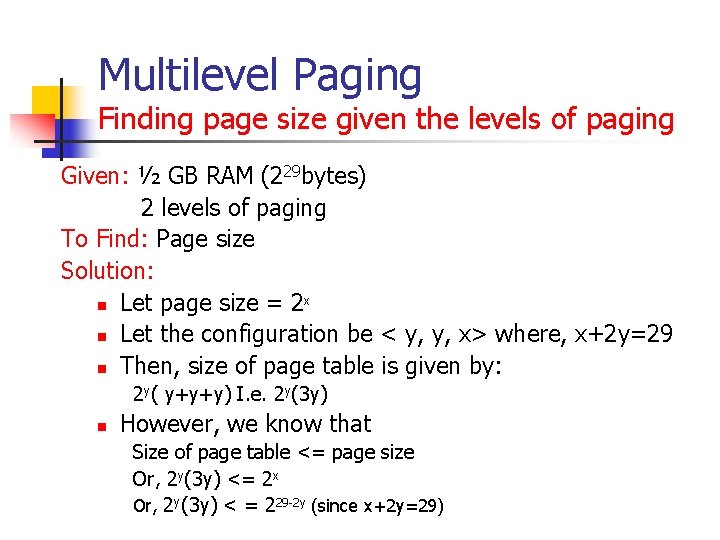

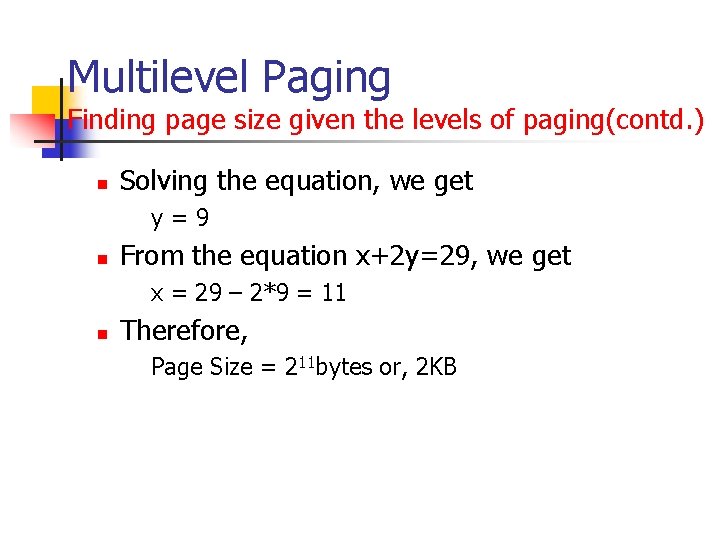

Multilevel Paging Finding page size given the levels of paging Given: ½ GB RAM (229 bytes) 2 levels of paging To Find: Page size Solution: n Let page size = 2 x n Let the configuration be < y, y, x> where, x+2 y=29 n Then, size of page table is given by: 2 y( y+y+y) I. e. 2 y(3 y) n However, we know that Size of page table <= page size Or, 2 y(3 y) <= 2 x Or, 2 y(3 y) < = 229 -2 y (since x+2 y=29)

Multilevel Paging Finding page size given the levels of paging(contd. ) n Solving the equation, we get y=9 n From the equation x+2 y=29, we get x = 29 – 2*9 = 11 n Therefore, Page Size = 211 bytes or, 2 KB

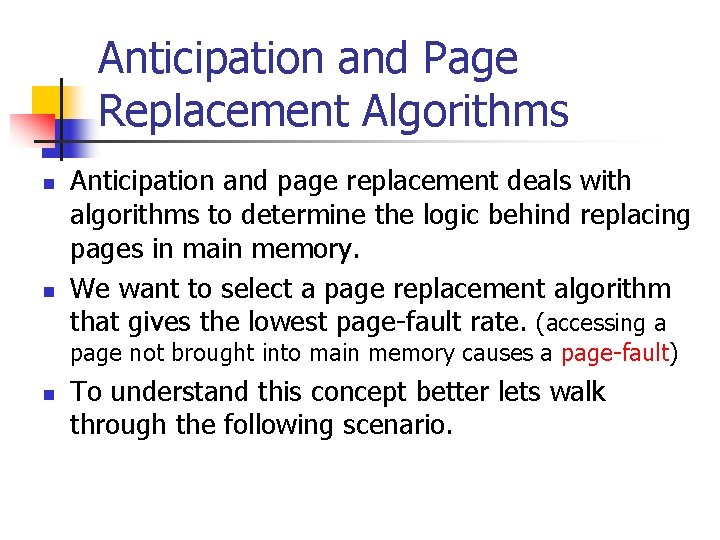

Anticipation and Page Replacement Algorithms n n Anticipation and page replacement deals with algorithms to determine the logic behind replacing pages in main memory. We want to select a page replacement algorithm that gives the lowest page-fault rate. (accessing a page not brought into main memory causes a page-fault) n To understand this concept better lets walk through the following scenario.

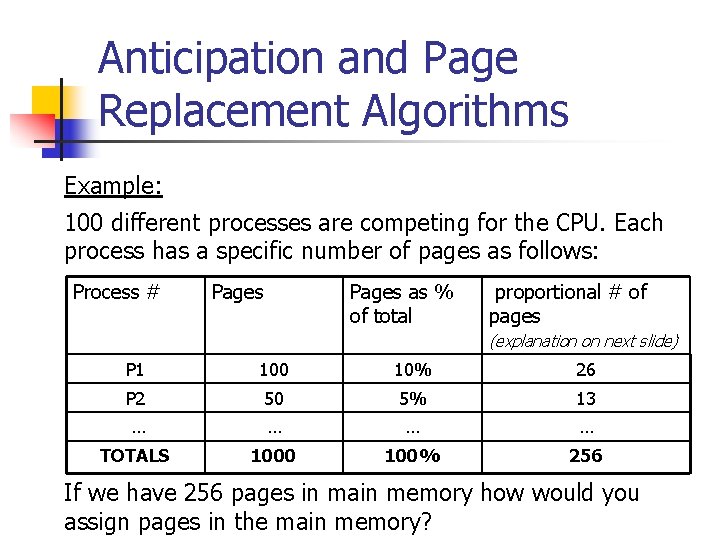

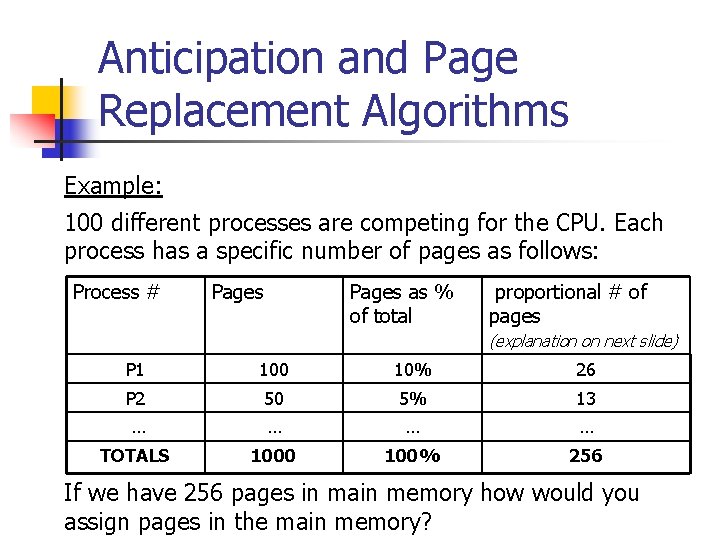

Anticipation and Page Replacement Algorithms Example: 100 different processes are competing for the CPU. Each process has a specific number of pages as follows: Process # Pages as % of total proportional # of pages (explanation on next slide) P 1 100 10% 26 P 2 50 5% 13 … … TOTALS 1000 100% 256 If we have 256 pages in main memory how would you assign pages in the main memory?

Anticipation and Page Replacement Algorithms n n n As depicted in the last column of the table on the previous slide, we might assign pages proportionally to their total pages initially. However, its not a good idea to keep it that way for the entire time since the pattern of usage of each process may vary drastically. For example, small processes might need more memory accesses, etc. Page allocation may be changed in a dynamic manner using any of the following algorithms discussed one at a time.

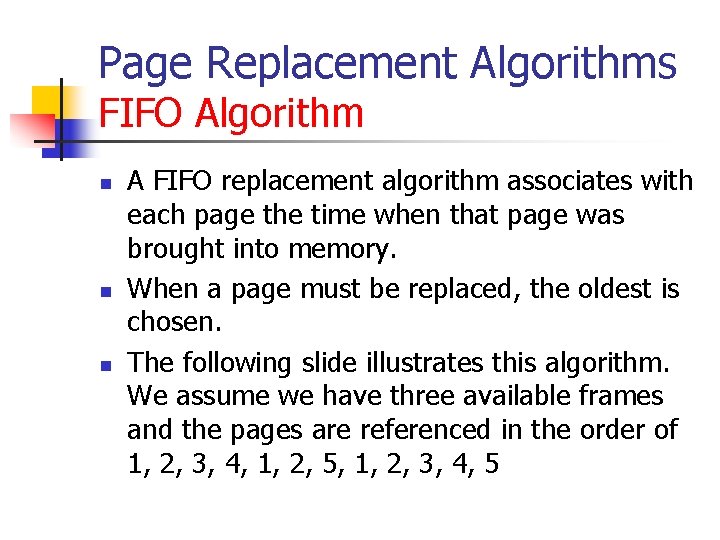

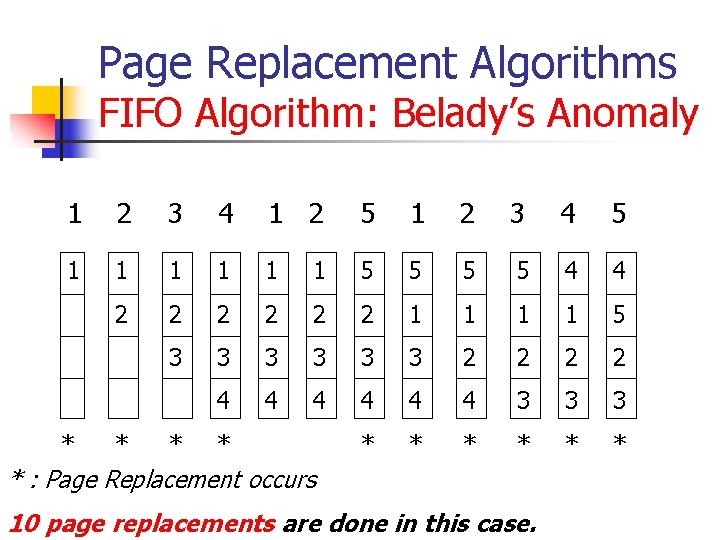

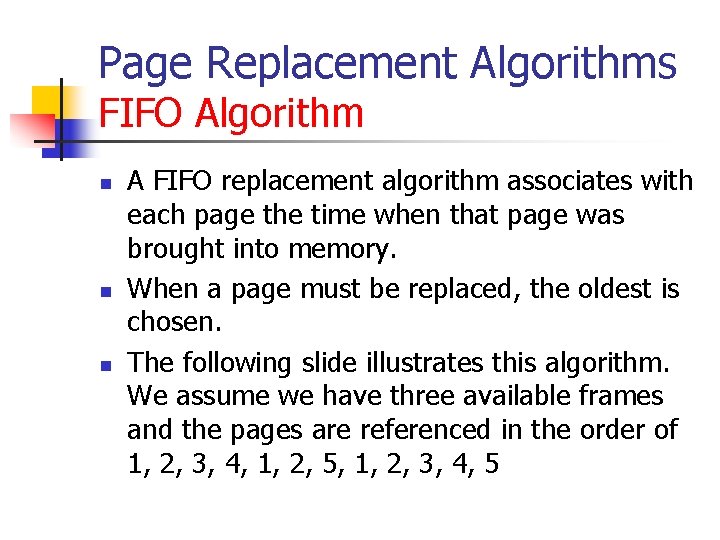

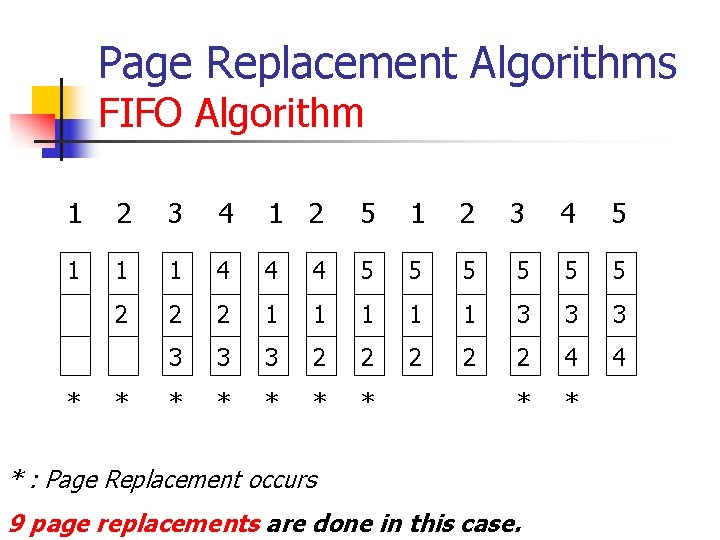

Page Replacement Algorithms FIFO Algorithm n n n A FIFO replacement algorithm associates with each page the time when that page was brought into memory. When a page must be replaced, the oldest is chosen. The following slide illustrates this algorithm. We assume we have three available frames and the pages are referenced in the order of 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5

Page Replacement Algorithms FIFO Algorithm 1 2 3 4 1 2 5 1 2 3 4 5 1 1 1 4 4 4 5 5 5 2 2 2 1 1 1 3 3 3 2 2 2 4 4 * * * * * : Page Replacement occurs 9 page replacements are done in this case.

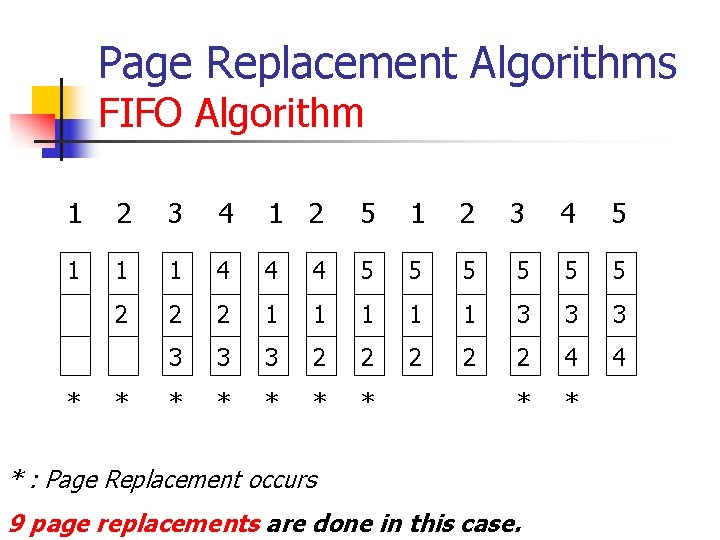

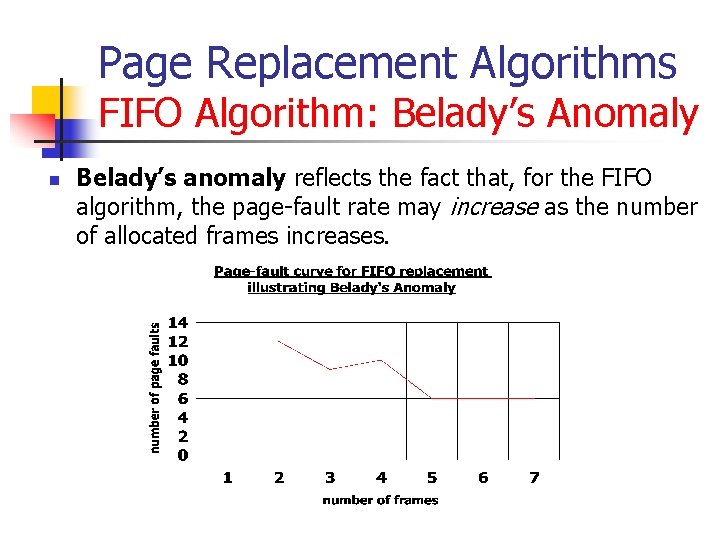

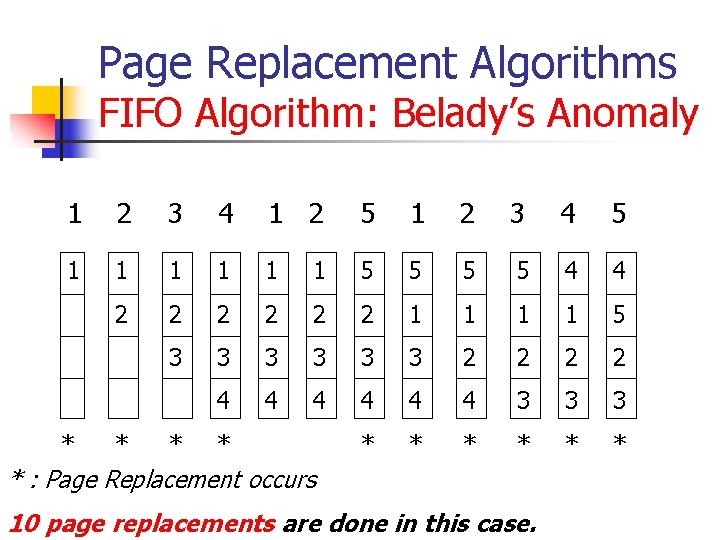

Page Replacement Algorithms FIFO Algorithm: Belady’s Anomaly n n Obviously, one would consider as the number of frames increases , the number of page faults will decrease as depicted in the graph on the side. However, for FIFO algorithm it is not so. We’ll first look at the previous example where we have four frames available instead of three and then look at the graph and discuss the anomaly.

Page Replacement Algorithms FIFO Algorithm: Belady’s Anomaly 1 2 3 4 1 2 5 1 2 3 4 5 1 1 1 5 5 4 4 2 2 2 1 1 5 3 3 3 2 2 4 4 4 3 3 3 * * * : Page Replacement occurs 10 page replacements are done in this case.

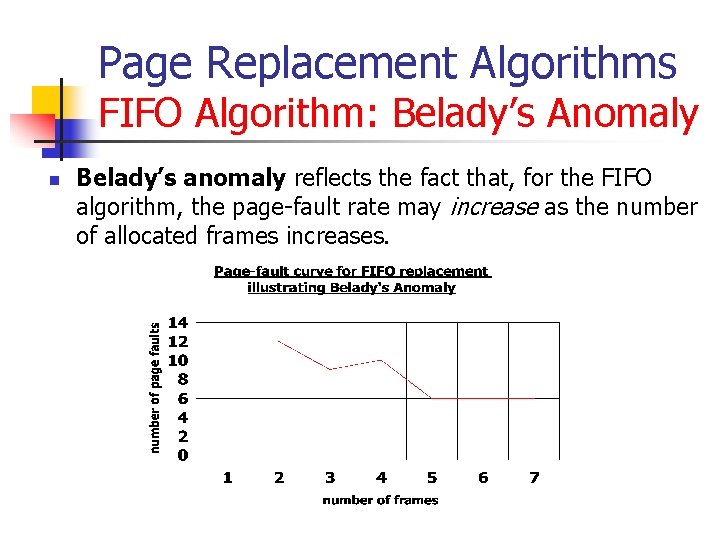

Page Replacement Algorithms FIFO Algorithm: Belady’s Anomaly n Belady’s anomaly reflects the fact that, for the FIFO algorithm, the page-fault rate may increase as the number of allocated frames increases.

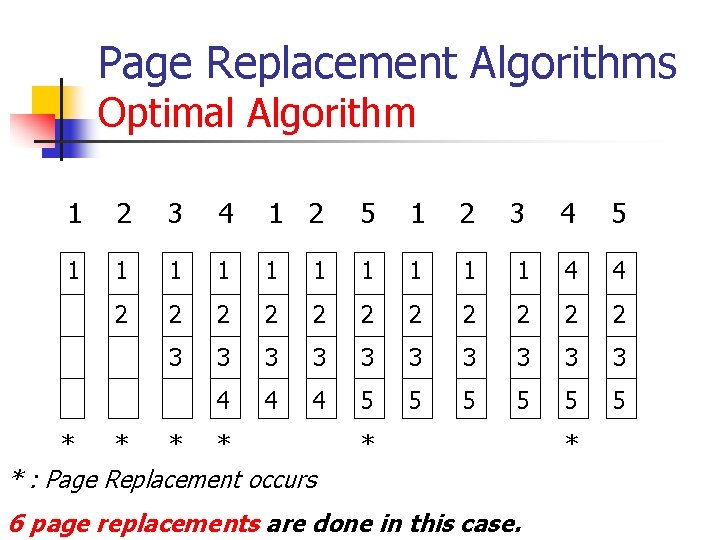

Page Replacement Algorithms Optimal Algorithm n n One result of the discovery of Belady’s anomaly was the search for an optimal page-replacement algorithm. An optimal page-replacement algorithm has the lowest page-fault rate of all algorithms. An optimal algorithm will never suffer from Belady’s anomaly. An optimal page-replacement algorithm exists, and has been called OPT or MIN.

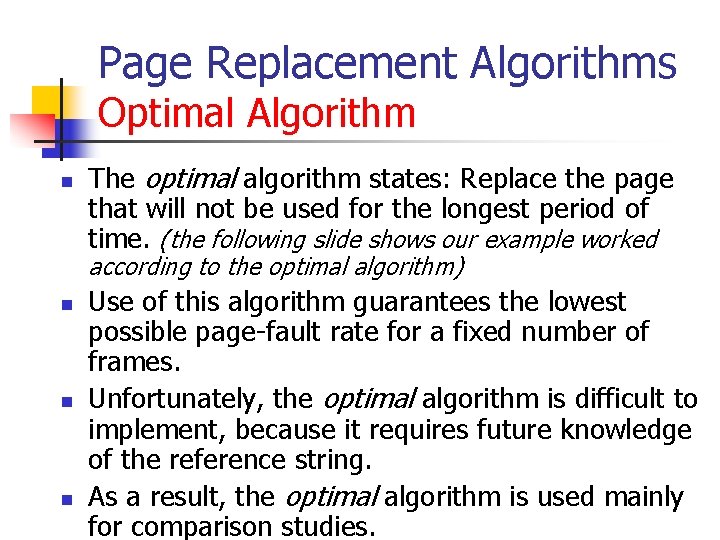

Page Replacement Algorithms Optimal Algorithm n The optimal algorithm states: Replace the page that will not be used for the longest period of time. (the following slide shows our example worked according to the optimal algorithm) n n n Use of this algorithm guarantees the lowest possible page-fault rate for a fixed number of frames. Unfortunately, the optimal algorithm is difficult to implement, because it requires future knowledge of the reference string. As a result, the optimal algorithm is used mainly for comparison studies.

Page Replacement Algorithms Optimal Algorithm 1 2 3 4 1 2 5 1 2 3 4 5 1 1 1 1 1 4 4 2 2 2 3 3 3 3 3 4 4 4 5 5 5 * * * : Page Replacement occurs 6 page replacements are done in this case. *

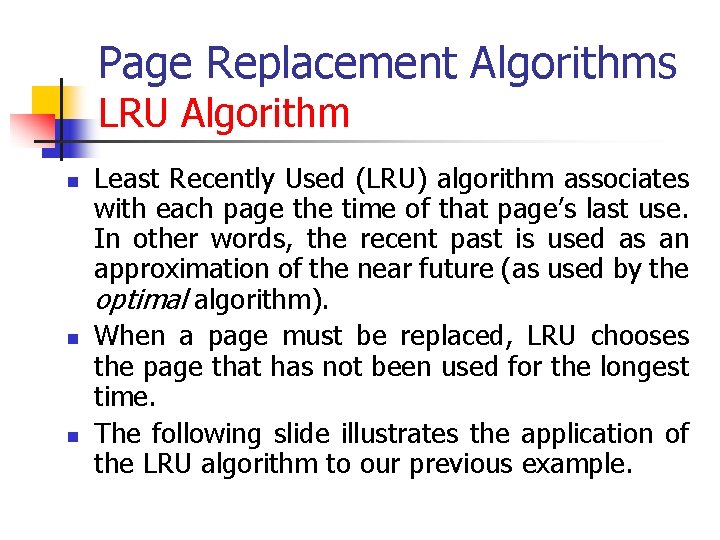

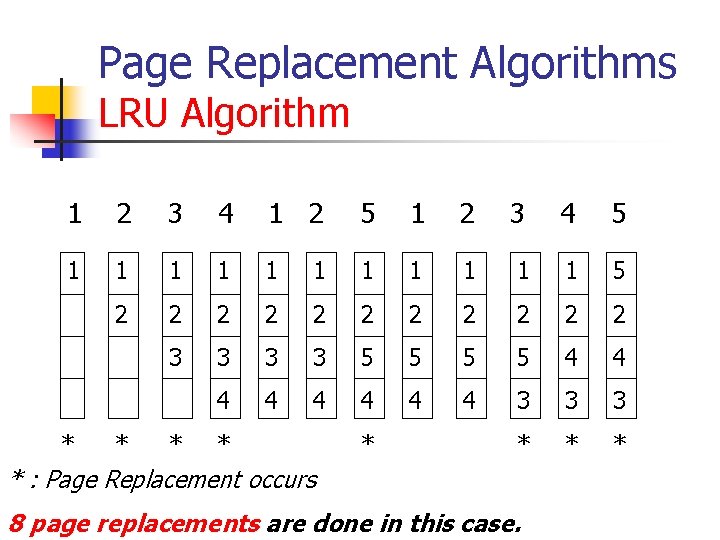

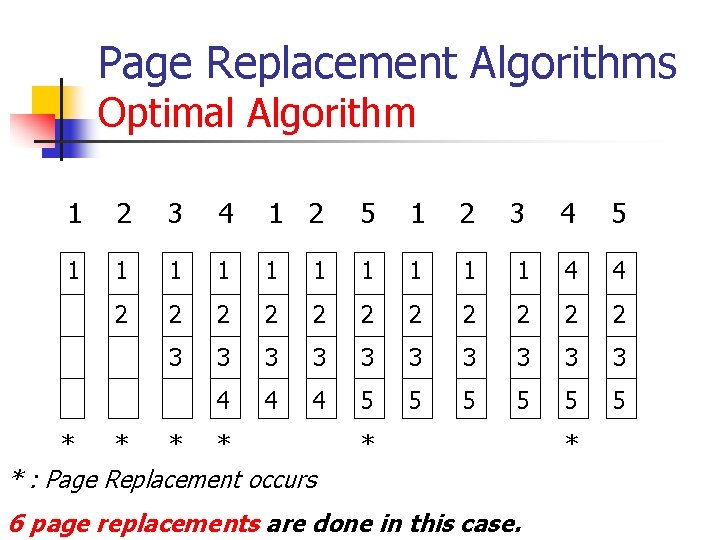

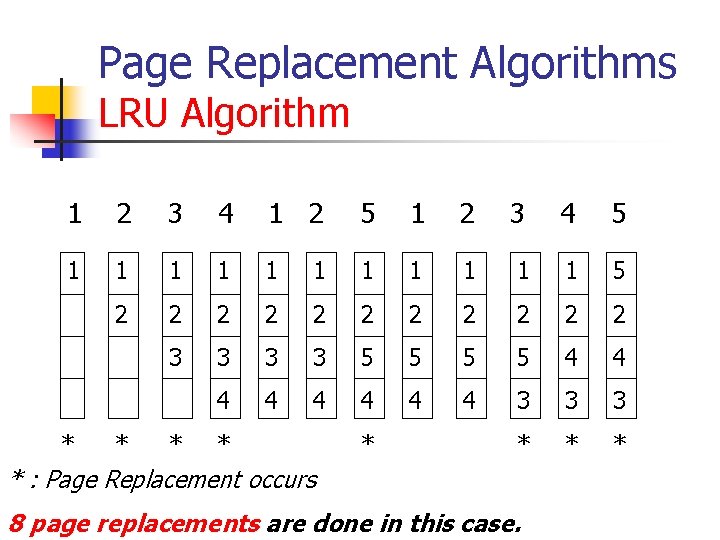

Page Replacement Algorithms LRU Algorithm n n n Least Recently Used (LRU) algorithm associates with each page the time of that page’s last use. In other words, the recent past is used as an approximation of the near future (as used by the optimal algorithm). When a page must be replaced, LRU chooses the page that has not been used for the longest time. The following slide illustrates the application of the LRU algorithm to our previous example.

Page Replacement Algorithms LRU Algorithm 1 2 3 4 1 2 5 1 2 3 4 5 1 1 1 5 2 2 2 3 3 5 5 4 4 4 4 3 3 3 * * * * * : Page Replacement occurs 8 page replacements are done in this case.

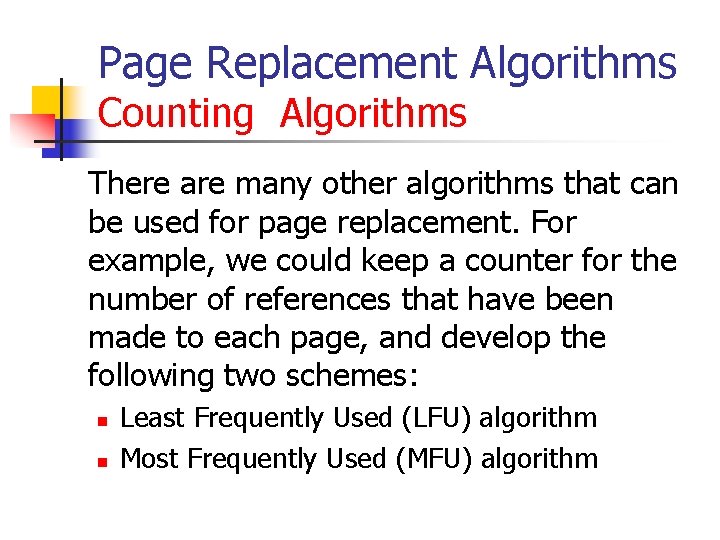

Page Replacement Algorithms LRU Algorithm n n n The LRU is often used as a pagereplacement algorithm and is considered to be quite good. The major problem is how to implement LRU replacement. An LRU page-replacement algorithm may require substantial hardware assistance.

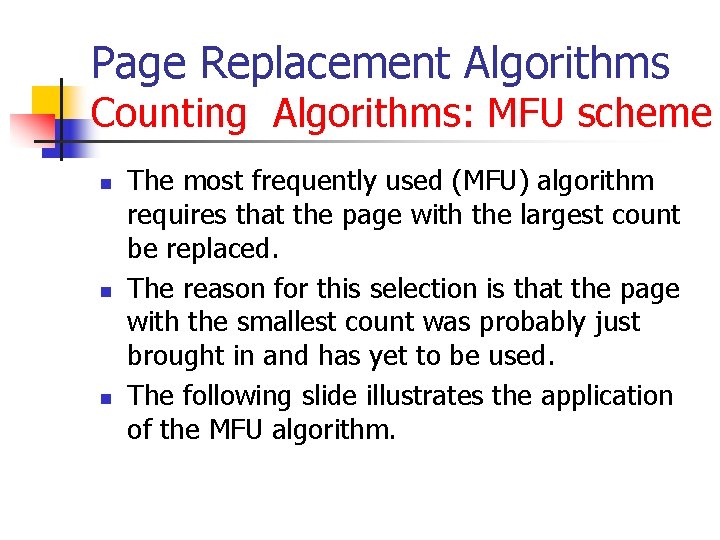

Page Replacement Algorithms Counting Algorithms There are many other algorithms that can be used for page replacement. For example, we could keep a counter for the number of references that have been made to each page, and develop the following two schemes: n n Least Frequently Used (LFU) algorithm Most Frequently Used (MFU) algorithm

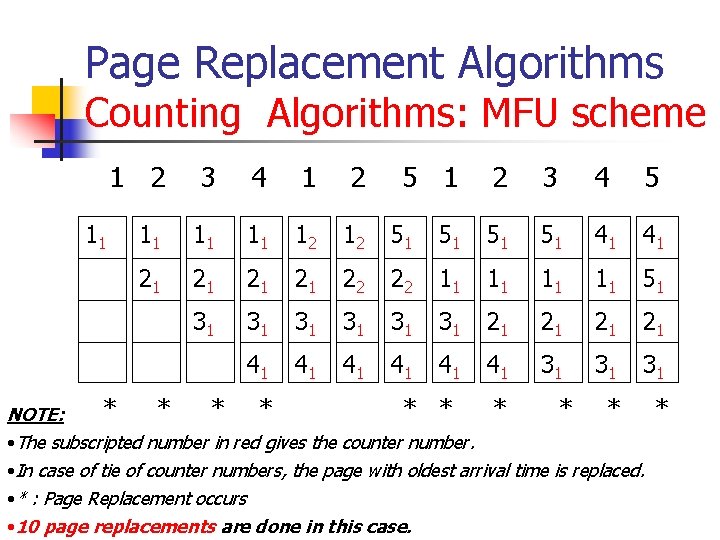

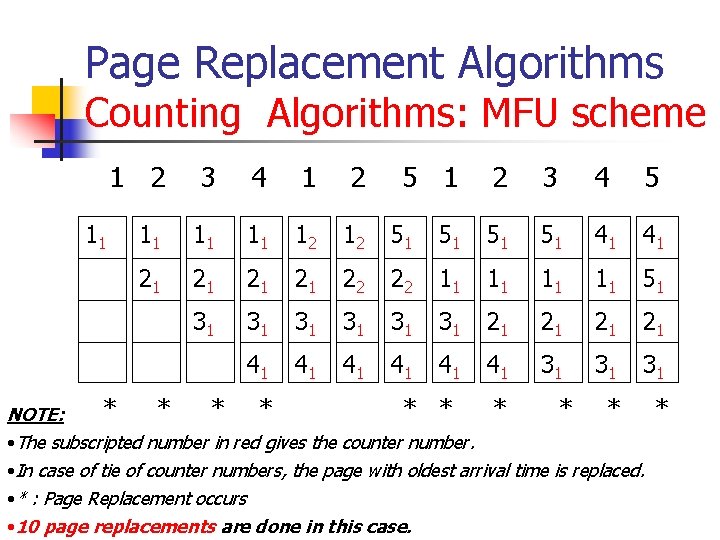

Page Replacement Algorithms Counting Algorithms: MFU scheme n n n The most frequently used (MFU) algorithm requires that the page with the largest count be replaced. The reason for this selection is that the page with the smallest count was probably just brought in and has yet to be used. The following slide illustrates the application of the MFU algorithm.

Page Replacement Algorithms Counting Algorithms: MFU scheme 11 1 2 3 4 1 2 5 1 2 3 4 5 11 11 11 12 12 51 51 41 41 21 21 22 22 11 11 51 31 31 31 21 21 41 41 41 31 31 31 * * * * * NOTE: • The subscripted number in red gives the counter number. • In case of tie of counter numbers, the page with oldest arrival time is replaced. • * : Page Replacement occurs • 10 page replacements are done in this case. *

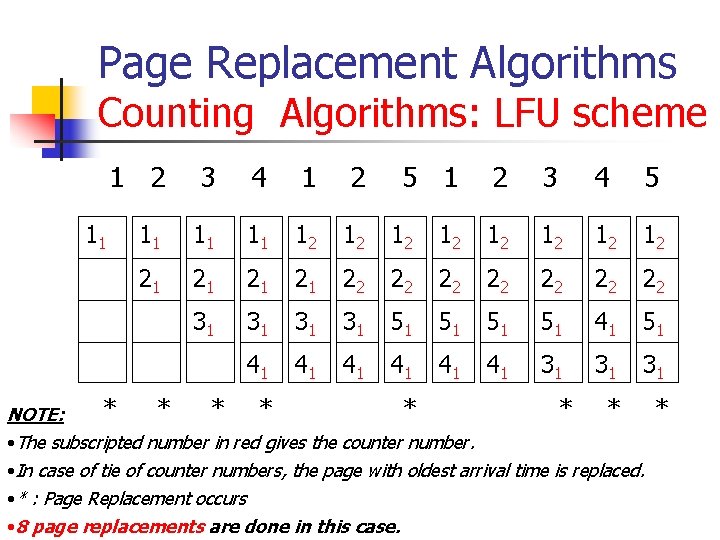

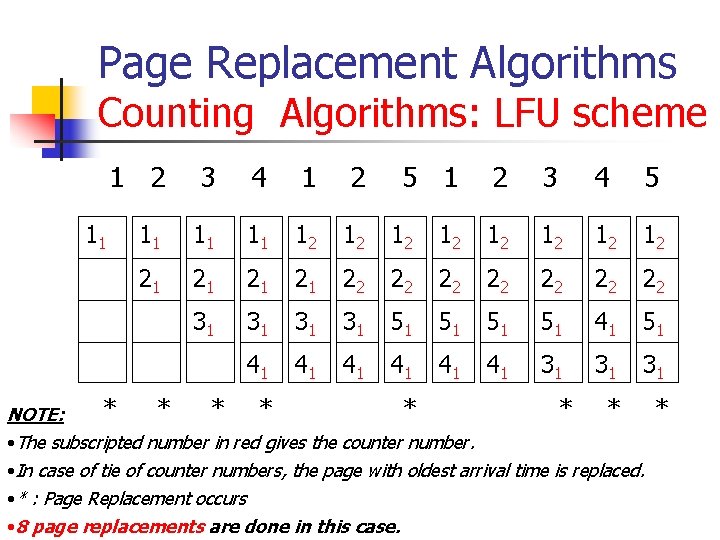

Page Replacement Algorithms Counting Algorithms: LFU scheme n n n The least frequently used (LFU) algorithm requires that the page with the smallest count be replaced. The reason for this selection is that an actively used page should have a large reference count. The following slide illustrates the application of the LFU algorithm.

Page Replacement Algorithms Counting Algorithms: LFU scheme 11 1 2 3 4 1 2 5 1 2 3 4 5 11 11 11 12 12 21 21 22 22 31 31 51 51 41 41 41 31 31 31 * * * * NOTE: • The subscripted number in red gives the counter number. • In case of tie of counter numbers, the page with oldest arrival time is replaced. • * : Page Replacement occurs • 8 page replacements are done in this case. *

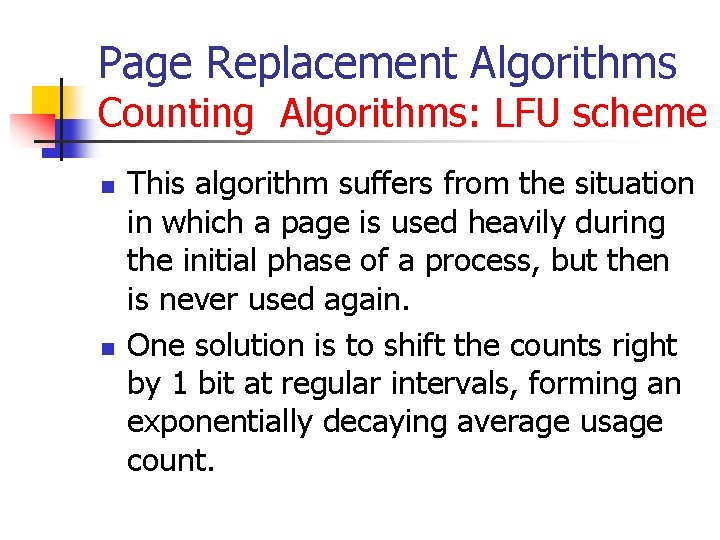

Page Replacement Algorithms Counting Algorithms: LFU scheme n n This algorithm suffers from the situation in which a page is used heavily during the initial phase of a process, but then is never used again. One solution is to shift the counts right by 1 bit at regular intervals, forming an exponentially decaying average usage count.

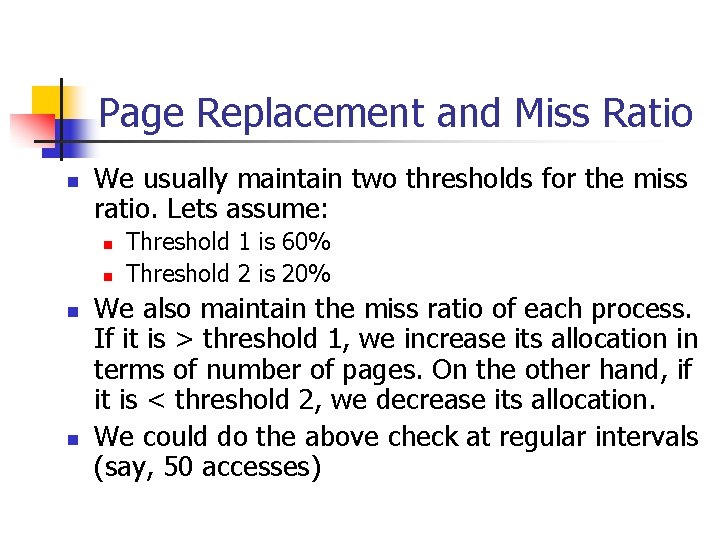

Page Replacement and Miss Ratio n We usually maintain two thresholds for the miss ratio. Lets assume: n n Threshold 1 is 60% Threshold 2 is 20% We also maintain the miss ratio of each process. If it is > threshold 1, we increase its allocation in terms of number of pages. On the other hand, if it is < threshold 2, we decrease its allocation. We could do the above check at regular intervals (say, 50 accesses)

Paging Parameters Involved The new parameters involved in this memory management scheme are: n n Page Size Page Replacement Algorithms

Paging Effect of Page Size n n n A large page size causes a lot of internal fragmentation. This means that, with a large page size, the paging scheme tends to degenerate to a continuous memory allocation scheme. On the other hand, a small page size requires large amounts of memory space to be allocated for page tables and more memory accesses potentially. Finding an optimal page size for a system is not easy as it is very subjective dependent on the process mix and the pattern of access.

Paging Effect of Page Replacement Algorithms n n n Least-recently used, first-in-first-out, leastfrequently used and random replacement are four of the more common schemes in use. The LRU is often used as a page-replacement algorithm and is considered to be quite good. However, an LRU page-replacement algorithm may require substantial hardware assistance.

Paging Performance Measures n n n Average Waiting Time Average Turnaround Time CPU utilization CPU throughput Replacement ratio (in percentage) n n This is a new performance measure and it quantifies page replacements. The ratio of number of page replacement to total number page accesses gives this measure.

Paging Implementation n n As part of Assignment 4, you’ll implement a memory manager system within an operating system satisfying the given requirements. (For complete details refer to Assignment 4) We’ll see a brief explanation of the assignment in the following slides.

Paging Mechanism Implementation Details Following are some specifications of the system you’ll implement: n You’ll use the memory and job mix description in Assignment 3 n n Implement the above using a paging mechanism (1 or more levels) (i. e, divide each jobs to a number of pages, given a fixed page size). Implement a page replacement algorithm (or more) to decide which pages to replace and to anticipate page usage throughout the simulation. You have to implement randomizers to simulate page access patterns for different processes. Try different dynamic algorithms for deciding on the number of memory pages to be assigned to a process through the life of a

Paging Mechanism Implementation Details After completing the implementation and doing a few sample runs, start thinking of this problem from an algorithmic design point of view. The algorithm/hardware implementation of the memory manager/Disc manager involves many parameters, some of them include: n n n n Memory Size Disc transfer speed. Time slot for RR. Page size Page replacement and paging anticipation algorithm choice. Two miss thresholds (percentages over time) for deciding to reallocate or de-allocate empty pages to a process. Waiting time for decision making regarding allocation.

Paging Mechanism Implementation Details The eventual goal (as usual) could be to optimize several (or some) performance measure (criteria) such as: n n n Average waiting time. Average turnaround time. CPU utilization. Maximum turnaround time. Maximum wait time. CPU Throughput.

Paging Mechanism Implementation Details n n Perform several test runs and write a summation indicating how sensitive are some of the performance measures to some of the above parameters (or a combination of the above parameters). Include some of the results (time lines and/or graphs) with your observations and conclusions regarding the effect of changing the values of different parameters on the performance measures.

![Paging Specifics of a sample implementation The implementation specifics of Zhaos study 6 are Paging Specifics of a sample implementation The implementation specifics of Zhao’s study [6] are](https://slidetodoc.com/presentation_image_h/afcf5b3401f115ca5ba15f58bf3af0dc/image-70.jpg)

Paging Specifics of a sample implementation The implementation specifics of Zhao’s study [6] are included here to illustrate one sample implementation. Zhao, in his study, simulated an operating system with a multilevel feedback queue scheduler, demand paging scheme for memory management and a disc scheduler. A set of generic processes was created by a random generator. Ranges were set for various PCB parameters as follows: n n n Process size: 100 KB to 3 MB Estimated execution time: 5 to 35 ms Priority: 1 to 4

Paging Specifics of a sample implementation (contd. ) n n A single level paging scheme was implemented. A memory size of 16 MB was chosen and the disc driver configuration: 8 surfaces, 64 sectors and 1000 tracks was used. Four page replacement algorithms: LRU, LFU, FIFO, random replacement and page size were chosen as the independent variables in context to paging. The dependent variables for the study were average turnaround time and replacement percentage.

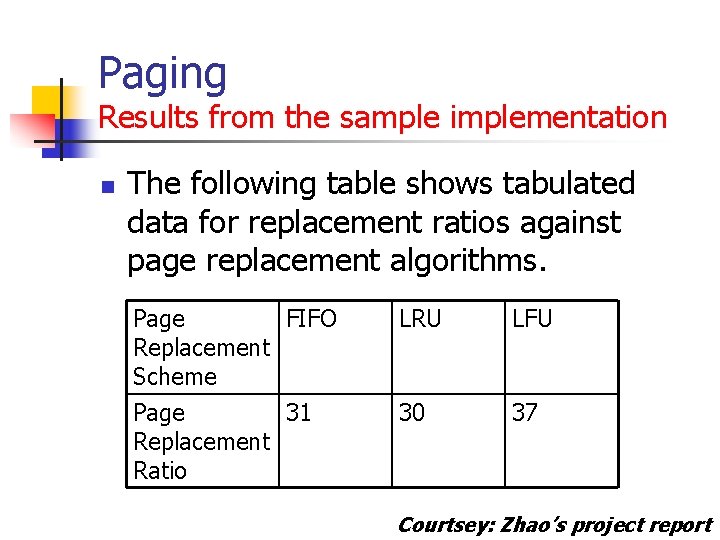

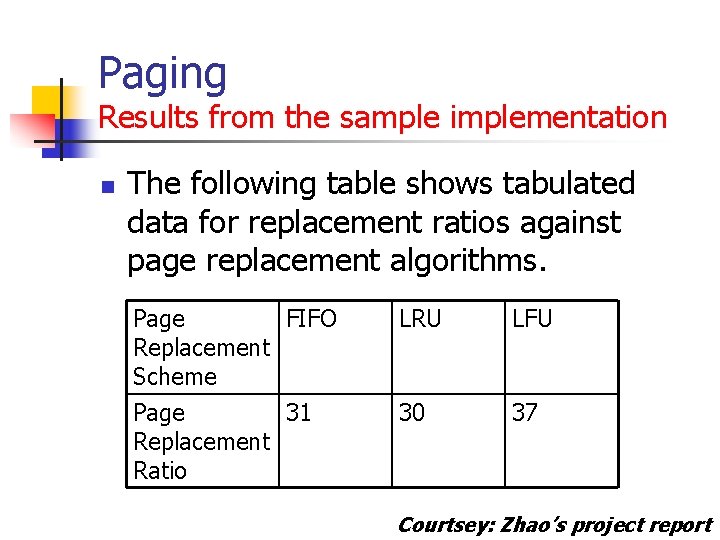

Paging Results from the sample implementation n The following table shows tabulated data for replacement ratios against page replacement algorithms. Page FIFO Replacement Scheme LRU LFU Page 31 Replacement Ratio 30 37 Courtsey: Zhao’s project report

Paging Conclusions from the above study n n After having found the optimal values of all studied parameters except page size in his work, Zhao used those optimal values for 1000 simulations each for a page size of 4 KB and 8 KB. The latter emerged as a better choice. In his work, Zhao concludes that 8 KB page size and the LRU replacement algorithms constitute the parametric optimization in context to paging parameters for the specified process mix.

Lecture Summary ü Introduction to Paging ü ü ü ü ü Paging Hardware & Page Tables Paging model of memory Page Size Paging versus Continuous Allocation Scheme Multilevel Paging Page Replacement and Page Anticipation Algorithms Parameters Involved Parameter-Performance Relationships Sample Results

Preview of next lecture The following topics shall be covered in the next lecture: § Introduction to Disc Scheduling § Need for disc scheduling § Disc structure § Details of disc speed § § Disc Scheduling Algorithms Parameters Involved Parameter-Performance Relationships Some Sample Results