Lecture 5 Memory Management Part I Lecture Highlights

- Slides: 62

Lecture 5 Memory Management Part I

Lecture Highlights Ø Introduction to Memory Management Ø What is memory management Ø Related Problems of Redundancy, Fragmentation and Synchronization Ø Memory Placement Algorithms Ø Continuous Memory Allocation Scheme Ø Parameters Involved Ø Parameter-Performance Relationships Ø Some Sample Results

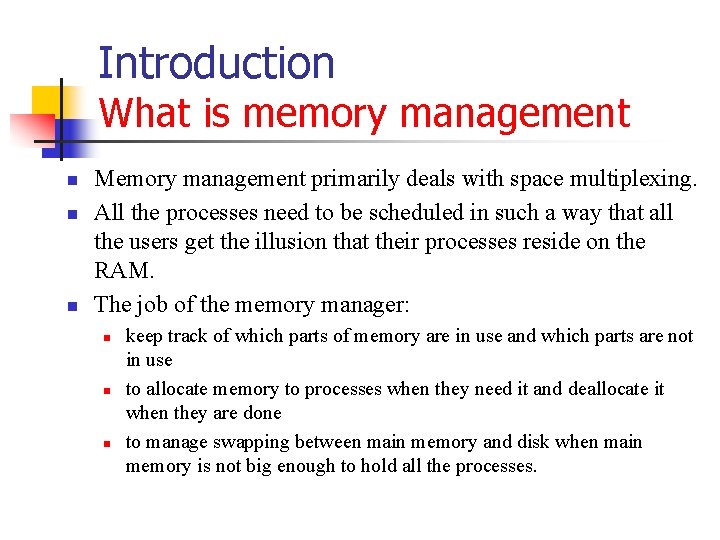

Introduction What is memory management n n n Memory management primarily deals with space multiplexing. All the processes need to be scheduled in such a way that all the users get the illusion that their processes reside on the RAM. The job of the memory manager: n n n keep track of which parts of memory are in use and which parts are not in use to allocate memory to processes when they need it and deallocate it when they are done to manage swapping between main memory and disk when main memory is not big enough to hold all the processes.

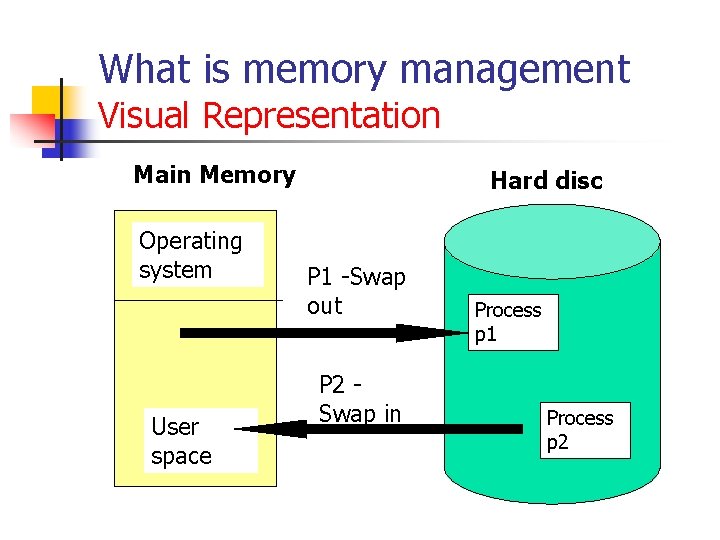

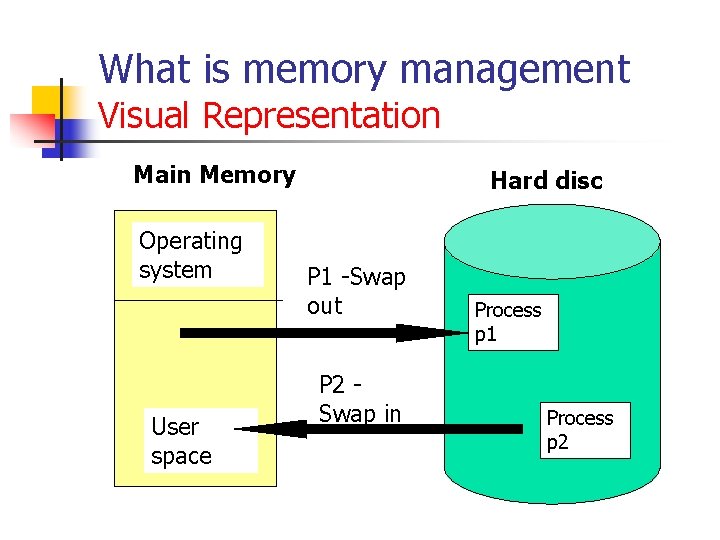

What is memory management Visual Representation Main Memory Operating system User space Hard disc P 1 -Swap out P 2 Swap in Process p 1 Process p 2

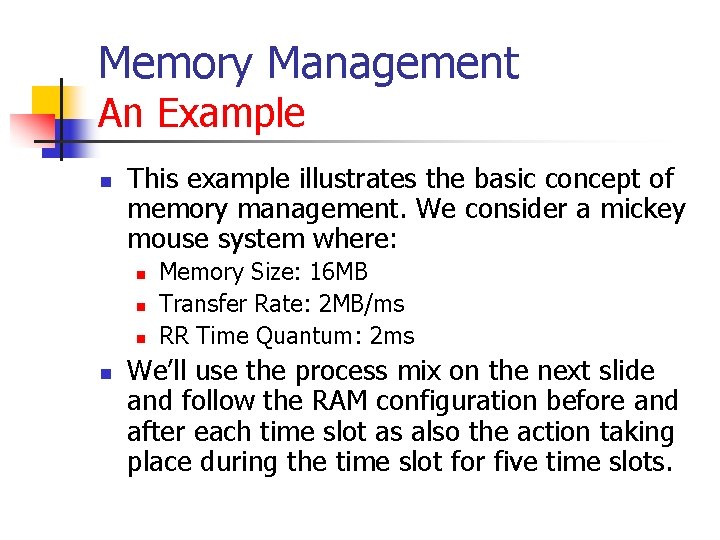

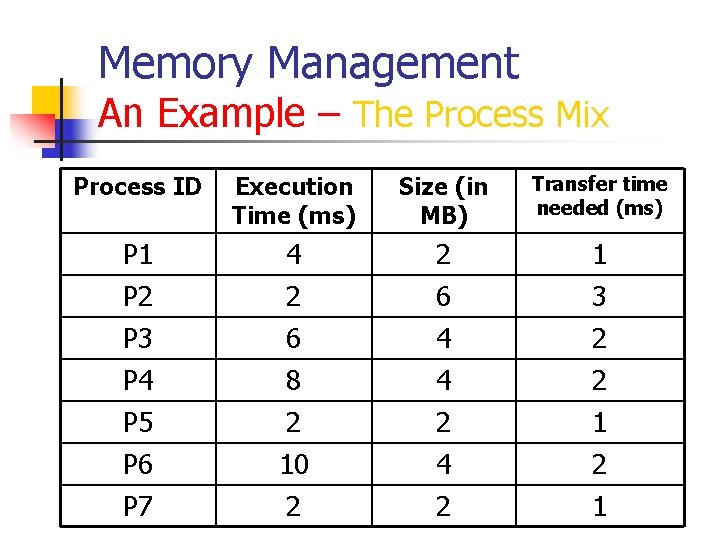

Memory Management An Example n This example illustrates the basic concept of memory management. We consider a mickey mouse system where: n n Memory Size: 16 MB Transfer Rate: 2 MB/ms RR Time Quantum: 2 ms We’ll use the process mix on the next slide and follow the RAM configuration before and after each time slot as also the action taking place during the time slot for five time slots.

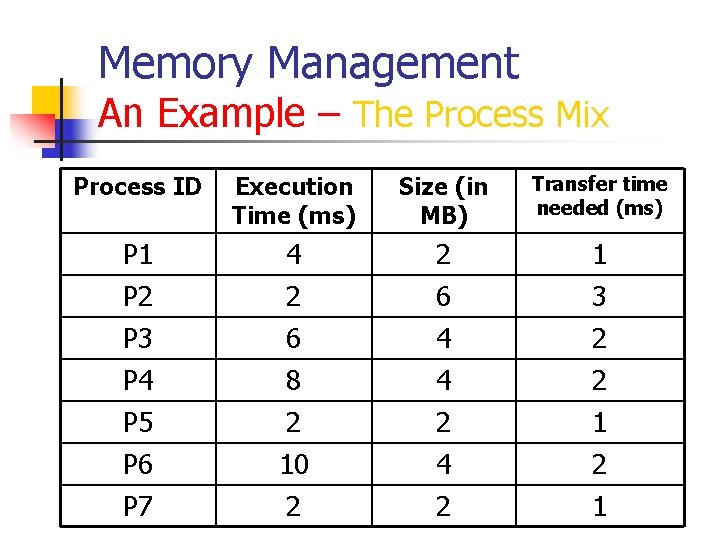

Memory Management An Example – The Process Mix Process ID Execution Time (ms) Size (in MB) Transfer time needed (ms) P 1 P 2 P 3 P 4 P 5 P 6 P 7 4 2 6 8 2 10 2 2 6 4 4 2 1 3 2 2 1

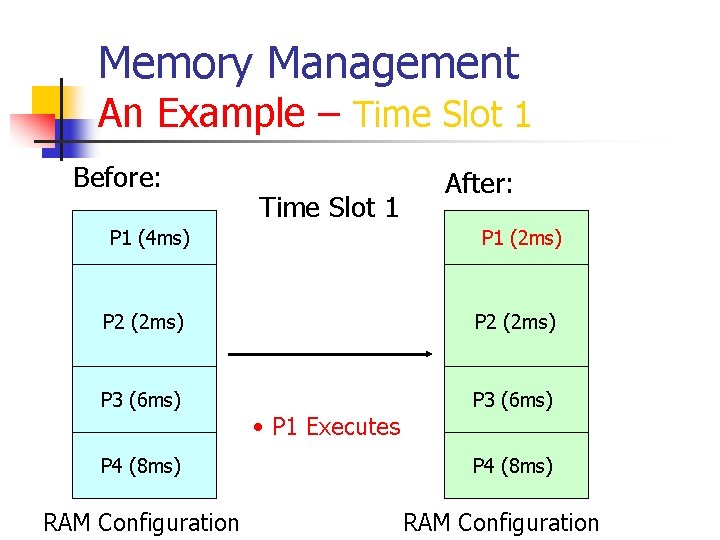

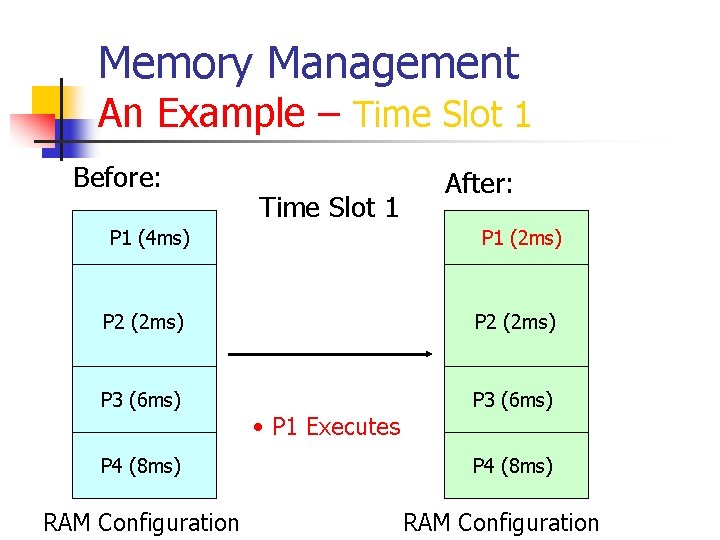

Memory Management An Example – Time Slot 1 Before: Time Slot 1 P 1 (4 ms) After: P 1 (2 ms) P 2 (2 ms) P 3 (6 ms) P 4 (8 ms) RAM Configuration • P 1 Executes P 4 (8 ms) RAM Configuration

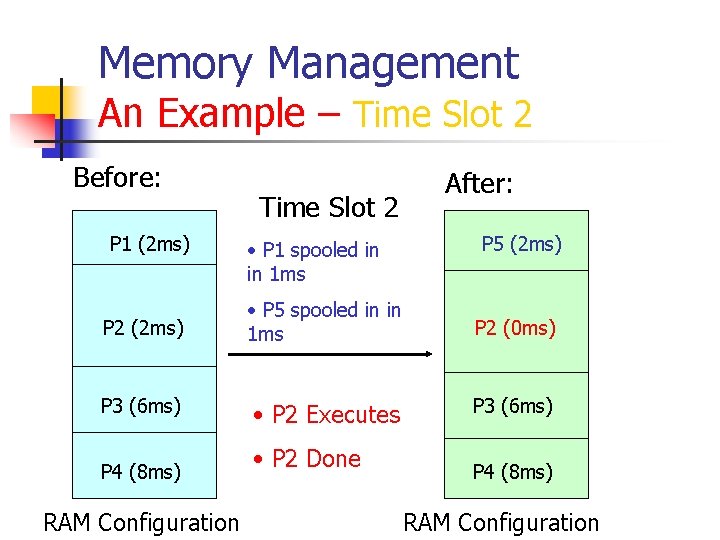

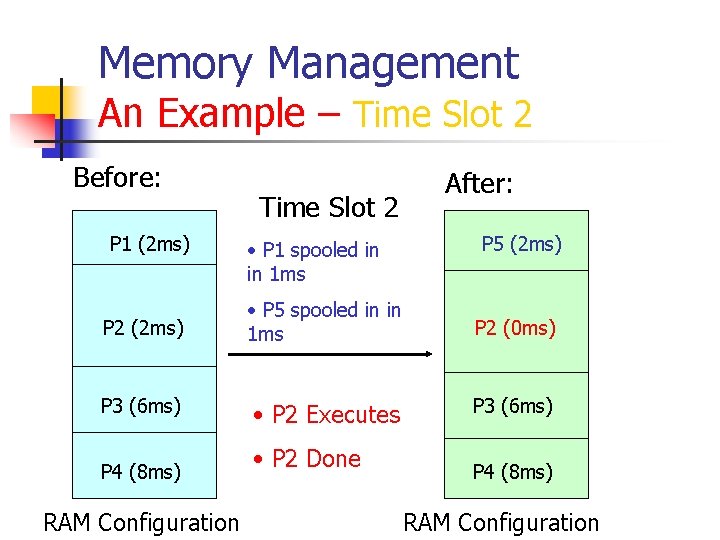

Memory Management An Example – Time Slot 2 Before: P 1 (2 ms) Time Slot 2 • P 1 spooled in in 1 ms After: P 5 (2 ms) P 2 (2 ms) • P 5 spooled in in 1 ms P 2 (0 ms) P 3 (6 ms) • P 2 Executes P 3 (6 ms) P 4 (8 ms) RAM Configuration • P 2 Done P 4 (8 ms) RAM Configuration

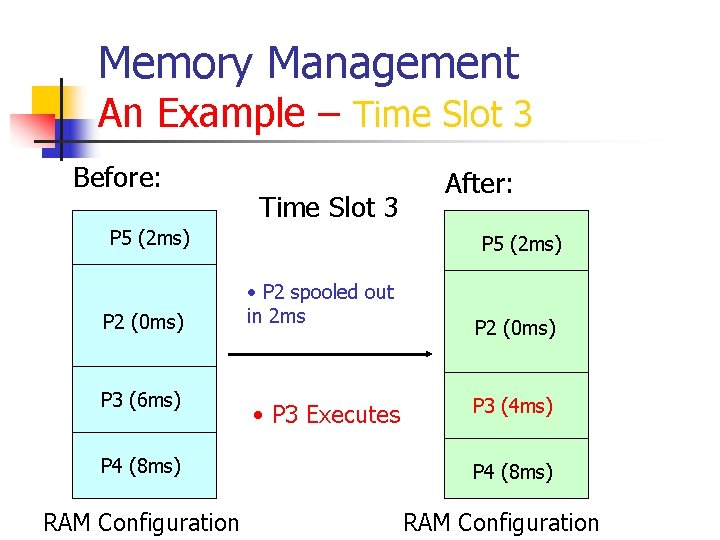

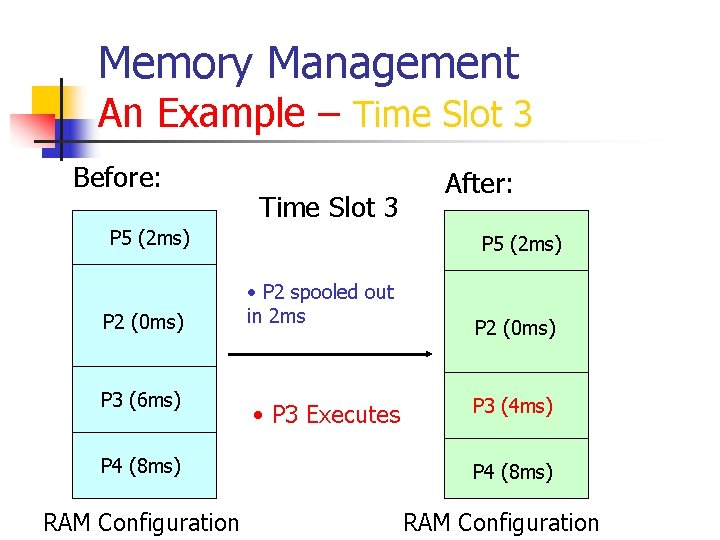

Memory Management An Example – Time Slot 3 Before: Time Slot 3 P 5 (2 ms) P 2 (0 ms) P 3 (6 ms) P 4 (8 ms) RAM Configuration After: P 5 (2 ms) • P 2 spooled out in 2 ms • P 3 Executes P 2 (0 ms) P 3 (4 ms) P 4 (8 ms) RAM Configuration

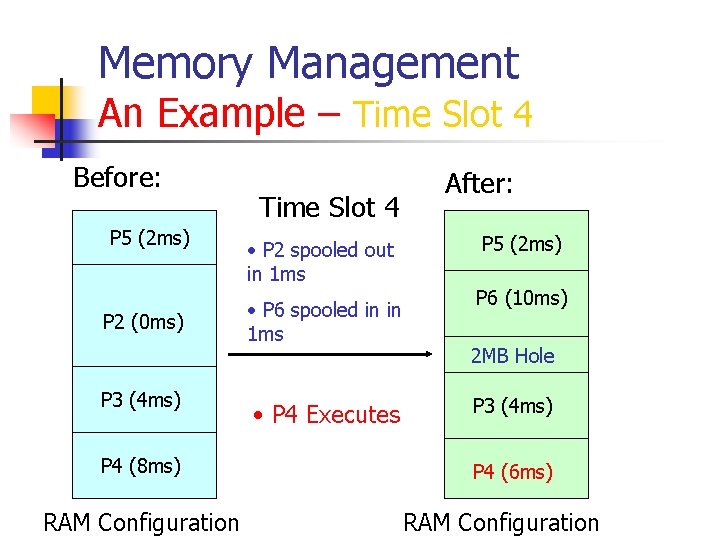

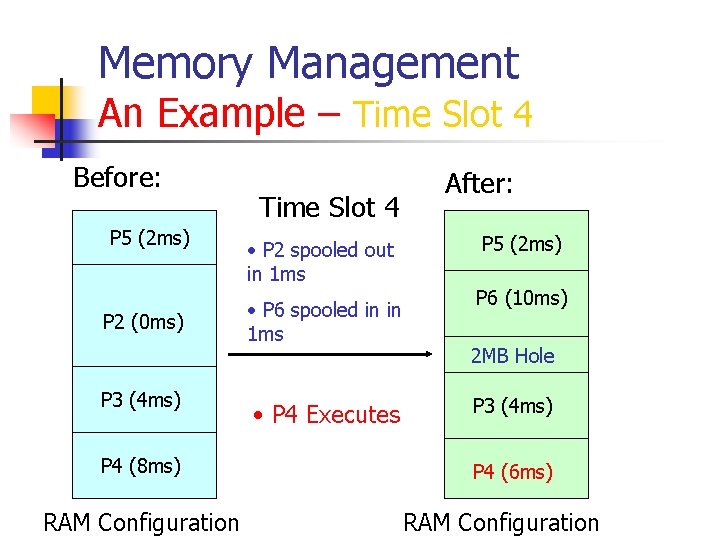

Memory Management An Example – Time Slot 4 Before: P 5 (2 ms) P 2 (0 ms) P 3 (4 ms) P 4 (8 ms) RAM Configuration Time Slot 4 • P 2 spooled out in 1 ms • P 6 spooled in in 1 ms • P 4 Executes After: P 5 (2 ms) P 6 (10 ms) 2 MB Hole P 3 (4 ms) P 4 (6 ms) RAM Configuration

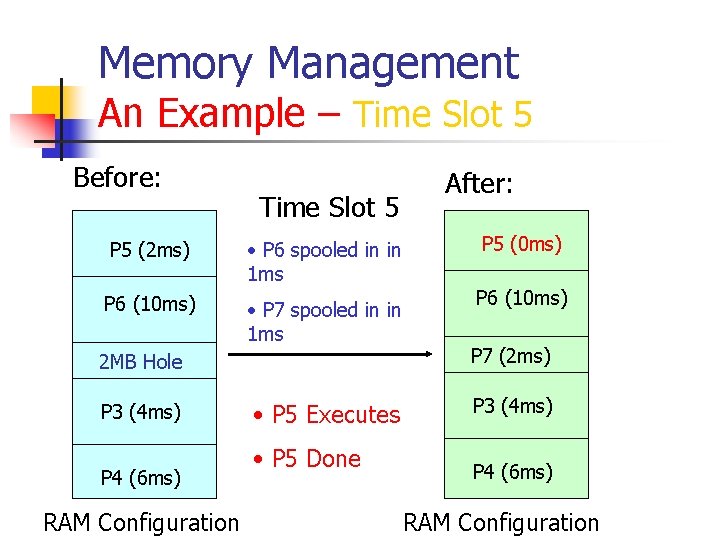

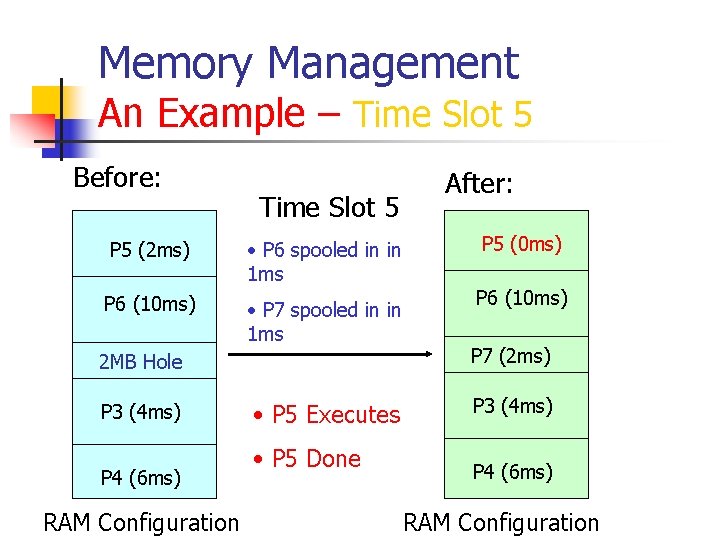

Memory Management An Example – Time Slot 5 Before: Time Slot 5 P 5 (2 ms) • P 6 spooled in in 1 ms P 6 (10 ms) • P 7 spooled in in 1 ms 2 MB Hole P 3 (4 ms) P 4 (6 ms) RAM Configuration • P 5 Executes • P 5 Done After: P 5 (0 ms) P 6 (10 ms) P 7 (2 ms) P 3 (4 ms) P 4 (6 ms) RAM Configuration

Memory Management An Example n n The previous slides gave started a stepwise walk-through of the mickey mouse system. Try and complete the walk through from this point on.

Related Problems Synchronization problem in spooling n n n Spooling enables the transfer of process while another process is in execution. It aims at preventing the CPU from being idle, thus, managing CPU utilization more efficiently. The processes that are being transferred to the main memory can be of different sizes. When trying to transfer a very big process, it is possible that the transfer time exceeds the combined execution time of the processes in the RAM. This results in the CPU being idle which was the problem for which spooling was invented. The above problem is termed as the synchronization problem. The reason behind it is that the variance in process sizes does not guarantee synchronization.

Related Problems Redundancy Problem n n n Usually the combined size of all processes is much bigger than the RAM size and for this reason processes are swapped in and out continuously. One issue regarding this is: What is the use of transferring the entire process when only part of the code is executed in a given time slot? This problem is termed as the Redundancy problem.

Related Problems Fragmentation n n Fragmentation is encountered when the free memory space is broken into little pieces as processes are loaded and removed from memory. Fragmentation is of two types: n n n External fragmentation In the present context, we are concerned with external fragmentation and shall explore the same in greater details in the following slides.

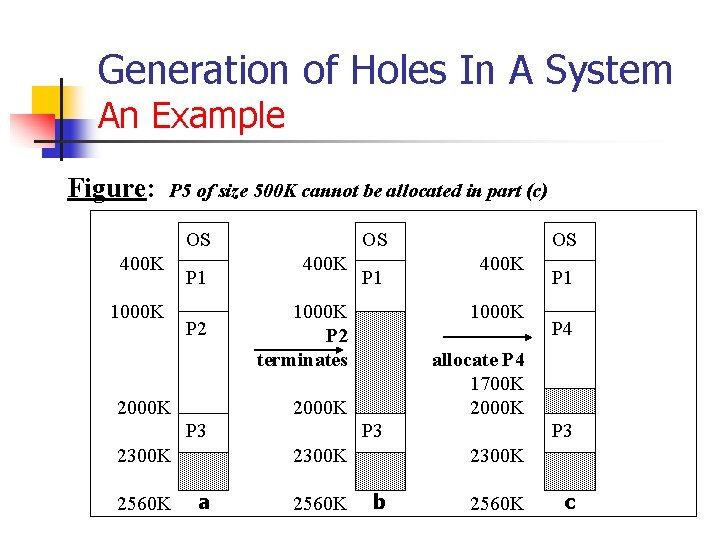

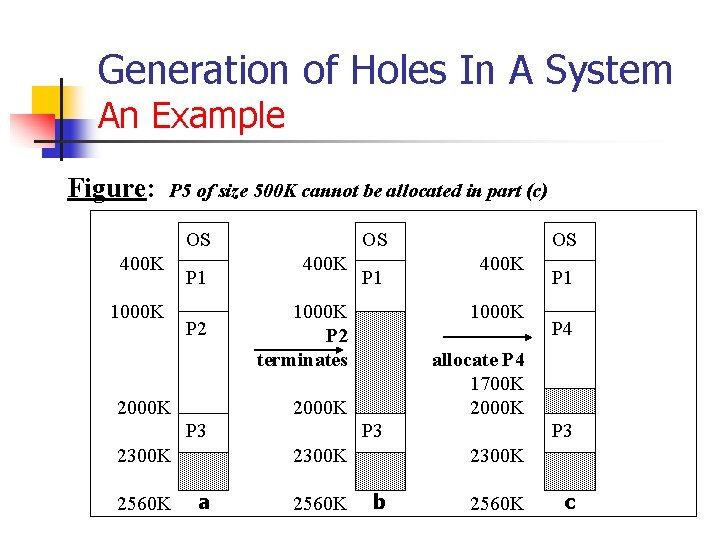

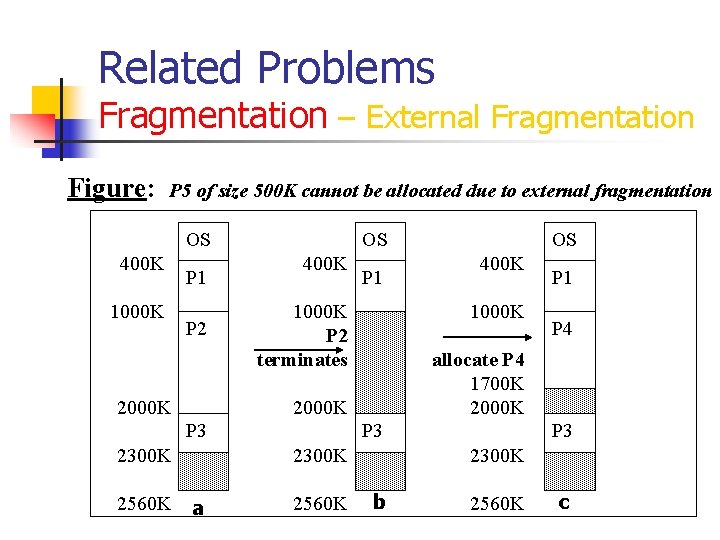

Generation of Holes In A System An Example Figure: P 5 of size 500 K cannot be allocated in part (c) OS 400 K 1000 K P 1 P 2 2000 K OS 400 K 1000 K P 2 terminates 2300 K P 3 2560 K P 1 P 4 allocate P 4 1700 K 2000 K 2300 K a 400 K 1000 K 2000 K P 3 2560 K P 1 OS P 3 2300 K b 2560 K c

Generation of Holes In A System An Example n n n In the previous visual presentation, we see that initially P 1, P 2, P 3 are in the RAM and the remaining 260 K is not enough for P 4 (700 K). (part a) When P 2 terminates, it is spooled out leaving behind a hole of size 1000 K. So now we have two holes of sizes 1000 K and 260 K respectively. (part b) At this point, we have a hole big enough to spool in P 4 which leaves us with two holes of sizes 300 K and 260 K. (part c) Thus, we see holes are generated because the size of the spooled out process is not that same as the size of the process waiting to be spooled in.

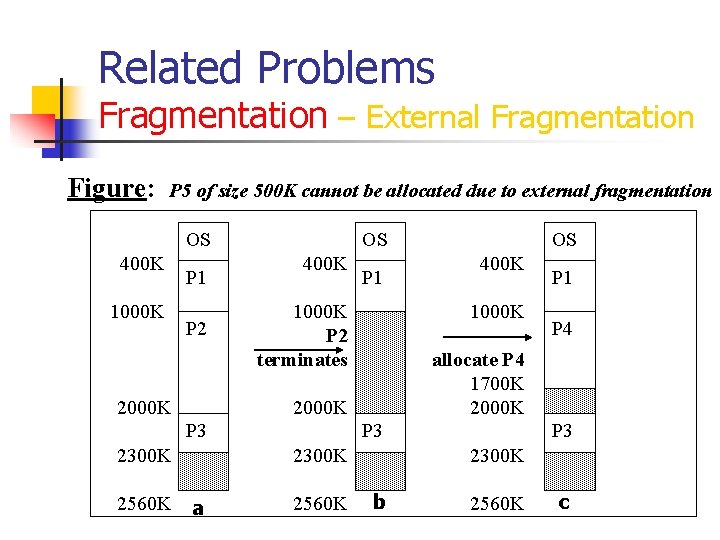

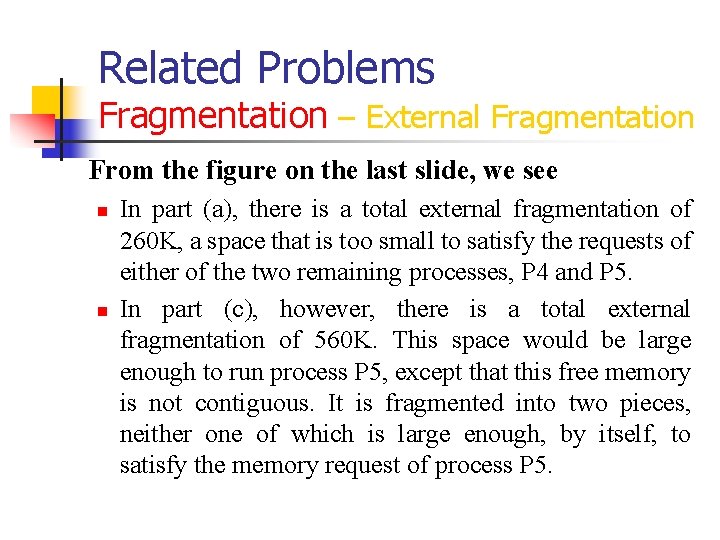

Related Problems Fragmentation – External Fragmentation n n External fragmentation exists when enough total memory space exists to satisfy a request, but it is not contiguous; storage is fragmented into a large number of small holes. Referring to the figure of the scheduling example on the next slide, two such cases can be observed.

Related Problems Fragmentation – External Fragmentation Figure: P 5 of size 500 K cannot be allocated due to external fragmentation OS 400 K 1000 K P 1 P 2 2000 K OS 400 K 1000 K P 2 terminates 2300 K P 3 2560 K P 1 P 4 allocate P 4 1700 K 2000 K 2300 K a 400 K 1000 K 2000 K P 3 2560 K P 1 OS P 3 2300 K b 2560 K c

Related Problems Fragmentation – External Fragmentation From the figure on the last slide, we see n n In part (a), there is a total external fragmentation of 260 K, a space that is too small to satisfy the requests of either of the two remaining processes, P 4 and P 5. In part (c), however, there is a total external fragmentation of 560 K. This space would be large enough to run process P 5, except that this free memory is not contiguous. It is fragmented into two pieces, neither one of which is large enough, by itself, to satisfy the memory request of process P 5.

Related Problems Fragmentation – External Fragmentation This fragmentation problem can be severe. In the worst case, there could be a block of free (wasted) memory between every two processes. If all this memory were in one big free block, a few more processes could be run. Depending on the total amount of memory storage and the average process size, external fragmentation may be either a minor or major problem.

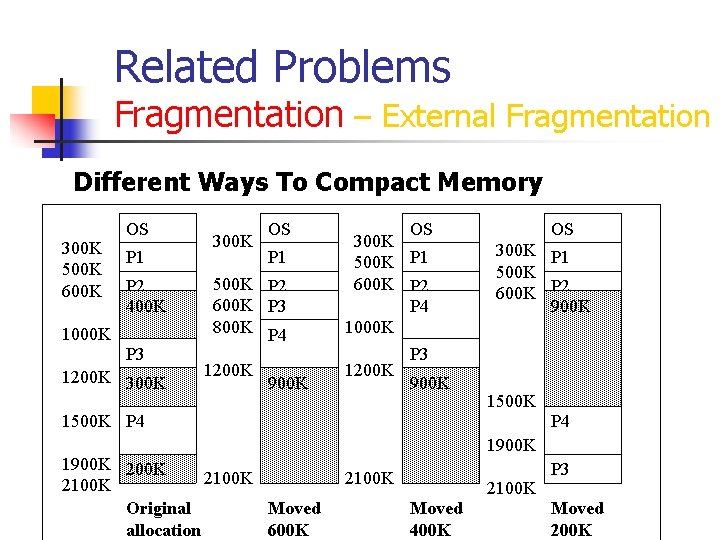

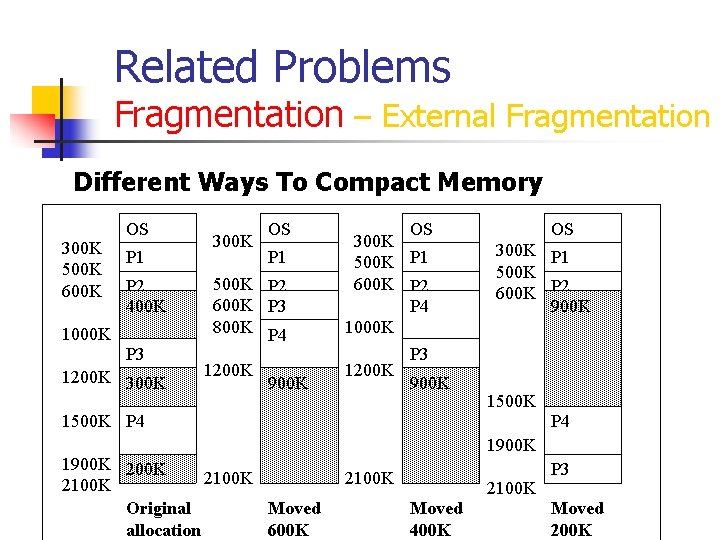

Related Problems Fragmentation – External Fragmentation n n n One solution to the problem of external fragmentation is compaction. The goal is to shuffle the memory contents to place all free memory together in one large block. The simplest compaction algorithm is to move all processes toward one end of the memory; all holes in the other direction, producing one large hole of available memory. This scheme can be quite expensive. The figure on the following slide shows different ways to compact memory. Selecting an optimal compaction strategy is quite difficult.

Related Problems Fragmentation – External Fragmentation Different Ways To Compact Memory 300 K 500 K 600 K 1000 K OS P 1 P 2 400 K P 3 1200 K 300 K OS P 1 500 K P 2 600 K P 3 800 K P 4 1200 K 900 K OS 300 K 500 K P 1 600 K P 2 P 4 1000 K P 3 1200 K 900 K 1500 K P 4 1900 K 2100 K Original Moved allocation 600 K OS 300 K P 1 500 K 600 K P 2 900 K 1500 K P 4 1900 K 2100 K Moved 400 K P 3 Moved 200 K

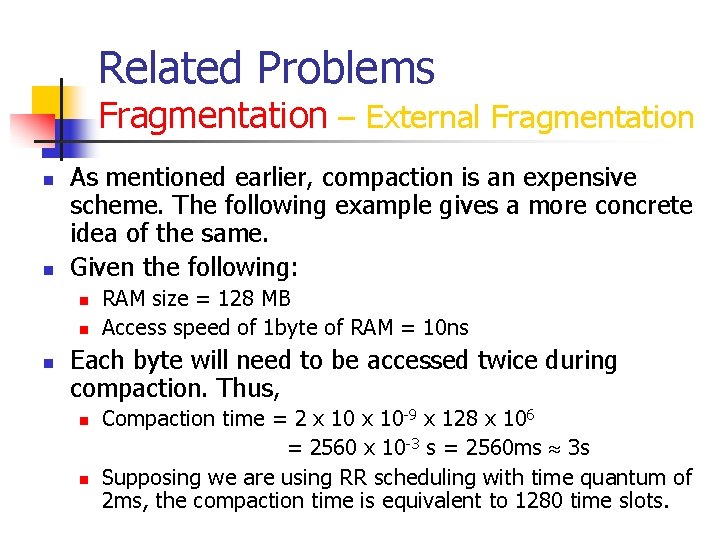

Related Problems Fragmentation – External Fragmentation n n As mentioned earlier, compaction is an expensive scheme. The following example gives a more concrete idea of the same. Given the following: n n n RAM size = 128 MB Access speed of 1 byte of RAM = 10 ns Each byte will need to be accessed twice during compaction. Thus, n n Compaction time = 2 x 10 -9 x 128 x 106 = 2560 x 10 -3 s = 2560 ms 3 s Supposing we are using RR scheduling with time quantum of 2 ms, the compaction time is equivalent to 1280 time slots.

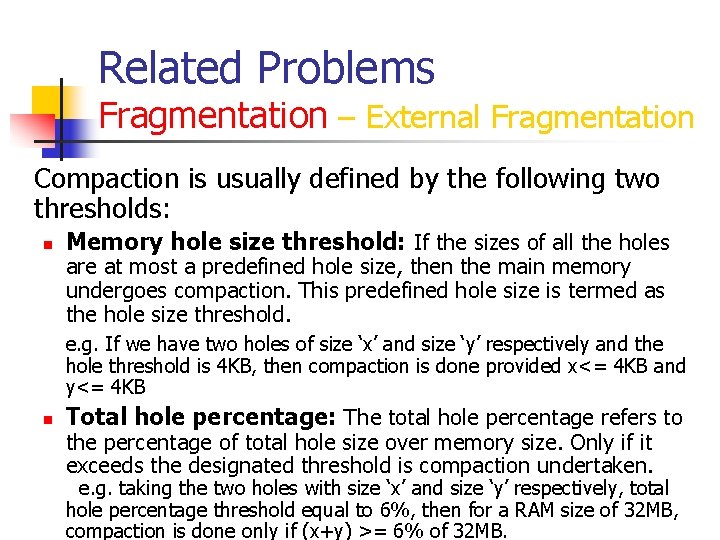

Related Problems Fragmentation – External Fragmentation Compaction is usually defined by the following two thresholds: n Memory hole size threshold: If the sizes of all the holes are at most a predefined hole size, then the main memory undergoes compaction. This predefined hole size is termed as the hole size threshold. e. g. If we have two holes of size ‘x’ and size ‘y’ respectively and the hole threshold is 4 KB, then compaction is done provided x<= 4 KB and y<= 4 KB n Total hole percentage: The total hole percentage refers to the percentage of total hole size over memory size. Only if it exceeds the designated threshold is compaction undertaken. e. g. taking the two holes with size ‘x’ and size ‘y’ respectively, total hole percentage threshold equal to 6%, then for a RAM size of 32 MB, compaction is done only if (x+y) >= 6% of 32 MB.

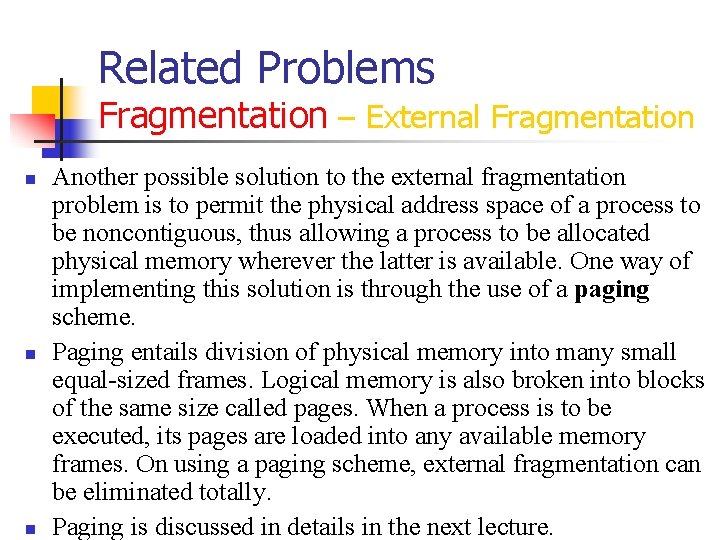

Related Problems Fragmentation – External Fragmentation n Another possible solution to the external fragmentation problem is to permit the physical address space of a process to be noncontiguous, thus allowing a process to be allocated physical memory wherever the latter is available. One way of implementing this solution is through the use of a paging scheme. Paging entails division of physical memory into many small equal-sized frames. Logical memory is also broken into blocks of the same size called pages. When a process is to be executed, its pages are loaded into any available memory frames. On using a paging scheme, external fragmentation can be eliminated totally. Paging is discussed in details in the next lecture.

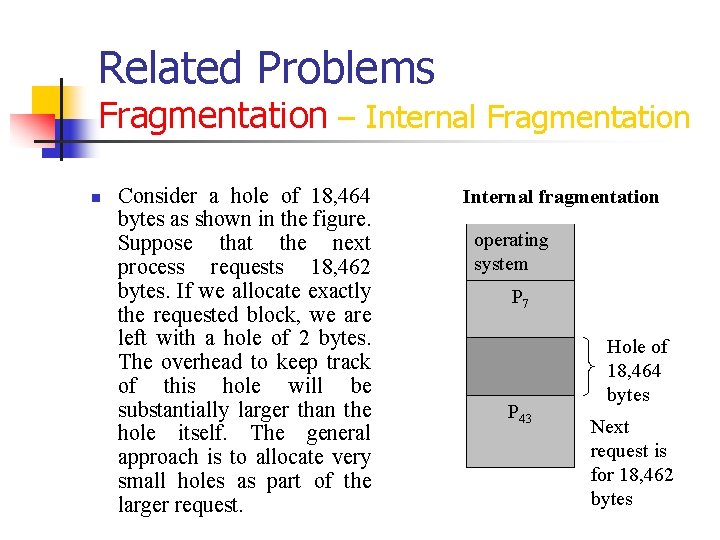

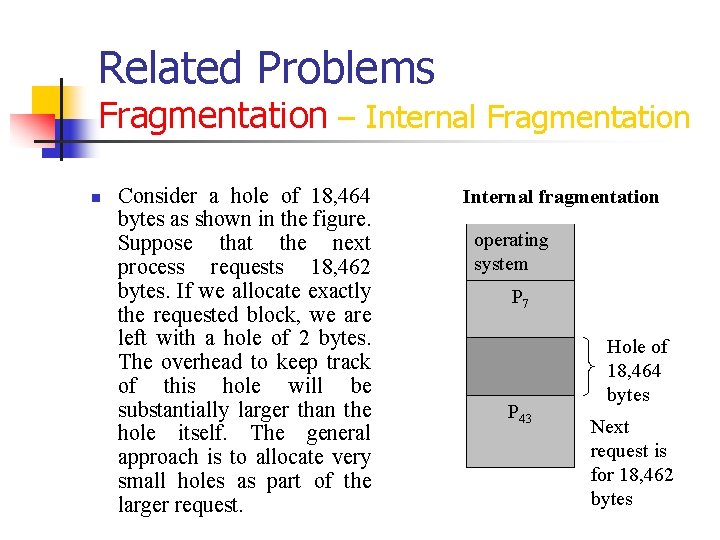

Related Problems Fragmentation – Internal Fragmentation n Consider a hole of 18, 464 bytes as shown in the figure. Suppose that the next process requests 18, 462 bytes. If we allocate exactly the requested block, we are left with a hole of 2 bytes. The overhead to keep track of this hole will be substantially larger than the hole itself. The general approach is to allocate very small holes as part of the larger request. Internal fragmentation operating system P 7 P 43 Hole of 18, 464 bytes Next request is for 18, 462 bytes

Related Problems Fragmentation – Internal Fragmentation n n As illustrated in the previous slide, the allocated memory may be slightly larger then the requested memory. The difference between these two numbers is internal fragmentation – memory that is internal to a partition, but is not being used. In other words, unused memory within allocated memory is called internal fragmentation.

Memory Placement Algorithms n n n As seen earlier, while swapping processes in and out of the RAM, holes are created. In general, there is at any time a set of holes, of various sizes, scattered throughout memory. When a process arrives and needs memory, we search the set of holes for a hole that is best suited for the process. The following slide describes three algorithms that are used to select a free hole.

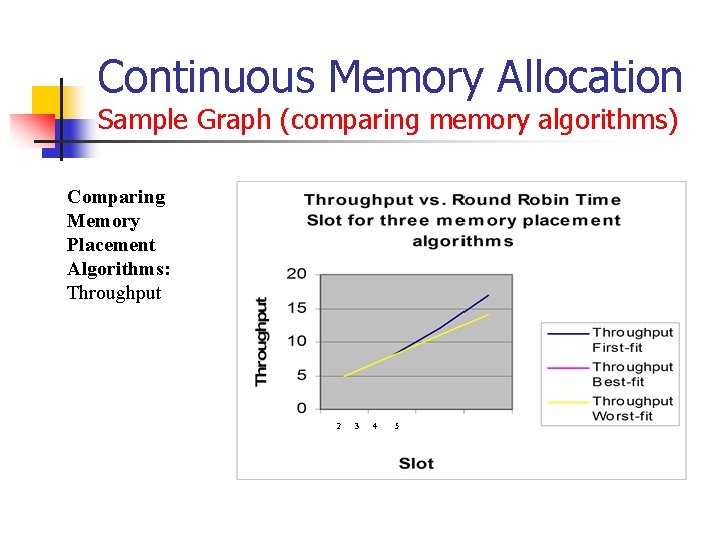

Memory Placement Algorithms The three placement algorithms are: n n n First-fit: Allocate the first hole that is big enough. Best-fit: Allocate the smallest hole that is big enough. Worst-fit: Allocate the largest hole. Simulations have shown that both first-fit and best-fit are better than worst-fit in terms of decreasing both time and storage utilization. Neither first-fit nor best-fit is clearly the best in terms of storage utilization, but first-fit is usually faster.

Continuous Memory Allocation Scheme n n n The continuous memory allocation scheme entails loading of processes into memory in a sequential order. When a process is removed from main memory, new processes are loaded if there is a hole big enough to hold it. This algorithm is easy to implement, however, it suffers from the drawback of external fragmentation. Compaction, consequently, becomes an inevitable part of the scheme.

Continuous Memory Allocation Scheme Parameters Involved n n Memory size RAM access time Disc access time Compaction thresholds n n Memory hole-size threshold Total hole percentage Memory placement algorithms Round robin time slot

Continuous Memory Allocation Scheme Effect of Memory Size n As anticipated, greater the amount of memory available, the higher would be the system performance.

Continuous Memory Allocation Scheme Effect of RAM and disc access times n n n RAM access time and disc access time together define the transfer rate in a system. Higher transfer rate means less time it takes to move processes from main memory to secondary memory and vice-versa thus increasing the efficiency of the operating system. Since compaction involves accessing the entire RAM twice, a lower RAM access time will translate to lower compaction times.

Continuous Memory Allocation Scheme Effect of Compaction Thresholds n n Optimal values of hole size threshold largely depend on the size of the processes since it is these processes that have to be fit in the holes. Thresholds that lead to frequent compaction can bring down performance at an accelerating rate since compaction is quite expensive in terms of time. Threshold values also play a key role in determining state of fragmentation present. Its effect on system performance is not very straightforward and has seldom been the focus of studies in this field.

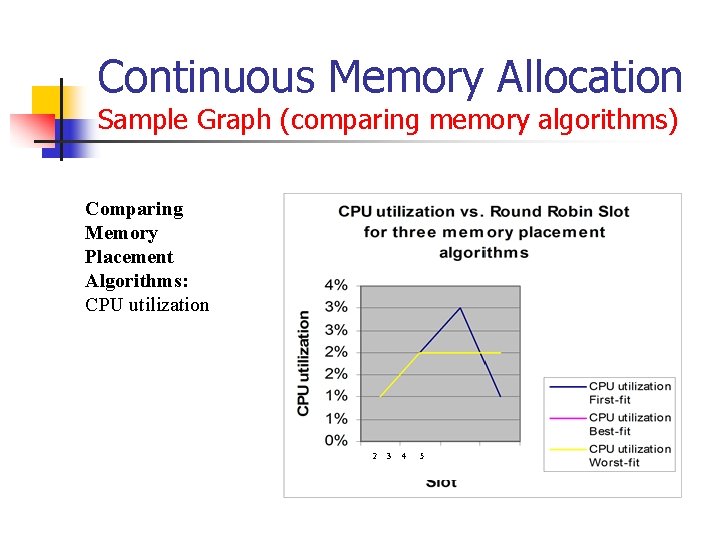

Continuous Memory Allocation Scheme Effect of Memory Placement Algorithms n n Simulations have shown that both firstfit and best-fit are better than worst-fit in terms of decreasing both time and storage utilization. Neither first-fit nor best fit is clearly best in terms of storage utilization, but firstfit is generally faster.

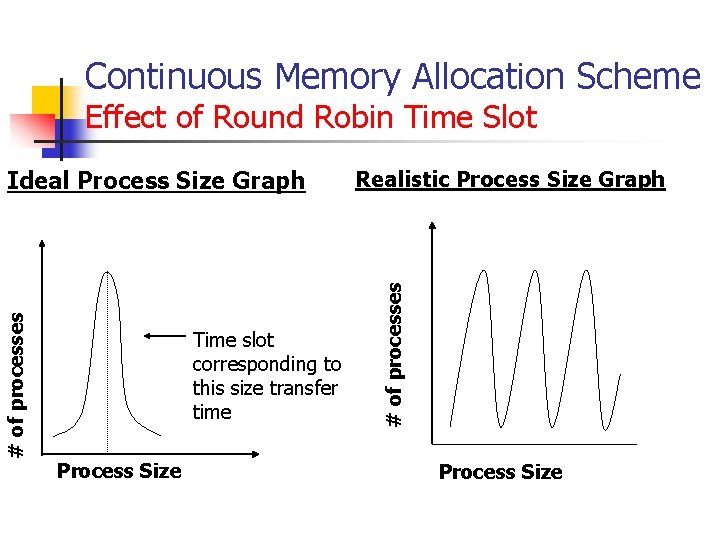

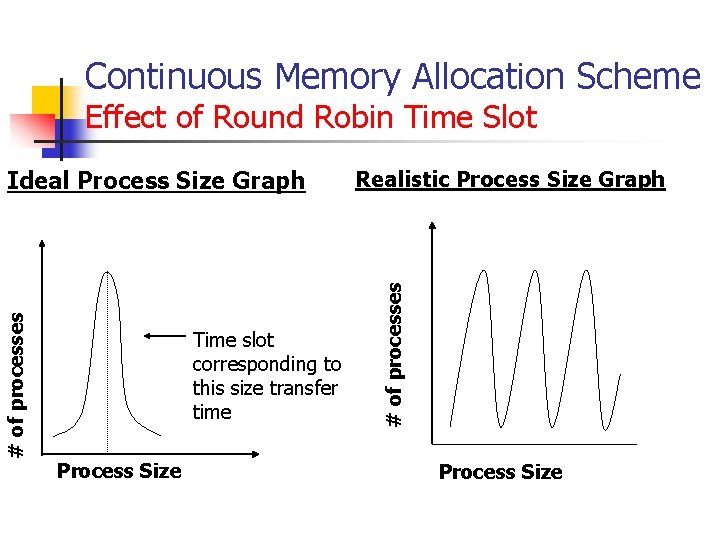

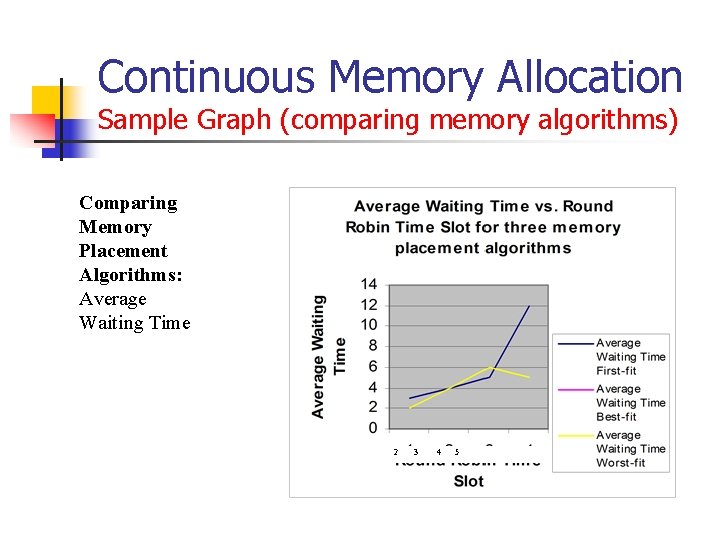

Continuous Memory Allocation Scheme Effect of Round Robin Time Slot n n As depicted in the figures on the next slide, best choice for the value of time slot would be corresponding to the transfer time for a single process. For example, if most of the processes required 2 ms to be transferred, then a time slot of 2 ms would be ideal. Hence, while one process completes execution, another can be transferred. However, the transfer times for the processes in consideration are seldom a normal or uniform distribution. The reason for the non-uniform distribution is that there are many different types of processes in a system. The variance as depicted in the figure is too much in a real system and makes the choice of time slot a difficult proposition to decide upon.

Continuous Memory Allocation Scheme Effect of Round Robin Time Slot Time slot corresponding to this size transfer time Process Size Realistic Process Size Graph # of processes Ideal Process Size Graph Process Size

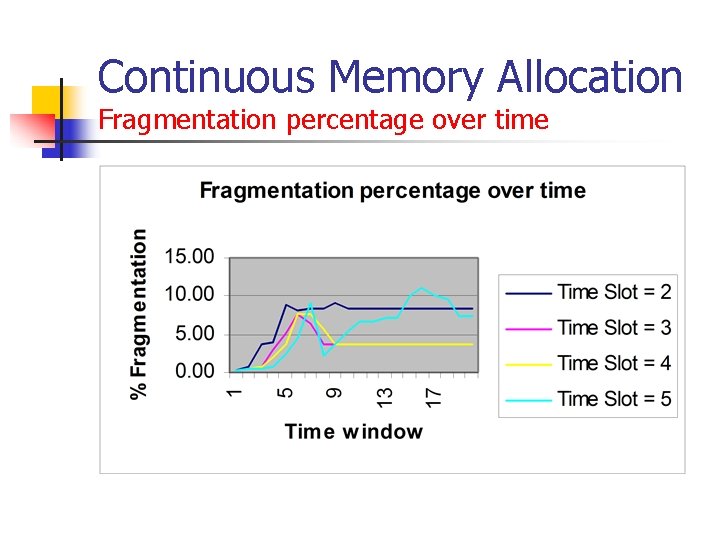

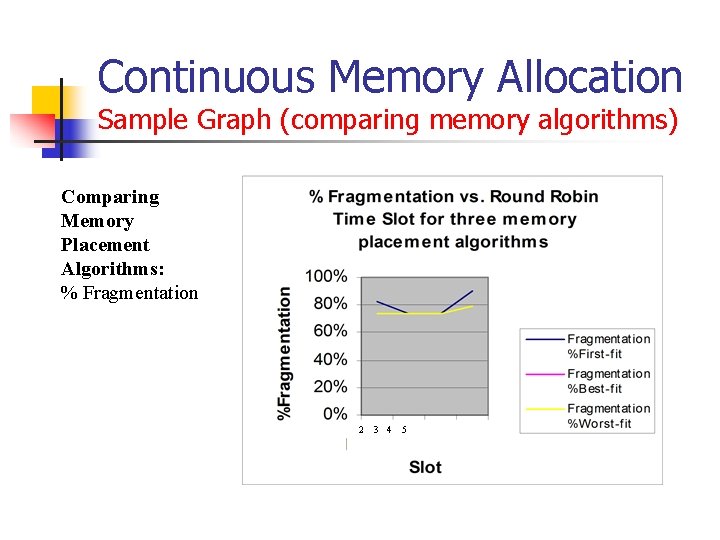

Continuous Memory Allocation Scheme Performance Measures n n n Average Waiting Time Average Turnaround Time CPU utilization CPU throughput Memory fragmentation percentage over time n n This is a new performance measure and it quantifies compaction cost. It is calculated as a percentage of compaction times versus the total time.

Continuous Memory Allocation Implementation n n As part of Assignment 3, you’ll implement a memory manager system within an operating system satisfying the given requirements. (For complete details refer to Assignment 3) We’ll see a brief explanation of the assignment in the following slides.

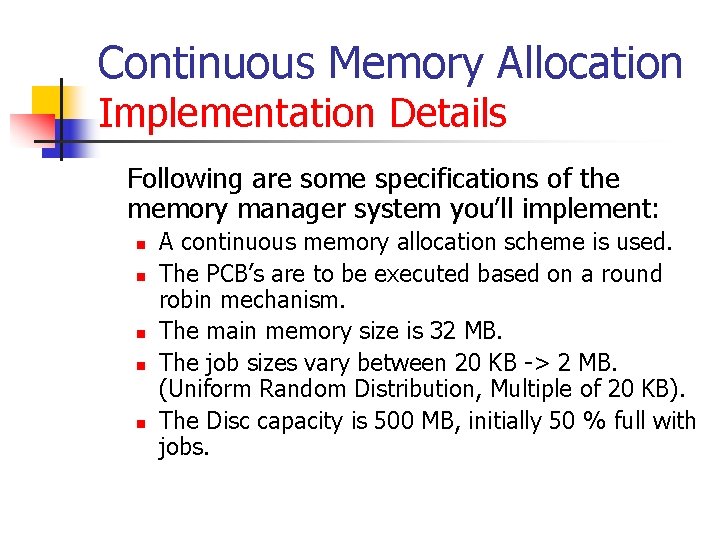

Continuous Memory Allocation Implementation Details Following are some specifications of the memory manager system you’ll implement: n n n A continuous memory allocation scheme is used. The PCB’s are to be executed based on a round robin mechanism. The main memory size is 32 MB. The job sizes vary between 20 KB -> 2 MB. (Uniform Random Distribution, Multiple of 20 KB). The Disc capacity is 500 MB, initially 50 % full with jobs.

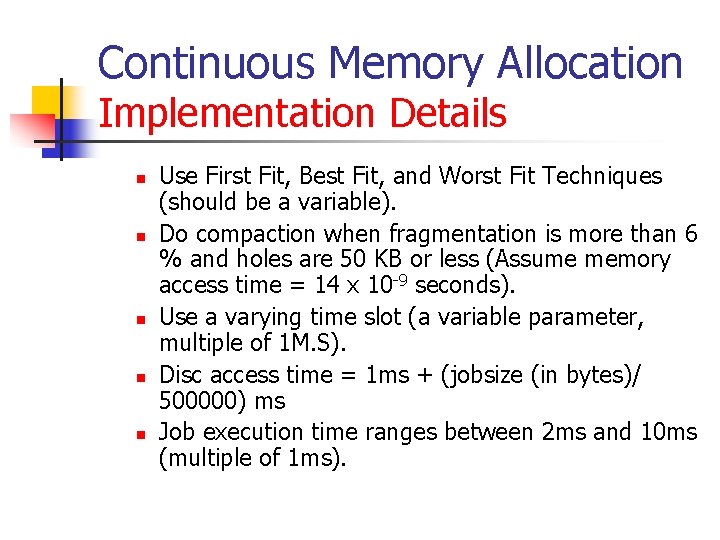

Continuous Memory Allocation Implementation Details n n n Use First Fit, Best Fit, and Worst Fit Techniques (should be a variable). Do compaction when fragmentation is more than 6 % and holes are 50 KB or less (Assume memory access time = 14 x 10 -9 seconds). Use a varying time slot (a variable parameter, multiple of 1 M. S). Disc access time = 1 ms + (jobsize (in bytes)/ 500000) ms Job execution time ranges between 2 ms and 10 ms (multiple of 1 ms).

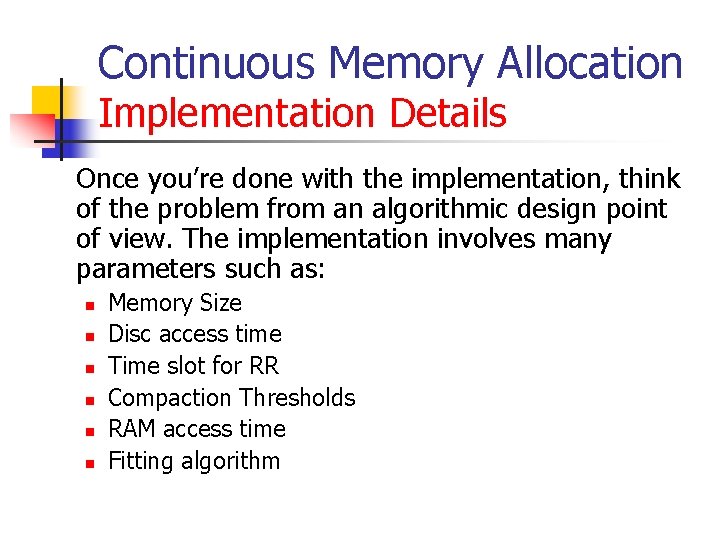

Continuous Memory Allocation Implementation Details Once you’re done with the implementation, think of the problem from an algorithmic design point of view. The implementation involves many parameters such as: n n n Memory Size Disc access time Time slot for RR Compaction Thresholds RAM access time Fitting algorithm

Continuous Memory Allocation Implementation Details n n The eventual goal would be to optimize several performance measures (enlisted earlier) Perform several test runs and write a summation indicating how sensitive are some of the performance measures to some of the above parameters

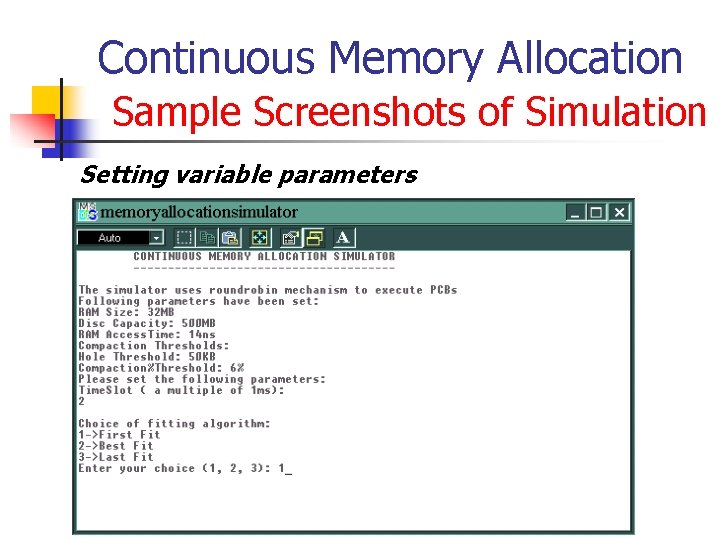

Continuous Memory Allocation Sample Screenshots of Simulation Setting variable parameters

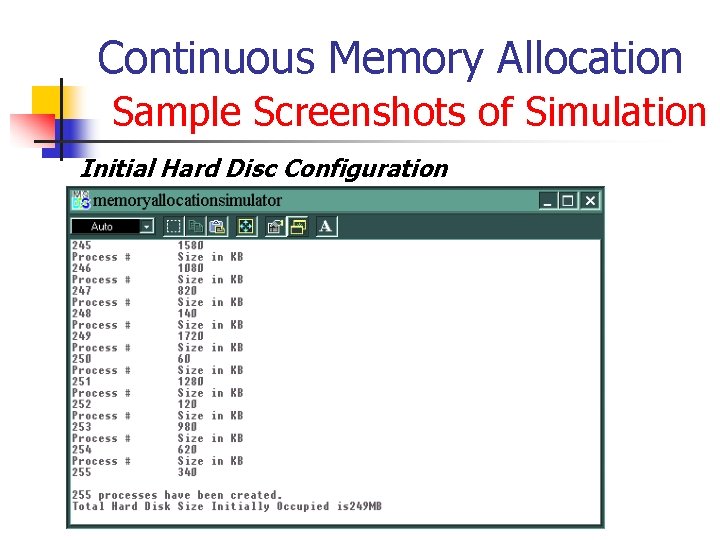

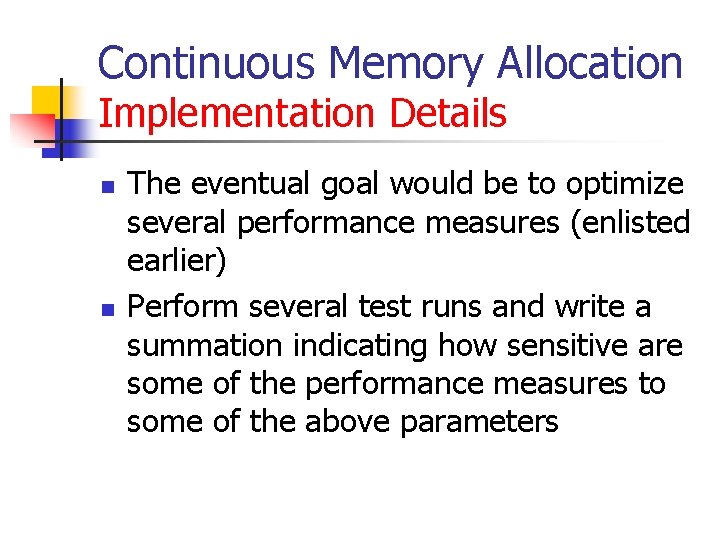

Continuous Memory Allocation Sample Screenshots of Simulation Initial Hard Disc Configuration

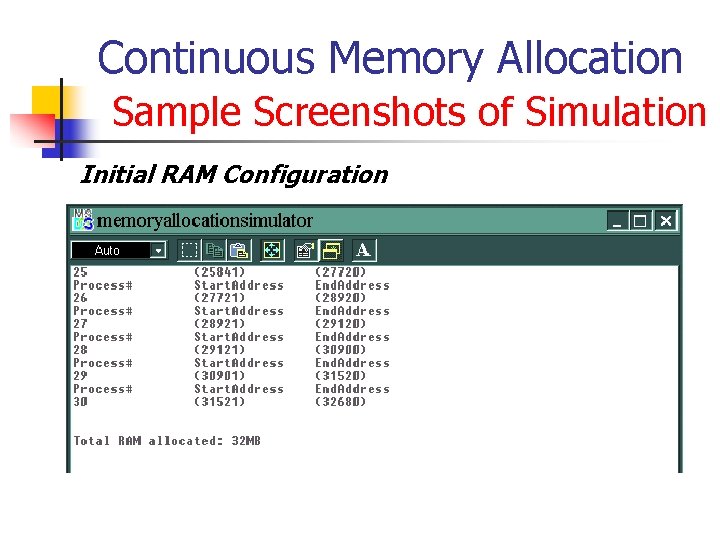

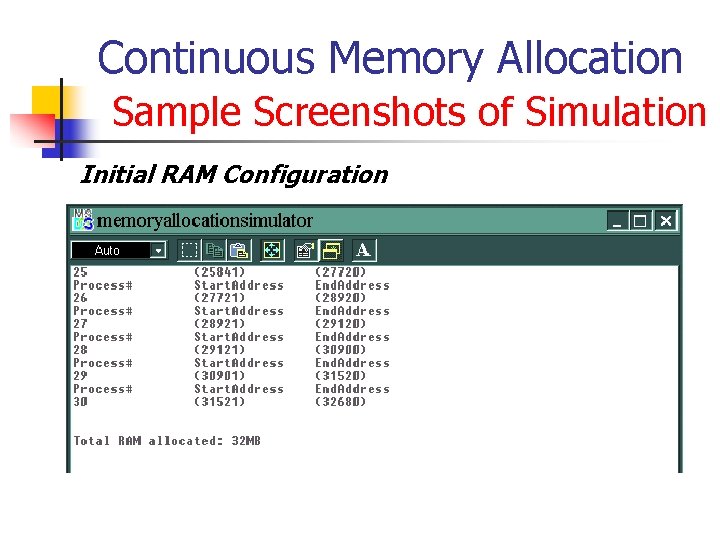

Continuous Memory Allocation Sample Screenshots of Simulation Initial RAM Configuration

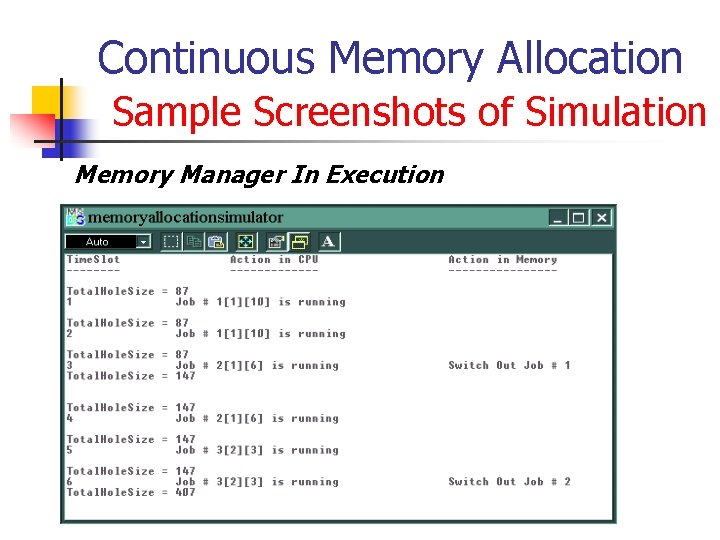

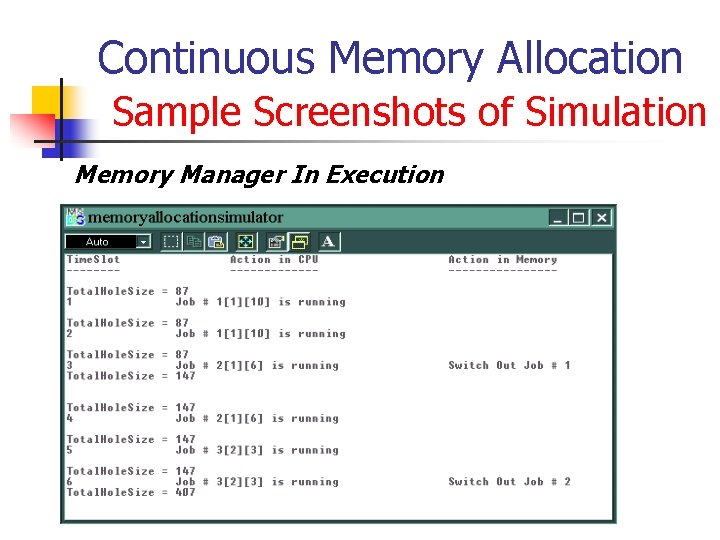

Continuous Memory Allocation Sample Screenshots of Simulation Memory Manager In Execution

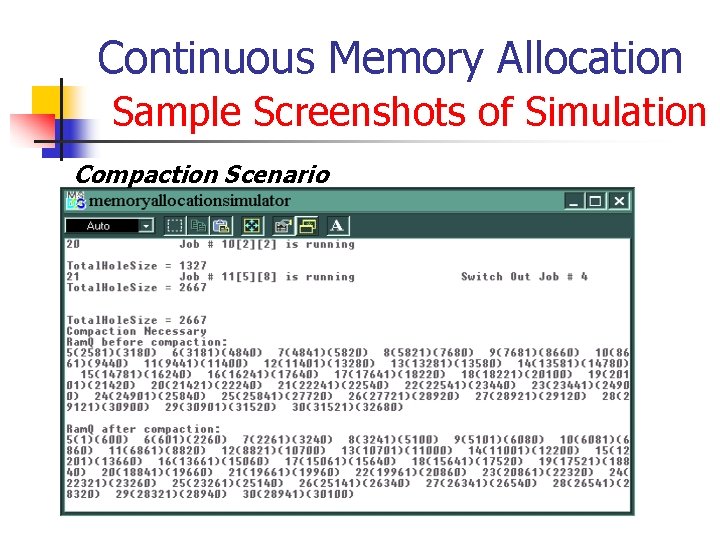

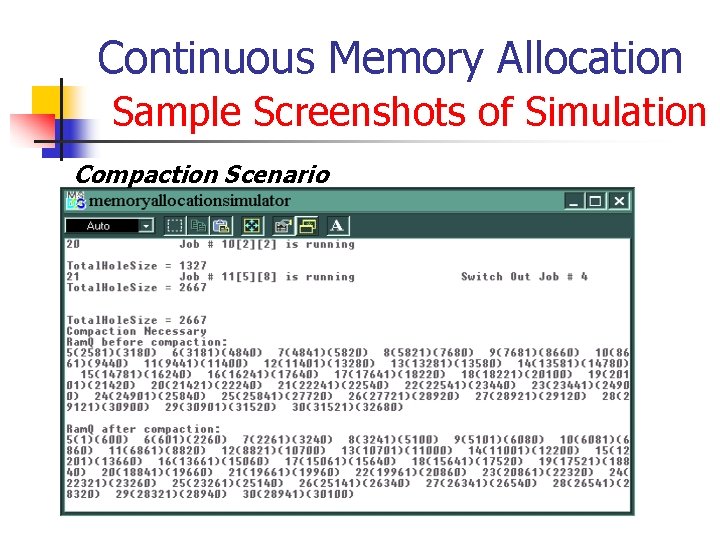

Continuous Memory Allocation Sample Screenshots of Simulation Compaction Scenario

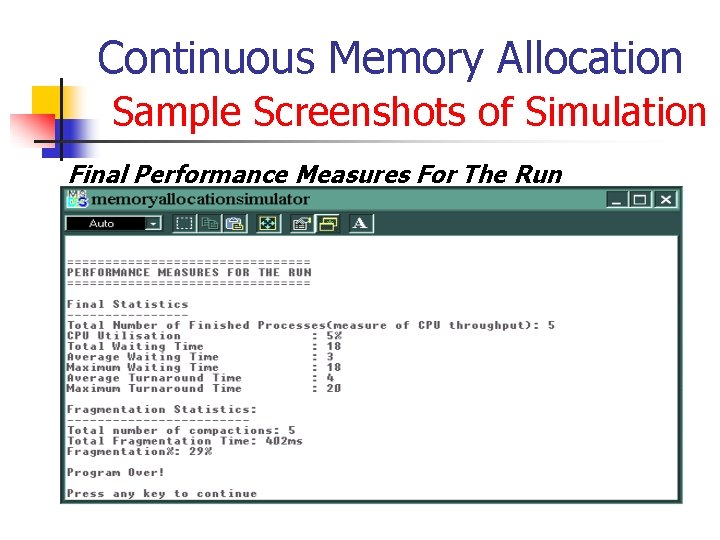

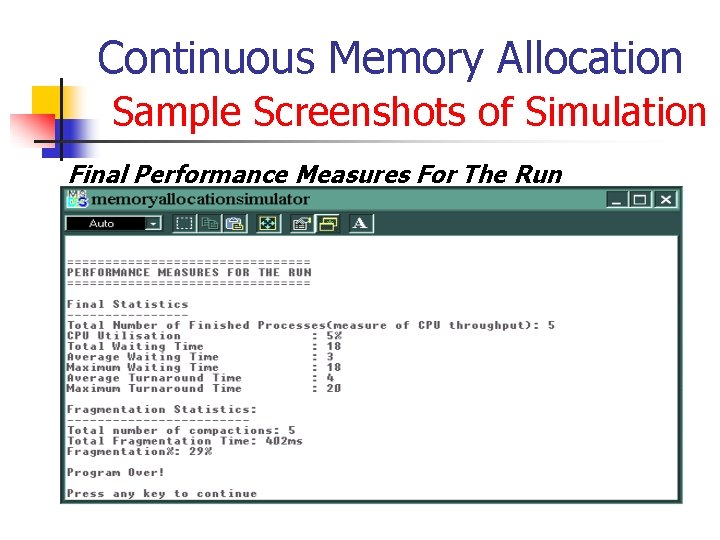

Continuous Memory Allocation Sample Screenshots of Simulation Final Performance Measures For The Run

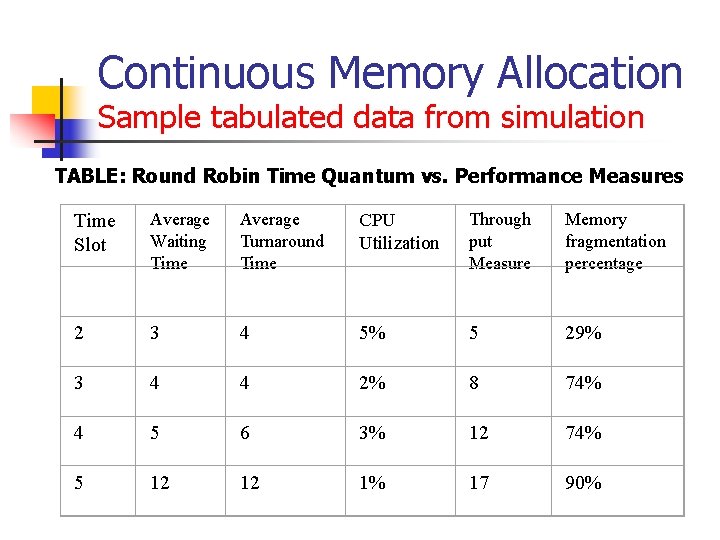

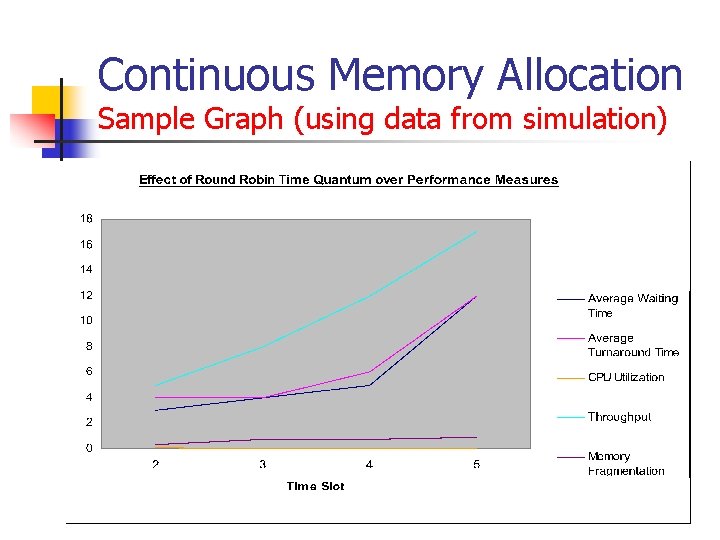

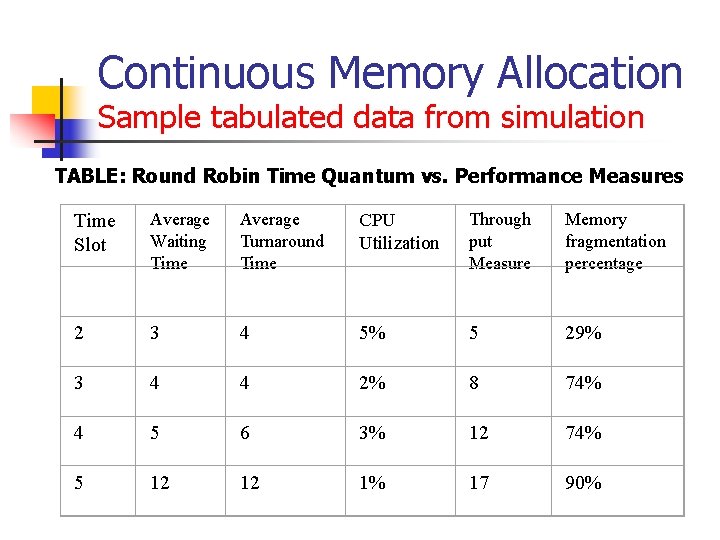

Continuous Memory Allocation Sample tabulated data from simulation TABLE: Round Robin Time Quantum vs. Performance Measures Time Slot Average Waiting Time Average Turnaround Time CPU Utilization Through put Measure Memory fragmentation percentage 2 3 4 5% 5 29% 3 4 4 2% 8 74% 4 5 6 3% 12 74% 5 12 12 1% 17 90%

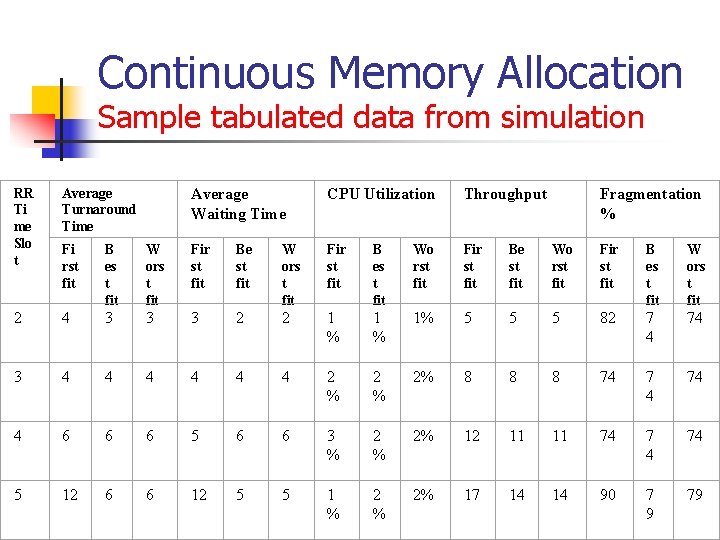

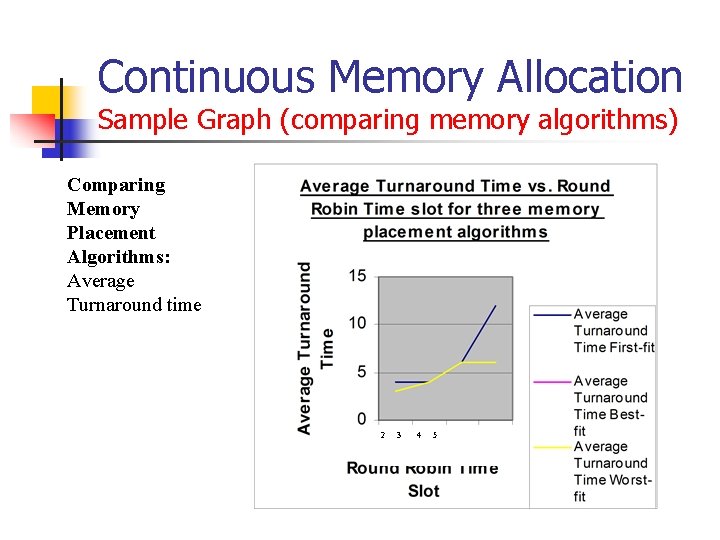

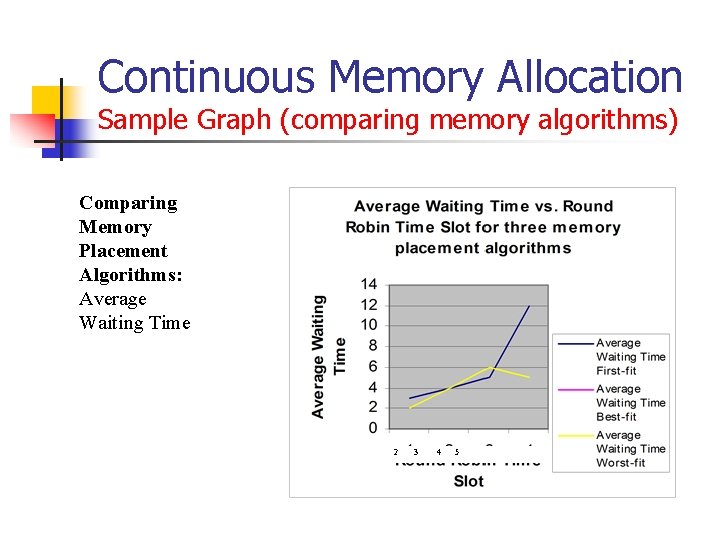

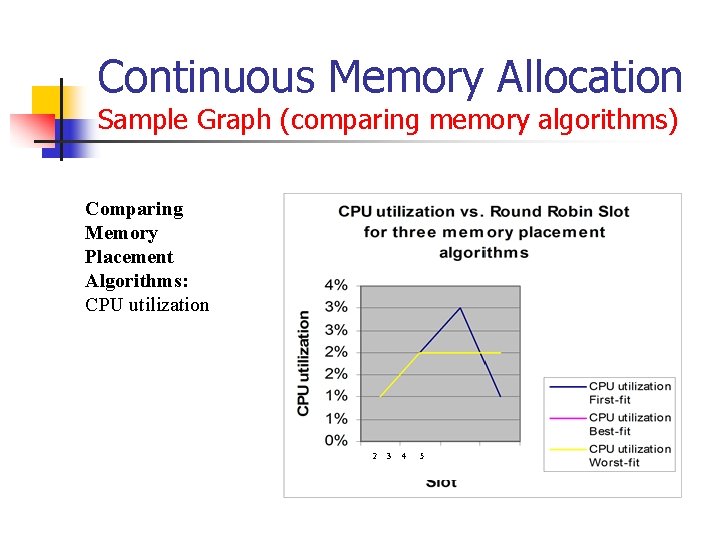

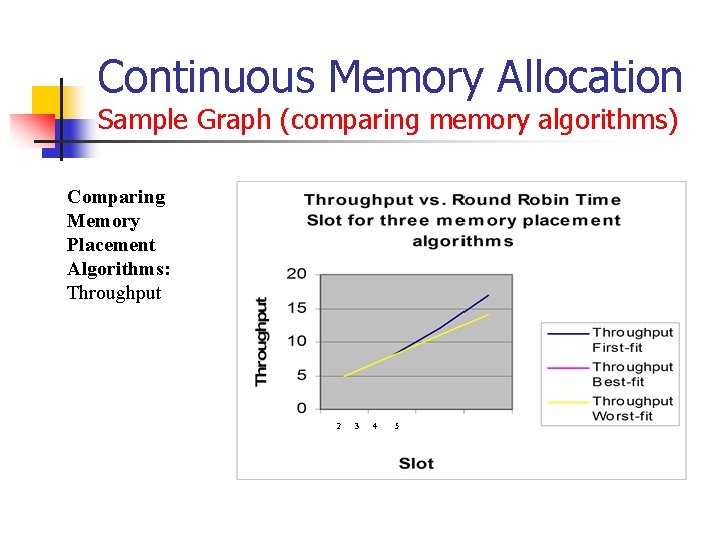

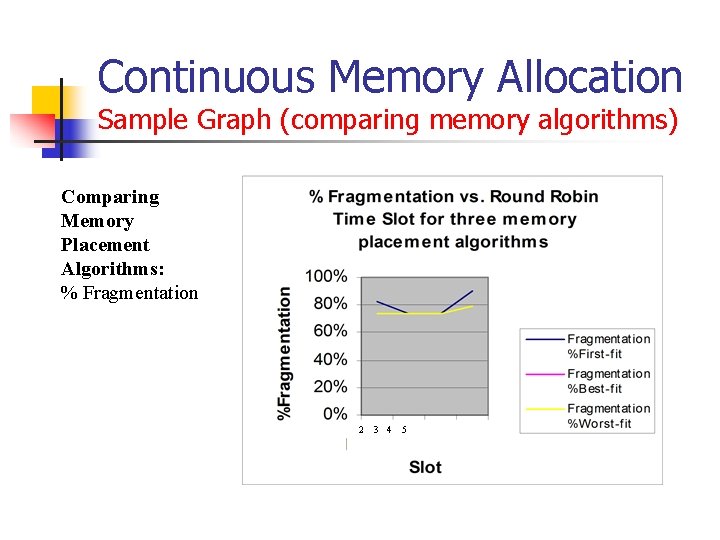

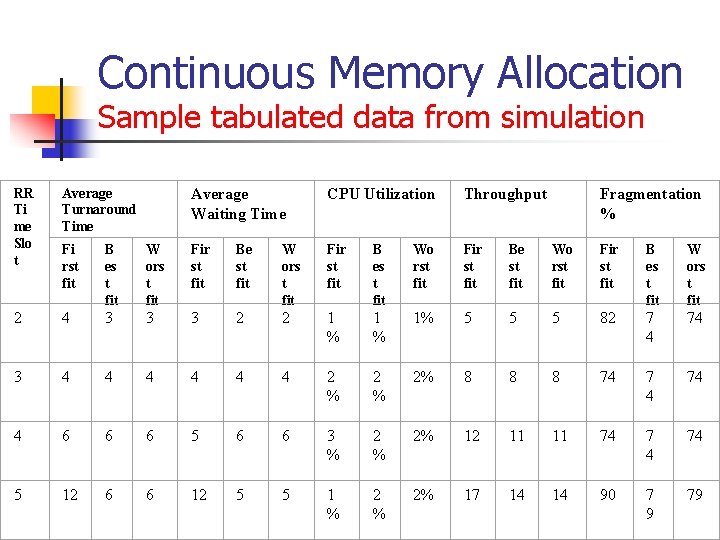

Continuous Memory Allocation Sample tabulated data from simulation RR Ti me Slo t Average Turnaround Time Average Waiting Time CPU Utilization Throughput Fragmentation % Fi rst fit B es t fit W ors t fit Fir st fit Be st fit W ors t fit Fir st fit B es t fit Wo rst fit Fir st fit Be st fit Wo rst fit Fir st fit B es t fit W ors t fit 2 4 3 3 3 2 2 1 % 1% 5 5 5 82 7 4 74 3 4 4 4 2 % 2% 8 8 8 74 7 4 74 4 6 6 6 5 6 6 3 % 2% 12 11 11 74 7 4 74 5 12 6 6 12 5 5 1 % 2% 17 14 14 90 7 9 79

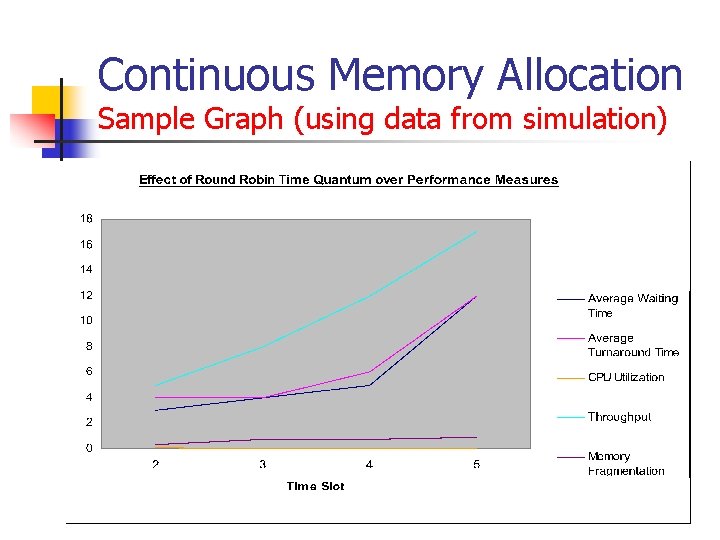

Continuous Memory Allocation Sample Graph (using data from simulation)

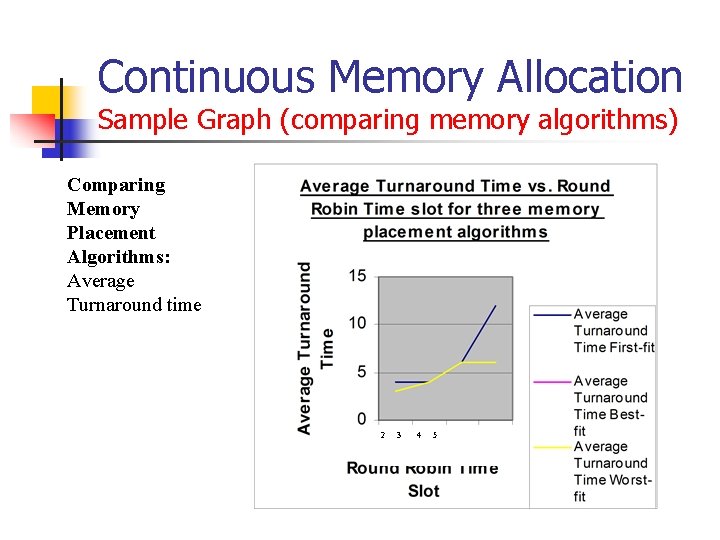

Continuous Memory Allocation Sample Graph (comparing memory algorithms) Comparing Memory Placement Algorithms: Average Turnaround time 2 3 4 5

Continuous Memory Allocation Sample Graph (comparing memory algorithms) Comparing Memory Placement Algorithms: Average Waiting Time 2 3 4 5

Continuous Memory Allocation Sample Graph (comparing memory algorithms) Comparing Memory Placement Algorithms: CPU utilization 2 3 4 5

Continuous Memory Allocation Sample Graph (comparing memory algorithms) Comparing Memory Placement Algorithms: Throughput 2 3 4 5

Continuous Memory Allocation Sample Graph (comparing memory algorithms) Comparing Memory Placement Algorithms: % Fragmentation 2 3 4 5

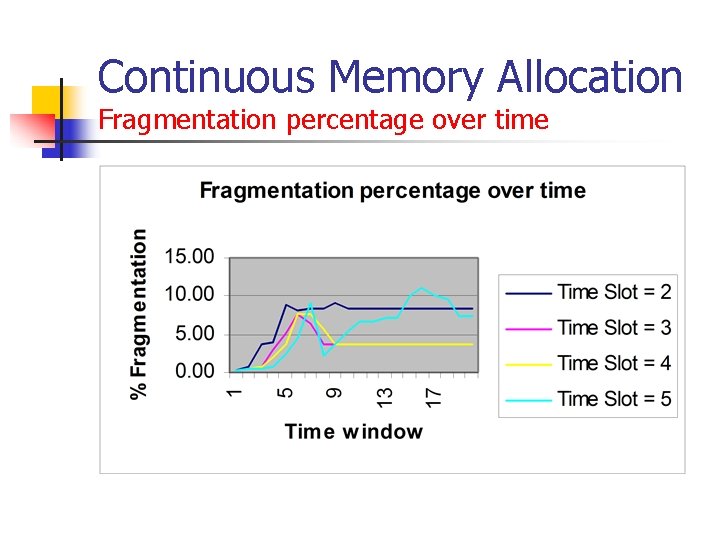

Continuous Memory Allocation Fragmentation percentage over time

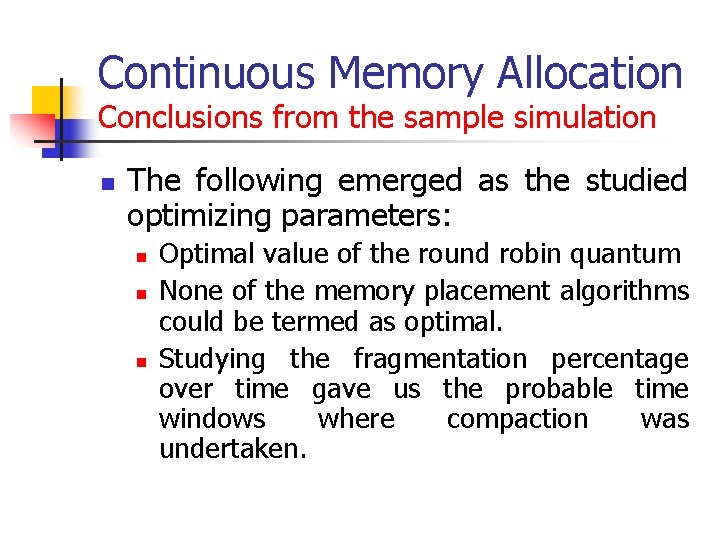

Continuous Memory Allocation Conclusions from the sample simulation n The following emerged as the studied optimizing parameters: n n n Optimal value of the round robin quantum None of the memory placement algorithms could be termed as optimal. Studying the fragmentation percentage over time gave us the probable time windows where compaction was undertaken.

Lecture Summary ü Introduction to Memory Management ü What is memory management ü Related Problems of Redundancy, Fragmentation and Synchronization ü Memory Placement Algorithms ü Continuous Memory Allocation Scheme ü Parameters Involved ü Parameter-Performance Relationships ü Some Sample Results

Preview of next lecture The following topics shall be covered in the next lecture: Ø Introduction to Paging Ø Ø Ø Ø Ø Paging Hardware & Page Tables Paging model of memory Page Size Paging versus Continuous Allocation Scheme Multilevel Paging Page Replacement & Page Anticipation Algorithms Parameters Involved Parameter-Performance Relationships Sample Results