FYP Final Presentation External Data Correlation System Agenda

- Slides: 70

FYP Final Presentation External Data Correlation System

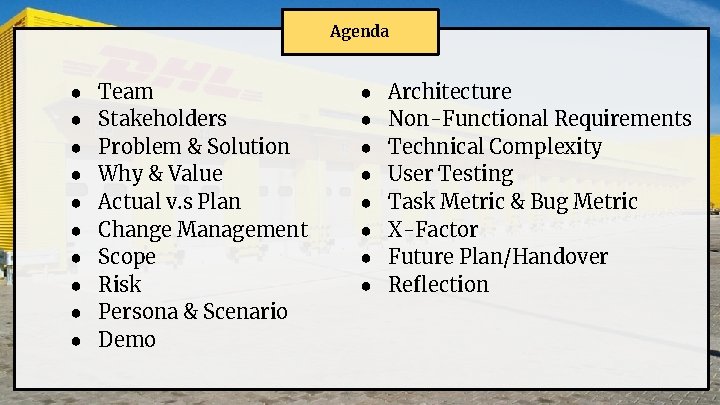

Agenda ● ● ● ● ● Team Stakeholders Problem & Solution Why & Value Actual v. s Plan Change Management Scope Risk Persona & Scenario Demo ● ● ● ● Architecture Non-Functional Requirements Technical Complexity User Testing Task Metric & Bug Metric X-Factor Future Plan/Handover Reflection

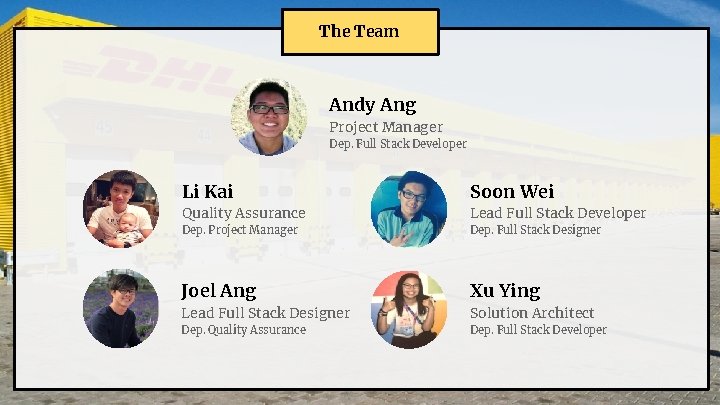

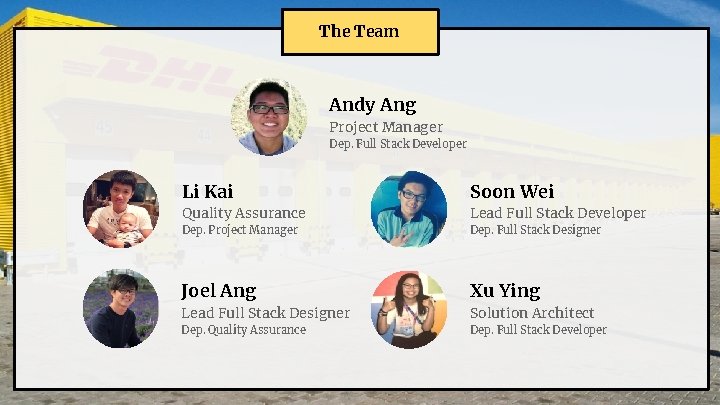

The Team Andy Ang Project Manager Dep. Full Stack Developer Li Kai Soon Wei Quality Assurance Lead Full Stack Developer Dep. Project Manager Dep. Full Stack Designer Joel Ang Xu Ying Lead Full Stack Designer Solution Architect Dep. Quality Assurance Dep. Full Stack Developer

Stakeholders DHL-SMU Analytics Lab Project Sponsor A collaboration between DHL and SMU to develop innovative concepts for consumer and business centric supply chain management through capabilities in big data analytics DHL Supply Chain Department End User DHL provides international courier, parcel and express mail services. DHL is the owner of the web application

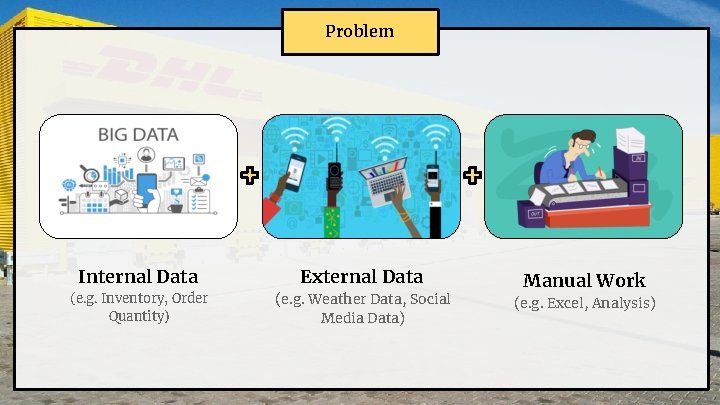

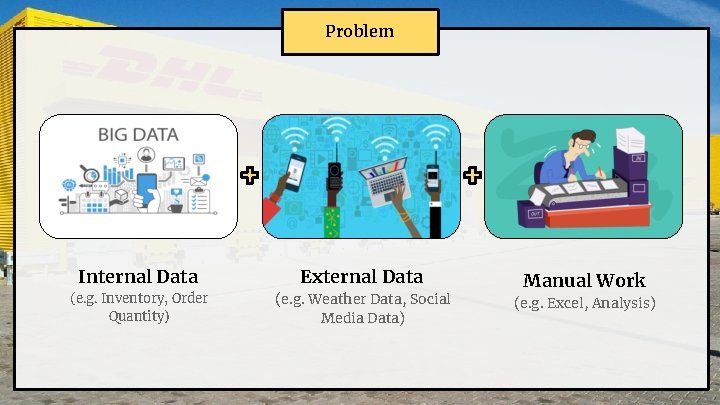

Problem Internal Data External Data (e. g. Inventory, Order Quantity) (e. g. Weather Data, Social Media Data) Manual Work (e. g. Excel, Analysis)

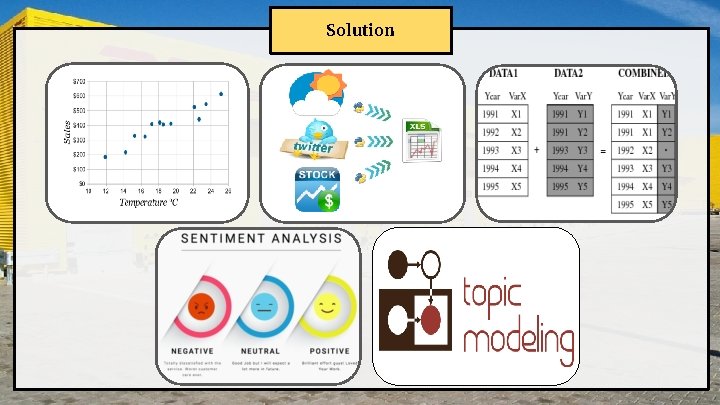

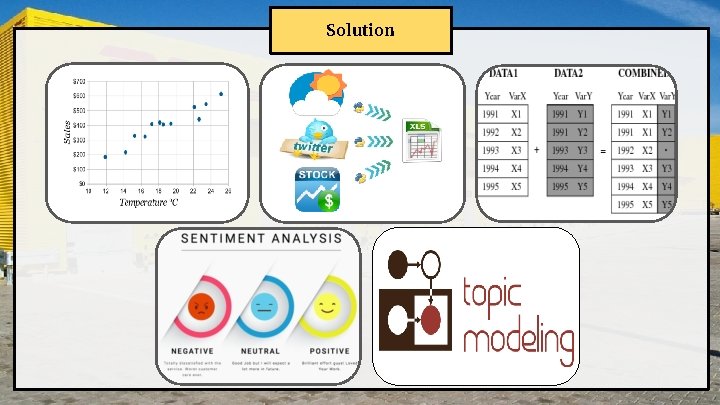

Solution

Why & Value Identify Hidden Patterns Reduce Manual Work Functions that are Relevant

Changes Made After Midterm

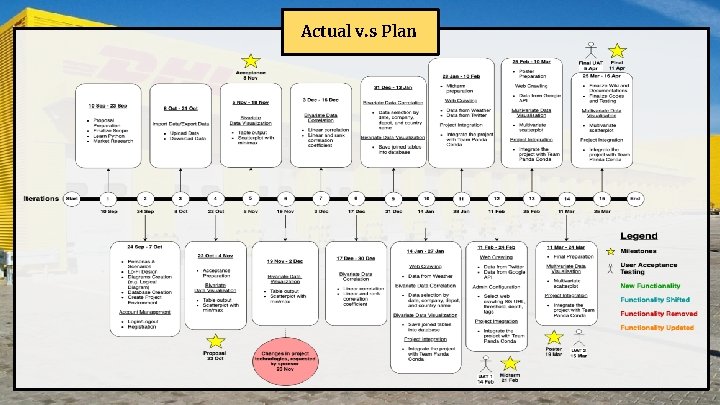

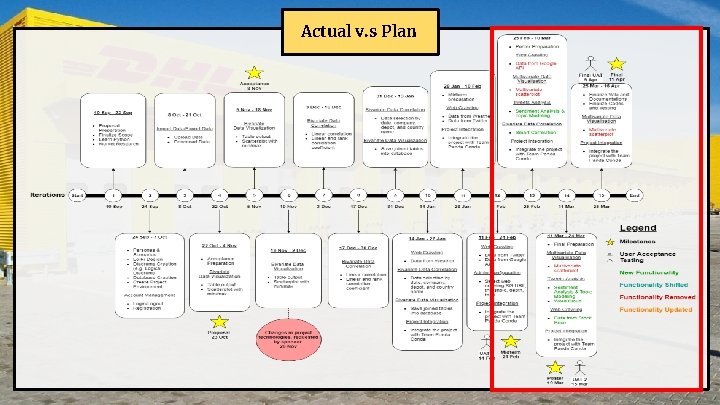

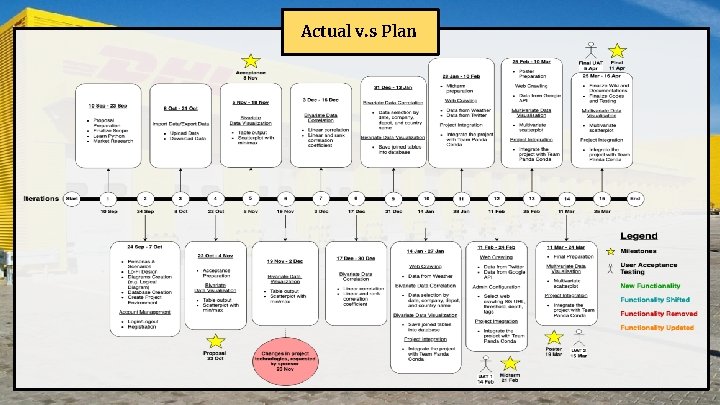

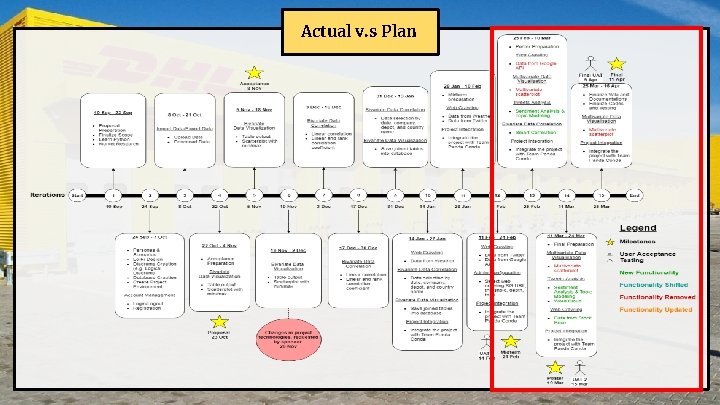

Actual v. s Plan

Actual v. s Plan

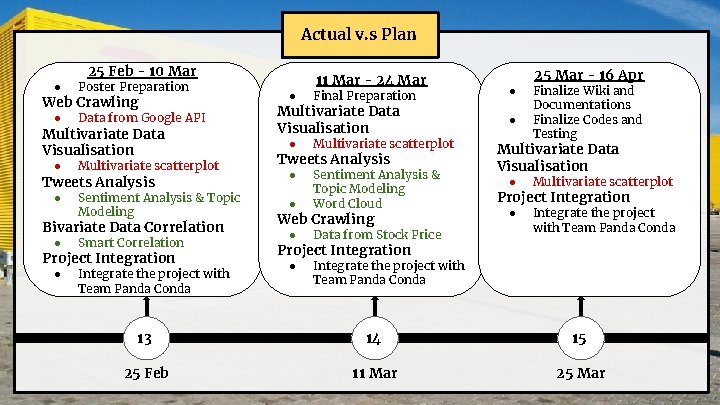

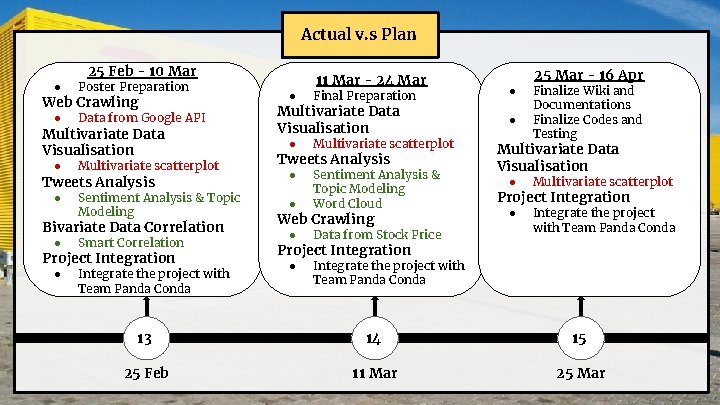

Actual v. s Plan 25 Feb - 10 Mar ● Poster Preparation ● Data from Google API Web Crawling Multivariate Data Visualisation ● Multivariate scatterplot Tweets Analysis ● Sentiment Analysis & Topic Modeling Bivariate Data Correlation ● Smart Correlation ● Integrate the project with Team Panda Conda Project Integration 11 Mar - 24 Mar ● Final Preparation Multivariate Data Visualisation ● Multivariate scatterplot ● ● Sentiment Analysis & Topic Modeling Word Cloud ● Data from Stock Price ● Integrate the project with Team Panda Conda Tweets Analysis Web Crawling 25 Mar - 16 Apr ● ● Finalize Wiki and Documentations Finalize Codes and Testing Multivariate Data Visualisation ● Multivariate scatterplot ● Integrate the project with Team Panda Conda Project Integration 13 14 15 25 Feb 11 Mar 25 Mar

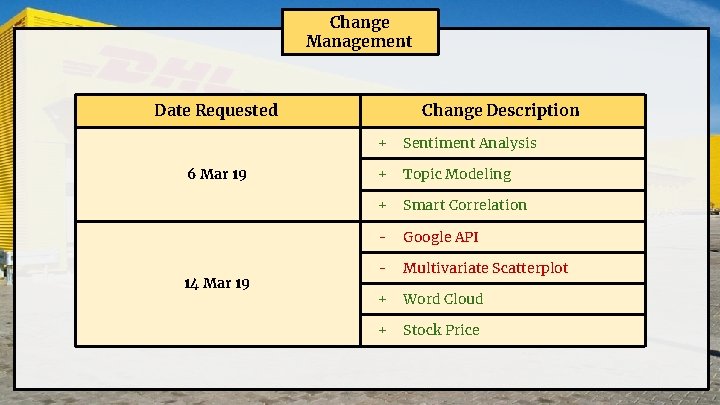

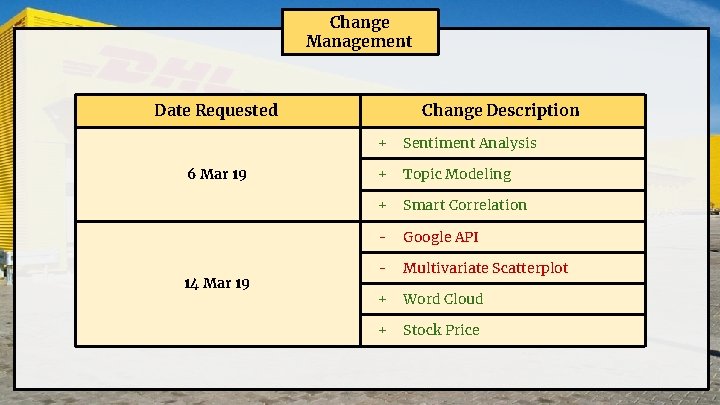

Change Management Date Requested 6 Mar 19 14 Mar 19 Change Description + Sentiment Analysis + Topic Modeling + Smart Correlation - Google API - Multivariate Scatterplot + Word Cloud + Stock Price

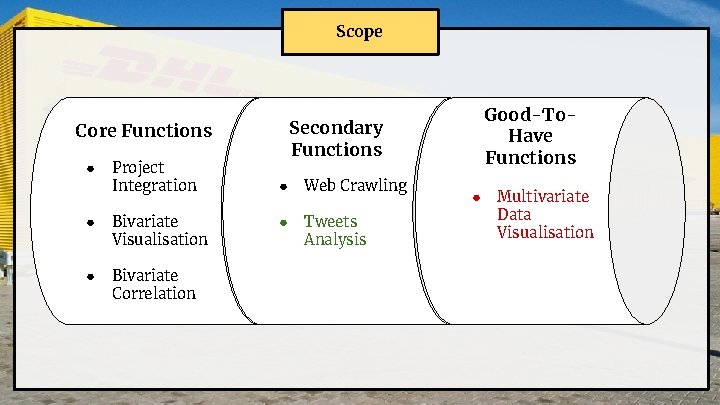

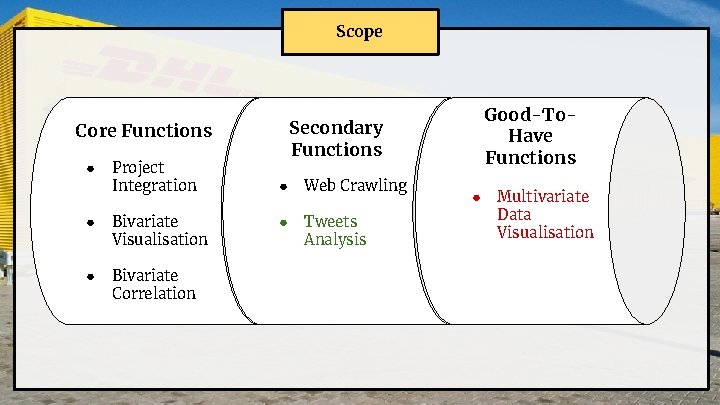

Why & Value Scope Core Functions ● Project Integration ● Bivariate Visualisation ● Bivariate Correlation Good-To. Have Functions Secondary Functions ● Web Crawling ● Tweets Analysis ● Multivariate Data Visualisation

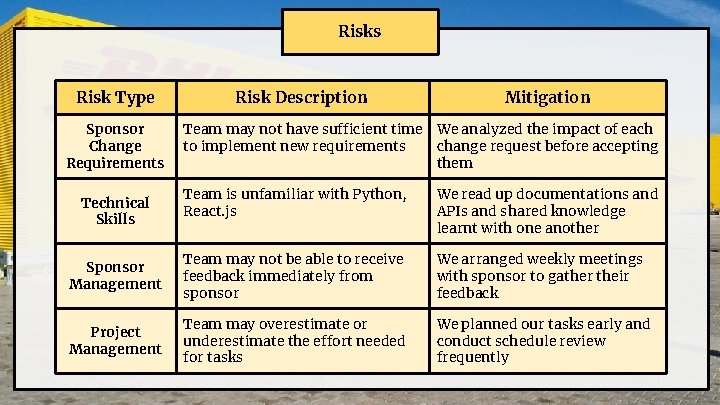

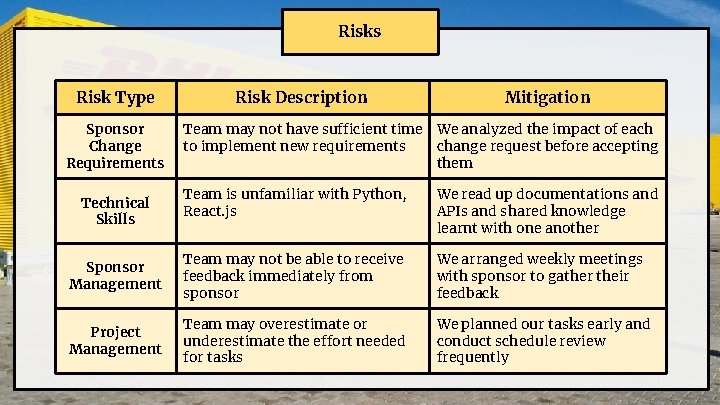

Risks Risk Type Sponsor Change Requirements Risk Description Mitigation Team may not have sufficient time We analyzed the impact of each to implement new requirements change request before accepting them Team is unfamiliar with Python, React. js We read up documentations and APIs and shared knowledge learnt with one another Sponsor Management Team may not be able to receive feedback immediately from sponsor We arranged weekly meetings with sponsor to gather their feedback Project Management Team may overestimate or underestimate the effort needed for tasks We planned our tasks early and conduct schedule review frequently Technical Skills

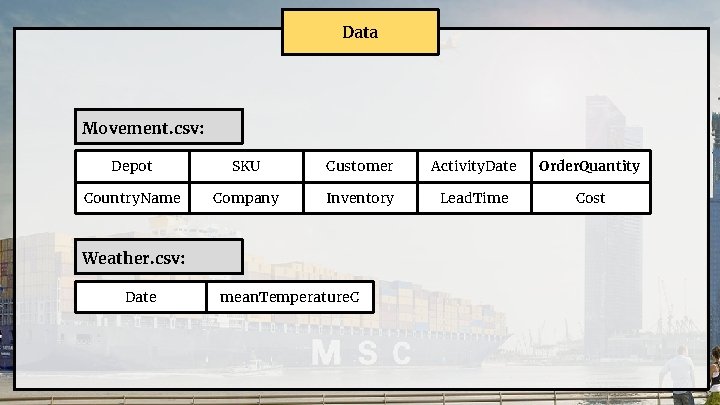

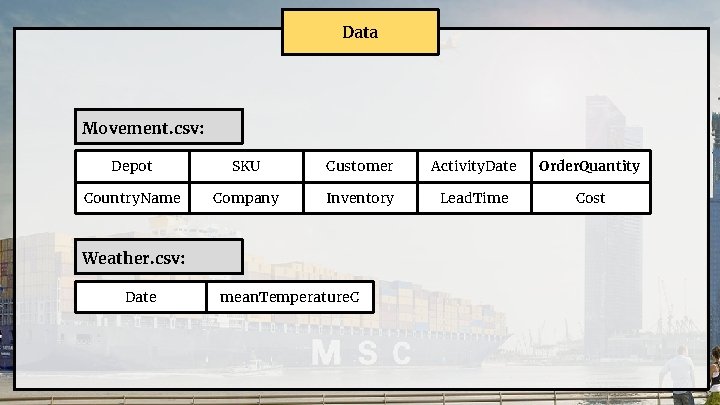

Data Movement. csv: Depot SKU Customer Activity. Date Order. Quantity Country. Name Company Inventory Lead. Time Cost Weather. csv: Date mean. Temperature. C

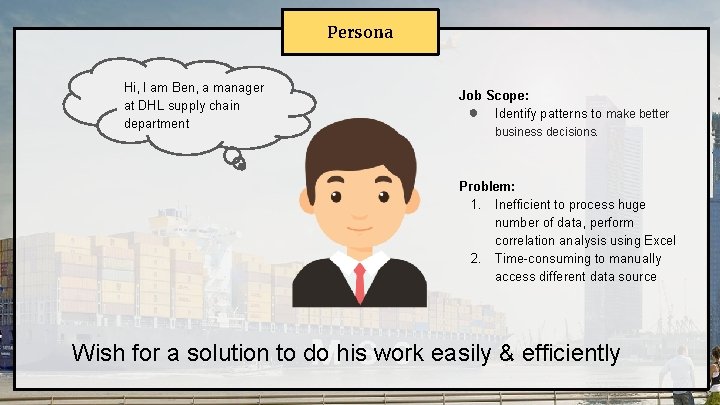

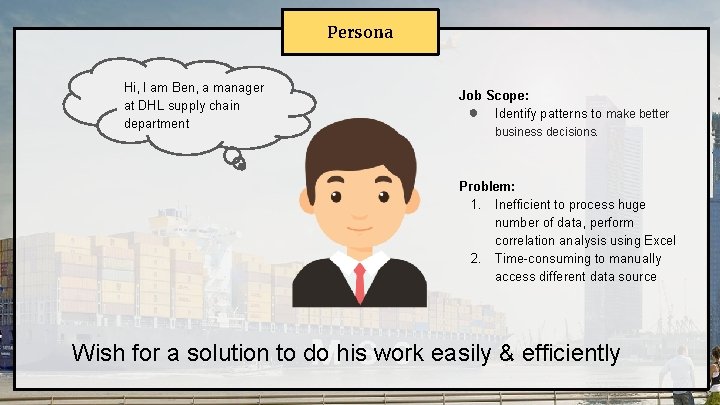

Persona Hi, I am Ben, a manager at DHL supply chain department Job Scope: ● Identify patterns to make better business decisions. Problem: 1. Inefficient to process huge number of data, perform correlation analysis using Excel 2. Time-consuming to manually access different data source Wish for a solution to do his work easily & efficiently

DEMO

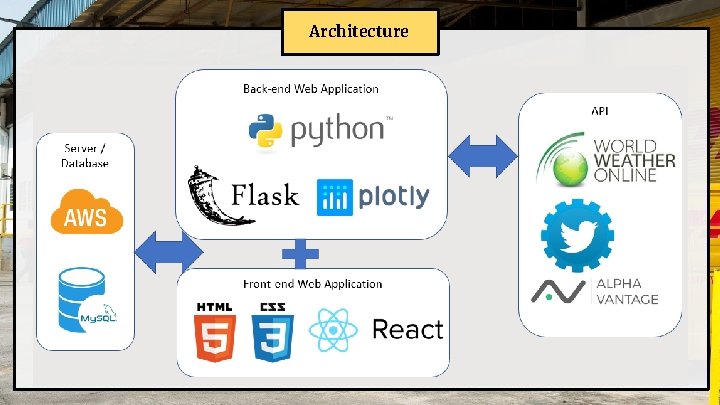

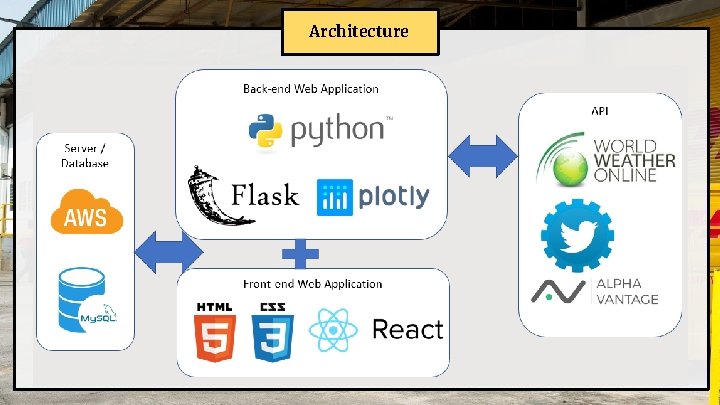

Architecture

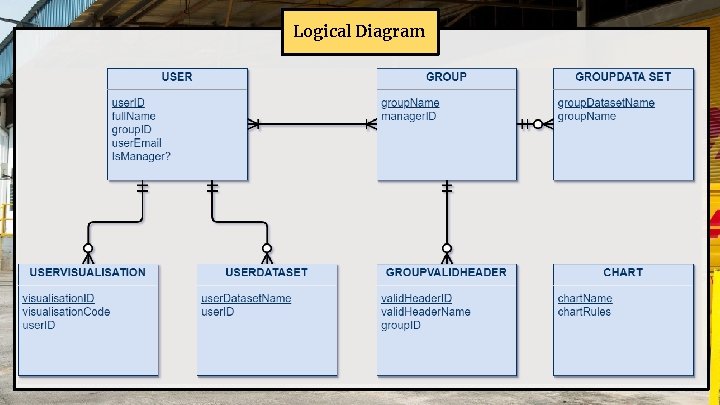

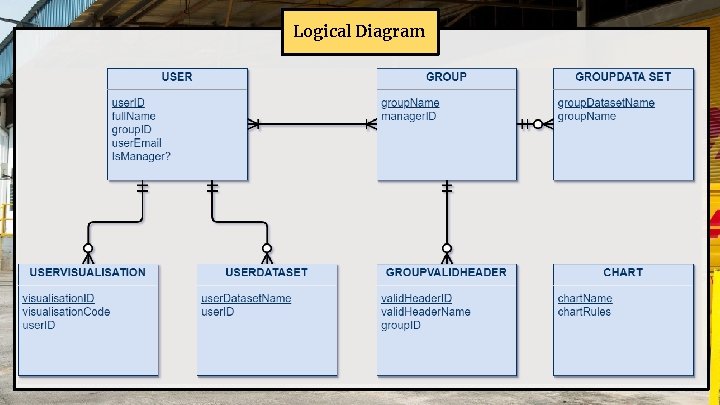

Logical Diagram

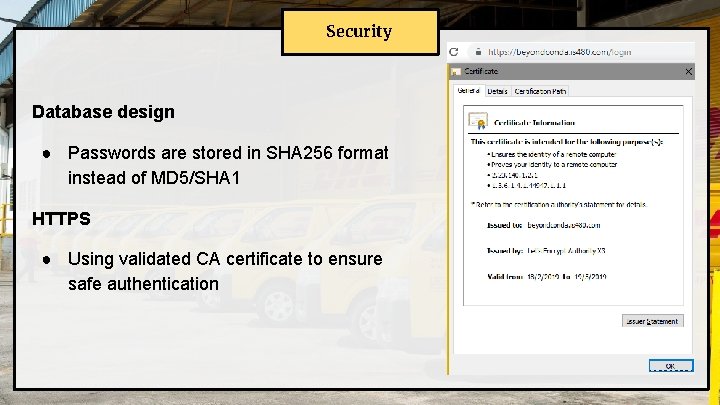

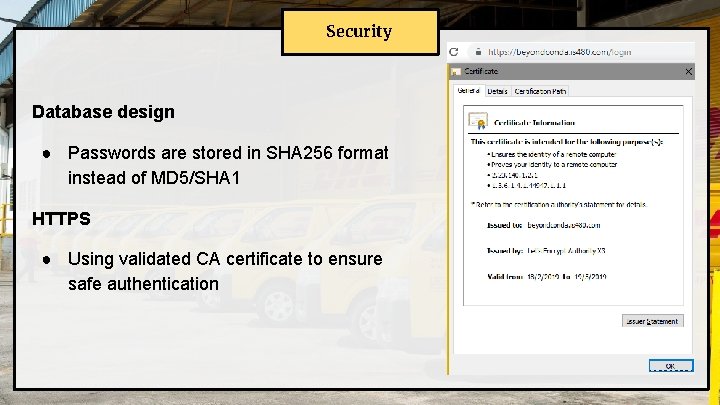

Security Database design ● Passwords are stored in SHA 256 format instead of MD 5/SHA 1 HTTPS ● Using validated CA certificate to ensure safe authentication

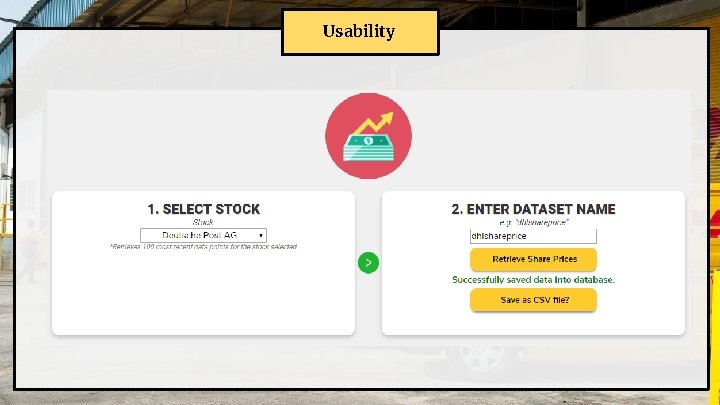

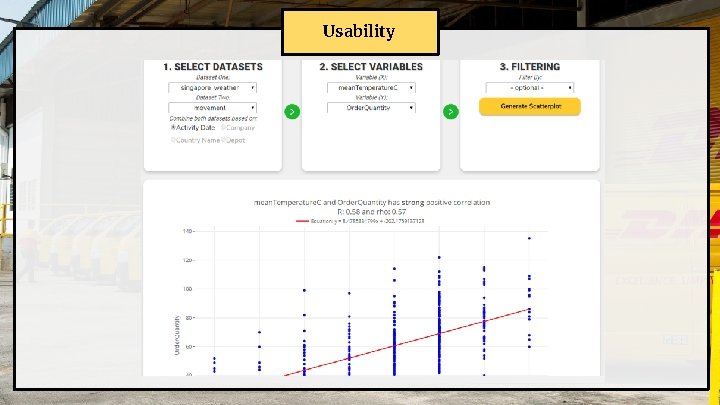

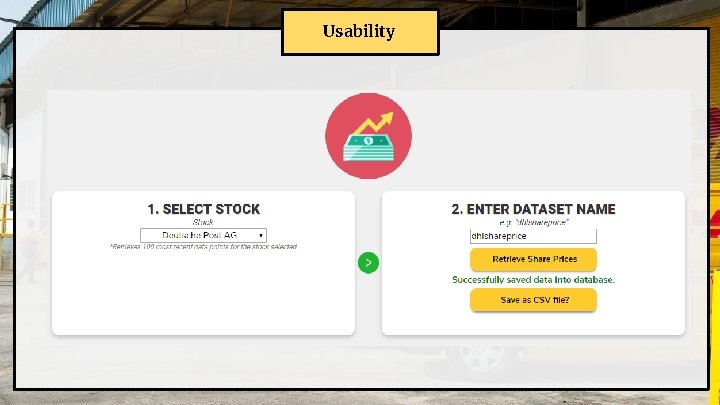

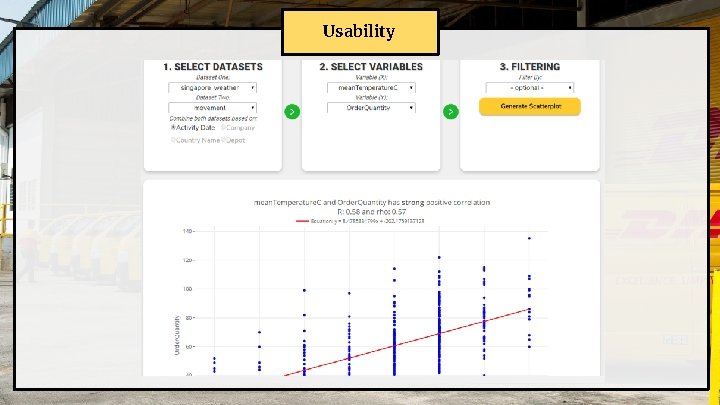

Usability

Usability

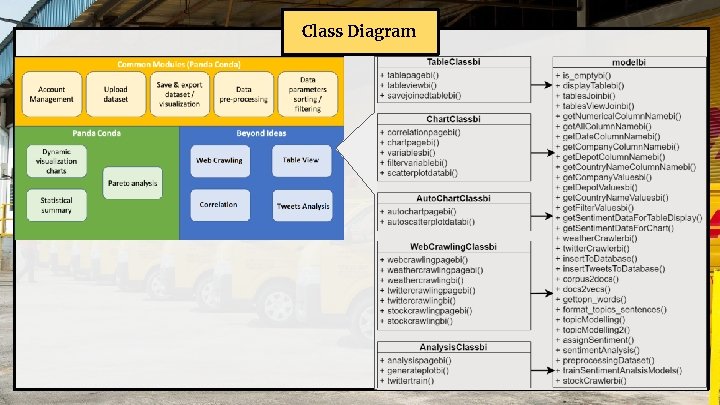

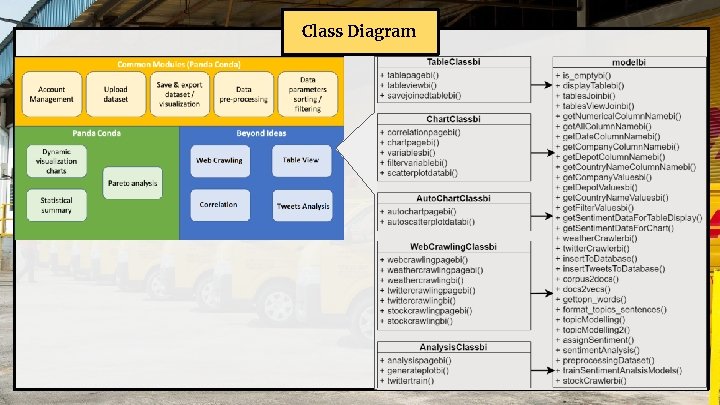

Class Diagram

Technical Complexity: Sentiment Analysis

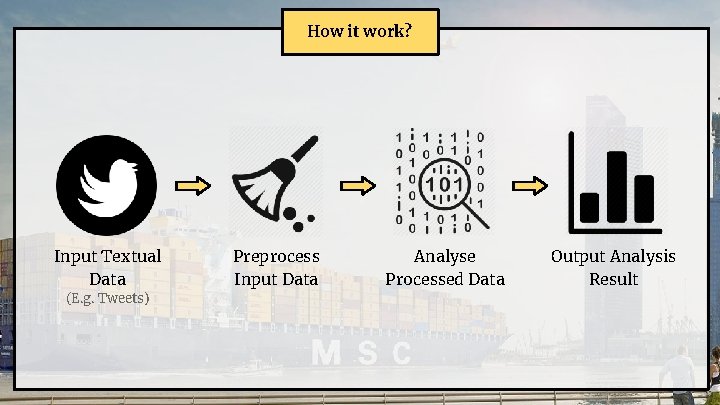

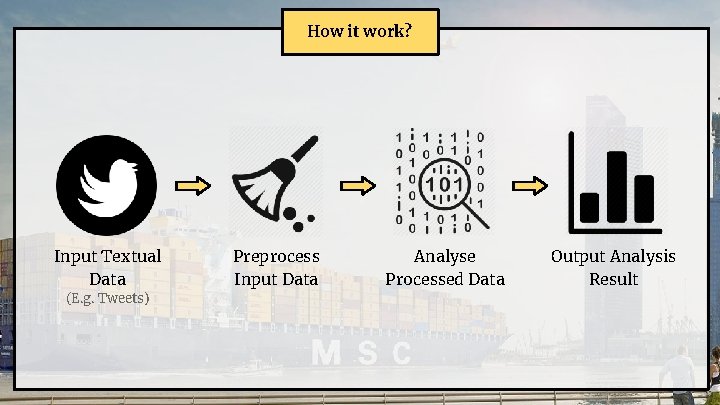

How it work? Input Textual Data (E. g. Tweets) Preprocess Input Data Analyse Processed Data Output Analysis Result

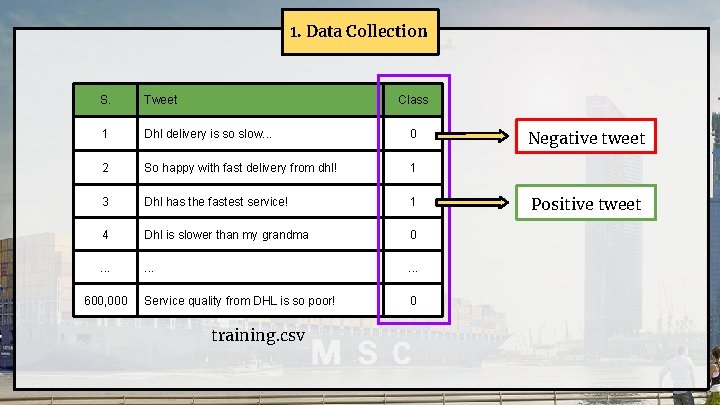

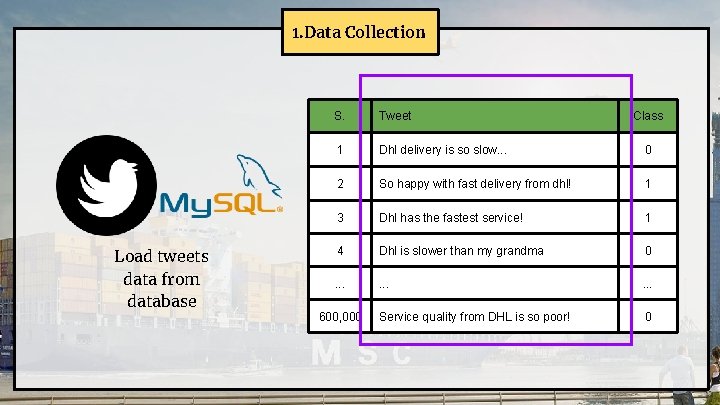

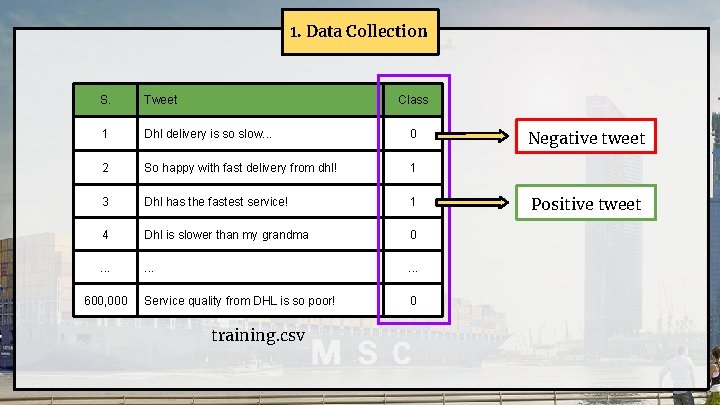

1. Data Collection S. Tweet 1 Dhl delivery is so slow. . . 0 2 So happy with fast delivery from dhl! 1 3 Dhl has the fastest service! 1 4 Dhl is slower than my grandma 0 . . Service quality from DHL is so poor! 0 600, 000 Class training. csv Negative tweet Positive tweet

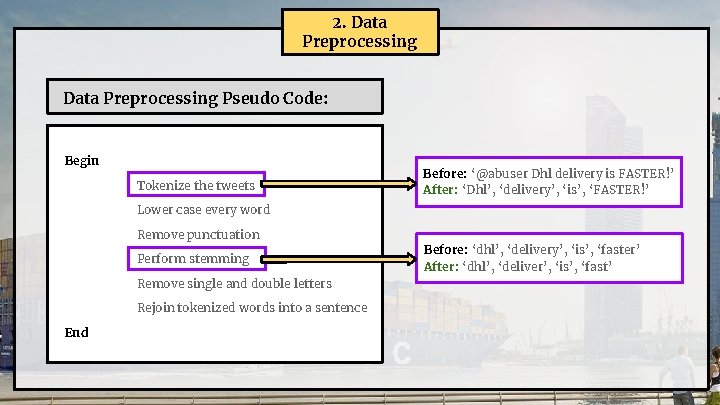

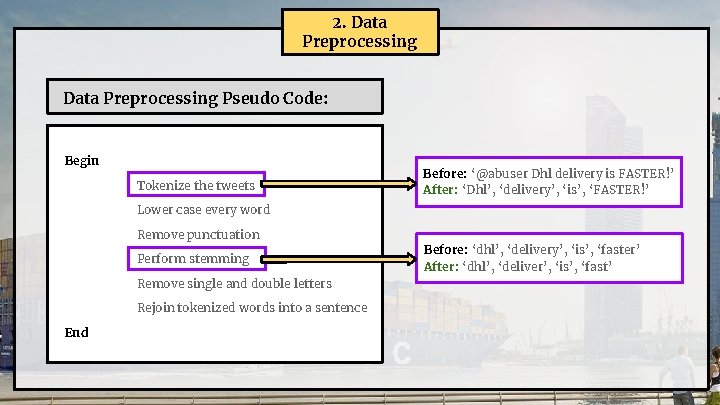

2. Data Preprocessing Pseudo Code: Begin Tokenize the tweets Before: ‘@abuser Dhl delivery is FASTER!’ After: ‘Dhl’, ‘delivery’, ‘is’, ‘FASTER!’ Lower case every word Remove punctuation Perform stemming Remove single and double letters Rejoin tokenized words into a sentence End Before: ‘dhl’, ‘delivery’, ‘is’, ‘faster’ After: ‘dhl’, ‘deliver’, ‘is’, ‘fast’

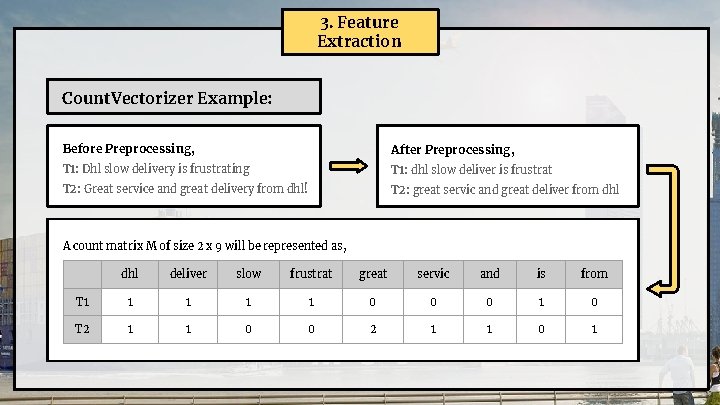

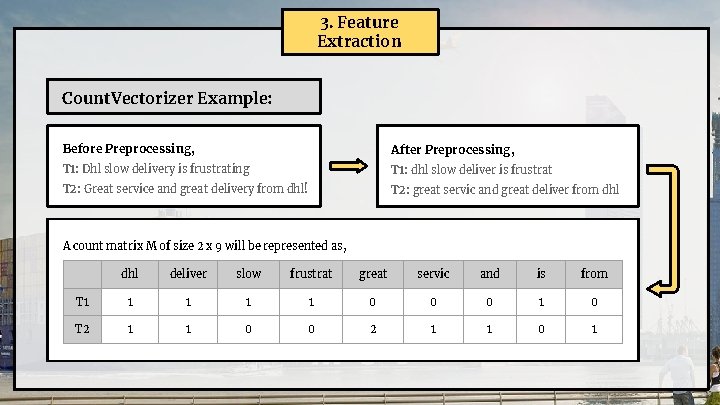

3. Feature Extraction Count. Vectorizer Example: Before Preprocessing, After Preprocessing, T 1: Dhl slow delivery is frustrating T 1: dhl slow deliver is frustrat T 2: Great service and great delivery from dhl! T 2: great servic and great deliver from dhl A count matrix M of size 2 x 9 will be represented as, dhl deliver slow frustrat great servic and is from T 1 1 1 0 0 0 1 0 T 2 1 1 0 0 2 1 1 0 1

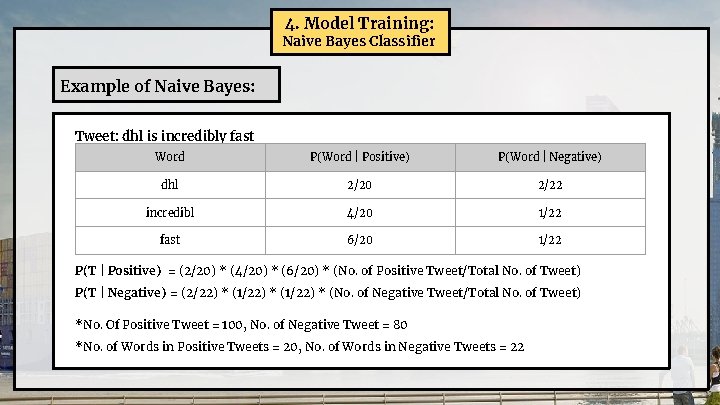

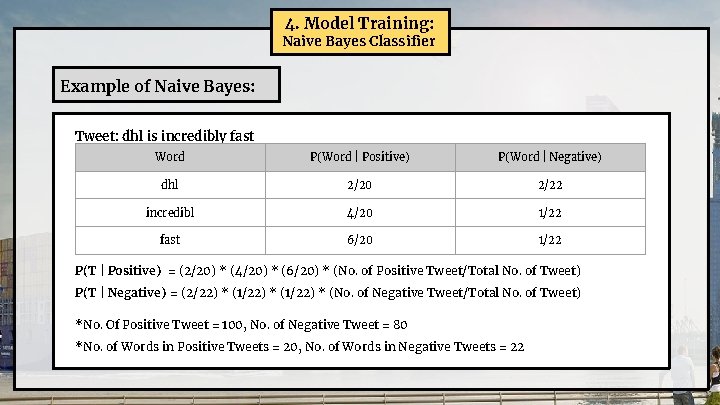

4. Model Training: Naive Bayes Classifier Example of Naive Bayes: Tweet: dhl is incredibly fast Word P(Word | Positive) P(Word | Negative) dhl 2/20 2/22 incredibl 4/20 1/22 fast 6/20 1/22 P(T | Positive) = (2/20) * (4/20) * (6/20) * (No. of Positive Tweet/Total No. of Tweet) P(T | Negative) = (2/22) * (1/22) * (No. of Negative Tweet/Total No. of Tweet) *No. Of Positive Tweet = 100, No. of Negative Tweet = 80 *No. of Words in Positive Tweets = 20, No. of Words in Negative Tweets = 22

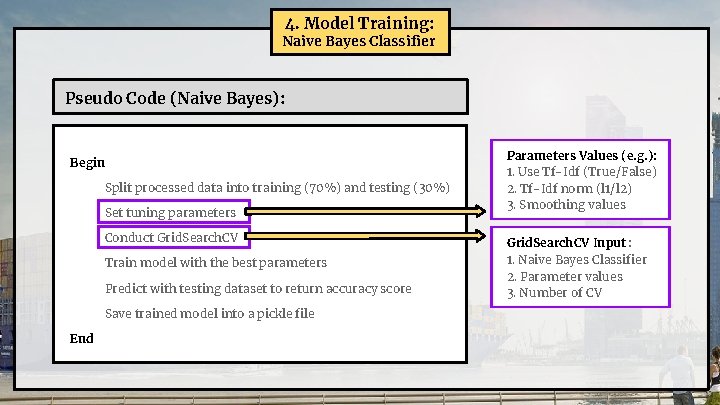

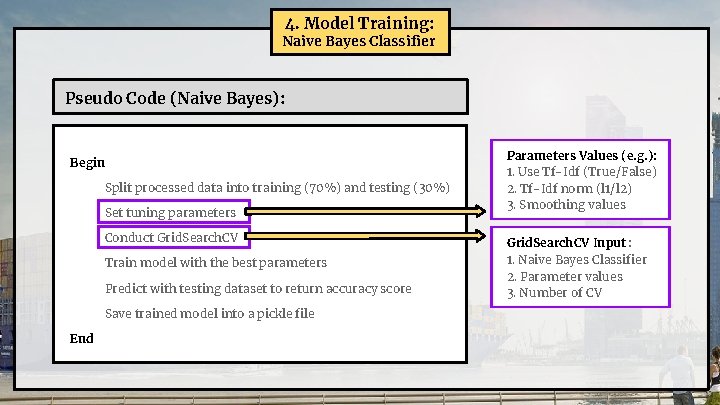

4. Model Training: Naive Bayes Classifier Pseudo Code (Naive Bayes): Begin Split processed data into training (70%) and testing (30%) Set tuning parameters Conduct Grid. Search. CV Train model with the best parameters Predict with testing dataset to return accuracy score Save trained model into a pickle file End Parameters Values (e. g. ): 1. Use Tf-Idf (True/False) 2. Tf-Idf norm (l 1/l 2) 3. Smoothing values Grid. Search. CV Input : 1. Naive Bayes Classifier 2. Parameter values 3. Number of CV

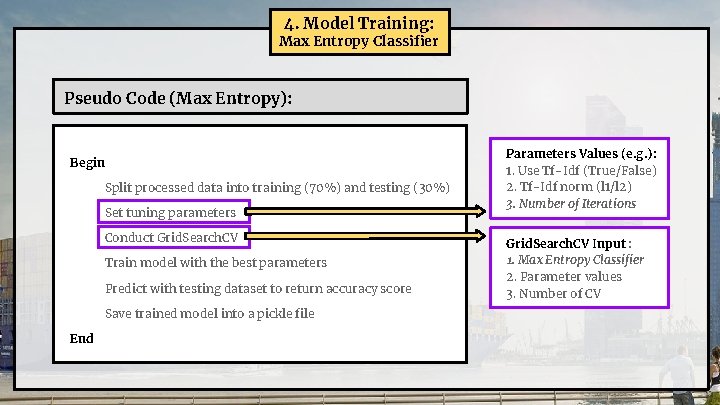

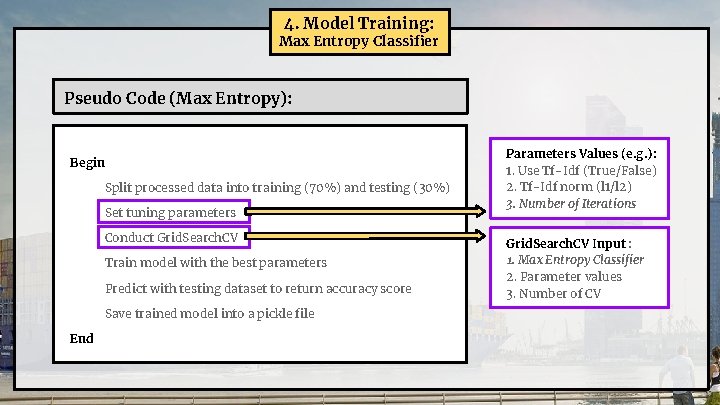

4. Model Training: Max Entropy Classifier Pseudo Code (Max Entropy): Begin Split processed data into training (70%) and testing (30%) Set tuning parameters Conduct Grid. Search. CV Train model with the best parameters Predict with testing dataset to return accuracy score Save trained model into a pickle file End Parameters Values (e. g. ): 1. Use Tf-Idf (True/False) 2. Tf-Idf norm (l 1/l 2) 3. Number of Iterations Grid. Search. CV Input : 1. Max Entropy Classifier 2. Parameter values 3. Number of CV

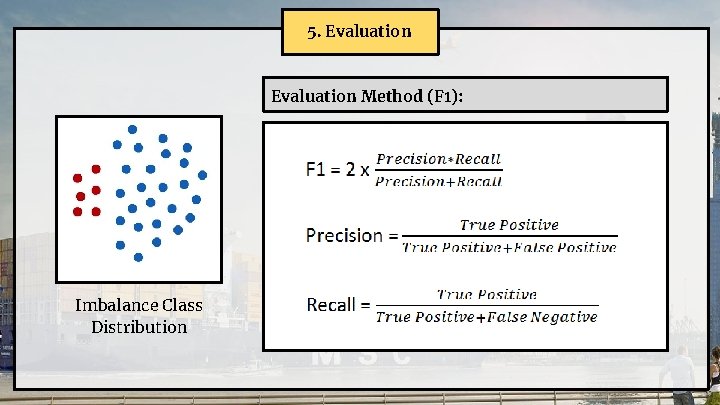

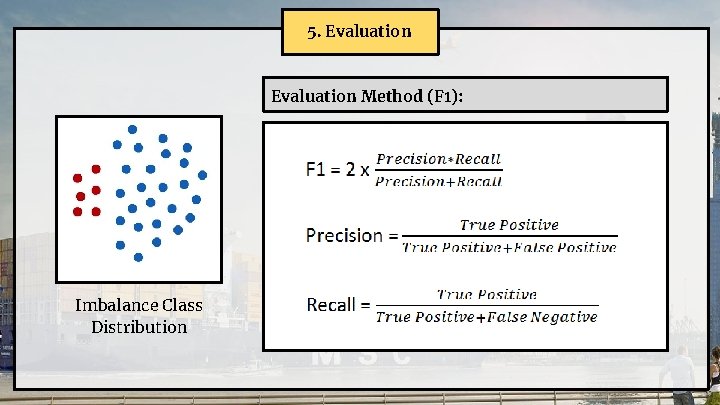

5. Evaluation Method (F 1): Imbalance Class Distribution

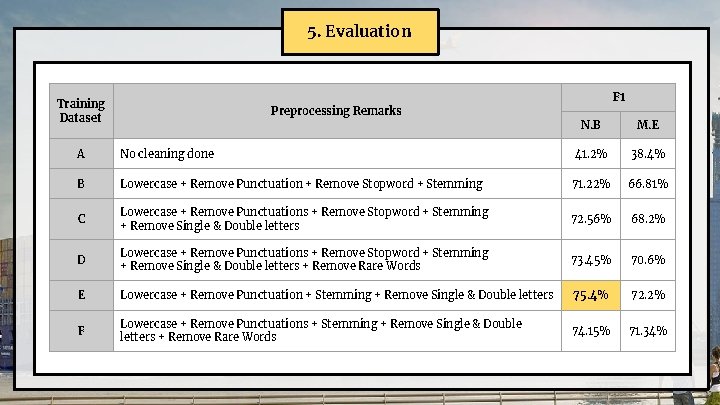

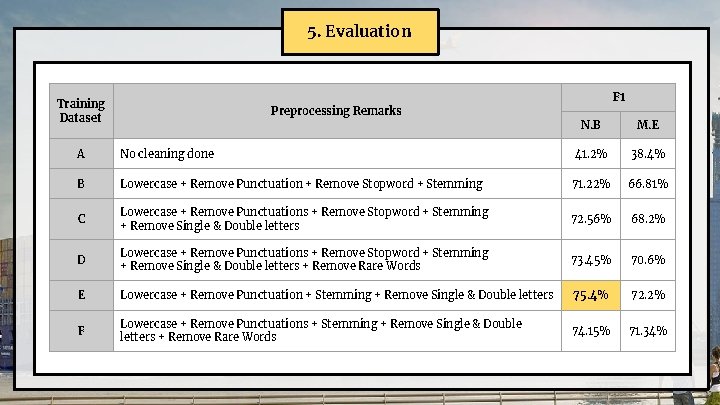

5. Evaluation Training Dataset Preprocessing Remarks F 1 N. B M. E A No cleaning done 41. 2% 38. 4% B Lowercase + Remove Punctuation + Remove Stopword + Stemming 71. 22% 66. 81% C Lowercase + Remove Punctuations + Remove Stopword + Stemming + Remove Single & Double letters 72. 56% 68. 2% D Lowercase + Remove Punctuations + Remove Stopword + Stemming + Remove Single & Double letters + Remove Rare Words 73. 45% 70. 6% E Lowercase + Remove Punctuation + Stemming + Remove Single & Double letters 75. 4% 72. 2% F Lowercase + Remove Punctuations + Stemming + Remove Single & Double letters + Remove Rare Words 74. 15% 71. 34%

Challenges Memory Error Text Mining No Neutral Class

Technical Complexity: Topic Modeling

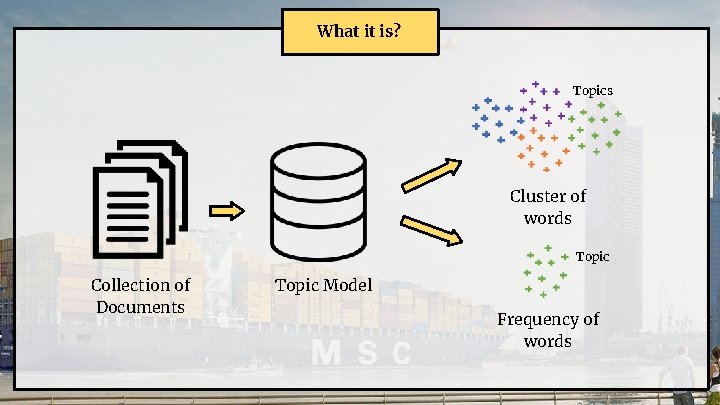

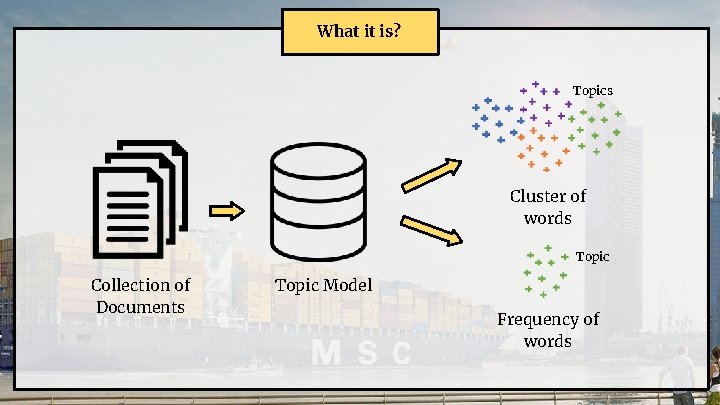

What it is? Topics Cluster of words Topic Collection of Documents Topic Model Frequency of words

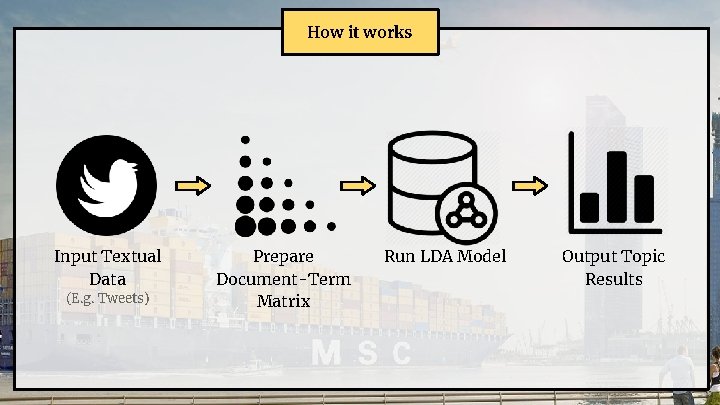

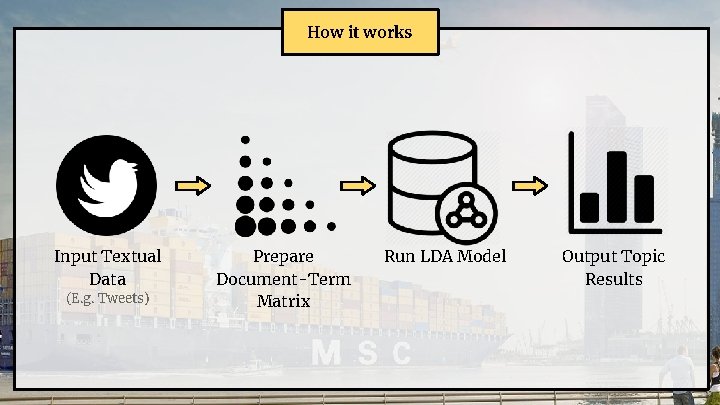

How it works Input Textual Data (E. g. Tweets) Prepare Document-Term Matrix Run LDA Model Output Topic Results

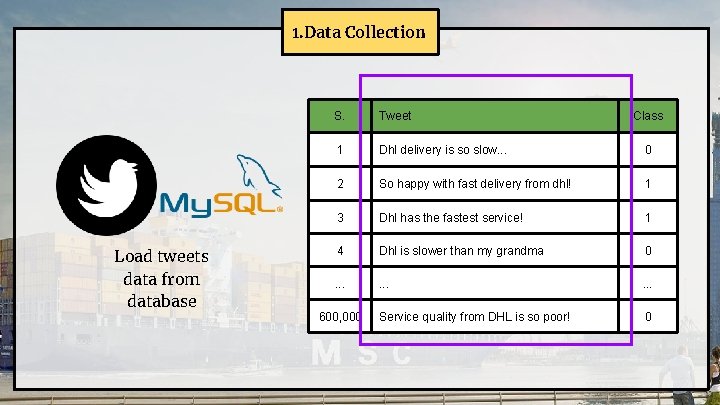

1. Data Collection Load tweets data from database S. Tweet 1 Dhl delivery is so slow. . . 0 2 So happy with fast delivery from dhl! 1 3 Dhl has the fastest service! 1 4 Dhl is slower than my grandma 0 . . Service quality from DHL is so poor! 0 600, 000 Class

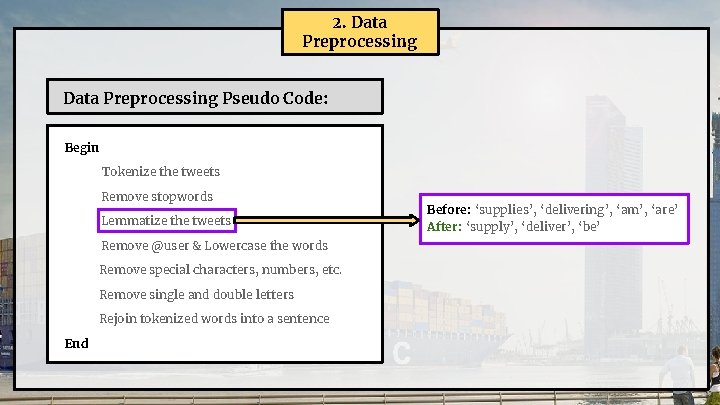

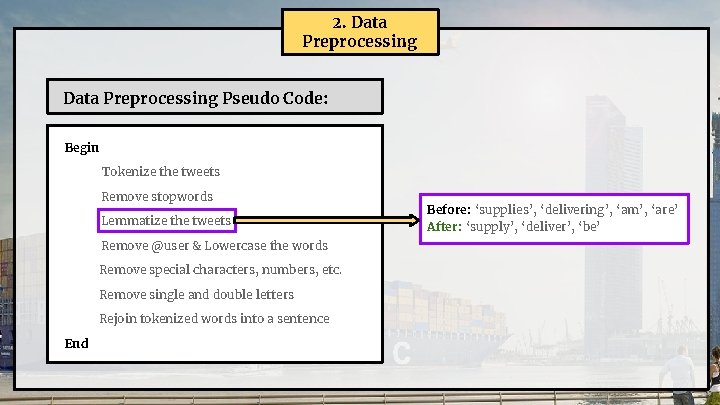

2. Data Preprocessing Pseudo Code: Begin Tokenize the tweets Remove stopwords Lemmatize the tweets Remove @user & Lowercase the words Remove special characters, numbers, etc. Remove single and double letters Rejoin tokenized words into a sentence End Before: ‘supplies’, ‘delivering’, ‘am’, ‘are’ After: ‘supply’, ‘deliver’, ‘be’

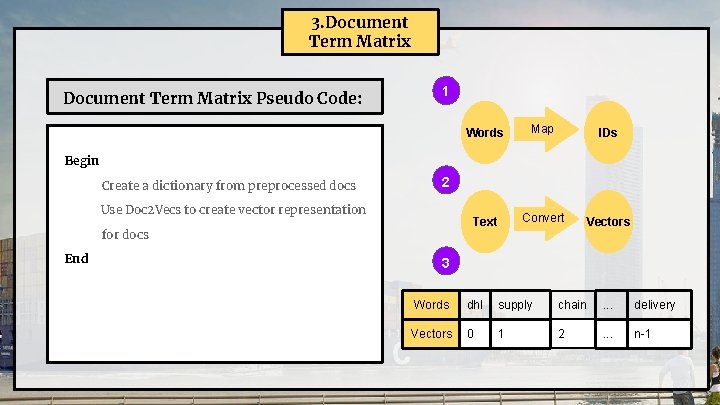

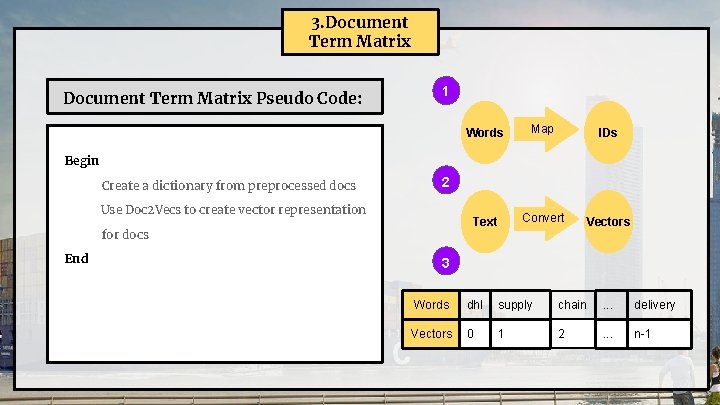

3. Document Term Matrix Pseudo Code: 1 Words Map IDs Text Convert Vectors Begin Create a dictionary from preprocessed docs 2 Use Doc 2 Vecs to create vector representation for docs End 3 Words dhl supply chain . . . delivery Vectors 0 1 2 . . . n-1

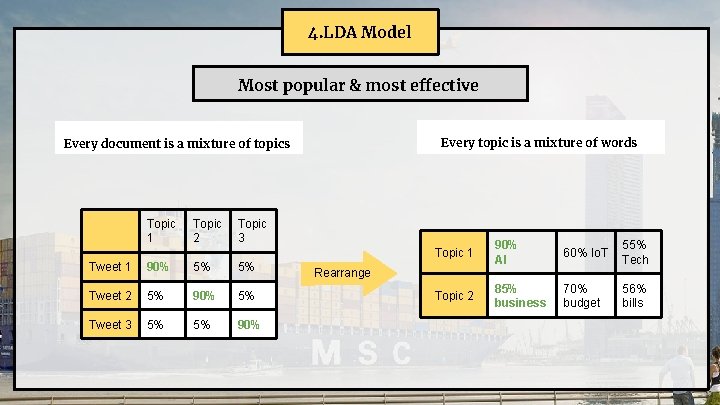

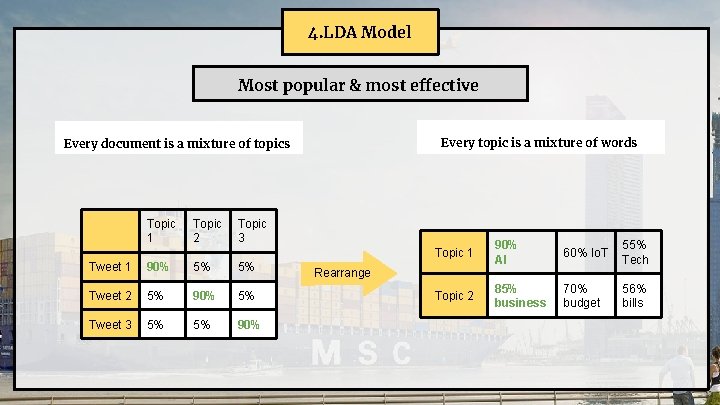

4. LDA Model Most popular & most effective Every topic is a mixture of words Every document is a mixture of topics Topic 1 Topic 2 Topic 3 Tweet 1 90% 5% 5% Tweet 2 5% 90% 5% Tweet 3 5% 5% 90% Topic 1 90% AI 60% Io. T 55% Tech Topic 2 85% business 70% budget 56% bills Rearrange

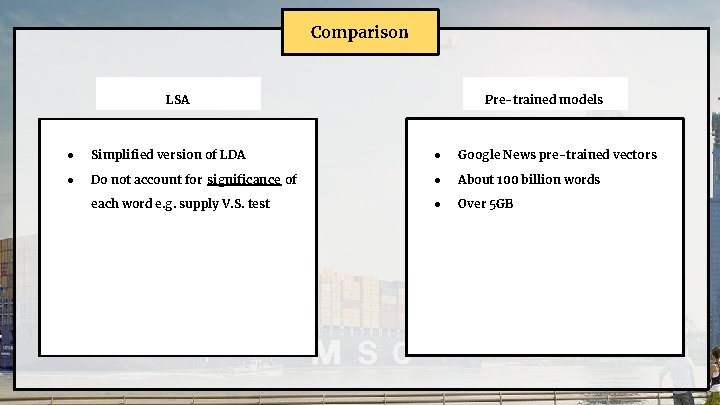

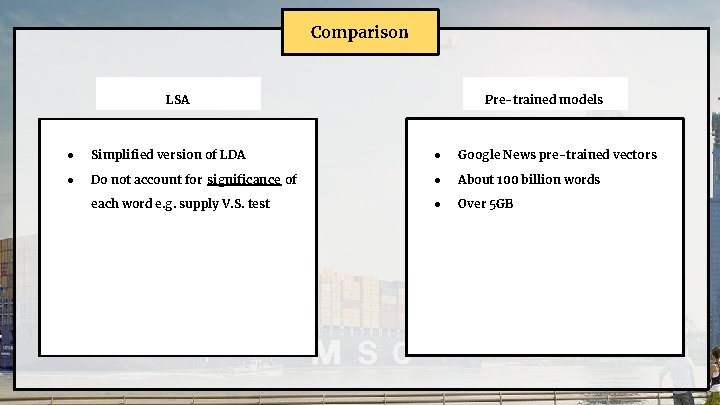

Comparison LSA Pre-trained models ● Simplified version of LDA ● Google News pre-trained vectors ● Do not account for significance of ● About 100 billion words each word e. g. supply V. S. test ● Over 5 GB

Challenges Number of topics Trial & Error

User Testing

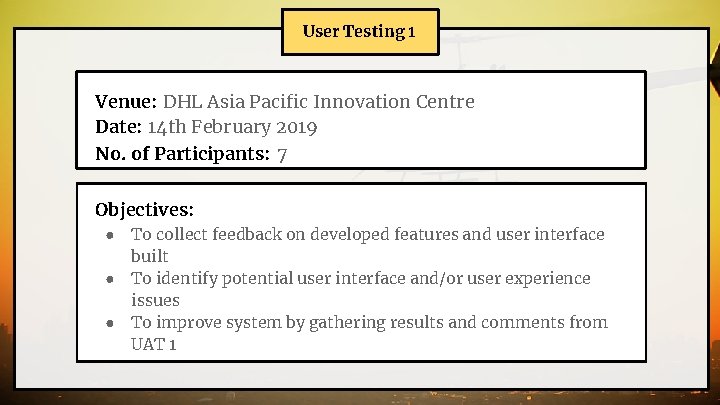

User Testing 1 Venue: DHL Asia Pacific Innovation Centre Date: 14 th February 2019 No. of Participants: 7 Objectives: ● ● ● To collect feedback on developed features and user interface built To identify potential user interface and/or user experience issues To improve system by gathering results and comments from UAT 1

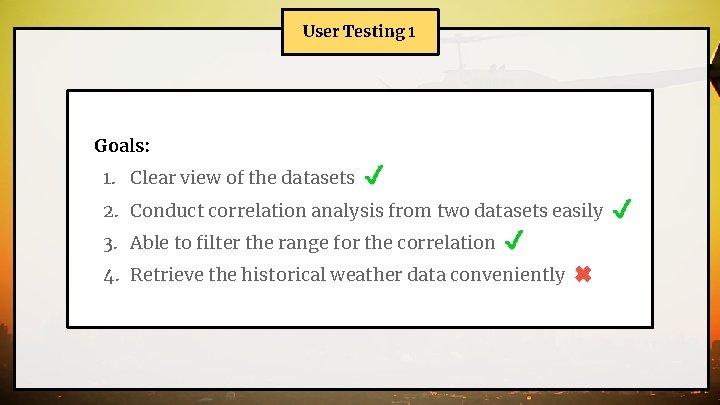

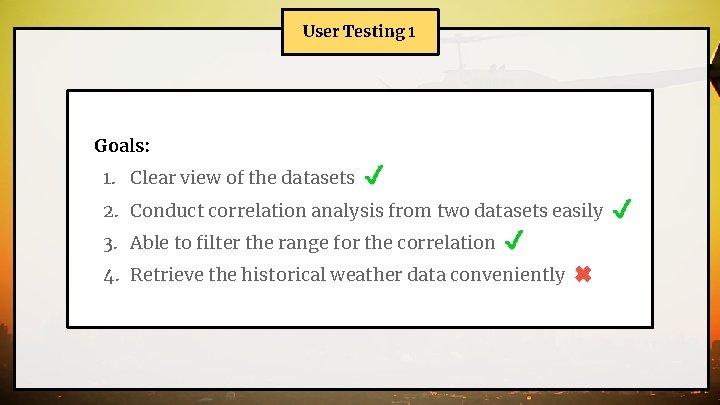

User Testing 1 Goals: 1. Clear view of the datasets 2. Conduct correlation analysis from two datasets easily 3. Able to filter the range for the correlation 4. Retrieve the historical weather data conveniently

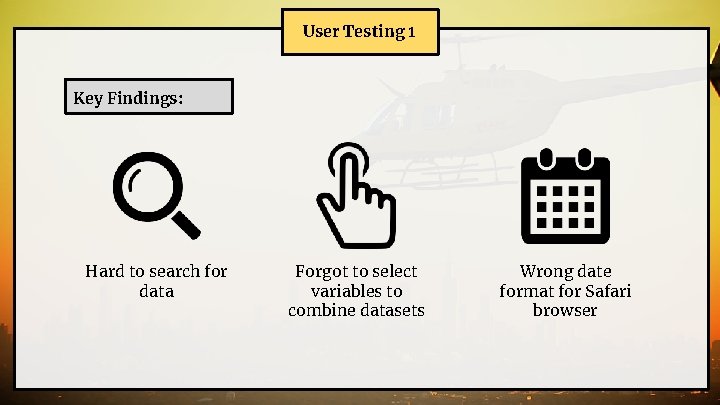

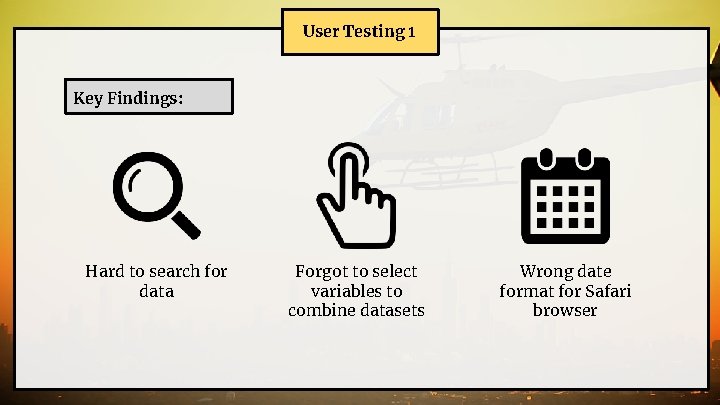

User Testing 1 Key Findings: Hard to search for data Forgot to select variables to combine datasets Wrong date format for Safari browser

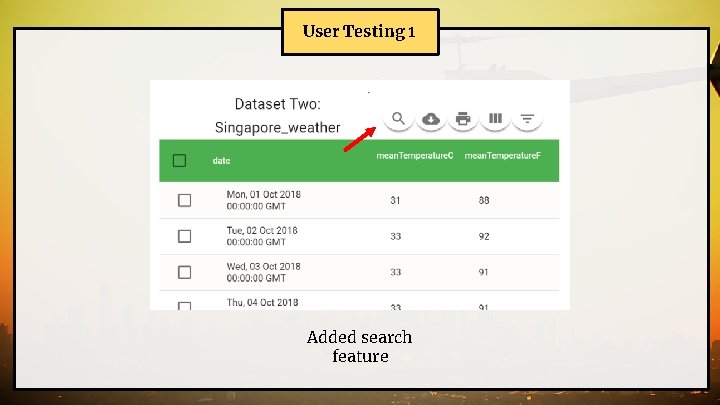

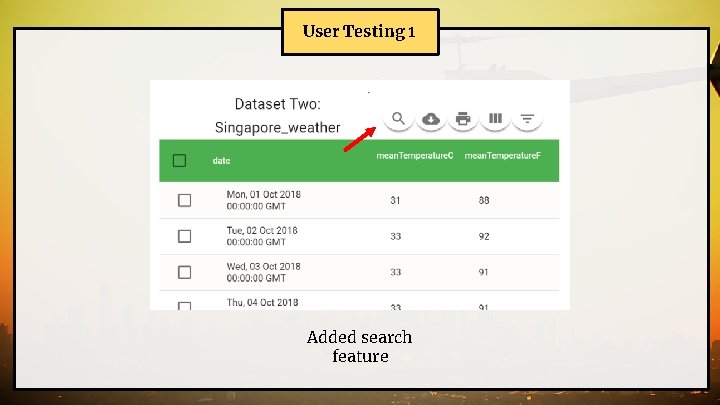

User Testing 1 Added search feature

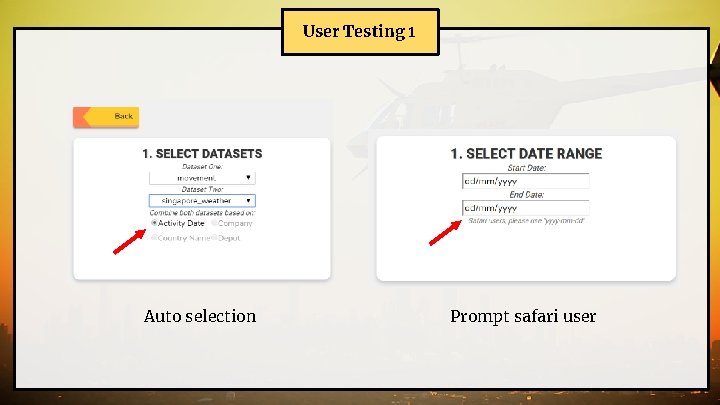

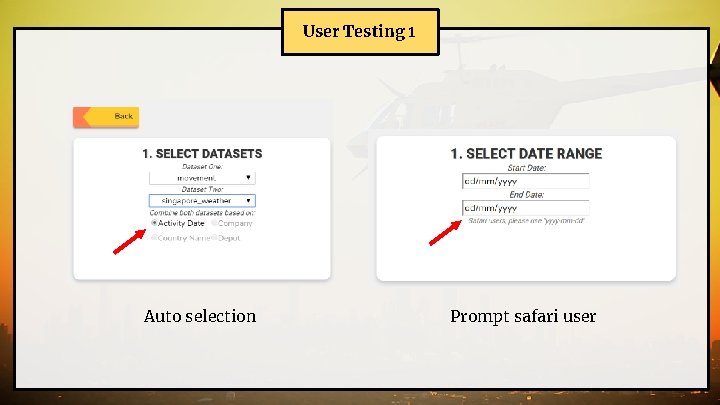

User Testing 1 Auto selection Prompt safari user

User Testing 2 Venue: DHL Asia Pacific Innovation Centre Date: 15 th March 2019 No. of Participants: 5 Objectives: ● ● To collect user's feedback on the updated functions based on input from UAT 1 To collect feedback on newly developed functions To identify potential user interface and/or user experience issues To improve the web application system based on the feedbacks & comments from the UAT

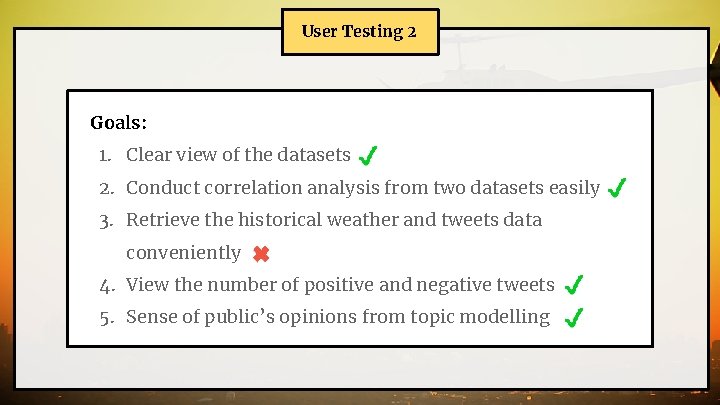

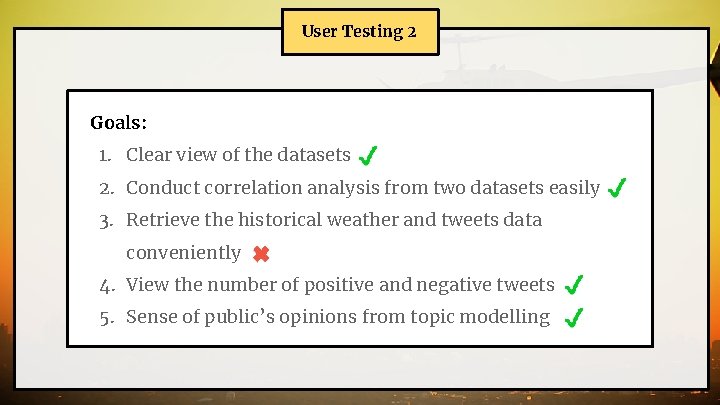

User Testing 2 Goals: 1. Clear view of the datasets 2. Conduct correlation analysis from two datasets easily 3. Retrieve the historical weather and tweets data conveniently 4. View the number of positive and negative tweets 5. Sense of public’s opinions from topic modelling

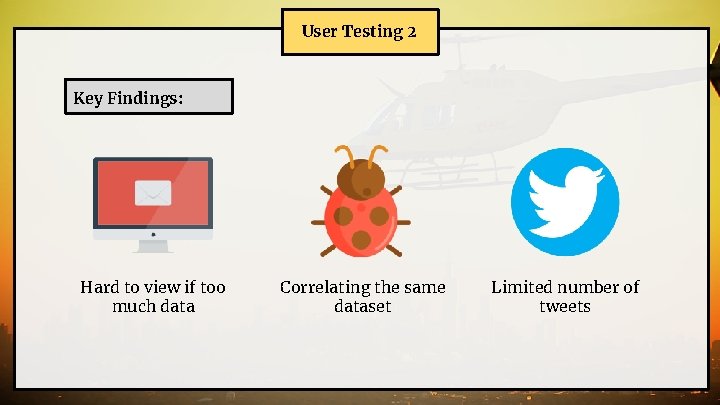

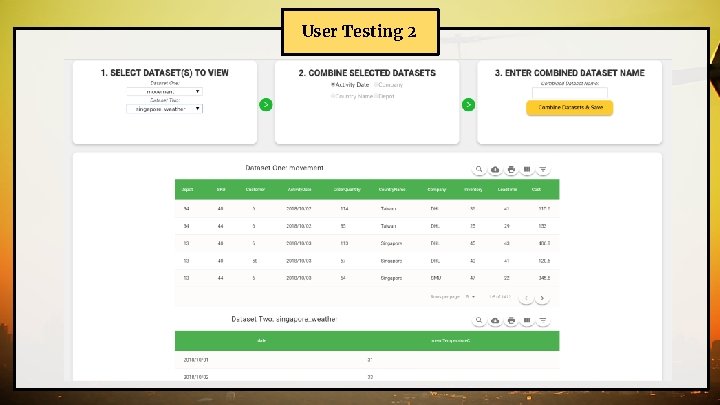

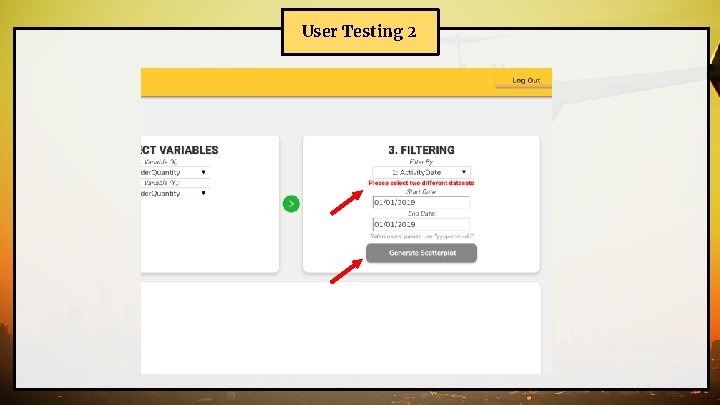

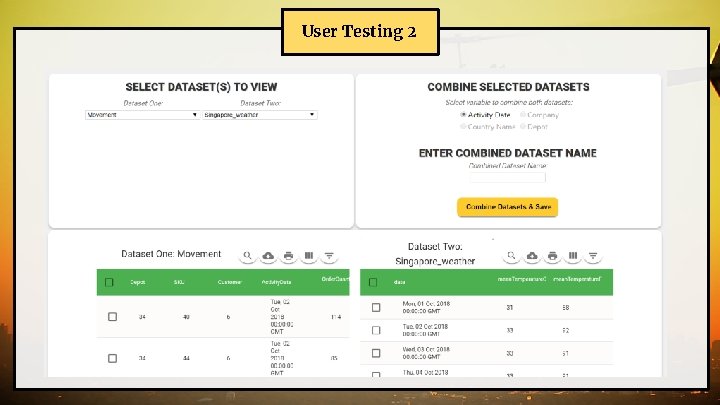

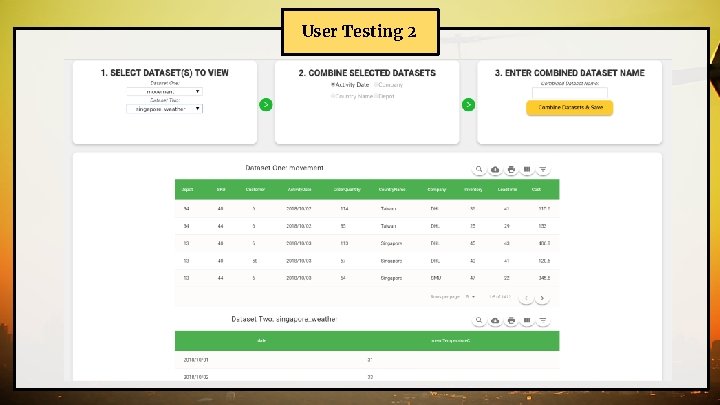

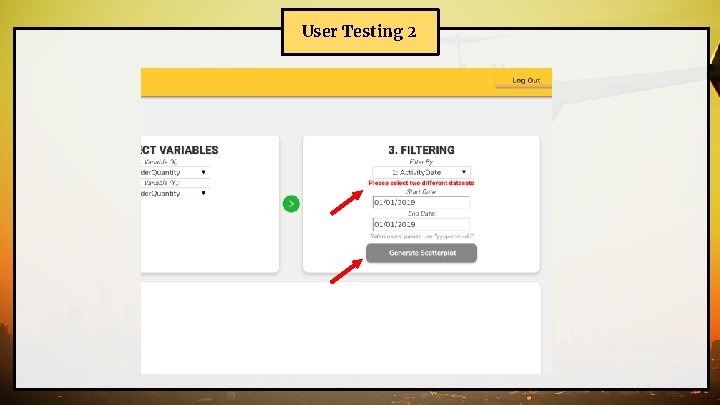

User Testing 2 Key Findings: Hard to view if too much data Correlating the same dataset Limited number of tweets

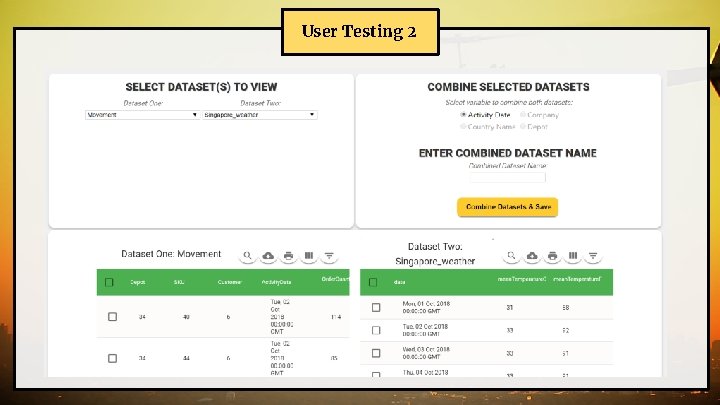

User Testing 2

User Testing 2

User Testing 2

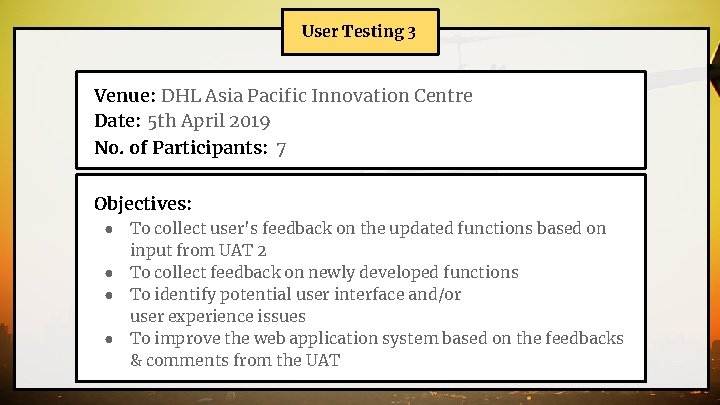

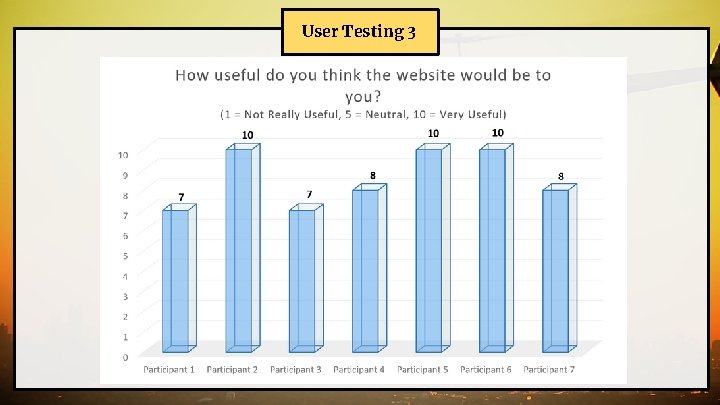

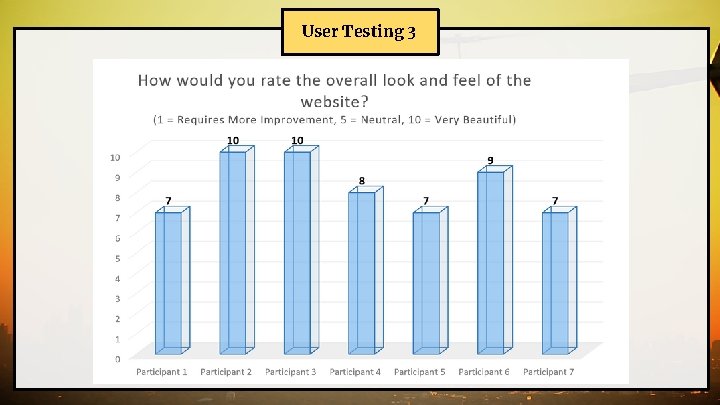

User Testing 3 Venue: DHL Asia Pacific Innovation Centre Date: 5 th April 2019 No. of Participants: 7 Objectives: ● ● To collect user's feedback on the updated functions based on input from UAT 2 To collect feedback on newly developed functions To identify potential user interface and/or user experience issues To improve the web application system based on the feedbacks & comments from the UAT

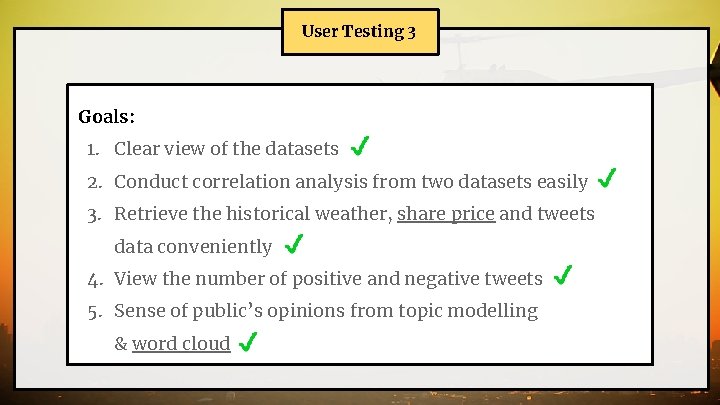

User Testing 3 Goals: 1. Clear view of the datasets 2. Conduct correlation analysis from two datasets easily 3. Retrieve the historical weather, share price and tweets data conveniently 4. View the number of positive and negative tweets 5. Sense of public’s opinions from topic modelling & word cloud

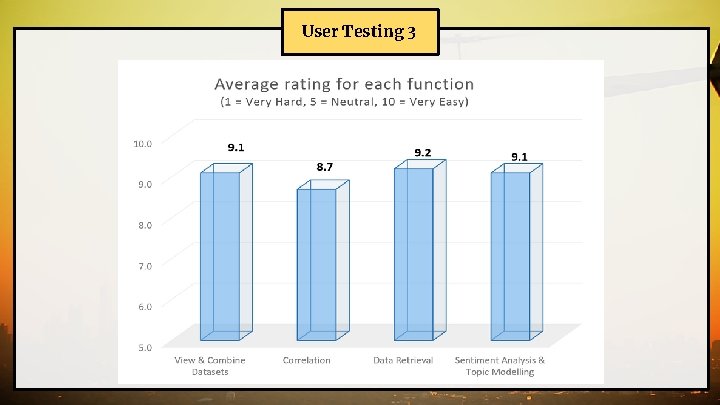

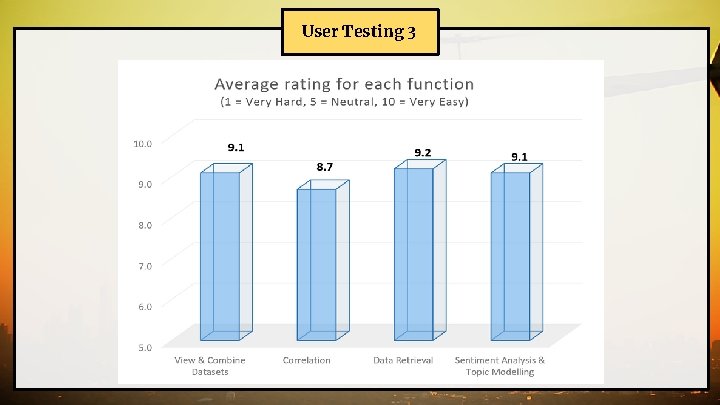

User Testing 3

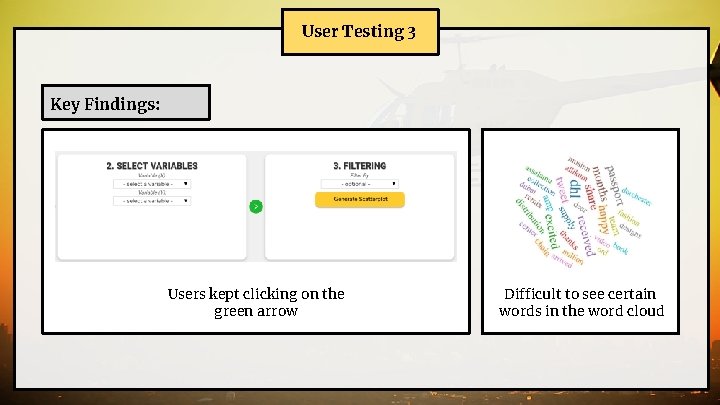

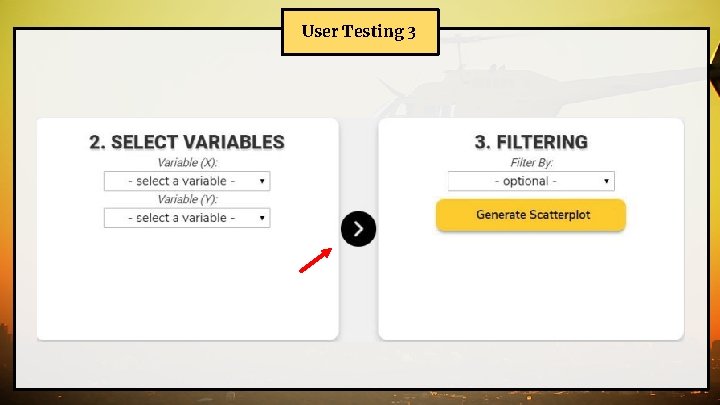

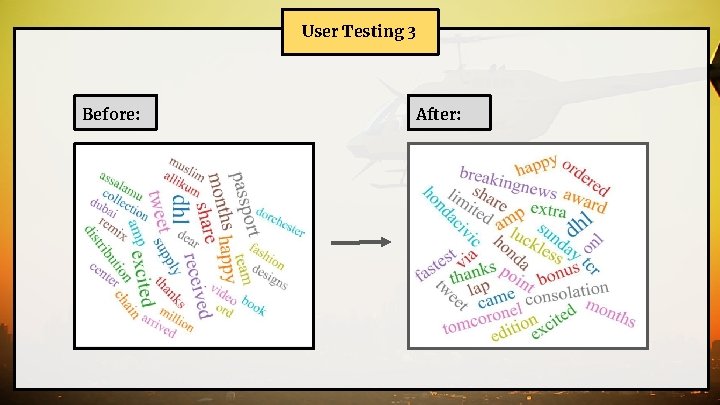

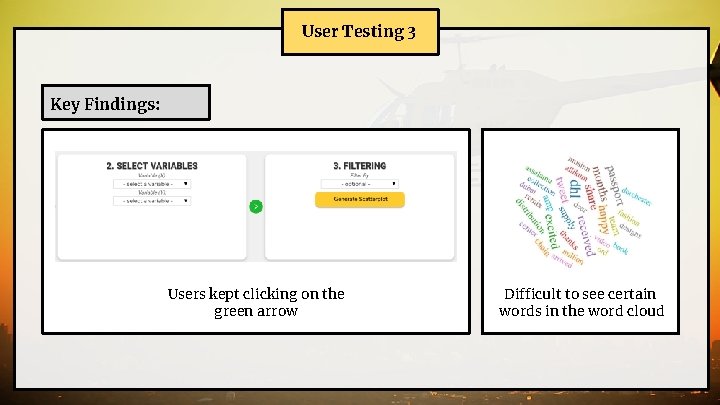

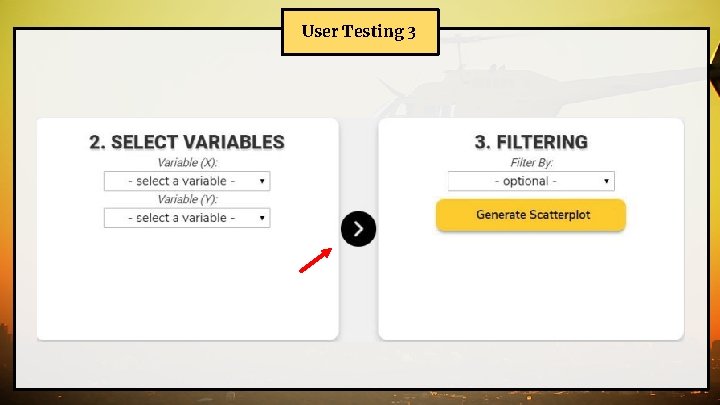

User Testing 3 Key Findings: Users kept clicking on the green arrow Difficult to see certain words in the word cloud

User Testing 3

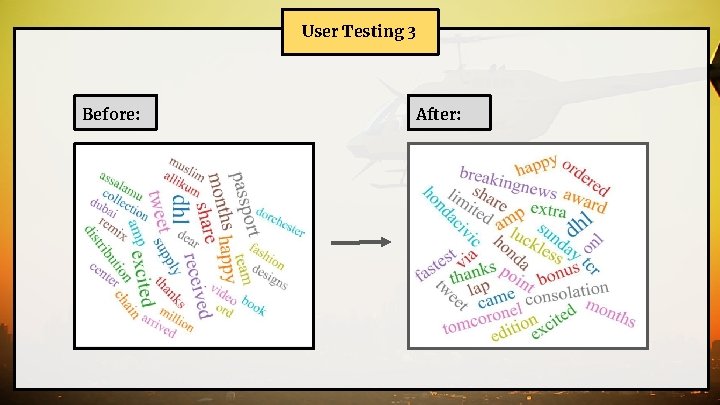

User Testing 3 Before: After:

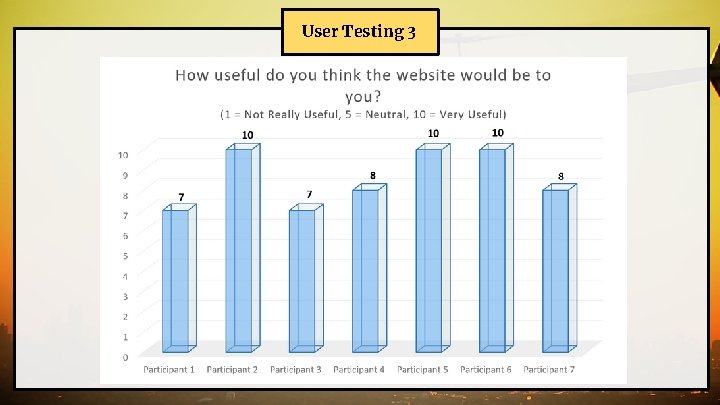

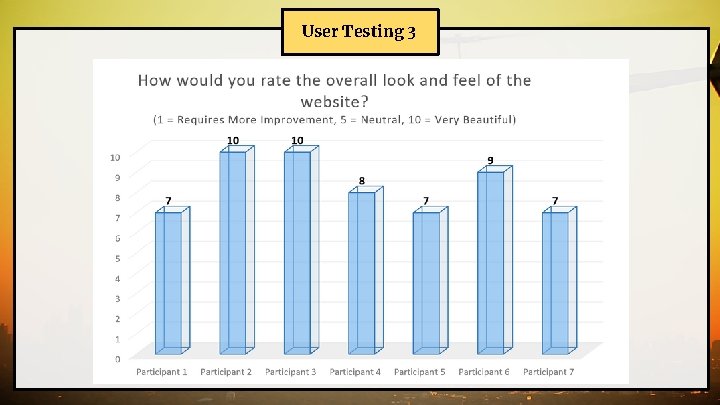

User Testing 3

User Testing 3

Overall Comments ● In overview, the functions in the web application were clear and easy to use. ● The user interface is simple and intuitive, which gave our client a very good user experience. ● Client was satisfied with the functionalities provided, they felt that it is useful and would help them in conducting various analysis.

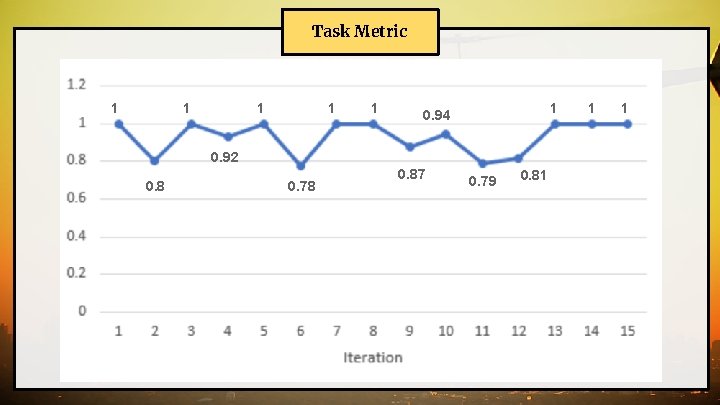

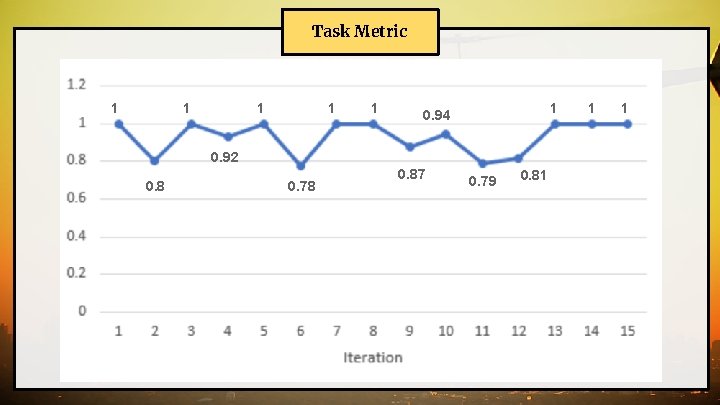

Task Metric 1 1 1 0. 94 0. 92 0. 8 0. 78 0. 87 0. 79 0. 81 1 1

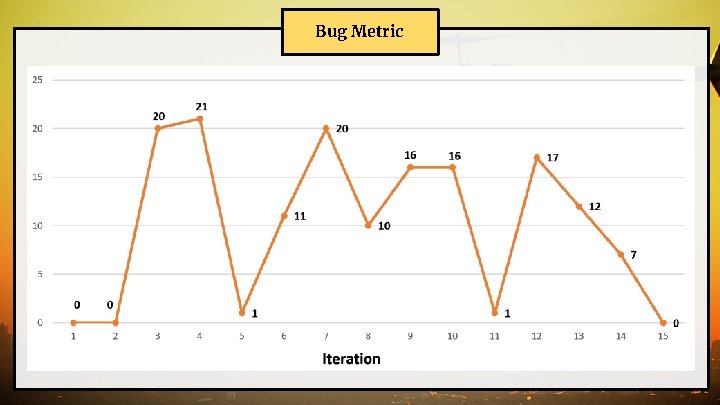

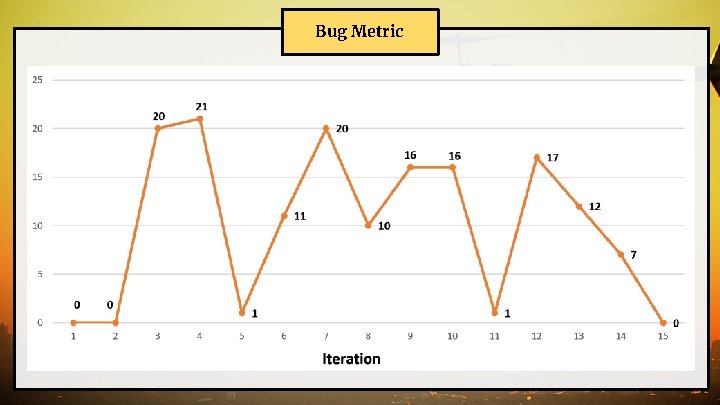

Bug Metric

X-Factor Mid-Term X Factors: Aim to have 1 real user in DHL that uses actual data in our system Final X Factors: ● ● ● Have over 90% satisfaction rate by clients Received recognition from Vice-President, Head of Innovation, Asia Pacific, Customer Solutions & Innovation, DHL Created an analytics platform for DHL to build on

Future Plans/Handover ● Completed our handover on 9 April 2019 ○ Source code ○ User Manual ○ Documentation ○ Test Results ● DHL will be maintaining and building on to our application

Team Reflection

End Thank You