Data Virtualization SQL Server 2019 Enhanced Polybase Kimberly

Data Virtualization SQL Server 2019 Enhanced Polybase Kimberly St. Jacques Michael Grayson

Agenda About us Enhanced Poly. Base overview Enhanced Poly. Base Installation Connecting to Data Sources Querying Troubleshooting Questions?

About Paychex is a leading provider of integrated human capital management solutions for payroll, HR, retirement, and insurance services. Backed by 47 years of industry expertise, Paychex serves approximately 605, 000 payroll client as of May 31, 2017, across more than 100 locations and pays one out of every 12 American private sector employees.

About the Speakers: Kim ● ● ● DBA since 2009 Currently working at Paychex Have worked at Xerox, Fuji. Film, and Wegmans Have administered SQL Server, Oracle, Mongo. DB, and Netezza Have also touched Informix, Maria. DB, and Postgres Graduated from RIT Married, 2 kids (both in college) Member of PASS Summit Program Committee - Served 4 Years Twitter: @kimstjacques Linked. In: www. linkedin. com/in/kimberly-stjacques Blog: http: //www. DBObserver. com

About the Speakers: Mike ● ● ● ● ● DBA since 2010 Currently working at Paychex Have worked at Paetec, Windstream, Thomson Reuters previously Have administered Mongo. DB, Oracle, My. SQL, Maria. DB, Cassandra, DB 2, Kafka Mongo. DB Master 2016 -present Graduated from Drexel University in Philadelphia Married, 4 kids Twitter: @mikegray 831 Linked. In: https: //www. linkedin. com/in/mikegrayson/ Blog: https: //mongomike. wordpress. com/blog

Data Virtualization

Data Virtualization Defined “Data virtualization offers techniques to abstract the way we handle and access data. It allows you to manage and work with data across heterogenous streams and systems, regardless of their physical location or format. Data virtualization can be defined as a set of tools, techniques and methods that let you access and interact with data without worrying about its physical location and what compute is done on it. For instance, say you have tons of data spread across disparate systems and want to query it all in a unified manner, but without moving the data around. That’s when you would want to leverage data virtualization techniques. ” - Tech. Net

For instance, say you have tons of data spread across disparate systems and want to query it all in a unified manner, but without moving the data around. That’s when you would want to leverage data virtualization techniques. ” - Tech. Net

Poly. Base

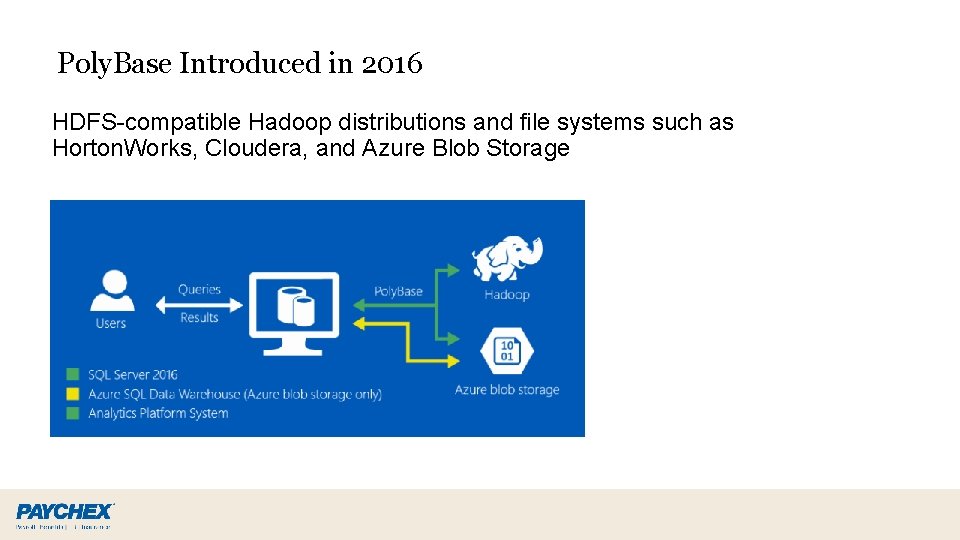

Poly. Base Introduced in 2016 HDFS-compatible Hadoop distributions and file systems such as Horton. Works, Cloudera, and Azure Blob Storage

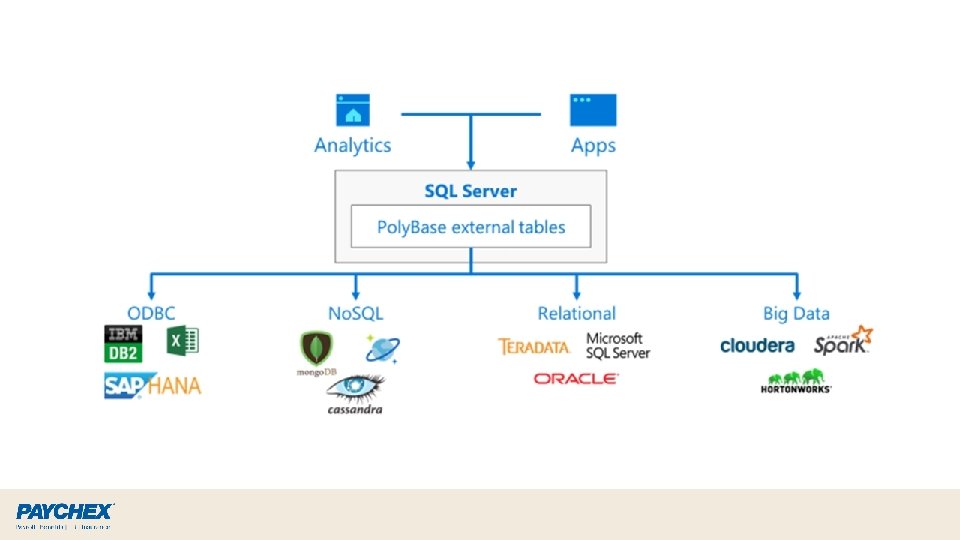

What is Enhanced Poly. Base? ● Combines many disparate data sources for reporting and analysis inside SQL Server, without the need to develop and run ETL processes. ● Use T-SQL to query data located on a wide array of data sources. ● HDFS-compatible Hadoop distributions and file systems such as Horton. Works, Cloudera, and Azure Blob Storage, SQL Server, Oracle, Teradata, Mongo. DB, or any data source with an ODBC driver ● Scalable with compute instances ● Secured with Active Directory Authentication ● Microsoft SQL Server 2019 (CTP 2. 2) - 15. 0. 1200. 24

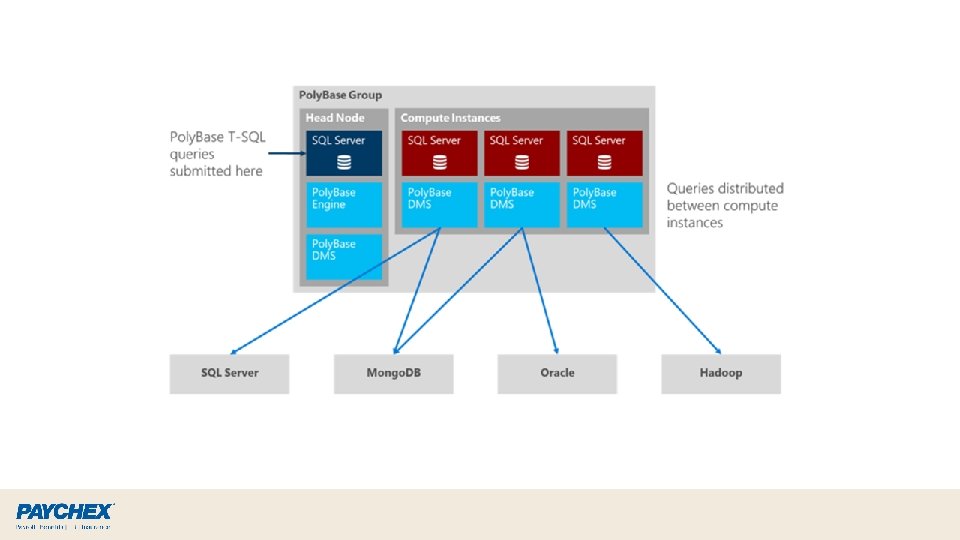

Head Node

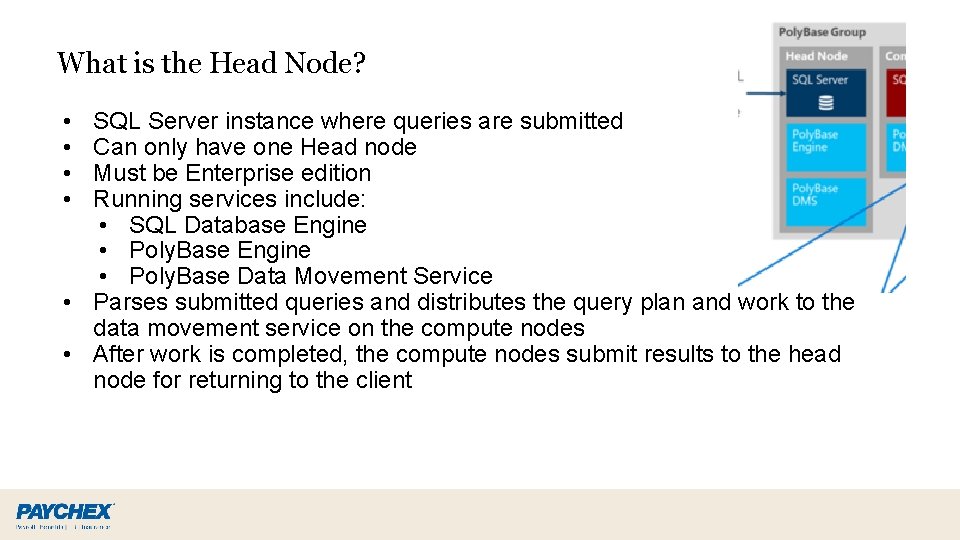

What is the Head Node? • • SQL Server instance where queries are submitted Can only have one Head node Must be Enterprise edition Running services include: • SQL Database Engine • Poly. Base Data Movement Service • Parses submitted queries and distributes the query plan and work to the data movement service on the compute nodes • After work is completed, the compute nodes submit results to the head node for returning to the client

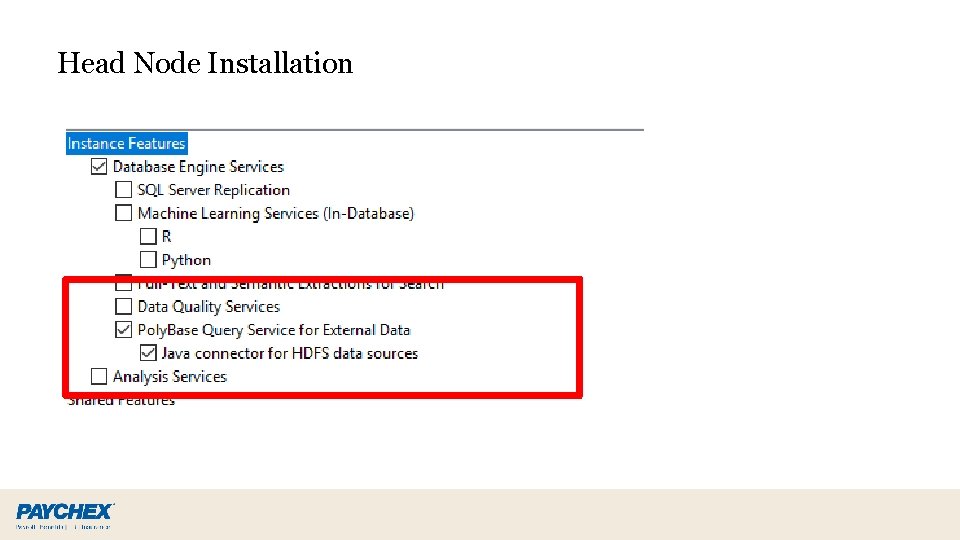

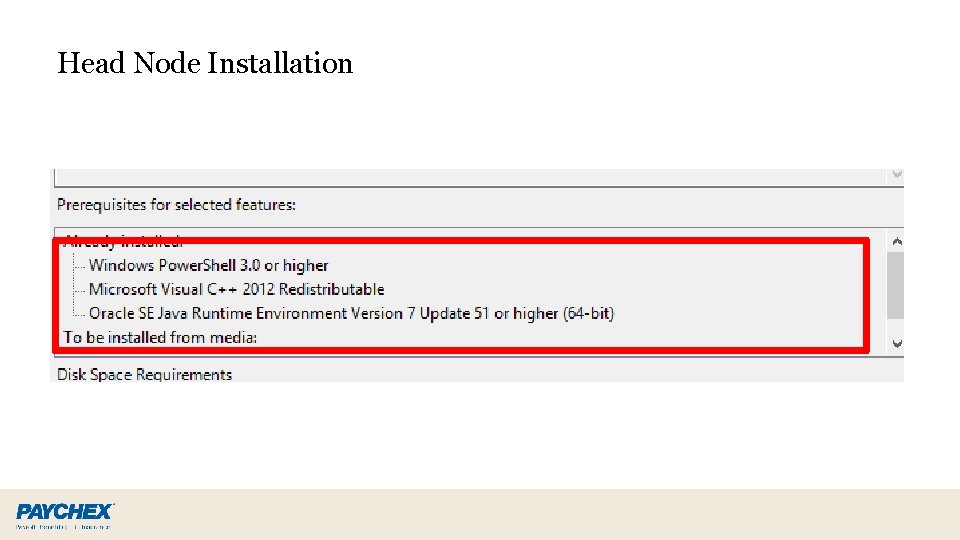

Head Node Installation

Head Node Installation

Head Node Installation

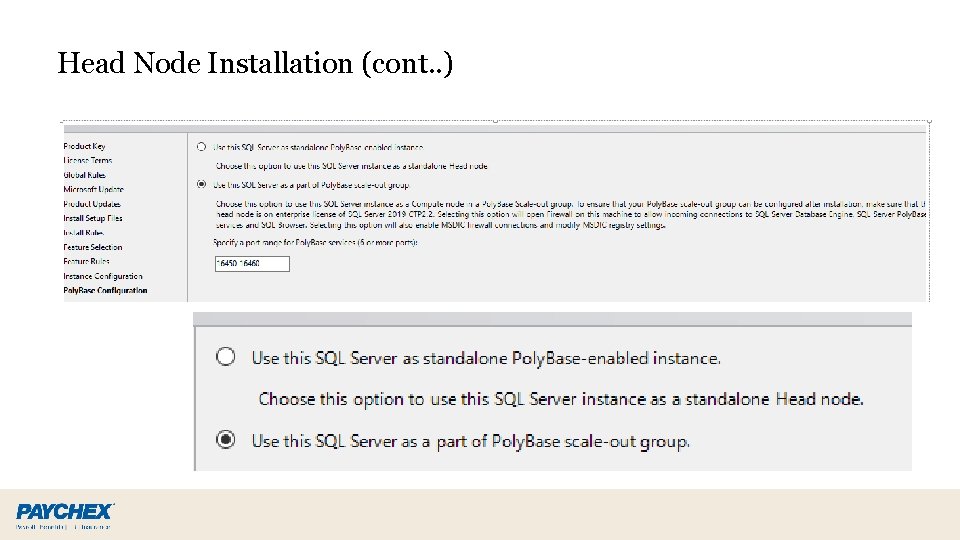

Head Node Installation (cont. . )

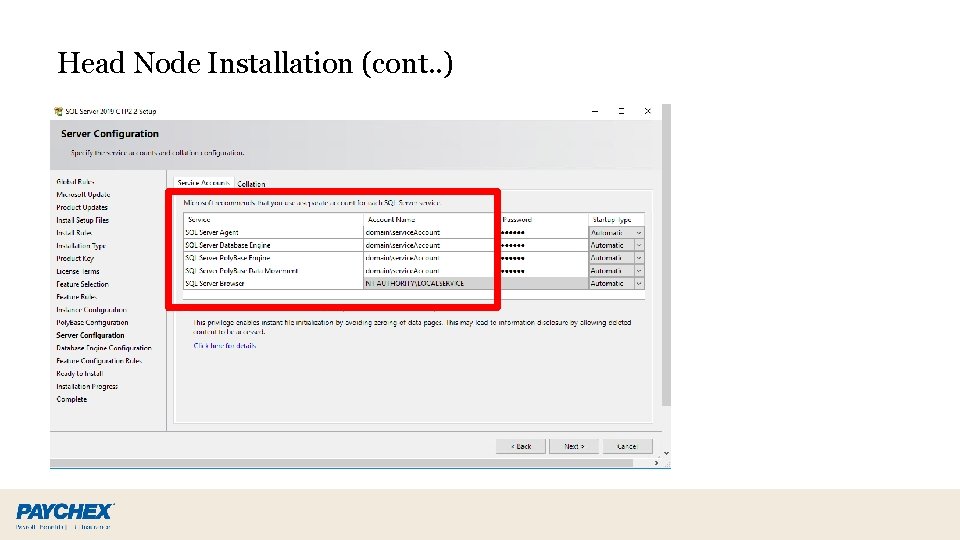

Head Node Installation (cont. . )

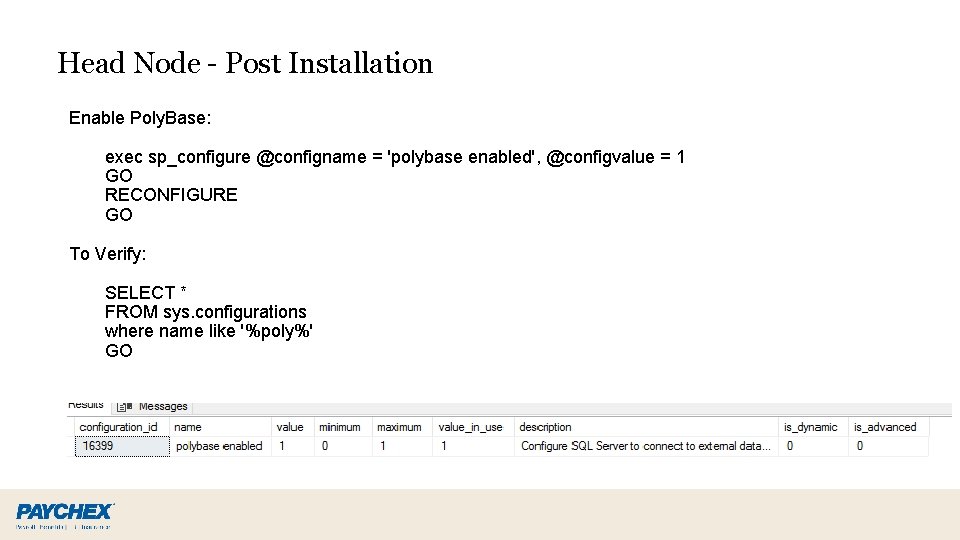

Head Node - Post Installation Enable Poly. Base: exec sp_configure @configname = 'polybase enabled', @configvalue = 1 GO RECONFIGURE GO To Verify: SELECT * FROM sys. configurations where name like '%poly%' GO

Compute Nodes

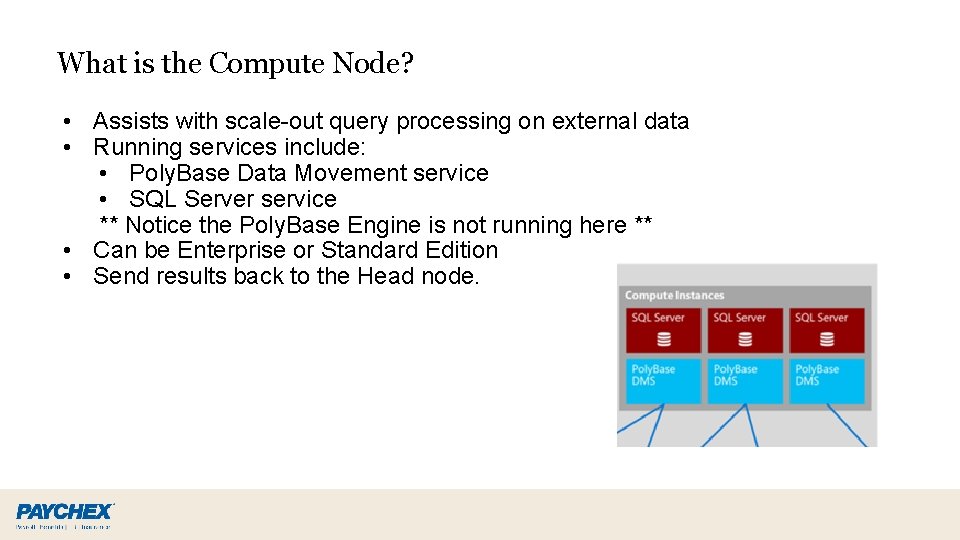

What is the Compute Node? • Assists with scale-out query processing on external data • Running services include: • Poly. Base Data Movement service • SQL Server service ** Notice the Poly. Base Engine is not running here ** • Can be Enterprise or Standard Edition • Send results back to the Head node.

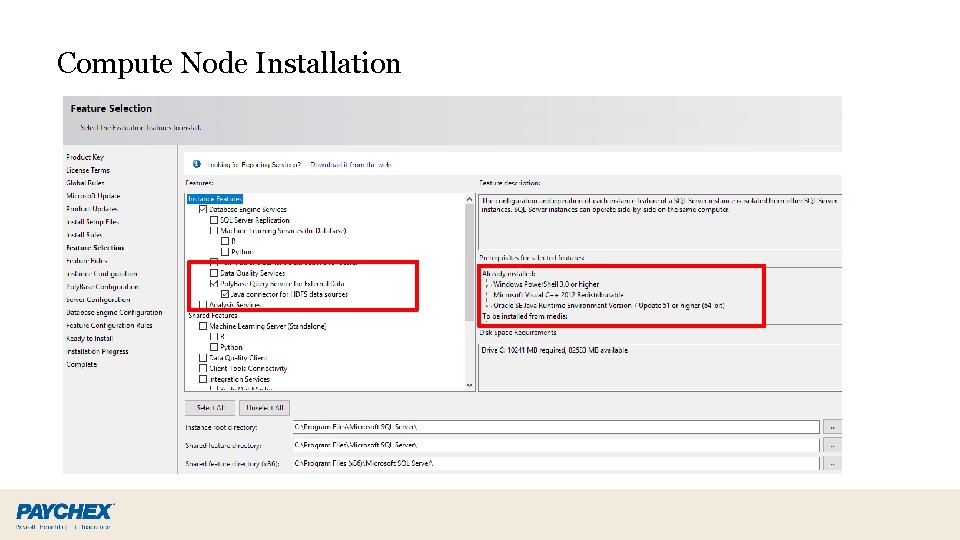

Compute Node Installation

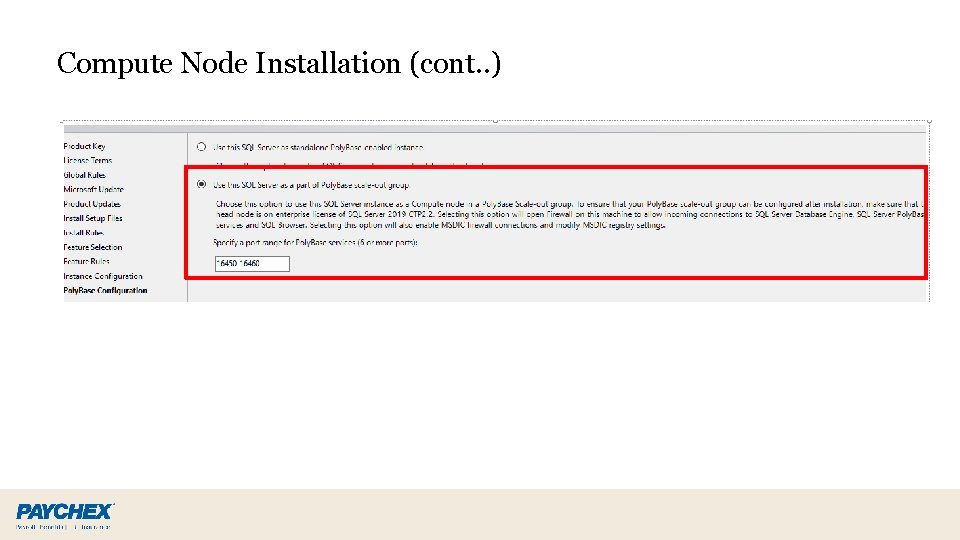

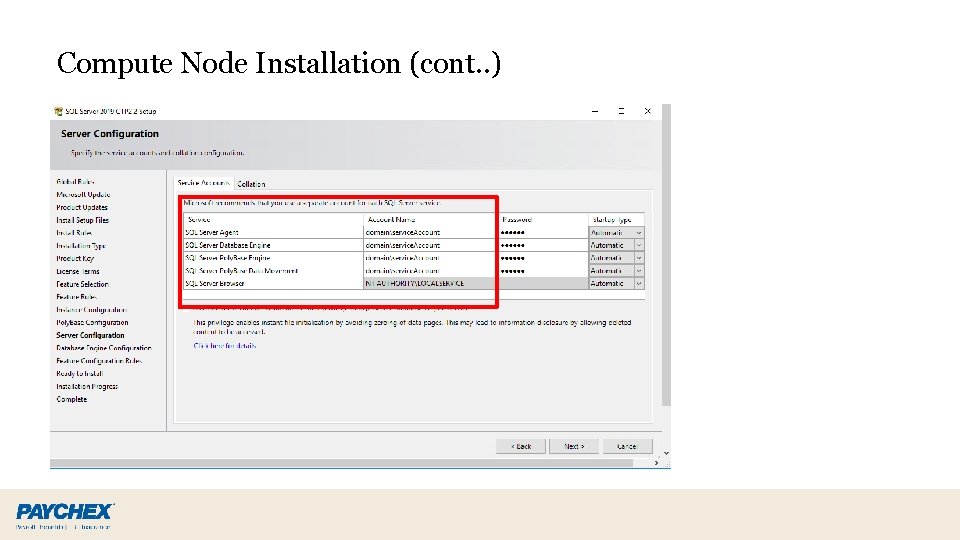

Compute Node Installation (cont. . )

Compute Node Installation (cont. . )

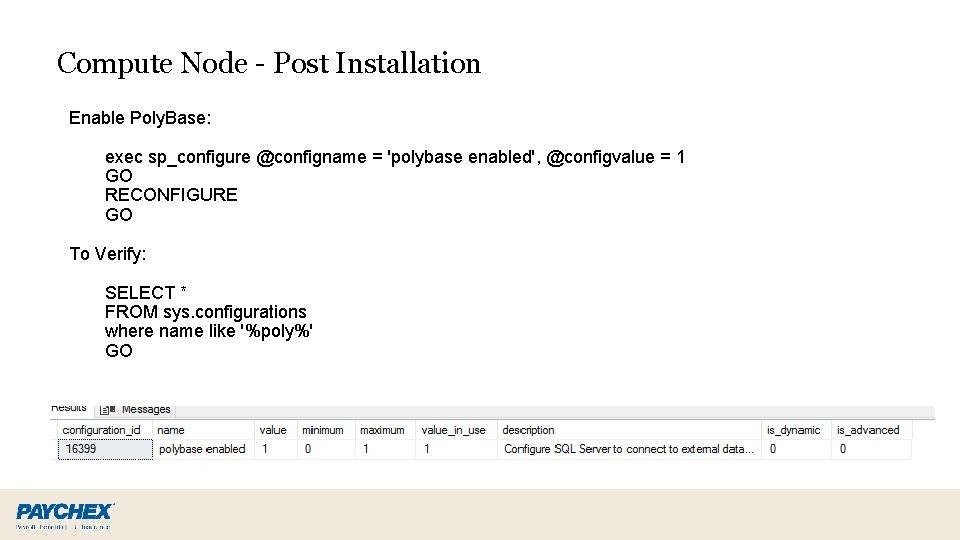

Compute Node - Post Installation Enable Poly. Base: exec sp_configure @configname = 'polybase enabled', @configvalue = 1 GO RECONFIGURE GO To Verify: SELECT * FROM sys. configurations where name like '%poly%' GO

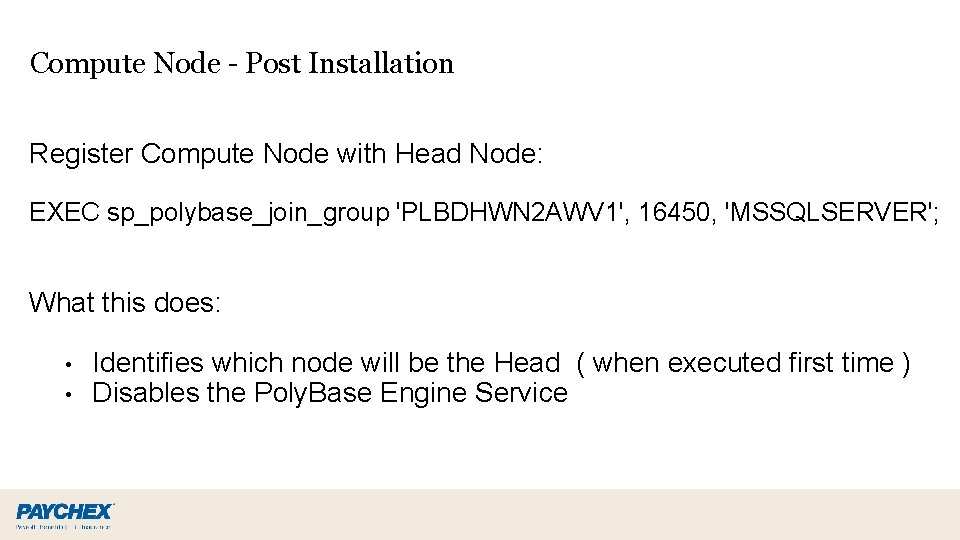

Compute Node - Post Installation Register Compute Node with Head Node: EXEC sp_polybase_join_group 'PLBDHWN 2 AWV 1', 16450, 'MSSQLSERVER'; What this does: • • Identifies which node will be the Head ( when executed first time ) Disables the Poly. Base Engine Service

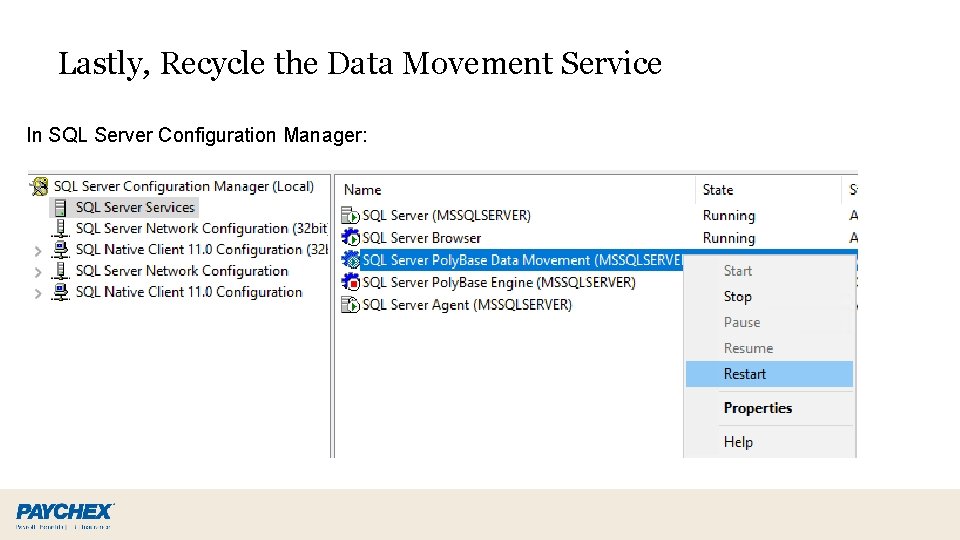

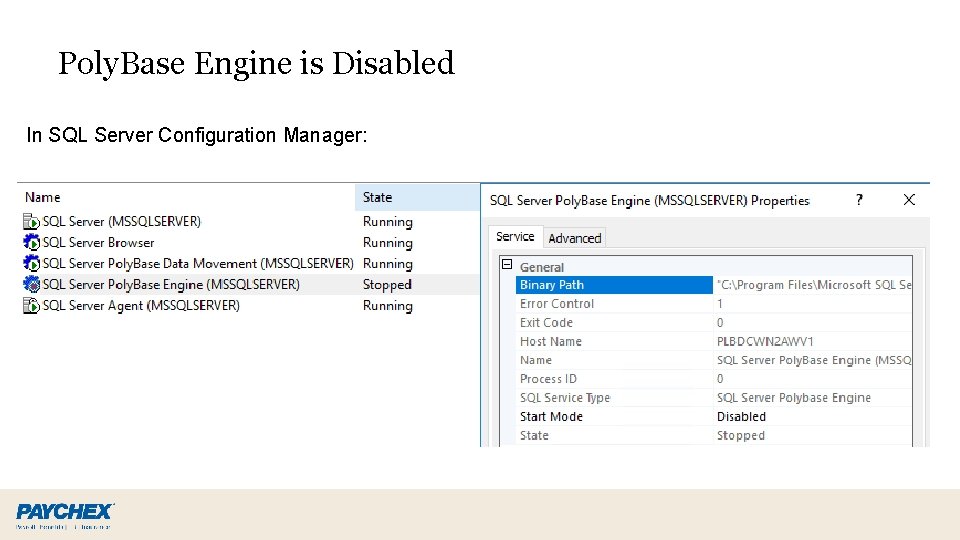

Lastly, Recycle the Data Movement Service In SQL Server Configuration Manager:

Poly. Base Engine is Disabled In SQL Server Configuration Manager:

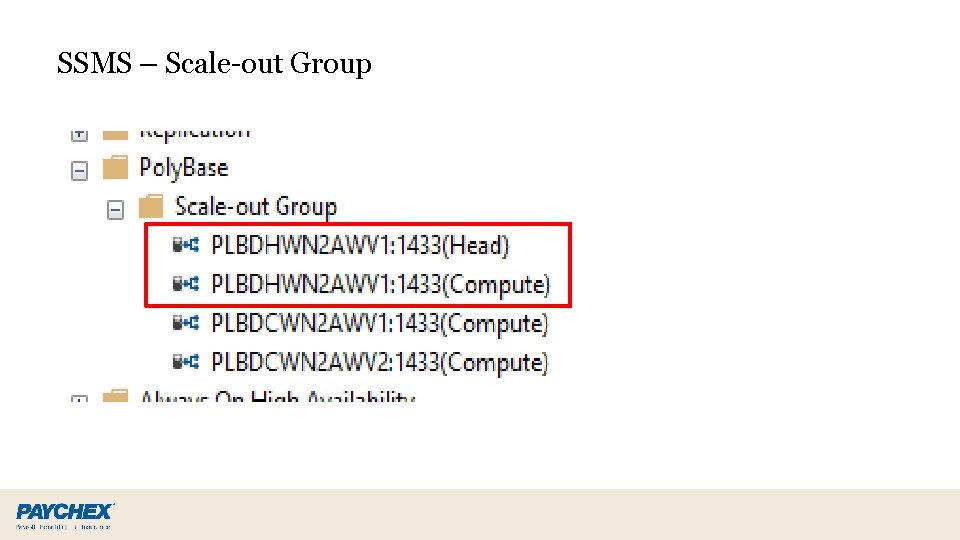

SSMS – Scale-out Group

Create Database

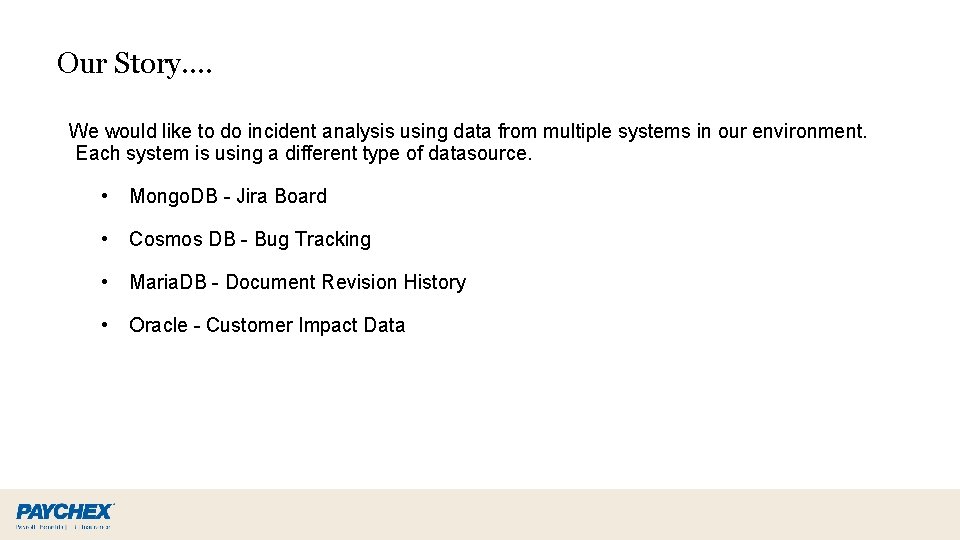

Our Story…. We would like to do incident analysis using data from multiple systems in our environment. Each system is using a different type of datasource. • Mongo. DB - Jira Board • Cosmos DB - Bug Tracking • Maria. DB - Document Revision History • Oracle - Customer Impact Data

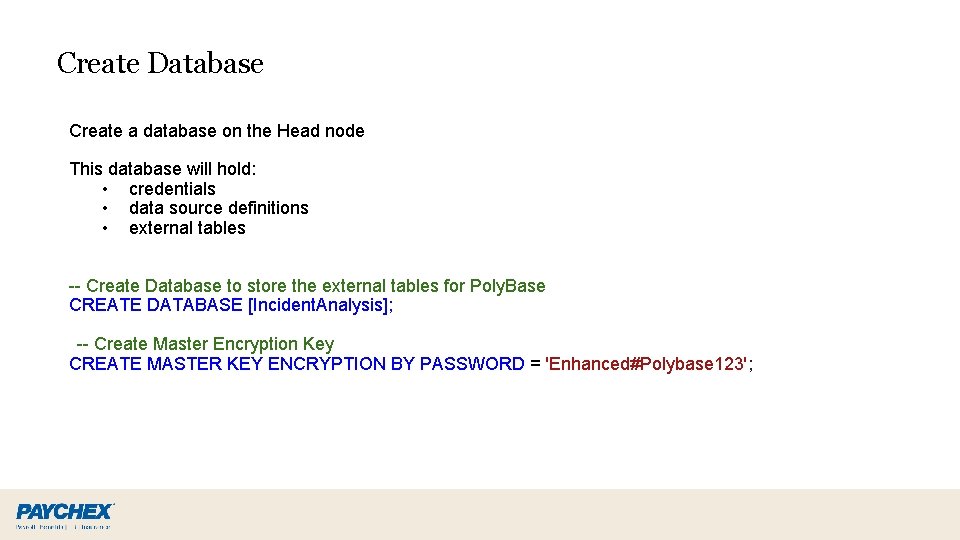

Create Database Create a database on the Head node This database will hold: • credentials • data source definitions • external tables -- Create Database to store the external tables for Poly. Base CREATE DATABASE [Incident. Analysis]; -- Create Master Encryption Key CREATE MASTER KEY ENCRYPTION BY PASSWORD = 'Enhanced#Polybase 123';

Connecting to Oracle

Configuring Oracle for Polybase On the Oracle source: • Create Oracle user for Poly. Base to authenticate with. • Grant the account access to the tables Poly. Base will need to extract data from. On the Poly. Base Head node: • Install the appropriate Oracle client and ODBC drivers.

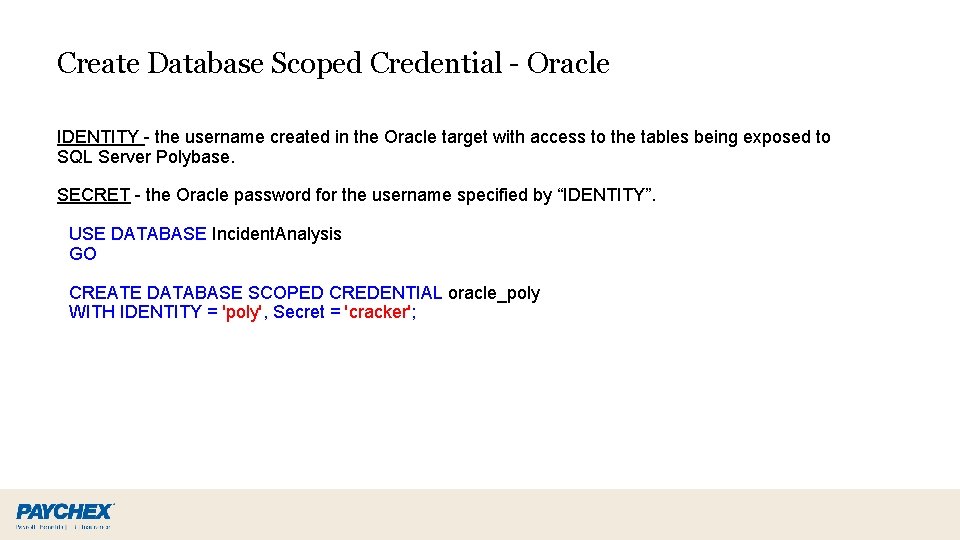

Create Database Scoped Credential - Oracle IDENTITY - the username created in the Oracle target with access to the tables being exposed to SQL Server Polybase. SECRET - the Oracle password for the username specified by “IDENTITY”. USE DATABASE Incident. Analysis GO CREATE DATABASE SCOPED CREDENTIAL oracle_poly WITH IDENTITY = 'poly', Secret = 'cracker';

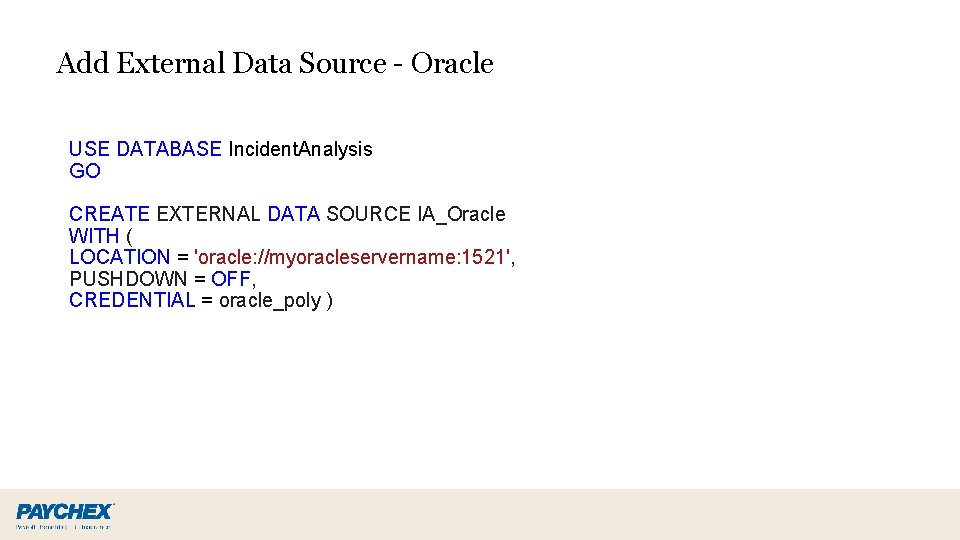

Add External Data Source - Oracle USE DATABASE Incident. Analysis GO CREATE EXTERNAL DATA SOURCE IA_Oracle WITH ( LOCATION = 'oracle: //myoracleservername: 1521', PUSHDOWN = OFF, CREDENTIAL = oracle_poly )

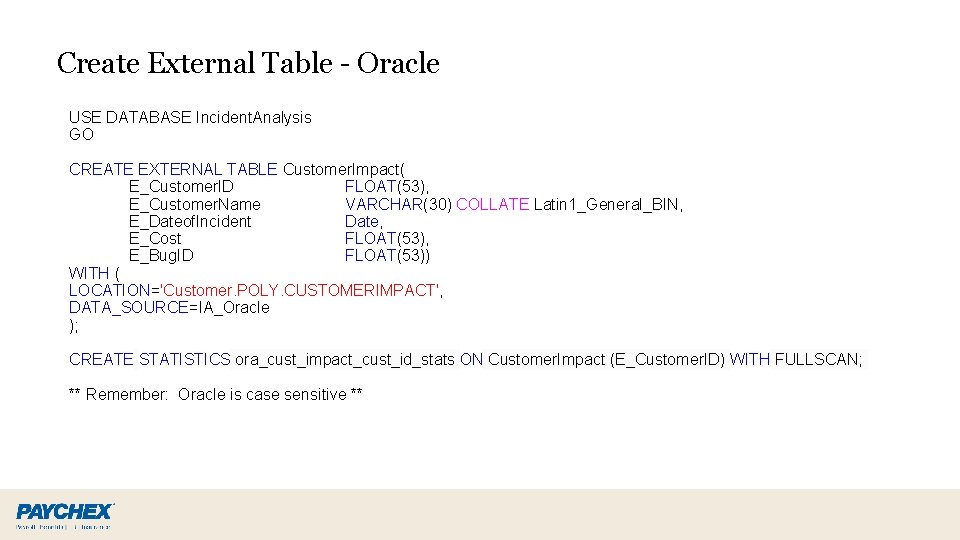

Create External Table - Oracle USE DATABASE Incident. Analysis GO CREATE EXTERNAL TABLE Customer. Impact( E_Customer. ID FLOAT(53), E_Customer. Name VARCHAR(30) COLLATE Latin 1_General_BIN, E_Dateof. Incident Date, E_Cost FLOAT(53), E_Bug. ID FLOAT(53)) WITH ( LOCATION='Customer. POLY. CUSTOMERIMPACT', DATA_SOURCE=IA_Oracle ); CREATE STATISTICS ora_cust_impact_cust_id_stats ON Customer. Impact (E_Customer. ID) WITH FULLSCAN; ** Remember: Oracle is case sensitive **

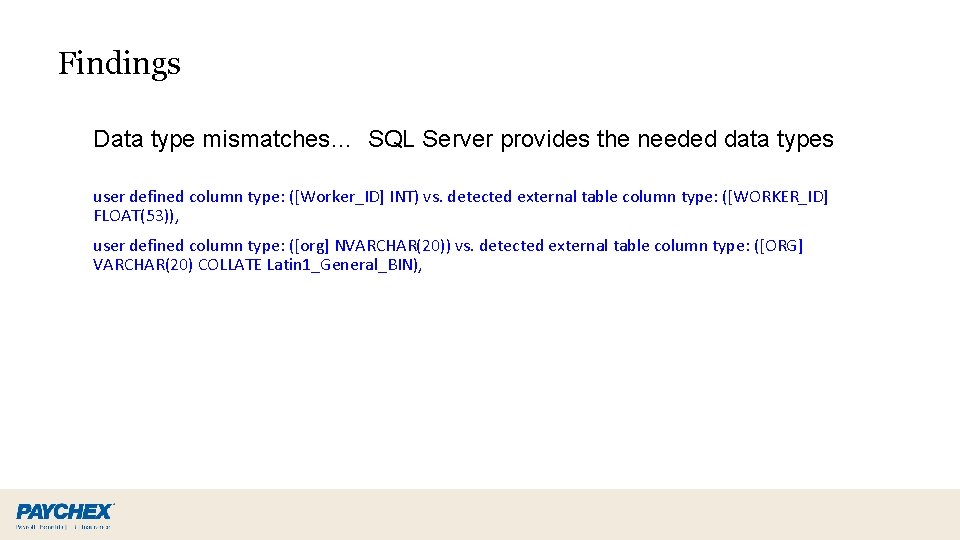

Findings Data type mismatches… SQL Server provides the needed data types user defined column type: ([Worker_ID] INT) vs. detected external table column type: ([WORKER_ID] FLOAT(53)), user defined column type: ([org] NVARCHAR(20)) vs. detected external table column type: ([ORG] VARCHAR(20) COLLATE Latin 1_General_BIN),

Connecting to Mongo. DB

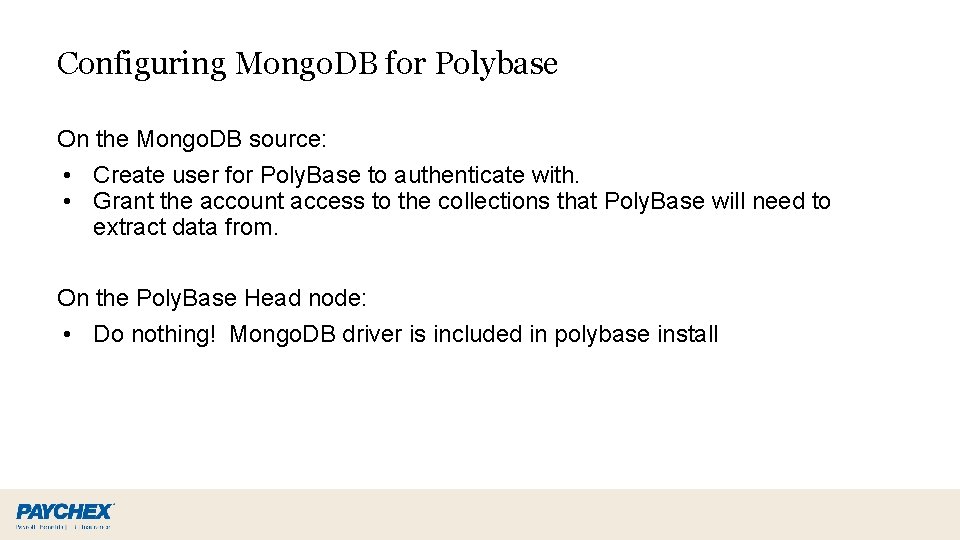

Configuring Mongo. DB for Polybase On the Mongo. DB source: • Create user for Poly. Base to authenticate with. • Grant the account access to the collections that Poly. Base will need to extract data from. On the Poly. Base Head node: • Do nothing! Mongo. DB driver is included in polybase install

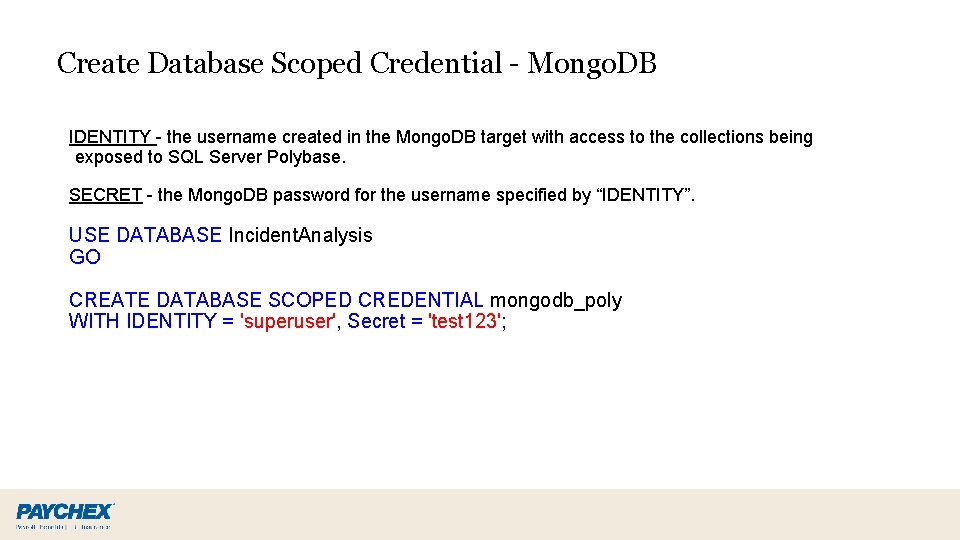

Create Database Scoped Credential - Mongo. DB IDENTITY - the username created in the Mongo. DB target with access to the collections being exposed to SQL Server Polybase. SECRET - the Mongo. DB password for the username specified by “IDENTITY”. USE DATABASE Incident. Analysis GO CREATE DATABASE SCOPED CREDENTIAL mongodb_poly WITH IDENTITY = 'superuser', Secret = 'test 123';

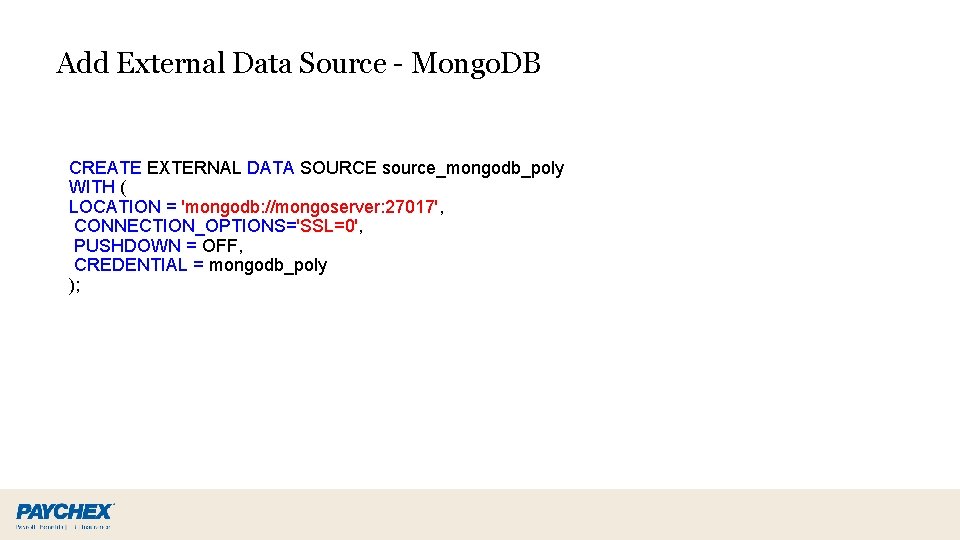

Add External Data Source - Mongo. DB CREATE EXTERNAL DATA SOURCE source_mongodb_poly WITH ( LOCATION = 'mongodb: //mongoserver: 27017', CONNECTION_OPTIONS='SSL=0', PUSHDOWN = OFF, CREDENTIAL = mongodb_poly );

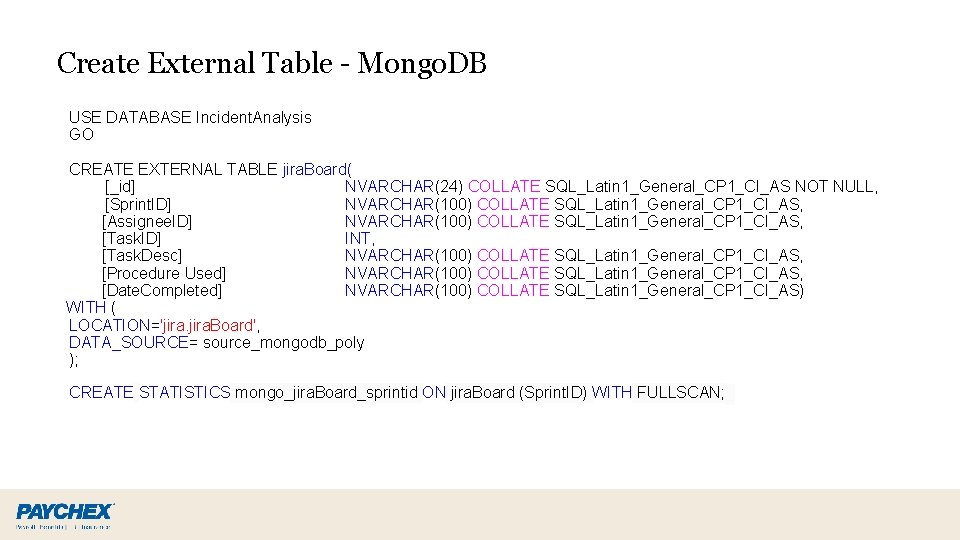

Create External Table - Mongo. DB USE DATABASE Incident. Analysis GO CREATE EXTERNAL TABLE jira. Board( [_id] NVARCHAR(24) COLLATE SQL_Latin 1_General_CP 1_CI_AS NOT NULL, [Sprint. ID] NVARCHAR(100) COLLATE SQL_Latin 1_General_CP 1_CI_AS, [Assignee. ID] NVARCHAR(100) COLLATE SQL_Latin 1_General_CP 1_CI_AS, [Task. ID] INT, [Task. Desc] NVARCHAR(100) COLLATE SQL_Latin 1_General_CP 1_CI_AS, [Procedure Used] NVARCHAR(100) COLLATE SQL_Latin 1_General_CP 1_CI_AS, [Date. Completed] NVARCHAR(100) COLLATE SQL_Latin 1_General_CP 1_CI_AS) WITH ( LOCATION='jira. Board', DATA_SOURCE= source_mongodb_poly ); CREATE STATISTICS mongo_jira. Board_sprintid ON jira. Board (Sprint. ID) WITH FULLSCAN;

Findings • Had to add “CONNECTION_OPTIONS='SSL=0', ” to turn off SSL (turned on by default)

Connecting to Maria. DB

Configuring Maria. DB for Polybase On the Maria. DB source: • Create Maria. DB user for Poly. Base to authenticate with. • Grant the account access to the tables Poly. Base will need to extract data from. On the Poly. Base Head node: • Install the appropriate Maria. DB ODBC drivers.

Create Database Scoped Credential - Maria. DB IDENTITY - the username created in the Oracle target with access to the tables being exposed to SQL Server Polybase. SECRET - the Oracle password for the username specified by “IDENTITY”. USE DATABASE Incident. Analysis GO CREATE DATABASE SCOPED CREDENTIAL mariadb_poly WITH IDENTITY = 'poly', Secret = 'cracker';

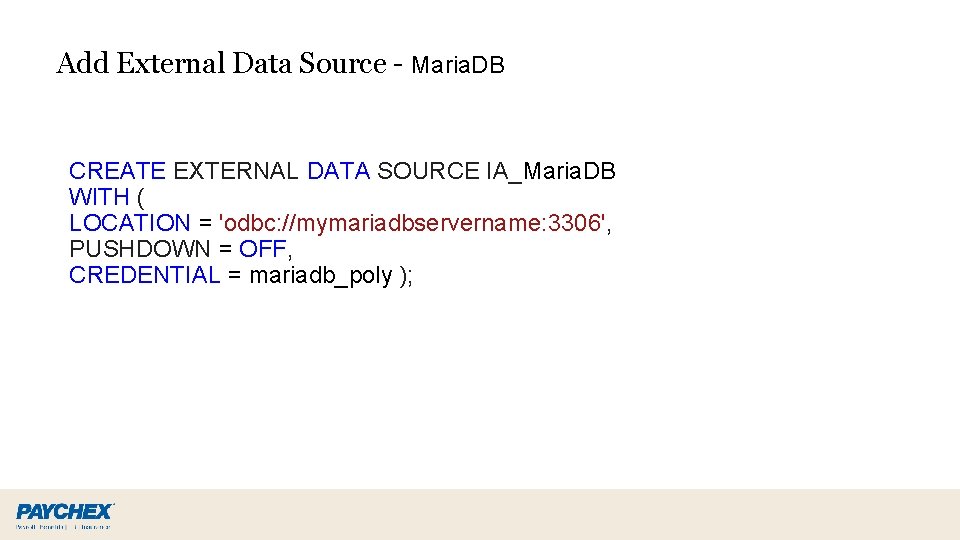

Add External Data Source - Maria. DB CREATE EXTERNAL DATA SOURCE IA_Maria. DB WITH ( LOCATION = 'odbc: //mymariadbservername: 3306', PUSHDOWN = OFF, CREDENTIAL = mariadb_poly );

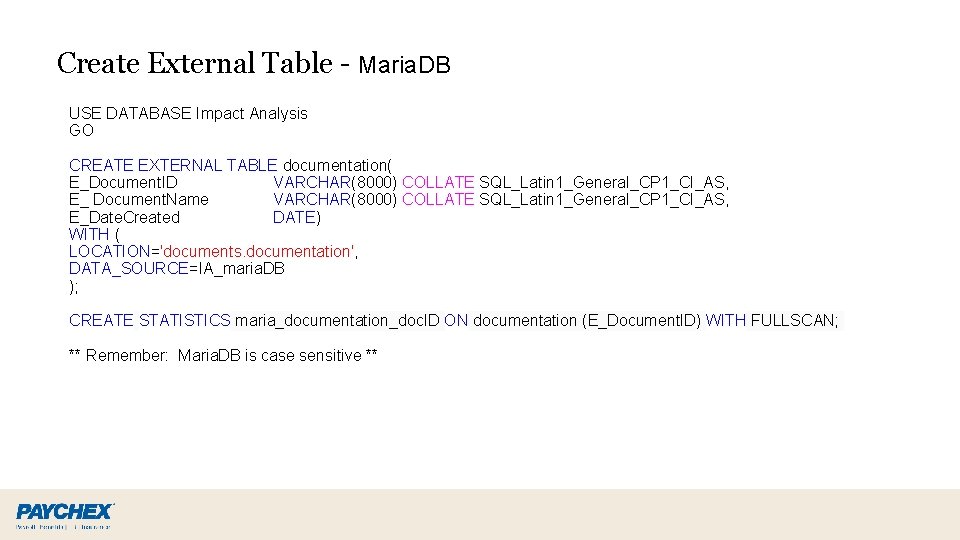

Create External Table - Maria. DB USE DATABASE Impact Analysis GO CREATE EXTERNAL TABLE documentation( E_Document. ID VARCHAR(8000) COLLATE SQL_Latin 1_General_CP 1_CI_AS, E_ Document. Name VARCHAR(8000) COLLATE SQL_Latin 1_General_CP 1_CI_AS, E_Date. Created DATE) WITH ( LOCATION='documents. documentation', DATA_SOURCE=IA_maria. DB ); CREATE STATISTICS maria_documentation_doc. ID ON documentation (E_Document. ID) WITH FULLSCAN; ** Remember: Maria. DB is case sensitive **

Connecting to Cosmos DB

Cosmos DB – Well Documented ? ?

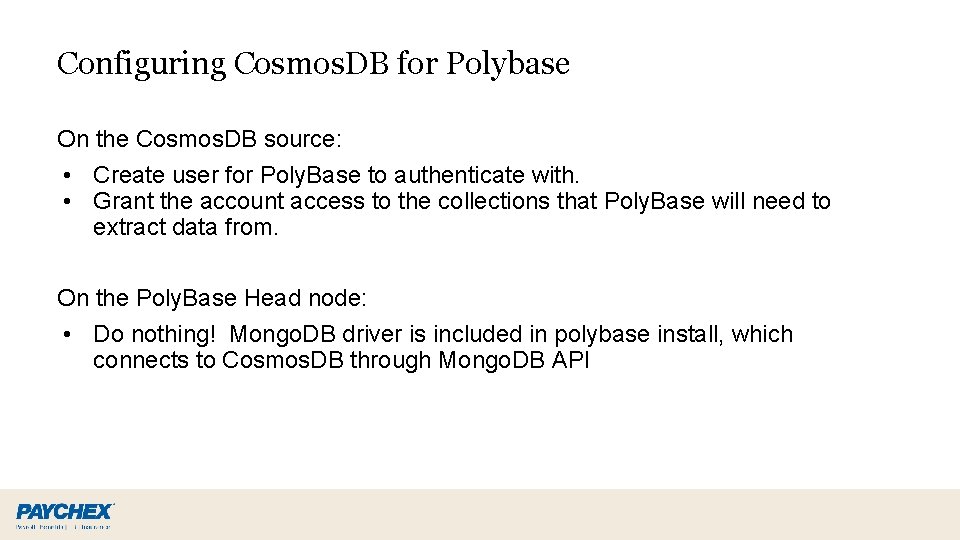

Configuring Cosmos. DB for Polybase On the Cosmos. DB source: • Create user for Poly. Base to authenticate with. • Grant the account access to the collections that Poly. Base will need to extract data from. On the Poly. Base Head node: • Do nothing! Mongo. DB driver is included in polybase install, which connects to Cosmos. DB through Mongo. DB API

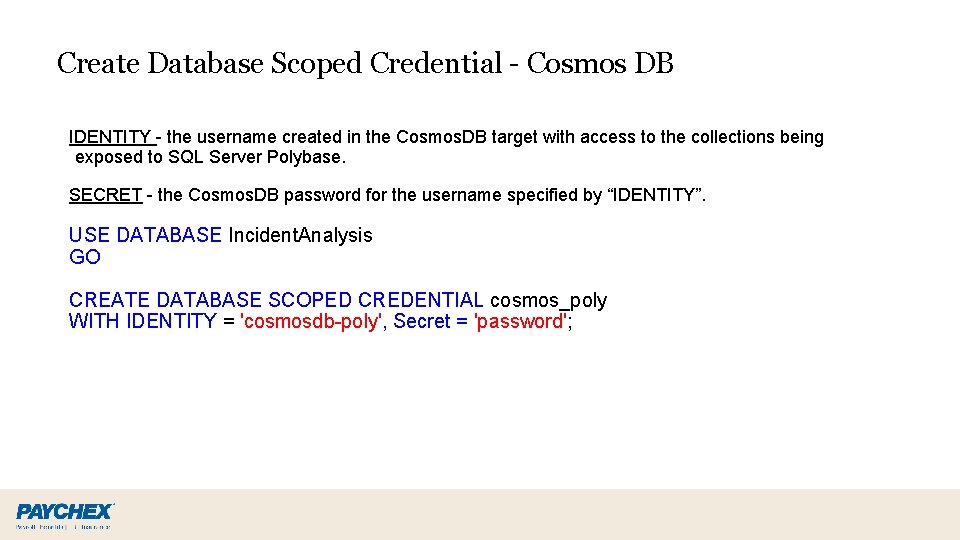

Create Database Scoped Credential - Cosmos DB IDENTITY - the username created in the Cosmos. DB target with access to the collections being exposed to SQL Server Polybase. SECRET - the Cosmos. DB password for the username specified by “IDENTITY”. USE DATABASE Incident. Analysis GO CREATE DATABASE SCOPED CREDENTIAL cosmos_poly WITH IDENTITY = 'cosmosdb-poly', Secret = 'password';

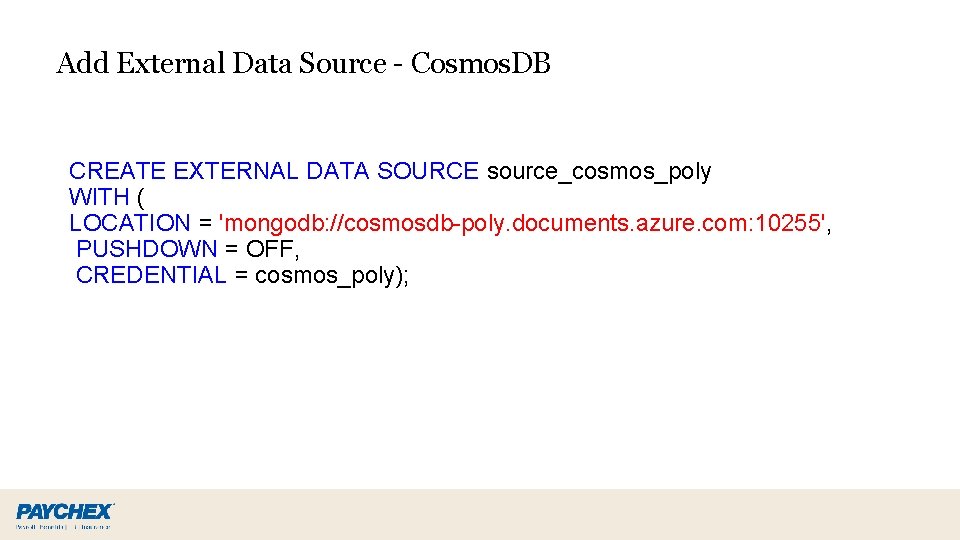

Add External Data Source - Cosmos. DB CREATE EXTERNAL DATA SOURCE source_cosmos_poly WITH ( LOCATION = 'mongodb: //cosmosdb-poly. documents. azure. com: 10255', PUSHDOWN = OFF, CREDENTIAL = cosmos_poly);

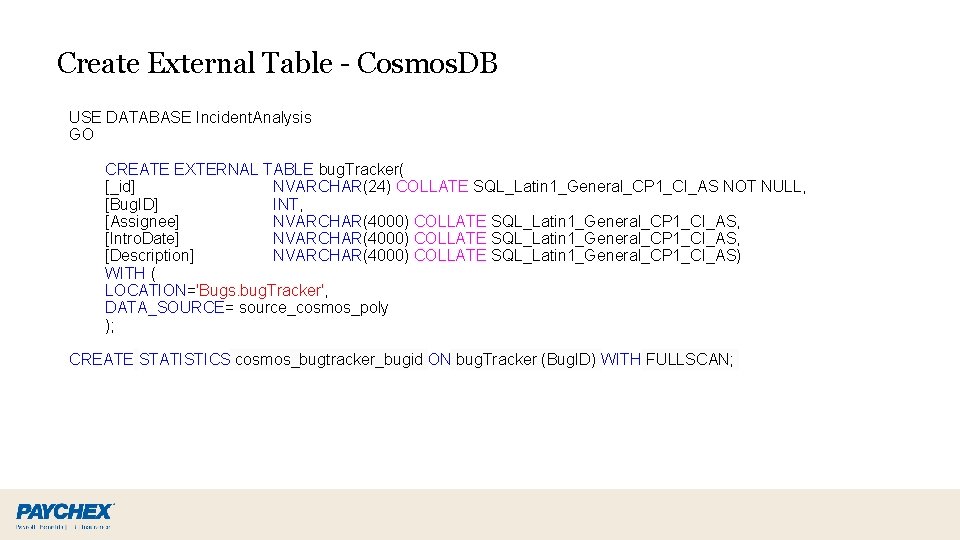

Create External Table - Cosmos. DB USE DATABASE Incident. Analysis GO CREATE EXTERNAL TABLE bug. Tracker( [_id] NVARCHAR(24) COLLATE SQL_Latin 1_General_CP 1_CI_AS NOT NULL, [Bug. ID] INT, [Assignee] NVARCHAR(4000) COLLATE SQL_Latin 1_General_CP 1_CI_AS, [Intro. Date] NVARCHAR(4000) COLLATE SQL_Latin 1_General_CP 1_CI_AS, [Description] NVARCHAR(4000) COLLATE SQL_Latin 1_General_CP 1_CI_AS) WITH ( LOCATION='Bugs. bug. Tracker', DATA_SOURCE= source_cosmos_poly ); CREATE STATISTICS cosmos_bugtracker_bugid ON bug. Tracker (Bug. ID) WITH FULLSCAN;

Findings • needed to use the Cosmos. DB emulator on-premise • needed to import documents via the Cosmos. DB SQL API

Query the Data

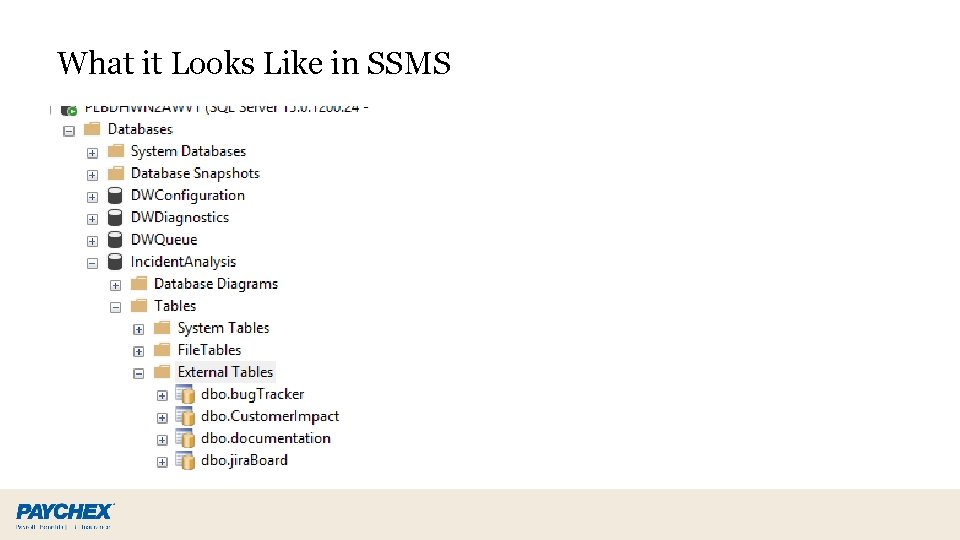

What it Looks Like in SSMS

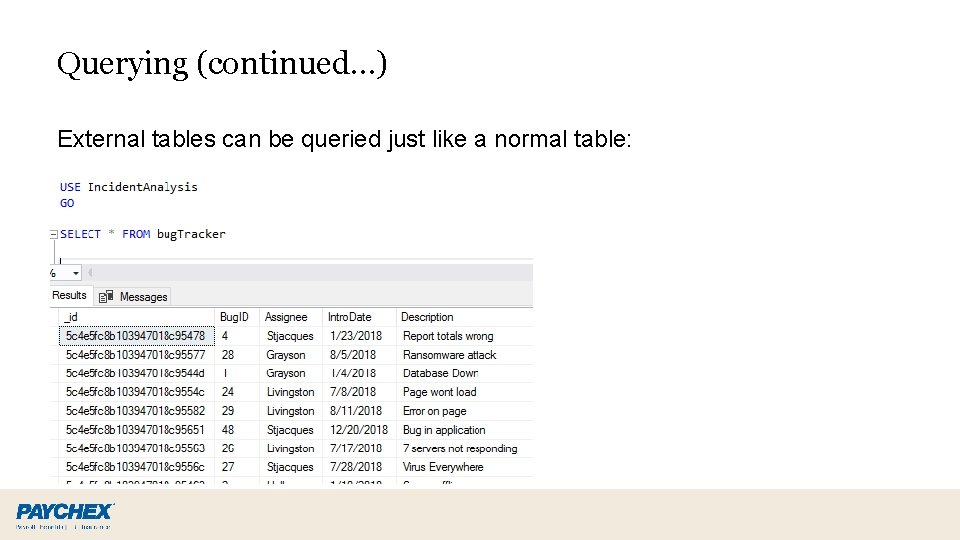

Querying (continued…) External tables can be queried just like a normal table:

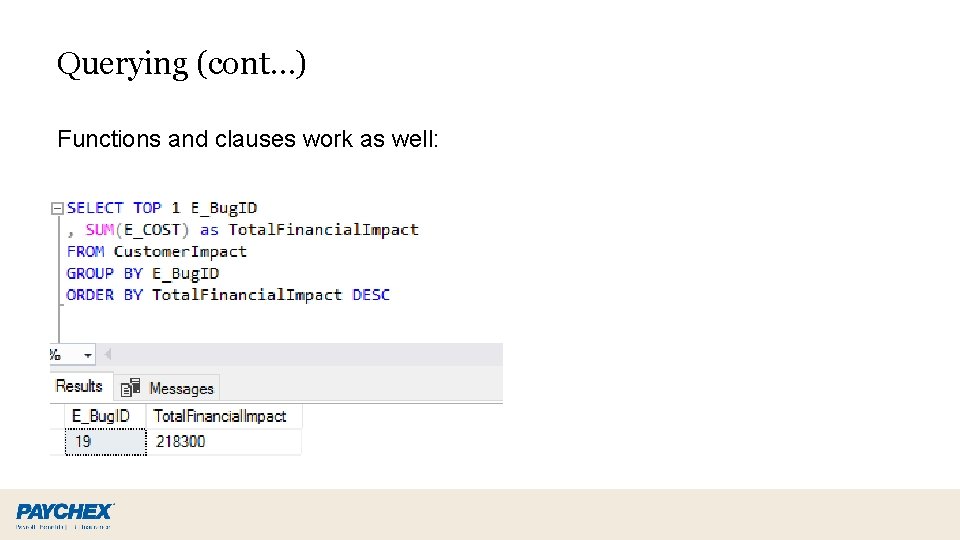

Querying (cont…) Functions and clauses work as well:

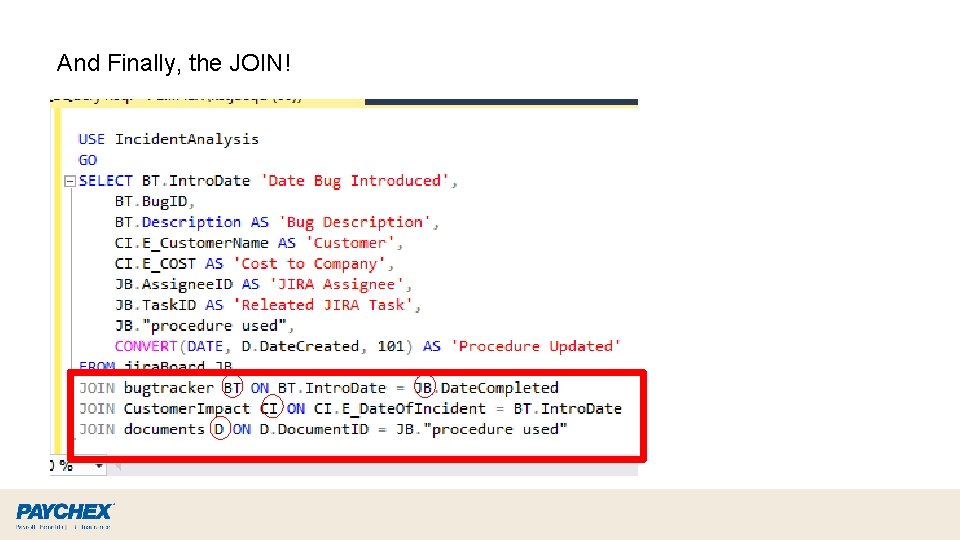

And Finally, the JOIN!

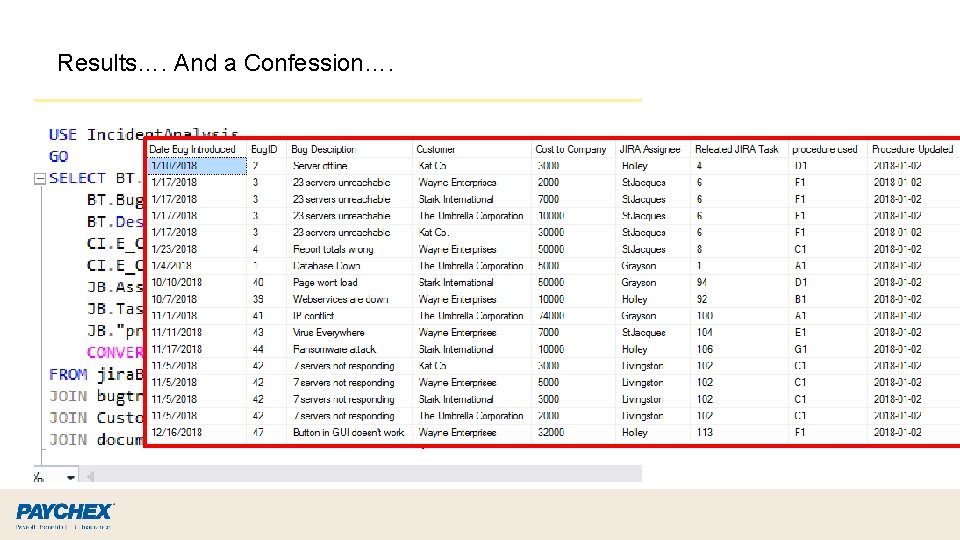

Results…. And a Confession….

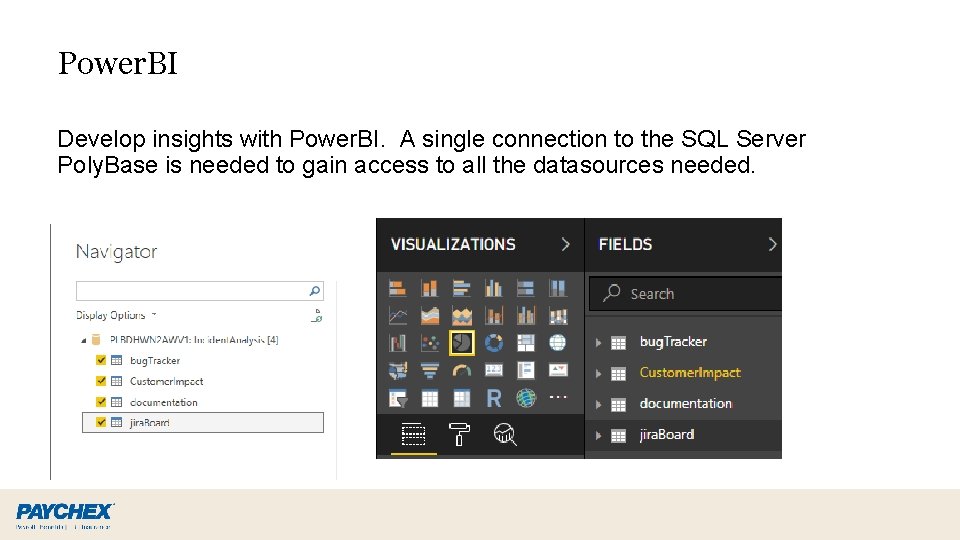

Power. BI Develop insights with Power. BI. A single connection to the SQL Server Poly. Base is needed to gain access to all the datasources needed.

Import/Export Data

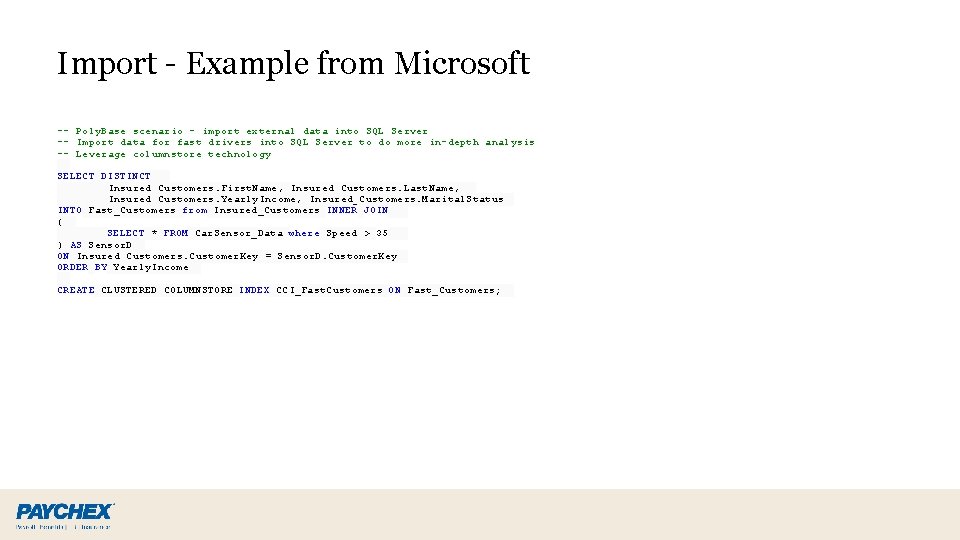

Import - Example from Microsoft -- Poly. Base scenario - import external data into SQL Server -- Import data for fast drivers into SQL Server to do more in-depth analysis -- Leverage columnstore technology SELECT DISTINCT Insured_Customers. First. Name, Insured_Customers. Last. Name, Insured_Customers. Yearly. Income, Insured_Customers. Marital. Status INTO Fast_Customers from Insured_Customers INNER JOIN ( SELECT * FROM Car. Sensor_Data where Speed > 35 ) AS Sensor. D ON Insured_Customers. Customer. Key = Sensor. D. Customer. Key ORDER BY Yearly. Income CREATE CLUSTERED COLUMNSTORE INDEX CCI_Fast. Customers ON Fast_Customers;

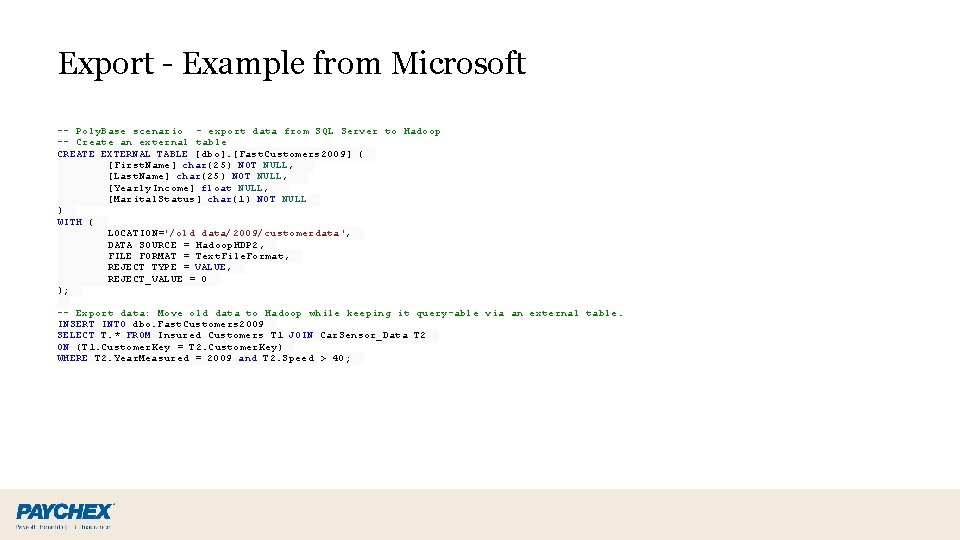

Export - Example from Microsoft -- Poly. Base scenario - export data from SQL Server to Hadoop -- Create an external table CREATE EXTERNAL TABLE [dbo]. [Fast. Customers 2009] ( [First. Name] char(25) NOT NULL, [Last. Name] char(25) NOT NULL, [Yearly. Income] float NULL, [Marital. Status] char(1) NOT NULL ) WITH ( LOCATION='/old_data/2009/customerdata', DATA_SOURCE = Hadoop. HDP 2, FILE_FORMAT = Text. File. Format, REJECT_TYPE = VALUE, REJECT_VALUE = 0 ); -- Export data: Move old data to Hadoop while keeping it query-able via an external table. INSERT INTO dbo. Fast. Customers 2009 SELECT T. * FROM Insured_Customers T 1 JOIN Car. Sensor_Data T 2 ON (T 1. Customer. Key = T 2. Customer. Key) WHERE T 2. Year. Measured = 2009 and T 2. Speed > 40;

Troubleshooting

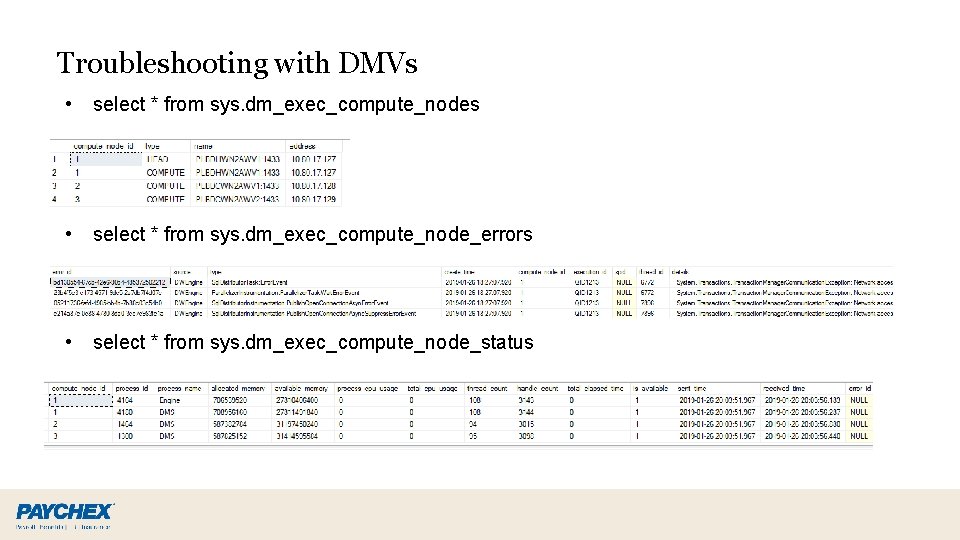

Troubleshooting with DMVs • select * from sys. dm_exec_compute_nodes • select * from sys. dm_exec_compute_node_errors • select * from sys. dm_exec_compute_node_status

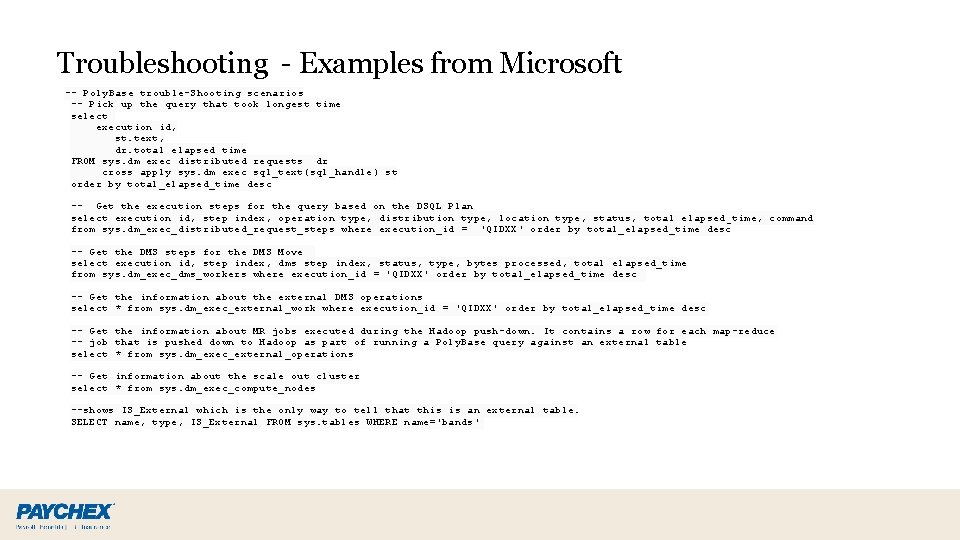

Troubleshooting - Examples from Microsoft -- Poly. Base trouble-Shooting scenarios -- Pick up the query that took longest time select execution_id, st. text, dr. total_elapsed_time FROM sys. dm_exec_distributed_requests dr cross apply sys. dm_exec_sql_text(sql_handle) st order by total_elapsed_time desc -- Get the execution steps for the query based on the DSQL Plan select execution_id, step_index, operation_type, distribution_type, location_type, status, total_elapsed_time, command from sys. dm_exec_distributed_request_steps where execution_id = 'QIDXX' order by total_elapsed_time desc -- Get the DMS steps for the DMS Move select execution_id, step_index, dms_step_index, status, type, bytes_processed, total_elapsed_time from sys. dm_exec_dms_workers where execution_id = 'QIDXX' order by total_elapsed_time desc -- Get the information about the external DMS operations select * from sys. dm_exec_external_work where execution_id = 'QIDXX' order by total_elapsed_time desc -- Get the information about MR jobs executed during the Hadoop push-down. It contains a row for each map-reduce -- job that is pushed down to Hadoop as part of running a Poly. Base query against an external table select * from sys. dm_exec_external_operations -- Get information about the scale out cluster select * from sys. dm_exec_compute_nodes --shows IS_External which is the only way to tell that this is an external table. SELECT name, type, IS_External FROM sys. tables WHERE name='bands'

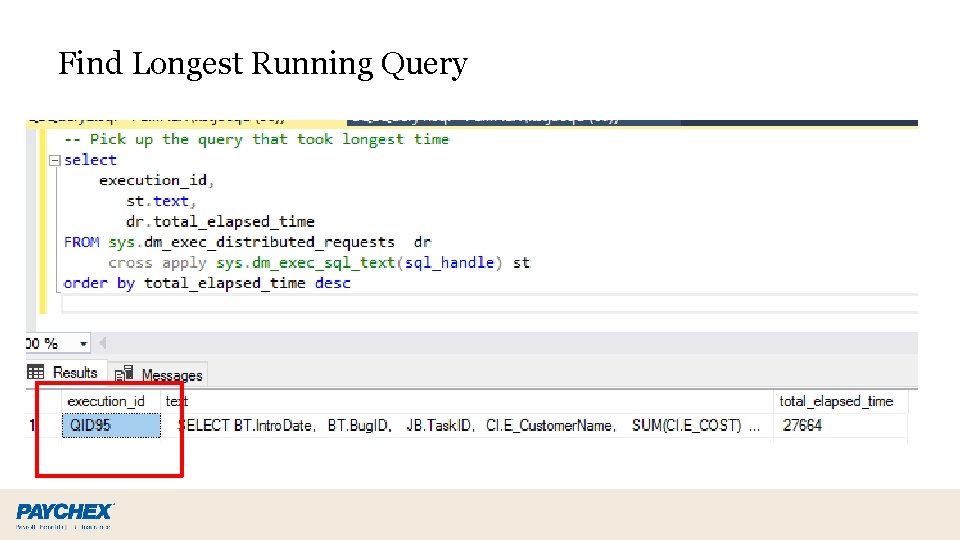

Find Longest Running Query

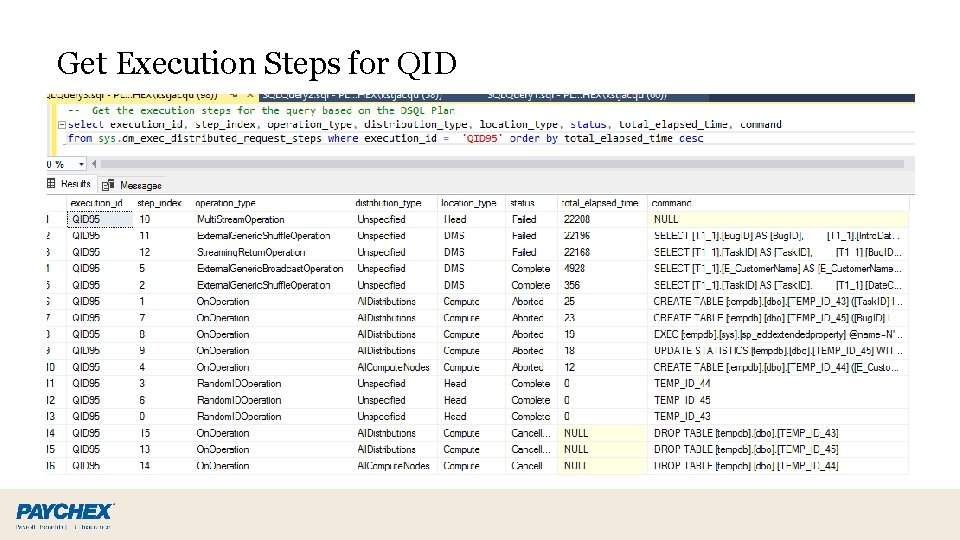

Get Execution Steps for QID

What’s Next?

What’s Next Today was a 101 level intro to Enhanced Poly. Base. In the future we hope to bring you more information on: • • • Performance / stress testing More data source examples More complex use cases Testing out new preview versions as released and eventually the GA Live Demos Import/Export in action

Wrapping Up. . . Today we covered: • • • Benefits of Virtualizing your Data What is SQL Server 2019 Enhanced Poly. Base Installation/Configuration of Head and Compute Nodes Adding Oracle, Mongo. DB, Cosmos DB, and Maria. DB as Data Sources Pulling the data all together through a single interface Tools for Troubleshooting

Useful Links More Info on Poly. Base: https: //docs. microsoft. com/en-us/sql/relational-databases/polybase-guide? view=sql-server-ver 15 https: //docs. microsoft. com/en-us/sql/relational-databases/polybase-scale-out-groups? view=sql-server-2017 https: //docs. microsoft. com/en-us/sql/relational-databases/polybase/configure-scale-out-groups-windows? view=sql-server-2017 https: //docs. microsoft. com/en-us/sql/relational-databases/polybase-configure-oracle? view=sql-server-ver 15 List of DMVs: https: //docs. microsoft. com/en-us/previous-versions/sql-server-2016/mt 146389(v=sql. 130)

Mind Blown? !? !? ! ** courtesy of James Livingston **

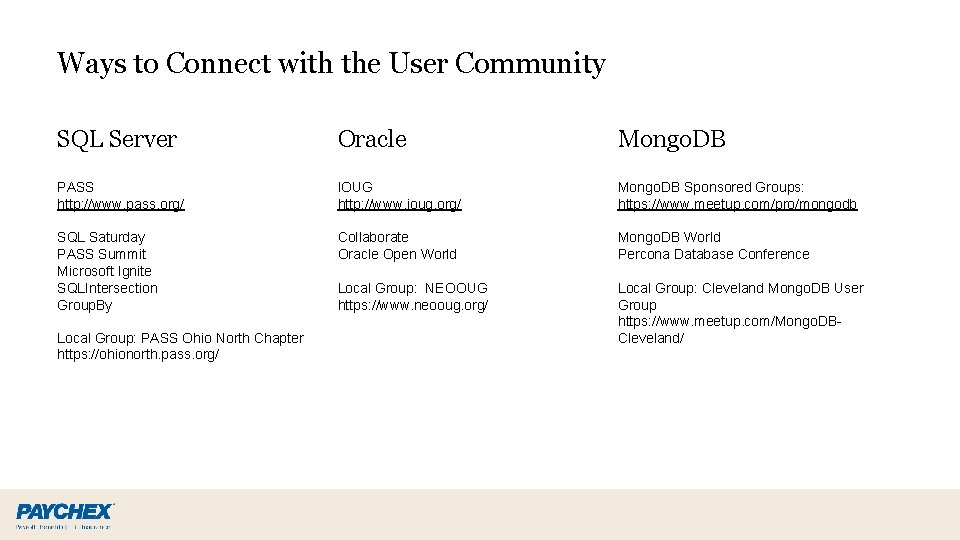

Ways to Connect with the User Community SQL Server Oracle Mongo. DB PASS http: //www. pass. org/ IOUG http: //www. ioug. org/ Mongo. DB Sponsored Groups: https: //www. meetup. com/pro/mongodb SQL Saturday PASS Summit Microsoft Ignite SQLIntersection Group. By Collaborate Oracle Open World Mongo. DB World Percona Database Conference Local Group: NEOOUG https: //www. neooug. org/ Local Group: Cleveland Mongo. DB User Group https: //www. meetup. com/Mongo. DBCleveland/ Local Group: PASS Ohio North Chapter https: //ohionorth. pass. org/

Questions? Kimberly St. Jacques ● Twitter: @kimstjacques ● Linked. In: www. linkedin. com/in/kimberly-stjacques ● Blog: http: //www. DBObserver. com Michael Grayson ● Twitter: @mikegray 831 ● Linked. In: https: //www. linkedin. com/in/mikegrayson/ ● Blog: https: //mongomike. wordpress. com/blog

Thank You!

- Slides: 81