Event correlation and data mining for event logs

![Event log monitoring Dec 18 08: 47: 26 myhost [daemon. info] sshd[15172]: log: Connection Event log monitoring Dec 18 08: 47: 26 myhost [daemon. info] sshd[15172]: log: Connection](https://slidetodoc.com/presentation_image_h/83fb097edc10d05a013137bfc27ac848/image-5.jpg)

![Examples of patterns detected with SLCT • Sample clusters detected with SLCT: sshd[*]: connect Examples of patterns detected with SLCT • Sample clusters detected with SLCT: sshd[*]: connect](https://slidetodoc.com/presentation_image_h/83fb097edc10d05a013137bfc27ac848/image-38.jpg)

- Slides: 39

Event correlation and data mining for event logs Risto Vaarandi SEB Eesti Ühispank risto. vaarandi@seb. ee

Outline • Event logging and event log monitoring • Event correlation – concept and existing solutions • Simple Event Correlator (SEC) • Frequent itemset mining for event logs • Data clustering for event logs • Discussion 2

Event logging • Event – a change in the system state, e. g. , a disk failure; when a system component (application, network device, etc. ) encounters an event, it could emit an event message that describes the event. • Event logging – a procedure of storing event messages to a local or remote (usually flat-file) event log. • Event logs play an important role in modern IT systems: ü many system components like applications, servers, and network devices have a builtin support for event logging (with the BSD syslog protocol being a widely accepted standard), ü since in most cases event messages are appended to event logs in realtime, event logs are an excellent source of information for monitoring the system (a number of tools like Swatch and Logsurfer have been developed for log monitoring), ü information that is stored to event logs can be useful for analysis at a later time, e. g. , for audit procedures. 3

Centralized logging infrastructure Central log server notifications to the monitoring console log file monitor Application syslog server Server events Netdevice network Event logs Applications, servers, and network devices use the syslog protocol for logging their events to the central log server that runs a syslog server. Log monitoring takes place on the central log server and alerts are sent to the monitoring console. 4

![Event log monitoring Dec 18 08 47 26 myhost daemon info sshd15172 log Connection Event log monitoring Dec 18 08: 47: 26 myhost [daemon. info] sshd[15172]: log: Connection](https://slidetodoc.com/presentation_image_h/83fb097edc10d05a013137bfc27ac848/image-5.jpg)

Event log monitoring Dec 18 08: 47: 26 myhost [daemon. info] sshd[15172]: log: Connection from 10. 2. 211. 19 port 1304 Dec 18 08: 47: 39 myhost [daemon. info] sshd[15172]: log: Password authentication for alex accepted. Dec 18 08: 50: 09 myhost [kern. crit] vmunix: /var/tmp: file system full Dec 18 08: 50: 10 myhost [mail. debug] imapd[15399]: imap service init from 10. 2. 213. 2 Dec 18 08: 50: 10 myhost [mail. info] imapd[15399]: Login user=risto host=risto 2 [10. 2. 213. 2] • Commonly used log monitoring tools – Swatch, Logsurfer, etc. (see http: //www. loganalysis. org for more information). • Current log monitoring practice – match logged lines in real time with regular expressions and perform an action (e. g. , send an alert) when a matching line is observed. • Open issues – in order to write rules (regexp → action) for log monitoring tools, one must have a good knowledge about the IT system and log messages; existing tools don’t support event correlation well. 5

Event correlation • Event correlation – a conceptual interpretation procedure where new meaning is assigned to a set of events that happen within a predefined time interval [Jakobson and Weissman, 1995]. During the event correlation process, new events might be inserted into the event stream and original events might be removed. • Examples: ü if 10 login failure events occur for a user within 5 minutes, generate a security attack event; ü if both device internal temperature too high and device not responding events have been observed within 5 seconds, replace them with the event device down due to overheating. • A number of approaches have been proposed for event correlation (rule-based, codebook based, neural network based etc. methods), and a number of event correlation products are available on the market (HP ECS, SMARTS, Nerve. Center, Rule. Core, LOGEC, etc. ) 6

Event correlation approaches • Rule-based (HP ECS, IMPACT, Rule. Core, etc. ) – events are correlated according to the rules condition → action that are specified by the human analyst. • Codebook based (SMARTS) – if a set of events e 1, . . . , ek must be interpreted as event A, then e 1, . . . , ek are stored to the codebook as a bit-vector pointing to A. In order to correlate a set of events, look for the most closely matching vector in the codebook, and report the interpretation that corresponds to the vector. • Graph based – find all dependencies between system components (network devices, hosts, services, etc. ) and construct a graph with each node representing a system component and each edge a dependency between two components. When a set of fault events occurs, use the graph for finding possible root cause(s) of fault events (e. g. , 10 “HTTP server not responding” events were caused by the failure of a single network link). • Neural network based – a neural net is trained for the detection of anomalies in the event stream, root cause(s) of fault events, etc. 7

Motivation for developing SEC • Existing event correlation products have the following drawbacks: ü Complex design and resource requirements – Ø they are mostly heavyweight solutions that are difficult to deploy and maintain, and that require extensive user training; Ø they are not very suitable for application on nodes with limited computing resources (e. g. , nodes in sensor and ad hoc networks); Ø many products are based on the client-server model which is inconvenient for fully distributed event correlation, ü Platform and domain dependence – Ø they are usually distributed in a binary form for a limited number of OS platforms; Ø some products are designed for one system management platform only; Ø some products have been designed for network fault management and their application in other domains (including event log monitoring) is cumbersome, ü The issue of pricing – they are quite expensive (at the present time, SEC is the only freely available event correlation engine). • Summary – there is a need for lightweight, platform-independent, and open source event correlation solutions, since heavyweight proprietary systems are infeasible for many tasks and environments. 8

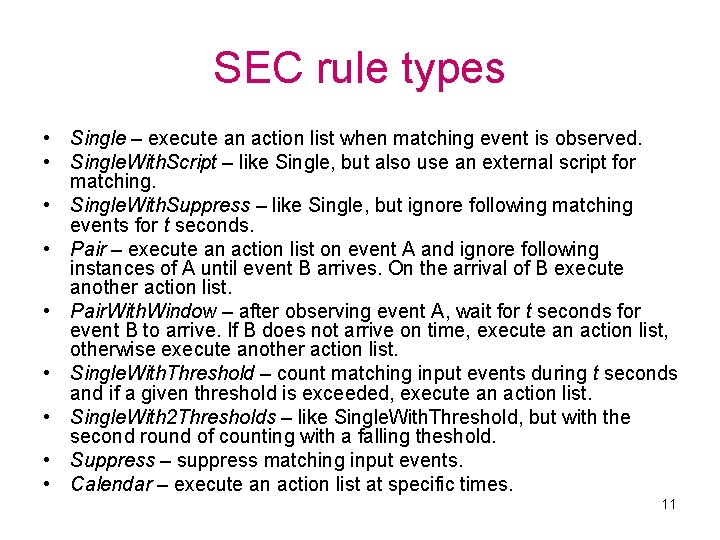

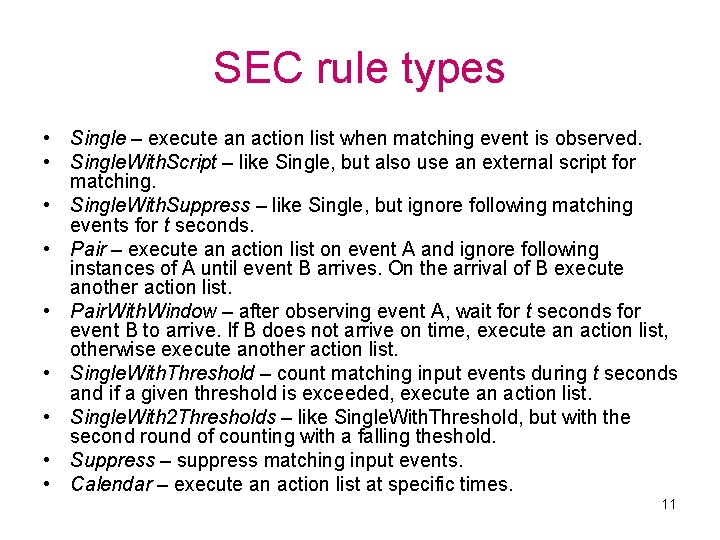

Key features of SEC • Uses rule-based approach for event correlation – this approach was chosen because of its naturalness of knowledge representation and transparency of the event correlation process to the end user, • Written in Perl, thus open-source and cross-platform, • Licensed under the terms of GNU GPL, • Easy to install and configure – no need for compiling and linking the source, no dependencies on other software, configuration files can be edited with any text editor like vi, • Small in size and doesn’t consume much system resources (CPU time and memory), • Reads input from log files, named pipes, and standard input (arbitrary number of input sources can be specified); employs regular expression patterns, Perl subroutines, substrings, and truth values for matching input events, • Can be used as a standalone event log monitoring solution, but also integrated with other applications through file/named pipe interface. 9

SEC configuration • SEC event correlation rules are stored in regular text files. • Rules from one configuration file are used sequentially in the same order as they are given in the file, rule sets from different configuration files are applied virtually in parallel. • Most rules have the following components: event matching pattern optional Boolean expression of contexts event correlation key correlation information (e. g. , event counting threshold and window) list of actions • With appropriate patterns, context expressions, and action lists, several rules can be combined into one event correlation scheme. • When an event matches a rule, event correlation key is calculated, and if there exists an event correlation operation with the same key, event is correlated by that operation. If there is no such operation and the rule specifies a correlation of events over time, the rule starts a new operation with the calculated key. 10

SEC rule types • Single – execute an action list when matching event is observed. • Single. With. Script – like Single, but also use an external script for matching. • Single. With. Suppress – like Single, but ignore following matching events for t seconds. • Pair – execute an action list on event A and ignore following instances of A until event B arrives. On the arrival of B execute another action list. • Pair. With. Window – after observing event A, wait for t seconds for event B to arrive. If B does not arrive on time, execute an action list, otherwise execute another action list. • Single. With. Threshold – count matching input events during t seconds and if a given threshold is exceeded, execute an action list. • Single. With 2 Thresholds – like Single. With. Threshold, but with the second round of counting with a falling theshold. • Suppress – suppress matching input events. • Calendar – execute an action list at specific times. 11

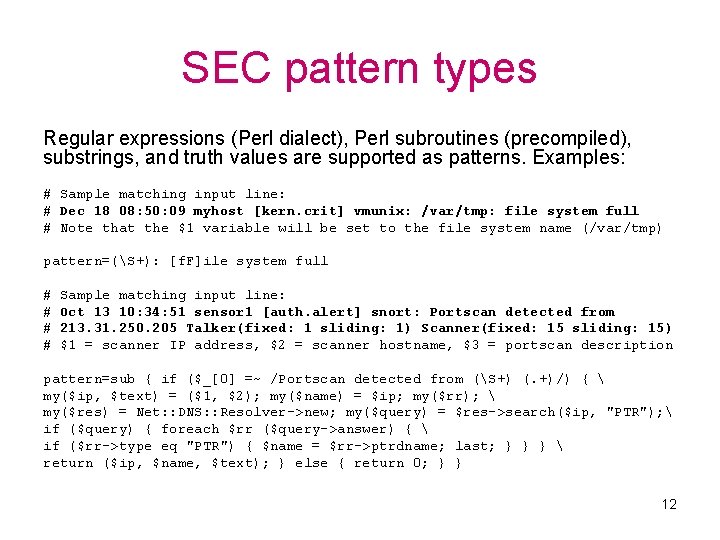

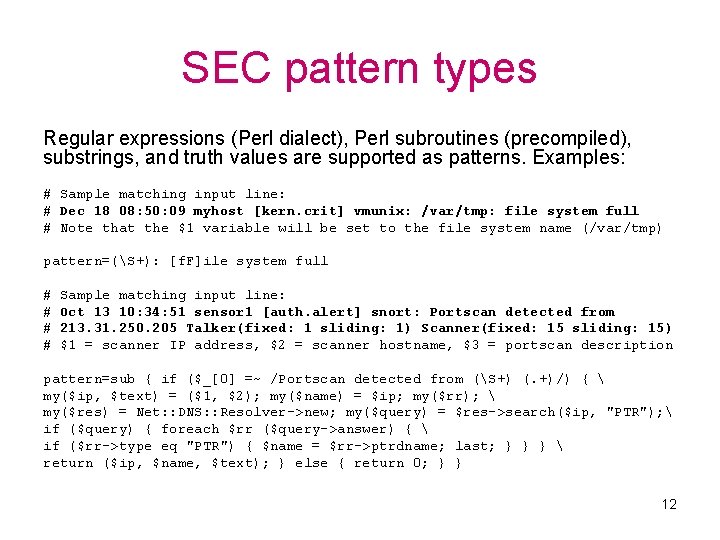

SEC pattern types Regular expressions (Perl dialect), Perl subroutines (precompiled), substrings, and truth values are supported as patterns. Examples: # Sample matching input line: # Dec 18 08: 50: 09 myhost [kern. crit] vmunix: /var/tmp: file system full # Note that the $1 variable will be set to the file system name (/var/tmp) pattern=(S+): [f. F]ile system full # # Sample matching input line: Oct 13 10: 34: 51 sensor 1 [auth. alert] snort: Portscan detected from 213. 31. 250. 205 Talker(fixed: 1 sliding: 1) Scanner(fixed: 15 sliding: 15) $1 = scanner IP address, $2 = scanner hostname, $3 = portscan description pattern=sub { if ($_[0] =~ /Portscan detected from (S+) (. +)/) { my($ip, $text) = ($1, $2); my($name) = $ip; my($rr); my($res) = Net: : DNS: : Resolver->new; my($query) = $res->search($ip, "PTR"); if ($query) { foreach $rr ($query->answer) { if ($rr->type eq "PTR") { $name = $rr->ptrdname; last; } } } return ($ip, $name, $text); } else { return 0; } } 12

SEC contexts and actions • SEC context – a logical entity that can be created or deleted from a rule (or internally by SEC for tagging input). At creation, the context lifetime can be set to a certain finite value (e. g. , 20 seconds). • The presence or absence of a context can decide whether a rule is applicable or not (e. g. , if a rule definition has A OR B specified for its context expression, and neither context A nor B exist, the rule will not be applied). • A context can act as an event store – events can be associated with a context, and all the collected events supplied for an external processing at a later time (e. g. , collect events from a suspicious FTP session, and mail them to the security administrator at the end of the session). • SEC actions – invoke external programs (shellcmd, spawn, etc. ), generate synthetic events (event), reset event correlation operations (reset), perform context operations (create, report, etc. ), set userdefined variables (assign, etc. ), write to files or FIFOs (write), execute Perl programs or precompiled subroutines (eval, call). 13

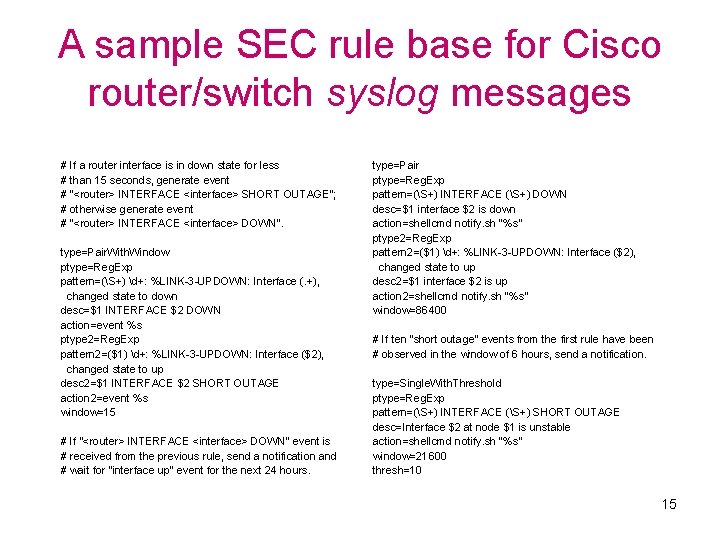

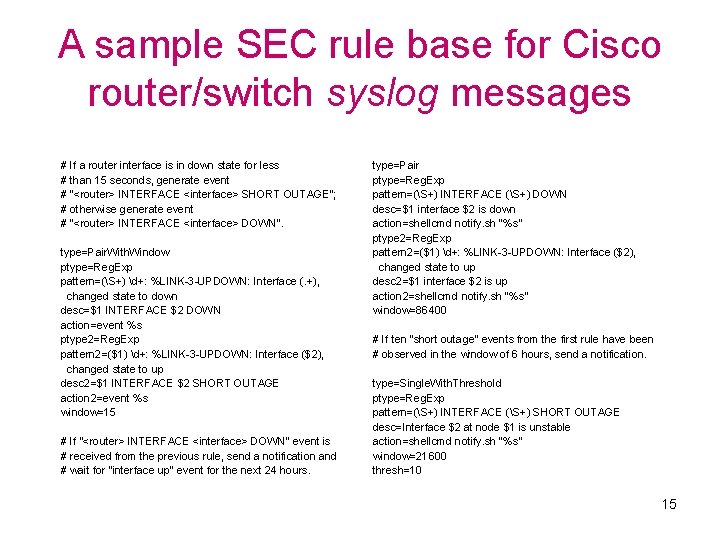

A sample SEC rule # This example assumes that SEC has been started with –intcontexts option type=Single. With. Suppress ptype=Reg. Exp pattern=(S+) [kern. crit] vmunix: (S+): [f. F]ile system full context=_FILE_EVENT_/logs/srv 1. messages || _FILE_EVENT_/logs/srv 2. messages desc=$1: $2 file system full action=pipe ‘File system $2 at host $1 full’ mail –s ‘FS full’ root window=900 When a “file system full” message is logged to either /logs/srv 1. messages or /logs/srv 2. messages, SEC will send an e-mail alert to the local root user, and ignore repeated “file system full” messages for the same host and same file system for 900 seconds. The desc parameter defines the event correlation key and the scope of event correlation – if we replace the key $1: $2 file system full with just file system full, we would get just one alert for the following messages at 12: 30: Oct 13 12: 30: 00 srv 1 [kern. crit] vmunix: /tmp: file system full Oct 13 12: 35: 00 srv 2 [kern. crit] vmunix: /home: file system full 14

A sample SEC rule base for Cisco router/switch syslog messages # If a router interface is in down state for less # than 15 seconds, generate event # "<router> INTERFACE <interface> SHORT OUTAGE"; # otherwise generate event # "<router> INTERFACE <interface> DOWN". type=Pair. With. Window ptype=Reg. Exp pattern=(S+) d+: %LINK-3 -UPDOWN: Interface (. +), changed state to down desc=$1 INTERFACE $2 DOWN action=event %s ptype 2=Reg. Exp pattern 2=($1) d+: %LINK-3 -UPDOWN: Interface ($2), changed state to up desc 2=$1 INTERFACE $2 SHORT OUTAGE action 2=event %s window=15 # If "<router> INTERFACE <interface> DOWN" event is # received from the previous rule, send a notification and # wait for "interface up" event for the next 24 hours. type=Pair ptype=Reg. Exp pattern=(S+) INTERFACE (S+) DOWN desc=$1 interface $2 is down action=shellcmd notify. sh "%s" ptype 2=Reg. Exp pattern 2=($1) d+: %LINK-3 -UPDOWN: Interface ($2), changed state to up desc 2=$1 interface $2 is up action 2=shellcmd notify. sh "%s" window=86400 # If ten "short outage" events from the first rule have been # observed in the window of 6 hours, send a notification. type=Single. With. Threshold ptype=Reg. Exp pattern=(S+) INTERFACE (S+) SHORT OUTAGE desc=Interface $2 at node $1 is unstable action=shellcmd notify. sh "%s" window=21600 thresh=10 15

The work of the sample rule base Interface A@B down + Pair. With. Window event B INTERwin=15 s FACE A SHORT OUTAGE Single. With. Threshold win=21600 s thresh=10 - event B INTERFACE A DOWN Interface A@B up shellcmd notify. sh “B interface A is down” shellcmd notify. sh “Interface A at node B is unstable” Pair shellcmd notify. sh “B interface A is up” 16

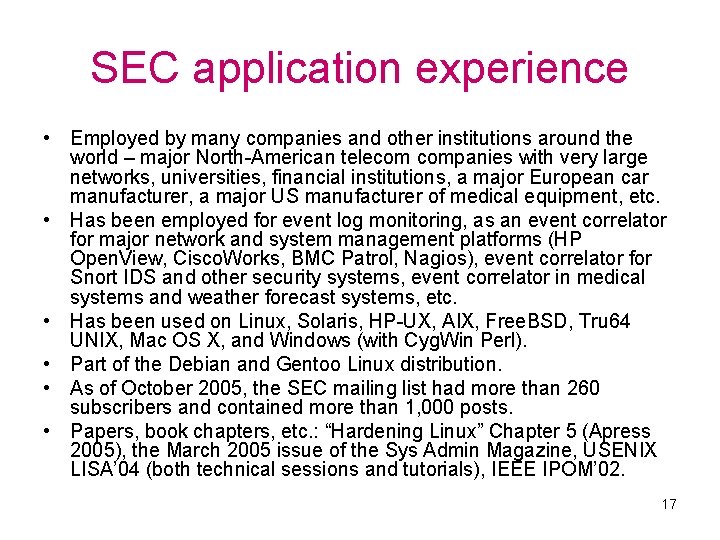

SEC application experience • Employed by many companies and other institutions around the world – major North-American telecom companies with very large networks, universities, financial institutions, a major European car manufacturer, a major US manufacturer of medical equipment, etc. • Has been employed for event log monitoring, as an event correlator for major network and system management platforms (HP Open. View, Cisco. Works, BMC Patrol, Nagios), event correlator for Snort IDS and other security systems, event correlator in medical systems and weather forecast systems, etc. • Has been used on Linux, Solaris, HP-UX, AIX, Free. BSD, Tru 64 UNIX, Mac OS X, and Windows (with Cyg. Win Perl). • Part of the Debian and Gentoo Linux distribution. • As of October 2005, the SEC mailing list had more than 260 subscribers and contained more than 1, 000 posts. • Papers, book chapters, etc. : “Hardening Linux” Chapter 5 (Apress 2005), the March 2005 issue of the Sys Admin Magazine, USENIX LISA’ 04 (both technical sessions and tutorials), IEEE IPOM’ 02. 17

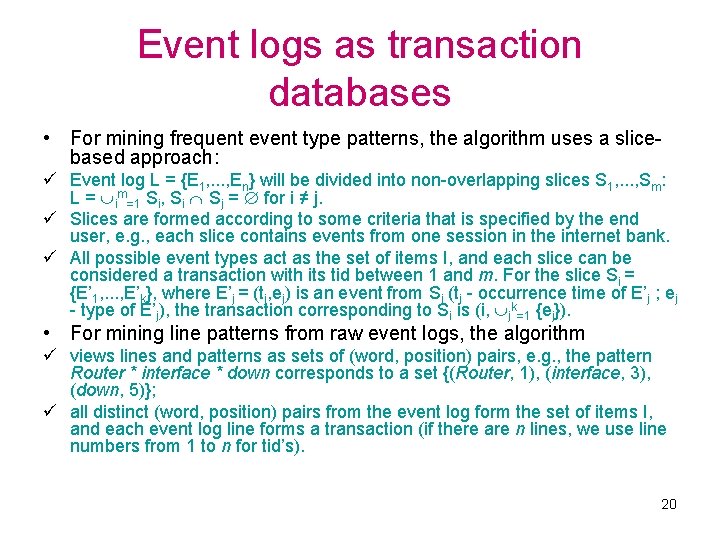

Data mining for event logs • Data mining for event logs has been identified as an important system management task – detected knowledge can be used for building rules for event correlation systems or event log monitoring tools, improving the design of web sites, etc. • Recently proposed mining algorithms – mostly based on the Apriori algorithm for mining frequent itemsets, designed for mining frequent patterns of event types. Event log is viewed as a sequence {E 1, . . . , En}, where Ei = (ti, ei), ti – time of occurrence of Ei, ei – type of Ei, and if i < j then ti ≤ tj. Frequent pattern can be defined in several ways, with most common definitions being window- and slice-based. • Shortcomings of existing mining approaches: ü Apriori is known to be inefficient for mining longer patterns, ü infrequent events remain undetected but are often interesting (e. g. , fault events are normally infrequent but highly interesting), ü focused on mining event type patterns from preprocessed event logs, ignoring patterns of other sorts (in particular, line patterns from raw event logs help one to find event types or write rules for log monitoring tools). 18

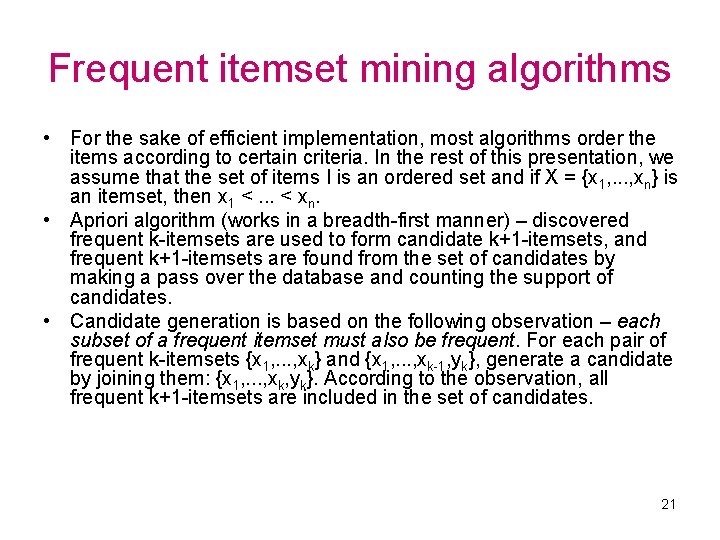

Frequent itemset mining problem • In this talk, an efficient frequent itemset mining algorithm will be presented that can be employed for mining both line and event type patterns from event logs. • Let I = {i 1, . . . , in} be a set of items. If X I, X is called an itemset, and if |X| = k, X is called a k-itemset. • A transaction is a tuple (tid, X), where tid is a transaction identifier and X is an itemset. A transaction database D is a set of transactions (with each transaction having a unique id). • The cover of an itemset X is the set of identifiers of transactions that contain X: cover(X) = {tid | (tid, Y) D, X Y} • The support of an itemset X is the number of elements in its cover: supp(X) = |cover(X)| • The frequent itemset mining problem – given the transaction database D and the support threshold s, find the set of frequent itemsets {X | (tid, X) D, supp(X) ≥ s} and the supports of frequent itemsets. 19

Event logs as transaction databases • For mining frequent event type patterns, the algorithm uses a slicebased approach: ü Event log L = {E 1, . . . , En} will be divided into non-overlapping slices S 1, . . . , Sm: L = im=1 Si, Si Sj = for i ≠ j. ü Slices are formed according to some criteria that is specified by the end user, e. g. , each slice contains events from one session in the internet bank. ü All possible event types act as the set of items I, and each slice can be considered a transaction with its tid between 1 and m. For the slice Si = {E’ 1, . . . , E’k}, where E’j = (tj, ej) is an event from Si (tj - occurrence time of E’j ; ej - type of E’j), the transaction corresponding to Si is (i, jk=1 {ej}). • For mining line patterns from raw event logs, the algorithm ü views lines and patterns as sets of (word, position) pairs, e. g. , the pattern Router * interface * down corresponds to a set {(Router, 1), (interface, 3), (down, 5)}; ü all distinct (word, position) pairs from the event log form the set of items I, and each event log line forms a transaction (if there are n lines, we use line numbers from 1 to n for tid’s). 20

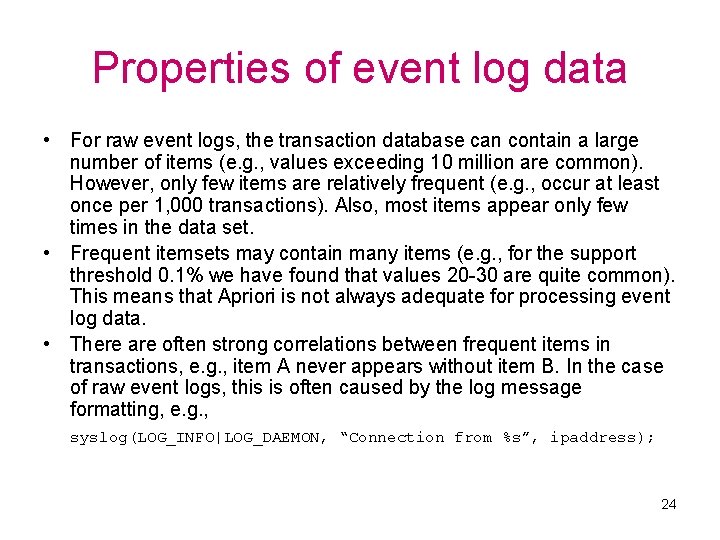

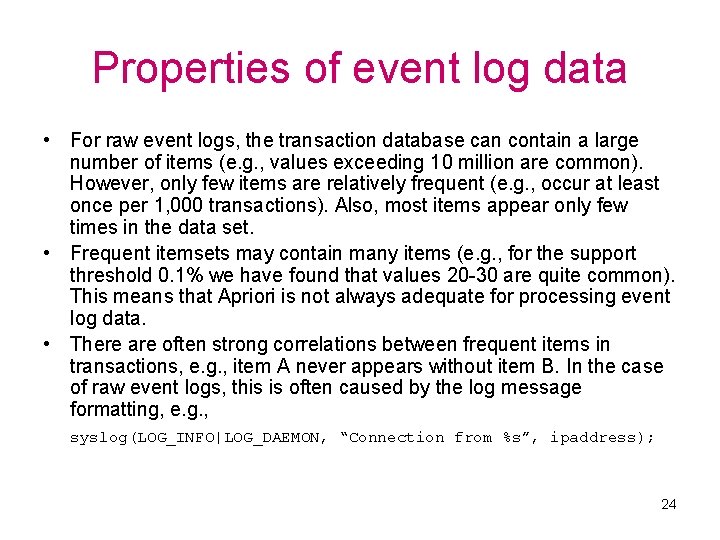

Frequent itemset mining algorithms • For the sake of efficient implementation, most algorithms order the items according to certain criteria. In the rest of this presentation, we assume that the set of items I is an ordered set and if X = {x 1, . . . , xn} is an itemset, then x 1 <. . . < xn. • Apriori algorithm (works in a breadth-first manner) – discovered frequent k-itemsets are used to form candidate k+1 -itemsets, and frequent k+1 -itemsets are found from the set of candidates by making a pass over the database and counting the support of candidates. • Candidate generation is based on the following observation – each subset of a frequent itemset must also be frequent. For each pair of frequent k-itemsets {x 1, . . . , xk} and {x 1, . . . , xk-1, yk}, generate a candidate by joining them: {x 1, . . . , xk, yk}. According to the observation, all frequent k+1 -itemsets are included in the set of candidates. 21

Apriori itemset trie a 4 b 4 c 3 5 c d 1 c 3 4 d Remove candidate with insufficient support 2 d c b 4 d 2 d Transaction db: 2 2 (1, abcde) (2, abc) (3, bcd) (4, abc) Each edge in the trie is labeled with a name of a certain item. (5, ab) Apriori builds the itemset trie layer by layer – when the node layer at depth k is complete, each node at depth k represents supp. thresh. =2 a frequent k-itemset, where path to that node identifies the items in the set and counter in that node the support of the set. a < b < c < d < e Candidate generation – for each node N at depth k, create 22 candidate child nodes by inspecting sibling nodes of N.

Breadth-first vs. depth-first • Shortcomings of Apriori – when the database contains larger frequent itemsets (e. g. , containing 30 -40 items), the trie will become very large (there will be 2 k-1 nodes in the trie for each frequent kitemset). As a result, the runtime and memory cost of the algorithm will be prohibitive. • Eclat and FP-growth algorithms – the algorithms first load the transaction database into main memory. At each step they will search for frequent itemsets {p 1, . . . , pk-1, x} with a certain prefix P = {p 1, . . . , pk-1}, where P is a previously detected frequent itemset. The in -memory representation of the database allows the algorithm to search only transactions that contain P. After the search, the prefix for the next step will be chosen from the detected frequent itemsets (or found by backtracking). • Drawback – the transaction database must fit into the main memory, but this is not always the case (also for many event log data sets!). • Proposed solution – use the breadth-first approach and the itemset trie data structure, with special techniques for speeding up the mining process and reducing its memory consumption. 23

Properties of event log data • For raw event logs, the transaction database can contain a large number of items (e. g. , values exceeding 10 million are common). However, only few items are relatively frequent (e. g. , occur at least once per 1, 000 transactions). Also, most items appear only few times in the data set. • Frequent itemsets may contain many items (e. g. , for the support threshold 0. 1% we have found that values 20 -30 are quite common). This means that Apriori is not always adequate for processing event log data. • There are often strong correlations between frequent items in transactions, e. g. , item A never appears without item B. In the case of raw event logs, this is often caused by the log message formatting, e. g. , syslog(LOG_INFO|LOG_DAEMON, “Connection from %s”, ipaddress); 24

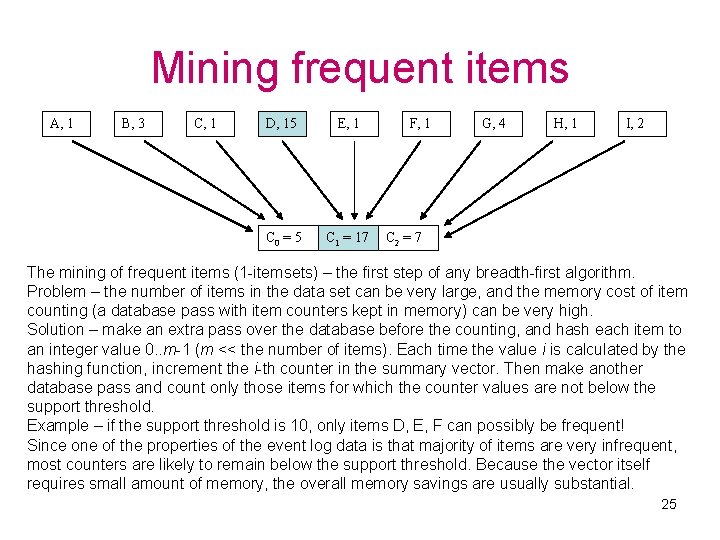

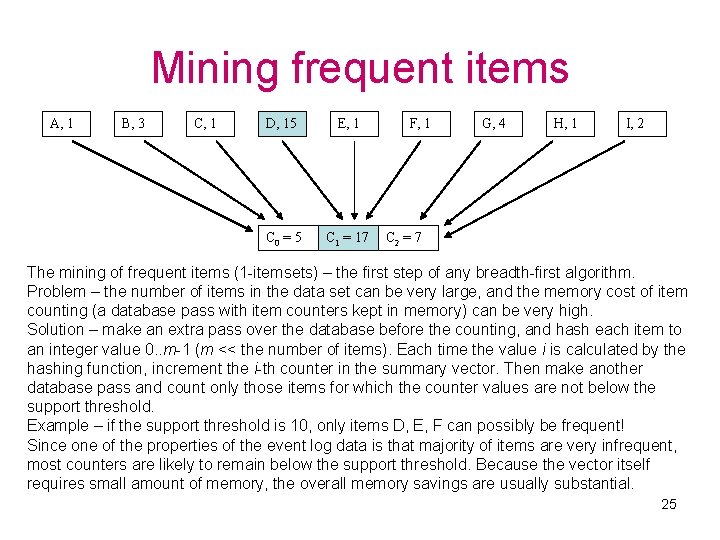

Mining frequent items A, 1 B, 3 C, 1 D, 15 E, 1 C 0 = 5 C 1 = 17 F, 1 G, 4 H, 1 I, 2 C 2 = 7 The mining of frequent items (1 -itemsets) – the first step of any breadth-first algorithm. Problem – the number of items in the data set can be very large, and the memory cost of item counting (a database pass with item counters kept in memory) can be very high. Solution – make an extra pass over the database before the counting, and hash each item to an integer value 0. . m-1 (m << the number of items). Each time the value i is calculated by the hashing function, increment the i-th counter in the summary vector. Then make another database pass and count only those items for which the counter values are not below the support threshold. Example – if the support threshold is 10, only items D, E, F can possibly be frequent! Since one of the properties of the event log data is that majority of items are very infrequent, most counters are likely to remain below the support threshold. Because the vector itself requires small amount of memory, the overall memory savings are usually substantial. 25

Transaction cache • Motivation – keep most frequently used transaction data in memory for speeding up the work of the algorithm. • Observation – if F is the set of frequent items and (tid, X) is a transaction, we only need to consider items X ∩ F (frequent items of the transaction) during the mining process. • Transaction cache – use the summary vector technique for detecting which sets X ∩ F have a chance to correspond to C or more transactions, and load them to main memory (identical sets are stored as a single record with an occurrence counter); write the rest of the sets to disk. The value of C is given by the user. • Result – the cache is guaranteed to contain most frequently used transaction data ({Y I | |{(tid, X) D | X ∩ F = Y}| ≥ C}), and the amount of data stored to the cache is controlled by the user. There will be no dependency on the amount of main memory like with depth-first algorithms, while the cache hit ratio is likely to be quite high. 26

Reducing the size of the itemset trie • Motivation – with a smaller trie less memory/CPU time is consumed. • Observation – when there are many strong correlations between frequent items in transactions, many parts of the Apriori itemset trie contain redundant information. • Let F = {f 1, . . . , fn} be the set of all frequent items. We call the set dep(fi) = {fj | fi ≠ fj, cover(fi) cover(fj)} the dependency set of fi, and say that fi has m dependencies if |dep(fi)| = m. • Dependency prefix of item fi: pr(fi) = {fj | fj dep(fi), fj < fi} • Dependency prefix of itemset {fi 1, . . . , fik}: pr({fi 1, . . . , fik}) = jk=1 pr(fij) • The trie reduction technique – if pr(X) X, don’t create a node for X in the trie. In order to maximize the efficiency of the technique, reorder frequent items, so that if fi < fj, then |dep(fi)| |dep(fj)| • Note that we can’t generate candidates as a separate step like Apriori, since some nodes needed for the step could be missing from the trie due to the trie reduction technique. Thus, we generate candidates on-the-fly during support counting. 27

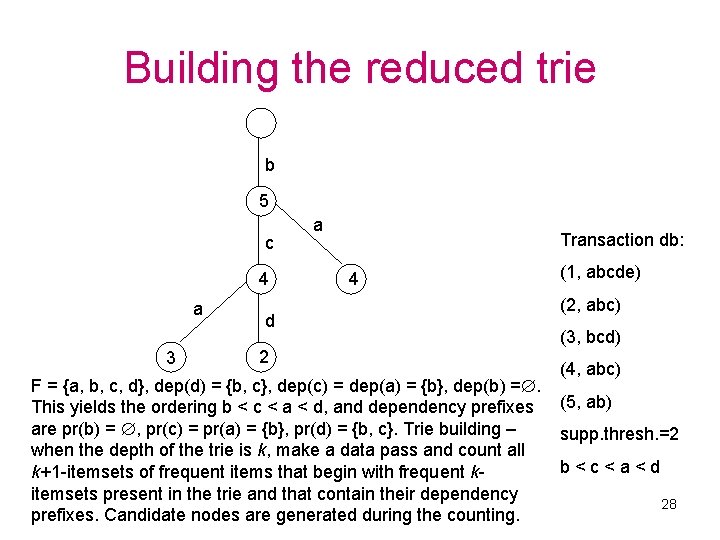

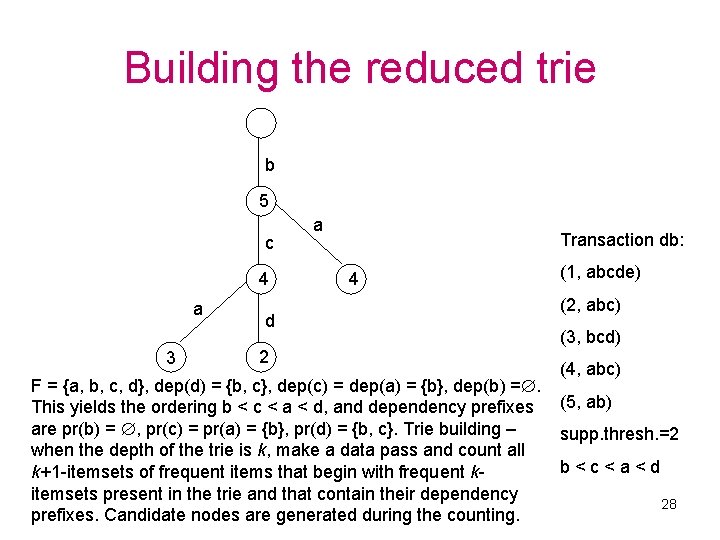

Building the reduced trie b 5 c 4 a 3 d 2 a Transaction db: 4 (1, abcde) (2, abc) (3, bcd) (4, abc) F = {a, b, c, d}, dep(d) = {b, c}, dep(c) = dep(a) = {b}, dep(b) =. This yields the ordering b < c < a < d, and dependency prefixes (5, ab) are pr(b) = , pr(c) = pr(a) = {b}, pr(d) = {b, c}. Trie building – supp. thresh. =2 when the depth of the trie is k, make a data pass and count all b < c < a < d k+1 -itemsets of frequent items that begin with frequent kitemsets present in the trie and that contain their dependency 28 prefixes. Candidate nodes are generated during the counting.

Deriving all itemsets from the trie and further optimizations • It can be shown that non-root nodes of the trie represent all frequent itemsets that contain their dependency prefixes, and all frequent itemsets can be derived from non-root nodes – if a node represents an itemset X, we can derive frequent itemsets {X Y | Y pr(X)} from X, with all such itemsets having a support supp(X). • Observation – if the trie reduction technique was not applied at node N for reducing the number of its child nodes, and M is a child of N, then the sibling nodes of M contain all necessary nodes for the candidate generation in Apriori fashion. • If we augment the algorithm with such an optimization, the algorithm becomes a generalization of Apriori: ü if at node N the algorithm discovers that the trie reduction technique is no longer effective, it switches to Apriori for the subtrie that starts from N, ü if there are no dependencies between frequent items (i. e. , frequent items are weakly correlated), the algorithm switches to Apriori at the root node, i. e. , it behaves like Apriori from the start. 29

The summary of the algorithm 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. Make a pass over the database, detect frequent items, and order them in lexicographic order (if the number of items is very large, the summary vector technique can be used for filtering out irrelevant items). If no frequent items were found, terminate. Make a pass over the database, in order to calculate dependency sets for frequent items and to build the transaction summary vector. Reorder frequent items in dependency ascending order and find their dependency prefixes. Make a pass over the database, in order to create the cache tree and the out-of-cache file. Create the root node of the itemset trie and attach nodes for frequent items with empty dependency prefixes to the root node. If all frequent items have empty dependency prefixes, set the APR-flag in the root node. Let k : = 1. Check all nodes in the trie at depth k. If the parent of a node N has the APR-flag set, generate candidate child nodes for the node N in Apriori fashion (node counters are set to zero), and set the APR-flag in the node N. Build the next layer of nodes in the trie using the trie reduction technique with the following additional condition – if the APR-flag is set in a node at depth k, don't attach any additional candidate nodes to that node. Remove the candidate nodes (nodes at depth k+1) with counter values below the support threshold. If all candidate nodes were removed, output frequent itemsets and terminate. Find the nodes at depth k for which the trie reduction technique was not applied during step 8 for reducing the number of their child nodes, and set the APR-flag in these nodes. Then let k : = k + 1 and go to step 7. 30

Log. Hound and examples of detected patterns • The frequent itemset mining algorithm for event logs has been implemented in a tool called Log. Hound (written in C, distributed under the terms of GNU GPL). • Includes several features for preprocessing raw event logs (support for regular expression filters, line conversion templates, etc. ) • Sample frequent line patterns detected with Log. Hound: Dec Dec Dec 18 18 18 * * * myhost myhost * * * connect from log: Connection from * port fatal: Did not receive ident string. log: * authentication for * accepted. fatal: Connection closed by remote host. • Sample frequent event type pattern detected with Log. Hound (the Code. Red worm footprint from the Snort IDS log): WEB-IIS Code. Red v 2 root. exe access WEB-IIS cmd. exe access HTTP Double Decoding Attack WEB-IIS unicode directory traversal attempt 31

Data clustering for event logs • The data clustering problem: divide a set of data points into groups (clusters), where points within each cluster are similar to each other. Points that do not fit well to any of the detected clusters are considered to form a special cluster of outliers. • The data clustering algorithm presented in this talk has been designed for dividing event log lines into clusters, so that: ü each regular cluster corresponds to a certain frequently occurring line pattern (e. g. , Interface * down), ü the cluster of outliers contains rare lines (which possibly represent fault conditions or unexpected behavior of the system). • Traditional clustering methods assume that data points belong to space Rn, and similarity between data points is measured in terms of distance between points – many algorithms use a variant of Lp norm as a distance function: Lp(x, y) = ( in= 1 xi – yi p) 1/p (L 1 – Manhattan distance, L 2 – Euclidean distance) 32

Traditional clustering methods Weaknesses of traditional data clustering methods: - they are unable to handle non-numerical (categorical) data, - they don’t work well in high-dimensional data spaces (n > 10), - they are unable to detect clusters in subspaces of the original data space. When we view event log lines as data points with categorical attributes, where the m-th word of the line is the value for the m-th attribute, e. g. , (“Password”, “authentication”, “for”, “john”, “accepted”), then all the weaknesses listed above are also relevant for event log data sets. 33

Recent clustering algorithms that address the problems • CLIQUE and MAFIA – employ Apriori-like algorithm for detecting clusters. Instead of measuring the distance between individual points, algorithms identify dense regions in the data space, and form clusters from these regions. First clusters in 1 -dimensional subspaces are detected. After clusters C 1, …, Cm in (k-1)-dimensional subspaces are detected, cluster candidates for k-dimensional subspaces are formed from C 1, …, Cm. The algorithm then checks which candidates are actual clusters, etc. Unfortunately, Apriori’s performance deteriorates as k increases (for detecting a cluster in kdimensional space, 2 k-2 of its superclusters must be produced first). • CACTUS – makes a pass over the data and builds a data summary, and then makes another pass over the data to find clusters using the summary. Although fast, CACTUS generates clusters with stretched shapes, which is undesirable for log file data clustering. • PROCLUS – uses K-medoid method to partition the data space into K clusters. However, it is not obvious what is the right value for K. 34

The algorithm – features and definitions • The algorithm views every event log line as a data point with categorical attributes, where the m-th word of the line is the value for the m-th attribute. • Makes few passes over the data (like the CACTUS algorithm). • Uses density-based approach for clustering (like CLIQUE and MAFIA algorithms) – identifies dense regions in the data space and forms clusters from them. • Region S – a subset of the data space, where certain attributes i 1, …, ik of all points that belong to the region S have identical values v 1, …, vk: x S, xi 1=v 1, …xik=vk. (Note that each region corresponds to a line pattern, e. g. , Password authentication for * accepted. ) • 1 -region – a region with one fixed attribute i 1 (i. e. , k=1). • Dense region – a region that contains at least N points, where N is the support threshold value given by the user. 35

The algorithm – basic steps 1. 2. 3. 4. 5. Make a pass over the data, and identify all dense 1 -regions (note that dense 1 -regions correspond to frequent words in the data set). Make another pass over the data, and generate cluster candidates. For every line that contains dense 1 -regions, create a cluster candidate by combining the fixed attributes of these regions. (For example, if the line is Password authentication for john accepted, and words Password, authentication, for, and accepted are frequent, then the candidate is Password authentication for * accepted. ) If the candidate is not present in the candidate table, it will be put there with a support value 1; otherwise its support value will be incremented. Optional step: for each candidate C, find all candidates representing more specific patterns, and add their support values to the value of C. Find which candidates in the candidate table have support values equal or greater than the support threshold, and output them as clusters. Detect outliers during a separate data pass. 36

SLCT – Simple Logfile Clustering Tool • The event log clustering algorithm has been implemented in a tool called SLCT (written in C, distributed under the terms of GNU GPL). • Supports regular expression filters, so that particular lines (or particular cluster) can be inspected more closely. • Supports line conversion templates (i. e. , before inspection, certain parts of a line are assembled into a new line), e. g. , with the filter ‘sshd[[0 -9]+]: (. +)’ and the template ‘$1’ the line sshd[2781]: connect from 10. 1. 1. 1 will be converted to connect from 10. 1. 1. 1 • Custom word delimiter can be specified (default is whitespace, but a custom regular expression can be given). • SLCT can refine variable parts of patterns by looking for constant heads and tails, e. g. , the pattern connect from * is converted to connect from 192. 168. * • After the first run, SLCT can be applied iteratively to the file of outliers, until the file is relatively small and can be inspected manually for unknown faults and anomalies. 37

![Examples of patterns detected with SLCT Sample clusters detected with SLCT sshd connect Examples of patterns detected with SLCT • Sample clusters detected with SLCT: sshd[*]: connect](https://slidetodoc.com/presentation_image_h/83fb097edc10d05a013137bfc27ac848/image-38.jpg)

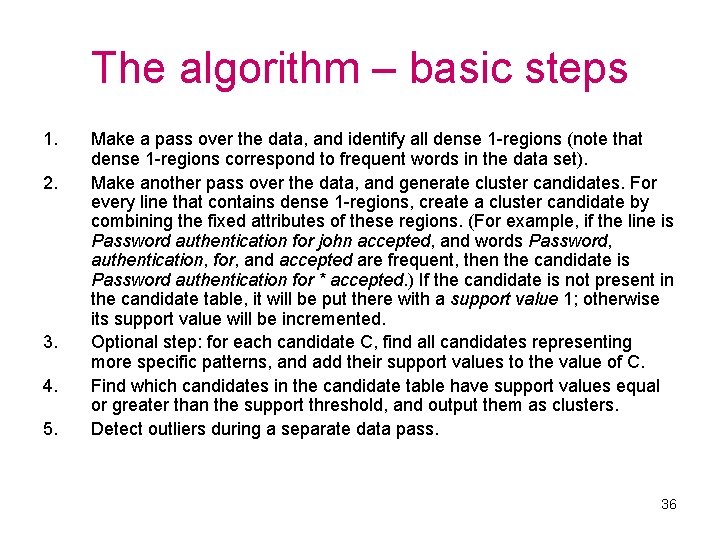

Examples of patterns detected with SLCT • Sample clusters detected with SLCT: sshd[*]: connect from 1* log: Connection from 1* port * log: * authentication for * accepted. log: Closing connection to 1* • Sample outliers detected with SLCT: sendmail[***]: NOQUEUE: SYSERR(***): can not chdir(/var/spool/mqueue/): Permission denied sendmail[***]: ***: SYSERR(root): collect: I/O error on connection from ***, from=<***> sendmail[***]: ***: SYSERR(root): putbody: write error: Input/output error login[***]: FAILED LOGIN 1 FROM (null) FOR root, Authentication failure sshd[***]: Failed password for root from *** port *** ssh 2 imapd[***]: Unable to load certificate from ***, host=*** [***] imapd[***]: Fatal disk error user=*** host=*** [***] mbx=***: Disk quota exceeded 38

References • • SEC home page – http: //simple-evcorr. sourceforge. net Log. Hound home page – http: //kodu. neti. ee/~risto/loghound/ SLCT home page – http: //kodu. neti. ee/~risto/slct/ My publications – see http: //kodu. neti. ee/~risto 39