COMP 3500 Introduction to Operating Systems Final Exam

- Slides: 70

COMP 3500 Introduction to Operating Systems Final Exam Review Dr. Xiao Qin Auburn University http: //www. eng. auburn. edu/~xqin@auburn. edu

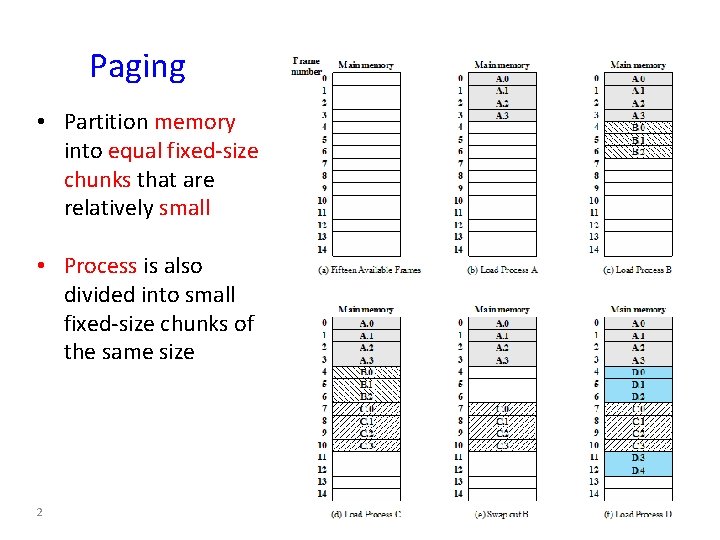

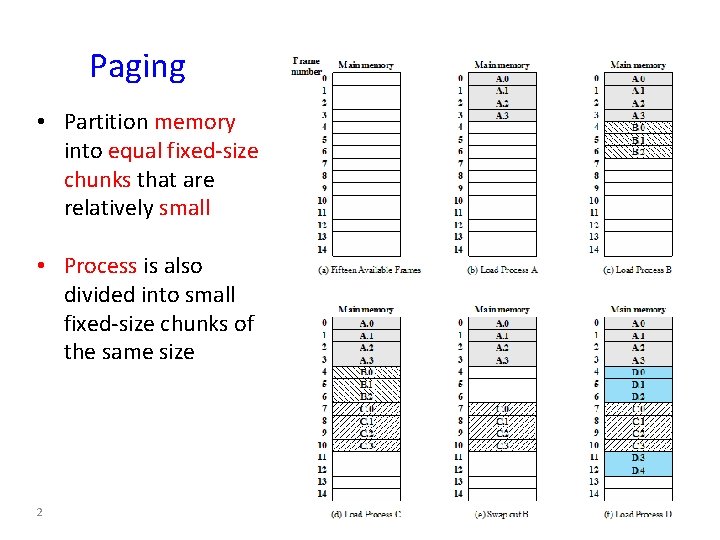

Paging • Partition memory into equal fixed-size chunks that are relatively small • Process is also divided into small fixed-size chunks of the same size 2

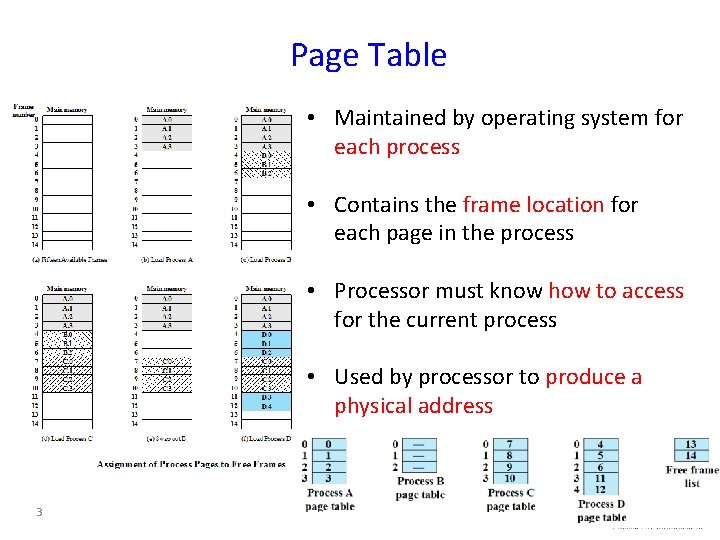

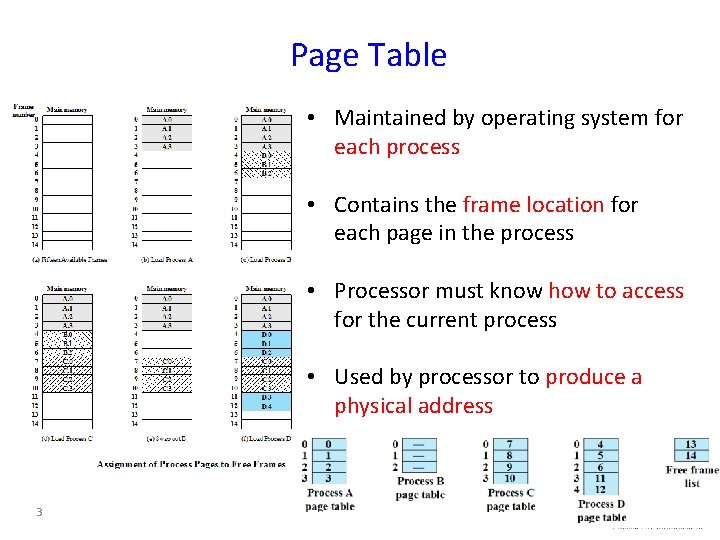

Page Table • Maintained by operating system for each process • Contains the frame location for each page in the process • Processor must know how to access for the current process • Used by processor to produce a physical address 3

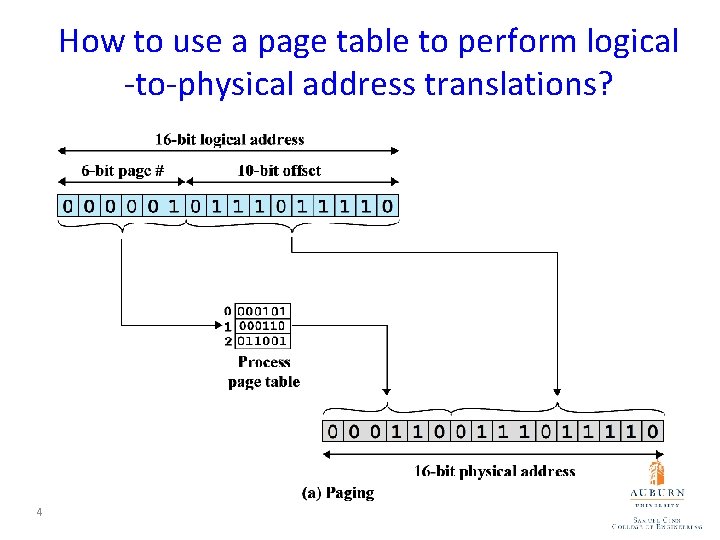

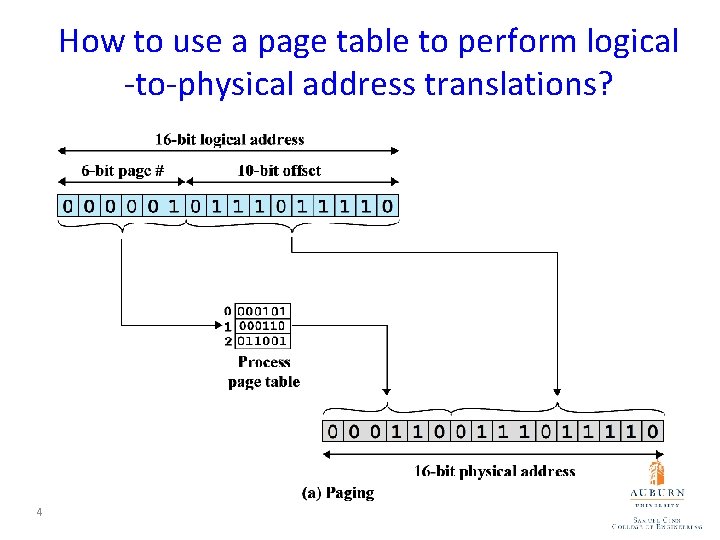

How to use a page table to perform logical -to-physical address translations? 4

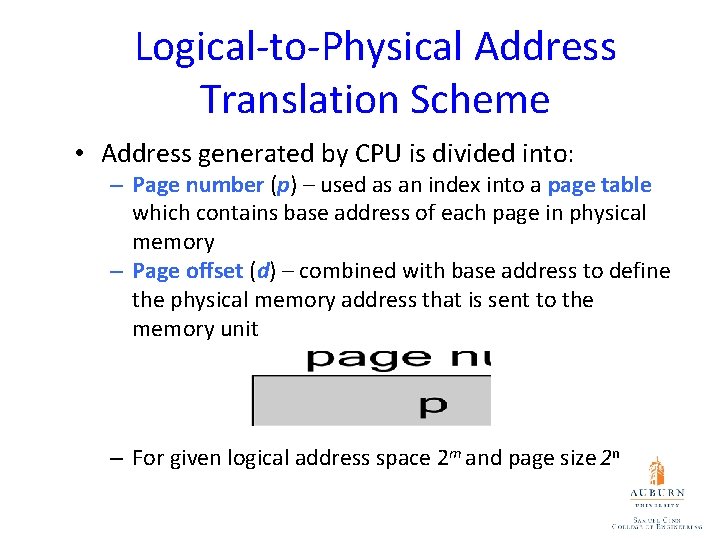

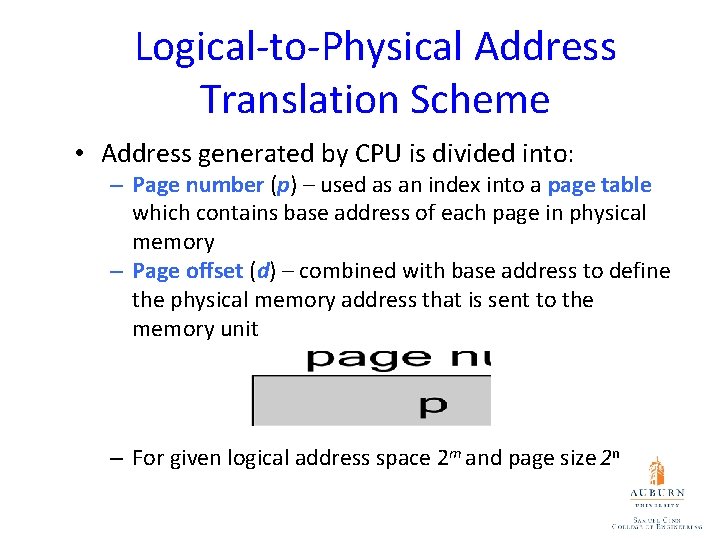

Logical-to-Physical Address Translation Scheme • Address generated by CPU is divided into: – Page number (p) – used as an index into a page table which contains base address of each page in physical memory – Page offset (d) – combined with base address to define the physical memory address that is sent to the memory unit – For given logical address space 2 m and page size 2 n

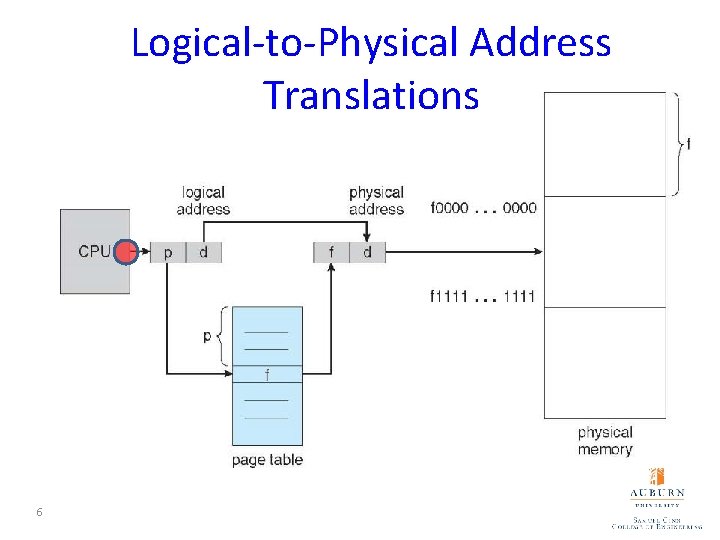

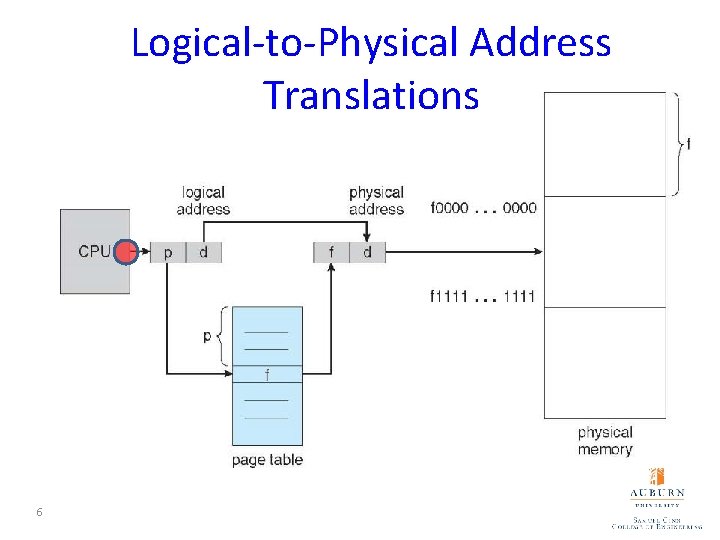

Logical-to-Physical Address Translations 6

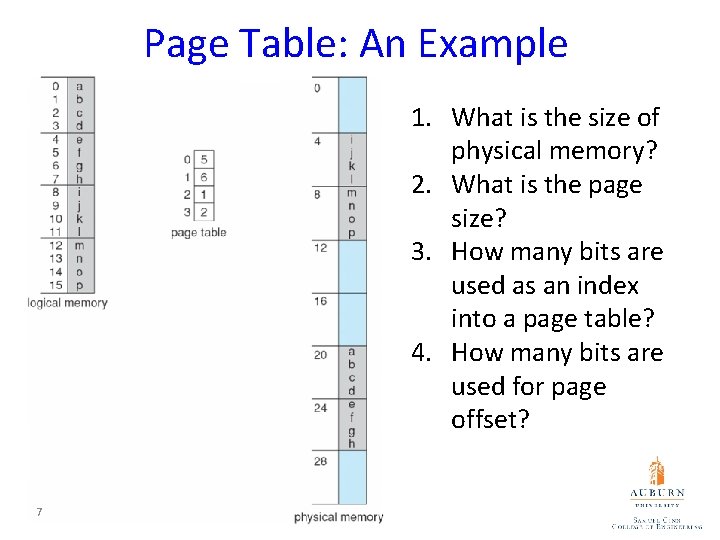

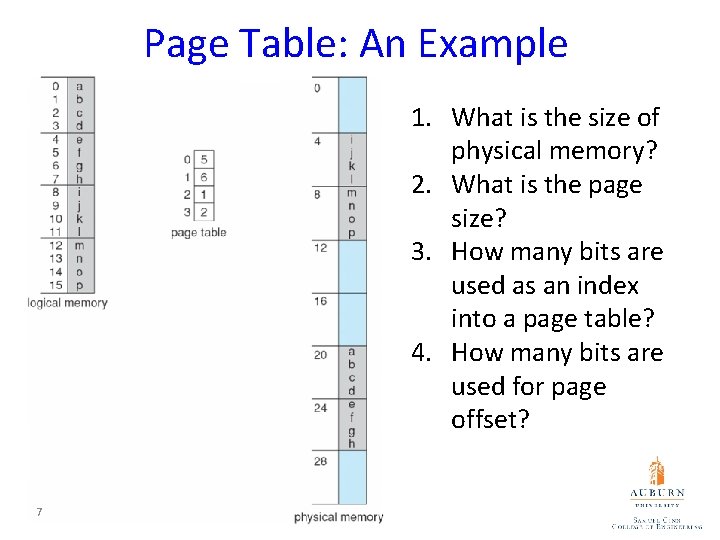

Page Table: An Example 1. What is the size of physical memory? 2. What is the page size? 3. How many bits are used as an index into a page table? 4. How many bits are used for page offset? 7

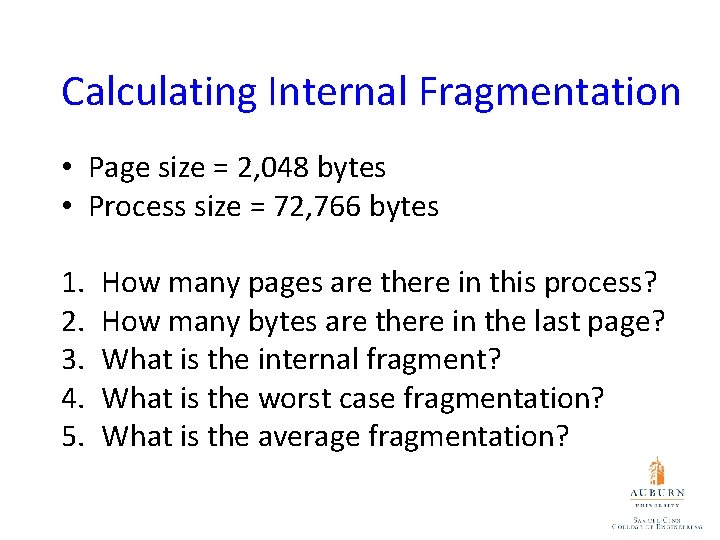

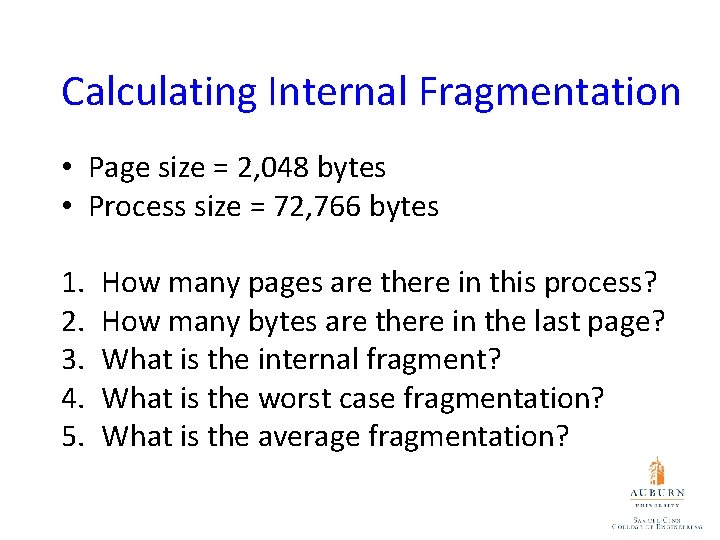

Calculating Internal Fragmentation • Page size = 2, 048 bytes • Process size = 72, 766 bytes 1. 2. 3. 4. 5. How many pages are there in this process? How many bytes are there in the last page? What is the internal fragment? What is the worst case fragmentation? What is the average fragmentation?

Are small frame sizes desirable? • Small frame size: small fragment • Small frame size: large table size – each page table entry takes memory to track • Page sizes growing over time – Solaris supports two page sizes – 8 KB and 4 MB

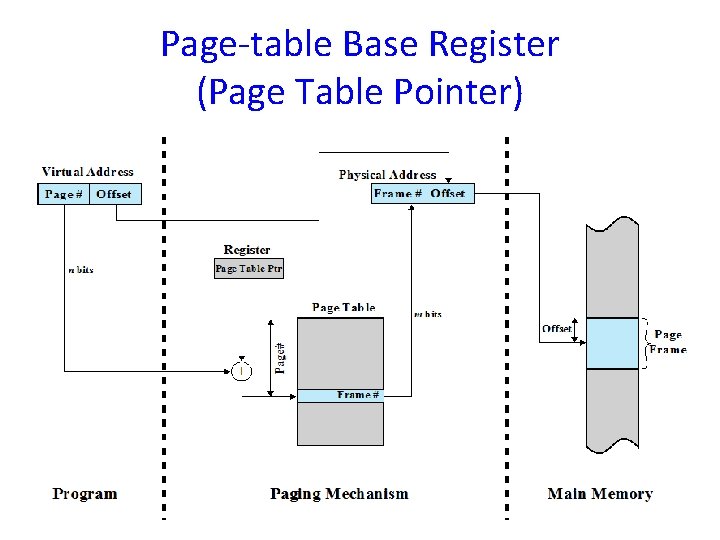

Two registers to support paging (1) Where should we keep page tables? (2) Where does the Page-table base register (PTBR) point at? (3) The Page-table length register (PTLR) indicates size of the page table. Why the Page-table length register (PTLR) is important?

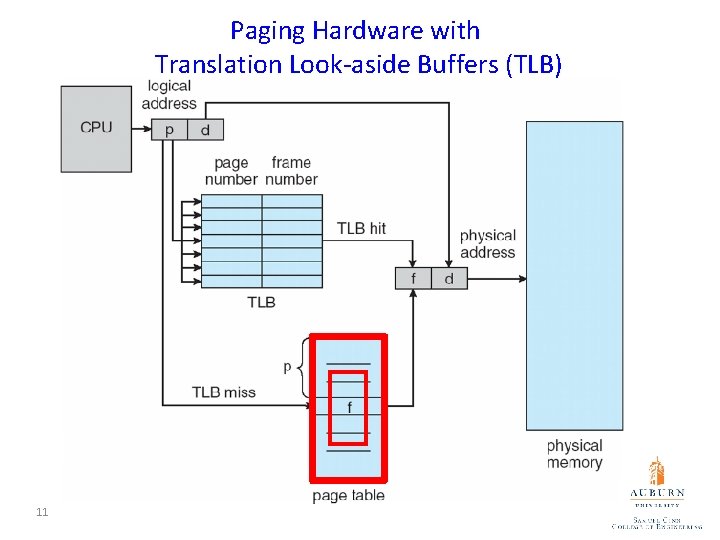

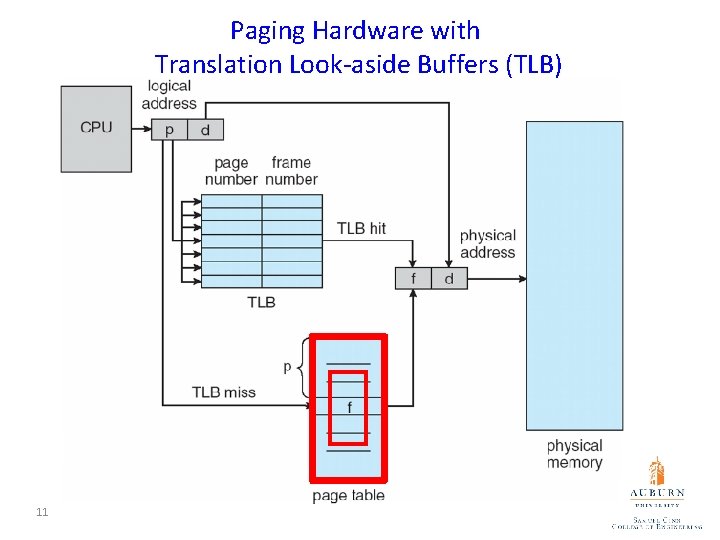

Paging Hardware with Translation Look-aside Buffers (TLB) 11

Parallel Searching the TLB • Associative memory – parallel search • Address translation (p, d) – If p is in associative register, get frame # out – Otherwise get frame # from page table in memory

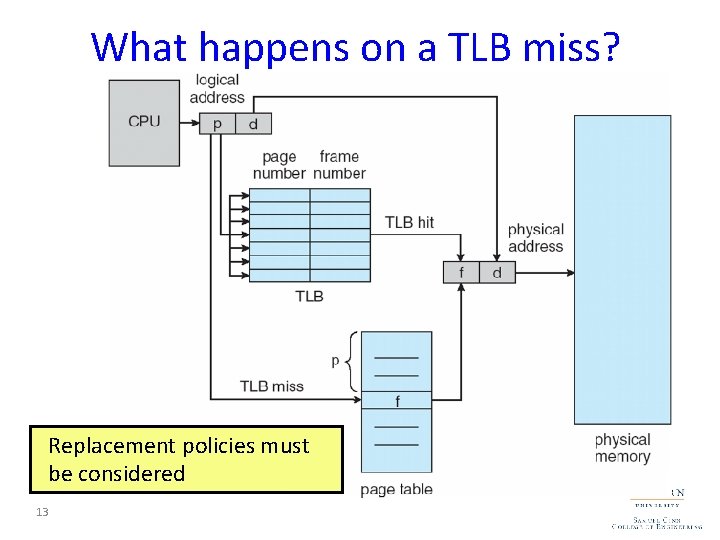

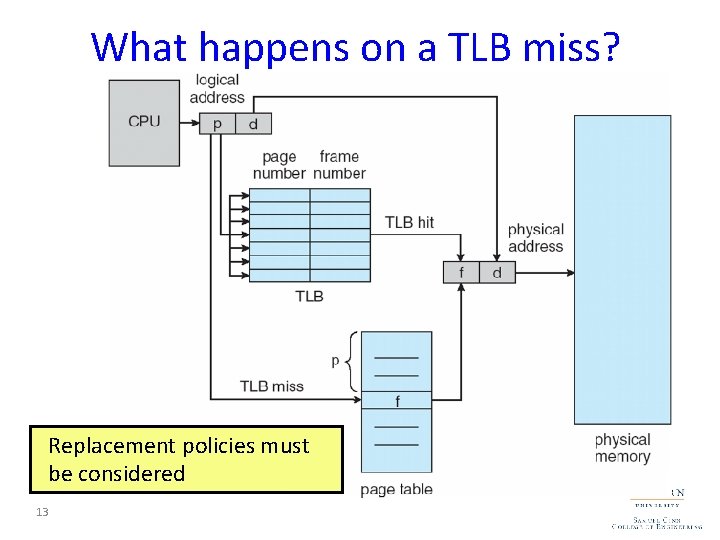

What happens on a TLB miss? Replacement policies must Value (? ) is loaded into the TLB for faster access next time be considered 13

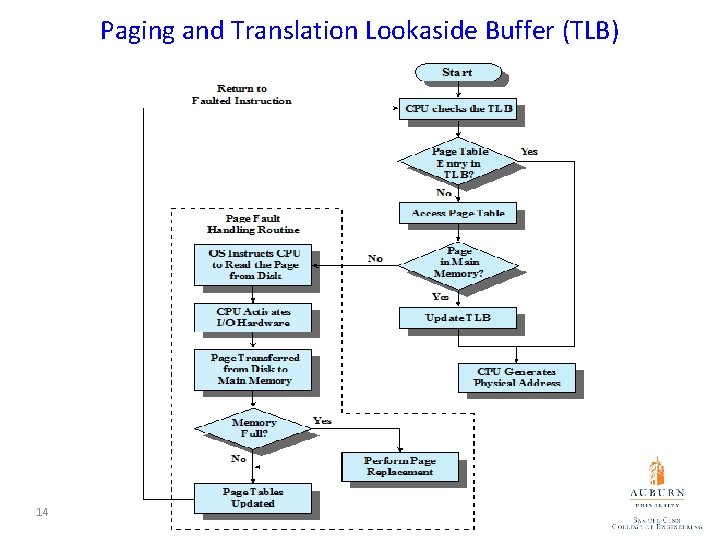

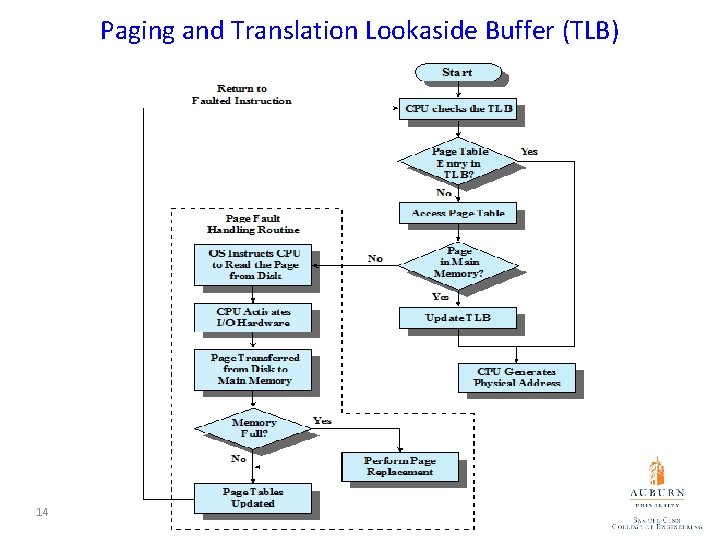

Paging and Translation Lookaside Buffer (TLB) 14

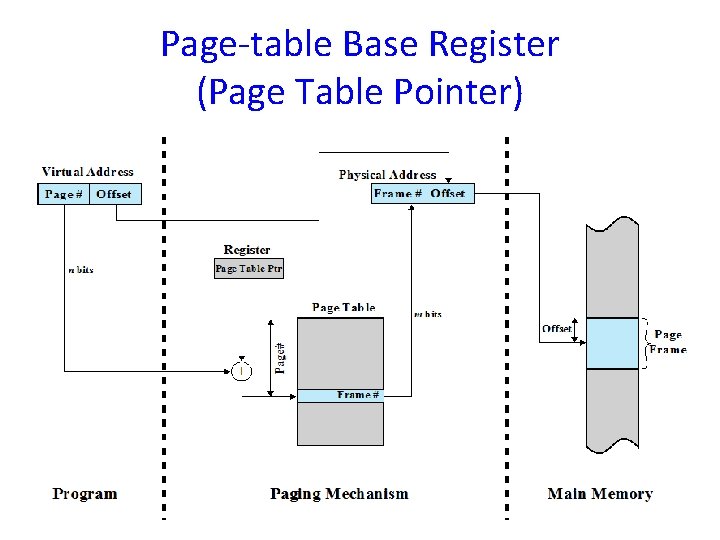

Page-table Base Register (Page Table Pointer) 15

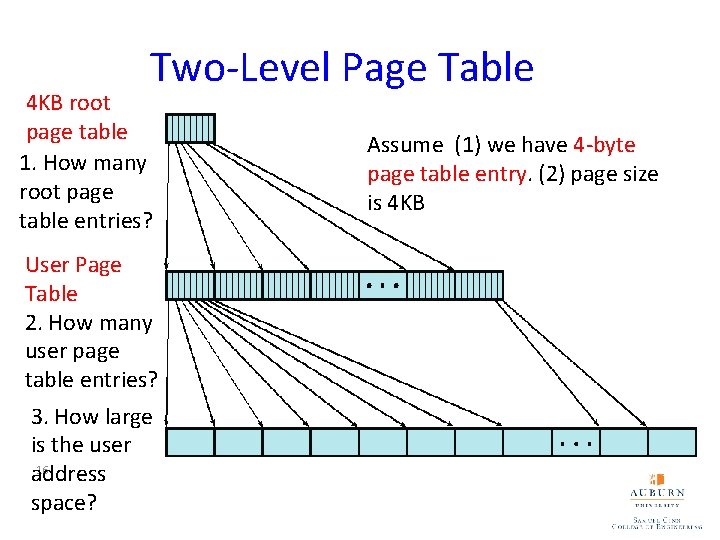

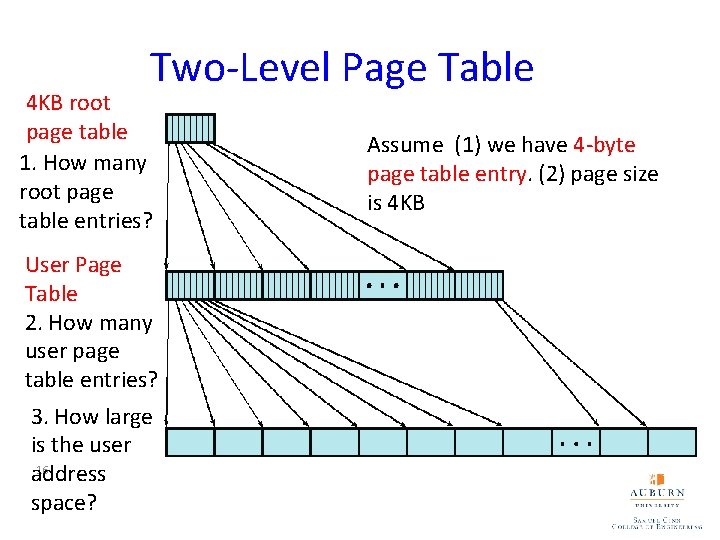

Two-Level Page Table 4 KB root page table 1. How many root page table entries? User Page Table 2. How many user page table entries? 3. How large is the user 16 address space? Assume (1) we have 4 -byte page table entry. (2) page size is 4 KB

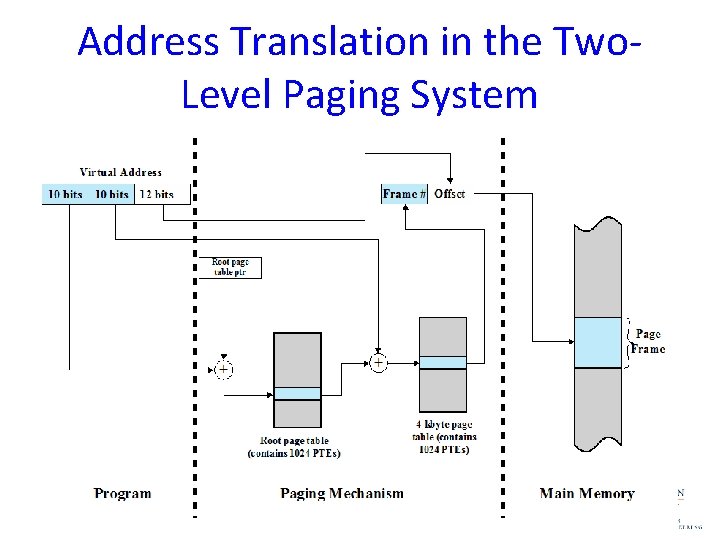

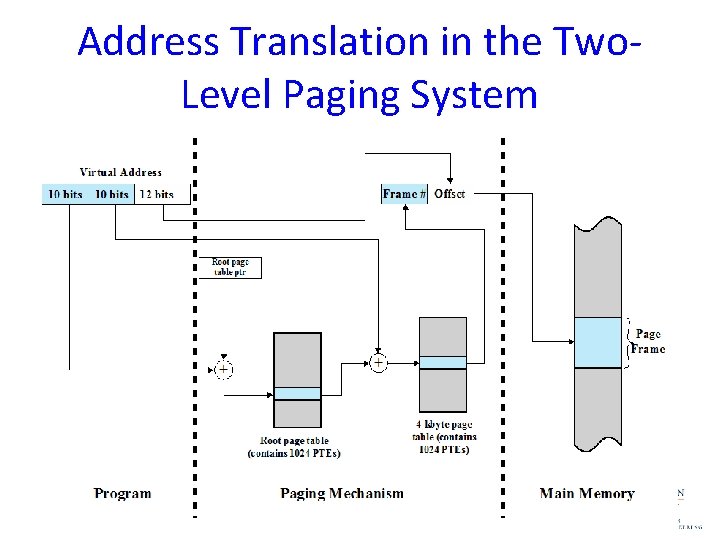

Address Translation in the Two. Level Paging System 17

Design virtual address format for a two-level paging system • Suppose you design a two-level page translation scheme where page size is 16 MB and page table entry size is 16 bytes. • What is the format of a 64 -bit virtual address?

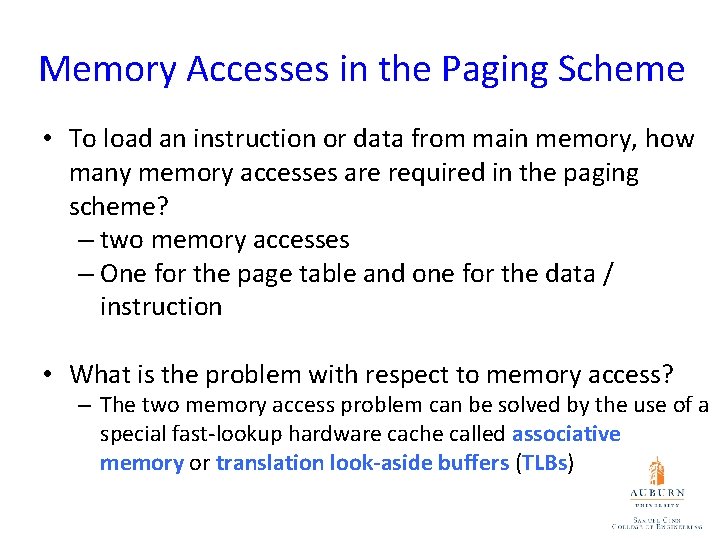

Memory Accesses in the Paging Scheme • To load an instruction or data from main memory, how many memory accesses are required in the paging scheme? – two memory accesses – One for the page table and one for the data / instruction • What is the problem with respect to memory access? – The two memory access problem can be solved by the use of a special fast-lookup hardware cache called associative memory or translation look-aside buffers (TLBs)

Address Space ID in TLBs • Why some TLBs store address-space identifiers (ASIDs) in each TLB entry – uniquely identifies each process? To provide address-space protection for that process

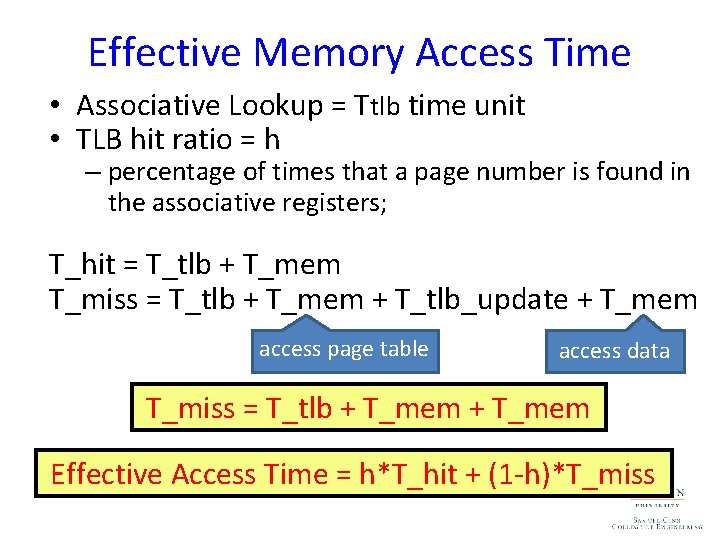

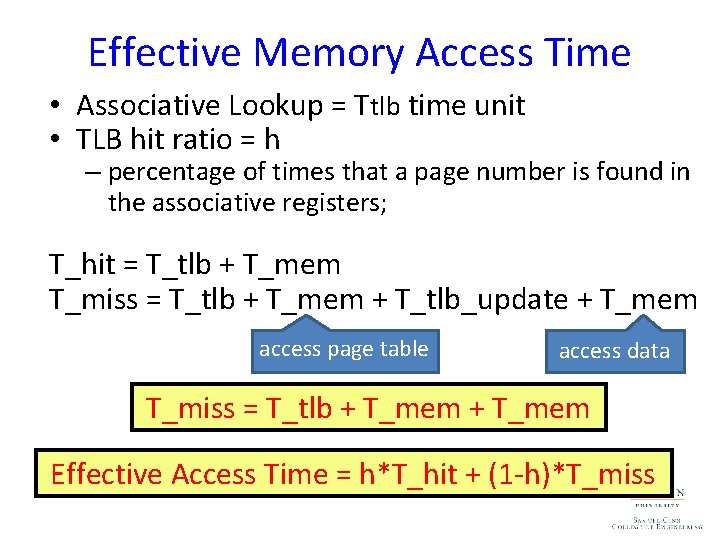

Effective Memory Access Time • Associative Lookup = Ttlb time unit • TLB hit ratio = h – percentage of times that a page number is found in the associative registers; T_hit = T_tlb + T_mem T_miss = T_tlb + T_mem + T_tlb_update + T_mem access page table access data T_miss = T_tlb + T_mem Effective Access Time = h*T_hit + (1 -h)*T_miss

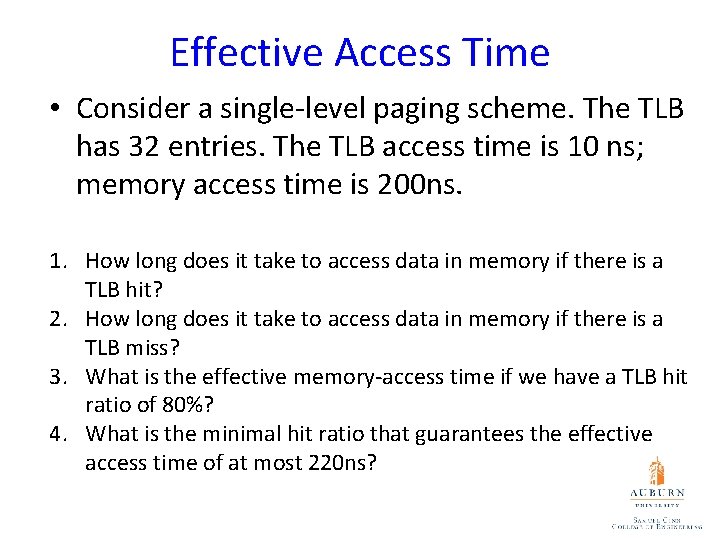

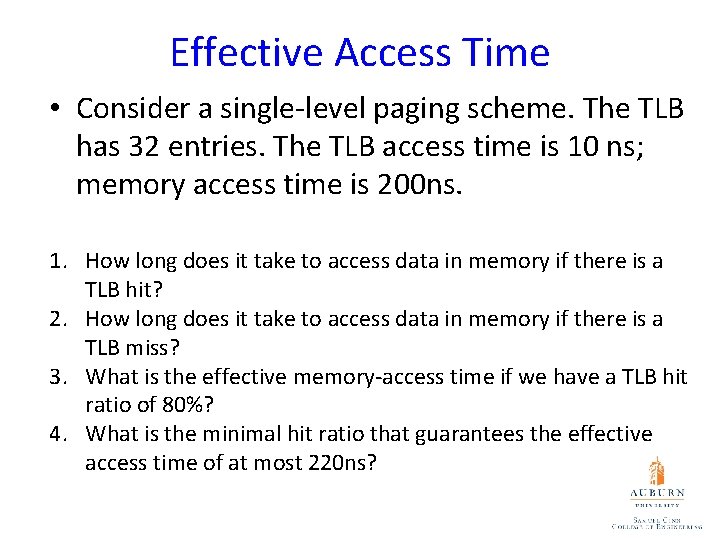

Effective Access Time • Consider a single-level paging scheme. The TLB has 32 entries. The TLB access time is 10 ns; memory access time is 200 ns. 1. How long does it take to access data in memory if there is a TLB hit? 2. How long does it take to access data in memory if there is a TLB miss? 3. What is the effective memory-access time if we have a TLB hit ratio of 80%? 4. What is the minimal hit ratio that guarantees the effective access time of at most 220 ns?

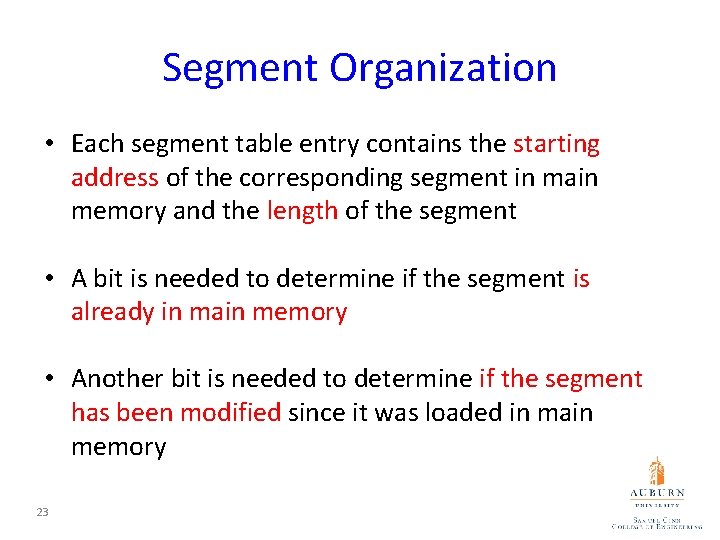

Segment Organization • Each segment table entry contains the starting address of the corresponding segment in main memory and the length of the segment • A bit is needed to determine if the segment is already in main memory • Another bit is needed to determine if the segment has been modified since it was loaded in main memory 23

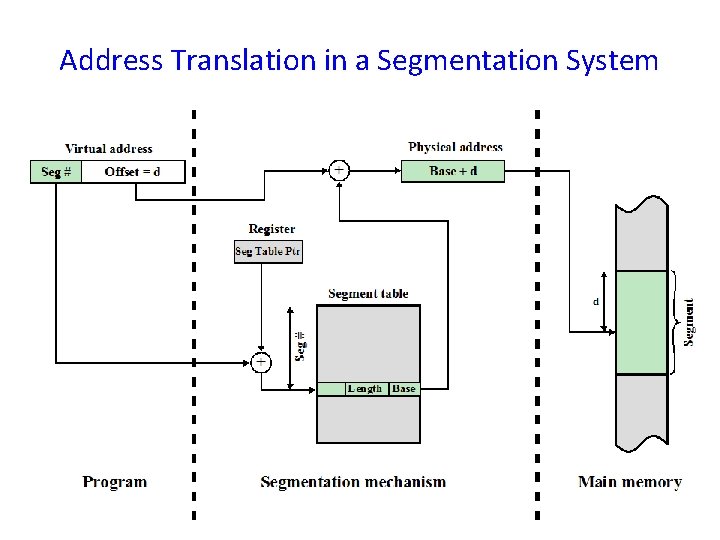

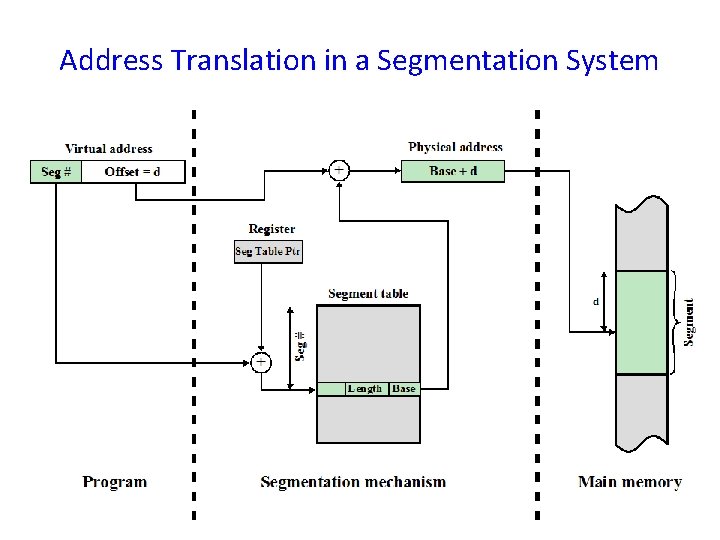

Address Translation in a Segmentation System 24

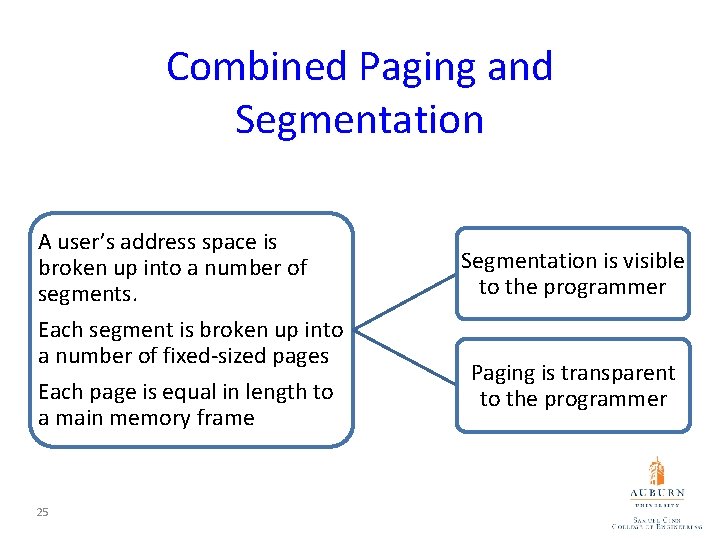

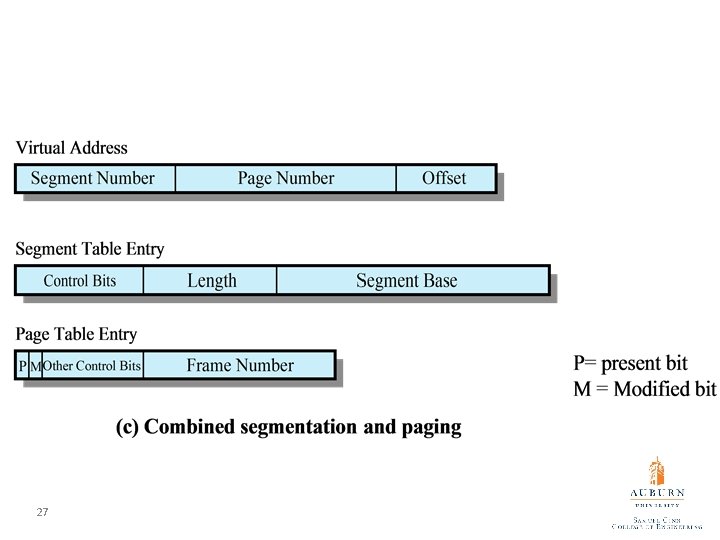

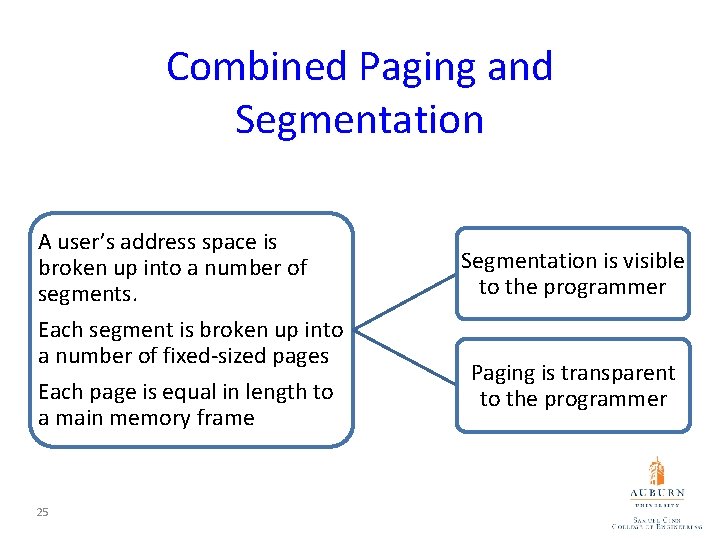

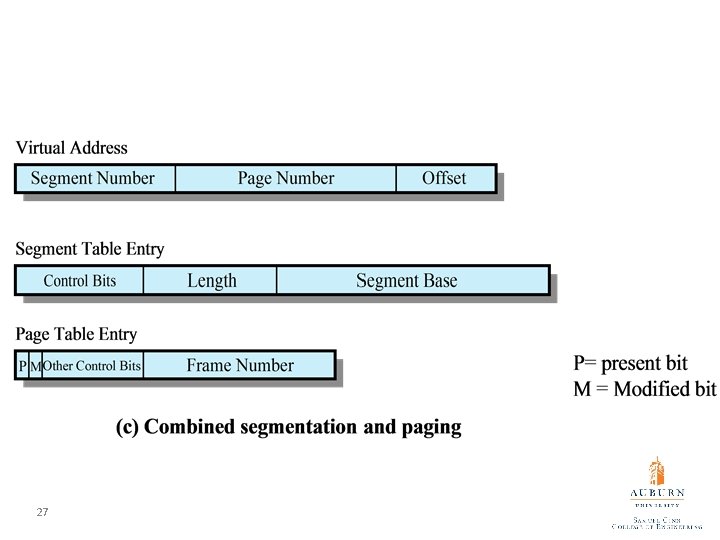

Combined Paging and Segmentation A user’s address space is broken up into a number of segments. Each segment is broken up into a number of fixed-sized pages Each page is equal in length to a main memory frame 25 Segmentation is visible to the programmer Paging is transparent to the programmer

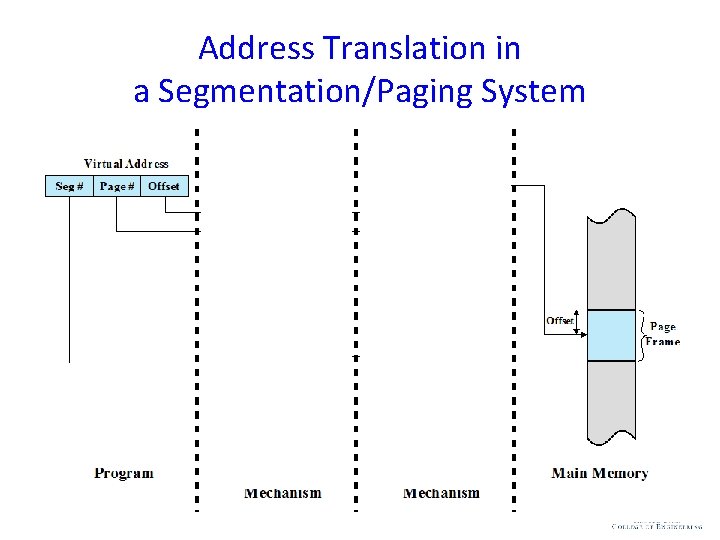

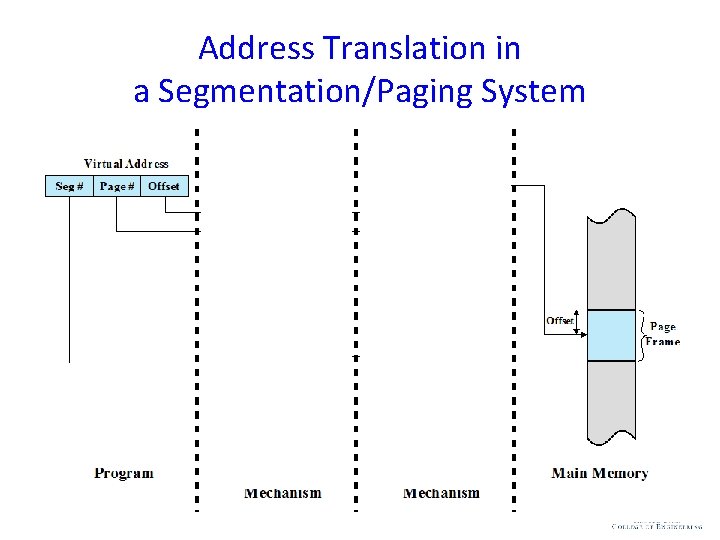

Address Translation in a Segmentation/Paging System

27

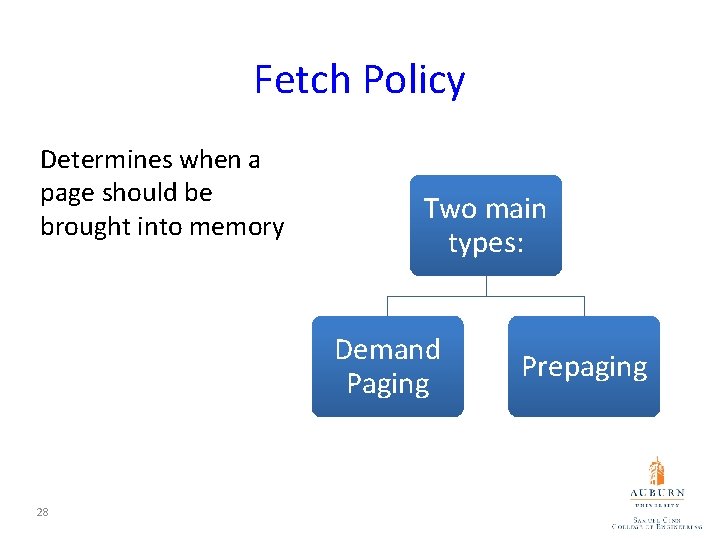

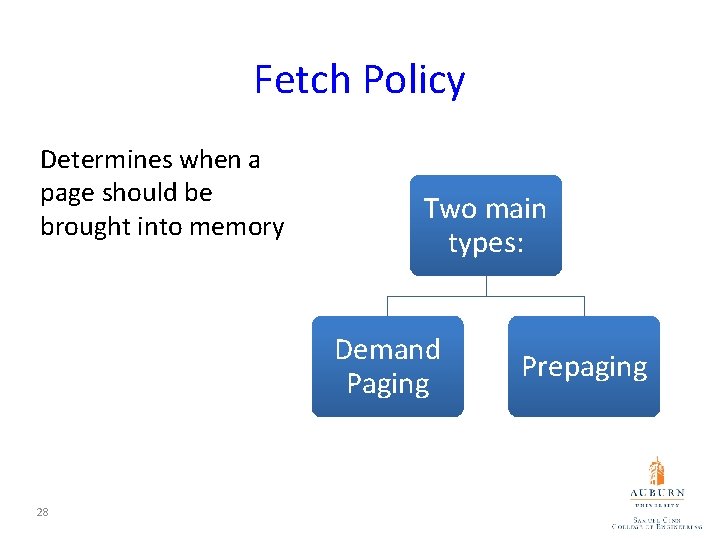

Fetch Policy Determines when a page should be brought into memory Two main types: Demand Paging 28 Prepaging

Demand Paging • Brings pages into main memory when a reference is made to a location on the page Many or few page faults when process is first started? What happens after more and more pages are brought in? • Principle of locality suggests that as, most future references will be to pages that have recently been brought in, and page faults should drop to a very low level 29

Prepaging • Pages other than the one demanded by a page fault are brought in • Exploits the characteristics of most secondary memory devices • If pages of a process are stored contiguously in secondary memory it is more efficient to bring in a number of pages at one time What is the downside? Ineffective if extra pages are not referenced 30

Placement Policy • Determines where in real memory a process piece is to reside • Important design issue in a segmentation system • Paging or combined paging with segmentation placing is irrelevant because hardware performs functions with equal efficiency • For NUMA systems an automatic placement strategy is desirable 31

Replacement Policy • Deals with the selection of a page in main memory to be replaced when a new page must be brought in • Objective: the page that is removed should be the page least likely to be referenced in the near future The more elaborate the replacement policy the greater the hardware and software overhead to implement it 32

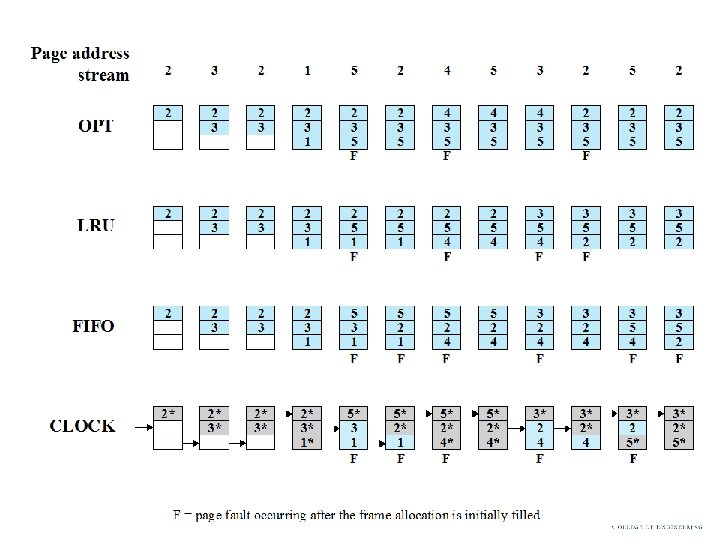

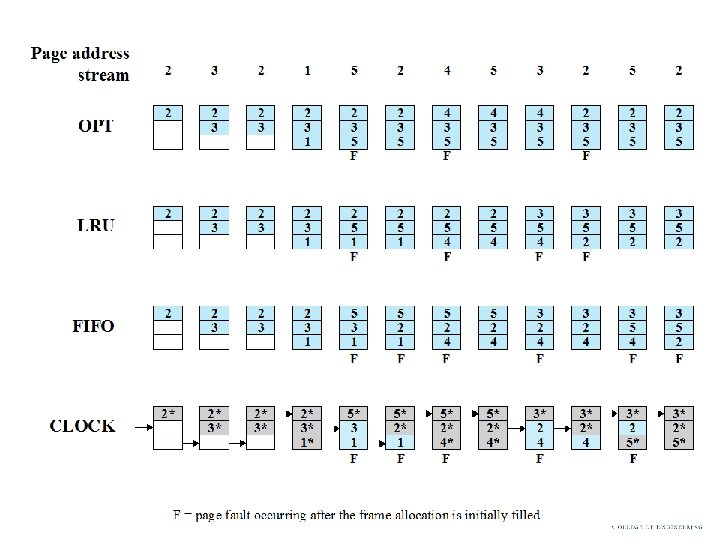

Basic Algorithms used for the selection of a page to replace: • Optimal • Least recently used (LRU) • First-in-first-out (FIFO) • Clock 33

Least Recently Used (LRU) • Replaces the page that has not been referenced for the longest time • By the principle of locality, this should be the page least likely to be referenced in the near future 34

Amazon Interview Question for Software Engineer / Developers • Implement an LRU cache How to implement LRU? – one approach is to tag each page with the time of last reference – this requires a great deal of overhead 35

First-in-First-out (FIFO) • Treats page frames allocated to a process as a circular buffer • Pages are removed in round-robin style • A Simple replacement policy to implement • Page that has been in memory the longest is replaced 36

37

File Management • File is a named, ordered collection of information What are a file manager’s responsibilities? – – Storing the information on a device Mapping the block storage to a logical view Allocating/deallocating storage Providing file directories

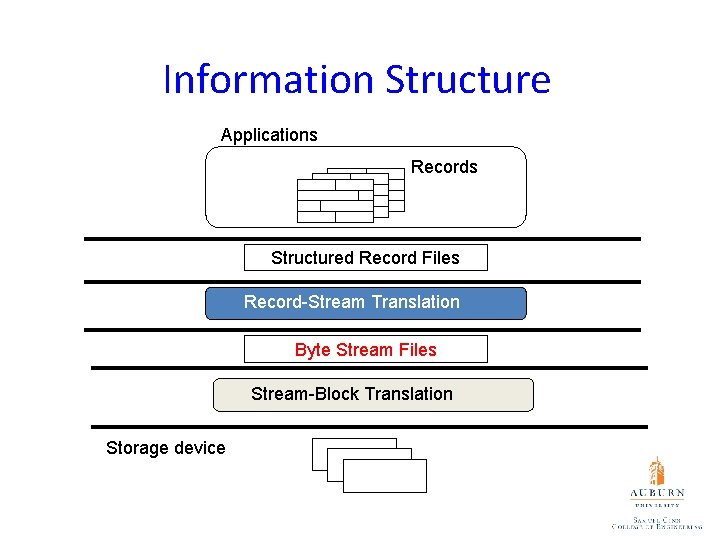

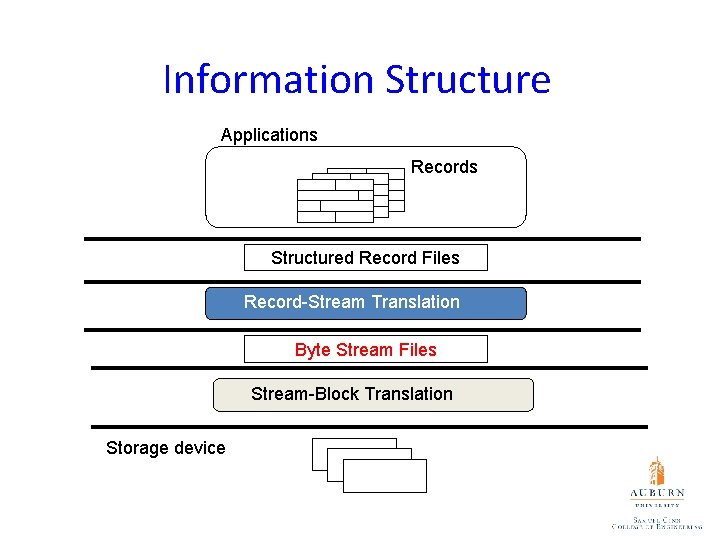

Information Structure Applications Records Structured Record Files Record-Stream Translation Byte Stream Files Stream-Block Translation Storage device

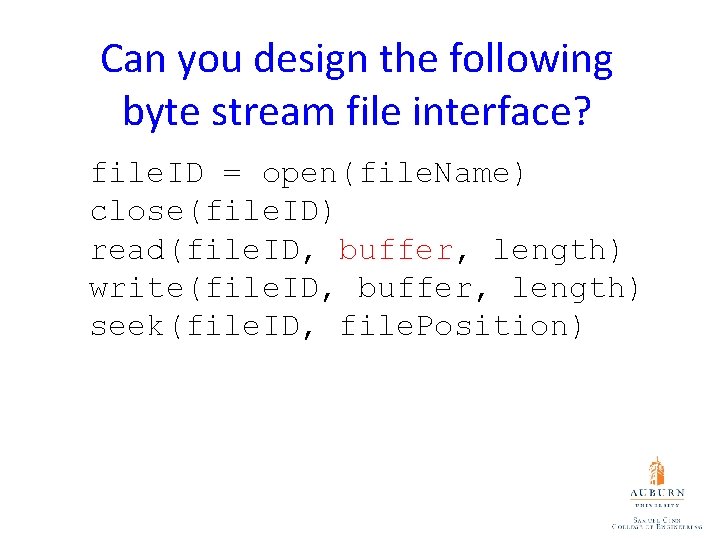

Can you design the following byte stream file interface? file. ID = open(file. Name) close(file. ID) read(file. ID, buffer, length) write(file. ID, buffer, length) seek(file. ID, file. Position)

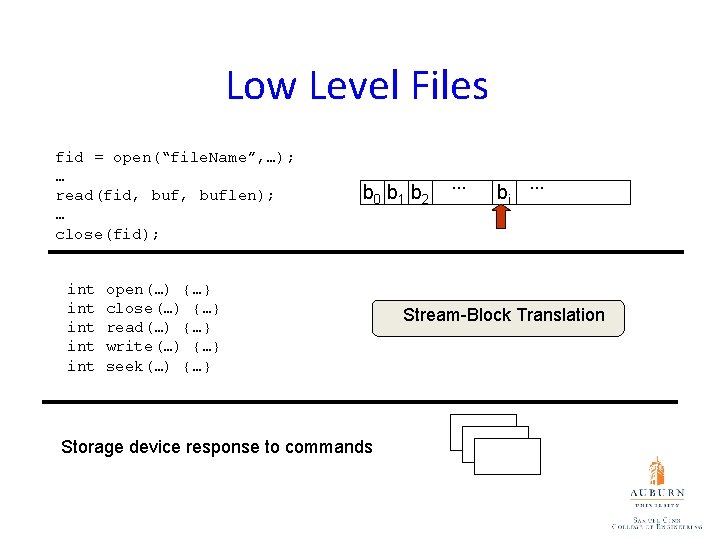

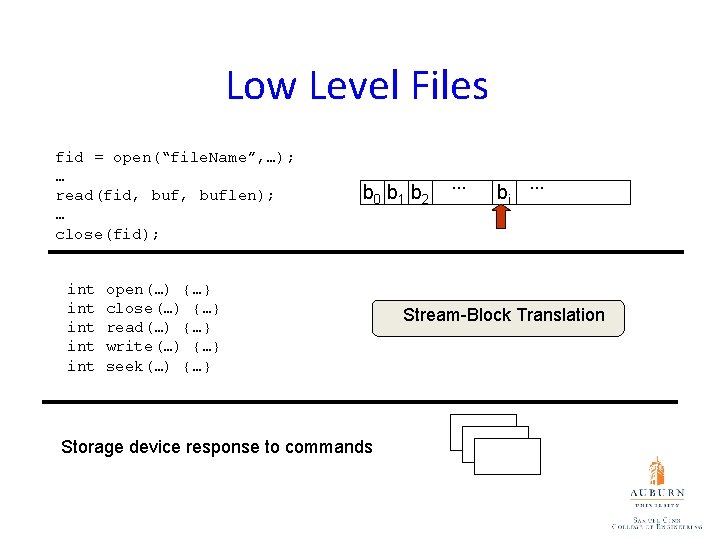

Low Level Files fid = open(“file. Name”, …); … read(fid, buflen); … close(fid); int int int b 0 b 1 b 2 open(…) {…} close(…) {…} read(…) {…} write(…) {…} seek(…) {…} Storage device response to commands . . . bi . . . Stream-Block Translation

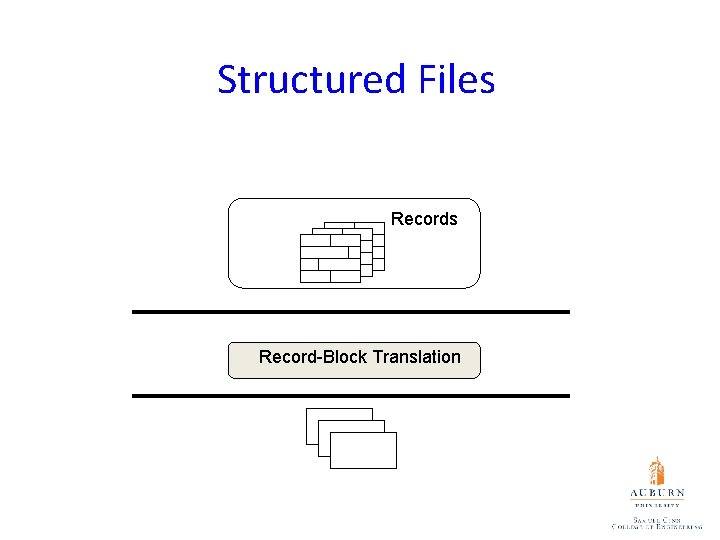

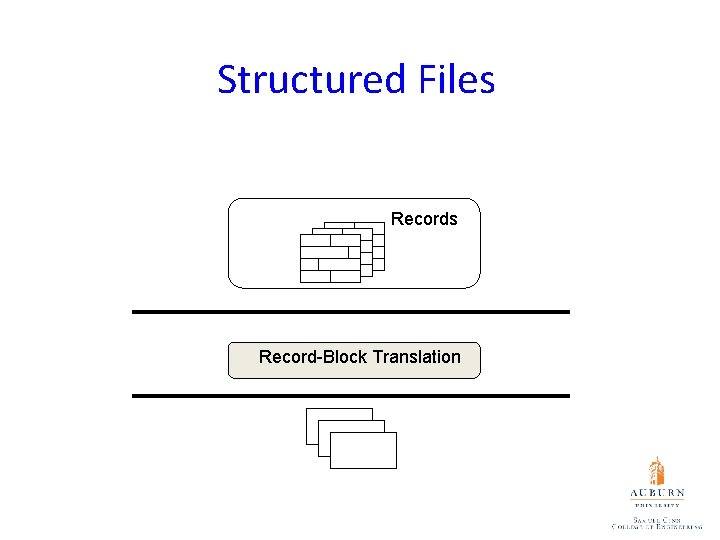

Structured Files Record-Block Translation

Record-Oriented Sequential Files Logical Record file. ID = open(file. Name) close(file. ID) get. Record(file. ID, record) put. Record(file. ID, record) seek(file. ID, position)

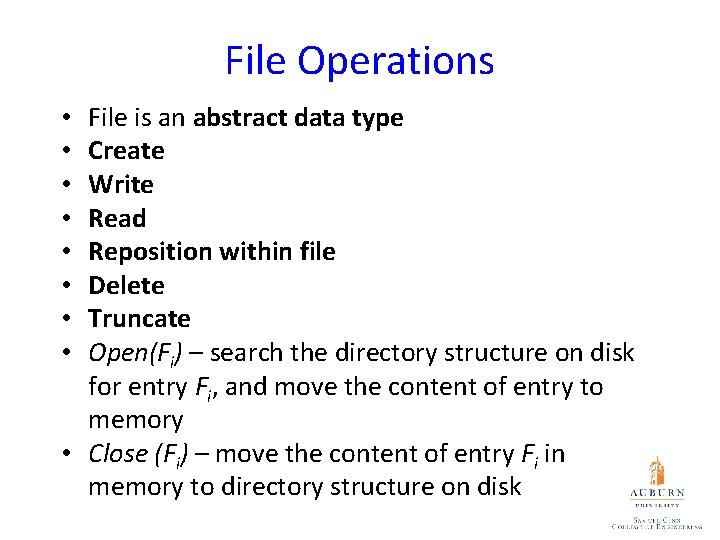

File Operations File is an abstract data type Create Write Read Reposition within file Delete Truncate Open(Fi) – search the directory structure on disk for entry Fi, and move the content of entry to memory • Close (Fi) – move the content of entry Fi in memory to directory structure on disk • •

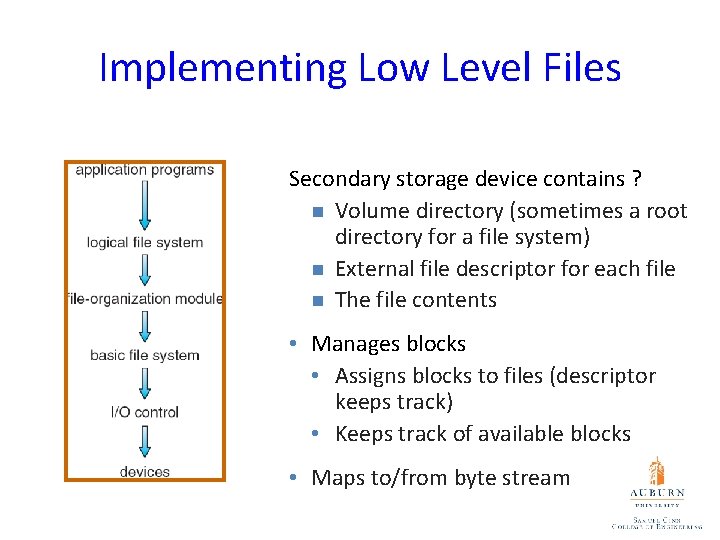

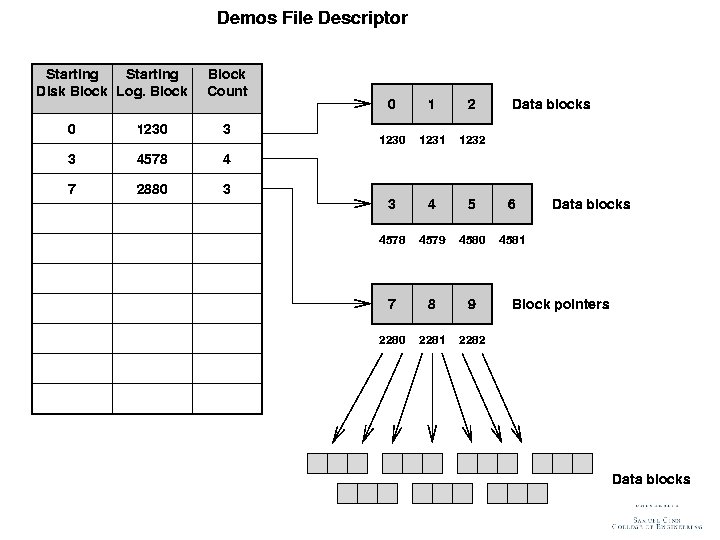

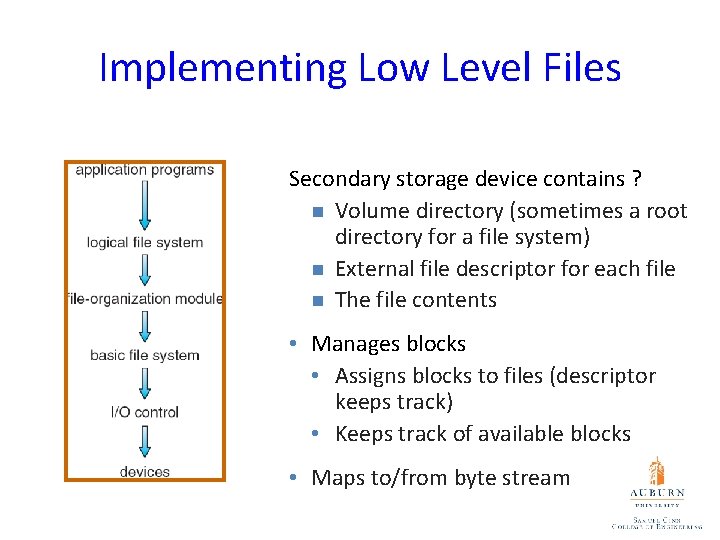

Implementing Low Level Files Secondary storage device contains ? n Volume directory (sometimes a root directory for a file system) n External file descriptor for each file n The file contents • Manages blocks • Assigns blocks to files (descriptor keeps track) • Keeps track of available blocks • Maps to/from byte stream

File Descriptors • • • • External name Current state Sharable Owner User Locks Protection settings Length Time of creation Time of last modification Time of last access Reference count Storage device details How to design a file control block (a. k. a. , file descriptor)? From “File Systems Requirements” to “File Attributes” From “File Attributes” to “a File Control Block”

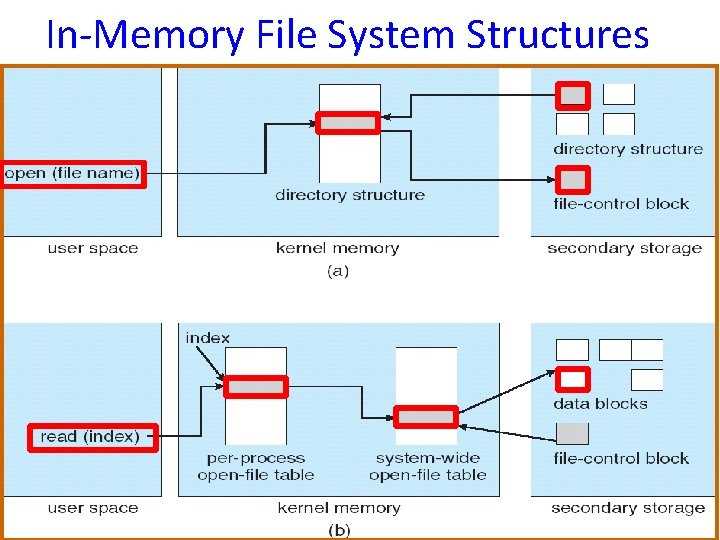

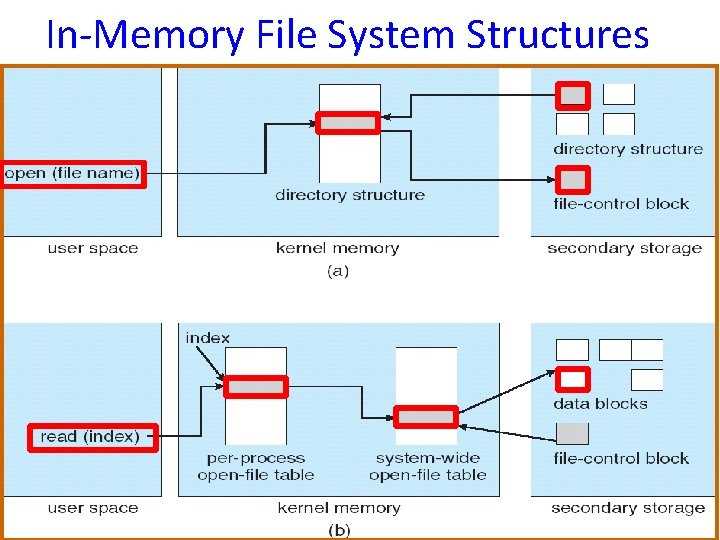

In-Memory File System Structures

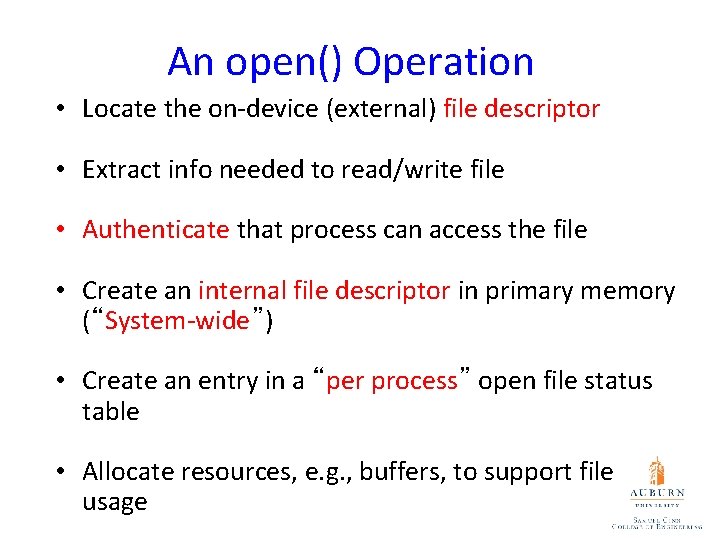

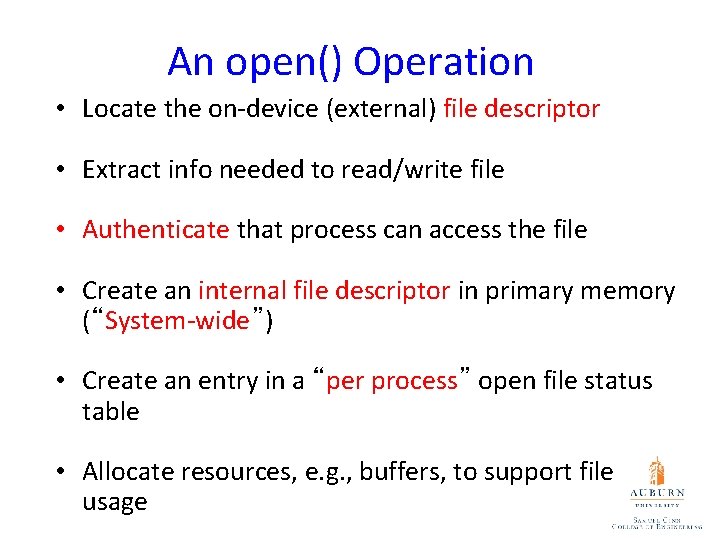

An open() Operation • Locate the on-device (external) file descriptor • Extract info needed to read/write file • Authenticate that process can access the file • Create an internal file descriptor in primary memory (“System-wide”) • Create an entry in a “per process” open file status table • Allocate resources, e. g. , buffers, to support file usage

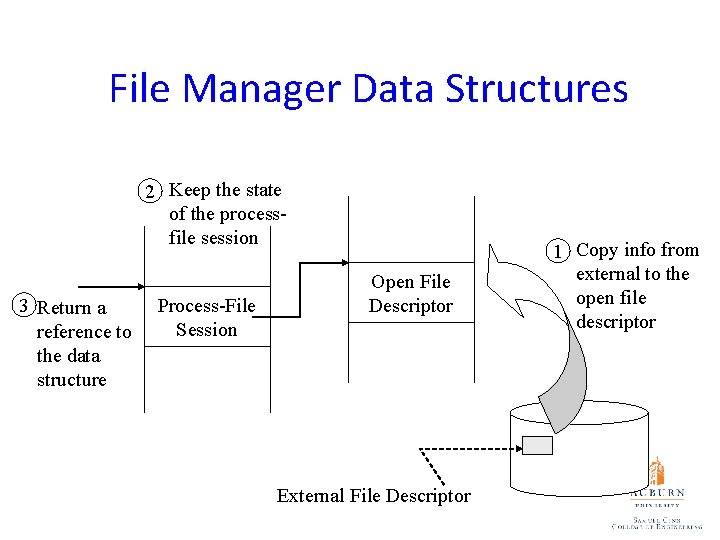

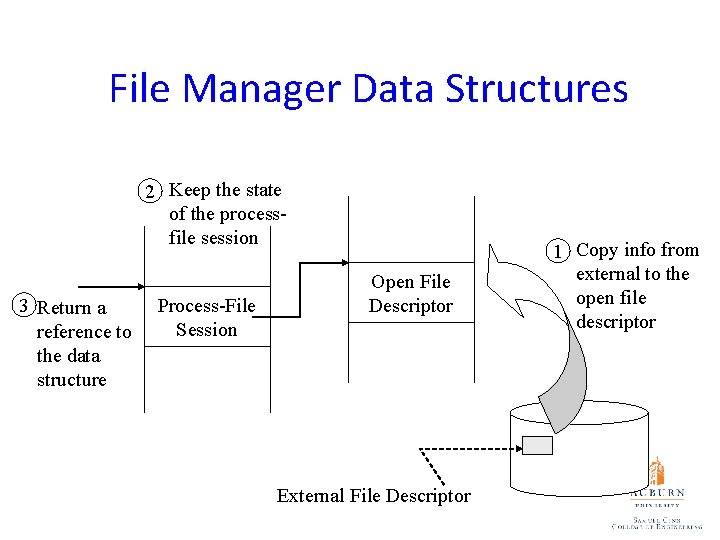

File Manager Data Structures 2 Keep the state of the processfile session 3 Return a reference to the data structure Process-File Session Open File Descriptor External File Descriptor 1 Copy info from external to the open file descriptor

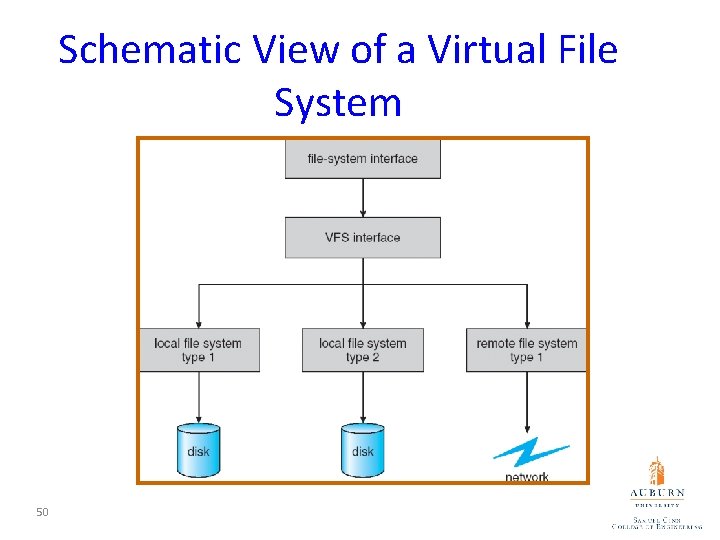

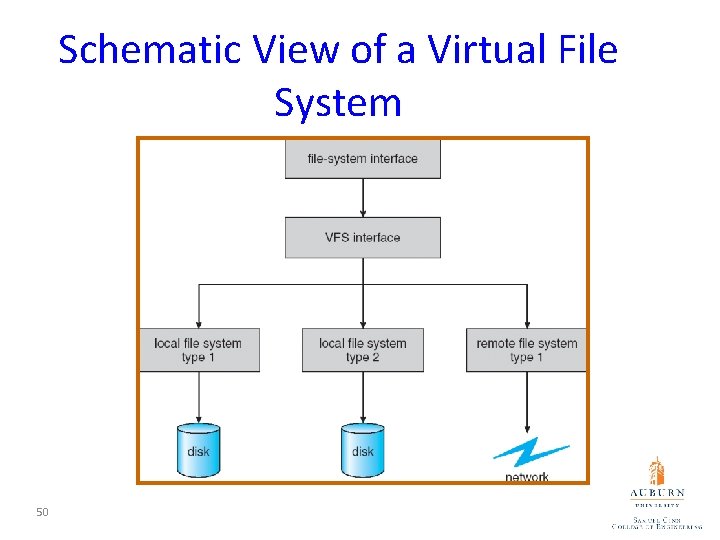

Schematic View of a Virtual File System 50

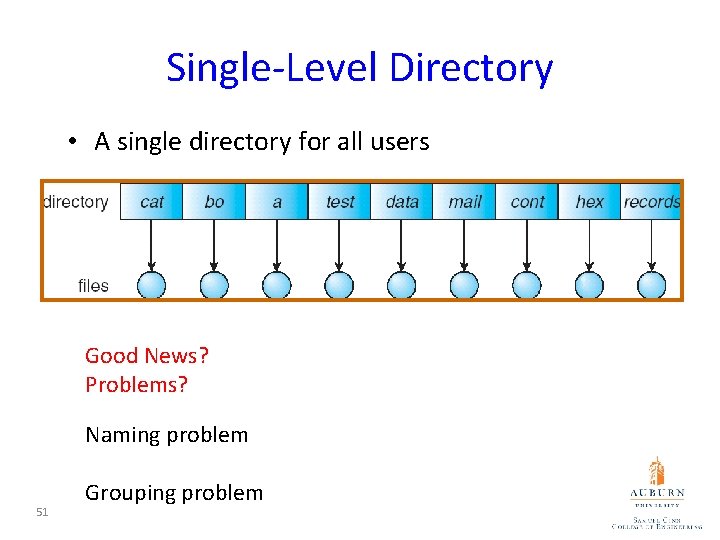

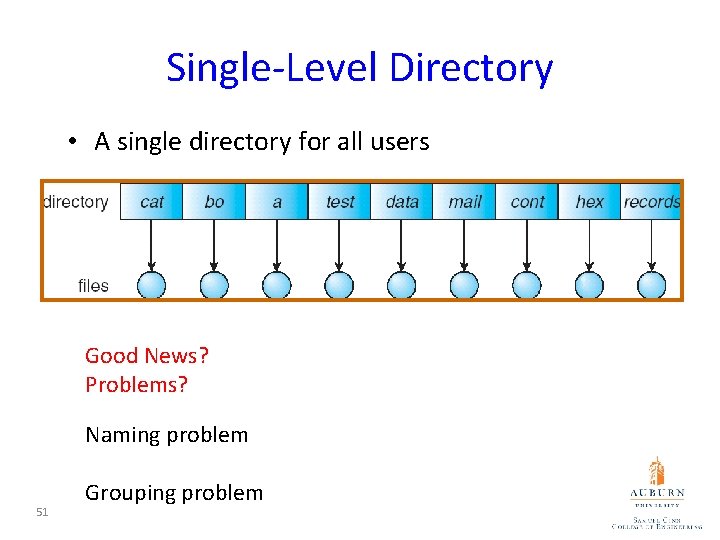

Single-Level Directory • A single directory for all users Good News? Problems? Naming problem 51 Grouping problem

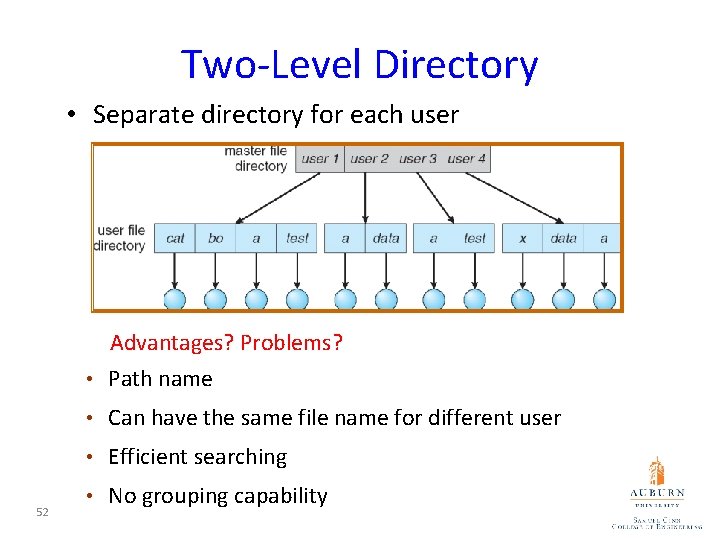

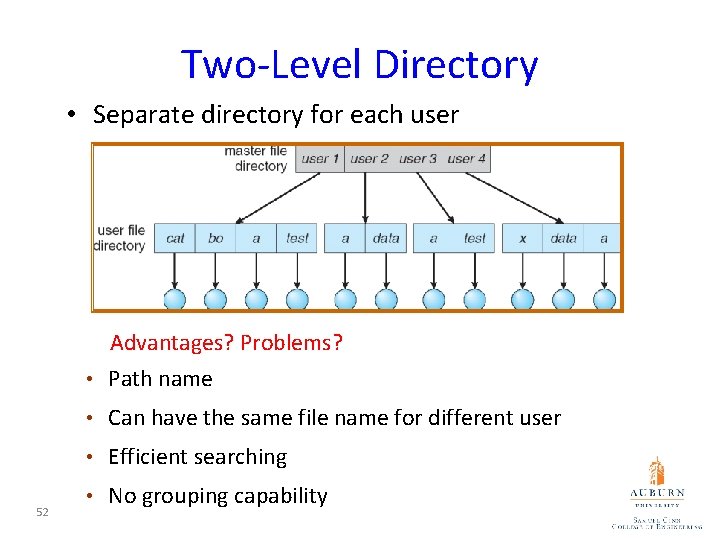

Two-Level Directory • Separate directory for each user Advantages? Problems? • Path name • Can have the same file name for different user • Efficient searching 52 • No grouping capability

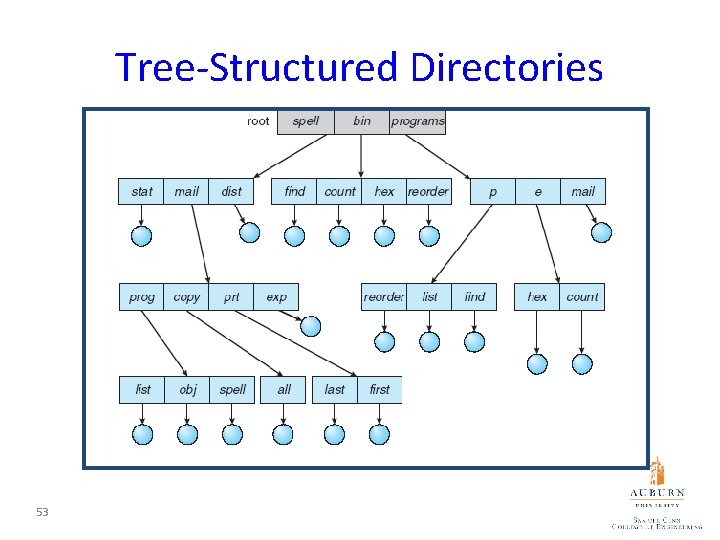

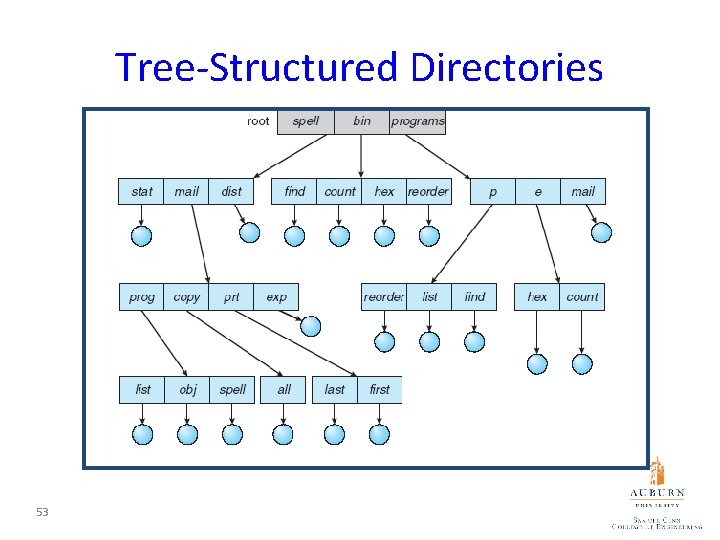

Tree-Structured Directories 53

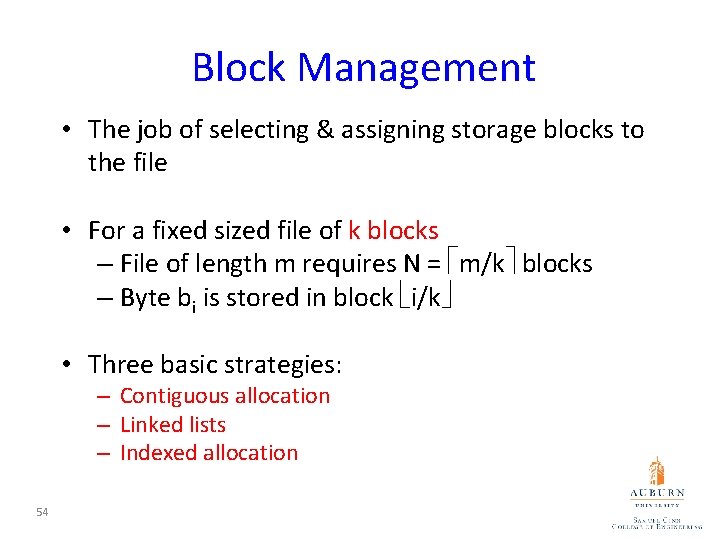

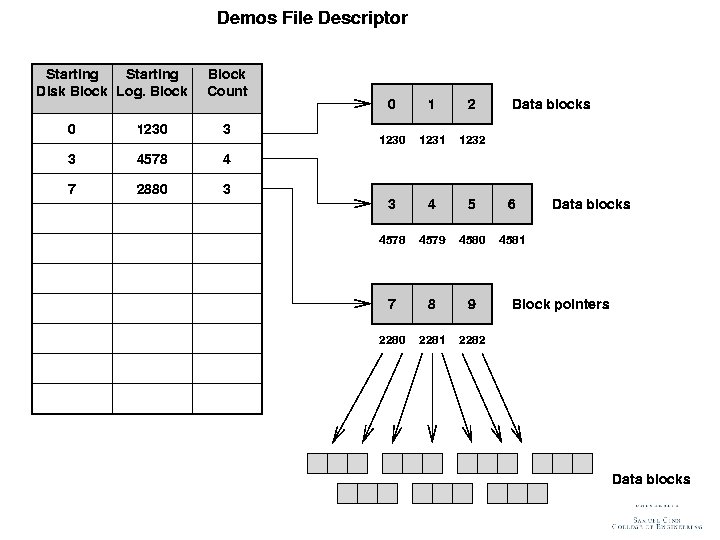

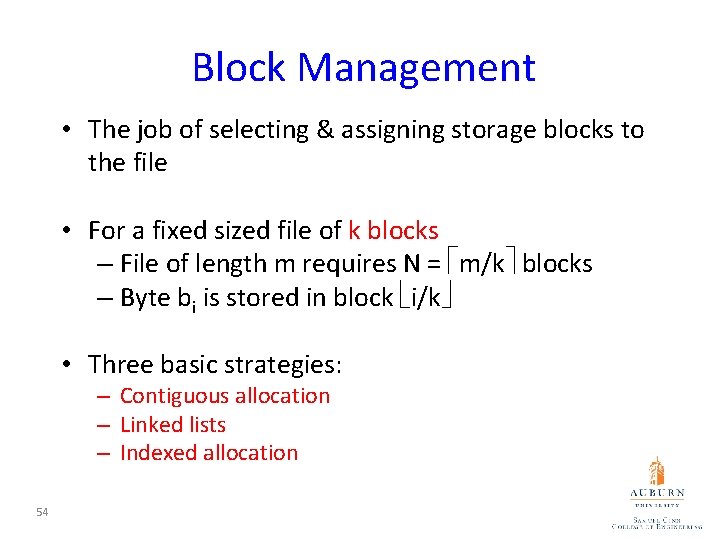

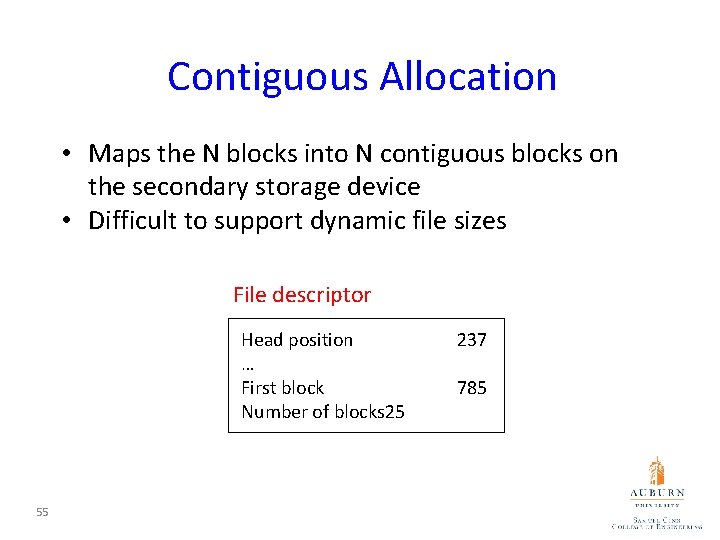

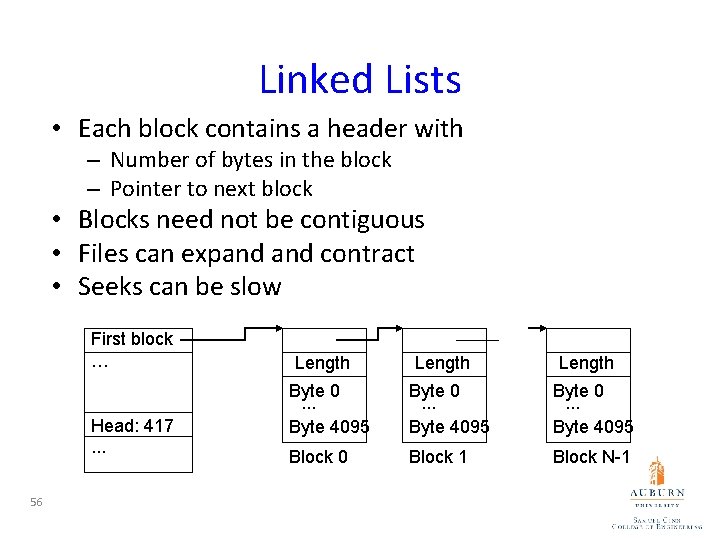

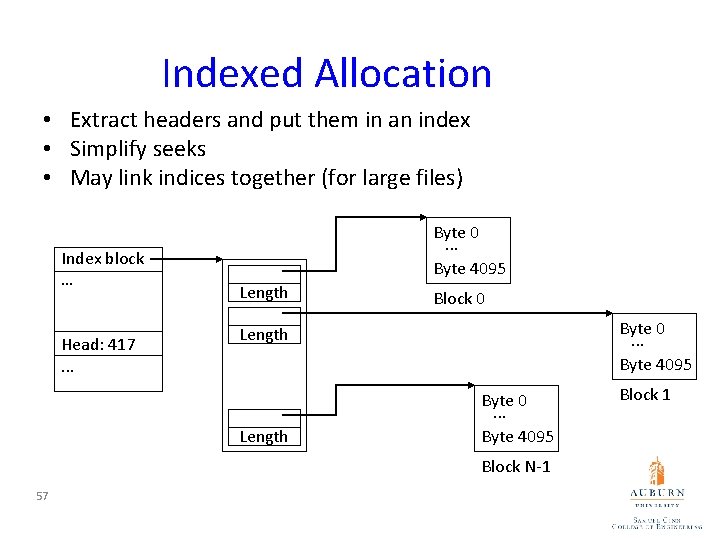

Block Management • The job of selecting & assigning storage blocks to the file • For a fixed sized file of k blocks – File of length m requires N = m/k blocks – Byte bi is stored in block i/k • Three basic strategies: – Contiguous allocation – Linked lists – Indexed allocation 54

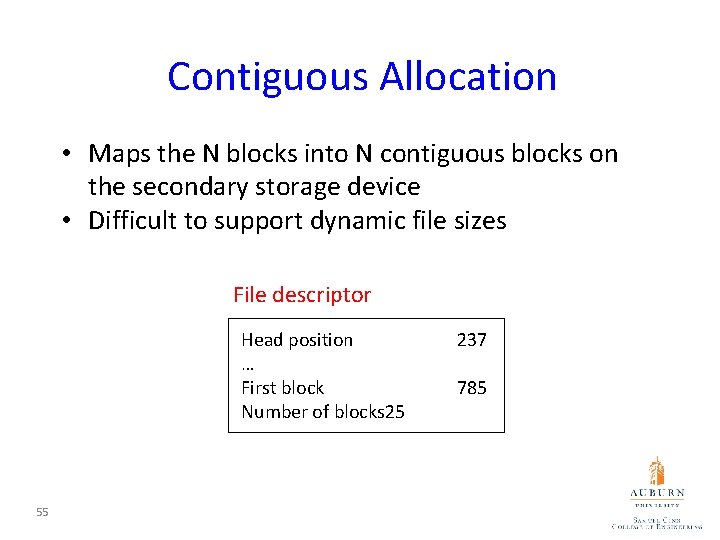

Contiguous Allocation • Maps the N blocks into N contiguous blocks on the secondary storage device • Difficult to support dynamic file sizes File descriptor Head position … First block Number of blocks 25 55 237 785

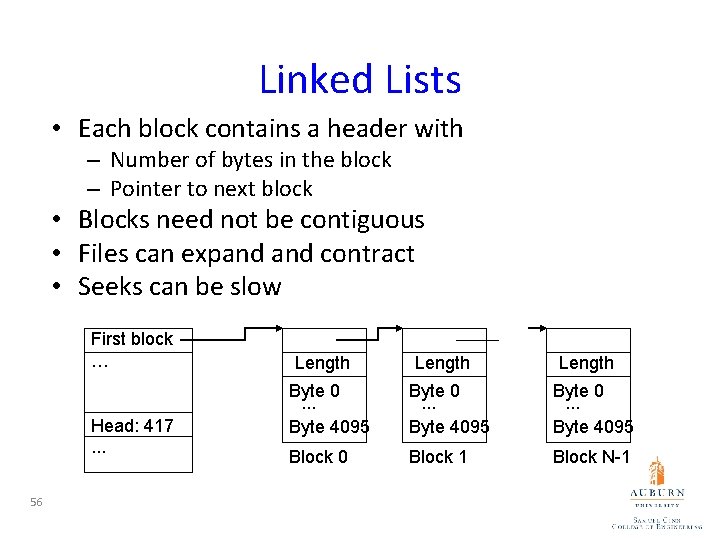

Linked Lists • Each block contains a header with – Number of bytes in the block – Pointer to next block • Blocks need not be contiguous • Files can expand contract • Seeks can be slow First block … Head: 417. . . 56 Length Byte 0. . . Byte 4095 Block 0 Block 1 Block N-1

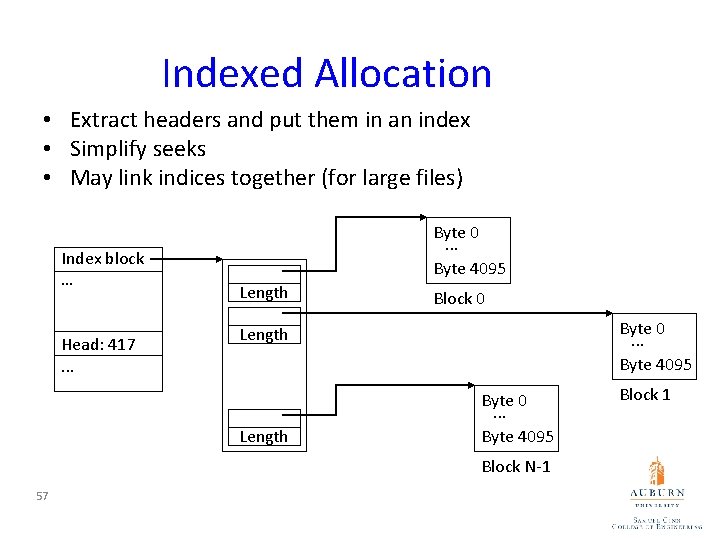

Indexed Allocation • Extract headers and put them in an index • Simplify seeks • May link indices together (for large files) Index block … Head: 417. . . Byte 0. . . Byte 4095 Length Block 0 Byte 0. . . Byte 4095 Length Byte 0. . . Byte 4095 Block N-1 57 Block 1

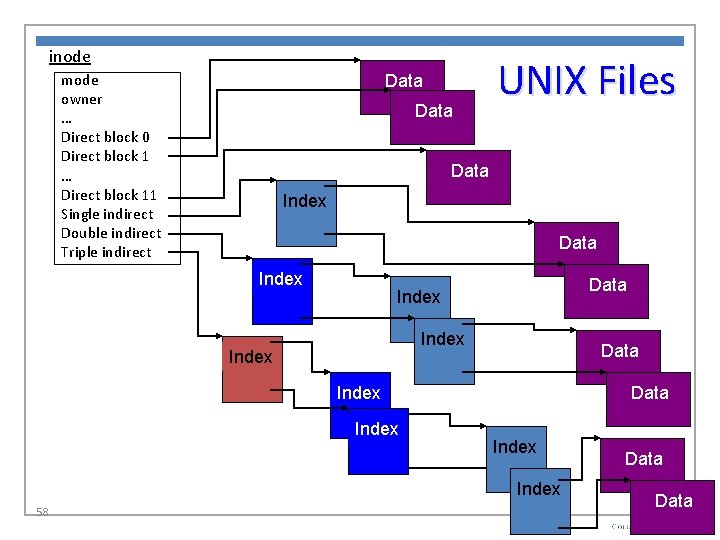

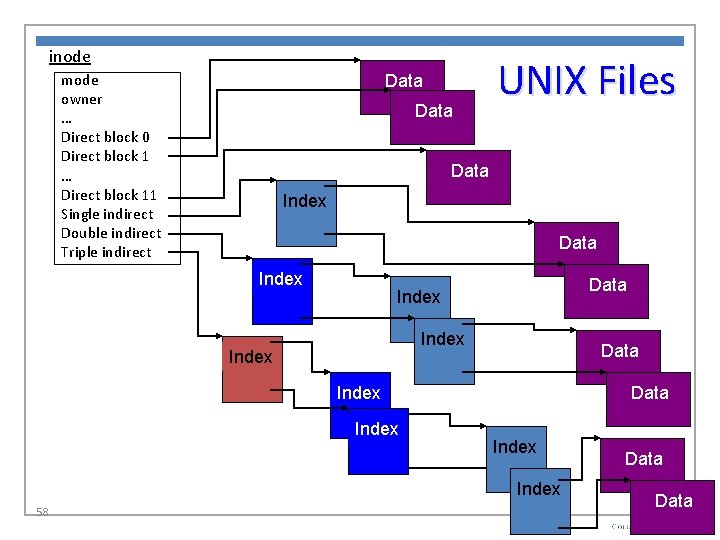

inode mode owner … Direct block 0 Direct block 1 … Direct block 11 Single indirect Double indirect Triple indirect Data UNIX Files Data Index Index Data Index 58 Data

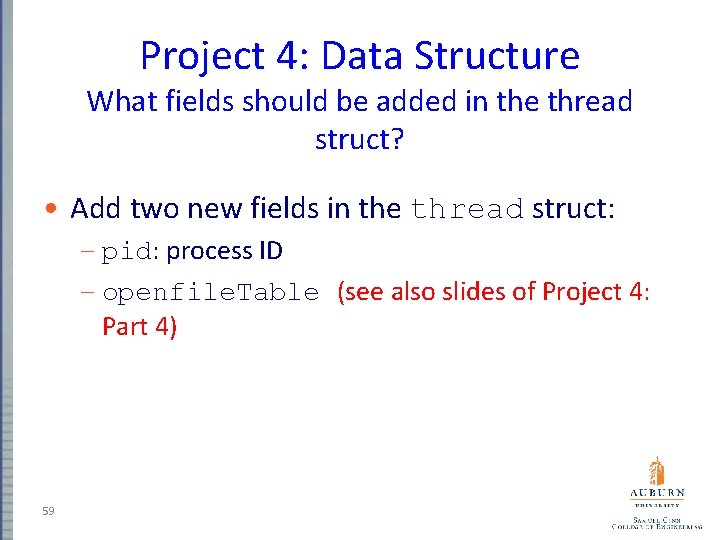

Project 4: Data Structure What fields should be added in the thread struct? • Add two new fields in the thread struct: – pid: process ID – openfile. Table (see also slides of Project 4: Part 4) 59

Project 4: Design How to allocate (i. e. , assign) PIDs? • Assign PID sequentially • Looping back to PIN_MIN when we reach PIN_MAX • Do not reuse the same PID quickly (no identical PIDs within a few minutes) 60

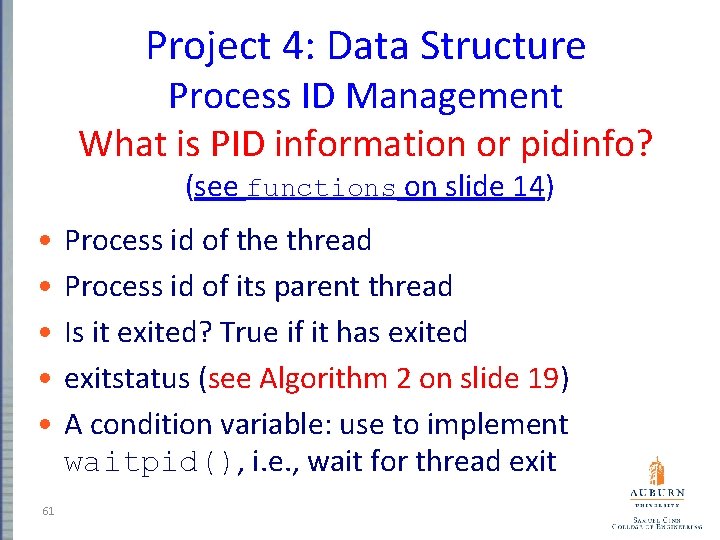

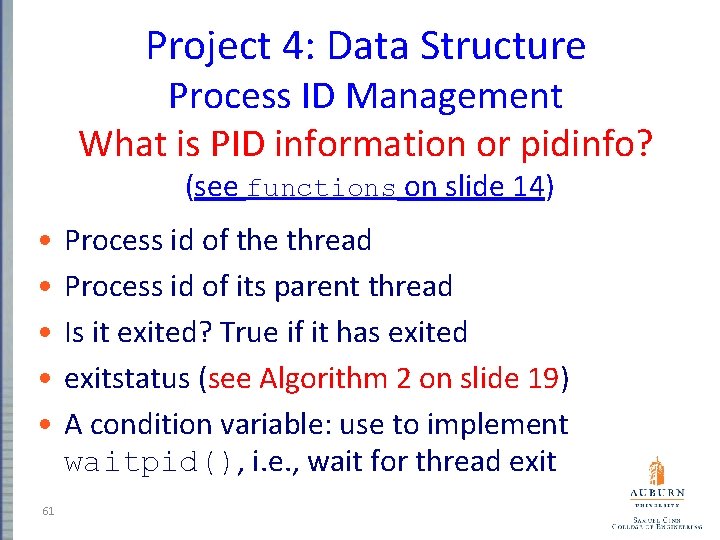

Project 4: Data Structure Process ID Management What is PID information or pidinfo? (see functions on slide 14) • • • 61 Process id of the thread Process id of its parent thread Is it exited? True if it has exited exitstatus (see Algorithm 2 on slide 19) A condition variable: use to implement waitpid(), i. e. , wait for thread exit

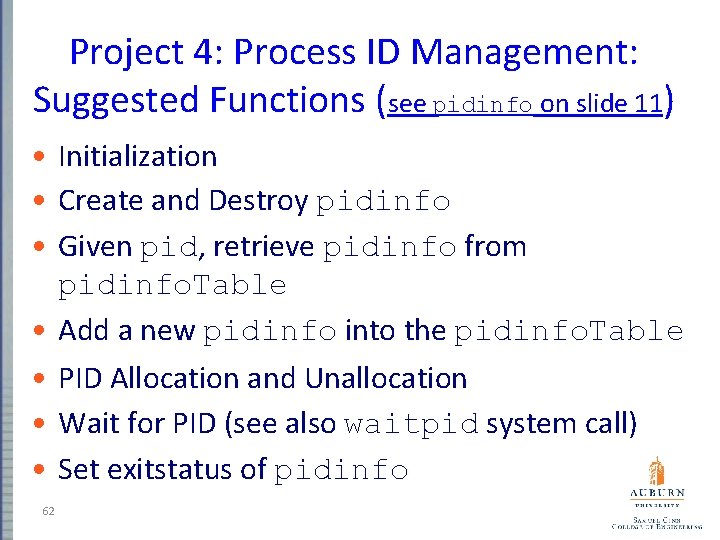

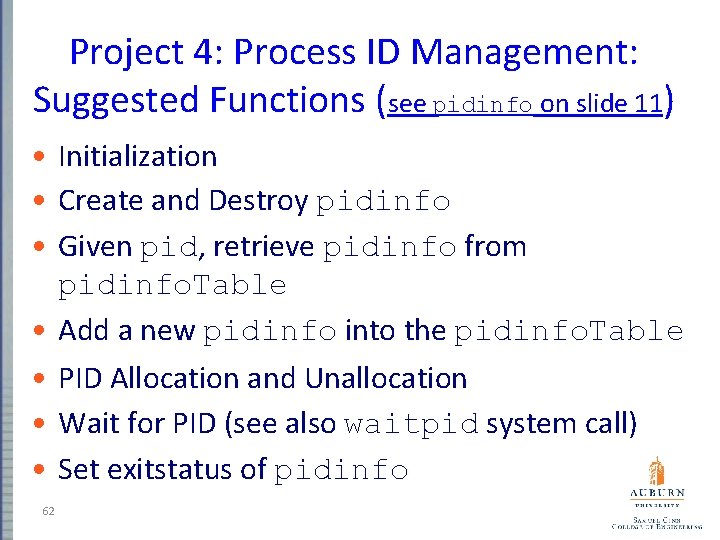

Project 4: Process ID Management: Suggested Functions (see pidinfo on slide 11) • Initialization • Create and Destroy pidinfo • Given pid, retrieve pidinfo from pidinfo. Table • Add a new pidinfo into the pidinfo. Table • PID Allocation and Unallocation • Wait for PID (see also waitpid system call) • Set exitstatus of pidinfo 62

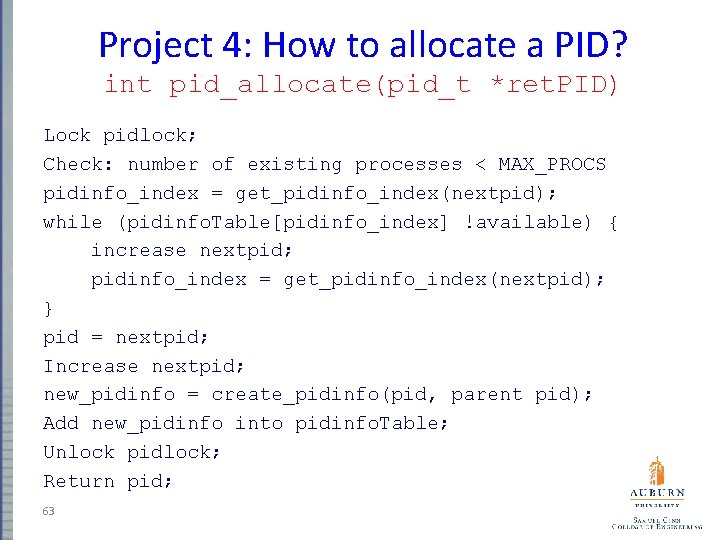

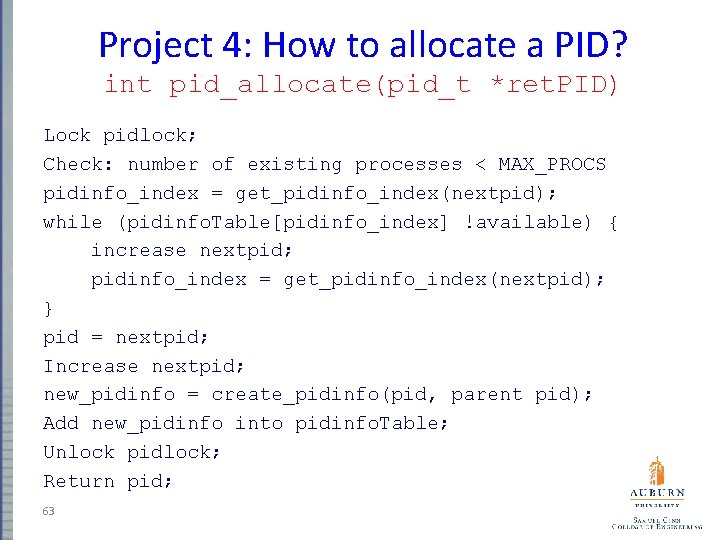

Project 4: How to allocate a PID? int pid_allocate(pid_t *ret. PID) Lock pidlock; Check: number of existing processes < MAX_PROCS pidinfo_index = get_pidinfo_index(nextpid); while (pidinfo. Table[pidinfo_index] !available) { increase nextpid; pidinfo_index = get_pidinfo_index(nextpid); } pid = nextpid; Increase nextpid; new_pidinfo = create_pidinfo(pid, parent pid); Add new_pidinfo into pidinfo. Table; Unlock pidlock; Return pid; 63

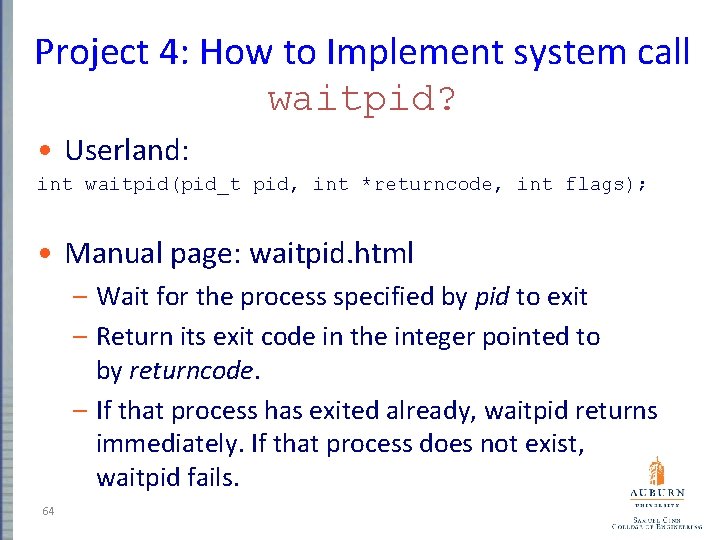

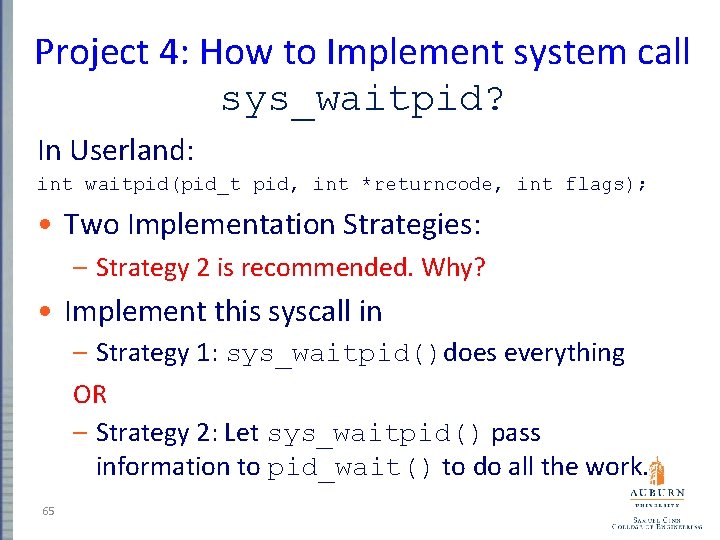

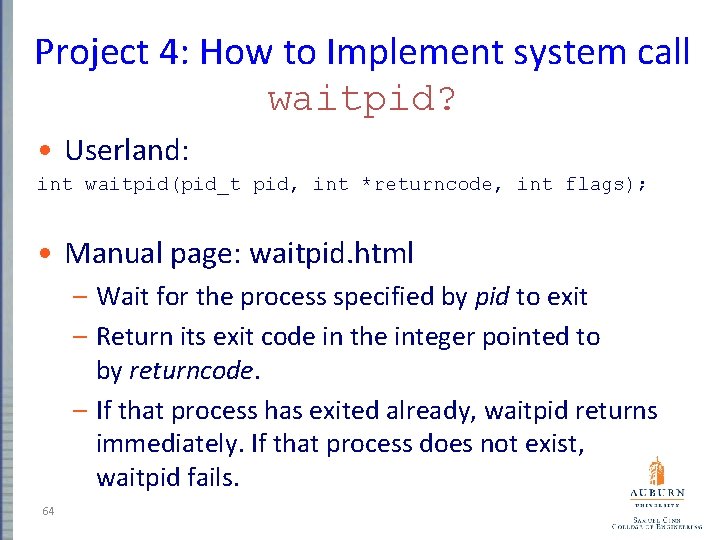

Project 4: How to Implement system call waitpid? • Userland: int waitpid(pid_t pid, int *returncode, int flags); • Manual page: waitpid. html – Wait for the process specified by pid to exit – Return its exit code in the integer pointed to by returncode. – If that process has exited already, waitpid returns immediately. If that process does not exist, waitpid fails. 64

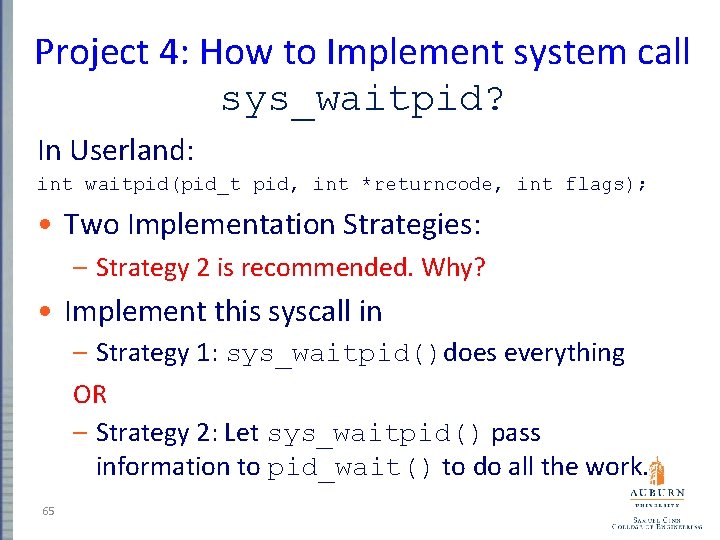

Project 4: How to Implement system call sys_waitpid? In Userland: int waitpid(pid_t pid, int *returncode, int flags); • Two Implementation Strategies: – Strategy 2 is recommended. Why? • Implement this syscall in – Strategy 1: sys_waitpid()does everything OR – Strategy 2: Let sys_waitpid() pass information to pid_wait() to do all the work. 65

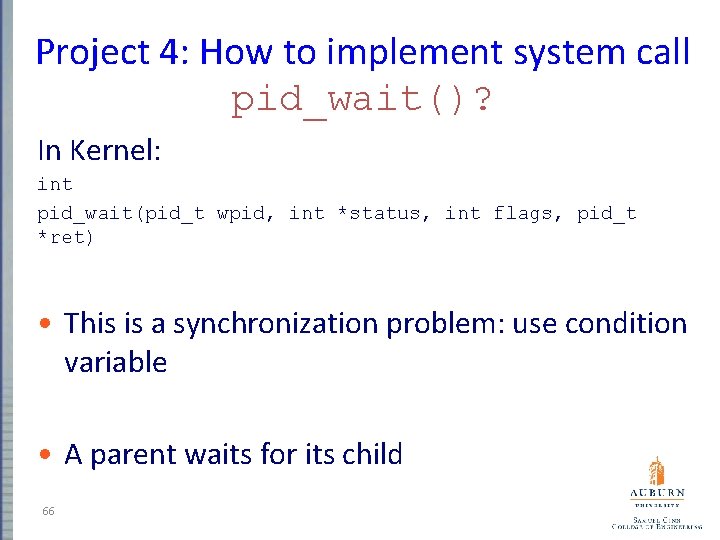

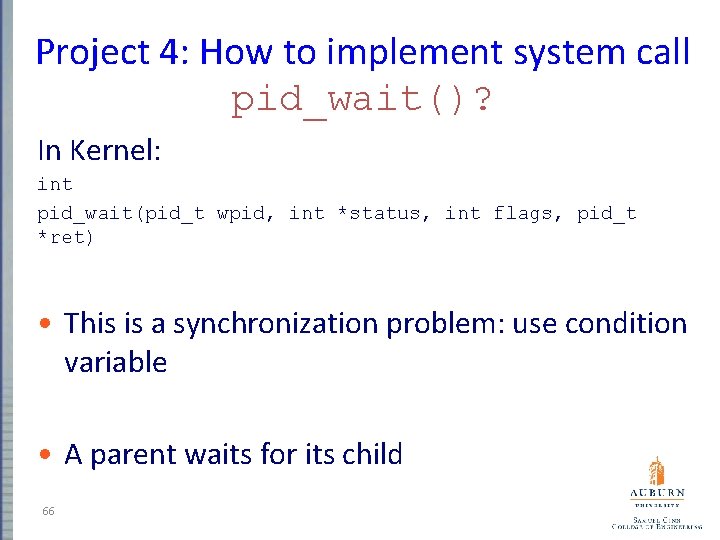

Project 4: How to implement system call pid_wait()? In Kernel: int pid_wait(pid_t wpid, int *status, int flags, pid_t *ret) • This is a synchronization problem: use condition variable • A parent waits for its child 66

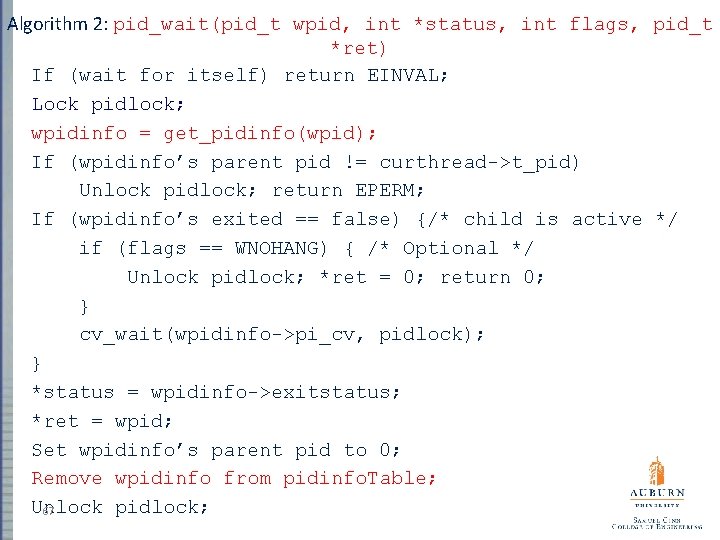

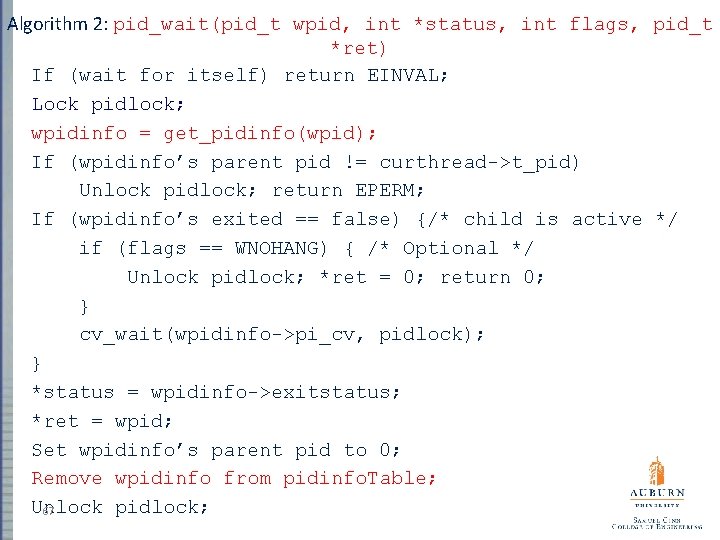

Algorithm 2: pid_wait(pid_t wpid, int *status, int flags, pid_t *ret) If (wait for itself) return EINVAL; Lock pidlock; wpidinfo = get_pidinfo(wpid); If (wpidinfo’s parent pid != curthread->t_pid) Unlock pidlock; return EPERM; If (wpidinfo’s exited == false) {/* child is active */ if (flags == WNOHANG) { /* Optional */ Unlock pidlock; *ret = 0; return 0; } cv_wait(wpidinfo->pi_cv, pidlock); } *status = wpidinfo->exitstatus; *ret = wpid; Set wpidinfo’s parent pid to 0; Remove wpidinfo from pidinfo. Table; Unlock pidlock; 67

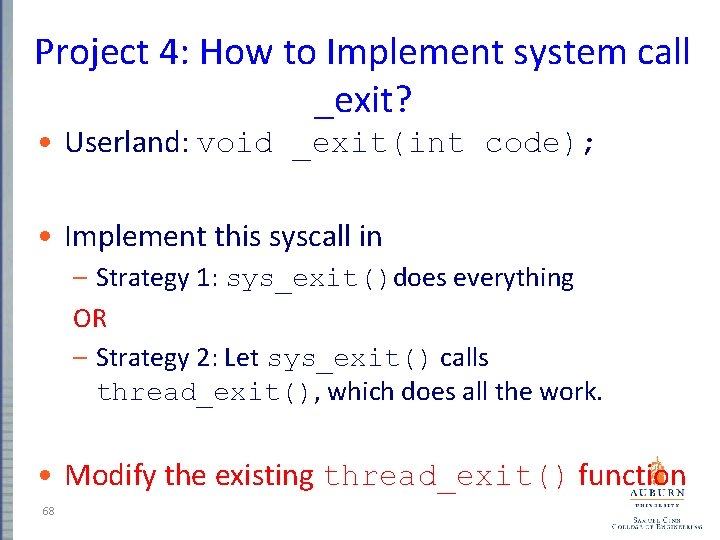

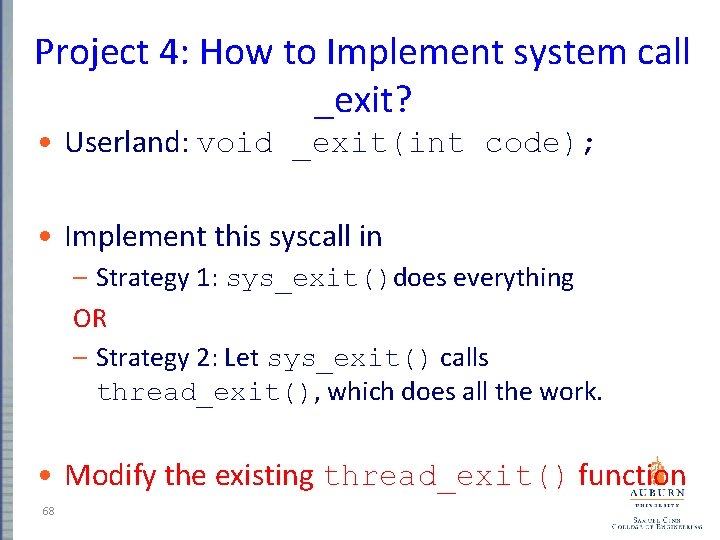

Project 4: How to Implement system call _exit? • Userland: void _exit(int code); • Implement this syscall in – Strategy 1: sys_exit()does everything OR – Strategy 2: Let sys_exit() calls thread_exit(), which does all the work. • Modify the existing thread_exit() function 68

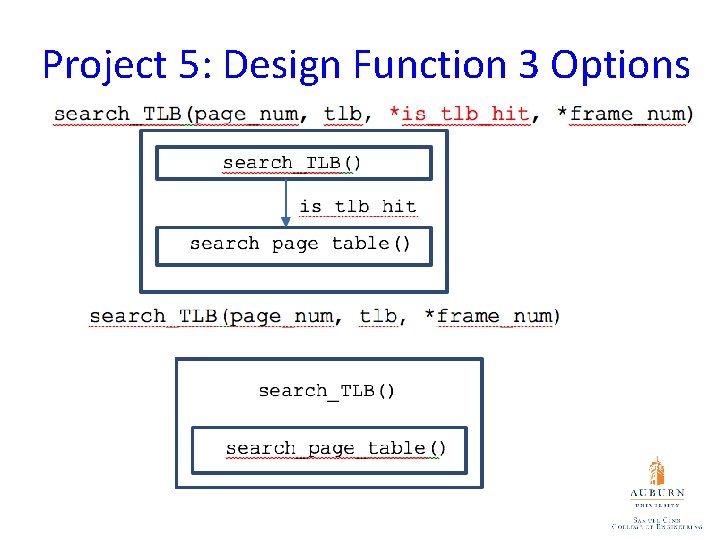

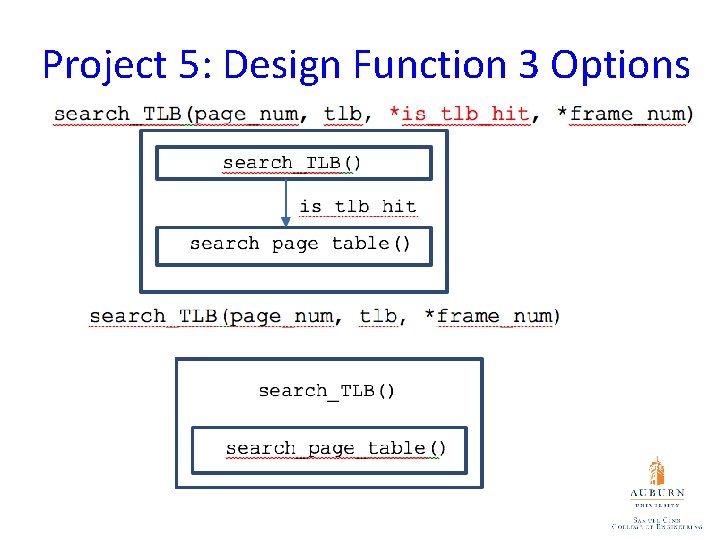

Project 5: Design Function 3 Options

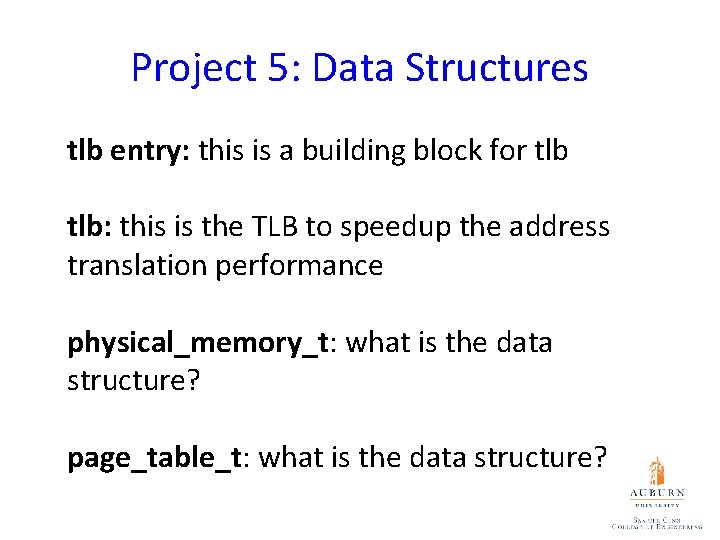

Project 5: Data Structures tlb entry: this is a building block for tlb: this is the TLB to speedup the address translation performance physical_memory_t: what is the data structure? page_table_t: what is the data structure?