COMP 3500 Introduction to Operating Systems Midterm Exam

- Slides: 83

COMP 3500 Introduction to Operating Systems Midterm Exam 2 Review Dr. Xiao Qin Auburn University http: //www. eng. auburn. edu/~xqin@auburn. edu

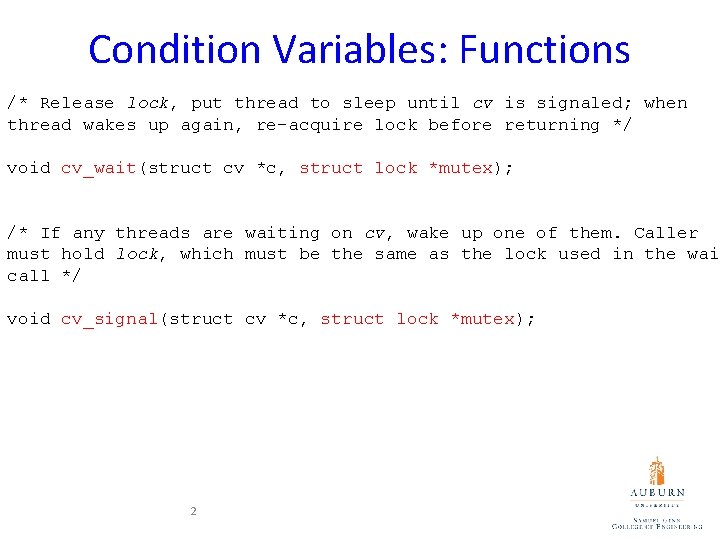

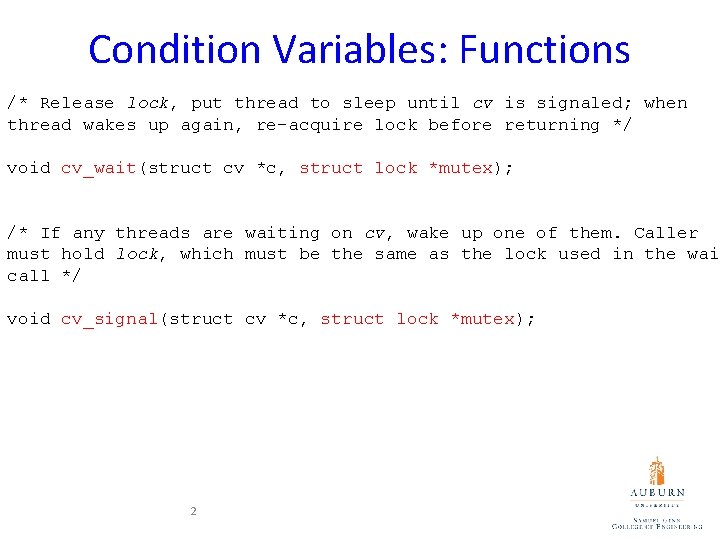

Condition Variables: Functions /* Release lock, put thread to sleep until cv is signaled; when thread wakes up again, re-acquire lock before returning */ void cv_wait(struct cv *c, struct lock *mutex); /* If any threads are waiting on cv, wake up one of them. Caller must hold lock, which must be the same as the lock used in the wai call */ void cv_signal(struct cv *c, struct lock *mutex); 2

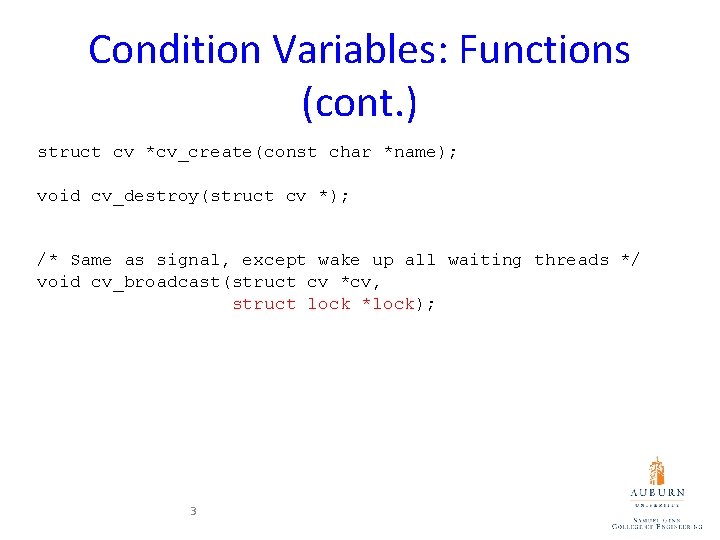

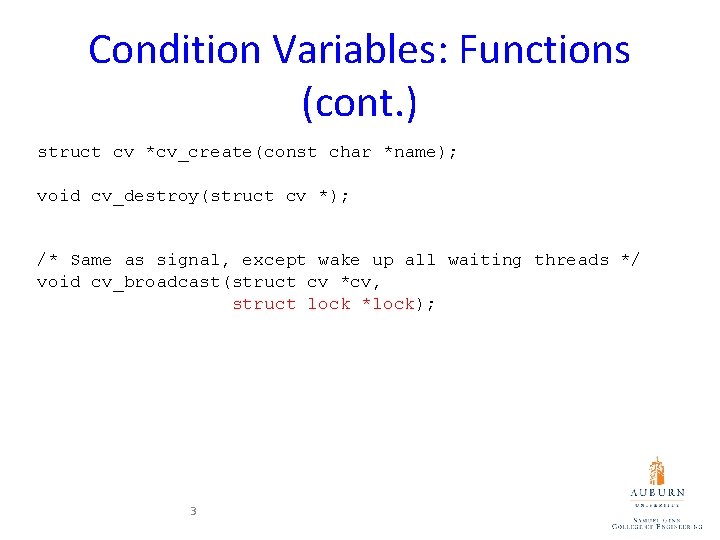

Condition Variables: Functions (cont. ) struct cv *cv_create(const char *name); void cv_destroy(struct cv *); /* Same as signal, except wake up all waiting threads */ void cv_broadcast(struct cv *cv, struct lock *lock); 3

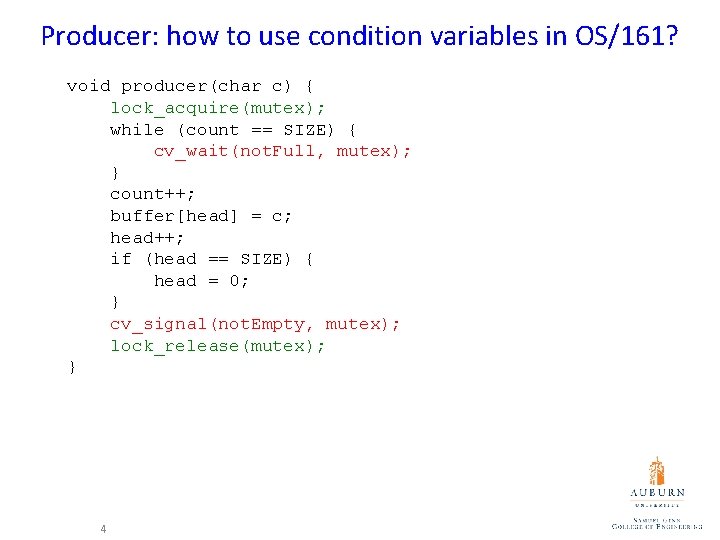

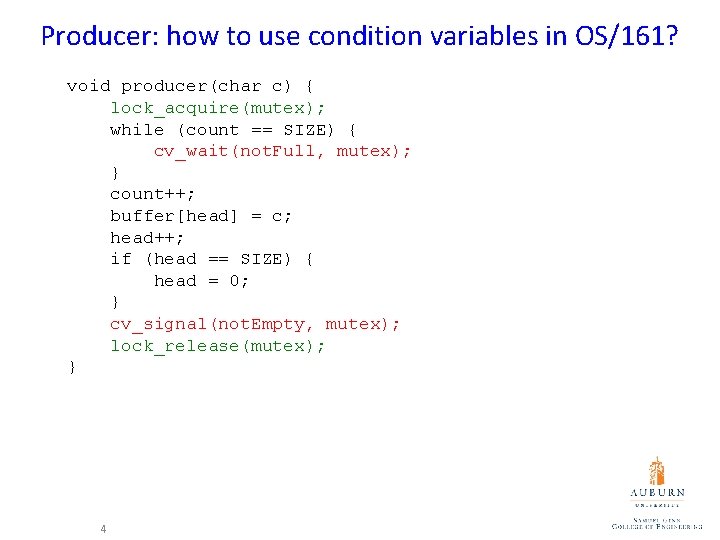

Producer: how to use condition variables in OS/161? void producer(char c) { lock_acquire(mutex); while (count == SIZE) { cv_wait(not. Full, mutex); } count++; buffer[head] = c; head++; if (head == SIZE) { head = 0; } cv_signal(not. Empty, mutex); lock_release(mutex); } 4

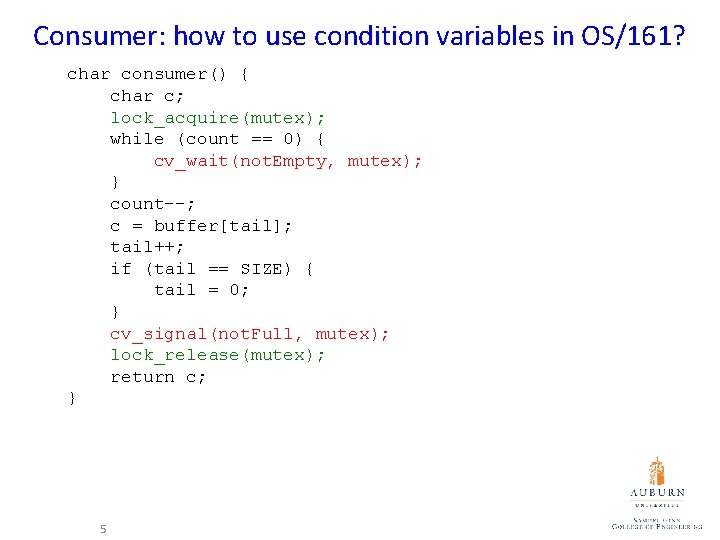

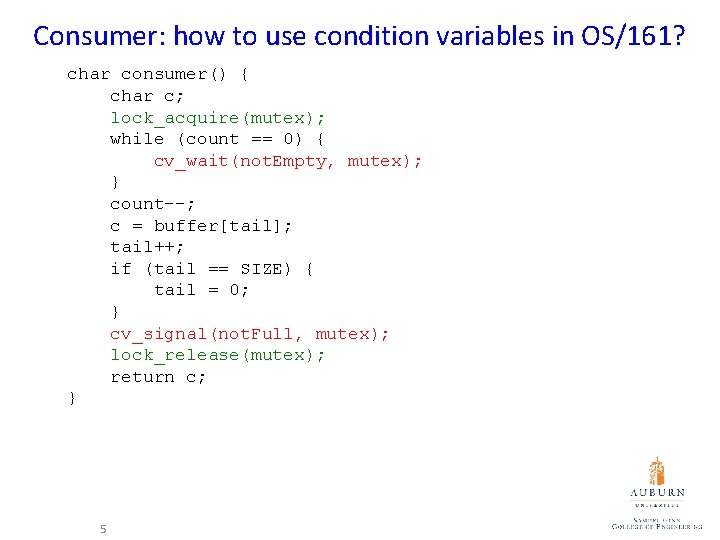

Consumer: how to use condition variables in OS/161? char consumer() { char c; lock_acquire(mutex); while (count == 0) { cv_wait(not. Empty, mutex); } count--; c = buffer[tail]; tail++; if (tail == SIZE) { tail = 0; } cv_signal(not. Full, mutex); lock_release(mutex); return c; } 5

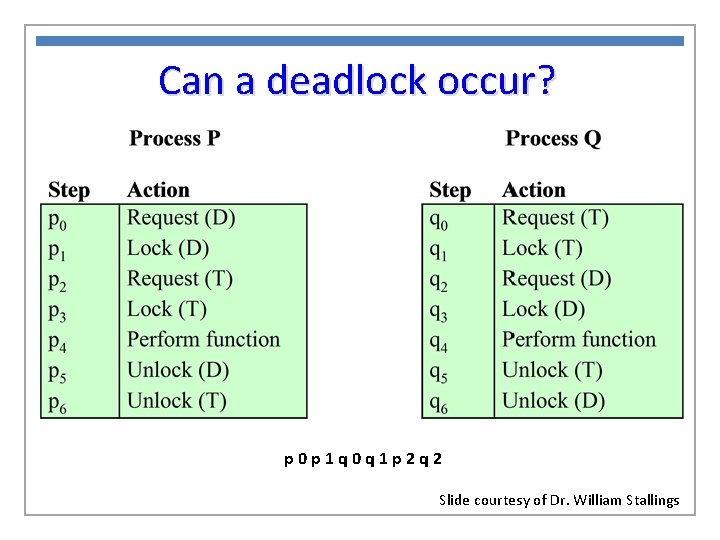

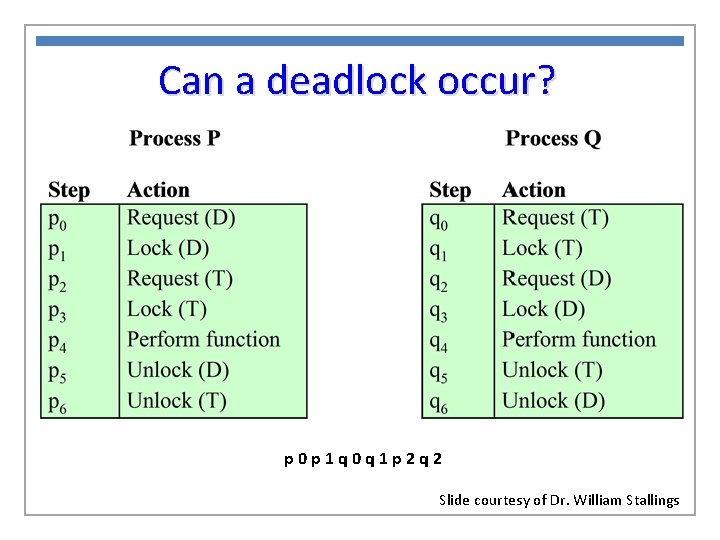

Can a deadlock occur? p 0 p 1 q 0 q 1 p 2 q 2 Slide courtesy of Dr. William Stallings

Deadlock Characterization Deadlock can arise if four conditions hold simultaneously. • 1. Mutual exclusion: only one process at a time can use a resource

Deadlock Characterization Deadlock can arise if four conditions hold simultaneously. • 2. Hold and wait: a process holding at least one resource is waiting to acquire additional resources held by other processes

Deadlock Characterization Deadlock can arise if four conditions hold simultaneously. • 3. No preemption: a resource can be released only voluntarily by the process holding it, after that process has completed its task

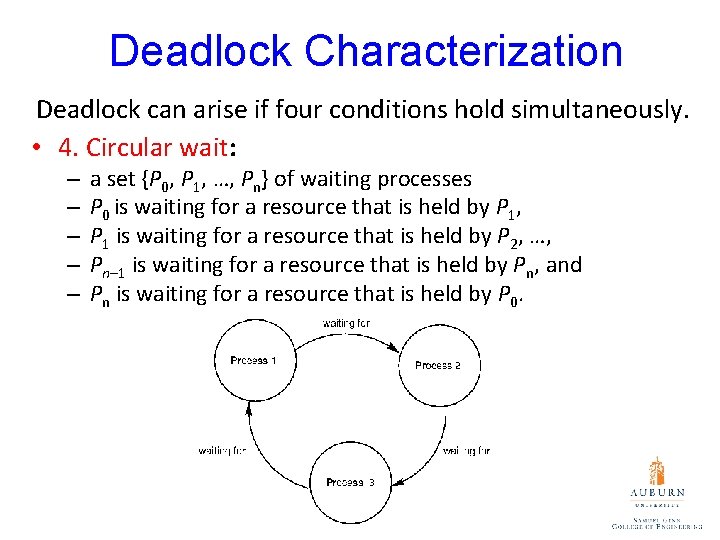

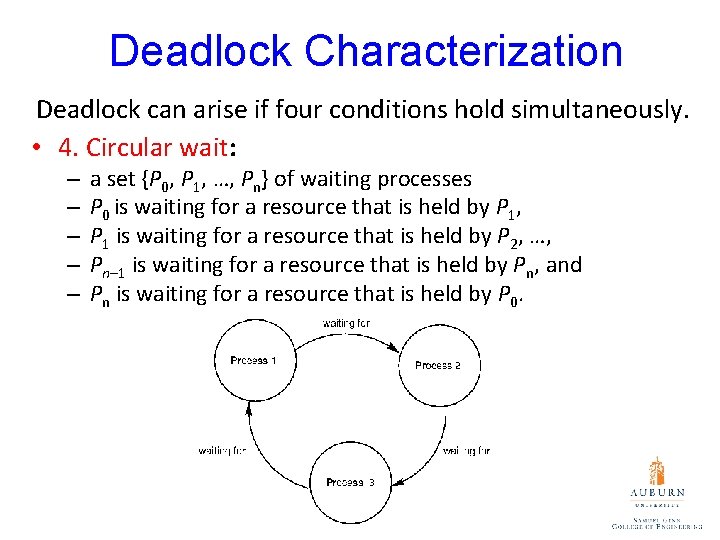

Deadlock Characterization Deadlock can arise if four conditions hold simultaneously. • 4. Circular wait: – – – a set {P 0, P 1, …, Pn} of waiting processes P 0 is waiting for a resource that is held by P 1, P 1 is waiting for a resource that is held by P 2, …, Pn– 1 is waiting for a resource that is held by Pn, and Pn is waiting for a resource that is held by P 0.

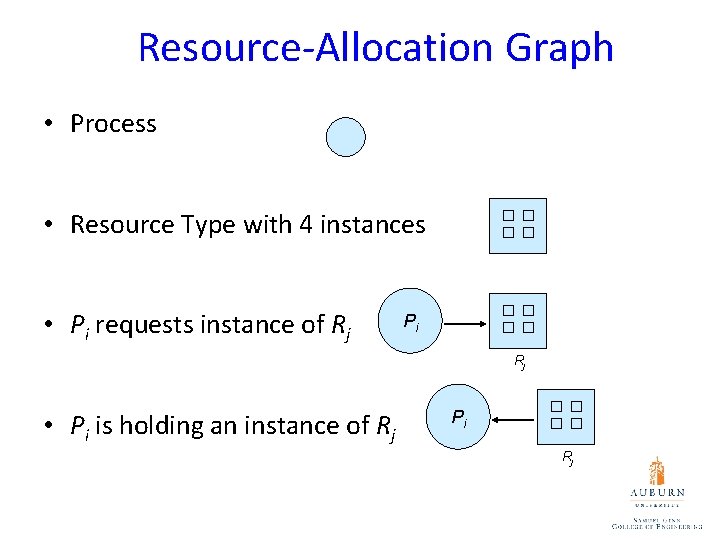

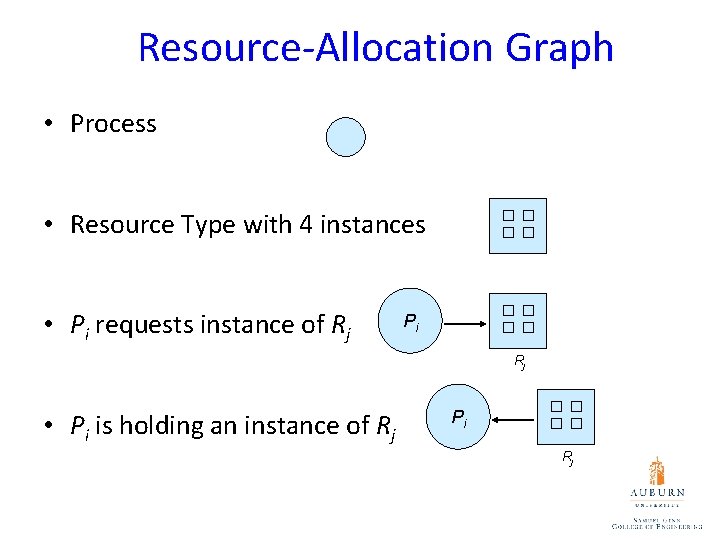

Resource-Allocation Graph • Process • Resource Type with 4 instances • Pi requests instance of Rj Pi Rj • Pi is holding an instance of Rj Pi Rj

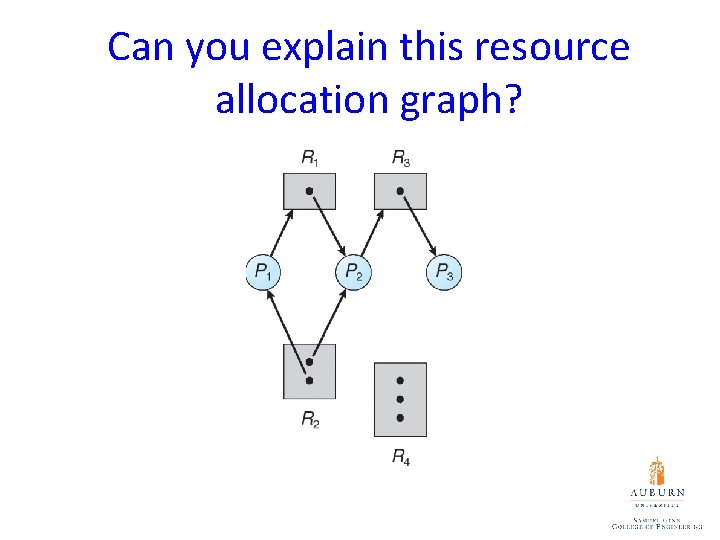

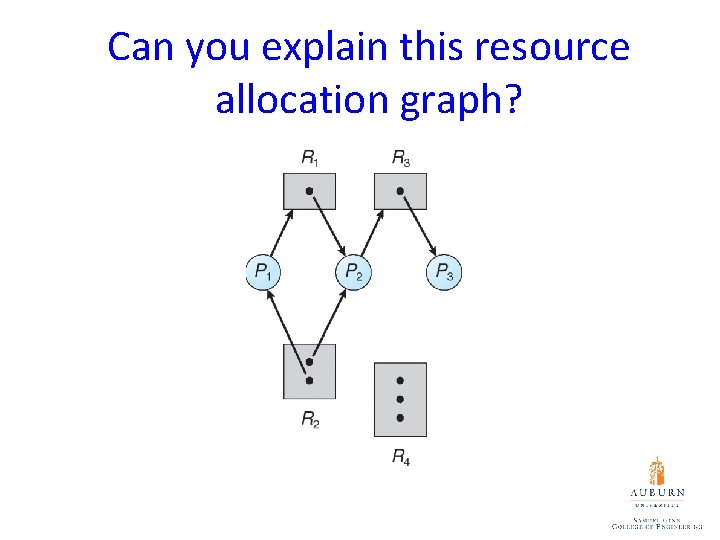

Can you explain this resource allocation graph?

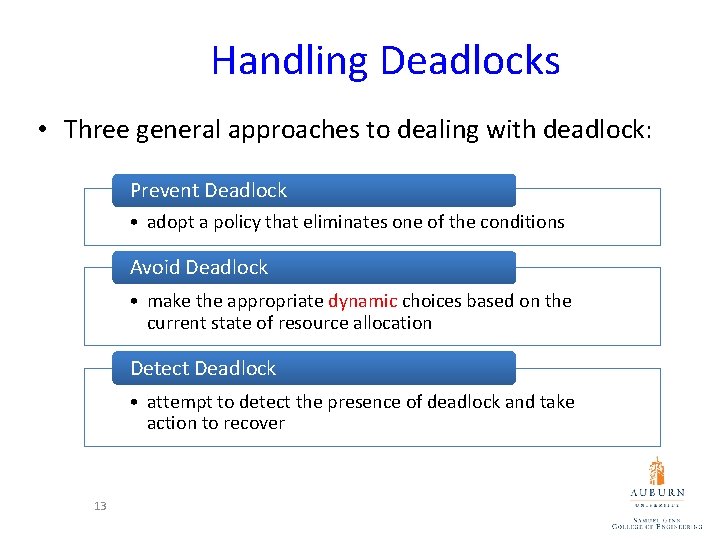

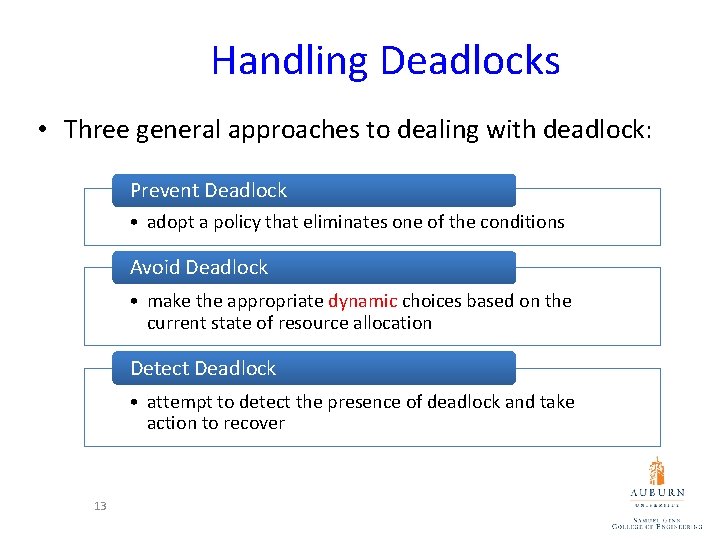

Handling Deadlocks • Three general approaches to dealing with deadlock: Prevent Deadlock • adopt a policy that eliminates one of the conditions Avoid Deadlock • make the appropriate dynamic choices based on the current state of resource allocation Detect Deadlock • attempt to detect the presence of deadlock and take action to recover 13

Deadlock Prevention (1) • Mutual Exclusion Can you propose the first deadlock prevention approach? • not required for sharable resources (e. g. , read-only files); must hold for non-sharable resources 14

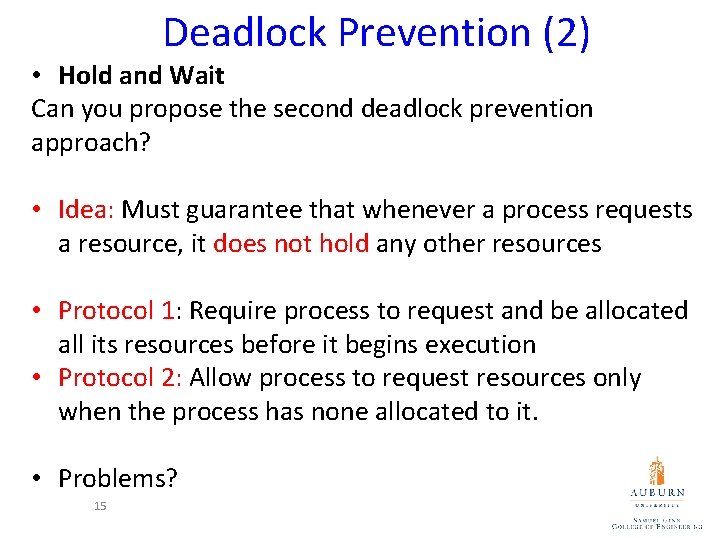

Deadlock Prevention (2) • Hold and Wait Can you propose the second deadlock prevention approach? • Idea: Must guarantee that whenever a process requests a resource, it does not hold any other resources • Protocol 1: Require process to request and be allocated all its resources before it begins execution • Protocol 2: Allow process to request resources only when the process has none allocated to it. • Problems? 15 Low resource utilization; starvation possible

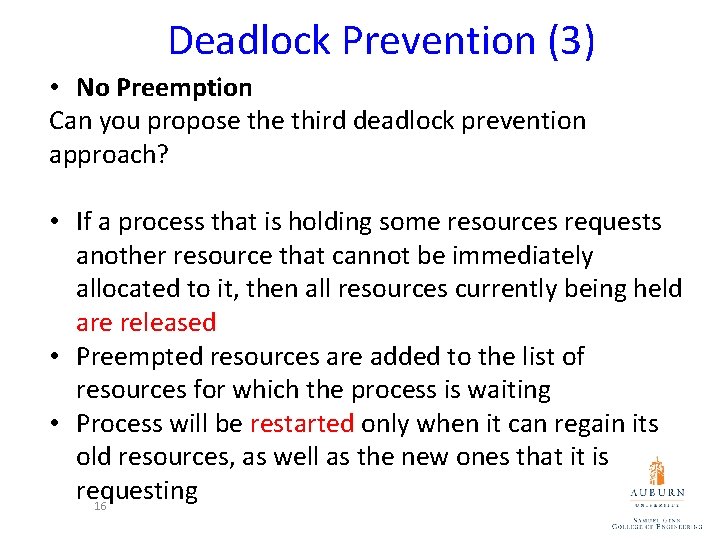

Deadlock Prevention (3) • No Preemption Can you propose third deadlock prevention approach? • If a process that is holding some resources requests another resource that cannot be immediately allocated to it, then all resources currently being held are released • Preempted resources are added to the list of resources for which the process is waiting • Process will be restarted only when it can regain its old resources, as well as the new ones that it is requesting 16

Deadlock Prevention (Cont. ) • Circular Wait Can you propose the fourth deadlock prevention approach? (Difficult) • Impose a total ordering of all resource types, and require that each process requests resources in an increasing order of enumeration 17

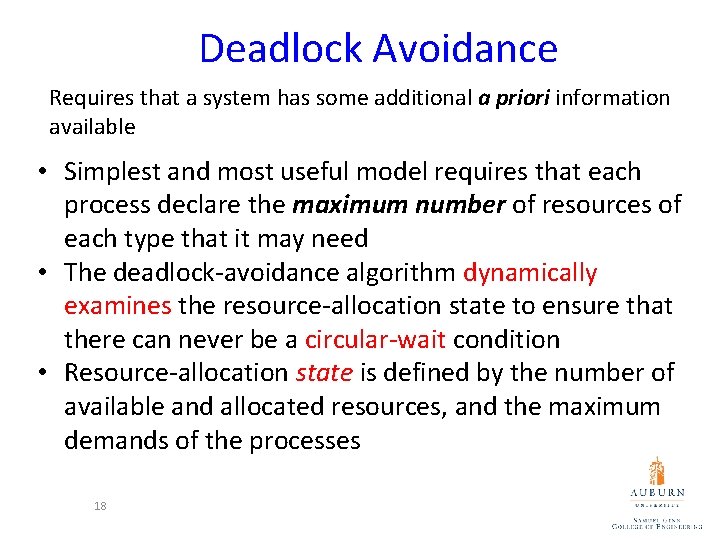

Deadlock Avoidance Requires that a system has some additional a priori information available • Simplest and most useful model requires that each process declare the maximum number of resources of each type that it may need • The deadlock-avoidance algorithm dynamically examines the resource-allocation state to ensure that there can never be a circular-wait condition • Resource-allocation state is defined by the number of available and allocated resources, and the maximum demands of the processes 18

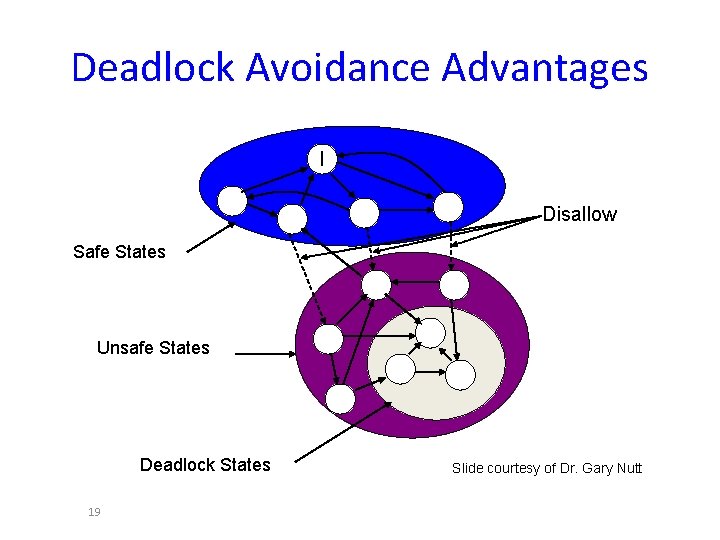

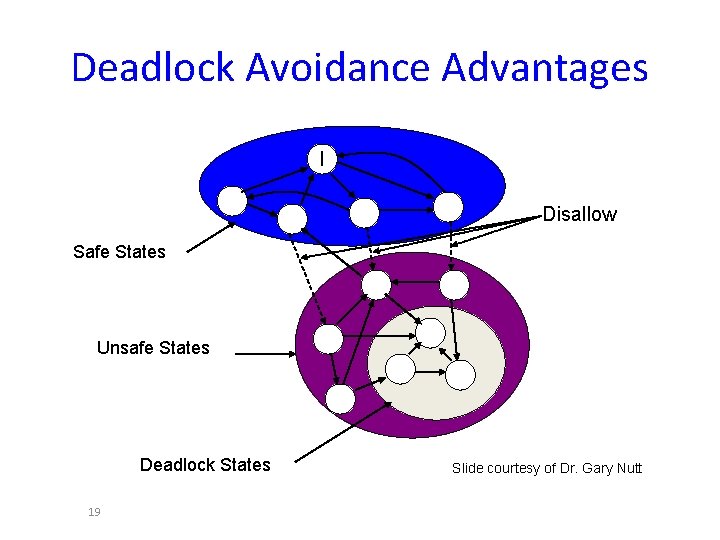

Deadlock Avoidance Advantages I Disallow Safe States Unsafe States Deadlock States 19 Slide courtesy of Dr. Gary Nutt

Safe State • When a process requests a resource, system must decide if allocation leaves the system in a safe state • System is in safe state if there exists a sequence <P 1, P 2, …, Pn> of ALL the processes in the systems such that for each Pi, the resources that Pi can still request can be satisfied by currently available resources + resources held by all the Pj, with j < I 20

Avoidance Algorithms • Single instance of a resource type – Use a resource-allocation graph • Multiple instances of a resource type – Use the banker’s algorithm 21

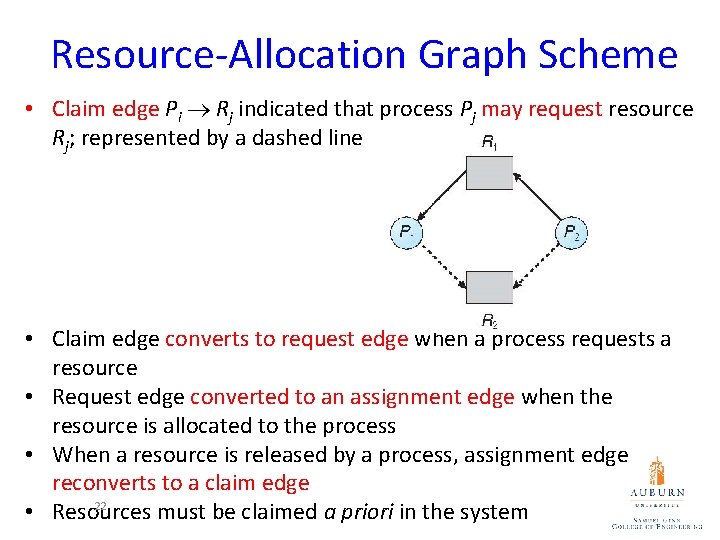

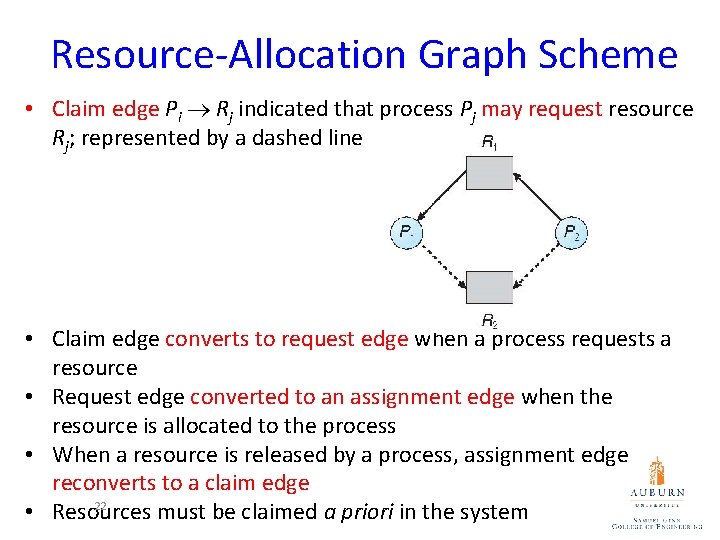

Resource-Allocation Graph Scheme • Claim edge Pi Rj indicated that process Pj may request resource Rj; represented by a dashed line • Claim edge converts to request edge when a process requests a resource • Request edge converted to an assignment edge when the resource is allocated to the process • When a resource is released by a process, assignment edge reconverts to a claim edge 22 • Resources must be claimed a priori in the system

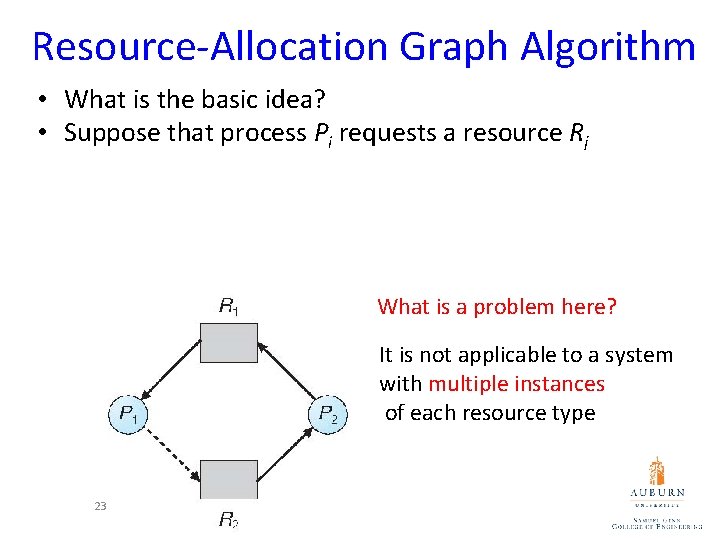

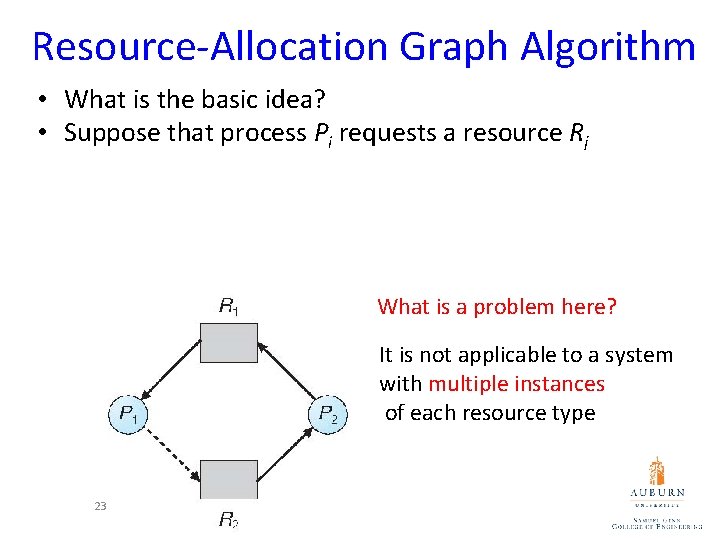

Resource-Allocation Graph Algorithm • What is the basic idea? • Suppose that process Pi requests a resource Rj • The request can be granted only if converting the request edge to an assignment edge does not result in the formation of a cycle in the resource allocation graph What is a problem here? It is not applicable to a system with multiple instances of each resource type 23

Banker’s Algorithm • Multiple instances • Each process must declare maximum use • When a process requests a resource it may have to wait • When a process gets all its resources it must return them in a finite amount of time 24

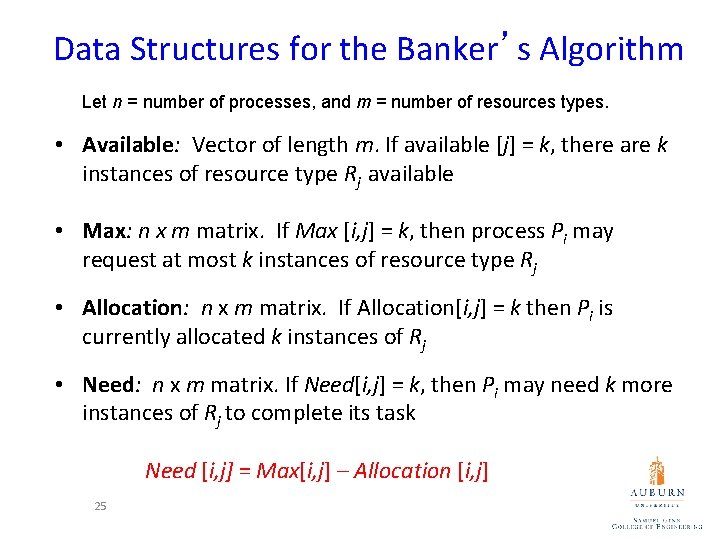

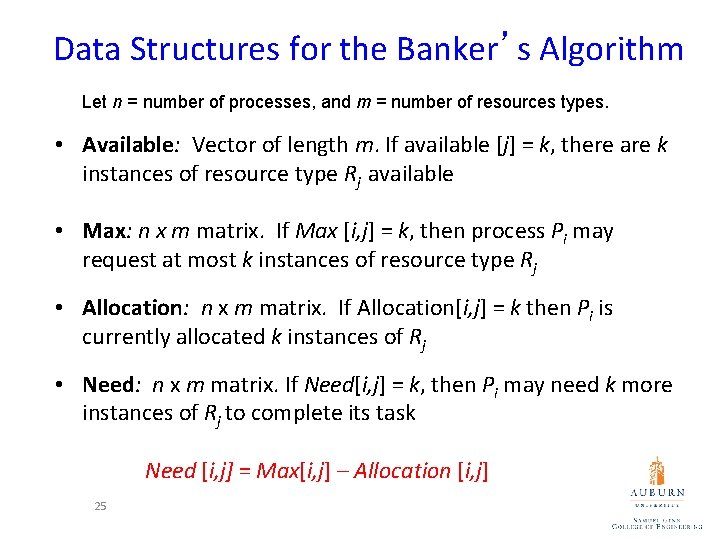

Data Structures for the Banker’s Algorithm Let n = number of processes, and m = number of resources types. • Available: Vector of length m. If available [j] = k, there are k instances of resource type Rj available • Max: n x m matrix. If Max [i, j] = k, then process Pi may request at most k instances of resource type Rj • Allocation: n x m matrix. If Allocation[i, j] = k then Pi is currently allocated k instances of Rj • Need: n x m matrix. If Need[i, j] = k, then Pi may need k more instances of Rj to complete its task Need [i, j] = Max[i, j] – Allocation [i, j] 25

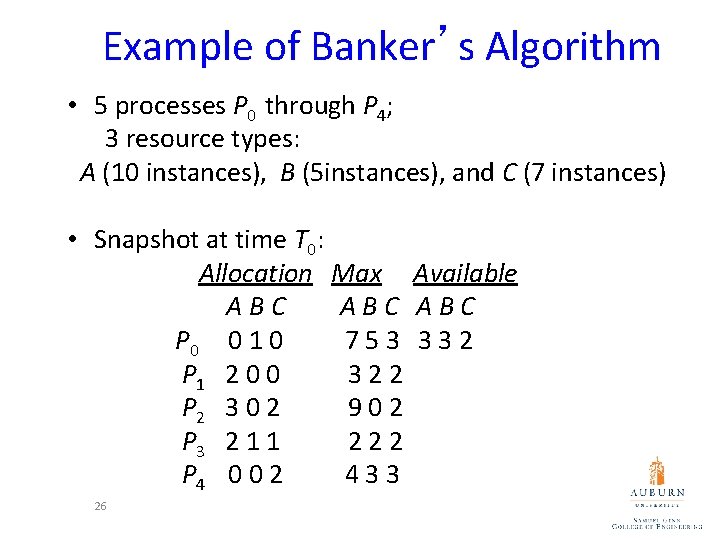

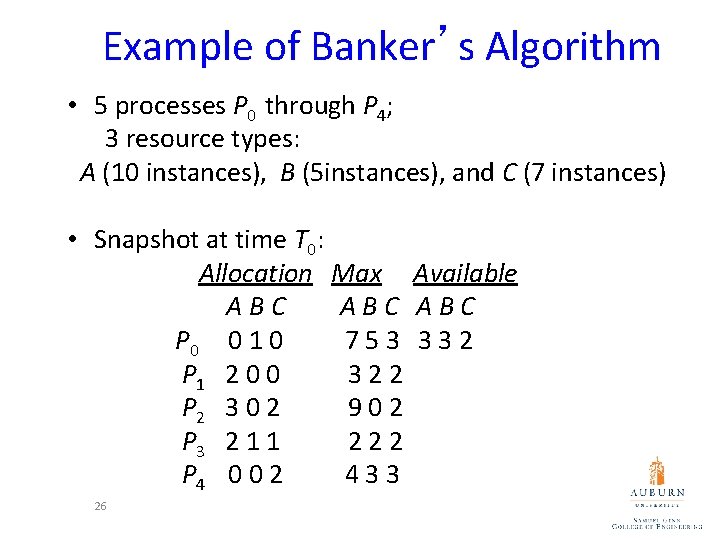

Example of Banker’s Algorithm • 5 processes P 0 through P 4; 3 resource types: A (10 instances), B (5 instances), and C (7 instances) • Snapshot at time T 0: Allocation Max Available ABC ABC P 0 0 1 0 753 332 P 1 2 0 0 322 P 2 3 0 2 902 P 3 2 1 1 222 P 4 0 0 2 433 26

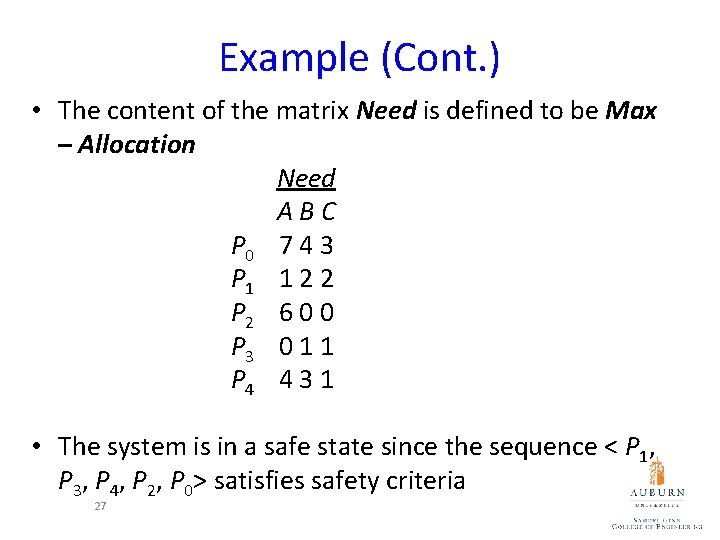

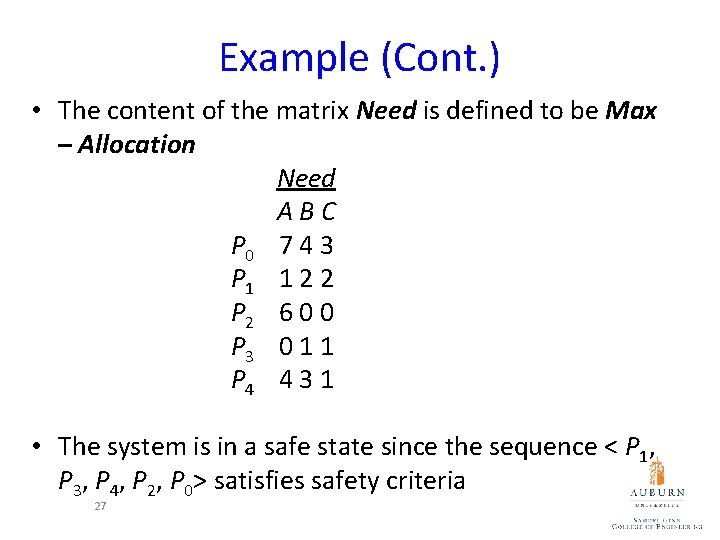

Example (Cont. ) • The content of the matrix Need is defined to be Max – Allocation Need ABC P 0 7 4 3 P 1 1 2 2 P 2 6 0 0 P 3 0 1 1 P 4 4 3 1 • The system is in a safe state since the sequence < P 1, P 3, P 4, P 2, P 0> satisfies safety criteria 27

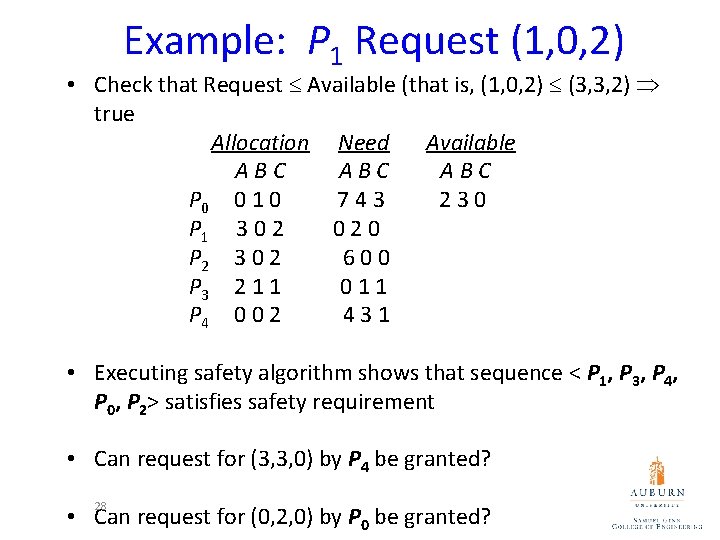

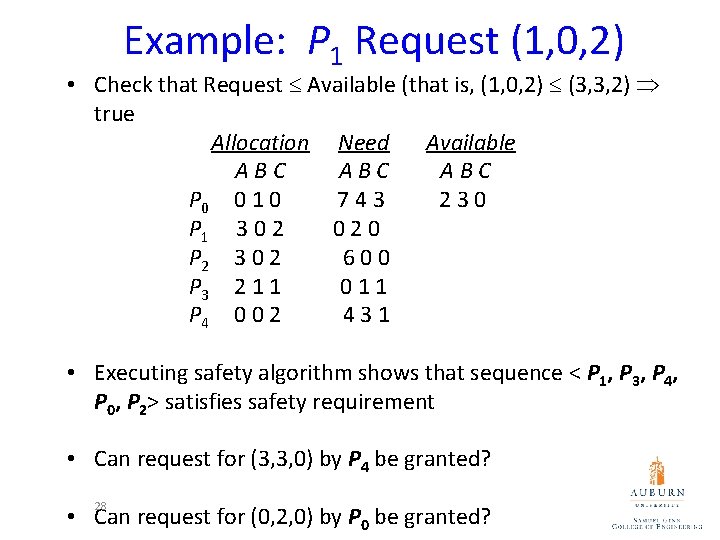

Example: P 1 Request (1, 0, 2) • Check that Request Available (that is, (1, 0, 2) (3, 3, 2) true Allocation Need Available ABC ABC P 0 0 1 0 743 230 P 1 3 0 2 020 P 2 3 0 2 600 P 3 2 1 1 011 P 4 0 0 2 431 • Executing safety algorithm shows that sequence < P 1, P 3, P 4, P 0, P 2> satisfies safety requirement • Can request for (3, 3, 0) by P 4 be granted? 28 • Can request for (0, 2, 0) by P 0 be granted?

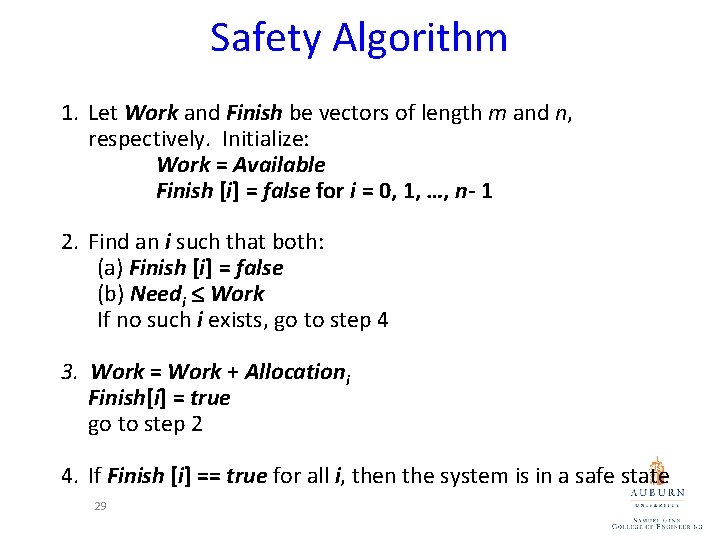

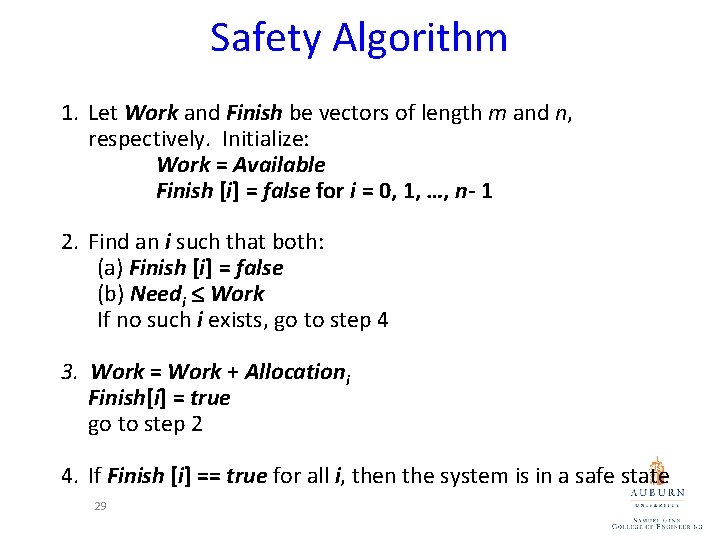

Safety Algorithm 1. Let Work and Finish be vectors of length m and n, respectively. Initialize: Work = Available Finish [i] = false for i = 0, 1, …, n- 1 2. Find an i such that both: (a) Finish [i] = false (b) Needi Work If no such i exists, go to step 4 3. Work = Work + Allocationi Finish[i] = true go to step 2 4. If Finish [i] == true for all i, then the system is in a safe state 29

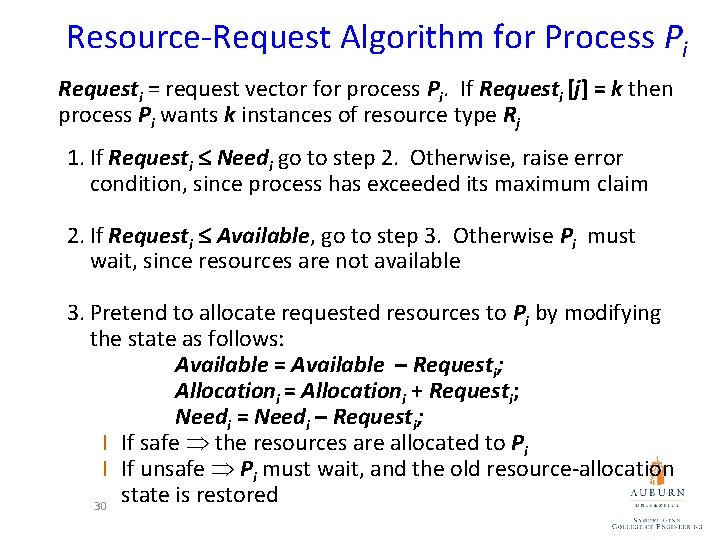

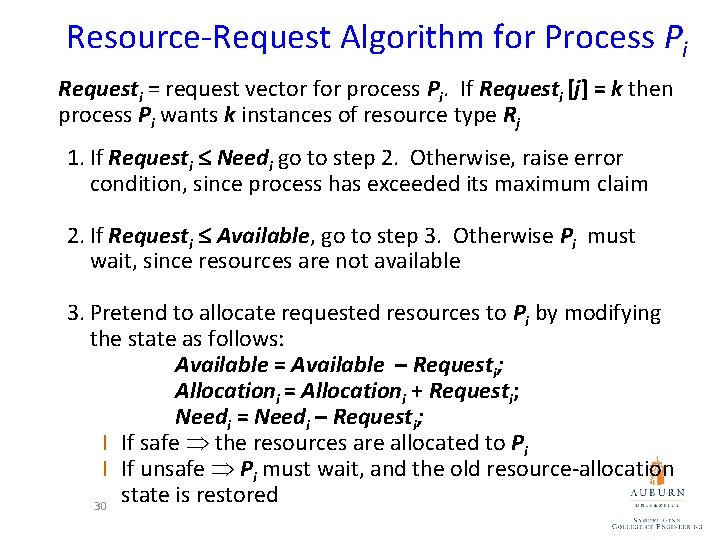

Resource-Request Algorithm for Process Pi Requesti = request vector for process Pi. If Requesti [j] = k then process Pi wants k instances of resource type Rj 1. If Requesti Needi go to step 2. Otherwise, raise error condition, since process has exceeded its maximum claim 2. If Requesti Available, go to step 3. Otherwise Pi must wait, since resources are not available 3. Pretend to allocate requested resources to Pi by modifying the state as follows: Available = Available – Requesti; Allocationi = Allocationi + Requesti; Needi = Needi – Requesti; l If safe the resources are allocated to Pi l If unsafe Pi must wait, and the old resource-allocation state is restored 30

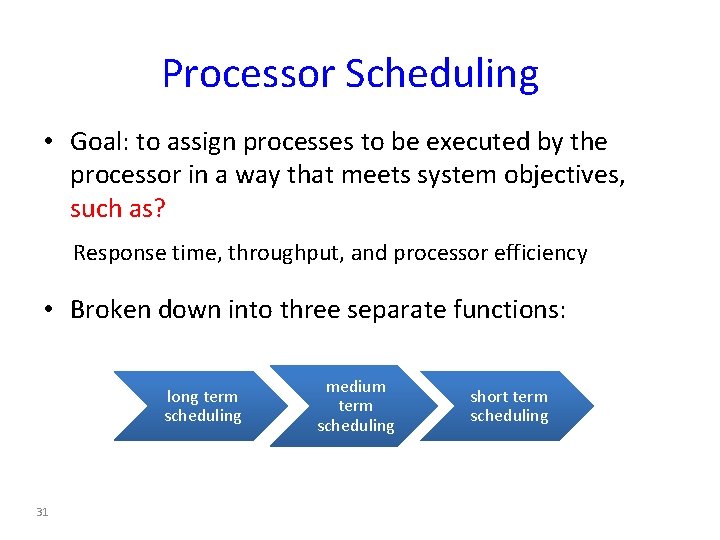

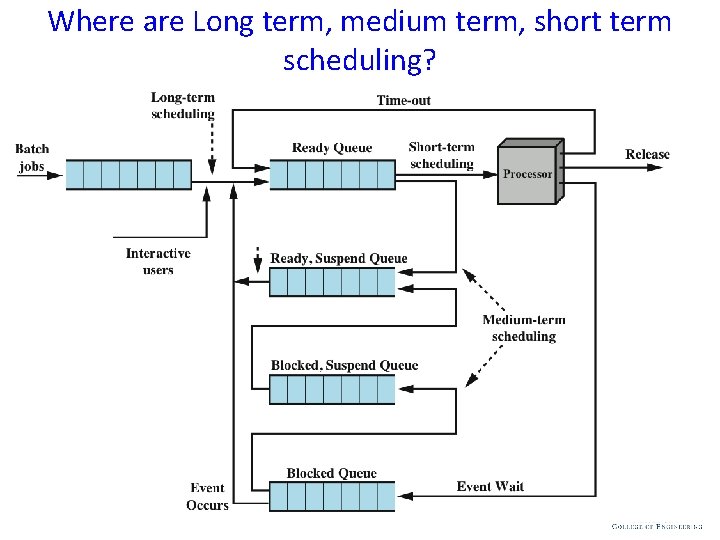

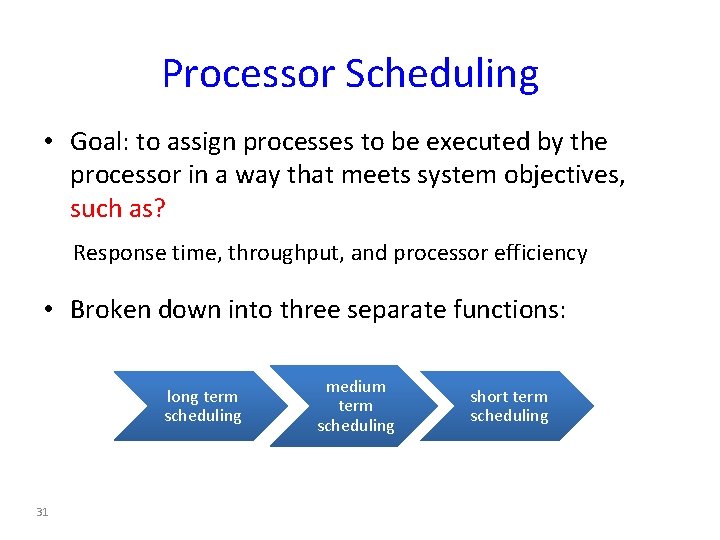

Processor Scheduling • Goal: to assign processes to be executed by the processor in a way that meets system objectives, such as? Response time, throughput, and processor efficiency • Broken down into three separate functions: long term scheduling 31 medium term scheduling short term scheduling

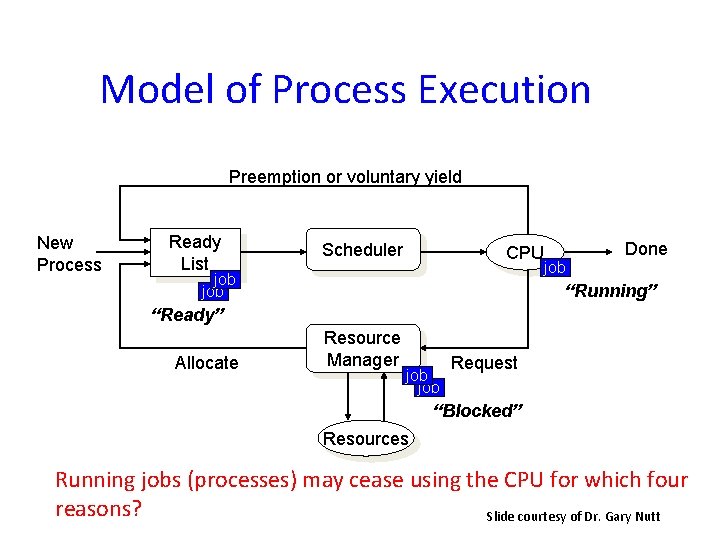

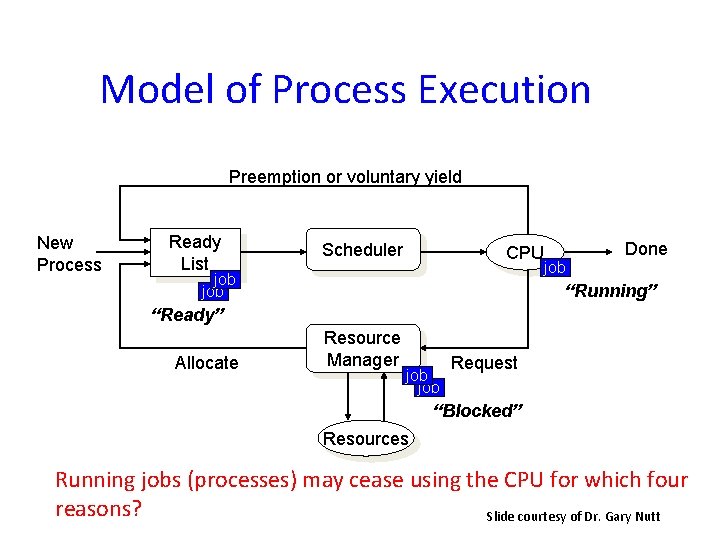

Model of Process Execution Preemption or voluntary yield New Process Ready List Scheduler CPU job job Done “Running” “Ready” Allocate Resource Manager job Request “Blocked” Resources Running jobs (processes) may cease using the CPU for which four reasons? Slide courtesy of Dr. Gary Nutt

How many different potential schedules? • A CPU scheduler determines an order for the execution of its scheduled proceses. • Given n processes to be scheduled n factorial n!

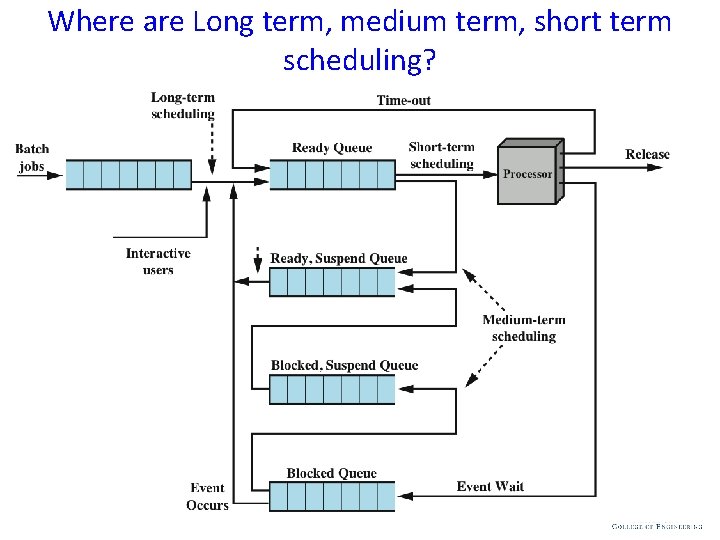

Where are Long term, medium term, short term scheduling? 34

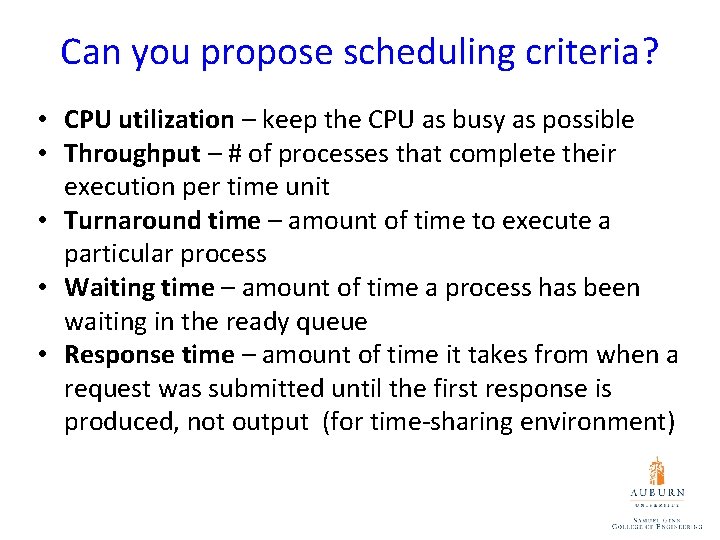

Can you propose scheduling criteria? • CPU utilization – keep the CPU as busy as possible • Throughput – # of processes that complete their execution per time unit • Turnaround time – amount of time to execute a particular process • Waiting time – amount of time a process has been waiting in the ready queue • Response time – amount of time it takes from when a request was submitted until the first response is produced, not output (for time-sharing environment)

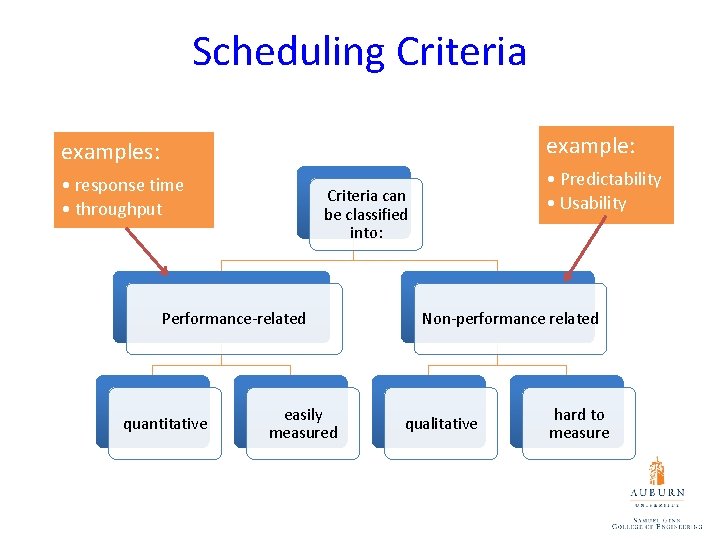

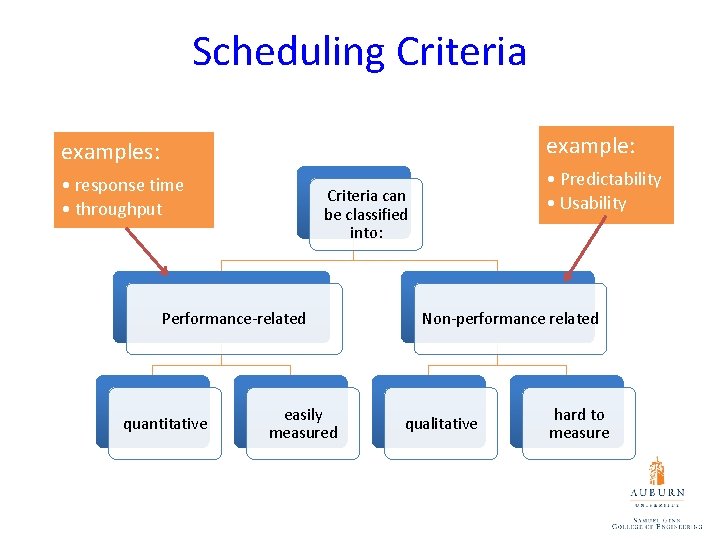

Scheduling Criteria examples: example: • response time • throughput • Predictability • Usability Criteria can be classified into: Performance-related quantitative easily measured Non-performance related qualitative hard to measure

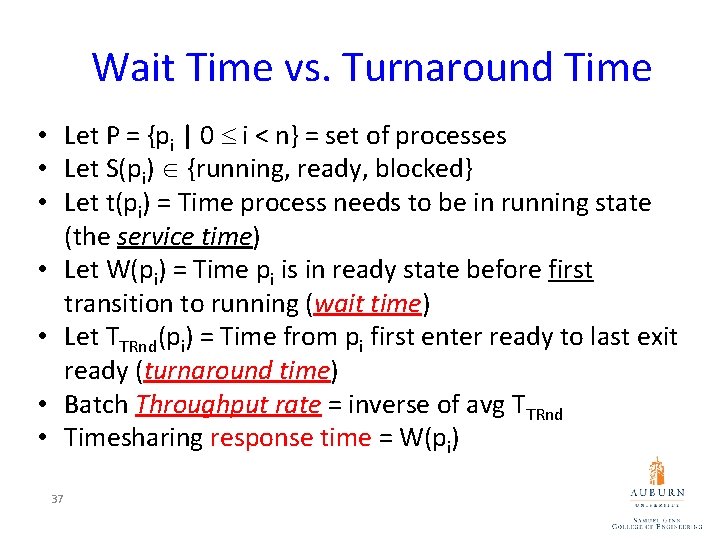

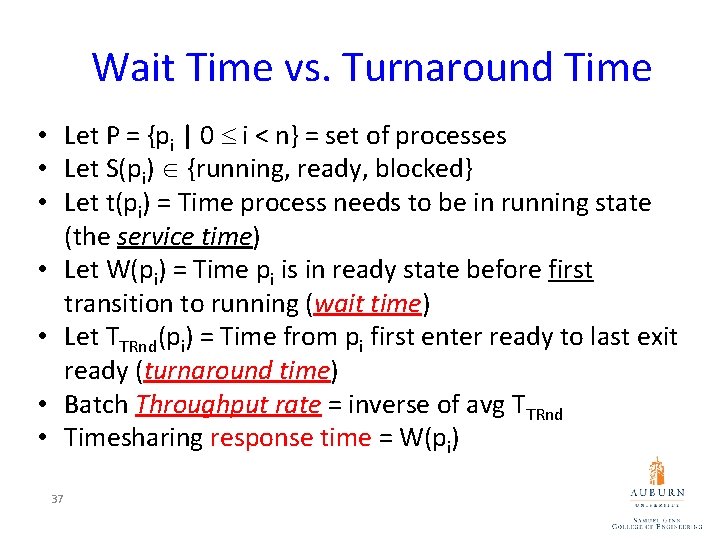

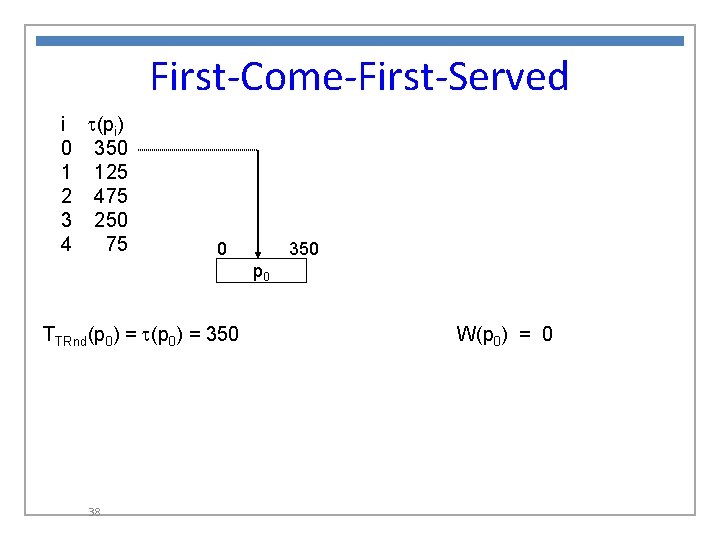

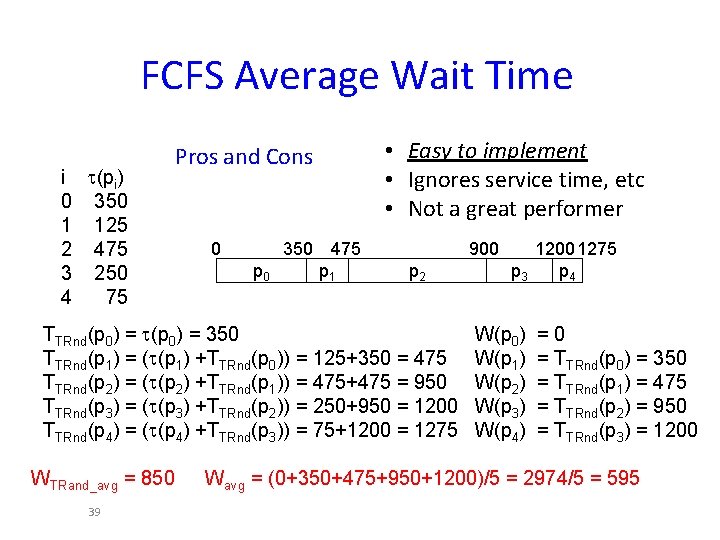

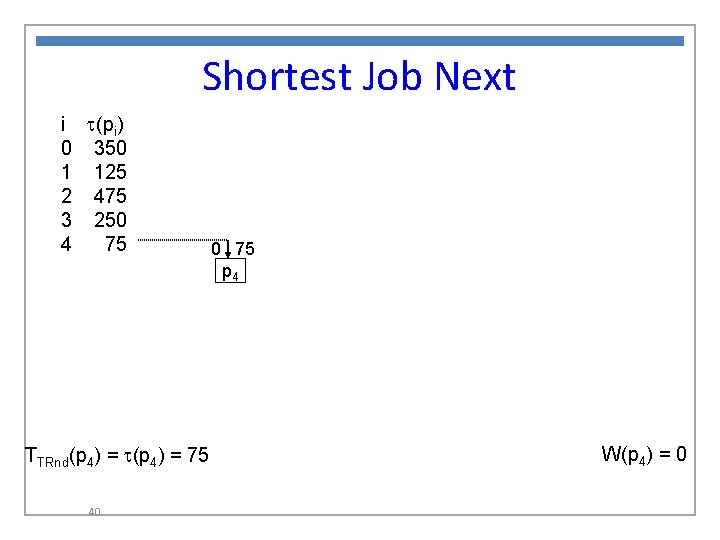

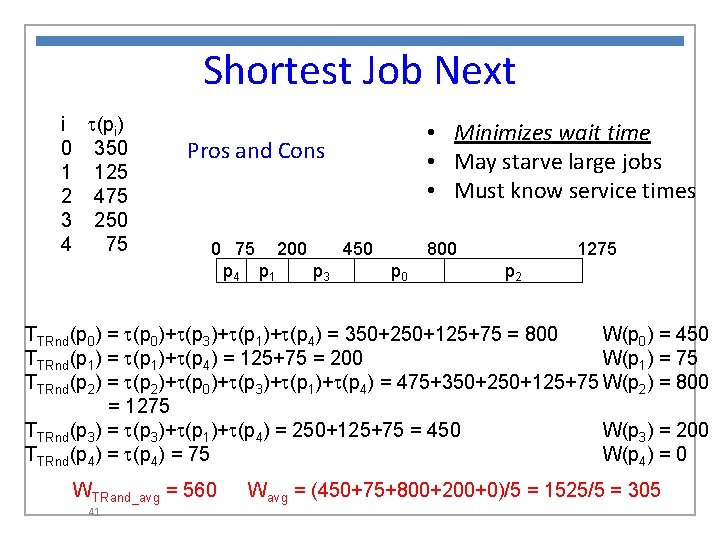

Wait Time vs. Turnaround Time • Let P = {pi | 0 i < n} = set of processes • Let S(pi) {running, ready, blocked} • Let t(pi) = Time process needs to be in running state (the service time) • Let W(pi) = Time pi is in ready state before first transition to running (wait time) • Let TTRnd(pi) = Time from pi first enter ready to last exit ready (turnaround time) • Batch Throughput rate = inverse of avg TTRnd • Timesharing response time = W(pi) 37

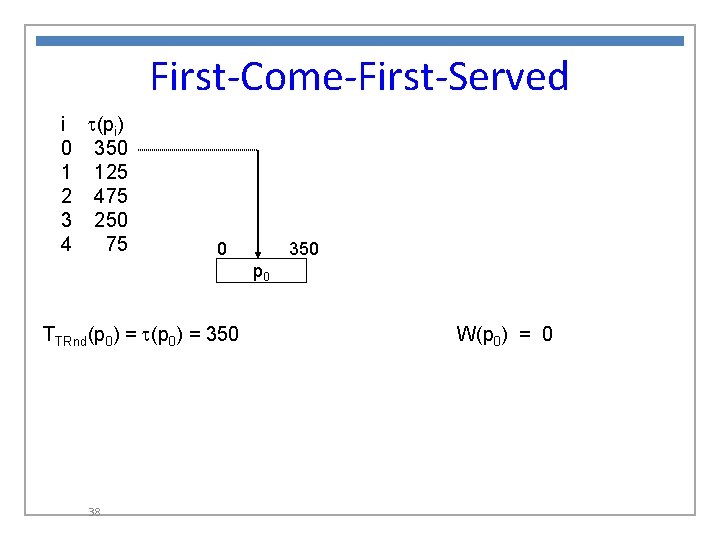

First-Come-First-Served i t(pi) 0 350 1 125 2 475 3 250 4 75 0 350 p 0 TTRnd(p 0) = t(p 0) = 350 38 W(p 0) = 0

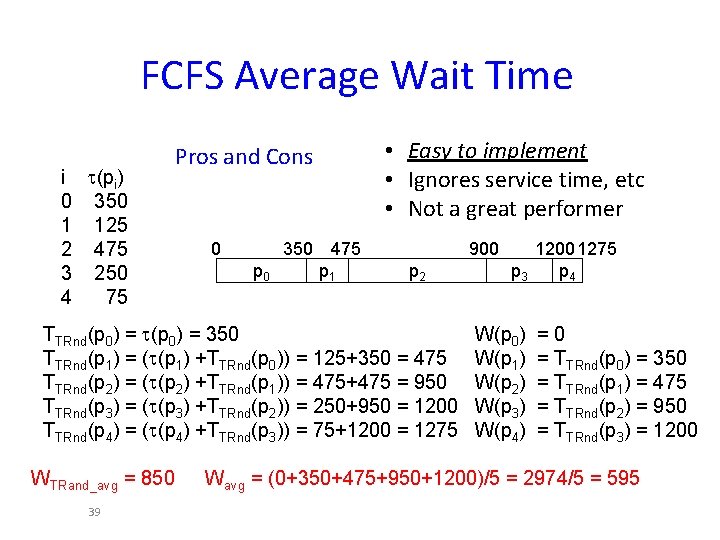

FCFS Average Wait Time i t(pi) 0 350 1 125 2 475 3 250 4 75 Pros and Cons 0 p 0 350 475 p 1 • Easy to implement • Ignores service time, etc • Not a great performer 900 p 2 TTRnd(p 0) = t(p 0) = 350 TTRnd(p 1) = (t(p 1) +TTRnd(p 0)) = 125+350 = 475 TTRnd(p 2) = (t(p 2) +TTRnd(p 1)) = 475+475 = 950 TTRnd(p 3) = (t(p 3) +TTRnd(p 2)) = 250+950 = 1200 TTRnd(p 4) = (t(p 4) +TTRnd(p 3)) = 75+1200 = 1275 WTRand_avg = 850 39 1200 1275 p 3 p 4 W(p 0) W(p 1) W(p 2) W(p 3) W(p 4) =0 = TTRnd(p 0) = 350 = TTRnd(p 1) = 475 = TTRnd(p 2) = 950 = TTRnd(p 3) = 1200 Wavg = (0+350+475+950+1200)/5 = 2974/5 = 595

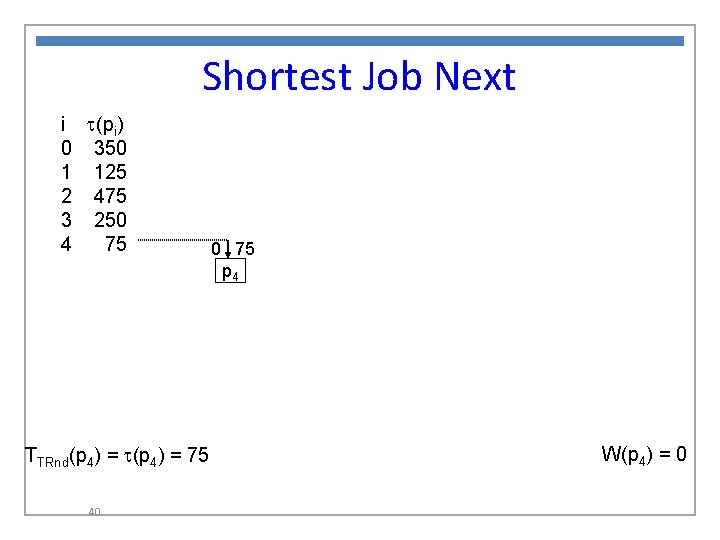

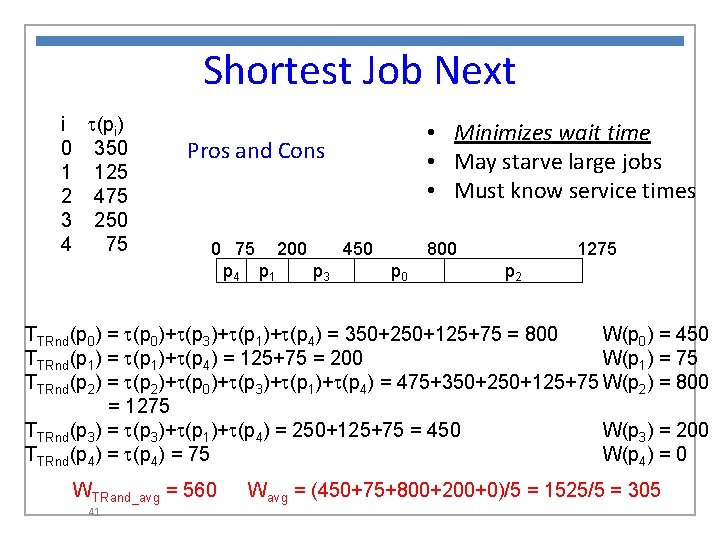

Shortest Job Next i t(pi) 0 350 1 125 2 475 3 250 4 75 TTRnd(p 4) = t(p 4) = 75 40 0 75 p 4 W(p 4) = 0

Shortest Job Next i t(pi) 0 350 1 125 2 475 3 250 4 75 Pros and Cons 0 75 200 450 p 4 p 1 p 3 p 0 • Minimizes wait time • May starve large jobs • Must know service times 800 1275 p 2 TTRnd(p 0) = t(p 0)+t(p 3)+t(p 1)+t(p 4) = 350+250+125+75 = 800 W(p 0) = 450 TTRnd(p 1) = t(p 1)+t(p 4) = 125+75 = 200 W(p 1) = 75 TTRnd(p 2) = t(p 2)+t(p 0)+t(p 3)+t(p 1)+t(p 4) = 475+350+250+125+75 W(p 2) = 800 = 1275 TTRnd(p 3) = t(p 3)+t(p 1)+t(p 4) = 250+125+75 = 450 W(p 3) = 200 TTRnd(p 4) = t(p 4) = 75 W(p 4) = 0 WTRand_avg = 560 41 Wavg = (450+75+800+200+0)/5 = 1525/5 = 305

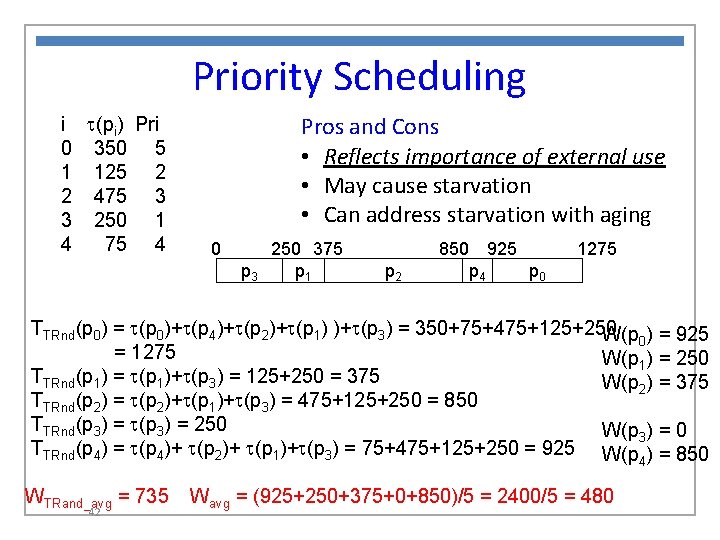

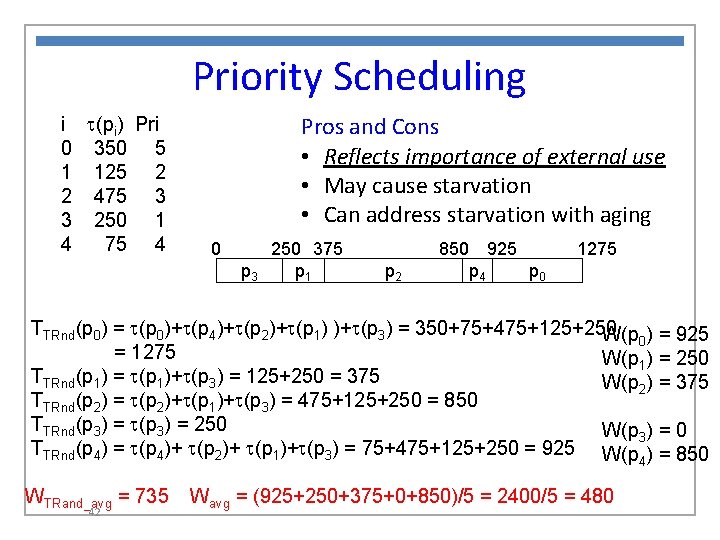

Priority Scheduling i t(pi) Pri 0 350 5 1 125 2 2 475 3 3 250 1 4 75 4 Pros and Cons • Reflects importance of external use • May cause starvation • Can address starvation with aging 0 p 3 250 375 p 1 p 2 850 925 p 4 p 0 1275 TTRnd(p 0) = t(p 0)+t(p 4)+t(p 2)+t(p 1) )+t(p 3) = 350+75+475+125+250 W(p 0) = 925 = 1275 W(p 1) = 250 TTRnd(p 1) = t(p 1)+t(p 3) = 125+250 = 375 W(p 2) = 375 TTRnd(p 2) = t(p 2)+t(p 1)+t(p 3) = 475+125+250 = 850 TTRnd(p 3) = t(p 3) = 250 W(p 3) = 0 TTRnd(p 4) = t(p 4)+ t(p 2)+ t(p 1)+t(p 3) = 75+475+125+250 = 925 W(p ) = 850 4 WTRand_avg = 735 42 Wavg = (925+250+375+0+850)/5 = 2400/5 = 480

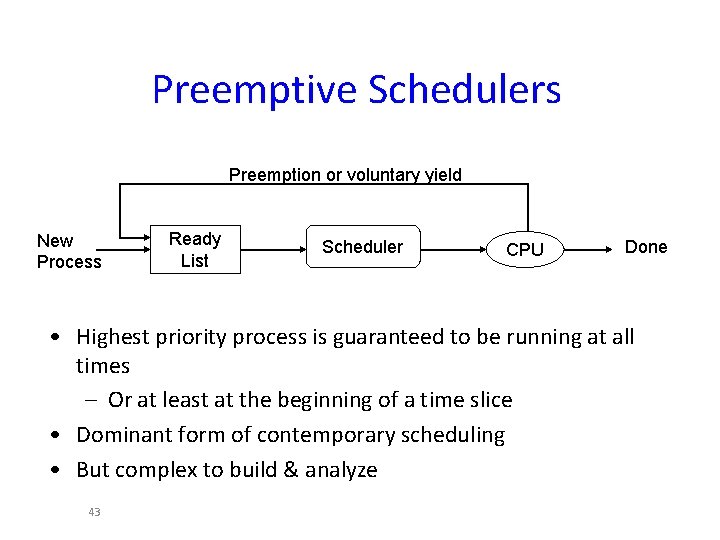

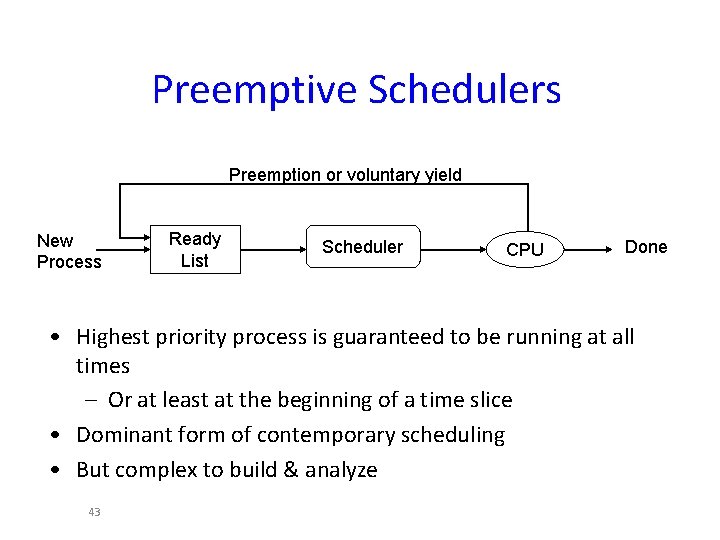

Preemptive Schedulers Preemption or voluntary yield New Process Ready List Scheduler CPU Done • Highest priority process is guaranteed to be running at all times – Or at least at the beginning of a time slice • Dominant form of contemporary scheduling • But complex to build & analyze 43

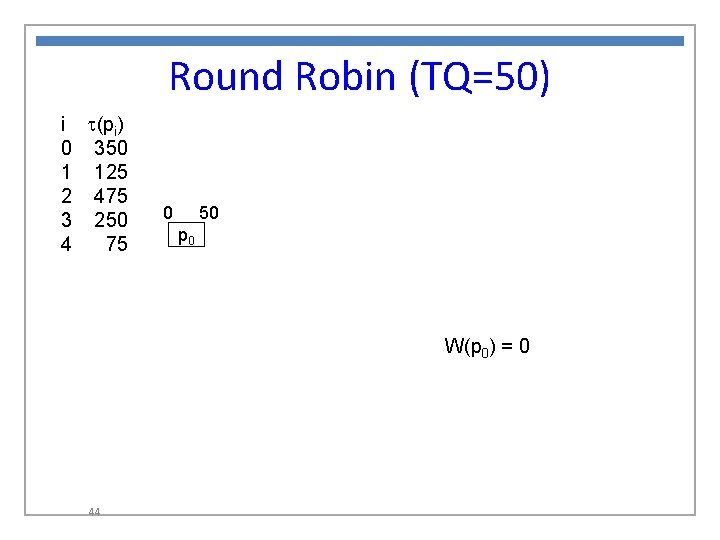

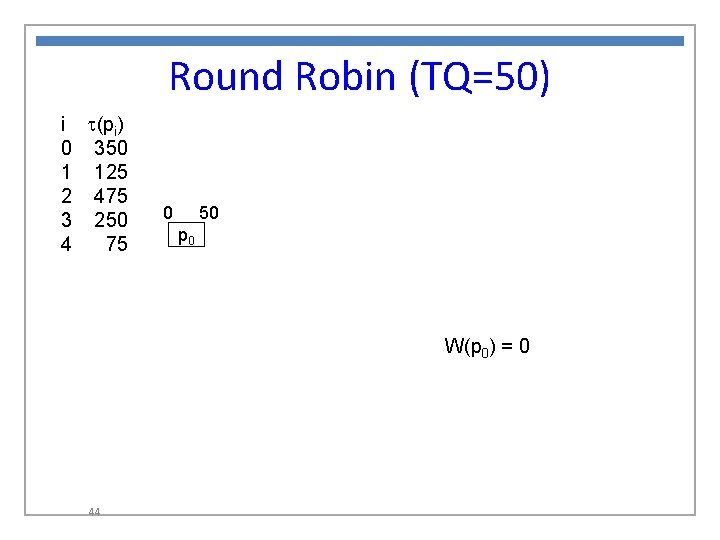

Round Robin (TQ=50) i t(pi) 0 350 1 125 2 475 3 250 4 75 0 50 p 0 W(p 0) = 0 44

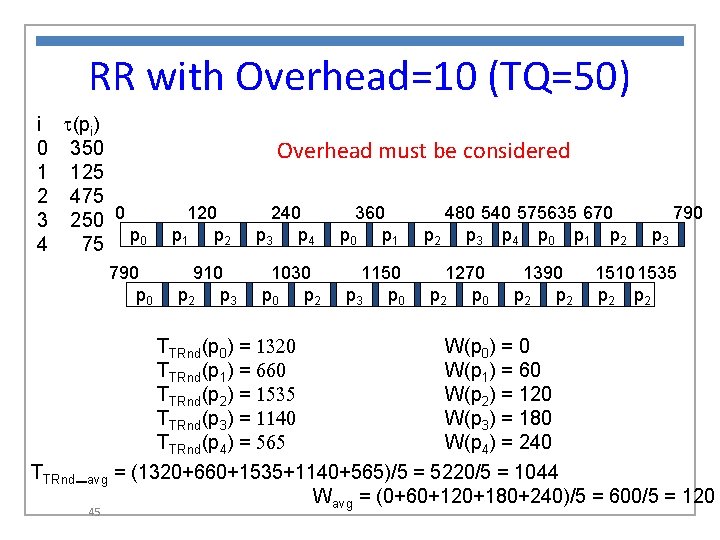

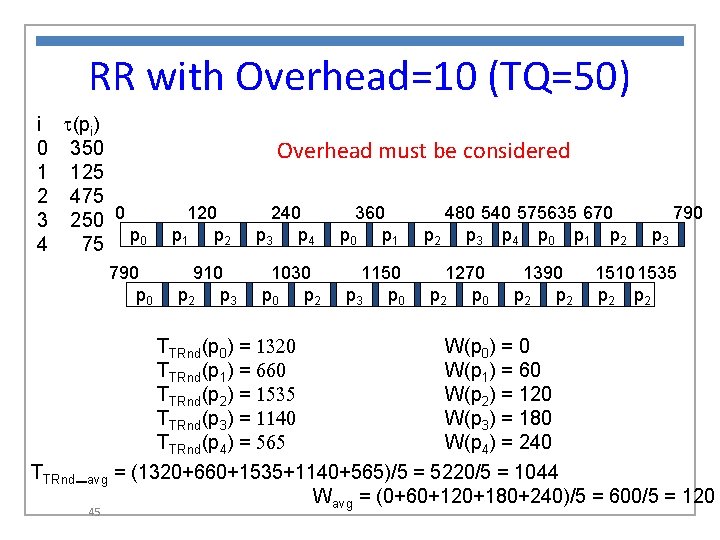

RR with Overhead=10 (TQ=50) i t(pi) 0 350 1 125 2 475 3 250 0 p 0 4 75 790 p 0 Overhead must be considered 120 p 1 p 2 910 p 2 p 3 240 p 3 p 4 1030 p 2 360 p 1 1150 p 3 p 0 480 540 575635 670 p 2 p 3 p 4 p 0 p 1 p 2 1270 p 2 p 0 1390 p 2 790 p 3 1510 1535 p 2 TTRnd(p 0) = 1320 W(p 0) = 0 TTRnd(p 1) = 660 W(p 1) = 60 TTRnd(p 2) = 1535 W(p 2) = 120 TTRnd(p 3) = 1140 W(p 3) = 180 TTRnd(p 4) = 565 W(p 4) = 240 TTRnd_avg = (1320+660+1535+1140+565)/5 = 5220/5 = 1044 Wavg = (0+60+120+180+240)/5 = 600/5 = 120 45

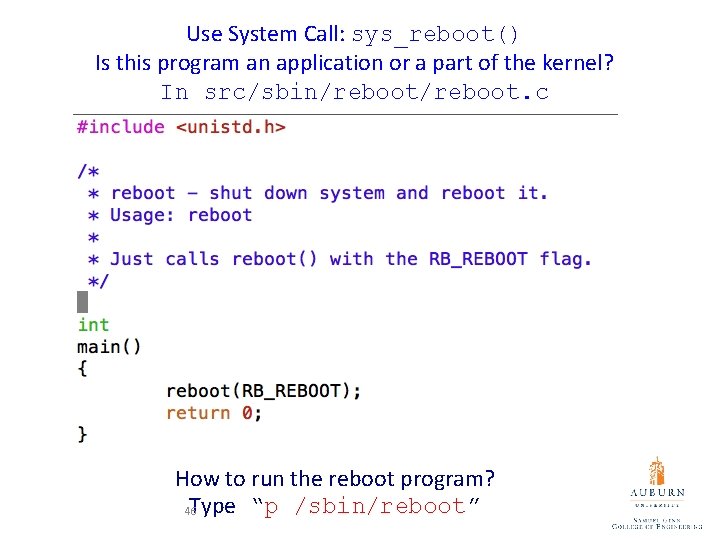

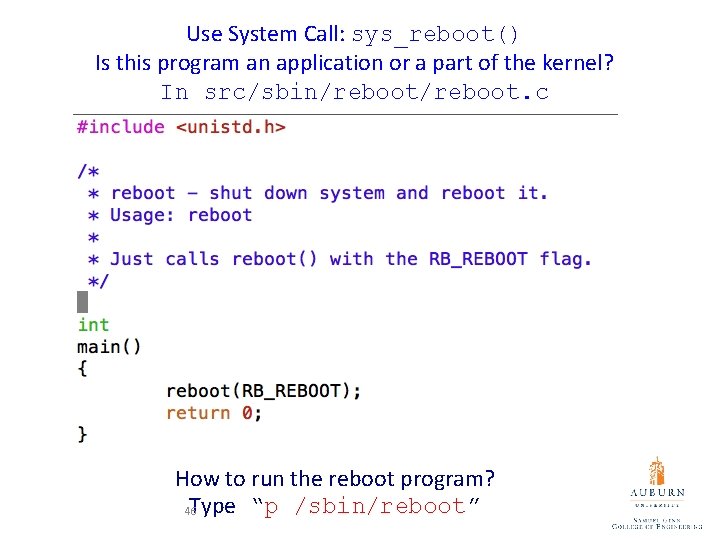

Use System Call: sys_reboot() Is this program an application or a part of the kernel? In src/sbin/reboot. c How to run the reboot program? Type “p /sbin/reboot” 46

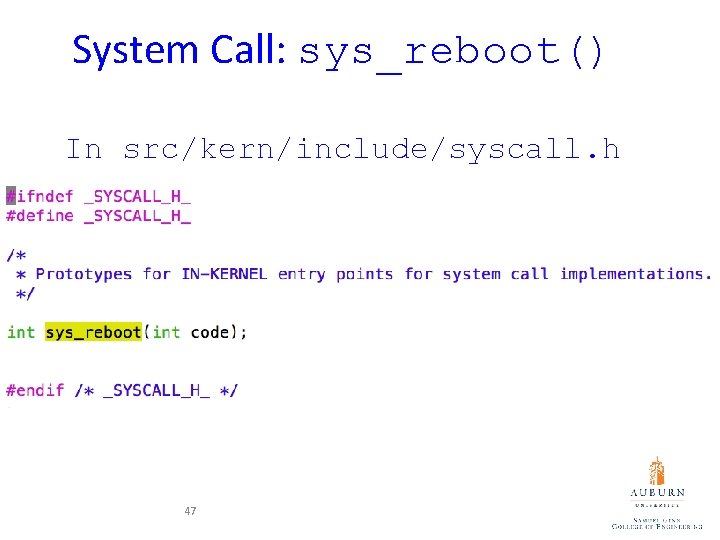

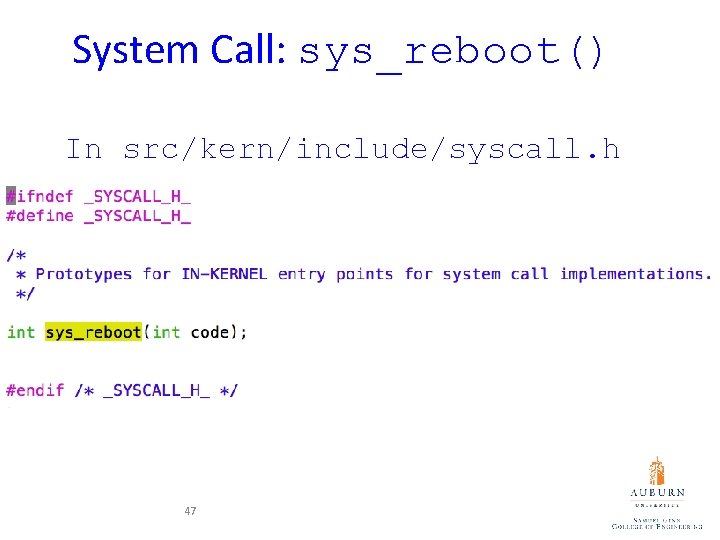

System Call: sys_reboot() In src/kern/include/syscall. h 47

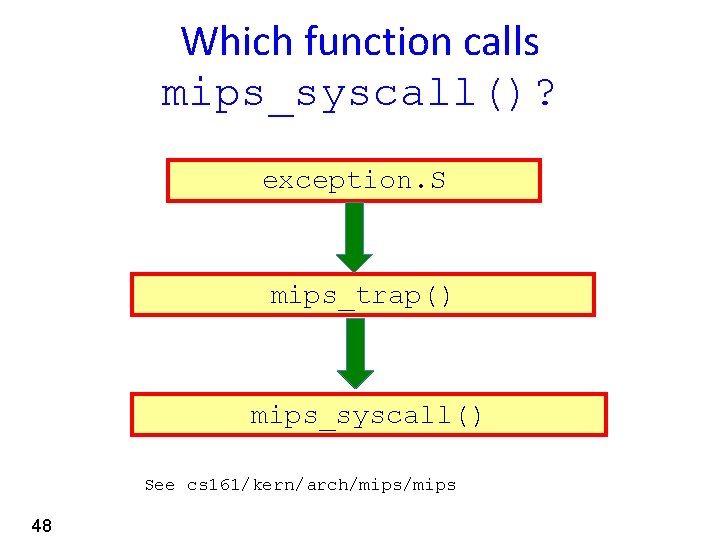

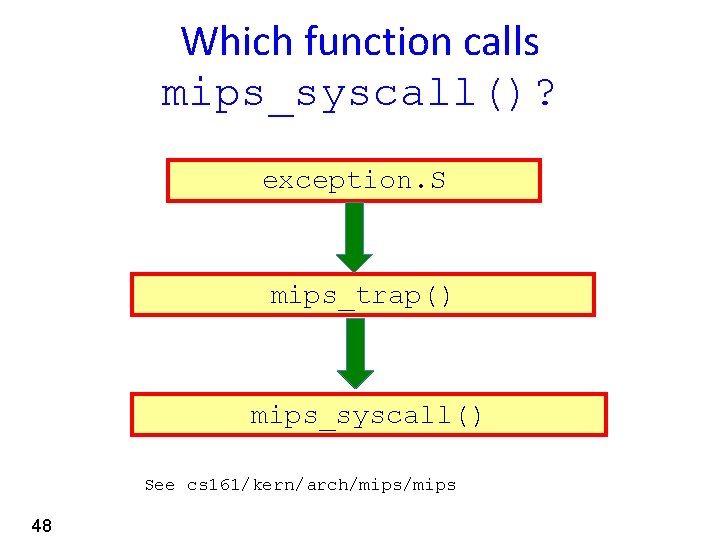

Which function calls mips_syscall()? exception. S mips_trap() mips_syscall() See cs 161/kern/arch/mips 48

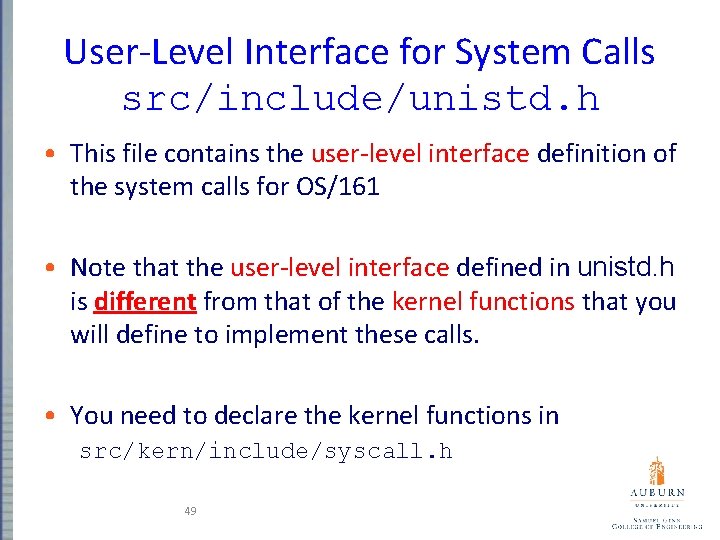

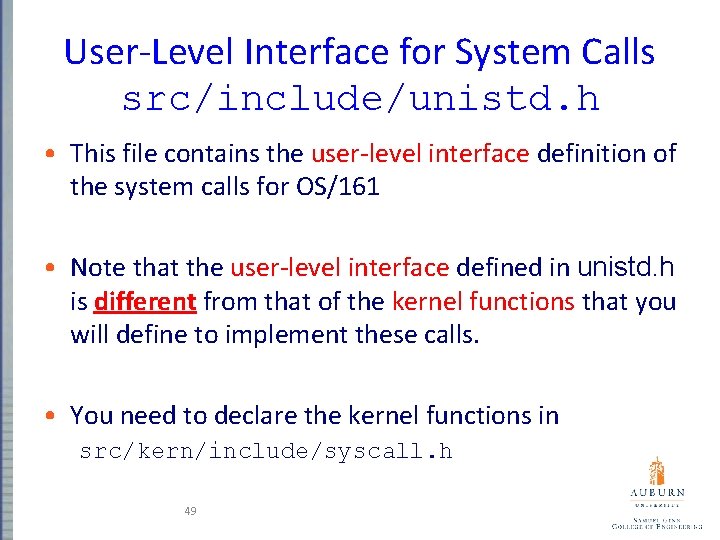

User-Level Interface for System Calls src/include/unistd. h • This file contains the user-level interface definition of the system calls for OS/161 • Note that the user-level interface defined in unistd. h is different from that of the kernel functions that you will define to implement these calls. • You need to declare the kernel functions in src/kern/include/syscall. h 49

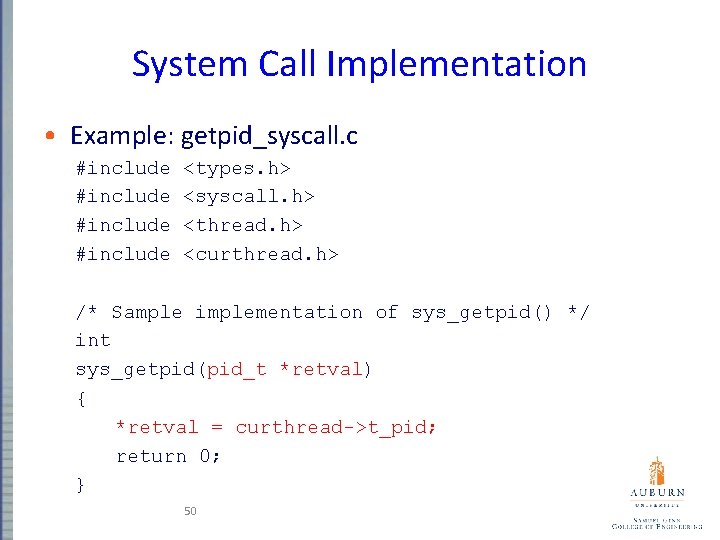

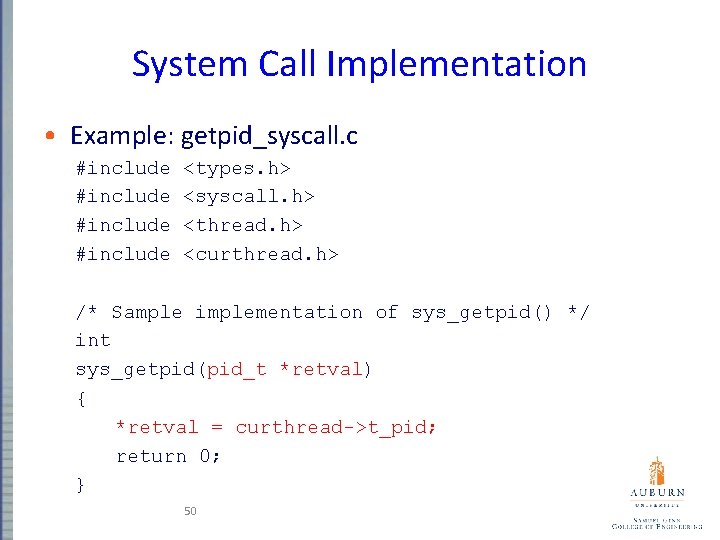

System Call Implementation • Example: getpid_syscall. c #include <types. h> #include <syscall. h> #include <thread. h> #include <curthread. h> /* Sample implementation of sys_getpid() */ int sys_getpid(pid_t *retval) { *retval = curthread->t_pid; return 0; } 50

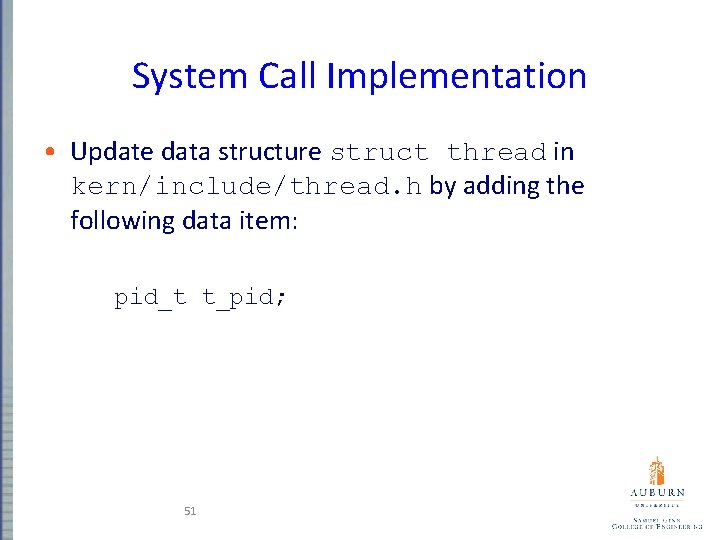

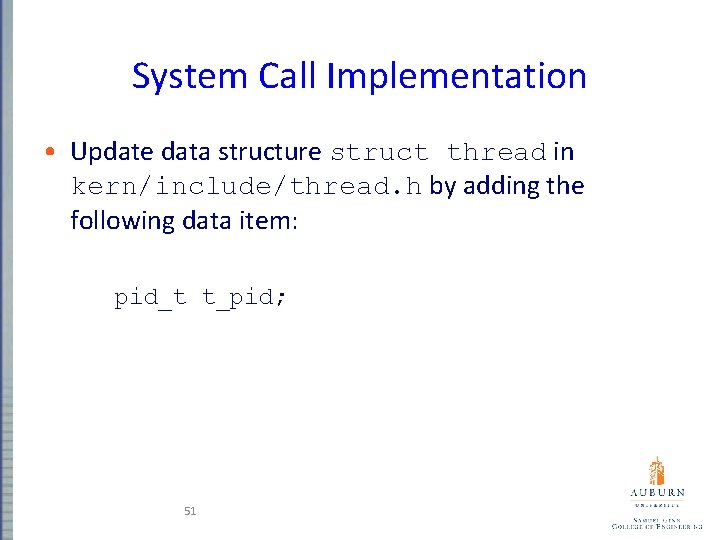

System Call Implementation • Update data structure struct thread in kern/include/thread. h by adding the following data item: pid_t t_pid; 51

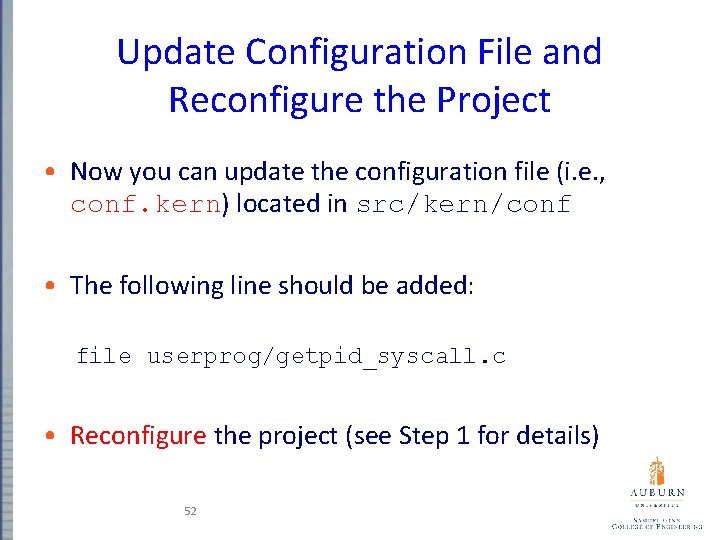

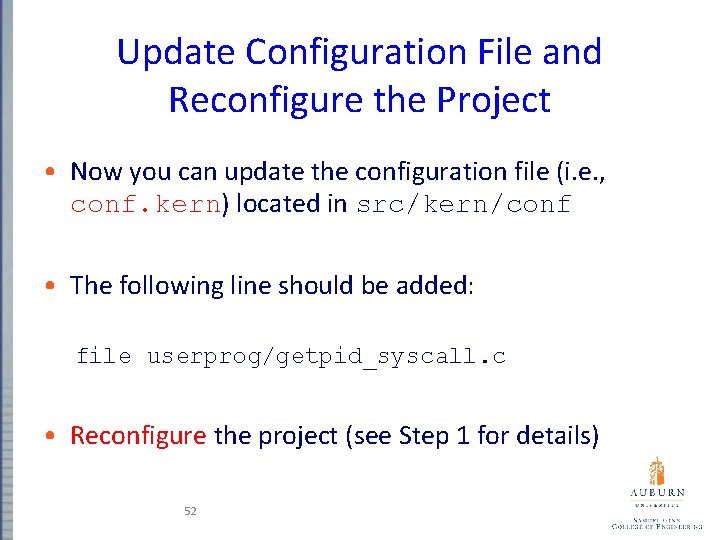

Update Configuration File and Reconfigure the Project • Now you can update the configuration file (i. e. , conf. kern) located in src/kern/conf • The following line should be added: file userprog/getpid_syscall. c • Reconfigure the project (see Step 1 for details) 52

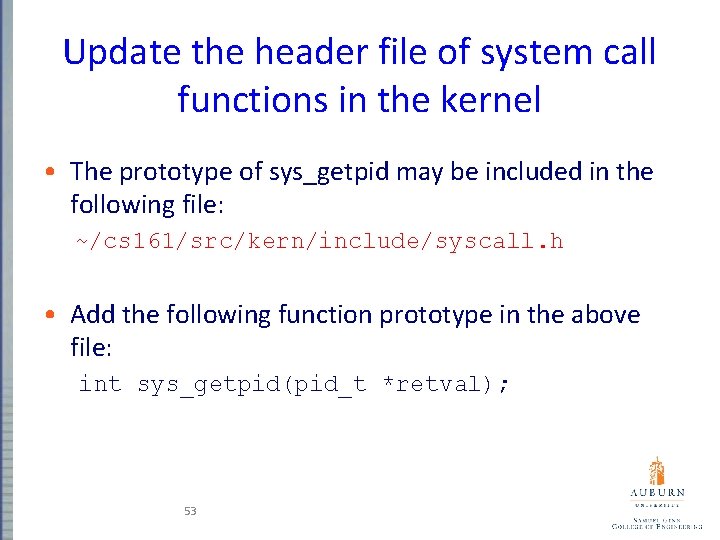

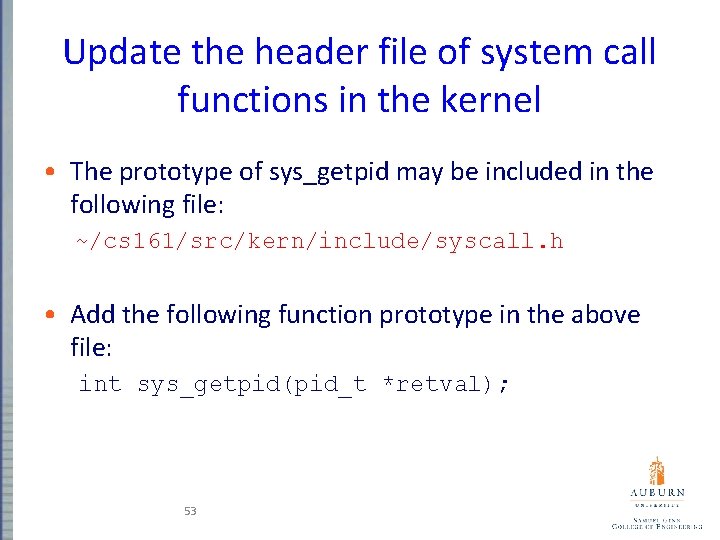

Update the header file of system call functions in the kernel • The prototype of sys_getpid may be included in the following file: ~/cs 161/src/kern/include/syscall. h • Add the following function prototype in the above file: int sys_getpid(pid_t *retval); 53

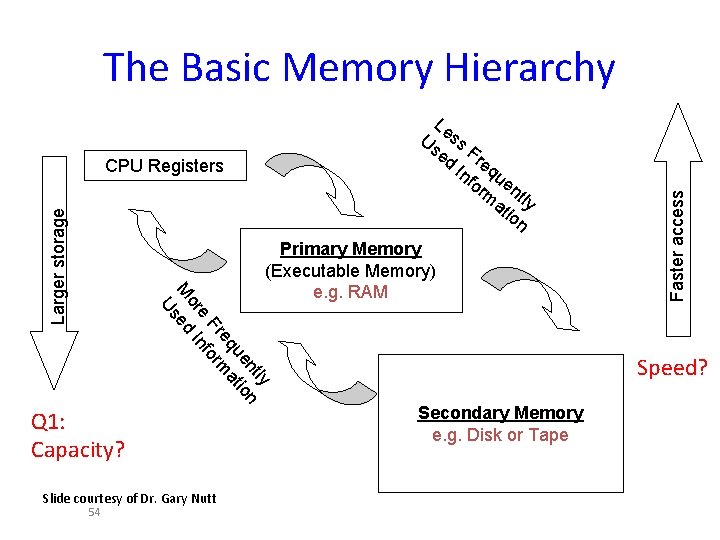

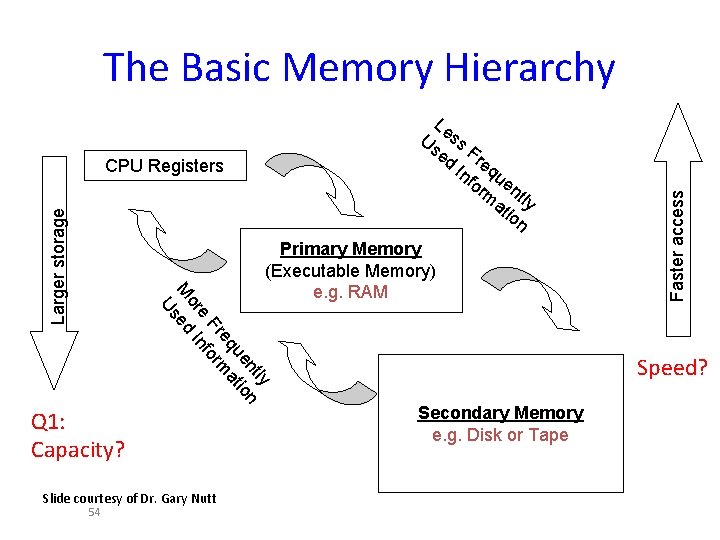

Le Us ss ed Fre In qu fo en rm tl at y io n Larger storage CPU Registers ly nt n ue tio eq a Fr form e or In M ed Us Primary Memory (Executable Memory) e. g. RAM Q 1: Capacity? Slide courtesy of Dr. Gary Nutt 54 Faster access The Basic Memory Hierarchy Speed? Secondary Memory e. g. Disk or Tape

Memory Management Requirements Can you list two functionalities? • Relocation • Protection • Sharing • Logical organization • Physical organization

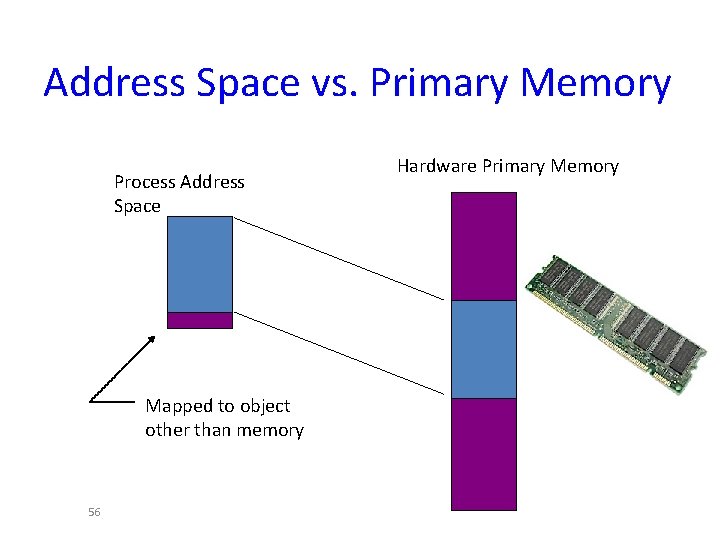

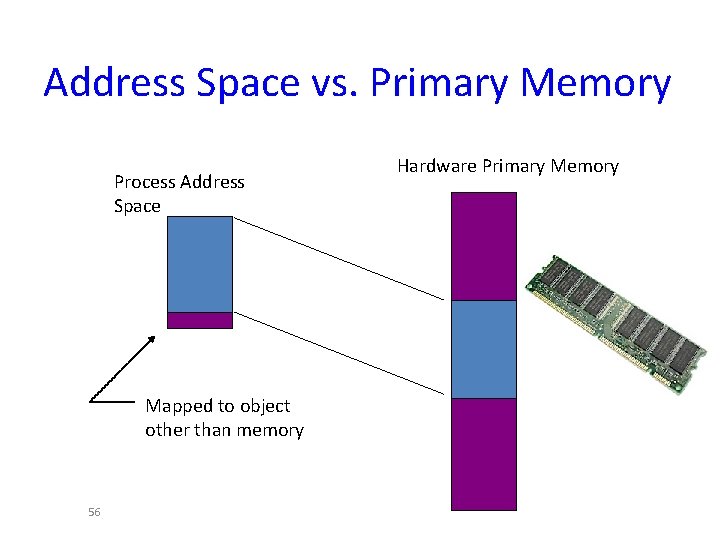

Address Space vs. Primary Memory Process Address Space Mapped to object other than memory 56 Hardware Primary Memory

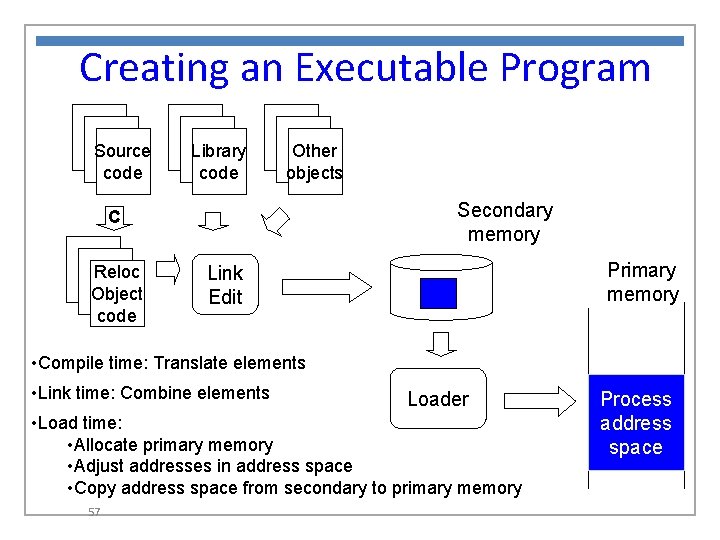

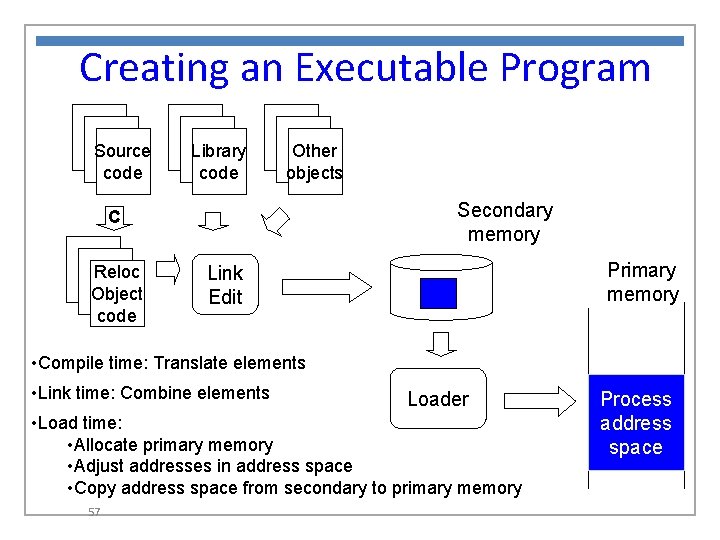

Creating an Executable Program Source code Library code Other objects Secondary memory C Reloc Object code Primary memory Link Edit • Compile time: Translate elements • Link time: Combine elements Loader • Load time: • Allocate primary memory • Adjust addresses in address space • Copy address space from secondary to primary memory 57 Process address space

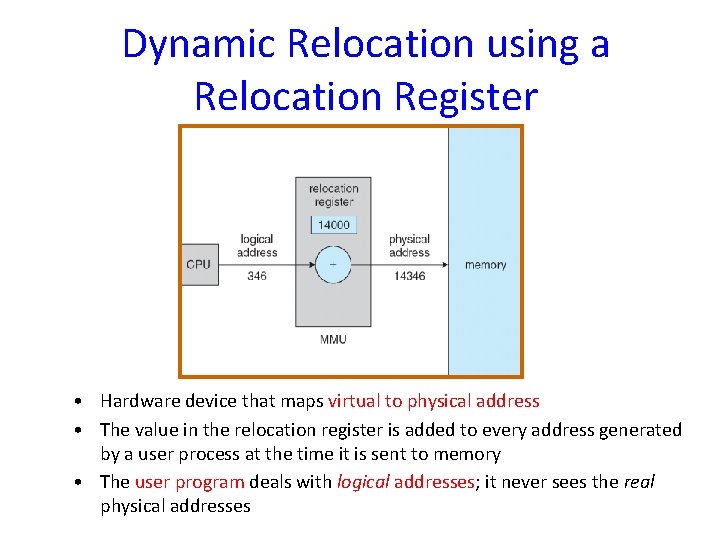

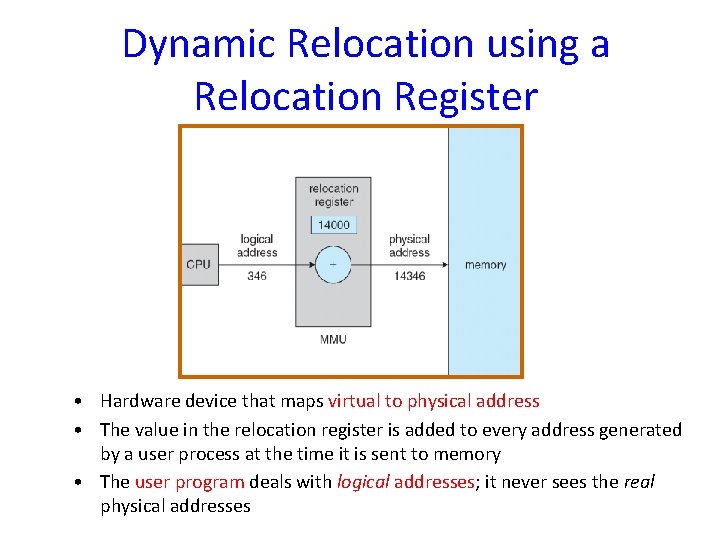

Dynamic Relocation using a Relocation Register • Hardware device that maps virtual to physical address • The value in the relocation register is added to every address generated by a user process at the time it is sent to memory • The user program deals with logical addresses; it never sees the real physical addresses

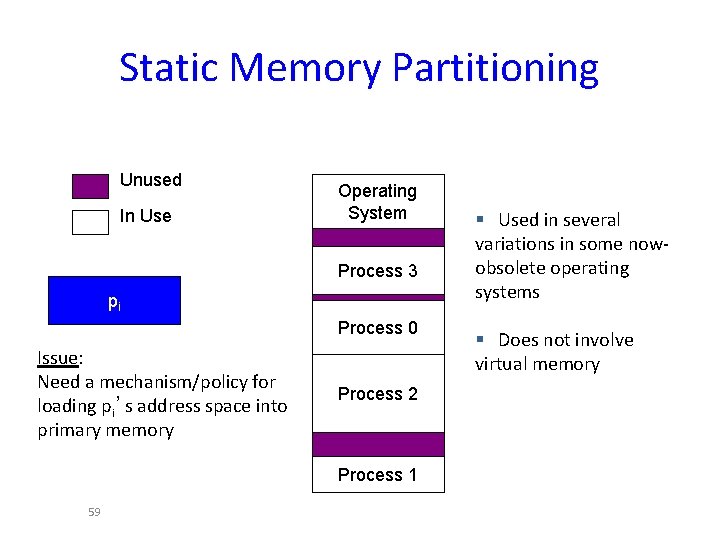

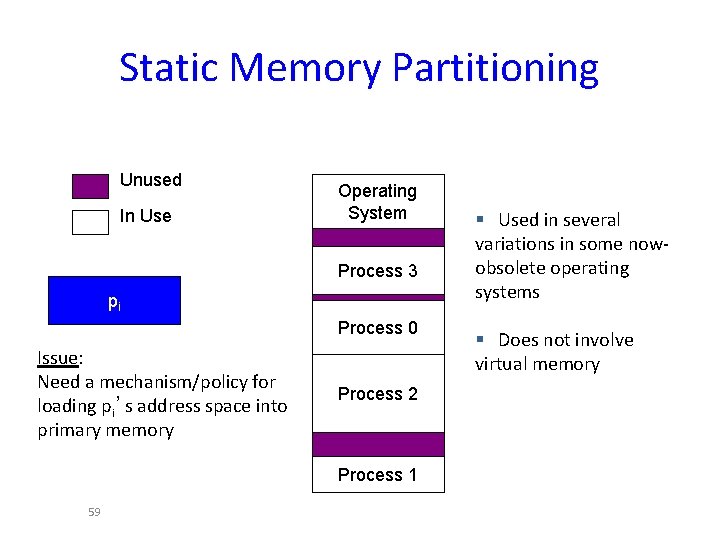

Static Memory Partitioning Unused In Use Operating System Process 3 pi Process 0 Issue: Need a mechanism/policy for loading pi’s address space into primary memory Process 2 Process 1 59 § Used in several variations in some nowobsolete operating systems § Does not involve virtual memory

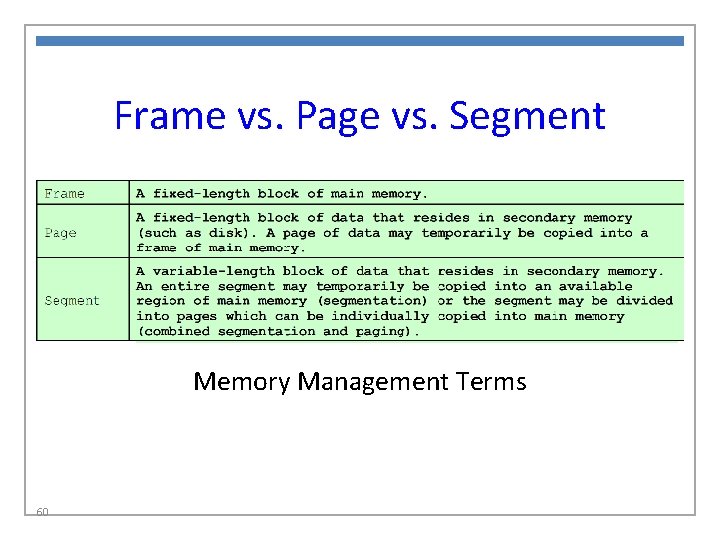

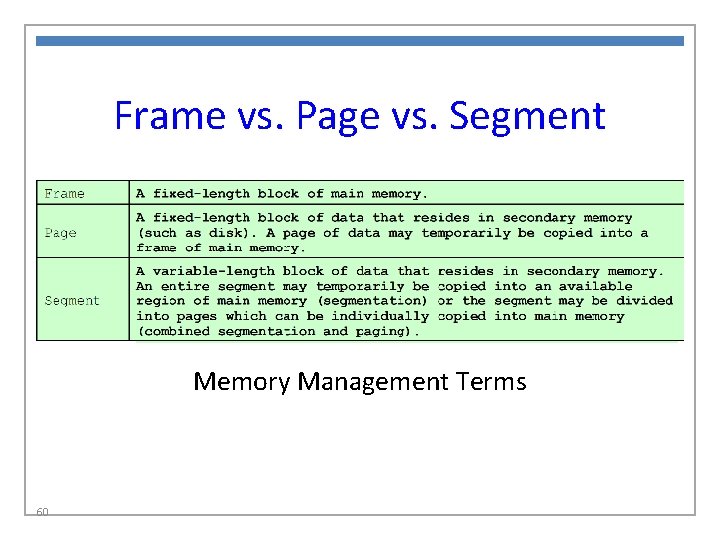

Frame vs. Page vs. Segment Memory Management Terms 60

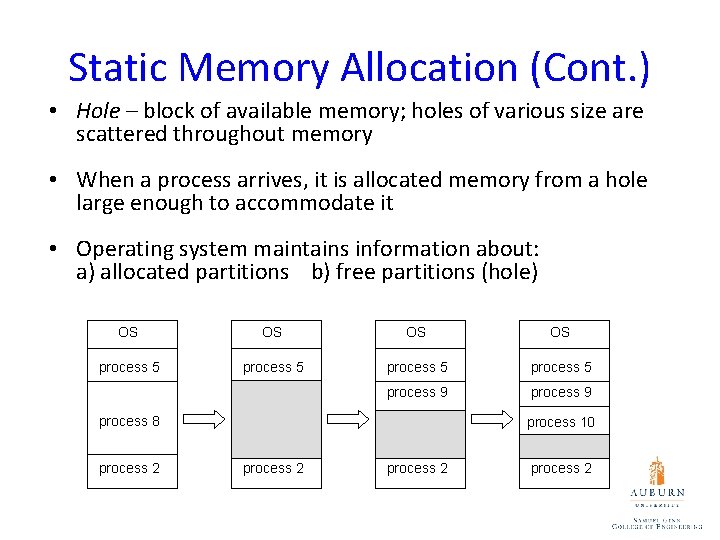

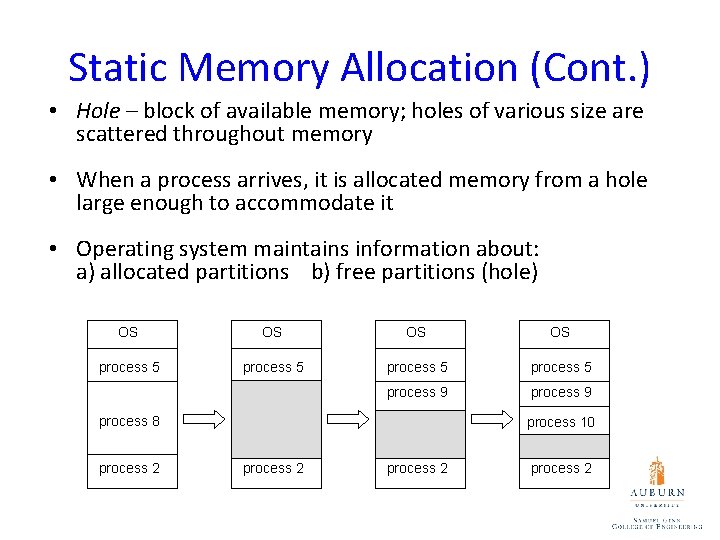

Static Memory Allocation (Cont. ) • Hole – block of available memory; holes of various size are scattered throughout memory • When a process arrives, it is allocated memory from a hole large enough to accommodate it • Operating system maintains information about: a) allocated partitions b) free partitions (hole) OS OS process 5 process 9 process 8 process 2 process 10 process 2

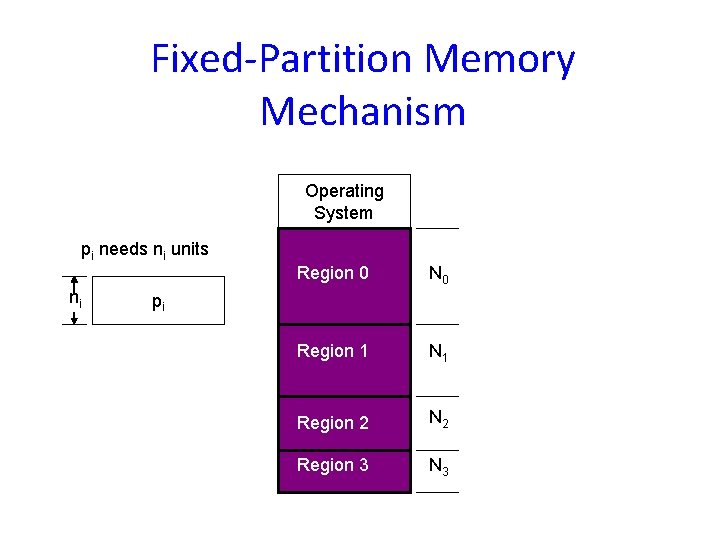

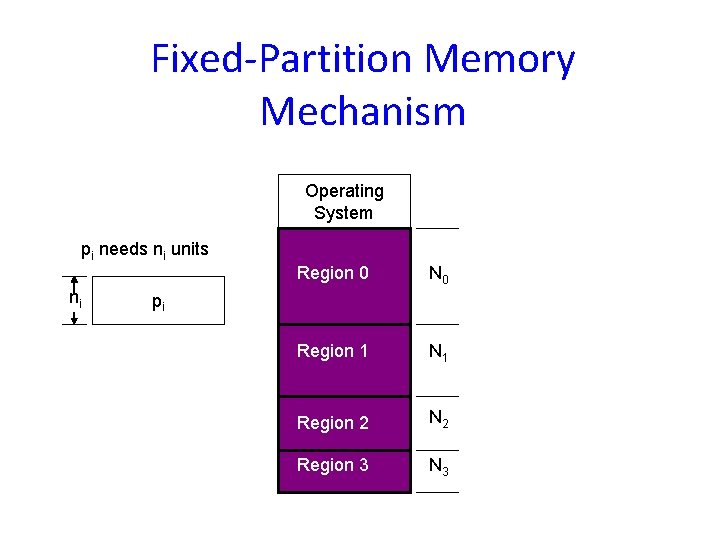

Fixed-Partition Memory Mechanism Operating System pi needs ni units ni Region 0 N 0 Region 1 N 1 Region 2 N 2 Region 3 N 3 pi

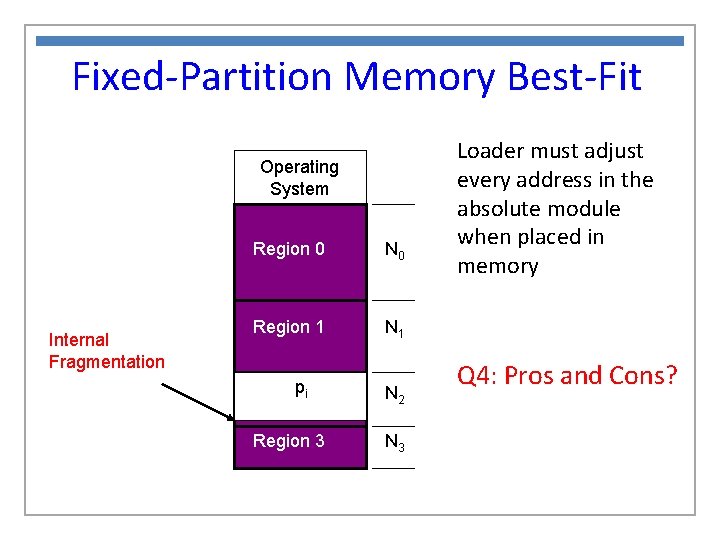

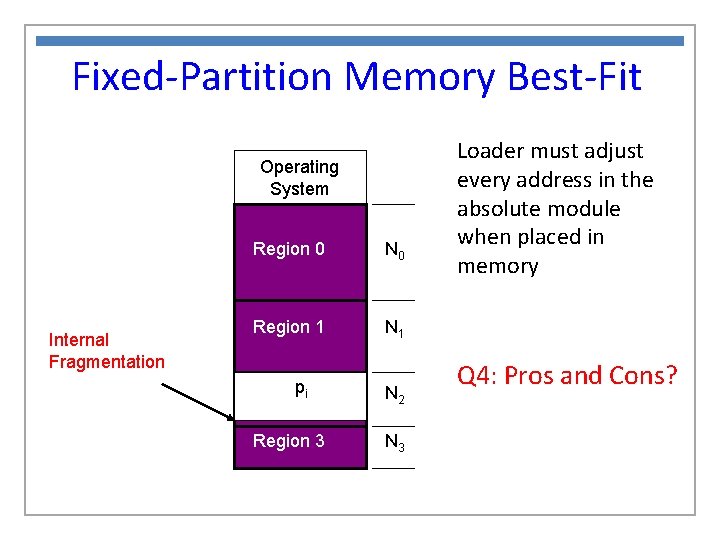

Fixed-Partition Memory Best-Fit Operating System Internal Fragmentation Region 0 N 0 Region 1 N 1 p Regioni 2 N 2 Region 3 N 3 Loader must adjust every address in the absolute module when placed in memory Q 4: Pros and Cons?

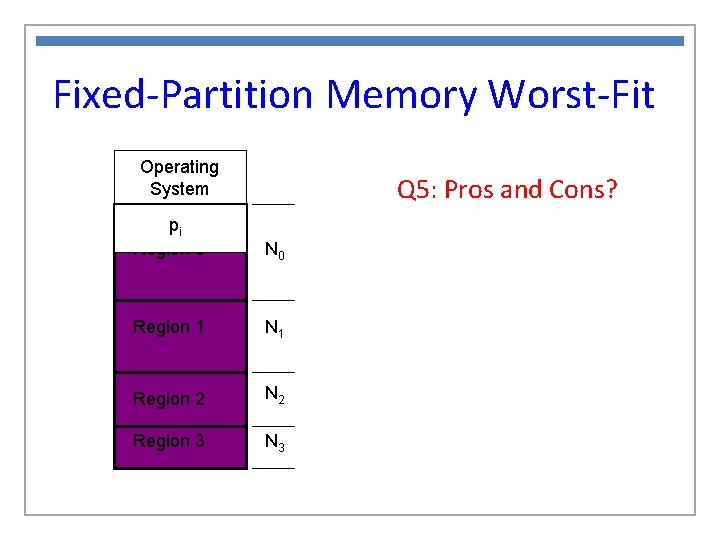

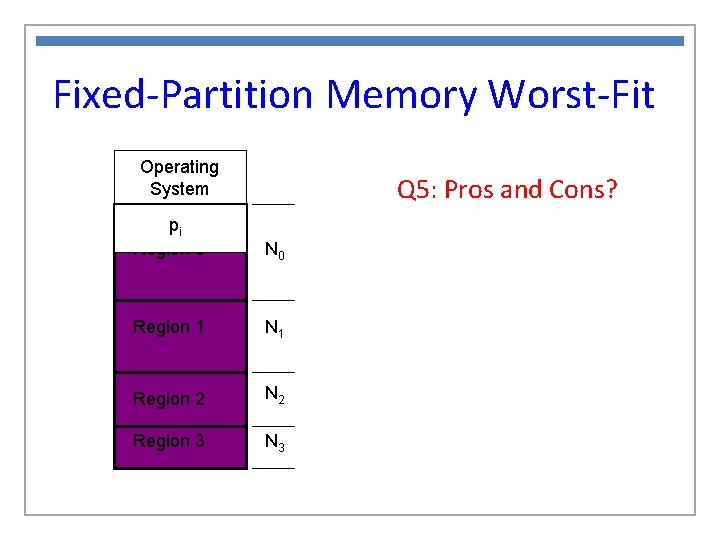

Fixed-Partition Memory Worst-Fit Operating System Q 5: Pros and Cons? pi Region 0 N 0 Region 1 N 1 Region 2 N 2 Region 3 N 3

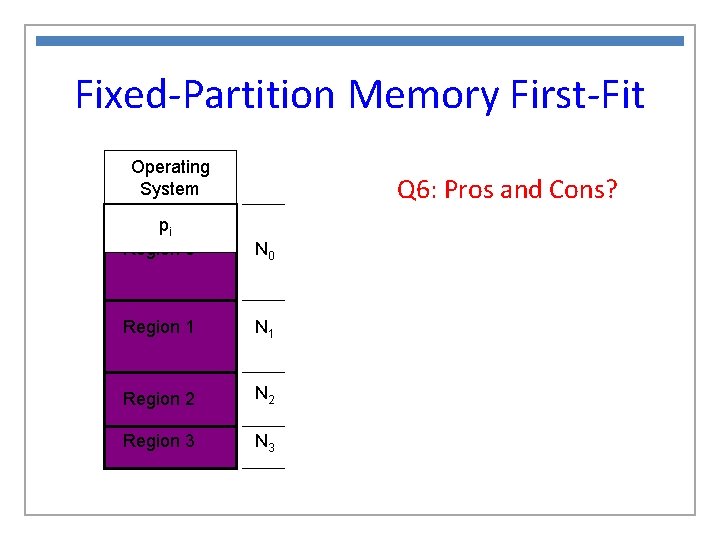

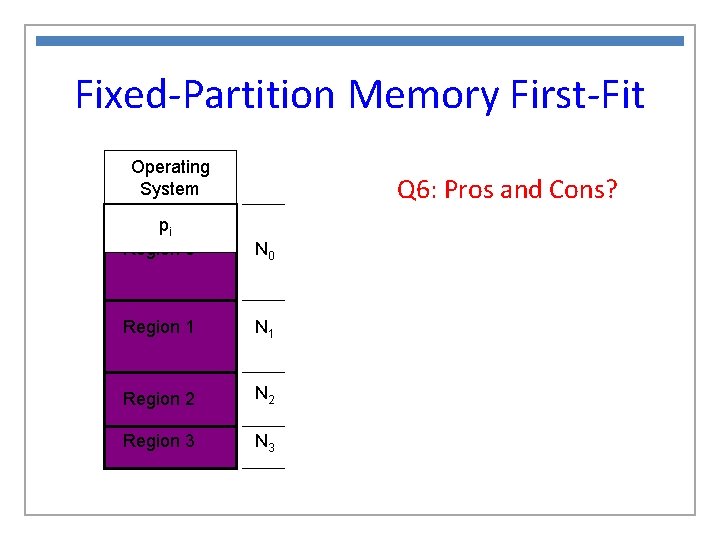

Fixed-Partition Memory First-Fit Operating System Q 6: Pros and Cons? pi Region 0 N 0 Region 1 N 1 Region 2 N 2 Region 3 N 3

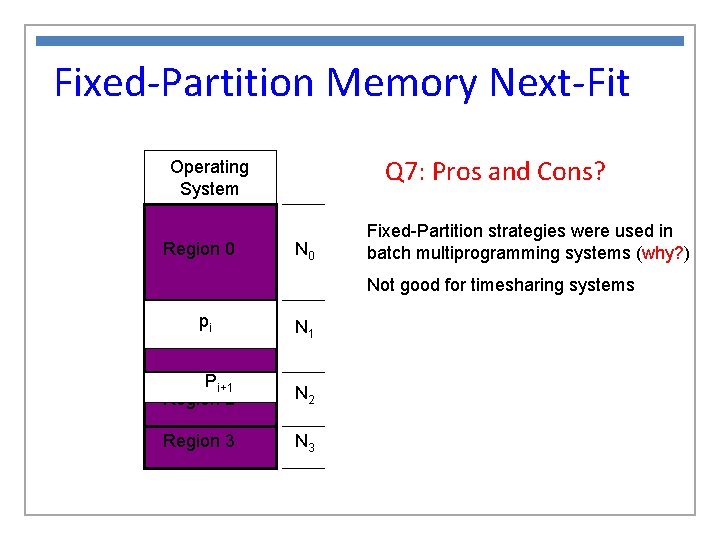

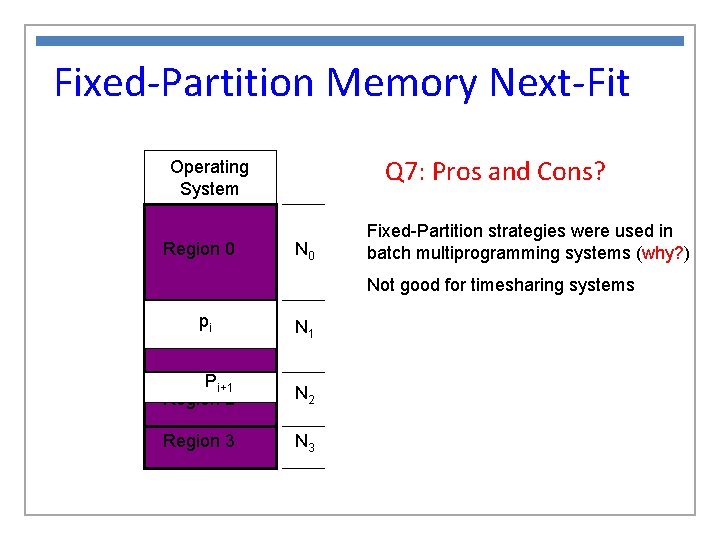

Fixed-Partition Memory Next-Fit Q 7: Pros and Cons? Operating System Region 0 N 0 Fixed-Partition strategies were used in batch multiprogramming systems (why? ) Not good for timesharing systems pi 1 Region N 1 Pi+1 Region 2 N 2 Region 3 N 3

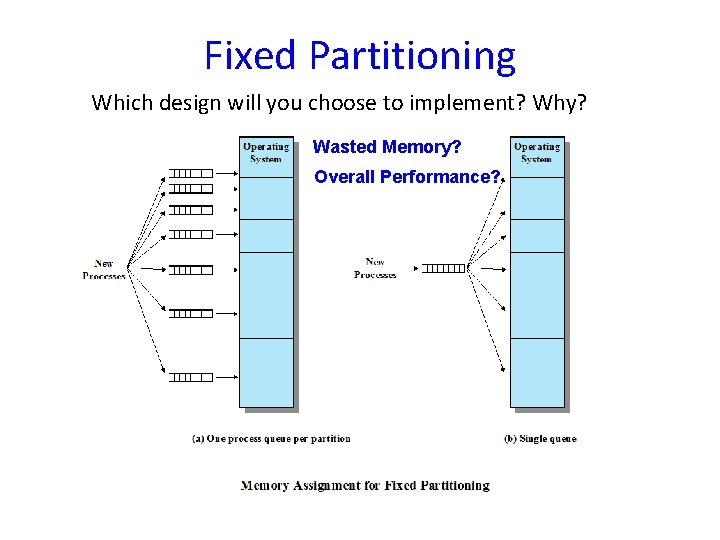

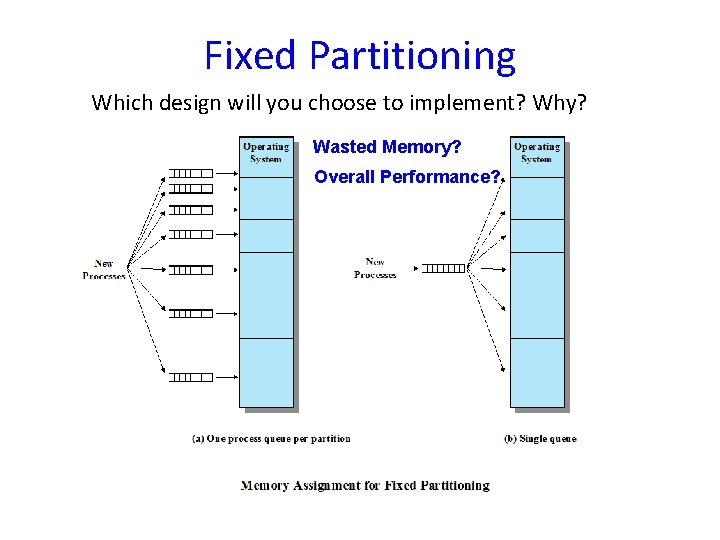

Fixed Partitioning Which design will you choose to implement? Why? Wasted Memory? Overall Performance? 67

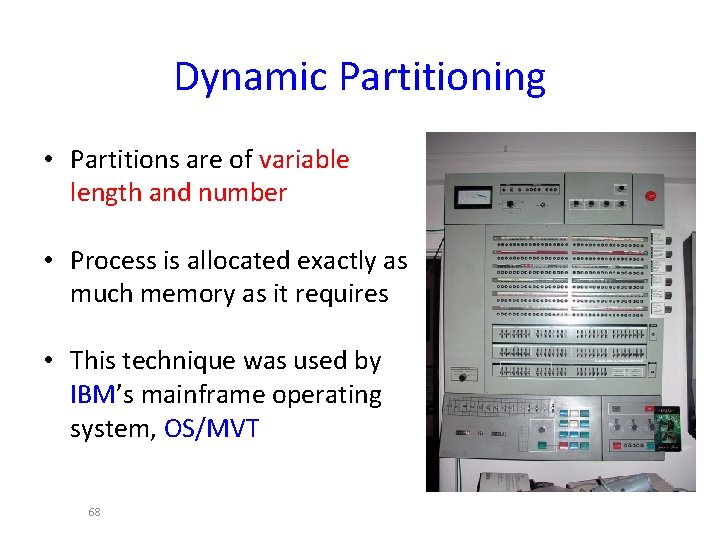

Dynamic Partitioning • Partitions are of variable length and number • Process is allocated exactly as much memory as it requires • This technique was used by IBM’s mainframe operating system, OS/MVT 68

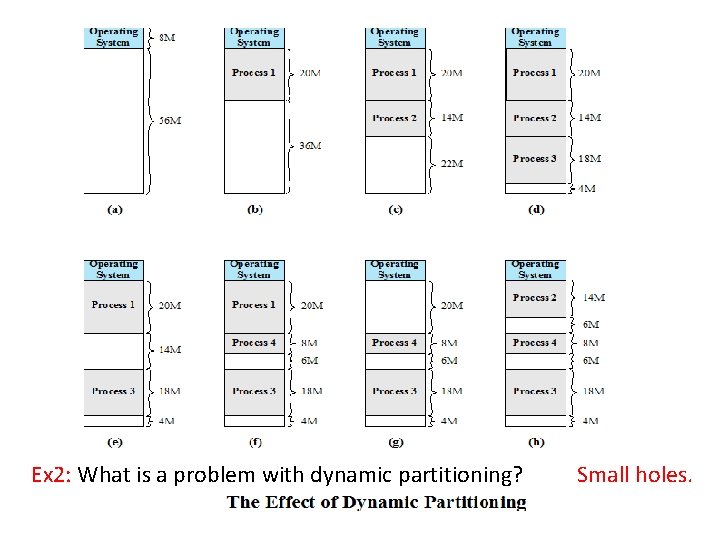

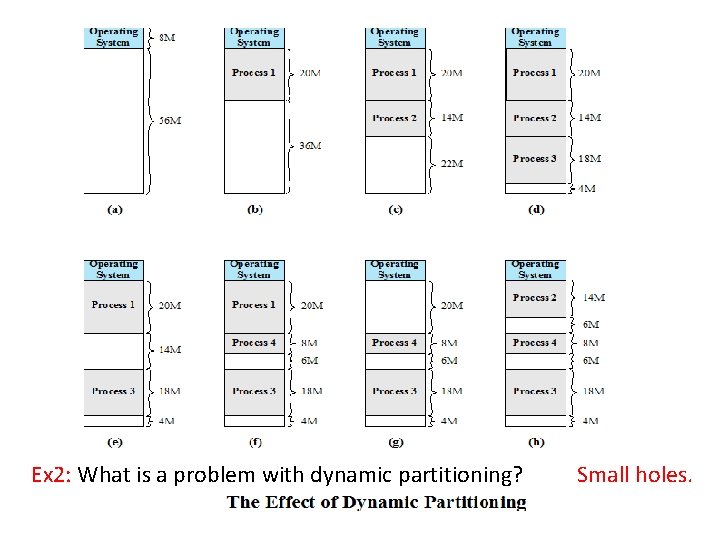

Ex 2: What is a problem with dynamic partitioning? 69 Small holes.

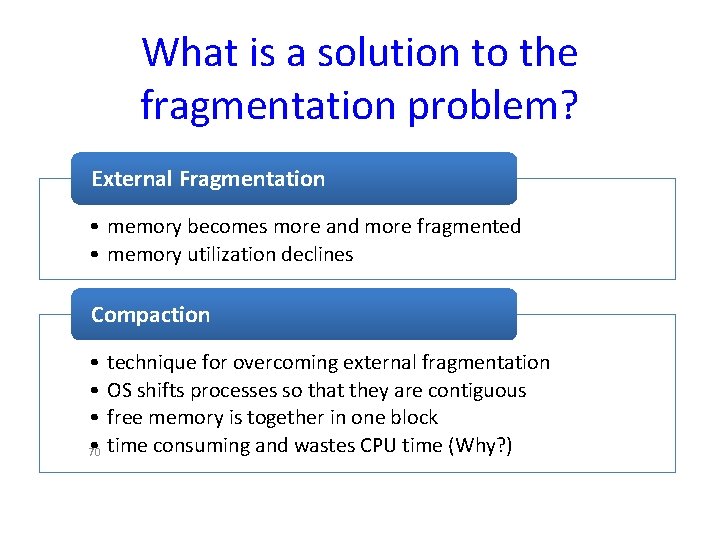

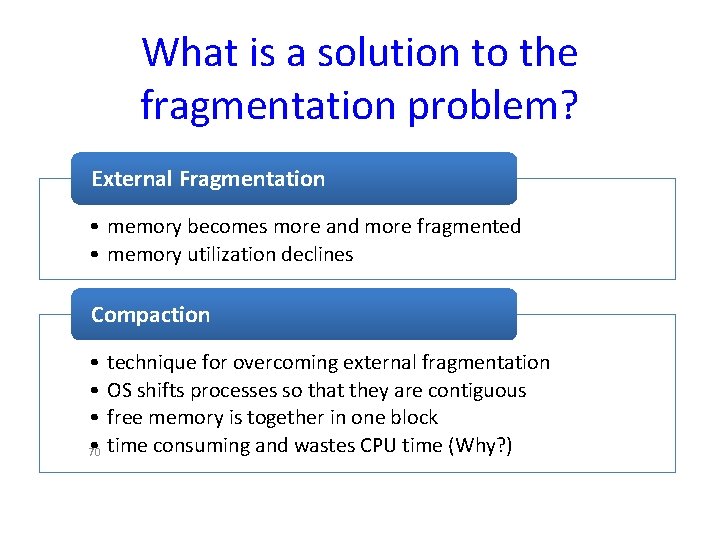

What is a solution to the fragmentation problem? External Fragmentation • memory becomes more and more fragmented • memory utilization declines Compaction • technique for overcoming external fragmentation • OS shifts processes so that they are contiguous • free memory is together in one block • time consuming and wastes CPU time (Why? ) 70

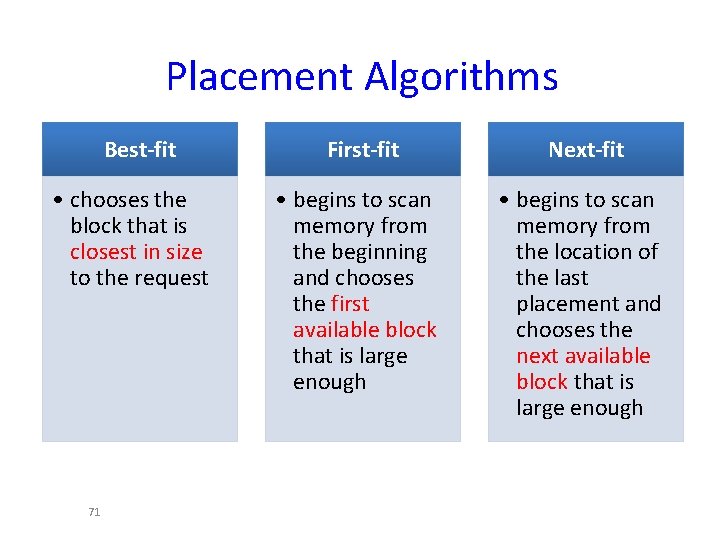

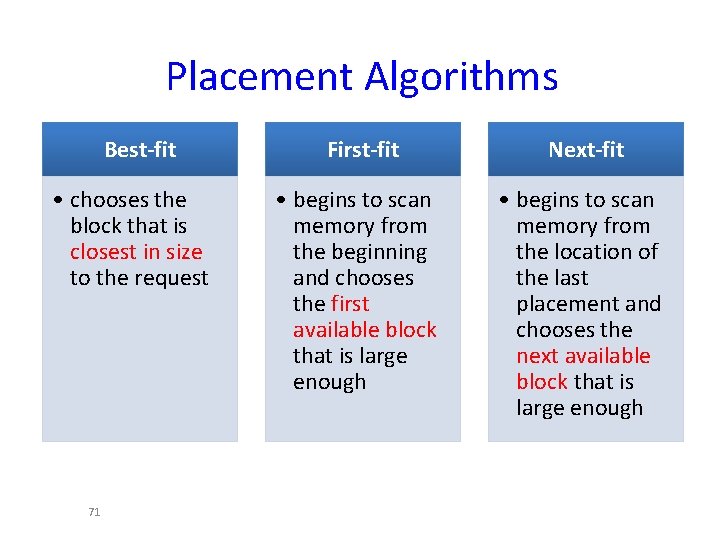

Placement Algorithms Best-fit • chooses the block that is closest in size to the request 71 First-fit Next-fit • begins to scan memory from the beginning and chooses the first available block that is large enough • begins to scan memory from the location of the last placement and chooses the next available block that is large enough

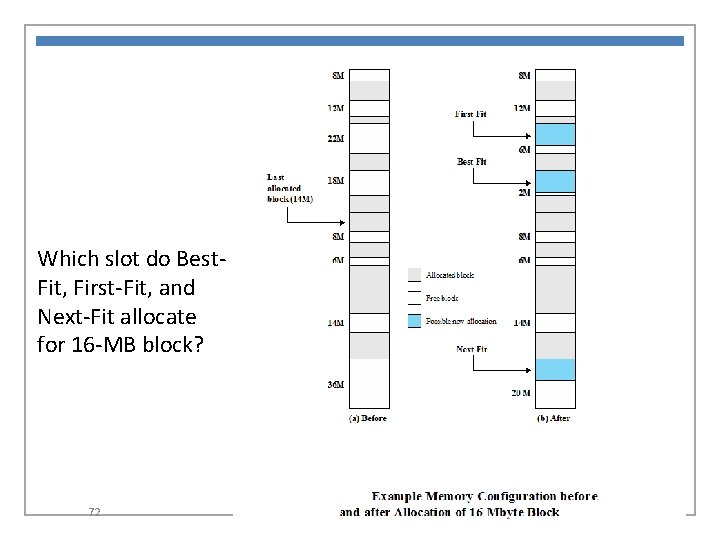

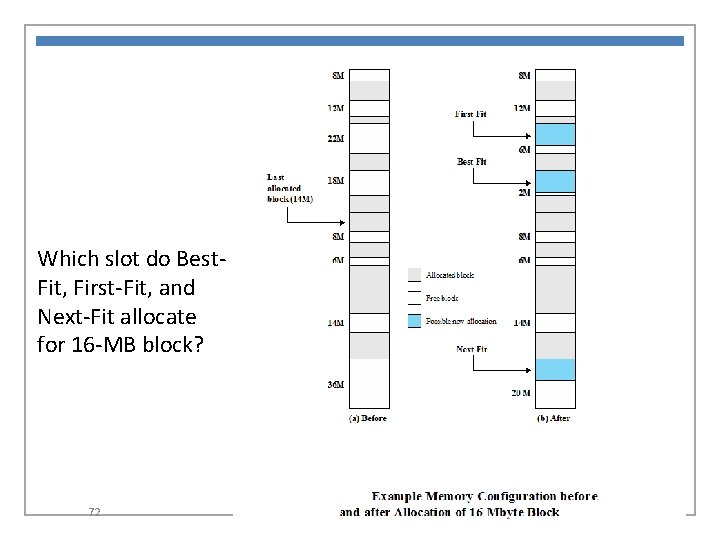

Which slot do Best. Fit, First-Fit, and Next-Fit allocate for 16 -MB block? 72

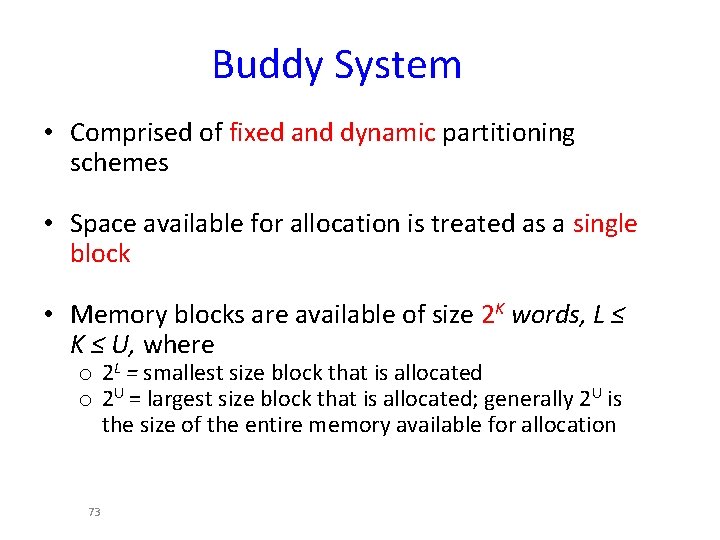

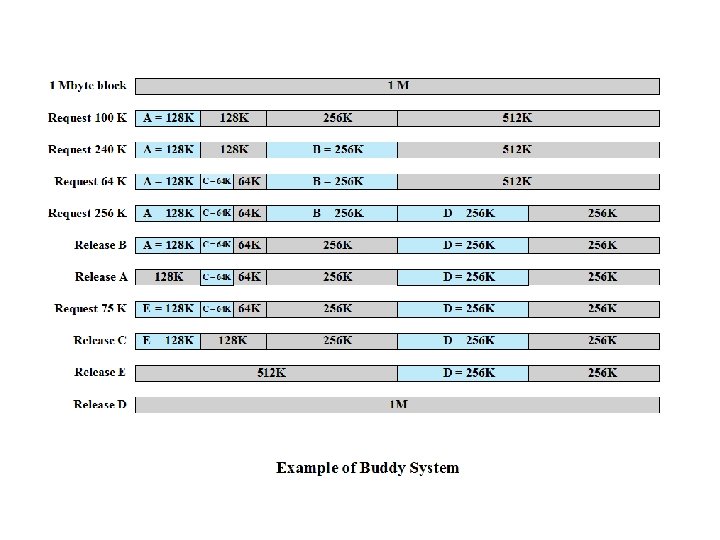

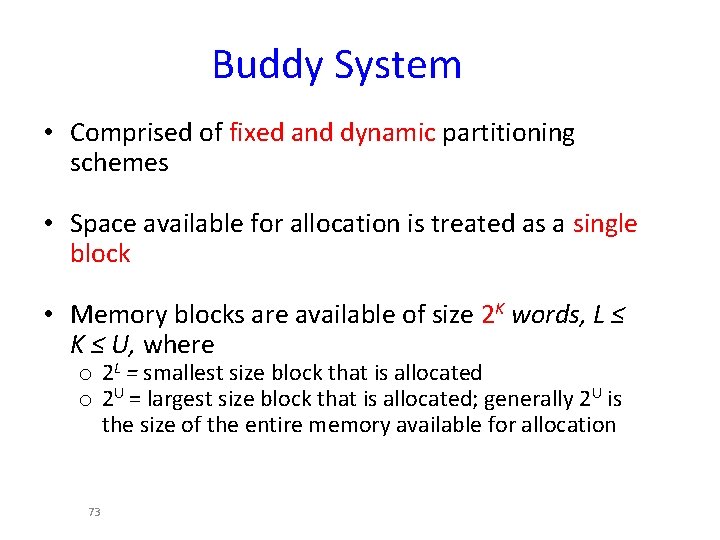

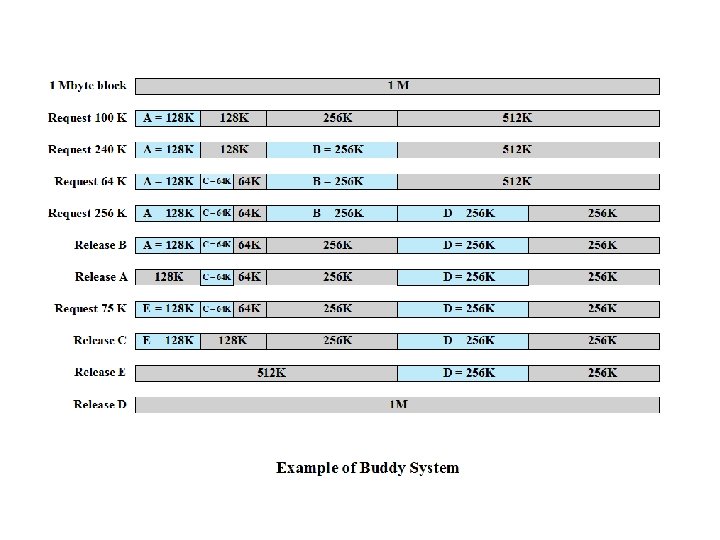

Buddy System • Comprised of fixed and dynamic partitioning schemes • Space available for allocation is treated as a single block • Memory blocks are available of size 2 K words, L ≤ K ≤ U, where o 2 L = smallest size block that is allocated o 2 U = largest size block that is allocated; generally 2 U is the size of the entire memory available for allocation 73

74

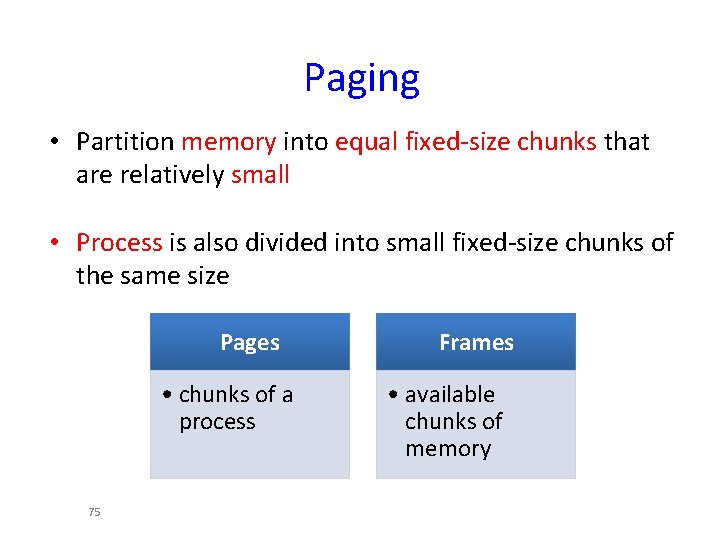

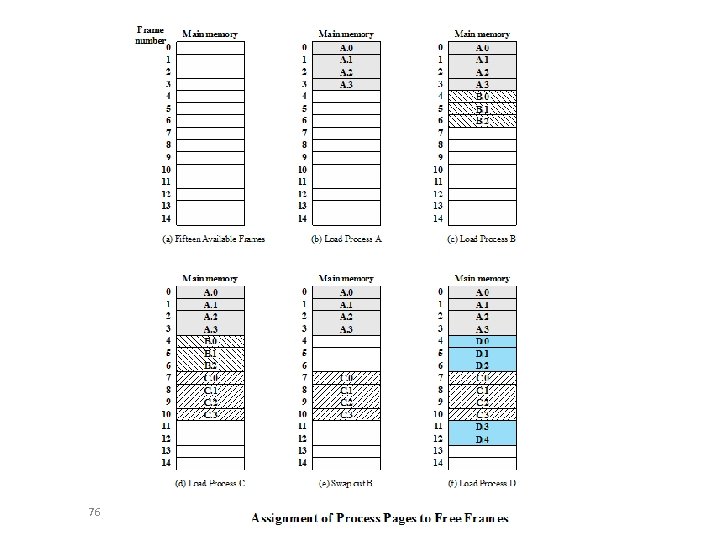

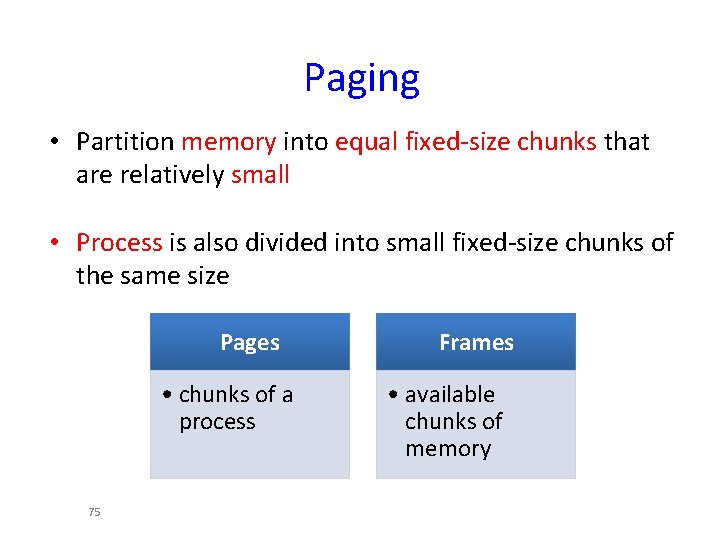

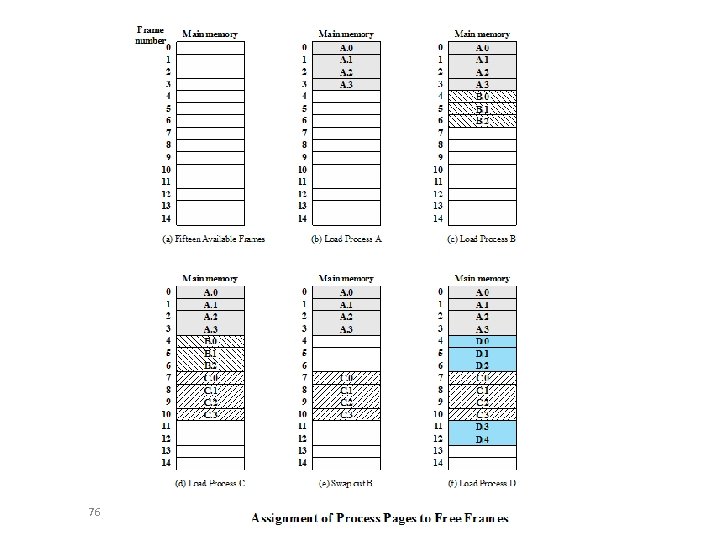

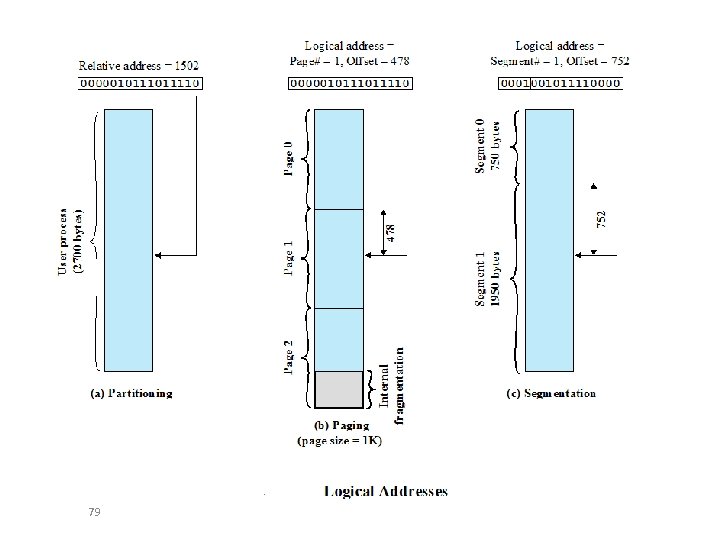

Paging • Partition memory into equal fixed-size chunks that are relatively small • Process is also divided into small fixed-size chunks of the same size Pages • chunks of a process 75 Frames • available chunks of memory

76

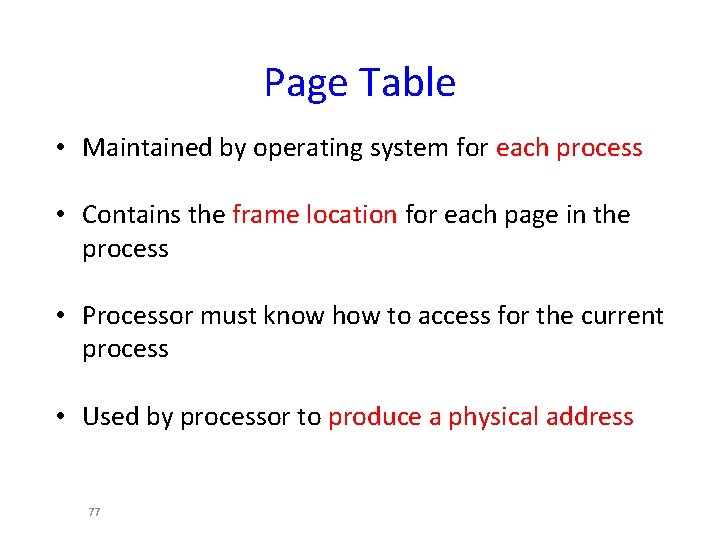

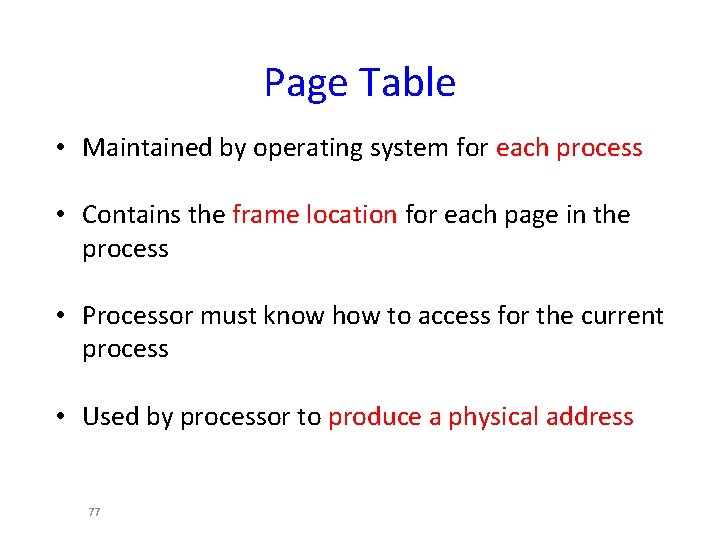

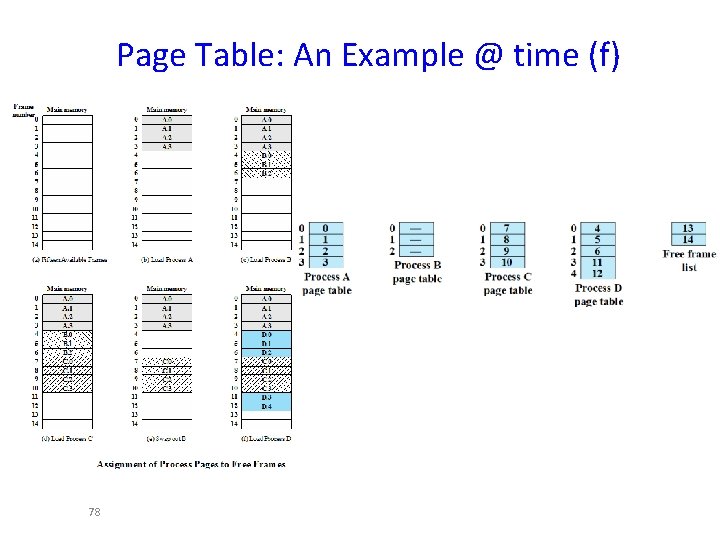

Page Table • Maintained by operating system for each process • Contains the frame location for each page in the process • Processor must know how to access for the current process • Used by processor to produce a physical address 77

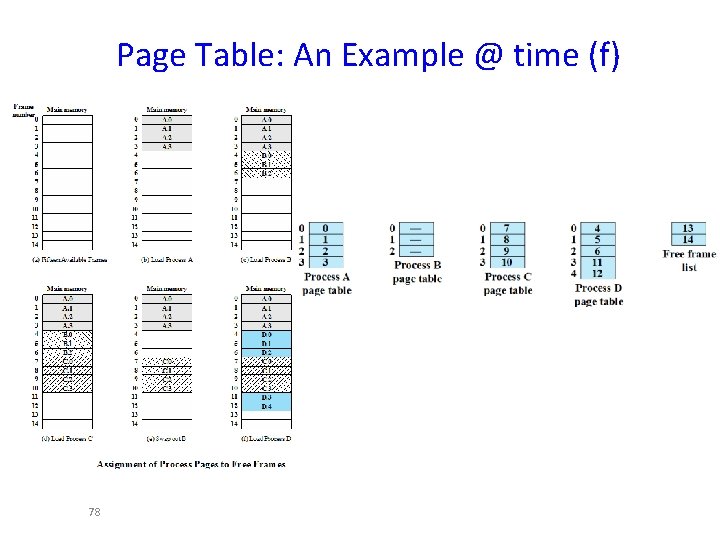

Page Table: An Example @ time (f) 78

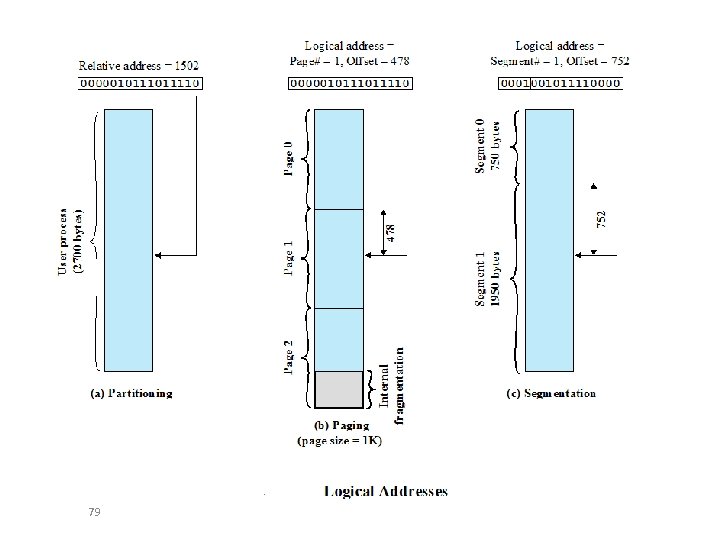

79

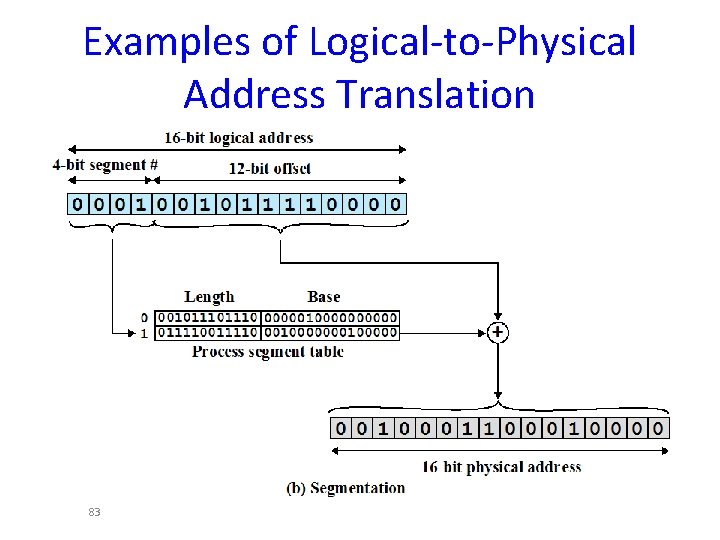

Examples of Logical-to-Physical Address Translation 80

Segmentation • A program can be subdivided into segments o may vary in length o there is a maximum length • Addressing consists of two parts: o segment number o an offset • Similar to dynamic partitioning • Eliminates internal fragmentation 81

Segmentation • Usually visible • Provided as a convenience for organizing programs and data • Typically the programmer will assign programs and data to different segments • For purposes of modular programming the program or data may be further broken down into multiple segments – Question: Why the programmer must be aware of the maximum segment size limitation? 82

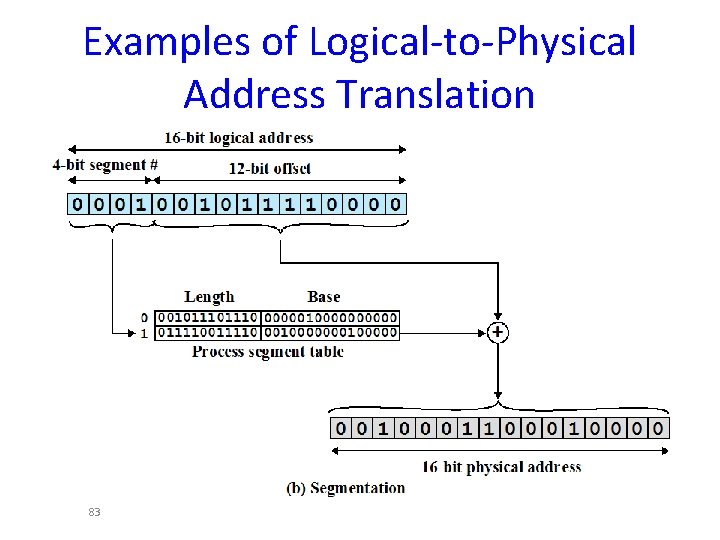

Examples of Logical-to-Physical Address Translation 83