Fundamentals of Relevance Computation Sergey Chernov L 3

- Slides: 112

Fundamentals of Relevance Computation Sergey Chernov, L 3 S Research Center Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

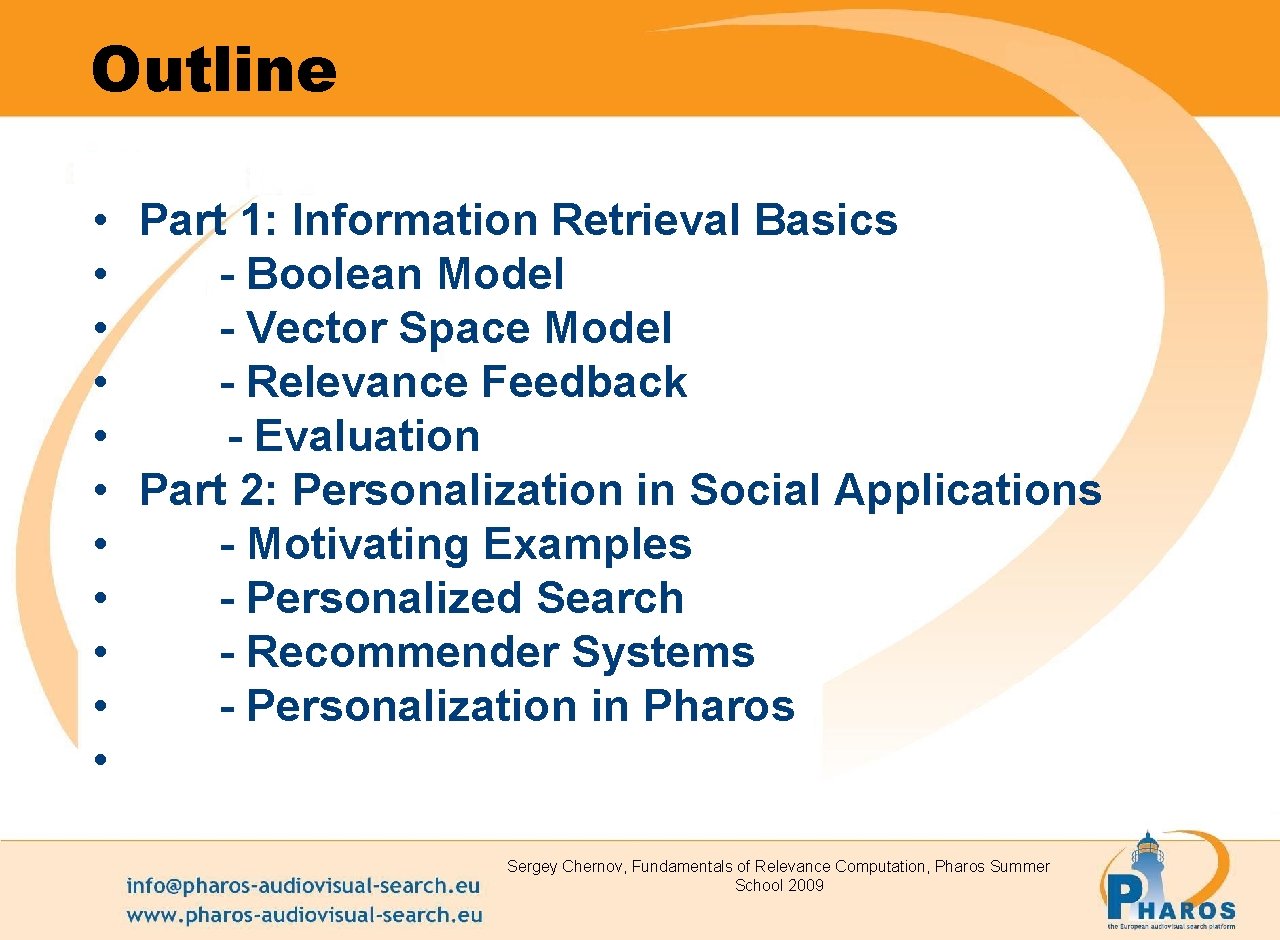

Outline • Part 1: Information Retrieval Basics • - Boolean Model • - Vector Space Model • - Relevance Feedback • - Evaluation • Part 2: Personalization in Social Applications • - Motivating Examples • - Personalized Search • - Recommender Systems • - Personalization in Pharos • Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Part 1 Information Retrieval Basics Slides are taken from Introduction to Information Retrieval Course by Christopher D. Manning, Prabhakar Raghavan and Hinrich Schütze Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Information Retrieval • Information Retrieval (IR) is finding material (usually documents) of an unstructured nature (usually text) that satisfies an information need from within large collections (usually stored on computers). Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 4 4

Basic assumptions of Information Retrieval Sec. 1. 1 • Collection: Fixed set of documents • Goal: Retrieve documents with information that is relevant to user’s information need and helps him complete a task Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 5 5

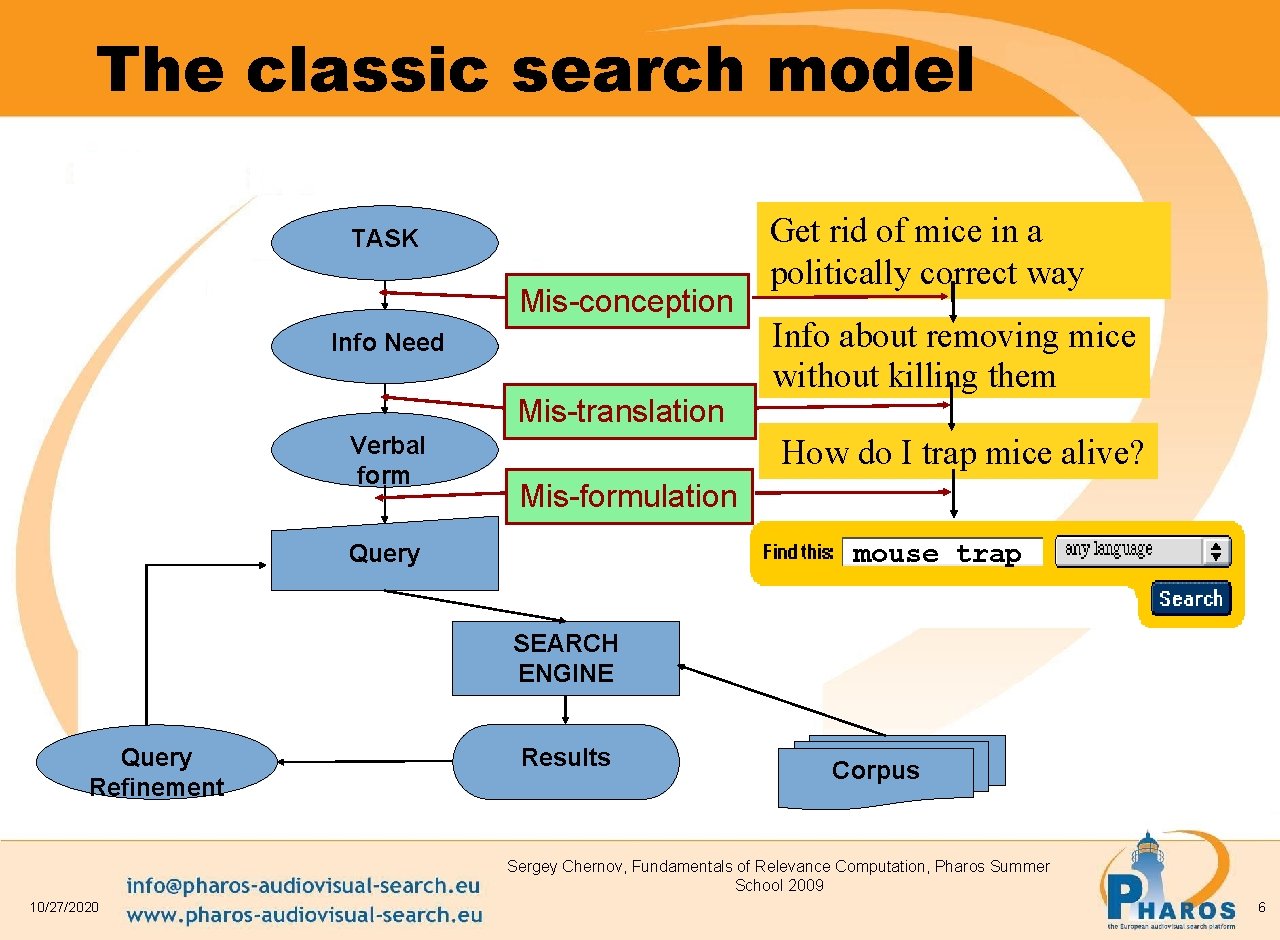

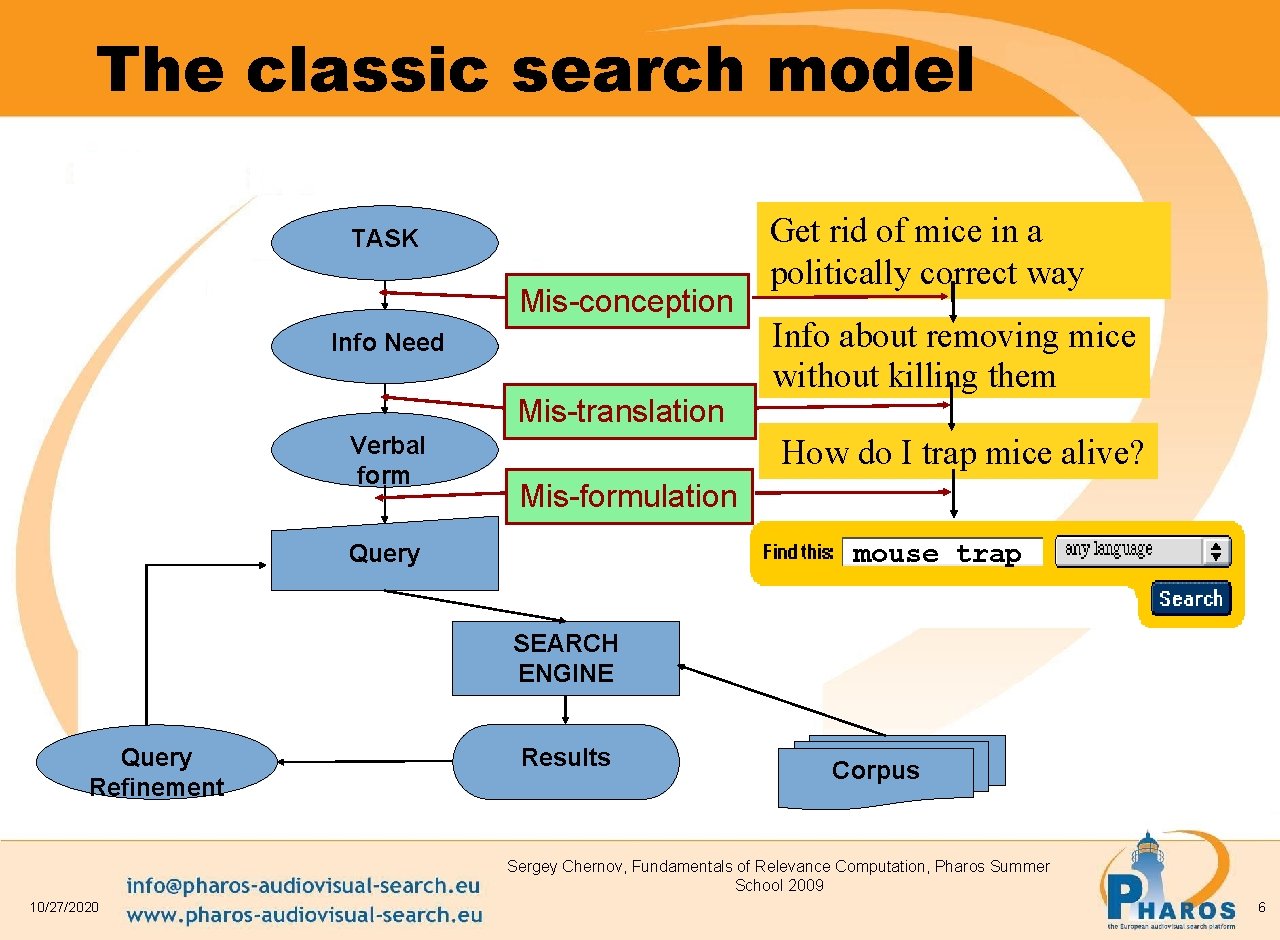

The classic search model TASK Mis-conception Info Need Mis-translation Verbal form Get rid of mice in a politically correct way Info about removing mice without killing them How do I trap mice alive? Mis-formulation Query mouse trap SEARCH ENGINE Query Refinement Results Corpus Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 6

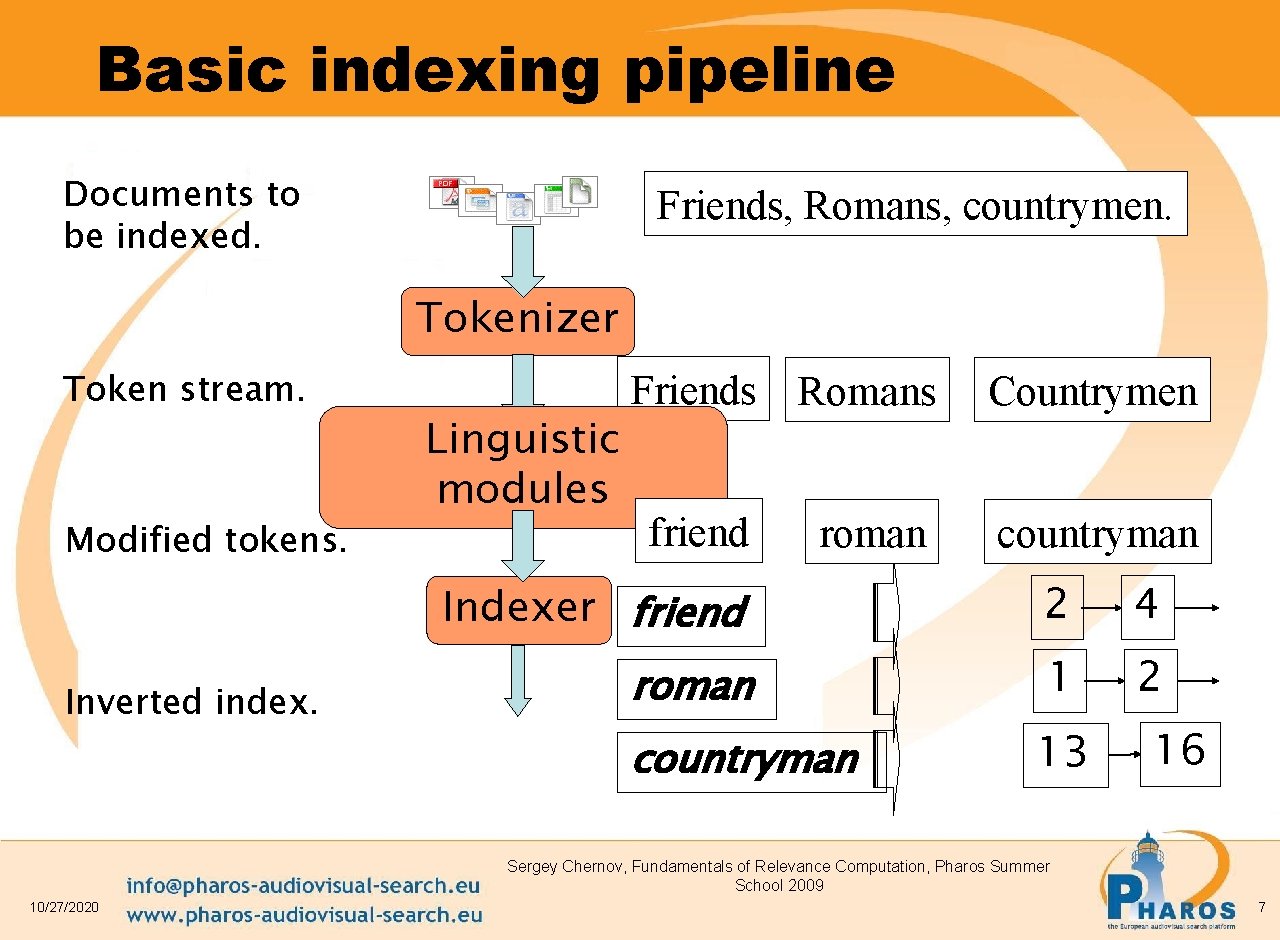

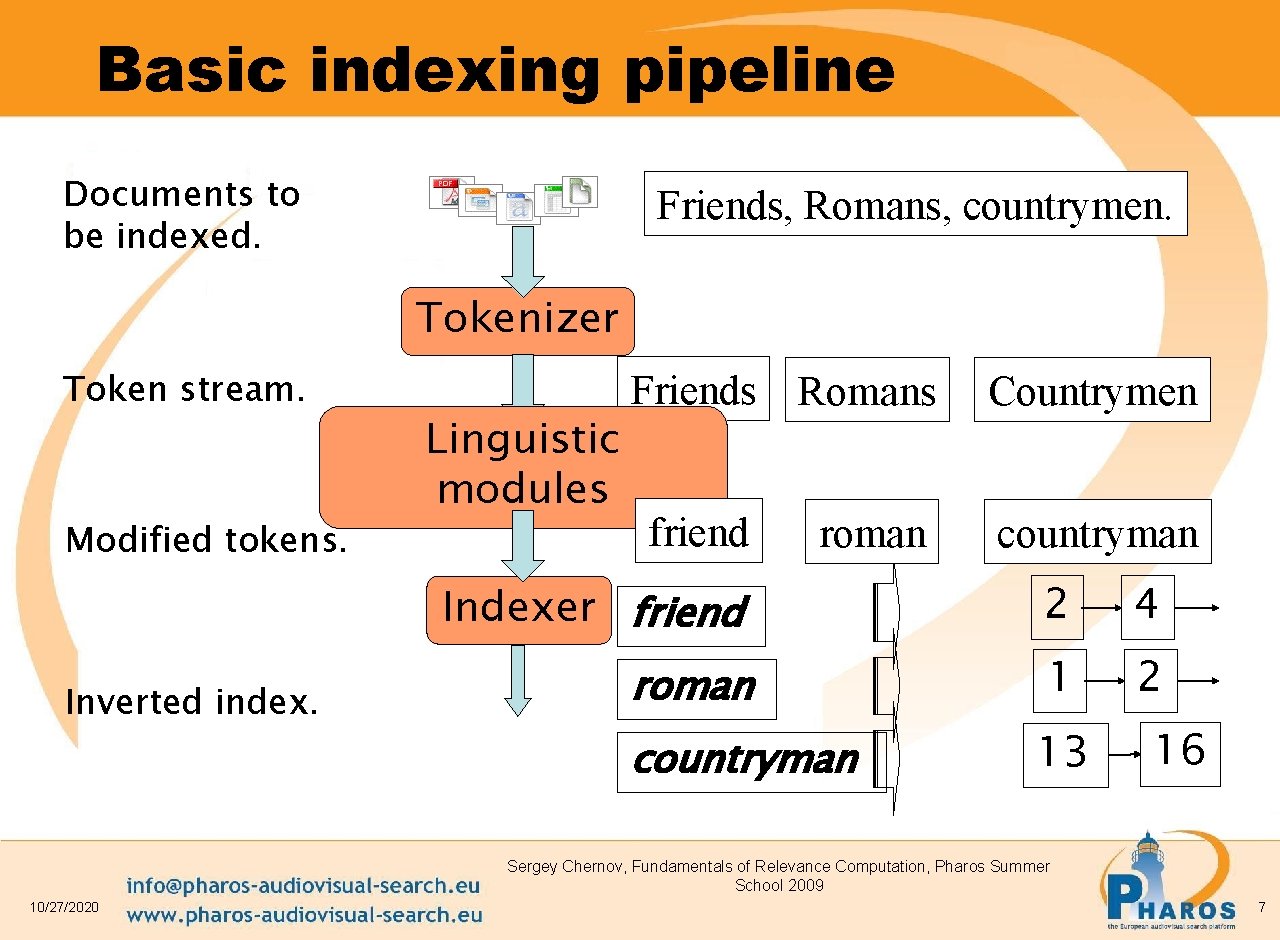

Basic indexing pipeline Documents to be indexed. Friends, Romans, countrymen. Tokenizer Token stream. Modified tokens. Inverted index. Linguistic modules Friends Romans friend roman Countrymen countryman Indexer friend 2 4 roman 1 2 countryman 13 16 Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 7

Parsing a document • What format is it in? –pdf/word/excel/html? • What language is it in? • What character set is in use? Each of these is a classification problem, but these tasks are often done heuristically … Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 8

Stop words • With a stop list, you exclude from dictionary entirely the commonest words. Intuition: –They have little semantic content: the, a, and, to, be –There a lot of them: ~30% of postings for top 30 wds Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 9

Normalization • Need to “normalize” terms in indexed text as well as query terms into the same form –We want to match U. S. A. and USA • We most commonly implicitly define equivalence classes of terms –e. g. , by deleting periods in a term • Alternative is to do asymmetric expansion: –Enter: window Search: window, windows –Enter: windows Search: Windows, window –Enter: Windows Search: Windows • Potentially more powerful, but less efficient Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 10

Stemming • Reduce terms to their “roots” before indexing • “Stemming” suggest crude affix chopping –language dependent –e. g. , automate(s), automatic, automation all reduced to automat. for example compressed and compression are both accepted as equivalent to compress. for exampl compress and compress ar both accept as equival to compress Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 11

Sec. 1. 1 Query • Which plays of Shakespeare contain the words Brutus AND Caesar but NOT Calpurnia? • One could grep all of Shakespeare’s plays for Brutus and Caesar, then strip out lines containing Calpurnia? –Slow (for large corpora) –NOT Calpurnia is non-trivial –Other operations (e. g. , find the word Romans near countrymen) not feasible –Ranked retrieval (best documents to return) Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 12 12

Term-document incidence Brutus AND Caesar but NOT Calpurnia Sec. 1. 1 1 if play contains word, 0 otherwise Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 13

Incidence vectors Sec. 1. 1 • So we have a 0/1 vector for each term. • To answer query: take the vectors for Brutus, Caesar and Calpurnia (complemented) bitwise AND. • 110100 AND 110111 AND 101111 = 100100. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 14 14

Answers to query Sec. 1. 1 • Antony and Cleopatra, Act III, Scene ii • Agrippa [Aside to DOMITIUS ENOBARBUS]: Why, Enobarbus, When Antony found Julius Caesar dead, He cried almost to roaring; and he wept When at Philippi he found Brutus slain. • Hamlet, Act III, Scene ii • Lord Polonius: I did enact Julius Caesar I was killed i' the • Capitol; Brutus killed me. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 15 15

Sec. 1. 3 Boolean queries: Exact match • The Boolean Retrieval model is being able to ask a query that is a Boolean expression: –Boolean Queries are queries using AND, OR and NOT to join query terms • Views each document as a set of words • Is precise: document matches condition or not. • Primary commercial retrieval tool for 3 decades. • Professional searchers (e. g. , lawyers) still like Boolean queries: –You know exactly what you’re getting. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 16 16

Example: West. Law Sec. 1. 4 http: //www. westlaw. com/ • Largest commercial (paying subscribers) legal search service (started 1975; ranking added 1992) • Tens of terabytes of data; 700, 000 users • Majority of users still use boolean queries • Example query: –What is the statute of limitations in cases involving the federal tort claims act? –LIMIT! /3 STATUTE ACTION /S FEDERAL /2 TORT /3 CLAIM • /3 = within 3 words, /S = in same sentence Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 17 17

Problem with Boolean search: feast or famine • Boolean queries often result in either too few (=0) or too many (1000 s) results. • Query 1: “standard user dlink 650” → 200, 000 hits • Query 2: “standard user dlink 650 no card found”: 0 hits • It takes skill to come up with a query that produces a manageable number of hits. • With a ranked list of documents it does not matter how large the retrieved set is. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 18

Scoring as the basis of ranked retrieval • We wish to return in order the documents most likely to be useful to the searcher • How can we rank-order the documents in the collection with respect to a query? • Assign a score – say in [0, 1] – to each document • This score measures how well document and query “match”. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 19

Recall: Binary term-document incidence matrix Each document is represented by a binary vector ∈ {0, 1}|V| Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 20

Term-document count matrices • Consider the number of occurrences of a term in a document: – Each document is a count vector in ℕv: a column below Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 21

Bag of words model • Vector representation doesn’t consider the ordering of words in a document • John is quicker than Mary and Mary is quicker than John have the same vectors • This is called the bag of words model. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 22

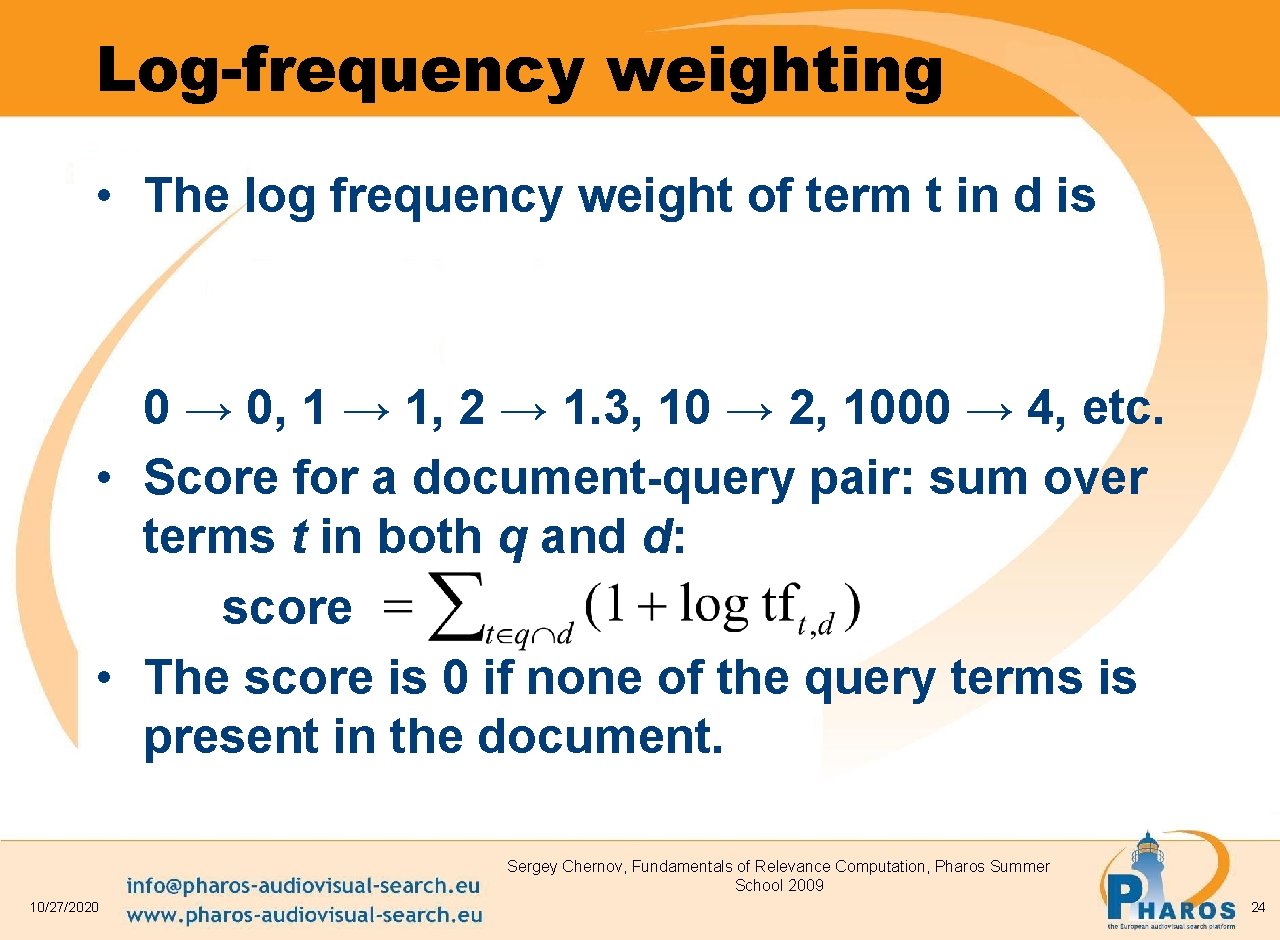

Term frequency tf • The term frequency tft, d of term t in document d is defined as the number of times that t occurs in d. • We want to use tf when computing query-document match scores. But how? • Raw term frequency is not what we want: –A document with 10 occurrences of the term is more relevant than a document with one occurrence of the term. –But not 10 times more relevant. • Relevance does not increase proportionally with term frequency. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 23

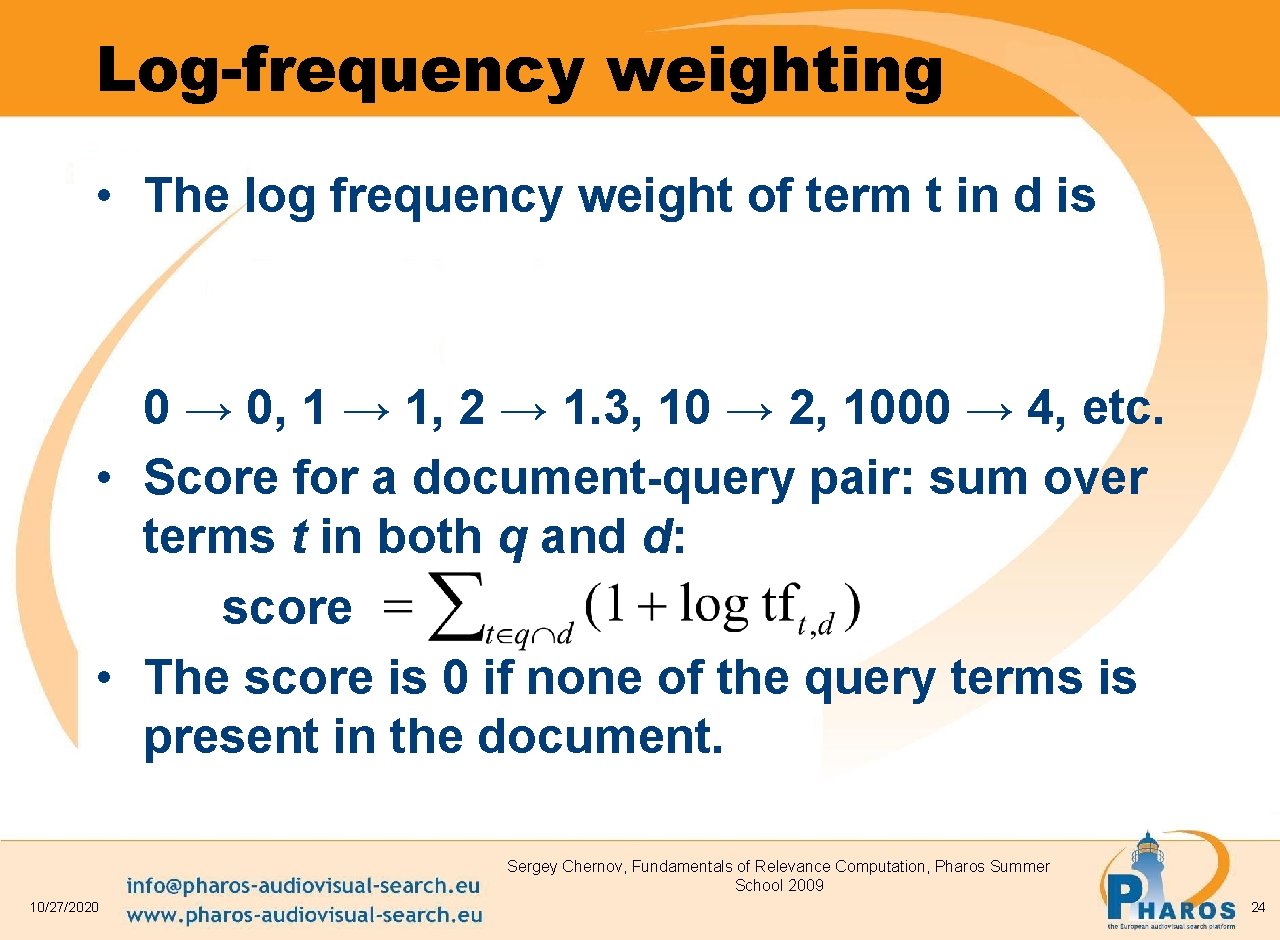

Log-frequency weighting • The log frequency weight of term t in d is 0 → 0, 1 → 1, 2 → 1. 3, 10 → 2, 1000 → 4, etc. • Score for a document-query pair: sum over terms t in both q and d: score • The score is 0 if none of the query terms is present in the document. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 24

Document frequency • Rare terms are more informative than frequent terms –Recall stop words • Consider a term in the query that is rare in the collection (e. g. , arachnocentric) • A document containing this term is very likely to be relevant to the query arachnocentric • → We want a high weight for rare terms like arachnocentric. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 25

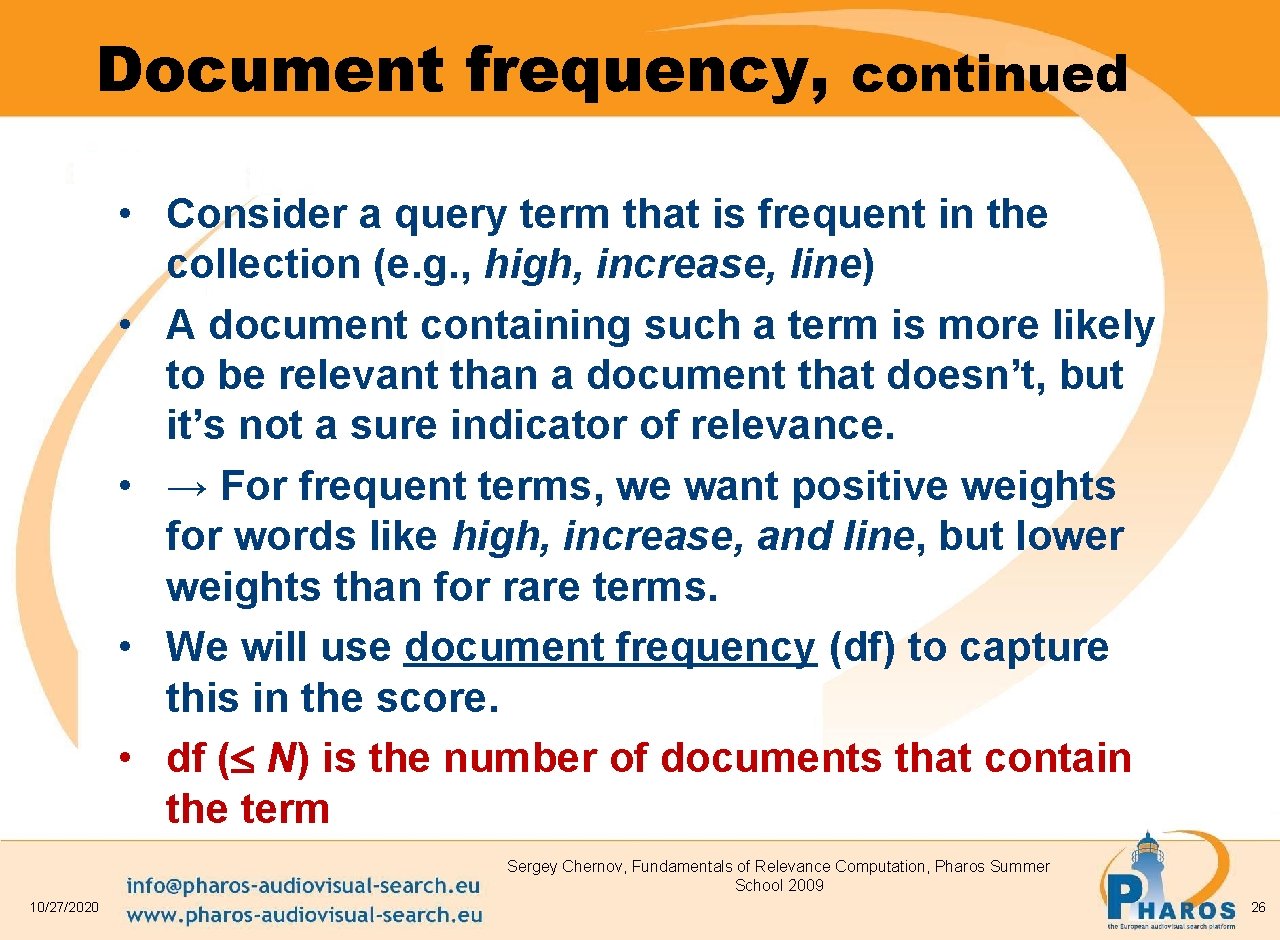

Document frequency, continued • Consider a query term that is frequent in the collection (e. g. , high, increase, line) • A document containing such a term is more likely to be relevant than a document that doesn’t, but it’s not a sure indicator of relevance. • → For frequent terms, we want positive weights for words like high, increase, and line, but lower weights than for rare terms. • We will use document frequency (df) to capture this in the score. • df ( N) is the number of documents that contain the term Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 26

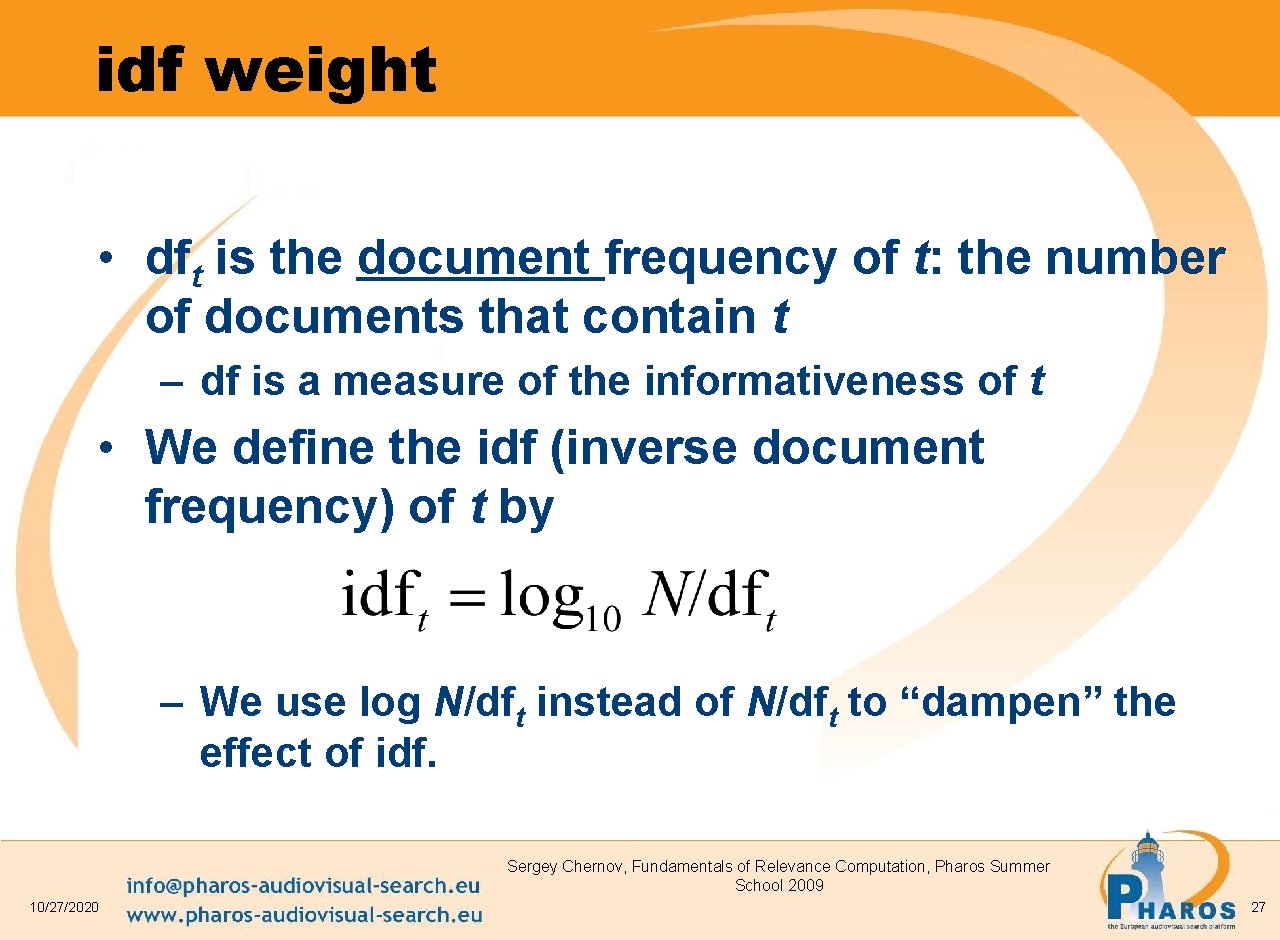

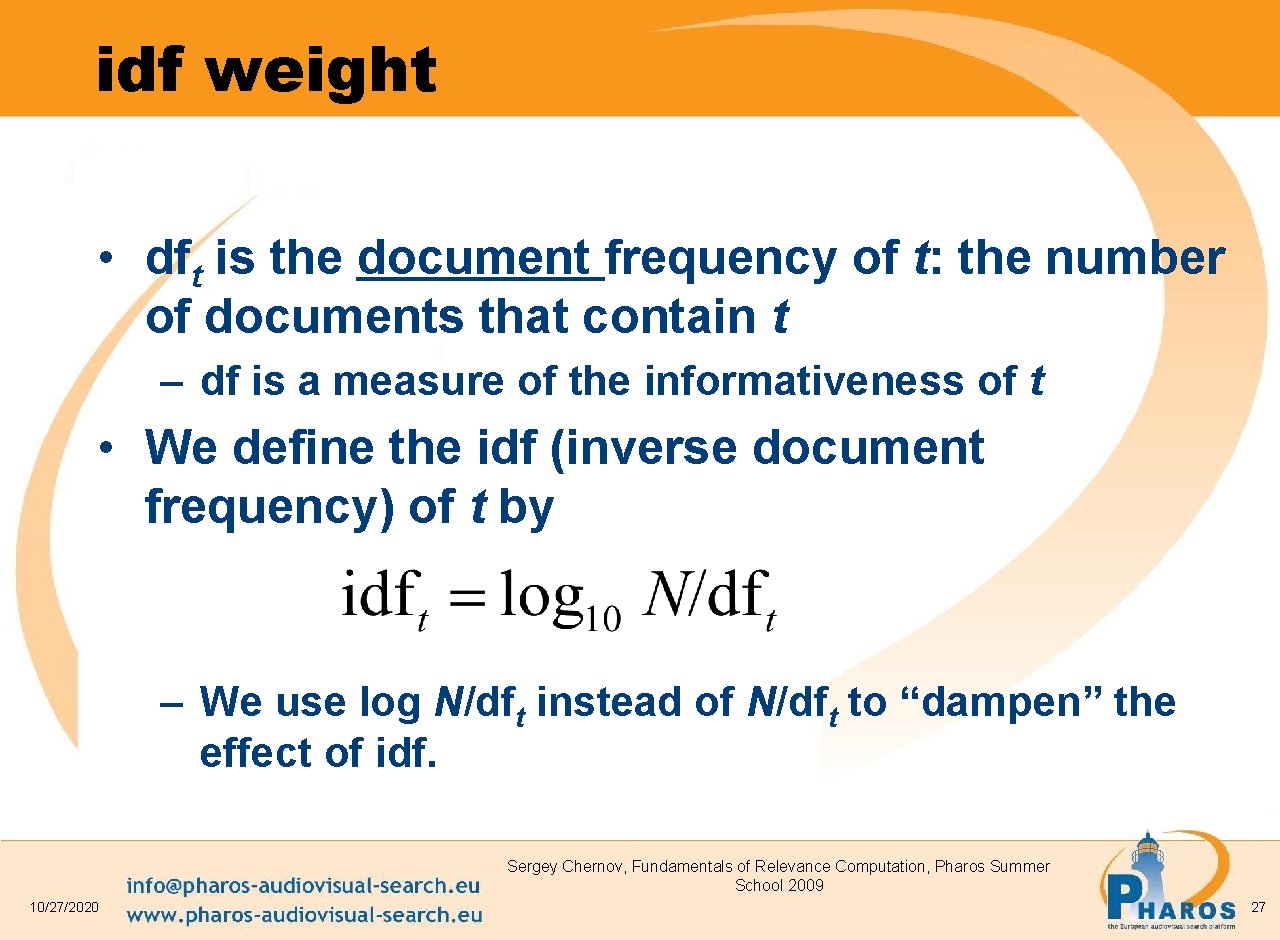

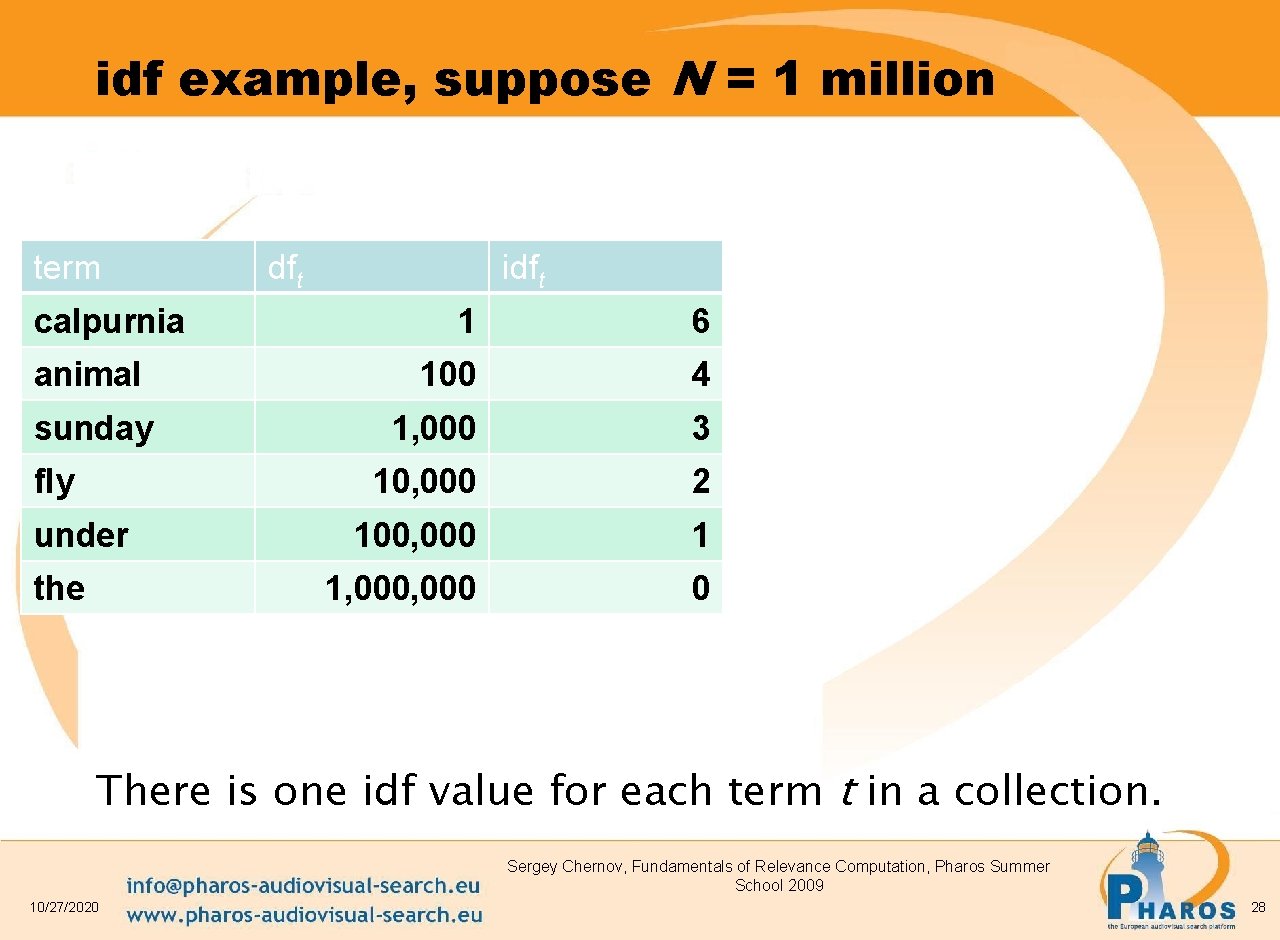

idf weight • dft is the document frequency of t: the number of documents that contain t – df is a measure of the informativeness of t • We define the idf (inverse document frequency) of t by – We use log N/dft instead of N/dft to “dampen” the effect of idf. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 27

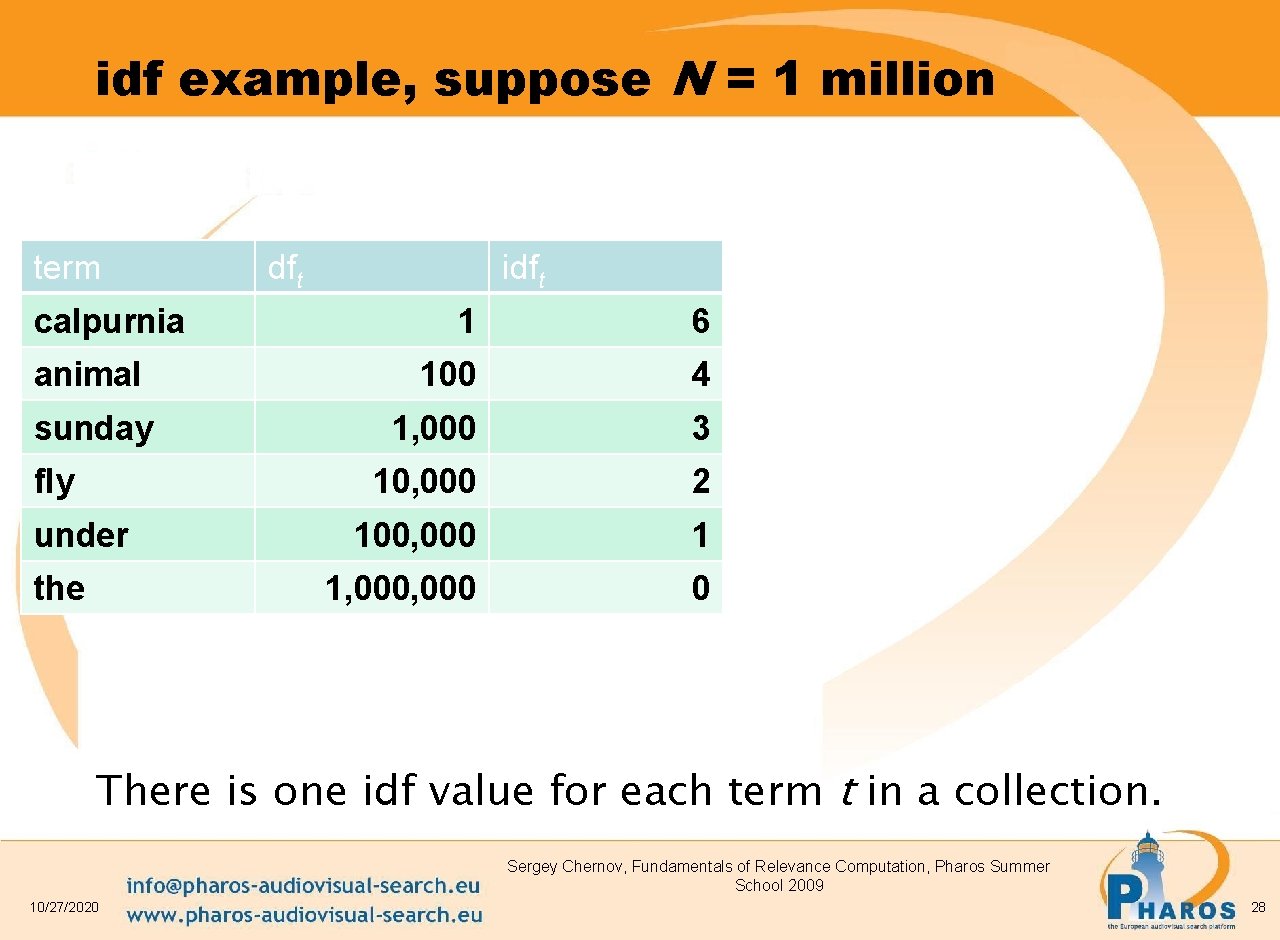

idf example, suppose N = 1 million term calpurnia animal sunday fly under the dft idft 1 6 100 4 1, 000 3 10, 000 2 100, 000 1 1, 000 0 There is one idf value for each term t in a collection. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 28

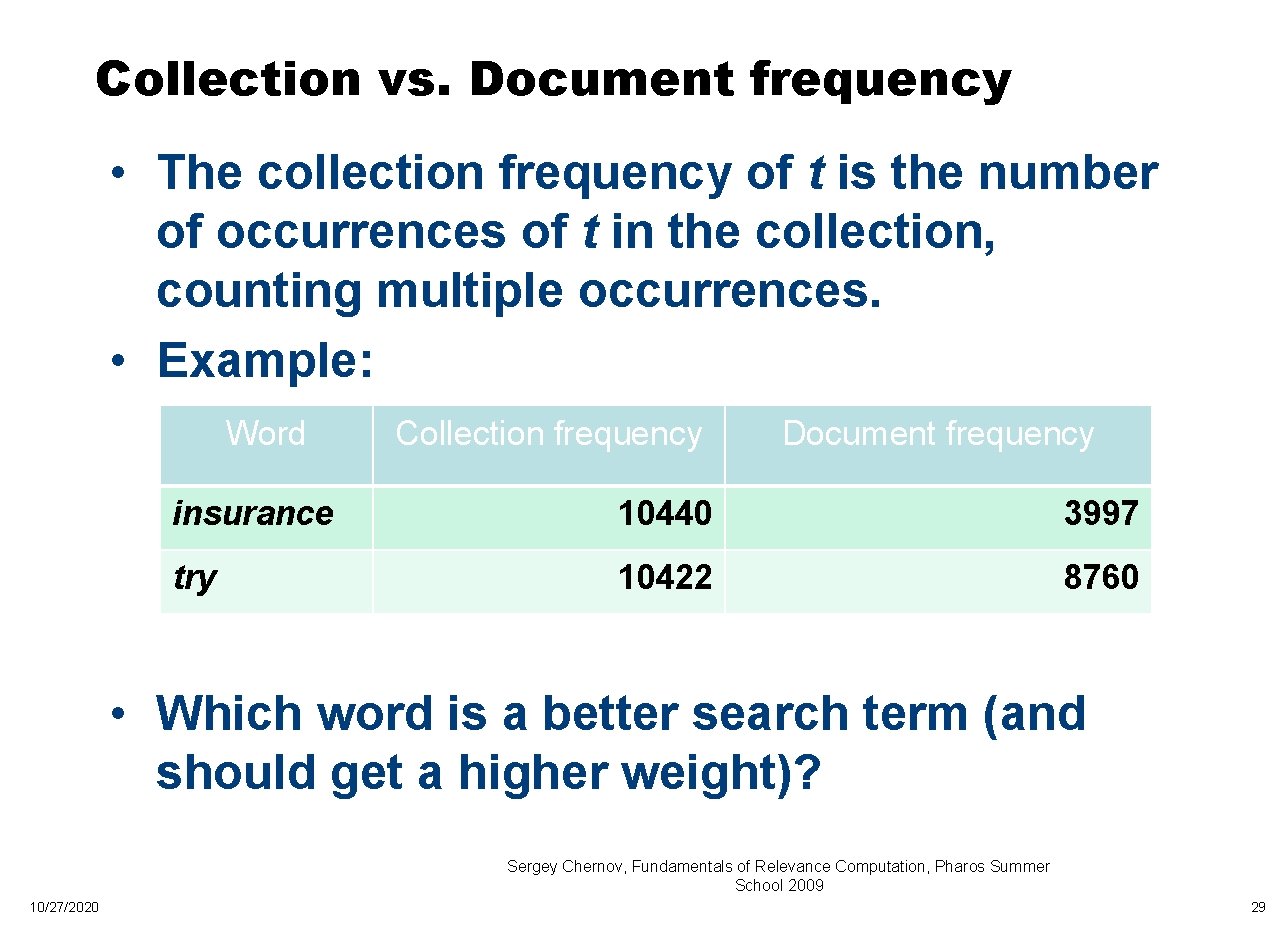

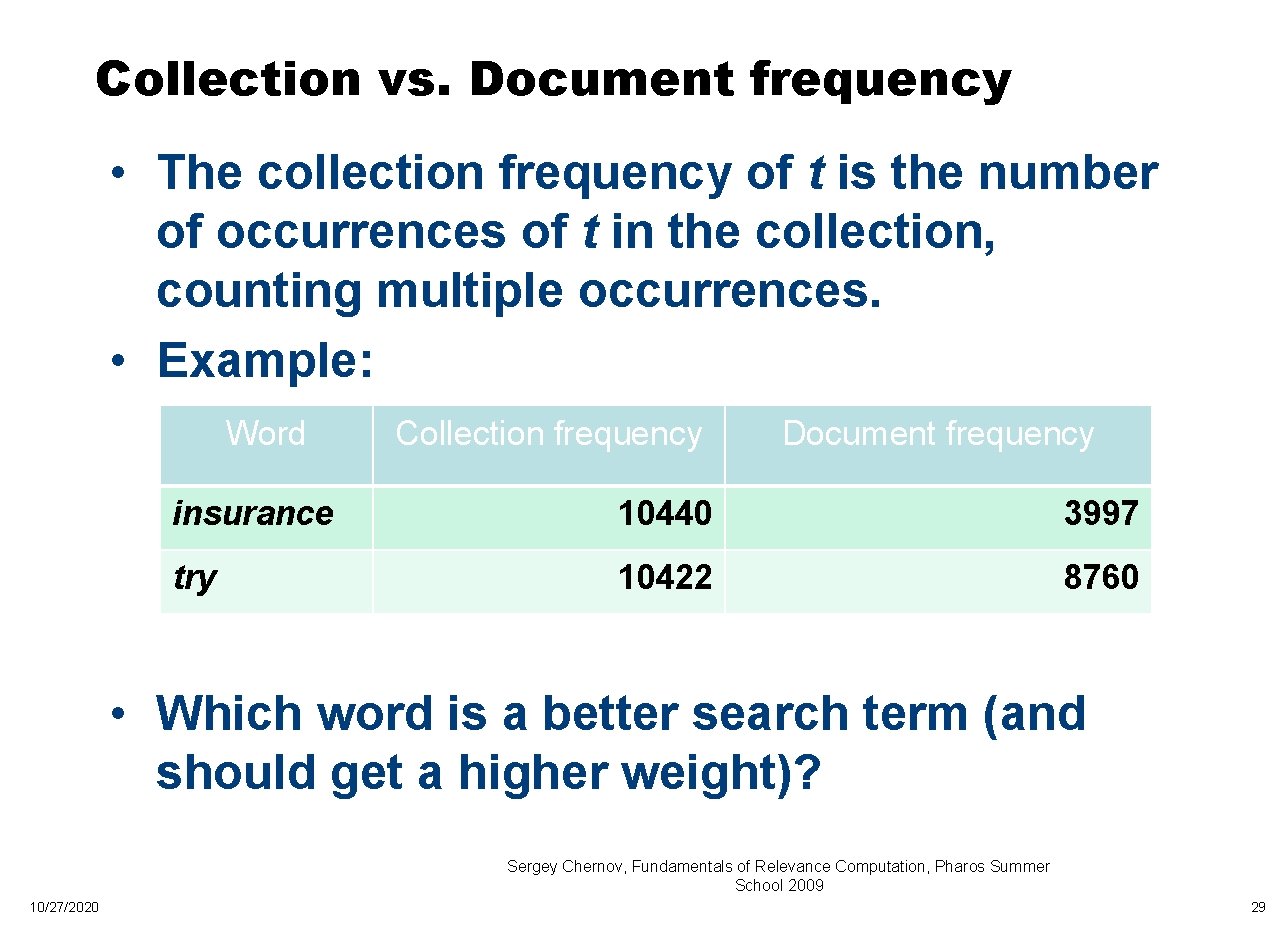

Collection vs. Document frequency • The collection frequency of t is the number of occurrences of t in the collection, counting multiple occurrences. • Example: Word Collection frequency Document frequency insurance 10440 3997 try 10422 8760 • Which word is a better search term (and should get a higher weight)? Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 29

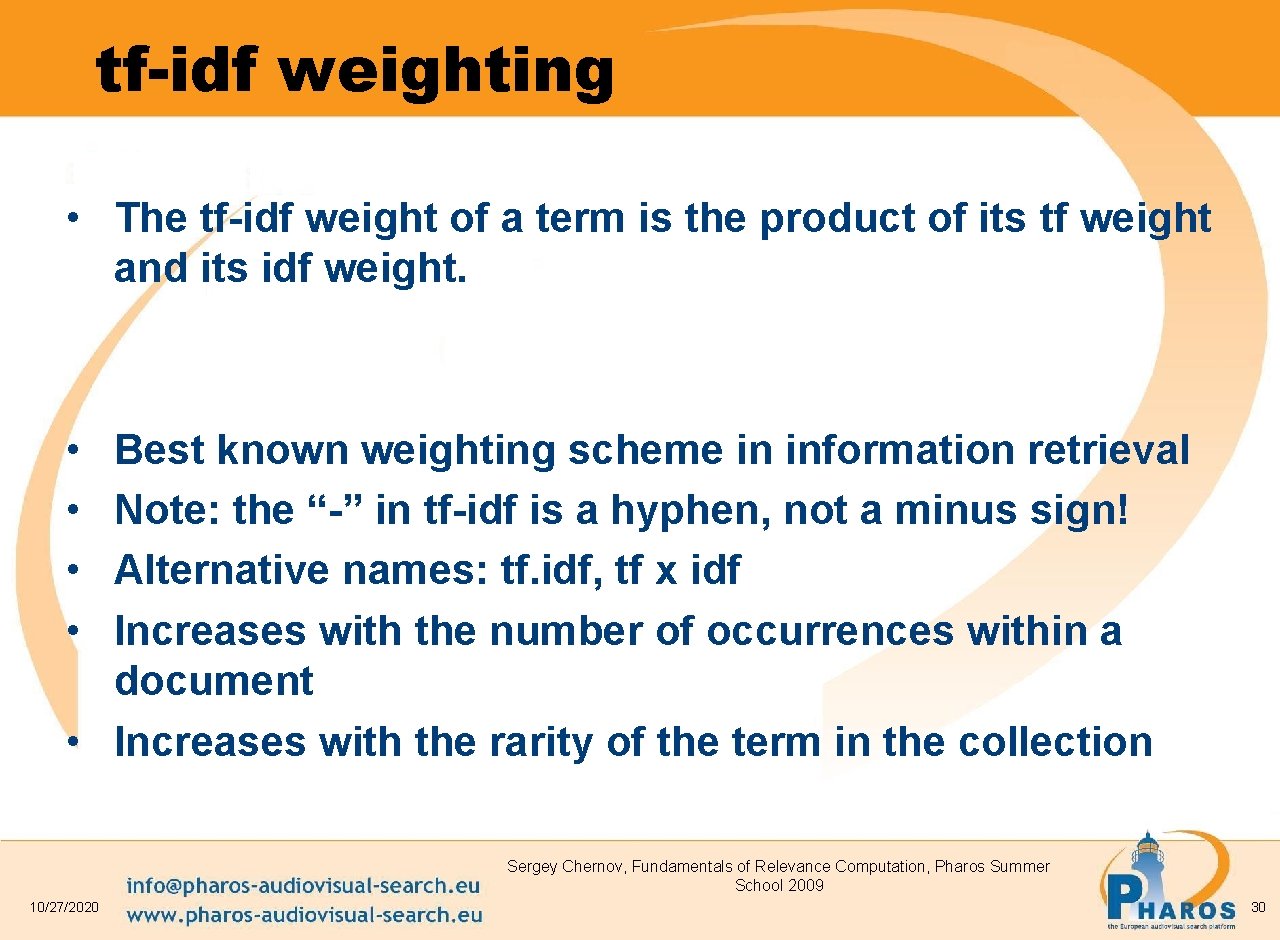

tf-idf weighting • The tf-idf weight of a term is the product of its tf weight and its idf weight. • • Best known weighting scheme in information retrieval Note: the “-” in tf-idf is a hyphen, not a minus sign! Alternative names: tf. idf, tf x idf Increases with the number of occurrences within a document • Increases with the rarity of the term in the collection Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 30

Binary → count → weight matrix Each document is now represented by a real-valued vector of tf-idf weights ∈ R|V| Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 31

Documents as vectors • • So we have a |V|-dimensional vector space Terms are axes of the space Documents are points or vectors in this space Very high-dimensional: hundreds of millions of dimensions when you apply this to a web search engine • This is a very sparse vector - most entries are zero. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 32

Queries as vectors • Key idea 1: Do the same for queries: represent them as vectors in the space • Key idea 2: Rank documents according to their proximity to the query in this space • proximity = similarity of vectors • proximity ≈ inverse of distance • Recall: We do this because we want to get away from the you’re-either-in-or-out Boolean model. • Instead: rank more relevant documents higher than less relevant documents Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 33

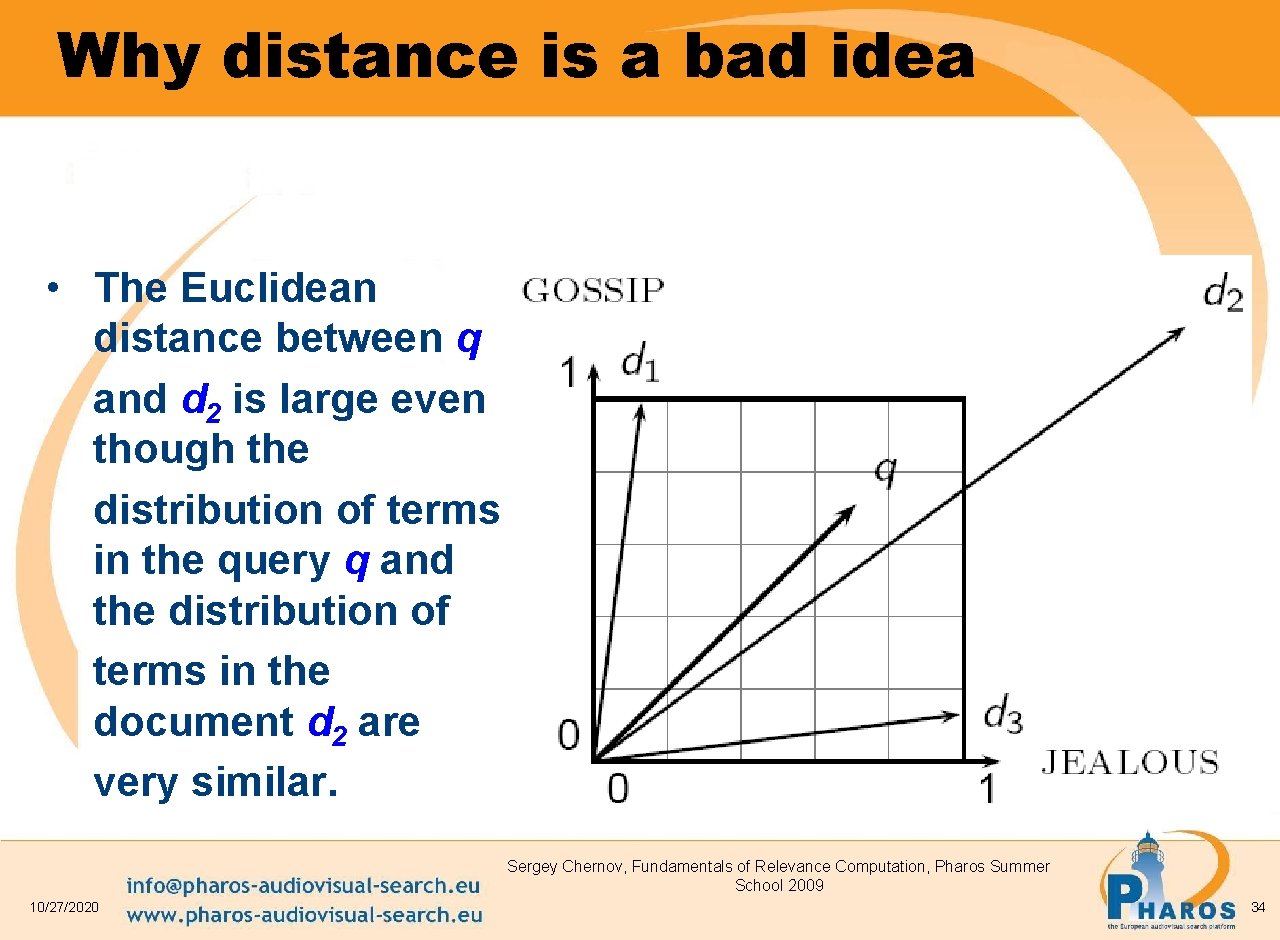

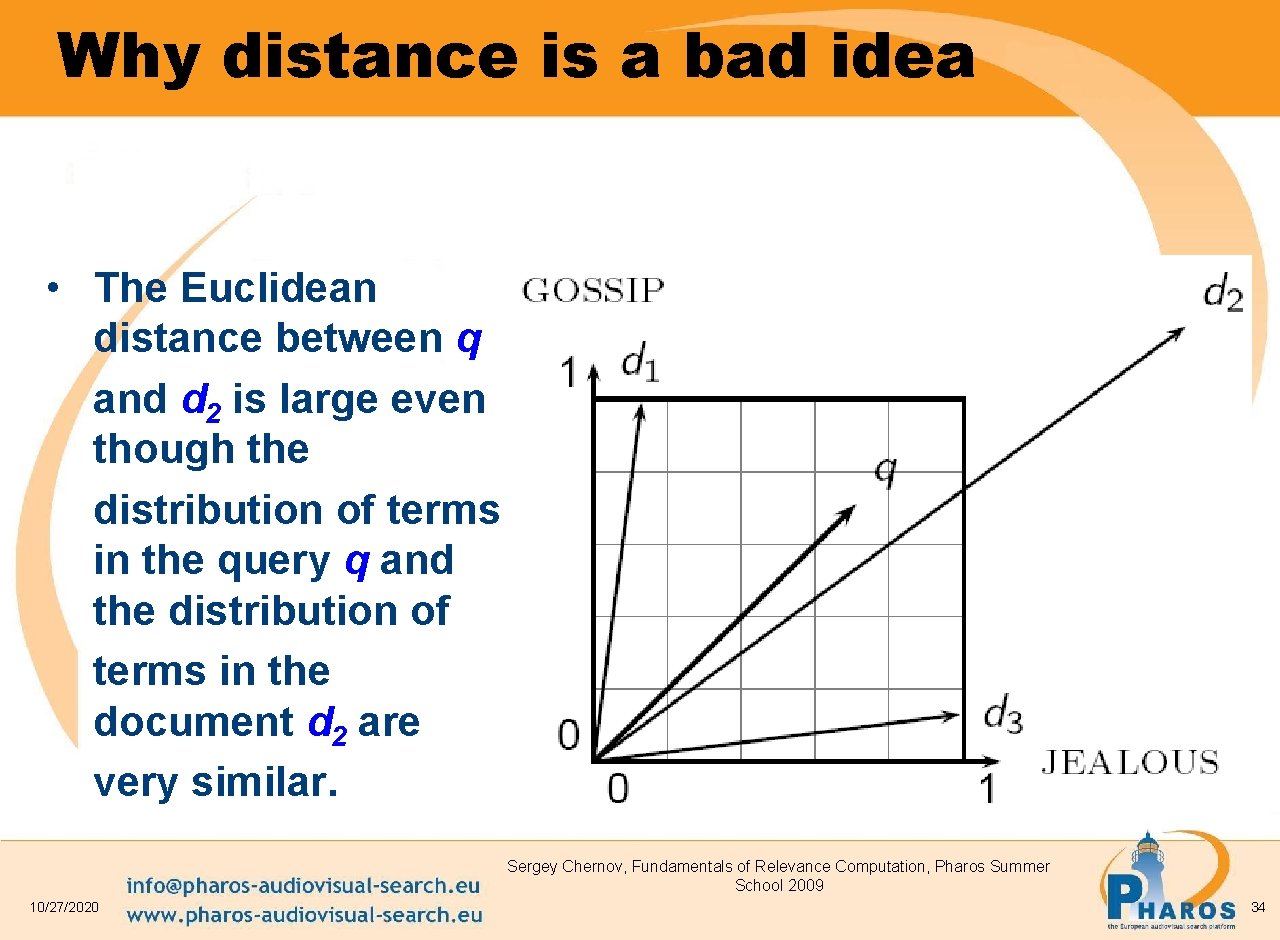

Why distance is a bad idea • The Euclidean distance between q and d 2 is large even though the distribution of terms in the query q and the distribution of terms in the document d 2 are very similar. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 34

cosine(query, document) Dot product qi is the tf-idf weight of term i in the query di is the tf-idf weight of term i in the document cos(q, d) is the cosine similarity of q and d … or, equivalently, the cosine of the angle between q and d. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 35

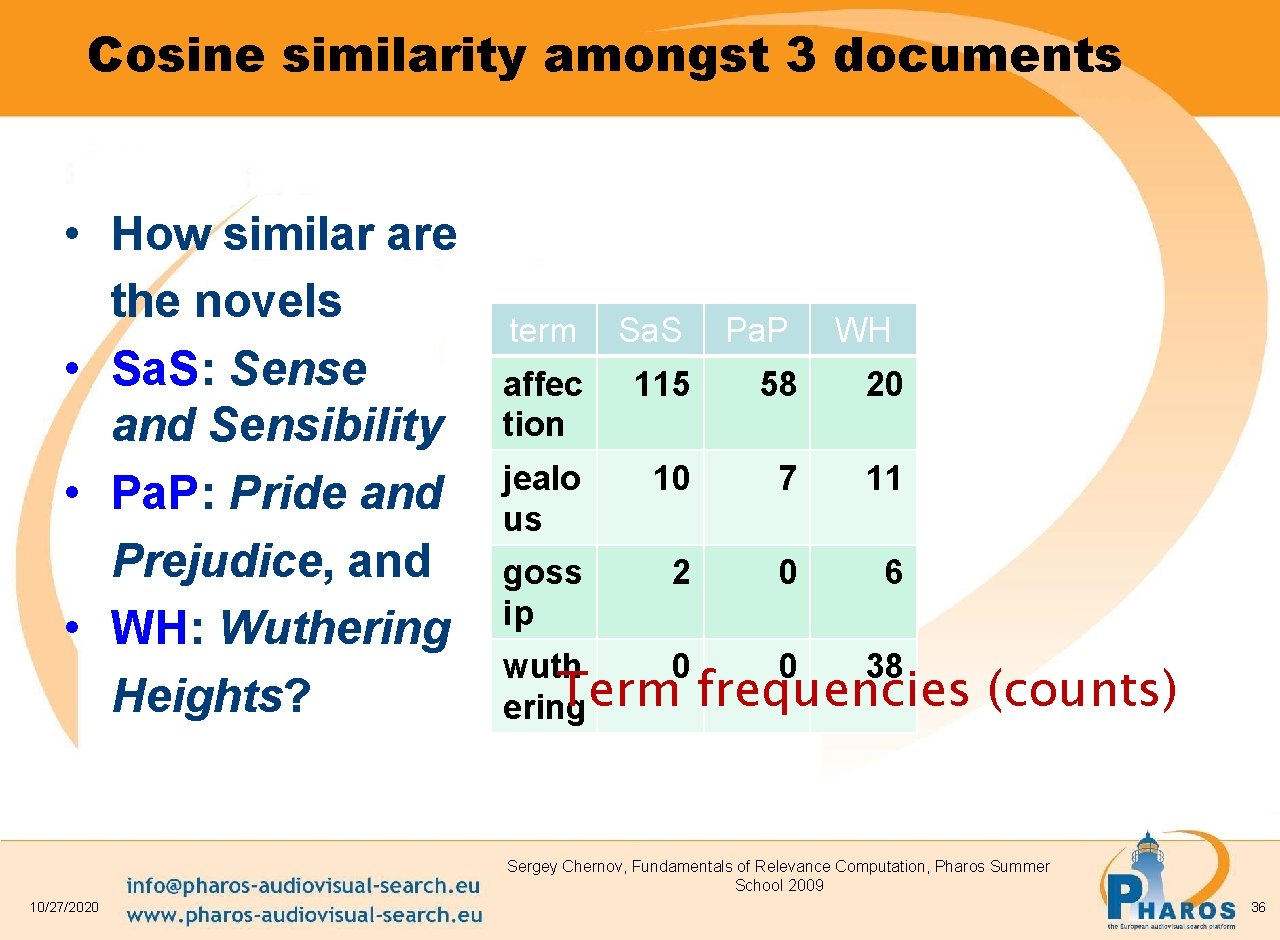

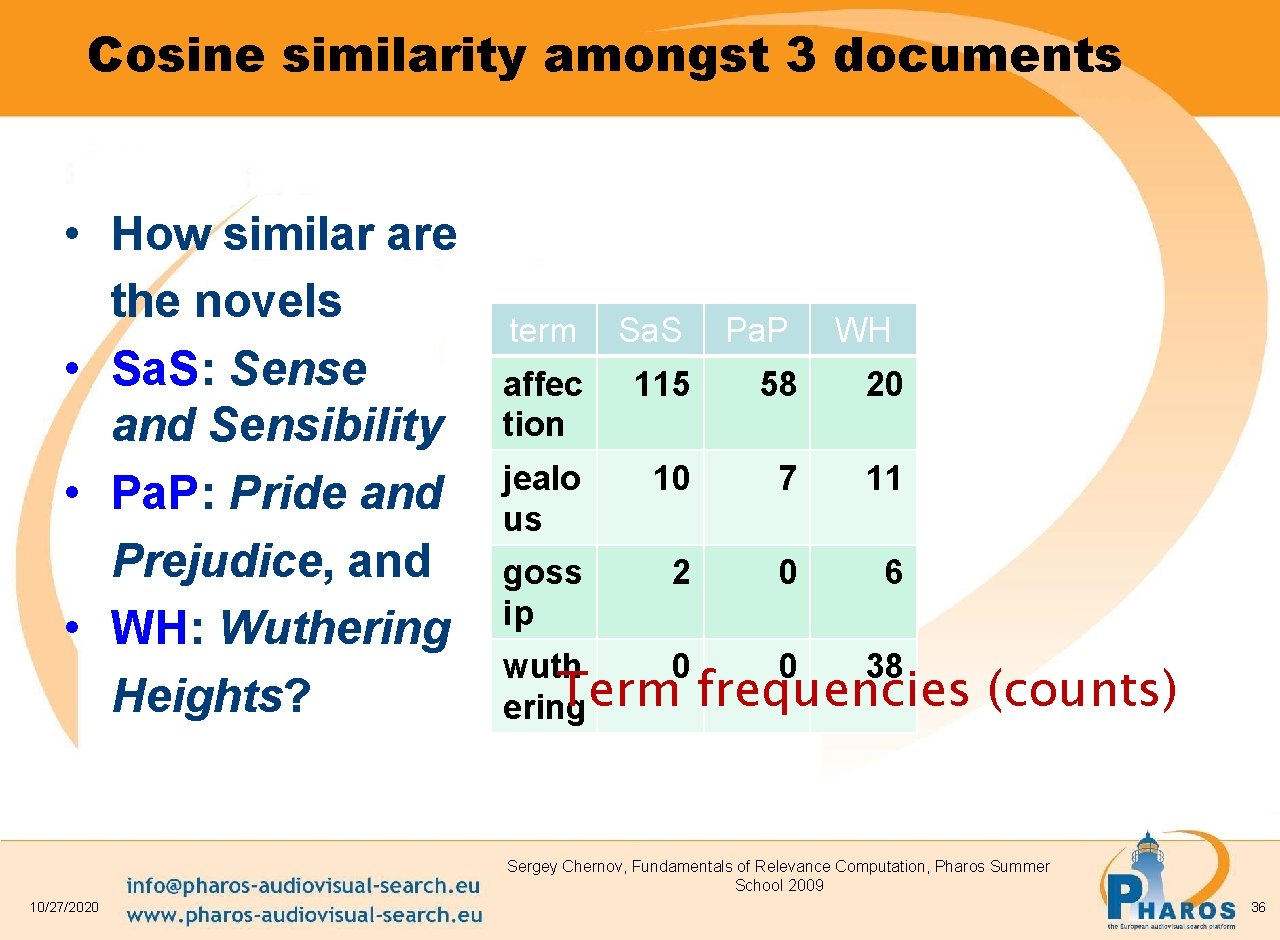

Cosine similarity amongst 3 documents • How similar are the novels • Sa. S: Sense and Sensibility • Pa. P: Pride and Prejudice, and • WH: Wuthering Heights? term Sa. S Pa. P WH affec tion 115 58 20 jealo us 10 7 11 goss ip 2 0 6 wuth 0 0 38 Term frequencies ering (counts) Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 36

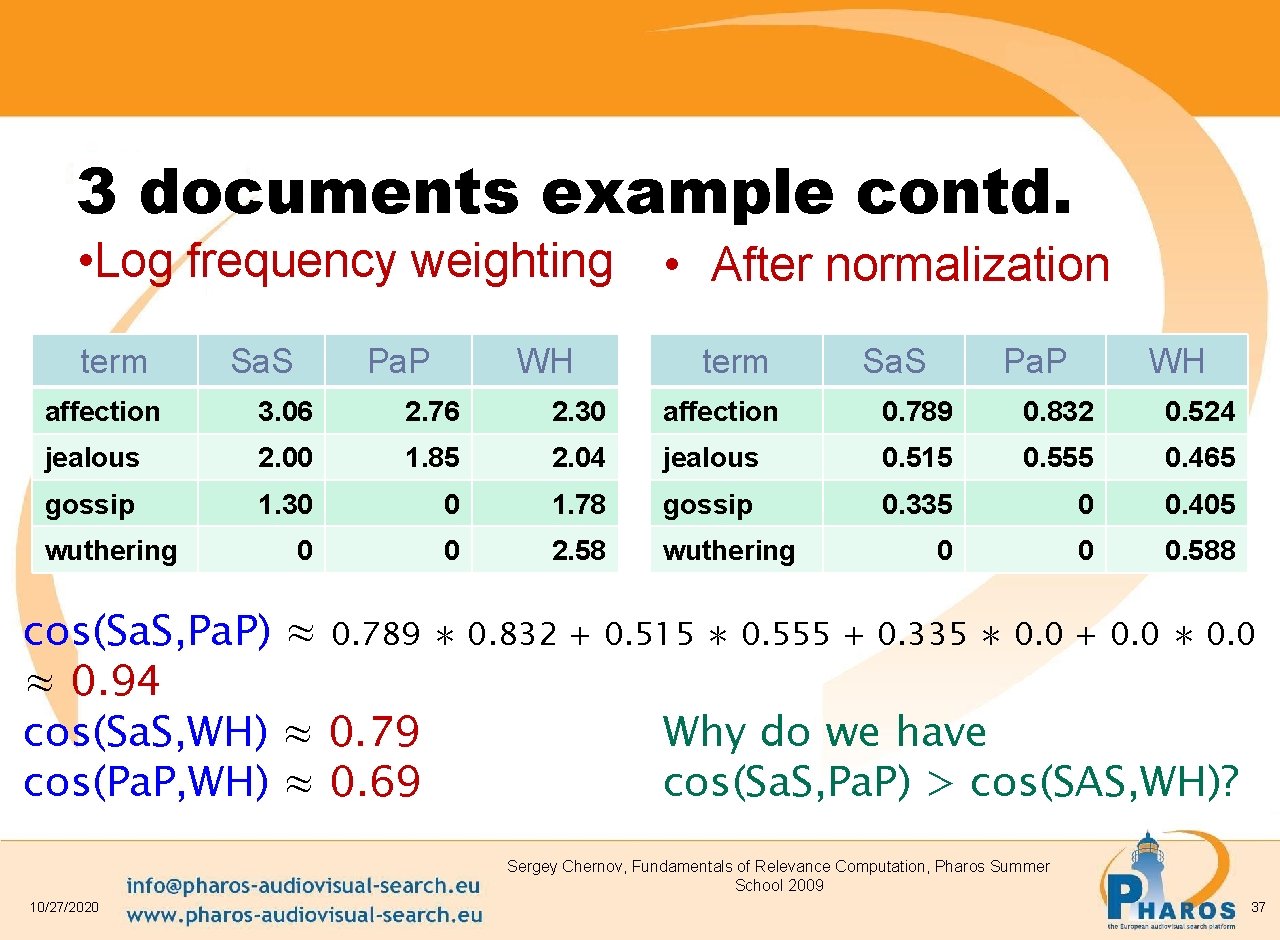

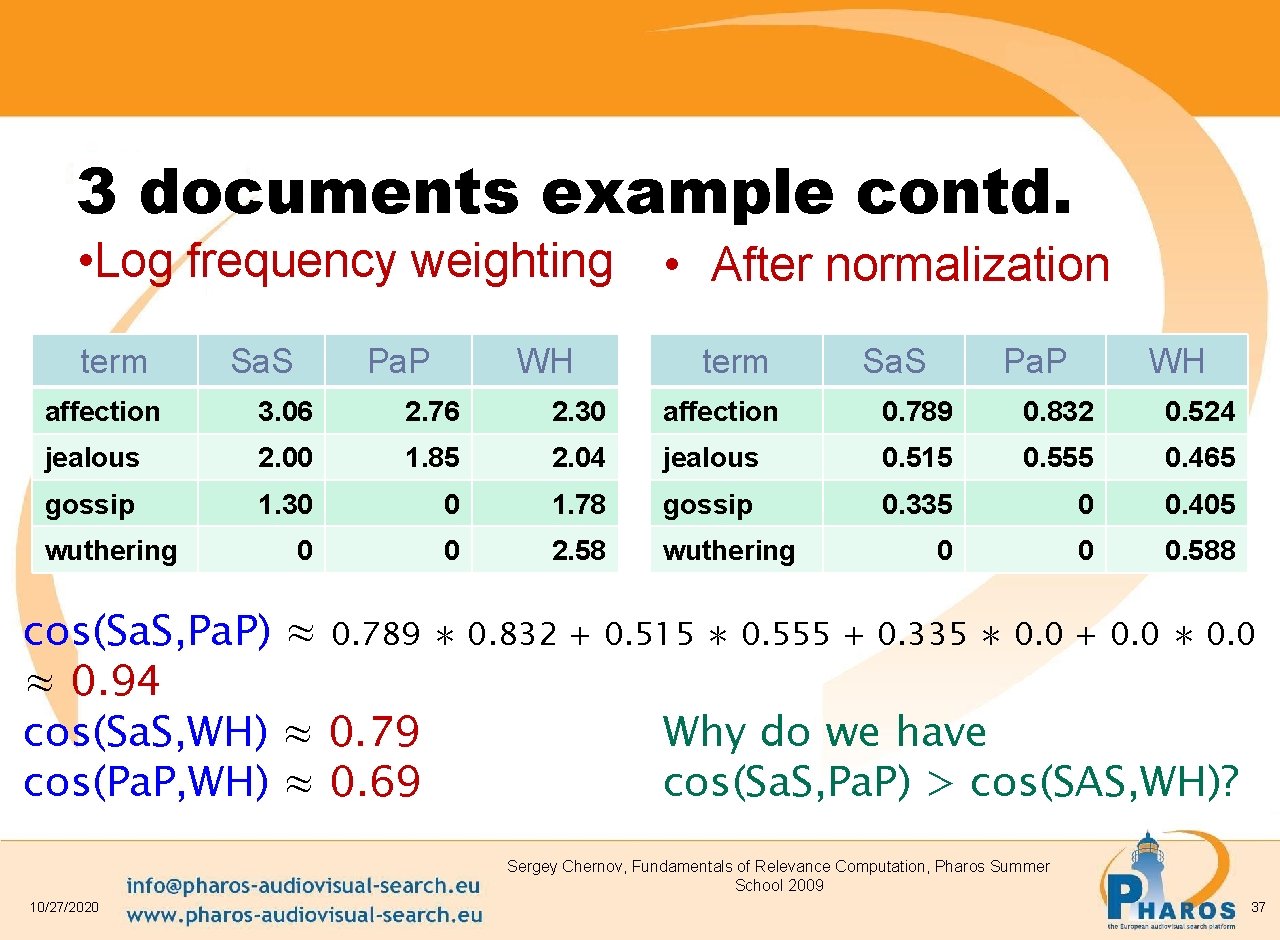

3 documents example contd. • Log frequency weighting term Sa. S Pa. P WH • After normalization term Sa. S Pa. P WH affection 3. 06 2. 76 2. 30 affection 0. 789 0. 832 0. 524 jealous 2. 00 1. 85 2. 04 jealous 0. 515 0. 555 0. 465 gossip 1. 30 0 1. 78 gossip 0. 335 0 0. 405 0 0 2. 58 wuthering 0 0 0. 588 wuthering cos(Sa. S, Pa. P) ≈ 0. 789 ∗ 0. 832 + 0. 515 ∗ 0. 555 + 0. 335 ∗ 0. 0 + 0. 0 ∗ 0. 0 ≈ 0. 94 Why do we have cos(Sa. S, WH) ≈ 0. 79 cos(Sa. S, Pa. P) > cos(SAS, WH)? cos(Pa. P, WH) ≈ 0. 69 Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 37

Summary – vector space ranking • Represent the query as a weighted tf-idf vector • Represent each document as a weighted tf-idf vector • Compute the cosine similarity score for the query vector and each document vector • Rank documents with respect to the query by score • Return the top K (e. g. , K = 10) to the user Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 38

Relevance Feedback • Relevance feedback: user feedback on relevance of docs in initial set of results – User issues a (short, simple) query – The user marks some results as relevant or nonrelevant. – The system computes a better representation of the information need based on feedback. – Relevance feedback can go through one or more iterations. • Idea: it may be difficult to formulate a good query when you don’t know the collection well, so iterate Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 39

Similar pages Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 40

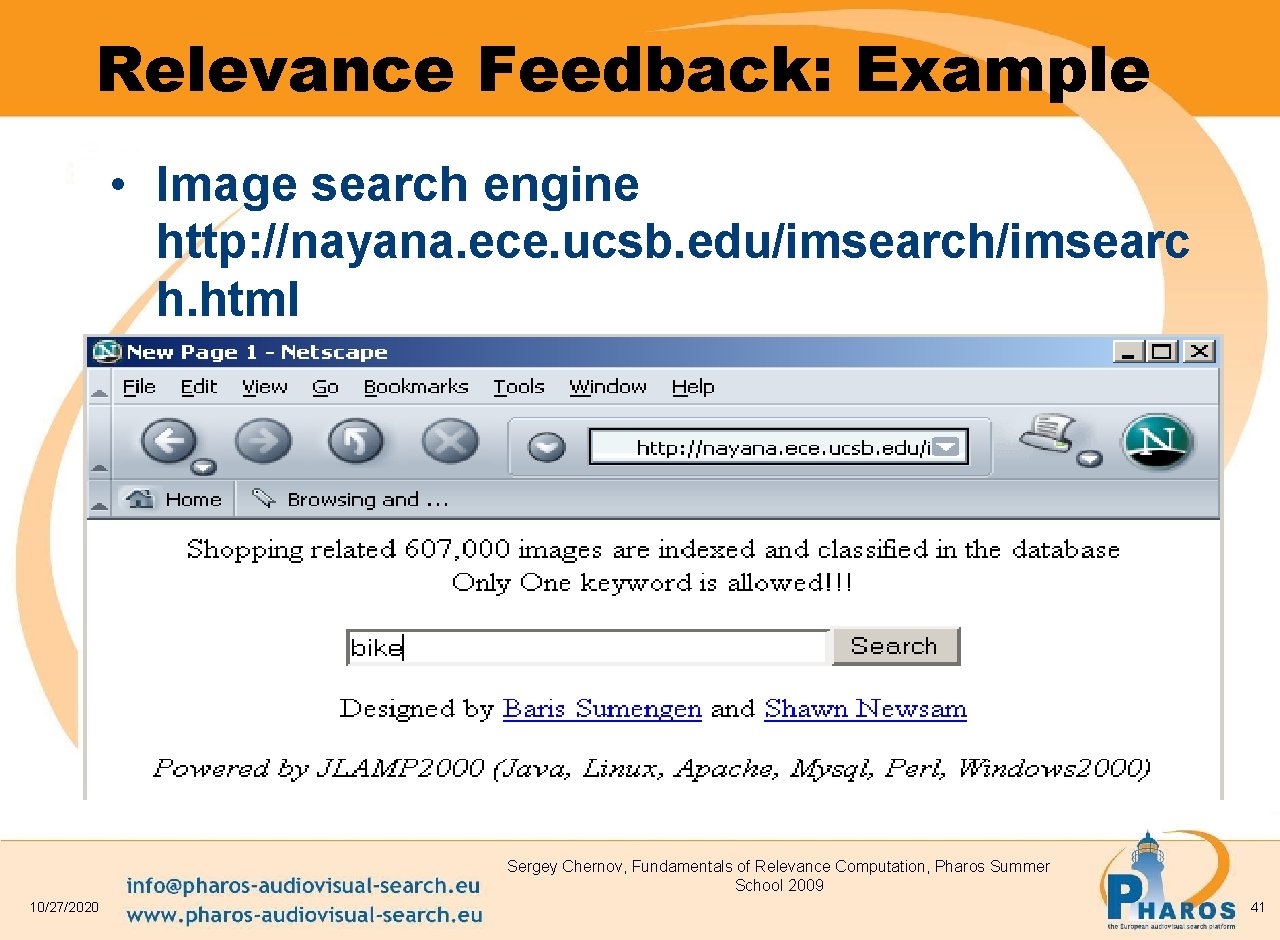

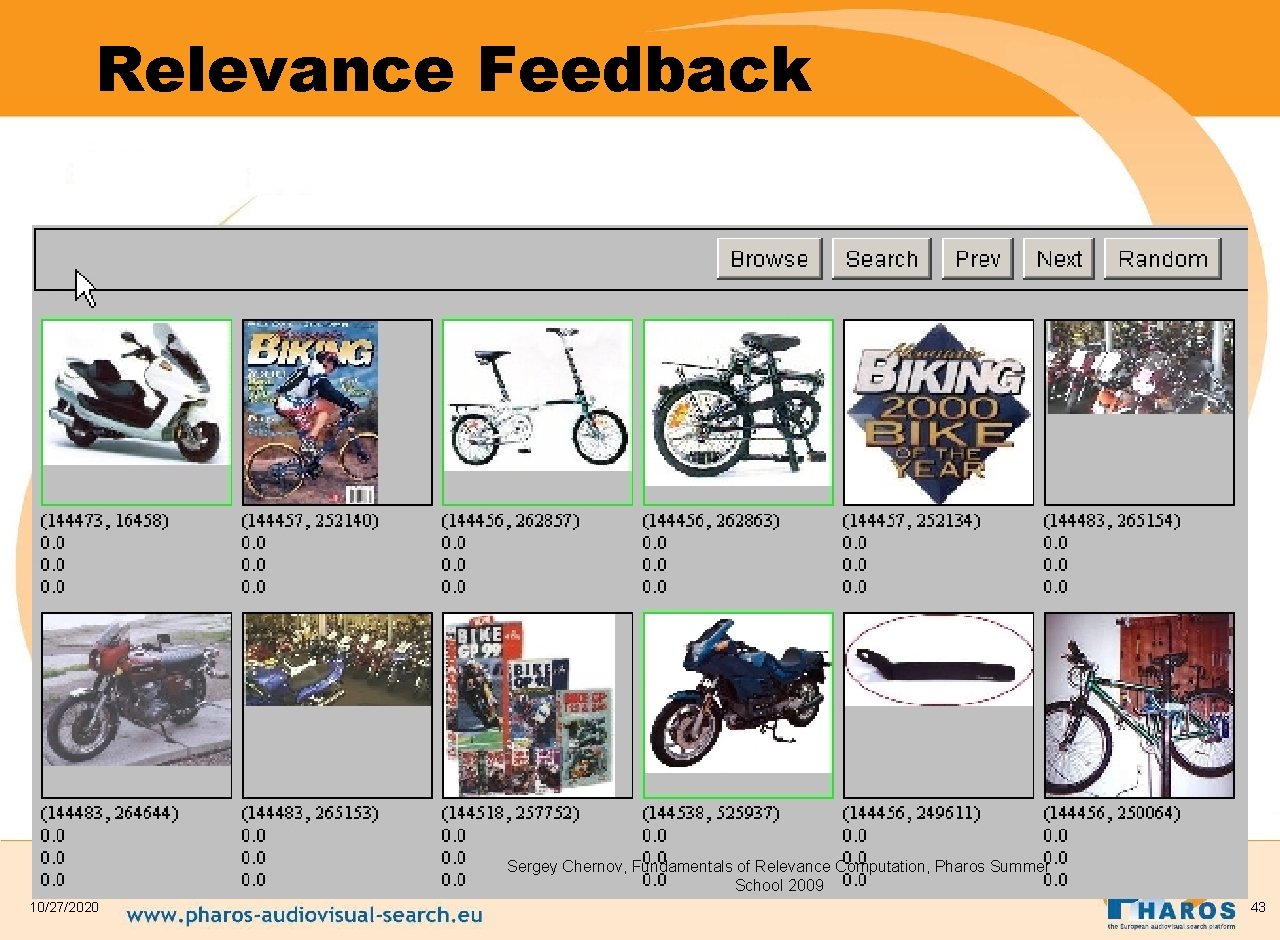

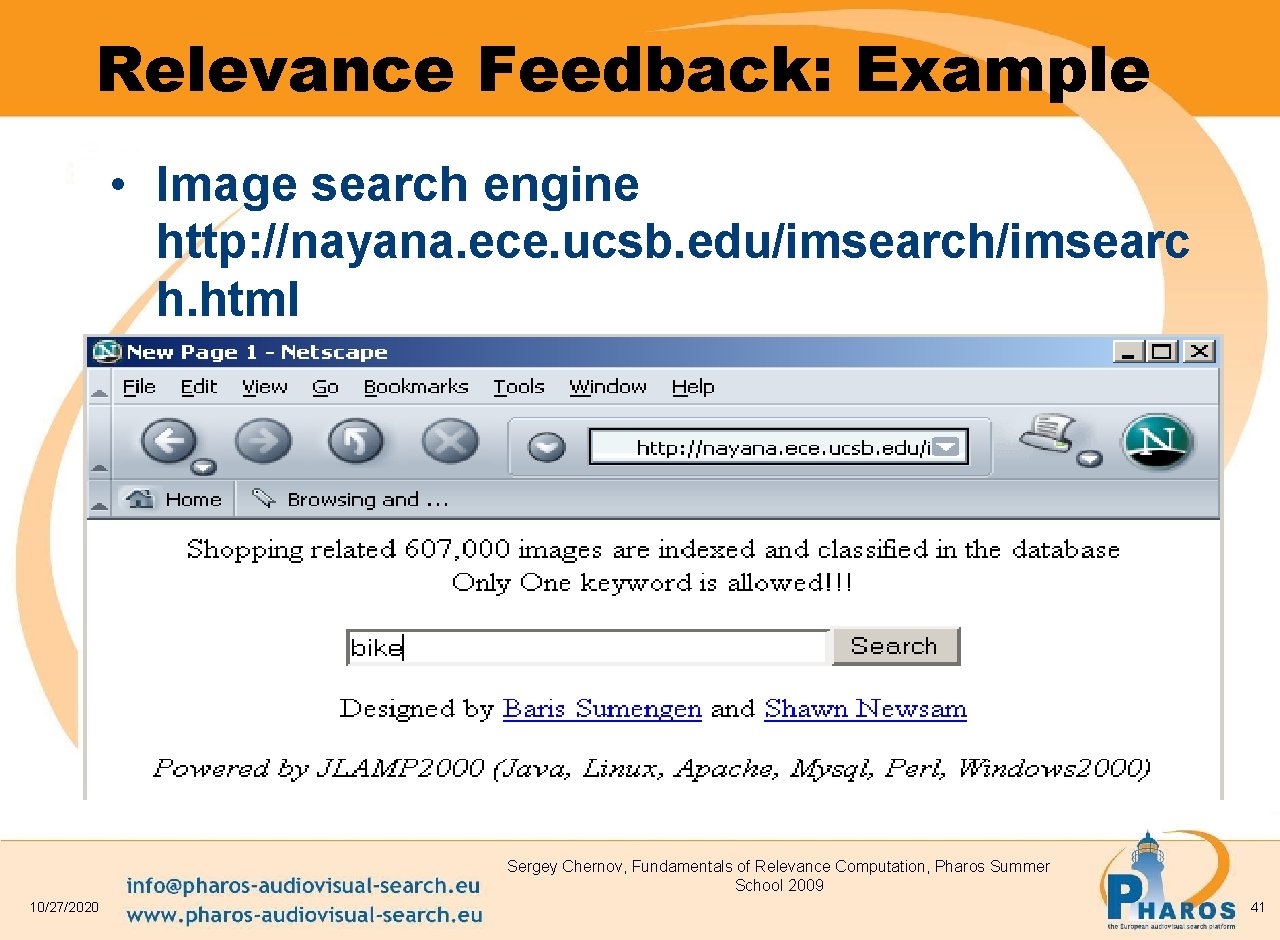

Relevance Feedback: Example • Image search engine http: //nayana. ece. ucsb. edu/imsearch/imsearc h. html Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 41

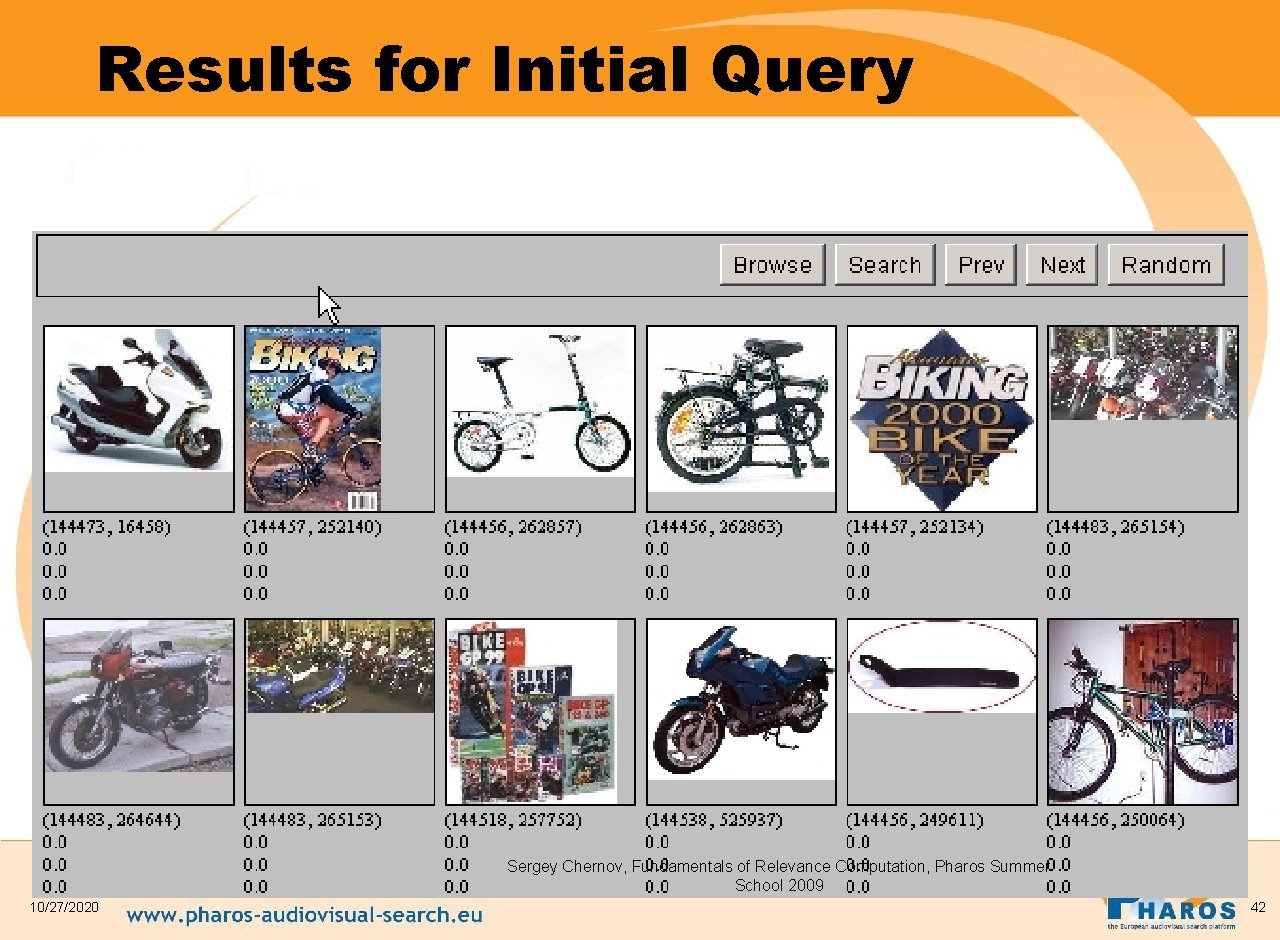

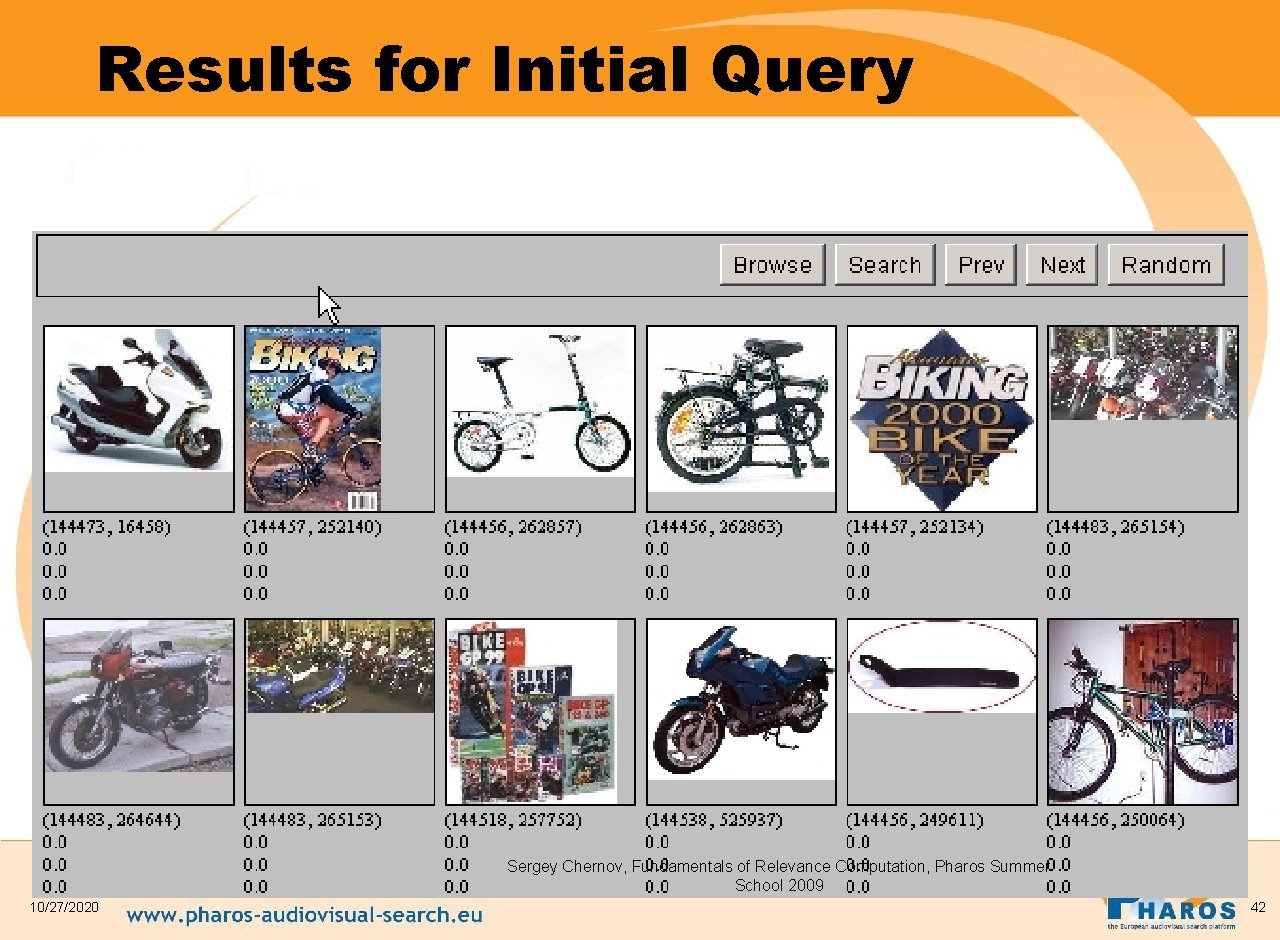

Results for Initial Query Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 42

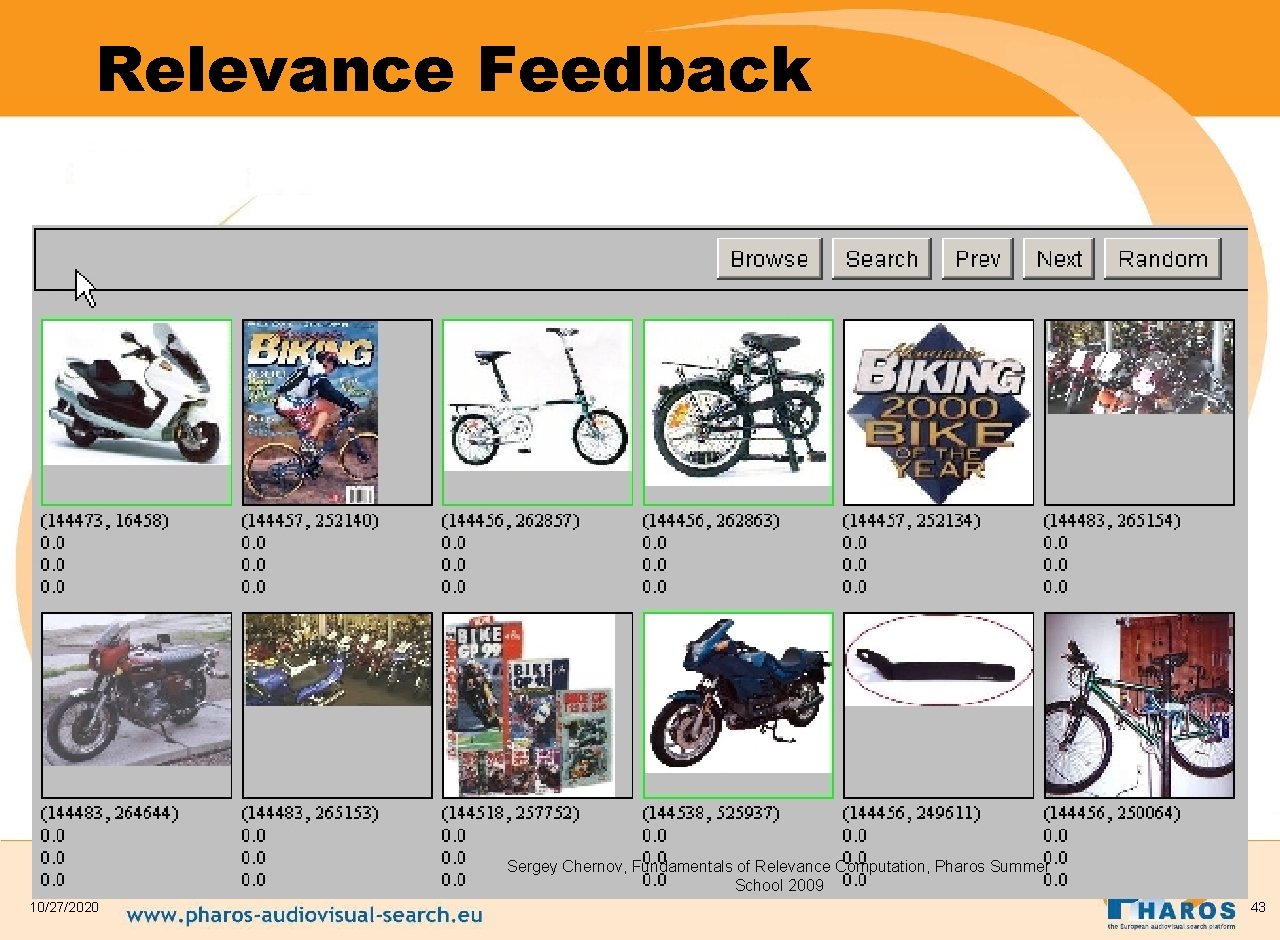

Relevance Feedback Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 43

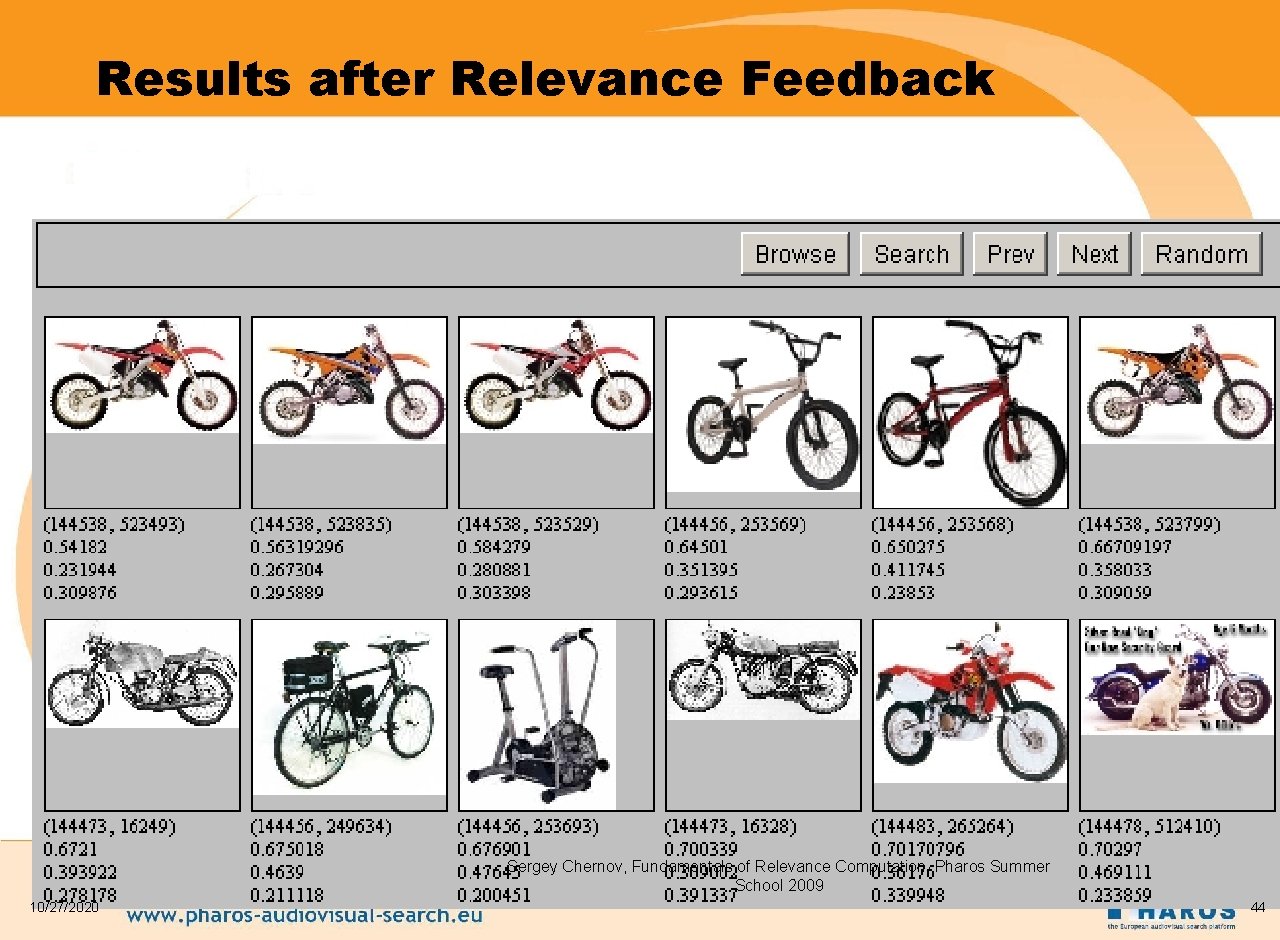

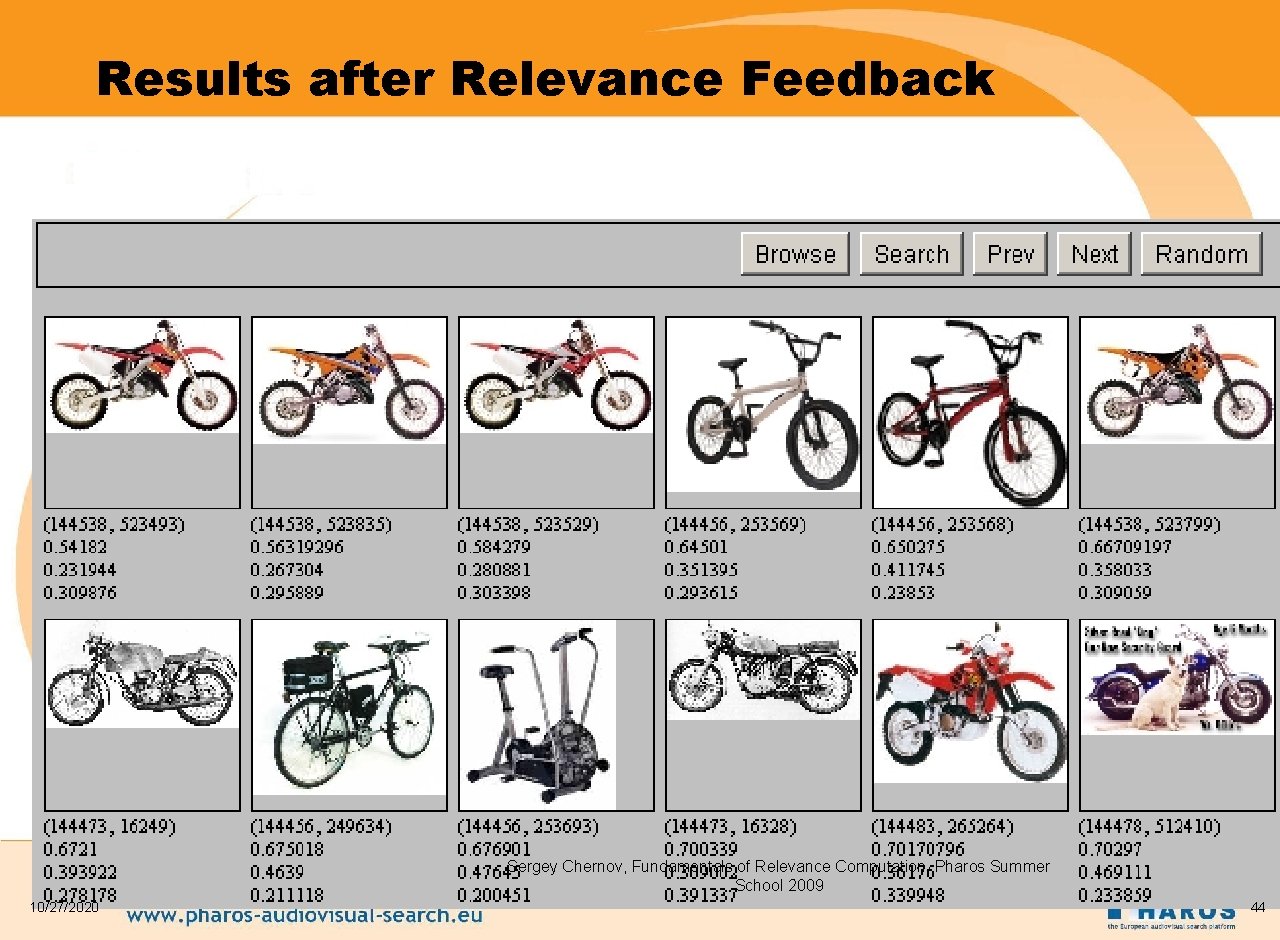

Results after Relevance Feedback Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 44

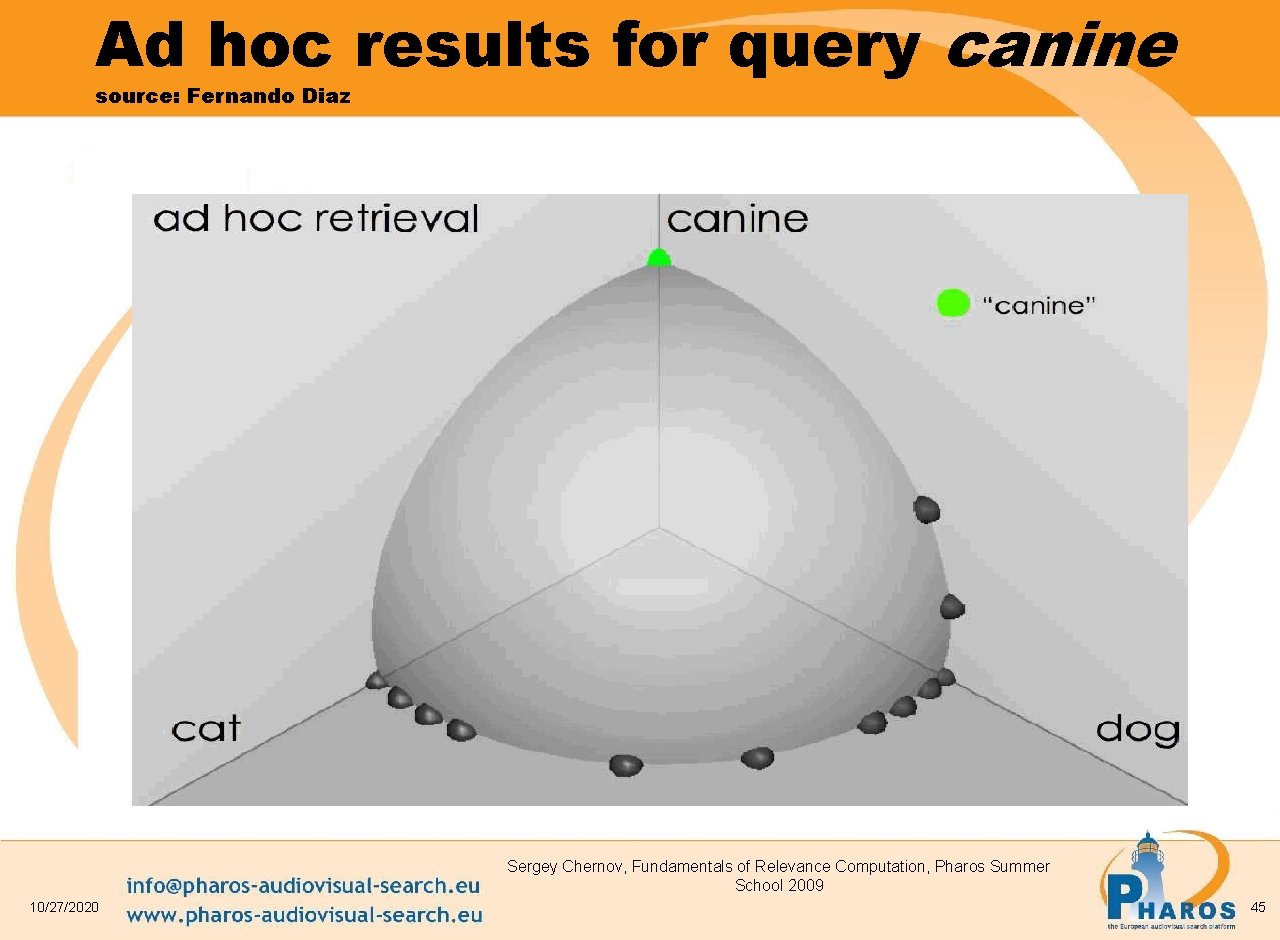

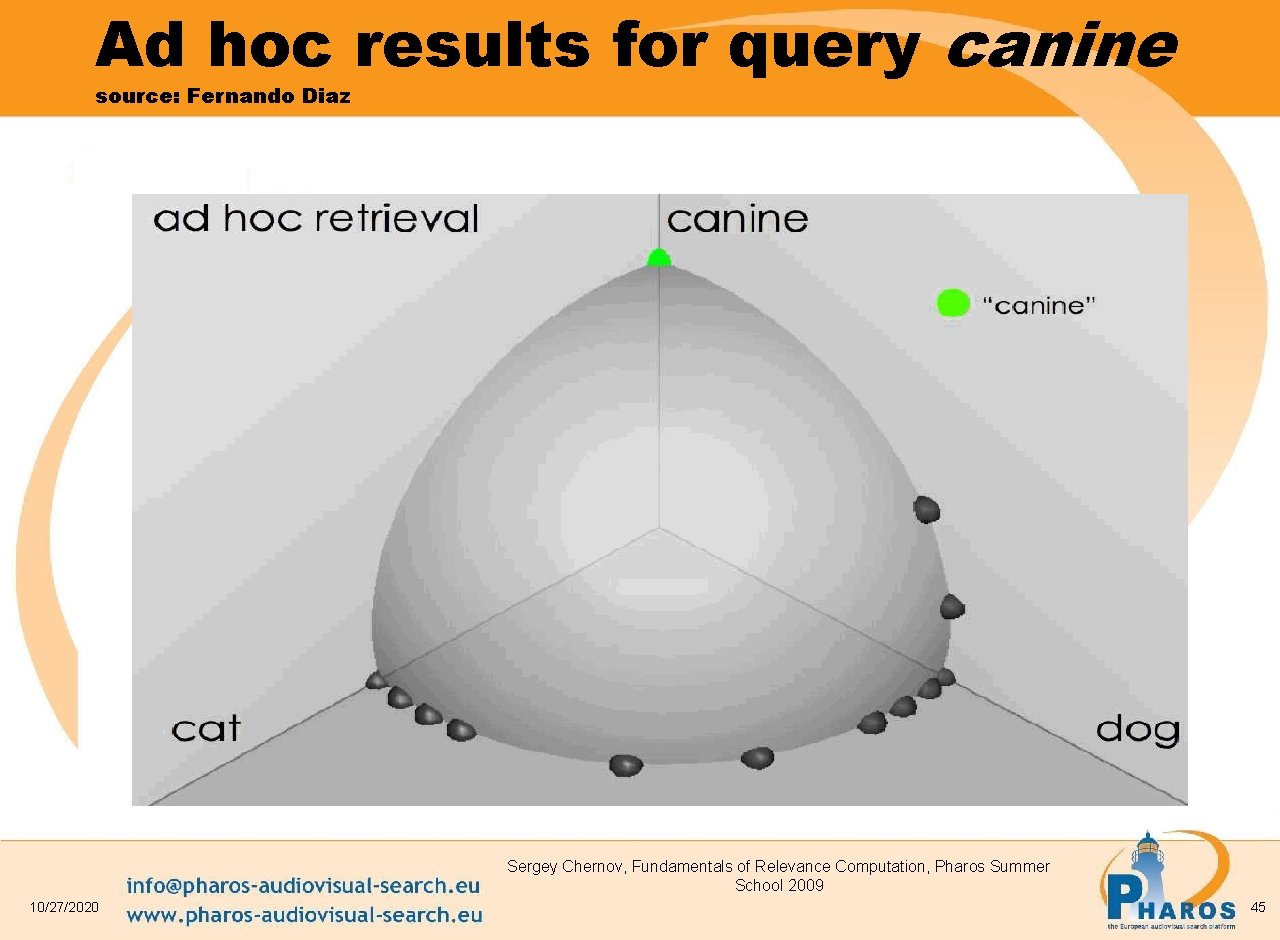

Ad hoc results for query canine source: Fernando Diaz Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 45

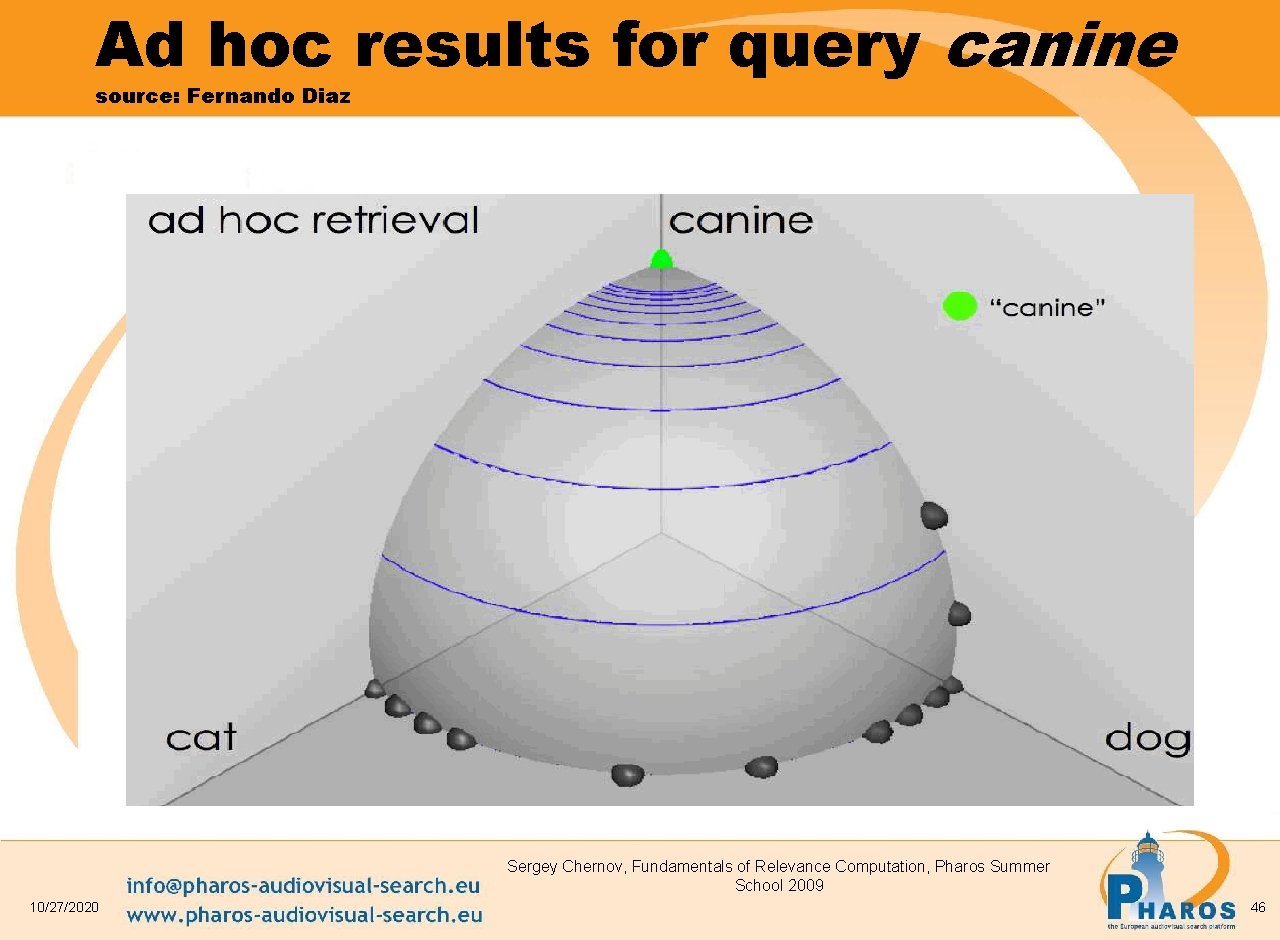

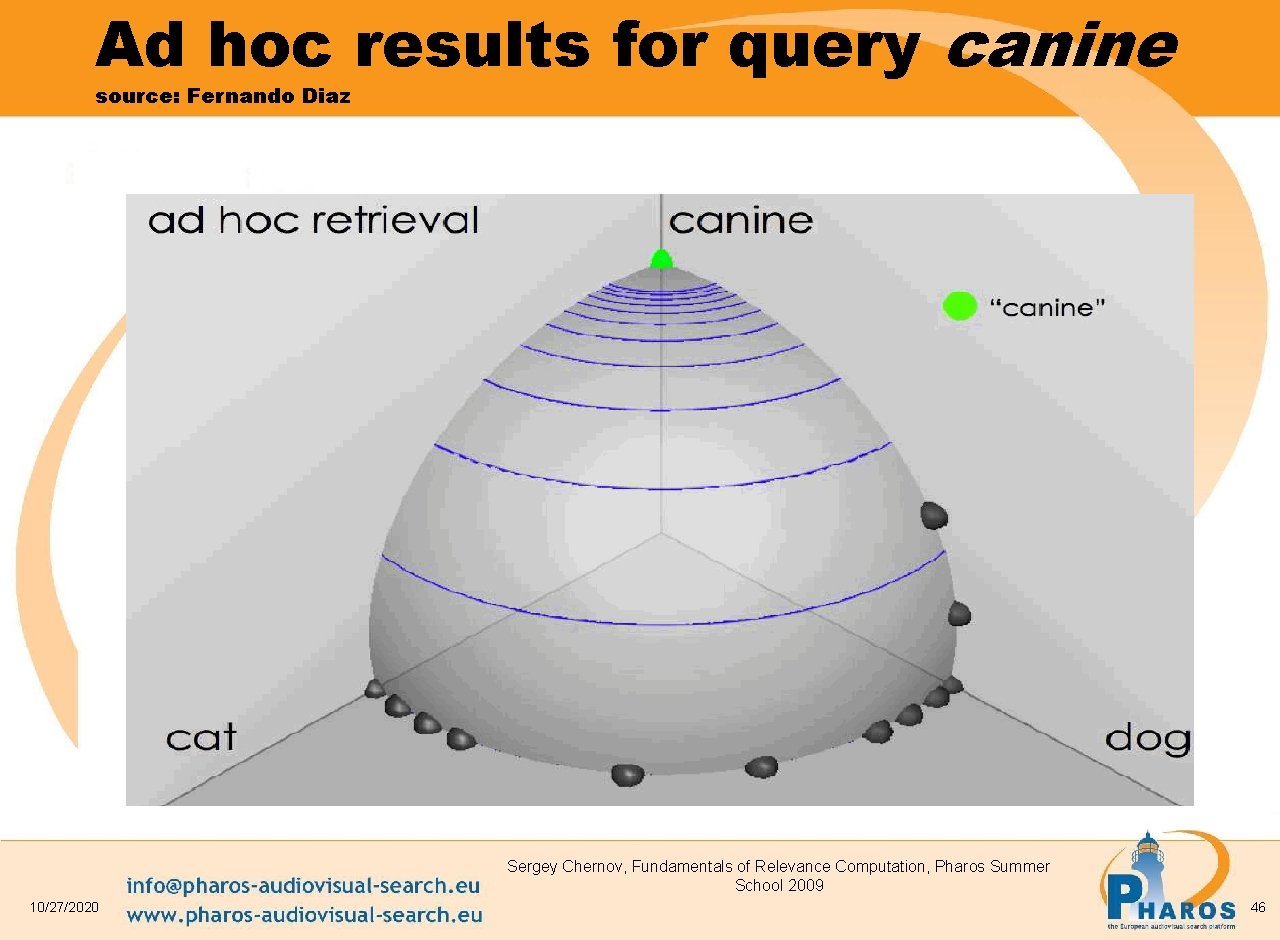

Ad hoc results for query canine source: Fernando Diaz Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 46

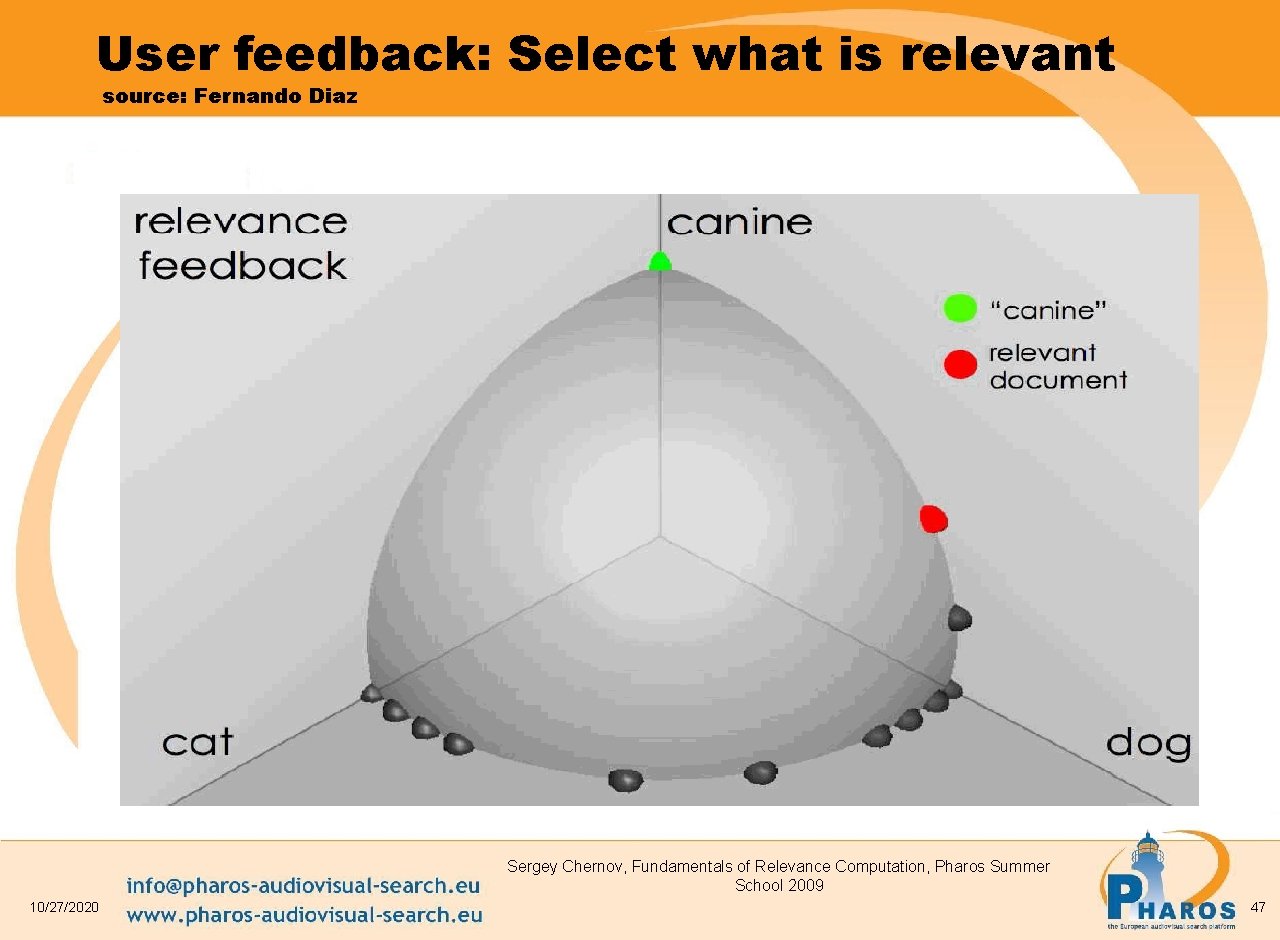

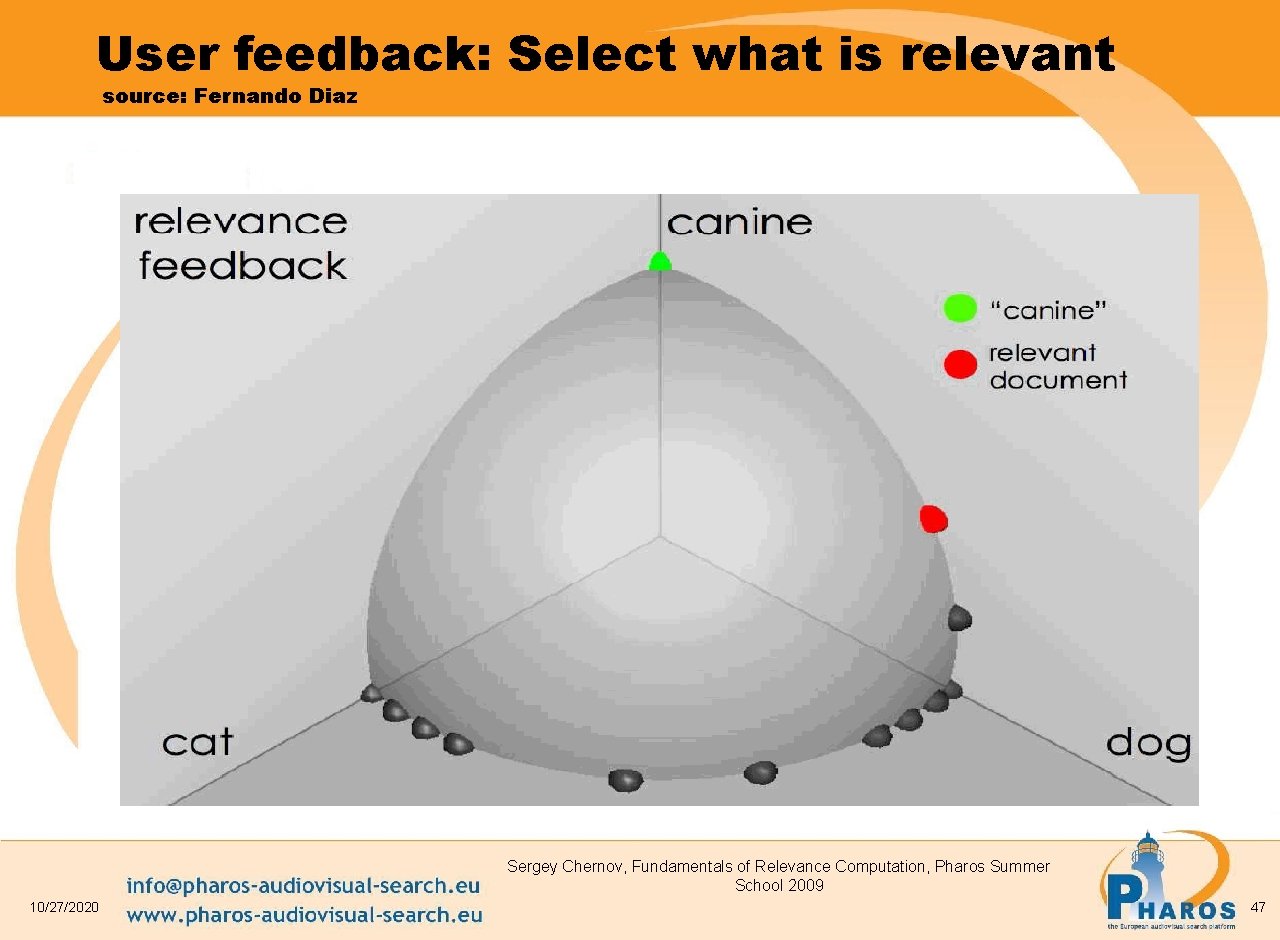

User feedback: Select what is relevant source: Fernando Diaz Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 47

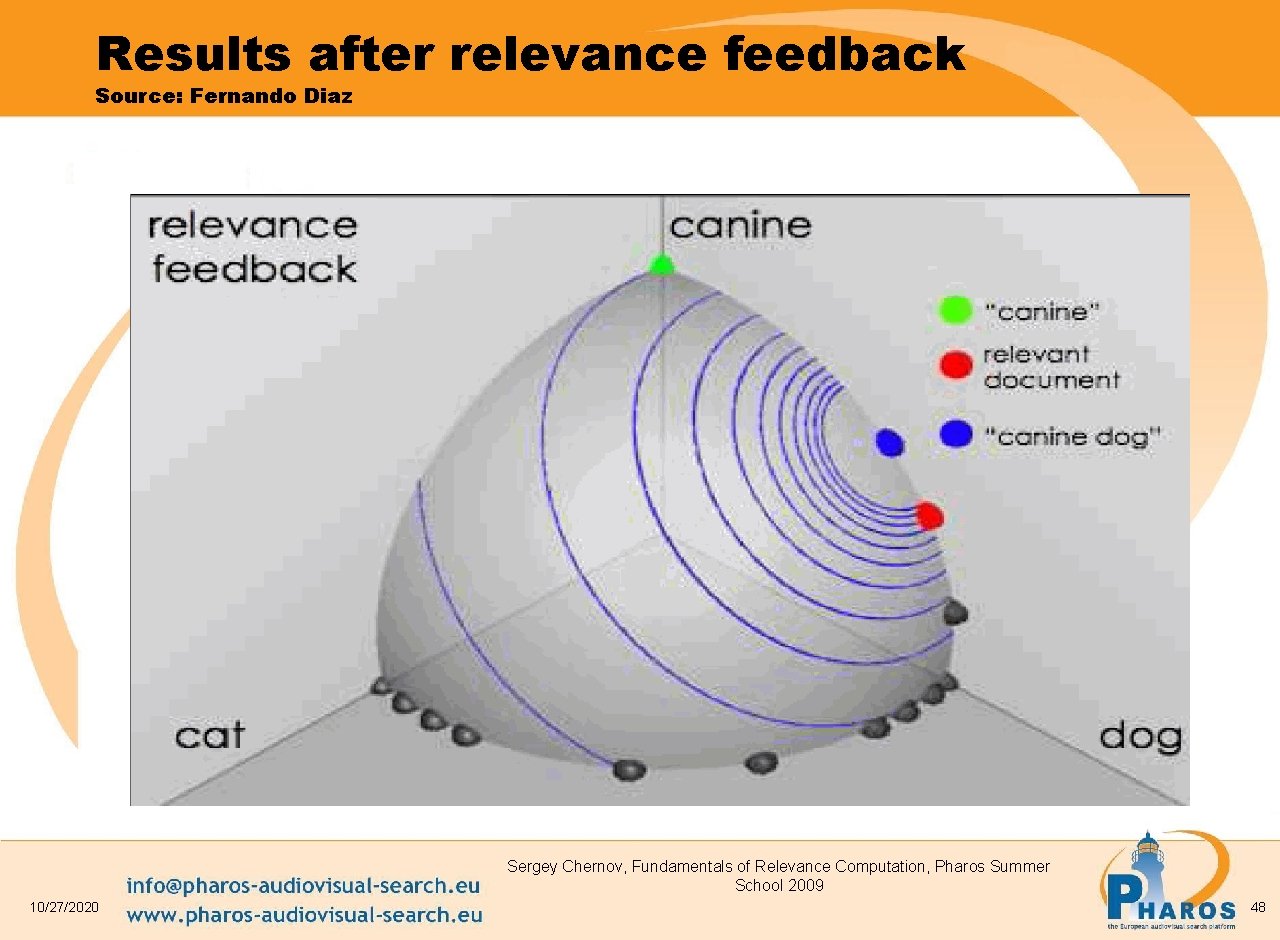

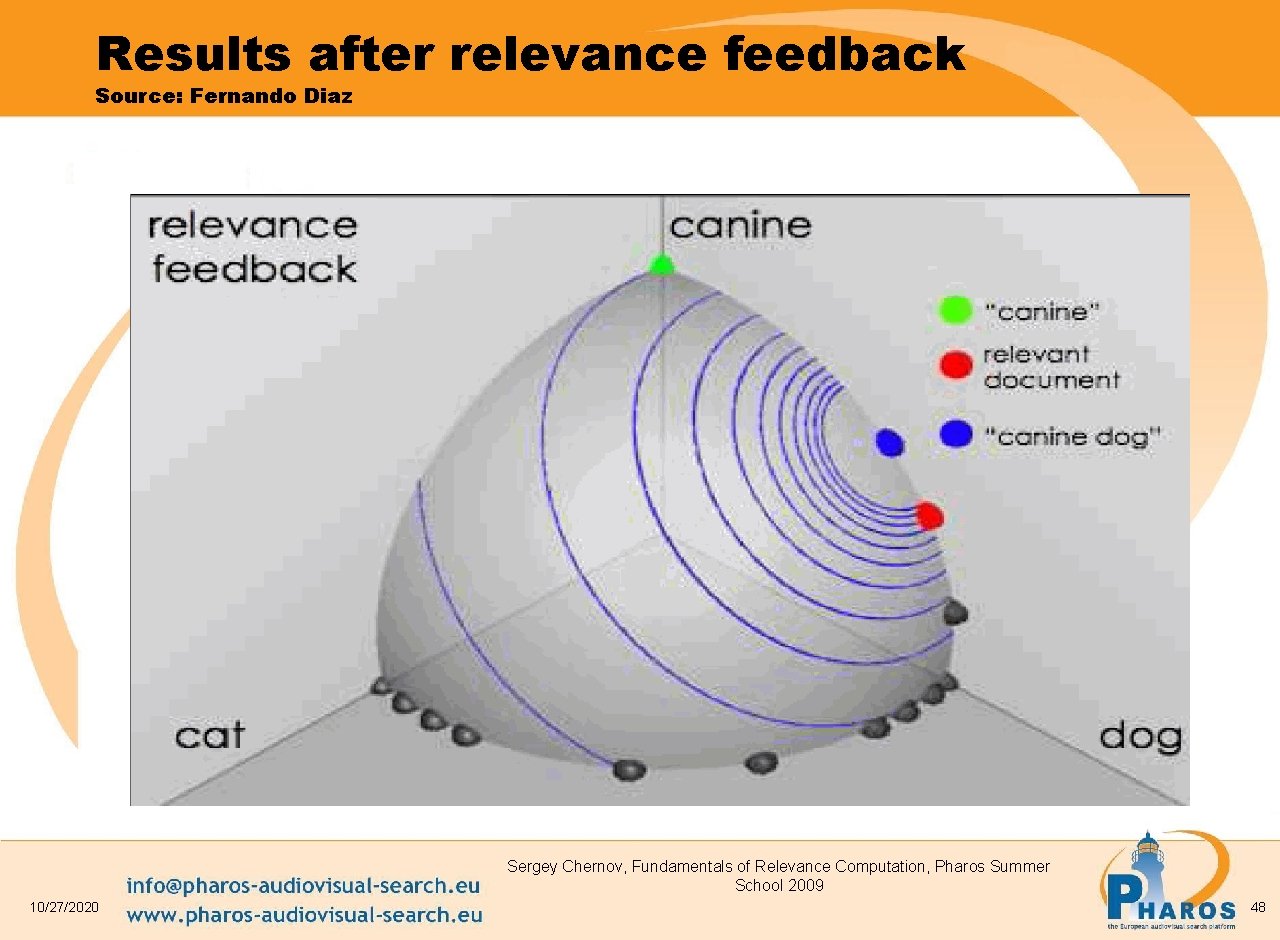

Results after relevance feedback Source: Fernando Diaz Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 48

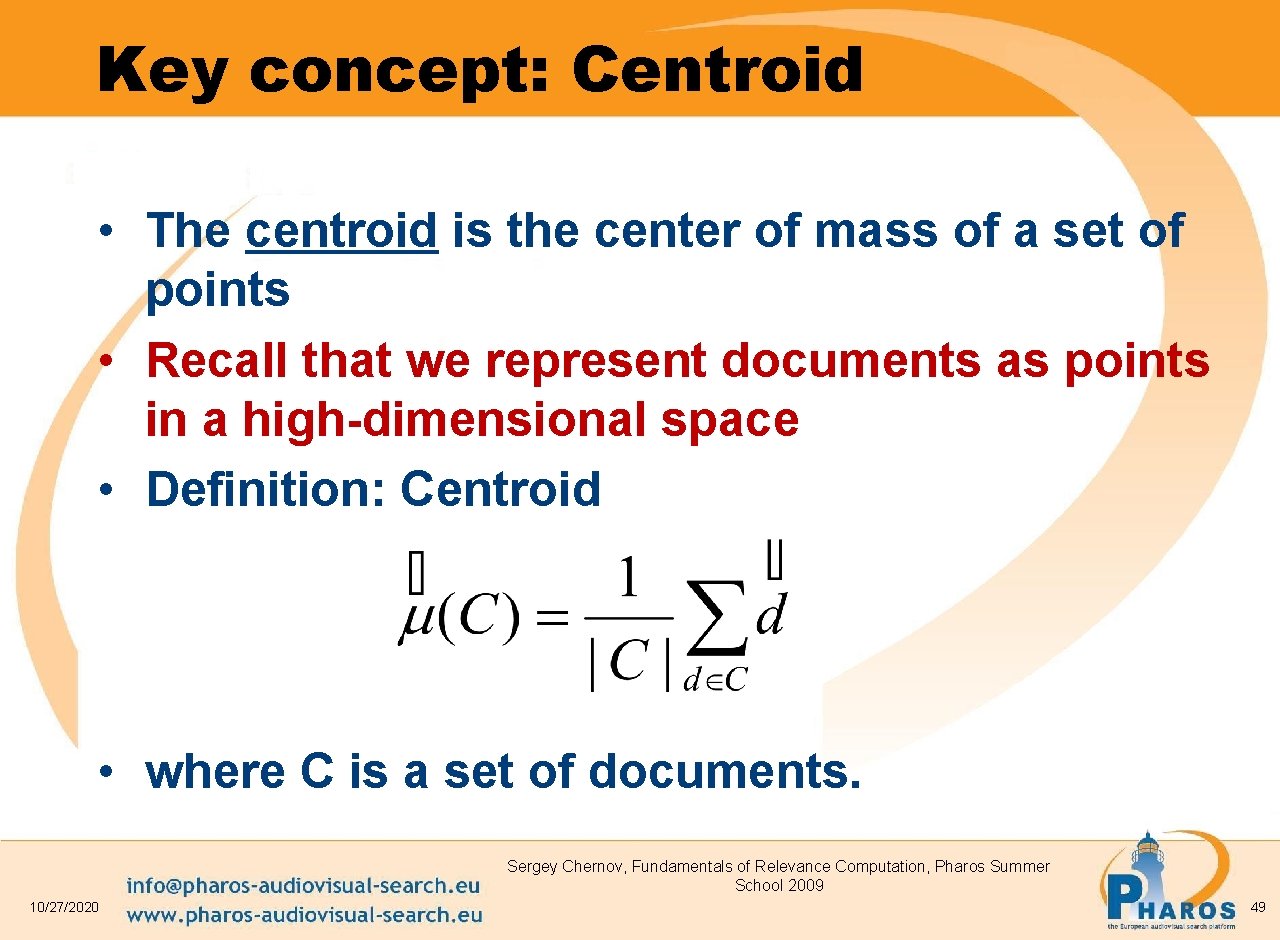

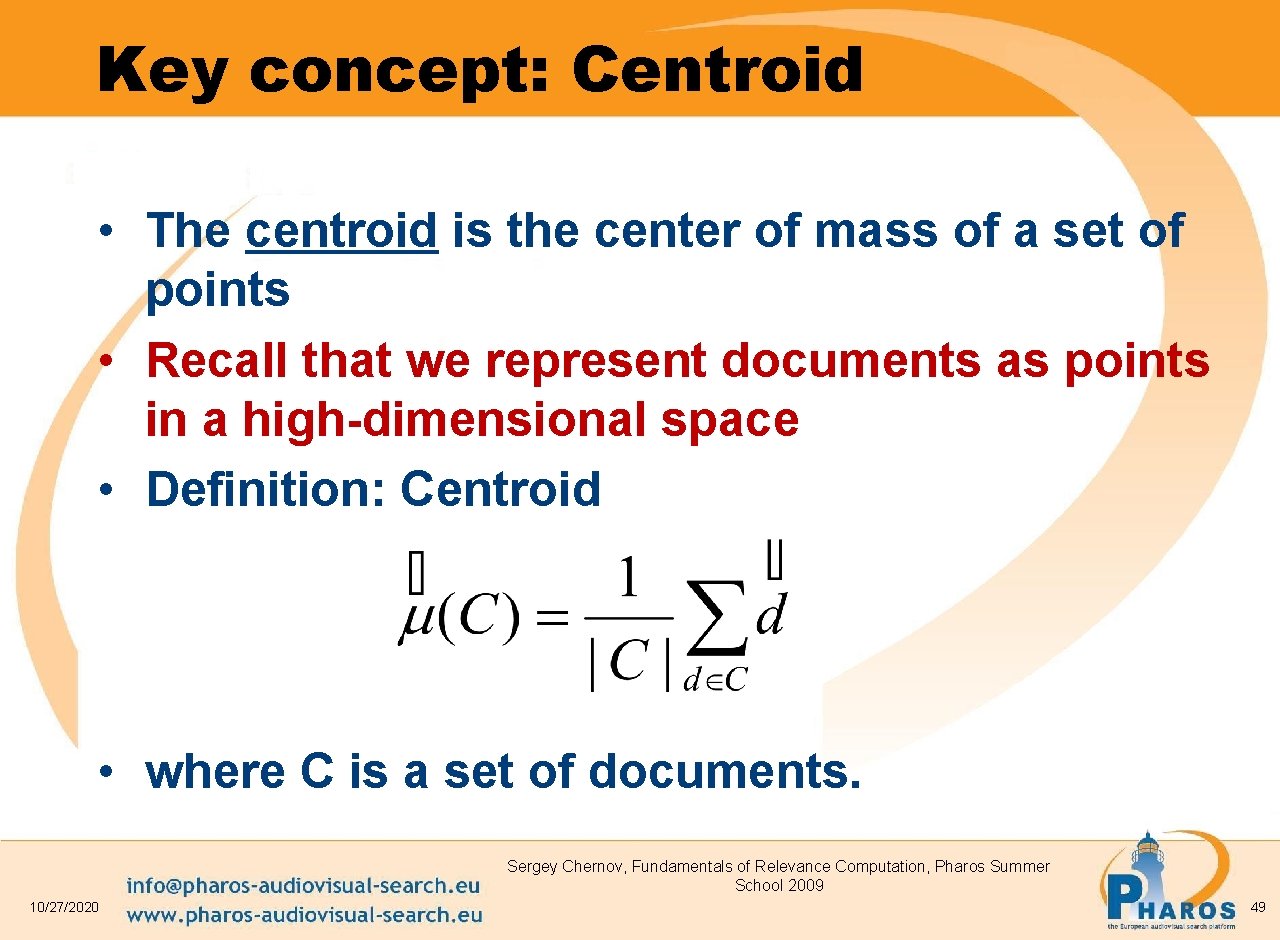

Key concept: Centroid • The centroid is the center of mass of a set of points • Recall that we represent documents as points in a high-dimensional space • Definition: Centroid • where C is a set of documents. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 49

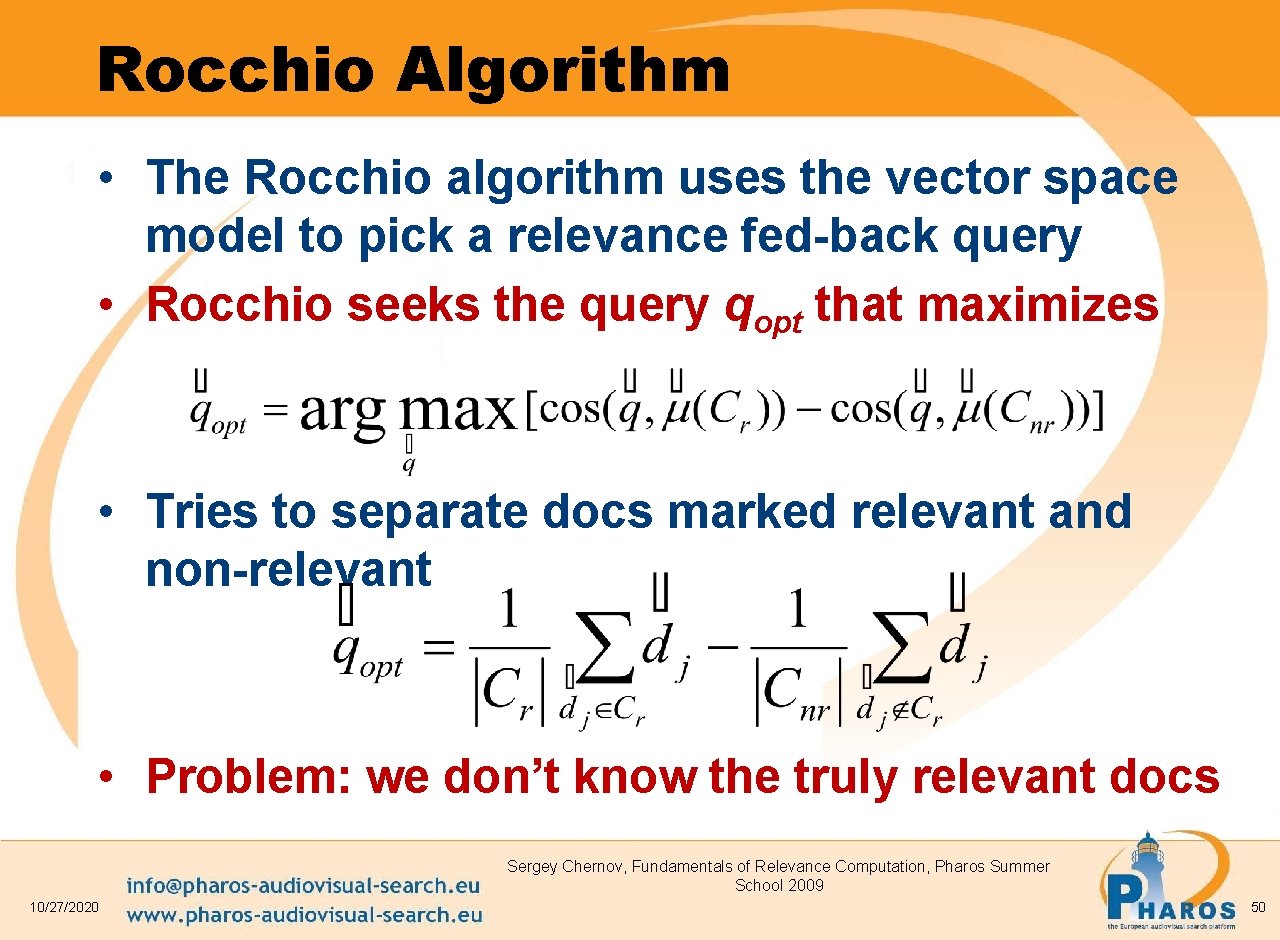

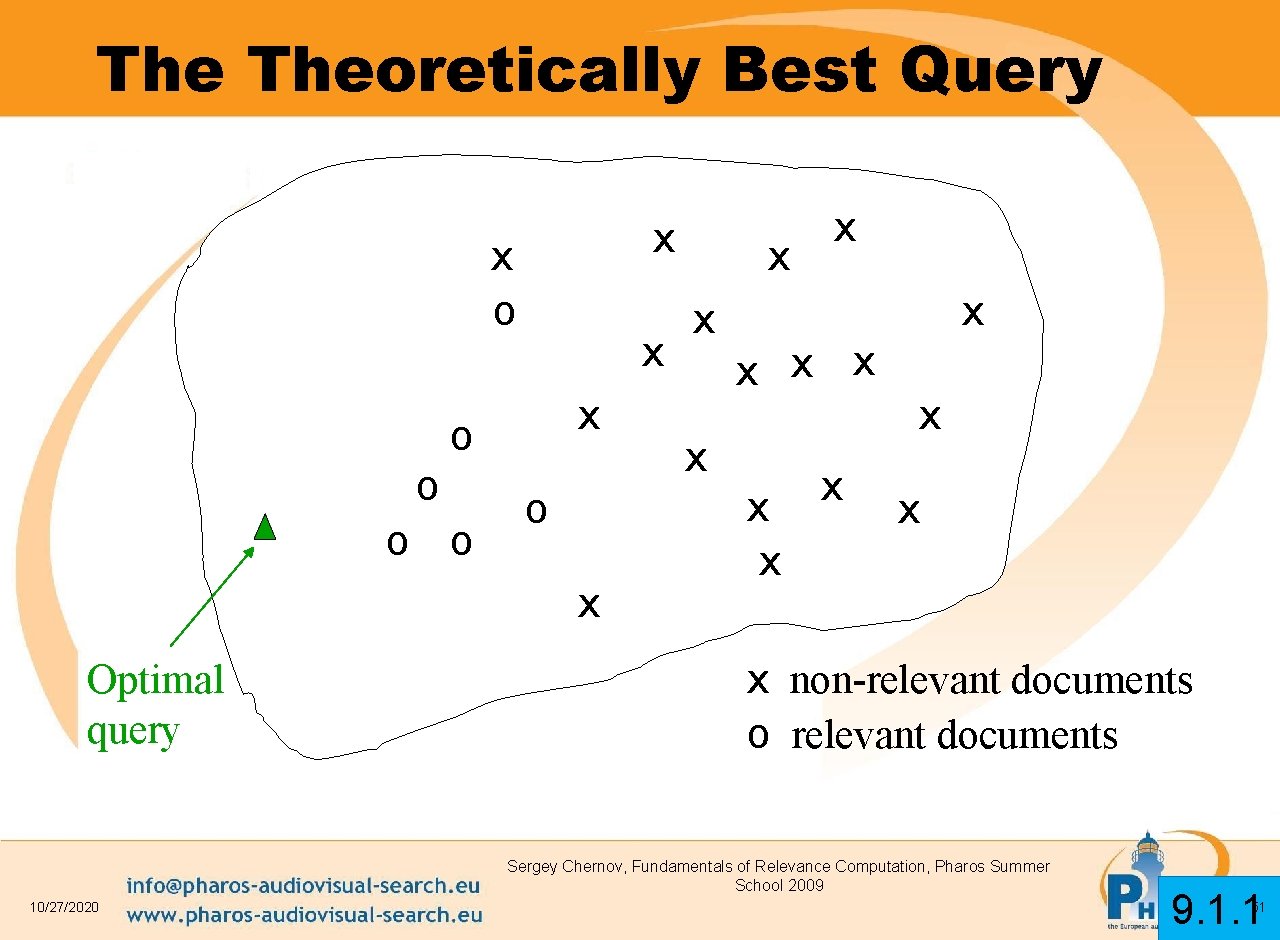

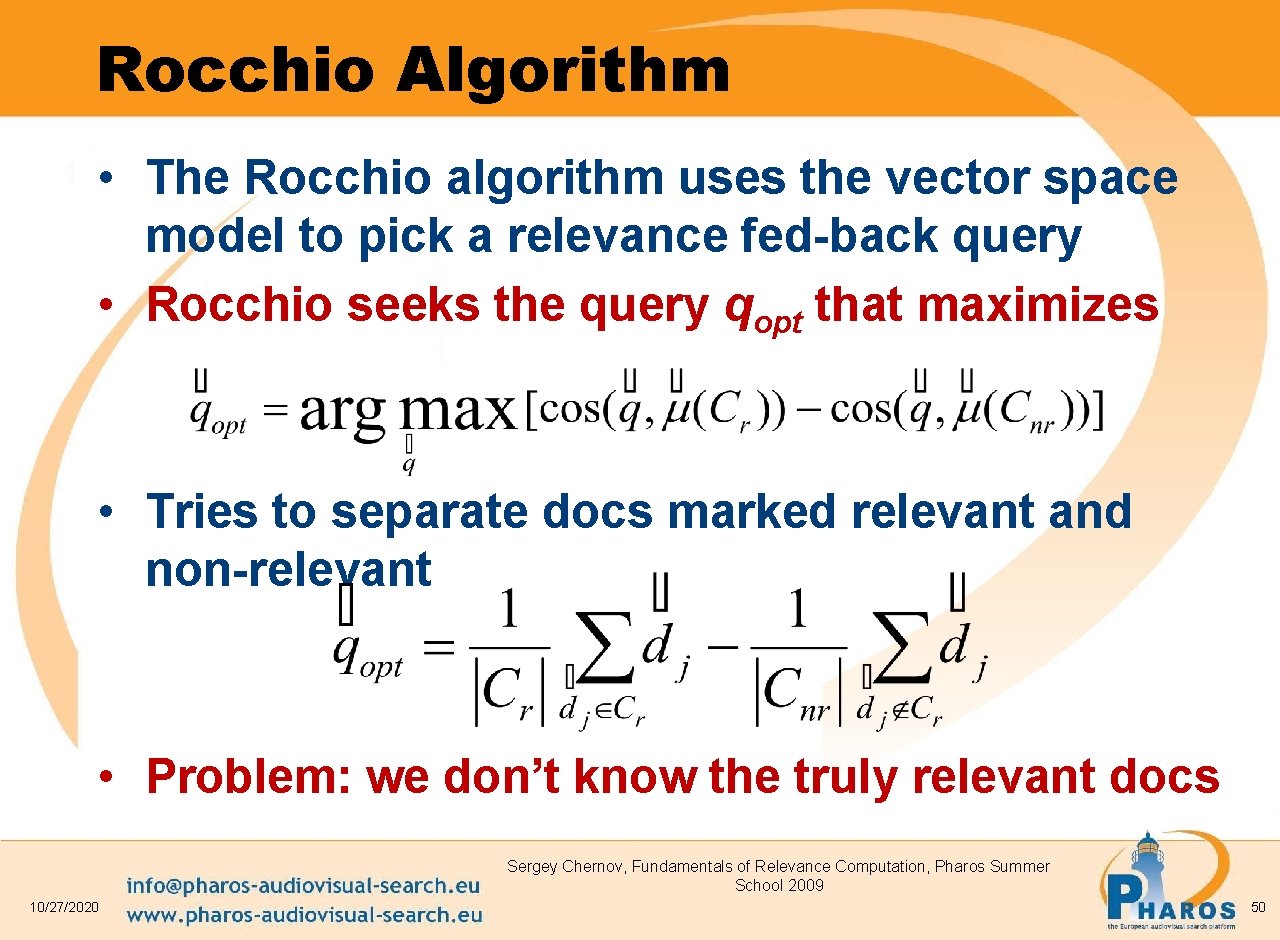

Rocchio Algorithm • The Rocchio algorithm uses the vector space model to pick a relevance fed-back query • Rocchio seeks the query qopt that maximizes • Tries to separate docs marked relevant and non-relevant • Problem: we don’t know the truly relevant docs Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 50

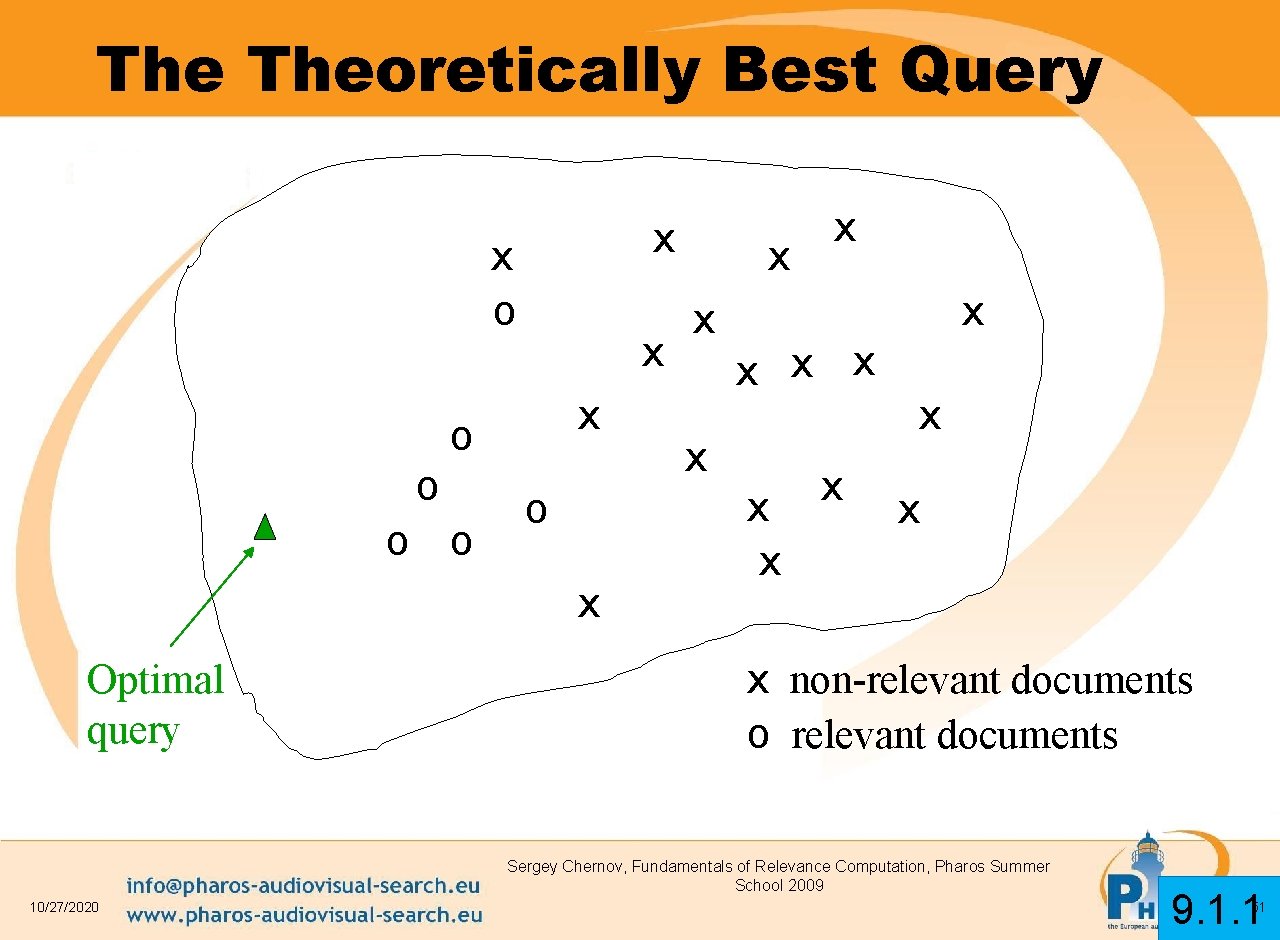

The Theoretically Best Query x x o o o x Optimal query x x x x non-relevant documents o relevant documents Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 9. 1. 1 51

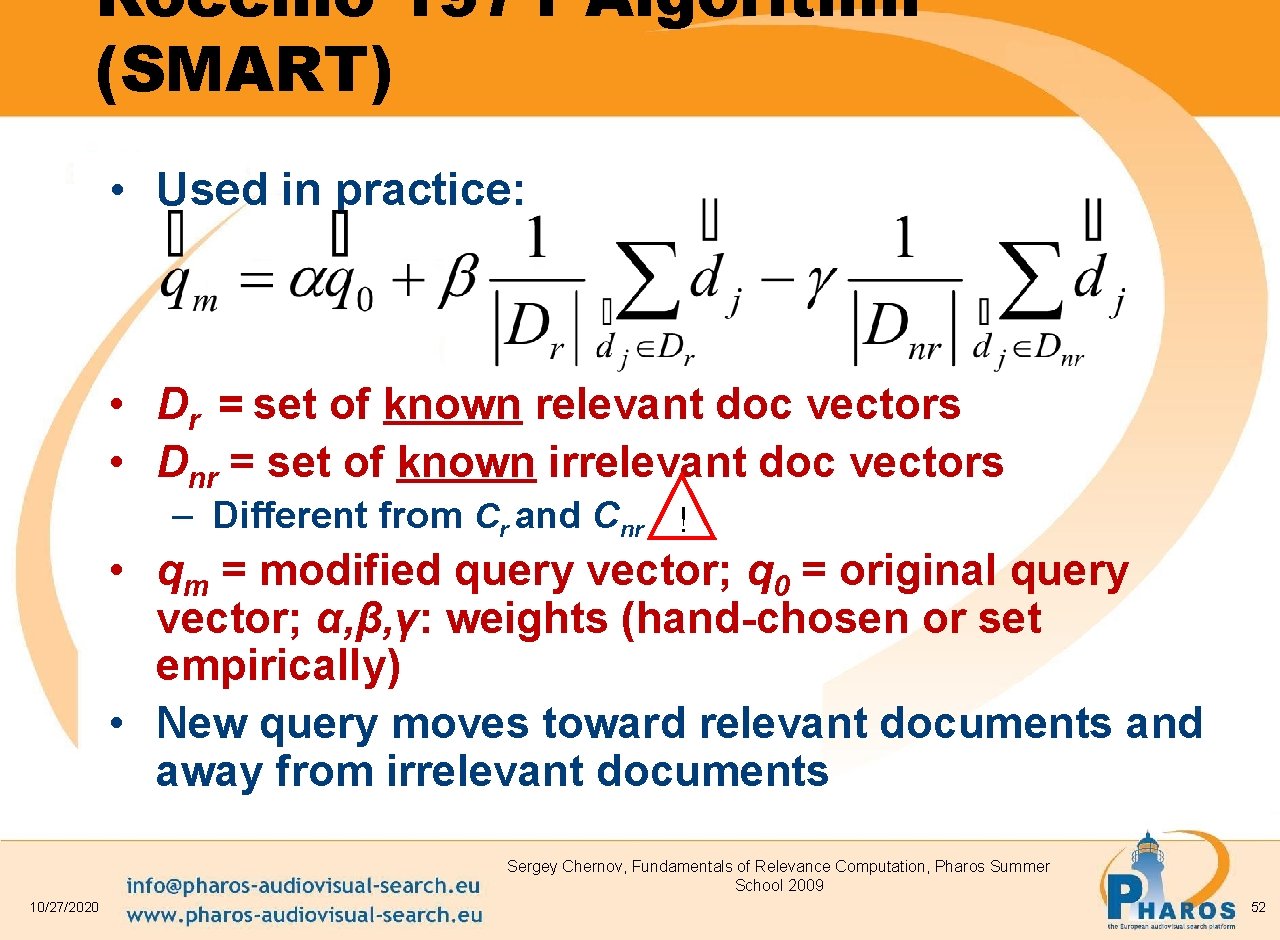

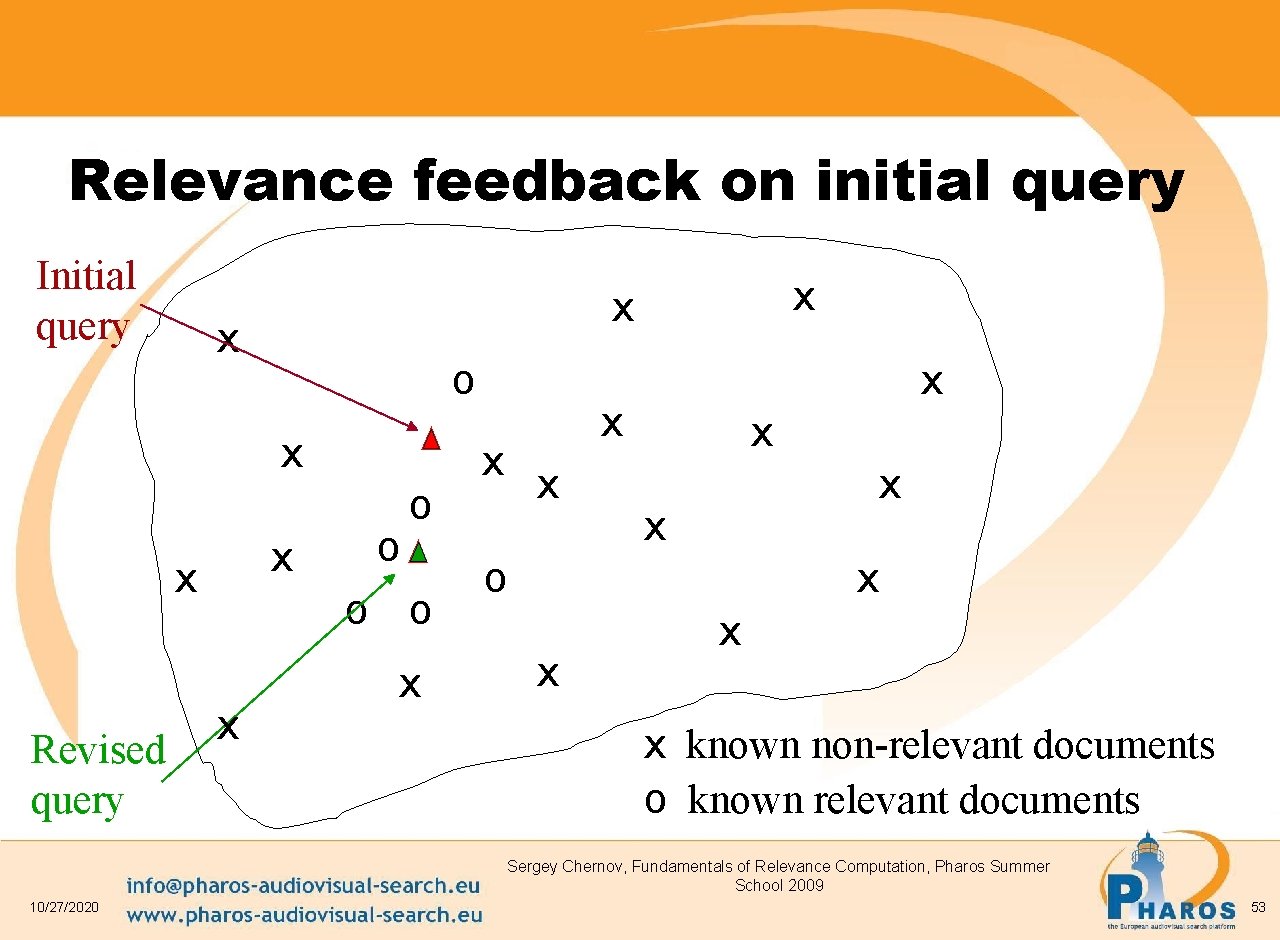

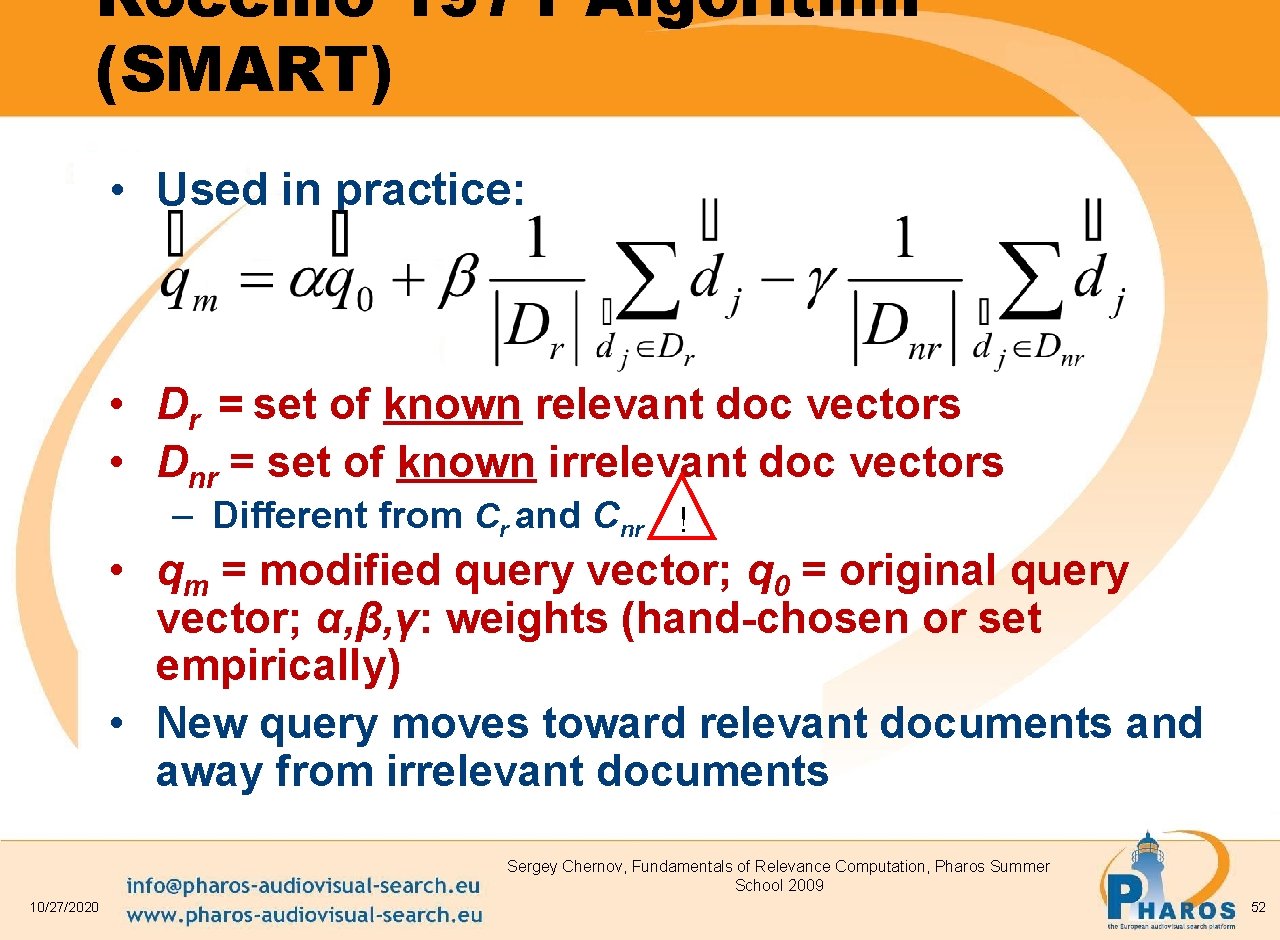

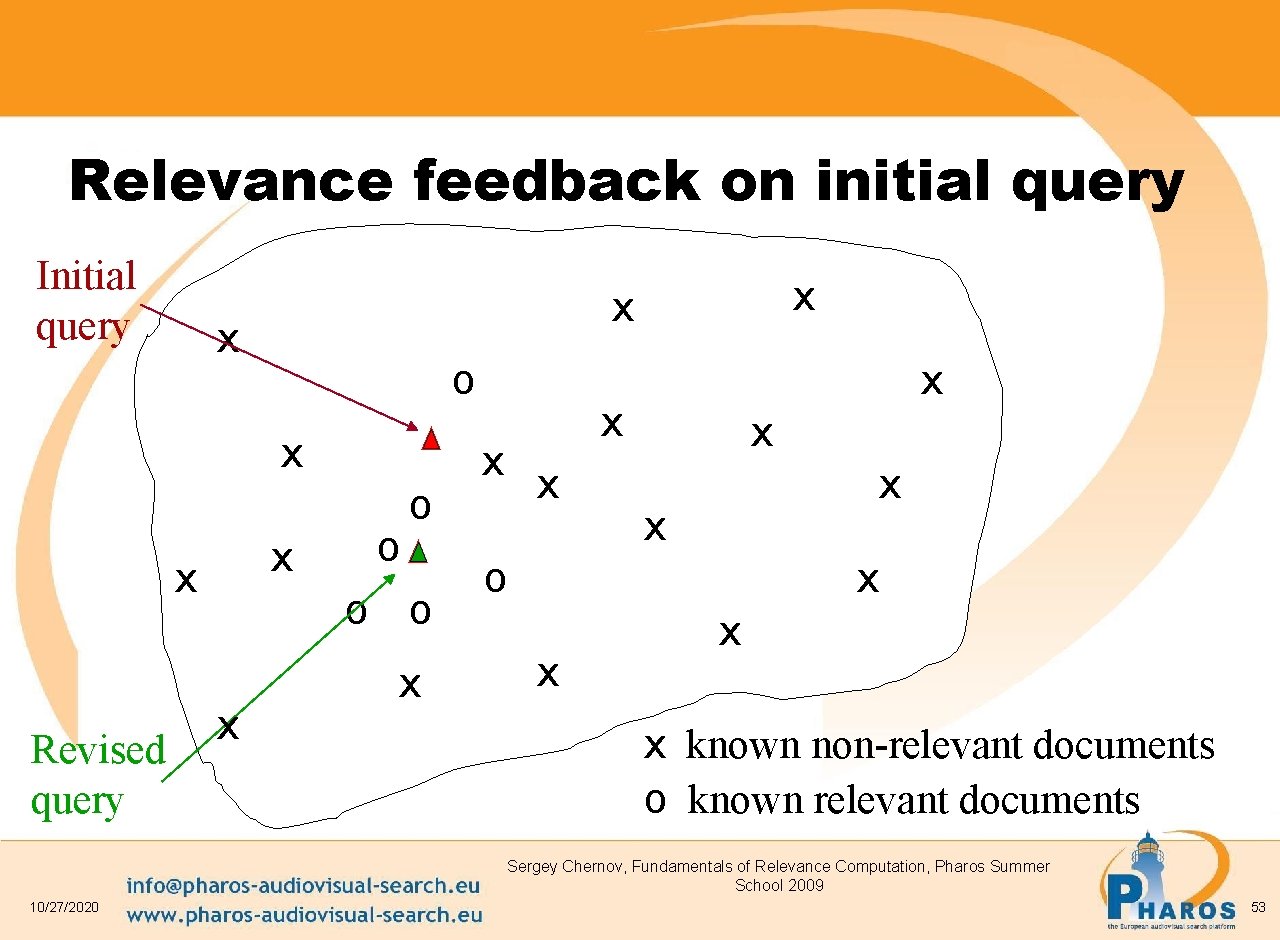

Rocchio 1971 Algorithm (SMART) • Used in practice: • Dr = set of known relevant doc vectors • Dnr = set of known irrelevant doc vectors – Different from Cr and Cnr ! • qm = modified query vector; q 0 = original query vector; α, β, γ: weights (hand-chosen or set empirically) • New query moves toward relevant documents and away from irrelevant documents Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 52

Relevance feedback on initial query Initial query x o x x Revised query x x x o o o x x x x o x x known non-relevant documents o known relevant documents Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 53

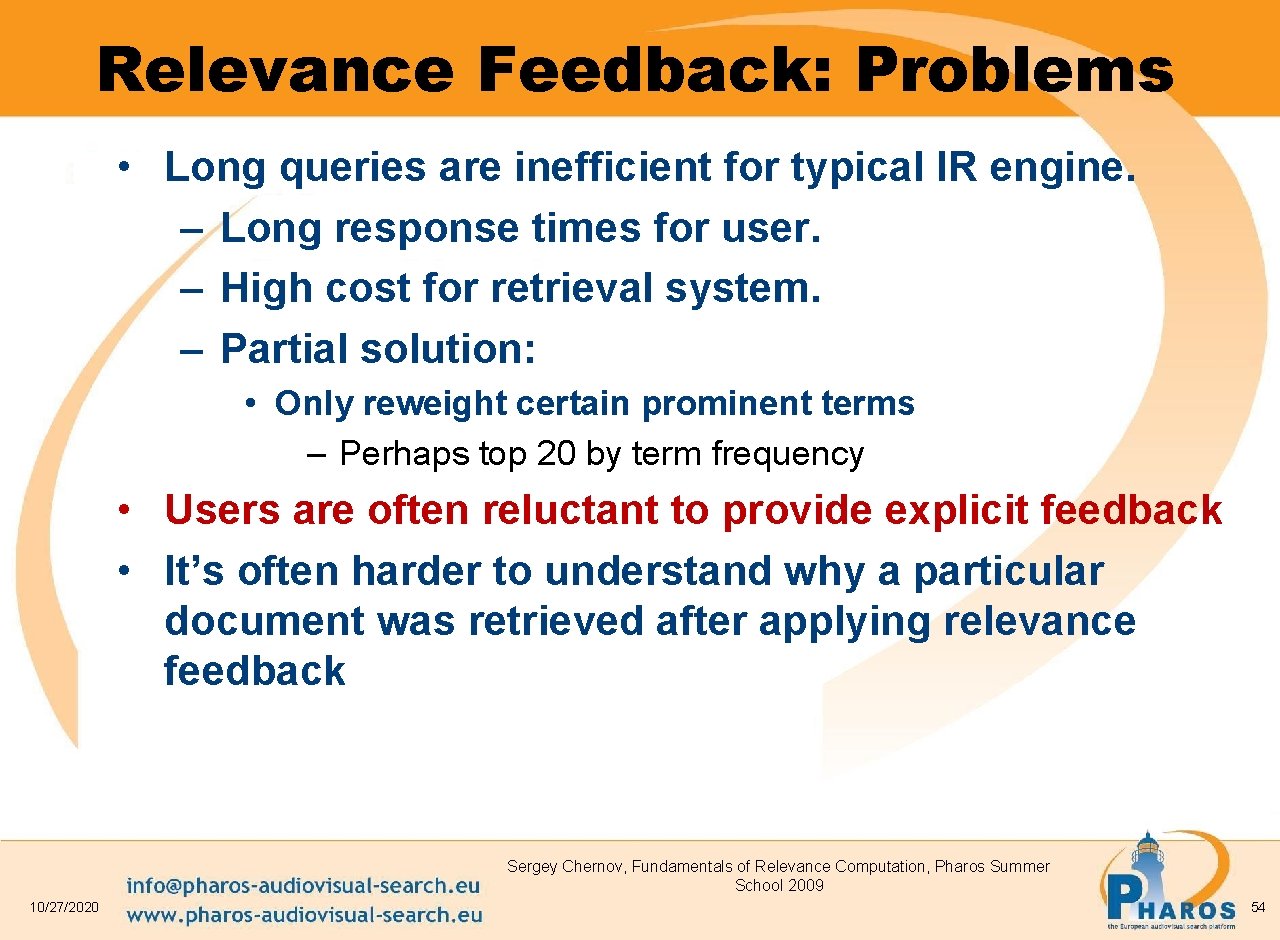

Relevance Feedback: Problems • Long queries are inefficient for typical IR engine. – Long response times for user. – High cost for retrieval system. – Partial solution: • Only reweight certain prominent terms – Perhaps top 20 by term frequency • Users are often reluctant to provide explicit feedback • It’s often harder to understand why a particular document was retrieved after applying relevance feedback Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 54

Relevance Feedback on the Web • Some search engines offer a similar/related pages feature (this is a trivial form of relevance feedback) – Google (link-based) – Altavista – Stanford Web. Base α/β/γ ? ? • But some don’t because it’s hard to explain to average user: – Alltheweb – msn live. com – Yahoo • Excite initially had true relevance feedback, but abandoned it due to lack of use. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 55

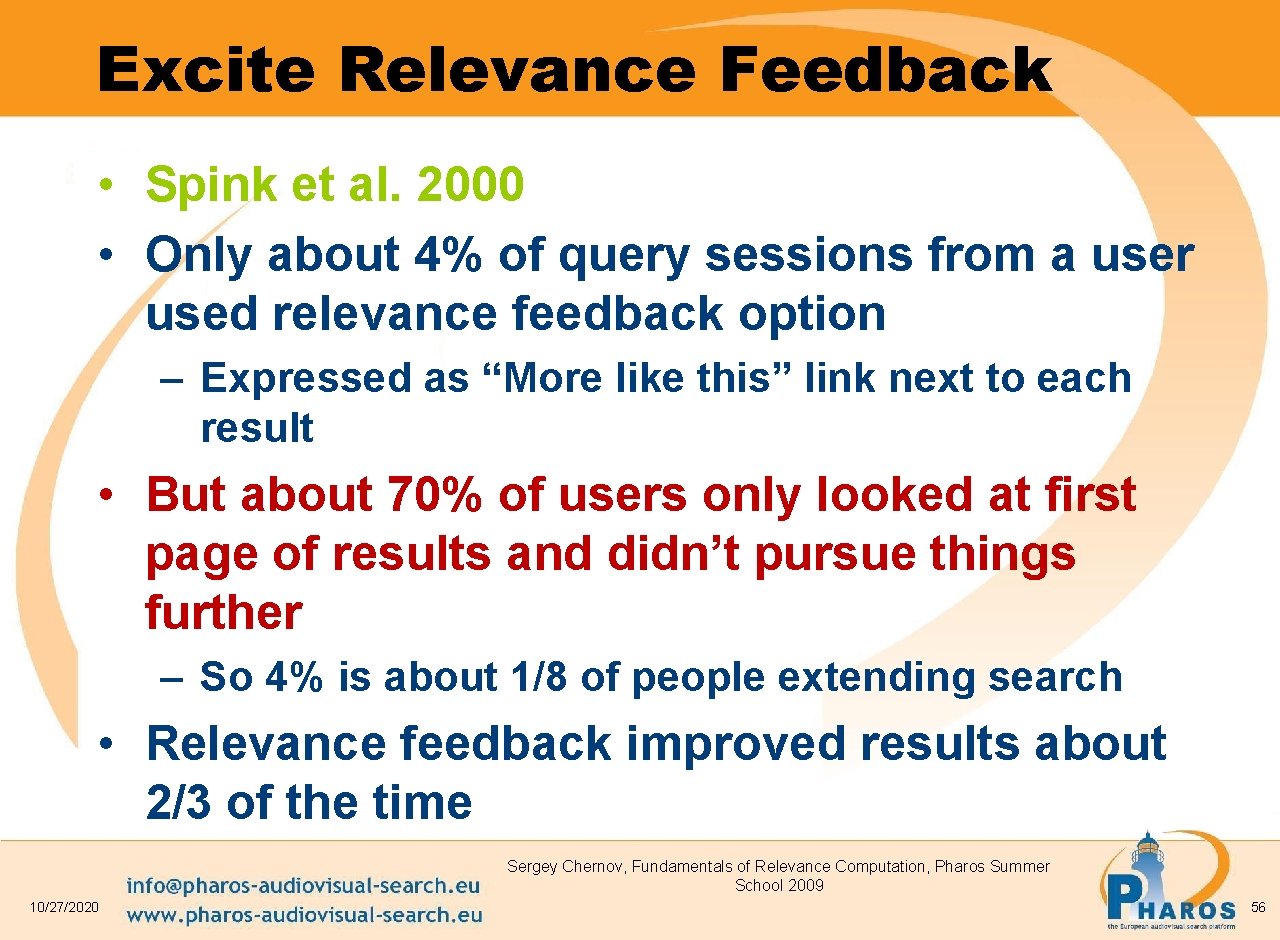

Excite Relevance Feedback • Spink et al. 2000 • Only about 4% of query sessions from a user used relevance feedback option – Expressed as “More like this” link next to each result • But about 70% of users only looked at first page of results and didn’t pursue things further – So 4% is about 1/8 of people extending search • Relevance feedback improved results about 2/3 of the time Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 56

Pseudo relevance feedback • Pseudo-relevance feedback automates the “manual” part of true relevance feedback. • Pseudo-relevance algorithm: –Retrieve a ranked list of hits for the user’s query –Assume that the top k documents are relevant. –Do relevance feedback (e. g. , Rocchio) • Works very well on average • But can go horribly wrong for some queries. • Several iterations can cause query drift. • Why? Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 9. 1. 6 57

Query Expansion • In relevance feedback, users give additional input (relevant/non-relevant) on documents, which is used to reweight terms in the documents • In query expansion, users give additional input (good/bad search term) on words or phrases Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 58

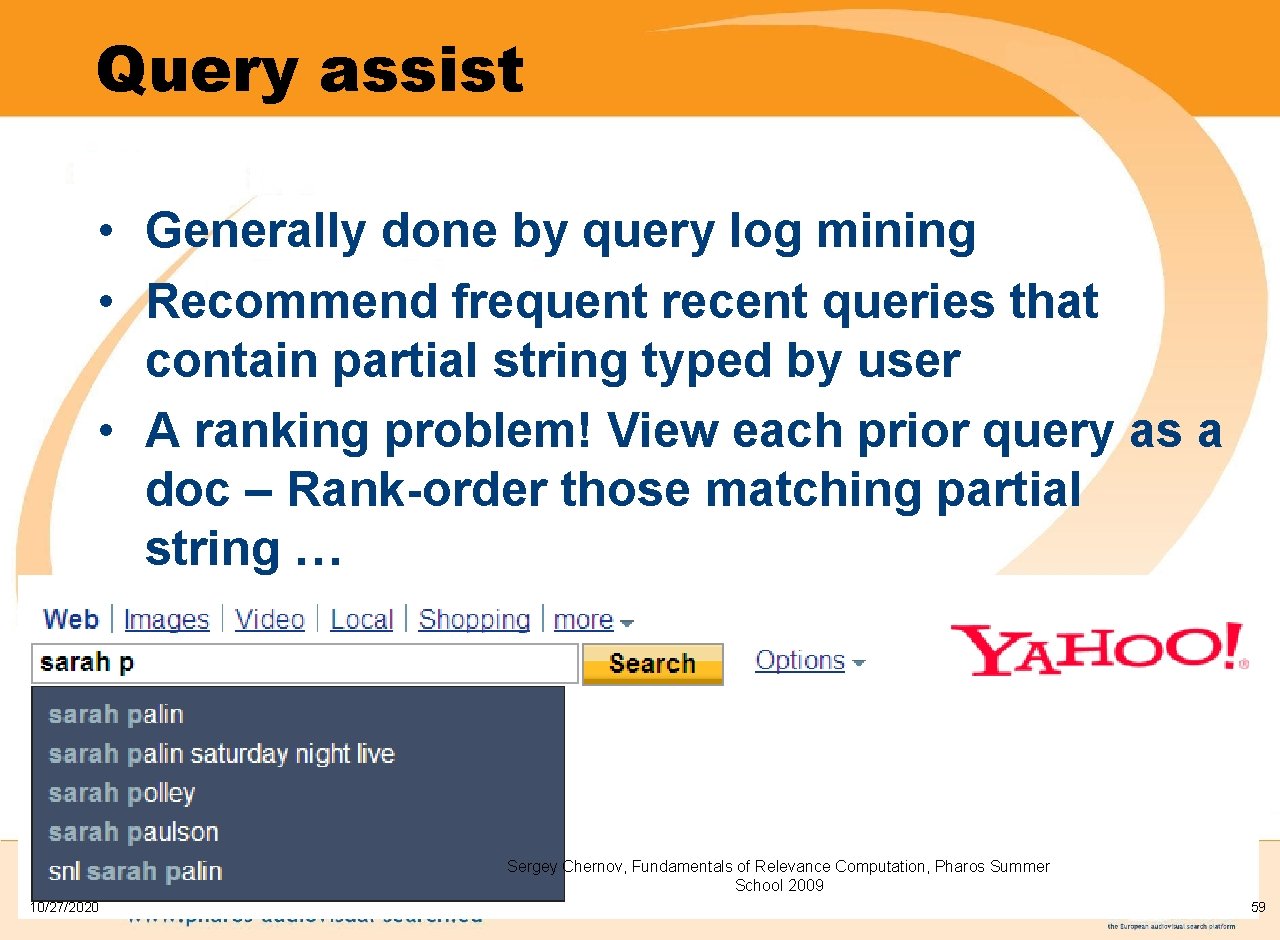

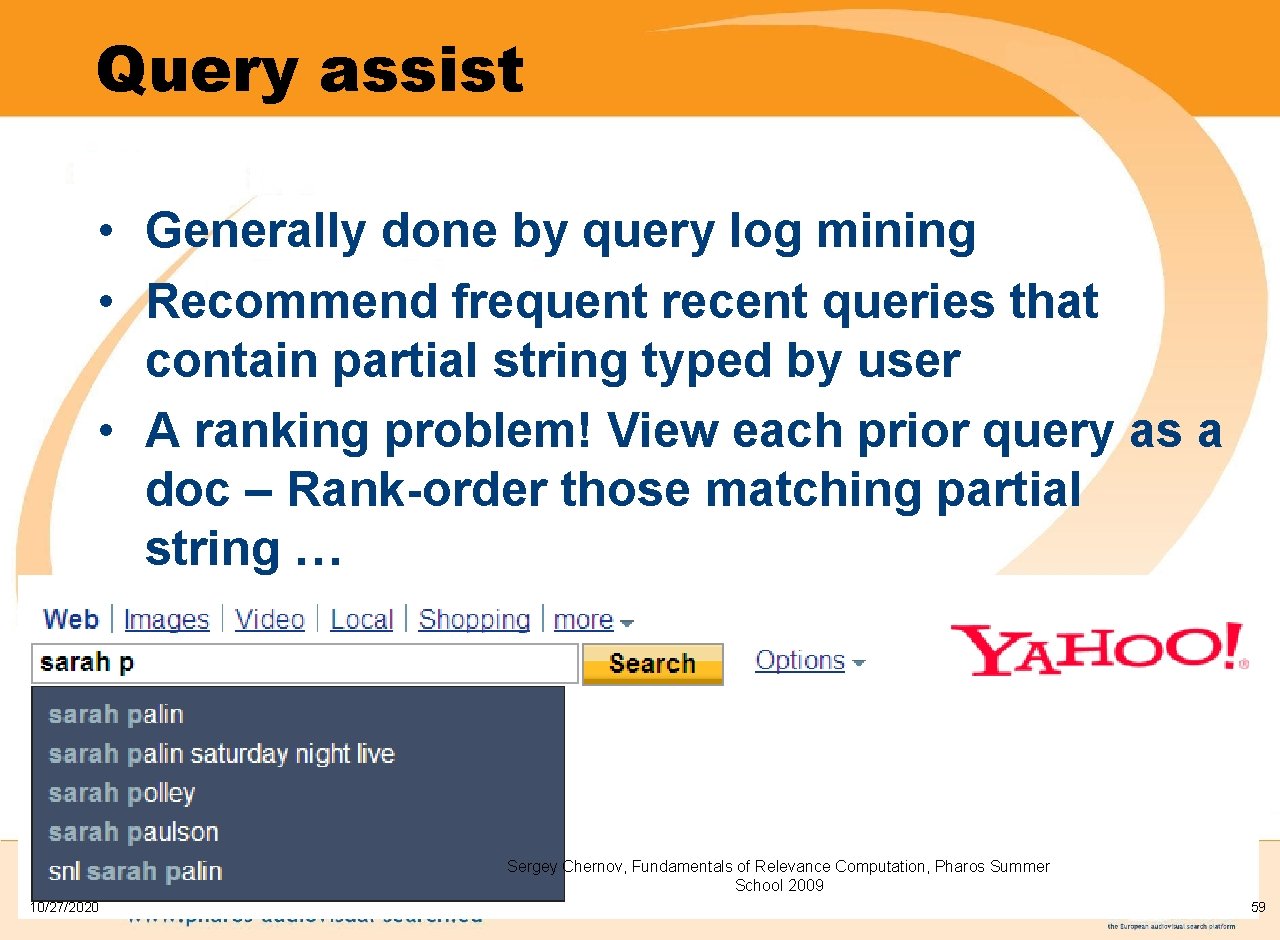

Query assist • Generally done by query log mining • Recommend frequent recent queries that contain partial string typed by user • A ranking problem! View each prior query as a doc – Rank-order those matching partial string … Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 59

Indirect relevance feedback • On the web, Direct. Hit introduced a form of indirect relevance feedback. • Direct. Hit ranked documents higher that users look at more often. – Clicked on links are assumed likely to be relevant • Assuming the displayed summaries are good, etc. • Globally: Not necessarily user or query specific. – This is the general area of clickstream mining • Today – handled as part of machine-learned ranking Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 60

Happiness: elusive to measure • Most common proxy: relevance of search results • But how do you measure relevance? • We will detail a methodology here, then examine its issues • Relevant measurement requires 3 elements: 1. A benchmark document collection 2. A benchmark suite of queries 3. A usually binary assessment of either Relevant or Nonrelevant for each query and each document • Some work on more-than-binary, but not the standard Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 61 61

Evaluating an IR system • Note: the information need is translated into a query • Relevance is assessed relative to the information need not the query • E. g. , Information need: I'm looking for information on whether drinking red wine is more effective at reducing your risk of heart attacks than white wine. • Query: wine red white heart attack effective • You evaluate whether the doc addresses the information need, not whether it has these words Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 62 62

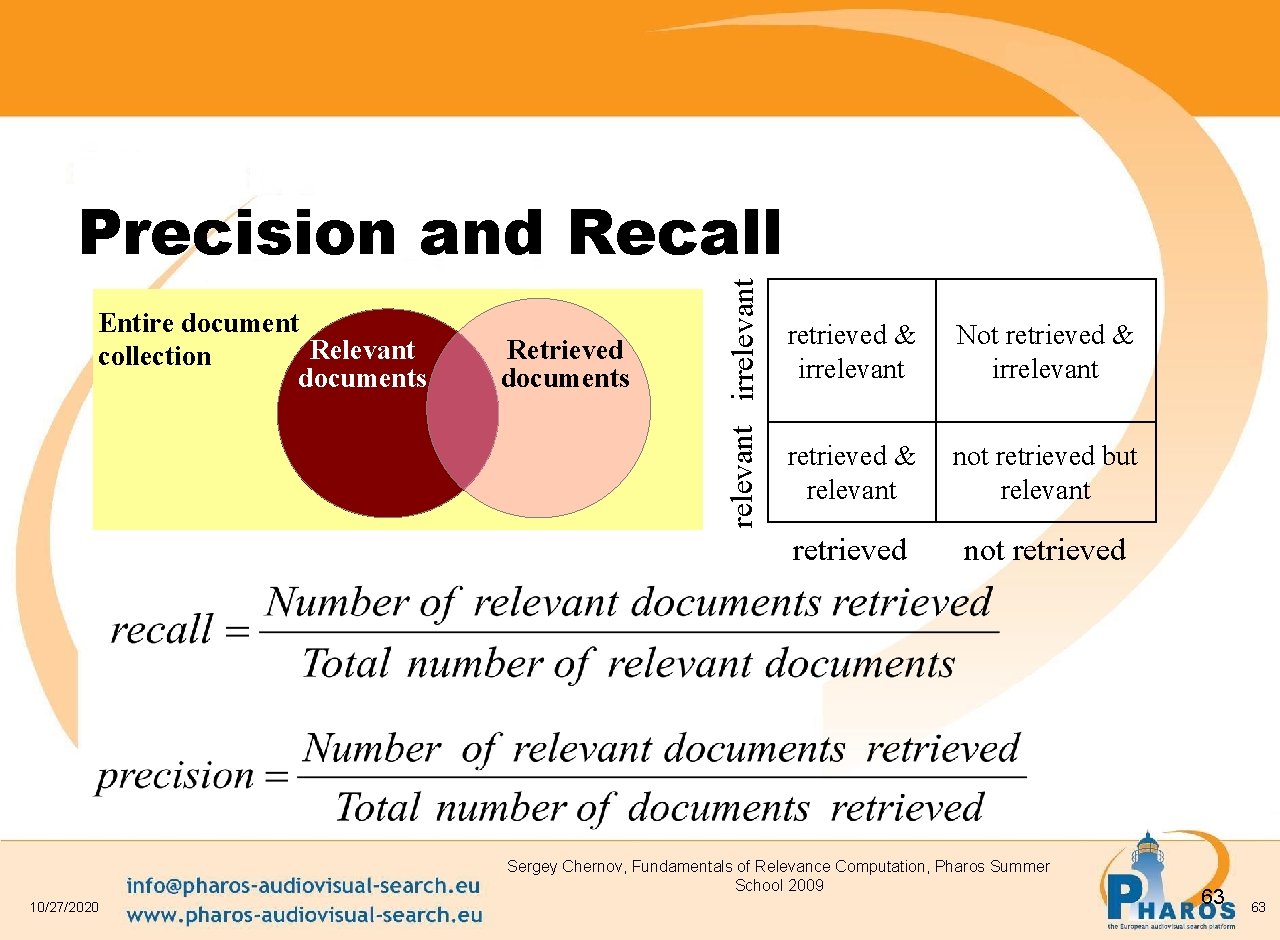

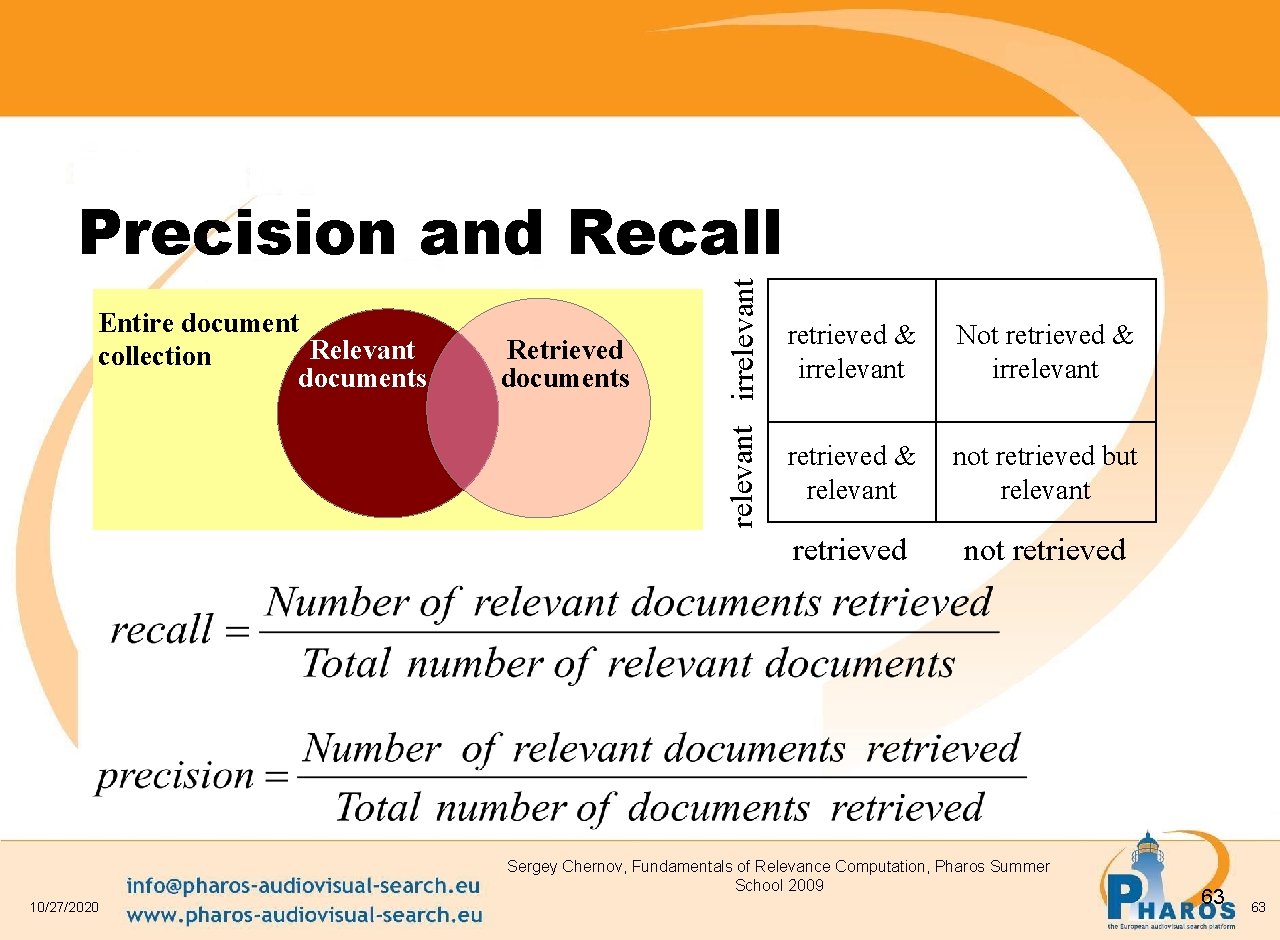

Entire document Relevant collection documents Retrieved documents relevant irrelevant Precision and Recall retrieved & irrelevant Not retrieved & irrelevant retrieved & relevant not retrieved but relevant retrieved not retrieved Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 63 63

Precision and Recall • Precision – The ability to retrieve top-ranked documents that are mostly relevant. • Recall – The ability of the search to find all of the relevant items in the corpus. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 64 64

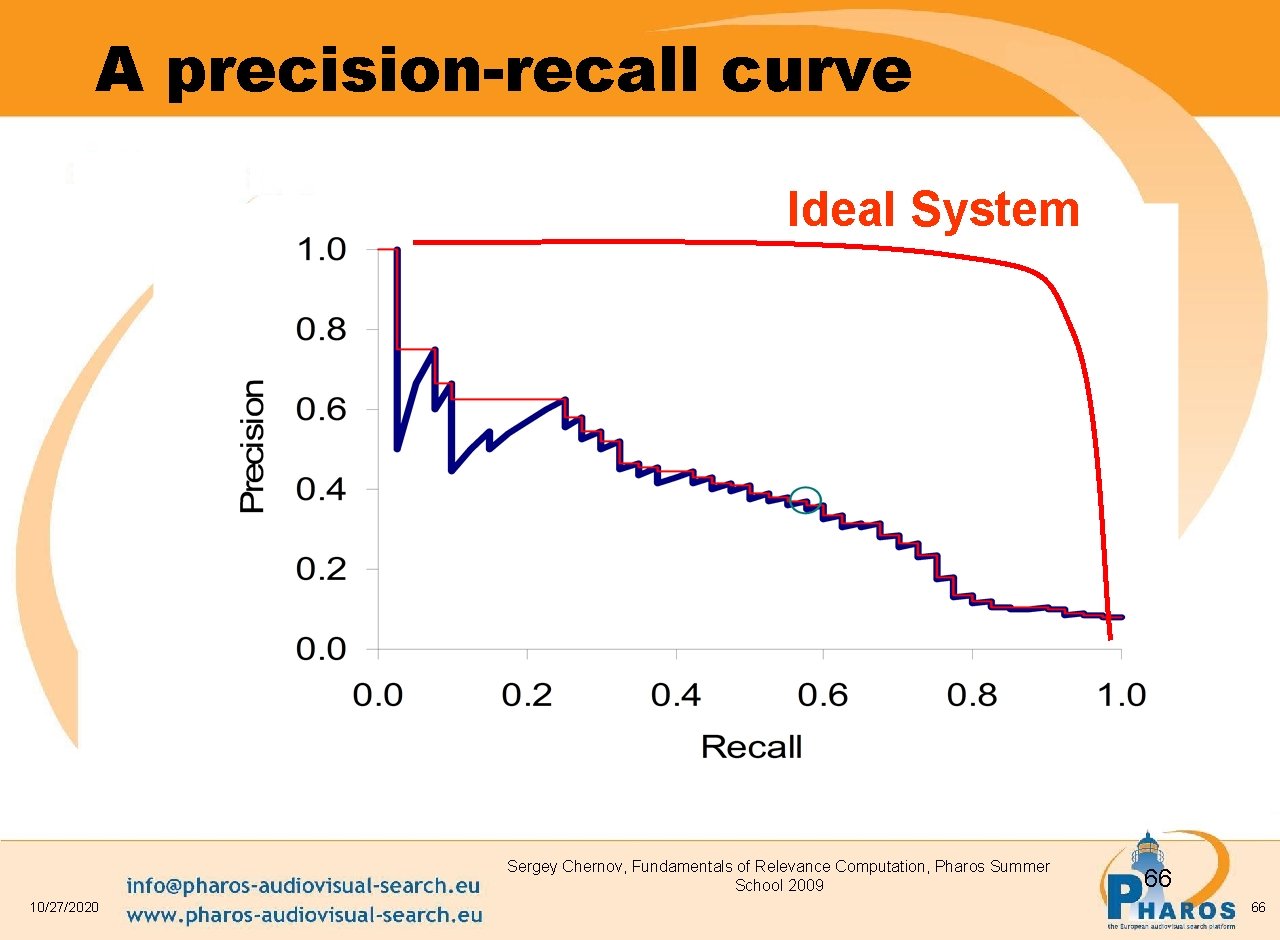

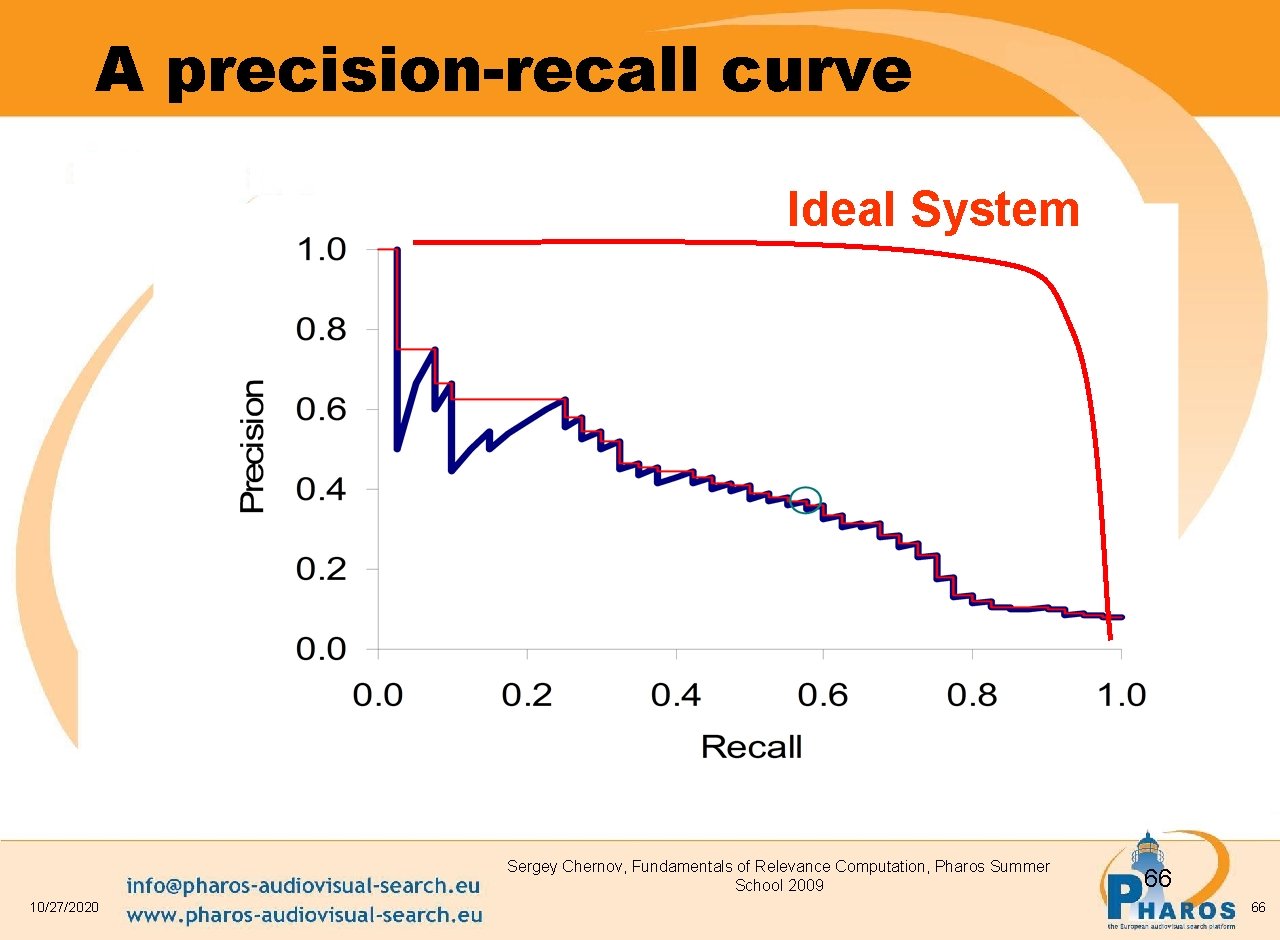

Precision/Recall • You can get high recall (but low precision) by retrieving all docs for all queries! • Recall is a non-decreasing function of the number of docs retrieved • In a good system, precision decreases as either the number of docs retrieved or recall increases • Evaluation of ranked results: –The system can return any number of results –By taking various numbers of the top returned documents (levels of recall), the evaluator can produce a precision-recall curve Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 65 65

A precision-recall curve Ideal System Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 66 66

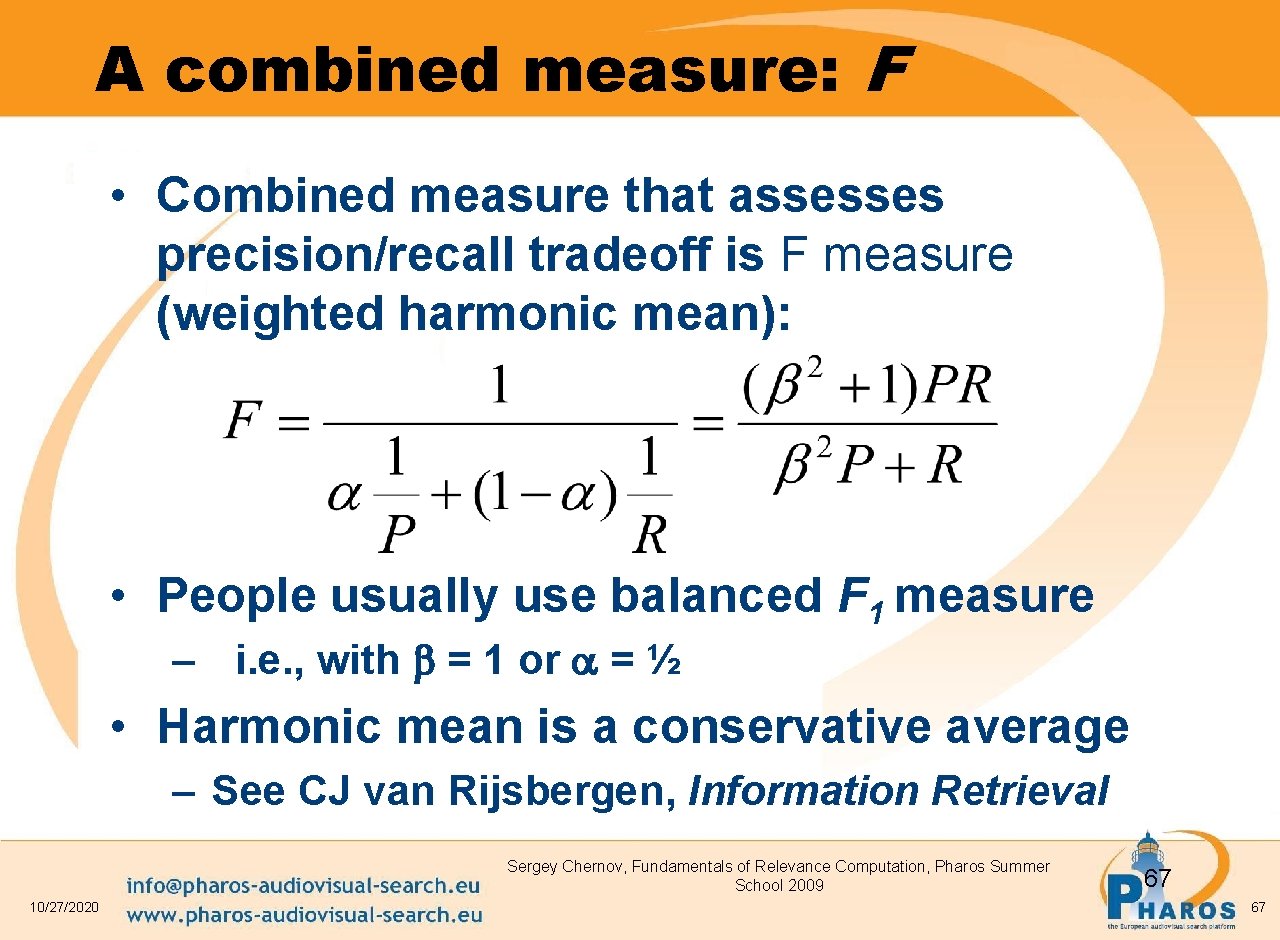

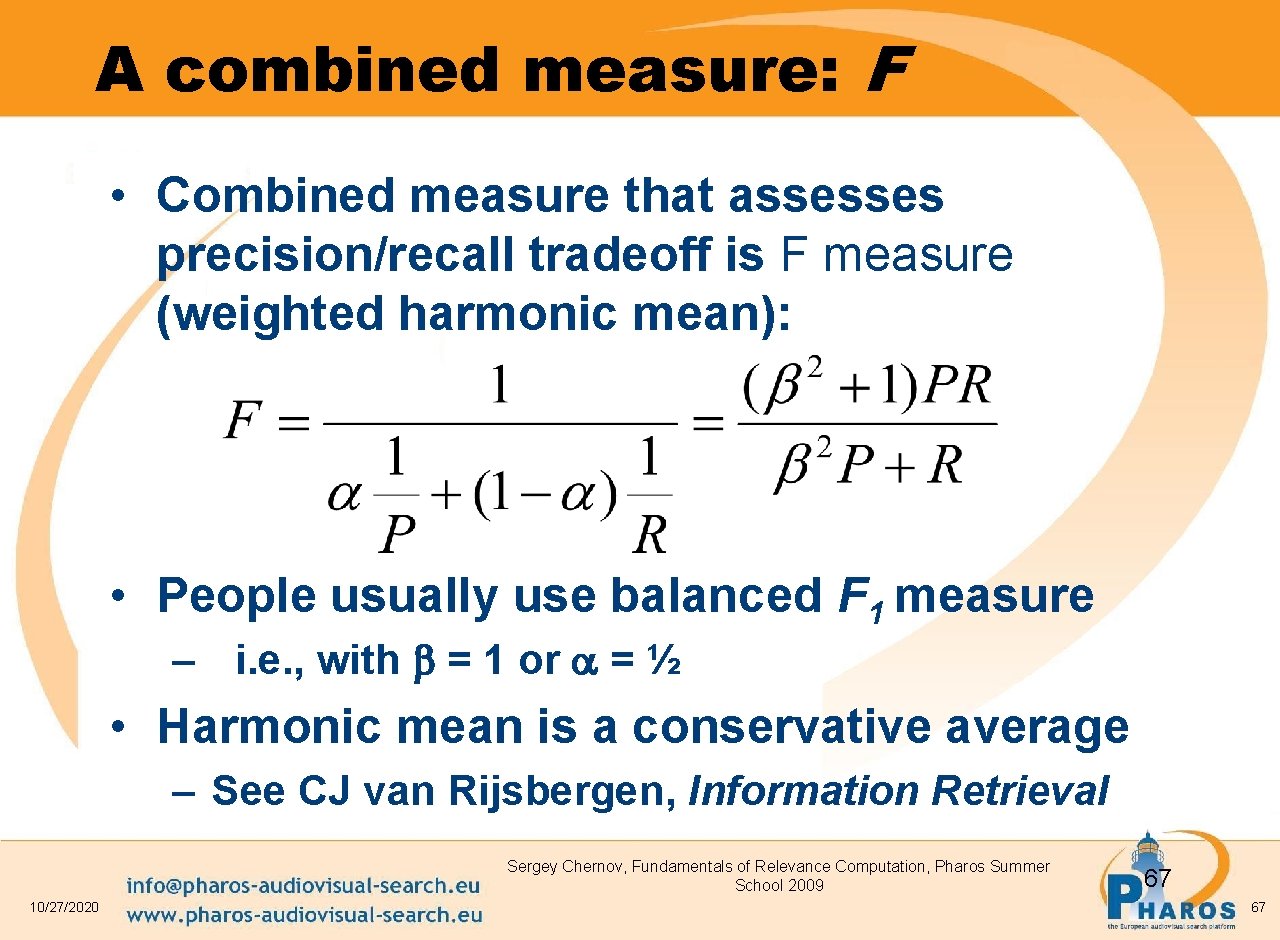

A combined measure: F • Combined measure that assesses precision/recall tradeoff is F measure (weighted harmonic mean): • People usually use balanced F 1 measure – i. e. , with = 1 or = ½ • Harmonic mean is a conservative average – See CJ van Rijsbergen, Information Retrieval Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 67 67

Evaluation at large search engines • • • Search engines have test collections of queries and hand-ranked results Recall is difficult to measure on the web Search engines often use precision at top k, e. g. , k = 10. . . or measures that reward you more for getting rank 1 right than for getting rank 10 right. – NDCG (Normalized Cumulative Discounted Gain) Search engines also use non-relevance-based measures. – Clickthrough on first result • Not very reliable if you look at a single clickthrough … but pretty reliable in the aggregate. – Studies of user behavior in the lab – A/B testing Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 68 68

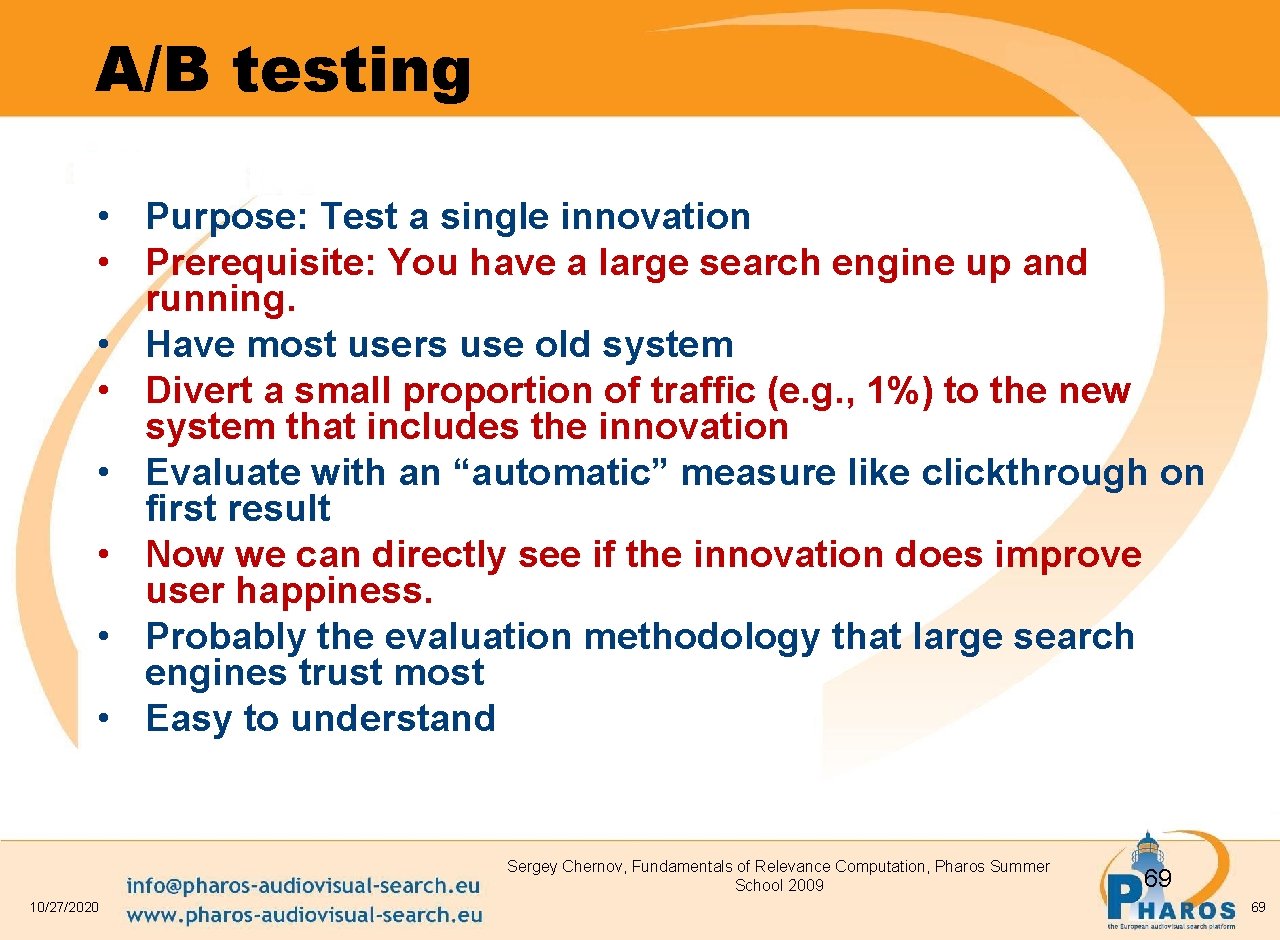

A/B testing • Purpose: Test a single innovation • Prerequisite: You have a large search engine up and running. • Have most users use old system • Divert a small proportion of traffic (e. g. , 1%) to the new system that includes the innovation • Evaluate with an “automatic” measure like clickthrough on first result • Now we can directly see if the innovation does improve user happiness. • Probably the evaluation methodology that large search engines trust most • Easy to understand Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 69 69

Part II Personalization: Techniques and Applications Slides are taken from Personalization: Techniques and Applications course by Krishnan Ramanathan, Geetha Manjunath, Somnath Banerjee, HP Labs, Bangalore Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

A Demonstration: What do you see? (from Susan Dumais) Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Why Personalization ? Scale of the web is limiting its utility • There is too much information • Consumer has to do all the work to use the web • Search engines and portals provide the same results for different personalities, intentions and contexts • Personalization can be the solution • Customize the web for individuals by • Filtering out irrelevant information • Identifying relevant information Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Some quotes from NY bits I am married with a house. Why do I see so many ads for online dating sites and cheap mortgages? Should I be happy that I see those ads? It means Internet advertisers still have no idea who I am. Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Personalization Goal – Provide users what they need without requiring them to ask for it explicitly Steps Generate useful, actionable knowledge about users Use this knowledge for personalizing an application User centric data model – Data must be attributable to specific user Personalization requires User Profiling Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Applications of Personalization Interface Personalization – E. g. Go directly to the web page of interest instead of site home page Content personalization – Filtering (News, blog articles, videos etc) – Ratings based recommendations • Amazon, Stumbleupon – Search • Text, images, stories, research papers – Ads Service Personalization Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

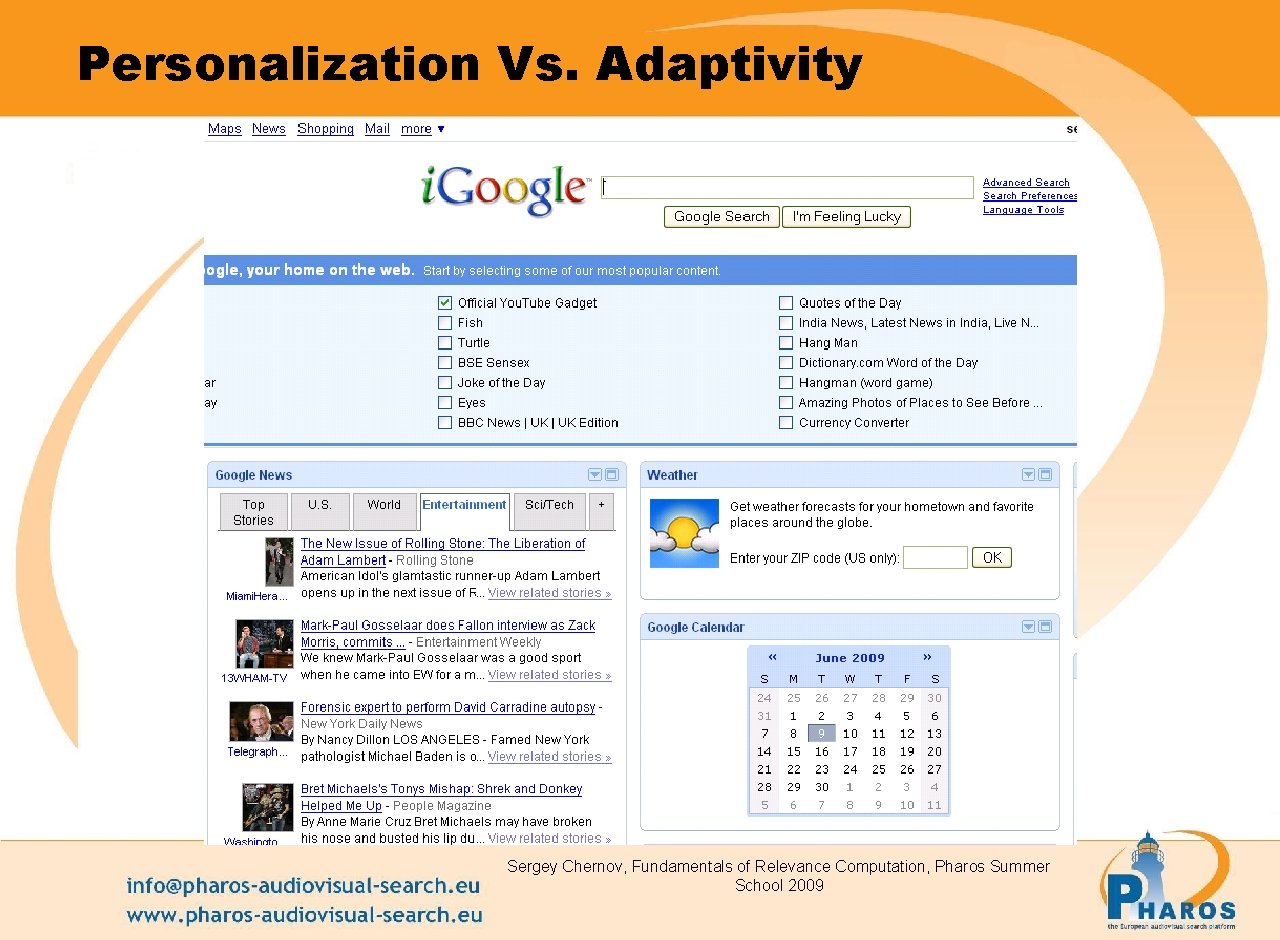

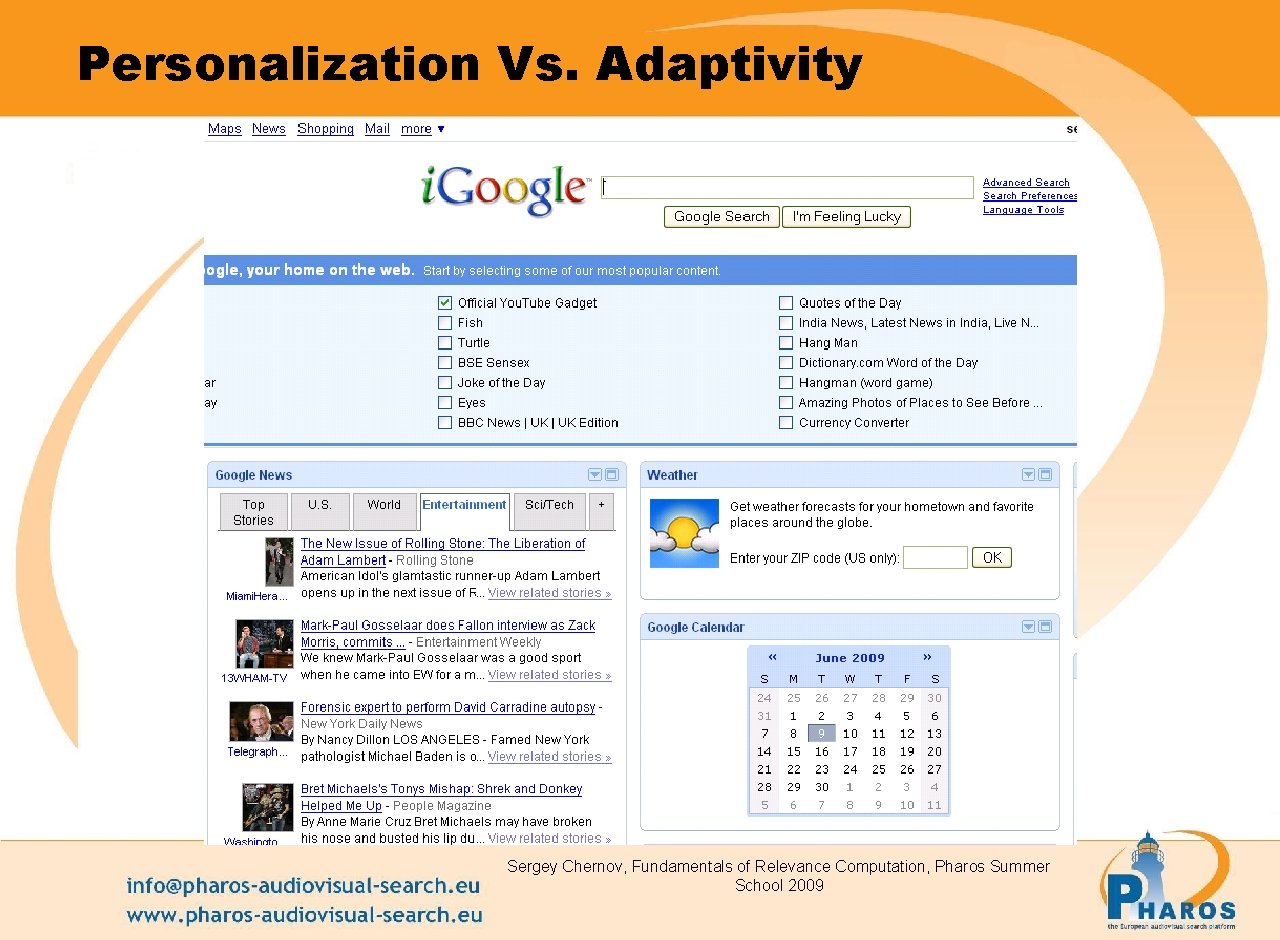

Personalization Vs. Adaptivity Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

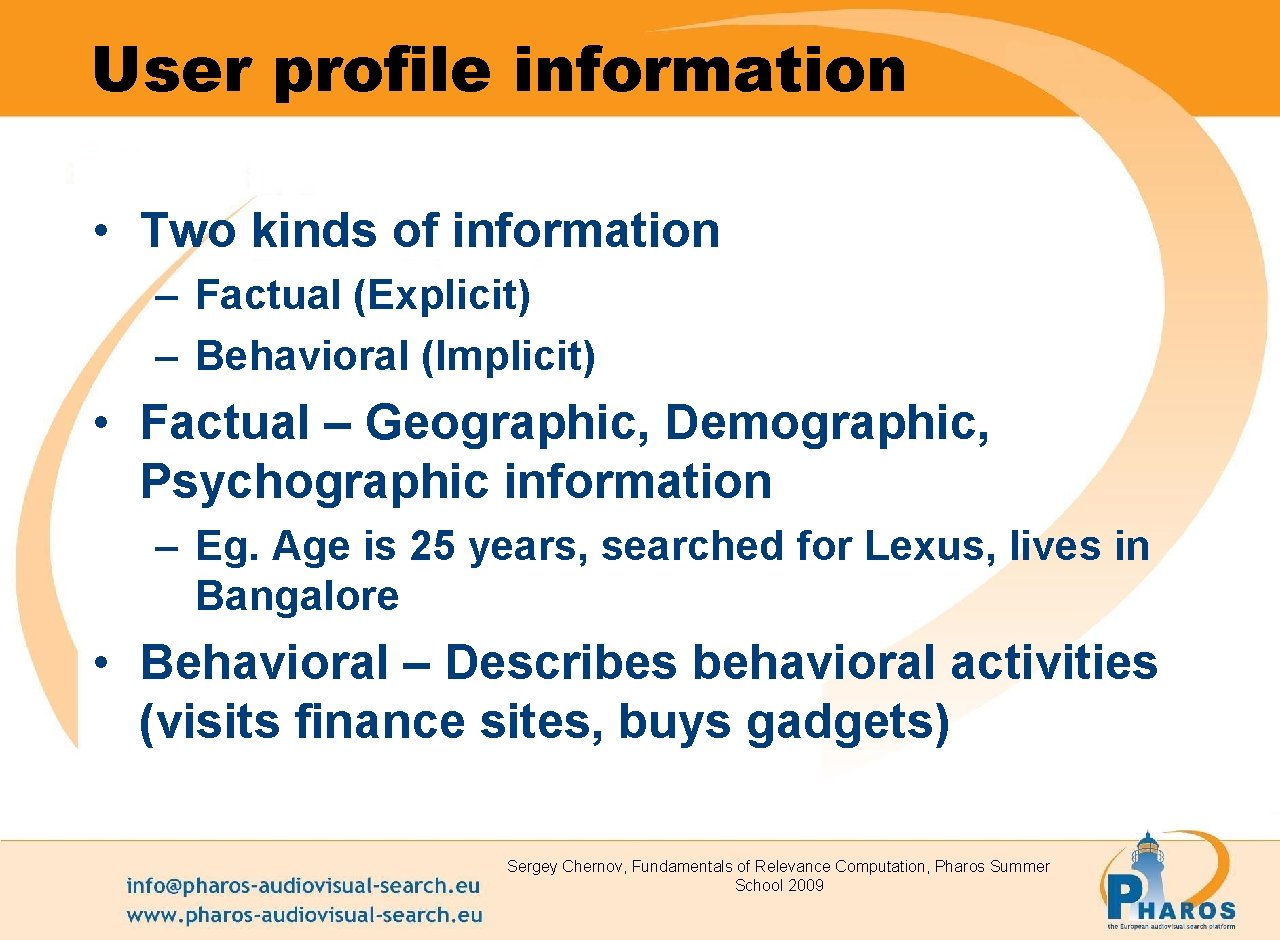

User profile information • Two kinds of information – Factual (Explicit) – Behavioral (Implicit) • Factual – Geographic, Demographic, Psychographic information – Eg. Age is 25 years, searched for Lexus, lives in Bangalore • Behavioral – Describes behavioral activities (visits finance sites, buys gadgets) Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

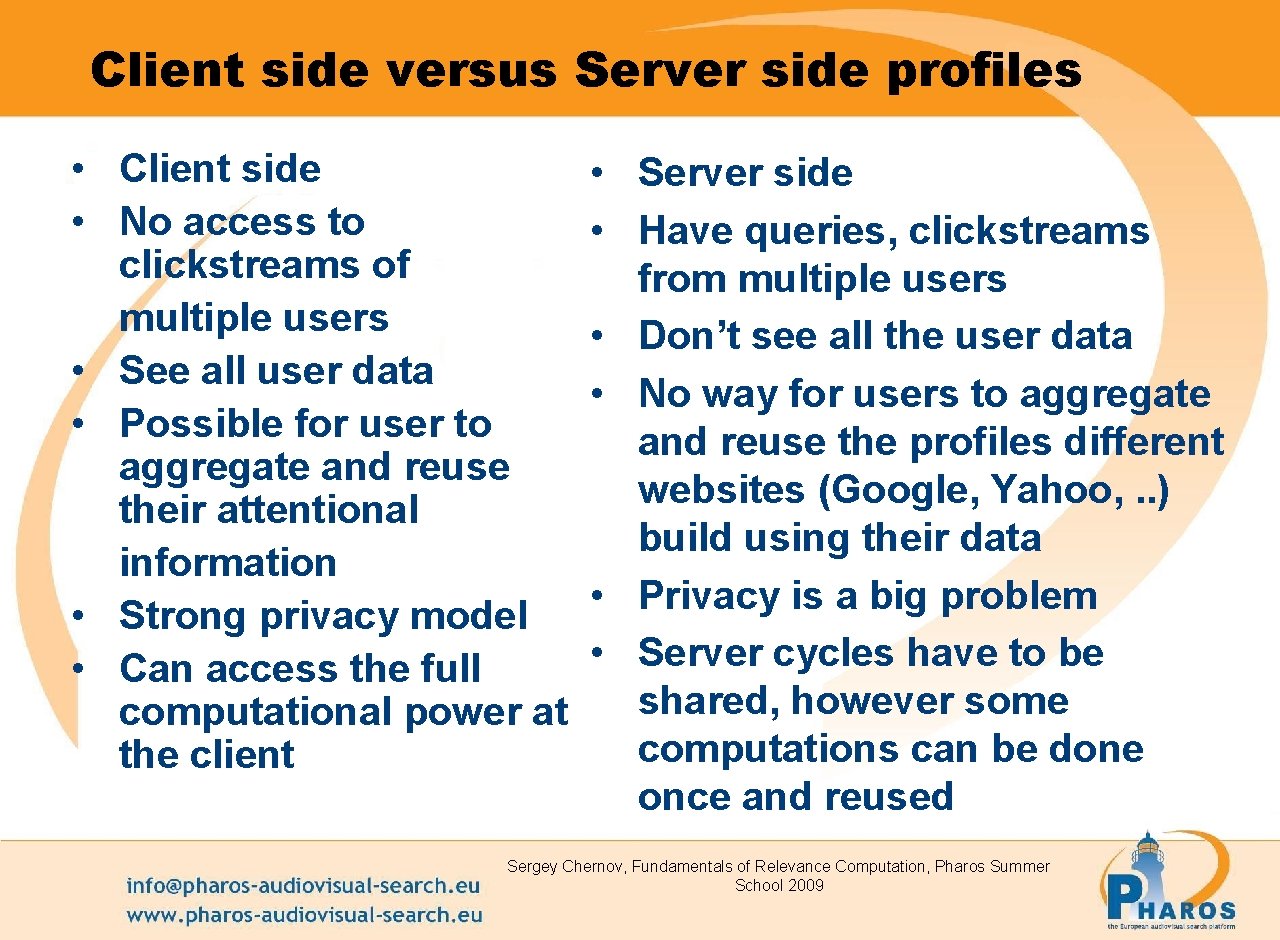

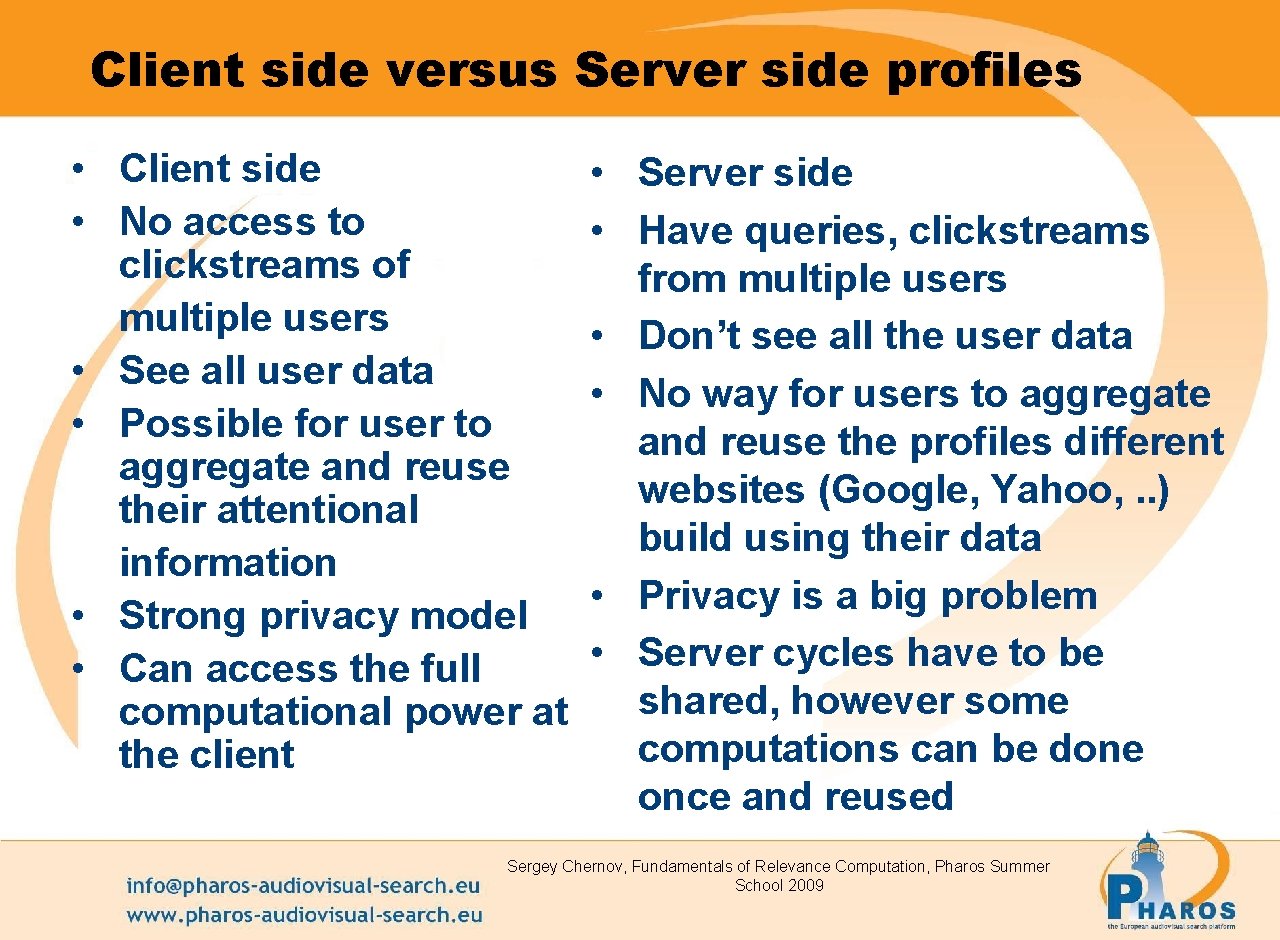

Client side versus Server side profiles • Client side • No access to clickstreams of multiple users • See all user data • Possible for user to aggregate and reuse their attentional information • Strong privacy model • Can access the full computational power at the client • Server side • Have queries, clickstreams from multiple users • Don’t see all the user data • No way for users to aggregate and reuse the profiles different websites (Google, Yahoo, . . ) build using their data • Privacy is a big problem • Server cycles have to be shared, however some computations can be done once and reused Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

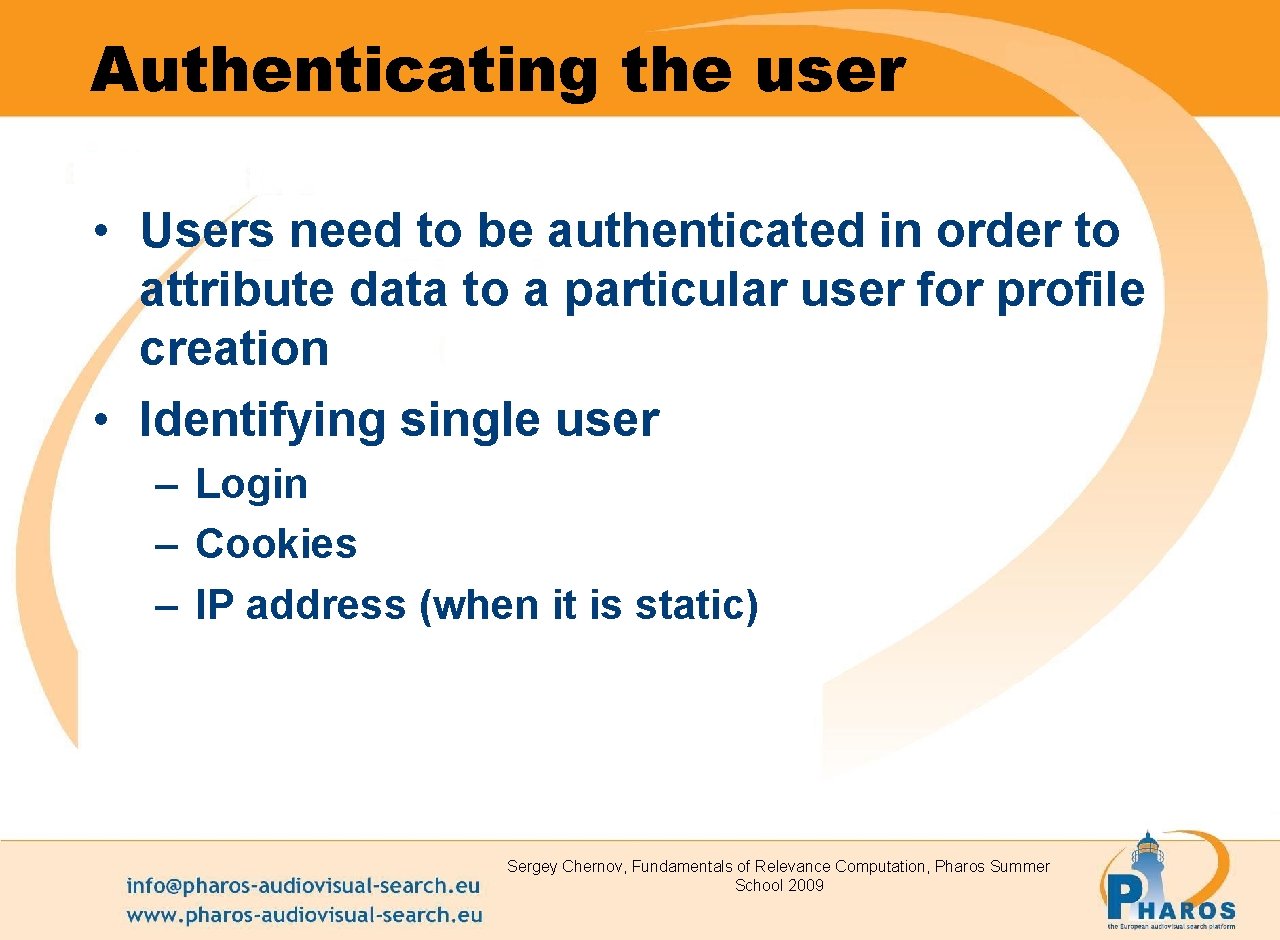

Authenticating the user • Users need to be authenticated in order to attribute data to a particular user for profile creation • Identifying single user – Login – Cookies – IP address (when it is static) Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

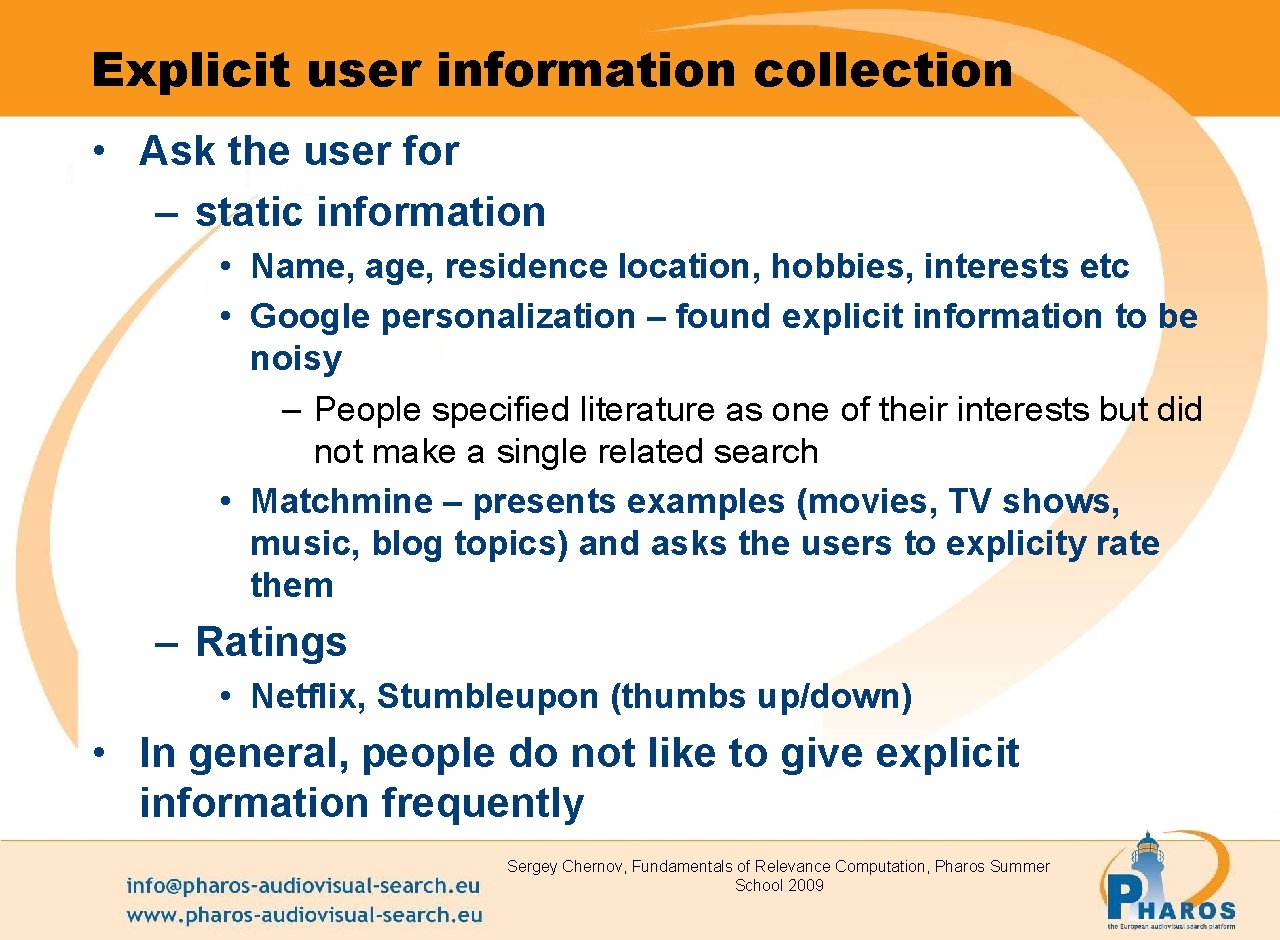

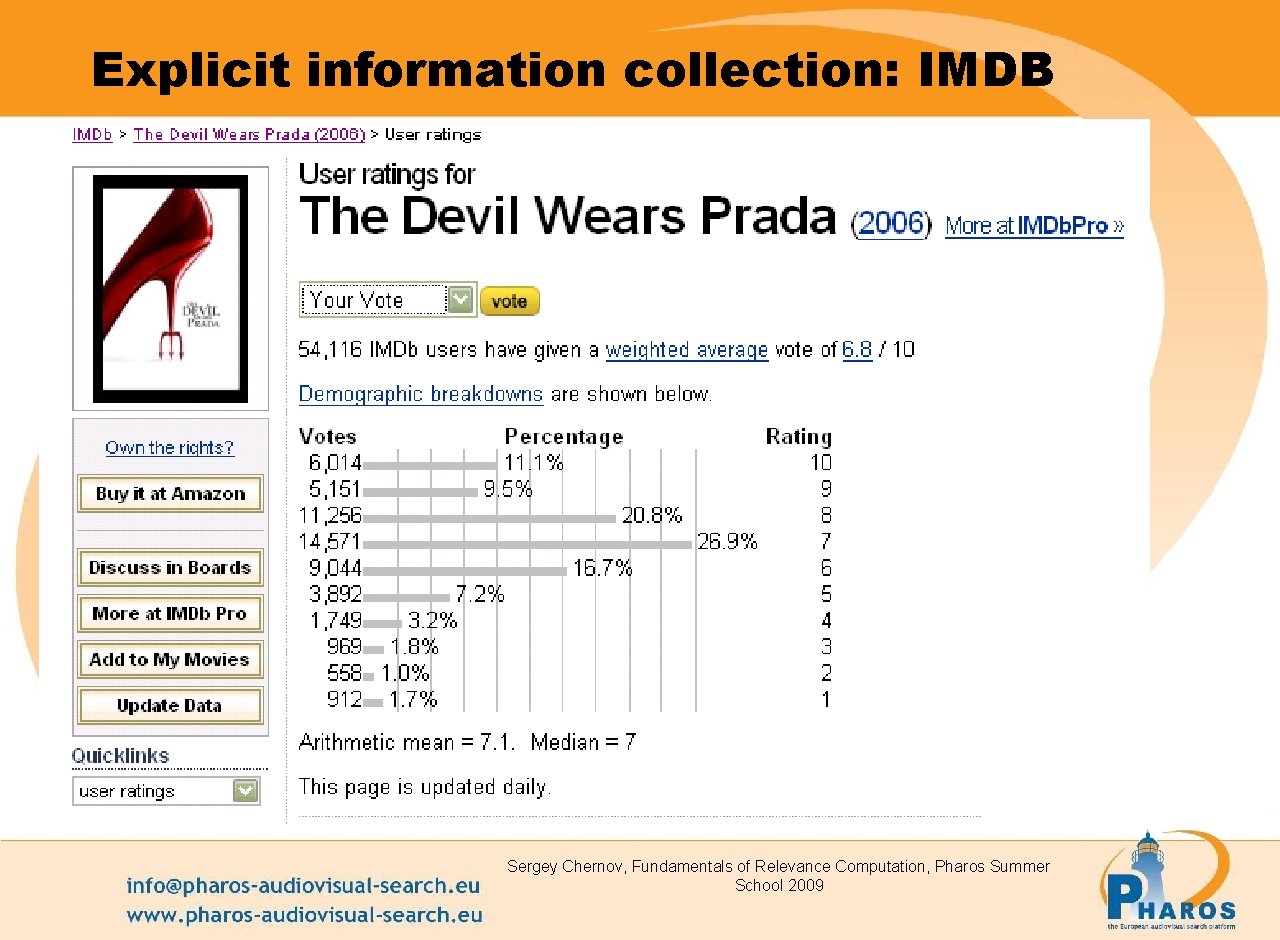

Explicit user information collection • Ask the user for – static information • Name, age, residence location, hobbies, interests etc • Google personalization – found explicit information to be noisy – People specified literature as one of their interests but did not make a single related search • Matchmine – presents examples (movies, TV shows, music, blog topics) and asks the users to explicity rate them – Ratings • Netflix, Stumbleupon (thumbs up/down) • In general, people do not like to give explicit information frequently Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

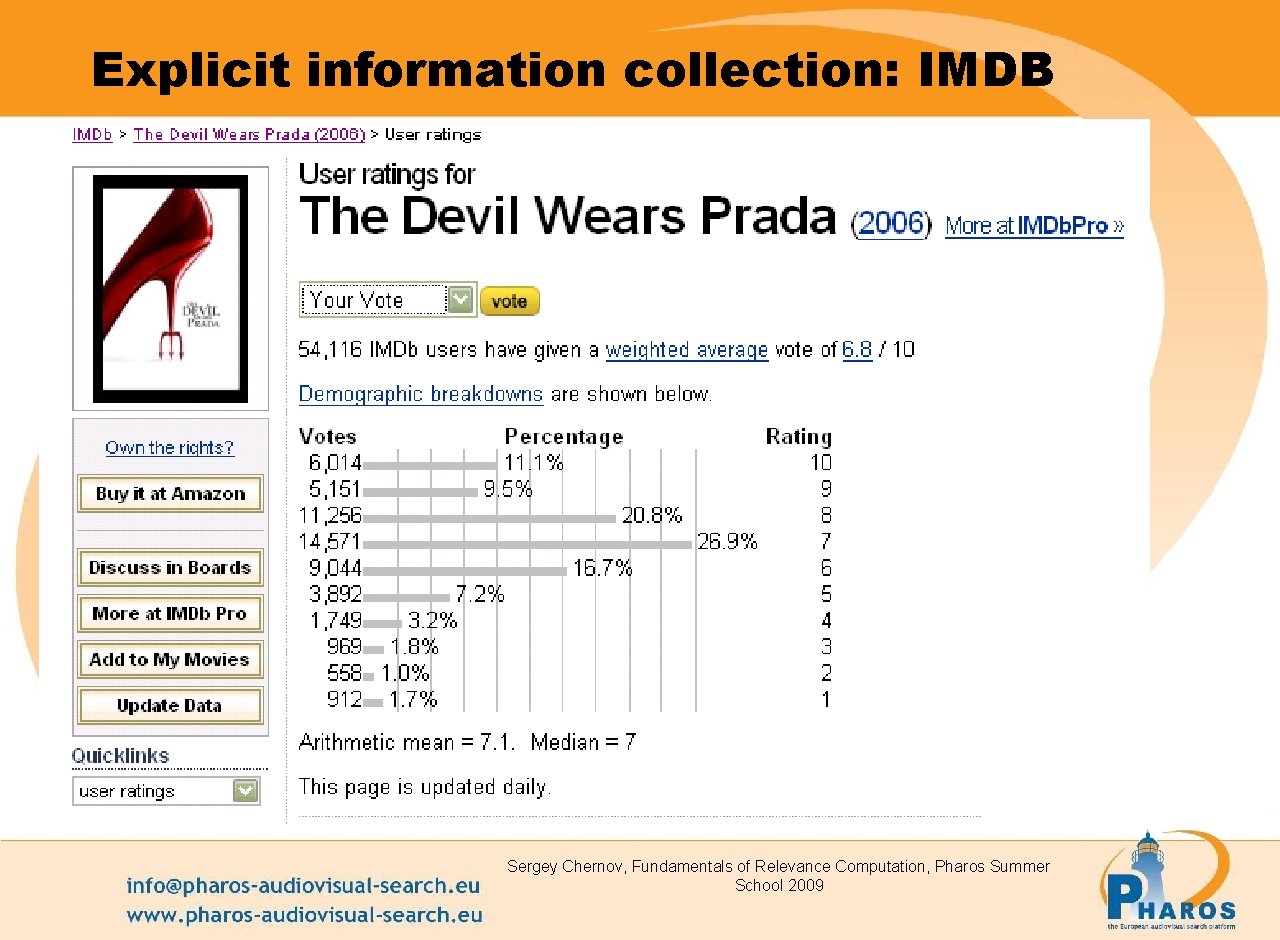

Explicit information collection: IMDB Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Implicit user information collection • Data sources – Web pages, documents, search queries, location – Information from applications (Media players, Games) • Data collection techniques – Desktop based • Browser cache • Proxy servers • Browser plugins – Server side • Web logs • Search logs Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Stereotypes • Generalizations from communities of users – Characteristics of group of users • Stereotypes alleviate the bootstrap problem • Construction of stereotypes – Manual – e. g. student will be interested in lectures – Automatic method • Clustering – Similar profiles are clustered and common characteristics extracted Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Profile representation • Bag of words (BOW) – Use words in user documents to represent user interests – Issues • Words appear independent of page content (“Home”, “page”) • Polysemy (word has multiple meanings e. g. bank) • Synonymy (multiple words have same meanings e. g. joy, happiness) • Large profile sizes • Concepts (e. g. DMOZ) – Use existing ontology maintained for free – Issues • Too large (about 600 k DMOZ nodes), ontology has to be drastically pruned for use • Need to build classifiers for each DMOZ node Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

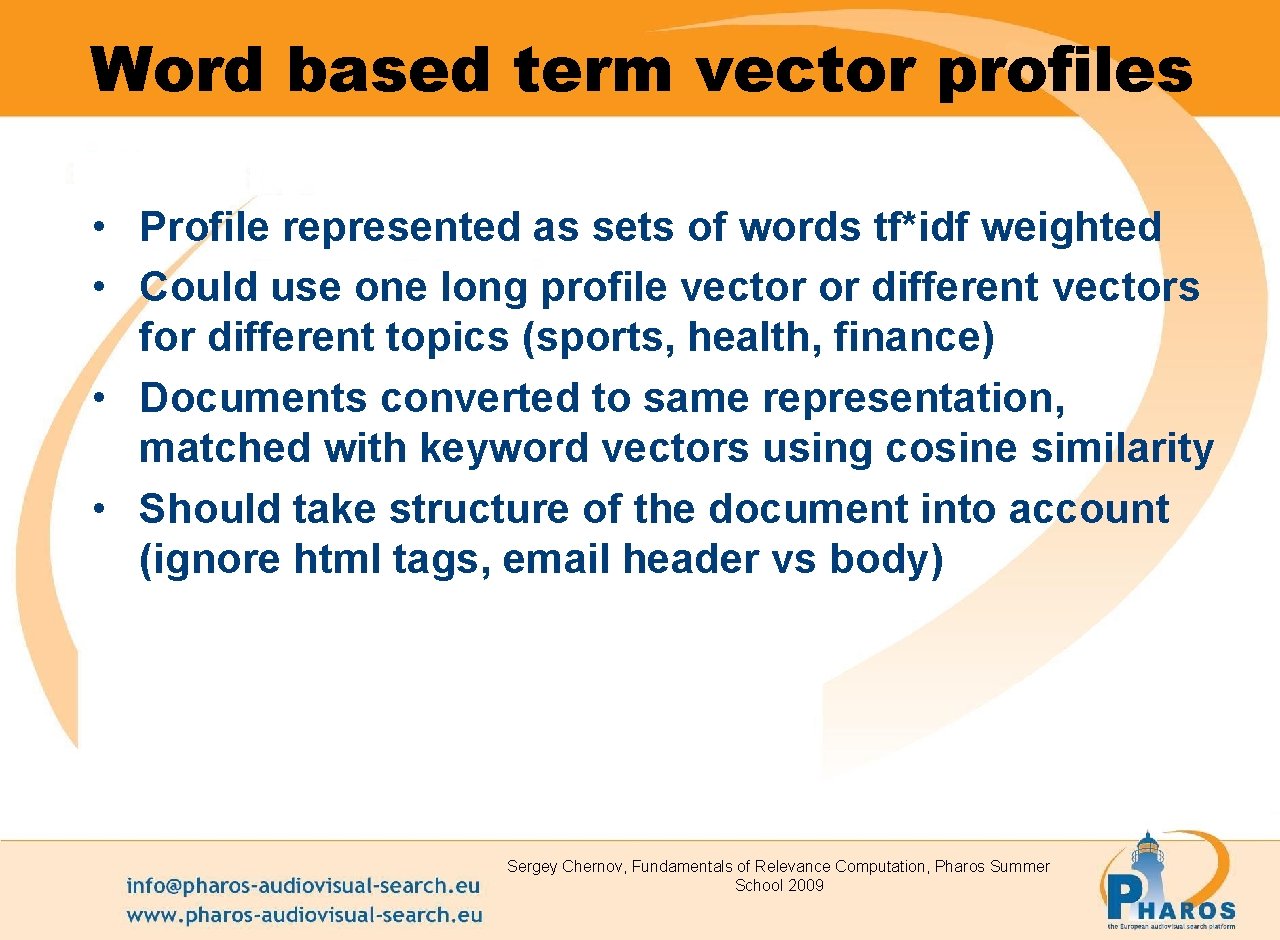

Word based term vector profiles • Profile represented as sets of words tf*idf weighted • Could use one long profile vector or different vectors for different topics (sports, health, finance) • Documents converted to same representation, matched with keyword vectors using cosine similarity • Should take structure of the document into account (ignore html tags, email header vs body) Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

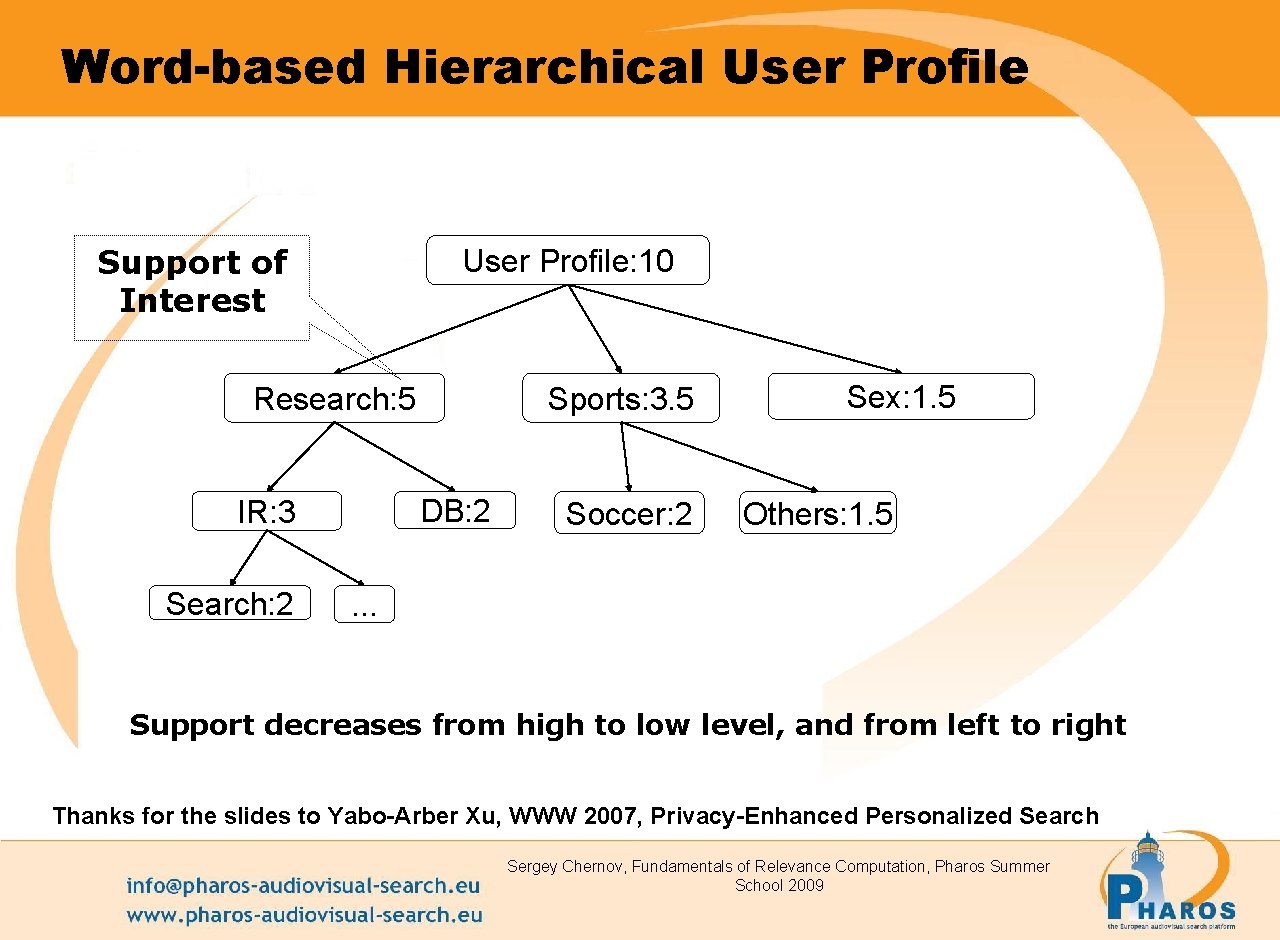

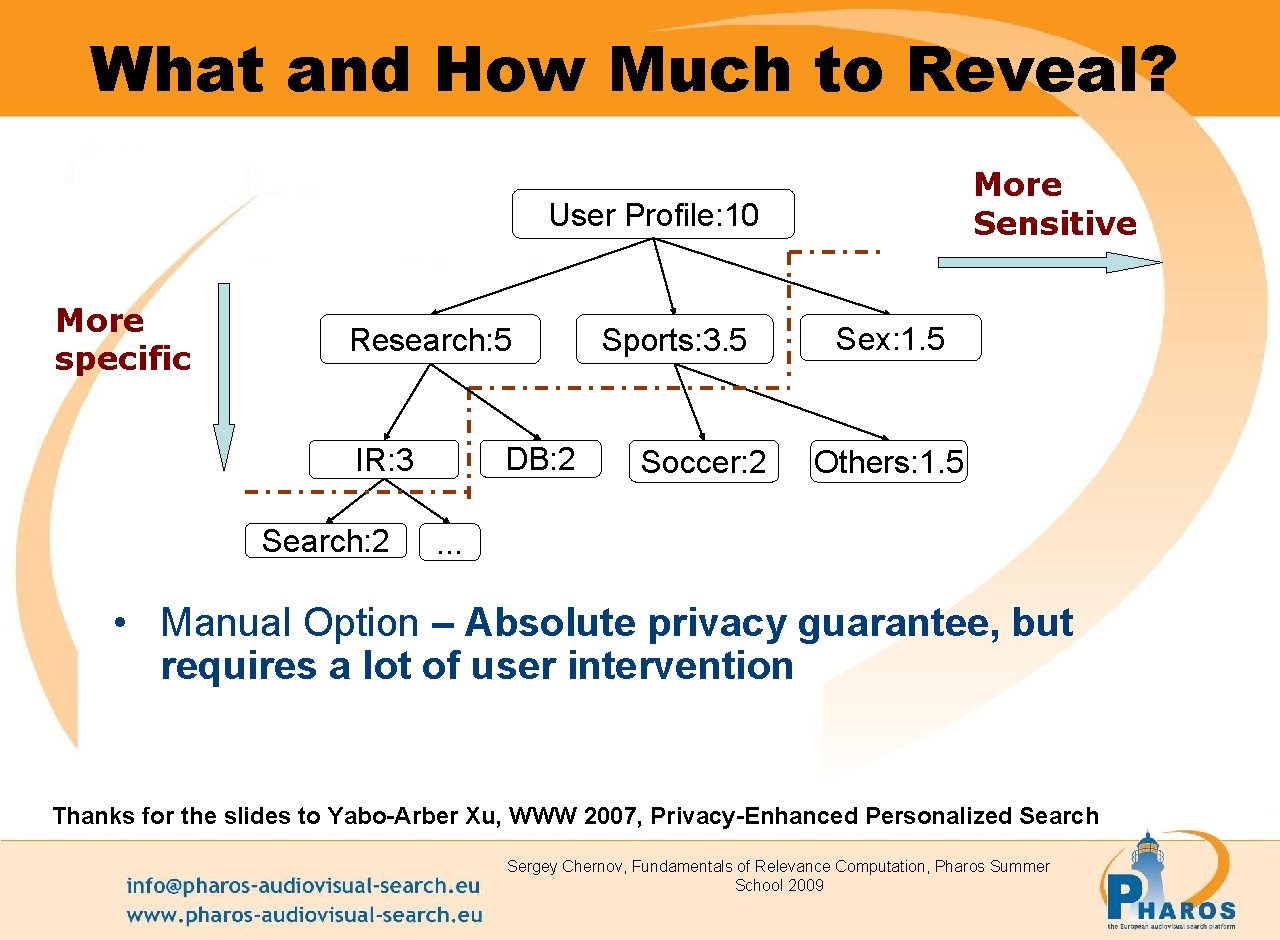

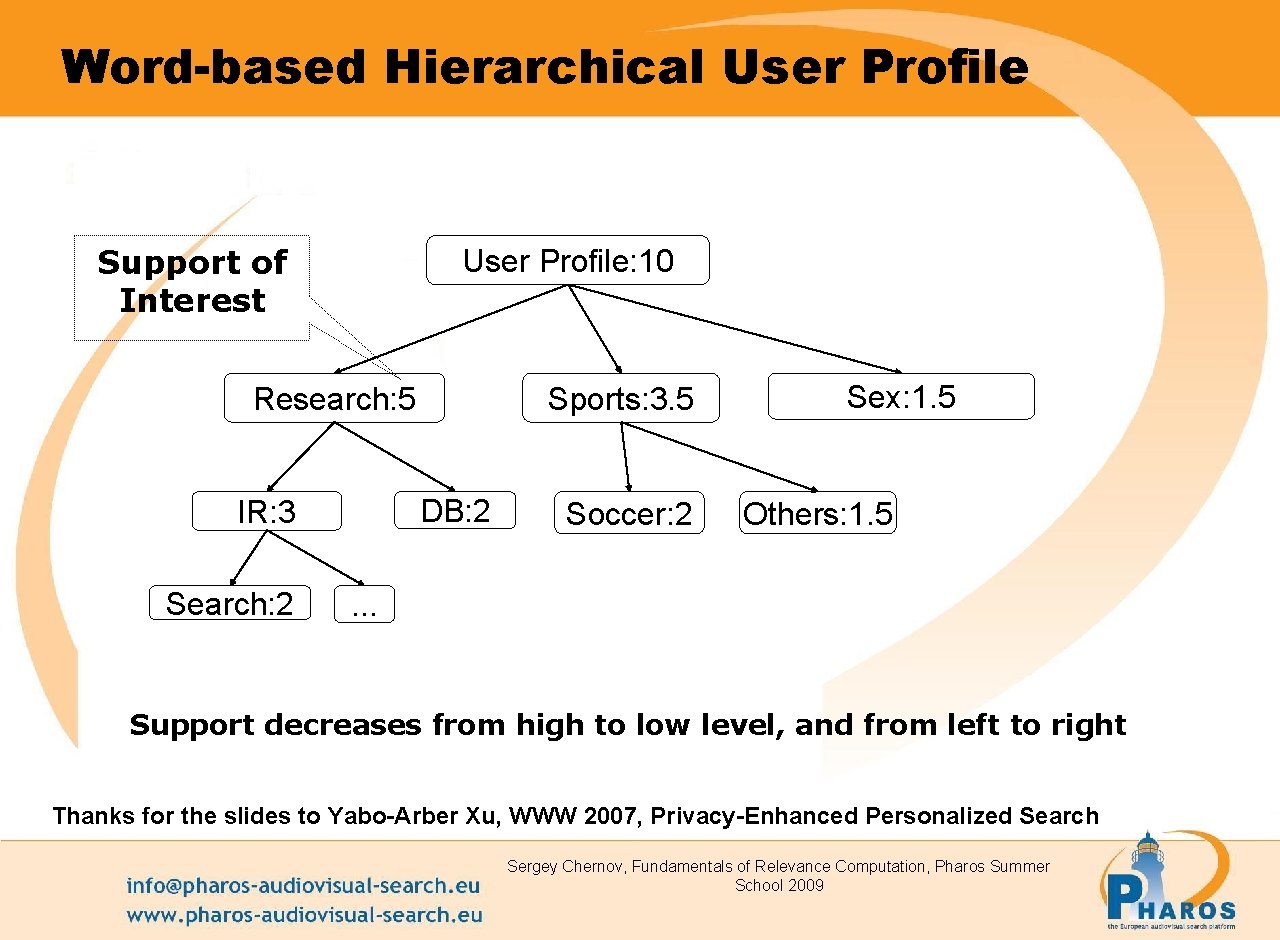

Word-based Hierarchical User Profile Support of Interest User Profile: 10 Research: 5 DB: 2 IR: 3 Search: 2 Sports: 3. 5 Soccer: 2 Sex: 1. 5 Others: 1. 5 . . . Support decreases from high to low level, and from left to right Thanks for the slides to Yabo-Arber Xu, WWW 2007, Privacy-Enhanced Personalized Search Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

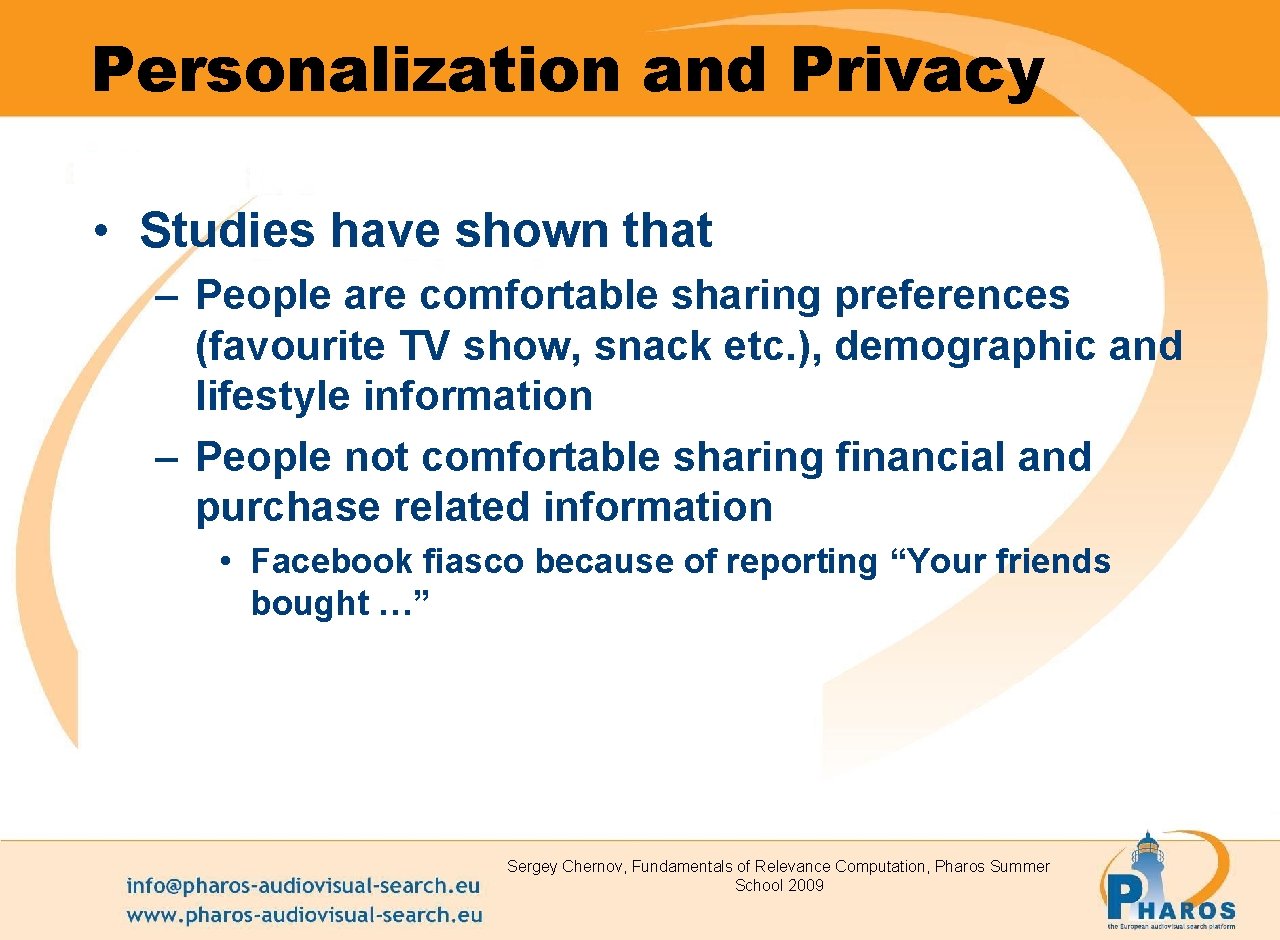

Personalization and Privacy • Studies have shown that – People are comfortable sharing preferences (favourite TV show, snack etc. ), demographic and lifestyle information – People not comfortable sharing financial and purchase related information • Facebook fiasco because of reporting “Your friends bought …” Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

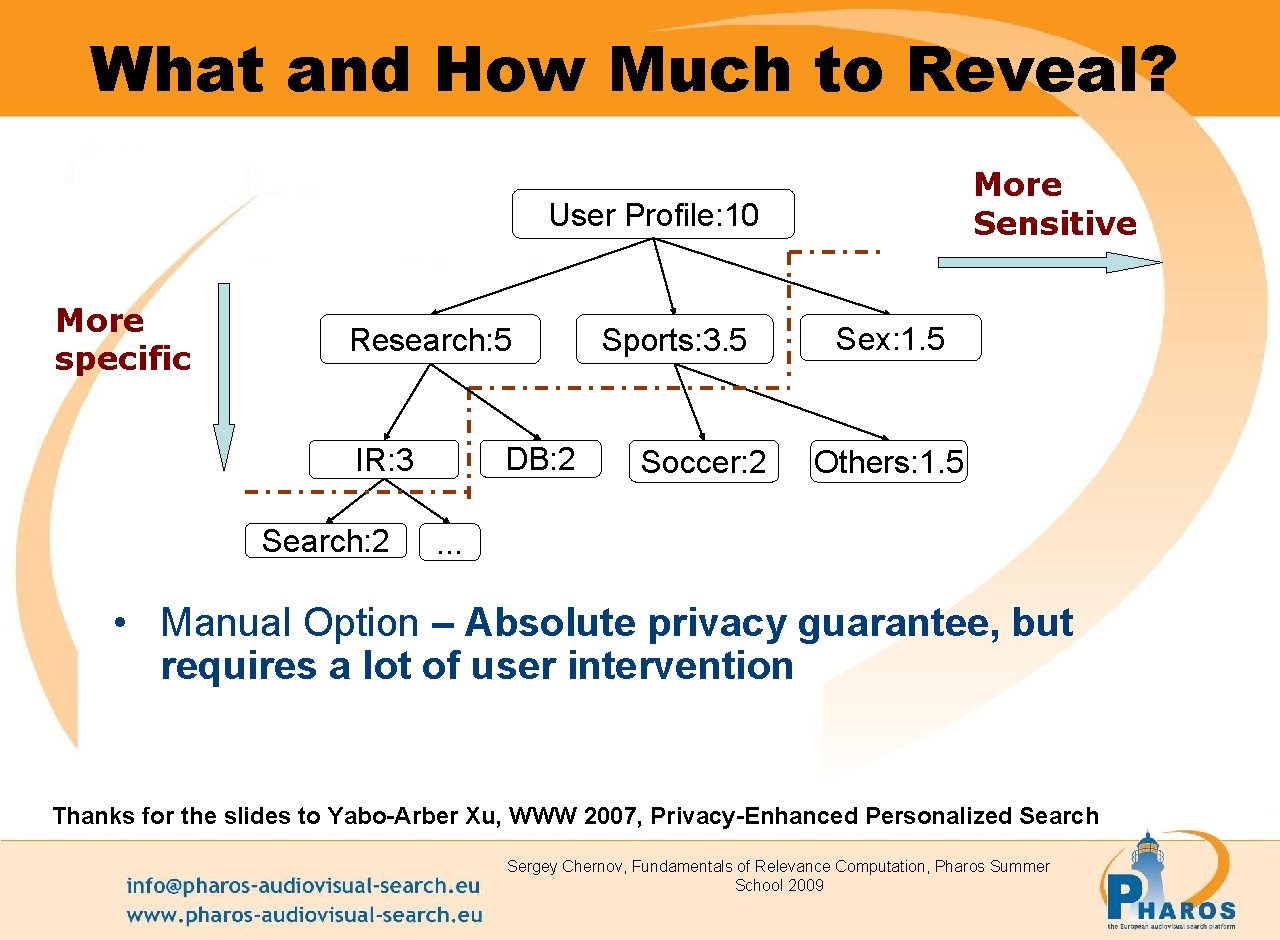

What and How Much to Reveal? More Sensitive User Profile: 10 More specific Research: 5 DB: 2 IR: 3 Search: 2 Sports: 3. 5 Soccer: 2 Sex: 1. 5 Others: 1. 5 . . . • Manual Option – Absolute privacy guarantee, but requires a lot of user intervention Thanks for the slides to Yabo-Arber Xu, WWW 2007, Privacy-Enhanced Personalized Search Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Attention Profile Markup (http: //www. apml. org) Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Personalized search • Search can be personalized based on – – – User profile Current working context Past search queries Server side clickstreams Personalized Pagerank • Determining user intent is hard (e. g query Visa) Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

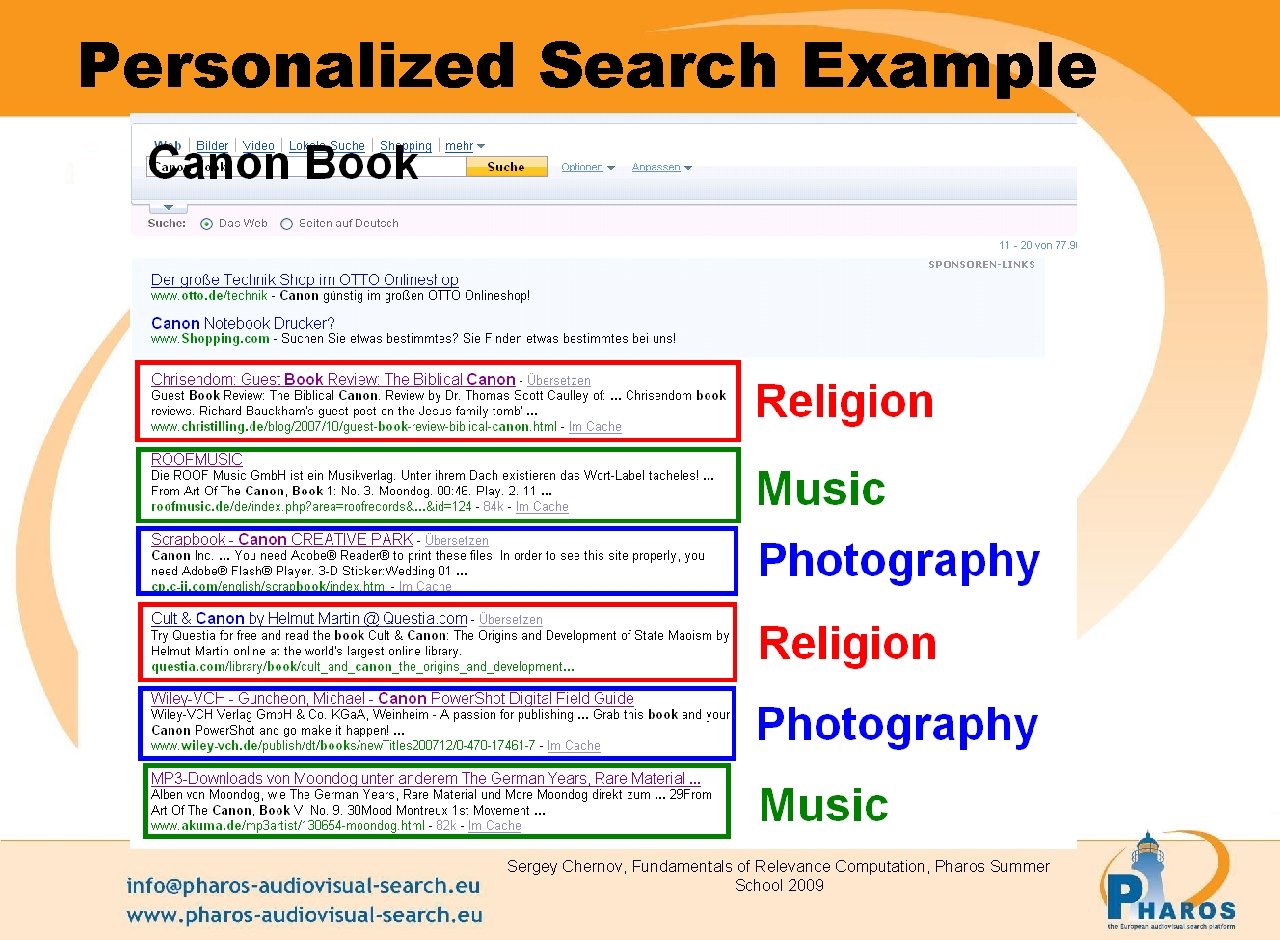

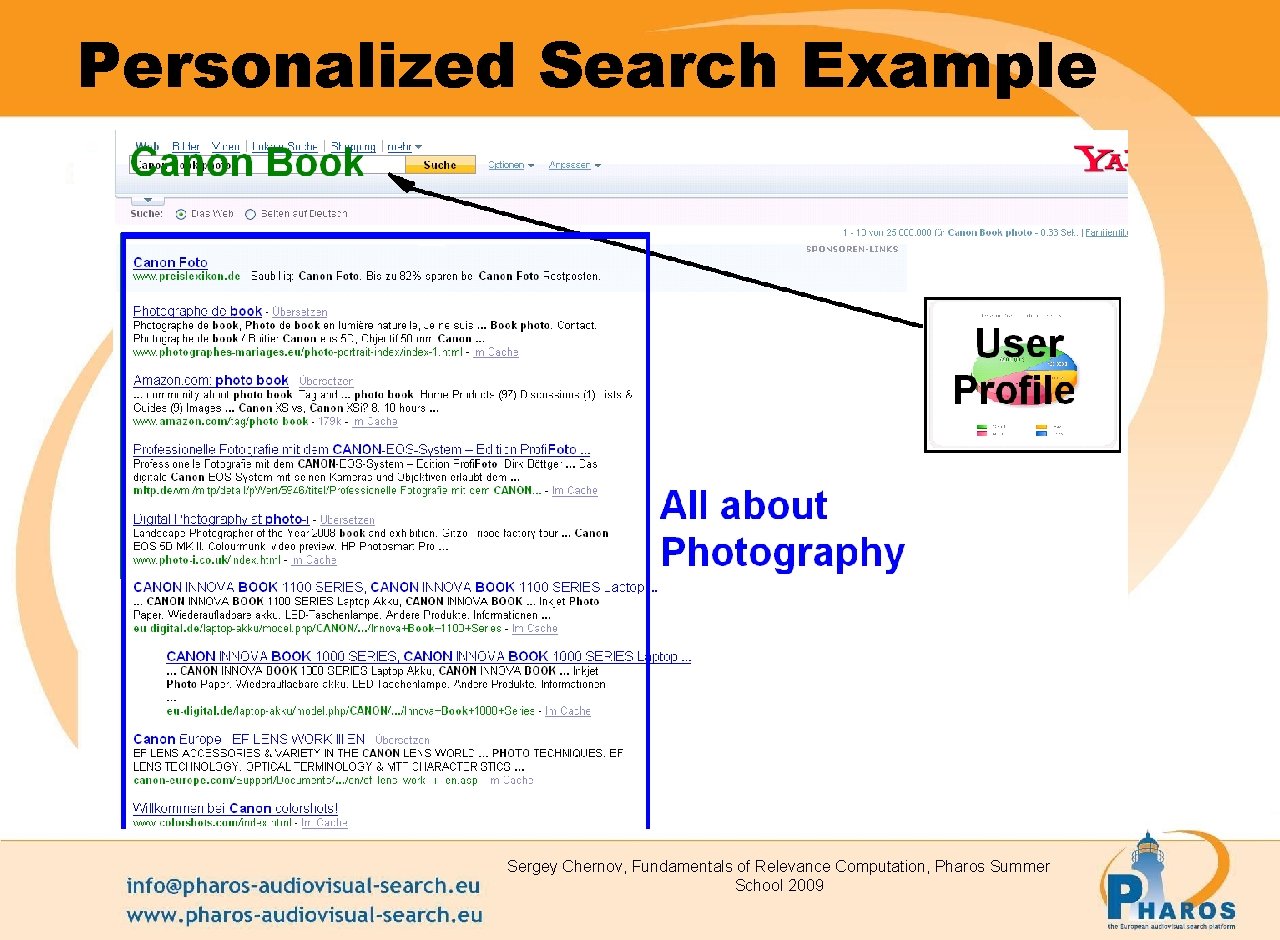

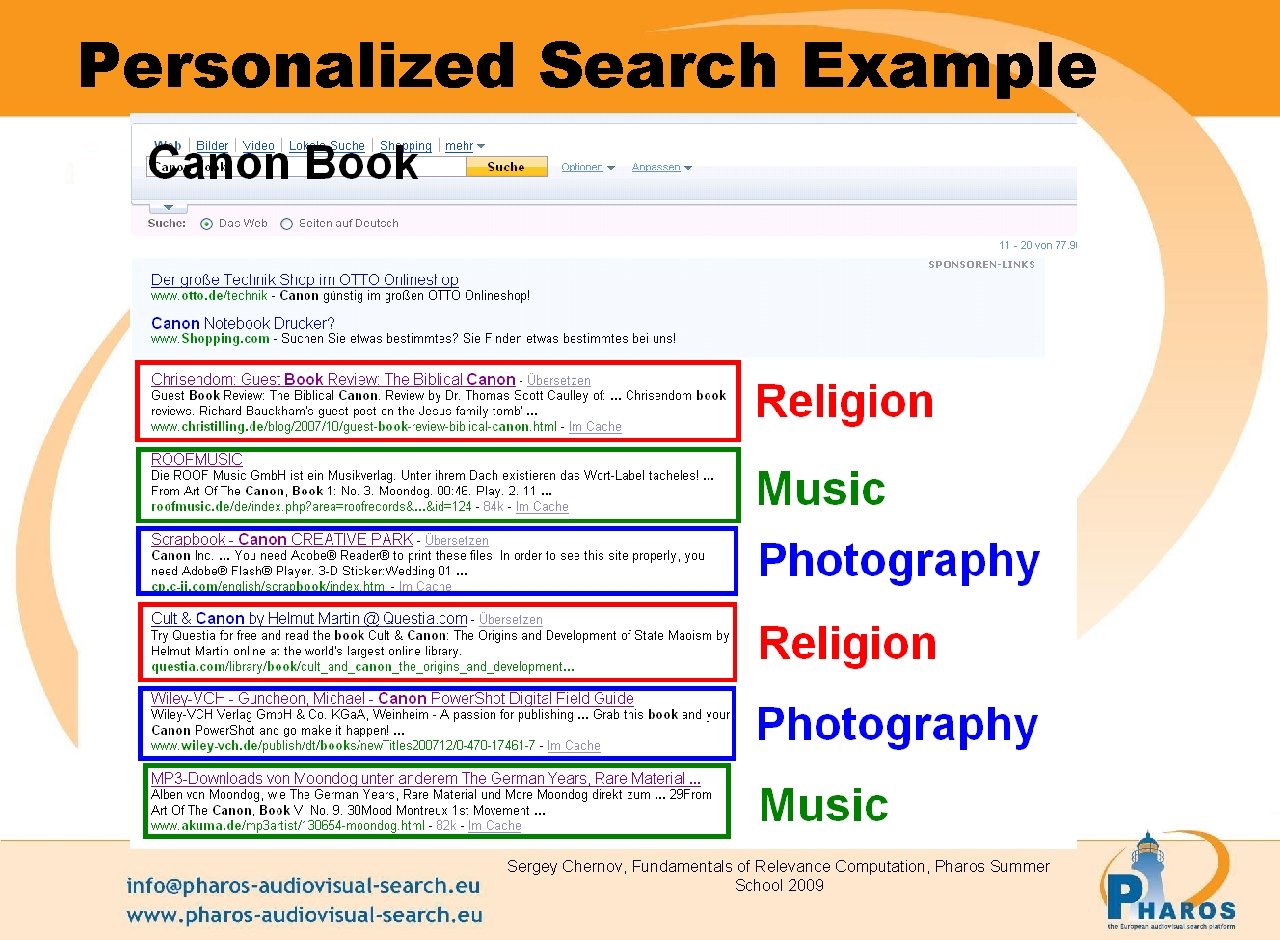

Personalized Search Example Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

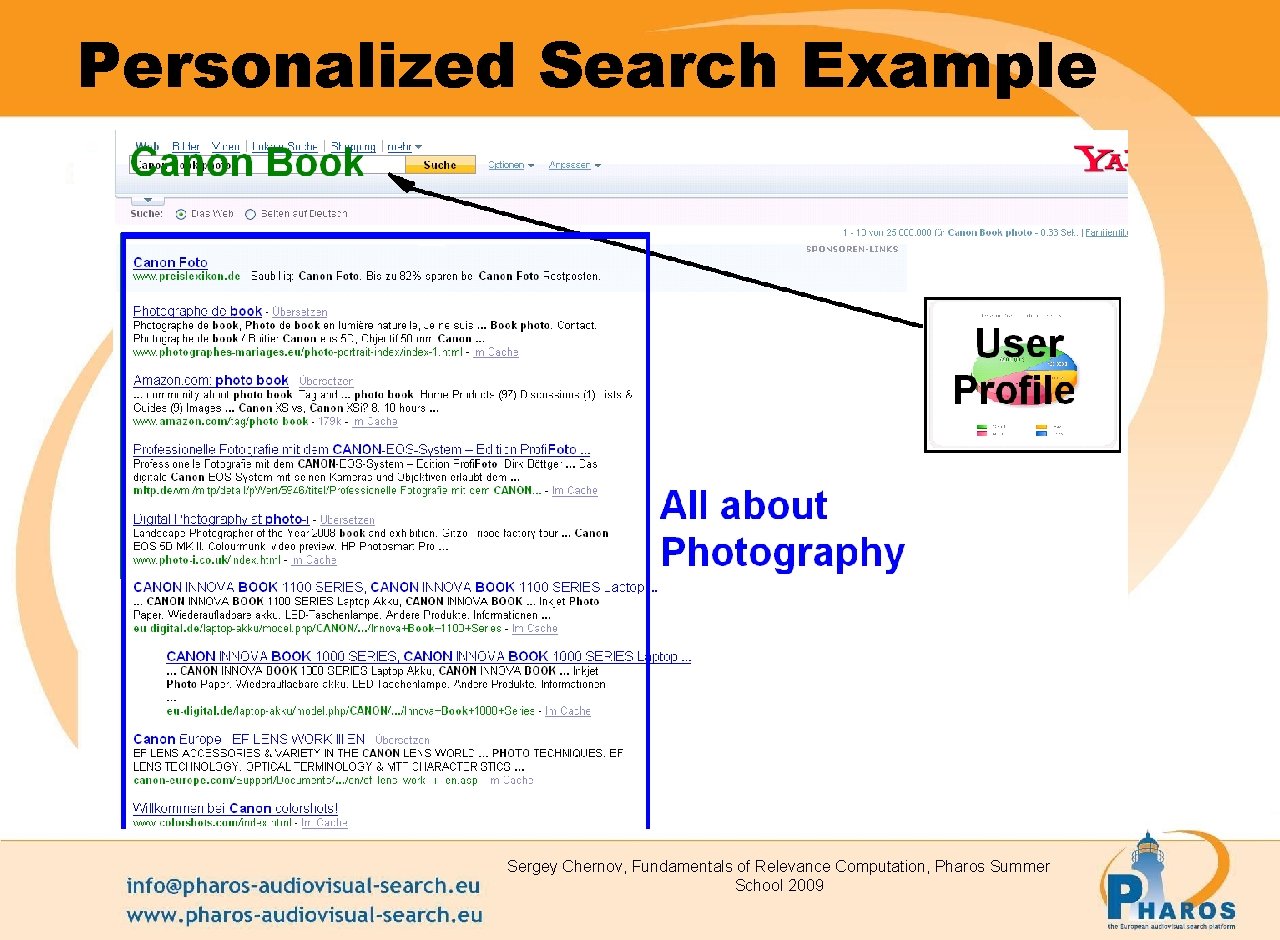

Personalized Search Example Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

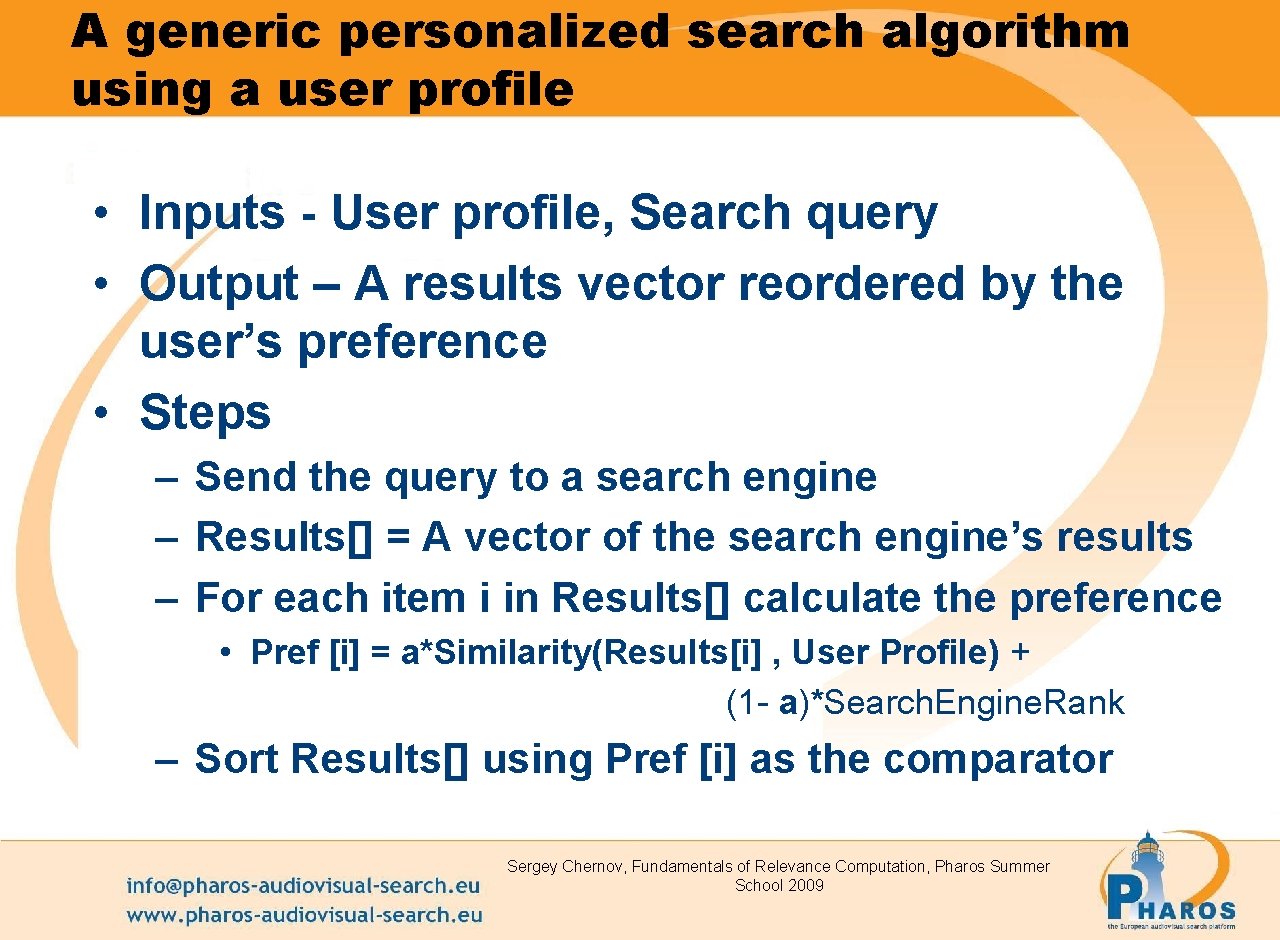

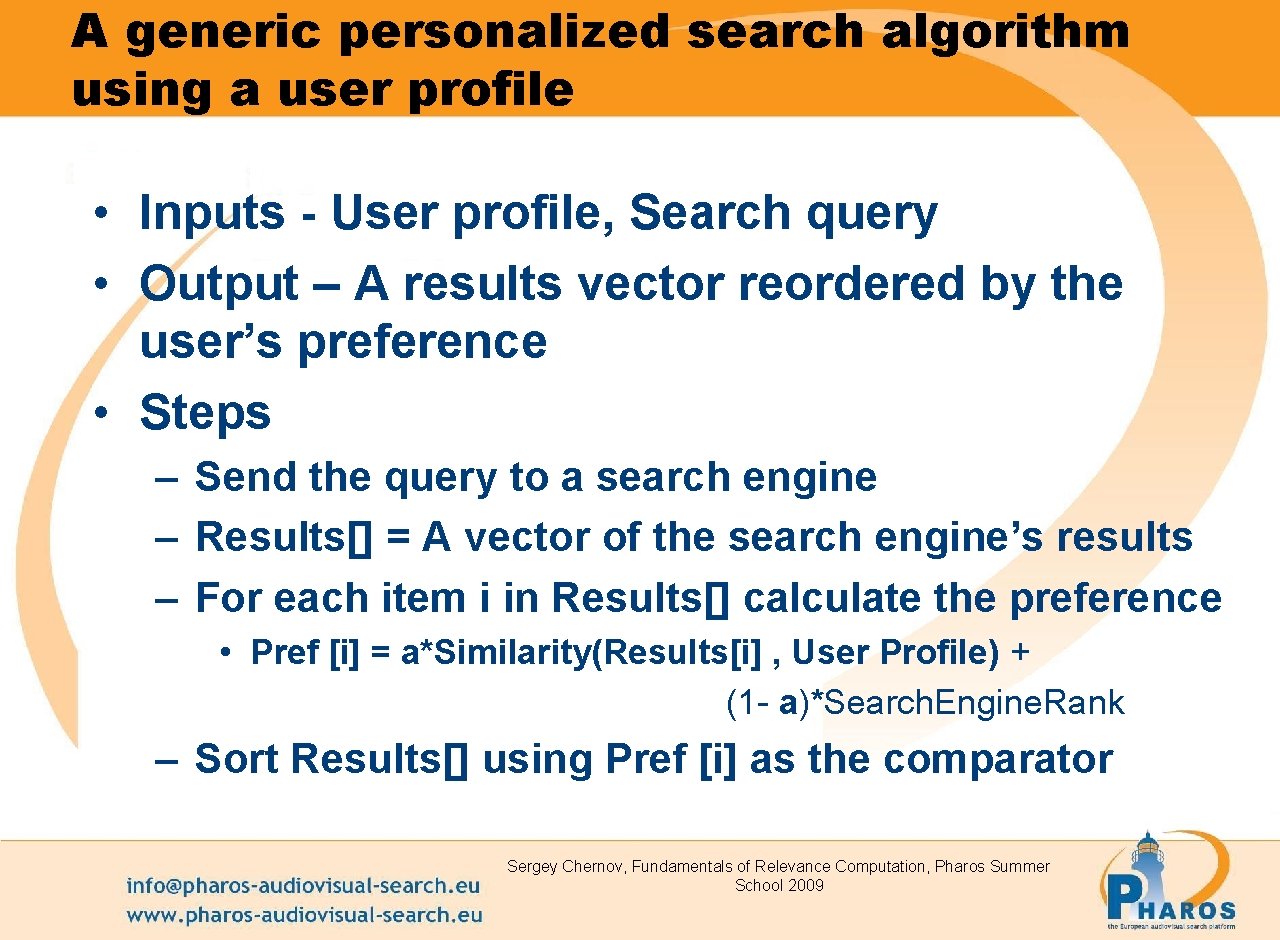

A generic personalized search algorithm using a user profile • Inputs - User profile, Search query • Output – A results vector reordered by the user’s preference • Steps – Send the query to a search engine – Results[] = A vector of the search engine’s results – For each item i in Results[] calculate the preference • Pref [i] = a*Similarity(Results[i] , User Profile) + (1 - a)*Search. Engine. Rank – Sort Results[] using Pref [i] as the comparator Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

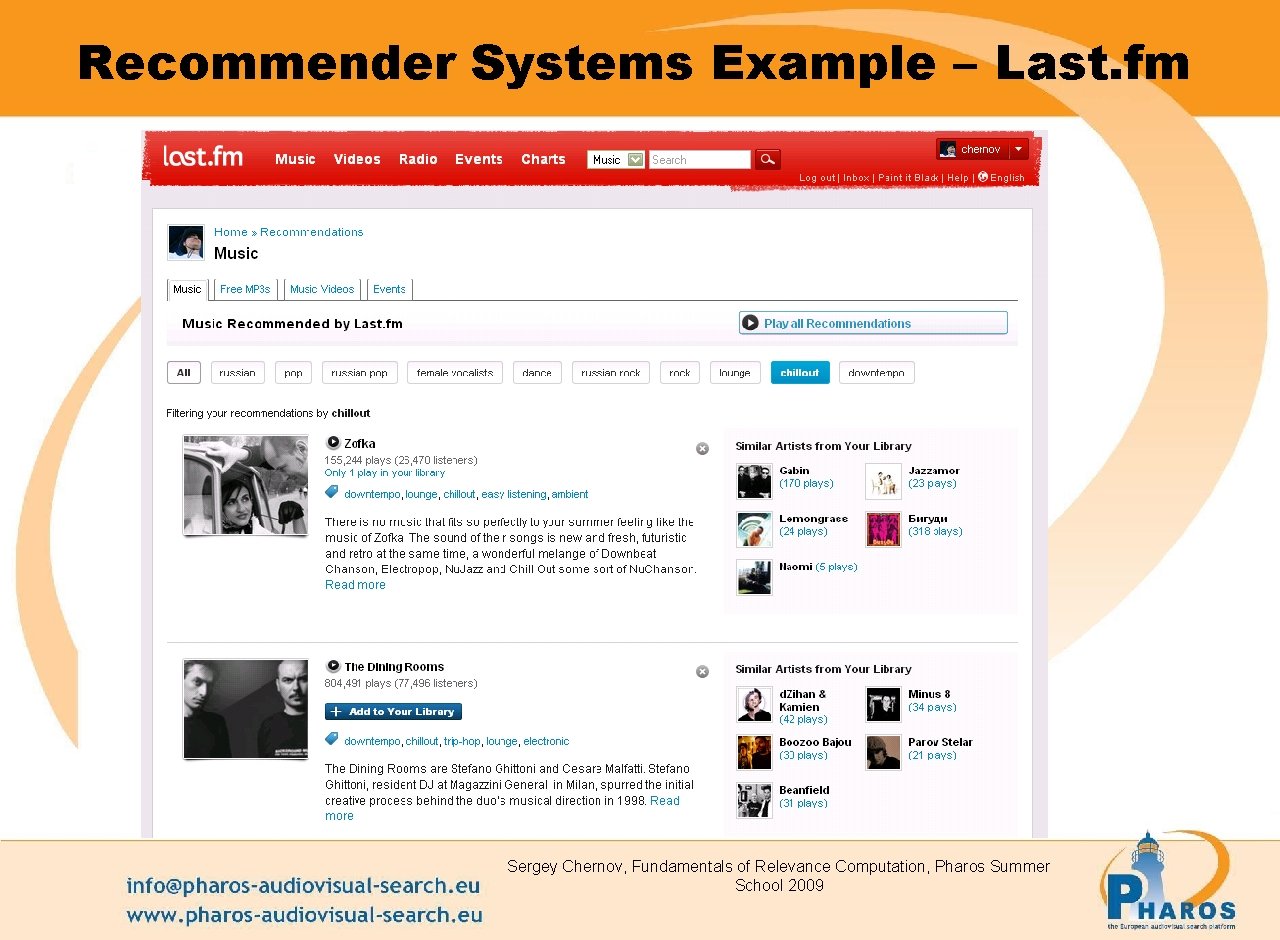

Recommender Systems • Lots of information on the Web, which items to pick? – – Movie recommendation Music recommendation Product recommendation Keyword recommendation Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

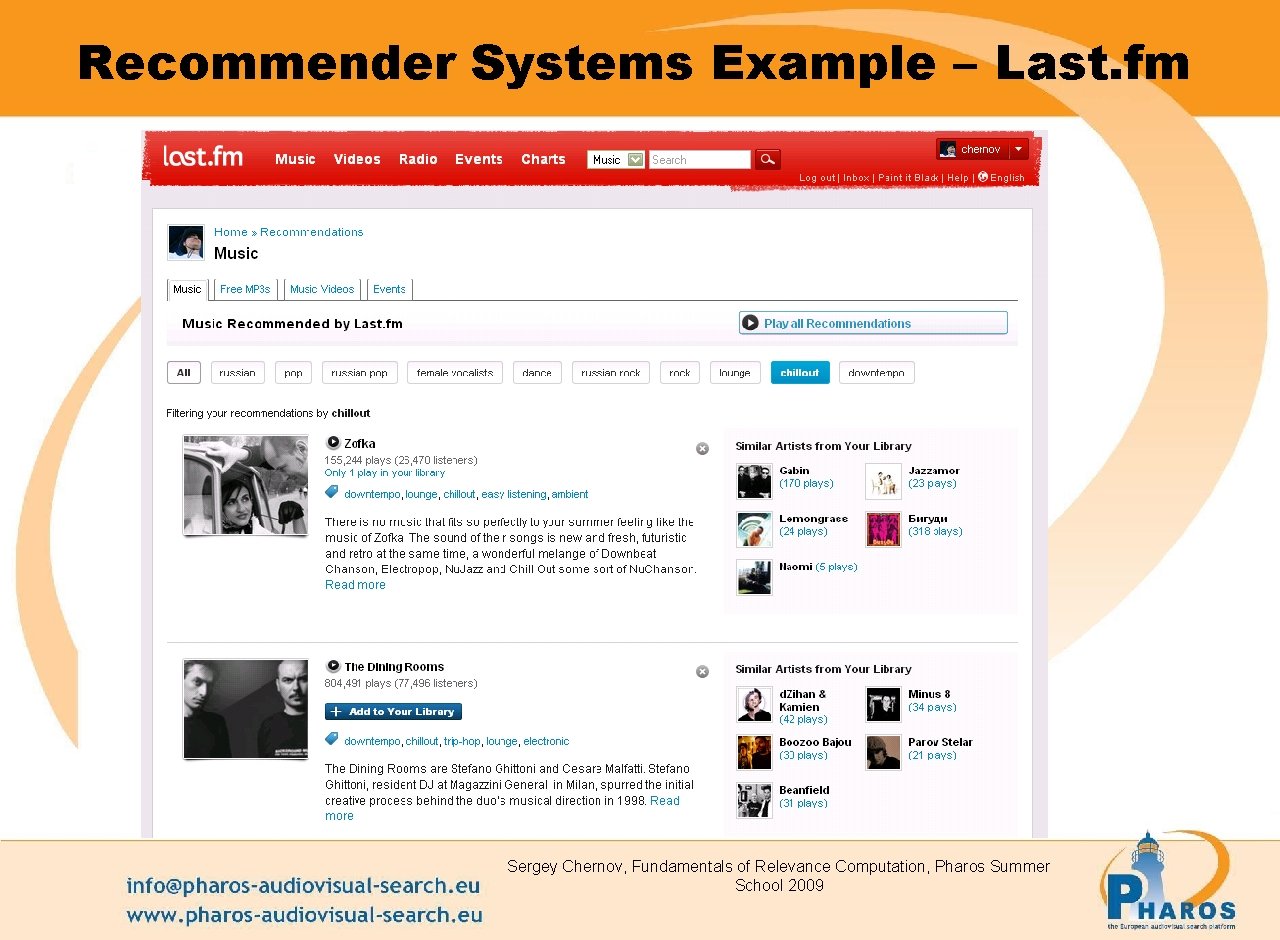

Recommender Systems Example – Last. fm Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Classification • Content Based Recommendation • Collaborative Filtering • Hybrid approach Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Content based recommendation • Utility of an item for a user is determined based on the items preferred by the user in the past Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Basic Approach • • Create and represent the user profile from the items rated by the user in the past – A popular choice of profile representation is vector of terms weighted based on TF*IDF Represent the item in the same format – A news item can be represented using (TF*IDF) term vector – For movies, books one needs to get sufficient metadata to represent the item in vector format Define a similarity measure to compute the similarity between the profile and the item – Popular choice is cosine similarity – Advance machine learning techniques can also be applied to do the matching Recommend most similar items Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Problems with content based recommendation • Knowledge engineering problem – How do you describe multimedia, graphics, movies, songs • Recommendation shows limited diversity • New user problem – It requires large number of ratings from the user to generate quality recommendation Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

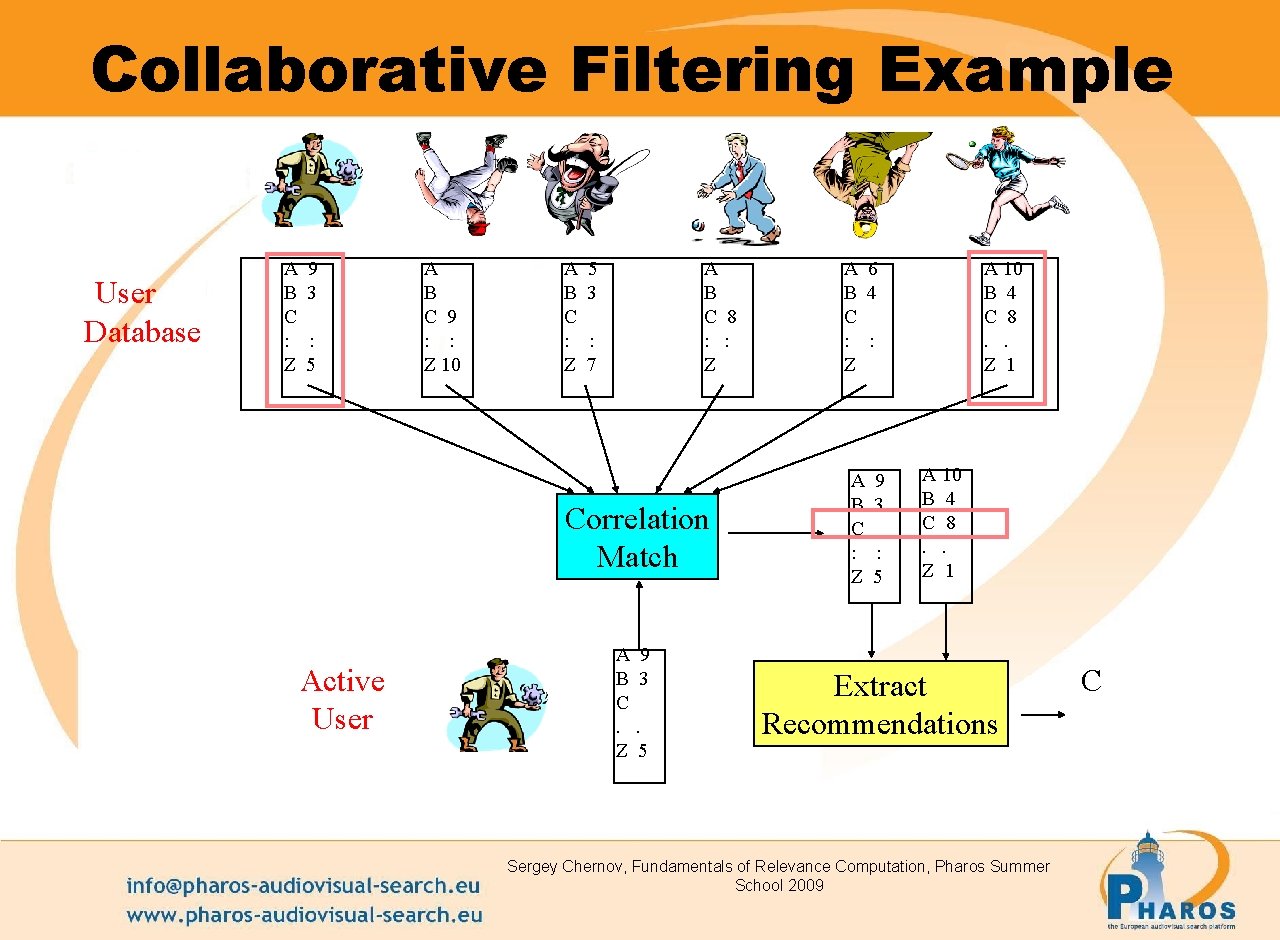

Collaborative filtering • Recommends items that are liked in the past by other users with similar tastes • Quite popular in e-commerce sites, like Amazon, e. Bay • Can recommend various media types, text, video, audio, Ads, products Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

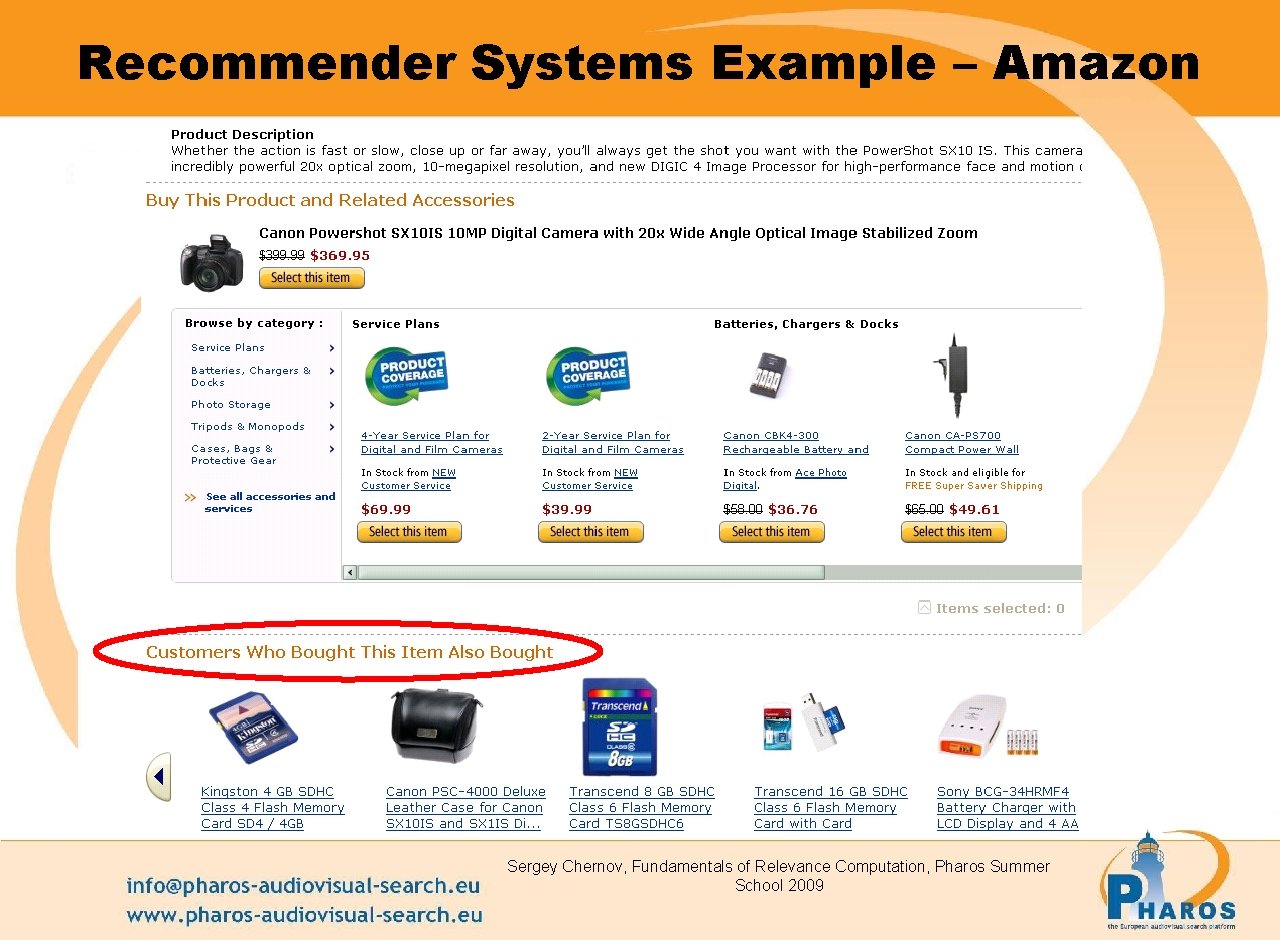

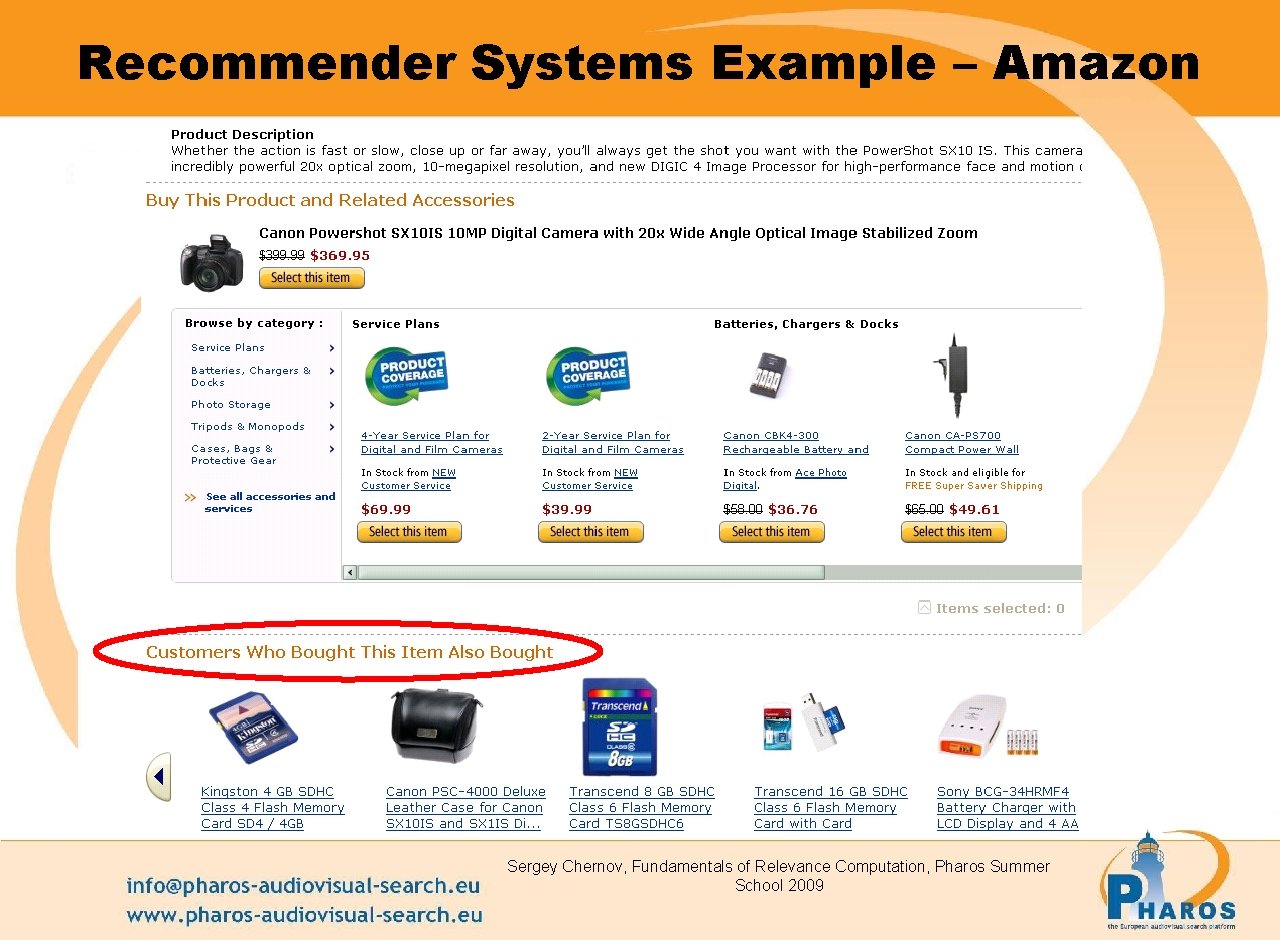

Recommender Systems Example – Amazon Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

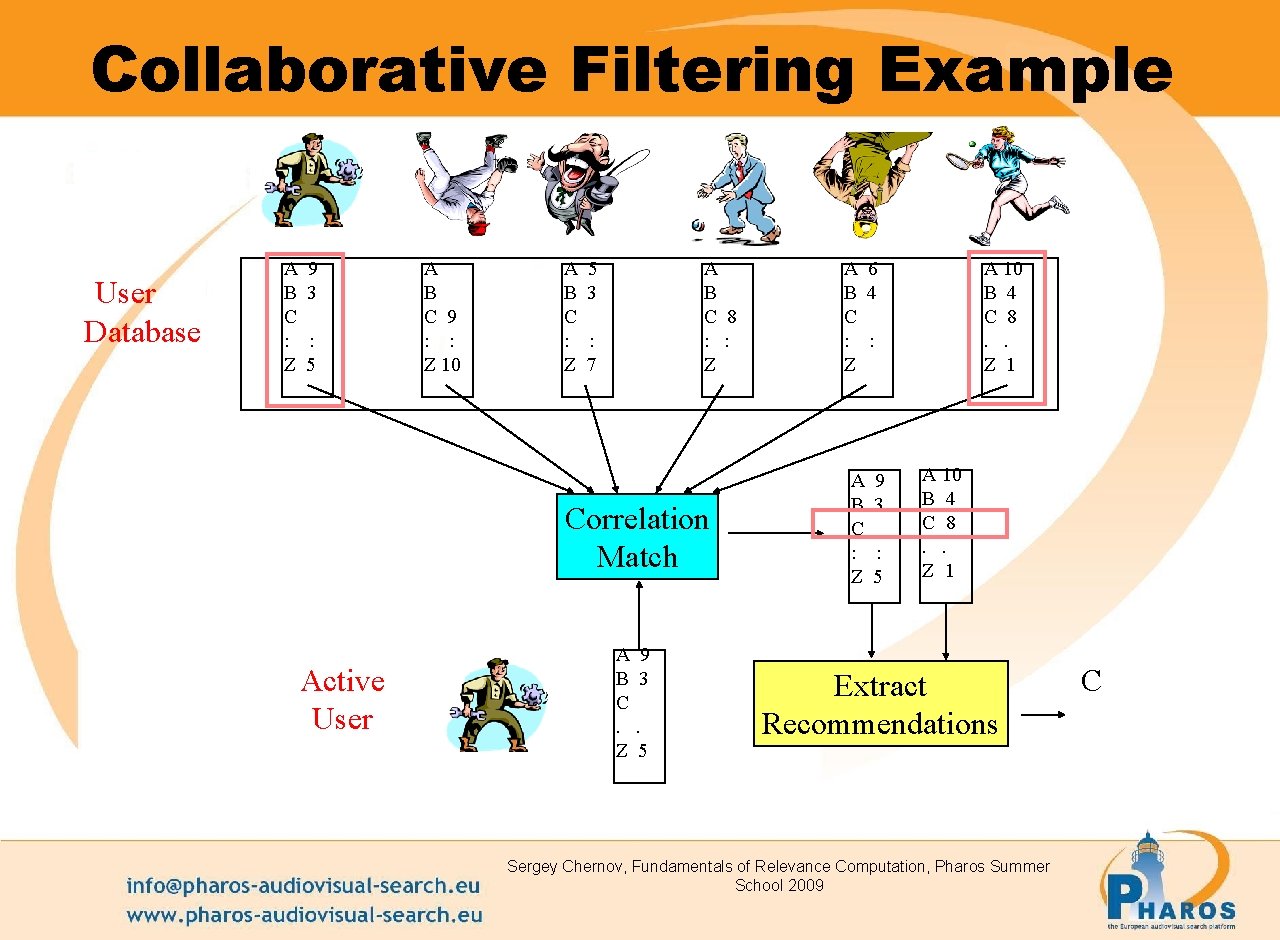

Collaborative Filtering Example User Database A B C : Z 9 3 : 5 A B C 9 : : Z 10 A B C : Z 5 3 A B C 8 : : Z : 7 Correlation Match Active User A 9 B 3 C. . Z 5 A 6 B 4 C : : Z A B C : Z 9 3 : 5 A 10 B 4 C 8. . Z 1 Extract Recommendations Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 C

Advantages • Does not have the knowledge engineering problem – Both user and items can be represented using just ids • Often recommendation shows good amount of diversity Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Problems of Collaborative Filtering • New user problem • New Item problem • Sparsity problem – A user rates only a few items • Unusual user – User whose tastes are unusual compared to the rest of the population Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

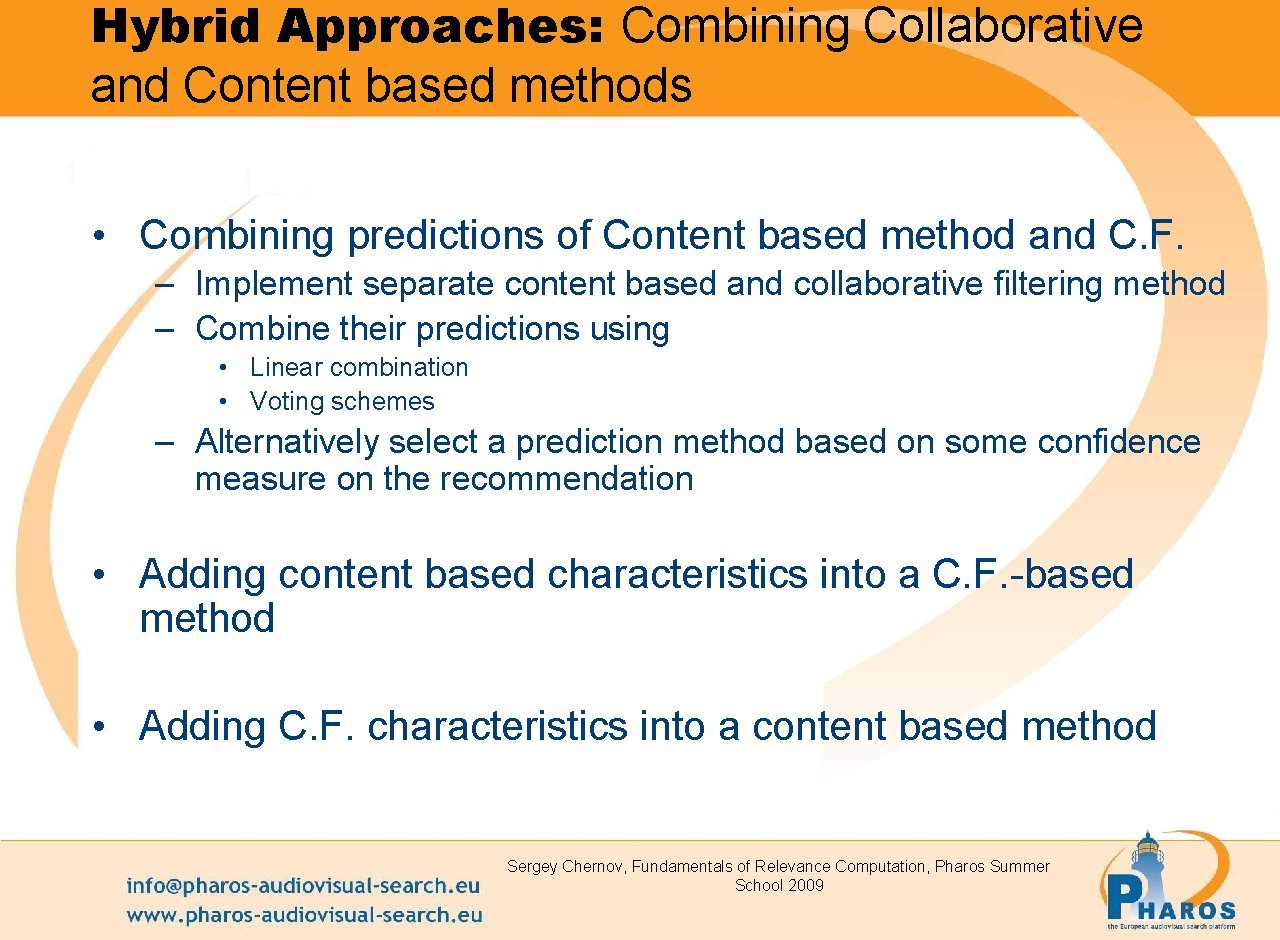

Hybrid Approaches: Combining Collaborative and Content based methods • Combining predictions of Content based method and C. F. – Implement separate content based and collaborative filtering method – Combine their predictions using • Linear combination • Voting schemes – Alternatively select a prediction method based on some confidence measure on the recommendation • Adding content based characteristics into a C. F. -based method • Adding C. F. characteristics into a content based method Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Personalization in Pharos Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Search in Pharos Search Scenario Q: “alternative” Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 107 Raluca Paiu 10/27/2020 107

Tags in Pharos Why research collaborative tagging? O D O T work Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 108 Kerstin Bischoff 10/27/2020 108

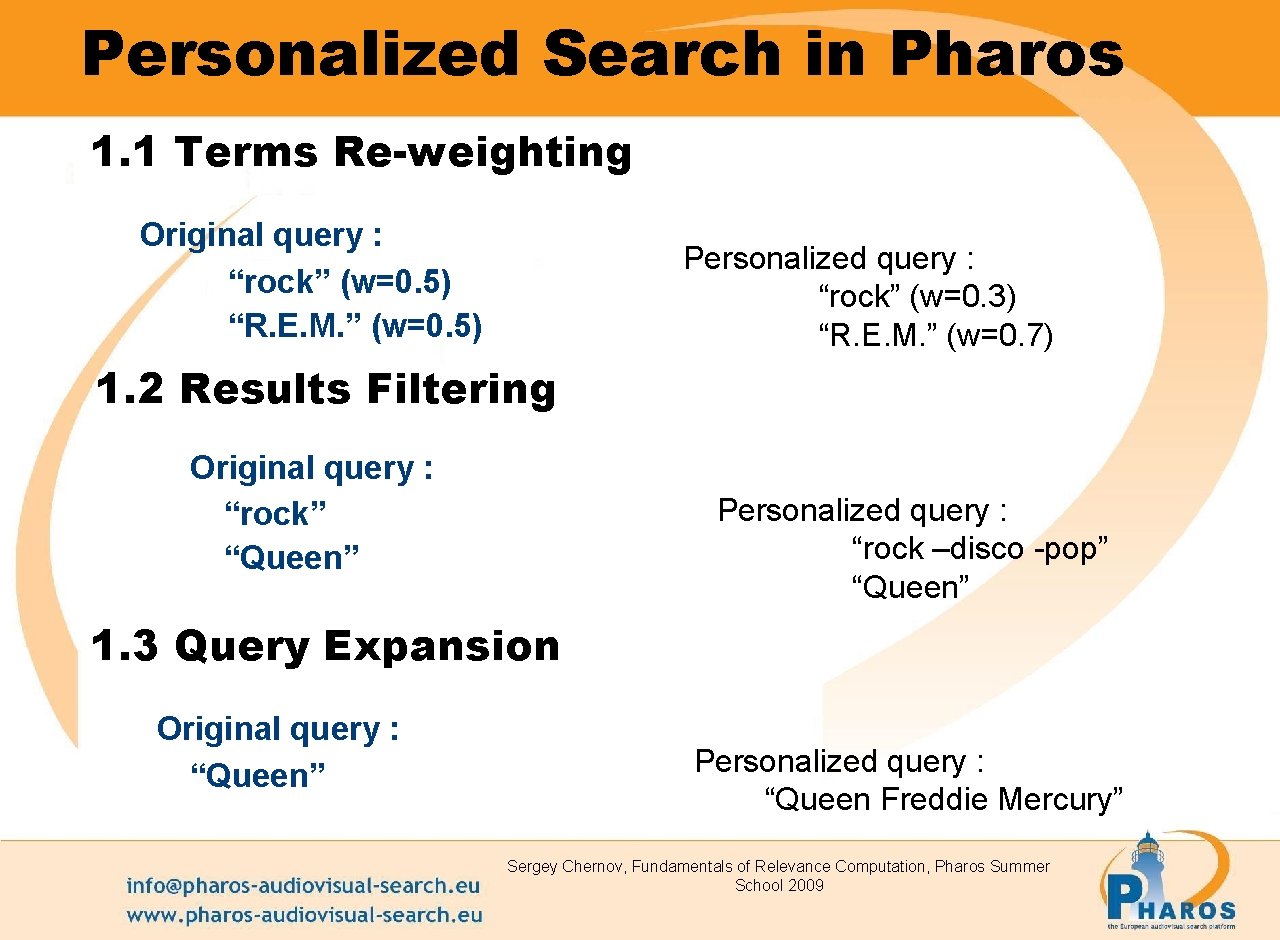

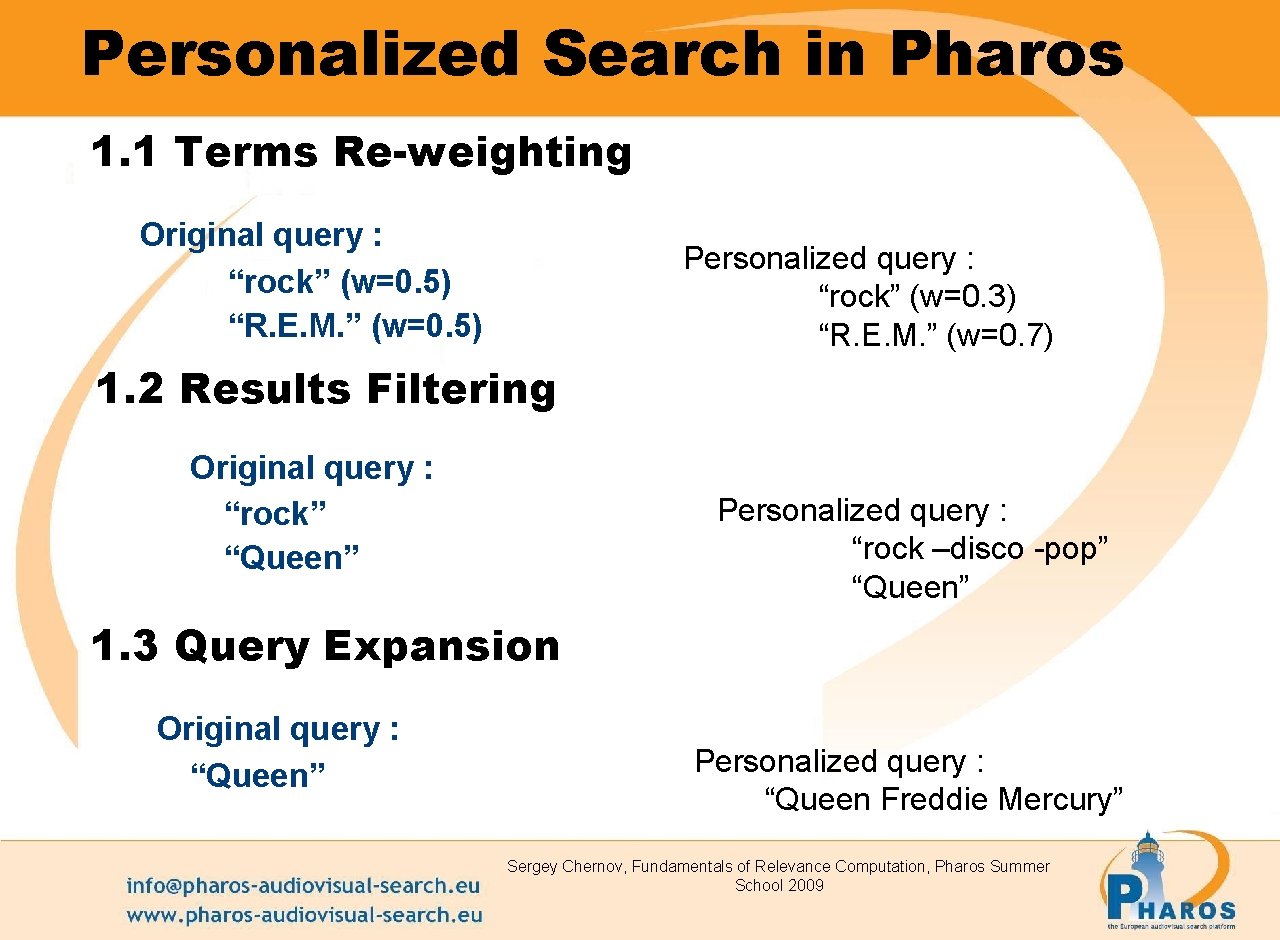

Personalized Search in Pharos 1. 1 Terms Re-weighting Original query : “rock” (w=0. 5) “R. E. M. ” (w=0. 5) Personalized query : “rock” (w=0. 3) “R. E. M. ” (w=0. 7) 1. 2 Results Filtering Original query : “rock” “Queen” Personalized query : “rock –disco -pop” “Queen” 1. 3 Query Expansion Original query : “Queen” Personalized query : “Queen Freddie Mercury” Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

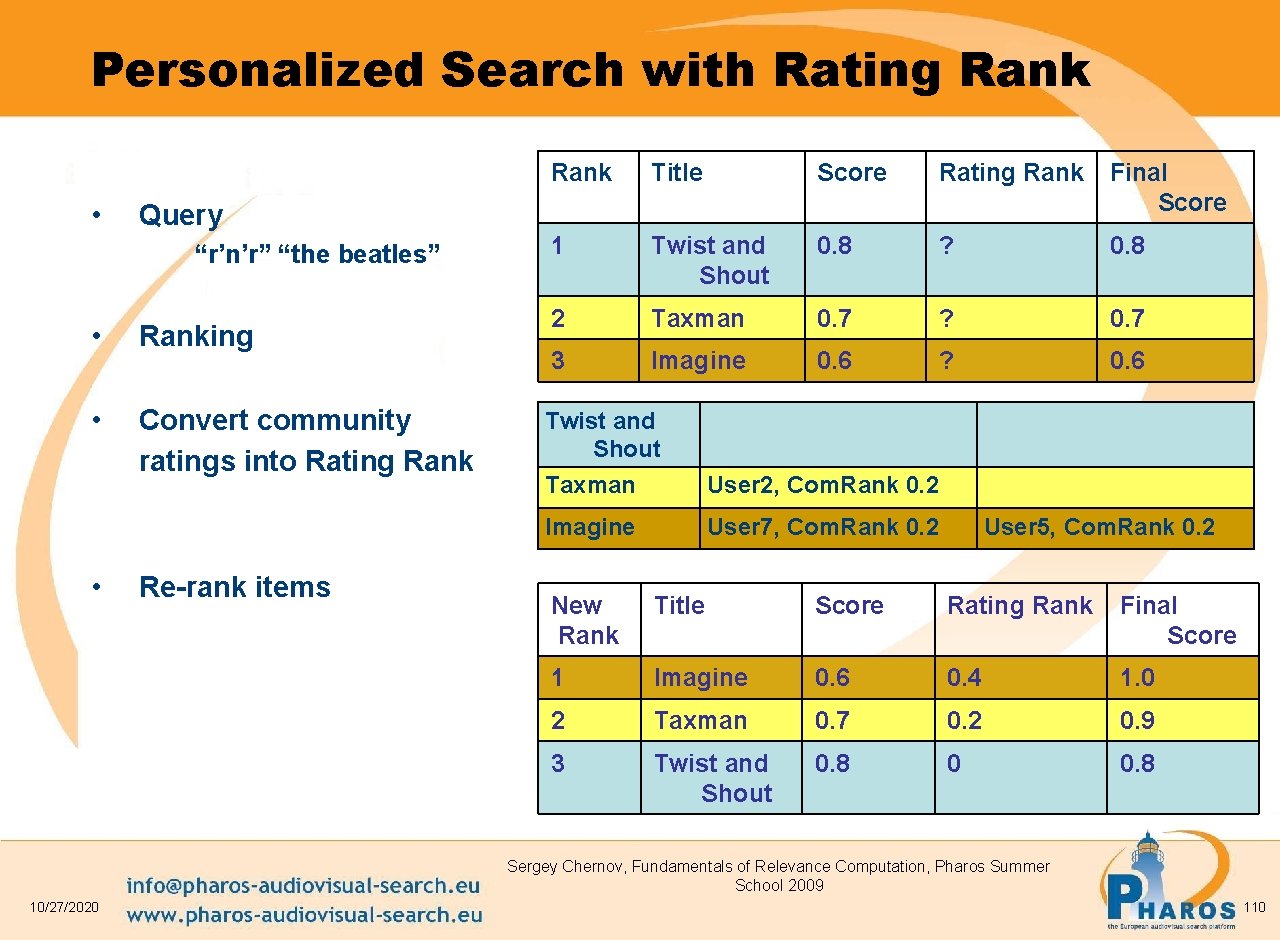

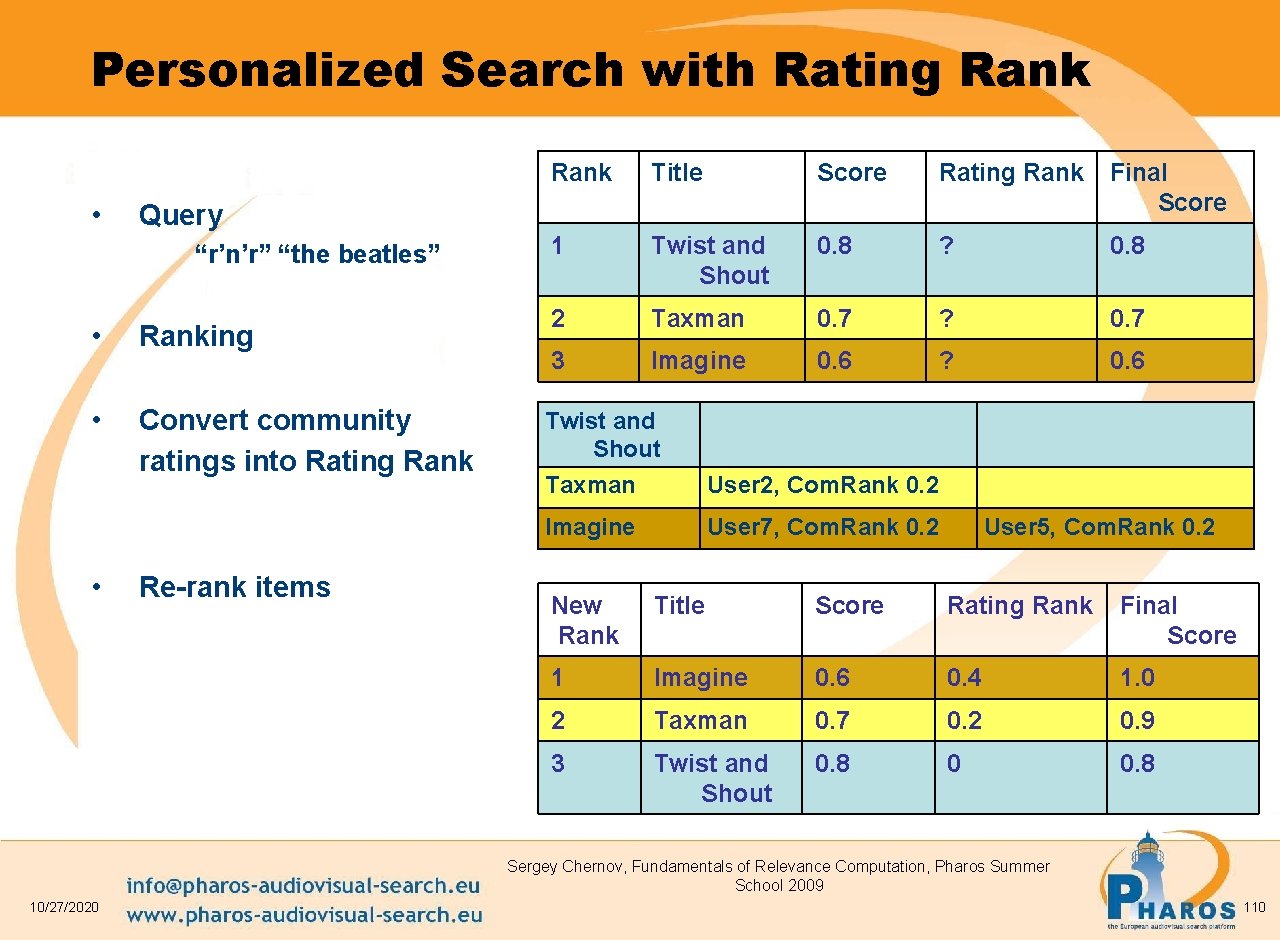

Personalized Search with Rating Rank • Title Score Rating Rank Final Score 1 Twist and Shout 0. 8 ? 0. 8 2 Taxman 0. 7 ? 0. 7 3 Imagine 0. 6 ? 0. 6 Query “r’n’r” “the beatles” • Ranking • Convert community ratings into Rating Rank • Rank Re-rank items Twist and Shout Taxman User 2, Com. Rank 0. 2 Imagine User 7, Com. Rank 0. 2 User 5, Com. Rank 0. 2 New Rank Title Score Rating Rank Final Score 1 Imagine 0. 6 0. 4 1. 0 2 Taxman 0. 7 0. 2 0. 9 3 Twist and Shout 0. 8 0 0. 8 Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 110

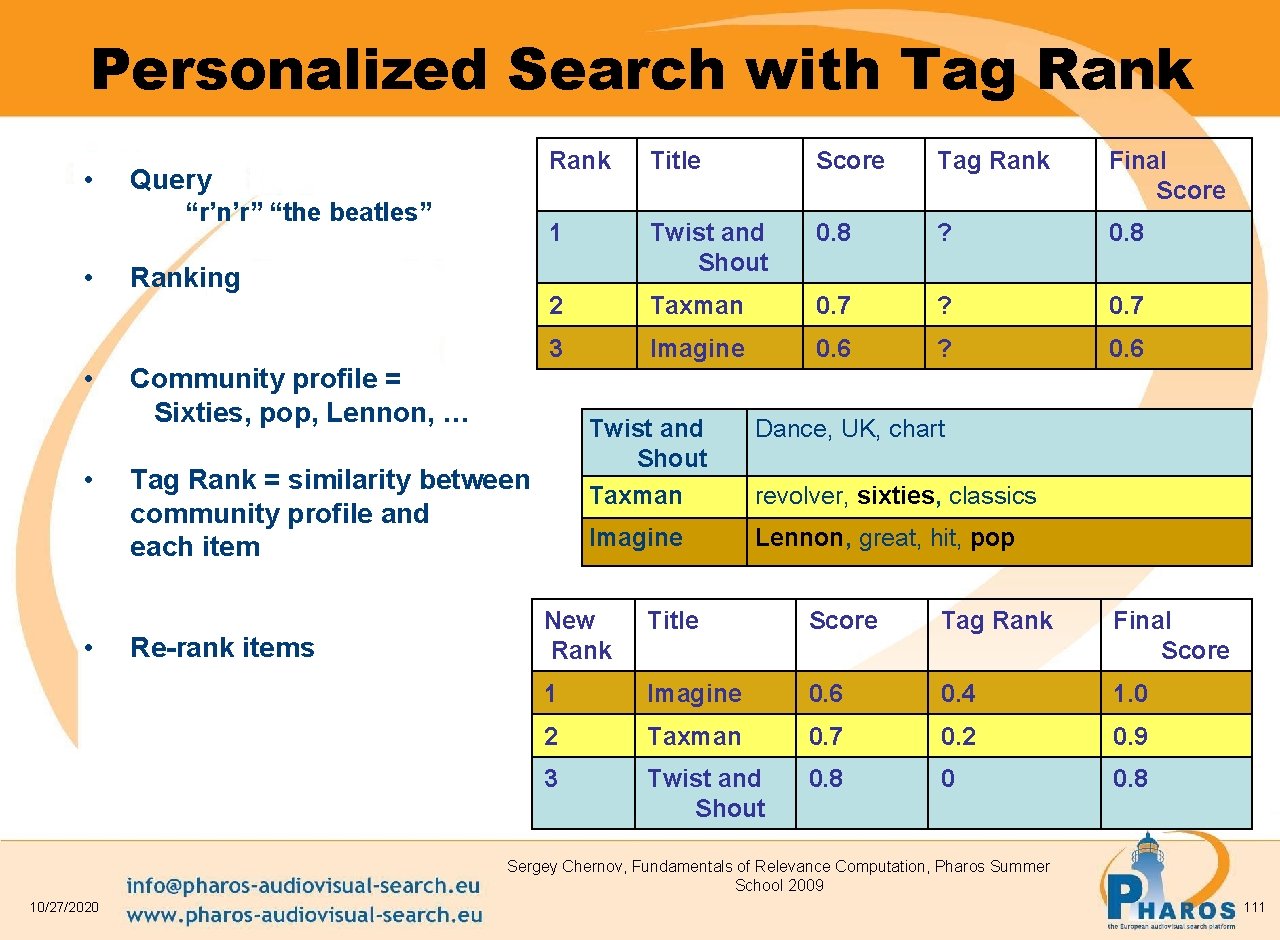

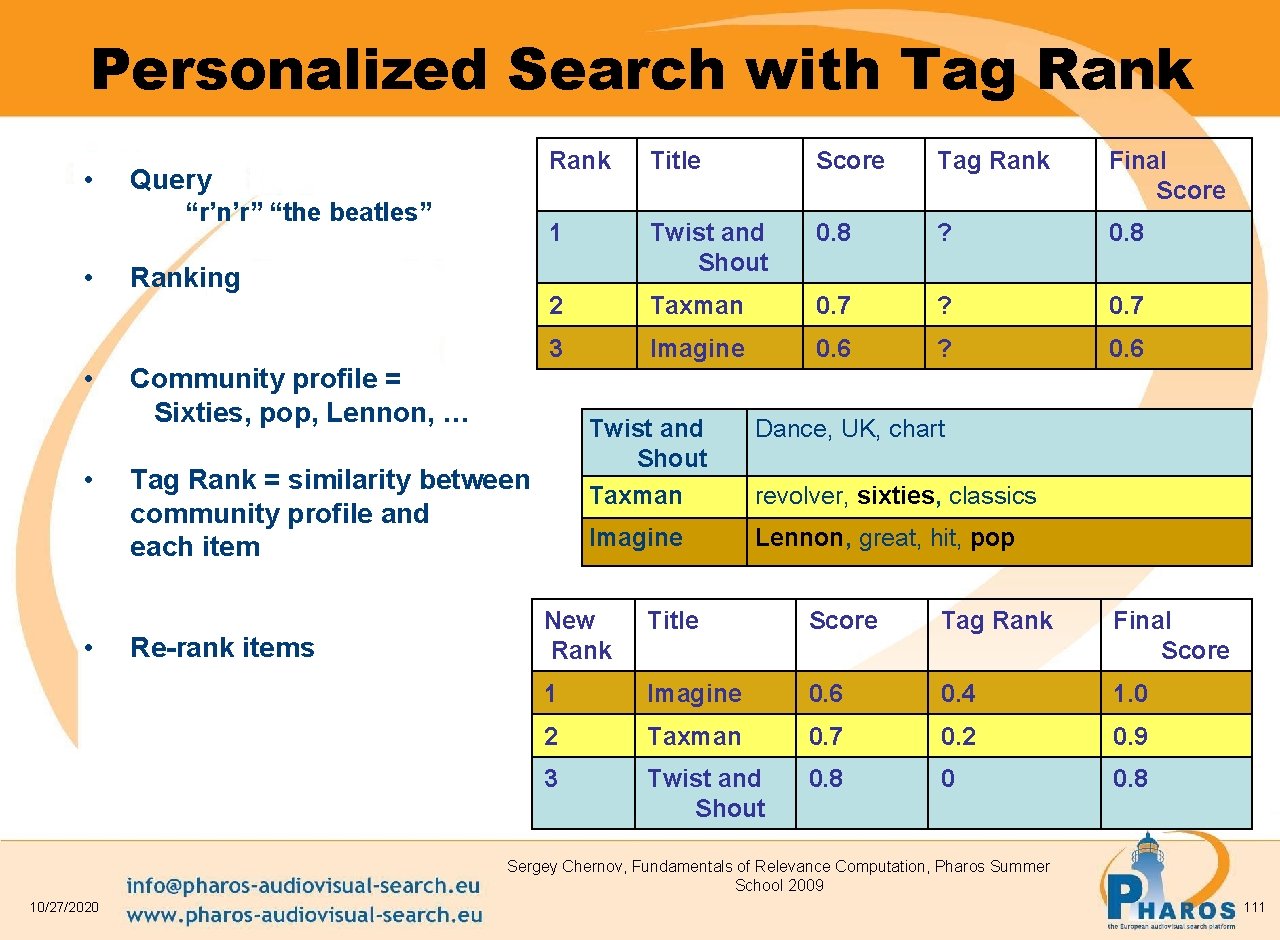

Personalized Search with Tag Rank • Query “r’n’r” “the beatles” • • Ranking Rank Title Score Tag Rank Final Score 1 Twist and Shout 0. 8 ? 0. 8 2 Taxman 0. 7 ? 0. 7 3 Imagine 0. 6 ? 0. 6 Community profile = Sixties, pop, Lennon, … Tag Rank = similarity between community profile and each item Re-rank items Twist and Shout Taxman Dance, UK, chart Imagine Lennon, great, hit, pop revolver, sixties, classics New Rank Title Score Tag Rank Final Score 1 Imagine 0. 6 0. 4 1. 0 2 Taxman 0. 7 0. 2 0. 9 3 Twist and Shout 0. 8 0 0. 8 Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009 10/27/2020 111

Concluding Remarks • Personalization: An upcoming area of technology • Personalization aims at faster access to information to improve user productivity • Challenges – Understanding the user behaviour, intentions, likes, … – Relating human edited content to the profile Sergey Chernov, Fundamentals of Relevance Computation, Pharos Summer School 2009

Chernov

Chernov Sergey alyaev

Sergey alyaev Sergey kononov

Sergey kononov Sergey barsuk

Sergey barsuk Sergey bogomolov

Sergey bogomolov Sergey fomel

Sergey fomel Sergey bravyi

Sergey bravyi Sergey afonin

Sergey afonin Fomels

Fomels Sergey makarychev cern

Sergey makarychev cern Interlingual translation

Interlingual translation Lhcb

Lhcb Importance of aromatic plants

Importance of aromatic plants Connective words

Connective words Clinical relevance

Clinical relevance Relevance information retrieval

Relevance information retrieval Rigor relevance framework

Rigor relevance framework Types of relevance

Types of relevance Importance of entrepreneurship

Importance of entrepreneurship Relevance lost the rise and fall of management accounting

Relevance lost the rise and fall of management accounting Relevance of note making

Relevance of note making 401 relevance

401 relevance What is ethical issue intensity

What is ethical issue intensity Relevance information retrieval

Relevance information retrieval Relevance theory in pragmatics

Relevance theory in pragmatics Rigor relevance and relationships in action

Rigor relevance and relationships in action Example of attacking the motive fallacy

Example of attacking the motive fallacy Quadrant d learners

Quadrant d learners Relevance in education

Relevance in education Pseudo relevance feedback

Pseudo relevance feedback Flouting of maxims

Flouting of maxims Ethical decision making and ethical leadership

Ethical decision making and ethical leadership Rigor relevance and relationships in action

Rigor relevance and relationships in action Relevance information retrieval

Relevance information retrieval Chris cuozzo

Chris cuozzo Importance of directive principles of state policy

Importance of directive principles of state policy Improving search relevance

Improving search relevance Fallacies of relevance examples

Fallacies of relevance examples Relevance feedback example

Relevance feedback example What is concept description in data mining

What is concept description in data mining Fallacies of relevance

Fallacies of relevance Rigor relevance and relationships in action

Rigor relevance and relationships in action Esg relevance score

Esg relevance score Cs 276

Cs 276 Counsell 5 rs

Counsell 5 rs Example of fallacies of relevance

Example of fallacies of relevance Rigor relevance relationships

Rigor relevance relationships Rigor and relevance checklist

Rigor and relevance checklist Relevance

Relevance Relevance information retrieval

Relevance information retrieval Luis von ahn human computation

Luis von ahn human computation Media computation

Media computation Nolco and mcit illustration

Nolco and mcit illustration Positional system

Positional system Income tax computation format

Income tax computation format Bir 2316 cd

Bir 2316 cd Computation history

Computation history Nfa theory of computation

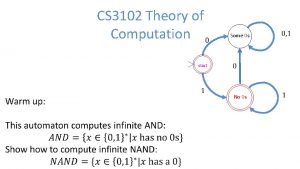

Nfa theory of computation Time hierarchy theorem proof

Time hierarchy theorem proof Generalized transition graph

Generalized transition graph Data cube computation

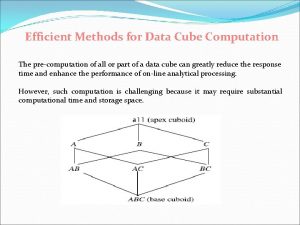

Data cube computation Computation examples

Computation examples Binary search in secure computation

Binary search in secure computation Fertilizer computation examples

Fertilizer computation examples Distributed computing paradigm

Distributed computing paradigm Computation history

Computation history Pmpl cis

Pmpl cis Theory of computation quiz

Theory of computation quiz 2307 rental sample

2307 rental sample Privacy-enhancing computation

Privacy-enhancing computation Dqage

Dqage Efficient methods for data cube computation

Efficient methods for data cube computation Uva lookup computing id

Uva lookup computing id Theory of computation

Theory of computation Income tax computation format

Income tax computation format Mcit tax

Mcit tax Expanded withholding tax computation

Expanded withholding tax computation Computation examples

Computation examples Computation structures

Computation structures Common lisp a gentle introduction to symbolic computation

Common lisp a gentle introduction to symbolic computation Types of languages in theory of computation

Types of languages in theory of computation 7 tuples of pda

7 tuples of pda How to calculate drop rate

How to calculate drop rate Computation symbol

Computation symbol Eecs 1019

Eecs 1019 Differential leveling

Differential leveling Hypertensive crisis

Hypertensive crisis Multiparty computation

Multiparty computation Automata calculator

Automata calculator Computation of wealth tax

Computation of wealth tax Ram model of computation

Ram model of computation Drug calculation formula

Drug calculation formula Parabon computation

Parabon computation Types of errors in numerical computation

Types of errors in numerical computation Crystalloid fluids examples

Crystalloid fluids examples The theory of computation

The theory of computation Fertilizer computation

Fertilizer computation Expanded withholding tax computation

Expanded withholding tax computation Nutrition29

Nutrition29 Umut acar

Umut acar Ucl bsc computer science

Ucl bsc computer science The pagerank citation ranking bringing order to the web

The pagerank citation ranking bringing order to the web Theory of computation

Theory of computation Cuts of a distributed computation

Cuts of a distributed computation Computation of machine hour rate

Computation of machine hour rate Union set operation

Union set operation Dumas method formula

Dumas method formula Verifiable computation

Verifiable computation Two round multiparty computation via multi-key fhe

Two round multiparty computation via multi-key fhe Cosc 3340

Cosc 3340 Board feet calculator

Board feet calculator Theory of computation

Theory of computation Data cube computation

Data cube computation