15 381 Artificial Intelligence Information Retrieval How to

![Relevance Feedback Rocchio Formula Q’ = F[Q, Dret ] F = weighted vector sum, Relevance Feedback Rocchio Formula Q’ = F[Q, Dret ] F = weighted vector sum,](https://slidetodoc.com/presentation_image/cf355cd594aa68cad5cf4441d8cd1701/image-22.jpg)

- Slides: 38

15 -381 Artificial Intelligence Information Retrieval (How to Power a Search Engine) Jaime Carbonell 20 September 2001 Topics Covered: • “Bag of Words” Hypothesis • Vector Space Model & Cosine Similarity • Query Expansion Methods

Information Retrieval: The Challenge (1) Text DB includes: (1) Rainfall measurements in the Sahara continue to show a steady decline starting from the first measurements in 1961. In 1996 only 12 mm of rain were recorded in upper Sudan, and 1 mm in Southern Algiers. . . (2) Dan Marino states that professional football risks loosing the number one position in heart of fans across this land. Declines in TV audience ratings are cited. . . (3) Alarming reductions in precipitation in desert regions are blamed for desert encroachment of previously fertile farmland in Northern Africa. Scientists measured both yearly precipitation and groundwater levels. . .

Information Retrieval: The Challenge (2) User query states: "Decline in rainfall and impact on farms near Sahara" Challenges • How to retrieve (1) and (3) and not (2)? • How to rank (3) as best? • How to cope with no shared words?

Information Retrieval Assumptions (1) Basic IR task • There exists a document collection {Dj } • Users enters at hoc query Q • Q correctly states user’s interest • User wants {Di } < {Dj } most relevant to Q

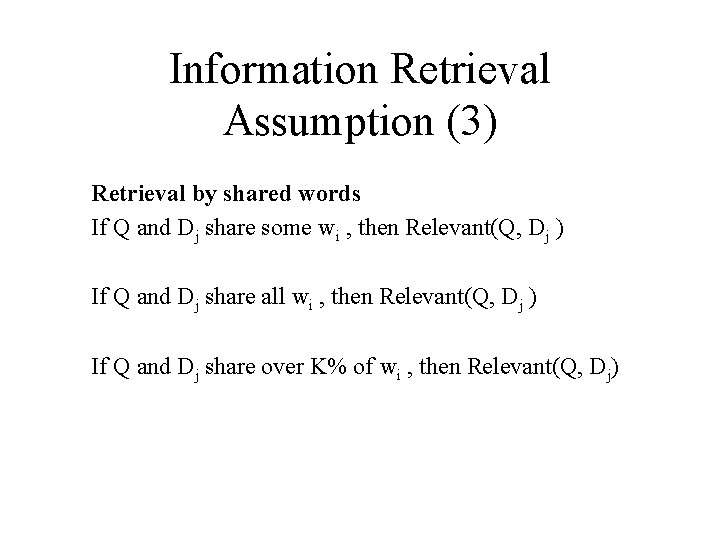

Information Retrieval Assumption (2) "Shared Bag of Words" assumption Every query = {wi } Every document = {wk }. . . where wi & wk in same Σ All syntax is irrelevant (e. g. word order) All document structure is irrelevant All meta-information is irrelevant (e. g. author, source, genre) => Words suffice for relevance assessment

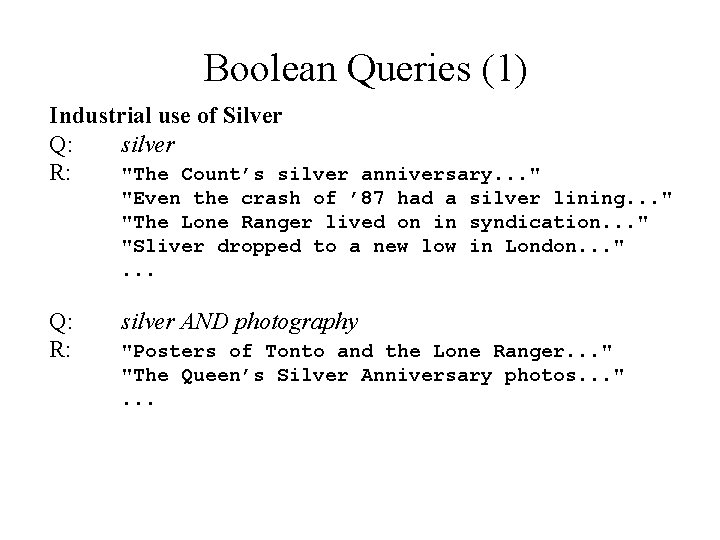

Information Retrieval Assumption (3) Retrieval by shared words If Q and Dj share some wi , then Relevant(Q, Dj ) If Q and Dj share all wi , then Relevant(Q, Dj ) If Q and Dj share over K% of wi , then Relevant(Q, Dj)

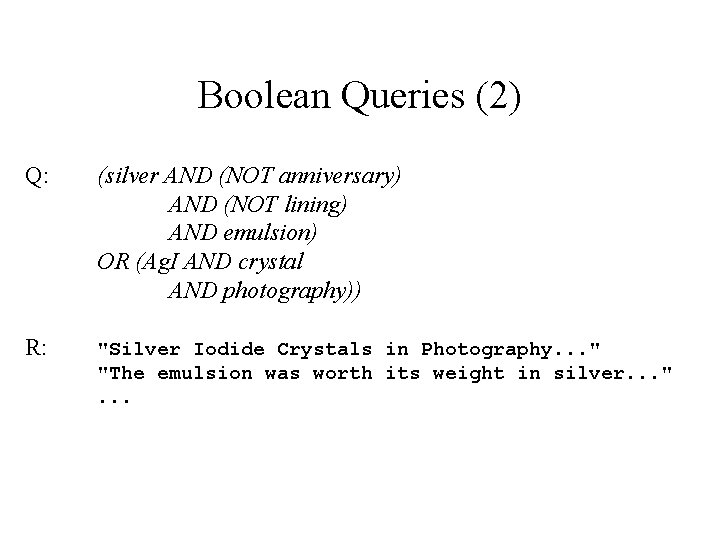

Boolean Queries (1) Industrial use of Silver Q: silver R: "The Count’s silver anniversary. . . " "Even the crash of ’ 87 had a silver lining. . . " "The Lone Ranger lived on in syndication. . . " "Sliver dropped to a new low in London. . . ". . . Q: R: silver AND photography "Posters of Tonto and the Lone Ranger. . . " "The Queen’s Silver Anniversary photos. . . ". . .

Boolean Queries (2) Q: (silver AND (NOT anniversary) AND (NOT lining) AND emulsion) OR (Ag. I AND crystal AND photography)) R: "Silver Iodide Crystals in Photography. . . " "The emulsion was worth its weight in silver. . . ". . .

Boolean Queries (3) Boolean queries are: a) easy to implement b) confusing to compose c) seldom used (except by librarians) d) prone to low recall e) all of the above

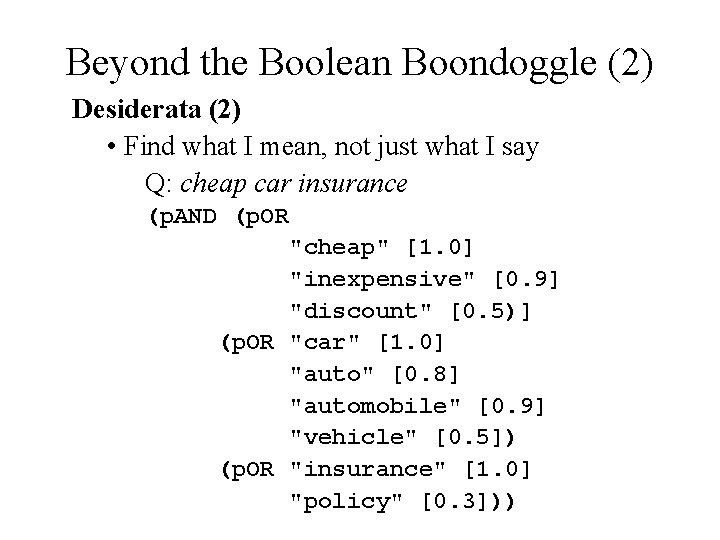

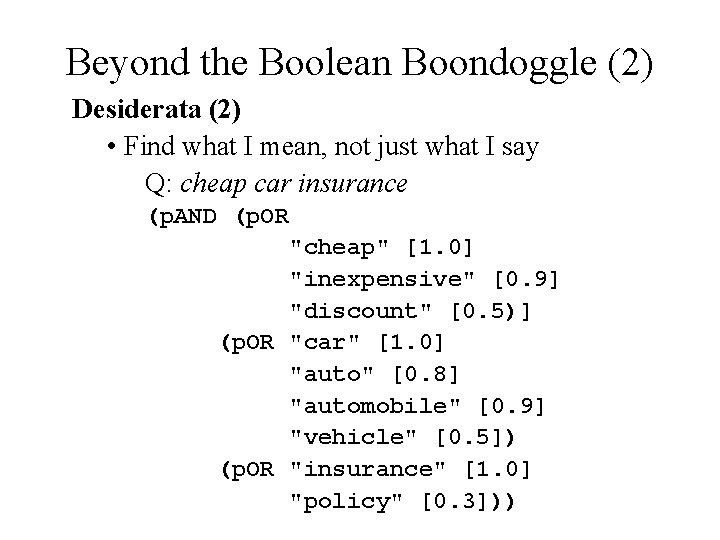

Beyond the Boolean Boondoggle (1) Desiderata (1) • Query must be natural for all users • Sentence, phrase, or word(s) • No AND’s, OR’s, NOT’s, . . . • No parentheses (no structure) • System focus on important words • Q: I want laser printers now

Beyond the Boolean Boondoggle (2) Desiderata (2) • Find what I mean, not just what I say Q: cheap car insurance (p. AND (p. OR "cheap" [1. 0] "inexpensive" [0. 9] "discount" [0. 5)] (p. OR "car" [1. 0] "auto" [0. 8] "automobile" [0. 9] "vehicle" [0. 5]) (p. OR "insurance" [1. 0] "policy" [0. 3]))

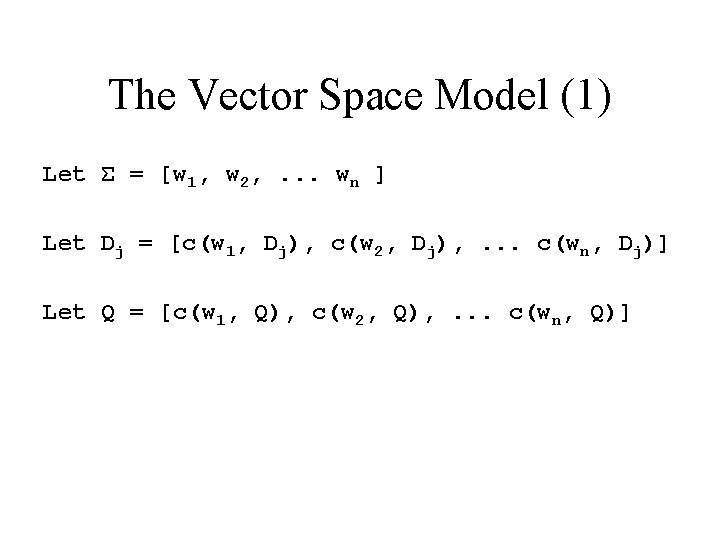

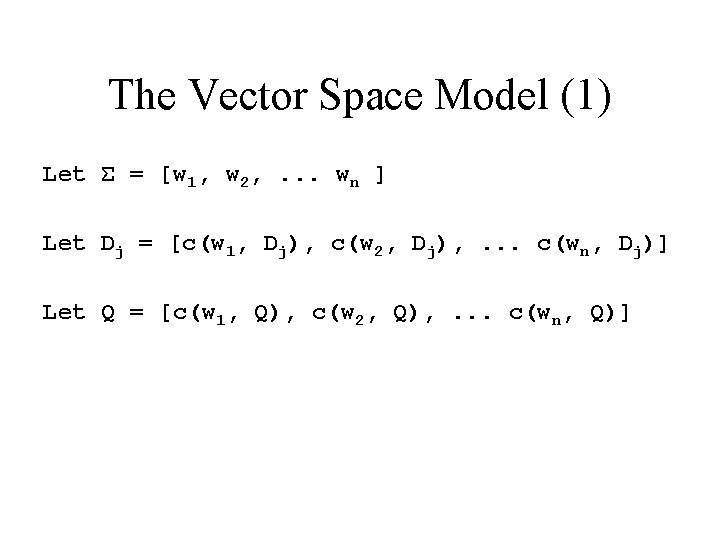

The Vector Space Model (1) Let Σ = [w 1, w 2, . . . wn ] Let Dj = [c(w 1, Dj), c(w 2, Dj), . . . c(wn, Dj)] Let Q = [c(w 1, Q), c(w 2, Q), . . . c(wn, Q)]

The Vector Space Model (2) Initial Definition of Similarity: SI(Q, Dj) = Q. Dj Normalized Definition of Similarity: SN(Q, Dj) = (Q. Dj)/(|Q| x |Dj|) = cos(Q, Dj)

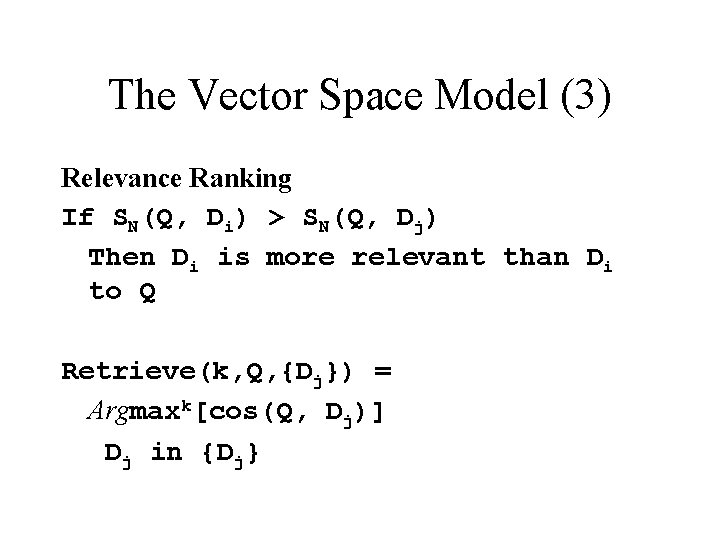

The Vector Space Model (3) Relevance Ranking If SN(Q, Di) > SN(Q, Dj) Then Di is more relevant than Di to Q Retrieve(k, Q, {Dj}) = Argmaxk[cos(Q, Dj)] Dj in {Dj}

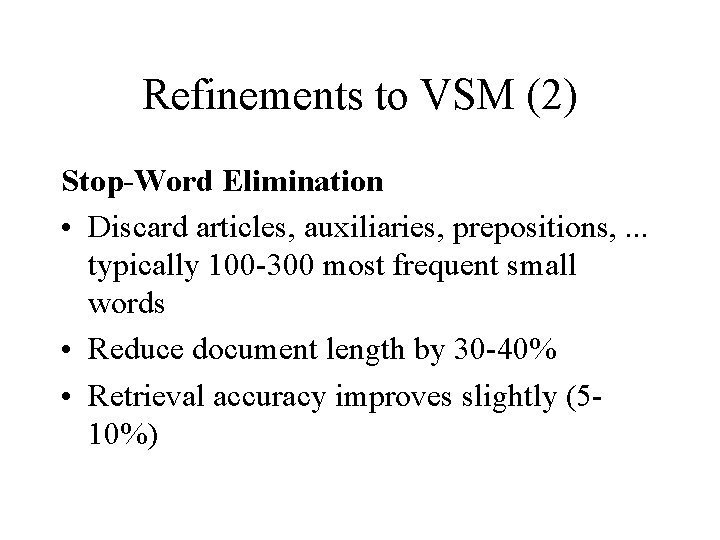

Refinements to VSM (2) Stop-Word Elimination • Discard articles, auxiliaries, prepositions, . . . typically 100 -300 most frequent small words • Reduce document length by 30 -40% • Retrieval accuracy improves slightly (510%)

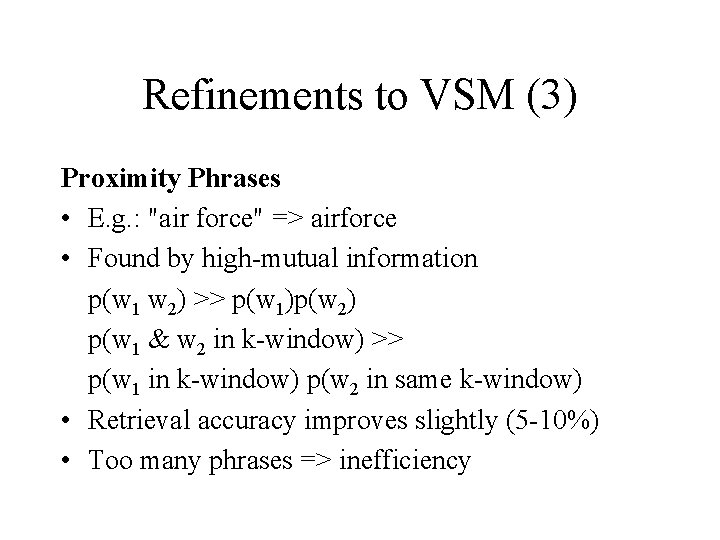

Refinements to VSM (3) Proximity Phrases • E. g. : "air force" => airforce • Found by high-mutual information p(w 1 w 2) >> p(w 1)p(w 2) p(w 1 & w 2 in k-window) >> p(w 1 in k-window) p(w 2 in same k-window) • Retrieval accuracy improves slightly (5 -10%) • Too many phrases => inefficiency

Refinements to VSM (4) Words => Terms • term = word | stemmed word | phrase • Use exactly the same VSM method on terms (vs words)

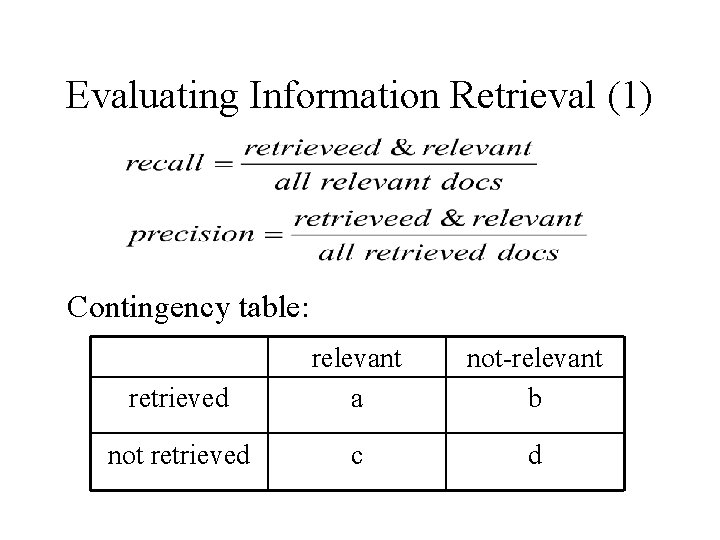

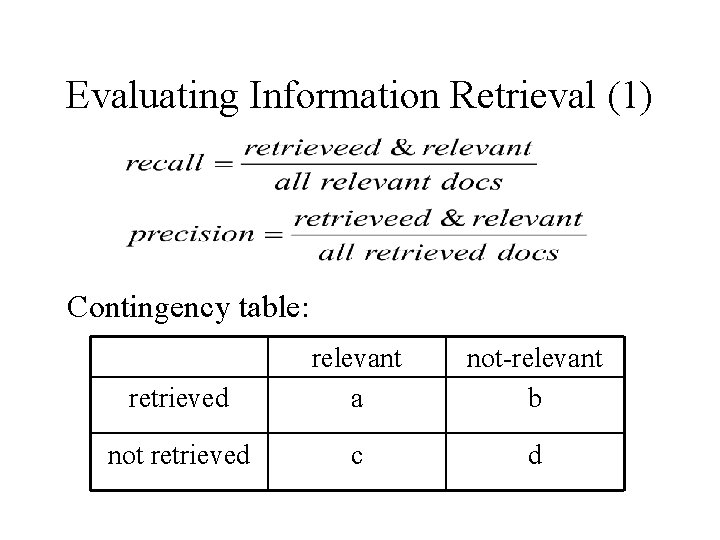

Evaluating Information Retrieval (1) Contingency table: retrieved relevant a not-relevant b not retrieved c d

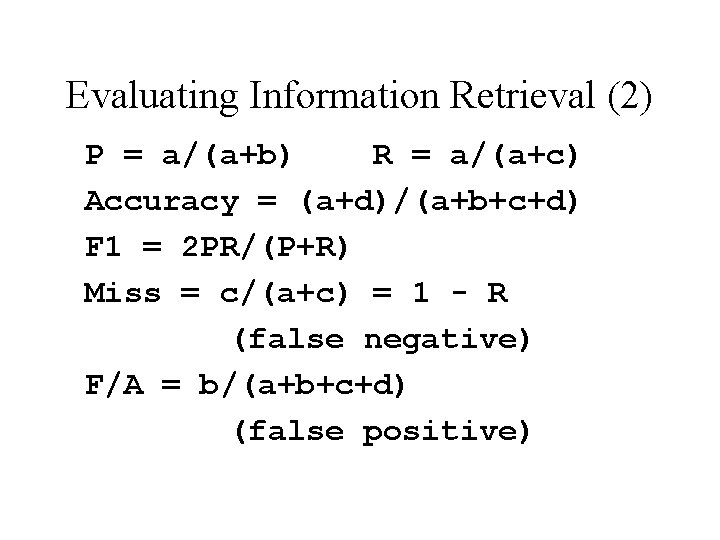

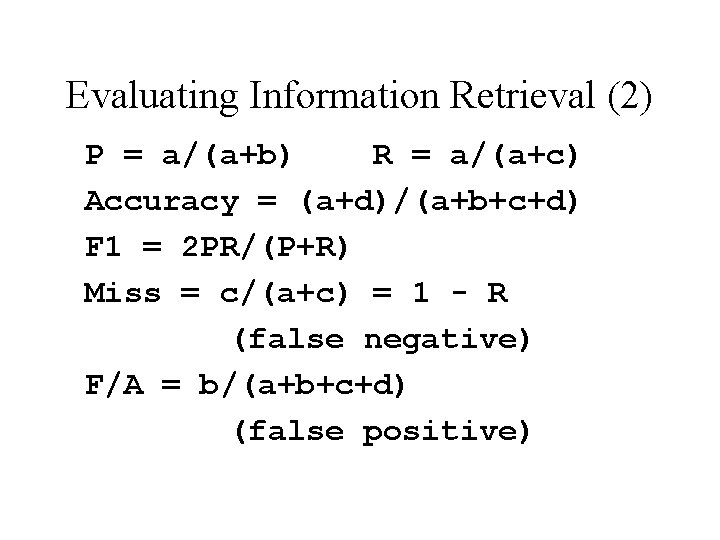

Evaluating Information Retrieval (2) P = a/(a+b) R = a/(a+c) Accuracy = (a+d)/(a+b+c+d) F 1 = 2 PR/(P+R) Miss = c/(a+c) = 1 - R (false negative) F/A = b/(a+b+c+d) (false positive)

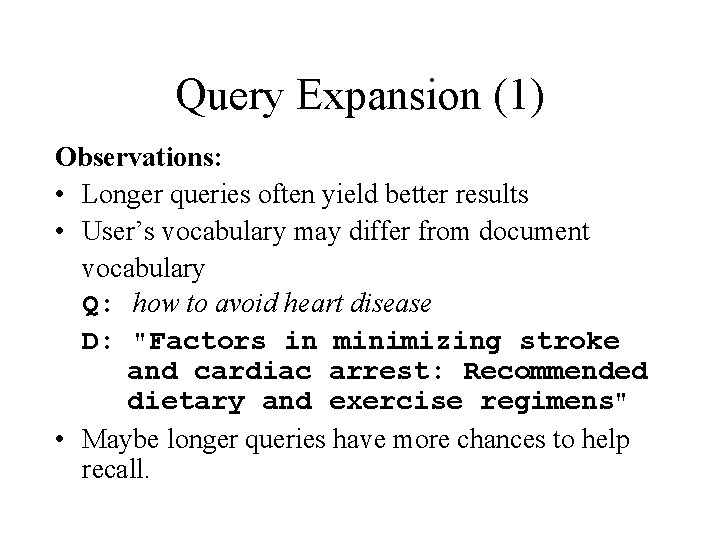

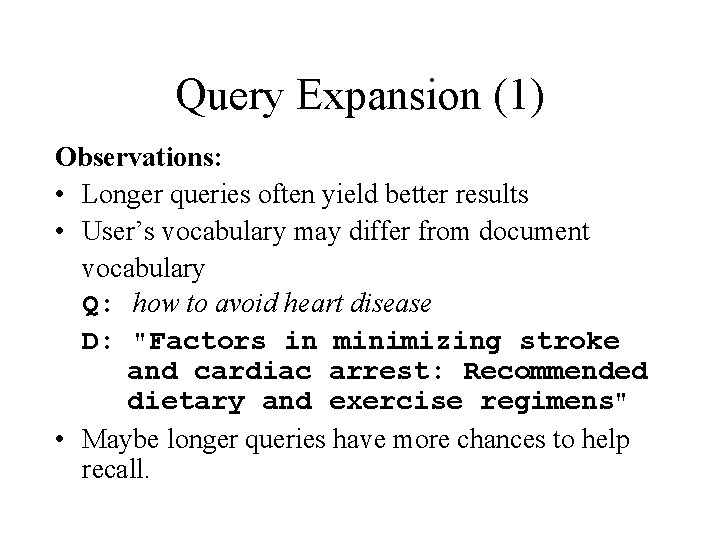

Query Expansion (1) Observations: • Longer queries often yield better results • User’s vocabulary may differ from document vocabulary Q: how to avoid heart disease D: "Factors in minimizing stroke and cardiac arrest: Recommended dietary and exercise regimens" • Maybe longer queries have more chances to help recall.

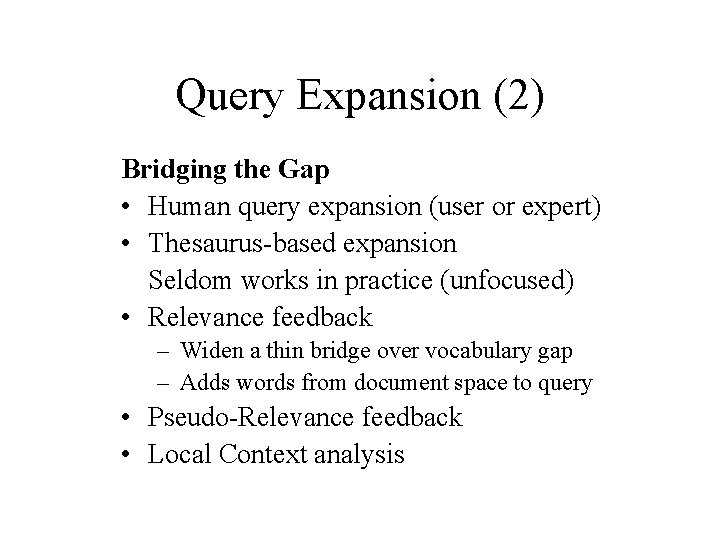

Query Expansion (2) Bridging the Gap • Human query expansion (user or expert) • Thesaurus-based expansion Seldom works in practice (unfocused) • Relevance feedback – Widen a thin bridge over vocabulary gap – Adds words from document space to query • Pseudo-Relevance feedback • Local Context analysis

![Relevance Feedback Rocchio Formula Q FQ Dret F weighted vector sum Relevance Feedback Rocchio Formula Q’ = F[Q, Dret ] F = weighted vector sum,](https://slidetodoc.com/presentation_image/cf355cd594aa68cad5cf4441d8cd1701/image-22.jpg)

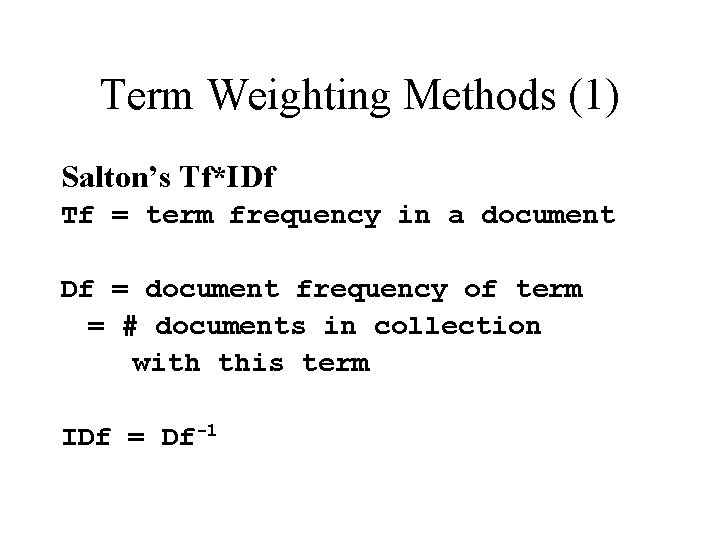

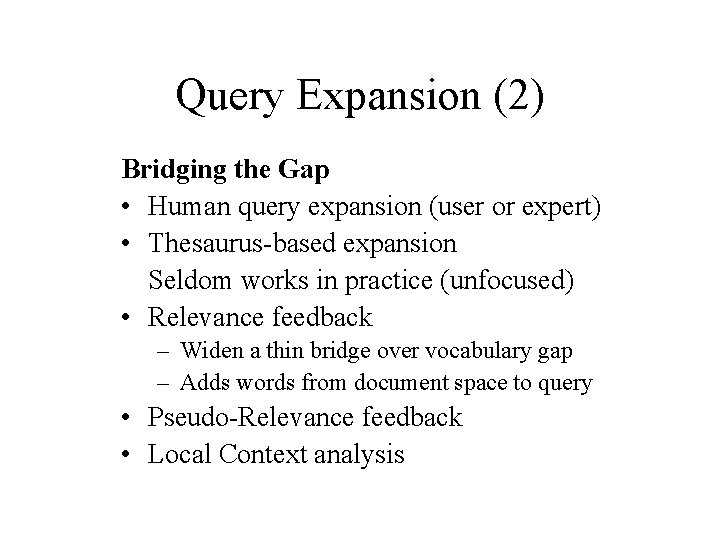

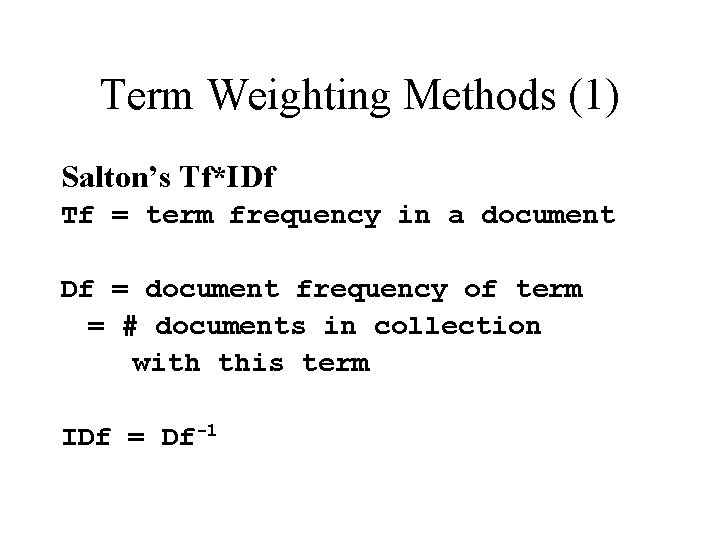

Relevance Feedback Rocchio Formula Q’ = F[Q, Dret ] F = weighted vector sum, such as: W(t, Q’) = αW(t, Q) + βW(t, Drel ) - γW(t, Dirr )

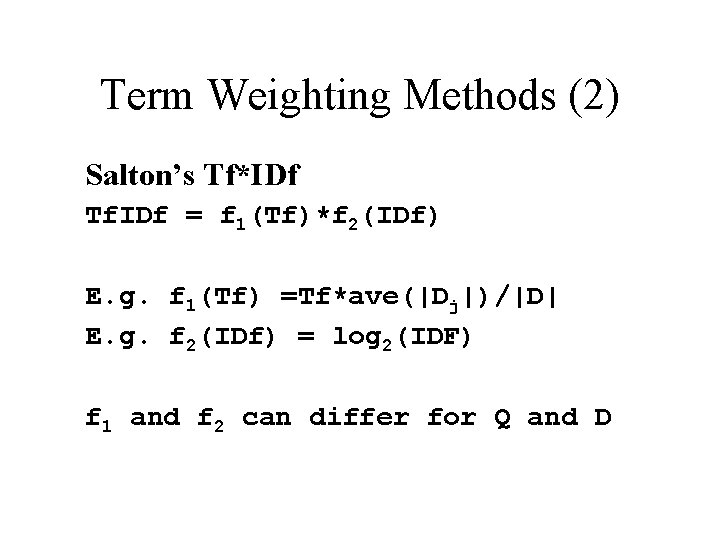

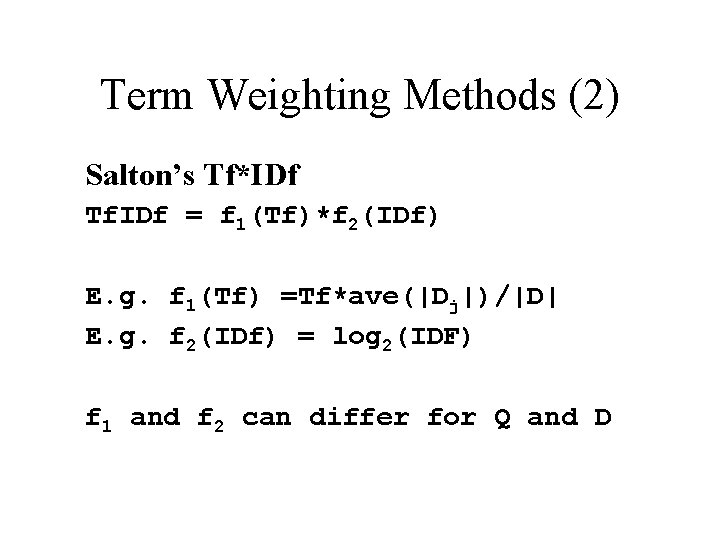

Term Weighting Methods (1) Salton’s Tf*IDf Tf = term frequency in a document Df = document frequency of term = # documents in collection with this term IDf = Df-1

Term Weighting Methods (2) Salton’s Tf*IDf Tf. IDf = f 1(Tf)*f 2(IDf) E. g. f 1(Tf) =Tf*ave(|Dj|)/|D| E. g. f 2(IDf) = log 2(IDF) f 1 and f 2 can differ for Q and D

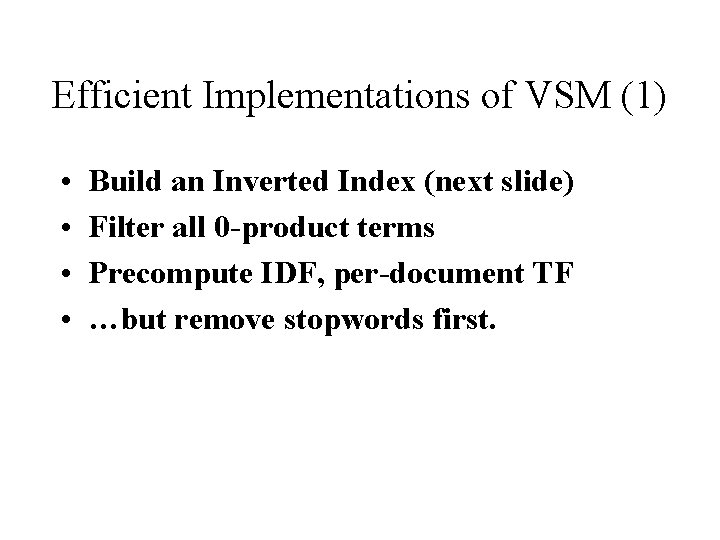

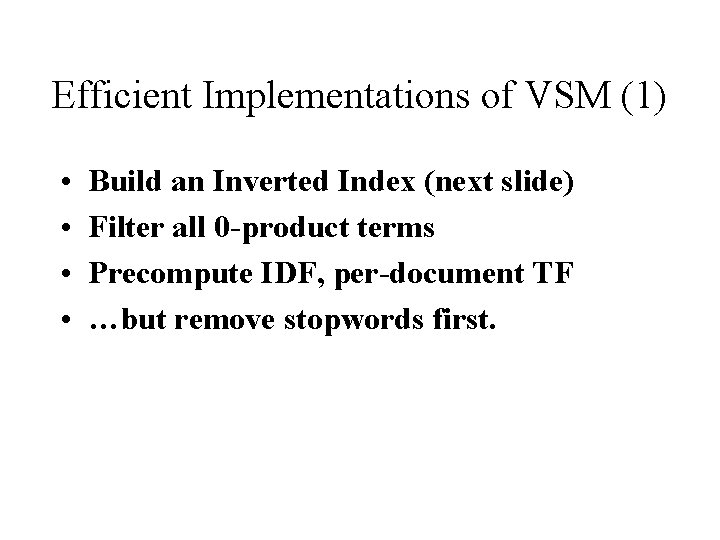

Efficient Implementations of VSM (1) • • Build an Inverted Index (next slide) Filter all 0 -product terms Precompute IDF, per-document TF …but remove stopwords first.

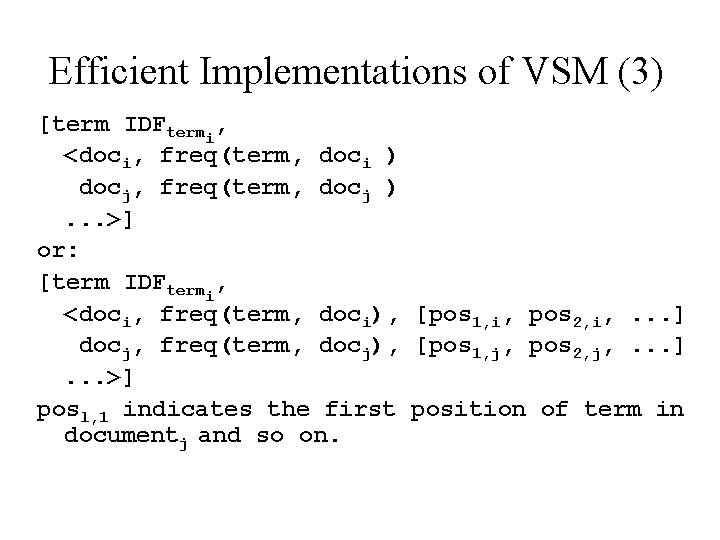

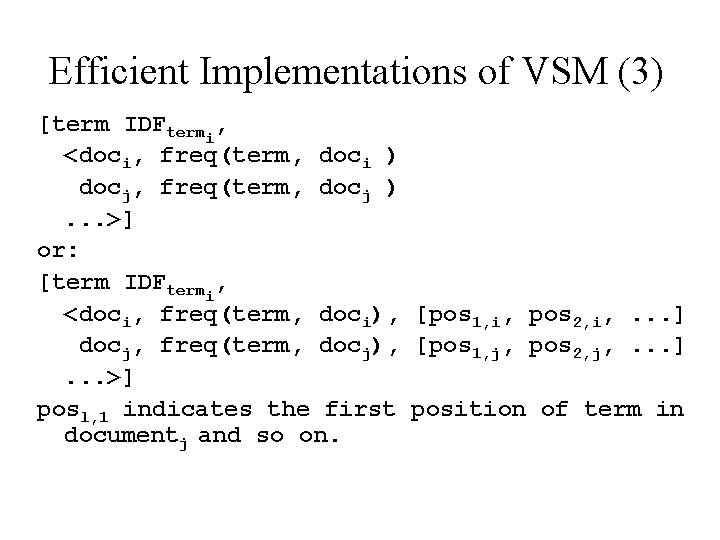

Efficient Implementations of VSM (3) [term IDFtermi, <doci, freq(term, doci ) docj, freq(term, docj ). . . >] or: [term IDFtermi, <doci, freq(term, doci), [pos 1, i, pos 2, i, . . . ] docj, freq(term, docj), [pos 1, j, pos 2, j, . . . ]. . . >] posl, 1 indicates the first position of term in documentj and so on.

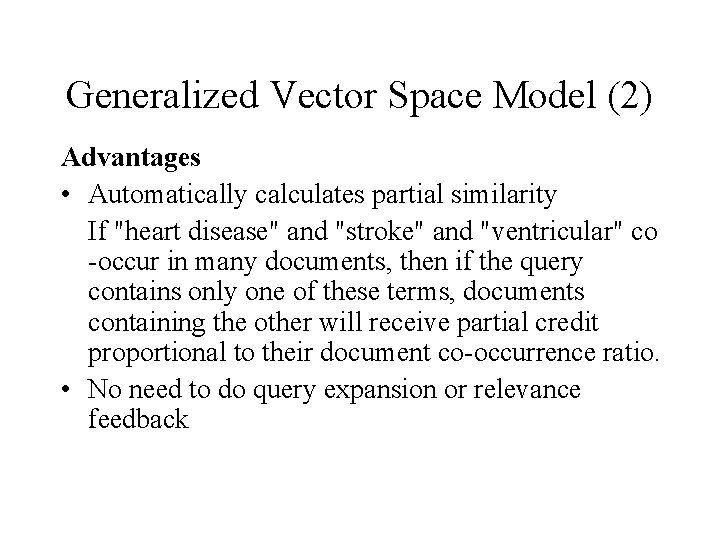

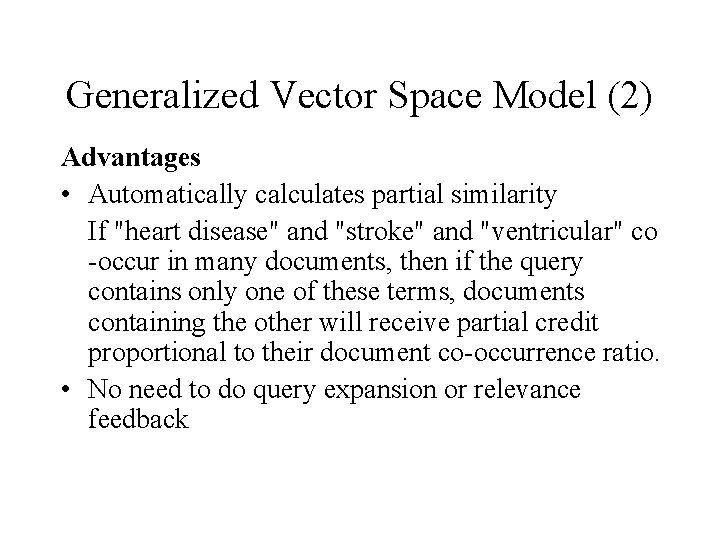

Generalized Vector Space Model (1) Principles • Define terms by their occurrence patterns in documents • Define query terms in the same way • Compute similarity by document-pattern overlap for terms in D and Q • Use standard Cos similarity and either binary or Tf. IDf weights

Generalized Vector Space Model (2) Advantages • Automatically calculates partial similarity If "heart disease" and "stroke" and "ventricular" co -occur in many documents, then if the query contains only one of these terms, documents containing the other will receive partial credit proportional to their document co-occurrence ratio. • No need to do query expansion or relevance feedback

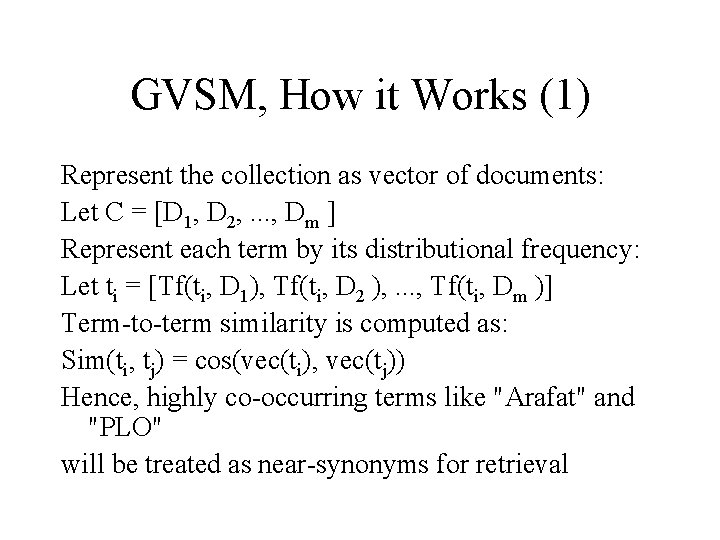

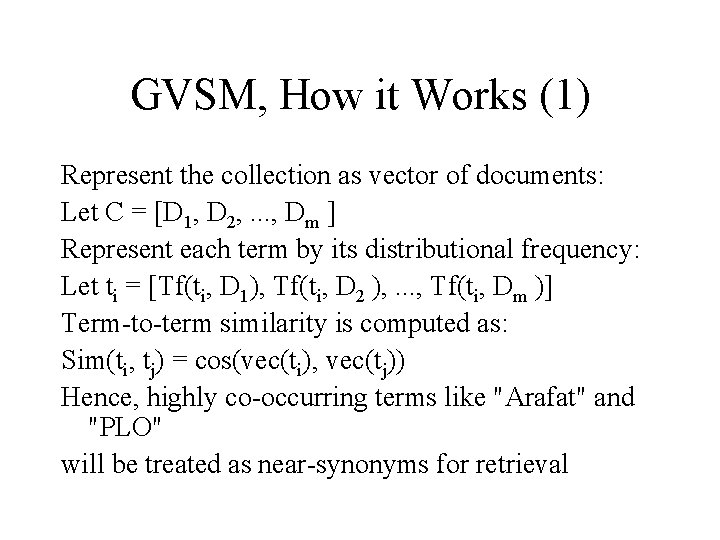

GVSM, How it Works (1) Represent the collection as vector of documents: Let C = [D 1, D 2, . . . , Dm ] Represent each term by its distributional frequency: Let ti = [Tf(ti, D 1), Tf(ti, D 2 ), . . . , Tf(ti, Dm )] Term-to-term similarity is computed as: Sim(ti, tj) = cos(vec(ti), vec(tj)) Hence, highly co-occurring terms like "Arafat" and "PLO" will be treated as near-synonyms for retrieval

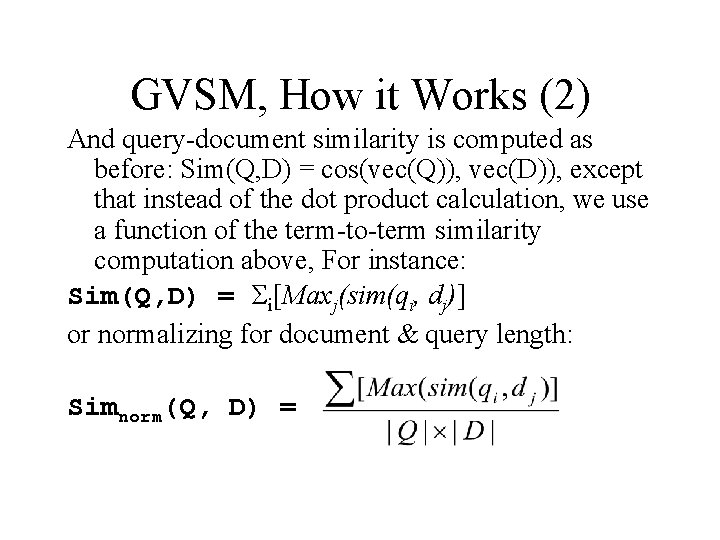

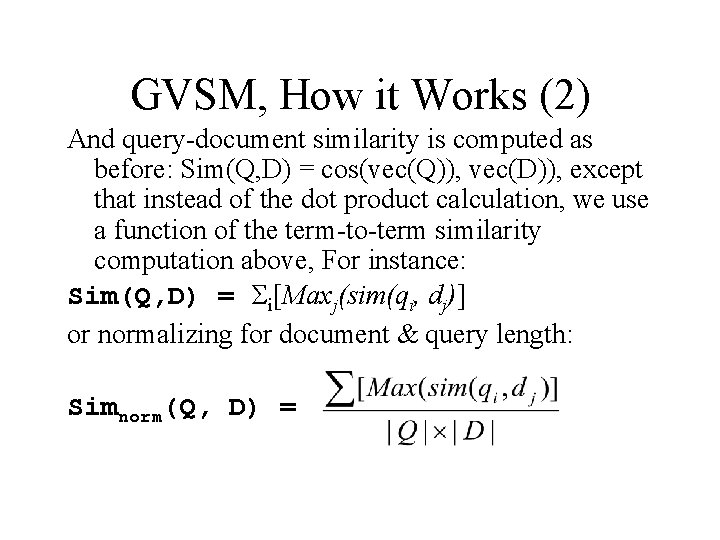

GVSM, How it Works (2) And query-document similarity is computed as before: Sim(Q, D) = cos(vec(Q)), vec(D)), except that instead of the dot product calculation, we use a function of the term-to-term similarity computation above, For instance: Sim(Q, D) = Σi[Maxj(sim(qi, dj)] or normalizing for document & query length: Simnorm(Q, D) =

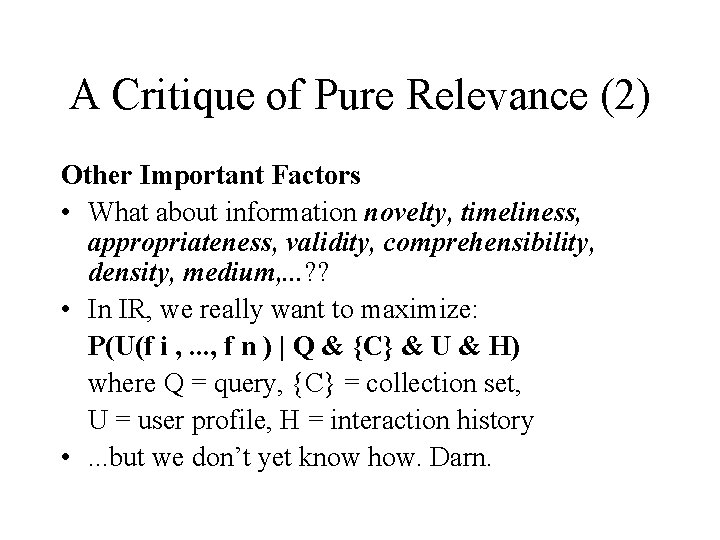

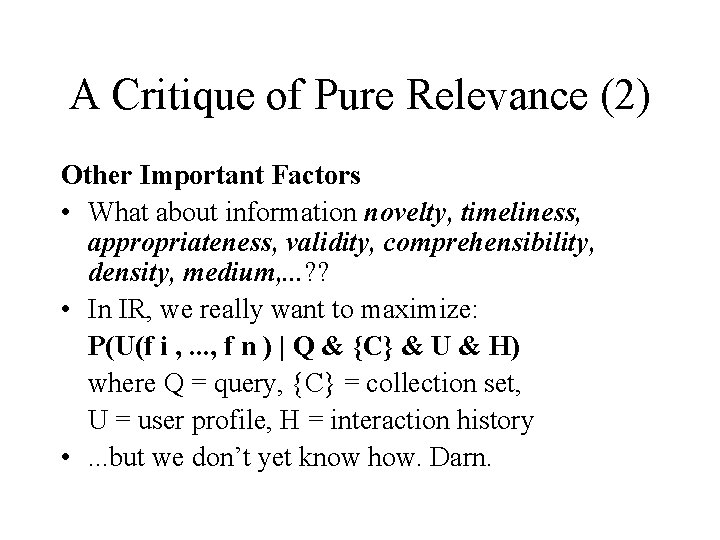

A Critique of Pure Relevance (1) IR Maximizes Relevance • Precision and recall are relevance measures • Quality of documents retrieved is ignored

A Critique of Pure Relevance (2) Other Important Factors • What about information novelty, timeliness, appropriateness, validity, comprehensibility, density, medium, . . . ? ? • In IR, we really want to maximize: P(U(f i , . . . , f n ) | Q & {C} & U & H) where Q = query, {C} = collection set, U = user profile, H = interaction history • . . . but we don’t yet know how. Darn.

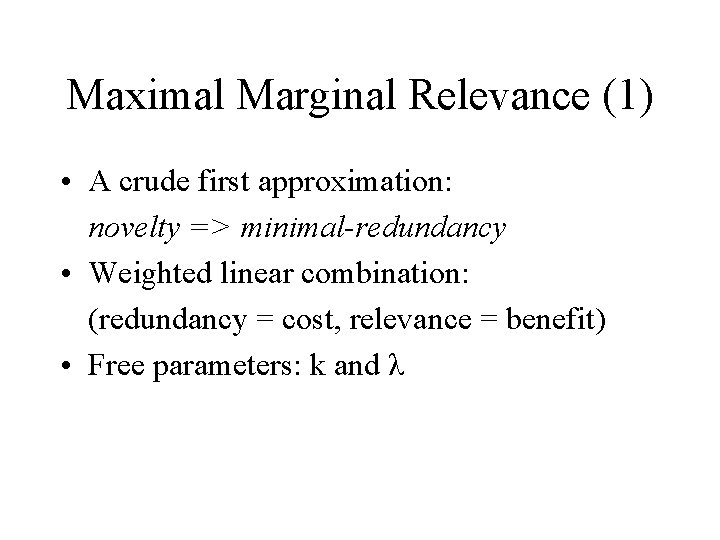

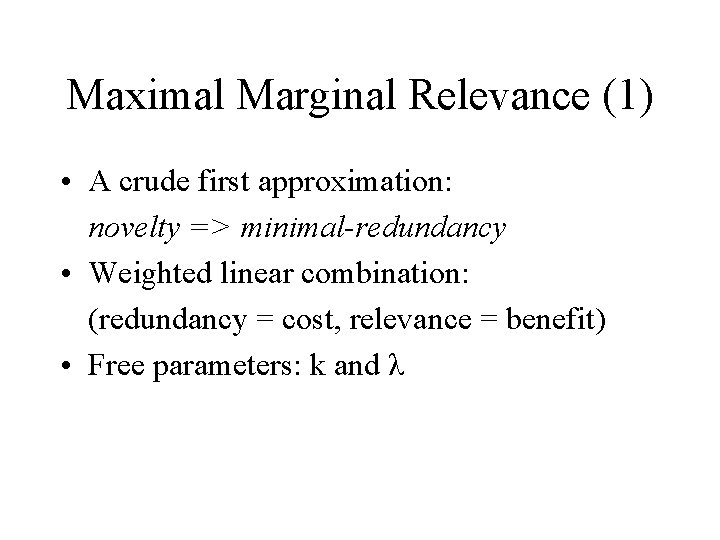

Maximal Marginal Relevance (1) • A crude first approximation: novelty => minimal-redundancy • Weighted linear combination: (redundancy = cost, relevance = benefit) • Free parameters: k and λ

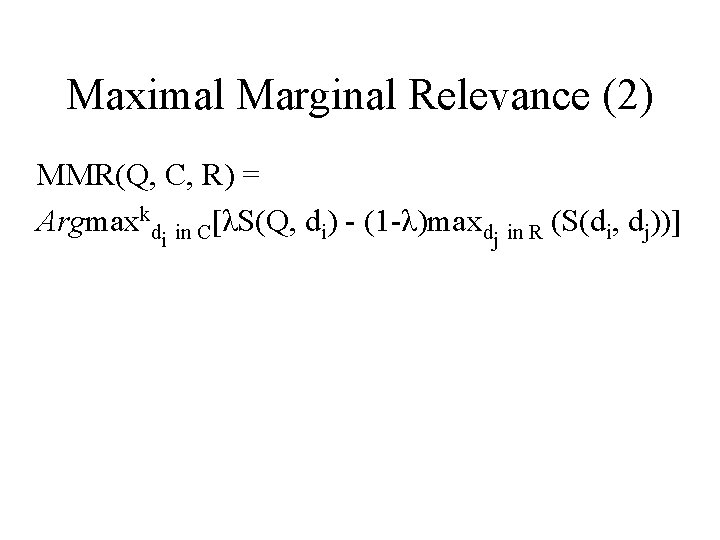

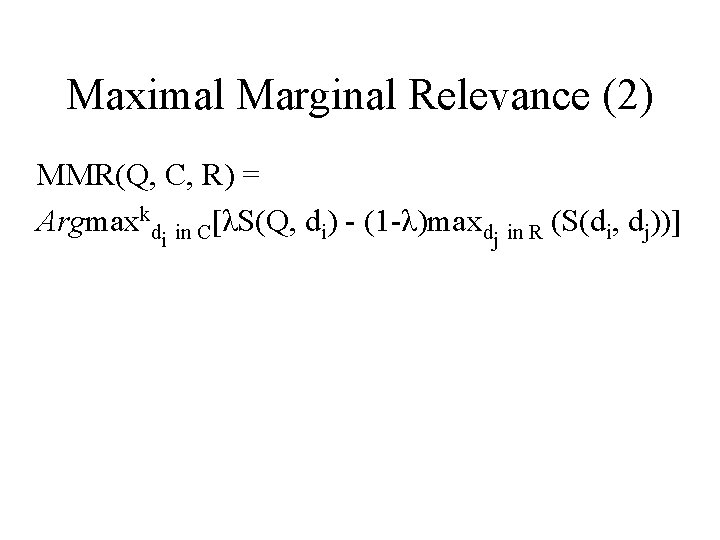

Maximal Marginal Relevance (2) MMR(Q, C, R) = Argmaxkdi in C[λS(Q, di) - (1 -λ)maxdj in R (S(di, dj))]

Maximal Marginal Relevance (MMR) (3) COMPUTATION OF MMR RERANKING 1. Standard IR Retrieval of top-N docs Let Dr = IR(D, Q, N) 2. Rank max sim(di ε Dr, Q) as top doc, i. e. Let Ranked = {di} 3. Let Dr = Dr{di} 4. While Dr is not empty, do: a. Find di with max MMR(Dr, Q. Ranked) b. Let Ranked = Ranked. di c. Let Dr = Dr{di}

Maximal Marginal Relevance (MMR) (4) Applications: • Ranking retrieved documents from IR Engine • Ranking passages for inclusion in Summaries

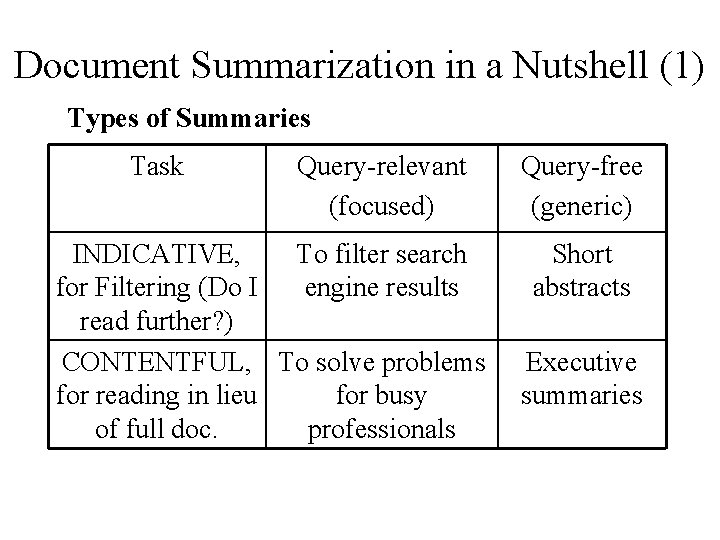

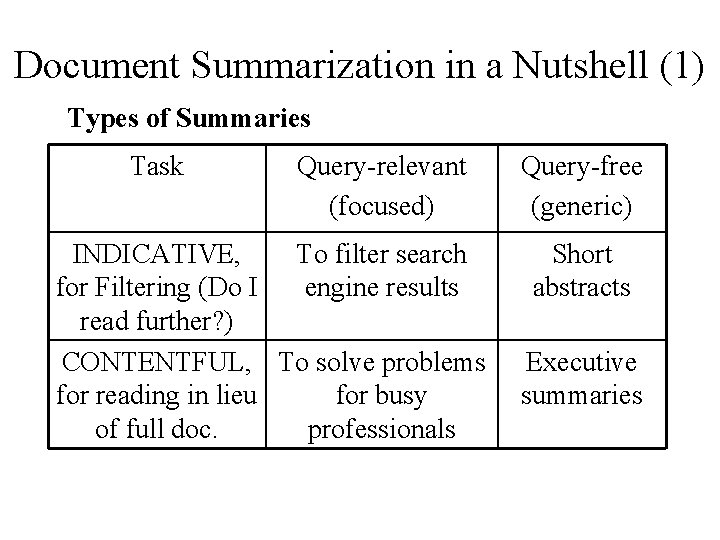

Document Summarization in a Nutshell (1) Types of Summaries Task Query-relevant (focused) INDICATIVE, To filter search for Filtering (Do I engine results read further? ) CONTENTFUL, To solve problems for reading in lieu for busy of full doc. professionals Query-free (generic) Short abstracts Executive summaries

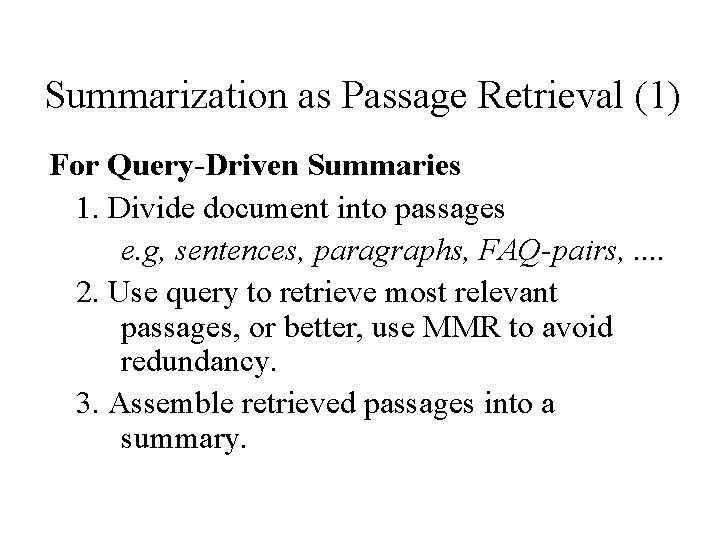

Summarization as Passage Retrieval (1) For Query-Driven Summaries 1. Divide document into passages e. g, sentences, paragraphs, FAQ-pairs, . . 2. Use query to retrieve most relevant passages, or better, use MMR to avoid redundancy. 3. Assemble retrieved passages into a summary.