Fd io The Universal Dataplane FD io The

- Slides: 30

Fd. io: The Universal Dataplane

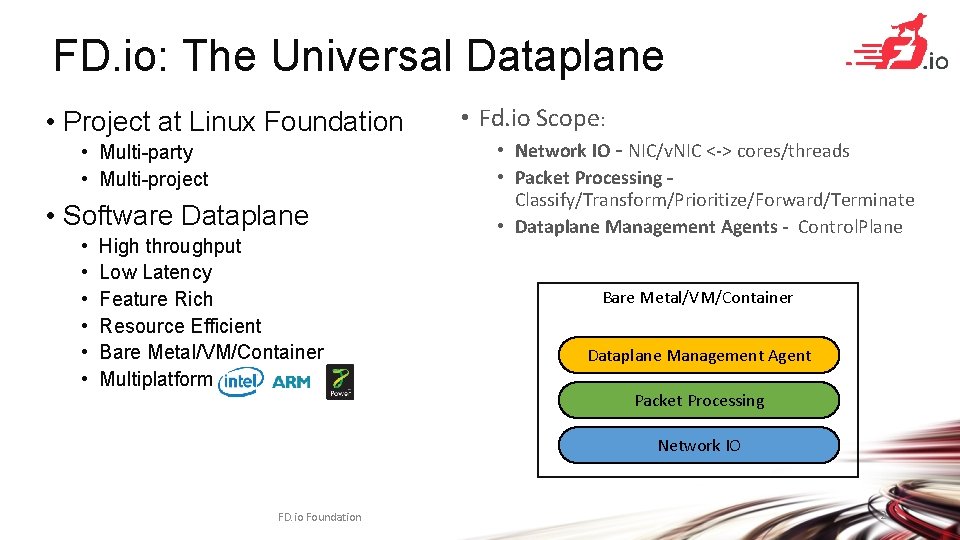

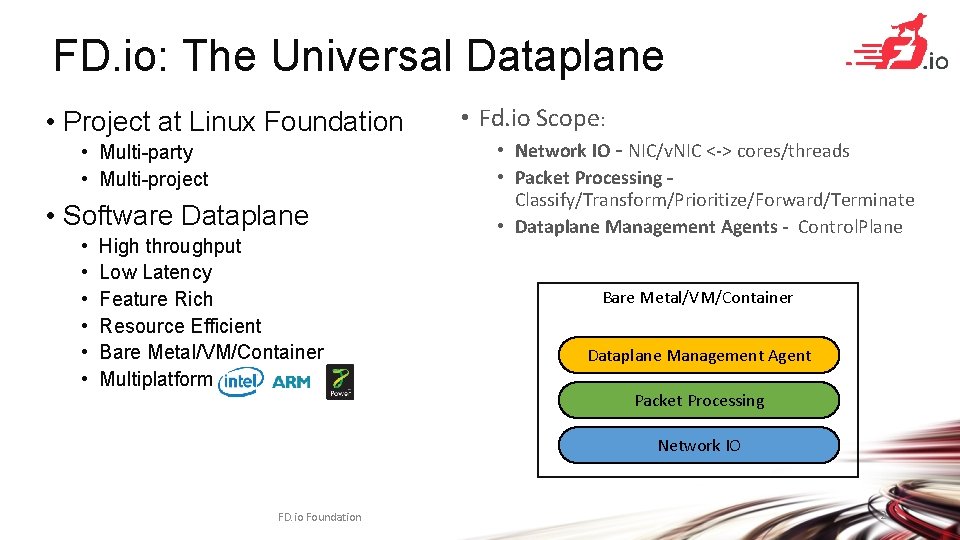

FD. io: The Universal Dataplane • Project at Linux Foundation • Multi-party • Multi-project • Software Dataplane • • • High throughput Low Latency Feature Rich Resource Efficient Bare Metal/VM/Container Multiplatform • Fd. io Scope: • Network IO - NIC/v. NIC <-> cores/threads • Packet Processing – Classify/Transform/Prioritize/Forward/Terminate • Dataplane Management Agents - Control. Plane Bare Metal/VM/Container Dataplane Management Agent Packet Processing Network IO FD. io Foundation 2

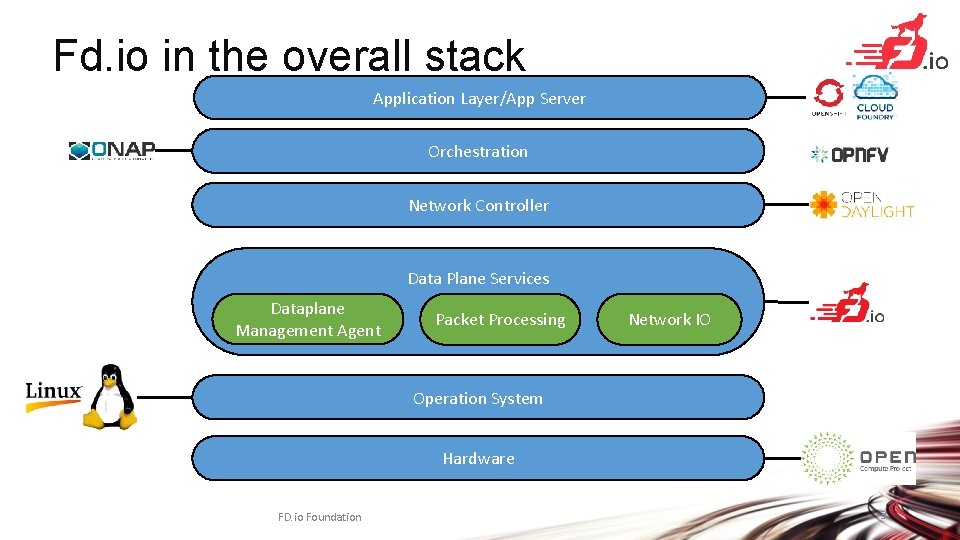

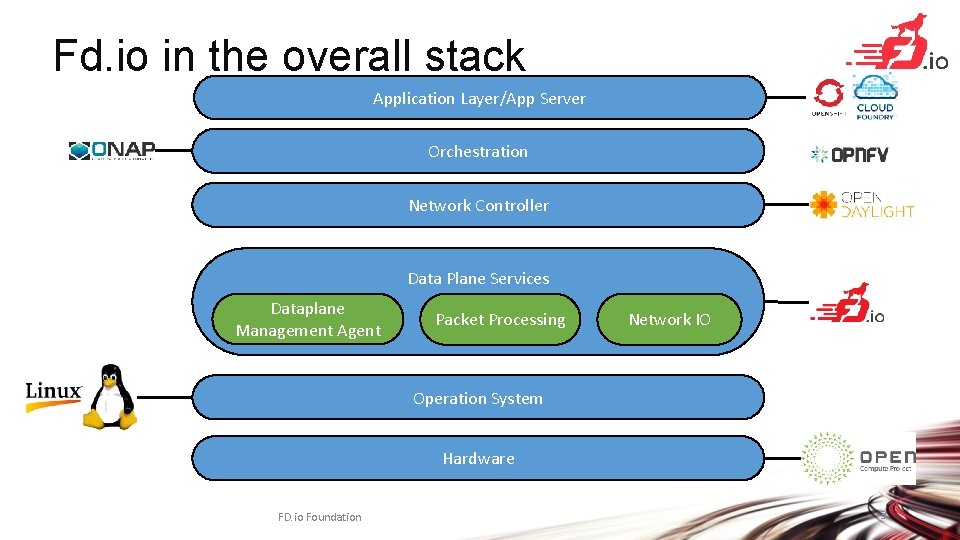

Fd. io in the overall stack Application Layer/App Server Orchestration Network Controller Data Plane Services Dataplane Management Agent Packet Processing Network IO Operation System Hardware FD. io Foundation 3

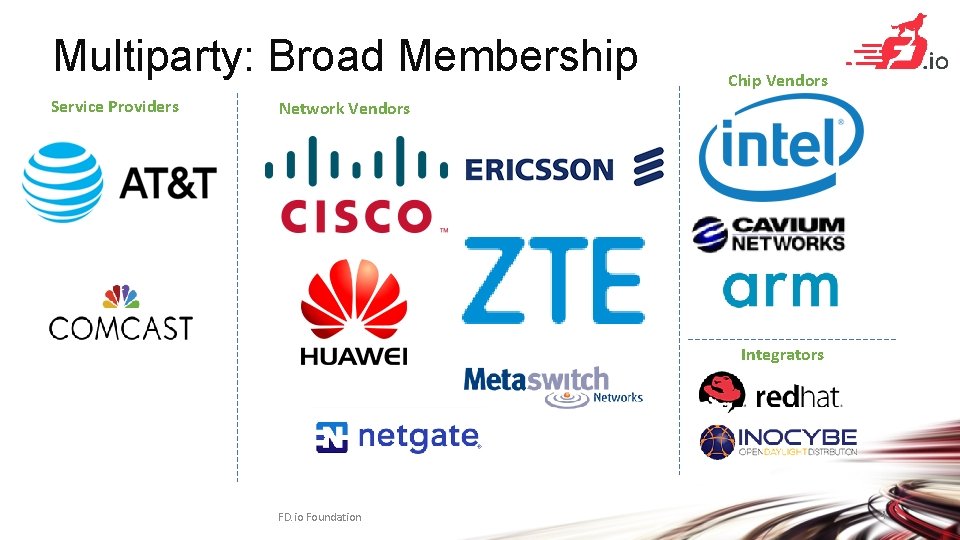

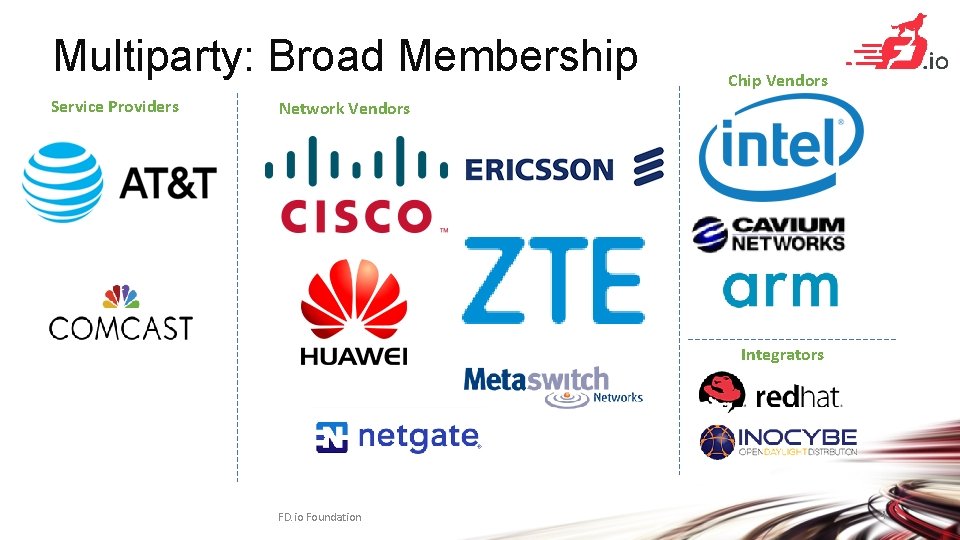

Multiparty: Broad Membership Service Providers Chip Vendors Network Vendors Integrators FD. io Foundation 4

Multiparty: Broad Contribution Qiniu Yandex Universitat Politècnica de Catalunya (UPC) FD. io Foundation 5

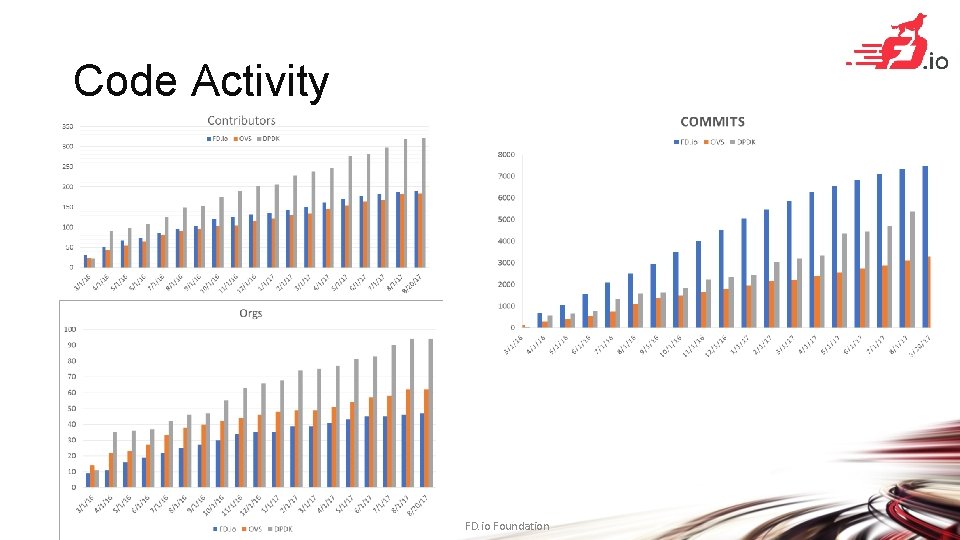

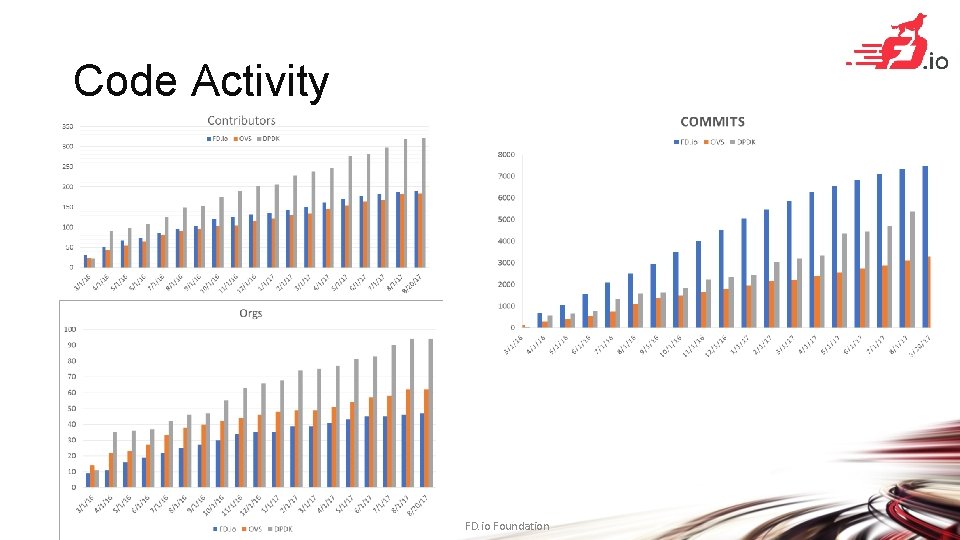

Code Activity FD. io Foundation

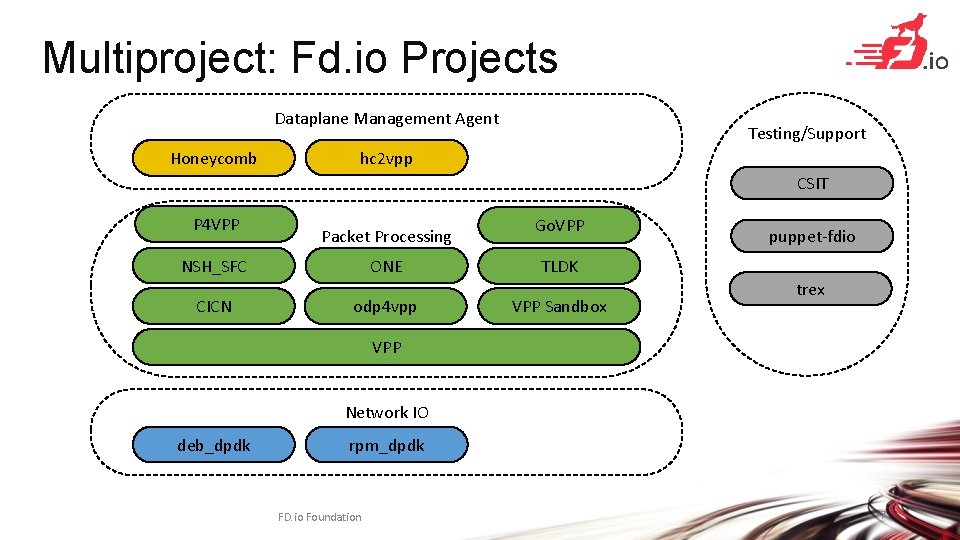

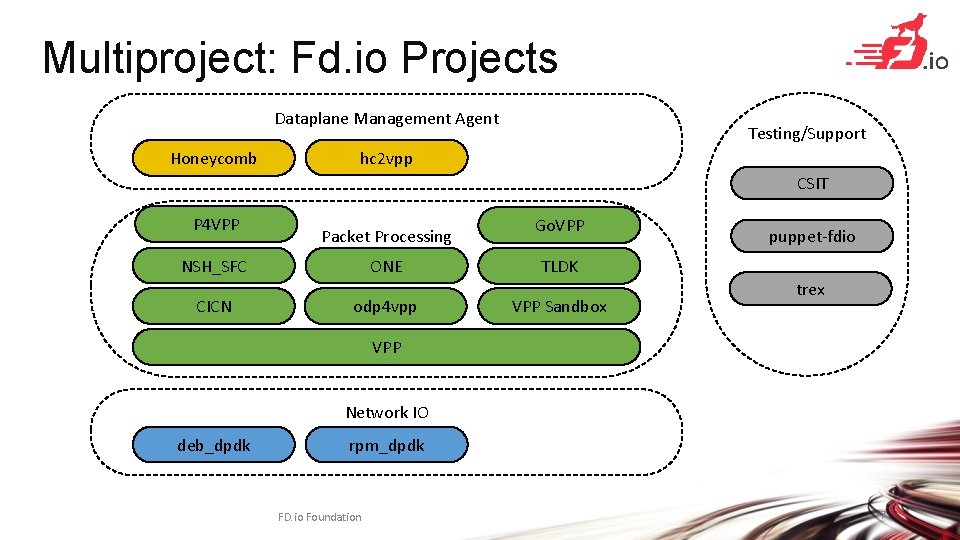

Multiproject: Fd. io Projects Dataplane Management Agent Honeycomb Testing/Support hc 2 vpp CSIT P 4 VPP Packet Processing NSH_SFC CICN ONE odp 4 vpp Go. VPP puppet-fdio TLDK VPP Sandbox trex VPP Network IO deb_dpdk rpm_dpdk FD. io Foundation 7

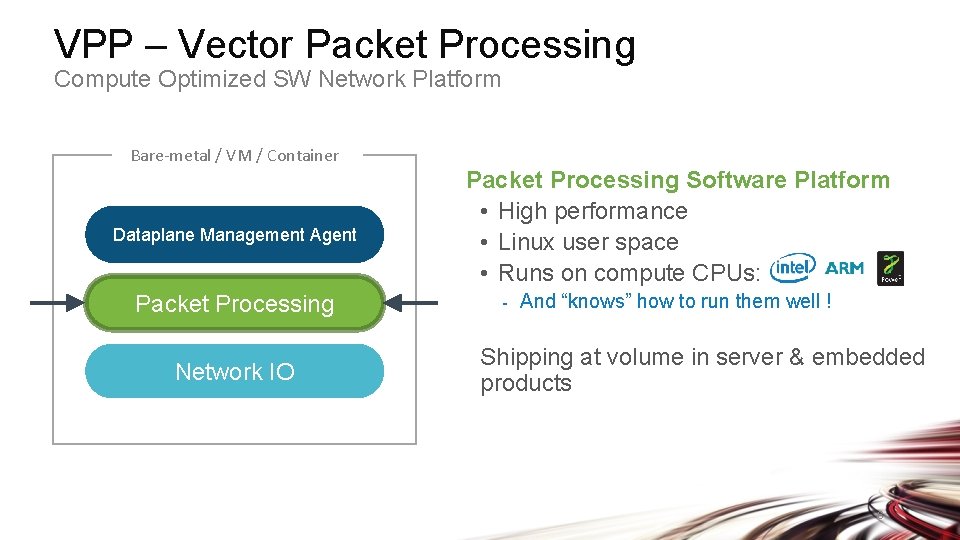

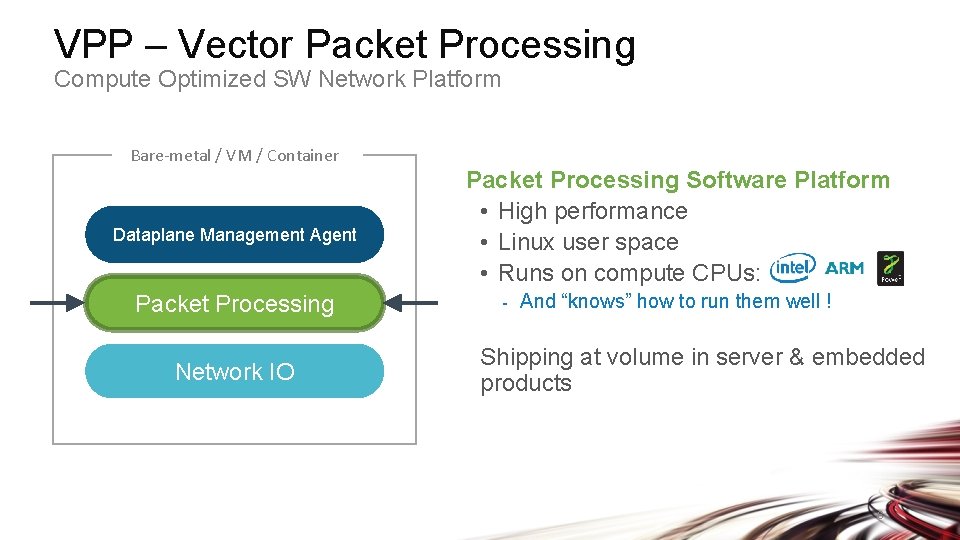

VPP – Vector Packet Processing Compute Optimized SW Network Platform Bare-metal / VM / Container Dataplane Management Agent Packet Processing Network IO Packet Processing Software Platform • High performance • Linux user space • Runs on compute CPUs: - And “knows” how to run them well ! Shipping at volume in server & embedded products 8

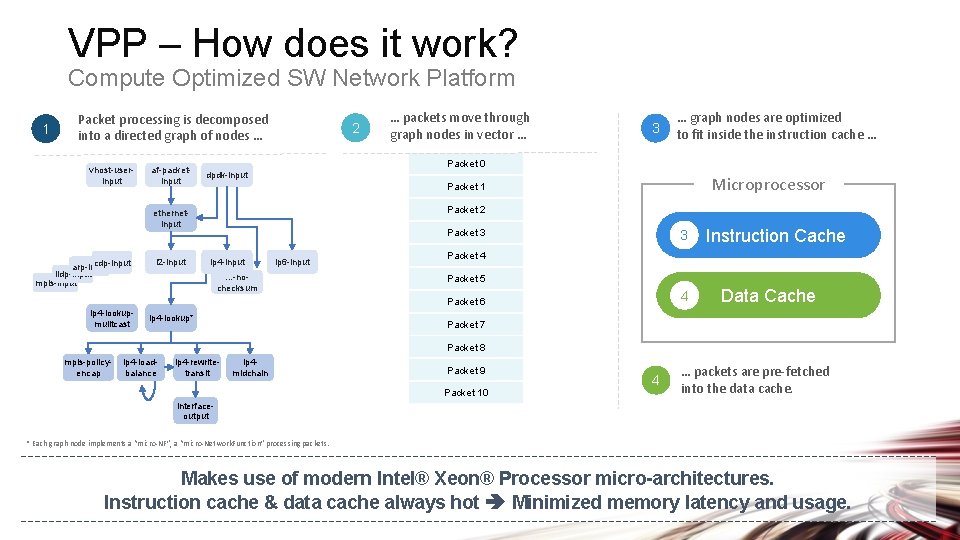

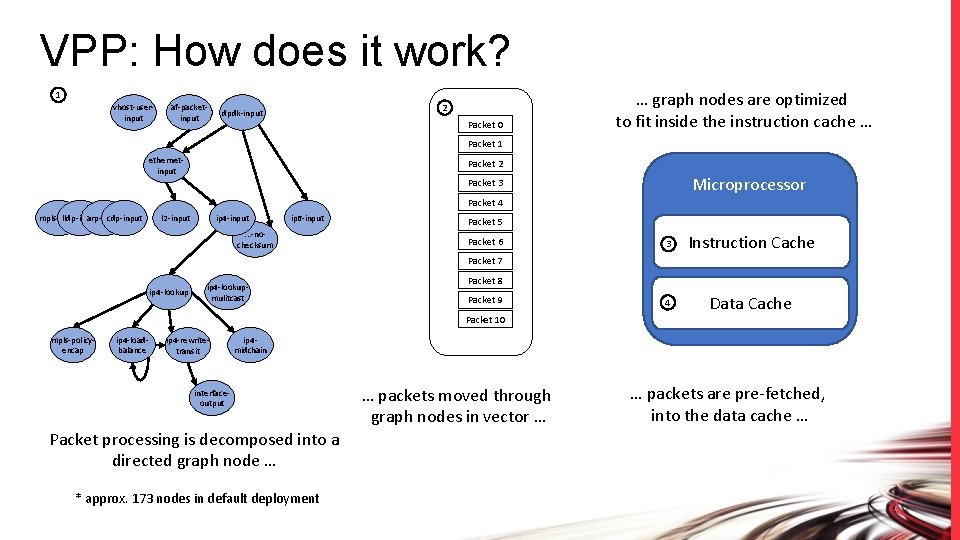

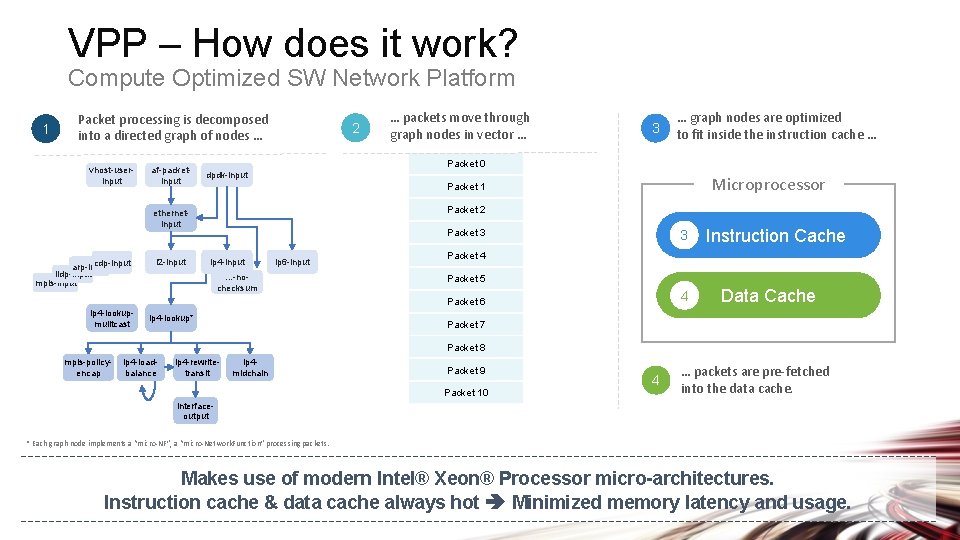

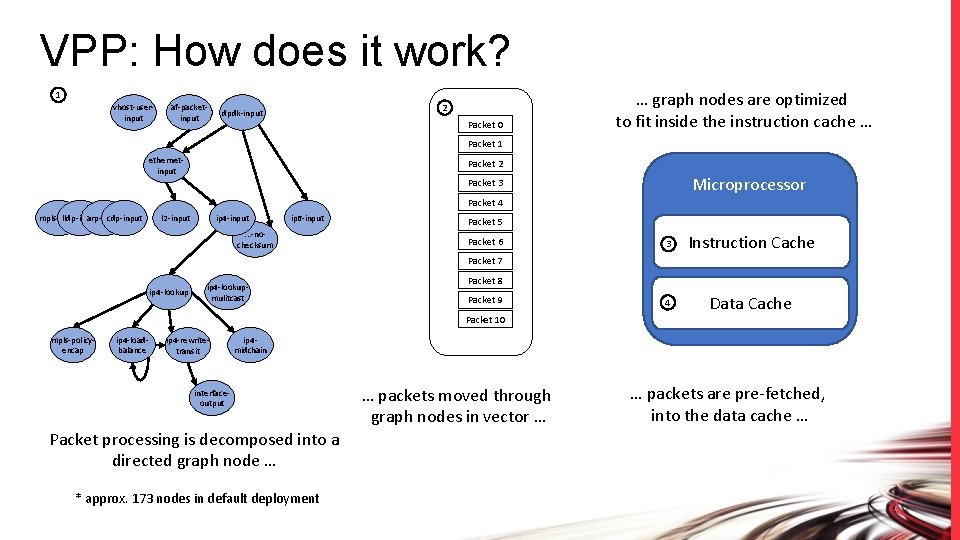

VPP – How does it work? Compute Optimized SW Network Platform 1 Packet processing is decomposed into a directed graph of nodes … vhost-userinput af-packetinput 2 l 2 -input 3 Packet 0 Microprocessor Packet 1 Packet 2 Packet 3 ip 4 -input ip 6 -input . . . -nochecksum 3 ip 4 -lookup* Instruction Cache Packet 4 Packet 5 4 Packet 6 ip 4 -lookupmulitcast … graph nodes are optimized to fit inside the instruction cache … dpdk-input ethernetinput cdp-input arp-input lldp-input mpls-input … packets move through graph nodes in vector … Data Cache Packet 7 Packet 8 mpls-policyencap ip 4 -loadbalance ip 4 -rewritetransit ip 4 midchain Packet 9 Packet 10 4 … packets are pre-fetched into the data cache. interfaceoutput * Each graph node implements a “micro-NF”, a “micro-Network. Function” processing packets. Makes use of modern Intel® Xeon® Processor micro-architectures. Instruction cache & data cache always hot Minimized memory latency and usage.

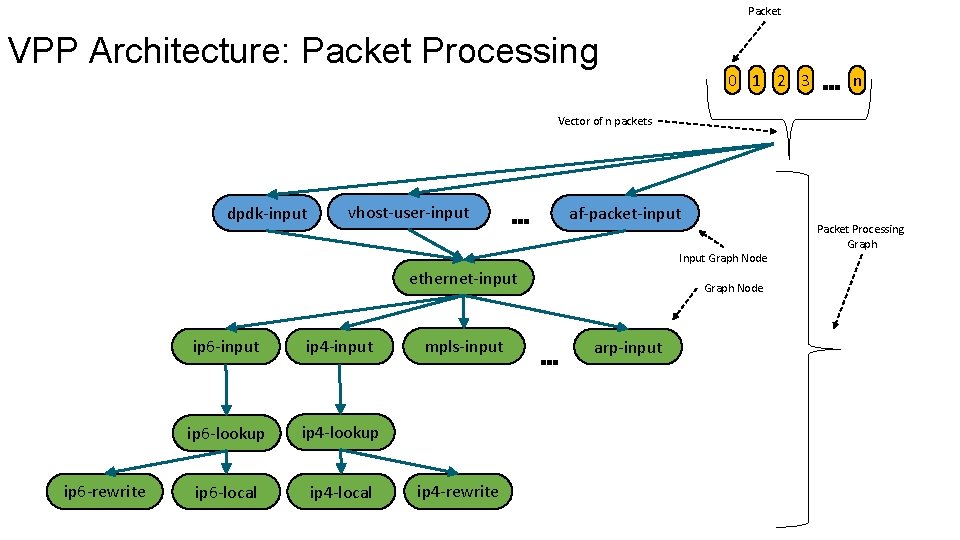

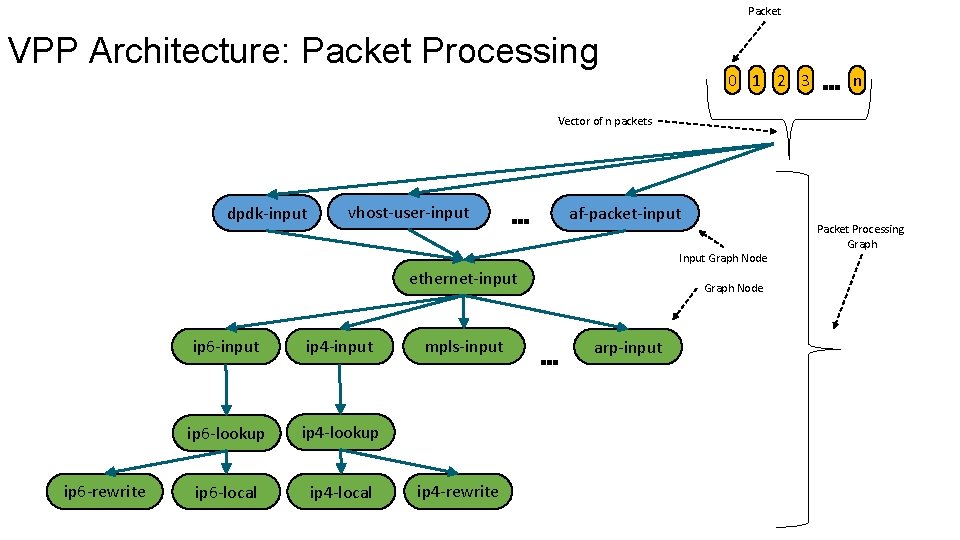

Packet VPP Architecture: Packet Processing 0 1 2 3 … n Vector of n packets dpdk-input vhost-user-input … af-packet-input Input Graph Node ethernet-input ip 6 -rewrite ip 6 -input ip 4 -input ip 6 -lookup ip 4 -lookup ip 6 -local ip 4 -local mpls-input ip 4 -rewrite Graph Node … arp-input Packet Processing Graph

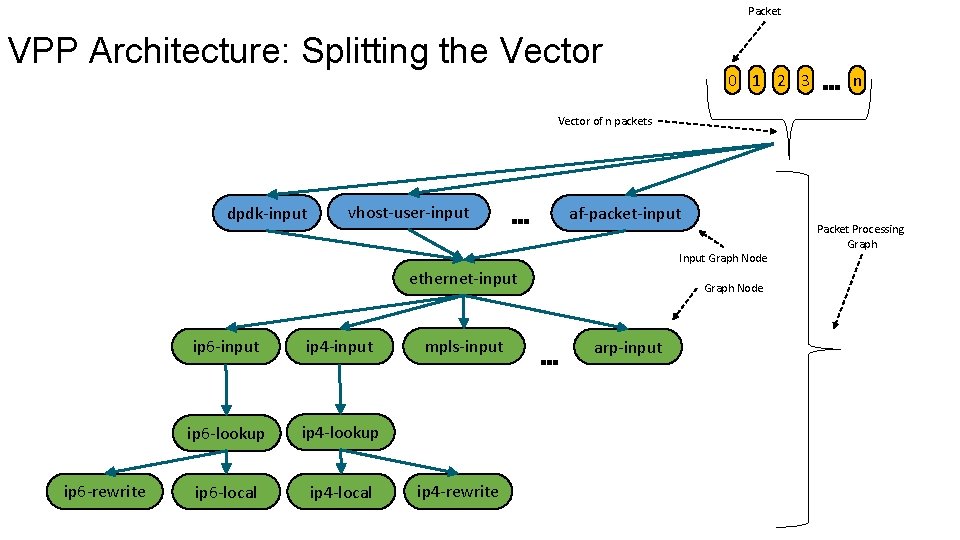

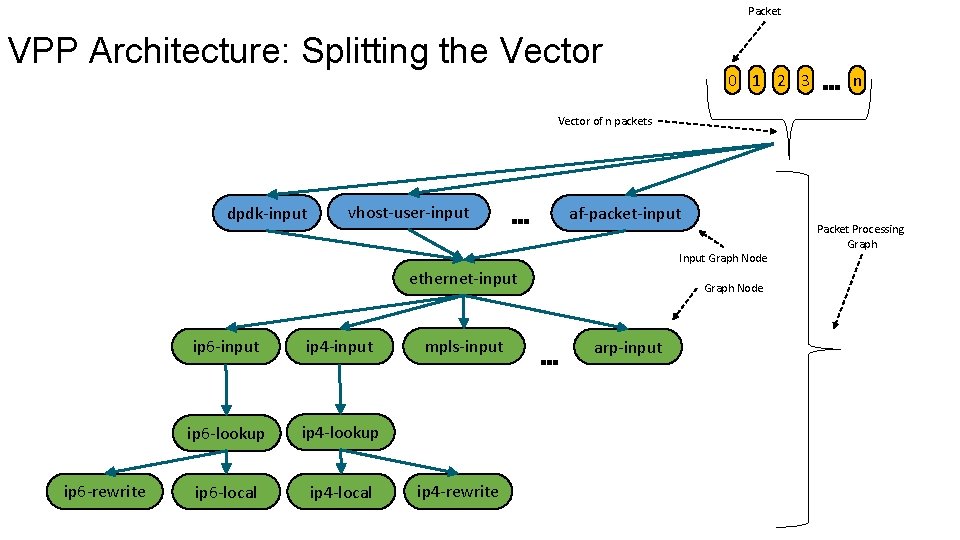

Packet VPP Architecture: Splitting the Vector 0 1 2 3 … n Vector of n packets dpdk-input vhost-user-input … af-packet-input Input Graph Node ethernet-input ip 6 -rewrite ip 6 -input ip 4 -input ip 6 -lookup ip 4 -lookup ip 6 -local ip 4 -local mpls-input ip 4 -rewrite Graph Node … arp-input Packet Processing Graph

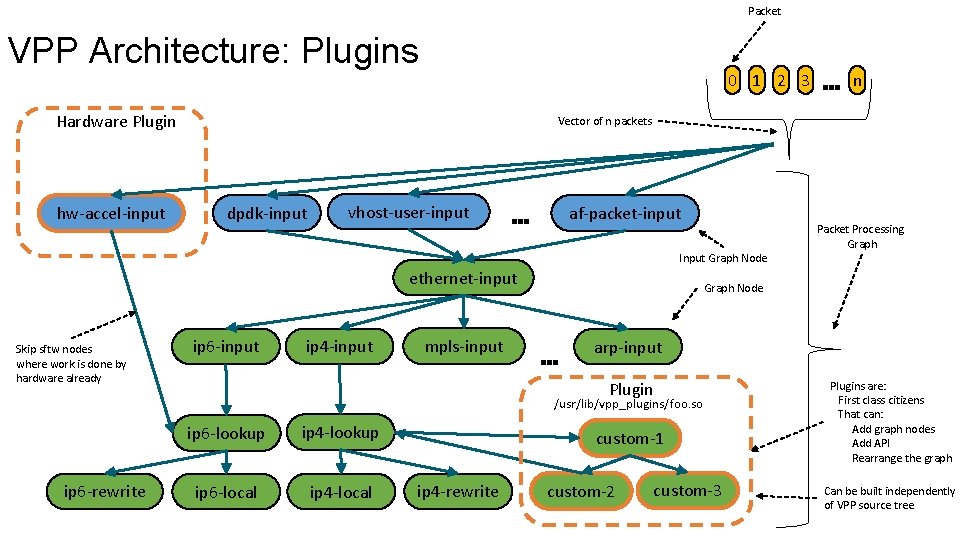

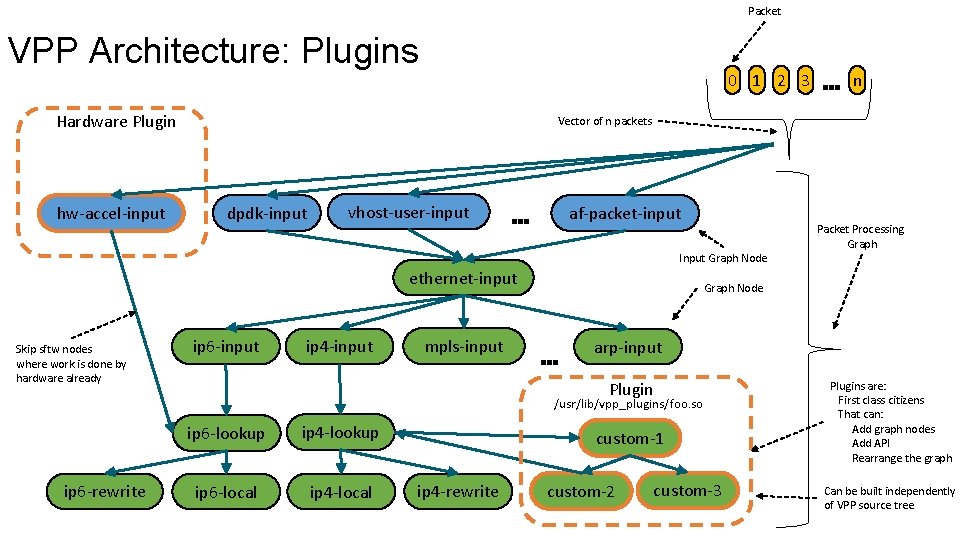

Packet VPP Architecture: Plugins 0 1 2 3 Hardware Plugin hw-accel-input dpdk-input vhost-user-input … af-packet-input ethernet-input ip 6 -input ip 4 -input mpls-input ip 6 -lookup ip 4 -lookup ip 6 -local ip 4 -local … arp-input Plugin custom-1 ip 4 -rewrite Packet Processing Graph Node /usr/lib/vpp_plugins/foo. so ip 6 -rewrite n Vector of n packets Input Graph Node Skip sftw nodes where work is done by hardware already … custom-2 custom-3 Plugins are: First class citizens That can: Add graph nodes Add API Rearrange the graph Can be built independently of VPP source tree

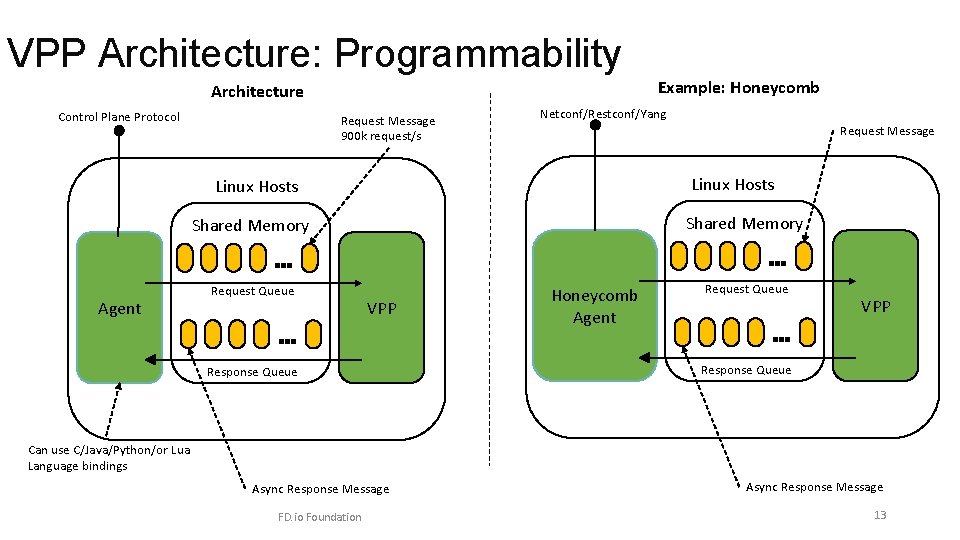

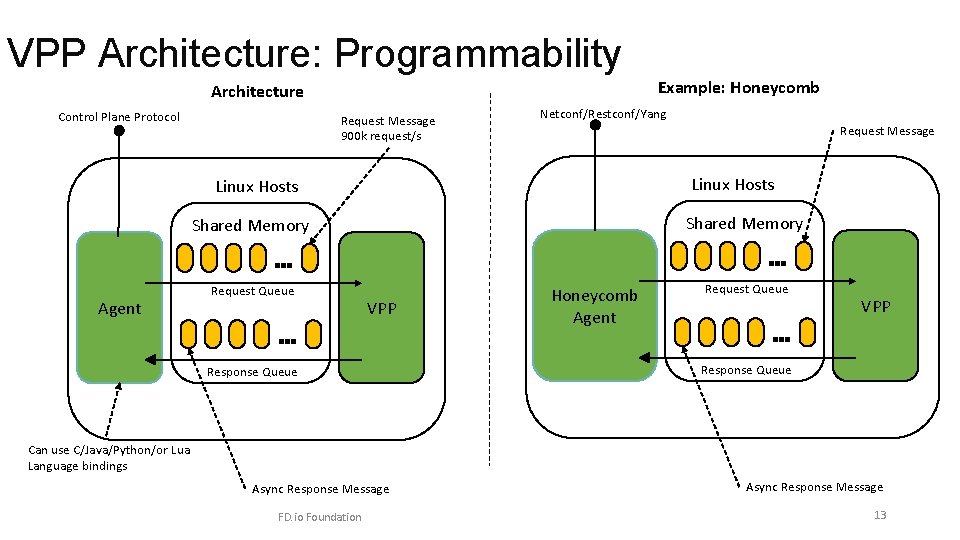

VPP Architecture: Programmability Example: Honeycomb Architecture Control Plane Protocol Request Message 900 k request/s Netconf/Restconf/Yang Request Message Linux Hosts Shared Memory … … Agent Request Queue … VPP Response Queue Honeycomb Agent Request Queue … VPP Response Queue Can use C/Java/Python/or Lua Language bindings Async Response Message FD. io Foundation Async Response Message 13

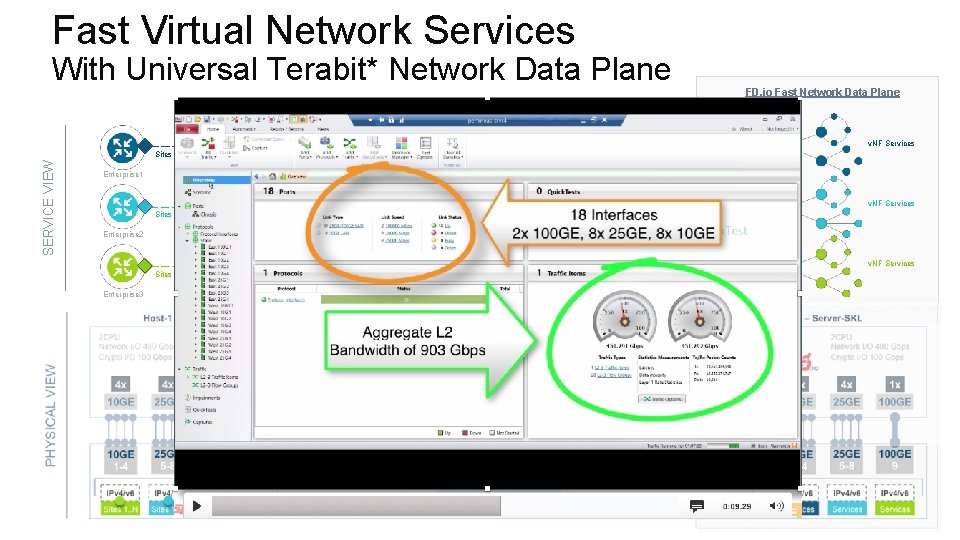

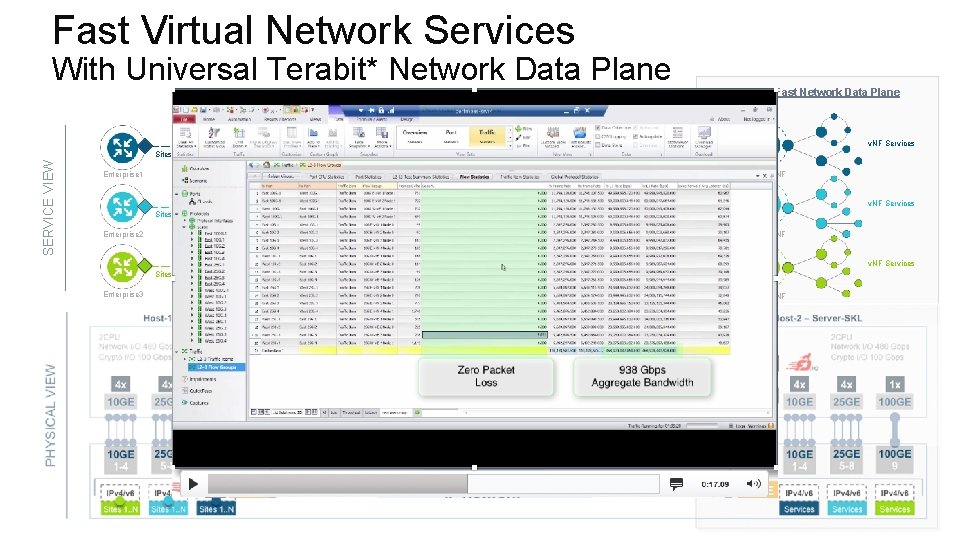

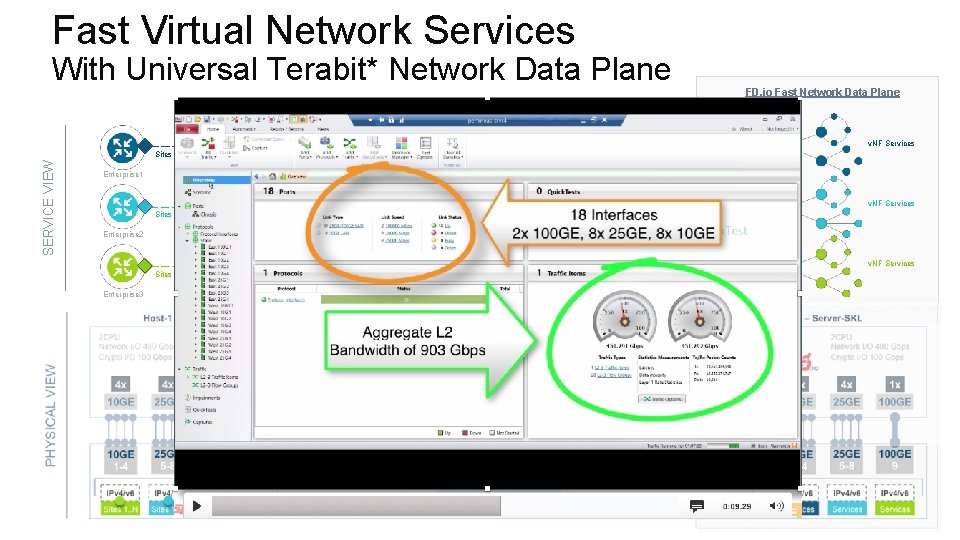

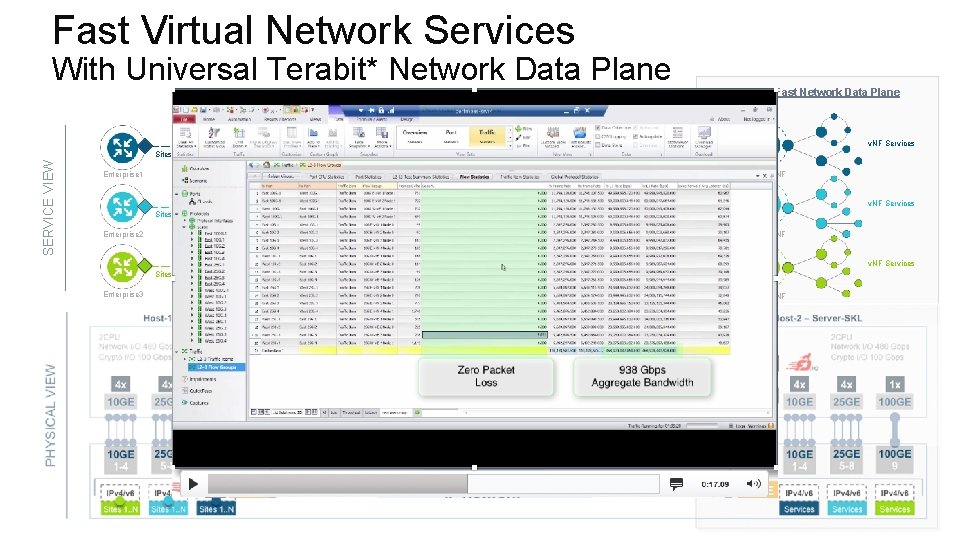

Fast Virtual Network Services With Universal Terabit* Network Data Plane FD. io Fast Network Data Plane Software Defined Network – Cloud Network Services, IPVPN and Internet Security v. NF Services SERVICE VIEW Sites 1. . N IPVPN and L 2 VPN Overlays*, IPSec/SSL Crypto Enterprise 1 v. Router v. NF Services Sites 1. . N IPVPN and L 2 VPN Overlays*, IPSec/SSL Crypto Enterprise 2 v. Router v. NF Services Sites 1. . N IPVPN and L 2 VPN Overlays*, IPSec/SSL Crypto Enterprise 3 v. Router v. NF Host-1 – Server-SKL PHYSICAL VIEW 2 CPU Network I/O 480 Gbps Crypto I/O 100 Gbps Host-2 – Server-SKL 4 x 4 x 4 x 1 x 10 GE 25 GE 100 GE 1 -4 25 GE 5 -8 100 GE 9 IPv 4/v 6 Sites 1. . N 25 GE 2 x 100 GE IP Network Private or Public IPVPN Service Traffic Simulator Traffic Generator DRAFT – NOT FOR DISTRIBUTION – CONTENT UNDER EMBARGO UNTIL 9: 15 AM PDT JULY 11, 2017 25 GE 2 CPU Network I/O 480 Gbps Crypto I/O 100 Gbps x 4 100 GE x 2 4 x 4 x 1 x 10 GE 25 GE 100 GE 1 -4 25 GE 5 -8 100 GE 9 IPv 4/v 6 Services

FD. io Foundation 15

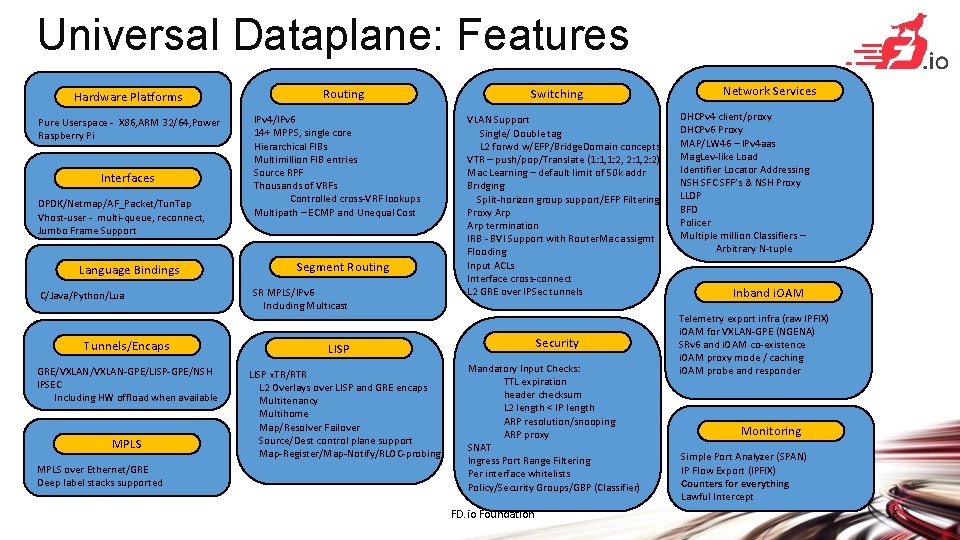

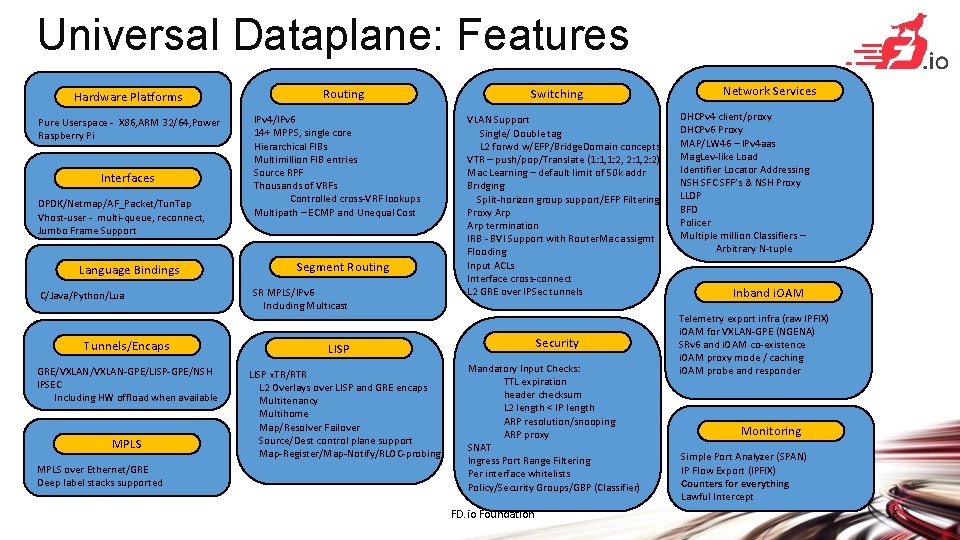

Universal Dataplane: Features Hardware Platforms Pure Userspace - X 86, ARM 32/64, Power Raspberry Pi Interfaces DPDK/Netmap/AF_Packet/Tun. Tap Vhost-user - multi-queue, reconnect, Jumbo Frame Support Language Bindings C/Java/Python/Lua Tunnels/Encaps GRE/VXLAN-GPE/LISP-GPE/NSH IPSEC Including HW offload when available MPLS over Ethernet/GRE Deep label stacks supported Routing IPv 4/IPv 6 14+ MPPS, single core Hierarchical FIBs Multimillion FIB entries Source RPF Thousands of VRFs Controlled cross-VRF lookups Multipath – ECMP and Unequal Cost Segment Routing SR MPLS/IPv 6 Including Multicast Switching VLAN Support Single/ Double tag L 2 forwd w/EFP/Bridge. Domain concepts VTR – push/pop/Translate (1: 1, 1: 2, 2: 1, 2: 2) Mac Learning – default limit of 50 k addr Bridging Split-horizon group support/EFP Filtering Proxy Arp termination IRB - BVI Support with Router. Mac assigmt Flooding Input ACLs Interface cross-connect L 2 GRE over IPSec tunnels Security LISP x. TR/RTR L 2 Overlays over LISP and GRE encaps Multitenancy Multihome Map/Resolver Failover Source/Dest control plane support Map-Register/Map-Notify/RLOC-probing Mandatory Input Checks: TTL expiration header checksum L 2 length < IP length ARP resolution/snooping ARP proxy SNAT Ingress Port Range Filtering Per interface whitelists Policy/Security Groups/GBP (Classifier) FD. io Foundation Network Services DHCPv 4 client/proxy DHCPv 6 Proxy MAP/LW 46 – IPv 4 aas Mag. Lev-like Load Identifier Locator Addressing NSH SFC SFF’s & NSH Proxy LLDP BFD Policer Multiple million Classifiers – Arbitrary N-tuple Inband i. OAM Telemetry export infra (raw IPFIX) i. OAM for VXLAN-GPE (NGENA) SRv 6 and i. OAM co-existence i. OAM proxy mode / caching i. OAM probe and responder Monitoring Simple Port Analyzer (SPAN) IP Flow Export (IPFIX) Counters for everything Lawful Intercept 16

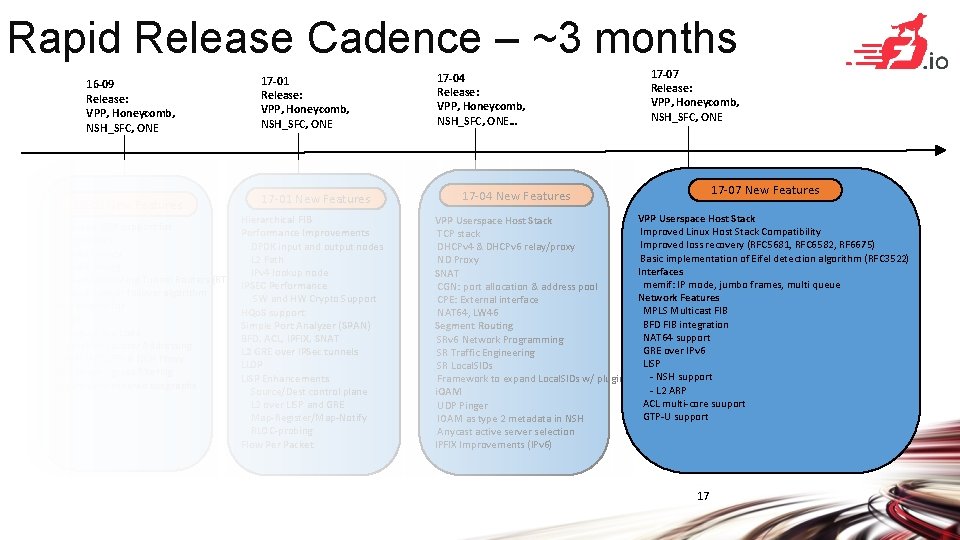

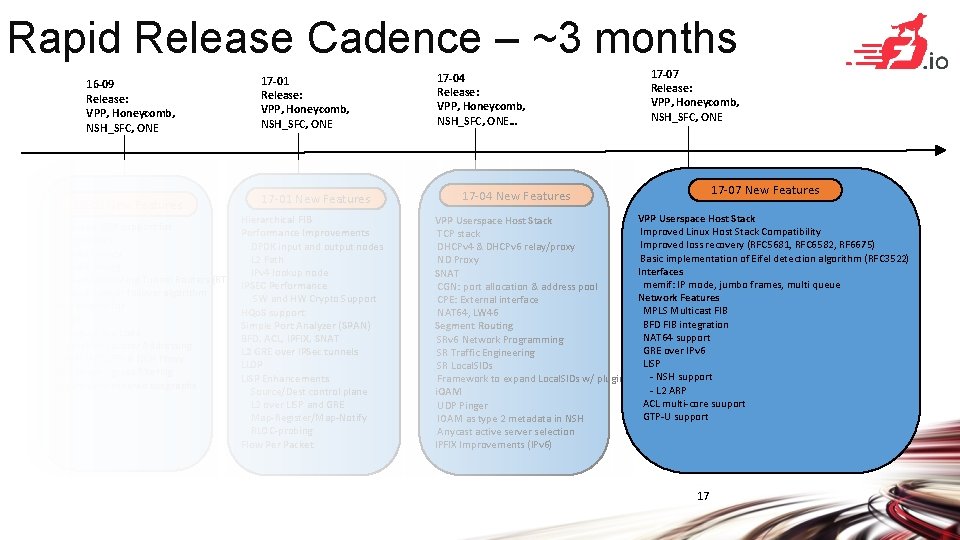

Rapid Release Cadence – ~3 months 16 -09 Release: VPP, Honeycomb, NSH_SFC, ONE 16 -09 New Features 17 -01 Release: VPP, Honeycomb, NSH_SFC, ONE 17 -01 New Features Hierarchical FIB Enhanced LISP support for Performance Improvements L 2 overlays DPDK input and output nodes Multitenancy L 2 Path Multihoming IPv 4 lookup node Re-encapsulating Tunnel Routers (RTR) support IPSEC Performance Map-Resolver failover algorithm SW and HW Crypto Support New plugins for HQo. S support SNAT Simple Port Analyzer (SPAN) Mag. Lev-like Load BFD, ACL, IPFIX, SNAT Identifier Locator Addressing L 2 GRE over IPSec tunnels NSH SFC SFF’s & NSH Proxy LLDP Port range ingress filtering LISP Enhancements Dynamically ordered subgraphs Source/Dest control plane L 2 over LISP and GRE Map-Register/Map-Notify RLOC-probing Flow Per Packet 17 -04 Release: VPP, Honeycomb, NSH_SFC, ONE… 17 -07 Release: VPP, Honeycomb, NSH_SFC, ONE 17 -07 New Features 17 -04 New Features VPP Userspace Host Stack Improved Linux Host Stack Compatibility TCP stack Improved loss recovery (RFC 5681, RFC 6582, RF 6675) DHCPv 4 & DHCPv 6 relay/proxy Basic implementation of Eifel detection algorithm (RFC 3522) ND Proxy Interfaces SNAT memif: IP mode, jumbo frames, multi queue CGN: port allocation & address pool Network Features CPE: External interface MPLS Multicast FIB NAT 64, LW 46 BFD FIB integration Segment Routing NAT 64 support SRv 6 Network Programming GRE over IPv 6 SR Traffic Engineering LISP SR Local. SIDs - NSH support Framework to expand Local. SIDs w/ plugins - L 2 ARP i. OAM ACL multi-core suuport UDP Pinger GTP-U support IOAM as type 2 metadata in NSH Anycast active server selection IPFIX Improvements (IPv 6) 17

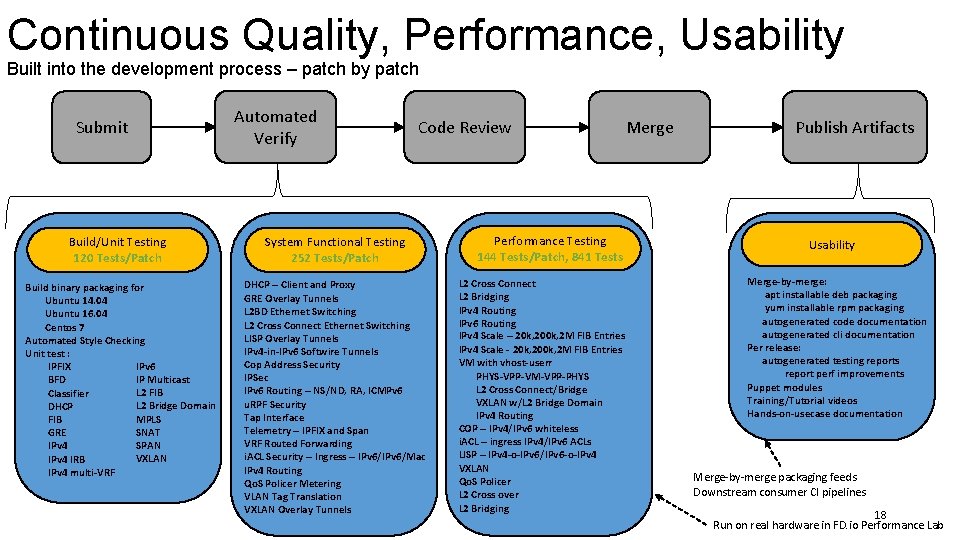

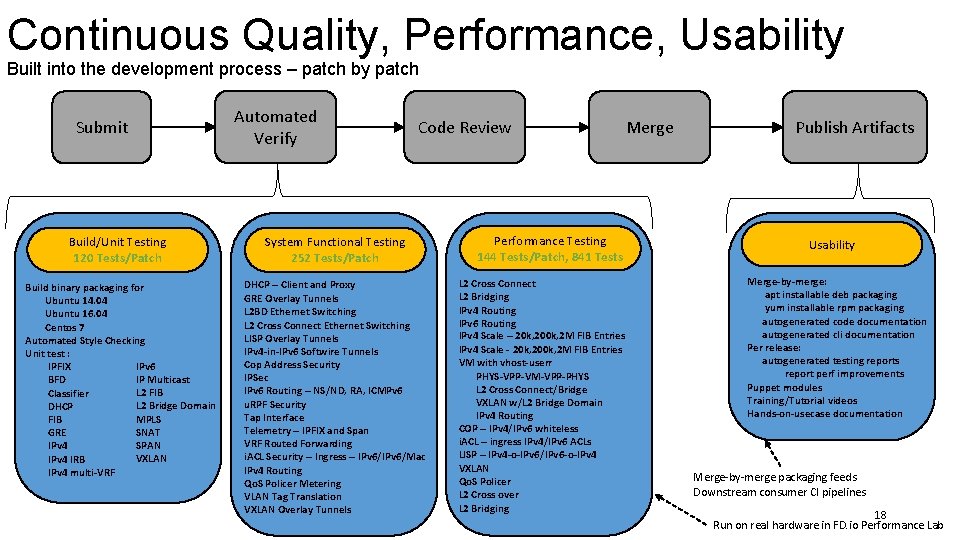

Continuous Quality, Performance, Usability Built into the development process – patch by patch Submit Automated Verify Code Review Build/Unit Testing 120 Tests/Patch System Functional Testing 252 Tests/Patch Build binary packaging for Ubuntu 14. 04 Ubuntu 16. 04 Centos 7 Automated Style Checking Unit test : IPv 6 IPFIX IP Multicast BFD L 2 FIB Classifier L 2 Bridge Domain DHCP MPLS FIB SNAT GRE SPAN IPv 4 VXLAN IPv 4 IRB IPv 4 multi-VRF DHCP – Client and Proxy GRE Overlay Tunnels L 2 BD Ethernet Switching L 2 Cross Connect Ethernet Switching LISP Overlay Tunnels IPv 4 -in-IPv 6 Softwire Tunnels Cop Address Security IPSec IPv 6 Routing – NS/ND, RA, ICMPv 6 u. RPF Security Tap Interface Telemetry – IPFIX and Span VRF Routed Forwarding i. ACL Security – Ingress – IPv 6/Mac IPv 4 Routing Qo. S Policer Metering VLAN Tag Translation VXLAN Overlay Tunnels FD. io Foundation Performance Testing 144 Tests/Patch, 841 Tests L 2 Cross Connect L 2 Bridging IPv 4 Routing IPv 6 Routing IPv 4 Scale – 20 k, 200 k, 2 M FIB Entries IPv 4 Scale - 20 k, 200 k, 2 M FIB Entries VM with vhost-userr PHYS-VPP-VM-VPP-PHYS L 2 Cross Connect/Bridge VXLAN w/L 2 Bridge Domain IPv 4 Routing COP – IPv 4/IPv 6 whiteless i. ACL – ingress IPv 4/IPv 6 ACLs LISP – IPv 4 -o-IPv 6/IPv 6 -o-IPv 4 VXLAN Qo. S Policer L 2 Cross over L 2 Bridging Merge Publish Artifacts Usability Merge-by-merge: apt installable deb packaging yum installable rpm packaging autogenerated code documentation autogenerated cli documentation Per release: autogenerated testing reports report perf improvements Puppet modules Training/Tutorial videos Hands-on-usecase documentation Merge-by-merge packaging feeds Downstream consumer CI pipelines 18 Run on real hardware in FD. io Performance Lab

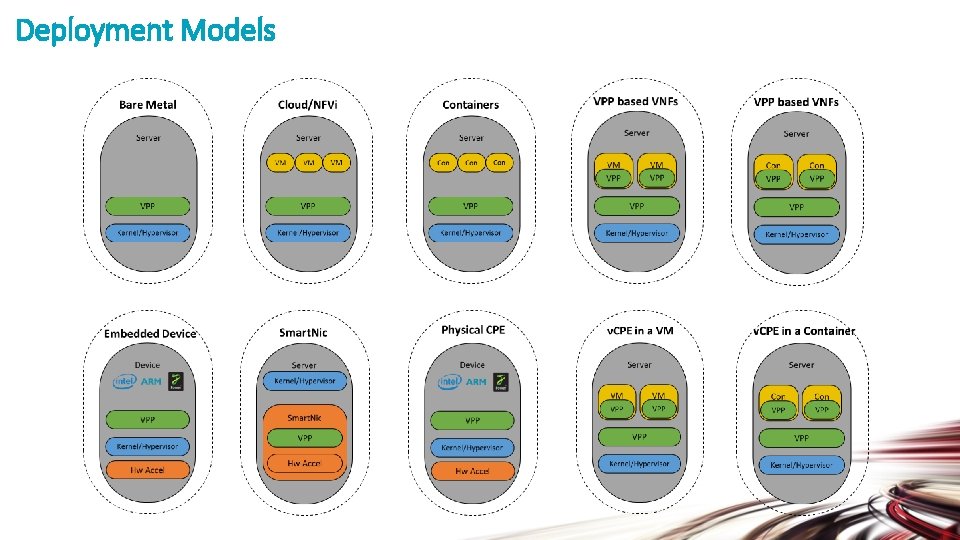

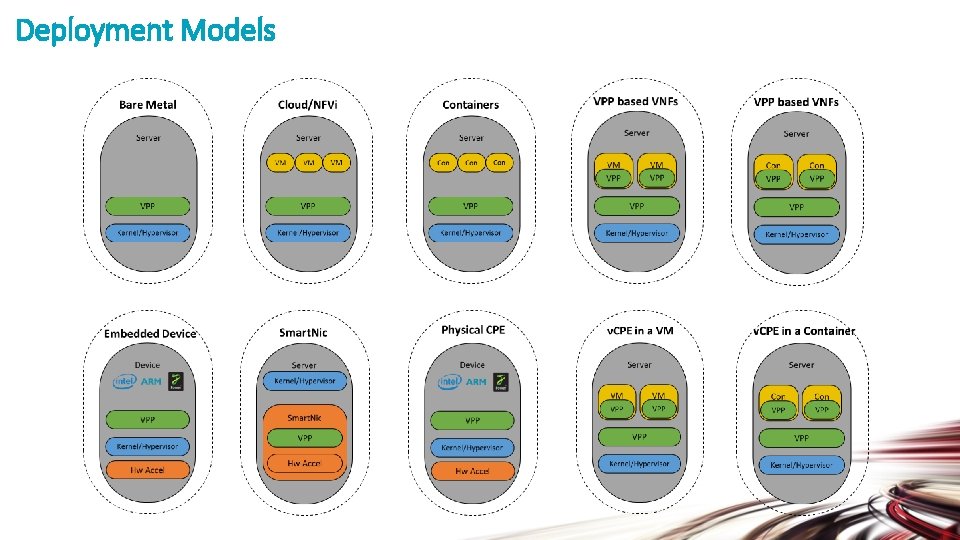

Deployment Models

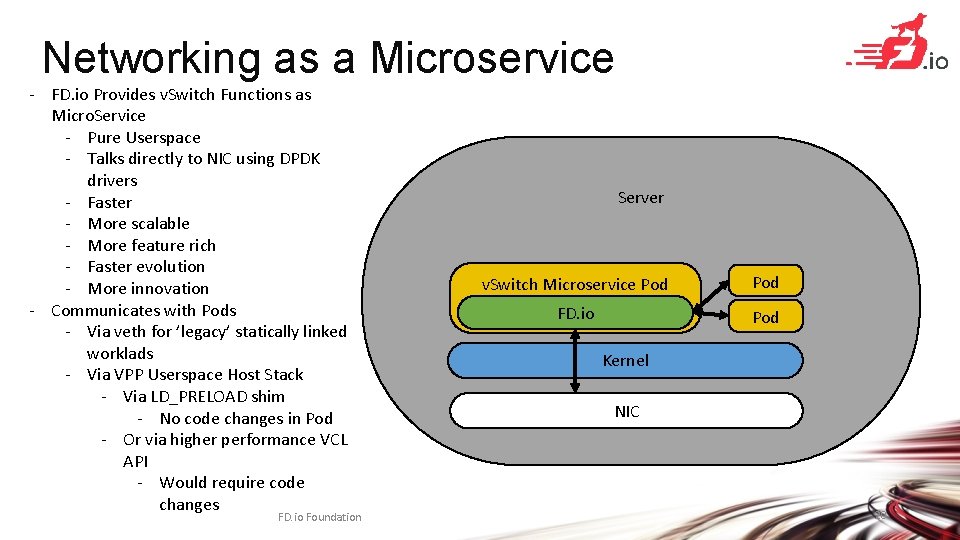

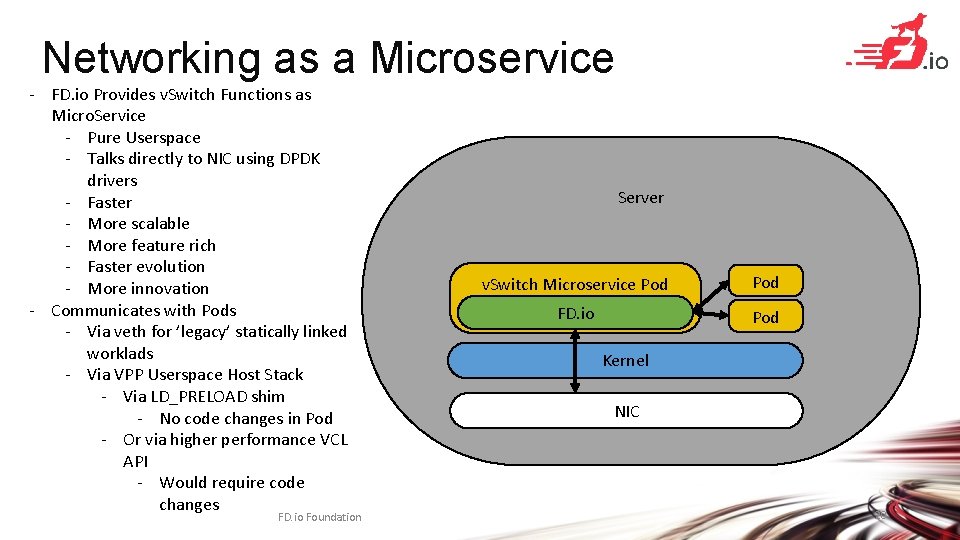

Networking as a Microservice - FD. io Provides v. Switch Functions as Micro. Service - Pure Userspace - Talks directly to NIC using DPDK drivers - Faster - More scalable - More feature rich - Faster evolution - More innovation - Communicates with Pods - Via veth for ’legacy’ statically linked worklads - Via VPP Userspace Host Stack - Via LD_PRELOAD shim - No code changes in Pod - Or via higher performance VCL API - Would require code changes FD. io Foundation Server v. Switch Microservice Pod FD. io Pod Kernel NIC 20

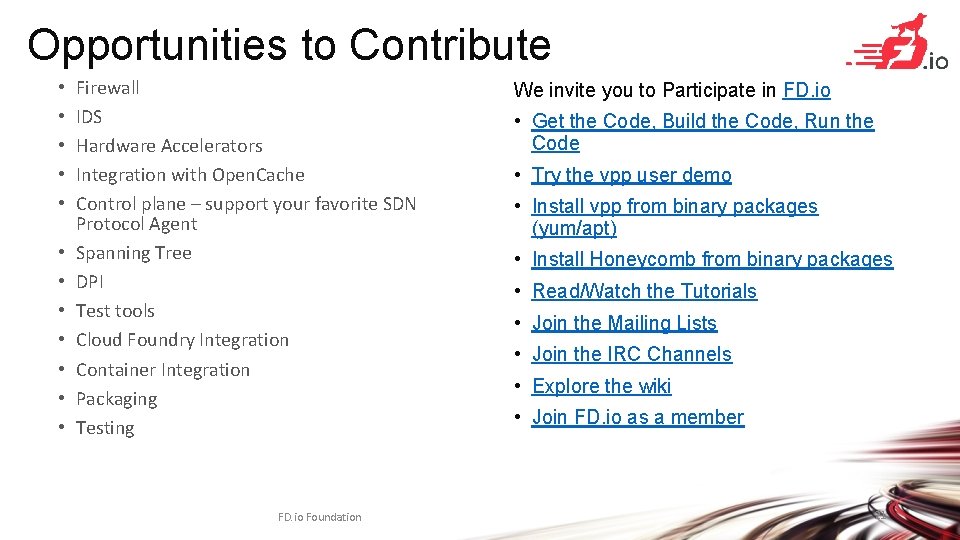

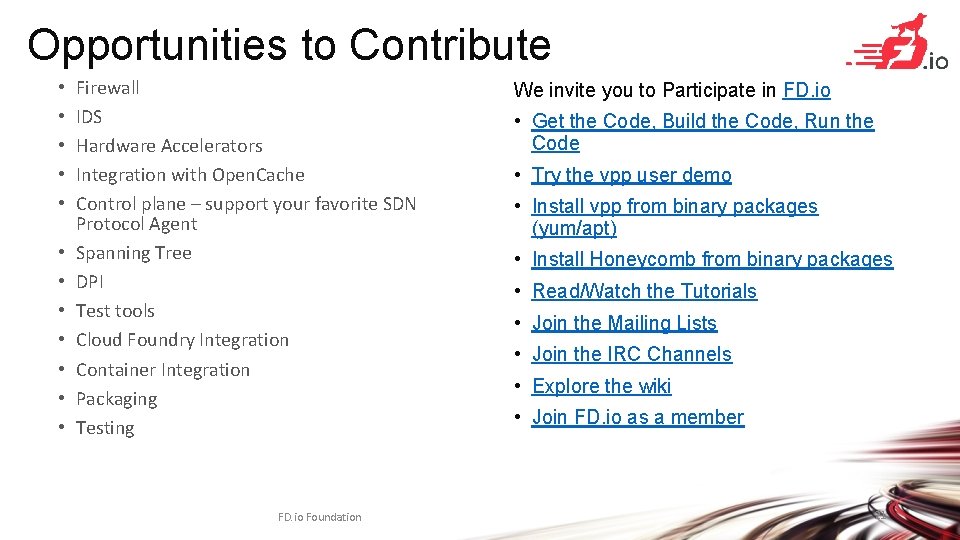

Opportunities to Contribute • • • Firewall IDS Hardware Accelerators Integration with Open. Cache Control plane – support your favorite SDN Protocol Agent Spanning Tree DPI Test tools Cloud Foundry Integration Container Integration Packaging Testing FD. io Foundation We invite you to Participate in FD. io • Get the Code, Build the Code, Run the Code • Try the vpp user demo • Install vpp from binary packages (yum/apt) • Install Honeycomb from binary packages • Read/Watch the Tutorials • Join the Mailing Lists • Join the IRC Channels • Explore the wiki • Join FD. io as a member 21

Backup

VPP: How does it work? 1 vhost-userinput af-packetinput 2 dpdk-input Packet 0 … graph nodes are optimized to fit inside the instruction cache … Packet 1 ethernetinput Packet 2 Microprocessor Packet 3 Packet 4 mpls-input lldp-input arp-input cdp-input ip 4 -input l 2 -input ip 6 -input . . . -nochecksum Packet 5 Packet 6 3 Instruction Cache 4 Data Cache Packet 7 ip 4 -lookupmulitcast Packet 8 Packet 9 Packet 10 mpls-policyencap ip 4 -loadbalance ip 4 -rewritetransit ip 4 midchain interfaceoutput Packet processing is decomposed into a directed graph node … * approx. 173 nodes in default deployment … packets moved through graph nodes in vector … … packets are pre-fetched, into the data cache …

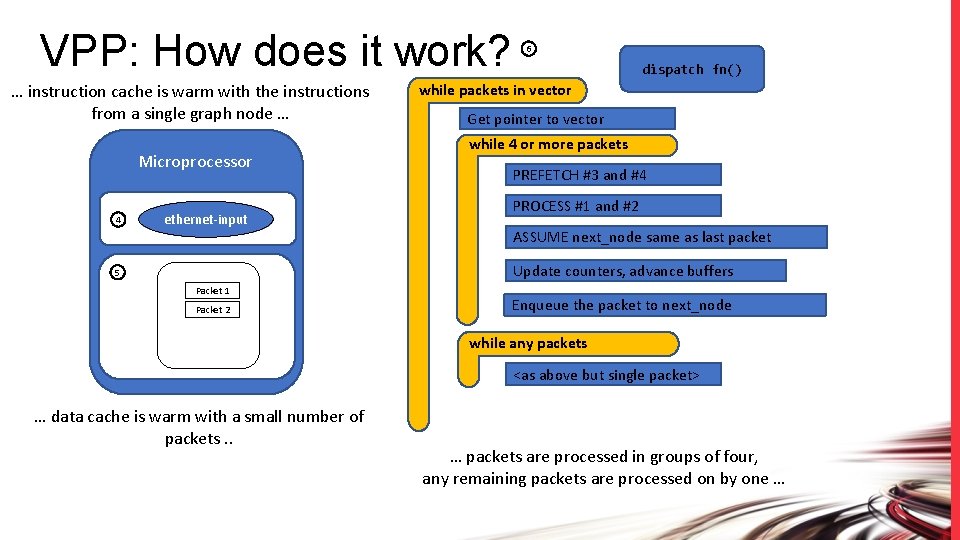

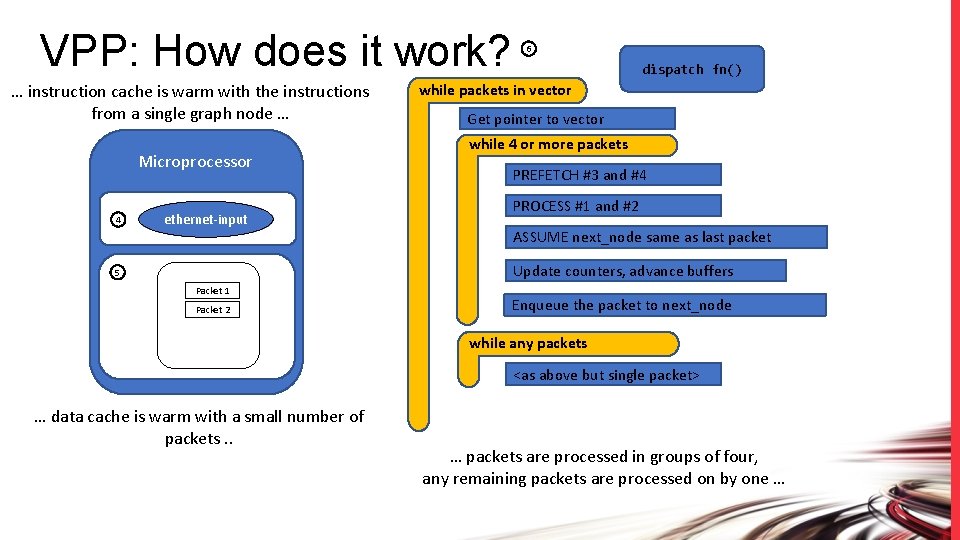

VPP: How does it work? … instruction cache is warm with the instructions from a single graph node … Microprocessor 4 ethernet-input 6 dispatch fn() while packets in vector Get pointer to vector while 4 or more packets PREFETCH #3 and #4 PROCESS #1 and #2 ASSUME next_node same as last packet Update counters, advance buffers 5 Packet 1 Packet 2 Enqueue the packet to next_node while any packets <as above but single packet> … data cache is warm with a small number of packets. . … packets are processed in groups of four, any remaining packets are processed on by one …

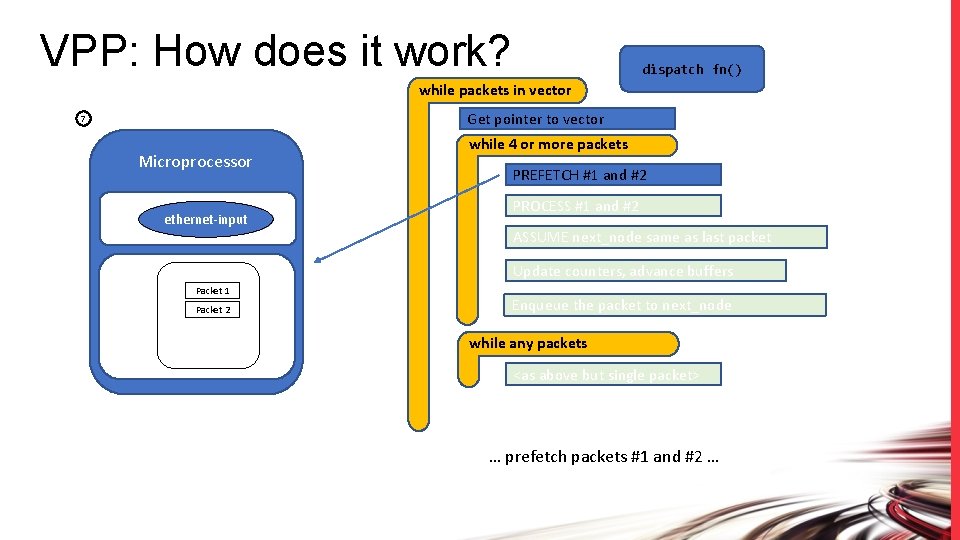

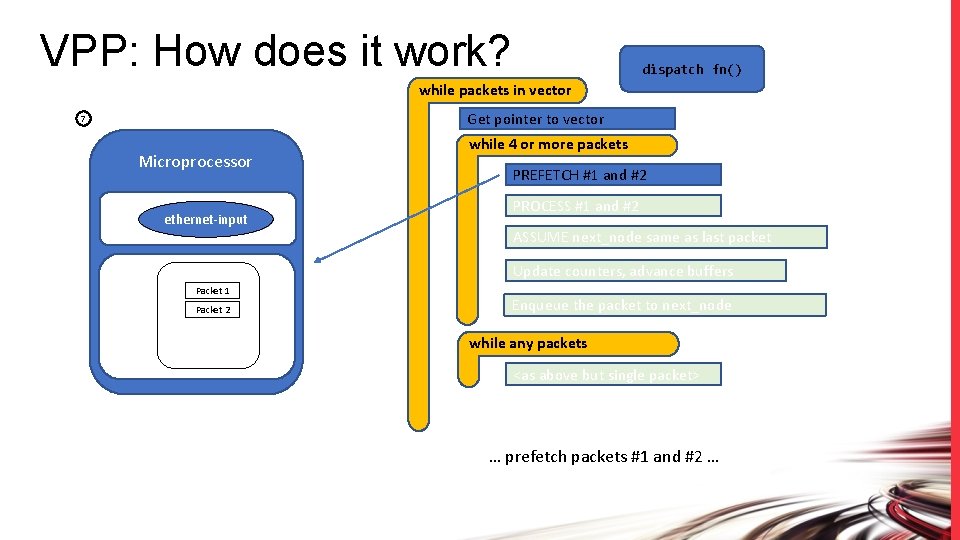

VPP: How does it work? dispatch fn() while packets in vector Get pointer to vector 7 Microprocessor ethernet-input while 4 or more packets PREFETCH #1 and #2 PROCESS #1 and #2 ASSUME next_node same as last packet Update counters, advance buffers Packet 1 Packet 2 Enqueue the packet to next_node while any packets <as above but single packet> … prefetch packets #1 and #2 …

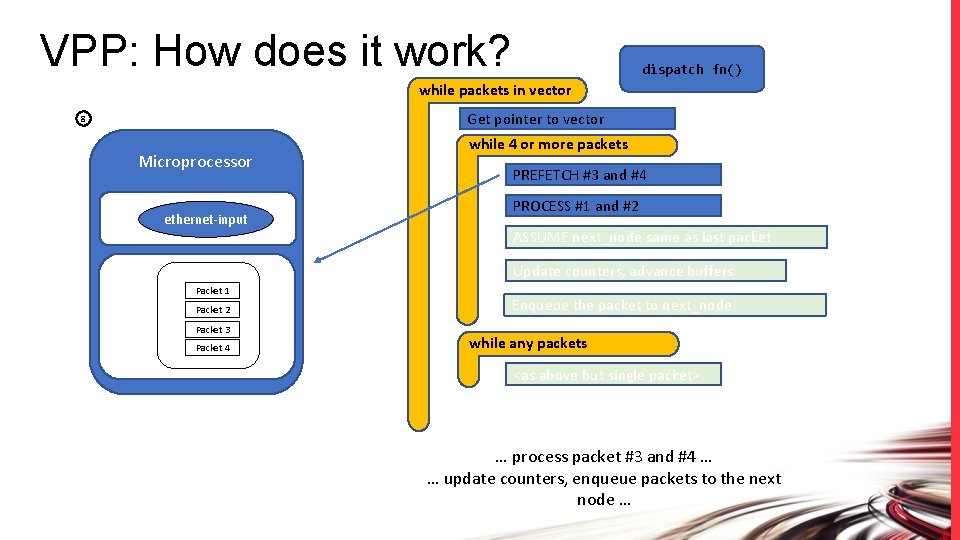

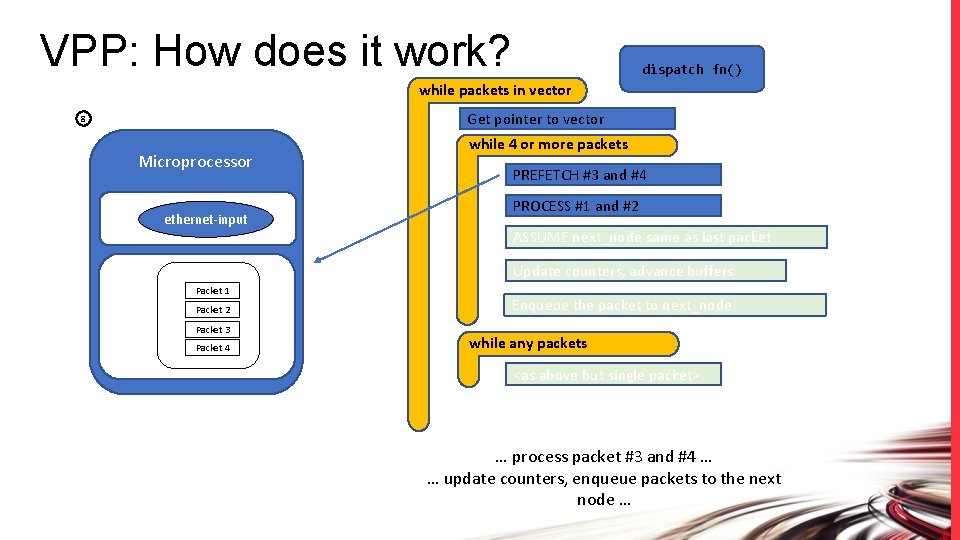

VPP: How does it work? dispatch fn() while packets in vector Get pointer to vector 8 Microprocessor ethernet-input while 4 or more packets PREFETCH #3 and #4 PROCESS #1 and #2 ASSUME next_node same as last packet Update counters, advance buffers Packet 1 Packet 2 Packet 3 Packet 4 Enqueue the packet to next_node while any packets <as above but single packet> … process packet #3 and #4 … … update counters, enqueue packets to the next node …

Fast Virtual Network Services With Universal Terabit* Network Data Plane FD. io Fast Network Data Plane Software Defined Network – Cloud Network Services, IPVPN and Internet Security v. NF Services SERVICE VIEW Sites 1. . N IPVPN and L 2 VPN Overlays*, IPSec/SSL Crypto Enterprise 1 v. Router v. NF Services Sites 1. . N IPVPN and L 2 VPN Overlays*, IPSec/SSL Crypto Enterprise 2 v. Router v. NF Services Sites 1. . N IPVPN and L 2 VPN Overlays*, IPSec/SSL Crypto Enterprise 3 v. Router v. NF Host-1 – Server-SKL PHYSICAL VIEW 2 CPU Network I/O 480 Gbps Crypto I/O 100 Gbps Host-2 – Server-SKL 4 x 4 x 4 x 1 x 10 GE 25 GE 100 GE 1 -4 25 GE 5 -8 100 GE 9 IPv 4/v 6 Sites 1. . N 25 GE 2 x 100 GE IP Network Private or Public IPVPN Service Traffic Simulator Traffic Generator DRAFT – NOT FOR DISTRIBUTION – CONTENT UNDER EMBARGO UNTIL 9: 15 AM PDT JULY 11, 2017 25 GE 2 CPU Network I/O 480 Gbps Crypto I/O 100 Gbps x 4 100 GE x 2 4 x 4 x 1 x 10 GE 25 GE 100 GE 1 -4 25 GE 5 -8 100 GE 9 IPv 4/v 6 Services

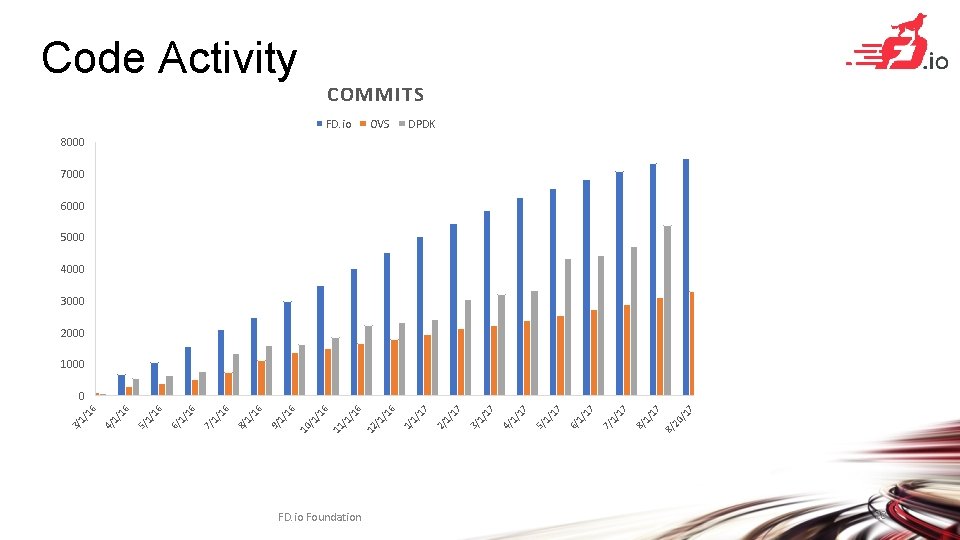

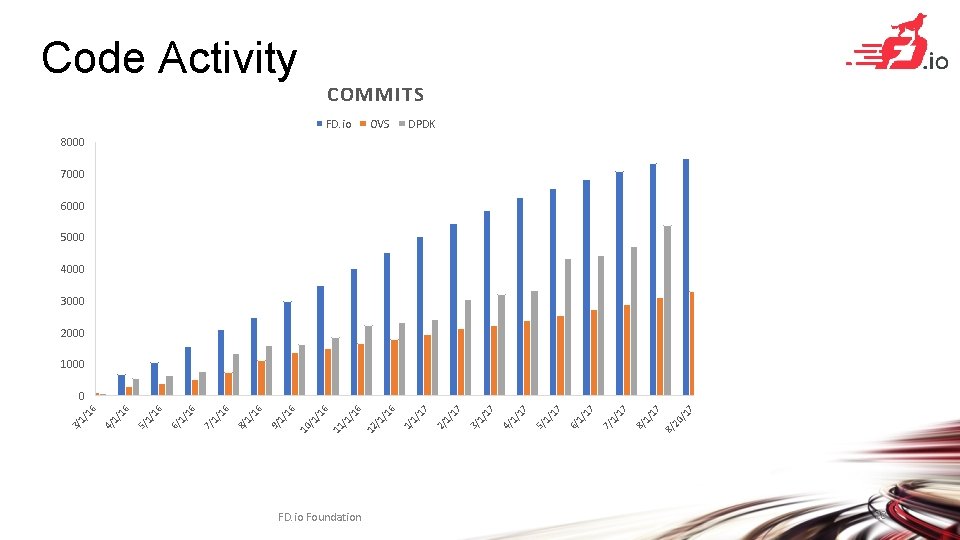

FD. io Foundation 7 /1 8/ 20 1/ 17 8/ 1/ 17 7/ 1/ 17 6/ 1/ 17 5/ 1/ 17 4/ 1/ 17 3/ 1/ 17 2/ 1/ 17 OVS 1/ 16 FD. io /1 / 12 16 /1 / 11 16 /1 / 10 1/ 16 9/ 1/ 16 8/ 1/ 16 7/ 1/ 16 6/ 1/ 16 5/ 1/ 16 4/ 1/ 16 3/ Code Activity COMMITS DPDK 8000 7000 6000 5000 4000 3000 2000 1000 0 28

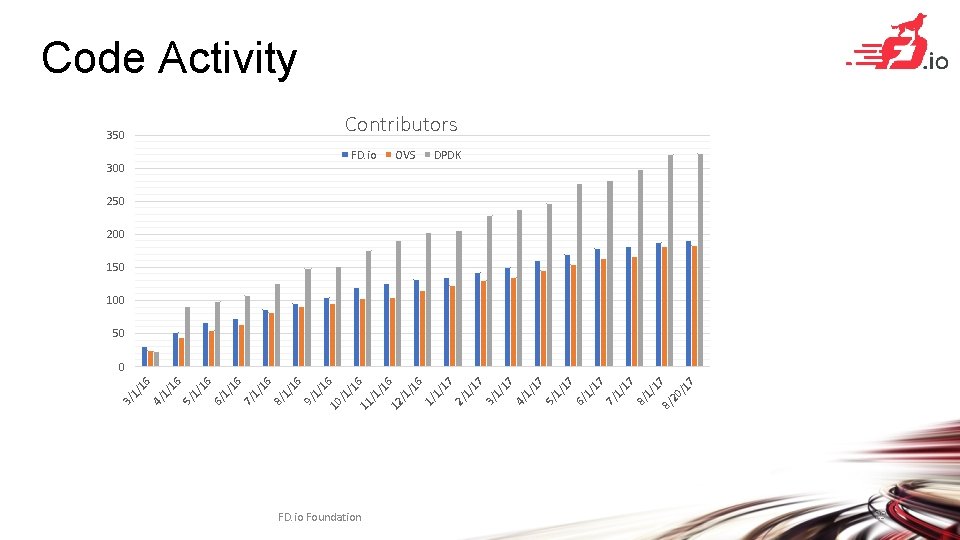

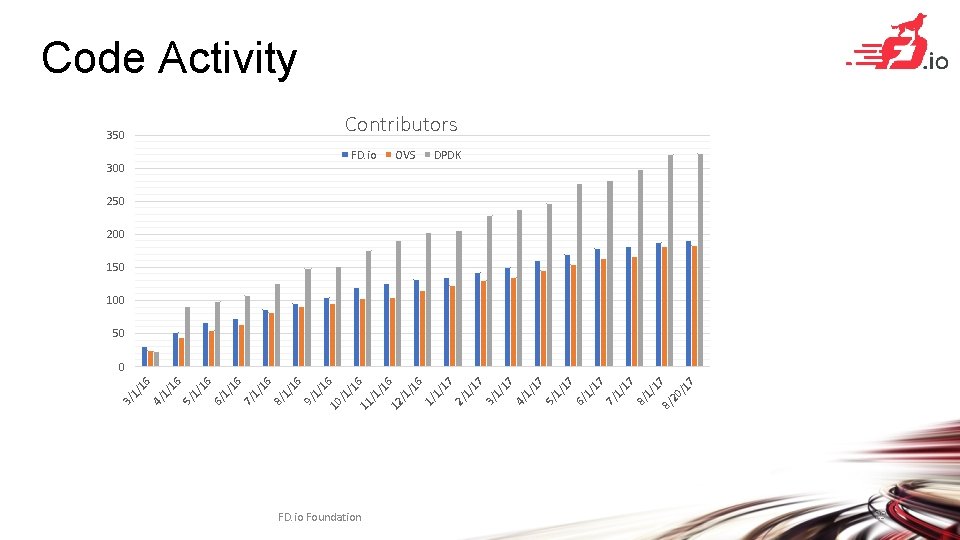

FD. io Foundation 7 17 /1 8/ 20 1/ 8/ 17 17 1/ 7/ 1/ 6/ 17 1/ 5/ 17 1/ 4/ 17 1/ 3/ 17 1/ 2/ 6 OVS 17 1/ 1/ /1 6 /1 FD. io /1 12 300 /1 6 350 11 16 /1 /1 10 1/ 9/ 16 16 1/ 8/ 1/ 7/ 16 1/ 6/ 16 1/ 5/ 16 1/ 4/ 16 1/ 3/ Code Activity Contributors DPDK 250 200 150 100 50 0 29

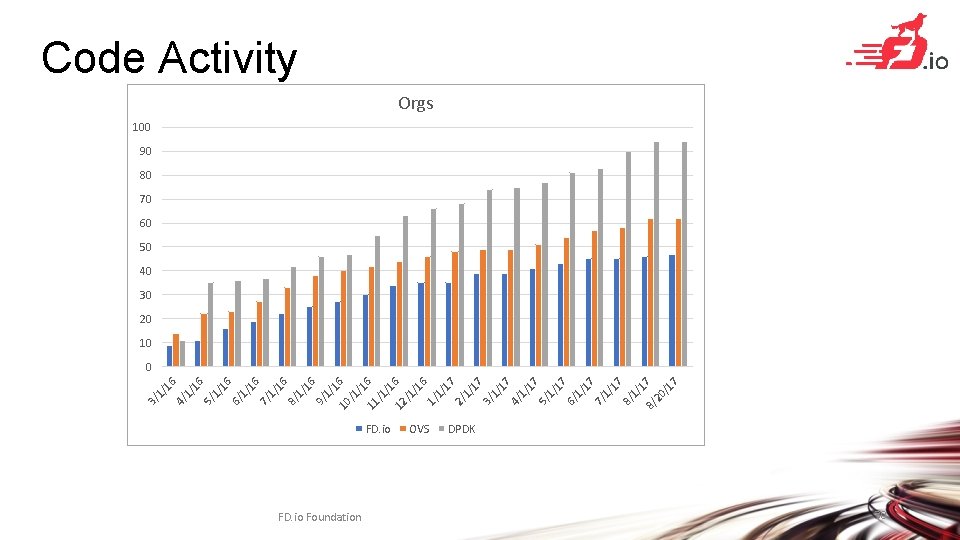

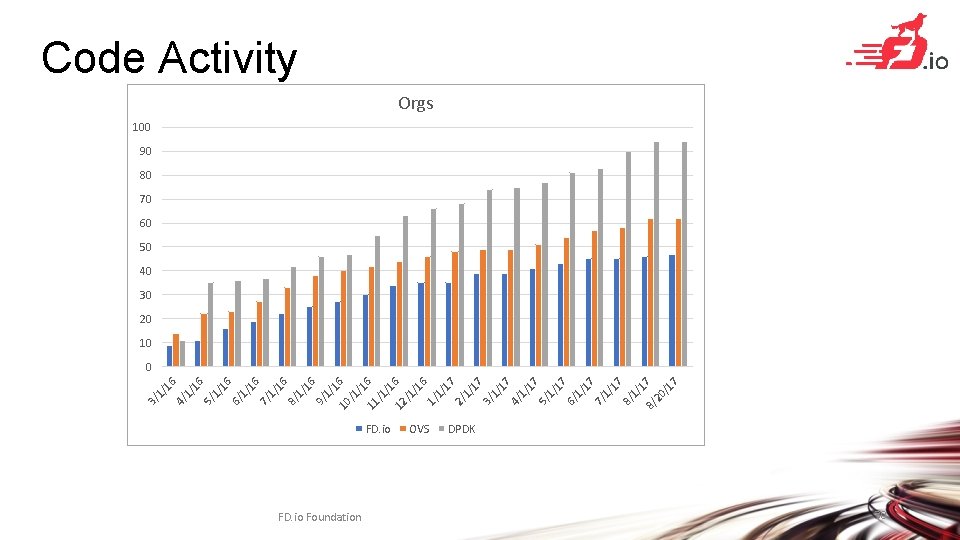

FD. io Foundation /1 6 FD. io OVS 17 1 8/ 7 20 /1 7 1/ 8/ 17 17 1/ 7/ 1/ 6/ 1/ 5/ 17 17 1/ 4/ 1/ 3/ 17 1/ 2/ 17 1/ 1/ /1 6 /1 12 16 /1 11 /1 10 1/ 9/ 16 1/ 8/ 16 1/ 7/ 16 16 1/ 6/ 1/ 5/ 16 16 1/ 4/ 1/ 3/ Code Activity Orgs 100 90 80 70 60 50 40 30 20 10 0 DPDK 30