Clustering qClustering is the unsupervised classification of patterns

![Zahn’s method [Zahn 71] the dashed edge is inconsistent and is deleted 1. Find Zahn’s method [Zahn 71] the dashed edge is inconsistent and is deleted 1. Find](https://slidetodoc.com/presentation_image_h2/4f5eb1ddfa277deeb31549f79cbfc284/image-53.jpg)

- Slides: 54

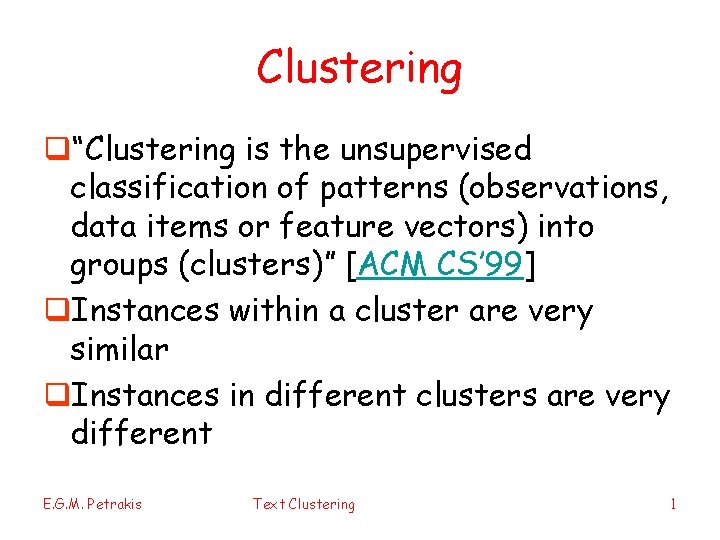

Clustering q“Clustering is the unsupervised classification of patterns (observations, data items or feature vectors) into groups (clusters)” [ACM CS’ 99] q. Instances within a cluster are very similar q. Instances in different clusters are very different E. G. M. Petrakis Text Clustering 1

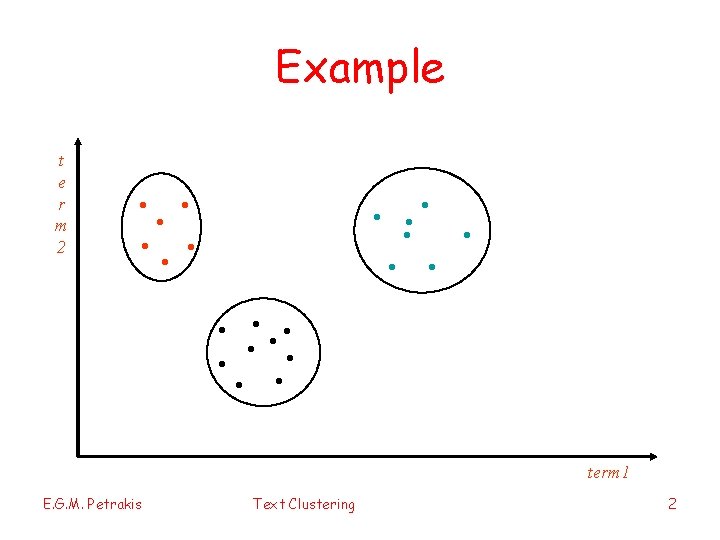

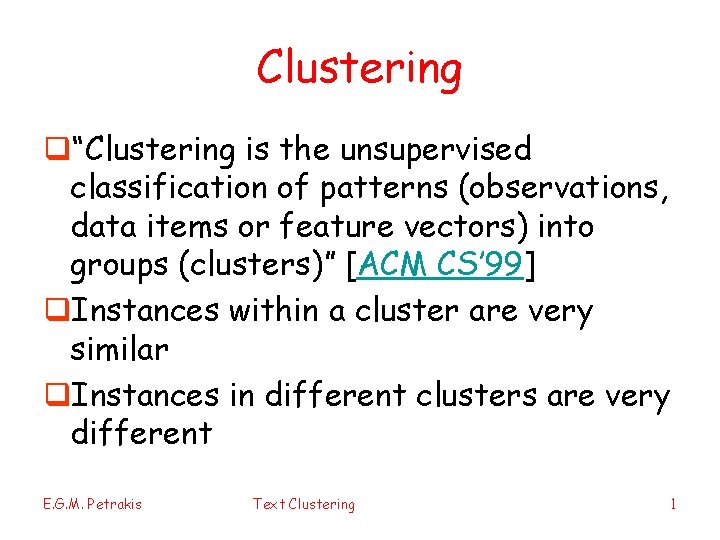

Example t e r m 2 . . term 1 E. G. M. Petrakis Text Clustering 2

Problems q. How many clusters ? q. Complexity? N is usually large q. Quality of clustering q. When a method is better than another? q. Overlapping clusters q. Sensitivity to outliers E. G. M. Petrakis Text Clustering 3

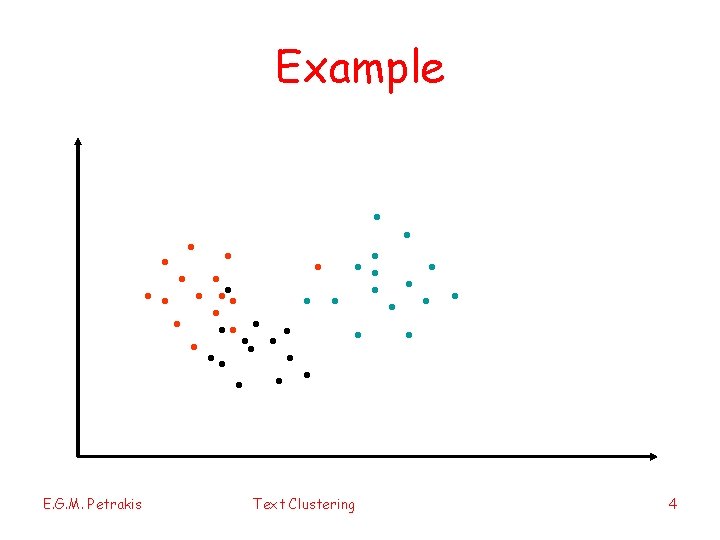

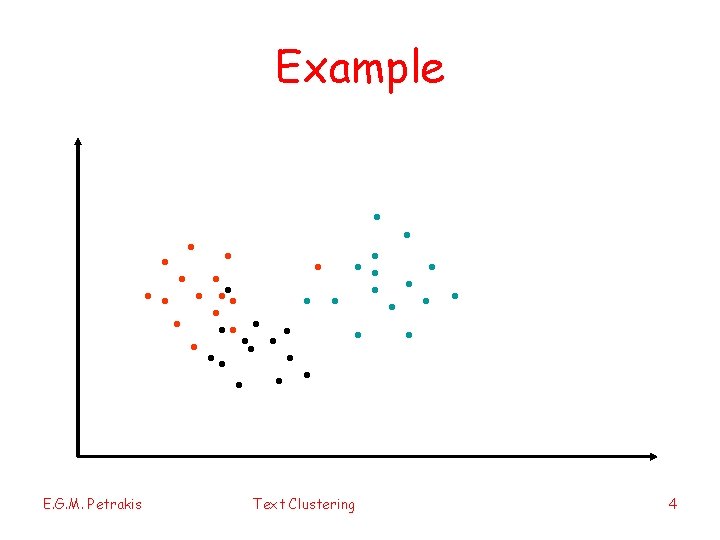

Example . . E. G. M. Petrakis Text Clustering 4

Soft Clustering q Hard clustering: each instance belongs to exactly one cluster q. Does not allow for uncertainty q. An instance may belong to two or more clusters q Soft clustering is based on probabilities that an instance belongs to each of a set of clusters qprobabilities of all categories must sum to 1 q. Expectation Minimization (EM) is the most popular approach E. G. M. Petrakis Text Clustering 5

Applications q. Faster retrieval q. Faster and better browsing q. Structuring of search results q. Revealing classes and other data regularities q. Directory construction q. Better data organization in general E. G. M. Petrakis Text Clustering 6

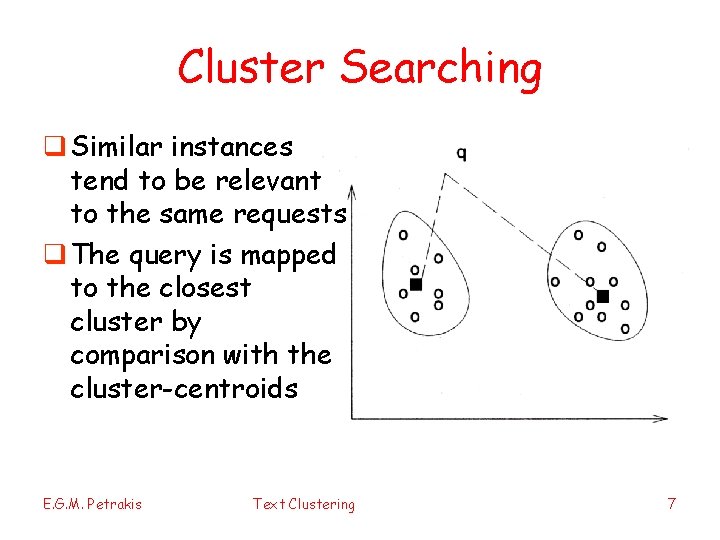

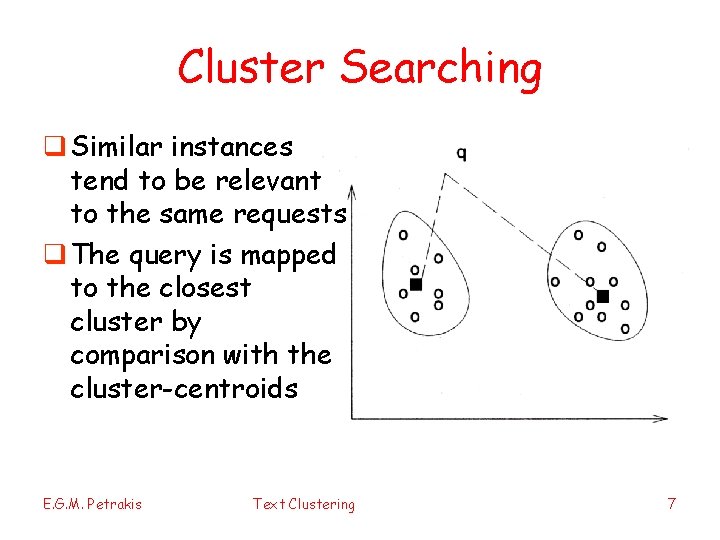

Cluster Searching q Similar instances tend to be relevant to the same requests q The query is mapped to the closest cluster by comparison with the cluster-centroids E. G. M. Petrakis Text Clustering 7

Notation q. N: number of elements q. Class: real world grouping – ground truth q. Cluster: grouping by algorithm q. The ideal clustering algorithm will produce clusters equivalent to real world classes with exactly the same members E. G. M. Petrakis Text Clustering 8

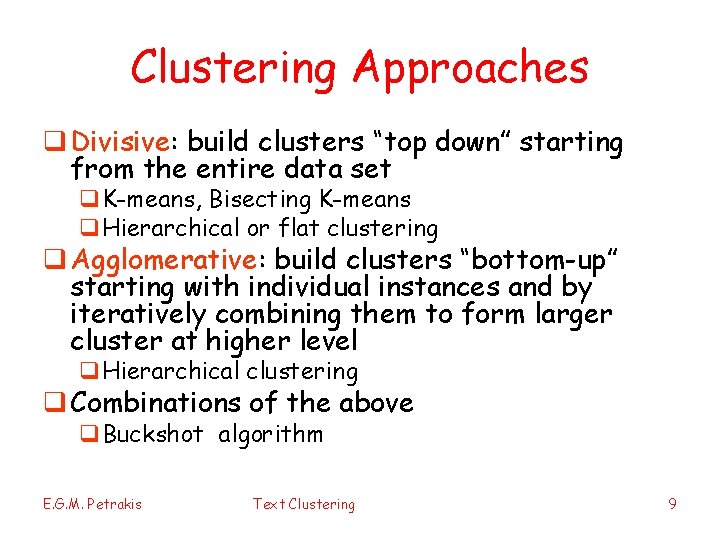

Clustering Approaches q Divisive: build clusters “top down” starting from the entire data set q. K-means, Bisecting K-means q. Hierarchical or flat clustering q Agglomerative: build clusters “bottom-up” starting with individual instances and by iteratively combining them to form larger cluster at higher level q. Hierarchical clustering q Combinations of the above q. Buckshot algorithm E. G. M. Petrakis Text Clustering 9

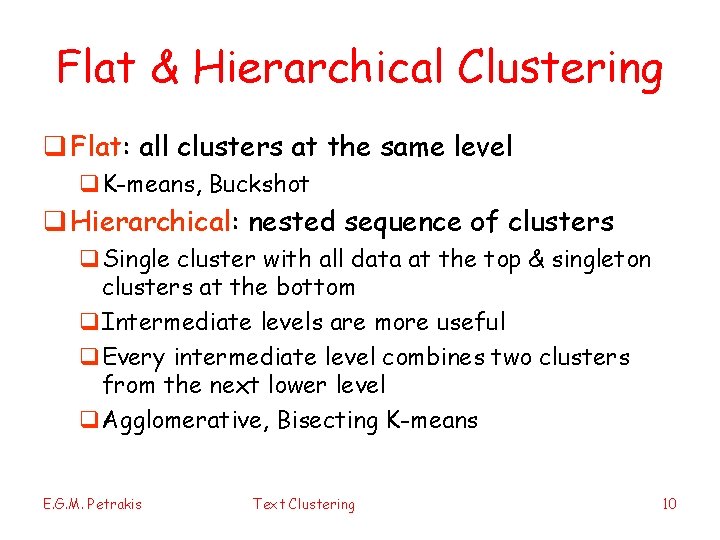

Flat & Hierarchical Clustering q Flat: all clusters at the same level q. K-means, Buckshot q Hierarchical: nested sequence of clusters q. Single cluster with all data at the top & singleton clusters at the bottom q. Intermediate levels are more useful q. Every intermediate level combines two clusters from the next lower level q. Agglomerative, Bisecting K-means E. G. M. Petrakis Text Clustering 10

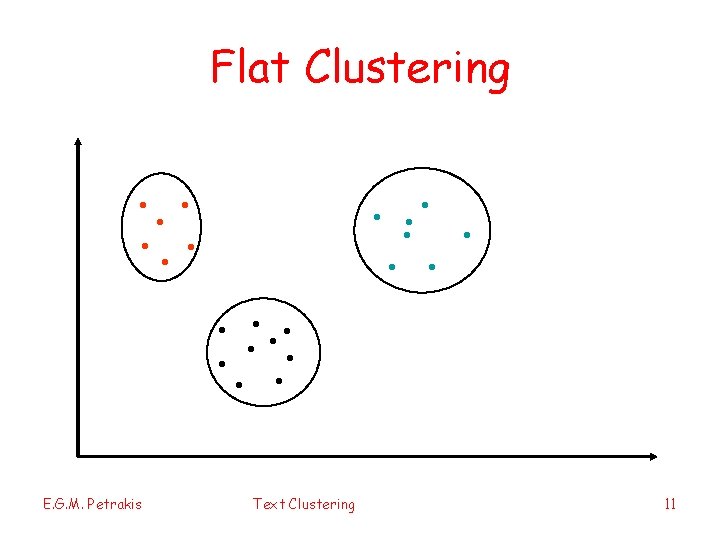

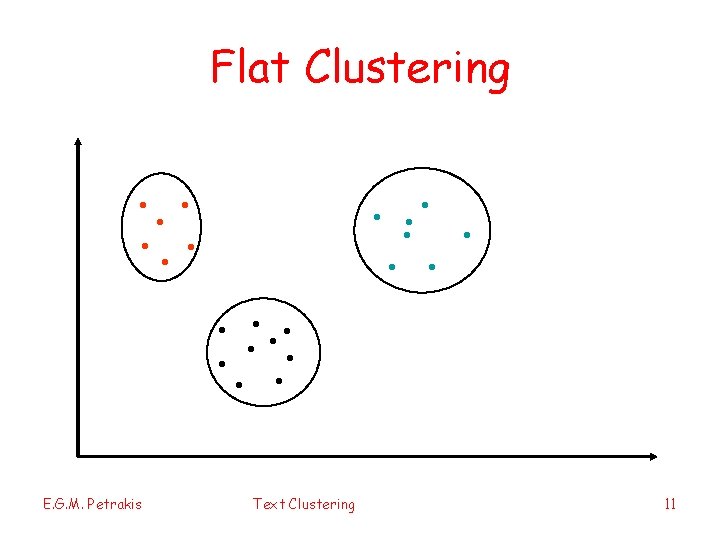

Flat Clustering . . . E. G. M. Petrakis . . Text Clustering . . . 11

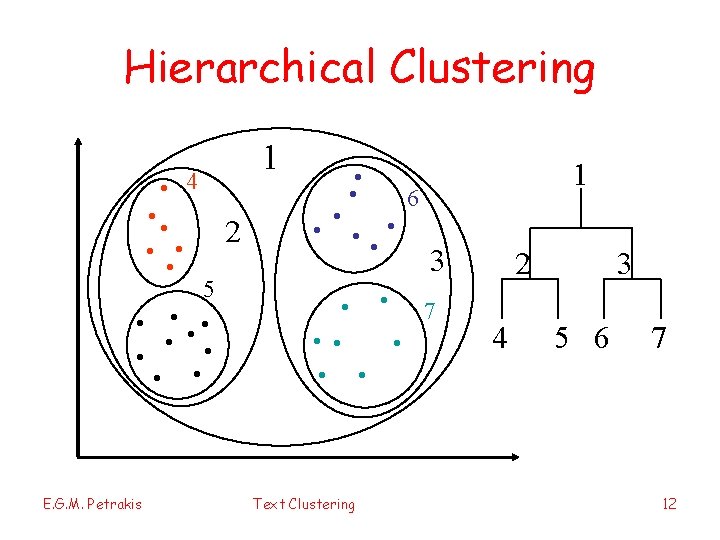

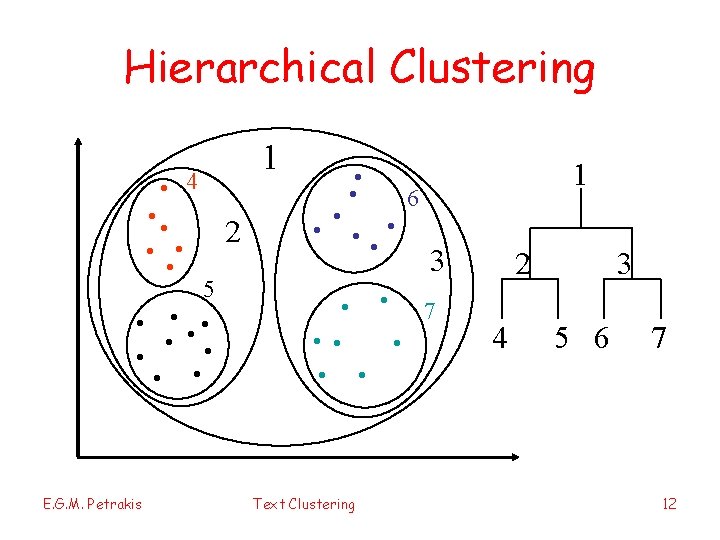

Hierarchical Clustering . . . 2. . 4 5 E. G. M. Petrakis 1 . . . Text Clustering 1 6 3 7 2 4 3 5 6 7 12

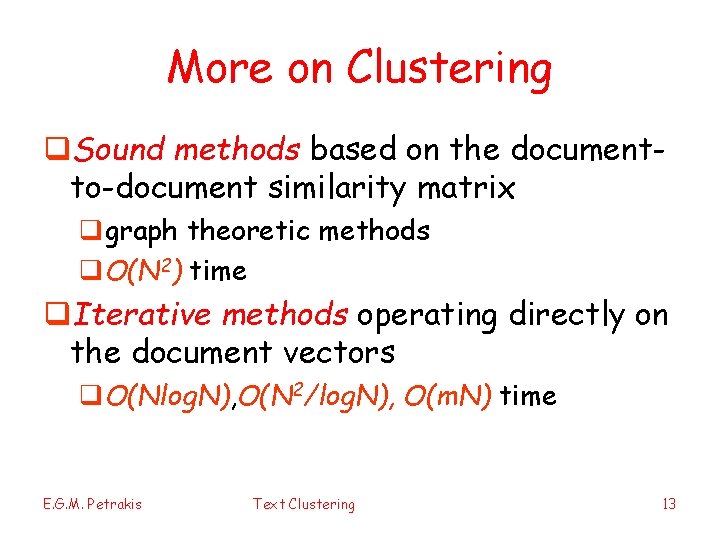

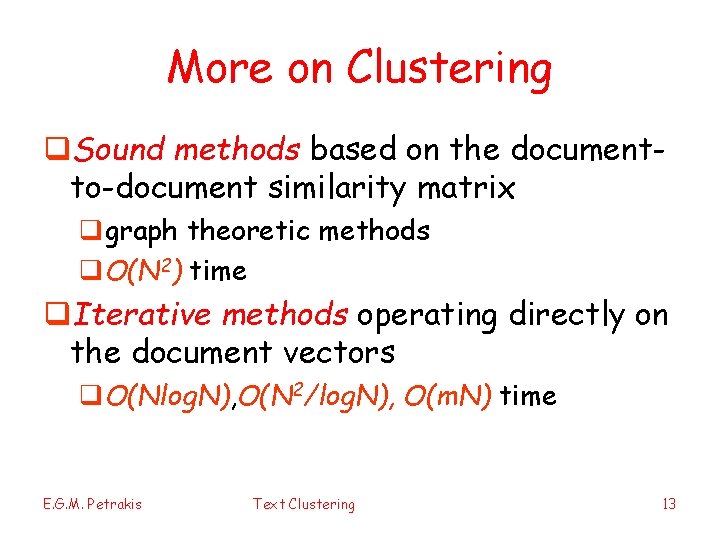

More on Clustering q. Sound methods based on the documentto-document similarity matrix qgraph theoretic methods q. O(N 2) time q. Iterative methods operating directly on the document vectors q. O(Nlog. N), O(N 2/log. N), O(m. N) time E. G. M. Petrakis Text Clustering 13

Text Clustering q. Finds overall similarities among documents or groups of documents q. Faster searching, browsing etc. q. Needs to know how to compute the similarity (or equivalently the distance) between documents E. G. M. Petrakis Text Clustering 14

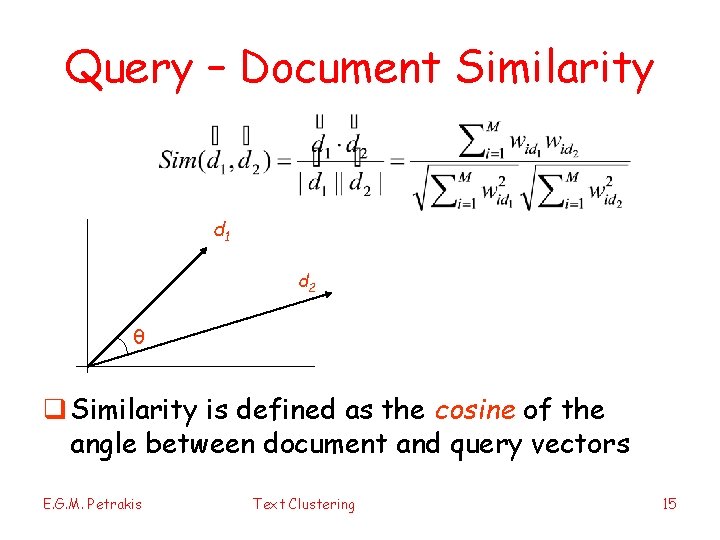

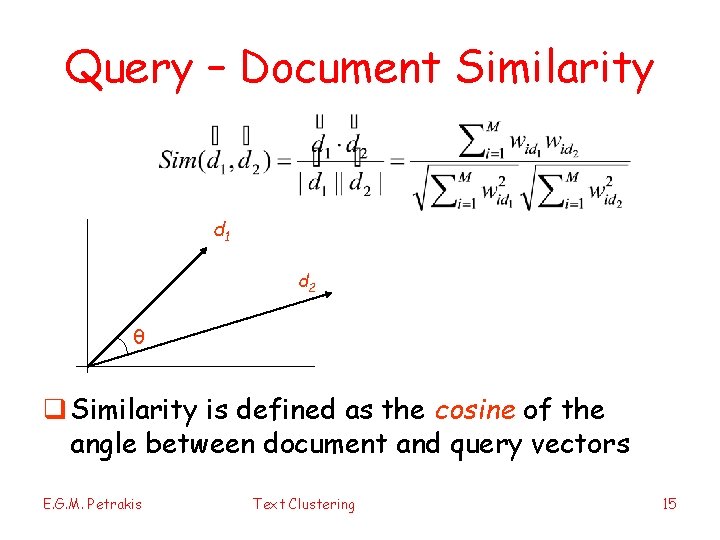

Query – Document Similarity d 1 d 2 θ q Similarity is defined as the cosine of the angle between document and query vectors E. G. M. Petrakis Text Clustering 15

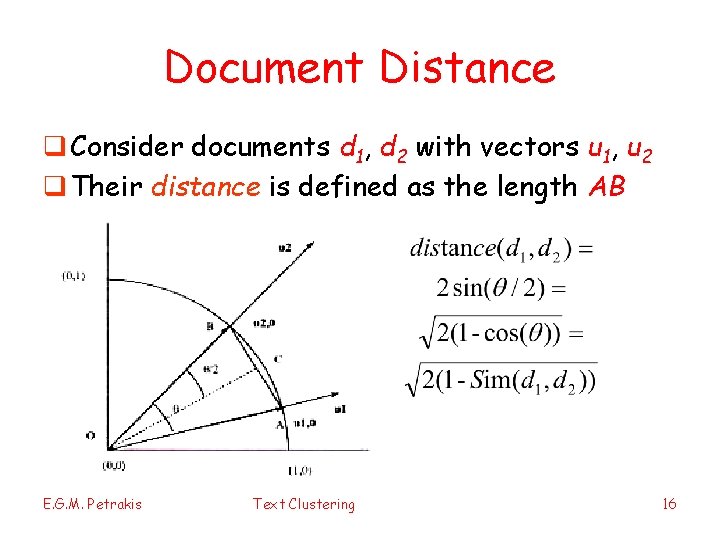

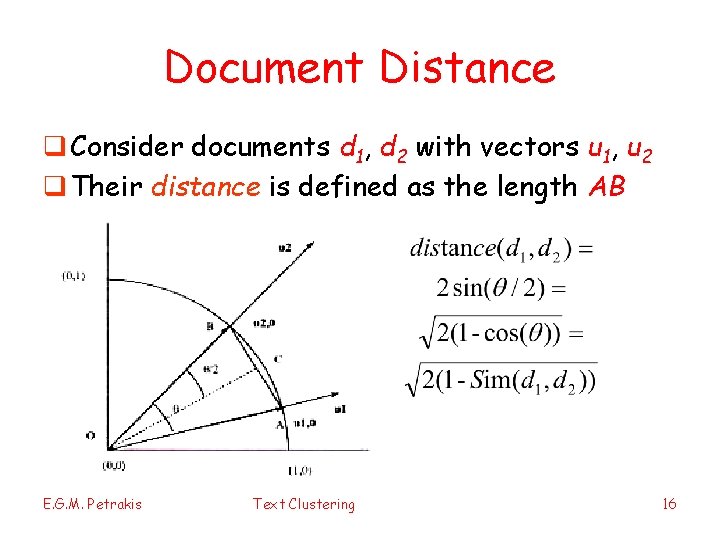

Document Distance q Consider documents d 1, d 2 with vectors u 1, u 2 q Their distance is defined as the length AB E. G. M. Petrakis Text Clustering 16

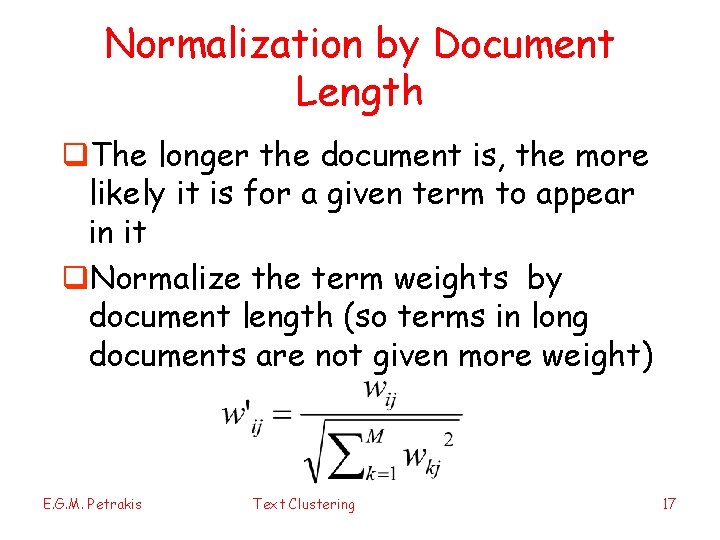

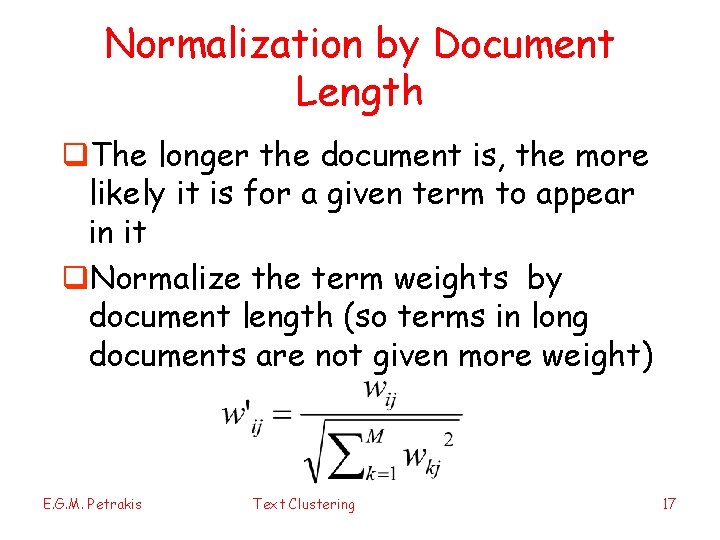

Normalization by Document Length q. The longer the document is, the more likely it is for a given term to appear in it q. Normalize the term weights by document length (so terms in long documents are not given more weight) E. G. M. Petrakis Text Clustering 17

Evaluation of Cluster Quality q. Clusters can be evaluated using internal or external knowledge q. Internal Measures: intra cluster cohesion and cluster separability q intra cluster similarity q inter cluster similarity q. External measures: quality of clusters compared to real classes q Entropy (E), Harmonic Mean (F) E. G. M. Petrakis Text Clustering 18

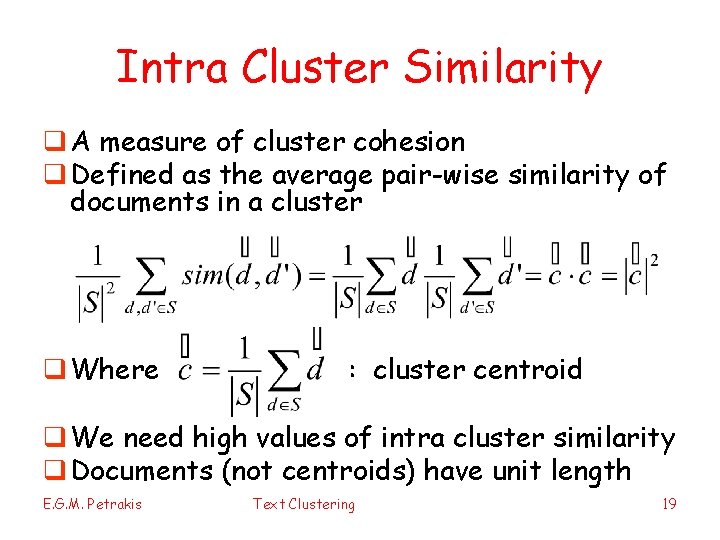

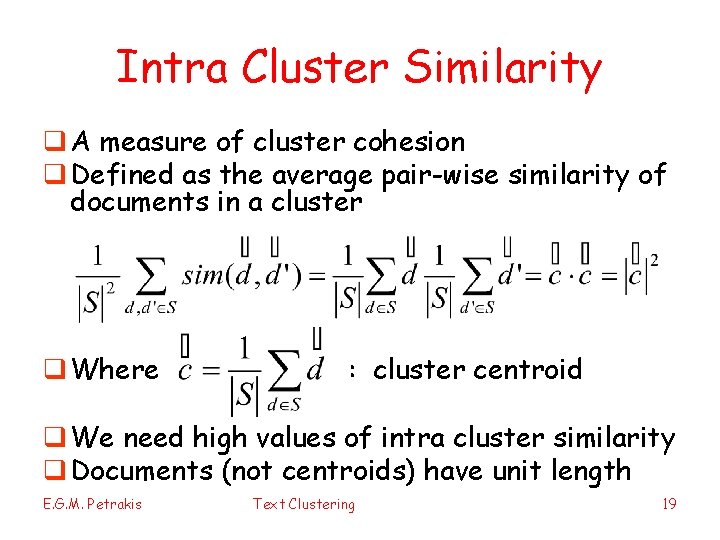

Intra Cluster Similarity q A measure of cluster cohesion q Defined as the average pair-wise similarity of documents in a cluster q Where : cluster centroid q We need high values of intra cluster similarity q Documents (not centroids) have unit length E. G. M. Petrakis Text Clustering 19

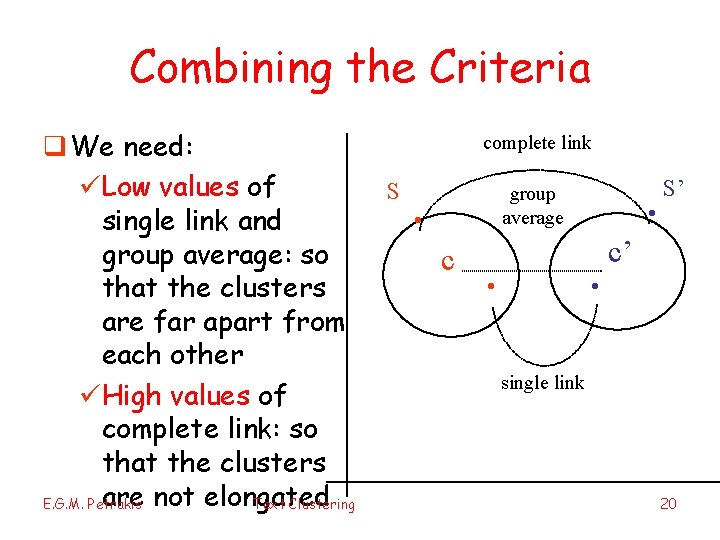

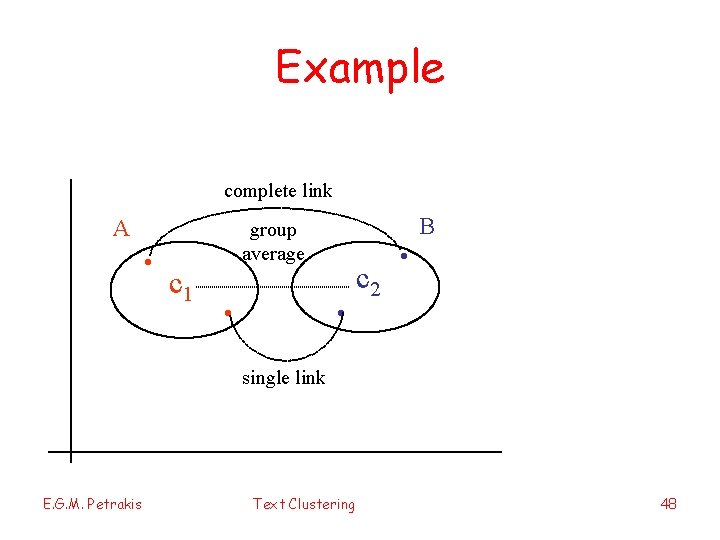

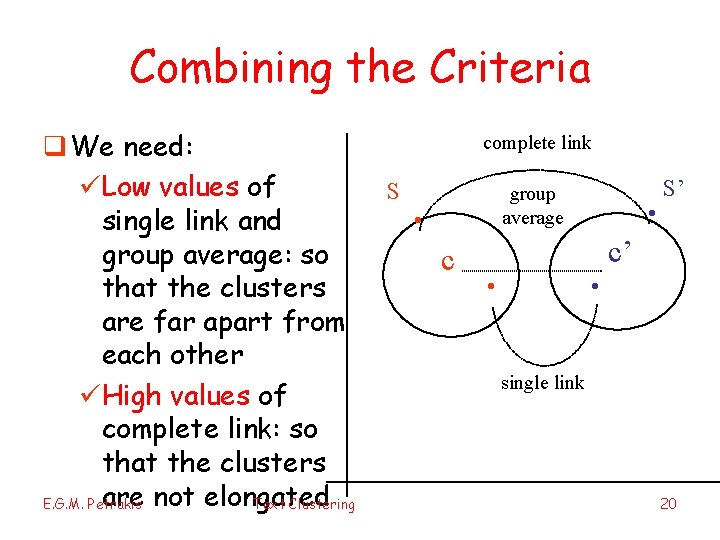

Combining the Criteria q We need: üLow values of single link and group average: so that the clusters are far apart from each other üHigh values of complete link: so that the clusters are not elongated E. G. M. Petrakis Text Clustering complete link S . . group average c . . S’ c’ single link 20

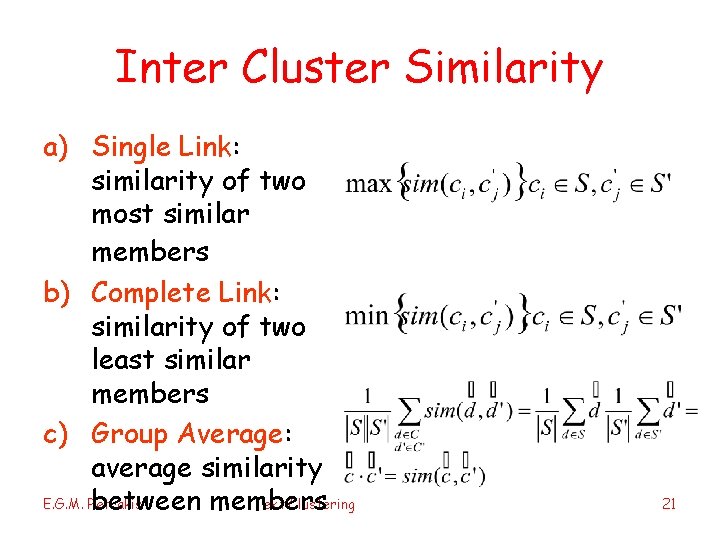

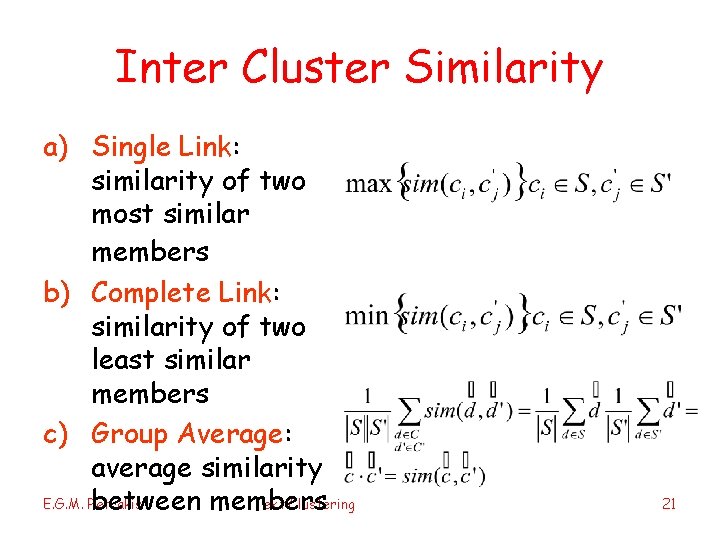

Inter Cluster Similarity a) Single Link: similarity of two most similar members b) Complete Link: similarity of two least similar members c) Group Average: average similarity E. G. M. Petrakis Text Clustering between members 21

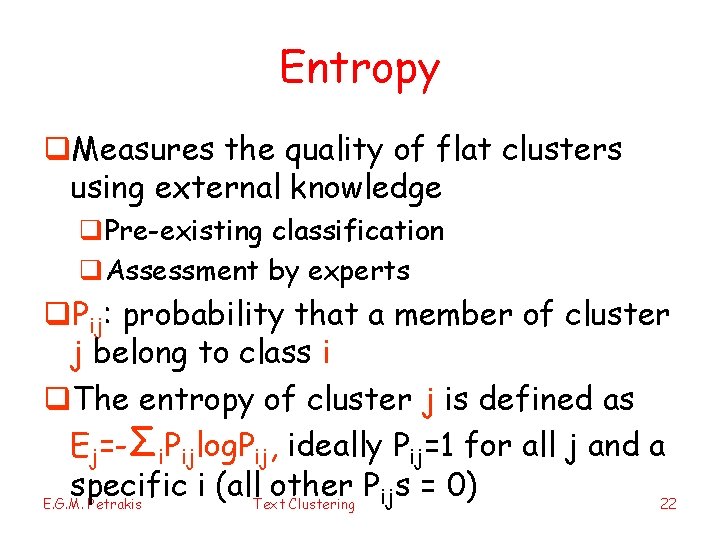

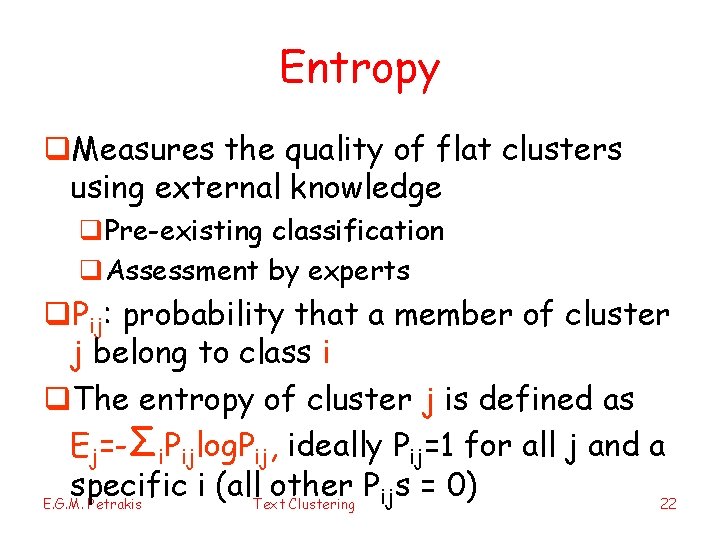

Entropy q. Measures the quality of flat clusters using external knowledge q. Pre-existing classification q. Assessment by experts q. Pij: probability that a member of cluster j belong to class i q. The entropy of cluster j is defined as Ej=-Σi. Pijlog. Pij, ideally Pij=1 for all j and a specific i (all other P s = 0) ij E. G. M. Petrakis Text Clustering 22

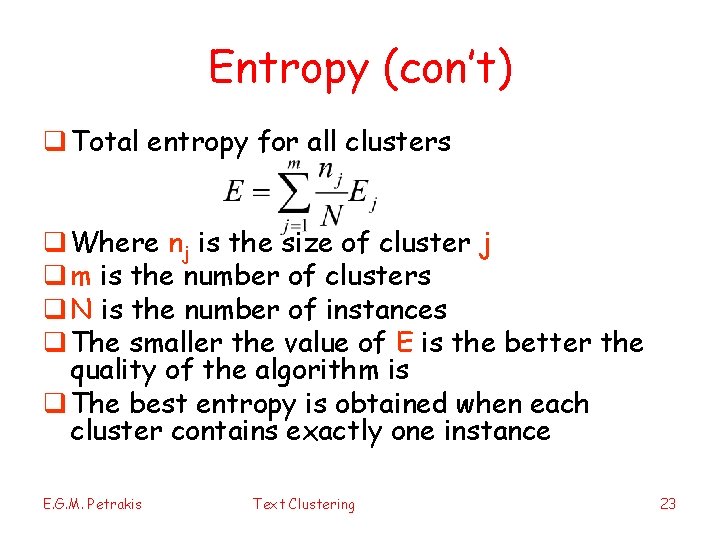

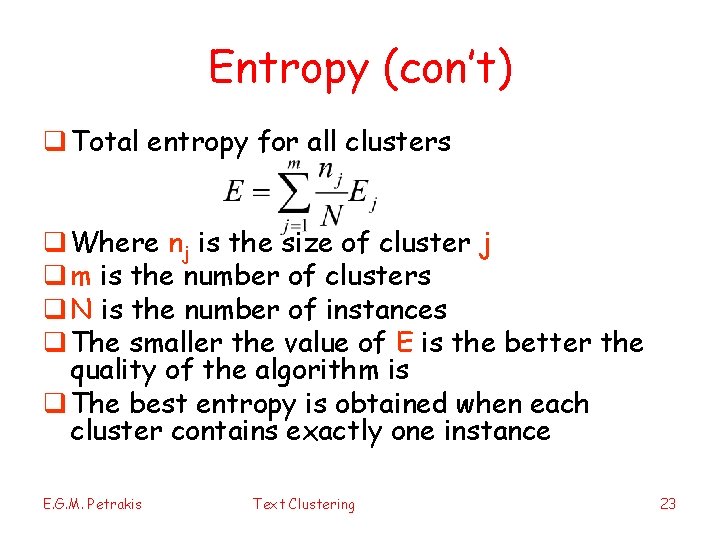

Entropy (con’t) q Total entropy for all clusters q Where nj is the size of cluster j q m is the number of clusters q N is the number of instances q The smaller the value of E is the better the quality of the algorithm is q The best entropy is obtained when each cluster contains exactly one instance E. G. M. Petrakis Text Clustering 23

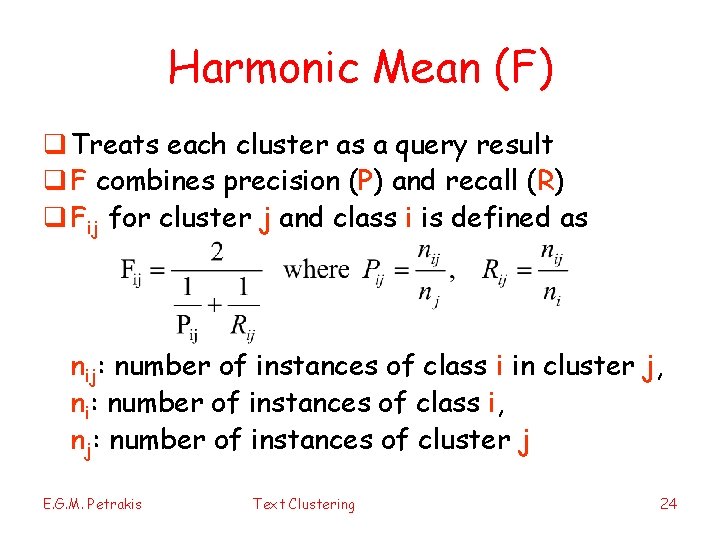

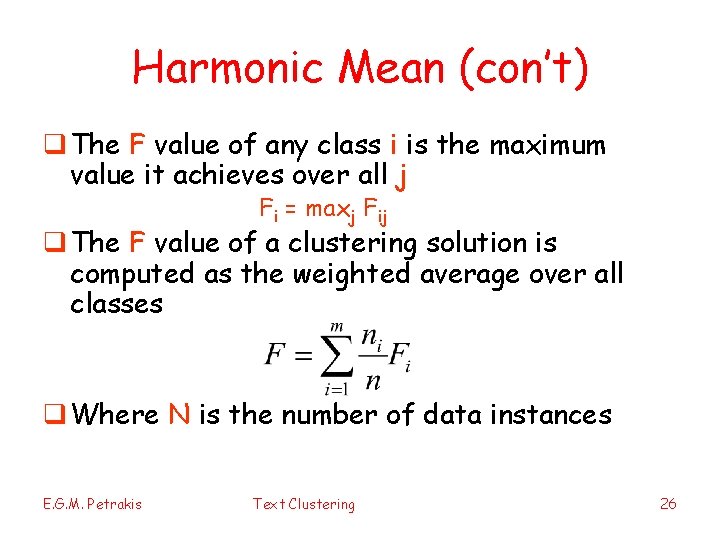

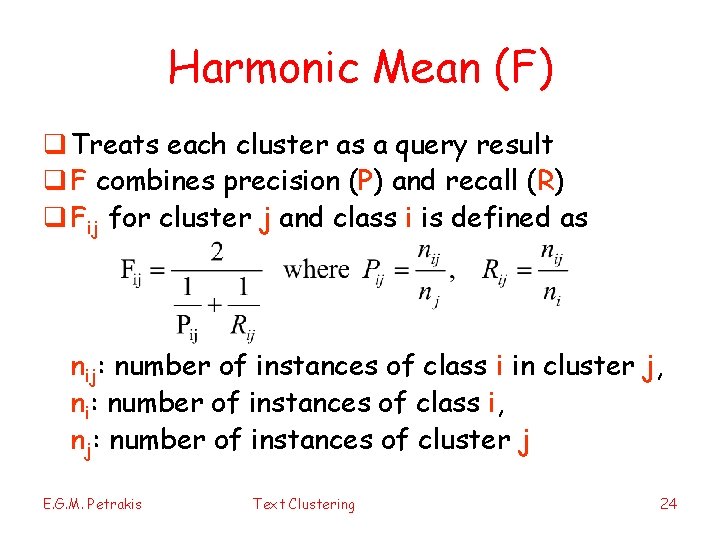

Harmonic Mean (F) q Treats each cluster as a query result q F combines precision (P) and recall (R) q Fij for cluster j and class i is defined as nij: number of instances of class i in cluster j, ni: number of instances of class i, nj: number of instances of cluster j E. G. M. Petrakis Text Clustering 24

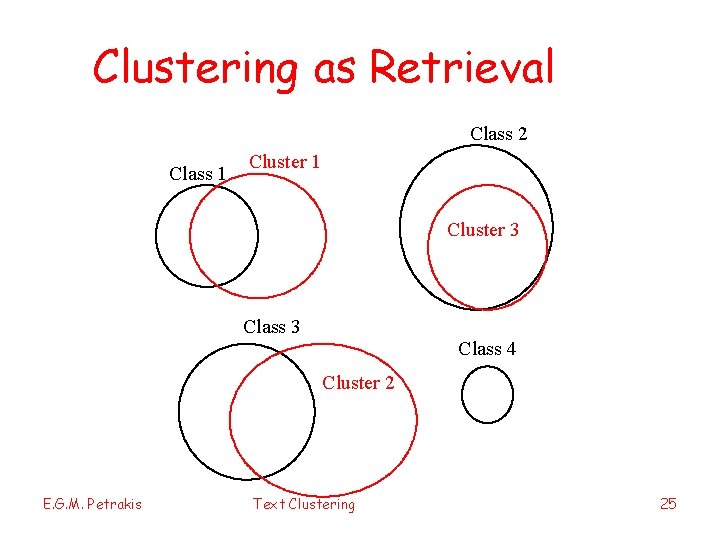

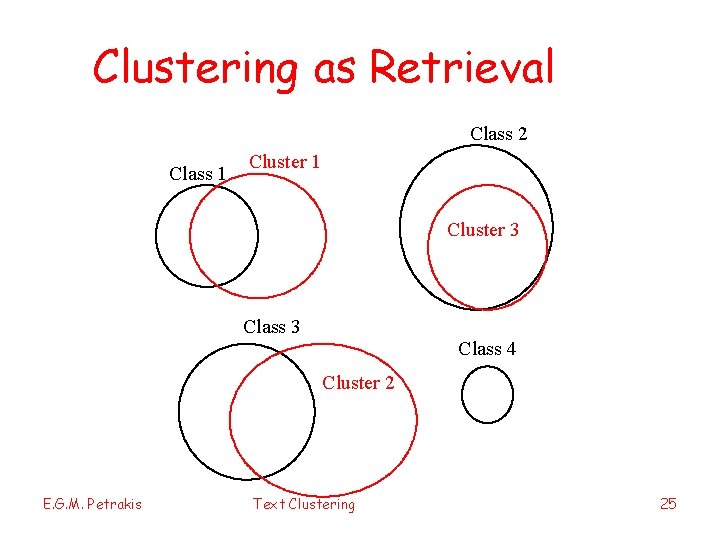

Clustering as Retrieval Class 2 Class 1 Cluster 3 Class 4 Cluster 2 E. G. M. Petrakis Text Clustering 25

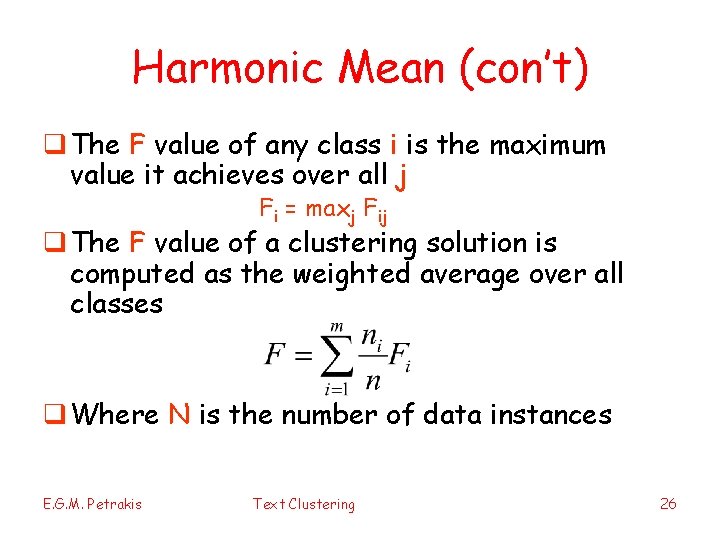

Harmonic Mean (con’t) q The F value of any class i is the maximum value it achieves over all j Fi = maxj Fij q The F value of a clustering solution is computed as the weighted average over all classes q Where N is the number of data instances E. G. M. Petrakis Text Clustering 26

Quality of Clustering q A good clustering method q. Maximizes intra-cluster similarity q. Minimizes inter cluster similarity q. Minimizes Entropy q. Maximizes Harmonic Mean q Difficult to achieve all together simultaneously q Maximize some objective function of the above q An algorithm is better than an other if it has better values on most of these measures E. G. M. Petrakis Text Clustering 27

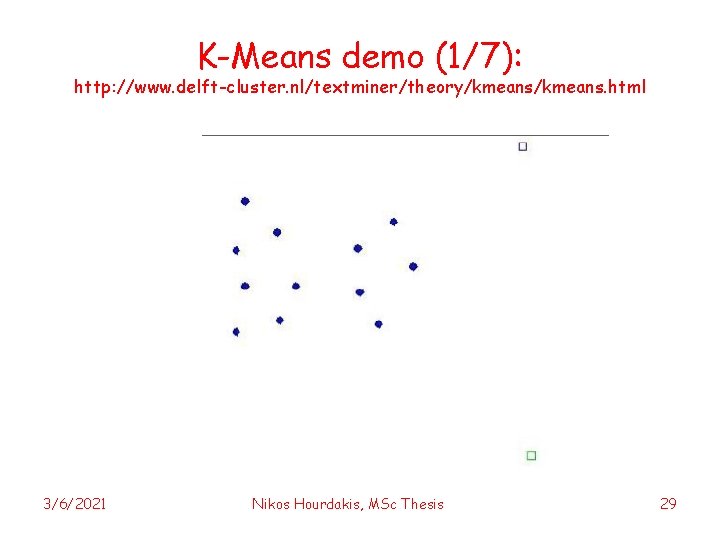

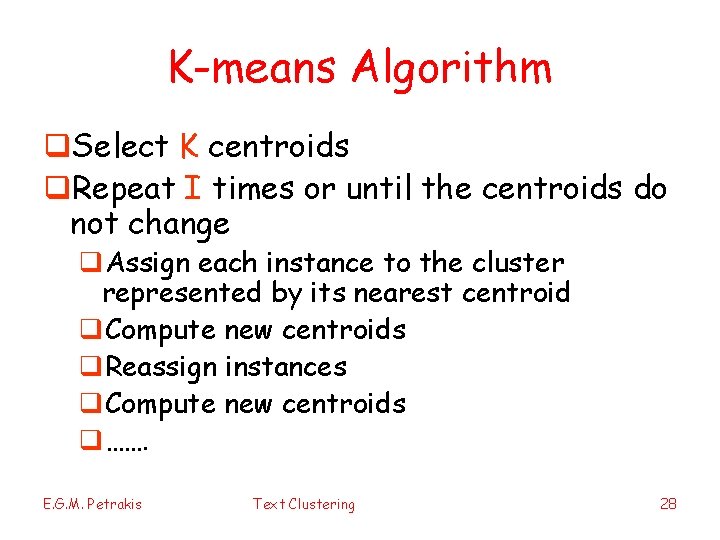

K-means Algorithm q. Select K centroids q. Repeat I times or until the centroids do not change q. Assign each instance to the cluster represented by its nearest centroid q. Compute new centroids q. Reassign instances q. Compute new centroids q……. E. G. M. Petrakis Text Clustering 28

K-Means demo (1/7): http: //www. delft-cluster. nl/textminer/theory/kmeans. html 3/6/2021 Nikos Hourdakis, MSc Thesis 29

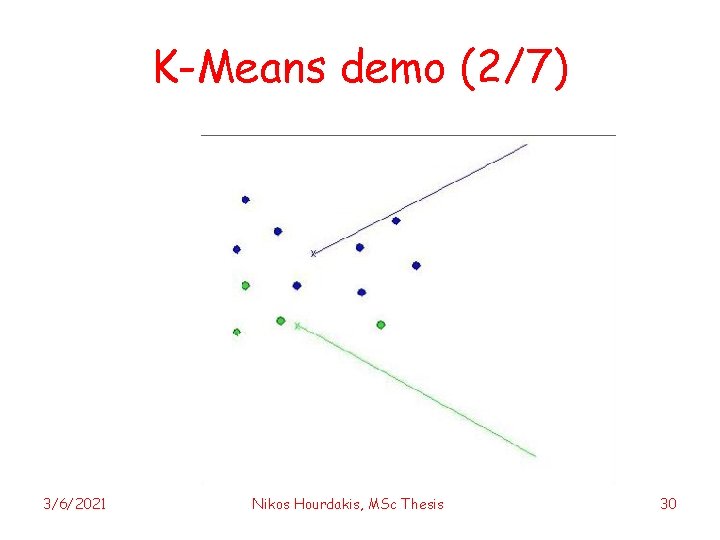

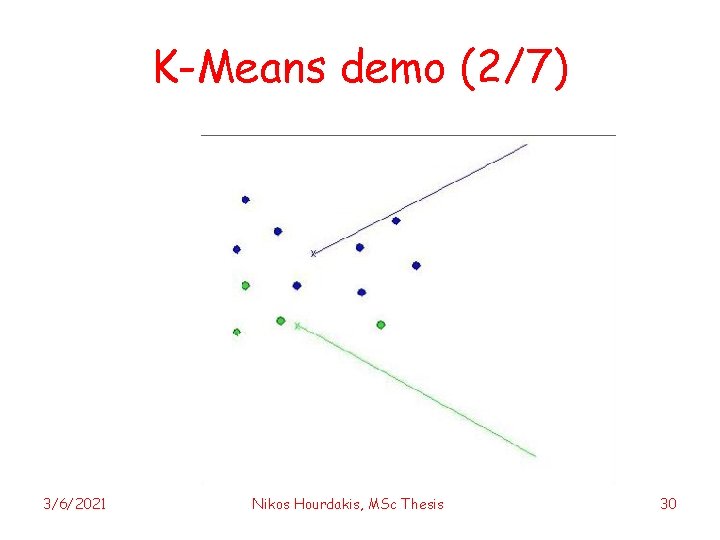

K-Means demo (2/7) 3/6/2021 Nikos Hourdakis, MSc Thesis 30

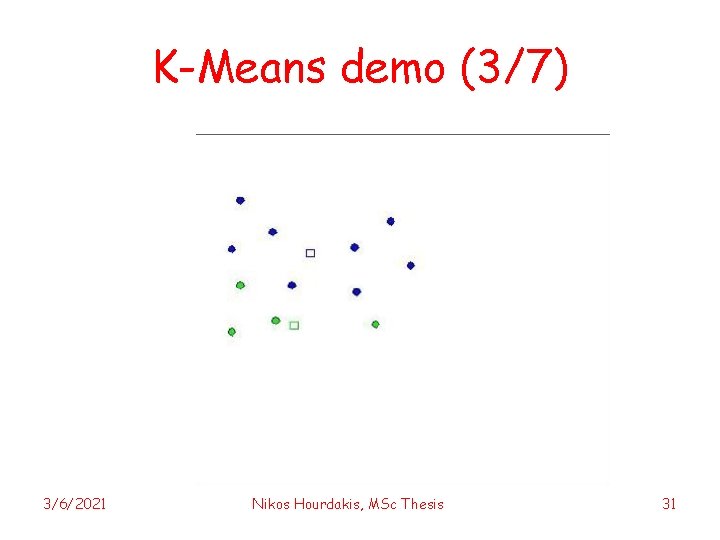

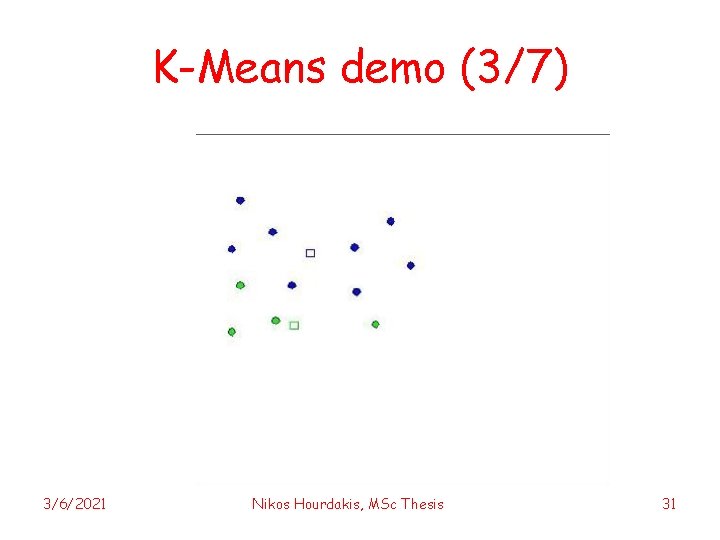

K-Means demo (3/7) 3/6/2021 Nikos Hourdakis, MSc Thesis 31

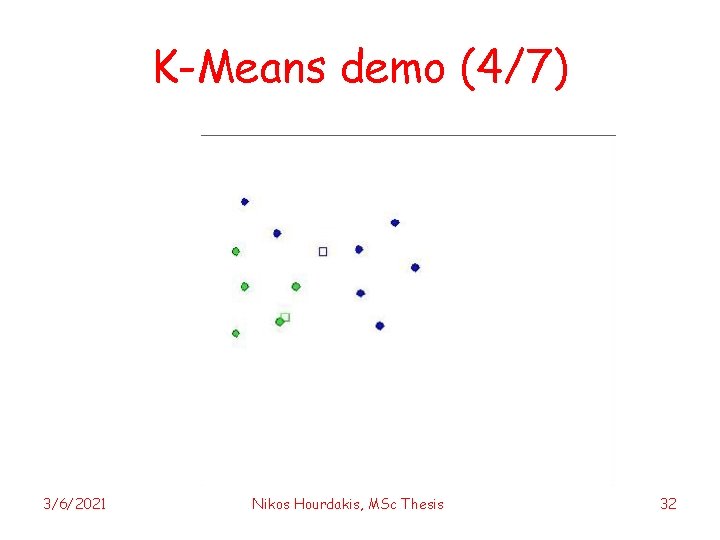

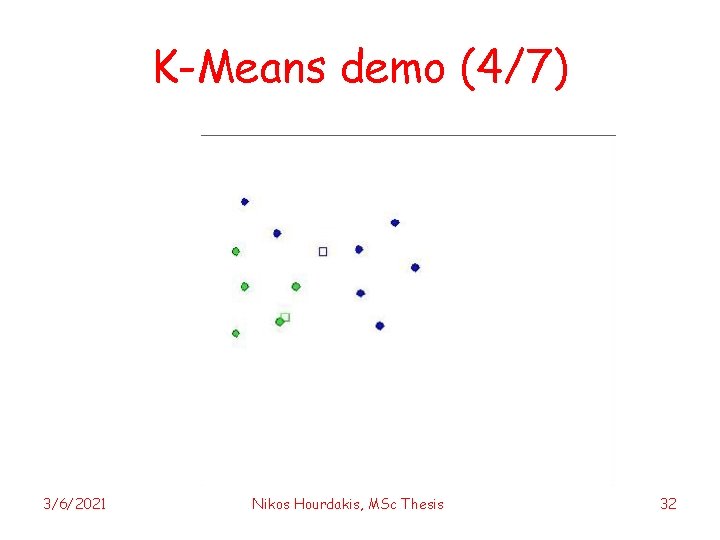

K-Means demo (4/7) 3/6/2021 Nikos Hourdakis, MSc Thesis 32

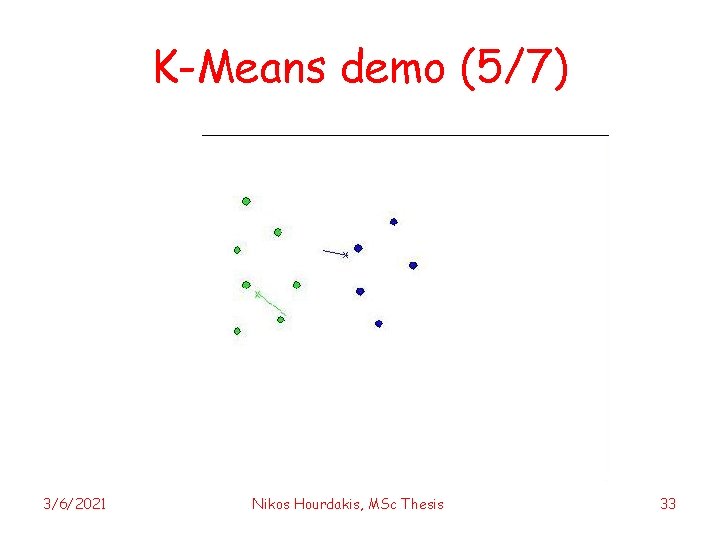

K-Means demo (5/7) 3/6/2021 Nikos Hourdakis, MSc Thesis 33

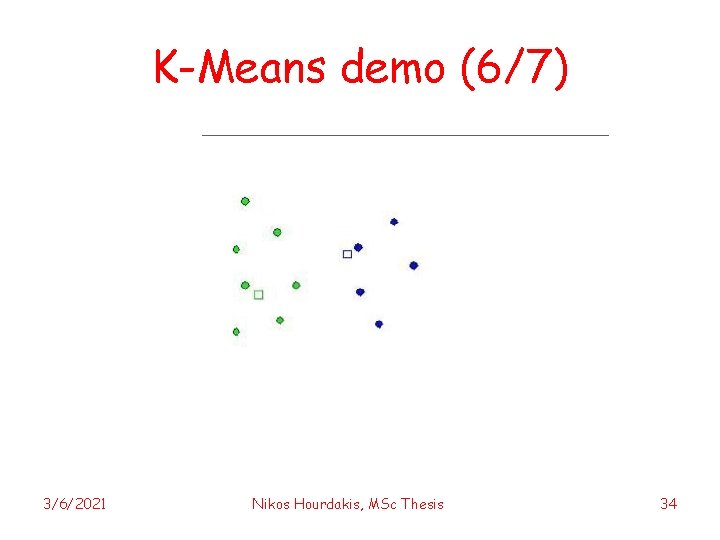

K-Means demo (6/7) 3/6/2021 Nikos Hourdakis, MSc Thesis 34

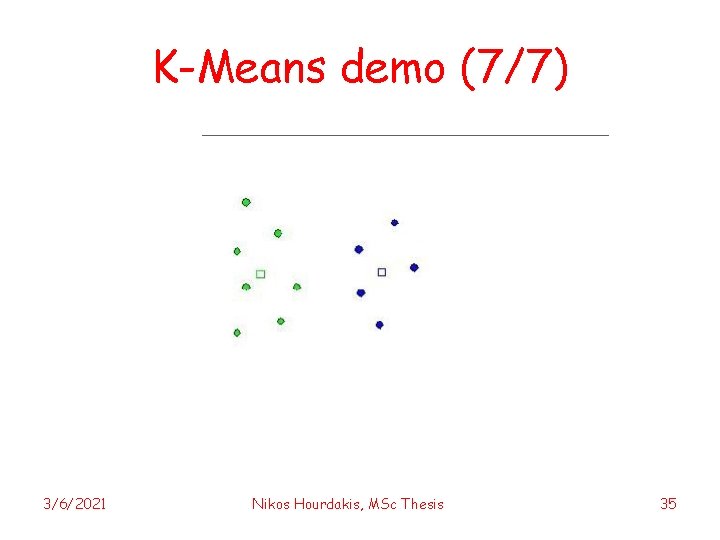

K-Means demo (7/7) 3/6/2021 Nikos Hourdakis, MSc Thesis 35

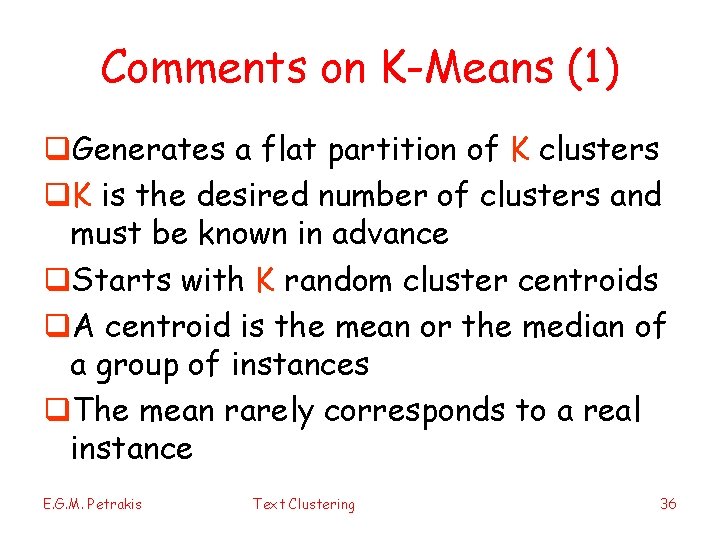

Comments on K-Means (1) q. Generates a flat partition of K clusters q. K is the desired number of clusters and must be known in advance q. Starts with K random cluster centroids q. A centroid is the mean or the median of a group of instances q. The mean rarely corresponds to a real instance E. G. M. Petrakis Text Clustering 36

Comments on K-Means (2) q. Up to I=10 iterations q. Keep the clustering resulted in best inter/intra similarity or the final clusters after I iterations q. Complexity O(IKN) q. A repeated application of K-Means for K=2, 4, … can produce a hierarchical clustering E. G. M. Petrakis Text Clustering 37

Choosing Centroids for K-means q. Quality of clustering depends on the selection of initial centroids q. Random selection may result in poor convergence rate, or convergence to sub -optimal clusterings. q. Select good initial centroids using a heuristic or the results of another method q. Buckshot algorithm E. G. M. Petrakis Text Clustering 38

Incremental K-Means q. Update each centroid during each iteration after each point is assigned to a cluster rather than at the end of each iteration q. Reassign instances to clusters at the end of each iteration q. Converges faster than simple K-means q. Usually 2 -5 iterations E. G. M. Petrakis Text Clustering 39

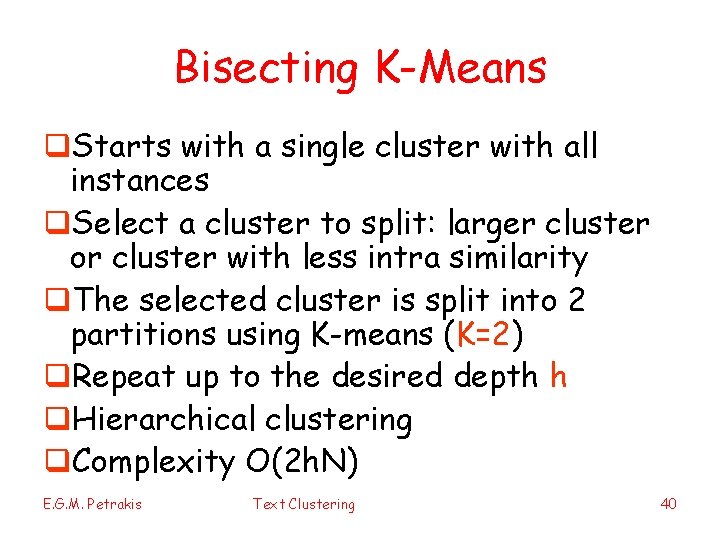

Bisecting K-Means q. Starts with a single cluster with all instances q. Select a cluster to split: larger cluster or cluster with less intra similarity q. The selected cluster is split into 2 partitions using K-means (K=2) q. Repeat up to the desired depth h q. Hierarchical clustering q. Complexity O(2 h. N) E. G. M. Petrakis Text Clustering 40

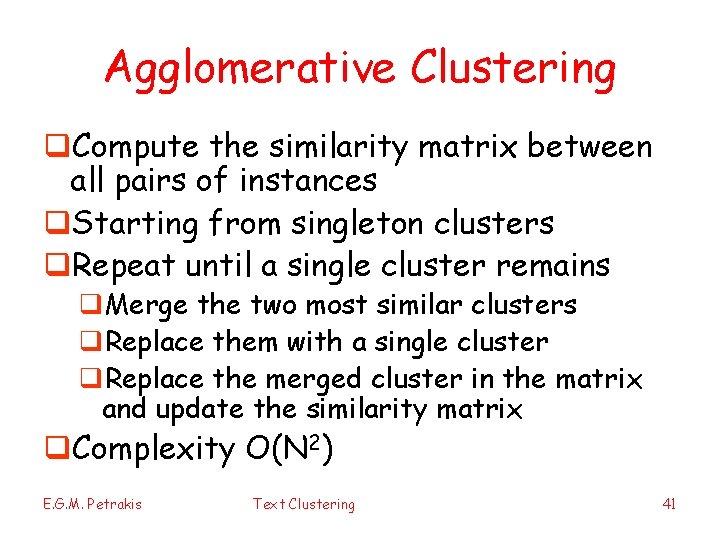

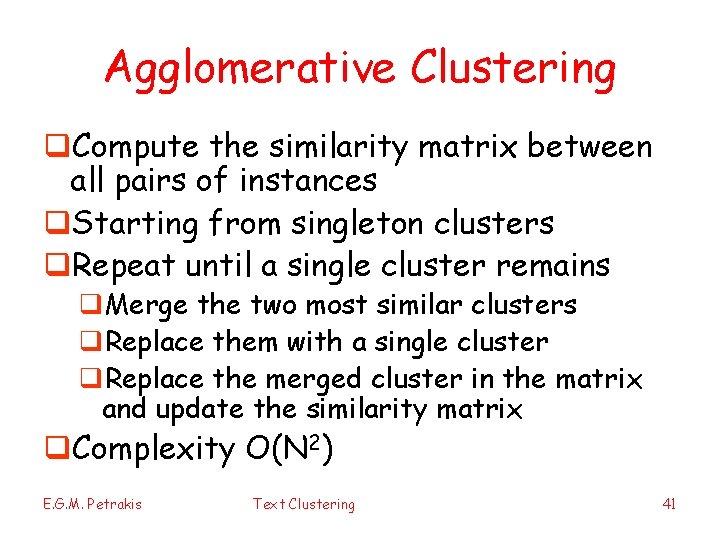

Agglomerative Clustering q. Compute the similarity matrix between all pairs of instances q. Starting from singleton clusters q. Repeat until a single cluster remains q. Merge the two most similar clusters q. Replace them with a single cluster q. Replace the merged cluster in the matrix and update the similarity matrix q. Complexity O(N 2) E. G. M. Petrakis Text Clustering 41

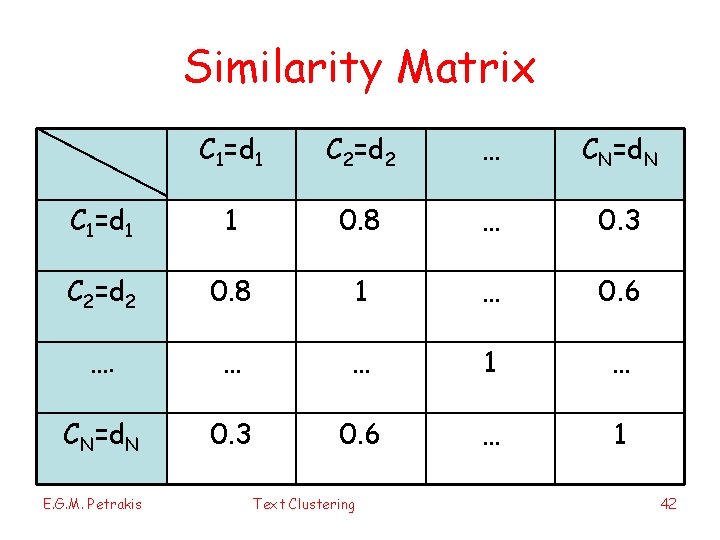

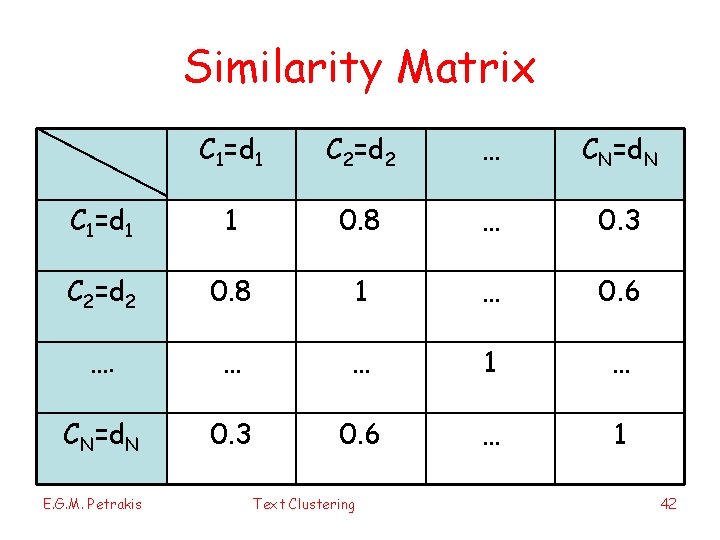

Similarity Matrix C 1=d 1 C 2=d 2 … CN=d. N C 1=d 1 1 0. 8 … 0. 3 C 2=d 2 0. 8 1 … 0. 6 …. … … 1 … CN=d. N 0. 3 0. 6 … 1 E. G. M. Petrakis Text Clustering 42

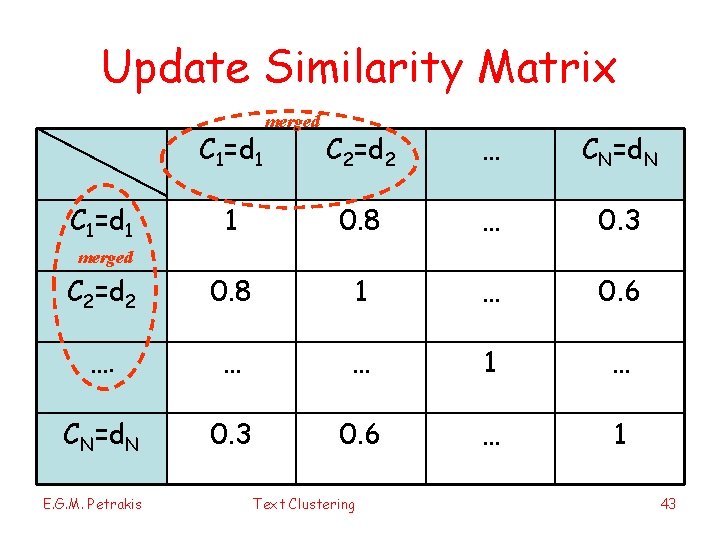

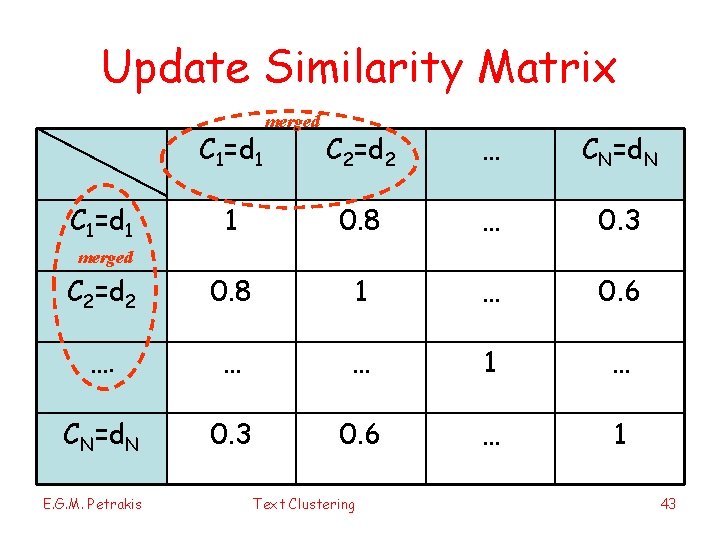

Update Similarity Matrix C 1=d 1 merged C 2=d 2 … CN=d. N 1 0. 8 … 0. 3 C 2=d 2 0. 8 1 … 0. 6 …. … … 1 … CN=d. N 0. 3 0. 6 … 1 C 1=d 1 merged E. G. M. Petrakis Text Clustering 43

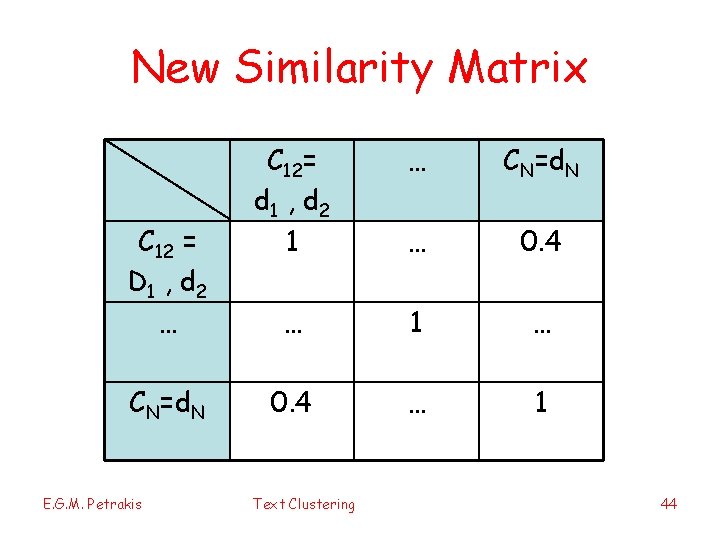

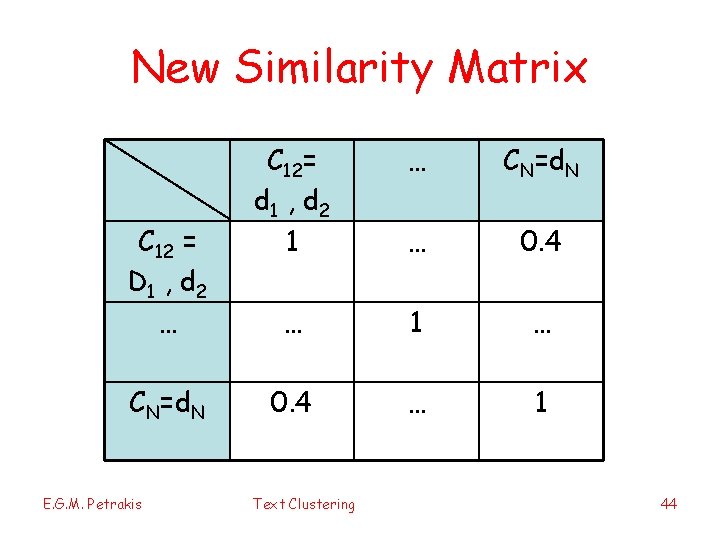

New Similarity Matrix C 12 = D 1 , d 2 … CN=d. N E. G. M. Petrakis C 12= d 1 , d 2 1 … CN=d. N … 0. 4 … 1 Text Clustering 44

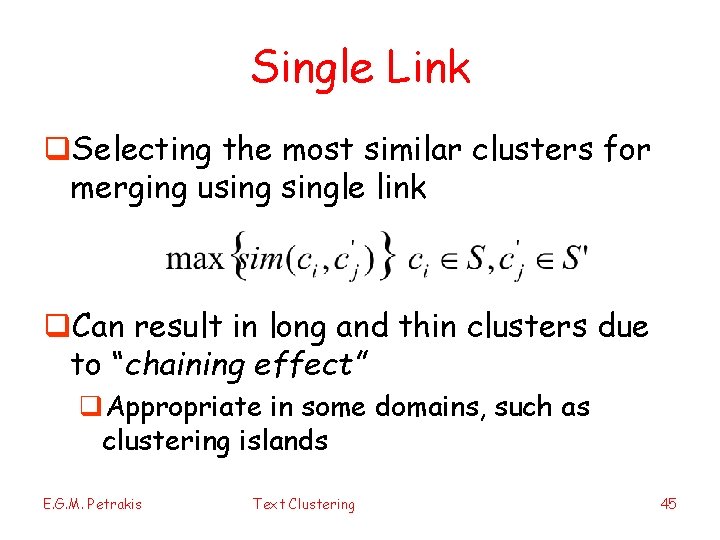

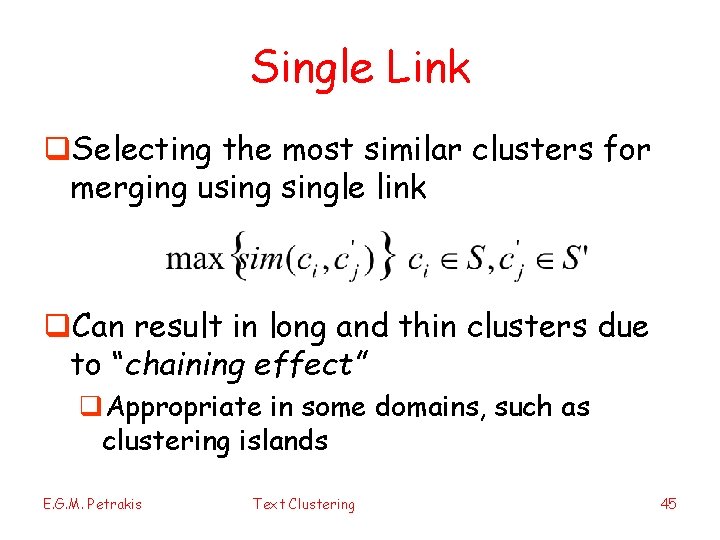

Single Link q. Selecting the most similar clusters for merging usingle link q. Can result in long and thin clusters due to “chaining effect” q. Appropriate in some domains, such as clustering islands E. G. M. Petrakis Text Clustering 45

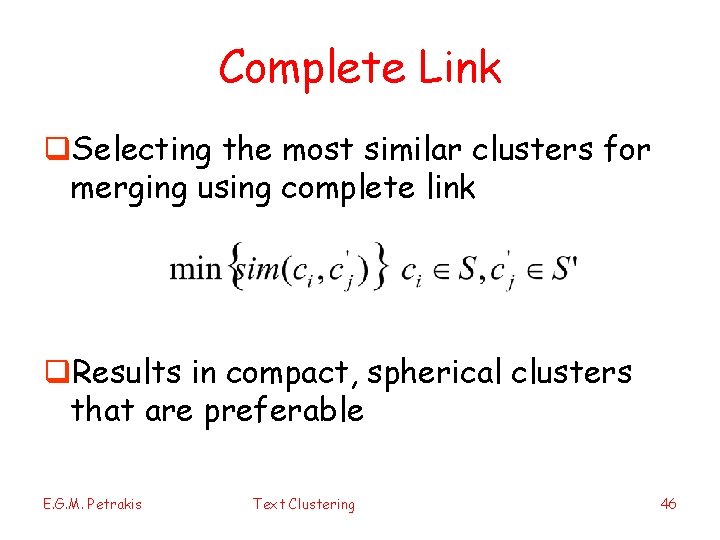

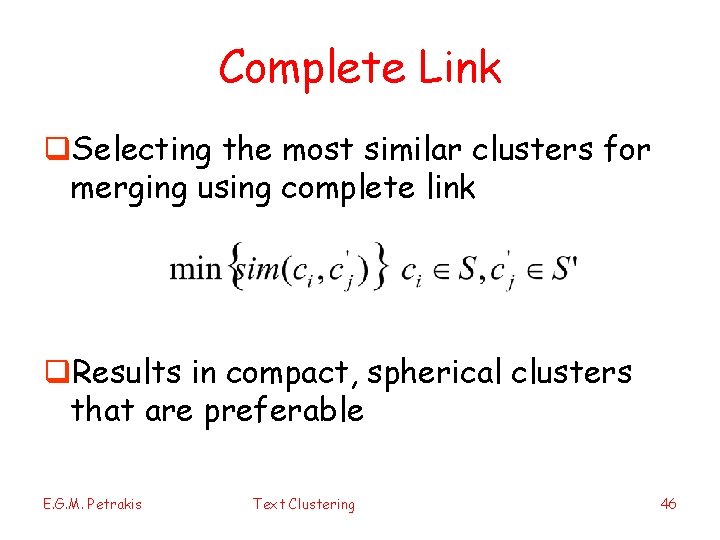

Complete Link q. Selecting the most similar clusters for merging using complete link q. Results in compact, spherical clusters that are preferable E. G. M. Petrakis Text Clustering 46

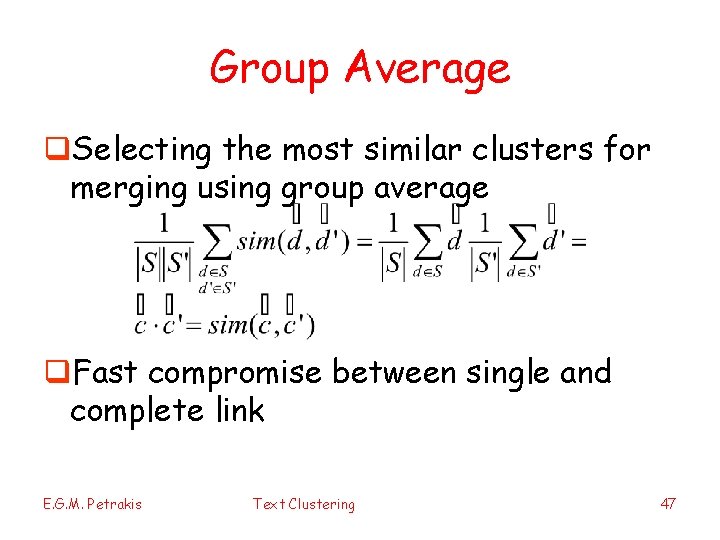

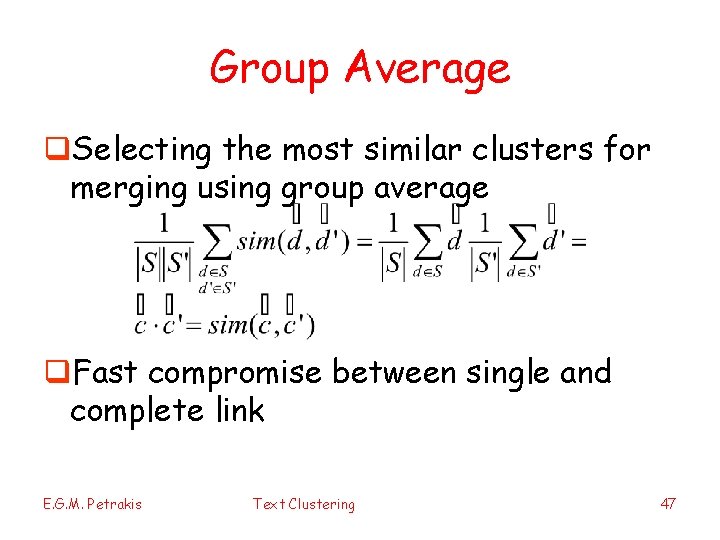

Group Average q. Selecting the most similar clusters for merging using group average q. Fast compromise between single and complete link E. G. M. Petrakis Text Clustering 47

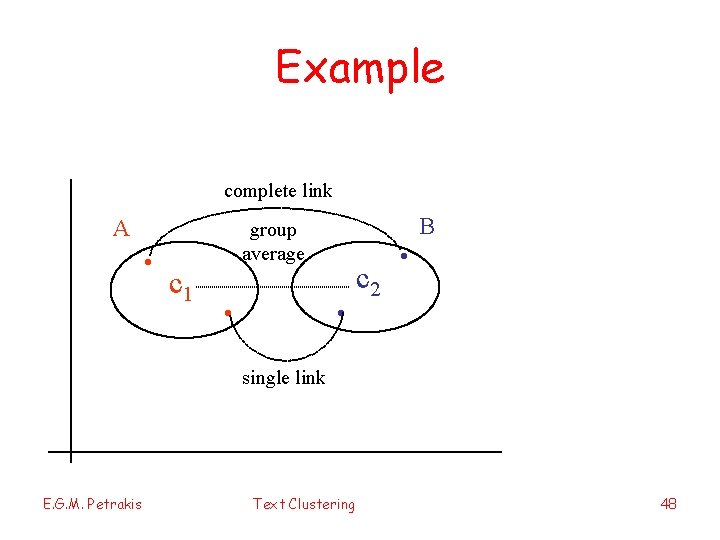

Example complete link A . c 1 . group average . c 2 . B single link E. G. M. Petrakis Text Clustering 48

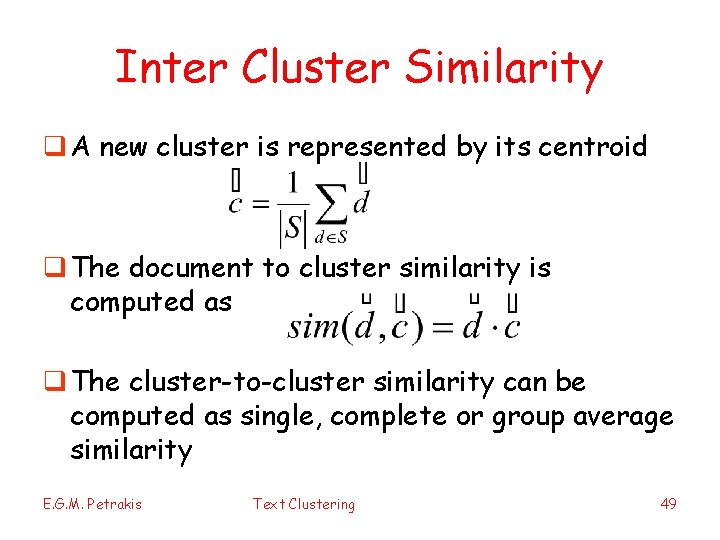

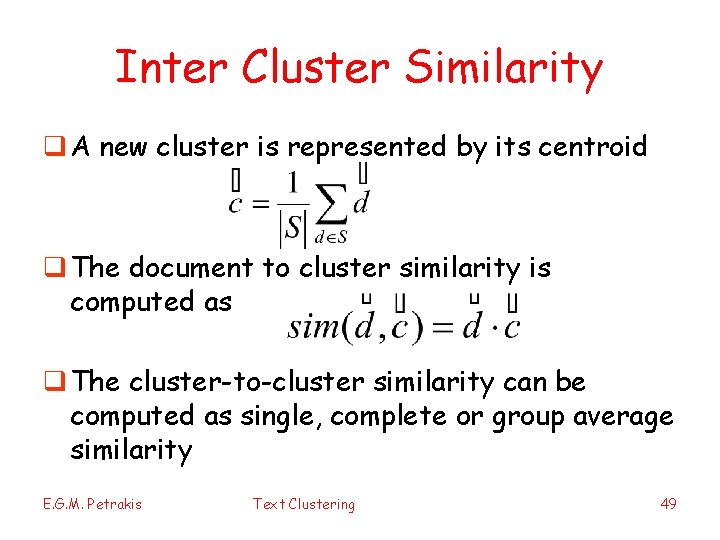

Inter Cluster Similarity q A new cluster is represented by its centroid q The document to cluster similarity is computed as q The cluster-to-cluster similarity can be computed as single, complete or group average similarity E. G. M. Petrakis Text Clustering 49

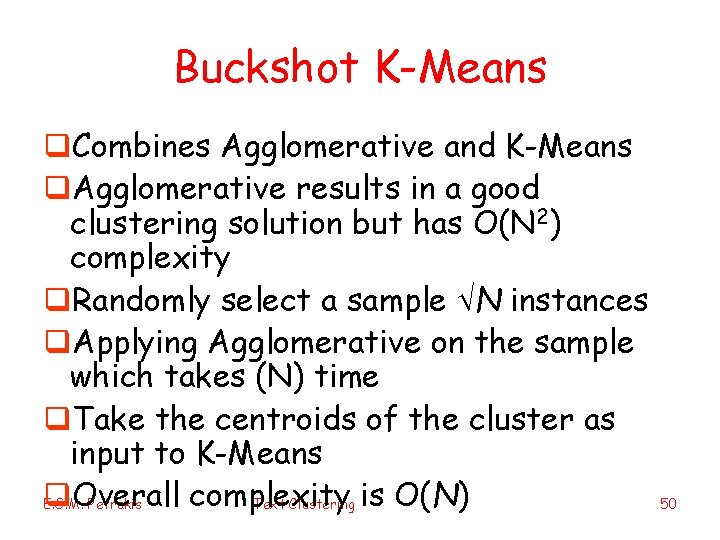

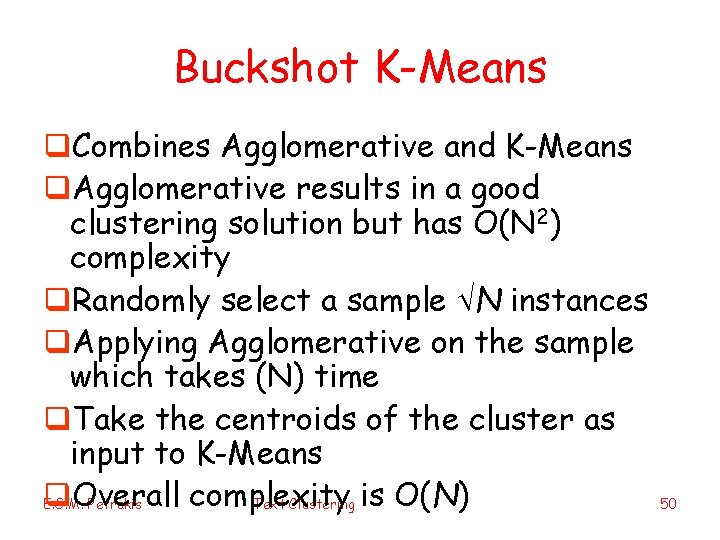

Buckshot K-Means q. Combines Agglomerative and K-Means q. Agglomerative results in a good clustering solution but has O(N 2) complexity q. Randomly select a sample N instances q. Applying Agglomerative on the sample which takes (N) time q. Take the centroids of the cluster as input to K-Means q. Overall complexity E. G. M. Petrakis Text Clustering is O(N) 50

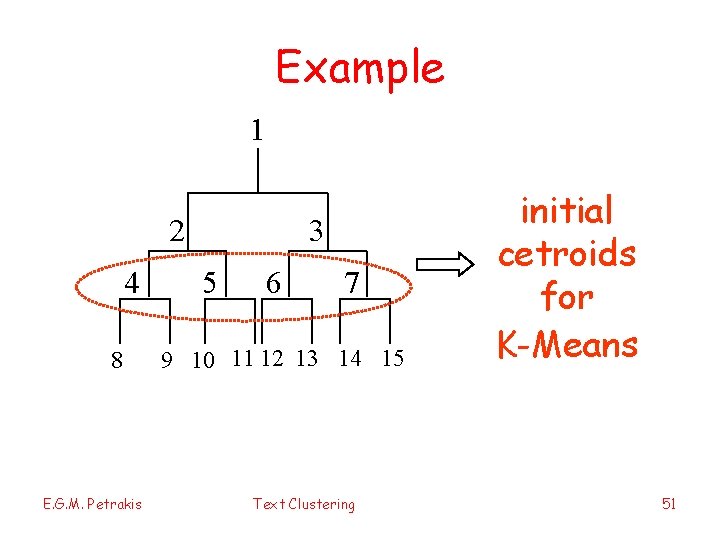

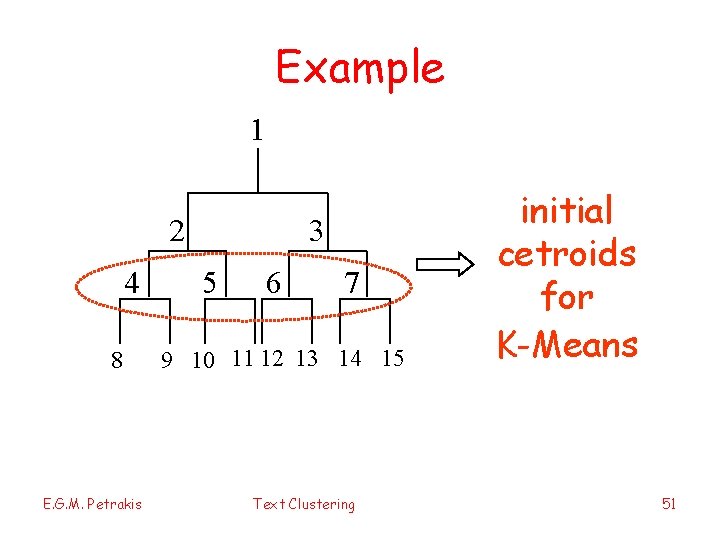

Example 1 2 4 8 E. G. M. Petrakis 3 5 6 7 9 10 11 12 13 14 15 Text Clustering initial cetroids for K-Means 51

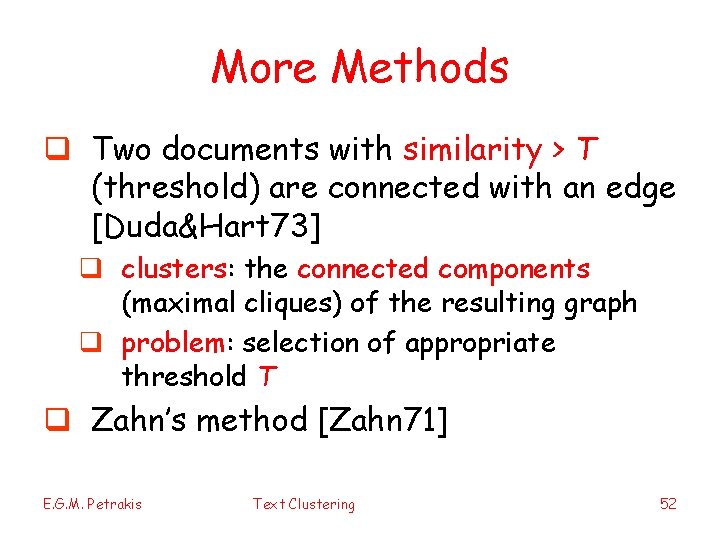

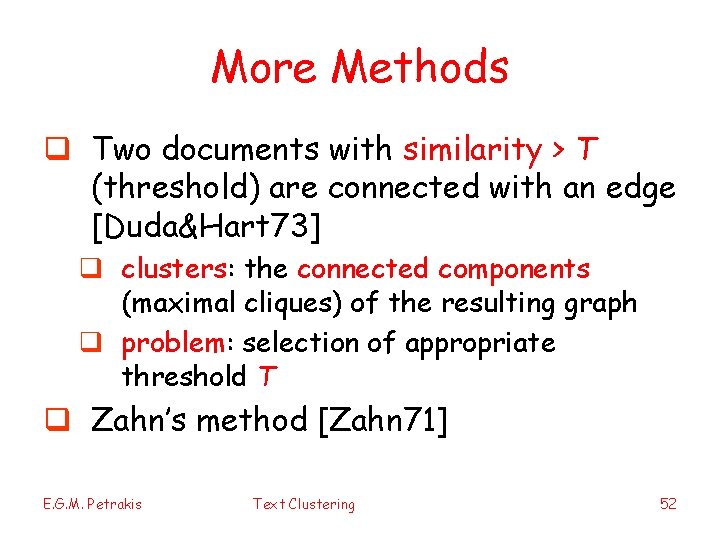

More Methods q Two documents with similarity > T (threshold) are connected with an edge [Duda&Hart 73] q clusters: the connected components (maximal cliques) of the resulting graph q problem: selection of appropriate threshold T q Zahn’s method [Zahn 71] E. G. M. Petrakis Text Clustering 52

![Zahns method Zahn 71 the dashed edge is inconsistent and is deleted 1 Find Zahn’s method [Zahn 71] the dashed edge is inconsistent and is deleted 1. Find](https://slidetodoc.com/presentation_image_h2/4f5eb1ddfa277deeb31549f79cbfc284/image-53.jpg)

Zahn’s method [Zahn 71] the dashed edge is inconsistent and is deleted 1. Find the minimum spanning tree 2. for each doc delete edges with length l > lavg q lavg: average distance if its incident edges 3. clusters: the connected components of the graph E. G. M. Petrakis Text Clustering 53

References q "Searching Multimedia Databases by Content", Christos Faloutsos, Kluwer Academic Publishers, 1996 q “A Comparison of Document Clustering Techniques”, M. Steinbach, G. Karypis, V. Kumar, In KDD Workshop on Text Mining, 2000 q “Data Clustering: A Review”, A. K. Jain, M. N. Murphy, P. J. Flynn, ACM Comp. Surveys, Vol. 31, No. 3, Sept. 99. q “Algorithms for Clustering Data” A. K. Jain, R. C. Dubes; Prentice-Hall , 1988, ISBN 0 -13 -022278 -X q “Automatic Text Processing: The Transformation, Analysis, and Retrieval of Information by Computer”, G. Salton, Addison-Wesley, 1989 E. G. M. Petrakis Text Clustering 54