Clustering Kmeans Clustering 2 means Clustering Curve Shows

- Slides: 77

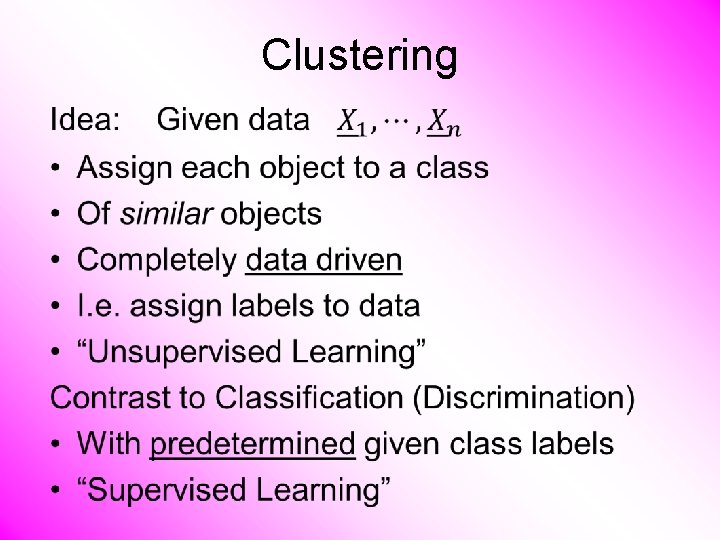

Clustering •

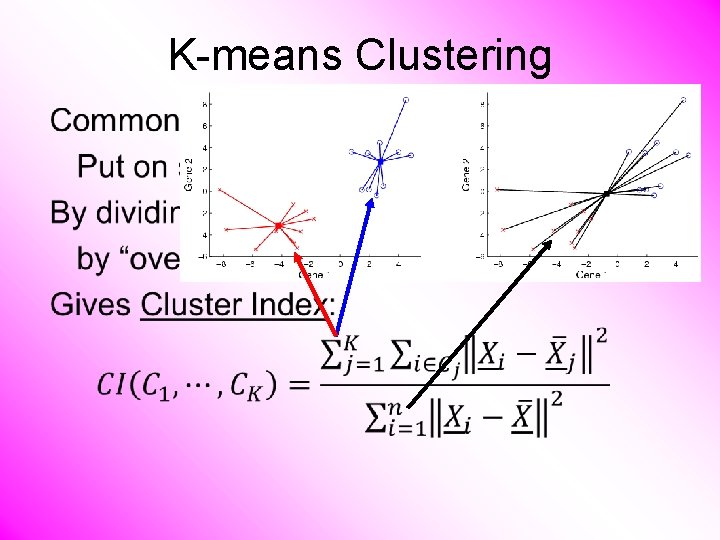

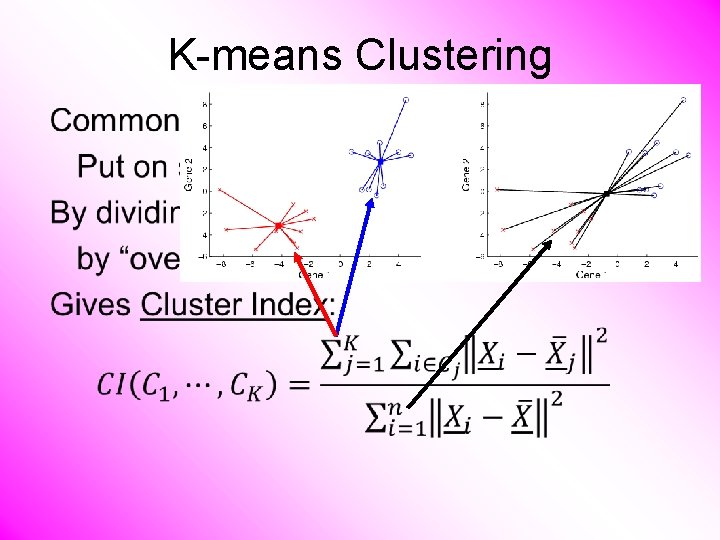

K-means Clustering •

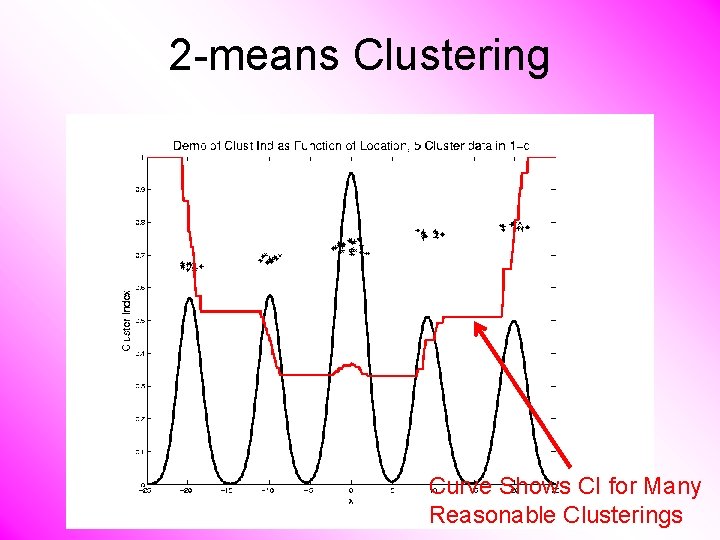

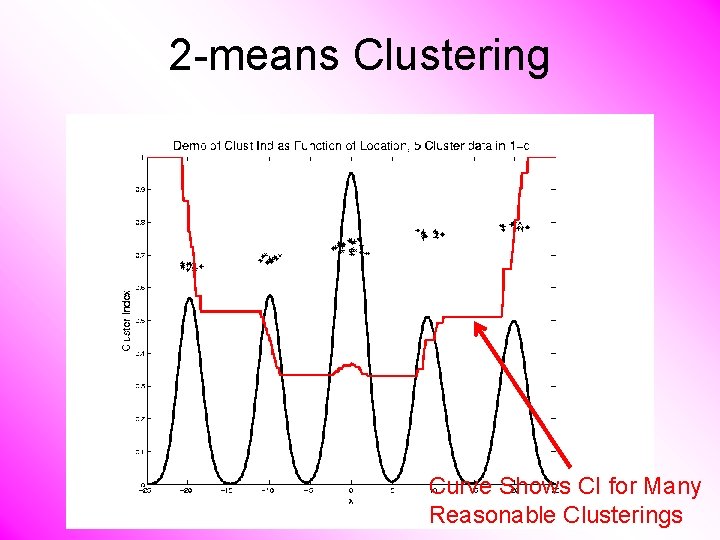

2 -means Clustering Curve Shows CI for Many Reasonable Clusterings

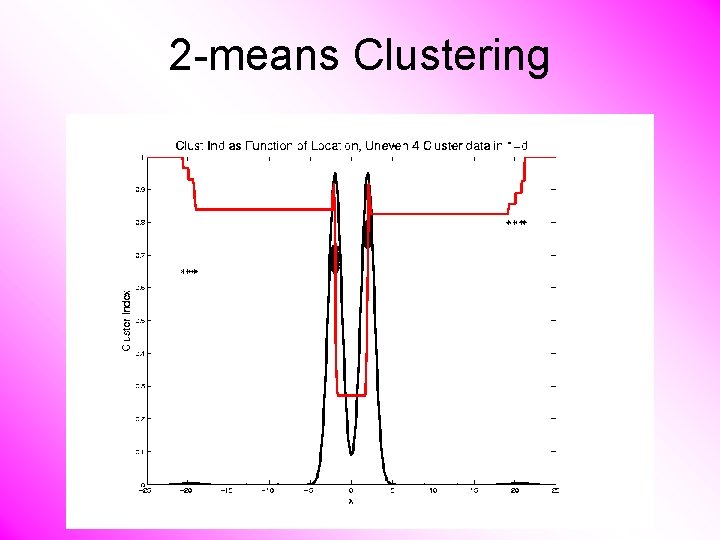

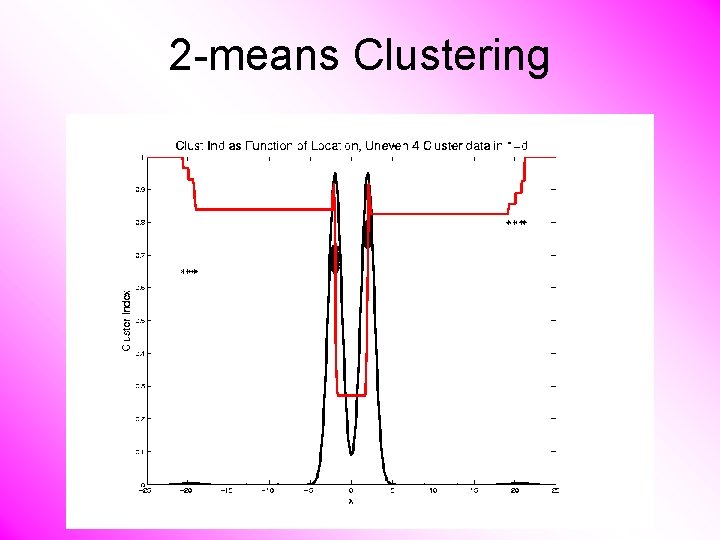

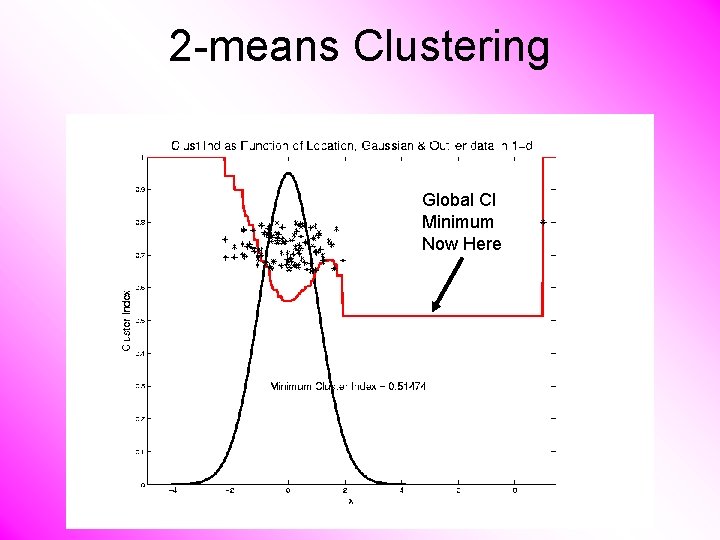

2 -means Clustering

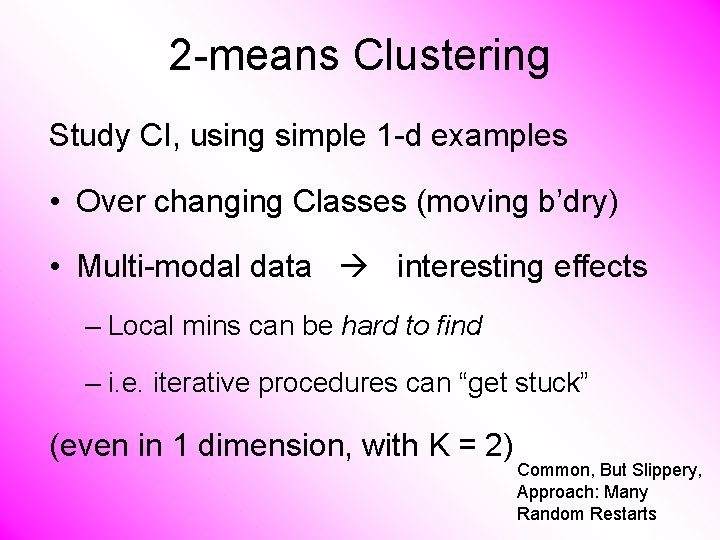

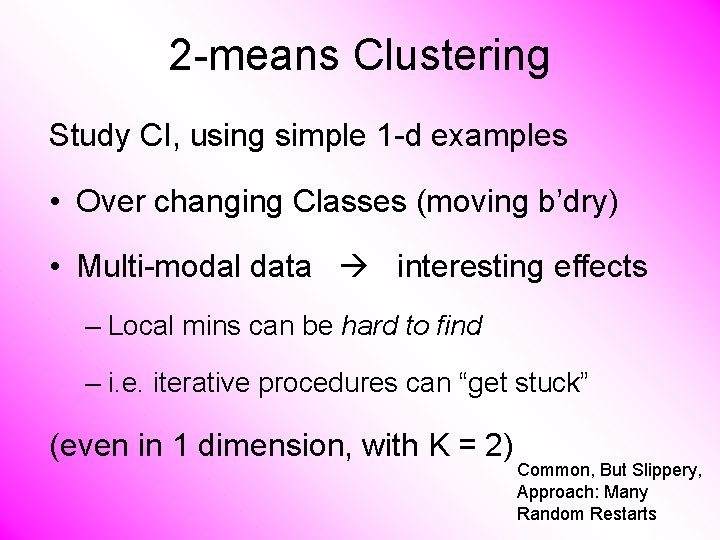

2 -means Clustering Study CI, using simple 1 -d examples • Over changing Classes (moving b’dry) • Multi-modal data interesting effects – Local mins can be hard to find – i. e. iterative procedures can “get stuck” (even in 1 dimension, with K = 2) Common, But Slippery, Approach: Many Random Restarts

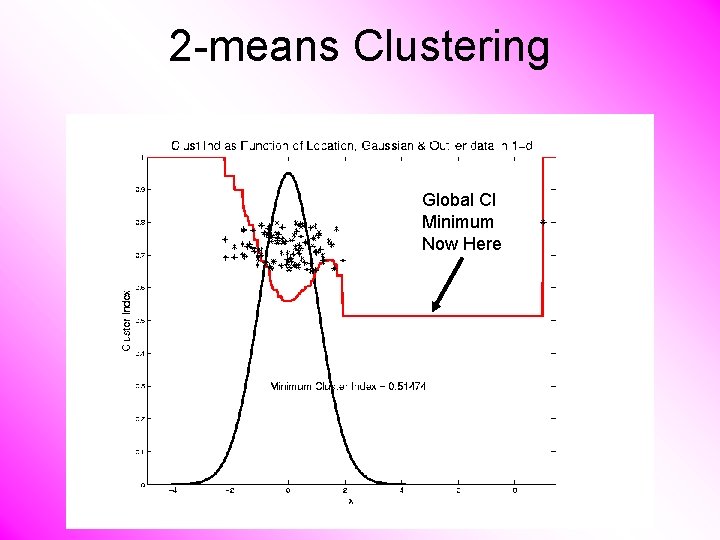

2 -means Clustering Global CI Minimum Now Here

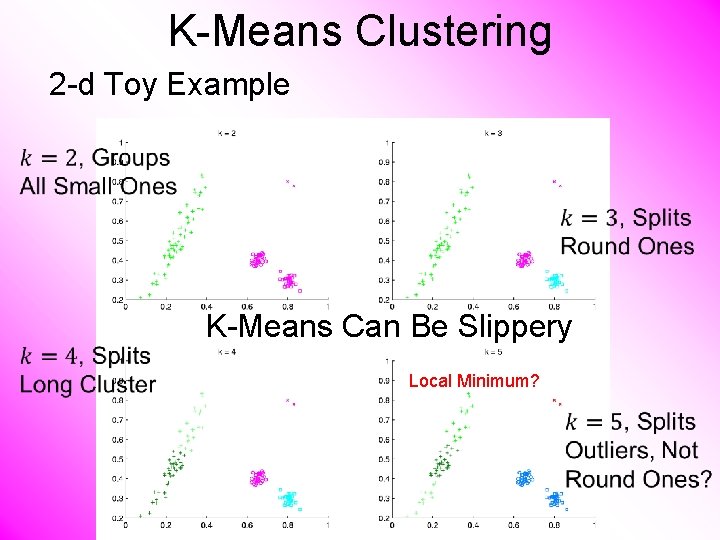

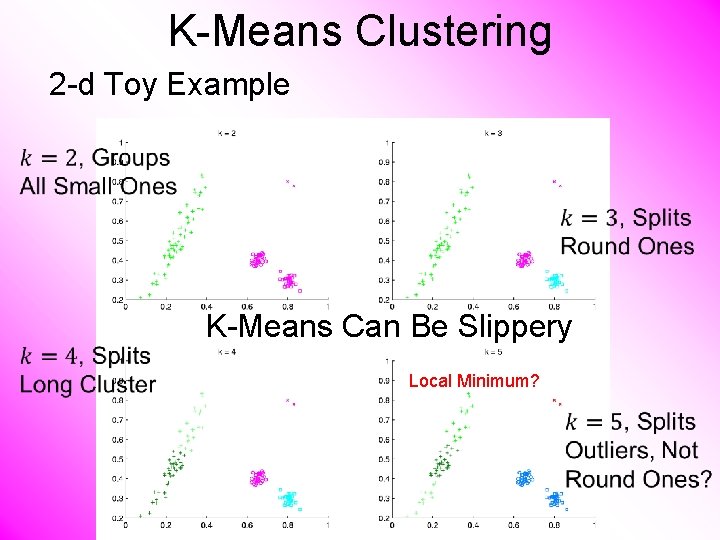

K-Means Clustering 2 -d Toy Example K-Means Can Be Slippery Local Minimum?

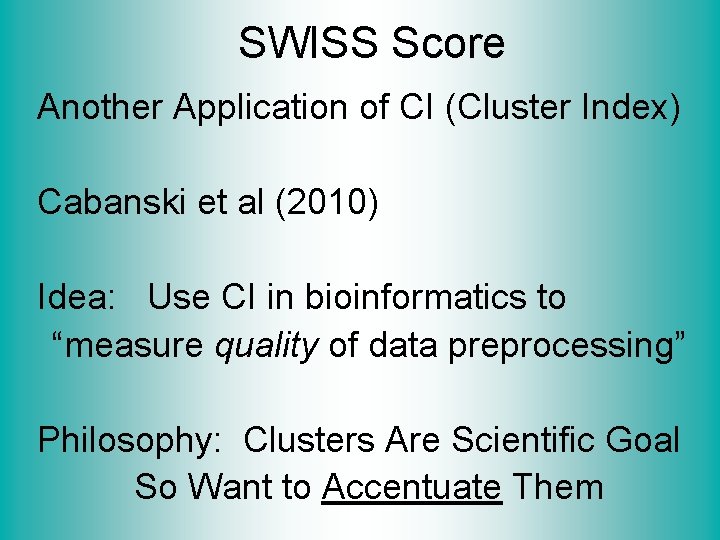

SWISS Score Another Application of CI (Cluster Index) Cabanski et al (2010) Idea: Use CI in bioinformatics to “measure quality of data preprocessing” Philosophy: Clusters Are Scientific Goal So Want to Accentuate Them

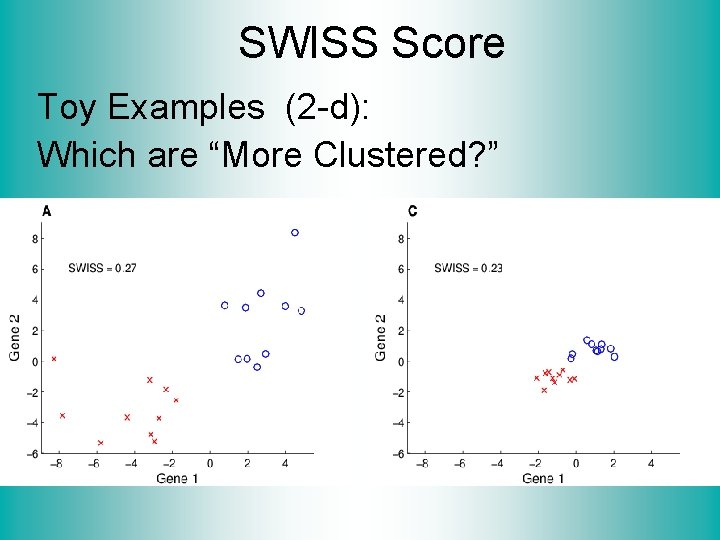

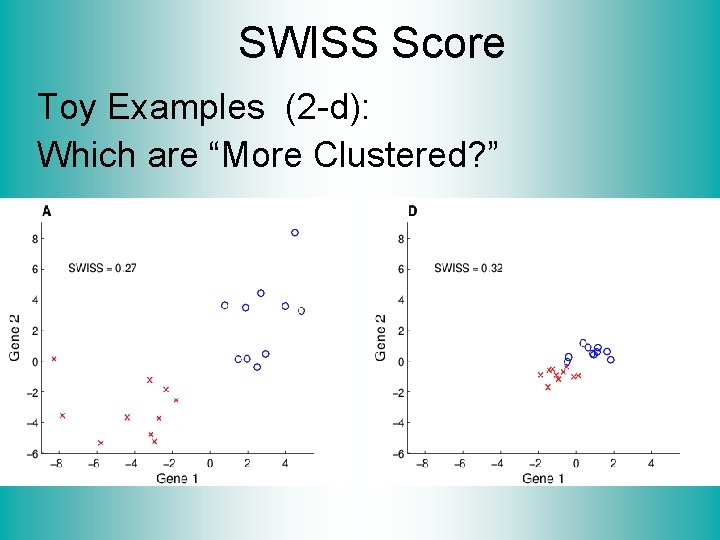

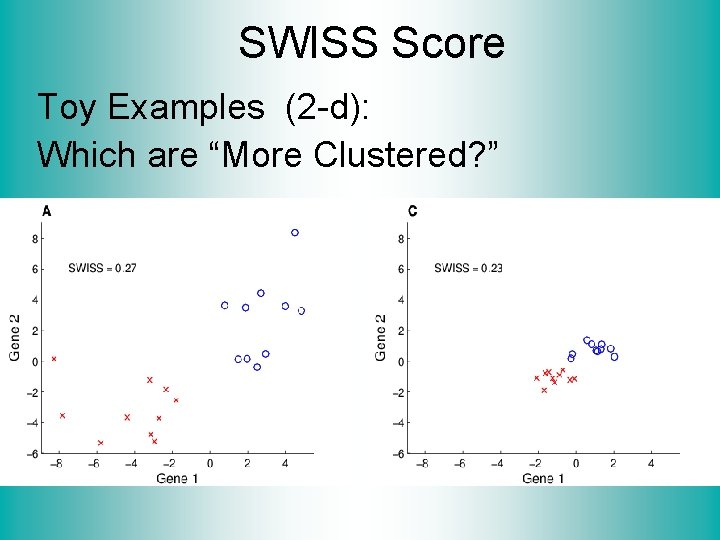

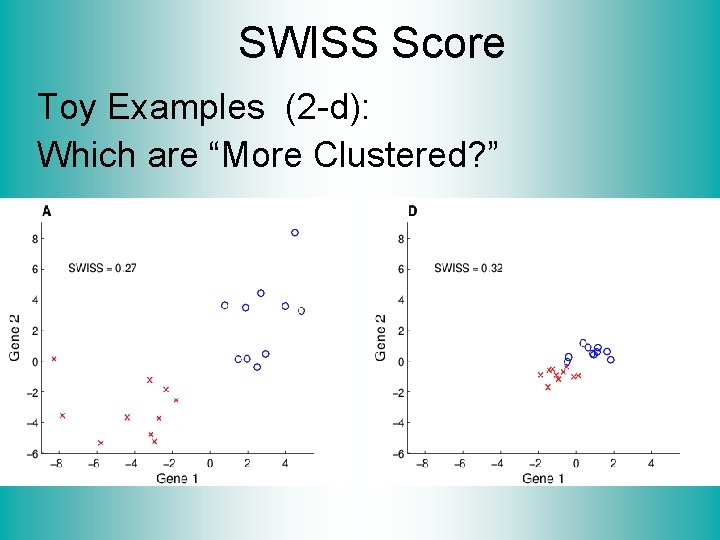

SWISS Score Toy Examples (2 -d): Which are “More Clustered? ”

SWISS Score Toy Examples (2 -d): Which are “More Clustered? ”

SWISS Score K-Class SWISS: Instead of using K-Class CI Use Average of Pairwise SWISS Scores (Preserves [0, 1] Range)

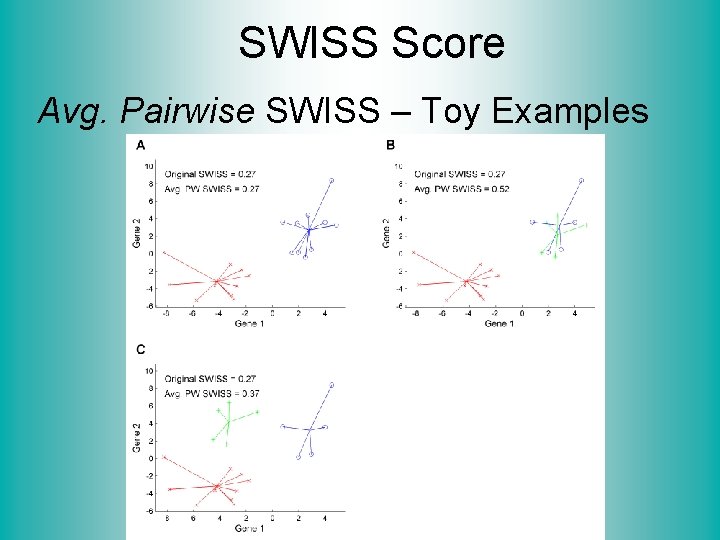

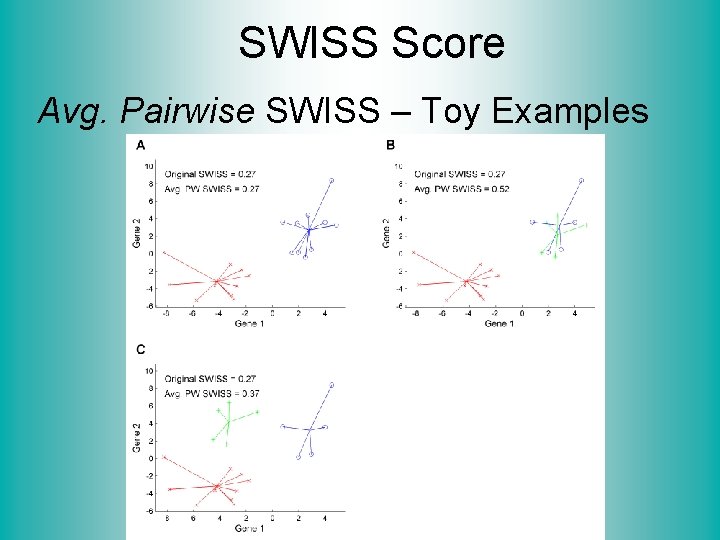

SWISS Score Avg. Pairwise SWISS – Toy Examples

SWISS Score Additional Feature: Ǝ Hypothesis Tests: ü H 1: SWISS 1 < 1 ü H 1: SWISS 1 < SWISS 2 Permutation Based See Cabanski et al (2010)

Clustering • A Very Large Area • K-Means is Only One Approach • Has its Drawbacks (Many Toy Examples of This) • Ǝ Many Other Approaches • Important (And Broad) Class Hierarchical Clustering

Hierarchical Clustering Idea: Consider Either: Bottom Up Aggregation: One by One Combine Data Top Down Splitting: All Data in One Cluster & Split Through Entire Data Set, to get Dendogram

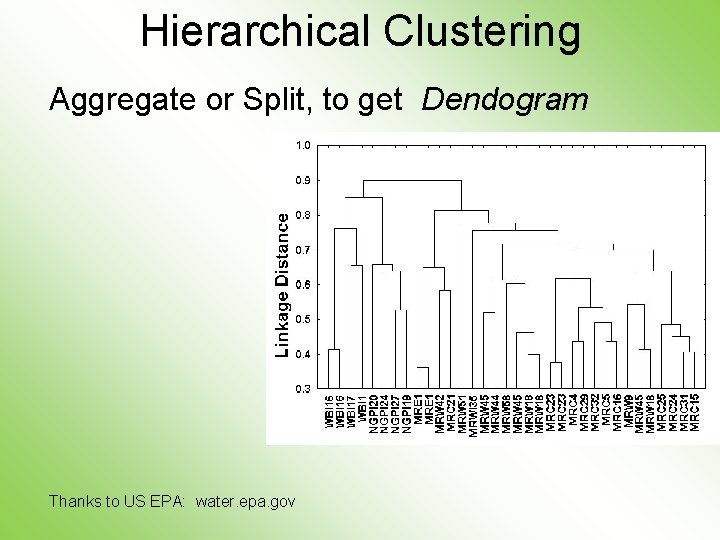

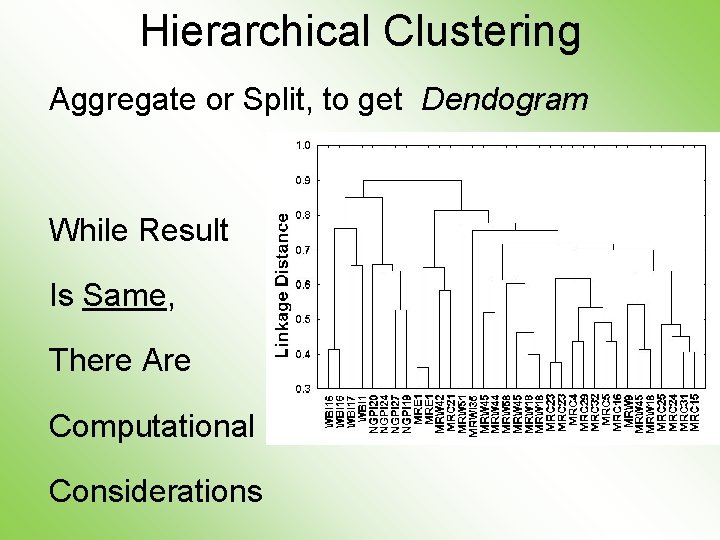

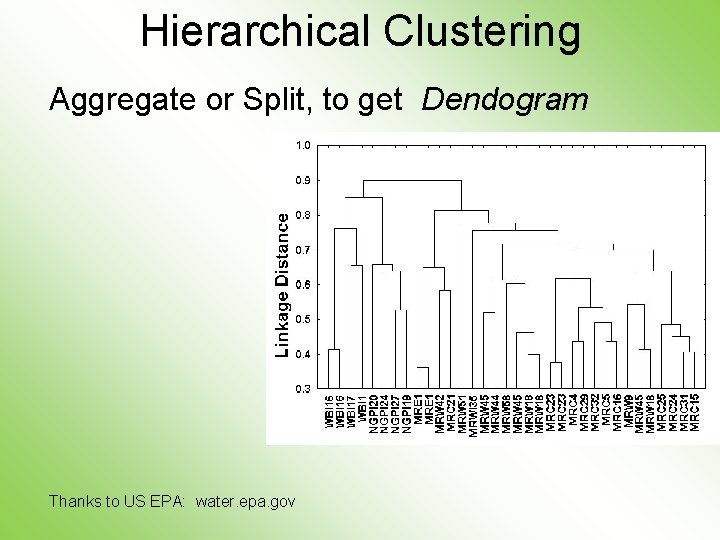

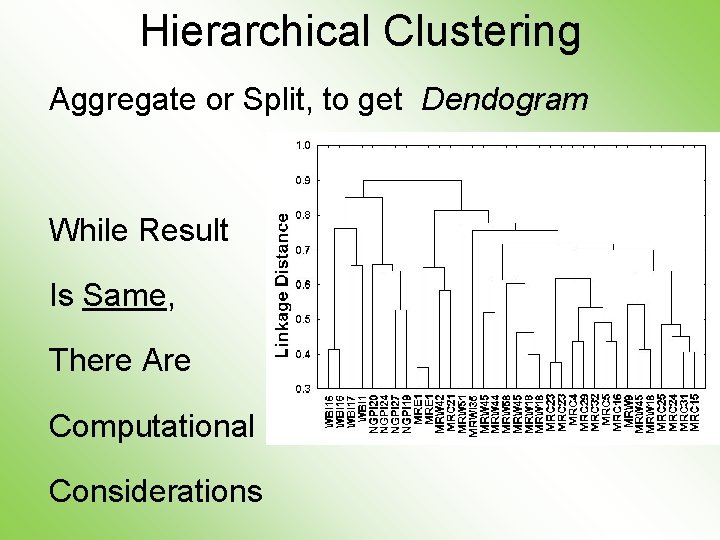

Hierarchical Clustering Aggregate or Split, to get Dendogram Thanks to US EPA: water. epa. gov

Hierarchical Clustering Aggregate or Split, to get Dendogram While Result Is Same, There Are Computational Considerations

Hierarchical Clustering • A Lot of “Art” Involved

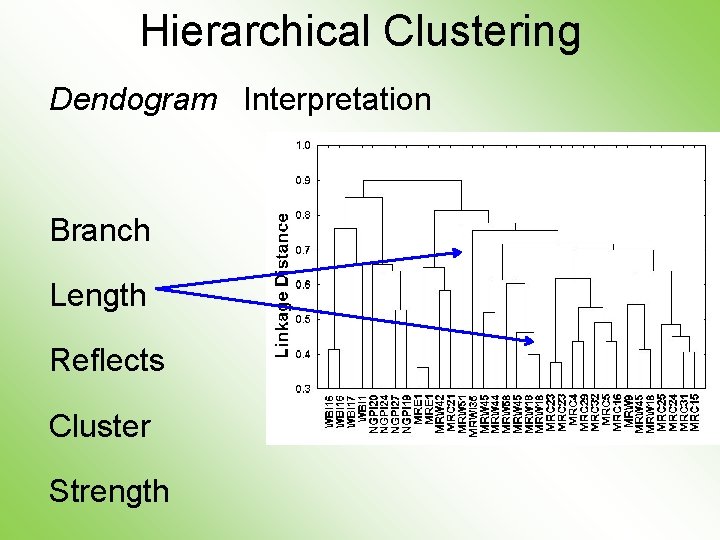

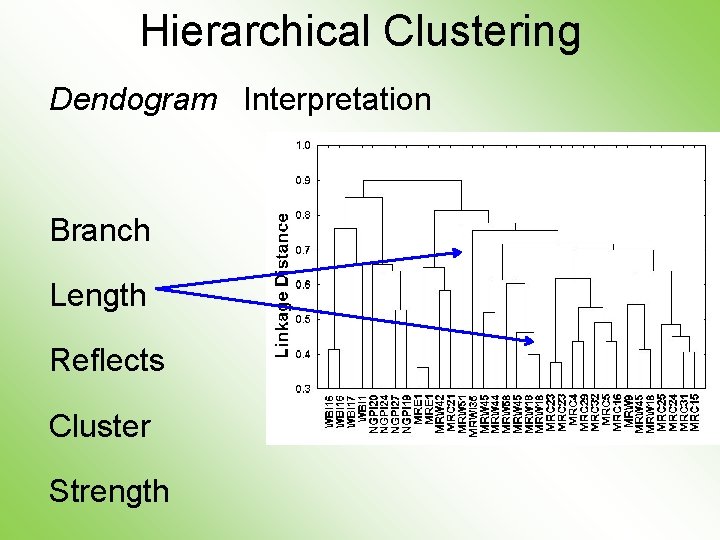

Hierarchical Clustering Dendogram Interpretation Branch Length Reflects Cluster Strength

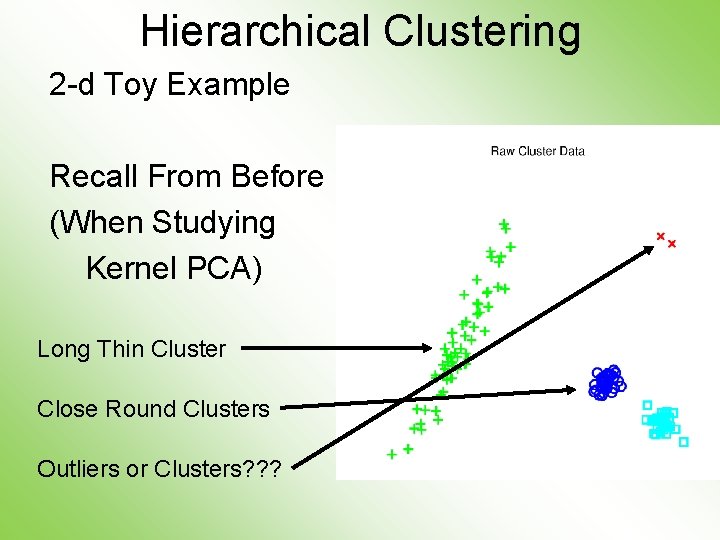

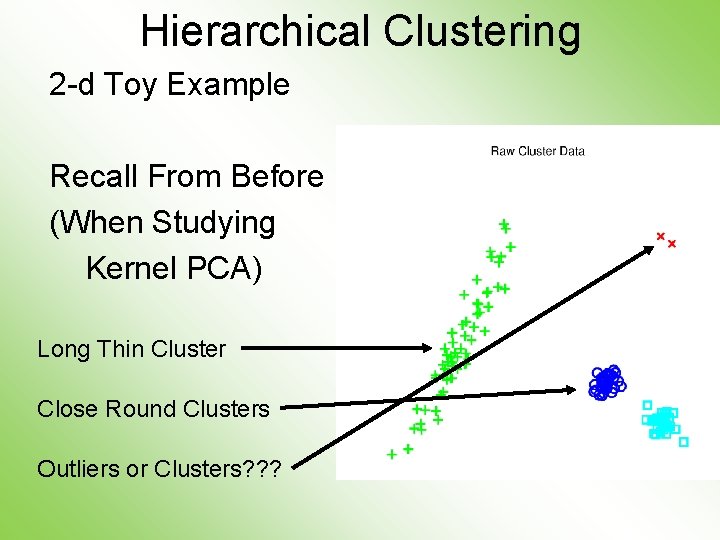

Hierarchical Clustering 2 -d Toy Example Recall From Before (When Studying Kernel PCA) Long Thin Cluster Close Round Clusters Outliers or Clusters? ? ?

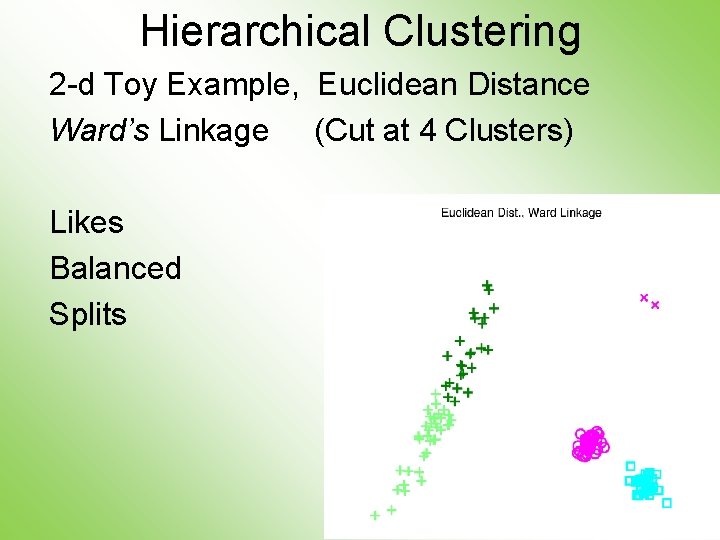

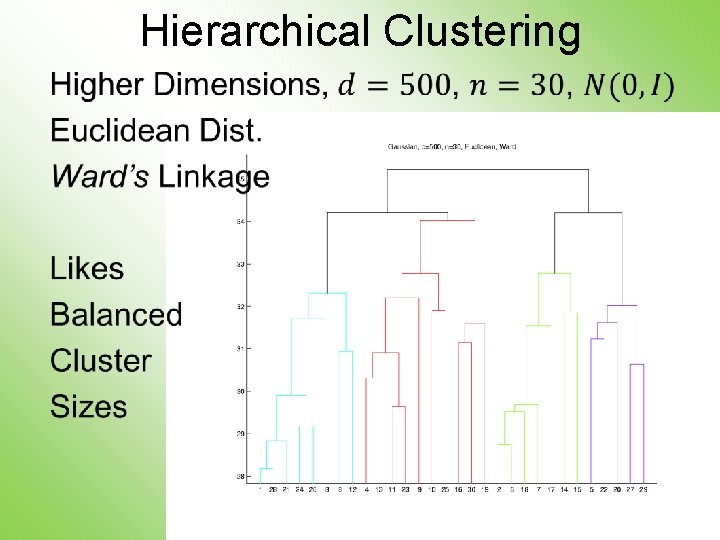

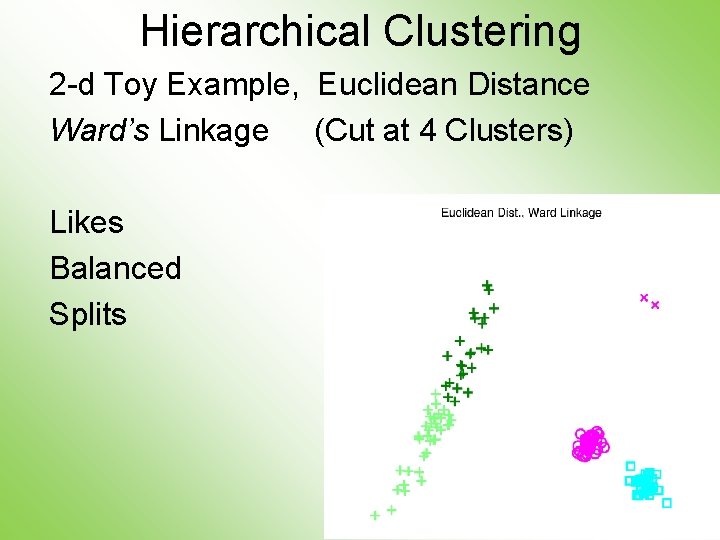

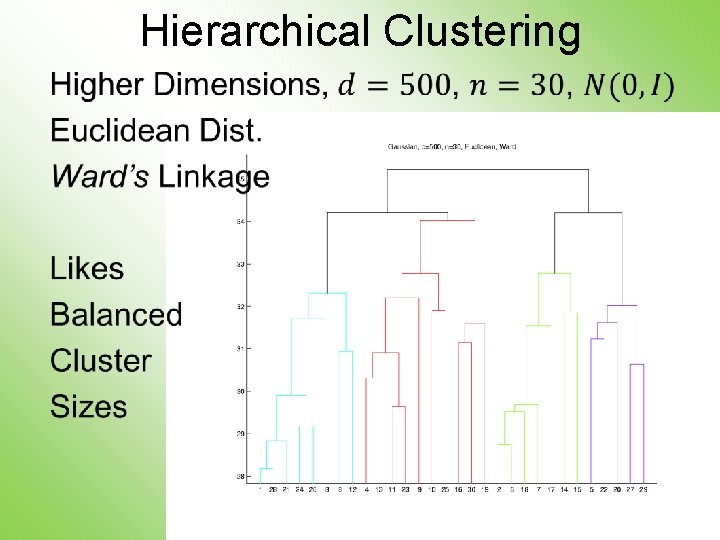

Hierarchical Clustering 2 -d Toy Example, Euclidean Distance Ward’s Linkage (Cut at 4 Clusters) Likes Balanced Splits

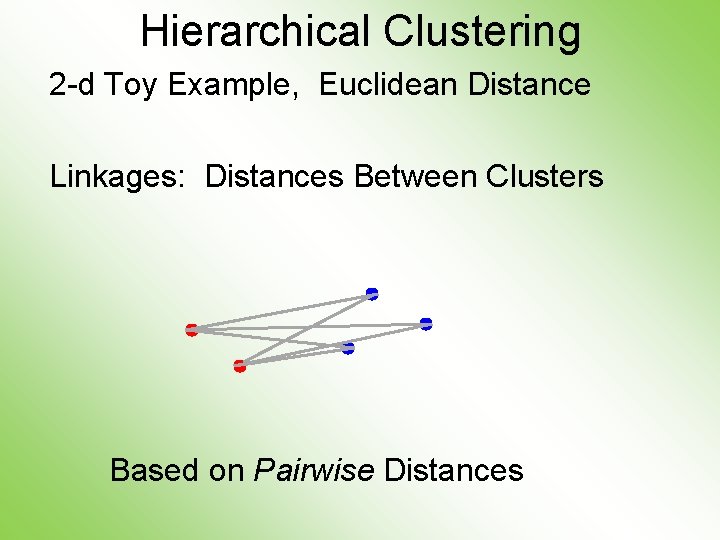

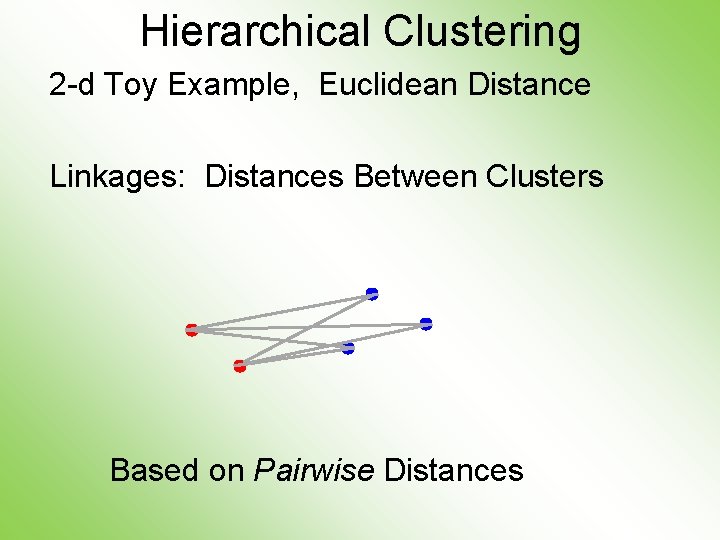

Hierarchical Clustering 2 -d Toy Example, Euclidean Distance Linkages: Distances Between Clusters Based on Pairwise Distances

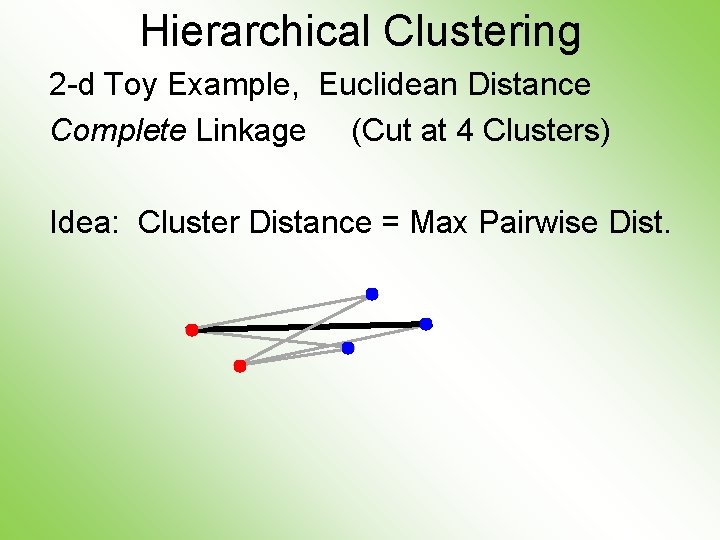

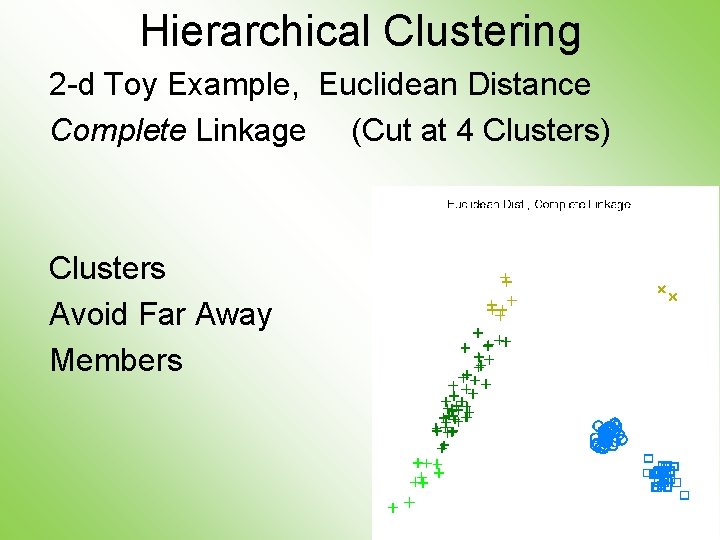

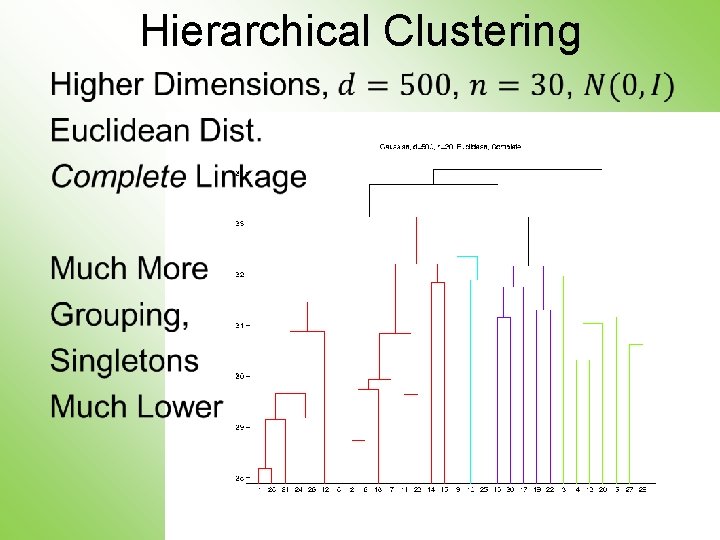

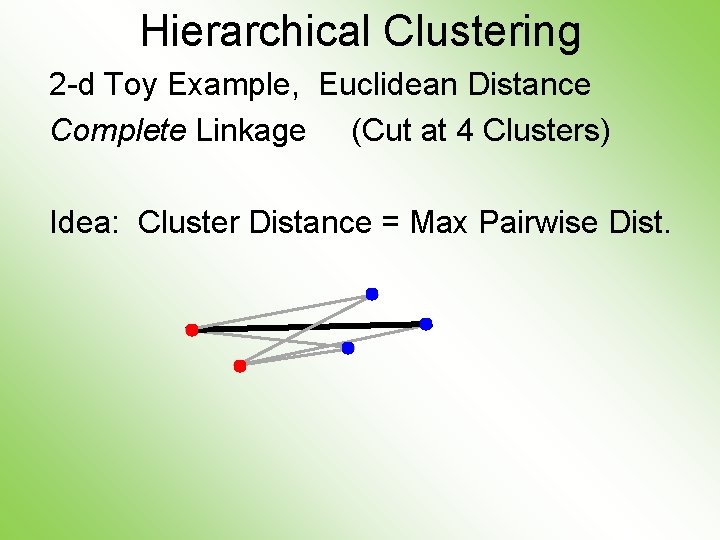

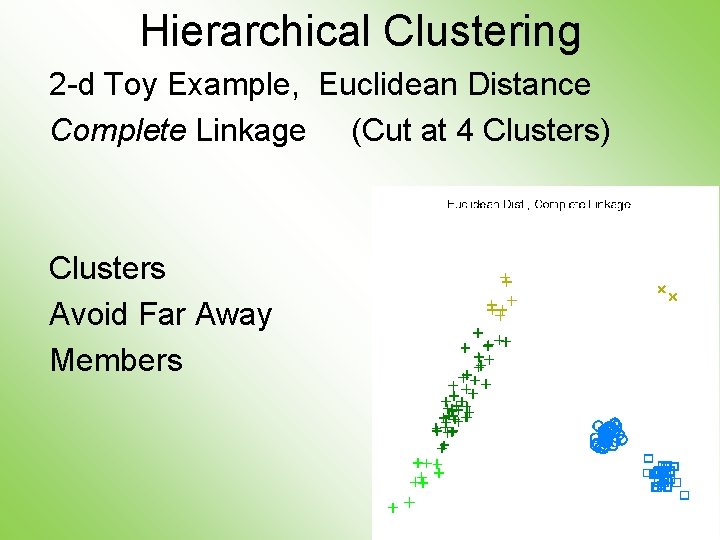

Hierarchical Clustering 2 -d Toy Example, Euclidean Distance Complete Linkage (Cut at 4 Clusters) Idea: Cluster Distance = Max Pairwise Dist.

Hierarchical Clustering 2 -d Toy Example, Euclidean Distance Complete Linkage (Cut at 4 Clusters) Clusters Avoid Far Away Members

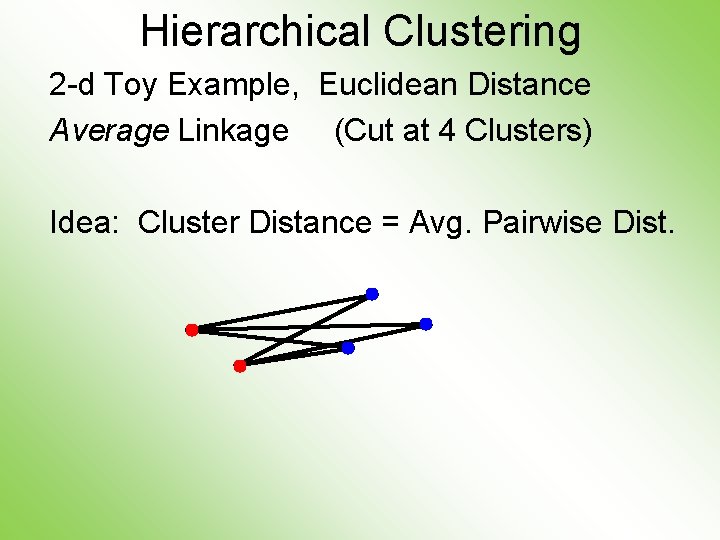

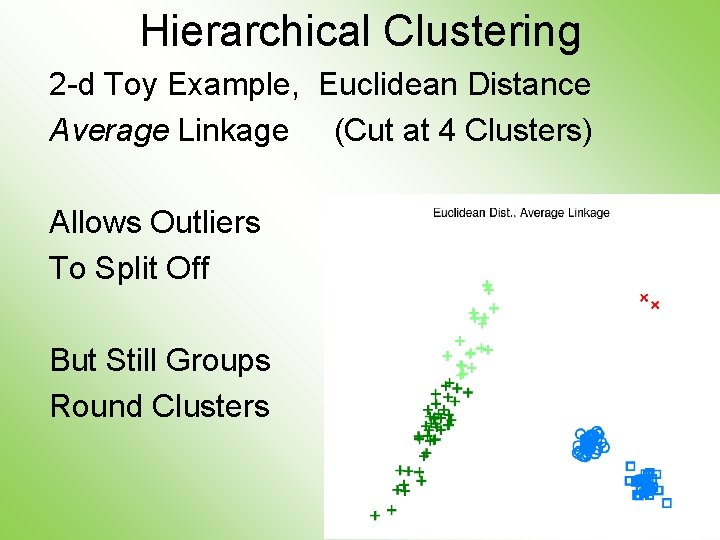

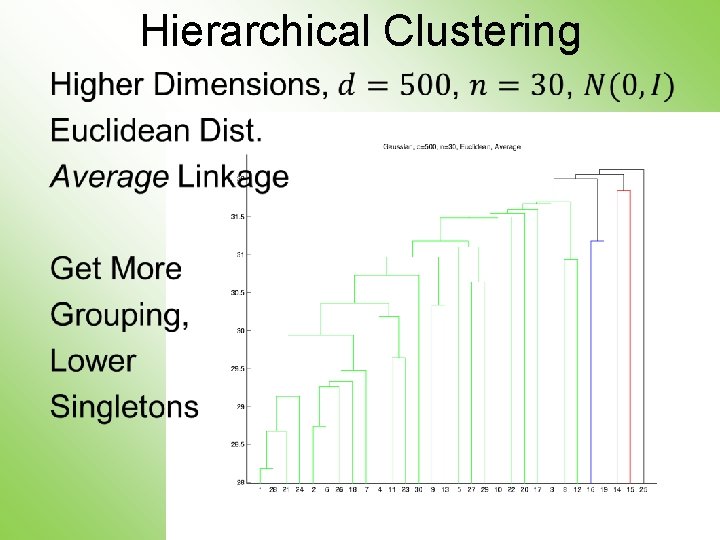

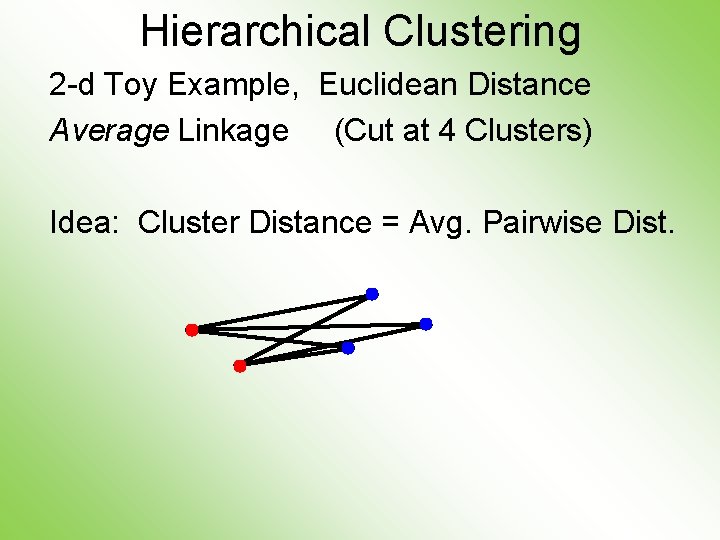

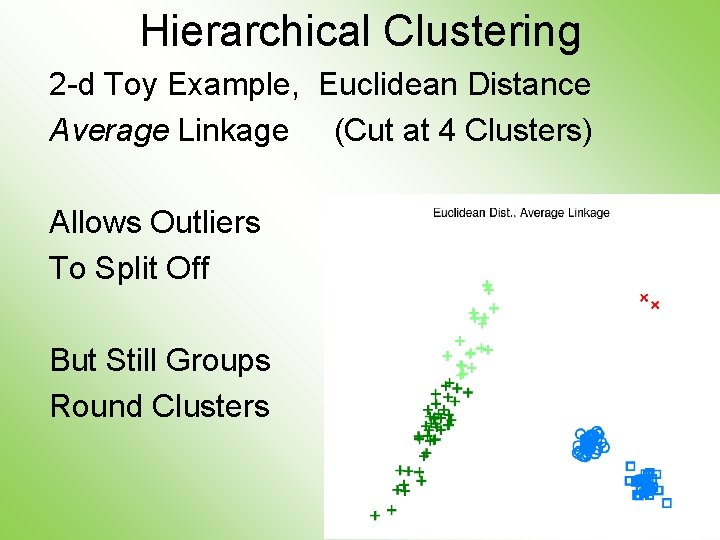

Hierarchical Clustering 2 -d Toy Example, Euclidean Distance Average Linkage (Cut at 4 Clusters) Idea: Cluster Distance = Avg. Pairwise Dist.

Hierarchical Clustering 2 -d Toy Example, Euclidean Distance Average Linkage (Cut at 4 Clusters) Allows Outliers To Split Off But Still Groups Round Clusters

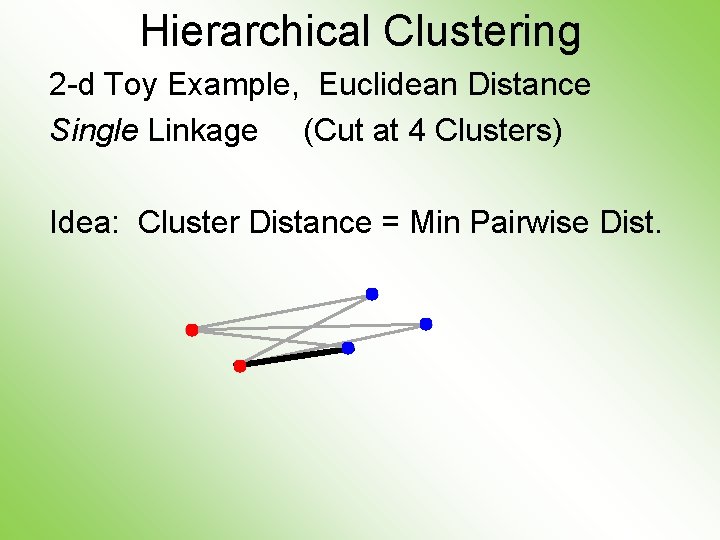

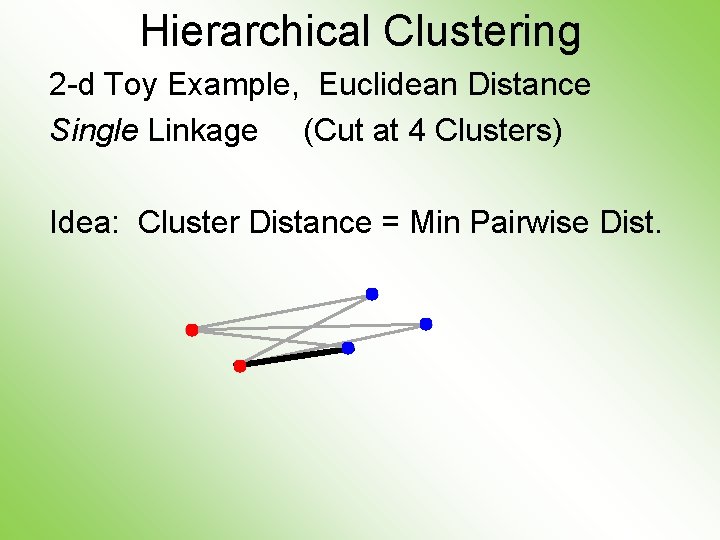

Hierarchical Clustering 2 -d Toy Example, Euclidean Distance Single Linkage (Cut at 4 Clusters) Idea: Cluster Distance = Min Pairwise Dist.

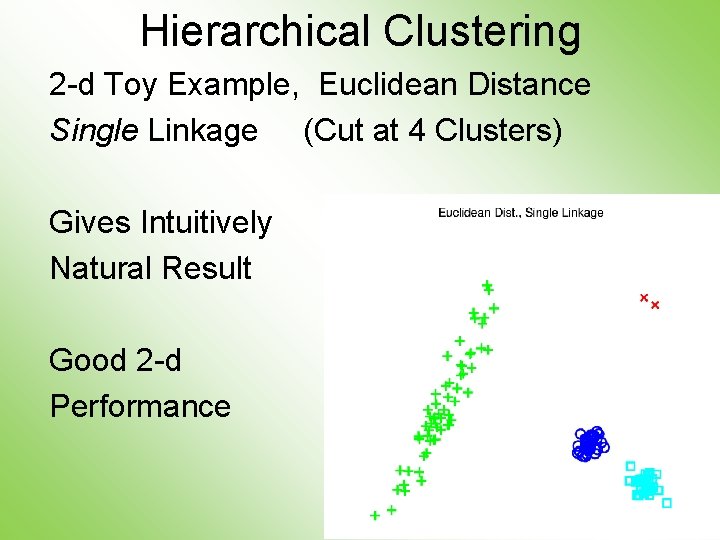

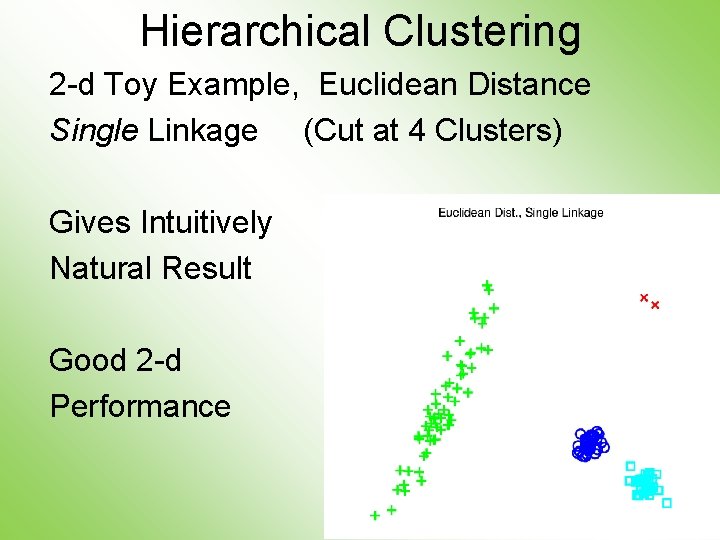

Hierarchical Clustering 2 -d Toy Example, Euclidean Distance Single Linkage (Cut at 4 Clusters) Gives Intuitively Natural Result Good 2 -d Performance

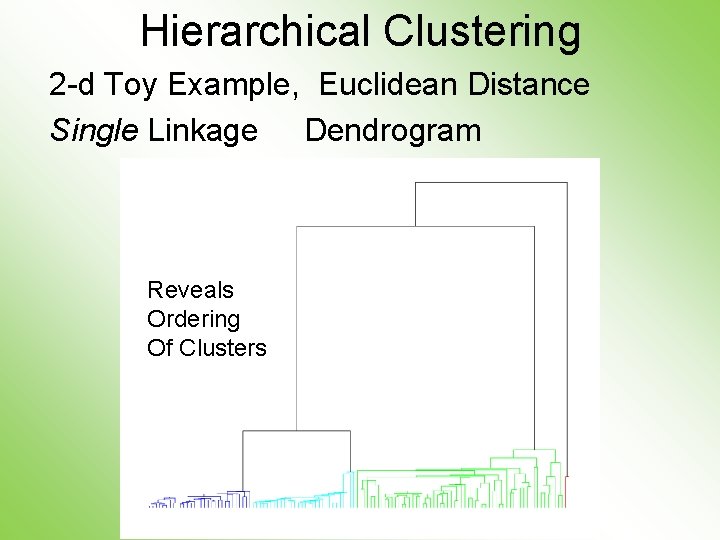

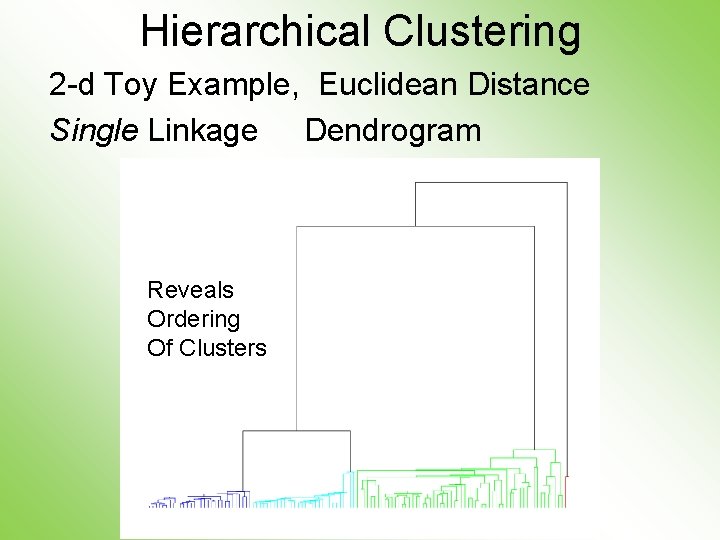

Hierarchical Clustering 2 -d Toy Example, Euclidean Distance Single Linkage Dendrogram Reveals Ordering Of Clusters

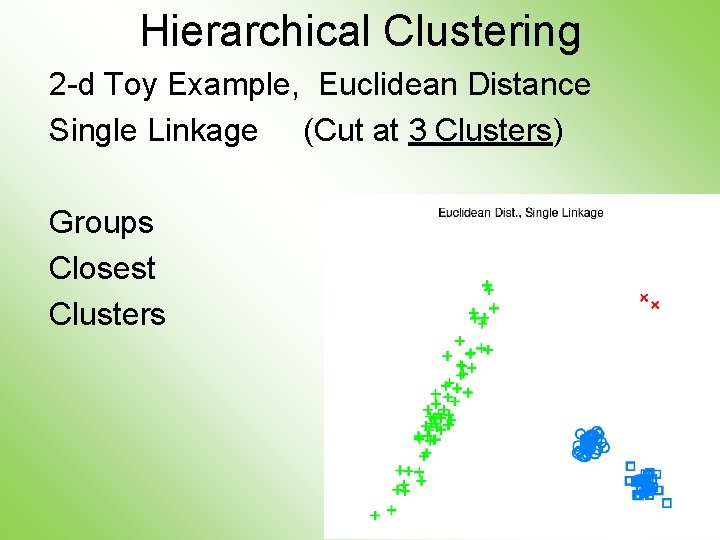

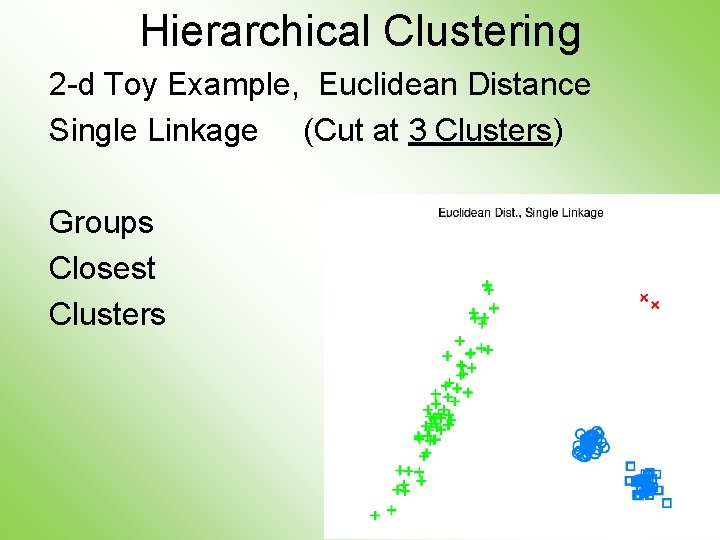

Hierarchical Clustering 2 -d Toy Example, Euclidean Distance Single Linkage (Cut at 3 Clusters) Groups Closest Clusters

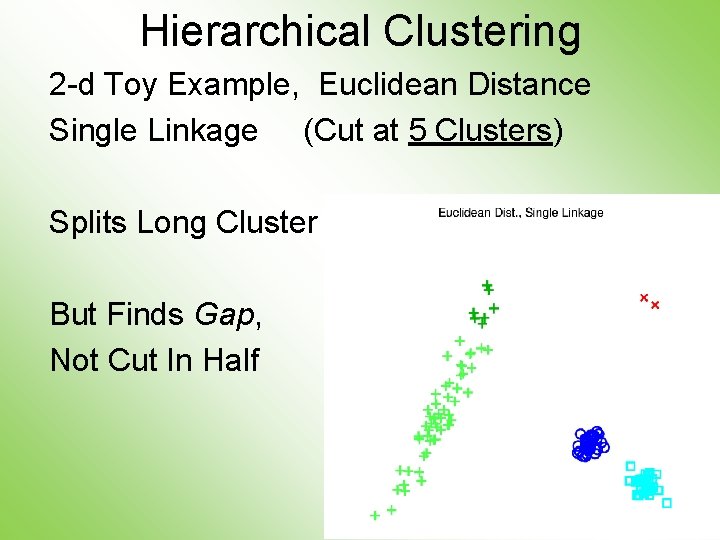

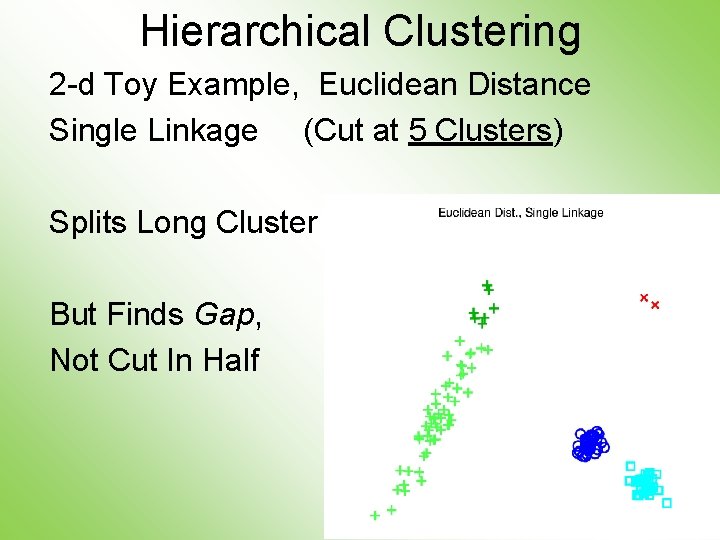

Hierarchical Clustering 2 -d Toy Example, Euclidean Distance Single Linkage (Cut at 5 Clusters) Splits Long Cluster But Finds Gap, Not Cut In Half

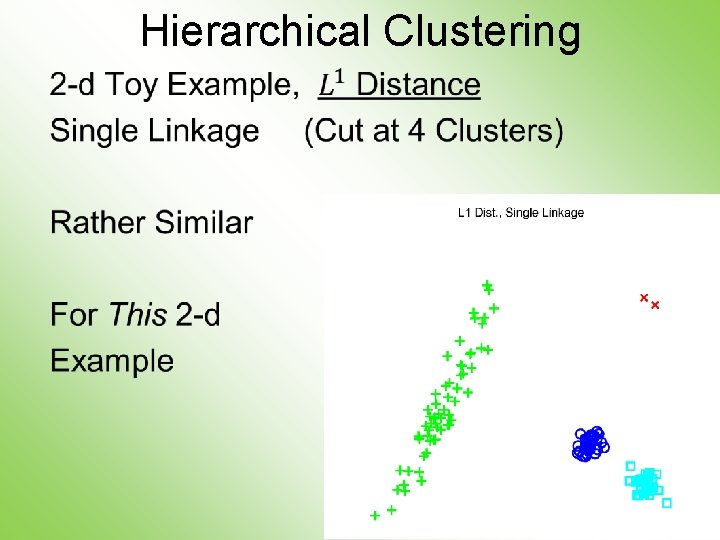

Hierarchical Clustering •

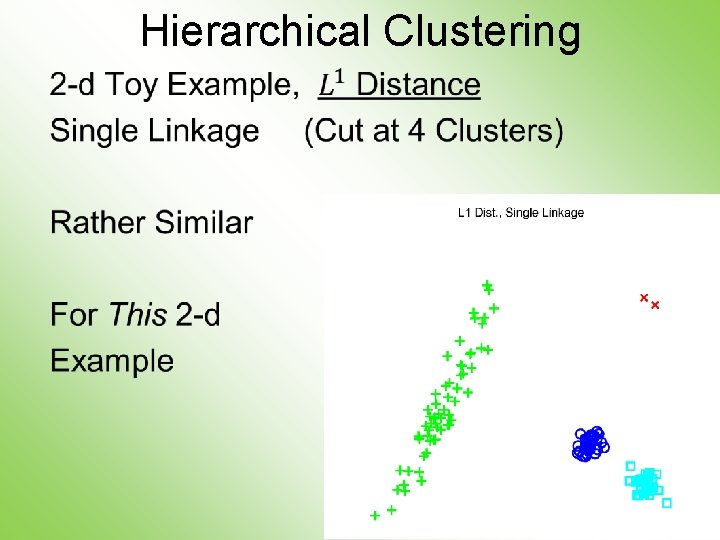

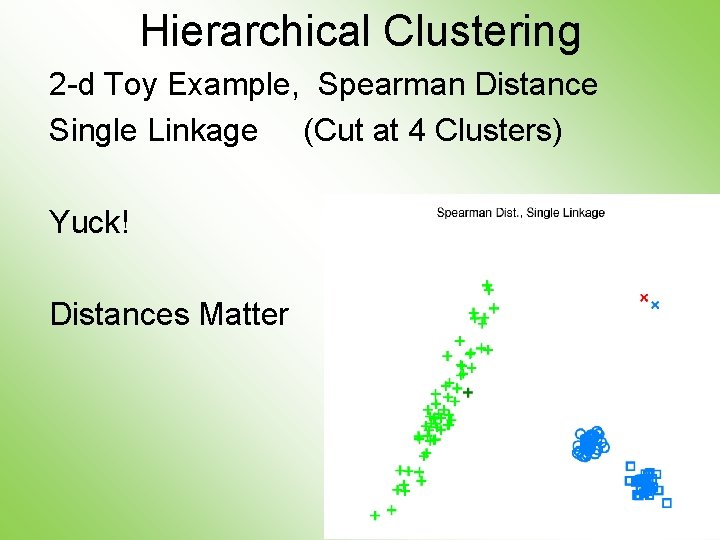

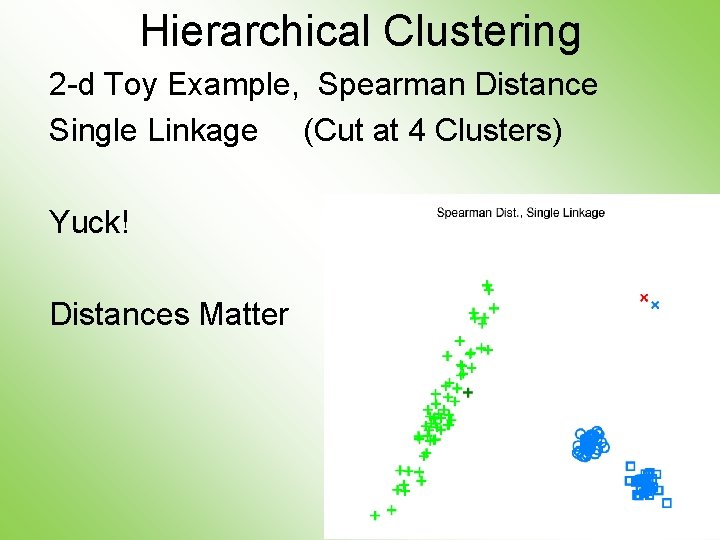

Hierarchical Clustering 2 -d Toy Example, Spearman Distance Single Linkage (Cut at 4 Clusters) Yuck! Distances Matter

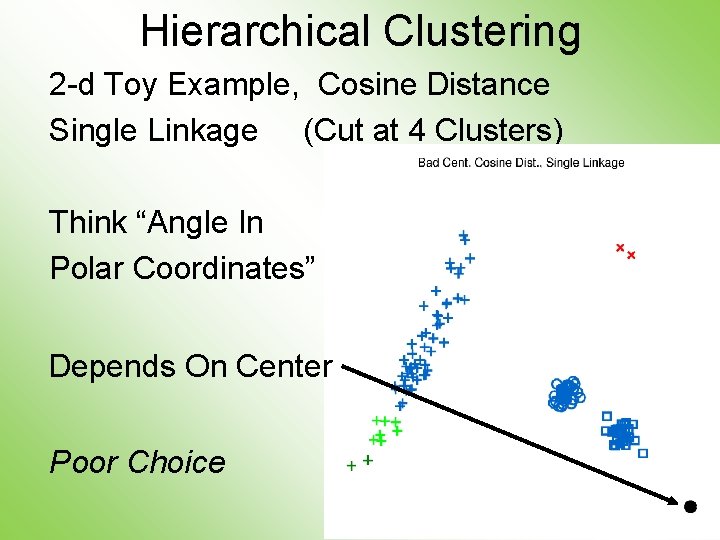

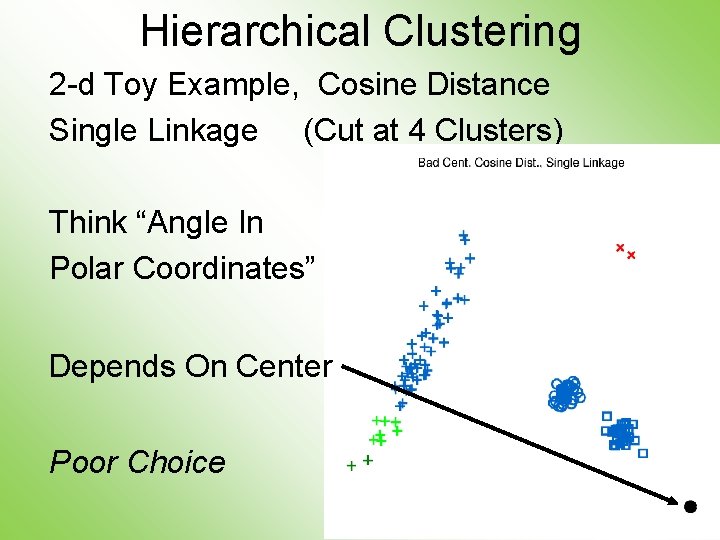

Hierarchical Clustering 2 -d Toy Example, Cosine Distance Single Linkage (Cut at 4 Clusters) Think “Angle In Polar Coordinates” Depends On Center Poor Choice

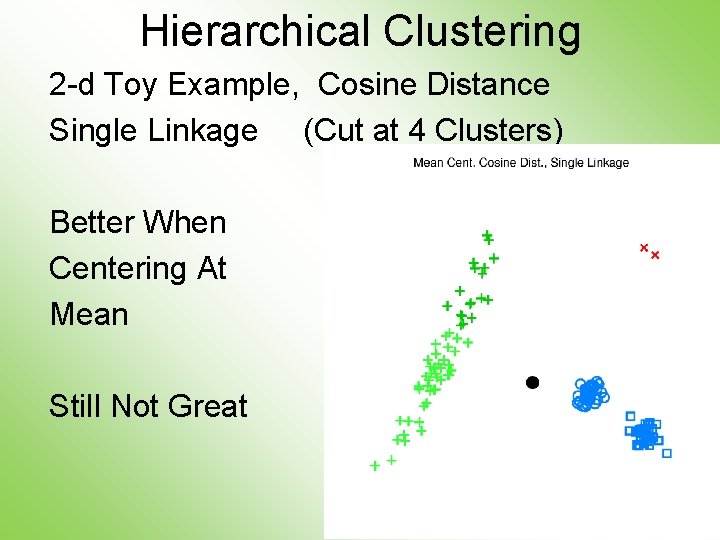

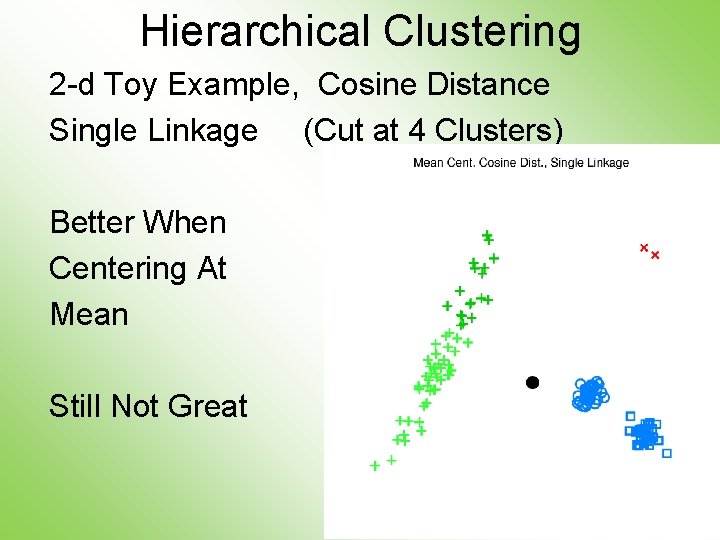

Hierarchical Clustering 2 -d Toy Example, Cosine Distance Single Linkage (Cut at 4 Clusters) Better When Centering At Mean Still Not Great

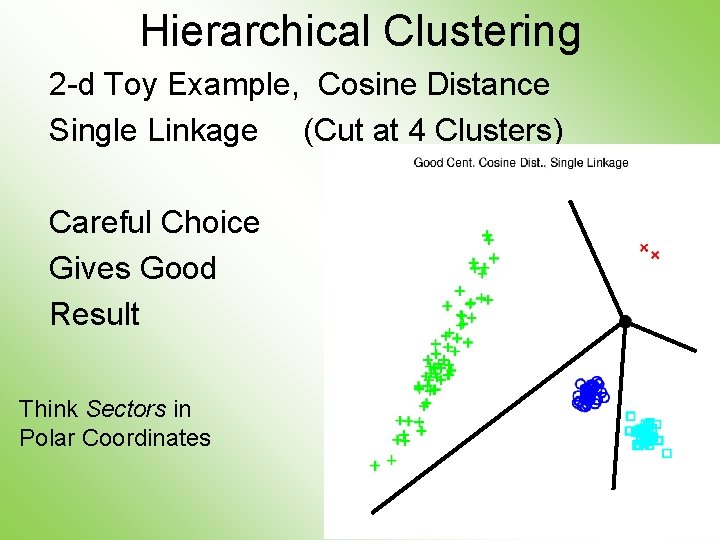

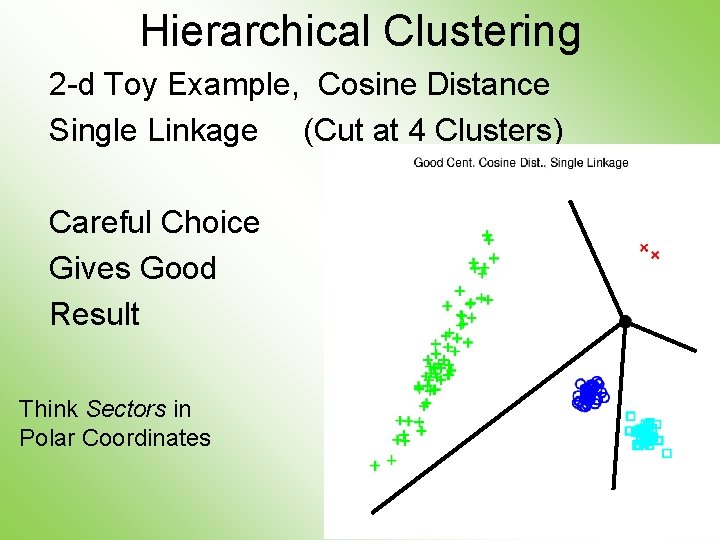

Hierarchical Clustering 2 -d Toy Example, Cosine Distance Single Linkage (Cut at 4 Clusters) Careful Choice Gives Good Result Think Sectors in Polar Coordinates

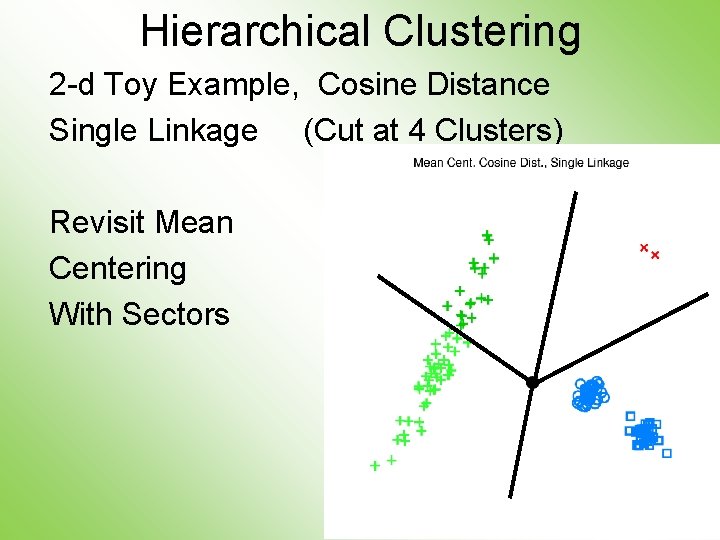

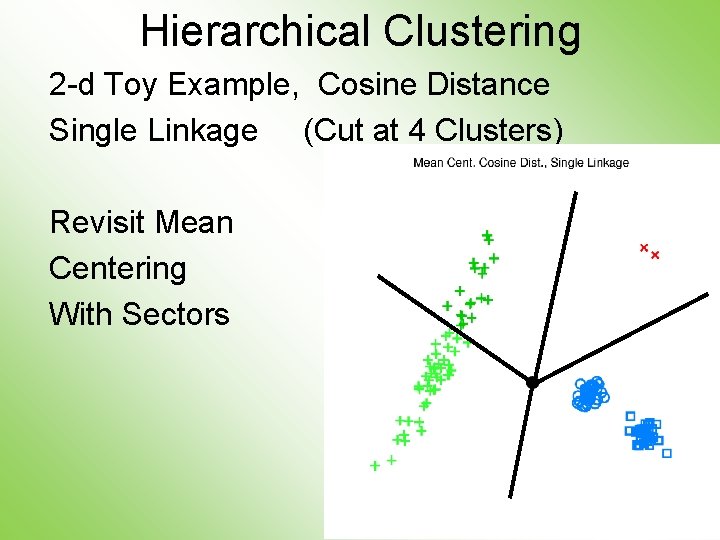

Hierarchical Clustering 2 -d Toy Example, Cosine Distance Single Linkage (Cut at 4 Clusters) Revisit Mean Centering With Sectors

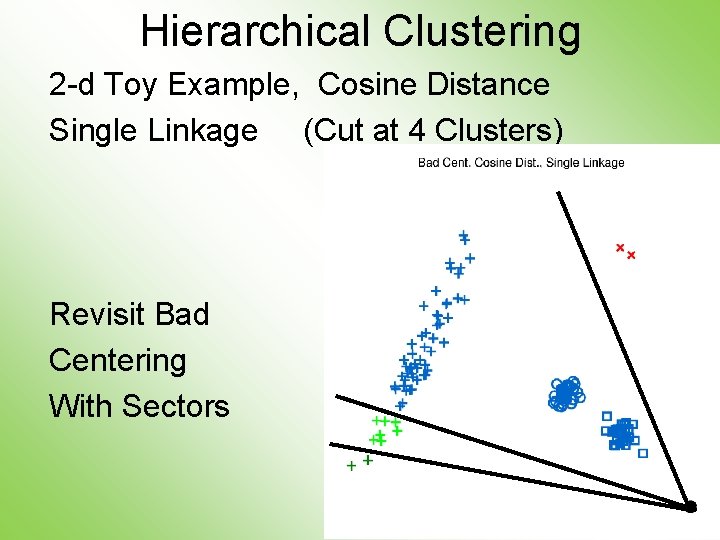

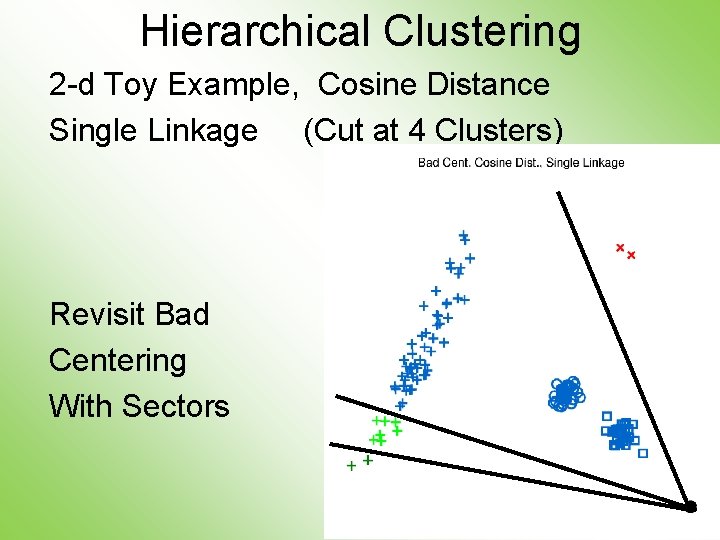

Hierarchical Clustering 2 -d Toy Example, Cosine Distance Single Linkage (Cut at 4 Clusters) Revisit Bad Centering With Sectors

Hierarchical Clustering •

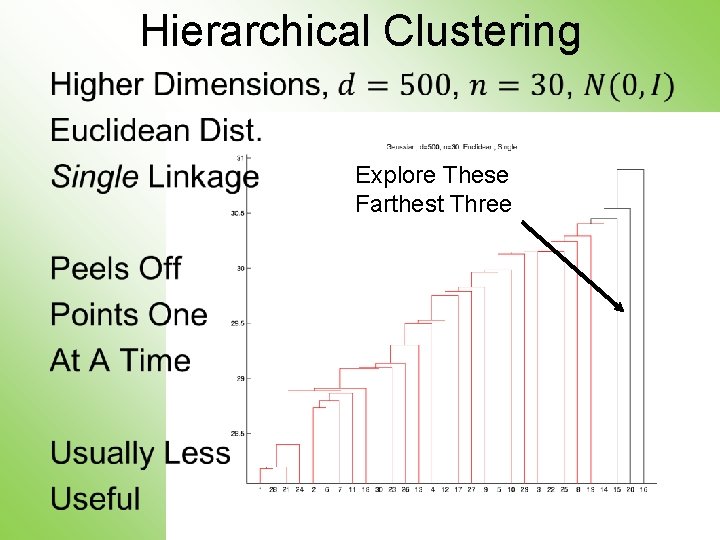

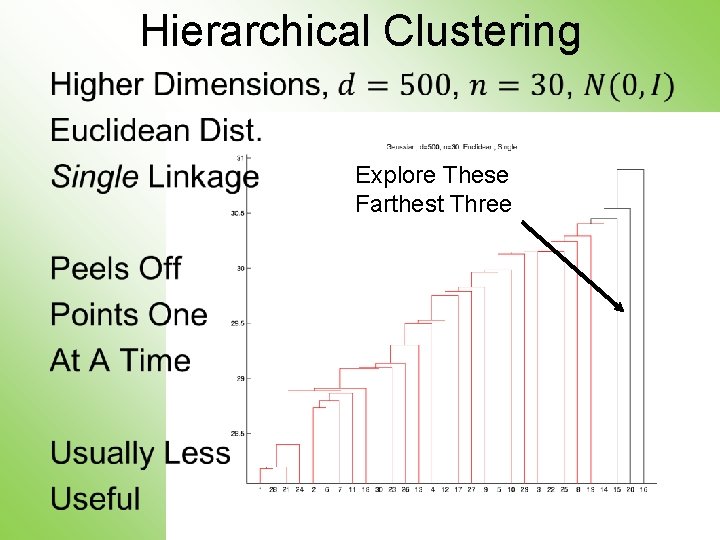

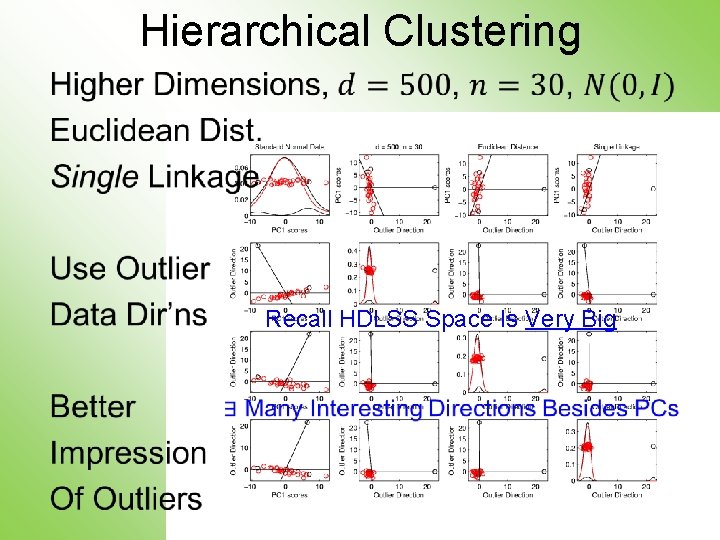

Hierarchical Clustering • Explore These Farthest Three

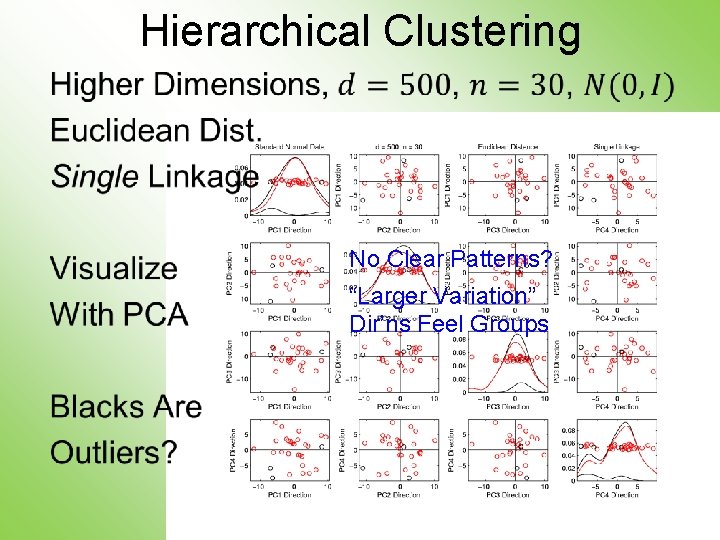

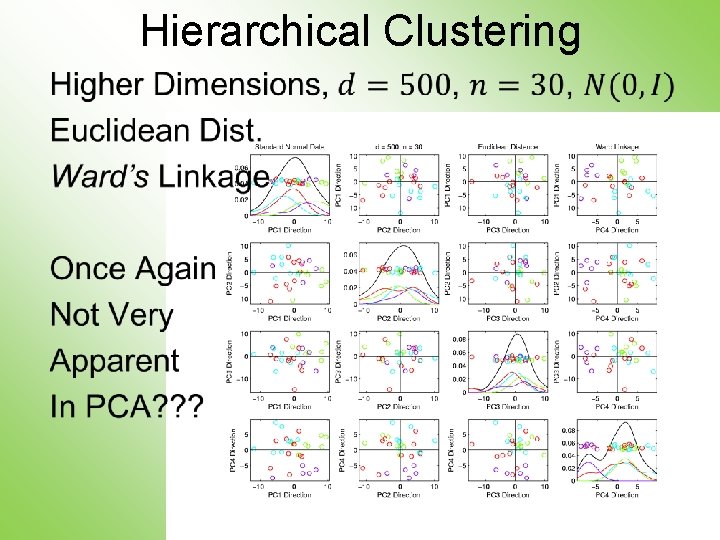

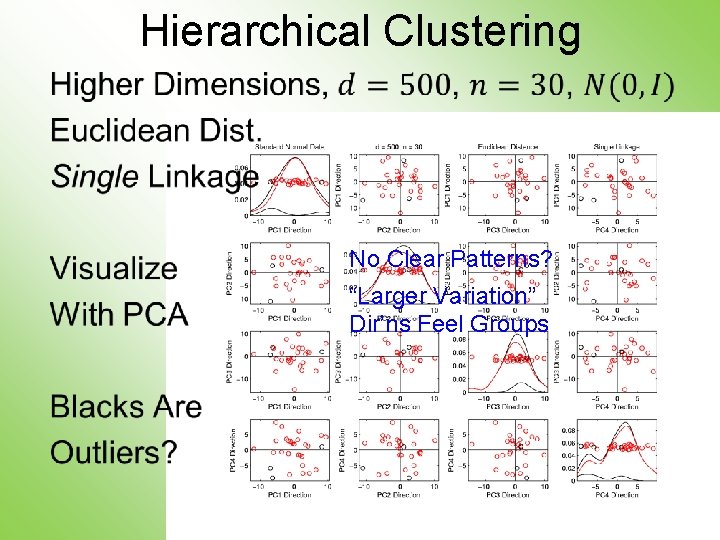

Hierarchical Clustering • No Clear Patterns? “Larger Variation” Dir’ns Feel Groups

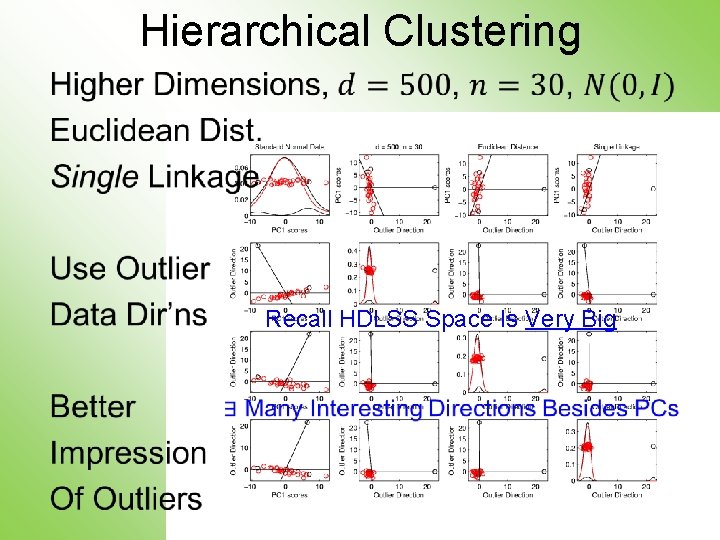

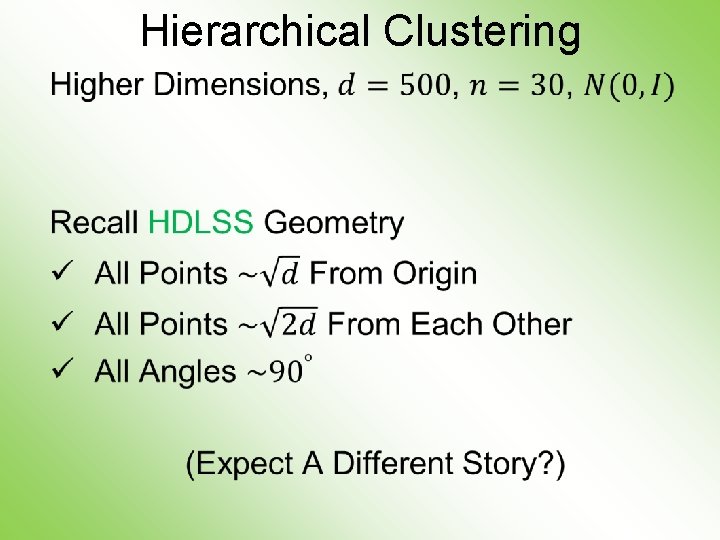

Hierarchical Clustering • Recall HDLSS Space Is Very Big

Hierarchical Clustering •

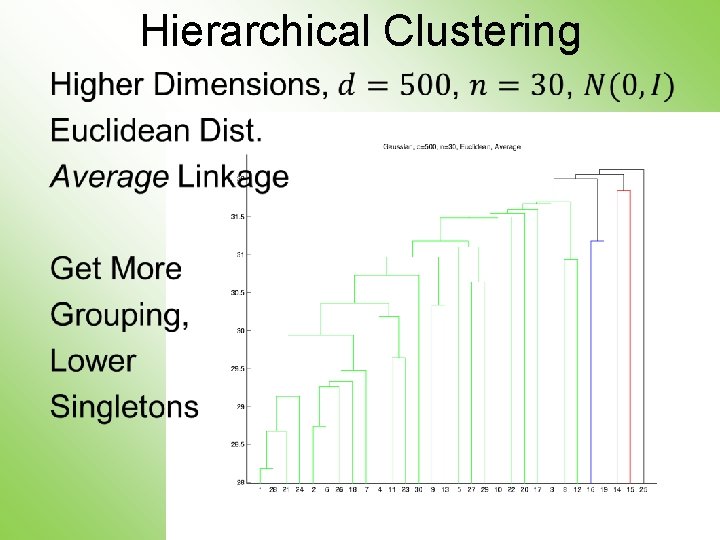

Hierarchical Clustering •

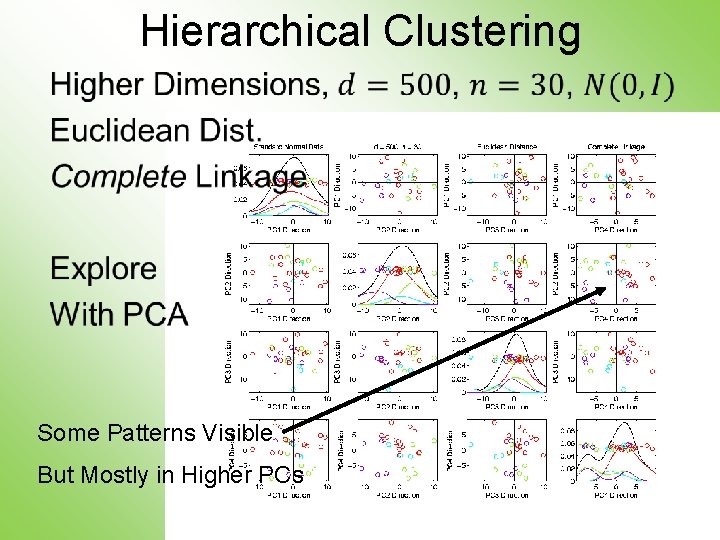

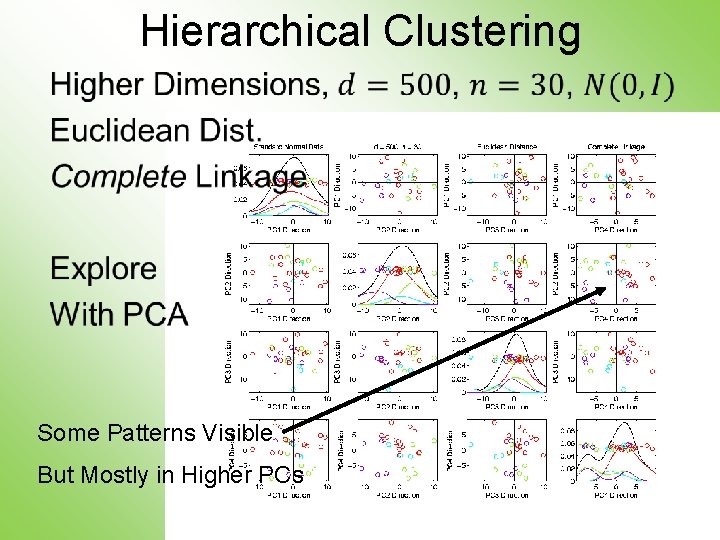

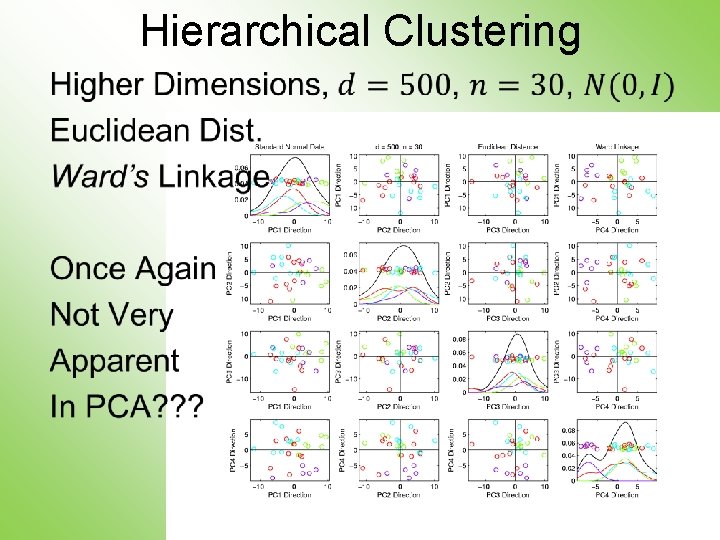

Hierarchical Clustering • Some Patterns Visible But Mostly in Higher PCs

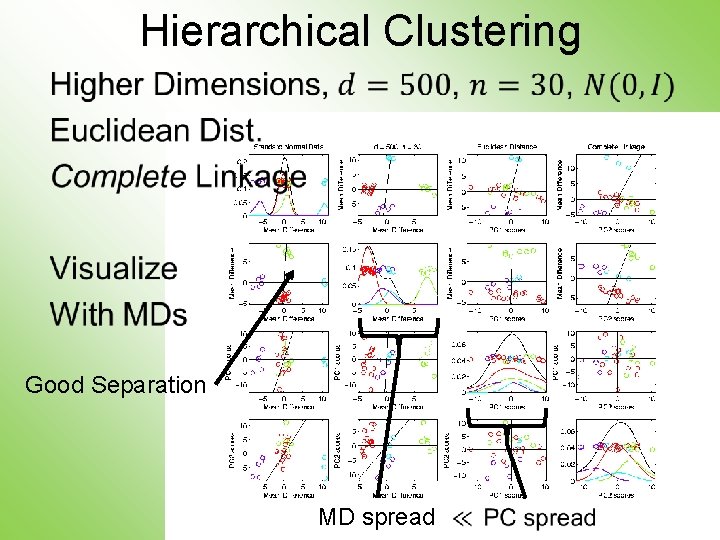

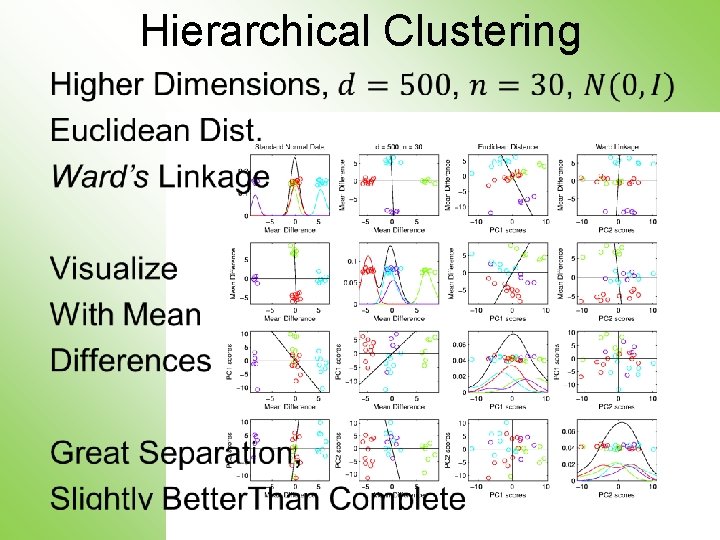

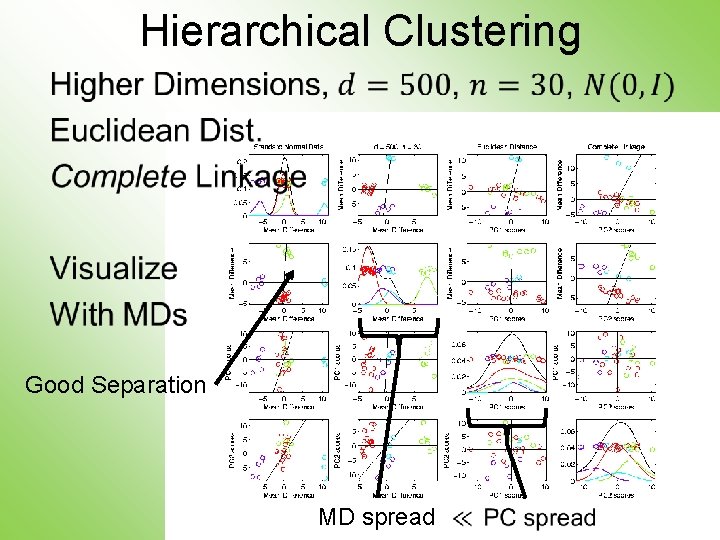

Hierarchical Clustering • Good Separation MD spread

Hierarchical Clustering •

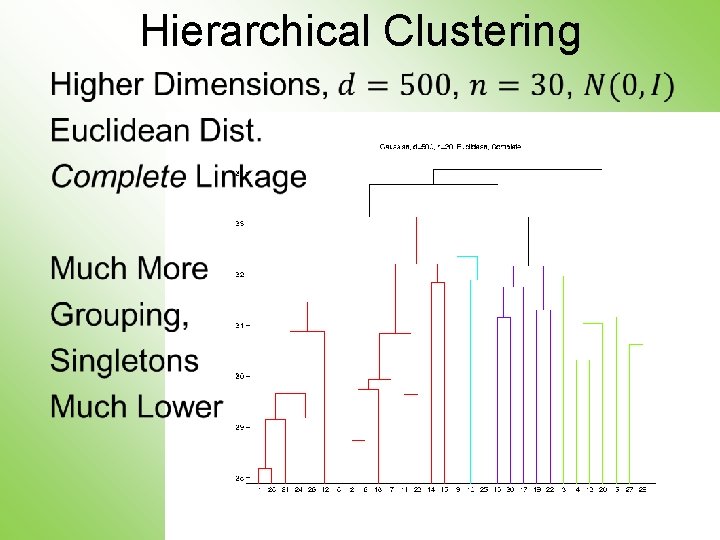

Hierarchical Clustering •

Hierarchical Clustering •

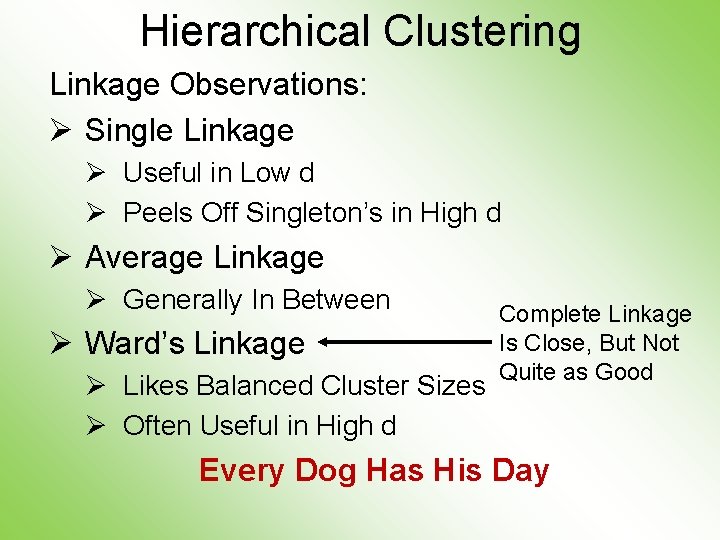

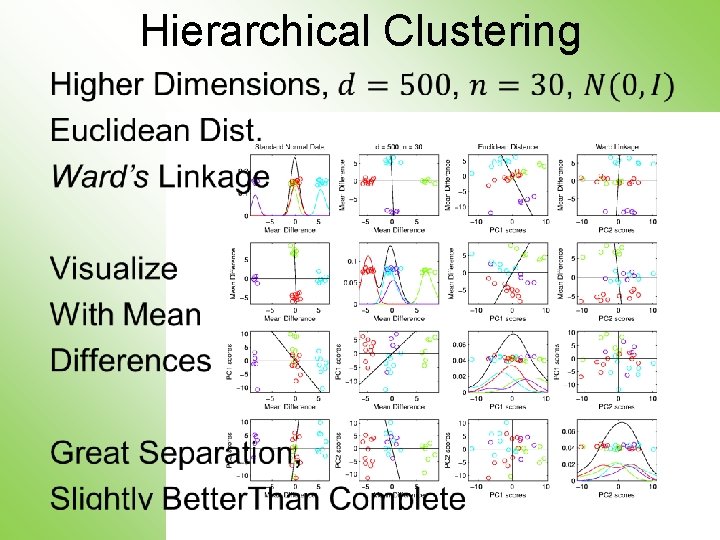

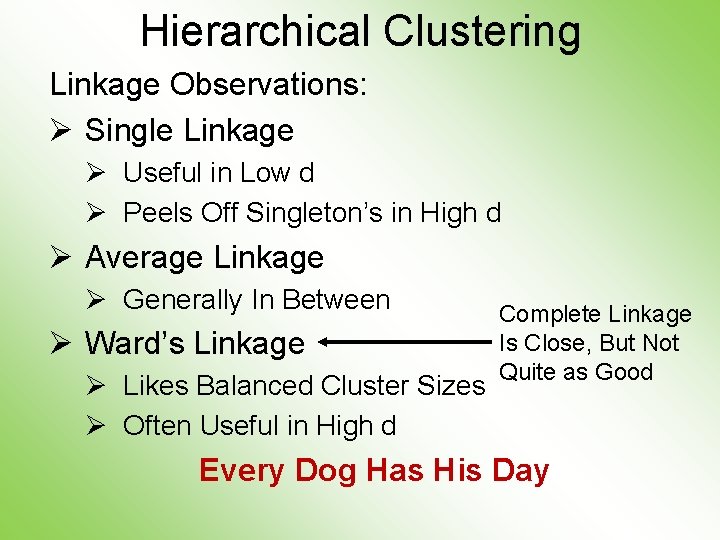

Hierarchical Clustering Linkage Observations: Ø Single Linkage Ø Useful in Low d Ø Peels Off Singleton’s in High d Ø Average Linkage Ø Generally In Between Ø Ward’s Linkage Ø Likes Balanced Cluster Sizes Ø Often Useful in High d Complete Linkage Is Close, But Not Quite as Good Every Dog Has His Day

Sig. Clust •

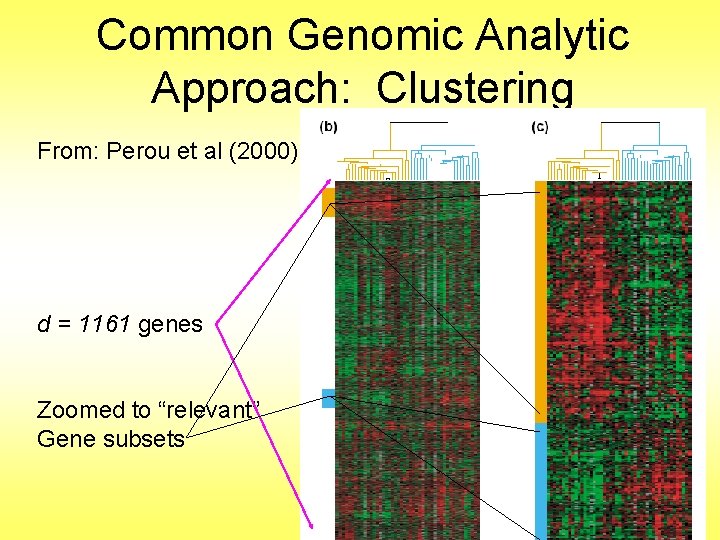

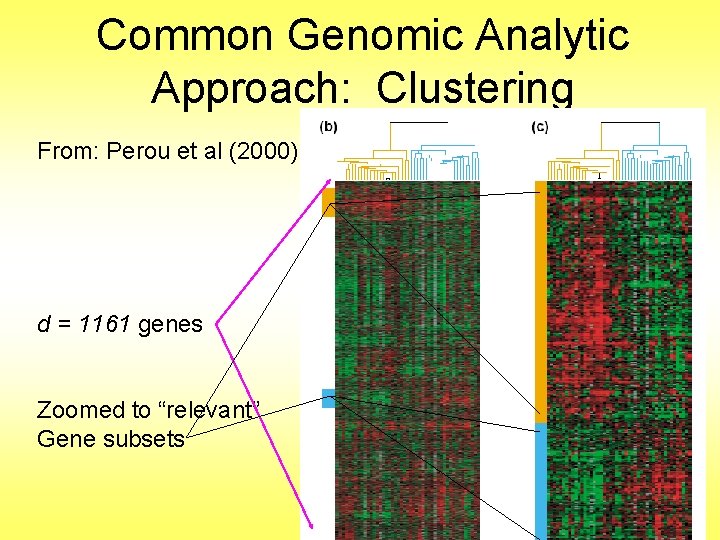

Common Genomic Analytic Approach: Clustering From: Perou et al (2000) d = 1161 genes Zoomed to “relevant” Gene subsets

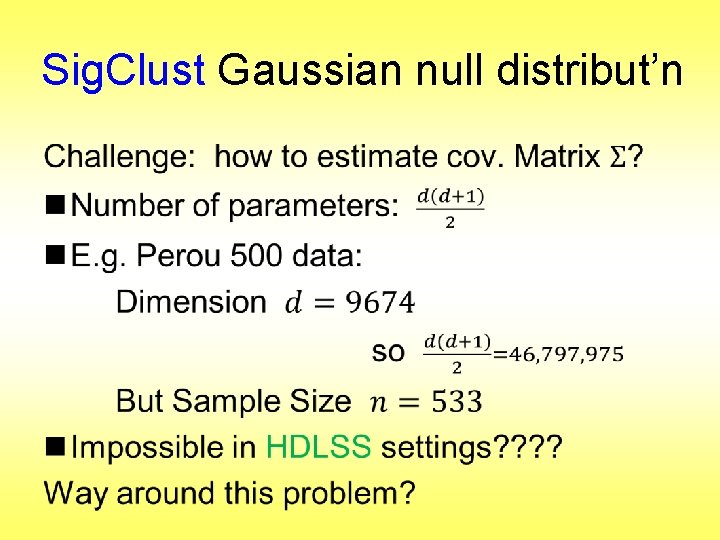

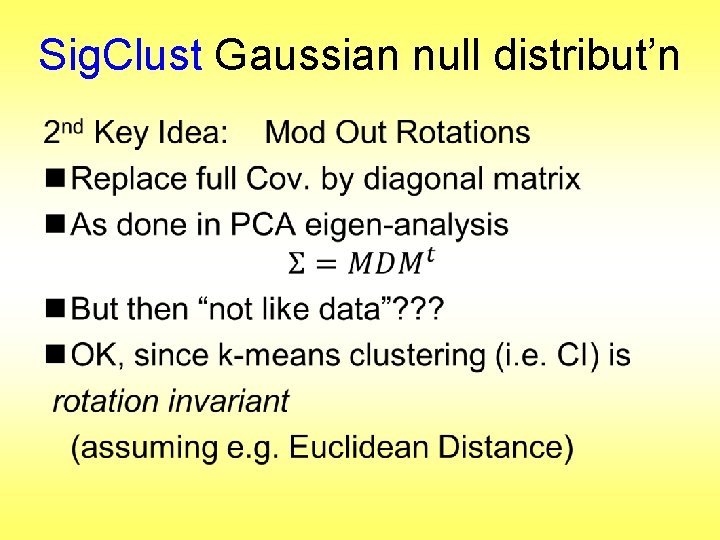

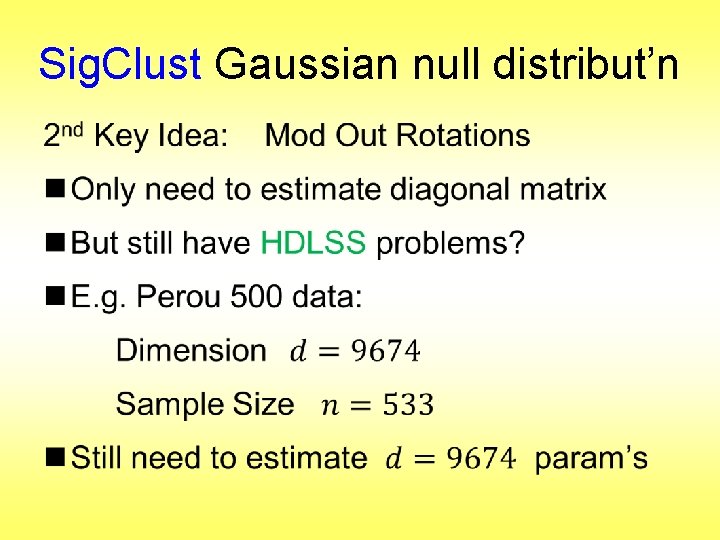

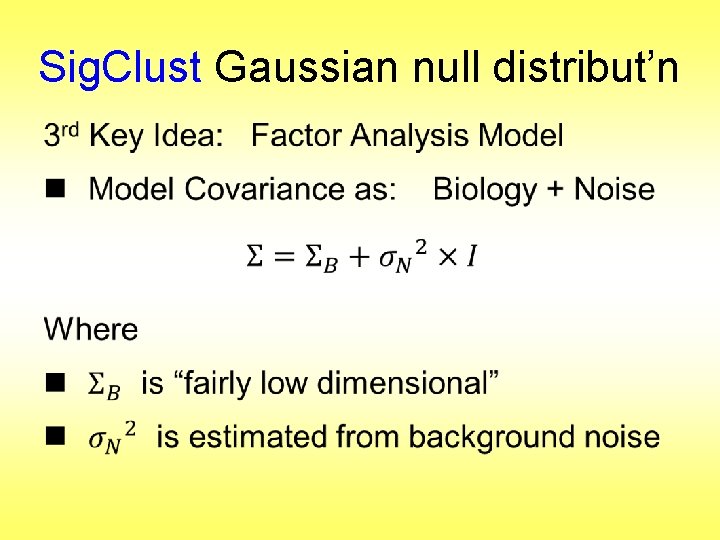

Interesting Statistical Problem For HDLSS data: n When clusters seem to appear n E. g. found by clustering method n How do we know they are really there? n Question asked by Neil Hayes n Define appropriate statistical significance? n Can we calculate it?

First Approaches: Hypo Testing e. g. Direction, Projection, Permutation Hypothesis test of: Significant difference between sub-populations Recall from 8/29/19

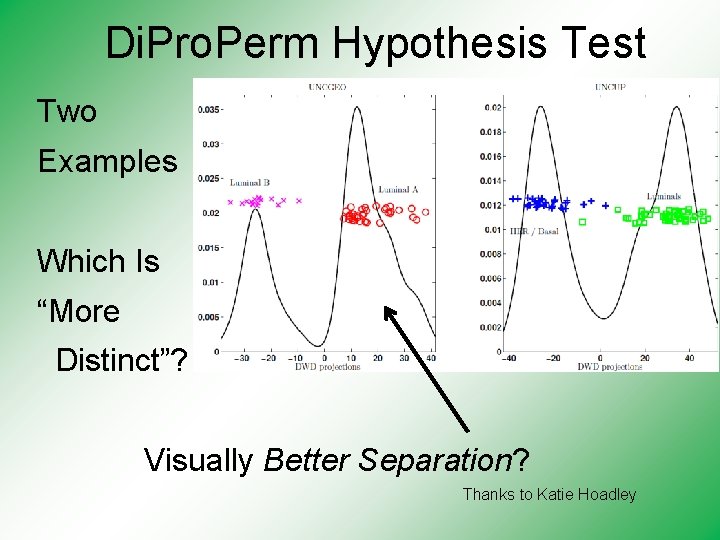

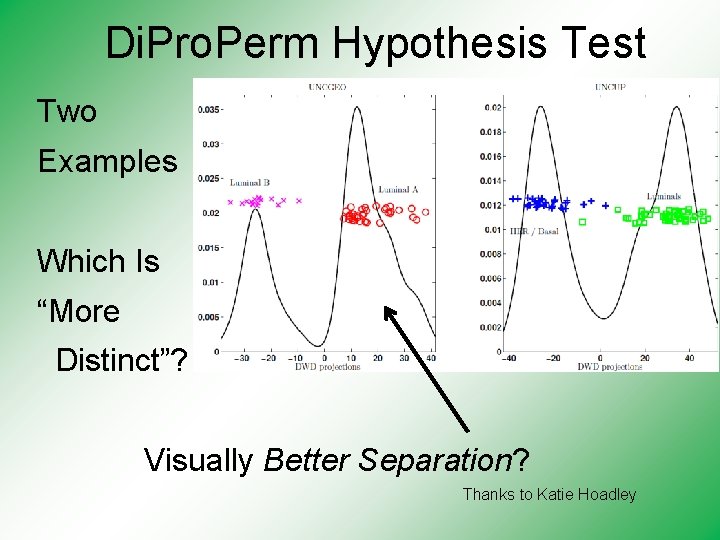

Di. Pro. Perm Hypothesis Test Two Examples Which Is “More Distinct”? Visually Better Separation? Thanks to Katie Hoadley

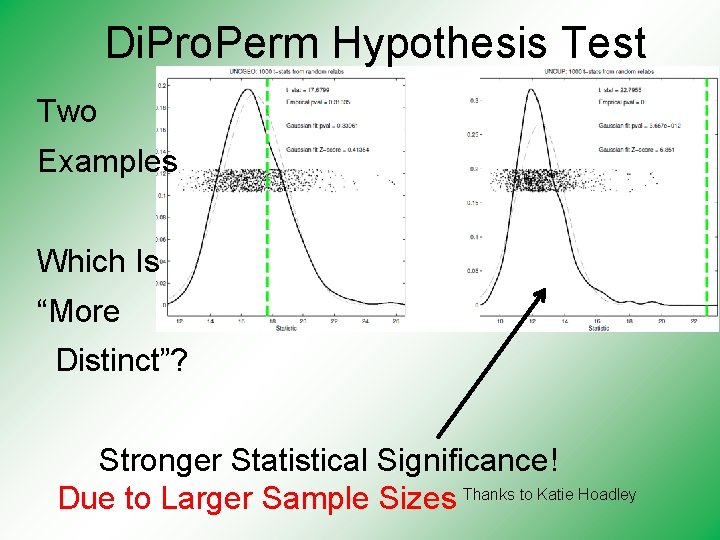

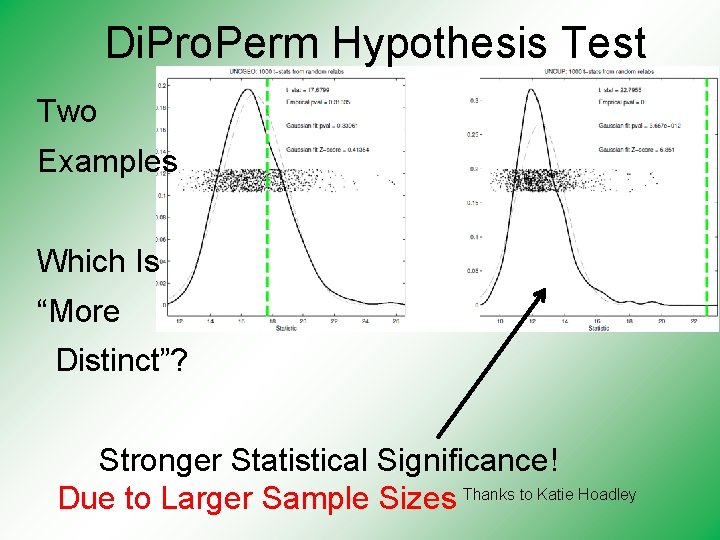

Di. Pro. Perm Hypothesis Test Two Examples Which Is “More Distinct”? Stronger Statistical Significance! Thanks to Katie Hoadley Due to Larger Sample Sizes

First Approaches: Hypo Testing e. g. Direction, Projection, Permutation Hypothesis test of: Significant difference between sub-populations n Effective and Accurate n I. e. Sensitive and Specific n There exist several such tests n But critical point is: What result implies about clusters

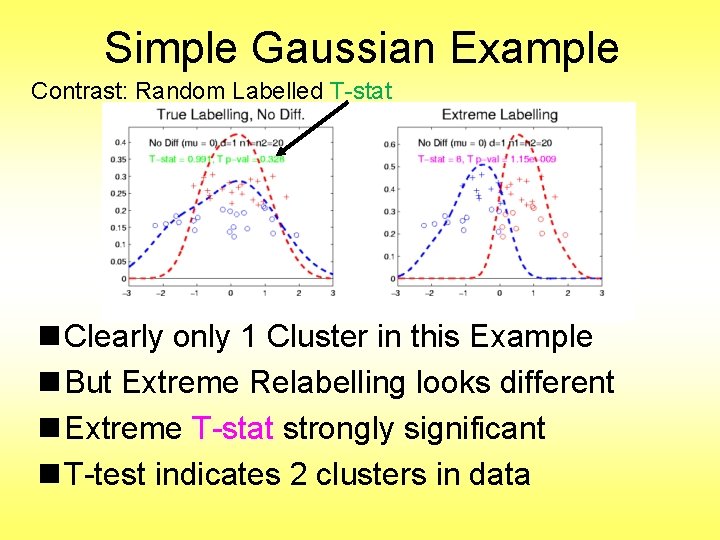

Clarifying Simple Example Why Population Difference Tests cannot indicate clustering n. Andrew Nobel Observation n. For Gaussian Data (Clearly 1 Cluster!) n. Assign Extreme Labels (e. g. by clustering) n. Subpopulations are signif’ly different

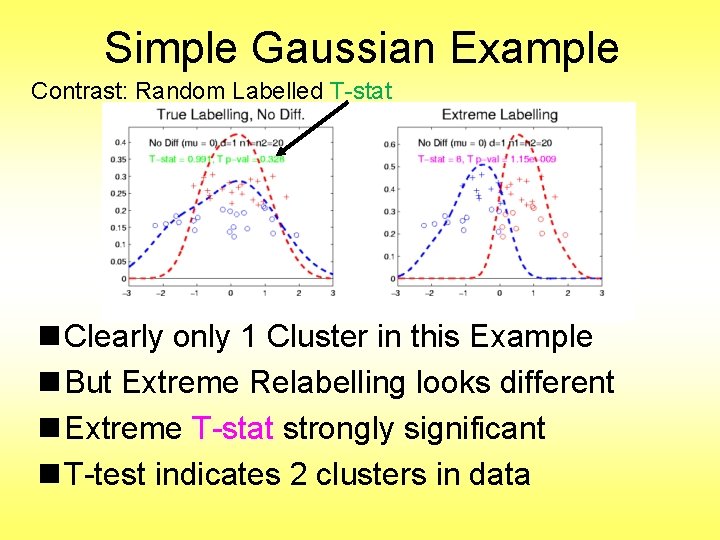

Simple Gaussian Example Contrast: Random Labelled T-stat n Clearly only 1 Cluster in this Example n But Extreme Relabelling looks different n Extreme T-stat strongly significant n T-test indicates 2 clusters in data

Simple Gaussian Example Results: n Random relabelling T-stat is not significant n But extreme T-stat is strongly significant n This comes from clustering operation n Conclude sub-populations are different n Now see that: Not the same as clusters really there n Need a new approach to study clusters

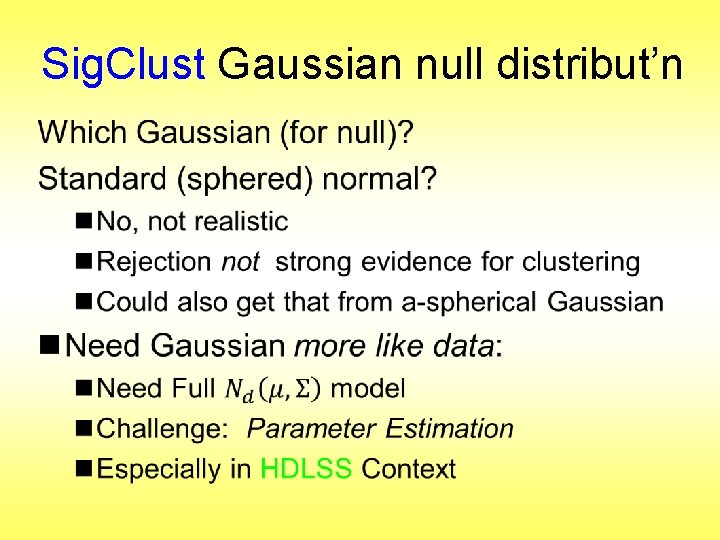

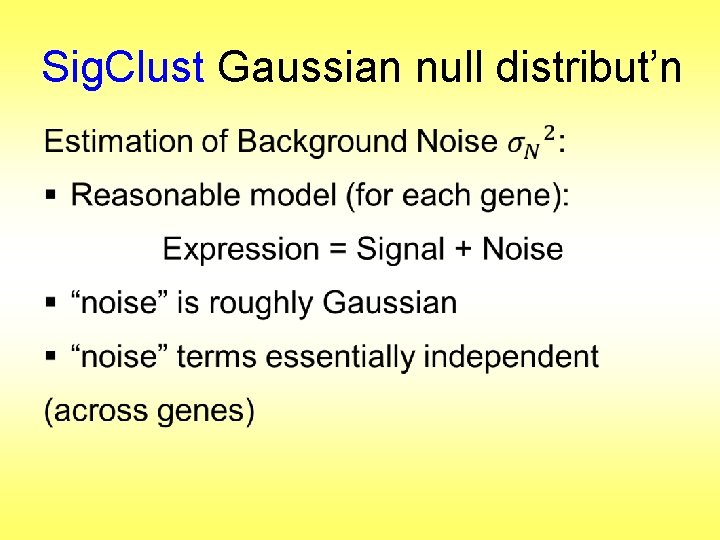

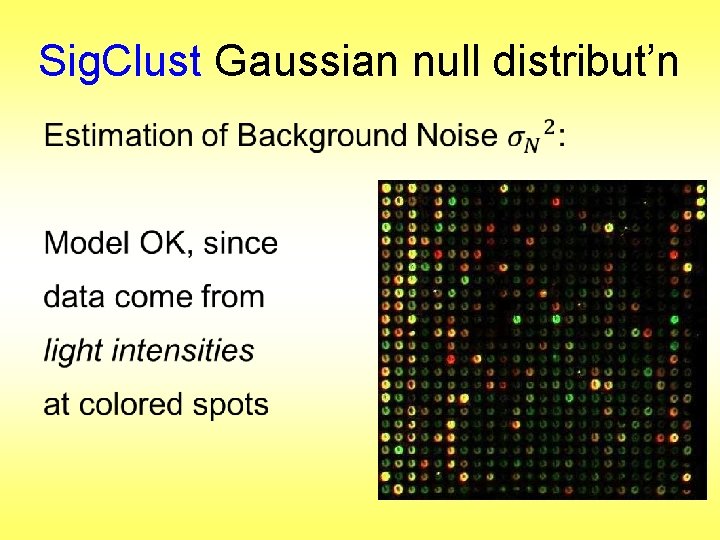

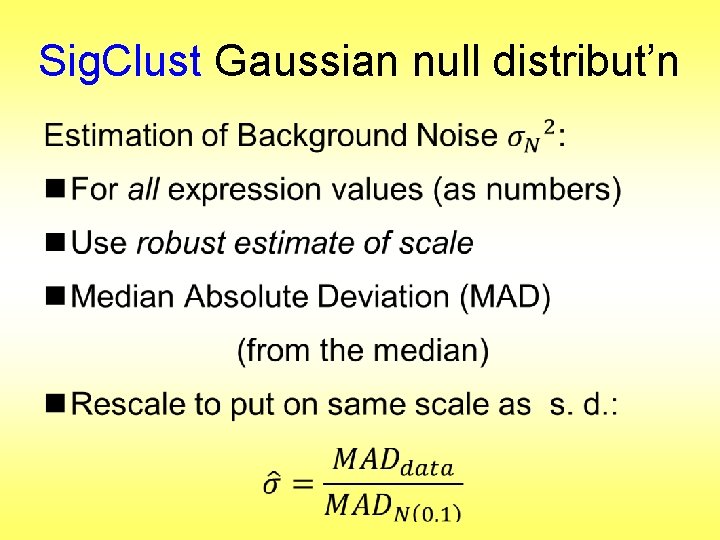

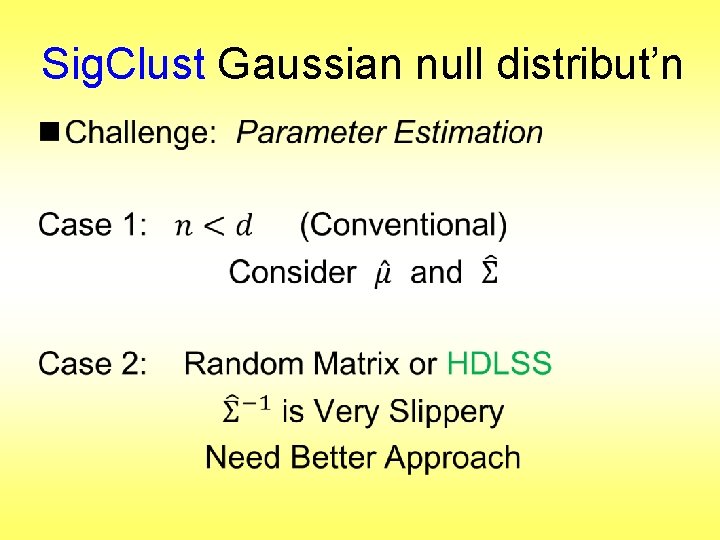

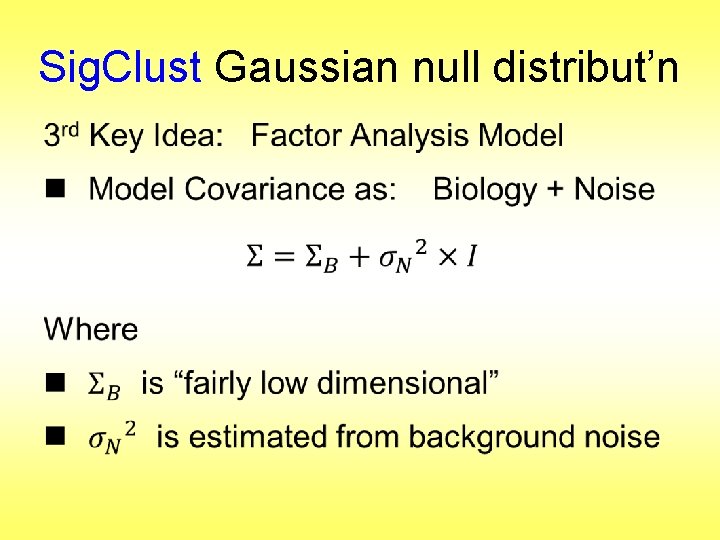

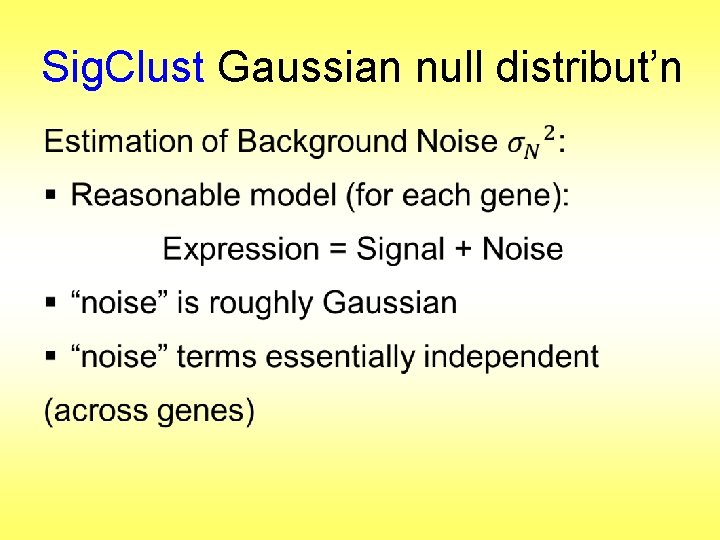

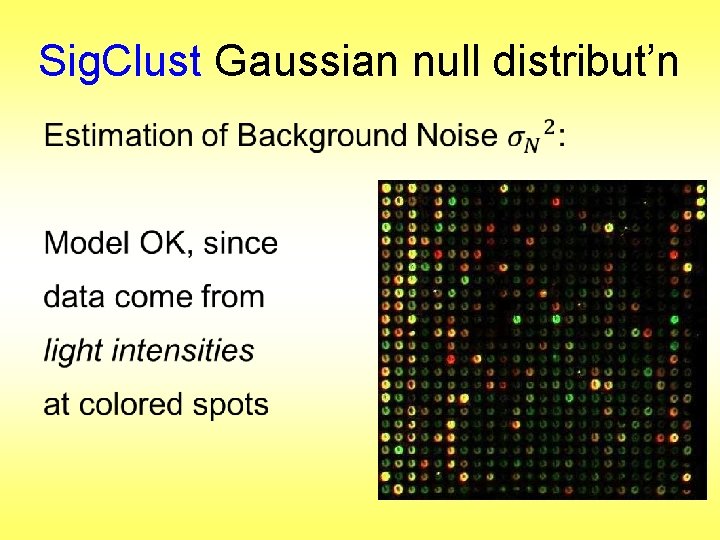

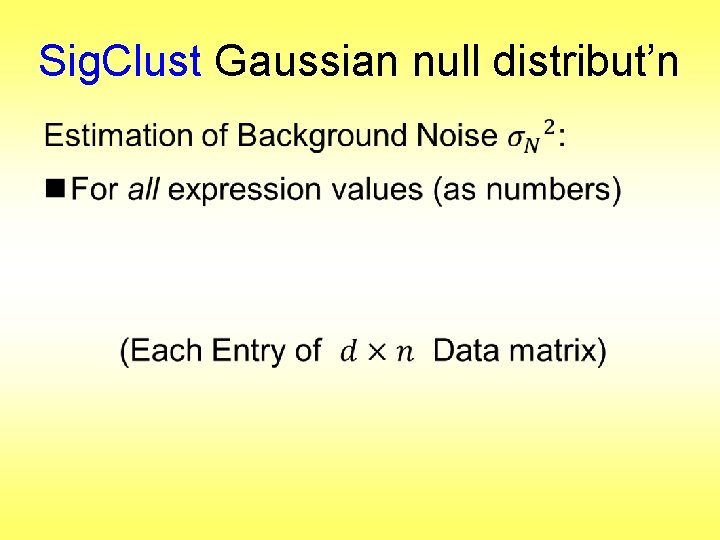

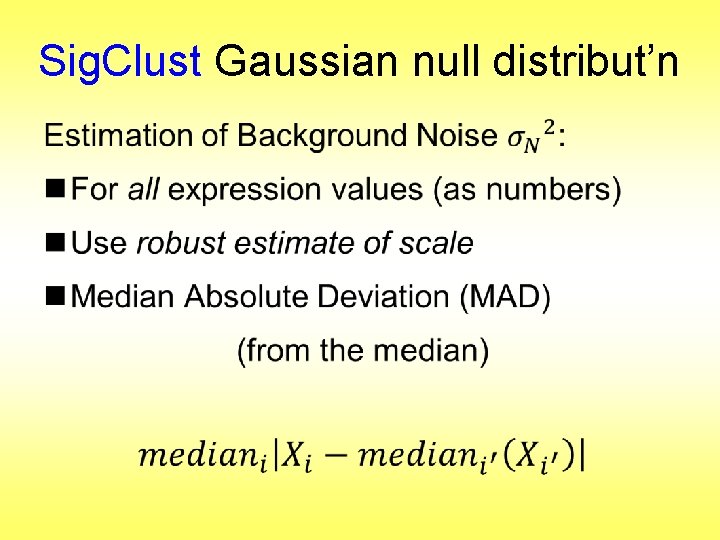

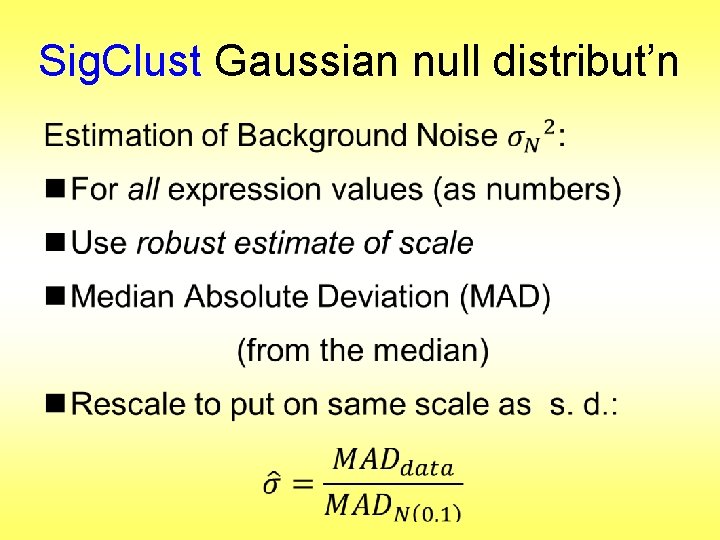

Statistical Significance of Clusters Basis of Sig. Clust Approach: n What defines: A Single Cluster? n A Gaussian distribution (Sarle & Kou 1993) n So define Sig. Clust test based on: n 2 -means cluster index (measure) as statistic n Gaussian null distribution n Currently compute by simulation n Possible to do this analytically? ? ?

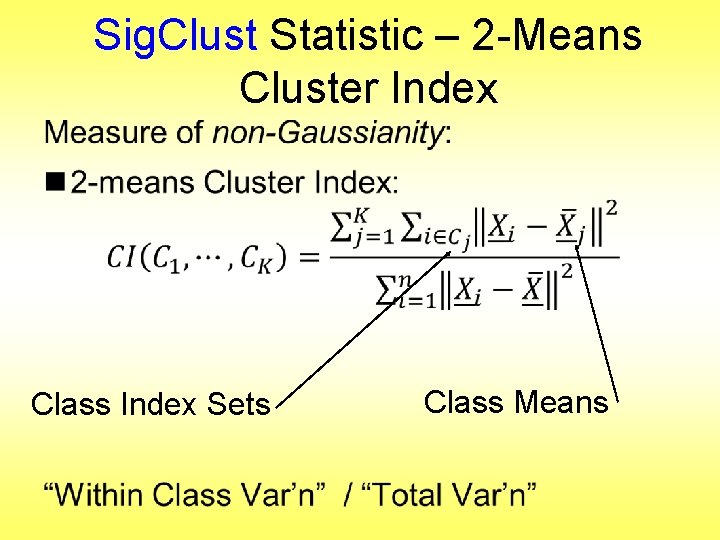

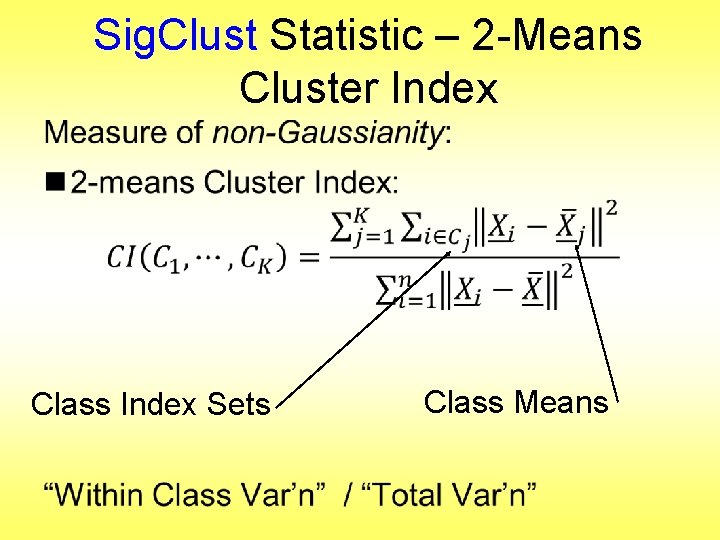

Sig. Clust Statistic – 2 -Means Cluster Index Measure of non-Gaussianity: n 2 -means Cluster Index n Familiar Criterion from k-means Clustering n Within Class Sum of Squared Distances to Class Means n Prefer to divide (normalize) by Overall Sum of Squared Distances to Mean n Puts on scale of proportions

Sig. Clust Statistic – 2 -Means Cluster Index • Class Index Sets Class Means

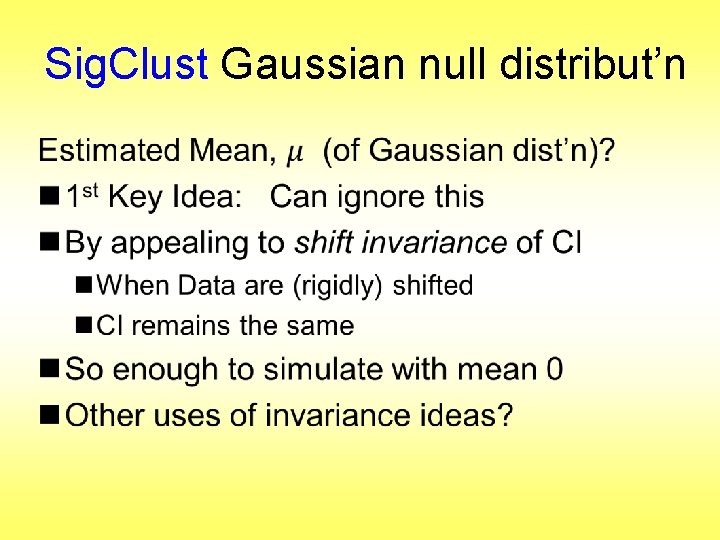

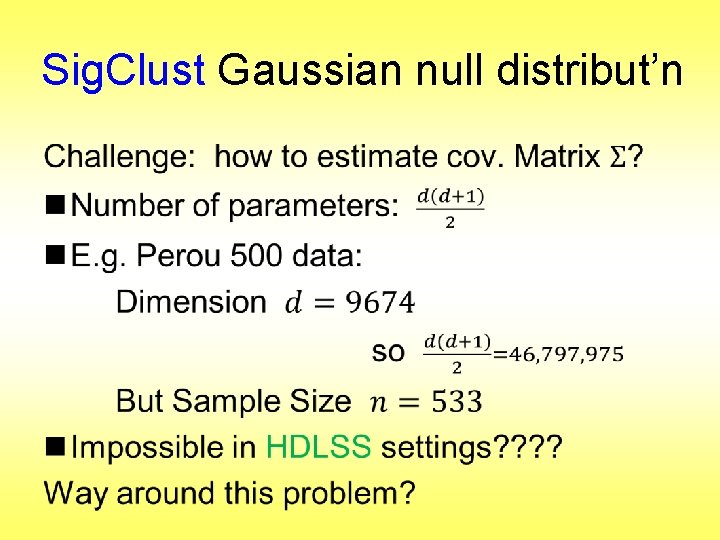

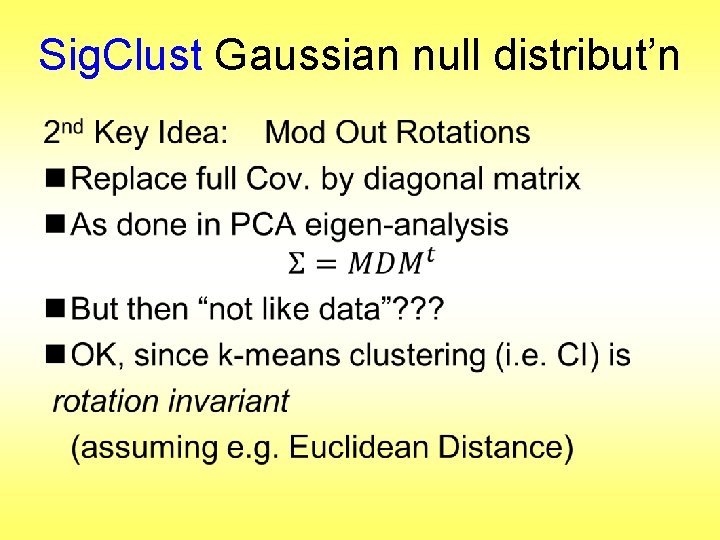

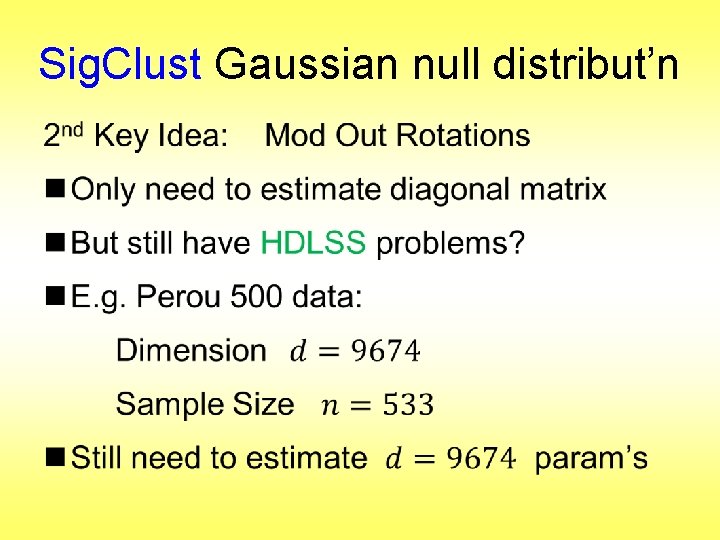

Sig. Clust Gaussian null distribut’n •

Sig. Clust Gaussian null distribut’n •

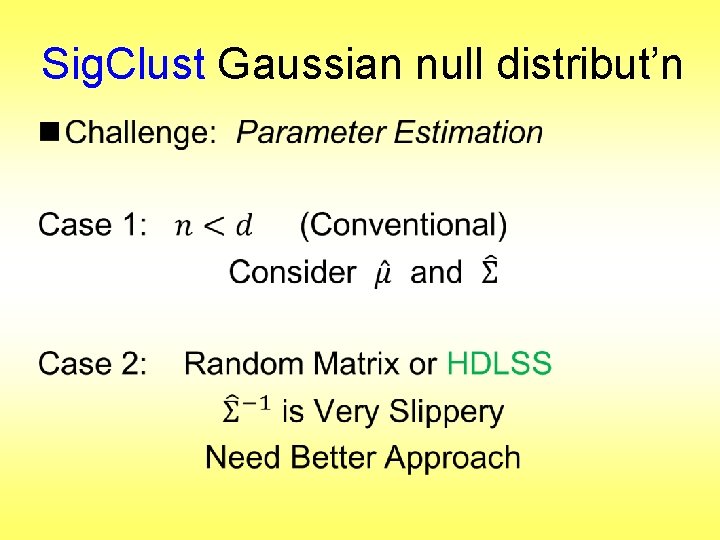

Sig. Clust Gaussian null distribut’n •

Sig. Clust Gaussian null distribut’n •

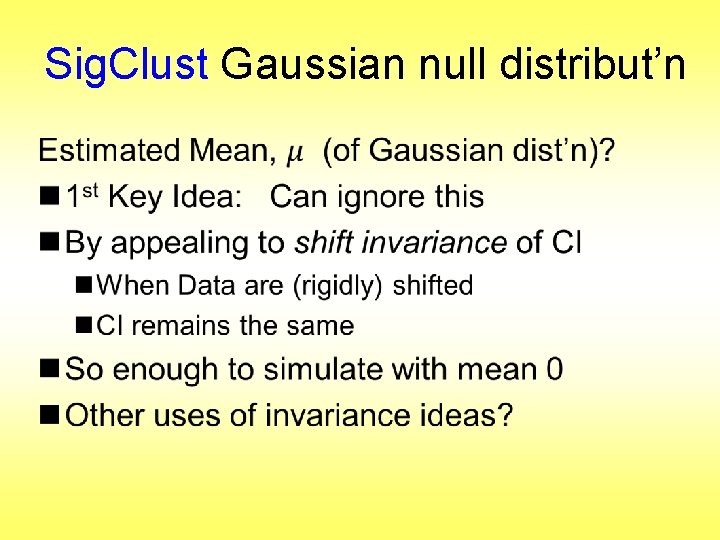

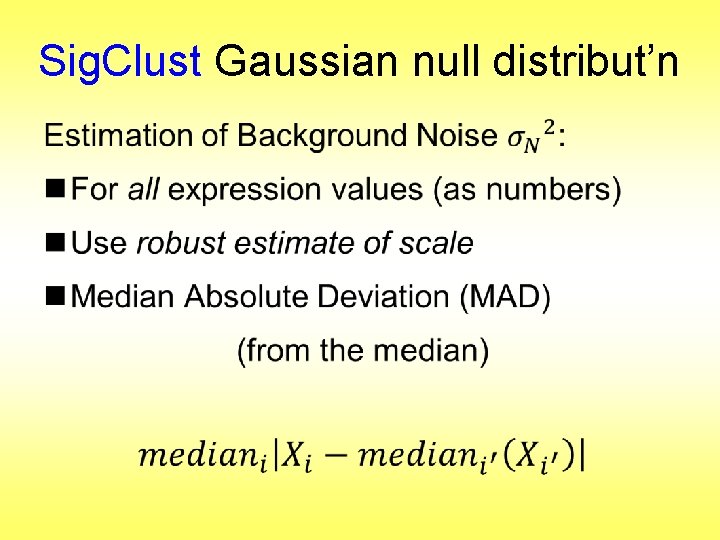

Sig. Clust Gaussian null distribut’n •

Sig. Clust Gaussian null distribut’n •

Sig. Clust Gaussian null distribut’n •

Sig. Clust Gaussian null distribut’n •

Sig. Clust Gaussian null distribut’n •

Sig. Clust Gaussian null distribut’n •

Sig. Clust Gaussian null distribut’n •

Sig. Clust Gaussian null distribut’n •

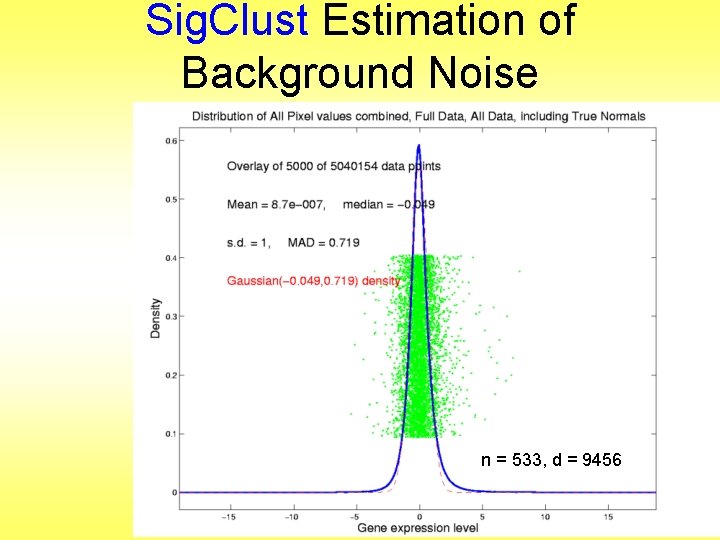

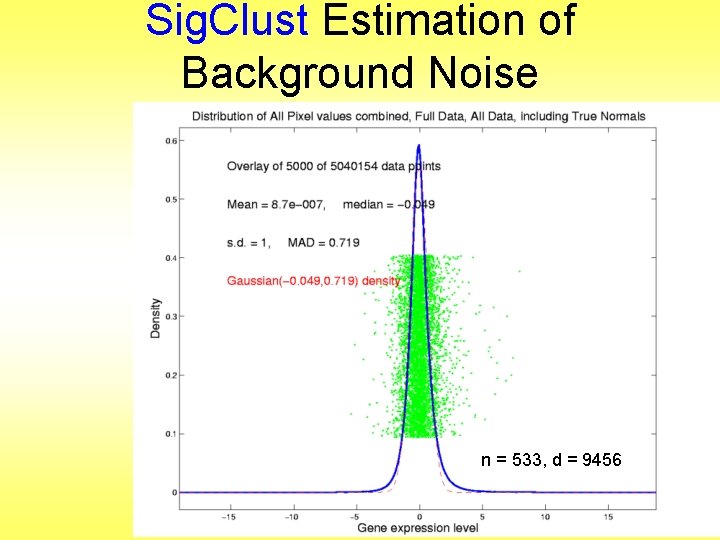

Sig. Clust Estimation of Background Noise n = 533, d = 9456

Participant Presentation Pavlos Zoubouloglou: Geodesic PCA in the Wasserstein Space Taylor Petty: Forensic DNA Testing

Som vs kmeans

Som vs kmeans Javascript kmeans

Javascript kmeans Thrust kmeans

Thrust kmeans Flat and hierarchical clustering

Flat and hierarchical clustering Partitional clustering vs hierarchical clustering

Partitional clustering vs hierarchical clustering Rumus euclidean

Rumus euclidean When god shows up he shows off

When god shows up he shows off Disadvantages of k means clustering

Disadvantages of k means clustering Billenko

Billenko Disadvantages of k means clustering

Disadvantages of k means clustering K means clustering

K means clustering K-means clustering algorithm in data mining

K-means clustering algorithm in data mining The ebbinghaus forgetting curve shows that:

The ebbinghaus forgetting curve shows that: Is curve shows

Is curve shows S curve and j curve

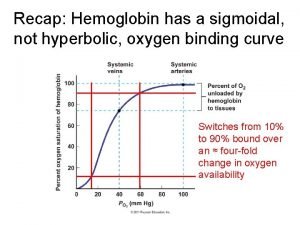

S curve and j curve Sigmoidal hyperbolic curve

Sigmoidal hyperbolic curve Centric and eccentric occlusion

Centric and eccentric occlusion S curve and j curve

S curve and j curve Triangle quadrilateral pentagon hexagon octagon

Triangle quadrilateral pentagon hexagon octagon Life bio

Life bio Meta means morphe means

Meta means morphe means Meta means in metamorphism

Meta means in metamorphism Define biodiversity conservation

Define biodiversity conservation Global clustering coefficient

Global clustering coefficient Bioinformatics unisannio

Bioinformatics unisannio Clustering vs classification

Clustering vs classification Text clustering

Text clustering Parallel social media

Parallel social media Cure: an efficient clustering algorithm for large databases

Cure: an efficient clustering algorithm for large databases Hierarchical clustering in data mining

Hierarchical clustering in data mining Graclus

Graclus Tmax jeus

Tmax jeus Classification regression clustering

Classification regression clustering Rto rpo

Rto rpo Ncut

Ncut Clustering slides

Clustering slides Fuzzy clustering tutorial

Fuzzy clustering tutorial Clustering ideas

Clustering ideas Clustering what is

Clustering what is Hcs clustering

Hcs clustering What is clustering in writing

What is clustering in writing Primary clustering

Primary clustering Manifold clustering

Manifold clustering A set of nested clusters organized as a hierarchical tree

A set of nested clusters organized as a hierarchical tree Bayesian hierarchical clustering

Bayesian hierarchical clustering Birch clustering

Birch clustering Global clustering coefficient

Global clustering coefficient Jvm clustering

Jvm clustering Bi clustering

Bi clustering Sql server geo clustering

Sql server geo clustering Rock clustering algorithm

Rock clustering algorithm Clustering slides

Clustering slides Hydrophobic clustering

Hydrophobic clustering Fuzzy clustering tutorial

Fuzzy clustering tutorial Clustering outline

Clustering outline A framework for clustering evolving data streams

A framework for clustering evolving data streams Clustering non numeric data

Clustering non numeric data The clustering clouds poem

The clustering clouds poem Distance matrices

Distance matrices Flat clustering

Flat clustering Point assignment clustering

Point assignment clustering Example of group technology layout

Example of group technology layout Hierarchical clustering spss

Hierarchical clustering spss Clustroid

Clustroid Clustering vs classification

Clustering vs classification Group technology and cellular manufacturing

Group technology and cellular manufacturing Clustering slides

Clustering slides Global clustering coefficient

Global clustering coefficient Find centroid of tree

Find centroid of tree Jarvis patrick clustering

Jarvis patrick clustering Agglomerative clustering

Agglomerative clustering Spectral clustering

Spectral clustering Real application clustering

Real application clustering Tableau clustering algorithm

Tableau clustering algorithm Grid-based clustering

Grid-based clustering Cmu machine learning

Cmu machine learning Zhluková analýza

Zhluková analýza Quest stat

Quest stat