FLAT CLUSTERING HIERARCHICAL CLUSTERING What is clustering Grouping

- Slides: 34

FLAT CLUSTERING & HIERARCHICAL CLUSTERING

What is clustering? • Grouping set of documents into subsets or clusters. • The Goal of clustering algorithm is: To create clusters that are coherent internally, but clearly different from each other

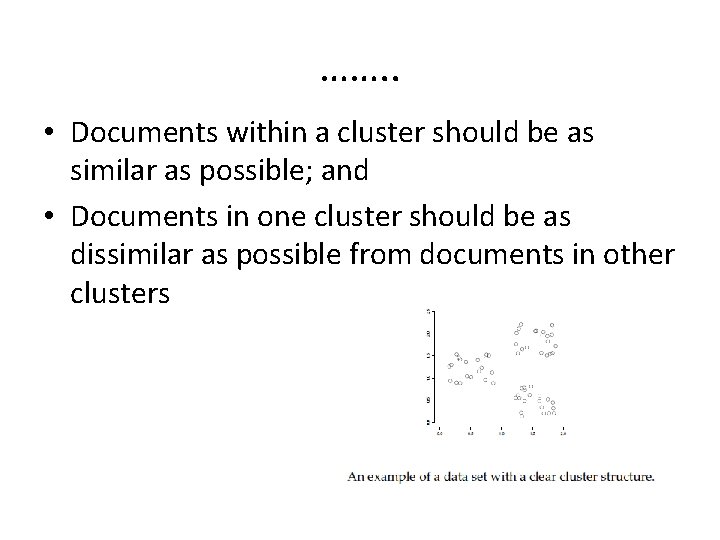

……. . • Documents within a cluster should be as similar as possible; and • Documents in one cluster should be as dissimilar as possible from documents in other clusters

……. • Clustering is the most common form of unsupervised learning. • The key input to a clustering algorithm is the distance measure. In document clustering, the distance measure is often also Euclidean distance. • Different distance measures give rise to different clustering's.

Why cluster documents? • Better user interface • Better search results • Effective “user recall”(relevant documents retrieved) will be higher • Faster search

Clustering in information retrieval • Cluster hypothesis : The hypothesis states that if there is a document from a cluster that is relevant to a search request, then it is likely that other documents from the same cluster are also relevant.

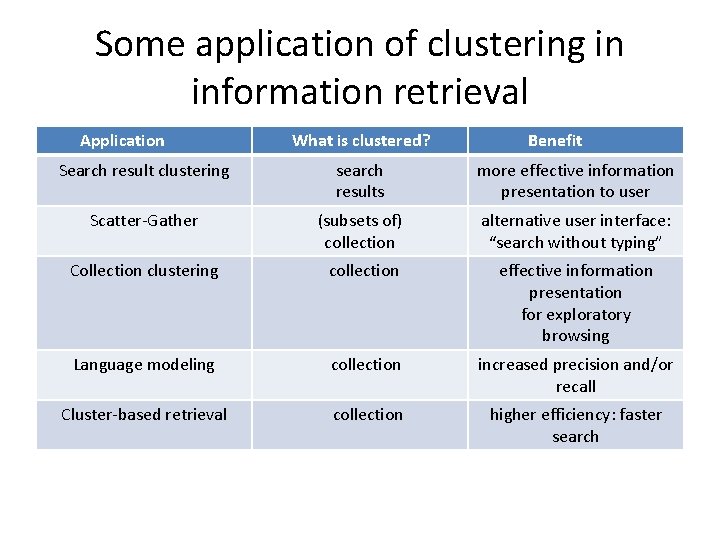

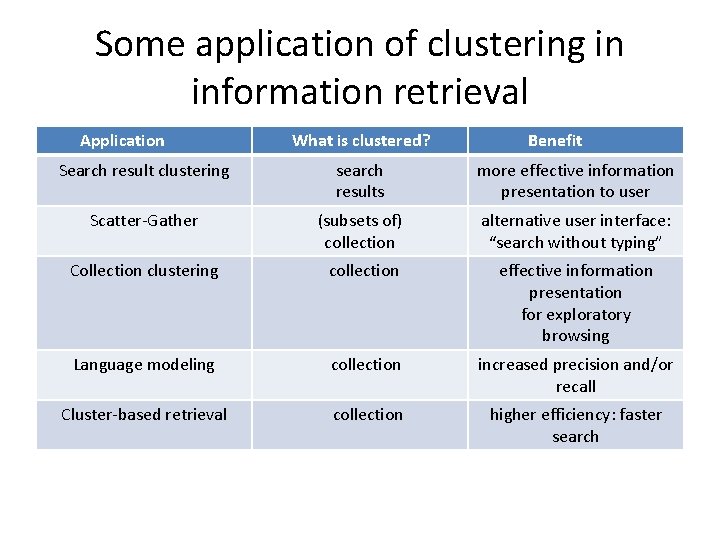

Some application of clustering in information retrieval Application What is clustered? Benefit Search result clustering search results more effective information presentation to user Scatter-Gather (subsets of) collection alternative user interface: “search without typing” Collection clustering collection effective information presentation for exploratory browsing Language modeling collection increased precision and/or recall Cluster-based retrieval collection higher efficiency: faster search

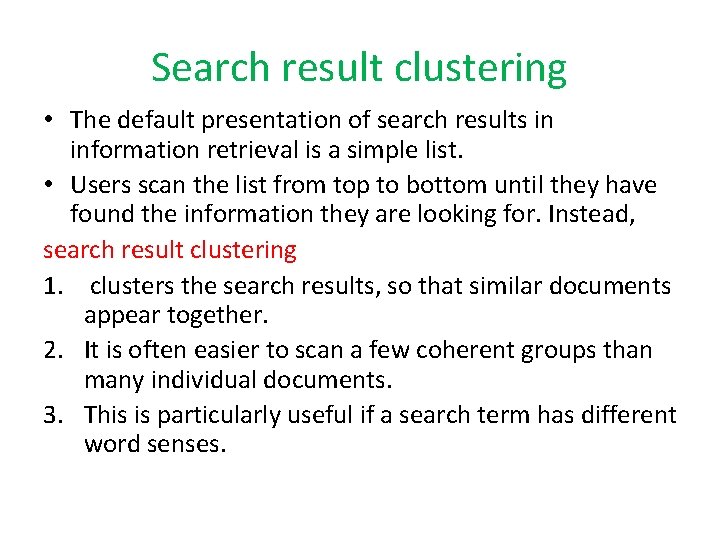

Search result clustering • The default presentation of search results in information retrieval is a simple list. • Users scan the list from top to bottom until they have found the information they are looking for. Instead, search result clustering 1. clusters the search results, so that similar documents appear together. 2. It is often easier to scan a few coherent groups than many individual documents. 3. This is particularly useful if a search term has different word senses.

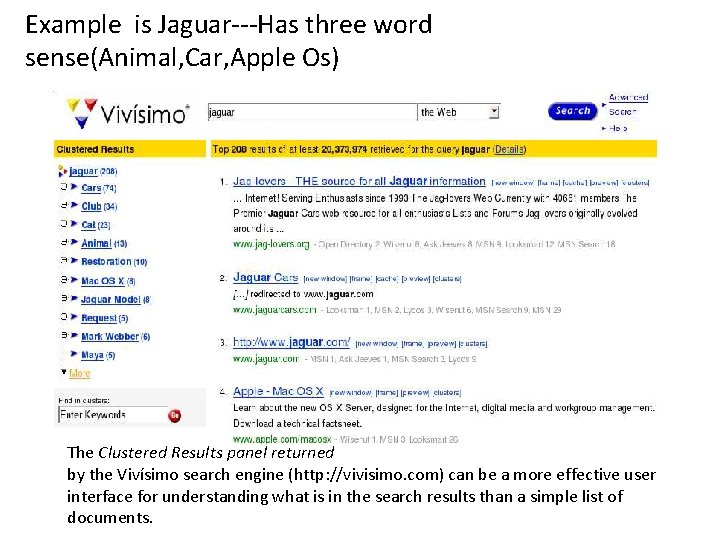

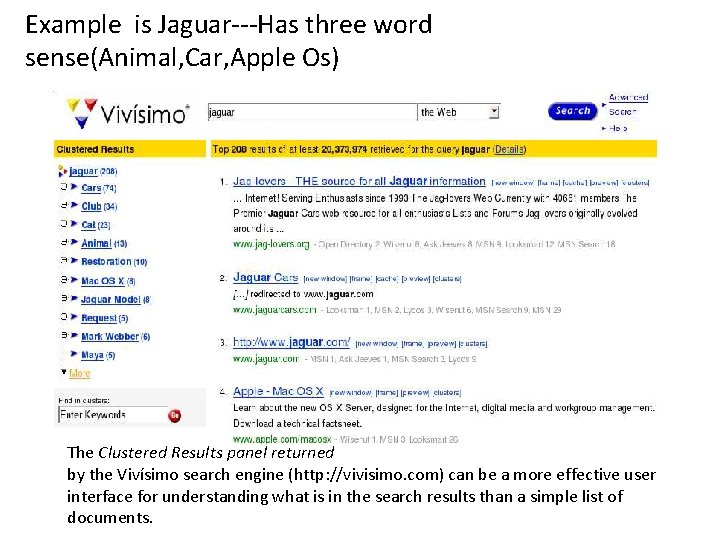

Example is Jaguar---Has three word sense(Animal, Car, Apple Os) The Clustered Results panel returned by the Vivísimo search engine (http: //vivisimo. com) can be a more effective user interface for understanding what is in the search results than a simple list of documents.

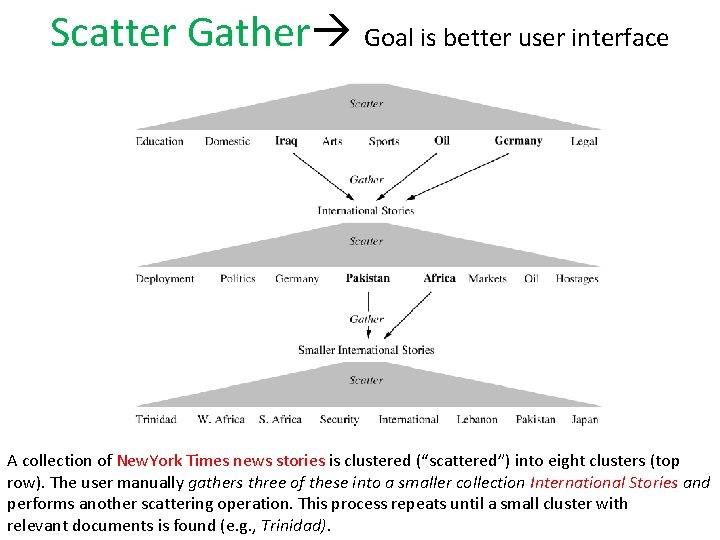

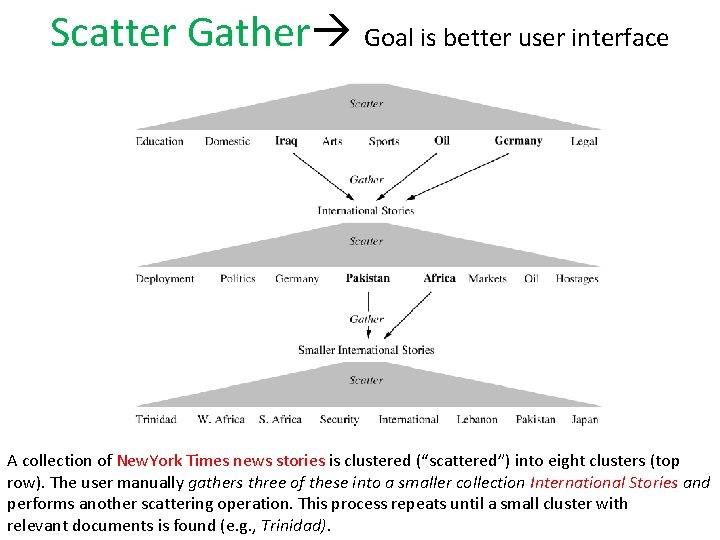

Scatter Gather Goal is better user interface A collection of New. York Times news stories is clustered (“scattered”) into eight clusters (top row). The user manually gathers three of these into a smaller collection International Stories and performs another scattering operation. This process repeats until a small cluster with relevant documents is found (e. g. , Trinidad).

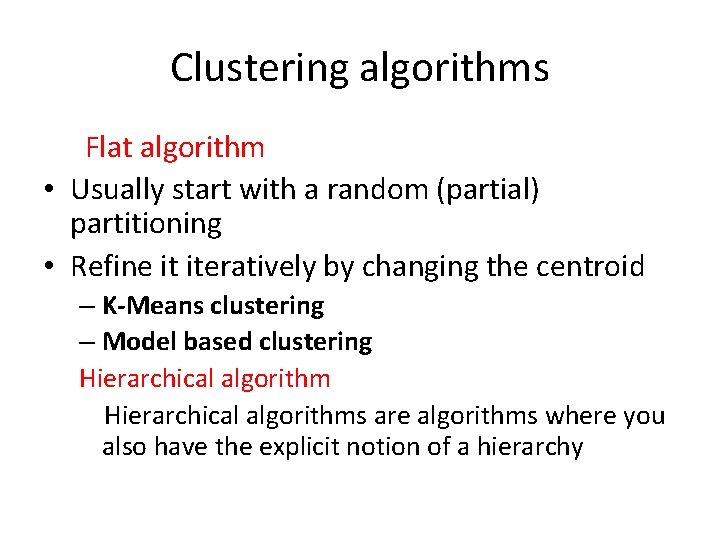

Clustering algorithms Flat algorithm • Usually start with a random (partial) partitioning • Refine it iteratively by changing the centroid – K-Means clustering – Model based clustering Hierarchical algorithms are algorithms where you also have the explicit notion of a hierarchy

…. • In hierarchical we can cluster our documents into certain no of clusters and then we can group together those clusters in turn into larger clusters and so on to finally have a hierarchy. Bottom up, aglomerative Top down , Divisive

Hard vs soft clustering Another way to classify clustering is: • Hard clustering: Each documents belong to exactly one cluster. • Soft clustering: A document can belongs to more than one cluster. Ex—You may want to put a pair of sneakers in two clusters (i) sports apparels (ii) shoes Soft clustering is not used only hard clustering is used today.

Partitioning algorithm • Given: A set of documents and the number K • Partitioning method: Construct a partition of n documents into a set of K clusters. • Find: A partition of K clusters that optimizes the chosen partitioning criterion. Example: Try to minimize the average distance between the documents with in the same cluster is one measure we could try to optimize. Any way this are mathematical objective that take more time. K-Means and K-Medoids is a effective heuristic method(methods are not guarantee to be optimal but they run very fast) of partitioning.

K-Means • K-Means is the important flat clustering algorithm. • Its objective is to minimize the average squared Euclidean distance of documents from their cluster centers. • where a cluster center is defined as the mean or centroid.

… • The first step of K-Means and our goal is to select seeds- is a initial cluster centers K randomly selected documents. • The algorithm then moves the cluster centers around in space to minimize RSS(residual sum of squares) • Rss- The square distance of each vector from its centroid summed over all the vectors.

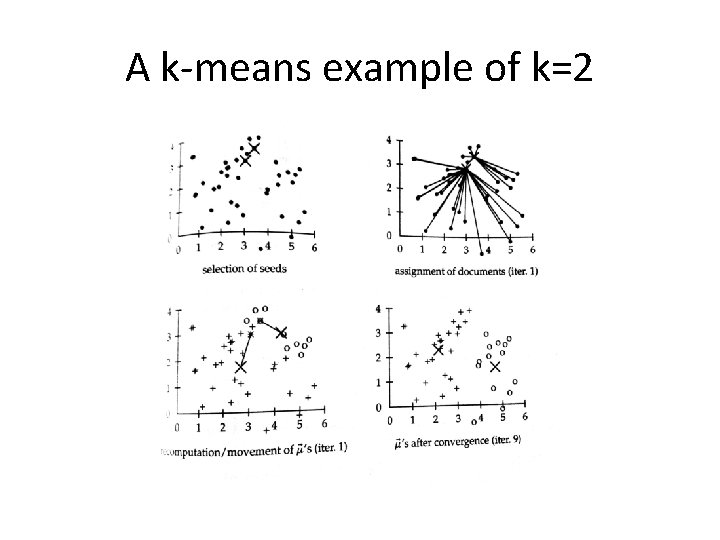

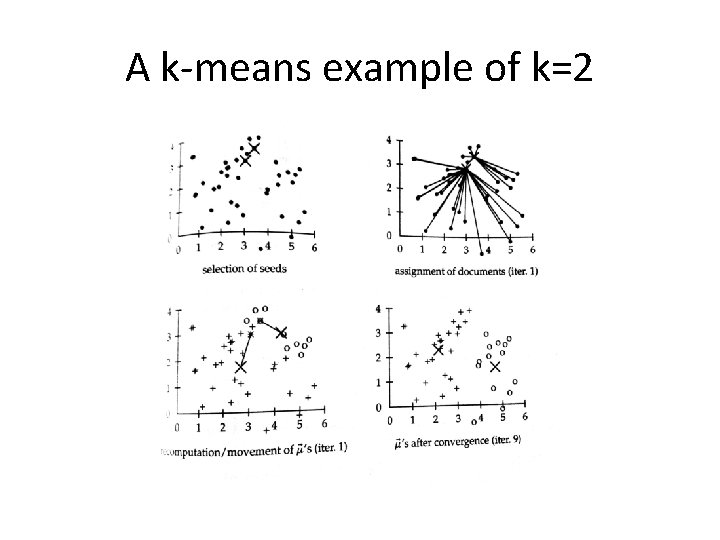

A k-means example of k=2

…… • Rss is equivalent to minimizing the average squared distance, a measure of how well centroids represent their documents. This is done iteratively by repeating two steps until a stopping criterion is met. Step-1: Reassigning documents to the cluster with the closest centroid Step-2: Recomputing each centroid based on the current members of its cluster

………. . Termination: • K-means converges by proving that RSS monotonically decreases in each iteration. • RSS decreases in both reassignment and recomputation step… The outcome of the clustering in K-Means depends on the initial seeds. Document that are far from any other document and therefore do not fit well into any cluster is called outlier. If an outlier is chosen as an initial seed , then no other vector is assigned to it during subsequent iterations. Thus we ended up with a singleton cluster(a cluster with only one document)

Drawback in flat clustering • The drawback in flat clustering is flat unstructured set of clusters, require a prespecified number of clusters as input and are nondeterministic. (for same input different outputs in different runs)

Hierarchy clustering • Hierarchical clustering outputs a hierarchy , a structure that is more informative than the unstructured set of clusters returned by flat clustering. • Hierarchical clustering does not require us to prespecify the number of clusters and most hierarchical algorithm are deterministic. • Hierarchical clustering produce better result than flat clustering

Hierarchical Agglomerative clustering • Hierarchical clustering algorithm are either topdown or bottom-up. • Bottom-up algorithms treat each document as a singleton clusters at the outset and then successively merge pairs of clusters until all clusters have been merged into a single cluster that contains all documents. • Bottom up hierarchical clustering is therefore called hierarchical agglomerative clustering(HAC)

……. • Agglomerative hierarchical clustering presents four different agglomerative algorithms. 1. Single link 2. Complete link 3. Group-average 4. Centroid similarity.

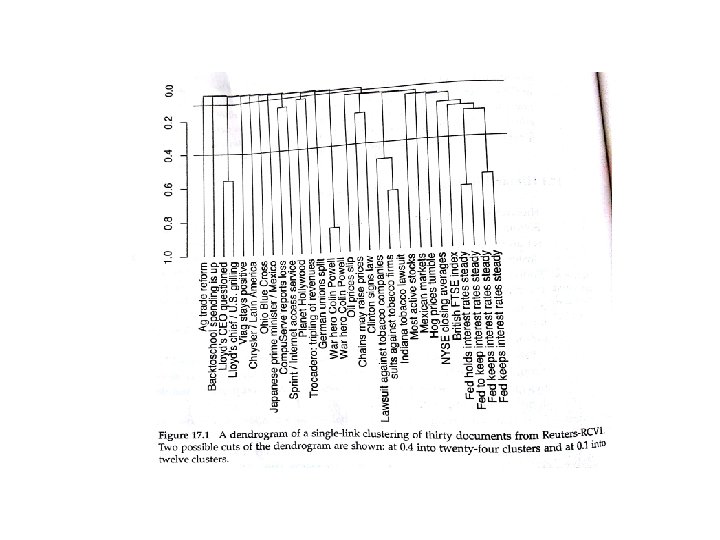

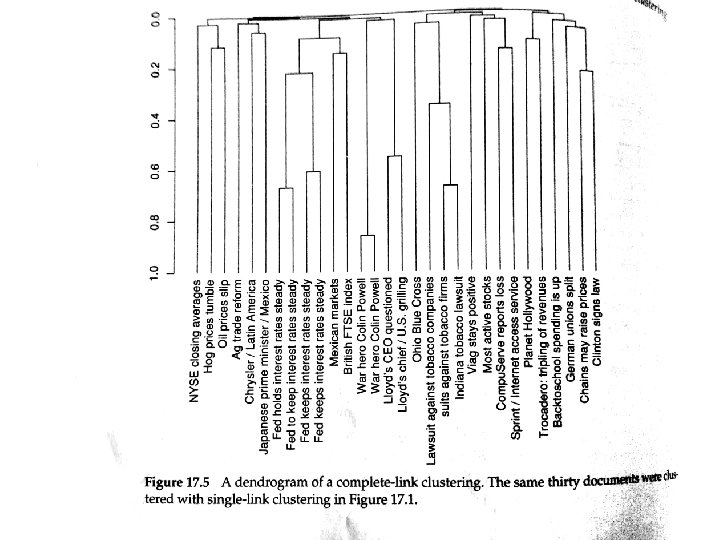

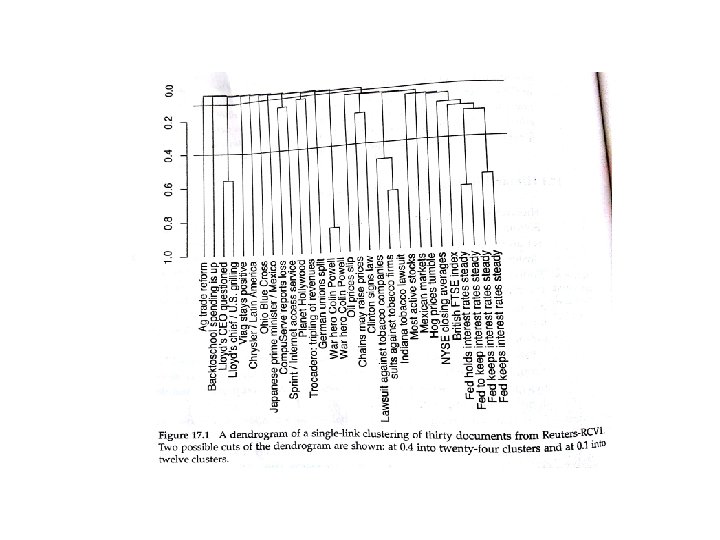

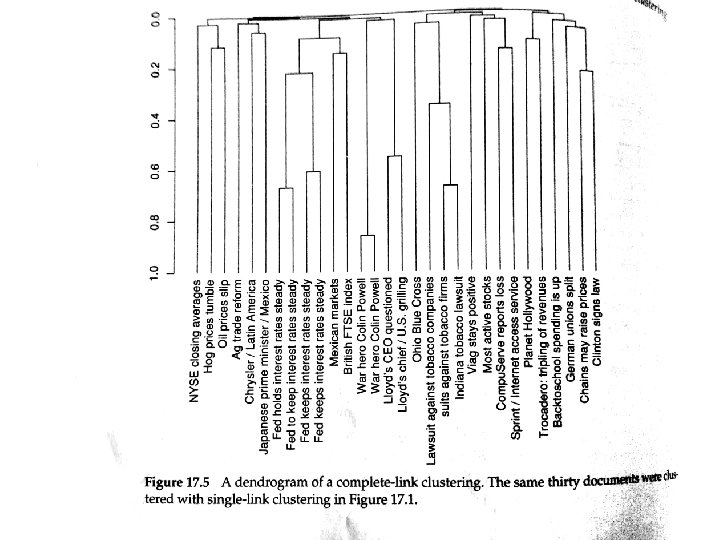

Dendogram • An HAC clustering is typically visualized as a dendogram. • Each merge is represented by a horizontal line. • The y coordinate of the horizontal line is the similarity of the two clusters that were merged. • We call this similarity the combination similarity of the merged cluster.

Combination similarity The combination similarity of the merged cluster. Example: Cluster consisting of Loyd’s CEO questioned Lloyd’s chief/U. S. grilling Is 0. 56

……. • By moving up from the bottom layer to the top node, a dendogram allows to reconstruct the history of merges that resulted in the depicted clustering • Example: we see two documents War hero and Colin powell were merged first. And last merge to a cluster Ag trade reform consisting of other twenty nine documents

………… • The hierarchy needs to be cut at some prespecified point. • For example: We cut the dendogram at 0. 4 if we want clusters with a minimum combination similarity. • Y=0. 4 yields 24 clusters • Y=0. 1 yields 12 clusters.

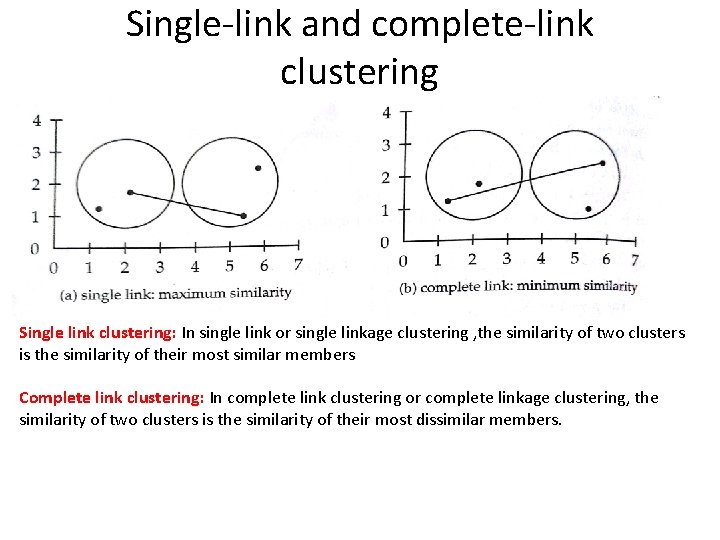

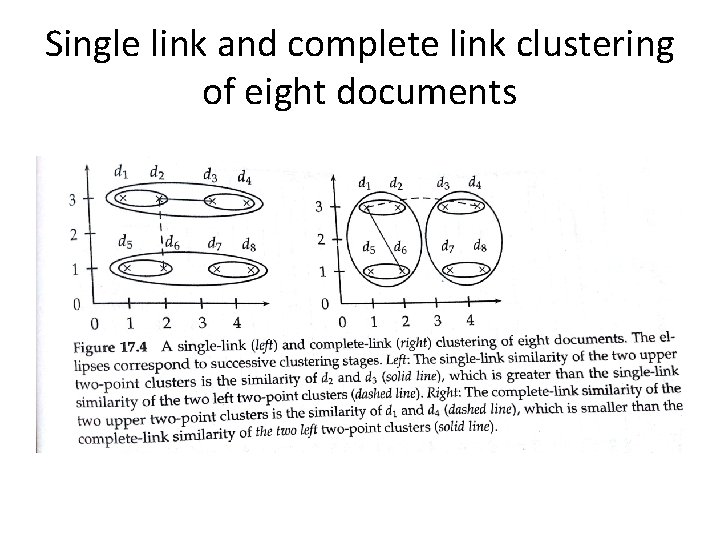

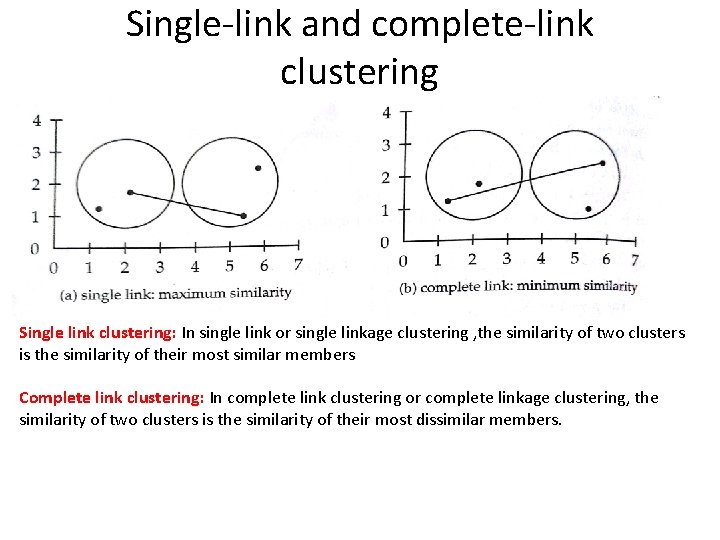

Single-link and complete-link clustering Single link clustering: In single link or single linkage clustering , the similarity of two clusters is the similarity of their most similar members Complete link clustering: In complete link clustering or complete linkage clustering, the similarity of two clusters is the similarity of their most dissimilar members.

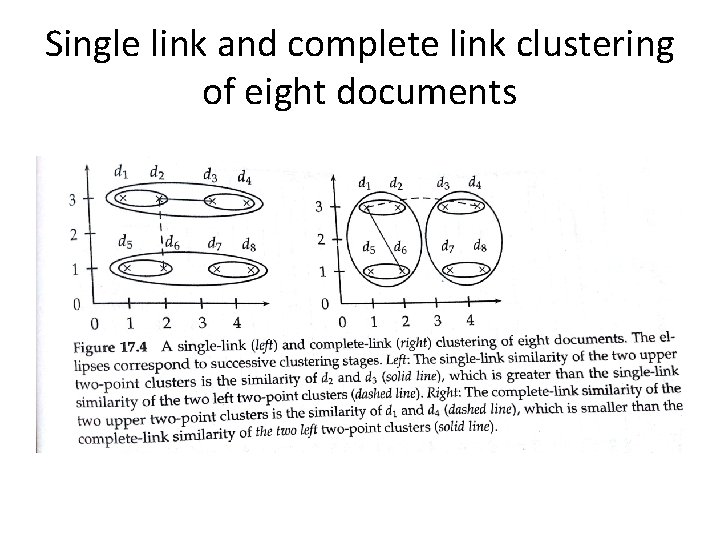

Single link and complete link clustering of eight documents

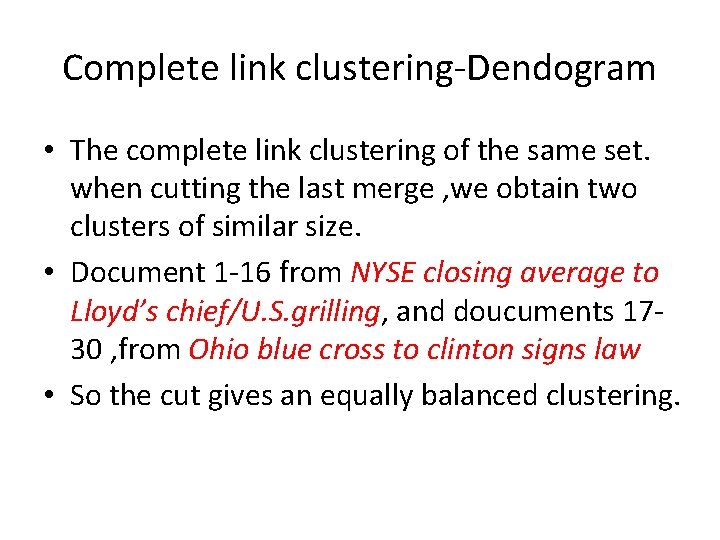

Complete link clustering-Dendogram • The complete link clustering of the same set. when cutting the last merge , we obtain two clusters of similar size. • Document 1 -16 from NYSE closing average to Lloyd’s chief/U. S. grilling, and doucuments 1730 , from Ohio blue cross to clinton signs law • So the cut gives an equally balanced clustering.

Divisive clustering • This variant of hierarchical clustering is called top-down clustering or divisive clustering. • We start at the top with all documents in one cluster. the cluster is split using a flat clustering algorithm. • This procedure is applied recursively until each document is in its own singleton cluster.

Thank you