CIS 501 Computer Architecture Unit 9 Static Dynamic

![Example: In-Order Limitations #1 0 1 2 3 4 5 Ld [r 1] ➜ Example: In-Order Limitations #1 0 1 2 3 4 5 Ld [r 1] ➜](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-17.jpg)

![Example: In-Order Limitations #2 0 1 2 3 4 5 Ld [p 1] ➜ Example: In-Order Limitations #2 0 1 2 3 4 5 Ld [p 1] ➜](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-18.jpg)

![Register Renaming Algorithm • Two key data structures: • maptable[architectural_reg] physical_reg • Free list: Register Renaming Algorithm • Two key data structures: • maptable[architectural_reg] physical_reg • Free list:](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-24.jpg)

![Dynamic Scheduling/Issue Algorithm • Data structures: • Ready table[phys_reg] yes/no (part of “issue queue”) Dynamic Scheduling/Issue Algorithm • Data structures: • Ready table[phys_reg] yes/no (part of “issue queue”)](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-27.jpg)

![Register Renaming Algorithm (Simplified) • Two key data structures: • maptable[architectural_reg] physical_reg • Free Register Renaming Algorithm (Simplified) • Two key data structures: • maptable[architectural_reg] physical_reg • Free](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-29.jpg)

![Dynamic Scheduling/Issue Algorithm • Data structures: • Ready table[phys_reg] yes/no (part of issue queue) Dynamic Scheduling/Issue Algorithm • Data structures: • Ready table[phys_reg] yes/no (part of issue queue)](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-53.jpg)

![Register Renaming Algorithm (Full) • Two key data structures: • maptable[architectural_reg] physical_reg • Free Register Renaming Algorithm (Full) • Two key data structures: • maptable[architectural_reg] physical_reg • Free](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-68.jpg)

![Out-of-Order Pipeline – Cycle 0 0 ld [r 1] ➜ r 2 F add Out-of-Order Pipeline – Cycle 0 0 ld [r 1] ➜ r 2 F add](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-99.jpg)

![Out-of-Order Pipeline – Cycle 1 a 0 1 ld [r 1] ➜ r 2 Out-of-Order Pipeline – Cycle 1 a 0 1 ld [r 1] ➜ r 2](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-100.jpg)

![Out-of-Order Pipeline – Cycle 1 b 0 1 ld [r 1] ➜ r 2 Out-of-Order Pipeline – Cycle 1 b 0 1 ld [r 1] ➜ r 2](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-101.jpg)

![Out-of-Order Pipeline – Cycle 1 c 0 1 ld [r 1] ➜ r 2 Out-of-Order Pipeline – Cycle 1 c 0 1 ld [r 1] ➜ r 2](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-102.jpg)

![Out-of-Order Pipeline – Cycle 2 a 0 1 2 ld [r 1] ➜ r Out-of-Order Pipeline – Cycle 2 a 0 1 2 ld [r 1] ➜ r](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-103.jpg)

![Out-of-Order Pipeline – Cycle 2 b 0 1 2 ld [r 1] ➜ r Out-of-Order Pipeline – Cycle 2 b 0 1 2 ld [r 1] ➜ r](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-104.jpg)

![Out-of-Order Pipeline – Cycle 2 c 0 1 2 ld [r 1] ➜ r Out-of-Order Pipeline – Cycle 2 c 0 1 2 ld [r 1] ➜ r](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-105.jpg)

![Out-of-Order Pipeline – Cycle 3 0 1 2 3 ld [r 1] ➜ r Out-of-Order Pipeline – Cycle 3 0 1 2 3 ld [r 1] ➜ r](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-106.jpg)

![Out-of-Order Pipeline – Cycle 4 0 1 2 3 4 ld [r 1] ➜ Out-of-Order Pipeline – Cycle 4 0 1 2 3 4 ld [r 1] ➜](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-107.jpg)

![Out-of-Order Pipeline – Cycle 6 0 1 2 3 4 5 ld [r 1] Out-of-Order Pipeline – Cycle 6 0 1 2 3 4 5 ld [r 1]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-110.jpg)

![Conservative Load Scheduling 0 1 2 3 4 5 ld [p 1] ➜ p Conservative Load Scheduling 0 1 2 3 4 5 ld [p 1] ➜ p](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-134.jpg)

![Optimistic Load Scheduling 0 1 2 3 4 5 ld [p 1] ➜ p Optimistic Load Scheduling 0 1 2 3 4 5 ld [p 1] ➜ p](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-135.jpg)

![1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-142.jpg)

![1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-143.jpg)

![1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-144.jpg)

![1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-145.jpg)

![1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-146.jpg)

![1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-147.jpg)

![1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-148.jpg)

![1. St p 1 ➜ [p 2] Bad/Good Interleaving 2. St p 3 ➜ 1. St p 1 ➜ [p 2] Bad/Good Interleaving 2. St p 3 ➜](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-149.jpg)

- Slides: 160

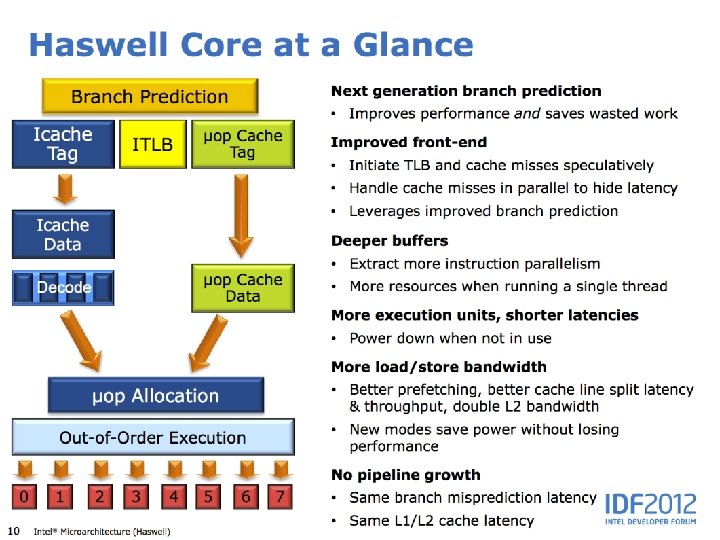

CIS 501: Computer Architecture Unit 9: Static & Dynamic Scheduling Slides originally developed by Drew Hilton, Amir Roth, Milo Martin and Joe Devietti at University of Pennsylvania CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 1

This Unit: Static & Dynamic Scheduling App App System software Mem CPU I/O • Code scheduling • To reduce pipeline stalls • To increase ILP (insn level parallelism) • Static scheduling by the compiler • Approach & limitations • Dynamic scheduling in hardware • Register renaming • Instruction selection • Handling memory operations CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 2

Readings • Textbook (MA: FSPTCM) • Sections 3. 3. 1 – 3. 3. 4 (but not “Sidebar: ”) • Sections 5. 0 -5. 2, 5. 3. 3, 5. 4, 5. 5 • Paper for group discussion and questions: • “Memory Dependence Prediction using Store Sets” by Chrysos & Emer • Suggested reading • “The MIPS R 10000 Superscalar Microprocessor” by Kenneth Yeager CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 3

Code Scheduling & Limitations CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 4

Code Scheduling • Scheduling: act of finding independent instructions • “Static” done at compile time by the compiler (software) • “Dynamic” done at runtime by the processor (hardware) • Why schedule code? • Scalar pipelines: fill in load-to-use delay slots to improve CPI • Superscalar: place independent instructions together • As above, load-to-use delay slots • Allow multiple-issue decode logic to let them execute at the same time CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 5

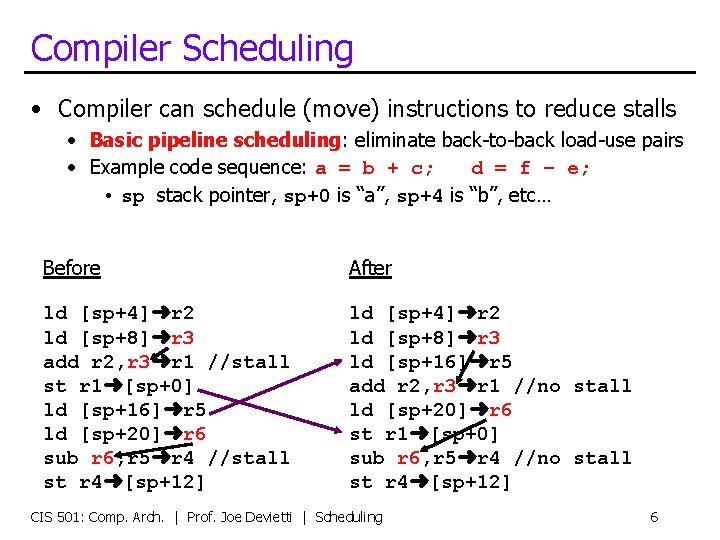

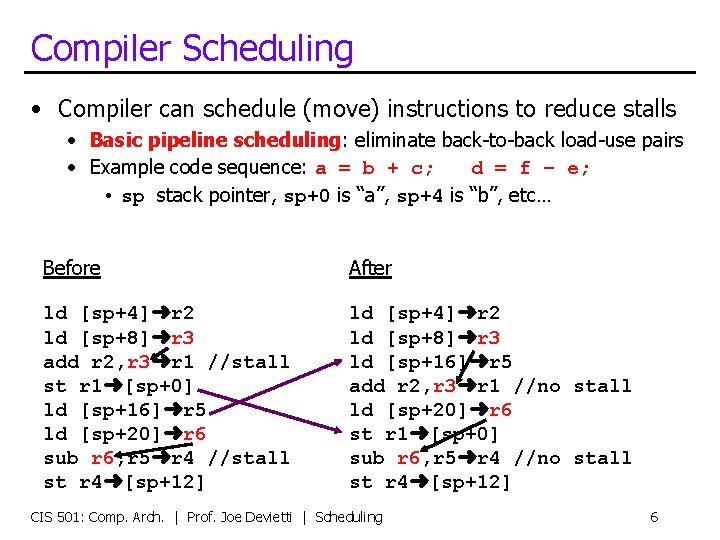

Compiler Scheduling • Compiler can schedule (move) instructions to reduce stalls • Basic pipeline scheduling: eliminate back-to-back load-use pairs • Example code sequence: a = b + c; d = f – e; • sp stack pointer, sp+0 is “a”, sp+4 is “b”, etc… Before After ld [sp+4]➜r 2 ld [sp+8]➜r 3 add r 2, r 3➜r 1 //stall st r 1➜[sp+0] ld [sp+16]➜r 5 ld [sp+20]➜r 6 sub r 6, r 5➜r 4 //stall st r 4➜[sp+12] ld [sp+4]➜r 2 ld [sp+8]➜r 3 ld [sp+16]➜r 5 add r 2, r 3➜r 1 //no stall ld [sp+20]➜r 6 st r 1➜[sp+0] sub r 6, r 5➜r 4 //no stall st r 4➜[sp+12] CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 6

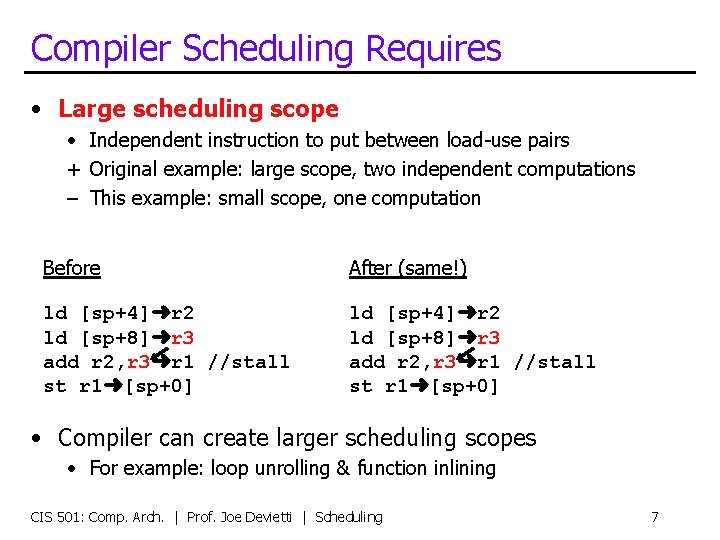

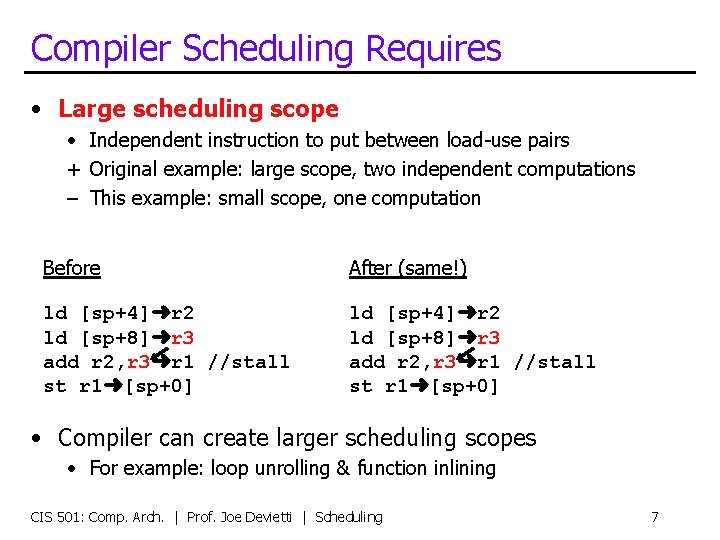

Compiler Scheduling Requires • Large scheduling scope • Independent instruction to put between load-use pairs + Original example: large scope, two independent computations – This example: small scope, one computation Before After (same!) ld [sp+4]➜r 2 ld [sp+8]➜r 3 add r 2, r 3➜r 1 //stall st r 1➜[sp+0] • Compiler can create larger scheduling scopes • For example: loop unrolling & function inlining CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 7

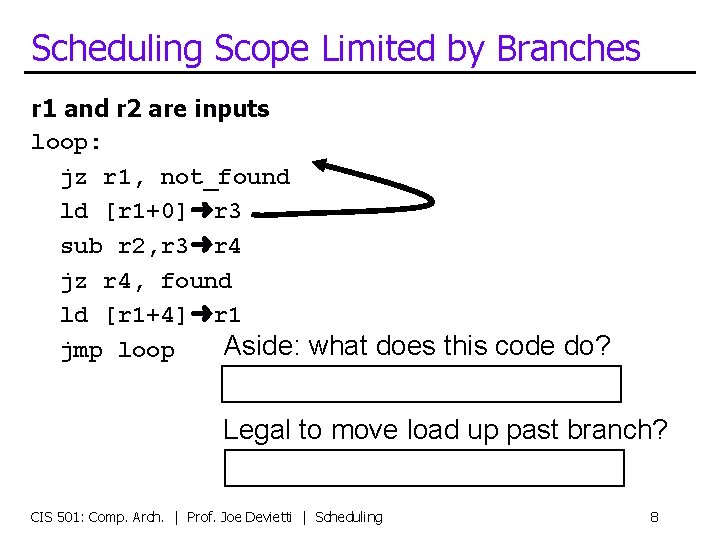

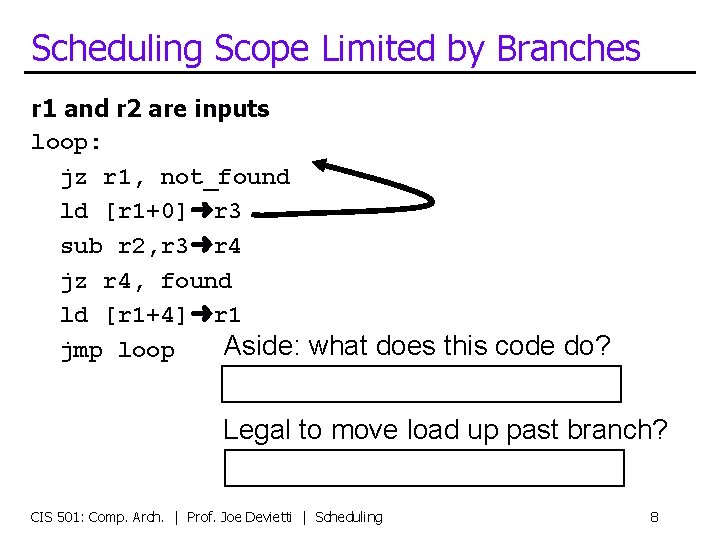

Scheduling Scope Limited by Branches r 1 and r 2 are inputs loop: jz r 1, not_found ld [r 1+0]➜r 3 sub r 2, r 3➜r 4 jz r 4, found ld [r 1+4]➜r 1 Aside: what does this code do? jmp loop Searches a linked list for an element Legal to move load up past branch? No: if r 1 is null, will cause a fault CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 8

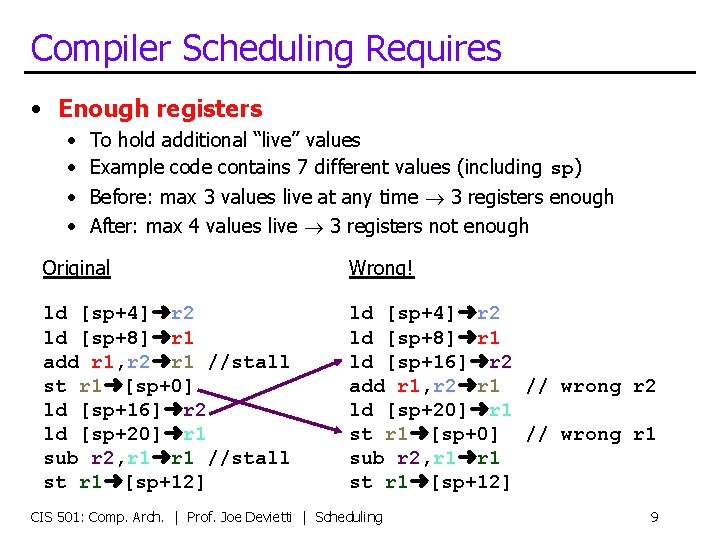

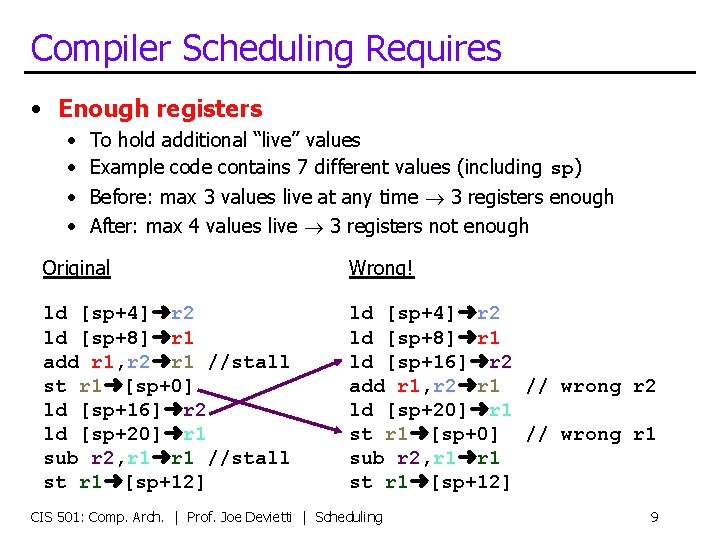

Compiler Scheduling Requires • Enough registers • To hold additional “live” values • Example code contains 7 different values (including sp) • Before: max 3 values live at any time 3 registers enough • After: max 4 values live 3 registers not enough Original Wrong! ld [sp+4]➜r 2 ld [sp+8]➜r 1 add r 1, r 2➜r 1 //stall st r 1➜[sp+0] ld [sp+16]➜r 2 ld [sp+20]➜r 1 sub r 2, r 1➜r 1 //stall st r 1➜[sp+12] ld [sp+4]➜r 2 ld [sp+8]➜r 1 ld [sp+16]➜r 2 add r 1, r 2➜r 1 // wrong r 2 ld [sp+20]➜r 1 st r 1➜[sp+0] // wrong r 1 sub r 2, r 1➜r 1 st r 1➜[sp+12] CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 9

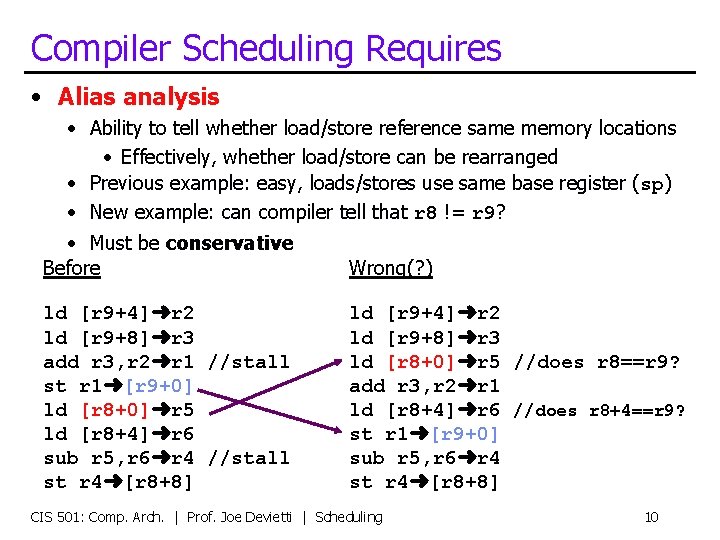

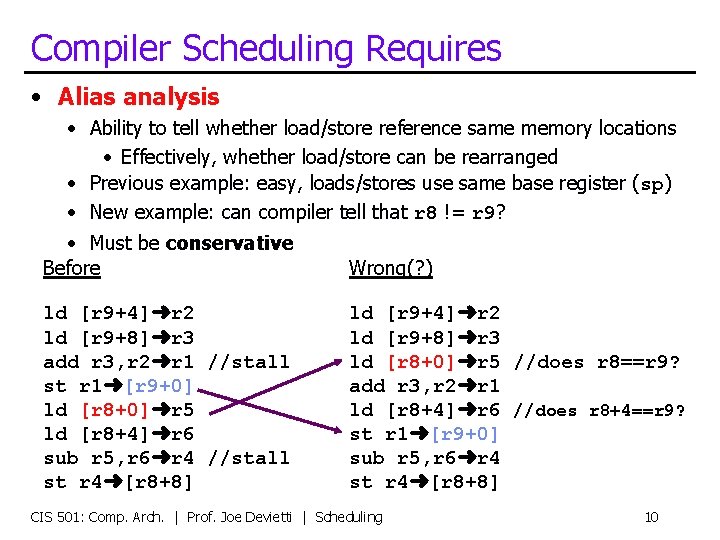

Compiler Scheduling Requires • Alias analysis • Ability to tell whether load/store reference same memory locations • Effectively, whether load/store can be rearranged • Previous example: easy, loads/stores use same base register (sp) • New example: can compiler tell that r 8 != r 9? • Must be conservative Before Wrong(? ) ld [r 9+4]➜r 2 ld [r 9+8]➜r 3 add r 3, r 2➜r 1 //stall st r 1➜[r 9+0] ld [r 8+0]➜r 5 ld [r 8+4]➜r 6 sub r 5, r 6➜r 4 //stall st r 4➜[r 8+8] ld [r 9+4]➜r 2 ld [r 9+8]➜r 3 ld [r 8+0]➜r 5 //does r 8==r 9? add r 3, r 2➜r 1 ld [r 8+4]➜r 6 //does r 8+4==r 9? st r 1➜[r 9+0] sub r 5, r 6➜r 4 st r 4➜[r 8+8] CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 10

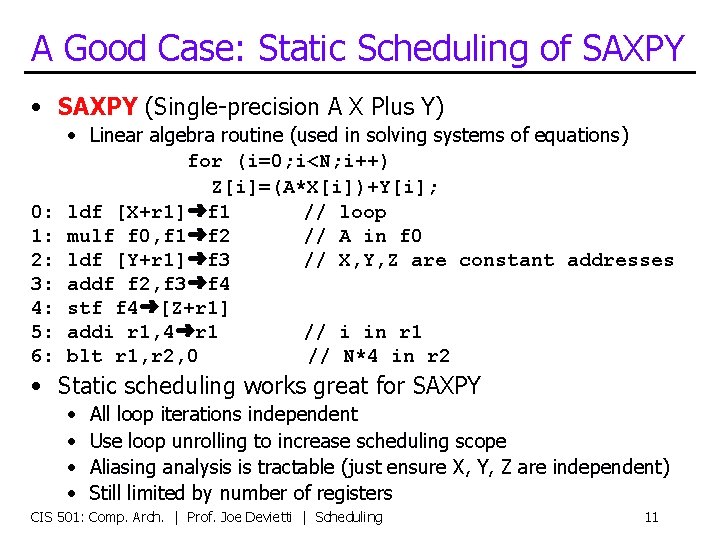

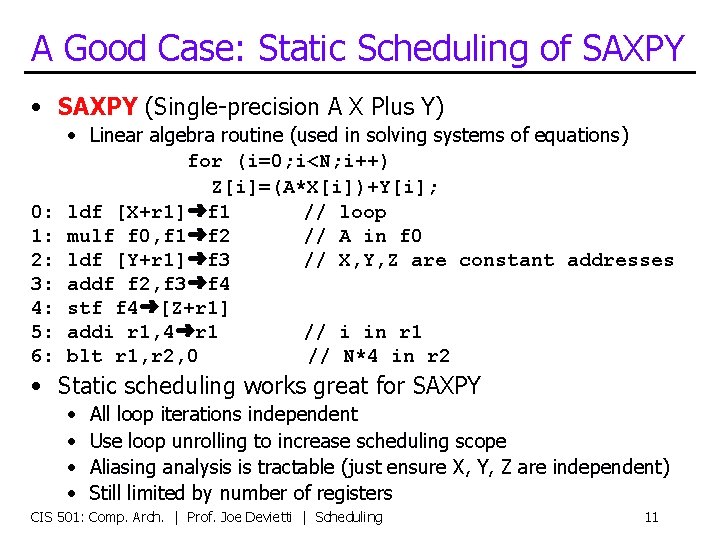

A Good Case: Static Scheduling of SAXPY • SAXPY (Single-precision A X Plus Y) 0: 1: 2: 3: 4: 5: 6: • Linear algebra routine (used in solving systems of equations) for (i=0; i<N; i++) Z[i]=(A*X[i])+Y[i]; ldf [X+r 1]➜f 1 // loop mulf f 0, f 1➜f 2 // A in f 0 ldf [Y+r 1]➜f 3 // X, Y, Z are constant addresses addf f 2, f 3➜f 4 stf f 4➜[Z+r 1] addi r 1, 4➜r 1 // i in r 1 blt r 1, r 2, 0 // N*4 in r 2 • Static scheduling works great for SAXPY • • All loop iterations independent Use loop unrolling to increase scheduling scope Aliasing analysis is tractable (just ensure X, Y, Z are independent) Still limited by number of registers CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 11

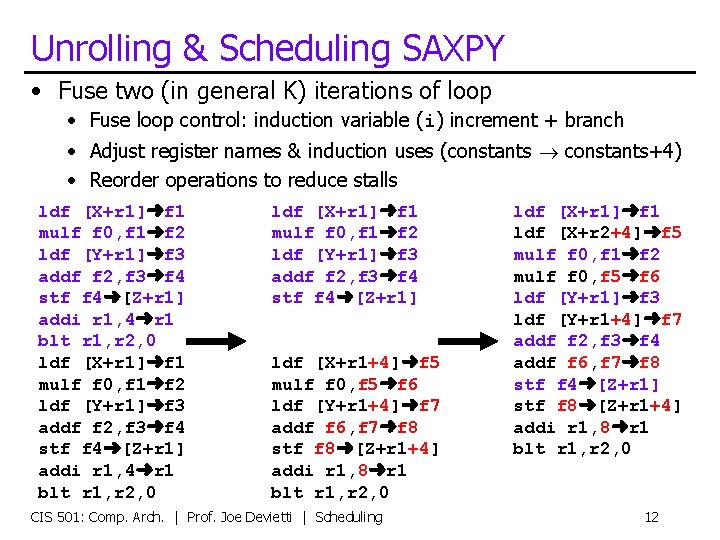

Unrolling & Scheduling SAXPY • Fuse two (in general K) iterations of loop • Fuse loop control: induction variable (i) increment + branch • Adjust register names & induction uses (constants constants+4) • Reorder operations to reduce stalls ldf [X+r 1]➜f 1 mulf f 0, f 1➜f 2 ldf [Y+r 1]➜f 3 addf f 2, f 3➜f 4 stf f 4➜[Z+r 1] addi r 1, 4➜r 1 blt r 1, r 2, 0 ldf [X+r 1]➜f 1 mulf f 0, f 1➜f 2 ldf [Y+r 1]➜f 3 addf f 2, f 3➜f 4 stf f 4➜[Z+r 1] ldf [X+r 1+4]➜f 5 mulf f 0, f 5➜f 6 ldf [Y+r 1+4]➜f 7 addf f 6, f 7➜f 8 stf f 8➜[Z+r 1+4] addi r 1, 8➜r 1 blt r 1, r 2, 0 CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling ldf [X+r 1]➜f 1 ldf [X+r 2+4]➜f 5 mulf f 0, f 1➜f 2 mulf f 0, f 5➜f 6 ldf [Y+r 1]➜f 3 ldf [Y+r 1+4]➜f 7 addf f 2, f 3➜f 4 addf f 6, f 7➜f 8 stf f 4➜[Z+r 1] stf f 8➜[Z+r 1+4] addi r 1, 8➜r 1 blt r 1, r 2, 0 12

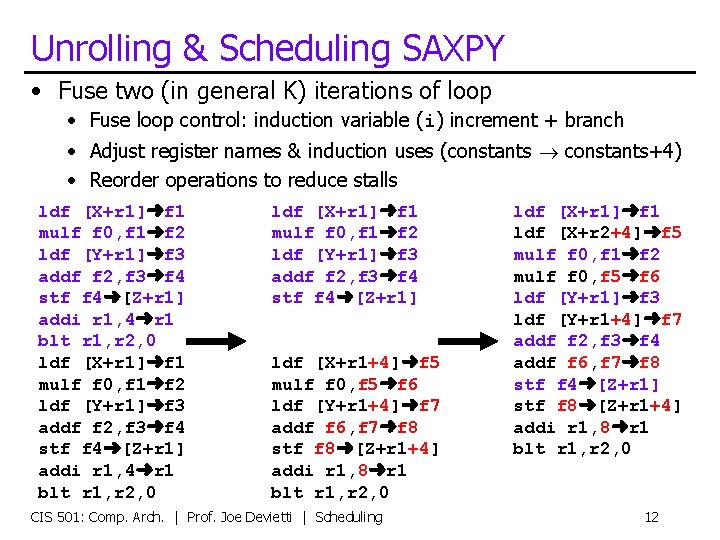

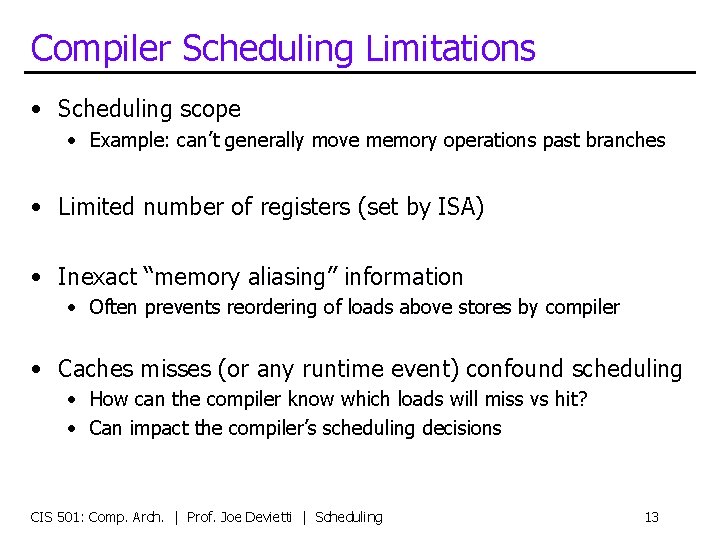

Compiler Scheduling Limitations • Scheduling scope • Example: can’t generally move memory operations past branches • Limited number of registers (set by ISA) • Inexact “memory aliasing” information • Often prevents reordering of loads above stores by compiler • Caches misses (or any runtime event) confound scheduling • How can the compiler know which loads will miss vs hit? • Can impact the compiler’s scheduling decisions CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 13

501 News • Paper Review #5 out • due on Wed 20 Nov • only 2 homework assignments to go! CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 14

Dynamic (Hardware) Scheduling CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 15

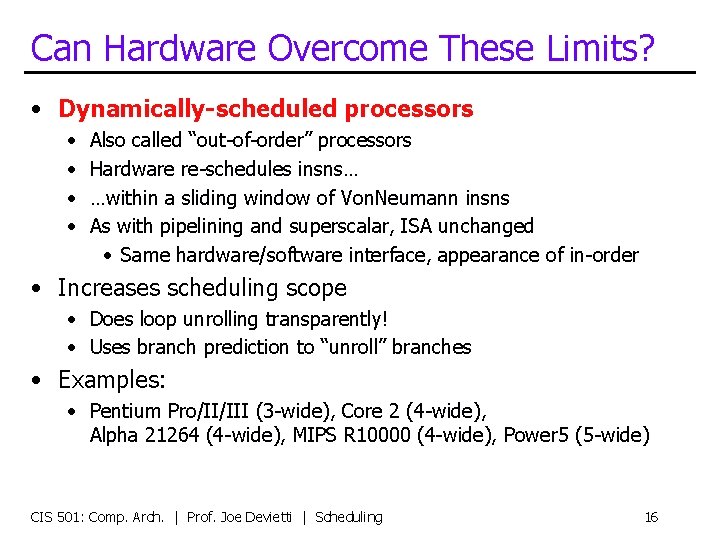

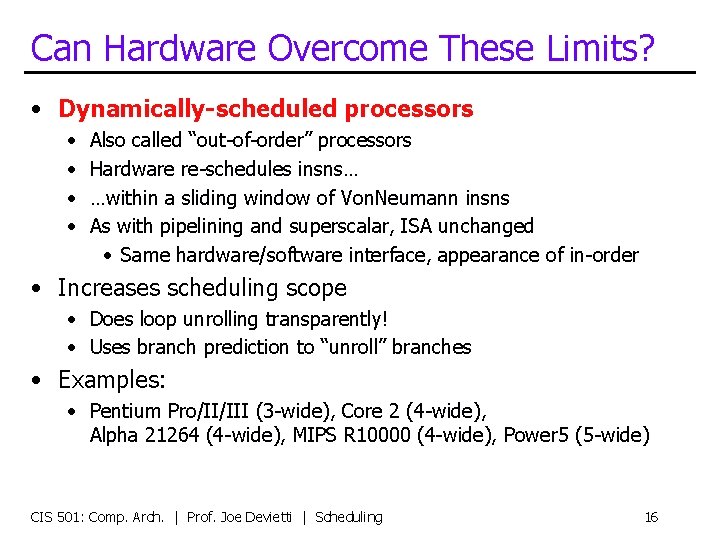

Can Hardware Overcome These Limits? • Dynamically-scheduled processors • • Also called “out-of-order” processors Hardware re-schedules insns… …within a sliding window of Von. Neumann insns As with pipelining and superscalar, ISA unchanged • Same hardware/software interface, appearance of in-order • Increases scheduling scope • Does loop unrolling transparently! • Uses branch prediction to “unroll” branches • Examples: • Pentium Pro/II/III (3 -wide), Core 2 (4 -wide), Alpha 21264 (4 -wide), MIPS R 10000 (4 -wide), Power 5 (5 -wide) CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 16

![Example InOrder Limitations 1 0 1 2 3 4 5 Ld r 1 Example: In-Order Limitations #1 0 1 2 3 4 5 Ld [r 1] ➜](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-17.jpg)

Example: In-Order Limitations #1 0 1 2 3 4 5 Ld [r 1] ➜ r 2 F D X M 1 M 2 W add r 2 + r 3 ➜ r 4 F D d* d* d* X 6 7 8 9 10 11 12 M 1 M 2 W xor r 4 ^ r 5 ➜ r 6 F D d* d* d* X M 1 M 2 W ld [r 7] ➜ r 4 F D p* p* p* X M 1 M 2 W • In-order pipeline, three-cycle load-use penalty • 2 -wide • Why not the following? 0 1 2 3 4 5 Ld [r 1] ➜ r 2 F D X M 1 M 2 W add r 2 + r 3 ➜ r 4 F D d* d* d* X xor r 4 ^ r 5 ➜ r 6 F D d* d* d* ld [r 7] ➜ r 4 F D X 6 7 8 9 10 11 12 M 1 M 2 W X M 1 M 2 W CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 17

![Example InOrder Limitations 2 0 1 2 3 4 5 Ld p 1 Example: In-Order Limitations #2 0 1 2 3 4 5 Ld [p 1] ➜](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-18.jpg)

Example: In-Order Limitations #2 0 1 2 3 4 5 Ld [p 1] ➜ p 2 F D X M 1 M 2 W add p 2 + p 3 ➜ p 4 F D d* d* d* X 6 7 8 9 10 11 12 M 1 M 2 W xor p 4 ^ p 5 ➜ p 6 F D d* d* d* X M 1 M 2 W ld [p 7] ➜ p 8 F D p* p* p* X M 1 M 2 W • In-order pipeline, three-cycle load-use penalty • 2 -wide • Why not the following: 0 1 2 3 4 5 Ld [p 1] ➜ p 2 F D X M 1 M 2 W add p 2 + p 3 ➜ p 4 F D d* d* d* X xor p 4 ^ p 5 ➜ p 6 F D d* d* d* ld [p 7] ➜ p 8 F D X 6 7 8 9 10 11 12 M 1 M 2 W X M 1 M 2 W CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 18

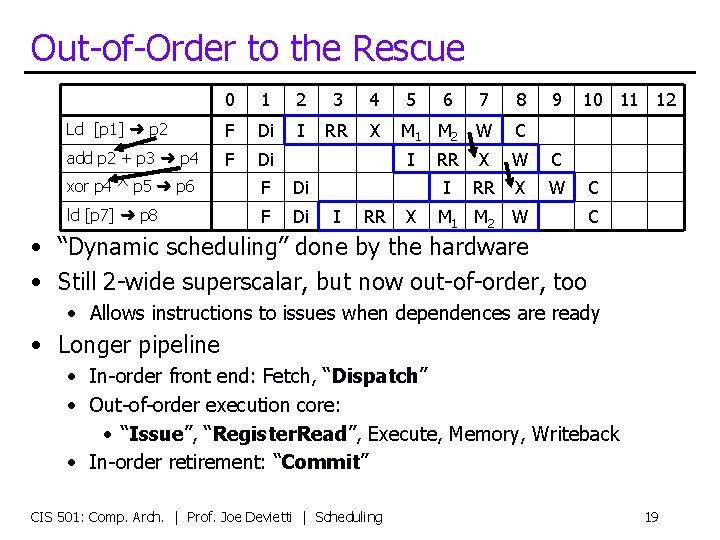

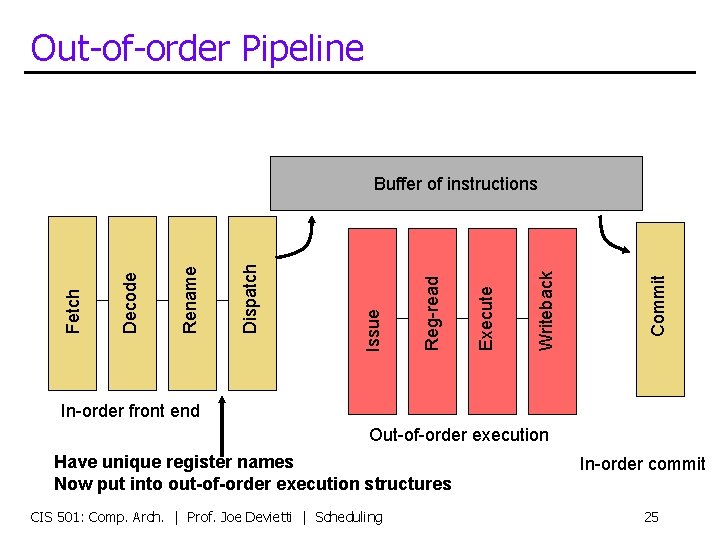

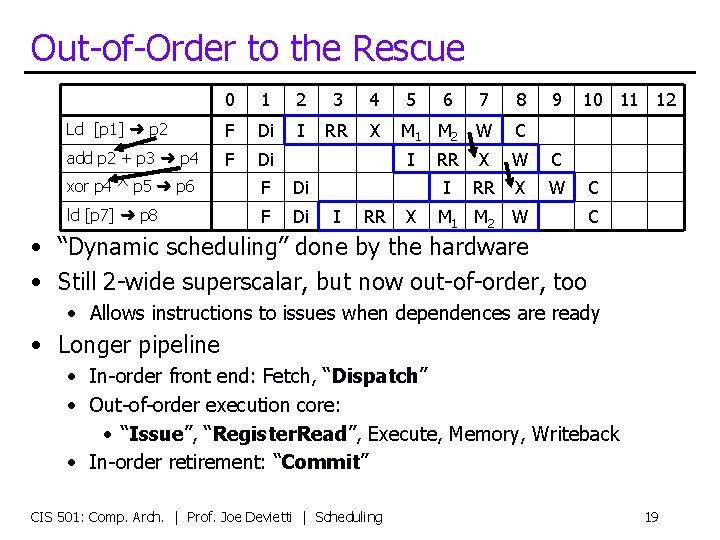

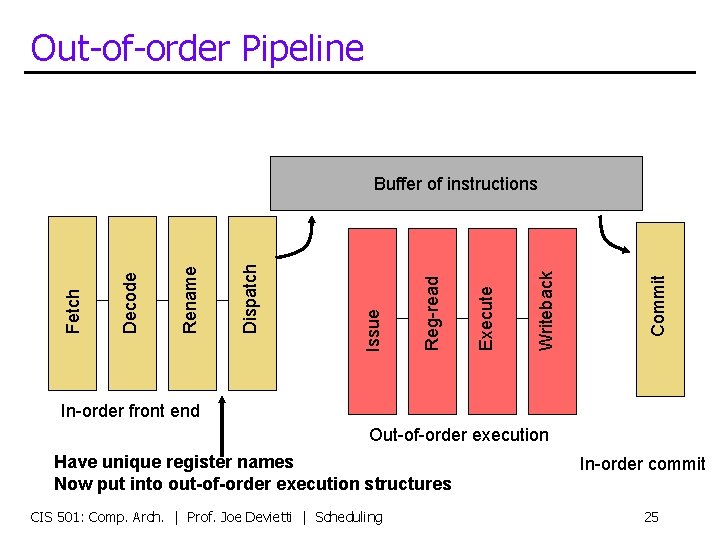

Out-of-Order to the Rescue 0 1 2 3 4 5 6 7 8 Ld [p 1] ➜ p 2 F Di I RR X M 1 M 2 W C add p 2 + p 3 ➜ p 4 F Di RR X W C I RR X W M 1 M 2 W I xor p 4 ^ p 5 ➜ p 6 F Di ld [p 7] ➜ p 8 F Di I RR X 9 10 11 12 C C • “Dynamic scheduling” done by the hardware • Still 2 -wide superscalar, but now out-of-order, too • Allows instructions to issues when dependences are ready • Longer pipeline • In-order front end: Fetch, “Dispatch” • Out-of-order execution core: • “Issue”, “Register. Read”, Execute, Memory, Writeback • In-order retirement: “Commit” CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 19

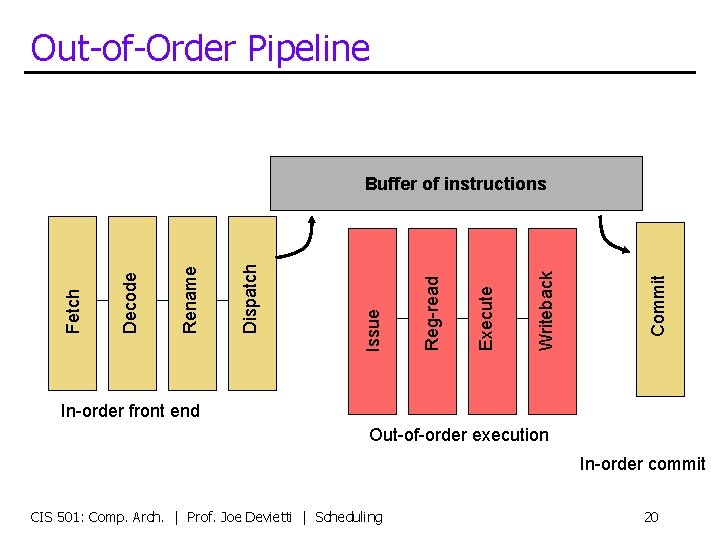

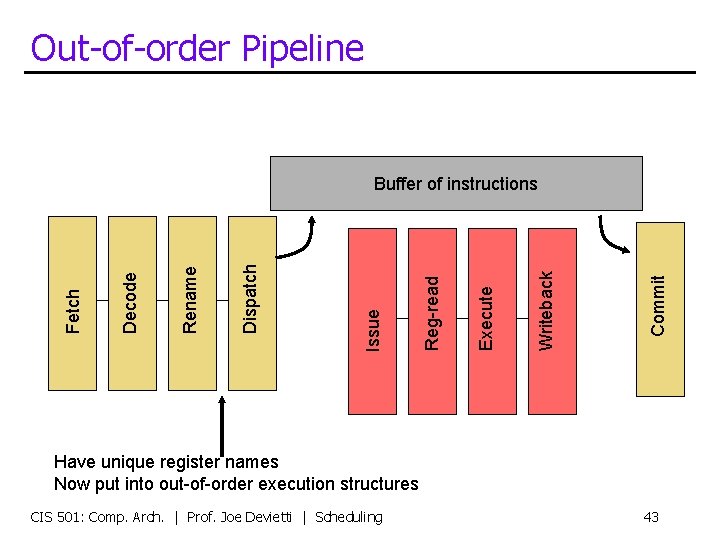

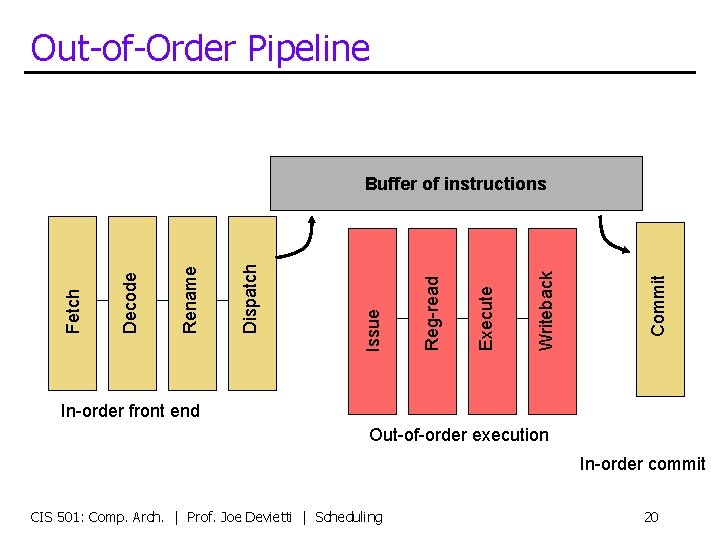

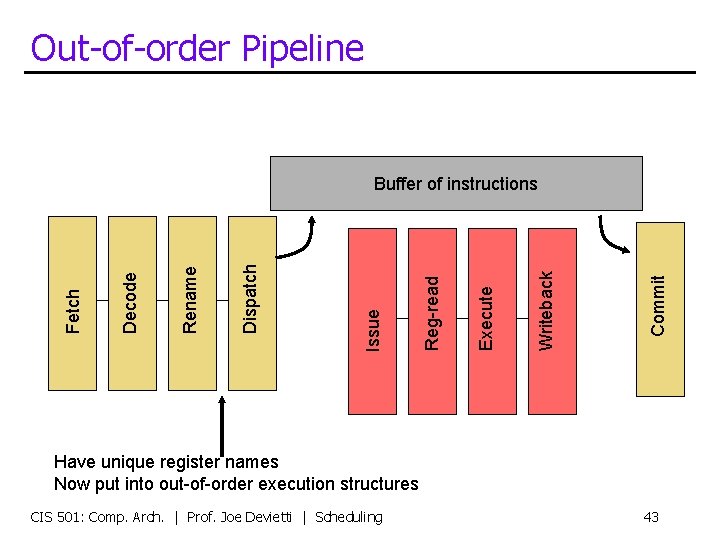

Out-of-Order Pipeline Commit Writeback Execute Reg-read Issue Dispatch Rename Decode Fetch Buffer of instructions In-order front end Out-of-order execution In-order commit CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 20

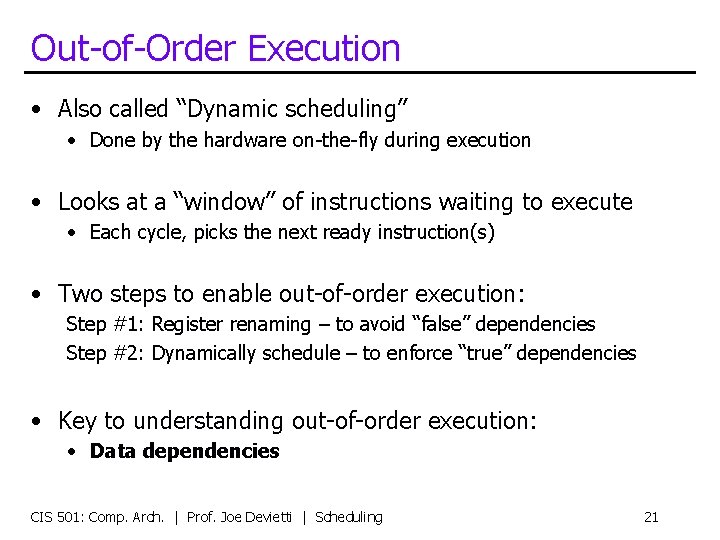

Out-of-Order Execution • Also called “Dynamic scheduling” • Done by the hardware on-the-fly during execution • Looks at a “window” of instructions waiting to execute • Each cycle, picks the next ready instruction(s) • Two steps to enable out-of-order execution: Step #1: Register renaming – to avoid “false” dependencies Step #2: Dynamically schedule – to enforce “true” dependencies • Key to understanding out-of-order execution: • Data dependencies CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 21

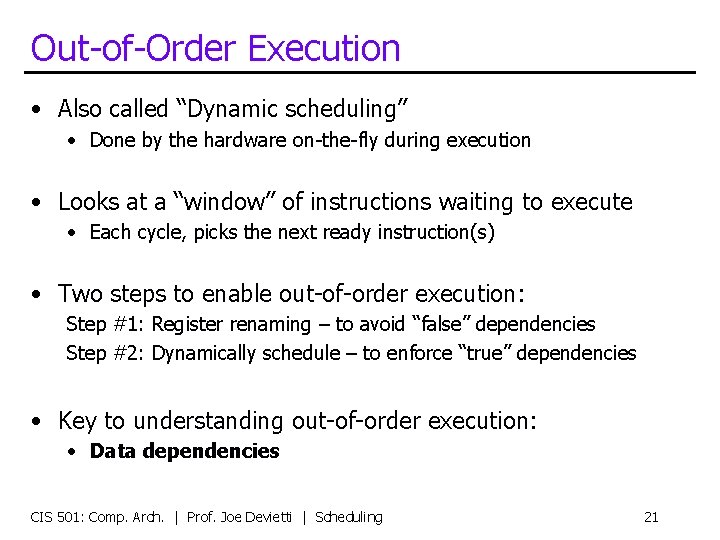

Dependence types • RAW (Read After Write) = “true dependence” (true) mul r 0 * r 1 ➜ r 2 … add r 2 + r 3 ➜ r 4 • WAW (Write After Write) = “output dependence” (false) mul r 0 * r 1➜ r 2 … add r 1 + r 3 ➜ r 2 • WAR (Write After Read) = “anti-dependence” (false) mul r 0 * r 1 ➜ r 2 … add r 3 + r 4 ➜ r 1 • WAW & WAR are “false”, Can be totally eliminated by “renaming” CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 22

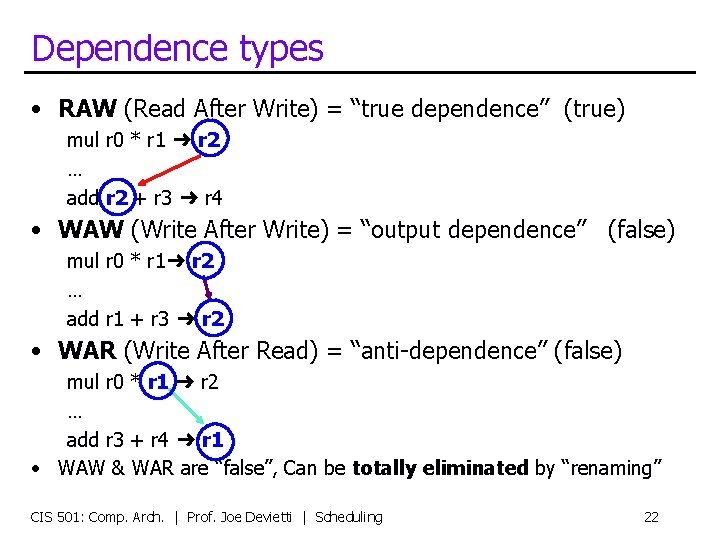

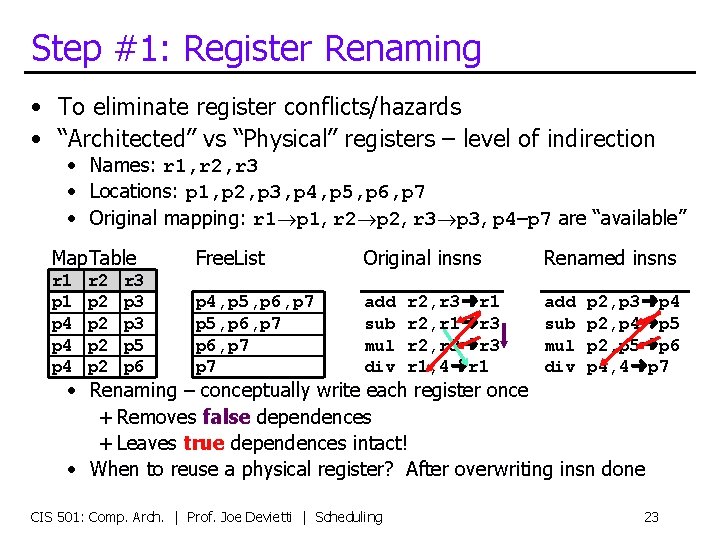

Step #1: Register Renaming • To eliminate register conflicts/hazards • “Architected” vs “Physical” registers – level of indirection • Names: r 1, r 2, r 3 • Locations: p 1, p 2, p 3, p 4, p 5, p 6, p 7 • Original mapping: r 1 p 1, r 2 p 2, r 3 p 3, p 4–p 7 are “available” Map. Table Free. List Original insns Renamed insns r 1 p 4 p 4 p 4, p 5, p 6, p 7 add sub mul div r 2 p 2 p 2 r 3 p 3 p 5 p 6 r 2, r 3➜r 1 r 2, r 1➜r 3 r 2, r 3➜r 3 r 1, 4➜r 1 p 2, p 3➜p 4 p 2, p 4➜p 5 p 2, p 5➜p 6 p 4, 4➜p 7 • Renaming – conceptually write each register once + Removes false dependences + Leaves true dependences intact! • When to reuse a physical register? After overwriting insn done CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 23

![Register Renaming Algorithm Two key data structures maptablearchitecturalreg physicalreg Free list Register Renaming Algorithm • Two key data structures: • maptable[architectural_reg] physical_reg • Free list:](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-24.jpg)

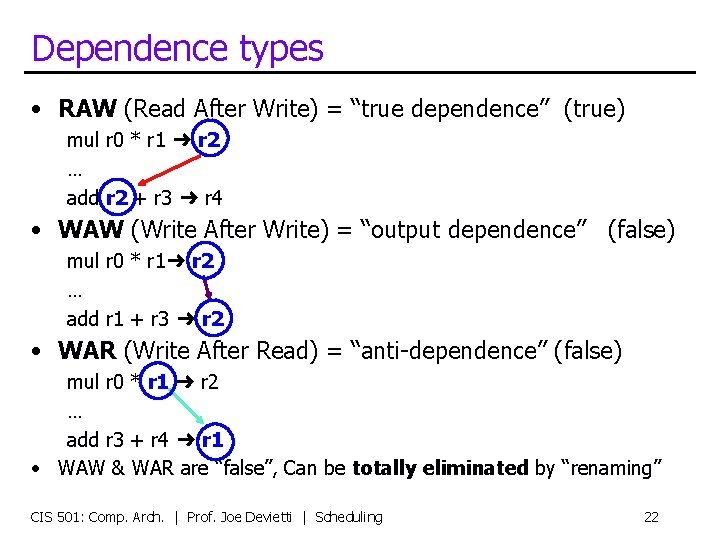

Register Renaming Algorithm • Two key data structures: • maptable[architectural_reg] physical_reg • Free list: allocate (new) & free registers (implemented as a queue) • Algorithm: at “decode” stage for each instruction: insn. phys_input 1 = maptable[insn. arch_input 1] insn. phys_input 2 = maptable[insn. arch_input 2] insn. old_phys_output = maptable[insn. arch_output] new_reg = new_phys_reg() maptable[insn. arch_output] = new_reg insn. phys_output = new_reg • At “commit” • Once all older instructions have committed, free register free_phys_reg(insn. old_phys_output) CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 24

Out-of-order Pipeline Commit Writeback Execute Reg-read Issue Dispatch Rename Decode Fetch Buffer of instructions In-order front end Out-of-order execution Have unique register names Now put into out-of-order execution structures CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling In-order commit 25

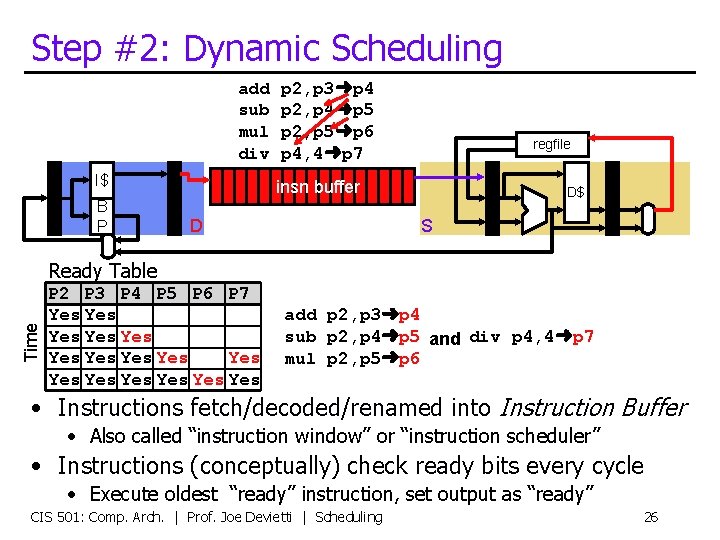

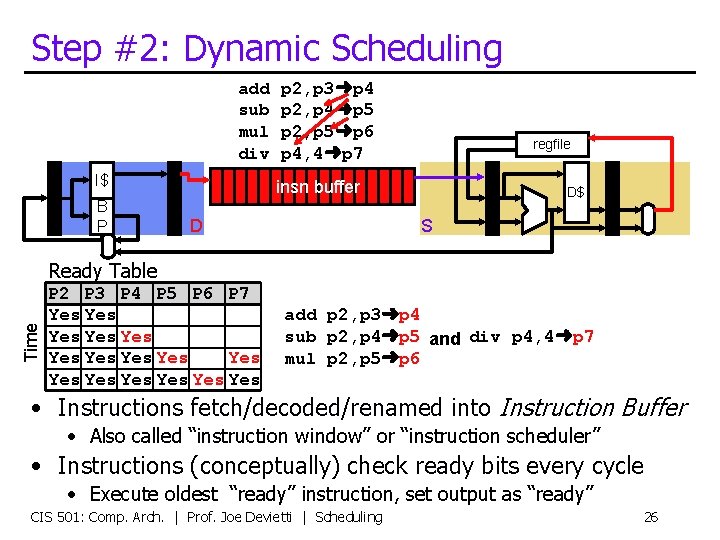

Step #2: Dynamic Scheduling add sub mul div I$ B P p 2, p 3➜p 4 p 2, p 4➜p 5 p 2, p 5➜p 6 p 4, 4➜p 7 regfile insn buffer D D$ S Time Ready Table P 2 P 3 P 4 P 5 P 6 P 7 Yes Yes Yes Yes add p 2, p 3➜p 4 sub p 2, p 4➜p 5 and div p 4, 4➜p 7 mul p 2, p 5➜p 6 • Instructions fetch/decoded/renamed into Instruction Buffer • Also called “instruction window” or “instruction scheduler” • Instructions (conceptually) check ready bits every cycle • Execute oldest “ready” instruction, set output as “ready” CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 26

![Dynamic SchedulingIssue Algorithm Data structures Ready tablephysreg yesno part of issue queue Dynamic Scheduling/Issue Algorithm • Data structures: • Ready table[phys_reg] yes/no (part of “issue queue”)](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-27.jpg)

Dynamic Scheduling/Issue Algorithm • Data structures: • Ready table[phys_reg] yes/no (part of “issue queue”) • Algorithm at “issue” stage (prior to read registers): foreach instruction: if table[insn. phys_input 1] == ready && table[insn. phys_input 2] == ready then insn is “ready” select the oldest “ready” instruction table[insn. phys_output] = ready • Multiple-cycle instructions? (such as loads) • For an insn with latency of N, set “ready” bit N-1 cycles in future CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 27

Register Renaming CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 28

![Register Renaming Algorithm Simplified Two key data structures maptablearchitecturalreg physicalreg Free Register Renaming Algorithm (Simplified) • Two key data structures: • maptable[architectural_reg] physical_reg • Free](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-29.jpg)

Register Renaming Algorithm (Simplified) • Two key data structures: • maptable[architectural_reg] physical_reg • Free list: allocate (new) & free registers (implemented as a queue) • Algorithm: at “decode” stage for each instruction: insn. phys_input 1 = maptable[insn. arch_input 1] insn. phys_input 2 = maptable[insn. arch_input 2] new_reg = new_phys_reg() maptable[insn. arch_output] = new_reg insn. phys_output = new_reg CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 29

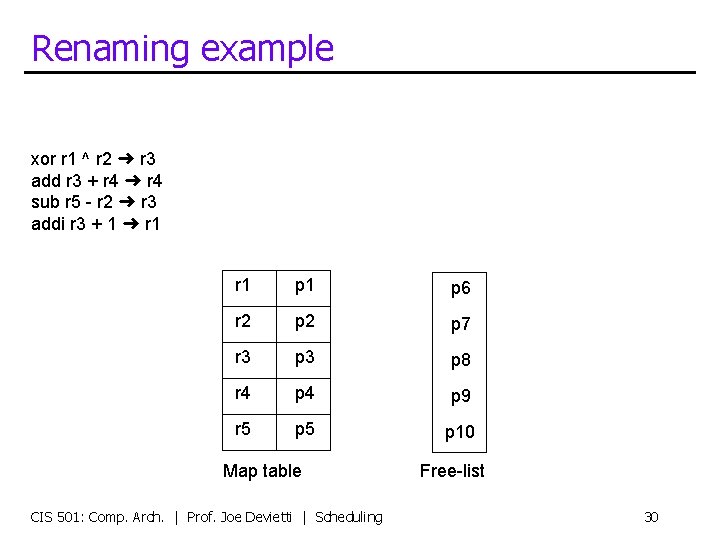

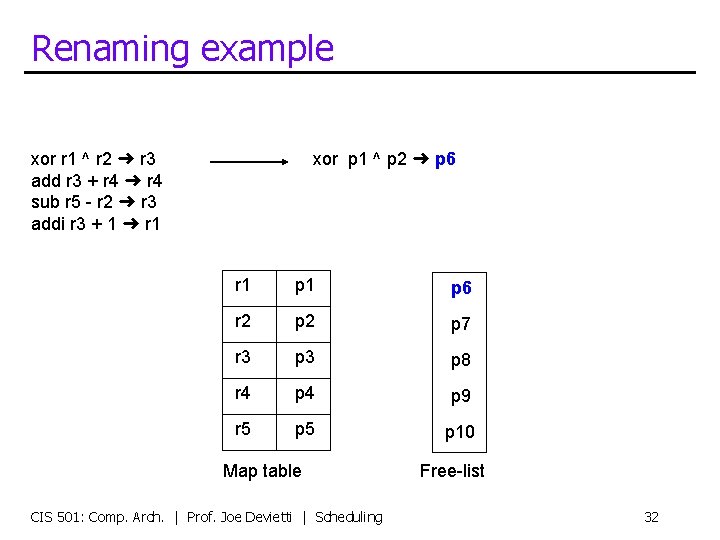

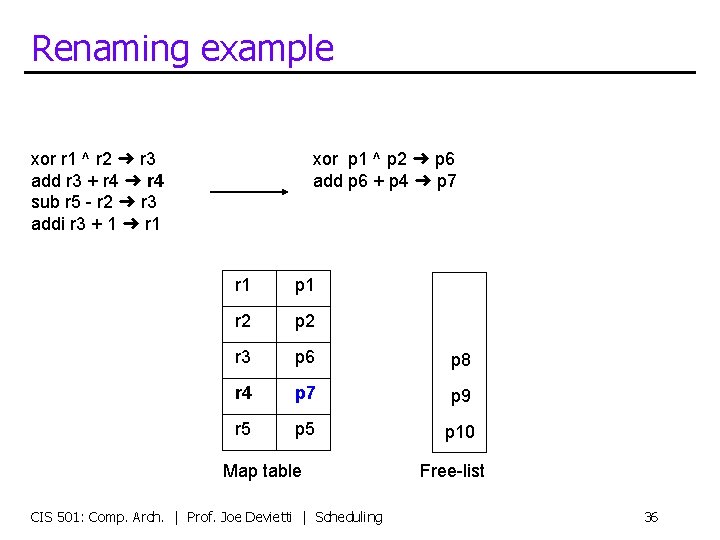

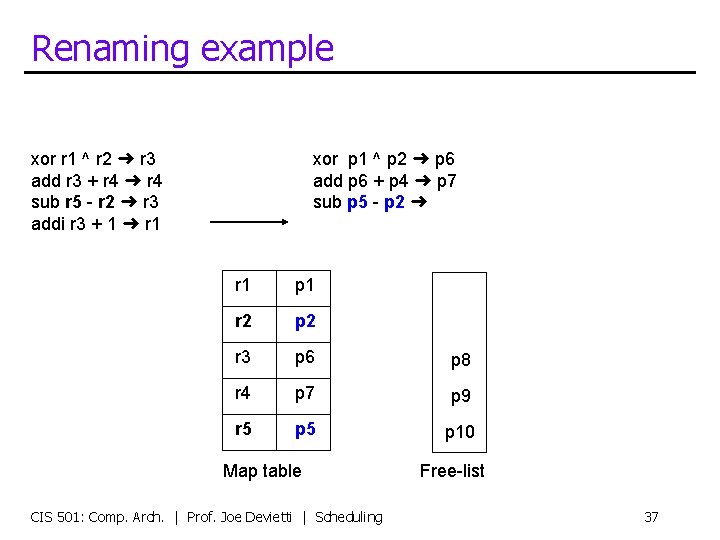

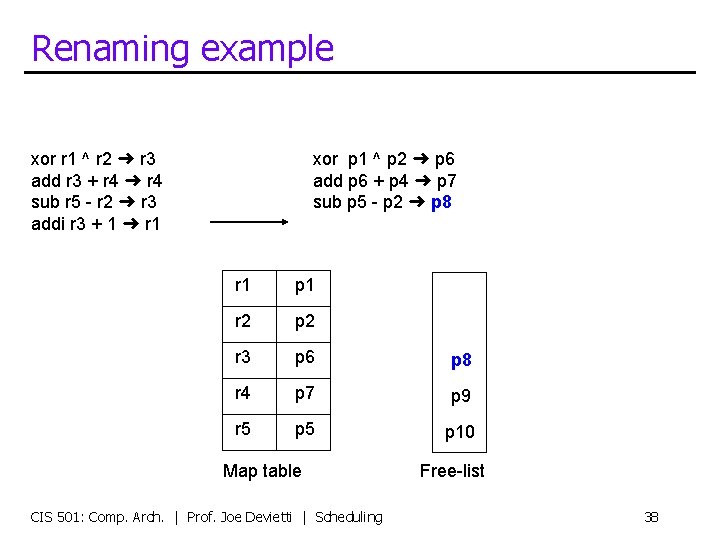

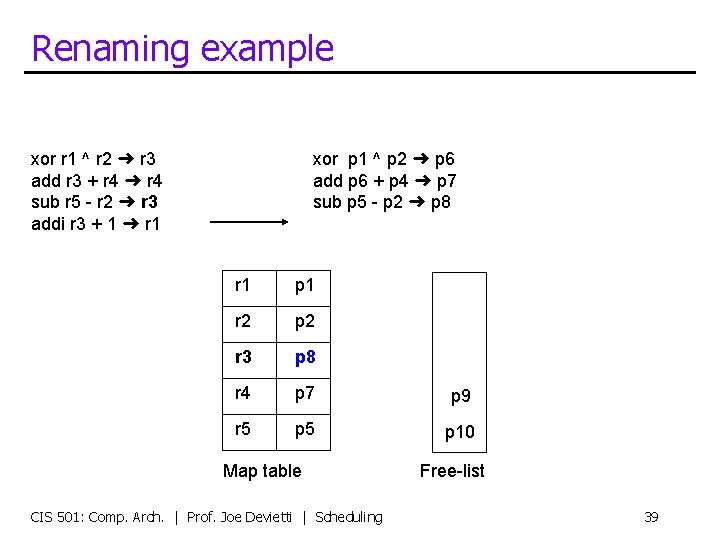

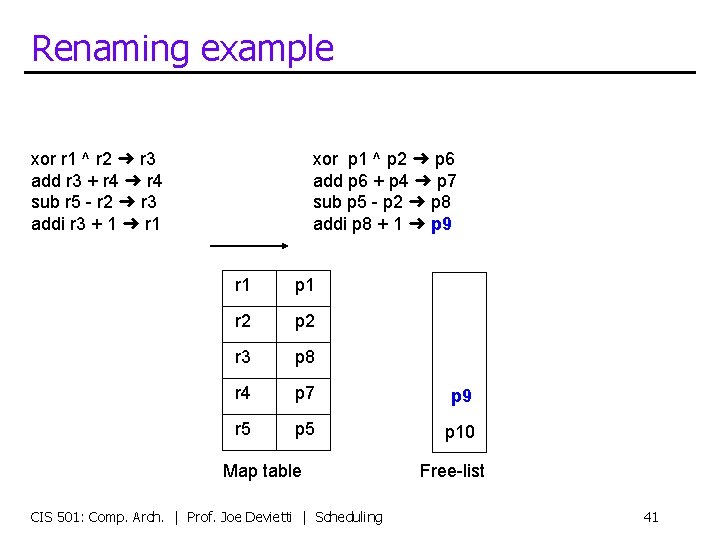

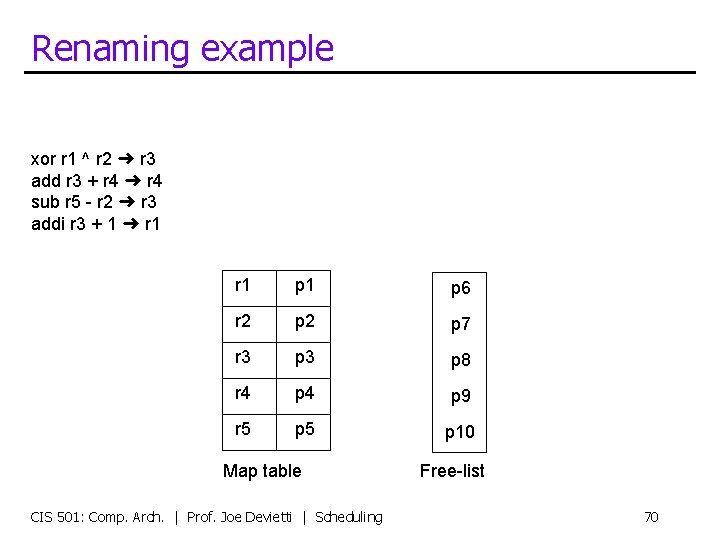

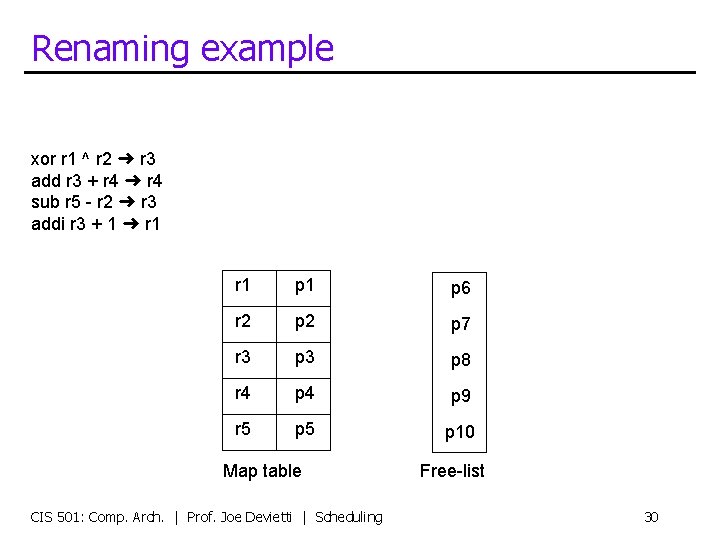

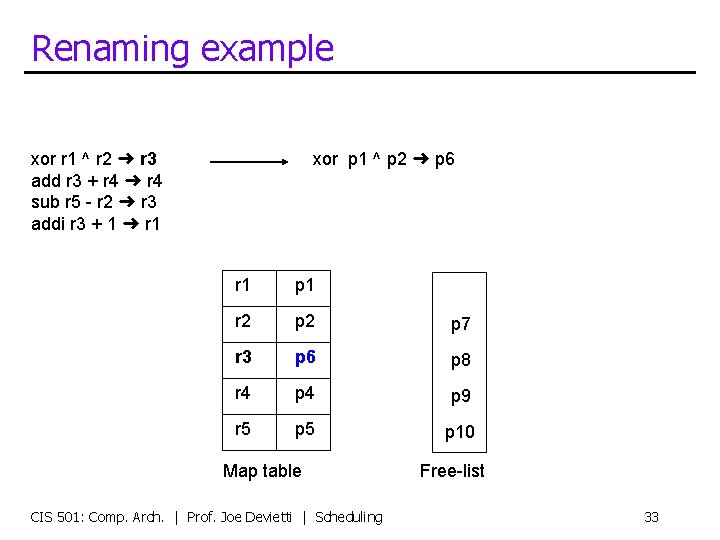

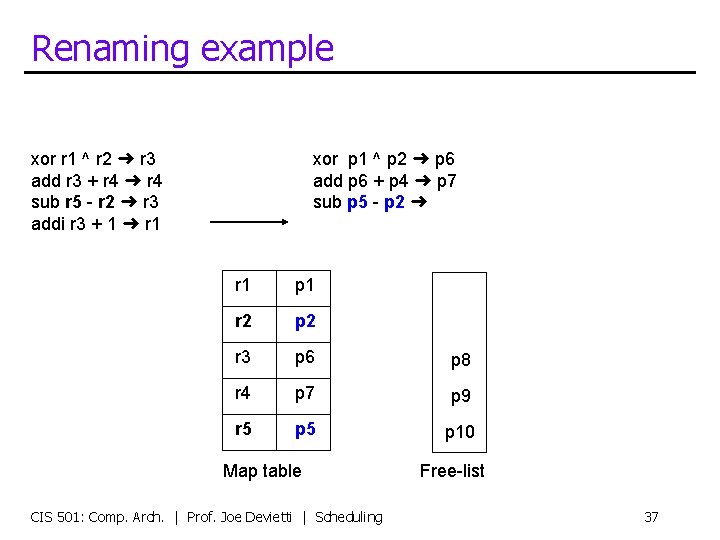

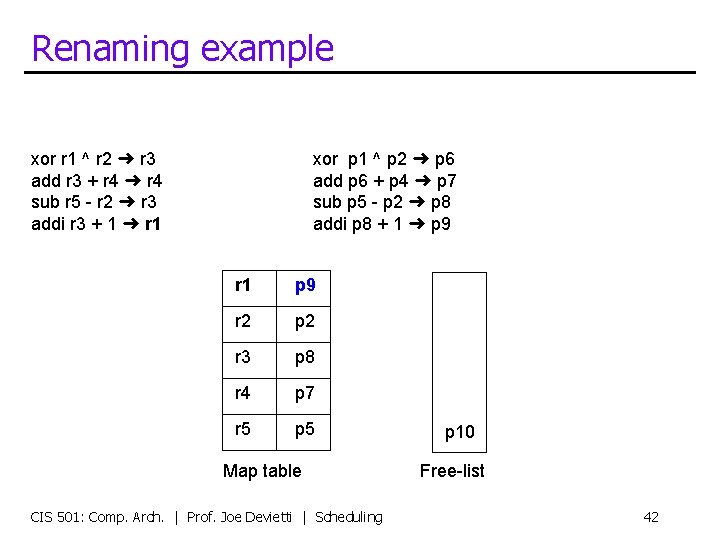

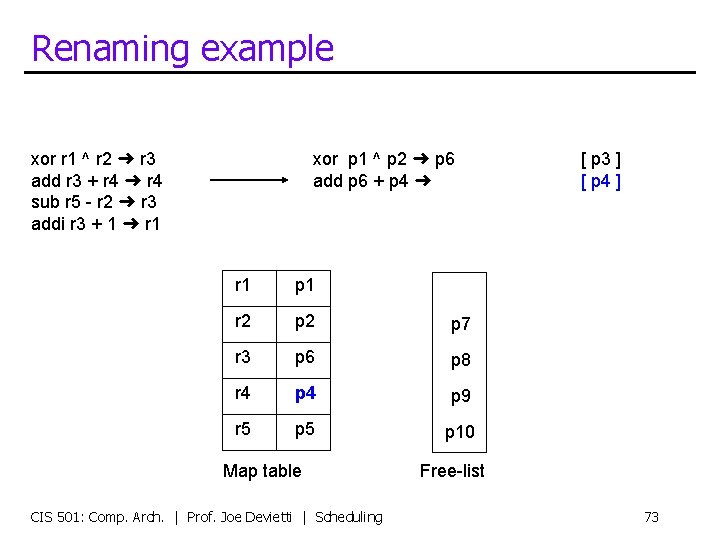

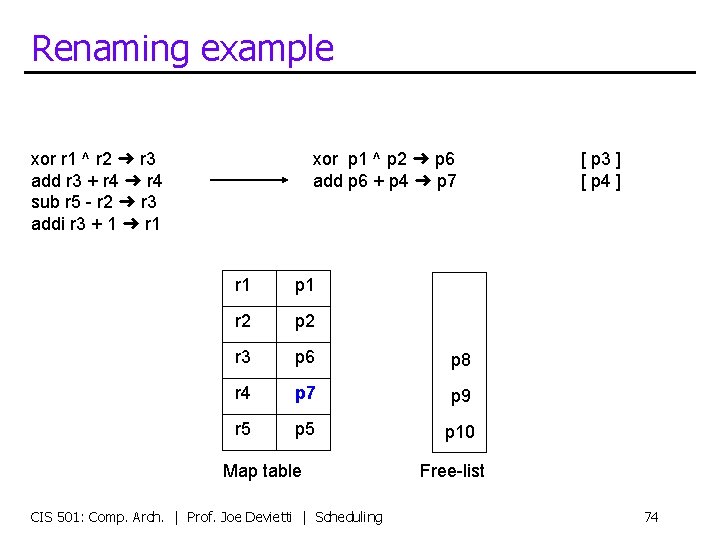

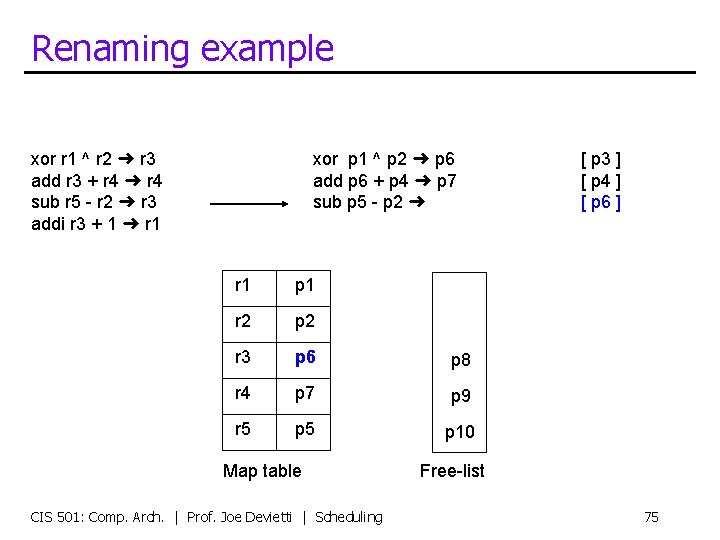

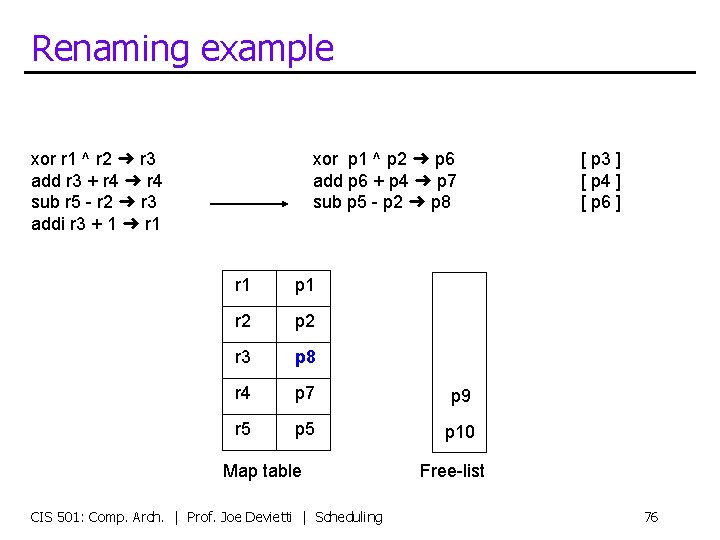

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 p 1 p 6 r 2 p 7 r 3 p 8 r 4 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 30

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ r 1 p 6 r 2 p 7 r 3 p 8 r 4 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 31

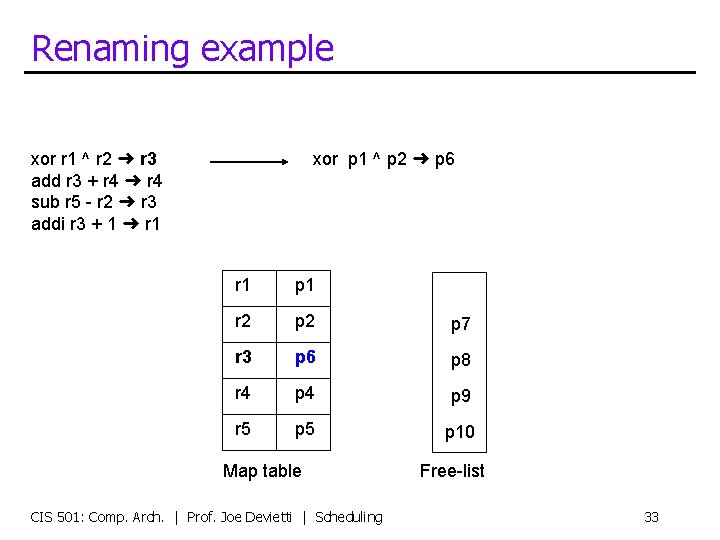

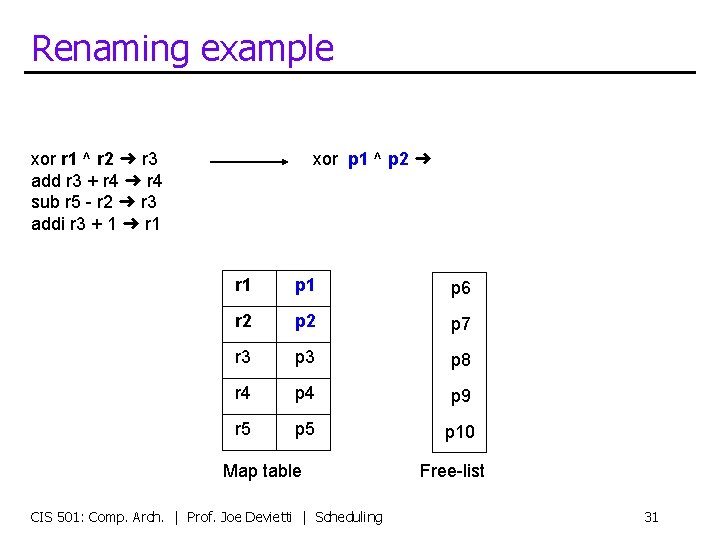

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 r 1 p 6 r 2 p 7 r 3 p 8 r 4 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 32

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 r 1 p 1 r 2 p 7 r 3 p 6 p 8 r 4 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 33

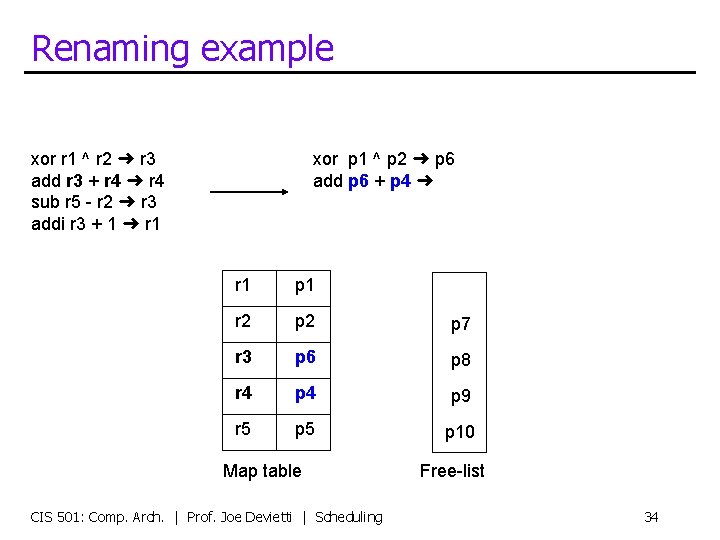

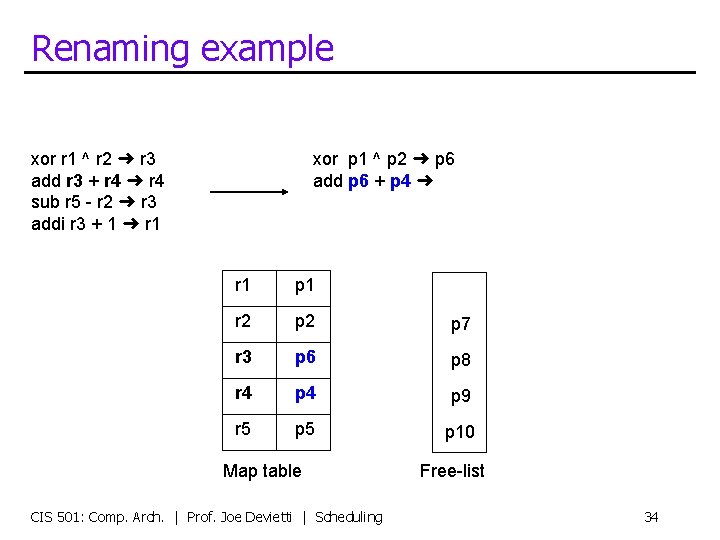

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ r 1 p 1 r 2 p 7 r 3 p 6 p 8 r 4 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 34

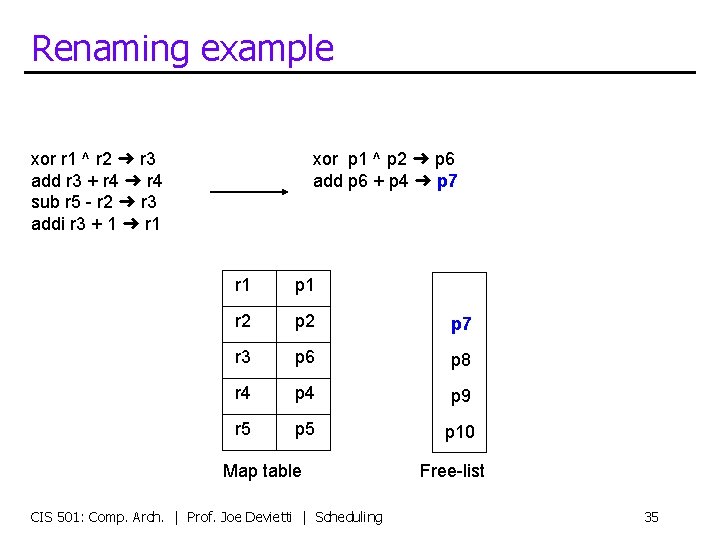

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 r 1 p 1 r 2 p 7 r 3 p 6 p 8 r 4 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 35

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 r 1 p 1 r 2 p 2 r 3 p 6 p 8 r 4 p 7 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 36

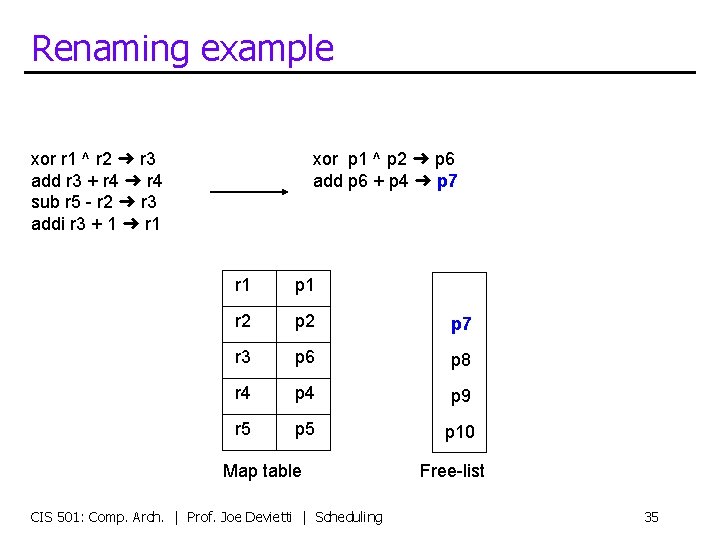

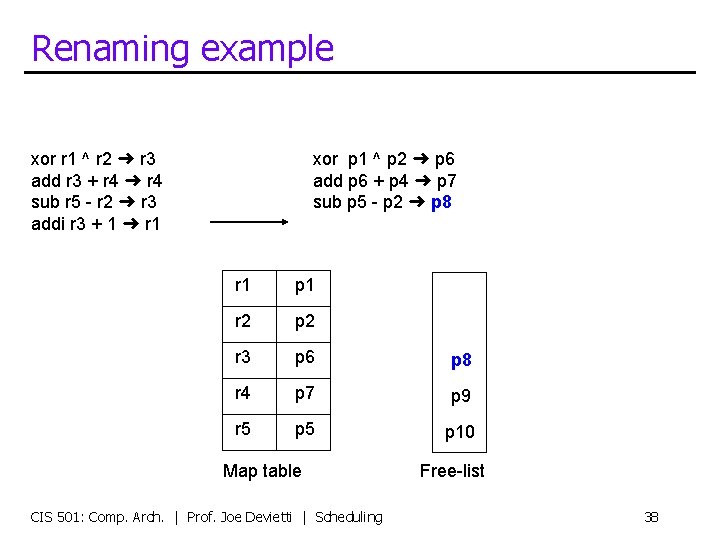

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ r 1 p 1 r 2 p 2 r 3 p 6 p 8 r 4 p 7 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 37

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 r 1 p 1 r 2 p 2 r 3 p 6 p 8 r 4 p 7 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 38

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 r 1 p 1 r 2 p 2 r 3 p 8 r 4 p 7 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 39

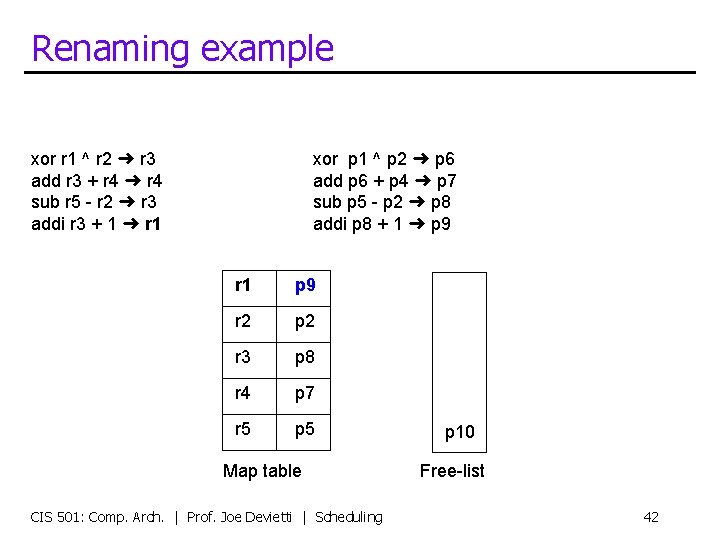

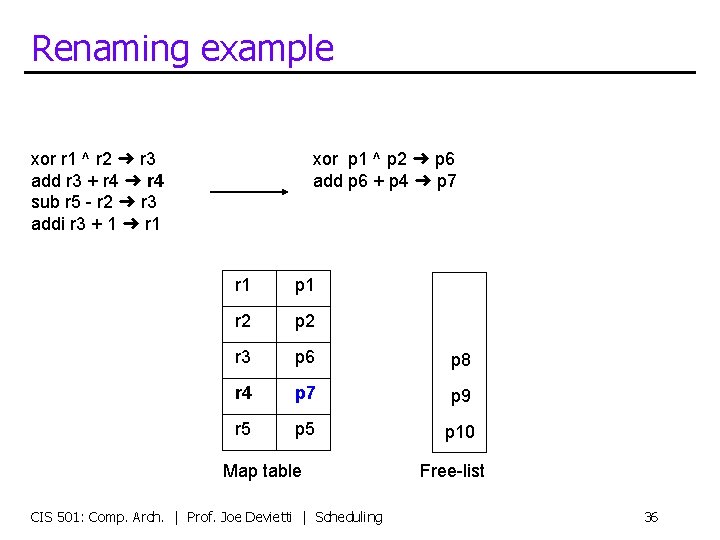

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ r 1 p 1 r 2 p 2 r 3 p 8 r 4 p 7 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 40

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 r 1 p 1 r 2 p 2 r 3 p 8 r 4 p 7 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 41

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 r 1 p 9 r 2 p 2 r 3 p 8 r 4 p 7 r 5 p 5 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling p 10 Free-list 42

Out-of-order Pipeline Commit Writeback Execute Reg-read Issue Dispatch Rename Decode Fetch Buffer of instructions Have unique register names Now put into out-of-order execution structures CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 43

Dynamic Scheduling Mechanisms CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 44

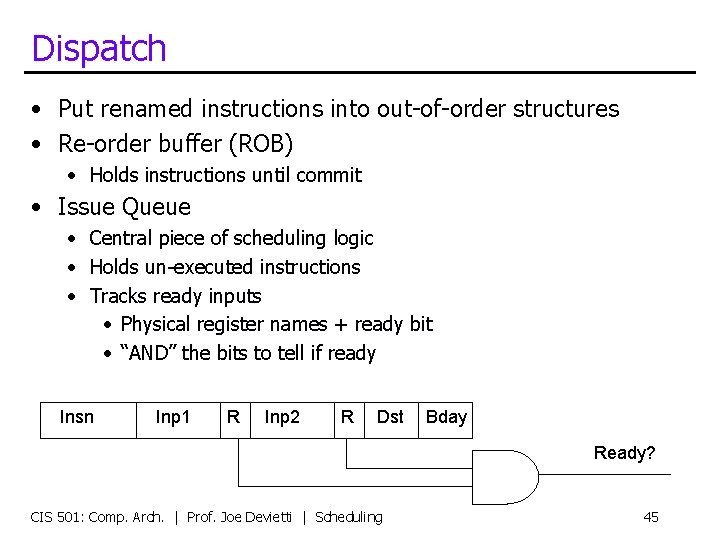

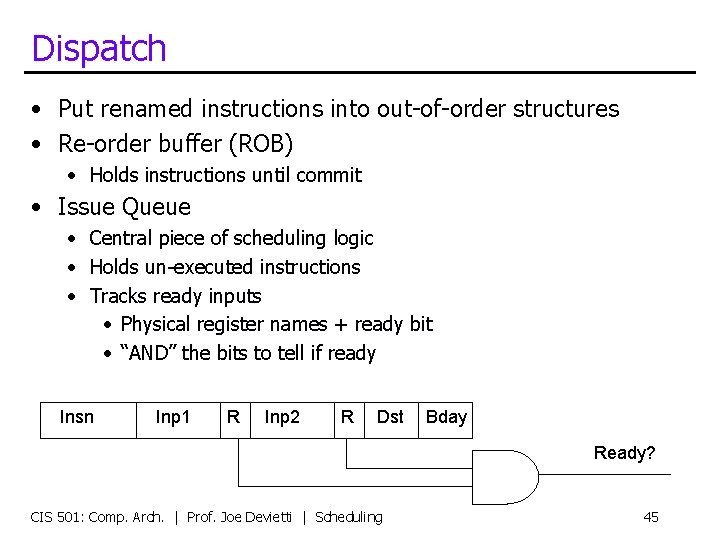

Dispatch • Put renamed instructions into out-of-order structures • Re-order buffer (ROB) • Holds instructions until commit • Issue Queue • Central piece of scheduling logic • Holds un-executed instructions • Tracks ready inputs • Physical register names + ready bit • “AND” the bits to tell if ready Insn Inp 1 R Inp 2 R Dst Bday Ready? CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 45

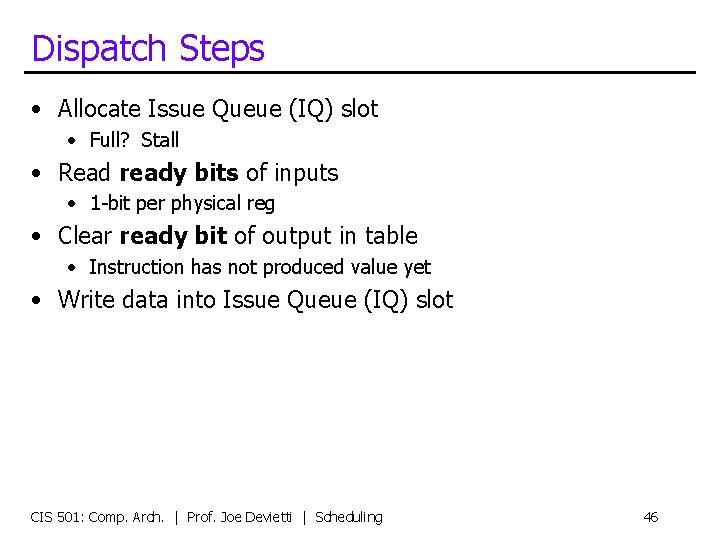

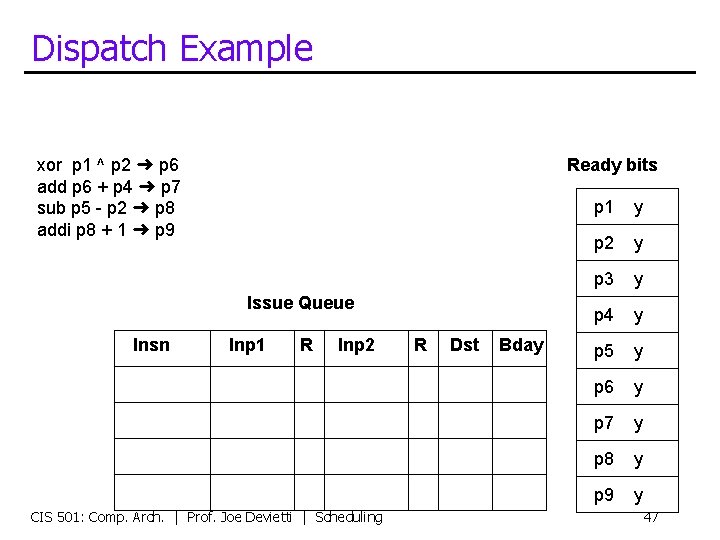

Dispatch Steps • Allocate Issue Queue (IQ) slot • Full? Stall • Read ready bits of inputs • 1 -bit per physical reg • Clear ready bit of output in table • Instruction has not produced value yet • Write data into Issue Queue (IQ) slot CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 46

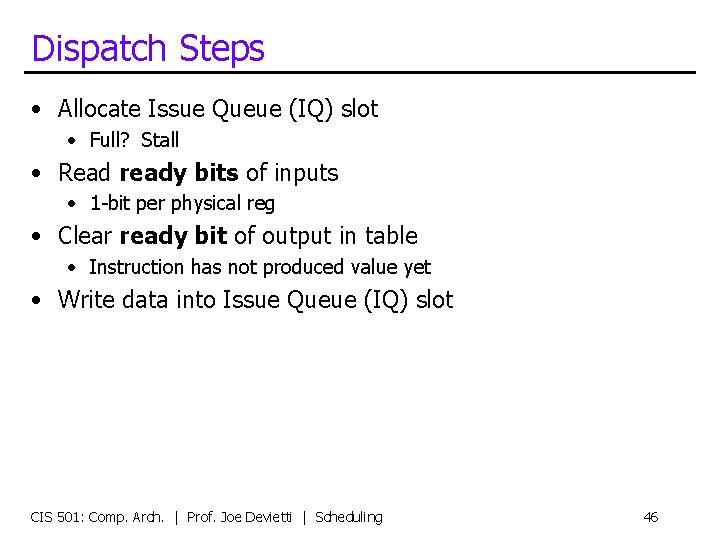

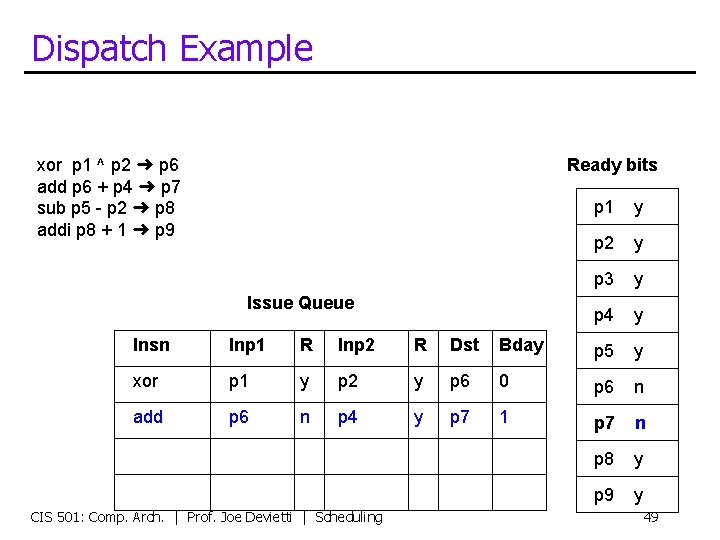

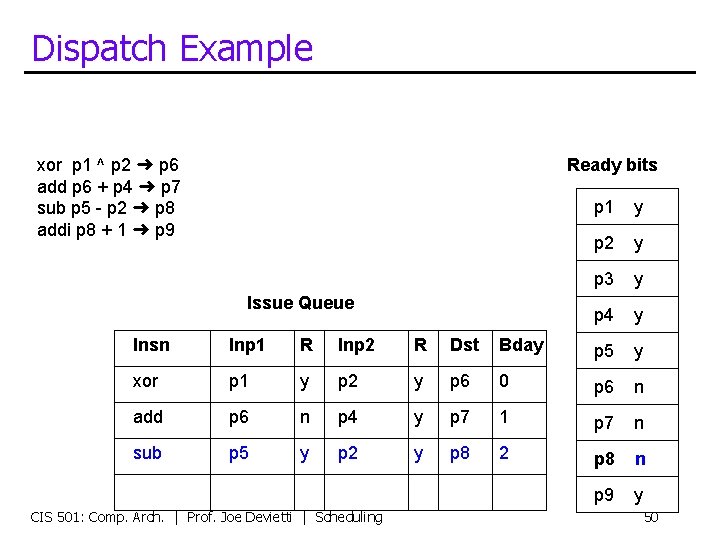

Dispatch Example xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 Ready bits Issue Queue Insn Inp 1 R Inp 2 CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling R Dst Bday p 1 y p 2 y p 3 y p 4 y p 5 y p 6 y p 7 y p 8 y p 9 y 47

Dispatch Example xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 Ready bits Issue Queue p 1 y p 2 y p 3 y p 4 y Insn Inp 1 R Inp 2 R Dst Bday p 5 y xor p 1 y p 2 y p 6 0 p 6 n p 7 y p 8 y p 9 y CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 48

Dispatch Example xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 Ready bits Issue Queue p 1 y p 2 y p 3 y p 4 y Insn Inp 1 R Inp 2 R Dst Bday p 5 y xor p 1 y p 2 y p 6 0 p 6 n add p 6 n p 4 y p 7 1 p 7 n p 8 y p 9 y CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 49

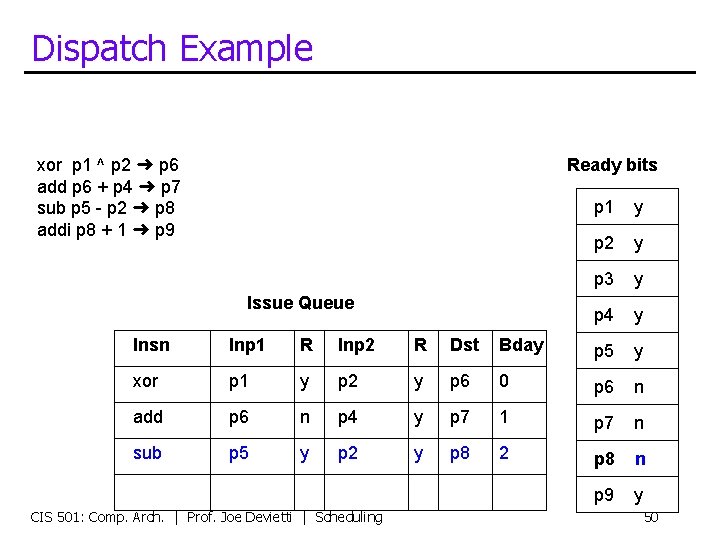

Dispatch Example xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 Ready bits Issue Queue p 1 y p 2 y p 3 y p 4 y Insn Inp 1 R Inp 2 R Dst Bday p 5 y xor p 1 y p 2 y p 6 0 p 6 n add p 6 n p 4 y p 7 1 p 7 n sub p 5 y p 2 y p 8 2 p 8 n p 9 y CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 50

Dispatch Example xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 Ready bits Issue Queue p 1 y p 2 y p 3 y p 4 y Insn Inp 1 R Inp 2 R Dst Bday p 5 y xor p 1 y p 2 y p 6 0 p 6 n add p 6 n p 4 y p 7 1 p 7 n sub p 5 y p 2 y p 8 2 p 8 n addi p 8 n --- y p 9 3 p 9 n CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 51

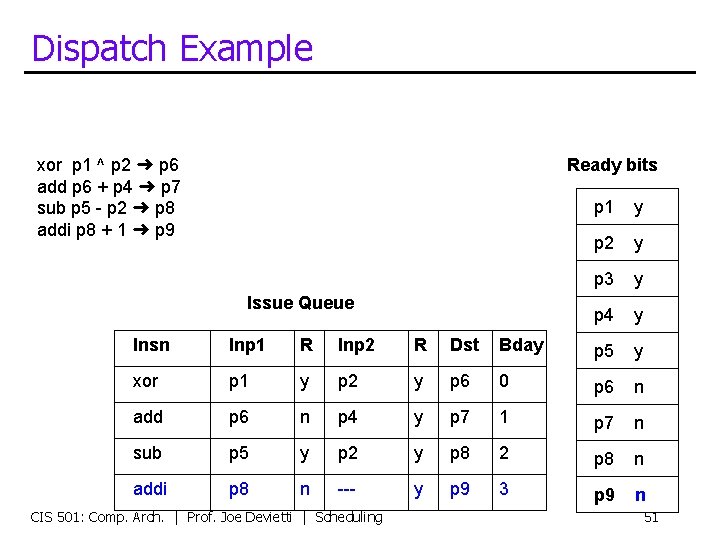

Out-of-order pipeline • Execution (out-of-order) stages • Select ready instructions • Send for execution • Wakeup dependents Issue Reg-read Execute Writeback CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 52

![Dynamic SchedulingIssue Algorithm Data structures Ready tablephysreg yesno part of issue queue Dynamic Scheduling/Issue Algorithm • Data structures: • Ready table[phys_reg] yes/no (part of issue queue)](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-53.jpg)

Dynamic Scheduling/Issue Algorithm • Data structures: • Ready table[phys_reg] yes/no (part of issue queue) • Algorithm at “schedule” stage (prior to read registers): foreach instruction: if table[insn. phys_input 1] == ready && table[insn. phys_input 2] == ready then insn is “ready” select the oldest “ready” instruction table[insn. phys_output] = ready CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 53

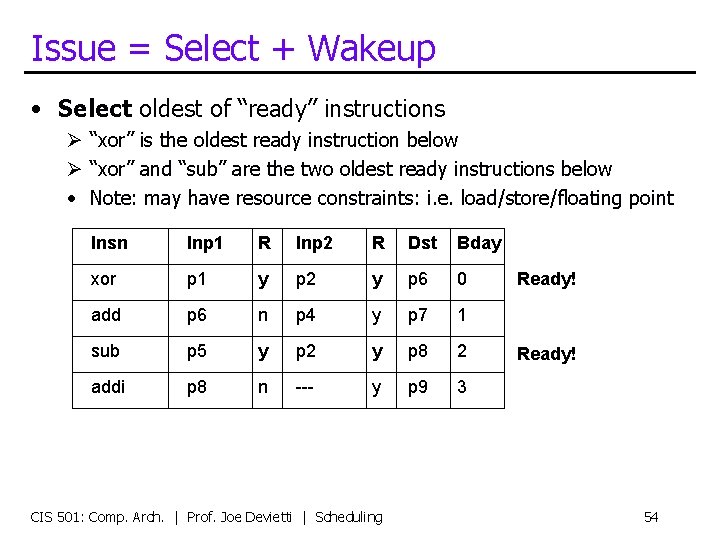

Issue = Select + Wakeup • Select oldest of “ready” instructions Ø “xor” is the oldest ready instruction below Ø “xor” and “sub” are the two oldest ready instructions below • Note: may have resource constraints: i. e. load/store/floating point Insn Inp 1 R Inp 2 R Dst Bday xor p 1 y p 2 y p 6 0 add p 6 n p 4 y p 7 1 sub p 5 y p 2 y p 8 2 addi p 8 n --- y p 9 3 CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Ready! 54

Issue = Select + Wakeup • Wakeup dependent instructions • Search for destination (Dst) in inputs & set “ready” bit • Implemented with a special memory array circuit called a Content Addressable Memory (CAM) • Also update ready-bit table for future instructions Ready bits p 1 y Insn Inp 1 R Inp 2 R Dst Bday p 2 y xor p 1 y p 2 y p 6 0 p 3 y add p 6 y p 4 y p 7 1 p 4 y sub p 5 y p 2 y p 8 2 p 5 y addi p 8 y --- y p 9 3 p 6 y p 7 n p 8 y p 9 n • For multi-cycle operations (loads, floating point) • Wakeup deferred a few cycles • Include checks to avoid structural hazards CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 55

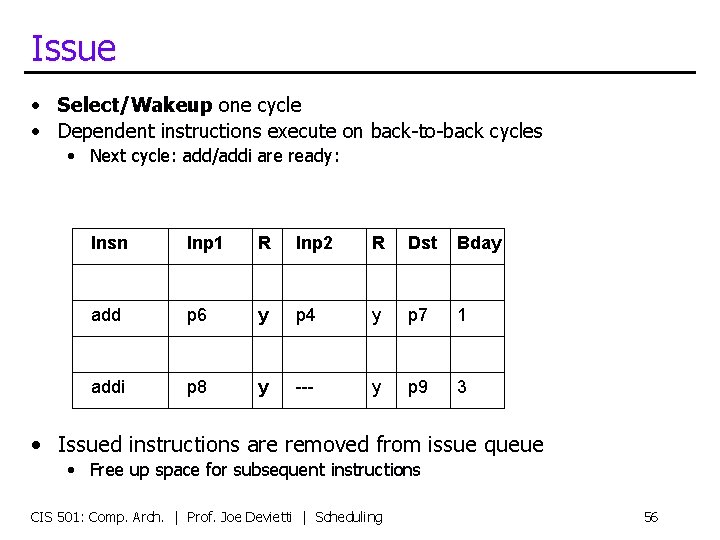

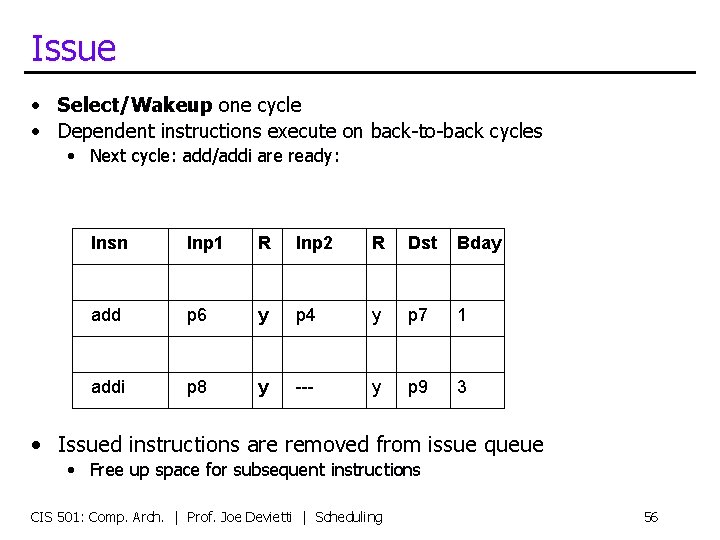

Issue • Select/Wakeup one cycle • Dependent instructions execute on back-to-back cycles • Next cycle: add/addi are ready: Insn Inp 1 R Inp 2 R Dst Bday add p 6 y p 4 y p 7 1 addi p 8 y --- y p 9 3 • Issued instructions are removed from issue queue • Free up space for subsequent instructions CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 56

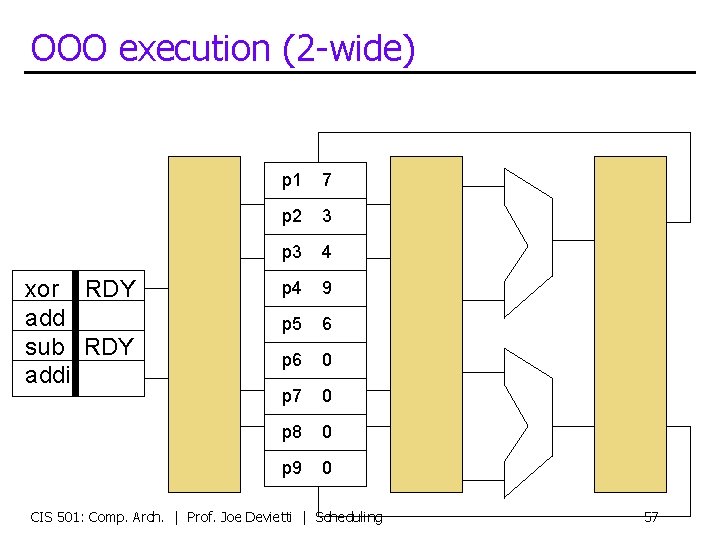

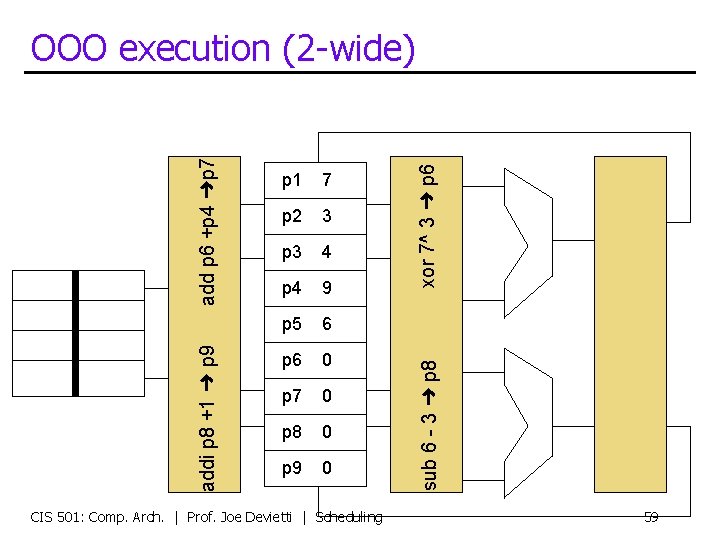

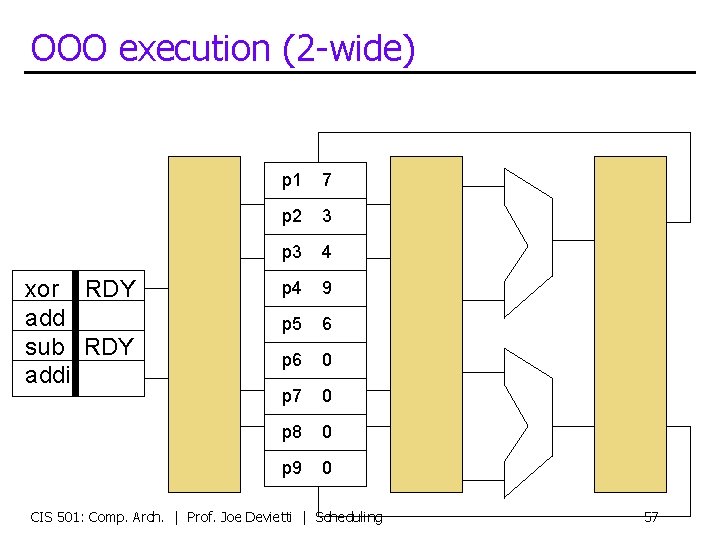

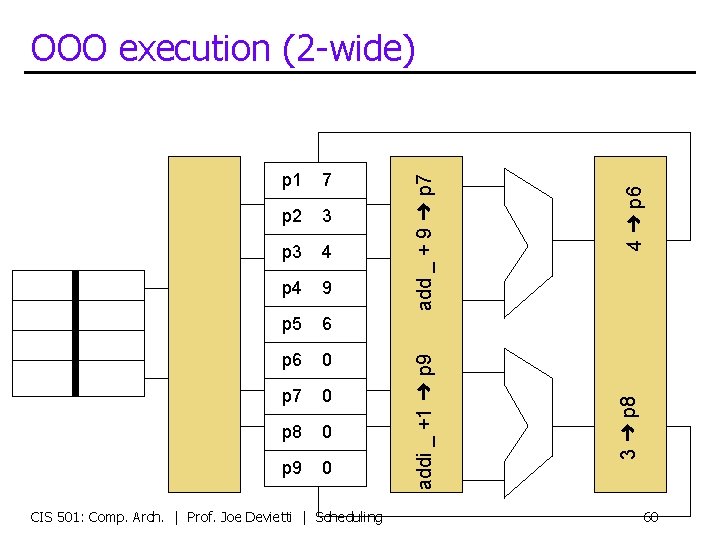

OOO execution (2 -wide) xor RDY add sub RDY addi p 1 7 p 2 3 p 3 4 p 4 9 p 5 6 p 6 0 p 7 0 p 8 0 p 9 0 CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 57

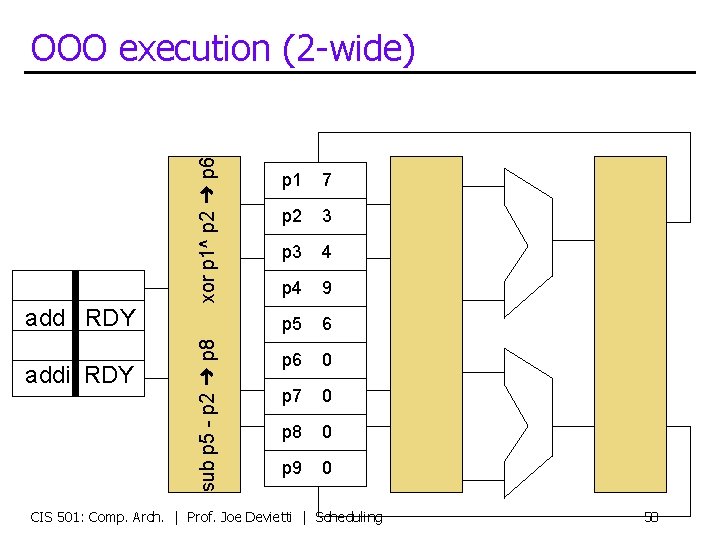

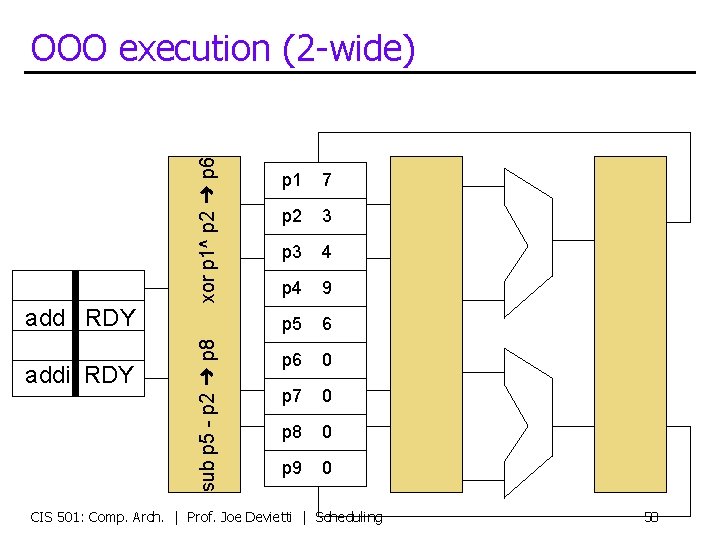

xor p 1^ p 2 ➜ p 6 OOO execution (2 -wide) addi RDY sub p 5 - p 2 ➜ p 8 add RDY p 1 7 p 2 3 p 3 4 p 4 9 p 5 6 p 6 0 p 7 0 p 8 0 p 9 0 CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 58

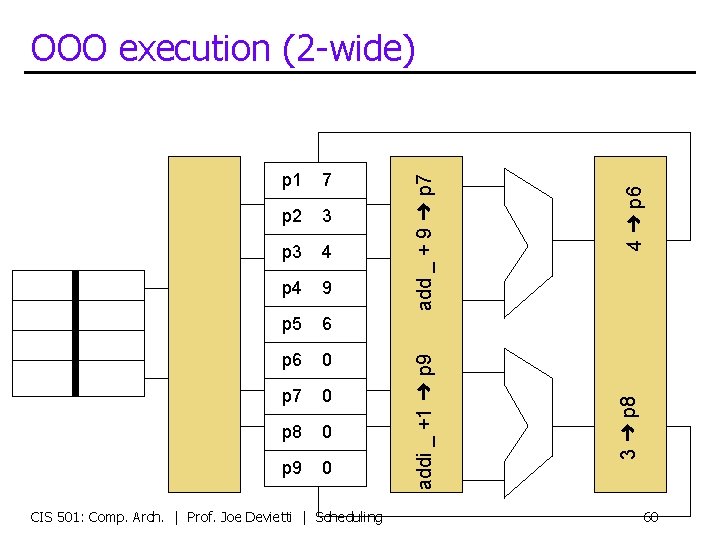

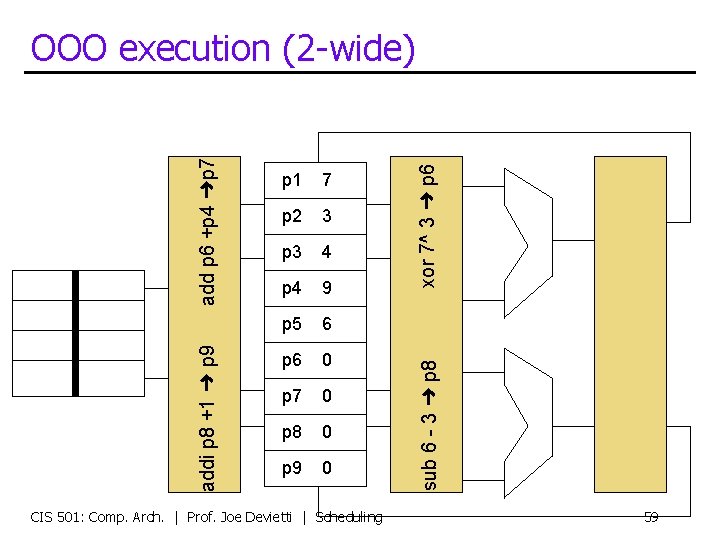

7 p 2 3 p 3 4 p 4 9 p 5 6 p 6 0 p 7 0 p 8 0 p 9 0 CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling xor 7^ 3 ➜ p 6 p 1 sub 6 - 3 ➜ p 8 addi p 8 +1 ➜ p 9 add p 6 +p 4 ➜p 7 OOO execution (2 -wide) 59

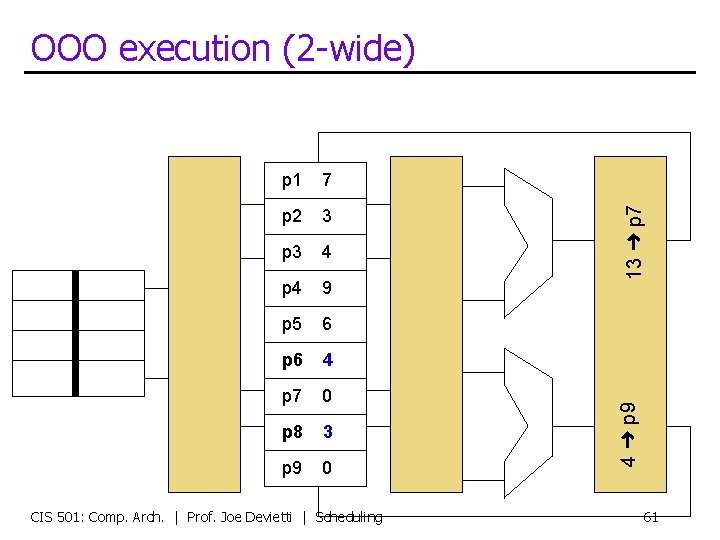

3 p 3 4 p 4 9 p 5 6 p 6 0 p 7 0 p 8 0 p 9 0 CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 4 ➜ p 6 p 2 3 ➜ p 8 7 addi _ +1 ➜ p 9 p 1 add _ + 9 ➜ p 7 OOO execution (2 -wide) 60

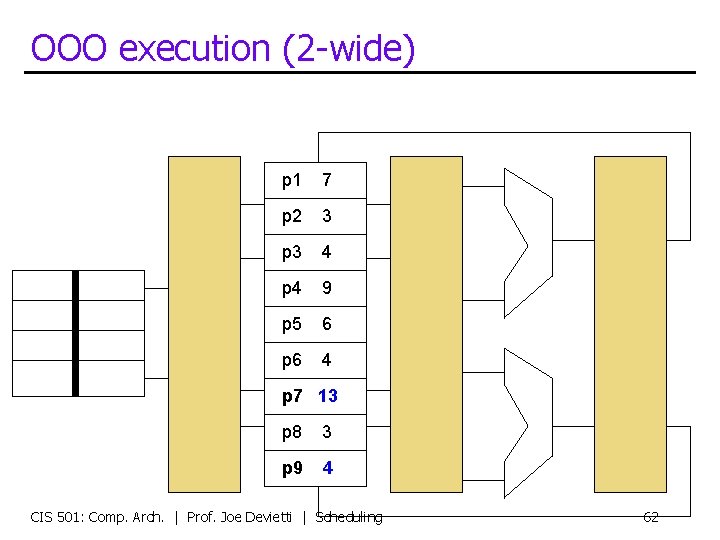

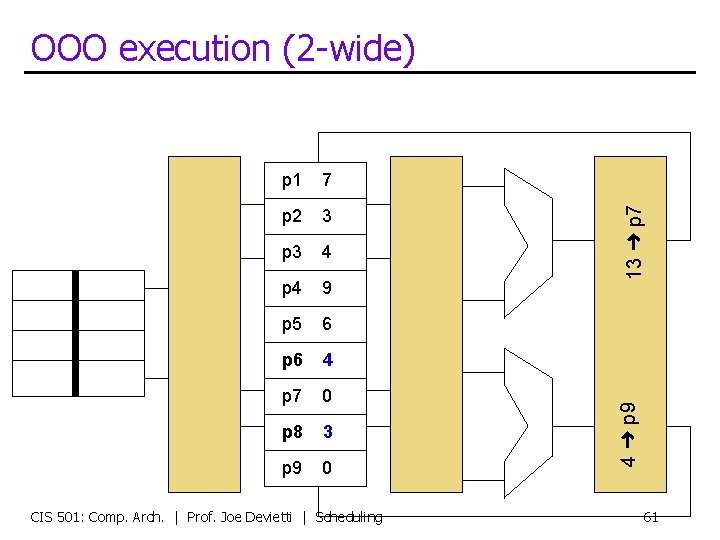

7 p 2 3 p 3 4 p 4 9 p 5 6 p 6 4 p 7 0 p 8 3 p 9 0 CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 4 ➜ p 9 p 1 13 ➜ p 7 OOO execution (2 -wide) 61

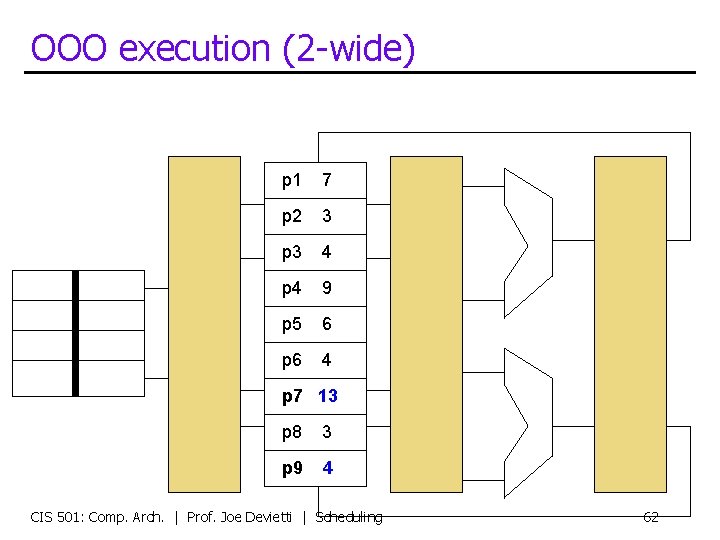

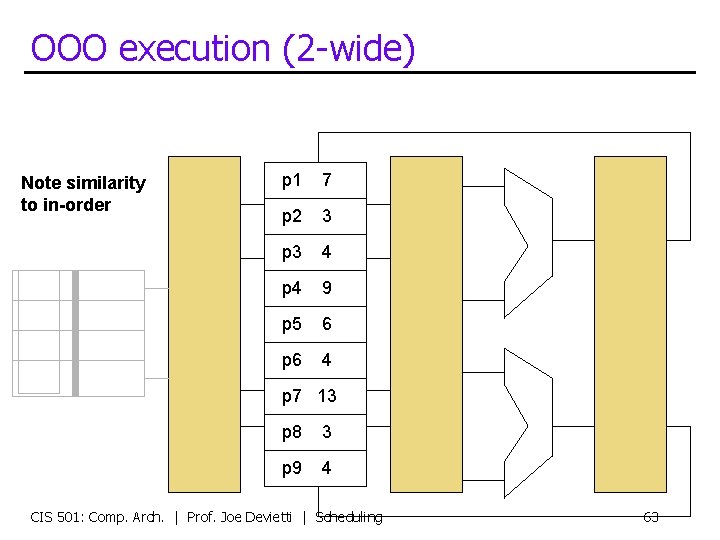

OOO execution (2 -wide) p 1 7 p 2 3 p 3 4 p 4 9 p 5 6 p 6 4 p 7 13 p 8 3 p 9 4 CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 62

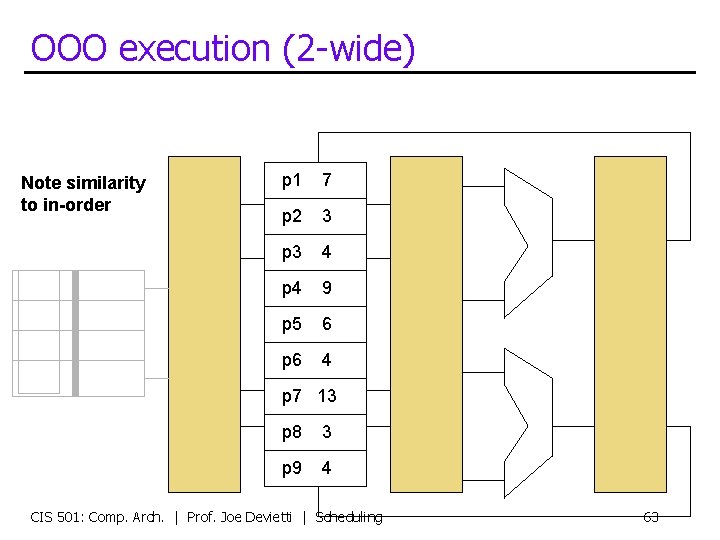

OOO execution (2 -wide) Note similarity to in-order p 1 7 p 2 3 p 3 4 p 4 9 p 5 6 p 6 4 p 7 13 p 8 3 p 9 4 CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 63

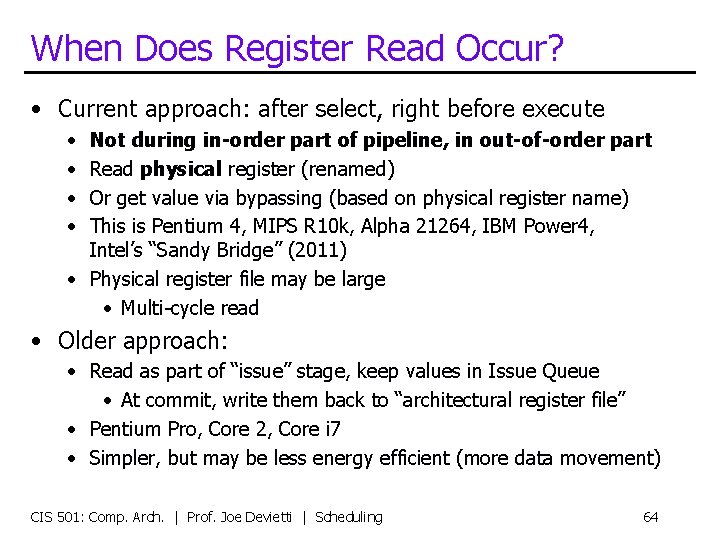

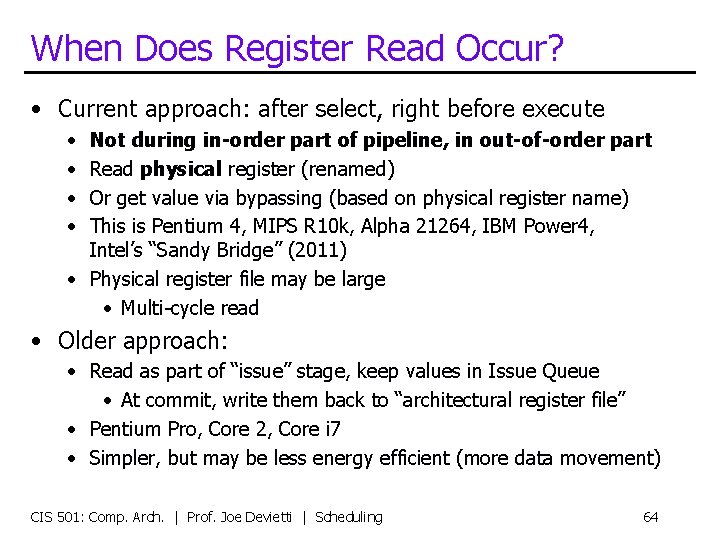

When Does Register Read Occur? • Current approach: after select, right before execute • • Not during in-order part of pipeline, in out-of-order part Read physical register (renamed) Or get value via bypassing (based on physical register name) This is Pentium 4, MIPS R 10 k, Alpha 21264, IBM Power 4, Intel’s “Sandy Bridge” (2011) • Physical register file may be large • Multi-cycle read • Older approach: • Read as part of “issue” stage, keep values in Issue Queue • At commit, write them back to “architectural register file” • Pentium Pro, Core 2, Core i 7 • Simpler, but may be less energy efficient (more data movement) CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 64

Renaming Revisited CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 65

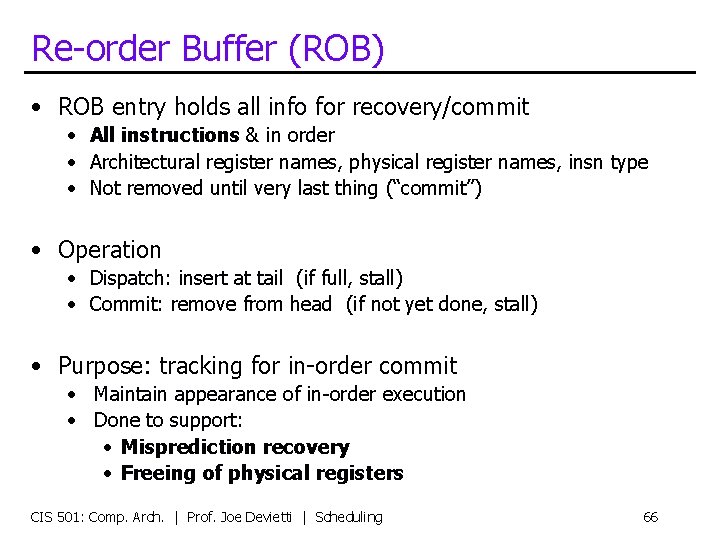

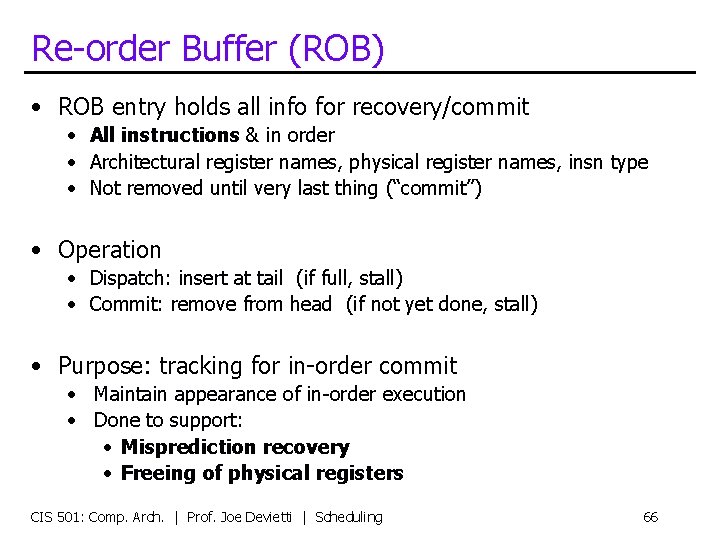

Re-order Buffer (ROB) • ROB entry holds all info for recovery/commit • All instructions & in order • Architectural register names, physical register names, insn type • Not removed until very last thing (“commit”) • Operation • Dispatch: insert at tail (if full, stall) • Commit: remove from head (if not yet done, stall) • Purpose: tracking for in-order commit • Maintain appearance of in-order execution • Done to support: • Misprediction recovery • Freeing of physical registers CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 66

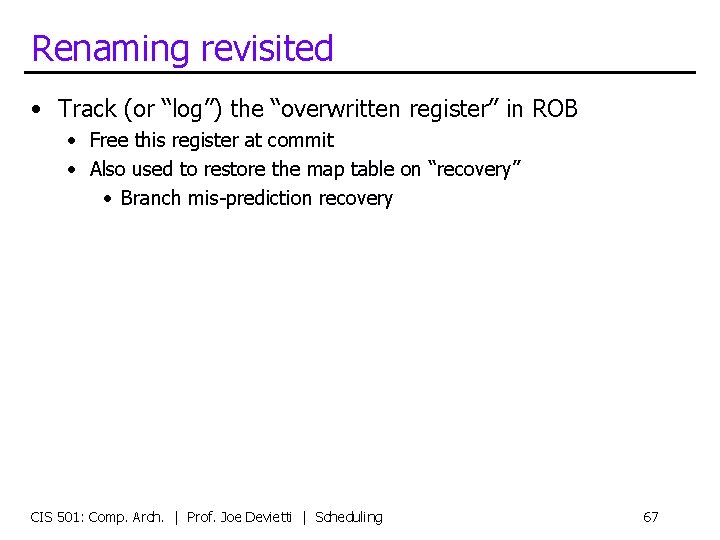

Renaming revisited • Track (or “log”) the “overwritten register” in ROB • Free this register at commit • Also used to restore the map table on “recovery” • Branch mis-prediction recovery CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 67

![Register Renaming Algorithm Full Two key data structures maptablearchitecturalreg physicalreg Free Register Renaming Algorithm (Full) • Two key data structures: • maptable[architectural_reg] physical_reg • Free](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-68.jpg)

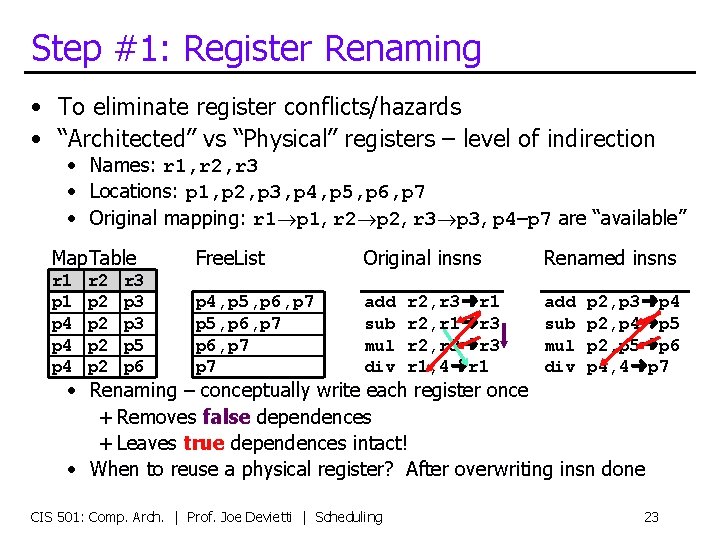

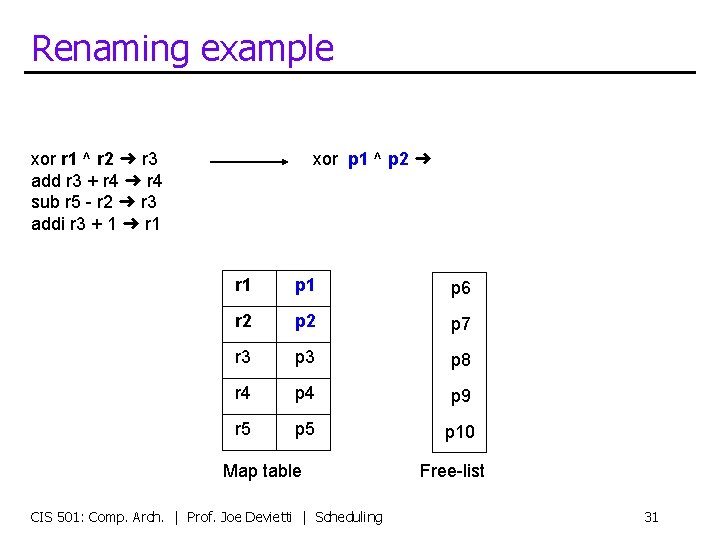

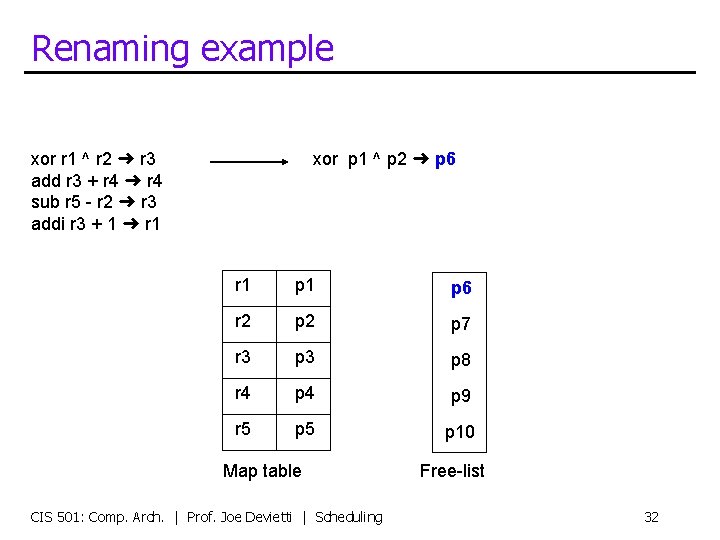

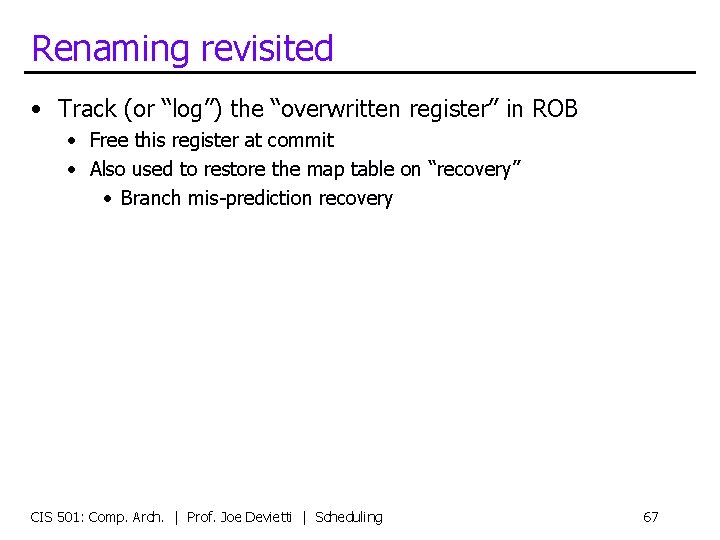

Register Renaming Algorithm (Full) • Two key data structures: • maptable[architectural_reg] physical_reg • Free list: allocate (new) & free registers (implemented as a queue) • Algorithm: at “decode” stage for each instruction: insn. phys_input 1 = maptable[insn. arch_input 1] insn. phys_input 2 = maptable[insn. arch_input 2] insn. old_phys_output = maptable[insn. arch_output] new_reg = new_phys_reg() maptable[insn. arch_output] = new_reg insn. phys_output = new_reg • At “commit” • Once all older instructions have committed, free register free_phys_reg(insn. old_phys_output) CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 68

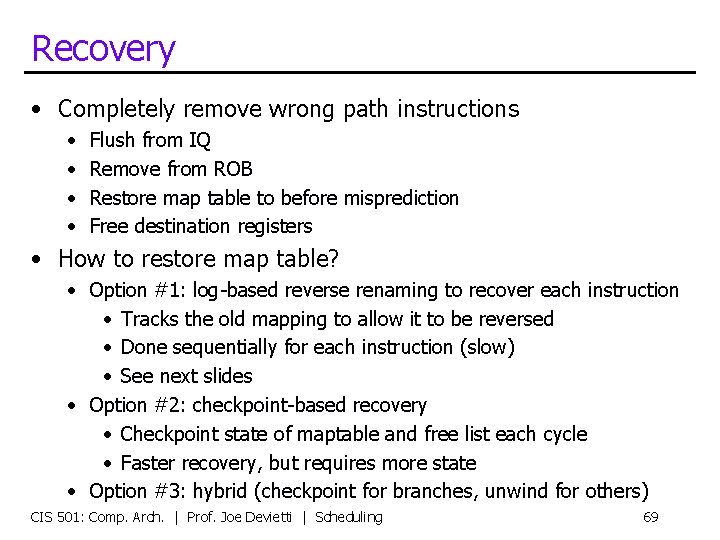

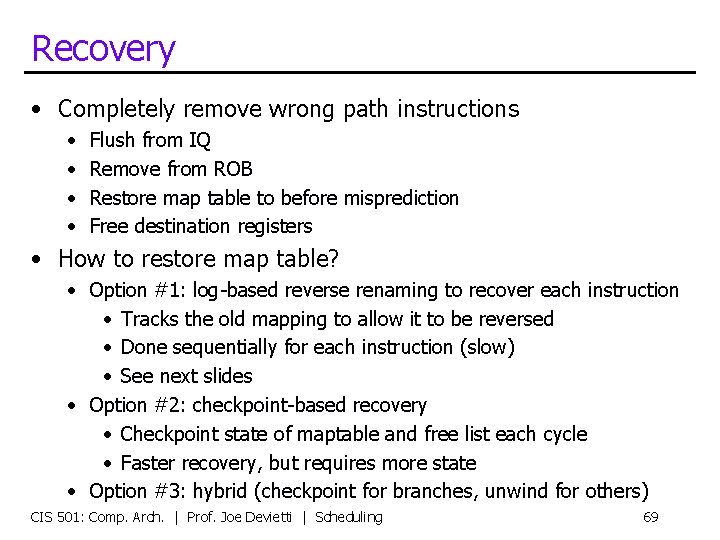

Recovery • Completely remove wrong path instructions • • Flush from IQ Remove from ROB Restore map table to before misprediction Free destination registers • How to restore map table? • Option #1: log-based reverse renaming to recover each instruction • Tracks the old mapping to allow it to be reversed • Done sequentially for each instruction (slow) • See next slides • Option #2: checkpoint-based recovery • Checkpoint state of maptable and free list each cycle • Faster recovery, but requires more state • Option #3: hybrid (checkpoint for branches, unwind for others) CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 69

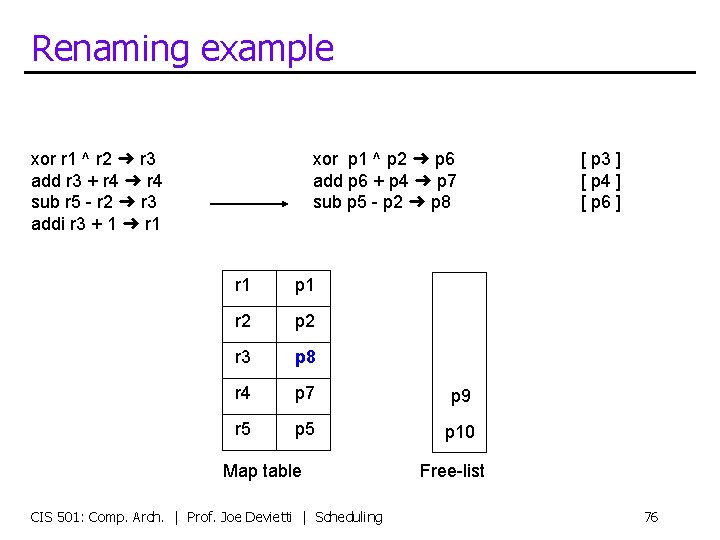

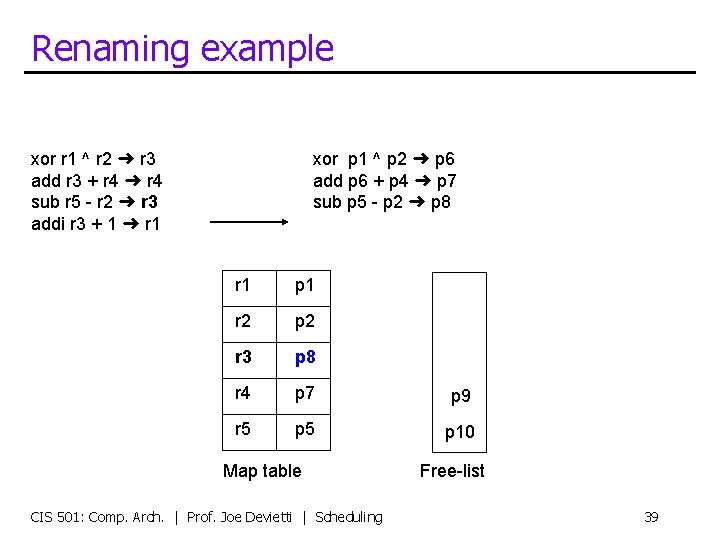

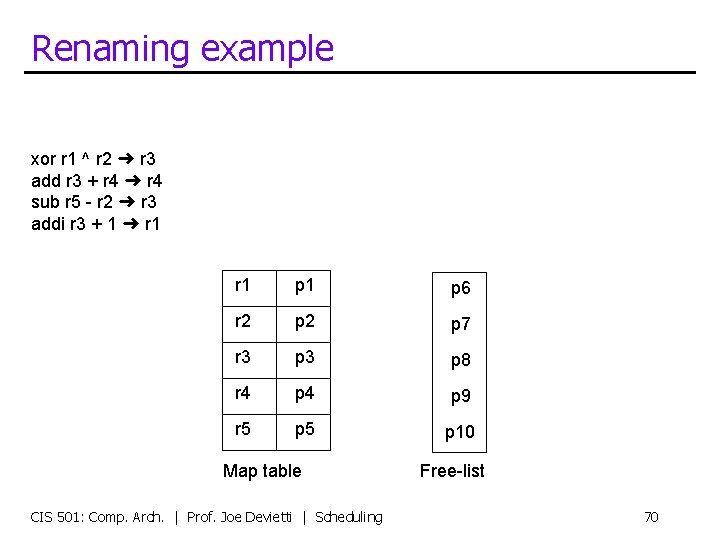

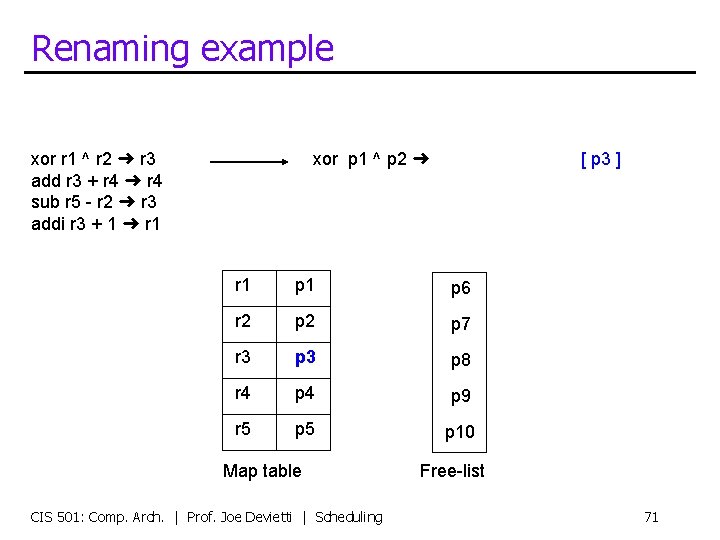

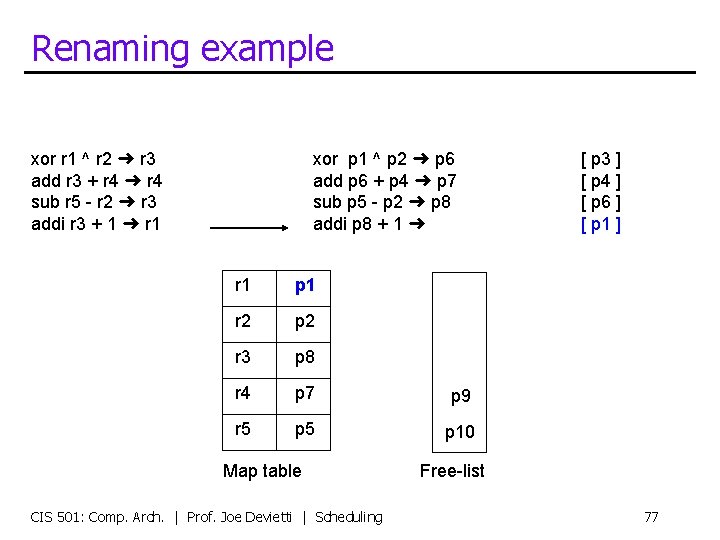

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 p 1 p 6 r 2 p 7 r 3 p 8 r 4 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 70

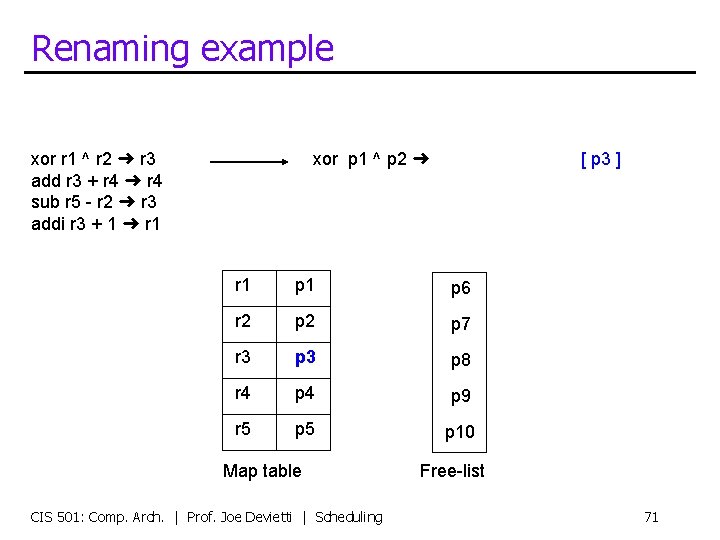

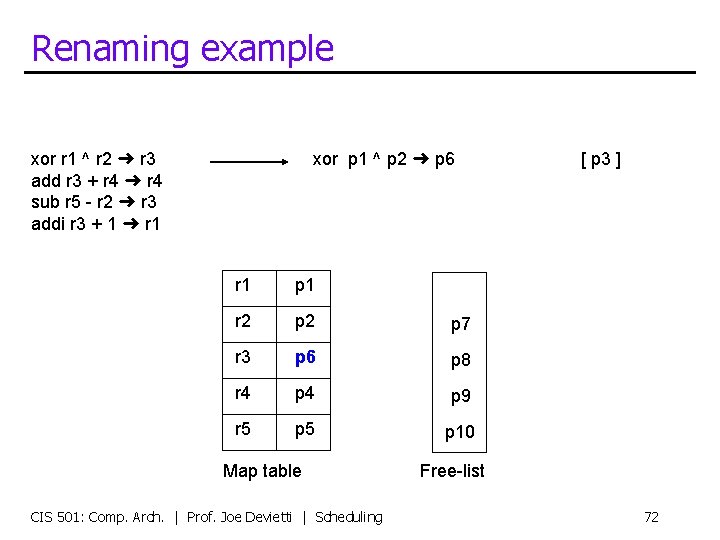

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ [ p 3 ] r 1 p 6 r 2 p 7 r 3 p 8 r 4 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 71

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 r 1 p 1 r 2 p 7 r 3 p 6 p 8 r 4 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ p 3 ] Free-list 72

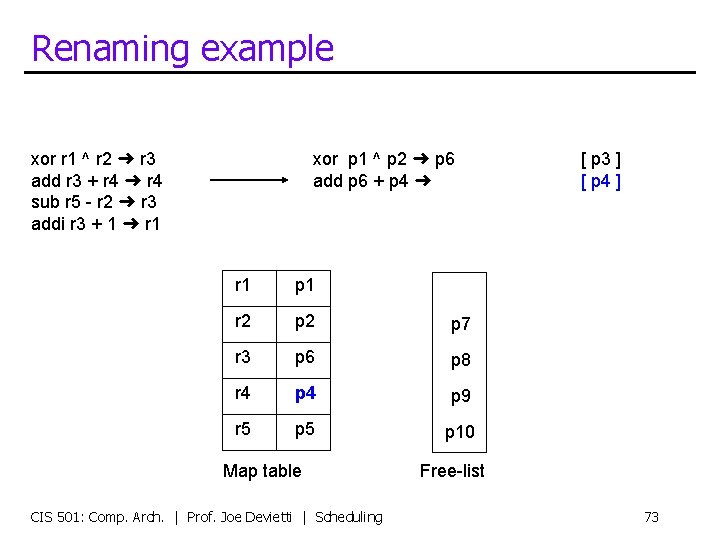

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ r 1 p 1 r 2 p 7 r 3 p 6 p 8 r 4 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ p 3 ] [ p 4 ] Free-list 73

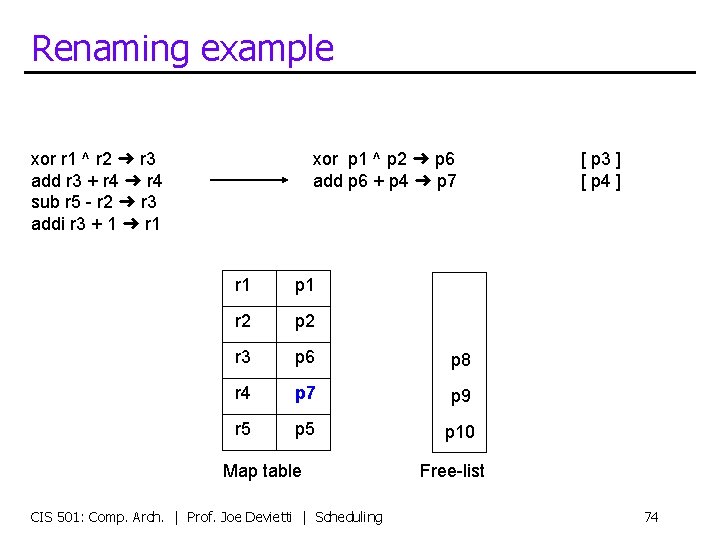

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 r 1 p 1 r 2 p 2 r 3 p 6 p 8 r 4 p 7 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ p 3 ] [ p 4 ] Free-list 74

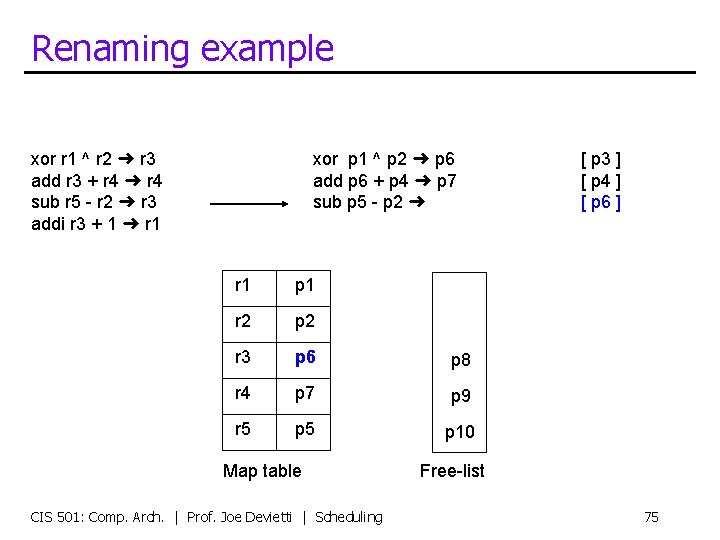

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ r 1 p 1 r 2 p 2 r 3 p 6 p 8 r 4 p 7 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ p 3 ] [ p 4 ] [ p 6 ] Free-list 75

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 r 1 p 1 r 2 p 2 r 3 p 8 r 4 p 7 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ p 3 ] [ p 4 ] [ p 6 ] Free-list 76

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ r 1 p 1 r 2 p 2 r 3 p 8 r 4 p 7 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ p 3 ] [ p 4 ] [ p 6 ] [ p 1 ] Free-list 77

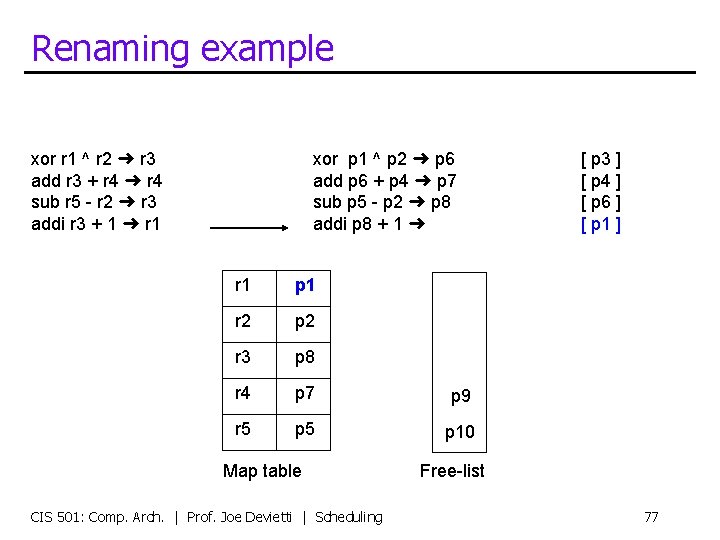

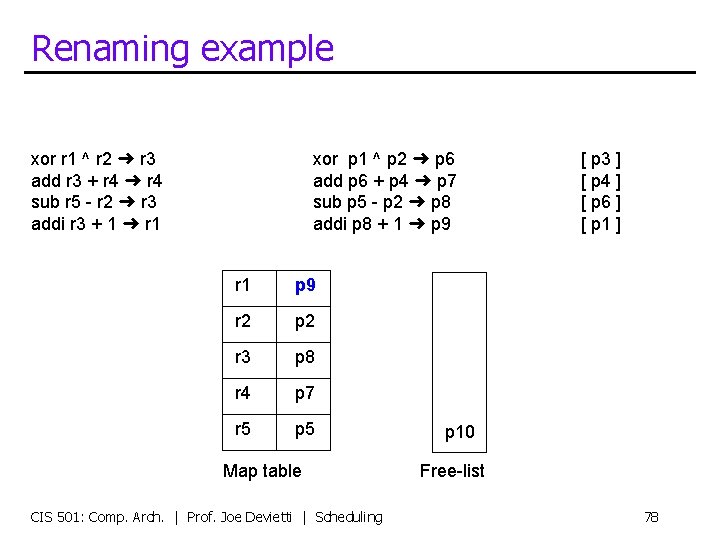

Renaming example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 r 1 p 9 r 2 p 2 r 3 p 8 r 4 p 7 r 5 p 5 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ p 3 ] [ p 4 ] [ p 6 ] [ p 1 ] p 10 Free-list 78

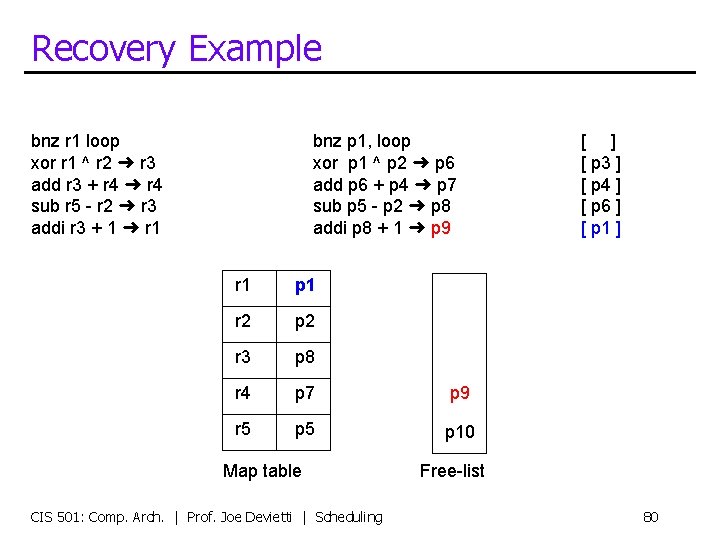

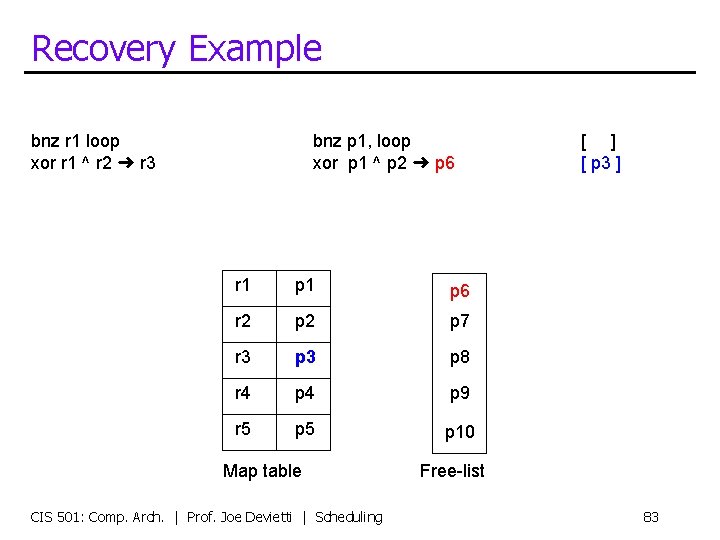

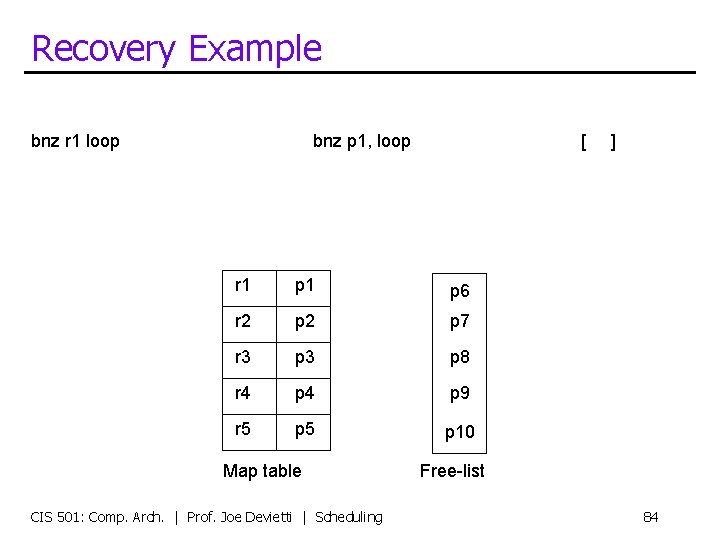

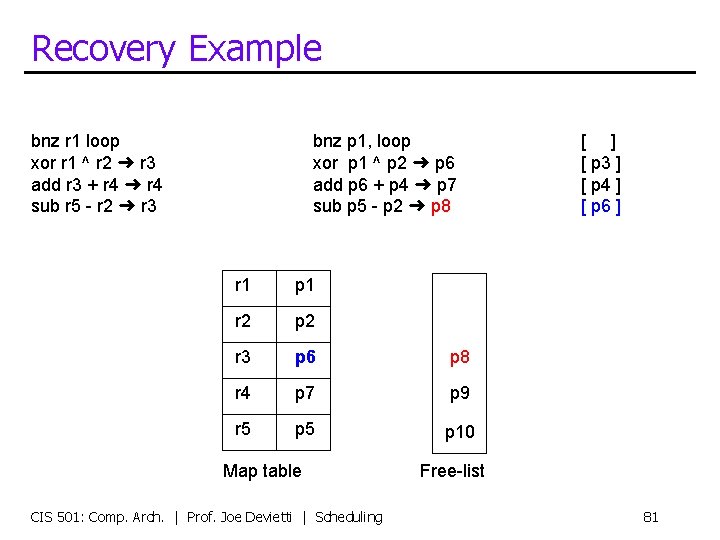

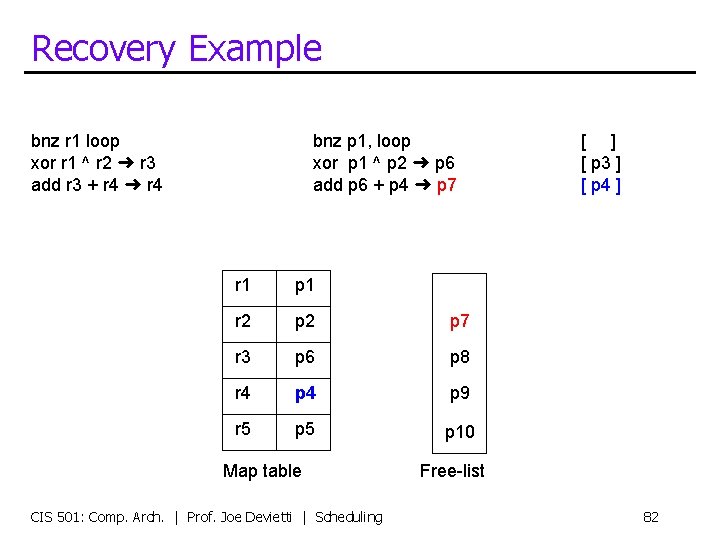

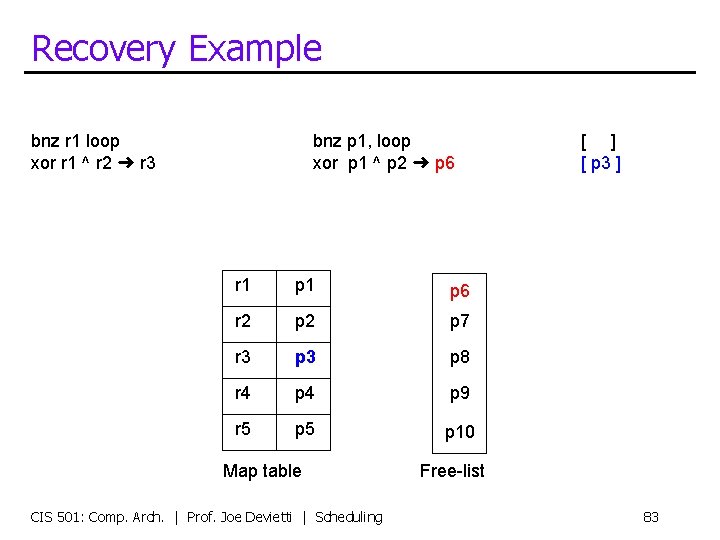

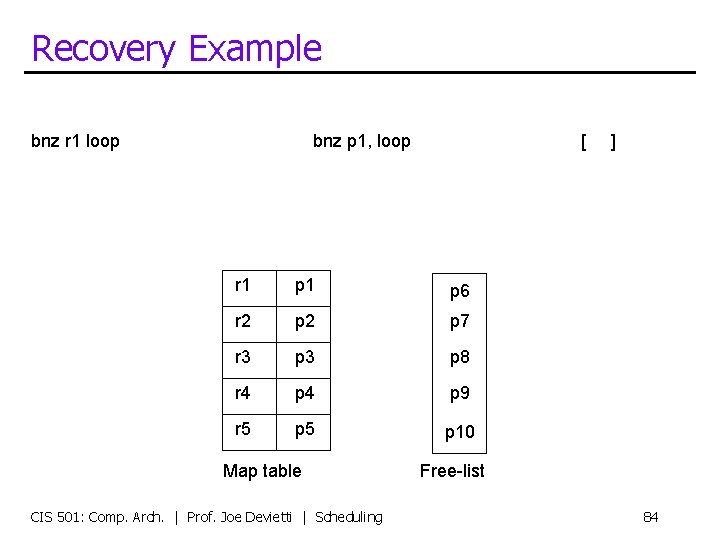

Recovery Example Now, let’s use this info. to recover from a branch misprediction bnz r 1 loop xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 bnz p 1, loop xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 r 1 p 9 r 2 p 2 r 3 p 8 r 4 p 7 r 5 p 5 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ ] [ p 3 ] [ p 4 ] [ p 6 ] [ p 1 ] p 10 Free-list 79

Recovery Example bnz r 1 loop xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 bnz p 1, loop xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 r 1 p 1 r 2 p 2 r 3 p 8 r 4 p 7 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ ] [ p 3 ] [ p 4 ] [ p 6 ] [ p 1 ] Free-list 80

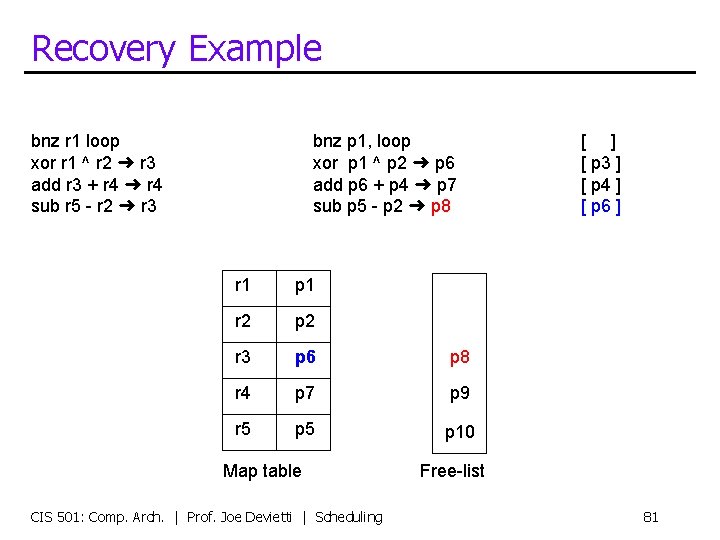

Recovery Example bnz r 1 loop xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 bnz p 1, loop xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 r 1 p 1 r 2 p 2 r 3 p 6 p 8 r 4 p 7 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ ] [ p 3 ] [ p 4 ] [ p 6 ] Free-list 81

Recovery Example bnz r 1 loop xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 bnz p 1, loop xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 r 1 p 1 r 2 p 7 r 3 p 6 p 8 r 4 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ ] [ p 3 ] [ p 4 ] Free-list 82

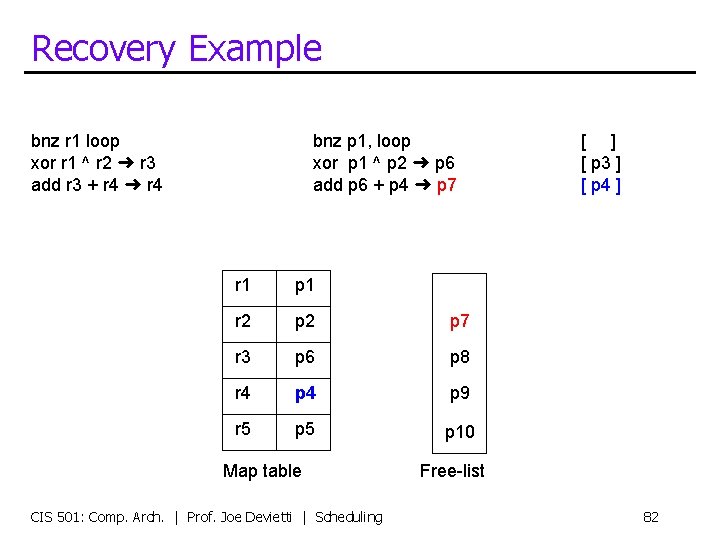

Recovery Example bnz r 1 loop xor r 1 ^ r 2 ➜ r 3 bnz p 1, loop xor p 1 ^ p 2 ➜ p 6 r 1 p 6 r 2 p 7 r 3 p 8 r 4 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ ] [ p 3 ] Free-list 83

Recovery Example bnz r 1 loop bnz p 1, loop [ r 1 p 6 r 2 p 7 r 3 p 8 r 4 p 9 r 5 p 10 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling ] Free-list 84

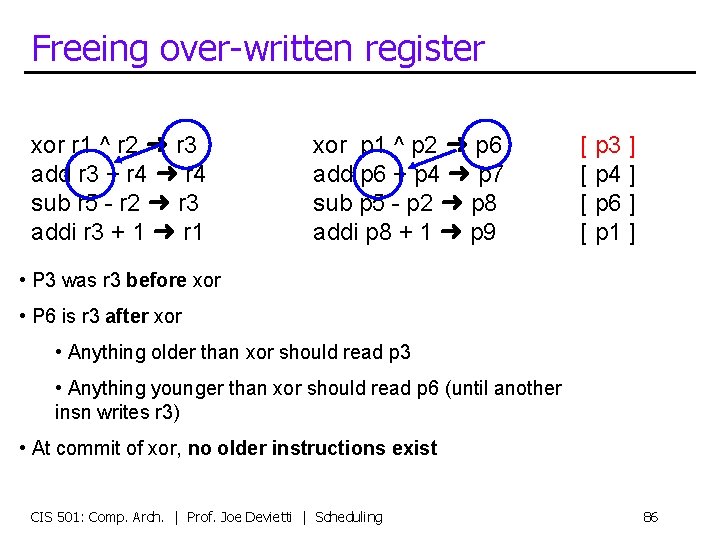

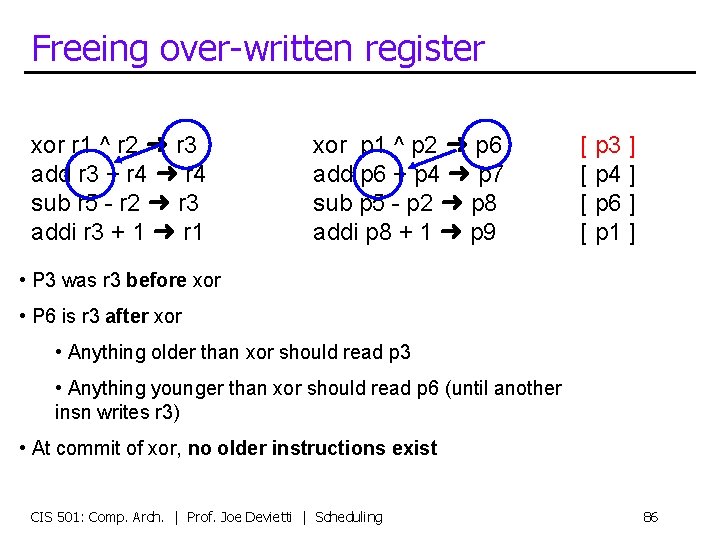

Commit xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 • Commit: xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 [ p 3 ] [ p 4 ] [ p 6 ] [ p 1 ] instruction becomes architected state • In-order, only when instructions are finished • Free overwritten register (why? ) CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 85

Freeing over-written register xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 [ p 3 ] [ p 4 ] [ p 6 ] [ p 1 ] • P 3 was r 3 before xor • P 6 is r 3 after xor • Anything older than xor should read p 3 • Anything younger than xor should read p 6 (until another insn writes r 3) • At commit of xor, no older instructions exist CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 86

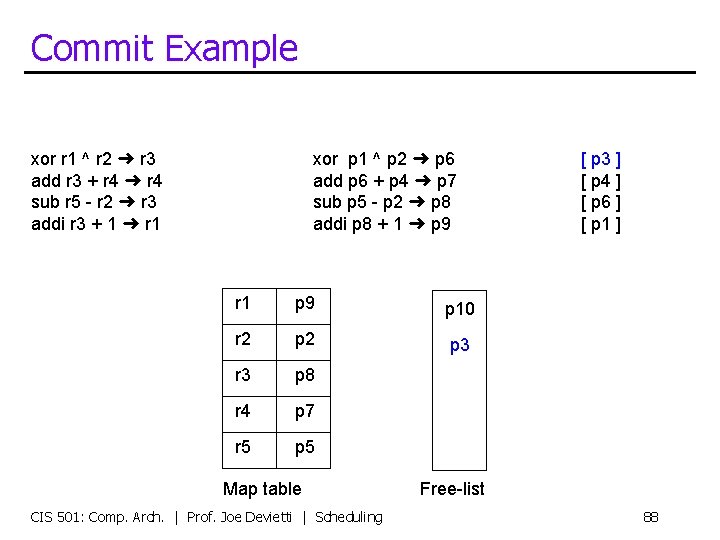

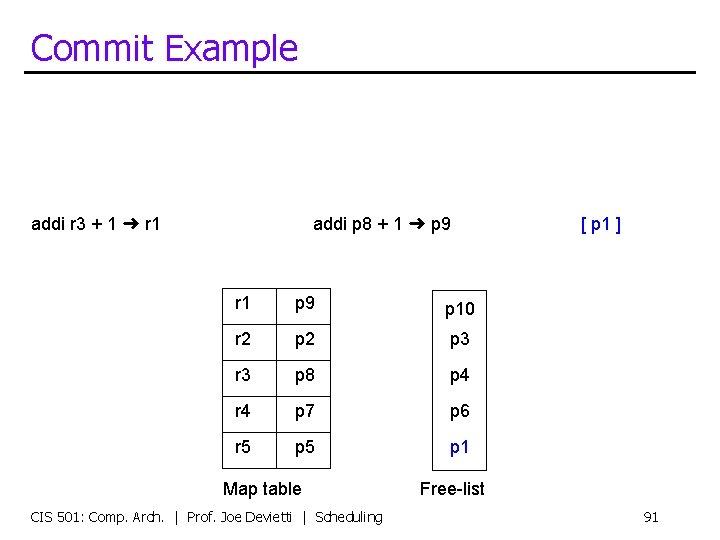

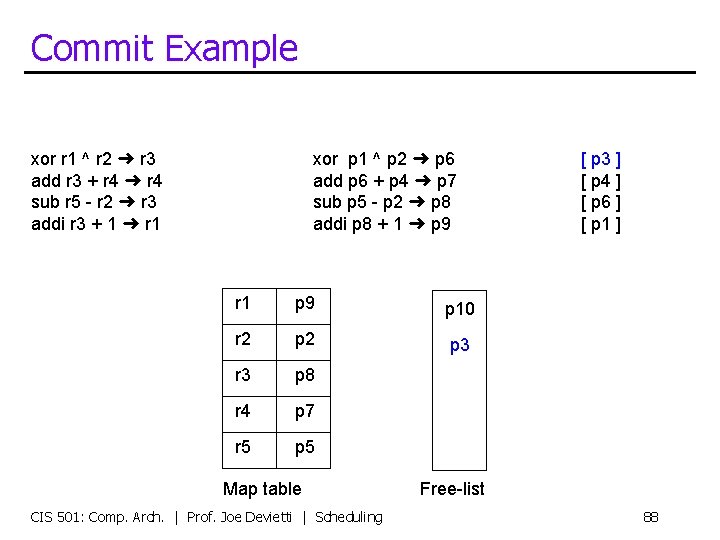

Commit Example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 r 1 p 9 r 2 p 2 r 3 p 8 r 4 p 7 r 5 p 5 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ p 3 ] [ p 4 ] [ p 6 ] [ p 1 ] p 10 Free-list 87

Commit Example xor r 1 ^ r 2 ➜ r 3 add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 xor p 1 ^ p 2 ➜ p 6 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 r 1 p 9 p 10 r 2 p 3 r 3 p 8 r 4 p 7 r 5 p 5 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ p 3 ] [ p 4 ] [ p 6 ] [ p 1 ] Free-list 88

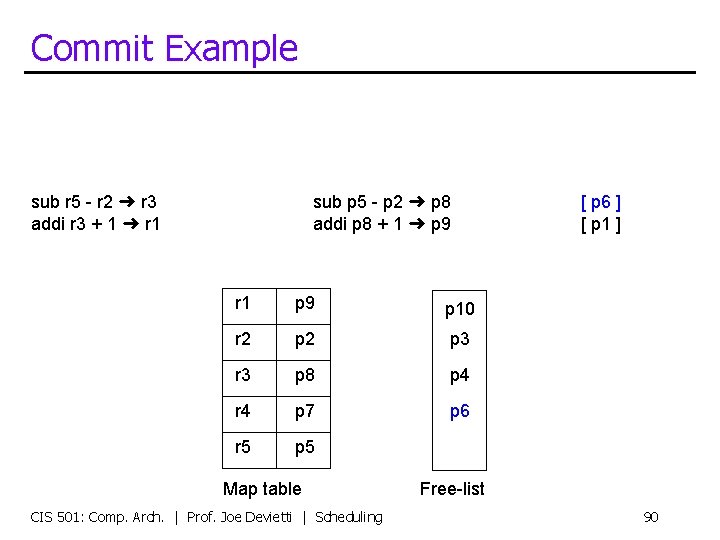

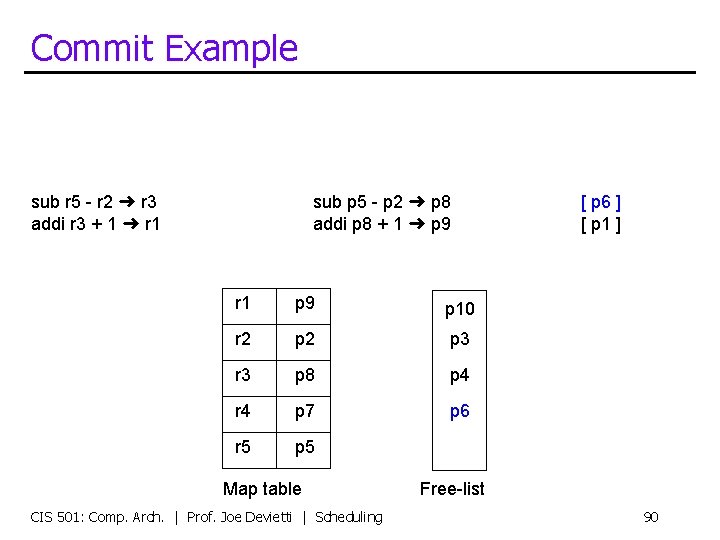

Commit Example add r 3 + r 4 ➜ r 4 sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 add p 6 + p 4 ➜ p 7 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 r 1 p 9 p 10 r 2 p 3 r 3 p 8 p 4 r 4 p 7 r 5 p 5 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ p 4 ] [ p 6 ] [ p 1 ] Free-list 89

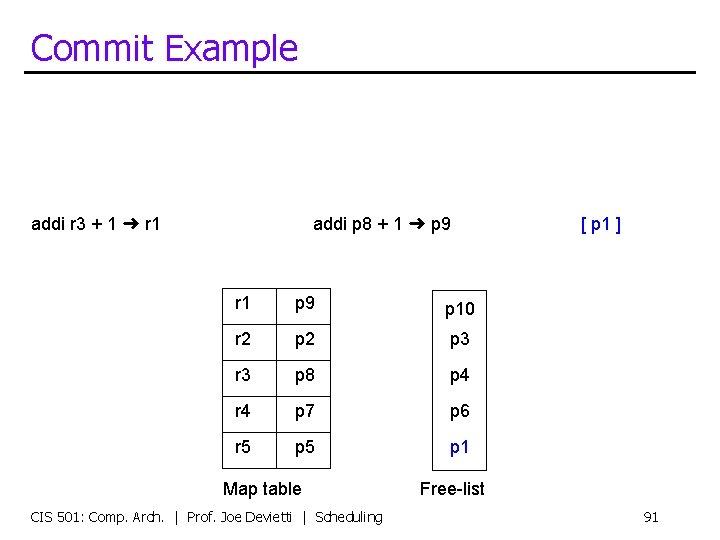

Commit Example sub r 5 - r 2 ➜ r 3 addi r 3 + 1 ➜ r 1 sub p 5 - p 2 ➜ p 8 addi p 8 + 1 ➜ p 9 r 1 p 9 p 10 r 2 p 3 r 3 p 8 p 4 r 4 p 7 p 6 r 5 p 5 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ p 6 ] [ p 1 ] Free-list 90

Commit Example addi r 3 + 1 ➜ r 1 addi p 8 + 1 ➜ p 9 r 1 p 9 p 10 r 2 p 3 r 3 p 8 p 4 r 4 p 7 p 6 r 5 p 1 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling [ p 1 ] Free-list 91

Commit Example r 1 p 9 p 10 r 2 p 3 r 3 p 8 p 4 r 4 p 7 p 6 r 5 p 1 Map table CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Free-list 92

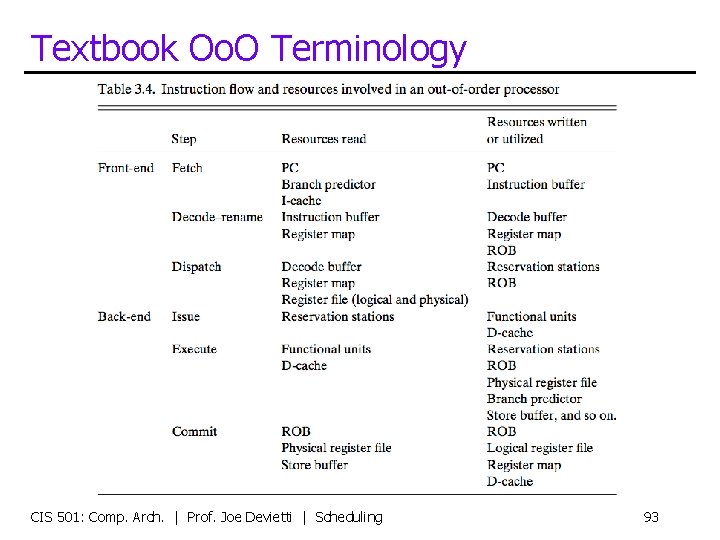

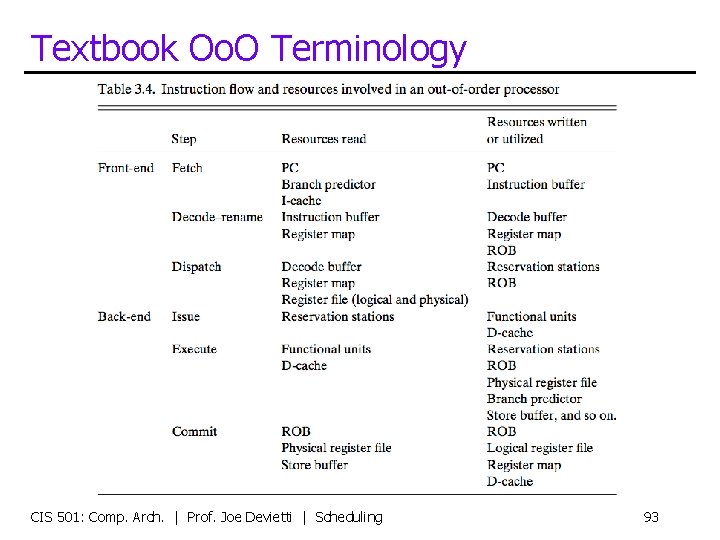

Textbook Oo. O Terminology CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 93

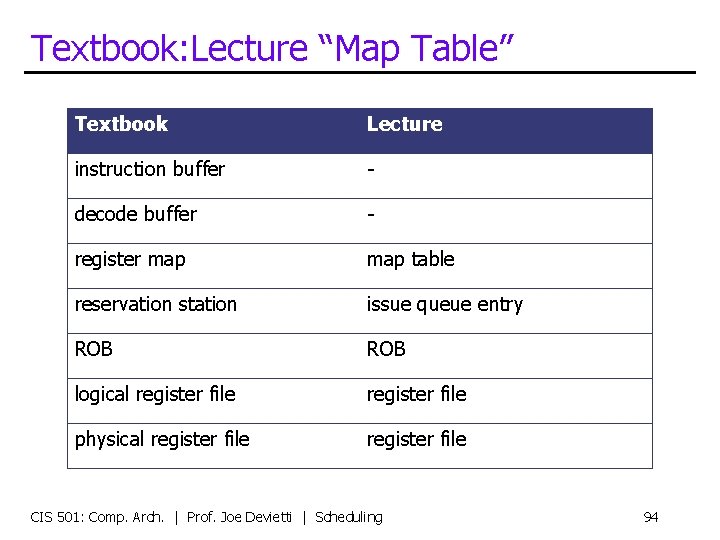

Textbook: Lecture “Map Table” Textbook Lecture instruction buffer - decode buffer - register map table reservation station issue queue entry ROB logical register file physical register file CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 94

Lecture version of Textbook Table 3. 4 Step/Stage resources read resources written/utilized Fetch PC, branch predictor, I$ PC Decode-rename map table, ROB Dispatch ready table ROB, issue queue Issue issue queue, regfile functional units Execute D$ functional units, issue queue, ROB, D$, branch predictor, regfile Commit ROB, map table, D$ CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 95

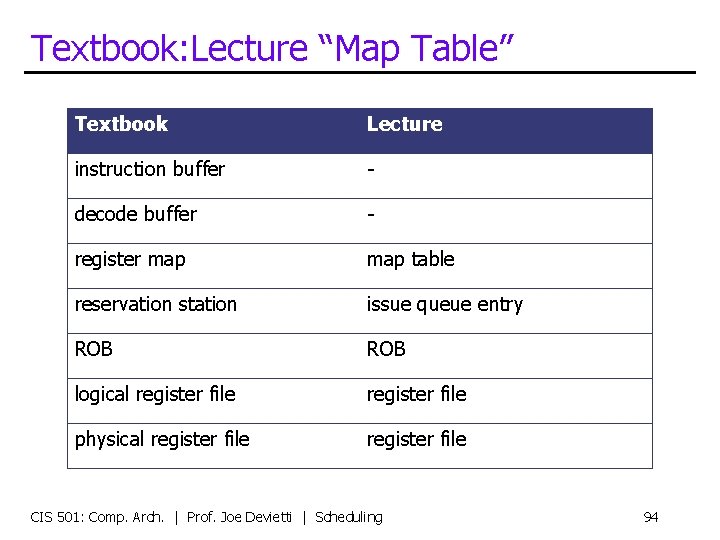

Dynamic Scheduling Example CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 96

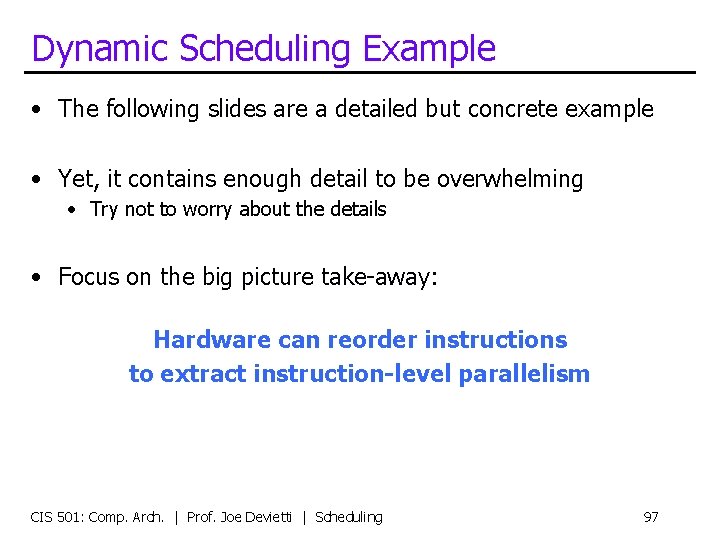

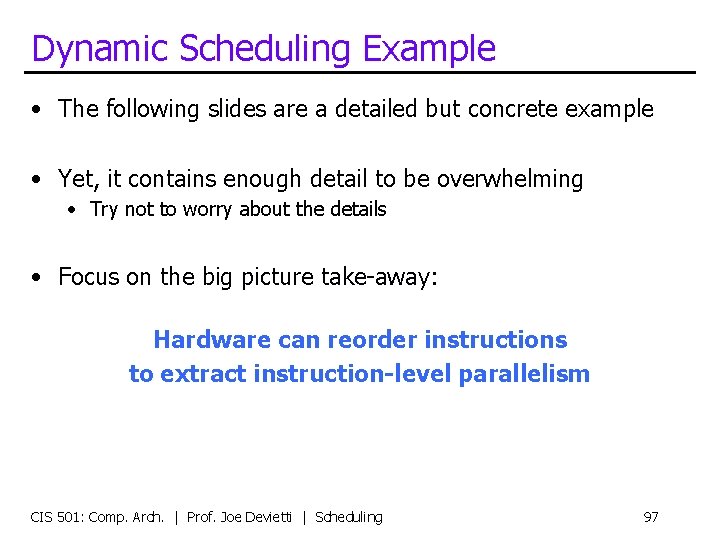

Dynamic Scheduling Example • The following slides are a detailed but concrete example • Yet, it contains enough detail to be overwhelming • Try not to worry about the details • Focus on the big picture take-away: Hardware can reorder instructions to extract instruction-level parallelism CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 97

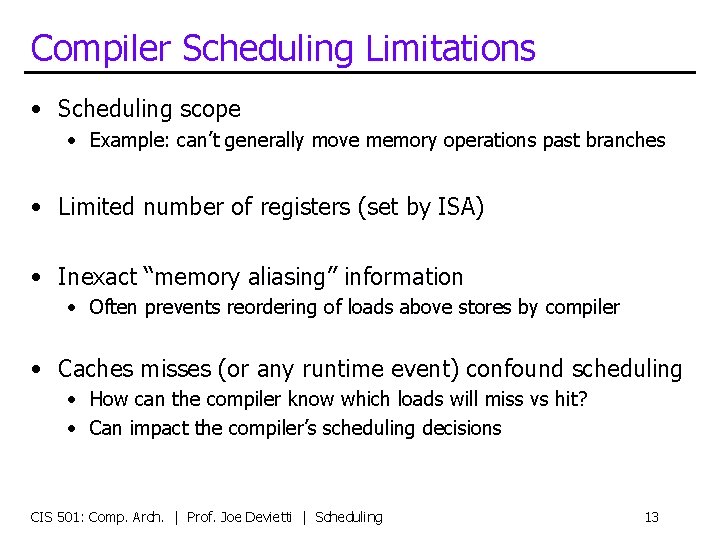

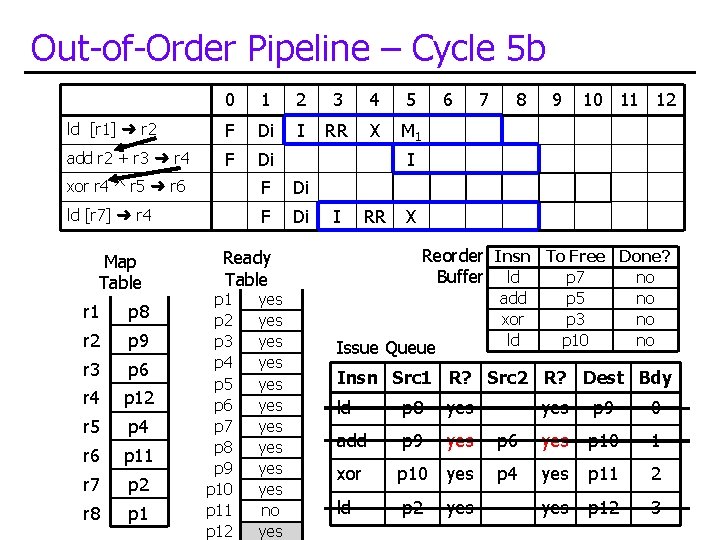

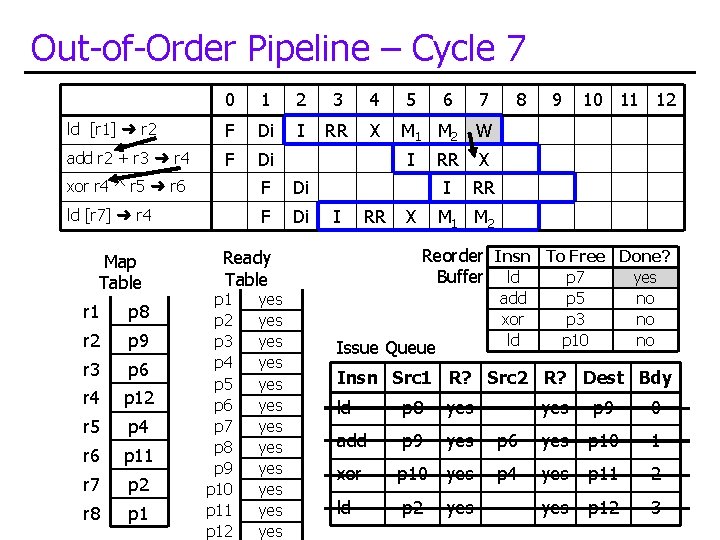

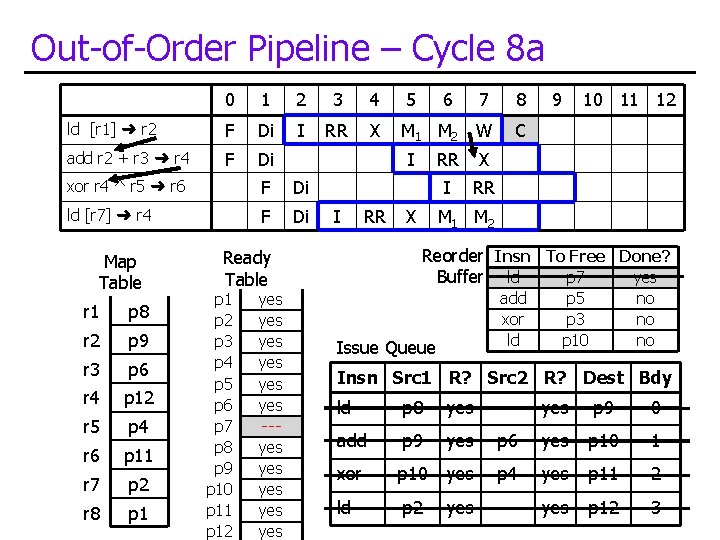

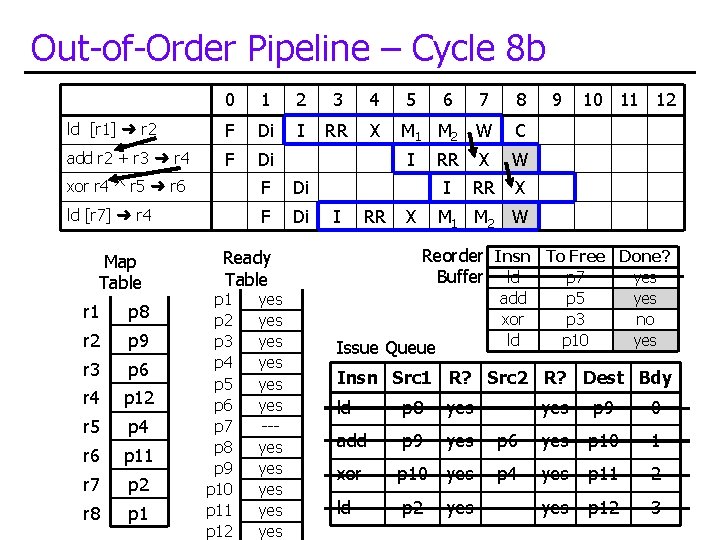

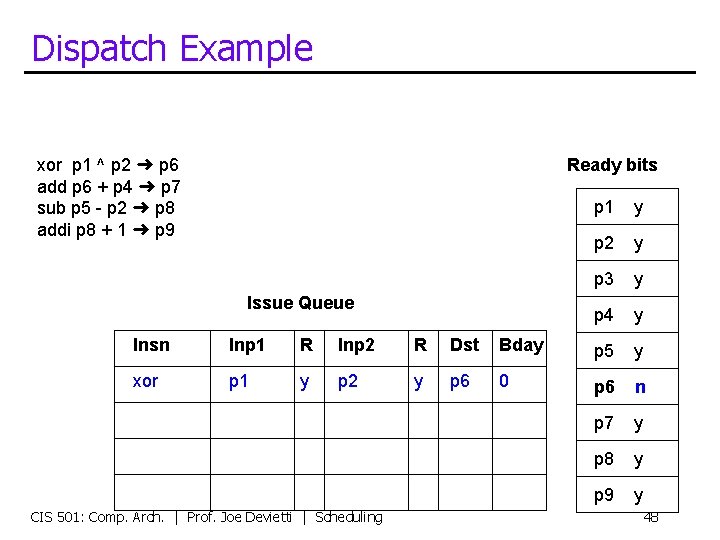

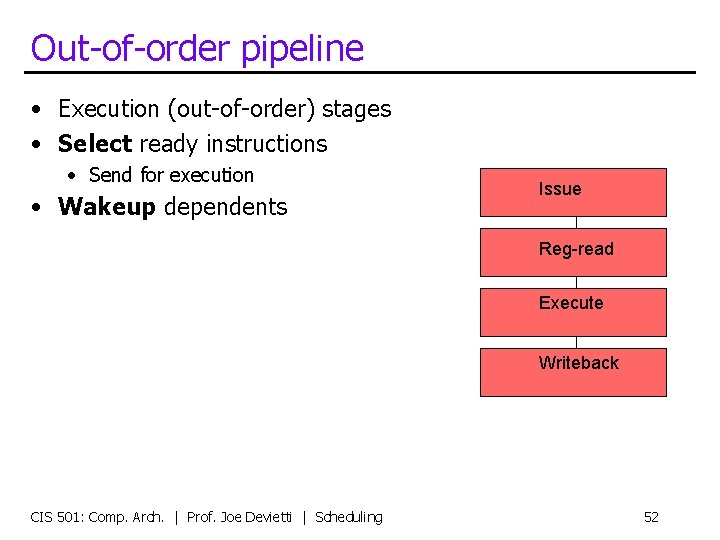

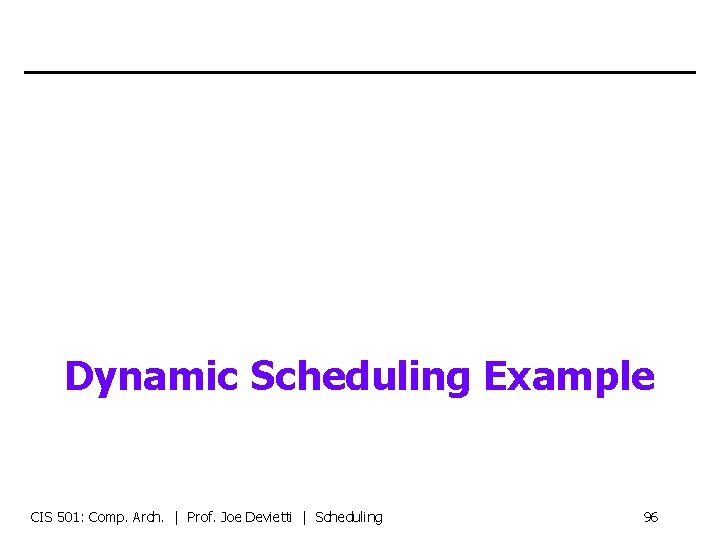

Recall: Motivating Example 0 1 2 3 4 5 6 7 8 ld [p 1] ➜ p 2 F Di I RR X M 1 M 2 W C add p 2 + p 3 ➜ p 4 F Di RR X W C I RR X W M 1 M 2 W I xor p 4 ^ p 5 ➜ p 6 F Di ld [p 7] ➜ p 8 F Di I RR X 9 10 11 12 C C • How would this execution occur cycle-by-cycle? • Execution latencies assumed in this example: • Loads have two-cycle load-to-use penalty • Three cycle total execution latency • All other instructions have single-cycle execution latency • “Issue queue”: hold all waiting (un-executed) instructions • Holds ready/not-ready status • Faster than looking up in ready table each cycle 98

![OutofOrder Pipeline Cycle 0 0 ld r 1 r 2 F add Out-of-Order Pipeline – Cycle 0 0 ld [r 1] ➜ r 2 F add](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-99.jpg)

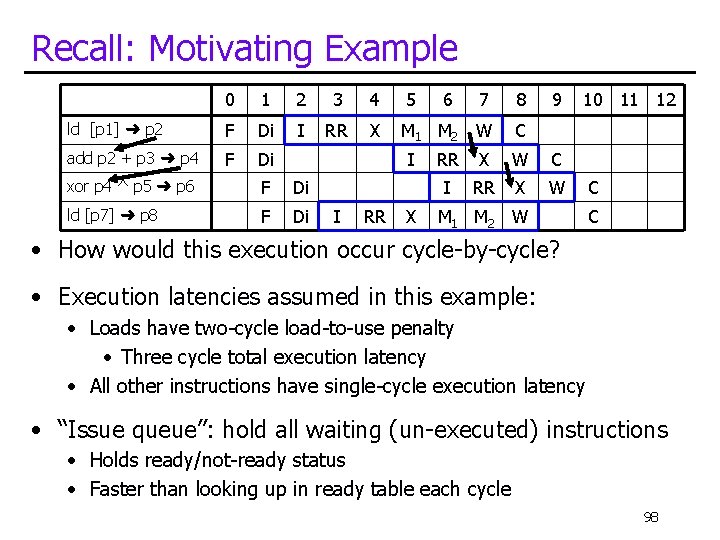

Out-of-Order Pipeline – Cycle 0 0 ld [r 1] ➜ r 2 F add r 2 + r 3 ➜ r 4 F 1 2 3 4 5 6 7 8 9 10 11 12 xor r 4 ^ r 5 ➜ r 6 ld [r 7] ➜ r 4 Map Table r 1 p 8 r 2 p 7 r 3 p 6 r 4 p 5 r 5 p 4 r 6 p 3 r 7 p 2 r 8 p 1 Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 yes yes ----- Reorder Insn To Free Done? no Buffer ld add no Issue Queue Insn Src 1 R? Src 2 R? Dest Bdy

![OutofOrder Pipeline Cycle 1 a 0 1 ld r 1 r 2 Out-of-Order Pipeline – Cycle 1 a 0 1 ld [r 1] ➜ r 2](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-100.jpg)

Out-of-Order Pipeline – Cycle 1 a 0 1 ld [r 1] ➜ r 2 F Di add r 2 + r 3 ➜ r 4 F 2 3 4 5 6 7 8 9 10 11 12 xor r 4 ^ r 5 ➜ r 6 ld [r 7] ➜ r 4 Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 5 r 5 p 4 r 6 p 3 r 7 p 2 r 8 p 1 Reorder Insn To Free Done? p 7 no Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 yes yes no ------- add no Issue Queue Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0

![OutofOrder Pipeline Cycle 1 b 0 1 ld r 1 r 2 Out-of-Order Pipeline – Cycle 1 b 0 1 ld [r 1] ➜ r 2](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-101.jpg)

Out-of-Order Pipeline – Cycle 1 b 0 1 ld [r 1] ➜ r 2 F Di add r 2 + r 3 ➜ r 4 F Di 2 3 4 5 6 7 8 9 10 11 12 xor r 4 ^ r 5 ➜ r 6 ld [r 7] ➜ r 4 Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 10 r 5 p 4 r 6 p 3 r 7 p 2 r 8 p 1 Reorder Insn To Free Done? p 7 no Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 yes yes no no ----- add p 5 no Issue Queue Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 no p 6 yes p 10 1

![OutofOrder Pipeline Cycle 1 c 0 1 ld r 1 r 2 Out-of-Order Pipeline – Cycle 1 c 0 1 ld [r 1] ➜ r 2](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-102.jpg)

Out-of-Order Pipeline – Cycle 1 c 0 1 ld [r 1] ➜ r 2 F Di add r 2 + r 3 ➜ r 4 F Di xor r 4 ^ r 5 ➜ r 6 F ld [r 7] ➜ r 4 F Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 10 r 5 p 4 r 6 p 3 r 7 p 2 r 8 p 1 2 3 yes yes no no ----- 5 6 7 8 9 10 11 12 Reorder Insn To Free Done? p 7 no Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 4 add xor ld Issue Queue p 5 no no no Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 no p 6 yes p 10 1

![OutofOrder Pipeline Cycle 2 a 0 1 2 ld r 1 r Out-of-Order Pipeline – Cycle 2 a 0 1 2 ld [r 1] ➜ r](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-103.jpg)

Out-of-Order Pipeline – Cycle 2 a 0 1 2 ld [r 1] ➜ r 2 F Di I add r 2 + r 3 ➜ r 4 F Di xor r 4 ^ r 5 ➜ r 6 F ld [r 7] ➜ r 4 F Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 10 r 5 p 4 r 6 p 3 r 7 p 2 r 8 p 1 3 yes yes no no ----- 5 6 7 8 9 10 11 12 Reorder Insn To Free Done? p 7 no Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 4 add xor ld Issue Queue p 5 no no no Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 no p 6 yes p 10 1

![OutofOrder Pipeline Cycle 2 b 0 1 2 ld r 1 r Out-of-Order Pipeline – Cycle 2 b 0 1 2 ld [r 1] ➜ r](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-104.jpg)

Out-of-Order Pipeline – Cycle 2 b 0 1 2 ld [r 1] ➜ r 2 F Di I add r 2 + r 3 ➜ r 4 F Di xor r 4 ^ r 5 ➜ r 6 F ld [r 7] ➜ r 4 F Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 10 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 3 yes yes no no no --- 5 6 7 8 9 10 11 12 Di Reorder Insn To Free Done? p 7 no Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 4 add xor ld Issue Queue p 5 p 3 no no no Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 no p 6 yes p 10 1 xor p 10 no p 4 yes p 11 2

![OutofOrder Pipeline Cycle 2 c 0 1 2 ld r 1 r Out-of-Order Pipeline – Cycle 2 c 0 1 2 ld [r 1] ➜ r](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-105.jpg)

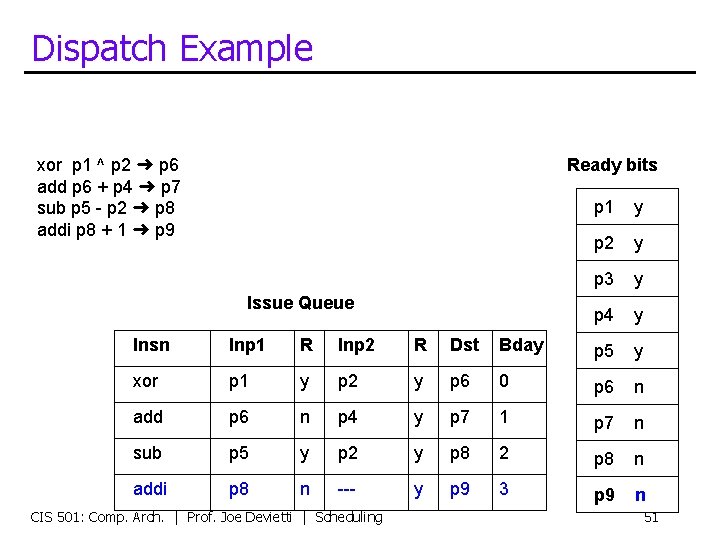

Out-of-Order Pipeline – Cycle 2 c 0 1 2 ld [r 1] ➜ r 2 F Di I add r 2 + r 3 ➜ r 4 F Di xor r 4 ^ r 5 ➜ r 6 F Di ld [r 7] ➜ r 4 F Di Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 12 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 3 yes yes no no 5 6 7 8 9 10 11 12 Reorder Insn To Free Done? p 7 no Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 4 add xor ld Issue Queue p 5 p 3 p 10 no no no Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 no p 6 yes p 10 1 xor p 10 no p 4 yes p 11 2 ld p 2 yes --- yes p 12 3

![OutofOrder Pipeline Cycle 3 0 1 2 3 ld r 1 r Out-of-Order Pipeline – Cycle 3 0 1 2 3 ld [r 1] ➜ r](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-106.jpg)

Out-of-Order Pipeline – Cycle 3 0 1 2 3 ld [r 1] ➜ r 2 F Di I RR add r 2 + r 3 ➜ r 4 F Di xor r 4 ^ r 5 ➜ r 6 F Di ld [r 7] ➜ r 4 F Di Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 12 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 yes yes no no 5 6 7 8 9 10 11 12 I Reorder Insn To Free Done? p 7 no Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 4 add xor ld Issue Queue p 5 p 3 p 10 no no no Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 no p 6 yes p 10 1 xor p 10 no p 4 yes p 11 2 ld p 2 yes --- yes p 12 3

![OutofOrder Pipeline Cycle 4 0 1 2 3 4 ld r 1 Out-of-Order Pipeline – Cycle 4 0 1 2 3 4 ld [r 1] ➜](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-107.jpg)

Out-of-Order Pipeline – Cycle 4 0 1 2 3 4 ld [r 1] ➜ r 2 F Di I RR X add r 2 + r 3 ➜ r 4 F Di I RR xor r 4 ^ r 5 ➜ r 6 F Di ld [r 7] ➜ r 4 F Di Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 12 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 yes yes yes no no no 6 7 8 9 10 11 12 Reorder Insn To Free Done? p 7 no Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 5 add xor ld Issue Queue p 5 p 3 p 10 no no no Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 yes p 6 yes p 10 1 xor p 10 no p 4 yes p 11 2 ld p 2 yes --- yes p 12 3

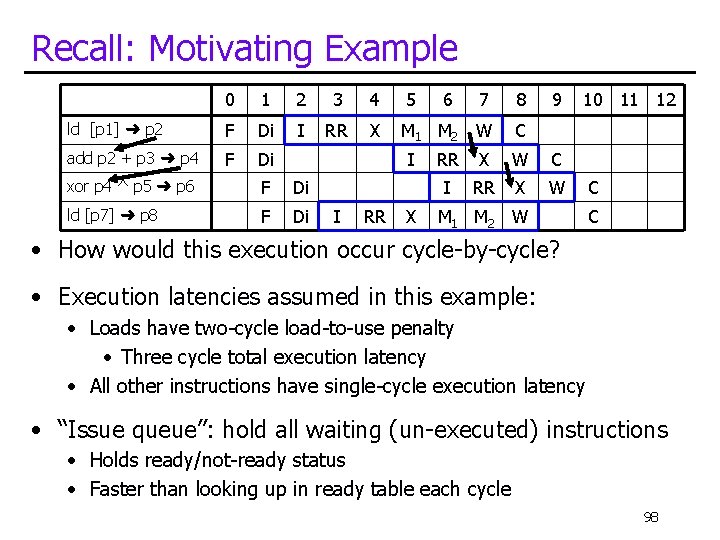

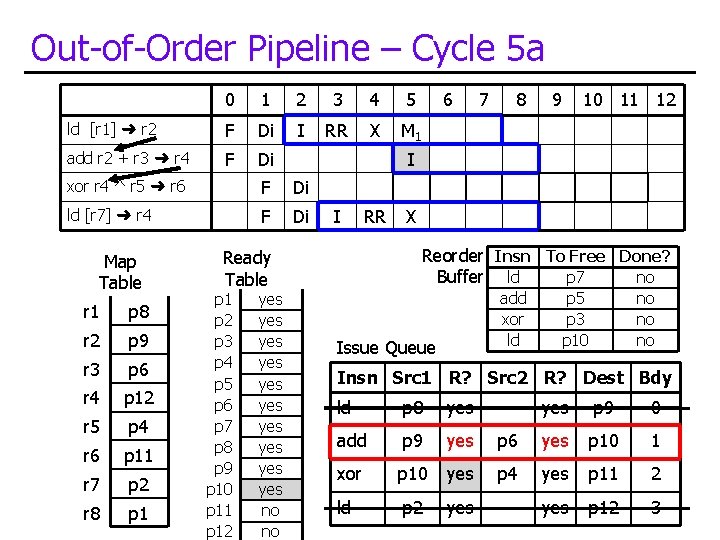

Out-of-Order Pipeline – Cycle 5 a 0 1 2 3 4 5 ld [r 1] ➜ r 2 F Di I RR X M 1 add r 2 + r 3 ➜ r 4 F Di ld [r 7] ➜ r 4 F Di r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 12 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 I RR yes yes yes no no 8 9 10 11 12 X Reorder Insn To Free Done? p 7 no Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 7 I xor r 4 ^ r 5 ➜ r 6 Map Table 6 add xor ld Issue Queue p 5 p 3 p 10 no no no Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 yes p 6 yes p 10 1 xor p 10 yes p 4 yes p 11 2 ld p 2 yes --- yes p 12 3

Out-of-Order Pipeline – Cycle 5 b 0 1 2 3 4 5 ld [r 1] ➜ r 2 F Di I RR X M 1 add r 2 + r 3 ➜ r 4 F Di ld [r 7] ➜ r 4 F Di r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 12 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 I RR yes yes yes no yes 8 9 10 11 12 X Reorder Insn To Free Done? p 7 no Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 7 I xor r 4 ^ r 5 ➜ r 6 Map Table 6 add xor ld Issue Queue p 5 p 3 p 10 no no no Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 yes p 6 yes p 10 1 xor p 10 yes p 4 yes p 11 2 ld p 2 yes --- yes p 12 3

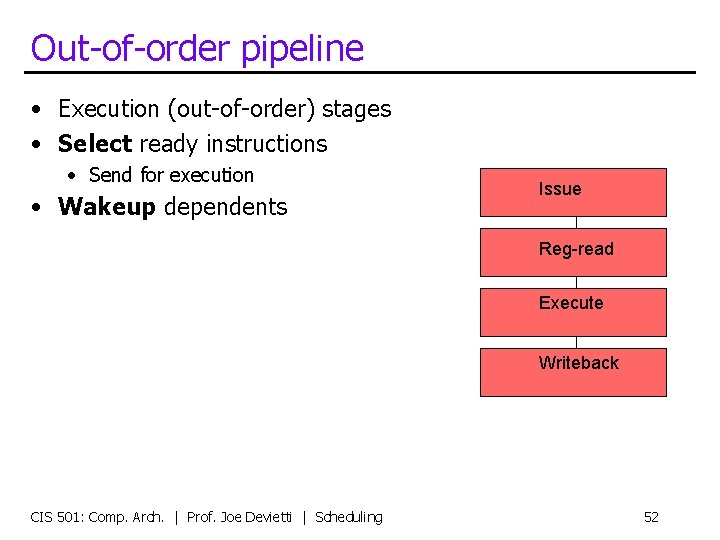

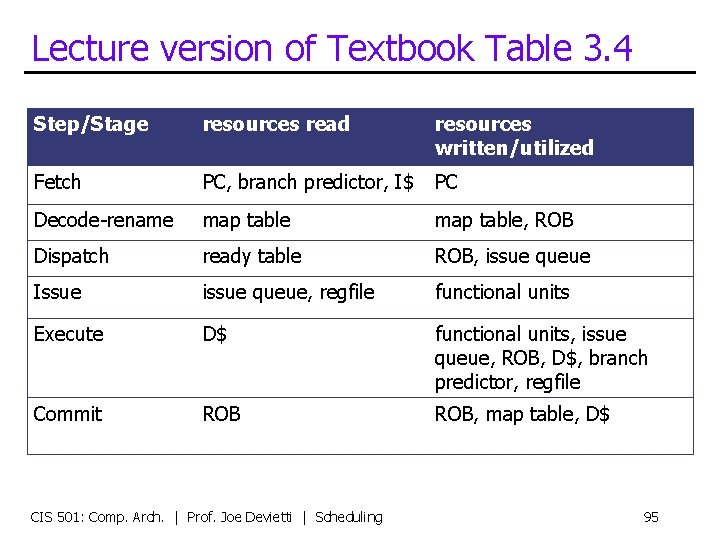

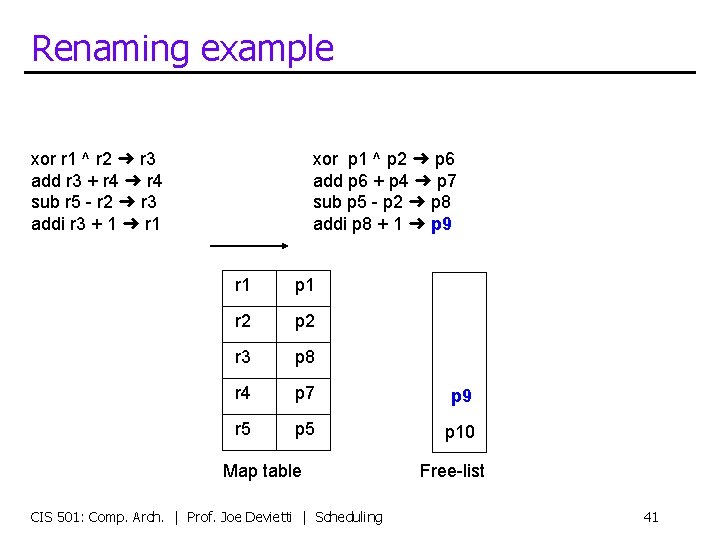

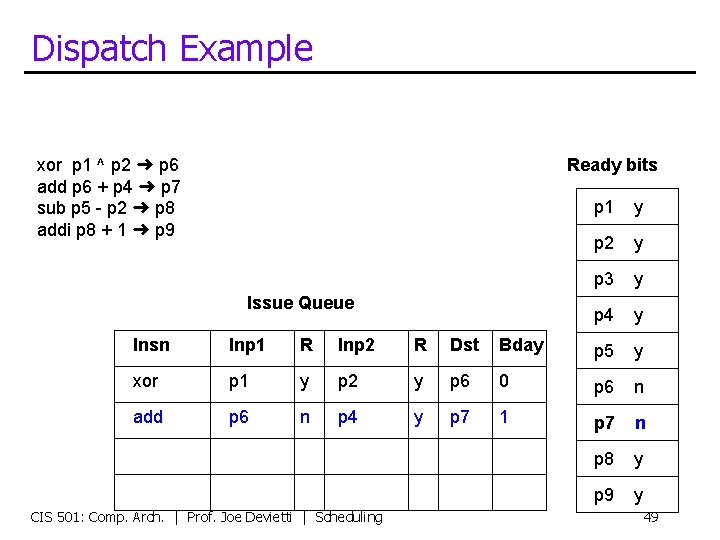

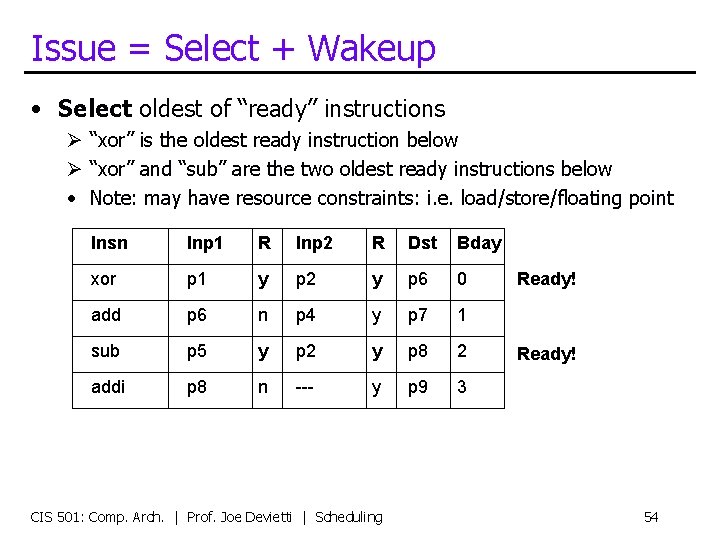

![OutofOrder Pipeline Cycle 6 0 1 2 3 4 5 ld r 1 Out-of-Order Pipeline – Cycle 6 0 1 2 3 4 5 ld [r 1]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-110.jpg)

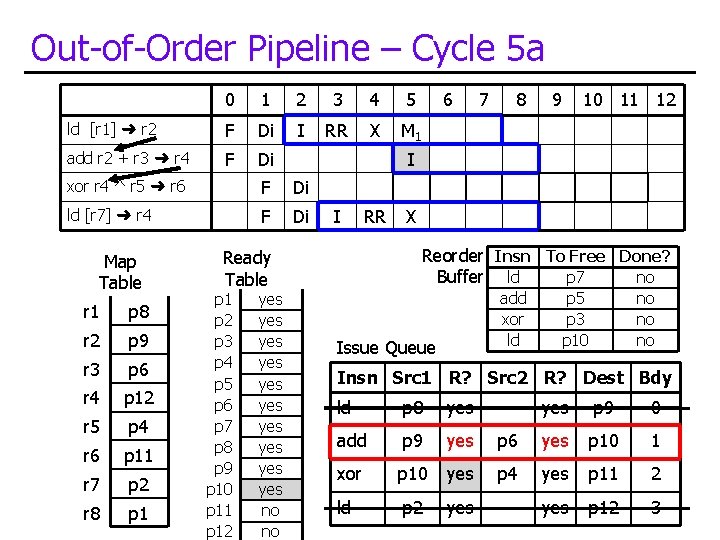

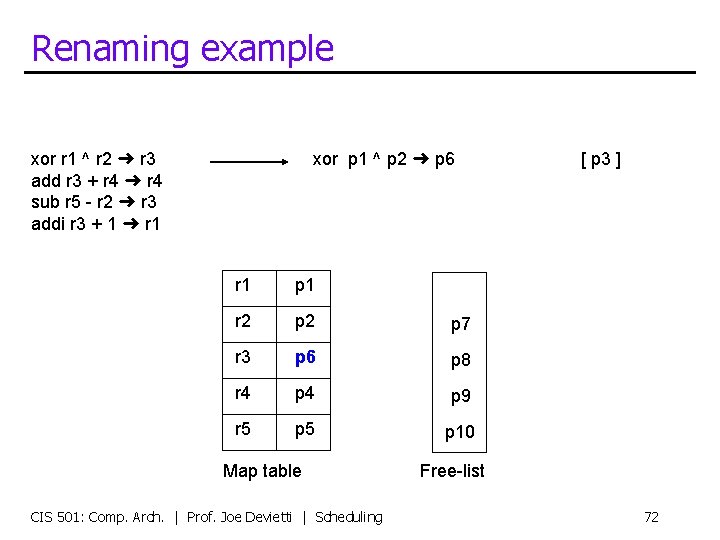

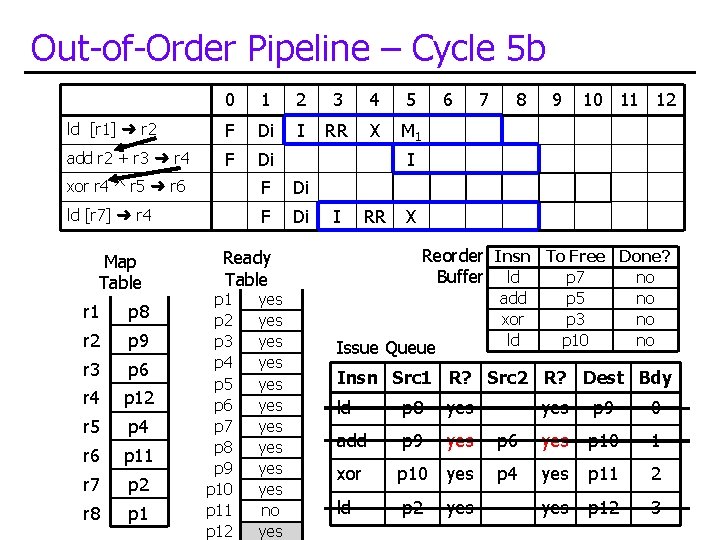

Out-of-Order Pipeline – Cycle 6 0 1 2 3 4 5 ld [r 1] ➜ r 2 F Di I RR X M 1 M 2 add r 2 + r 3 ➜ r 4 F Di I xor r 4 ^ r 5 ➜ r 6 F Di ld [r 7] ➜ r 4 F Di Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 12 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 yes yes yes 7 8 9 10 11 12 RR I I RR X M 1 Reorder Insn To Free Done? p 7 no Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 6 add xor ld Issue Queue p 5 p 3 p 10 no no no Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 yes p 6 yes p 10 1 xor p 10 yes p 4 yes p 11 2 ld p 2 yes --- yes p 12 3

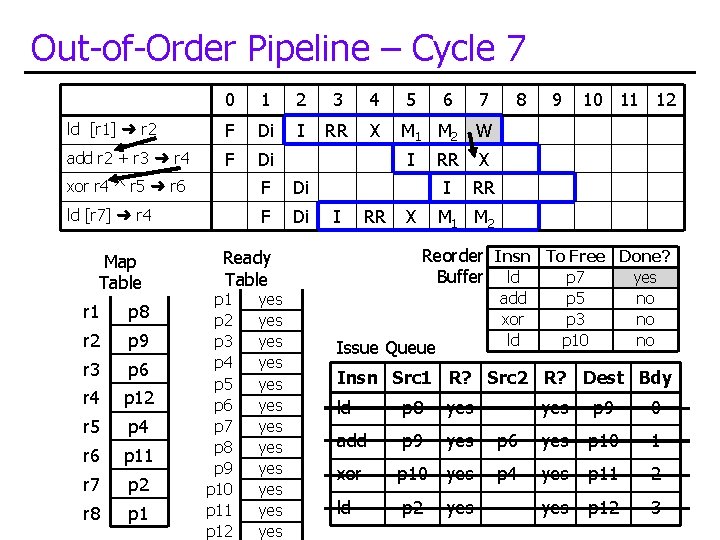

Out-of-Order Pipeline – Cycle 7 0 1 2 3 4 5 6 7 ld [r 1] ➜ r 2 F Di I RR X M 1 M 2 W add r 2 + r 3 ➜ r 4 F Di I xor r 4 ^ r 5 ➜ r 6 F Di ld [r 7] ➜ r 4 F Di Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 12 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 I RR yes yes yes X I RR X 9 10 11 12 M 1 M 2 Reorder Insn To Free Done? p 7 yes Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 RR 8 add xor ld Issue Queue p 5 p 3 p 10 no no no Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 yes p 6 yes p 10 1 xor p 10 yes p 4 yes p 11 2 ld p 2 yes --- yes p 12 3

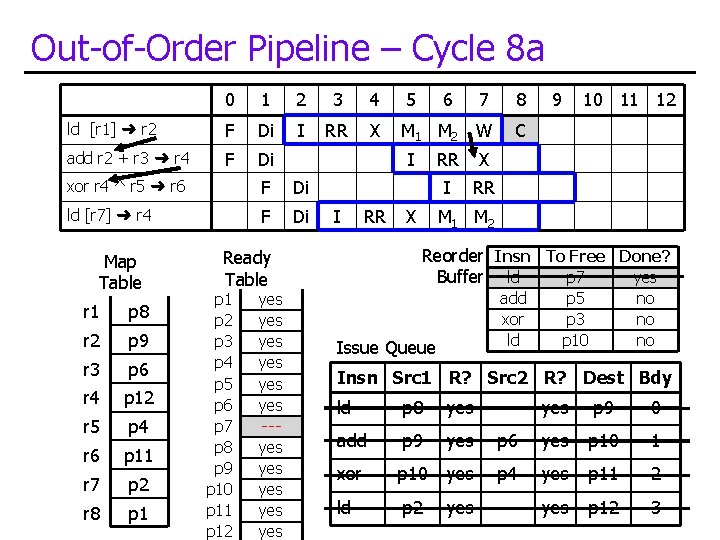

Out-of-Order Pipeline – Cycle 8 a 0 1 2 3 4 5 6 7 8 ld [r 1] ➜ r 2 F Di I RR X M 1 M 2 W C add r 2 + r 3 ➜ r 4 F Di I xor r 4 ^ r 5 ➜ r 6 F Di ld [r 7] ➜ r 4 F Di Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 12 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 I RR yes yes yes --yes yes yes X I RR X 10 11 12 M 1 M 2 Reorder Insn To Free Done? p 7 yes Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 RR 9 add xor ld Issue Queue p 5 p 3 p 10 no no no Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 yes p 6 yes p 10 1 xor p 10 yes p 4 yes p 11 2 ld p 2 yes --- yes p 12 3

Out-of-Order Pipeline – Cycle 8 b 0 1 2 3 4 5 6 7 8 ld [r 1] ➜ r 2 F Di I RR X M 1 M 2 W C add r 2 + r 3 ➜ r 4 F Di RR X W I RR X M 1 M 2 W I xor r 4 ^ r 5 ➜ r 6 F Di ld [r 7] ➜ r 4 F Di Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 12 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 I RR yes yes yes --yes yes yes 10 11 12 Reorder Insn To Free Done? p 7 yes Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 X 9 add xor ld Issue Queue p 5 p 3 p 10 yes no yes Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 yes p 6 yes p 10 1 xor p 10 yes p 4 yes p 11 2 ld p 2 yes --- yes p 12 3

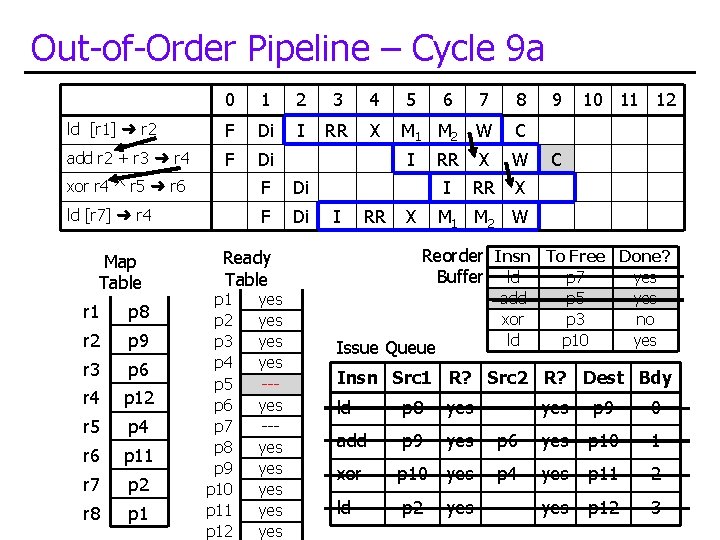

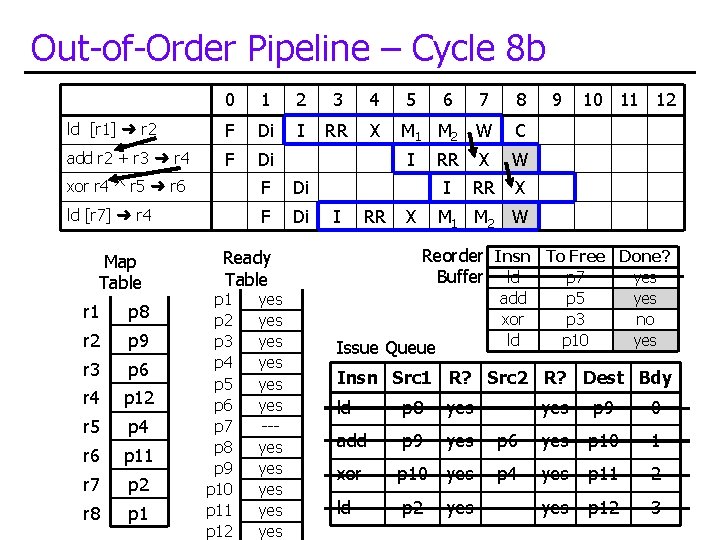

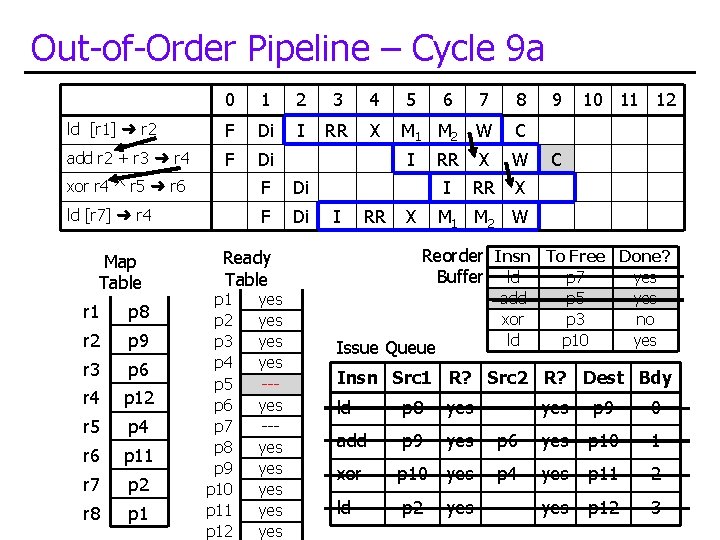

Out-of-Order Pipeline – Cycle 9 a 0 1 2 3 4 5 6 7 8 ld [r 1] ➜ r 2 F Di I RR X M 1 M 2 W C add r 2 + r 3 ➜ r 4 F Di RR X W I RR X M 1 M 2 W I xor r 4 ^ r 5 ➜ r 6 F Di ld [r 7] ➜ r 4 F Di Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 12 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 I RR yes yes --yes yes yes 10 11 12 C Reorder Insn To Free Done? p 7 yes Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 X 9 add xor ld Issue Queue p 5 p 3 p 10 yes no yes Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 yes p 6 yes p 10 1 xor p 10 yes p 4 yes p 11 2 ld p 2 yes --- yes p 12 3

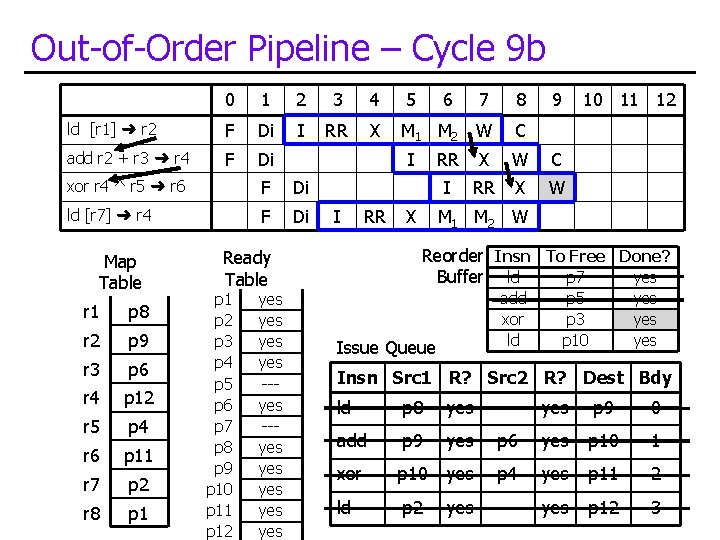

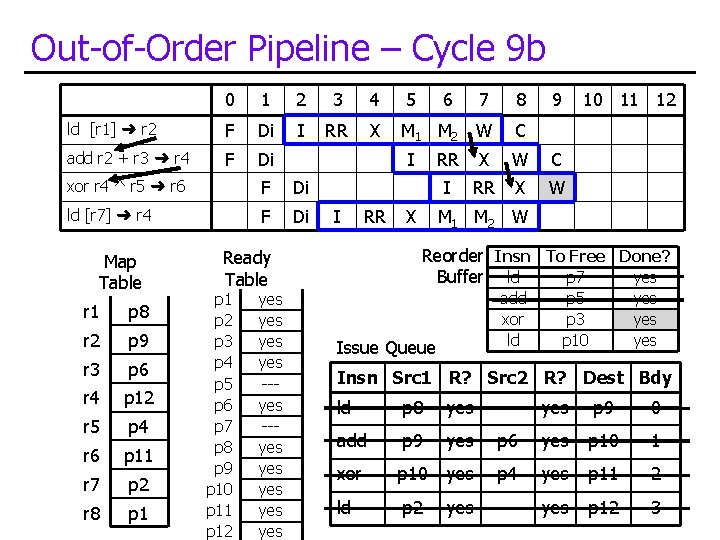

Out-of-Order Pipeline – Cycle 9 b 0 1 2 3 4 5 6 7 8 ld [r 1] ➜ r 2 F Di I RR X M 1 M 2 W C add r 2 + r 3 ➜ r 4 F Di RR X W C I RR X W M 1 M 2 W I xor r 4 ^ r 5 ➜ r 6 F Di ld [r 7] ➜ r 4 F Di Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 12 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 I RR yes yes --yes yes yes 10 11 12 Reorder Insn To Free Done? p 7 yes Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 X 9 add xor ld Issue Queue p 5 p 3 p 10 yes yes Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 yes p 6 yes p 10 1 xor p 10 yes p 4 yes p 11 2 ld p 2 yes --- yes p 12 3

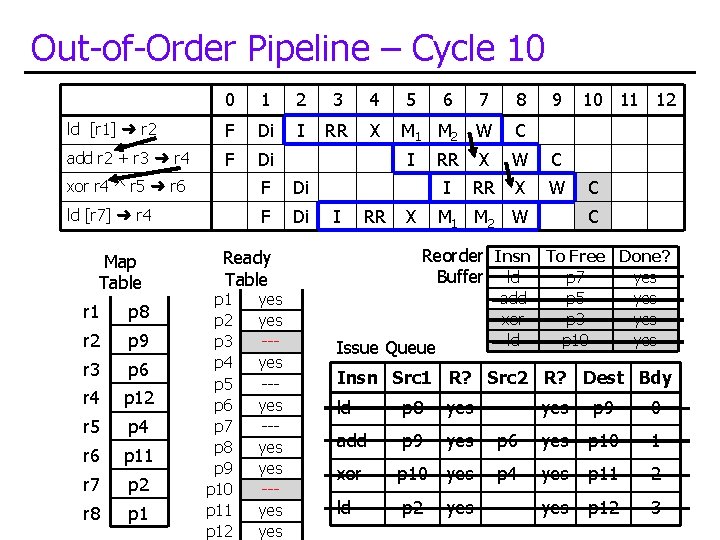

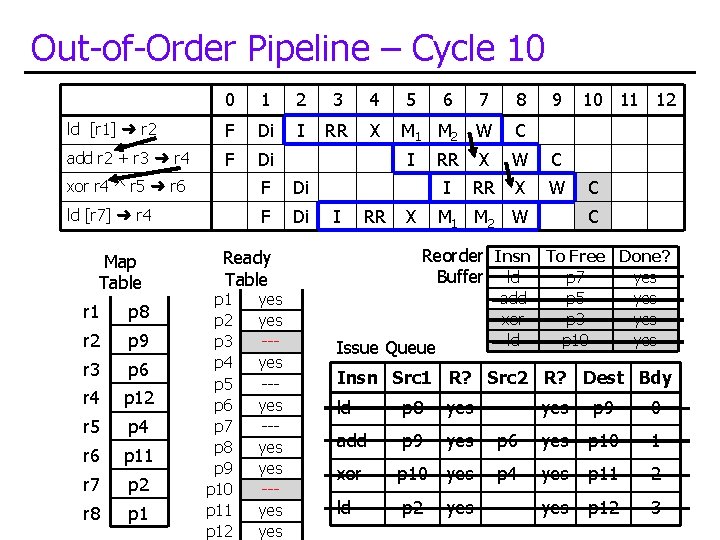

Out-of-Order Pipeline – Cycle 10 0 1 2 3 4 5 6 7 8 ld [r 1] ➜ r 2 F Di I RR X M 1 M 2 W C add r 2 + r 3 ➜ r 4 F Di RR X W C I RR X W M 1 M 2 W I xor r 4 ^ r 5 ➜ r 6 F Di ld [r 7] ➜ r 4 F Di Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 12 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 I RR yes --yes yes 10 11 12 C C Reorder Insn To Free Done? p 7 yes Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 X 9 add xor ld Issue Queue p 5 p 3 p 10 yes yes Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 yes p 6 yes p 10 1 xor p 10 yes p 4 yes p 11 2 ld p 2 yes --- yes p 12 3

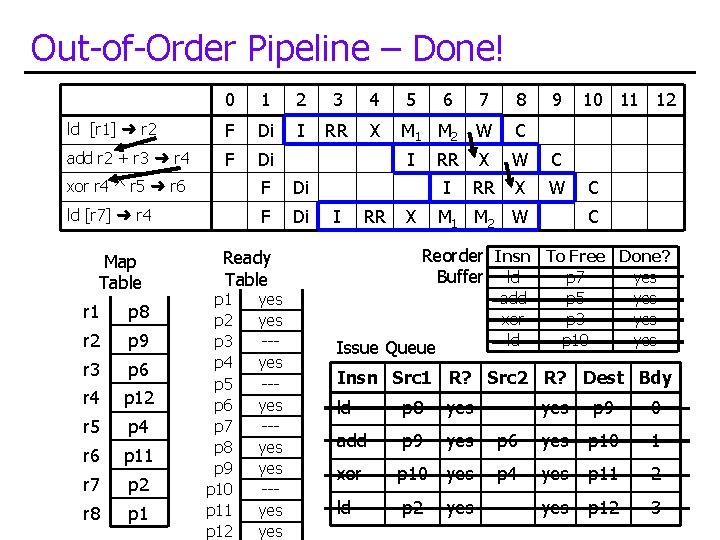

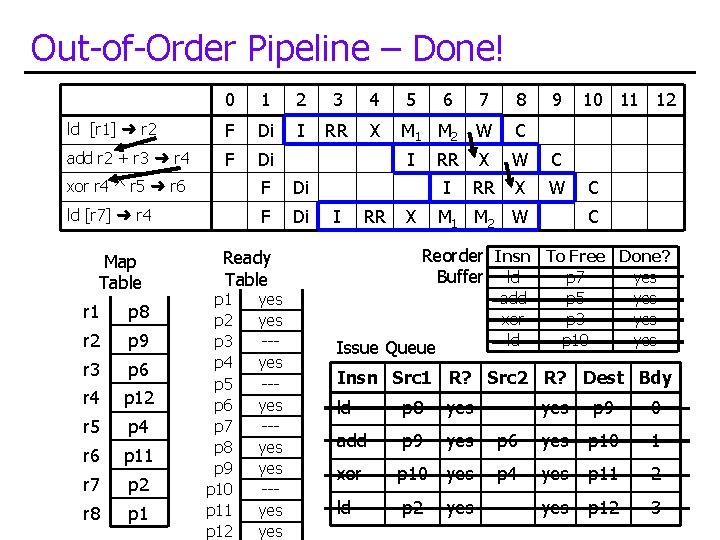

Out-of-Order Pipeline – Done! 0 1 2 3 4 5 6 7 8 ld [r 1] ➜ r 2 F Di I RR X M 1 M 2 W C add r 2 + r 3 ➜ r 4 F Di RR X W C I RR X W M 1 M 2 W I xor r 4 ^ r 5 ➜ r 6 F Di ld [r 7] ➜ r 4 F Di Map Table r 1 p 8 r 2 p 9 r 3 p 6 r 4 p 12 r 5 p 4 r 6 p 11 r 7 p 2 r 8 p 1 I RR yes --yes yes 10 11 12 C C Reorder Insn To Free Done? p 7 yes Buffer ld Ready Table p 1 p 2 p 3 p 4 p 5 p 6 p 7 p 8 p 9 p 10 p 11 p 12 X 9 add xor ld Issue Queue p 5 p 3 p 10 yes yes Insn Src 1 R? Src 2 R? Dest Bdy ld p 8 yes --- yes p 9 0 add p 9 yes p 6 yes p 10 1 xor p 10 yes p 4 yes p 11 2 ld p 2 yes --- yes p 12 3

Handling Memory Operations CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 118

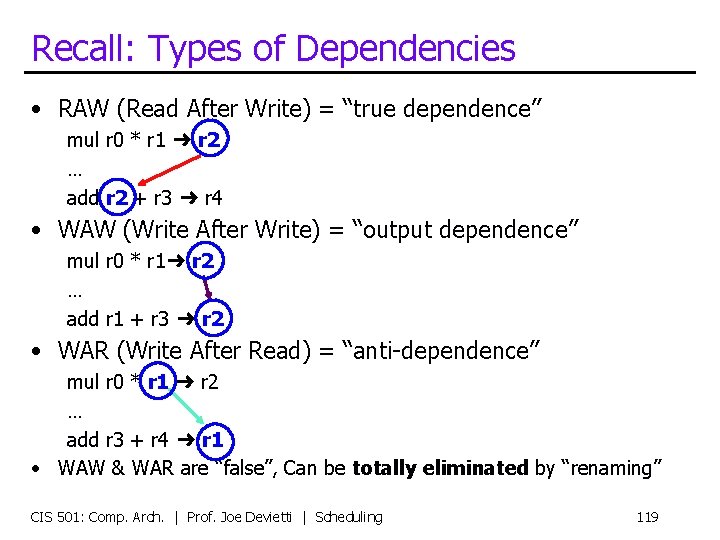

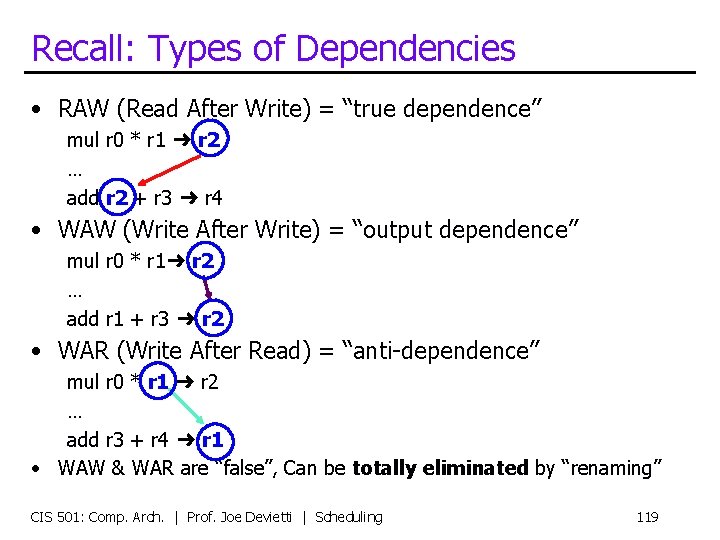

Recall: Types of Dependencies • RAW (Read After Write) = “true dependence” mul r 0 * r 1 ➜ r 2 … add r 2 + r 3 ➜ r 4 • WAW (Write After Write) = “output dependence” mul r 0 * r 1➜ r 2 … add r 1 + r 3 ➜ r 2 • WAR (Write After Read) = “anti-dependence” mul r 0 * r 1 ➜ r 2 … add r 3 + r 4 ➜ r 1 • WAW & WAR are “false”, Can be totally eliminated by “renaming” CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 119

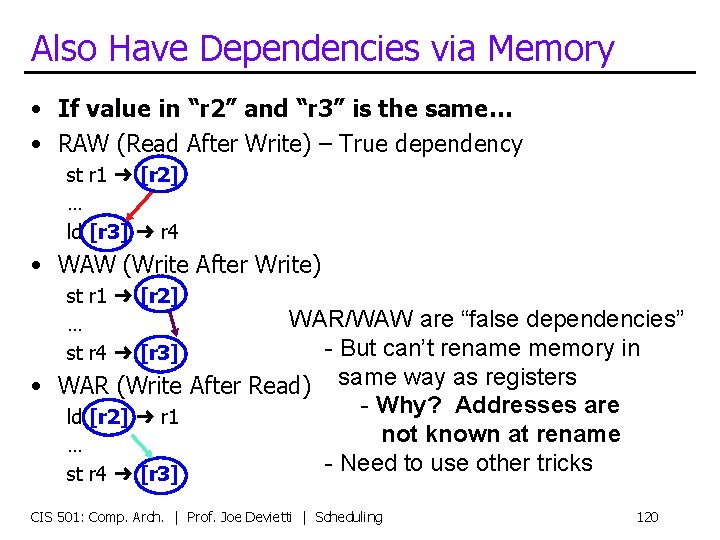

Also Have Dependencies via Memory • If value in “r 2” and “r 3” is the same… • RAW (Read After Write) – True dependency st r 1 ➜ [r 2] … ld [r 3] ➜ r 4 • WAW (Write After Write) st r 1 ➜ [r 2] … st r 4 ➜ [r 3] WAR/WAW are “false dependencies” - But can’t rename memory in • WAR (Write After Read) same way as registers - Why? Addresses are ld [r 2] ➜ r 1 not known at rename … - Need to use other tricks st r 4 ➜ [r 3] CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 120

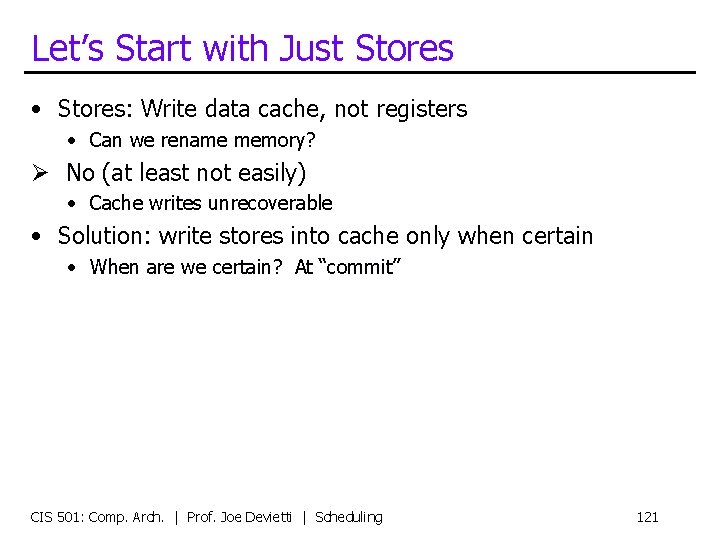

Let’s Start with Just Stores • Stores: Write data cache, not registers • Can we rename memory? Ø No (at least not easily) • Cache writes unrecoverable • Solution: write stores into cache only when certain • When are we certain? At “commit” CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 121

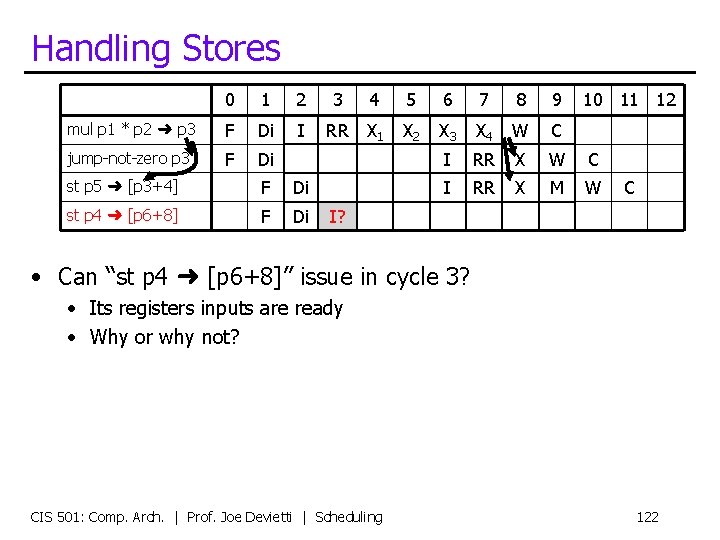

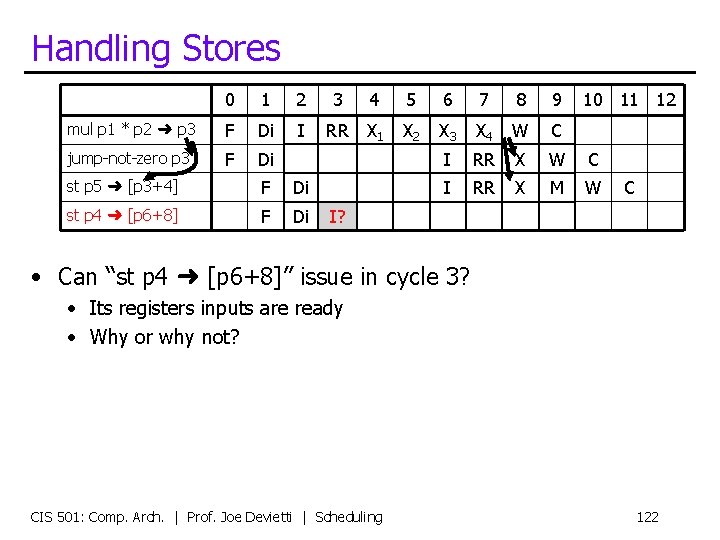

Handling Stores 0 1 2 mul p 1 * p 2 ➜ p 3 F Di I jump-not-zero p 3 F Di st p 5 ➜ [p 3+4] F Di st p 4 ➜ [p 6+8] F Di 3 4 5 6 7 8 9 10 11 12 RR X 1 X 2 X 3 X 4 W C I RR X M W C I? • Can “st p 4 ➜ [p 6+8]” issue in cycle 3? • Its registers inputs are ready • Why or why not? CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 122

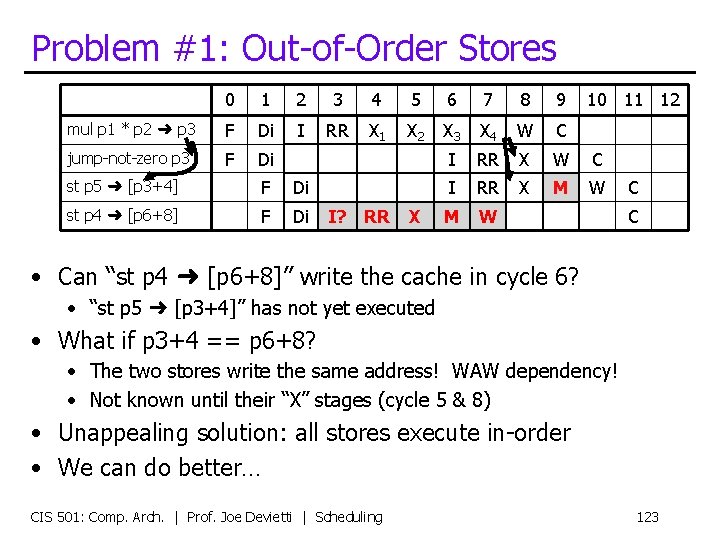

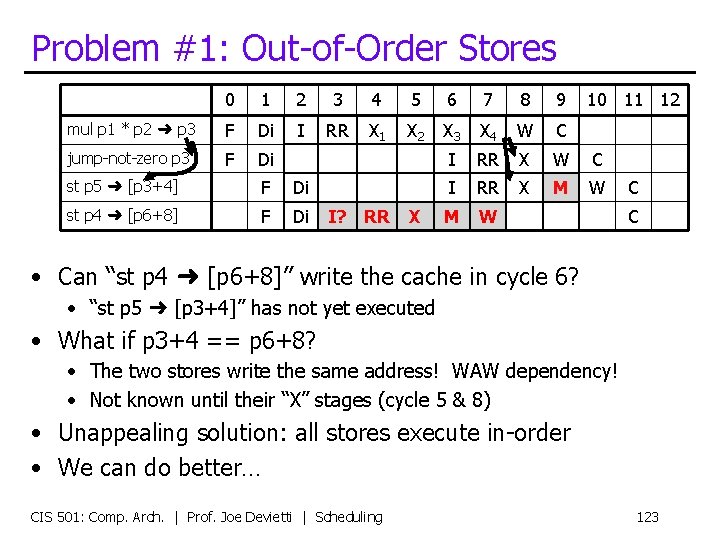

Problem #1: Out-of-Order Stores 0 1 2 3 4 5 6 7 8 9 mul p 1 * p 2 ➜ p 3 F Di I RR X 1 X 2 X 3 X 4 W C jump-not-zero p 3 F Di I RR X W C I RR X M W st p 5 ➜ [p 3+4] F Di st p 4 ➜ [p 6+8] F Di I? RR X 10 11 12 C C • Can “st p 4 ➜ [p 6+8]” write the cache in cycle 6? • “st p 5 ➜ [p 3+4]” has not yet executed • What if p 3+4 == p 6+8? • The two stores write the same address! WAW dependency! • Not known until their “X” stages (cycle 5 & 8) • Unappealing solution: all stores execute in-order • We can do better… CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 123

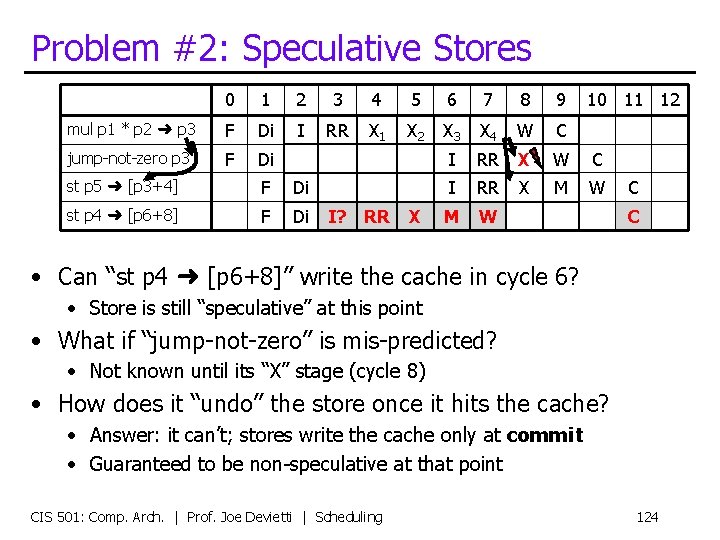

Problem #2: Speculative Stores 0 1 2 3 4 5 6 7 8 9 mul p 1 * p 2 ➜ p 3 F Di I RR X 1 X 2 X 3 X 4 W C jump-not-zero p 3 F Di I RR X W C I RR X M W st p 5 ➜ [p 3+4] F Di st p 4 ➜ [p 6+8] F Di I? RR X 10 11 12 C C • Can “st p 4 ➜ [p 6+8]” write the cache in cycle 6? • Store is still “speculative” at this point • What if “jump-not-zero” is mis-predicted? • Not known until its “X” stage (cycle 8) • How does it “undo” the store once it hits the cache? • Answer: it can’t; stores write the cache only at commit • Guaranteed to be non-speculative at that point CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 124

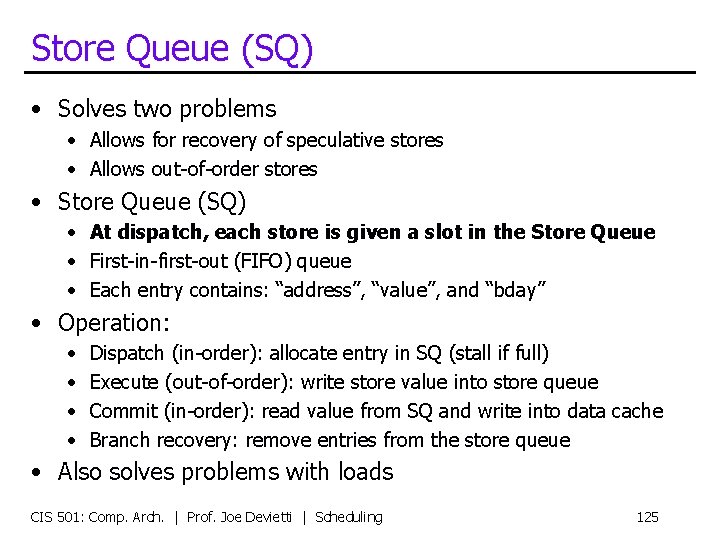

Store Queue (SQ) • Solves two problems • Allows for recovery of speculative stores • Allows out-of-order stores • Store Queue (SQ) • At dispatch, each store is given a slot in the Store Queue • First-in-first-out (FIFO) queue • Each entry contains: “address”, “value”, and “bday” • Operation: • • Dispatch (in-order): allocate entry in SQ (stall if full) Execute (out-of-order): write store value into store queue Commit (in-order): read value from SQ and write into data cache Branch recovery: remove entries from the store queue • Also solves problems with loads CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 125

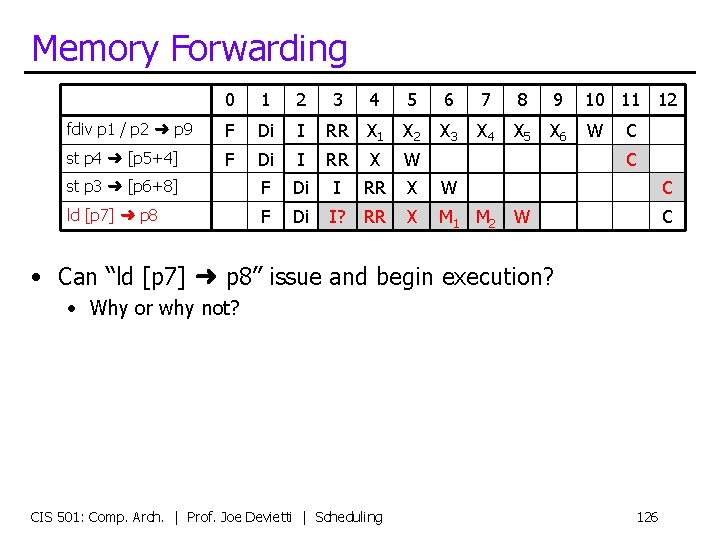

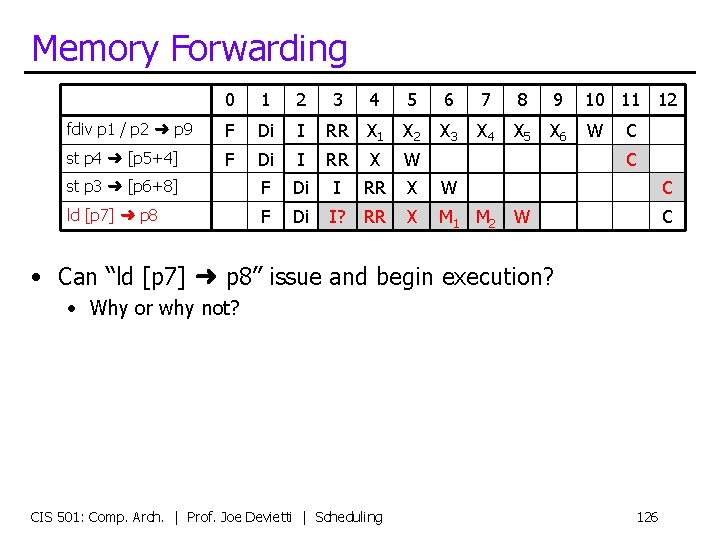

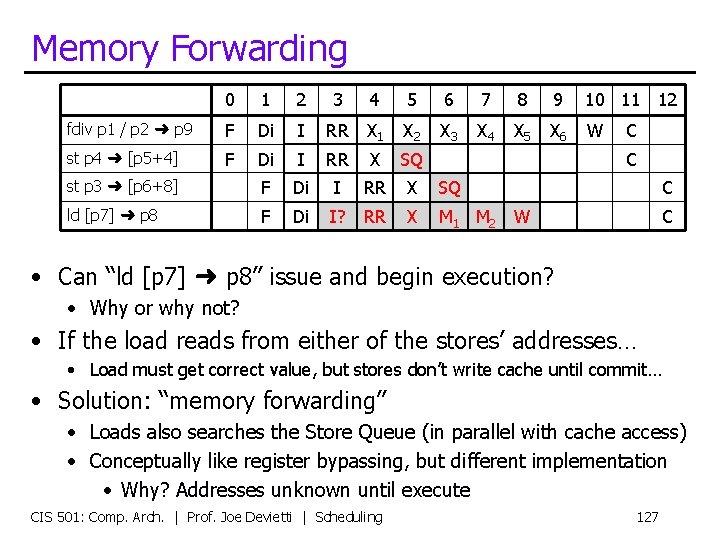

Memory Forwarding 0 1 2 fdiv p 1 / p 2 ➜ p 9 F Di st p 4 ➜ [p 5+4] F 3 4 5 6 7 8 9 10 11 12 I RR X 1 X 2 X 3 X 4 X 5 X 6 W Di I RR X W st p 3 ➜ [p 6+8] F Di I RR X W ld [p 7] ➜ p 8 F Di I? RR X M 1 M 2 C C C W C • Can “ld [p 7] ➜ p 8” issue and begin execution? • Why or why not? CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 126

Memory Forwarding 0 1 2 fdiv p 1 / p 2 ➜ p 9 F Di st p 4 ➜ [p 5+4] F 3 4 5 6 7 8 9 10 11 12 I RR X 1 X 2 X 3 X 4 X 5 X 6 W Di I RR X SQ st p 3 ➜ [p 6+8] F Di I RR X SQ ld [p 7] ➜ p 8 F Di I? RR X M 1 M 2 C C C W C • Can “ld [p 7] ➜ p 8” issue and begin execution? • Why or why not? • If the load reads from either of the stores’ addresses… • Load must get correct value, but stores don’t write cache until commit… • Solution: “memory forwarding” • Loads also searches the Store Queue (in parallel with cache access) • Conceptually like register bypassing, but different implementation • Why? Addresses unknown until execute CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 127

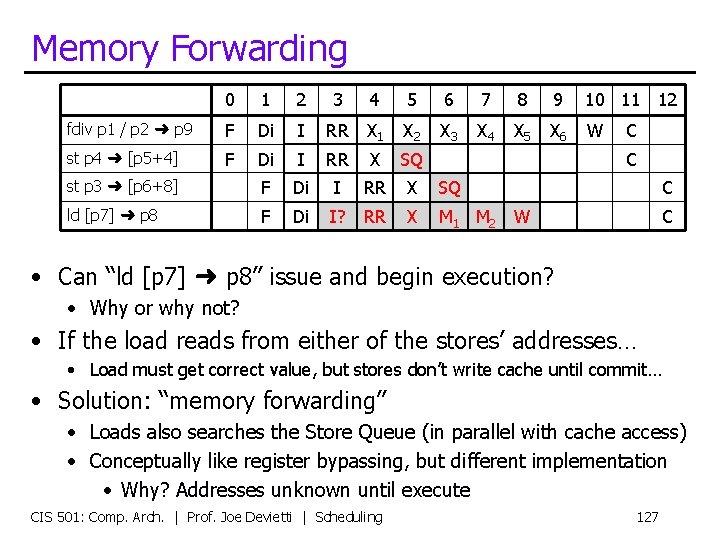

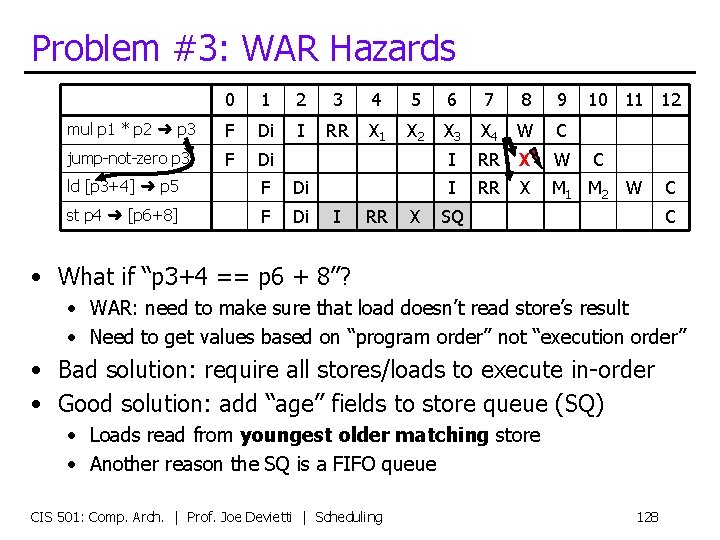

Problem #3: WAR Hazards 0 1 2 3 4 5 6 7 8 9 mul p 1 * p 2 ➜ p 3 F Di I RR X 1 X 2 X 3 X 4 W C jump-not-zero p 3 F Di I RR X W I RR X M 1 M 2 ld [p 3+4] ➜ p 5 F Di st p 4 ➜ [p 6+8] F Di I RR X 10 11 12 C W SQ C C • What if “p 3+4 == p 6 + 8”? • WAR: need to make sure that load doesn’t read store’s result • Need to get values based on “program order” not “execution order” • Bad solution: require all stores/loads to execute in-order • Good solution: add “age” fields to store queue (SQ) • Loads read from youngest older matching store • Another reason the SQ is a FIFO queue CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 128

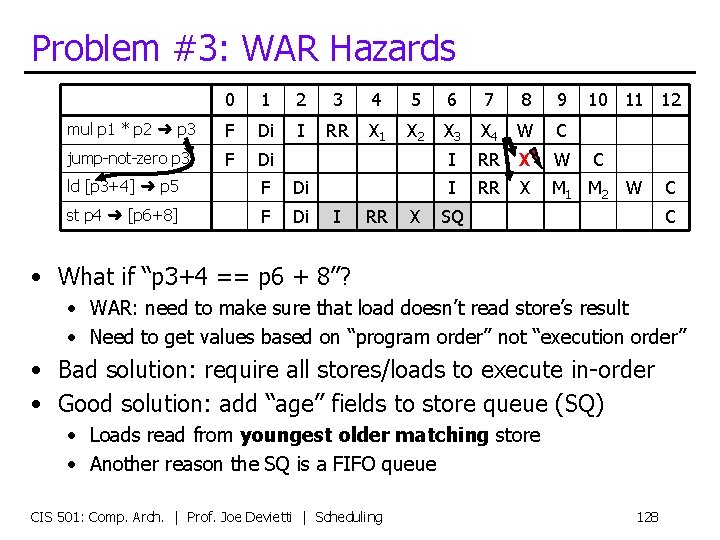

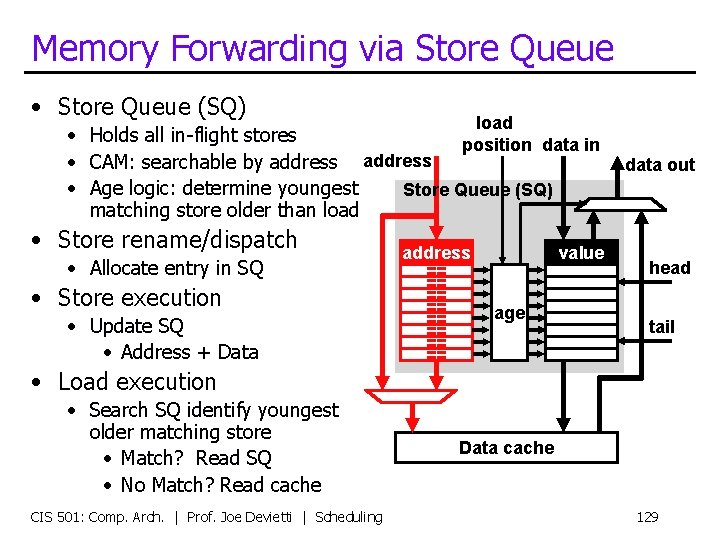

Memory Forwarding via Store Queue • Store Queue (SQ) load • Holds all in-flight stores position data in • CAM: searchable by address • Age logic: determine youngest Store Queue (SQ) matching store older than load • Store rename/dispatch • Allocate entry in SQ • Store execution • Update SQ • Address + Data address == == value age data out head tail • Load execution • Search SQ identify youngest older matching store • Match? Read SQ • No Match? Read cache CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling Data cache 129

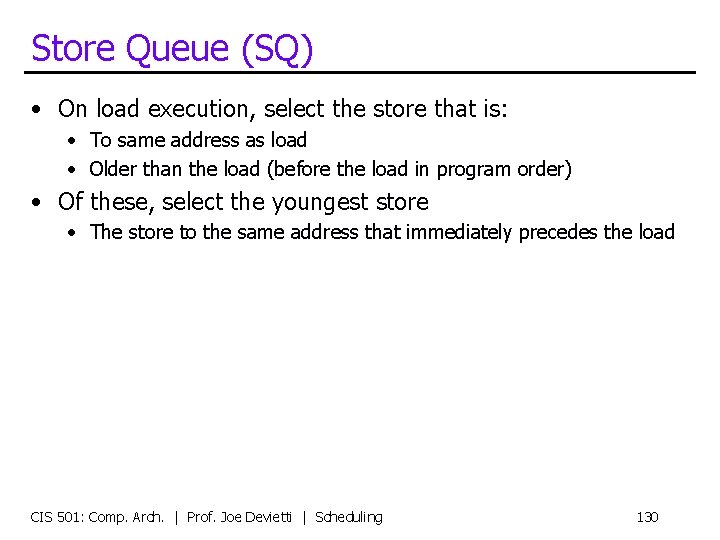

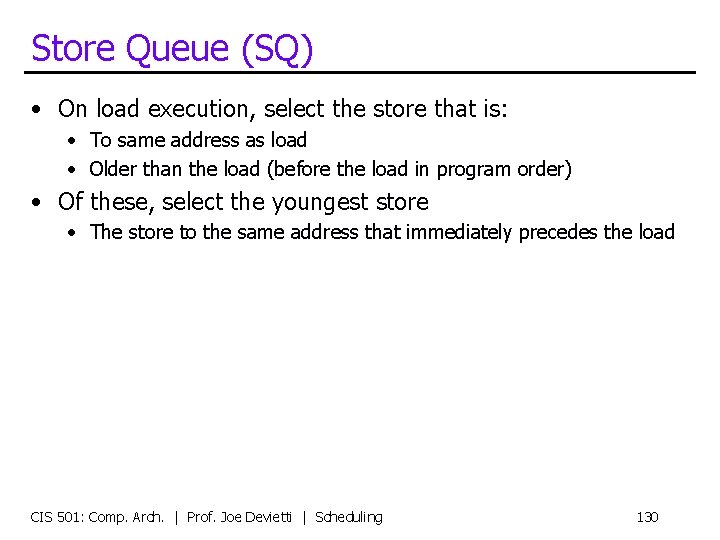

Store Queue (SQ) • On load execution, select the store that is: • To same address as load • Older than the load (before the load in program order) • Of these, select the youngest store • The store to the same address that immediately precedes the load CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 130

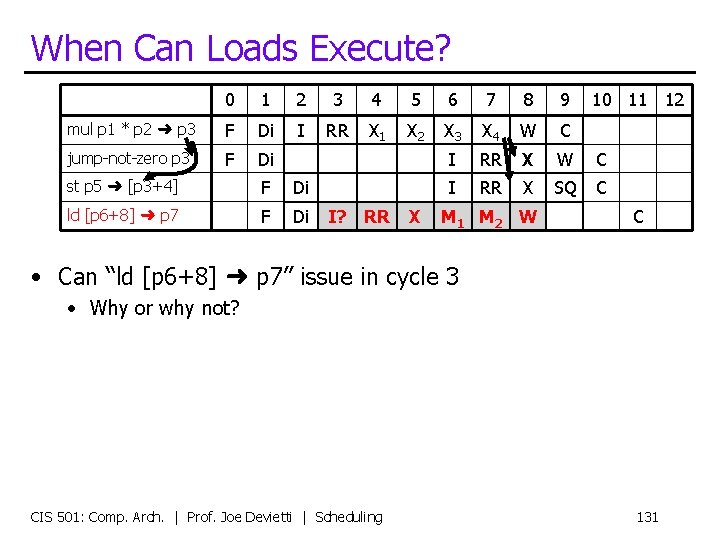

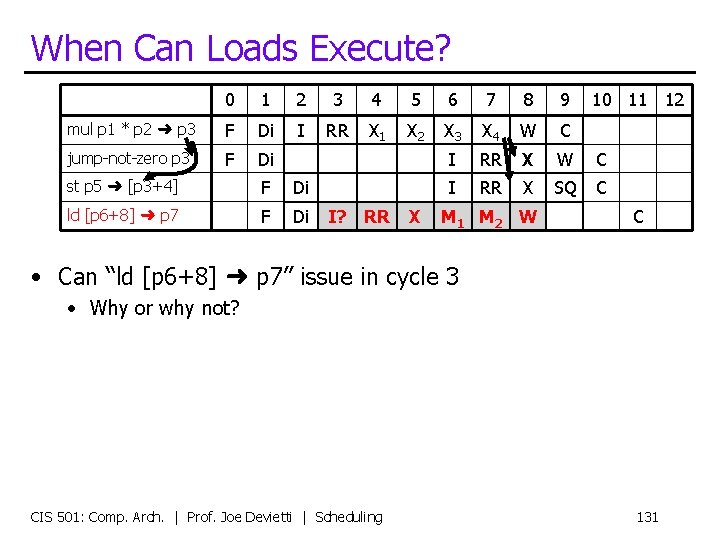

When Can Loads Execute? 0 1 2 3 4 5 6 7 8 9 mul p 1 * p 2 ➜ p 3 F Di I RR X 1 X 2 X 3 X 4 W C jump-not-zero p 3 F Di I RR X W C I RR X SQ C st p 5 ➜ [p 3+4] F Di ld [p 6+8] ➜ p 7 F Di I? RR X M 1 M 2 W 10 11 12 C • Can “ld [p 6+8] ➜ p 7” issue in cycle 3 • Why or why not? CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 131

When Can Loads Execute? 0 1 2 3 4 5 6 7 8 9 mul p 1 * p 2 ➜ p 3 F Di I RR X 1 X 2 X 3 X 4 W C jump-not-zero p 3 F Di I RR X W C I RR X SQ C st p 5 ➜ [p 3+4] F Di ld [p 6+8] ➜ p 7 F Di I? RR X M 1 M 2 W 10 11 12 C • Aliasing! Does p 3+4 == p 6+8? • If no, load should get value from memory • Can it start to execute? • If yes, load should get value from store • By reading the store queue? • But the value isn’t put into the store queue until cycle 9 • Key challenge: don’t know addresses until execution! • One solution: require all loads to wait for all earlier (prior) stores CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 132

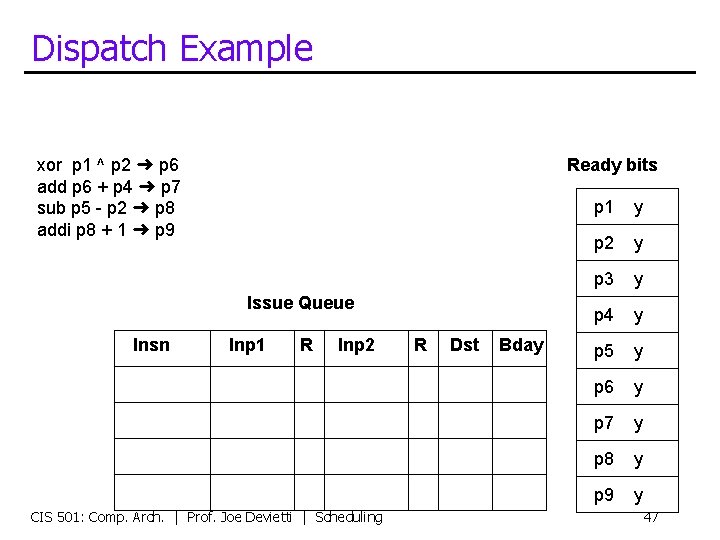

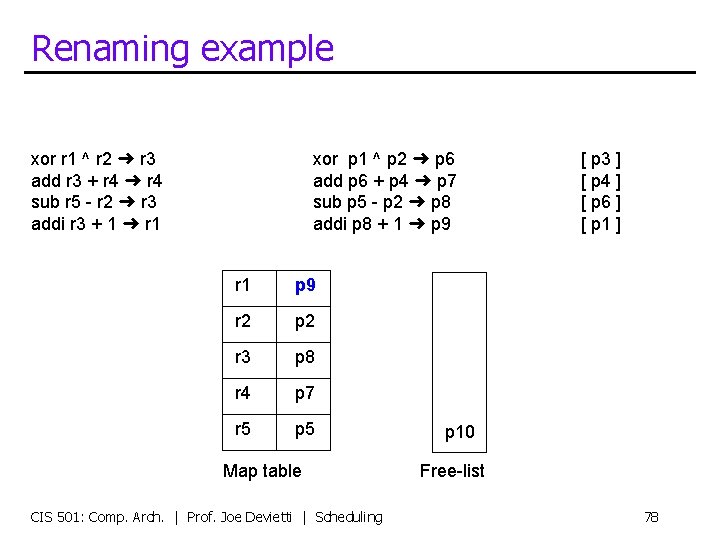

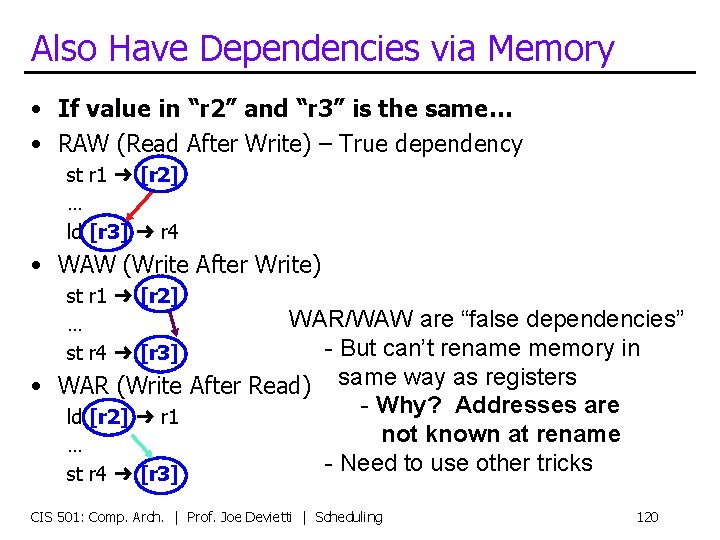

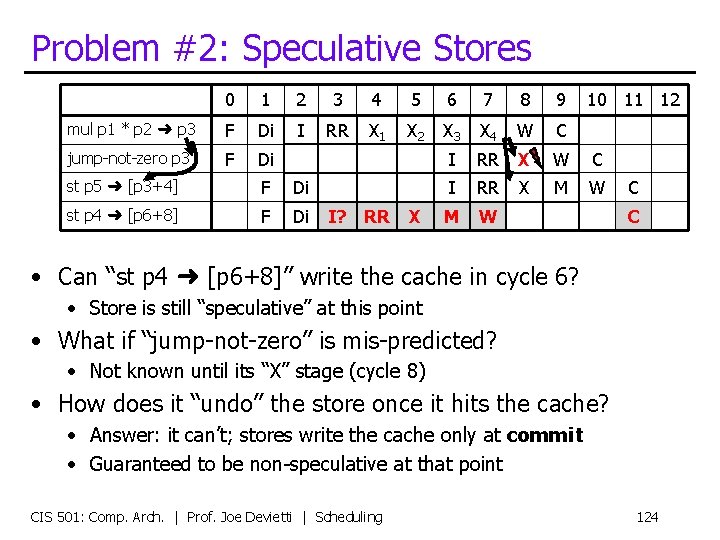

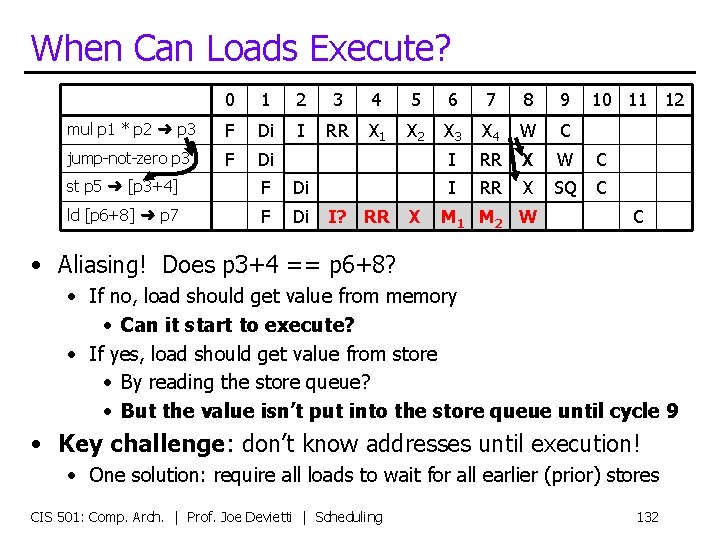

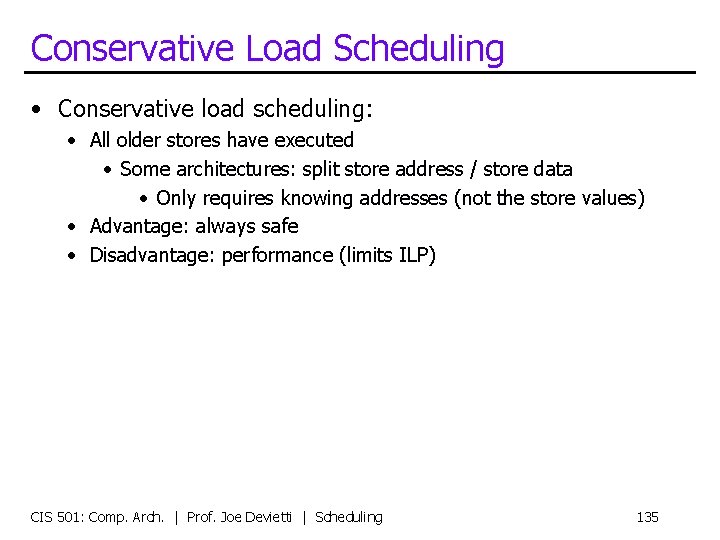

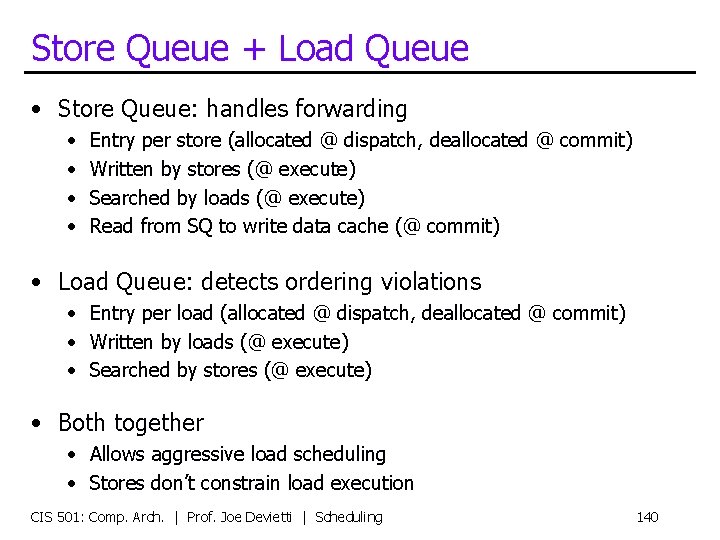

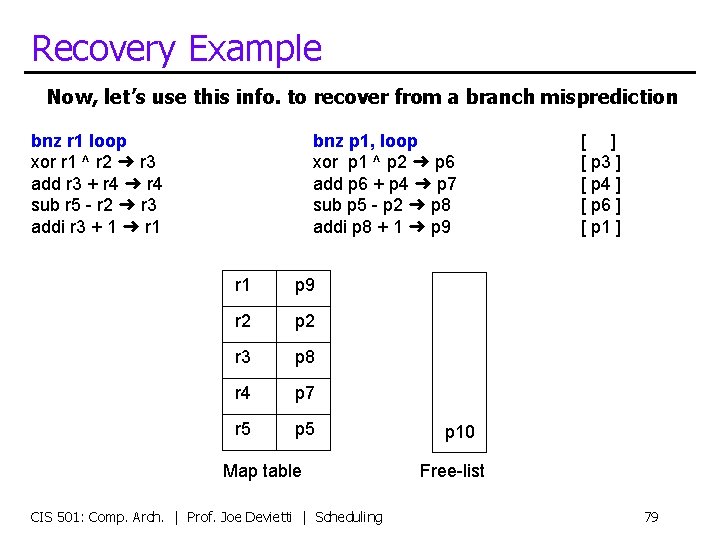

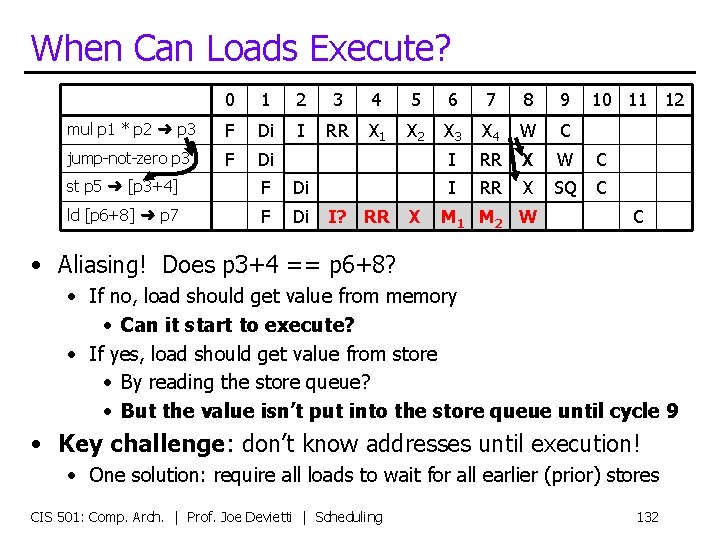

Conservative Load Scheduling • Conservative load scheduling: • All older stores have executed • Some architectures: split store address / store data • Only requires knowing addresses (not the store values) • Advantage: always safe • Disadvantage: performance (limits ILP) CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 135

![Conservative Load Scheduling 0 1 2 3 4 5 ld p 1 p Conservative Load Scheduling 0 1 2 3 4 5 ld [p 1] ➜ p](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-134.jpg)

Conservative Load Scheduling 0 1 2 3 4 5 ld [p 1] ➜ p 4 F Di I Rr X M 1 M 2 W C ld [p 2] ➜ p 5 F Di I Rr X M 1 M 2 W C add p 4, p 5 ➜ p 6 F Di st p 6 ➜ [p 3] F Di I 6 7 8 9 Rr X W C I Rr X 10 11 12 13 F Di I Rr X M 1 M 2 W C ld [p 2+4] ➜ p 8 F Di I Rr X M 1 M 2 W C F Di st p 9 ➜ [p 3+4] F Di I Rr I X W C Rr X SQ Conservative load scheduling: can’t issue ld [p 1+4] until cycle 7! Might as well be an in-order machine on this example Can we do better? How? CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 15 SQ C ld [p 1+4] ➜ p 7 add p 7, p 8 ➜ p 9 14 136 C

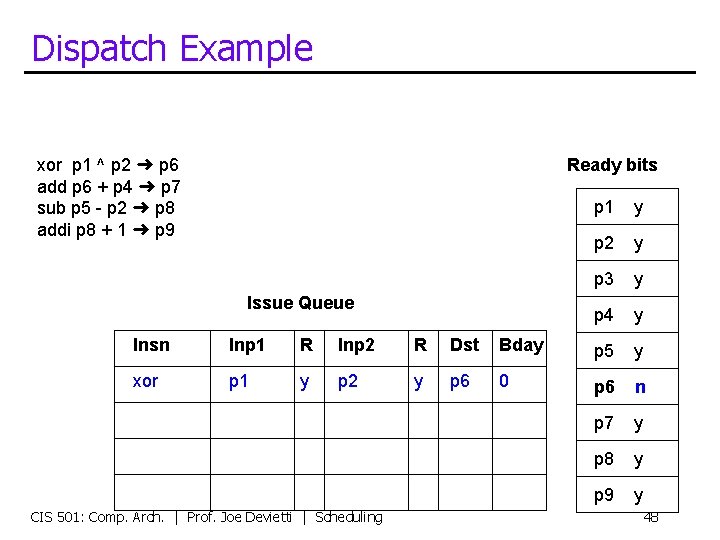

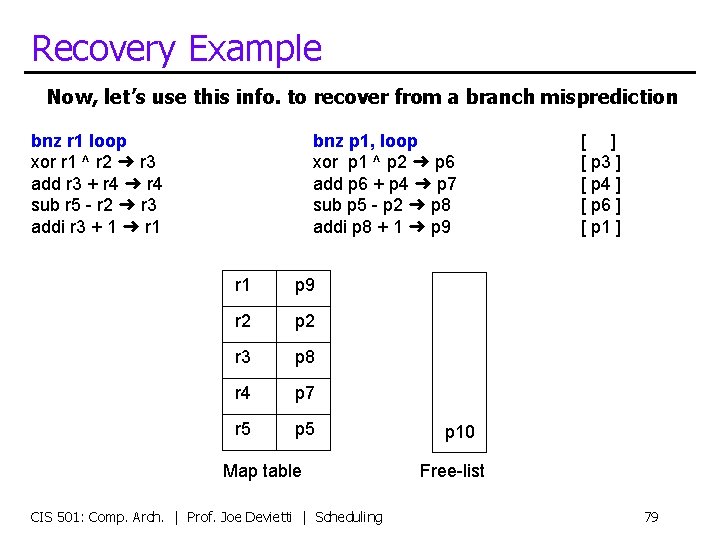

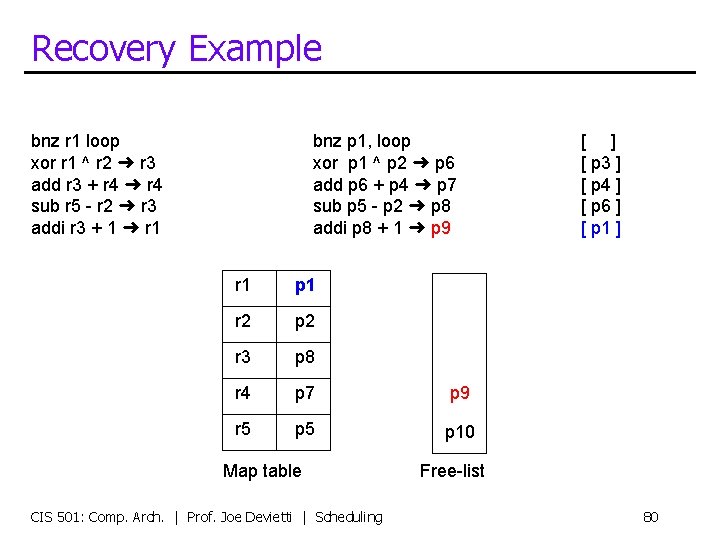

![Optimistic Load Scheduling 0 1 2 3 4 5 ld p 1 p Optimistic Load Scheduling 0 1 2 3 4 5 ld [p 1] ➜ p](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-135.jpg)

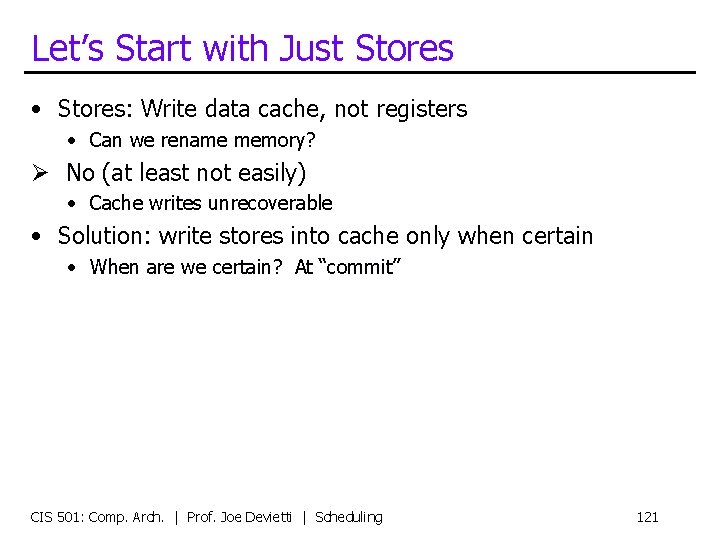

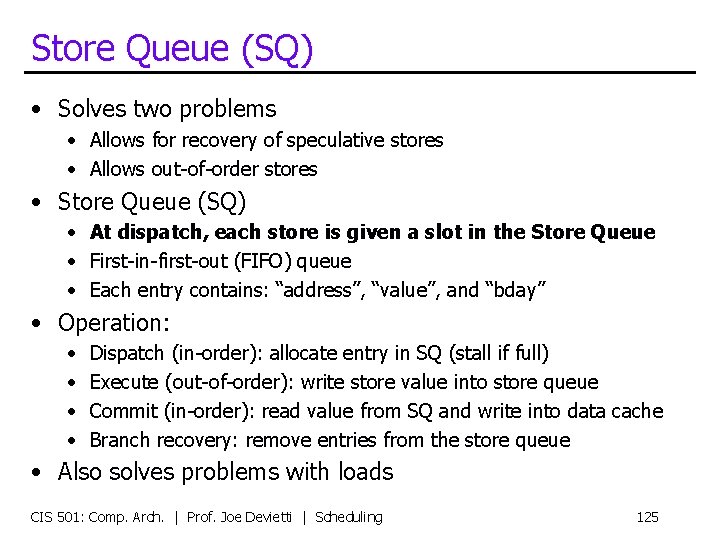

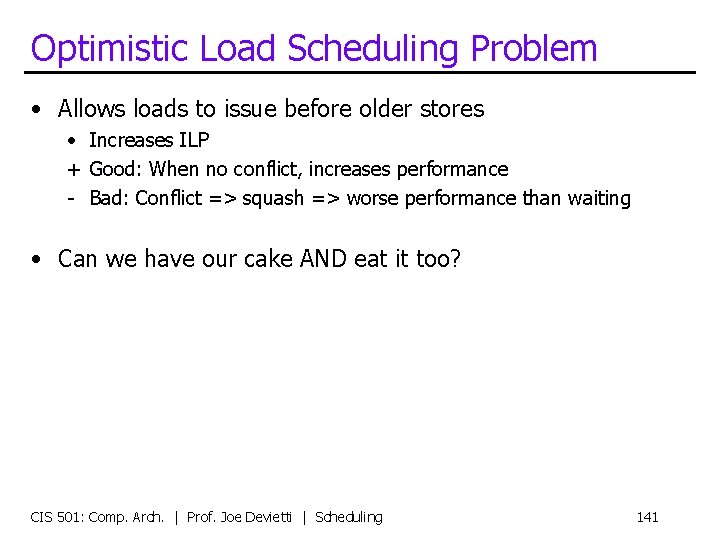

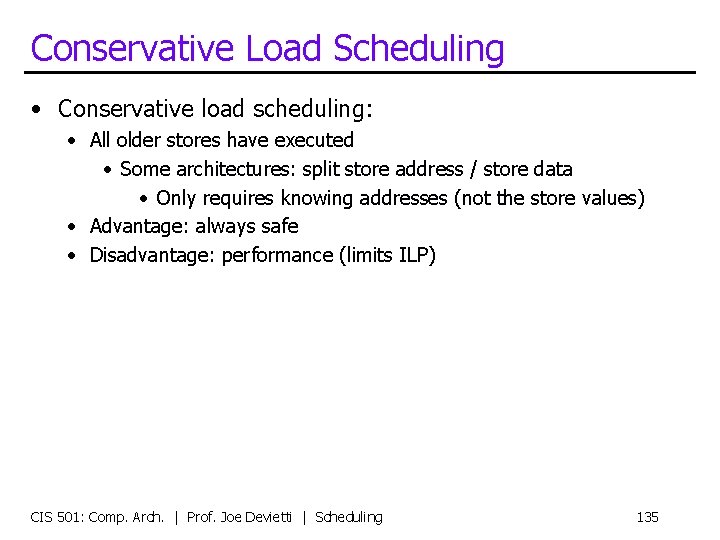

Optimistic Load Scheduling 0 1 2 3 4 5 ld [p 1] ➜ p 4 F Di I Rr X M 1 M 2 W C ld [p 2] ➜ p 5 F Di I Rr X M 1 M 2 W C add p 4, p 5 ➜ p 6 F Di st p 6 ➜ [p 3] F Di I 6 7 8 9 10 Rr X W C I Rr X SQ C ld [p 1+4] ➜ p 7 F Di I Rr X M 1 M 2 W C ld [p 2+4] ➜ p 8 F Di I Rr X M 1 M 2 W add p 7, p 8 ➜ p 9 F Di st p 9 ➜ [p 3+4] F Di I 11 12 13 14 C Rr X W I Rr X C SQ C Optimistic load scheduling: can actually benefit from out-of-order! But how do we know when our speculation (optimism) fails? CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 137 15

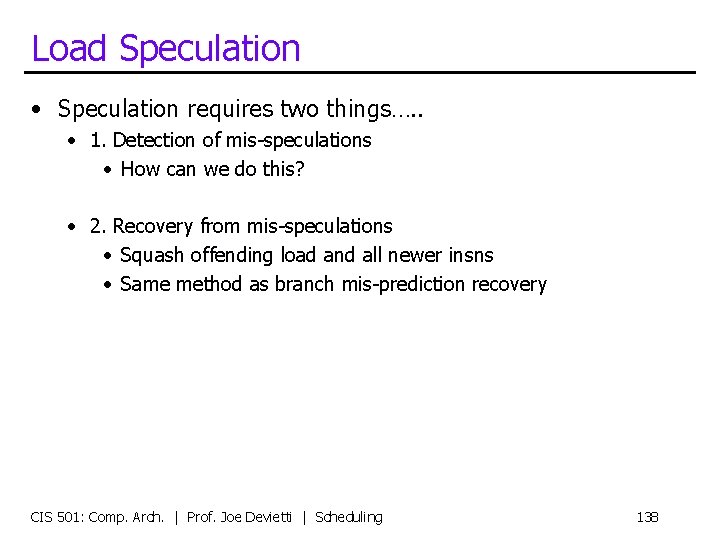

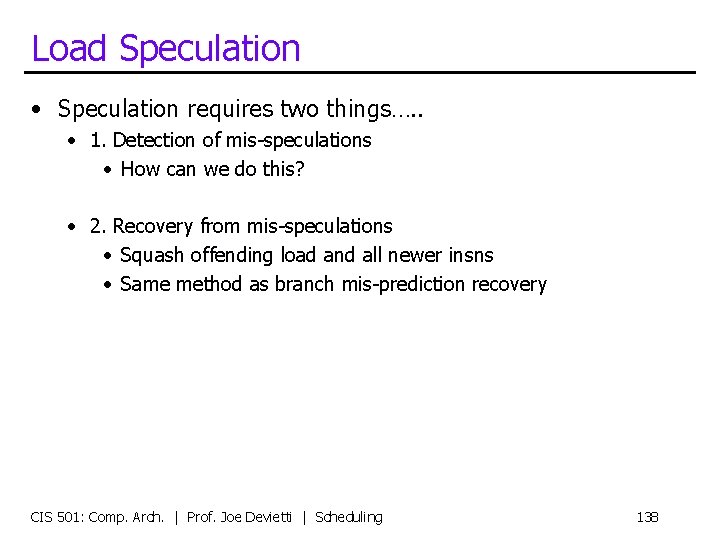

Load Speculation • Speculation requires two things…. . • 1. Detection of mis-speculations • How can we do this? • 2. Recovery from mis-speculations • Squash offending load and all newer insns • Same method as branch mis-prediction recovery CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 138

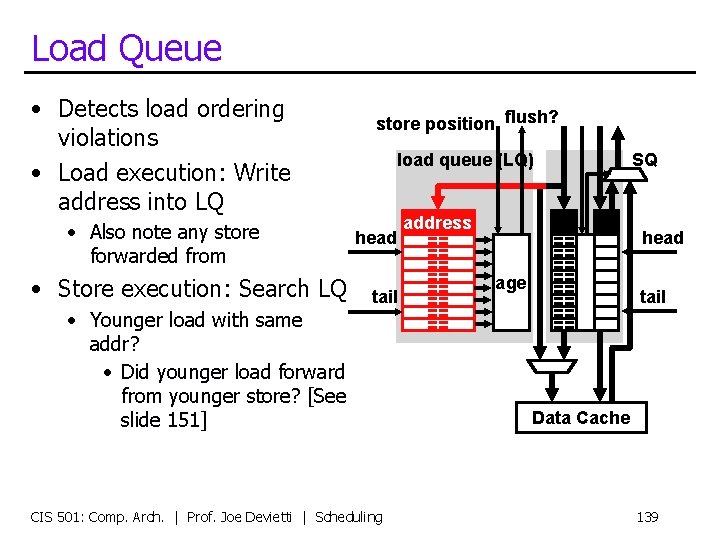

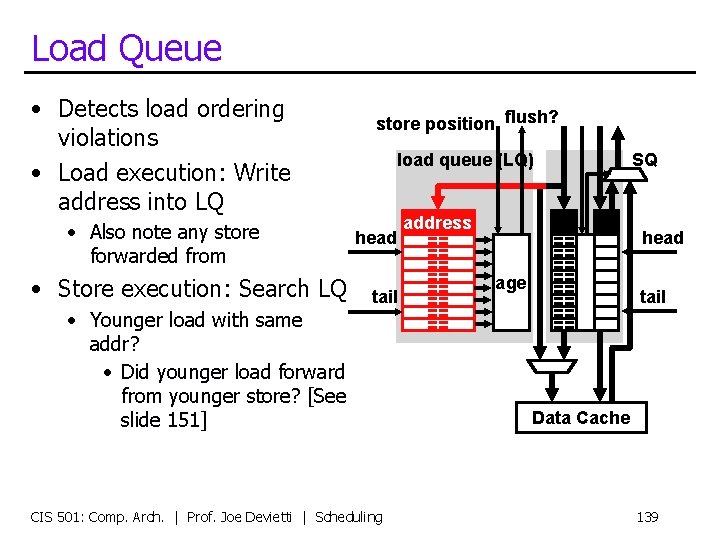

Load Queue • Detects load ordering violations • Load execution: Write address into LQ • Also note any store forwarded from • Store execution: Search LQ • Younger load with same addr? • Did younger load forward from younger store? [See slide 151] store position flush? load queue (LQ) address head == == == tail == == == CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling age SQ == == head tail Data Cache 139

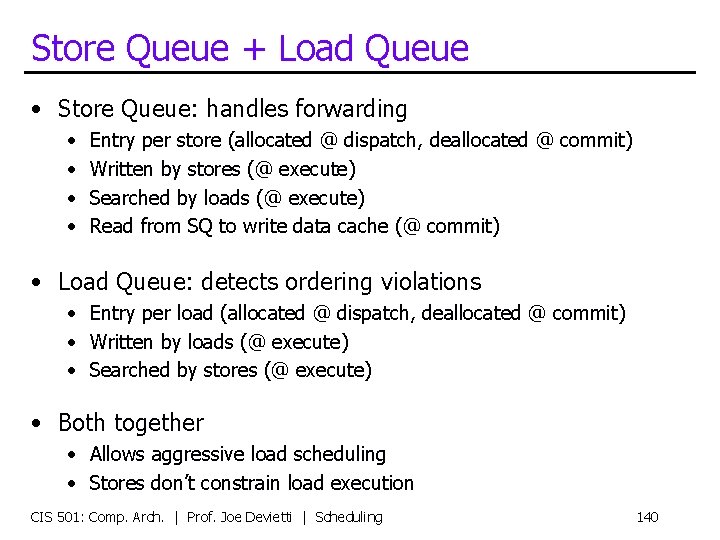

Store Queue + Load Queue • Store Queue: handles forwarding • • Entry per store (allocated @ dispatch, deallocated @ commit) Written by stores (@ execute) Searched by loads (@ execute) Read from SQ to write data cache (@ commit) • Load Queue: detects ordering violations • Entry per load (allocated @ dispatch, deallocated @ commit) • Written by loads (@ execute) • Searched by stores (@ execute) • Both together • Allows aggressive load scheduling • Stores don’t constrain load execution CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 140

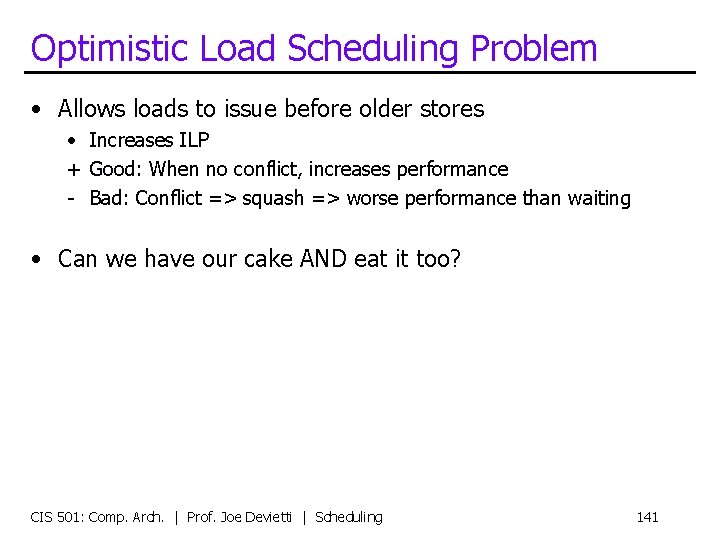

Optimistic Load Scheduling Problem • Allows loads to issue before older stores • Increases ILP + Good: When no conflict, increases performance - Bad: Conflict => squash => worse performance than waiting • Can we have our cake AND eat it too? CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 141

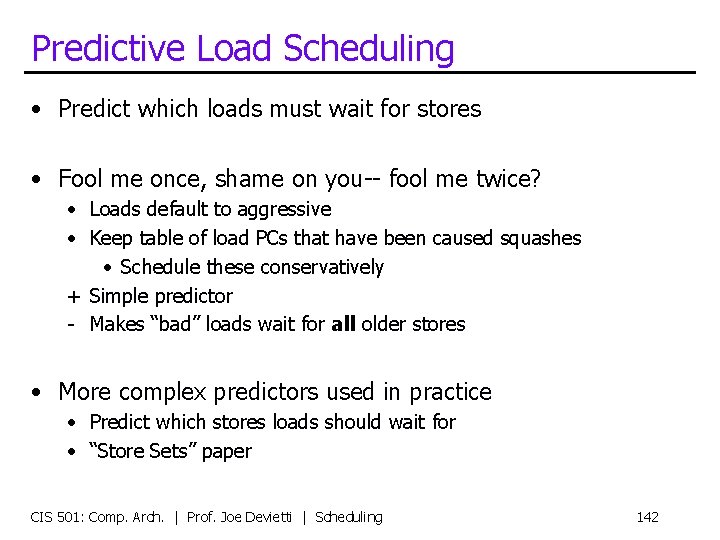

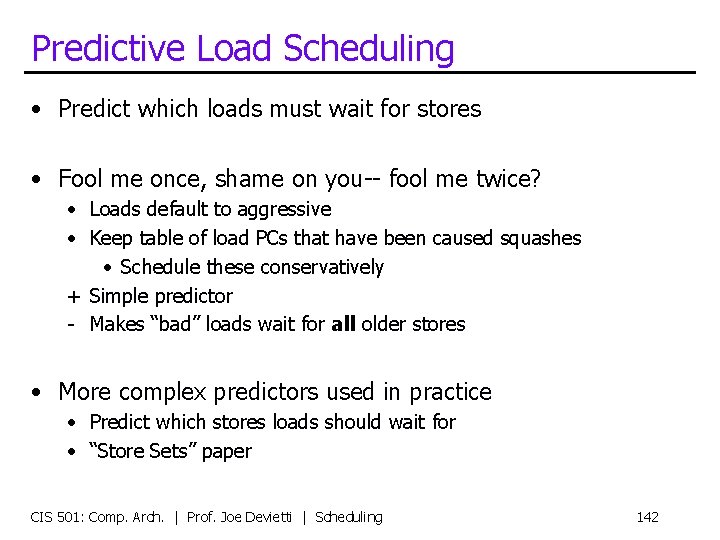

Predictive Load Scheduling • Predict which loads must wait for stores • Fool me once, shame on you-- fool me twice? • Loads default to aggressive • Keep table of load PCs that have been caused squashes • Schedule these conservatively + Simple predictor - Makes “bad” loads wait for all older stores • More complex predictors used in practice • Predict which stores loads should wait for • “Store Sets” paper CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 142

Load/Store Queue Examples CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 143

![1 St p 1 p 2 2 St p 3 p 4 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-142.jpg)

1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 3. Ld [p 5] ➜ p 6 Initial State (Stores to different addresses) Reg. File Load Queue p 1 Bdy Addr 5 5 5 p 2 100 p 3 p 3 9 p 4 200 p 5 100 p 6 --- p 7 p 8 Store Queue Bdy Addr Val 9 p 4 200 p 5 100 p 6 --- p 7 --- p 8 Cache Addr Val Store Queue Bdy Addr Val 9 p 4 200 p 5 100 p 6 --- p 7 --- p 8 --- Cache Addr Val Store Queue Bdy Addr Val Cache Addr Val 100 13 200 17 144

![1 St p 1 p 2 2 St p 3 p 4 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-143.jpg)

1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 3. Ld [p 5] ➜ p 6 Good Interleaving (Shows importance of address check) 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 3. Ld [p 5] ➜ p 6 Reg. File Load Queue p 1 Bdy Addr 5 5 5 p 2 100 p 3 p 3 9 p 4 200 p 5 100 p 6 --- p 7 --- p 8 --- p 4 200 Store Queue Bdy Addr Val 1 100 Cache Addr Val 9 5 p 5 100 Bdy Addr Val p 6 --- p 7 --- 1 100 5 p 8 --- 2 200 9 Cache Addr Val 100 9 p 4 200 Store Queue 3 p 5 100 Store Queue Bdy Addr Val p 6 5 p 7 --- 1 100 5 p 8 --- 2 200 9 Cache Addr Val 100 13 200 17 145

![1 St p 1 p 2 2 St p 3 p 4 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-144.jpg)

1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 3. Ld [p 5] ➜ p 6 Different Initial State (All to same address) Reg. File Load Queue p 1 Bdy Addr 5 5 5 p 2 100 p 3 p 3 9 p 4 100 p 5 100 p 6 --- p 7 p 8 Store Queue Bdy Addr Val 9 p 4 100 p 5 100 p 6 --- p 7 --- p 8 Cache Addr Val Store Queue Bdy Addr Val 9 p 4 100 p 5 100 p 6 --- p 7 --- p 8 --- Cache Addr Val Store Queue Bdy Addr Val Cache Addr Val 100 13 200 17 146

![1 St p 1 p 2 2 St p 3 p 4 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-145.jpg)

1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 3. Ld [p 5] ➜ p 6 Good Interleaving #1 (Program Order) 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 3. Ld [p 5] ➜ p 6 Reg. File Load Queue p 1 Bdy Addr 5 5 5 p 2 100 p 3 p 3 9 p 4 100 p 5 100 p 6 --- p 7 --- p 8 --- p 4 100 Store Queue Bdy Addr Val 1 100 Cache Addr Val 9 5 p 5 100 Bdy Addr Val p 6 --- p 7 --- 1 100 5 p 8 --- 2 100 9 Cache Addr Val 100 9 p 4 100 Store Queue 3 p 5 100 Store Queue Bdy Addr Val p 6 9 p 7 --- 1 100 5 p 8 --- 2 100 9 Cache Addr Val 100 13 200 17 147

![1 St p 1 p 2 2 St p 3 p 4 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-146.jpg)

1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 3. Ld [p 5] ➜ p 6 Good Interleaving #2 (Stores reordered) 2. St p 3 ➜ [p 4] 1. St p 1 ➜ [p 2] 3. Ld [p 5] ➜ p 6 Reg. File Load Queue p 1 Bdy Addr 5 5 5 p 2 100 p 3 p 3 9 p 4 100 p 5 100 p 6 --- p 7 --- p 8 --- p 4 100 Store Queue Bdy Addr Val 2 100 Cache Addr Val 9 9 p 5 100 Bdy Addr Val p 6 --- p 7 --- 1 100 5 p 8 --- 2 100 9 Cache Addr Val 100 9 p 4 100 Store Queue 3 p 5 100 Store Queue Bdy Addr Val p 6 9 p 7 --- 1 100 5 p 8 --- 2 100 9 Cache Addr Val 100 13 200 17 148

![1 St p 1 p 2 2 St p 3 p 4 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-147.jpg)

1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 3. Ld [p 5] ➜ p 6 Bad Interleaving #1 (Load reads the cache) 3. Ld [p 5] ➜ p 6 2. St p 3 ➜ [p 4] Reg. File Load Queue p 1 Bdy Addr 5 p 2 100 p 3 3 100 p 4 100 p 5 100 p 6 13 p 7 p 8 p 2 100 p 3 9 Store Queue Bdy Addr Val 5 100 9 p 4 100 p 5 100 p 6 13 --- p 7 --- p 8 --- Cache Addr Val 3 Store Queue Bdy Addr Val 2 100 9 Cache Addr Val 100 13 200 17 149

![1 St p 1 p 2 2 St p 3 p 4 1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4]](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-148.jpg)

1. St p 1 ➜ [p 2] 2. St p 3 ➜ [p 4] 3. Ld [p 5] ➜ p 6 Bad Interleaving #2 (Load gets value from wrong store) 1. St p 1 ➜ [p 2] 3. Ld [p 5] ➜ p 6 2. St p 3 ➜ [p 4] Reg. File Load Queue p 1 Bdy Addr 5 5 p 2 100 p 3 9 p 4 100 p 5 100 p 6 --- p 7 --- p 8 --- Bdy Addr Val 1 100 Cache Addr Val 5 p 2 100 p 3 9 p 4 100 Store Queue 3 p 5 100 p 6 5 p 7 --- p 8 --- Bdy Addr Val 100 Cache Addr Val 5 3 100 9 p 4 100 Store Queue 1 5 p 5 100 Store Queue Bdy Addr Val p 6 5 p 7 --- 1 100 5 p 8 --- 2 100 9 Cache Addr Val 100 13 200 17 150

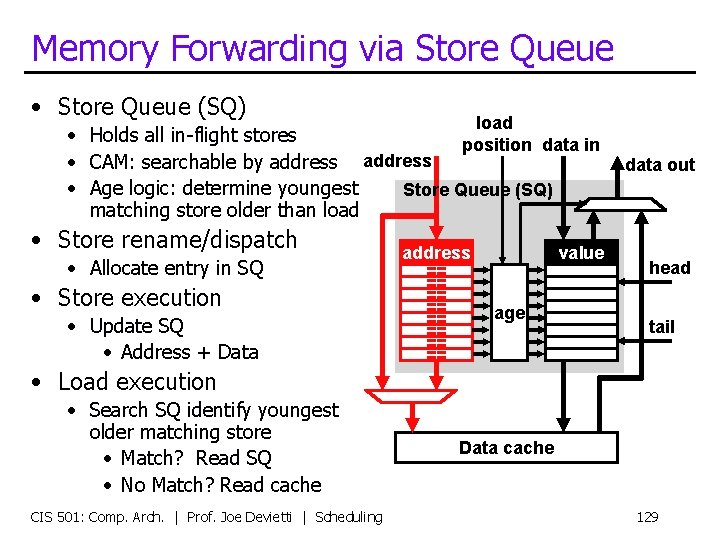

![1 St p 1 p 2 BadGood Interleaving 2 St p 3 1. St p 1 ➜ [p 2] Bad/Good Interleaving 2. St p 3 ➜](https://slidetodoc.com/presentation_image_h2/7d953ba5a8867ad793e4aed8af594e9c/image-149.jpg)

1. St p 1 ➜ [p 2] Bad/Good Interleaving 2. St p 3 ➜ [p 4] (Load gets value from correct store, but does it work? ) 3. Ld [p 5] ➜ p 6 2. St p 3 ➜ [p 4] 3. Ld [p 5] ➜ p 6 1. St p 1 ➜ [p 2] Reg. File Load Queue p 1 Bdy Addr 5 5 p 2 100 p 3 9 p 4 100 p 5 100 p 6 --- p 7 --- p 8 --- Bdy Addr Val 2 100 Cache Addr Val 9 p 2 100 p 3 9 p 4 100 Store Queue 3 p 5 100 p 6 9 p 7 --- p 8 --- Bdy Addr Val 100 Cache Addr Val 9 3 100 9 p 4 100 Store Queue 2 5 p 5 100 ? Store Queue Bdy Addr Val p 6 9 p 7 --- 1 100 5 p 8 --- 2 100 9 Cache Addr Val 100 13 200 17 151

Out-of-Order: Benefits & Challenges CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 152

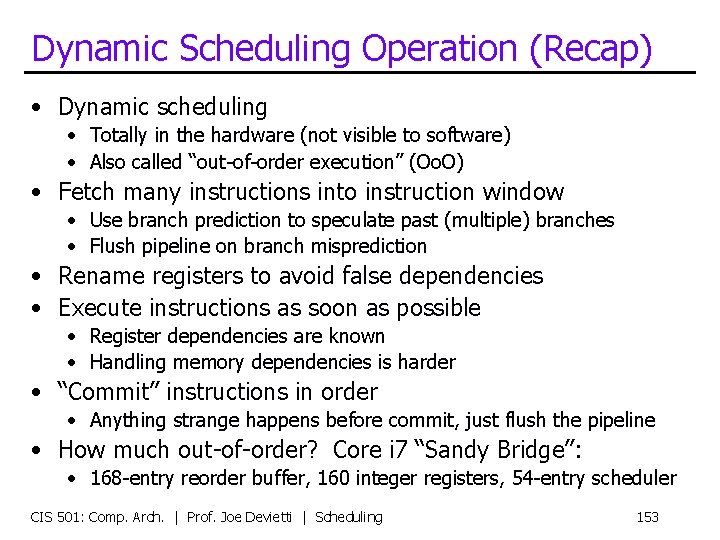

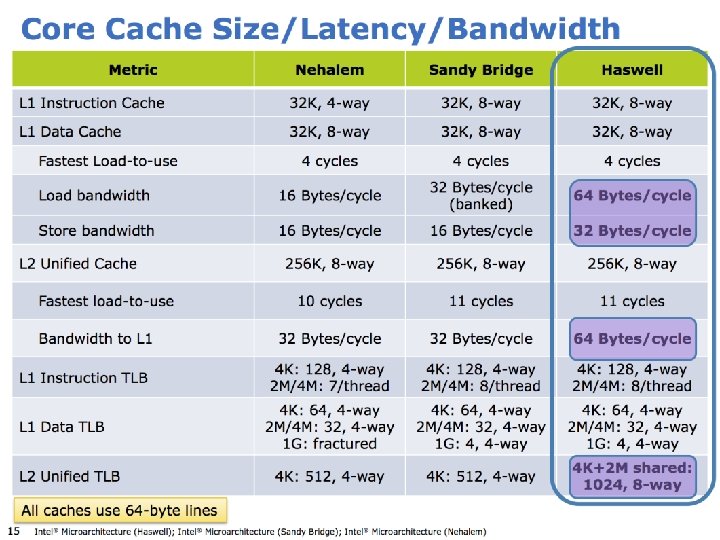

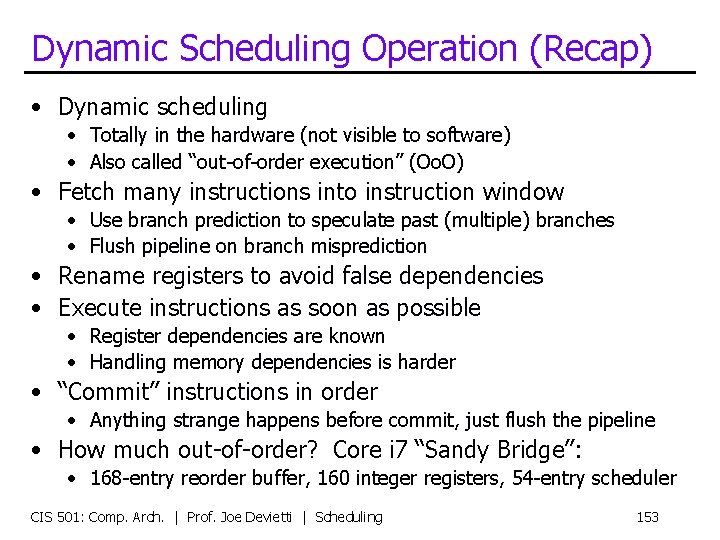

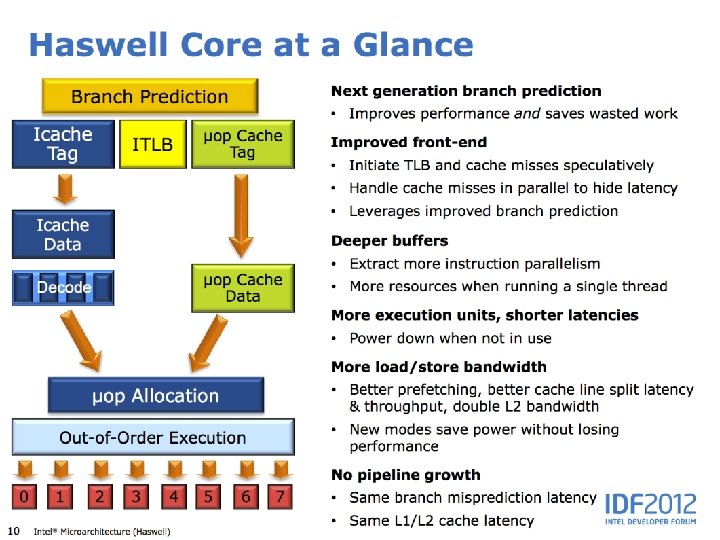

Dynamic Scheduling Operation (Recap) • Dynamic scheduling • Totally in the hardware (not visible to software) • Also called “out-of-order execution” (Oo. O) • Fetch many instructions into instruction window • Use branch prediction to speculate past (multiple) branches • Flush pipeline on branch misprediction • Rename registers to avoid false dependencies • Execute instructions as soon as possible • Register dependencies are known • Handling memory dependencies is harder • “Commit” instructions in order • Anything strange happens before commit, just flush the pipeline • How much out-of-order? Core i 7 “Sandy Bridge”: • 168 -entry reorder buffer, 160 integer registers, 54 -entry scheduler CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 153

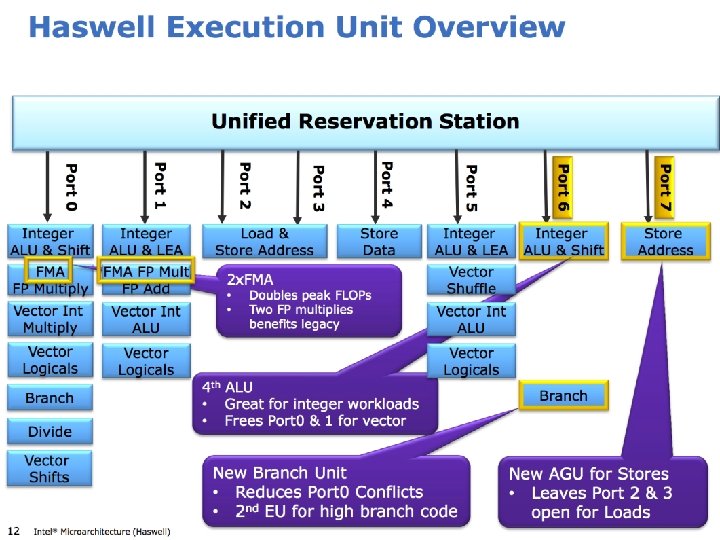

CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 154

CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 155

CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 156

CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 157

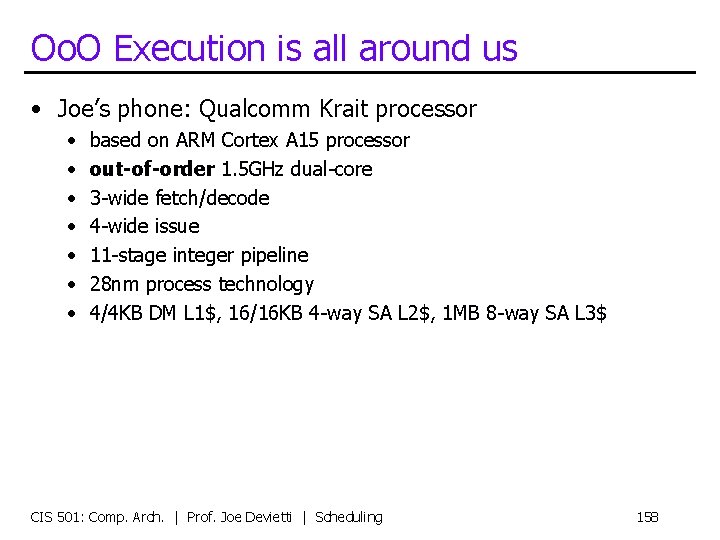

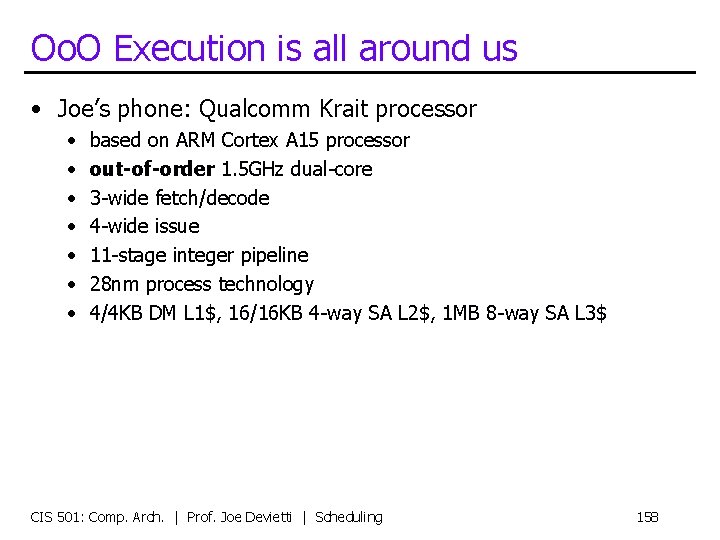

Oo. O Execution is all around us • Joe’s phone: Qualcomm Krait processor • • based on ARM Cortex A 15 processor out-of-order 1. 5 GHz dual-core 3 -wide fetch/decode 4 -wide issue 11 -stage integer pipeline 28 nm process technology 4/4 KB DM L 1$, 16/16 KB 4 -way SA L 2$, 1 MB 8 -way SA L 3$ CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 158

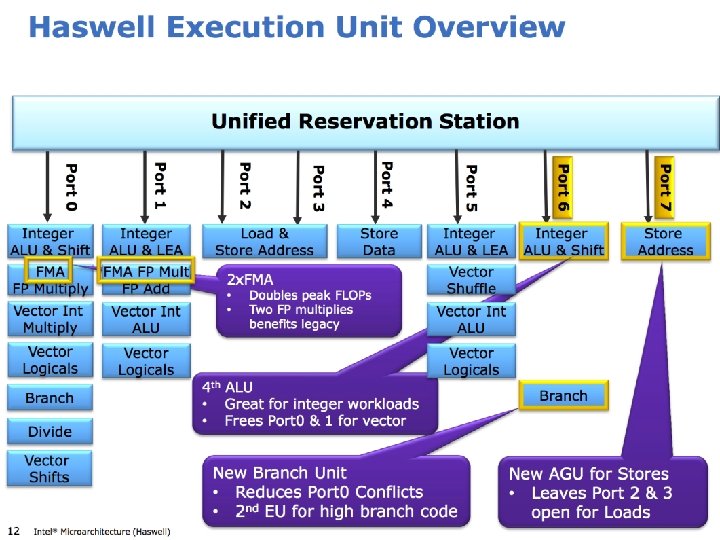

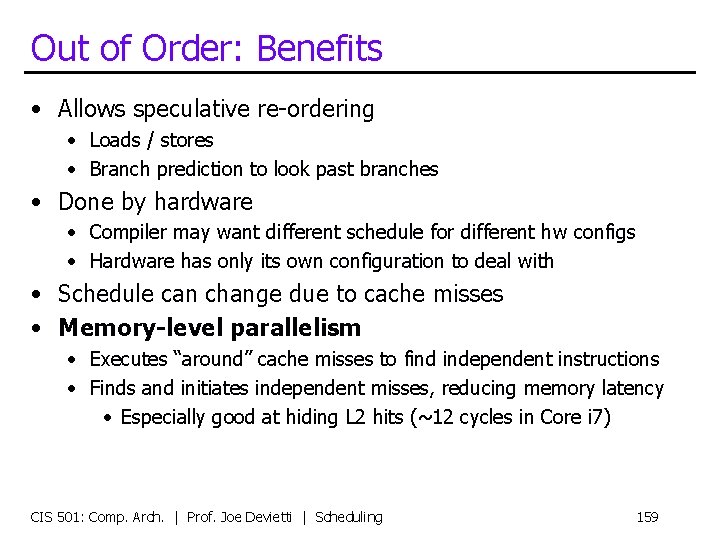

Out of Order: Benefits • Allows speculative re-ordering • Loads / stores • Branch prediction to look past branches • Done by hardware • Compiler may want different schedule for different hw configs • Hardware has only its own configuration to deal with • Schedule can change due to cache misses • Memory-level parallelism • Executes “around” cache misses to find independent instructions • Finds and initiates independent misses, reducing memory latency • Especially good at hiding L 2 hits (~12 cycles in Core i 7) CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 159

Challenges for Out-of-Order Cores • Design complexity • More complicated than in-order? Certainly! • But, we have managed to overcome the design complexity • Clock frequency • Can we build a “high ILP” machine at high clock frequency? • Yep, with some additional pipe stages, clever design • Limits to (efficiently) scaling the window and ILP • Large physical register file • Fast register renaming/wakeup/select/load queue/store queue • Active areas of micro-architectural research • Branch & memory depend. prediction (limits effective window size) • 95% branch mis-prediction: 1 in 20 branches, or 1 in 100 insn. • Plus all the issues of building “wide” in-order superscalar • Power efficiency • Today, even mobile phone chips are out-of-order cores CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 160

Redux: HW vs. SW Scheduling • Static scheduling • Performed by compiler, limited in several ways • Dynamic scheduling • Performed by the hardware, overcomes limitations • Static limitation ➜ dynamic mitigation • • Number of registers in the ISA ➜ register renaming Scheduling scope ➜ branch prediction & speculation Inexact memory aliasing information ➜ speculative memory ops Unknown latencies of cache misses ➜ execute when ready • Which to do? Compiler does what it can, hardware the rest • • Why? dynamic scheduling needed to sustain more than 2 -way issue Helps with hiding memory latency (execute around misses) Intel Core i 7 is four-wide execute w/ scheduling window of 100+ Even mobile phones have dynamically scheduled cores (ARM A 9, A 15) CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 161

Summary: Scheduling App App System software Mem CPU I/O • Code scheduling • To reduce pipeline stalls • To increase ILP (insn-level parallelism) • Static scheduling by the compiler • Approach & limitations • Dynamic scheduling in hardware • Register renaming • Instruction selection • Handling memory operations • Up next: multicore CIS 501: Comp. Arch. | Prof. Joe Devietti | Scheduling 162