Math Stat Department Colloquium Georgetown University March 20

![Gradient Method g x[0] x[1] x[2] x[3] Gradient Method g x[0] x[1] x[2] x[3]](https://slidetodoc.com/presentation_image_h2/c129dae9b7aba0f61d22548687347d08/image-14.jpg)

![Gradient Method g x[0] x[1] x[2] x[3] g Gradient Method g x[0] x[1] x[2] x[3] g](https://slidetodoc.com/presentation_image_h2/c129dae9b7aba0f61d22548687347d08/image-15.jpg)

![Gradient Method g x[0] x[1] x[2] x[3] g Gradient Method g x[0] x[1] x[2] x[3] g](https://slidetodoc.com/presentation_image_h2/c129dae9b7aba0f61d22548687347d08/image-16.jpg)

- Slides: 124

Math & Stat Department Colloquium Georgetown University March 20, 2020

Security and Privacy for Distributed Optimization and Learning Nitin Vaidya Georgetown University

Goals g Background g Problem formulation g Intuition No theorems/proofs

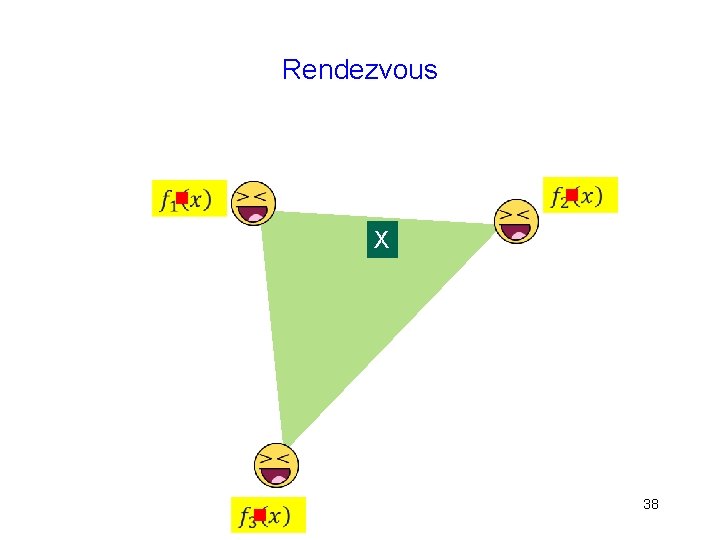

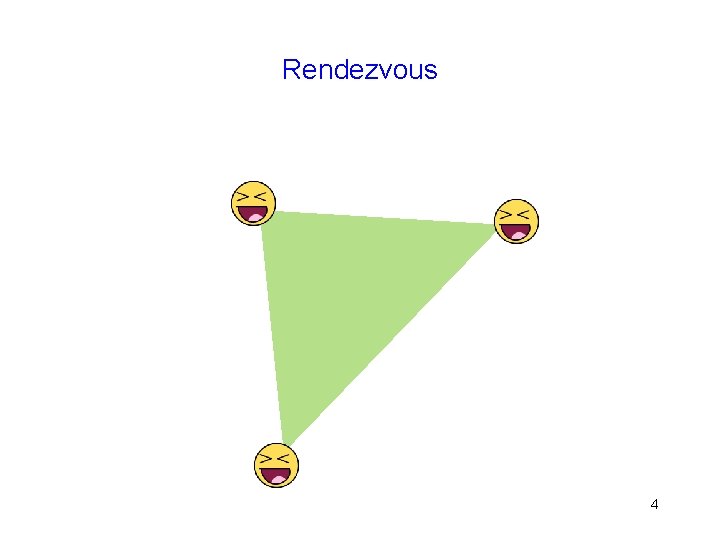

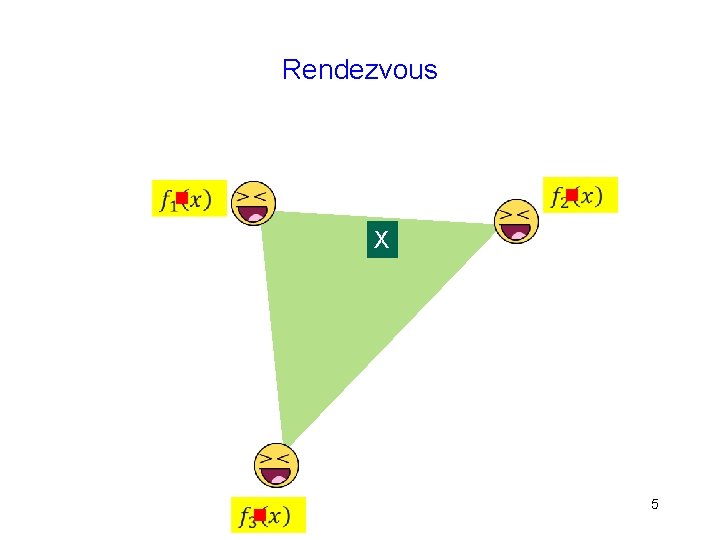

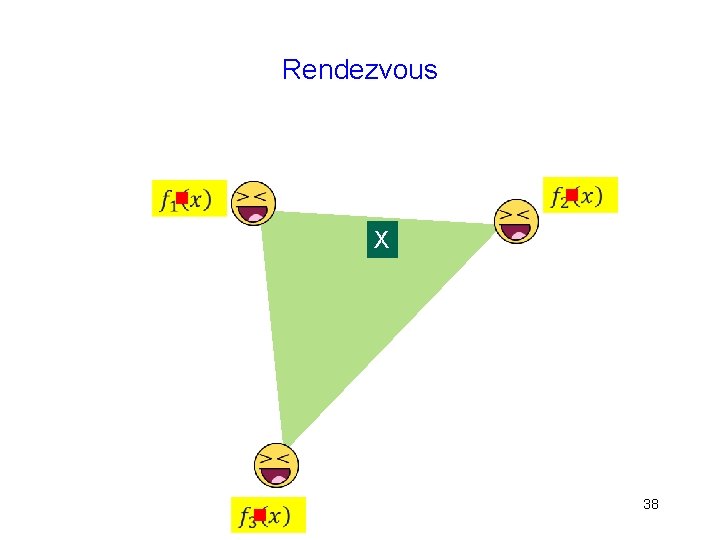

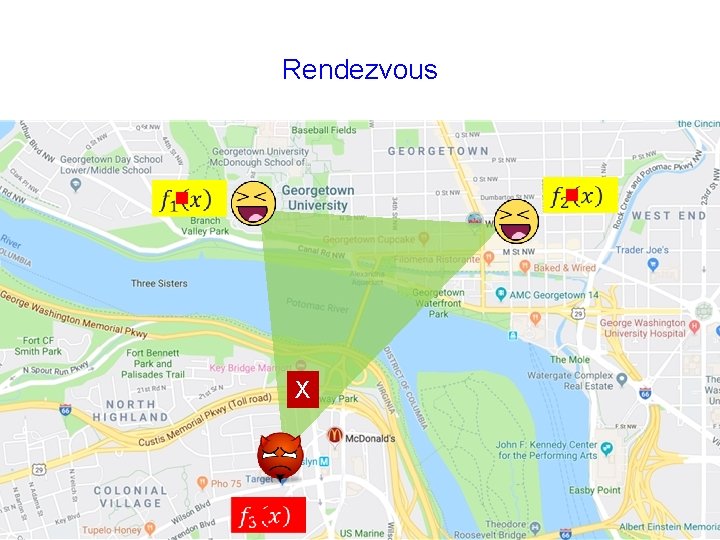

Rendezvous 4

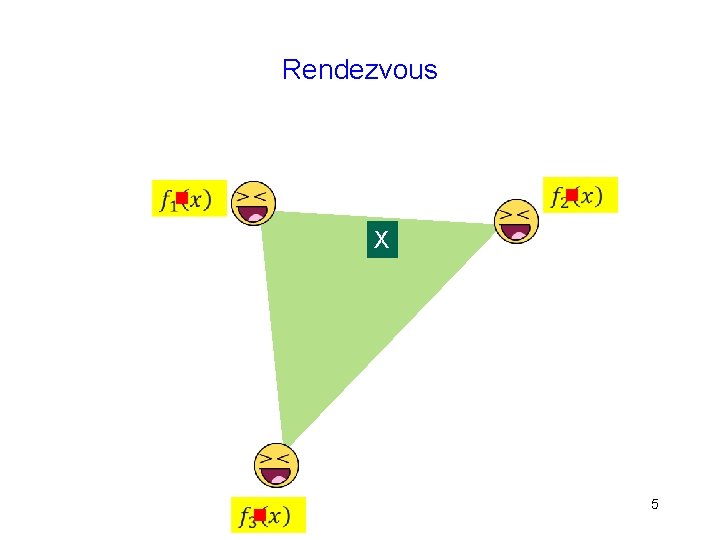

Rendezvous g g X g 5

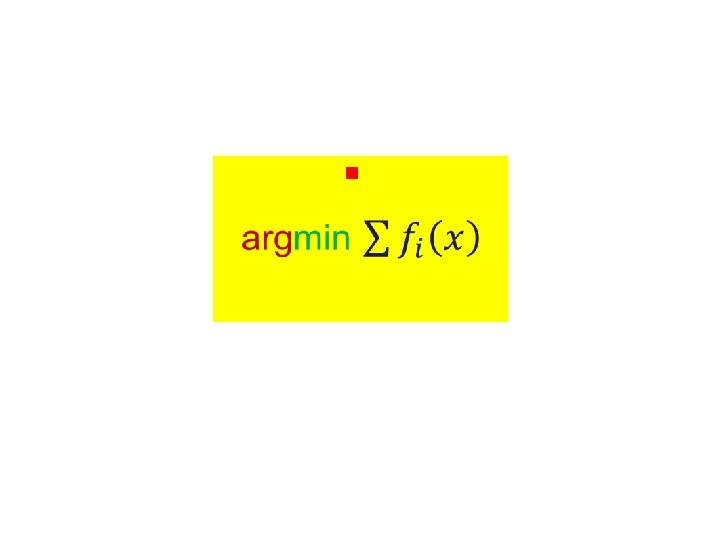

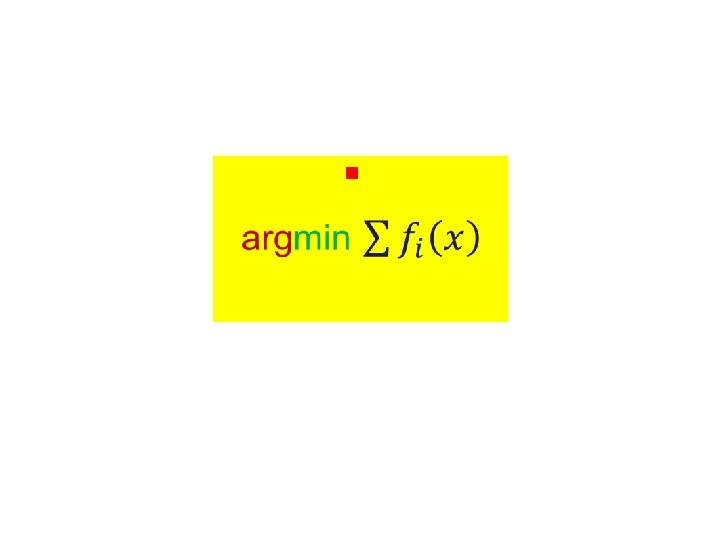

g

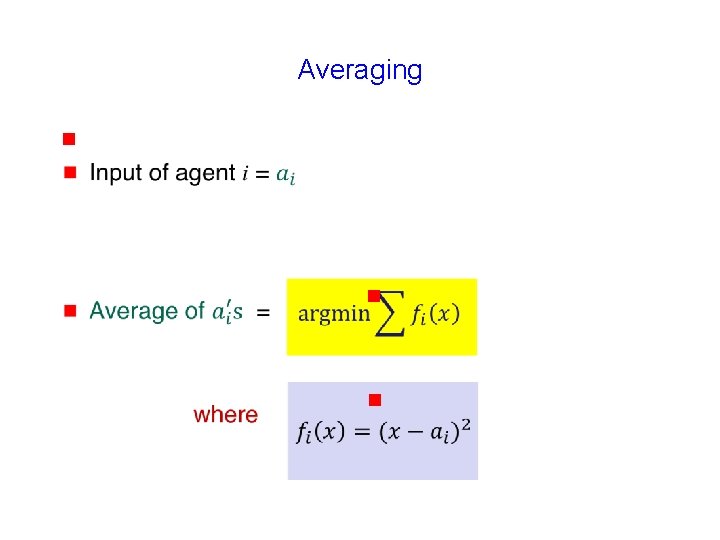

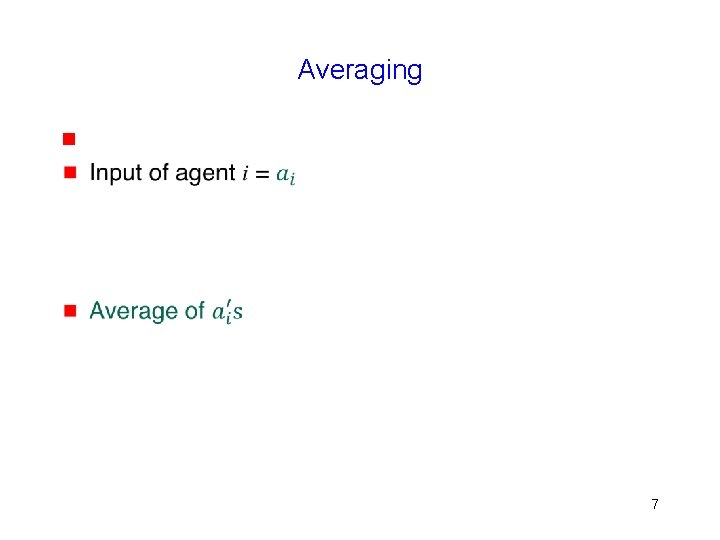

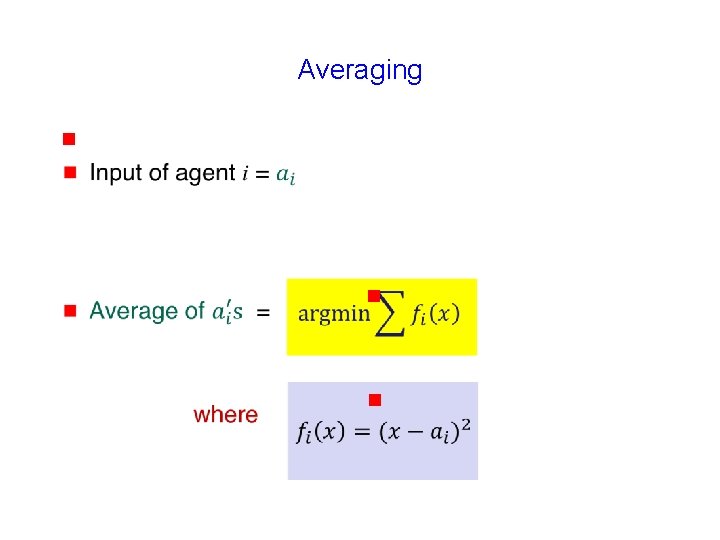

Averaging g 7

Averaging g

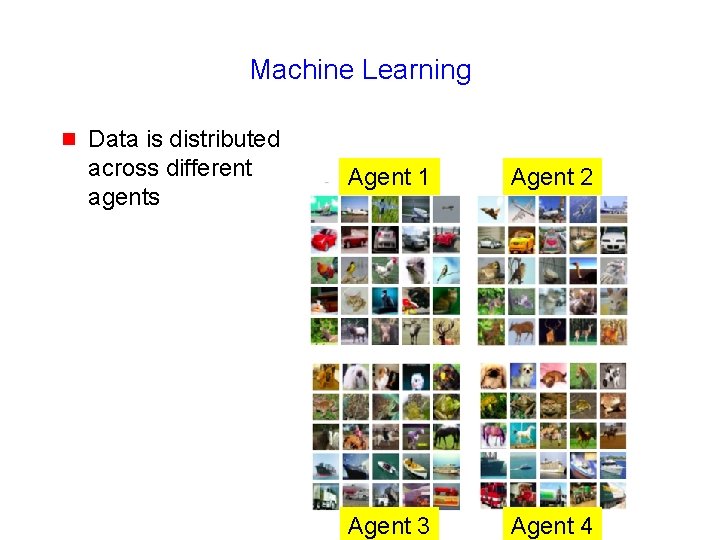

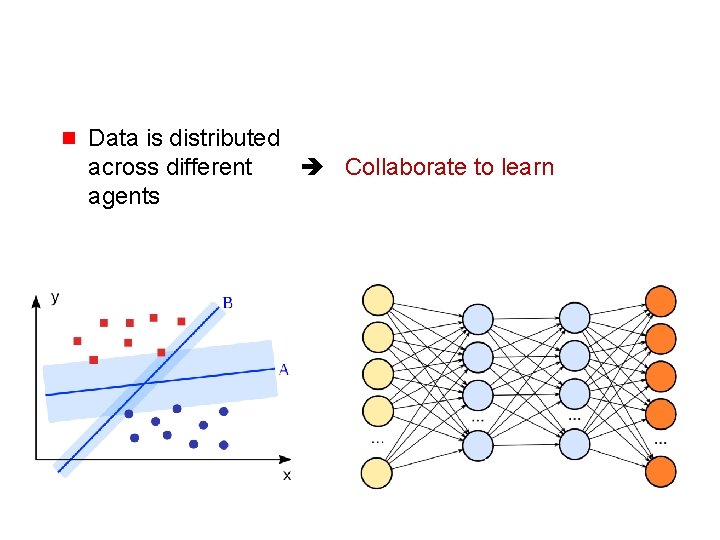

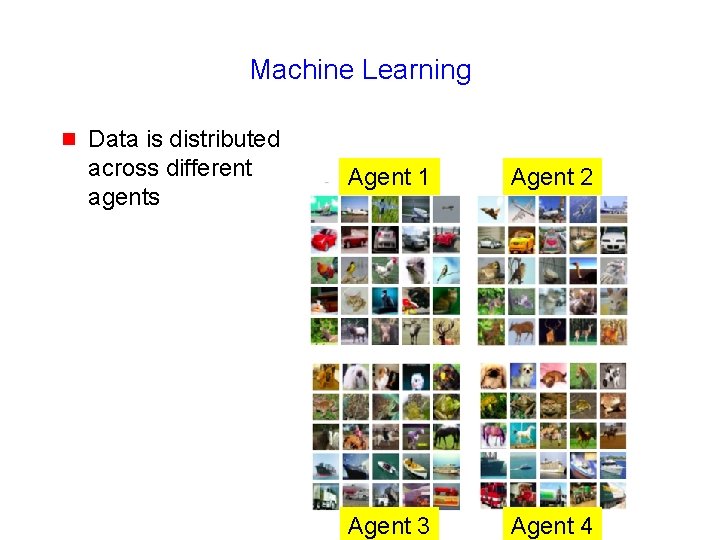

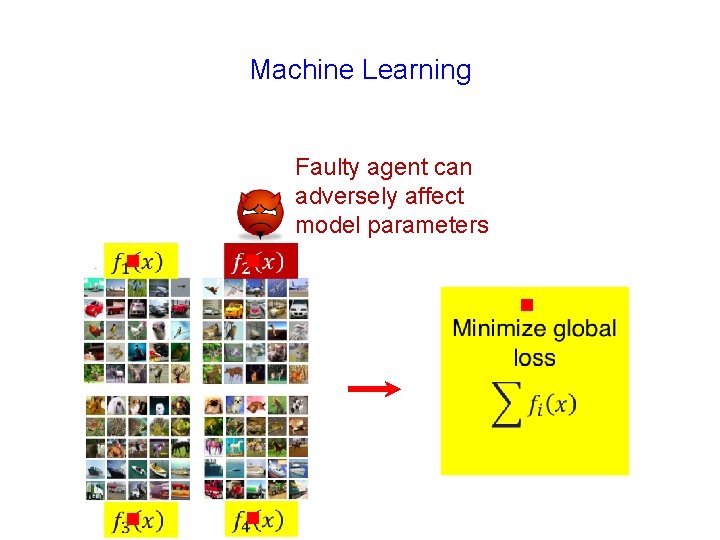

Machine Learning g Data is distributed across different agents Agent 1 Agent 2 Agent 3 Agent 4

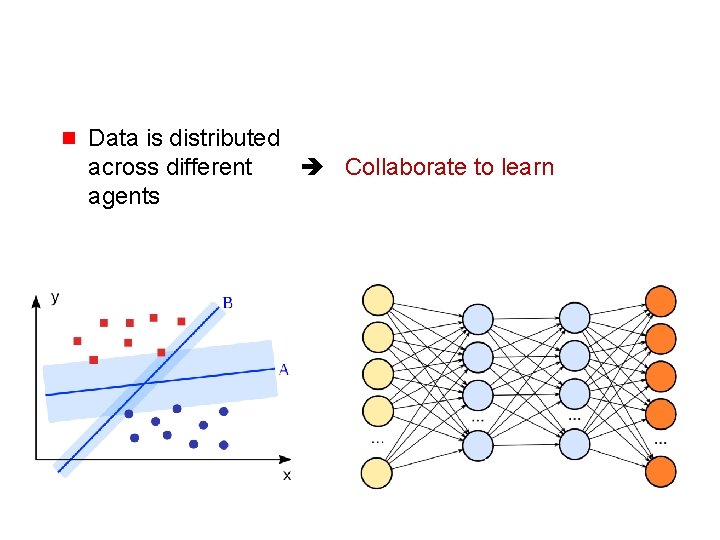

g Data is distributed across different Collaborate to learn agents

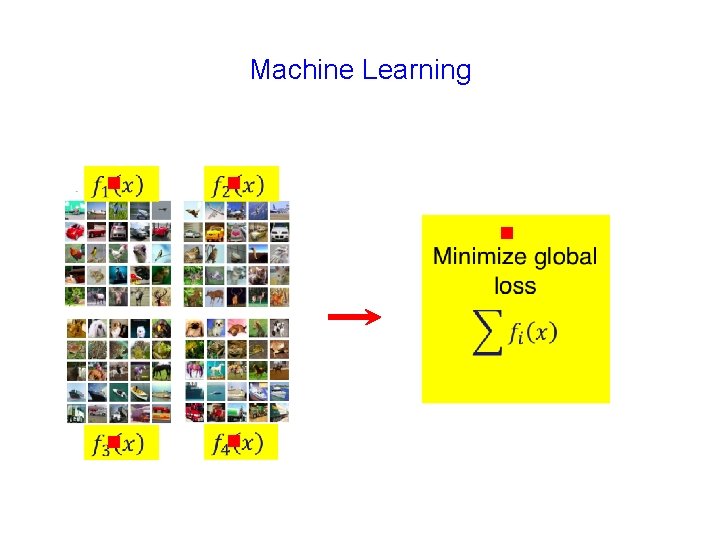

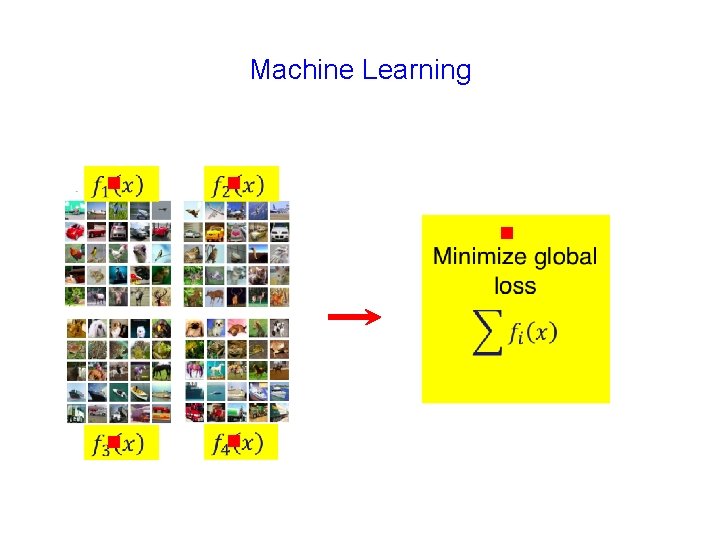

Machine Learning g g

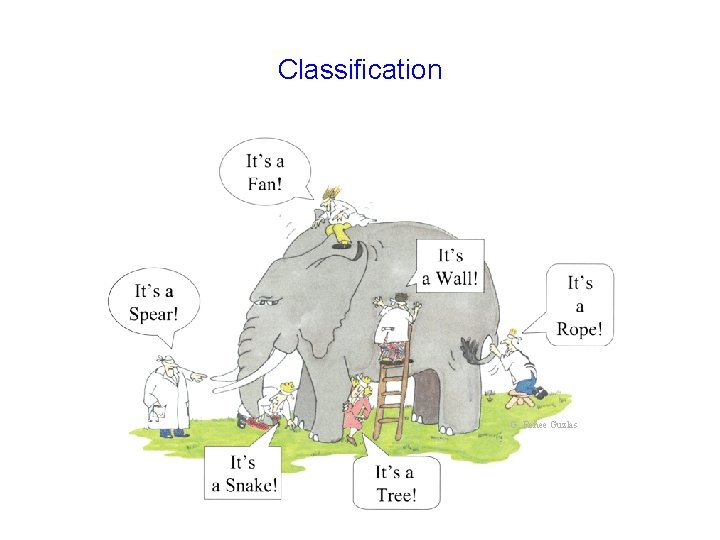

Classification G. Renee Guzlas

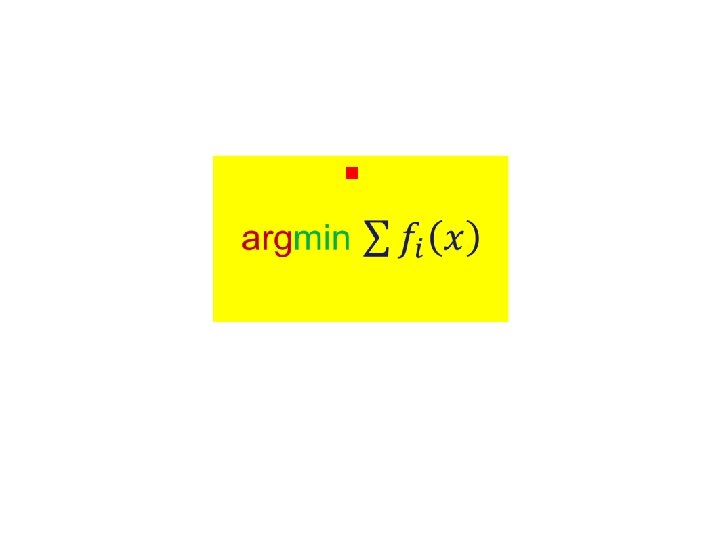

g

![Gradient Method g x0 x1 x2 x3 Gradient Method g x[0] x[1] x[2] x[3]](https://slidetodoc.com/presentation_image_h2/c129dae9b7aba0f61d22548687347d08/image-14.jpg)

Gradient Method g x[0] x[1] x[2] x[3]

![Gradient Method g x0 x1 x2 x3 g Gradient Method g x[0] x[1] x[2] x[3] g](https://slidetodoc.com/presentation_image_h2/c129dae9b7aba0f61d22548687347d08/image-15.jpg)

Gradient Method g x[0] x[1] x[2] x[3] g

![Gradient Method g x0 x1 x2 x3 g Gradient Method g x[0] x[1] x[2] x[3] g](https://slidetodoc.com/presentation_image_h2/c129dae9b7aba0f61d22548687347d08/image-16.jpg)

Gradient Method g x[0] x[1] x[2] x[3] g

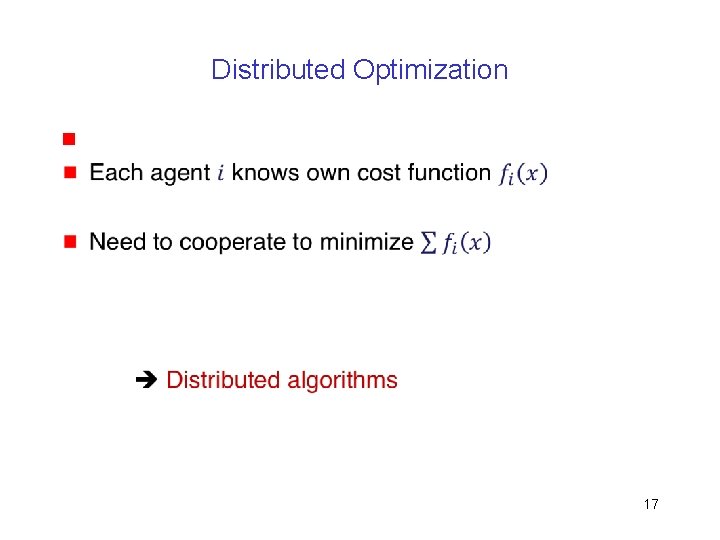

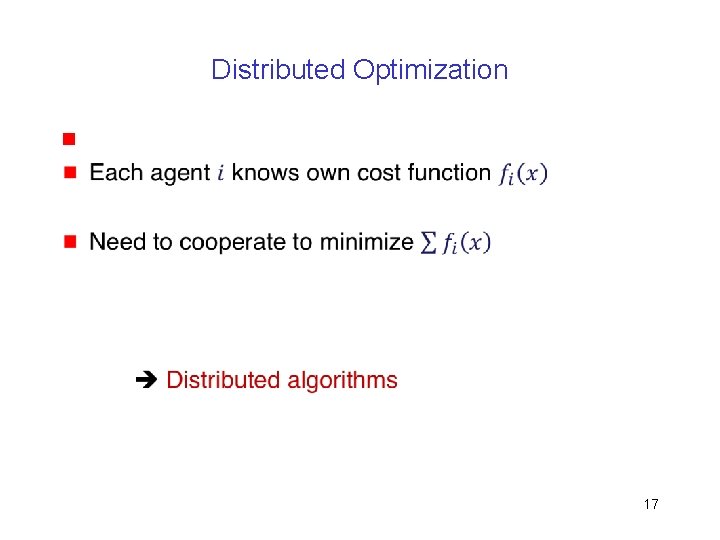

Distributed Optimization g 17

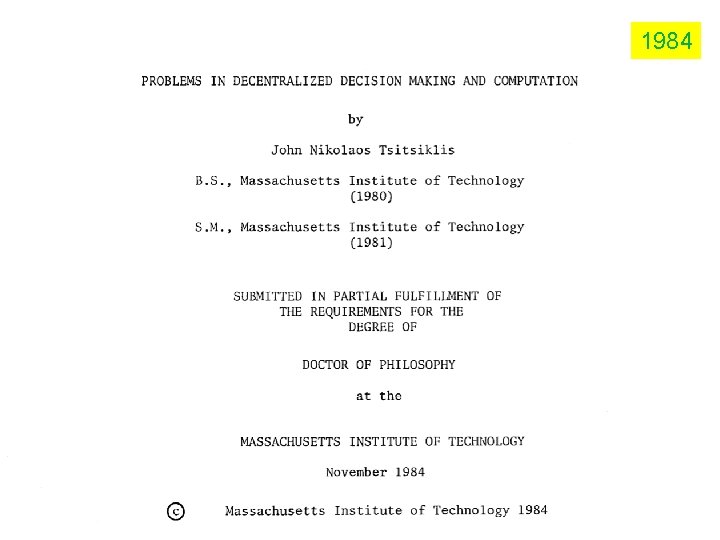

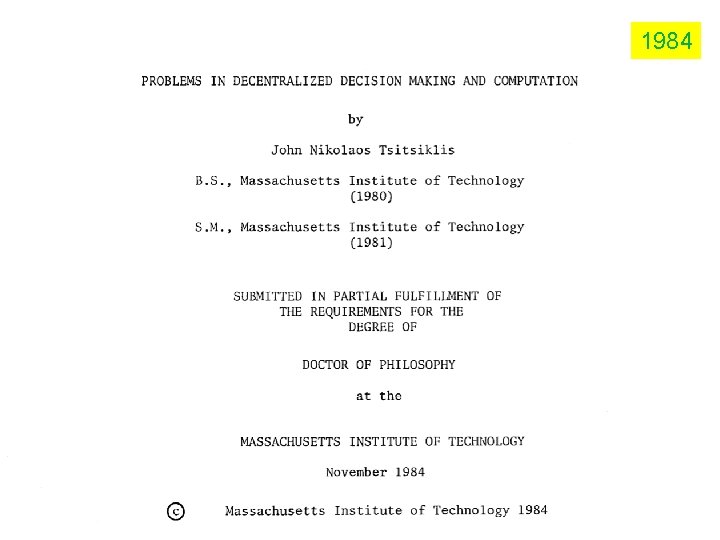

1984 18

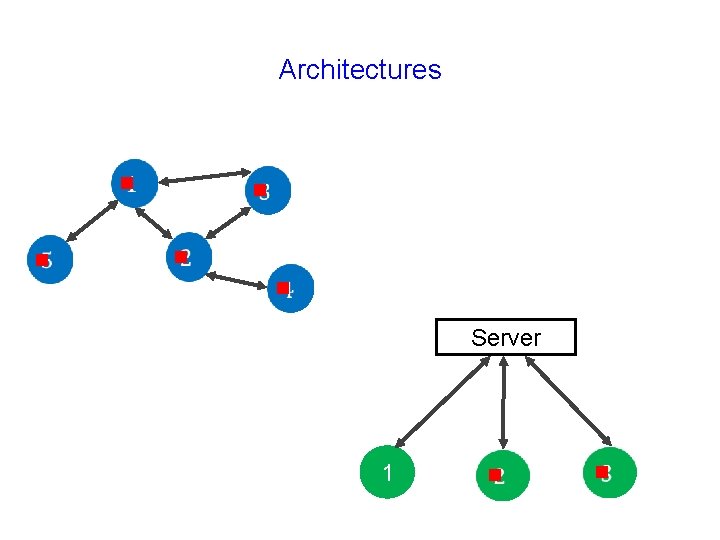

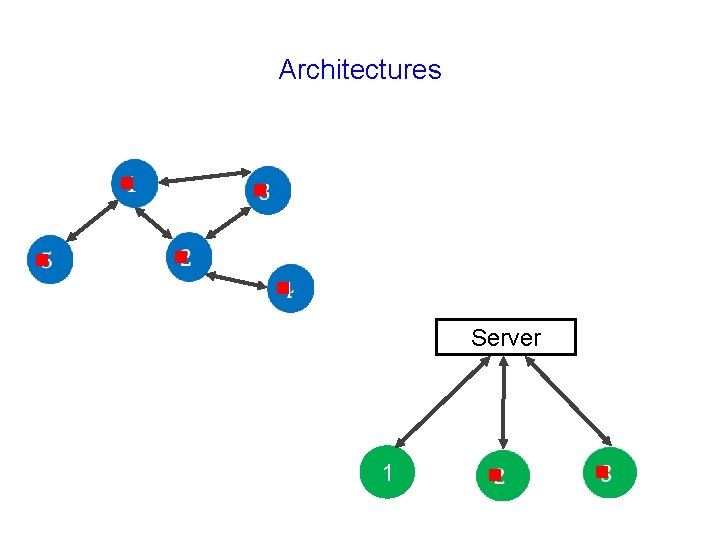

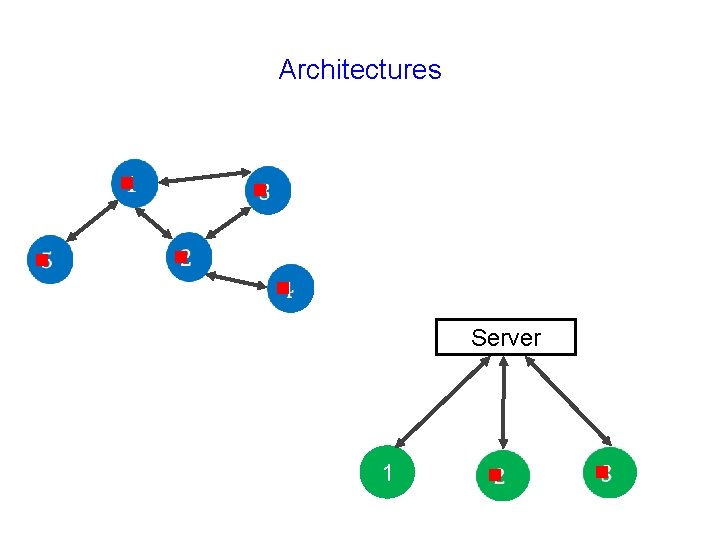

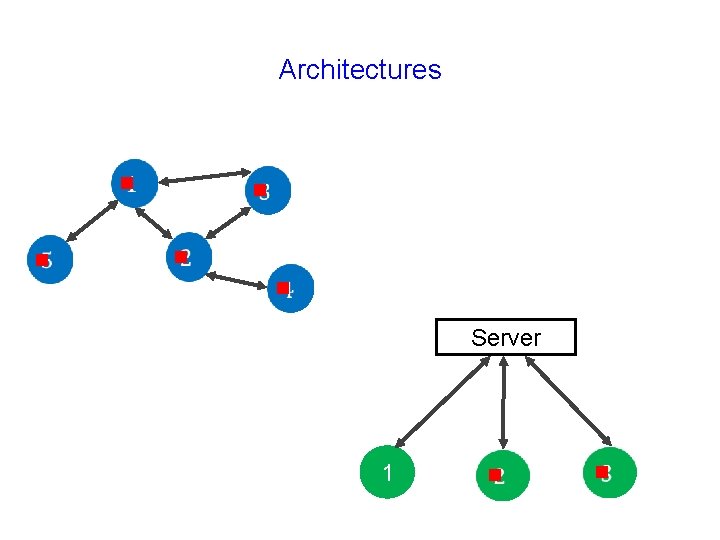

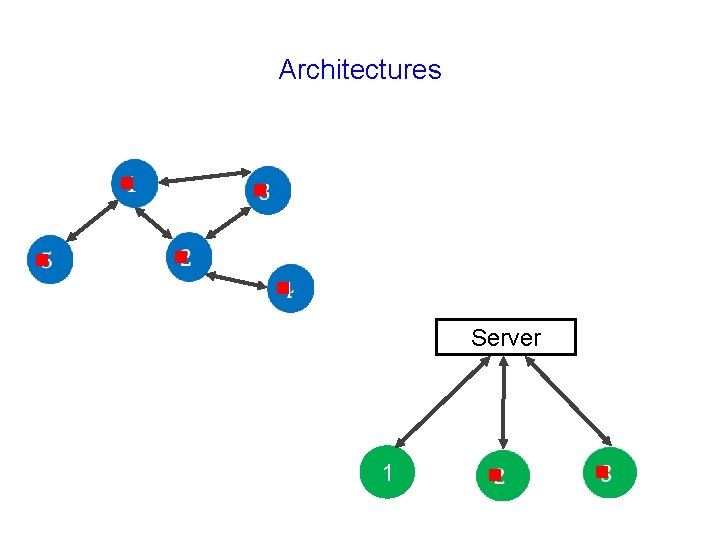

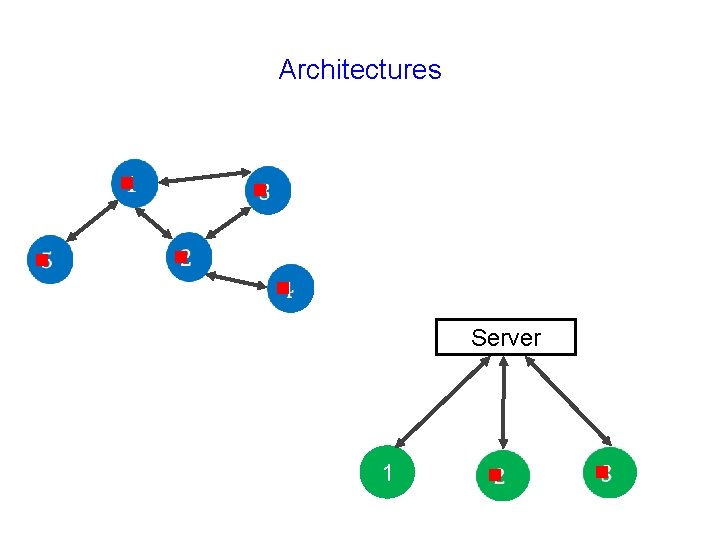

Architectures g g g Server 1 g g

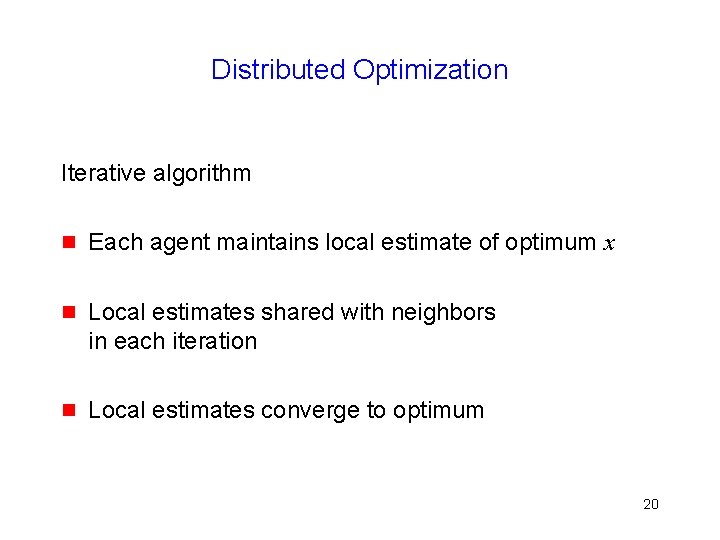

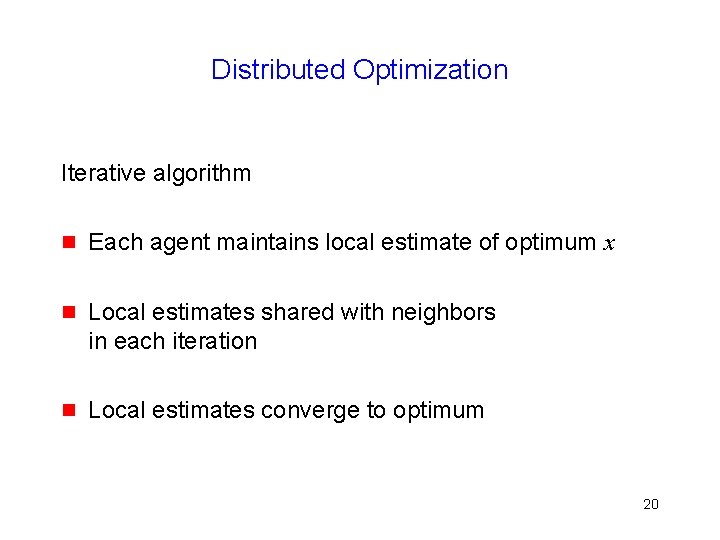

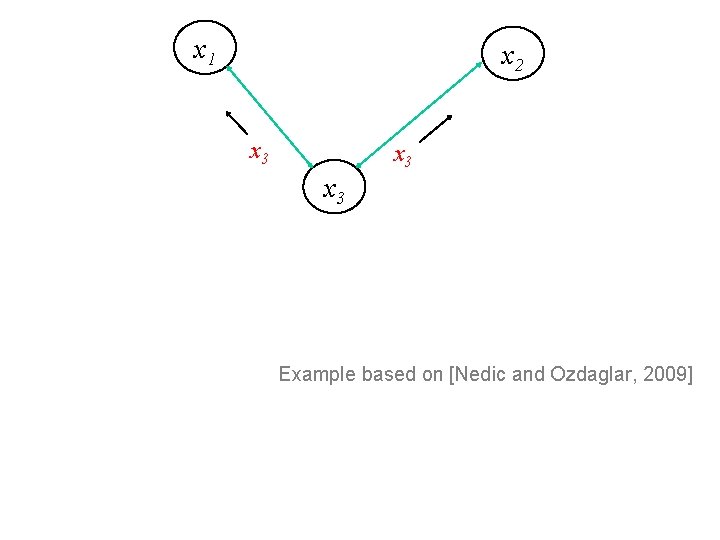

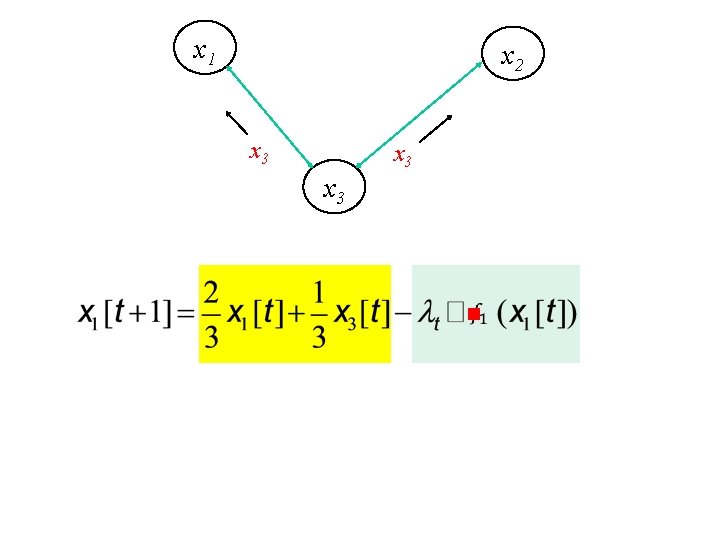

Distributed Optimization Iterative algorithm g Each agent maintains local estimate of optimum x g Local estimates shared with neighbors in each iteration g Local estimates converge to optimum 20

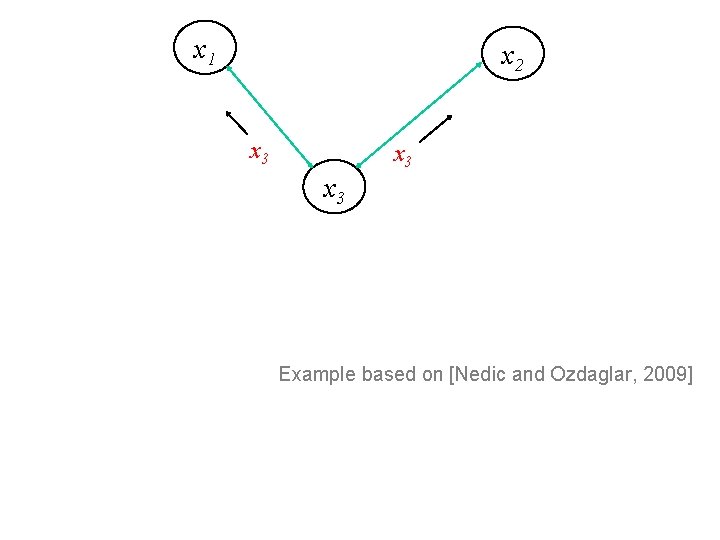

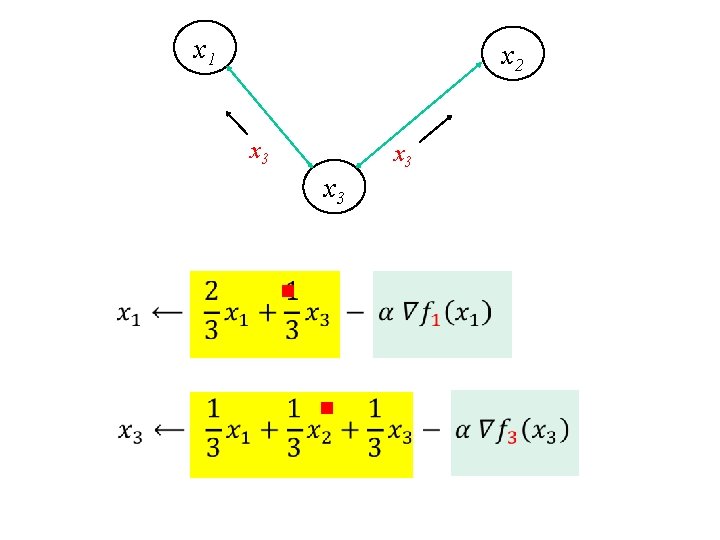

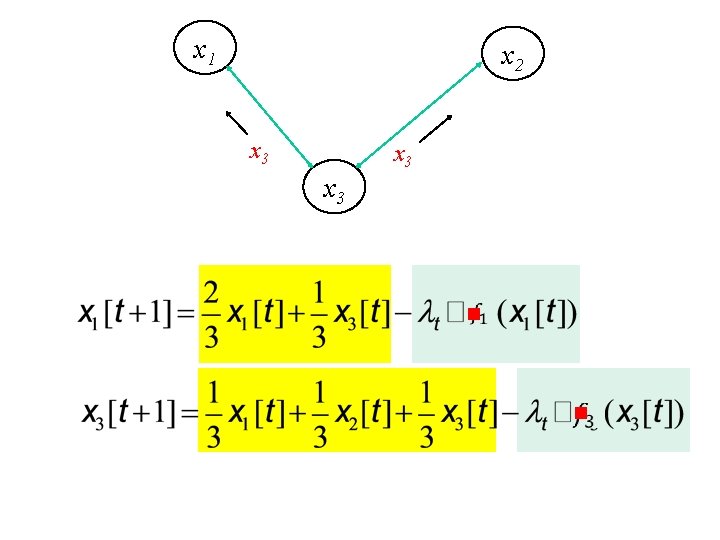

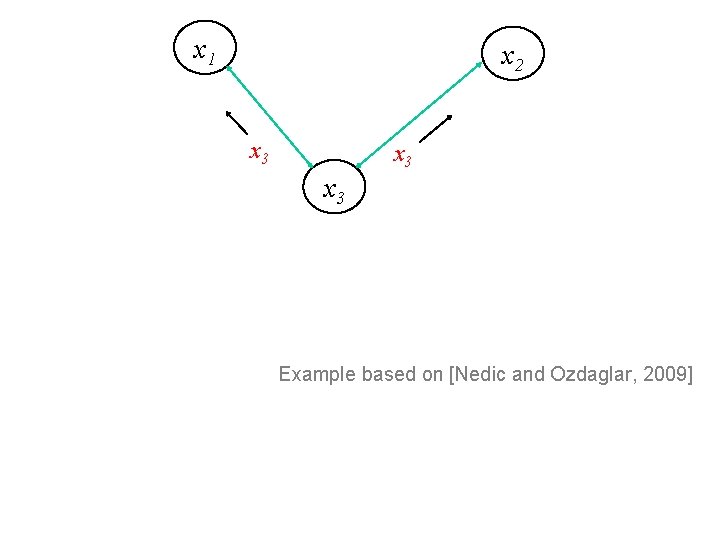

x 1 x 2 x 3 x 3 Example based on [Nedic and Ozdaglar, 2009]

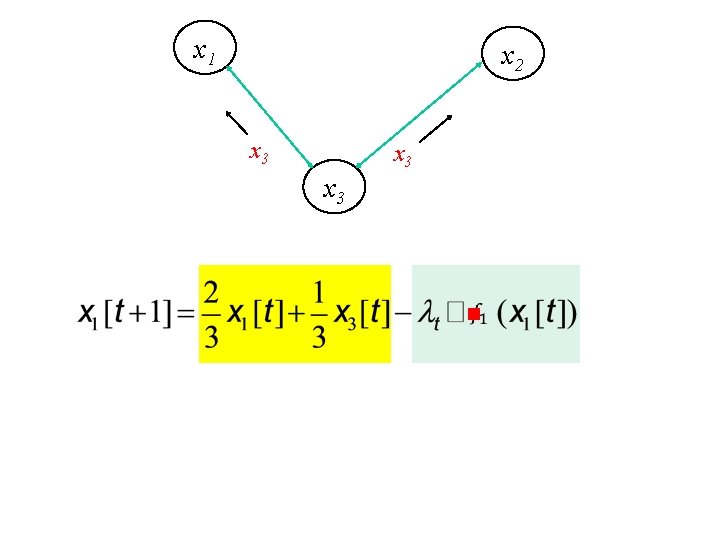

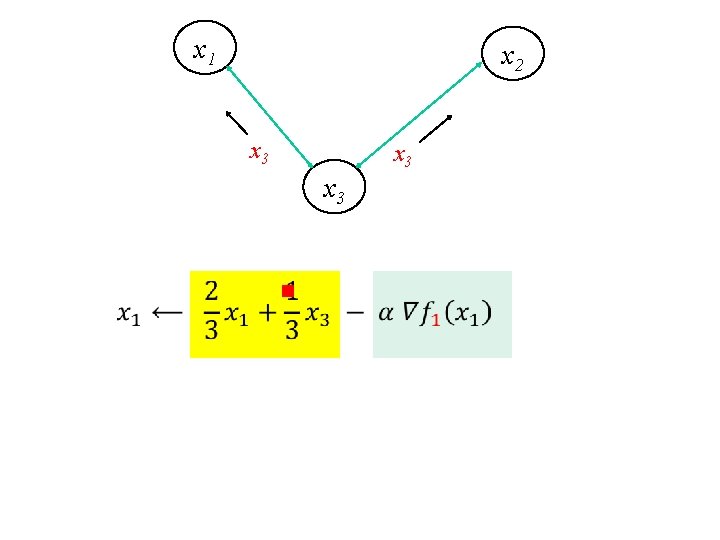

x 1 x 2 x 3 x 3 g

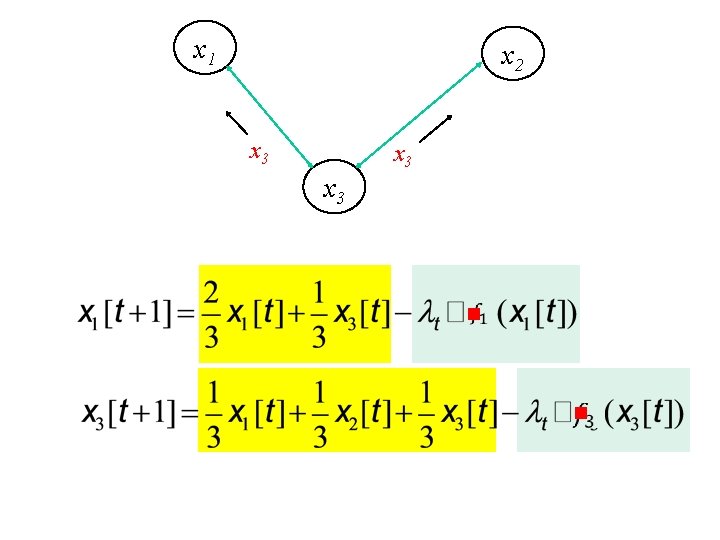

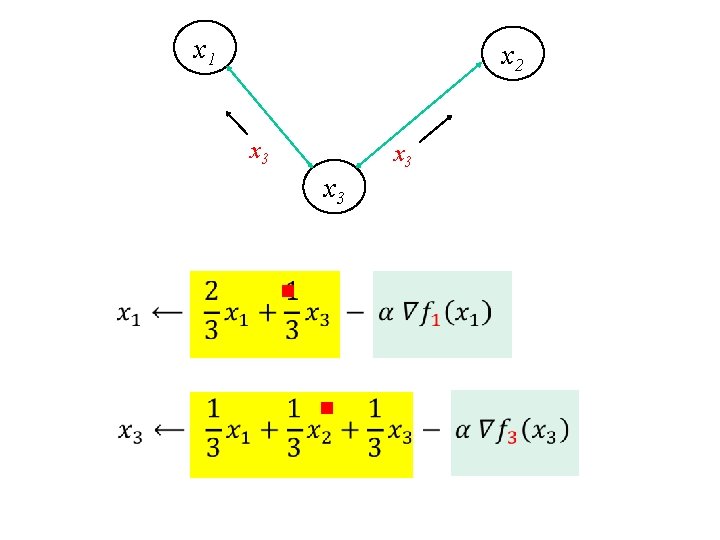

x 1 x 2 x 3 x 3 g g

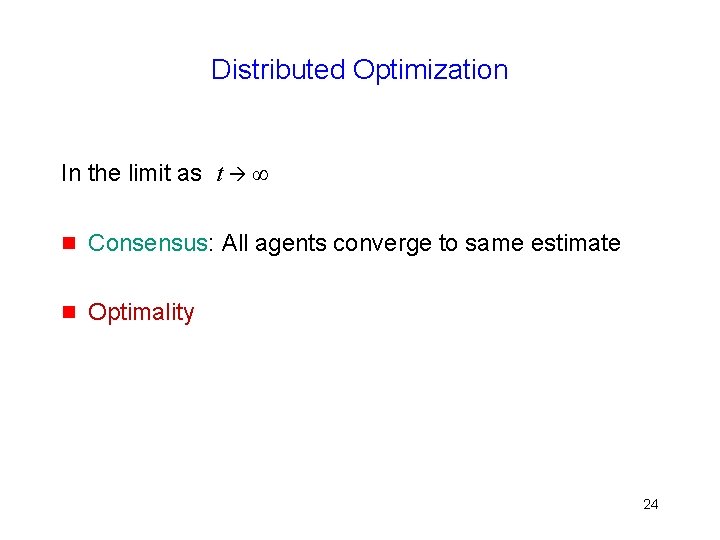

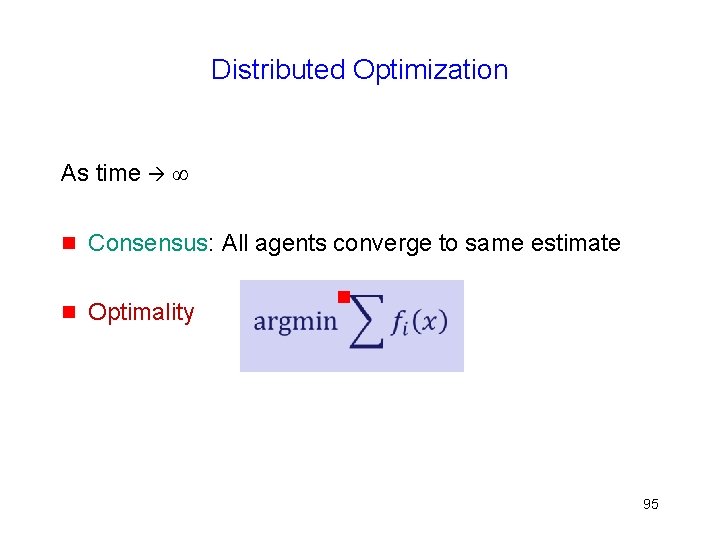

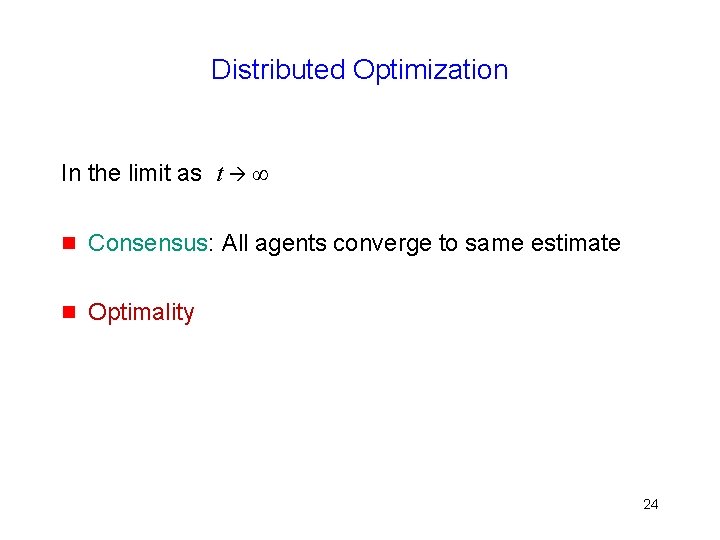

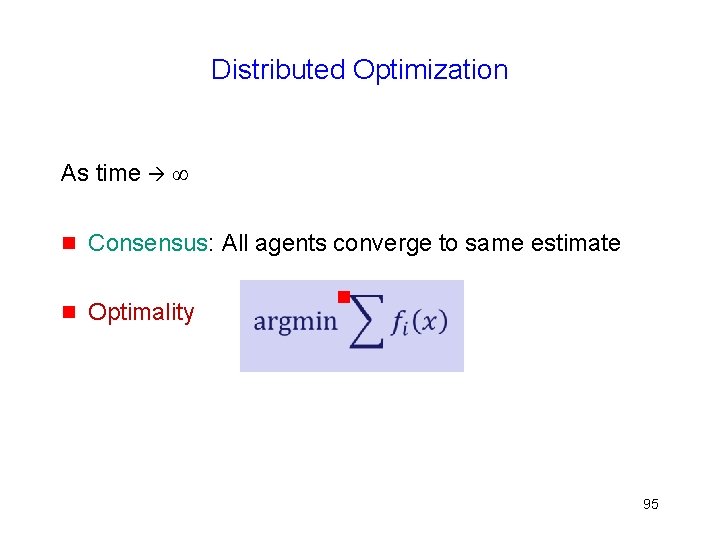

Distributed Optimization In the limit as t ∞ g Consensus: All agents converge to same estimate g Optimality 24

Why does this work?

Architectures g g g Server 1 g g

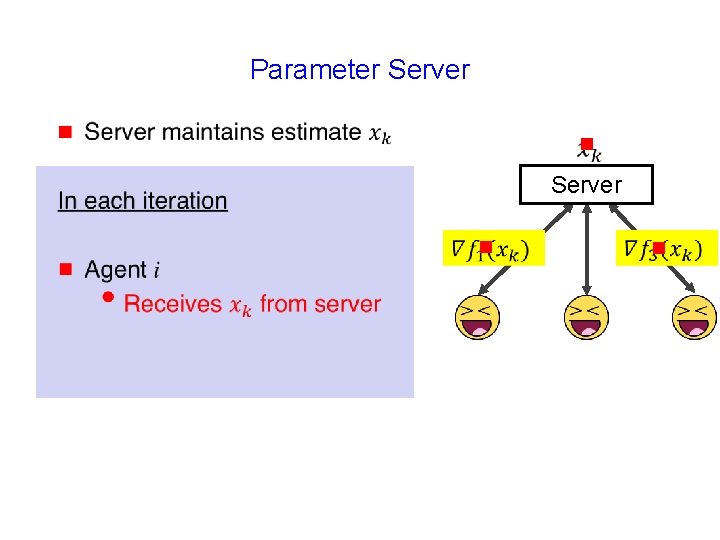

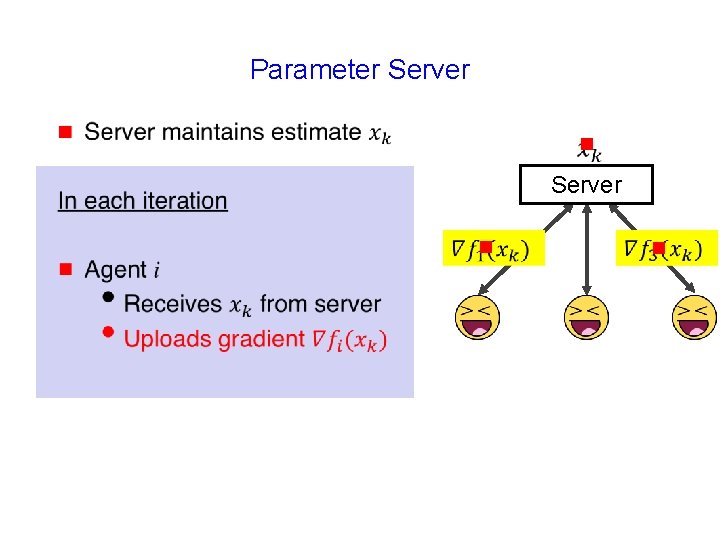

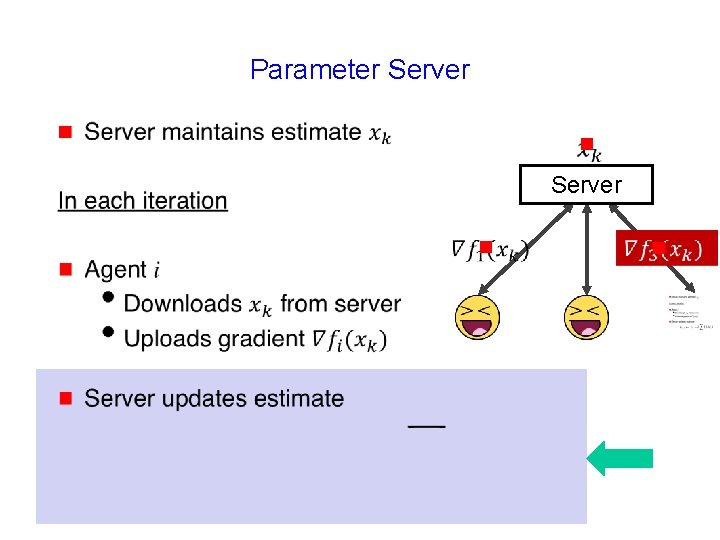

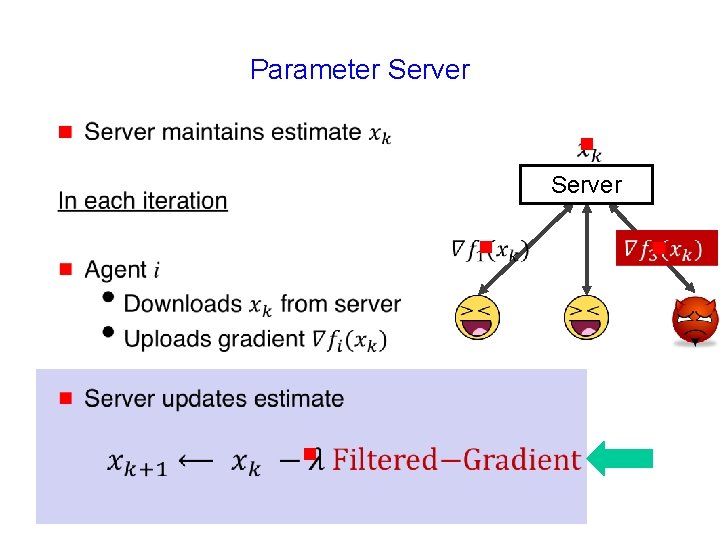

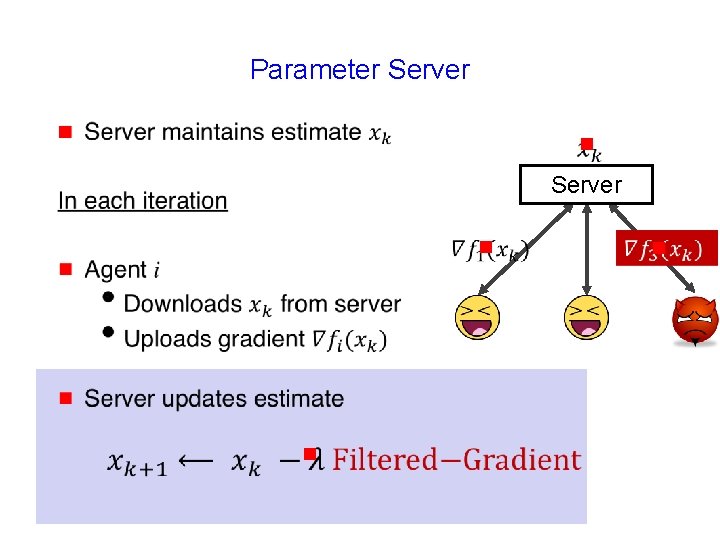

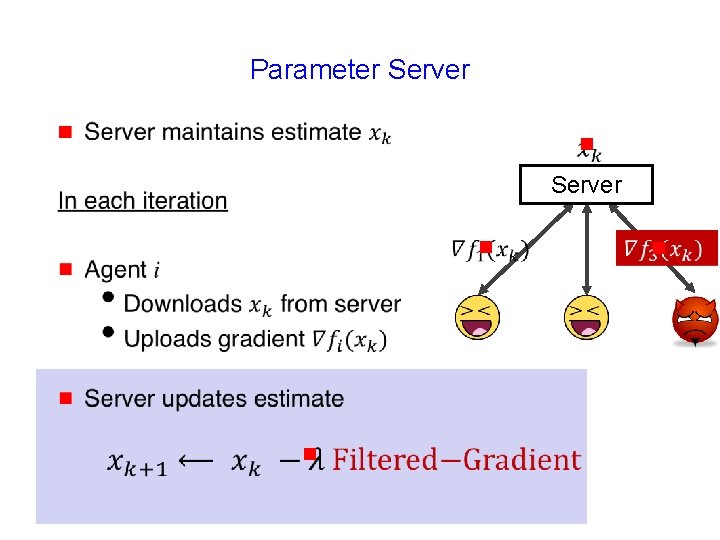

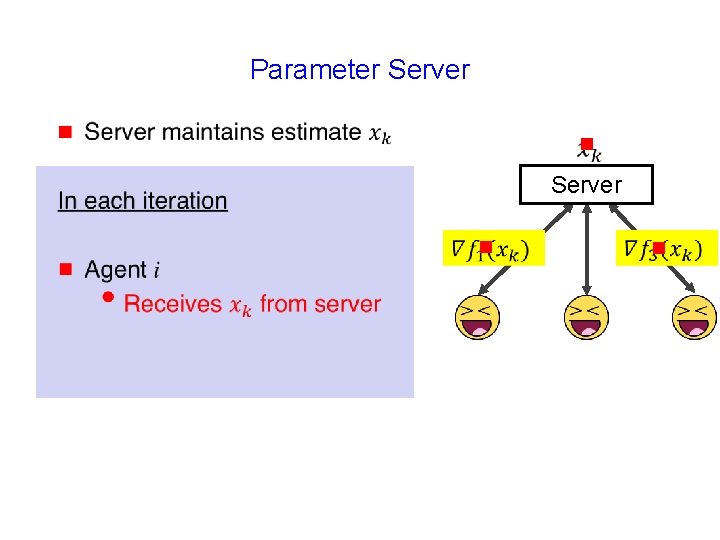

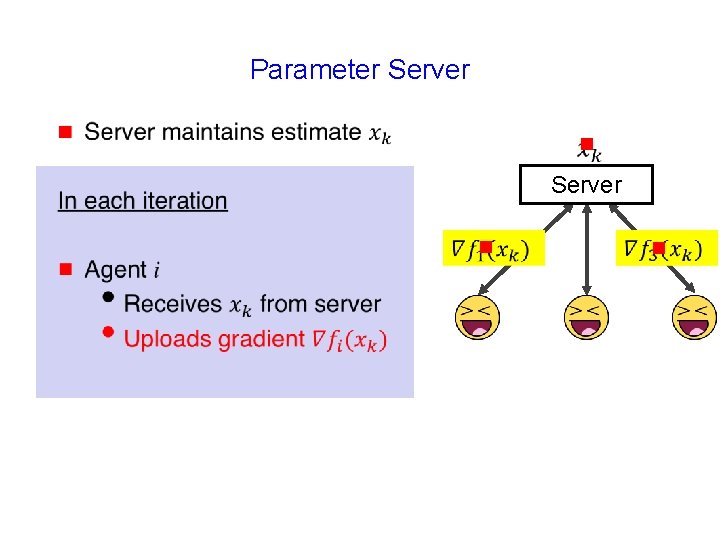

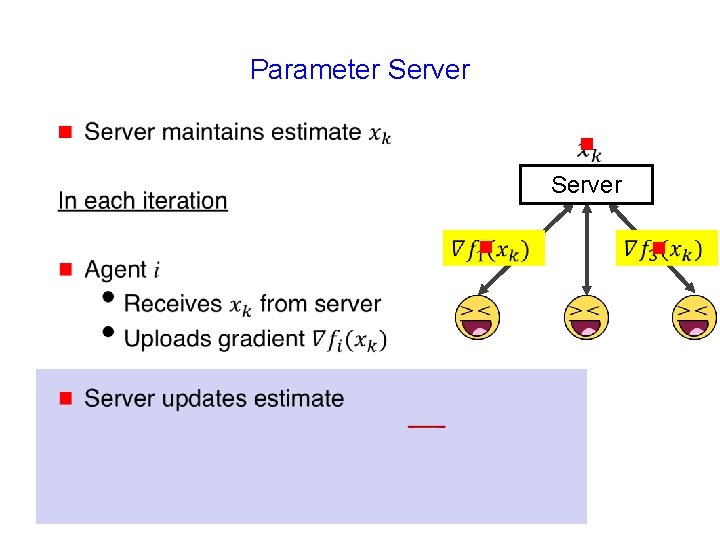

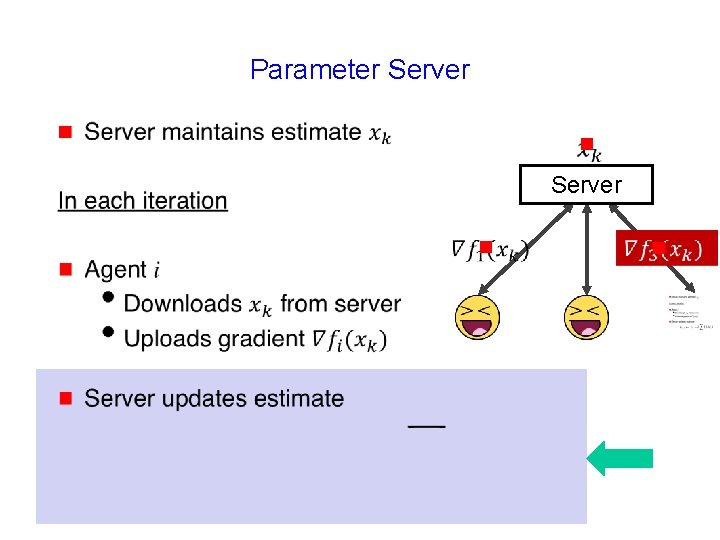

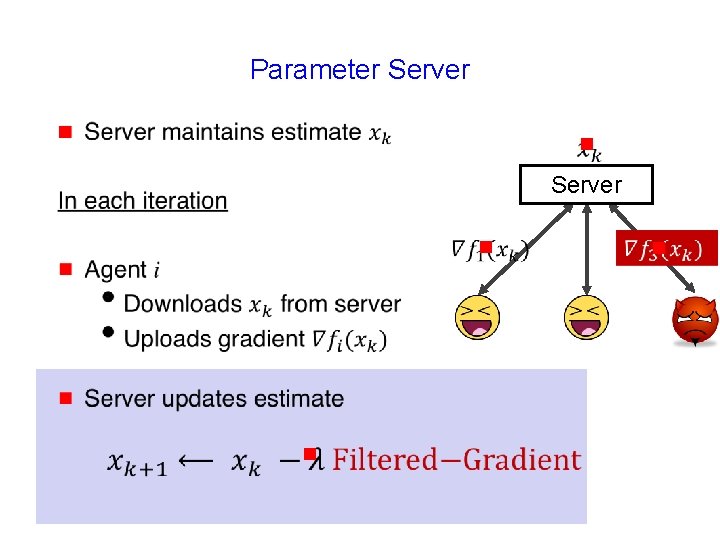

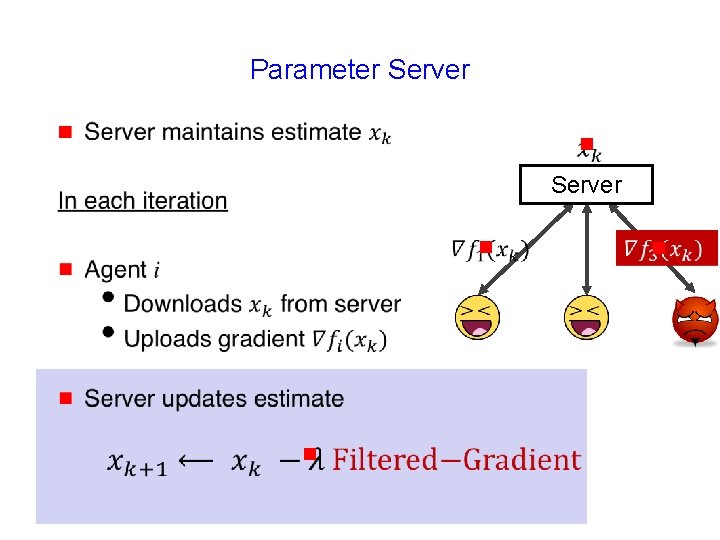

Parameter Server g g Server

Parameter Server g g

Parameter Server g g

Parameter Server g g

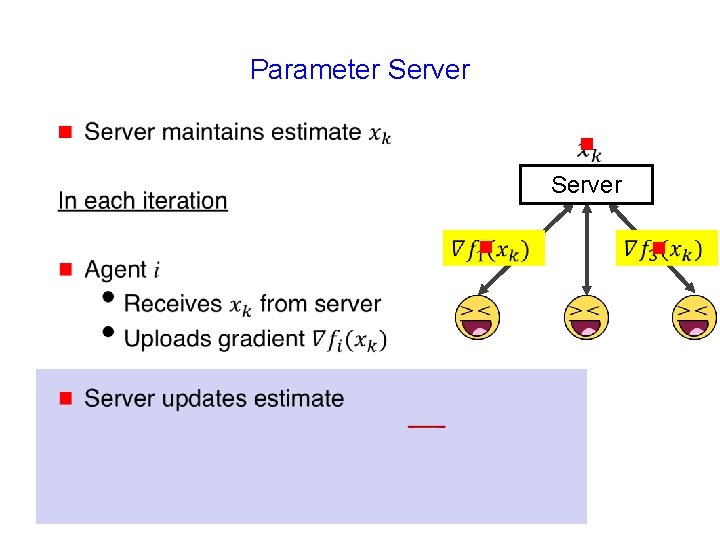

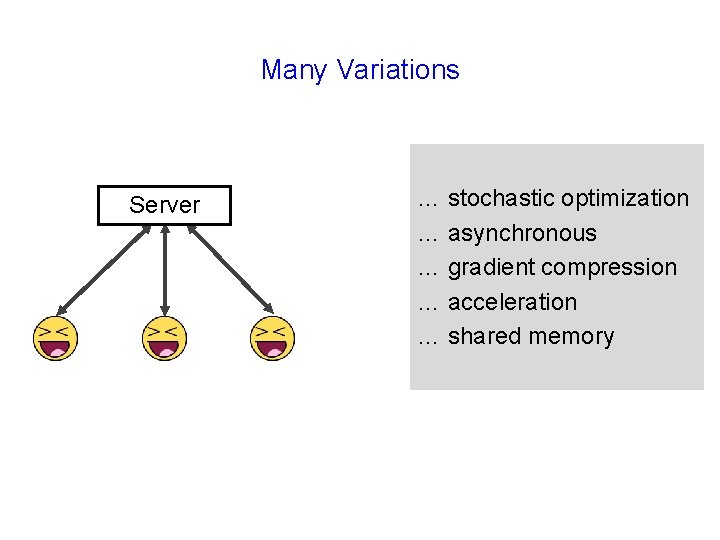

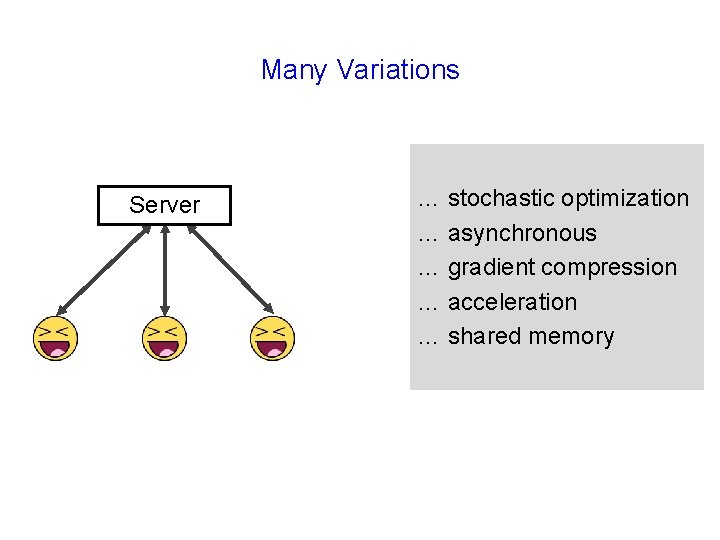

Many Variations Server … stochastic optimization … asynchronous … gradient compression … acceleration … shared memory

Architectures g g g Server 1 g g

Challenges

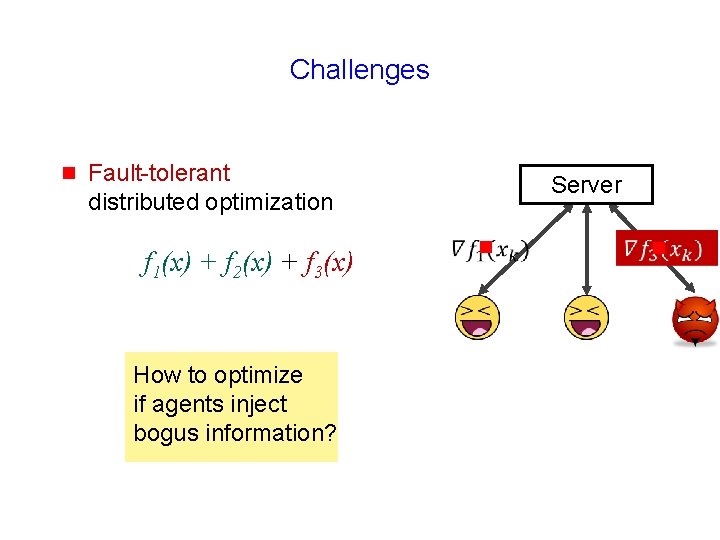

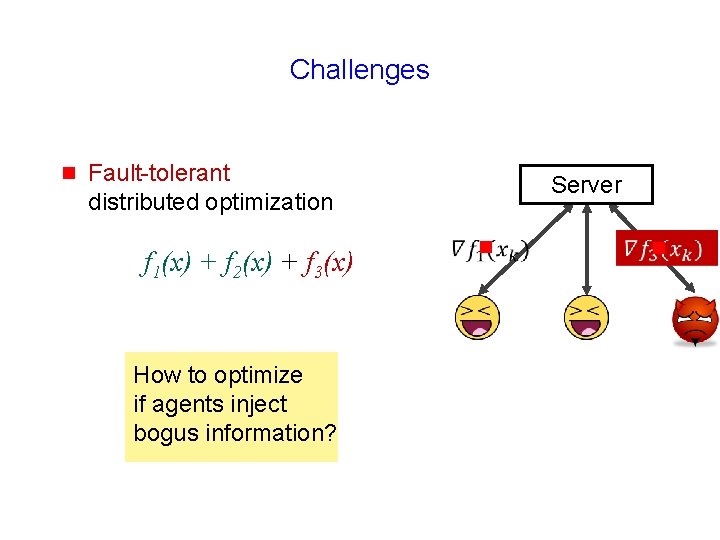

Challenges g Fault-tolerant distributed optimization f 1(x) + f 2(x) + f 3(x) How to optimize if agents inject bogus information? Server g g

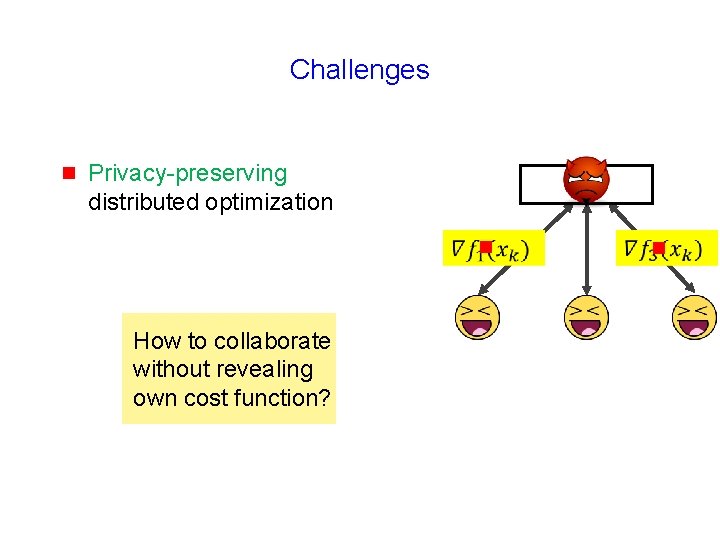

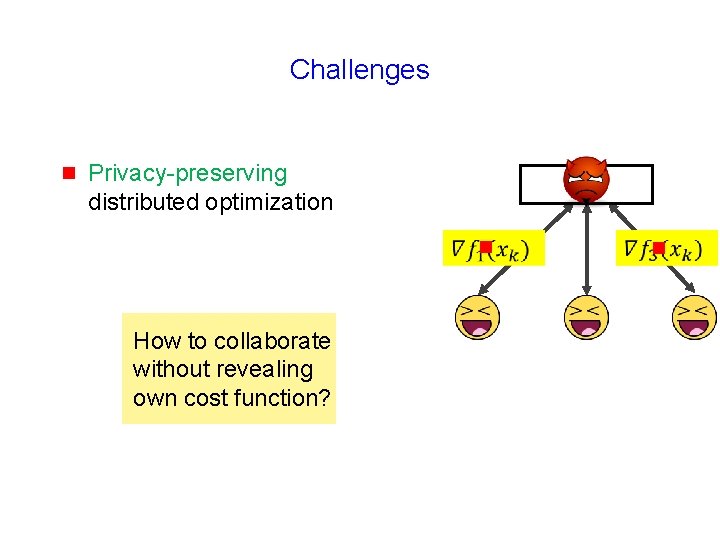

Challenges g Privacy-preserving distributed optimization g How to collaborate without revealing own cost function? g

Fault-Tolerant Optimization 2015 …

Byzantine Fault Model g No constraint on misbehavior of faulty agents

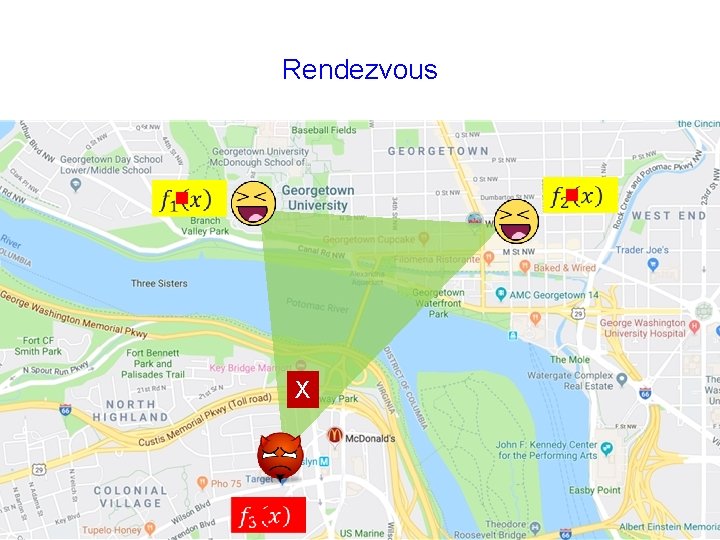

Rendezvous g g X g 38

Rendezvous g g X g 39

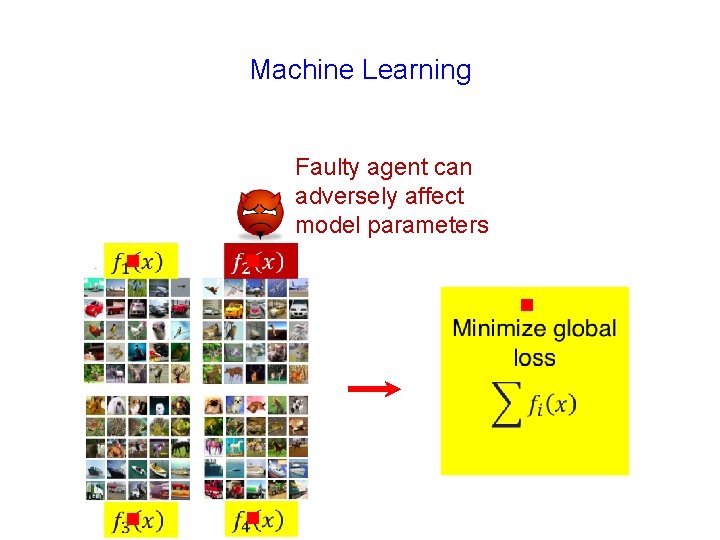

Machine Learning Faulty agent can adversely affect model parameters g g g

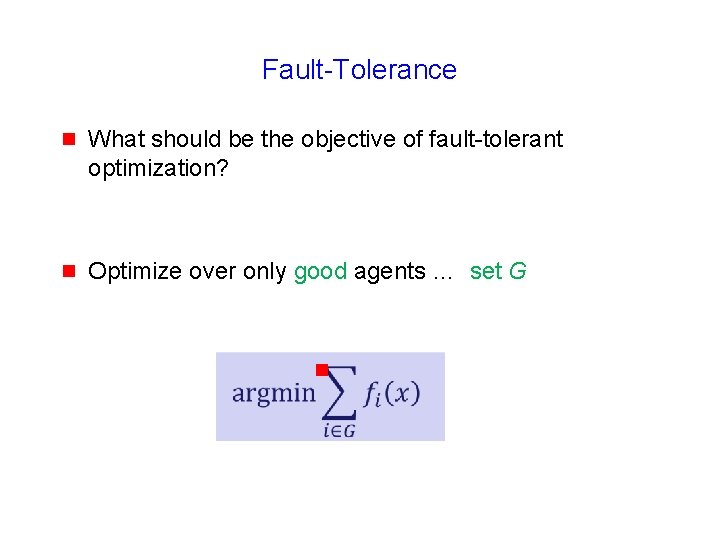

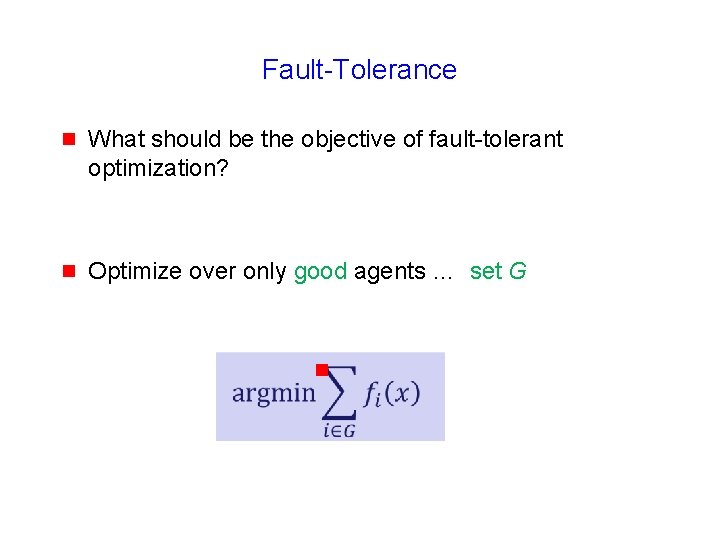

Fault-Tolerance g What should be the objective of fault-tolerant optimization? 41

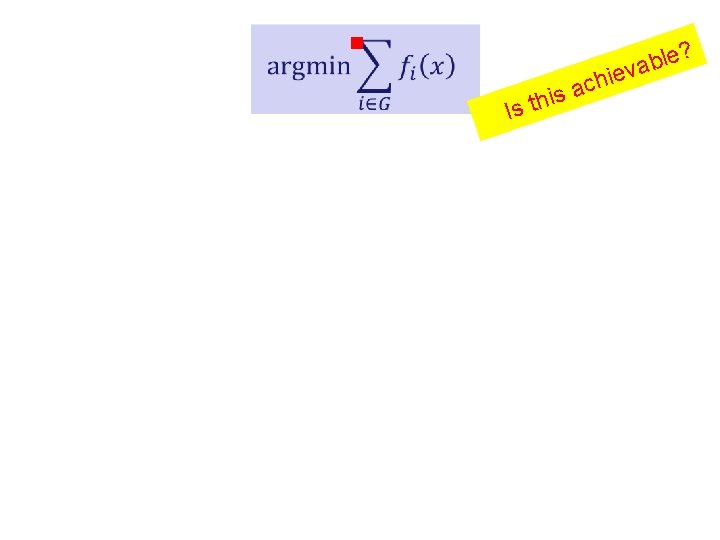

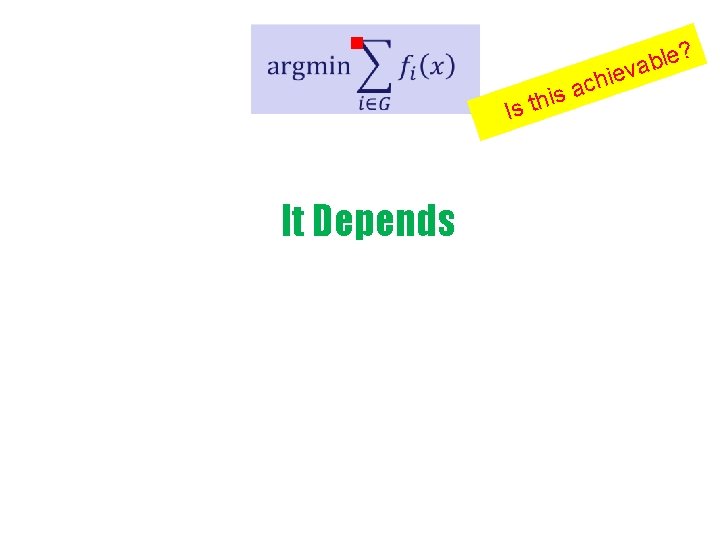

Fault-Tolerance g What should be the objective of fault-tolerant optimization? g Optimize over only good agents … set G

Fault-Tolerance g What should be the objective of fault-tolerant optimization? g Optimize over only good agents … set G g

Parameter Server g g

Parameter Server g g g

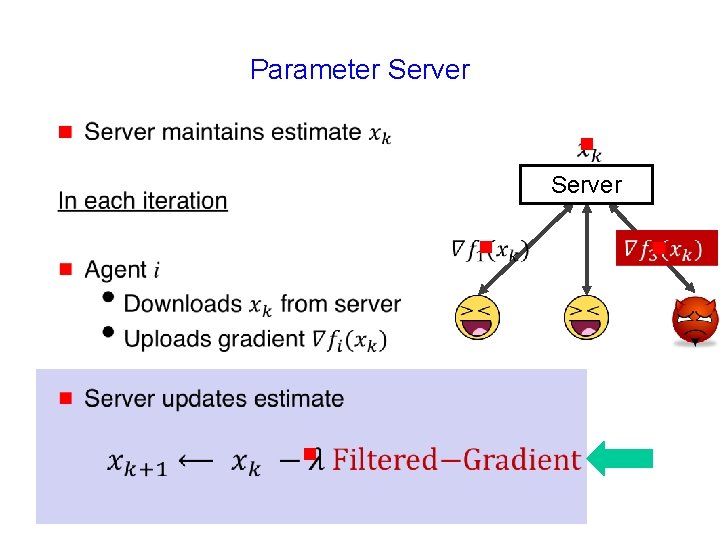

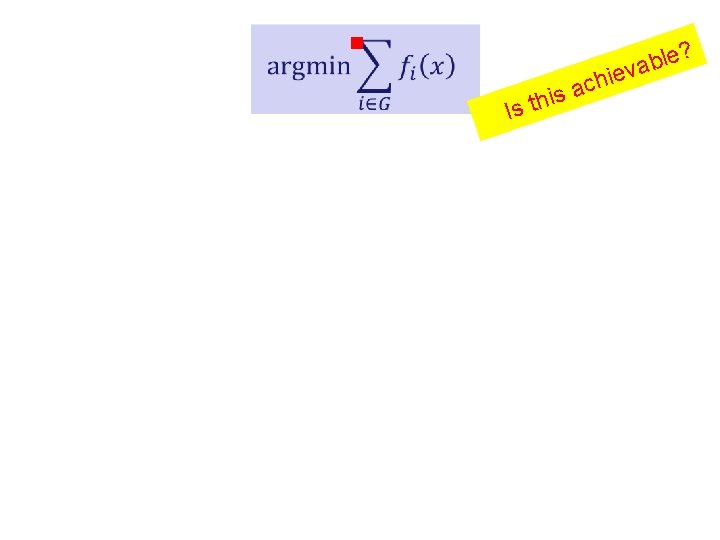

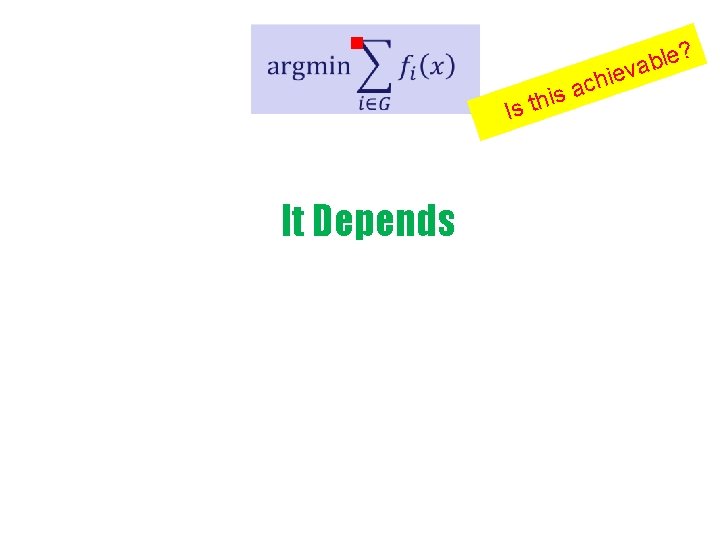

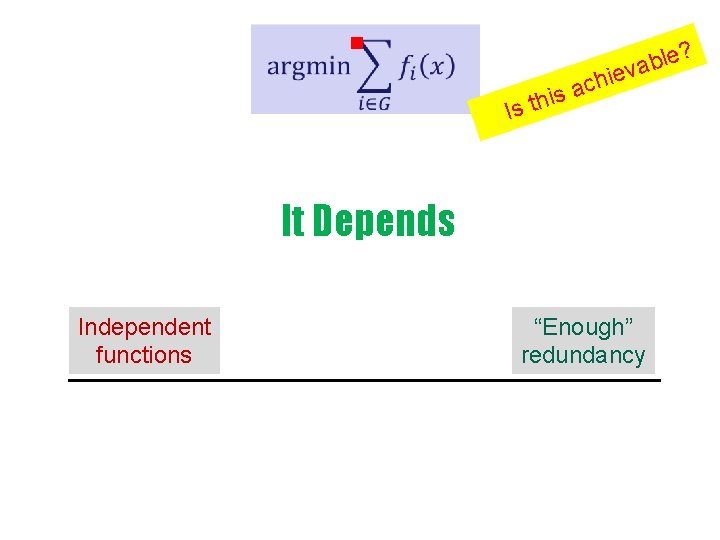

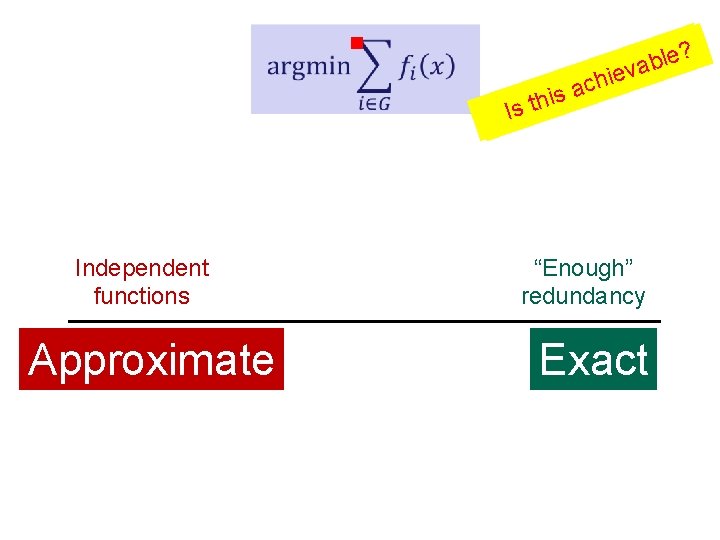

g ? Is a this c ble a v hie

g ? Is It Depends a this c ble a v hie

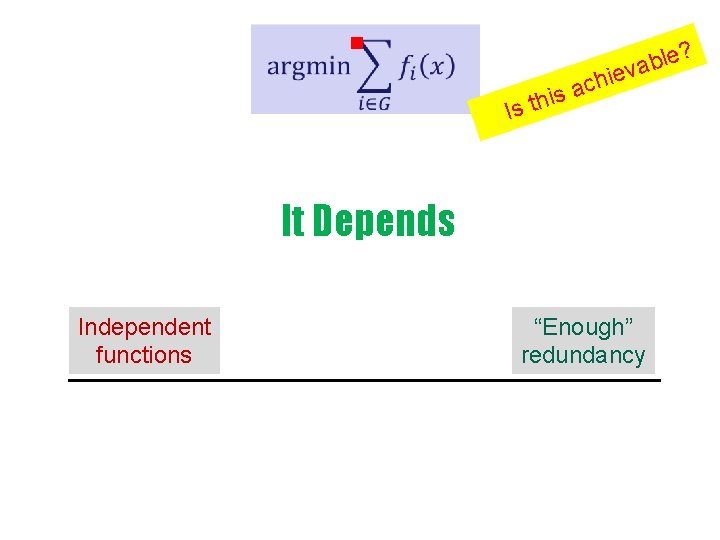

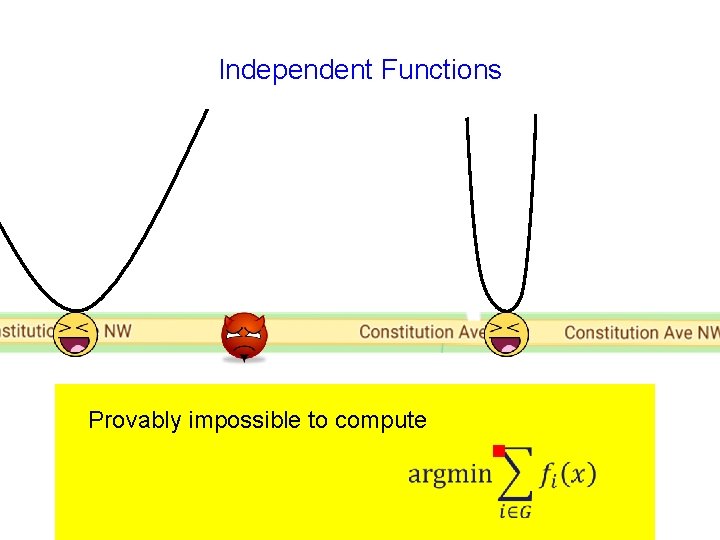

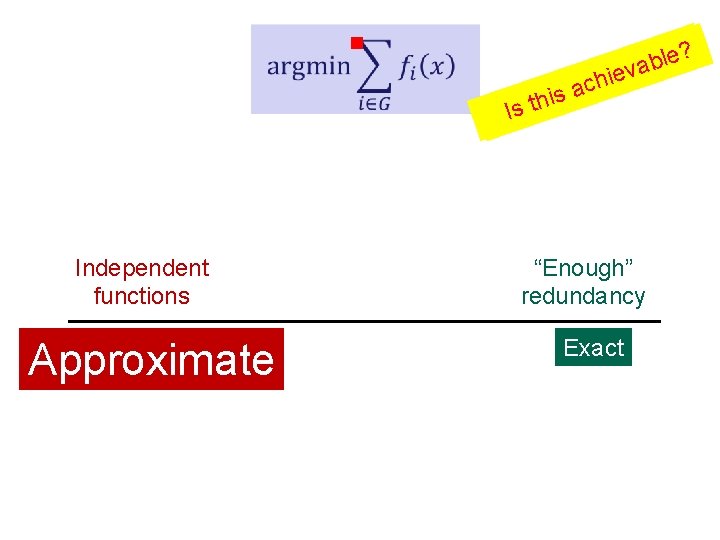

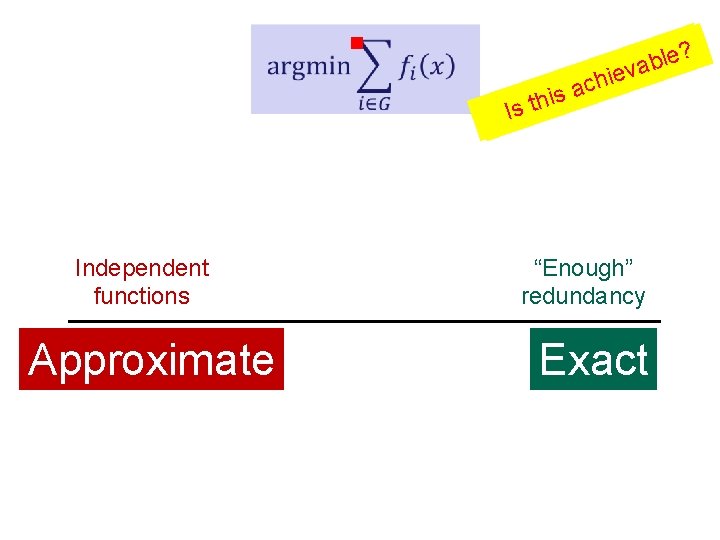

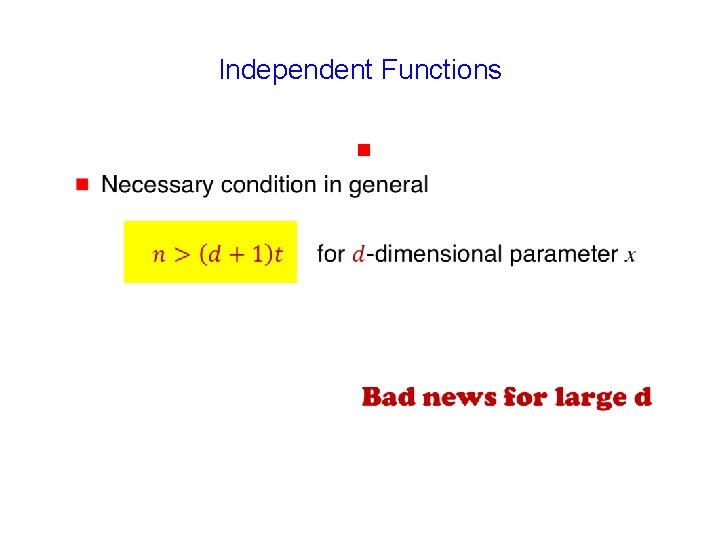

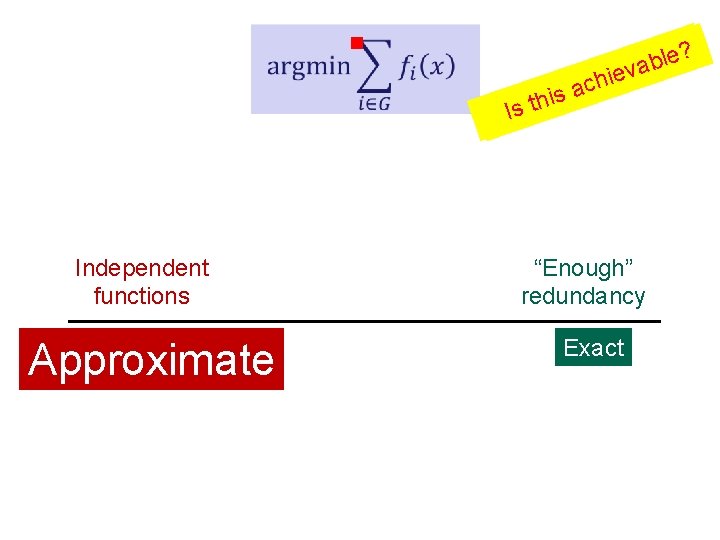

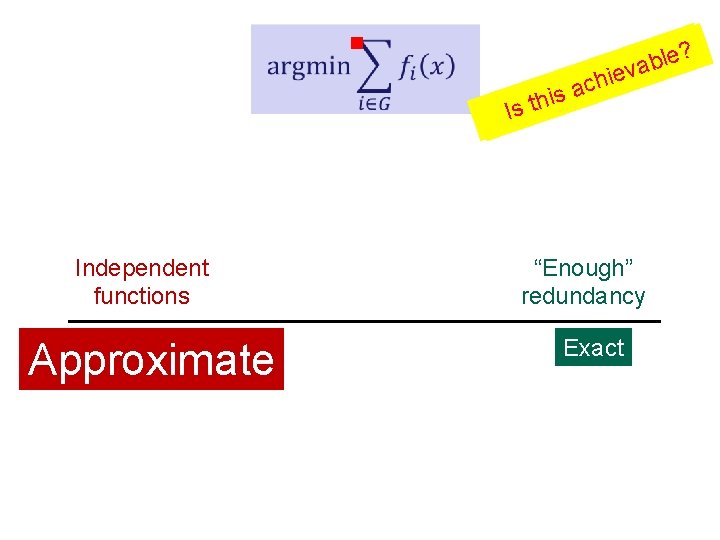

g ? Is a this ble a v hie c It Depends Independent functions “Enough” redundancy

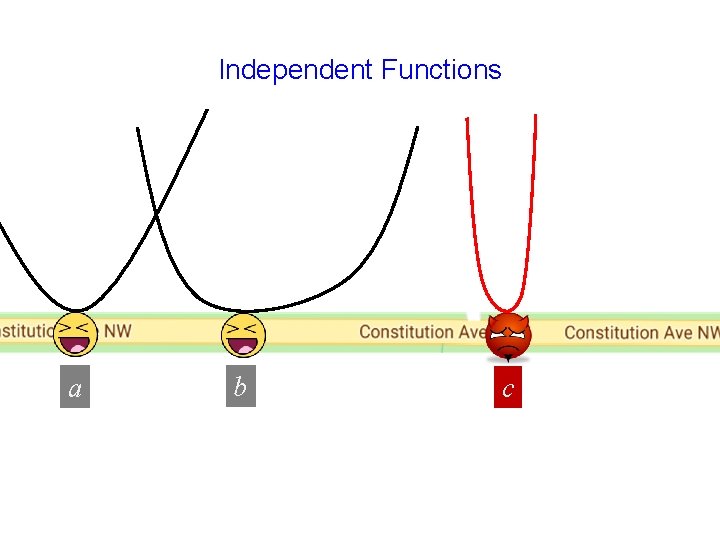

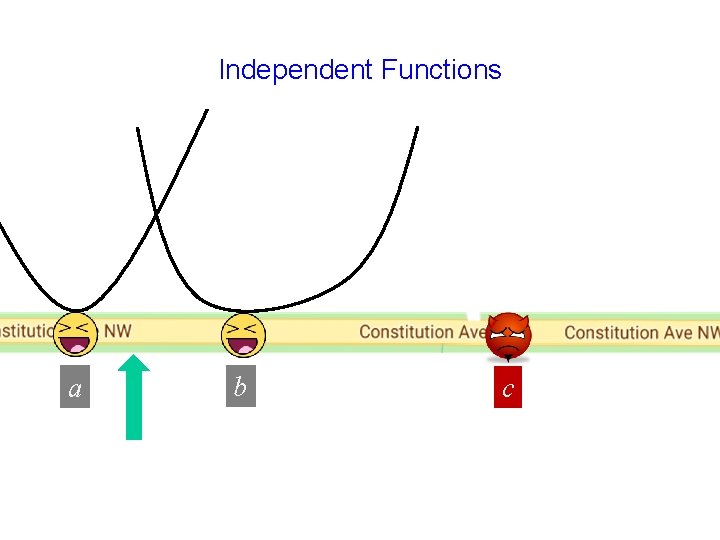

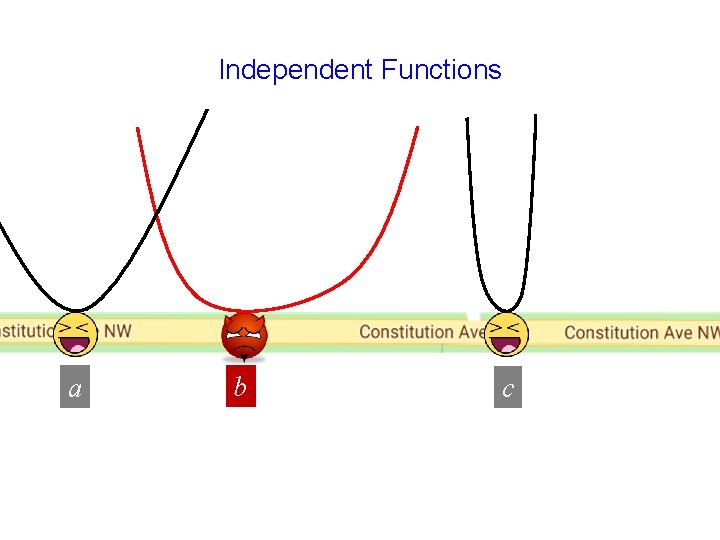

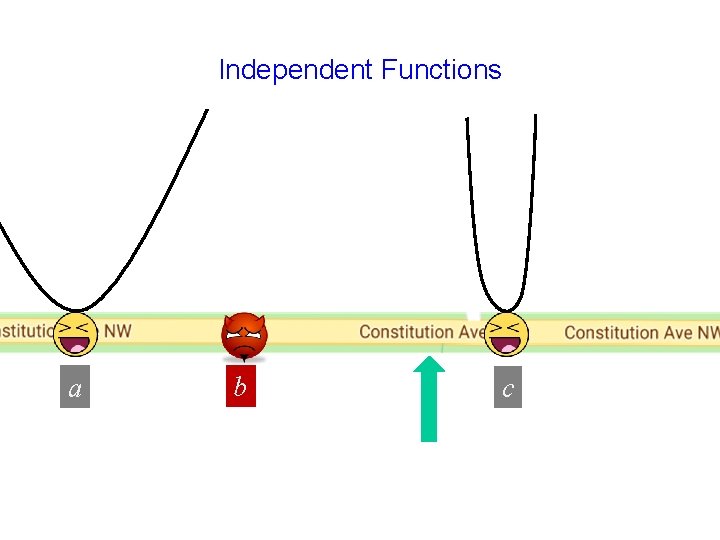

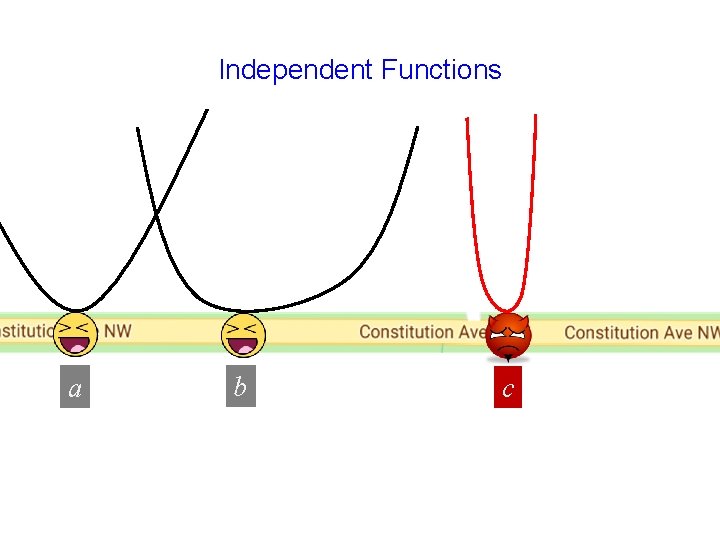

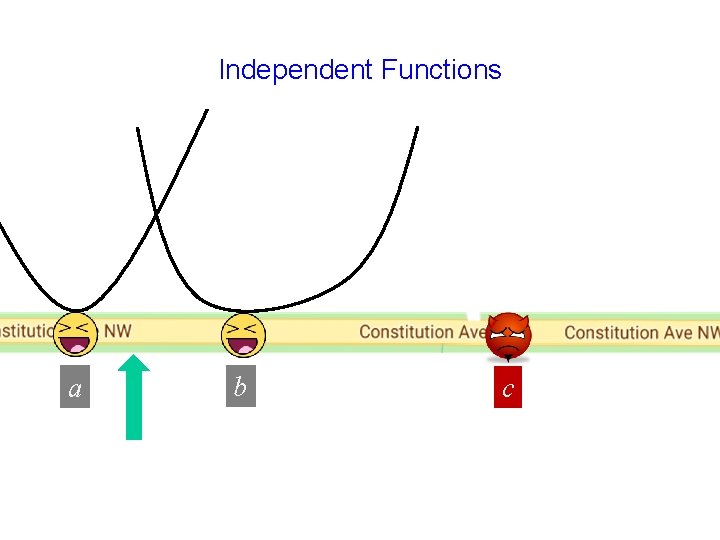

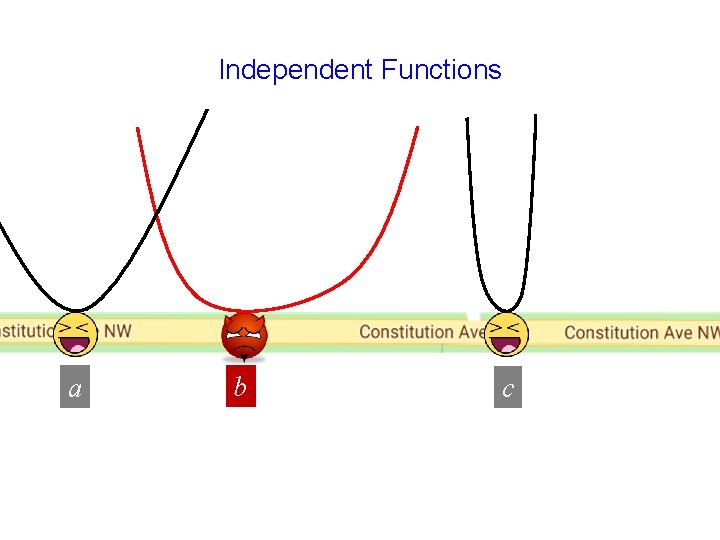

Independent Functions a b c

Independent Functions a b c

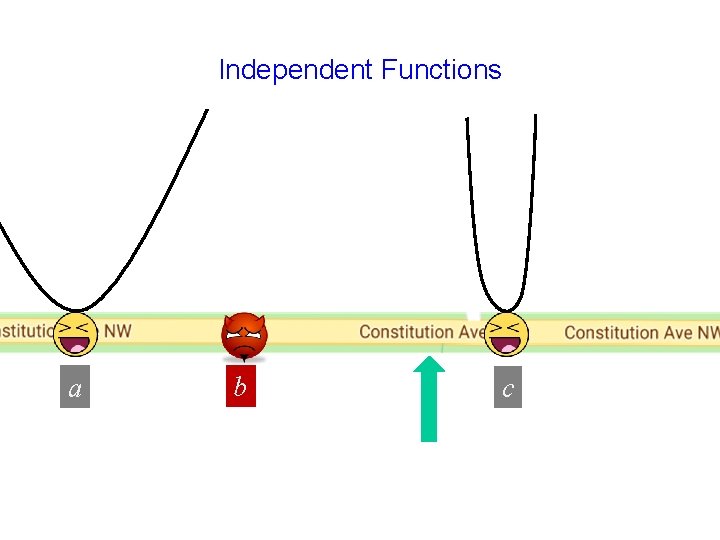

Independent Functions a b c

Independent Functions a b c

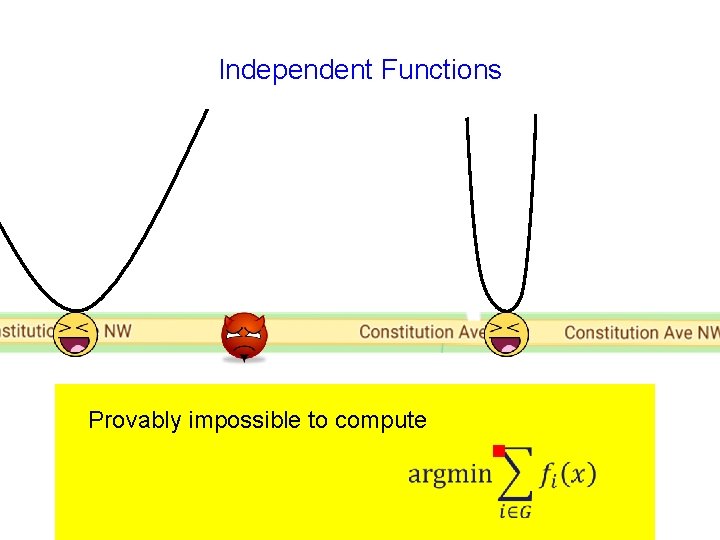

Independent Functions Provably impossible to compute g

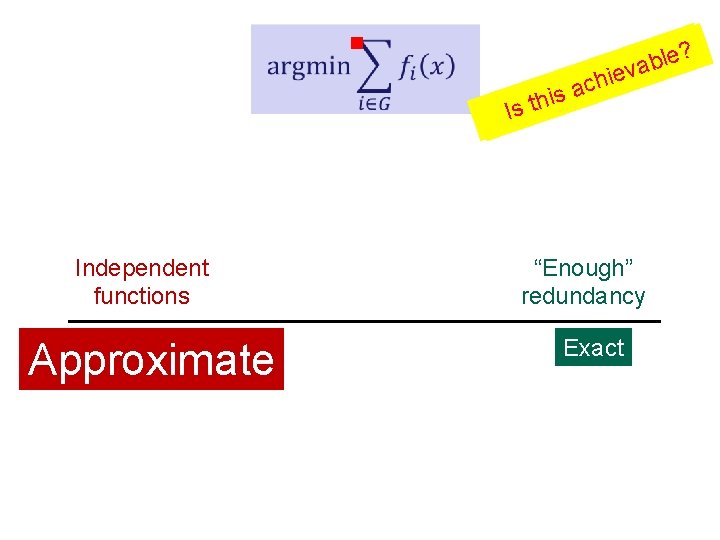

g Independent functions Approximate lele? ? b b a a vv e e i i h h aacc s s i i th Is. Isth “Enough” redundancy Exact

g Independent functions Approximate lele? ? b b a a vv e e i i h h aacc s s i i th Is. Isth “Enough” redundancy Exact

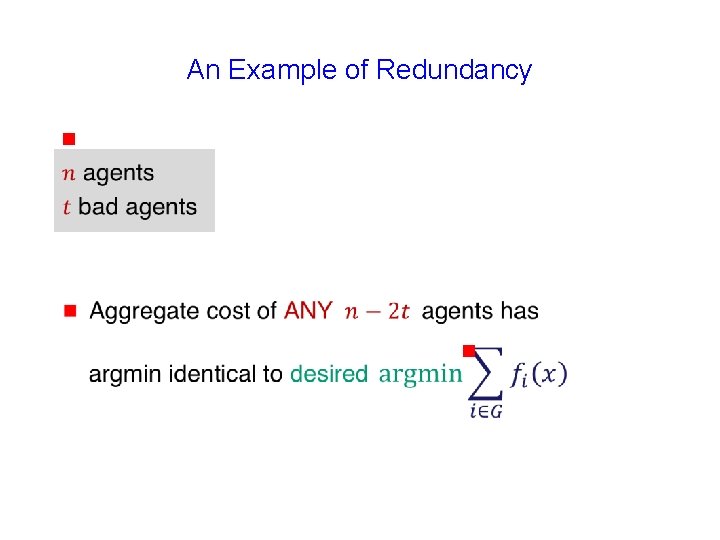

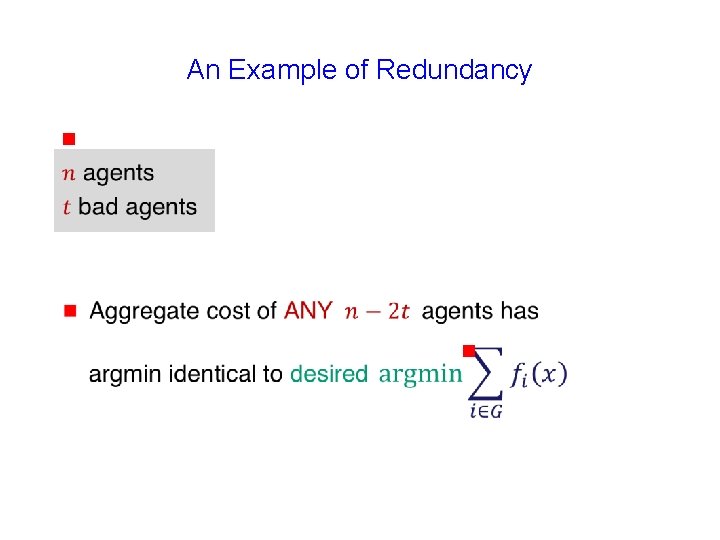

An Example of Redundancy g g

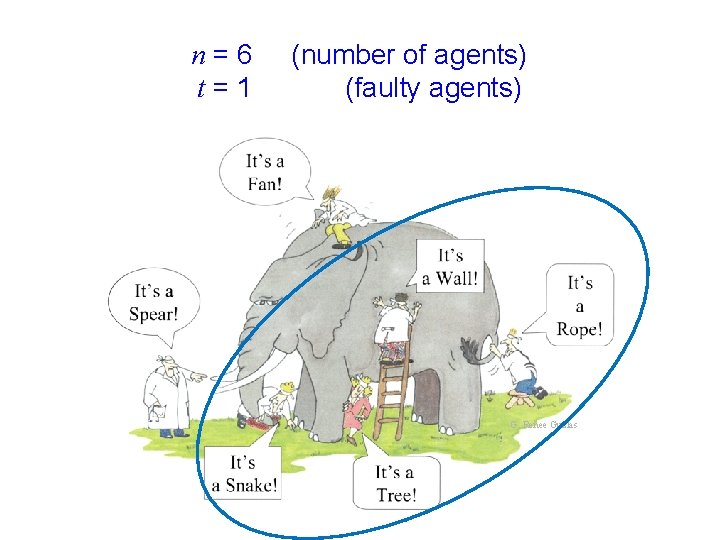

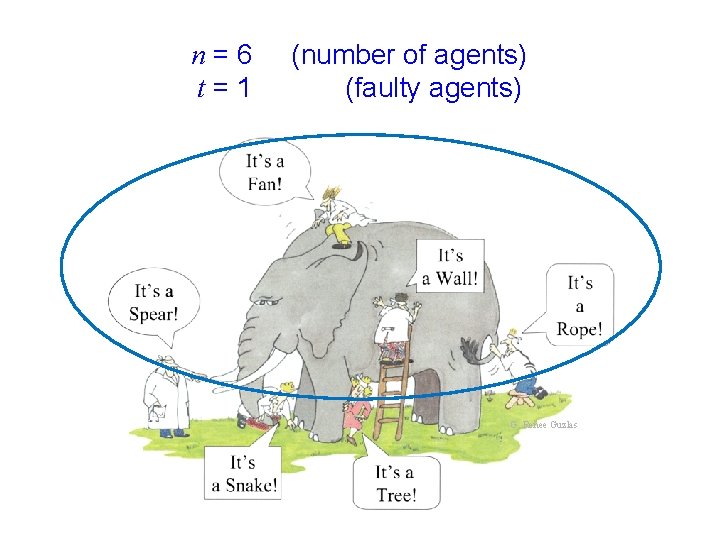

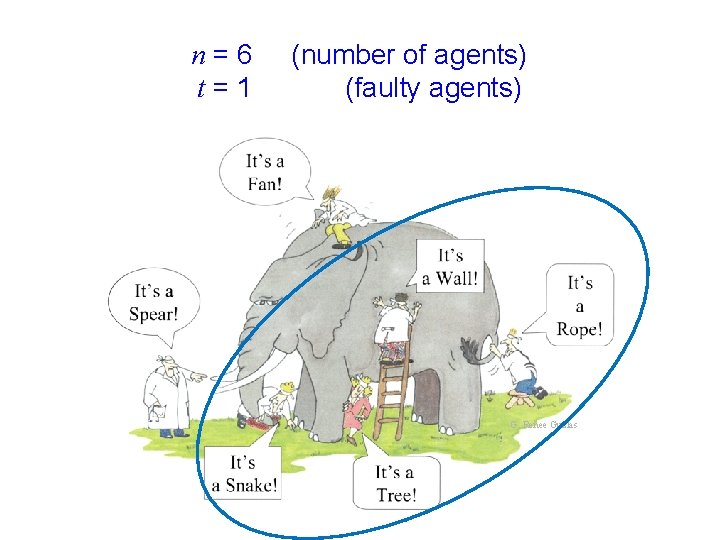

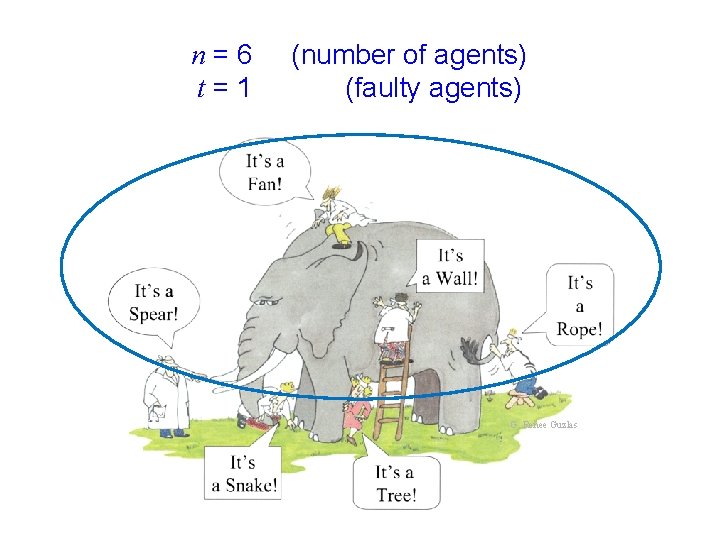

n=6 t=1 (number of agents) (faulty agents) G. Renee Guzlas

n=6 t=1 (number of agents) (faulty agents) G. Renee Guzlas

Parameter Server g g g

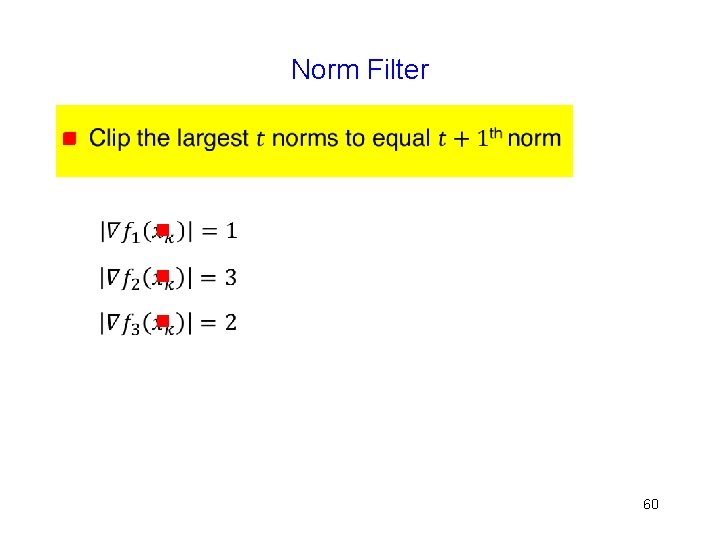

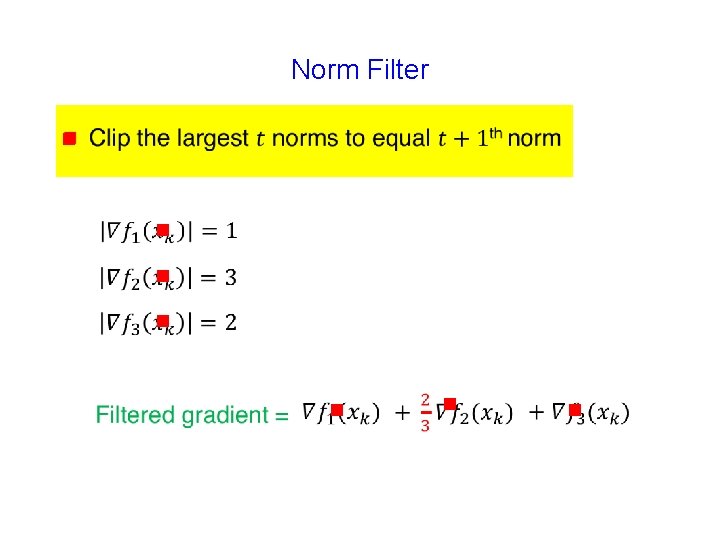

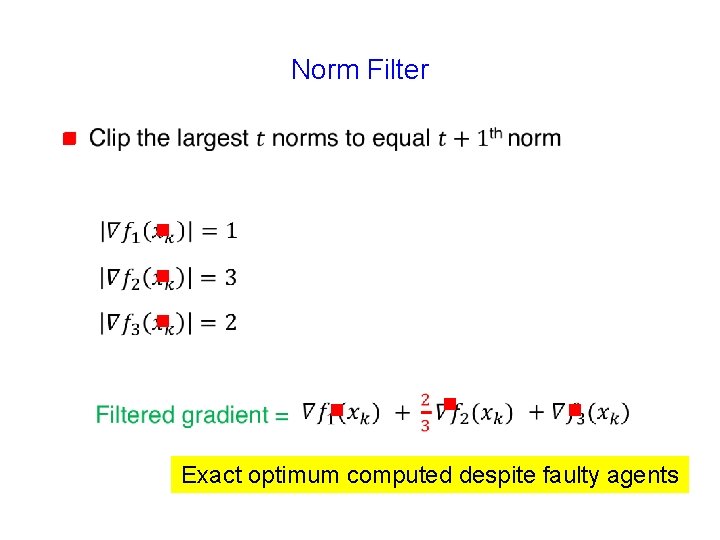

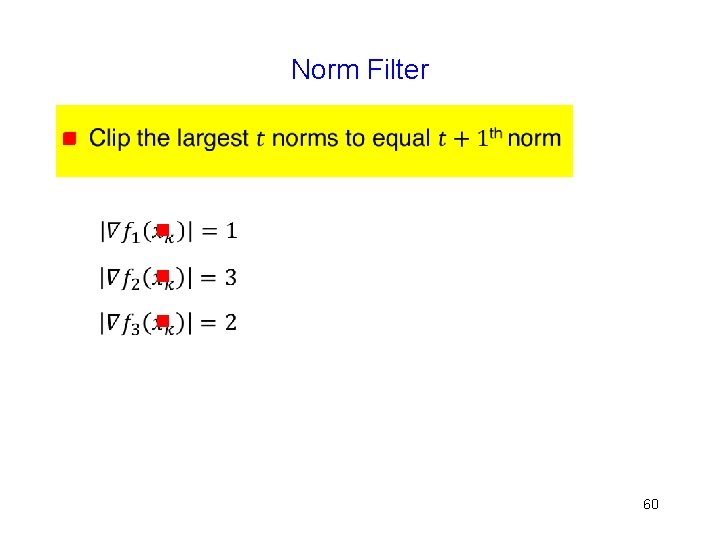

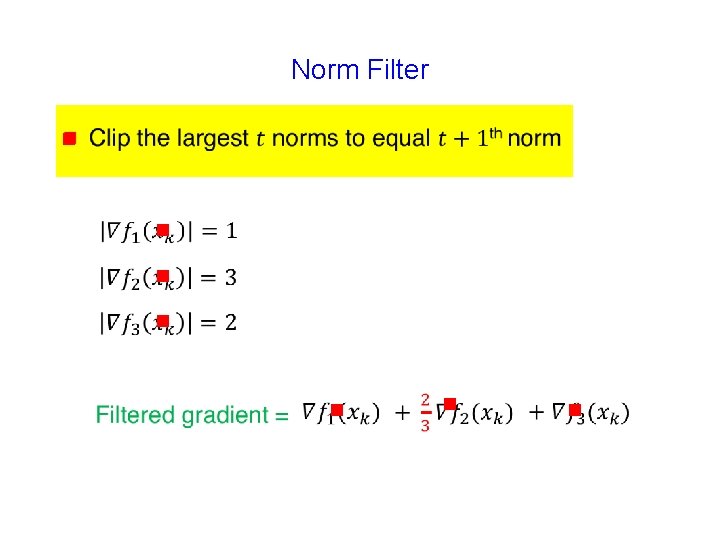

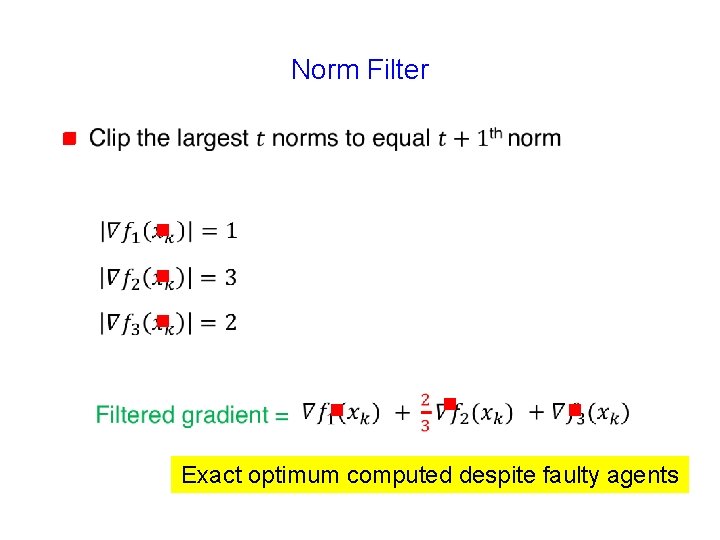

Norm Filter g g 60

Norm Filter g g g g

Norm Filter g g g g Exact optimum computed despite faulty agents

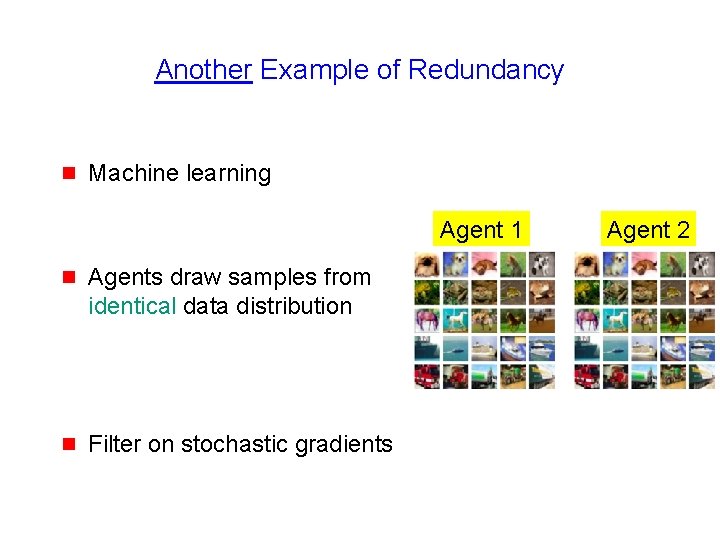

Another Example of Redundancy 63

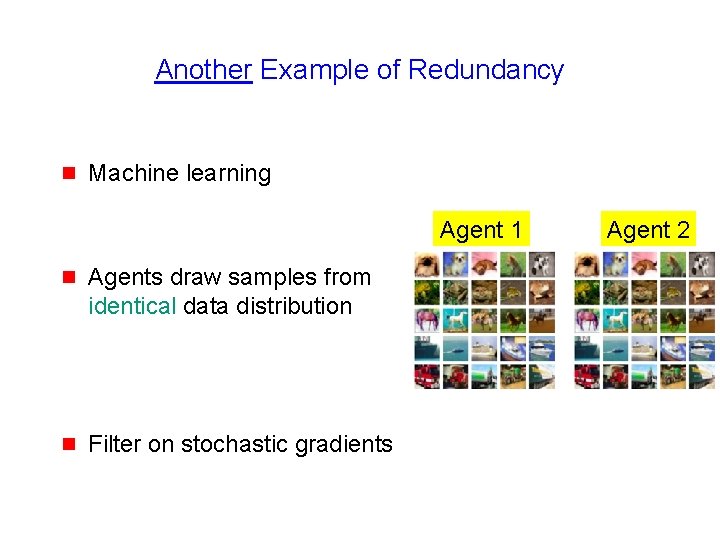

Another Example of Redundancy g Machine learning Agent 1 g Agents draw samples from identical data distribution g Filter on stochastic gradients Agent 2

g Independent functions Approximate lele? ? b b a a vv e e i i h h aacc s s i i th Is. Isth “Enough” redundancy Exact

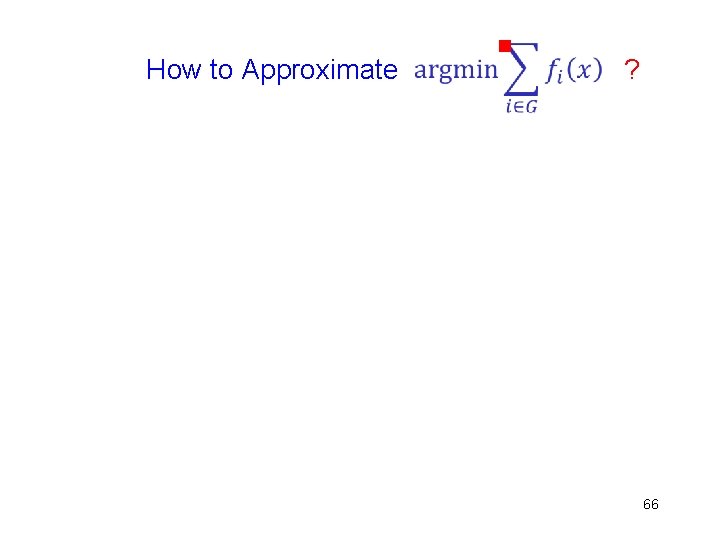

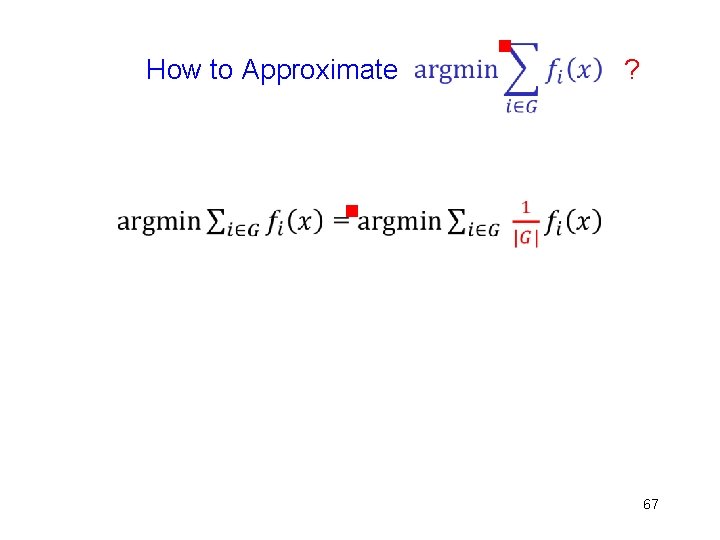

How to Approximate g ? 66

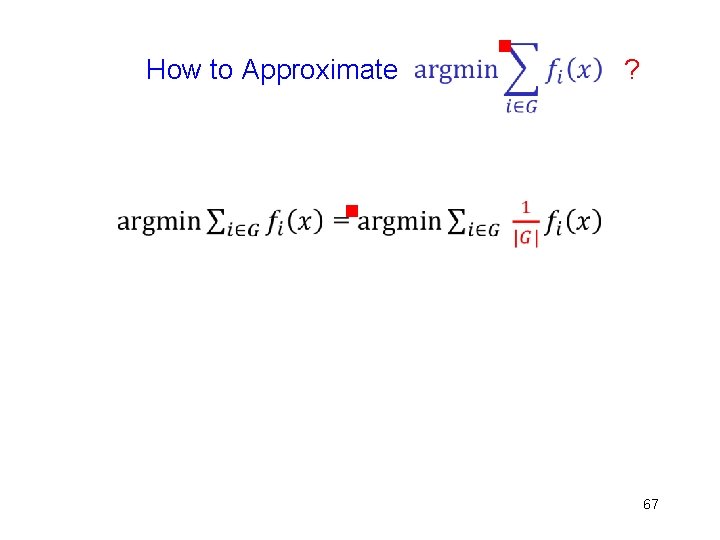

How to Approximate g ? g 67

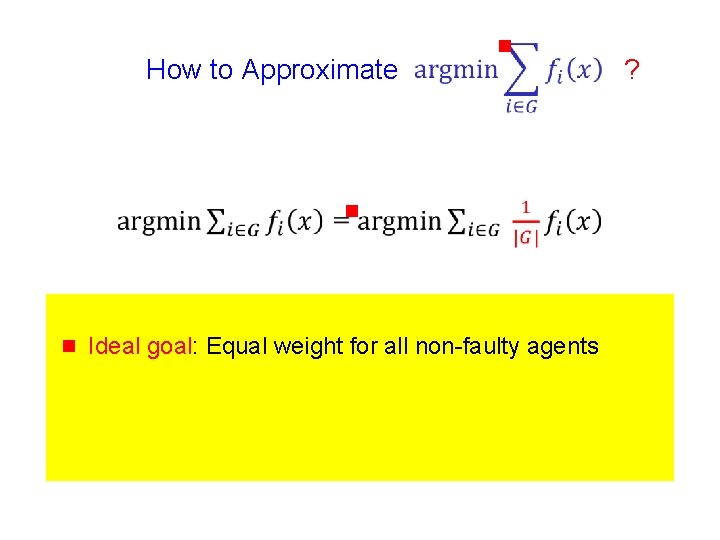

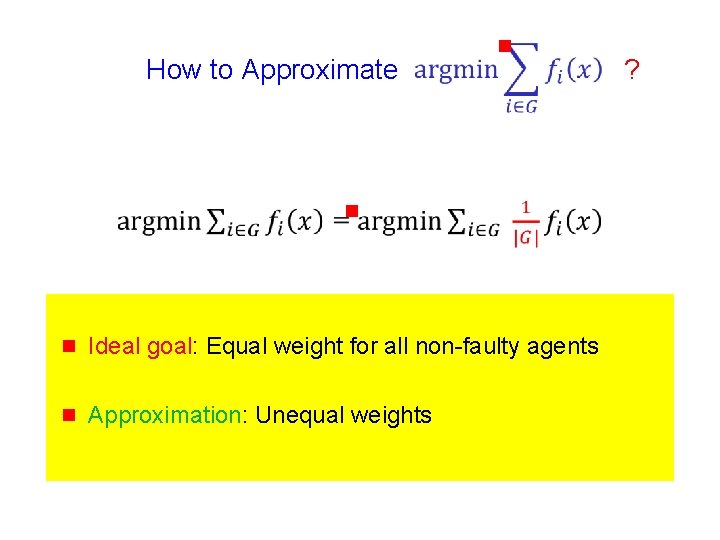

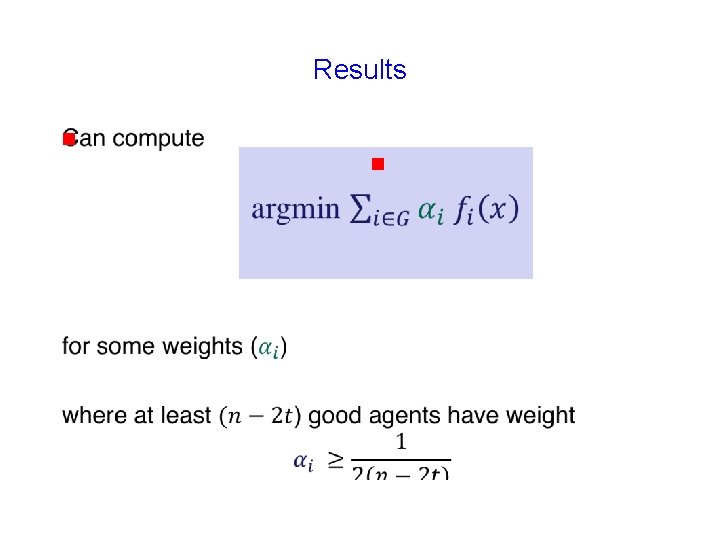

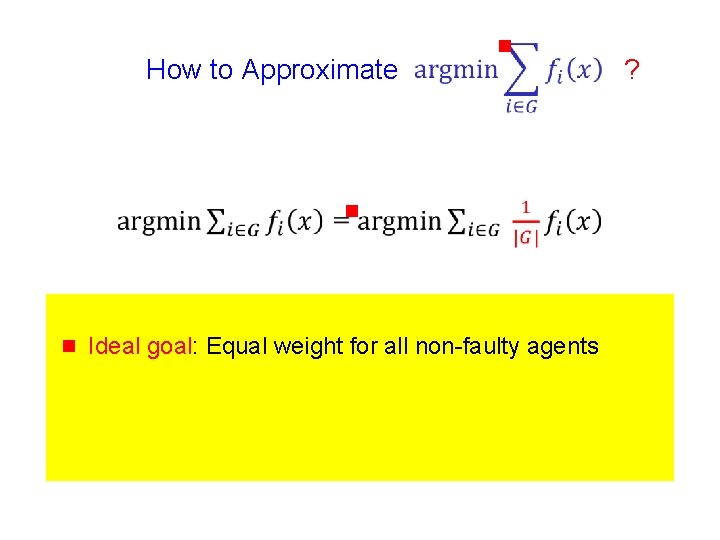

How to Approximate g g g Ideal goal: Equal weight for all non-faulty agents ?

How to Approximate g g g Ideal goal: Equal weight for all non-faulty agents g Approximation: Unequal weights ?

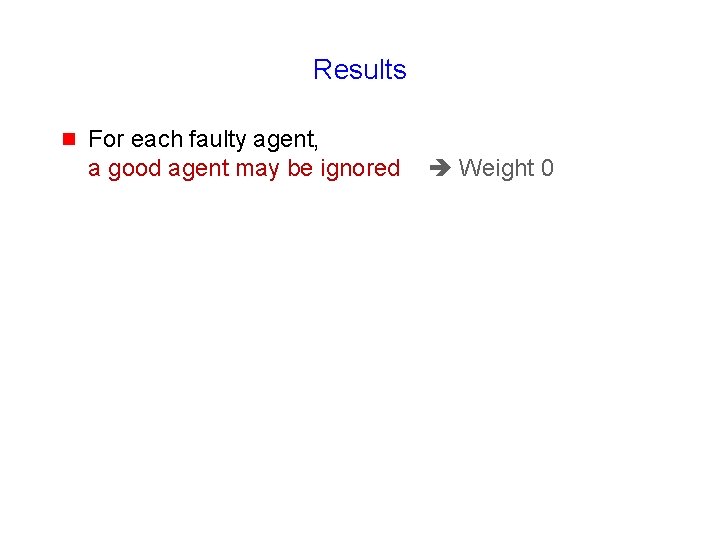

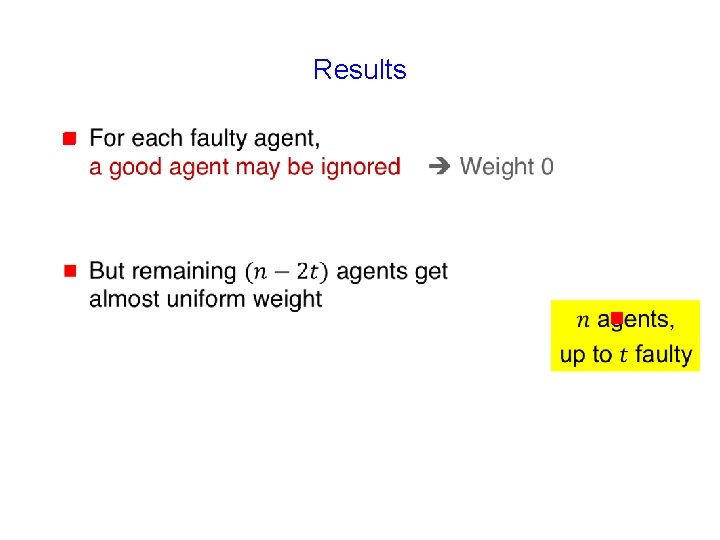

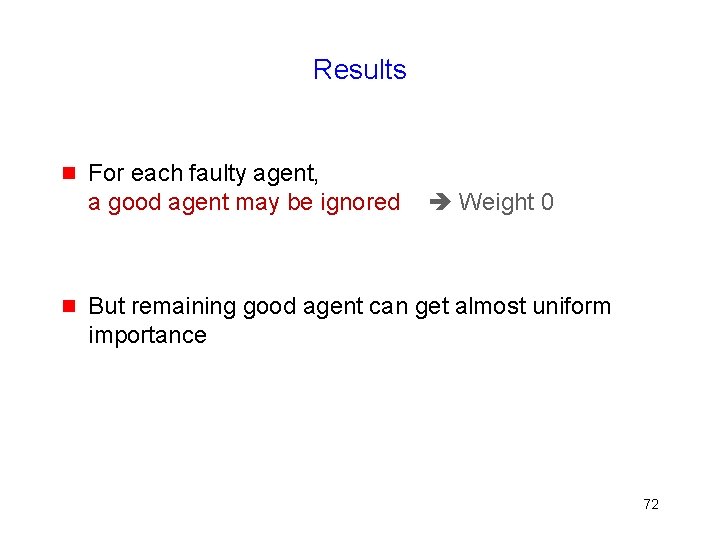

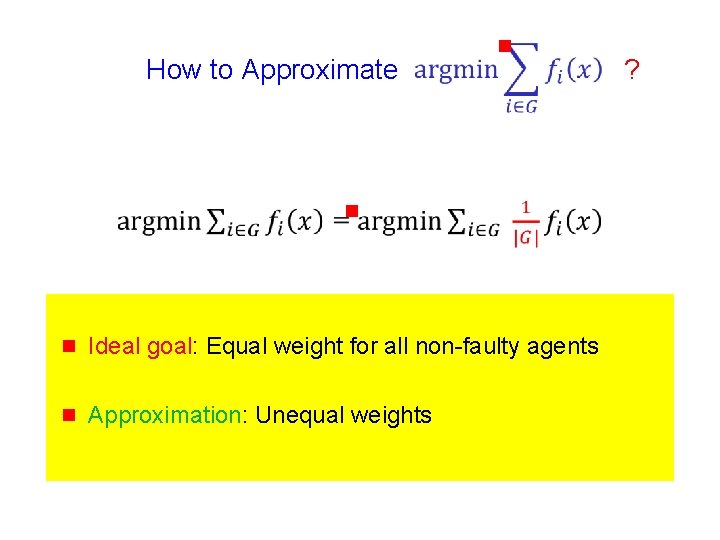

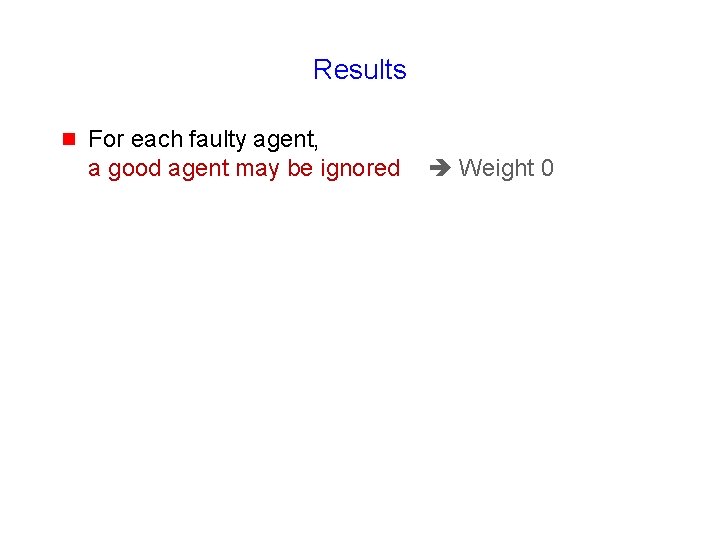

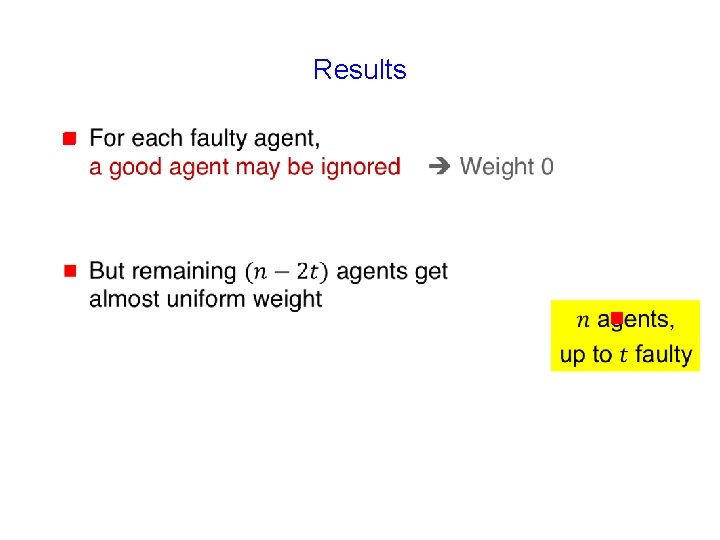

Results g For each faulty agent, a good agent may be ignored Weight 0

Results g g

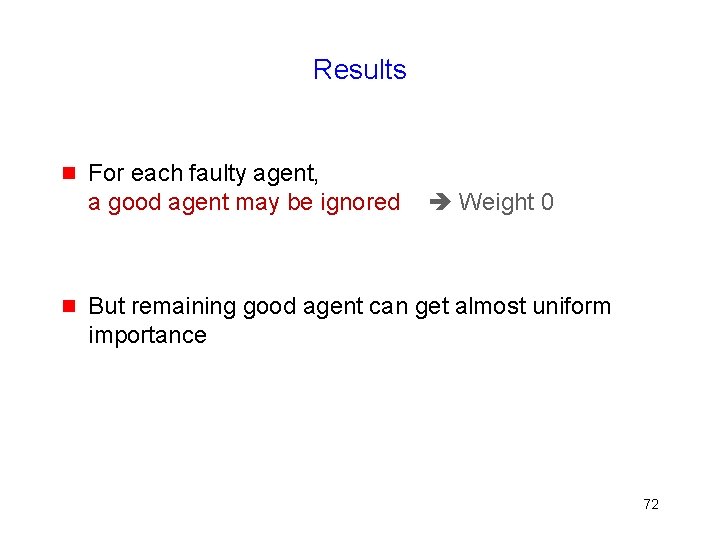

Results g g For each faulty agent, a good agent may be ignored Weight 0 But remaining good agent can get almost uniform importance 72

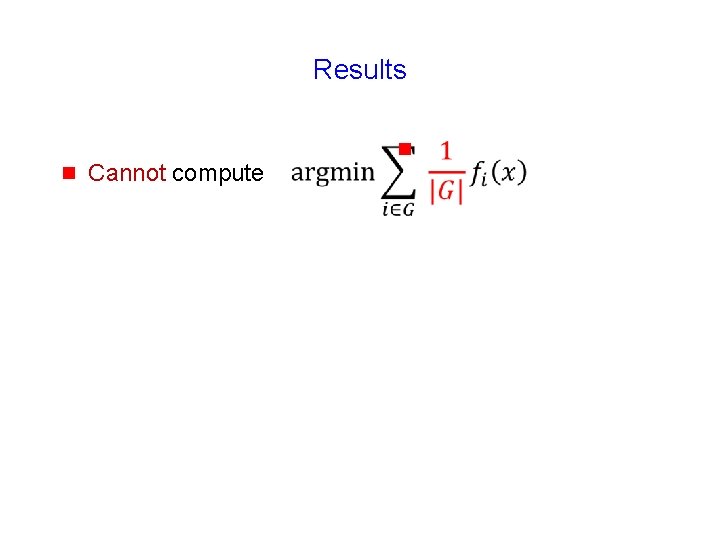

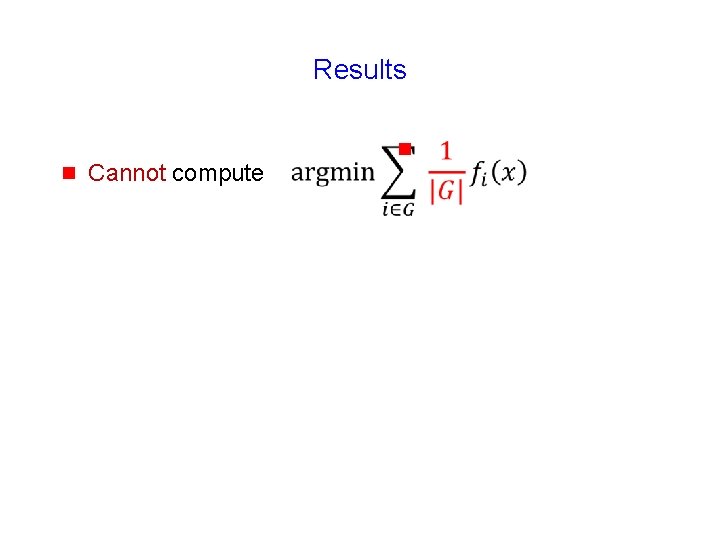

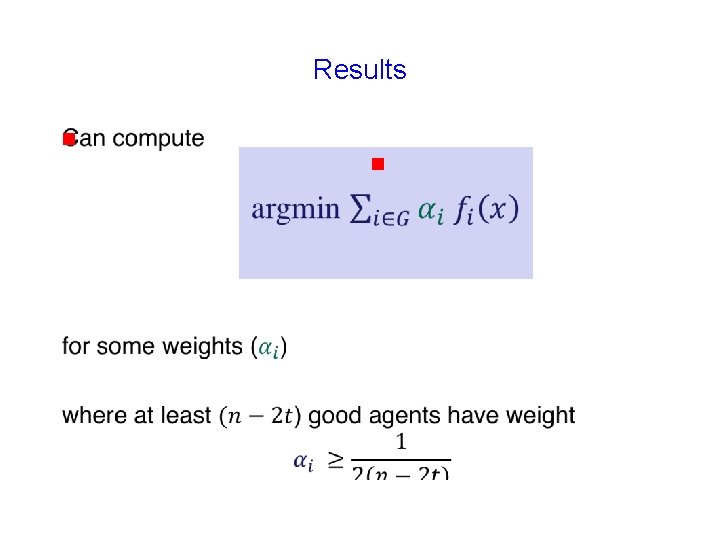

Results g Cannot compute g

Results g g

Parameter Server g g g

Privacy-Preserving Optimization 2016 …

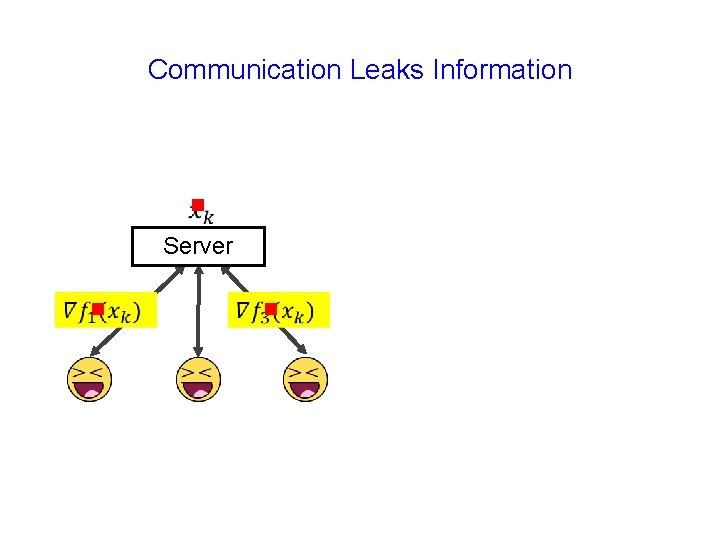

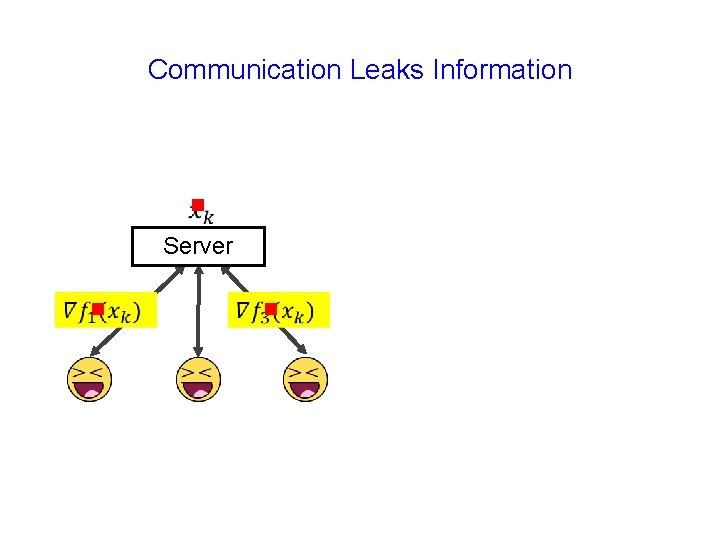

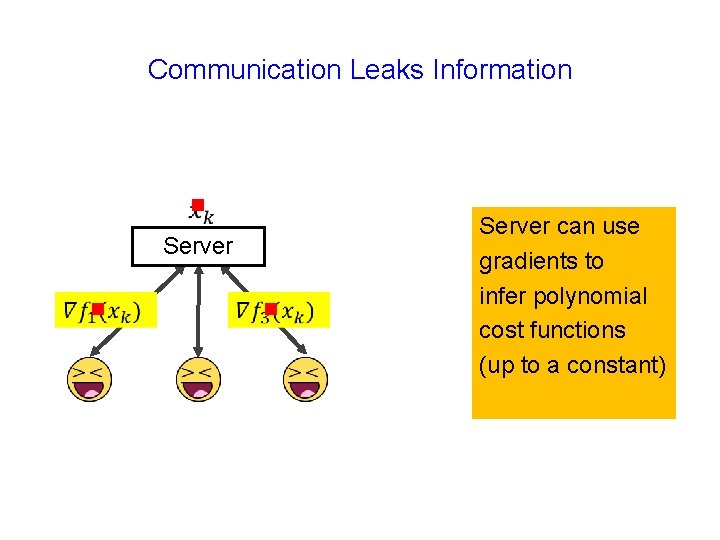

Communication Leaks Information g Server g g

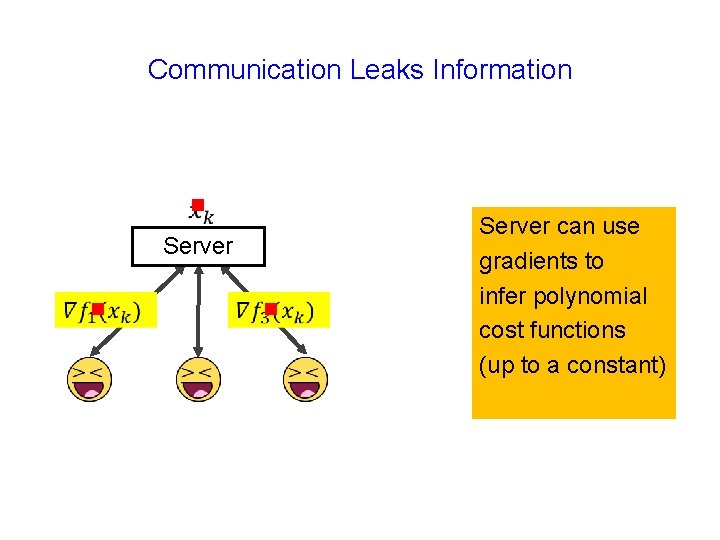

Communication Leaks Information g Server g g Server can use gradients to infer polynomial cost functions (up to a constant)

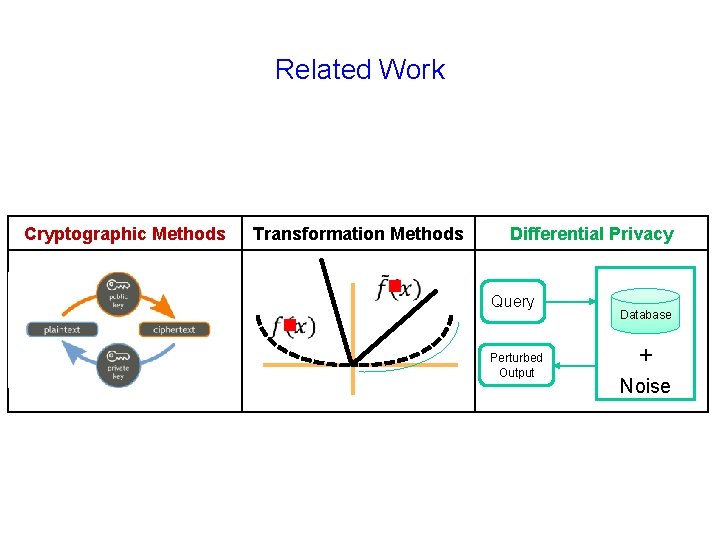

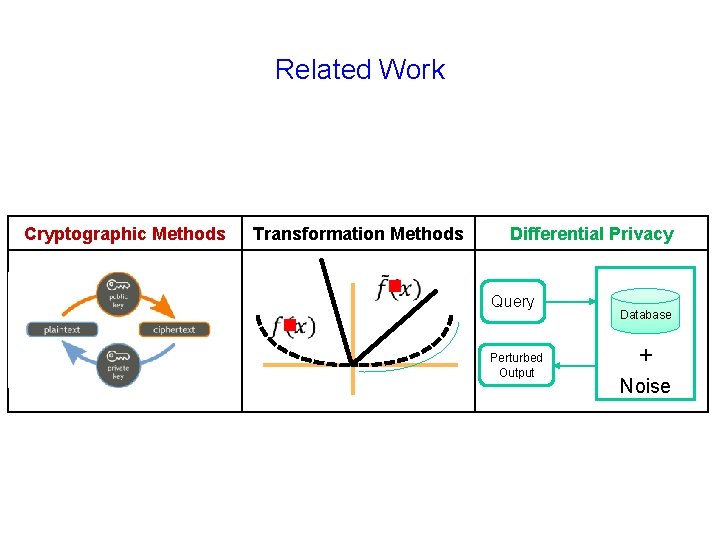

Related Work Cryptographic Methods Transformation Methods g Differential Privacy Query g Perturbed Output Database + Noise

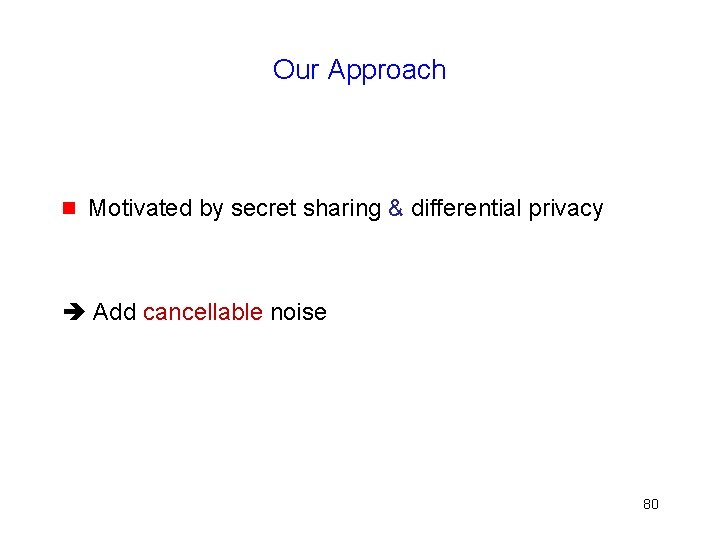

Our Approach g Motivated by secret sharing & differential privacy Add cancellable noise 80

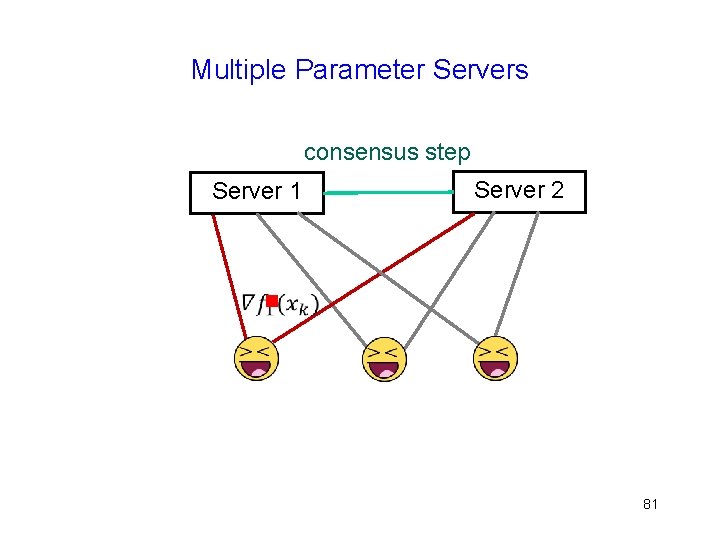

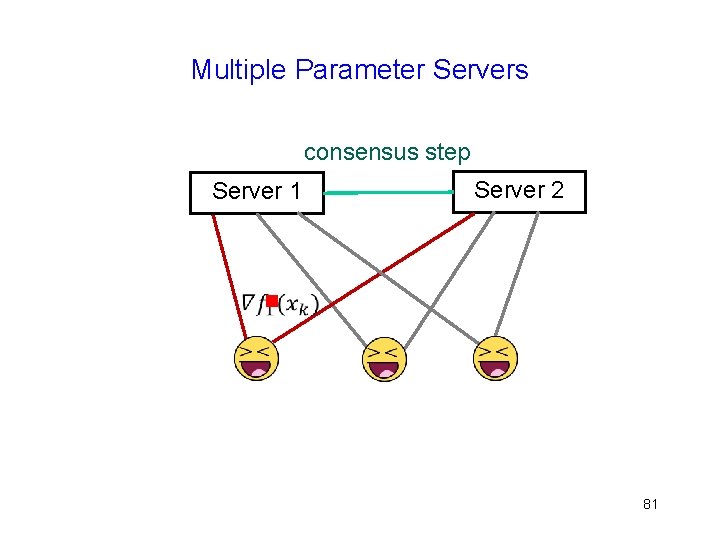

Multiple Parameter Servers consensus step Server 1 Server 2 g 81

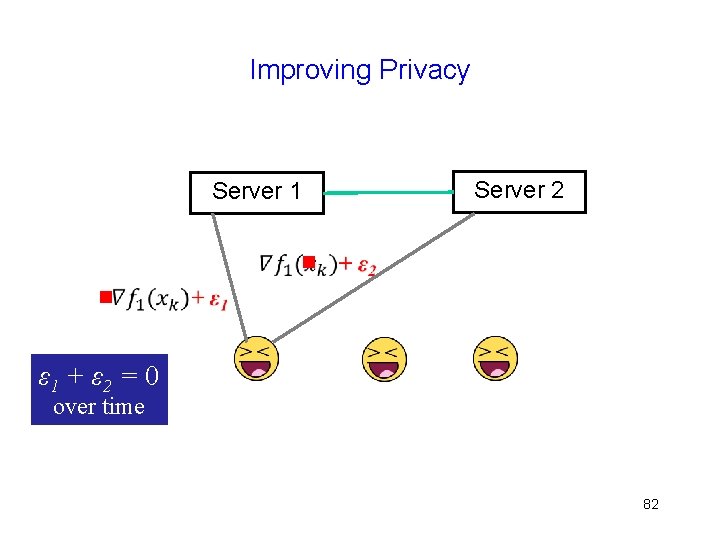

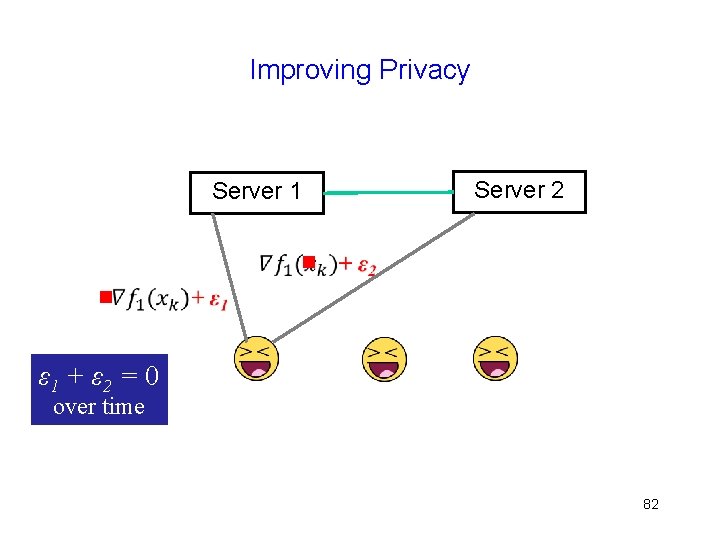

Improving Privacy Server 1 Server 2 g g ε 1 + ε 2 = 0 over time 82

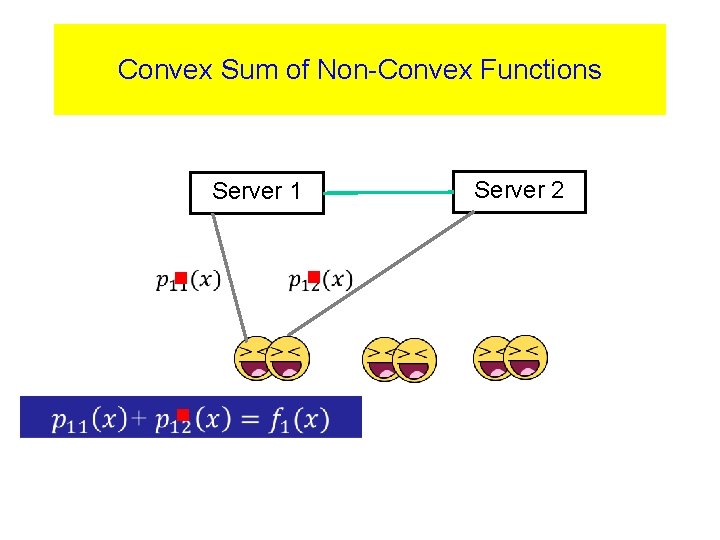

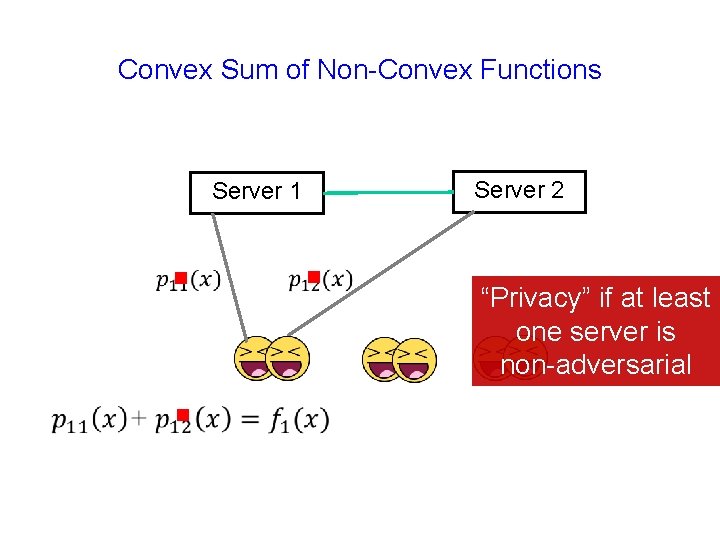

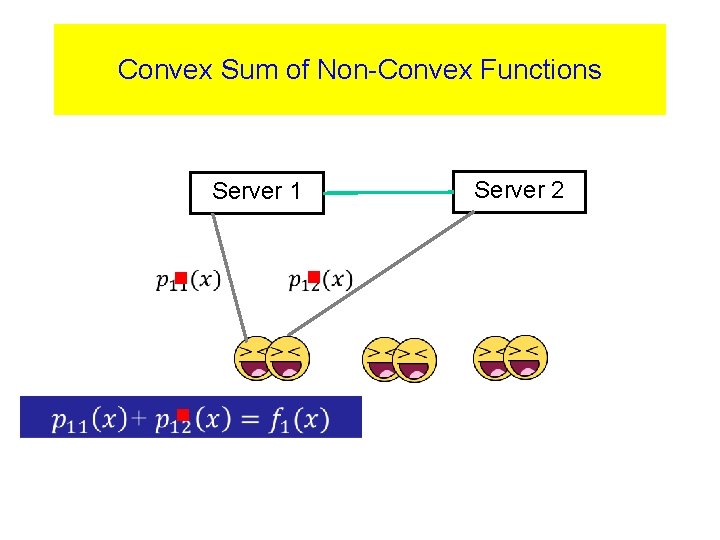

Convex Sum of Non-Convex Functions Server 2 Server 1 g g g

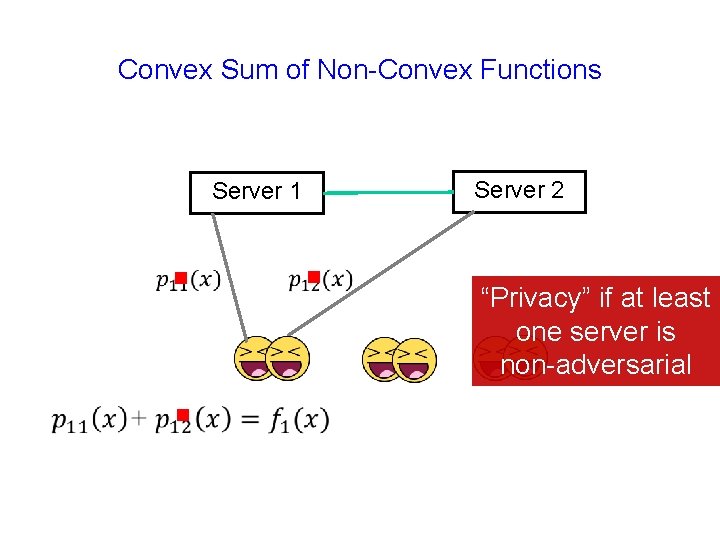

Convex Sum of Non-Convex Functions Server 2 Server 1 g g g “Privacy” if at least one server is non-adversarial

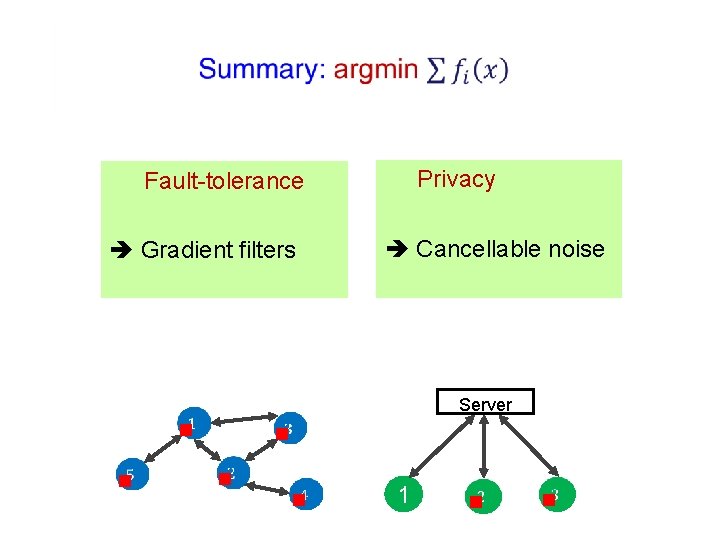

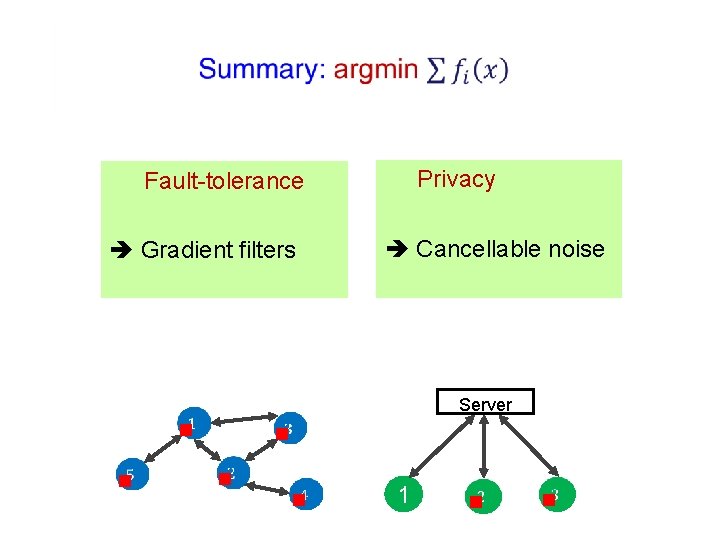

Privacy Fault-tolerance Gradient filters Cancellable noise Server g g g 1 g g

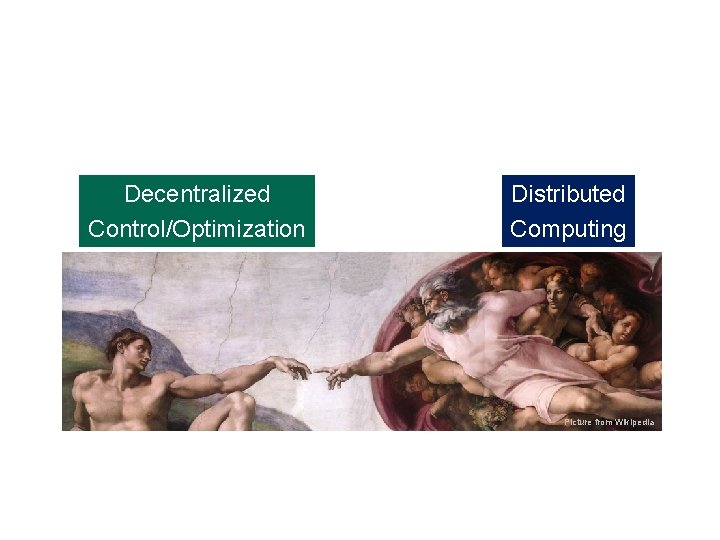

Decentralized Control/Optimization Distributed Computing Picture from Wikipedia 86

Lili Su Shripad Gade Dimitrios Pylorof Nirupam Gupta Shuo Liu

Thanks! disc. georgetown. domains

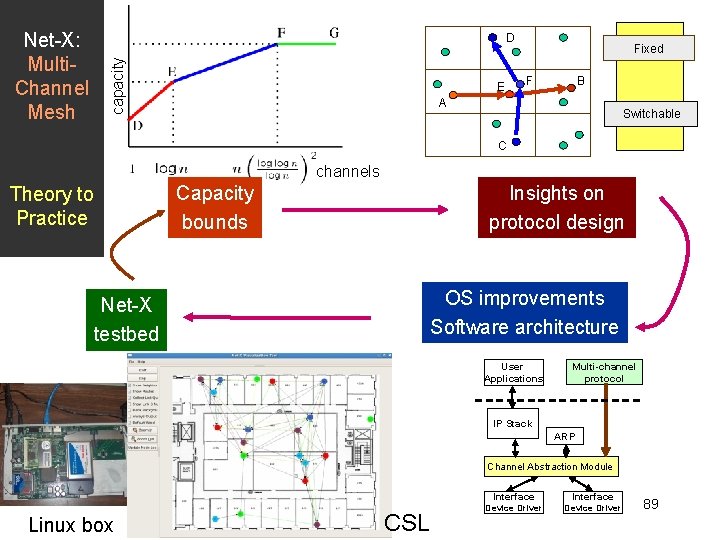

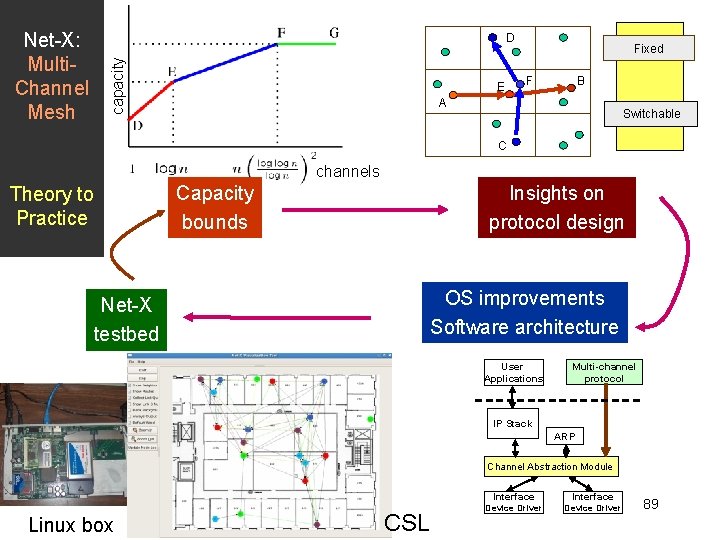

Net-X: Multi. Channel Mesh capacity D E Fixed F B A Switchable C channels Theory to Practice Net-X testbed Capacity bounds Insights on protocol design OS improvements Software architecture User Applications Multi-channel protocol IP Stack ARP Channel Abstraction Module Linux box CSL Interface Device Driver 89

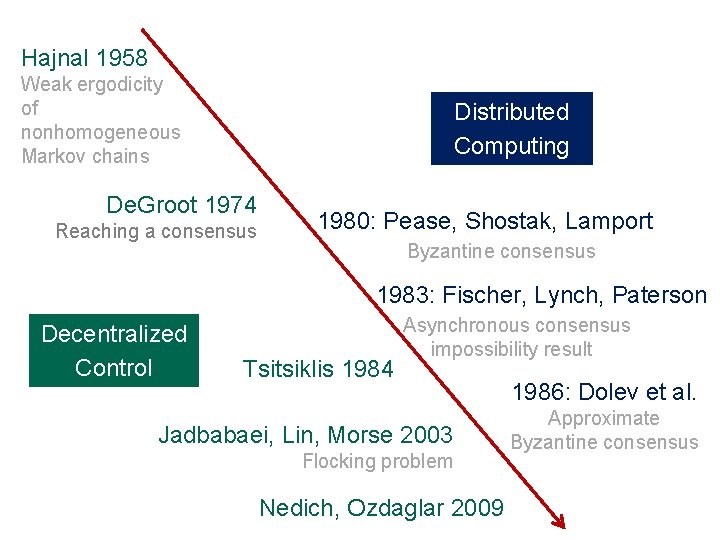

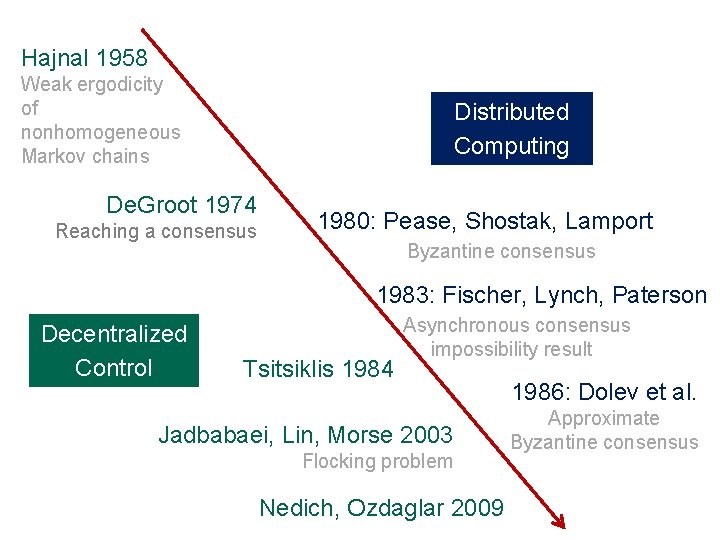

Hajnal 1958 Weak ergodicity of nonhomogeneous Markov chains Distributed Computing De. Groot 1974 Reaching a consensus 1980: Pease, Shostak, Lamport Byzantine consensus 1983: Fischer, Lynch, Paterson Decentralized Control Tsitsiklis 1984 Asynchronous consensus impossibility result Jadbabaei, Lin, Morse 2003 Flocking problem Nedich, Ozdaglar 2009 1986: Dolev et al. Approximate Byzantine consensus

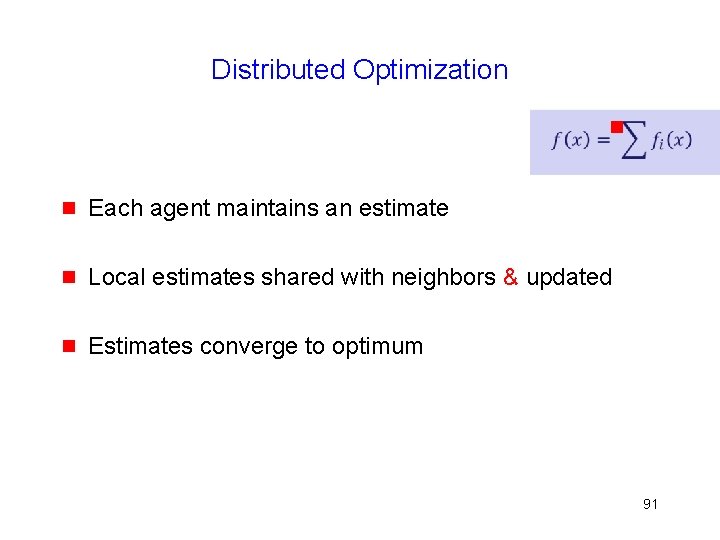

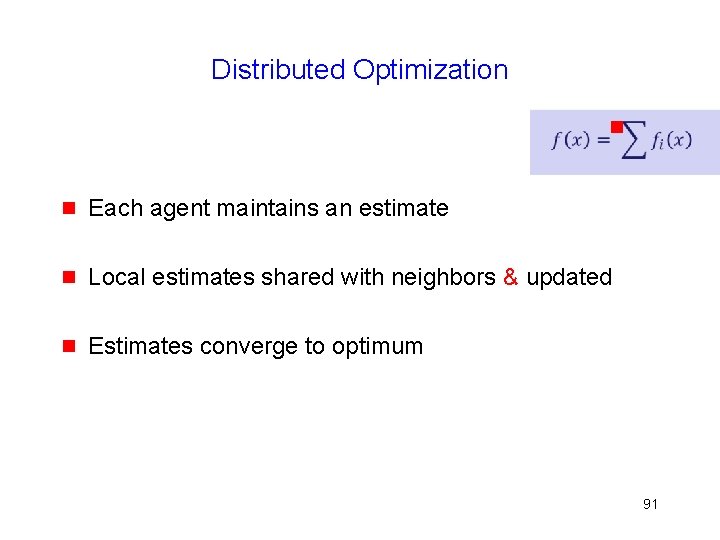

Distributed Optimization g g Each agent maintains an estimate g Local estimates shared with neighbors & updated g Estimates converge to optimum 91

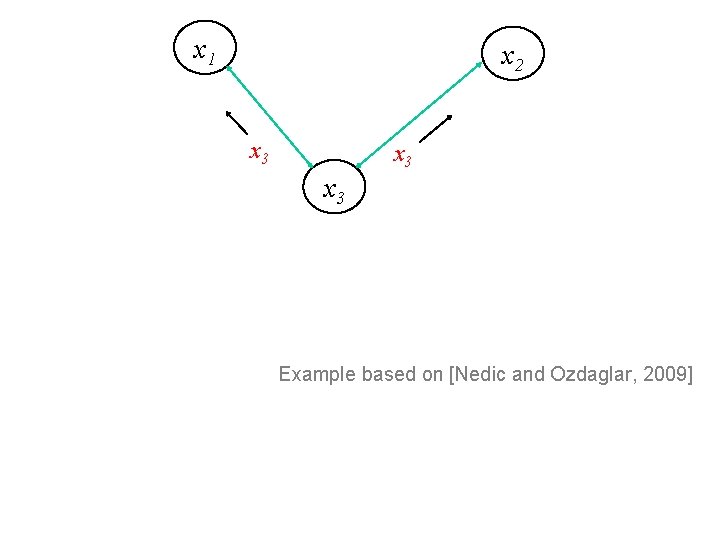

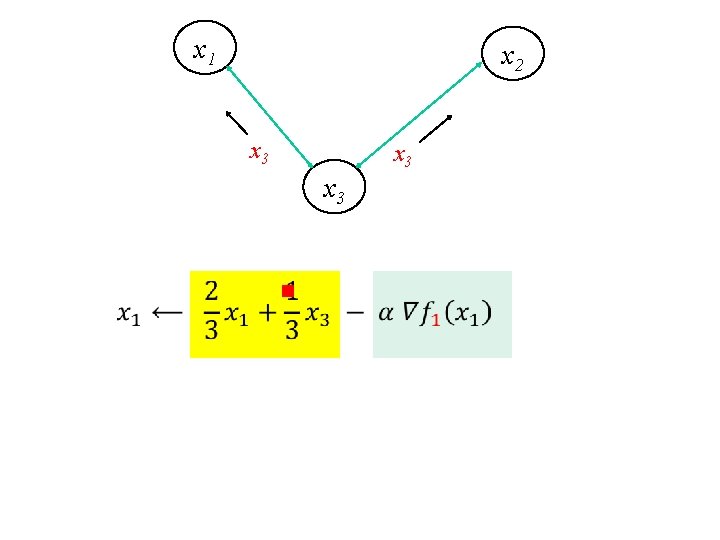

x 1 x 2 x 3 x 3 Example based on [Nedic and Ozdaglar, 2009]

x 1 x 2 x 3 x 3 g

x 1 x 2 x 3 x 3 g g

Distributed Optimization As time ∞ g Consensus: All agents converge to same estimate g Optimality g 95

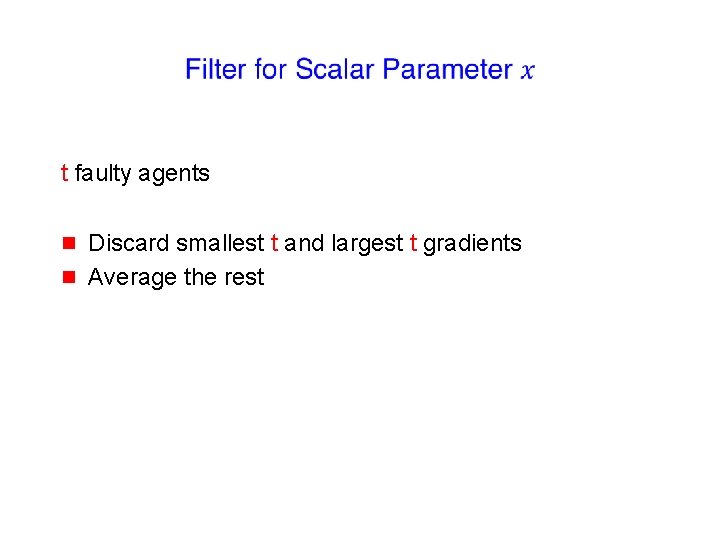

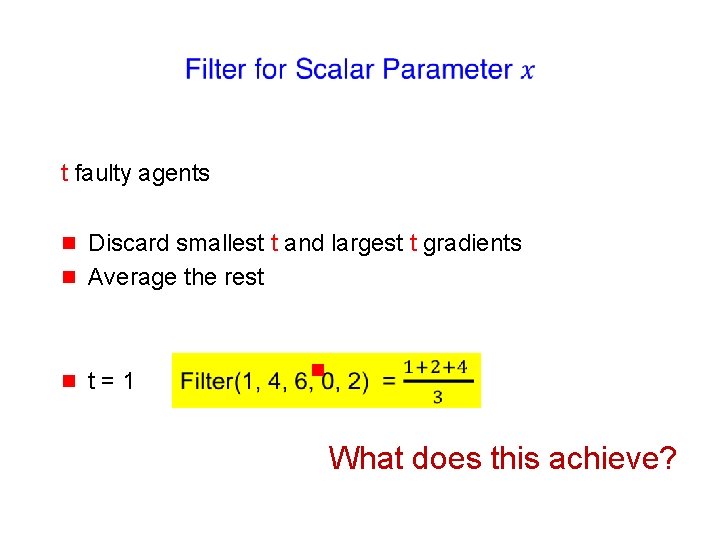

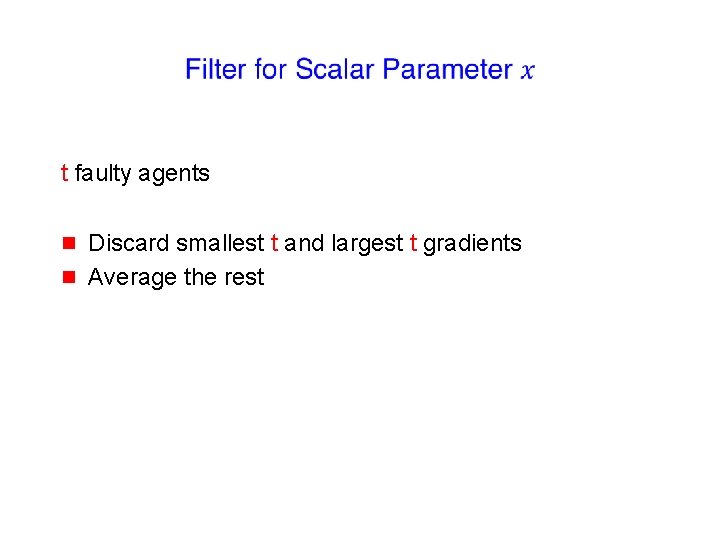

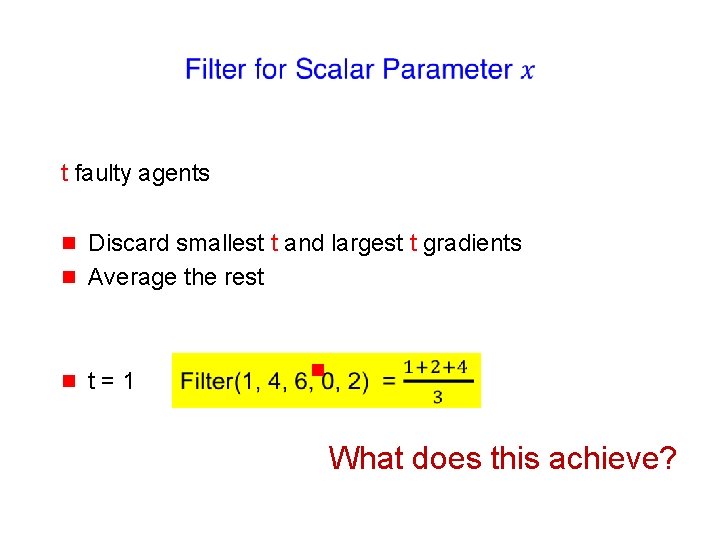

t faulty agents

t faulty agents g g Discard smallest t and largest t gradients Average the rest

t faulty agents g Discard smallest t and largest t gradients Average the rest g t=1 g g

t faulty agents g Discard smallest t and largest t gradients Average the rest g t=1 g g What does this achieve?

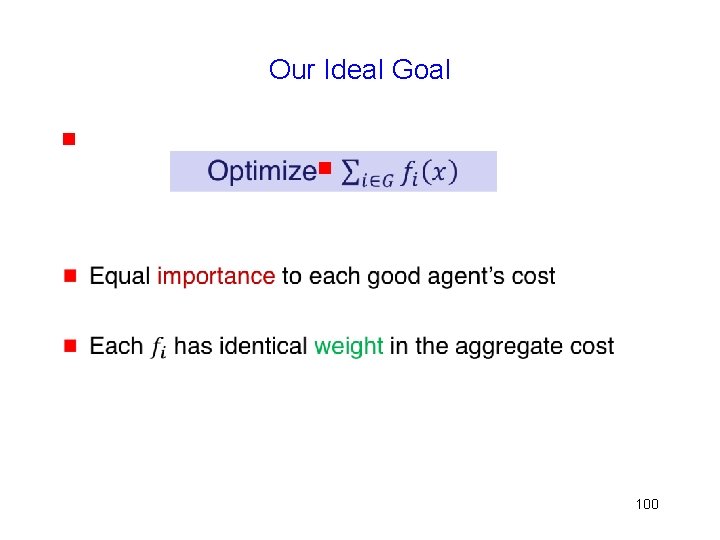

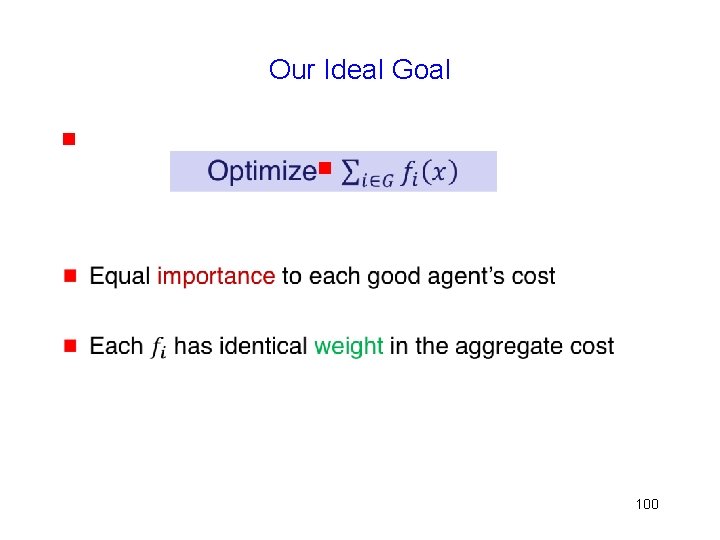

Our Ideal Goal g g 100

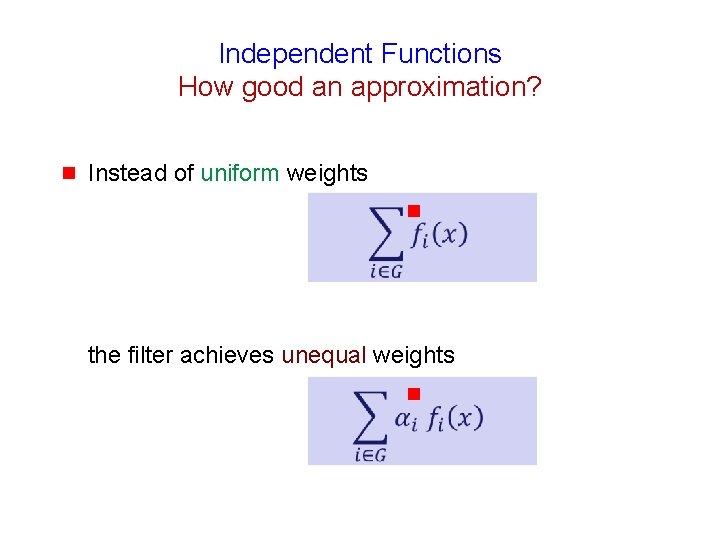

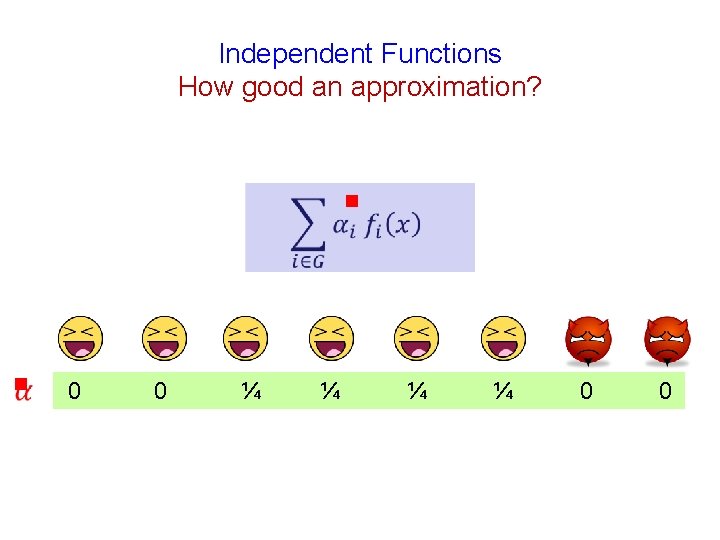

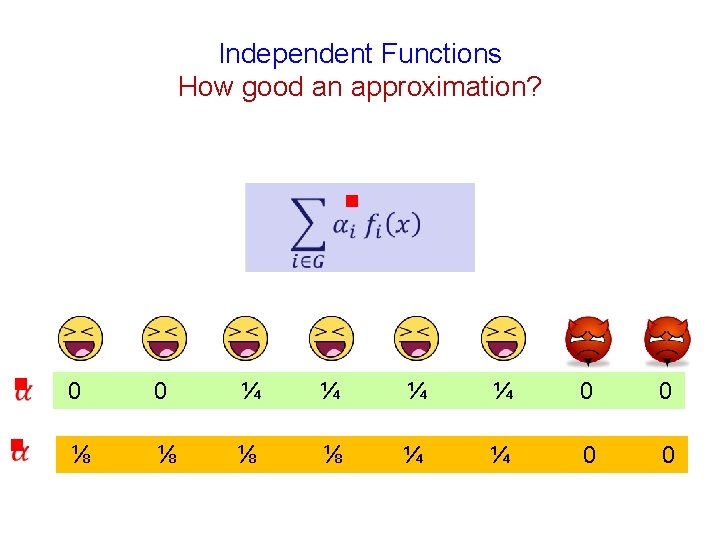

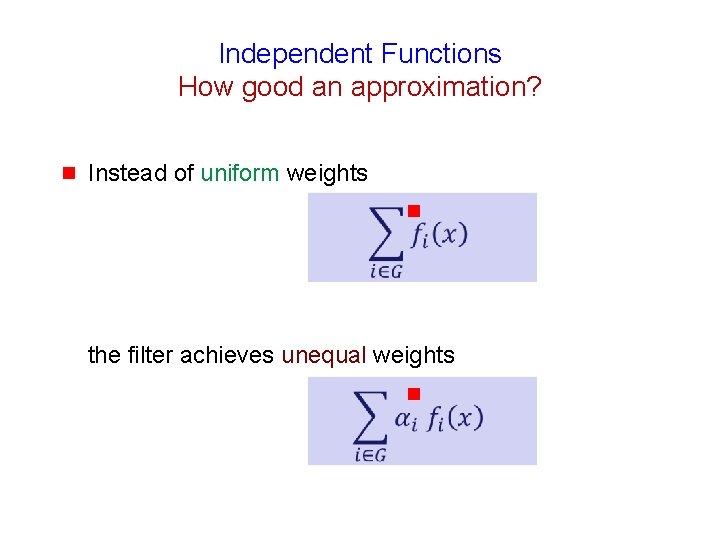

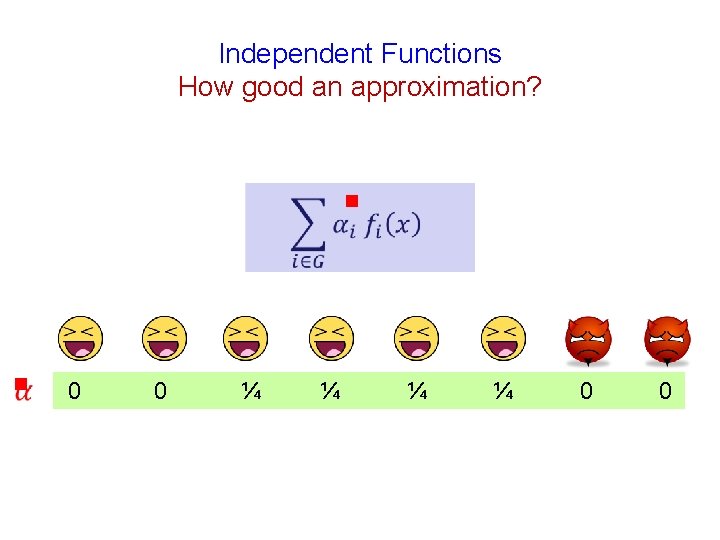

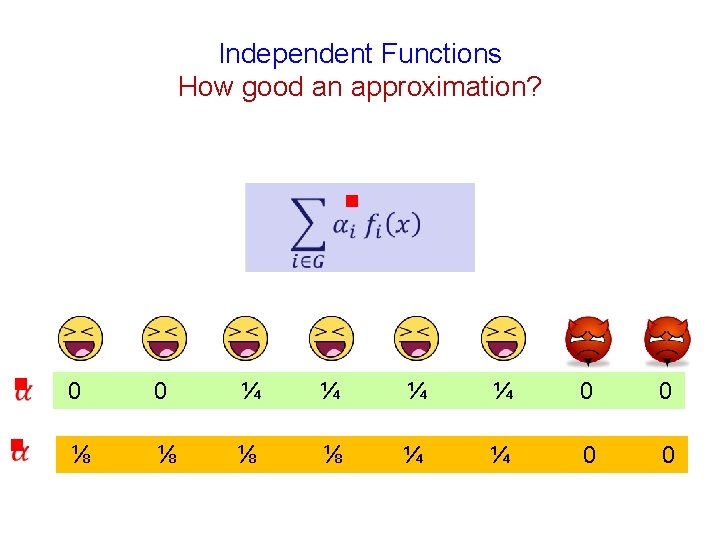

Independent Functions How good an approximation? g Instead of uniform weights g the filter achieves unequal weights g

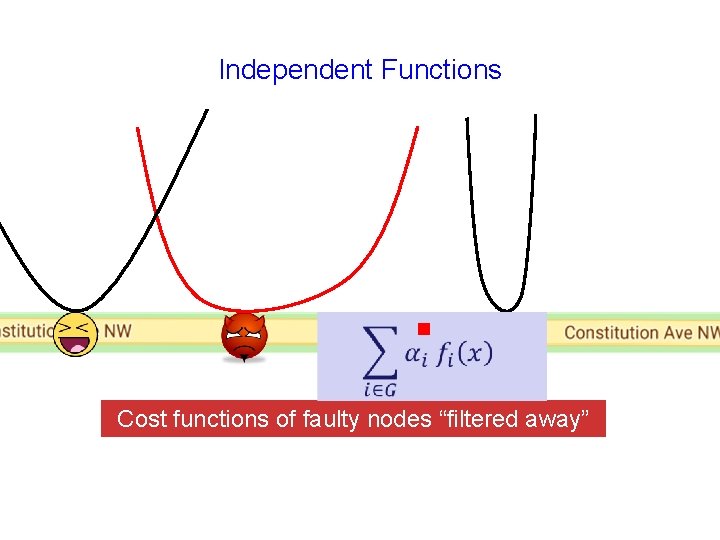

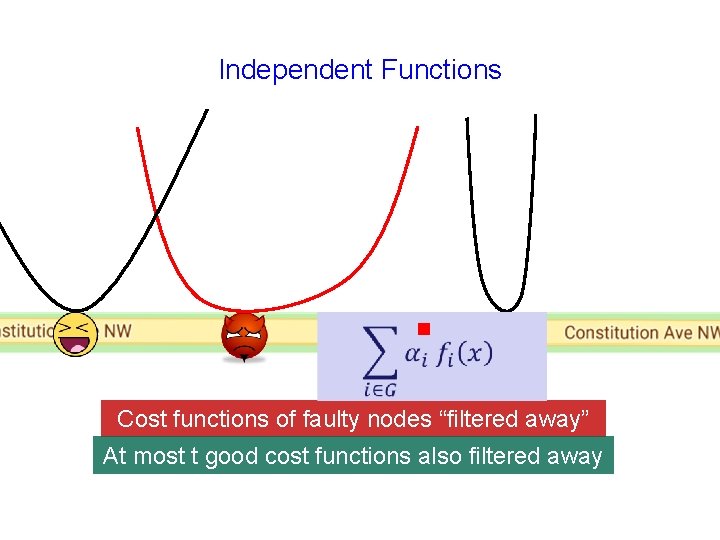

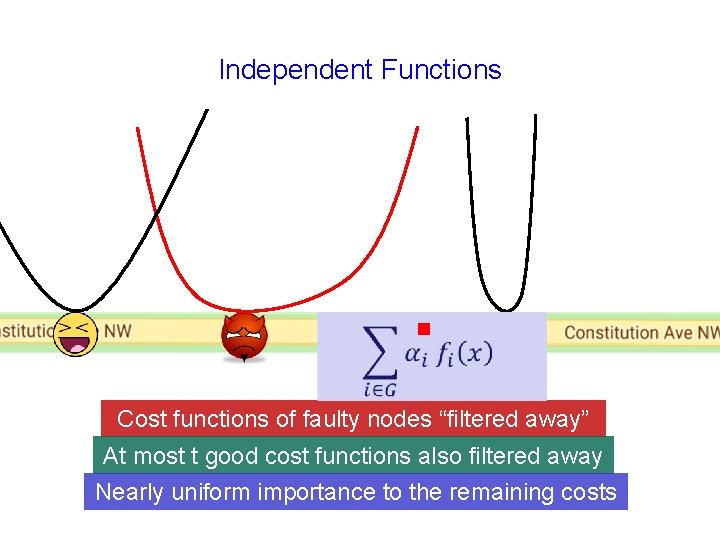

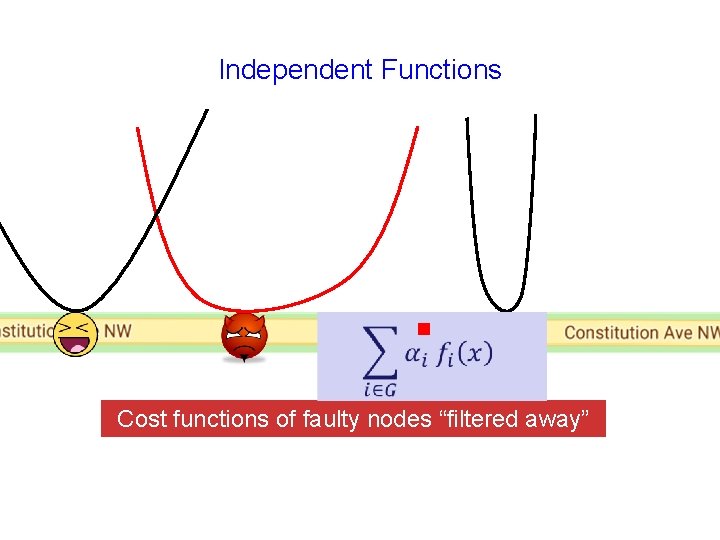

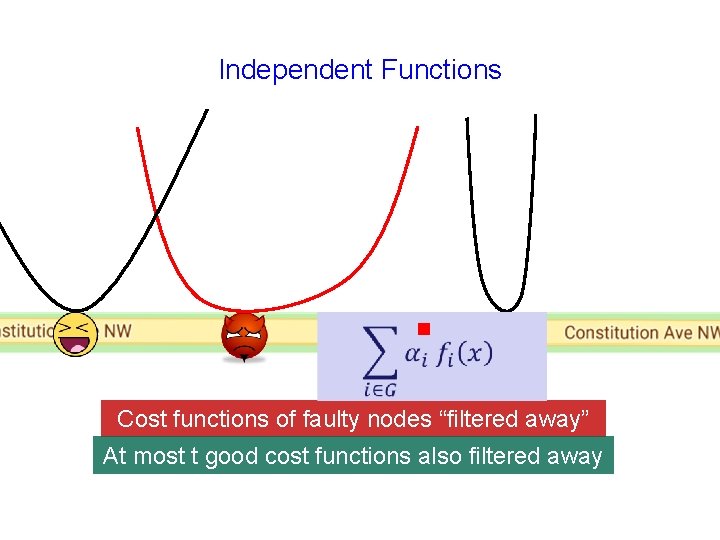

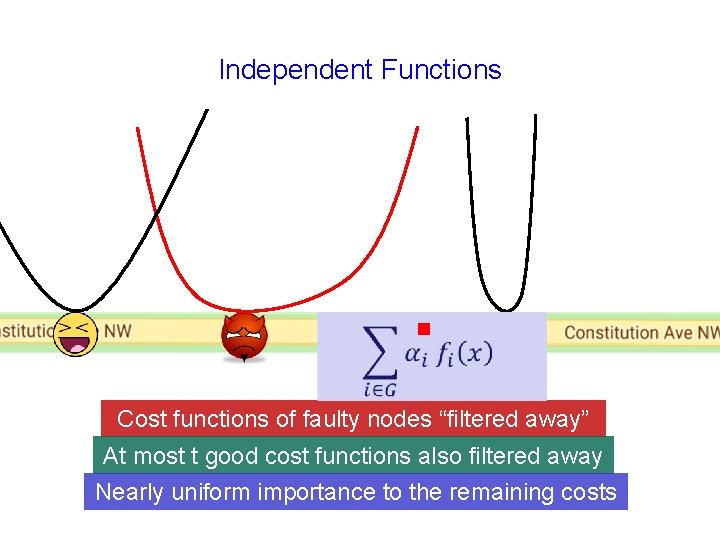

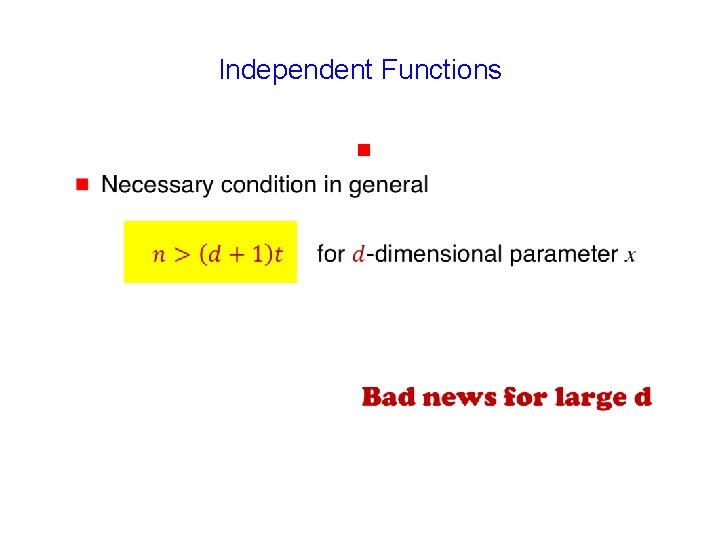

Independent Functions g Cost functions of faulty nodes “filtered away”

Independent Functions g Cost functions of faulty nodes “filtered away” At most t good cost functions also filtered away

Independent Functions g Cost functions of faulty nodes “filtered away” At most t good cost functions also filtered away Nearly uniform importance to the remaining costs

Independent Functions How good an approximation? g g 0 0 ¼ ¼ 0 0

Independent Functions How good an approximation? g g 0 0 ¼ ¼ 0 0 g ⅛ ⅛ ¼ ¼ 0 0

Independent Functions g

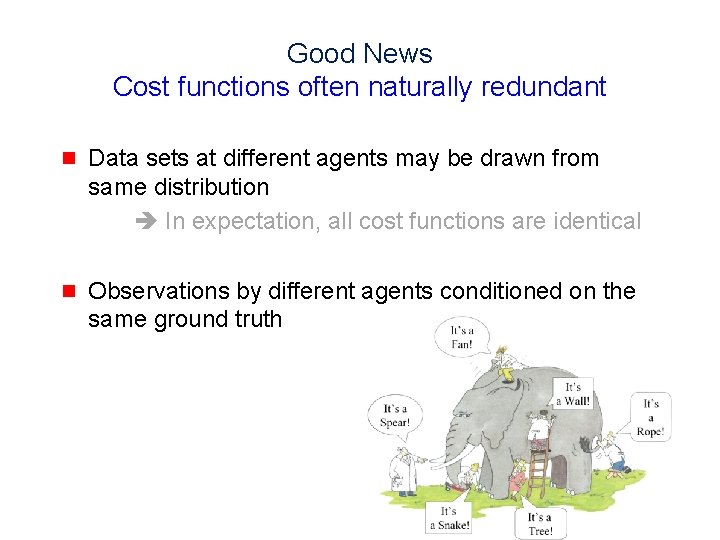

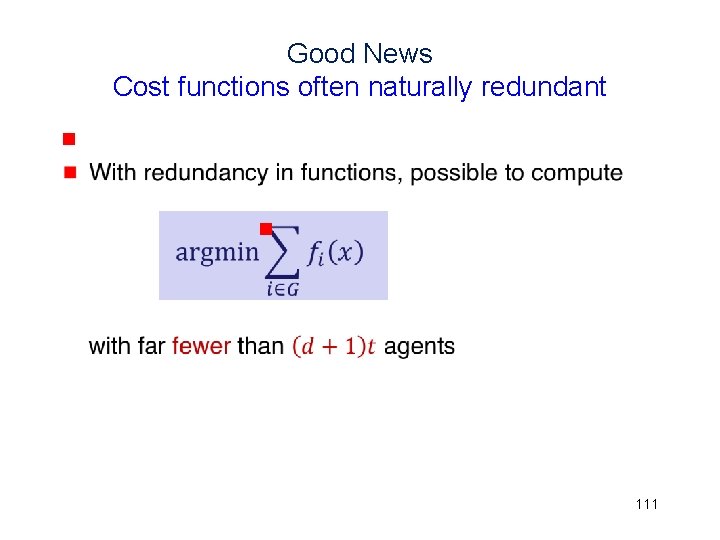

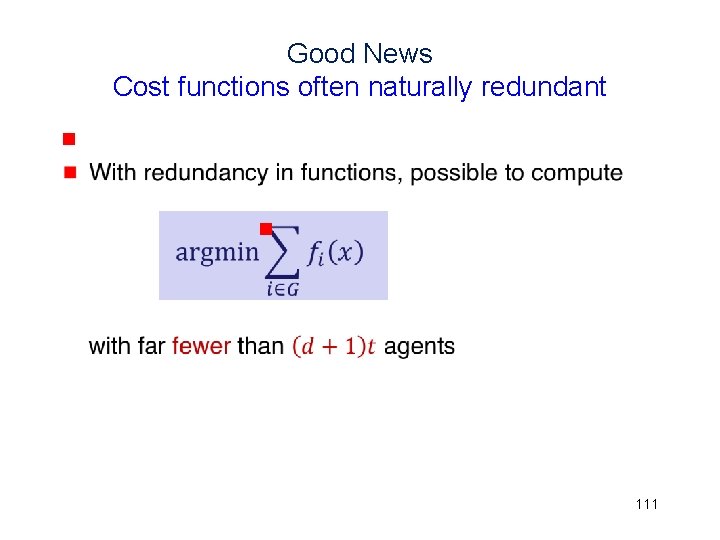

Good News Cost functions often naturally redundant

Good News Cost functions often naturally redundant g Data sets at different agents may be drawn from same distribution In expectation, all cost functions are identical

Good News Cost functions often naturally redundant g Data sets at different agents may be drawn from same distribution In expectation, all cost functions are identical g Observations by different agents conditioned on the same ground truth

Good News Cost functions often naturally redundant g g 111

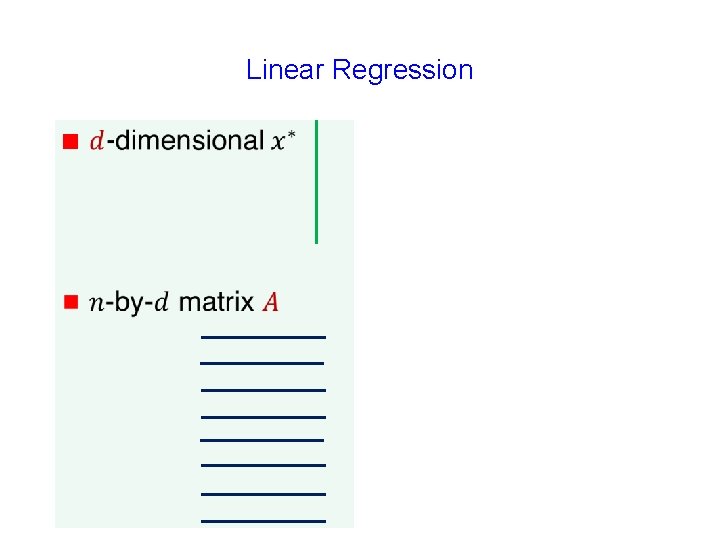

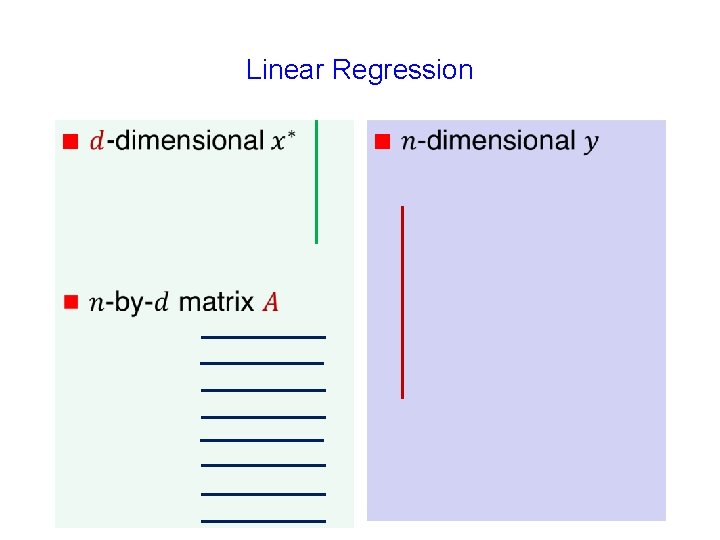

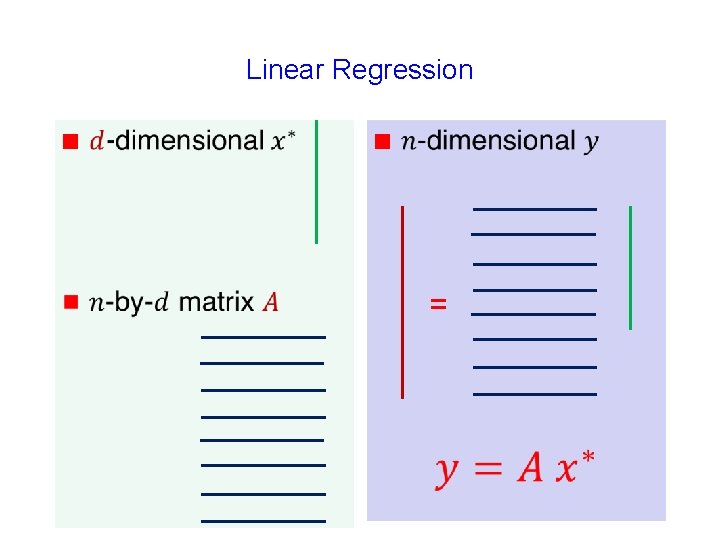

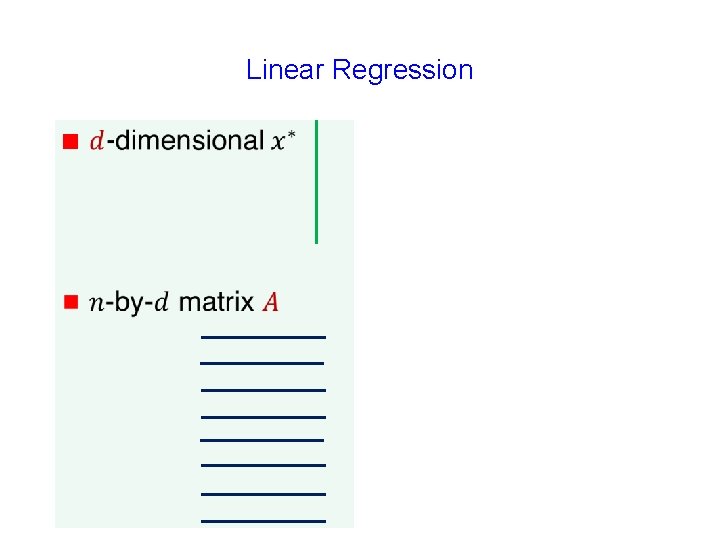

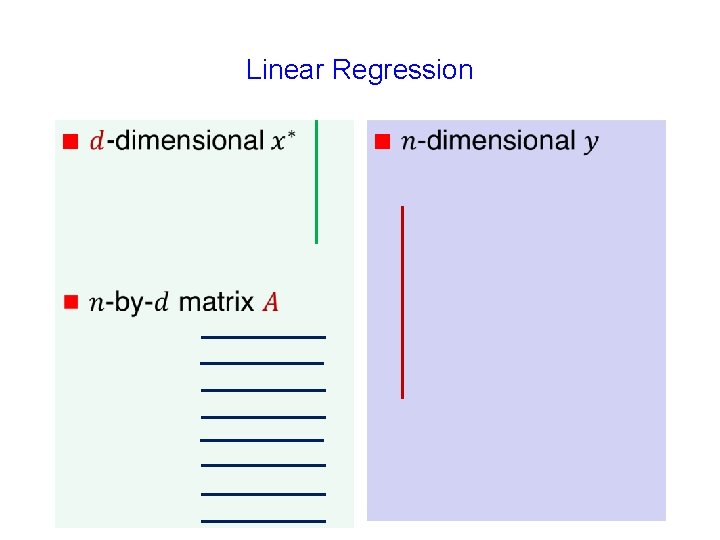

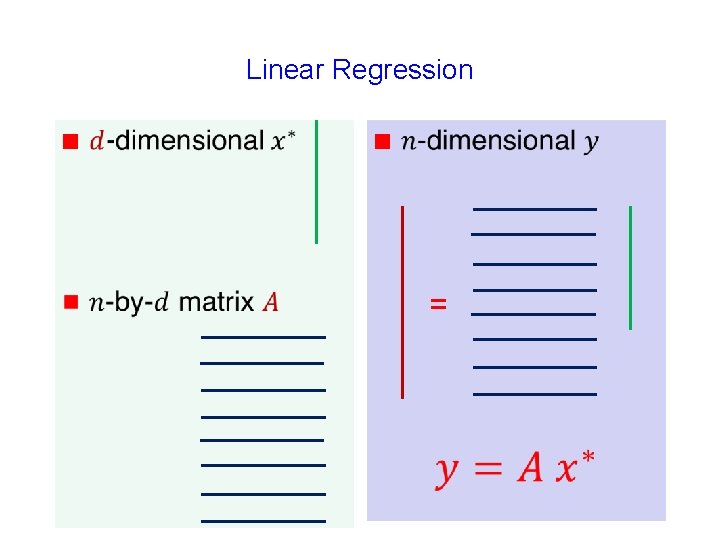

Linear Regression

Linear Regression g

Linear Regression g g

Linear Regression g g =

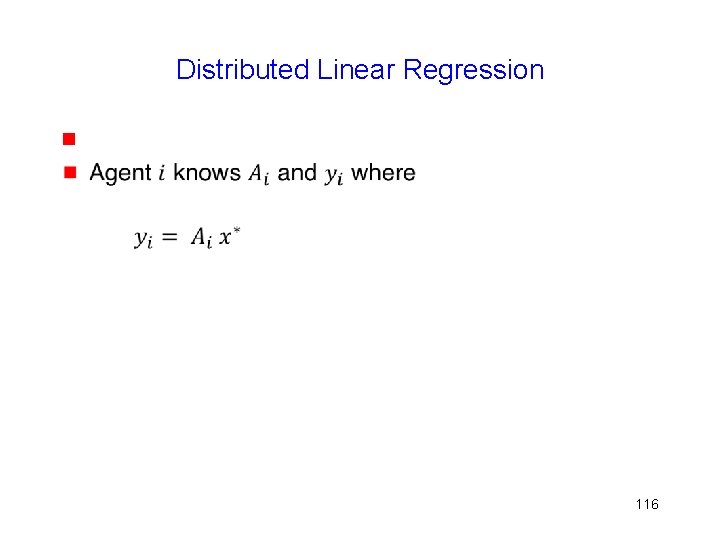

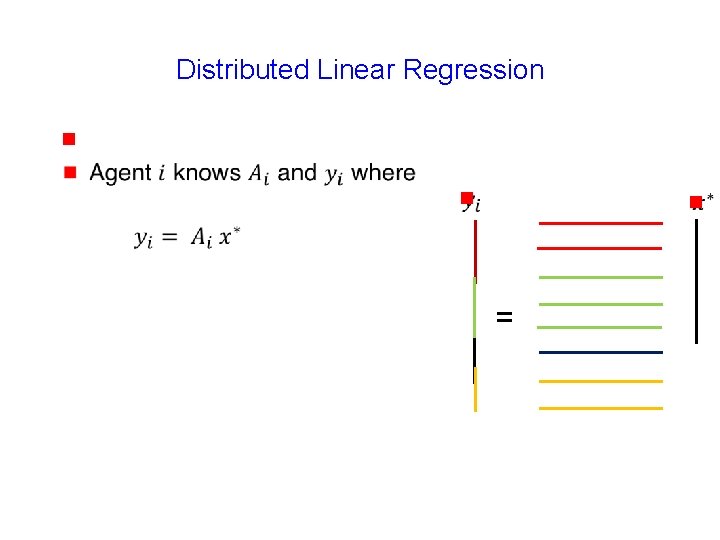

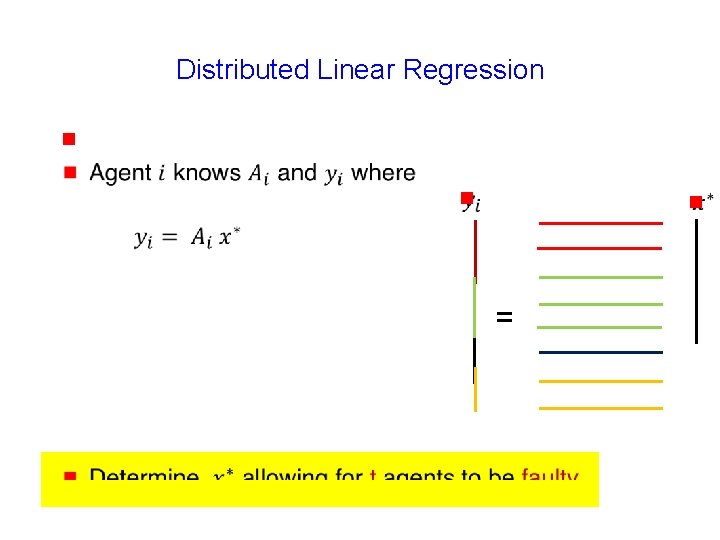

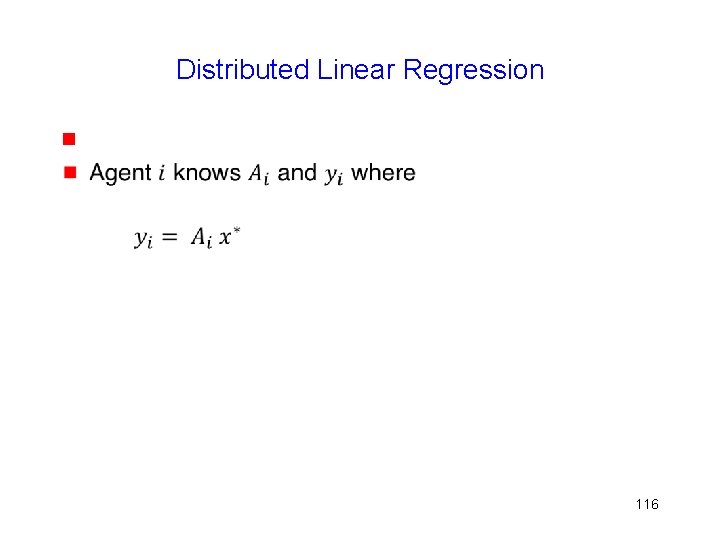

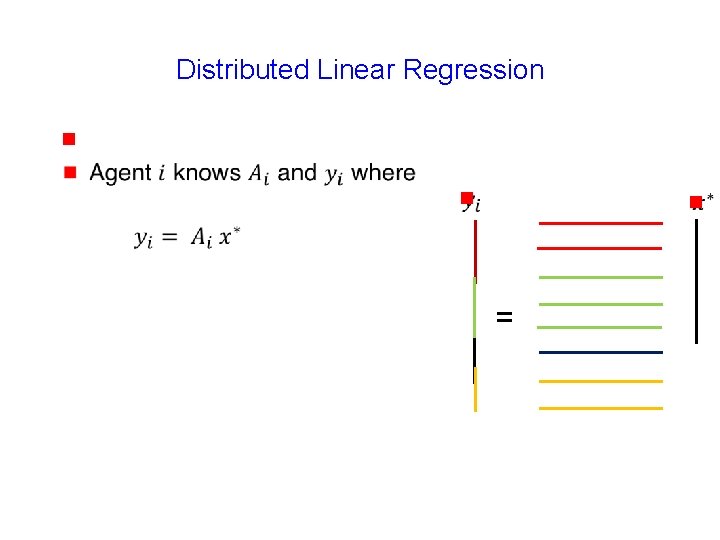

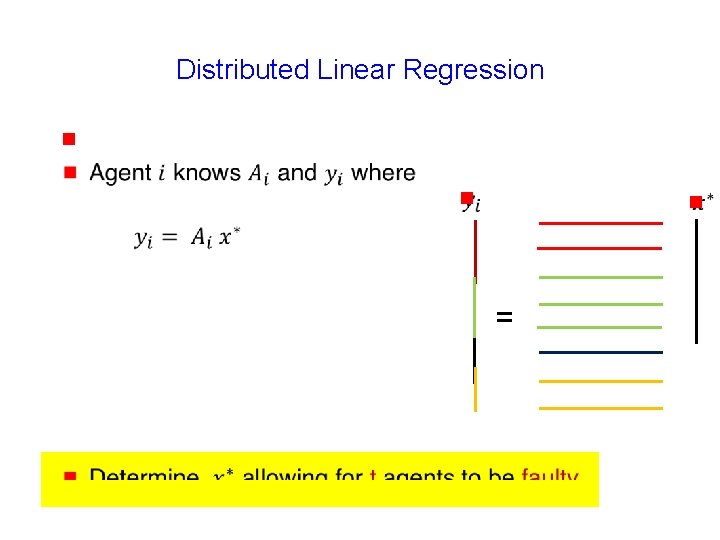

Distributed Linear Regression g 116

Distributed Linear Regression g g g =

Distributed Linear Regression g g g =

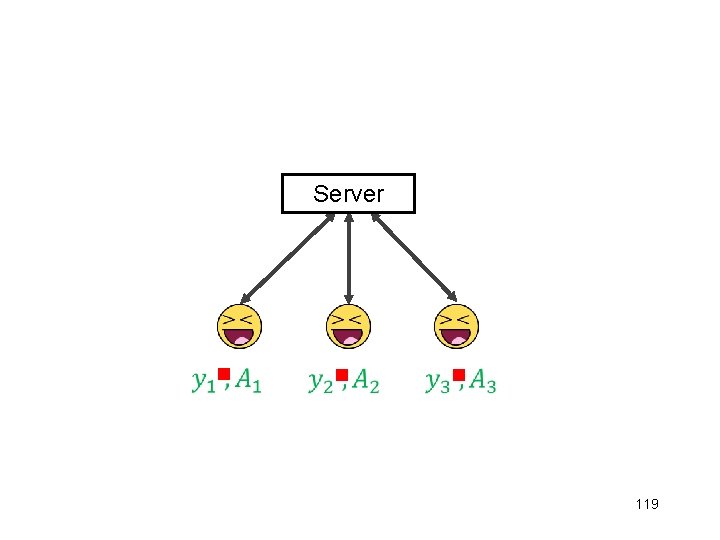

Server g g g 119

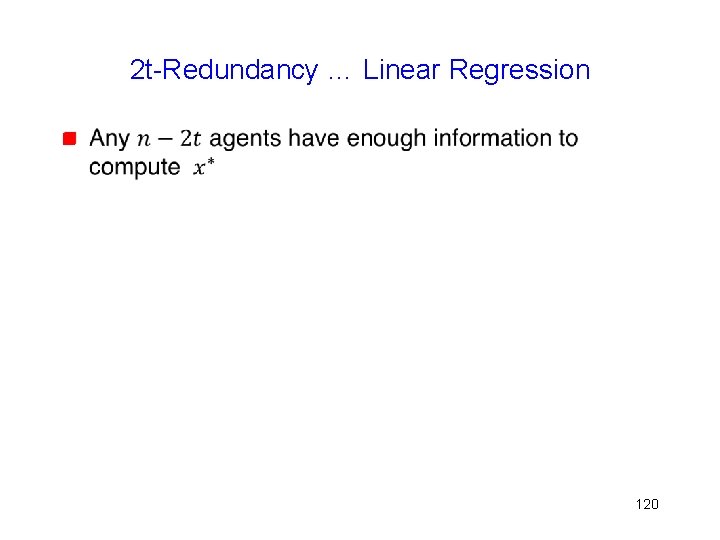

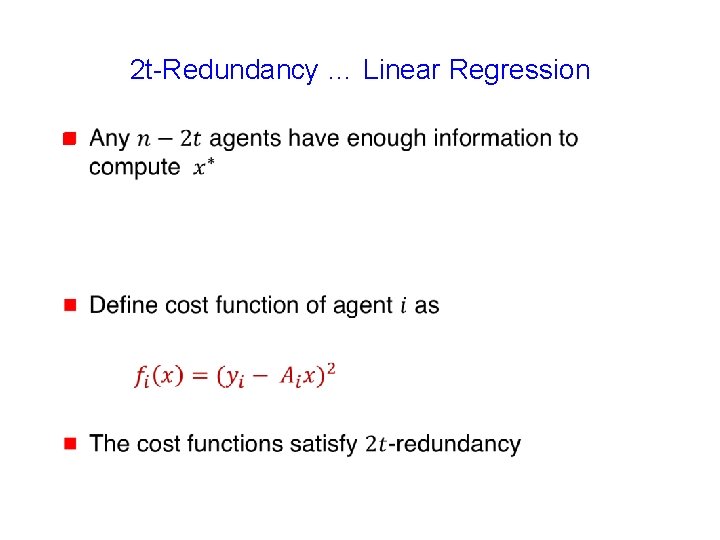

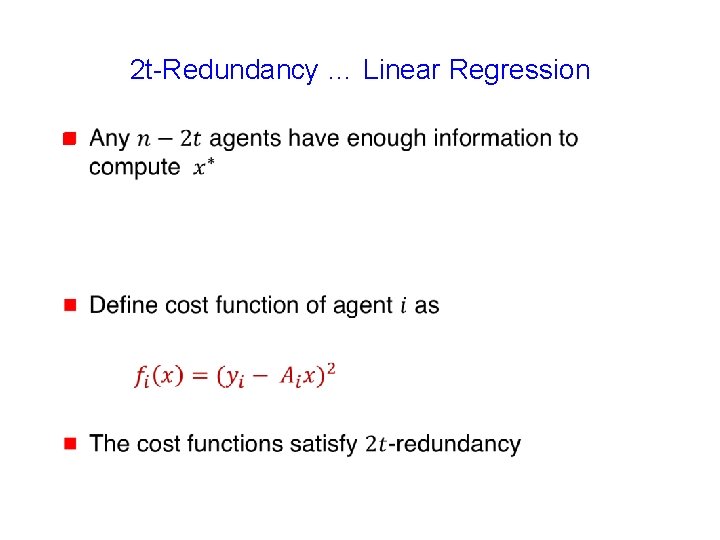

2 t-Redundancy … Linear Regression g 120

2 t-Redundancy … Linear Regression g

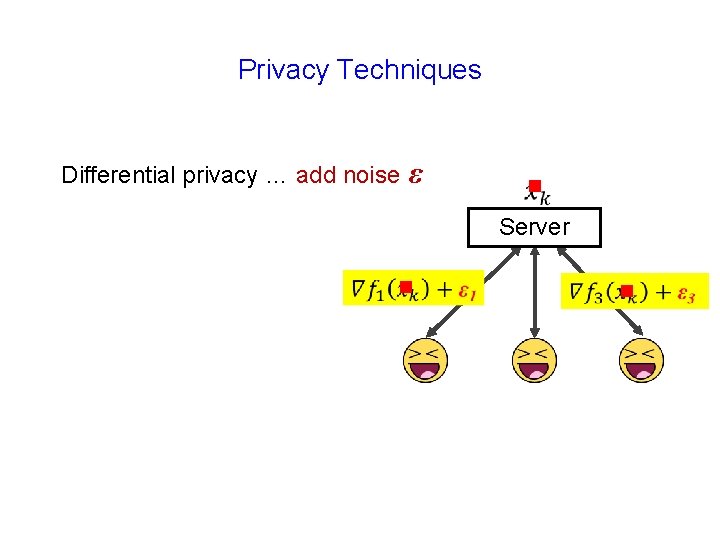

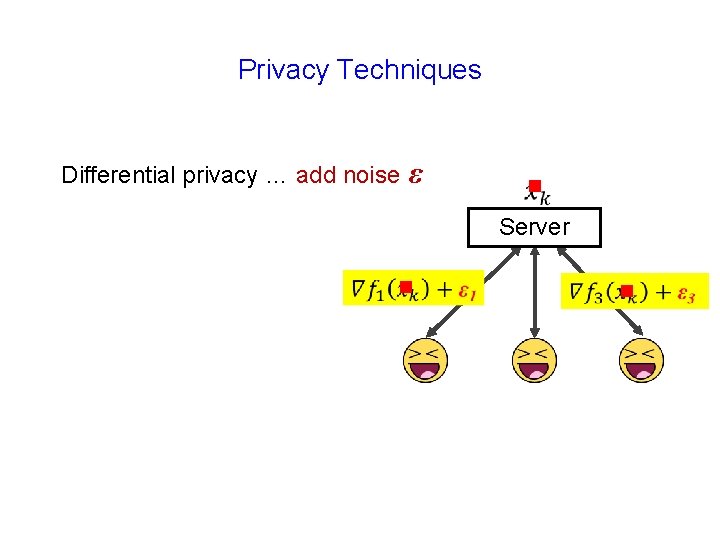

Privacy Techniques Differential privacy … add noise ε g Server g g

Privacy Techniques Differential privacy … add noise ε g Server g g Optimality compromised due to the noise g g

Privacy Techniques Homomorphic encryption g Expensive 124