GPUTera Sort High Performance Graphics Coprocessor Sorting for

- Slides: 55

GPUTera. Sort: High Performance Graphics Co-processor Sorting for Large Data Management Naga K. Govindaraju Jim Gray Ritesh Kumar Dinesh Manocha http: //gamma. cs. unc. edu/GPUTERASORT The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Sorting “I believe that virtually every important aspect of programming arises somewhere in the context of sorting or searching!” -Don Knuth 2 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Sorting Well studied High performance computing Databases Computer graphics Programming languages. . . Google map reduce algorithm Spec benchmark routine! 3 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Massive Databases Terabyte-data sets are common Google sorts more than 100 billion terms in its index > 1 Trillion records in web indexed! Database sizes are rapidly increasing! Max DB sizes increases 3 x per year (http: //www. wintercorp. com) Processor improvements not matching information explosion 4 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

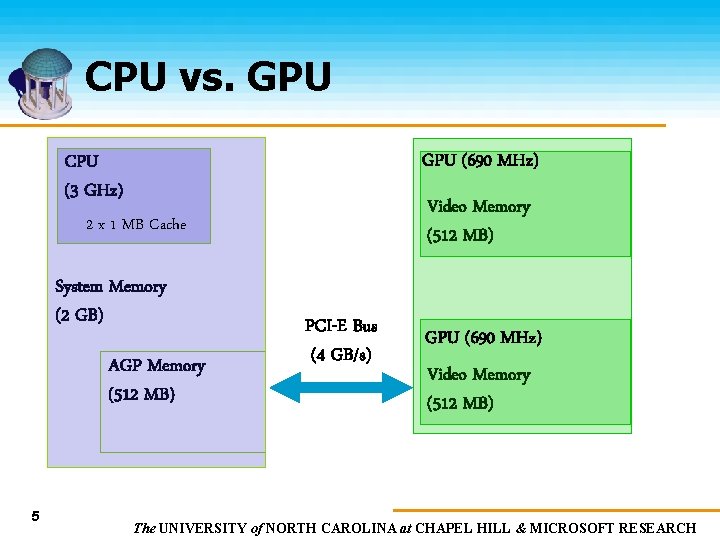

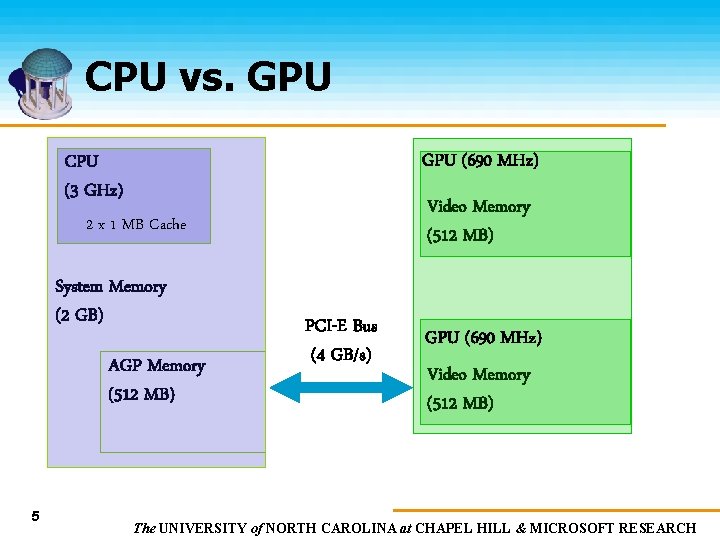

CPU vs. GPU (690 MHz) CPU (3 GHz) Video Memory (512 MB) 2 x 1 MB Cache System Memory (2 GB) AGP Memory (512 MB) 5 PCI-E Bus (4 GB/s) GPU (690 MHz) Video Memory (512 MB) The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

External Memory Sorting Performed on Terabyte-scale databases Two phases algorithm [Vitter 01, Salzberg 90, Nyberg 94, Nyberg 95] Limited main memory First phase – partitions input file into large data chunks and writes sorted chunks known as “Runs” Second phase – Merge the “Runs” to generate the sorted file 6 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

External Memory Sorting Performance mainly governed by I/O Salzberg Analysis: Given the main memory size M and the file size N, if the I/O read size per run is T in phase 2, external memory sorting achieves efficient I/O performance if the run size R in phase 1 is given by R ≈ √(TN) 7 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

External Memory Sorting Given the main memory size M and the file size N, if the I/O read size per run is T in phase 2, external memory sorting achieves efficient I/O performance if the run size R in phase 1 is given by R ≈ √(TN) N 8 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

External Memory Sorting Given the main memory size M and the file size N, if the I/O read size per run is T in phase 2, external memory sorting achieves efficient I/O performance if the run size R in phase 1 is given by R ≈ √(TN) R 9 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

External Memory Sorting Given the main memory size M and the file size N, if the I/O read size per run is T in phase 2, external memory sorting achieves efficient I/O performance if the run size R in phase 1 is given by R ≈ √(TN) T 10 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Salzberg Analysis If N=100 GB, T=2 MB, then R ≈ 230 MB Large data sorting on CPUs can achieve high I/O performance by sorting large runs 11 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

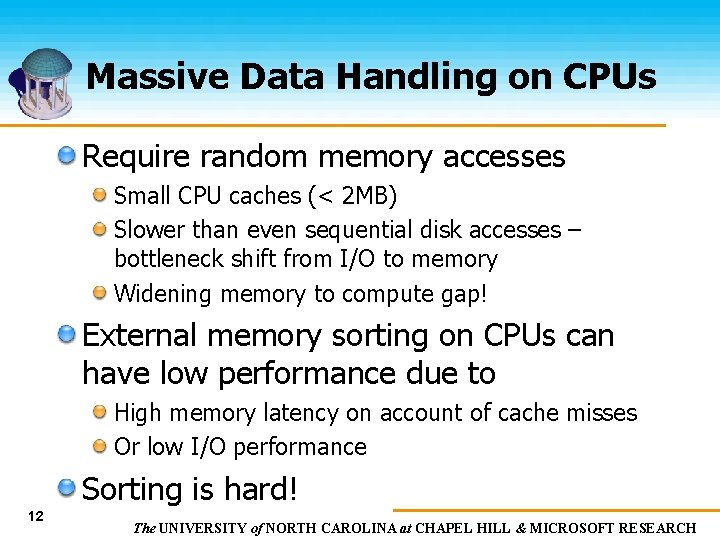

Massive Data Handling on CPUs Require random memory accesses Small CPU caches (< 2 MB) Slower than even sequential disk accesses – bottleneck shift from I/O to memory Widening memory to compute gap! External memory sorting on CPUs can have low performance due to High memory latency on account of cache misses Or low I/O performance Sorting is hard! 12 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

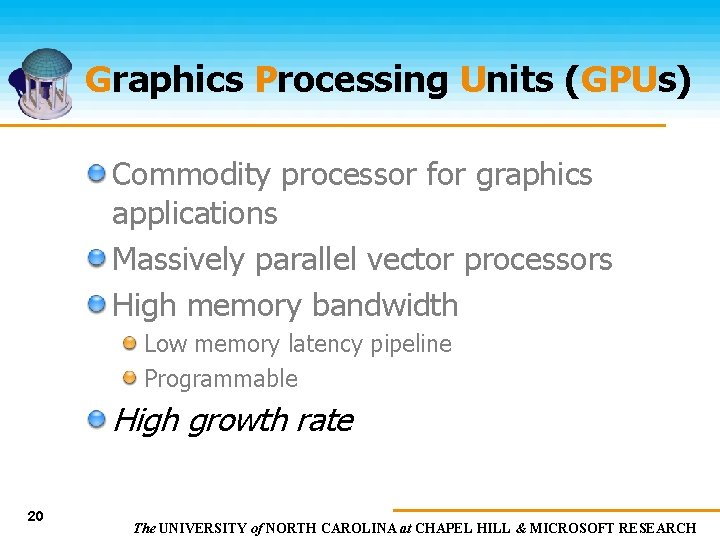

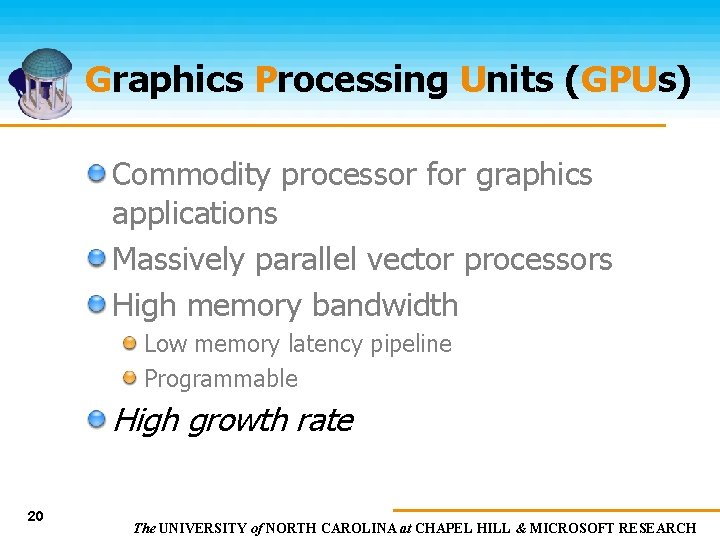

Graphics Processing Units (GPUs) Commodity processor for graphics applications Massively parallel vector processors High memory bandwidth Low memory latency pipeline Programmable High growth rate 13 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

GPU: Commodity Processor Cell phones 14 Desktops Laptops Consoles PSP The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Graphics Processing Units (GPUs) Commodity processor for graphics applications Massively parallel vector processors 10 x more operations per sec than CPUs High memory bandwidth Low memory latency pipeline Programmable High growth rate 15 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

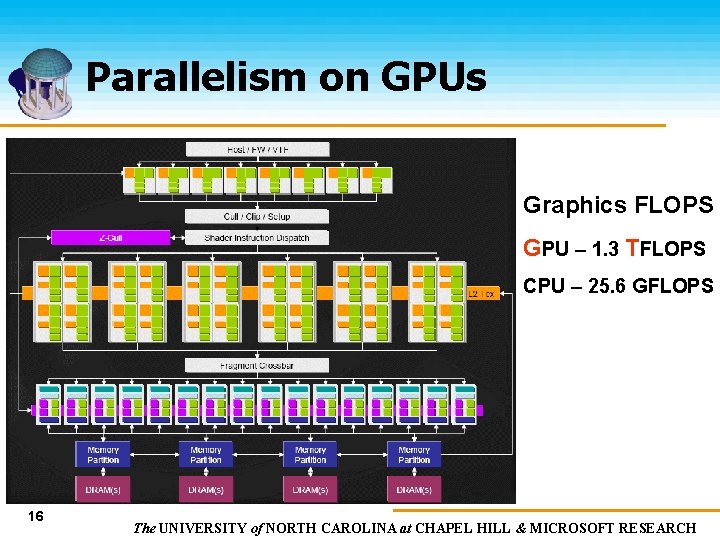

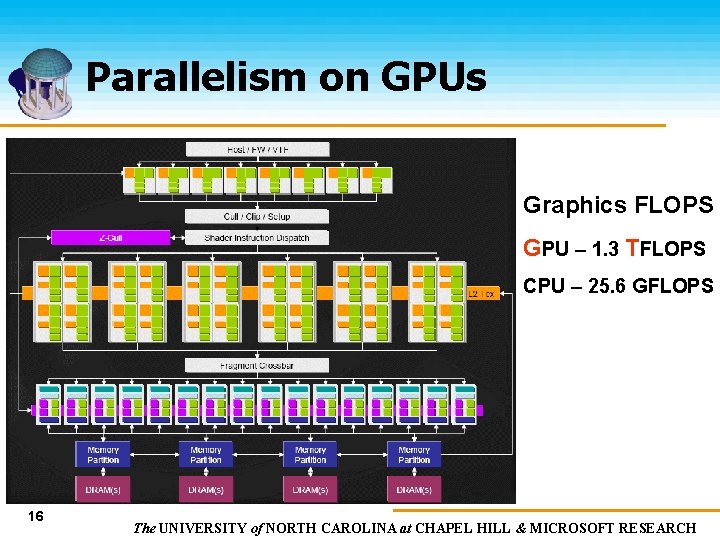

Parallelism on GPUs Graphics FLOPS GPU – 1. 3 TFLOPS CPU – 25. 6 GFLOPS 16 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

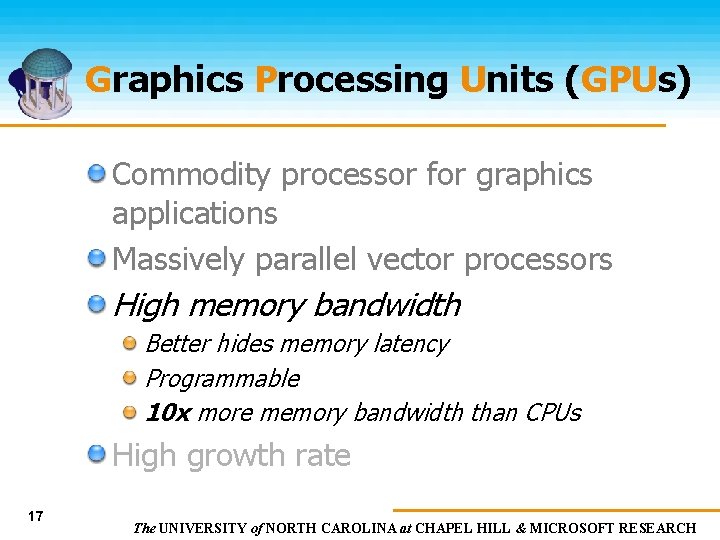

Graphics Processing Units (GPUs) Commodity processor for graphics applications Massively parallel vector processors High memory bandwidth Better hides memory latency Programmable 10 x more memory bandwidth than CPUs High growth rate 17 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

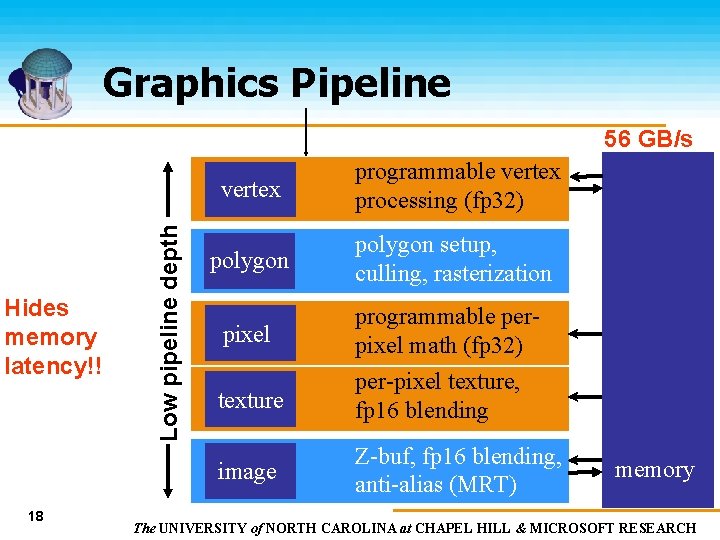

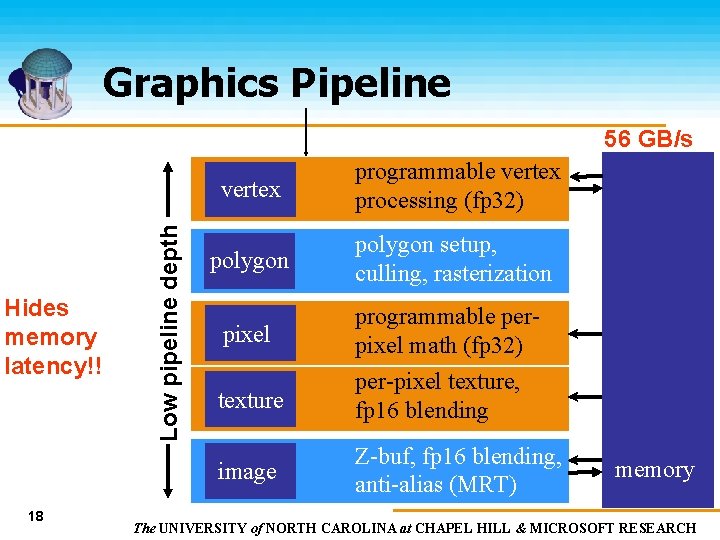

Graphics Pipeline Hides memory latency!! Low pipeline depth 56 GB/s vertex programmable vertex processing (fp 32) setup polygon rasterizer polygon setup, culling, rasterization pixel texture image 18 programmable perpixel math (fp 32) per-pixel texture, fp 16 blending Z-buf, fp 16 blending, anti-alias (MRT) memory The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

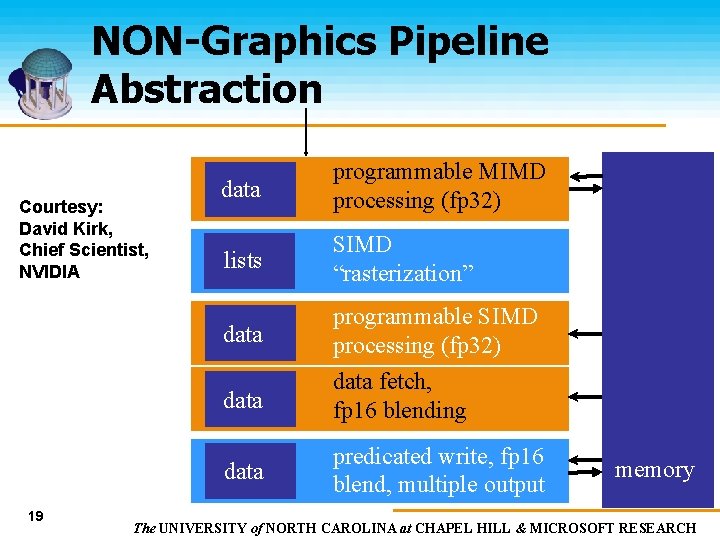

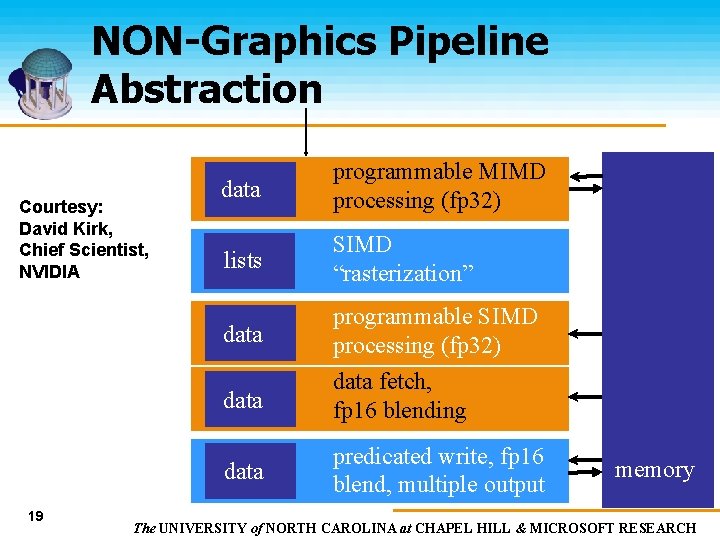

NON-Graphics Pipeline Abstraction Courtesy: David Kirk, Chief Scientist, NVIDIA data setup lists rasterizer data 19 programmable MIMD processing (fp 32) SIMD “rasterization” programmable SIMD processing (fp 32) data fetch, fp 16 blending predicated write, fp 16 blend, multiple output memory The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Graphics Processing Units (GPUs) Commodity processor for graphics applications Massively parallel vector processors High memory bandwidth Low memory latency pipeline Programmable High growth rate 20 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

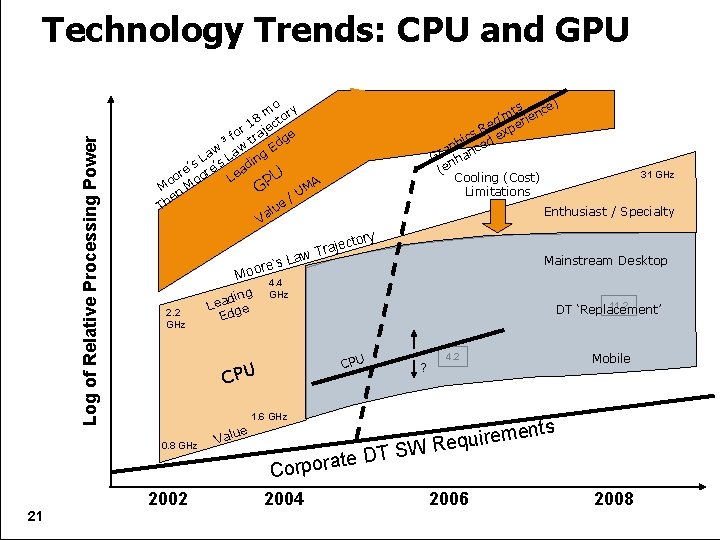

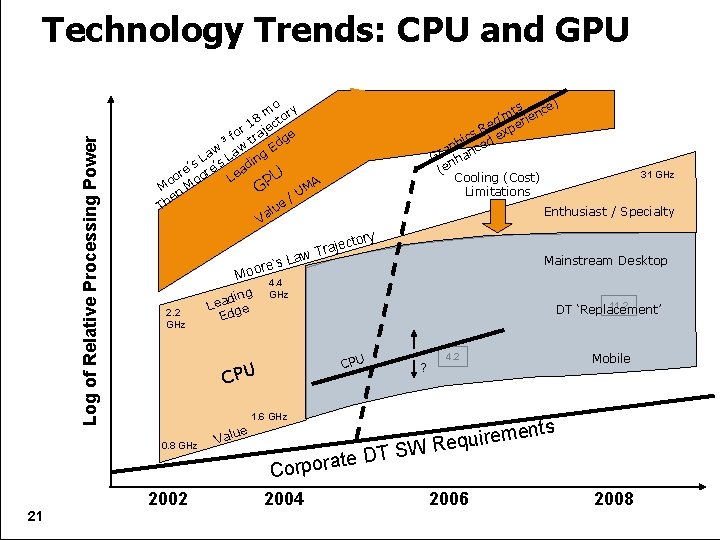

Log of Relative Processing Power Technology Trends: CPU and GPU 31 GHz Enthusiast / Specialty tory ajec w Tr ’s La e r o Mo 2. 2 GHz ding Lea ge Ed 11. 2 DT ‘Replacement’ CPU 1. 6 GHz 2002 Mainstream Desktop 4. 4 GHz CPU 0. 8 GHz 21 ) ts nce m e ’ i q r Re xpe s e ic ph nced a Gr ha n (e Cooling (Cost) Limitations o m ory 8 t r 1 ajec e o 3 f tr dg E w w g La La s e’s adin ’ e U or oor Le o M M n GP UMA e / e Th u l Va ? Mobile 4. 2 ts men e r i u q e WR te DT S alue V Corpora 2004 2006 2008 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

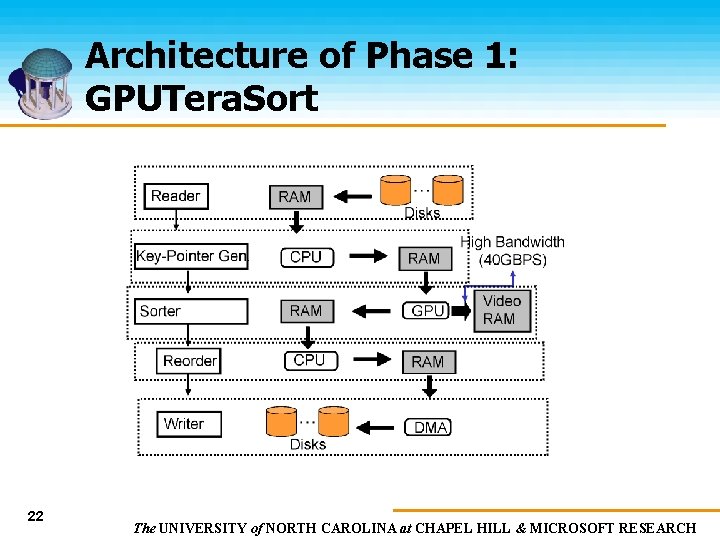

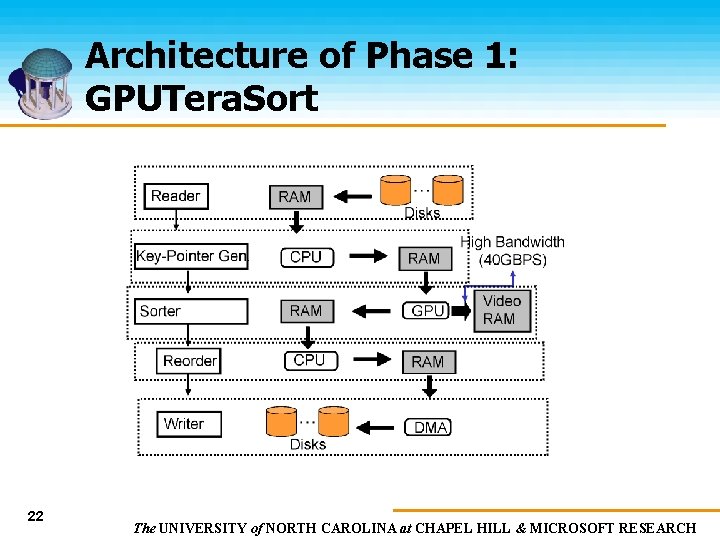

Architecture of Phase 1: GPUTera. Sort 22 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

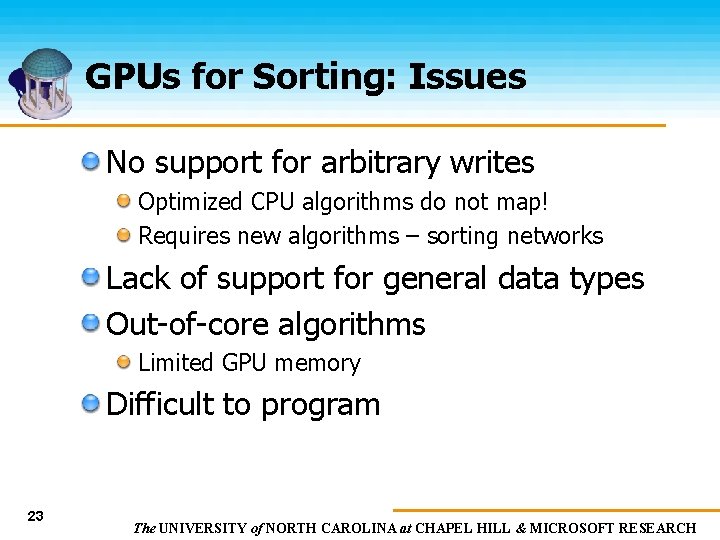

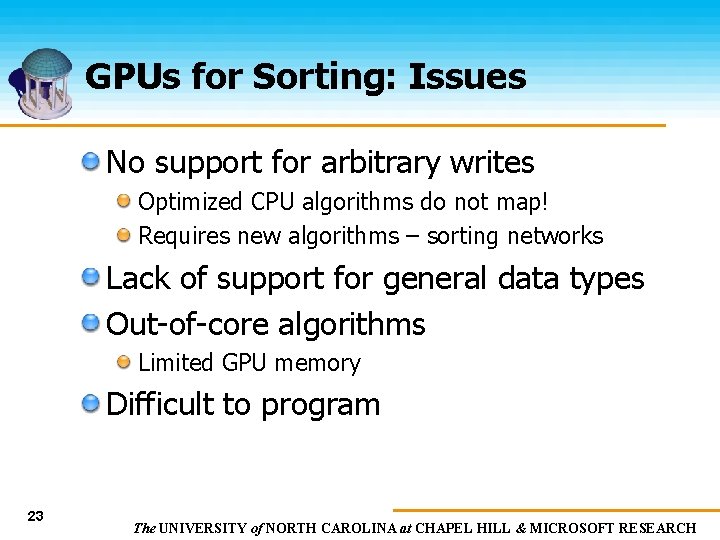

GPUs for Sorting: Issues No support for arbitrary writes Optimized CPU algorithms do not map! Requires new algorithms – sorting networks Lack of support for general data types Out-of-core algorithms Limited GPU memory Difficult to program 23 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

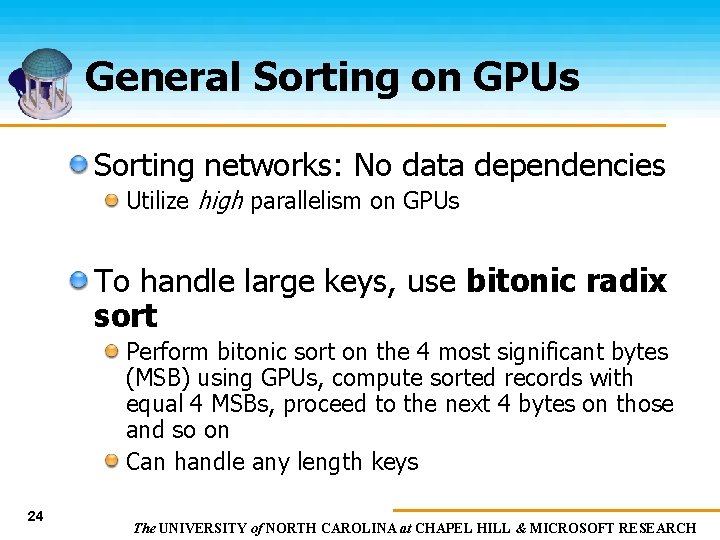

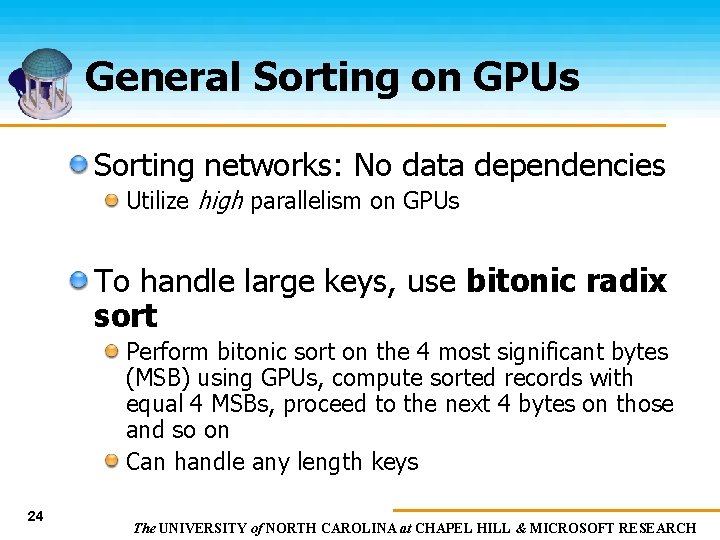

General Sorting on GPUs Sorting networks: No data dependencies Utilize high parallelism on GPUs To handle large keys, use bitonic radix sort Perform bitonic sort on the 4 most significant bytes (MSB) using GPUs, compute sorted records with equal 4 MSBs, proceed to the next 4 bytes on those and so on Can handle any length keys 24 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

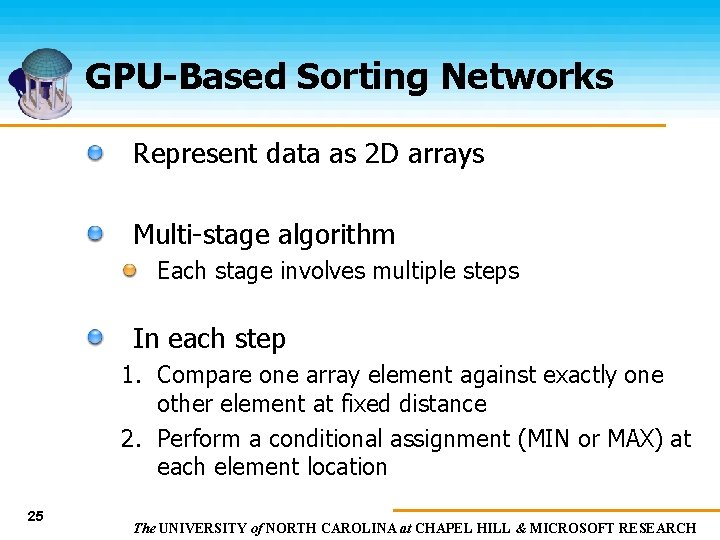

GPU-Based Sorting Networks Represent data as 2 D arrays Multi-stage algorithm Each stage involves multiple steps In each step 1. Compare one array element against exactly one other element at fixed distance 2. Perform a conditional assignment (MIN or MAX) at each element location 25 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Flash animation removed to save (46 MB !) 26 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

2 D Memory Addressing GPUs optimized for 2 D representations Map 1 D arrays to 2 D arrays Minimum and maximum regions mapped to rowaligned or column-aligned quads 27 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

1 D – 2 D Mapping MIN 28 MAX The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

1 D – 2 D Mapping Effectively reduce instructions per element MIN 29 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

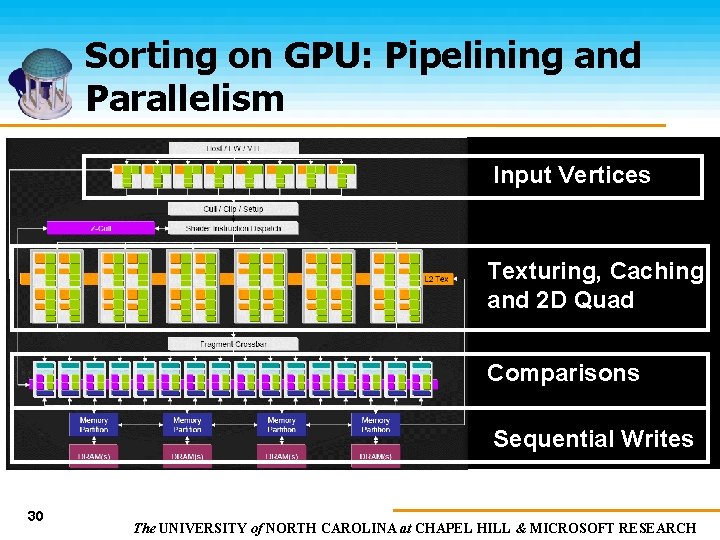

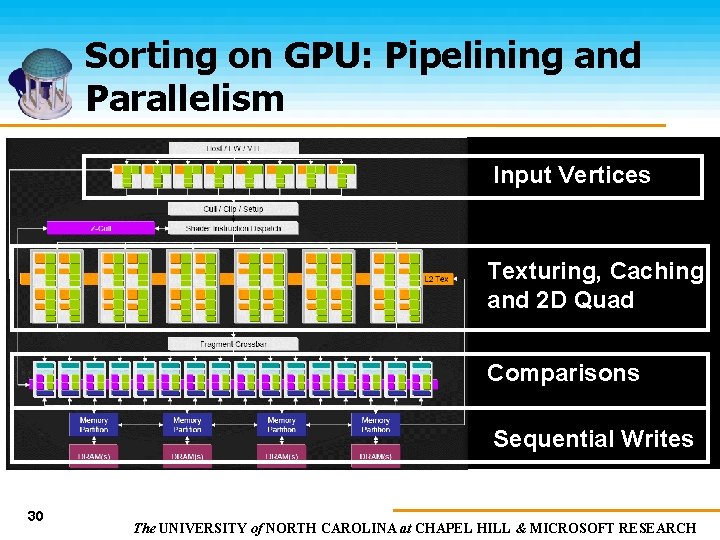

Sorting on GPU: Pipelining and Parallelism Input Vertices Texturing, Caching and 2 D Quad Comparisons Sequential Writes 30 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

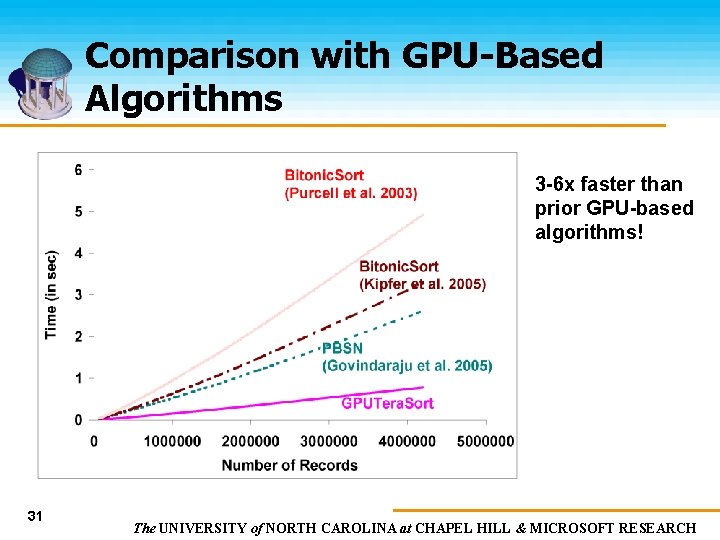

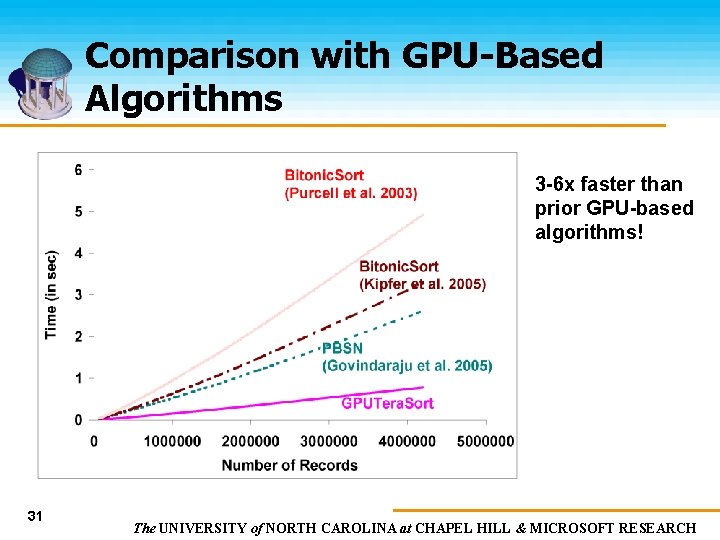

Comparison with GPU-Based Algorithms 3 -6 x faster than prior GPU-based algorithms! 31 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

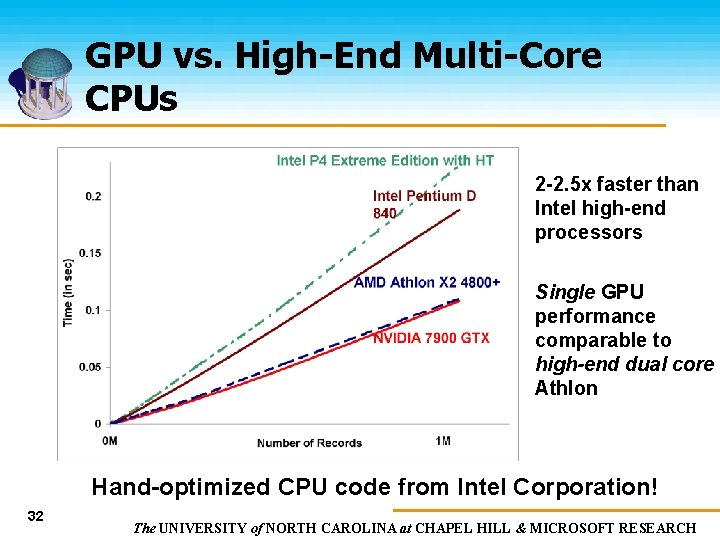

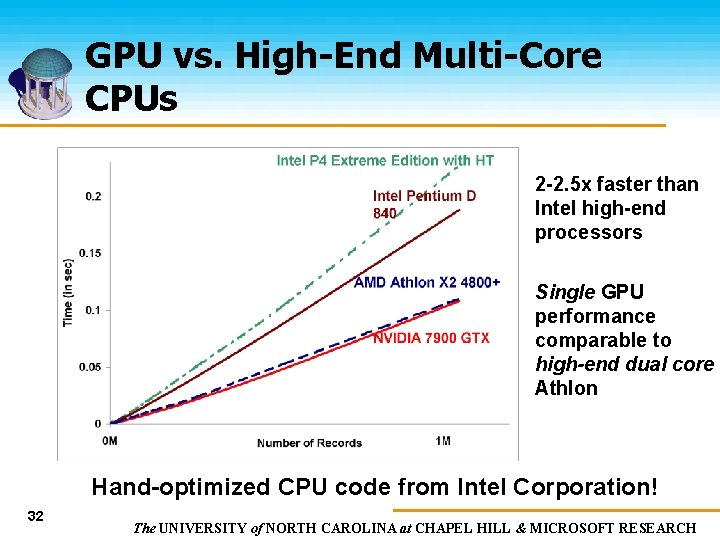

GPU vs. High-End Multi-Core CPUs 2 -2. 5 x faster than Intel high-end processors Single GPU performance comparable to high-end dual core Athlon Hand-optimized CPU code from Intel Corporation! 32 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

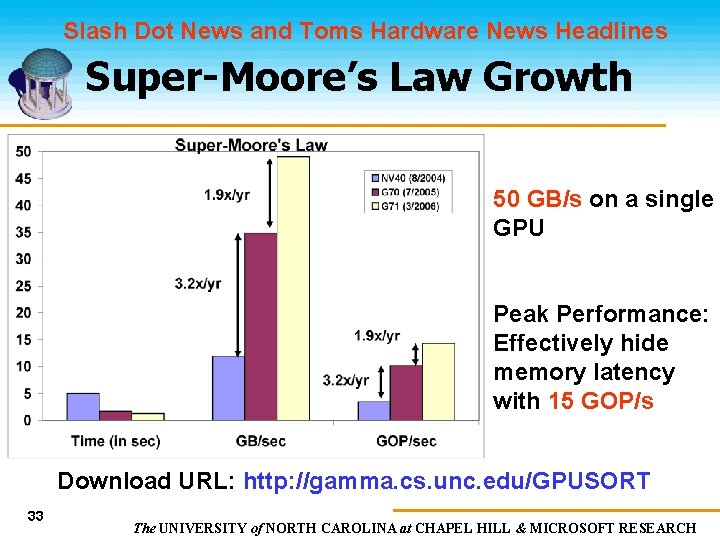

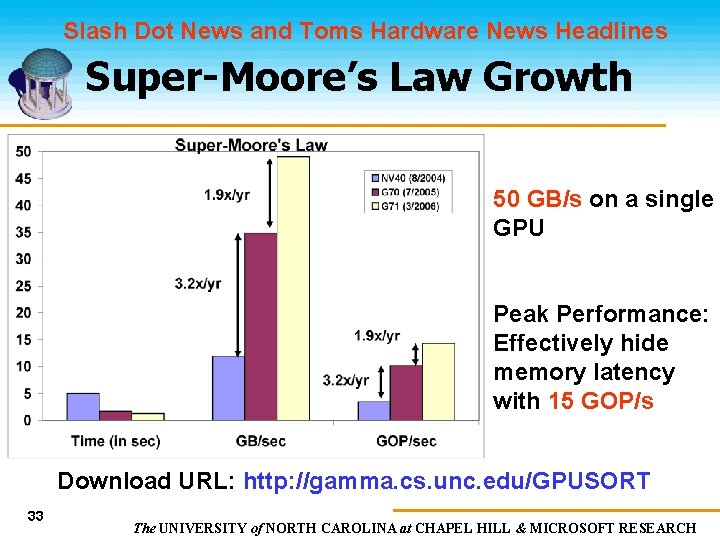

Slash Dot News and Toms Hardware News Headlines Super-Moore’s Law Growth 50 GB/s on a single GPU Peak Performance: Effectively hide memory latency with 15 GOP/s Download URL: http: //gamma. cs. unc. edu/GPUSORT 33 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Implementation & Results Pentium IV PC ($170) NVIDIA 7800 GT ($270) 2 GB RAM ($152) 9 80 GB SATA disks ($477) Super. Micro Motherboard & SATA Controller ($325) Windows XP PC costs $1469 34 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Implementation & Results Indy Sort. Benchmark 10 byte random string keys 100 byte long records Sort maximum amount in 644 seconds 35 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

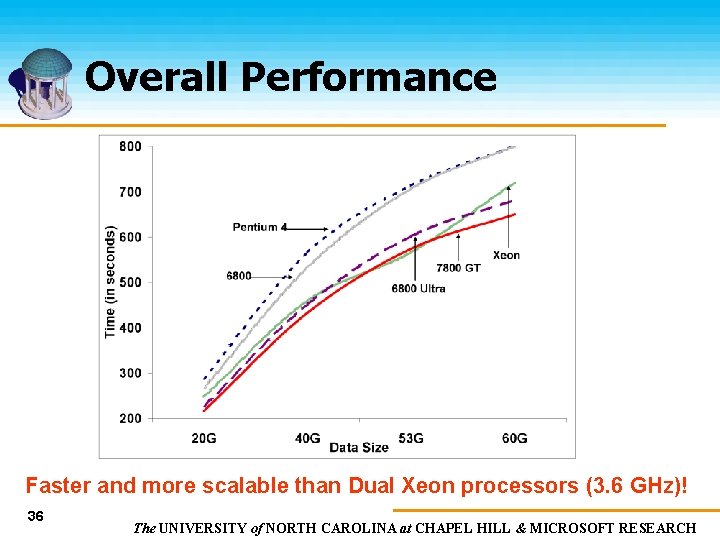

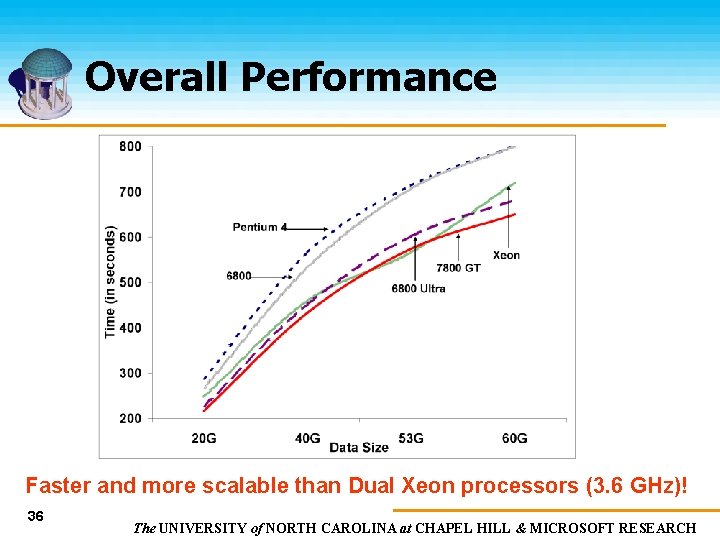

Overall Performance Faster and more scalable than Dual Xeon processors (3. 6 GHz)! 36 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

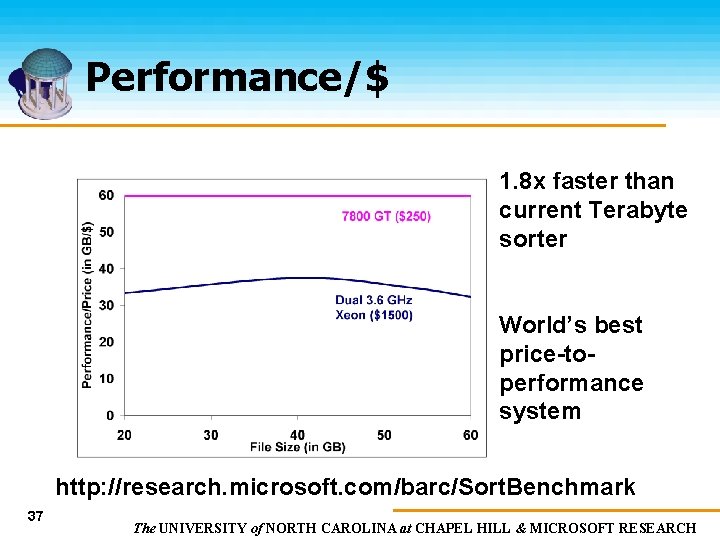

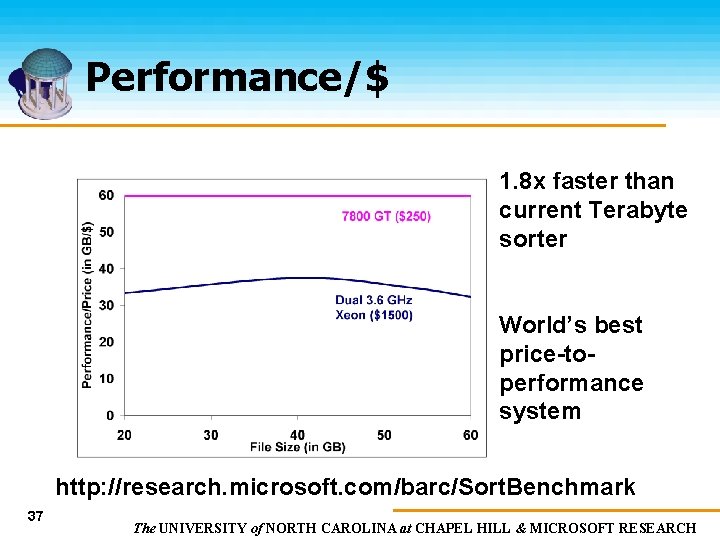

Performance/$ 1. 8 x faster than current Terabyte sorter World’s best price-toperformance system http: //research. microsoft. com/barc/Sort. Benchmark 37 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

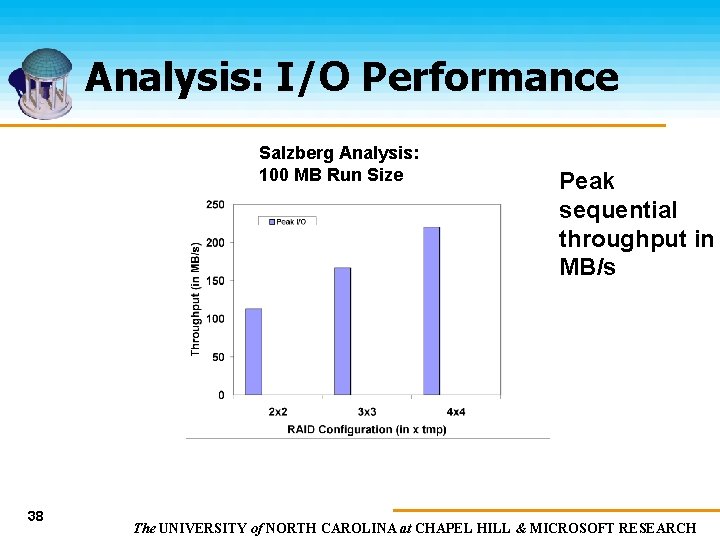

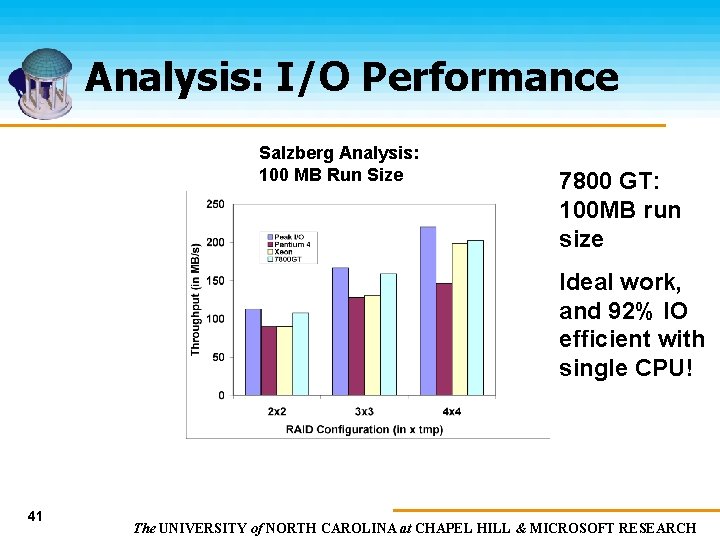

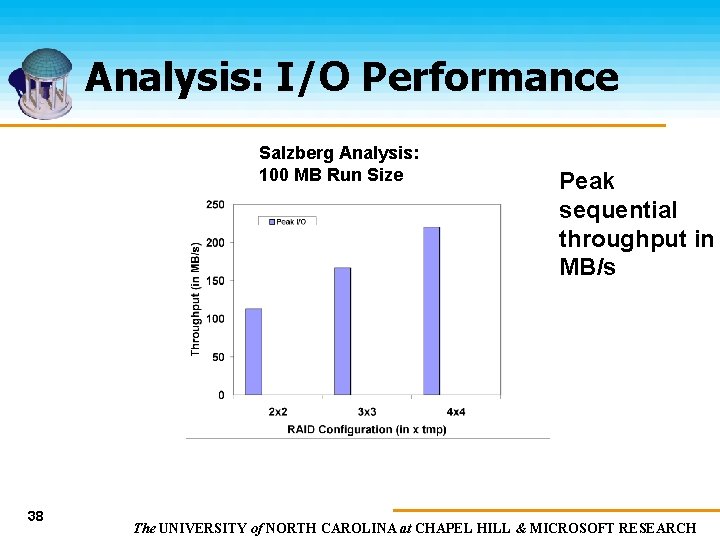

Analysis: I/O Performance Salzberg Analysis: 100 MB Run Size 38 Peak sequential throughput in MB/s The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

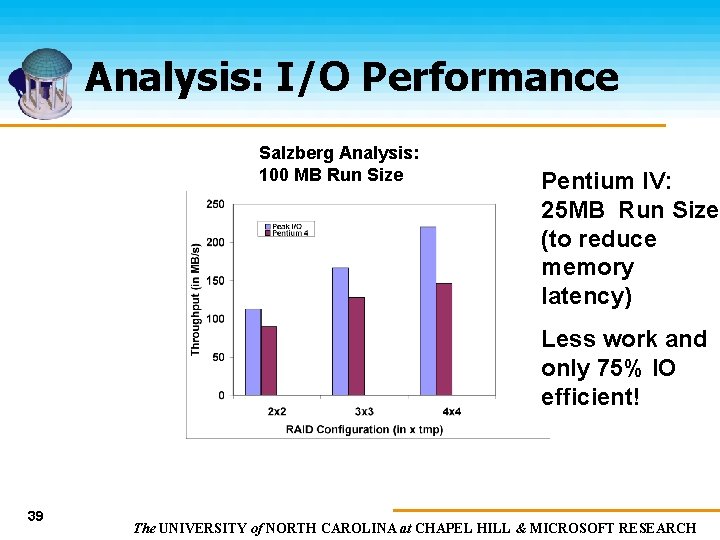

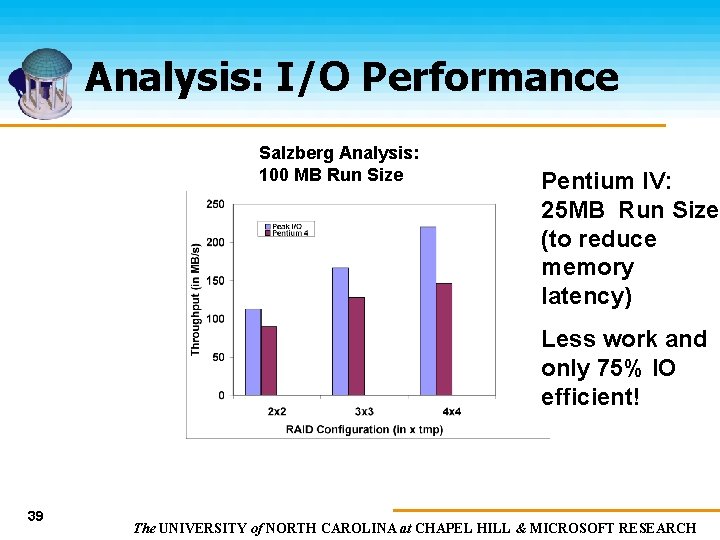

Analysis: I/O Performance Salzberg Analysis: 100 MB Run Size Pentium IV: 25 MB Run Size (to reduce memory latency) Less work and only 75% IO efficient! 39 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

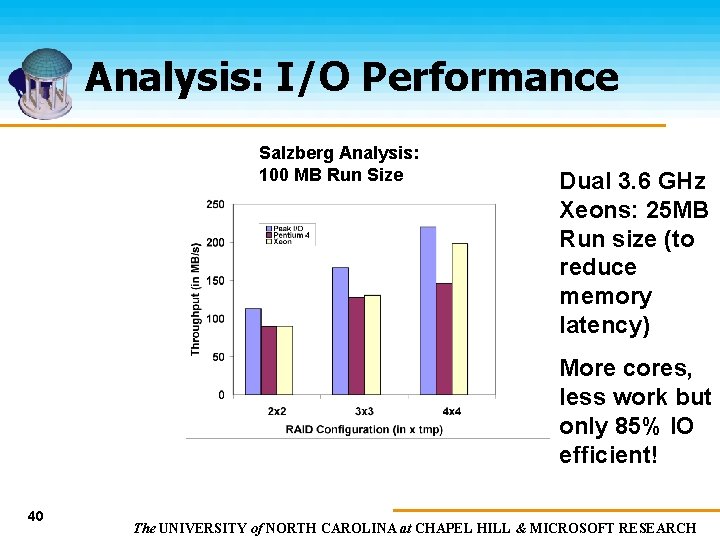

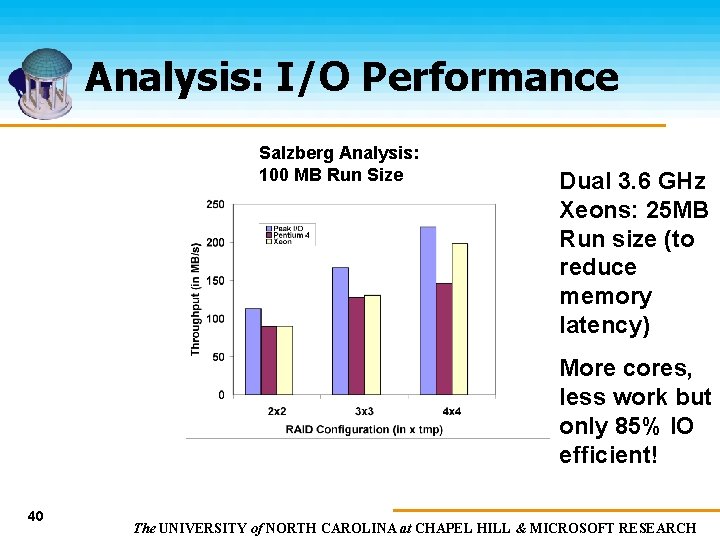

Analysis: I/O Performance Salzberg Analysis: 100 MB Run Size Dual 3. 6 GHz Xeons: 25 MB Run size (to reduce memory latency) More cores, less work but only 85% IO efficient! 40 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

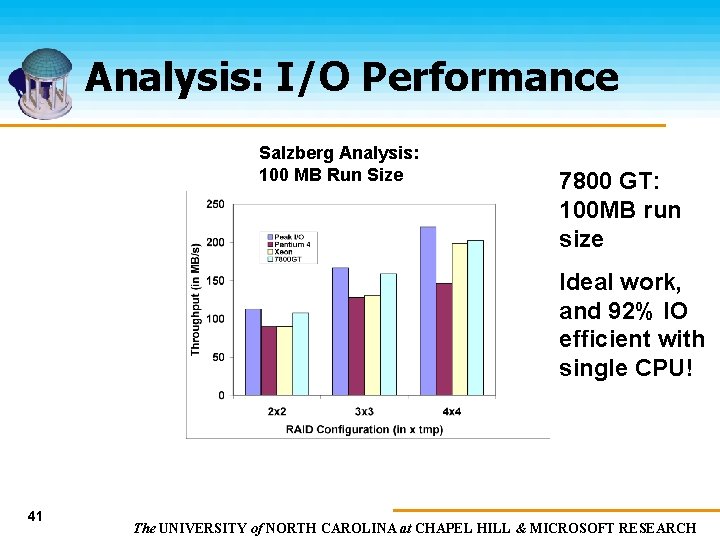

Analysis: I/O Performance Salzberg Analysis: 100 MB Run Size 7800 GT: 100 MB run size Ideal work, and 92% IO efficient with single CPU! 41 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

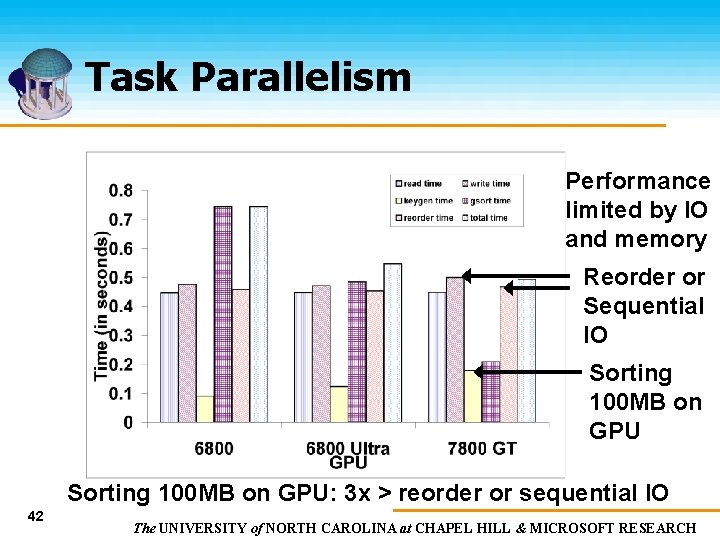

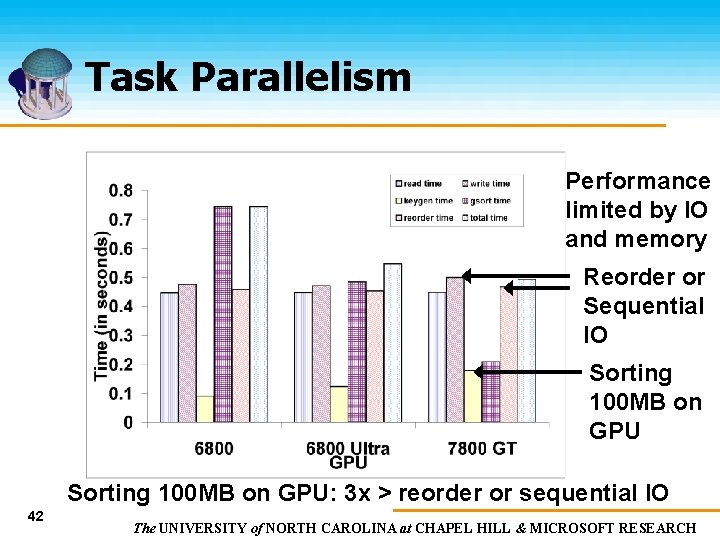

Task Parallelism Performance limited by IO and memory Reorder or Sequential IO Sorting 100 MB on GPU: 3 x > reorder or sequential IO 42 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

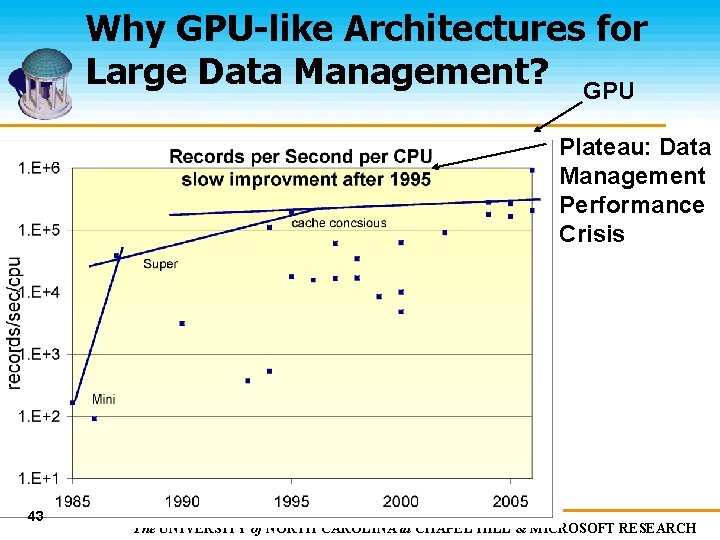

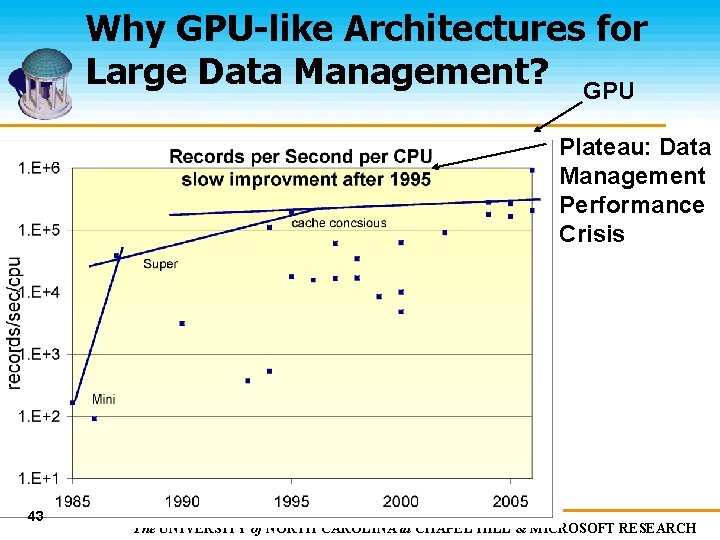

Why GPU-like Architectures for Large Data Management? GPU Plateau: Data Management Performance Crisis 43 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Advantages Exploit high memory bandwidth on GPUs Higher memory performance than CPU-based algorithms High I/O performance due to large run sizes 44 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Advantages Offload work from CPUs CPU cycles well-utilized for resource management Scalable solution for large databases Best performance/price solution for terabyte sorting 45 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Limitations May not work well on variable-sized keys and almost sorted databases Requires programmable GPUs (GPUs manufactured after 2003) 46 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Conclusions Designed new sorting algorithms on GPUs Handles wide keys and long records Achieves 10 x higher memory performance Memory efficient sorting algorithm with peak memory performance of (50 GB/s) on GPUs 15 GOP/sec on a single GPU 47 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Conclusions Novel external memory sorting algorithm as a scalable solution Achieves peak I/O performance on CPUs Best performance/price solution – world’s fastest sorting system High performance growth rate characteristics Improve 2 -3 times/yr 48 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Future Work Designed high performance/price solutions High wattage and cooling requirements of CPUs and GPUs To exploit GPUs, we need easy-to-use programming APIs Promising directions: Brook. GPU, Microsoft Accelerator, Sh, etc. Scientific libraries utilizing high parallelism and memory bandwidth Scientific routines on LU, QR, SVD, FFT, etc. BLAS library on GPUs Eventually, build GPU-LAPACK and Matlab routines 49 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

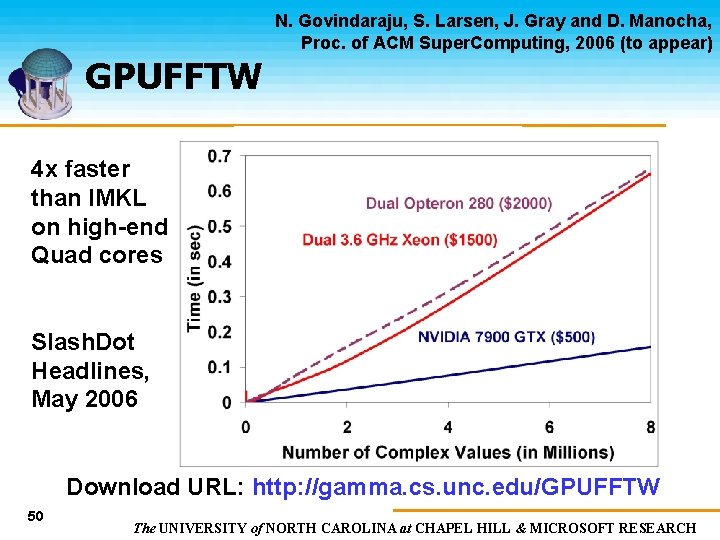

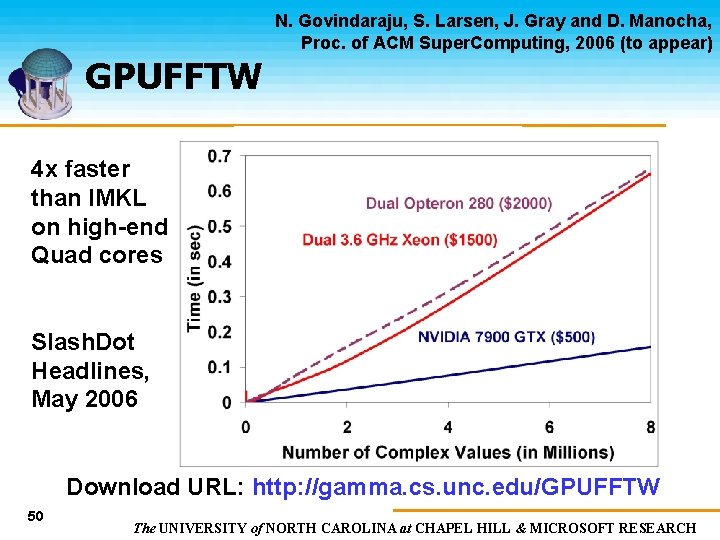

N. Govindaraju, S. Larsen, J. Gray and D. Manocha, Proc. of ACM Super. Computing, 2006 (to appear) GPUFFTW 4 x faster than IMKL on high-end Quad cores Slash. Dot Headlines, May 2006 Download URL: http: //gamma. cs. unc. edu/GPUFFTW 50 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

GPU Roadmap GPUs are becoming more general purpose Fewer limitations in Microsoft Direct. X 10 API • Better and consistent floating point support, • Integer instruction support, • More programmable stages, etc. Significant advance in performance GPUs are being widely adopted in commercial applications Eg. Microsoft Vista 51 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Call to Action Don’t put all your eggs in the Multi-core basket If you want Tera. Ops – go where they are If you want memory bandwidth – go where the memory bandwidth is. CPU-GPU gap is widening Microsoft Xbox is ½ Tera. OP today. 52 40 gops 40 g. Bps The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Acknowledgements Research Sponsors: Army Research Office Defense and Advanced Research Projects Agency National Science Foundation Naval Research Laboratory Intel Corporation Microsoft Corporation Craig Peeper, Peter-Pike Sloan, David Blythe, Jingren Zhou NVIDIA Corporation RDECOM 53 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Acknowledgements David Tuft (UNC) UNC Systems, GAMMA and Walkthrough groups 54 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH

Thank You Questions or Comments? {naga, ritesh, dm}@cs. unc. edu Jim. Gray@microsoft. com http: //www. cs. unc. edu/~naga http: //research. microsoft. com/~Gray 55 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL & MICROSOFT RESEARCH