CPE 619 Introduction To Simulation Aleksandar Milenkovi The

- Slides: 83

CPE 619 Introduction To Simulation Aleksandar Milenković The La. CASA Laboratory Electrical and Computer Engineering Department The University of Alabama in Huntsville http: //www. ece. uah. edu/~milenka http: //www. ece. uah. edu/~lacasa

Overview n n n n Simulation: Key Questions Introduction to Simulation Common Mistakes in Simulation Other Causes of Simulation Analysis Failure Checklist for Simulations Terminology Types of Models 2

Simulation: Key Questions n n n n What are the common mistakes in simulation and why most simulations fail? What language should be used for developing a simulation model? What are different types of simulations? How to schedule events in a simulation? How to verify and validate a model? How to determine that the simulation has reached a steady state? How long to run a simulation? 3

Simulation: Key Questions (cont’d) n n n How to generate uniform random numbers? How to verify that a given random number generator is good? How to select seeds for random number generators? How to generate random variables with a given distribution? What distributions should be used and when? 4

Introduction to Simulation The best advice to those about to embark on a very large simulation is often the same as Punch's famous advice to those about to marry: Don't! -Brately, Fox, and Schrage (1987) 5

Common Mistakes in Simulation 1. Inappropriate Level of Detail: More detail Þ More time Þ More Bugs Þ More CPU Þ More parameters ¹ More accurate 2. Improper Language General purpose Þ More portable, More efficient, More time 3. Unverified Models: Bugs 4. Invalid Models: Model vs. reality 5. Improperly Handled Initial Conditions 6. Too Short Simulations: Need confidence intervals 7. Poor Random Number Generators: Safer to use a well-known generator 8. Improper Selection of Seeds: Zero seeds, Same seeds for all streams 6

Other Causes of Simulation Analysis Failure 1. Inadequate Time Estimate 2. No Achievable Goal 3. Incomplete Mix of Essential Skills (a) Project Leadership (b) Modeling and (c) Programming (d) Knowledge of the Modeled System 4. Inadequate Level of User Participation 5. Obsolete or Nonexistent Documentation 6. Inability to Manage the Development of a Large Complex Computer Program Need software engineering tools 7. Mysterious Results 7

Checklist for Simulations 1. Checks before developing a simulation: (a) Is the goal of the simulation properly specified? (b) Is the level of detail in the model appropriate for the goal? (c) Does the simulation team include personnel with project leadership, modeling, programming, and computer systems backgrounds? (d) Has sufficient time been planned for the project? 2. Checks during development: (a) Has the random number generator used in the simulation been tested for uniformity and independence? (b) Is the model reviewed regularly with the end user? (c) Is the model documented? 8

Checklist for Simulations (cont’d) 3. Checks after the simulation is running: (a) Is the simulation length appropriate? (b) Are the initial transients removed before computation? (c) Has the model been verified thoroughly? (d) Has the model been validated before using its results? (e) If there any surprising results, have they been validated? (f) Are all seeds such that the random number streams will not overlap? 9

Terminology n Introduce terms using an example of simulating CPU scheduling n n State Variables: Define the state of the system n n n Study various scheduling techniques given job characteristics, ignoring disks, display… Can restart simulation from state variables E. g. , length of the job queue. Event: Change in the system state n E. g. , arrival, beginning of a new execution, departure 10

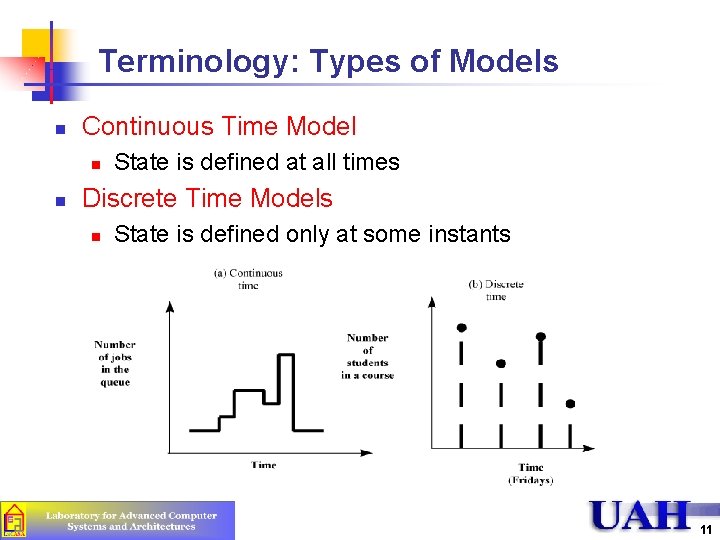

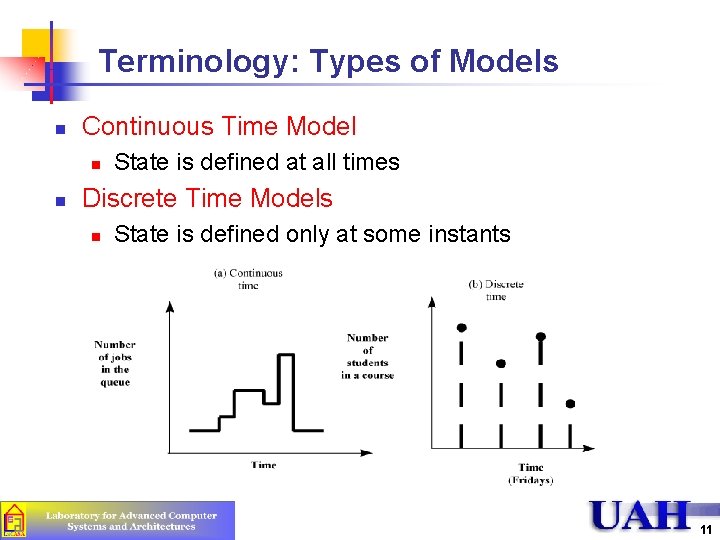

Terminology: Types of Models n Continuous Time Model n n State is defined at all times Discrete Time Models n State is defined only at some instants 11

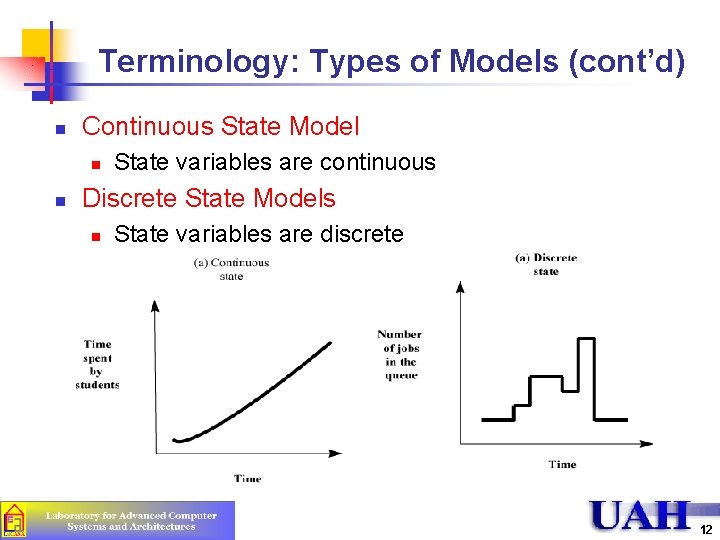

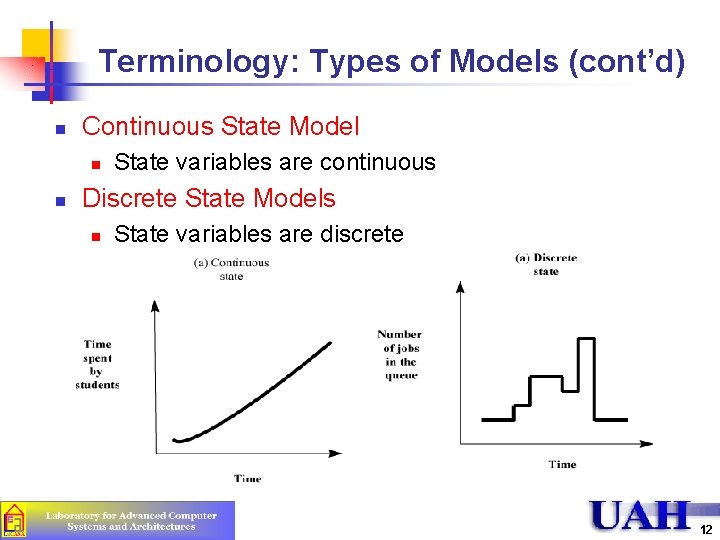

Terminology: Types of Models (cont’d) n Continuous State Model n n State variables are continuous Discrete State Models n State variables are discrete 12

Terminology: Types of Models (cont’d) n Discrete state = Discrete event model Continuous state = Continuous event model Continuity of time ¹ Continuity of state n Four possible combinations n n n 1. discrete state/discrete time 2. discrete state/continuous time 3. continuous state/discrete time 4. continuous state/continuous time 13

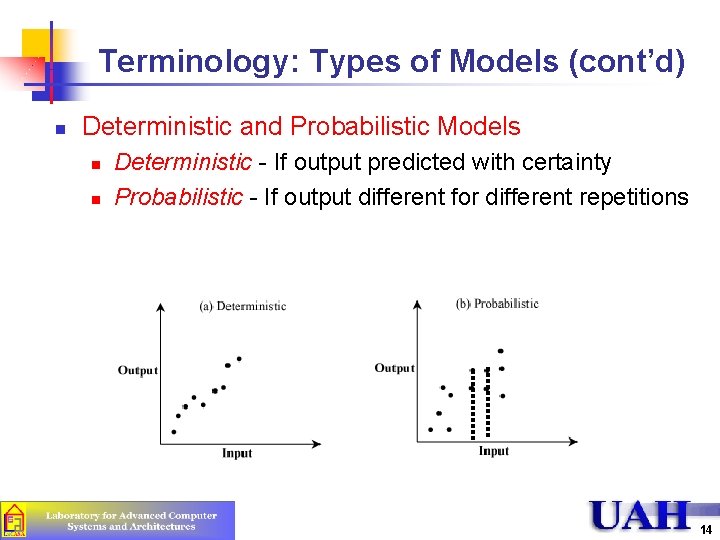

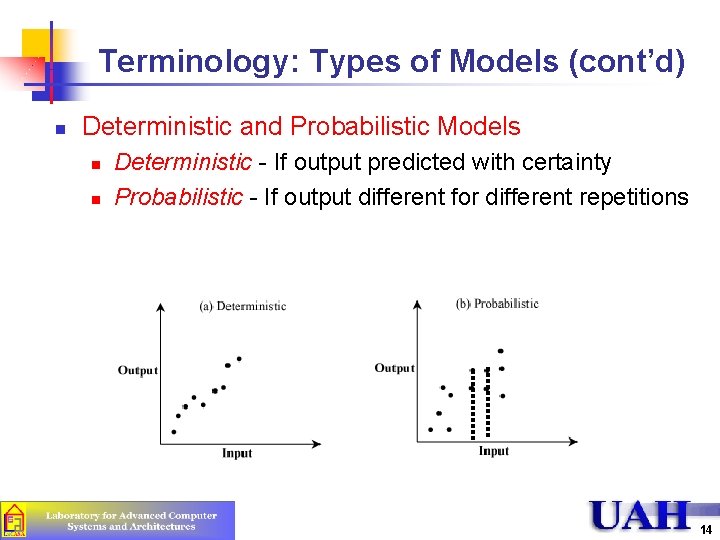

Terminology: Types of Models (cont’d) n Deterministic and Probabilistic Models n n Deterministic - If output predicted with certainty Probabilistic - If output different for different repetitions 14

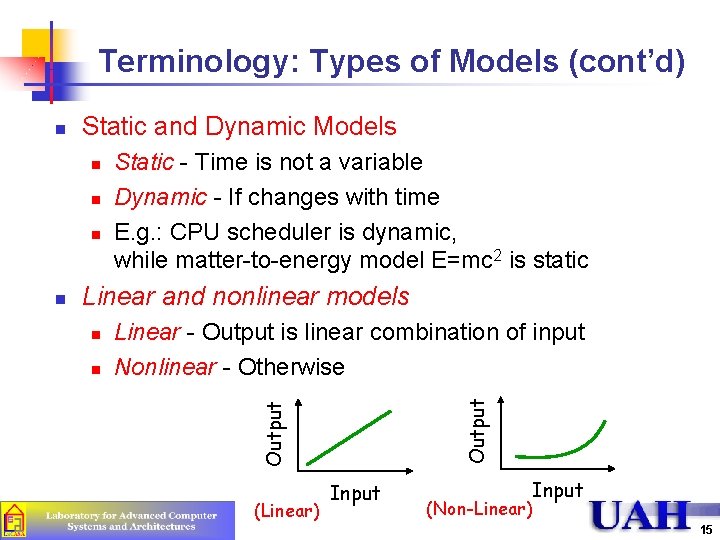

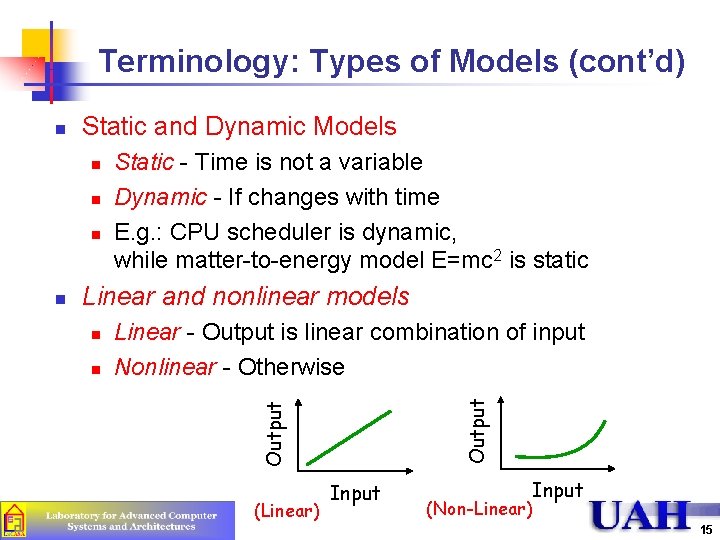

Terminology: Types of Models (cont’d) Static and Dynamic Models n n n Linear and nonlinear models n n Linear - Output is linear combination of input Nonlinear - Otherwise Output n Static - Time is not a variable Dynamic - If changes with time E. g. : CPU scheduler is dynamic, while matter-to-energy model E=mc 2 is static Output n (Linear) Input (Non-Linear) 15

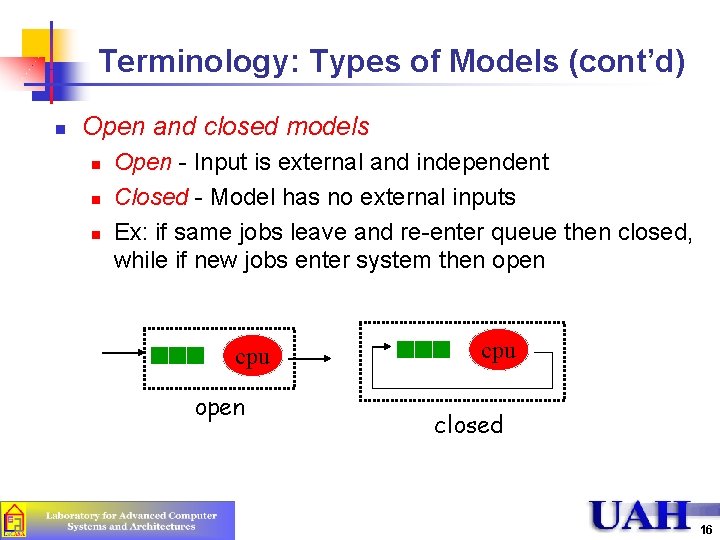

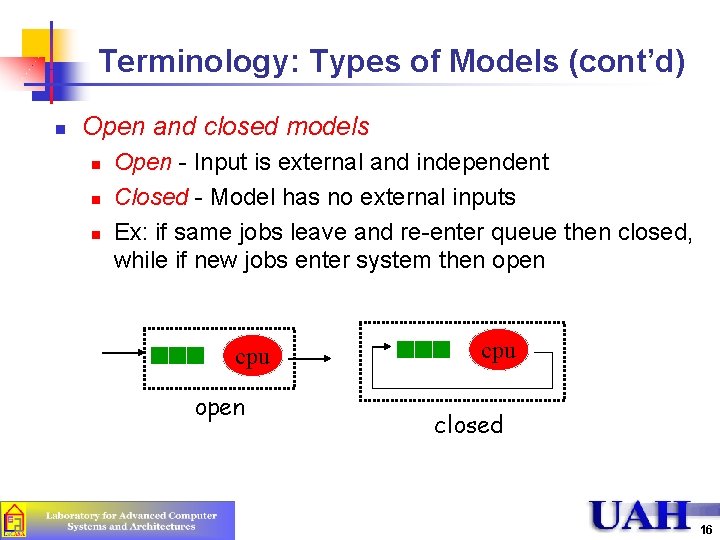

Terminology: Types of Models (cont’d) n Open and closed models n n n Open - Input is external and independent Closed - Model has no external inputs Ex: if same jobs leave and re-enter queue then closed, while if new jobs enter system then open cpu closed 16

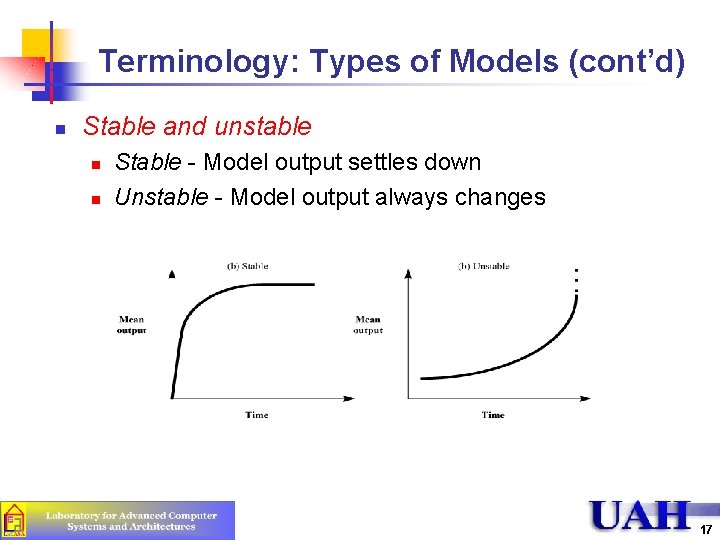

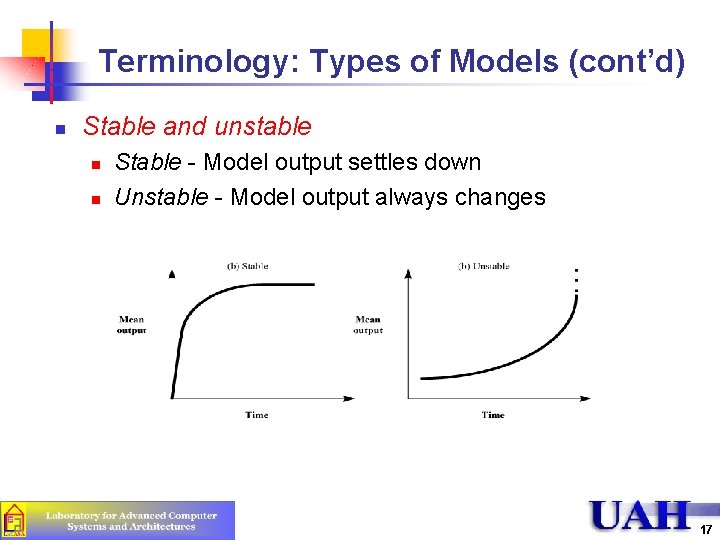

Terminology: Types of Models (cont’d) n Stable and unstable n n Stable - Model output settles down Unstable - Model output always changes 17

Computer System Models n n n n Continuous time Discrete state Probabilistic Dynamic Nonlinear Open or closed Stable or unstable 18

Selecting a Language for Simulation n Four choices n n 1. Simulation language 2. General purpose 3. Extension of a general purpose language 4. Simulation package 19

Selecting a Language for Simulation (cont’d) n n n Simulation language – built in facilities for time steps, event scheduling, data collection, reporting General-purpose – known to developer, available on more systems, flexible The major difference is the cost tradeoff (SL vs. GPL) n n n SL+: save development time (if you know it), more time for system specific issues, more readable code SL-: requires startup time to learn GPL+: Analyst's familiarity, availability, quick startup GPL-: may require more time to add simulation flexibility, portability, flexibility Recommendation may be for all analysts to learn one simulation language so understand those “costs” and can compare 20

Selecting a Language for Simulation n n Extension of general-purpose – collection of routines and tasks commonly used. Often, base language with extra libraries that can be called Simulation packages – allow definition of model in interactive fashion. Get results in one day Tradeoff is in flexibility, where packages can only do what developer envisioned, but if that is what is needed then is quicker to do so Examples: GASP (for FORTRAN) n n n Collection of routines to handle simulation tasks Compromise for efficiency, flexibility, and portability. Examples: QNET 4, and RESQ n n Input dialog Library of data structures, routines, and algorithms Big time savings Inflexible Þ Simplification 21

Types of Simulation Languages n Continuous Simulation Languages n n Discrete-event Simulation Languages n n CSMP, DYNAMO Differential equations Used in chemical engineering SIMULA and GPSS Combined n n SIMSCRIPT and GASP Allow discrete, continuous, as well as combined simulations. 22

Types of Simulations 1. Emulation: Using hardware or firmware 2. Monte Carlo Simulation 3. Trace-Driven Simulation 4. Discrete Event Simulation 23

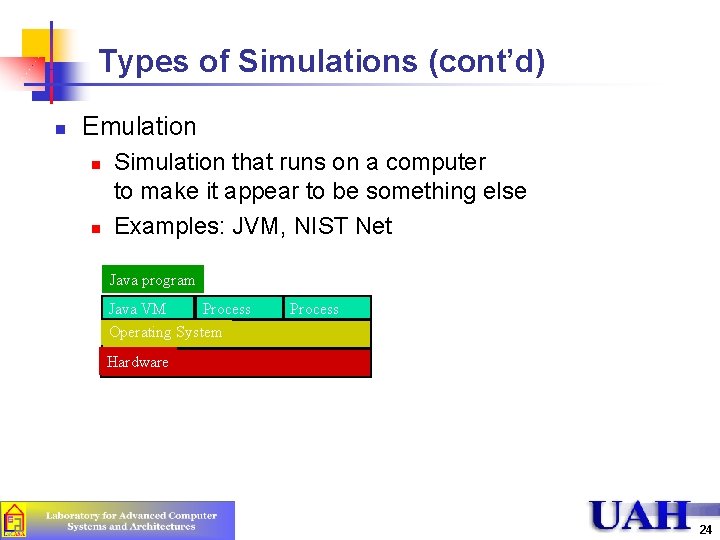

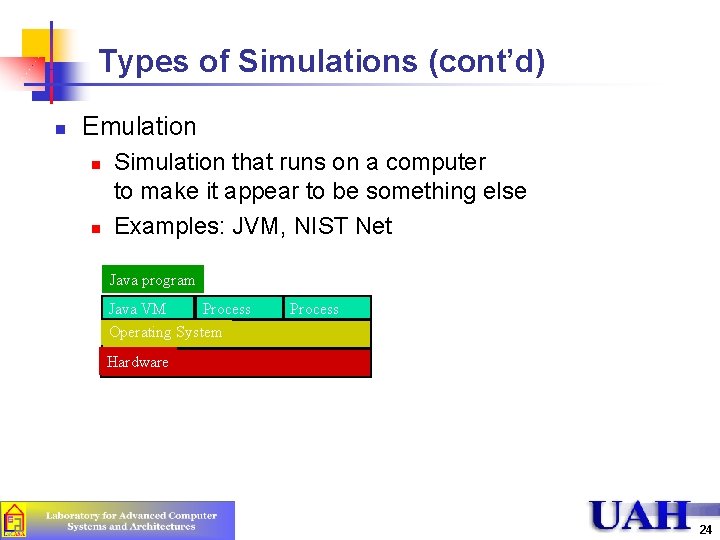

Types of Simulations (cont’d) n Emulation n n Simulation that runs on a computer to make it appear to be something else Examples: JVM, NIST Net Java program Java VM Process Operating System Process Hardware 24

Types of Simulation (cont’d) Monte Carlo method [Origin: after Count Montgomery de Carlo, Italian gambler and random-number generator (1792 -1838). ] A method of jazzing up the action in certain statistical and number-analytic environments by setting up a book and inviting bets on the outcome of a computation. - The Devil's DP Dictionary Mc. Graw Hill (1981) 25

Monte Carlo Simulation n A static simulation has no time parameter n n n Used to model physical phenomena, evaluate probabilistic system, numerically estimate complex mathematical expression Driven with random number generator n n n Runs until some equilibrium state reached So “Monte Carlo” (after casinos) simulation Example, consider numerically determining the value of Area of circle = 2 for radius 1 26

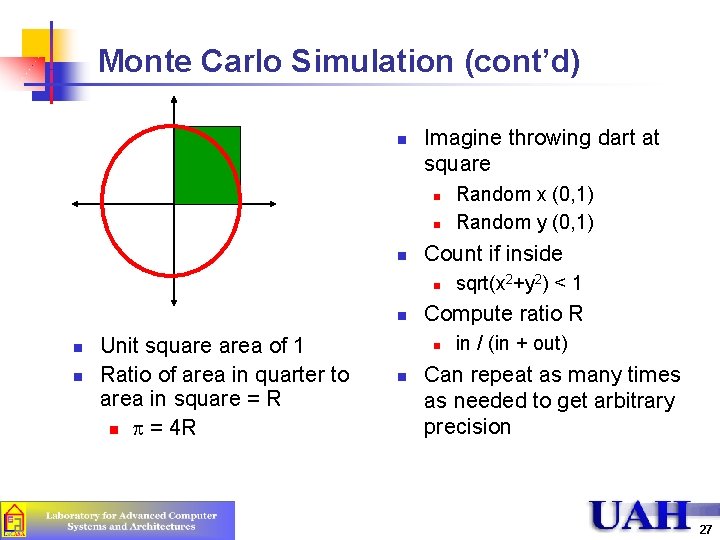

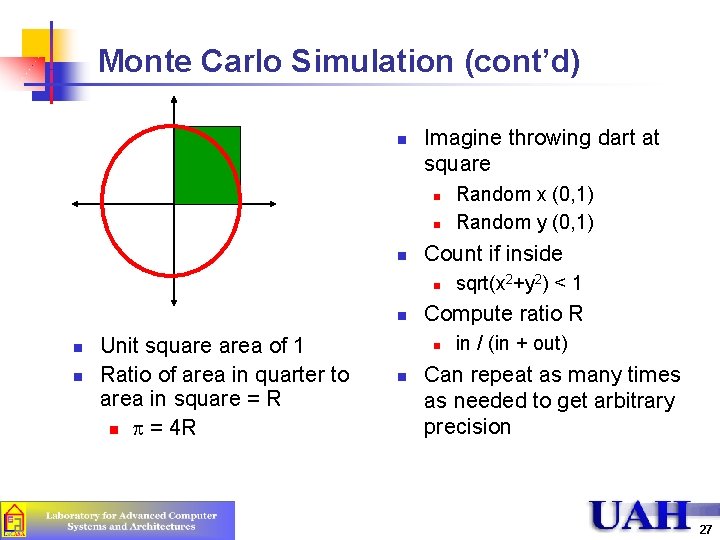

Monte Carlo Simulation (cont’d) n Imagine throwing dart at square n n n Count if inside n n Unit square area of 1 Ratio of area in quarter to area in square = R n = 4 R sqrt(x 2+y 2) < 1 Compute ratio R n n Random x (0, 1) Random y (0, 1) in / (in + out) Can repeat as many times as needed to get arbitrary precision 27

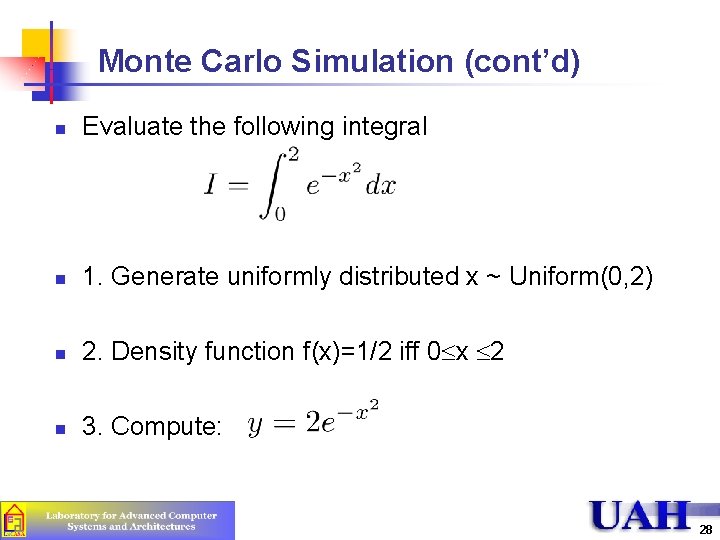

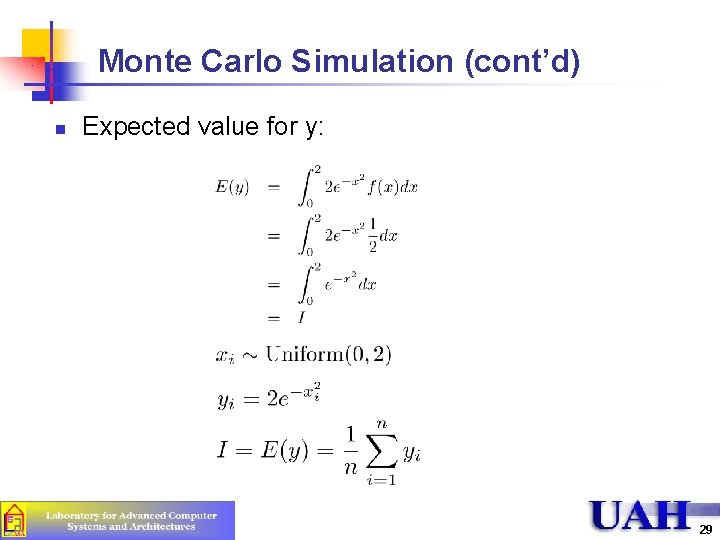

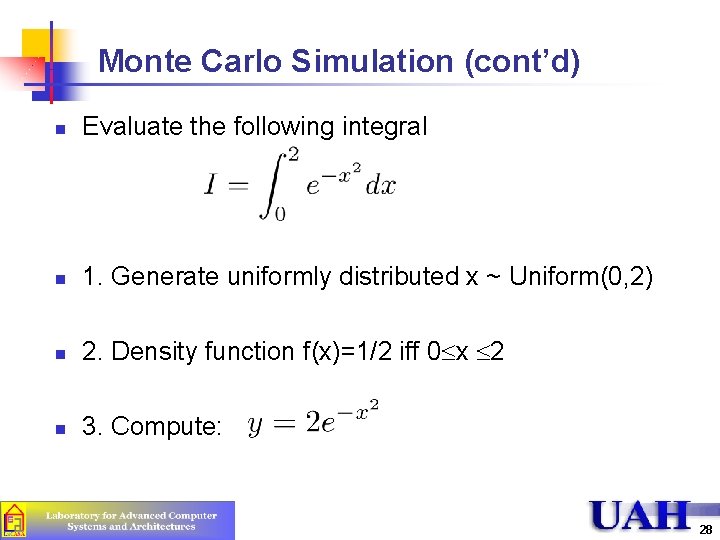

Monte Carlo Simulation (cont’d) n Evaluate the following integral n 1. Generate uniformly distributed x ~ Uniform(0, 2) n 2. Density function f(x)=1/2 iff 0 x 2 n 3. Compute: 28

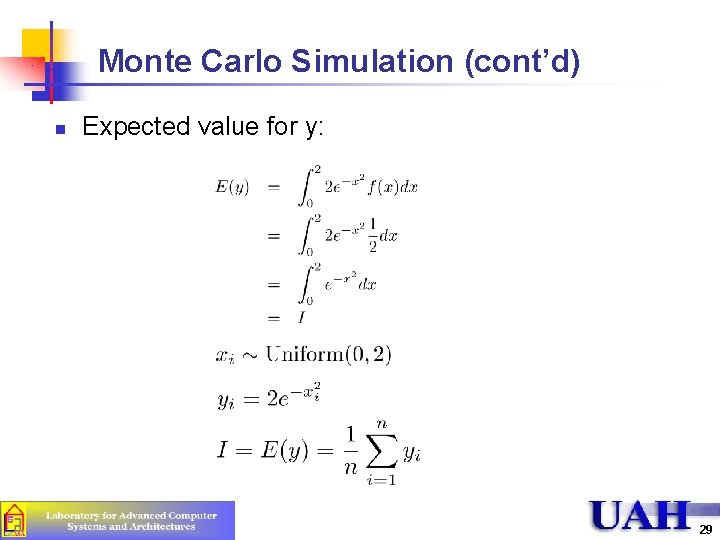

Monte Carlo Simulation (cont’d) n Expected value for y: 29

Trace-Driven Simulation n Uses time-ordered record of events on real system as input n n Example: to compare memory management, use trace of page reference patterns as input, and can model and simulate page replacement algorithms Note, need trace to be independent of system n Example: if had trace of disk events, could not be used to study page replacement since events are dependent upon current algorithm 30

Advantages of Trace-Driven Simulations 1. Credibility 2. Easy Validation: Compare simulation with measured 3. Accurate Workload: Models correlation and interference 4. Detailed Trade-Offs: Detailed workload Þ Can study small changes in algorithms 5. Less Randomness: Trace Þ deterministic input Þ Fewer repetitions 6. Fair Comparison: Better than random input 7. Similarity to the Actual Implementation: Trace-driven model is similar to the system Þ Can understand complexity of implementation 31

Disadvantages of Trace-Driven Simulations 1. Complexity: More detailed 2. Representativeness: Workload changes with time, equipment 3. Finiteness: Few minutes fill up a disk 4. Single Point of Validation: One trace = one point 5. Detail 6. Trade-Off: Difficult to change workload 32

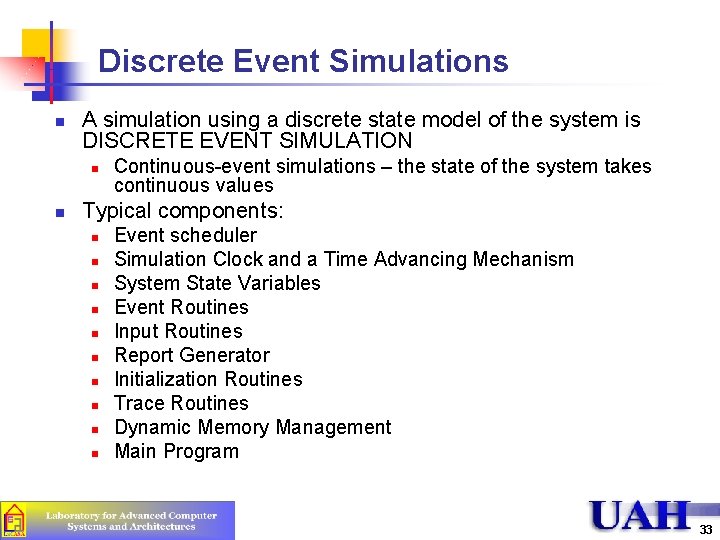

Discrete Event Simulations n A simulation using a discrete state model of the system is DISCRETE EVENT SIMULATION n n Continuous-event simulations – the state of the system takes continuous values Typical components: n n n n n Event scheduler Simulation Clock and a Time Advancing Mechanism System State Variables Event Routines Input Routines Report Generator Initialization Routines Trace Routines Dynamic Memory Management Main Program 33

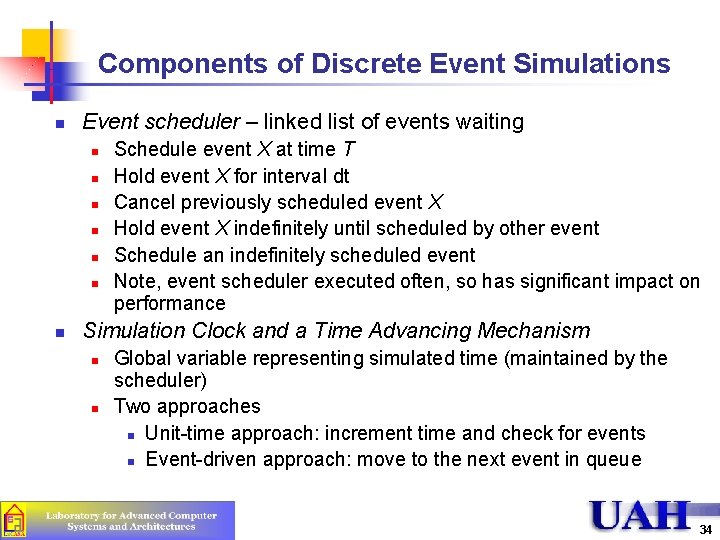

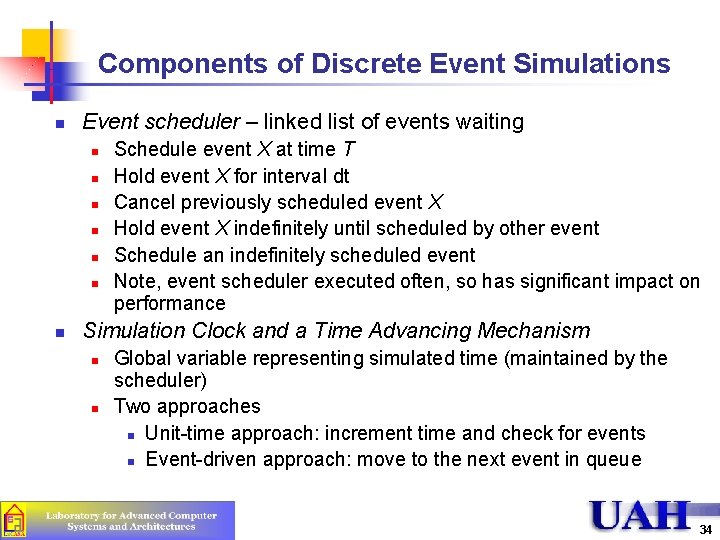

Components of Discrete Event Simulations n Event scheduler – linked list of events waiting n n n n Schedule event X at time T Hold event X for interval dt Cancel previously scheduled event X Hold event X indefinitely until scheduled by other event Schedule an indefinitely scheduled event Note, event scheduler executed often, so has significant impact on performance Simulation Clock and a Time Advancing Mechanism n n Global variable representing simulated time (maintained by the scheduler) Two approaches n Unit-time approach: increment time and check for events n Event-driven approach: move to the next event in queue 34

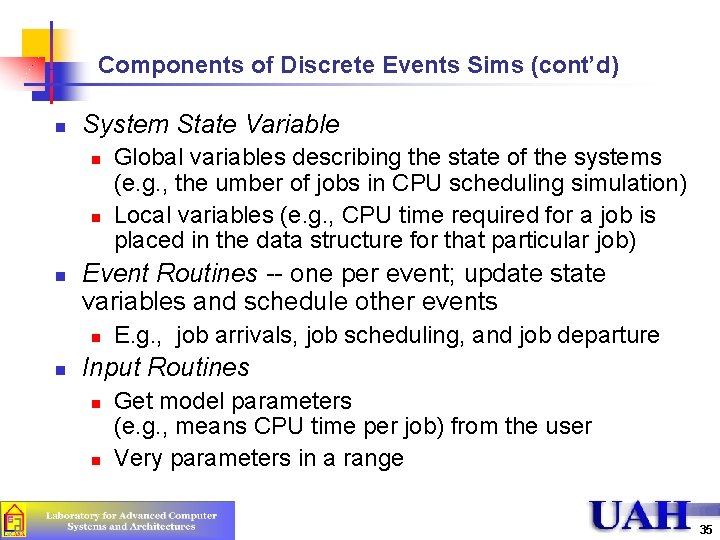

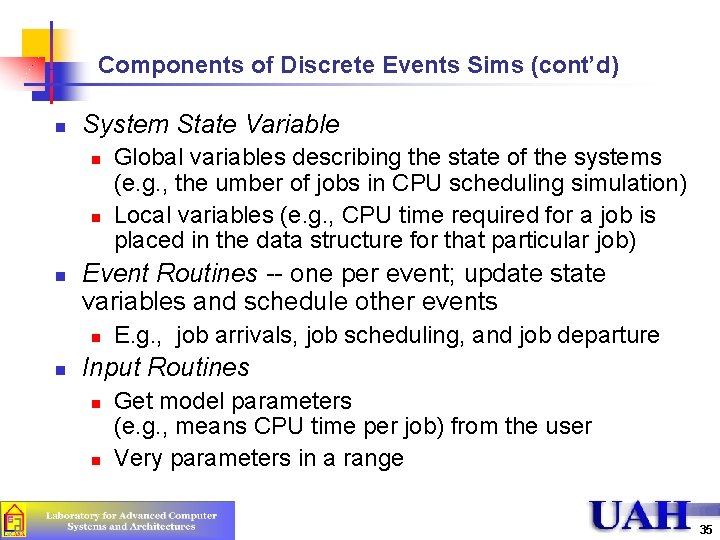

Components of Discrete Events Sims (cont’d) n System State Variable n n n Event Routines -- one per event; update state variables and schedule other events n n Global variables describing the state of the systems (e. g. , the umber of jobs in CPU scheduling simulation) Local variables (e. g. , CPU time required for a job is placed in the data structure for that particular job) E. g. , job arrivals, job scheduling, and job departure Input Routines n n Get model parameters (e. g. , means CPU time per job) from the user Very parameters in a range 35

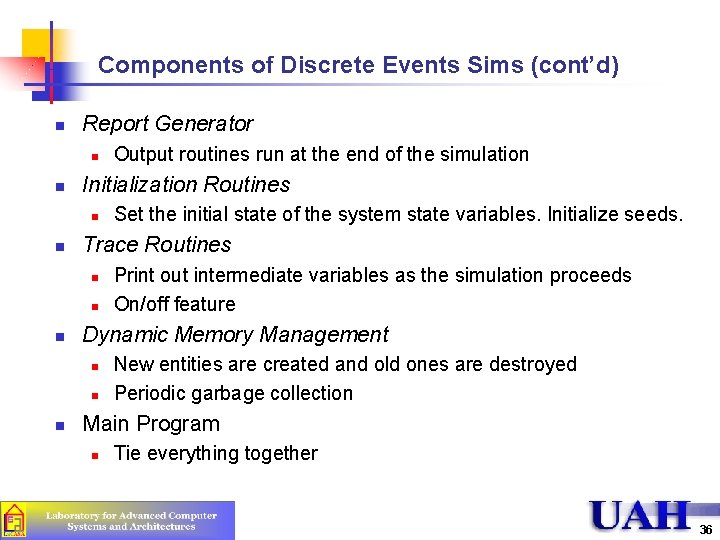

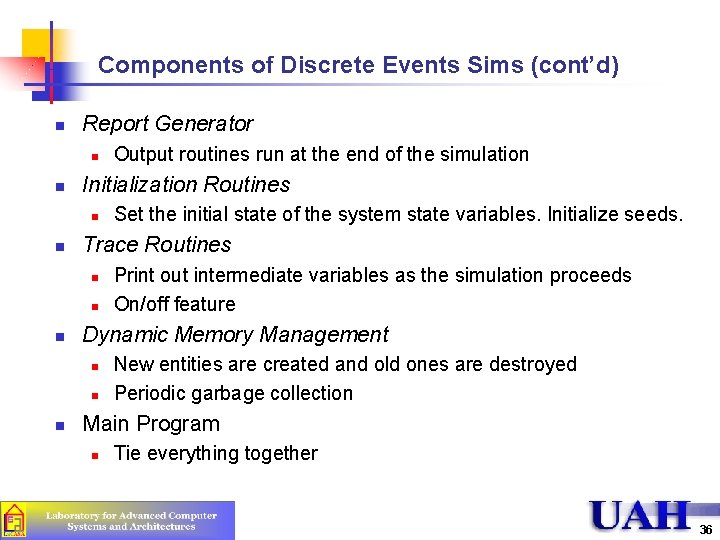

Components of Discrete Events Sims (cont’d) n Report Generator n n Initialization Routines n n n Print out intermediate variables as the simulation proceeds On/off feature Dynamic Memory Management n n n Set the initial state of the system state variables. Initialize seeds. Trace Routines n n Output routines run at the end of the simulation New entities are created and old ones are destroyed Periodic garbage collection Main Program n Tie everything together 36

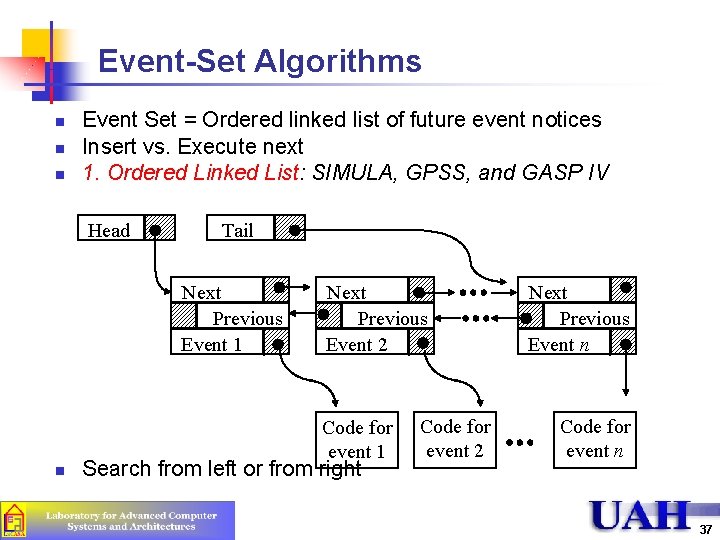

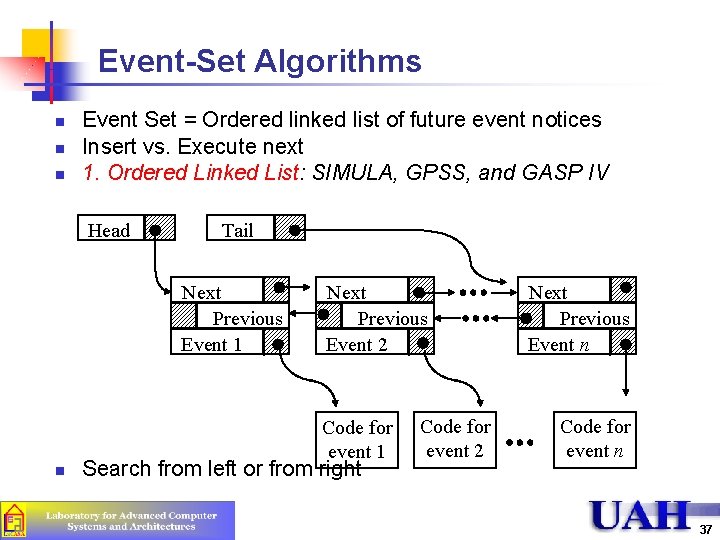

Event-Set Algorithms n n n Event Set = Ordered linked list of future event notices Insert vs. Execute next 1. Ordered Linked List: SIMULA, GPSS, and GASP IV Head Tail Next Previous Event 1 Next Previous Event 2 Code for event 1 n Search from left or from right Code for event 2 Next Previous Event n Code for event n 37

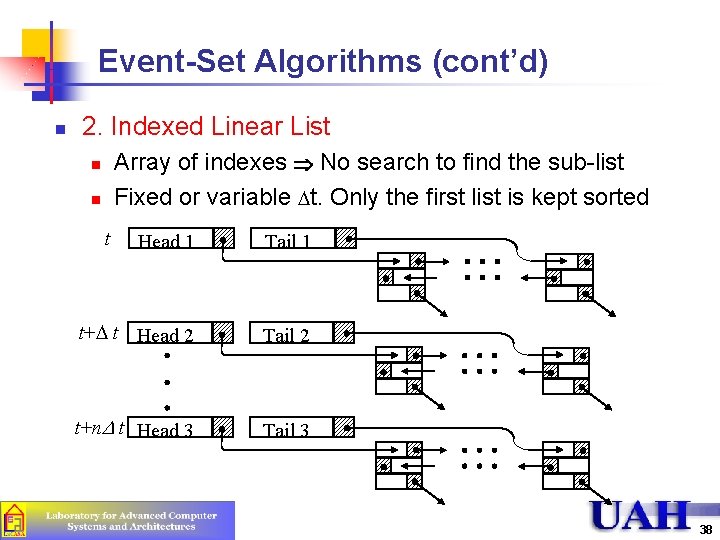

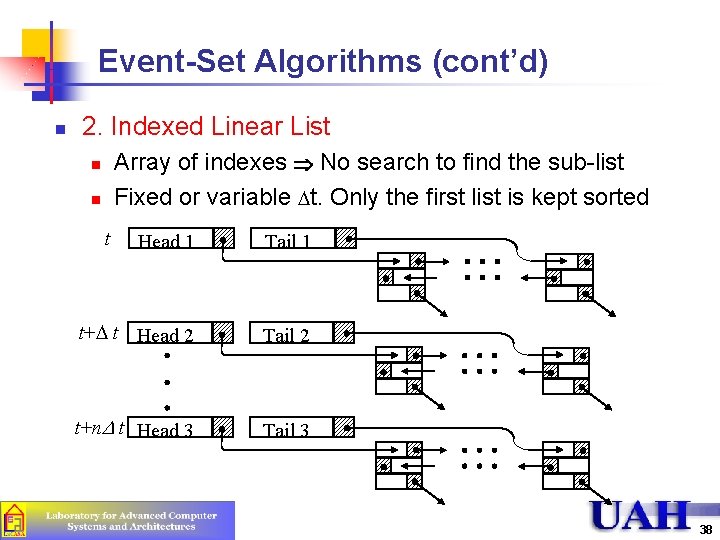

Event-Set Algorithms (cont’d) n 2. Indexed Linear List Array of indexes Þ No search to find the sub-list Fixed or variable Dt. Only the first list is kept sorted n n t Head 1 Tail 1 t+D t Head 2 Tail 2 t+n. D t Head 3 Tail 3 38

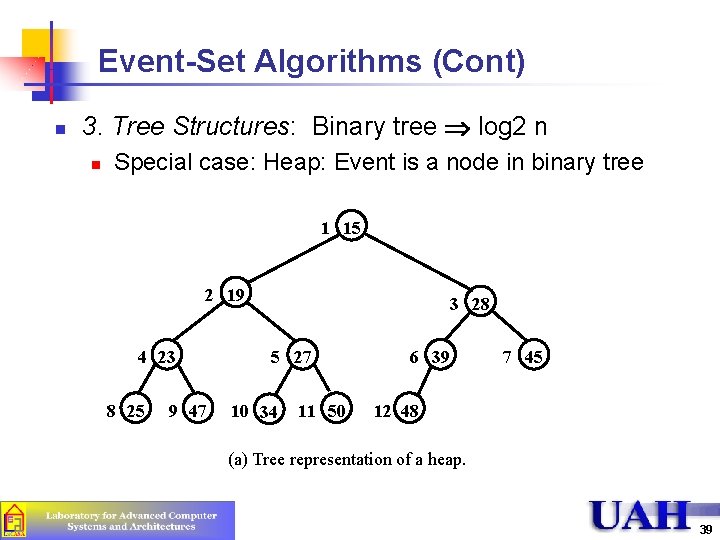

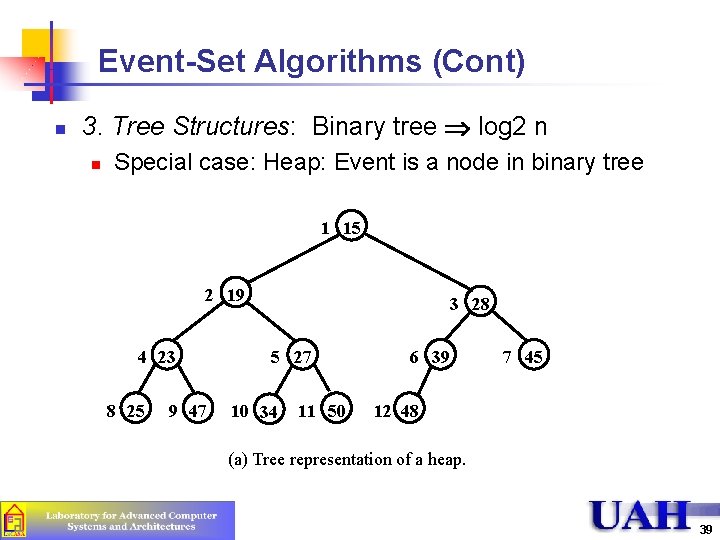

Event-Set Algorithms (Cont) n 3. Tree Structures: Binary tree Þ log 2 n n Special case: Heap: Event is a node in binary tree 1 15 2 19 4 23 8 25 9 47 3 28 5 27 10 34 11 50 6 39 7 45 12 48 (a) Tree representation of a heap. 39

Summary n n n Common Mistakes: Detail, Invalid, Short Discrete Event, Continuous time, nonlinear models Monte Carlo Simulation: Static models Trace driven simulation: Credibility, difficult trade-offs Even Set Algorithms: Linked list, indexed linear list, heaps 40

Analysis of Simulation Results

Overview n n n n Analysis of Simulation Results Model Verification Techniques Model Validation Techniques Transient Removal Terminating Simulations Stopping Criteria: Variance Estimation Variance Reduction 42

Model Verification vs. Validation n The model output should be close to that of real system n n 1 st step, test if assumptions are reasonable n n Validation, or representativeness of assumptions 2 nd step, test whether model implements assumptions n n Make assumptions about behavior of real systems Verification, or correctness Four Possibilities 1. 2. 3. 4. Unverified, Invalid Unverified, Valid Verified, Invalid Verified, Valid 43

Model Verification Techniques 1. Top Down Modular Design 2. Anti-bugging 3. Structured Walk-Through 4. Deterministic Models 5. Run Simplified Cases 6. Trace 7. On-Line Graphic Displays 8. Continuity Test 9. Degeneracy Tests 10. Consistency Tests 11. Seed Independence 44

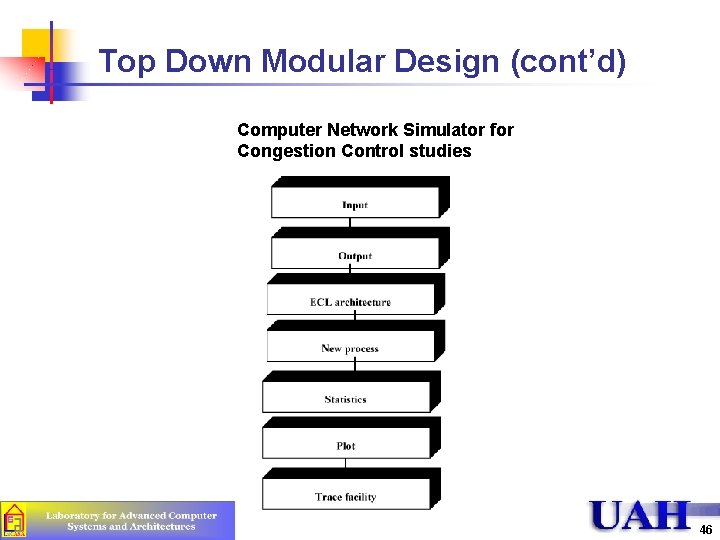

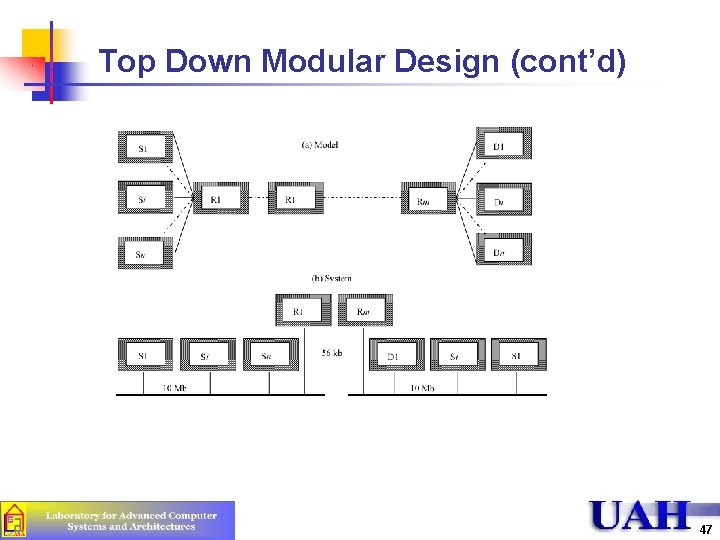

Top Down Modular Design n n Divide and Conquer Modules = Subroutines, Subprograms, Procedures n n n Modules have well defined interfaces Can be independently developed, debugged, and maintained Top-down design Hierarchical structure Modules and sub-modules 45

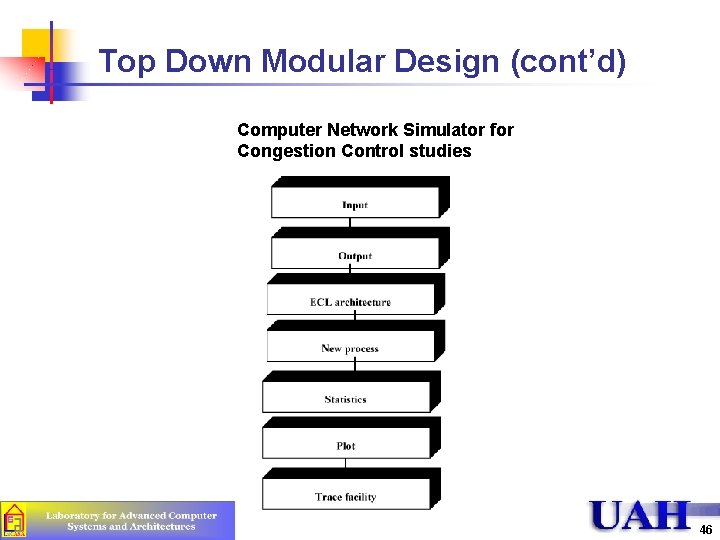

Top Down Modular Design (cont’d) Computer Network Simulator for Congestion Control studies 46

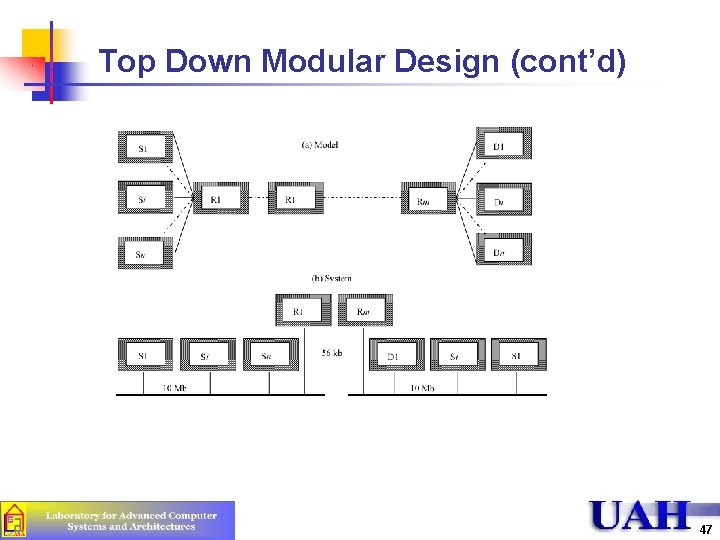

Top Down Modular Design (cont’d) 47

Verification Techniques n Anti-bugging: Include self-checks n n n Structured Walk-Through n n å Probabilities = 1 Jobs left = Generated - Serviced Explain the code another person or group Works even if the person is sleeping Deterministic Models: Use constant values Run Simplified Cases n n n Only one packet Only one source Only one intermediate node 48

Verification Techniques (cont’d) n n Trace = Time-ordered list of events and variables Several levels of detail n n Events trace Procedure trace Variables trace User selects the detail n Include on and off 49

Verification Techniques (cont’d) n On-Line Graphic Displays n n n Make simulation interesting Help selling the results More comprehensive than trace 50

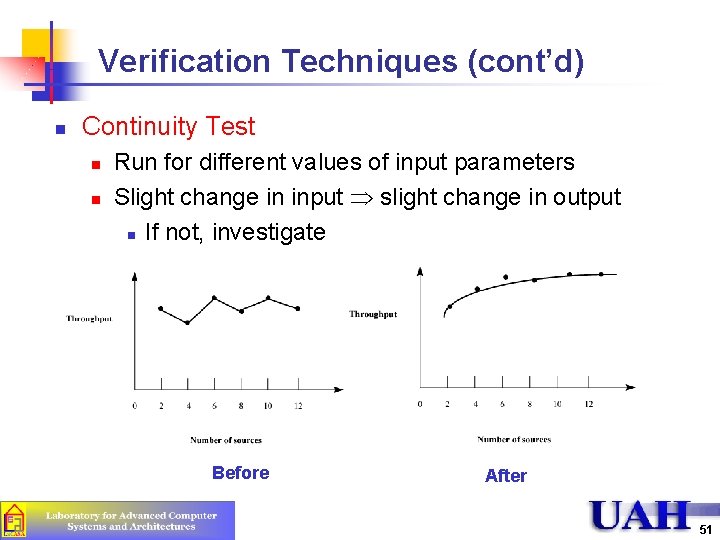

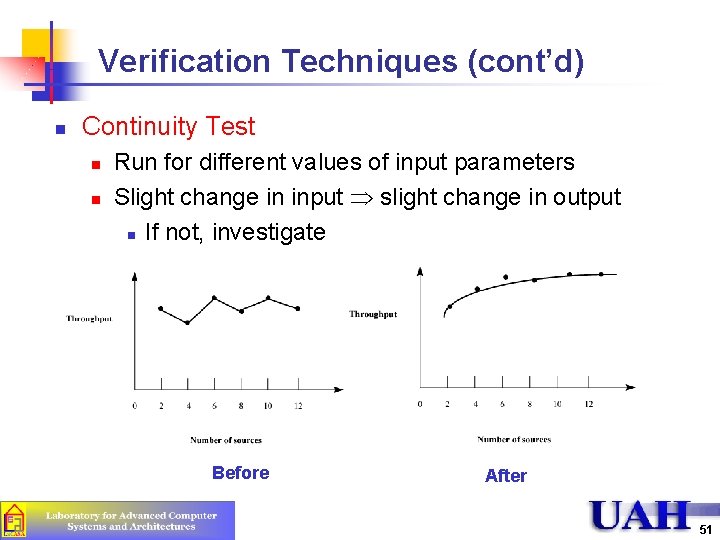

Verification Techniques (cont’d) n Continuity Test n n Run for different values of input parameters Slight change in input slight change in output n If not, investigate Before After 51

Verification Techniques (cont’d) n Degeneracy Tests: Try extreme configuration and workloads n n Consistency Tests n n n One CPU, Zero disk Similar result for inputs that have same effect n Four users at 100 Mbps vs. Two at 200 Mbps Build a test library of continuity, degeneracy and consistency tests Seed Independence: Similar results for different seeds 52

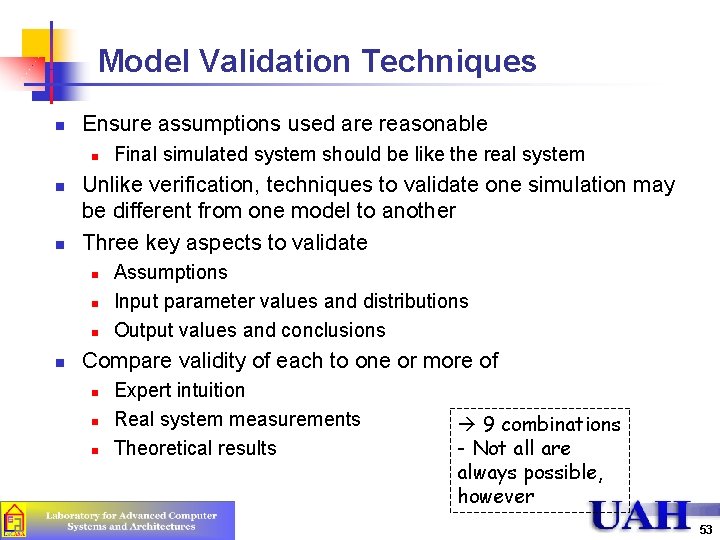

Model Validation Techniques n Ensure assumptions used are reasonable n n n Unlike verification, techniques to validate one simulation may be different from one model to another Three key aspects to validate n n Final simulated system should be like the real system Assumptions Input parameter values and distributions Output values and conclusions Compare validity of each to one or more of n n n Expert intuition Real system measurements Theoretical results 9 combinations - Not all are always possible, however 53

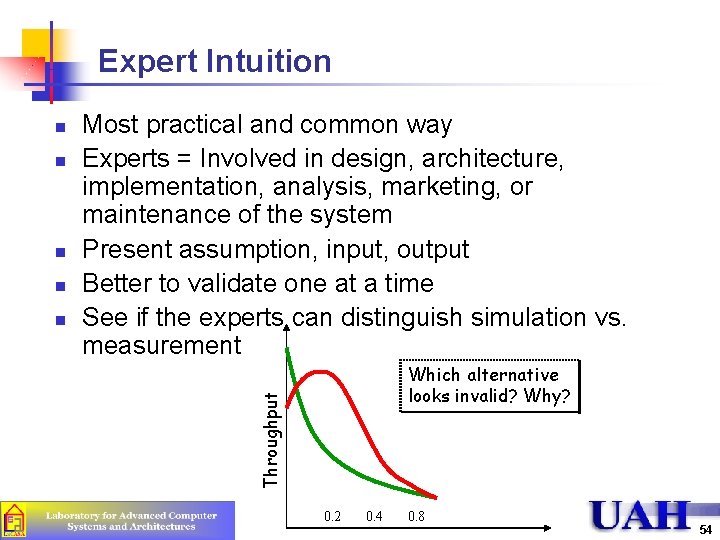

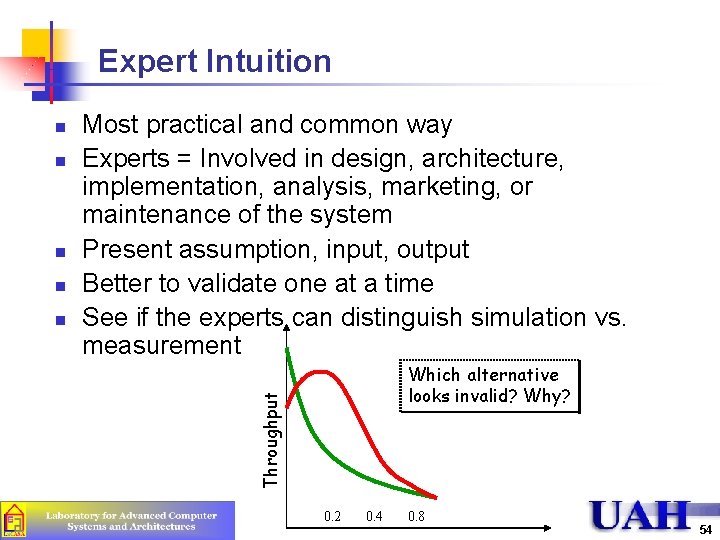

Expert Intuition n n Most practical and common way Experts = Involved in design, architecture, implementation, analysis, marketing, or maintenance of the system Present assumption, input, output Better to validate one at a time See if the experts can distinguish simulation vs. measurement Which alternative looks invalid? Why? Throughput n 0. 2 0. 4 0. 8 54

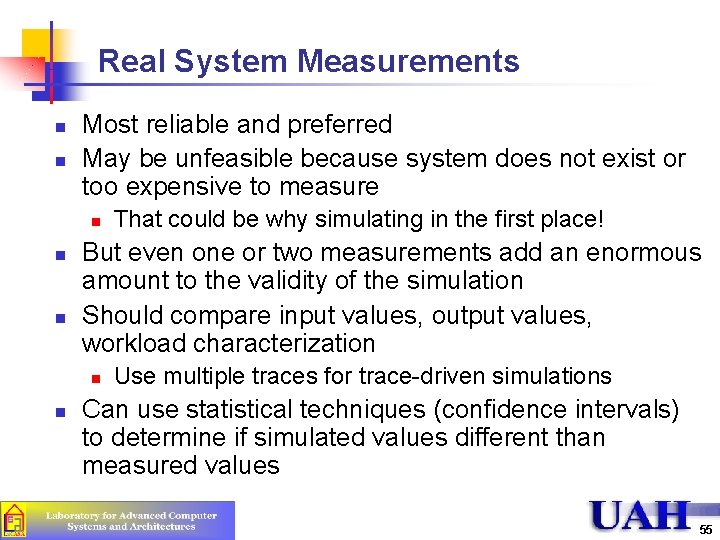

Real System Measurements n n Most reliable and preferred May be unfeasible because system does not exist or too expensive to measure n n n But even one or two measurements add an enormous amount to the validity of the simulation Should compare input values, output values, workload characterization n n That could be why simulating in the first place! Use multiple traces for trace-driven simulations Can use statistical techniques (confidence intervals) to determine if simulated values different than measured values 55

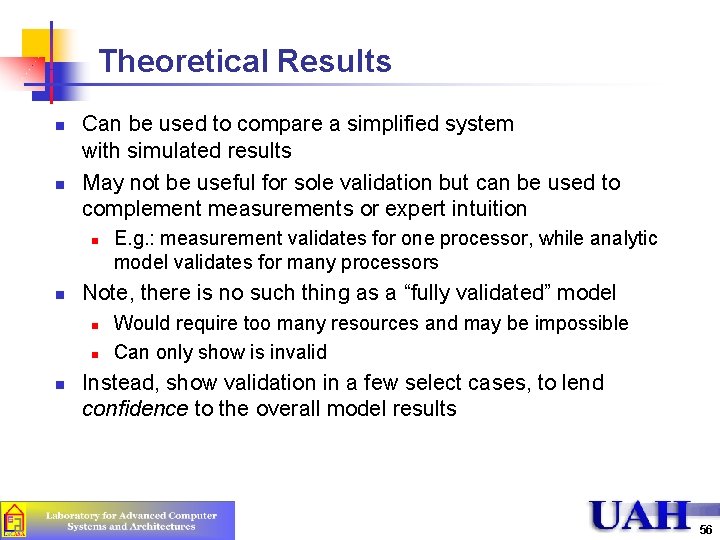

Theoretical Results n n Can be used to compare a simplified system with simulated results May not be useful for sole validation but can be used to complement measurements or expert intuition n n Note, there is no such thing as a “fully validated” model n n n E. g. : measurement validates for one processor, while analytic model validates for many processors Would require too many resources and may be impossible Can only show is invalid Instead, show validation in a few select cases, to lend confidence to the overall model results 56

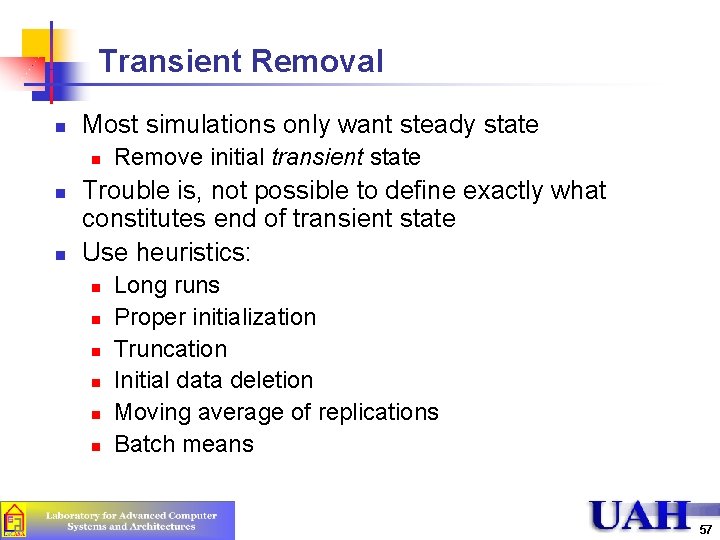

Transient Removal n Most simulations only want steady state n n n Remove initial transient state Trouble is, not possible to define exactly what constitutes end of transient state Use heuristics: n n n Long runs Proper initialization Truncation Initial data deletion Moving average of replications Batch means 57

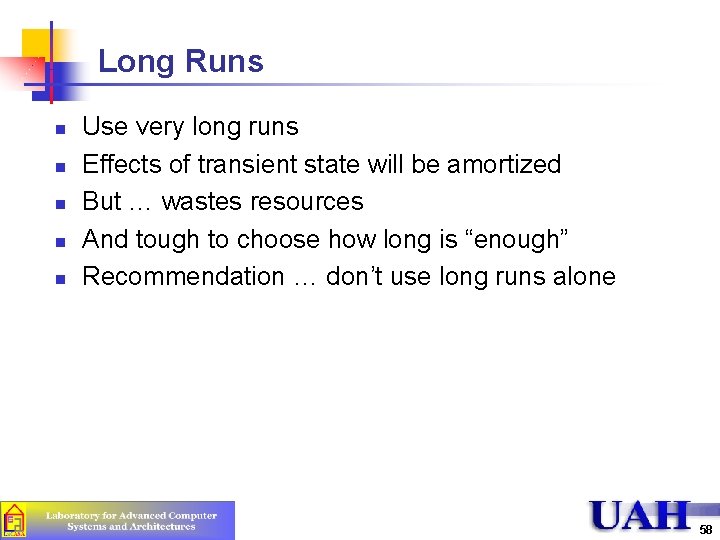

Long Runs n n n Use very long runs Effects of transient state will be amortized But … wastes resources And tough to choose how long is “enough” Recommendation … don’t use long runs alone 58

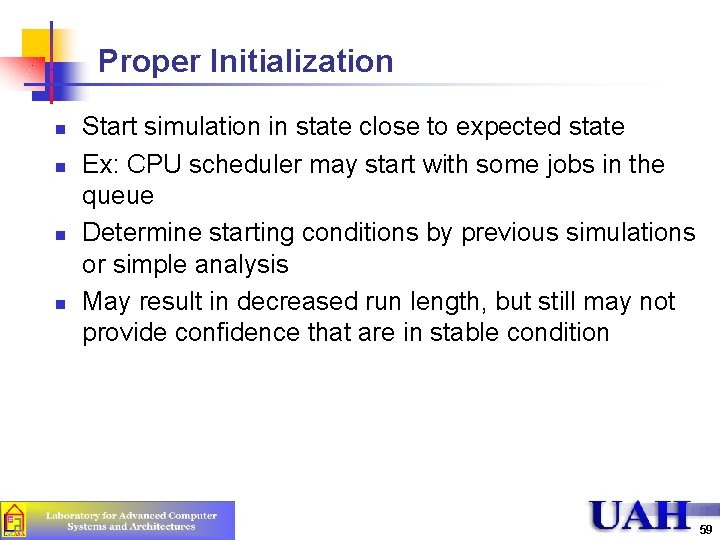

Proper Initialization n n Start simulation in state close to expected state Ex: CPU scheduler may start with some jobs in the queue Determine starting conditions by previous simulations or simple analysis May result in decreased run length, but still may not provide confidence that are in stable condition 59

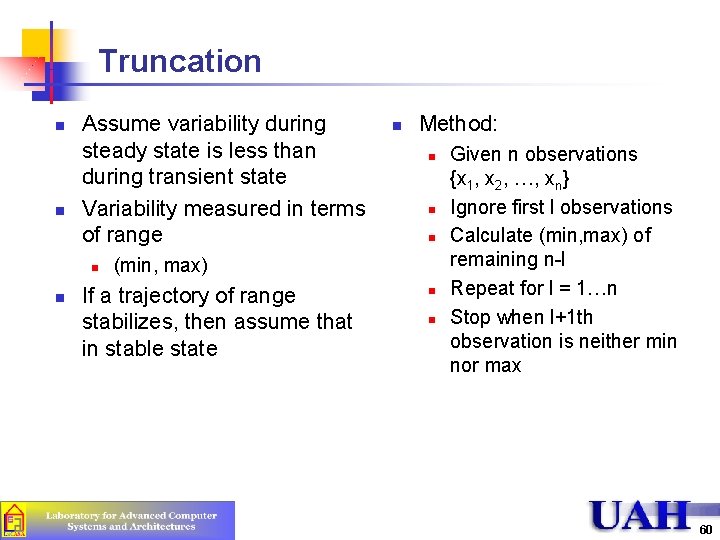

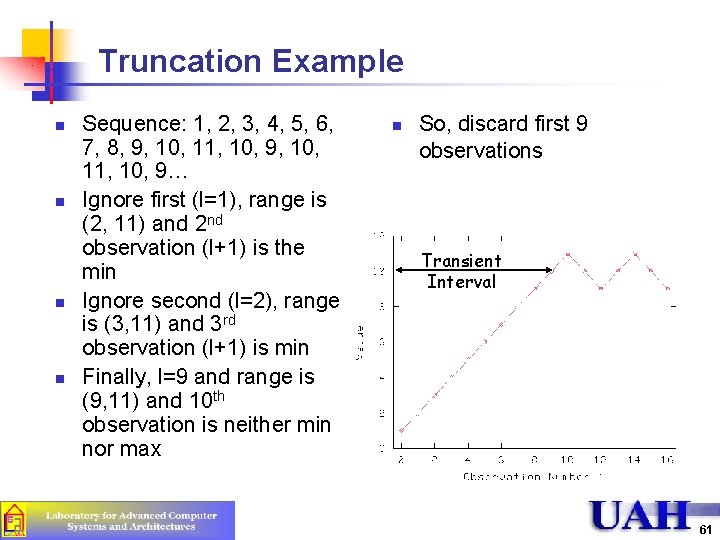

Truncation n n Assume variability during steady state is less than during transient state Variability measured in terms of range n n n Method: n n n (min, max) If a trajectory of range stabilizes, then assume that in stable state n n Given n observations {x 1, x 2, …, xn} Ignore first l observations Calculate (min, max) of remaining n-l Repeat for l = 1…n Stop when l+1 th observation is neither min nor max 60

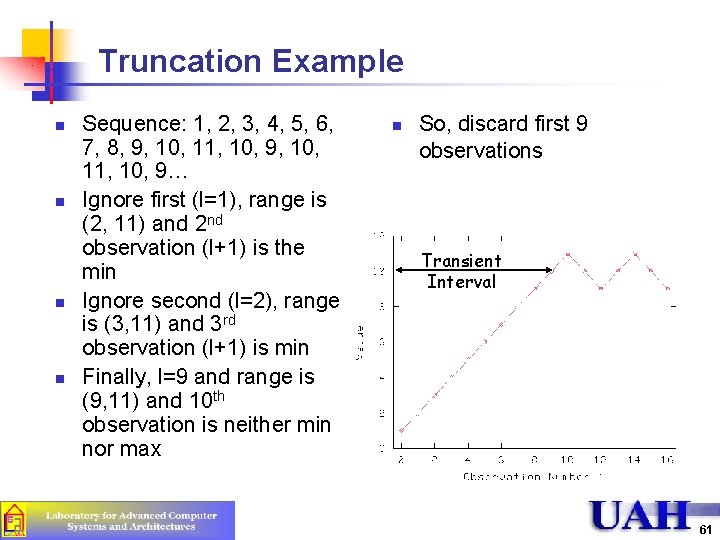

Truncation Example n n Sequence: 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 10, 9… Ignore first (l=1), range is (2, 11) and 2 nd observation (l+1) is the min Ignore second (l=2), range is (3, 11) and 3 rd observation (l+1) is min Finally, l=9 and range is (9, 11) and 10 th observation is neither min nor max n So, discard first 9 observations Transient Interval 61

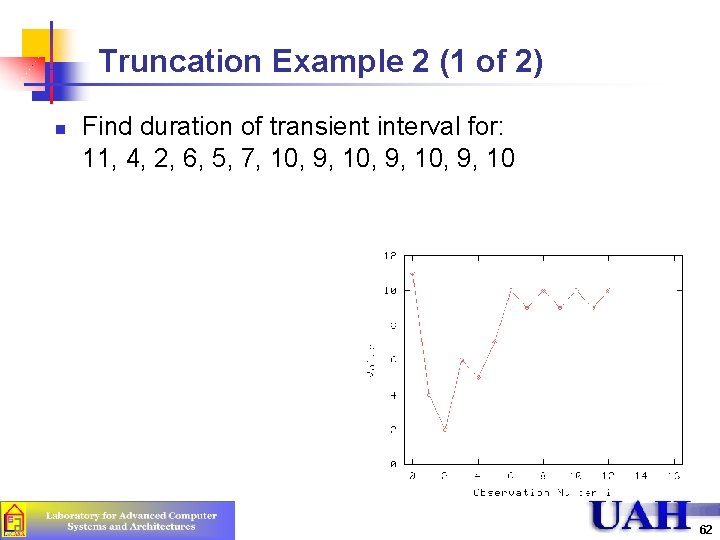

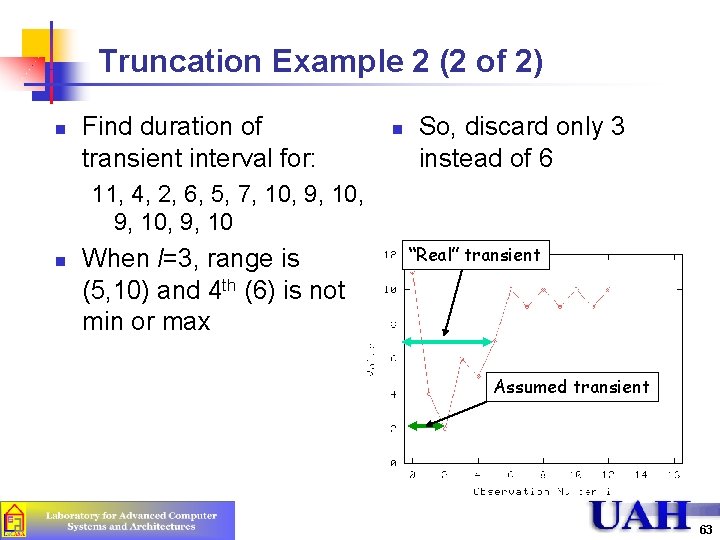

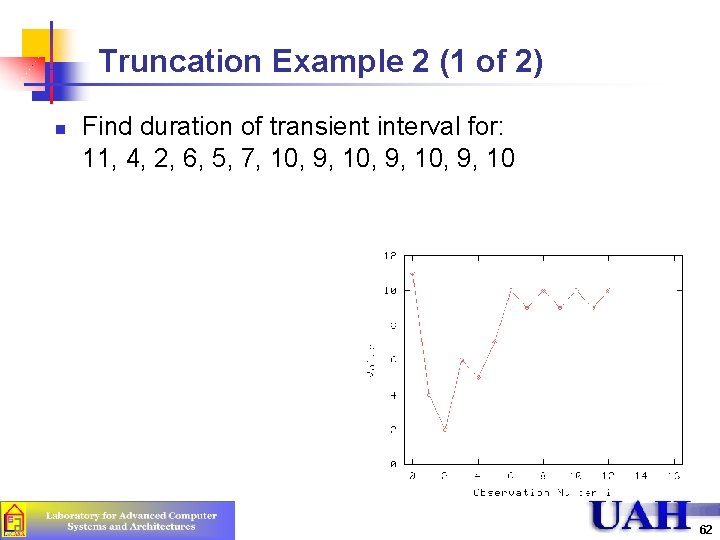

Truncation Example 2 (1 of 2) n Find duration of transient interval for: 11, 4, 2, 6, 5, 7, 10, 9, 10 62

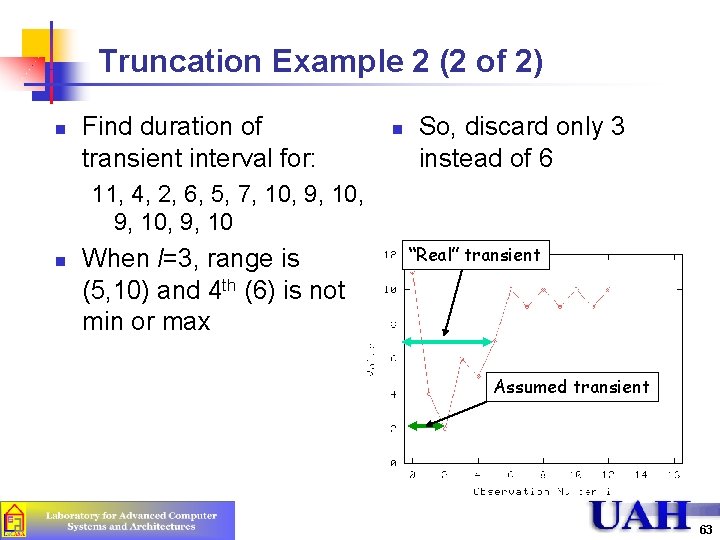

Truncation Example 2 (2 of 2) n Find duration of transient interval for: n So, discard only 3 instead of 6 11, 4, 2, 6, 5, 7, 10, 9, 10 n When l=3, range is (5, 10) and 4 th (6) is not min or max “Real” transient Assumed transient 63

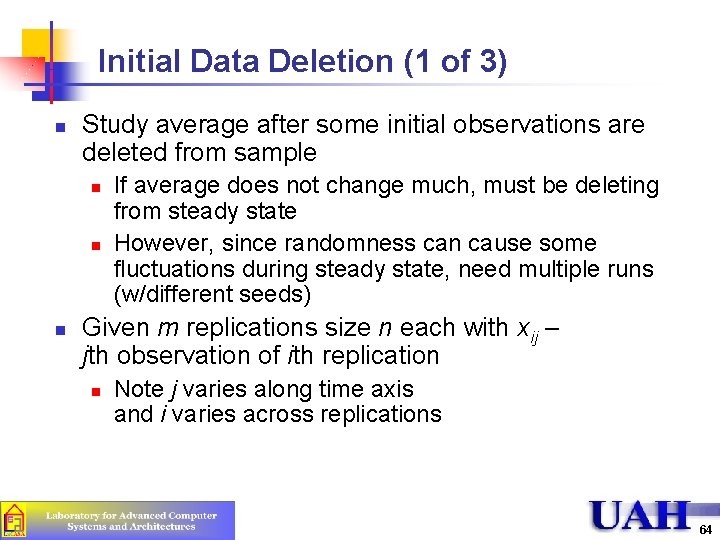

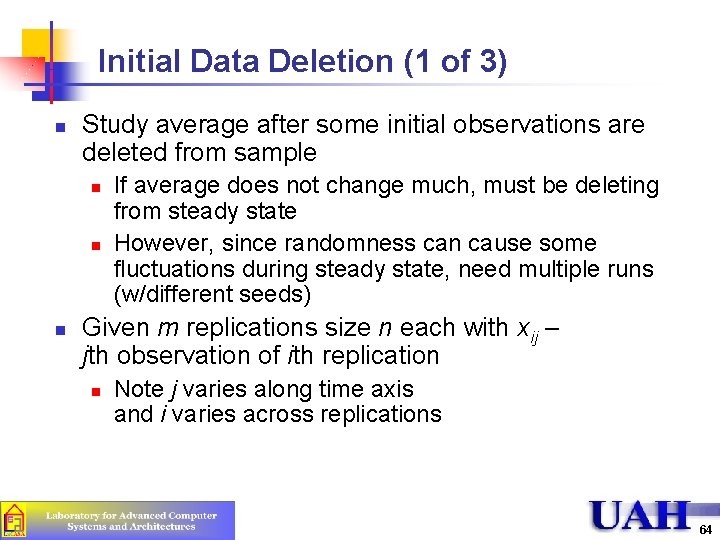

Initial Data Deletion (1 of 3) n Study average after some initial observations are deleted from sample n n n If average does not change much, must be deleting from steady state However, since randomness can cause some fluctuations during steady state, need multiple runs (w/different seeds) Given m replications size n each with xij – jth observation of ith replication n Note j varies along time axis and i varies across replications 64

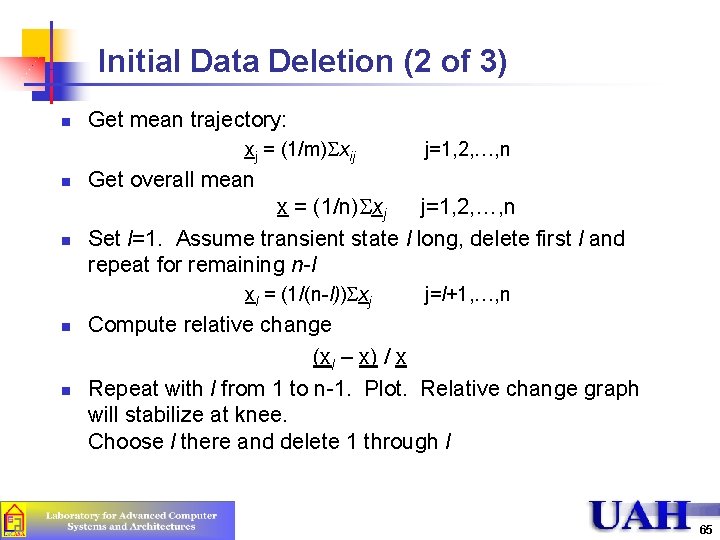

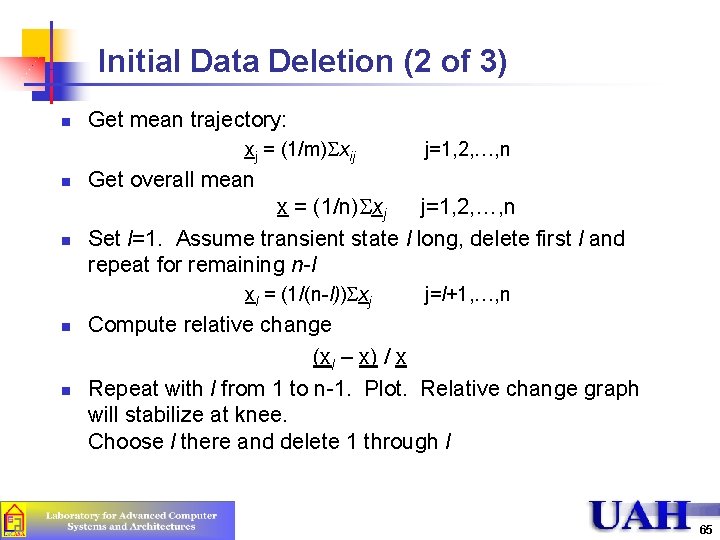

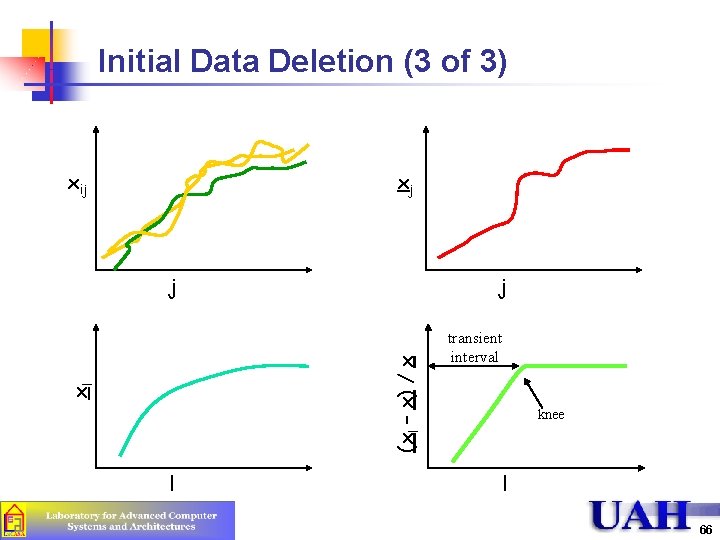

Initial Data Deletion (2 of 3) n Get mean trajectory: xj = (1/m) xij j=1, 2, …, n n Get overall mean n x = (1/n) xj j=1, 2, …, n Set l=1. Assume transient state l long, delete first l and repeat for remaining n-l xl = (1/(n-l)) xj n n j=l+1, …, n Compute relative change (xl – x) / x Repeat with l from 1 to n-1. Plot. Relative change graph will stabilize at knee. Choose l there and delete 1 through l 65

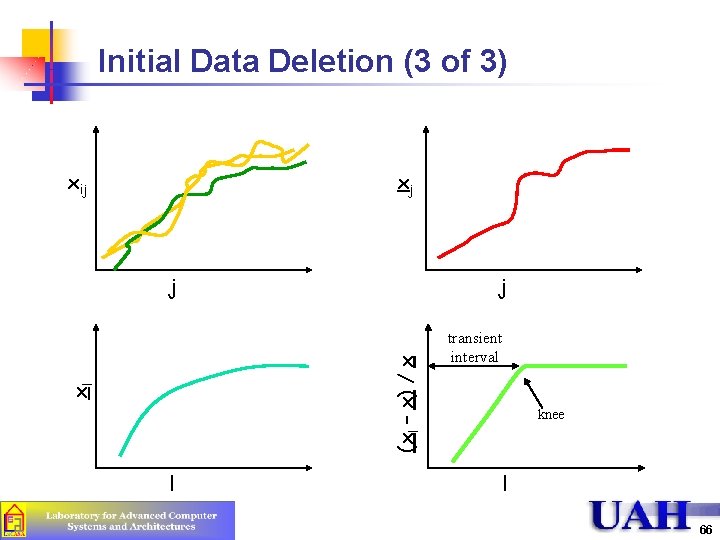

Initial Data Deletion (3 of 3) xij xj j xl (xl – x) / x j l transient interval knee l 66

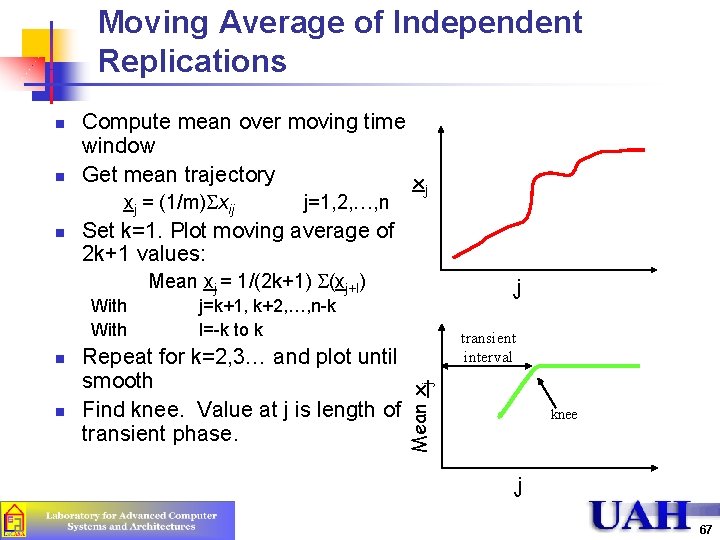

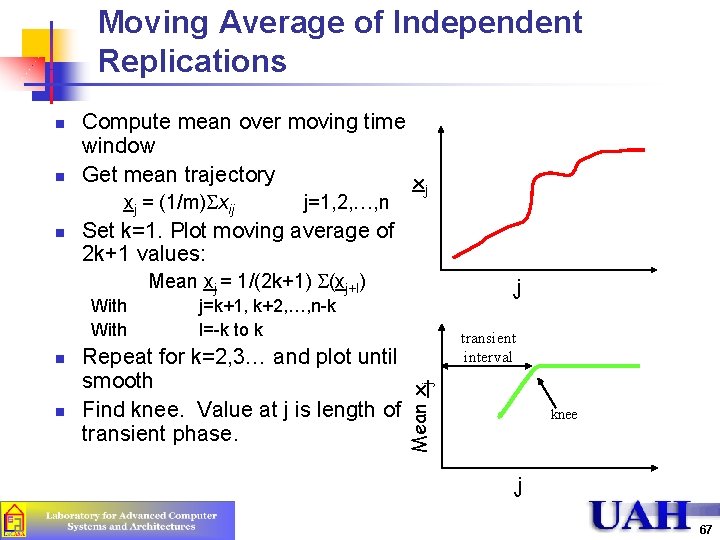

Moving Average of Independent Replications n n Compute mean over moving time window Get mean trajectory x xj = (1/m) xij n j=1, 2, …, n j Set k=1. Plot moving average of 2 k+1 values: Mean xj = 1/(2 k+1) (xj+l) n n j=k+1, k+2, …, n-k l=-k to k Repeat for k=2, 3… and plot until smooth Find knee. Value at j is length of transient phase. transient interval Mean xj With j knee j 67

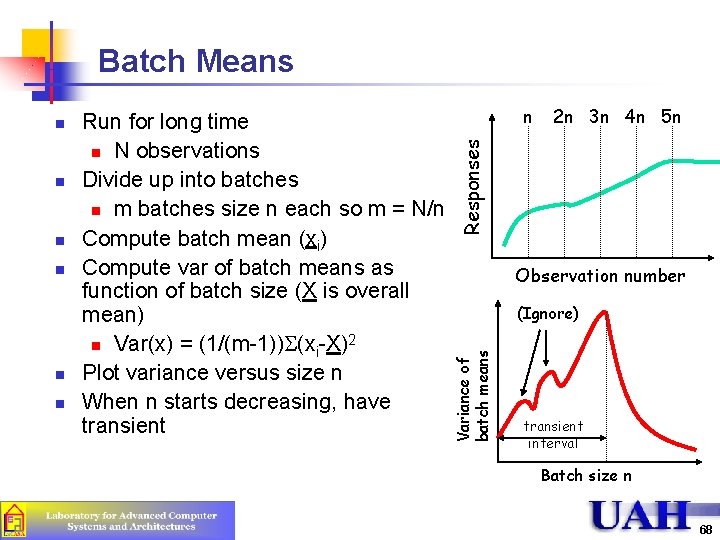

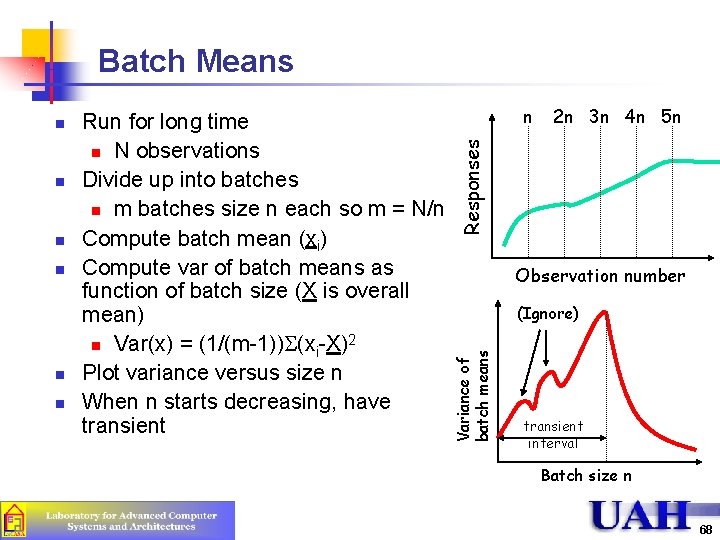

Batch Means n n n 2 n 3 n 4 n 5 n Responses n Run for long time n N observations Divide up into batches n m batches size n each so m = N/n Compute batch mean (xi) Compute var of batch means as function of batch size (X is overall mean) 2 n Var(x) = (1/(m-1)) (xi-X) Plot variance versus size n When n starts decreasing, have transient Observation number (Ignore) Variance of batch means n transient interval Batch size n 68

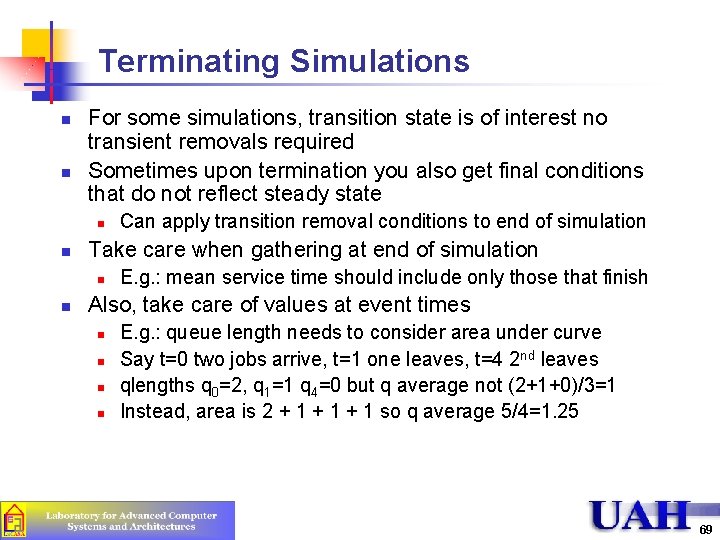

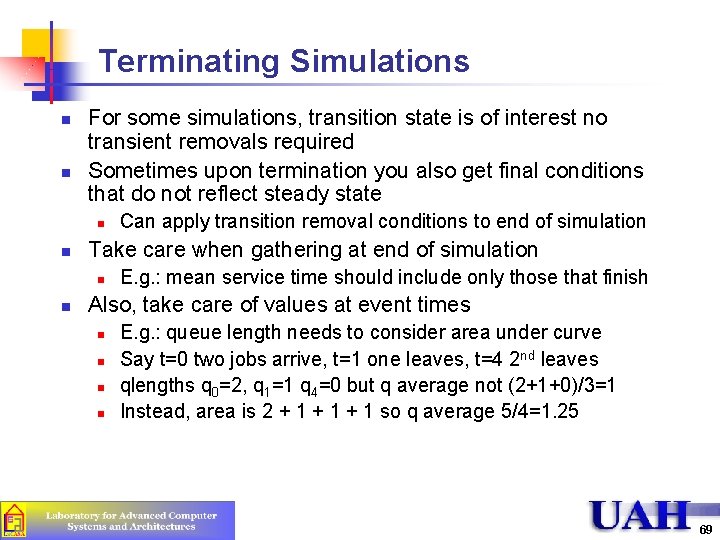

Terminating Simulations n n For some simulations, transition state is of interest no transient removals required Sometimes upon termination you also get final conditions that do not reflect steady state n n Take care when gathering at end of simulation n n Can apply transition removal conditions to end of simulation E. g. : mean service time should include only those that finish Also, take care of values at event times n n E. g. : queue length needs to consider area under curve Say t=0 two jobs arrive, t=1 one leaves, t=4 2 nd leaves qlengths q 0=2, q 1=1 q 4=0 but q average not (2+1+0)/3=1 Instead, area is 2 + 1 + 1 so q average 5/4=1. 25 69

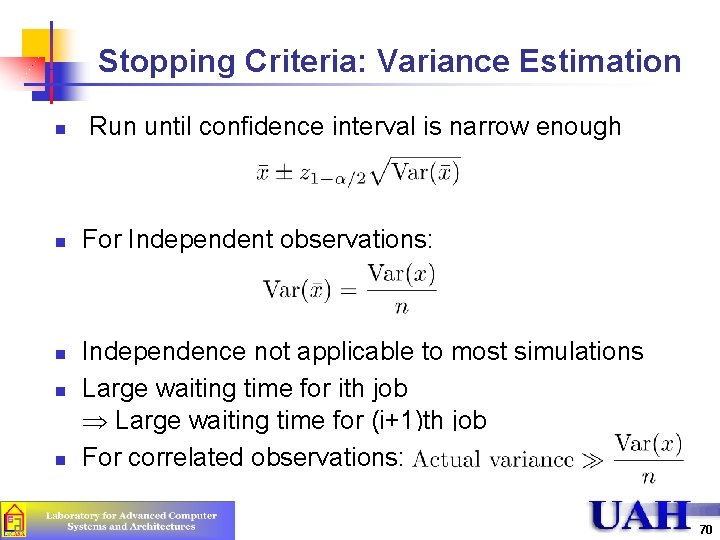

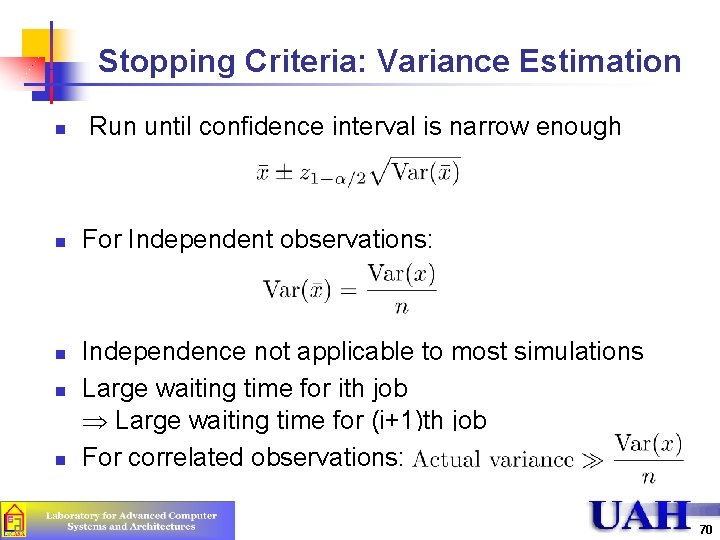

Stopping Criteria: Variance Estimation n n Run until confidence interval is narrow enough For Independent observations: Independence not applicable to most simulations Large waiting time for ith job Large waiting time for (i+1)th job For correlated observations: 70

Variance Estimation Methods 1. Independent Replications 2. Batch Means 3. Method of Regeneration 71

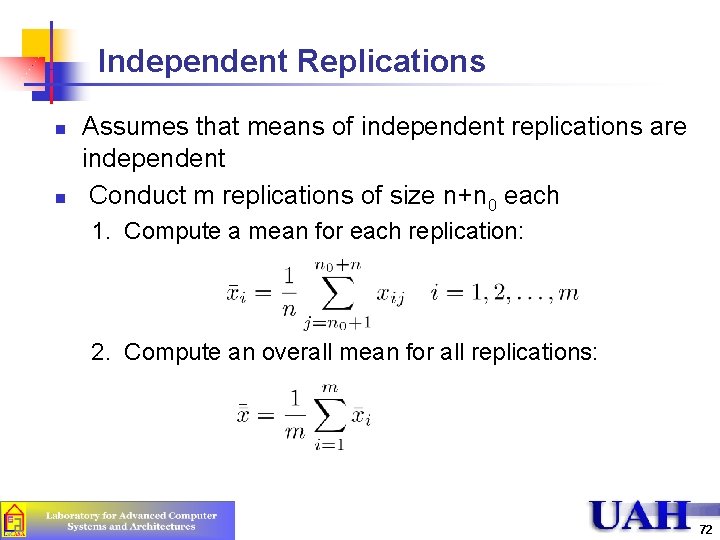

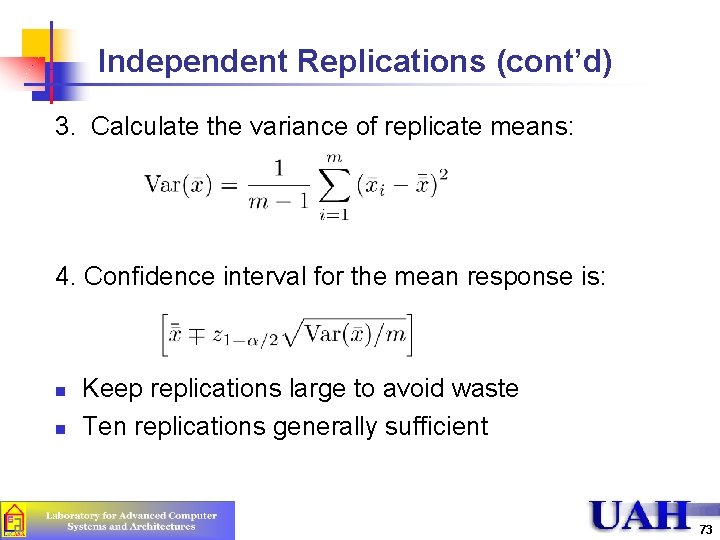

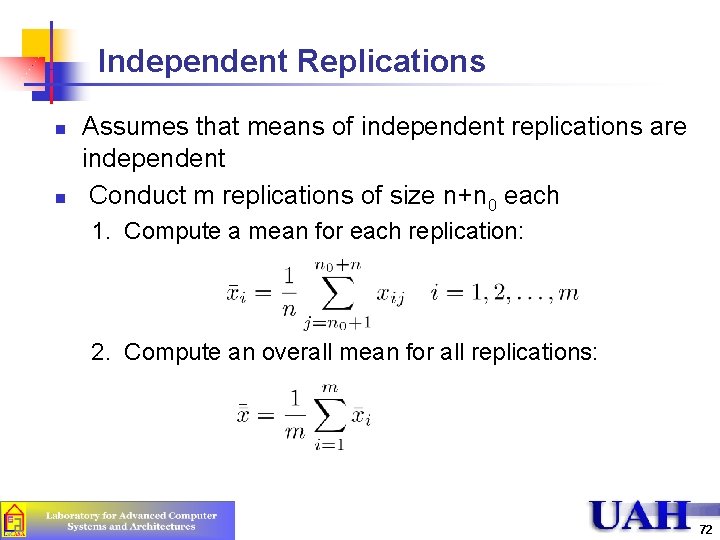

Independent Replications n n Assumes that means of independent replications are independent Conduct m replications of size n+n 0 each 1. Compute a mean for each replication: 2. Compute an overall mean for all replications: 72

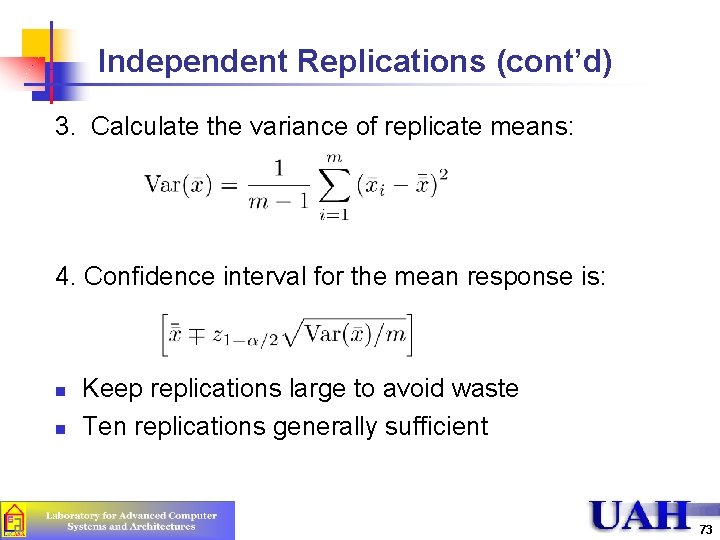

Independent Replications (cont’d) 3. Calculate the variance of replicate means: 4. Confidence interval for the mean response is: n n Keep replications large to avoid waste Ten replications generally sufficient 73

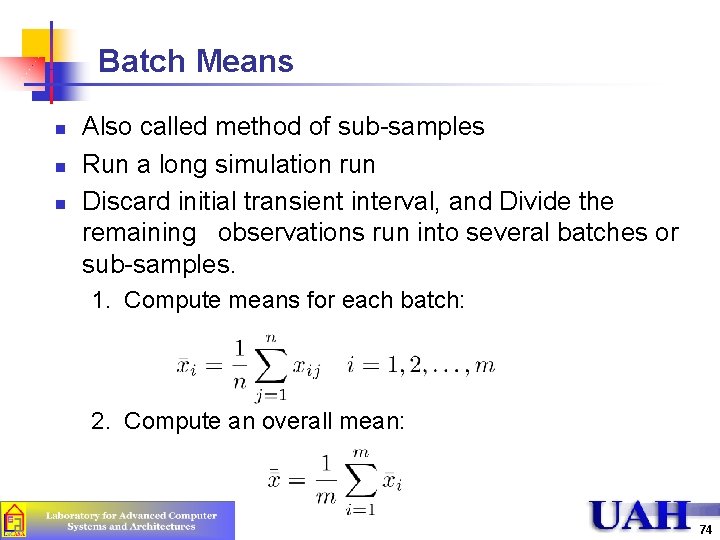

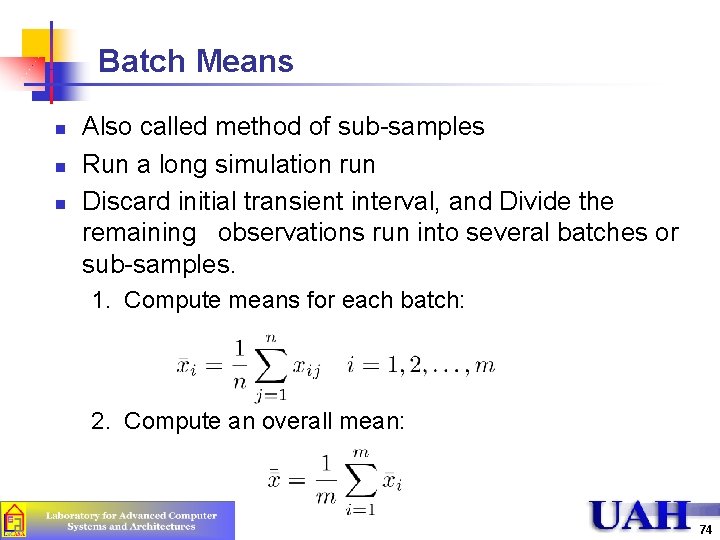

Batch Means n n n Also called method of sub-samples Run a long simulation run Discard initial transient interval, and Divide the remaining observations run into several batches or sub-samples. 1. Compute means for each batch: 2. Compute an overall mean: 74

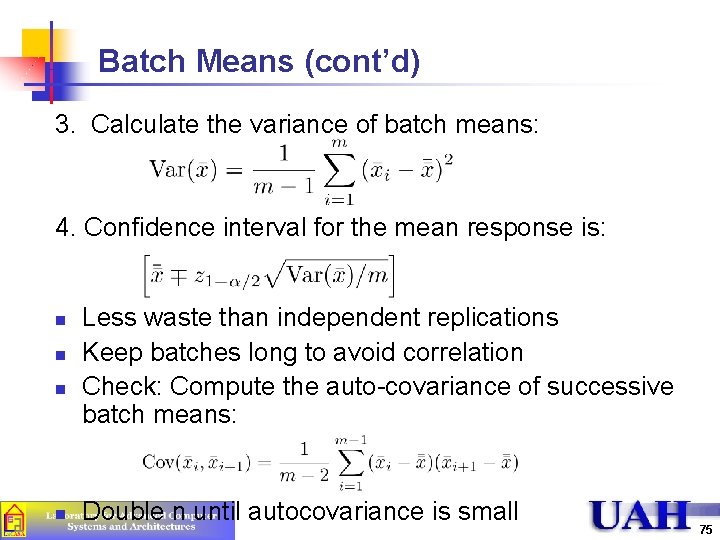

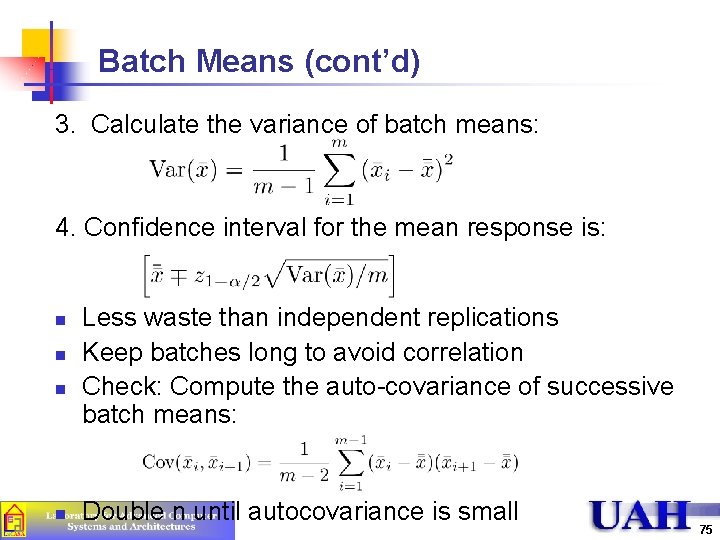

Batch Means (cont’d) 3. Calculate the variance of batch means: 4. Confidence interval for the mean response is: n n Less waste than independent replications Keep batches long to avoid correlation Check: Compute the auto-covariance of successive batch means: Double n until autocovariance is small 75

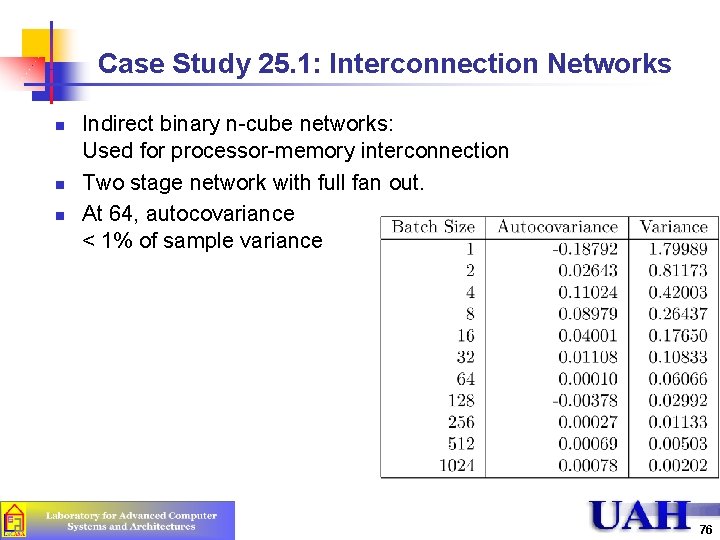

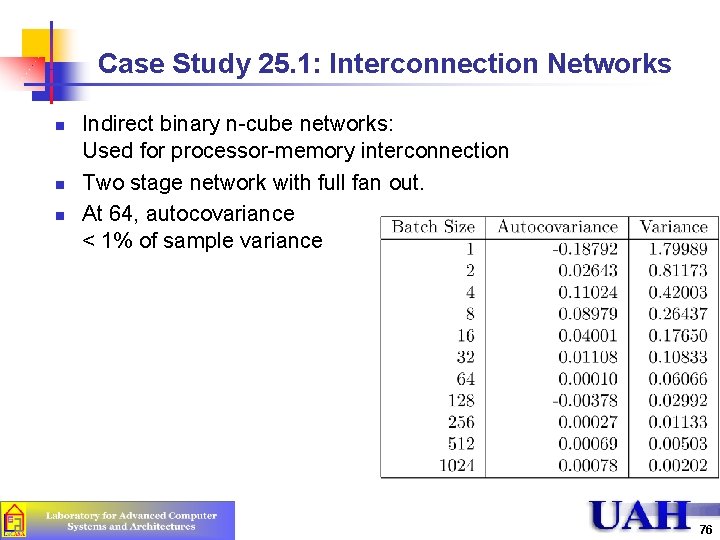

Case Study 25. 1: Interconnection Networks n n n Indirect binary n-cube networks: Used for processor-memory interconnection Two stage network with full fan out. At 64, autocovariance < 1% of sample variance 76

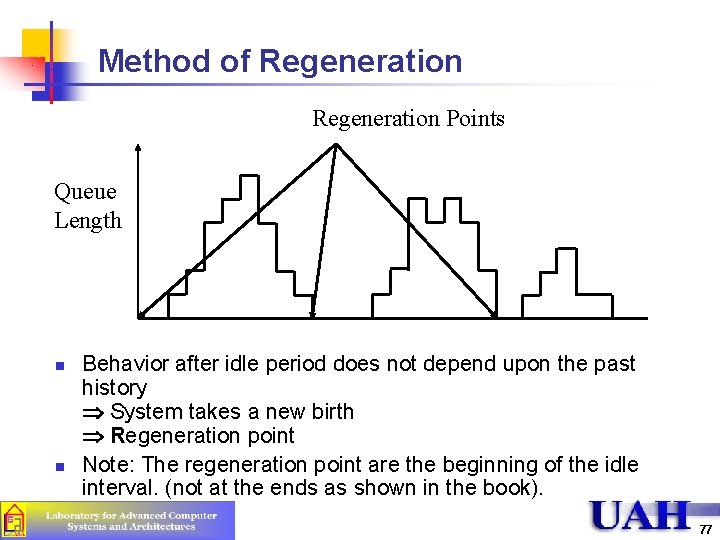

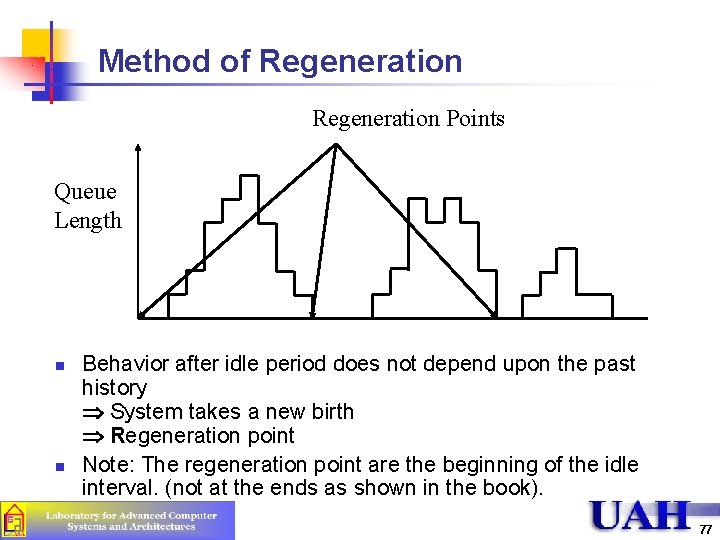

Method of Regeneration Points Queue Length n n Behavior after idle period does not depend upon the past history Þ System takes a new birth Þ Regeneration point Note: The regeneration point are the beginning of the idle interval. (not at the ends as shown in the book). 77

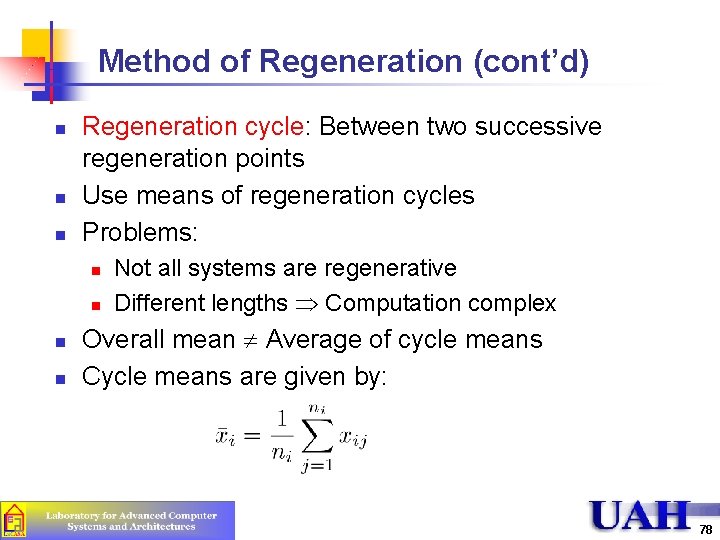

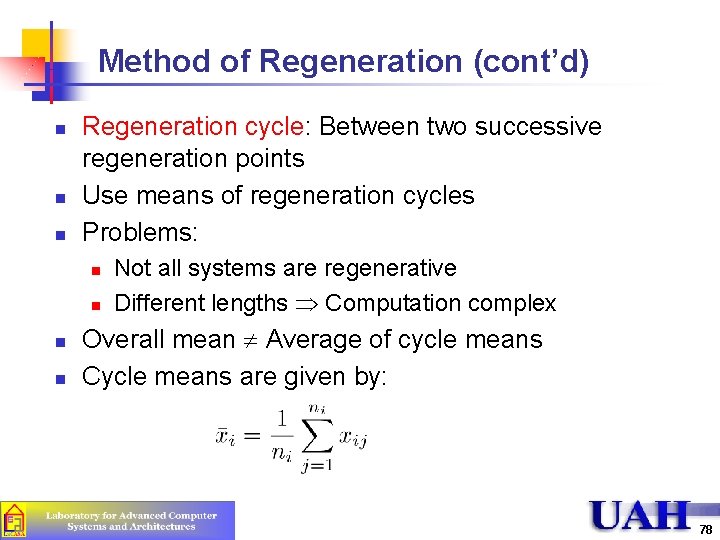

Method of Regeneration (cont’d) n n n Regeneration cycle: Between two successive regeneration points Use means of regeneration cycles Problems: n n Not all systems are regenerative Different lengths Computation complex Overall mean ¹ Average of cycle means Cycle means are given by: 78

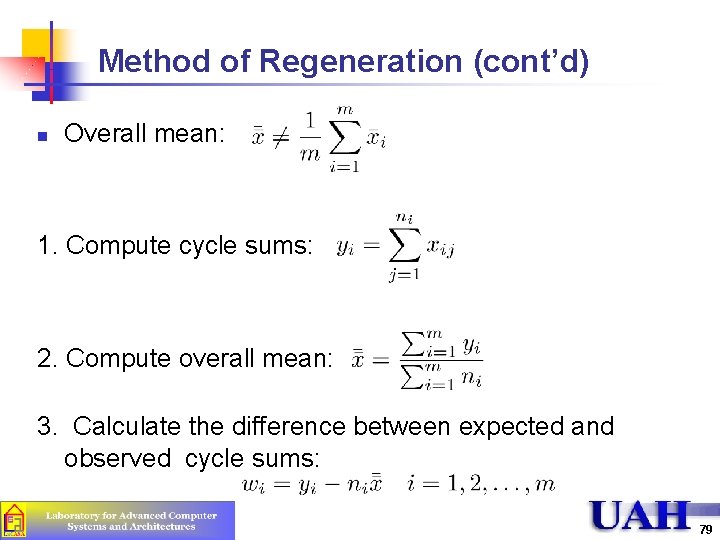

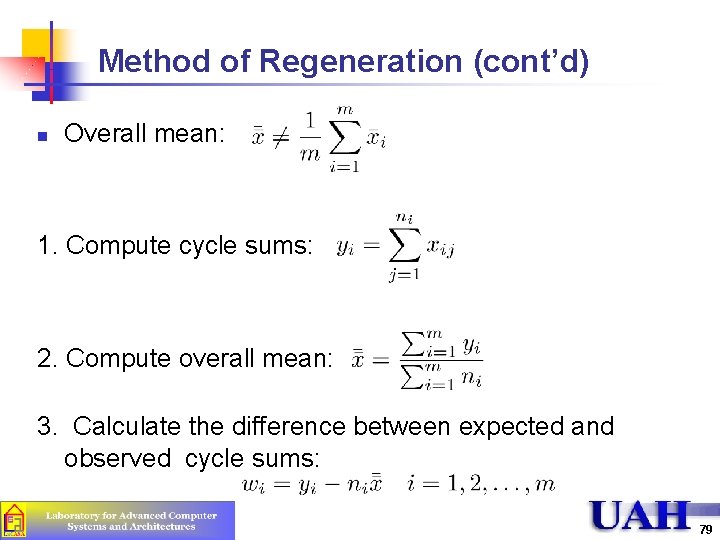

Method of Regeneration (cont’d) n Overall mean: 1. Compute cycle sums: 2. Compute overall mean: 3. Calculate the difference between expected and observed cycle sums: 79

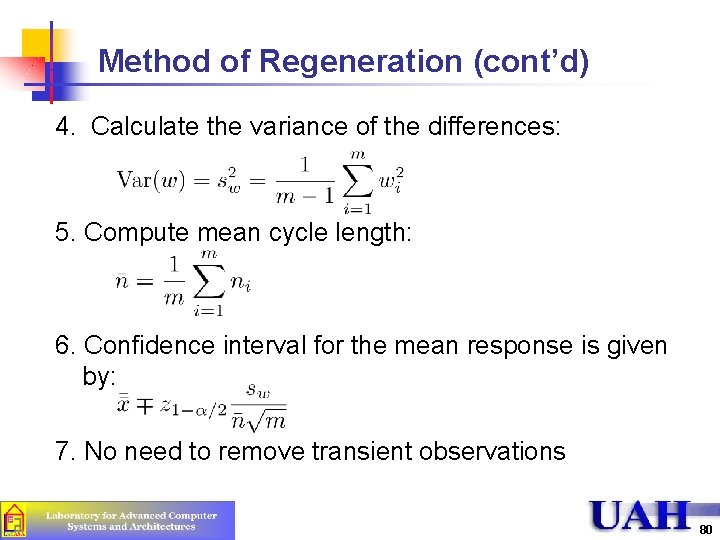

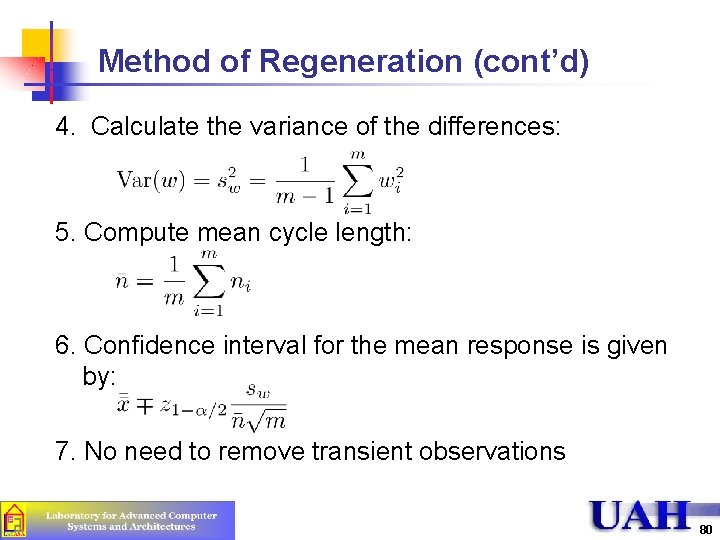

Method of Regeneration (cont’d) 4. Calculate the variance of the differences: 5. Compute mean cycle length: 6. Confidence interval for the mean response is given by: 7. No need to remove transient observations 80

Method of Regeneration: Problems 1. The cycle lengths are unpredictable. Can't plan the simulation time beforehand. 2. Finding the regeneration point may require a lot of checking after every event. 3. Many of the variance reduction techniques can not be used due to variable length of the cycles. 4. The mean and variance estimators are biased 81

Variance Reduction n n Reduce variance by controlling random number streams Introduce correlation in successive observations Problem: Careless use may backfire and lead to increased variance. For statistically sophisticated analysts only Not recommended for beginners 82

Summary n n n Verification = Debugging Software development techniques Validation Simulation = Real Experts involvement Transient Removal: Initial data deletion, batch means Terminating Simulations = Transients are of interest Stopping Criteria: Independent replications, batch means, method of regeneration Variance reduction is not for novice 83

Milenkovi

Milenkovi Popcvm

Popcvm Milenkovi

Milenkovi Milenkovi

Milenkovi Milenkovi

Milenkovi Milenkovi

Milenkovi Requisitos de los títulos valores

Requisitos de los títulos valores Xkcd pointers

Xkcd pointers Comp309

Comp309 Swe 619 gmu

Swe 619 gmu Lesson 1 skills practice circumference

Lesson 1 skills practice circumference Swe 619

Swe 619 Swe 619

Swe 619 Jeff offutt

Jeff offutt Sf 619

Sf 619 Idea part b section 619

Idea part b section 619 Round 2 617 to the nearest ten

Round 2 617 to the nearest ten Scalameter

Scalameter Aleksandar kupusinac

Aleksandar kupusinac Kupusinac

Kupusinac Aleksandar plamenac

Aleksandar plamenac Aleksandar rakicevic

Aleksandar rakicevic Aleksandar kuzmanovic flashback

Aleksandar kuzmanovic flashback Aleksandar tatalovic

Aleksandar tatalovic Aleksandar nikcevic

Aleksandar nikcevic Aleksandar baucal

Aleksandar baucal Abx aleksandar settings

Abx aleksandar settings Kartelj matf

Kartelj matf Hefestion i aleksandar

Hefestion i aleksandar Aleksandar prokopec

Aleksandar prokopec Aleksandar.krizo

Aleksandar.krizo Aleksandar stefanovic sorbonne

Aleksandar stefanovic sorbonne Primjeri franšize u hrvatskoj

Primjeri franšize u hrvatskoj Peritoneoskopija

Peritoneoskopija Introduction to modeling and simulation

Introduction to modeling and simulation Contoh model simulasi

Contoh model simulasi Business analytics simulation

Business analytics simulation Tr069 adalah

Tr069 adalah Cpe wan management protocol cwmp

Cpe wan management protocol cwmp Listado rama media neuquen

Listado rama media neuquen Jb nagar study circle

Jb nagar study circle Cpe vpn

Cpe vpn Bisk cpe

Bisk cpe Unr 365

Unr 365 Kaj je cpe

Kaj je cpe Cpe 426

Cpe 426 Tcp/ip

Tcp/ip Tr069 protocol stack

Tr069 protocol stack Leamos la cpe

Leamos la cpe Cpe

Cpe Cpe

Cpe 23 ku

23 ku Calendrier cpe 2021

Calendrier cpe 2021 Telindus modem

Telindus modem Cpe risk assessment

Cpe risk assessment Ucf cpe flowchart

Ucf cpe flowchart Cpe rama media

Cpe rama media Cpe

Cpe Cpe lifecycle management

Cpe lifecycle management Multi-vendor deployment

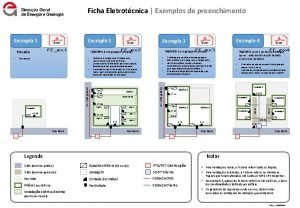

Multi-vendor deployment Ficha eletrotécnica com nip e cpe

Ficha eletrotécnica com nip e cpe Tcp flow control

Tcp flow control Cpe426

Cpe426 Cpe 426

Cpe 426 Cpe 426

Cpe 426 What is the probability cpe

What is the probability cpe Jicpa cpe

Jicpa cpe Hát kết hợp bộ gõ cơ thể

Hát kết hợp bộ gõ cơ thể Lp html

Lp html Bổ thể

Bổ thể Tỉ lệ cơ thể trẻ em

Tỉ lệ cơ thể trẻ em Gấu đi như thế nào

Gấu đi như thế nào Tư thế worm breton là gì

Tư thế worm breton là gì Bài hát chúa yêu trần thế alleluia

Bài hát chúa yêu trần thế alleluia Các môn thể thao bắt đầu bằng tiếng đua

Các môn thể thao bắt đầu bằng tiếng đua Thế nào là hệ số cao nhất

Thế nào là hệ số cao nhất Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Công thức tính thế năng

Công thức tính thế năng Trời xanh đây là của chúng ta thể thơ

Trời xanh đây là của chúng ta thể thơ Mật thư tọa độ 5x5

Mật thư tọa độ 5x5 101012 bằng

101012 bằng độ dài liên kết

độ dài liên kết Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Thơ thất ngôn tứ tuyệt đường luật

Thơ thất ngôn tứ tuyệt đường luật