CPE 619 Selection of Techniques and Metrics Aleksandar

- Slides: 38

CPE 619 Selection of Techniques and Metrics Aleksandar Milenković The La. CASA Laboratory Electrical and Computer Engineering Department The University of Alabama in Huntsville http: //www. ece. uah. edu/~milenka http: //www. ece. uah. edu/~lacasa

Overview n n n One or more systems, real or hypothetical You want to evaluate their performance What technique do you choose? n n Analytic Modeling? Simulation? Measurement? What metrics do you use? 2

Outline § Selecting an Evaluation Technique § Selecting Performance Metrics § Case Study § Commonly Used Performance Metrics § Setting Performance Requirements § Case Study 3

Selecting an Evaluation Technique (1 of 4) n Which life-cycle stage the system is in? n n n When are results needed? (often, yesterday!) n n n Measurement only when something exists If new, analytical modeling or simulation are only options Analytic modeling only choice Simulations and measurement can be same n But Murphy’s Law strikes measurement more often (“If anything can go wrong, it will. ”) What tools and skills are available? n n n Maybe languages to support simulation Tools to support measurement (e. g. : packet sniffers, source code to add monitoring hooks) Skills in analytic modeling (e. g. : queuing theory) 4

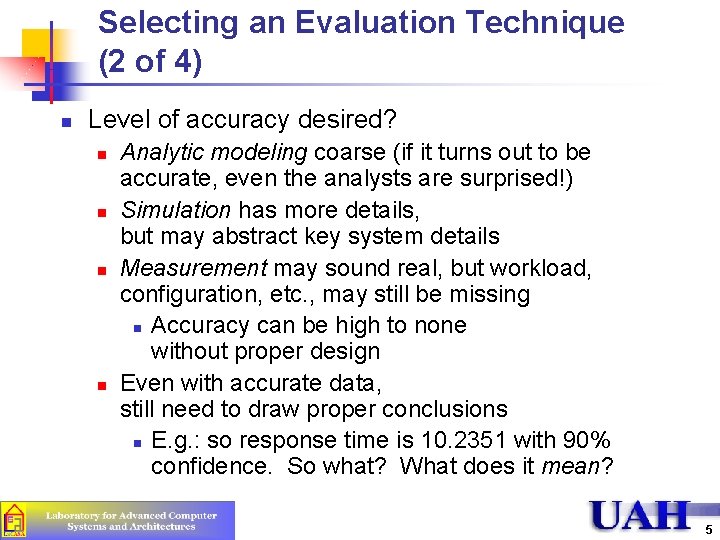

Selecting an Evaluation Technique (2 of 4) n Level of accuracy desired? n n Analytic modeling coarse (if it turns out to be accurate, even the analysts are surprised!) Simulation has more details, but may abstract key system details Measurement may sound real, but workload, configuration, etc. , may still be missing n Accuracy can be high to none without proper design Even with accurate data, still need to draw proper conclusions n E. g. : so response time is 10. 2351 with 90% confidence. So what? What does it mean? 5

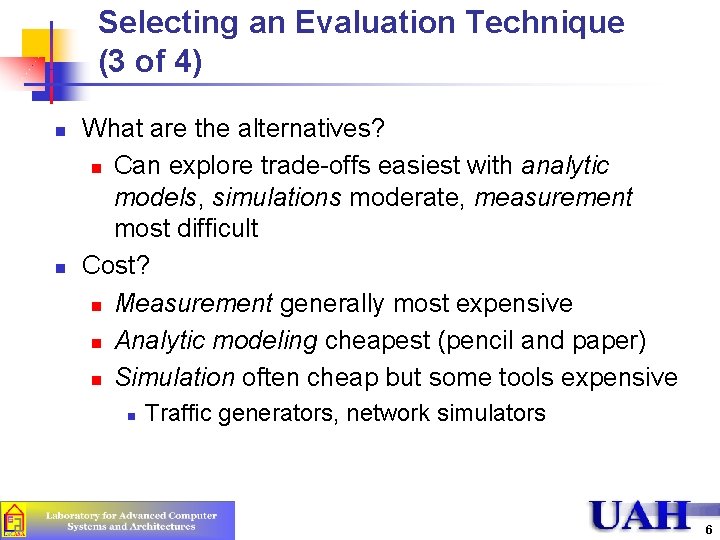

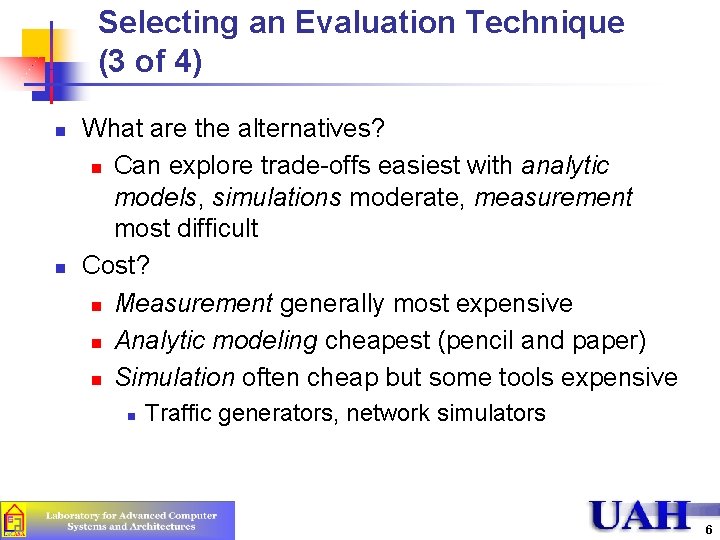

Selecting an Evaluation Technique (3 of 4) n n What are the alternatives? n Can explore trade-offs easiest with analytic models, simulations moderate, measurement most difficult Cost? n Measurement generally most expensive n Analytic modeling cheapest (pencil and paper) n Simulation often cheap but some tools expensive n Traffic generators, network simulators 6

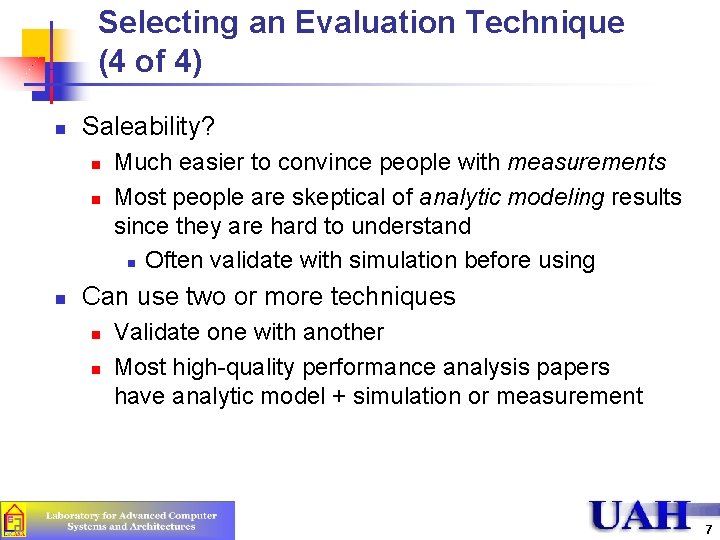

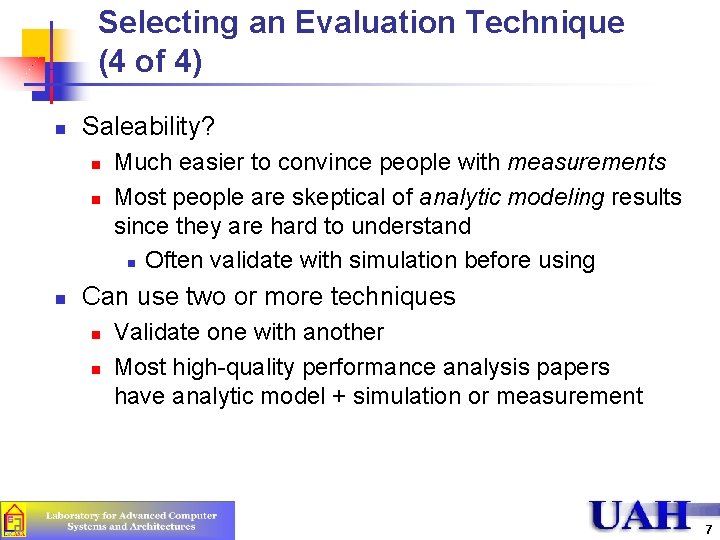

Selecting an Evaluation Technique (4 of 4) n Saleability? n n n Much easier to convince people with measurements Most people are skeptical of analytic modeling results since they are hard to understand n Often validate with simulation before using Can use two or more techniques n n Validate one with another Most high-quality performance analysis papers have analytic model + simulation or measurement 7

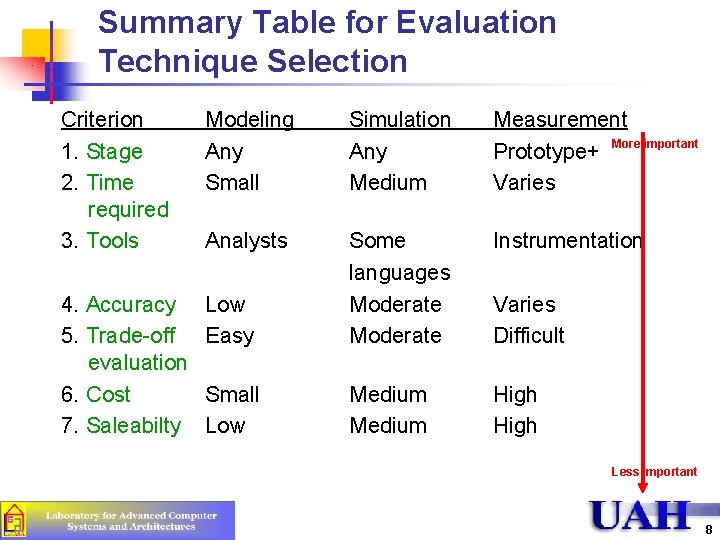

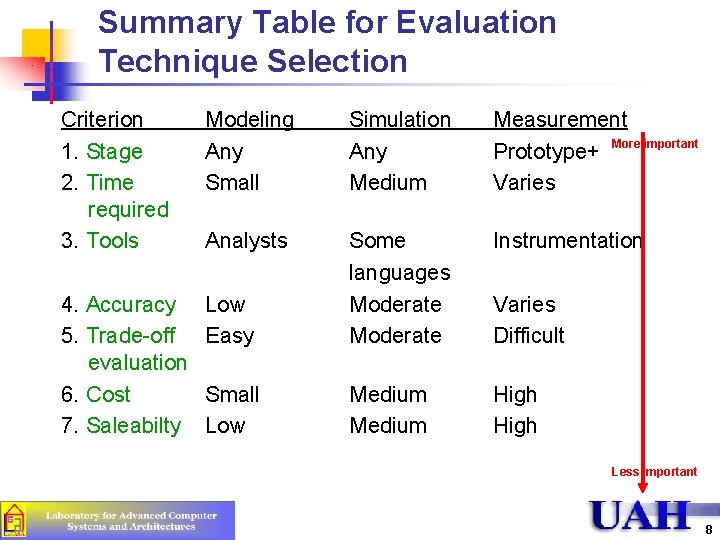

Summary Table for Evaluation Technique Selection Criterion 1. Stage 2. Time required 3. Tools Modeling Any Small Simulation Any Medium Measurement Prototype+ More important Varies Analysts Instrumentation 4. Accuracy 5. Trade-off evaluation 6. Cost 7. Saleabilty Low Easy Some languages Moderate Small Low Medium High Varies Difficult Less important 8

Outline § Selecting an Evaluation Technique § Selecting Performance Metrics § Case Study § Commonly Used Performance Metrics § Setting Performance Requirements § Case Study 9

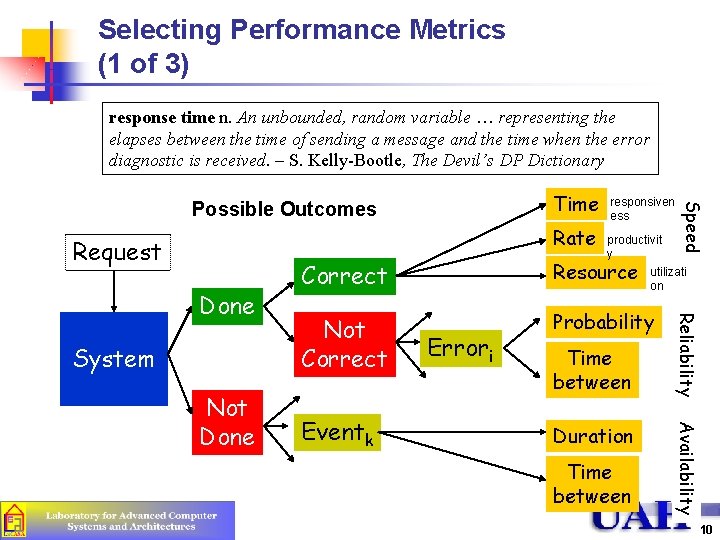

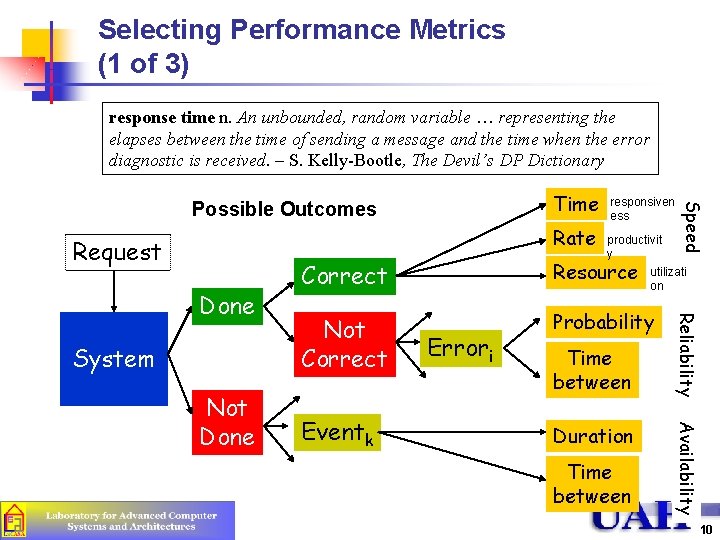

Selecting Performance Metrics (1 of 3) response time n. An unbounded, random variable … representing the elapses between the time of sending a message and the time when the error diagnostic is received. – S. Kelly-Bootle, The Devil’s DP Dictionary Rate Request System Correct Resource Not Correct Probability Eventk Errori Time between Duration Time between Availability Not Done productivit y utilizati on Reliability Done responsiven ess Speed Time Possible Outcomes 10

Selecting Performance Metrics (2 of 3) n Mean is what usually matters n n Individual vs. Global (systems shared by many users) n n But do not overlook the effect of variability May be at odds Increase individual may decrease global n E. g. : response time at the cost of throughput Increase global may not be most fair n E. g. : throughput of cross traffic Performance optimizations of bottleneck have most impact n n E. g. : Response time of Web request Client processing 1 s, Latency 500 ms, Server processing 10 s Total is 11. 5 s Improve client 50%? 11 s Improve server 50%? 6. 5 s 11

Selecting Performance Metrics (3 of 3) n May be more than one set of metrics n n Resources: Queue size, CPU Utilization, Memory Use … Criteria for selecting subset, choose: n n n Low variability – need fewer repetitions Non redundancy – don’t use 2 if 1 will do n E. g. : queue size and delay may provide identical information Completeness – should capture tradeoffs n E. g. : one disk may be faster but may return more errors so add reliability measure 12

Outline § § Selecting an Evaluation Technique Selecting Performance Metrics § § § Case Study Commonly Used Performance Metrics Setting Performance Requirements § Case Study 13

Case Study (1 of 5) n Computer system of end-hosts sending packets through routers n n n Congestion occurs when number of packets at router exceed buffering capacity Goal: compare two congestion control algorithms User sends block of packets to destination; Four possible outcomes: n n A) Some delivered in order B) Some delivered out of order C) Some delivered more than once D) Some dropped 14

Case Study (2 of 5) n For A), straightforward metrics exist: 1) Response time: delay for individual packet 2) Throughput: number of packets per unit time 3) Processor time per packet at source 4) Processor time per packet at destination 5) Processor time per packet at router n Since large response times can cause extra (unnecessary) retransmissions: 6) Variability in response time (is also important) 15

Case Study (3 of 5) n For B), out-of-order packets cannot be delivered to the user immediately They are often discarded (considered dropped) n Alternatively, they are stored in destination buffers awaiting arrival of intervening packets 7) Probability of out of order arrivals n n For C), consume resources without any use 8) Probability of duplicate packets n For D), for many reasons is undesirable 9) Probability of lost packets n Also, excessive loss can cause disconnection 10) Probability of disconnect 16

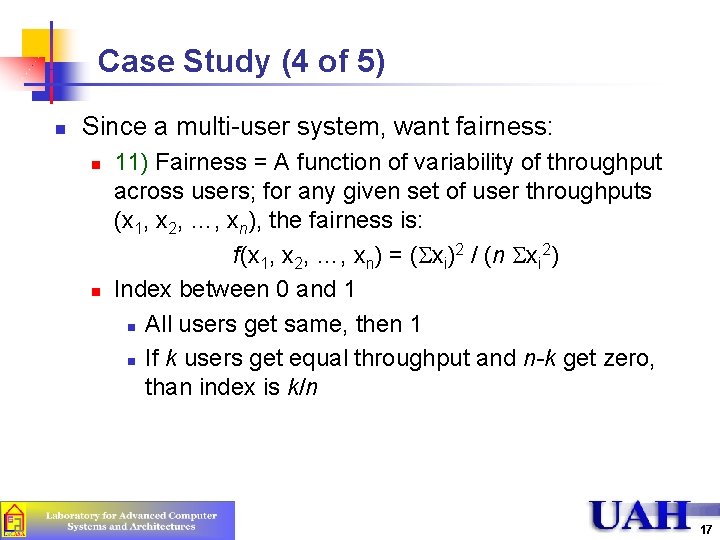

Case Study (4 of 5) n Since a multi-user system, want fairness: n n 11) Fairness = A function of variability of throughput across users; for any given set of user throughputs (x 1, x 2, …, xn), the fairness is: f(x 1, x 2, …, xn) = ( xi)2 / (n xi 2) Index between 0 and 1 n All users get same, then 1 n If k users get equal throughput and n-k get zero, than index is k/n 17

Case Study (5 of 5) n After a few experiments (pilot tests) n n n Found throughput and delay redundant n higher throughput had higher delay n instead, combine with power = thrput/delay Found variance in response time redundant with probability of duplication and probability of disconnection n Drop variance in response time Thus, left with nine metrics 18

Outline n n Selecting an Evaluation Technique Selecting Performance Metrics n n n Case Study Commonly Used Performance Metrics Setting Performance Requirements n Case Study 19

Commonly Used Performance Metrics n Response Time n n Throughput n n n Turn around time Reaction time Stretch factor Operations/second Capacity Efficiency Utilization Reliability n n Uptime MTTF 20

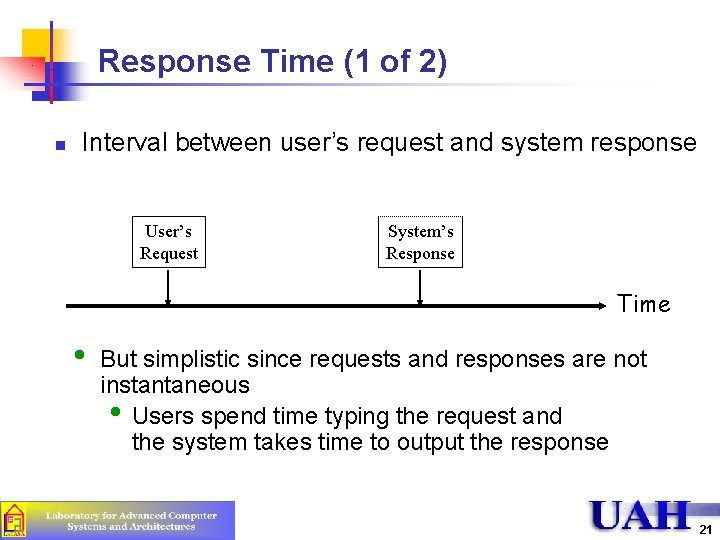

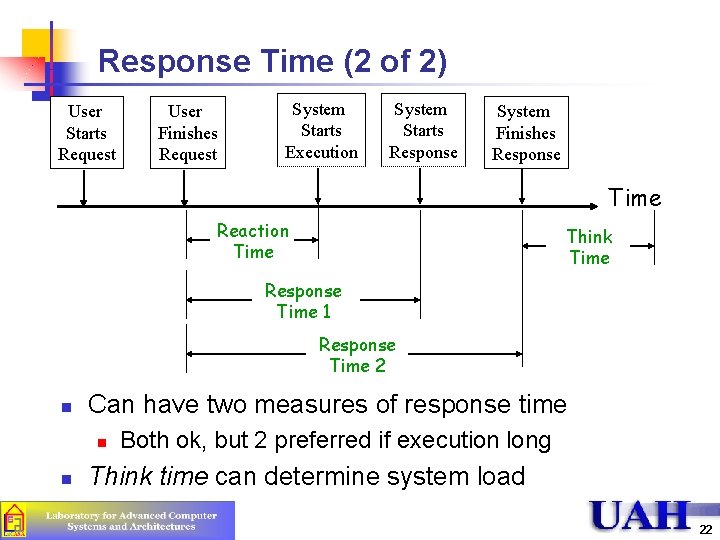

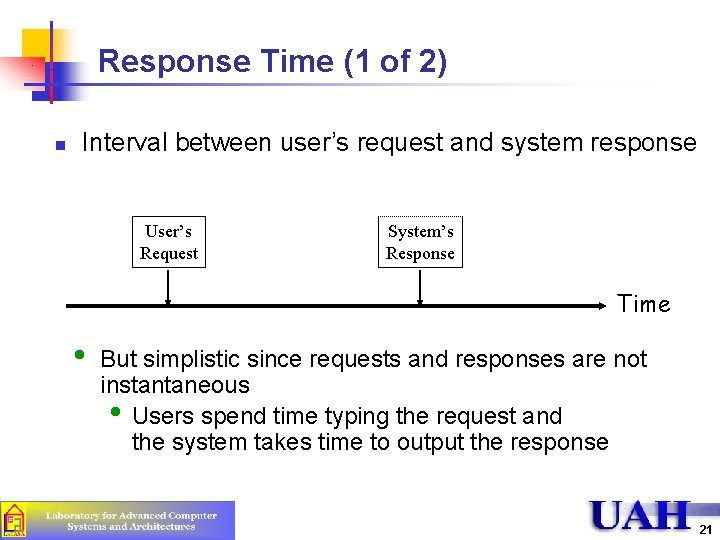

Response Time (1 of 2) n Interval between user’s request and system response User’s Request System’s Response Time • But simplistic since requests and responses are not instantaneous • Users spend time typing the request and the system takes time to output the response 21

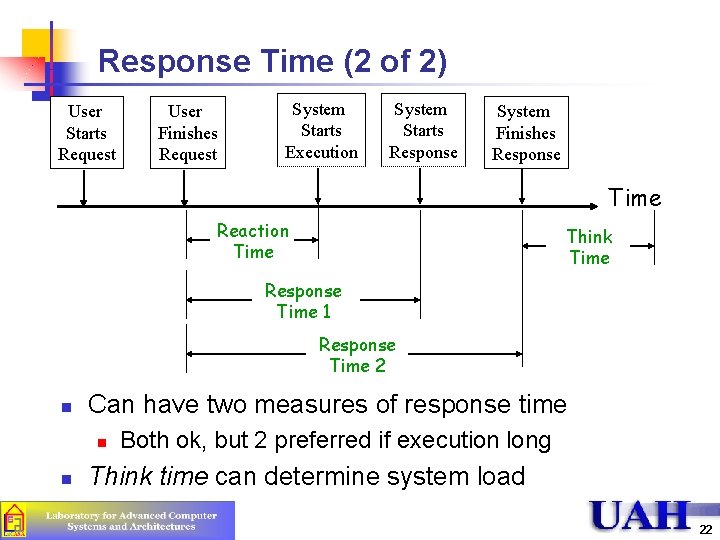

Response Time (2 of 2) User Starts Request User Finishes Request System Starts Execution System Starts Response System Finishes Response Time Reaction Time Think Time Response Time 1 Response Time 2 n Can have two measures of response time n n Both ok, but 2 preferred if execution long Think time can determine system load 22

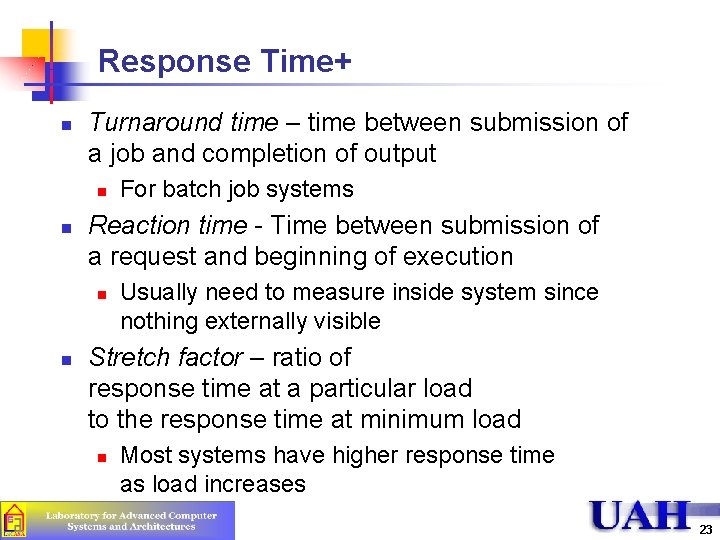

Response Time+ n Turnaround time – time between submission of a job and completion of output n n Reaction time - Time between submission of a request and beginning of execution n n For batch job systems Usually need to measure inside system since nothing externally visible Stretch factor – ratio of response time at a particular load to the response time at minimum load n Most systems have higher response time as load increases 23

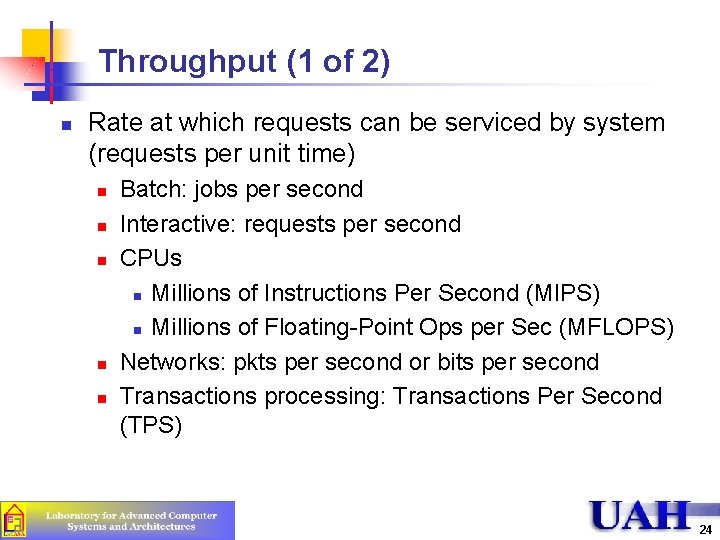

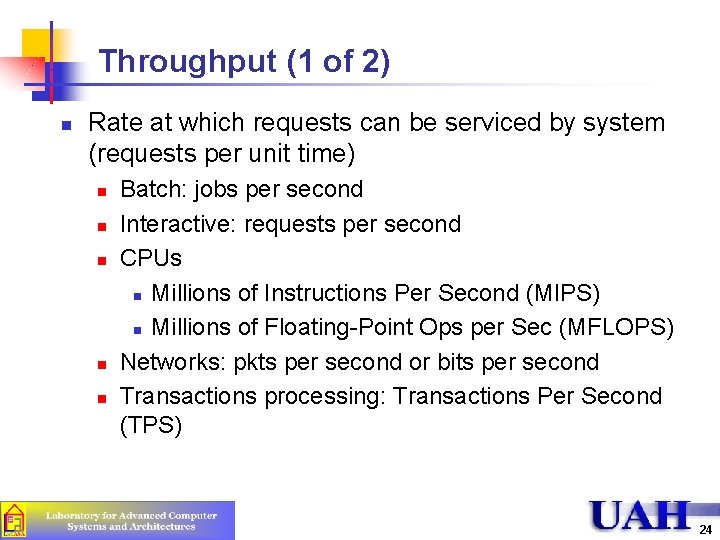

Throughput (1 of 2) n Rate at which requests can be serviced by system (requests per unit time) n n n Batch: jobs per second Interactive: requests per second CPUs n Millions of Instructions Per Second (MIPS) n Millions of Floating-Point Ops per Sec (MFLOPS) Networks: pkts per second or bits per second Transactions processing: Transactions Per Second (TPS) 24

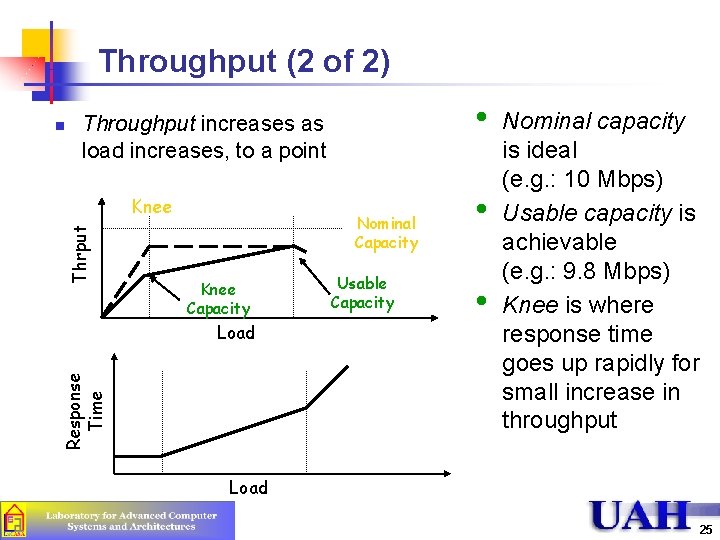

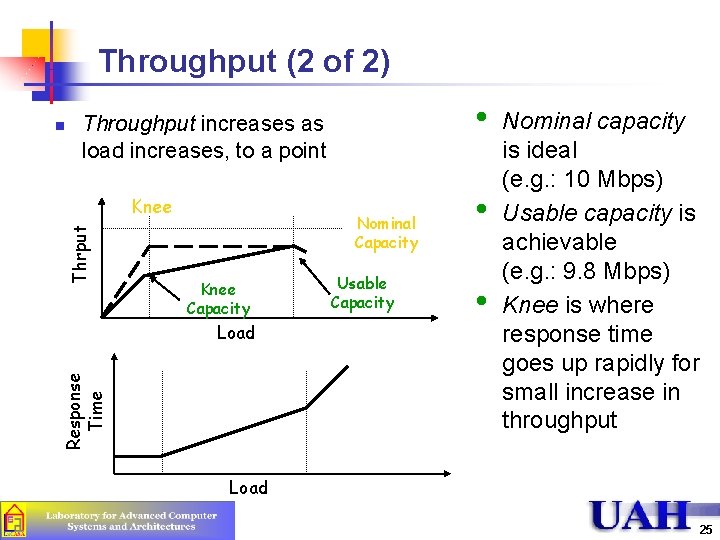

Throughput (2 of 2) n • Throughput increases as load increases, to a point Thrput Knee Nominal Capacity Knee Capacity Response Time Load Usable Capacity • • Nominal capacity is ideal (e. g. : 10 Mbps) Usable capacity is achievable (e. g. : 9. 8 Mbps) Knee is where response time goes up rapidly for small increase in throughput Load 25

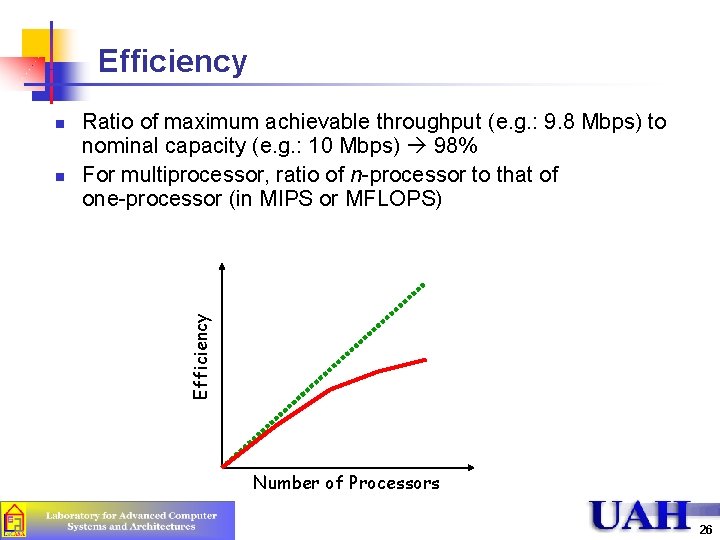

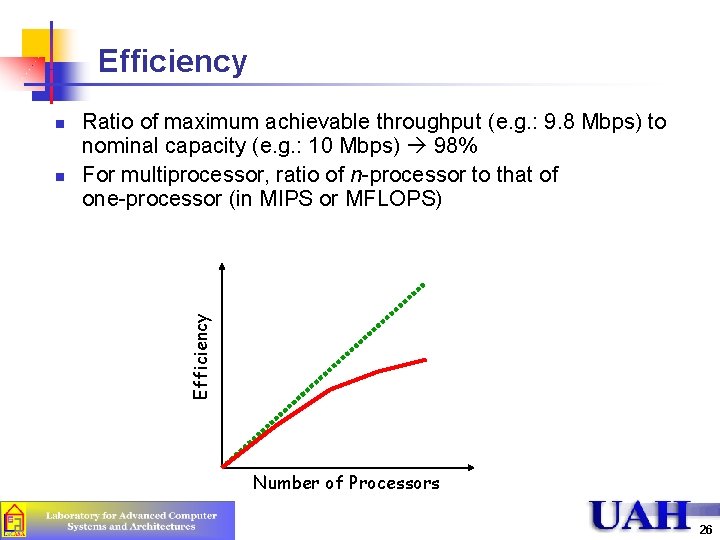

Efficiency n Ratio of maximum achievable throughput (e. g. : 9. 8 Mbps) to nominal capacity (e. g. : 10 Mbps) 98% For multiprocessor, ratio of n-processor to that of one-processor (in MIPS or MFLOPS) Efficiency n Number of Processors 26

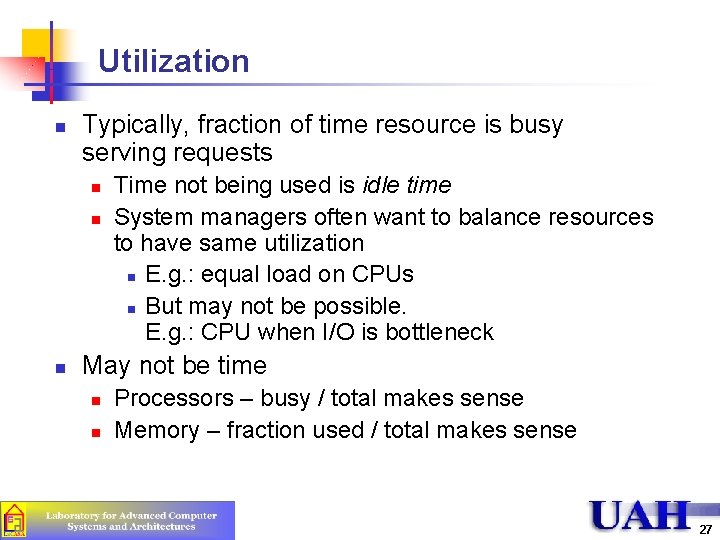

Utilization n Typically, fraction of time resource is busy serving requests n n n Time not being used is idle time System managers often want to balance resources to have same utilization n E. g. : equal load on CPUs n But may not be possible. E. g. : CPU when I/O is bottleneck May not be time n n Processors – busy / total makes sense Memory – fraction used / total makes sense 27

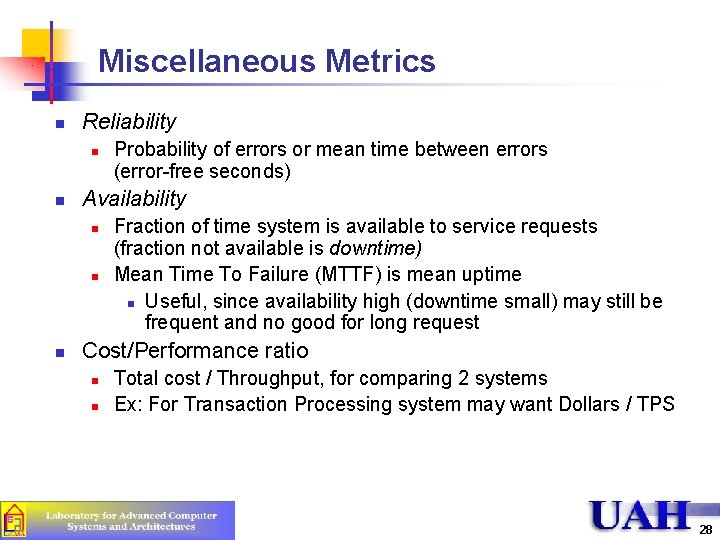

Miscellaneous Metrics n Reliability n n Availability n n n Probability of errors or mean time between errors (error-free seconds) Fraction of time system is available to service requests (fraction not available is downtime) Mean Time To Failure (MTTF) is mean uptime n Useful, since availability high (downtime small) may still be frequent and no good for long request Cost/Performance ratio n n Total cost / Throughput, for comparing 2 systems Ex: For Transaction Processing system may want Dollars / TPS 28

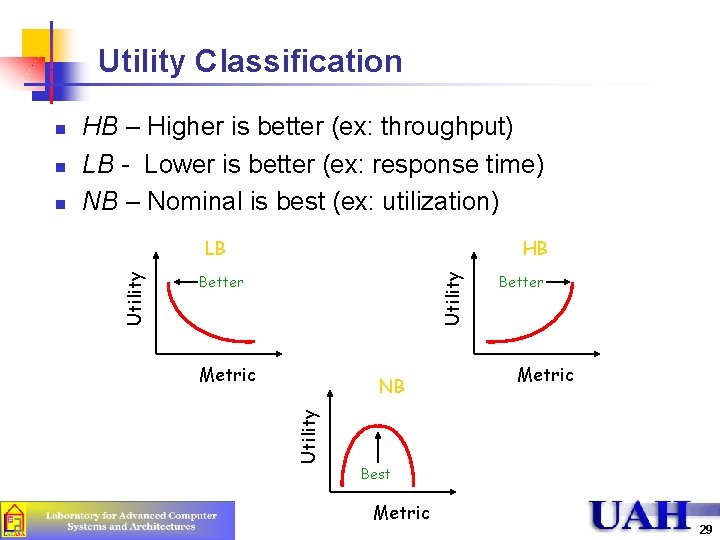

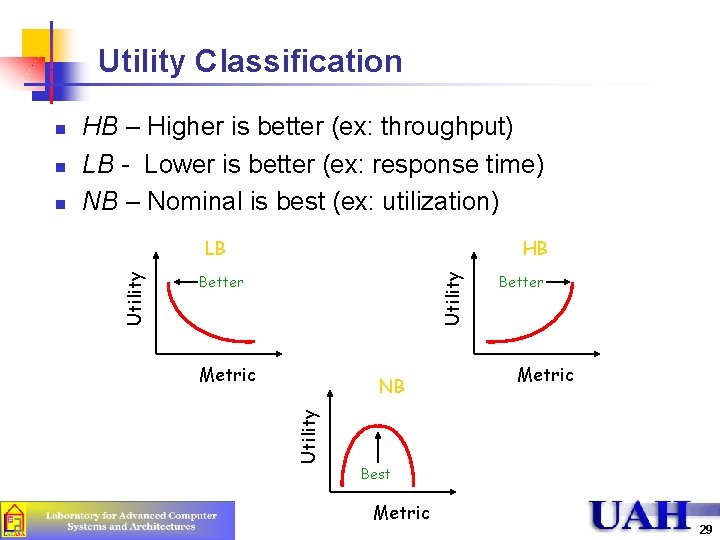

Utility Classification LB HB Utility n Better Metric NB Utility n HB – Higher is better (ex: throughput) LB - Lower is better (ex: response time) NB – Nominal is best (ex: utilization) Utility n Better Metric Best Metric 29

Outline n n Selecting an Evaluation Technique Selecting Performance Metrics n n n Case Study Commonly Used Performance Metrics Setting Performance Requirements n Case Study 30

Setting Performance Requirements (1 of 2) n Consider these typical requirement statements n n n The system should be both processing and memory efficient. It should not create excessive overhead There should be an extremely low probability that the network will duplicate a packet, deliver it to a wrong destination, or change the data What’s wrong? 31

Setting Performance Requirements (2 of 2) n General Problems n n n Nonspecific – no numbers. Only qualitative words (rare, low, high, extremely small) Nonmeasureable – no way to measure and verify that the system meets requirements Nonacceptable – numerical values of requirements are set based upon what can be achieved or on what looks good; If set on what can be achieved, they may turn out to be too low Nonrealizable – numbers based on what sounds good, but once started are too high Nonthorough – no attempt is made to specify all outcomes 32

Outline n n Selecting an Evaluation Technique Selecting Performance Metrics n n n Case Study Commonly Used Performance Metrics Setting Performance Requirements n Case Study 33

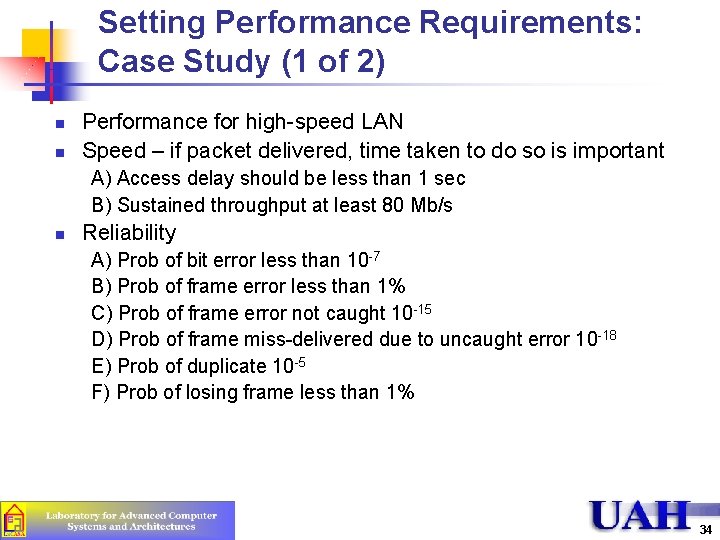

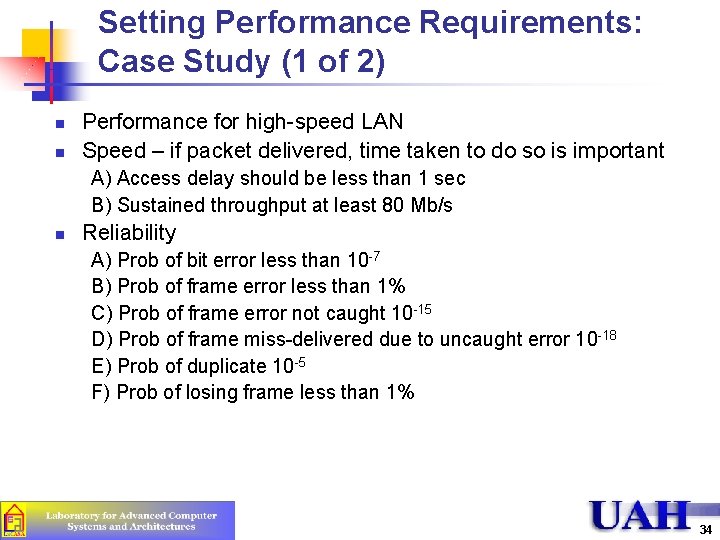

Setting Performance Requirements: Case Study (1 of 2) n n Performance for high-speed LAN Speed – if packet delivered, time taken to do so is important A) Access delay should be less than 1 sec B) Sustained throughput at least 80 Mb/s n Reliability A) Prob of bit error less than 10 -7 B) Prob of frame error less than 1% C) Prob of frame error not caught 10 -15 D) Prob of frame miss-delivered due to uncaught error 10 -18 E) Prob of duplicate 10 -5 F) Prob of losing frame less than 1% 34

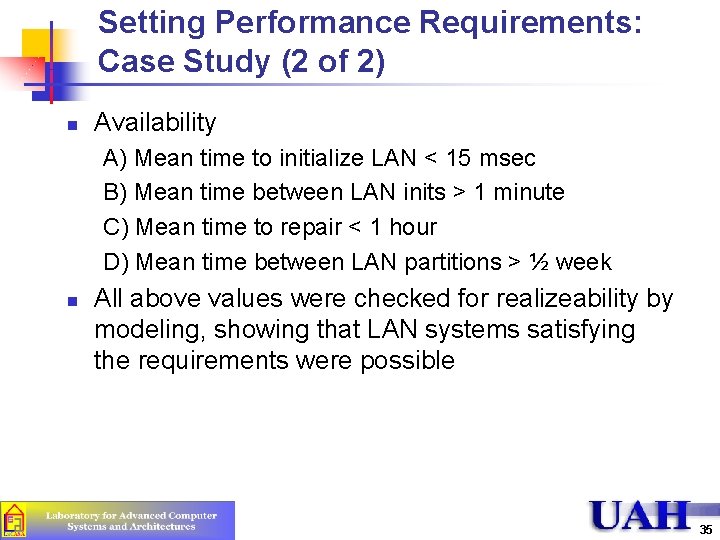

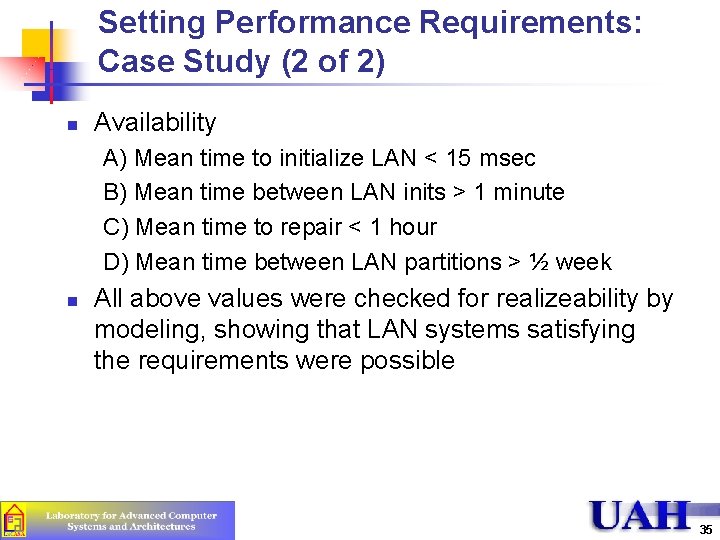

Setting Performance Requirements: Case Study (2 of 2) n Availability A) Mean time to initialize LAN < 15 msec B) Mean time between LAN inits > 1 minute C) Mean time to repair < 1 hour D) Mean time between LAN partitions > ½ week n All above values were checked for realizeability by modeling, showing that LAN systems satisfying the requirements were possible 35

Part I: Things to Remember n Systematic Approach n n Define the system, list its services, metrics, parameters, decide factors, evaluation technique, workload, experimental design, analyze the data, and present results Selecting Evaluation Technique n The life-cycle stage is the key. Other considerations are: time available, tools available, accuracy required, trade-offs to be evaluated, cost, and saleability of results. 36

Part I: Things to Remember n Selecting Metrics n n For each service list time, rate, and resource consumption For each undesirable outcome, measure the frequency and duration of the outcome Check for low-variability, non-redundancy, and completeness. Performance requirements: n Should be SMART. Specific, measurable, acceptable, realizable, and thorough. 37

Homework #1 n n Read Chapters #1, #2, #3 Submit answers to exercises n n 2. 2 (assume the system is personal computer) 3. 1 and 3. 2 Due: Monday, August 31, 2009 Submit by email to instructor with subject “CPE 619 -HW 1” 38