CPE 631 Lecture 05 CPU Caches Aleksandar Milenkovi

![CPE 631 AM Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; CPE 631 AM Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE];](https://slidetodoc.com/presentation_image_h2/d6f1b21467a0b29dfe3fde46b3f6b40d/image-56.jpg)

- Slides: 60

CPE 631 Lecture 05: CPU Caches Aleksandar Milenković, milenka@ece. uah. edu Electrical and Computer Engineering University of Alabama in Huntsville

CPE 631 AM Outline Memory Hierarchy n Four Questions for Memory Hierarchy n Cache Performance n 10/20/2021 UAH-CPE 631 2

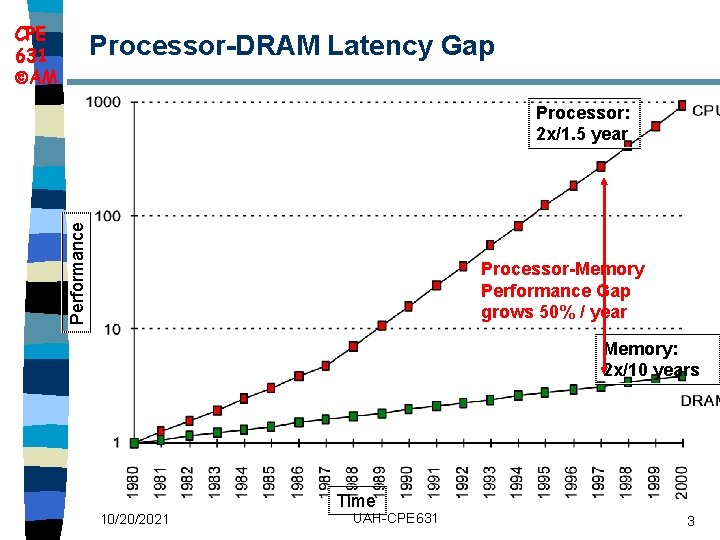

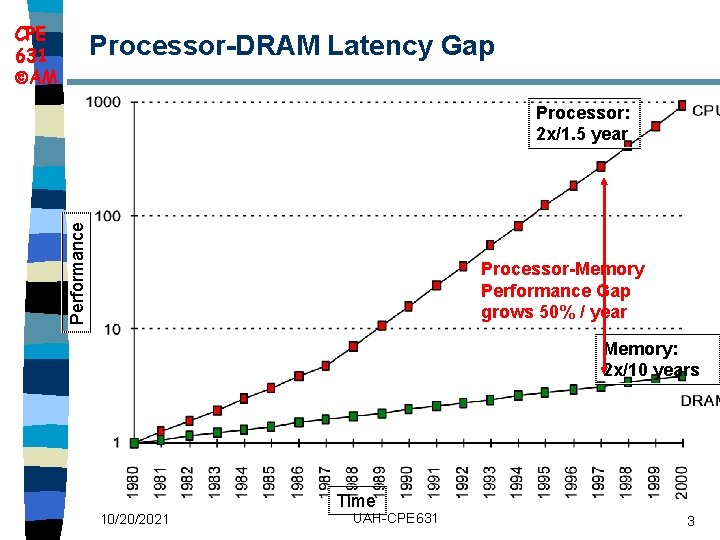

CPE 631 AM Processor DRAM Latency Gap Performance Processor: 2 x/1. 5 year Processor Memory Performance Gap grows 50% / year Memory: 2 x/10 years Time 10/20/2021 UAH-CPE 631 3

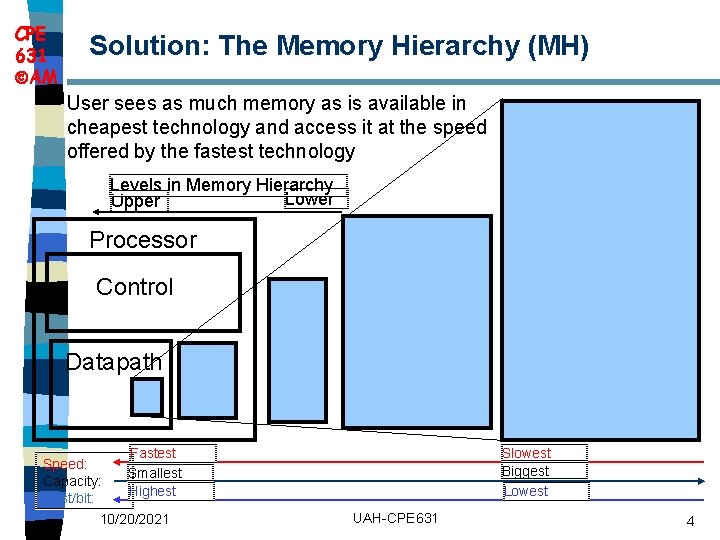

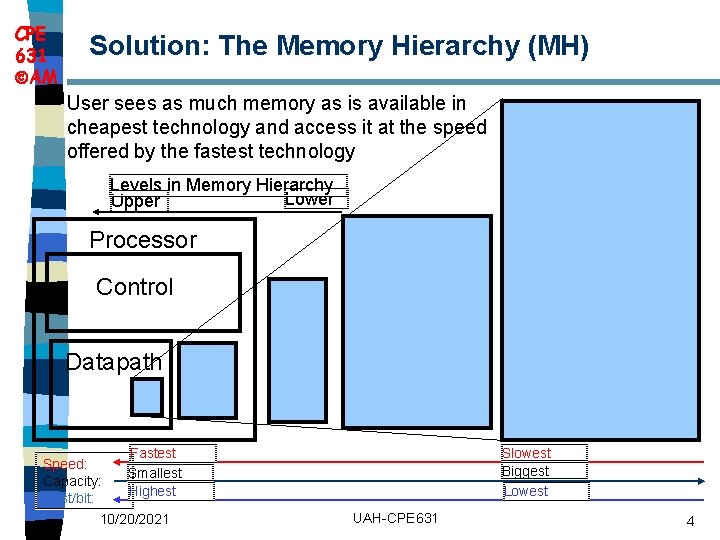

CPE 631 AM Solution: The Memory Hierarchy (MH) User sees as much memory as is available in cheapest technology and access it at the speed offered by the fastest technology Levels in Memory Hierarchy Lower Upper Processor Control Datapath Speed: Capacity: Cost/bit: Slowest Biggest Lowest Fastest Smallest Highest 10/20/2021 UAH-CPE 631 4

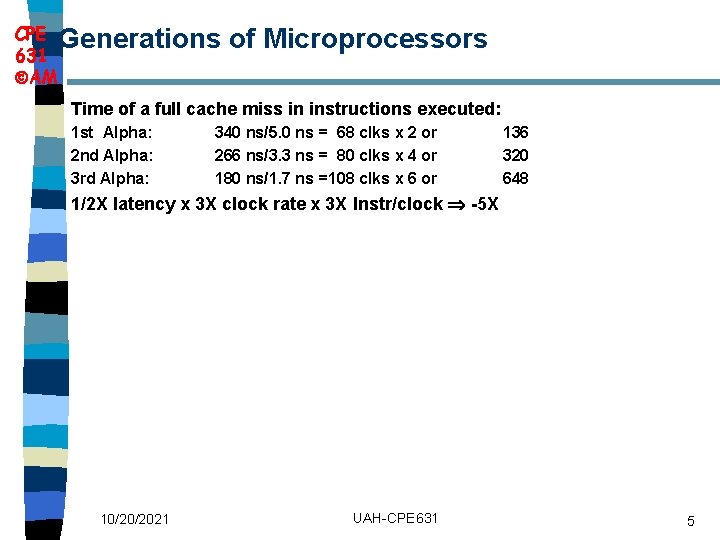

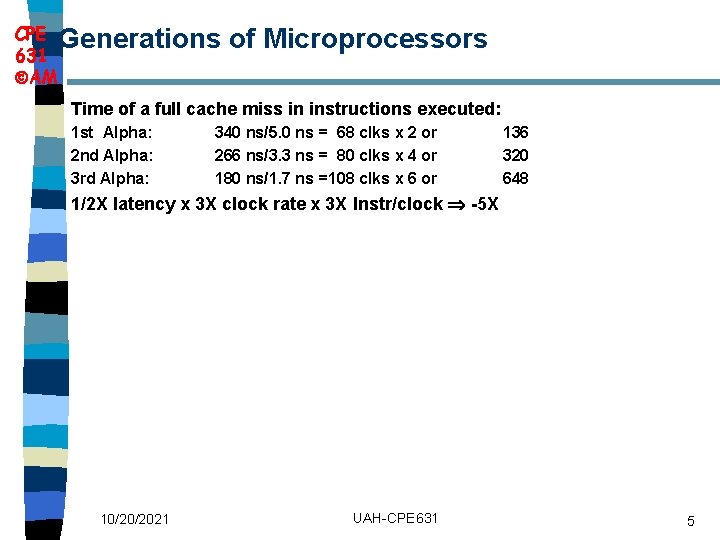

CPE Generations 631 AM of Microprocessors Time of a full cache miss in instructions executed: 1 st Alpha: 2 nd Alpha: 3 rd Alpha: 340 ns/5. 0 ns = 68 clks x 2 or 266 ns/3. 3 ns = 80 clks x 4 or 180 ns/1. 7 ns =108 clks x 6 or 136 320 648 1/2 X latency x 3 X clock rate x 3 X Instr/clock 5 X 10/20/2021 UAH-CPE 631 5

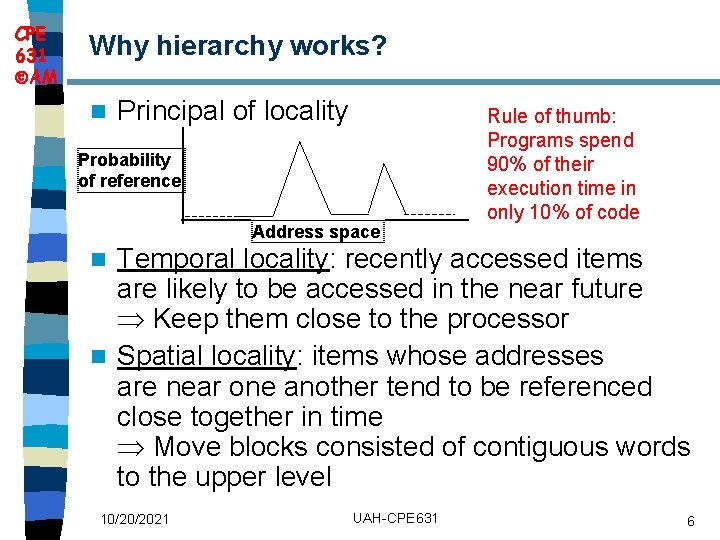

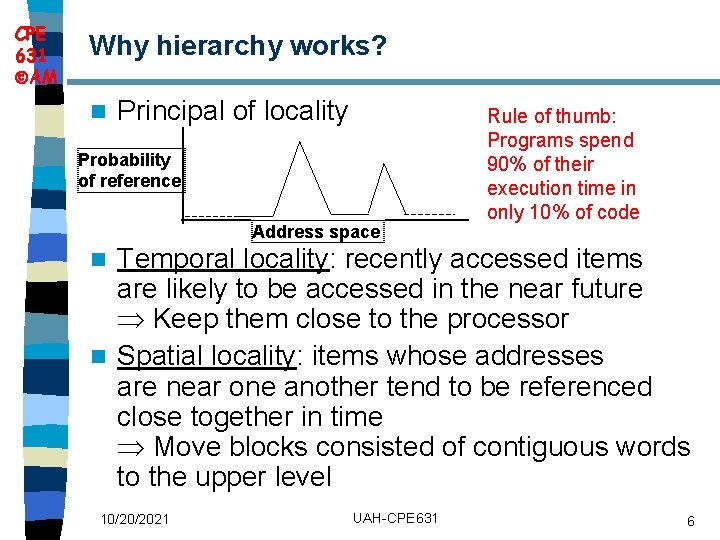

CPE 631 AM Why hierarchy works? n Principal of locality Probability of reference Address space Rule of thumb: Programs spend 90% of their execution time in only 10% of code Temporal locality: recently accessed items are likely to be accessed in the near future Keep them close to the processor n Spatial locality: items whose addresses are near one another tend to be referenced close together in time Move blocks consisted of contiguous words to the upper level n 10/20/2021 UAH-CPE 631 6

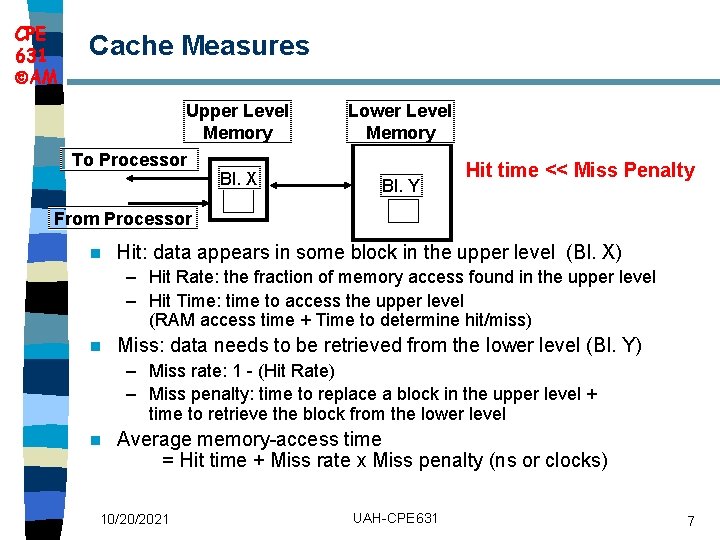

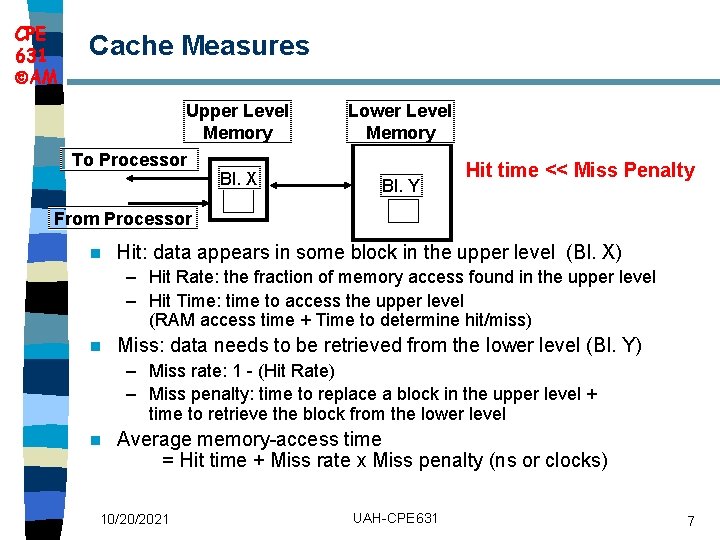

CPE 631 AM Cache Measures Upper Level Memory To Processor Bl. X Lower Level Memory Bl. Y Hit time << Miss Penalty From Processor n Hit: data appears in some block in the upper level (Bl. X) – Hit Rate: the fraction of memory access found in the upper level – Hit Time: time to access the upper level (RAM access time + Time to determine hit/miss) n Miss: data needs to be retrieved from the lower level (Bl. Y) – Miss rate: 1 - (Hit Rate) – Miss penalty: time to replace a block in the upper level + time to retrieve the block from the lower level n Average memory-access time = Hit time + Miss rate x Miss penalty (ns or clocks) 10/20/2021 UAH-CPE 631 7

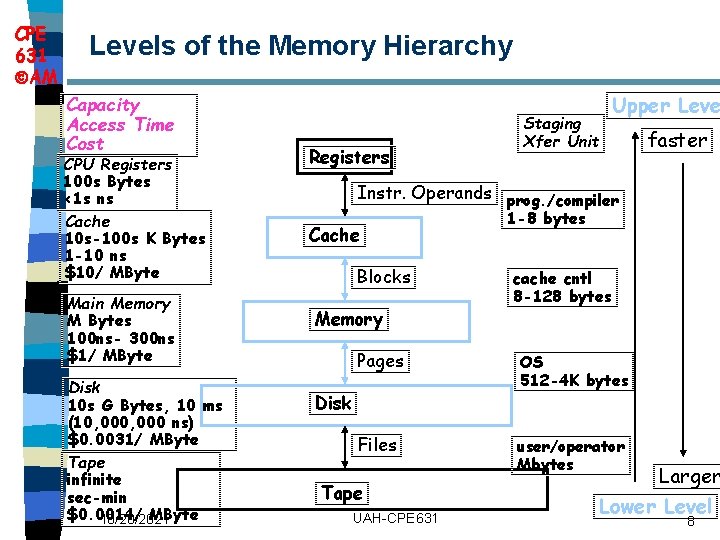

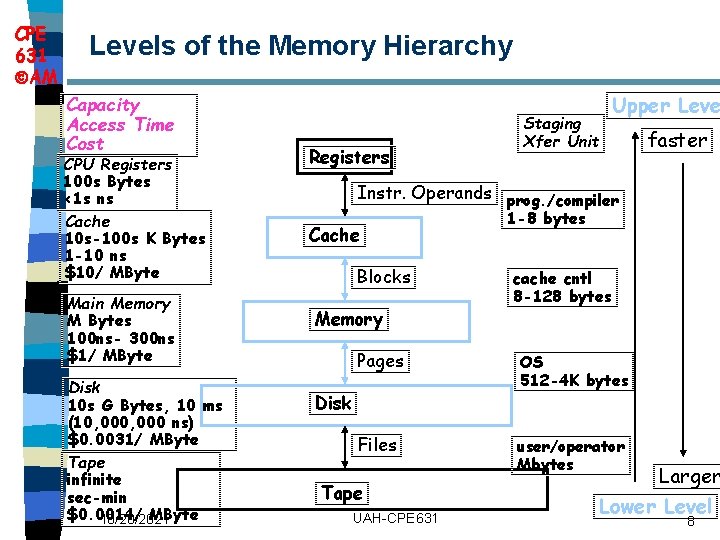

CPE 631 AM Levels of the Memory Hierarchy Capacity Access Time Cost CPU Registers 100 s Bytes <1 s ns Cache 10 s-100 s K Bytes 1 -10 ns $10/ MByte Main Memory M Bytes 100 ns- 300 ns $1/ MByte Disk 10 s G Bytes, 10 ms (10, 000 ns) $0. 0031/ MByte Tape infinite sec-min $0. 0014/ MByte 10/20/2021 Registers Upper Leve Staging Xfer Unit faster Instr. Operands prog. /compiler Cache Blocks Memory 1 -8 bytes cache cntl 8 -128 bytes Pages OS 512 -4 K bytes Files user/operator Mbytes Disk Tape UAH-CPE 631 Larger Lower Level 8

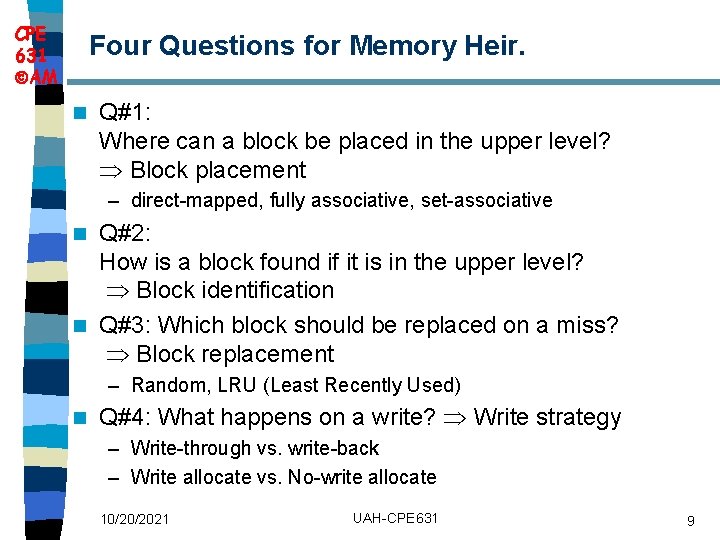

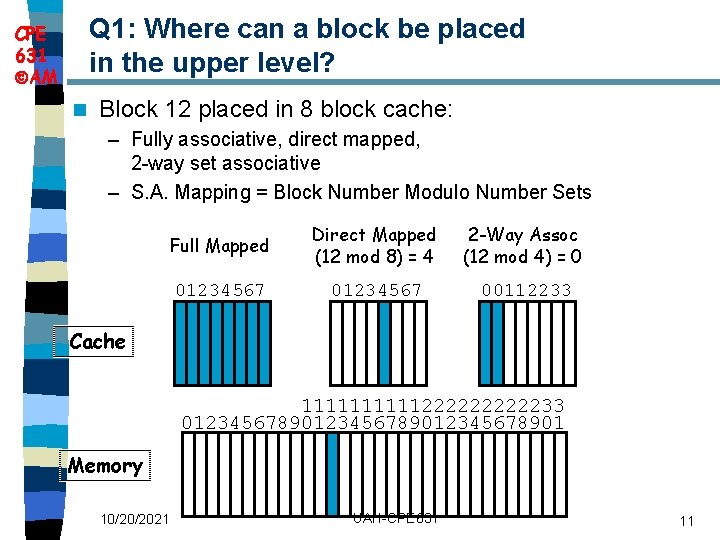

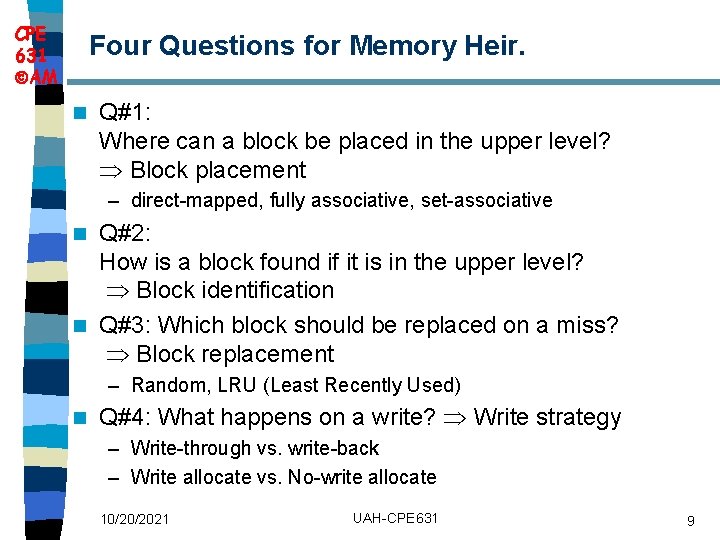

CPE 631 AM Four Questions for Memory Heir. n Q#1: Where can a block be placed in the upper level? Block placement – direct-mapped, fully associative, set-associative Q#2: How is a block found if it is in the upper level? Block identification n Q#3: Which block should be replaced on a miss? Block replacement n – Random, LRU (Least Recently Used) n Q#4: What happens on a write? Write strategy – Write-through vs. write-back – Write allocate vs. No-write allocate 10/20/2021 UAH-CPE 631 9

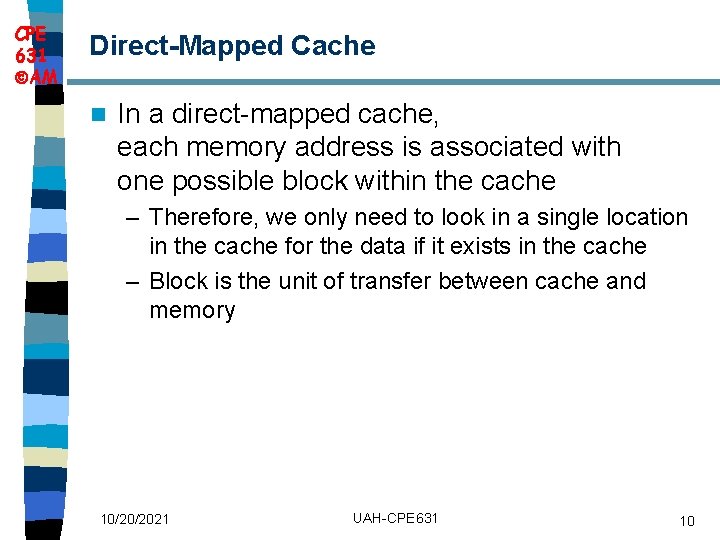

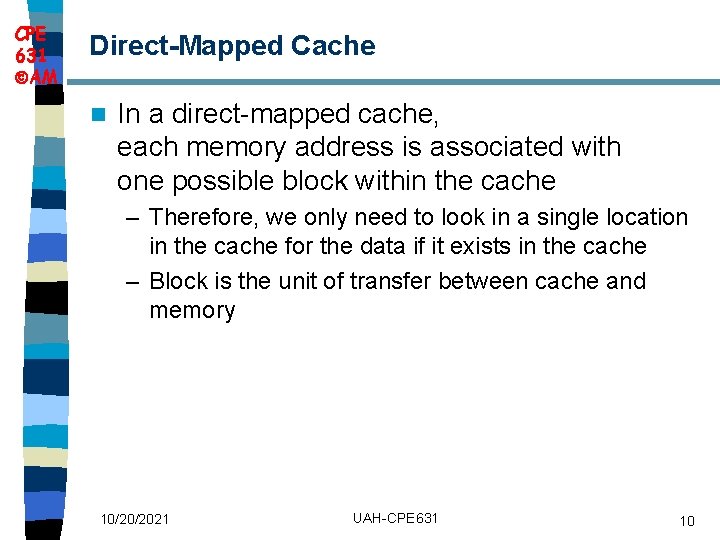

CPE 631 AM Direct Mapped Cache n In a direct-mapped cache, each memory address is associated with one possible block within the cache – Therefore, we only need to look in a single location in the cache for the data if it exists in the cache – Block is the unit of transfer between cache and memory 10/20/2021 UAH-CPE 631 10

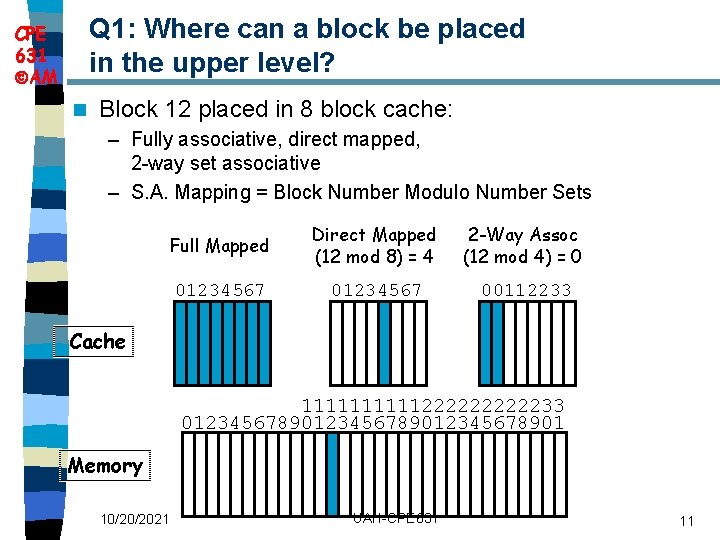

Q 1: Where can a block be placed in the upper level? CPE 631 AM n Block 12 placed in 8 block cache: – Fully associative, direct mapped, 2 -way set associative – S. A. Mapping = Block Number Modulo Number Sets Full Mapped Direct Mapped (12 mod 8) = 4 2 -Way Assoc (12 mod 4) = 0 01234567 00112233 Cache 111112222233 0123456789012345678901 Memory 10/20/2021 UAH-CPE 631 11

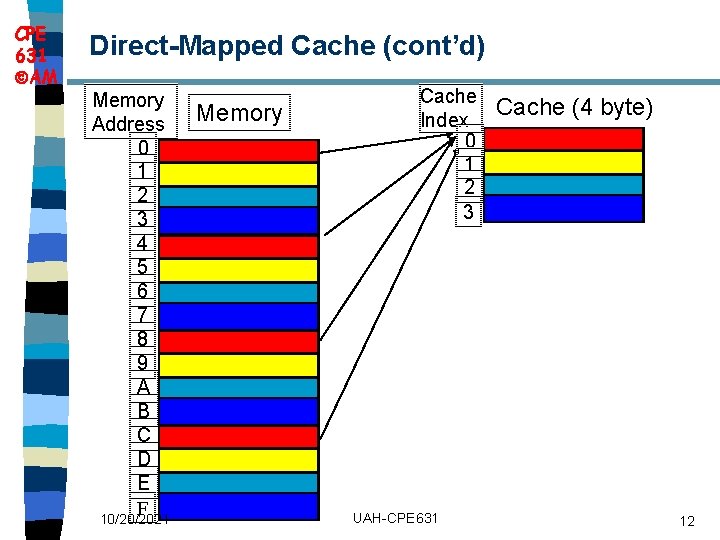

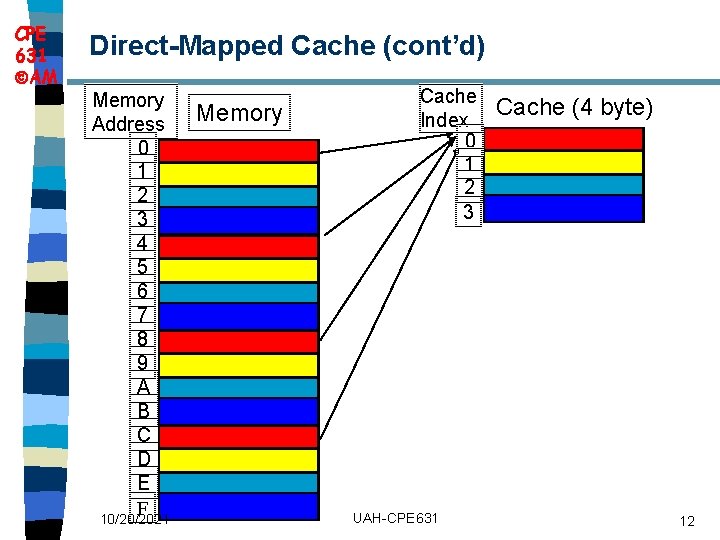

CPE 631 AM Direct Mapped Cache (cont’d) Memory Address 0 1 2 3 4 5 6 7 8 9 A B C D E F 10/20/2021 Memory Cache Index 0 1 2 3 UAH-CPE 631 Cache (4 byte) 12

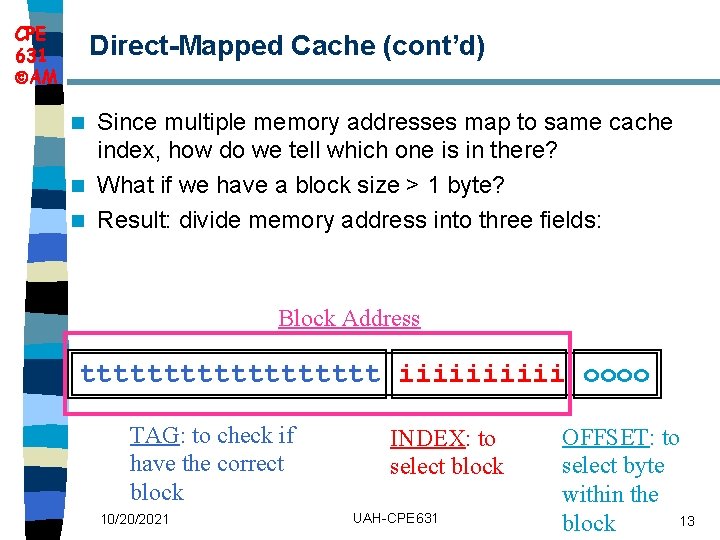

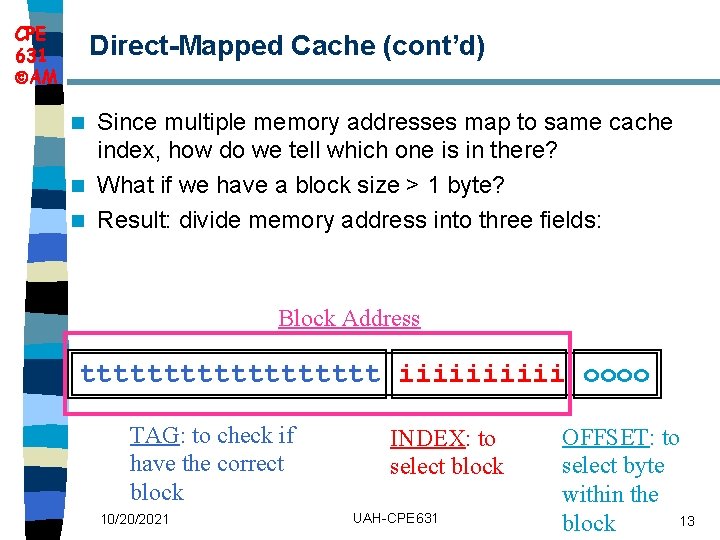

CPE 631 AM Direct Mapped Cache (cont’d) Since multiple memory addresses map to same cache index, how do we tell which one is in there? n What if we have a block size > 1 byte? n Result: divide memory address into three fields: n Block Address ttttttttt iiiii oooo TAG: to check if have the correct block 10/20/2021 INDEX: to select block UAH-CPE 631 OFFSET: to select byte within the 13 block

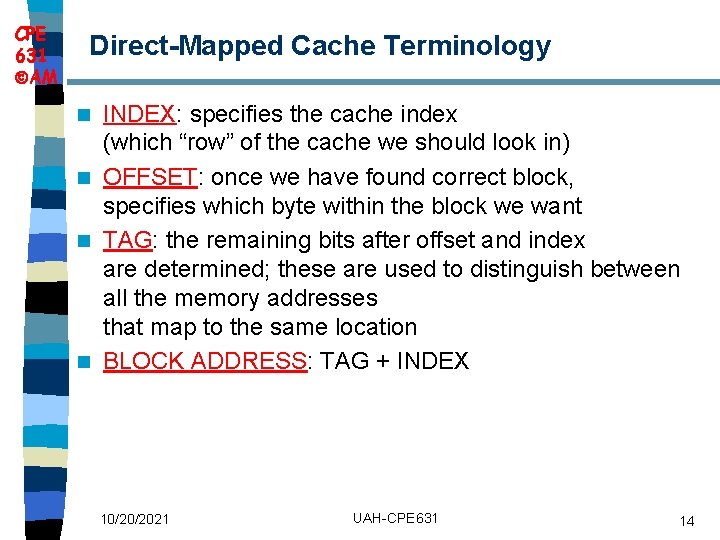

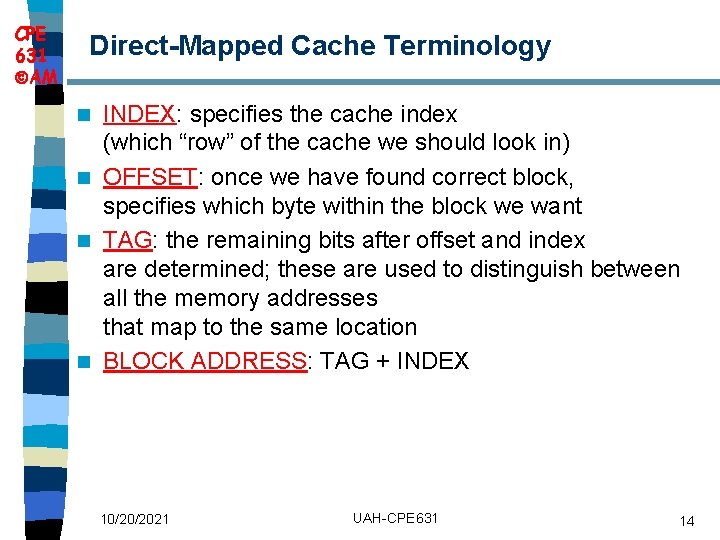

CPE 631 AM Direct Mapped Cache Terminology INDEX: specifies the cache index (which “row” of the cache we should look in) n OFFSET: once we have found correct block, specifies which byte within the block we want n TAG: the remaining bits after offset and index are determined; these are used to distinguish between all the memory addresses that map to the same location n BLOCK ADDRESS: TAG + INDEX n 10/20/2021 UAH-CPE 631 14

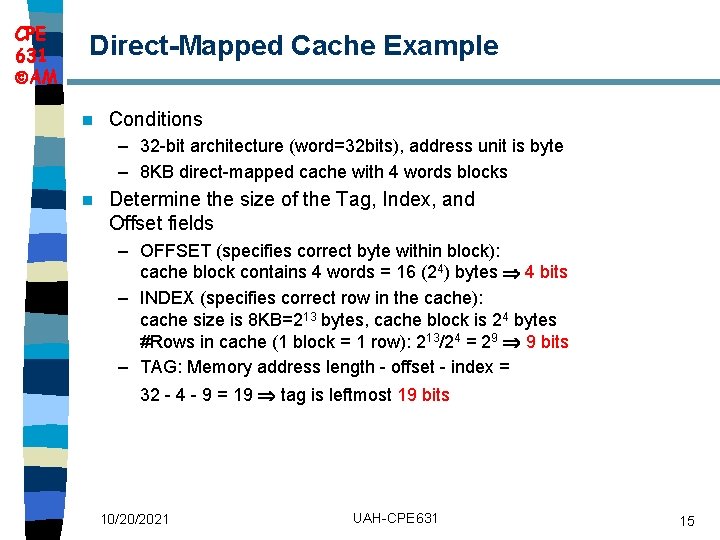

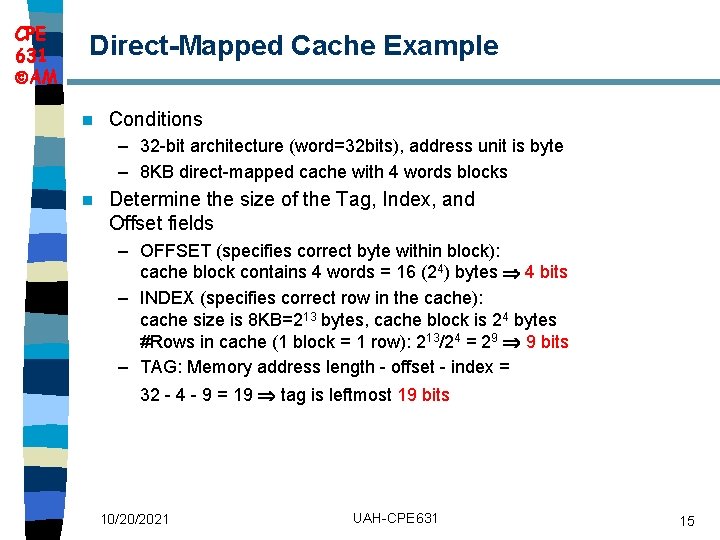

CPE 631 AM Direct Mapped Cache Example n Conditions – 32 -bit architecture (word=32 bits), address unit is byte – 8 KB direct-mapped cache with 4 words blocks n Determine the size of the Tag, Index, and Offset fields – OFFSET (specifies correct byte within block): cache block contains 4 words = 16 (24) bytes 4 bits – INDEX (specifies correct row in the cache): cache size is 8 KB=213 bytes, cache block is 24 bytes #Rows in cache (1 block = 1 row): 213/24 = 29 9 bits – TAG: Memory address length - offset - index = 32 - 4 - 9 = 19 tag is leftmost 19 bits 10/20/2021 UAH-CPE 631 15

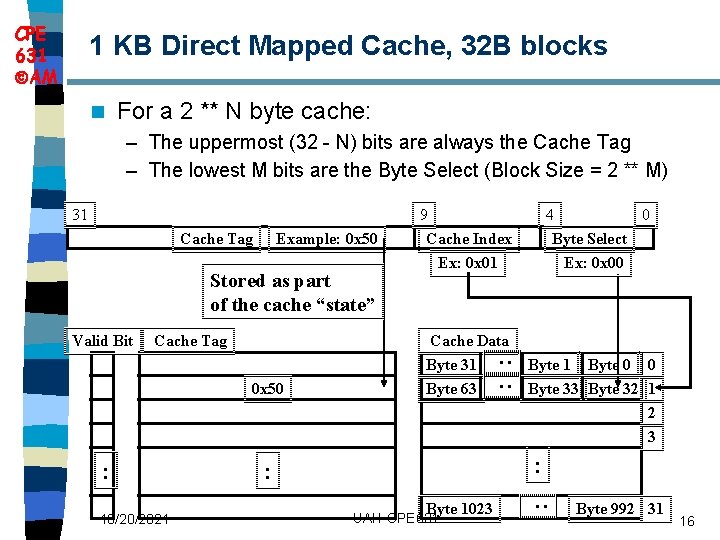

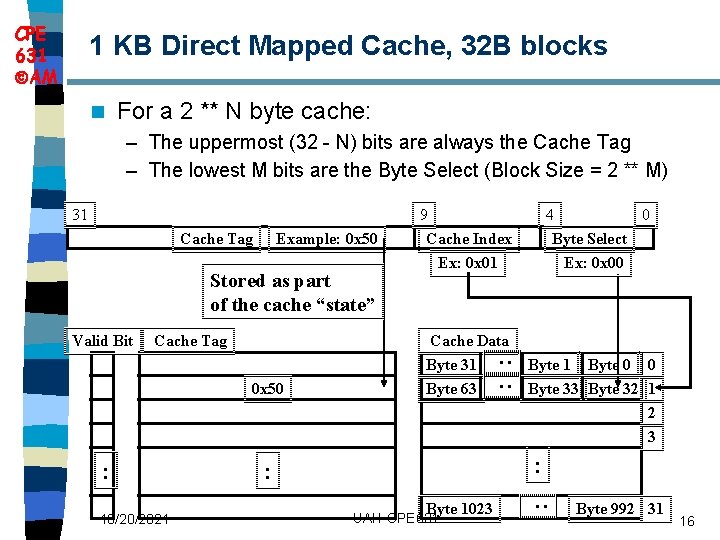

CPE 631 AM 1 KB Direct Mapped Cache, 32 B blocks n For a 2 ** N byte cache: – The uppermost (32 - N) bits are always the Cache Tag – The lowest M bits are the Byte Select (Block Size = 2 ** M) 31 9 Example: 0 x 50 Stored as part of the cache “state” Cache Tag 0 x 50 : 10/20/2021 Cache Data Byte 31 Byte 63 : : Valid Bit Cache Index Ex: 0 x 01 0 Byte Select Ex: 0 x 00 Byte 1 Byte 0 0 Byte 33 Byte 32 1 2 3 : : Byte 1023 UAH-CPE 631 : Cache Tag 4 Byte 992 31 16

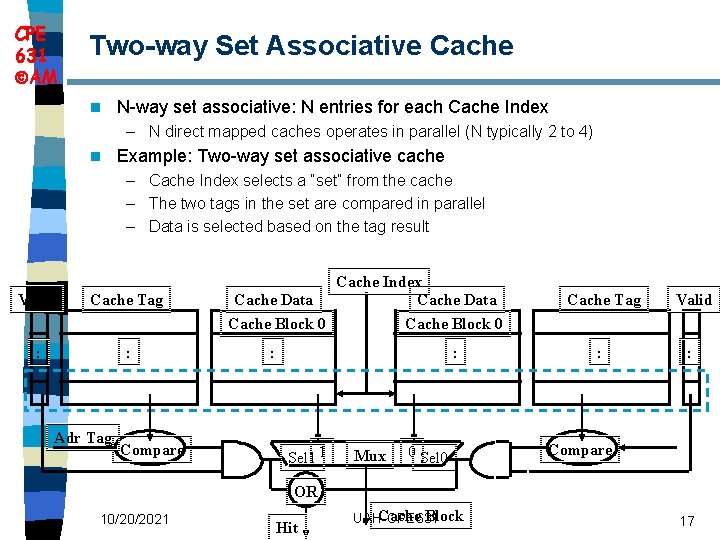

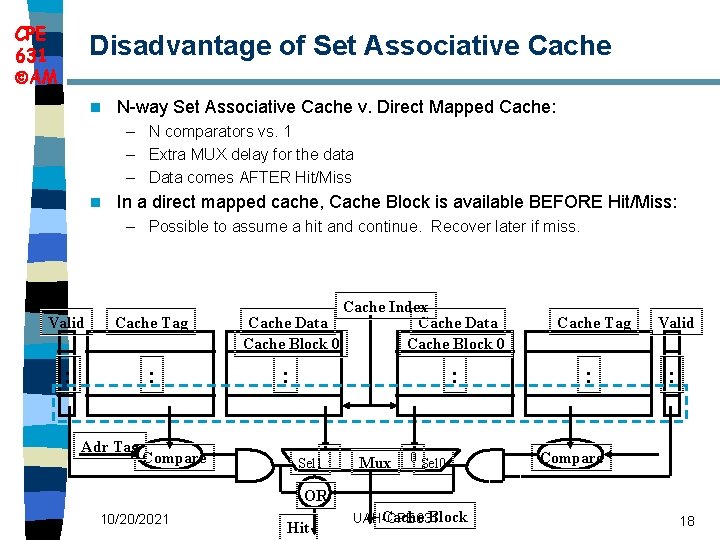

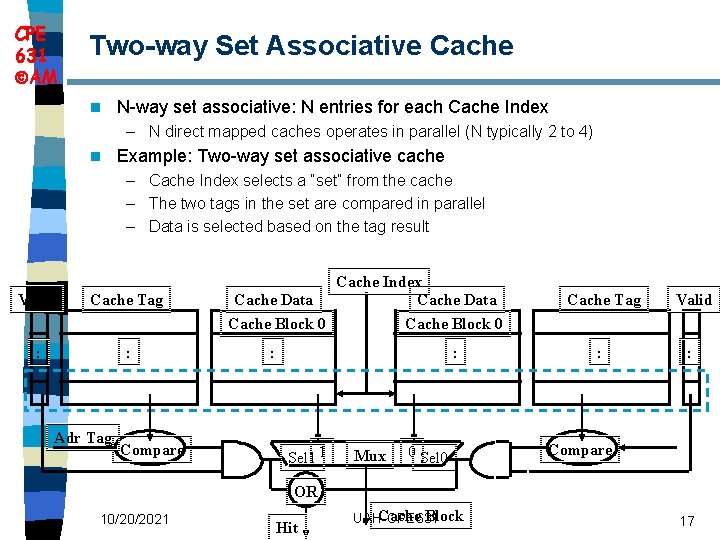

CPE 631 AM Two way Set Associative Cache n N-way set associative: N entries for each Cache Index – N direct mapped caches operates in parallel (N typically 2 to 4) n Example: Two-way set associative cache – Cache Index selects a “set” from the cache – The two tags in the set are compared in parallel – Data is selected based on the tag result Valid Cache Tag : : Adr Tag Compare Cache Index Cache Data Cache Block 0 : : Sel 1 1 Mux 0 Sel 0 Cache Tag Valid : : Compare OR 10/20/2021 Hit Cache Block UAH-CPE 631 17

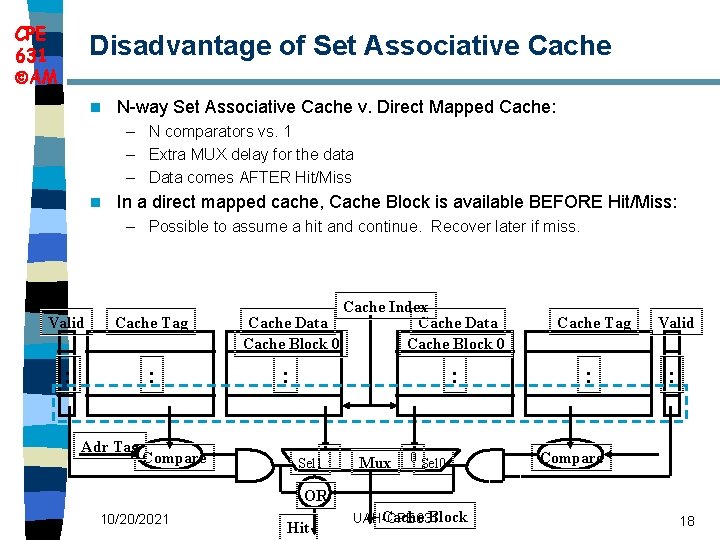

CPE 631 AM Disadvantage of Set Associative Cache n N-way Set Associative Cache v. Direct Mapped Cache: – N comparators vs. 1 – Extra MUX delay for the data – Data comes AFTER Hit/Miss n In a direct mapped cache, Cache Block is available BEFORE Hit/Miss: – Possible to assume a hit and continue. Recover later if miss. Valid Cache Tag : : Adr Tag Compare Cache Index Cache Data Cache Block 0 : : Sel 1 1 Mux 0 Sel 0 Cache Tag : Valid : Compare OR 10/20/2021 Hit Cache Block UAH-CPE 631 18

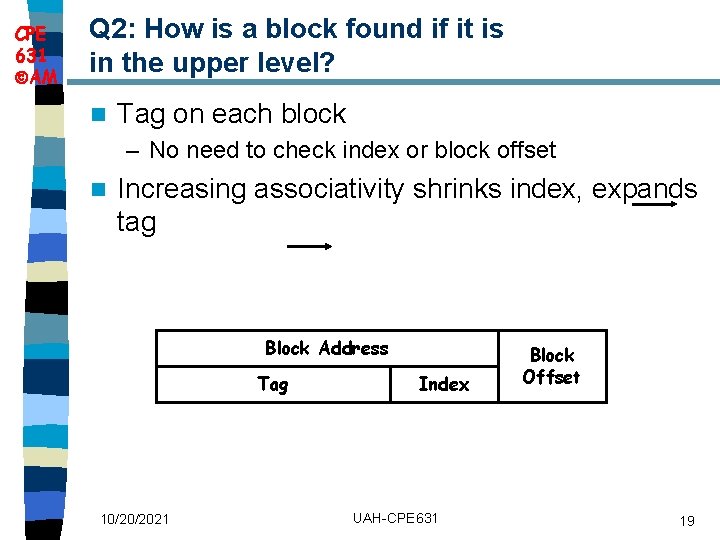

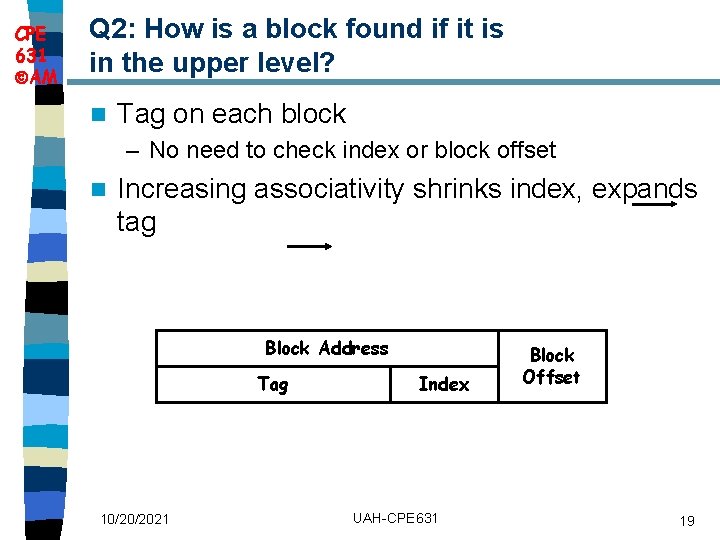

CPE 631 AM Q 2: How is a block found if it is in the upper level? n Tag on each block – No need to check index or block offset n Increasing associativity shrinks index, expands tag Block Address Tag 10/20/2021 Index UAH-CPE 631 Block Offset 19

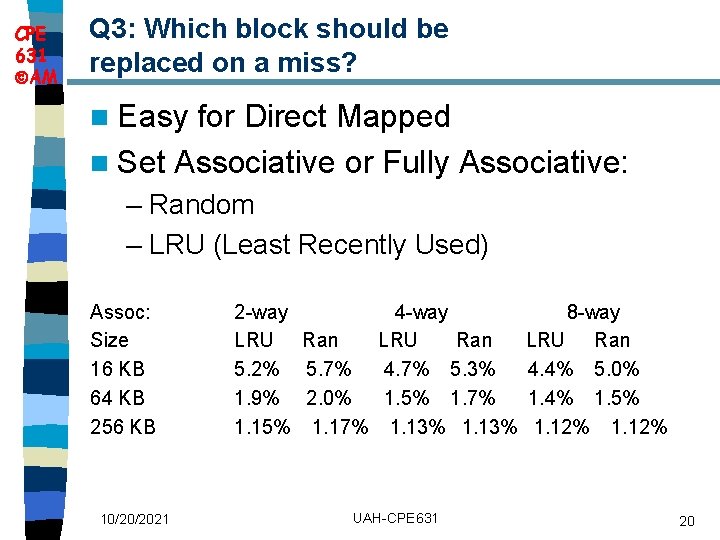

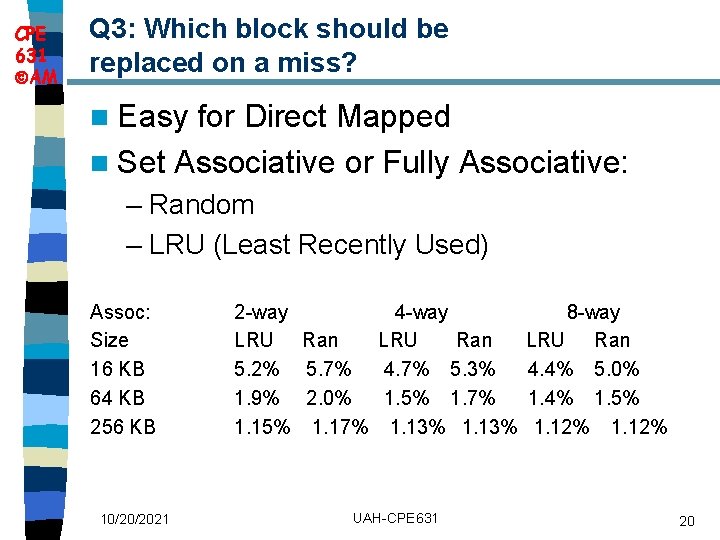

CPE 631 AM Q 3: Which block should be replaced on a miss? n Easy for Direct Mapped n Set Associative or Fully Associative: – Random – LRU (Least Recently Used) Assoc: Size 16 KB 64 KB 256 KB 10/20/2021 2 -way 4 -way LRU Ran 5. 2% 5. 7% 4. 7% 5. 3% 1. 9% 2. 0% 1. 5% 1. 7% 1. 15% 1. 17% 1. 13% UAH-CPE 631 8 -way LRU Ran 4. 4% 5. 0% 1. 4% 1. 5% 1. 12% 20

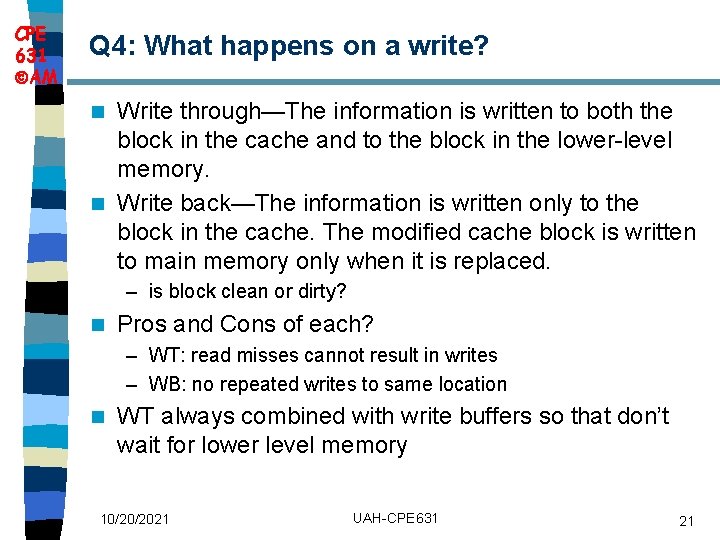

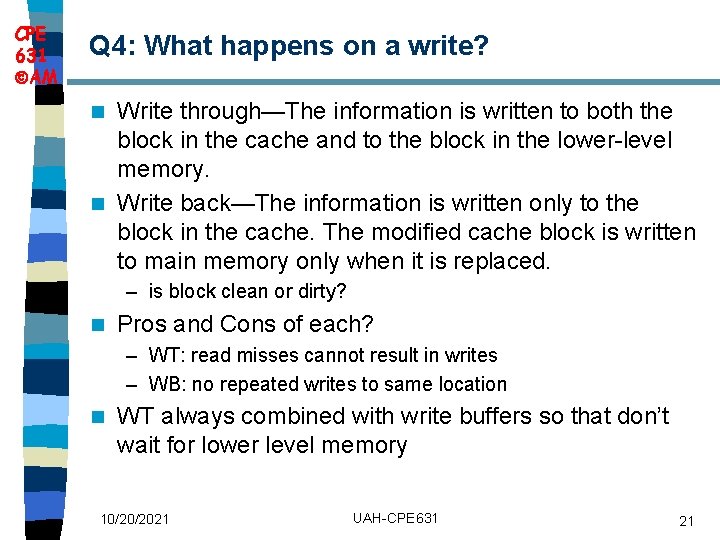

CPE 631 AM Q 4: What happens on a write? Write through—The information is written to both the block in the cache and to the block in the lower-level memory. n Write back—The information is written only to the block in the cache. The modified cache block is written to main memory only when it is replaced. n – is block clean or dirty? n Pros and Cons of each? – WT: read misses cannot result in writes – WB: no repeated writes to same location n WT always combined with write buffers so that don’t wait for lower level memory 10/20/2021 UAH-CPE 631 21

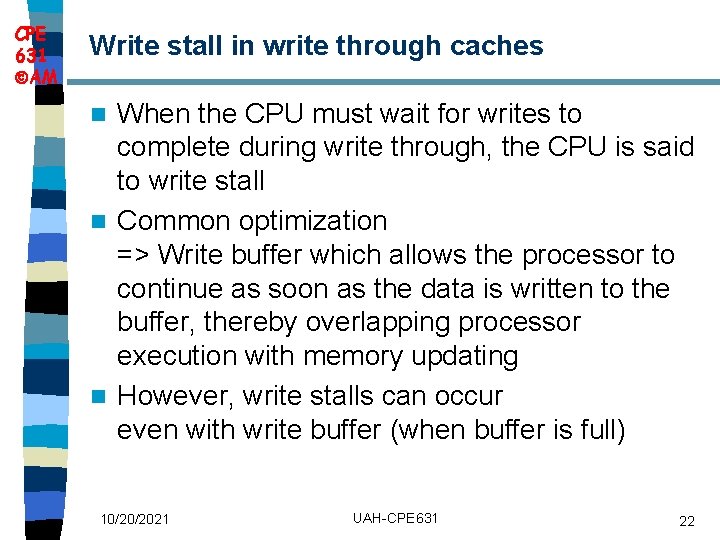

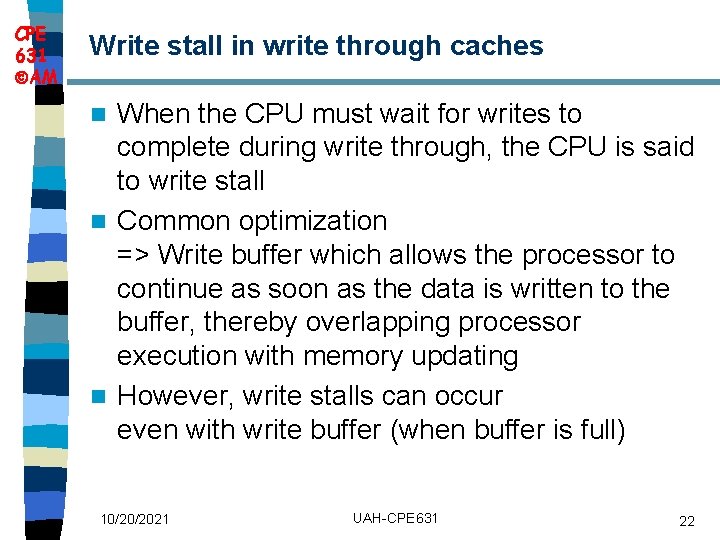

CPE 631 AM Write stall in write through caches When the CPU must wait for writes to complete during write through, the CPU is said to write stall n Common optimization => Write buffer which allows the processor to continue as soon as the data is written to the buffer, thereby overlapping processor execution with memory updating n However, write stalls can occur even with write buffer (when buffer is full) n 10/20/2021 UAH-CPE 631 22

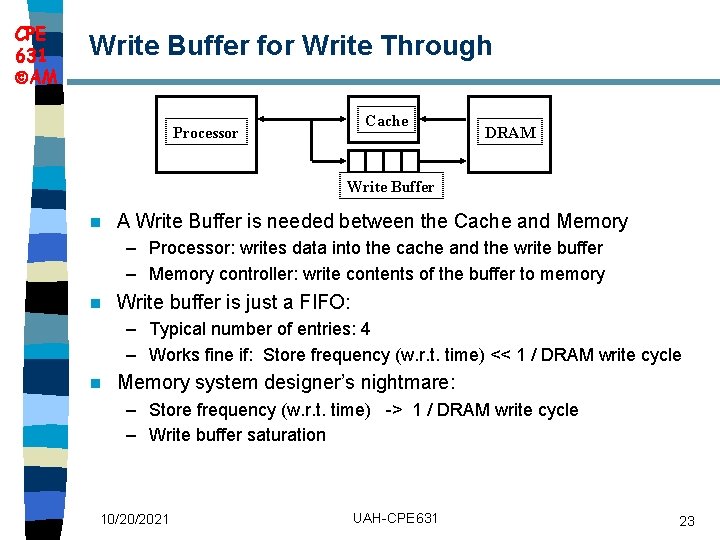

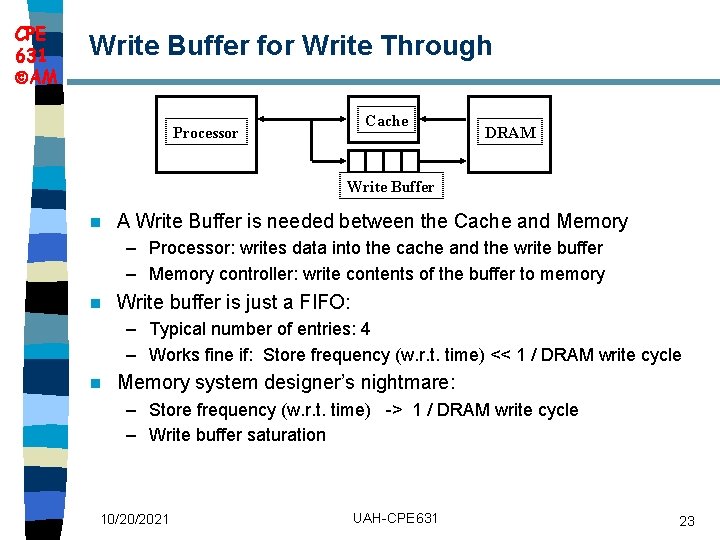

CPE 631 AM Write Buffer for Write Through Cache Processor DRAM Write Buffer n A Write Buffer is needed between the Cache and Memory – Processor: writes data into the cache and the write buffer – Memory controller: write contents of the buffer to memory n Write buffer is just a FIFO: – Typical number of entries: 4 – Works fine if: Store frequency (w. r. t. time) << 1 / DRAM write cycle n Memory system designer’s nightmare: – Store frequency (w. r. t. time) -> 1 / DRAM write cycle – Write buffer saturation 10/20/2021 UAH-CPE 631 23

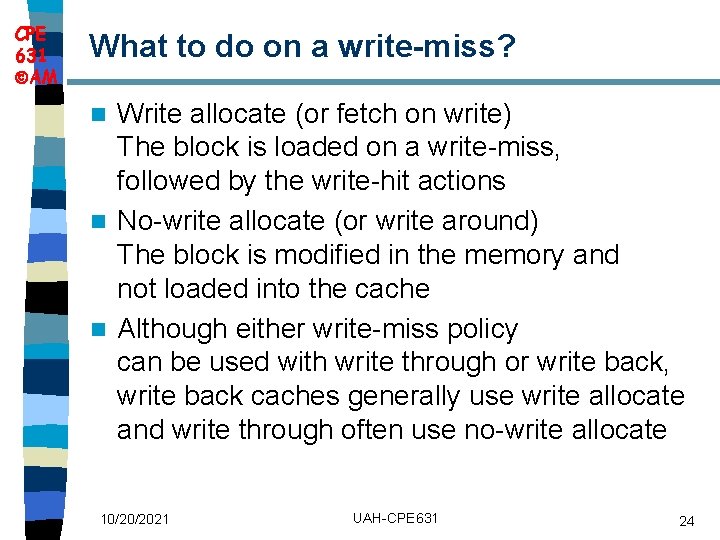

CPE 631 AM What to do on a write miss? Write allocate (or fetch on write) The block is loaded on a write-miss, followed by the write-hit actions n No-write allocate (or write around) The block is modified in the memory and not loaded into the cache n Although either write-miss policy can be used with write through or write back, write back caches generally use write allocate and write through often use no-write allocate n 10/20/2021 UAH-CPE 631 24

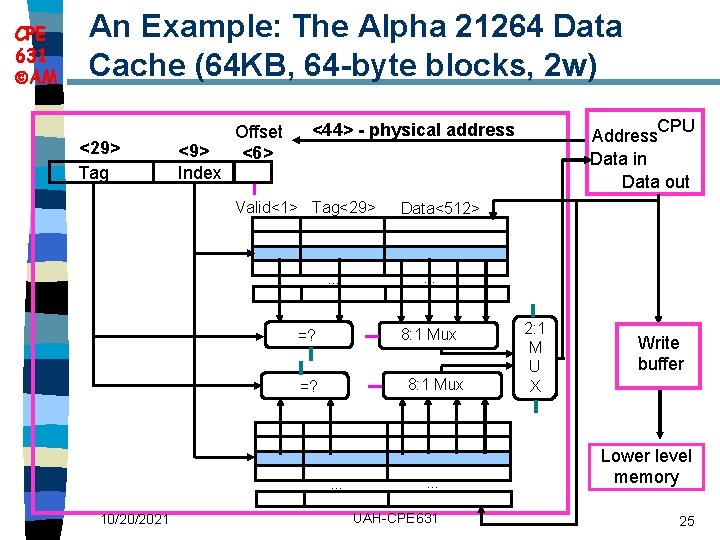

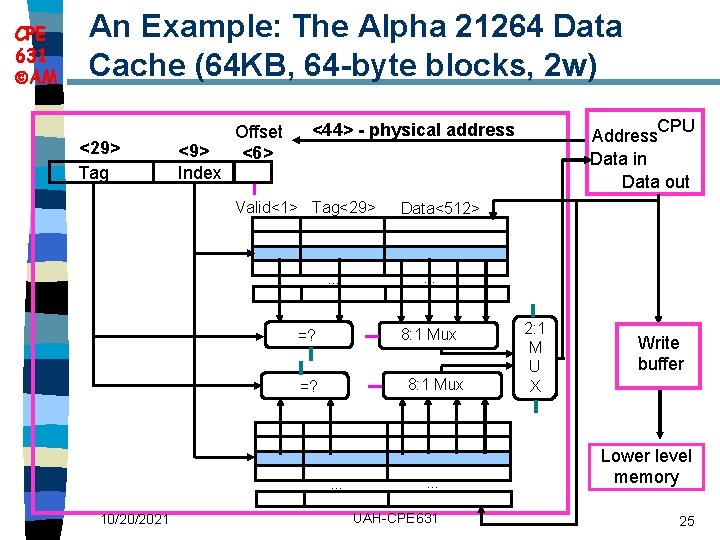

CPE 631 AM An Example: The Alpha 21264 Data Cache (64 KB, 64 byte blocks, 2 w) <29> Tag <9> Index Offset <6> Valid<1> Tag<29> . . . Data<512> . . . 8: 1 Mux =? . . . 10/20/2021 CPU Address Data in Data out <44> physical address 2: 1 M U X Write buffer . . . Lower level memory UAH-CPE 631 25

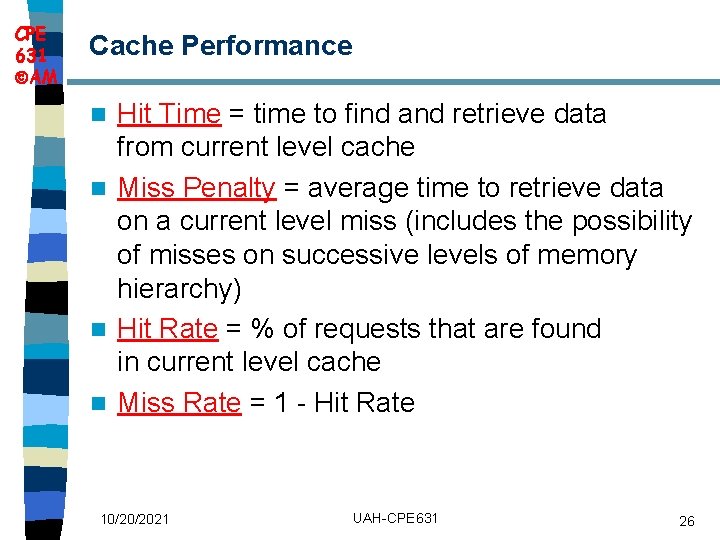

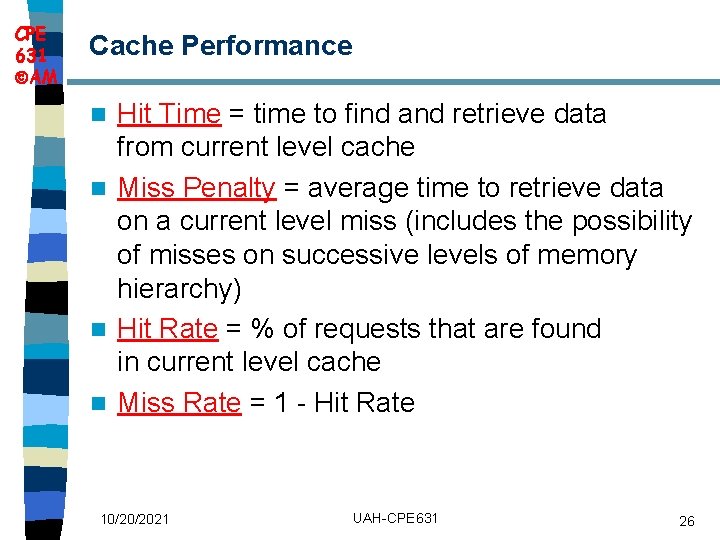

CPE 631 AM Cache Performance Hit Time = time to find and retrieve data from current level cache n Miss Penalty = average time to retrieve data on a current level miss (includes the possibility of misses on successive levels of memory hierarchy) n Hit Rate = % of requests that are found in current level cache n Miss Rate = 1 - Hit Rate n 10/20/2021 UAH-CPE 631 26

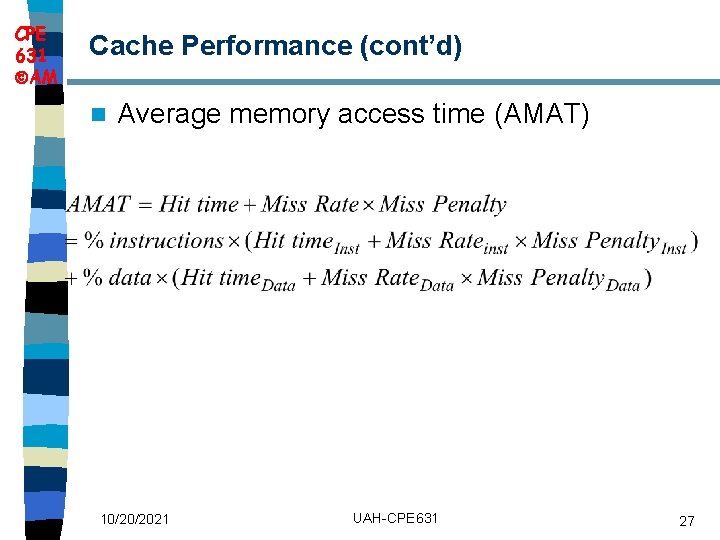

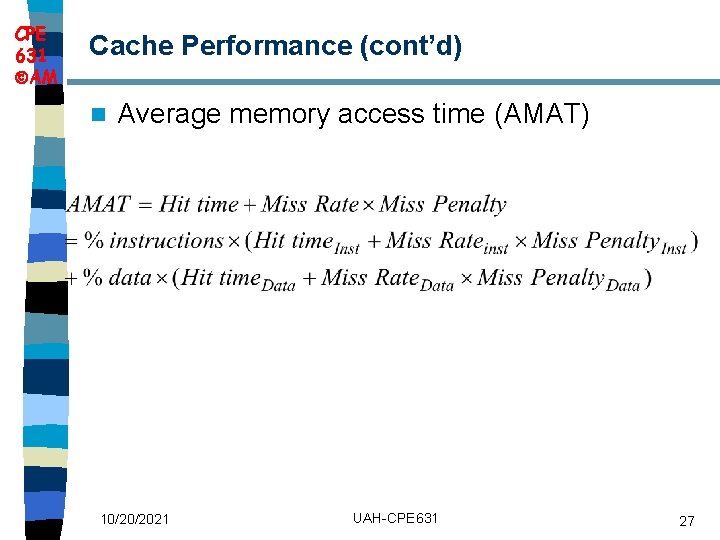

CPE 631 AM Cache Performance (cont’d) n Average memory access time (AMAT) 10/20/2021 UAH-CPE 631 27

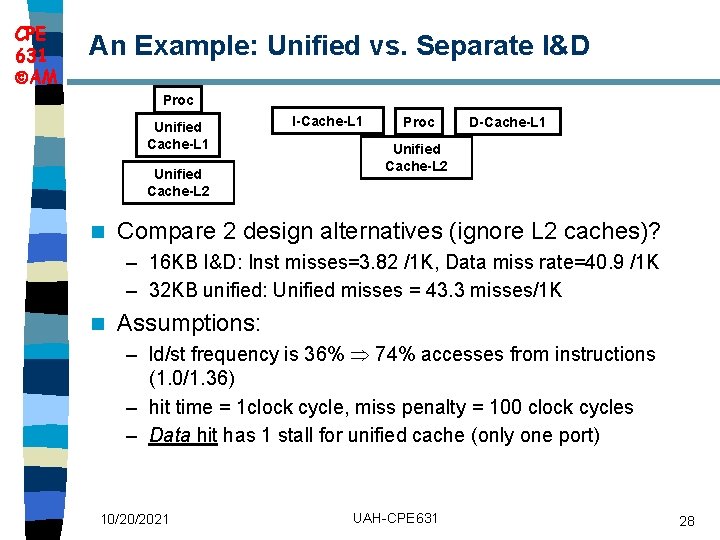

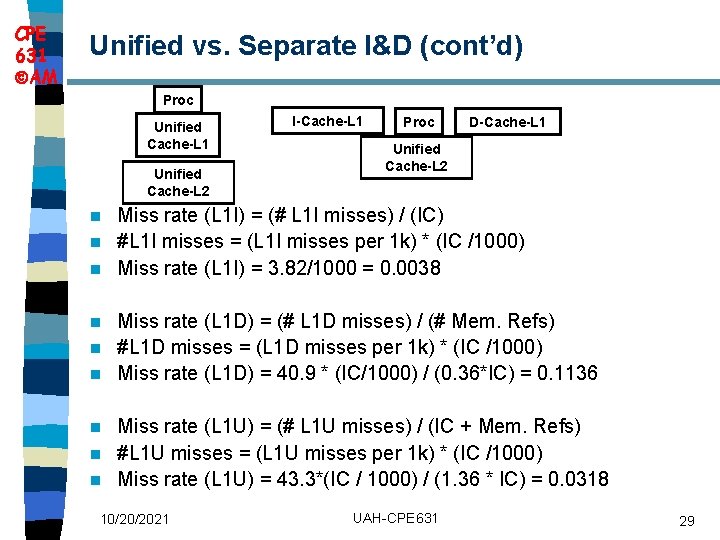

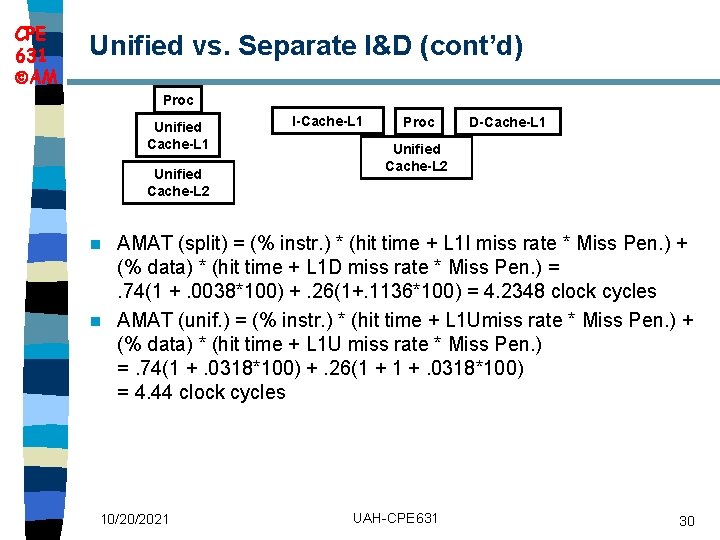

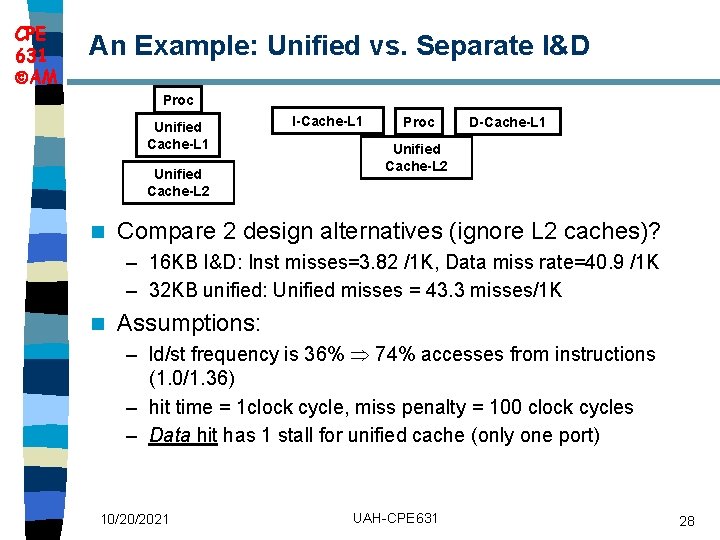

CPE 631 AM An Example: Unified vs. Separate I&D Proc Unified Cache L 1 Unified Cache L 2 n I Cache L 1 Proc D Cache L 1 Unified Cache L 2 Compare 2 design alternatives (ignore L 2 caches)? – 16 KB I&D: Inst misses=3. 82 /1 K, Data miss rate=40. 9 /1 K – 32 KB unified: Unified misses = 43. 3 misses/1 K n Assumptions: – ld/st frequency is 36% 74% accesses from instructions (1. 0/1. 36) – hit time = 1 clock cycle, miss penalty = 100 clock cycles – Data hit has 1 stall for unified cache (only one port) 10/20/2021 UAH-CPE 631 28

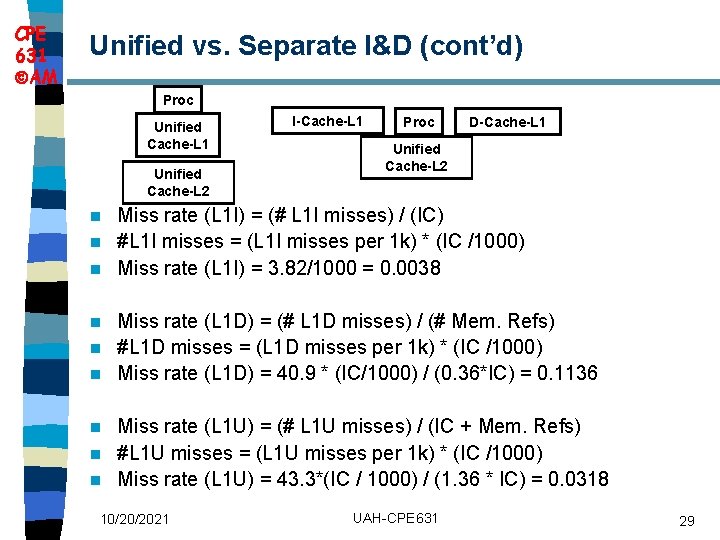

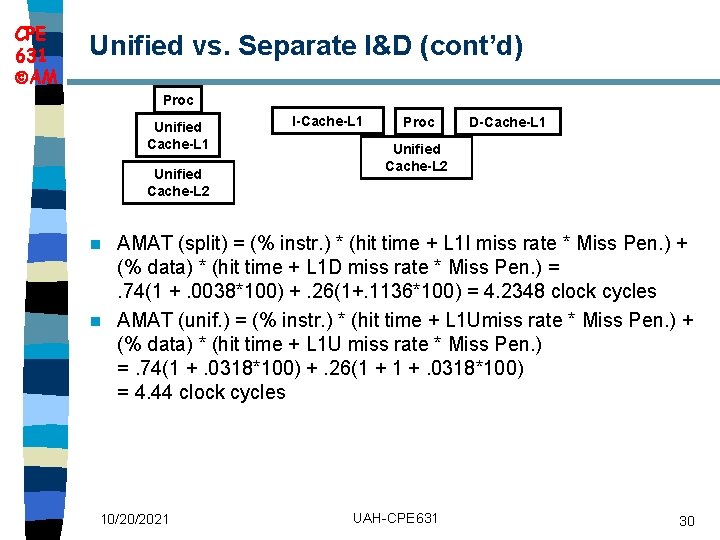

CPE 631 AM Unified vs. Separate I&D (cont’d) Proc Unified Cache L 1 Unified Cache L 2 I Cache L 1 Proc D Cache L 1 Unified Cache L 2 Miss rate (L 1 I) = (# L 1 I misses) / (IC) n #L 1 I misses = (L 1 I misses per 1 k) * (IC /1000) n Miss rate (L 1 I) = 3. 82/1000 = 0. 0038 n Miss rate (L 1 D) = (# L 1 D misses) / (# Mem. Refs) n #L 1 D misses = (L 1 D misses per 1 k) * (IC /1000) n Miss rate (L 1 D) = 40. 9 * (IC/1000) / (0. 36*IC) = 0. 1136 n Miss rate (L 1 U) = (# L 1 U misses) / (IC + Mem. Refs) n #L 1 U misses = (L 1 U misses per 1 k) * (IC /1000) n Miss rate (L 1 U) = 43. 3*(IC / 1000) / (1. 36 * IC) = 0. 0318 n 10/20/2021 UAH-CPE 631 29

CPE 631 AM Unified vs. Separate I&D (cont’d) Proc Unified Cache L 1 Unified Cache L 2 I Cache L 1 Proc D Cache L 1 Unified Cache L 2 AMAT (split) = (% instr. ) * (hit time + L 1 I miss rate * Miss Pen. ) + (% data) * (hit time + L 1 D miss rate * Miss Pen. ) =. 74(1 +. 0038*100) +. 26(1+. 1136*100) = 4. 2348 clock cycles n AMAT (unif. ) = (% instr. ) * (hit time + L 1 Umiss rate * Miss Pen. ) + (% data) * (hit time + L 1 U miss rate * Miss Pen. ) =. 74(1 +. 0318*100) +. 26(1 + 1 +. 0318*100) = 4. 44 clock cycles n 10/20/2021 UAH-CPE 631 30

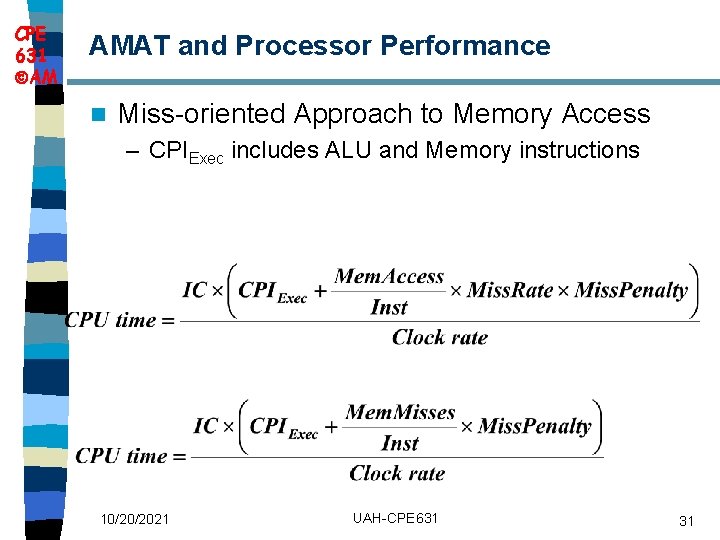

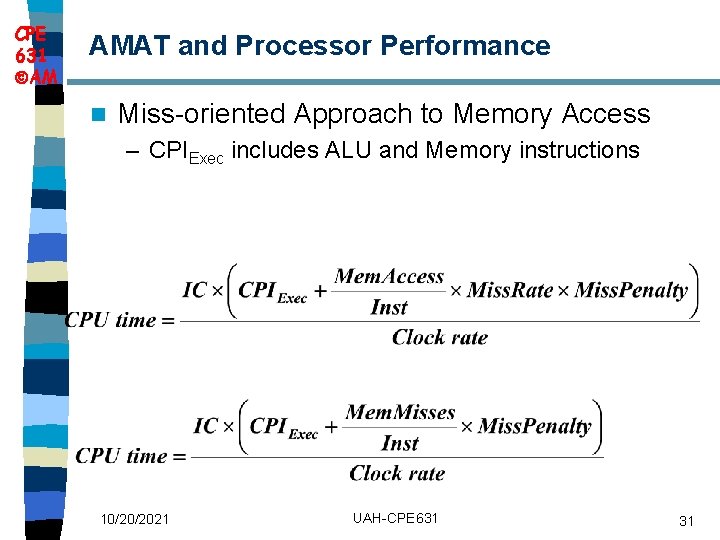

CPE 631 AM AMAT and Processor Performance n Miss-oriented Approach to Memory Access – CPIExec includes ALU and Memory instructions 10/20/2021 UAH-CPE 631 31

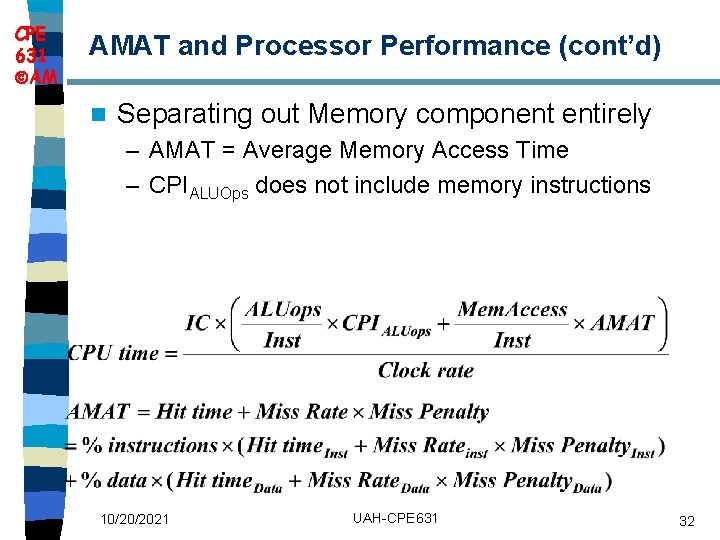

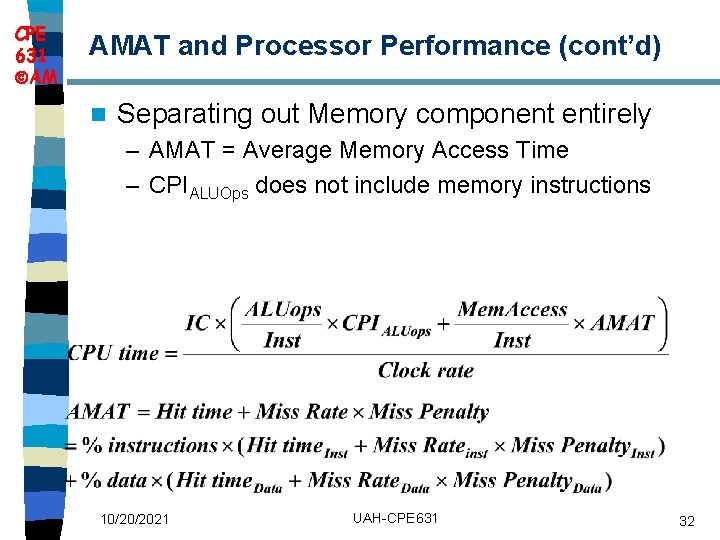

CPE 631 AM AMAT and Processor Performance (cont’d) n Separating out Memory component entirely – AMAT = Average Memory Access Time – CPIALUOps does not include memory instructions 10/20/2021 UAH-CPE 631 32

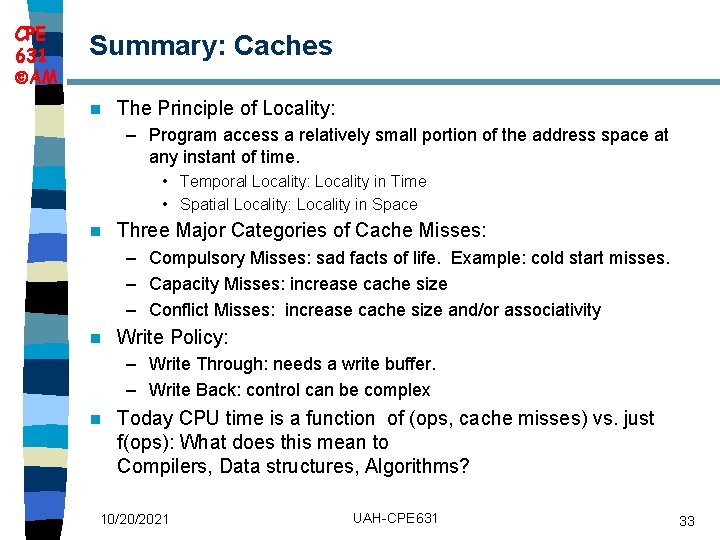

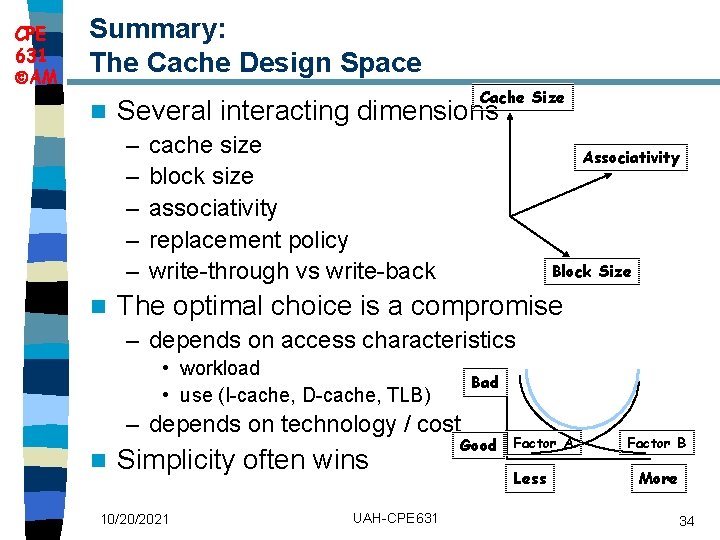

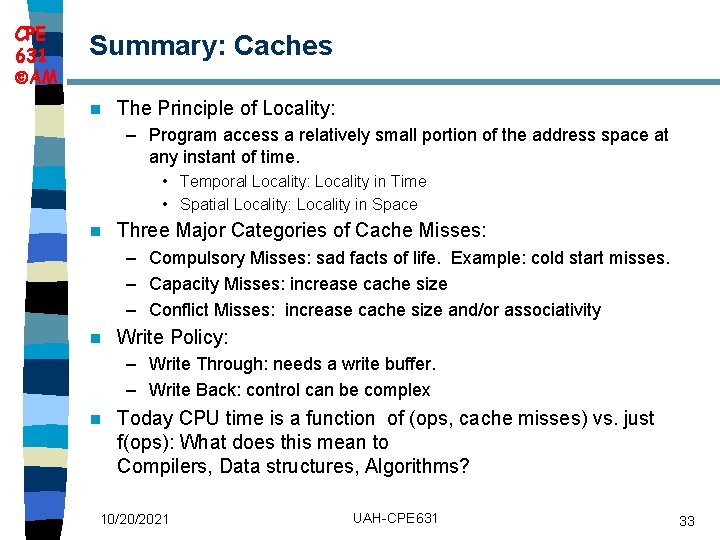

CPE 631 AM Summary: Caches n The Principle of Locality: – Program access a relatively small portion of the address space at any instant of time. • Temporal Locality: Locality in Time • Spatial Locality: Locality in Space n Three Major Categories of Cache Misses: – Compulsory Misses: sad facts of life. Example: cold start misses. – Capacity Misses: increase cache size – Conflict Misses: increase cache size and/or associativity n Write Policy: – Write Through: needs a write buffer. – Write Back: control can be complex n Today CPU time is a function of (ops, cache misses) vs. just f(ops): What does this mean to Compilers, Data structures, Algorithms? 10/20/2021 UAH-CPE 631 33

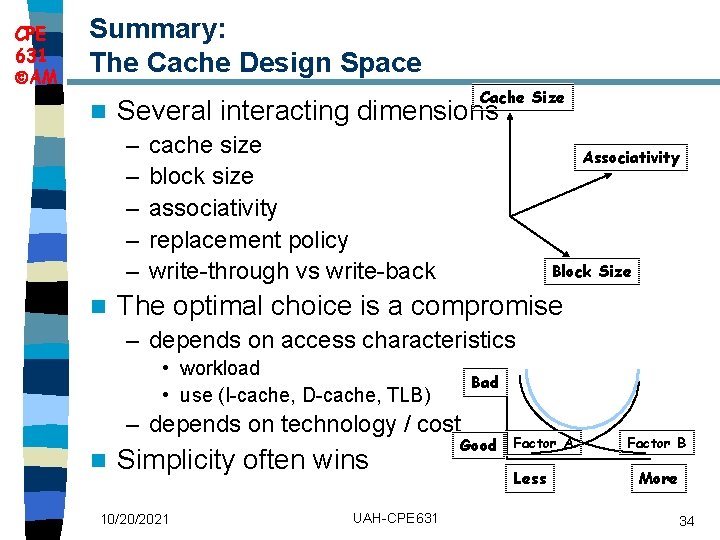

CPE 631 AM Summary: The Cache Design Space n Cache Size Several interacting dimensions – – – n cache size block size associativity replacement policy write-through vs write-back Associativity Block Size The optimal choice is a compromise – depends on access characteristics • workload • use (I-cache, D-cache, TLB) Bad – depends on technology / cost n Simplicity often wins 10/20/2021 UAH-CPE 631 Good Factor A Less Factor B More 34

CPE 631 AM How to Improve Cache Performance? n Cache optimizations – 1. Reduce the miss rate – 2. Reduce the miss penalty – 3. Reduce the time to hit in the cache 10/20/2021 UAH-CPE 631 35

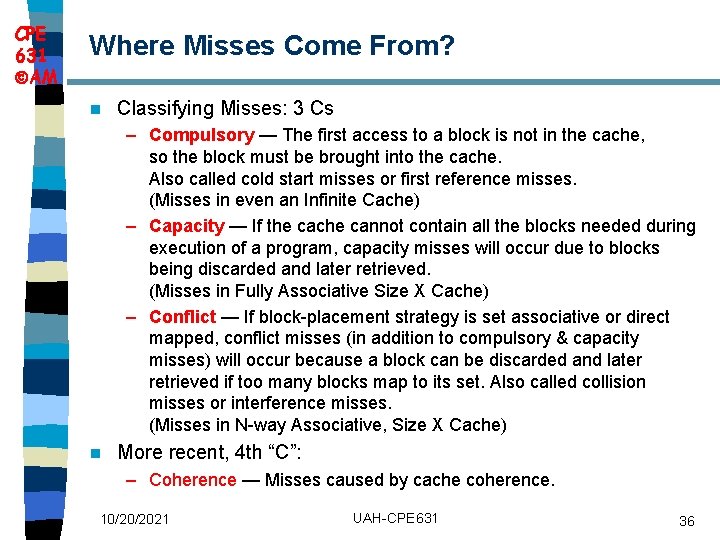

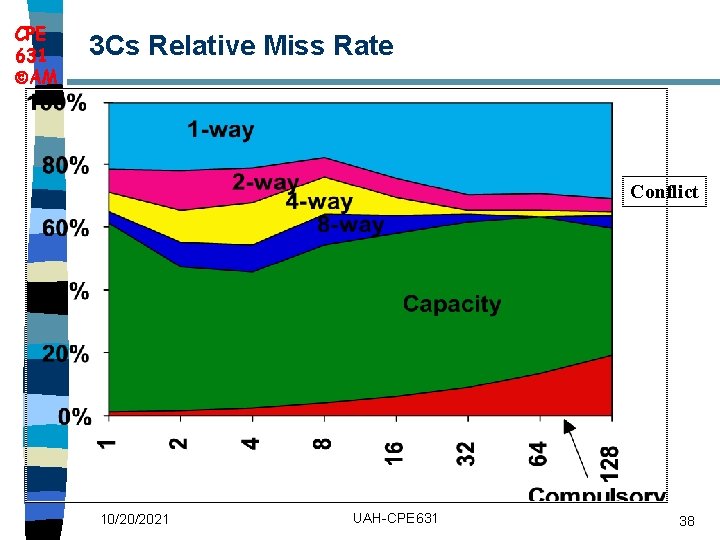

CPE 631 AM Where Misses Come From? n Classifying Misses: 3 Cs – Compulsory — The first access to a block is not in the cache, so the block must be brought into the cache. Also called cold start misses or first reference misses. (Misses in even an Infinite Cache) – Capacity — If the cache cannot contain all the blocks needed during execution of a program, capacity misses will occur due to blocks being discarded and later retrieved. (Misses in Fully Associative Size X Cache) – Conflict — If block-placement strategy is set associative or direct mapped, conflict misses (in addition to compulsory & capacity misses) will occur because a block can be discarded and later retrieved if too many blocks map to its set. Also called collision misses or interference misses. (Misses in N-way Associative, Size X Cache) n More recent, 4 th “C”: – Coherence — Misses caused by cache coherence. 10/20/2021 UAH-CPE 631 36

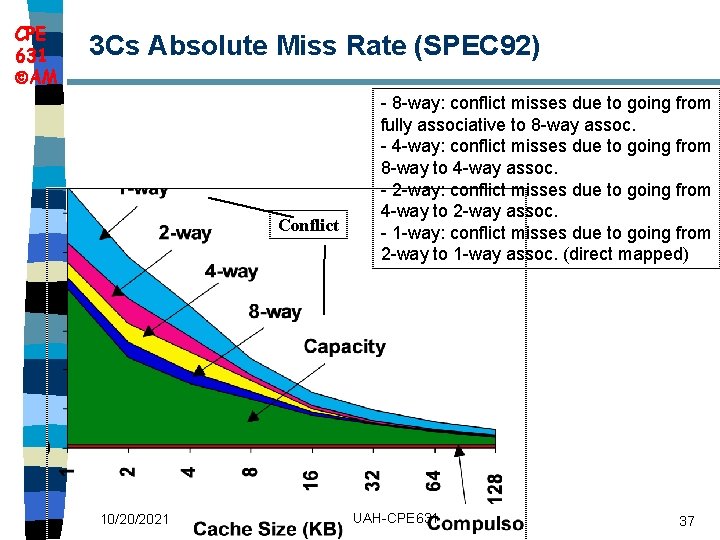

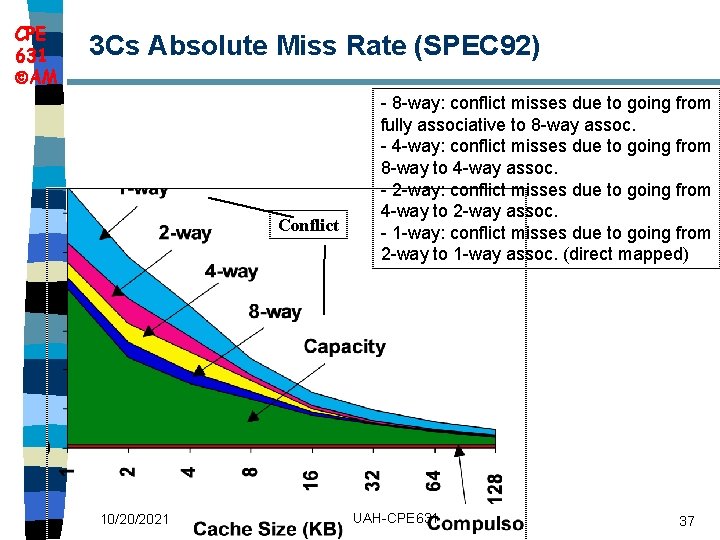

CPE 631 AM 3 Cs Absolute Miss Rate (SPEC 92) Conflict 10/20/2021 - 8 -way: conflict misses due to going from fully associative to 8 -way assoc. - 4 -way: conflict misses due to going from 8 -way to 4 -way assoc. - 2 -way: conflict misses due to going from 4 -way to 2 -way assoc. - 1 -way: conflict misses due to going from 2 -way to 1 -way assoc. (direct mapped) UAH-CPE 631 37

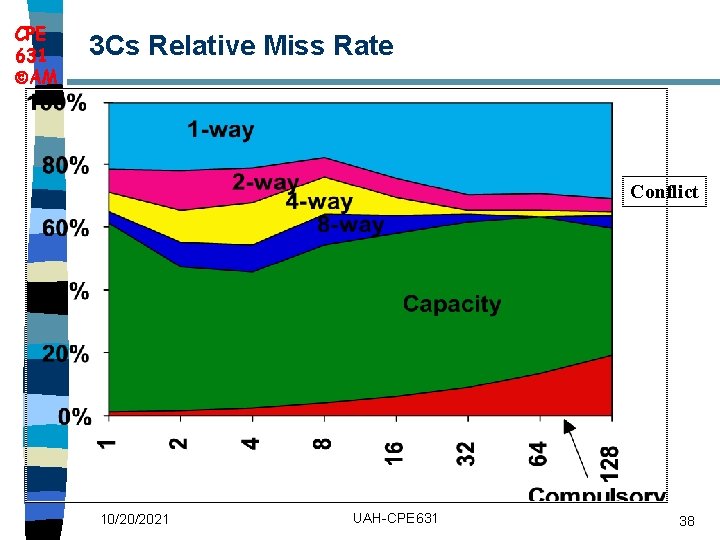

CPE 631 AM 3 Cs Relative Miss Rate Conflict 10/20/2021 UAH-CPE 631 38

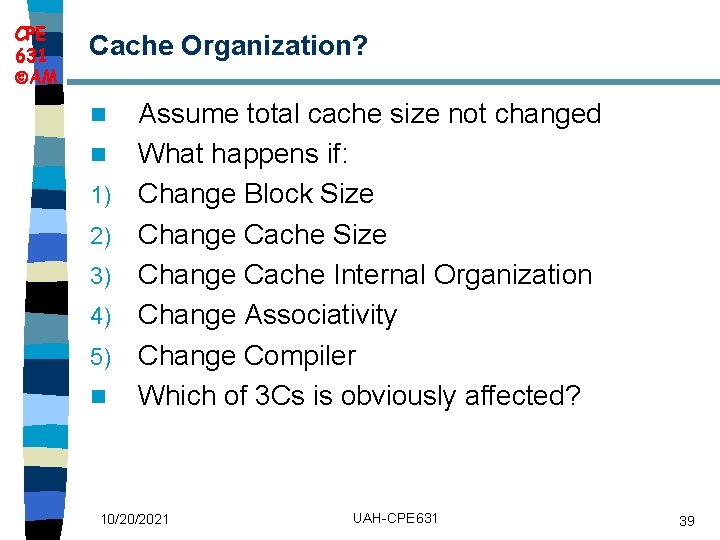

CPE 631 AM Cache Organization? n n 1) 2) 3) 4) 5) n Assume total cache size not changed What happens if: Change Block Size Change Cache Internal Organization Change Associativity Change Compiler Which of 3 Cs is obviously affected? 10/20/2021 UAH-CPE 631 39

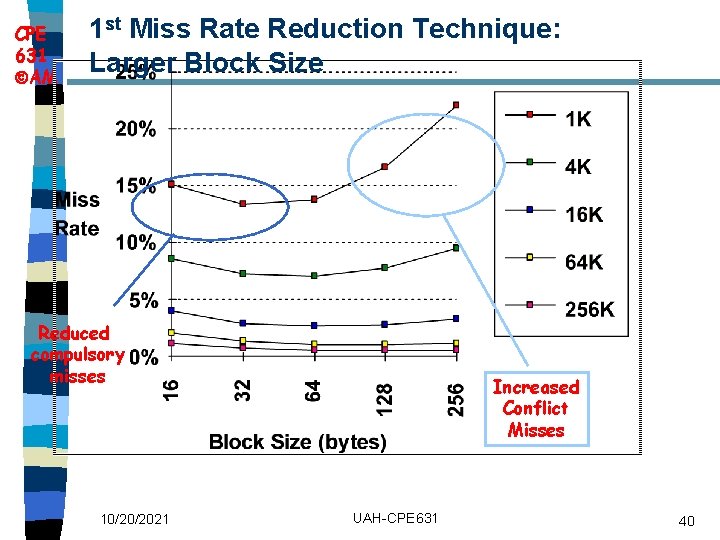

CPE 631 AM 1 st Miss Rate Reduction Technique: Larger Block Size Reduced compulsory misses 10/20/2021 Increased Conflict Misses UAH-CPE 631 40

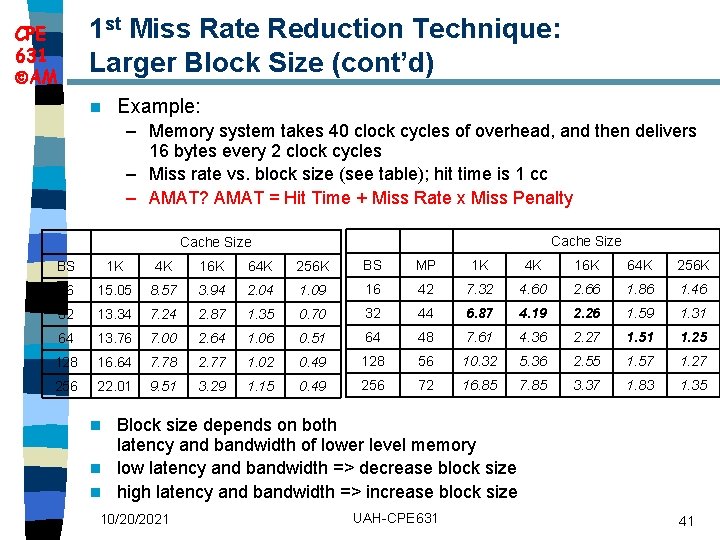

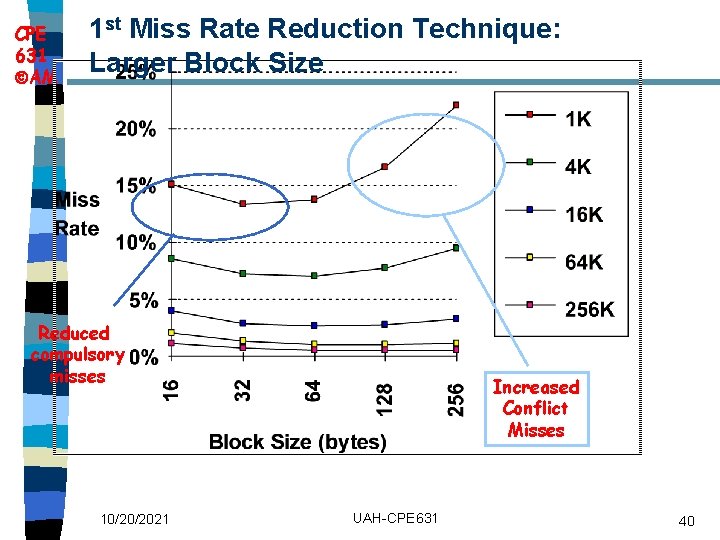

1 st Miss Rate Reduction Technique: Larger Block Size (cont’d) CPE 631 AM n Example: – Memory system takes 40 clock cycles of overhead, and then delivers 16 bytes every 2 clock cycles – Miss rate vs. block size (see table); hit time is 1 cc – AMAT? AMAT = Hit Time + Miss Rate x Miss Penalty Cache Size BS 1 K 4 K 16 K 64 K 256 K BS MP 1 K 4 K 16 K 64 K 256 K 16 15. 05 8. 57 3. 94 2. 04 1. 09 16 42 7. 32 4. 60 2. 66 1. 86 1. 46 32 13. 34 7. 24 2. 87 1. 35 0. 70 32 44 6. 87 4. 19 2. 26 1. 59 1. 31 64 13. 76 7. 00 2. 64 1. 06 0. 51 64 48 7. 61 4. 36 2. 27 1. 51 1. 25 128 16. 64 7. 78 2. 77 1. 02 0. 49 128 56 10. 32 5. 36 2. 55 1. 57 1. 27 256 22. 01 9. 51 3. 29 1. 15 0. 49 256 72 16. 85 7. 85 3. 37 1. 83 1. 35 Block size depends on both latency and bandwidth of lower level memory n low latency and bandwidth => decrease block size n high latency and bandwidth => increase block size n 10/20/2021 UAH-CPE 631 41

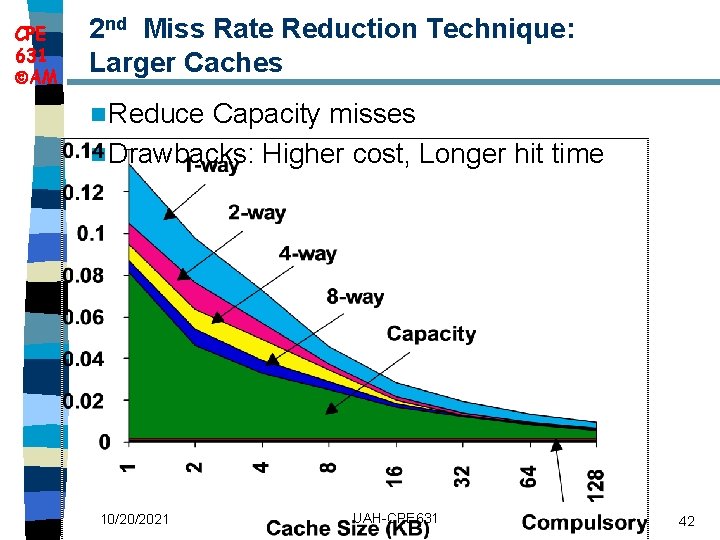

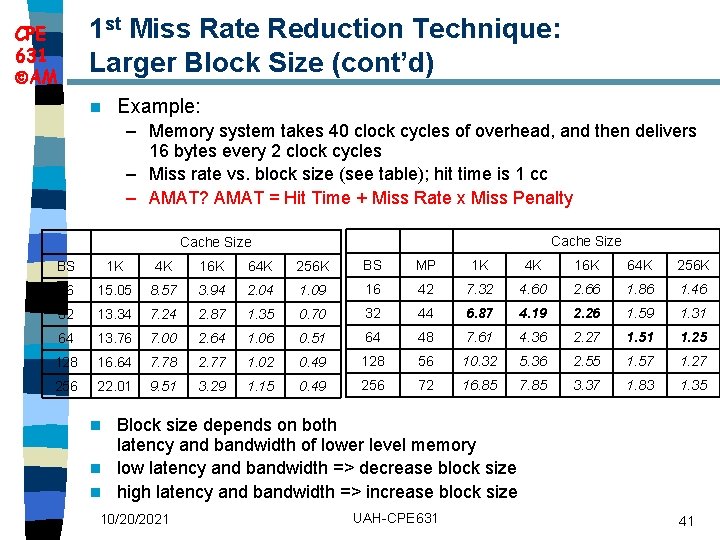

CPE 631 AM 2 nd Miss Rate Reduction Technique: Larger Caches n Reduce Capacity misses n Drawbacks: Higher cost, Longer hit time 10/20/2021 UAH-CPE 631 42

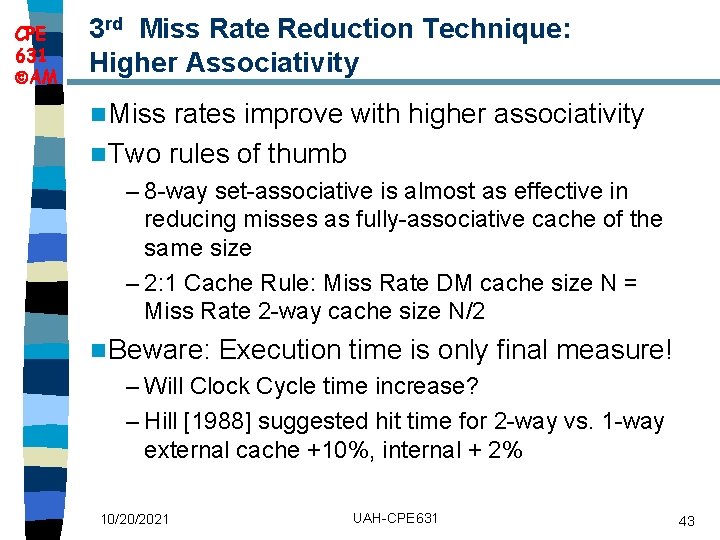

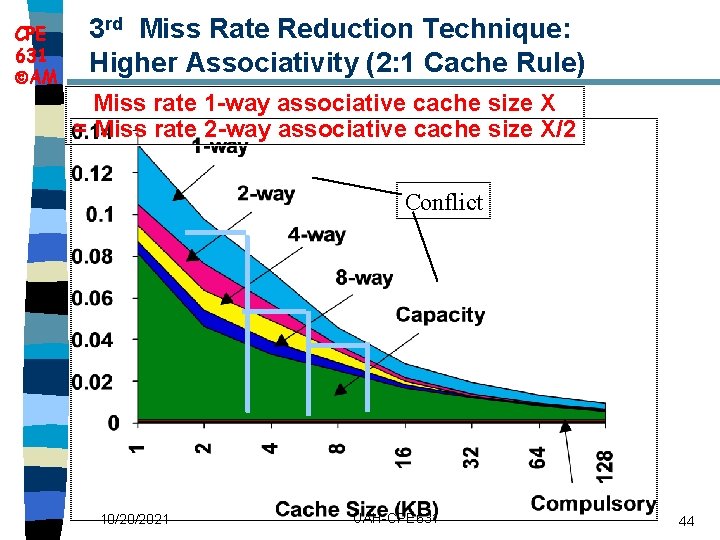

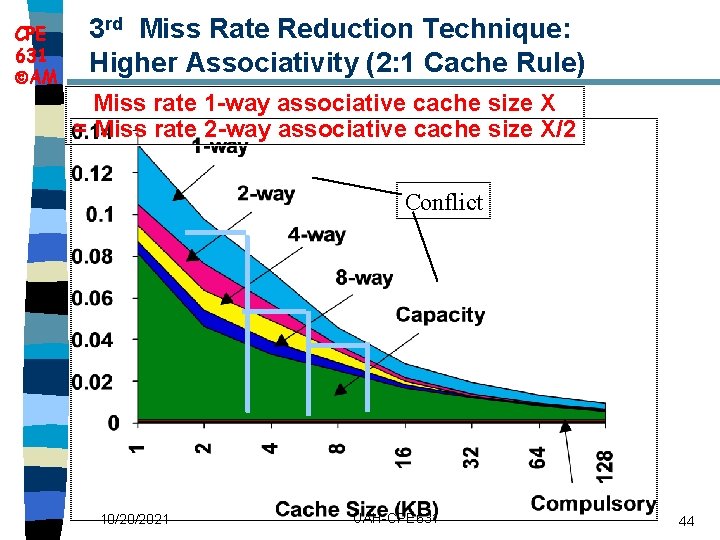

CPE 631 AM 3 rd Miss Rate Reduction Technique: Higher Associativity n Miss rates improve with higher associativity n Two rules of thumb – 8 -way set-associative is almost as effective in reducing misses as fully-associative cache of the same size – 2: 1 Cache Rule: Miss Rate DM cache size N = Miss Rate 2 -way cache size N/2 n Beware: Execution time is only final measure! – Will Clock Cycle time increase? – Hill [1988] suggested hit time for 2 -way vs. 1 -way external cache +10%, internal + 2% 10/20/2021 UAH-CPE 631 43

CPE 631 AM 3 rd Miss Rate Reduction Technique: Higher Associativity (2: 1 Cache Rule) Miss rate 1 way associative cache size X = Miss rate 2 way associative cache size X/2 Conflict 10/20/2021 UAH-CPE 631 44

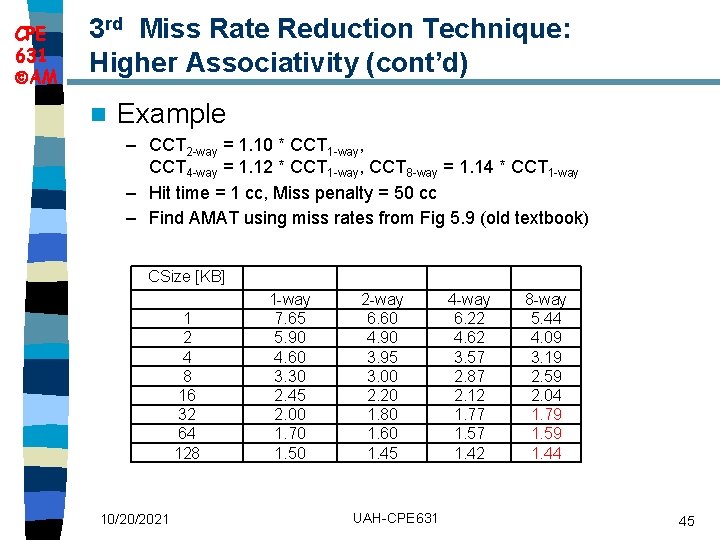

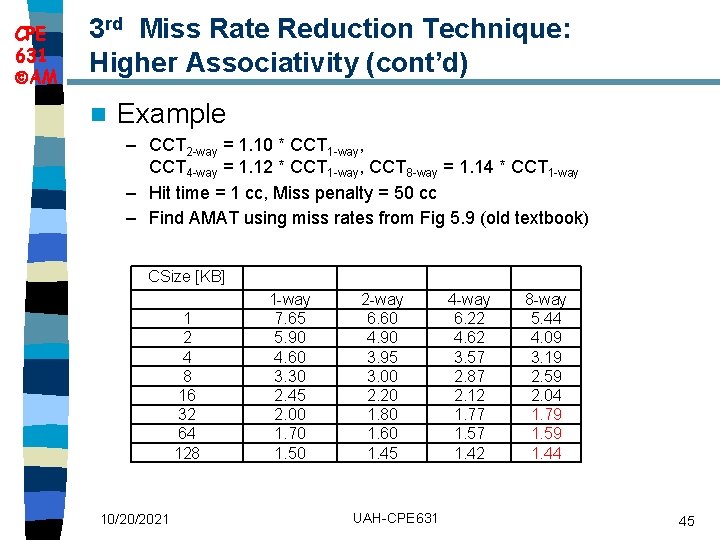

CPE 631 AM 3 rd Miss Rate Reduction Technique: Higher Associativity (cont’d) n Example – CCT 2 -way = 1. 10 * CCT 1 -way, CCT 4 -way = 1. 12 * CCT 1 -way, CCT 8 -way = 1. 14 * CCT 1 -way – Hit time = 1 cc, Miss penalty = 50 cc – Find AMAT using miss rates from Fig 5. 9 (old textbook) CSize [KB] 1 2 4 8 16 32 64 128 10/20/2021 1 -way 7. 65 5. 90 4. 60 3. 30 2. 45 2. 00 1. 70 1. 50 2 -way 6. 60 4. 90 3. 95 3. 00 2. 20 1. 80 1. 60 1. 45 UAH-CPE 631 4 -way 6. 22 4. 62 3. 57 2. 87 2. 12 1. 77 1. 57 1. 42 8 -way 5. 44 4. 09 3. 19 2. 59 2. 04 1. 79 1. 59 1. 44 45

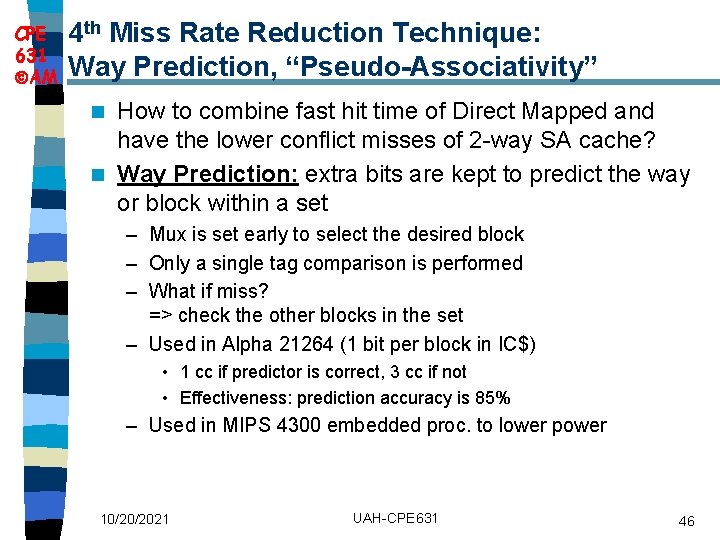

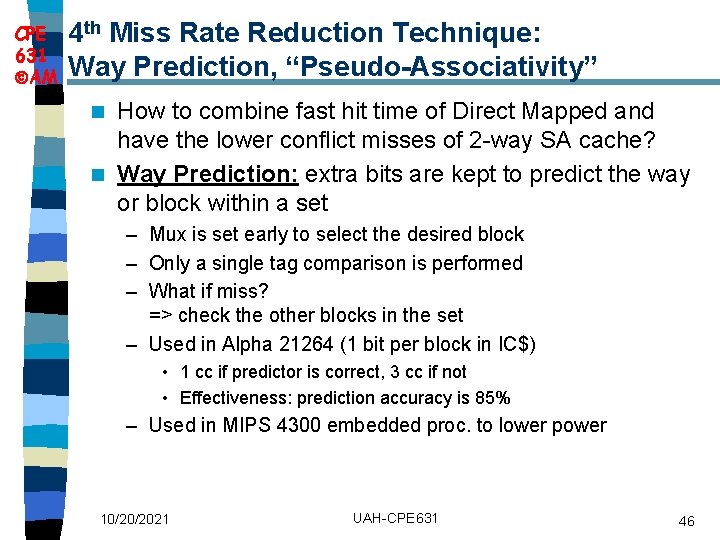

CPE 631 AM 4 th Miss Rate Reduction Technique: Way Prediction, “Pseudo Associativity” How to combine fast hit time of Direct Mapped and have the lower conflict misses of 2 -way SA cache? n Way Prediction: extra bits are kept to predict the way or block within a set n – Mux is set early to select the desired block – Only a single tag comparison is performed – What if miss? => check the other blocks in the set – Used in Alpha 21264 (1 bit per block in IC$) • 1 cc if predictor is correct, 3 cc if not • Effectiveness: prediction accuracy is 85% – Used in MIPS 4300 embedded proc. to lower power 10/20/2021 UAH-CPE 631 46

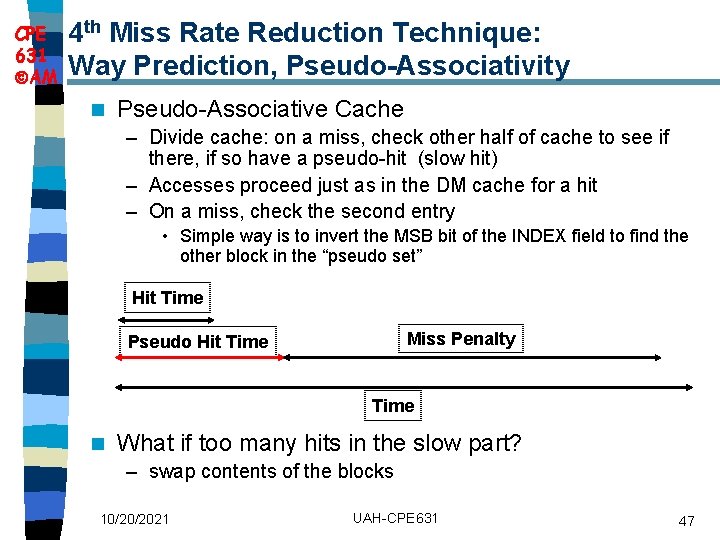

CPE 631 AM 4 th Miss Rate Reduction Technique: Way Prediction, Pseudo Associativity n Pseudo-Associative Cache – Divide cache: on a miss, check other half of cache to see if there, if so have a pseudo-hit (slow hit) – Accesses proceed just as in the DM cache for a hit – On a miss, check the second entry • Simple way is to invert the MSB bit of the INDEX field to find the other block in the “pseudo set” Hit Time Miss Penalty Pseudo Hit Time n What if too many hits in the slow part? – swap contents of the blocks 10/20/2021 UAH-CPE 631 47

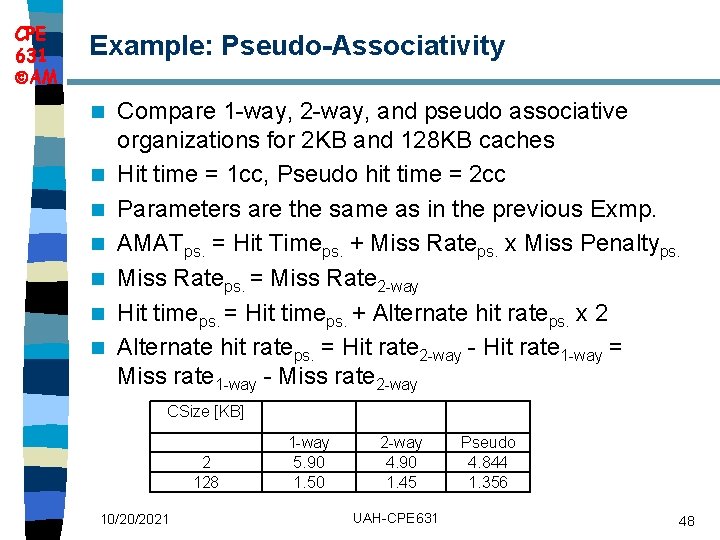

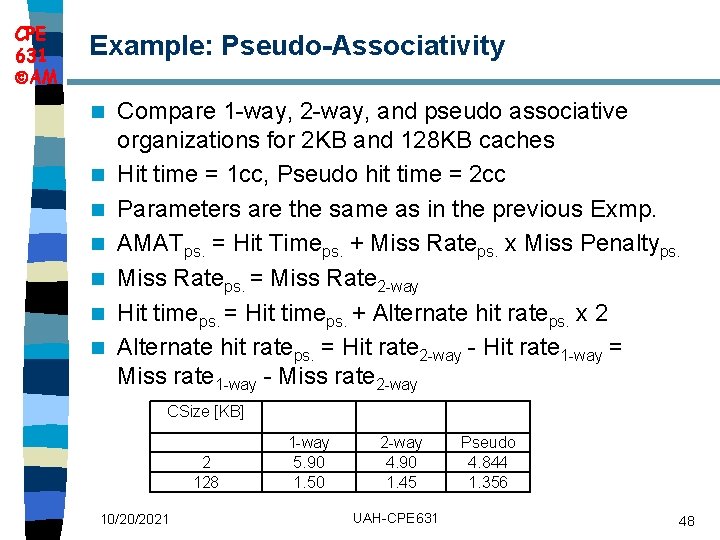

CPE 631 AM Example: Pseudo Associativity n n n n Compare 1 -way, 2 -way, and pseudo associative organizations for 2 KB and 128 KB caches Hit time = 1 cc, Pseudo hit time = 2 cc Parameters are the same as in the previous Exmp. AMATps. = Hit Timeps. + Miss Rateps. x Miss Penaltyps. Miss Rateps. = Miss Rate 2 -way Hit timeps. = Hit timeps. + Alternate hit rateps. x 2 Alternate hit rateps. = Hit rate 2 -way - Hit rate 1 -way = Miss rate 1 -way - Miss rate 2 -way CSize [KB] 2 128 10/20/2021 1 -way 5. 90 1. 50 2 -way 4. 90 1. 45 UAH-CPE 631 Pseudo 4. 844 1. 356 48

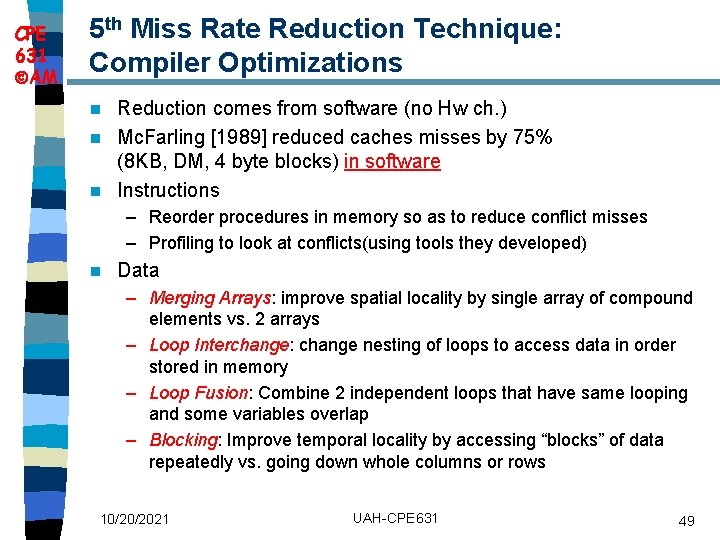

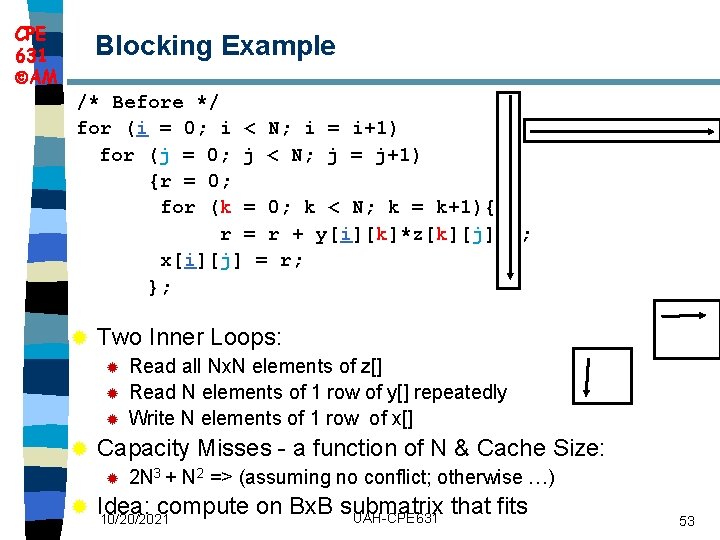

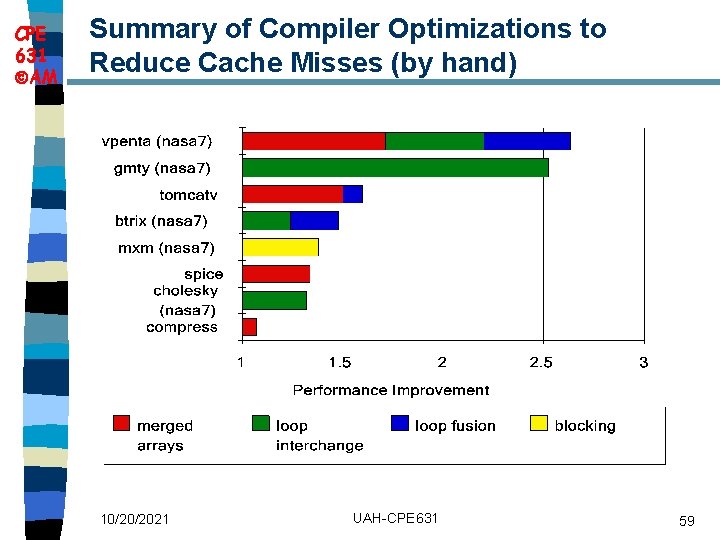

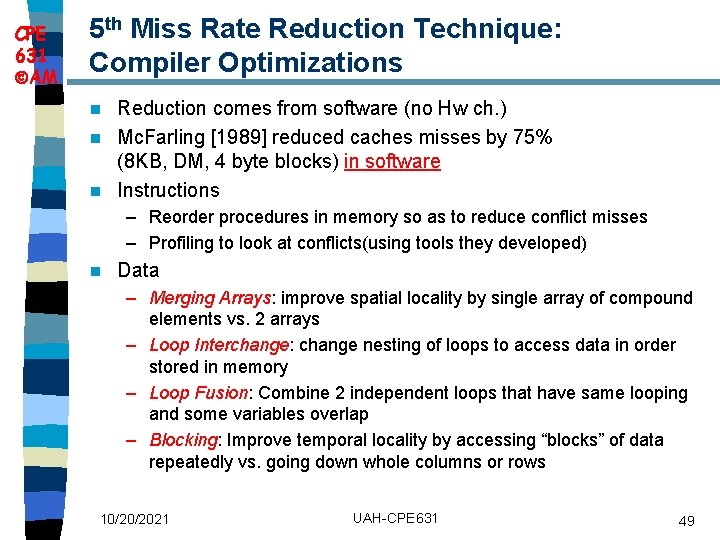

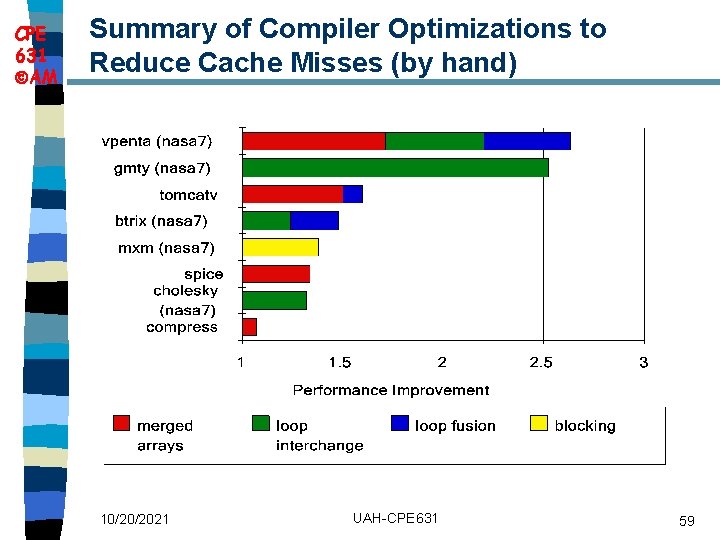

CPE 631 AM 5 th Miss Rate Reduction Technique: Compiler Optimizations Reduction comes from software (no Hw ch. ) n Mc. Farling [1989] reduced caches misses by 75% (8 KB, DM, 4 byte blocks) in software n Instructions n – Reorder procedures in memory so as to reduce conflict misses – Profiling to look at conflicts(using tools they developed) n Data – Merging Arrays: improve spatial locality by single array of compound elements vs. 2 arrays – Loop Interchange: change nesting of loops to access data in order stored in memory – Loop Fusion: Combine 2 independent loops that have same looping and some variables overlap – Blocking: Improve temporal locality by accessing “blocks” of data repeatedly vs. going down whole columns or rows 10/20/2021 UAH-CPE 631 49

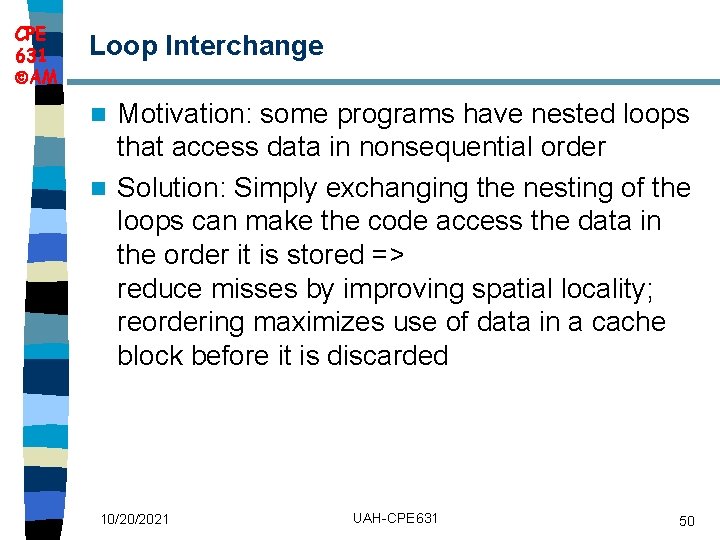

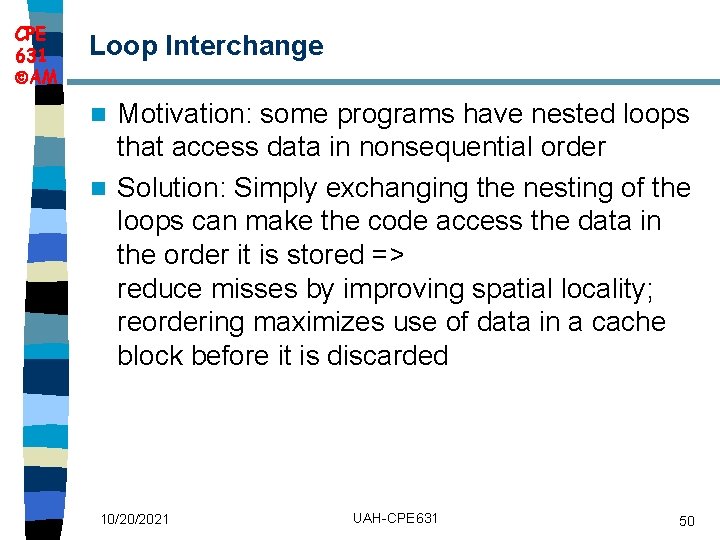

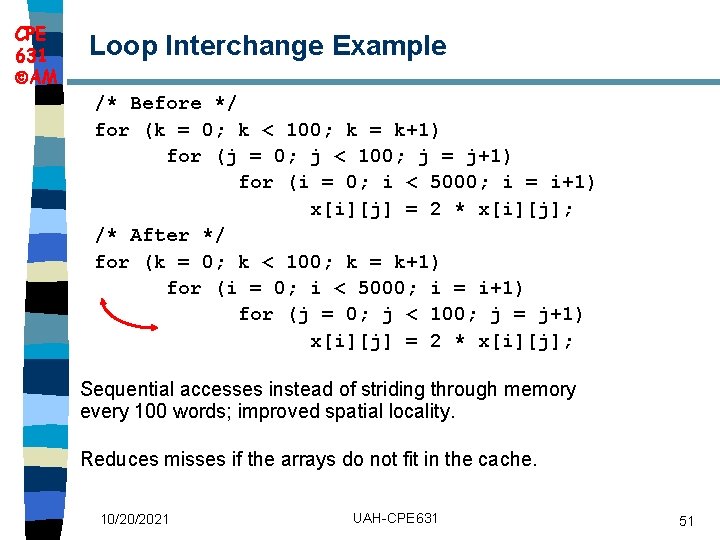

CPE 631 AM Loop Interchange Motivation: some programs have nested loops that access data in nonsequential order n Solution: Simply exchanging the nesting of the loops can make the code access the data in the order it is stored => reduce misses by improving spatial locality; reordering maximizes use of data in a cache block before it is discarded n 10/20/2021 UAH-CPE 631 50

CPE 631 AM Loop Interchange Example /* Before */ for (k = 0; k < 100; k = k+1) for (j = 0; j < 100; j = j+1) for (i = 0; i < 5000; i = i+1) x[i][j] = 2 * x[i][j]; /* After */ for (k = 0; k < 100; k = k+1) for (i = 0; i < 5000; i = i+1) for (j = 0; j < 100; j = j+1) x[i][j] = 2 * x[i][j]; Sequential accesses instead of striding through memory every 100 words; improved spatial locality. Reduces misses if the arrays do not fit in the cache. 10/20/2021 UAH-CPE 631 51

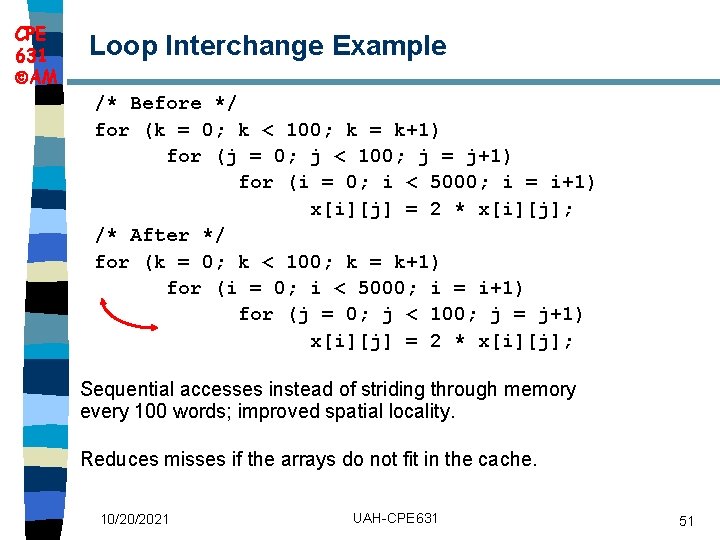

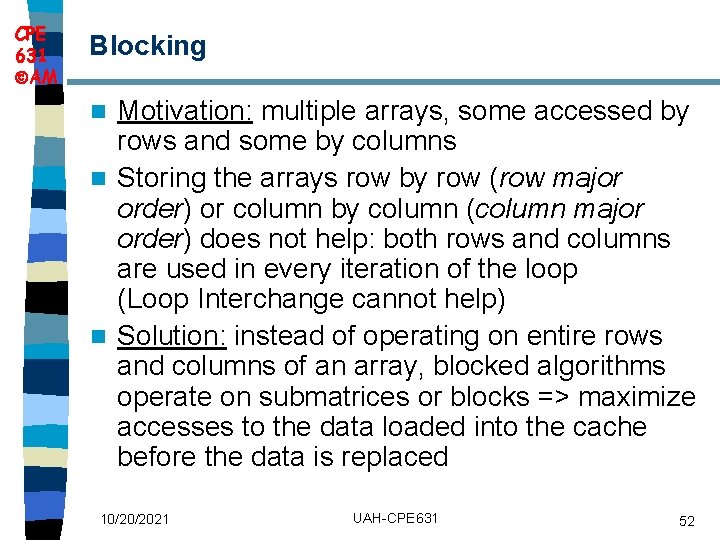

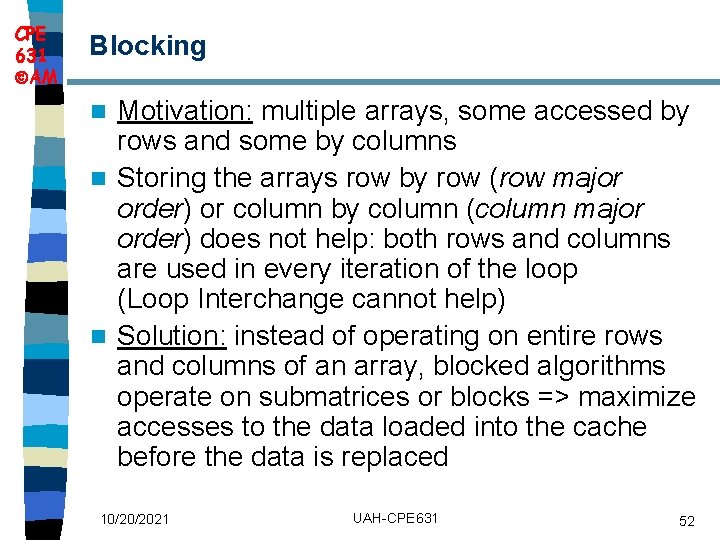

CPE 631 AM Blocking Motivation: multiple arrays, some accessed by rows and some by columns n Storing the arrays row by row (row major order) or column by column (column major order) does not help: both rows and columns are used in every iteration of the loop (Loop Interchange cannot help) n Solution: instead of operating on entire rows and columns of an array, blocked algorithms operate on submatrices or blocks => maximize accesses to the data loaded into the cache before the data is replaced n 10/20/2021 UAH-CPE 631 52

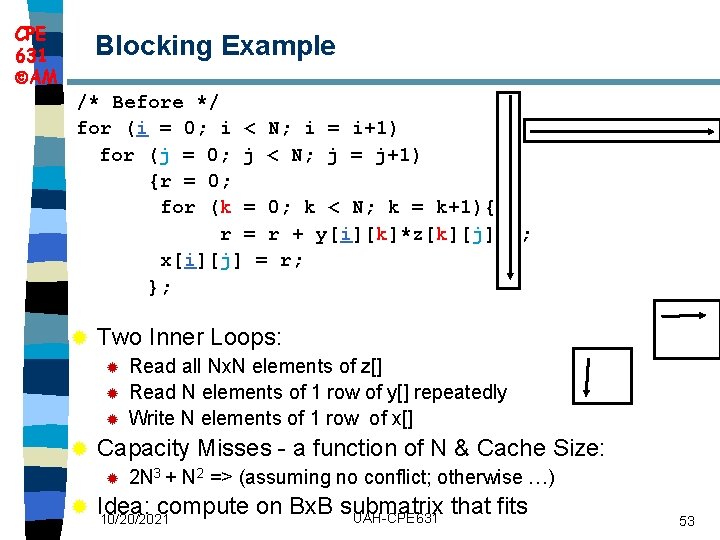

CPE 631 AM Blocking Example /* Before */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) {r = 0; for (k = 0; k < N; k = k+1){ r = r + y[i][k]*z[k][j]; }; x[i][j] = r; }; ® Two Inner Loops: ® ® Capacity Misses - a function of N & Cache Size: ® ® Read all Nx. N elements of z[] Read N elements of 1 row of y[] repeatedly Write N elements of 1 row of x[] 2 N 3 + N 2 => (assuming no conflict; otherwise …) Idea: compute on Bx. B submatrix that fits UAH-CPE 631 10/20/2021 53

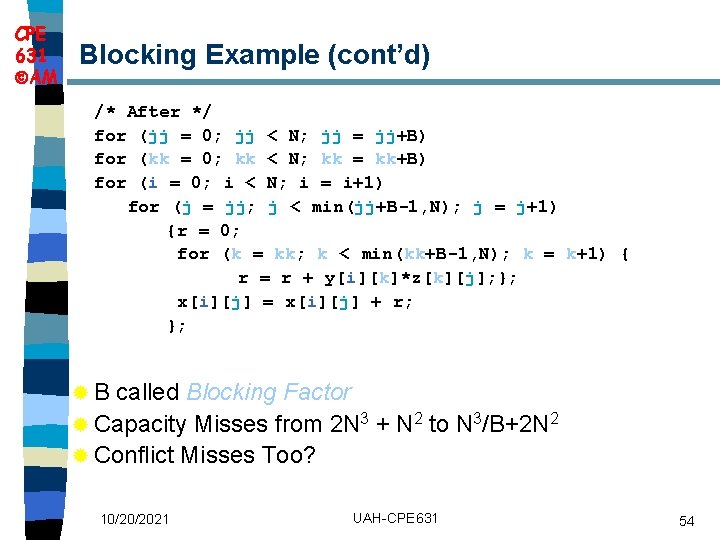

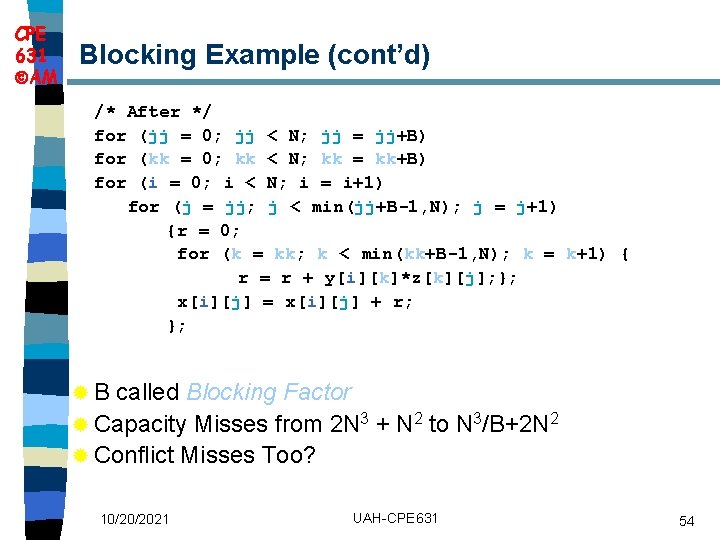

CPE 631 AM Blocking Example (cont’d) /* After */ for (jj = 0; jj < N; jj = jj+B) for (kk = 0; kk < N; kk = kk+B) for (i = 0; i < N; i = i+1) for (j = jj; j < min(jj+B-1, N); j = j+1) {r = 0; for (k = kk; k < min(kk+B-1, N); k = k+1) { r = r + y[i][k]*z[k][j]; }; x[i][j] = x[i][j] + r; }; ®B called Blocking Factor ® Capacity Misses from 2 N 3 + N 2 to N 3/B+2 N 2 ® Conflict Misses Too? 10/20/2021 UAH-CPE 631 54

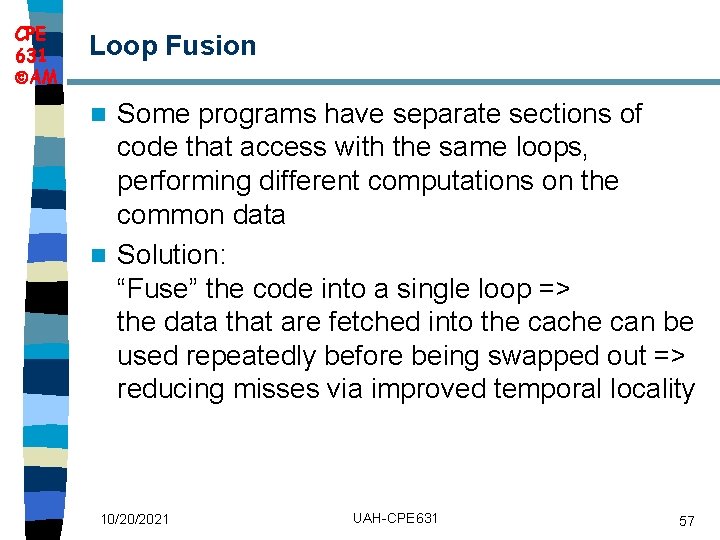

CPE 631 AM Merging Arrays Motivation: some programs reference multiple arrays in the same dimension with the same indices at the same time => these accesses can interfere with each other, leading to conflict misses n Solution: combine these independent matrices into a single compound array, so that a single cache block can contain the desired elements n 10/20/2021 UAH-CPE 631 55

![CPE 631 AM Merging Arrays Example Before 2 sequential arrays int valSIZE CPE 631 AM Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE];](https://slidetodoc.com/presentation_image_h2/d6f1b21467a0b29dfe3fde46b3f6b40d/image-56.jpg)

CPE 631 AM Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; int key[SIZE]; /* After: 1 array of stuctures */ struct merge { int val; int key; }; struct merged_array[SIZE]; 10/20/2021 UAH-CPE 631 56

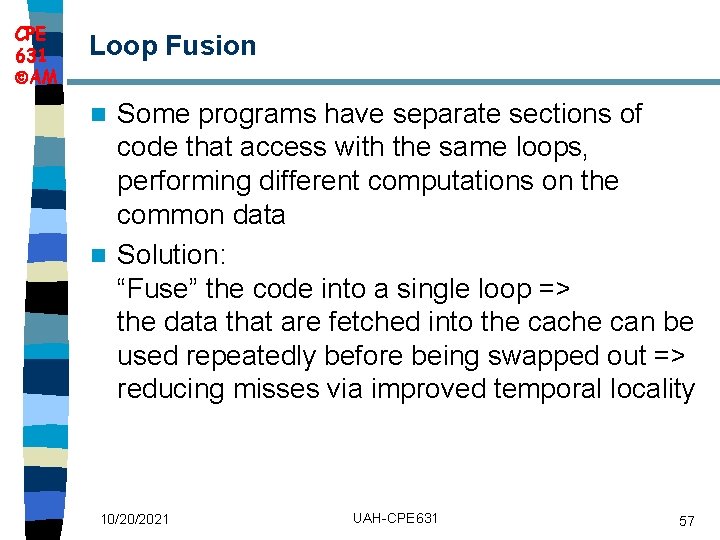

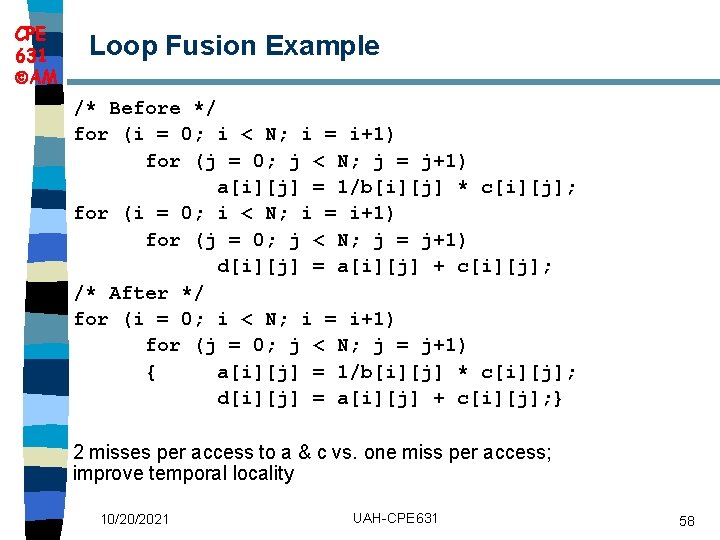

CPE 631 AM Loop Fusion Some programs have separate sections of code that access with the same loops, performing different computations on the common data n Solution: “Fuse” the code into a single loop => the data that are fetched into the cache can be used repeatedly before being swapped out => reducing misses via improved temporal locality n 10/20/2021 UAH-CPE 631 57

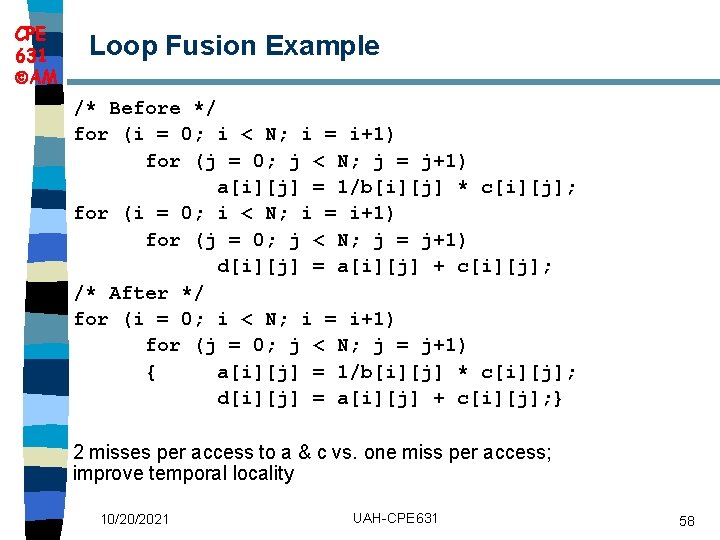

CPE 631 AM Loop Fusion Example /* Before */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) a[i][j] = 1/b[i][j] * c[i][j]; for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) d[i][j] = a[i][j] + c[i][j]; /* After */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) { a[i][j] = 1/b[i][j] * c[i][j]; d[i][j] = a[i][j] + c[i][j]; } 2 misses per access to a & c vs. one miss per access; improve temporal locality 10/20/2021 UAH-CPE 631 58

CPE 631 AM Summary of Compiler Optimizations to Reduce Cache Misses (by hand) 10/20/2021 UAH-CPE 631 59

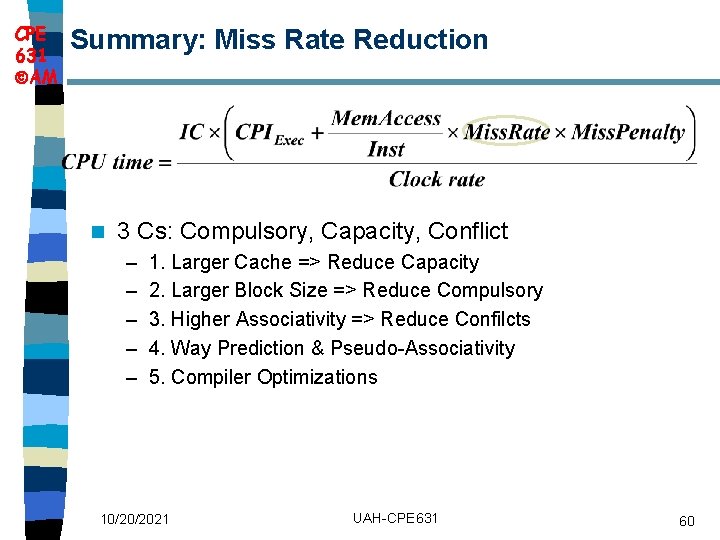

CPE 631 AM Summary: Miss Rate Reduction n 3 Cs: Compulsory, Capacity, Conflict – – – 1. Larger Cache => Reduce Capacity 2. Larger Block Size => Reduce Compulsory 3. Higher Associativity => Reduce Confilcts 4. Way Prediction & Pseudo-Associativity 5. Compiler Optimizations 10/20/2021 UAH-CPE 631 60

Analyzing and leveraging decoupled l1 caches in gpus

Analyzing and leveraging decoupled l1 caches in gpus L caches

L caches Casa cpe

Casa cpe Popcvm

Popcvm Milenkovi

Milenkovi Milenkovi

Milenkovi Milenkovi

Milenkovi Milenkovi

Milenkovi Latecoere 631 interieur

Latecoere 631 interieur Ddl diamond

Ddl diamond 704-631-1500

704-631-1500 631-992-3221

631-992-3221 Latécoère 631

Latécoère 631 205 to the nearest 10

205 to the nearest 10 Flavour enhancer 627 and 631 side effects

Flavour enhancer 627 and 631 side effects Lc 631/2019

Lc 631/2019 6318283160

6318283160 01:640:244 lecture notes - lecture 15: plat, idah, farad

01:640:244 lecture notes - lecture 15: plat, idah, farad Aleksandar kuzmanovic flashback

Aleksandar kuzmanovic flashback Abx aleksandar settings

Abx aleksandar settings Aleksandar prokopec

Aleksandar prokopec Aleksandar kupusinac

Aleksandar kupusinac Vats simpatektomija

Vats simpatektomija Aleksandar tatalovic

Aleksandar tatalovic Kartelj

Kartelj Aleksandar.krizo

Aleksandar.krizo Aleksandar plamenac

Aleksandar plamenac Aleksandar nikcevic

Aleksandar nikcevic Scala meter

Scala meter Vjenčanje europe i azije

Vjenčanje europe i azije Aleksandar stefanovic sorbonne

Aleksandar stefanovic sorbonne Aleksandar rakicevic fon

Aleksandar rakicevic fon Aleksandar baucal

Aleksandar baucal Aleksandar kupusinac

Aleksandar kupusinac Franiza

Franiza Robert juenemann

Robert juenemann Cpe rama media

Cpe rama media Cpe 426

Cpe 426 Cpe

Cpe Portal único docente neuquén

Portal único docente neuquén Exemplificação de preenchimento da ficha eletrotécnica

Exemplificação de preenchimento da ficha eletrotécnica Cpe 426

Cpe 426 Telindus modem

Telindus modem Engr 301 unr

Engr 301 unr Cpe

Cpe Cpe

Cpe Icaipdc

Icaipdc Cpe426

Cpe426 Tcp flow control

Tcp flow control Cpe risk assessment

Cpe risk assessment Centralna procesna enota

Centralna procesna enota Tr069 protocol stack

Tr069 protocol stack Tr069 protocol

Tr069 protocol Cpe lifecycle management

Cpe lifecycle management Program linking in system software

Program linking in system software Cpe vpn

Cpe vpn What is the probability cpe

What is the probability cpe Vhdl lut

Vhdl lut Cpe426

Cpe426 Leamos la cpe

Leamos la cpe Adam rozumek

Adam rozumek