Neural Networks CSE 4309 Machine Learning Vassilis Athitsos

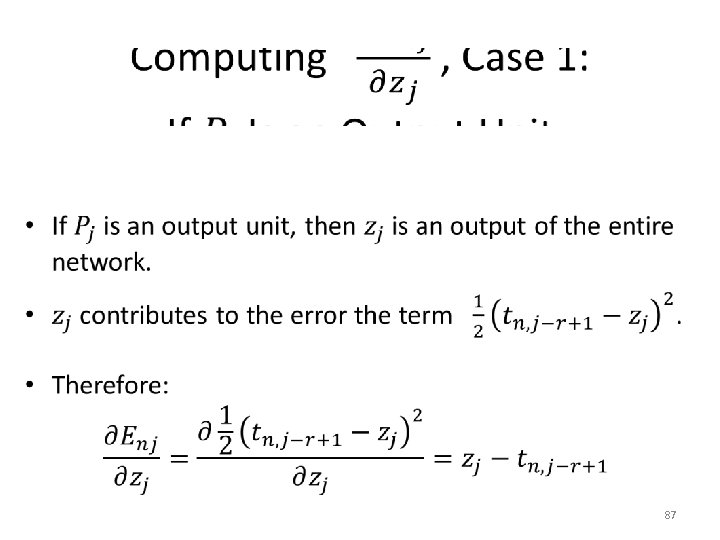

- Slides: 105

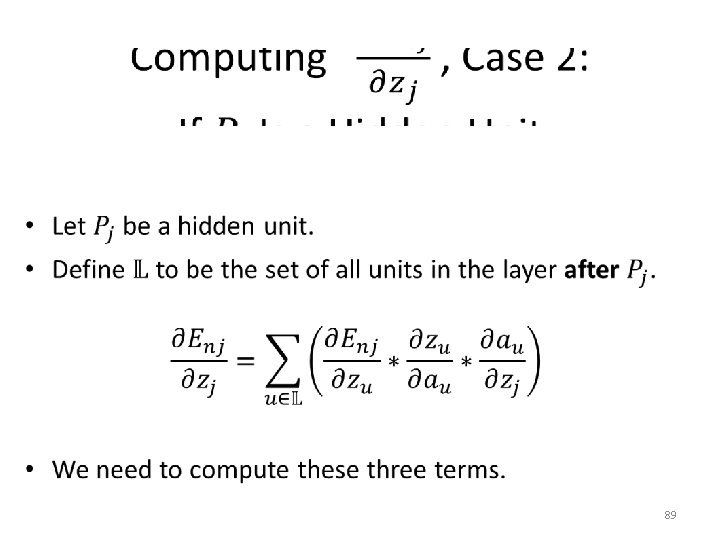

Neural Networks CSE 4309 – Machine Learning Vassilis Athitsos Computer Science and Engineering Department University of Texas at Arlington 1

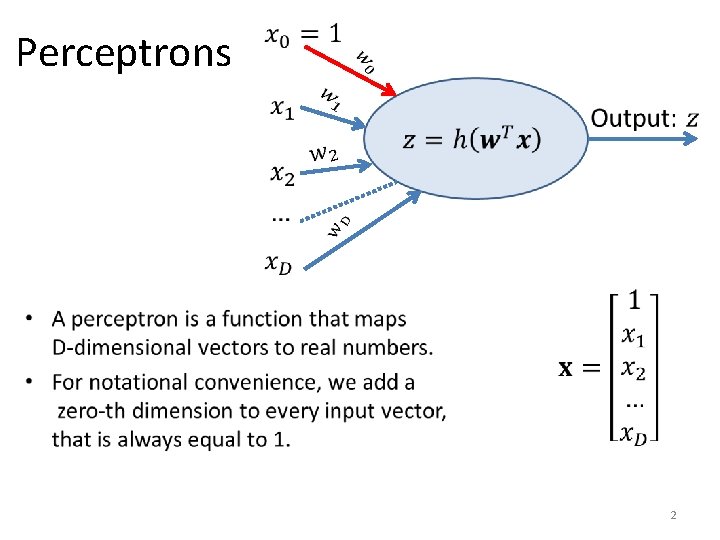

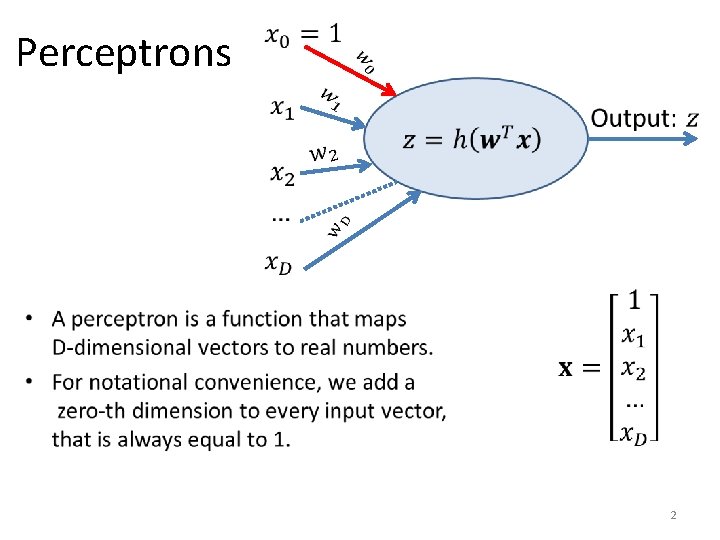

Perceptrons • 2

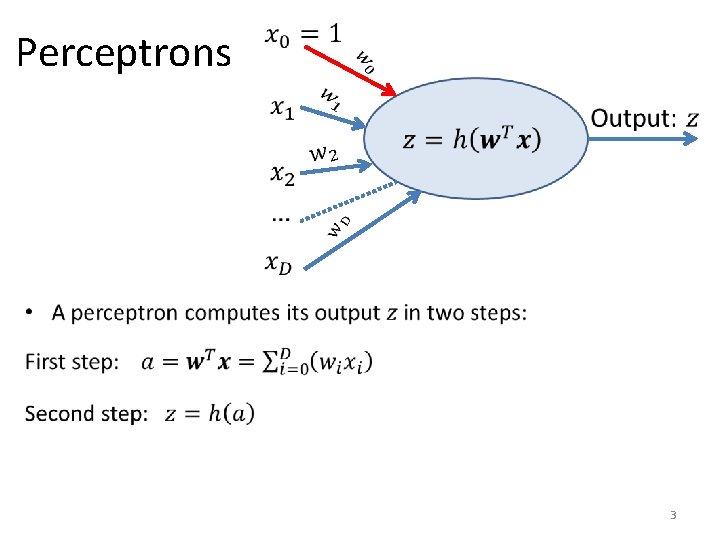

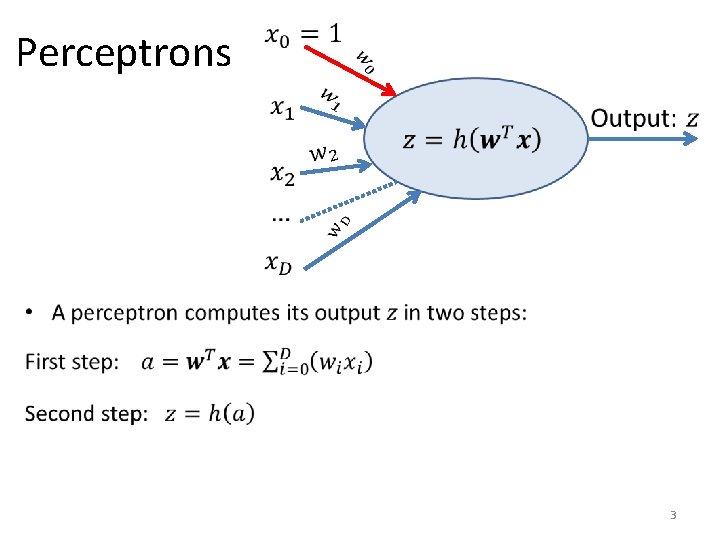

Perceptrons • 3

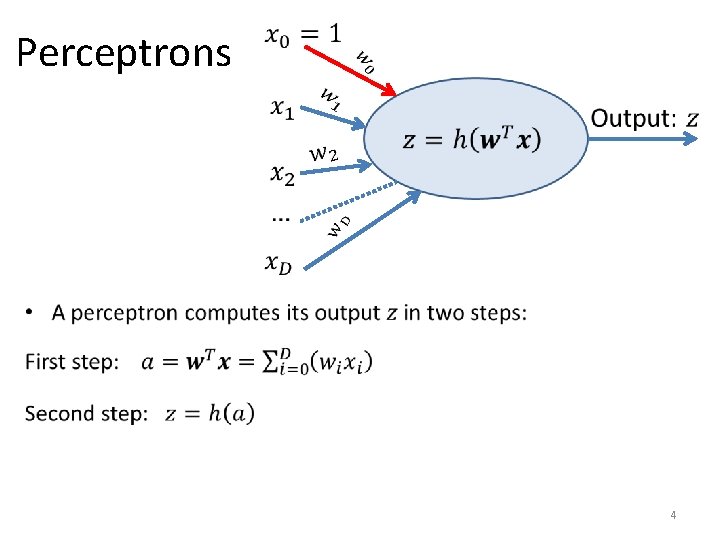

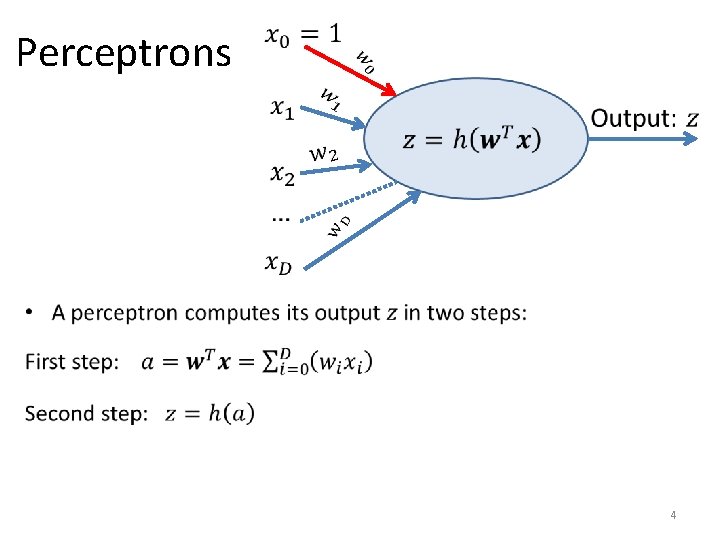

Perceptrons • 4

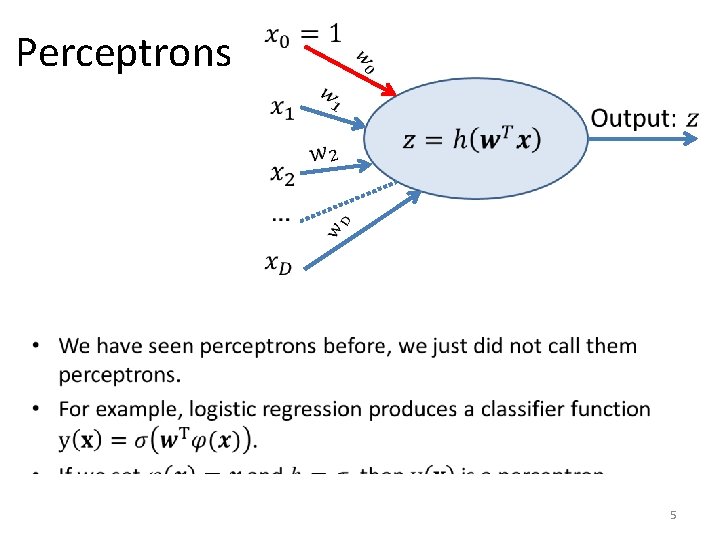

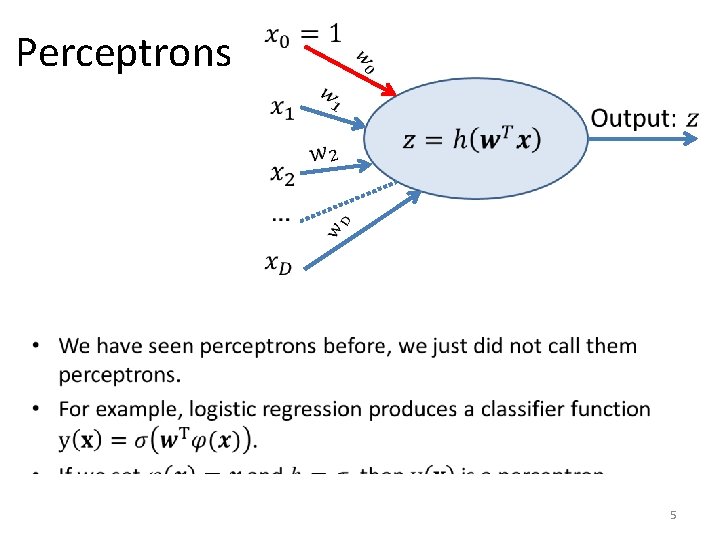

Perceptrons • 5

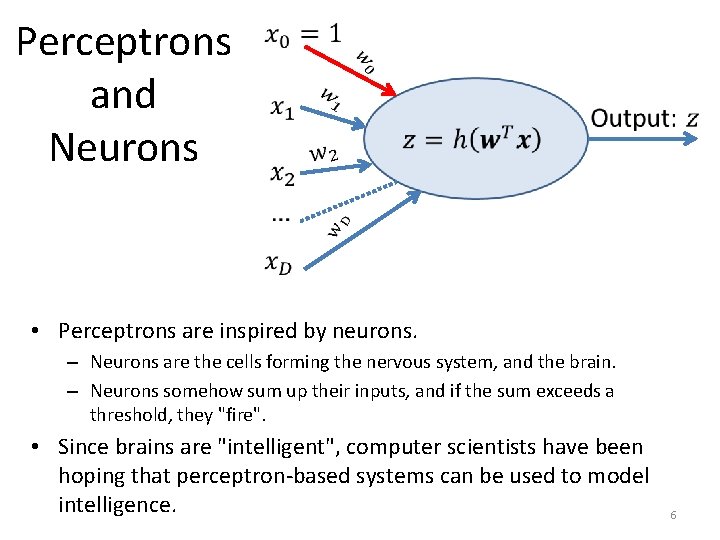

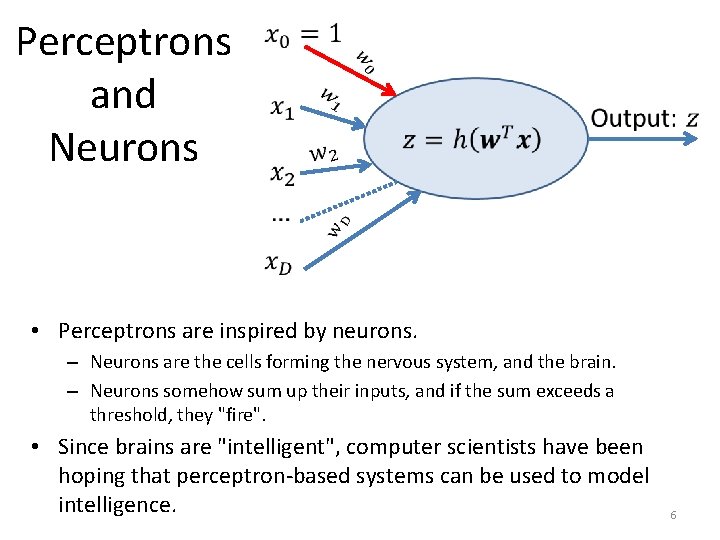

Perceptrons and Neurons • Perceptrons are inspired by neurons. – Neurons are the cells forming the nervous system, and the brain. – Neurons somehow sum up their inputs, and if the sum exceeds a threshold, they "fire". • Since brains are "intelligent", computer scientists have been hoping that perceptron-based systems can be used to model intelligence. 6

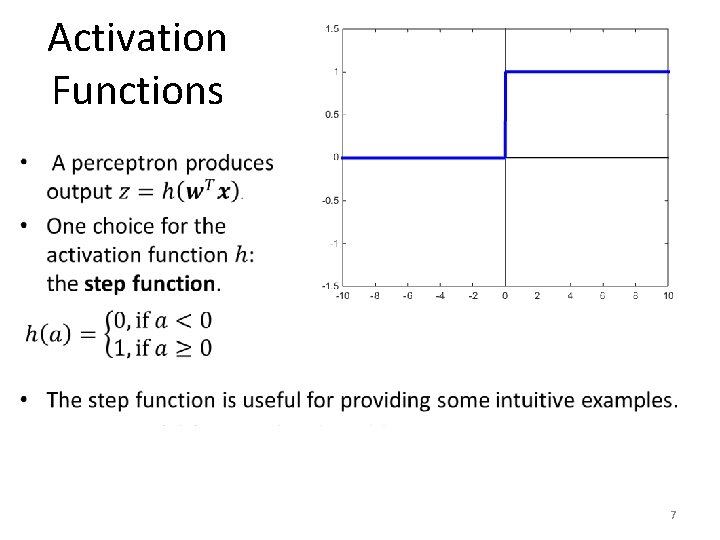

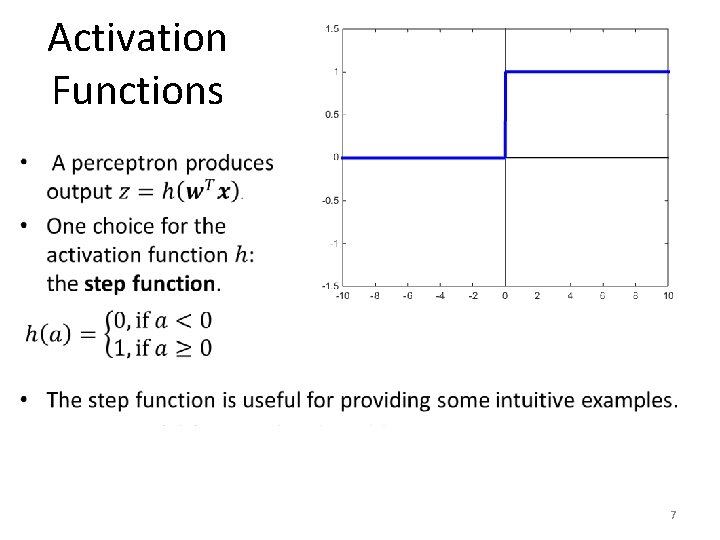

Activation Functions • 7

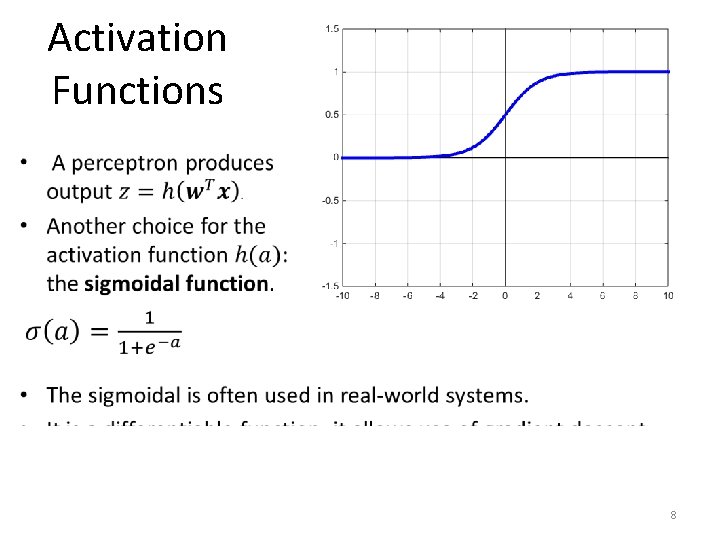

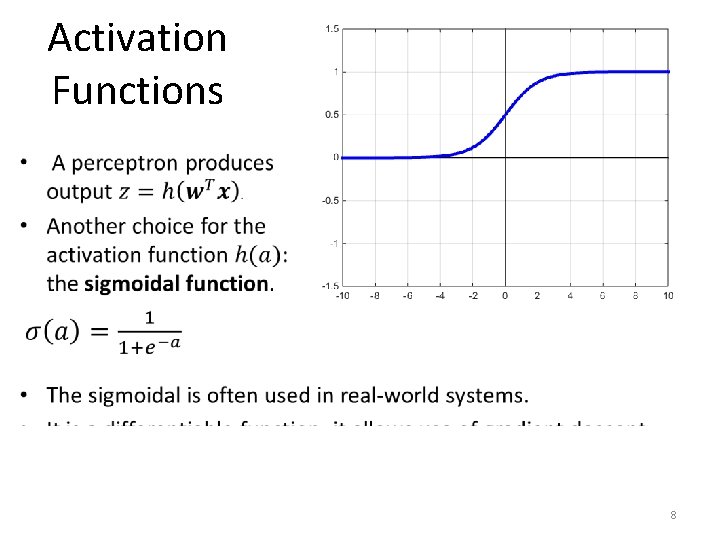

Activation Functions • 8

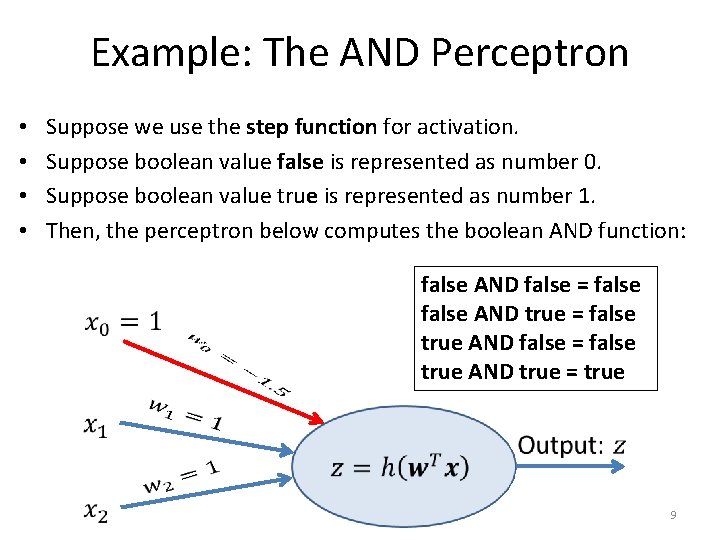

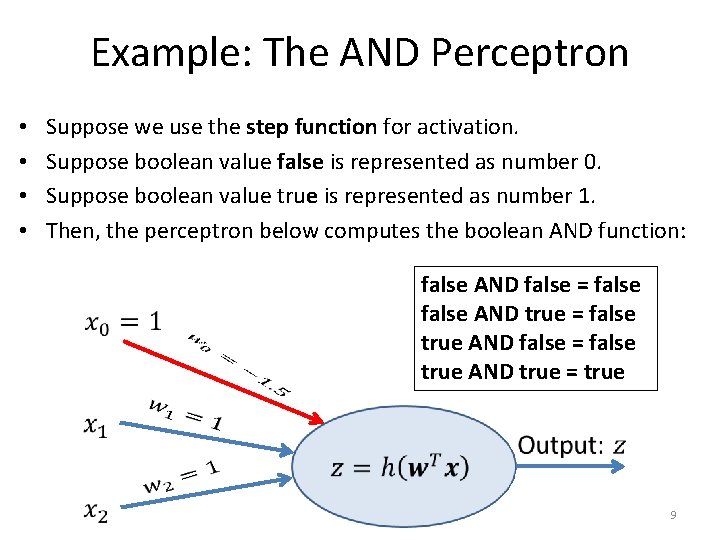

Example: The AND Perceptron • • Suppose we use the step function for activation. Suppose boolean value false is represented as number 0. Suppose boolean value true is represented as number 1. Then, the perceptron below computes the boolean AND function: false AND false = false AND true = false true AND false = false true AND true = true 9

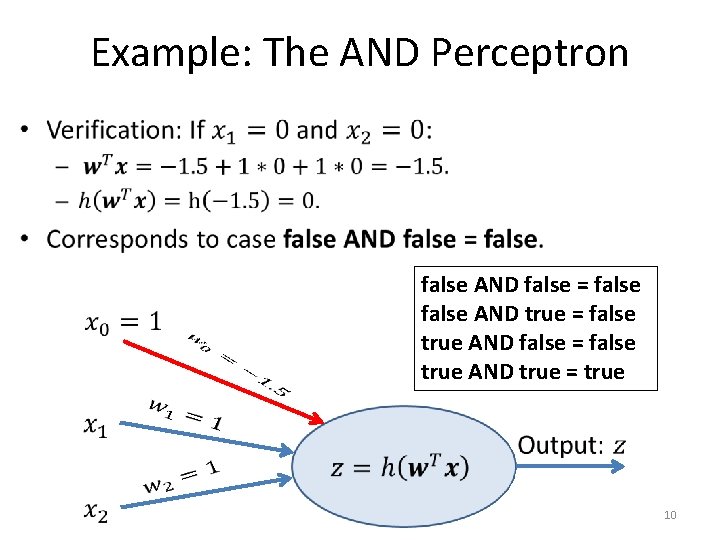

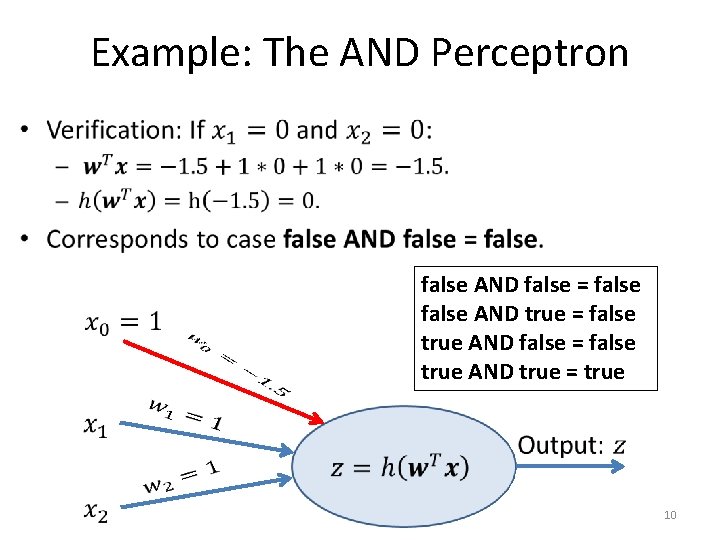

Example: The AND Perceptron • false AND false = false AND true = false true AND false = false true AND true = true 10

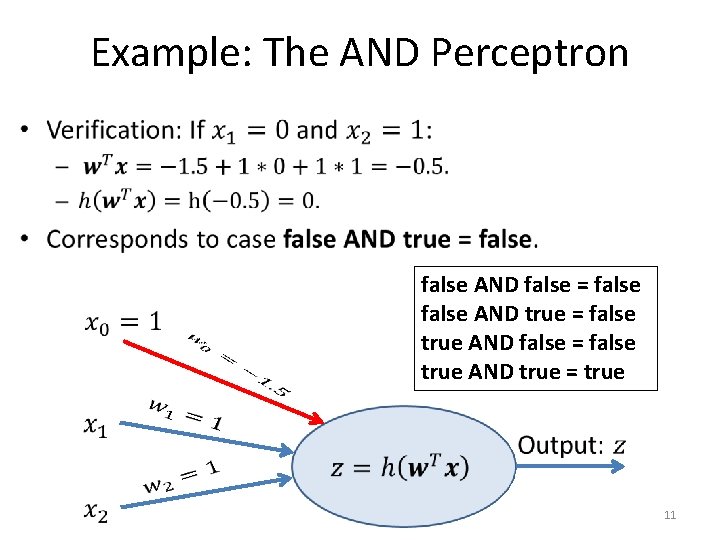

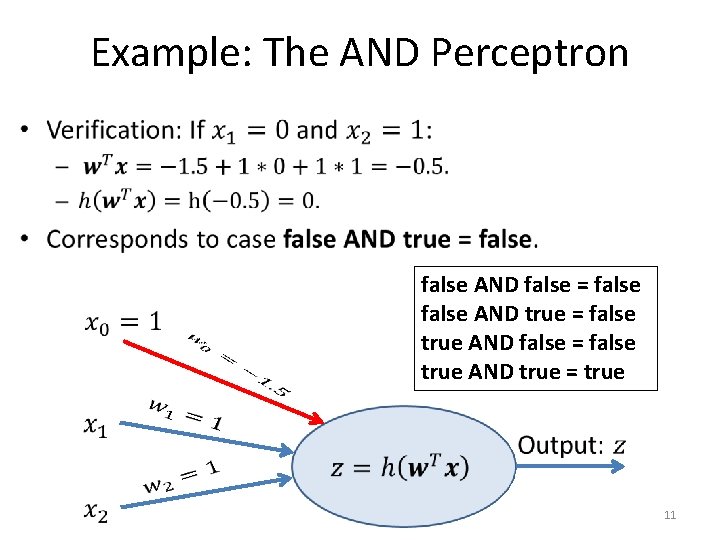

Example: The AND Perceptron • false AND false = false AND true = false true AND false = false true AND true = true 11

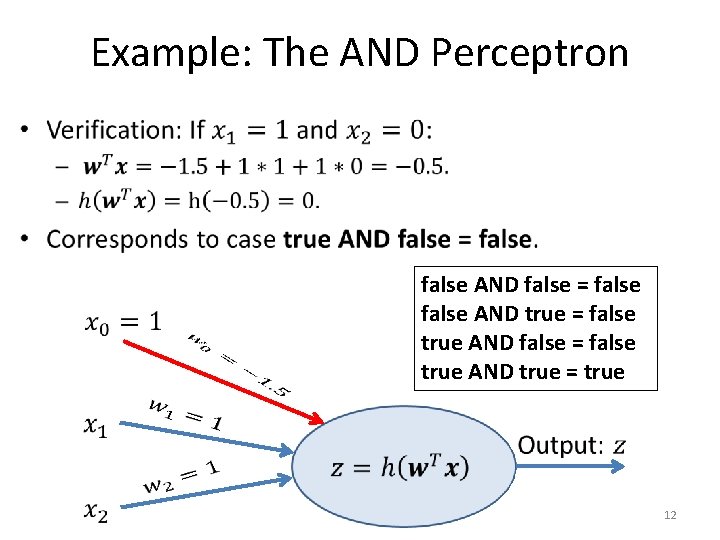

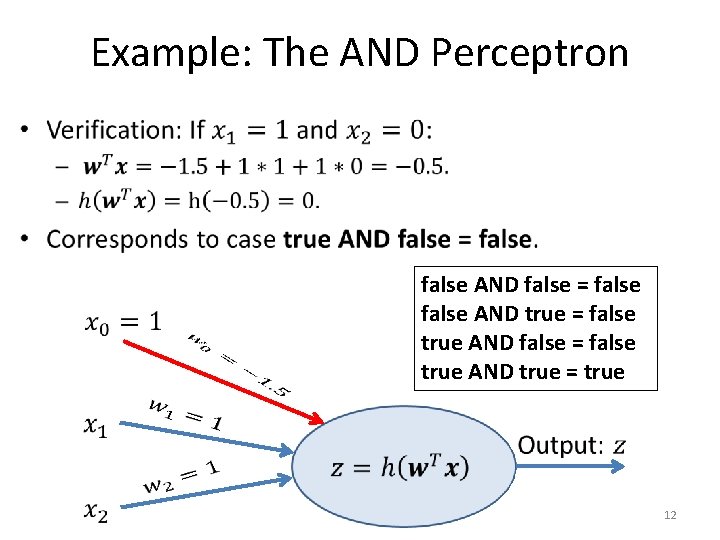

Example: The AND Perceptron • false AND false = false AND true = false true AND false = false true AND true = true 12

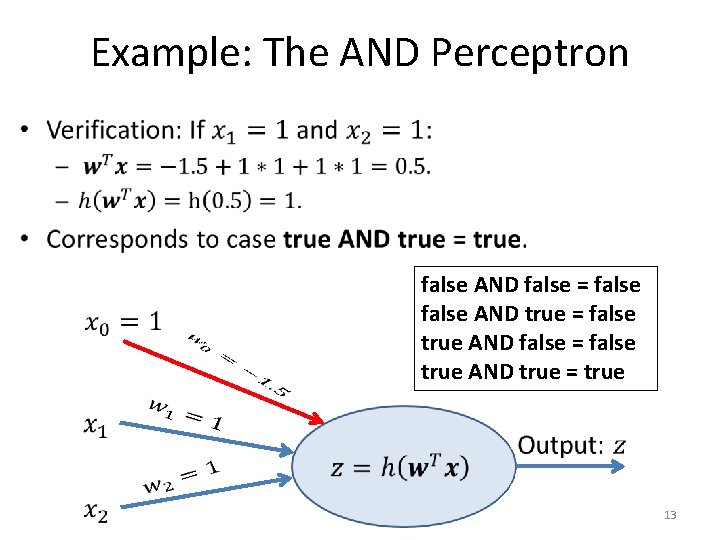

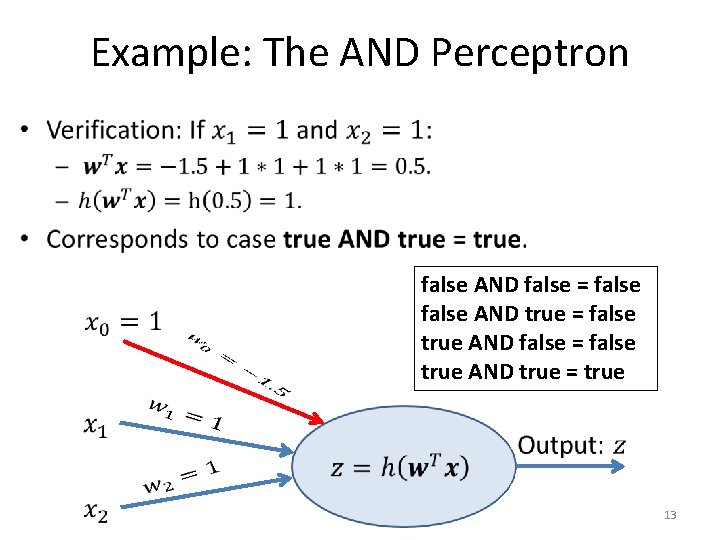

Example: The AND Perceptron • false AND false = false AND true = false true AND false = false true AND true = true 13

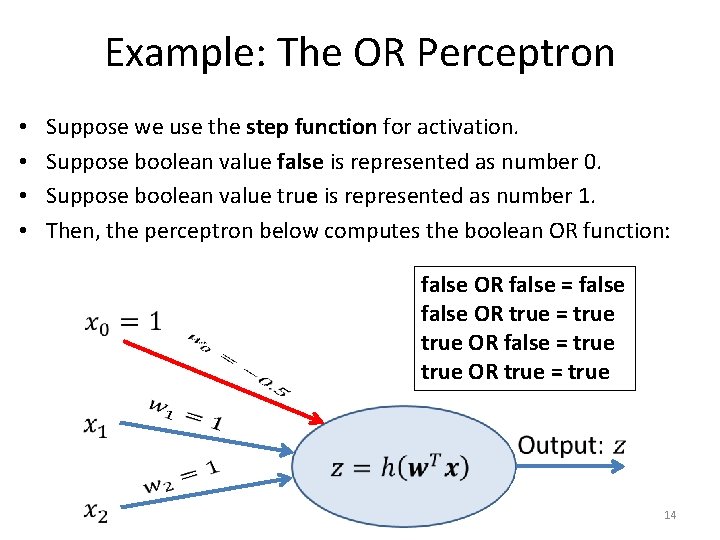

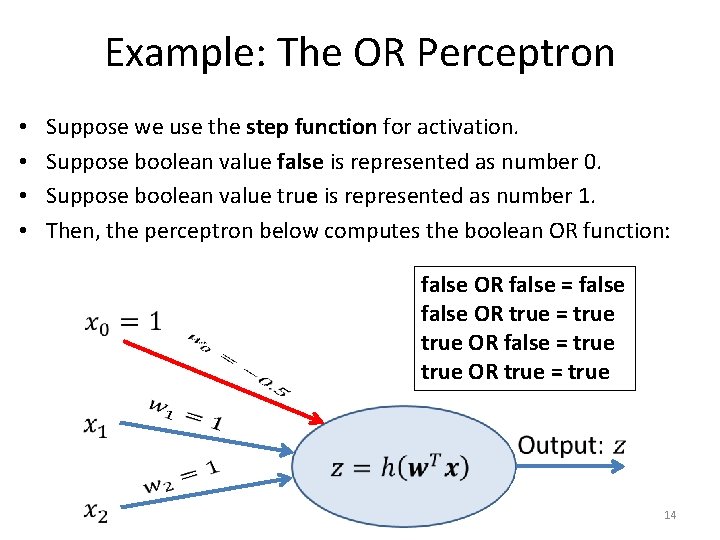

Example: The OR Perceptron • • Suppose we use the step function for activation. Suppose boolean value false is represented as number 0. Suppose boolean value true is represented as number 1. Then, the perceptron below computes the boolean OR function: false OR false = false OR true = true OR false = true OR true = true 14

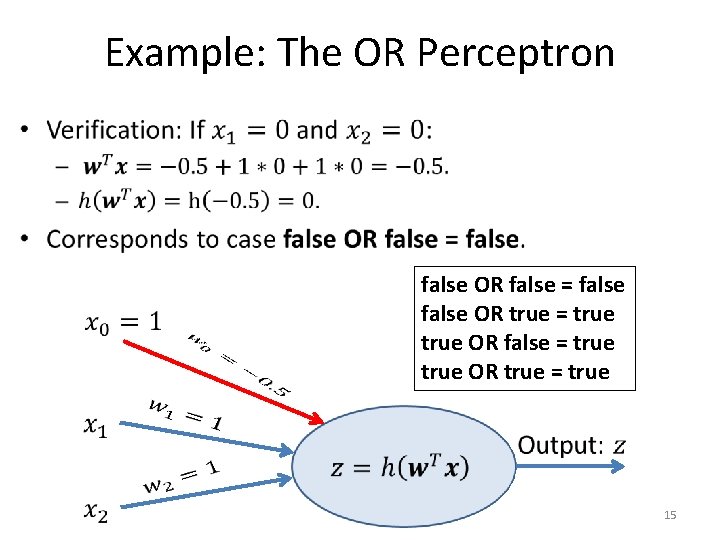

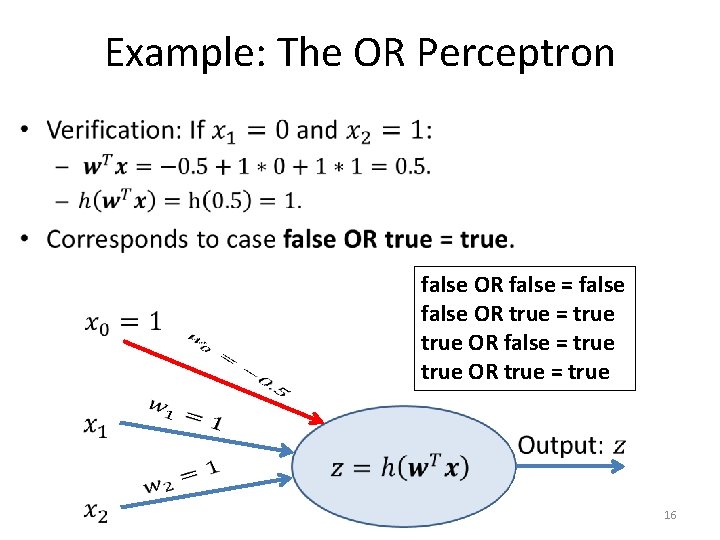

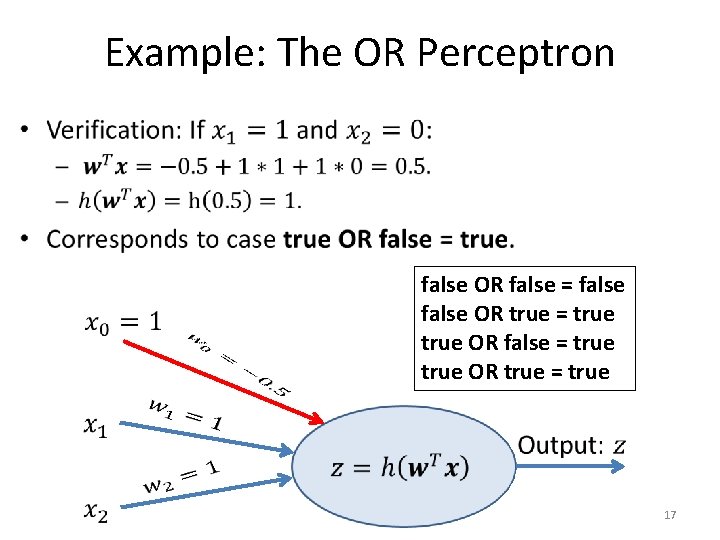

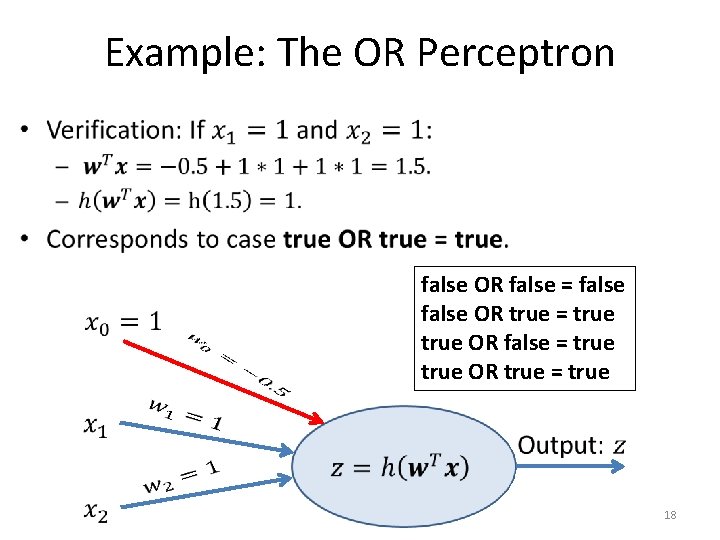

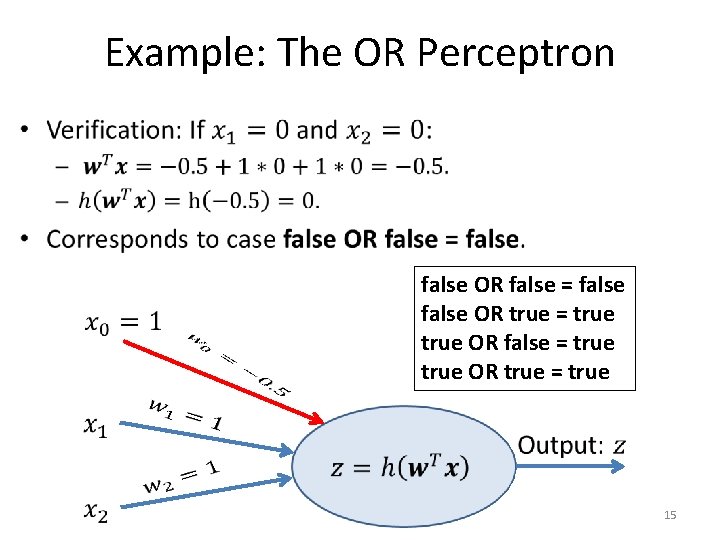

Example: The OR Perceptron • false OR false = false OR true = true OR false = true OR true = true 15

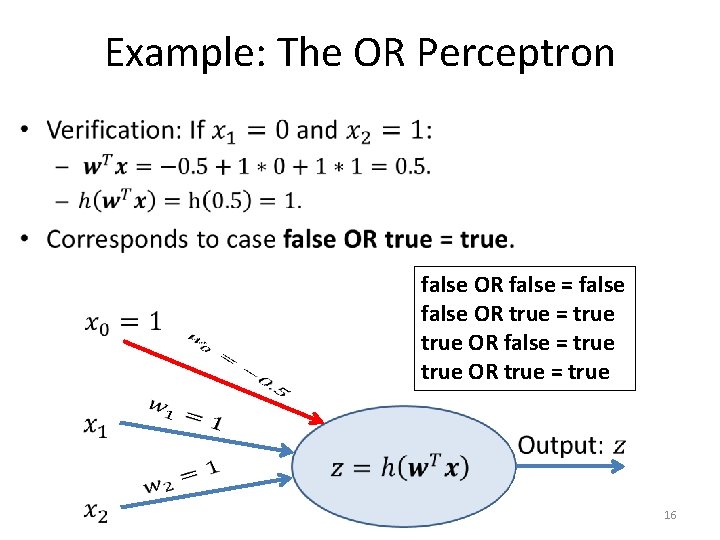

Example: The OR Perceptron • false OR false = false OR true = true OR false = true OR true = true 16

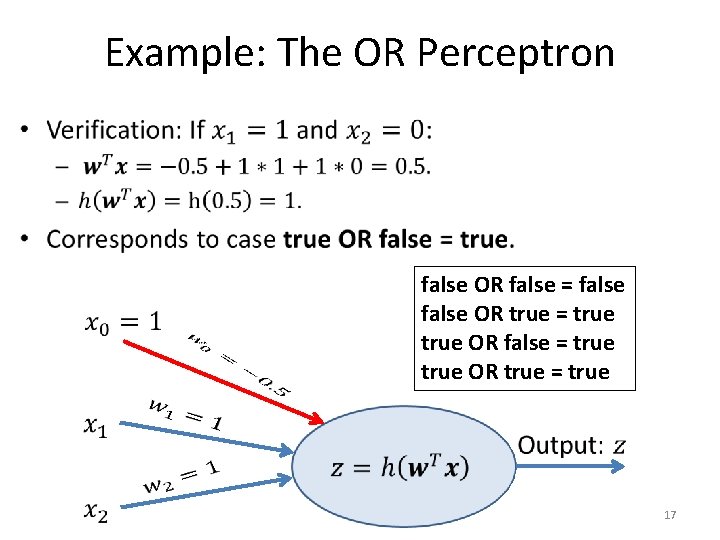

Example: The OR Perceptron • false OR false = false OR true = true OR false = true OR true = true 17

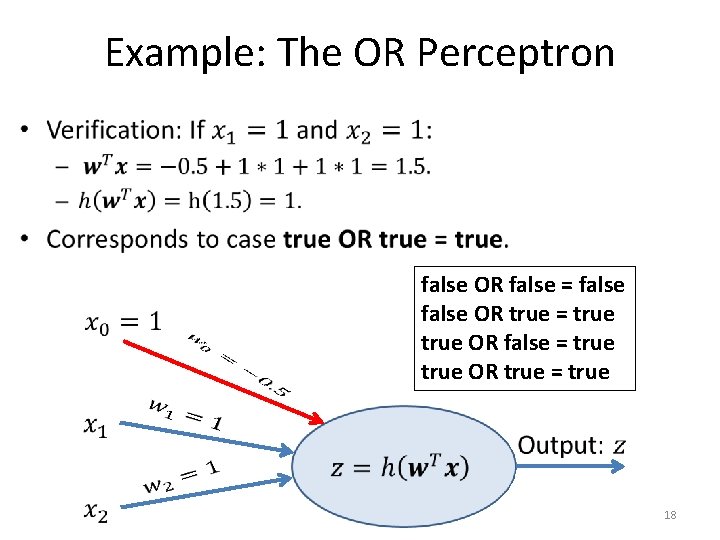

Example: The OR Perceptron • false OR false = false OR true = true OR false = true OR true = true 18

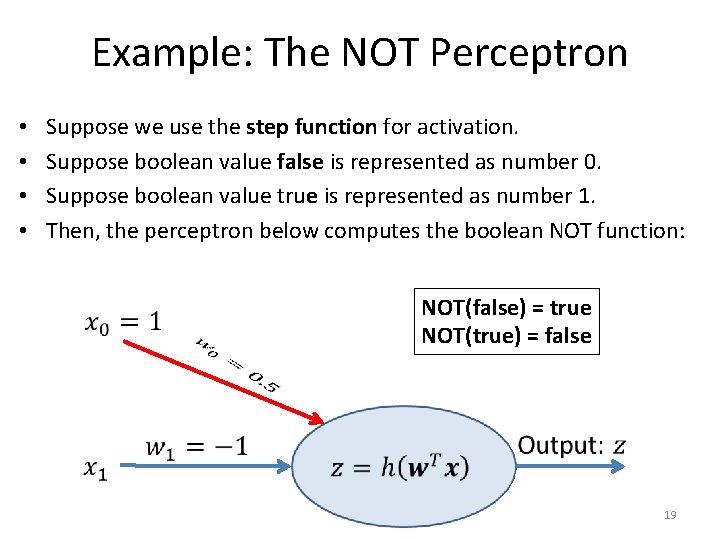

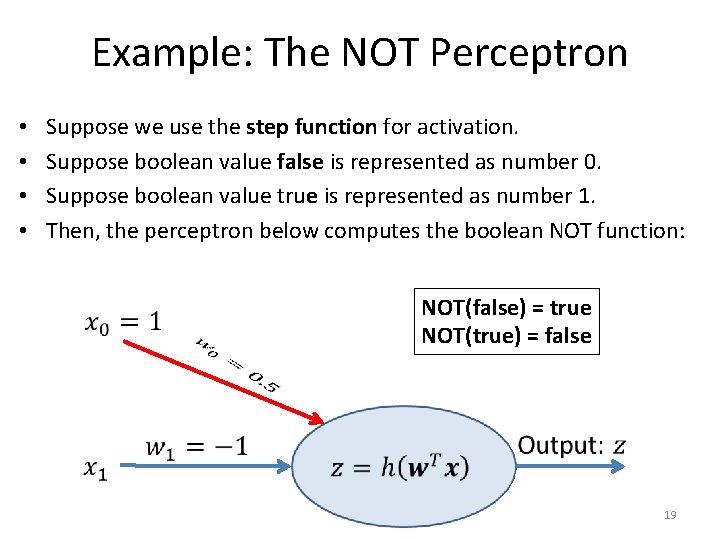

Example: The NOT Perceptron • • Suppose we use the step function for activation. Suppose boolean value false is represented as number 0. Suppose boolean value true is represented as number 1. Then, the perceptron below computes the boolean NOT function: NOT(false) = true NOT(true) = false 19

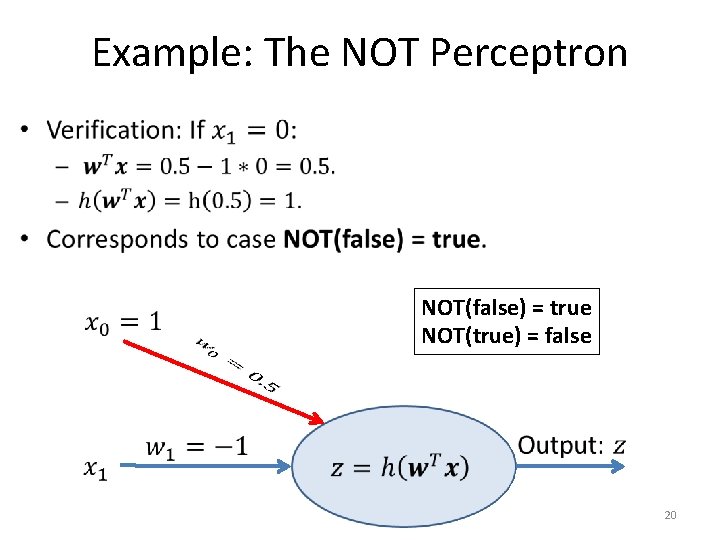

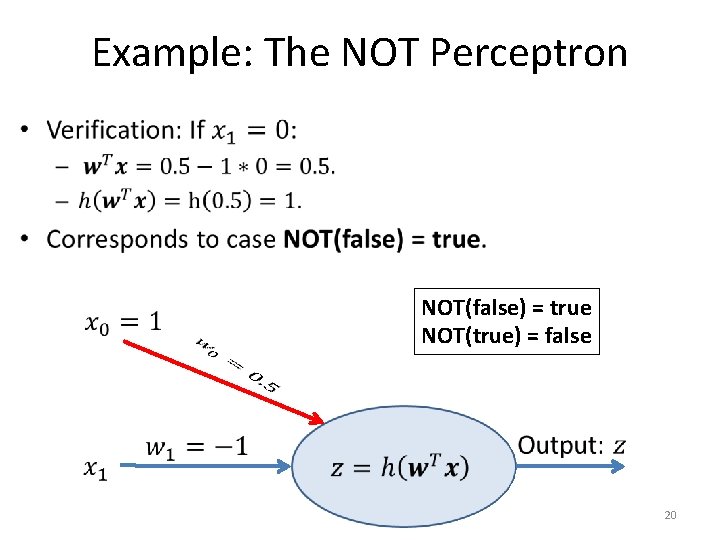

Example: The NOT Perceptron • NOT(false) = true NOT(true) = false 20

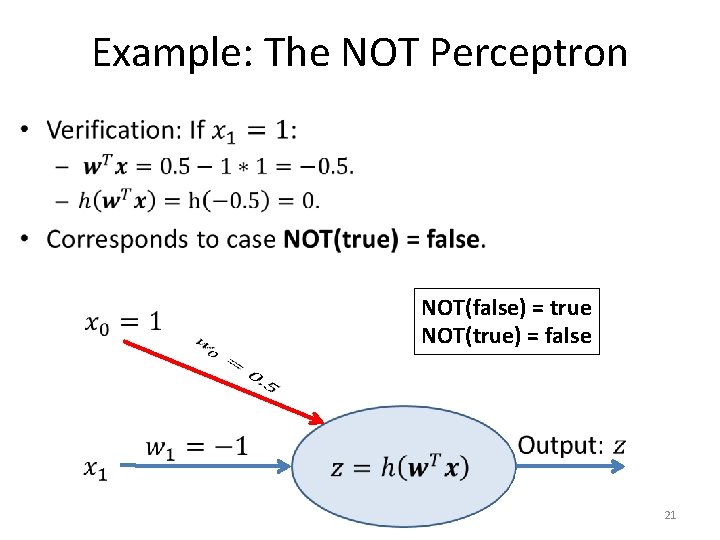

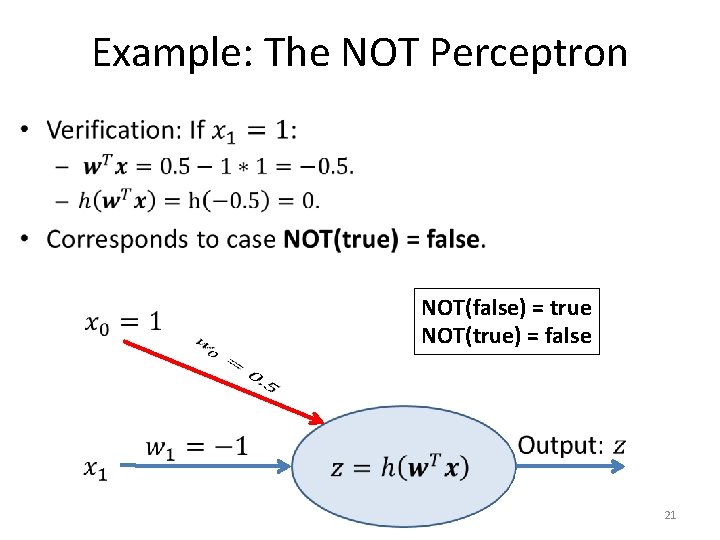

Example: The NOT Perceptron • NOT(false) = true NOT(true) = false 21

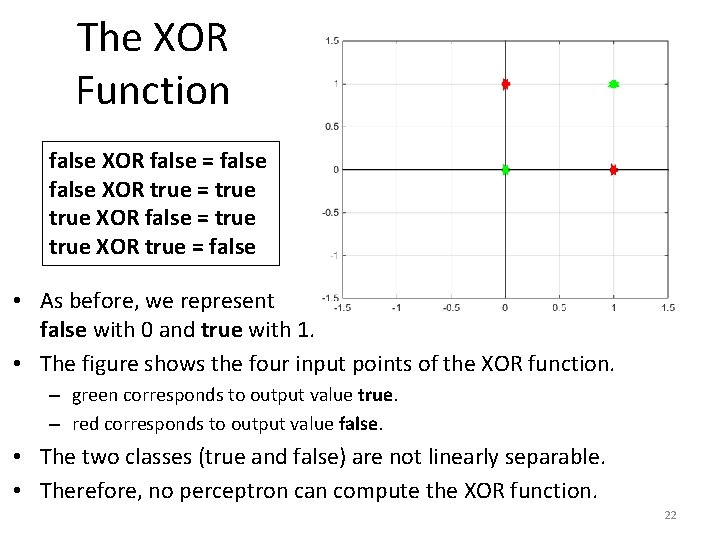

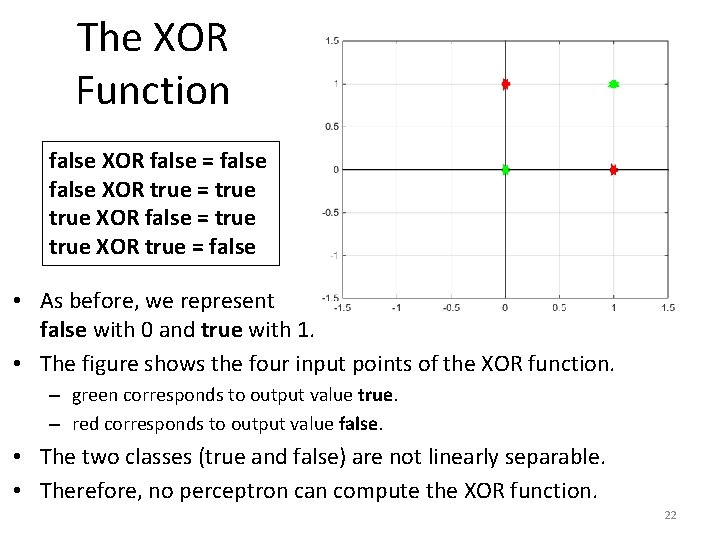

The XOR Function false XOR false = false XOR true = true XOR false = true XOR true = false • As before, we represent false with 0 and true with 1. • The figure shows the four input points of the XOR function. – green corresponds to output value true. – red corresponds to output value false. • The two classes (true and false) are not linearly separable. • Therefore, no perceptron can compute the XOR function. 22

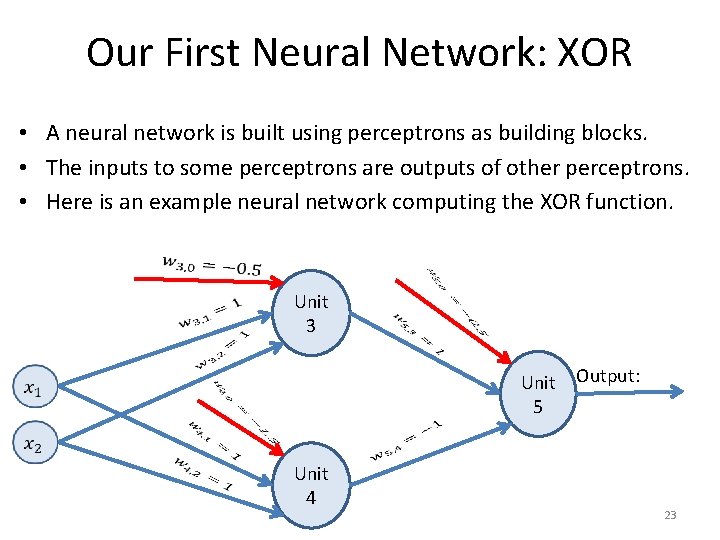

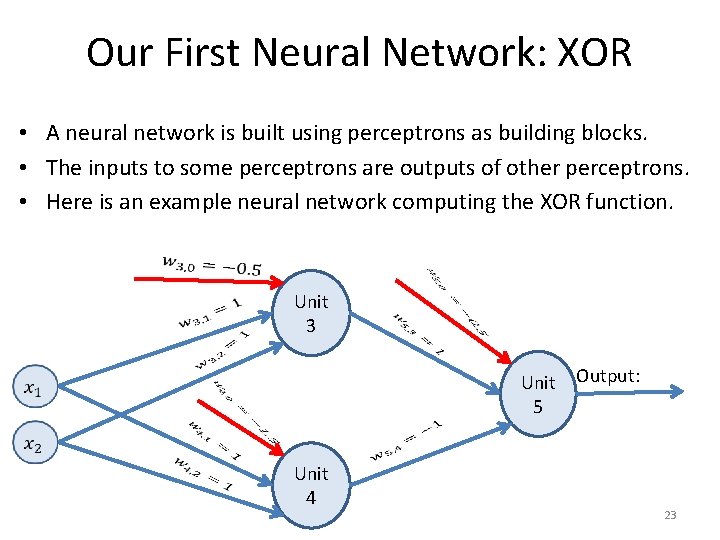

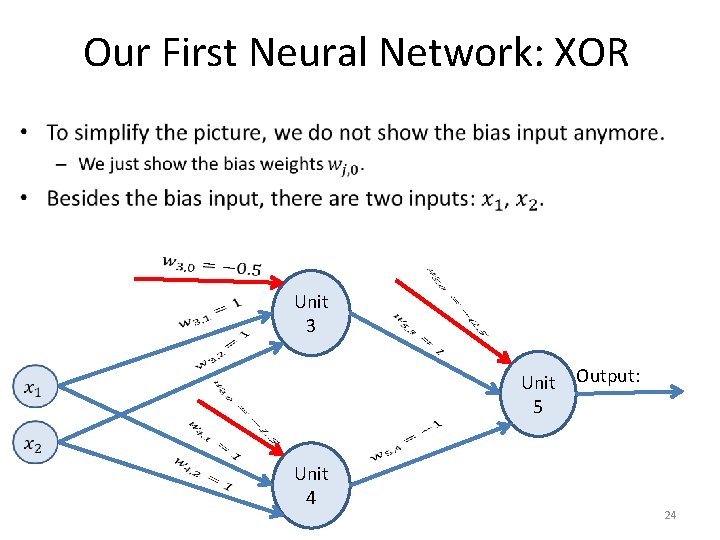

Our First Neural Network: XOR • A neural network is built using perceptrons as building blocks. • The inputs to some perceptrons are outputs of other perceptrons. • Here is an example neural network computing the XOR function. Unit 3 Unit Output: 5 Unit 4 23

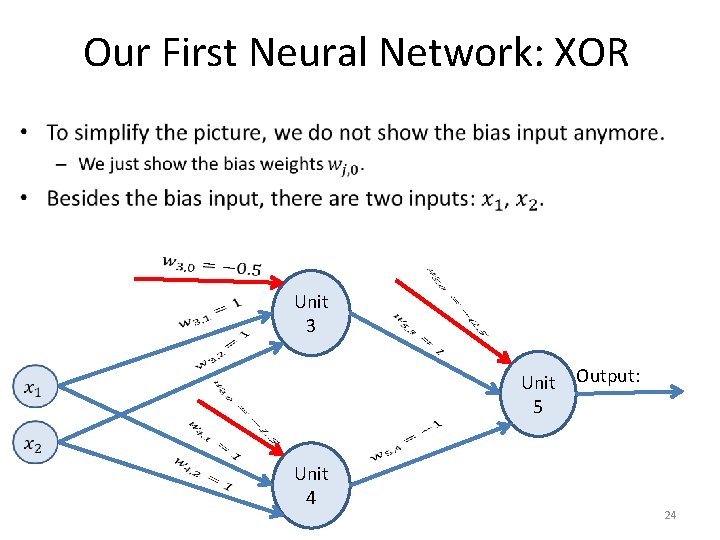

Our First Neural Network: XOR • Unit 3 Unit Output: 5 Unit 4 24

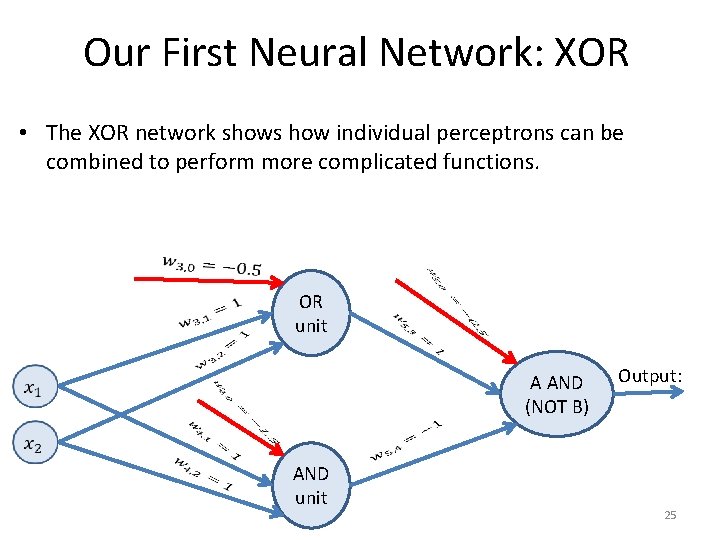

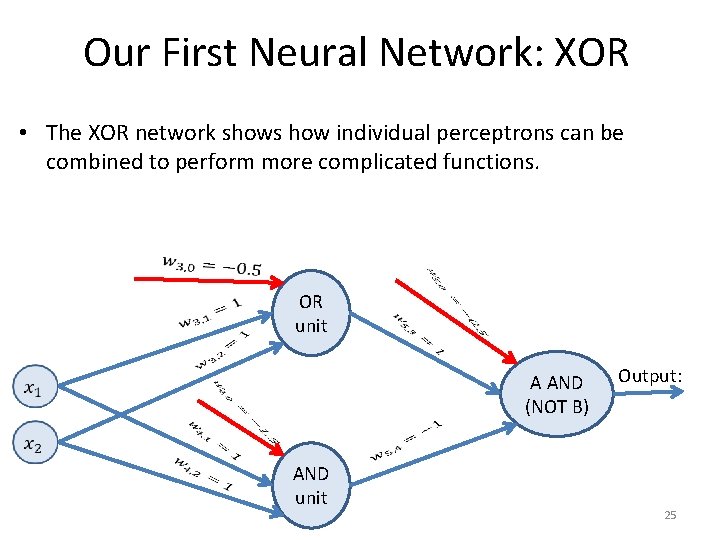

Our First Neural Network: XOR • The XOR network shows how individual perceptrons can be combined to perform more complicated functions. OR unit A AND (NOT B) AND unit Output: 25

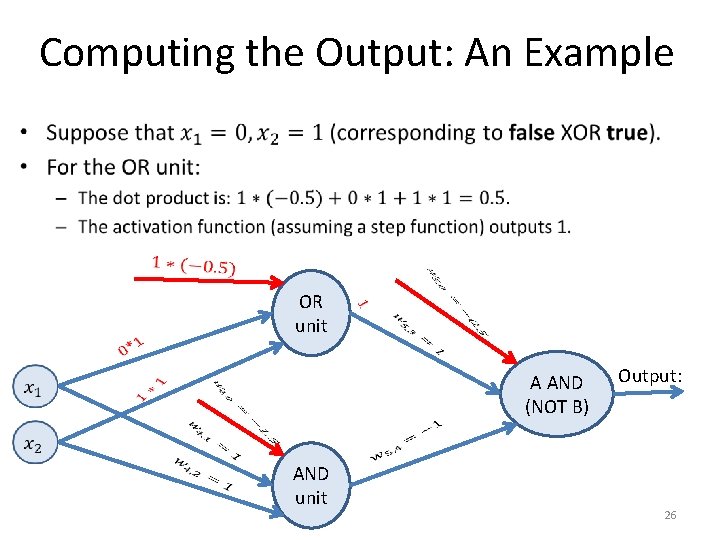

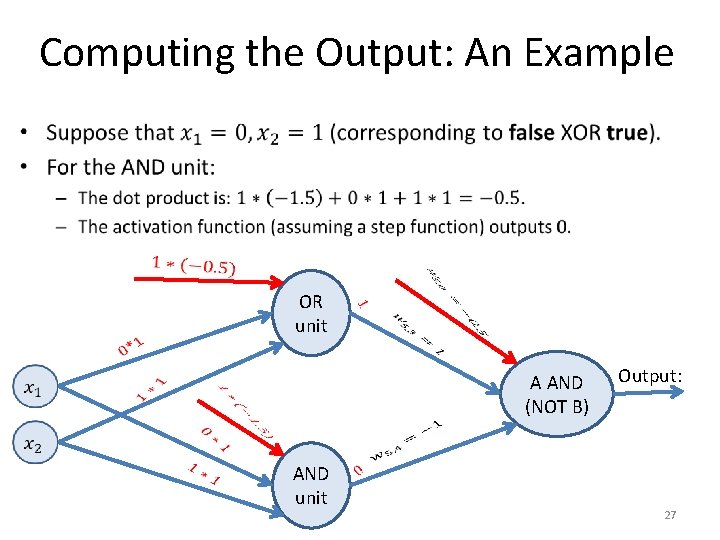

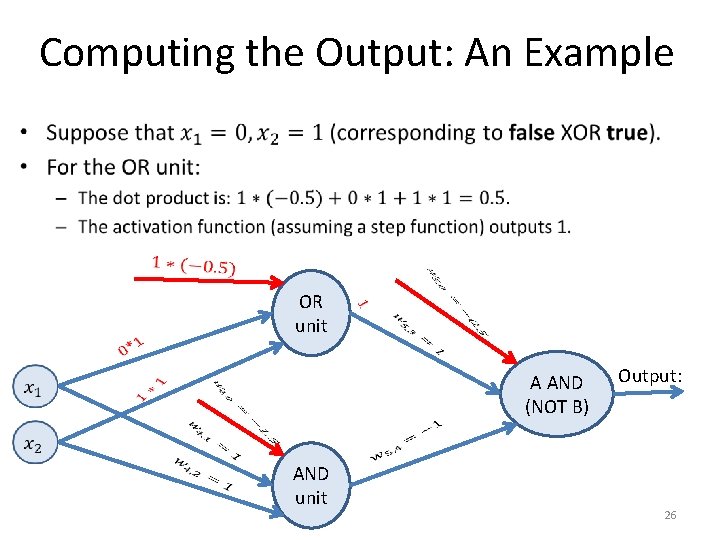

Computing the Output: An Example • OR unit A AND (NOT B) AND unit Output: 26

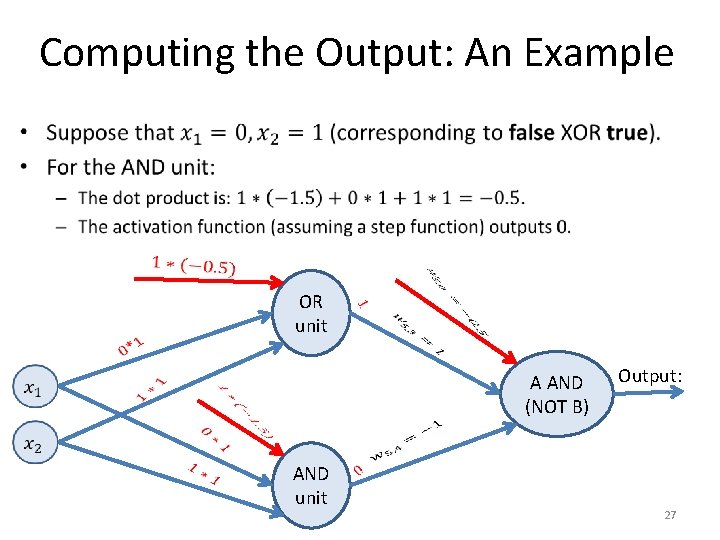

Computing the Output: An Example • OR unit A AND (NOT B) AND unit Output: 27

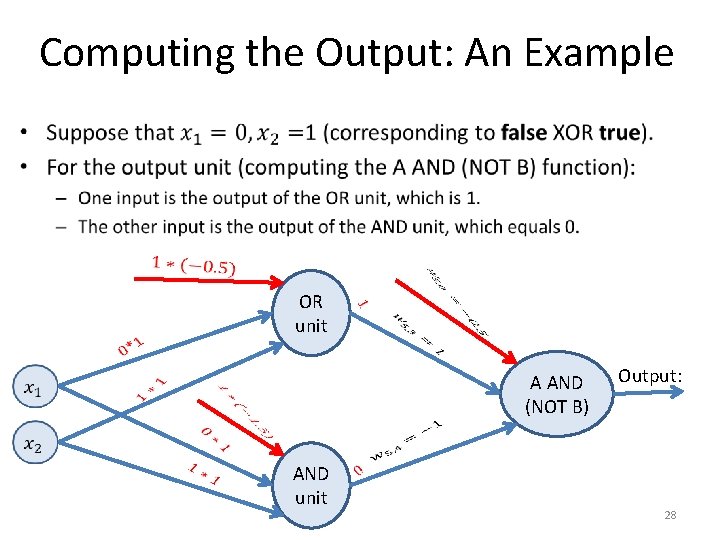

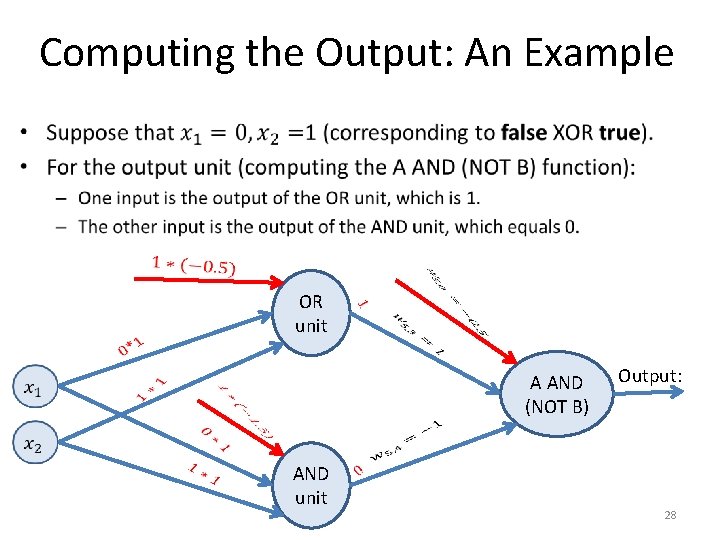

Computing the Output: An Example • OR unit A AND (NOT B) AND unit Output: 28

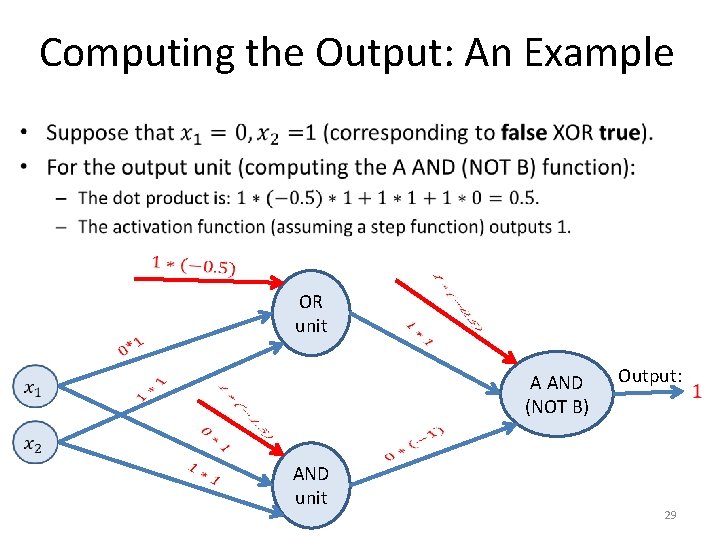

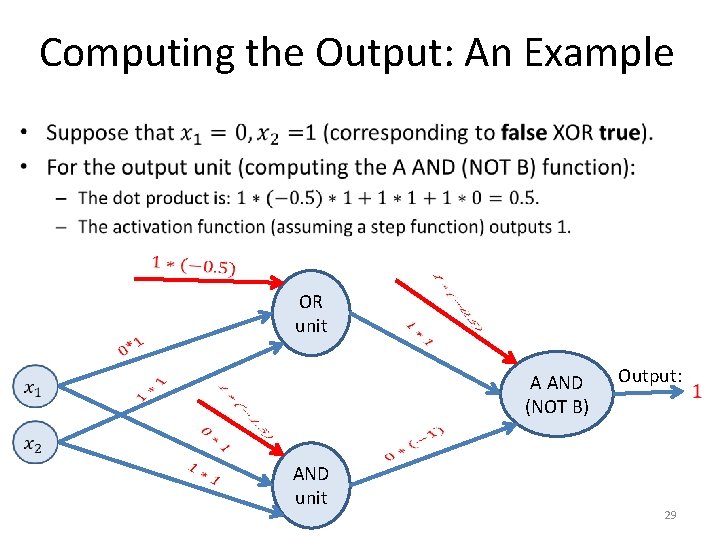

Computing the Output: An Example • OR unit A AND (NOT B) AND unit Output: 29

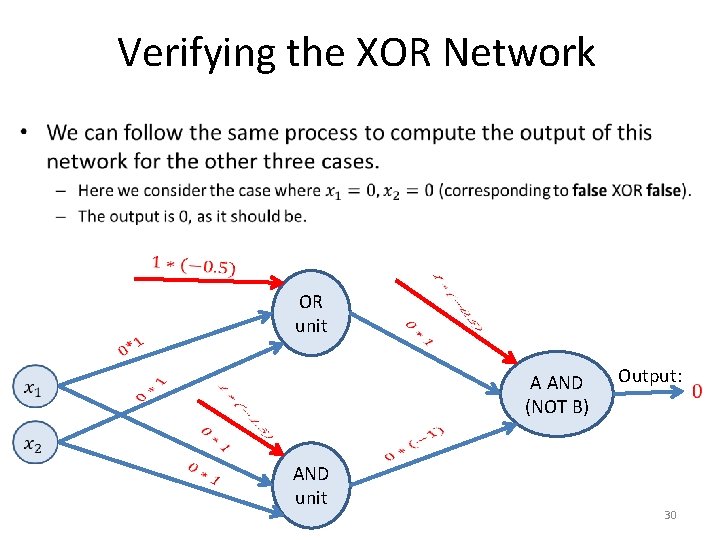

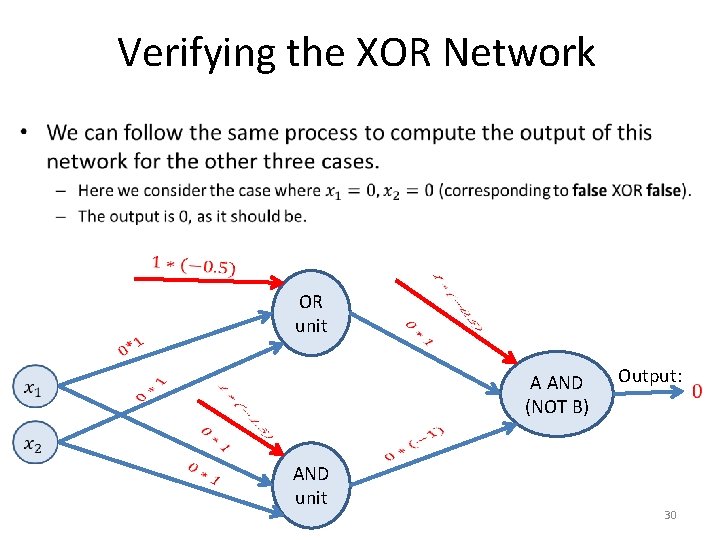

Verifying the XOR Network • OR unit A AND (NOT B) AND unit Output: 30

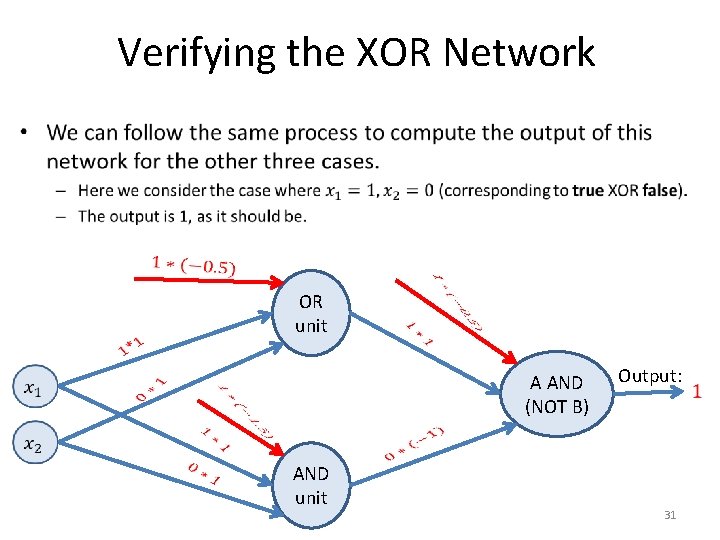

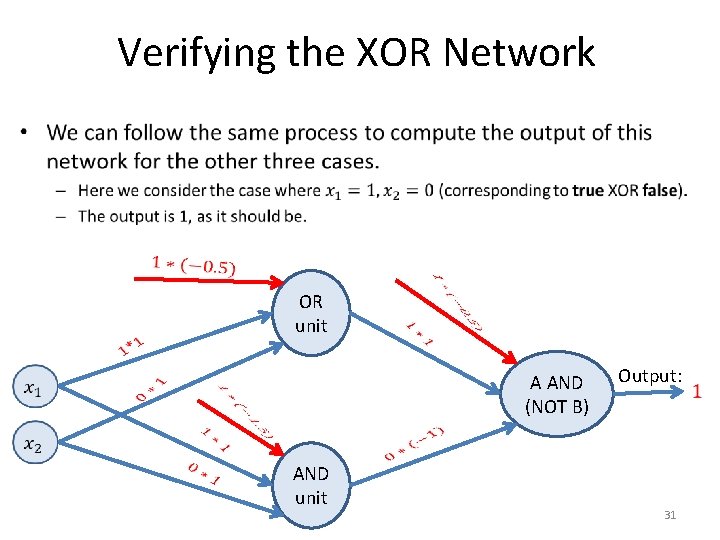

Verifying the XOR Network • OR unit A AND (NOT B) AND unit Output: 31

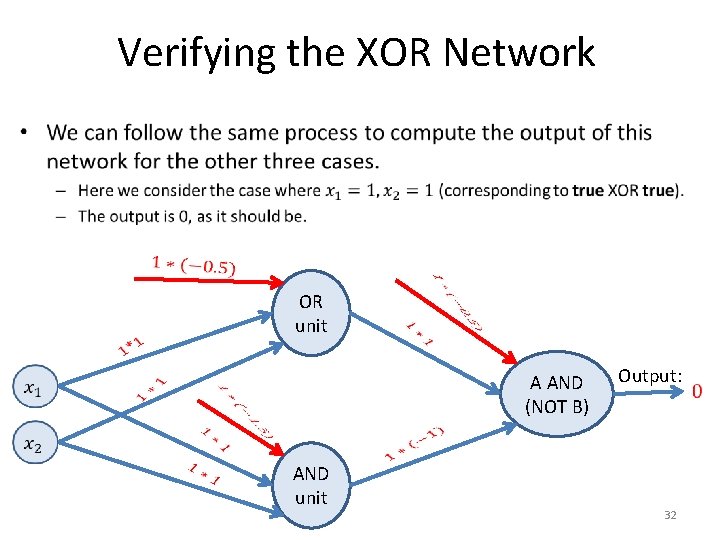

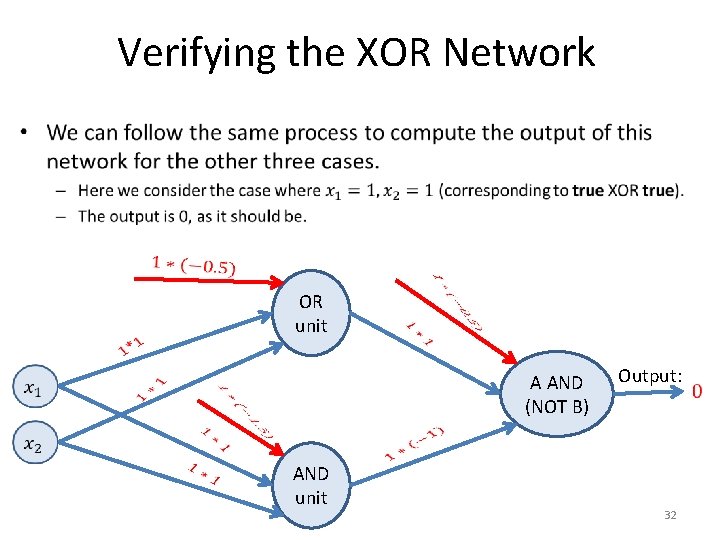

Verifying the XOR Network • OR unit A AND (NOT B) AND unit Output: 32

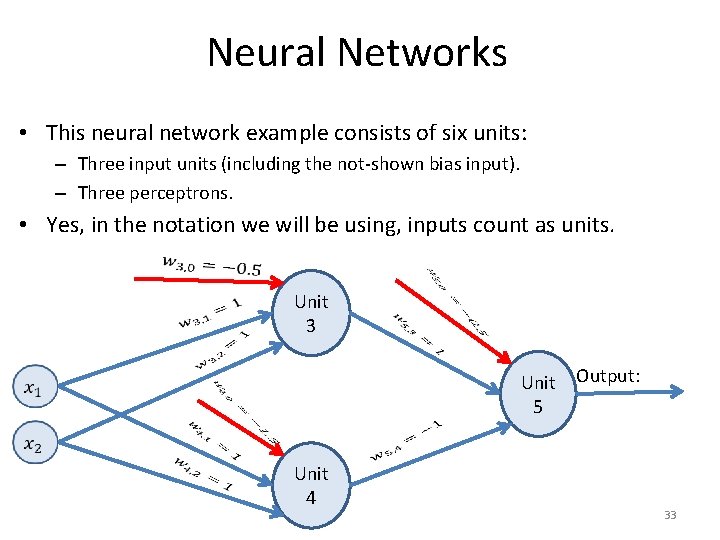

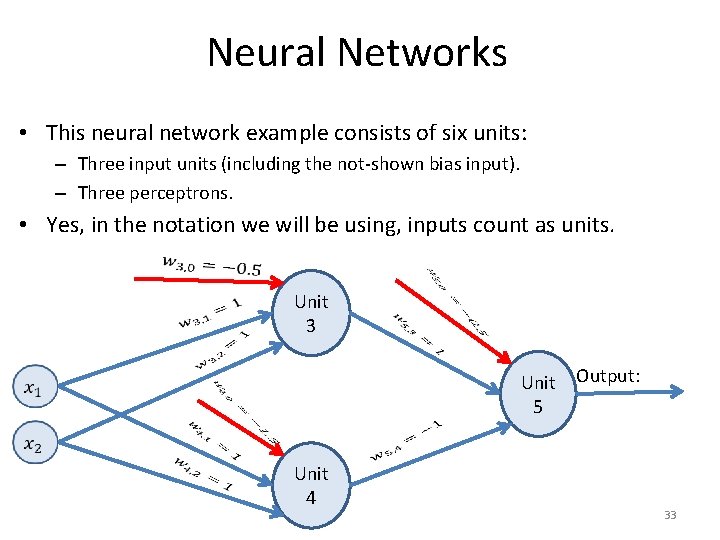

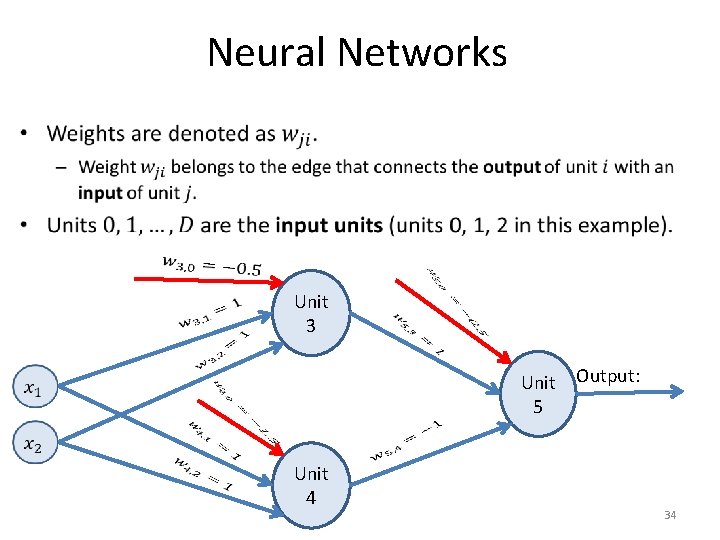

Neural Networks • This neural network example consists of six units: – Three input units (including the not-shown bias input). – Three perceptrons. • Yes, in the notation we will be using, inputs count as units. Unit 3 Unit Output: 5 Unit 4 33

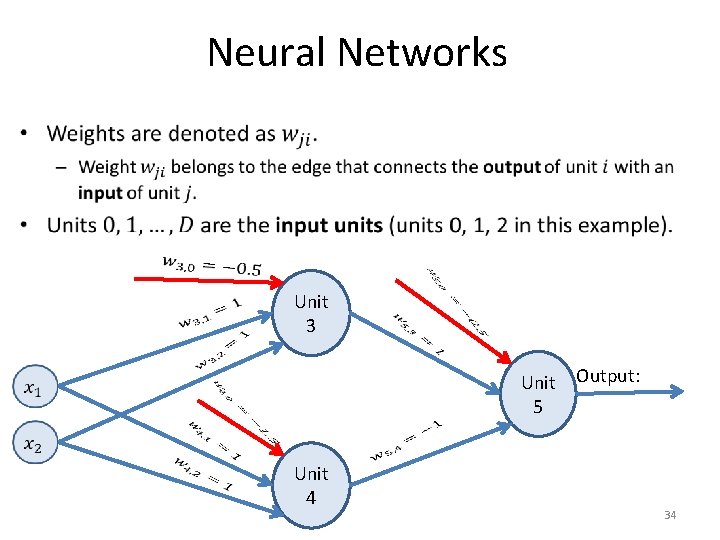

Neural Networks • Unit 3 Unit Output: 5 Unit 4 34

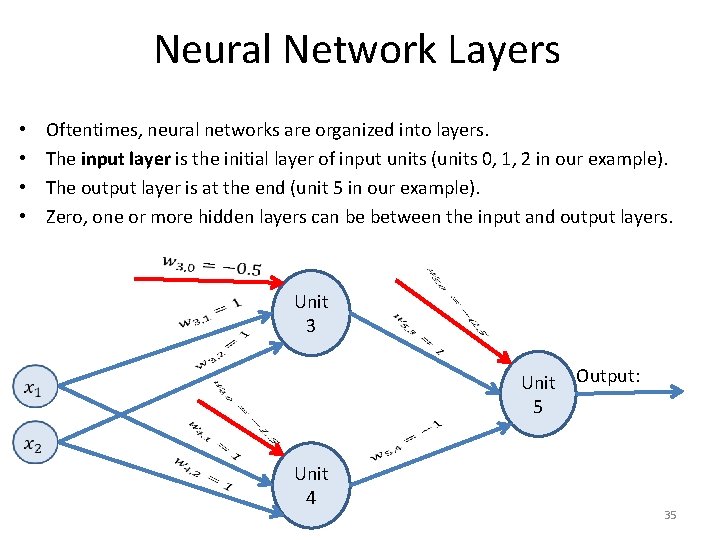

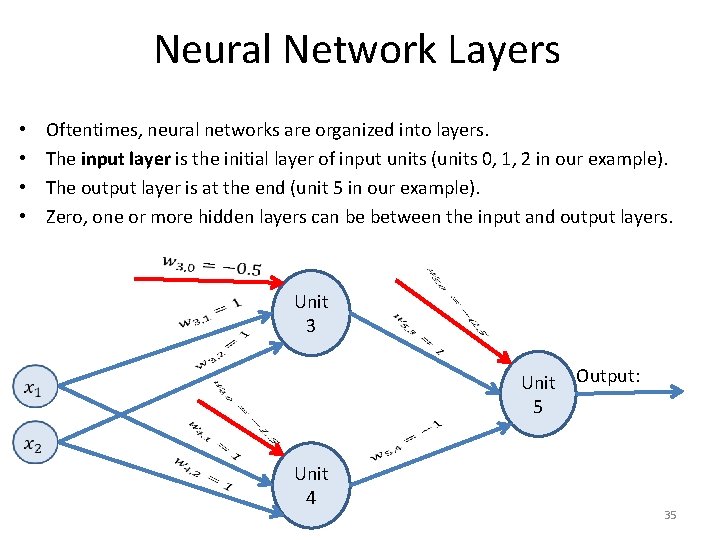

Neural Network Layers • • Oftentimes, neural networks are organized into layers. The input layer is the initial layer of input units (units 0, 1, 2 in our example). The output layer is at the end (unit 5 in our example). Zero, one or more hidden layers can be between the input and output layers. Unit 3 Unit Output: 5 Unit 4 35

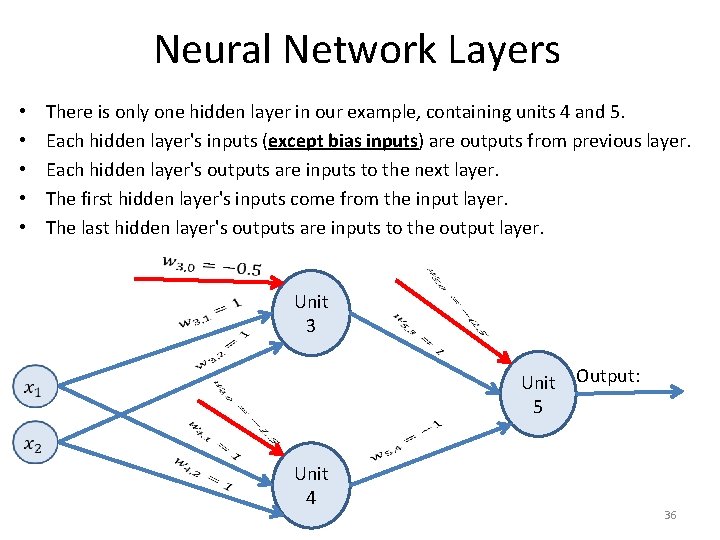

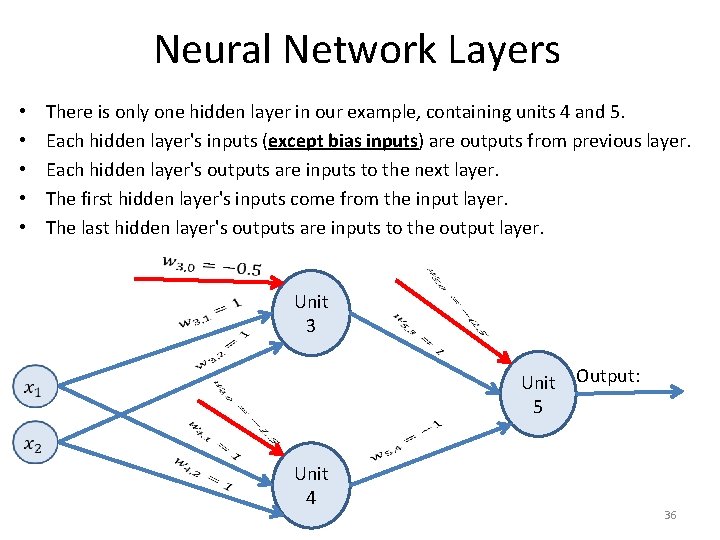

Neural Network Layers • • • There is only one hidden layer in our example, containing units 4 and 5. Each hidden layer's inputs (except bias inputs) are outputs from previous layer. Each hidden layer's outputs are inputs to the next layer. The first hidden layer's inputs come from the input layer. The last hidden layer's outputs are inputs to the output layer. Unit 3 Unit Output: 5 Unit 4 36

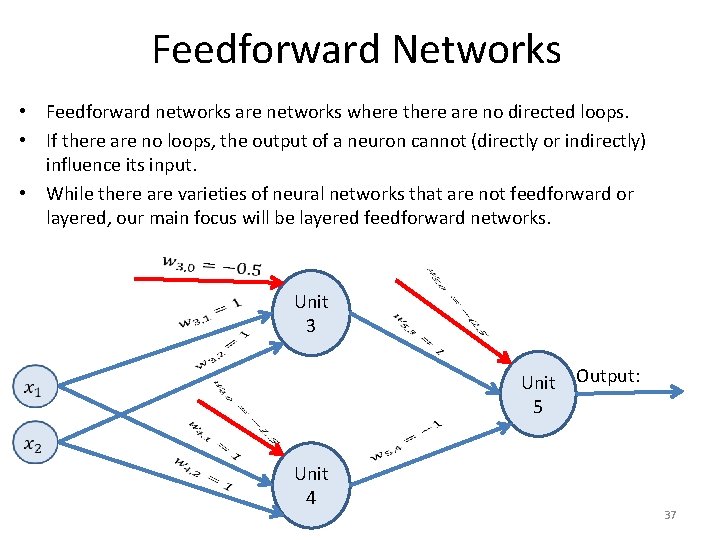

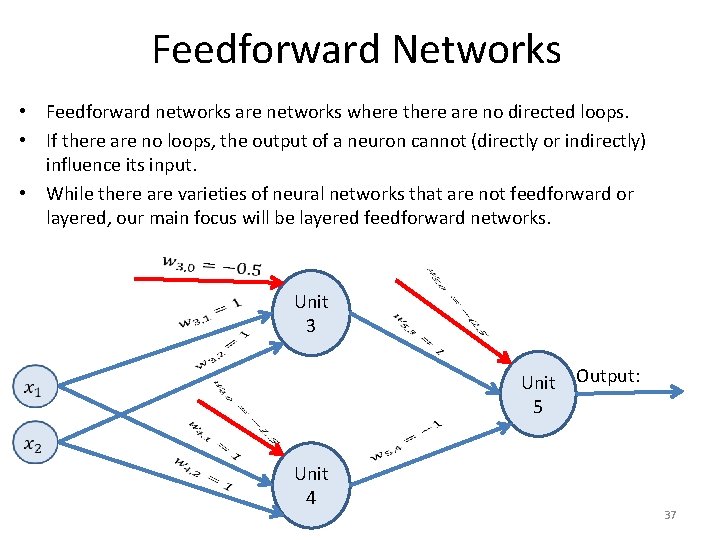

Feedforward Networks • Feedforward networks are networks where there are no directed loops. • If there are no loops, the output of a neuron cannot (directly or indirectly) influence its input. • While there are varieties of neural networks that are not feedforward or layered, our main focus will be layered feedforward networks. Unit 3 Unit Output: 5 Unit 4 37

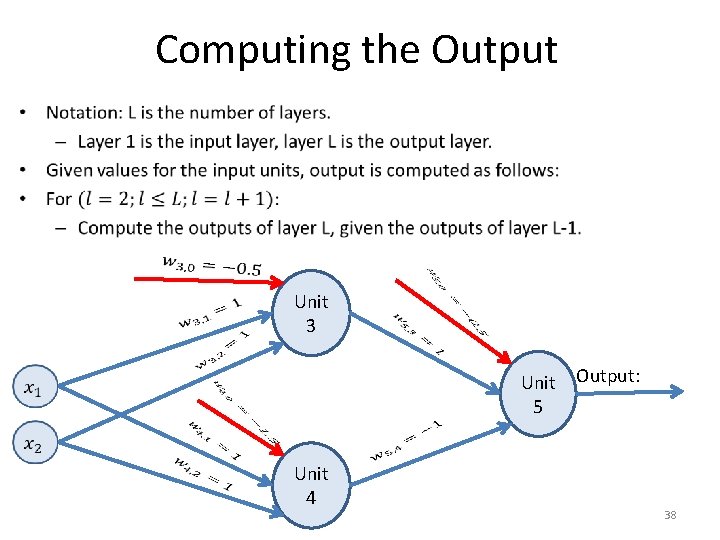

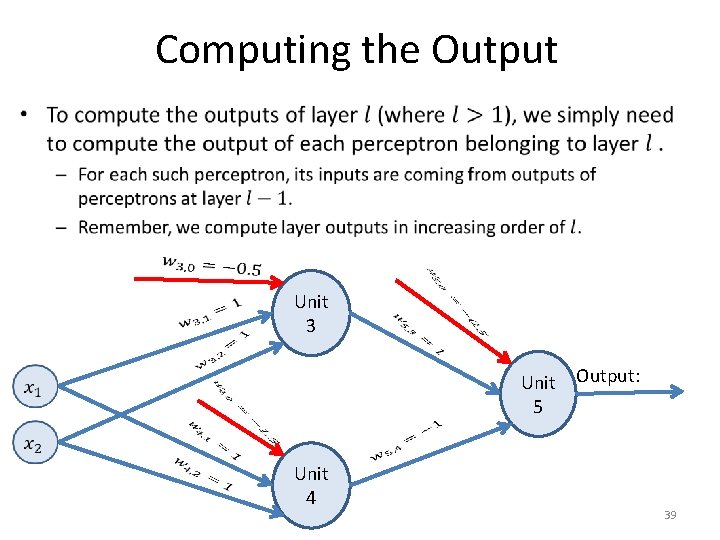

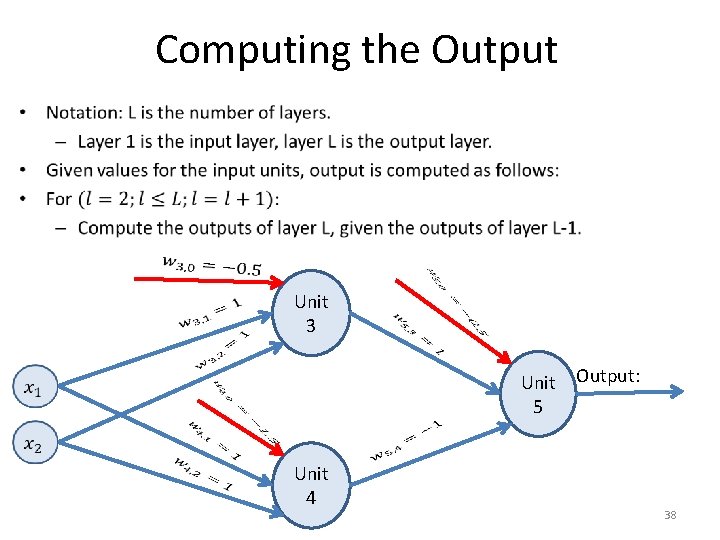

Computing the Output • Unit 3 Unit Output: 5 Unit 4 38

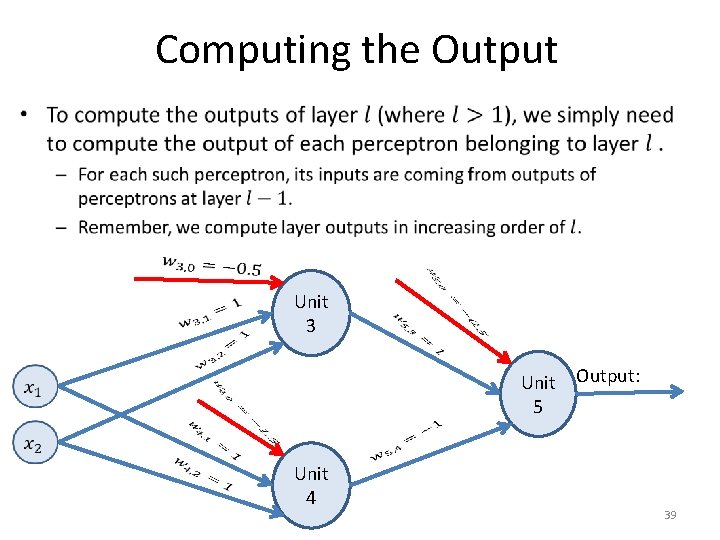

Computing the Output • Unit 3 Unit Output: 5 Unit 4 39

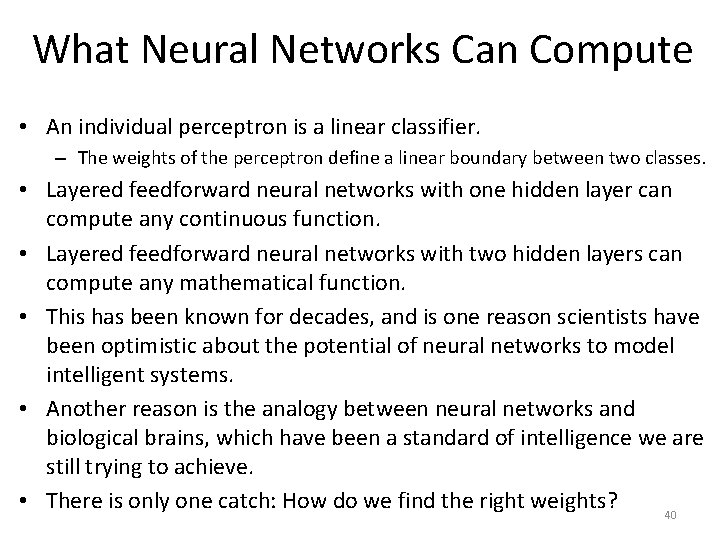

What Neural Networks Can Compute • An individual perceptron is a linear classifier. – The weights of the perceptron define a linear boundary between two classes. • Layered feedforward neural networks with one hidden layer can compute any continuous function. • Layered feedforward neural networks with two hidden layers can compute any mathematical function. • This has been known for decades, and is one reason scientists have been optimistic about the potential of neural networks to model intelligent systems. • Another reason is the analogy between neural networks and biological brains, which have been a standard of intelligence we are still trying to achieve. • There is only one catch: How do we find the right weights? 40

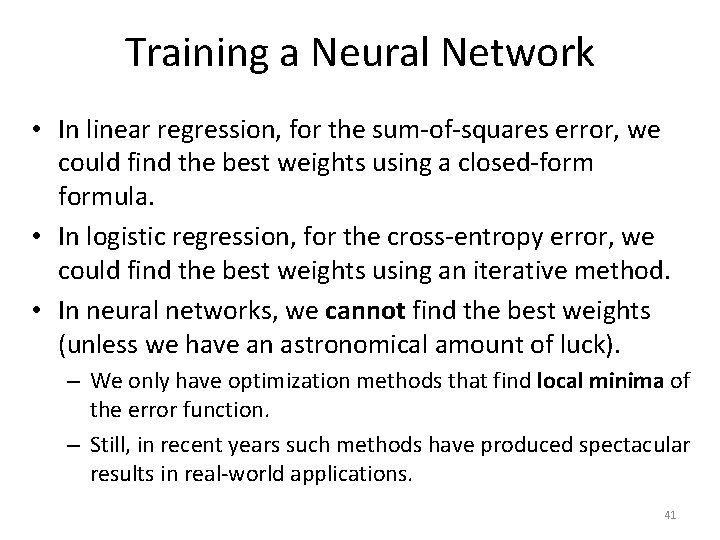

Training a Neural Network • In linear regression, for the sum-of-squares error, we could find the best weights using a closed-formula. • In logistic regression, for the cross-entropy error, we could find the best weights using an iterative method. • In neural networks, we cannot find the best weights (unless we have an astronomical amount of luck). – We only have optimization methods that find local minima of the error function. – Still, in recent years such methods have produced spectacular results in real-world applications. 41

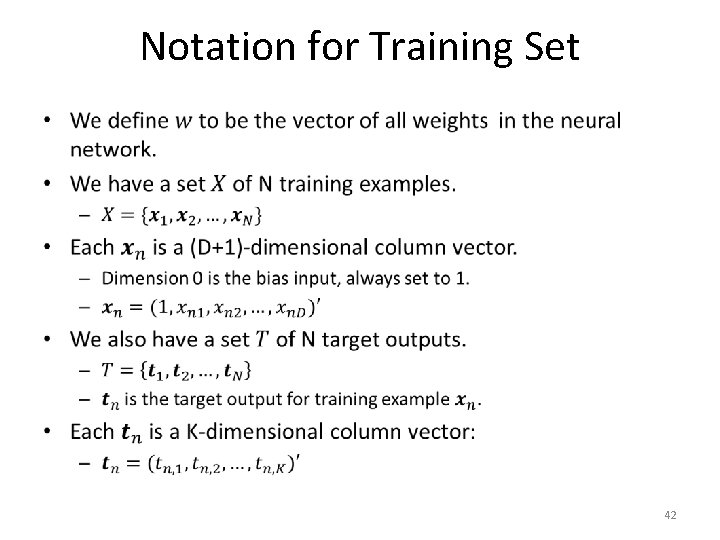

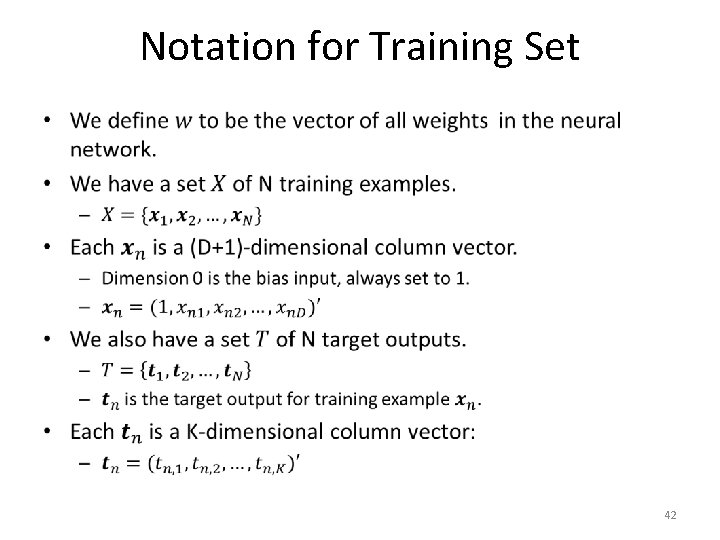

Notation for Training Set • 42

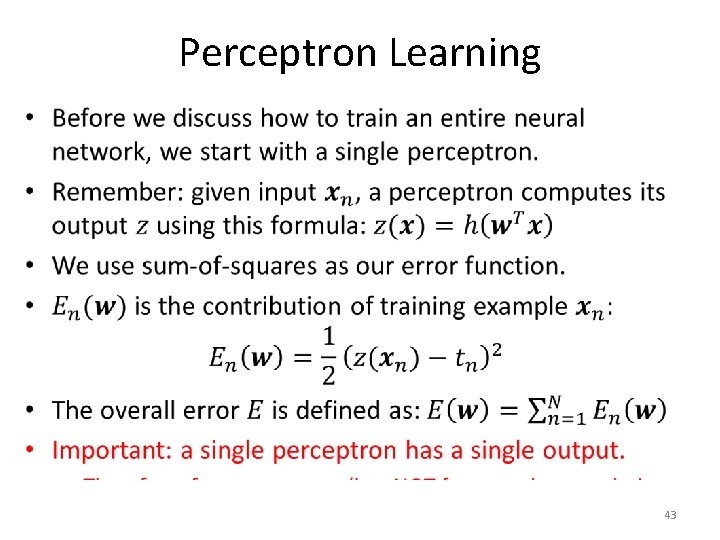

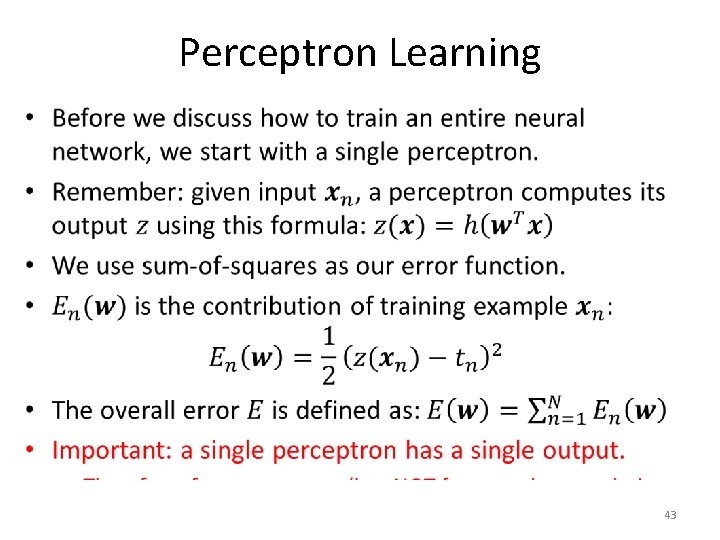

Perceptron Learning • 43

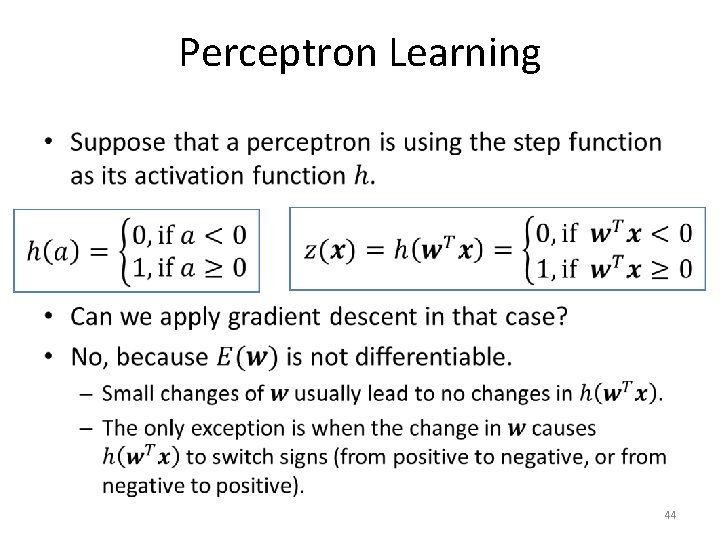

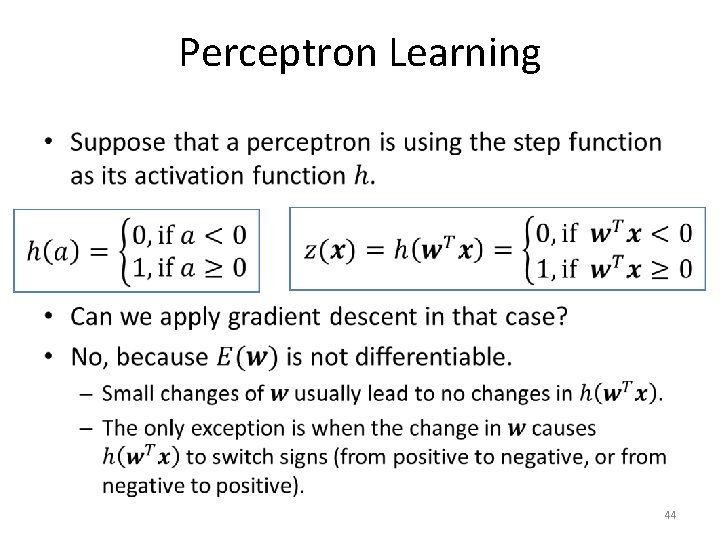

Perceptron Learning • 44

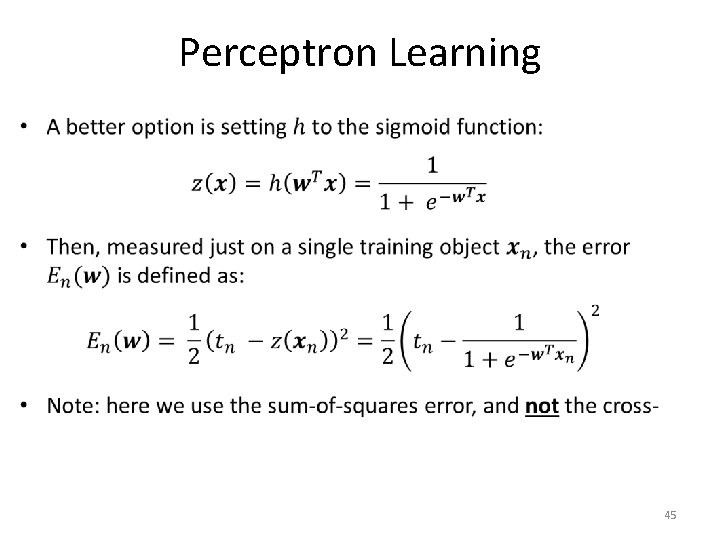

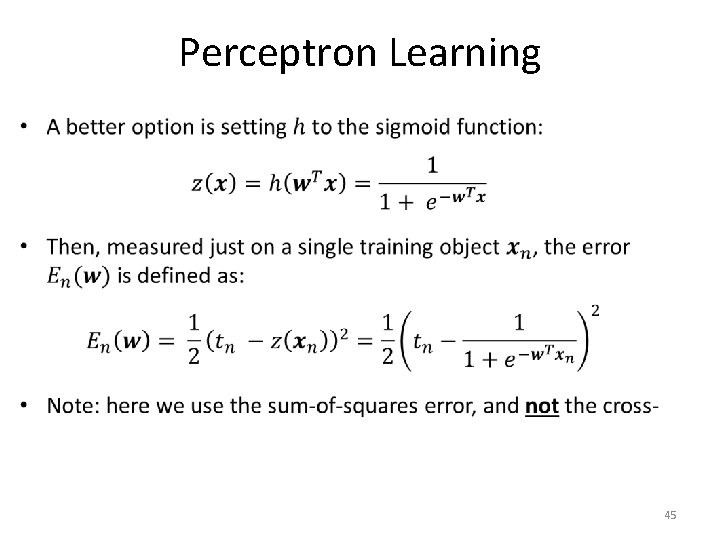

Perceptron Learning • 45

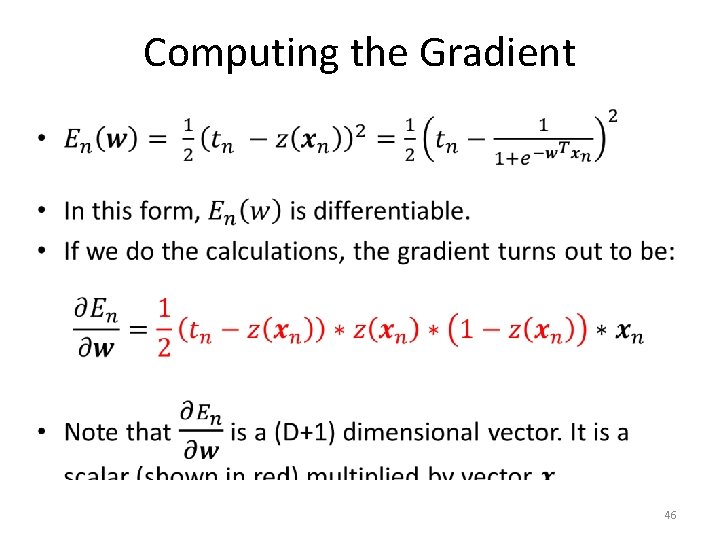

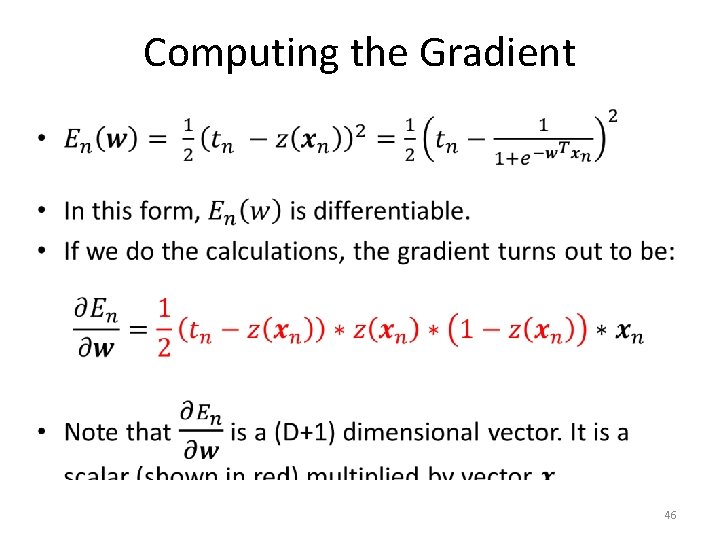

Computing the Gradient • 46

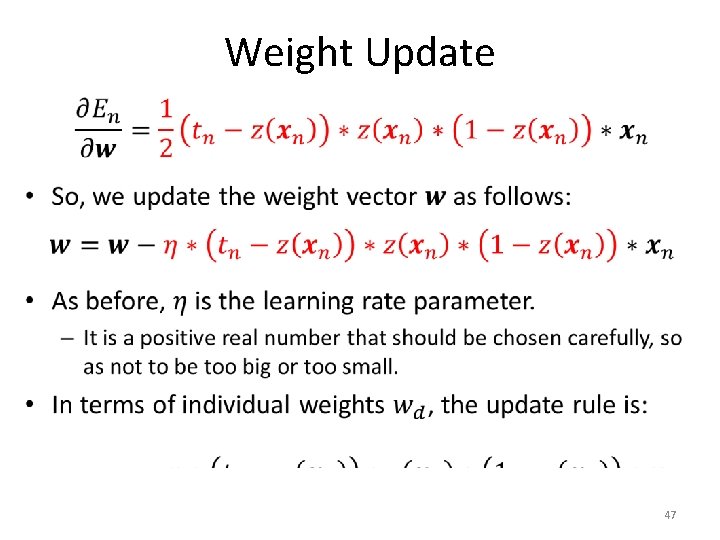

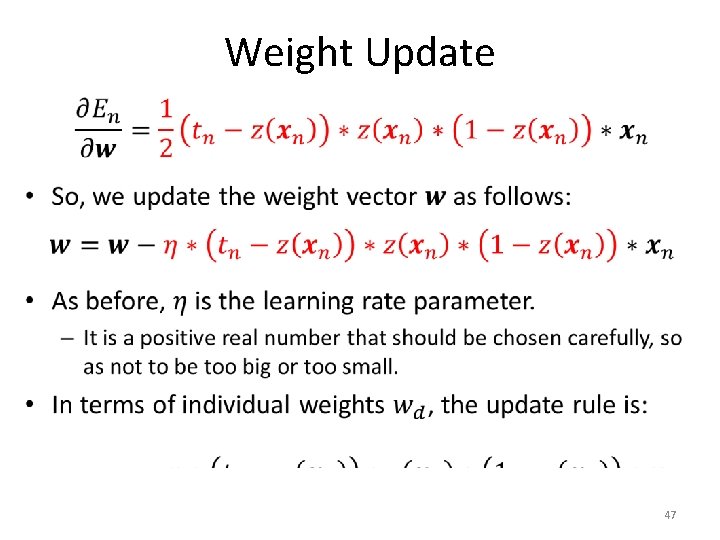

Weight Update • 47

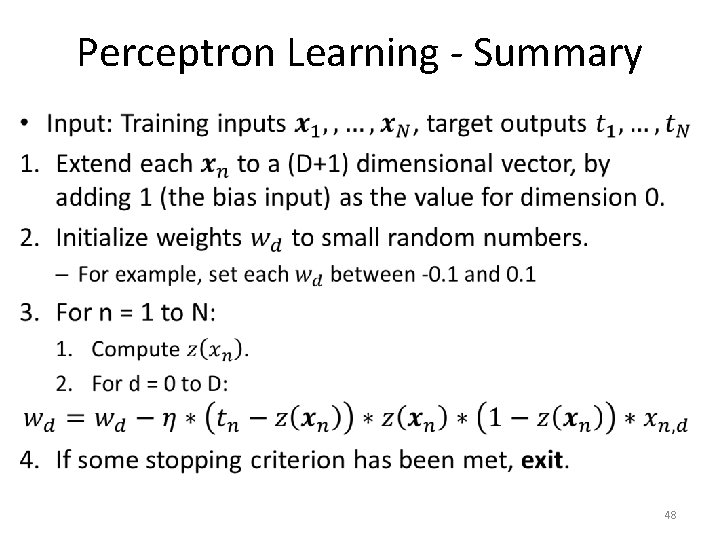

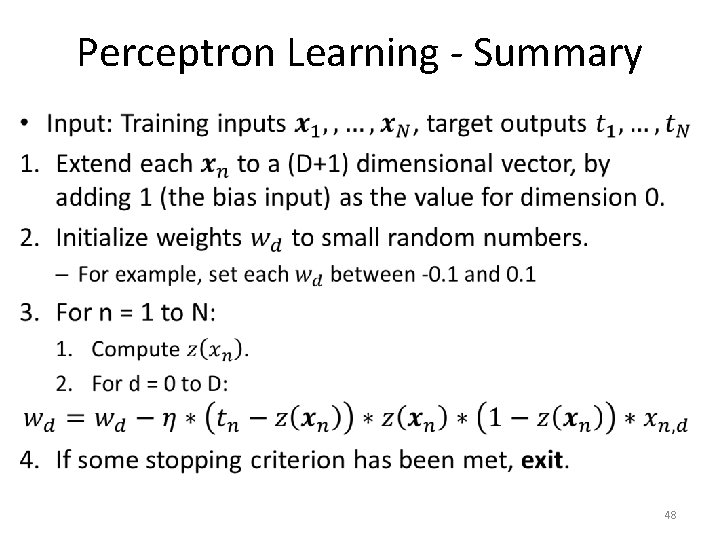

Perceptron Learning - Summary • 48

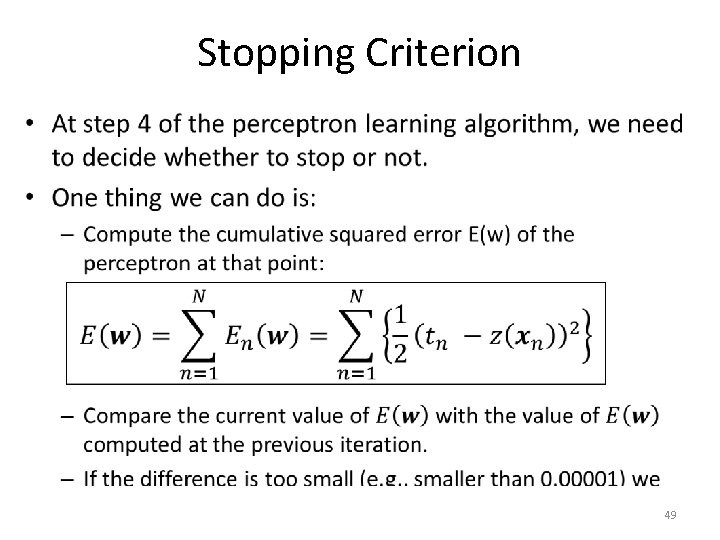

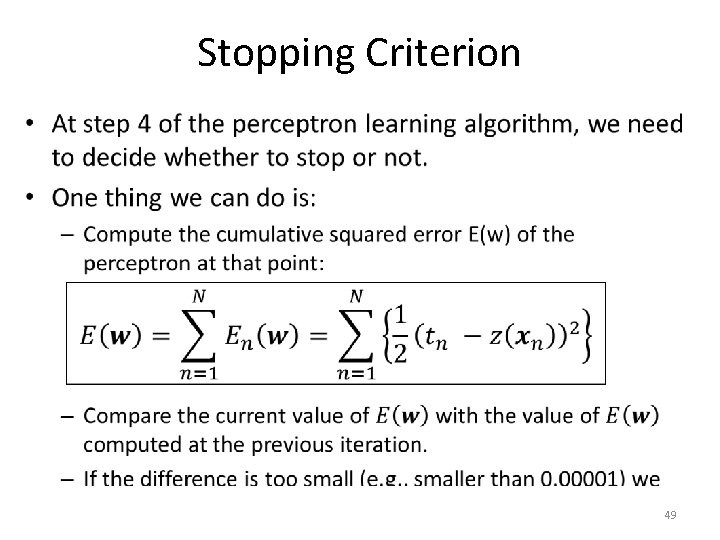

Stopping Criterion • 49

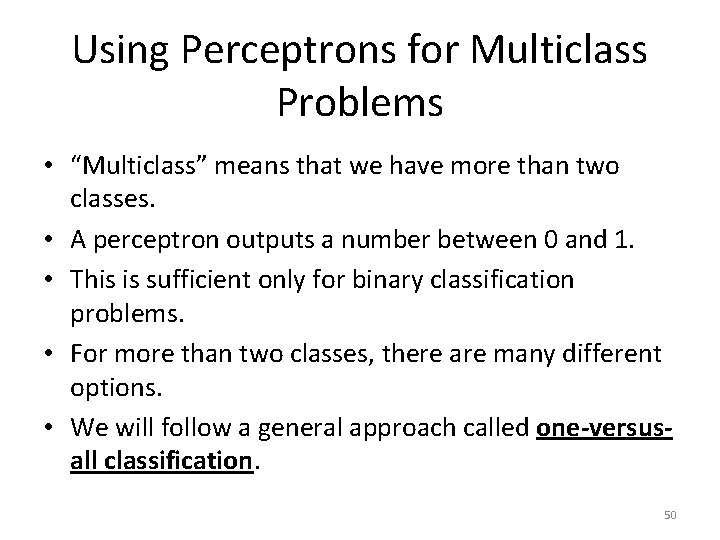

Using Perceptrons for Multiclass Problems • “Multiclass” means that we have more than two classes. • A perceptron outputs a number between 0 and 1. • This is sufficient only for binary classification problems. • For more than two classes, there are many different options. • We will follow a general approach called one-versusall classification. 50

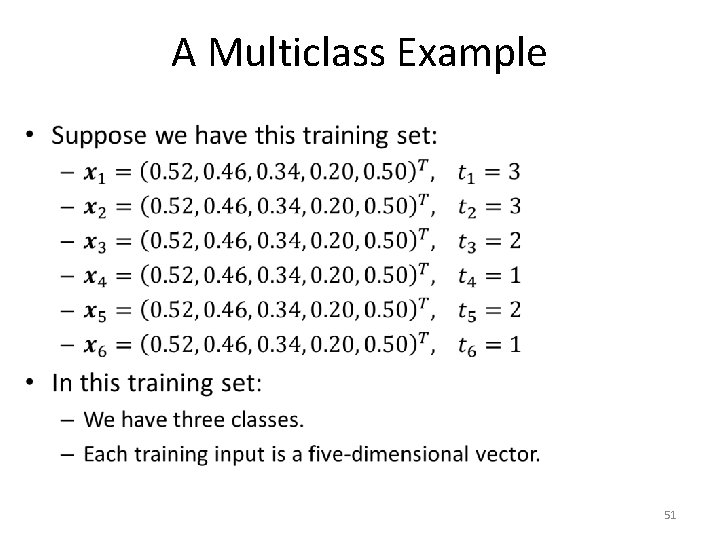

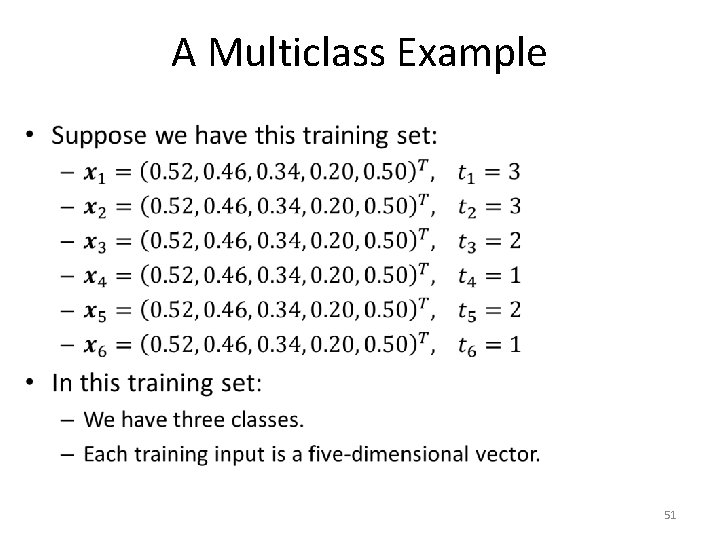

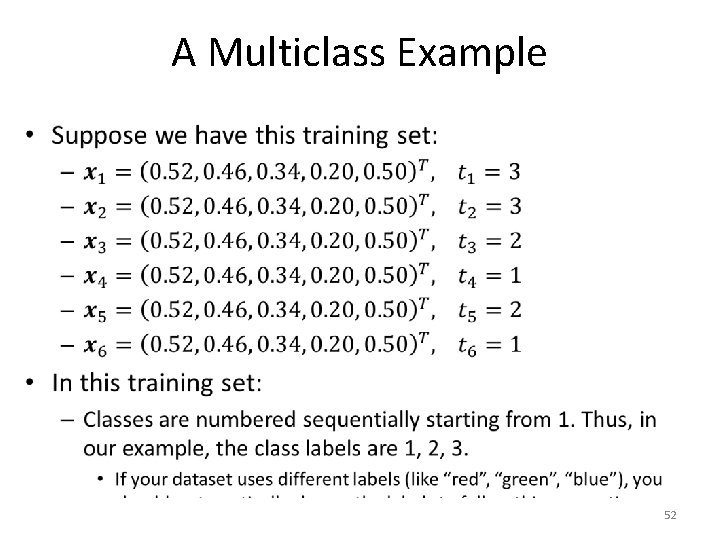

A Multiclass Example • 51

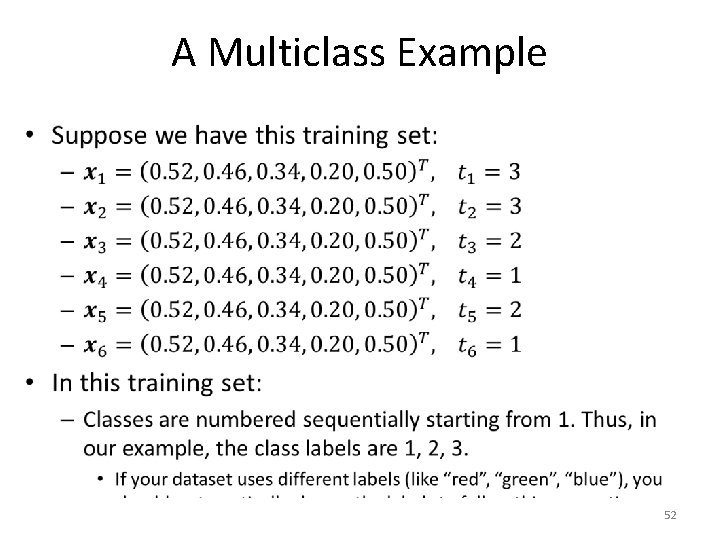

A Multiclass Example • 52

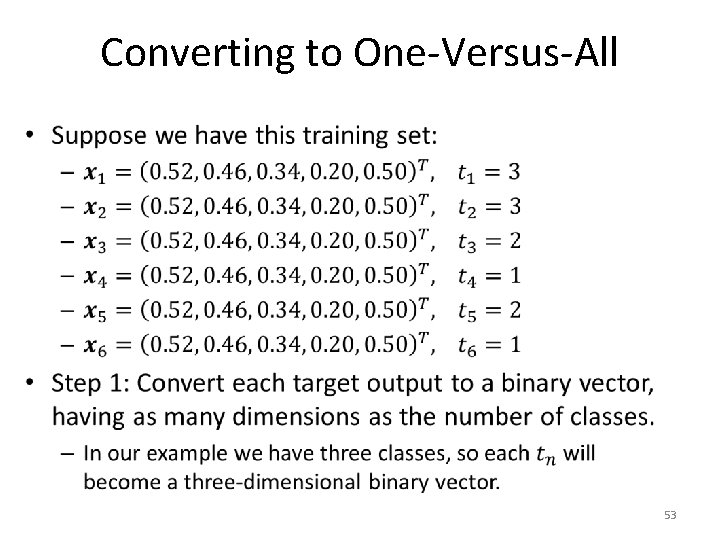

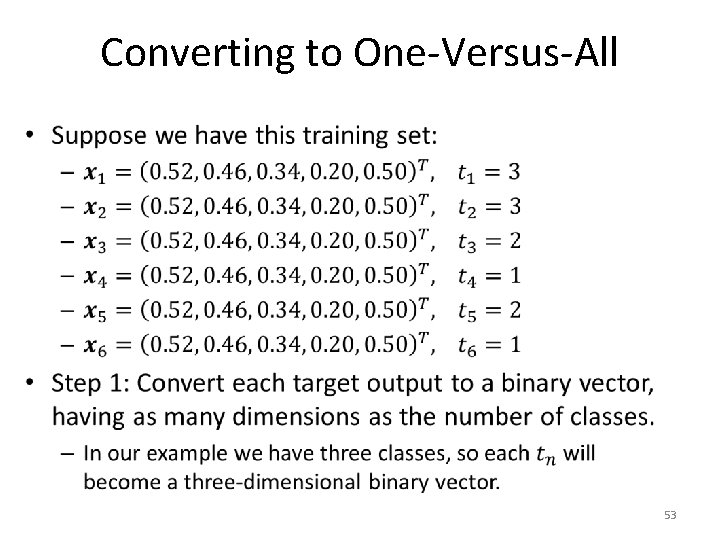

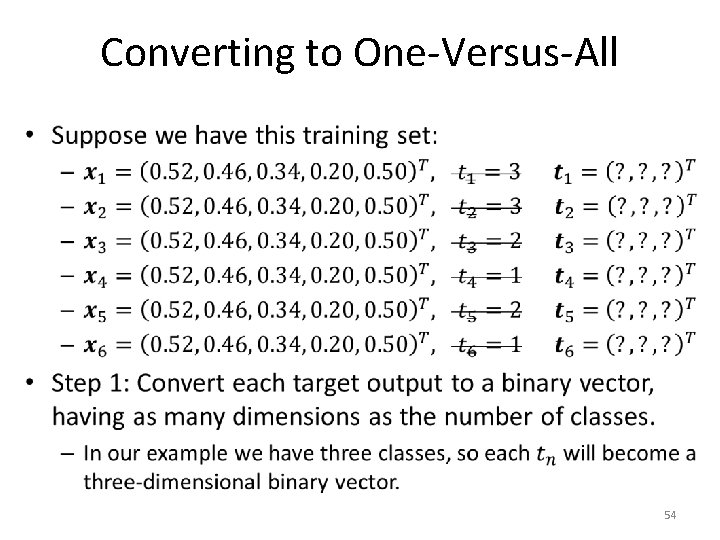

Converting to One-Versus-All • 53

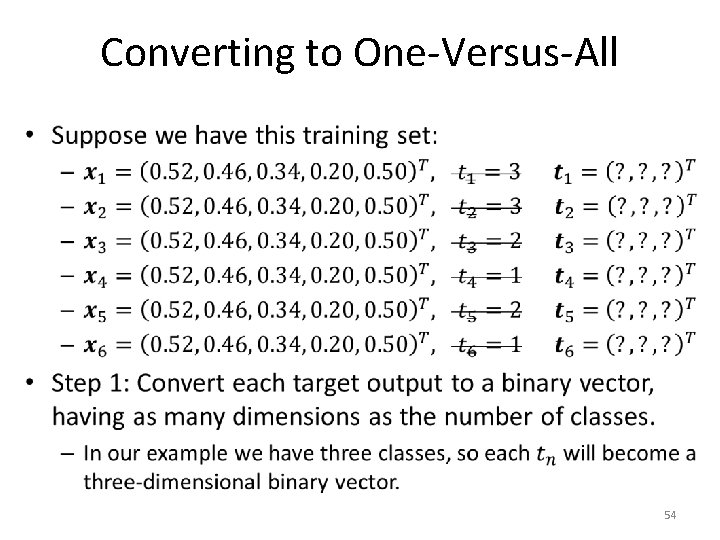

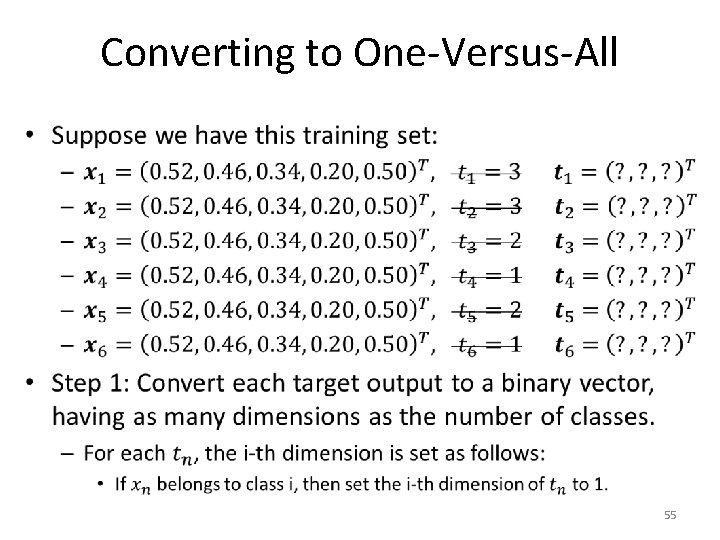

Converting to One-Versus-All • 54

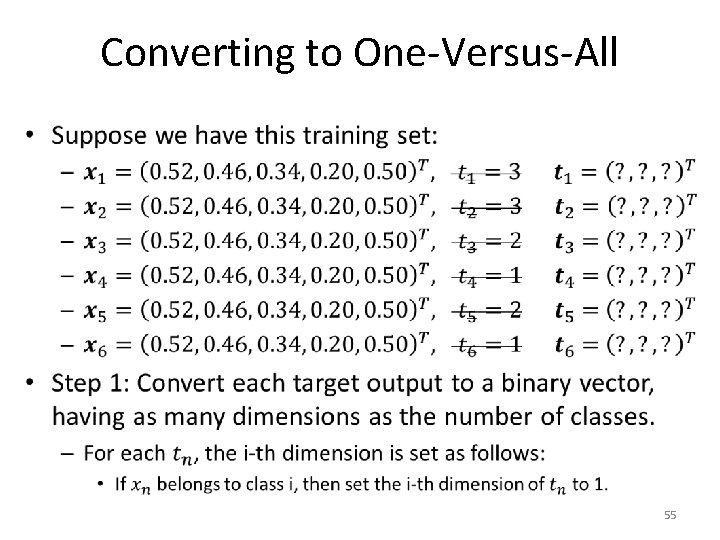

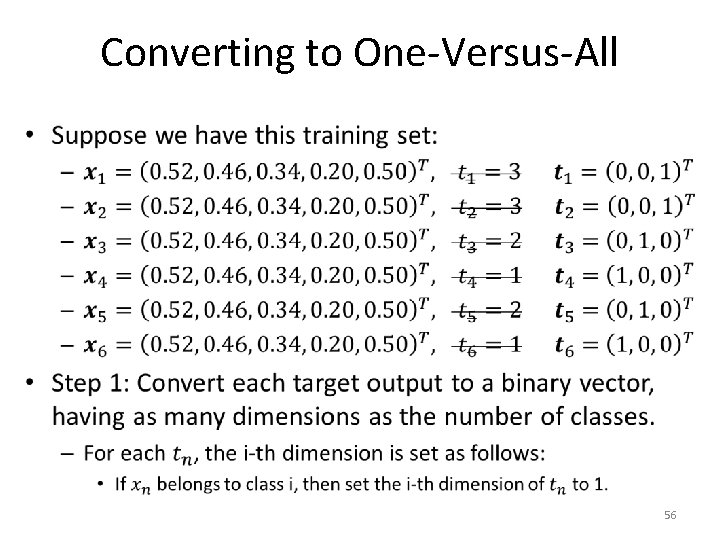

Converting to One-Versus-All • 55

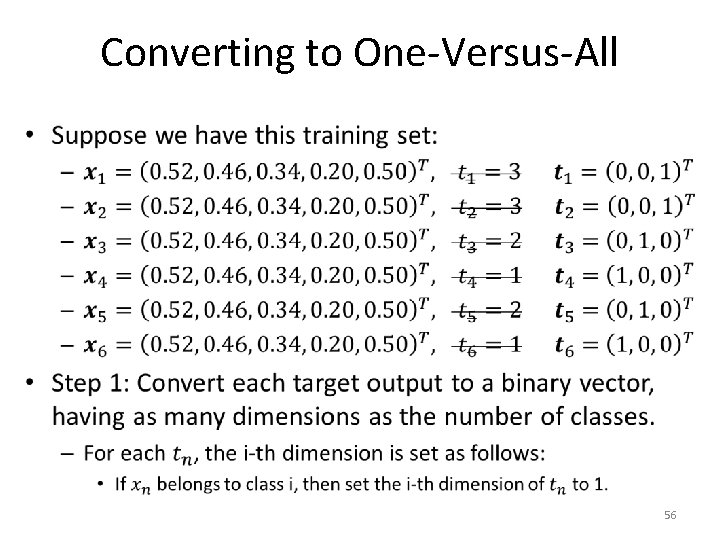

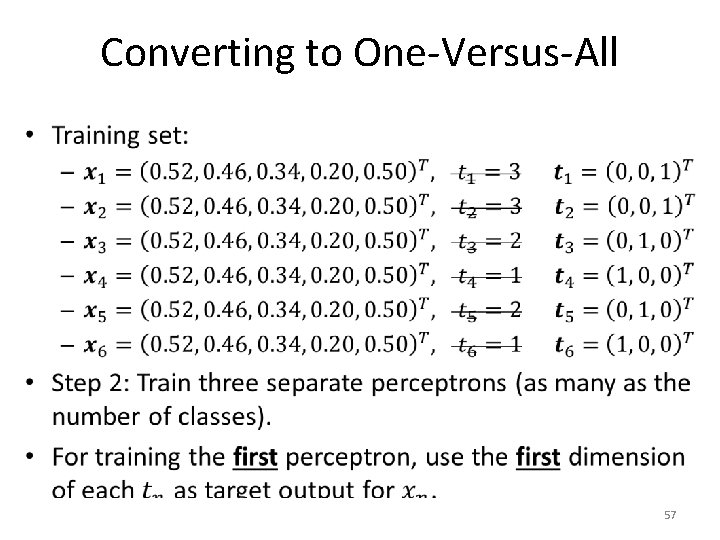

Converting to One-Versus-All • 56

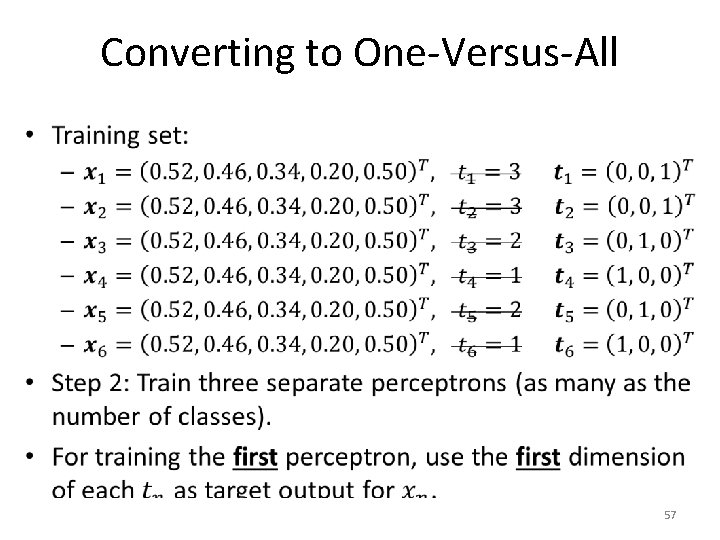

Converting to One-Versus-All • 57

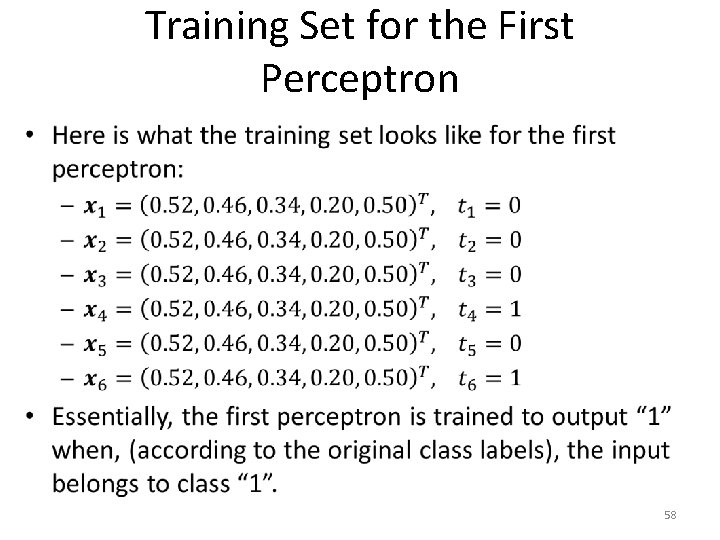

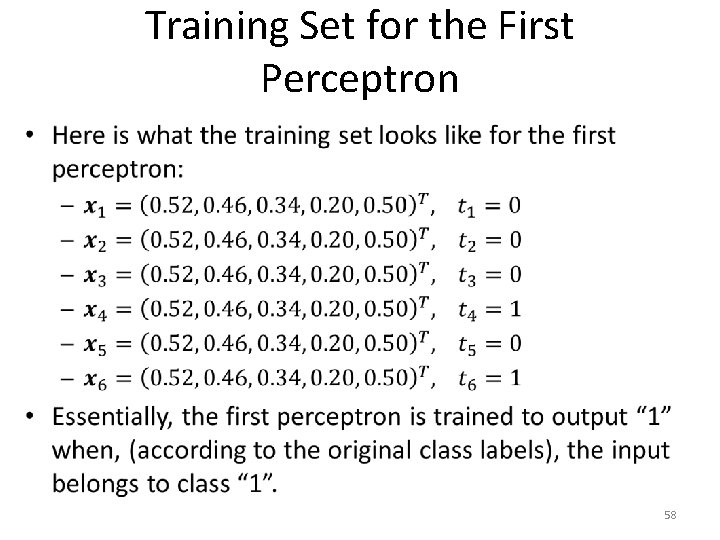

Training Set for the First Perceptron • 58

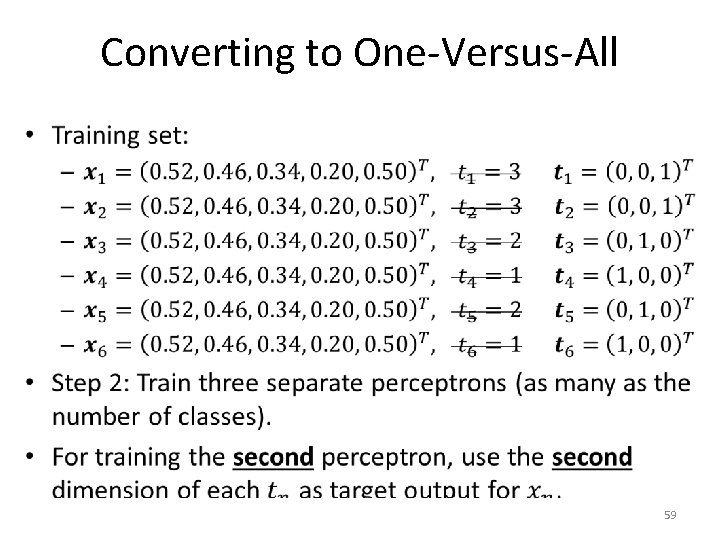

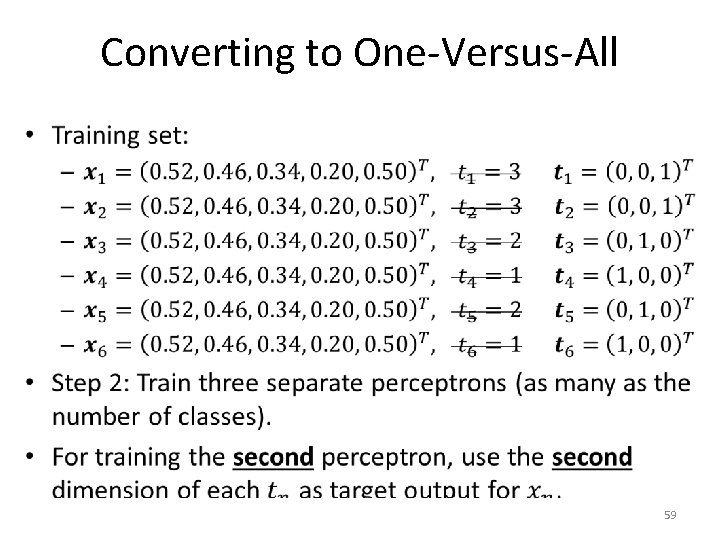

Converting to One-Versus-All • 59

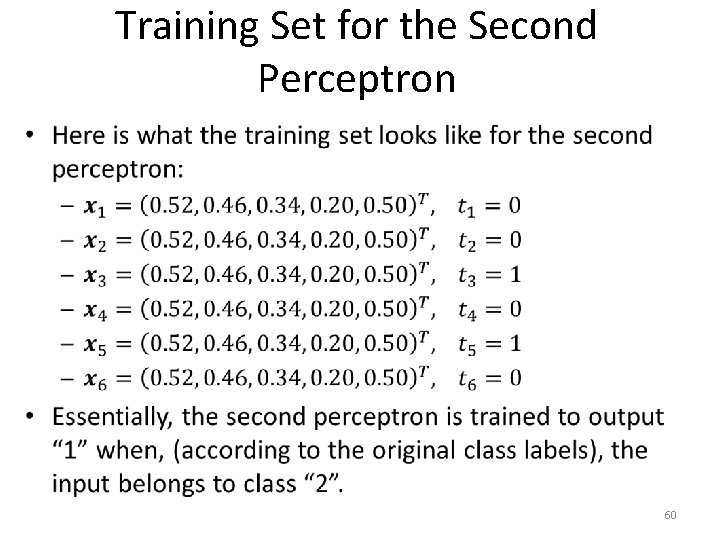

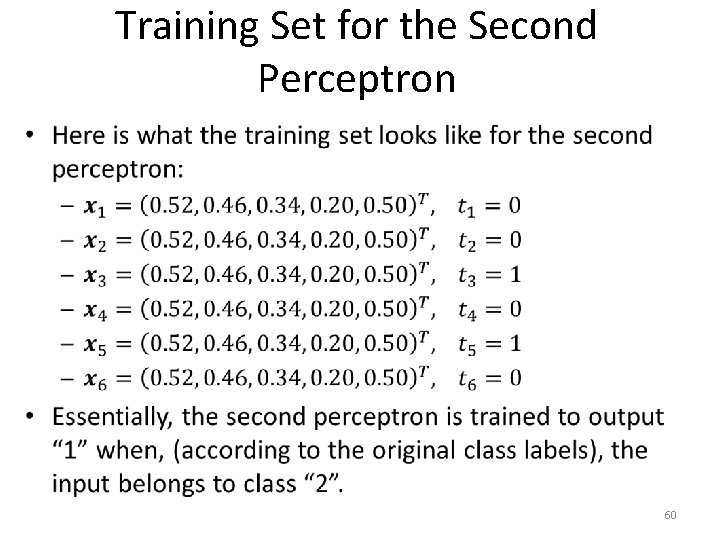

Training Set for the Second Perceptron • 60

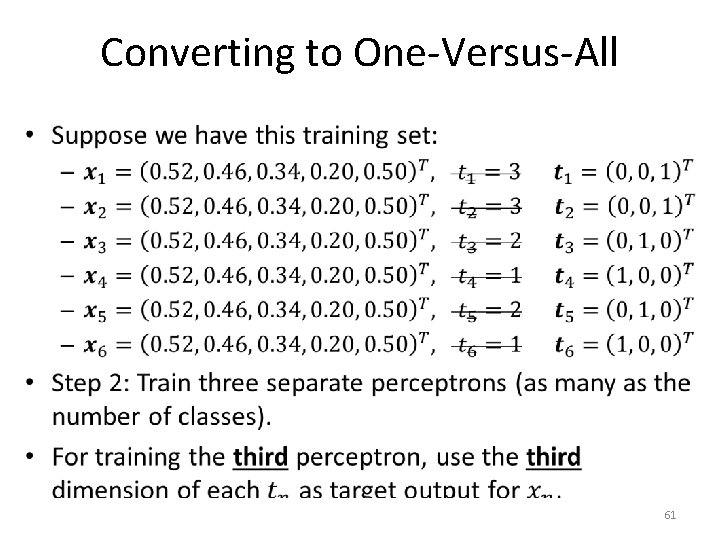

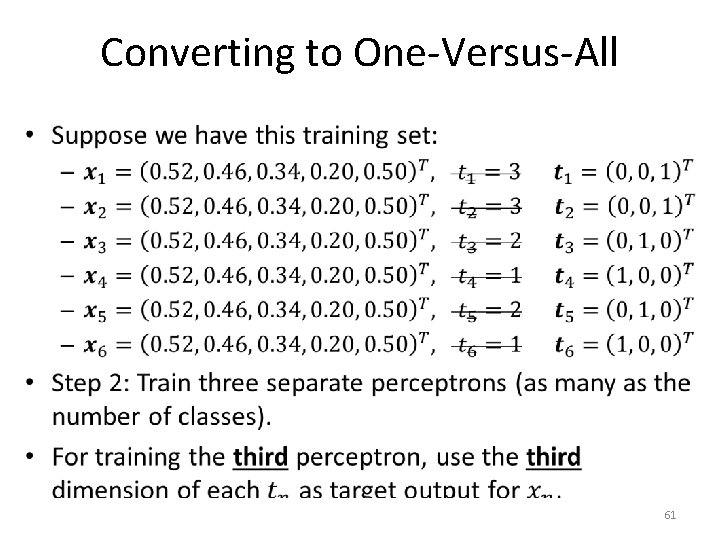

Converting to One-Versus-All • 61

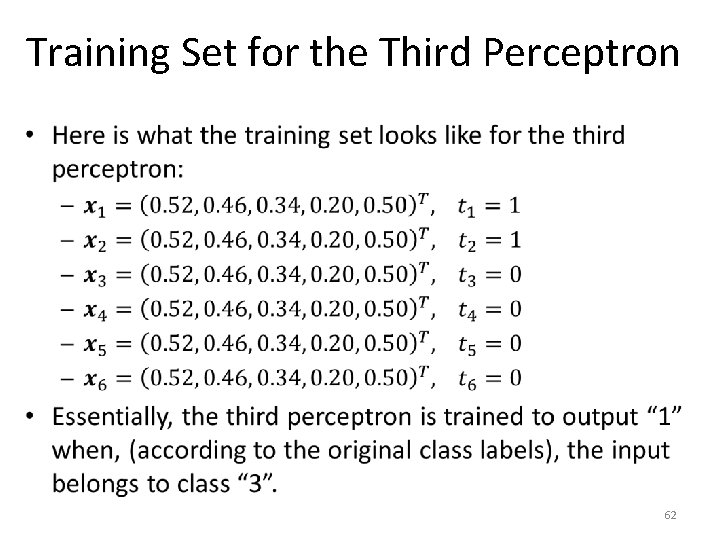

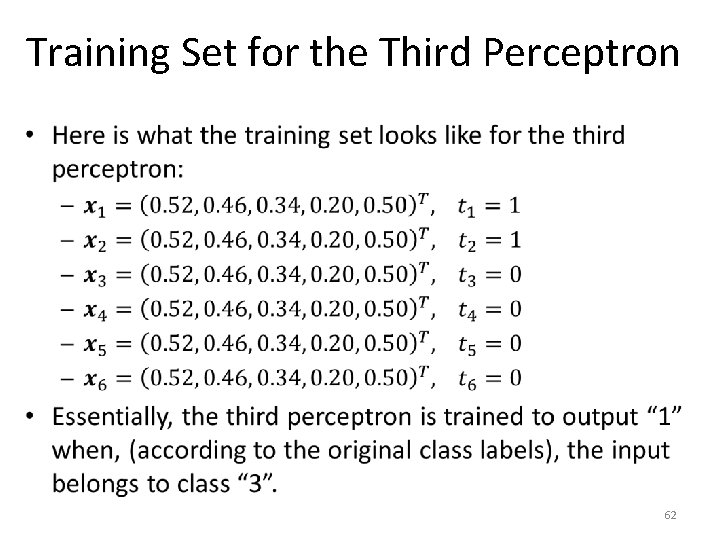

Training Set for the Third Perceptron • 62

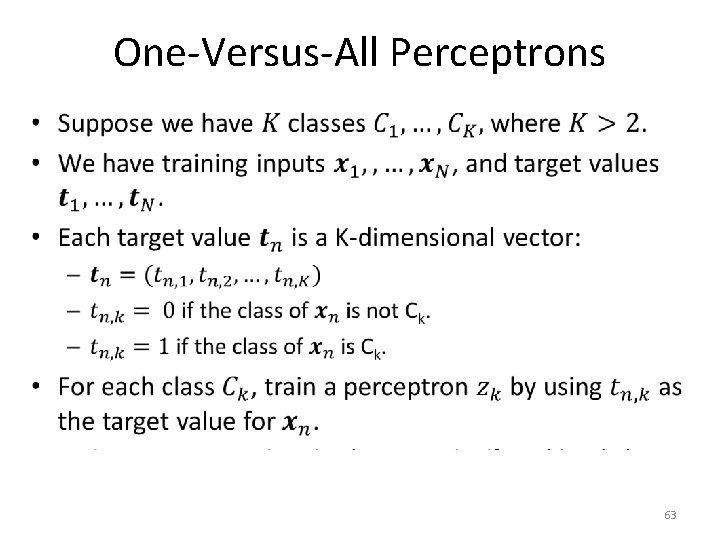

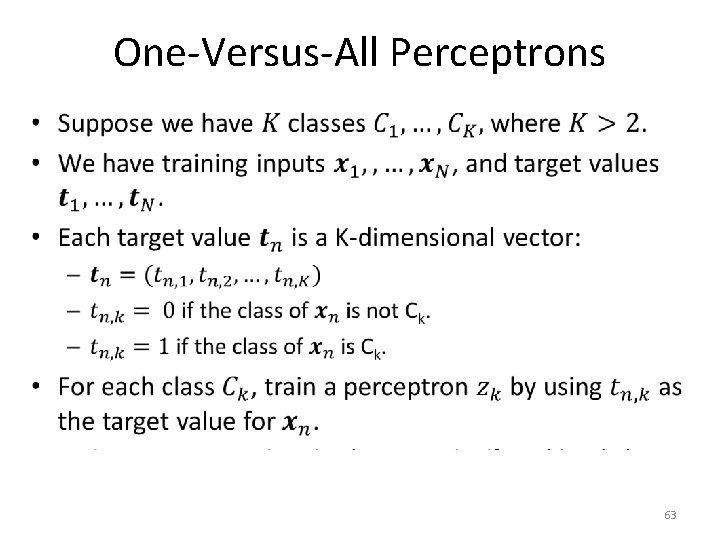

One-Versus-All Perceptrons • 63

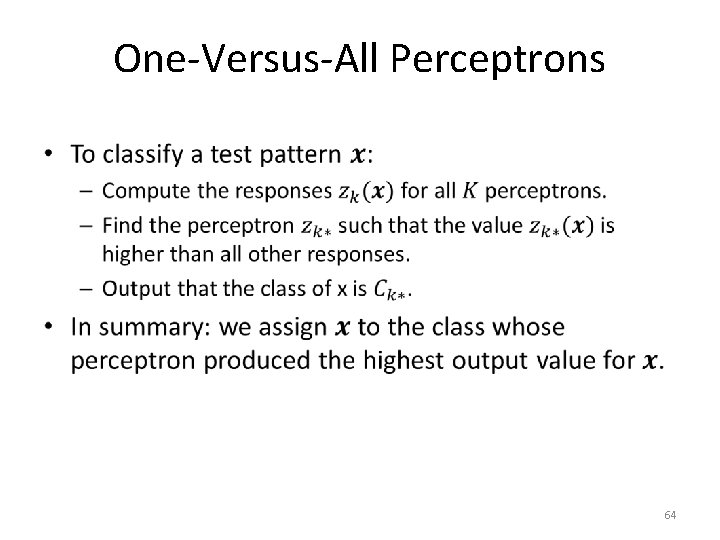

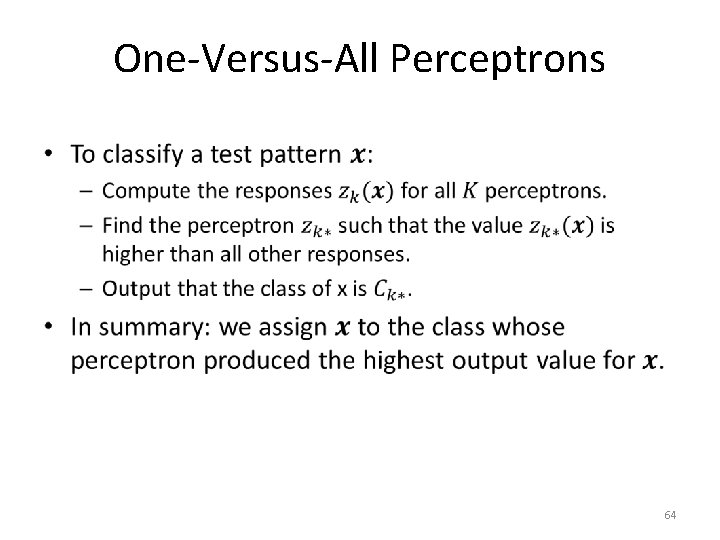

One-Versus-All Perceptrons • 64

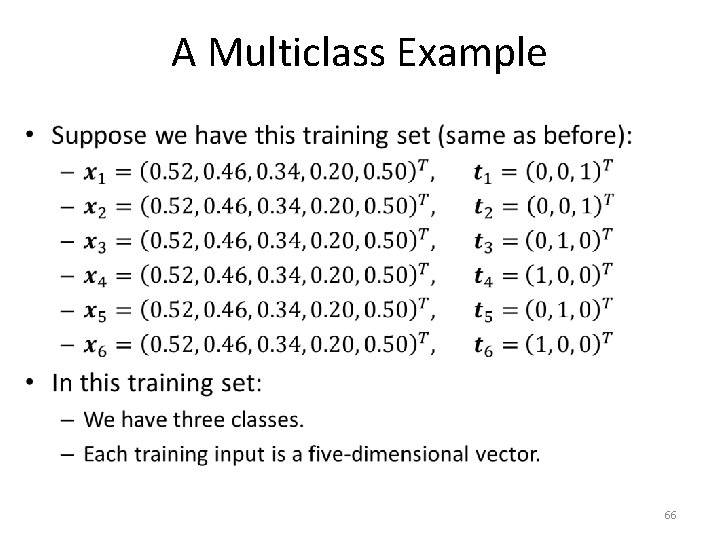

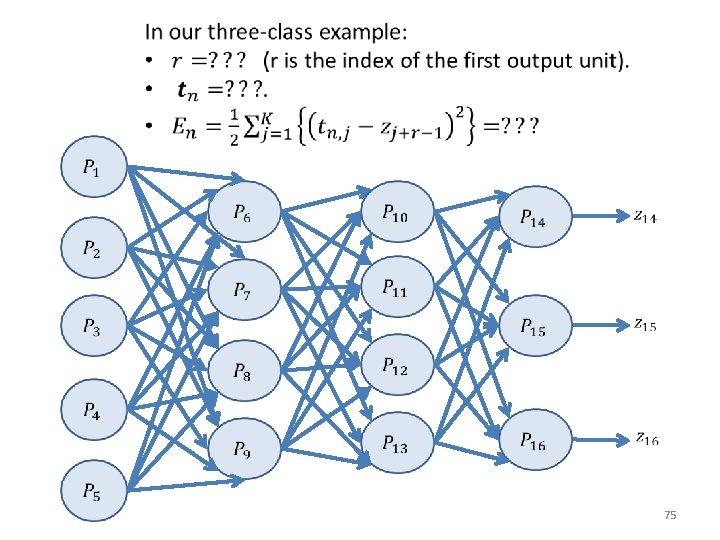

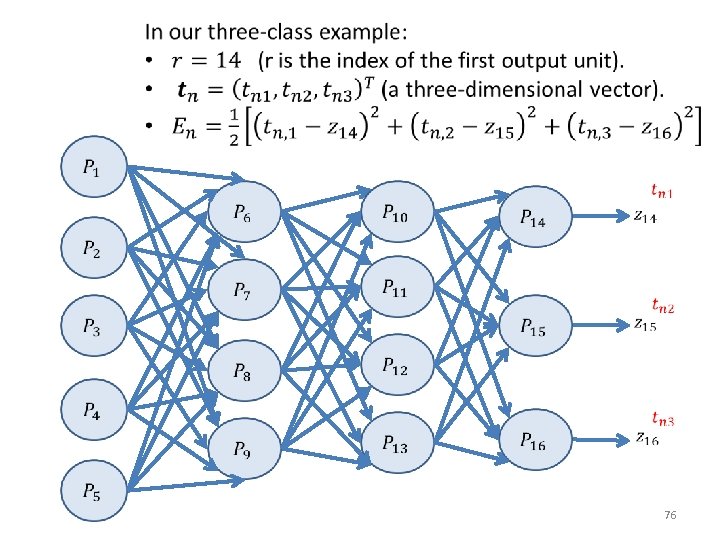

Multiclass Neural Networks • For perceptrons, we saw that we can perform multiclass (i. e. , for more than two classes) classification by training one perceptron for each class. • For neural networks, we will train a SINGLE neural network, with MULTIPLE output units. – The number of output units will be equal to the number of classes. 65

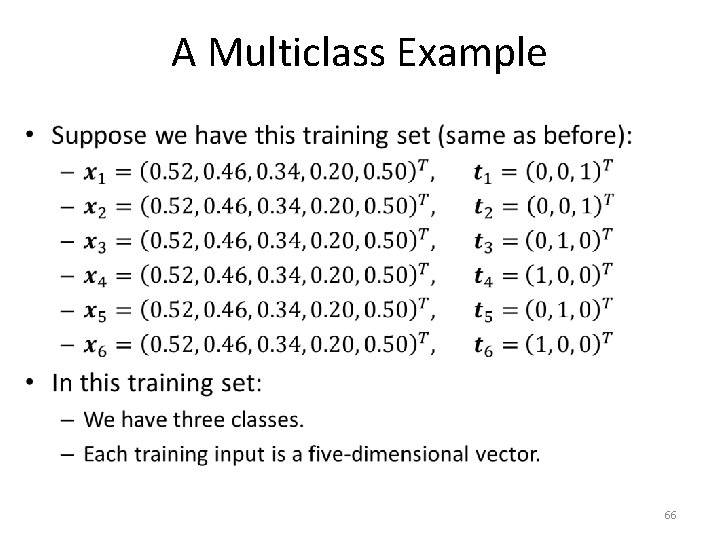

A Multiclass Example • 66

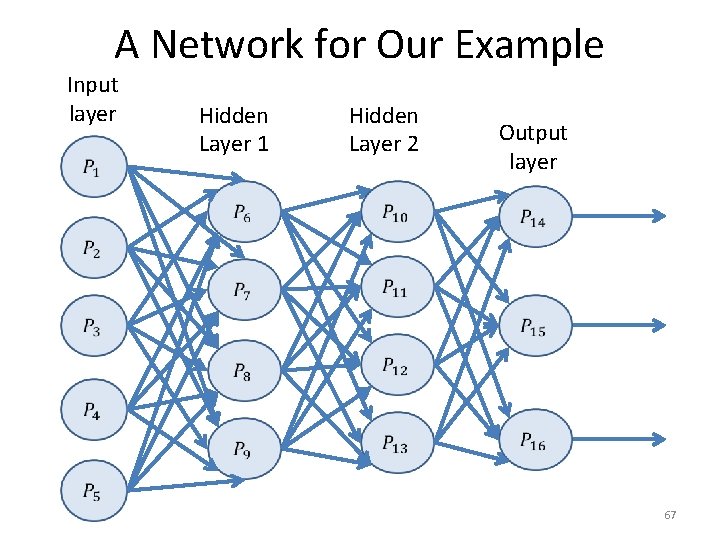

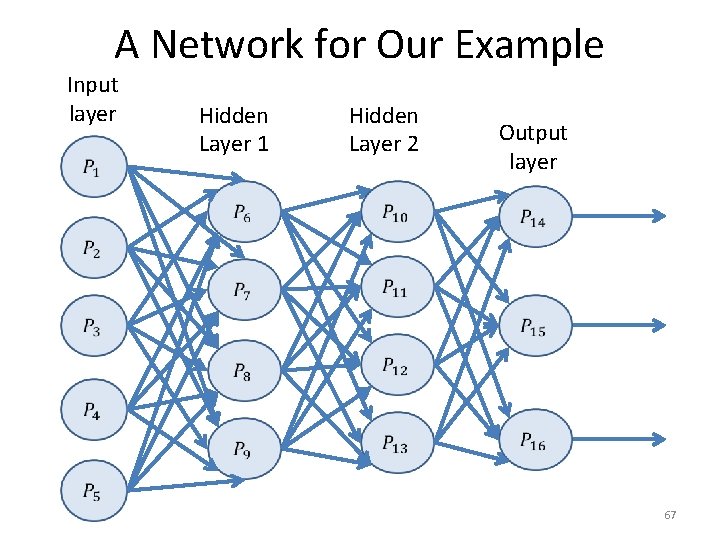

A Network for Our Example Input layer Hidden Layer 1 Hidden Layer 2 Output layer 67

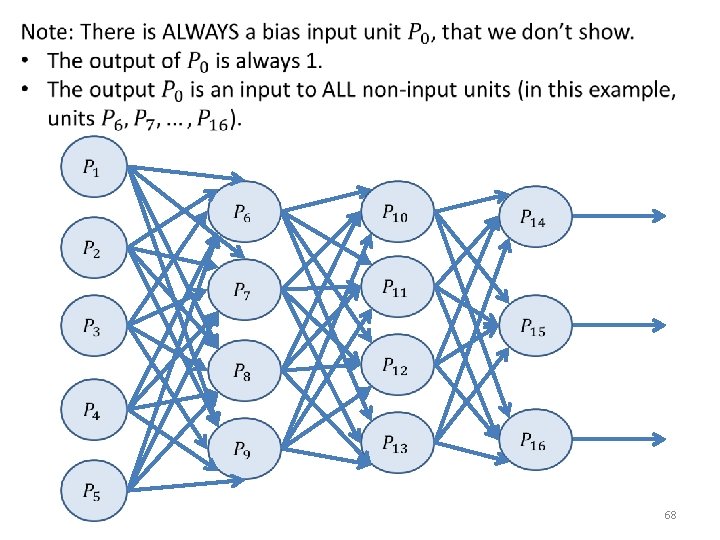

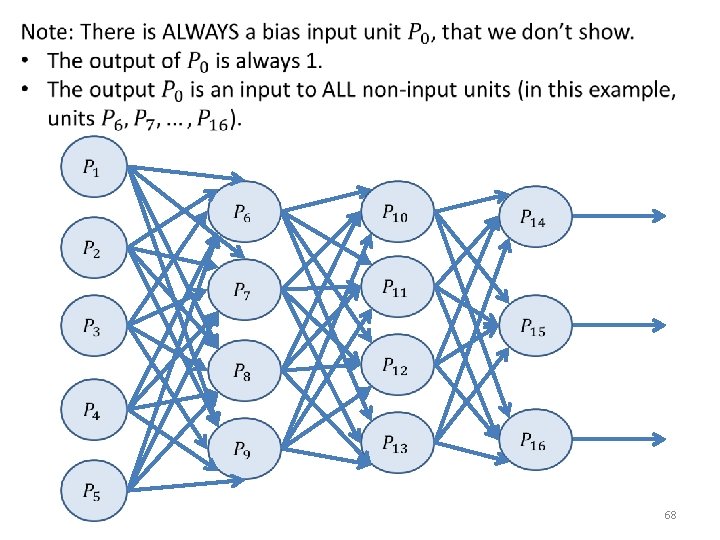

68

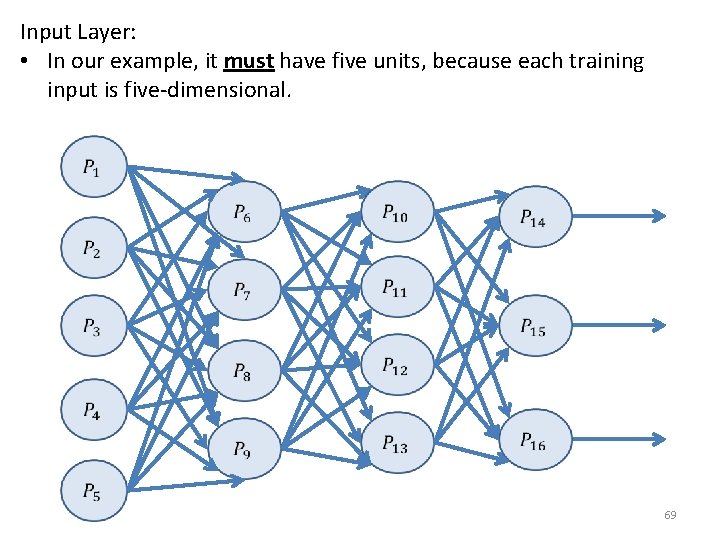

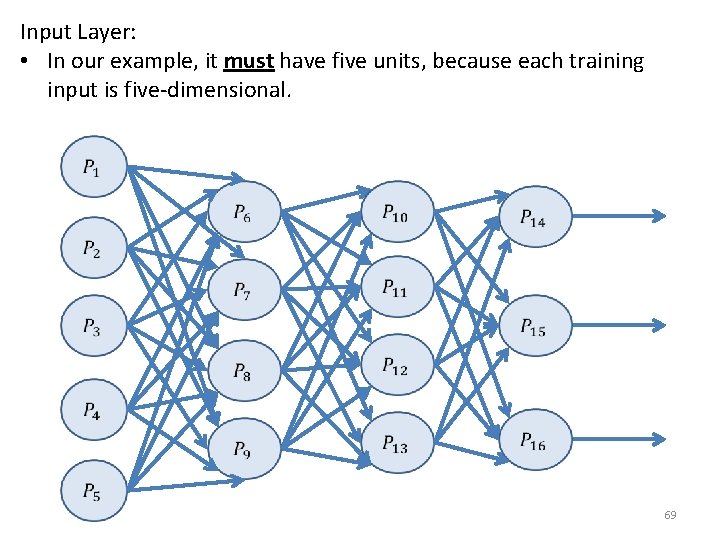

Input Layer: • In our example, it must have five units, because each training input is five-dimensional. 69

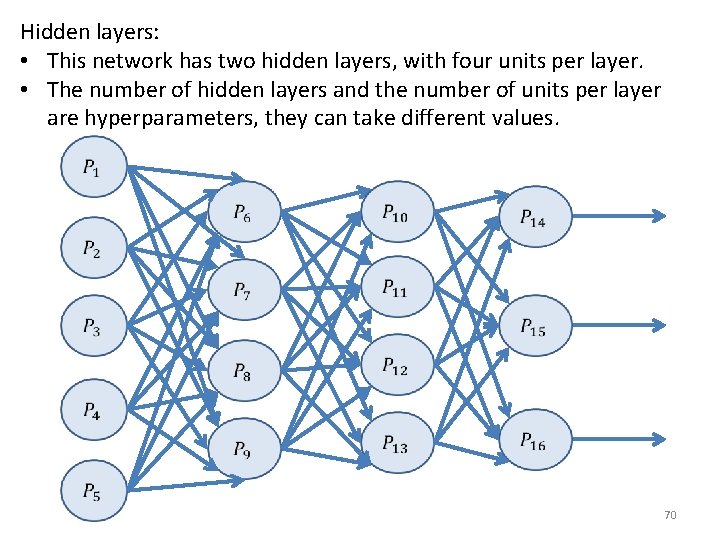

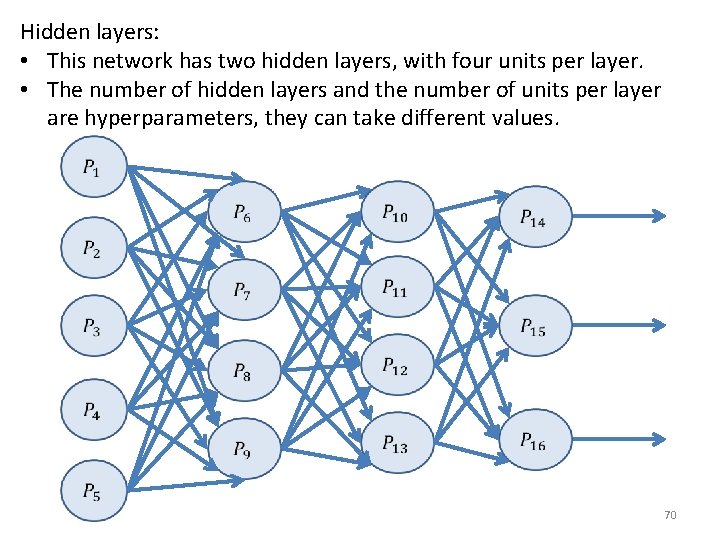

Hidden layers: • This network has two hidden layers, with four units per layer. • The number of hidden layers and the number of units per layer are hyperparameters, they can take different values. 70

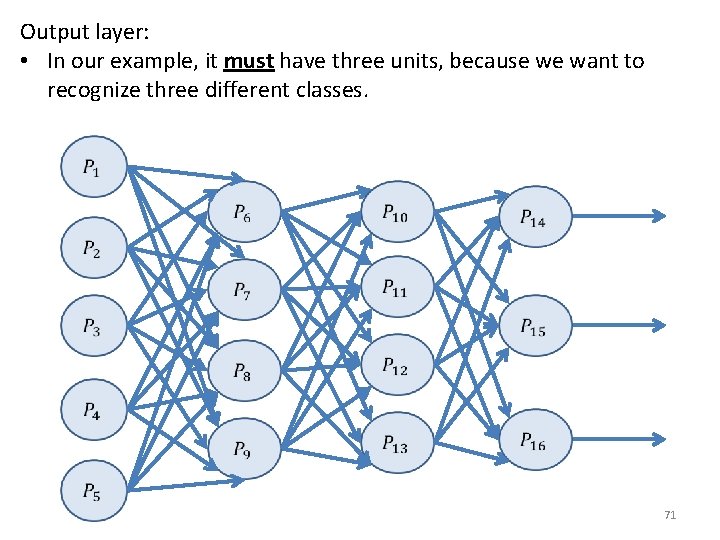

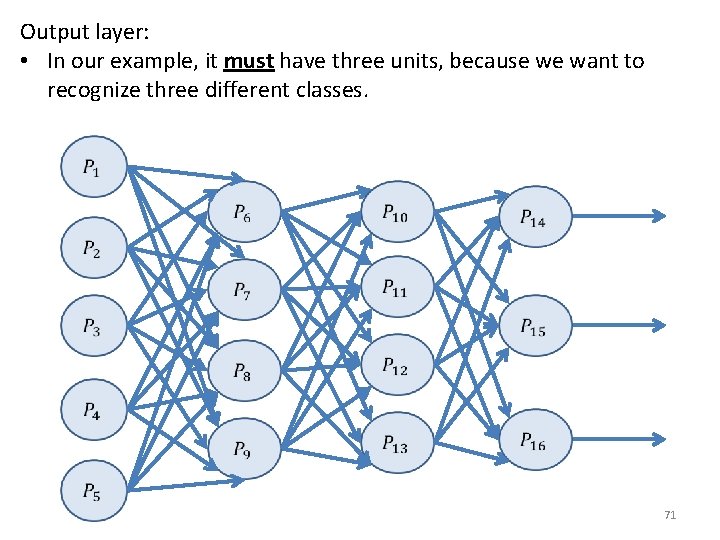

Output layer: • In our example, it must have three units, because we want to recognize three different classes. 71

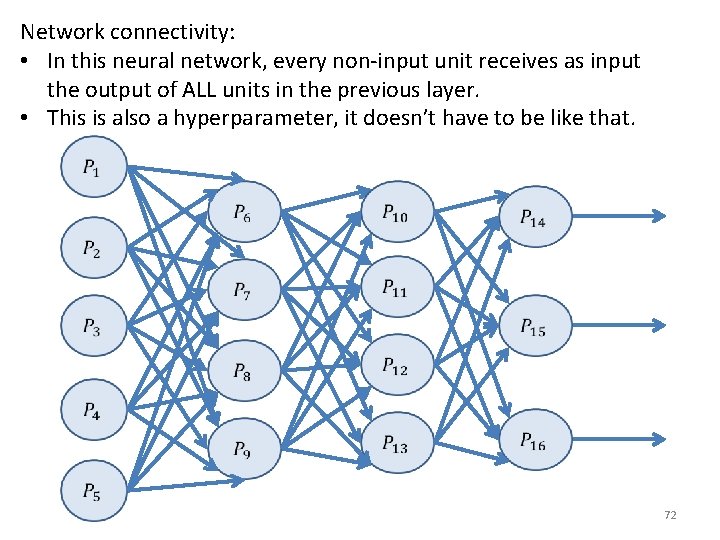

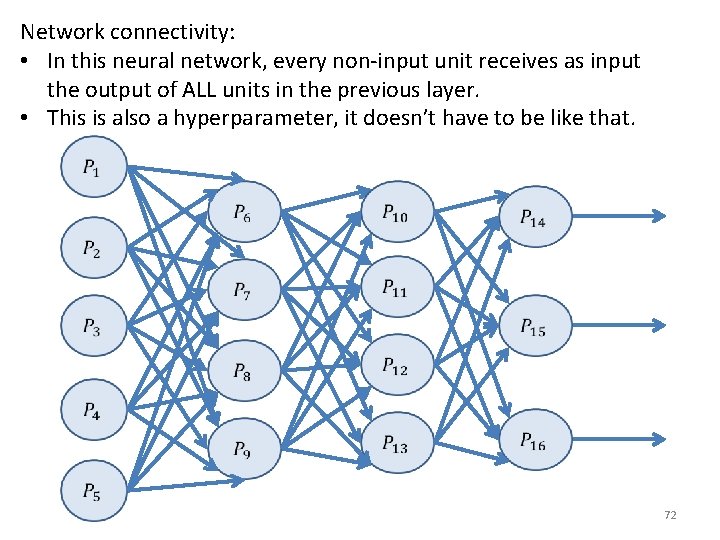

Network connectivity: • In this neural network, every non-input unit receives as input the output of ALL units in the previous layer. • This is also a hyperparameter, it doesn’t have to be like that. 72

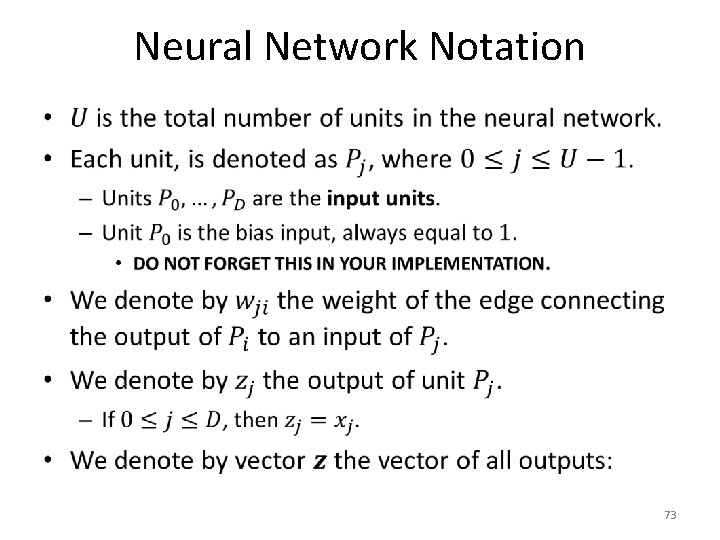

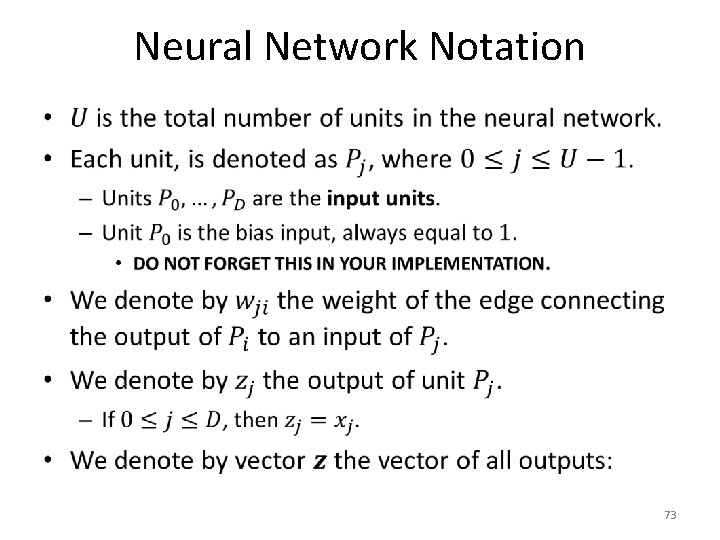

Neural Network Notation • 73

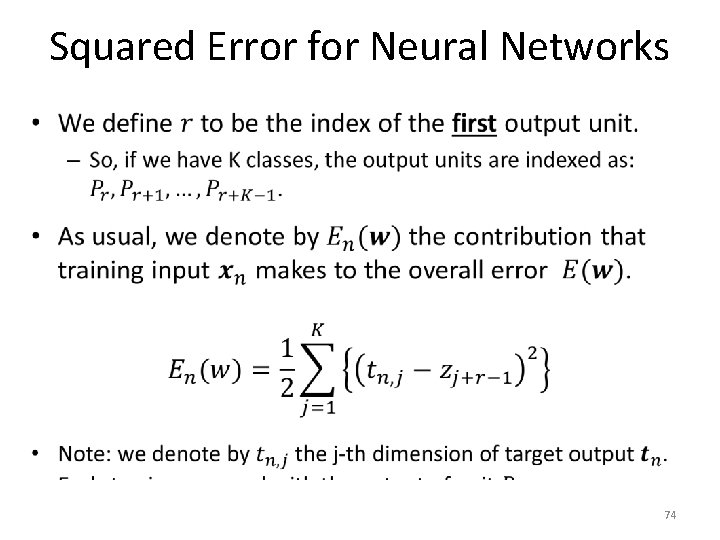

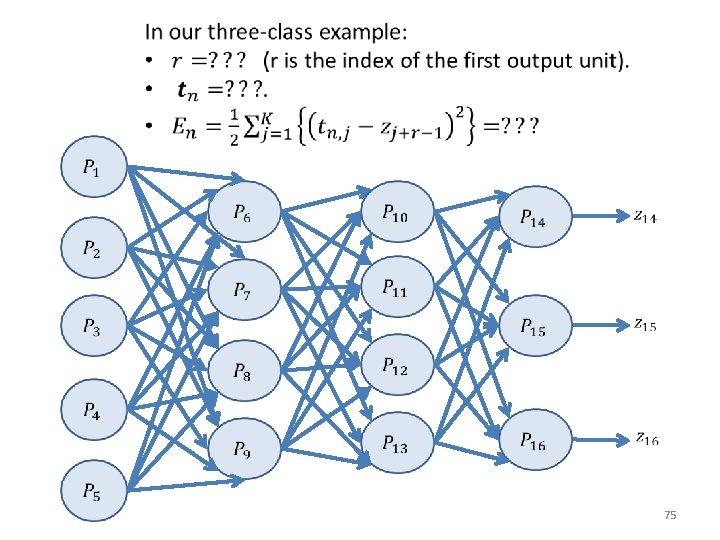

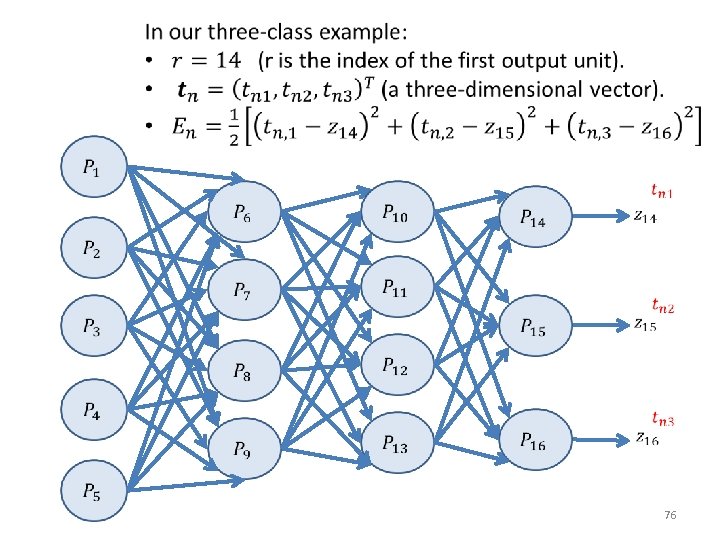

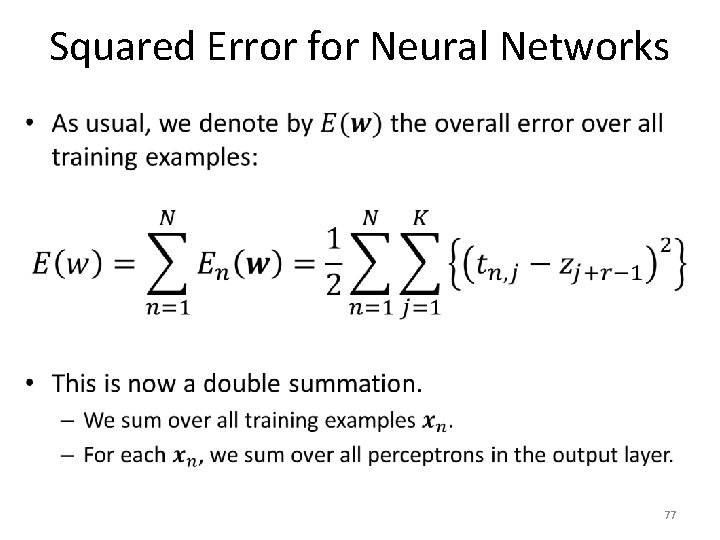

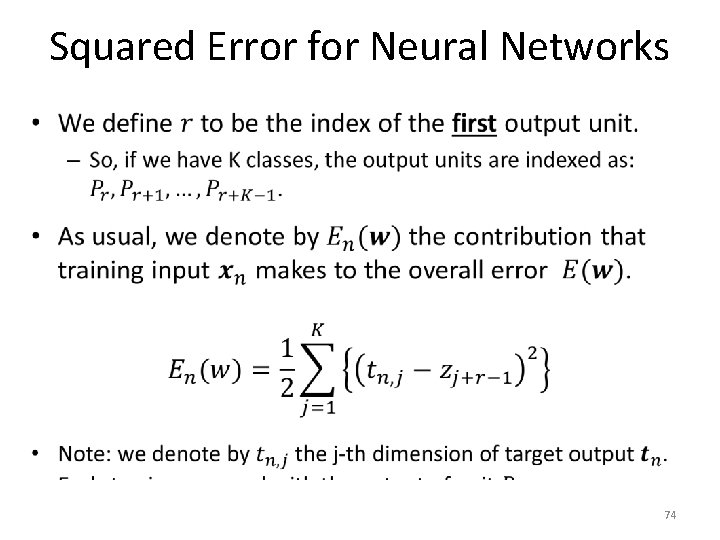

Squared Error for Neural Networks • 74

75

76

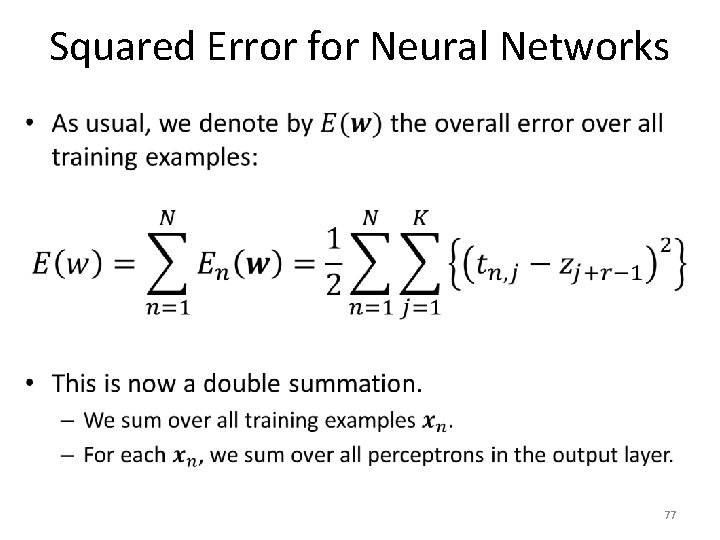

Squared Error for Neural Networks • 77

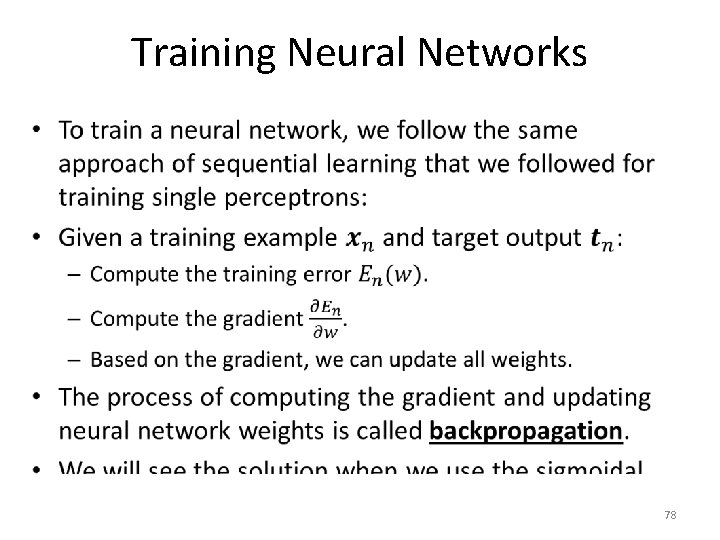

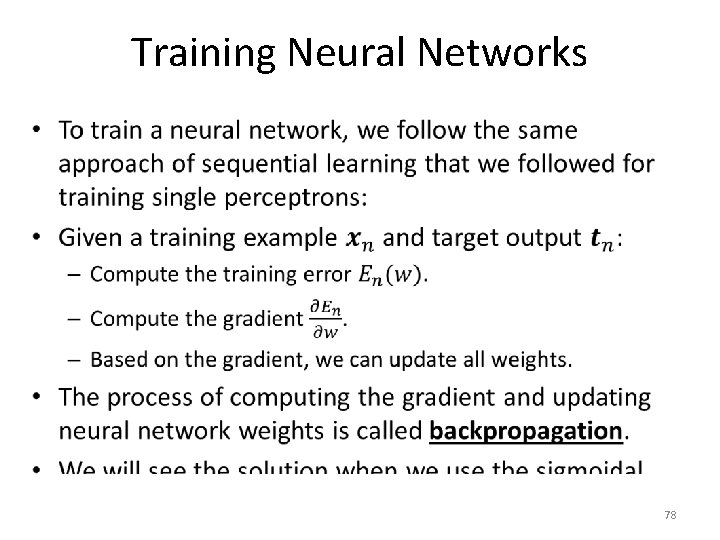

Training Neural Networks • 78

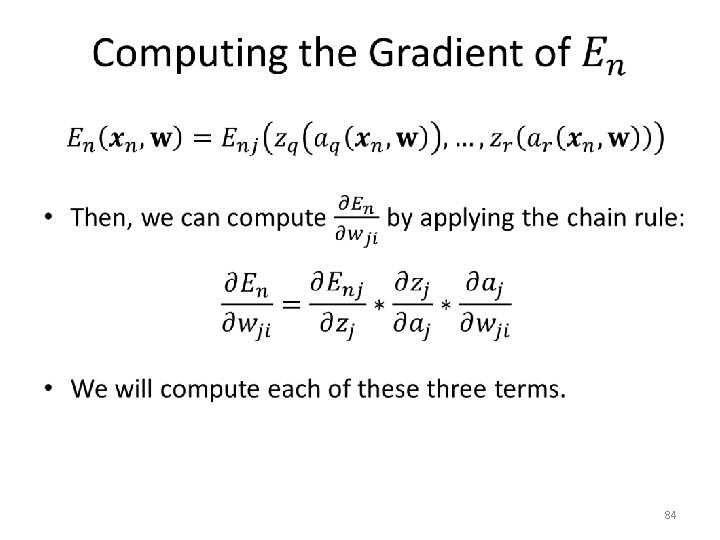

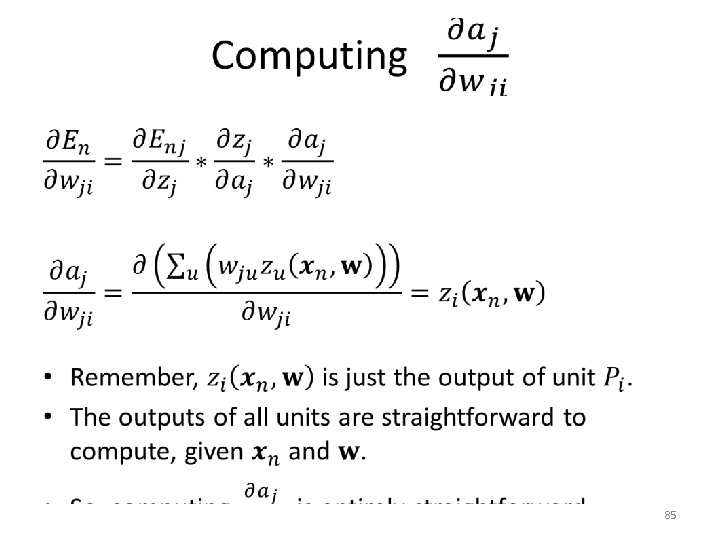

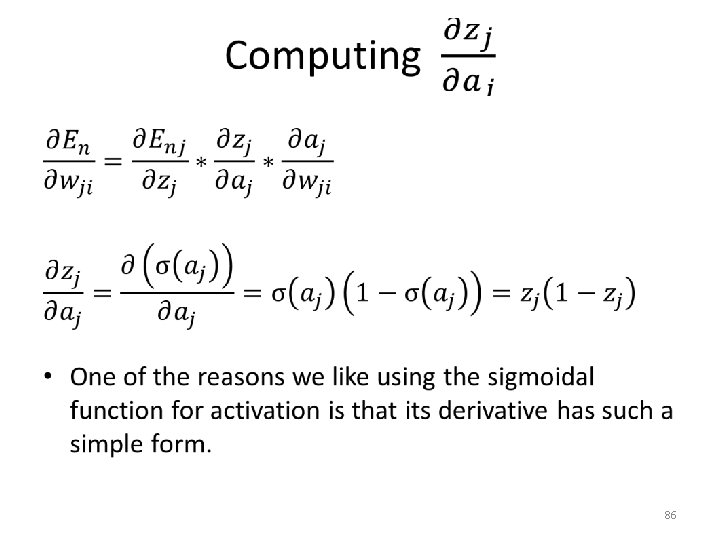

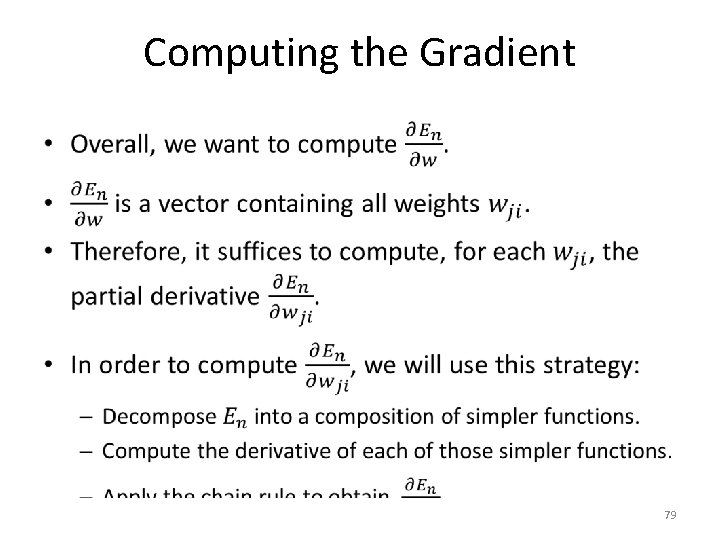

Computing the Gradient • 79

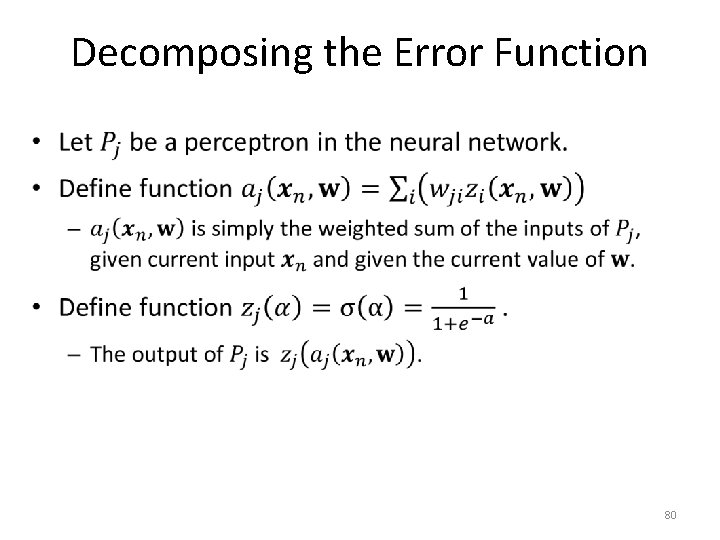

Decomposing the Error Function • 80

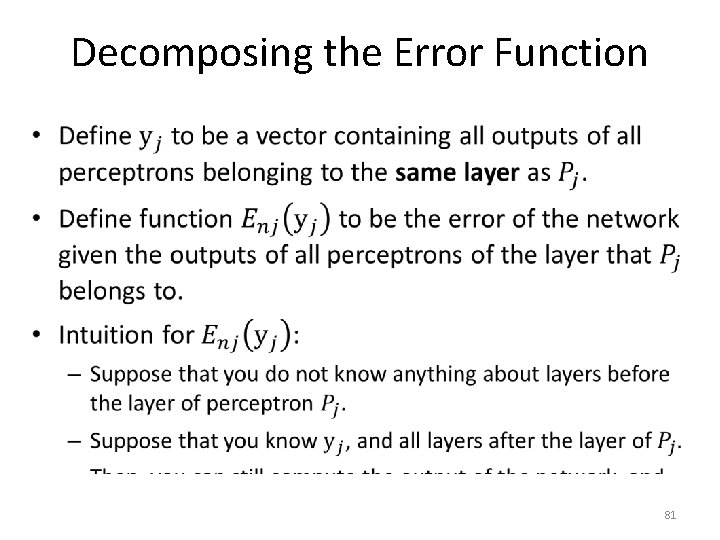

Decomposing the Error Function • 81

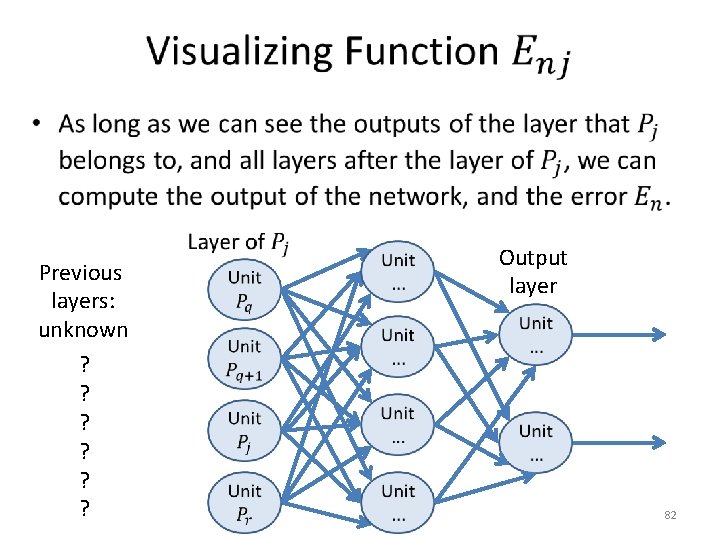

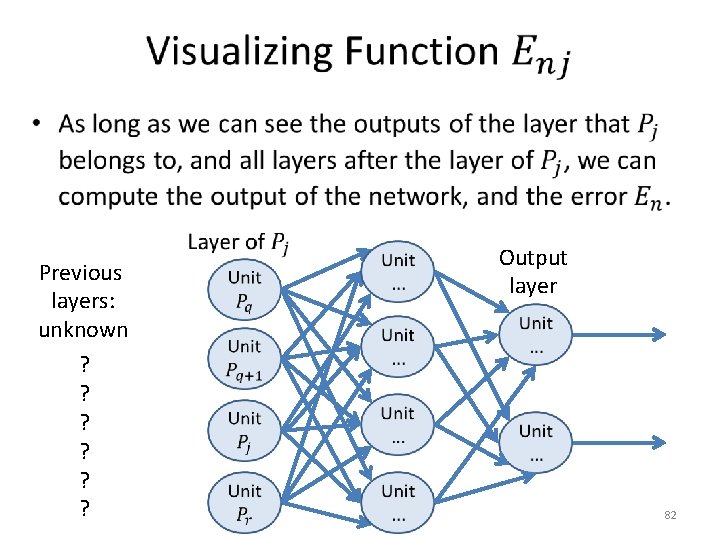

• Previous layers: unknown ? ? ? Output layer 82

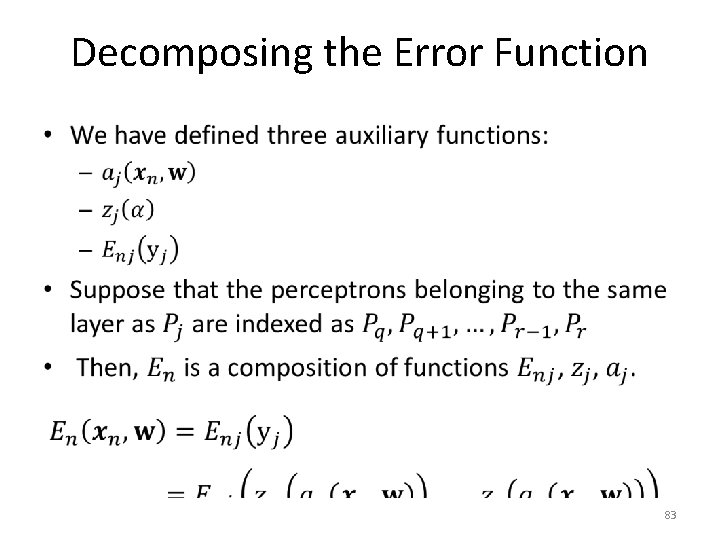

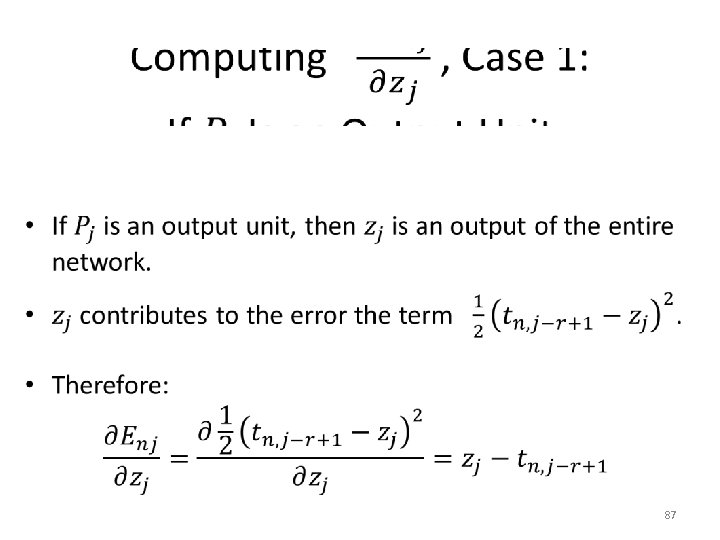

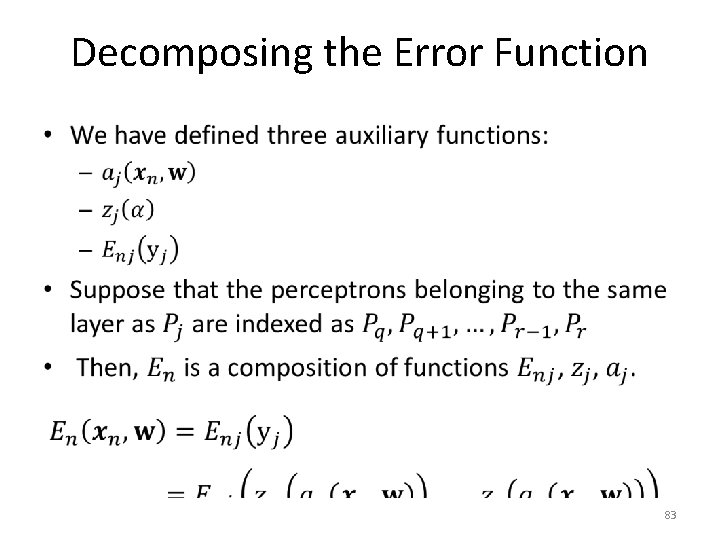

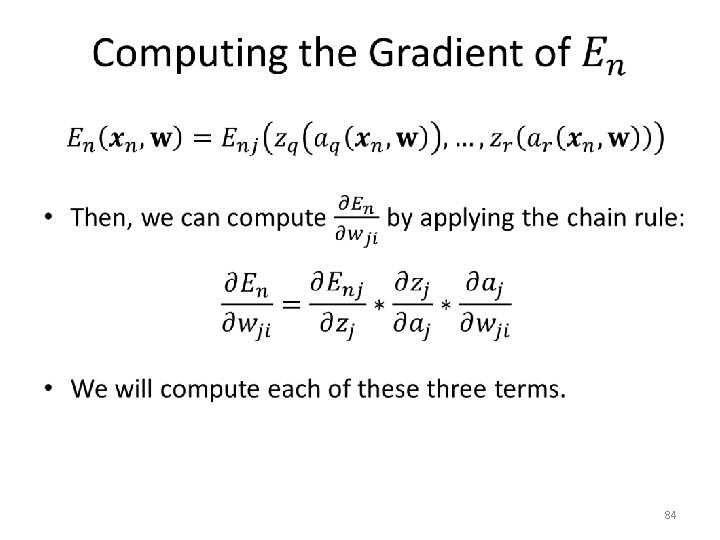

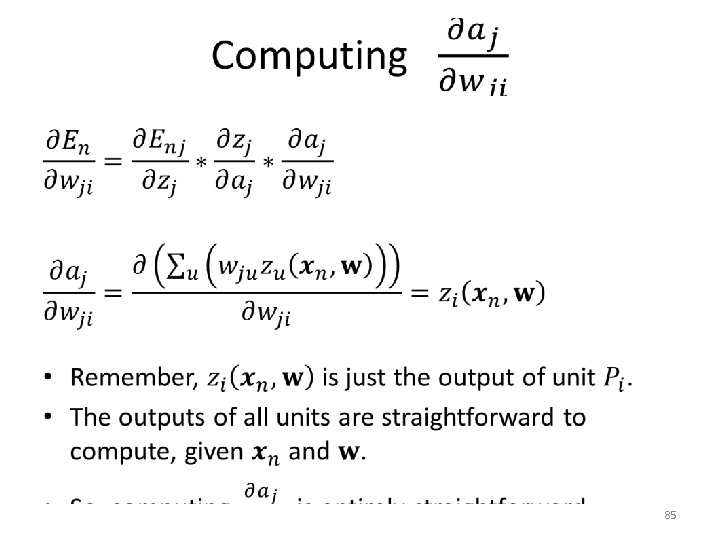

Decomposing the Error Function • 83

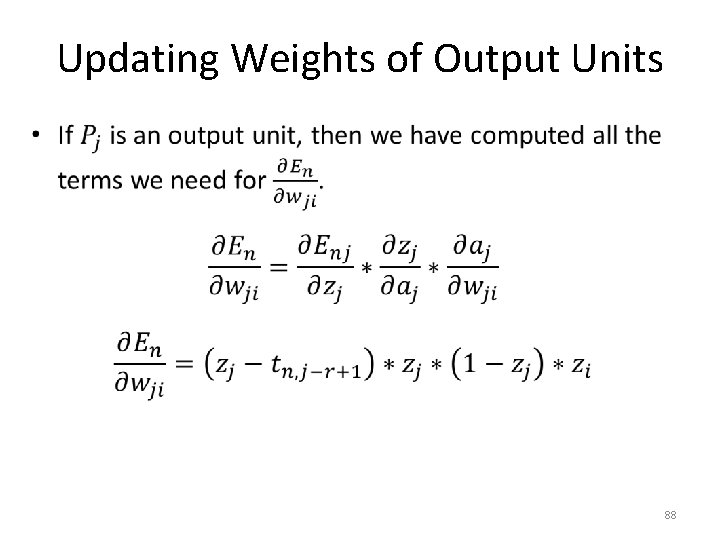

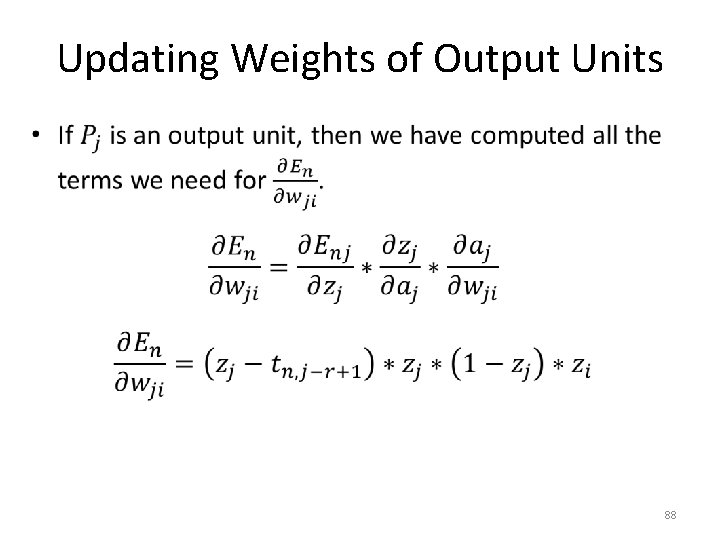

Updating Weights of Output Units • 88

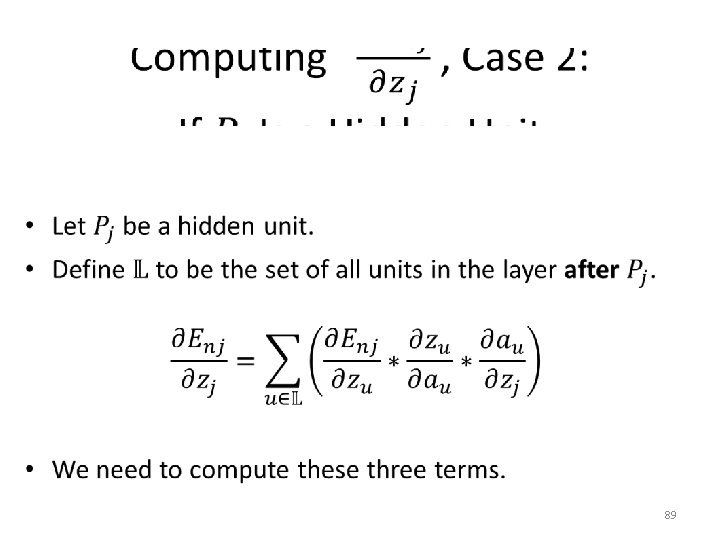

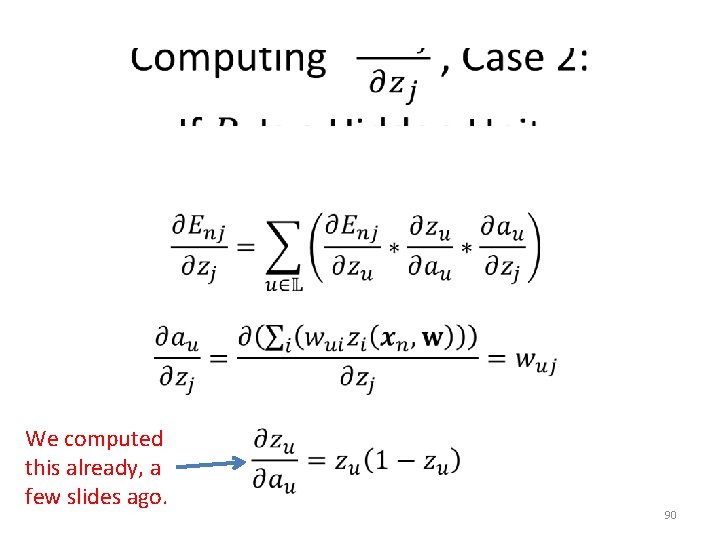

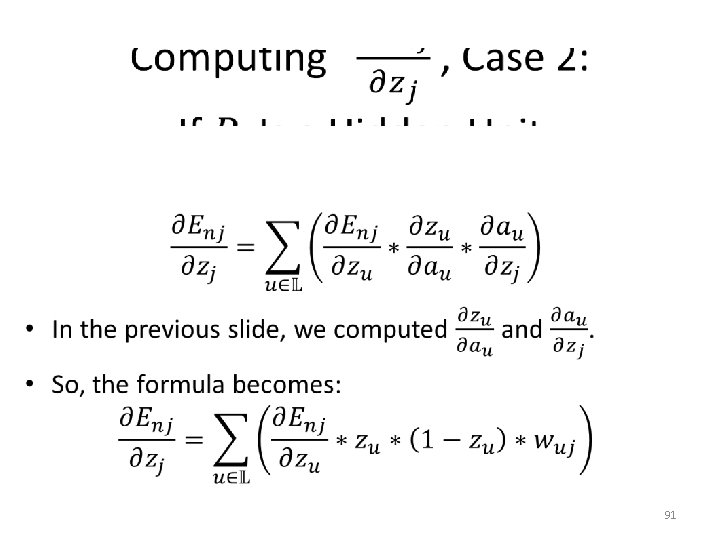

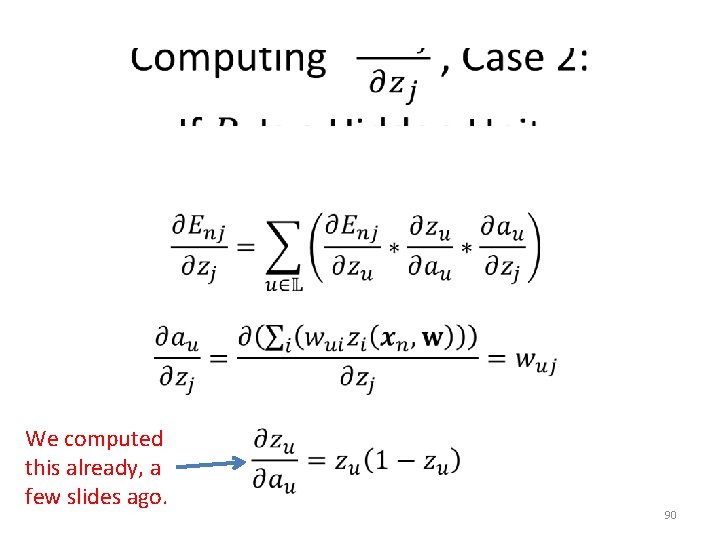

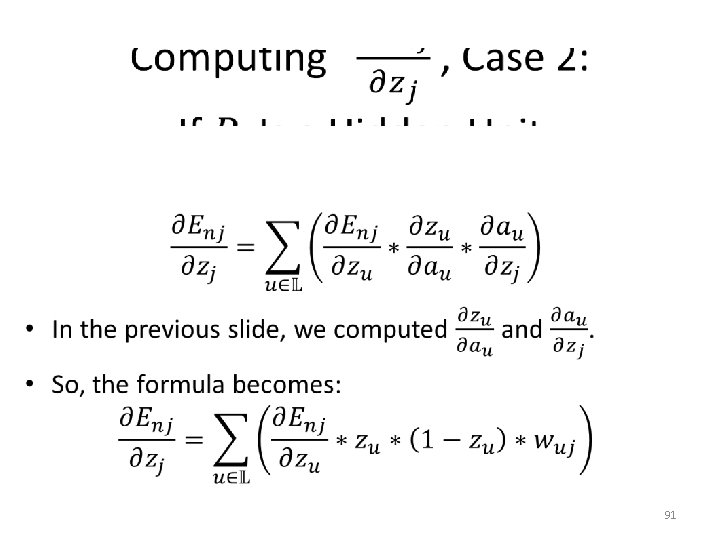

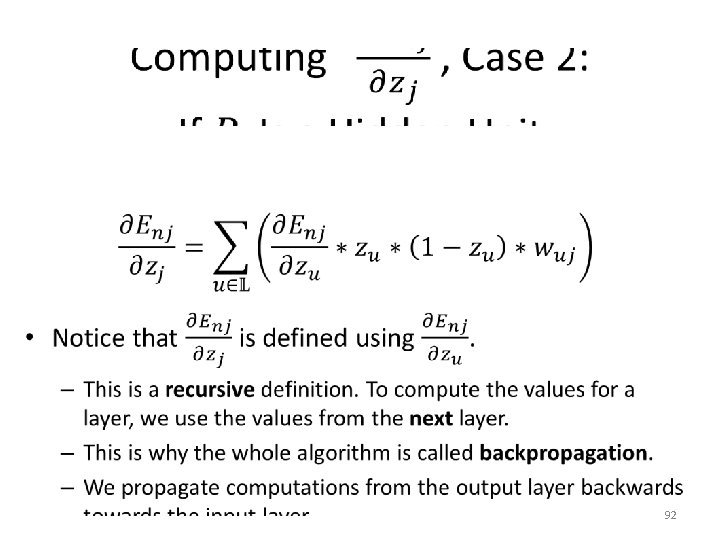

• We computed this already, a few slides ago. 90

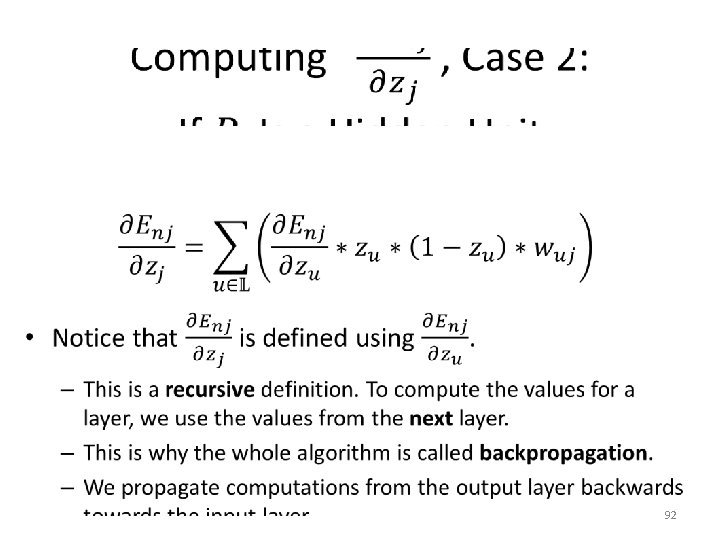

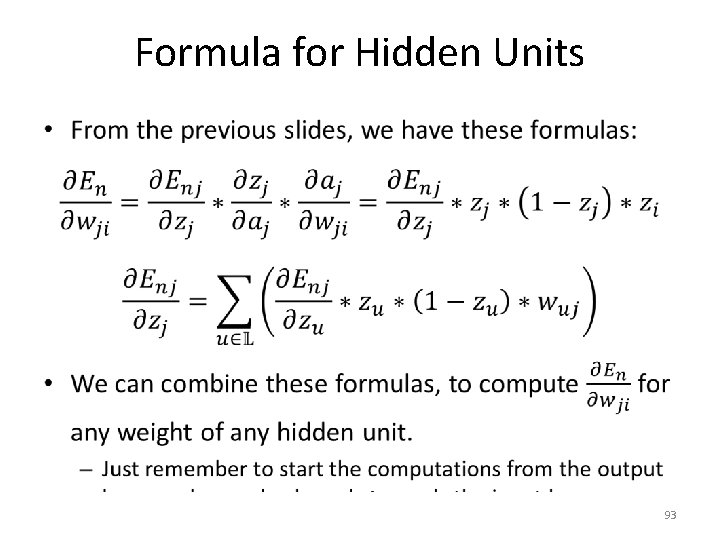

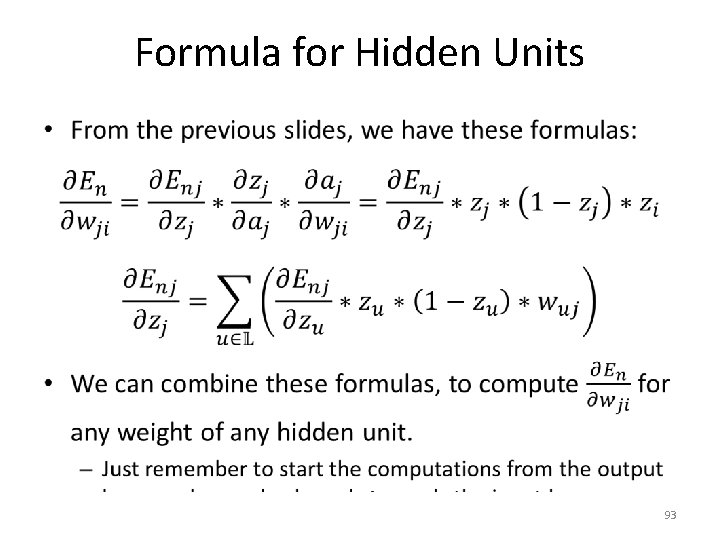

Formula for Hidden Units • 93

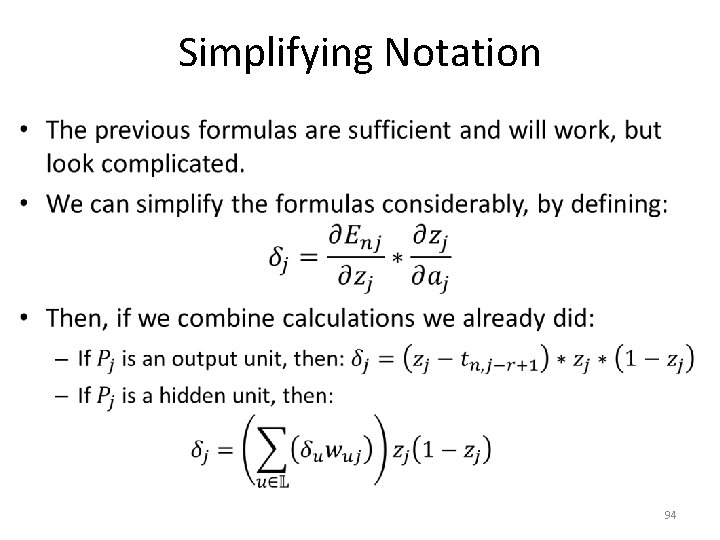

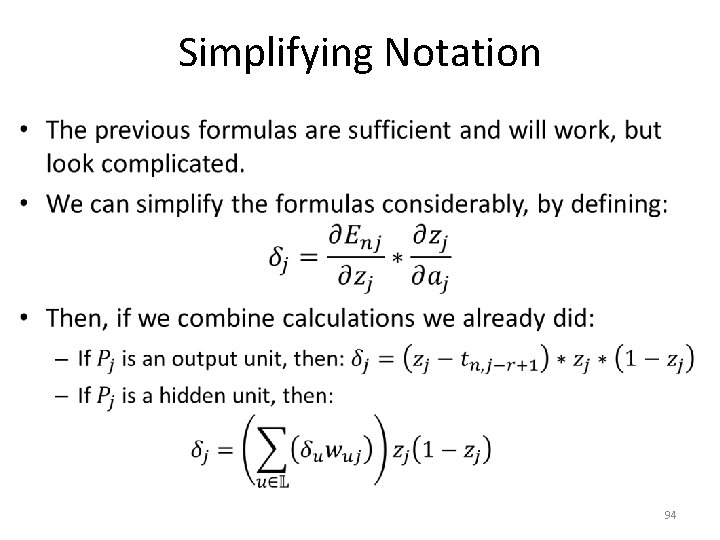

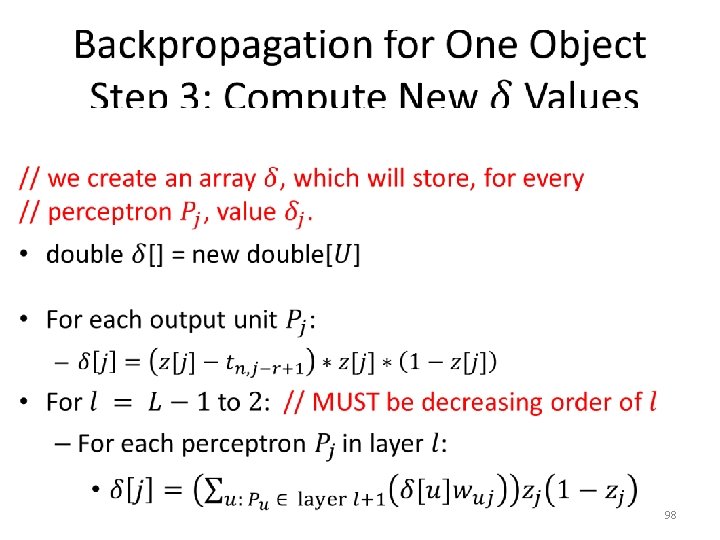

Simplifying Notation • 94

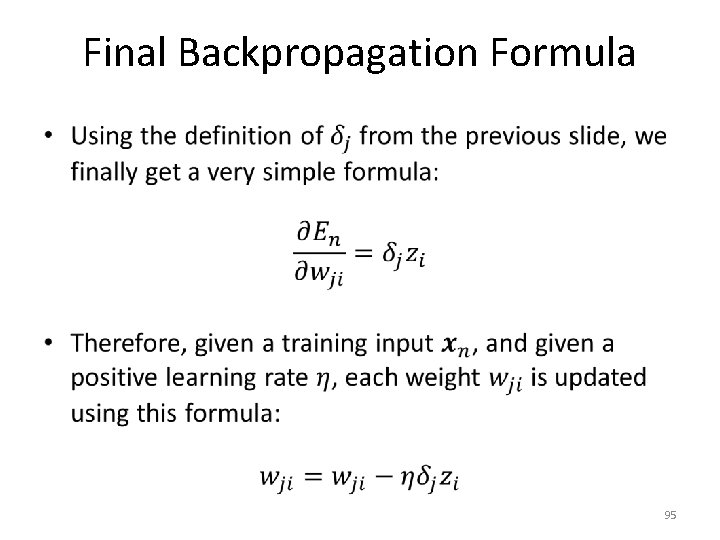

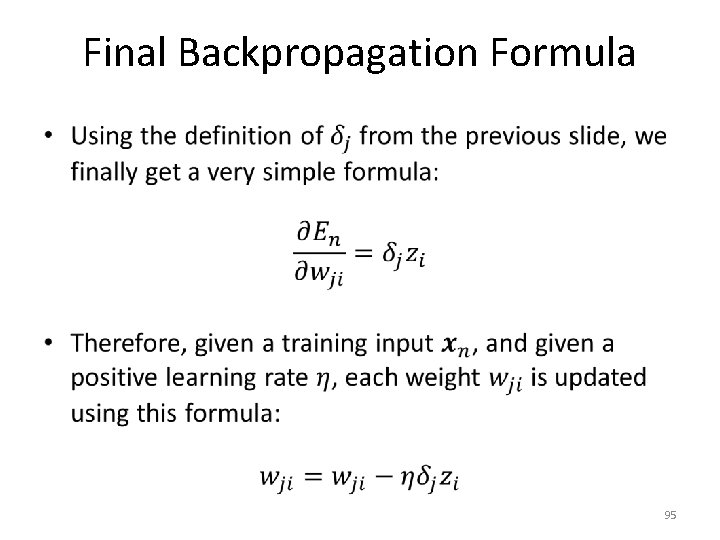

Final Backpropagation Formula • 95

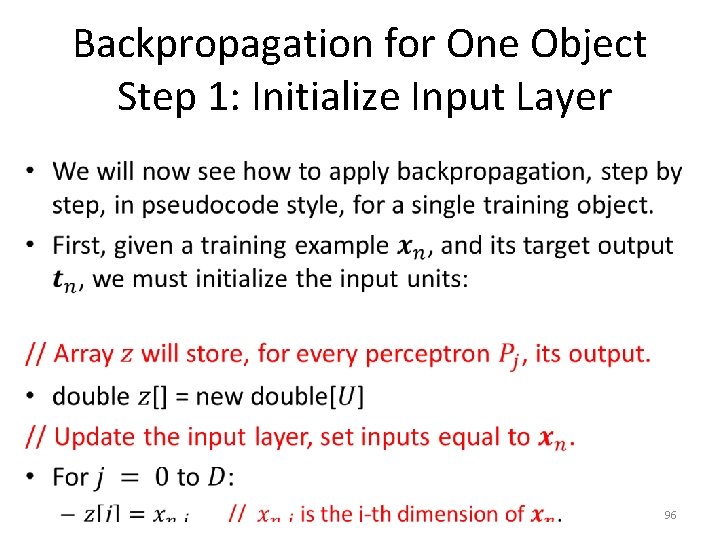

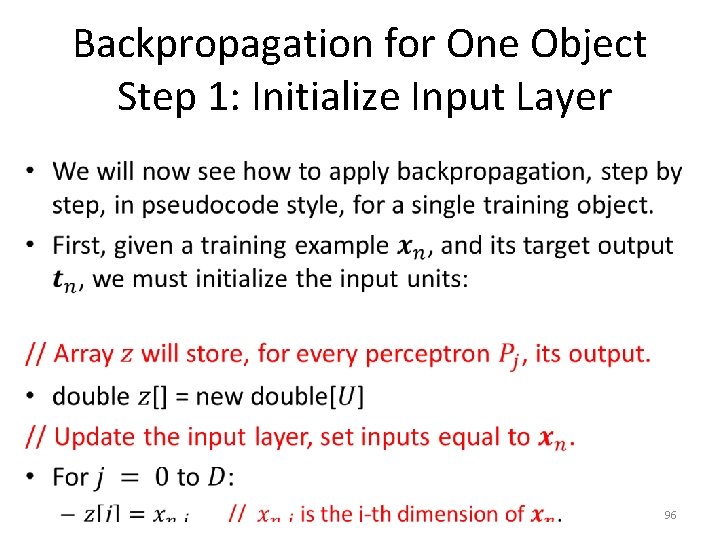

Backpropagation for One Object Step 1: Initialize Input Layer • 96

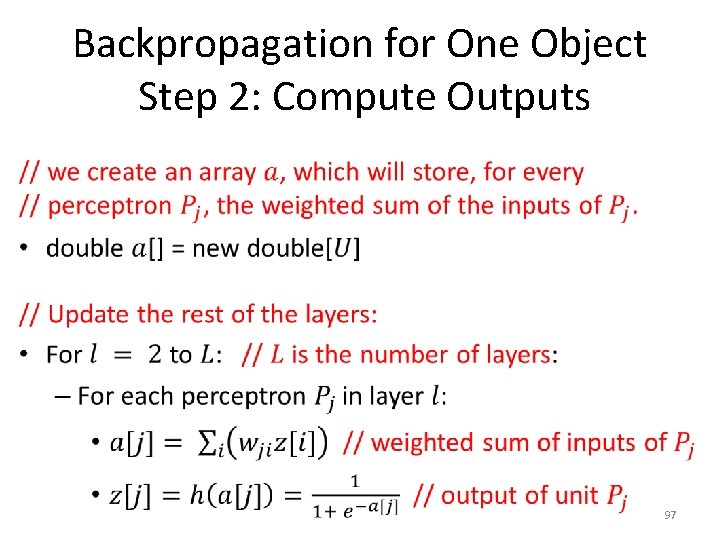

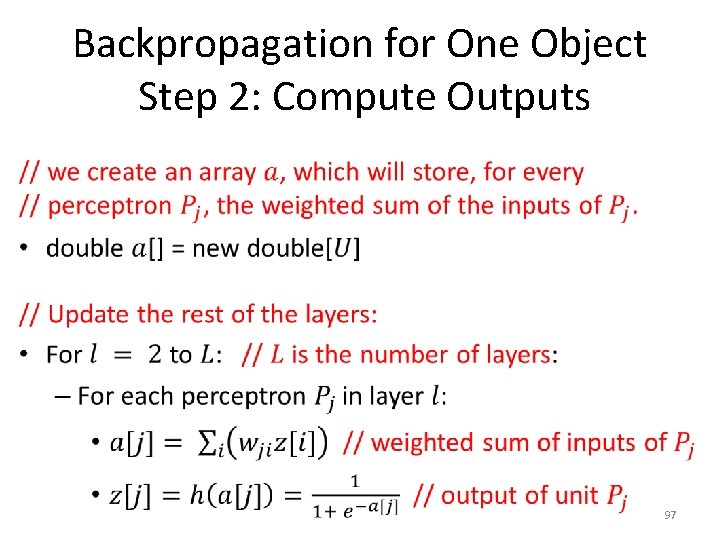

Backpropagation for One Object Step 2: Compute Outputs • 97

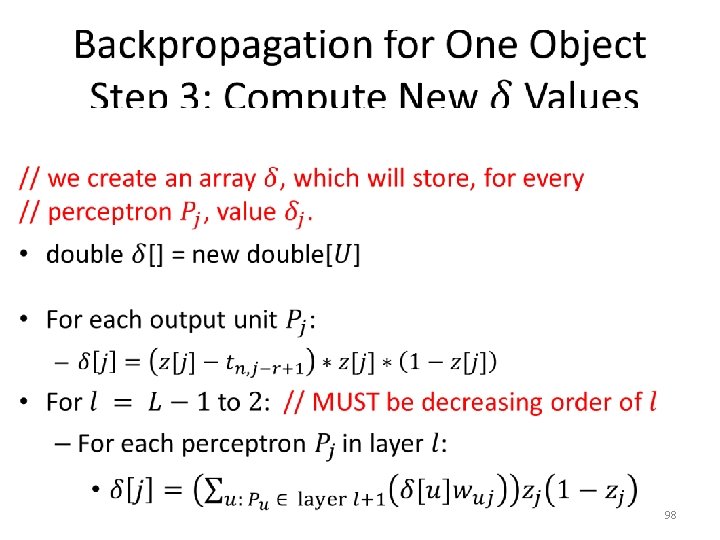

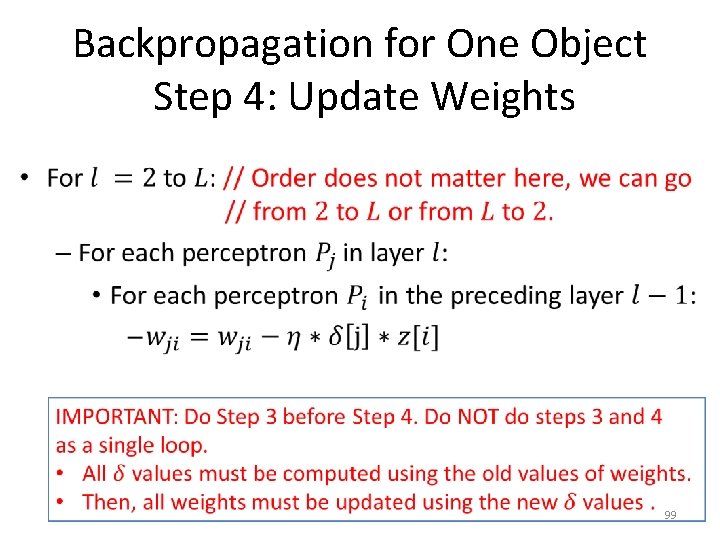

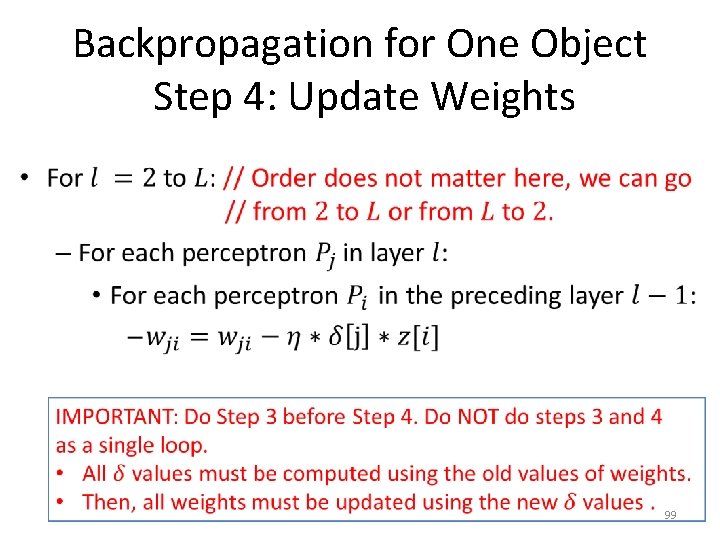

Backpropagation for One Object Step 4: Update Weights • 99

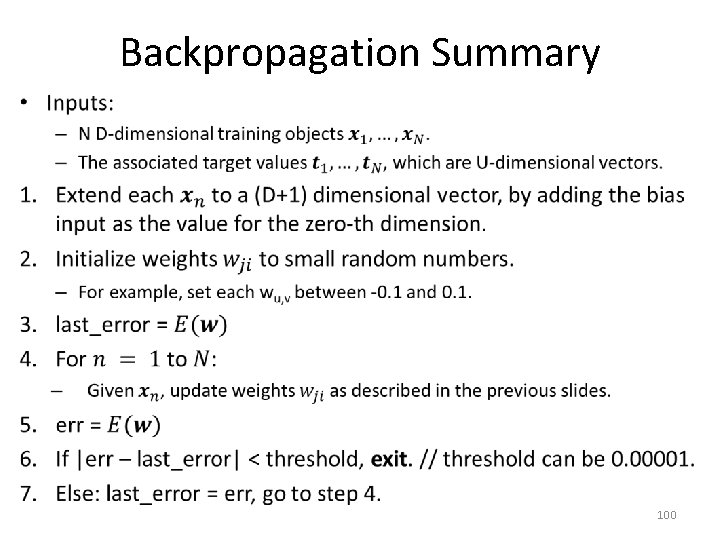

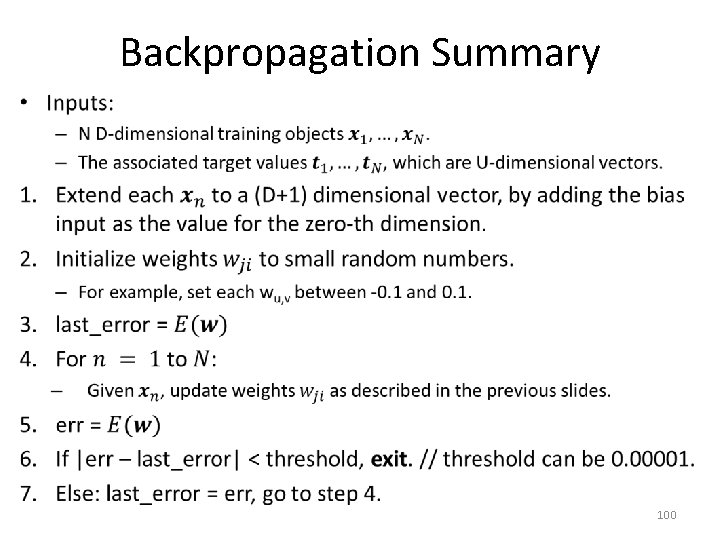

Backpropagation Summary • 100

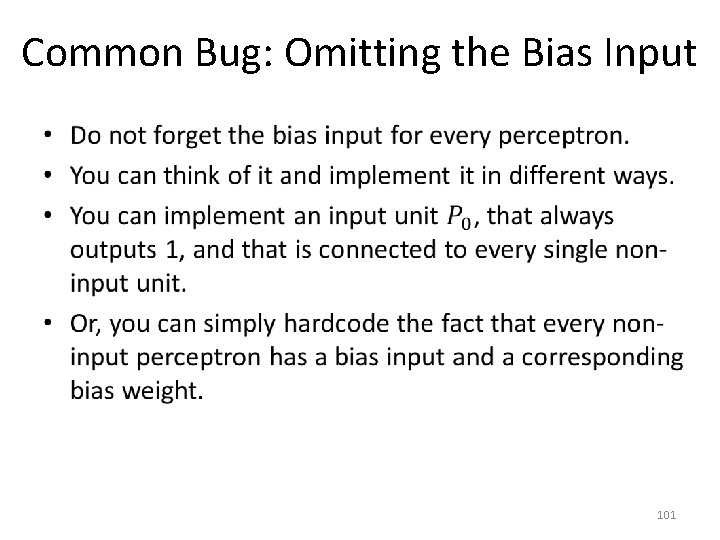

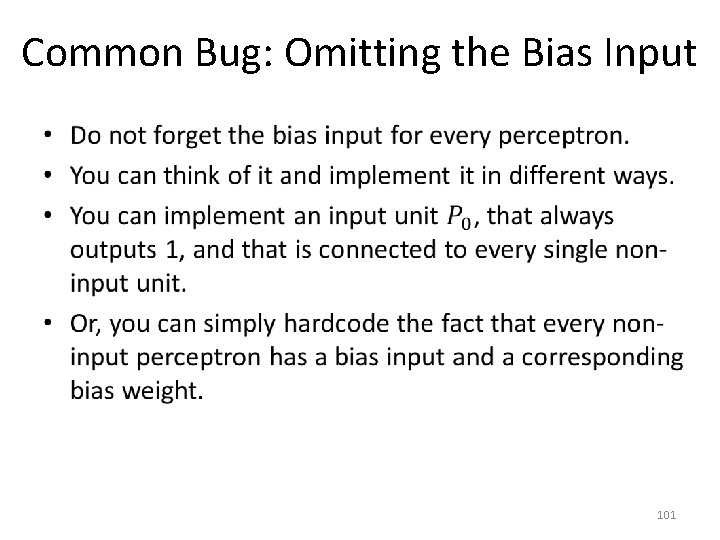

Common Bug: Omitting the Bias Input • 101

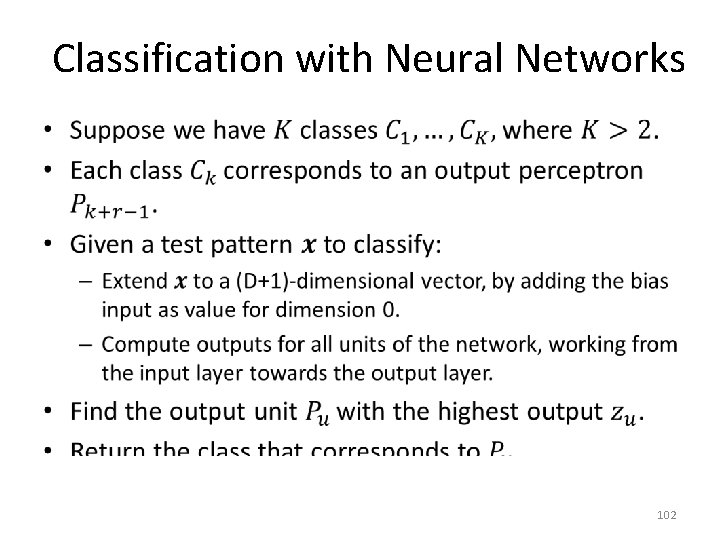

Classification with Neural Networks • 102

Structure of Neural Networks • Backpropagation describes how to learn weights. • However, it does not describe how to learn the structure: – How many layers? – How many units at each layer? • These are parameters that we have to choose somehow. • A good way to choose such parameters is by using a validation set, containing examples and their class labels. – The validation set should be separate (disjoint) from the training set. 103

Structure of Neural Networks • To choose the best structure for a neural network using a validation set, we try many different parameters (number of layers, number of units per layer). • For each choice of parameters: – We train several neural networks using backpropagation. – We measure how well each neural network classifies the validation examples. – Why not train just one neural network? 104

Structure of Neural Networks • To choose the best structure for a neural network using a validation set, we try many different parameters (number of layers, number of units per layer). • For each choice of parameters: – We train several neural networks using backpropagation. – We measure how well each neural network classifies the validation examples. – Why not train just one neural network? – Each network is randomly initialized, so after backpropagation it can end up being different from the other networks. • At the end, we select the neural network that did best on the validation set. 105

Vassilis athitsos

Vassilis athitsos Athitsos

Athitsos Vassilis athitsos

Vassilis athitsos Vassilis athitsos

Vassilis athitsos Vassilis athitsos

Vassilis athitsos Passive reinforcement learning

Passive reinforcement learning Vassilis athitsos

Vassilis athitsos Fisher's

Fisher's Least mean square algorithm in neural network

Least mean square algorithm in neural network Few shot learning with graph neural networks

Few shot learning with graph neural networks Neural networks and learning machines

Neural networks and learning machines Visualizing and understanding convolutional networks

Visualizing and understanding convolutional networks Liran szlak

Liran szlak Formation of neural networks ib psychology

Formation of neural networks ib psychology Audio super resolution using neural networks

Audio super resolution using neural networks Convolutional neural networks for visual recognition

Convolutional neural networks for visual recognition Leon gatys

Leon gatys Nvdla

Nvdla Mippers

Mippers What is stride in cnn

What is stride in cnn Pixelrnn

Pixelrnn Toolbox neural network matlab

Toolbox neural network matlab Neural networks for rf and microwave design

Neural networks for rf and microwave design 11-747 neural networks for nlp

11-747 neural networks for nlp Xor problem

Xor problem Csrmm

Csrmm On the computational efficiency of training neural networks

On the computational efficiency of training neural networks Tlu neural networks

Tlu neural networks Fuzzy logic lecture

Fuzzy logic lecture Netinsights

Netinsights Convolutional neural networks

Convolutional neural networks Deep forest: towards an alternative to deep neural networks

Deep forest: towards an alternative to deep neural networks Convolutional neural networks

Convolutional neural networks Neuraltools neural networks

Neuraltools neural networks Andrew ng recurrent neural networks

Andrew ng recurrent neural networks Predicting nba games using neural networks

Predicting nba games using neural networks The wake-sleep algorithm for unsupervised neural networks

The wake-sleep algorithm for unsupervised neural networks Audio super resolution using neural networks

Audio super resolution using neural networks Convolutional neural network alternatives

Convolutional neural network alternatives Slideshare.net

Slideshare.net Datagram network diagram

Datagram network diagram Basestore iptv

Basestore iptv Concept learning task in machine learning

Concept learning task in machine learning Analytical learning in machine learning

Analytical learning in machine learning Pac learning model in machine learning

Pac learning model in machine learning Pac learning model in machine learning

Pac learning model in machine learning Inductive and analytical learning in machine learning

Inductive and analytical learning in machine learning Inductive and analytical learning problem

Inductive and analytical learning problem Instance based learning in machine learning

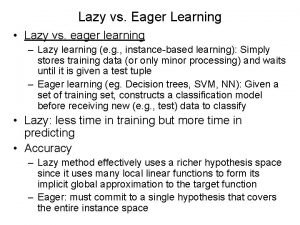

Instance based learning in machine learning Inductive learning machine learning

Inductive learning machine learning First order rule learning in machine learning

First order rule learning in machine learning Eager classification versus lazy classification

Eager classification versus lazy classification Deep learning vs machine learning

Deep learning vs machine learning Weisfeiler-lehman neural machine for link prediction

Weisfeiler-lehman neural machine for link prediction Visualizing and understanding neural machine translation

Visualizing and understanding neural machine translation Cuadro comparativo e-learning y b-learning

Cuadro comparativo e-learning y b-learning Adaptive learning neural network

Adaptive learning neural network My cpu is a neural net processor a learning computer

My cpu is a neural net processor a learning computer Node 2 vec

Node 2 vec A tutorial on learning with bayesian networks

A tutorial on learning with bayesian networks Finite state machine vending machine example

Finite state machine vending machine example Moore machine

Moore machine Moore machine

Moore machine Chapter 10 energy, work and simple machines answer key

Chapter 10 energy, work and simple machines answer key The non-iid data quagmire of decentralized machine learning

The non-iid data quagmire of decentralized machine learning Expected risk machine learning

Expected risk machine learning Sql server predictive analytics

Sql server predictive analytics Azure machine learning studio

Azure machine learning studio Octave machine learning tutorial

Octave machine learning tutorial Jmp neural network

Jmp neural network Machine learning tom mitchell

Machine learning tom mitchell Machine learning infrastructure monitoring

Machine learning infrastructure monitoring Valerie du preez

Valerie du preez Zillow machine learning

Zillow machine learning Tom mitchell machine learning solutions chapter 3

Tom mitchell machine learning solutions chapter 3 Ethem alpaydin

Ethem alpaydin Hypothesis space in machine learning

Hypothesis space in machine learning Machine learning kth

Machine learning kth Introduction to machine learning andrew ng

Introduction to machine learning andrew ng Andrew ng intro machine learning

Andrew ng intro machine learning Hypothesis space in machine learning

Hypothesis space in machine learning Ilp machine learning

Ilp machine learning Ibm qradar uba

Ibm qradar uba Analogizers

Analogizers Avoiding discrimination through causal reasoning

Avoiding discrimination through causal reasoning Bagging boosting stacking in machine learning

Bagging boosting stacking in machine learning Econometrics machine learning

Econometrics machine learning Feature reduction in machine learning

Feature reduction in machine learning Wholesale spark mllib

Wholesale spark mllib Njit machine learning

Njit machine learning Synapse machine learning

Synapse machine learning Hypothesis space in machine learning

Hypothesis space in machine learning Convex optimization in machine learning javatpoint

Convex optimization in machine learning javatpoint Explain general to specific ordering of hypothesis

Explain general to specific ordering of hypothesis Upenn machine learning

Upenn machine learning Aws lambda

Aws lambda Mike mozer

Mike mozer Deep reinforcement learning example

Deep reinforcement learning example Cisco machine learning security

Cisco machine learning security Conclusion of machine learning

Conclusion of machine learning Advice for applying machine learning

Advice for applying machine learning 5 tribes of machine learning

5 tribes of machine learning Machine learning algorithms for restaurants

Machine learning algorithms for restaurants Coarse coding reinforcement learning

Coarse coding reinforcement learning Axis aligned rectangles vc dimension

Axis aligned rectangles vc dimension Multivariate methods in machine learning

Multivariate methods in machine learning