Introduction to Neural Networks Terminator II Terminator My

- Slides: 15

Introduction to Neural Networks

Terminator II • Terminator: My CPU is a neural net processor, a learning computer. The more contact I have with humans, the more I learn.

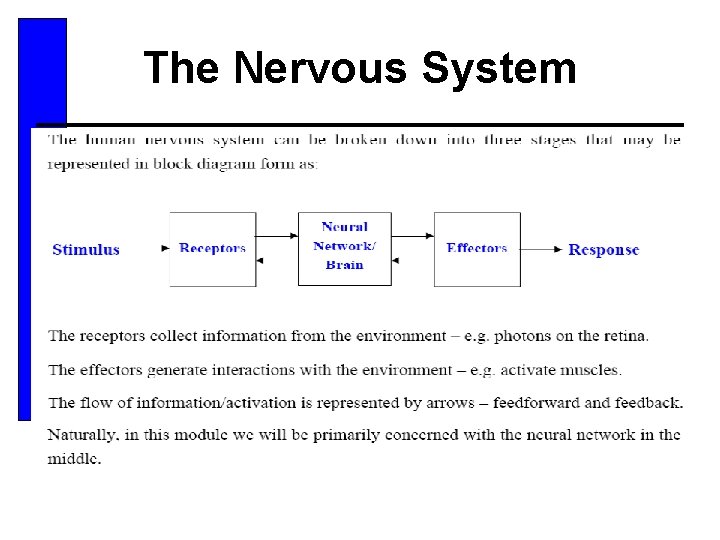

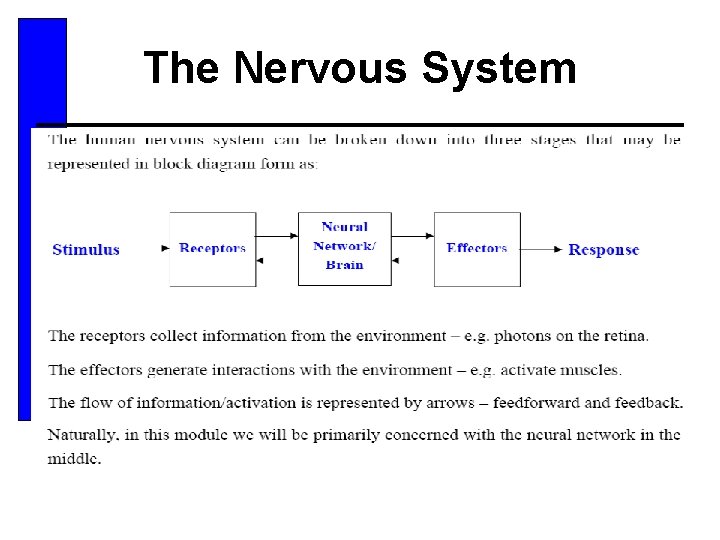

The Nervous System

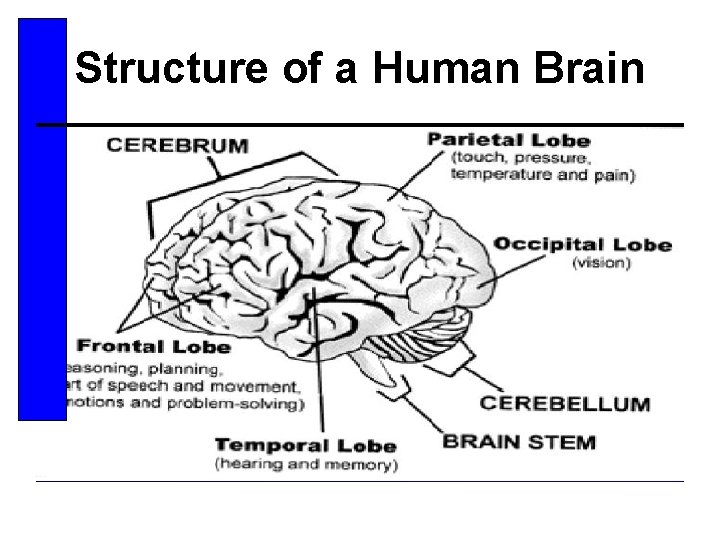

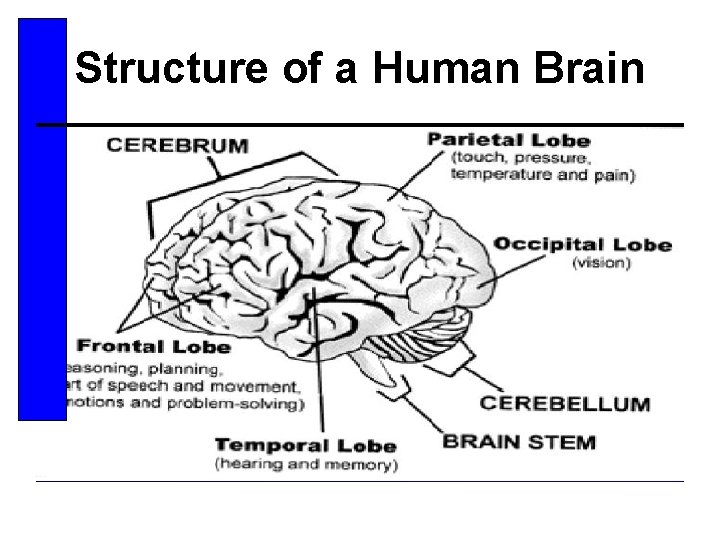

Structure of a Human Brain

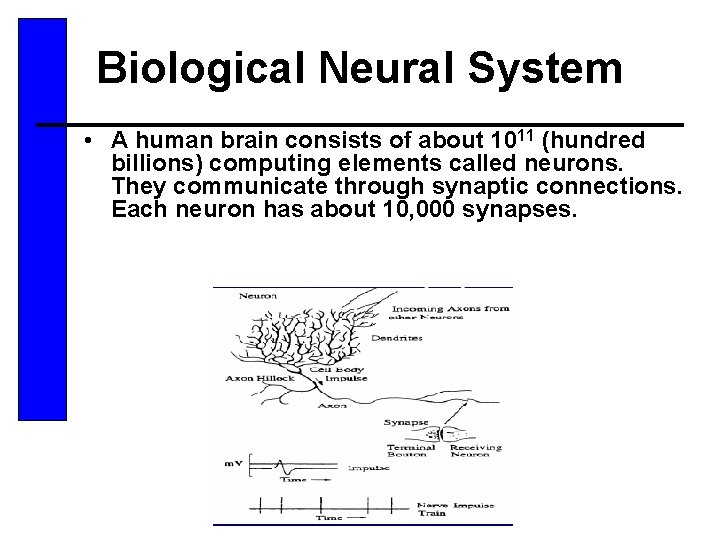

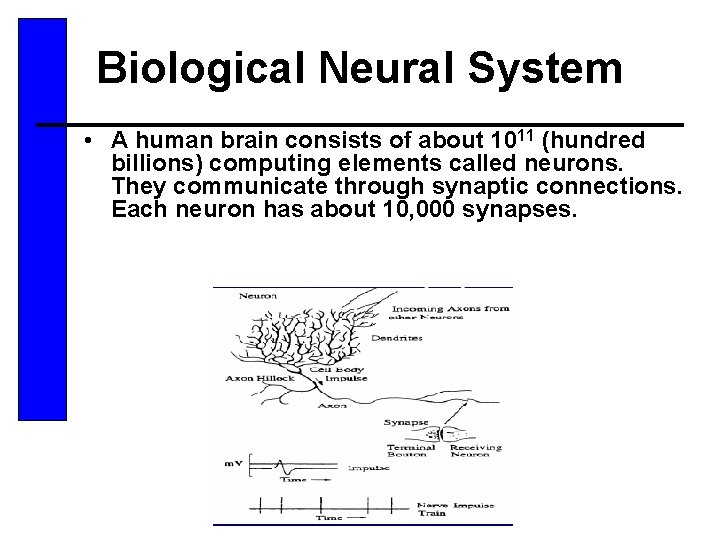

Biological Neural System • A human brain consists of about 1011 (hundred billions) computing elements called neurons. They communicate through synaptic connections. Each neuron has about 10, 000 synapses.

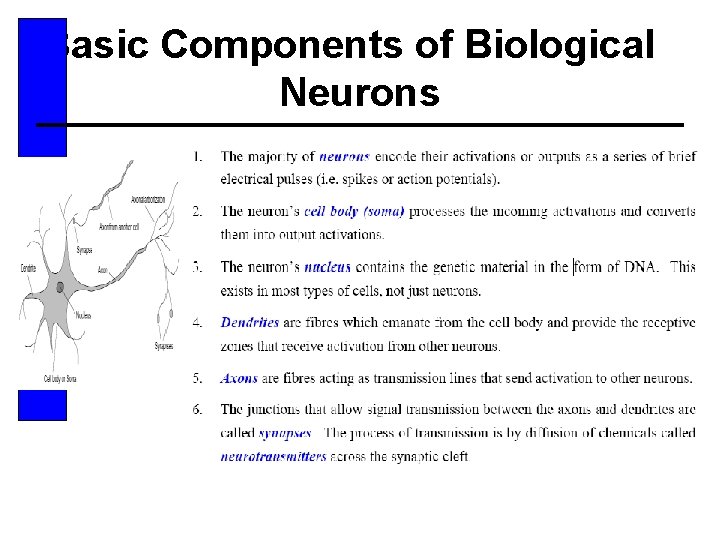

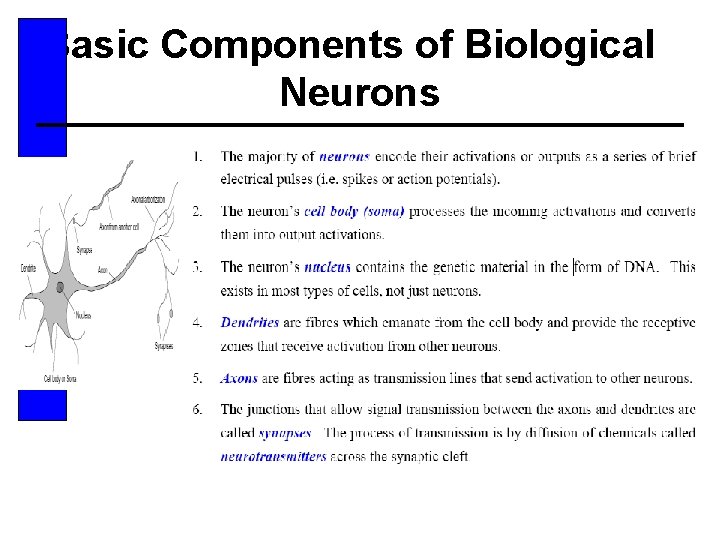

Basic Components of Biological Neurons

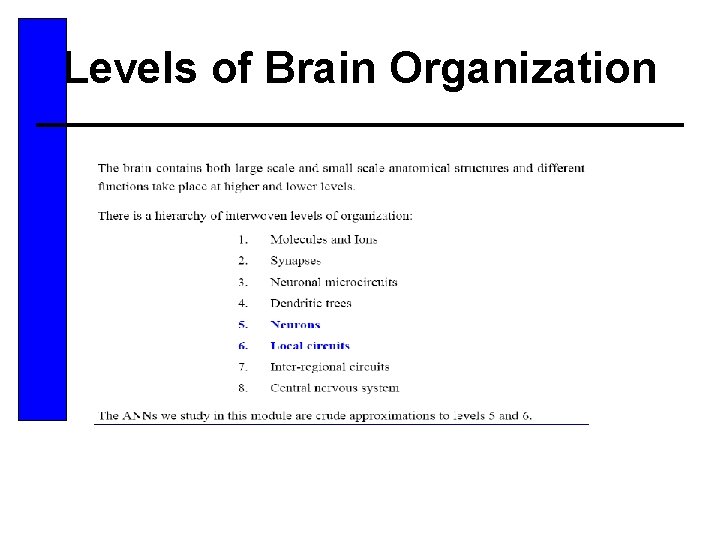

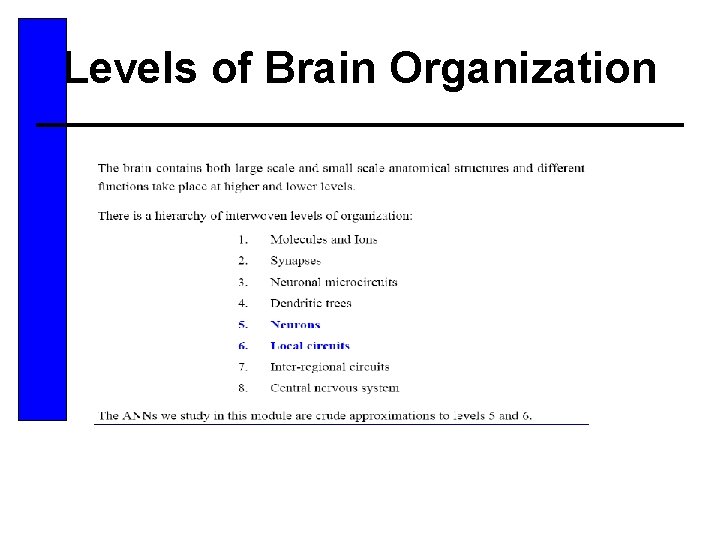

Levels of Brain Organization

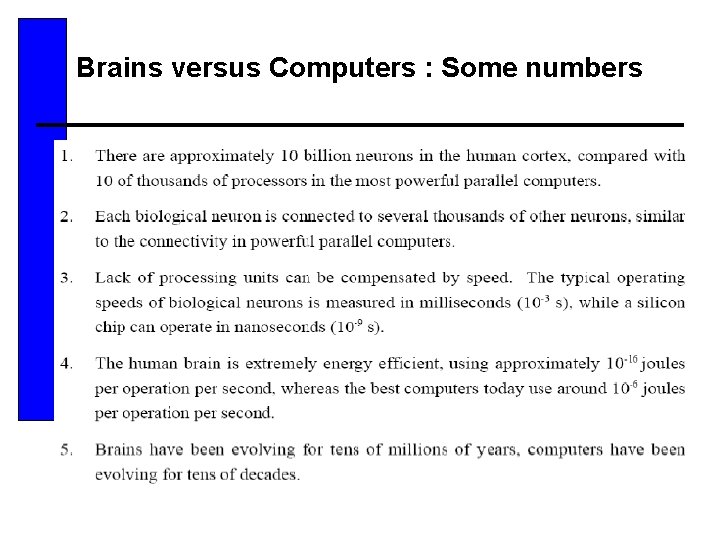

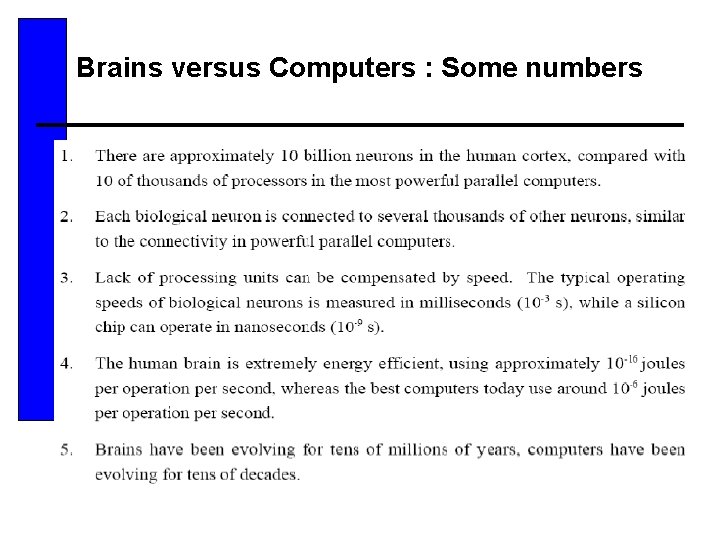

Brains versus Computers : Some numbers

From Biological Neural Systems to Artificial Neural Networks • It possible to emulate biological neural systems, but it remains an impossible target to achieve the intelligence of biological systems. • Artificial Neural Networks (ANNs) were designed to emulate the biological nervous systems. (focusing on functions rather than structures) • ANNs try to simulate Functions of neurons and synapses. • ANNs tries to use Distributed memory (knowledge representation). • ANNs use also Parallel processing. • ANNs can achieve Adaptation (evolution) through learning.

Definition of ANNs According to Simon Haykin(Neural Networks: A Comprehensive Foundation, Prentice-Hall, 1999, p. 2 • A neural network is a massively parallel distributed processor made up of simple (adaptive) processing units, which has a natural propensity for storing experiential knowledge and making it available for use. It resembles the brain in two respects: 1) Knowledge is acquired by the network from its environment through a learning process; 2) Interneuron connection strengths, known as synaptic weights, are used to store the acquired knowledge.

ANN History • Pioneering work • (a) The first neuron model: W. S. Mc. Culloch and W. Pitts (1943), ―A logical calculus of the ideas immanent in nervous activity, Bulletin of Mathematical Biophysics, vol. 5, pp. 115 -133. ——Mark of the birth of neural networks and artificial intelligence. Neurons were modelledby Heaviside functions. A network with a sufficient number of such simple units and synaptic connections set properly and operate synchronously, in principle, is able to compute any computable function. • (b) The first learning rule D. O. Hebb(1949), The Organization of Behavior: A Neuropsychological Theory, New York: Wiley. • The effectiveness of a variable synapse between two neurons is increased by the repeated activation of one neuron by the other across that synapse.

History of ANNs (Cont) • 1950 s and 1960 s: Early bright years for ANN research • F. Rosenblatt (1958), ―”The perceptron: a probabilistic model for information storage and organization in the brain, ” Psychological Review, vol. 65, pp. 386 -408. (perceptronand delta learning rule) • B. Widrowand M. E. Hoff, Jr. (1960), “Adaptive switching circuits, ” IREWESCON Convention Record, pp. 96 -104. (Adlineand least mean squares learning rule)

History of ANNs • • T. Kohonen(1982), “Self-organisedformation of topologically correct feature maps, ” Biological Cybernetics, vol. 43, pp. 59 -69. (Associative memory, SOM) J. J. Hopfield (1982), “Neural networks and physical systems with emergent collective computational ability, ” Proc. of the National Academy of Sciences, USA, vol. 79, pp. 2554 -2558. J. J. Hopfield and D. W. Tank (1985), “Neural computation of decisions in optimisationproblems, ” Biological Cybernetics, vol. 52, pp. 141 -152. (Hopfield network, Energy function, recurrent network) S. Kirhpatrick, C. D. Gelatt, Jr. and M. P. Vecchi(1983), “Optimzationby simulated annealing, ” Science, vol. 220, pp. 671 -680. (Simulated annealing) D. E. Rumelhart, G. E. Hinton, and R. J. Williams (1986), “Learning representations of back-propagation errors, ” Nature, vol. 323, pp. 533 -536. D. E. Rumelhart, J. L. Mc. Clelland, eds. (1986), Parallel Distributed Processing: Explorations in the Microstructure of Cognition, MIT Press. (Back-propagation learning algorithm) D. S. Broomheadand D. Lowe (1988), “Multivariable functional interpolation and adaptive networks, ” Complex Systems, vol. 2, pp. 321 -355. (RBF network) R. P. Lippmann, “An introduction to computing with neural nets, ” IEEE ASSP Magazine, April 1987, pp. 4 -22.

ANN Areas of Application • System Modeling/ Approximation – Examples: process modeling, prediction, control, image compression, etc. • Classification / Recognition–Examples: image/ handwriting /speech , recognition, robotic vision and control, ECG/EEG diagnosis, data clustering, etc. • Optimisation

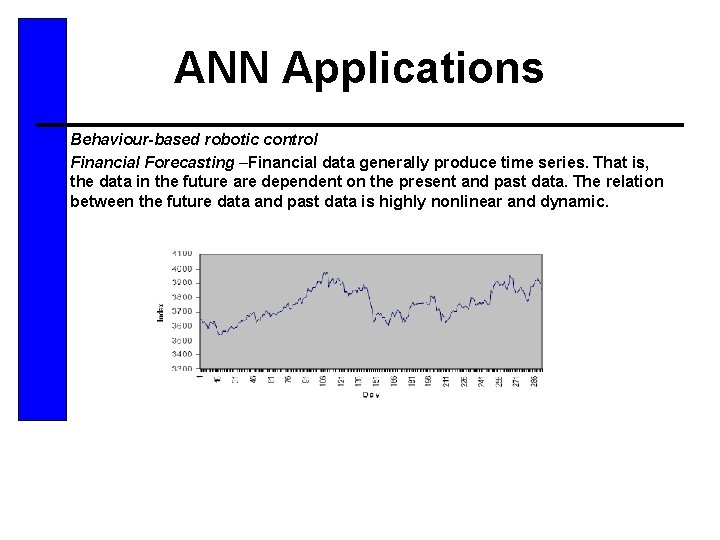

ANN Applications • • Behaviour-based robotic control Financial Forecasting –Financial data generally produce time series. That is, the data in the future are dependent on the present and past data. The relation between the future data and past data is highly nonlinear and dynamic.

Introduction to neural networks using matlab

Introduction to neural networks using matlab Convolutional neural networks ppt

Convolutional neural networks ppt Netinsights

Netinsights Terminator learning computer

Terminator learning computer Visualizing and understanding convolutional neural networks

Visualizing and understanding convolutional neural networks Vc dimension neural network

Vc dimension neural network Neuroplasticity ib psychology

Neuroplasticity ib psychology Audio super resolution using neural networks

Audio super resolution using neural networks Convolutional neural networks for visual recognition

Convolutional neural networks for visual recognition Style transfer

Style transfer Nvdla

Nvdla Mippers

Mippers Neural networks and learning machines 3rd edition

Neural networks and learning machines 3rd edition Rnn

Rnn Neural networks for rf and microwave design

Neural networks for rf and microwave design 11-747 neural networks for nlp

11-747 neural networks for nlp