Introduction to ILP Inductive Logic Programming machine learning

- Slides: 32

Introduction to ILP = Inductive Logic Programming = machine learning logic programming = learning with logic Introduced by Muggleton in 1992

(Machine) Learning • The process by which relatively permanent changes occur in behavioral potential as a result of experience. (Anderson) • Learning is constructing or modifying representations of what is being experienced. (Michalski) • A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E. (Mitchell)

Machine Learning Techniques • • Decision tree learning Conceptual clustering Case-based learning Reinforcement learning Neural networks Genetic algorithms and… Inductive Logic Programming

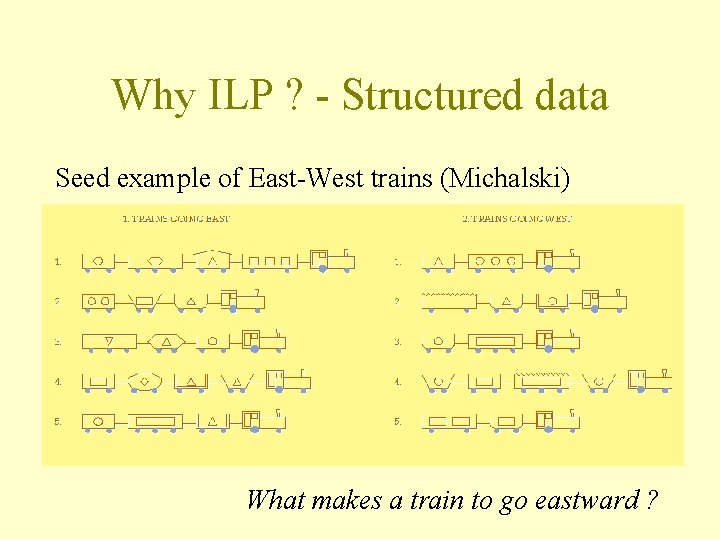

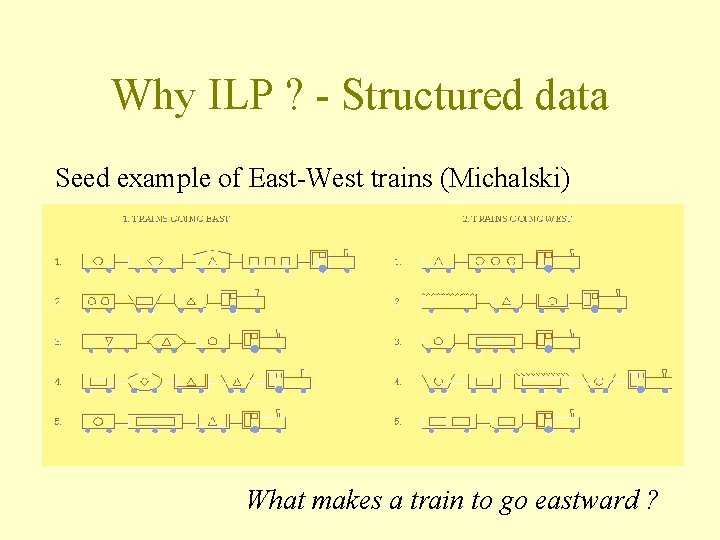

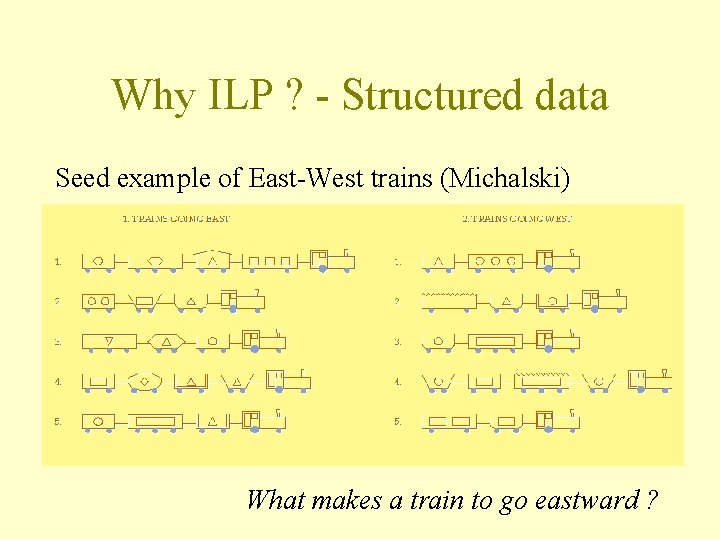

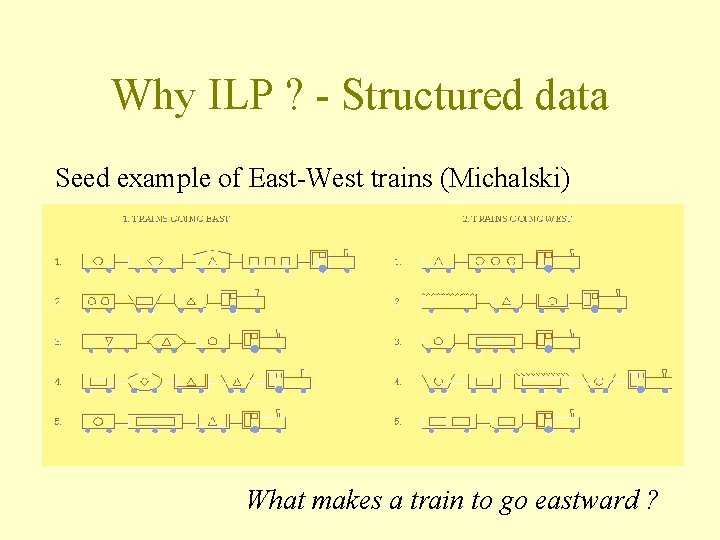

Why ILP ? - Structured data Seed example of East-West trains (Michalski) What makes a train to go eastward ?

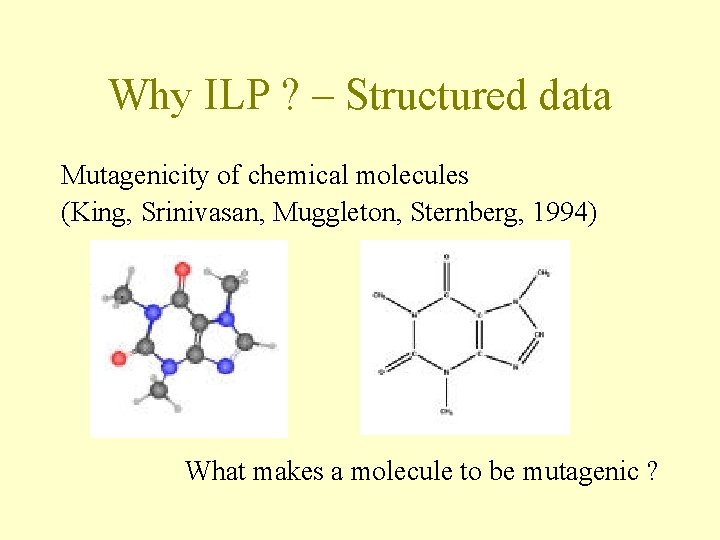

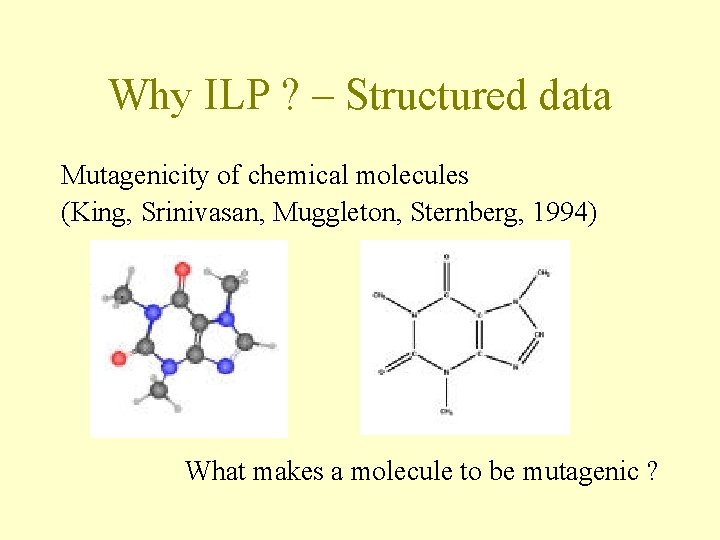

Why ILP ? – Structured data Mutagenicity of chemical molecules (King, Srinivasan, Muggleton, Sternberg, 1994) What makes a molecule to be mutagenic ?

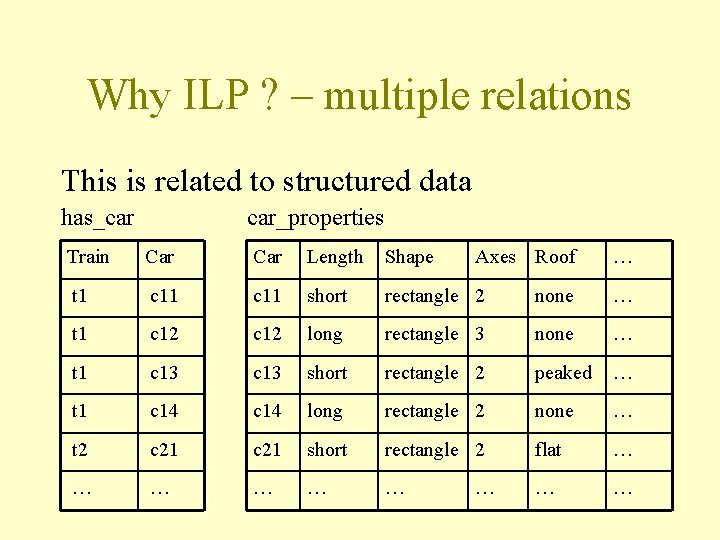

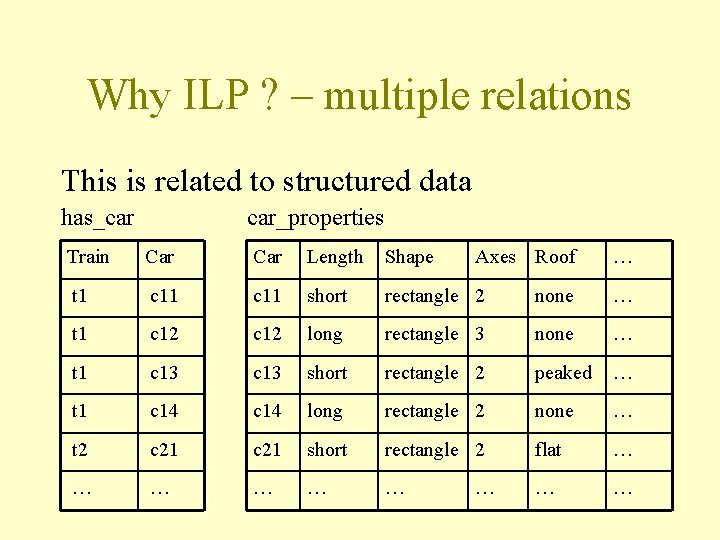

Why ILP ? – multiple relations This is related to structured data has_car car_properties Train Car Length Shape Axes Roof t 1 c 11 short rectangle 2 none … t 1 c 12 long rectangle 3 none … t 1 c 13 short rectangle 2 peaked … t 1 c 14 long rectangle 2 none … t 2 c 21 short rectangle 2 flat … … … … …

Why ILP ? – multiple relations Genealogy example: • Given known relations… – father(Old, Young) and mother(Old, Young) – male(Somebody) and female(Somebody) • …learn new relations – parent(X, Y) : - father(X, Y). – parent(X, Y) : - mother(X, Y). – brother(X, Y) : male(X), father(Z, Y). Most ML techniques can’t use more than 1 relation e. g. : decision trees, neural networks, …

Why ILP ? – logical foundation • Prolog = Programming with Logic is used to represent: – Background knowledge (of the domain): facts – Examples (of the relation to be learned): facts – Theories (as a result of learning): rules • Supports 2 forms of logical reasoning – Deduction – Induction

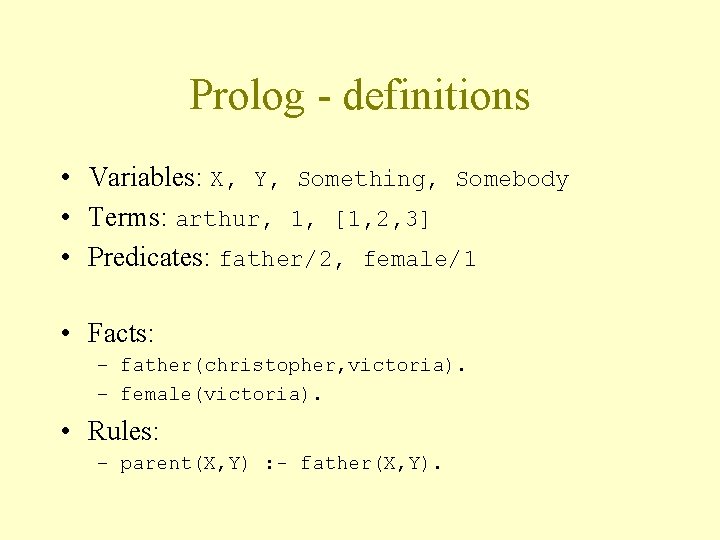

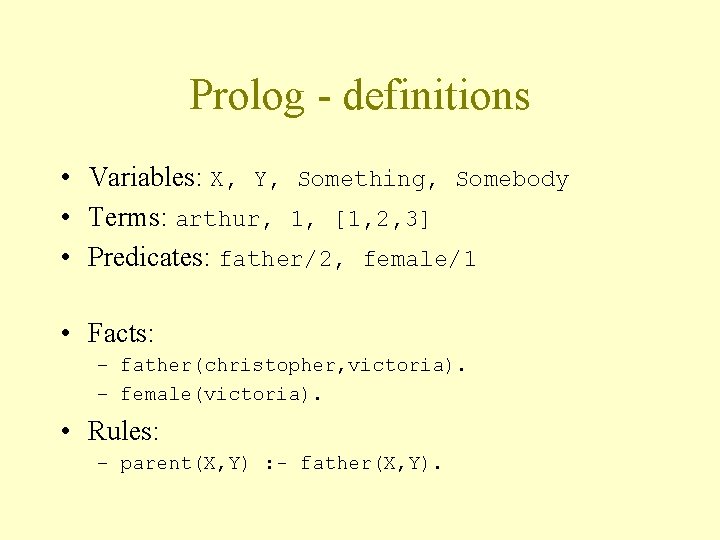

Prolog - definitions • Variables: X, Y, Something, Somebody • Terms: arthur, 1, [1, 2, 3] • Predicates: father/2, female/1 • Facts: – father(christopher, victoria). – female(victoria). • Rules: – parent(X, Y) : - father(X, Y).

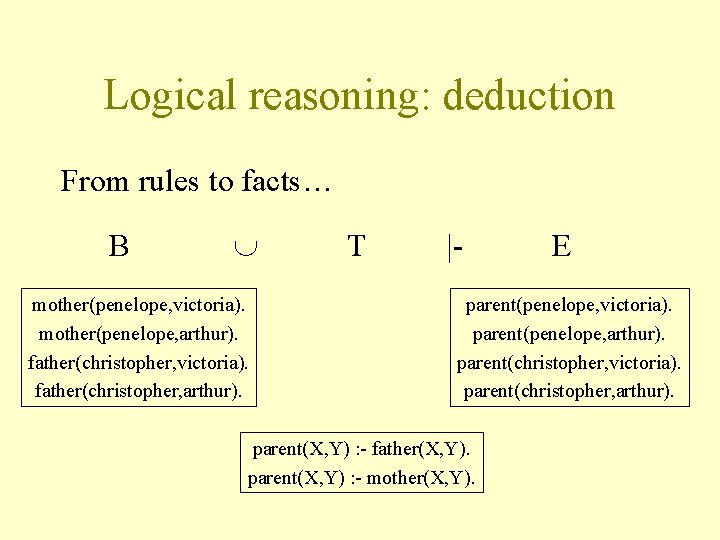

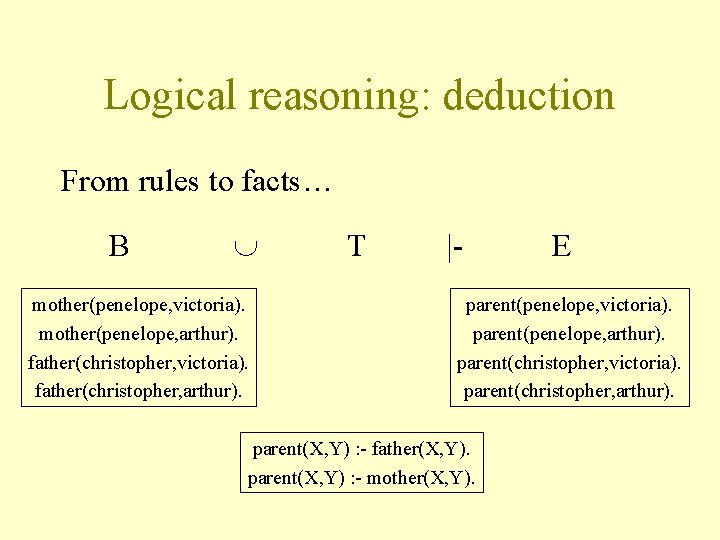

Logical reasoning: deduction From rules to facts… B mother(penelope, victoria). mother(penelope, arthur). father(christopher, victoria). father(christopher, arthur). T |- E parent(penelope, victoria). parent(penelope, arthur). parent(christopher, victoria). parent(christopher, arthur). parent(X, Y) : - father(X, Y). parent(X, Y) : - mother(X, Y).

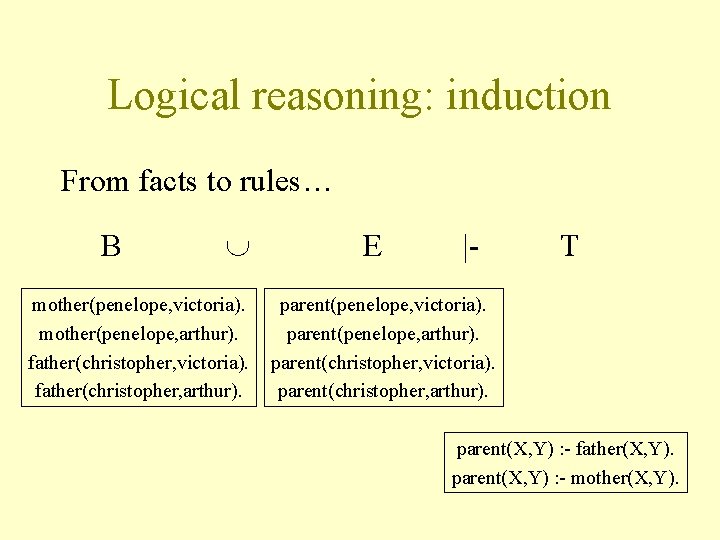

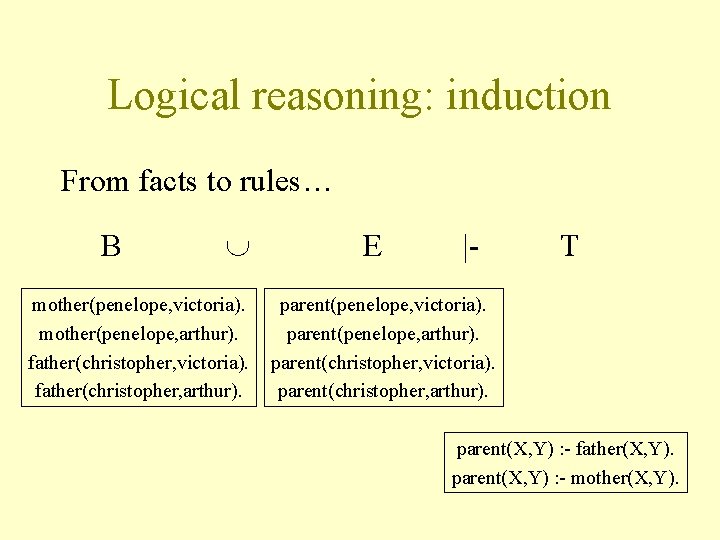

Logical reasoning: induction From facts to rules… B mother(penelope, victoria). mother(penelope, arthur). father(christopher, victoria). father(christopher, arthur). E |- T parent(penelope, victoria). parent(penelope, arthur). parent(christopher, victoria). parent(christopher, arthur). parent(X, Y) : - father(X, Y). parent(X, Y) : - mother(X, Y).

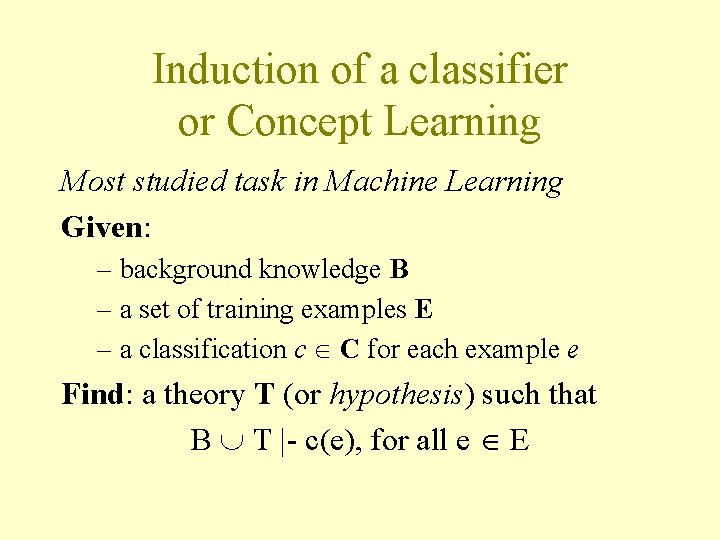

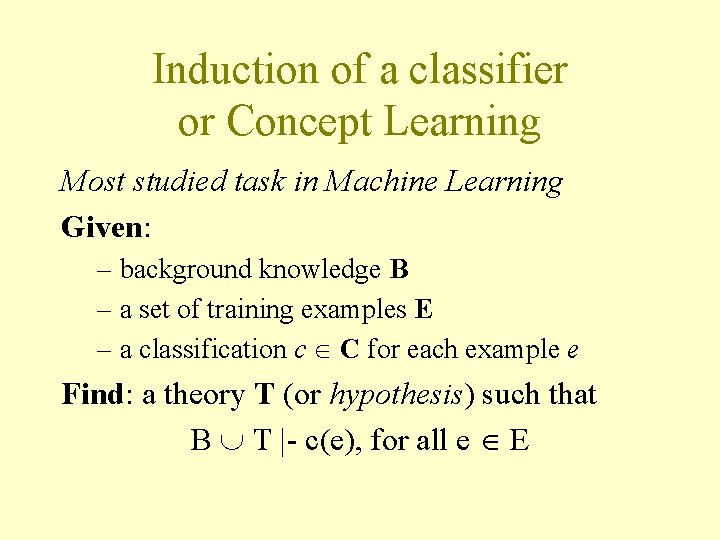

Induction of a classifier or Concept Learning Most studied task in Machine Learning Given: – background knowledge B – a set of training examples E – a classification c C for each example e Find: a theory T (or hypothesis) such that B T |- c(e), for all e E

Induction of a classifier: example Example of East-West trains • B: relations has_car and car_properties (length, roof, shape, etc. ) ex. : has_car(t 1, c 11), shape(c 11, bucket) • E: the trains t 1 to t 10 • C: east, west

Why ILP ? - Structured data Seed example of East-West trains (Michalski) What makes a train to go eastward ?

Induction of a classifier: example Example of East-West trains • B: relations has_car and car_properties (length, roof, shape, etc. ) ex. : has_car(t 1, c 11) • E: the trains t 1 to t 10 • C: east, west • Possible T: east(T) : has_car(T, C), length(C, short), roof(C, _).

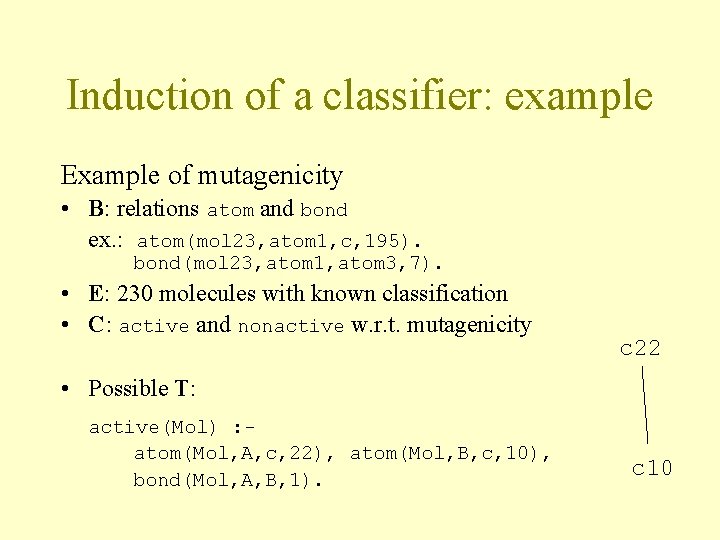

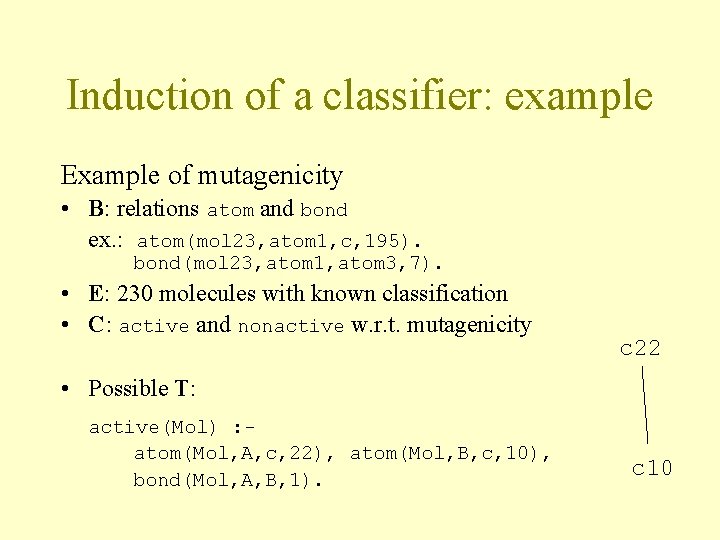

Induction of a classifier: example Example of mutagenicity • B: relations atom and bond ex. : atom(mol 23, atom 1, c, 195). bond(mol 23, atom 1, atom 3, 7). • E: 230 molecules with known classification • C: active and nonactive w. r. t. mutagenicity c 22 • Possible T: active(Mol) : atom(Mol, A, c, 22), atom(Mol, B, c, 10), bond(Mol, A, B, 1). c 10

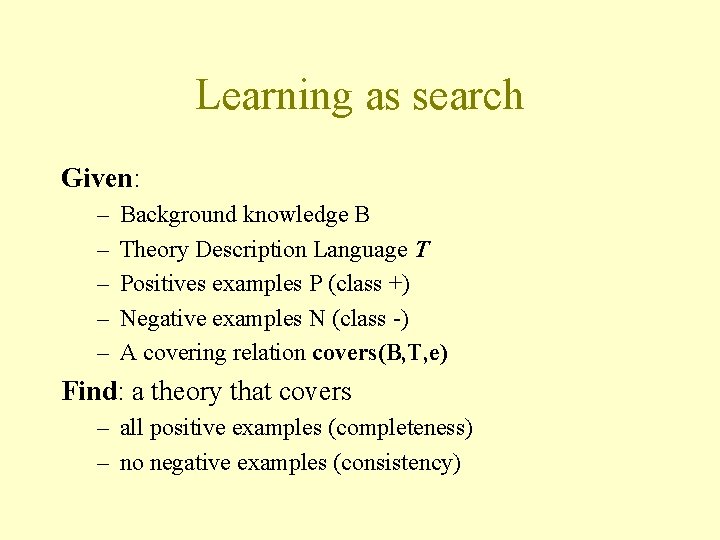

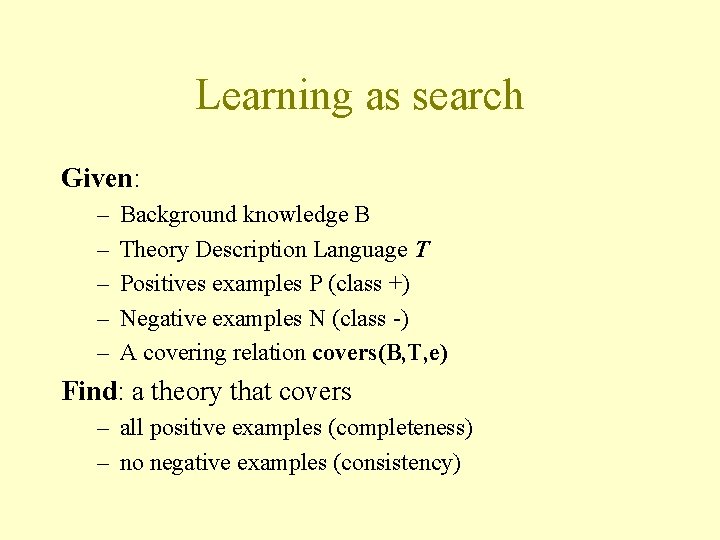

Learning as search Given: – – – Background knowledge B Theory Description Language T Positives examples P (class +) Negative examples N (class -) A covering relation covers(B, T, e) Find: a theory that covers – all positive examples (completeness) – no negative examples (consistency)

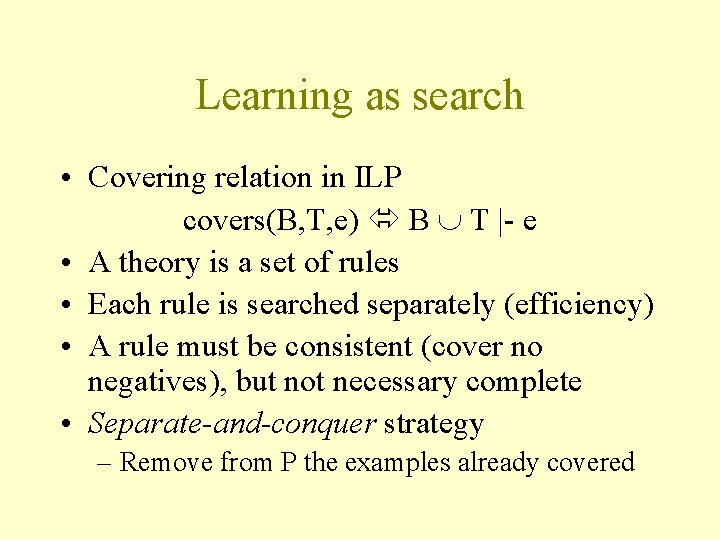

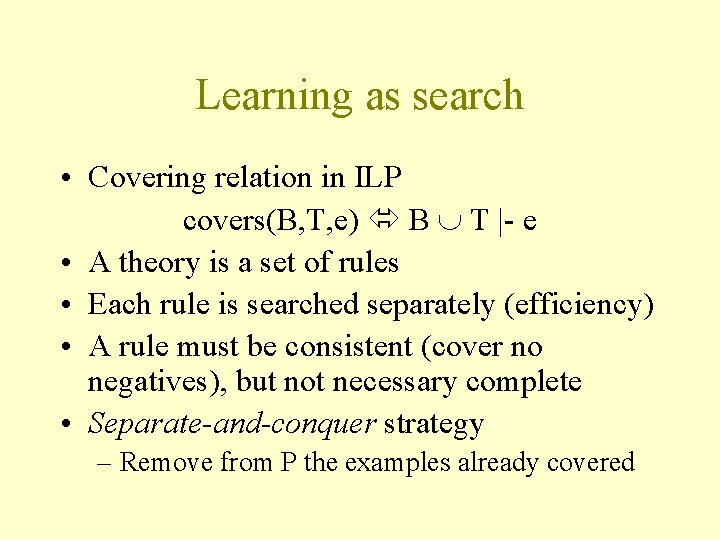

Learning as search • Covering relation in ILP covers(B, T, e) B T |- e • A theory is a set of rules • Each rule is searched separately (efficiency) • A rule must be consistent (cover no negatives), but not necessary complete • Separate-and-conquer strategy – Remove from P the examples already covered

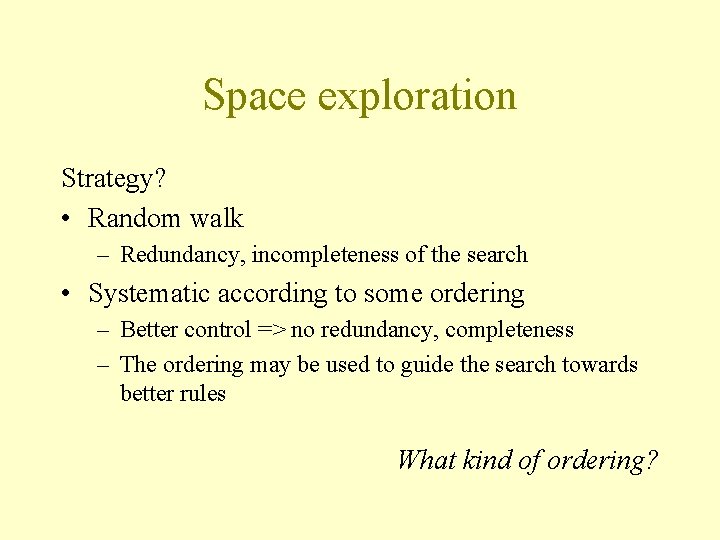

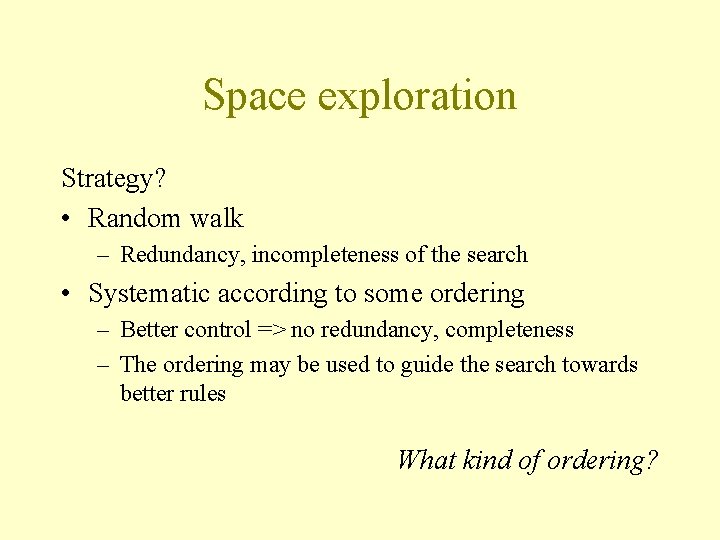

Space exploration Strategy? • Random walk – Redundancy, incompleteness of the search • Systematic according to some ordering – Better control => no redundancy, completeness – The ordering may be used to guide the search towards better rules What kind of ordering?

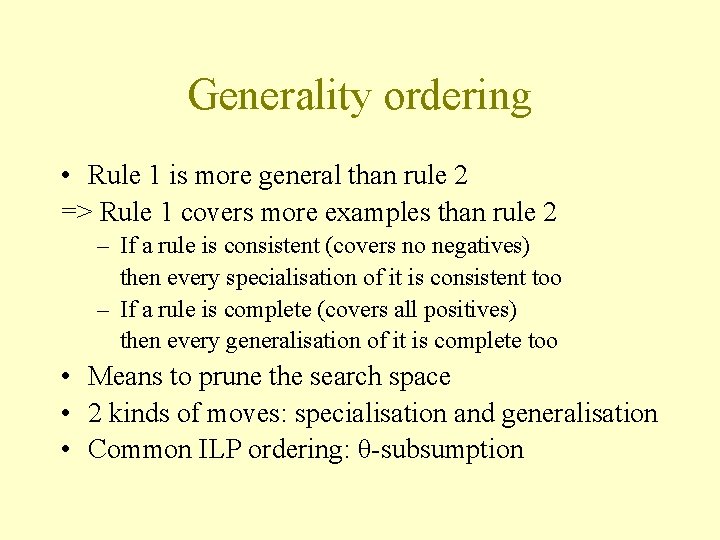

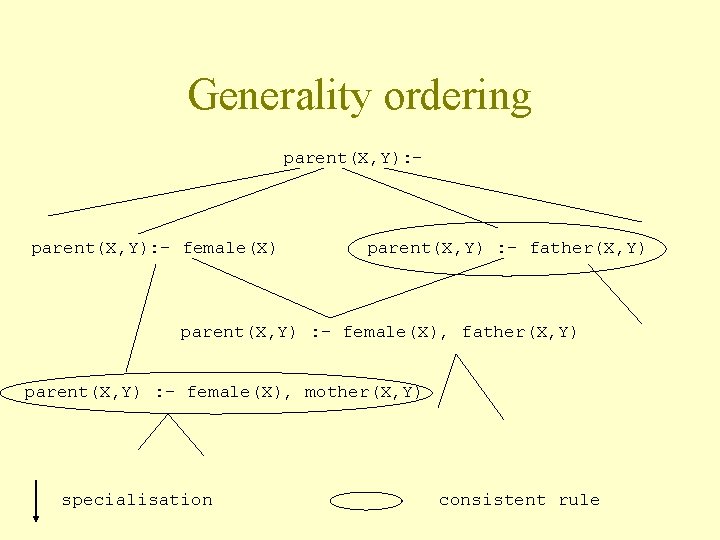

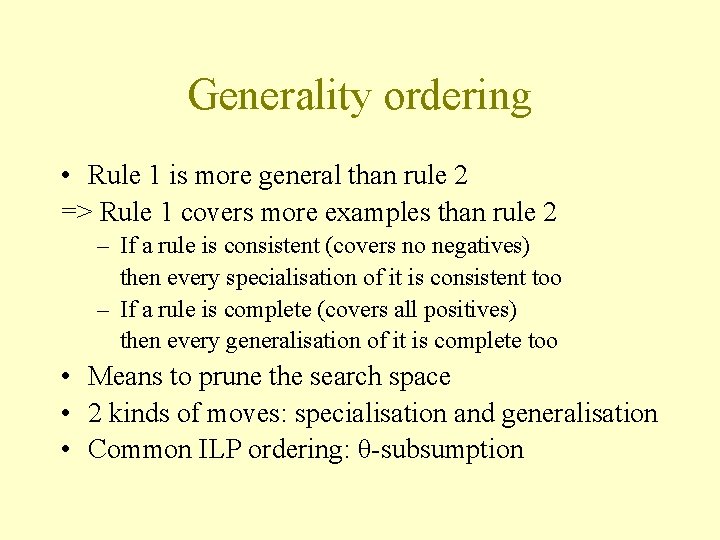

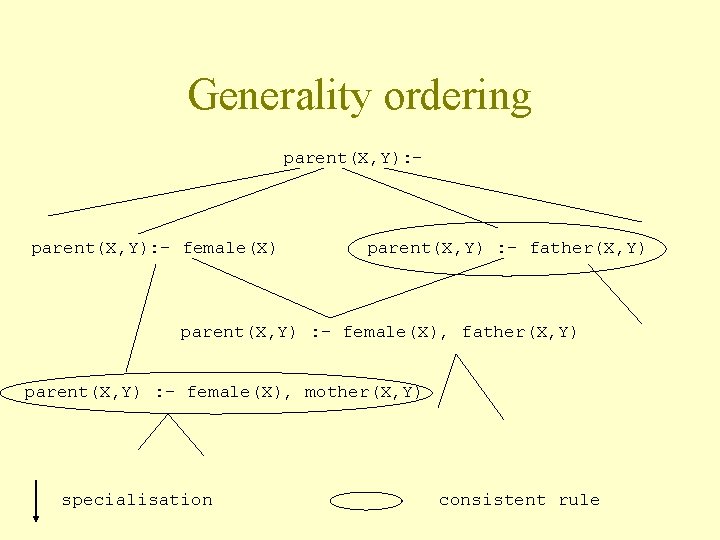

Generality ordering • Rule 1 is more general than rule 2 => Rule 1 covers more examples than rule 2 – If a rule is consistent (covers no negatives) then every specialisation of it is consistent too – If a rule is complete (covers all positives) then every generalisation of it is complete too • Means to prune the search space • 2 kinds of moves: specialisation and generalisation • Common ILP ordering: θ-subsumption

Generality ordering parent(X, Y): - female(X) parent(X, Y) : - father(X, Y) parent(X, Y) : - female(X), mother(X, Y) specialisation consistent rule

Search biases “Bias refers to any criterion for choosing one generalization over another than strict consistency with the observed training instances. ” (Mitchell) • Restrict the search space (efficiency) • Guide the search (given domain knowledge) • Different kinds of bias – Language bias – Search bias – Strategy bias

Language bias • Choice of predicates: roof(C, flat) ? roof(C) ? flat(C) ? • Types of predicates : east(T) : - roof(T), roof(C, 3) • Modes of predicates : east(T) : - roof(C, flat) east(T) : - has_car(T, C), roof(C, flat) • Discretization of numerical values

Search bias The moves direction in the search space • Top-down – start: the empty rule (c(X) : -. ) – moves: specialisations • Bottom-up – start: the bottom clause (~ c(X) : - B. ) – moves: generalisations • Bi-directional

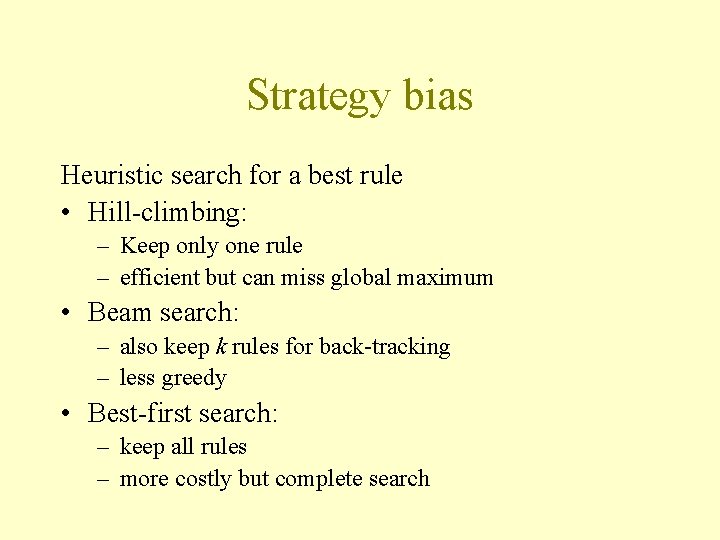

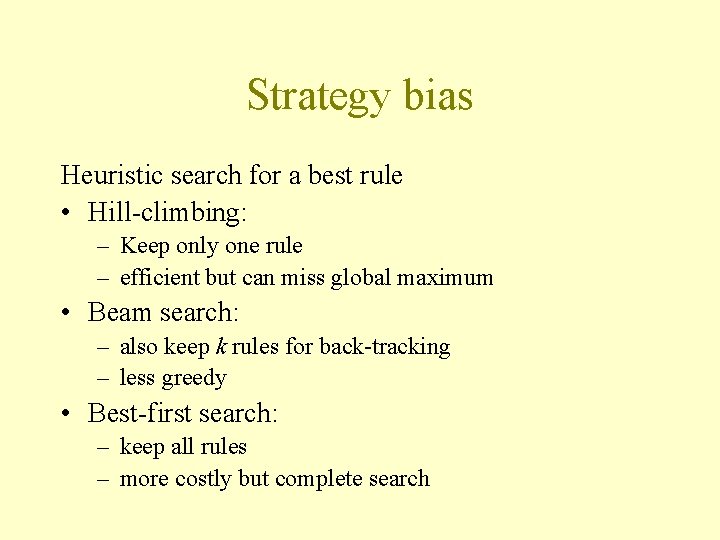

Strategy bias Heuristic search for a best rule • Hill-climbing: – Keep only one rule – efficient but can miss global maximum • Beam search: – also keep k rules for back-tracking – less greedy • Best-first search: – keep all rules – more costly but complete search

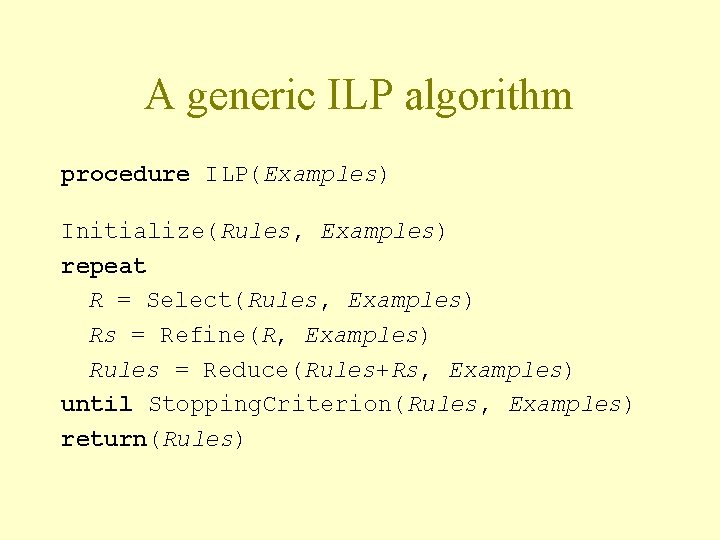

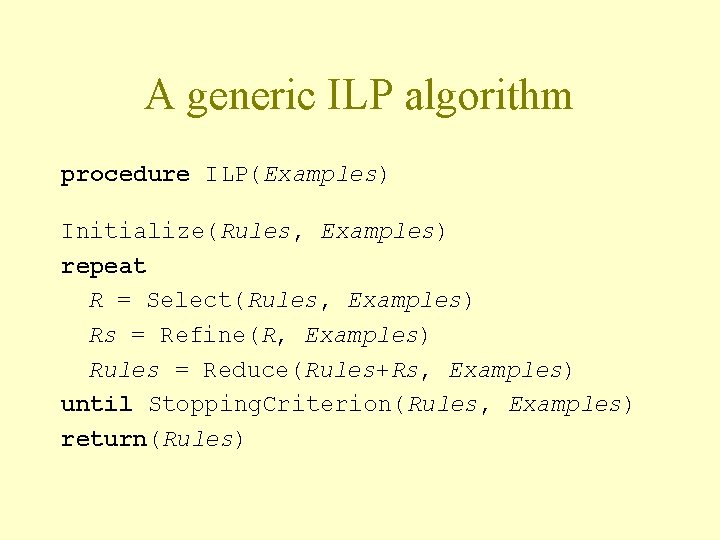

A generic ILP algorithm procedure ILP(Examples) Initialize(Rules, Examples) repeat R = Select(Rules, Examples) Rs = Refine(R, Examples) Rules = Reduce(Rules+Rs, Examples) until Stopping. Criterion(Rules, Examples) return(Rules)

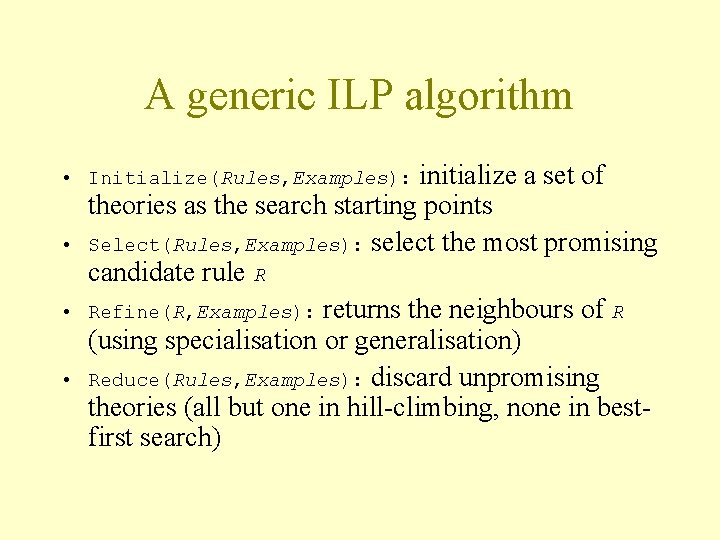

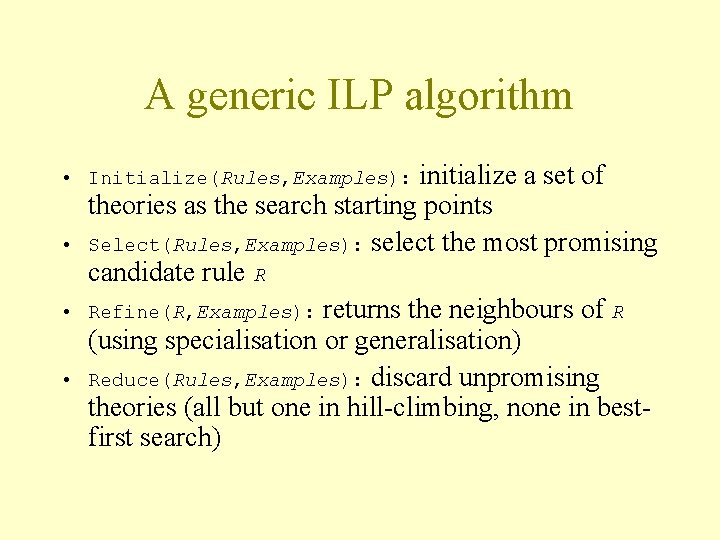

A generic ILP algorithm initialize a set of theories as the search starting points Select(Rules, Examples): select the most promising candidate rule R Refine(R, Examples): returns the neighbours of R (using specialisation or generalisation) Reduce(Rules, Examples): discard unpromising theories (all but one in hill-climbing, none in bestfirst search) • Initialize(Rules, Examples): • • •

ILPnet 2 – www. cs. bris. ac. uk/~ILPnet 2/ Network of Excellence in ILP in Europe • 37 universities and research institutes • Educational materials • Publications • Events (conferences, summer schools, …) • Description of ILP systems • Applications

ILP systems • FOIL (Quinlan and Cameron-Jones 1993): topdown hill-climbing search • Progol (Muggleton, 1995): top-down best-first search with bottom clause • Golem (Muggleton and Feng 1992): bottom-up hill-climbing search • LINUS (Lavrac and Dzeroski 1994): propositionalisation • Aleph (~Progol), Tilde (relational decision trees), …

ILP applications • Life sciences – mutagenecity, predicting toxicology – protein structure/folding • Natural language processing – english verb past tense – document analysis and classification • Engineering – finite element mesh design • Environmental sciences – biodegradability of chemical compounds

The end A few books on ILP… • J. Lloyd. Logic for learning: learning comprehensible theories from structured data. 2003. • S. Dzeroski and N. Lavrac, editors. Relational Data Mining. September 2001. • L. De Raedt, editor. Advances in Inductive Logic Programming. 1996. • N. Lavrac and S. Dzeroski. Inductive Logic Programming: Techniques and Applications. 1994.