HighLevel Synthesis Creating Custom Circuits from HighLevel Code

![High-Level Synthesis acc = 0; for (i=0; i < 128; i++) acc += a[i]; High-Level Synthesis acc = 0; for (i=0; i < 128; i++) acc += a[i];](https://slidetodoc.com/presentation_image_h2/c0b100a67c04c838b4a5f3b5489d4603/image-17.jpg)

- Slides: 121

High-Level Synthesis: Creating Custom Circuits from High-Level Code Greg Stitt ECE Department University of Florida

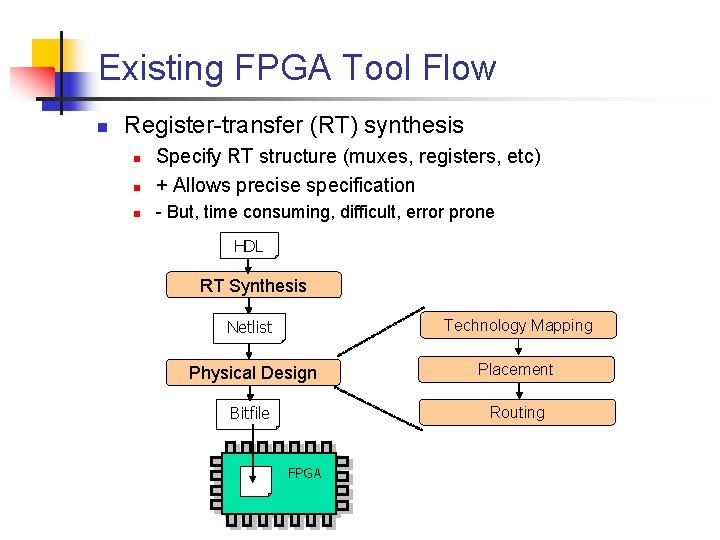

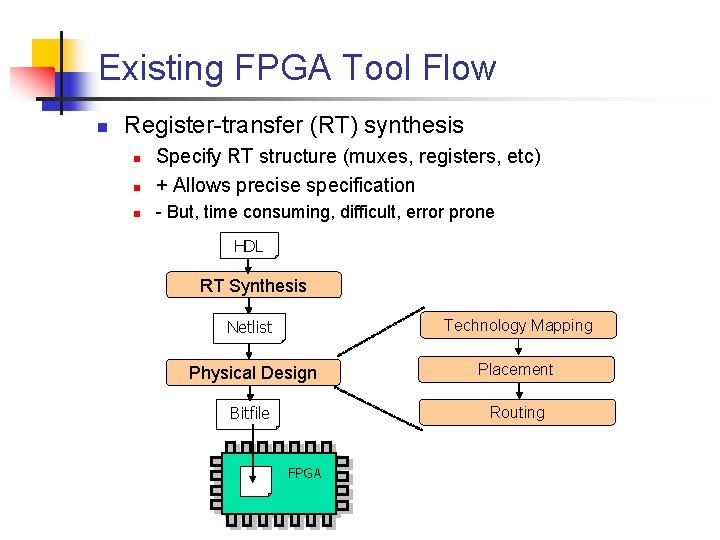

Existing FPGA Tool Flow n Register-transfer (RT) synthesis n Specify RT structure (muxes, registers, etc) + Allows precise specification n - But, time consuming, difficult, error prone n HDL RT Synthesis Netlist Technology Mapping Physical Design Placement Bitfile Routing FPGA Processor

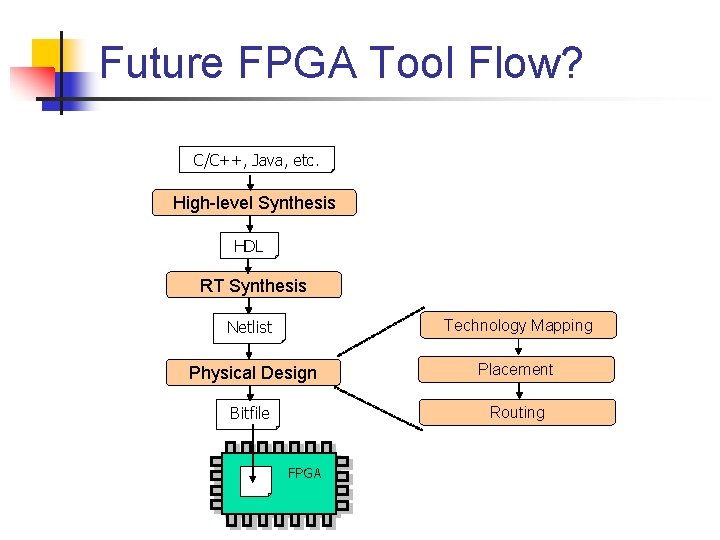

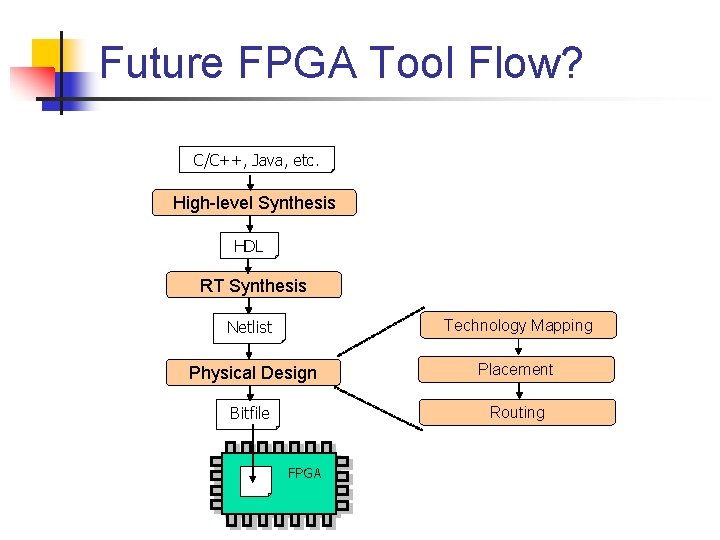

Future FPGA Tool Flow? C/C++, Java, etc. High-level Synthesis HDL RT Synthesis Netlist Technology Mapping Physical Design Placement Bitfile Routing FPGA Processor

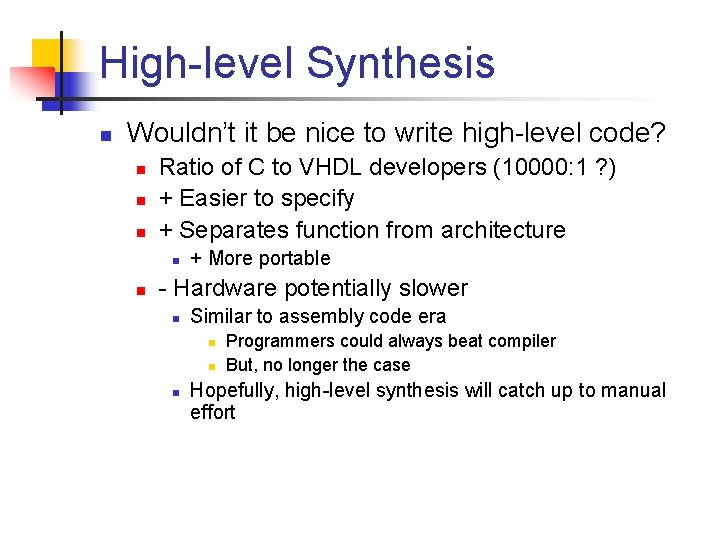

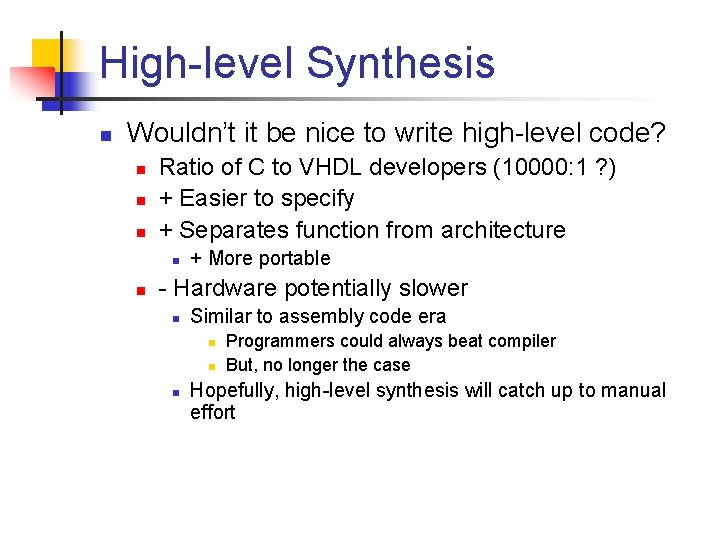

High-level Synthesis n Wouldn’t it be nice to write high-level code? n n n Ratio of C to VHDL developers (10000: 1 ? ) + Easier to specify + Separates function from architecture n n + More portable - Hardware potentially slower n Similar to assembly code era n n n Programmers could always beat compiler But, no longer the case Hopefully, high-level synthesis will catch up to manual effort

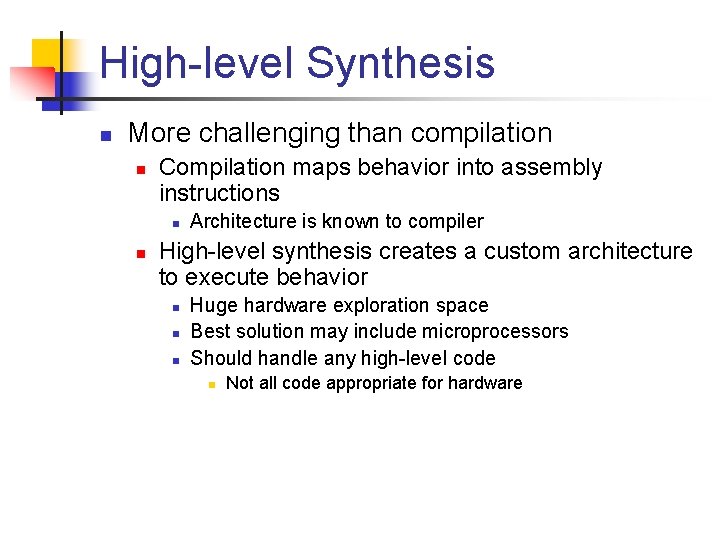

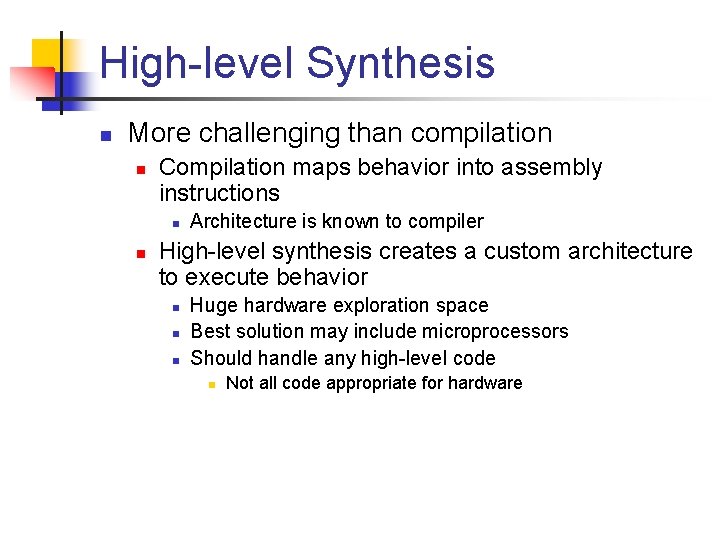

High-level Synthesis n More challenging than compilation n Compilation maps behavior into assembly instructions n n Architecture is known to compiler High-level synthesis creates a custom architecture to execute behavior n n n Huge hardware exploration space Best solution may include microprocessors Should handle any high-level code n Not all code appropriate for hardware

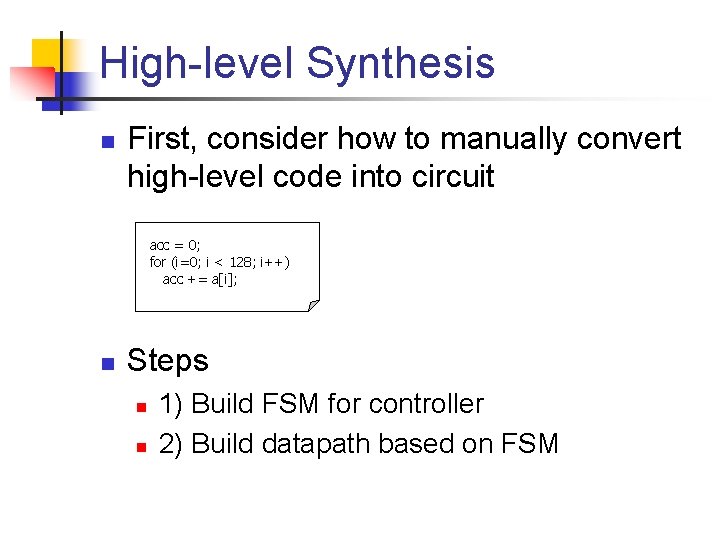

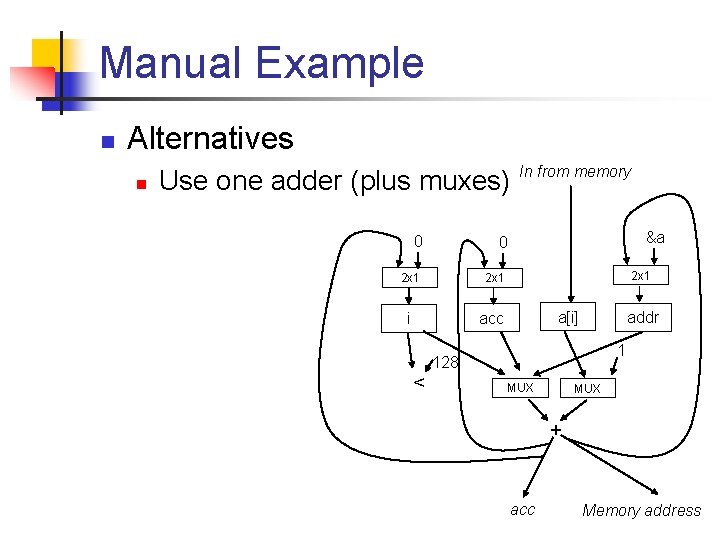

High-level Synthesis n First, consider how to manually convert high-level code into circuit acc = 0; for (i=0; i < 128; i++) acc += a[i]; n Steps n n 1) Build FSM for controller 2) Build datapath based on FSM

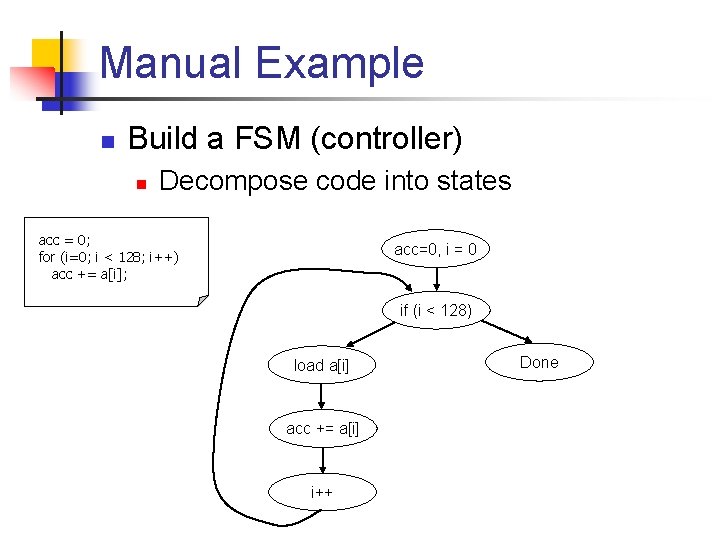

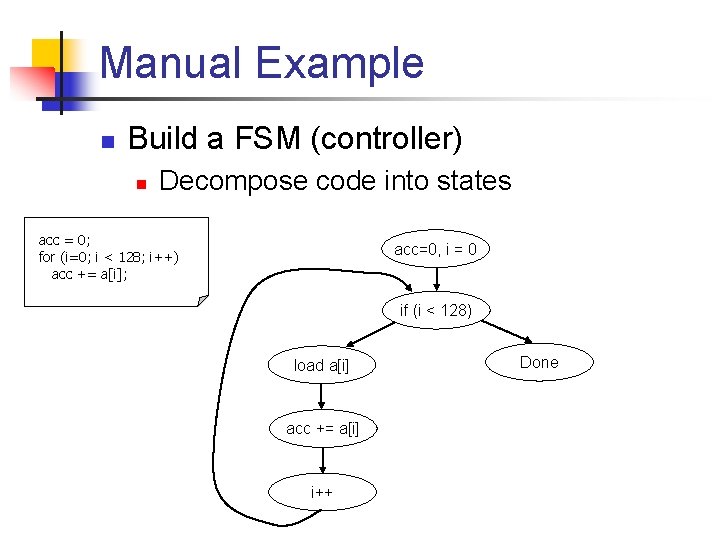

Manual Example n Build a FSM (controller) n Decompose code into states acc = 0; for (i=0; i < 128; i++) acc += a[i]; acc=0, i = 0 if (i < 128) load a[i] acc += a[i] i++ Done

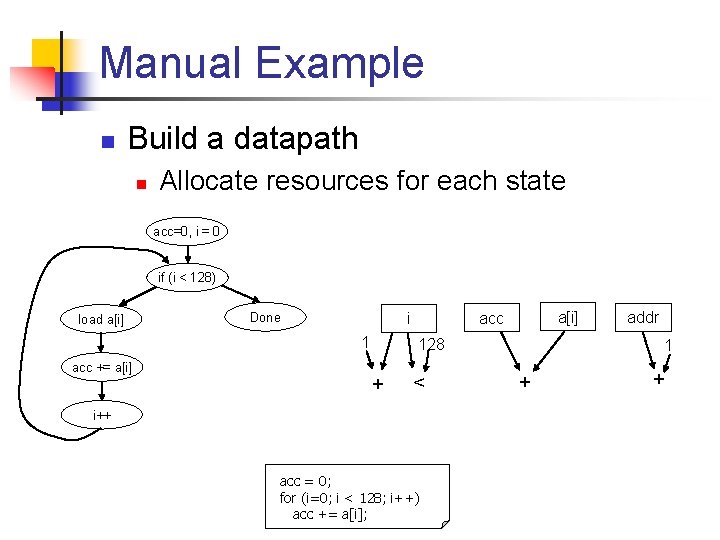

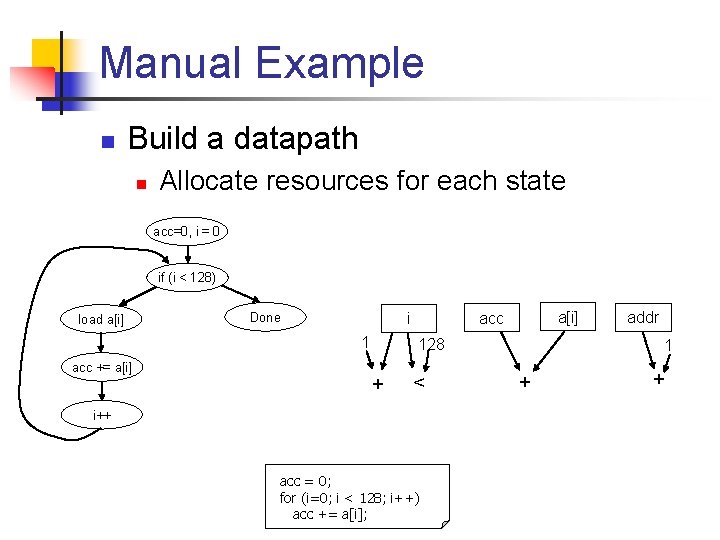

Manual Example n Build a datapath n Allocate resources for each state acc=0, i = 0 if (i < 128) load a[i] 1 acc += a[i] acc i Done 128 + < i++ acc = 0; for (i=0; i < 128; i++) acc += a[i]; addr 1 + +

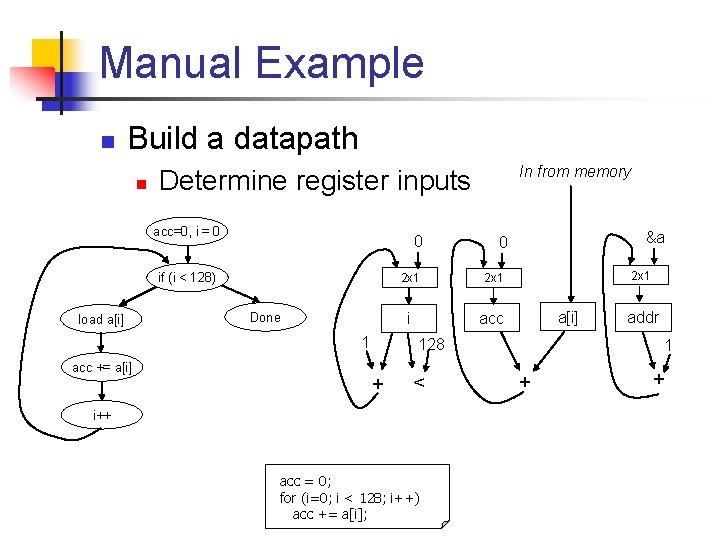

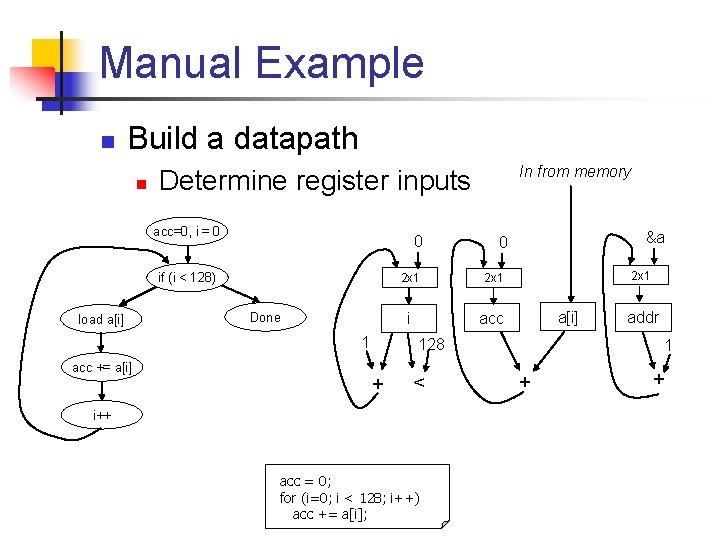

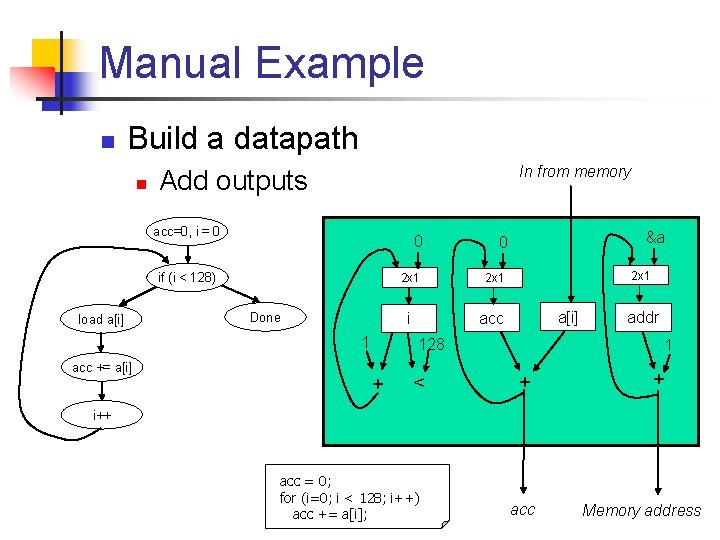

Manual Example n Build a datapath n acc=0, i = 0 0 if (i < 128) load a[i] Done 1 acc += a[i] In from memory Determine register inputs &a 0 2 x 1 i acc 2 x 1 a[i] 128 + < i++ acc = 0; for (i=0; i < 128; i++) acc += a[i]; addr 1 + +

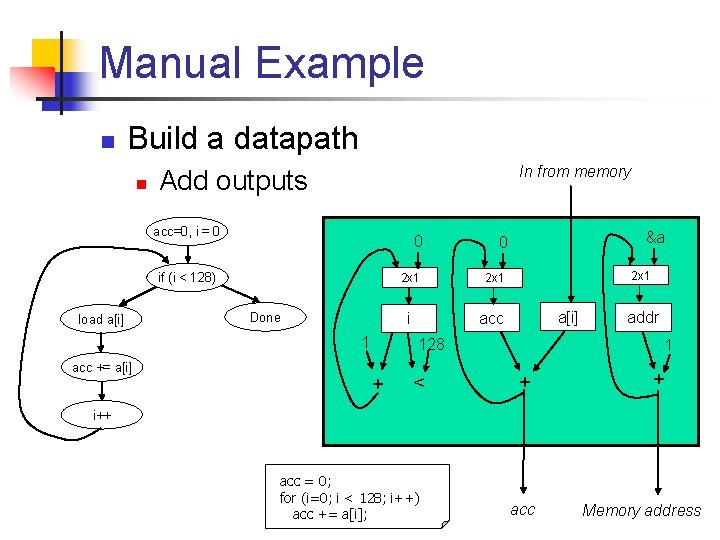

Manual Example n Build a datapath n In from memory Add outputs acc=0, i = 0 if (i < 128) load a[i] Done 1 acc += a[i] 0 0 2 x 1 i acc &a 2 x 1 a[i] 128 + < addr 1 + + i++ acc = 0; for (i=0; i < 128; i++) acc += a[i]; acc Memory address

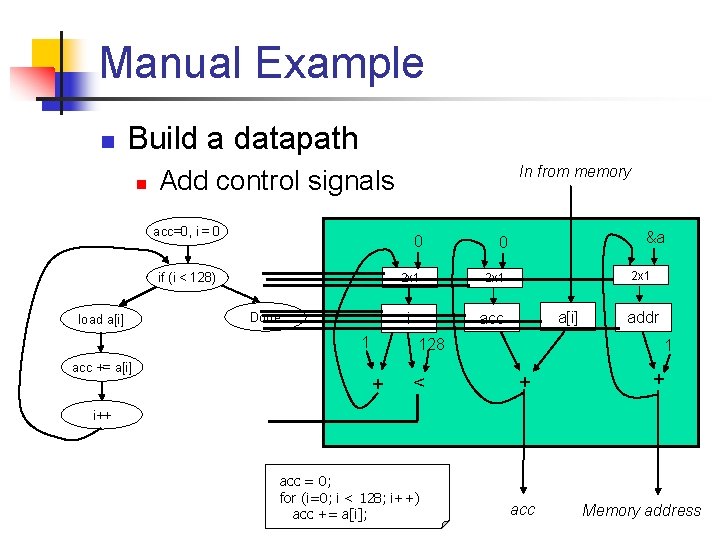

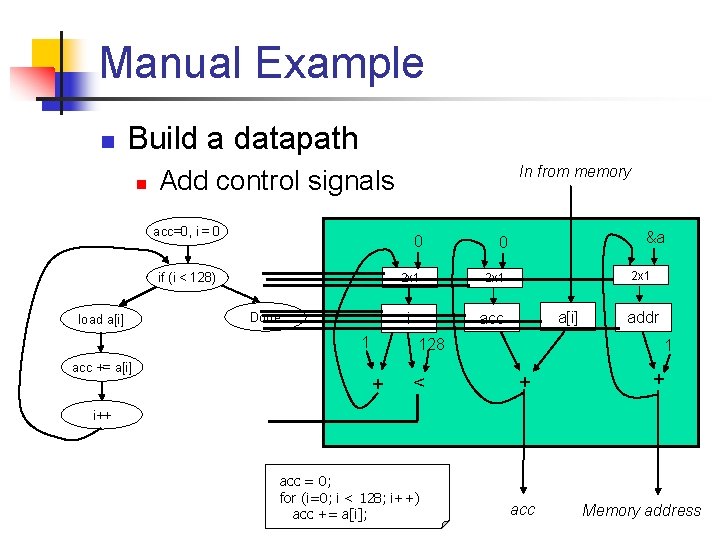

Manual Example n Build a datapath n In from memory Add control signals acc=0, i = 0 if (i < 128) load a[i] Done 1 acc += a[i] 0 0 2 x 1 i acc &a 2 x 1 a[i] 128 + < addr 1 + + i++ acc = 0; for (i=0; i < 128; i++) acc += a[i]; acc Memory address

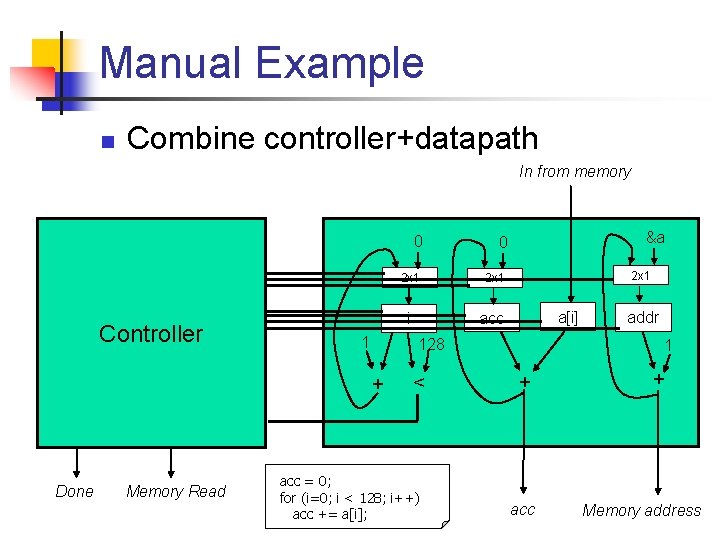

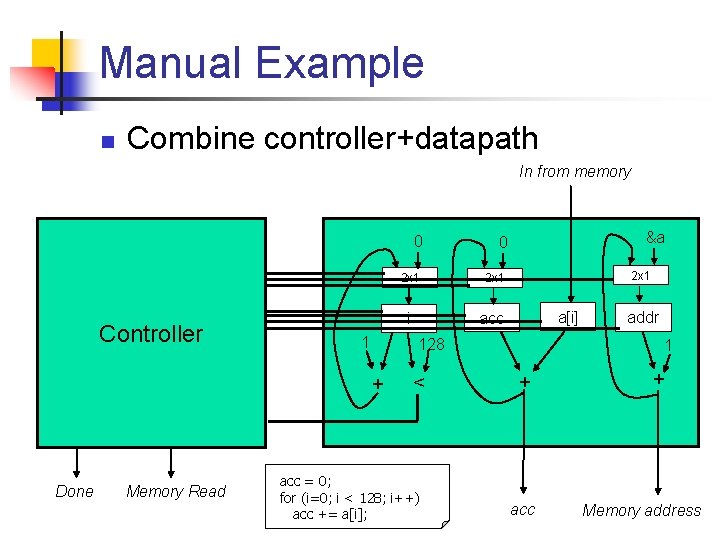

Manual Example n Combine controller+datapath In from memory Controller 1 Memory Read 0 2 x 1 i acc &a 2 x 1 a[i] 128 + Done 0 < acc = 0; for (i=0; i < 128; i++) acc += a[i]; addr 1 + acc + Memory address

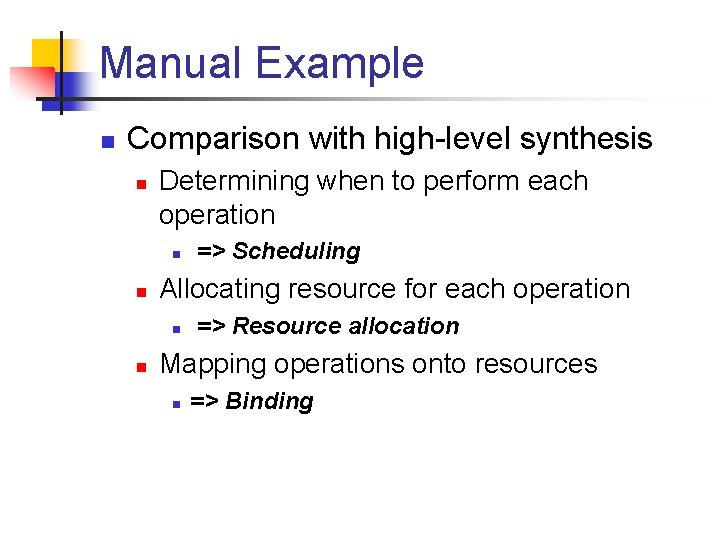

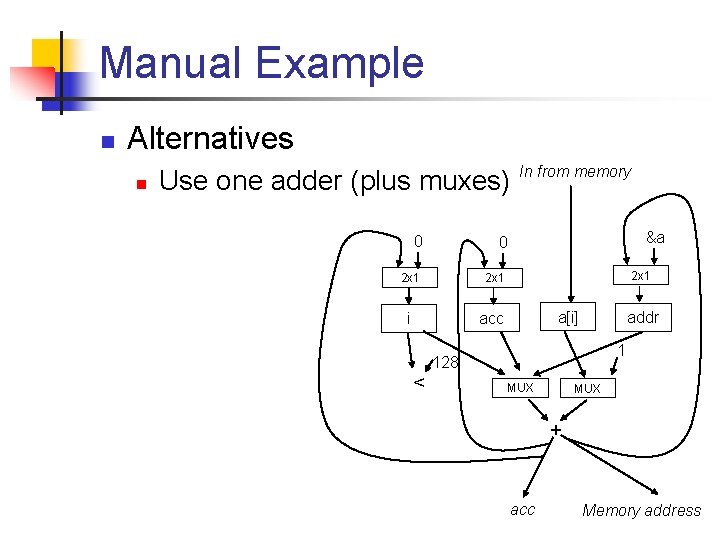

Manual Example n Alternatives n Use one adder (plus muxes) 0 0 2 x 1 i acc In from memory &a 2 x 1 a[i] addr 1 128 < MUX + acc Memory address

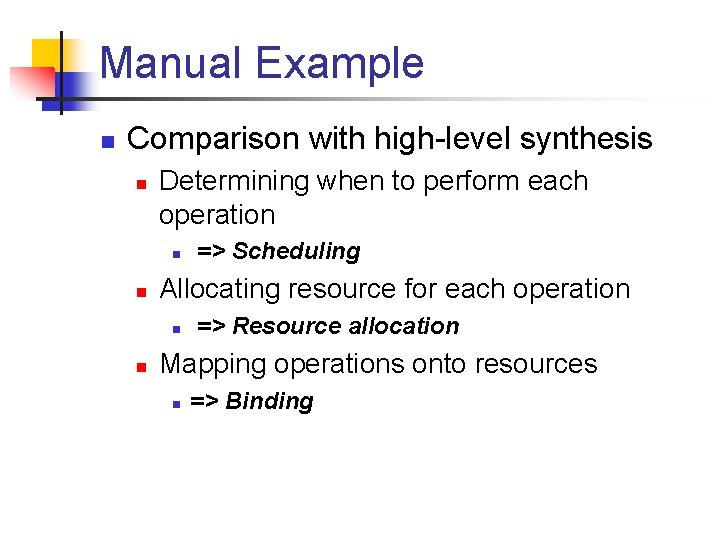

Manual Example n Comparison with high-level synthesis n Determining when to perform each operation n n Allocating resource for each operation n n => Scheduling => Resource allocation Mapping operations onto resources n => Binding

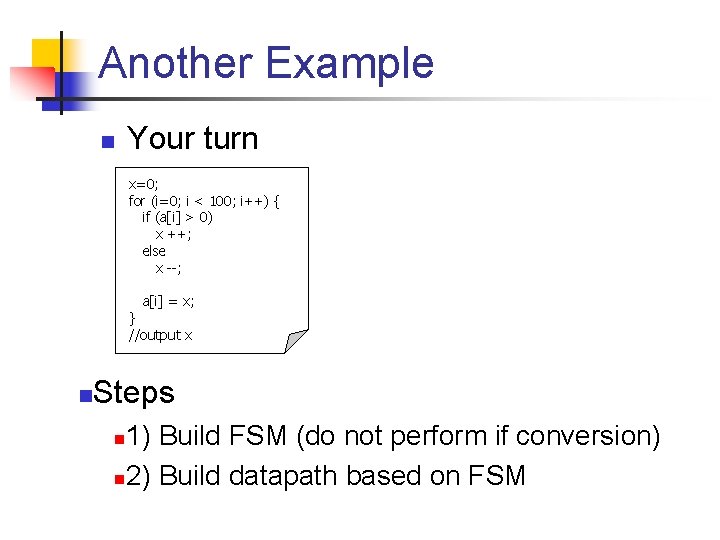

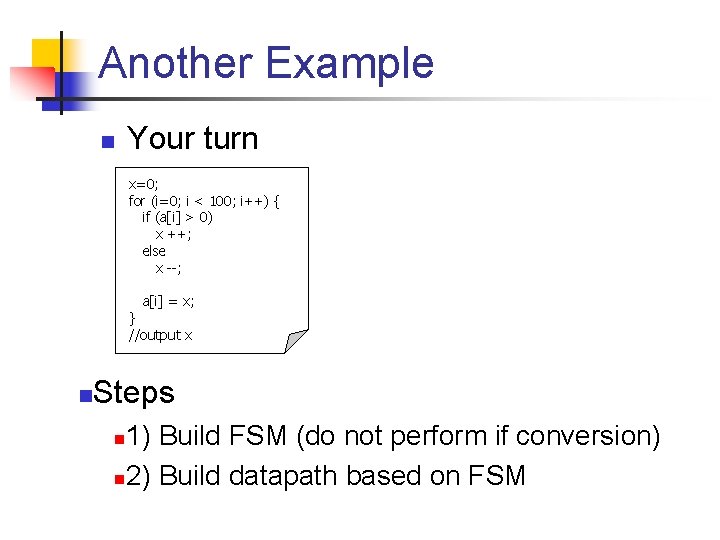

Another Example n Your turn x=0; for (i=0; i < 100; i++) { if (a[i] > 0) x ++; else x --; a[i] = x; } //output x n Steps 1) Build FSM (do not perform if conversion) n 2) Build datapath based on FSM n

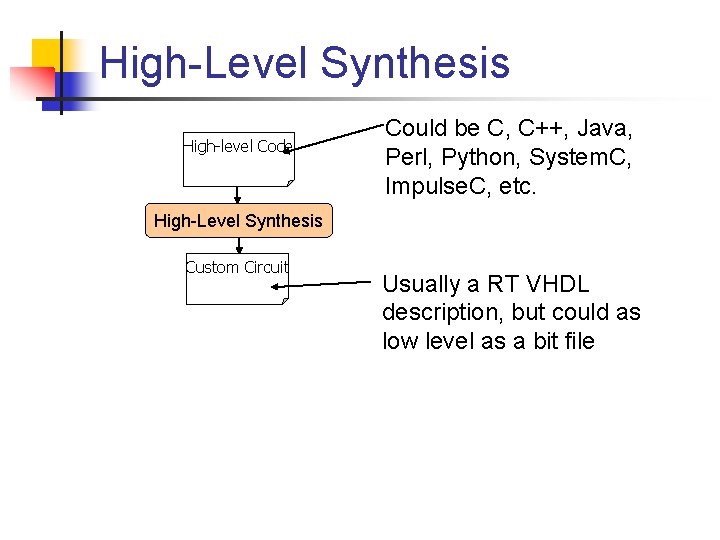

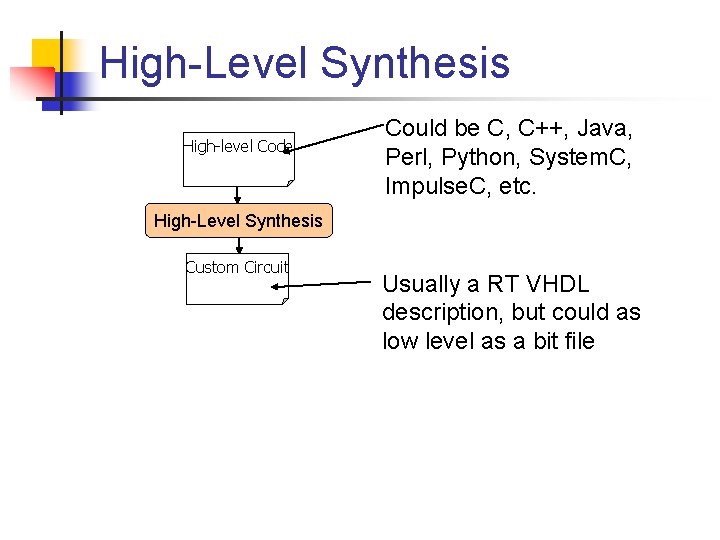

High-Level Synthesis High-level Code Could be C, C++, Java, Perl, Python, System. C, Impulse. C, etc. High-Level Synthesis Custom Circuit Usually a RT VHDL description, but could as low level as a bit file

![HighLevel Synthesis acc 0 for i0 i 128 i acc ai High-Level Synthesis acc = 0; for (i=0; i < 128; i++) acc += a[i];](https://slidetodoc.com/presentation_image_h2/c0b100a67c04c838b4a5f3b5489d4603/image-17.jpg)

High-Level Synthesis acc = 0; for (i=0; i < 128; i++) acc += a[i]; High-Level Synthesis Controller 1 In from memory 0 0 2 x 1 i acc 2 x 1 a[i] 128 + < Done Memory Read &a + acc addr 1 + Memory address

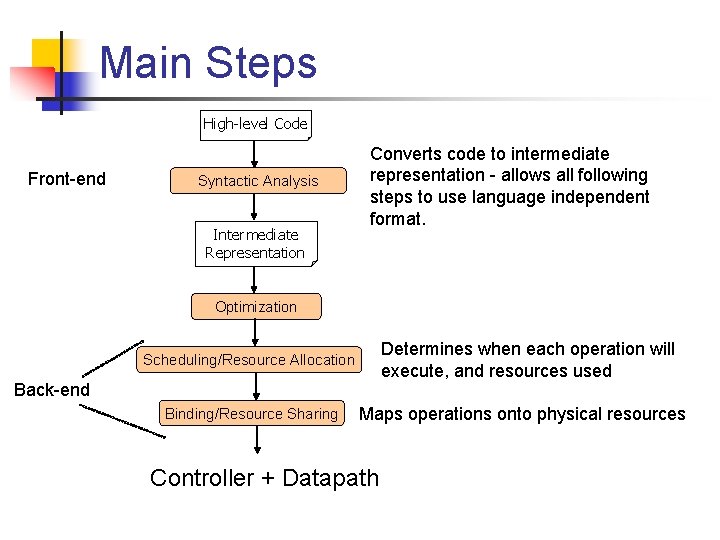

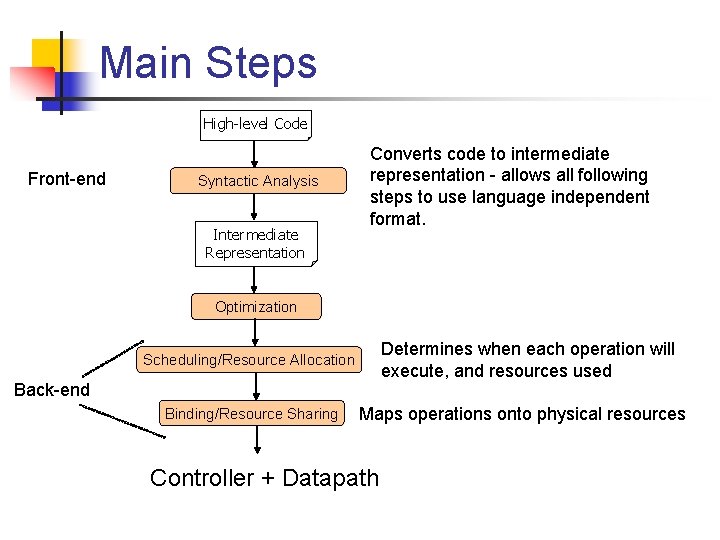

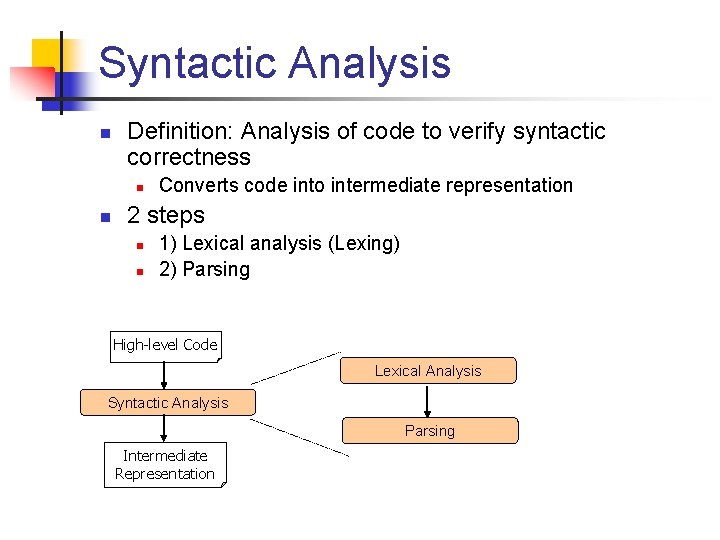

Main Steps High-level Code Front-end Syntactic Analysis Intermediate Representation Converts code to intermediate representation - allows all following steps to use language independent format. Optimization Determines when each operation will execute, and resources used Scheduling/Resource Allocation Back-end Binding/Resource Sharing Maps operations onto physical resources Controller + Datapath

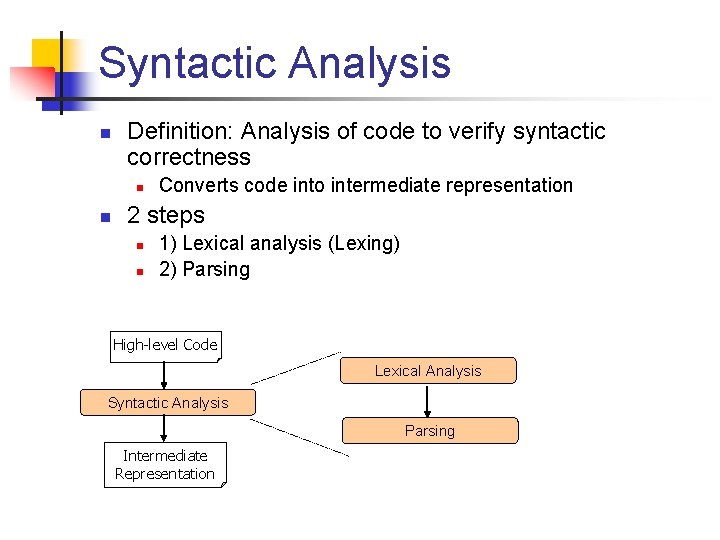

Syntactic Analysis n Definition: Analysis of code to verify syntactic correctness n n Converts code into intermediate representation 2 steps n n 1) Lexical analysis (Lexing) 2) Parsing High-level Code Lexical Analysis Syntactic Analysis Parsing Intermediate Representation

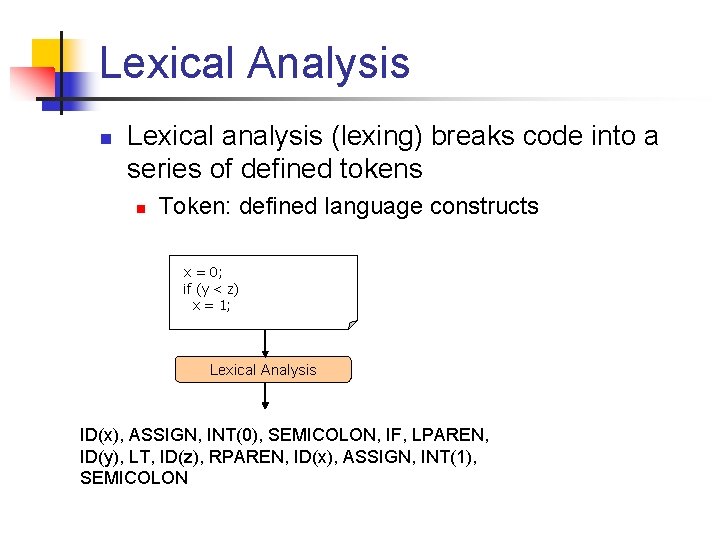

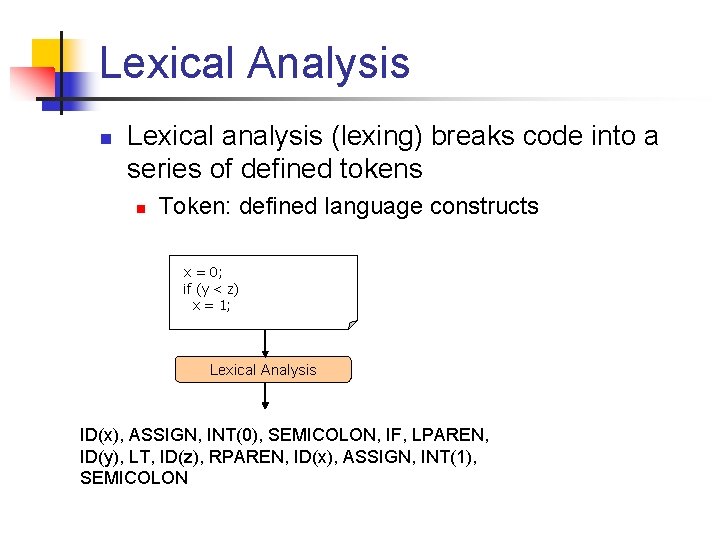

Lexical Analysis n Lexical analysis (lexing) breaks code into a series of defined tokens n Token: defined language constructs x = 0; if (y < z) x = 1; Lexical Analysis ID(x), ASSIGN, INT(0), SEMICOLON, IF, LPAREN, ID(y), LT, ID(z), RPAREN, ID(x), ASSIGN, INT(1), SEMICOLON

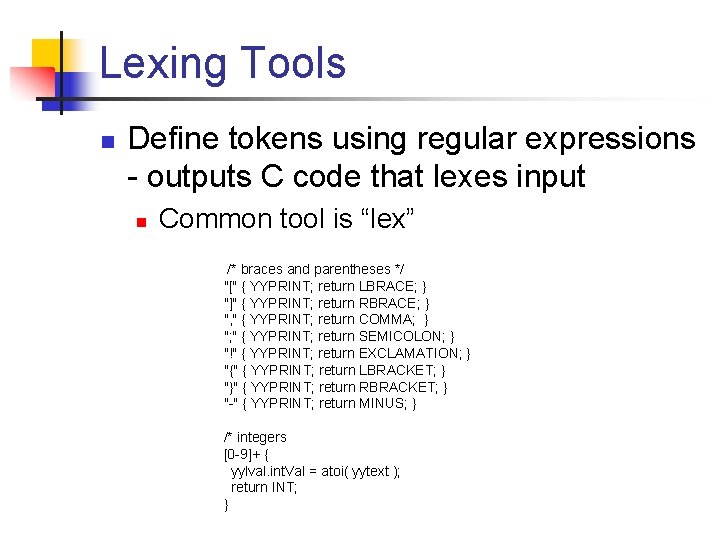

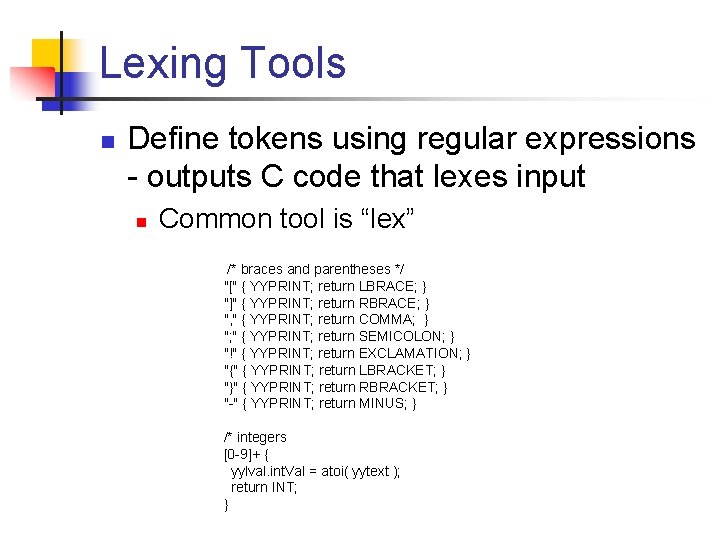

Lexing Tools n Define tokens using regular expressions - outputs C code that lexes input n Common tool is “lex” /* braces and parentheses */ "[" { YYPRINT; return LBRACE; } "]" { YYPRINT; return RBRACE; } ", " { YYPRINT; return COMMA; } "; " { YYPRINT; return SEMICOLON; } "!" { YYPRINT; return EXCLAMATION; } "{" { YYPRINT; return LBRACKET; } "}" { YYPRINT; return RBRACKET; } "-" { YYPRINT; return MINUS; } /* integers [0 -9]+ { yylval. int. Val = atoi( yytext ); return INT; }

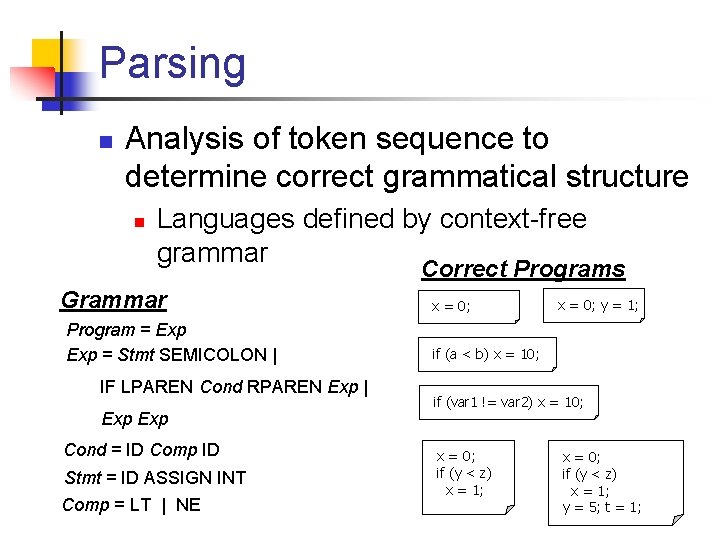

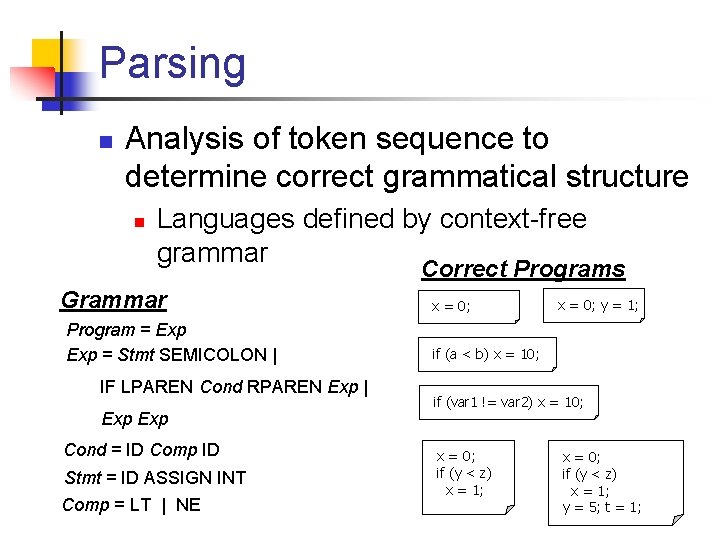

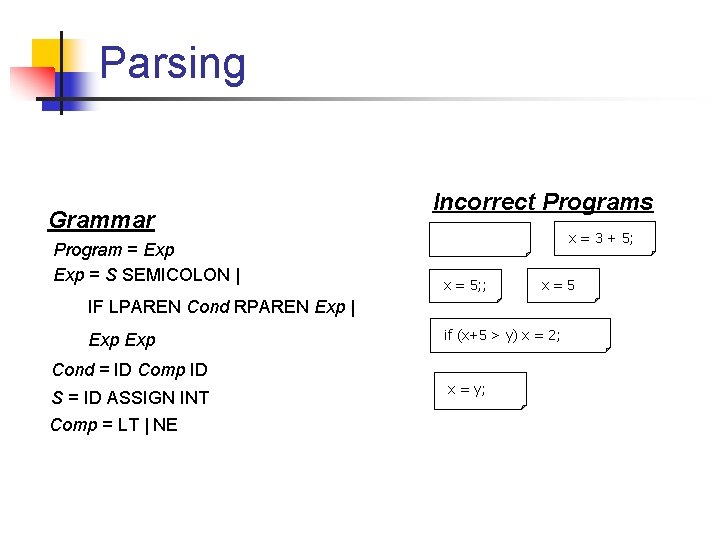

Parsing n Analysis of token sequence to determine correct grammatical structure n Languages defined by context-free grammar Correct Programs Grammar Program = Exp = Stmt SEMICOLON | IF LPAREN Cond RPAREN Exp | Exp Cond = ID Comp ID Stmt = ID ASSIGN INT Comp = LT | NE x = 0; y = 1; if (a < b) x = 10; if (var 1 != var 2) x = 10; x = 0; if (y < z) x = 1; y = 5; t = 1;

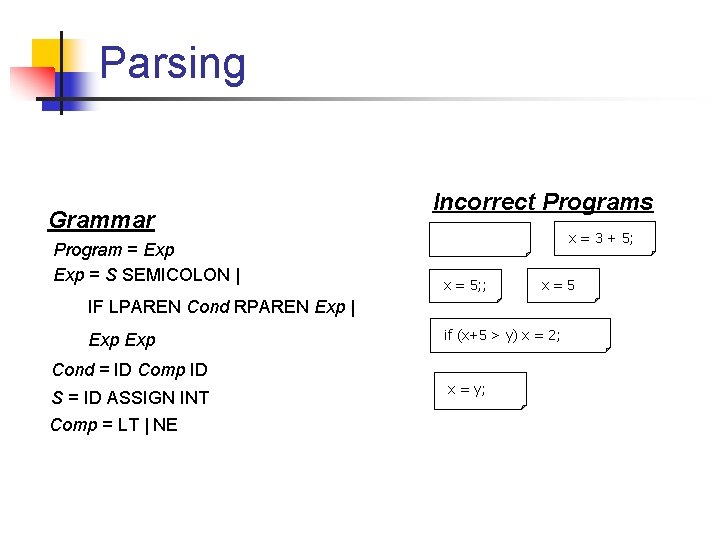

Parsing Grammar Program = Exp = S SEMICOLON | Incorrect Programs x = 3 + 5; x = 5; ; x=5 IF LPAREN Cond RPAREN Exp | Exp Cond = ID Comp ID S = ID ASSIGN INT Comp = LT | NE if (x+5 > y) x = 2; x = y;

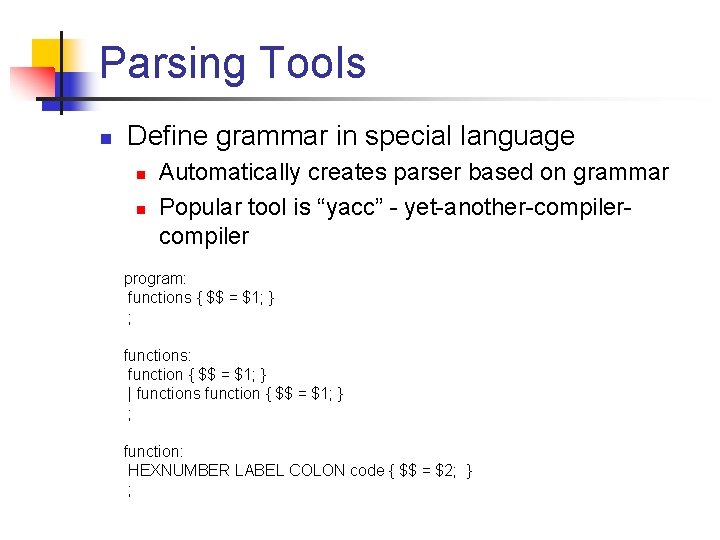

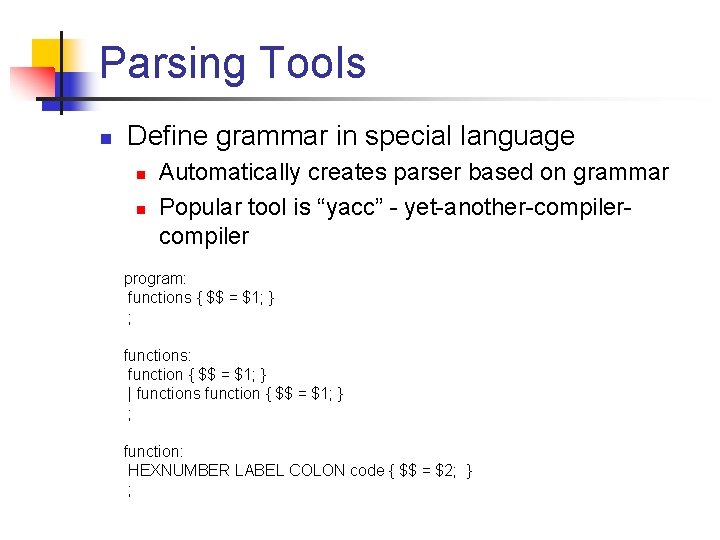

Parsing Tools n Define grammar in special language n n Automatically creates parser based on grammar Popular tool is “yacc” - yet-another-compiler program: functions { $$ = $1; } ; functions: function { $$ = $1; } | functions function { $$ = $1; } ; function: HEXNUMBER LABEL COLON code { $$ = $2; } ;

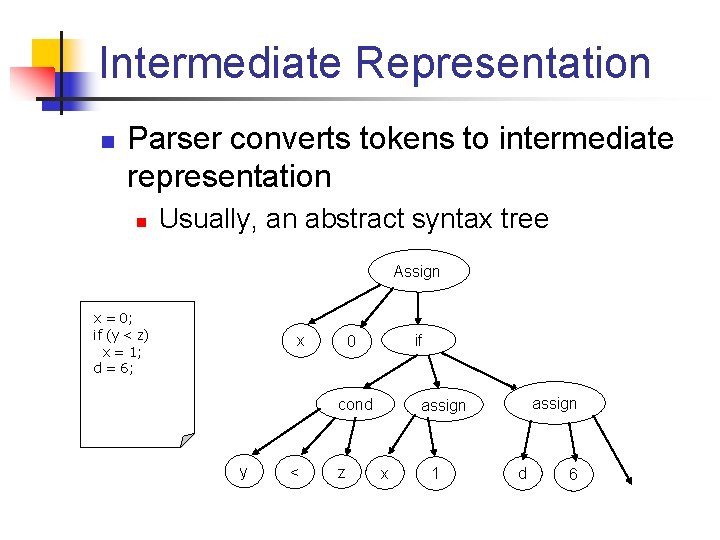

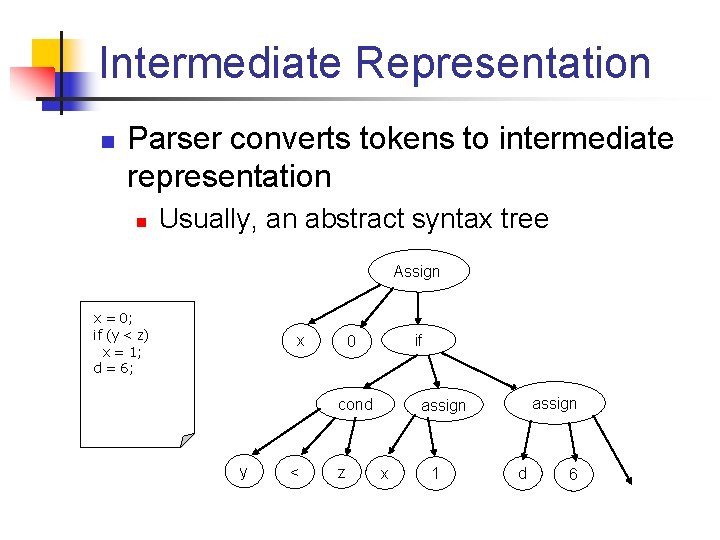

Intermediate Representation n Parser converts tokens to intermediate representation n Usually, an abstract syntax tree Assign x = 0; if (y < z) x = 1; d = 6; x if 0 cond y < z assign x 1 d 6

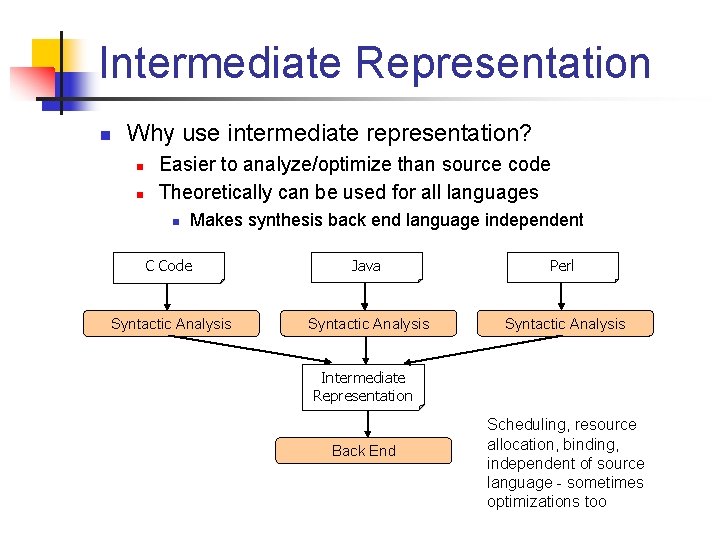

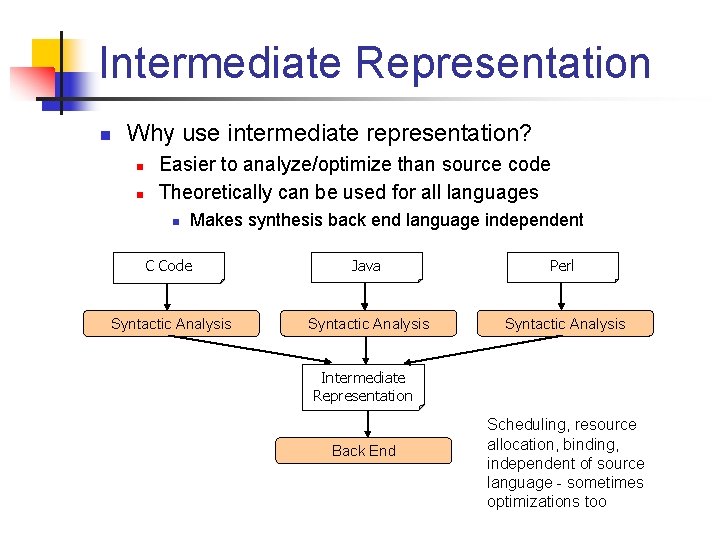

Intermediate Representation n Why use intermediate representation? n n Easier to analyze/optimize than source code Theoretically can be used for all languages n Makes synthesis back end language independent C Code Java Perl Syntactic Analysis Intermediate Representation Back End Scheduling, resource allocation, binding, independent of source language - sometimes optimizations too

Intermediate Representation n Different Types n n n Abstract Syntax Tree Control/Data Flow Graph (CDFG) Sequencing Graph n n Etc. We will focus on CDFG n Combines control flow graph (CFG) and data flow graph (DFG)

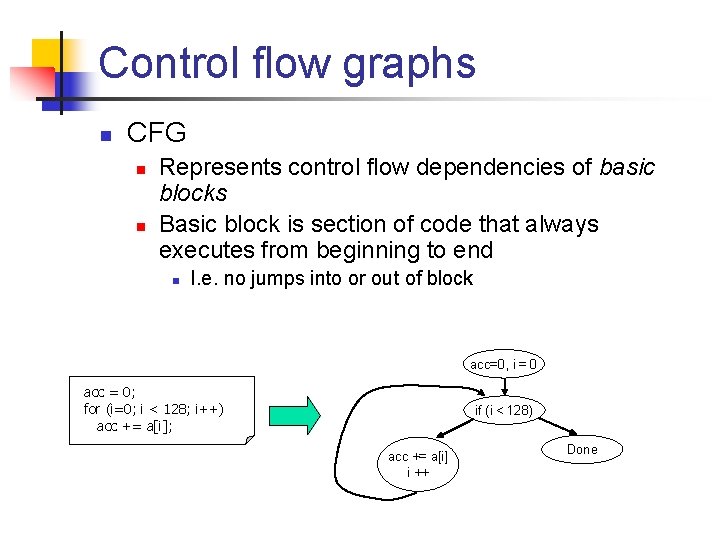

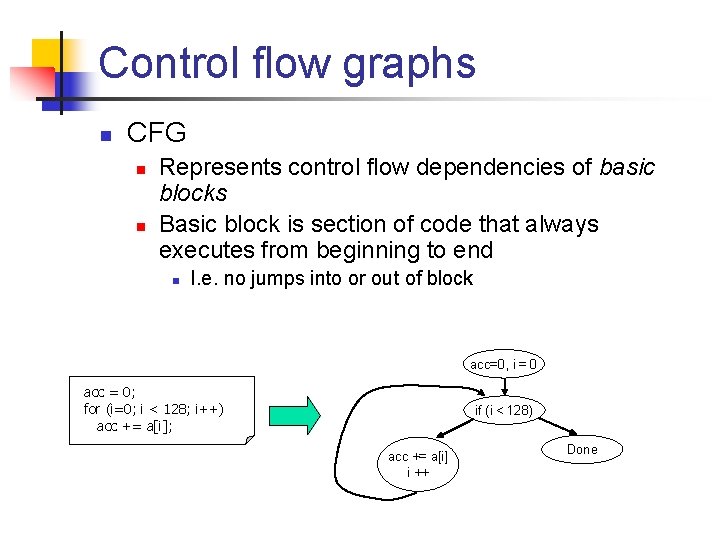

Control flow graphs n CFG n n Represents control flow dependencies of basic blocks Basic block is section of code that always executes from beginning to end n I. e. no jumps into or out of block acc=0, i = 0 acc = 0; for (i=0; i < 128; i++) acc += a[i]; if (i < 128) acc += a[i] i ++ Done

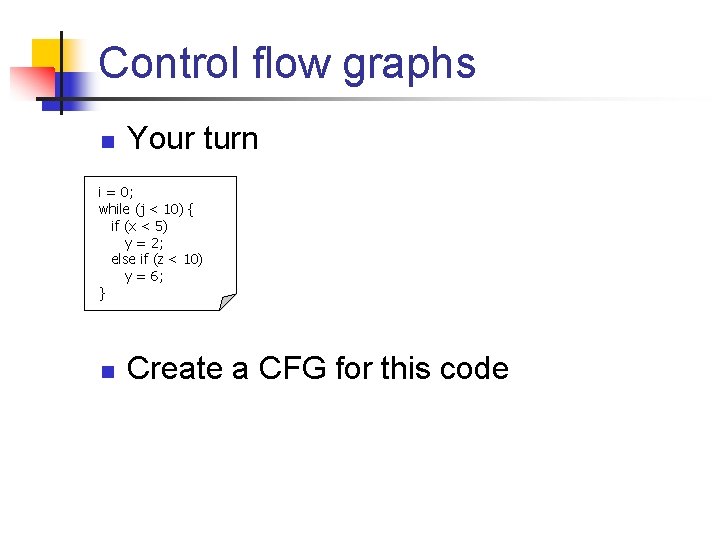

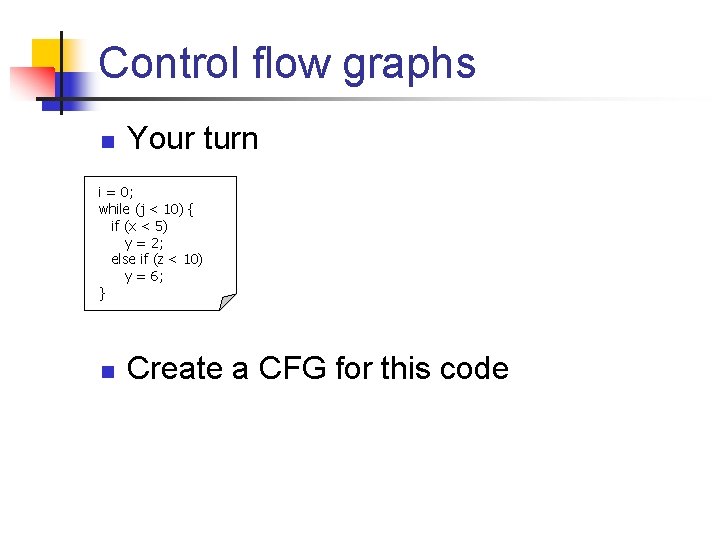

Control flow graphs n Your turn i = 0; while (j < 10) { if (x < 5) y = 2; else if (z < 10) y = 6; } n Create a CFG for this code

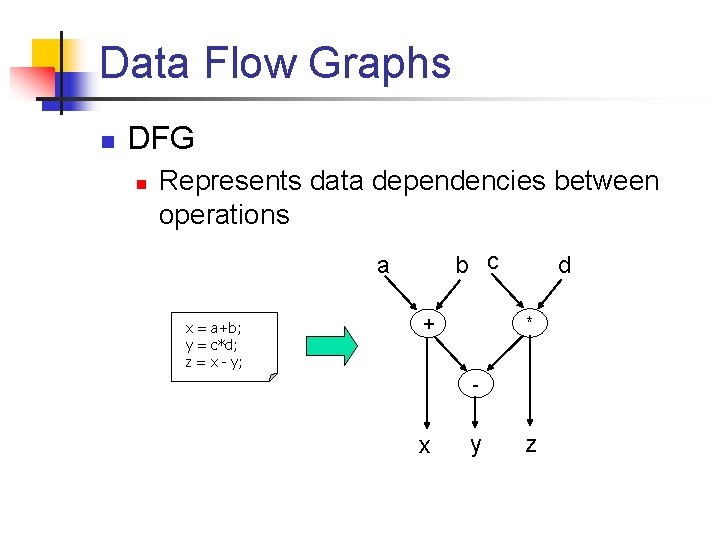

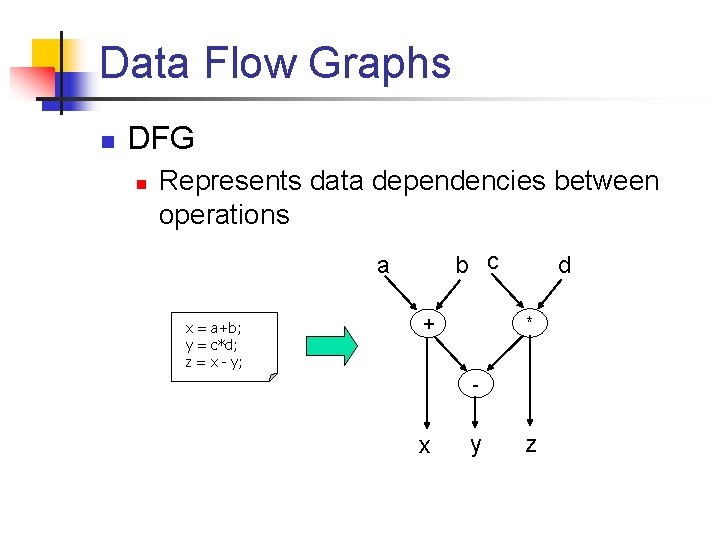

Data Flow Graphs n DFG n Represents data dependencies between operations b c a x = a+b; y = c*d; z = x - y; d * + - x y z

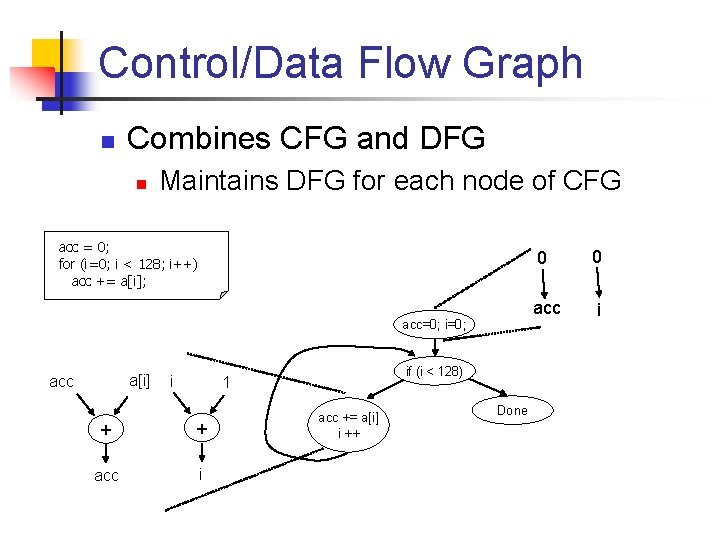

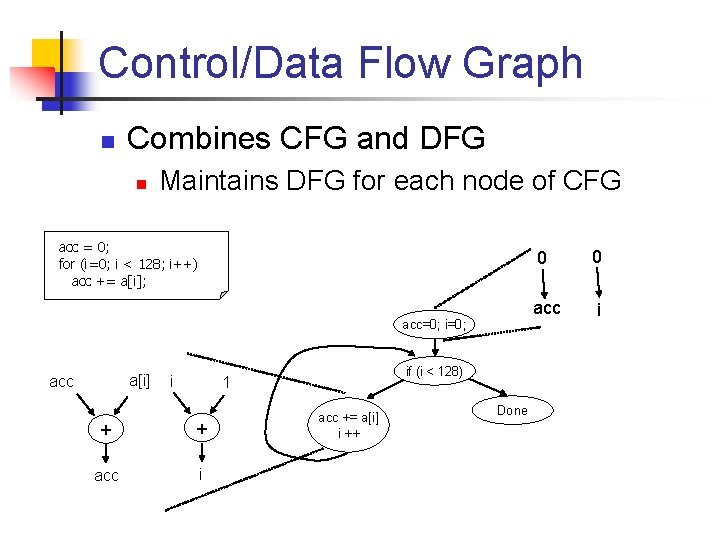

Control/Data Flow Graph n Combines CFG and DFG n Maintains DFG for each node of CFG acc = 0; for (i=0; i < 128; i++) acc += a[i]; acc=0; i=0; a[i] acc i if (i < 128) 1 + + acc i acc += a[i] i ++ Done 0 0 acc i

High-Level Synthesis: Optimization

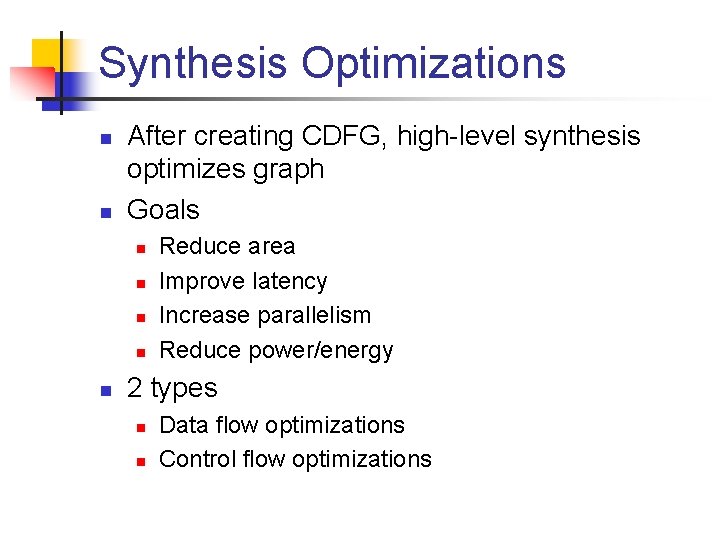

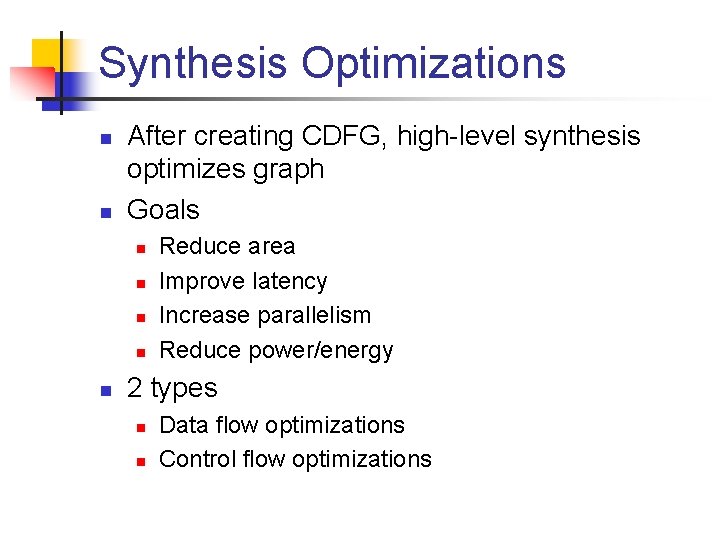

Synthesis Optimizations n n After creating CDFG, high-level synthesis optimizes graph Goals n n n Reduce area Improve latency Increase parallelism Reduce power/energy 2 types n n Data flow optimizations Control flow optimizations

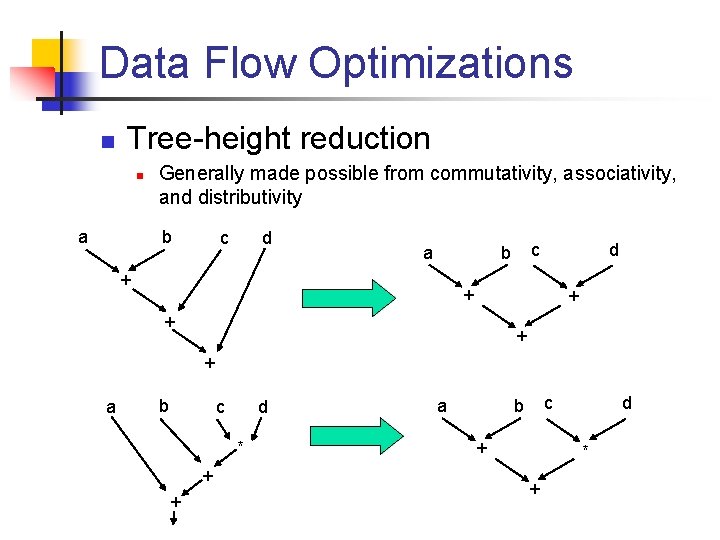

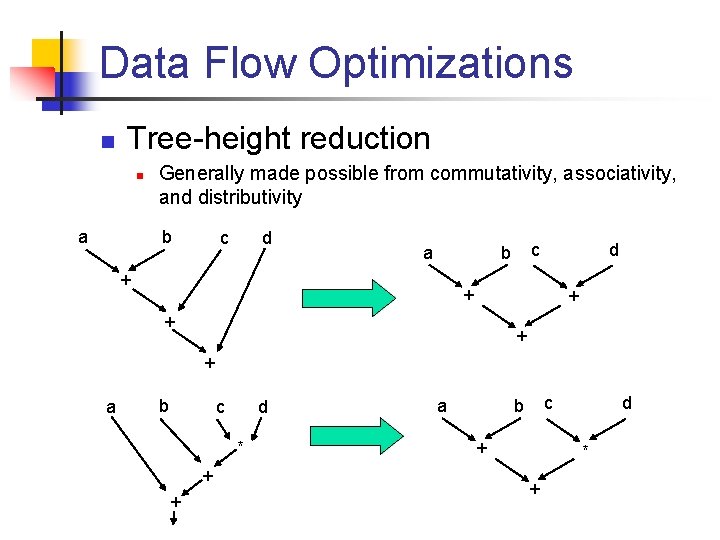

Data Flow Optimizations n Tree-height reduction n a Generally made possible from commutativity, associativity, and distributivity b c d a c b + d + + + a b c d * + + a c b + d * +

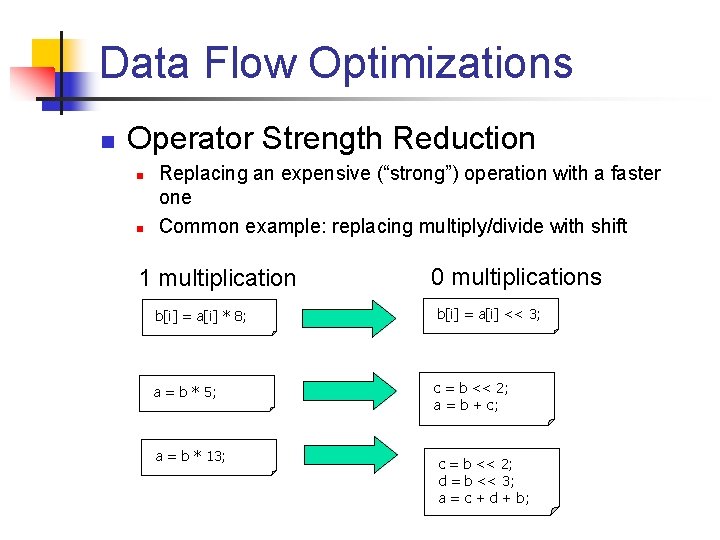

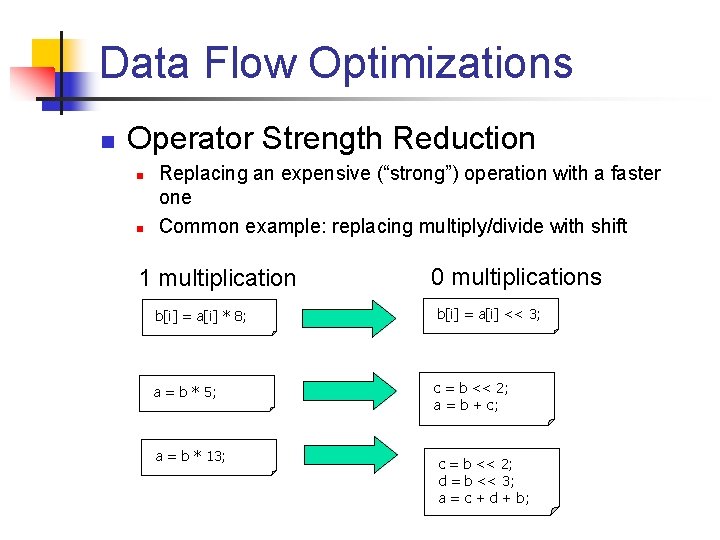

Data Flow Optimizations n Operator Strength Reduction n n Replacing an expensive (“strong”) operation with a faster one Common example: replacing multiply/divide with shift 1 multiplication 0 multiplications b[i] = a[i] * 8; b[i] = a[i] << 3; a = b * 5; c = b << 2; a = b + c; a = b * 13; c = b << 2; d = b << 3; a = c + d + b;

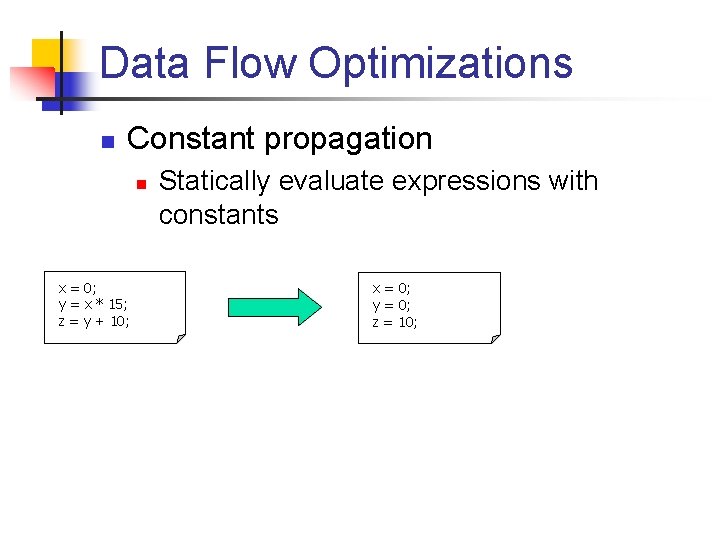

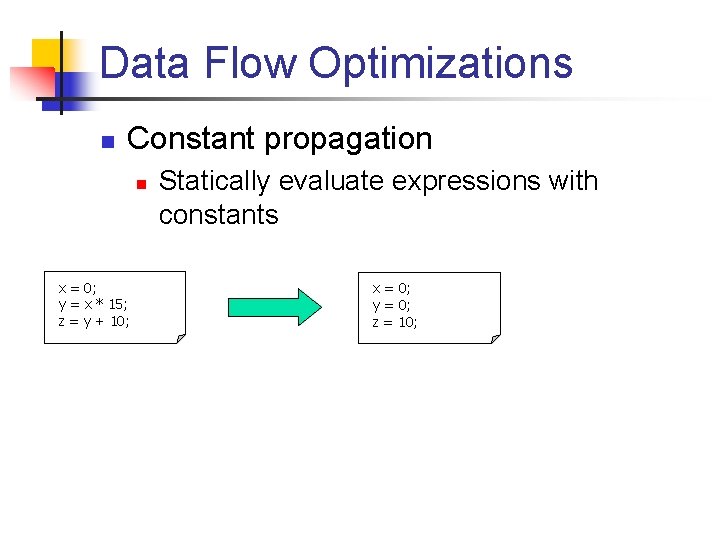

Data Flow Optimizations n Constant propagation n x = 0; y = x * 15; z = y + 10; Statically evaluate expressions with constants x = 0; y = 0; z = 10;

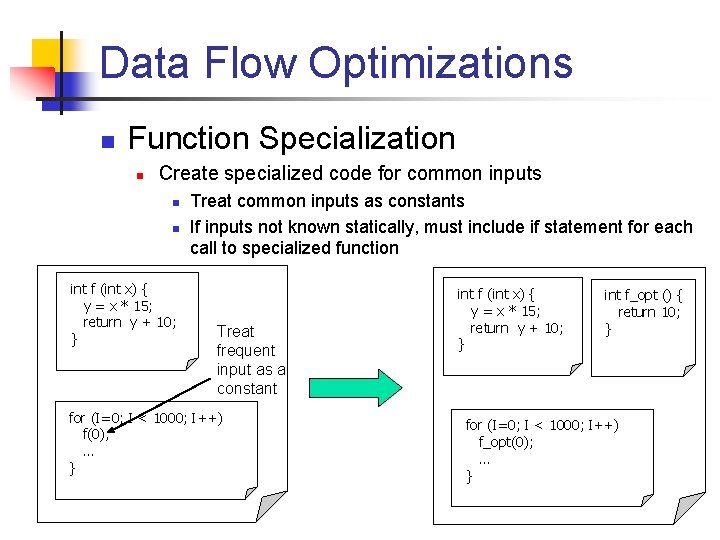

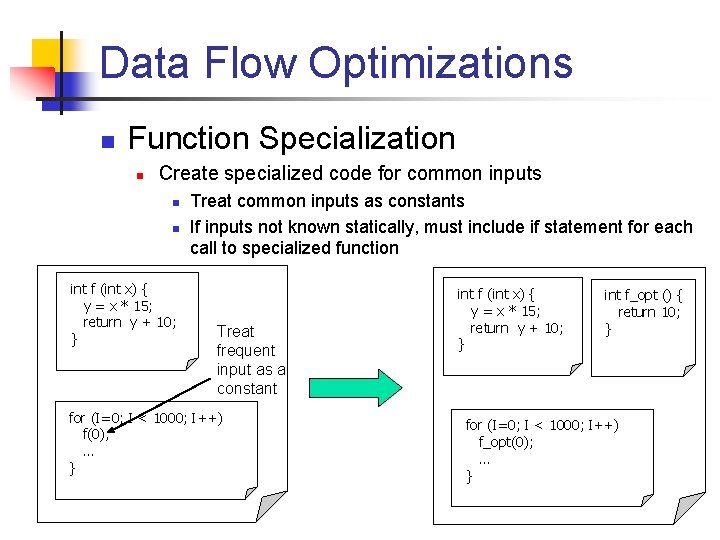

Data Flow Optimizations n Function Specialization n Create specialized code for common inputs n n int f (int x) { y = x * 15; return y + 10; } Treat common inputs as constants If inputs not known statically, must include if statement for each call to specialized function Treat frequent input as a constant for (I=0; I < 1000; I++) f(0); … } int f (int x) { y = x * 15; return y + 10; } int f_opt () { return 10; } for (I=0; I < 1000; I++) f_opt(0); … }

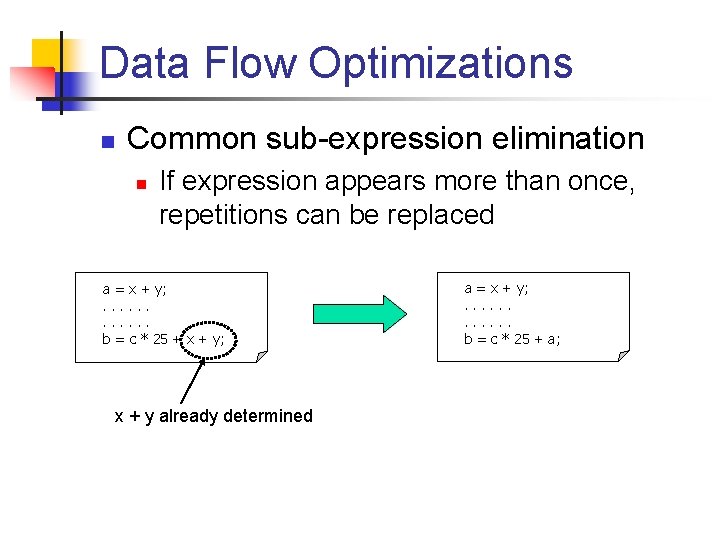

Data Flow Optimizations n Common sub-expression elimination n If expression appears more than once, repetitions can be replaced a = x + y; . . . b = c * 25 + x + y; x + y already determined a = x + y; . . . b = c * 25 + a;

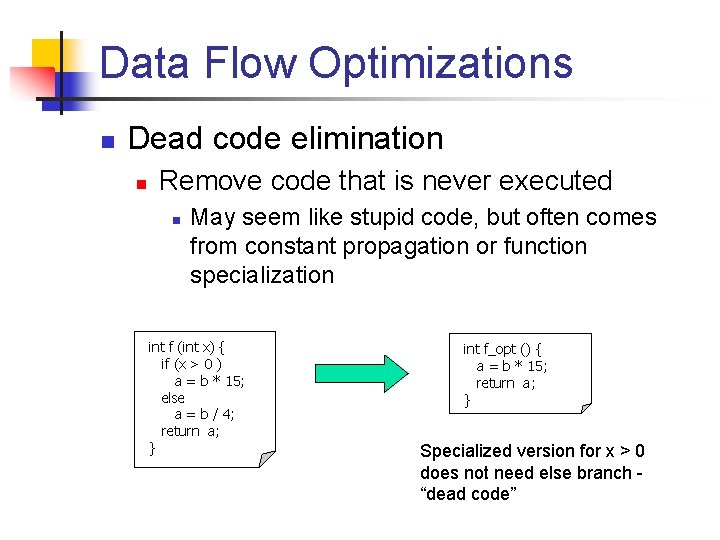

Data Flow Optimizations n Dead code elimination n Remove code that is never executed n May seem like stupid code, but often comes from constant propagation or function specialization int f (int x) { if (x > 0 ) a = b * 15; else a = b / 4; return a; } int f_opt () { a = b * 15; return a; } Specialized version for x > 0 does not need else branch “dead code”

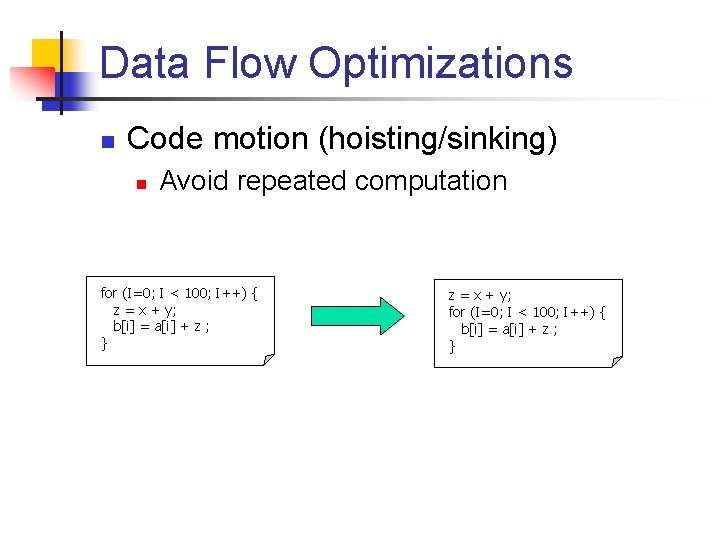

Data Flow Optimizations n Code motion (hoisting/sinking) n Avoid repeated computation for (I=0; I < 100; I++) { z = x + y; b[i] = a[i] + z ; } z = x + y; for (I=0; I < 100; I++) { b[i] = a[i] + z ; }

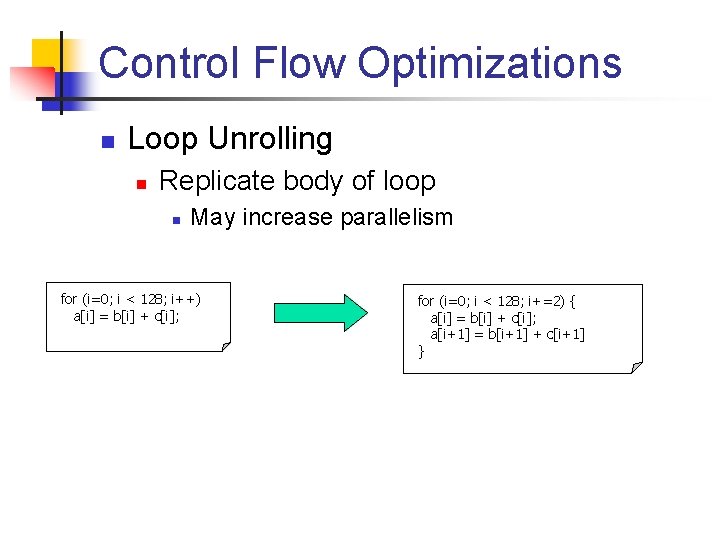

Control Flow Optimizations n Loop Unrolling n Replicate body of loop n May increase parallelism for (i=0; i < 128; i++) a[i] = b[i] + c[i]; for (i=0; i < 128; i+=2) { a[i] = b[i] + c[i]; a[i+1] = b[i+1] + c[i+1] }

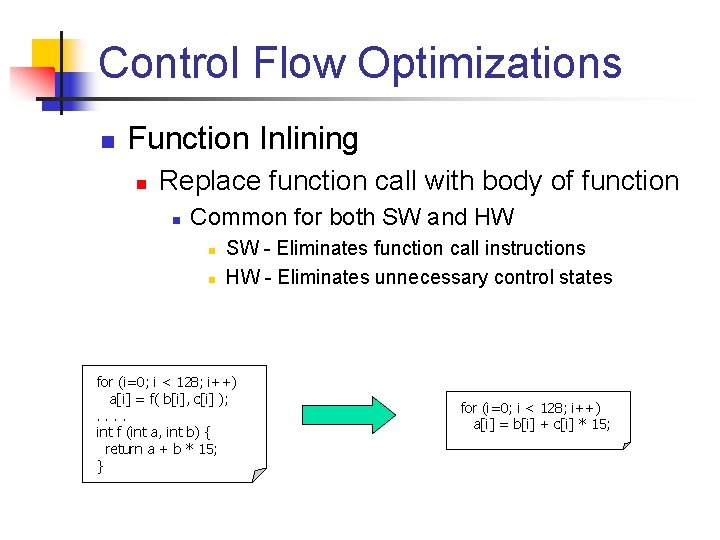

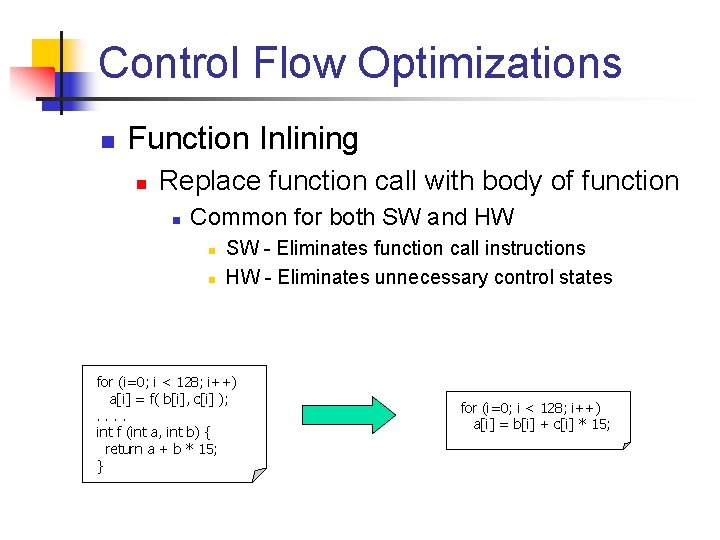

Control Flow Optimizations n Function Inlining n Replace function call with body of function n Common for both SW and HW n n SW - Eliminates function call instructions HW - Eliminates unnecessary control states for (i=0; i < 128; i++) a[i] = f( b[i], c[i] ); . . int f (int a, int b) { return a + b * 15; } for (i=0; i < 128; i++) a[i] = b[i] + c[i] * 15;

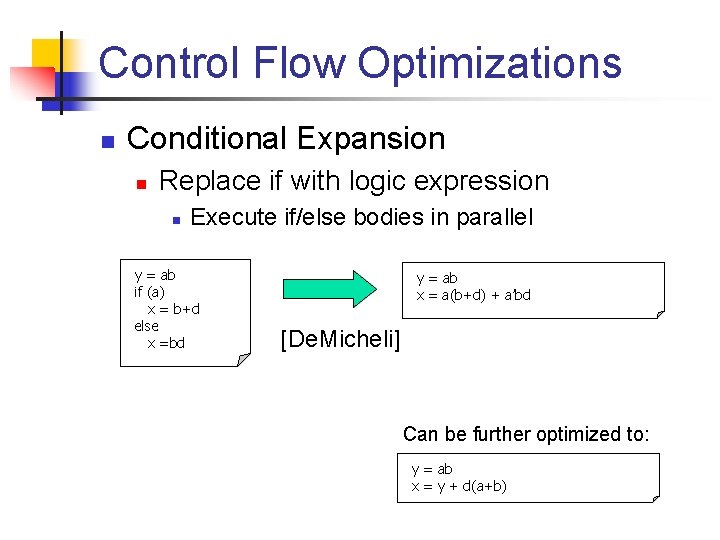

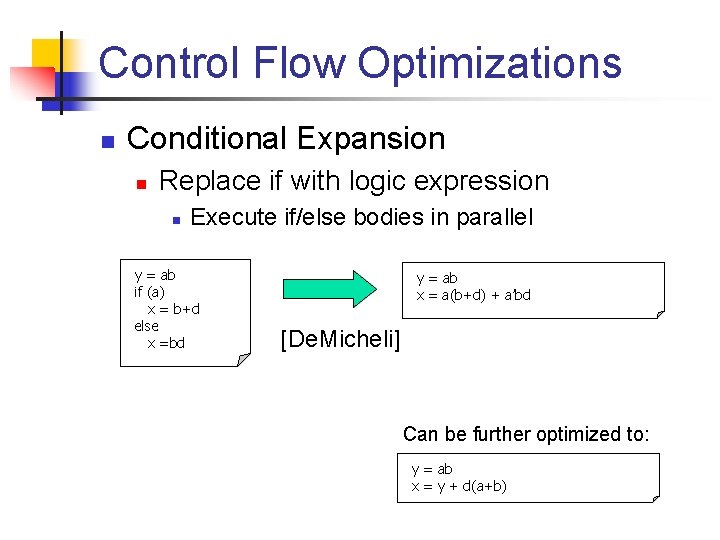

Control Flow Optimizations n Conditional Expansion n Replace if with logic expression n Execute if/else bodies in parallel y = ab if (a) x = b+d else x =bd y = ab x = a(b+d) + a’bd [De. Micheli] Can be further optimized to: y = ab x = y + d(a+b)

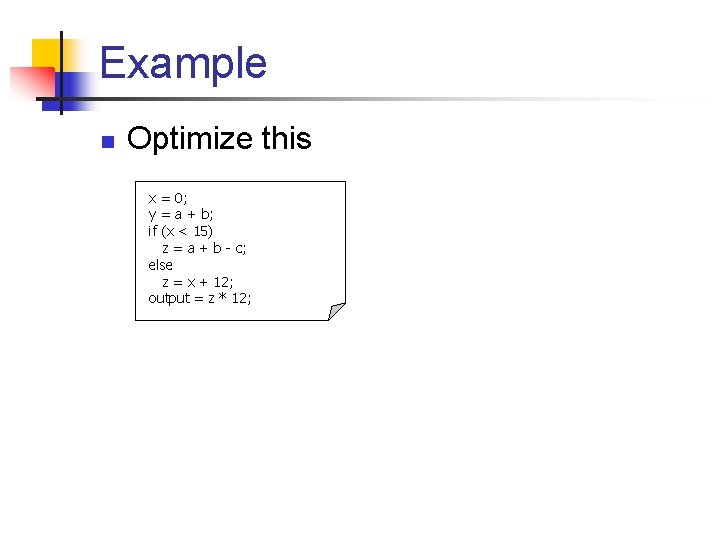

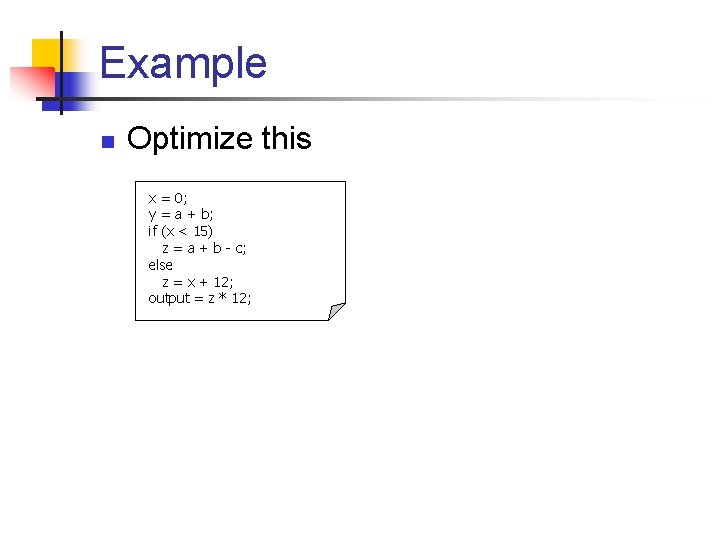

Example n Optimize this x = 0; y = a + b; if (x < 15) z = a + b - c; else z = x + 12; output = z * 12;

High-Level Synthesis: Scheduling/Resource Allocation

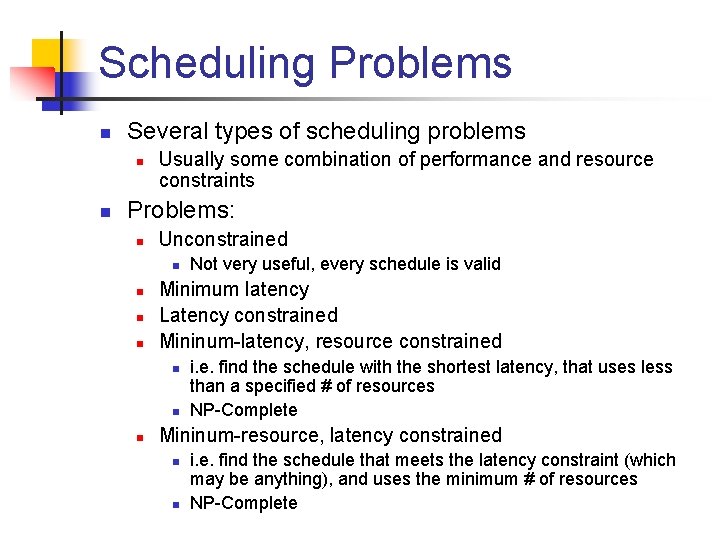

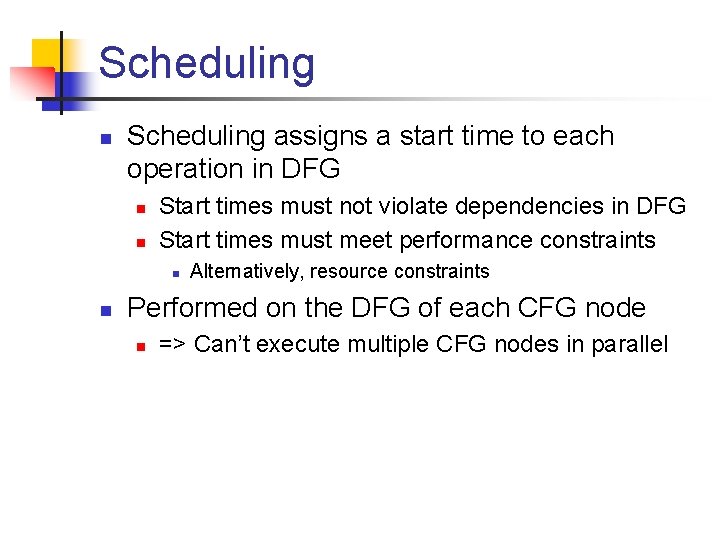

Scheduling n Scheduling assigns a start time to each operation in DFG n n Start times must not violate dependencies in DFG Start times must meet performance constraints n n Alternatively, resource constraints Performed on the DFG of each CFG node n => Can’t execute multiple CFG nodes in parallel

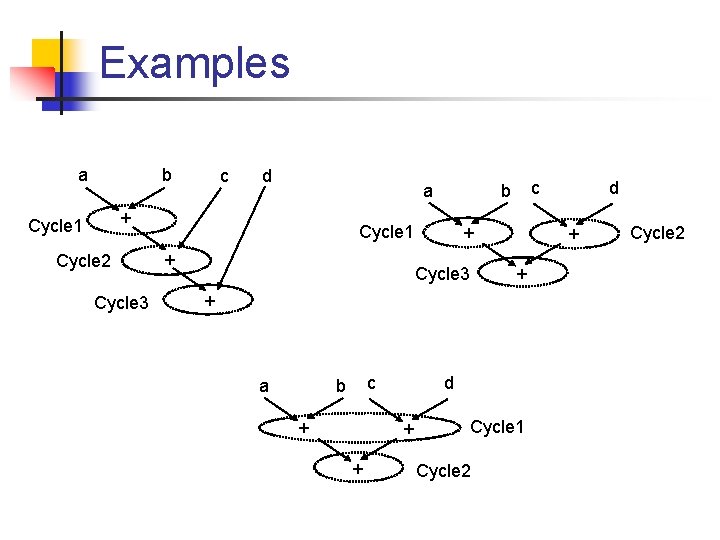

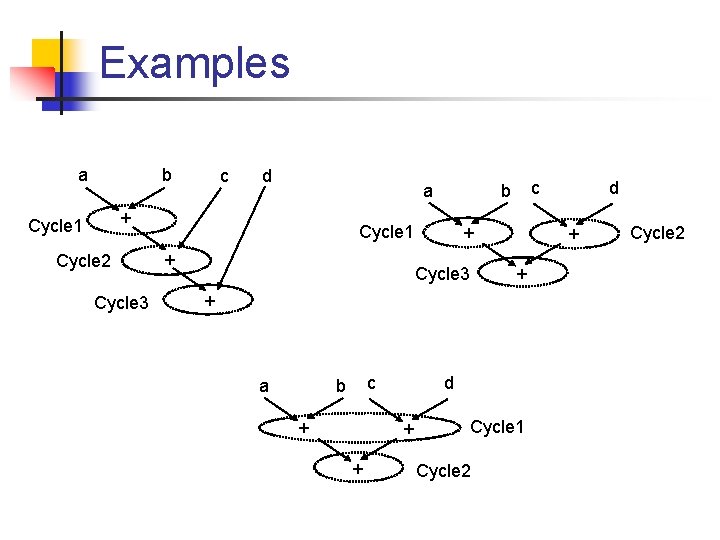

Examples a b c d a + Cycle 1 Cycle 2 Cycle 3 Cycle 1 + c b + Cycle 3 + + + a c b + d + + Cycle 1 Cycle 2 d Cycle 2

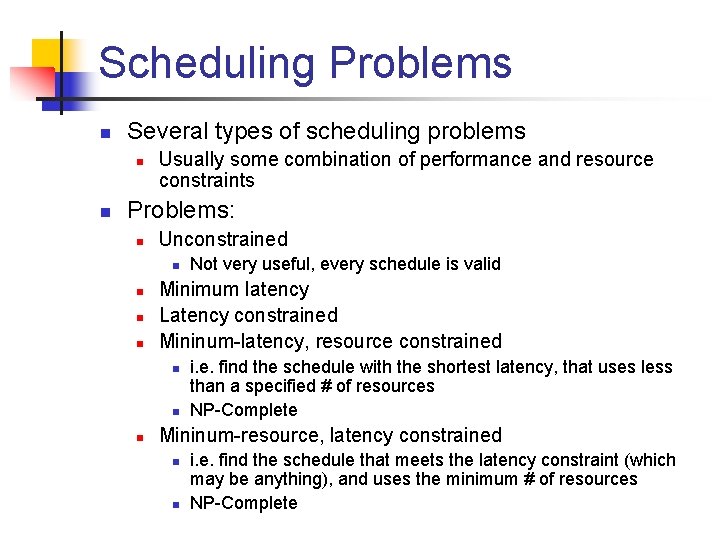

Scheduling Problems n Several types of scheduling problems n n Usually some combination of performance and resource constraints Problems: n Unconstrained n n Minimum latency Latency constrained Mininum-latency, resource constrained n n n Not very useful, every schedule is valid i. e. find the schedule with the shortest latency, that uses less than a specified # of resources NP-Complete Mininum-resource, latency constrained n n i. e. find the schedule that meets the latency constraint (which may be anything), and uses the minimum # of resources NP-Complete

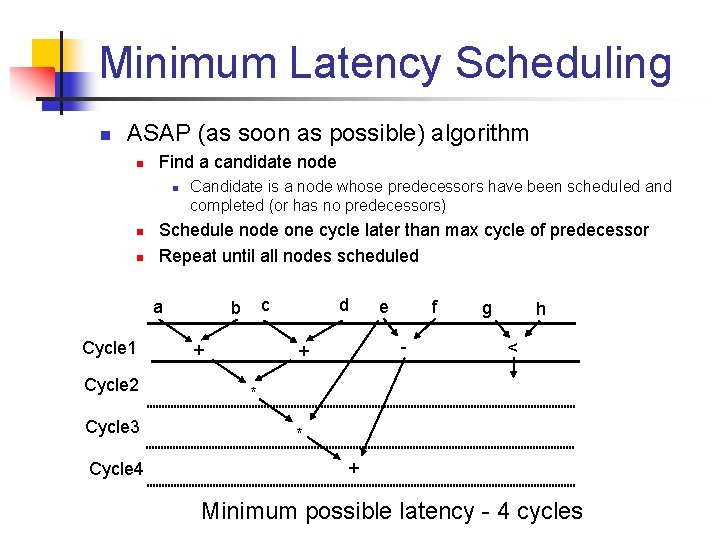

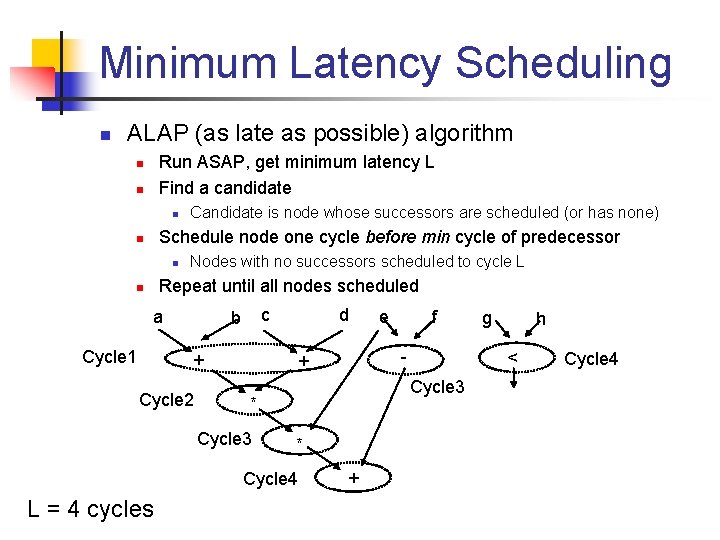

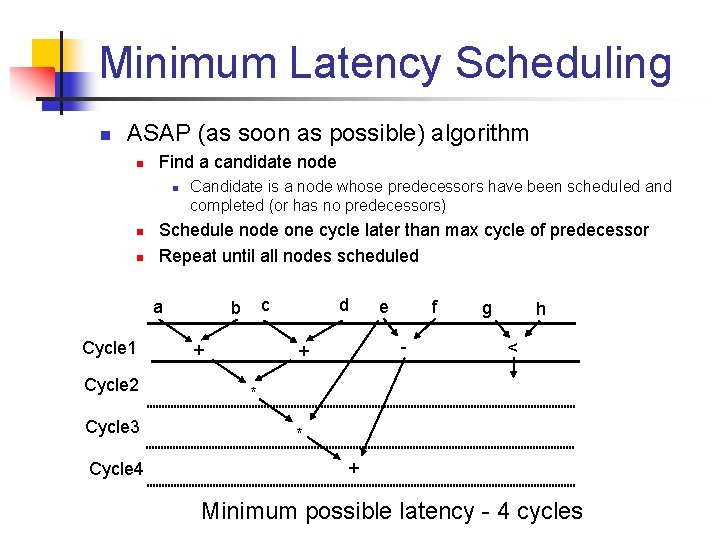

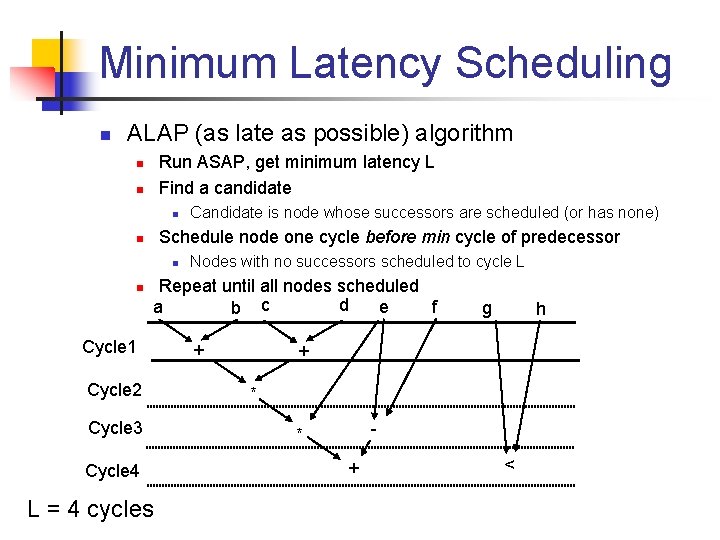

Minimum Latency Scheduling n ASAP (as soon as possible) algorithm n Find a candidate node n n n Candidate is a node whose predecessors have been scheduled and completed (or has no predecessors) Schedule node one cycle later than max cycle of predecessor Repeat until all nodes scheduled a Cycle 1 Cycle 2 Cycle 3 Cycle 4 c b + d e f - + g h < * * + Minimum possible latency - 4 cycles

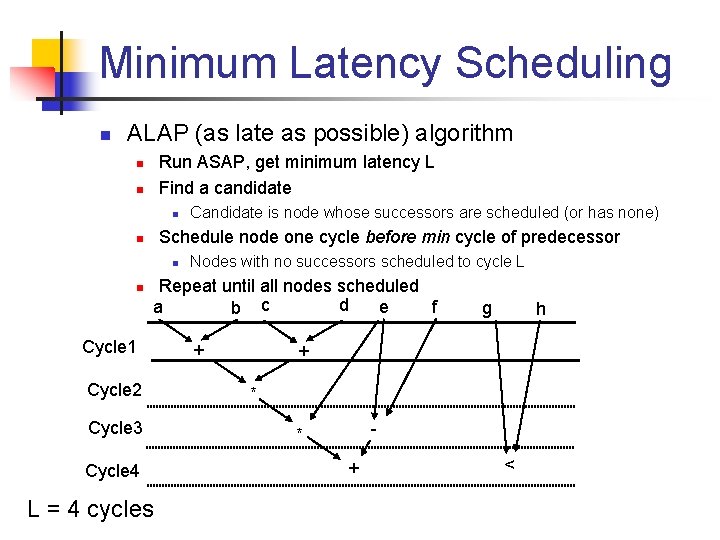

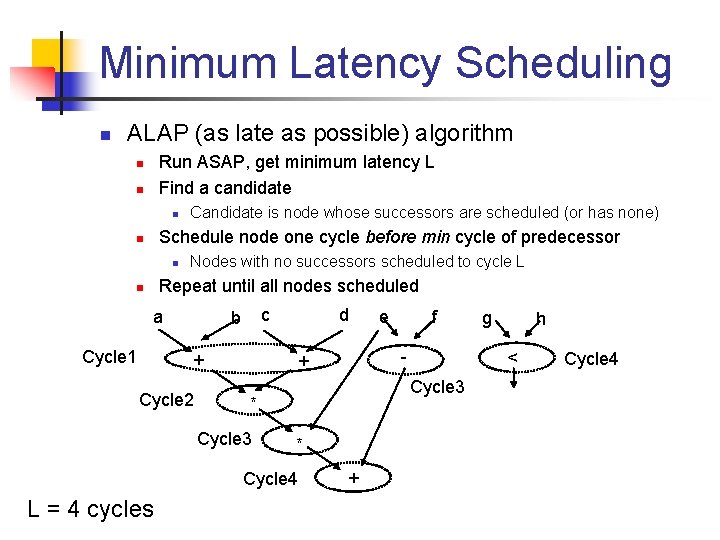

Minimum Latency Scheduling n ALAP (as late as possible) algorithm Run ASAP, get minimum latency L Find a candidate n n n Candidate is node whose successors are scheduled (or has none) Schedule node one cycle before min cycle of predecessor n n Nodes with no successors scheduled to cycle L Repeat until all nodes scheduled n a Cycle 1 c b + Cycle 2 d * * + g h < Cycle 3 Cycle 4 L = 4 cycles f - + Cycle 3 e Cycle 4

Minimum Latency Scheduling n ALAP (as late as possible) algorithm Run ASAP, get minimum latency L Find a candidate n n n Schedule node one cycle before min cycle of predecessor n n n Candidate is node whose successors are scheduled (or has none) Nodes with no successors scheduled to cycle L Repeat until all nodes scheduled d e a f b c Cycle 1 Cycle 2 Cycle 3 Cycle 4 L = 4 cycles + g h + * - * + <

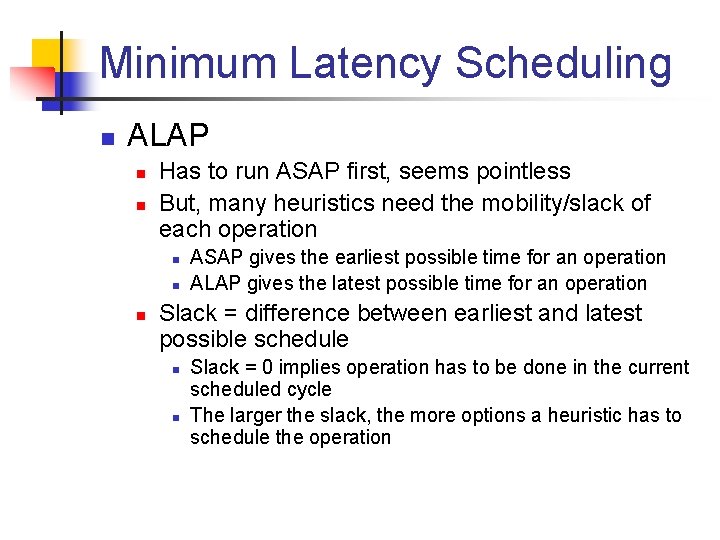

Minimum Latency Scheduling n ALAP n n Has to run ASAP first, seems pointless But, many heuristics need the mobility/slack of each operation n ASAP gives the earliest possible time for an operation ALAP gives the latest possible time for an operation Slack = difference between earliest and latest possible schedule n n Slack = 0 implies operation has to be done in the current scheduled cycle The larger the slack, the more options a heuristic has to schedule the operation

Latency-Constrained Scheduling n Instead of finding the minimum latency, find latency less than L n Solutions: n n Use ASAP, verify that minimum latency less than L Use ALAP starting with cycle L instead of minimum latency (don’t need ASAP)

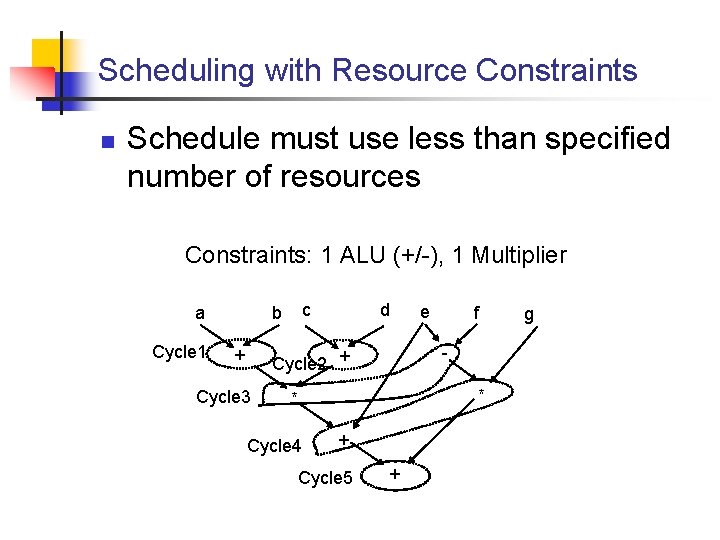

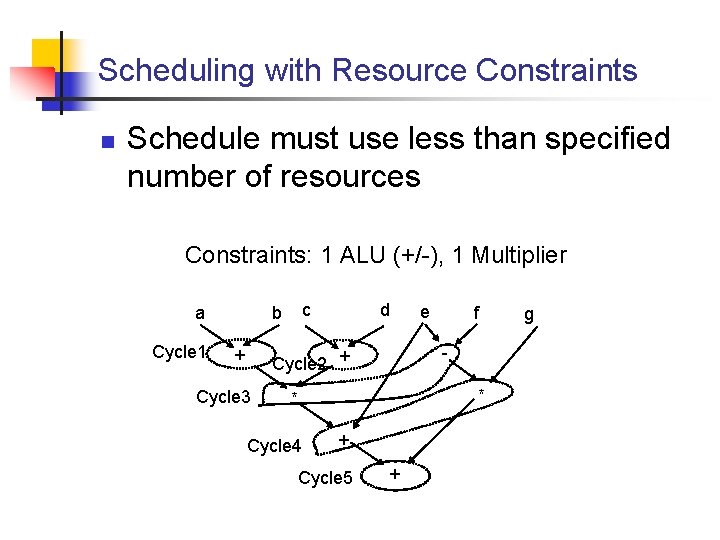

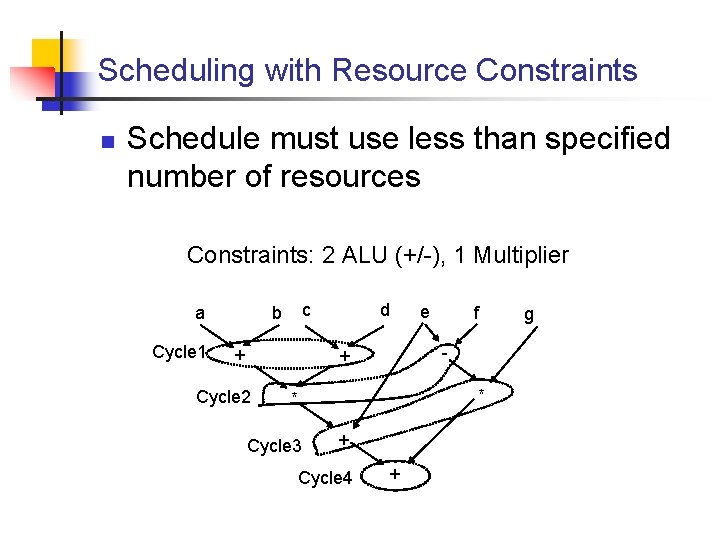

Scheduling with Resource Constraints n Schedule must use less than specified number of resources Constraints: 1 ALU (+/-), 1 Multiplier a Cycle 1 c b + d f - Cycle 2 + Cycle 3 e * * Cycle 4 + Cycle 5 + g

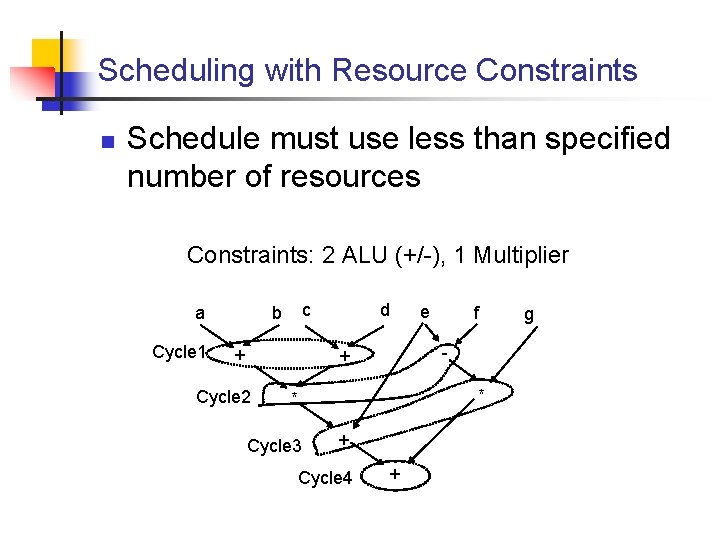

Scheduling with Resource Constraints n Schedule must use less than specified number of resources Constraints: 2 ALU (+/-), 1 Multiplier a Cycle 1 c b + d f - + Cycle 2 e * * Cycle 3 + Cycle 4 + g

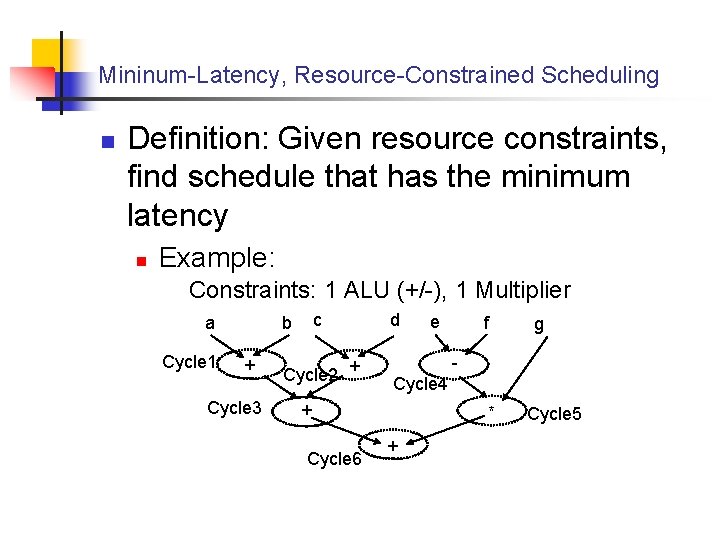

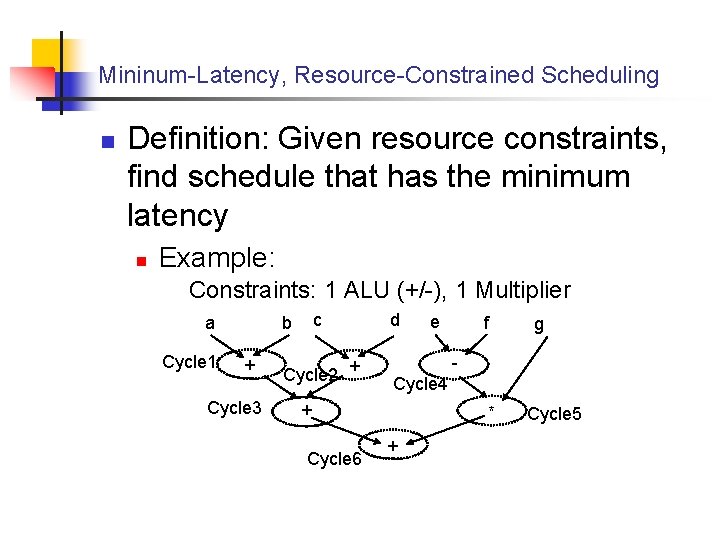

Mininum-Latency, Resource-Constrained Scheduling n Definition: Given resource constraints, find schedule that has the minimum latency n Example: Constraints: 1 ALU (+/-), 1 Multiplier a Cycle 1 c b + Cycle 3 Cycle 2 + d f g Cycle 4 + Cycle 6 e * + Cycle 5

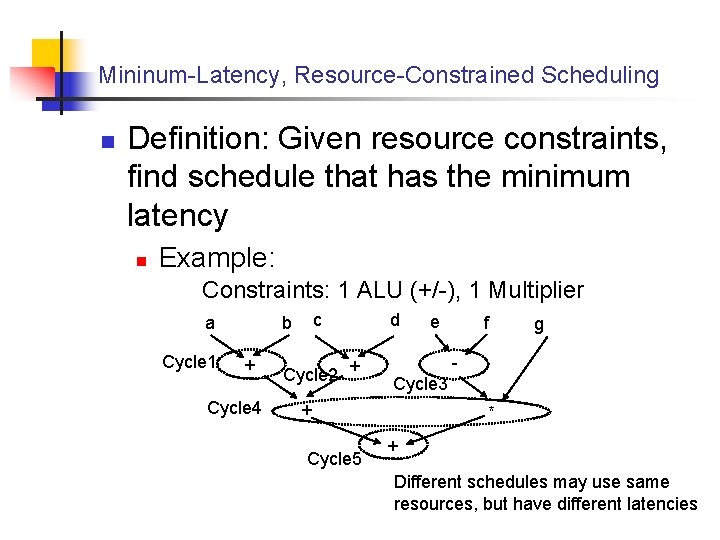

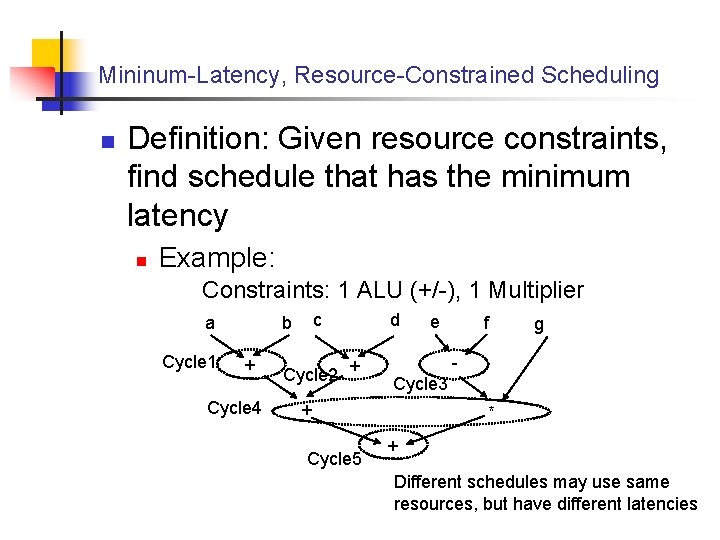

Mininum-Latency, Resource-Constrained Scheduling n Definition: Given resource constraints, find schedule that has the minimum latency n Example: Constraints: 1 ALU (+/-), 1 Multiplier a Cycle 1 c b + Cycle 4 Cycle 2 + d f g Cycle 3 + Cycle 5 e * + Different schedules may use same resources, but have different latencies

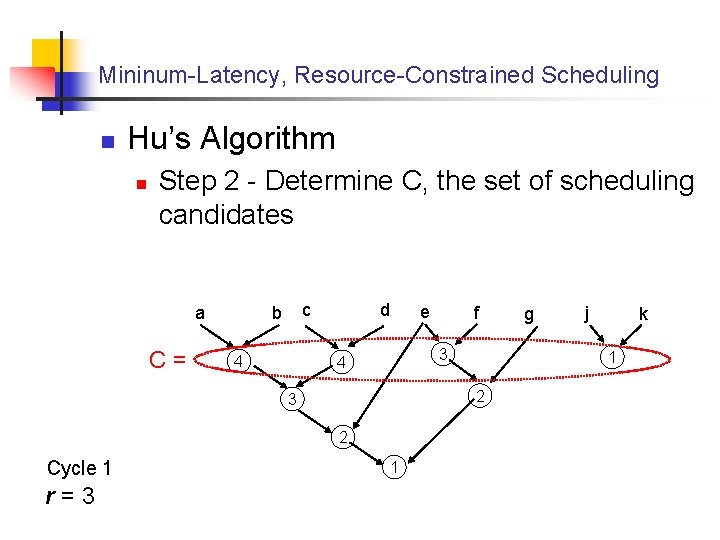

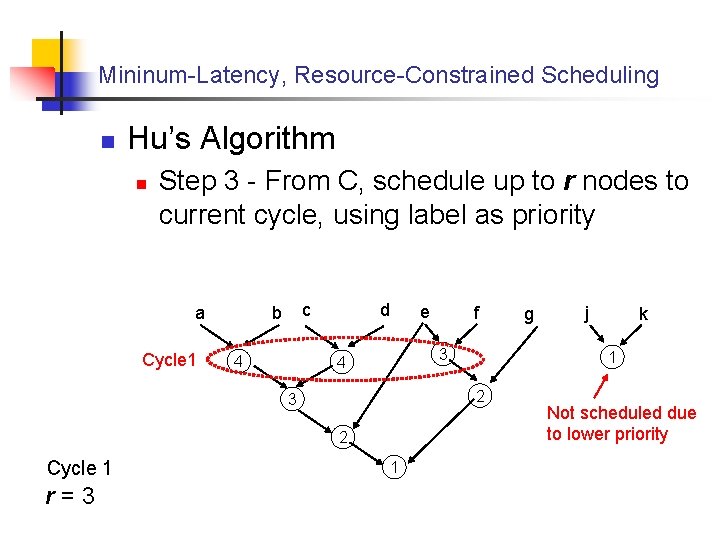

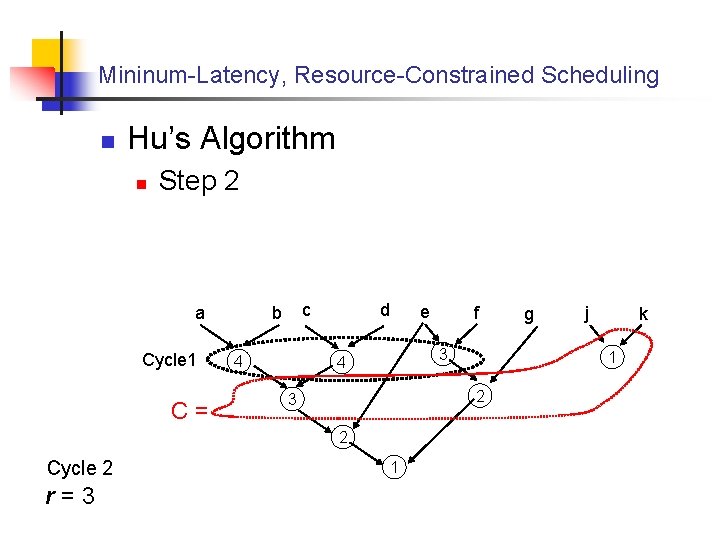

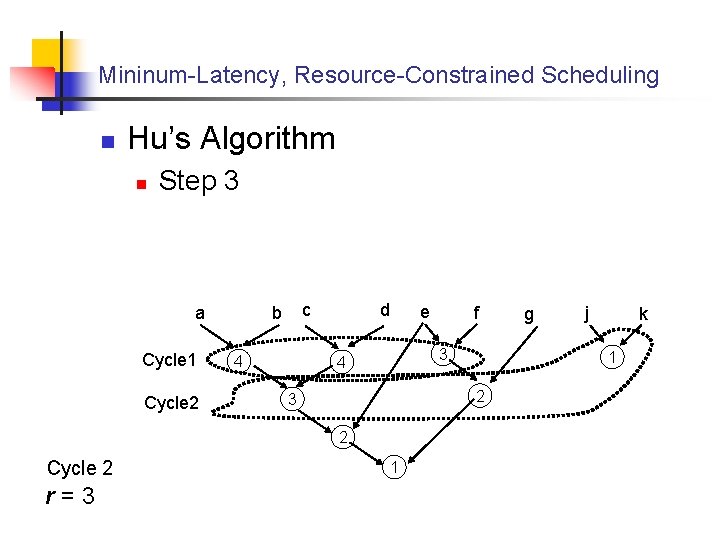

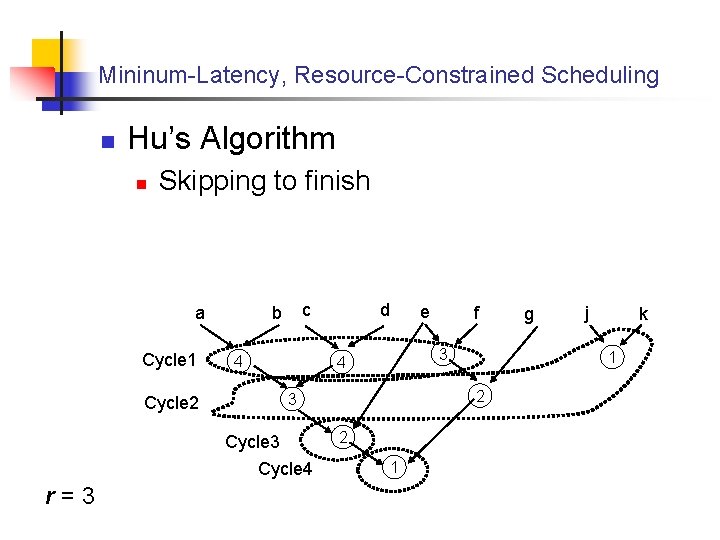

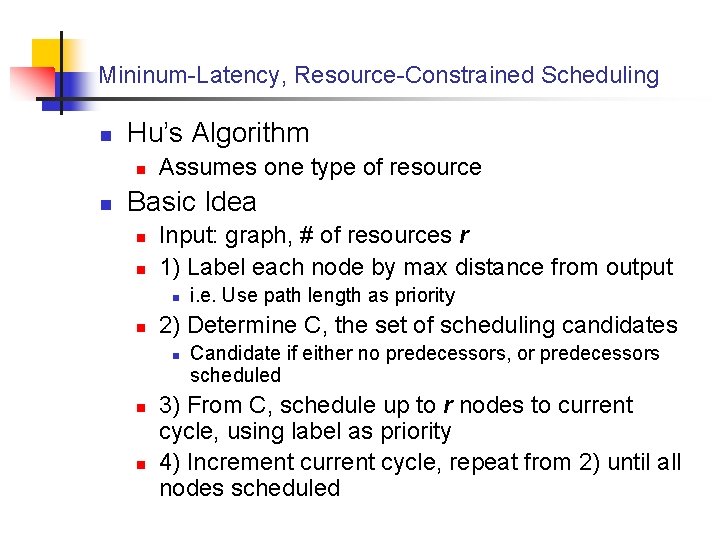

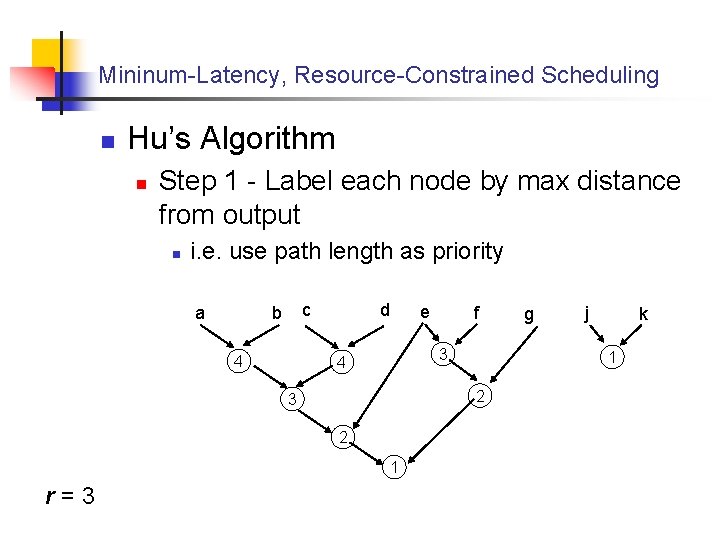

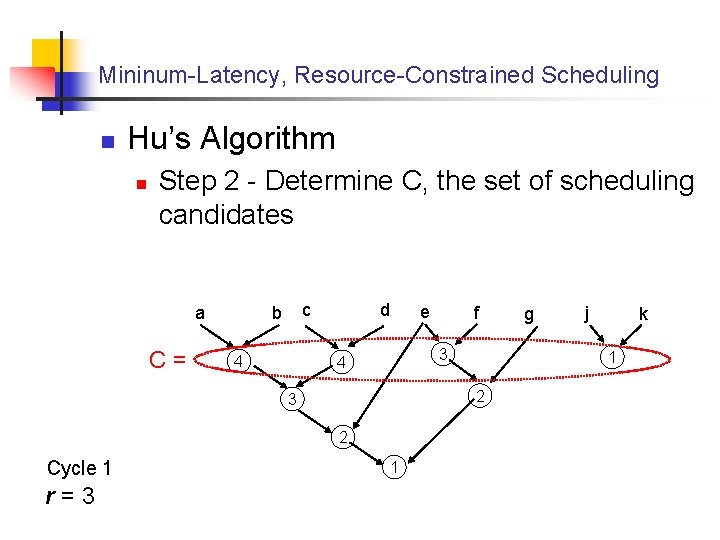

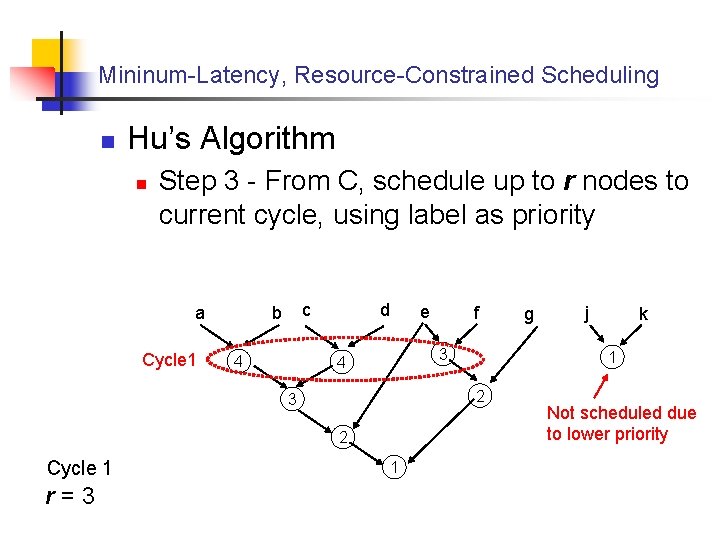

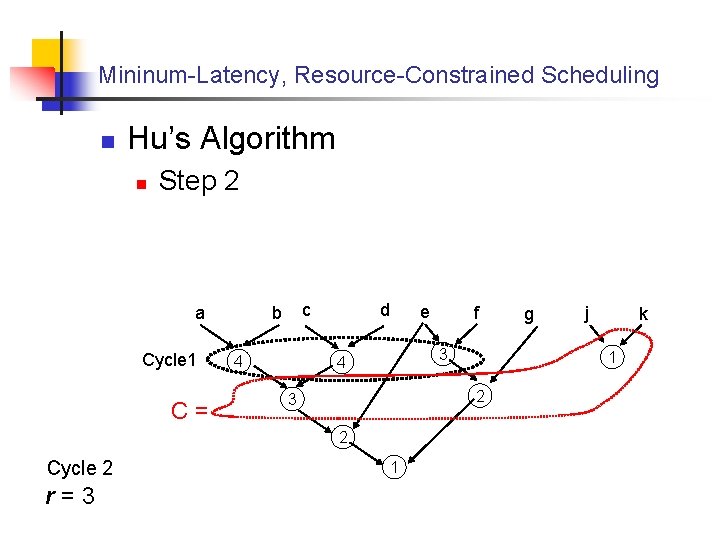

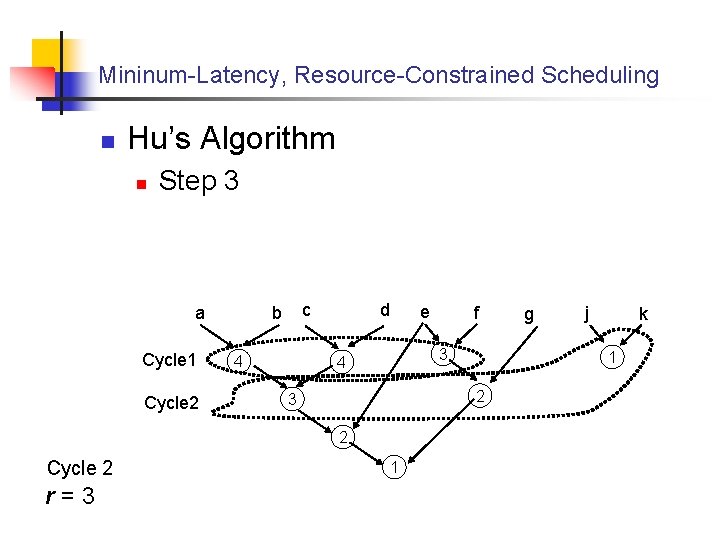

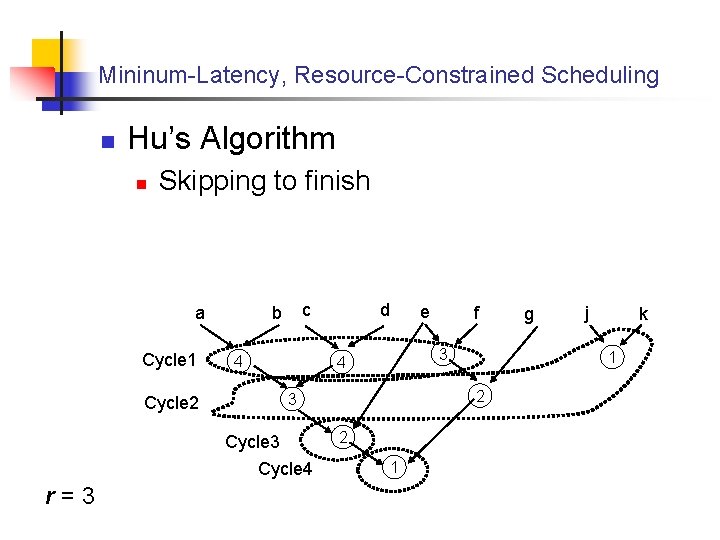

Mininum-Latency, Resource-Constrained Scheduling n Hu’s Algorithm n n Assumes one type of resource Basic Idea n n Input: graph, # of resources r 1) Label each node by max distance from output n n 2) Determine C, the set of scheduling candidates n n n i. e. Use path length as priority Candidate if either no predecessors, or predecessors scheduled 3) From C, schedule up to r nodes to current cycle, using label as priority 4) Increment current cycle, repeat from 2) until all nodes scheduled

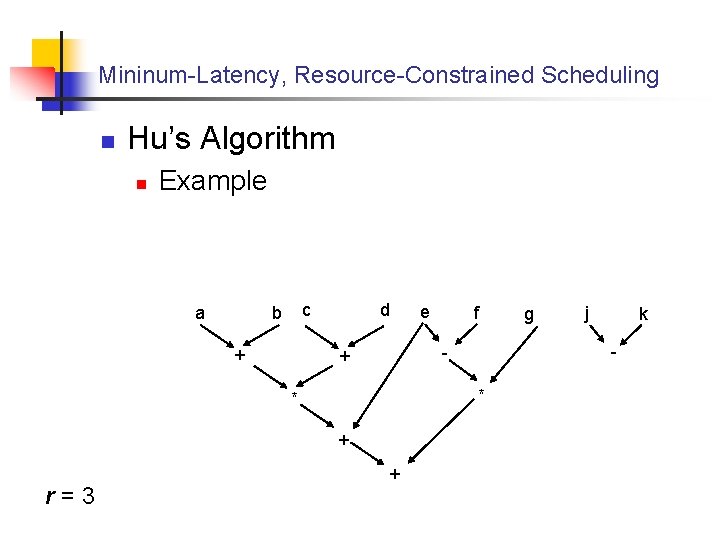

Mininum-Latency, Resource-Constrained Scheduling n Hu’s Algorithm n Example a c b + d f * + + g j k - - + * r=3 e

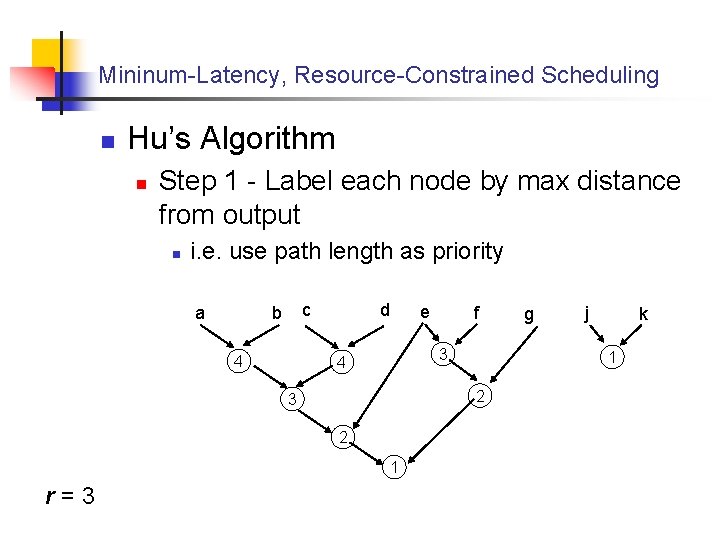

Mininum-Latency, Resource-Constrained Scheduling n Hu’s Algorithm n Step 1 - Label each node by max distance from output n i. e. use path length as priority a c b 4 d f 3 4 2 1 g j k 1 2 3 r=3 e

Mininum-Latency, Resource-Constrained Scheduling n Hu’s Algorithm n Step 2 - Determine C, the set of scheduling candidates a C= c b 4 d 1 g j k 1 2 2 r=3 f 3 4 3 Cycle 1 e

Mininum-Latency, Resource-Constrained Scheduling n Hu’s Algorithm n Step 3 - From C, schedule up to r nodes to current cycle, using label as priority a Cycle 1 c b 4 d 1 g j k 1 2 2 r=3 f 3 4 3 Cycle 1 e Not scheduled due to lower priority

Mininum-Latency, Resource-Constrained Scheduling n Hu’s Algorithm n Step 2 a Cycle 1 C= c b 4 d r=3 f 3 4 1 g j k 1 2 3 2 Cycle 2 e

Mininum-Latency, Resource-Constrained Scheduling n Hu’s Algorithm n Step 3 a Cycle 1 Cycle 2 c b 4 d r=3 f 3 4 1 g j k 1 2 3 2 Cycle 2 e

Mininum-Latency, Resource-Constrained Scheduling n Hu’s Algorithm n Skipping to finish a Cycle 1 Cycle 2 c b 4 e f 3 4 2 1 g j k 1 2 3 Cycle 4 r=3 d

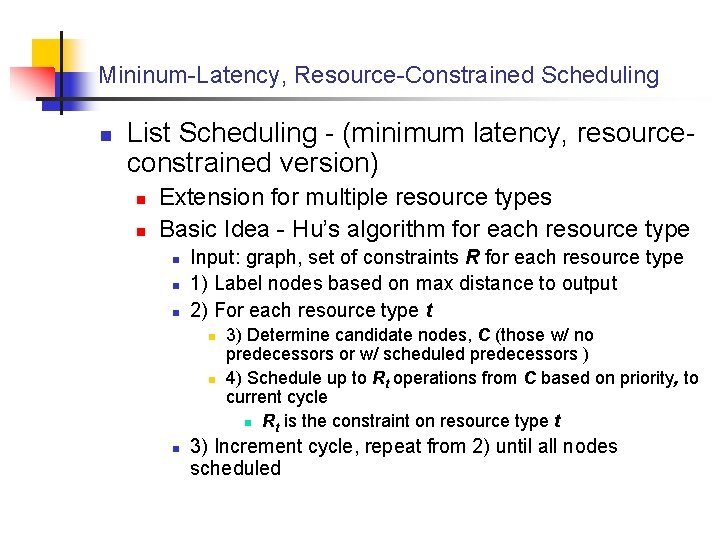

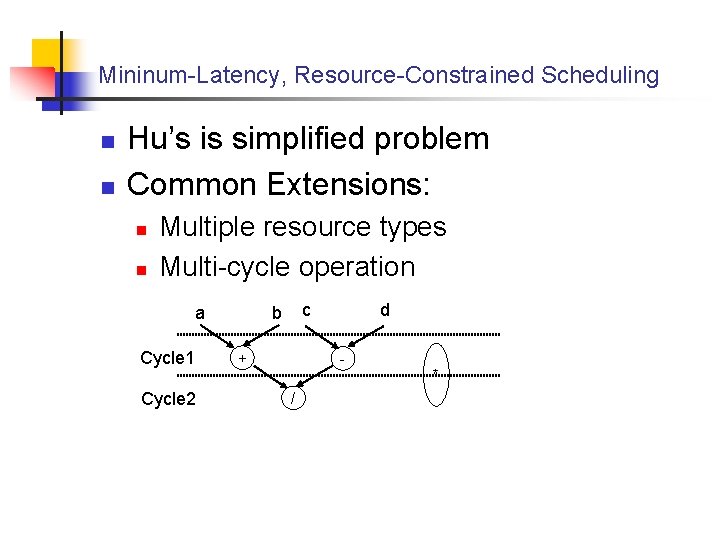

Mininum-Latency, Resource-Constrained Scheduling n n Hu’s is simplified problem Common Extensions: n n Multiple resource types Multi-cycle operation a Cycle 1 Cycle 2 c b + d - / *

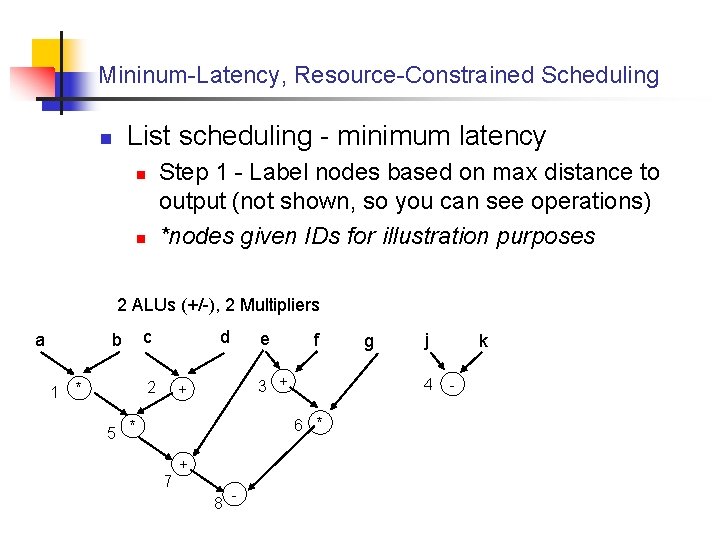

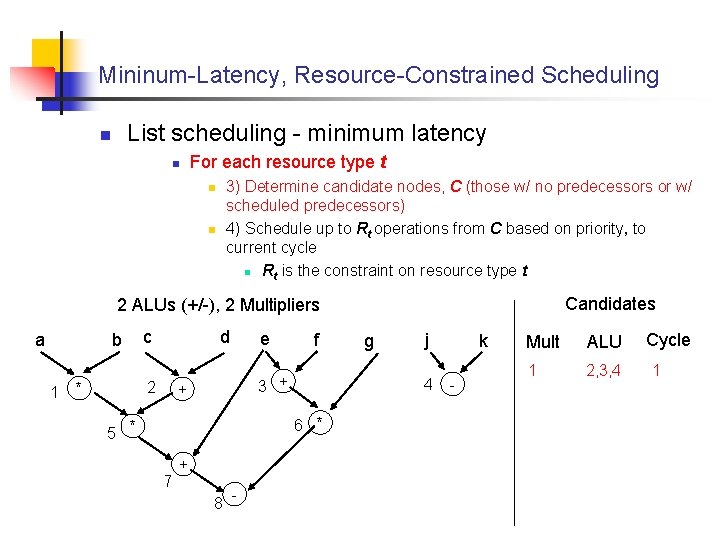

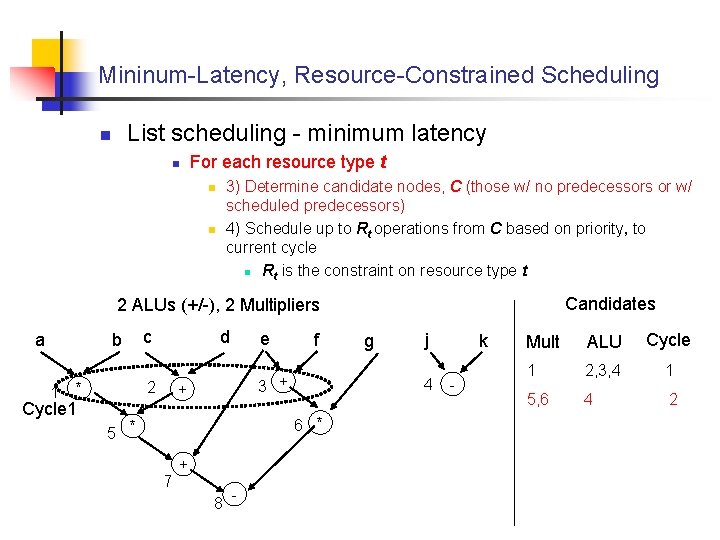

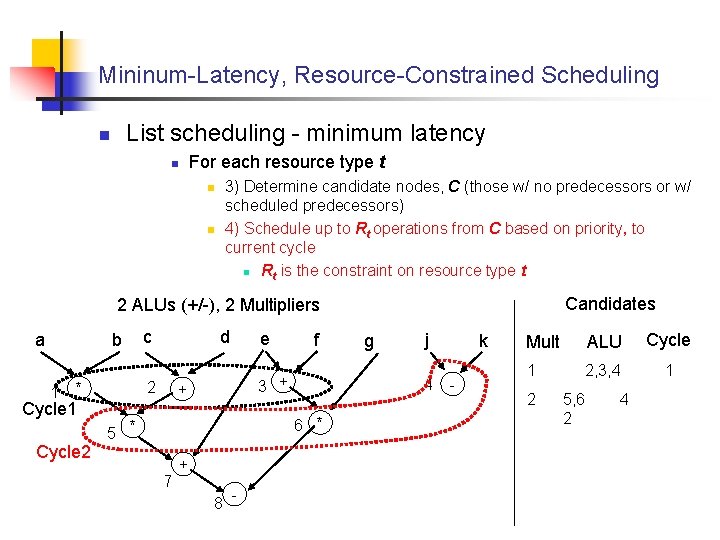

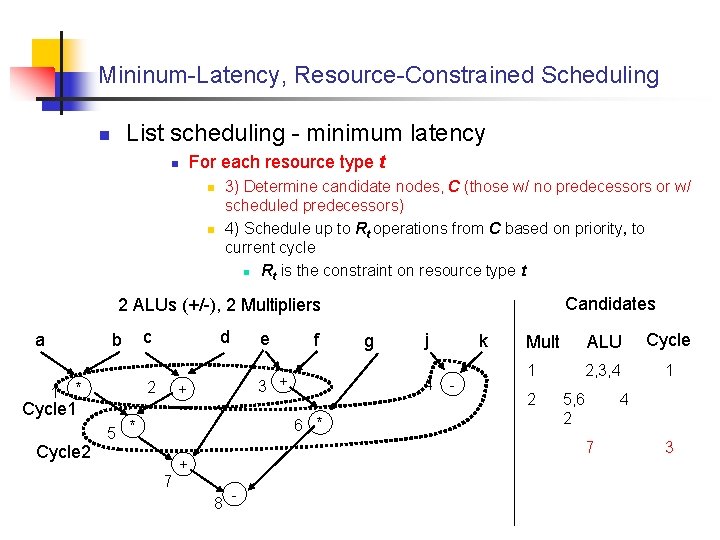

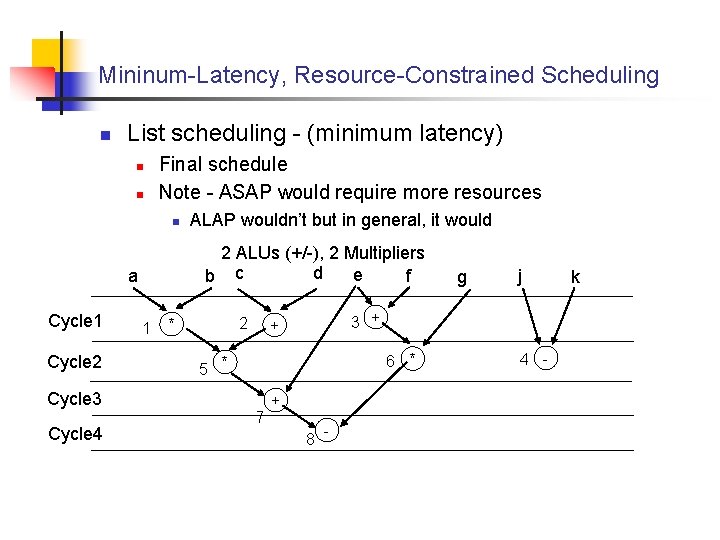

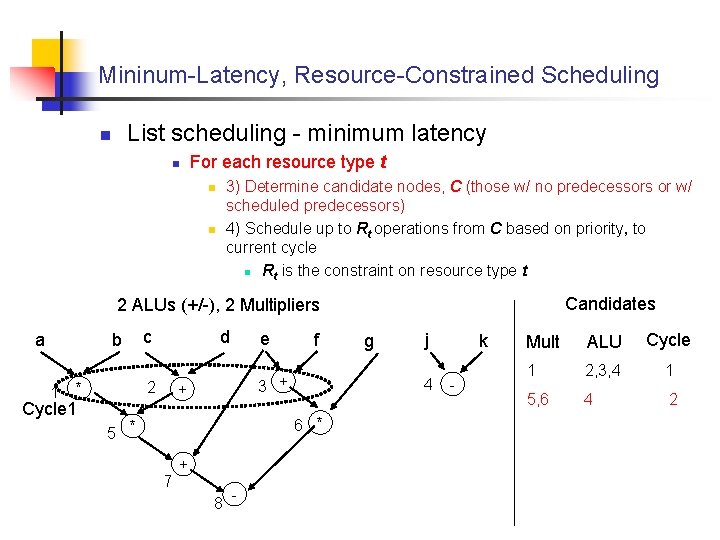

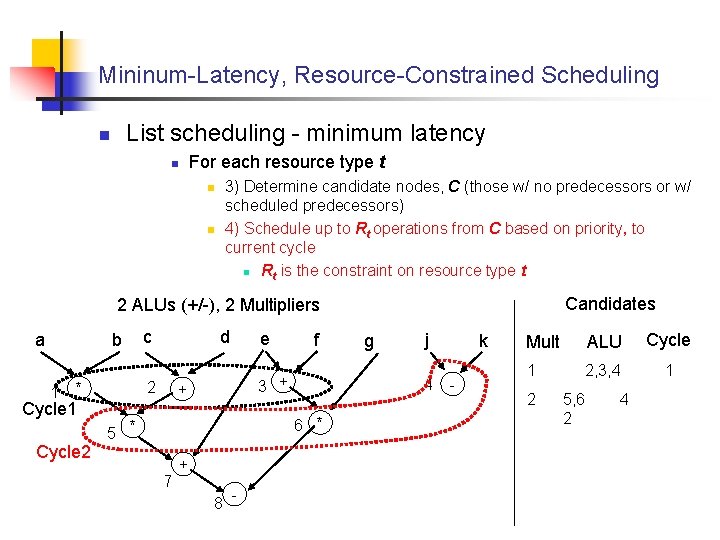

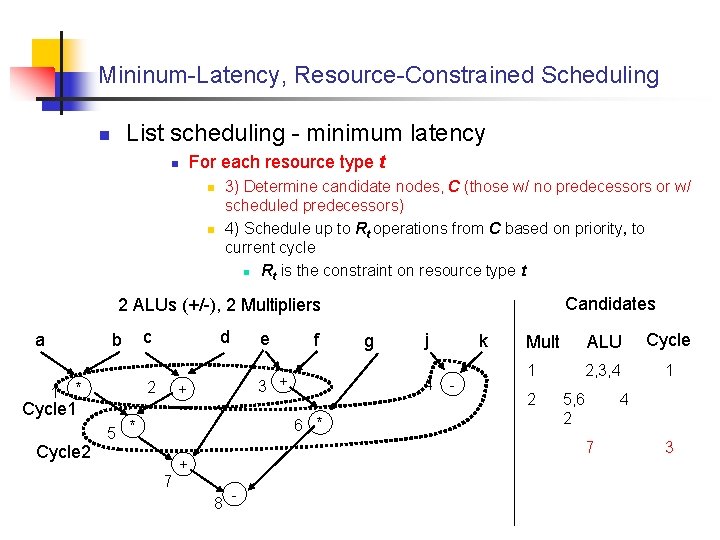

Mininum-Latency, Resource-Constrained Scheduling n List Scheduling - (minimum latency, resourceconstrained version) n n Extension for multiple resource types Basic Idea - Hu’s algorithm for each resource type n n n Input: graph, set of constraints R for each resource type 1) Label nodes based on max distance to output 2) For each resource type t n n n 3) Determine candidate nodes, C (those w/ no predecessors or w/ scheduled predecessors ) 4) Schedule up to Rt operations from C based on priority, to current cycle n Rt is the constraint on resource type t 3) Increment cycle, repeat from 2) until all nodes scheduled

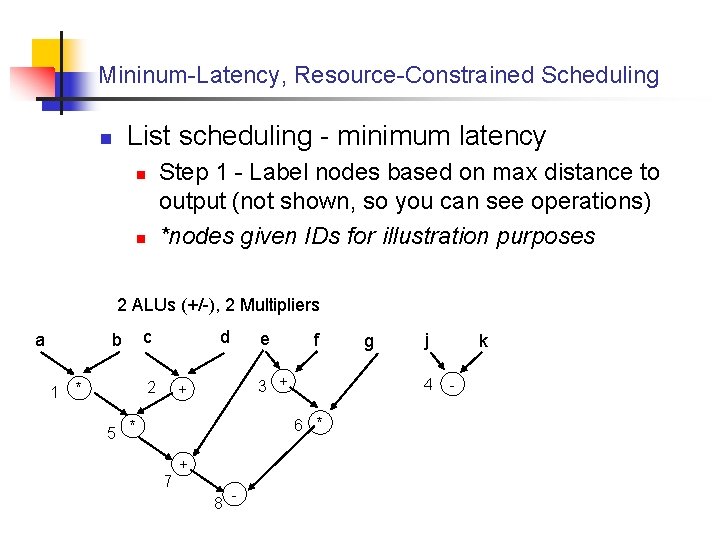

Mininum-Latency, Resource-Constrained Scheduling List scheduling - minimum latency n Step 1 - Label nodes based on max distance to output (not shown, so you can see operations) *nodes given IDs for illustration purposes n n 2 ALUs (+/-), 2 Multipliers a b 1 c d 2 * e f 3 + + 7 + 8 - j 4 6 * 5 * g k -

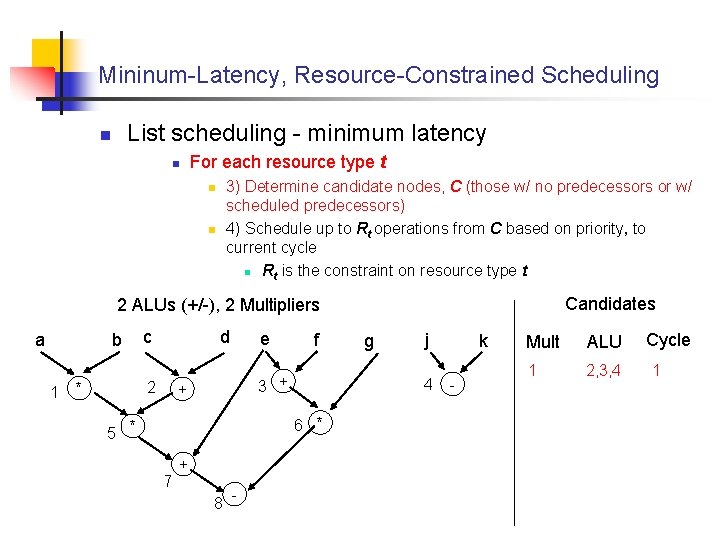

Mininum-Latency, Resource-Constrained Scheduling List scheduling - minimum latency n n For each resource type t 3) Determine candidate nodes, C (those w/ no predecessors or w/ scheduled predecessors) 4) Schedule up to Rt operations from C based on priority, to current cycle n Rt is the constraint on resource type t n n Candidates 2 ALUs (+/-), 2 Multipliers a b 1 c d 2 * e f 3 + + 7 + 8 - j 4 6 * 5 * g k - Mult ALU 1 2, 3, 4 Cycle 1

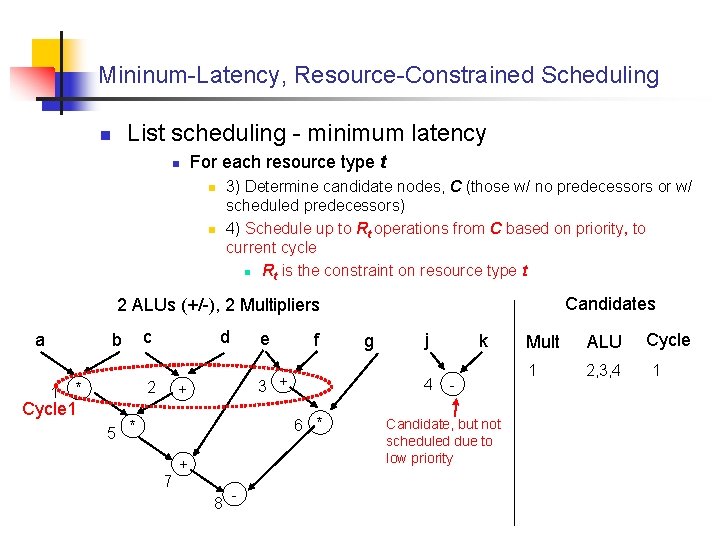

Mininum-Latency, Resource-Constrained Scheduling List scheduling - minimum latency n n For each resource type t 3) Determine candidate nodes, C (those w/ no predecessors or w/ scheduled predecessors) 4) Schedule up to Rt operations from C based on priority, to current cycle n Rt is the constraint on resource type t n n Candidates 2 ALUs (+/-), 2 Multipliers a b 1 d 2 * Cycle 1 c e f 3 + + 7 + 8 - j 4 6 * 5 * g k - Candidate, but not scheduled due to low priority Mult ALU 1 2, 3, 4 Cycle 1

Mininum-Latency, Resource-Constrained Scheduling List scheduling - minimum latency n n For each resource type t 3) Determine candidate nodes, C (those w/ no predecessors or w/ scheduled predecessors) 4) Schedule up to Rt operations from C based on priority, to current cycle n Rt is the constraint on resource type t n n Candidates 2 ALUs (+/-), 2 Multipliers a b 1 d 2 * Cycle 1 c e f 3 + + 7 + 8 - j 4 6 * 5 * g k - Mult ALU Cycle 1 2, 3, 4 1 5, 6 4 2

Mininum-Latency, Resource-Constrained Scheduling List scheduling - minimum latency n For each resource type t n 3) Determine candidate nodes, C (those w/ no predecessors or w/ scheduled predecessors) 4) Schedule up to Rt operations from C based on priority, to current cycle n Rt is the constraint on resource type t n n Candidates 2 ALUs (+/-), 2 Multipliers a b 1 Cycle 2 d 2 * Cycle 1 c e f 3 + + 7 + 8 - j 4 6 * 5 * g k - Mult ALU Cycle 1 2, 3, 4 1 2 5, 6 2 4

Mininum-Latency, Resource-Constrained Scheduling List scheduling - minimum latency n For each resource type t n 3) Determine candidate nodes, C (those w/ no predecessors or w/ scheduled predecessors) 4) Schedule up to Rt operations from C based on priority, to current cycle n Rt is the constraint on resource type t n n Candidates 2 ALUs (+/-), 2 Multipliers a b 1 Cycle 2 d 2 * Cycle 1 c e f 3 + + 7 j 4 6 * 5 * g k - Mult ALU Cycle 1 2, 3, 4 1 2 5, 6 2 7 + 8 - 4 3

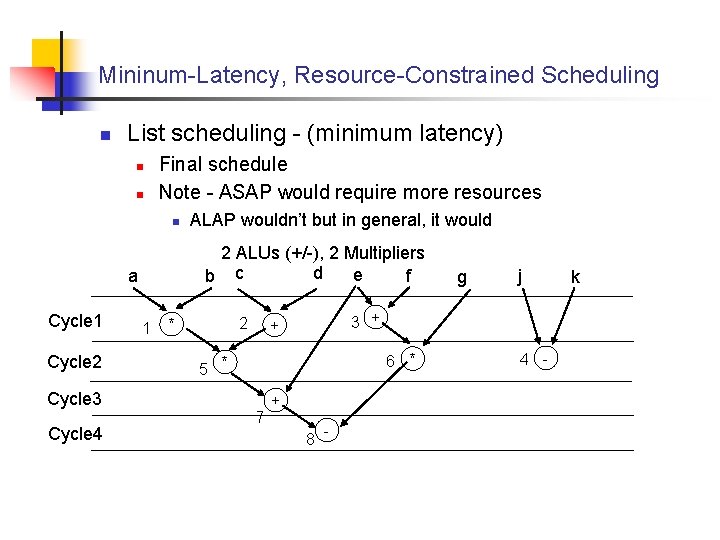

Mininum-Latency, Resource-Constrained Scheduling n List scheduling - (minimum latency) n n Final schedule Note - ASAP would require more resources n 2 ALUs (+/-), 2 Multipliers d e f b c a Cycle 1 Cycle 2 ALAP wouldn’t but in general, it would 1 2 * 6 * 5 * Cycle 4 7 j 3 + + Cycle 3 g + 8 - 4 - k

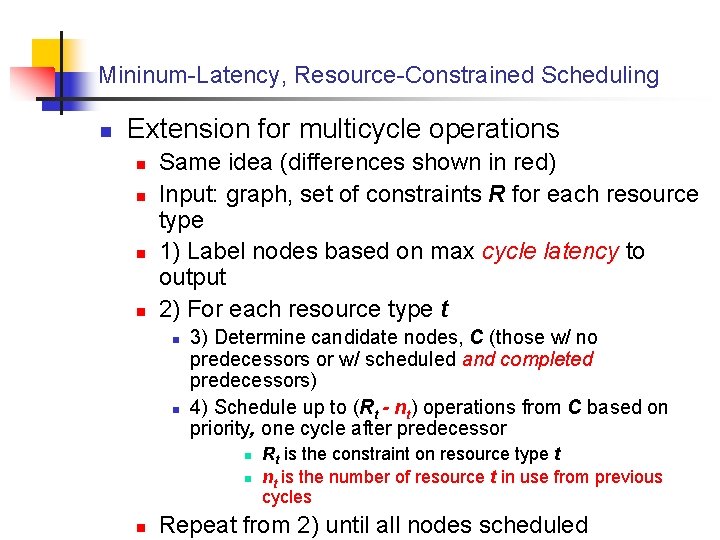

Mininum-Latency, Resource-Constrained Scheduling n Extension for multicycle operations n n Same idea (differences shown in red) Input: graph, set of constraints R for each resource type 1) Label nodes based on max cycle latency to output 2) For each resource type t n n 3) Determine candidate nodes, C (those w/ no predecessors or w/ scheduled and completed predecessors) 4) Schedule up to (Rt - nt) operations from C based on priority, one cycle after predecessor n n n Rt is the constraint on resource type t nt is the number of resource t in use from previous cycles Repeat from 2) until all nodes scheduled

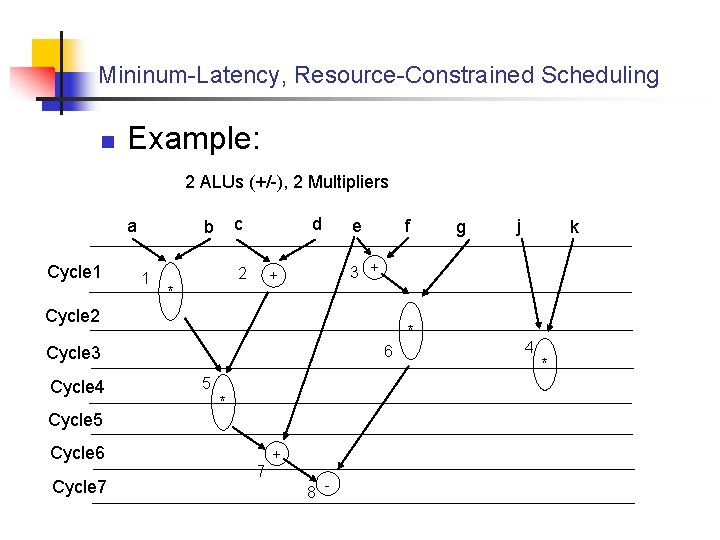

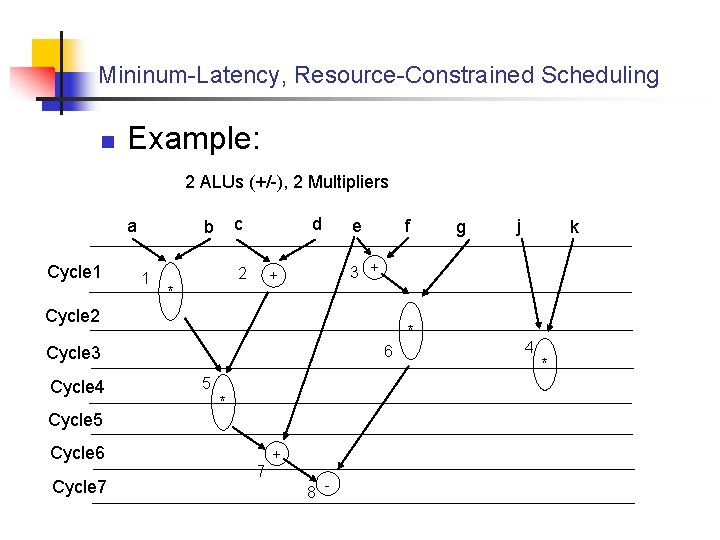

Mininum-Latency, Resource-Constrained Scheduling n Example: 2 ALUs (+/-), 2 Multipliers a Cycle 1 c b 1 d 2 e Cycle 2 * 6 Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 5 * 7 g j k 3 + + * f + 8 - 4 *

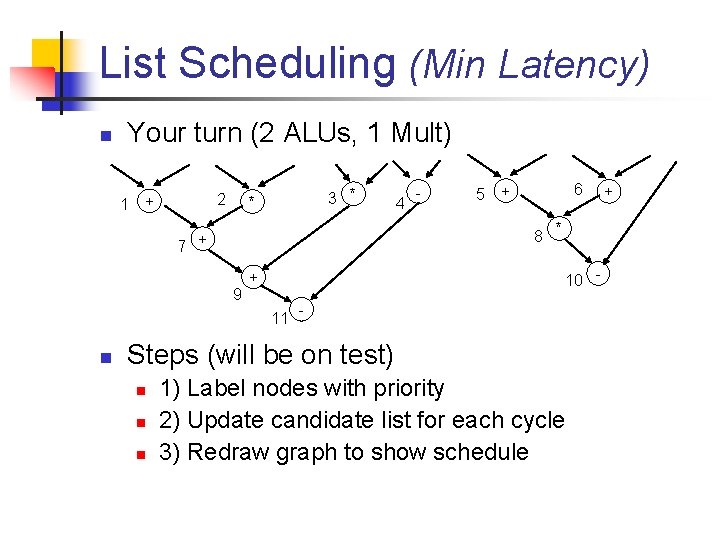

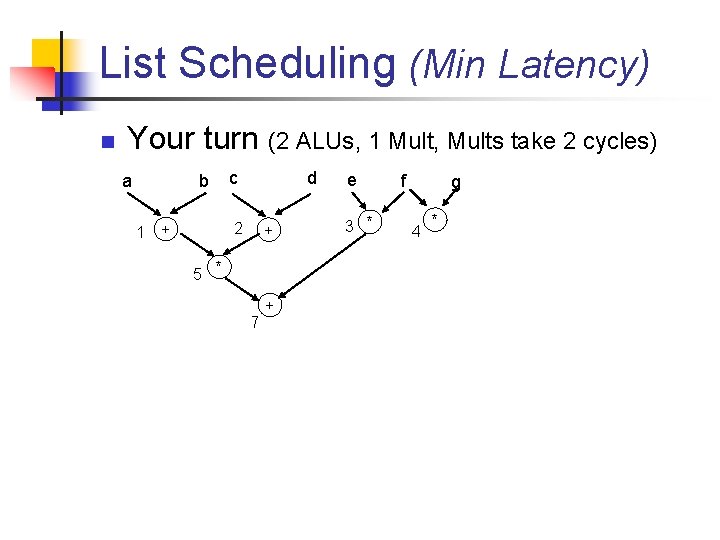

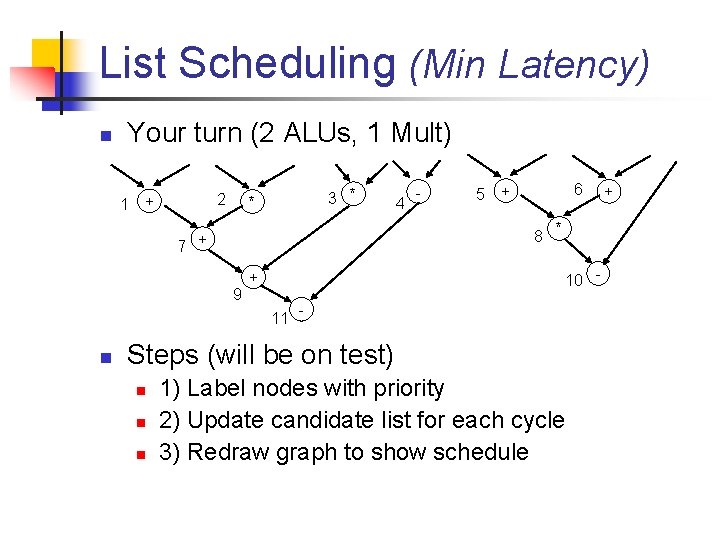

List Scheduling (Min Latency) n Your turn (2 ALUs, 1 Mult) 1 2 + 3 * * 5 6 + 8 * 7 + 9 10 - + 11 n 4 - - Steps (will be on test) n n n 1) Label nodes with priority 2) Update candidate list for each cycle 3) Redraw graph to show schedule +

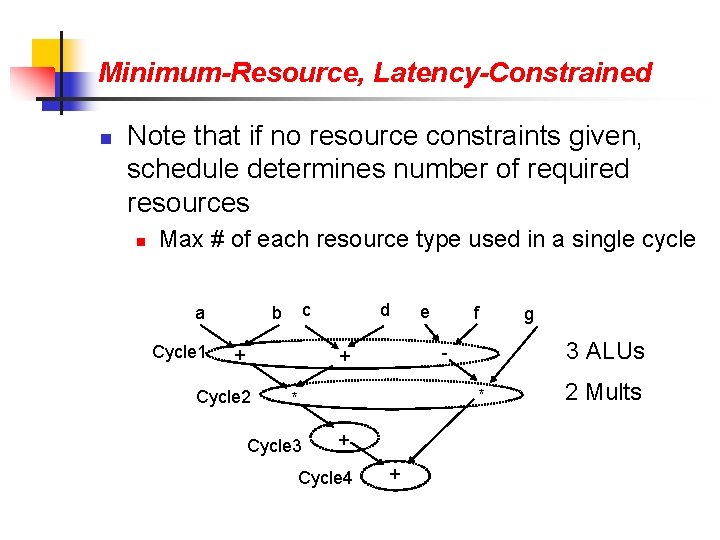

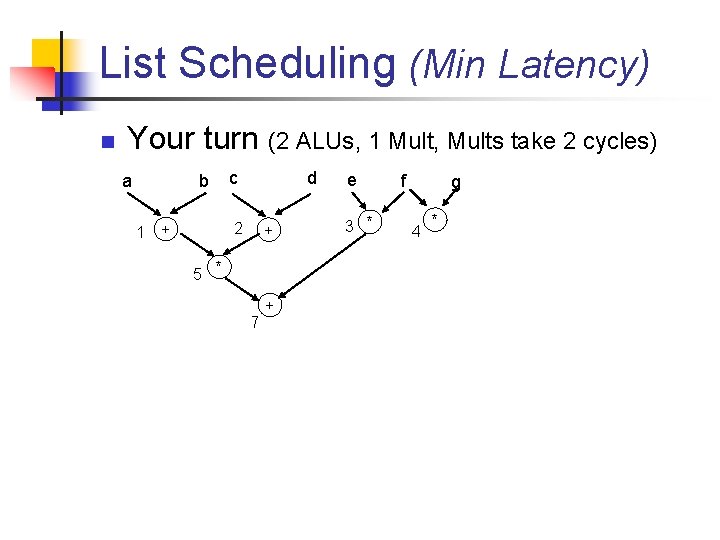

List Scheduling (Min Latency) n Your turn (2 ALUs, 1 Mult, Mults take 2 cycles) a b 1 c d 2 + + 5 * 7 + e 3 * f g 4 *

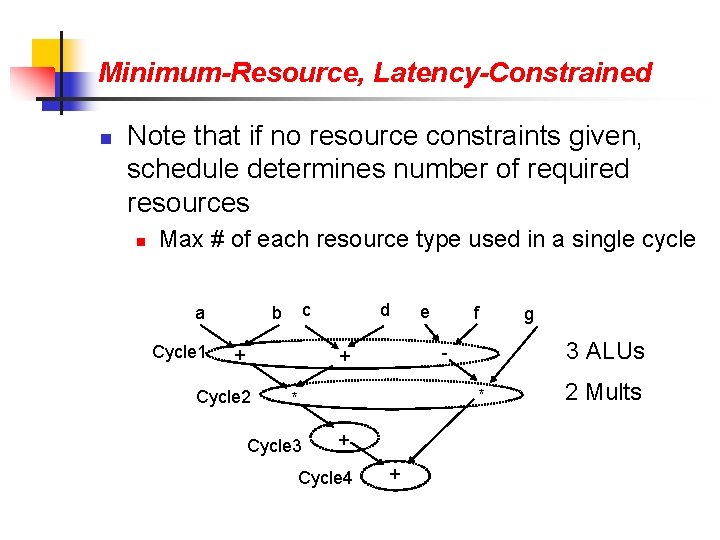

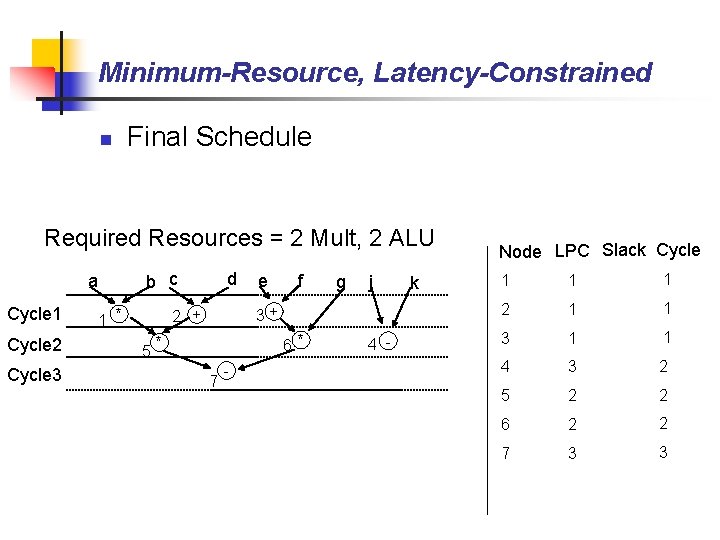

Minimum-Resource, Latency-Constrained n Note that if no resource constraints given, schedule determines number of required resources n Max # of each resource type used in a single cycle a Cycle 1 c b + d f * * Cycle 3 + Cycle 4 + g 3 ALUs - + Cycle 2 Mults

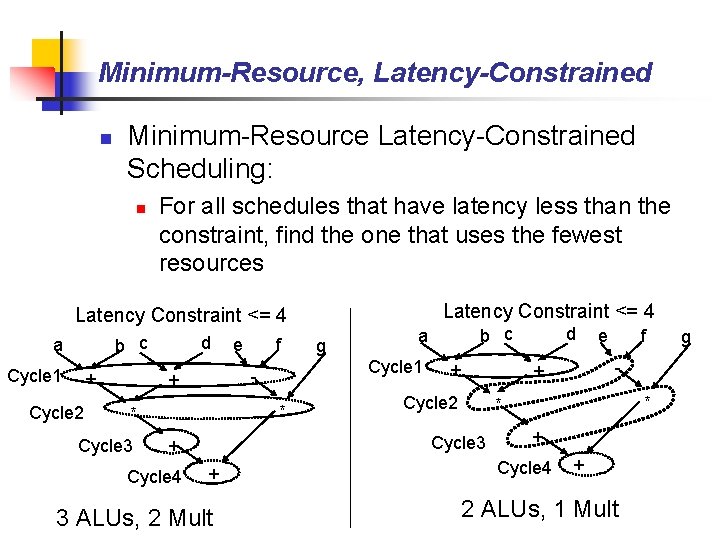

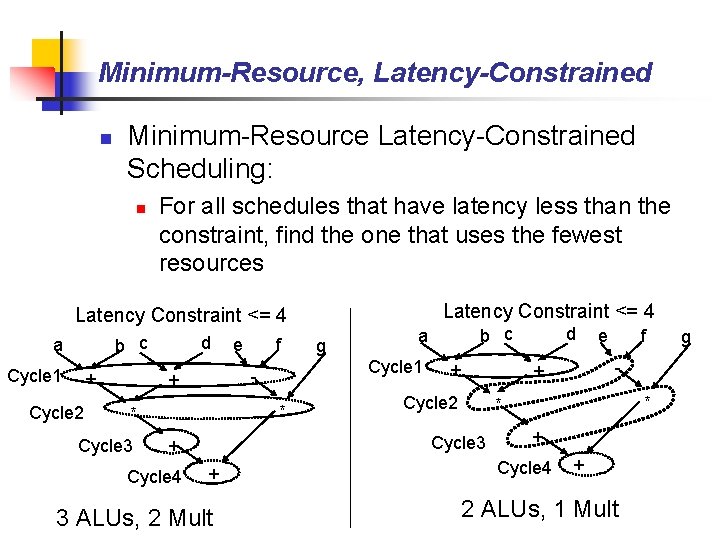

Minimum-Resource, Latency-Constrained n Minimum-Resource Latency-Constrained Scheduling: n For all schedules that have latency less than the constraint, find the one that uses the fewest resources Latency Constraint <= 4 b c a Cycle 1 + Cycle 2 d f * * b c a + + 3 ALUs, 2 Mult d Cycle 2 e f - + * * Cycle 3 + Cycle 4 g Cycle 1 - + Cycle 3 e + Cycle 4 + 2 ALUs, 1 Mult g

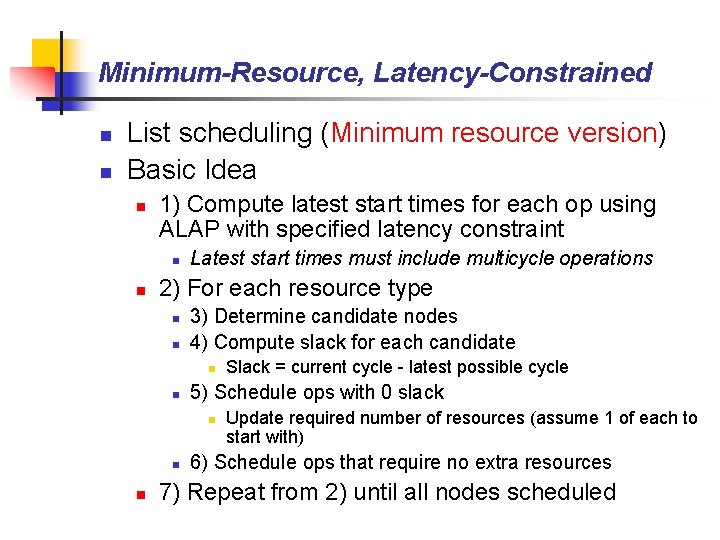

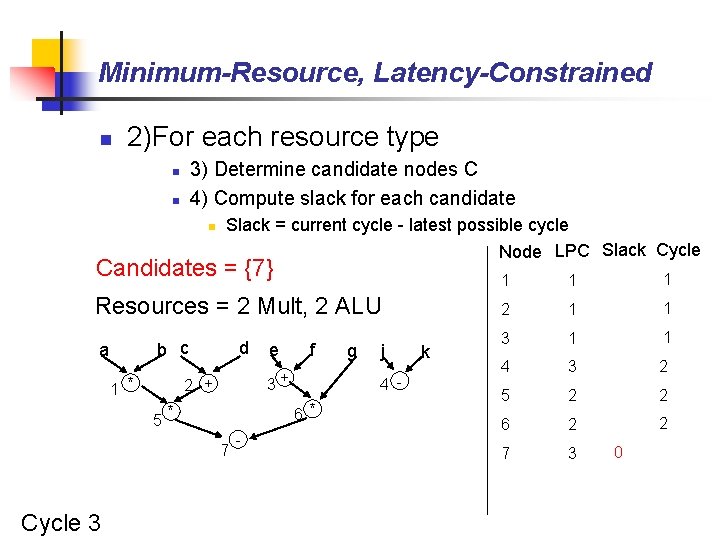

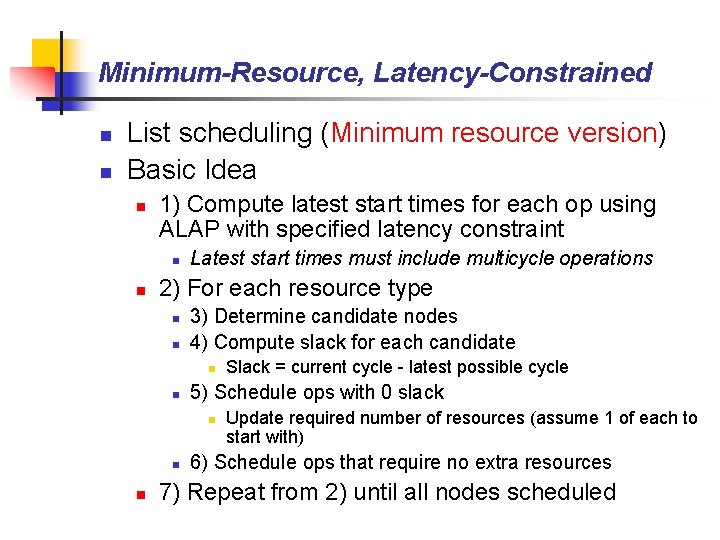

Minimum-Resource, Latency-Constrained n n List scheduling (Minimum resource version) Basic Idea n 1) Compute latest start times for each op using ALAP with specified latency constraint n n Latest start times must include multicycle operations 2) For each resource type n n 3) Determine candidate nodes 4) Compute slack for each candidate n n 5) Schedule ops with 0 slack n n n Slack = current cycle - latest possible cycle Update required number of resources (assume 1 of each to start with) 6) Schedule ops that require no extra resources 7) Repeat from 2) until all nodes scheduled

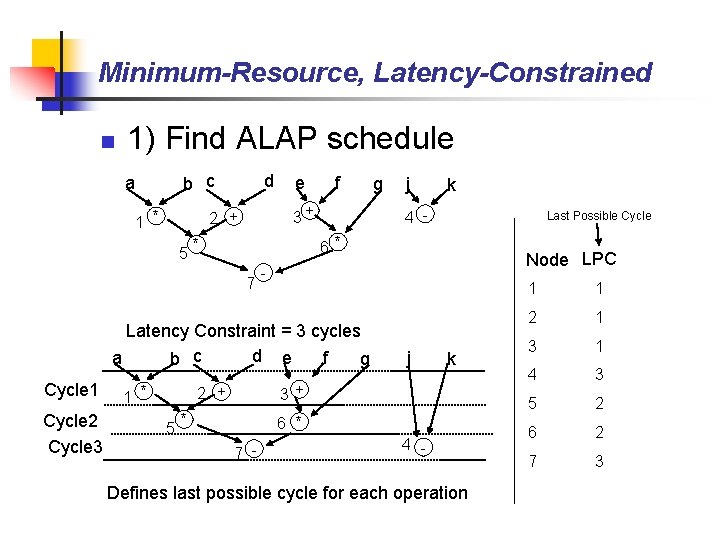

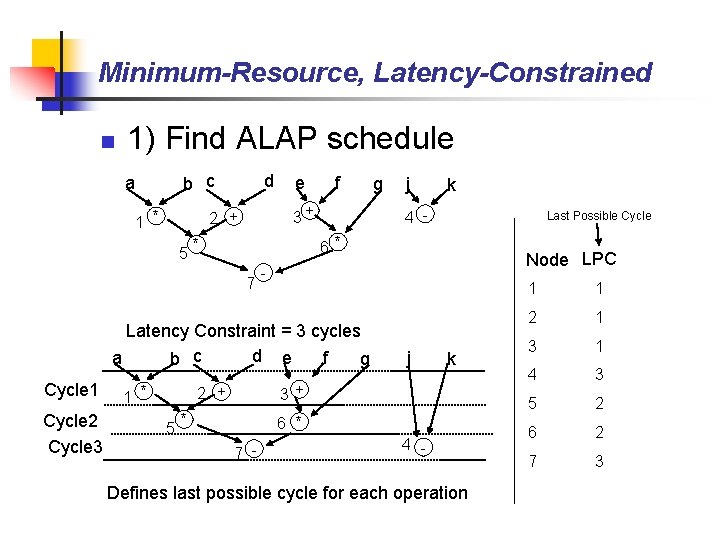

Minimum-Resource, Latency-Constrained n 1) Find ALAP schedule b c a 1 * d f 3+ 2 + 5 e Cycle 2 Cycle 3 Last Possible Cycle Node LPC - j k 3+ 2 + 5 k 4 - Latency Constraint = 3 cycles d e a f b c g 1 * j 6 * * 7 Cycle 1 g * 6 * 7 - 4 - Defines last possible cycle for each operation 1 1 2 1 3 1 4 3 5 2 6 2 7 3

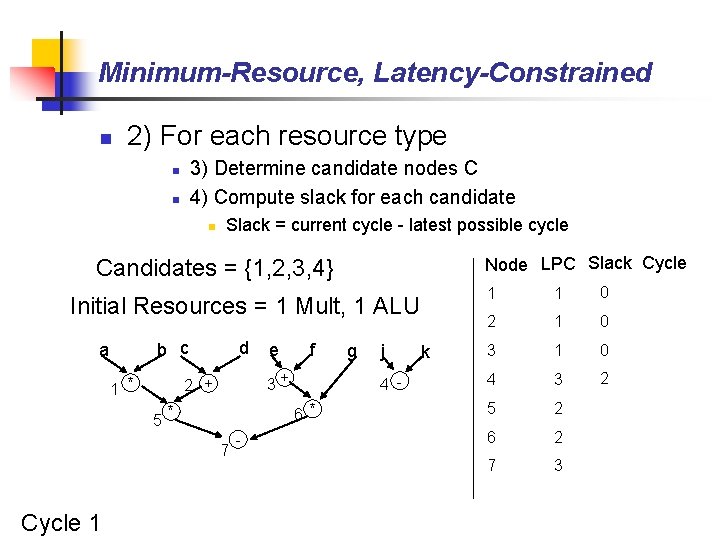

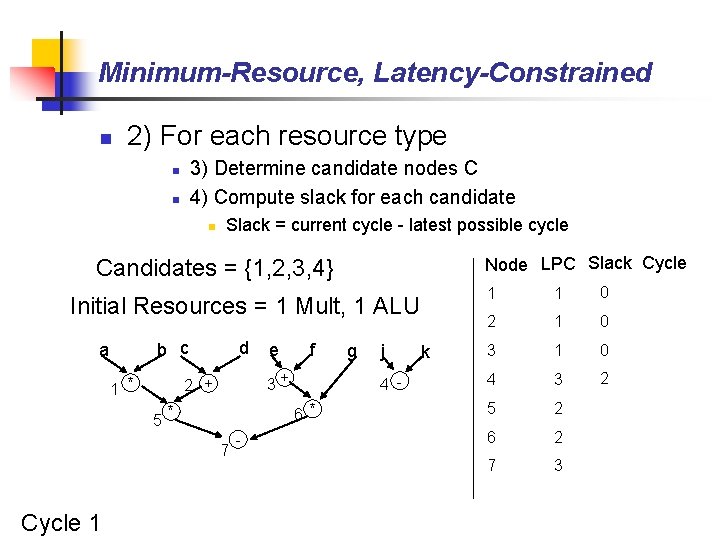

Minimum-Resource, Latency-Constrained n 2) For each resource type 3) Determine candidate nodes C 4) Compute slack for each candidate n n n Slack = current cycle - latest possible cycle Node LPC Slack Cycle Candidates = {1, 2, 3, 4} Initial Resources = 1 Mult, 1 ALU b c a 1 * d - g j 4 - 6 * * 7 Cycle 1 f 3+ 2 + 5 e k 1 1 0 2 1 0 3 1 0 4 3 2 5 2 6 2 7 3

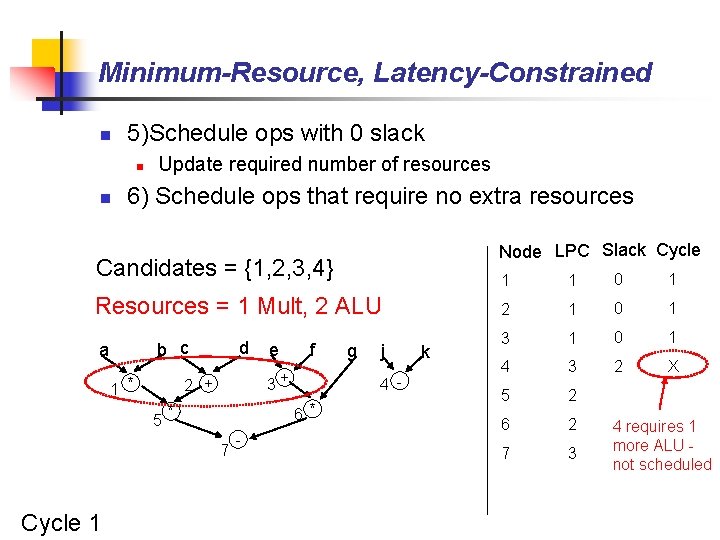

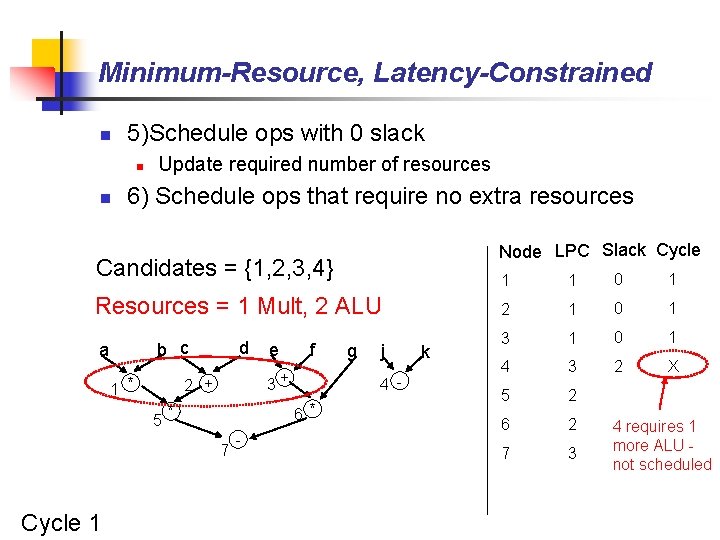

Minimum-Resource, Latency-Constrained n 5)Schedule ops with 0 slack n n Update required number of resources 6) Schedule ops that require no extra resources Node LPC Slack Cycle Candidates = {1, 2, 3, 4} Resources = 1 Mult, 2 ALU b c a 1 * d * - g j 4 - 6 * 7 Cycle 1 f 3+ 2 + 5 e k 1 1 0 1 2 1 0 1 3 1 0 1 4 3 2 X 5 2 6 2 7 3 4 requires 1 more ALU not scheduled

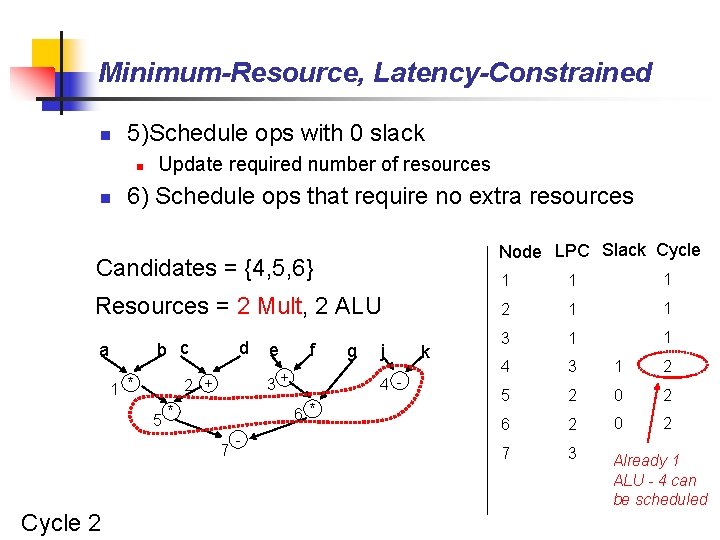

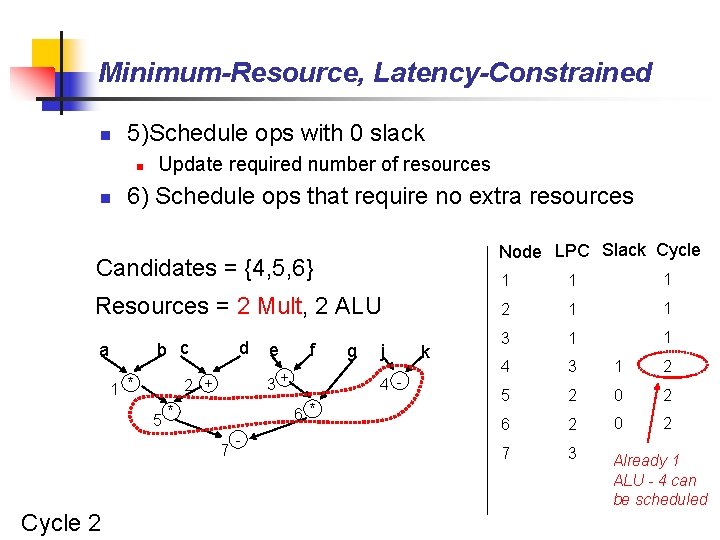

Minimum-Resource, Latency-Constrained n 2)For each resource type 3) Determine candidate nodes C 4) Compute slack for each candidate n n n Slack = current cycle - latest possible cycle Node LPC Slack Cycle Candidates = {4, 5, 6} 1 1 1 Resources = 1 Mult, 2 ALU 2 1 1 3 1 1 4 3 1 5 2 0 6 2 0 7 3 b c a 1 * d * - g j 4 - 6 * 7 Cycle 2 f 3+ 2 + 5 e k

Minimum-Resource, Latency-Constrained n 5)Schedule ops with 0 slack n n Update required number of resources 6) Schedule ops that require no extra resources Node LPC Slack Cycle Candidates = {4, 5, 6} Resources = 2 Mult, 2 ALU b c a 1 * d * - g j 4 - 6 * 7 Cycle 2 f 3+ 2 + 5 e k 1 1 1 2 1 1 3 1 1 4 3 1 2 5 2 0 2 6 2 0 2 7 3 Already 1 ALU - 4 can be scheduled

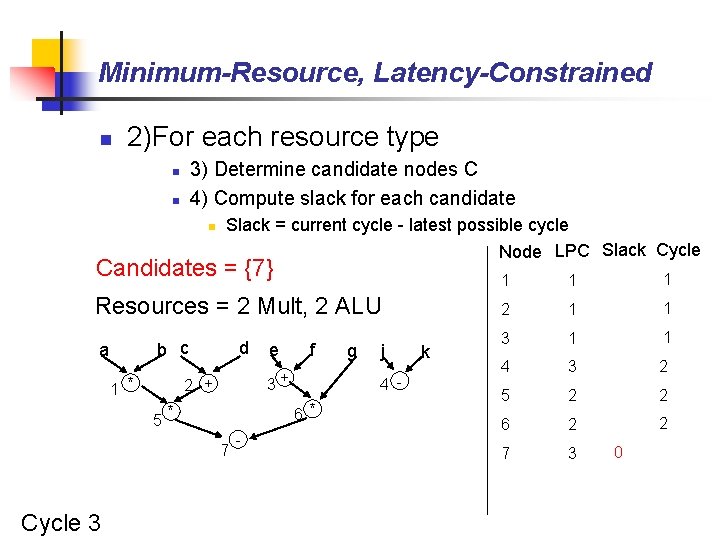

Minimum-Resource, Latency-Constrained n 2)For each resource type 3) Determine candidate nodes C 4) Compute slack for each candidate n n n Slack = current cycle - latest possible cycle Node LPC Slack Cycle Candidates = {7} 1 1 1 Resources = 2 Mult, 2 ALU 2 1 1 3 1 1 4 3 2 5 2 2 6 2 2 7 3 b c a 1 * d * - g j 4 - 6 * 7 Cycle 3 f 3+ 2 + 5 e k 0

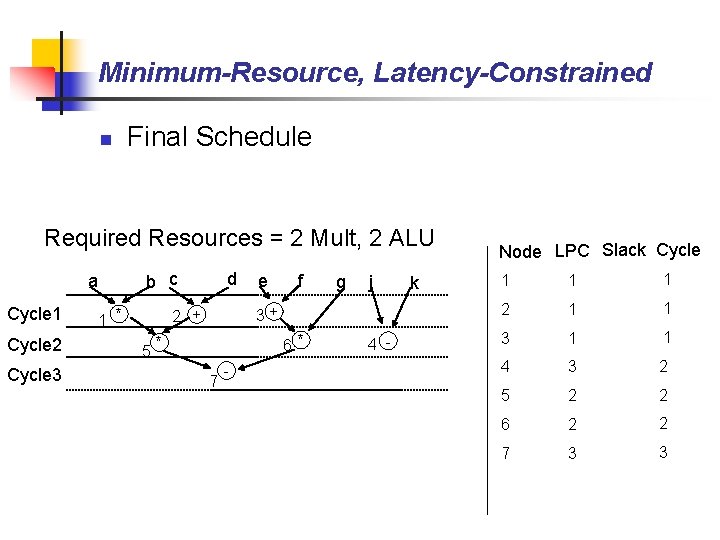

Minimum-Resource, Latency-Constrained n Final Schedule Required Resources = 2 Mult, 2 ALU b c a Cycle 1 Cycle 2 Cycle 3 1 * d f g j 3+ 2 + 5 e 6 * * 7 - 4 - k Node LPC Slack Cycle 1 1 1 2 1 1 3 1 1 4 3 2 5 2 2 6 2 2 7 3 3

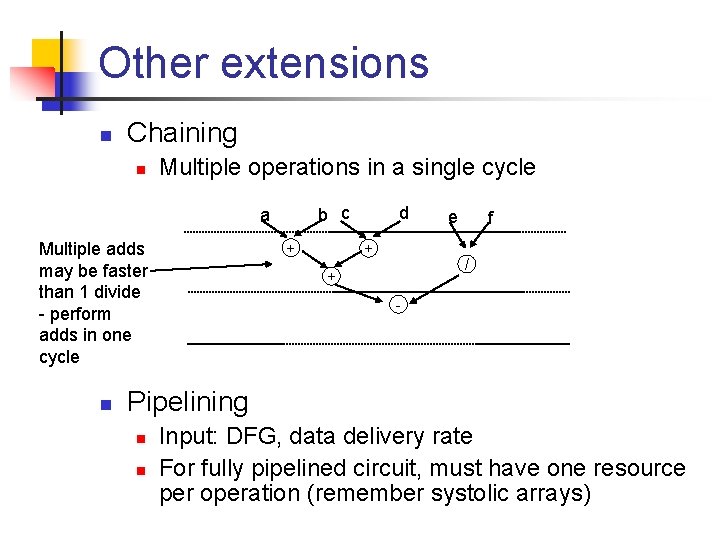

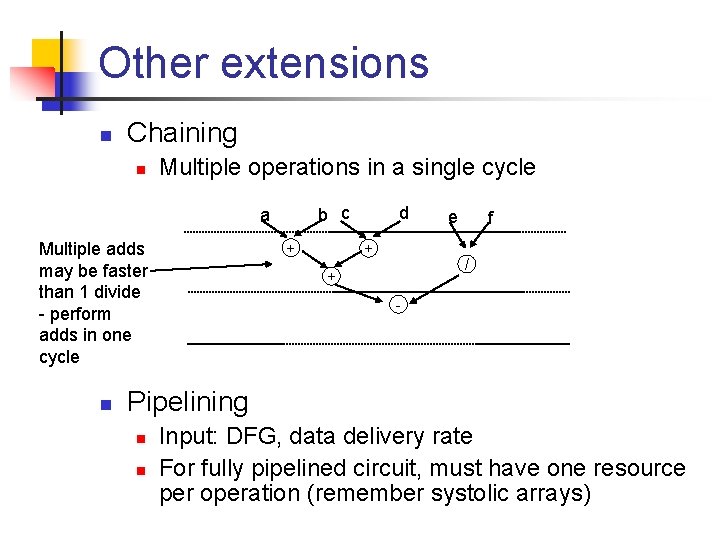

Other extensions n Chaining n Multiple operations in a single cycle b c a Multiple adds may be faster than 1 divide - perform adds in one cycle n + d + e f / + - Pipelining n n Input: DFG, data delivery rate For fully pipelined circuit, must have one resource per operation (remember systolic arrays)

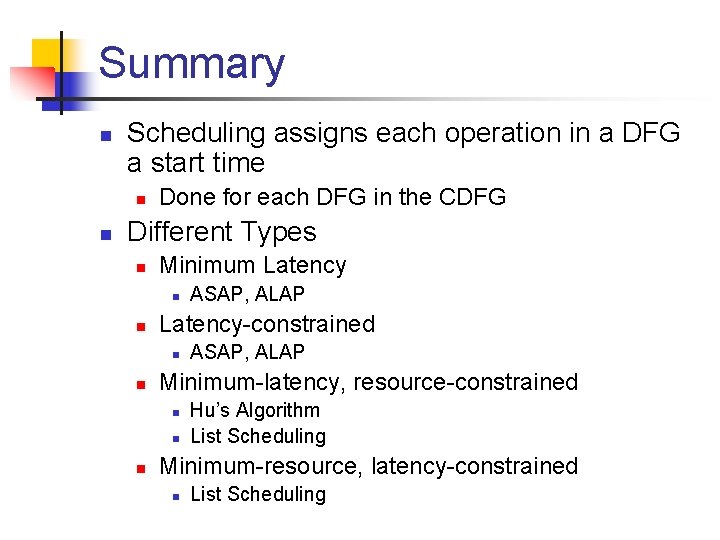

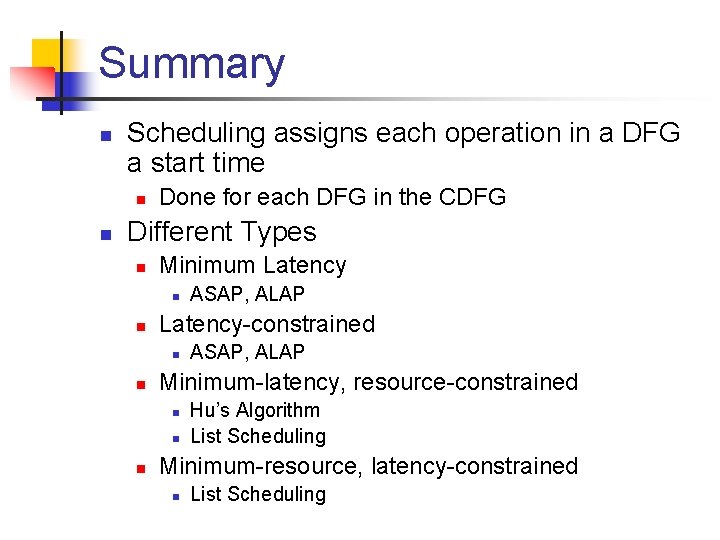

Summary n Scheduling assigns each operation in a DFG a start time n n Done for each DFG in the CDFG Different Types n Minimum Latency n n Latency-constrained n n ASAP, ALAP Minimum-latency, resource-constrained n n n ASAP, ALAP Hu’s Algorithm List Scheduling Minimum-resource, latency-constrained n List Scheduling

High-level Synthesis: Binding/Resource Sharing

Binding n During scheduling, we determined: n n n When ops will execute How many resources are needed We still need to decide which ops execute on which resources n n => Binding If multiple ops use the same resource n =>Resource Sharing

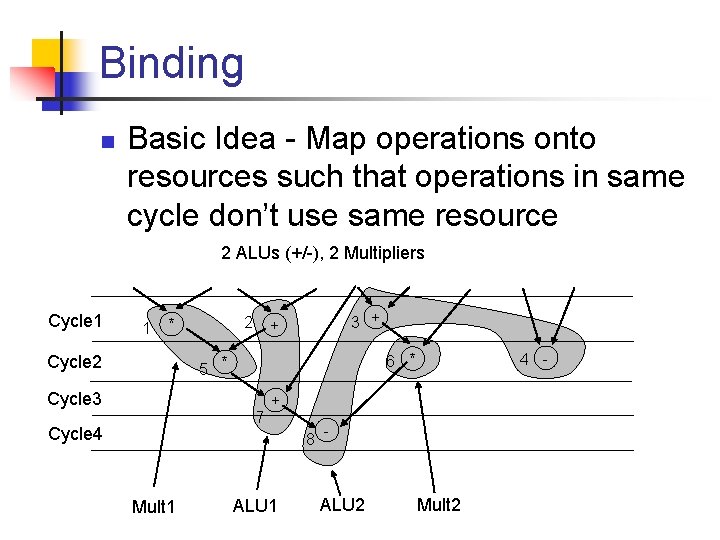

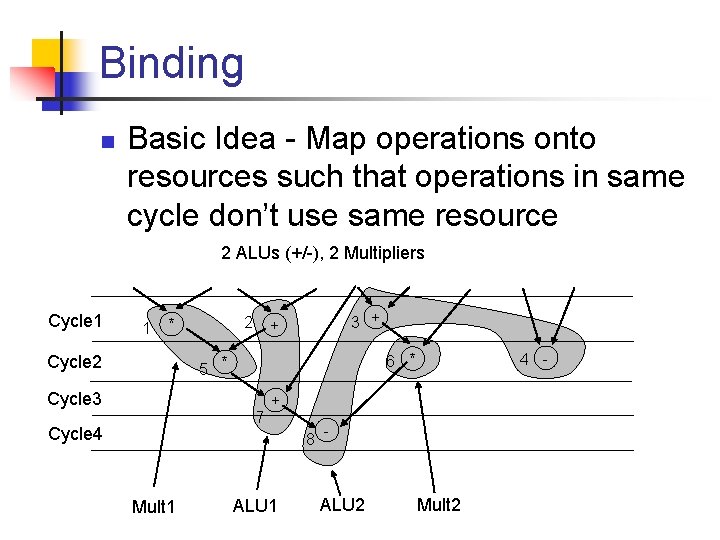

Binding n Basic Idea - Map operations onto resources such that operations in same cycle don’t use same resource 2 ALUs (+/-), 2 Multipliers Cycle 1 1 2 * Cycle 2 3 + + Cycle 3 7 Cycle 4 + 8 Mult 1 4 - 6 * 5 * ALU 1 - ALU 2 Mult 2

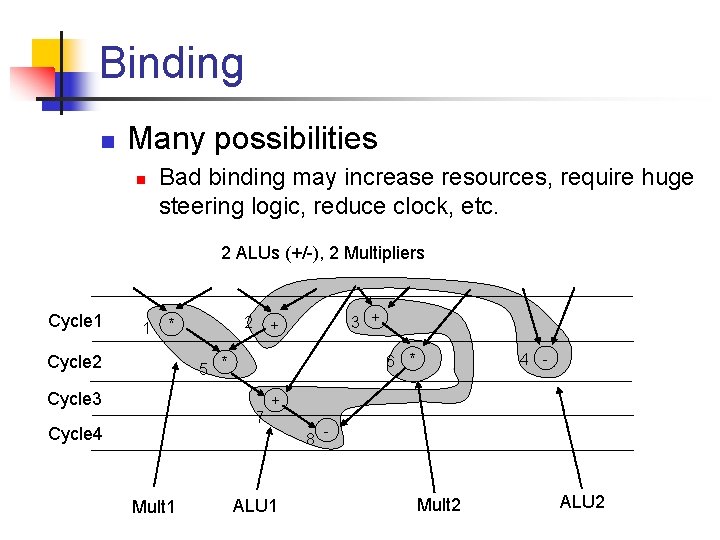

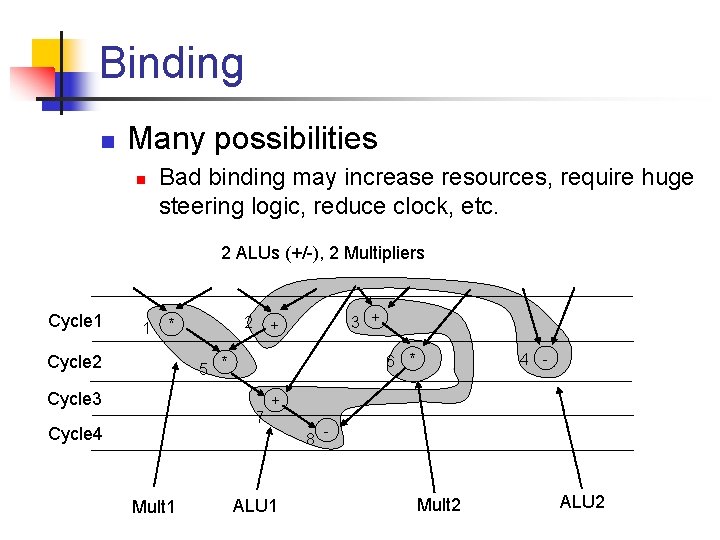

Binding n Many possibilities n Bad binding may increase resources, require huge steering logic, reduce clock, etc. 2 ALUs (+/-), 2 Multipliers Cycle 1 1 2 * Cycle 2 3 + + Cycle 3 7 Cycle 4 + 8 Mult 1 4 - 6 * 5 * ALU 1 - Mult 2 ALU 2

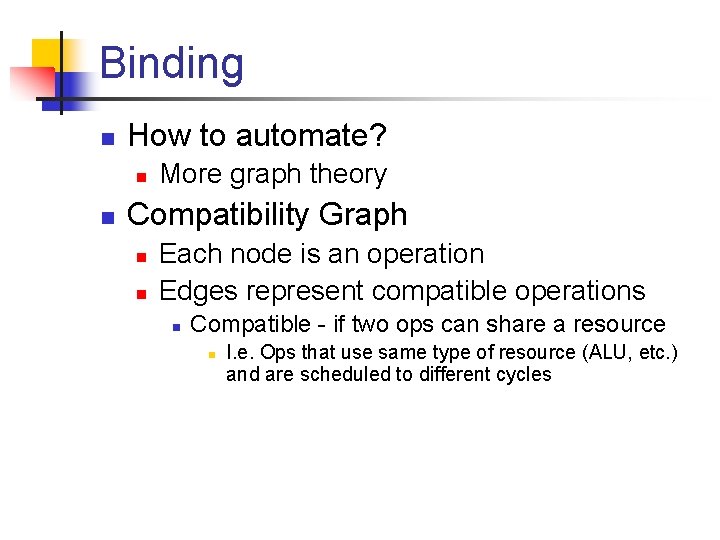

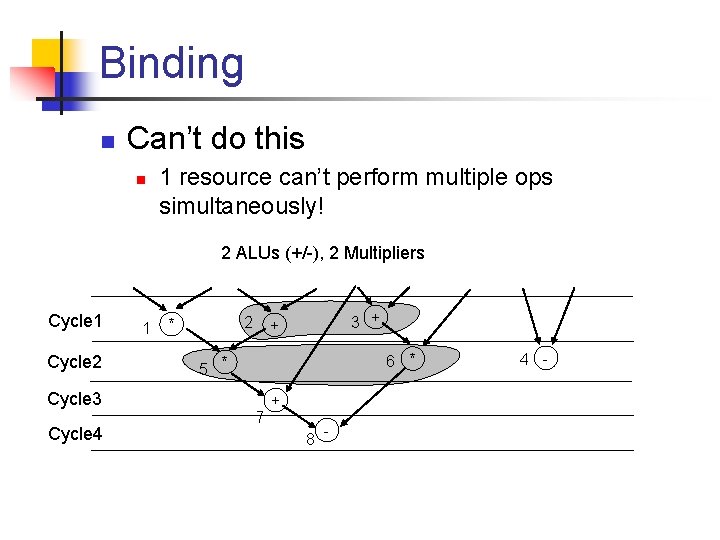

Binding n Can’t do this n 1 resource can’t perform multiple ops simultaneously! 2 ALUs (+/-), 2 Multipliers Cycle 1 Cycle 2 1 2 * 6 * 5 * Cycle 3 Cycle 4 3 + + 7 + 8 - 4 -

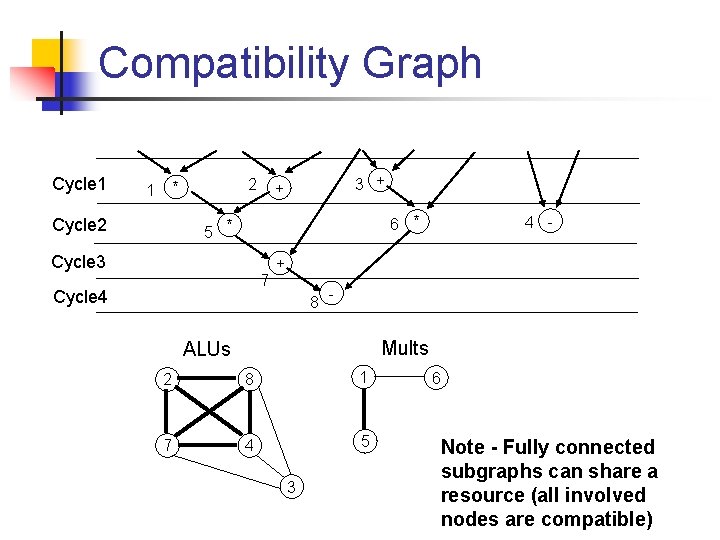

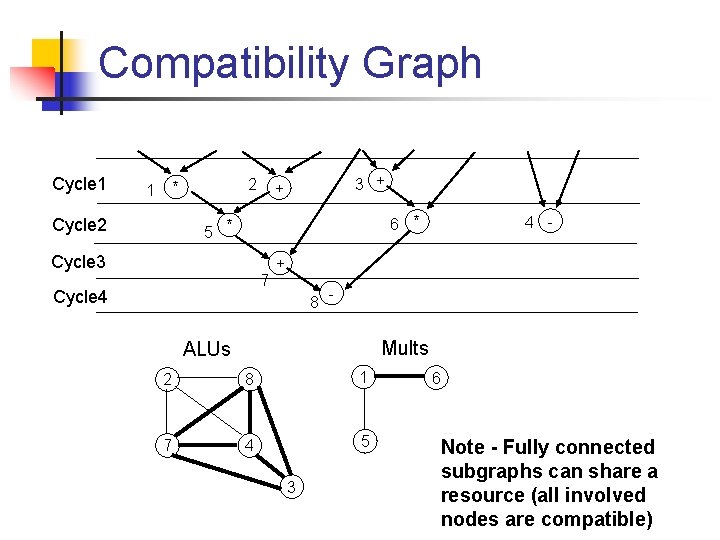

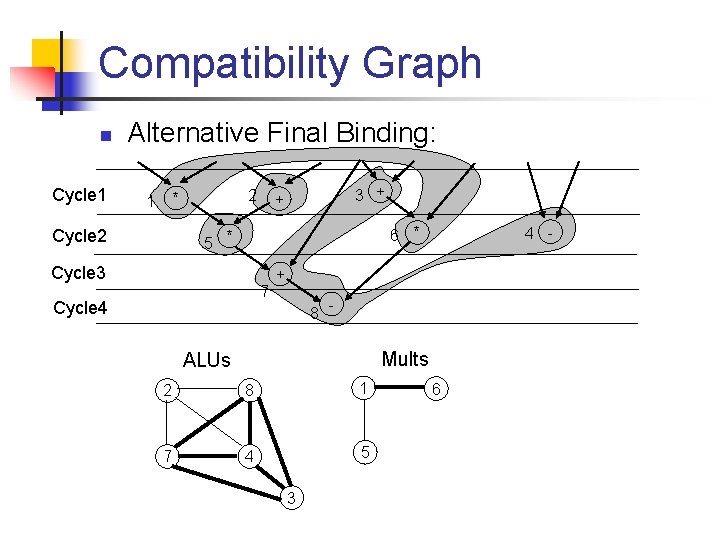

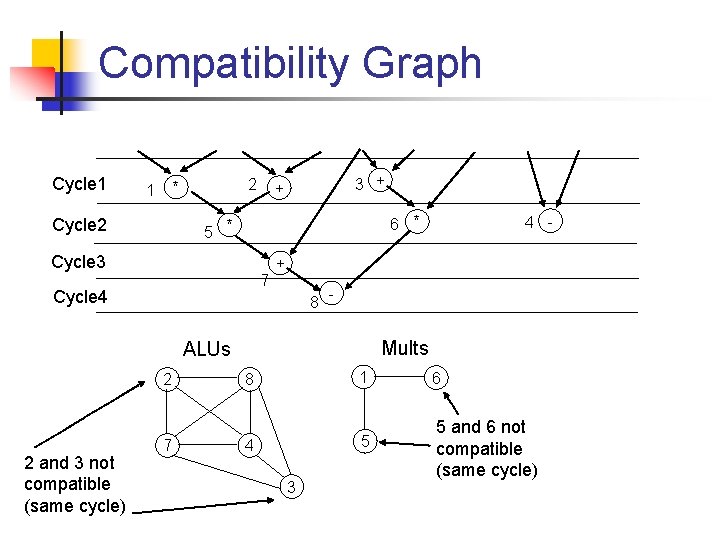

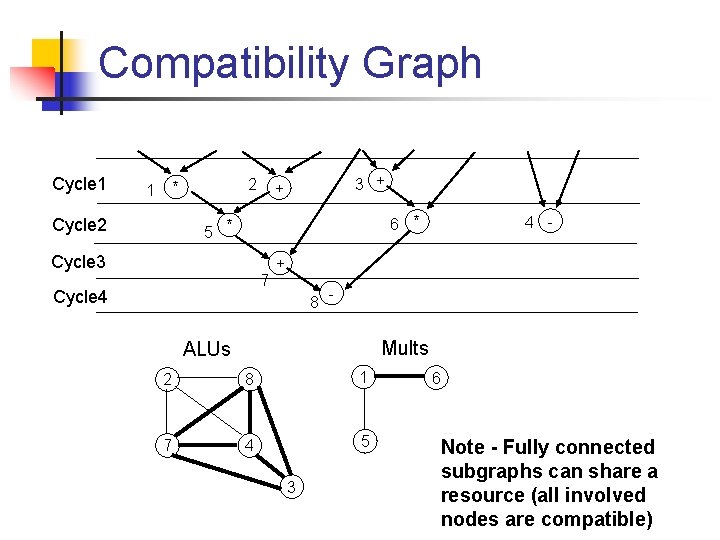

Binding n How to automate? n n More graph theory Compatibility Graph n n Each node is an operation Edges represent compatible operations n Compatible - if two ops can share a resource n I. e. Ops that use same type of resource (ALU, etc. ) and are scheduled to different cycles

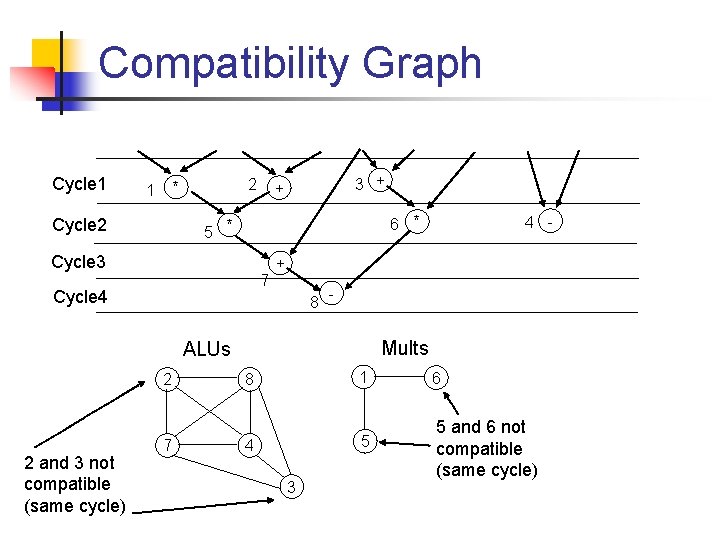

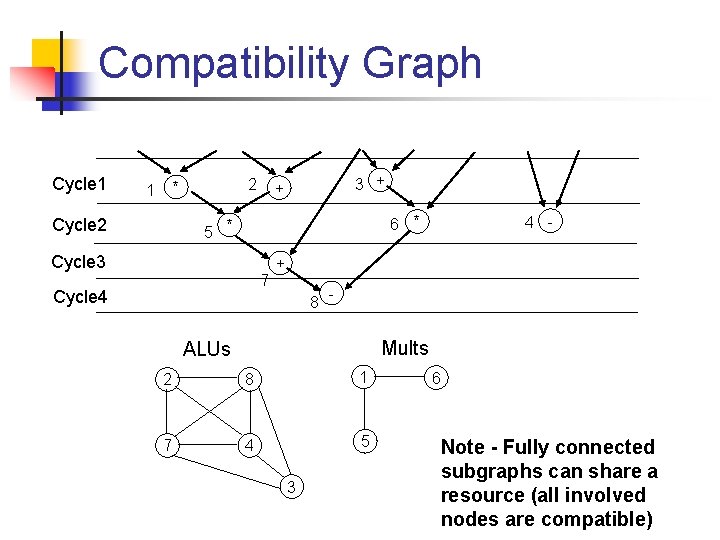

Compatibility Graph Cycle 1 2 * 1 Cycle 2 3 + + Cycle 3 7 Cycle 4 + 8 - Mults ALUs 2 2 and 3 not compatible (same cycle) 7 4 - 6 * 5 * 8 1 6 4 5 5 and 6 not compatible (same cycle) 3

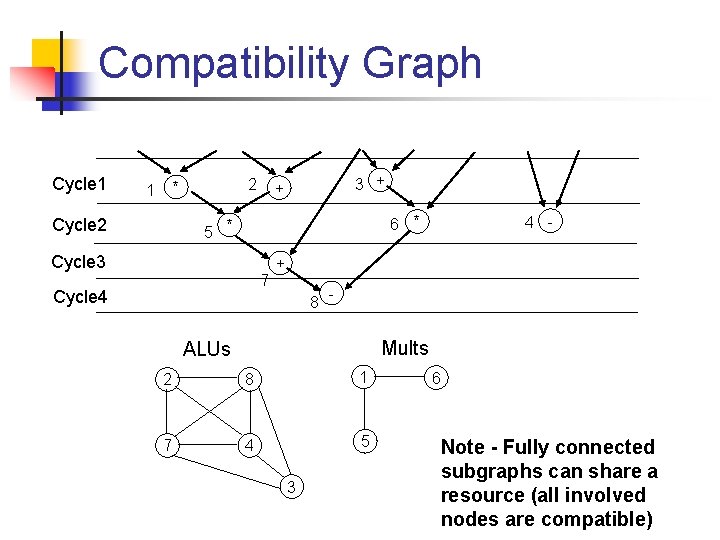

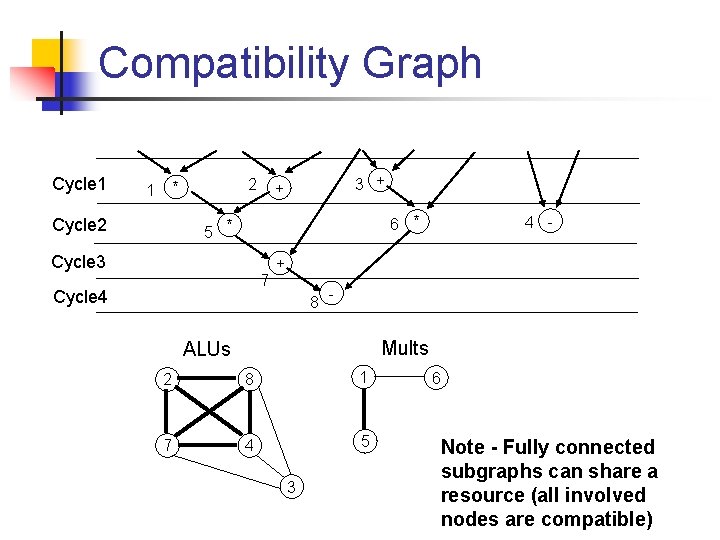

Compatibility Graph Cycle 1 2 * 1 Cycle 2 3 + + 4 - 6 * 5 * Cycle 3 7 Cycle 4 + 8 - Mults ALUs 2 8 1 7 4 5 3 6 Note - Fully connected subgraphs can share a resource (all involved nodes are compatible)

Compatibility Graph Cycle 1 2 * 1 Cycle 2 3 + + 4 - 6 * 5 * Cycle 3 7 Cycle 4 + 8 - Mults ALUs 2 8 1 7 4 5 3 6 Note - Fully connected subgraphs can share a resource (all involved nodes are compatible)

Compatibility Graph Cycle 1 2 * 1 Cycle 2 3 + + 4 - 6 * 5 * Cycle 3 7 Cycle 4 + 8 - Mults ALUs 2 8 1 7 4 5 3 6 Note - Fully connected subgraphs can share a resource (all involved nodes are compatible)

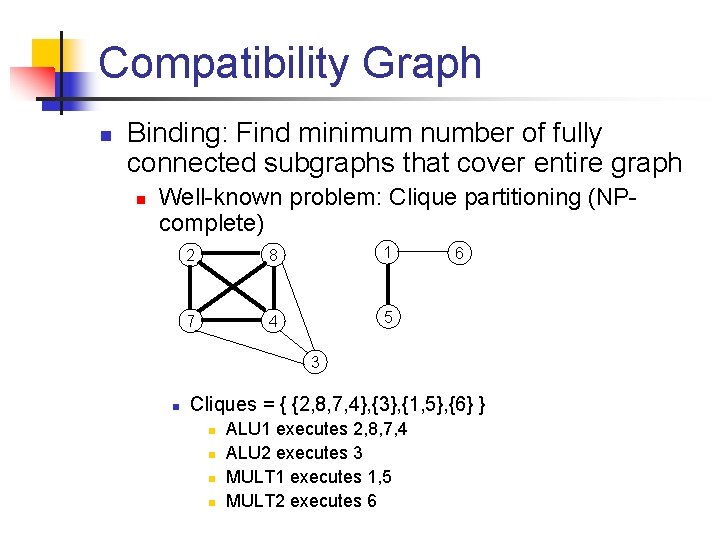

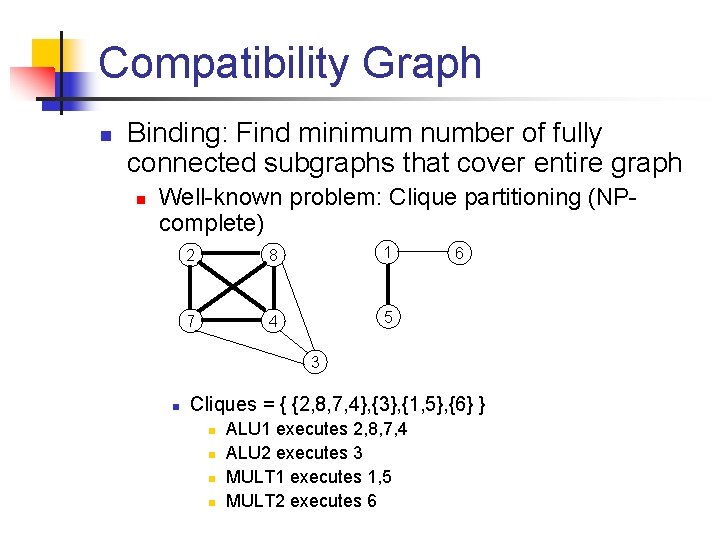

Compatibility Graph n Binding: Find minimum number of fully connected subgraphs that cover entire graph n Well-known problem: Clique partitioning (NPcomplete) 2 8 1 7 4 5 6 3 n Cliques = { {2, 8, 7, 4}, {3}, {1, 5}, {6} } n n ALU 1 executes 2, 8, 7, 4 ALU 2 executes 3 MULT 1 executes 1, 5 MULT 2 executes 6

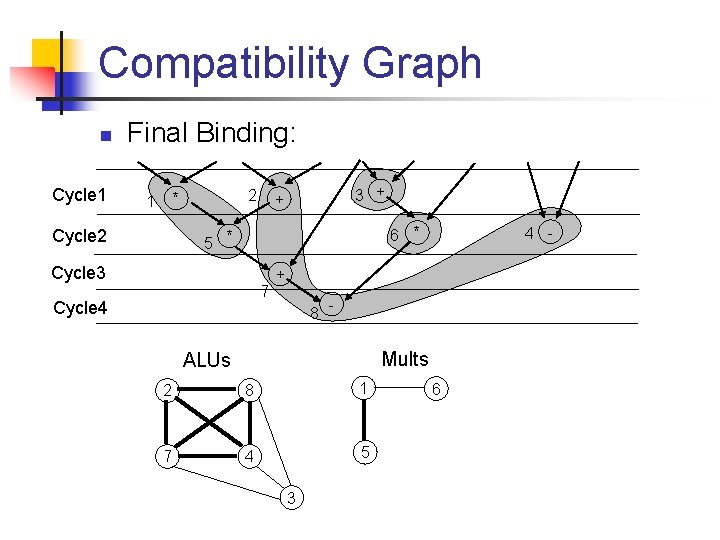

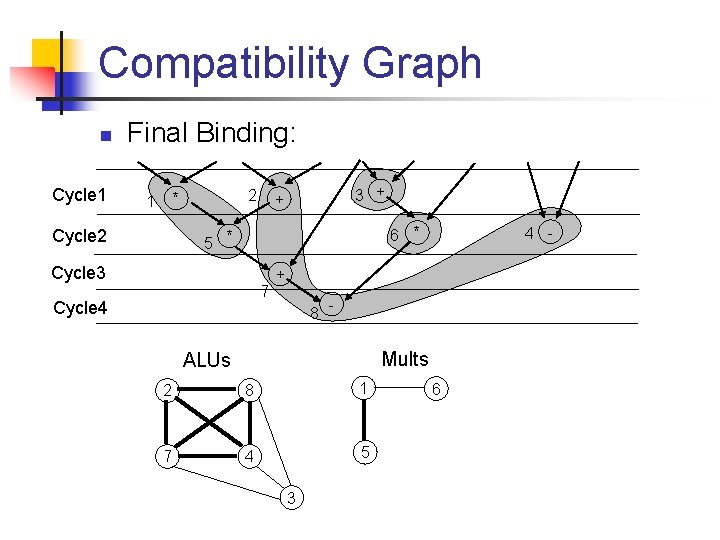

Compatibility Graph n Cycle 1 Final Binding: 2 * 1 Cycle 2 3 + + 4 - 6 * 5 * Cycle 3 7 Cycle 4 + 8 - Mults ALUs 2 8 1 7 4 5 3 6

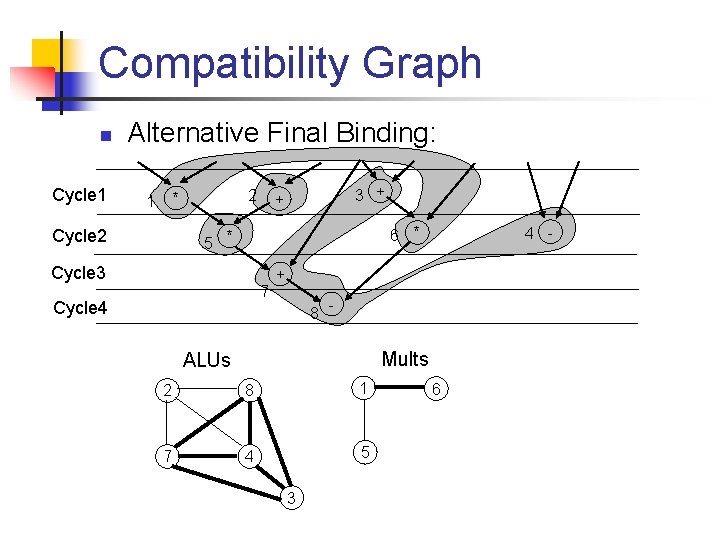

Compatibility Graph n Cycle 1 Alternative Final Binding: 2 * 1 Cycle 2 3 + + 4 - 6 * 5 * Cycle 3 7 Cycle 4 + 8 - Mults ALUs 2 8 1 7 4 5 3 6

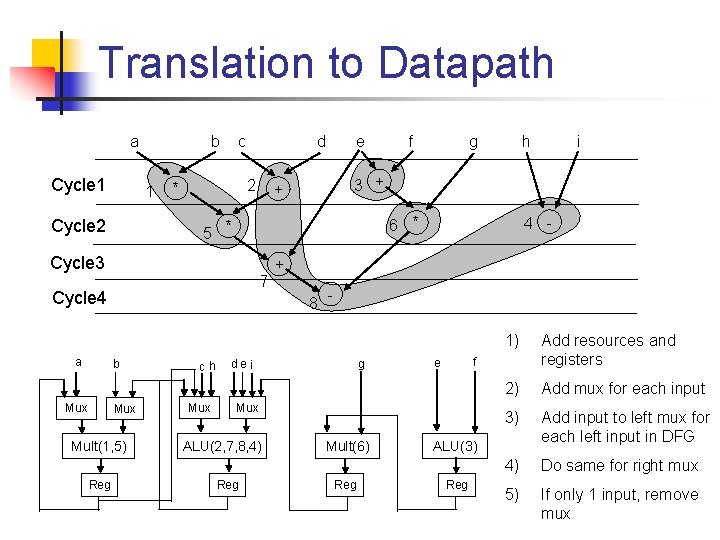

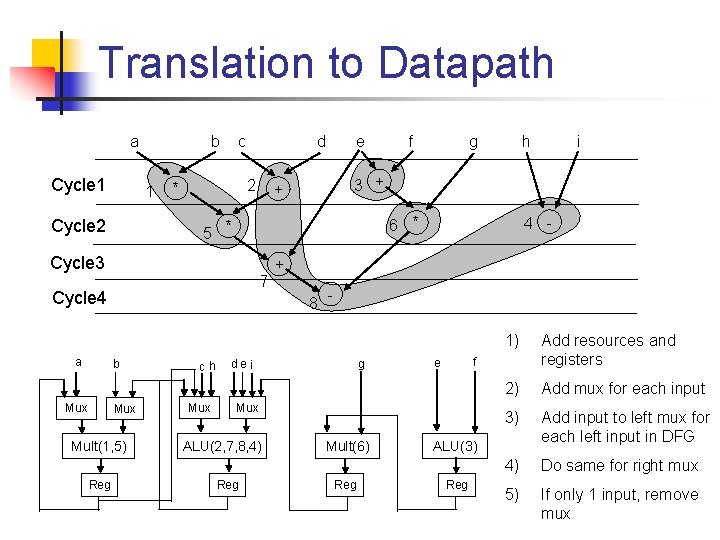

Translation to Datapath a Cycle 1 b 1 Cycle 2 c d 2 * 7 Cycle 4 Mux Mult(1, 5) Reg Mux h 4 - - g de i Reg Mult(6) Reg 1) Add resources and registers 2) Add mux for each input 3) Add input to left mux for each left input in DFG 4) Do same for right mux 5) If only 1 input, remove mux f e Mux ALU(2, 7, 8, 4) i + 8 ch g 6 * 5 * b f 3 + + Cycle 3 a e ALU(3) Reg

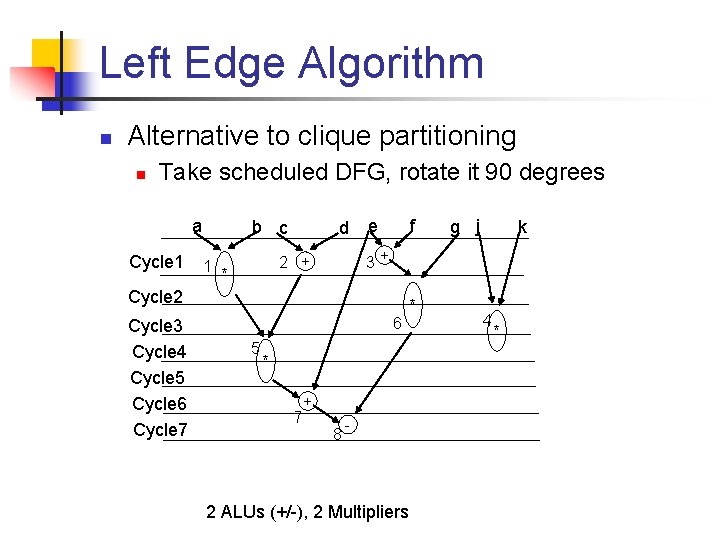

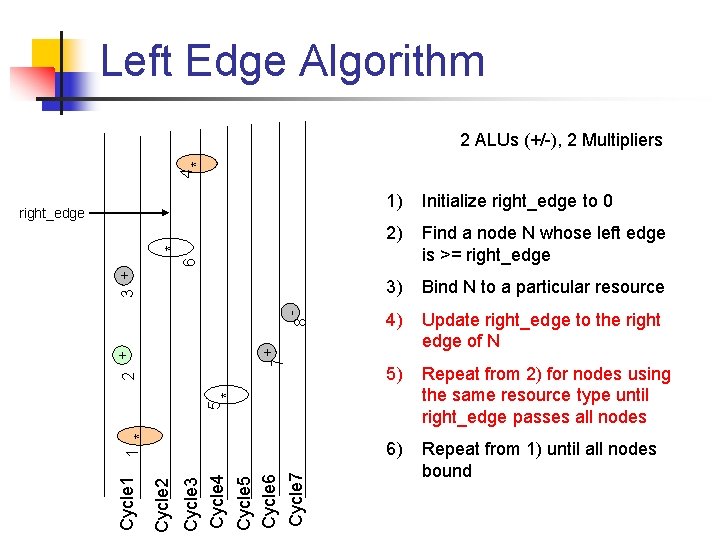

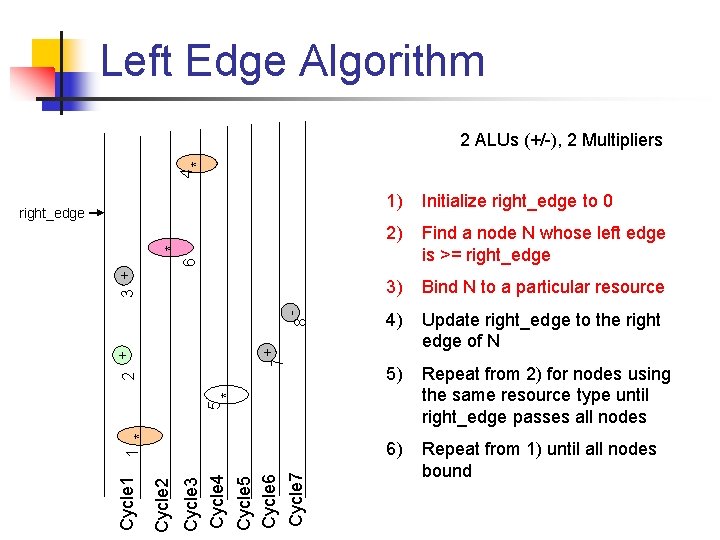

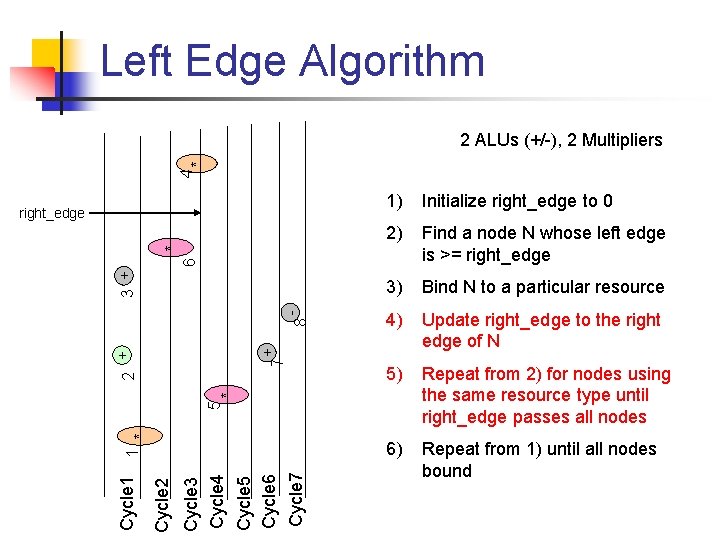

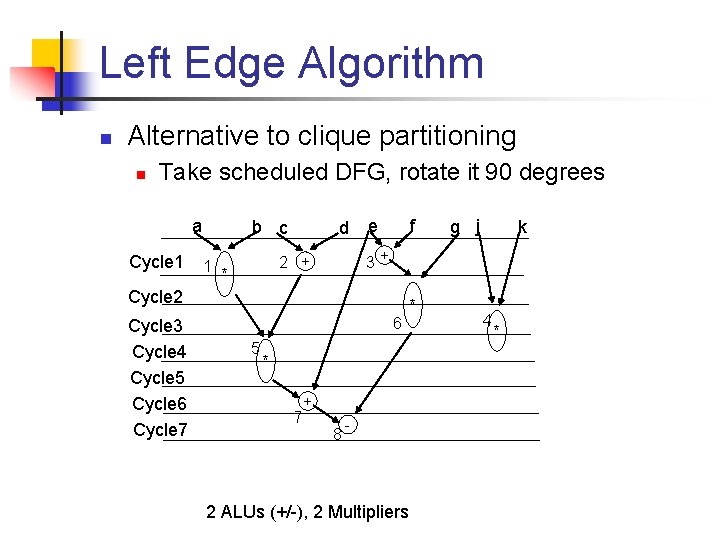

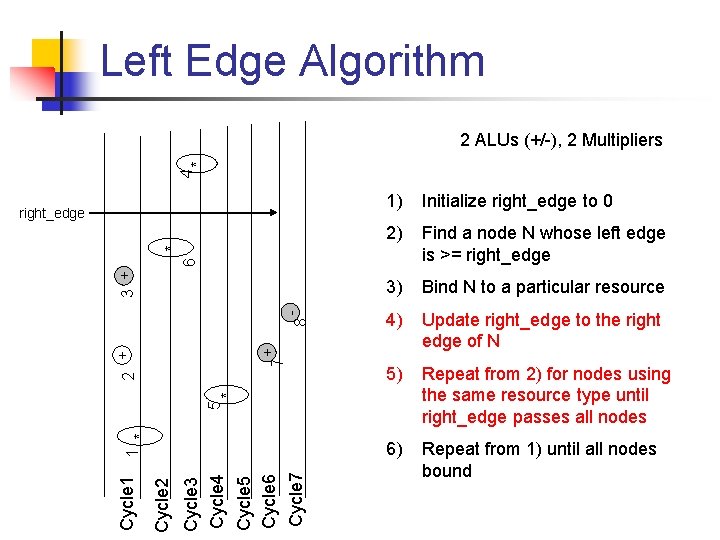

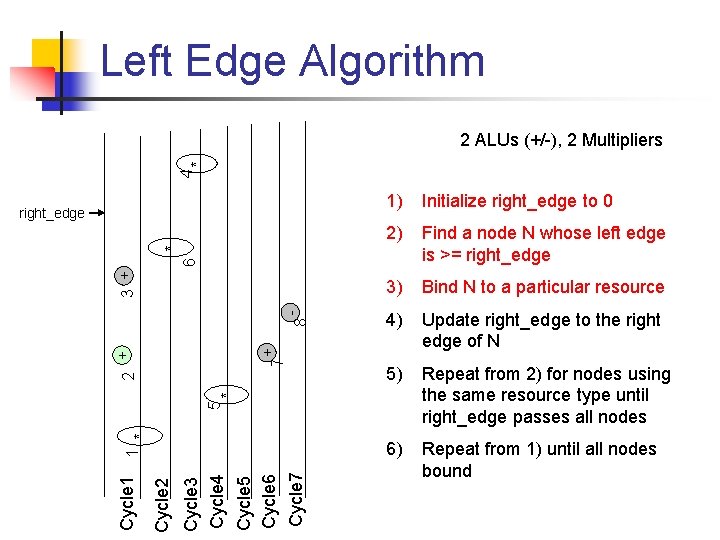

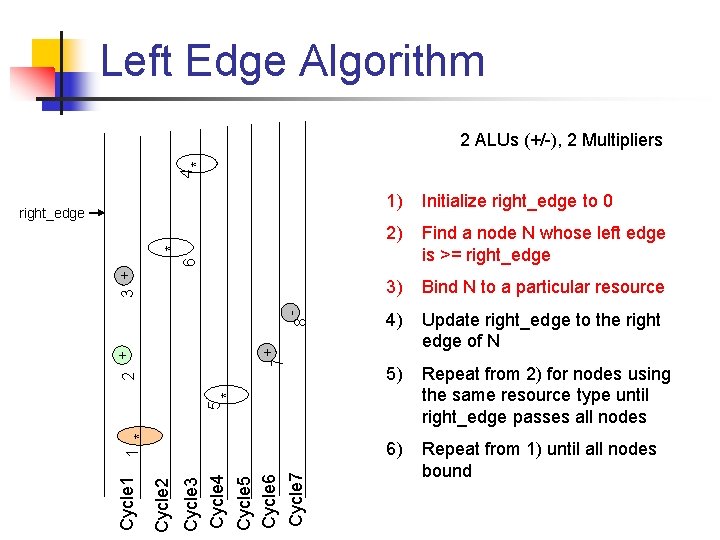

Left Edge Algorithm n Alternative to clique partitioning n Take scheduled DFG, rotate it 90 degrees a Cycle 1 b c d f Cycle 2 Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 * 6 5 * 7 g j k 3+ 2 + 1 * e + 8 - 2 ALUs (+/-), 2 Multipliers 4 *

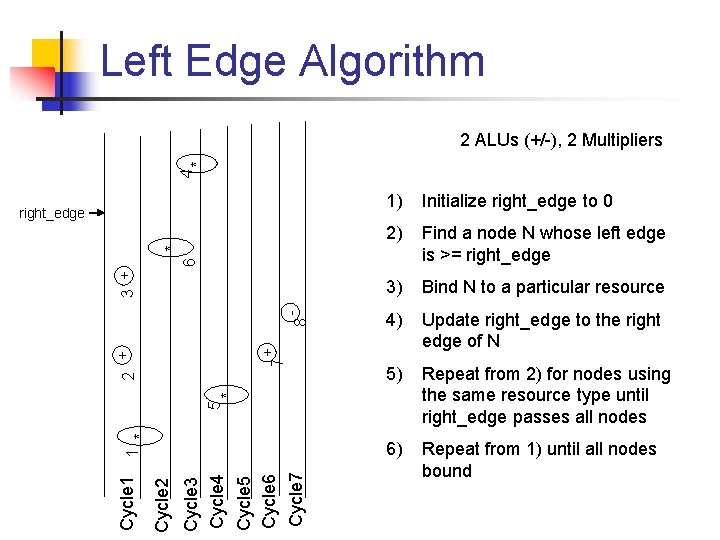

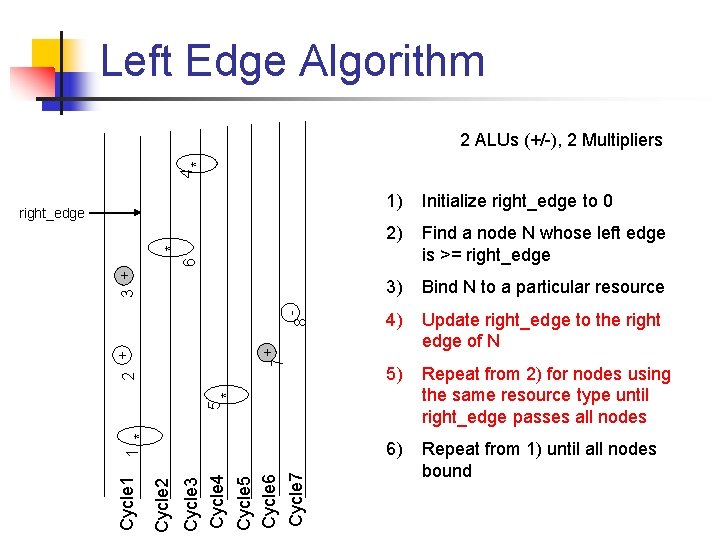

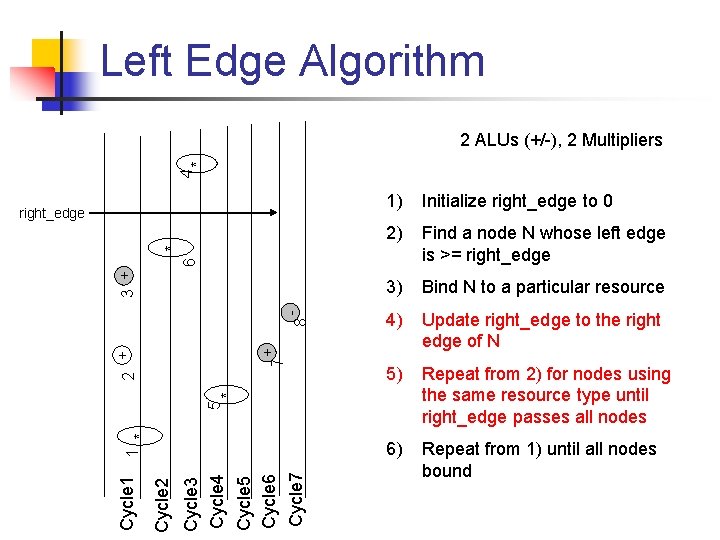

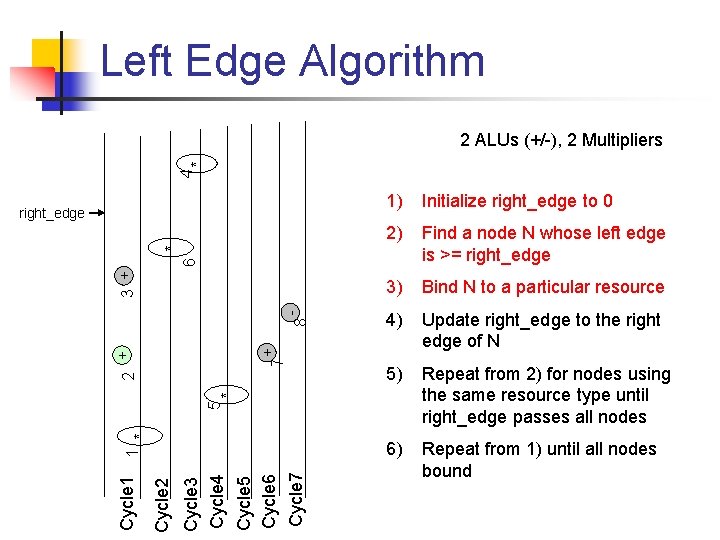

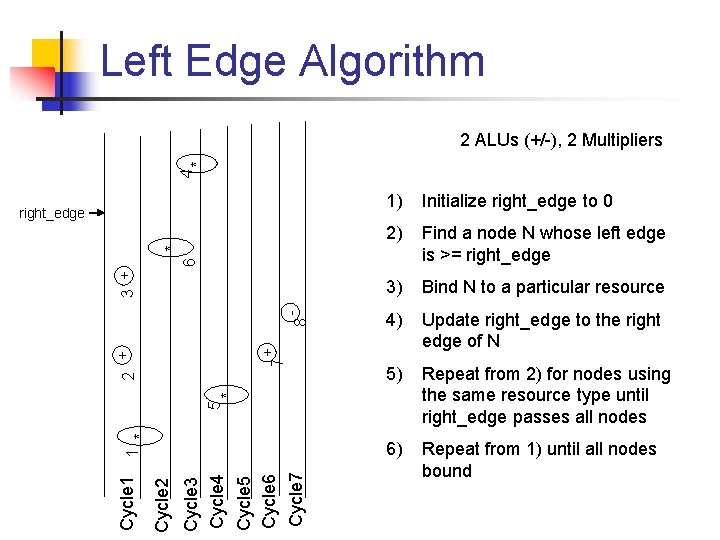

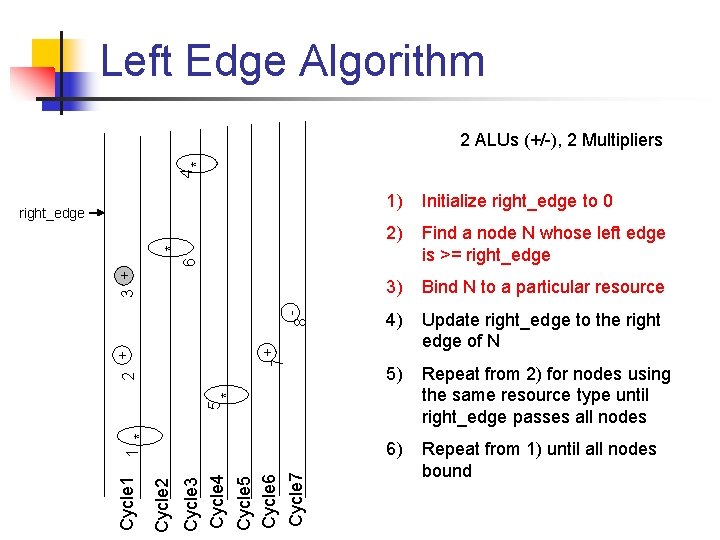

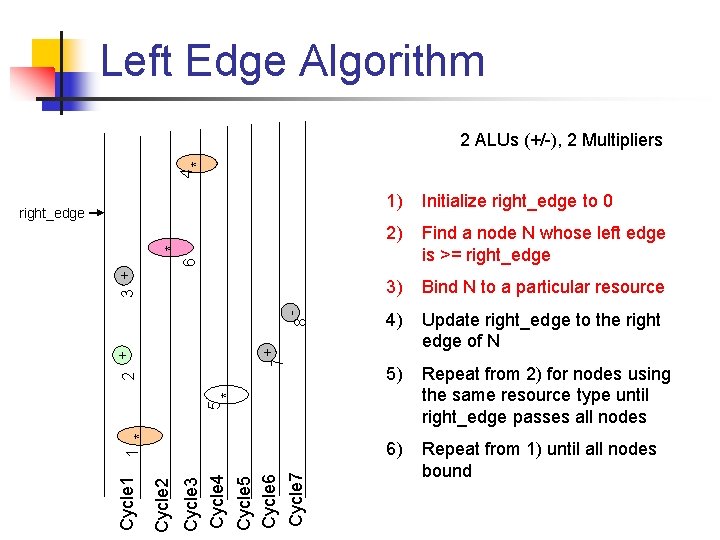

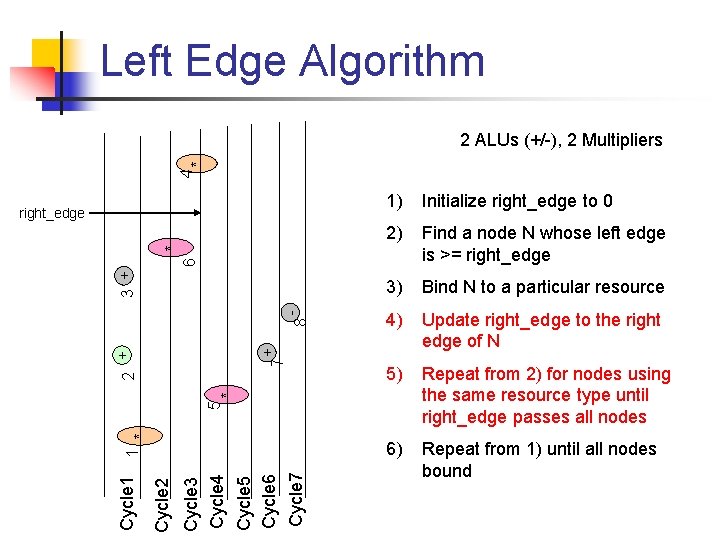

Left Edge Algorithm 4 * 2 ALUs (+/-), 2 Multipliers 1) Initialize right_edge to 0 2) Find a node N whose left edge is >= right_edge 3) Bind N to a particular resource 4) Update right_edge to the right edge of N 5) Repeat from 2) for nodes using the same resource type until right_edge passes all nodes 6) Repeat from 1) until all nodes bound Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 2 Cycle 1 1 * 5 * 7 2 + + 8 - 3 + 6 * right_edge

Left Edge Algorithm 4 * 2 ALUs (+/-), 2 Multipliers 1) Initialize right_edge to 0 2) Find a node N whose left edge is >= right_edge 3) Bind N to a particular resource 4) Update right_edge to the right edge of N 5) Repeat from 2) for nodes using the same resource type until right_edge passes all nodes 6) Repeat from 1) until all nodes bound Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 2 Cycle 1 1 * 5 * 7 2 + + 8 - 3 + 6 * right_edge

Left Edge Algorithm 4 * 2 ALUs (+/-), 2 Multipliers 1) Initialize right_edge to 0 2) Find a node N whose left edge is >= right_edge 3) Bind N to a particular resource 4) Update right_edge to the right edge of N 5) Repeat from 2) for nodes using the same resource type until right_edge passes all nodes 6) Repeat from 1) until all nodes bound Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 2 Cycle 1 1 * 5 * 7 2 + + 8 - 3 + 6 * right_edge

Left Edge Algorithm 4 * 2 ALUs (+/-), 2 Multipliers 1) Initialize right_edge to 0 2) Find a node N whose left edge is >= right_edge 3) Bind N to a particular resource 4) Update right_edge to the right edge of N 5) Repeat from 2) for nodes using the same resource type until right_edge passes all nodes 6) Repeat from 1) until all nodes bound Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 2 Cycle 1 1 * 5 * 7 2 + + 8 - 3 + 6 * right_edge

Left Edge Algorithm 4 * 2 ALUs (+/-), 2 Multipliers 1) Initialize right_edge to 0 2) Find a node N whose left edge is >= right_edge 3) Bind N to a particular resource 4) Update right_edge to the right edge of N 5) Repeat from 2) for nodes using the same resource type until right_edge passes all nodes 6) Repeat from 1) until all nodes bound Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 2 Cycle 1 1 * 5 * 7 2 + + 8 - 3 + 6 * right_edge

Left Edge Algorithm 4 * 2 ALUs (+/-), 2 Multipliers 1) Initialize right_edge to 0 2) Find a node N whose left edge is >= right_edge 3) Bind N to a particular resource 4) Update right_edge to the right edge of N 5) Repeat from 2) for nodes using the same resource type until right_edge passes all nodes 6) Repeat from 1) until all nodes bound Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 2 Cycle 1 1 * 5 * 7 2 + + 8 - 3 + 6 * right_edge

Left Edge Algorithm 4 * 2 ALUs (+/-), 2 Multipliers 1) Initialize right_edge to 0 2) Find a node N whose left edge is >= right_edge 3) Bind N to a particular resource 4) Update right_edge to the right edge of N 5) Repeat from 2) for nodes using the same resource type until right_edge passes all nodes 6) Repeat from 1) until all nodes bound Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 2 Cycle 1 1 * 5 * 7 2 + + 8 - 3 + 6 * right_edge

Left Edge Algorithm 4 * 2 ALUs (+/-), 2 Multipliers 1) Initialize right_edge to 0 2) Find a node N whose left edge is >= right_edge 3) Bind N to a particular resource 4) Update right_edge to the right edge of N 5) Repeat from 2) for nodes using the same resource type until right_edge passes all nodes 6) Repeat from 1) until all nodes bound Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 2 Cycle 1 1 * 5 * 7 2 + + 8 - 3 + 6 * right_edge

Left Edge Algorithm 4 * 2 ALUs (+/-), 2 Multipliers 1) Initialize right_edge to 0 2) Find a node N whose left edge is >= right_edge 3) Bind N to a particular resource 4) Update right_edge to the right edge of N 5) Repeat from 2) for nodes using the same resource type until right_edge passes all nodes 6) Repeat from 1) until all nodes bound Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 2 Cycle 1 1 * 5 * 7 2 + + 8 - 3 + 6 * right_edge

Extensions n Algorithms presented so far find a valid binding n n n But, do not consider amount of steering logic required Different bindings can require significantly different # of muxes One solution n Extend compatibility graph n n Use weighted edges/nodes - cost function representing steering logic Perform clique partitioning, finding the set of cliques that minimize weight

Binding Summary n Binding maps operations onto physical resources n n n Binding may greatly affect steering logic Trivial for fully-pipelined circuits n n Determines sharing among resources 1 resource per operation Straightforward translation from bound DFG to datapath

High-level Synthesis: Summary

Main Steps n Front-end (lexing/parsing) converts code into intermediate representation n n Scheduling assigns a start time for each operation in DFG n n n CFG node start times defined by control dependencies Resource allocation determined by schedule Binding maps scheduled operations onto physical resources n n We looked at CDFG Determines how resources are shared Big picture: n n n Scheduled/Bound DFG can be translated into a datapath CFG can be translated to a controller => High-level synthesis can create a custom circuit for any CDFG!

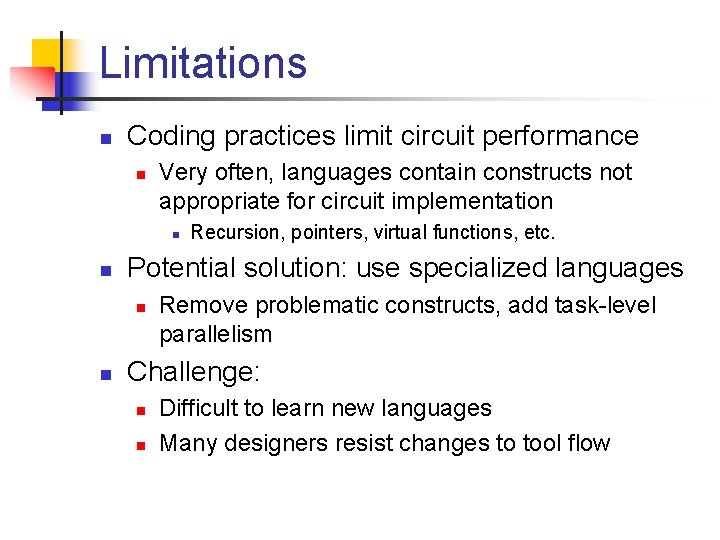

Limitations n Task-level parallelism n Parallelism in CDFG limited to individual control states n n Can’t have multiple states executing concurrently Potential solution: use model other than CDFG n Kahn Process Networks n n n High-level synthesis can create a controller+datapath for each process n n Nodes represents parallel processes/tasks Edges represent communication between processes Must also consider communication buffers Challenge: n Most high-level code does not have explicit parallelism n Difficult/impossible to extract task-level parallelism from code

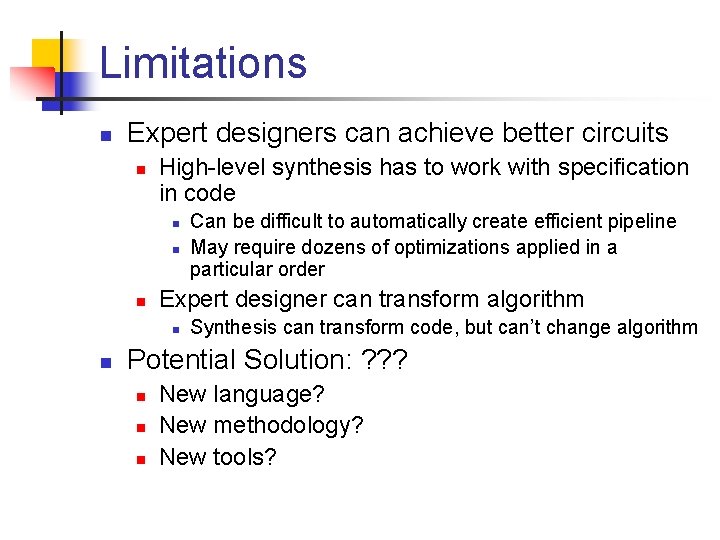

Limitations n Coding practices limit circuit performance n Very often, languages contain constructs not appropriate for circuit implementation n n Potential solution: use specialized languages n n Recursion, pointers, virtual functions, etc. Remove problematic constructs, add task-level parallelism Challenge: n n Difficult to learn new languages Many designers resist changes to tool flow

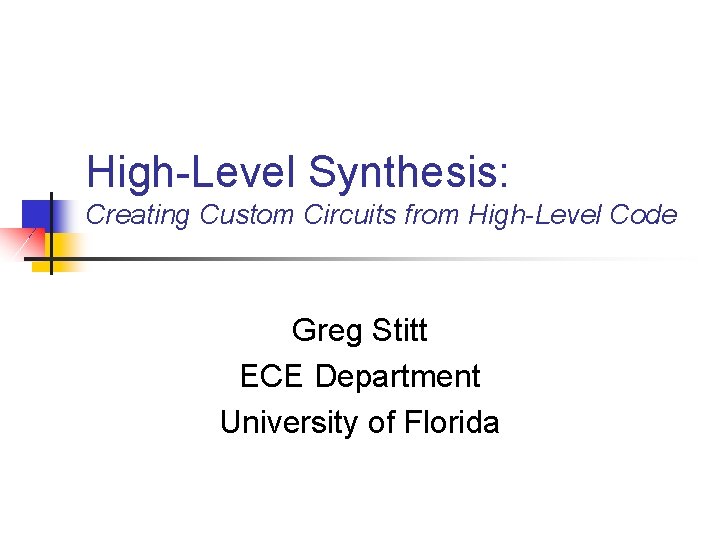

Limitations n Expert designers can achieve better circuits n High-level synthesis has to work with specification in code n n n Expert designer can transform algorithm n n Can be difficult to automatically create efficient pipeline May require dozens of optimizations applied in a particular order Synthesis can transform code, but can’t change algorithm Potential Solution: ? ? ? n n n New language? New methodology? New tools?