Deep Learning The Future of RealTime Rendering Marco

![Deep Learning is Changing the Way We Do Graphics [Laine 17] [Karras 17] [Holden Deep Learning is Changing the Way We Do Graphics [Laine 17] [Karras 17] [Holden](https://slidetodoc.com/presentation_image_h/c4ed2a0509b2a5a0d845774079513682/image-2.jpg)

![Bibliography ■ ■ ■ [Chaitanya 17] “Interactive Reconstruction of Monte Carlo Image Sequences using Bibliography ■ ■ ■ [Chaitanya 17] “Interactive Reconstruction of Monte Carlo Image Sequences using](https://slidetodoc.com/presentation_image_h/c4ed2a0509b2a5a0d845774079513682/image-49.jpg)

- Slides: 53

Deep Learning: The Future of Real-Time Rendering? Marco Salvi NVIDIA 1 “Open Problems in Real-Time Rendering” Course

![Deep Learning is Changing the Way We Do Graphics Laine 17 Karras 17 Holden Deep Learning is Changing the Way We Do Graphics [Laine 17] [Karras 17] [Holden](https://slidetodoc.com/presentation_image_h/c4ed2a0509b2a5a0d845774079513682/image-2.jpg)

Deep Learning is Changing the Way We Do Graphics [Laine 17] [Karras 17] [Holden 17] [Chaitanya 17] [Dahm 17] [Nalbach 17] “Open Problems in Real-Time Rendering” Course

Video “Audio-Driven Facial Animation by Joint End-to-End Learning of Pose and Emotion” Tero Karras, Timo Aila, Samuli Laine, Antti Herva, and Jaakko Lehtinen 3 “Open Problems in Real-Time Rendering” Course

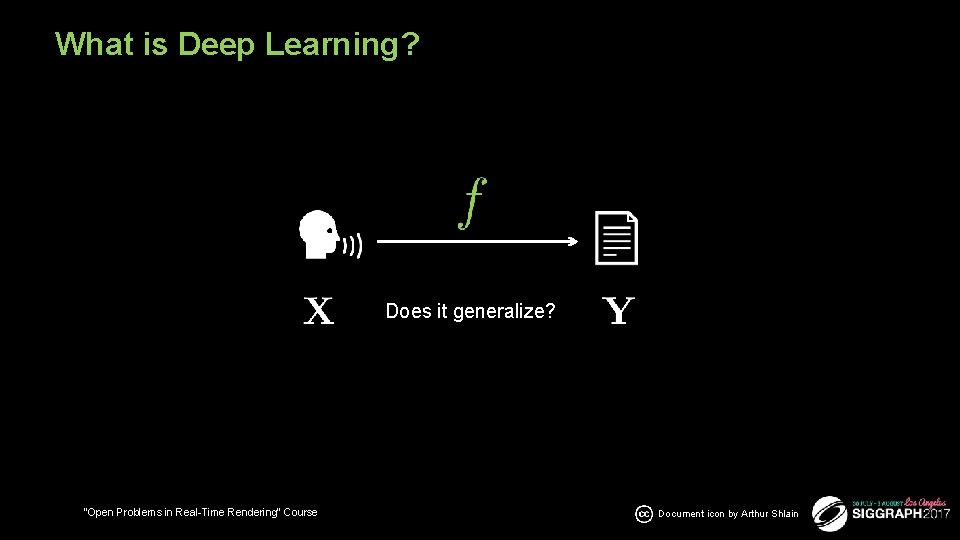

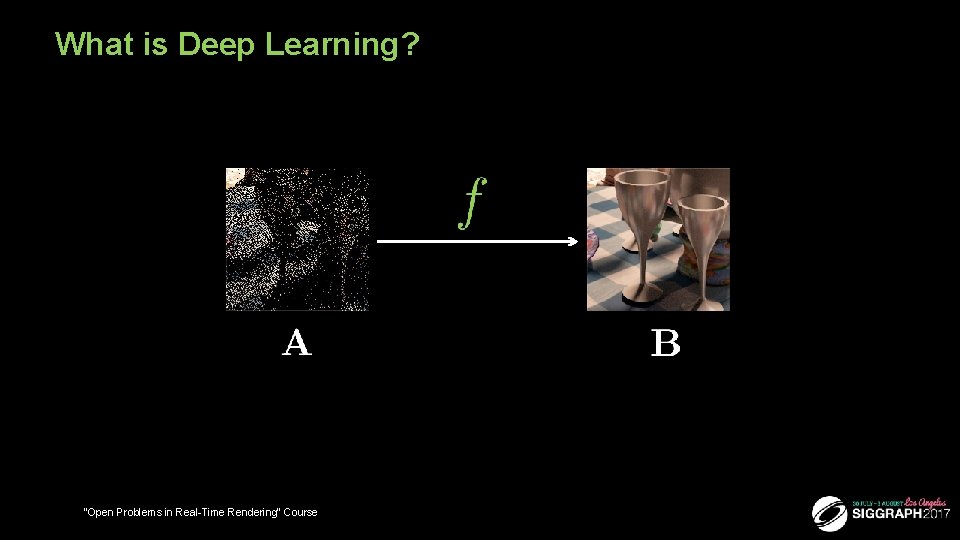

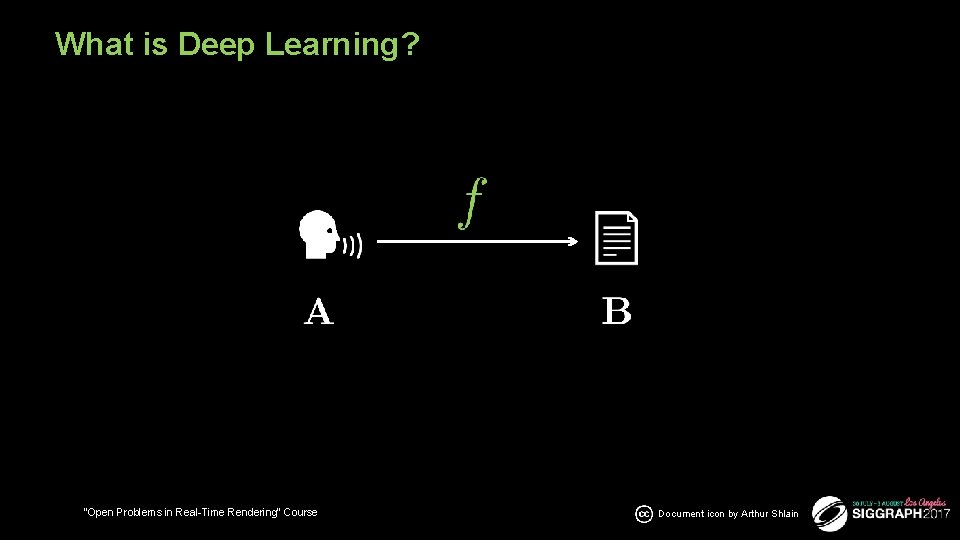

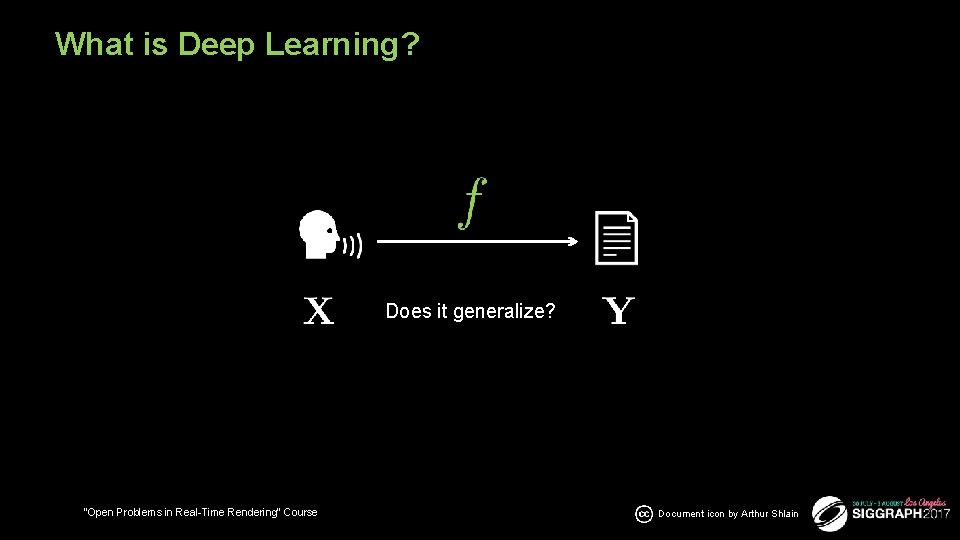

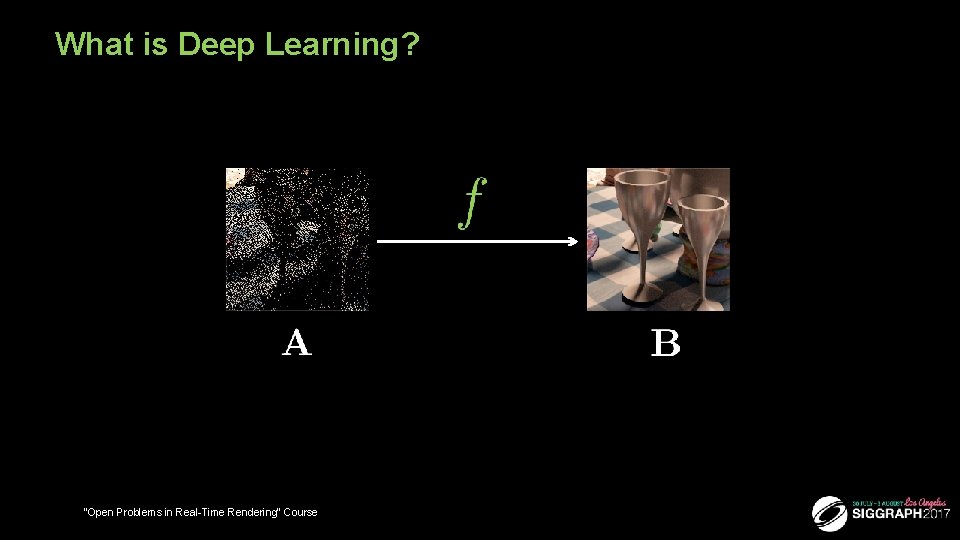

What is Deep Learning? 4 “Open Problems in Real-Time Rendering” Course Document icon by Arthur Shlain

What is Deep Learning? 5 “Open Problems in Real-Time Rendering” Course Document icon by Arthur Shlain

What is Deep Learning? Does it generalize? 6 “Open Problems in Real-Time Rendering” Course Document icon by Arthur Shlain

What is Deep Learning? 7 “Open Problems in Real-Time Rendering” Course

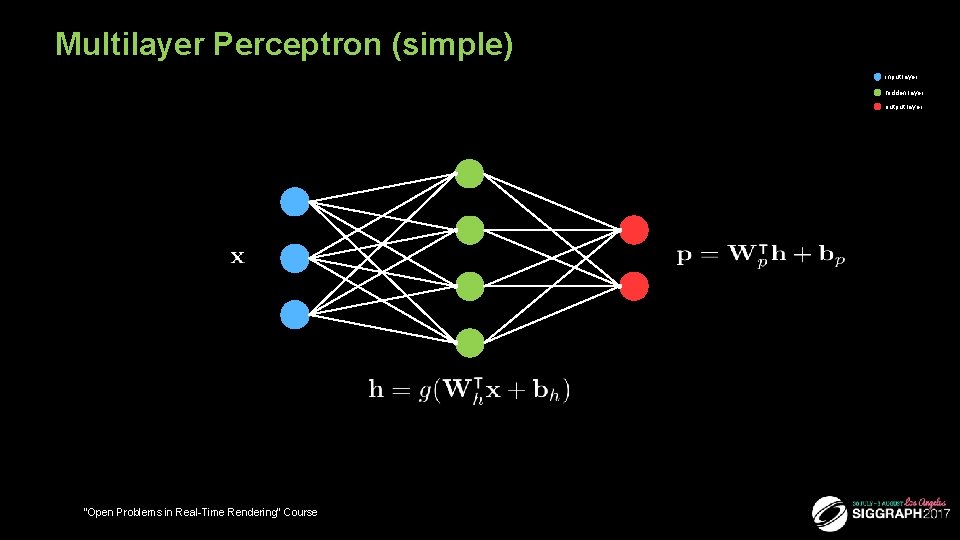

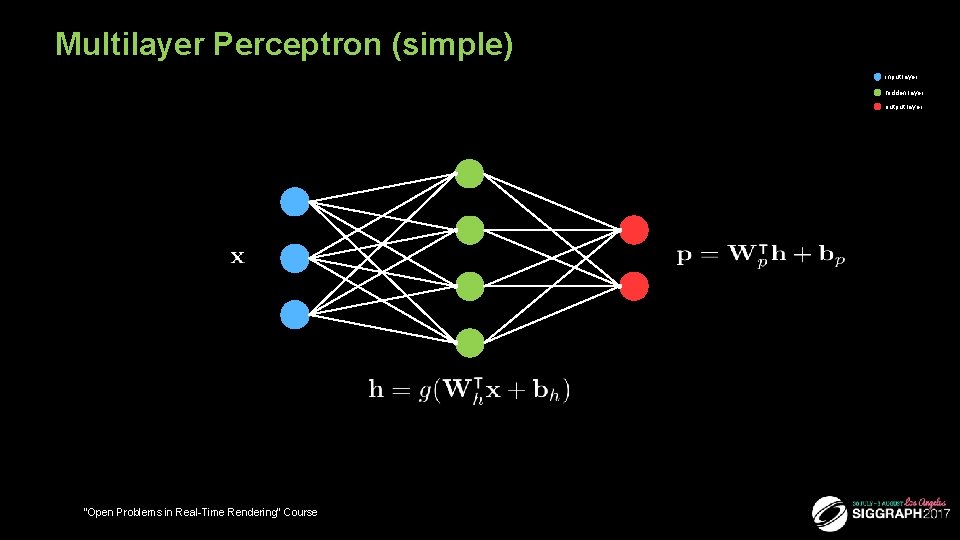

Multilayer Perceptron (simple) input layer hidden layer output layer 8 “Open Problems in Real-Time Rendering” Course

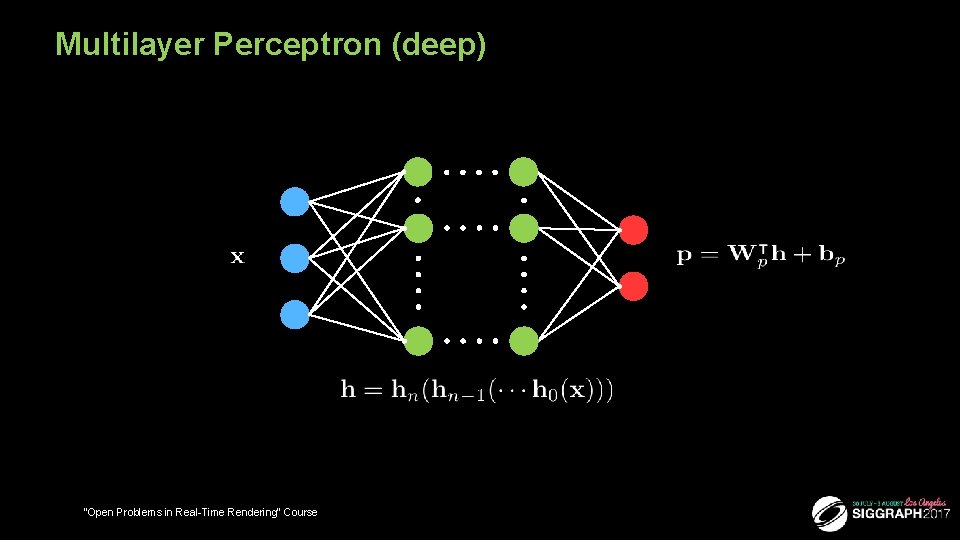

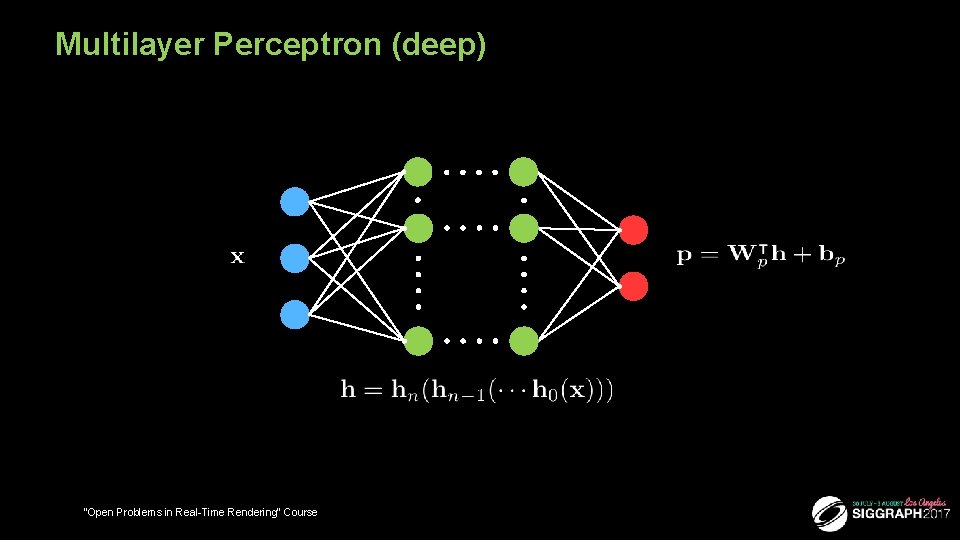

Multilayer Perceptron (deep) 9 “Open Problems in Real-Time Rendering” Course

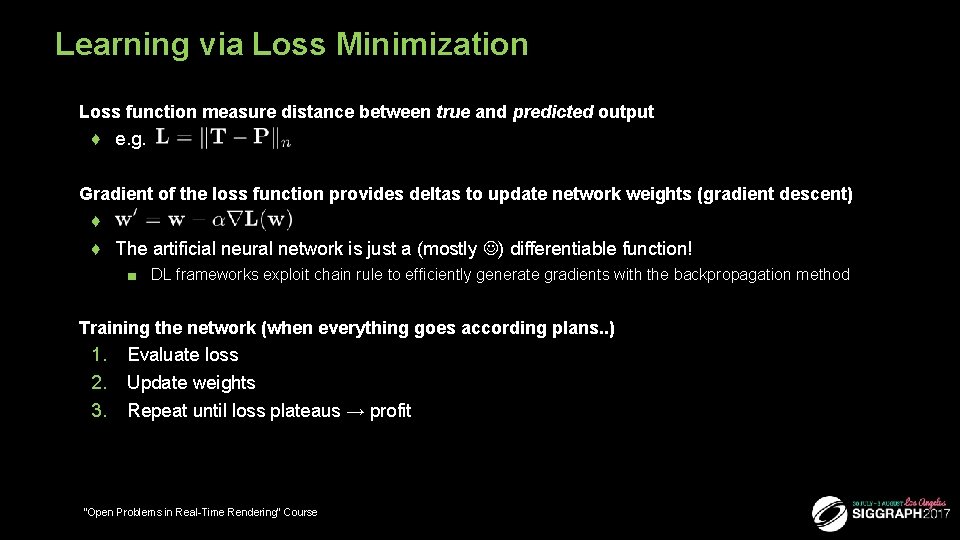

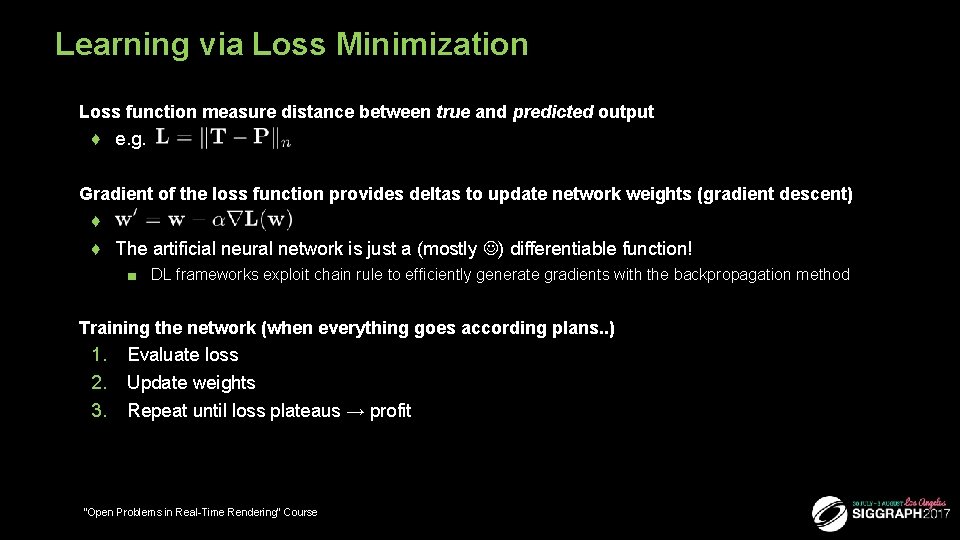

Learning via Loss Minimization ■ Loss function measure distance between true and predicted output ♦ e. g. ■ Gradient of the loss function provides deltas to update network weights (gradient descent) ♦ ♦ The artificial neural network is just a (mostly ) differentiable function! ■ DL frameworks exploit chain rule to efficiently generate gradients with the backpropagation method ■ Training the network (when everything goes according plans. . ) 1. 2. 3. 10 Evaluate loss Update weights Repeat until loss plateaus → profit “Open Problems in Real-Time Rendering” Course

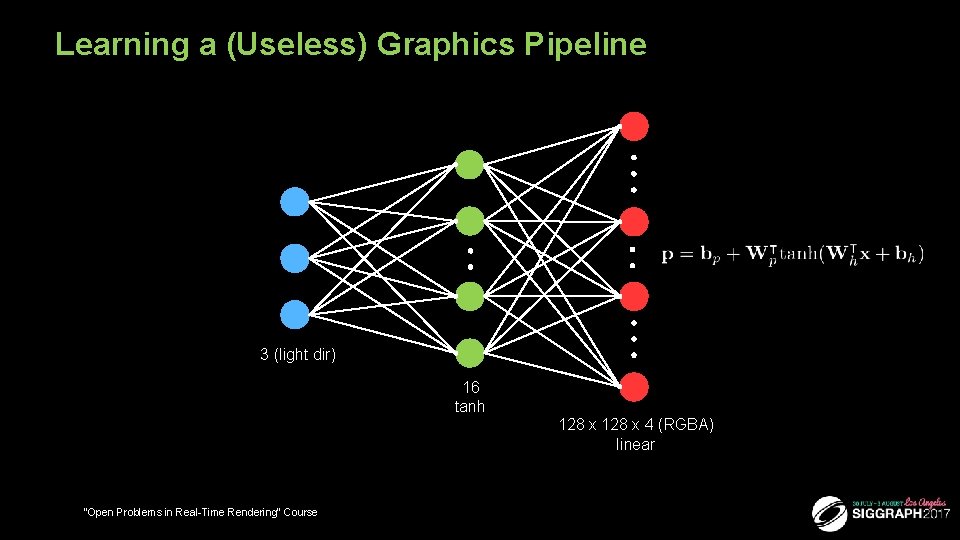

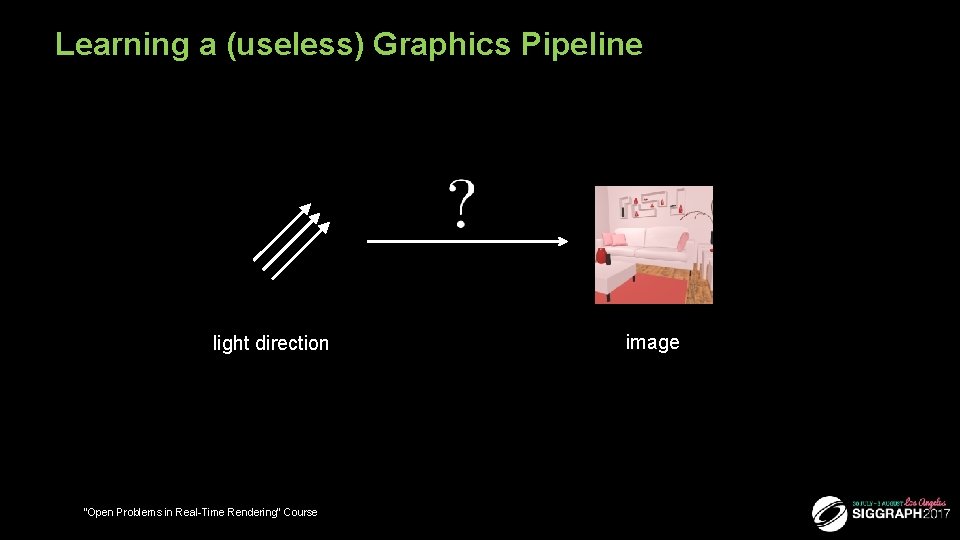

Learning a (useless) Graphics Pipeline light direction 11 “Open Problems in Real-Time Rendering” Course image

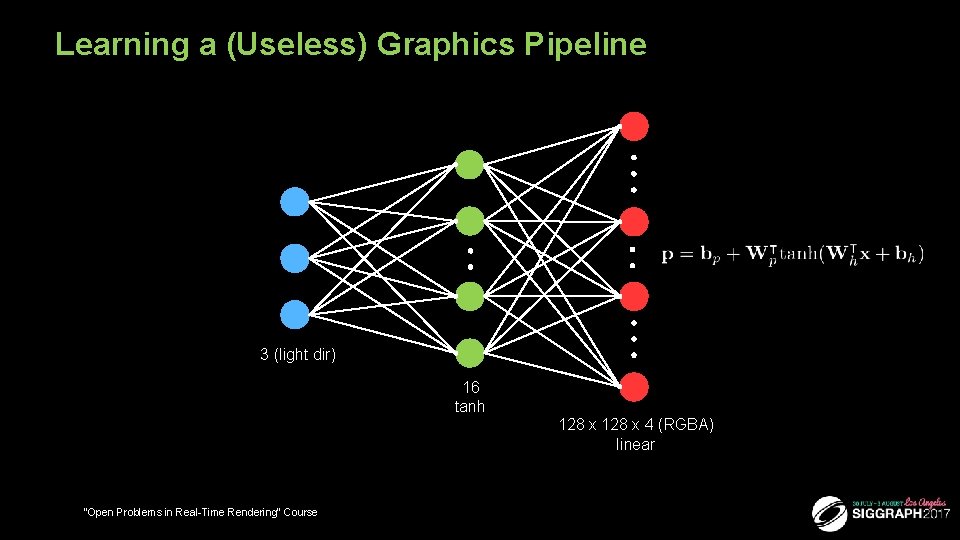

Learning a (Useless) Graphics Pipeline 3 (light dir) 16 tanh 128 x 4 (RGBA) linear 12 “Open Problems in Real-Time Rendering” Course

Live Training Demo 13 “Open Problems in Real-Time Rendering” Course

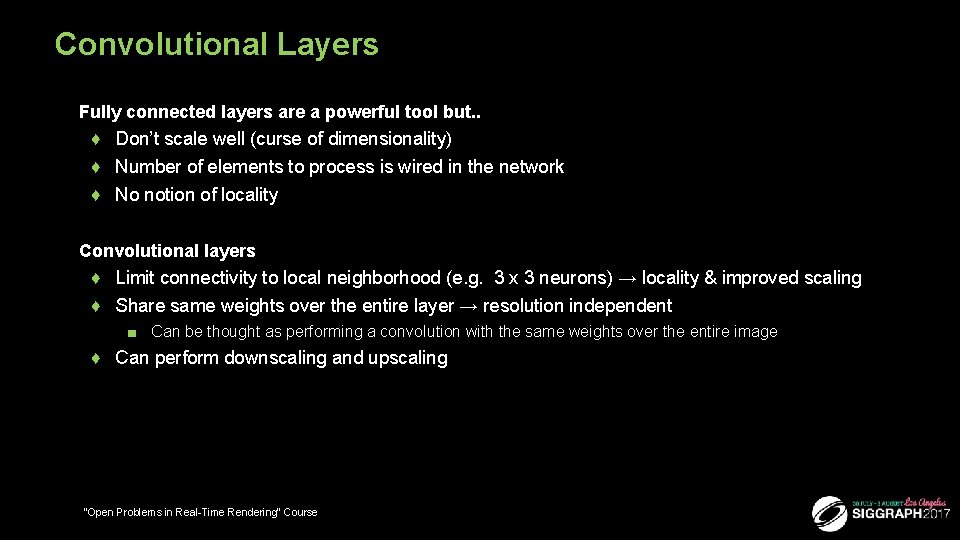

Convolutional Layers ■ Fully connected layers are a powerful tool but. . ♦ Don’t scale well (curse of dimensionality) ♦ Number of elements to process is wired in the network ♦ No notion of locality ■ Convolutional layers ♦ Limit connectivity to local neighborhood (e. g. 3 x 3 neurons) → locality & improved scaling ♦ Share same weights over the entire layer → resolution independent ■ Can be thought as performing a convolution with the same weights over the entire image ♦ Can perform downscaling and upscaling 14 “Open Problems in Real-Time Rendering” Course

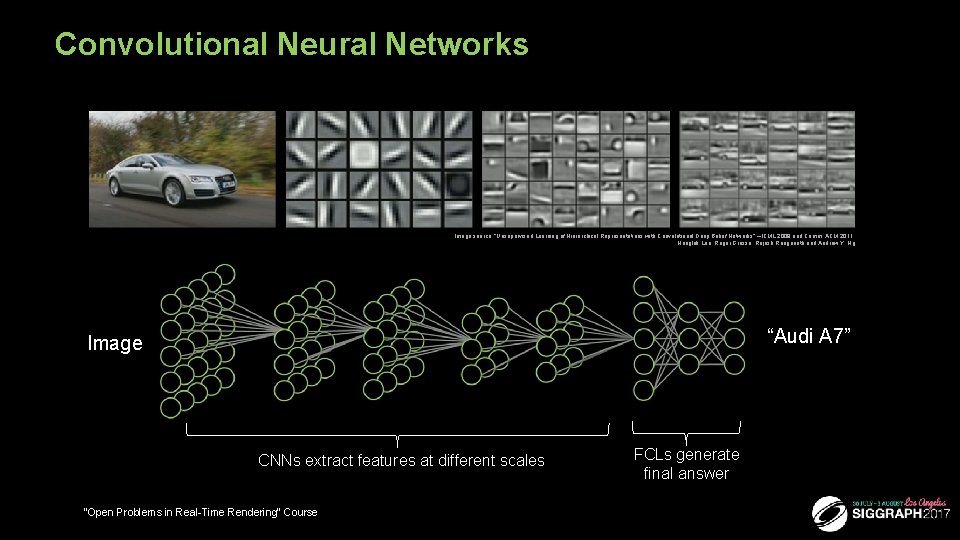

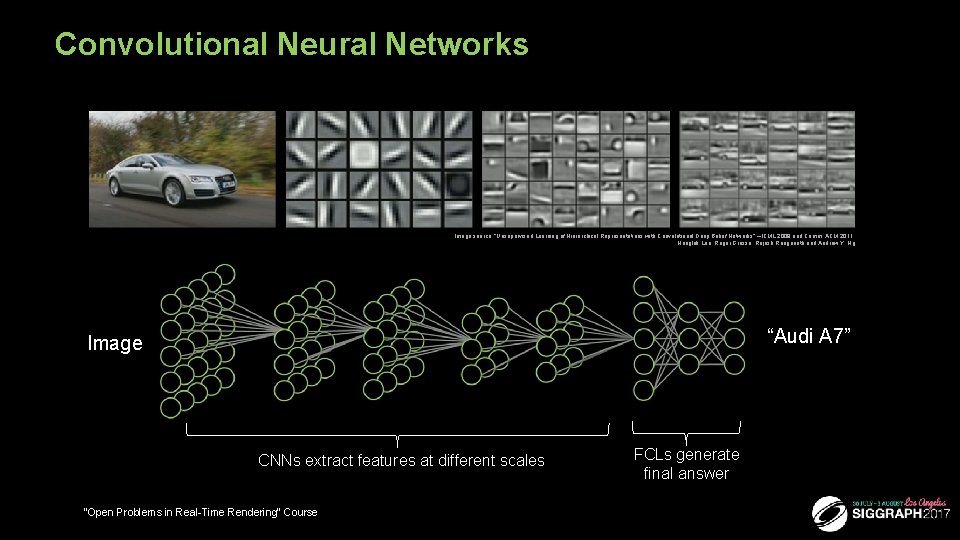

Convolutional Neural Networks Image source: “Unsupervised Learning of Hierarchical Representations with Convolutional Deep Belief Networks” – ICML 2009 and Comm. ACM 2011 Honglak Lee, Roger Grosse, Rajesh Ranganath and Andrew Y. Ng “Audi A 7” Image CNNs extract features at different scales “Open Problems in Real-Time Rendering” Course FCLs generate final answer

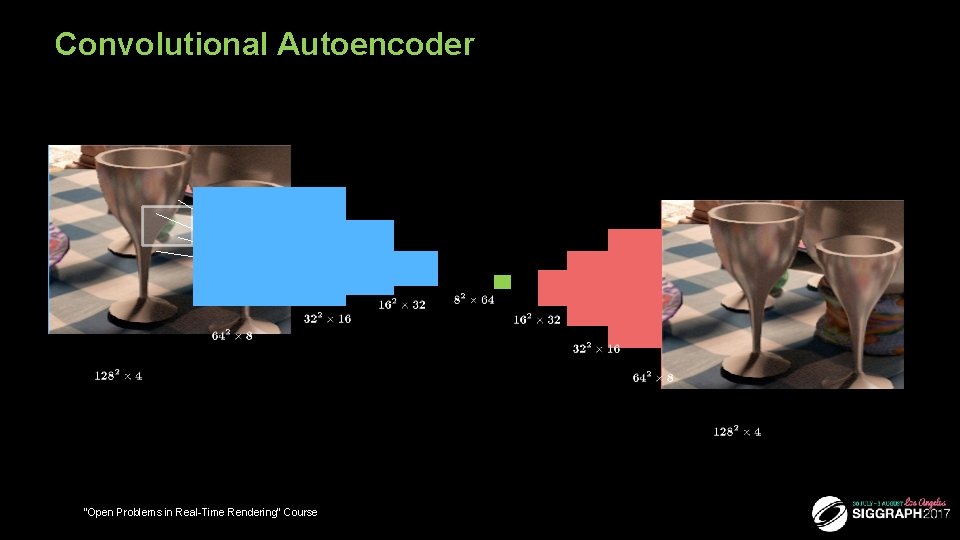

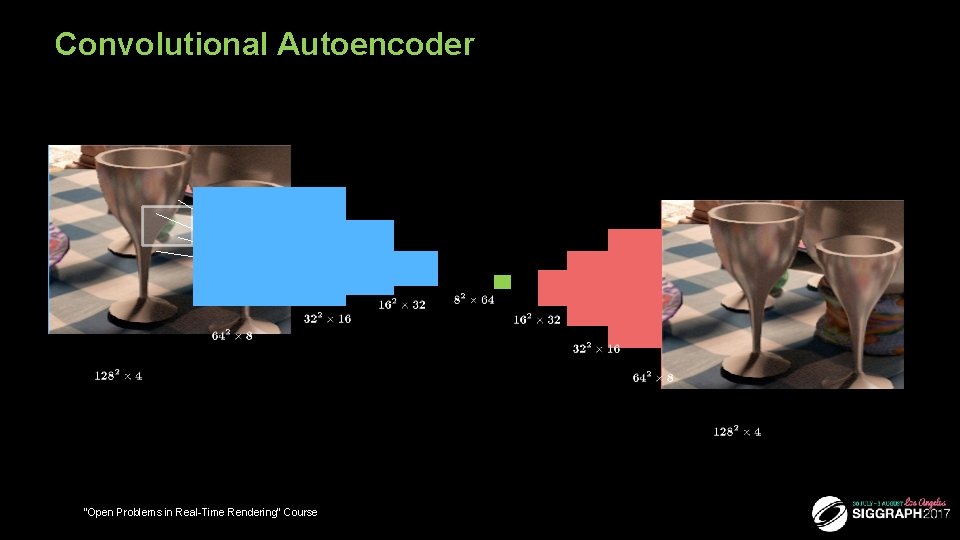

Convolutional Autoencoder “Open Problems in Real-Time Rendering” Course

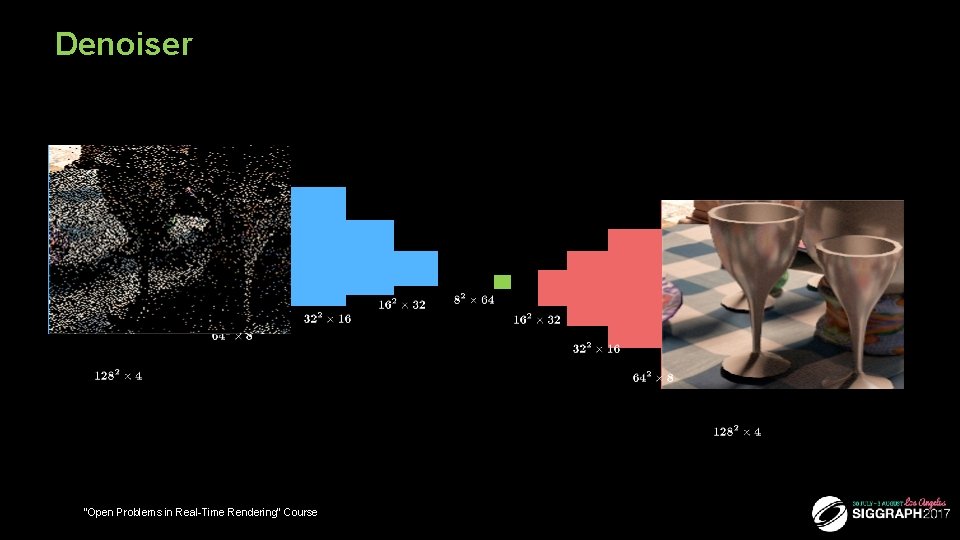

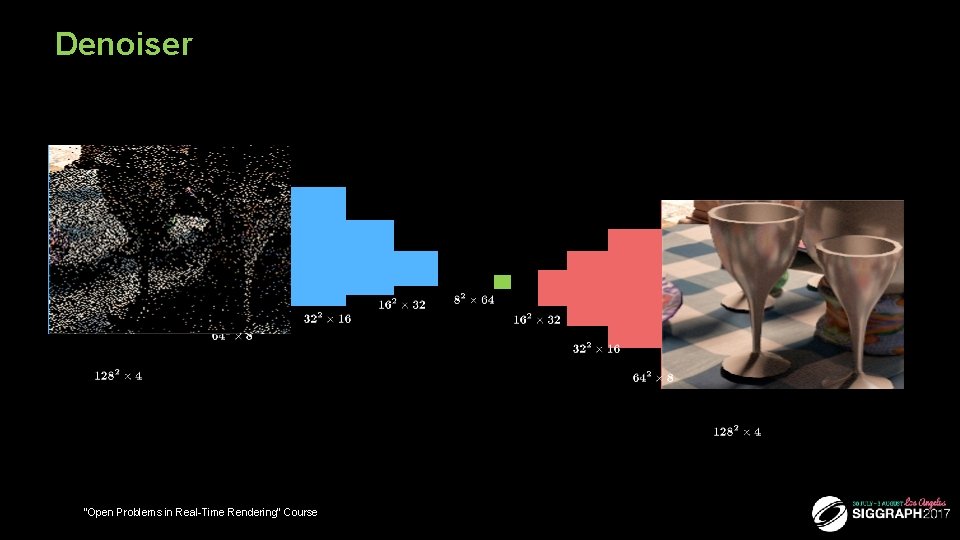

Denoiser “Open Problems in Real-Time Rendering” Course

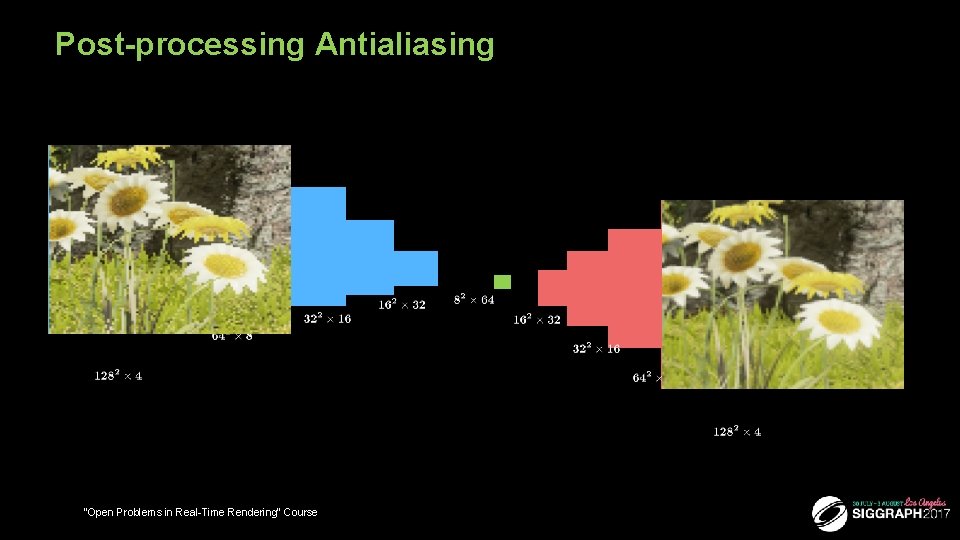

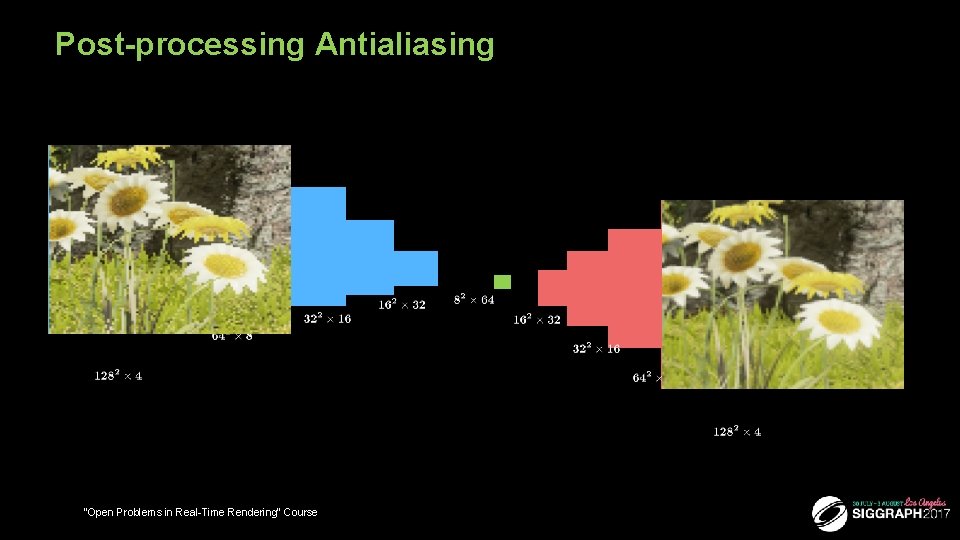

Post-processing Antialiasing “Open Problems in Real-Time Rendering” Course

Case Study: Antialiasing 19 “Open Problems in Real-Time Rendering” Course

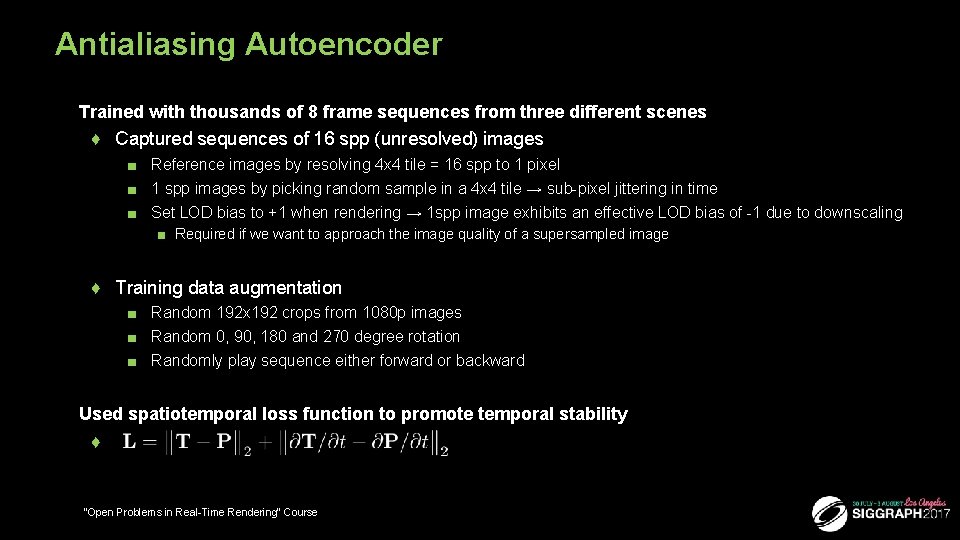

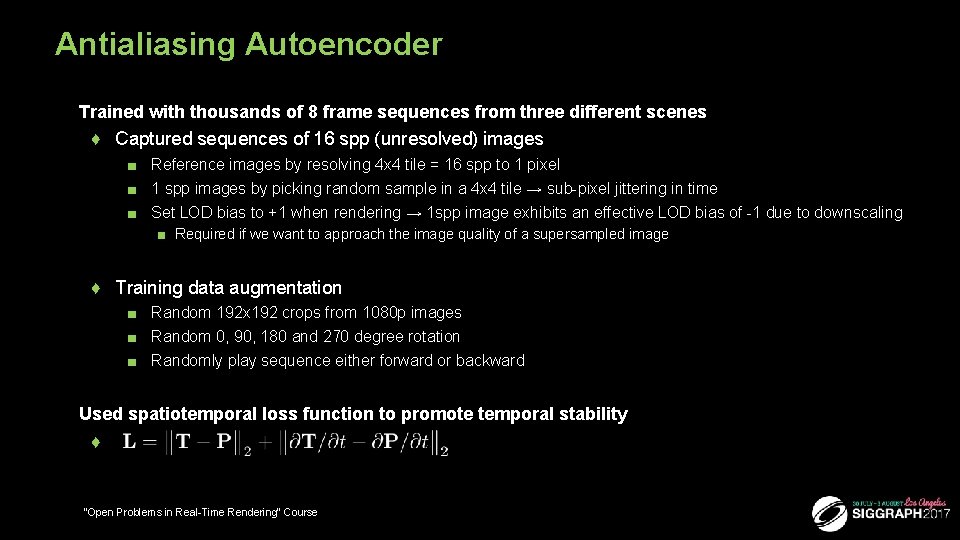

Antialiasing Autoencoder ■ Trained with thousands of 8 frame sequences from three different scenes ♦ Captured sequences of 16 spp (unresolved) images ■ Reference images by resolving 4 x 4 tile = 16 spp to 1 pixel ■ 1 spp images by picking random sample in a 4 x 4 tile → sub-pixel jittering in time ■ Set LOD bias to +1 when rendering → 1 spp image exhibits an effective LOD bias of -1 due to downscaling ■ Required if we want to approach the image quality of a supersampled image ♦ Training data augmentation ■ Random 192 x 192 crops from 1080 p images ■ Random 0, 90, 180 and 270 degree rotation ■ Randomly play sequence either forward or backward ■ Used spatiotemporal loss function to promote temporal stability ♦ “Open Problems in Real-Time Rendering” Course

Antialiasing video: 1 spp vs. Autoencoder “Open Problems in Real-Time Rendering” Course

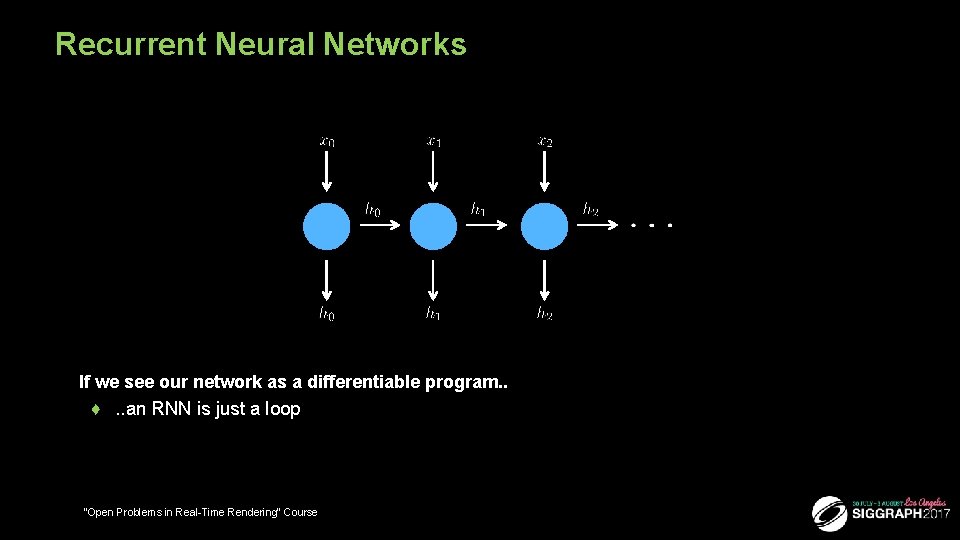

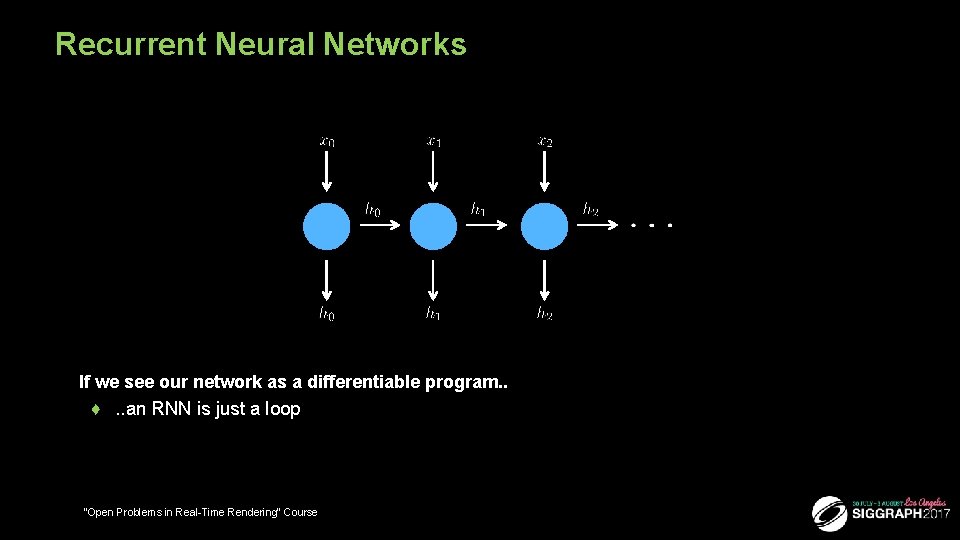

Recurrent Neural Networks ■ If we see our network as a differentiable program. . ♦. . an RNN is just a loop “Open Problems in Real-Time Rendering” Course

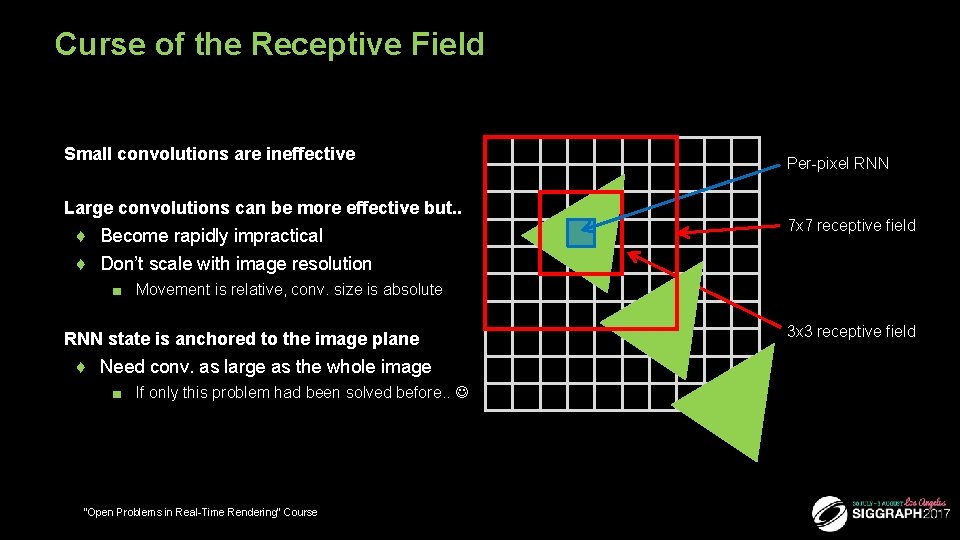

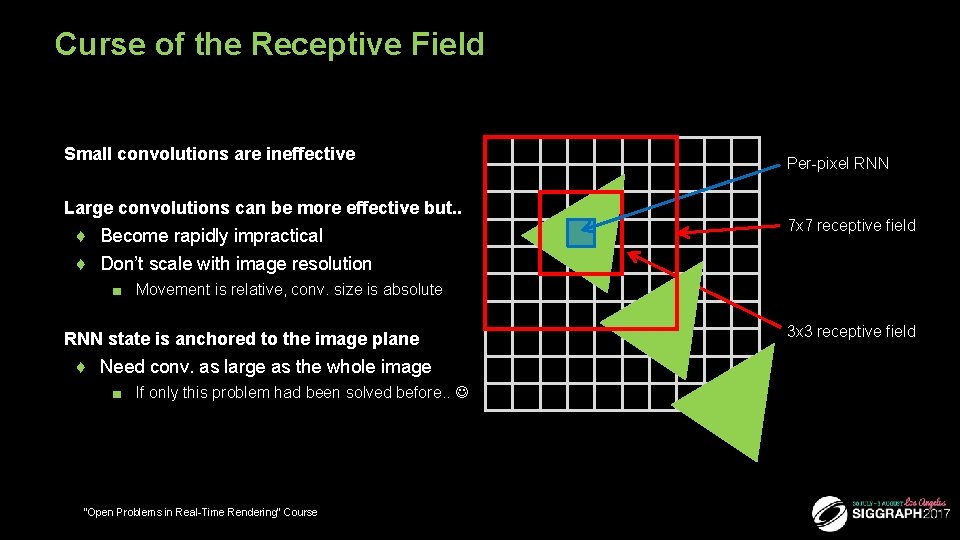

Curse of the Receptive Field ■ Small convolutions are ineffective ■ Large convolutions can be more effective but. . ♦ Become rapidly impractical ♦ Don’t scale with image resolution Per-pixel RNN 7 x 7 receptive field ■ Movement is relative, conv. size is absolute ■ RNN state is anchored to the image plane ♦ Need conv. as large as the whole image ■ If only this problem had been solved before. . “Open Problems in Real-Time Rendering” Course 3 x 3 receptive field

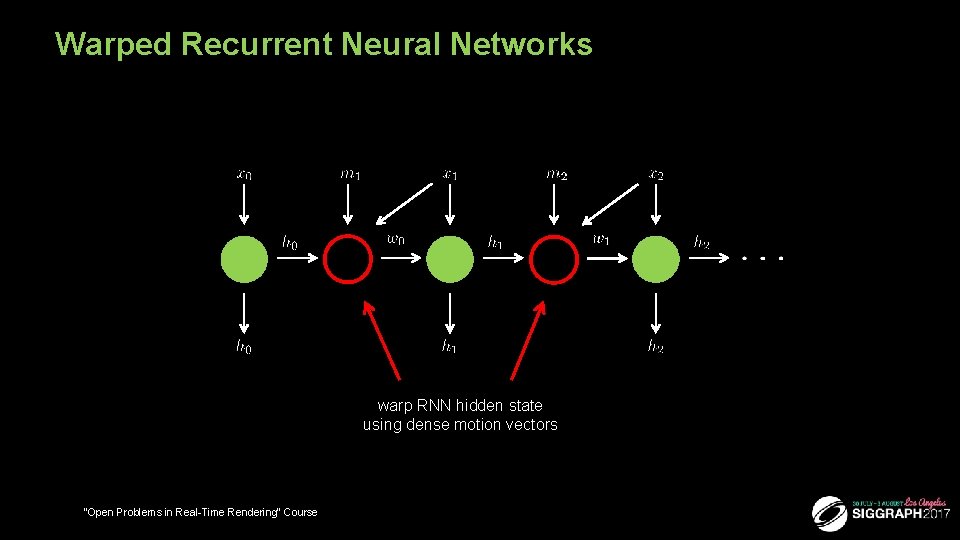

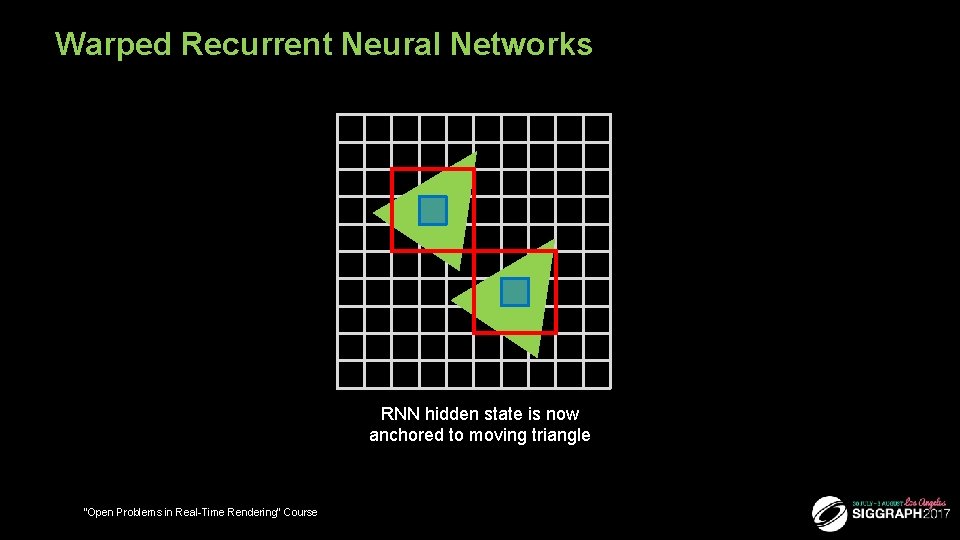

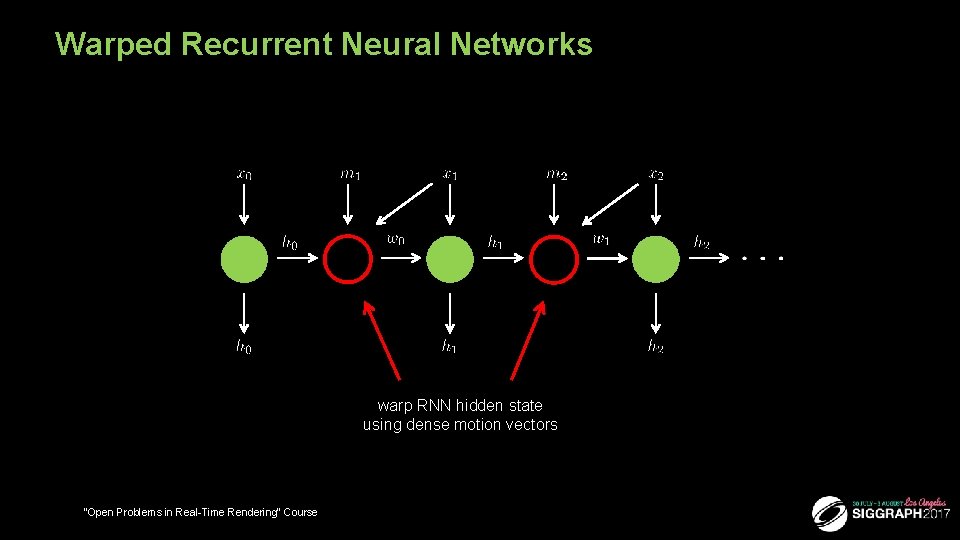

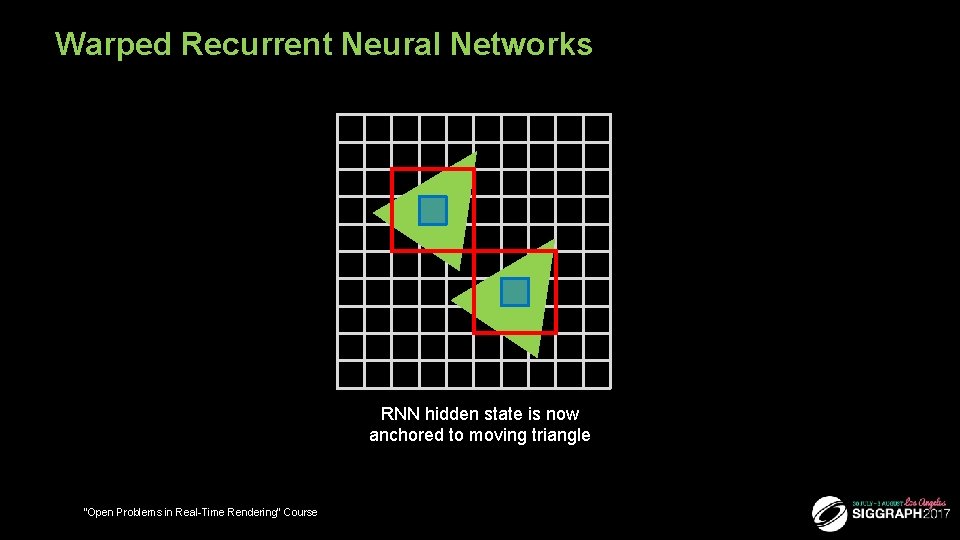

Warped Recurrent Neural Networks warp RNN hidden state using dense motion vectors “Open Problems in Real-Time Rendering” Course

Warped Recurrent Neural Networks RNN hidden state is now anchored to moving triangle “Open Problems in Real-Time Rendering” Course

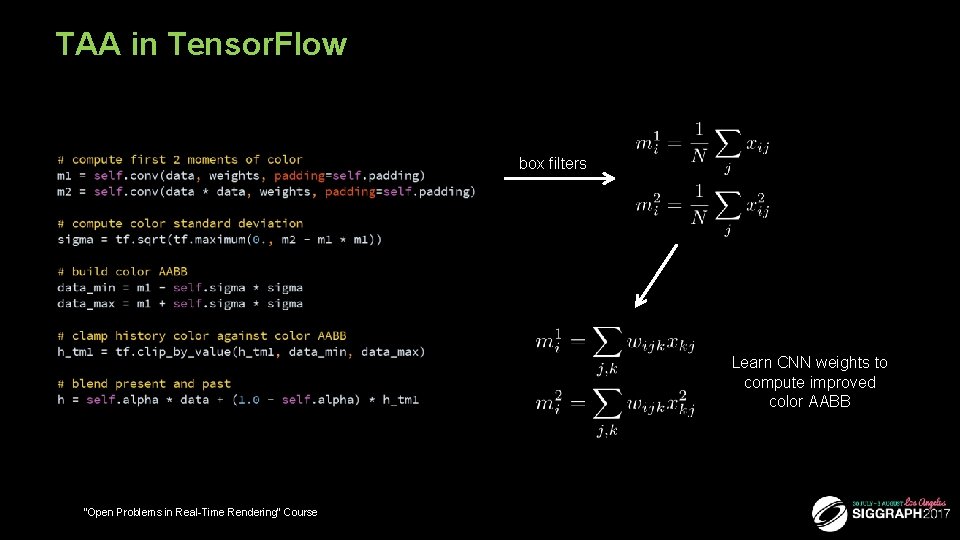

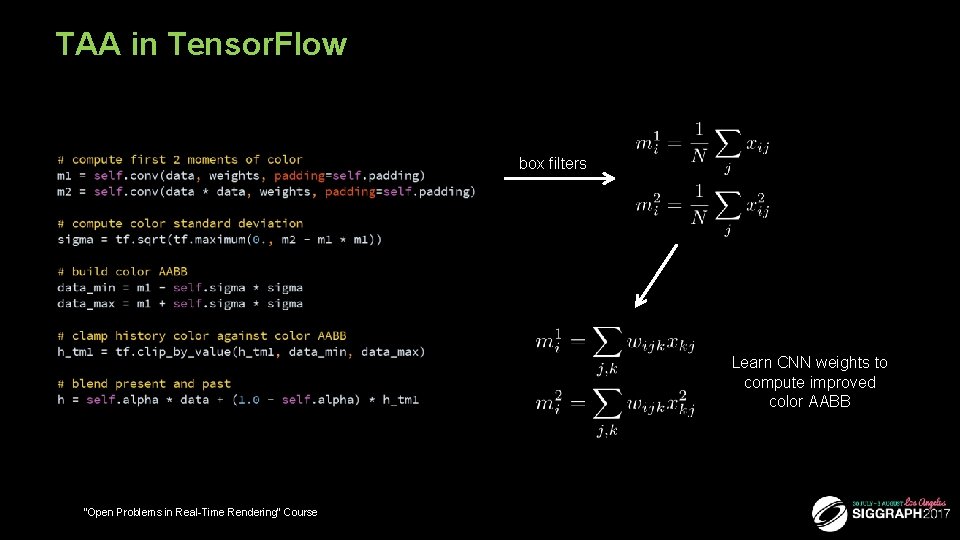

TAA in Tensor. Flow box filters Learn CNN weights to compute improved color AABB “Open Problems in Real-Time Rendering” Course

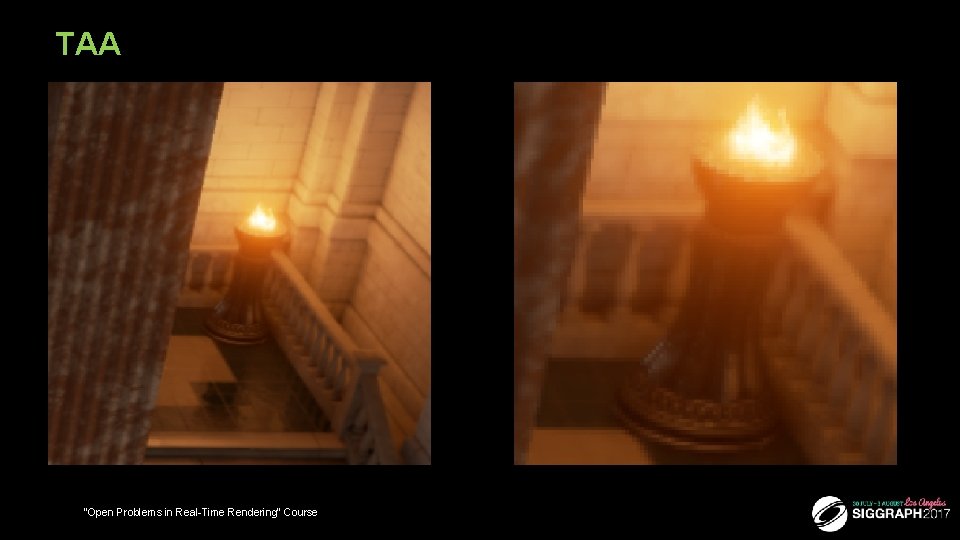

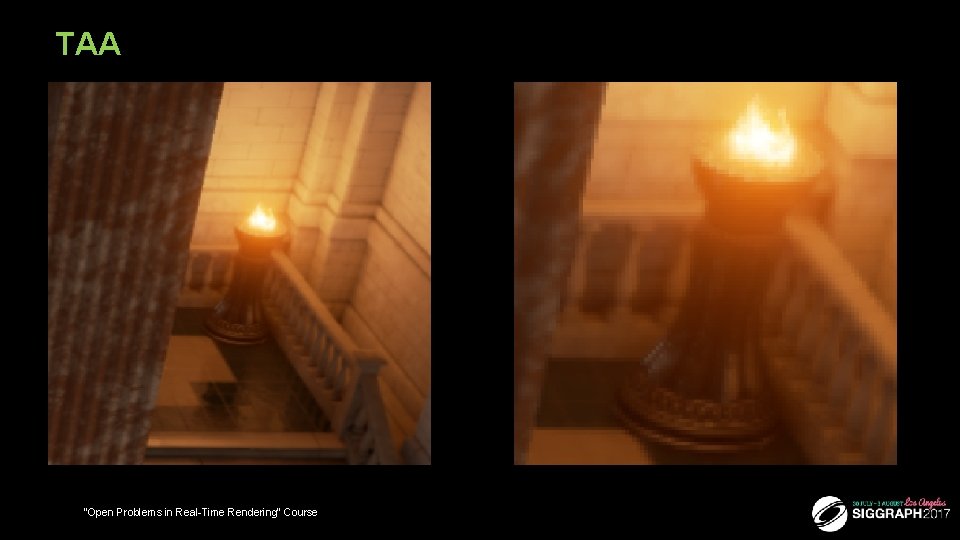

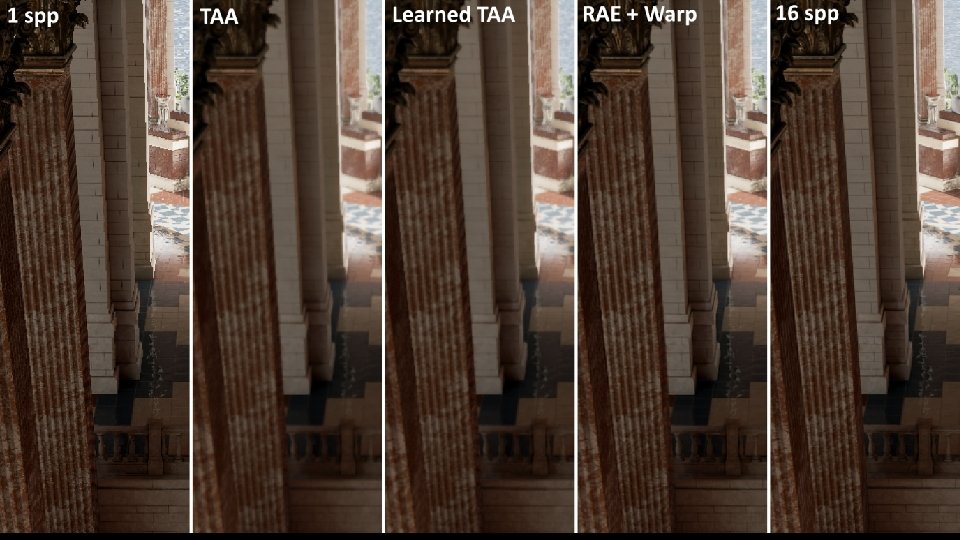

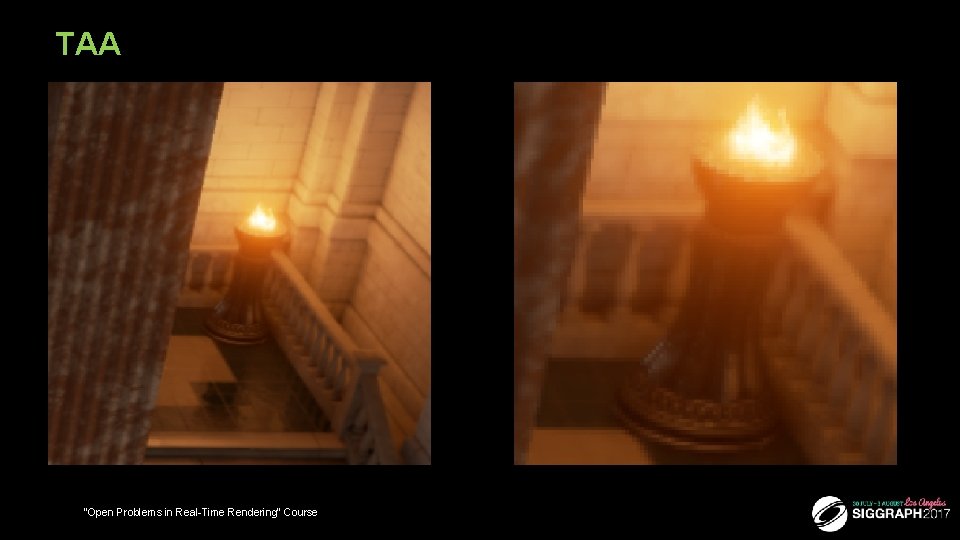

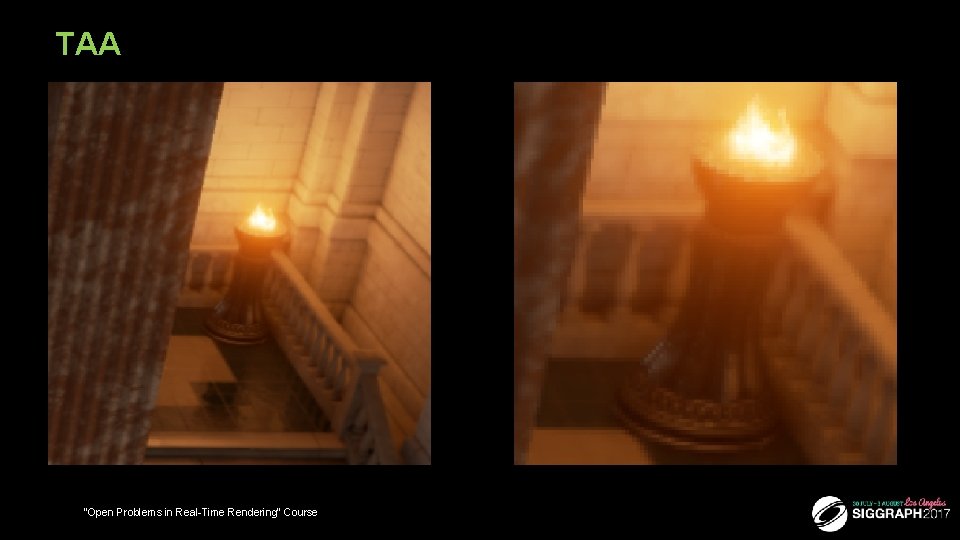

TAA 27 “Open Problems in Real-Time Rendering” Course

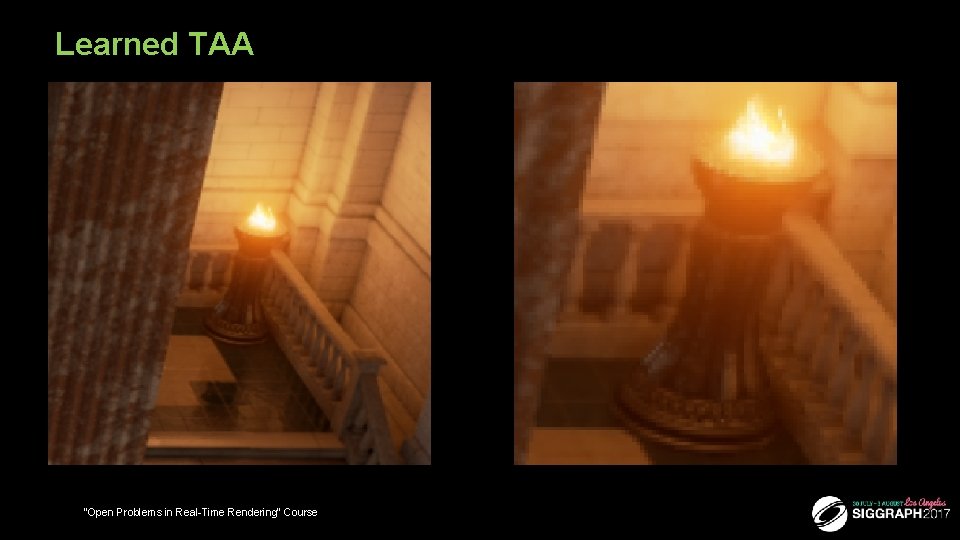

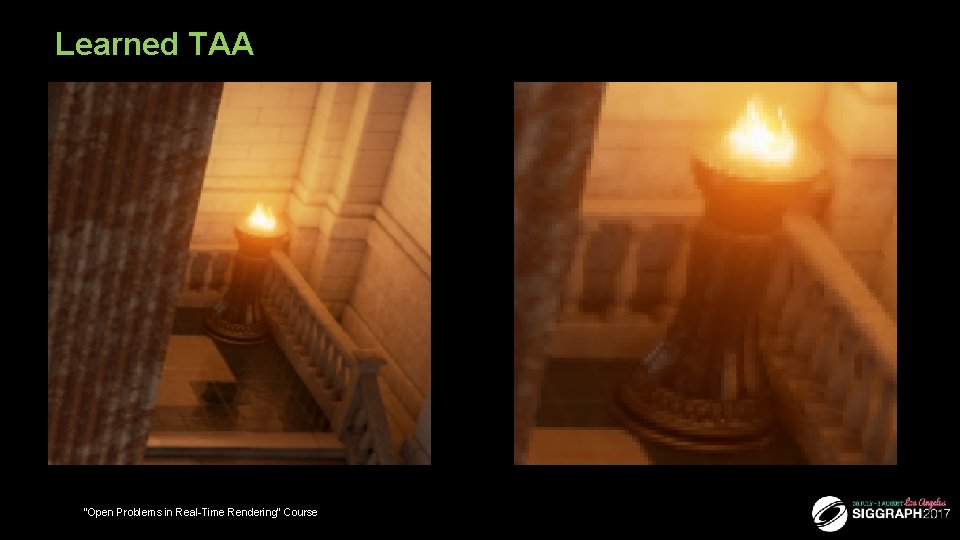

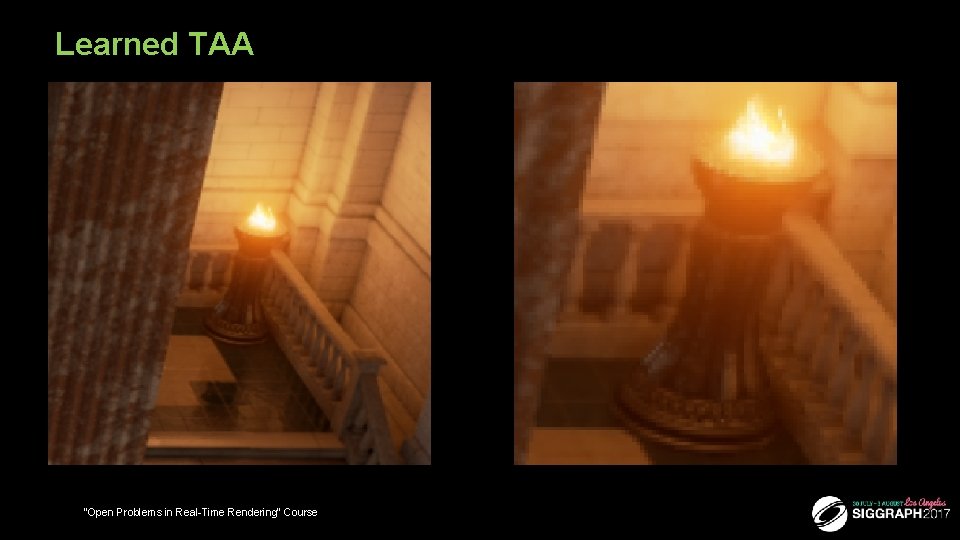

Learned TAA 28 “Open Problems in Real-Time Rendering” Course

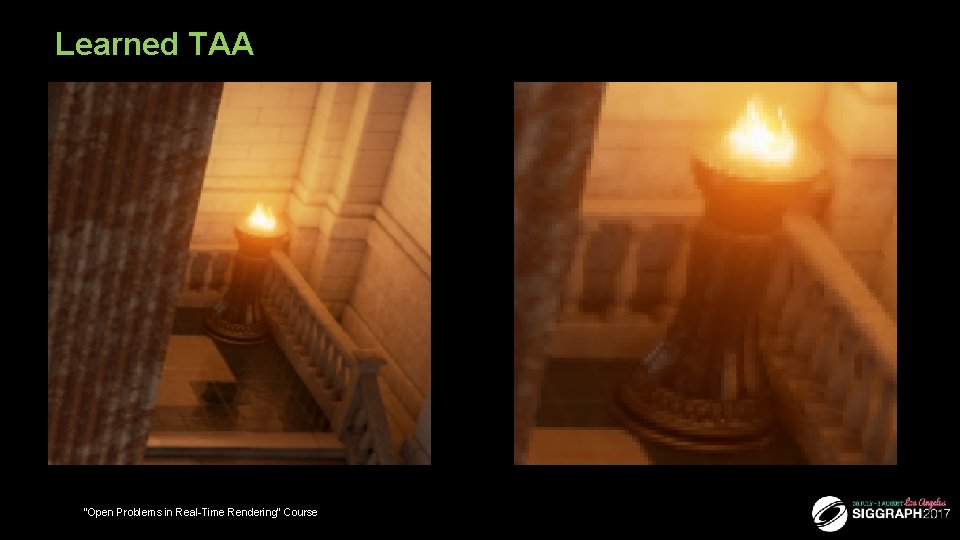

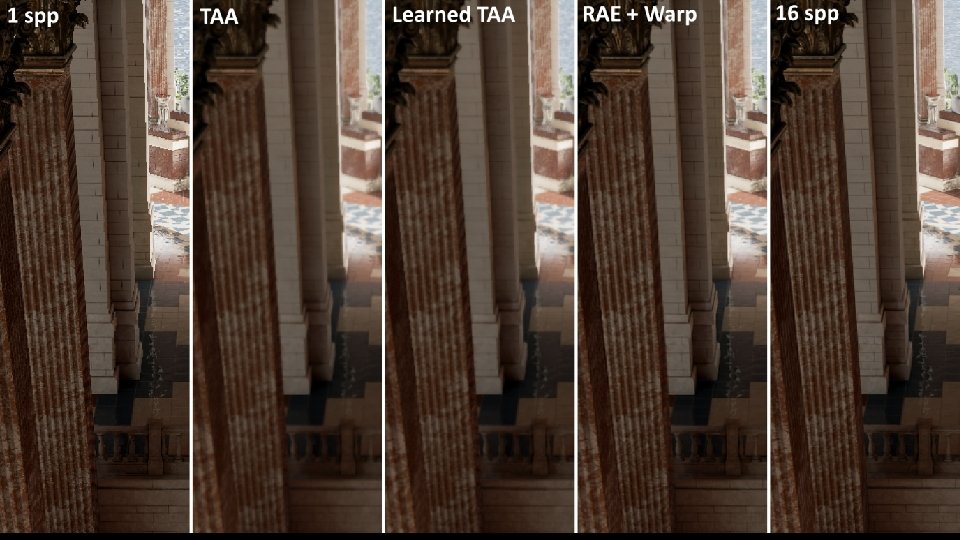

Learned TAA ■ Generally sharper image than “regular” TAA ♦ Temporal stability seems to be unaffected (still very good) ■ Cost should be similar to TAA ■ Slightly more expensive math to compute the color moments “Open Problems in Real-Time Rendering” Course

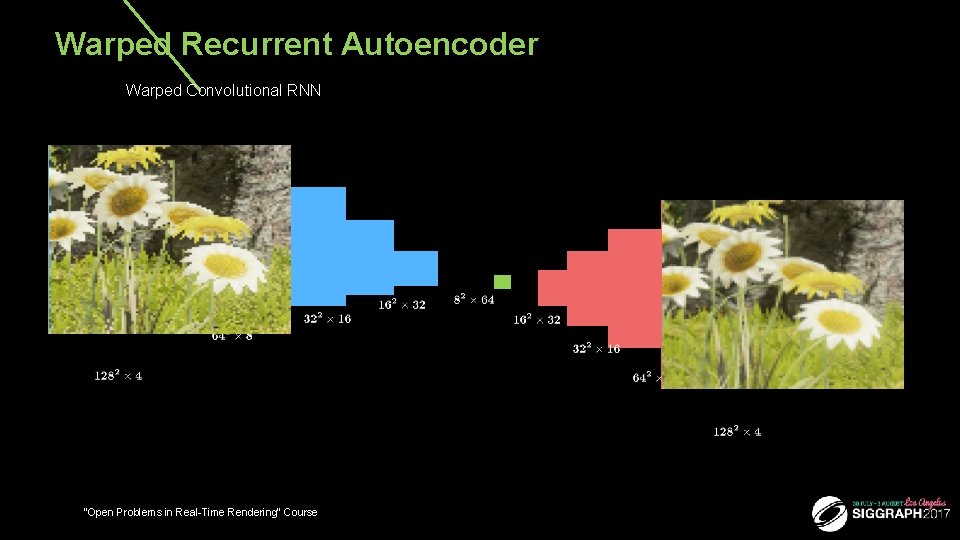

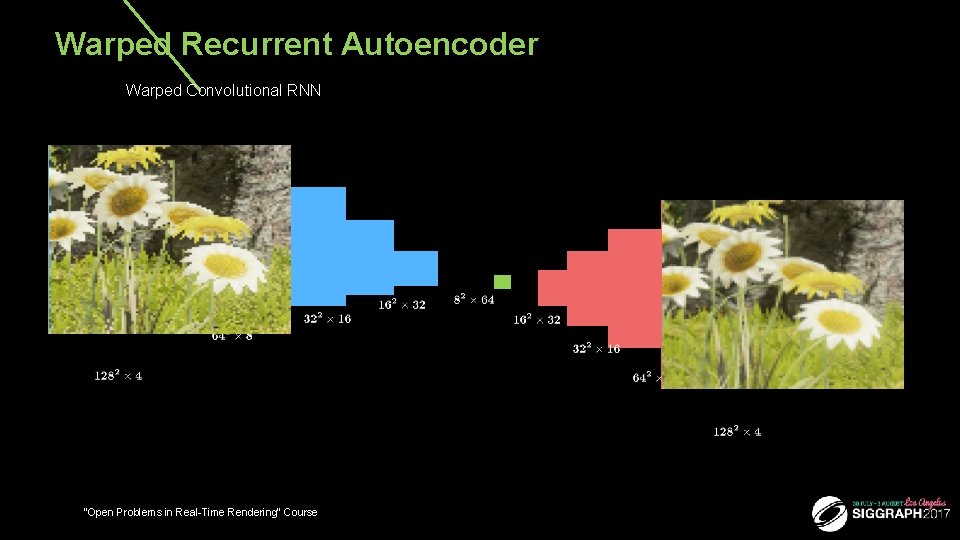

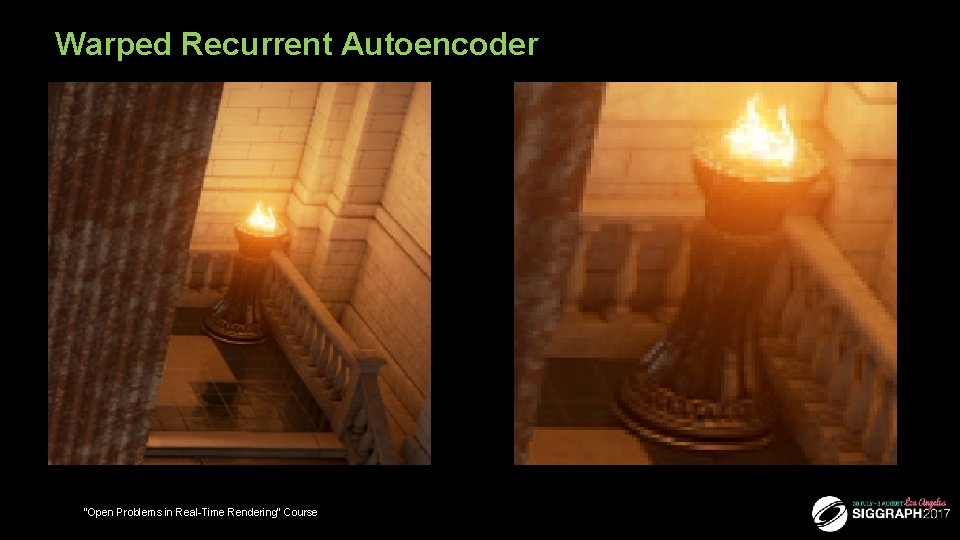

Warped Recurrent Autoencoder Warped Convolutional RNN “Open Problems in Real-Time Rendering” Course

TAA 31 “Open Problems in Real-Time Rendering” Course

Learned TAA 32 “Open Problems in Real-Time Rendering” Course

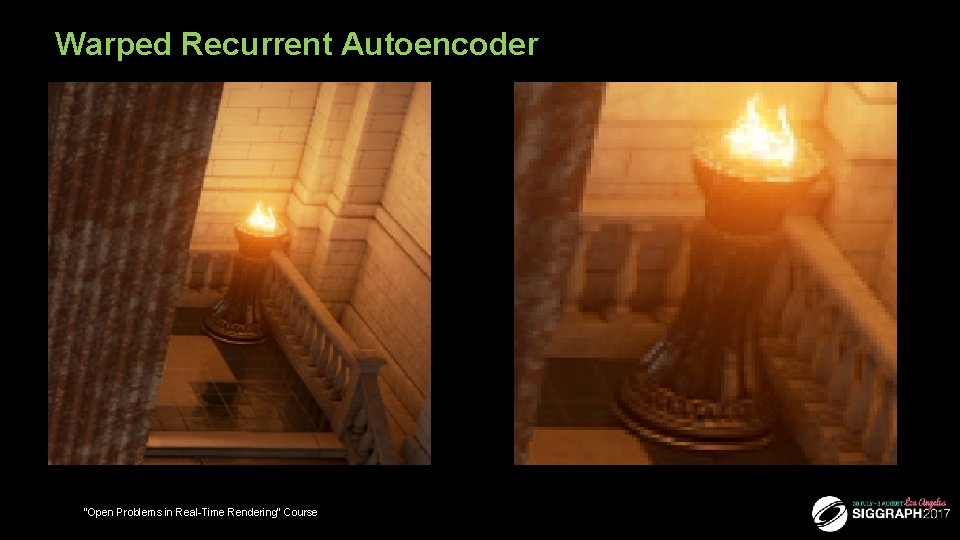

Warped Recurrent Autoencoder 33 “Open Problems in Real-Time Rendering” Course

Reference (16 spp) 34 “Open Problems in Real-Time Rendering” Course

Antialiasing video: AE vs. Warped RAE “Open Problems in Real-Time Rendering” Course

Antialiasing with Warped Recurrent Autoencoder ■ WRAE learned how to temporally integrate color while also removing stale data from the past ♦ This capabilities are hardwired in learned TAA ■ Generates more detailed and less biased images than learned TAA ♦ Still very temporally stable ■ Less ghosting than TAA with noisy/high frequency content ♦ But strangely we observe more ghosting in simpler situations well handled by TAA “Open Problems in Real-Time Rendering” Course

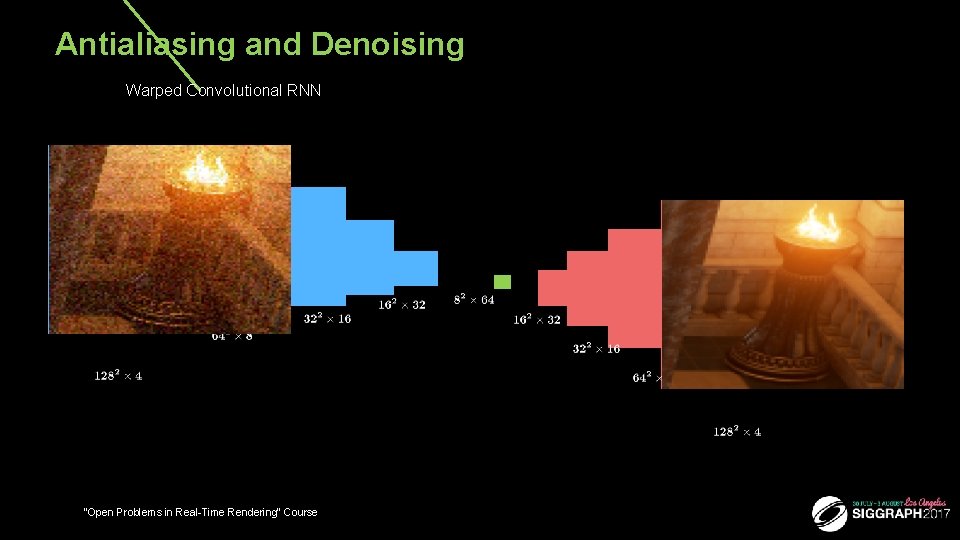

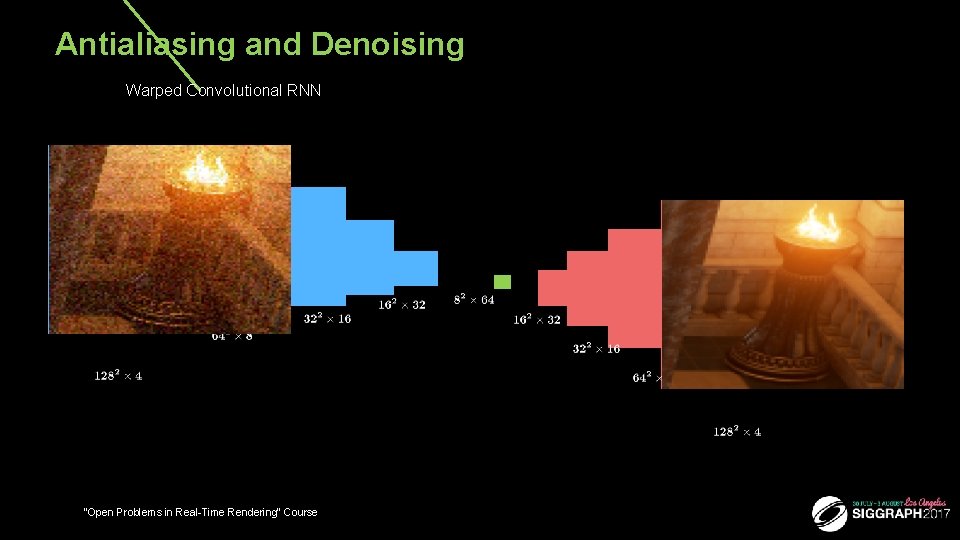

Antialiasing and Denoising Warped Convolutional RNN “Open Problems in Real-Time Rendering” Course

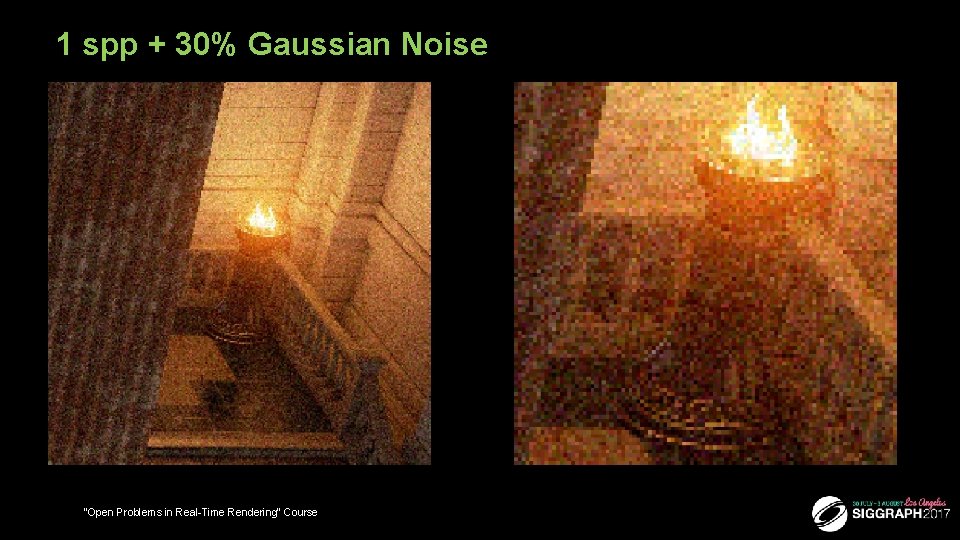

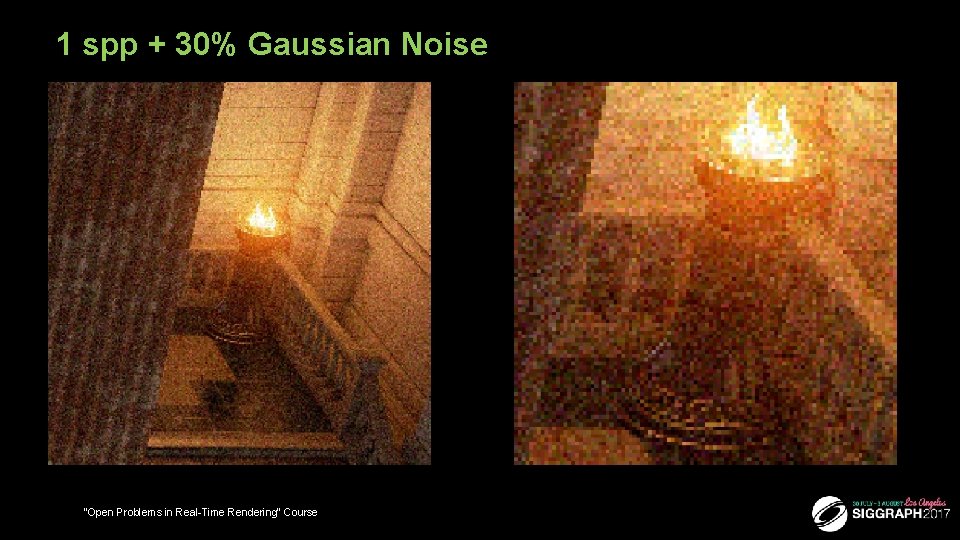

1 spp + 30% Gaussian Noise 38 “Open Problems in Real-Time Rendering” Course

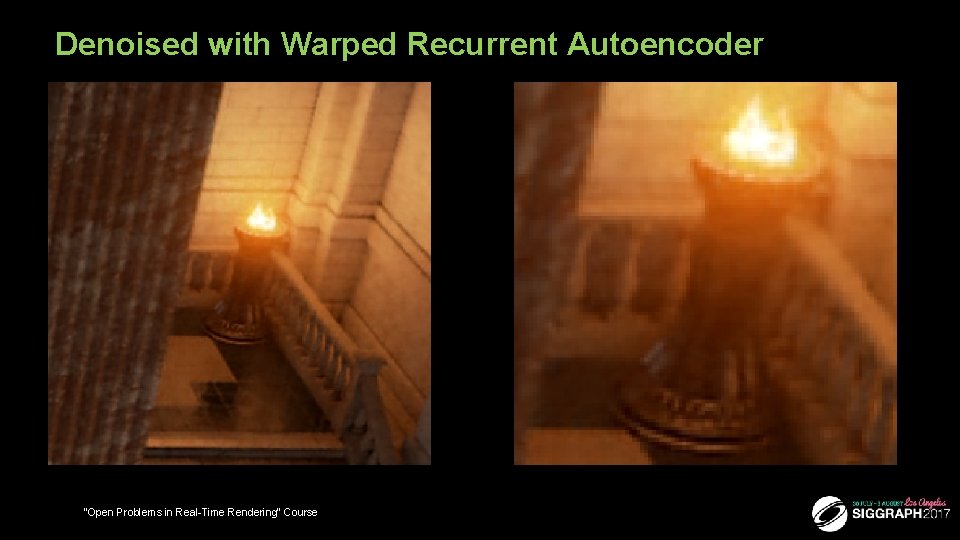

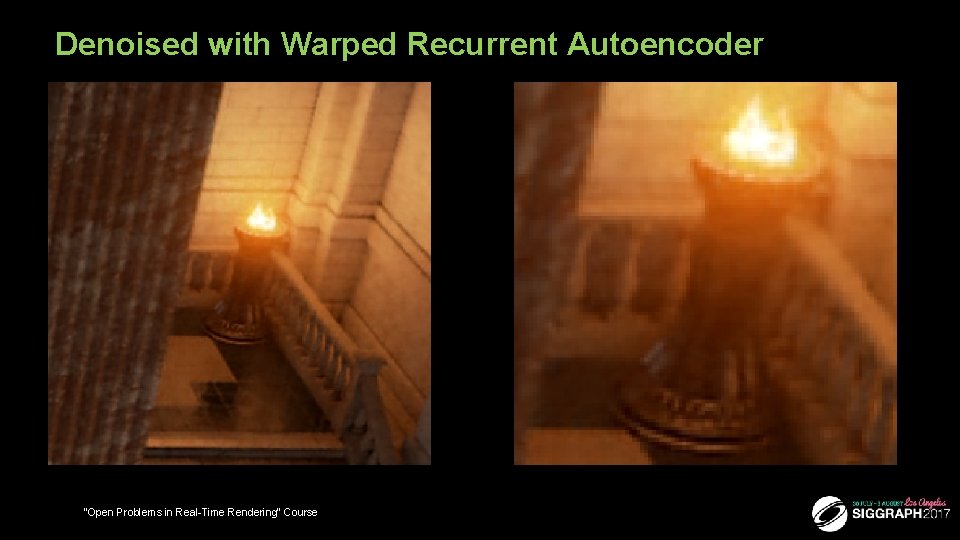

Denoised with Warped Recurrent Autoencoder 39 “Open Problems in Real-Time Rendering” Course

Denoising Video “Open Problems in Real-Time Rendering” Course

Open Problems & Directions 41 “Open Problems in Real-Time Rendering” Course

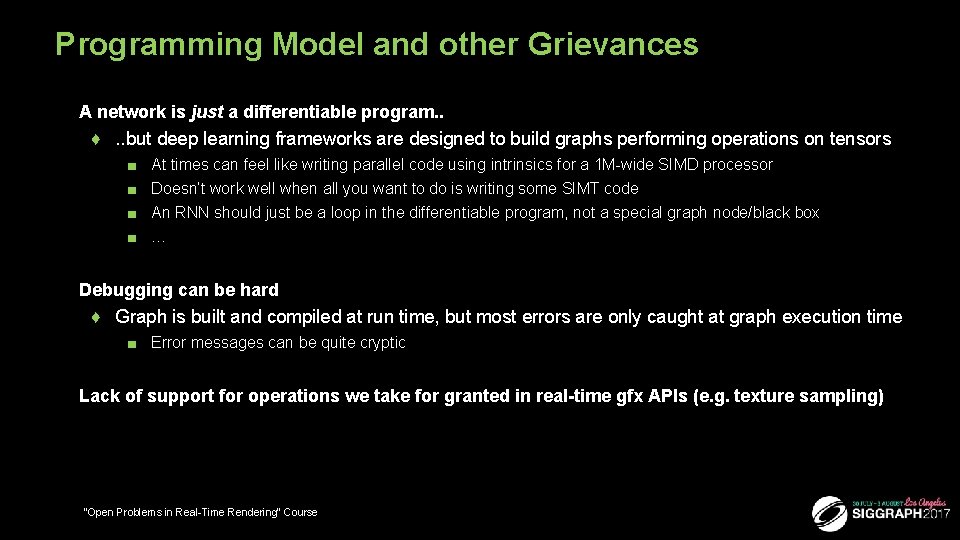

Programming Model and other Grievances ■ A network is just a differentiable program. . ♦. . but deep learning frameworks are designed to build graphs performing operations on tensors ■ ■ At times can feel like writing parallel code using intrinsics for a 1 M-wide SIMD processor Doesn’t work well when all you want to do is writing some SIMT code An RNN should just be a loop in the differentiable program, not a special graph node/black box … ■ Debugging can be hard ♦ Graph is built and compiled at run time, but most errors are only caught at graph execution time ■ Error messages can be quite cryptic ■ Lack of support for operations we take for granted in real-time gfx APIs (e. g. texture sampling) 42 “Open Problems in Real-Time Rendering” Course

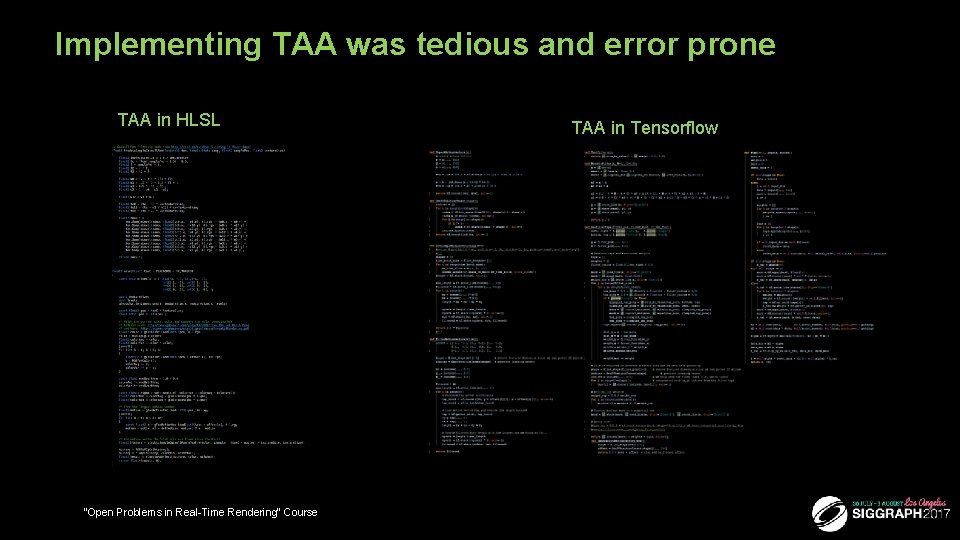

Implementing TAA was tedious and error prone TAA in HLSL 43 “Open Problems in Real-Time Rendering” Course TAA in Tensorflow

Temporal Stability Is Hard ■ Warped RNNs are a step forward, but many limitations ♦ Not always possible to have accurate motion vectors ■ e. g. transparent layers, dynamic shadows, reflections, refractions, animated UVs, etc. ■ Many other possibilities to explore ♦ ♦ Dilated convolutions to reduce the cost of large receptive fields 3 D Convolutions (space + time) Attention models … “Open Problems in Real-Time Rendering” Course

Autoenconders ■ Real-time Image reconstruction / restoration ♦ Fix artifacts caused by approximations and shortcuts ■ Caution: must be able to generate reference image ♦ ♦ Antialiasing Upsampling / super-resolution Foveation … ■ Denoising ♦ Soft shadows ♦ Motion & defocus blur ♦ Interactive path tracing 45 “Open Problems in Real-Time Rendering” Course

Countless Opportunities. . ■ Models ♦ Automated appearance-preserving LODs ♦ Blended animations that look natural ♦ New geometry representations? ■ Move full post-processing pipeline to DL ♦ Co-optimize post-processing pipeline and rendering? ■ Shading ♦ Faster / higher quality / pre-filtered materials ♦ Learning more optimal G-buffer terms/format “Open Problems in Real-Time Rendering” Course

Conclusion ■ Deep learning is a new powerful and rapidly evolving tool at our disposal ♦ Unlike in other fields, we can generate our training data ♦ Consider deep learning when you don’t know how to otherwise solve a problem ♦ Or to enhance a well known solution ■ Likely a profound impact on real-time rendering in coming years ♦ Reducing content creation costs, improving performance & image quality ■ Will deep learning take over significant parts of the graphics pipeline? “Open Problems in Real-Time Rendering” Course

Acknowledgments ■ ■ ■ ■ Timo Aila Nir Benty Donald Brittain Chakravarty R. Alla Chaitanya Andrew Edelstein Marco Foco Jon Hasselgren Anton Kaplanyan Jan Kautz Aaron Lefohn David Luebke Jacob Munkberg Anjul Patney Natalya Tatarchuk Chris Wyman “Open Problems in Real-Time Rendering” Course

![Bibliography Chaitanya 17 Interactive Reconstruction of Monte Carlo Image Sequences using Bibliography ■ ■ ■ [Chaitanya 17] “Interactive Reconstruction of Monte Carlo Image Sequences using](https://slidetodoc.com/presentation_image_h/c4ed2a0509b2a5a0d845774079513682/image-49.jpg)

Bibliography ■ ■ ■ [Chaitanya 17] “Interactive Reconstruction of Monte Carlo Image Sequences using a Recurrent Denoising Autoencoder” [Dahm 17] [Karras 17] [Laine 17] [Nalbach 17] [Holden 17] “Learning Light Transport the Reinforced Way” “Audio-Driven Facial Animation by Joint End-to-End Learning of Pose and Emotion” “Production-Level Facial Performance Capture Using Deep Convolutional Neural Networks” “Deep Shading: Convolutional Neural Networks for Screen-Space Shading” “Phase-Functioned Neural Networks for Character Control” “Open Problems in Real-Time Rendering” Course

Thank You “Open Problems in Real-Time Rendering” Course

Backup Material “Open Problems in Real-Time Rendering” Course

1 spp 52 “Open Problems in Real-Time Rendering” Course

“Open Problems in Real-Time Rendering” Course