Tips for Deep Learning Recipe of Deep Learning

![Maxout • Learnable activation function [Ian J. Goodfellow, ICML’ 13] • Activation function in Maxout • Learnable activation function [Ian J. Goodfellow, ICML’ 13] • Activation function in](https://slidetodoc.com/presentation_image_h2/3ac5e8f45f0d6ca0d26fc678b1cefda2/image-16.jpg)

- Slides: 52

Tips for Deep Learning

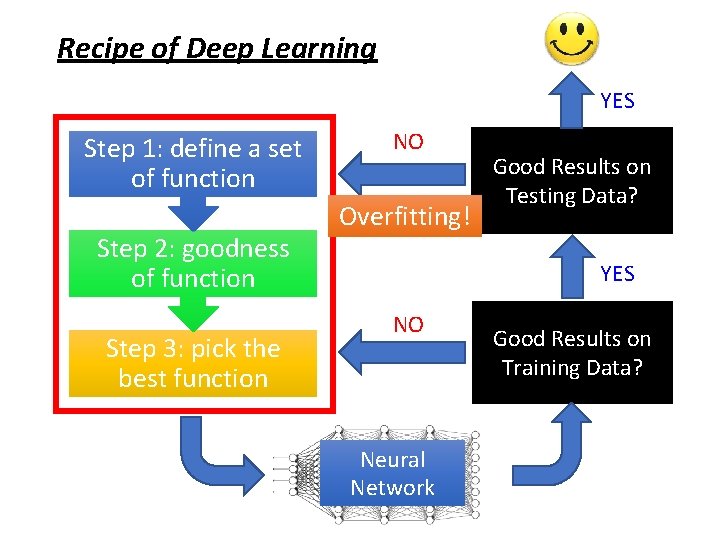

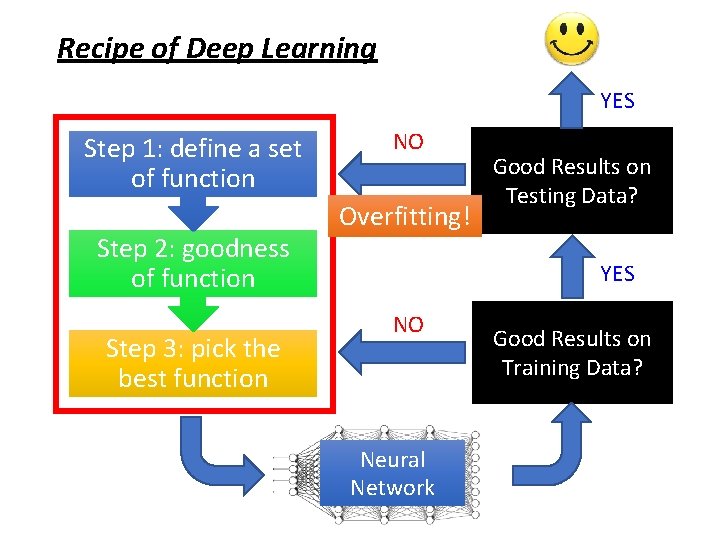

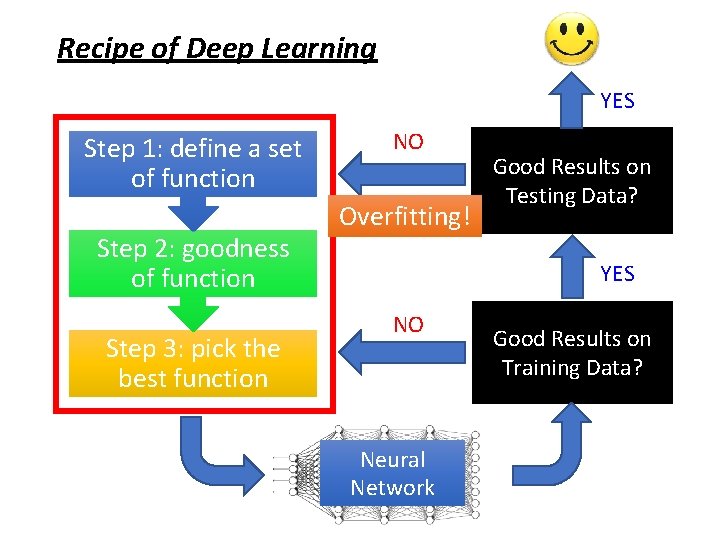

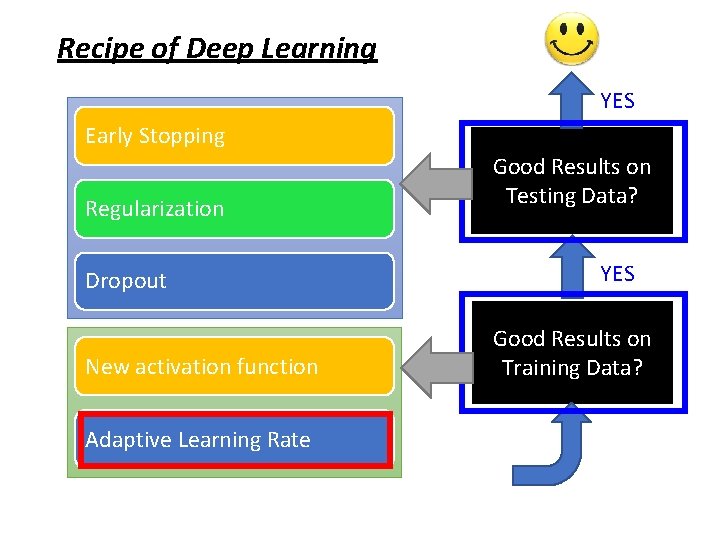

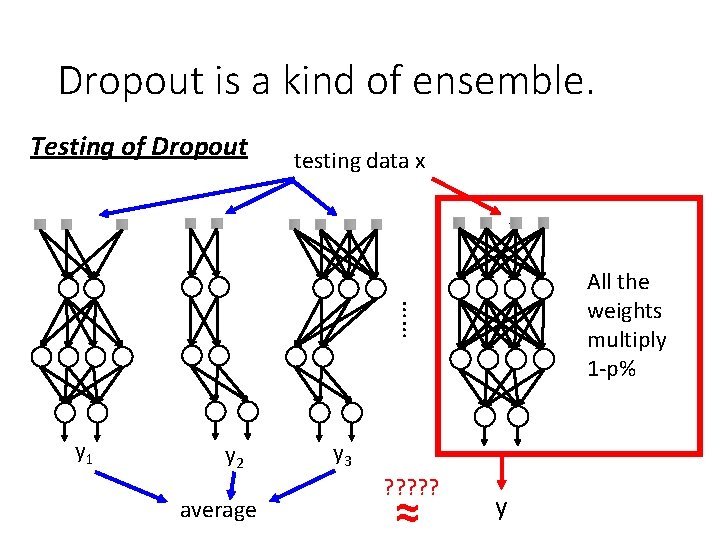

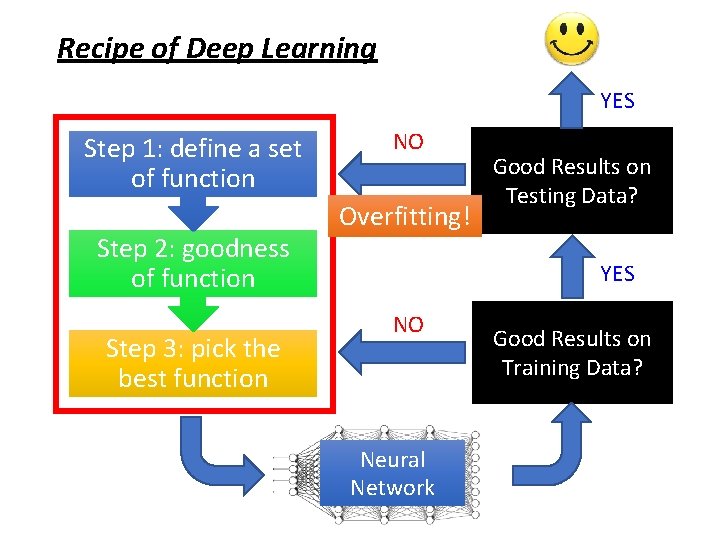

Recipe of Deep Learning YES Step 1: define a set of function Step 2: goodness of function Step 3: pick the best function NO Overfitting! Good Results on Testing Data? YES NO Neural Network Good Results on Training Data?

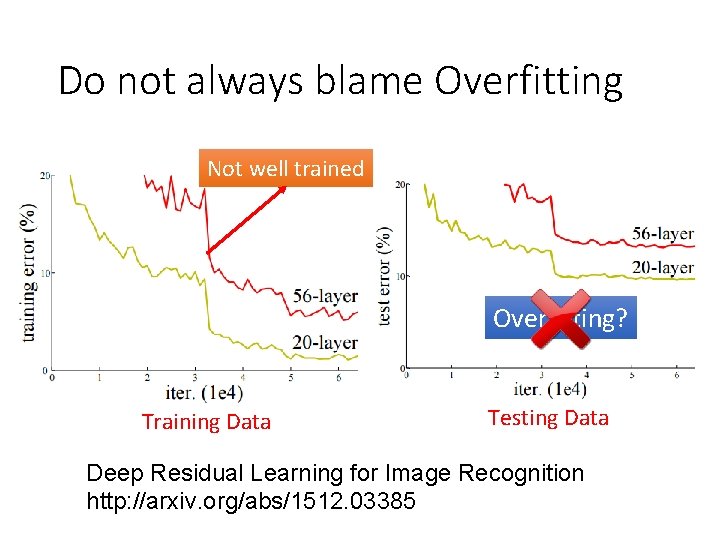

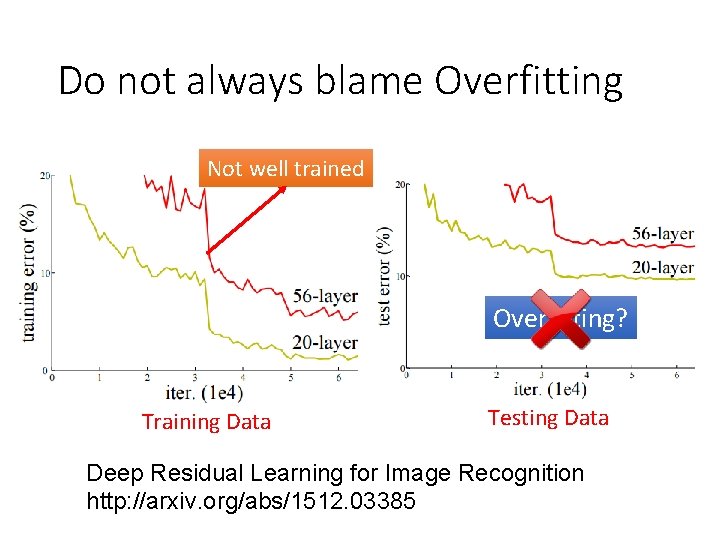

Do not always blame Overfitting Not well trained Overfitting? Training Data Testing Data Deep Residual Learning for Image Recognition http: //arxiv. org/abs/1512. 03385

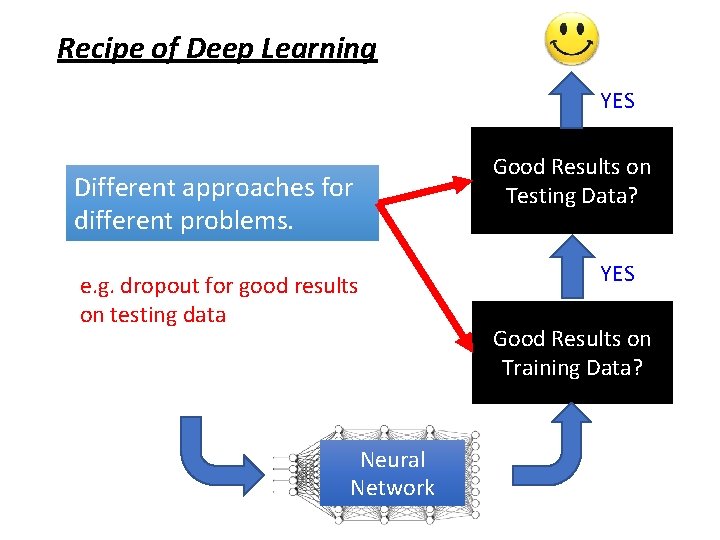

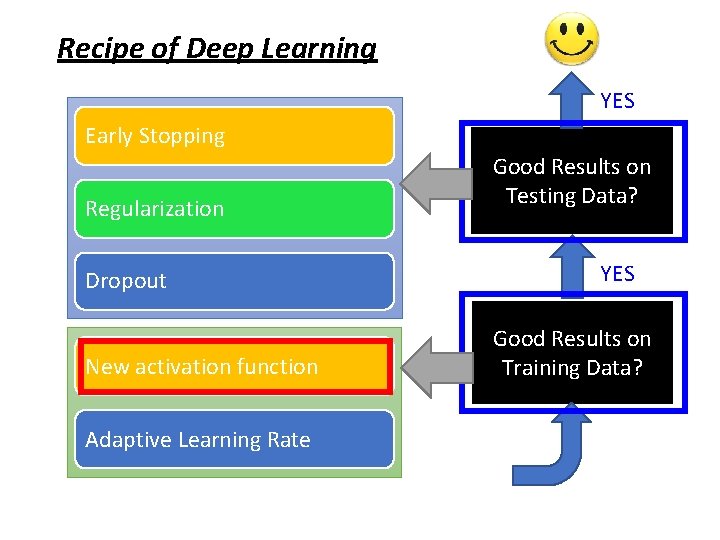

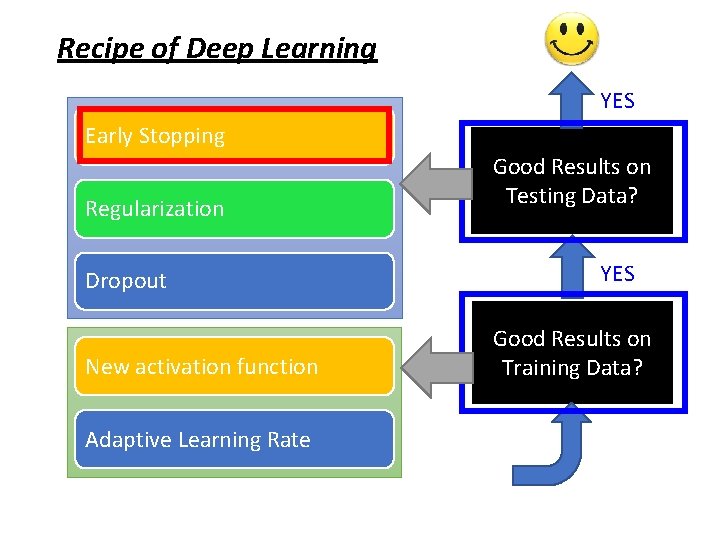

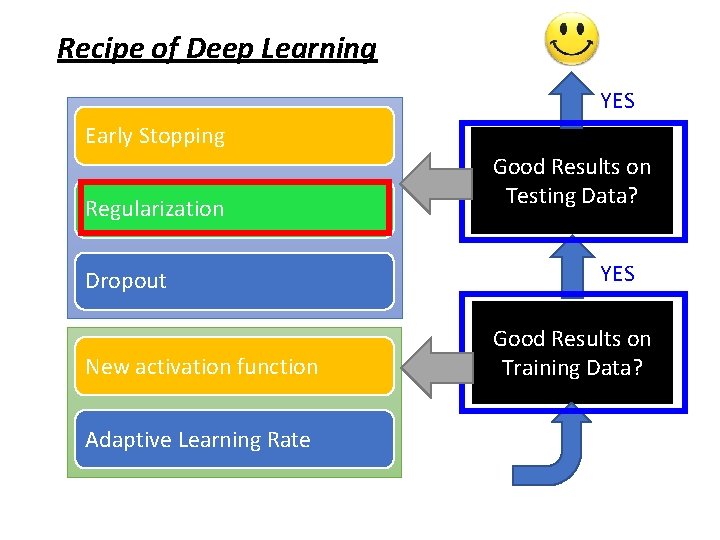

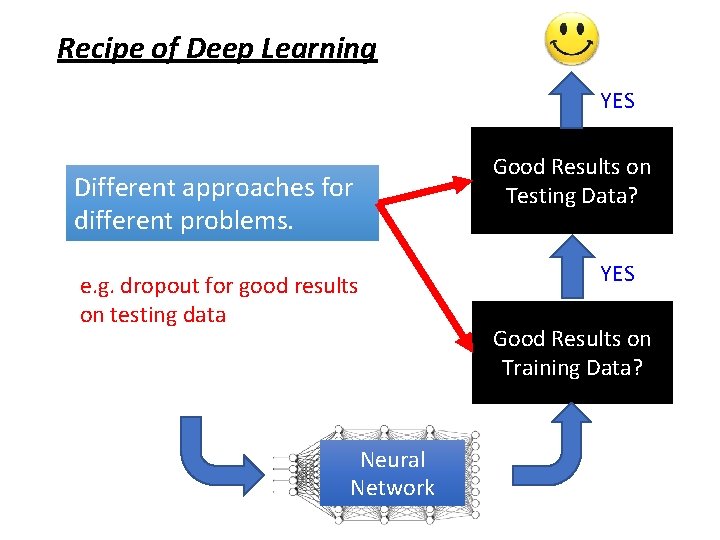

Recipe of Deep Learning YES Different approaches for different problems. e. g. dropout for good results on testing data Neural Network Good Results on Testing Data? YES Good Results on Training Data?

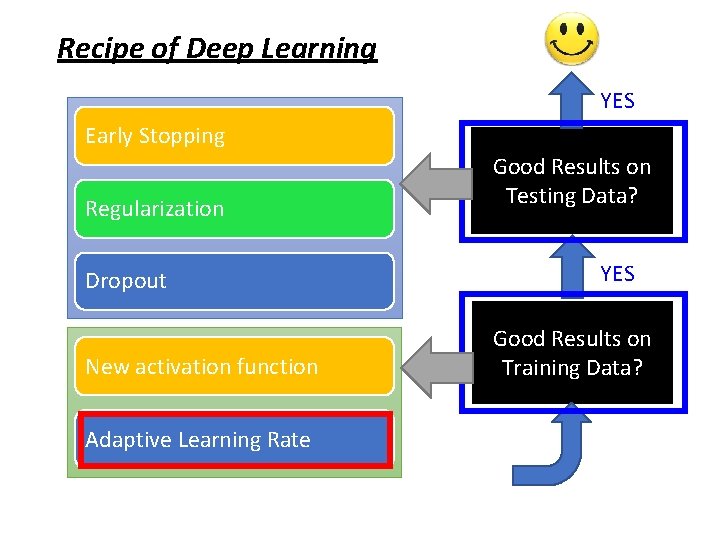

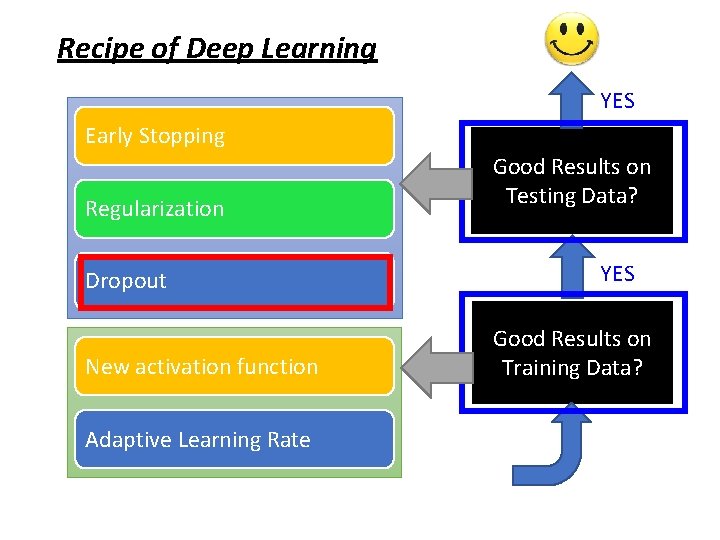

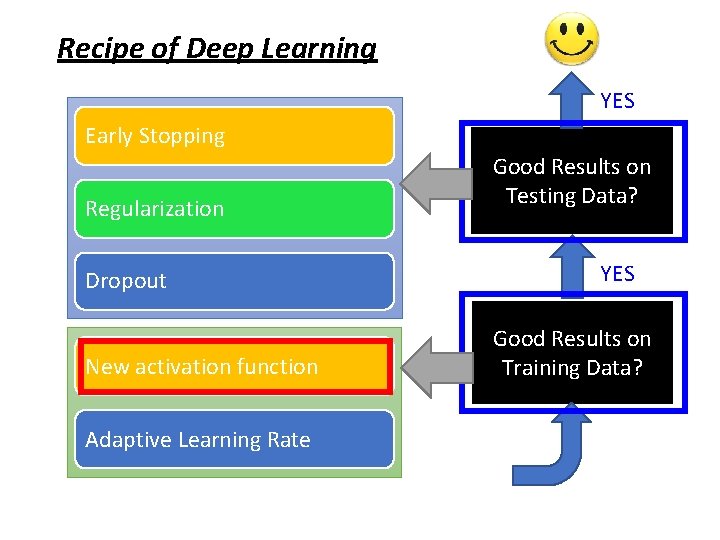

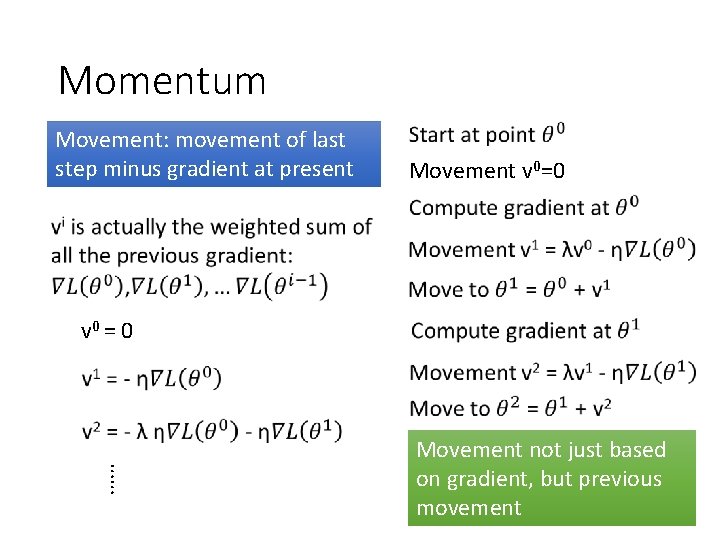

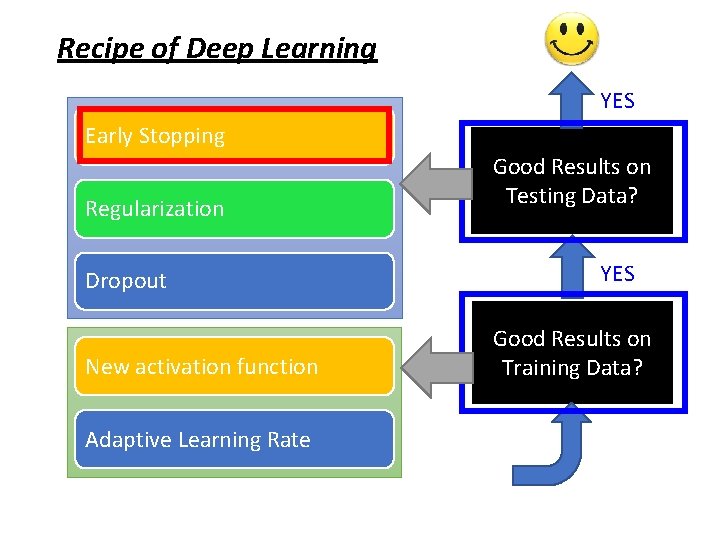

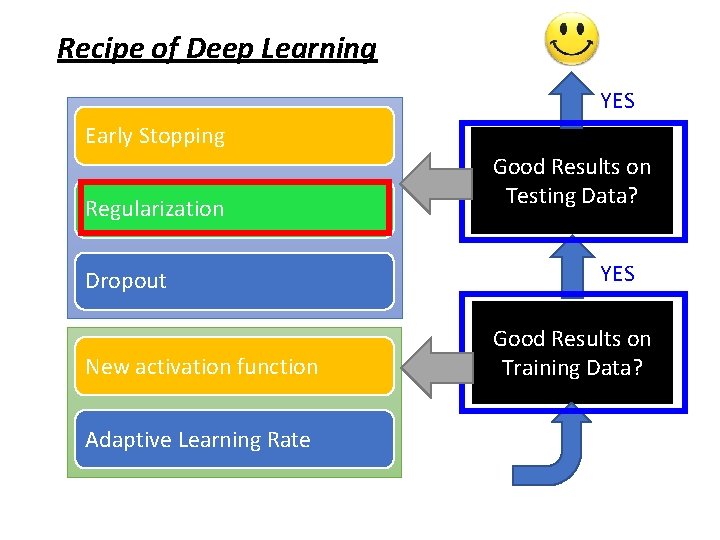

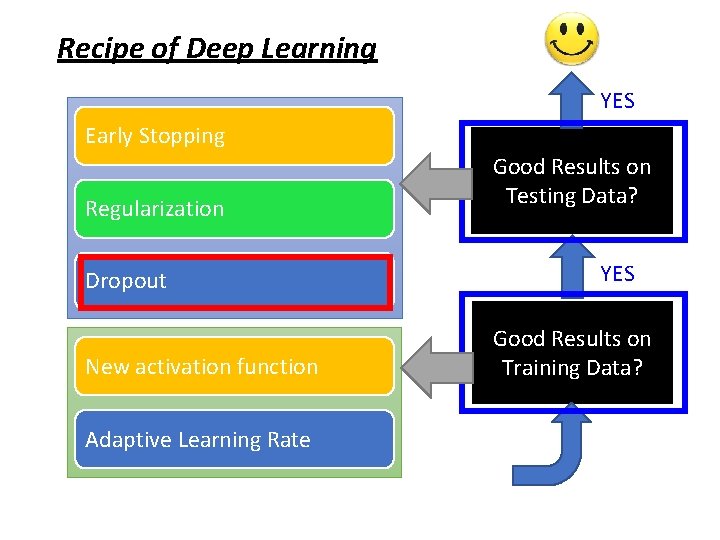

Recipe of Deep Learning YES Early Stopping Regularization Dropout New activation function Adaptive Learning Rate Good Results on Testing Data? YES Good Results on Training Data?

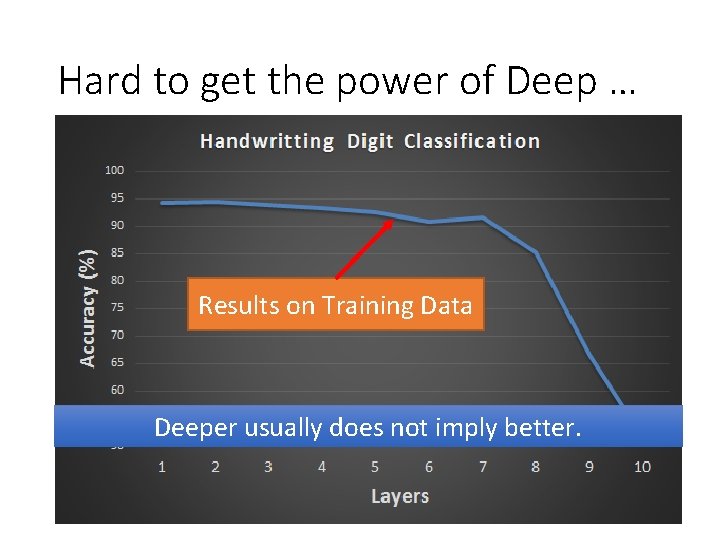

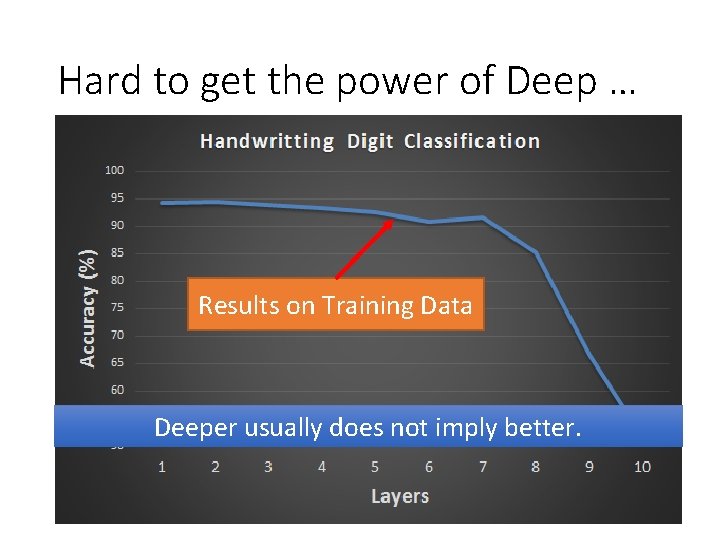

Hard to get the power of Deep … Results on Training Data Deeper usually does not imply better.

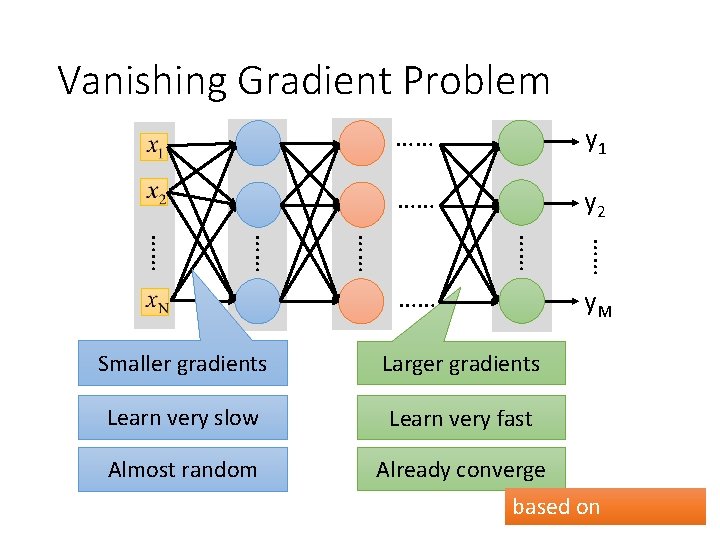

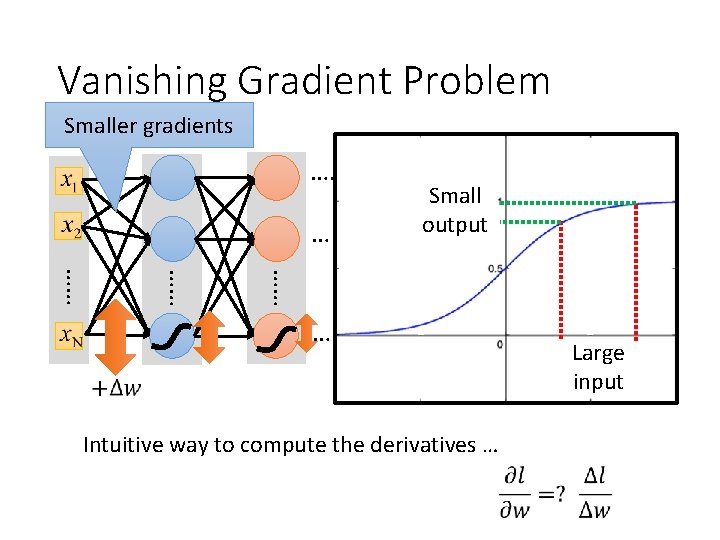

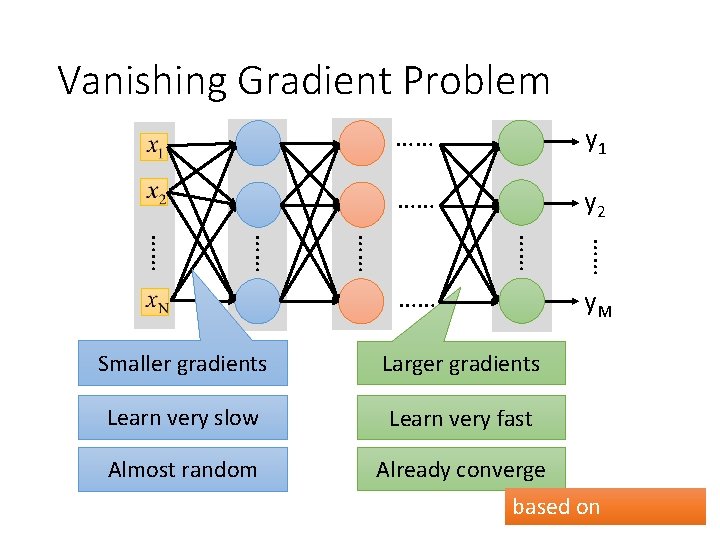

Vanishing Gradient Problem …… y 1 …… y 2 …… …… …… y. M Smaller gradients Larger gradients Learn very slow Learn very fast Almost random Already converge based on

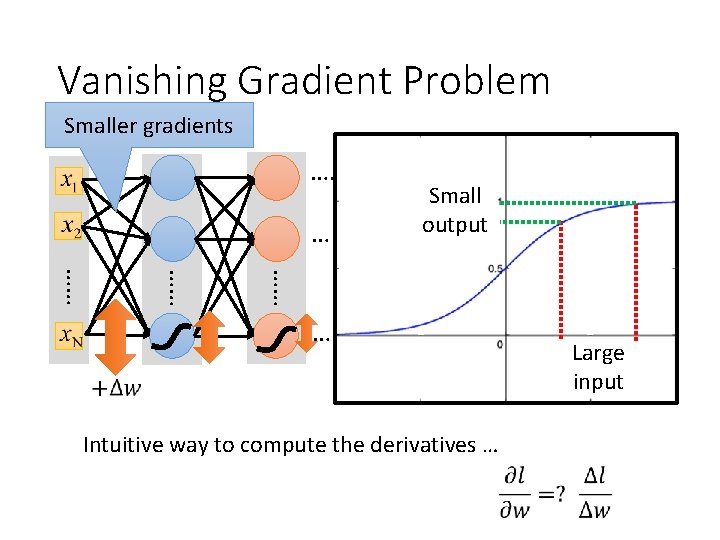

Vanishing Gradient Problem Smaller gradients …… …… Small output …… Intuitive way to compute the derivatives … …… …… …… Large input

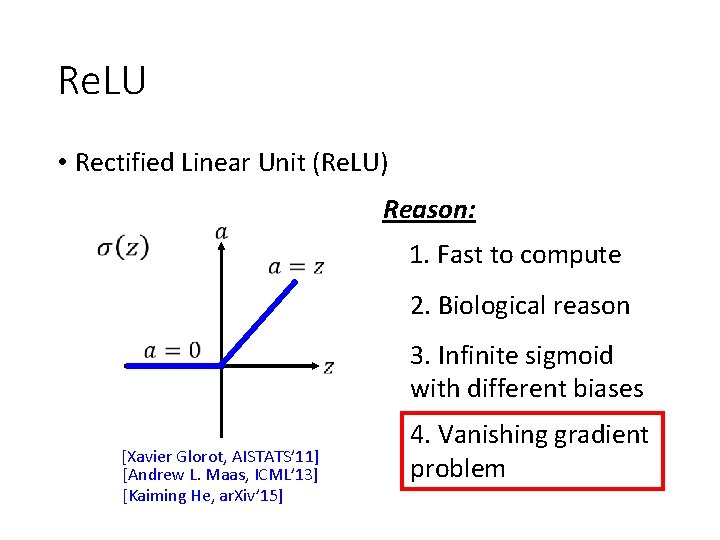

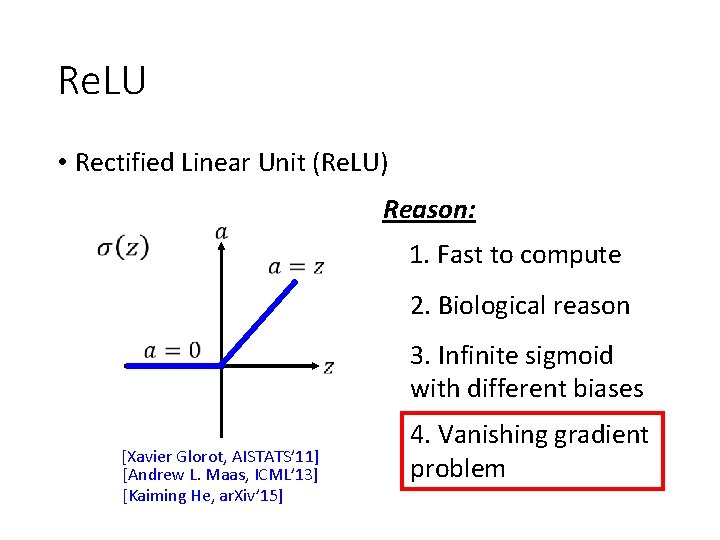

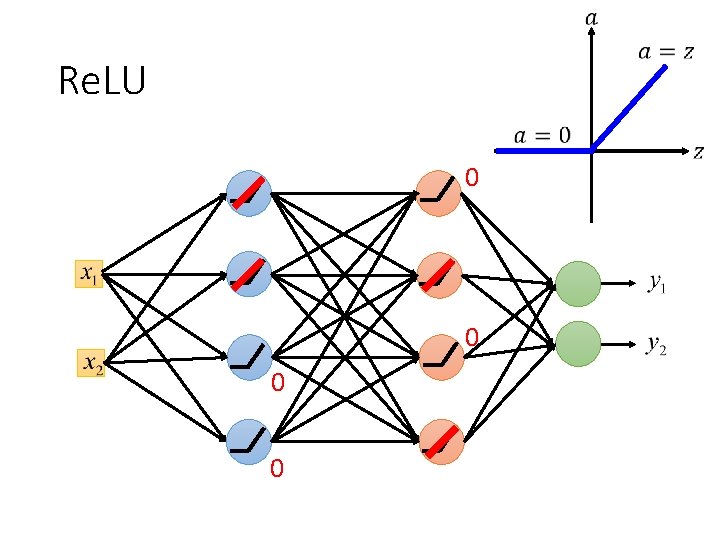

Re. LU • Rectified Linear Unit (Re. LU) Reason: 1. Fast to compute 2. Biological reason 3. Infinite sigmoid with different biases [Xavier Glorot, AISTATS’ 11] [Andrew L. Maas, ICML’ 13] [Kaiming He, ar. Xiv’ 15] 4. Vanishing gradient problem

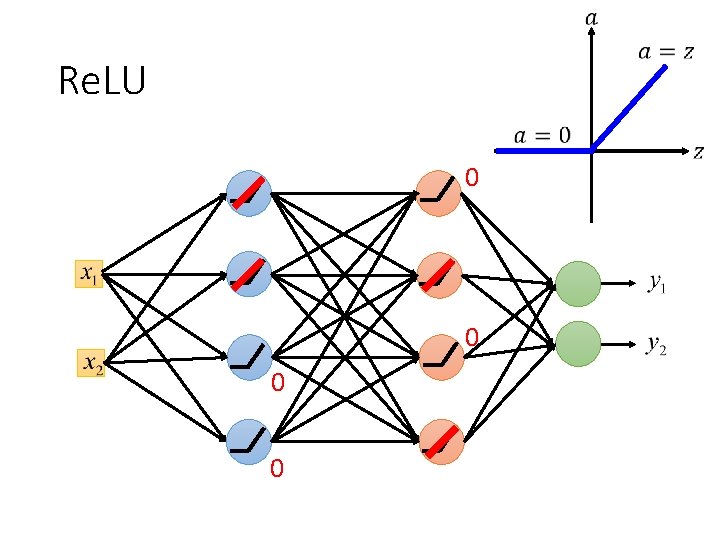

Re. LU 0 0

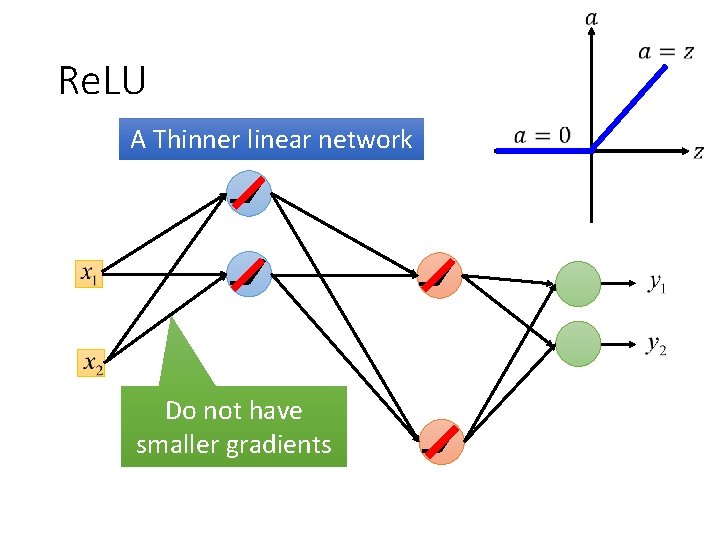

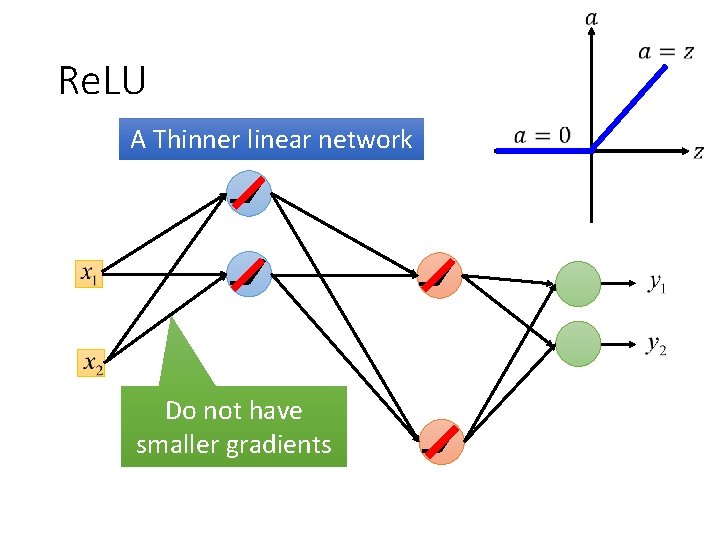

Re. LU A Thinner linear network Do not have smaller gradients

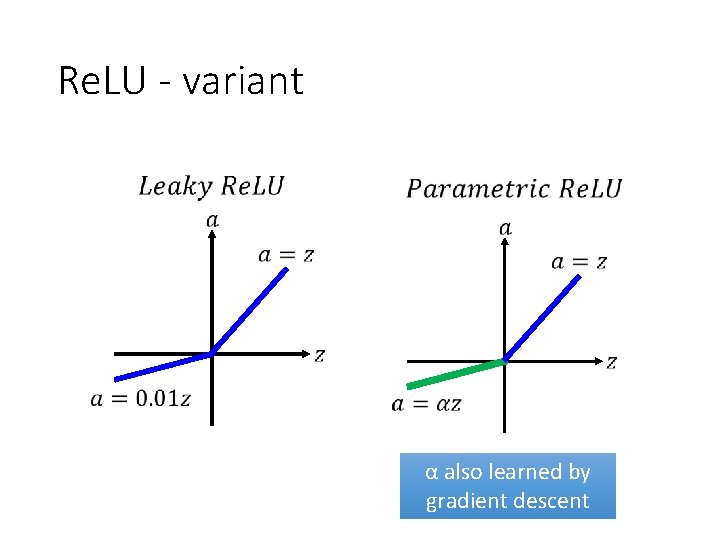

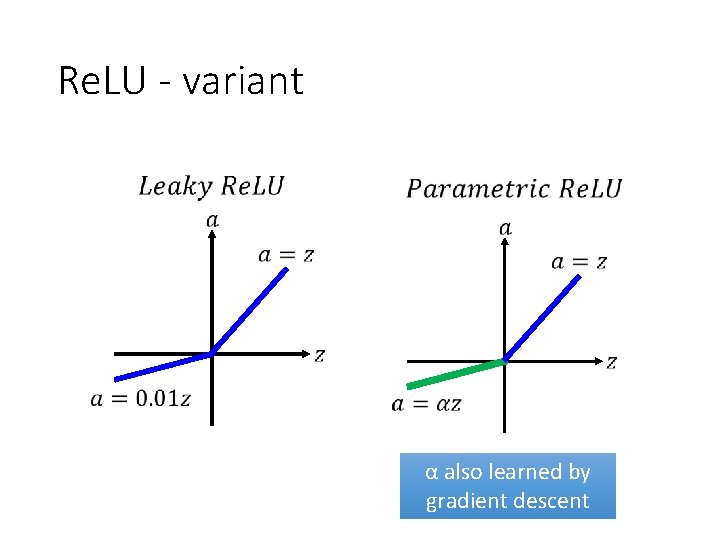

Re. LU - variant α also learned by gradient descent

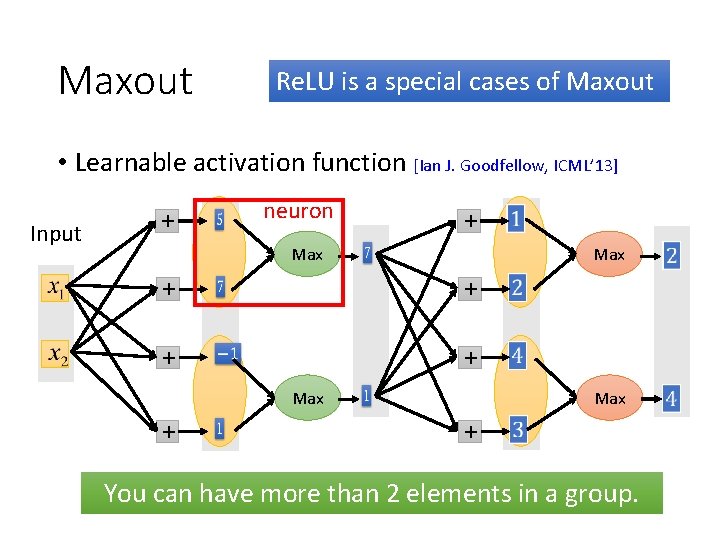

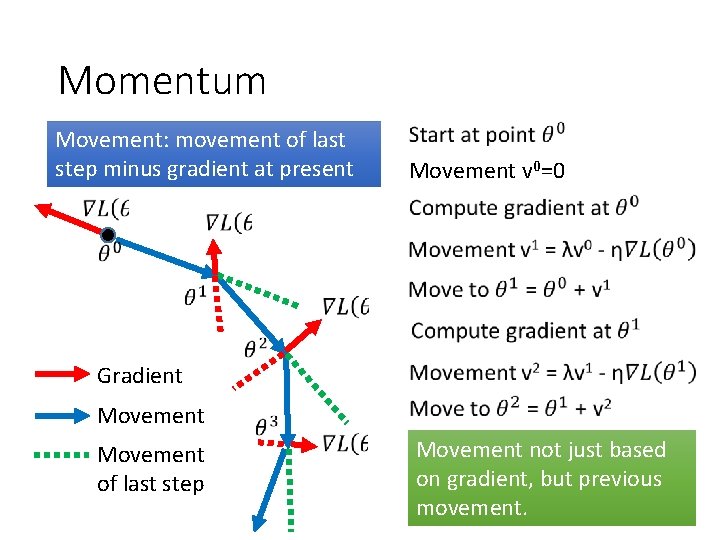

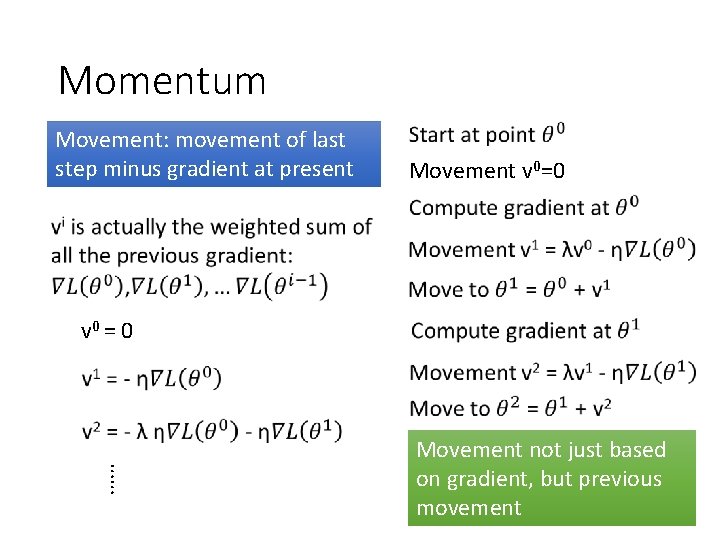

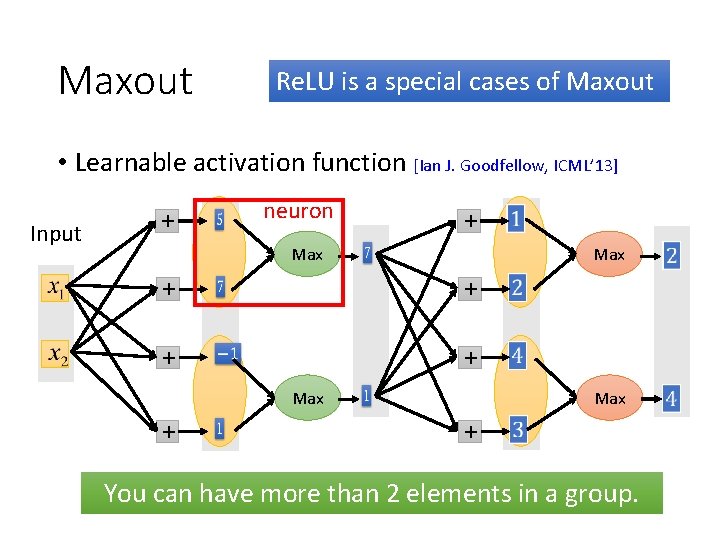

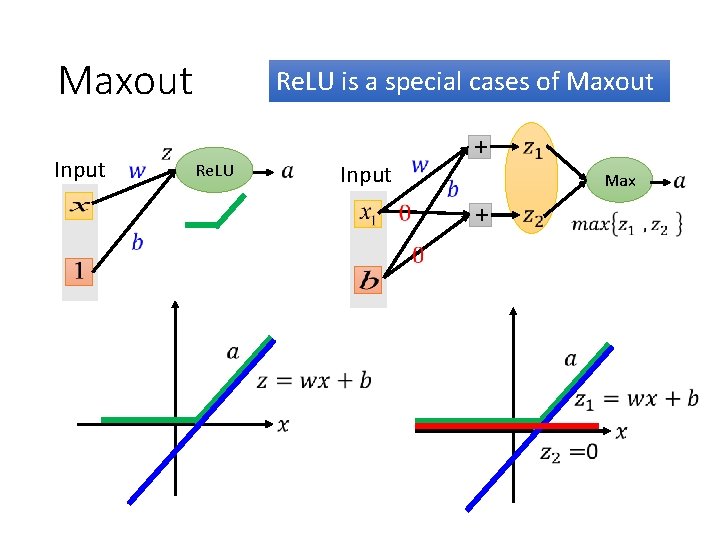

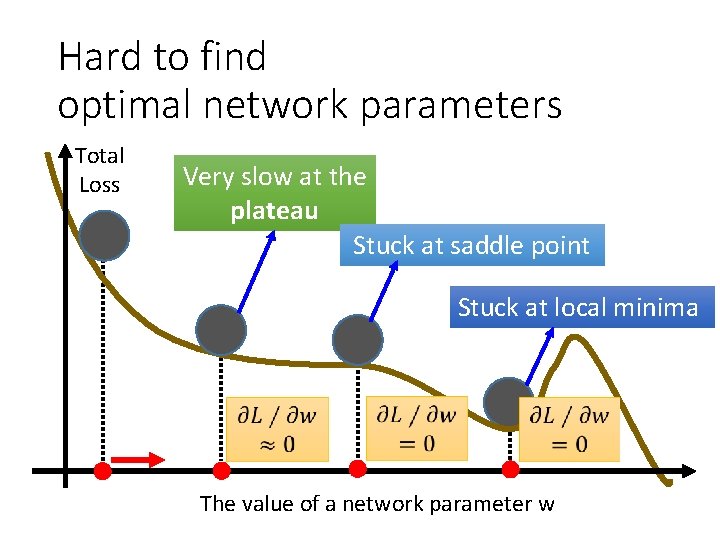

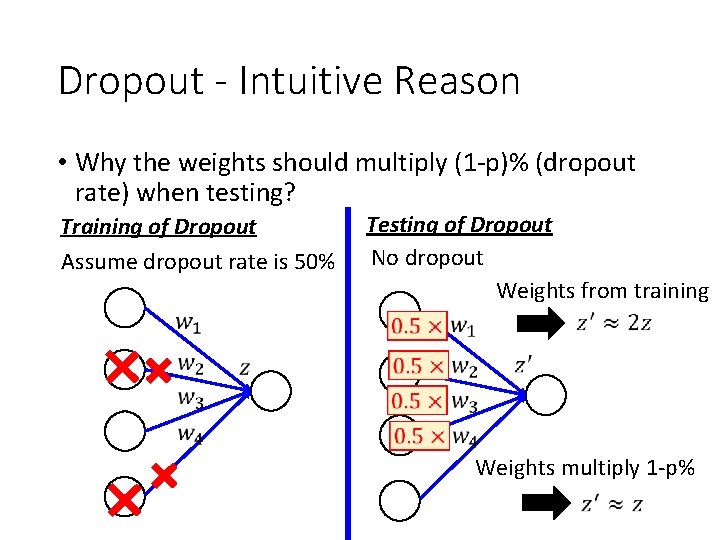

Maxout Re. LU is a special cases of Maxout • Learnable activation function [Ian J. Goodfellow, ICML’ 13] Input + neuron + Max + You can have more than 2 elements in a group.

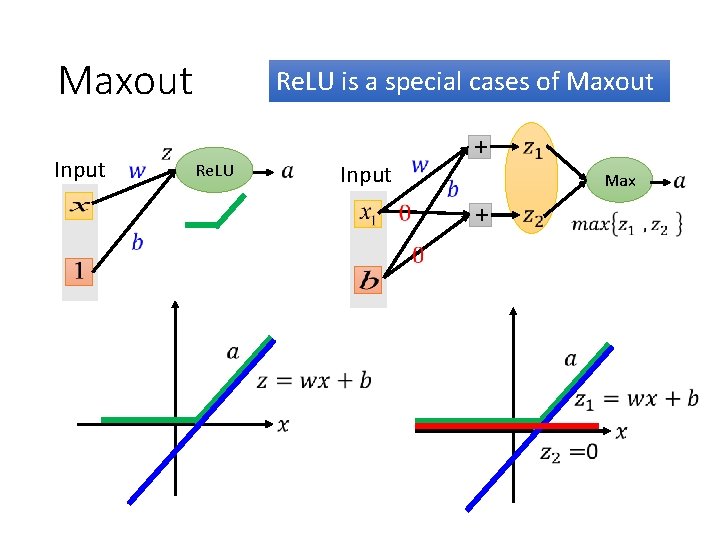

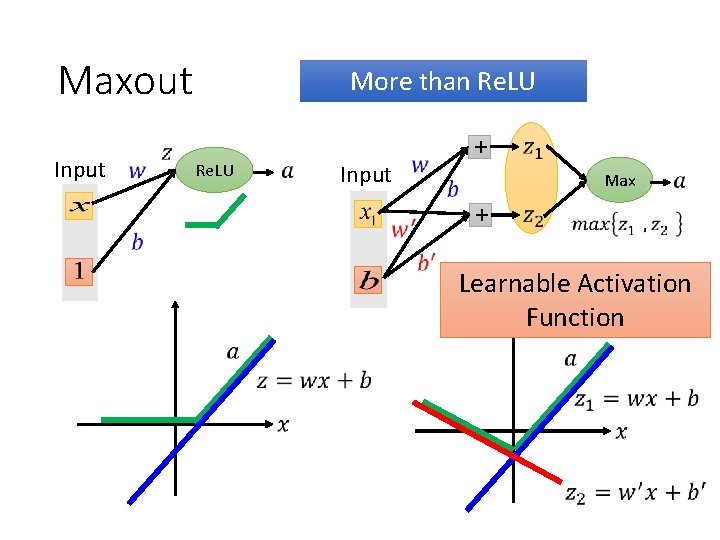

Maxout Input Re. LU is a special cases of Maxout Re. LU Input + Max +

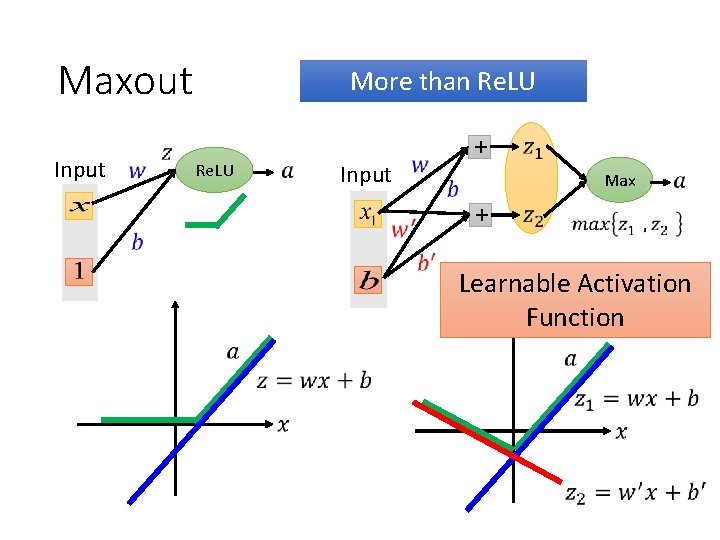

Maxout Input More than Re. LU Input + Max + Learnable Activation Function

![Maxout Learnable activation function Ian J Goodfellow ICML 13 Activation function in Maxout • Learnable activation function [Ian J. Goodfellow, ICML’ 13] • Activation function in](https://slidetodoc.com/presentation_image_h2/3ac5e8f45f0d6ca0d26fc678b1cefda2/image-16.jpg)

Maxout • Learnable activation function [Ian J. Goodfellow, ICML’ 13] • Activation function in maxout network can be any piecewise linear convex function • How many pieces depending on how many elements in a group 2 elements in a group 3 elements in a group

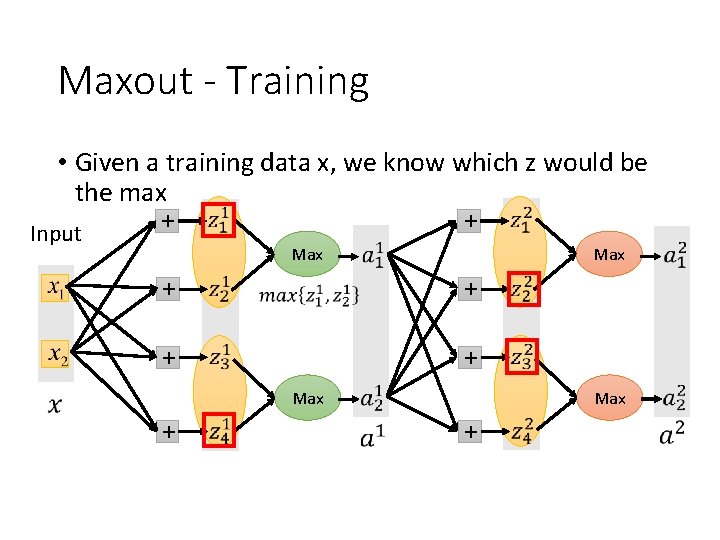

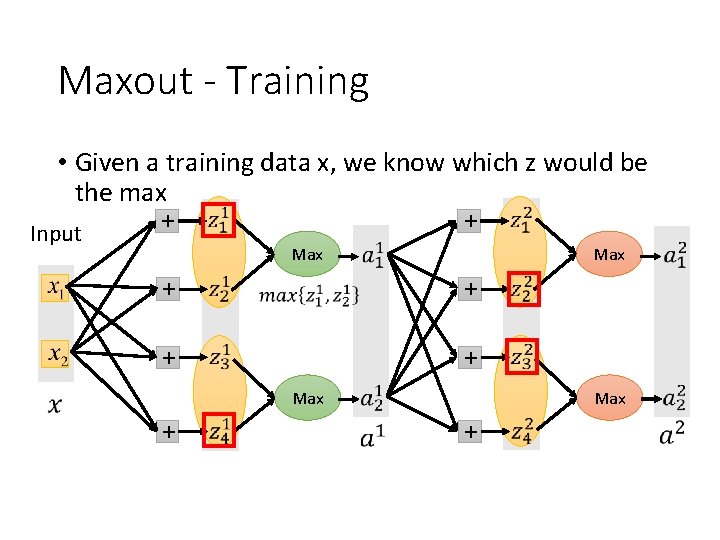

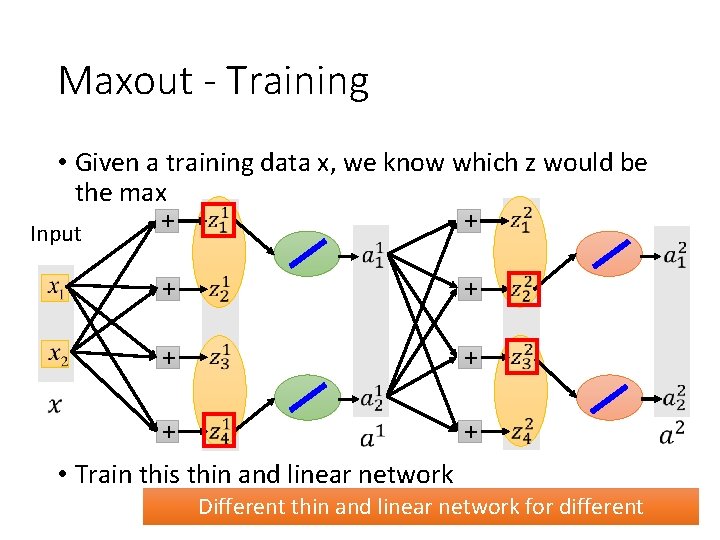

Maxout - Training • Given a training data x, we know which z would be the max + + Input Max + + Max +

Maxout - Training • Given a training data x, we know which z would be the max + + Input + + + • Train this thin and linear network Different thin and linear network for different

Recipe of Deep Learning YES Early Stopping Regularization Dropout New activation function Adaptive Learning Rate Good Results on Testing Data? YES Good Results on Training Data?

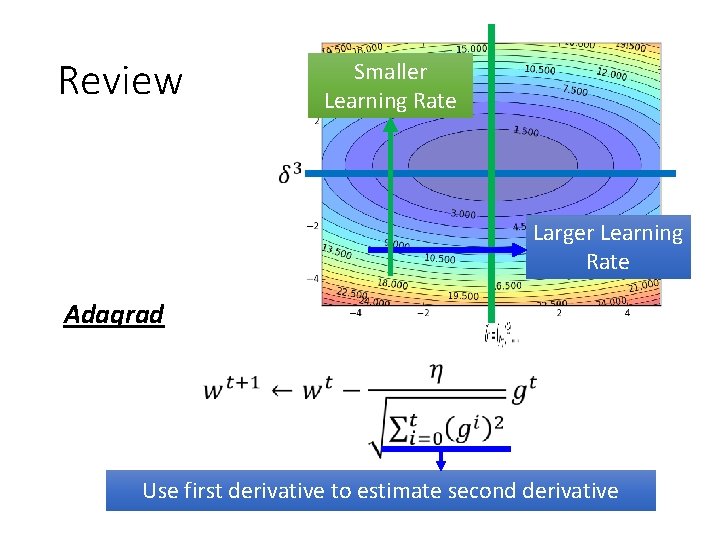

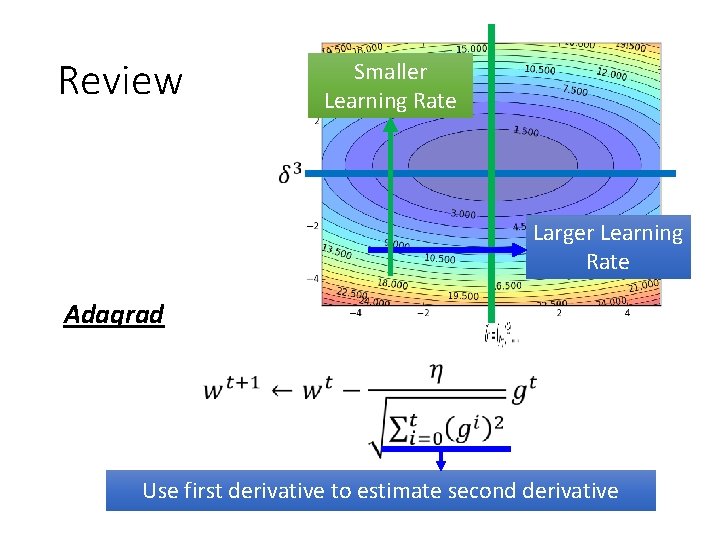

Review Smaller Learning Rate Larger Learning Rate Adagrad Use first derivative to estimate second derivative

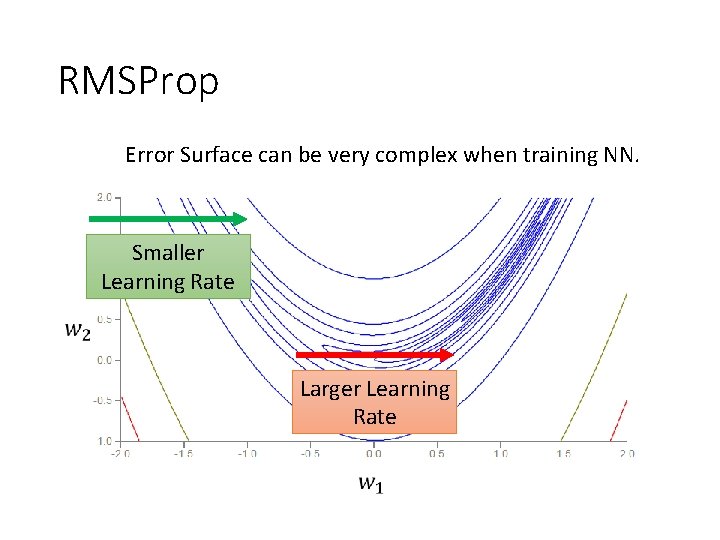

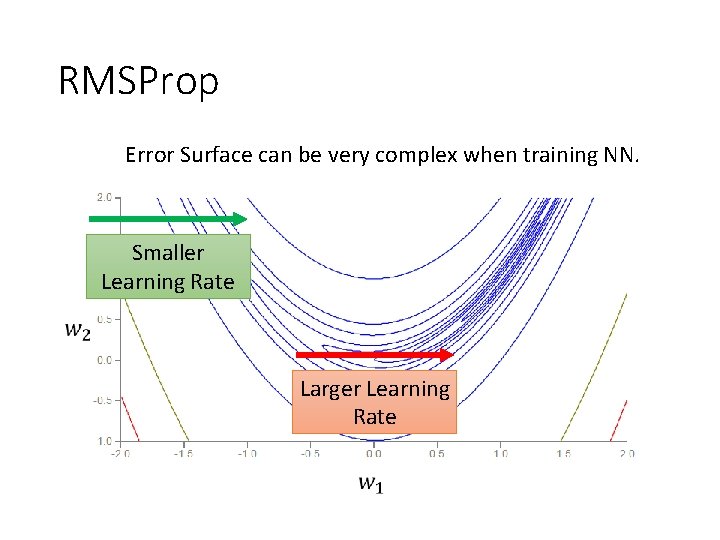

RMSProp Error Surface can be very complex when training NN. Smaller Learning Rate Larger Learning Rate

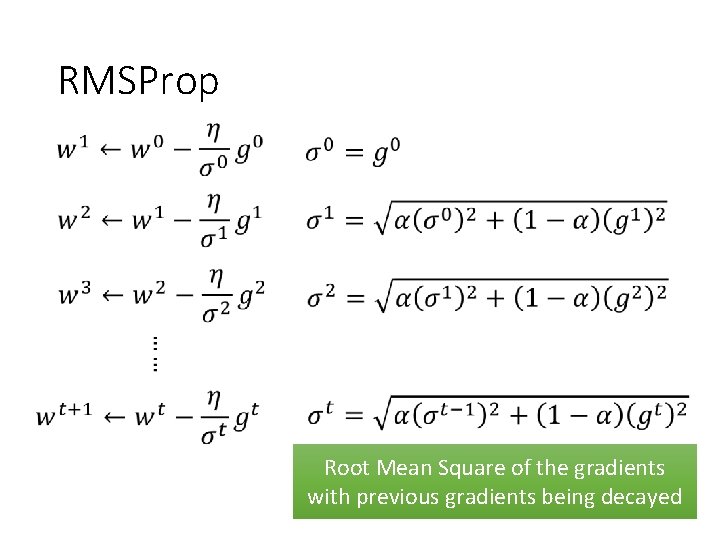

RMSProp Root Mean Square of the gradients with previous gradients being decayed

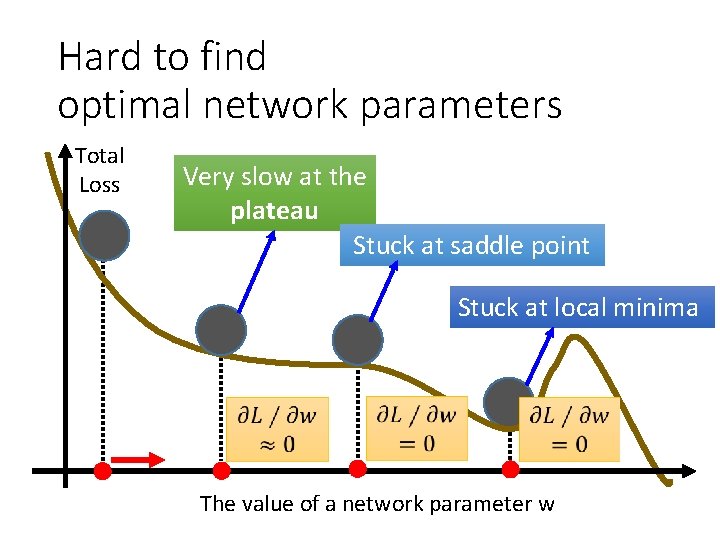

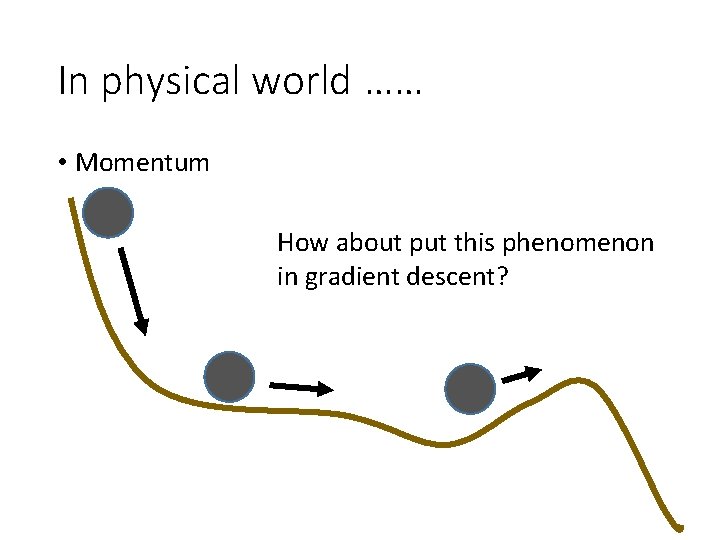

Hard to find optimal network parameters Total Loss Very slow at the plateau Stuck at saddle point Stuck at local minima The value of a network parameter w

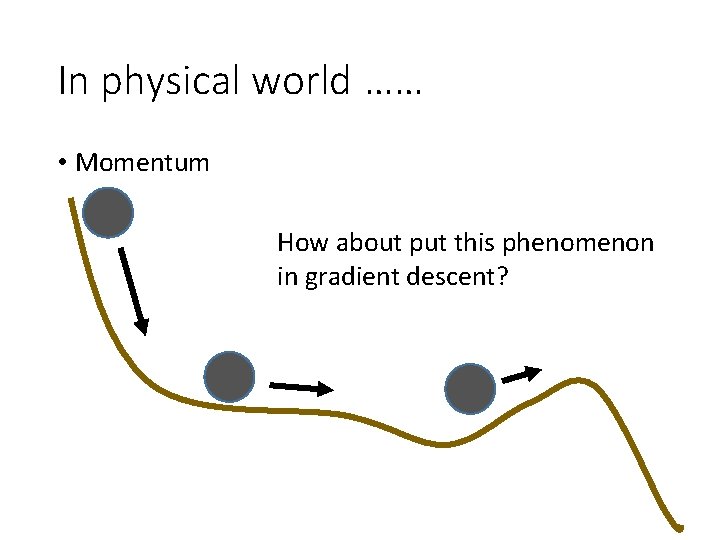

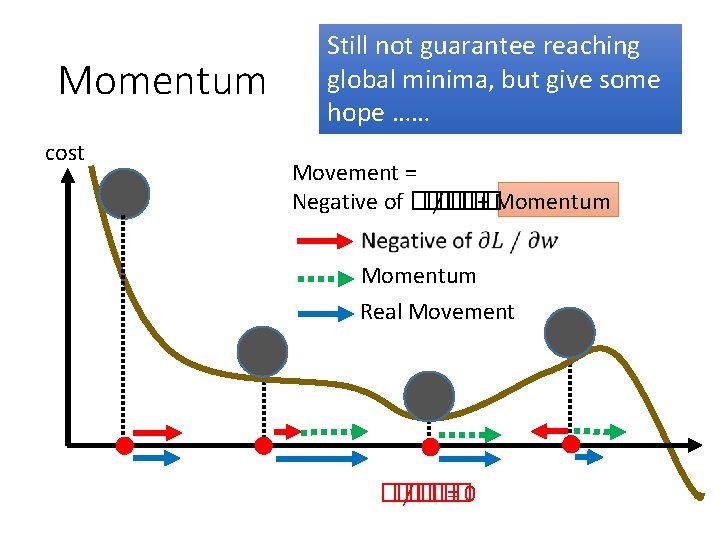

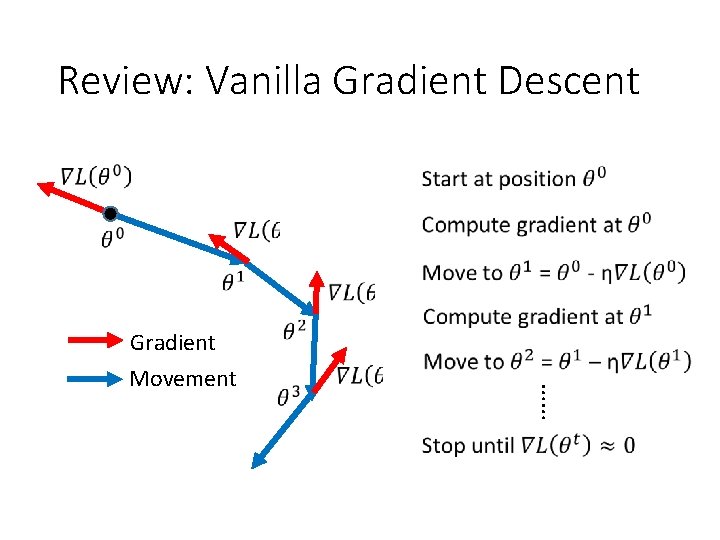

In physical world …… • Momentum How about put this phenomenon in gradient descent?

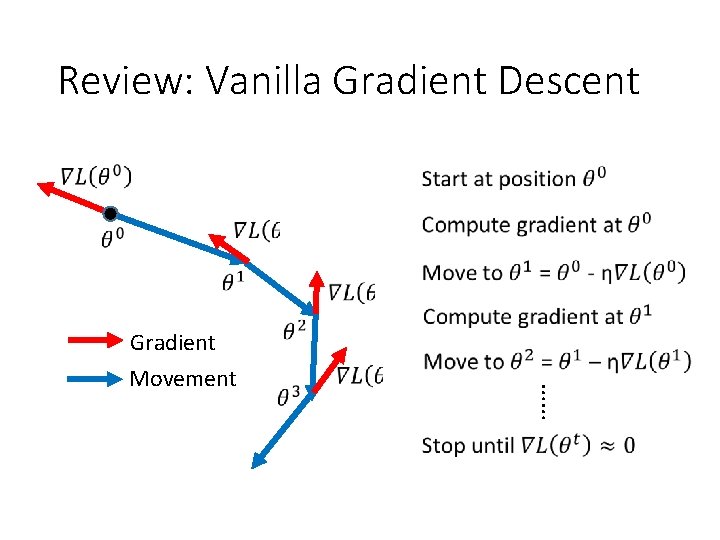

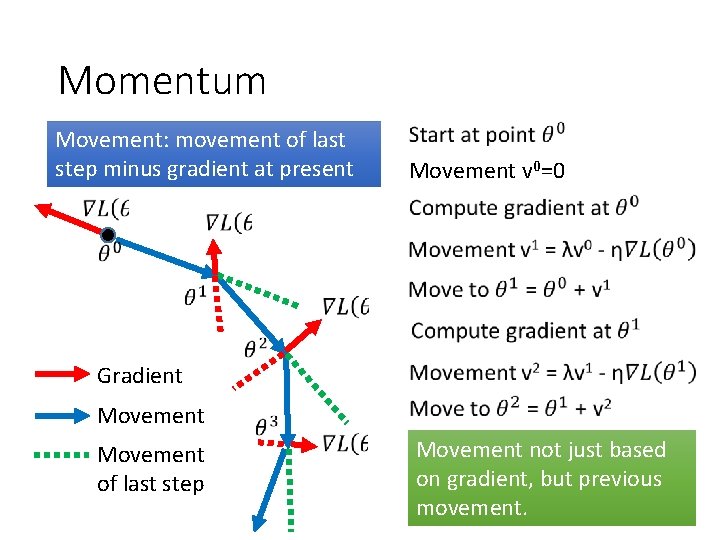

Review: Vanilla Gradient Descent Gradient …… Movement

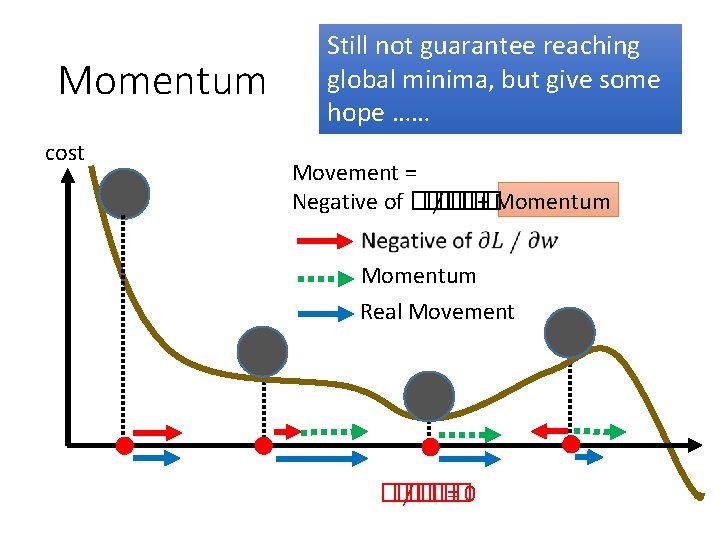

Momentum Movement: movement of last step minus gradient at present Movement v 0=0 Gradient Movement of last step Movement not just based on gradient, but previous movement.

Momentum Movement: movement of last step minus gradient at present Movement v 0=0 v 0 = 0 …… Movement not just based on gradient, but previous movement

Momentum cost Still not guarantee reaching global minima, but give some hope …… Movement = Negative of �� �� ∕�� �� + Momentum Real Movement �� �� ∕�� �� =0

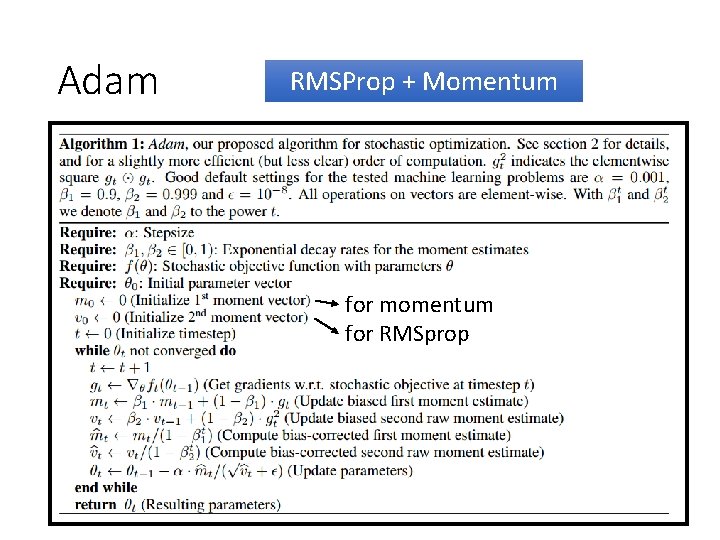

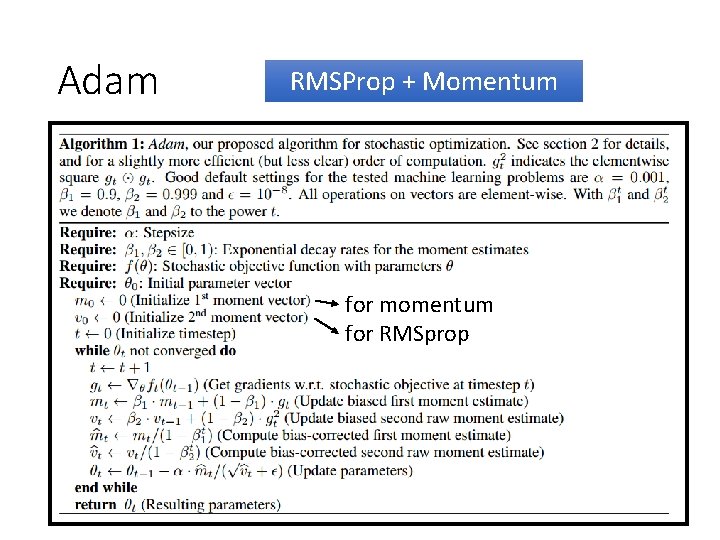

Adam RMSProp + Momentum for momentum for RMSprop

Recipe of Deep Learning YES Early Stopping Regularization Dropout New activation function Adaptive Learning Rate Good Results on Testing Data? YES Good Results on Training Data?

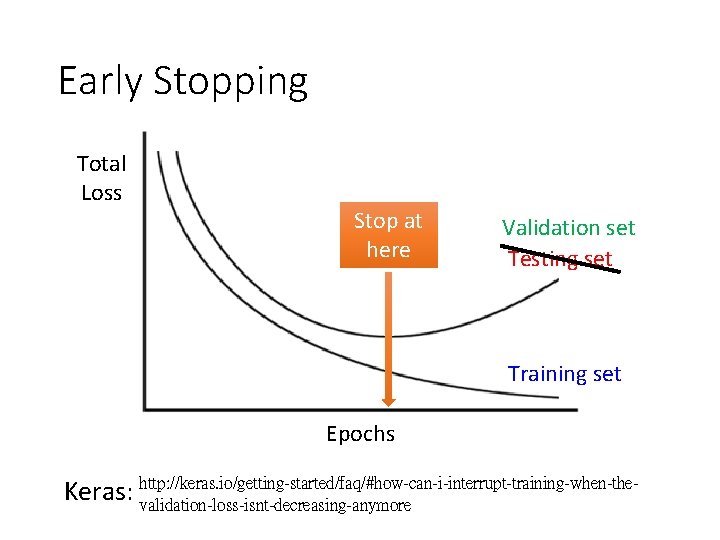

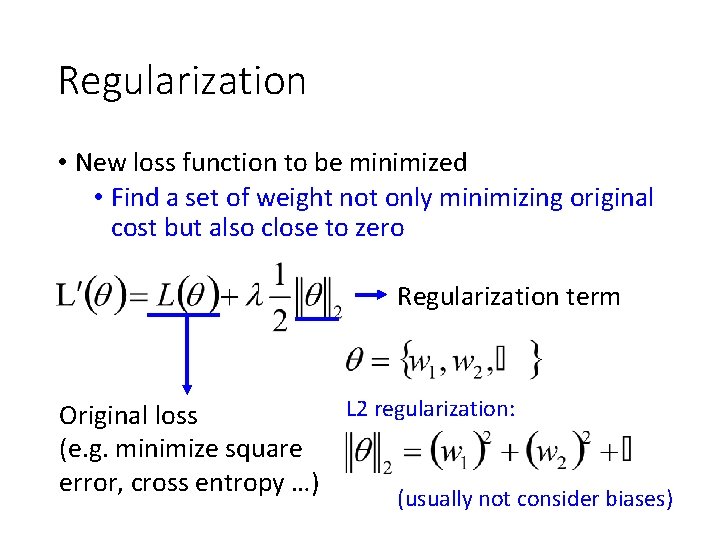

Early Stopping Total Loss Stop at here Validation set Testing set Training set Epochs Keras: http: //keras. io/getting-started/faq/#how-can-i-interrupt-training-when-thevalidation-loss-isnt-decreasing-anymore

Recipe of Deep Learning YES Early Stopping Regularization Dropout New activation function Adaptive Learning Rate Good Results on Testing Data? YES Good Results on Training Data?

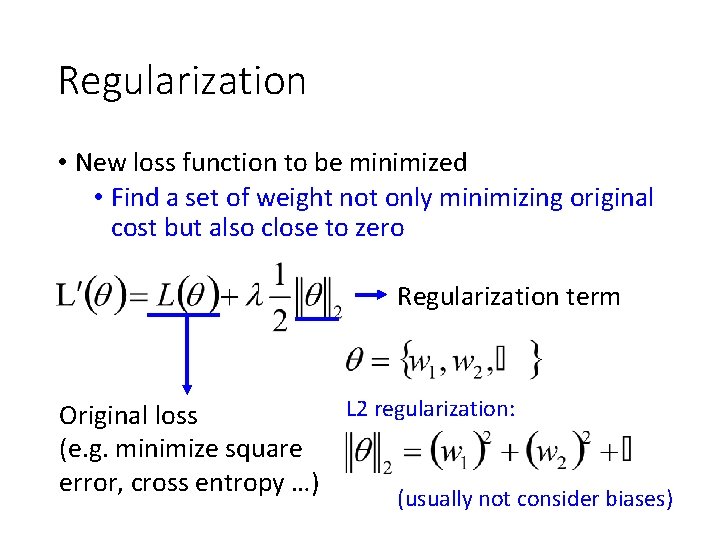

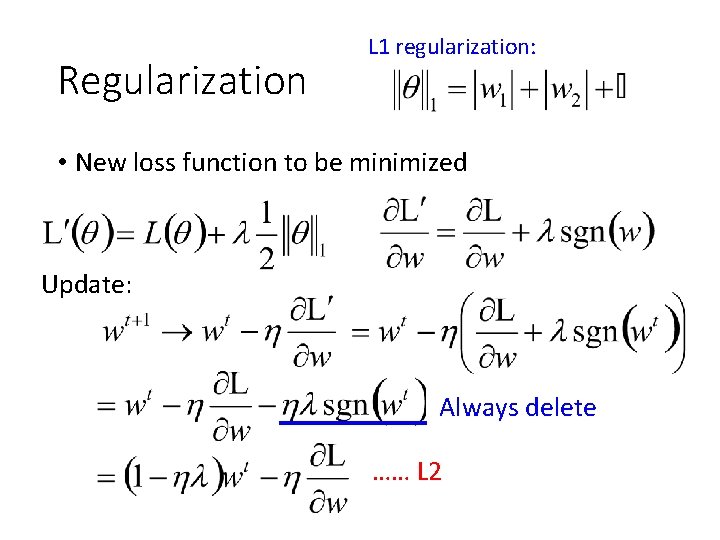

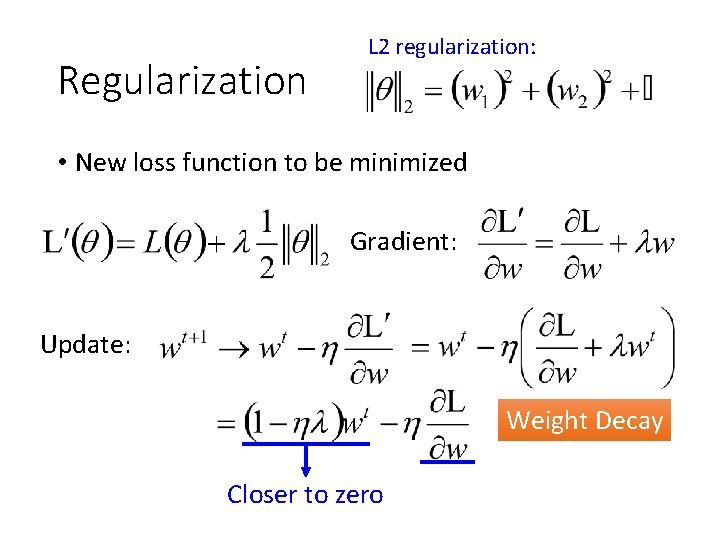

Regularization • New loss function to be minimized • Find a set of weight not only minimizing original cost but also close to zero Regularization term L 2 regularization: Original loss (e. g. minimize square error, cross entropy …) (usually not consider biases)

Regularization L 2 regularization: • New loss function to be minimized Gradient: Update: Weight Decay Closer to zero

Regularization L 1 regularization: • New loss function to be minimized Update: Always delete …… L 2

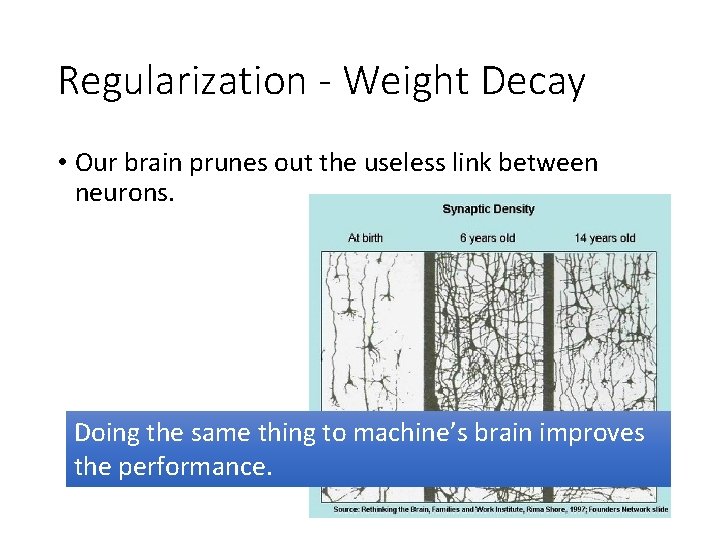

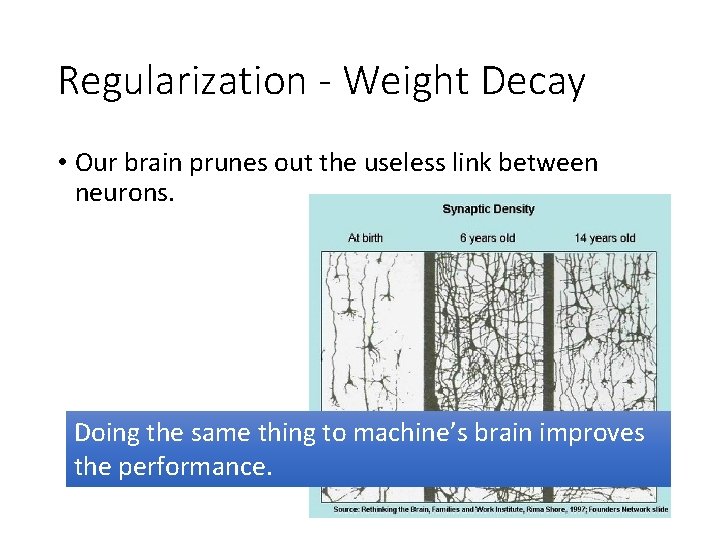

Regularization - Weight Decay • Our brain prunes out the useless link between neurons. Doing the same thing to machine’s brain improves the performance.

Recipe of Deep Learning YES Early Stopping Regularization Dropout New activation function Adaptive Learning Rate Good Results on Testing Data? YES Good Results on Training Data?

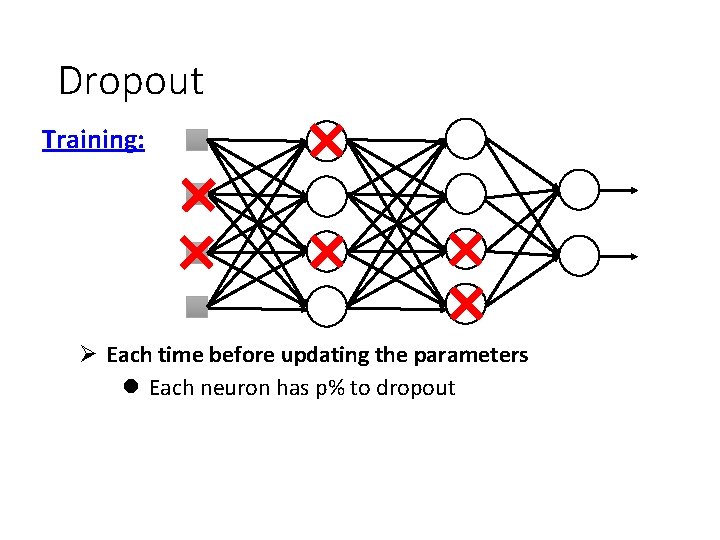

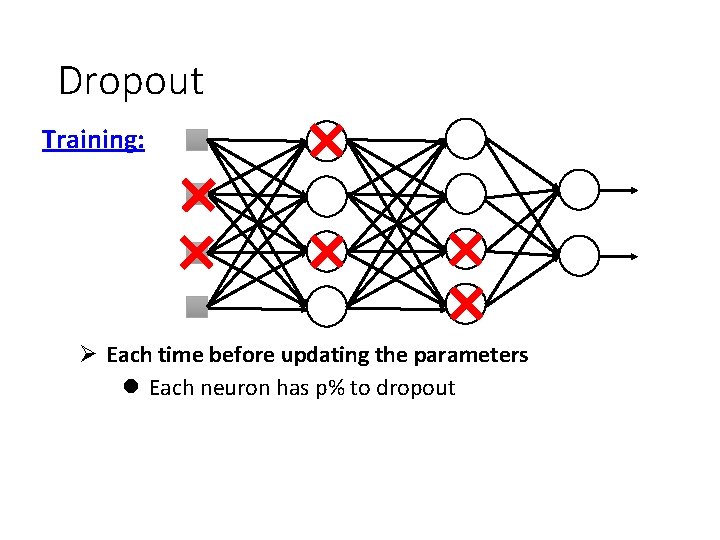

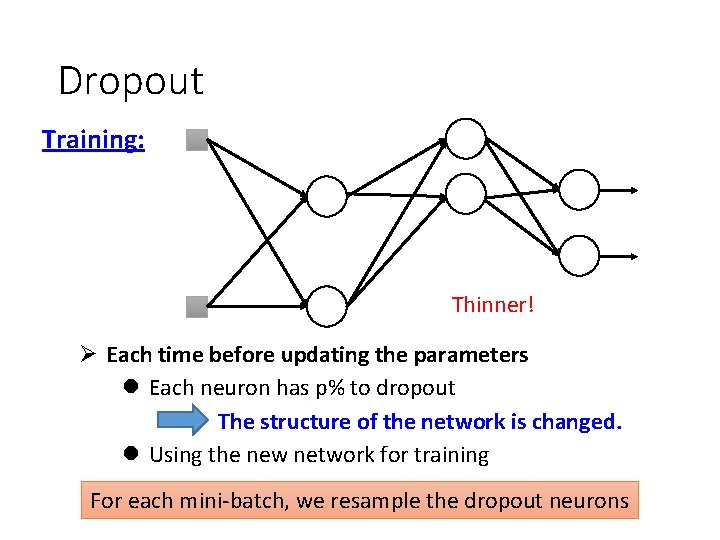

Dropout Training: Ø Each time before updating the parameters l Each neuron has p% to dropout

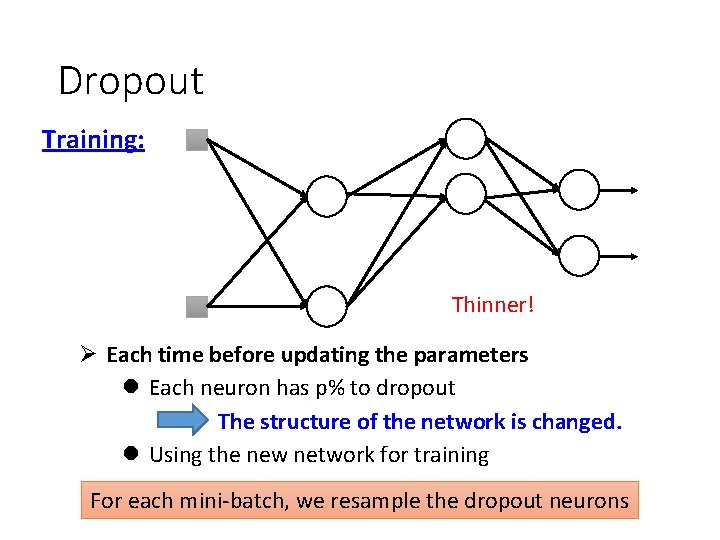

Dropout Training: Thinner! Ø Each time before updating the parameters l Each neuron has p% to dropout The structure of the network is changed. l Using the new network for training For each mini-batch, we resample the dropout neurons

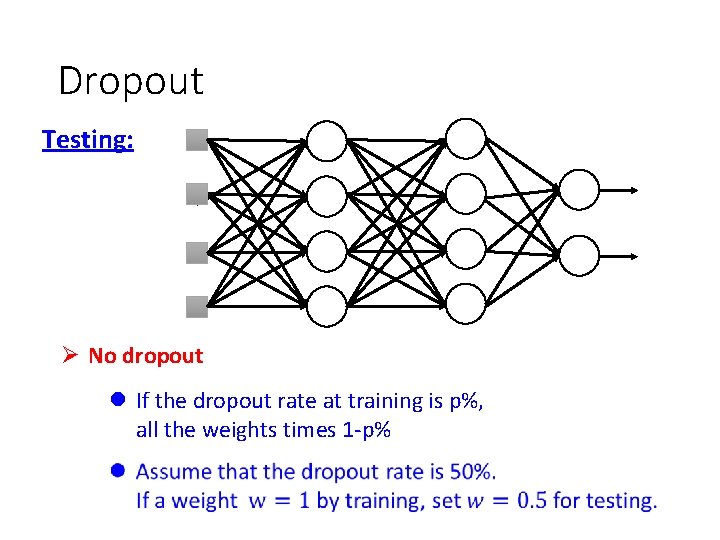

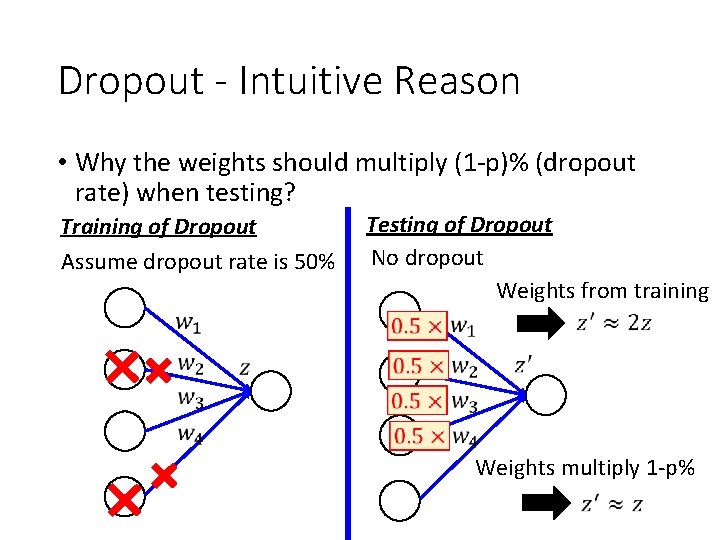

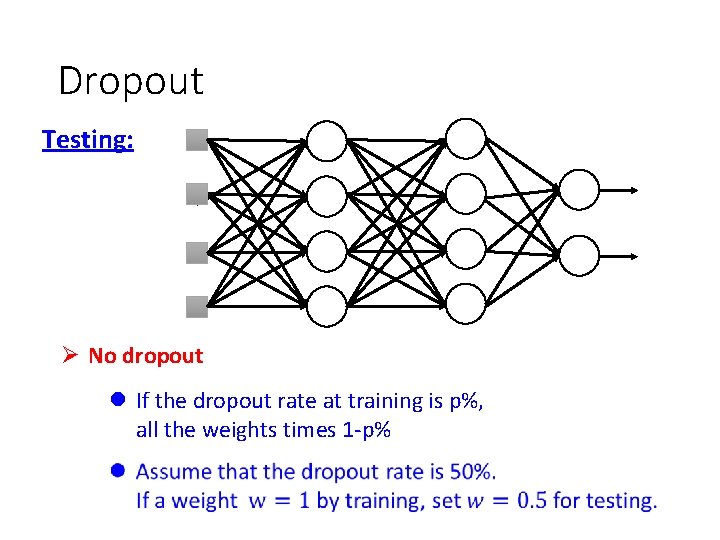

Dropout Testing: Ø No dropout l If the dropout rate at training is p%, all the weights times 1 -p%

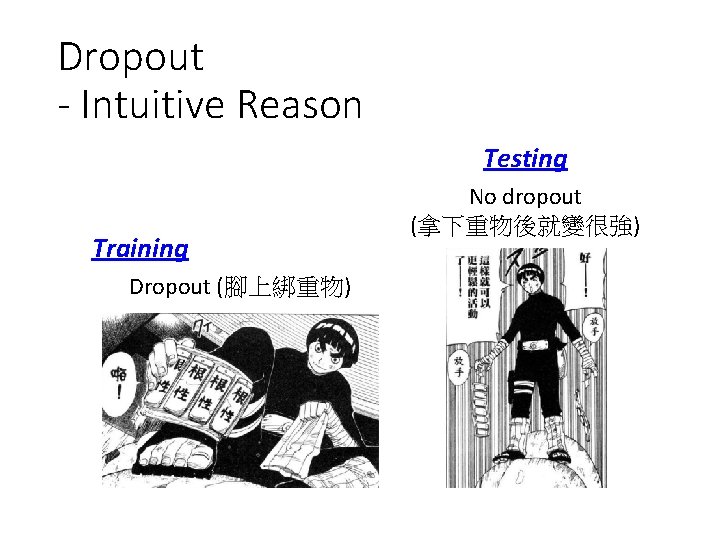

Dropout - Intuitive Reason Testing Training Dropout (腳上綁重物) No dropout (拿下重物後就變很強)

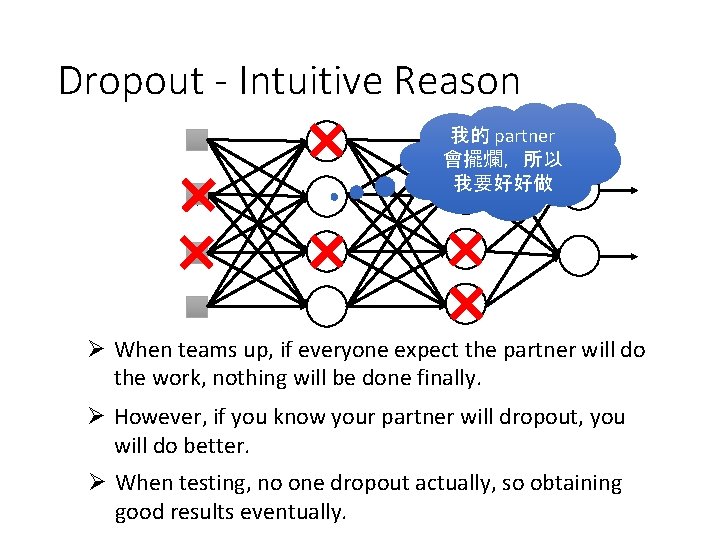

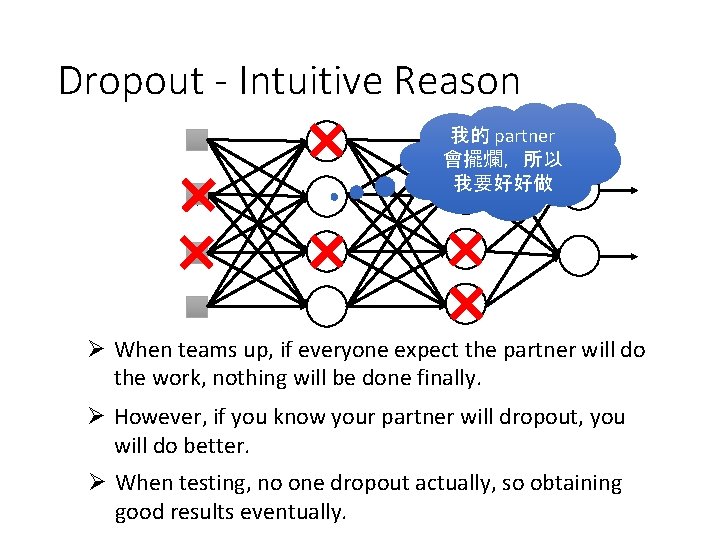

Dropout - Intuitive Reason 我的 partner 會擺爛,所以 我要好好做 Ø When teams up, if everyone expect the partner will do the work, nothing will be done finally. Ø However, if you know your partner will dropout, you will do better. Ø When testing, no one dropout actually, so obtaining good results eventually.

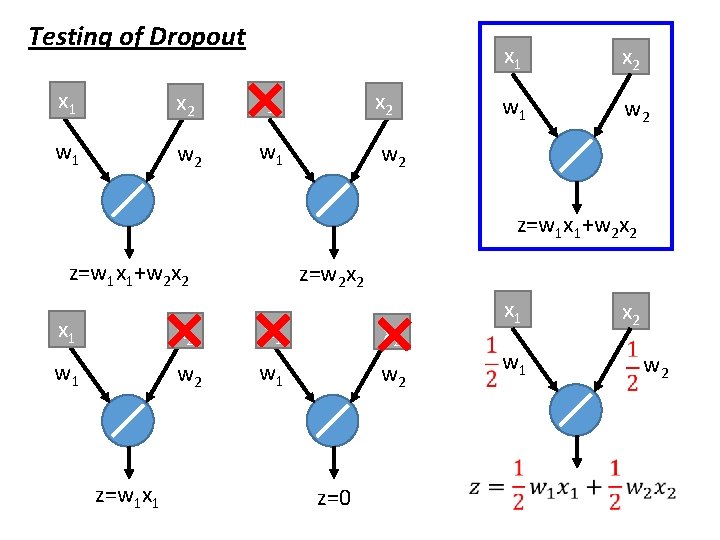

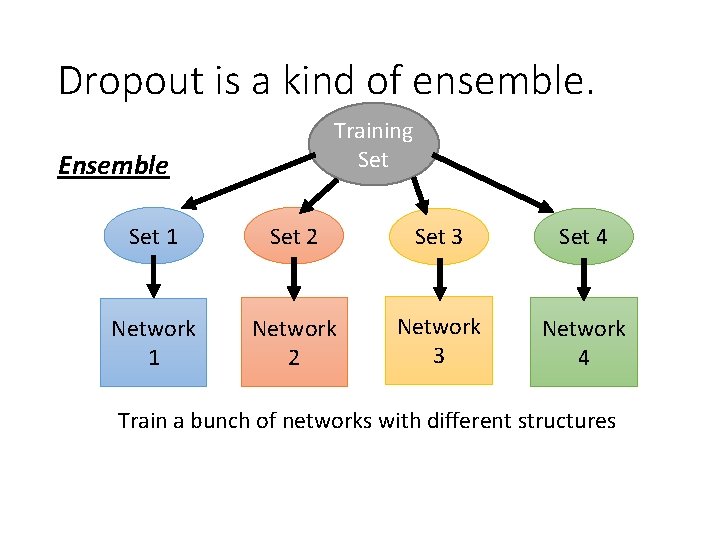

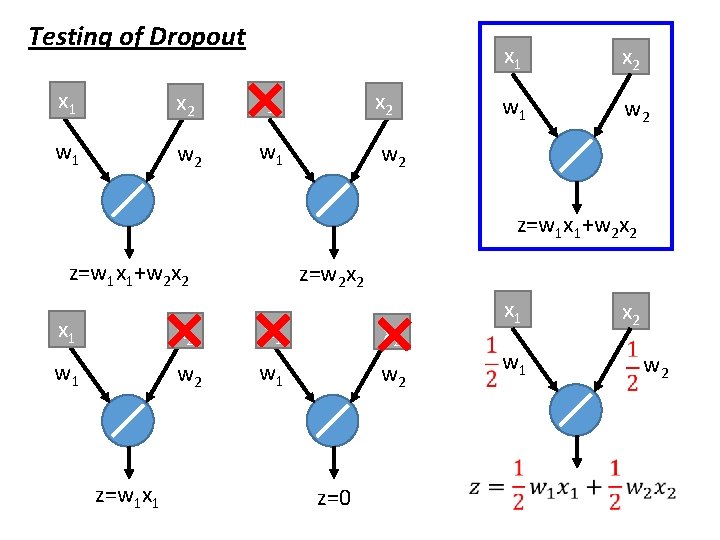

Dropout - Intuitive Reason • Why the weights should multiply (1 -p)% (dropout rate) when testing? Training of Dropout Assume dropout rate is 50% Testing of Dropout No dropout Weights from training Weights multiply 1 -p%

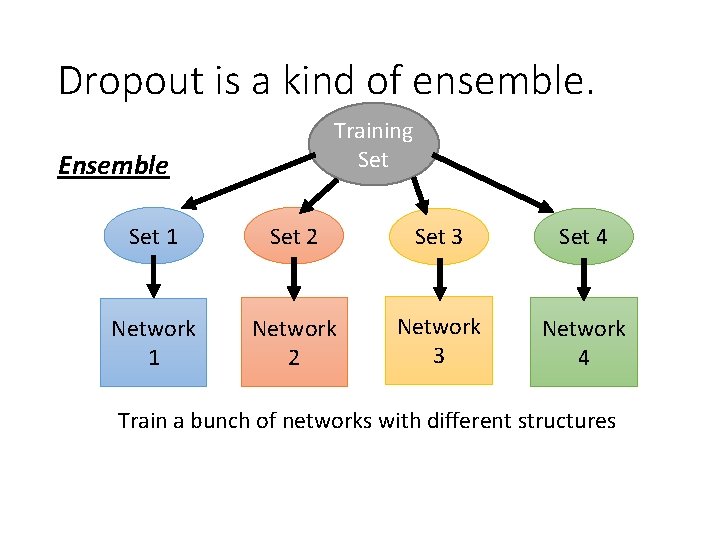

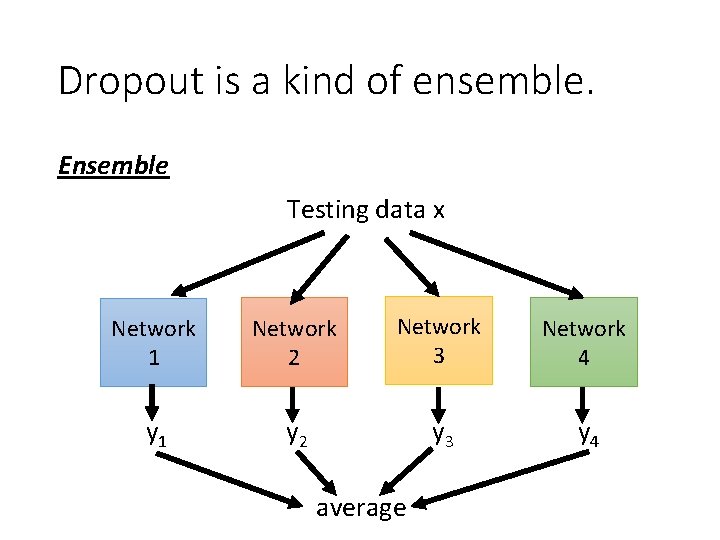

Dropout is a kind of ensemble. Training Set Ensemble Set 1 Set 2 Set 3 Set 4 Network 1 Network 2 Network 3 Network 4 Train a bunch of networks with different structures

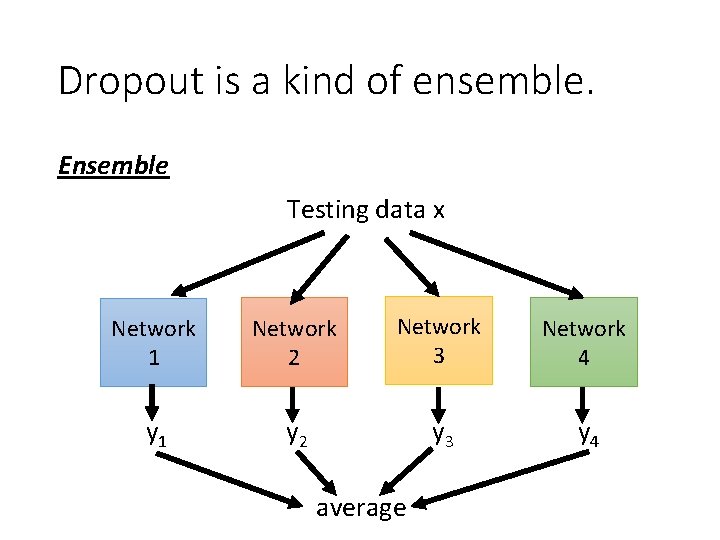

Dropout is a kind of ensemble. Ensemble Testing data x Network 1 Network 2 Network 3 Network 4 y 1 y 2 y 3 y 4 average

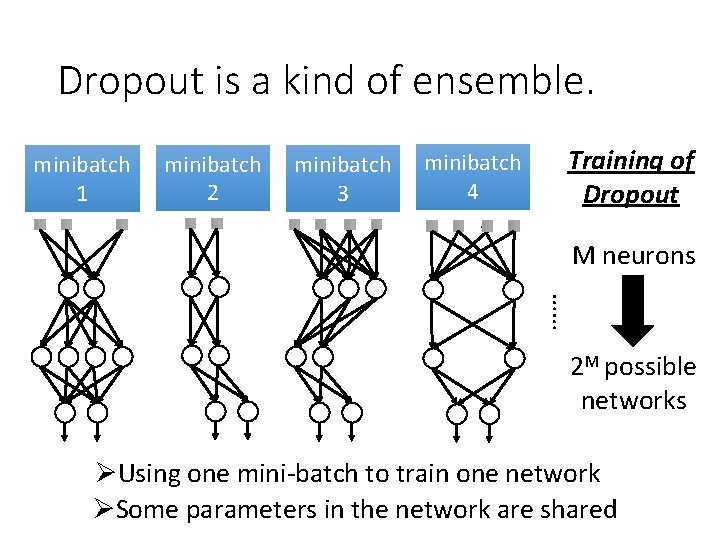

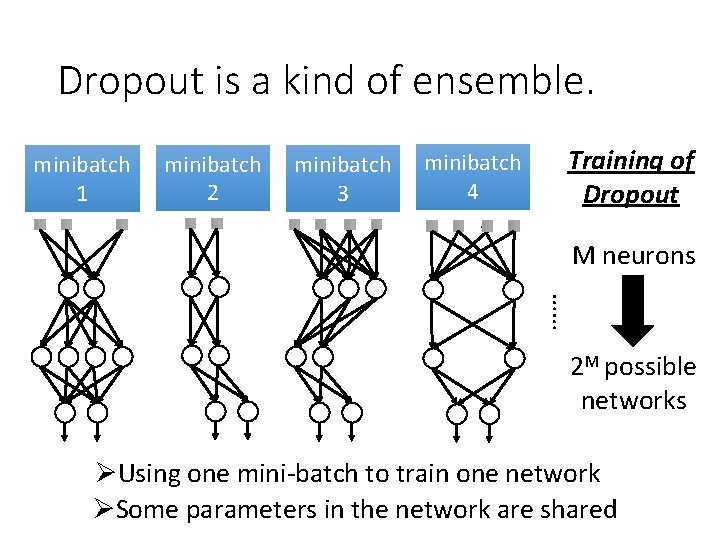

Dropout is a kind of ensemble. minibatch 1 minibatch 2 minibatch 3 minibatch 4 Training of Dropout M neurons …… 2 M possible networks ØUsing one mini-batch to train one network ØSome parameters in the network are shared

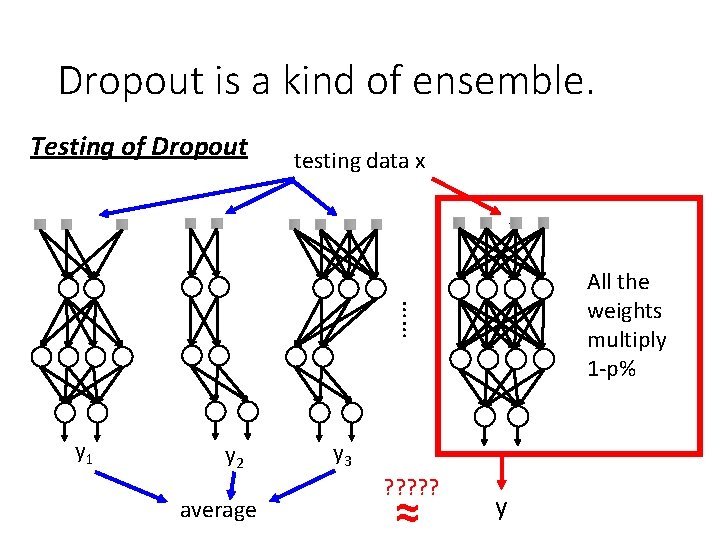

Dropout is a kind of ensemble. Testing of Dropout testing data x …… All the weights multiply 1 -p% y 1 y 2 average y 3 ? ? ? ≈ y

Testing of Dropout x 1 x 2 w 1 w 2 z=w 1 x 1+w 2 x 2 z=w 2 x 2 x 1 x 2 w 1 w 2 z=w 1 x 1 z=0 x 1 w 1 x 2 w 2

Recipe of Deep Learning YES Step 1: define a set of function Step 2: goodness of function Step 3: pick the best function NO Overfitting! Good Results on Testing Data? YES NO Neural Network Good Results on Training Data?

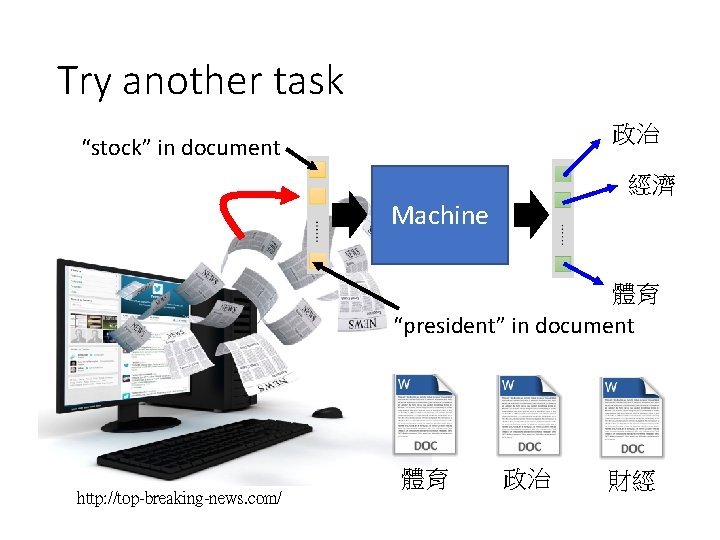

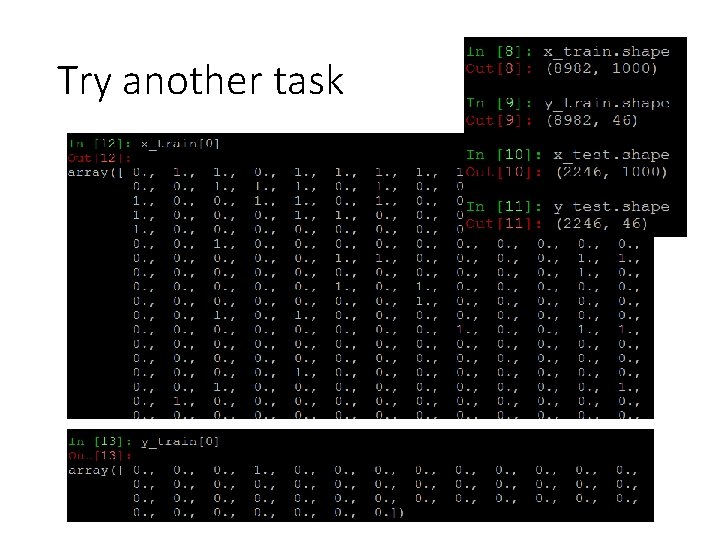

Try another task 政治 “stock” in document 經濟 Machine 體育 “president” in document http: //top-breaking-news. com/ 體育 政治 財經

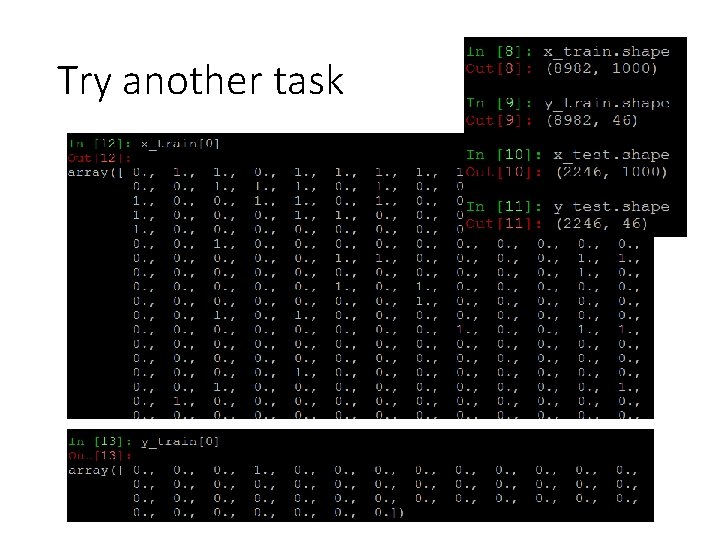

Try another task

Live Demo