Deep Learning Hungyi Lee Deep learning attracts lots

- Slides: 29

Deep Learning Hung-yi Lee 李宏毅

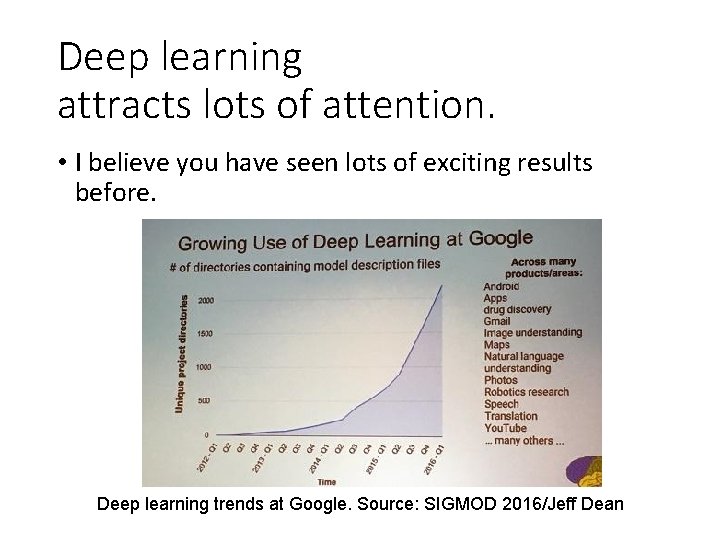

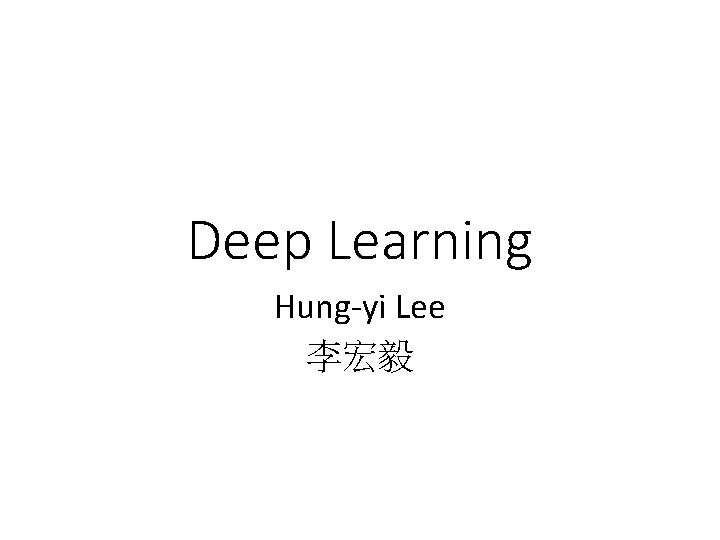

Deep learning attracts lots of attention. • I believe you have seen lots of exciting results before. Deep learning trends at Google. Source: SIGMOD 2016/Jeff Dean

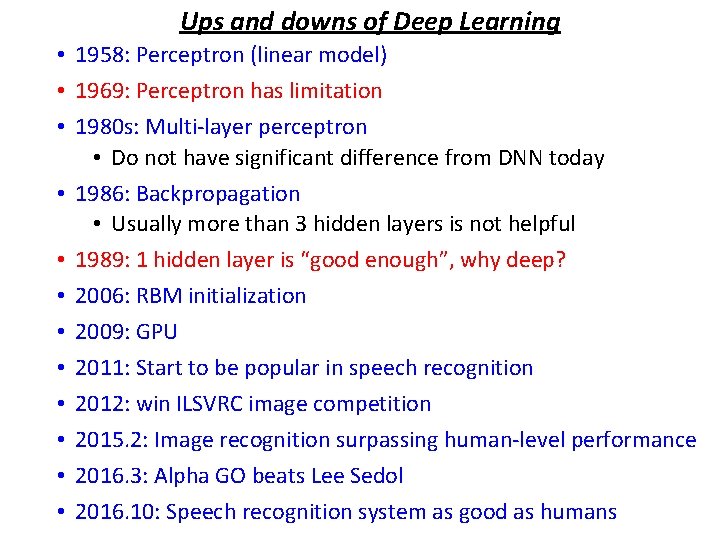

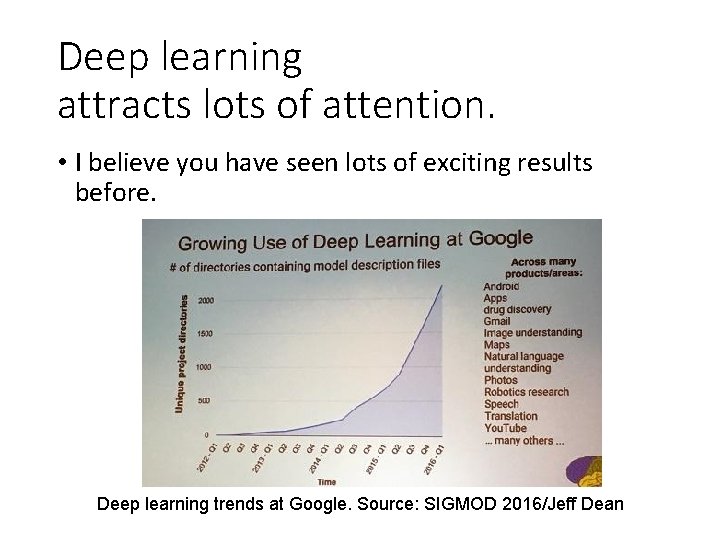

Ups and downs of Deep Learning • 1958: Perceptron (linear model) • 1969: Perceptron has limitation • 1980 s: Multi-layer perceptron • Do not have significant difference from DNN today • 1986: Backpropagation • Usually more than 3 hidden layers is not helpful • 1989: 1 hidden layer is “good enough”, why deep? • 2006: RBM initialization • 2009: GPU • 2011: Start to be popular in speech recognition • 2012: win ILSVRC image competition • 2015. 2: Image recognition surpassing human-level performance • 2016. 3: Alpha GO beats Lee Sedol • 2016. 10: Speech recognition system as good as humans

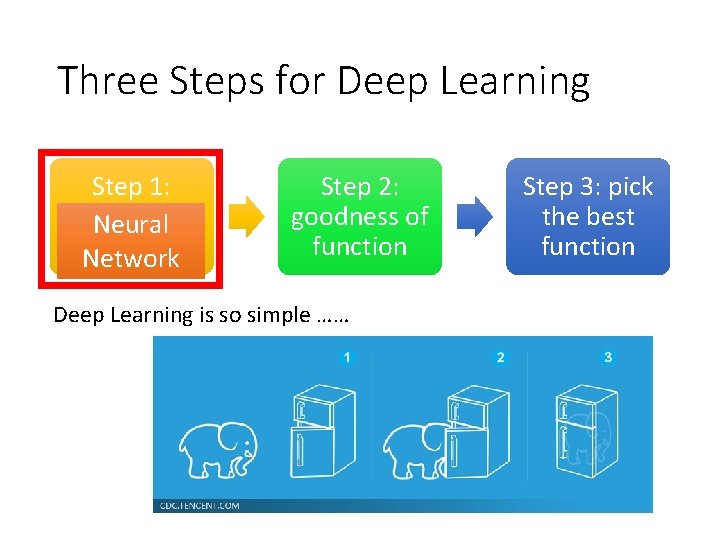

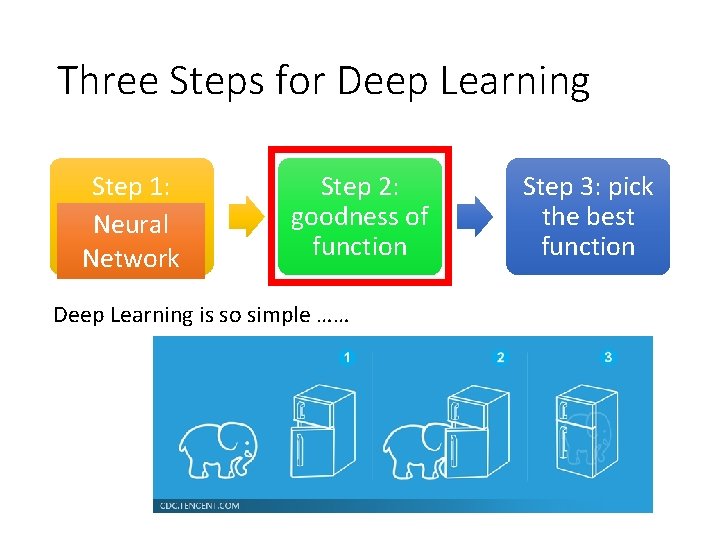

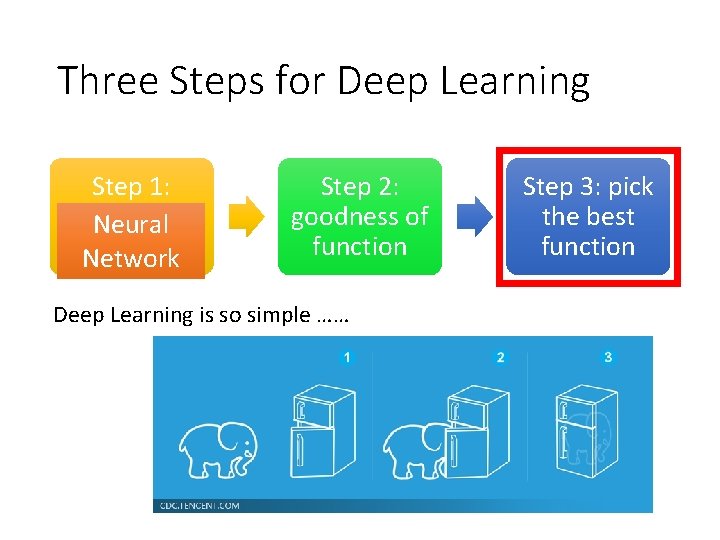

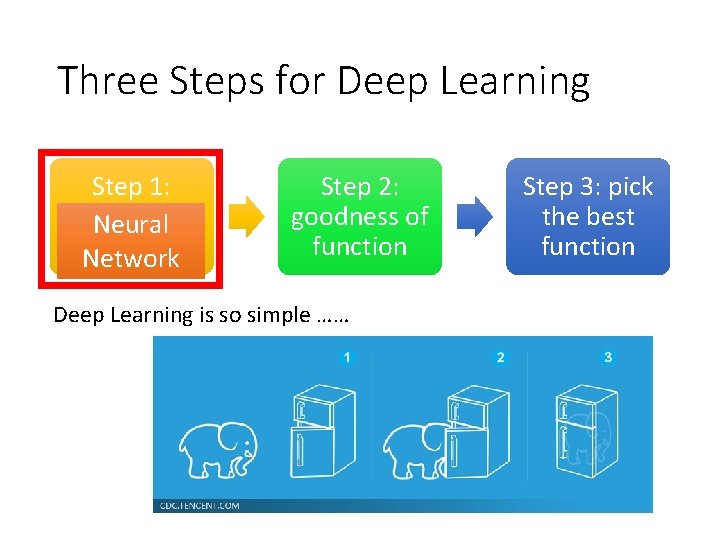

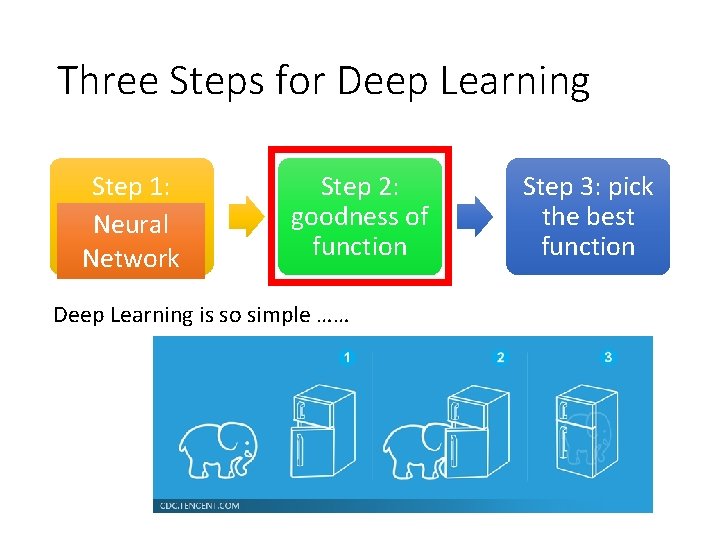

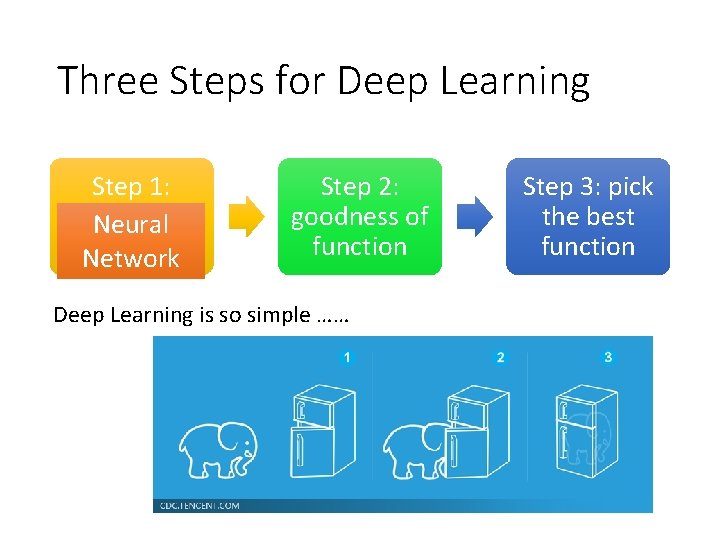

Three Steps for Deep Learning Step 1: Step 2: define a set goodness of Neural of function function Network Deep Learning is so simple …… Step 3: pick the best function

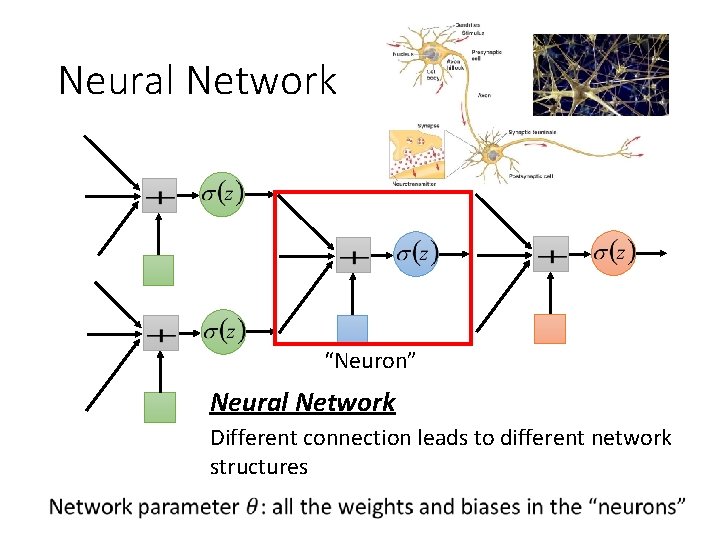

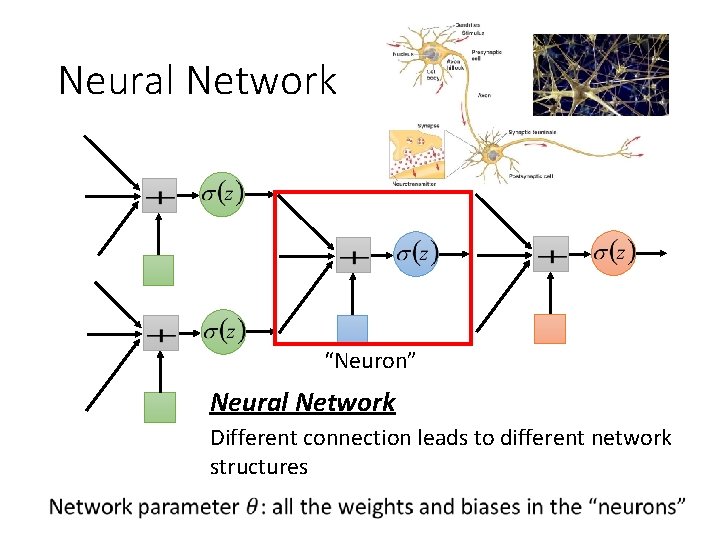

Neural Network “Neuron” Neural Network Different connection leads to different network structures

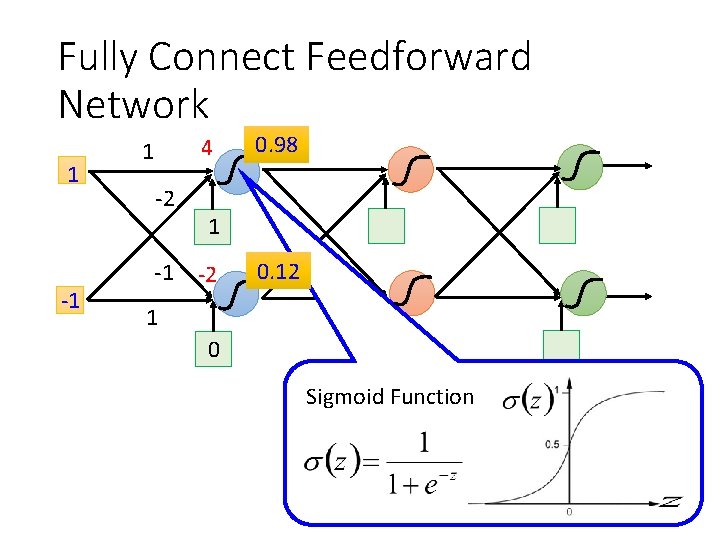

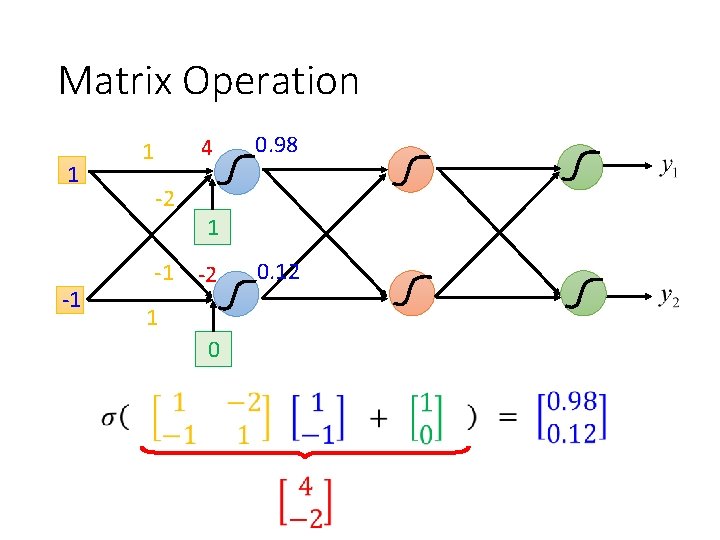

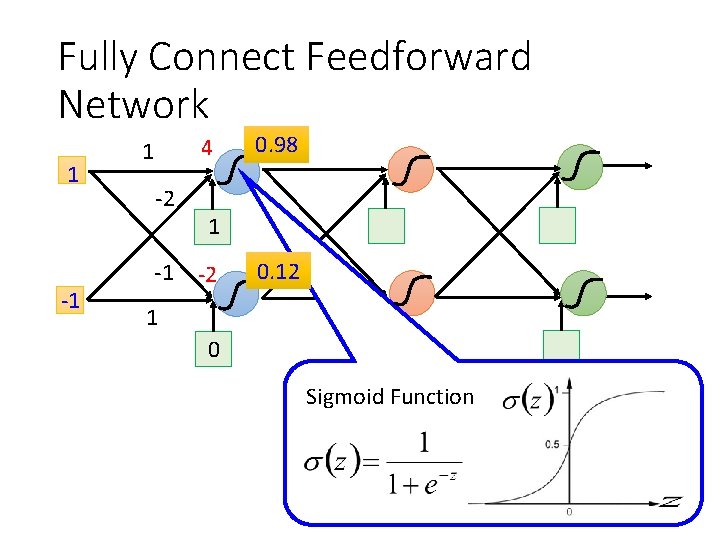

Fully Connect Feedforward Network 1 -1 4 1 -2 -1 0. 98 1 -2 0. 12 1 0 Sigmoid Function

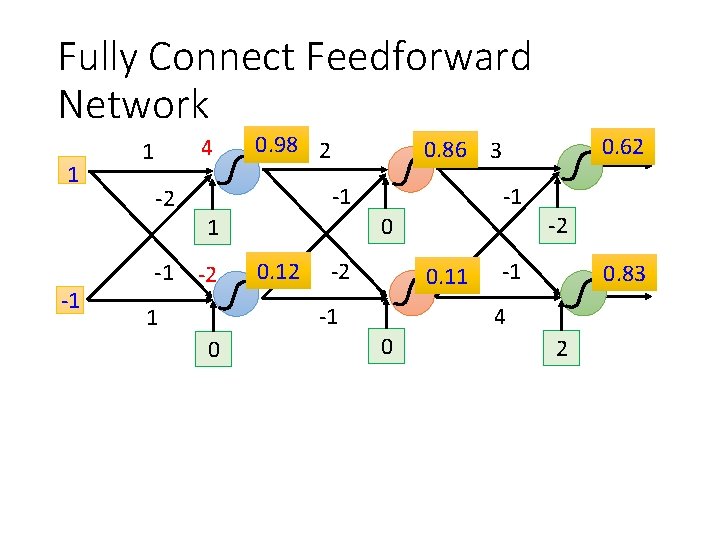

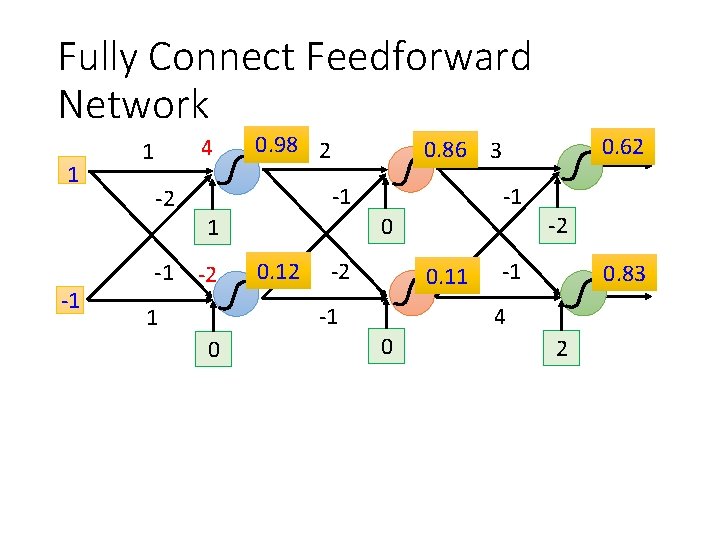

Fully Connect Feedforward Network 1 -1 4 1 -2 -1 0. 98 2 -1 -1 0. 12 -2 0. 11 -1 1 0 -2 0 1 -2 0. 62 0. 86 3 -1 0. 83 4 0 2

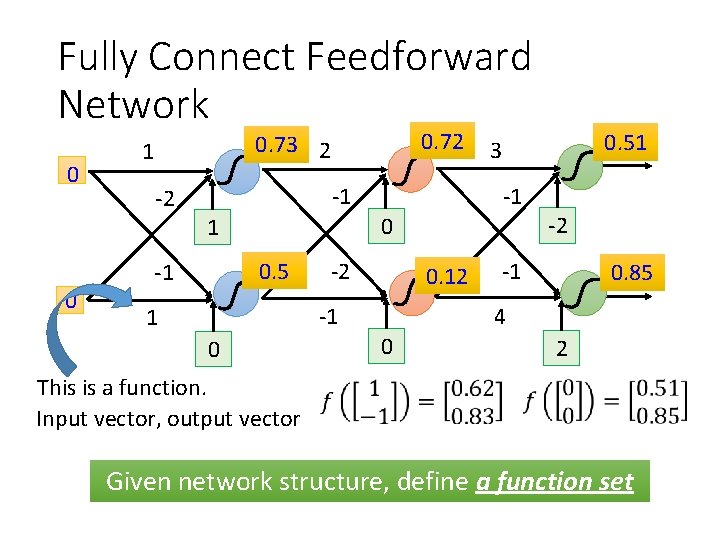

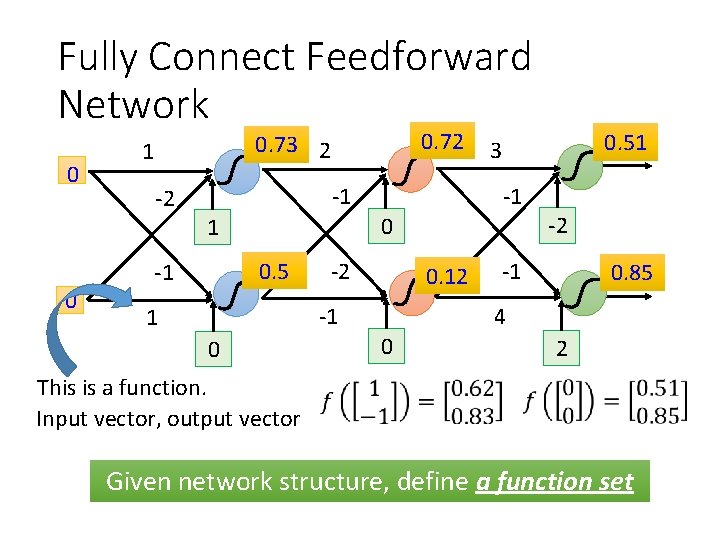

Fully Connect Feedforward Network 0 0 0. 72 0. 73 2 1 -2 -1 0. 5 -1 -1 -2 0. 12 -1 1 0 0. 51 3 -1 0. 85 4 0 2 This is a function. Input vector, output vector Given network structure, define a function set

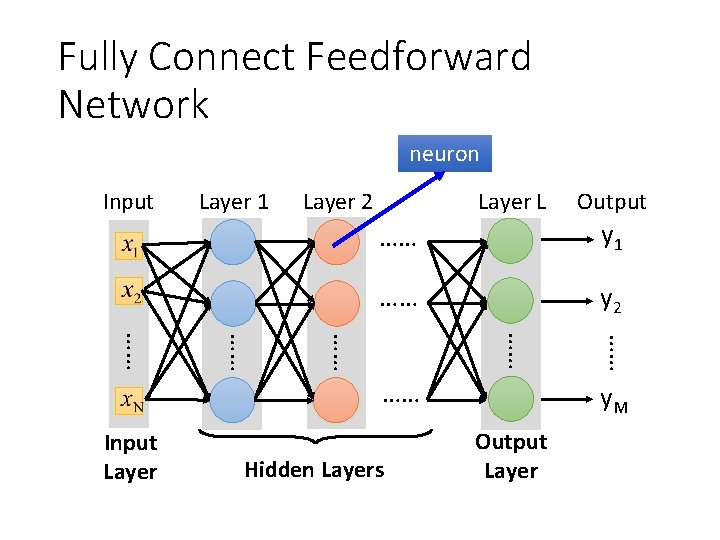

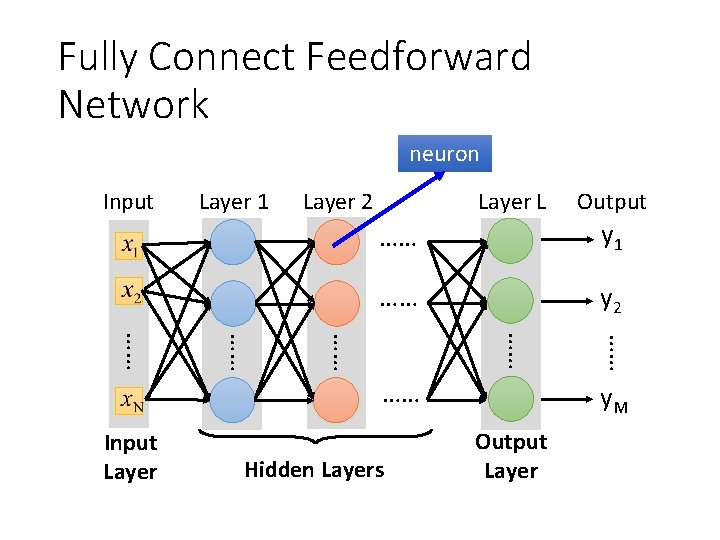

Fully Connect Feedforward Network neuron Input Layer 1 Layer 2 Layer L …… y 1 …… y 2 Hidden Layers …… …… …… Input Layer Output y. M Output Layer

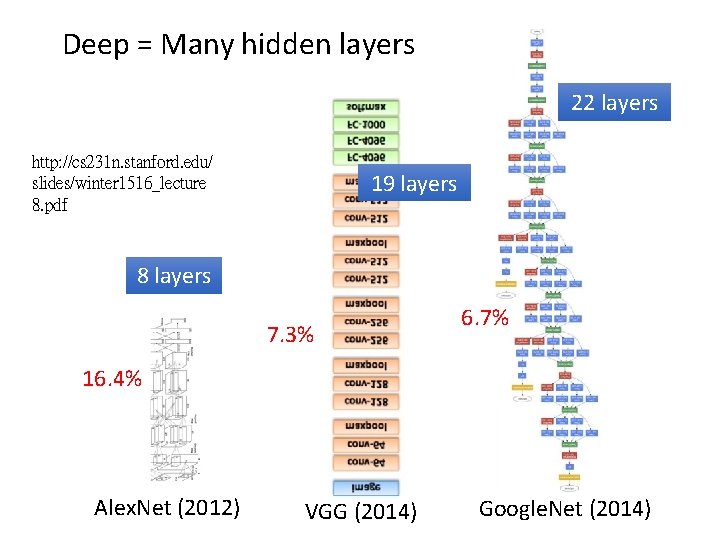

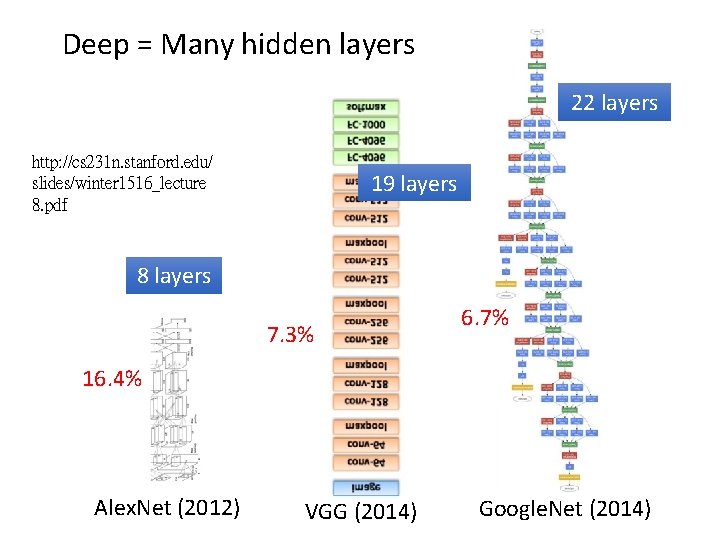

Deep = Many hidden layers 22 layers http: //cs 231 n. stanford. edu/ slides/winter 1516_lecture 8. pdf 19 layers 8 layers 7. 3% 6. 7% 16. 4% Alex. Net (2012) VGG (2014) Google. Net (2014)

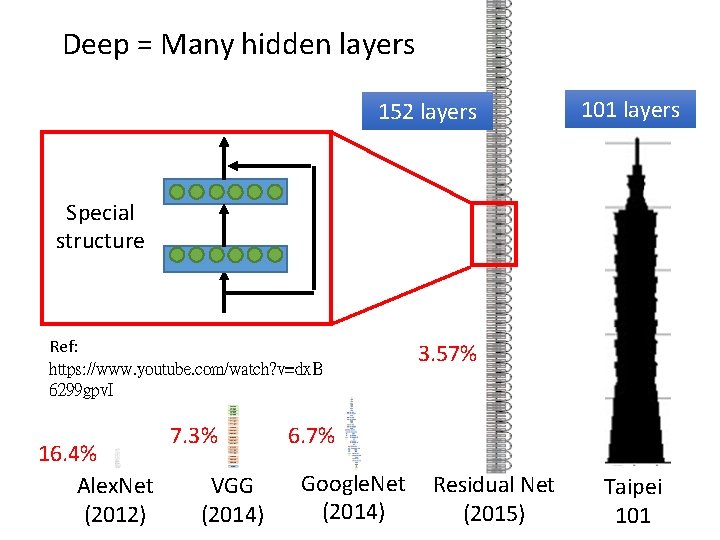

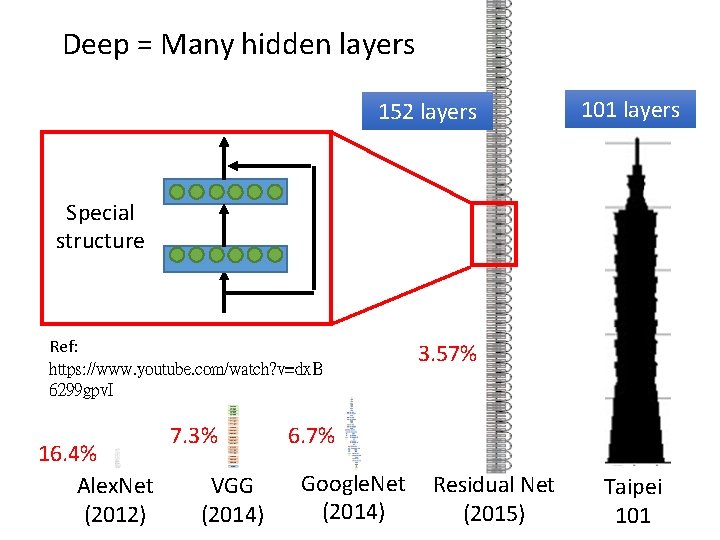

Deep = Many hidden layers 152 layers 101 layers Special structure Ref: https: //www. youtube. com/watch? v=dx. B 6299 gpv. I 16. 4% Alex. Net (2012) 7. 3% VGG (2014) 3. 57% 6. 7% Google. Net Residual Net (2014) (2015) Taipei 101

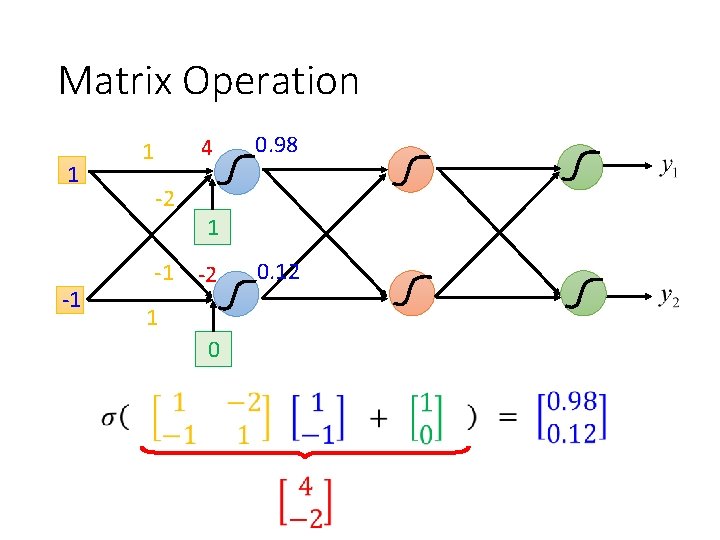

Matrix Operation 1 -1 4 1 -2 -1 0. 98 1 -2 1 0 0. 12

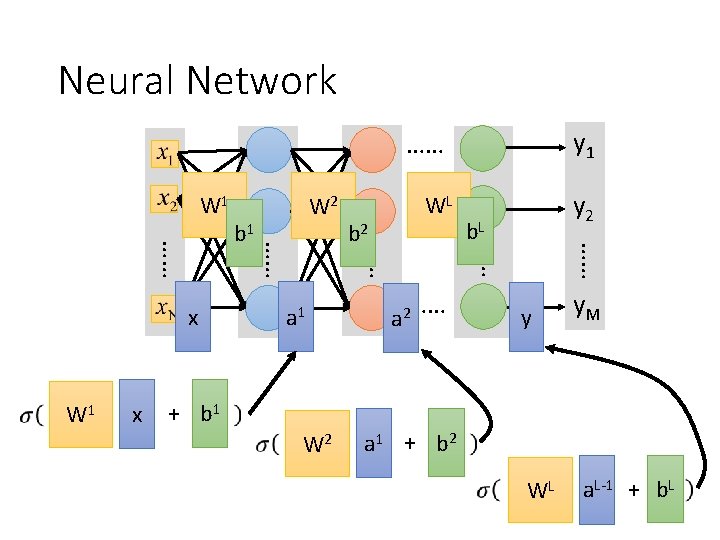

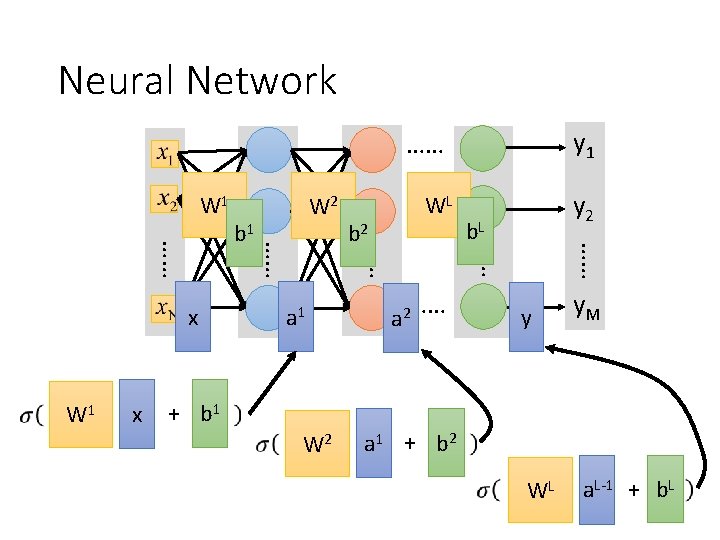

Neural Network W 1 WL …… y 2 + b 1 W 2 …… a 1 b. L …… x b 2 y 1 …… W 1 …… …… x b 1 W 2 …… y y. M a 1 + b 2 WL a. L-1 + b. L

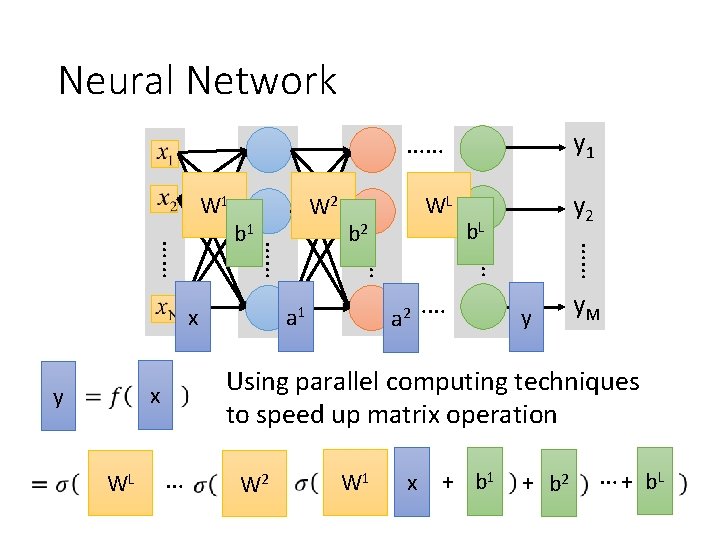

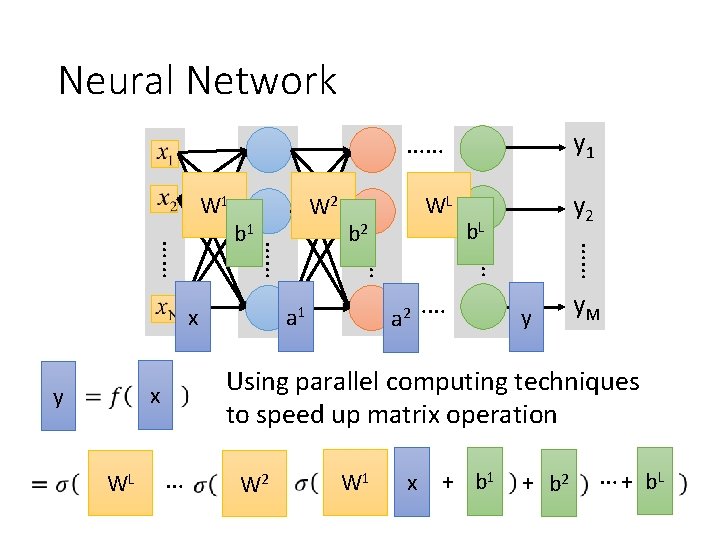

Neural Network W 1 b. L …… a 1 a 2 …… WL y 2 y y. M Using parallel computing techniques to speed up matrix operation x y WL …… …… x b 2 y 1 …… …… …… b 1 W 2 …… … W 2 W 1 x + b 1 + b 2 … + b. L

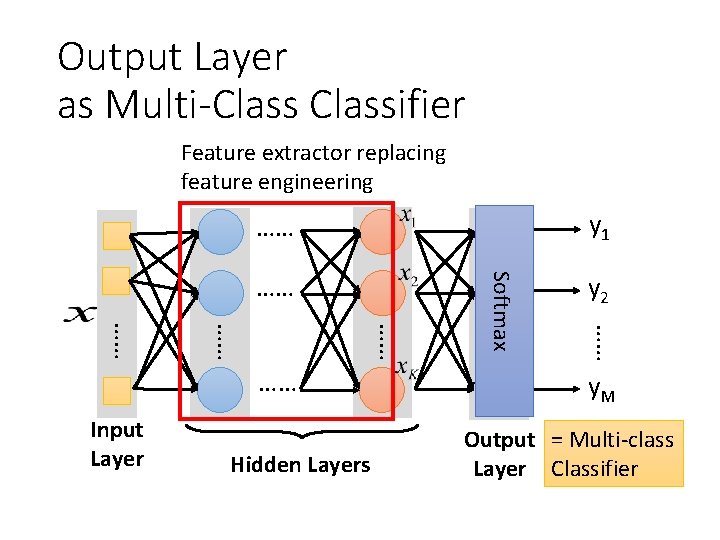

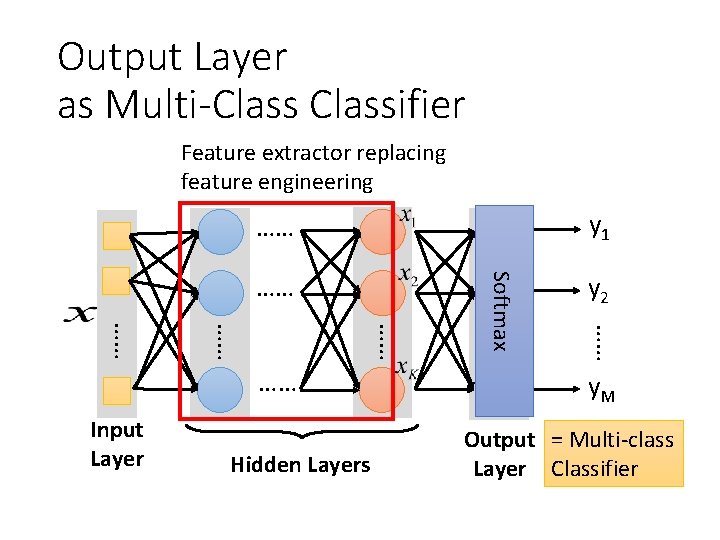

Output Layer as Multi-Classifier Feature extractor replacing feature engineering y 1 …… y 2 Input Layer Hidden Layers …… …… …… Softmax …… y. M Output = Multi-class Layer Classifier

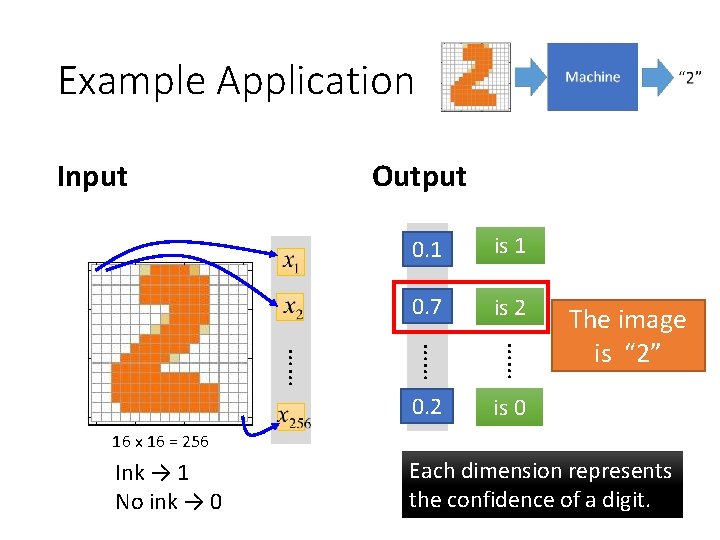

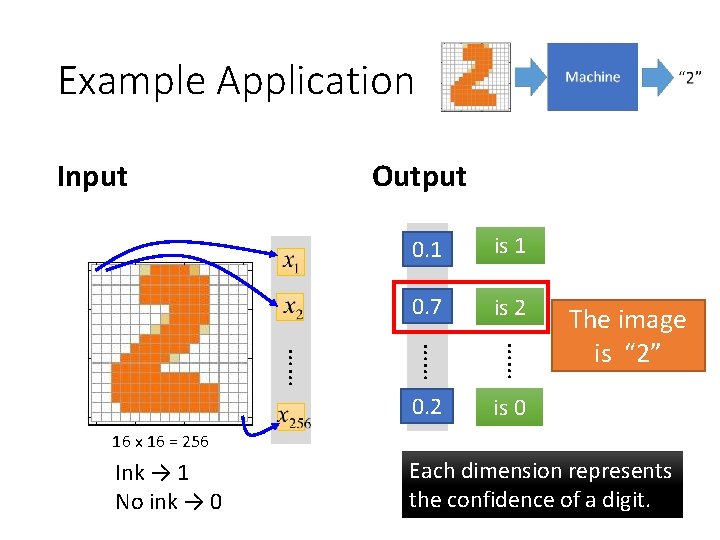

Example Application Input Output y 1 0. 1 is 1 0. 7 y 2 is 2 …… …… …… 0. 2 y 10 The image is “ 2” is 0 16 x 16 = 256 Ink → 1 No ink → 0 Each dimension represents the confidence of a digit.

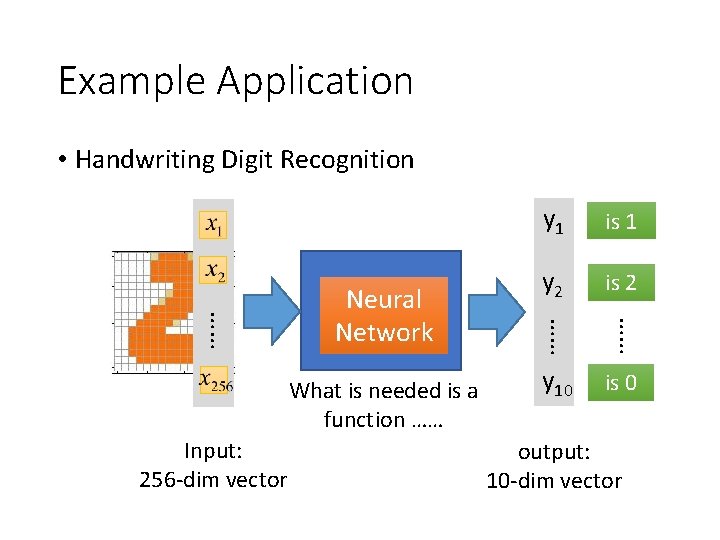

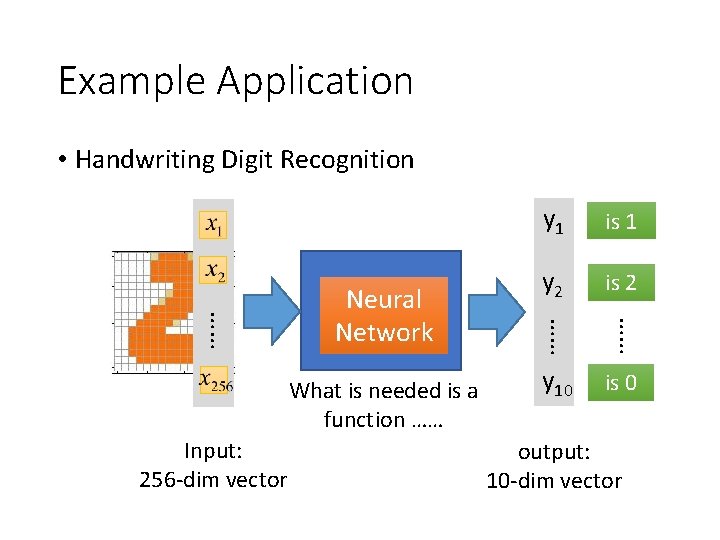

Example Application • Handwriting Digit Recognition Input: 256 -dim vector y 2 is 2 “ 2” y 10 …… What is needed is a function …… is 1 …… …… Neural Machine Network y 1 is 0 output: 10 -dim vector

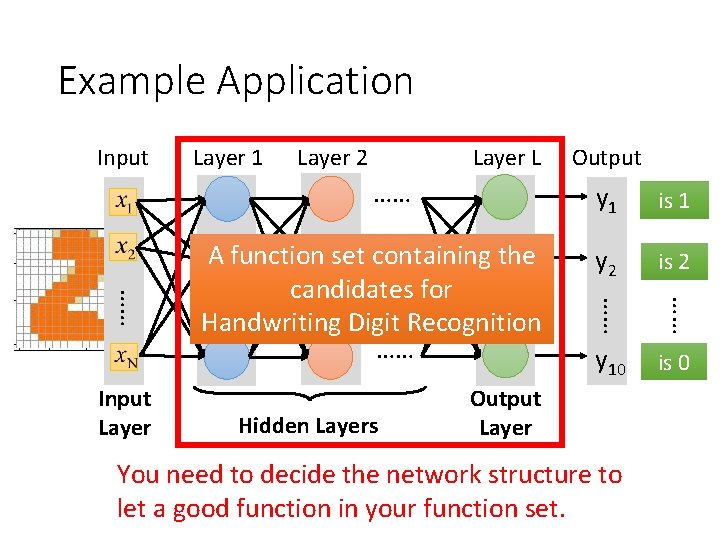

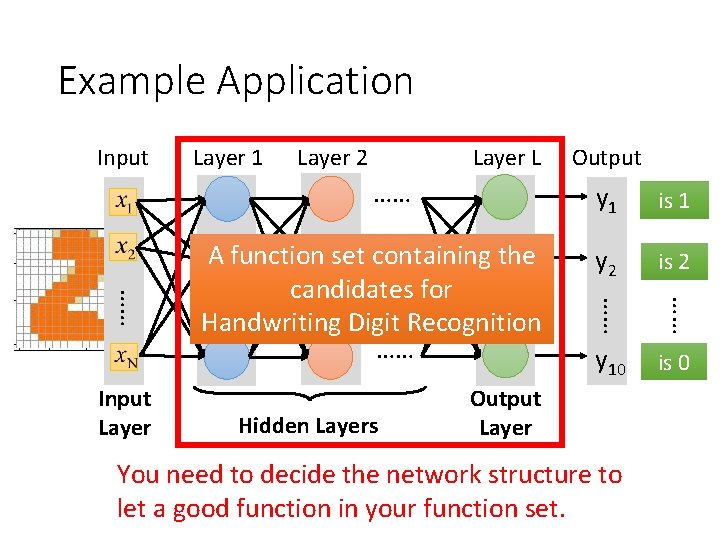

Example Application Input Layer 1 Layer 2 Layer L …… y 1 is 1 y 2 is 2 y 10 Output Layer You need to decide the network structure to let a good function in your function set. …… “ 2” …… Hidden Layers …… …… Input Layer …… A function set containing the candidates for Handwriting Digit Recognition …… Output is 0

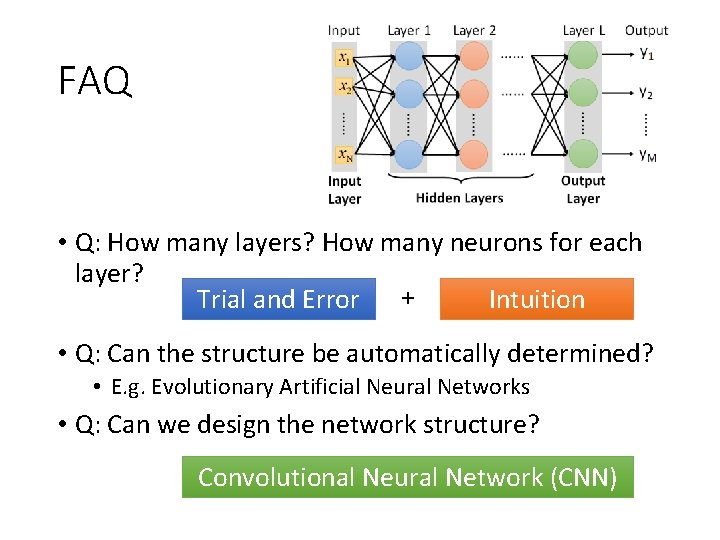

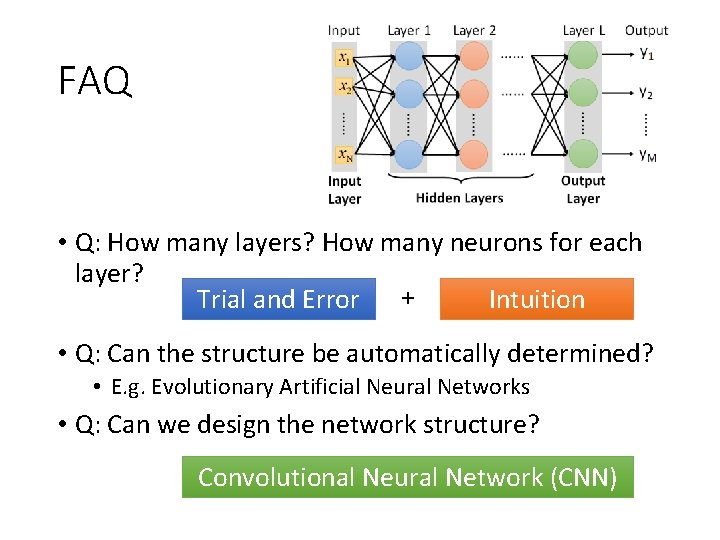

FAQ • Q: How many layers? How many neurons for each layer? Intuition Trial and Error + • Q: Can the structure be automatically determined? • E. g. Evolutionary Artificial Neural Networks • Q: Can we design the network structure? Convolutional Neural Network (CNN)

Three Steps for Deep Learning Step 1: Step 2: define a set goodness of Neural of function function Network Deep Learning is so simple …… Step 3: pick the best function

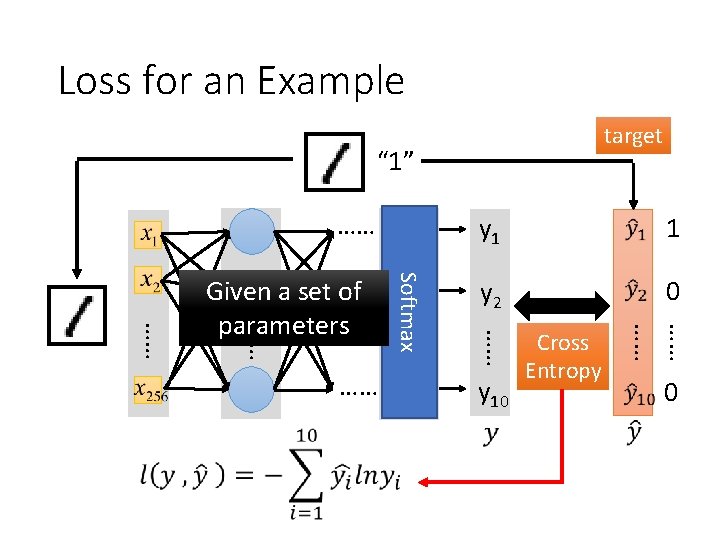

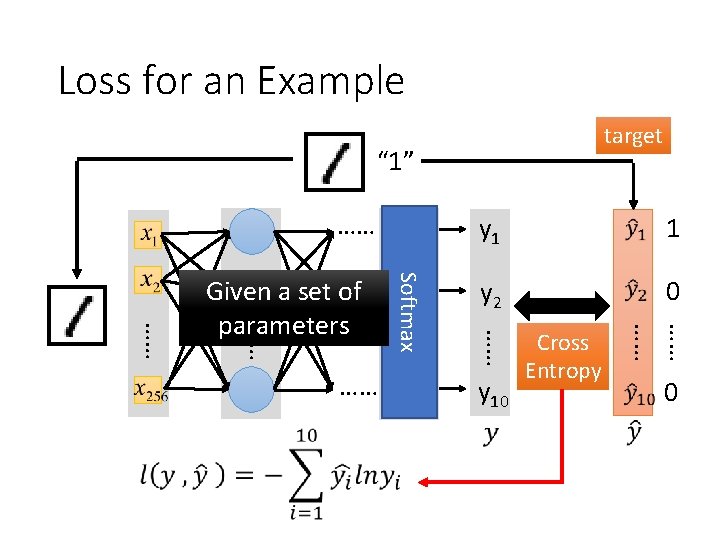

Loss for an Example target “ 1” …… 1 y 2 0 y 10 Cross Entropy …… …… …… Softmax …… …… …… Given a set of parameters y 1 0

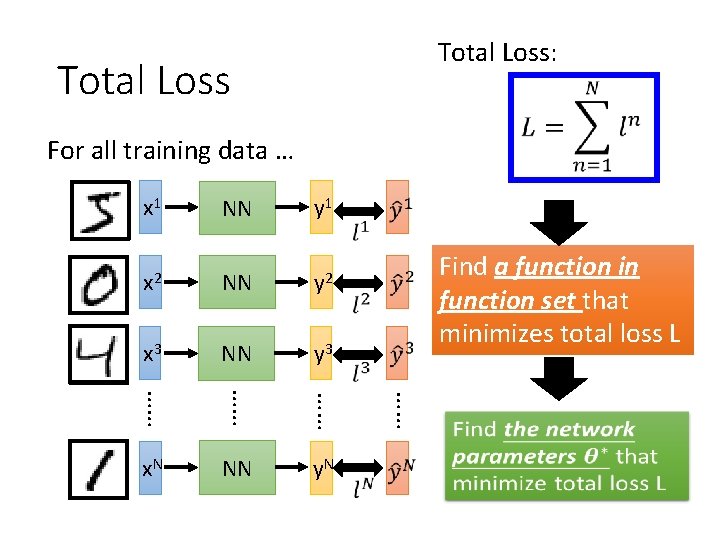

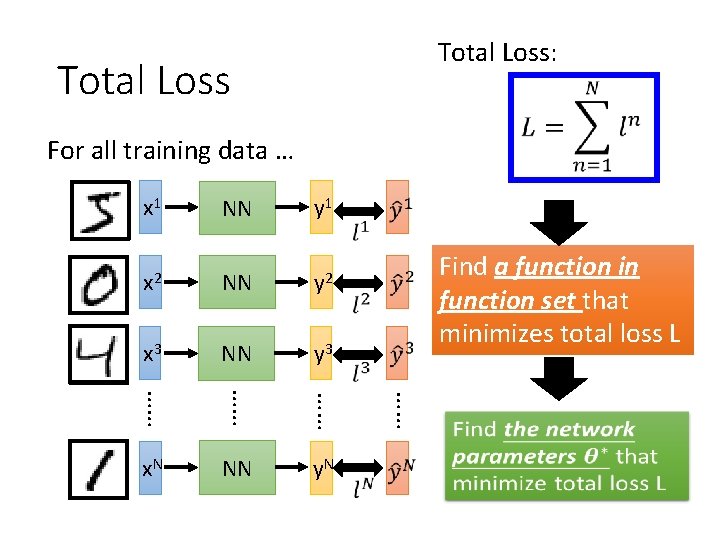

Total Loss: Total Loss For all training data … y 1 x 2 NN y 2 x 3 NN y 3 …… …… x. N NN y. N Find a function in function set that minimizes total loss L …… NN …… x 1

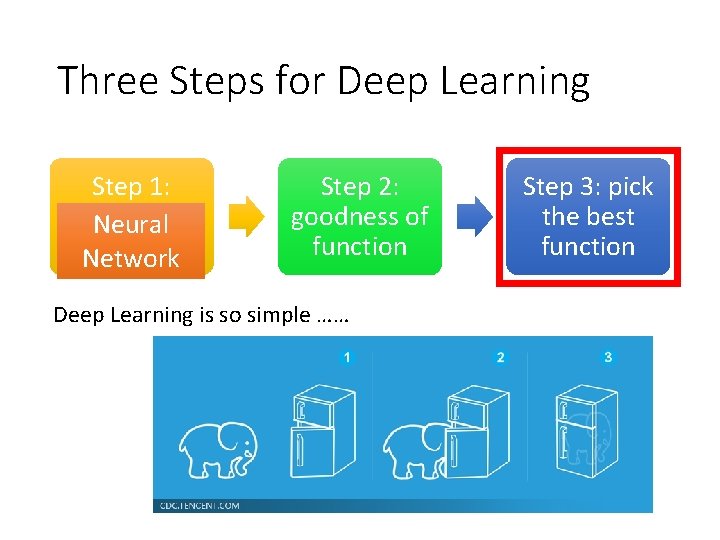

Three Steps for Deep Learning Step 1: Step 2: define a set goodness of Neural of function function Network Deep Learning is so simple …… Step 3: pick the best function

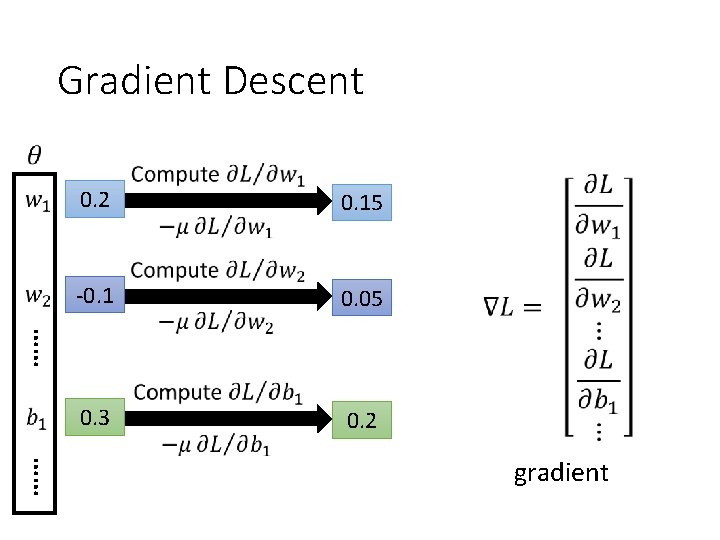

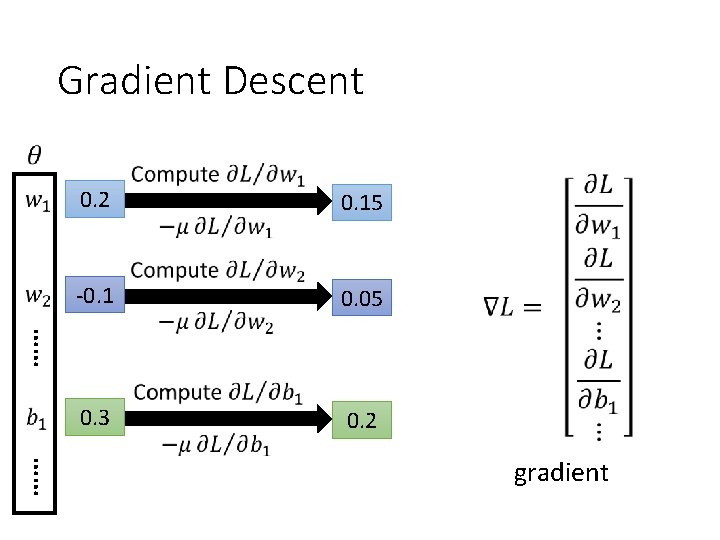

Gradient Descent 0. 15 -0. 1 0. 05 0. 3 0. 2 …… gradient

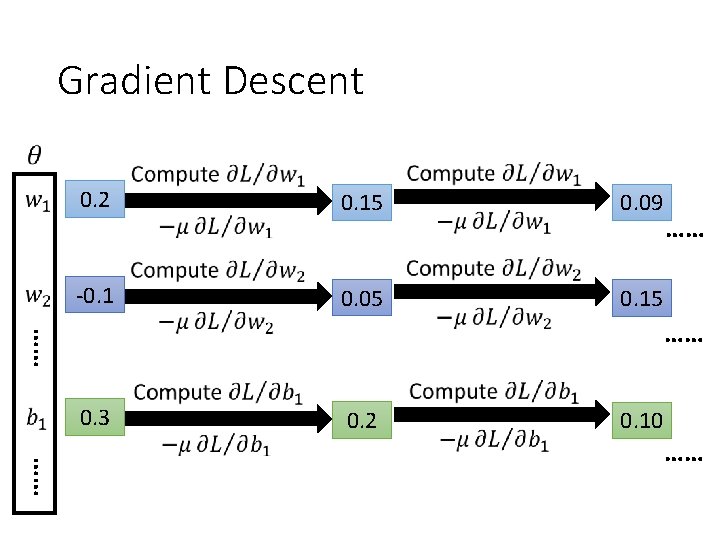

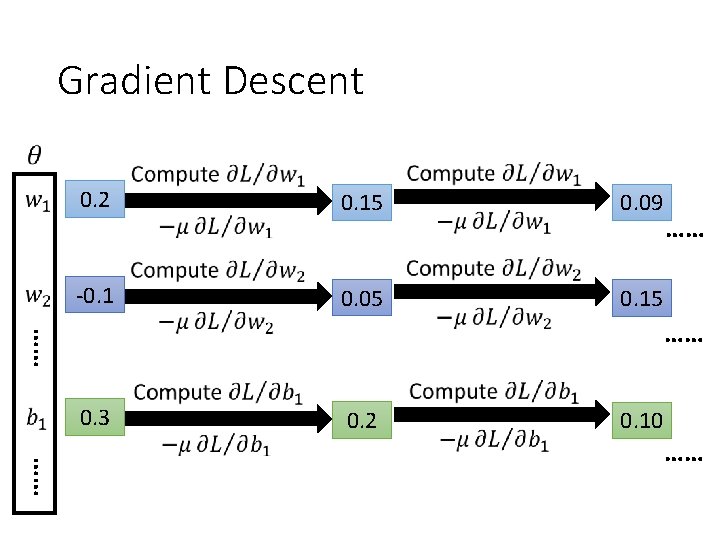

Gradient Descent 0. 2 0. 15 0. 09 -0. 1 0. 05 0. 15 …… …… …… 0. 3 0. 2 0. 10 …… ……

Gradient Descent This is the “learning” of machines in deep learning …… Even alpha go using this approach. People image …… Actually …. . I hope you are not too disappointed : p

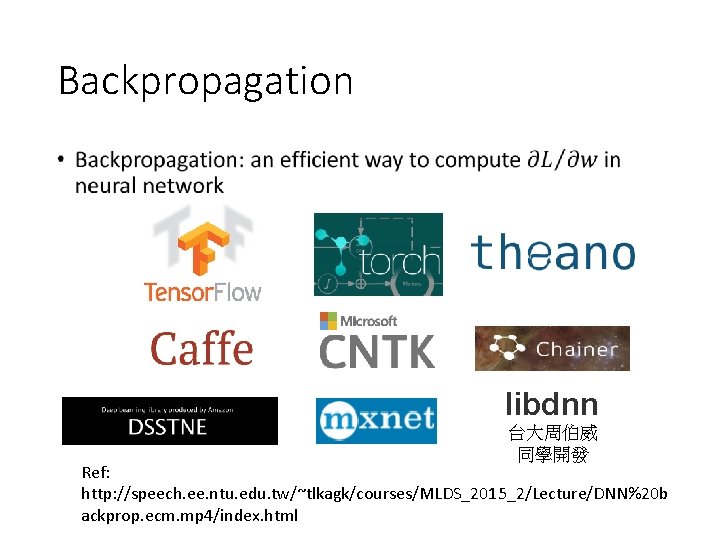

Backpropagation • libdnn 台大周伯威 同學開發 Ref: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/MLDS_2015_2/Lecture/DNN%20 b ackprop. ecm. mp 4/index. html

Three Steps for Deep Learning Step 1: Step 2: define a set goodness of Neural of function function Network Deep Learning is so simple …… Step 3: pick the best function

Acknowledgment • 感謝 Victor Chen 發現投影片上的打字錯誤