Unstructured Lumigraph Rendering Chris Buehler Michael Bosse Leonard

Unstructured Lumigraph Rendering Chris Buehler Michael Bosse Leonard Mc. Millan MIT-LCS Steven J. Gortler Harvard University Michael F. Cohen Microsoft Research

The Image-Based Rendering Problem • Synthesize novel views from reference images • Static scenes, fixed lighting • Flexible geometry and camera configurations

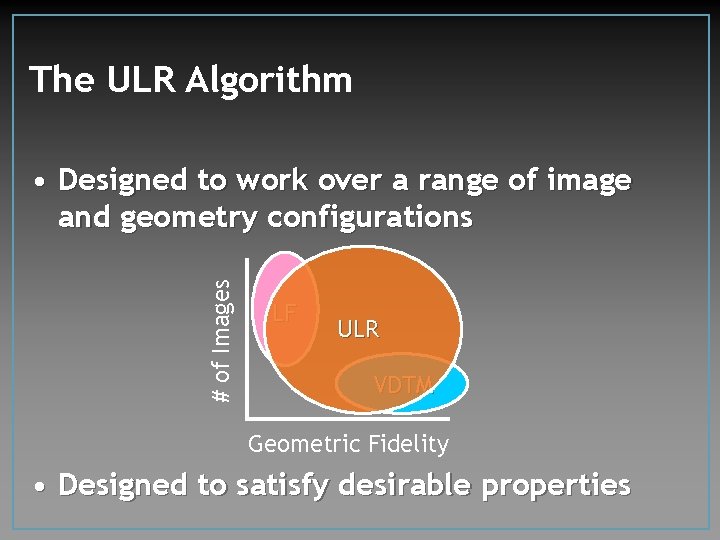

The ULR Algorithm # of Images • Designed to work over a range of image and geometry configurations LF ULR VDTM Geometric Fidelity • Designed to satisfy desirable properties

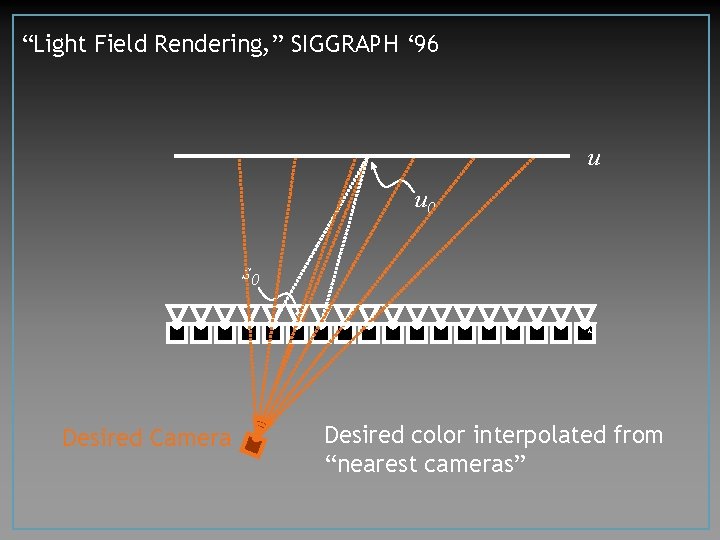

“Light Field Rendering, ” SIGGRAPH ‘ 96 u u 0 s Desired Camera Desired color interpolated from “nearest cameras”

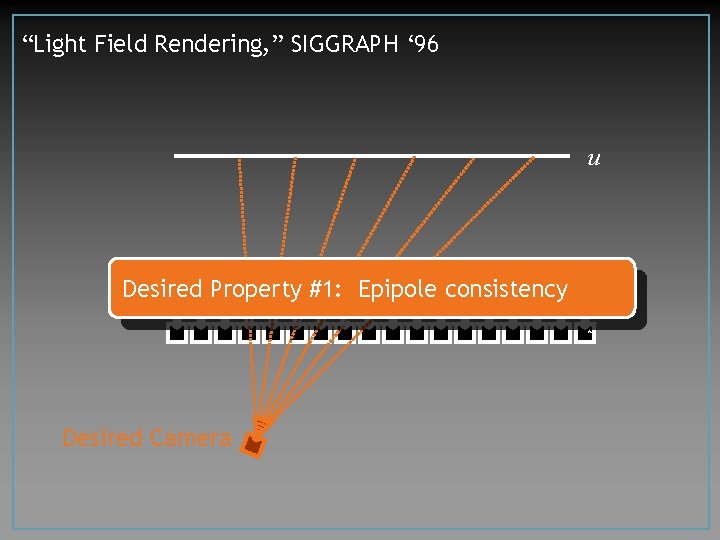

“Light Field Rendering, ” SIGGRAPH ‘ 96 u Desired Property #1: Epipole consistency s Desired Camera

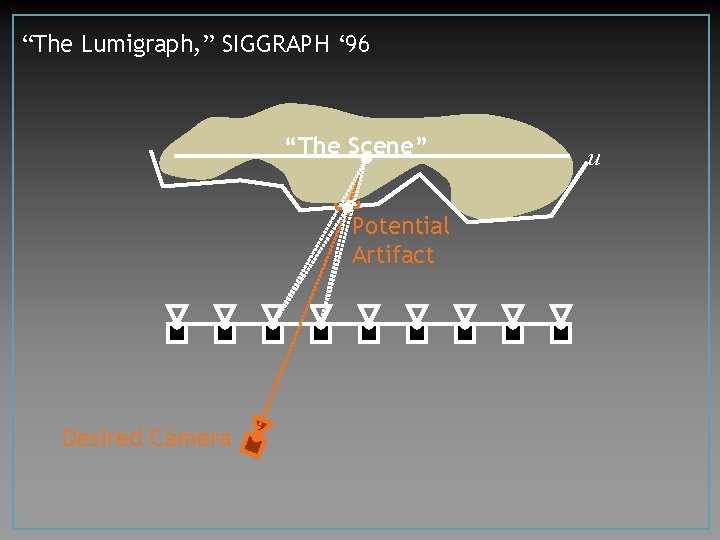

“The Lumigraph, ” SIGGRAPH ‘ 96 “The Scene” Potential Artifact Desired Camera u

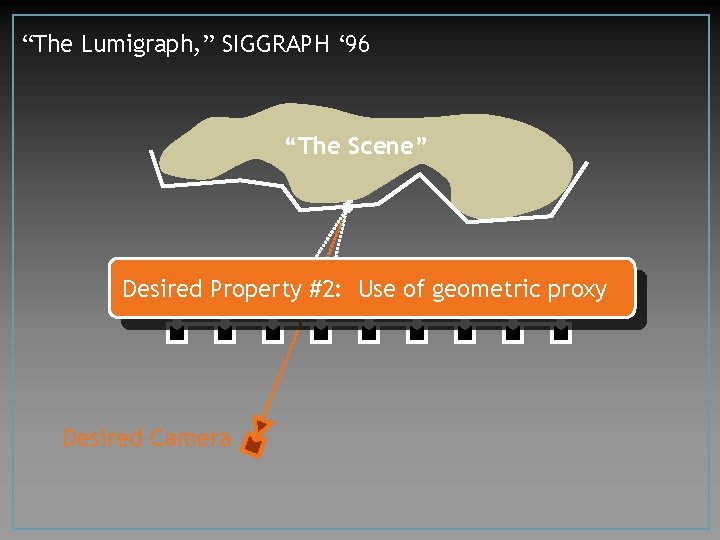

“The Lumigraph, ” SIGGRAPH ‘ 96 “The Scene” Desired Property #2: Use of geometric proxy Desired Camera

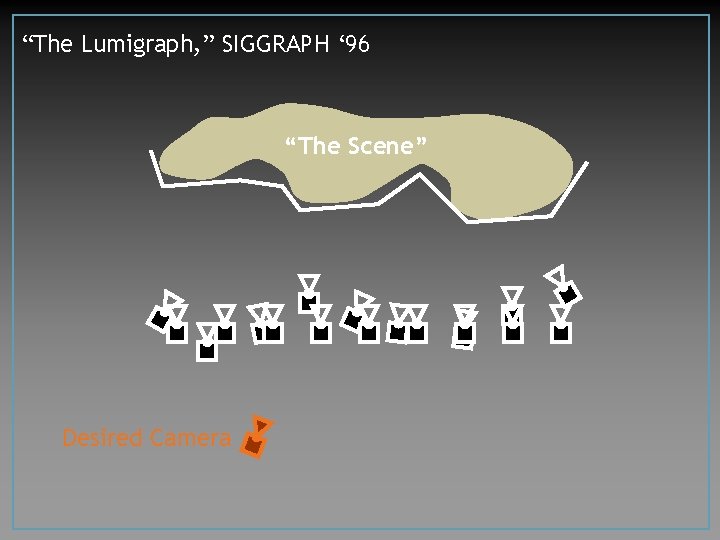

“The Lumigraph, ” SIGGRAPH ‘ 96 “The Scene” Desired Camera

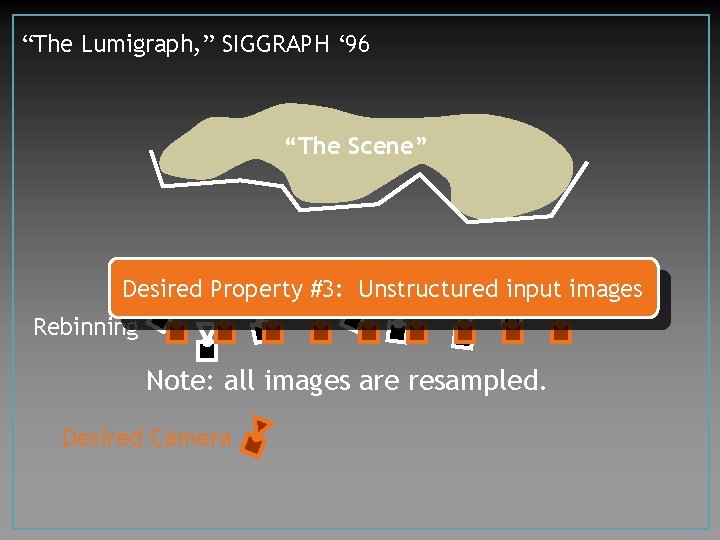

“The Lumigraph, ” SIGGRAPH ‘ 96 “The Scene” Desired Property #3: Unstructured input images Rebinning Note: all images are resampled. Desired Camera

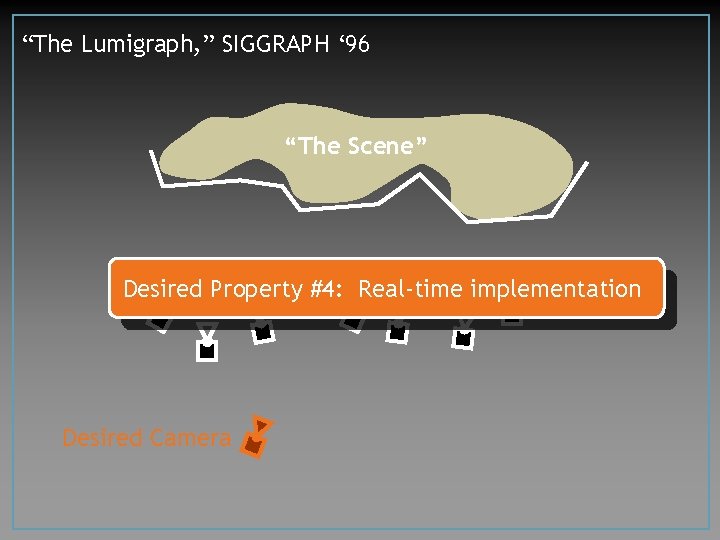

“The Lumigraph, ” SIGGRAPH ‘ 96 “The Scene” Desired Property #4: Real-time implementation Desired Camera

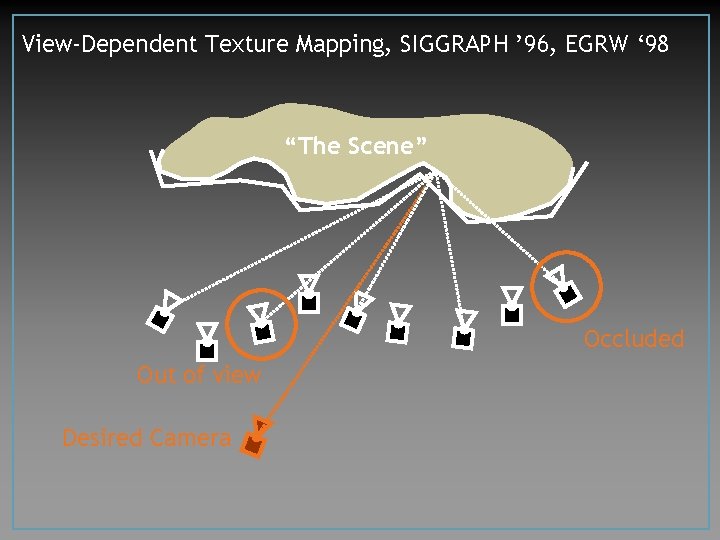

View-Dependent Texture Mapping, SIGGRAPH ’ 96, EGRW ‘ 98 “The Scene” Occluded Out of view Desired Camera

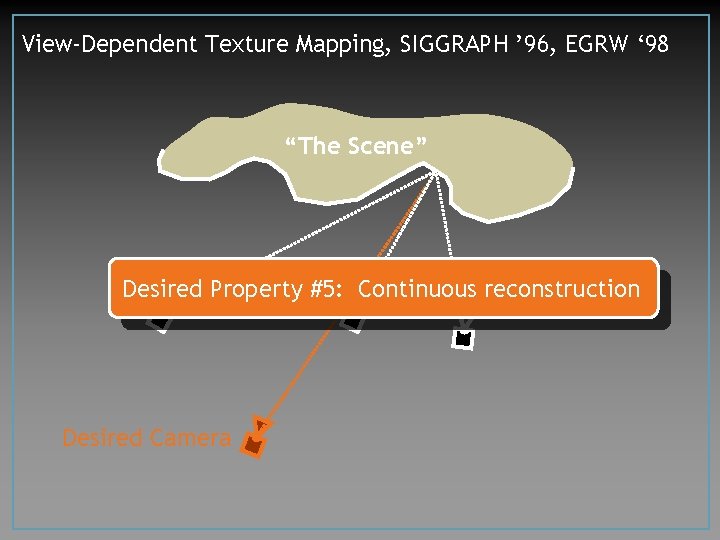

View-Dependent Texture Mapping, SIGGRAPH ’ 96, EGRW ‘ 98 “The Scene” Desired Property #5: Continuous reconstruction Desired Camera

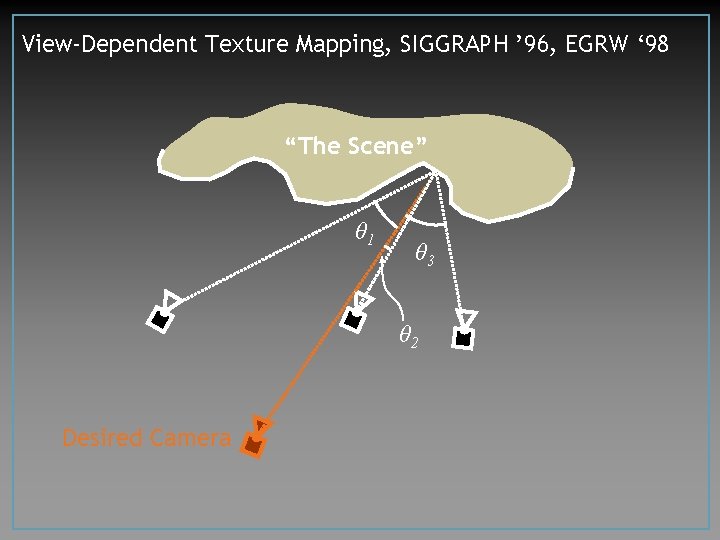

View-Dependent Texture Mapping, SIGGRAPH ’ 96, EGRW ‘ 98 “The Scene” θ 1 θ 3 θ 2 Desired Camera

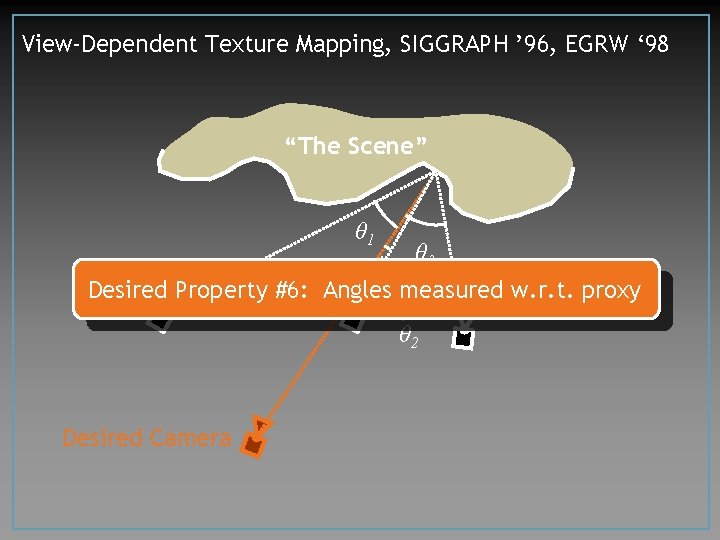

View-Dependent Texture Mapping, SIGGRAPH ’ 96, EGRW ‘ 98 “The Scene” θ 1 θ 3 Desired Property #6: Angles measured w. r. t. proxy θ 2 Desired Camera

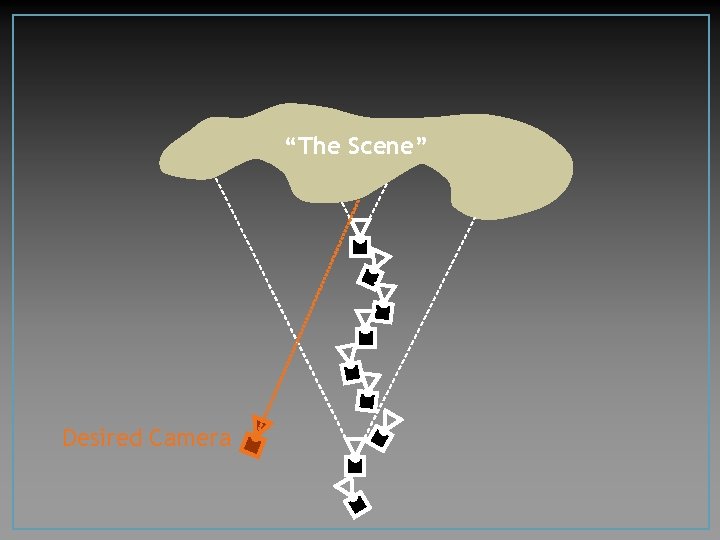

“The Scene” Desired Camera

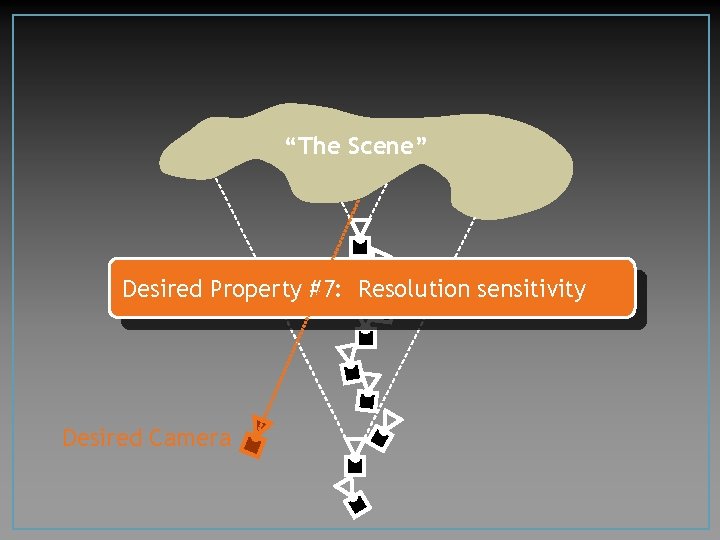

“The Scene” Desired Property #7: Resolution sensitivity Desired Camera

Previous Work Light fields and Lumigraphs • Levoy and Hanrahan, Gortler et al. , Isaksen et al. View-dependent Texture Mapping • Debevec et al. , Wood et al. Plenoptic Modeling w/Hand-held Cameras • Heigl et al. Many others…

Unstructured Lumigraph Rendering 1. 2. 3. 4. 5. 6. 7. Epipole consistency Use of geometric proxy Unstructured input Real-time implementation Continuous reconstruction Angles measured w. r. t. proxy Resolution sensitivity

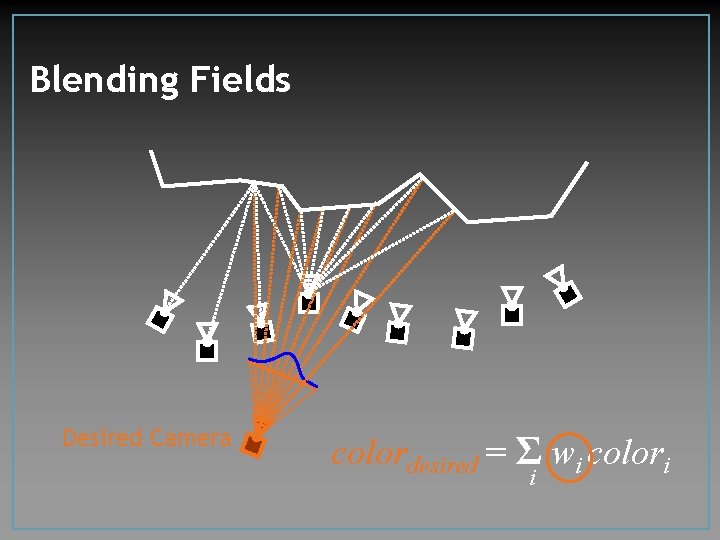

Blending Fields Desired Camera colordesired = Σ wi colori i

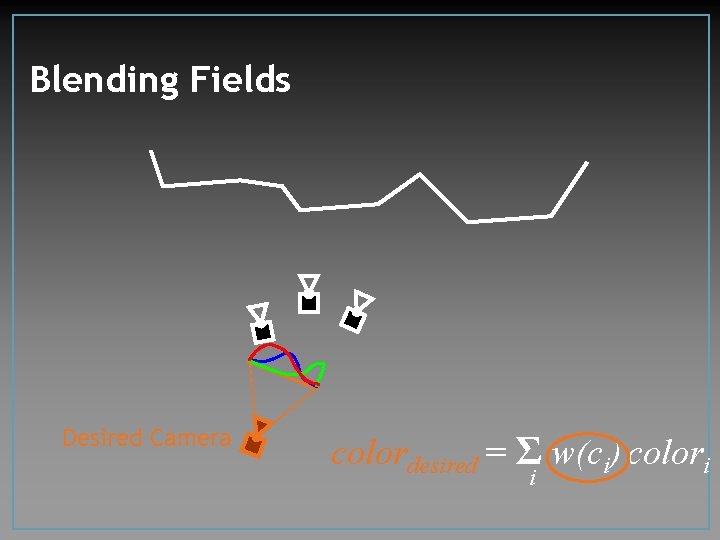

Blending Fields Desired Camera colordesired = Σ w(ci) colori i

Unstructured Lumigraph Rendering • Explicitly construct blending field • Computed using penalties • Sample and interpolate over desired image • Render with hardware • Projective texture mapping and alpha blending

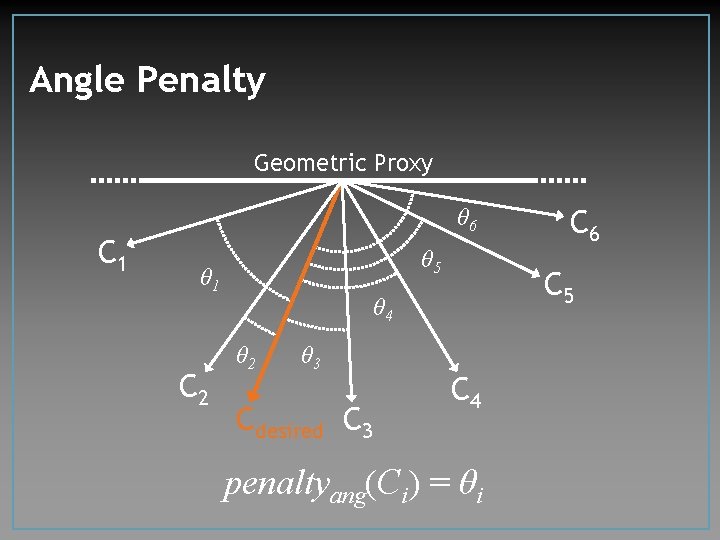

Angle Penalty Geometric Proxy C 1 θ 6 θ 5 θ 1 C 2 C 5 θ 4 θ 2 θ 3 Cdesired C 3 C 6 C 4 penaltyang(Ci) = θi

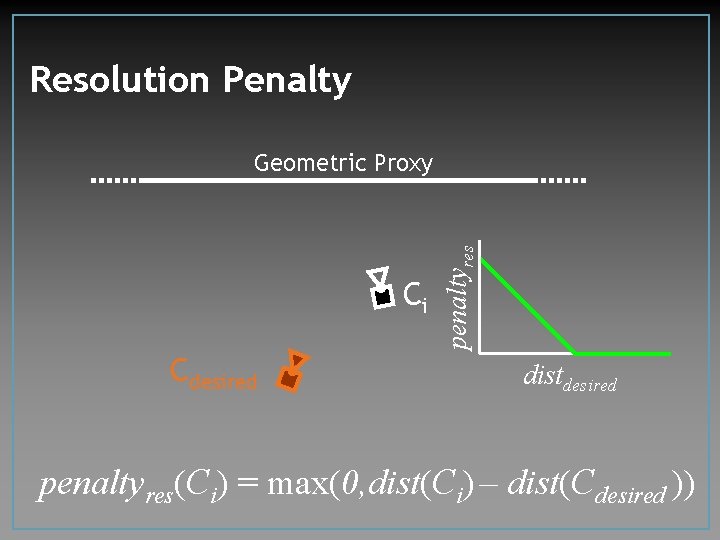

Resolution Penalty Ci Cdesired penaltyres Geometric Proxy distdesired penaltyres(Ci) = max(0, dist(Ci) – dist(Cdesired ))

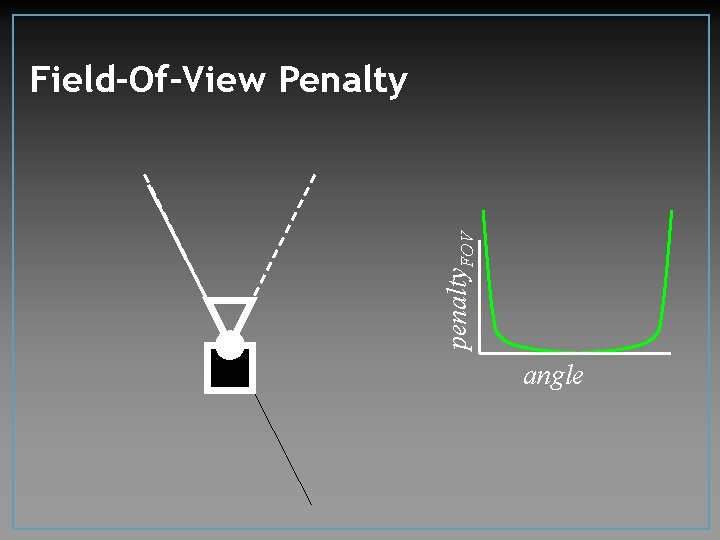

penalty. FOV Field-Of-View Penalty angle

Total Penalty penalty(Ci) =α penaltyang(i) + β penaltyres(i) + γ penaltyfov(i)

K-Nearest Continuous Blending • Only use cameras with K smallest penalties • C 0 Continuity: contribution drops to zero as camera leaves K-nearest set • w(Ci) = 1 - penalty(Ci)/penalty(Ck+1 st closest ) • Partition of Unity: normalize ~ ) = w(C )/Σw(C ) • w(C i i j j

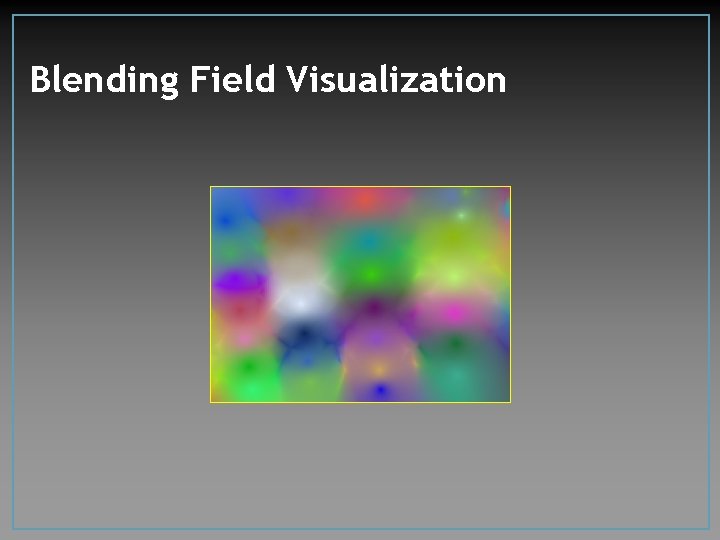

Blending Field Visualization

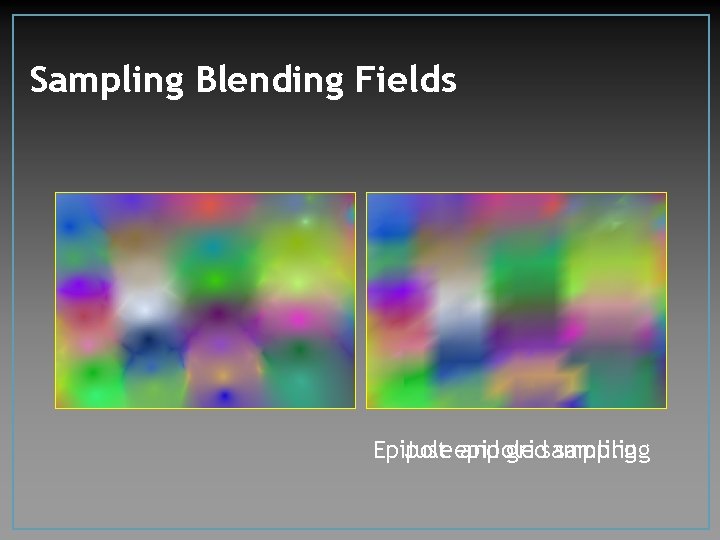

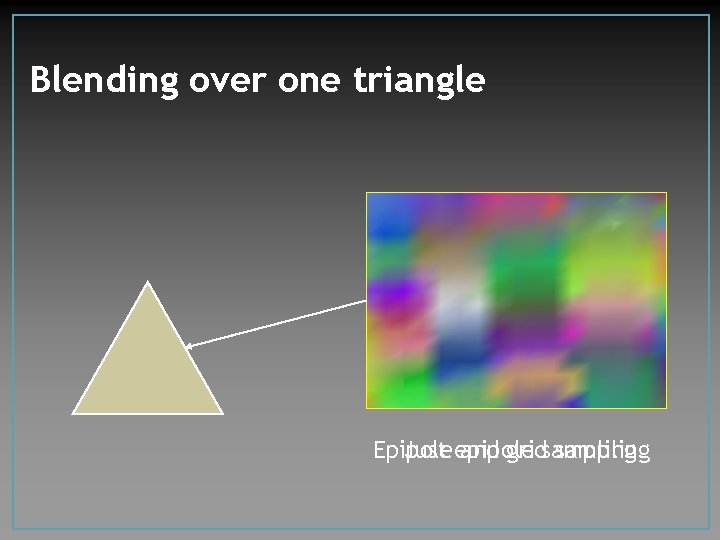

Sampling Blending Fields Epipole Just epipole and gridsampling

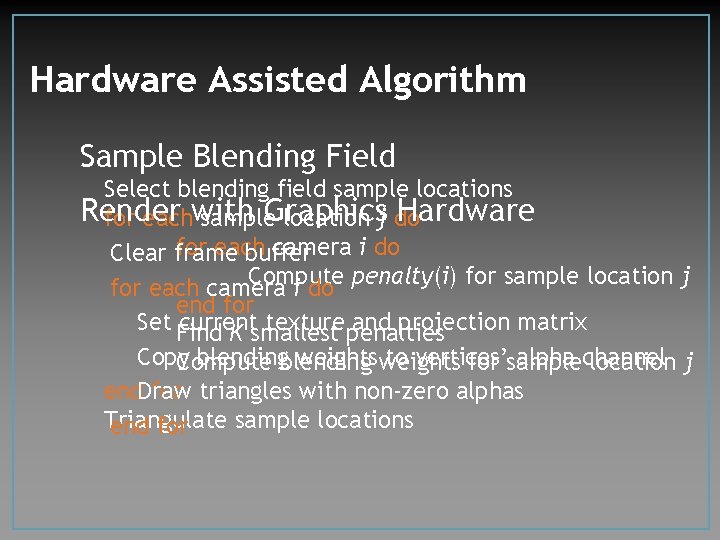

Hardware Assisted Algorithm Sample Blending Field Select blending field sample locations Render Graphics Hardware for eachwith sample location j do for each camera i do Clear frame buffer Compute for each camera i do penalty(i) for sample location j end for Set Find current texture penalties and projection matrix K smallest Copy blending weights to vertices’ alpha channel Compute for sample location j end. Draw for triangles with non-zero alphas Triangulate sample locations end for

Blending over one triangle Epipole Just epipole and gridsampling

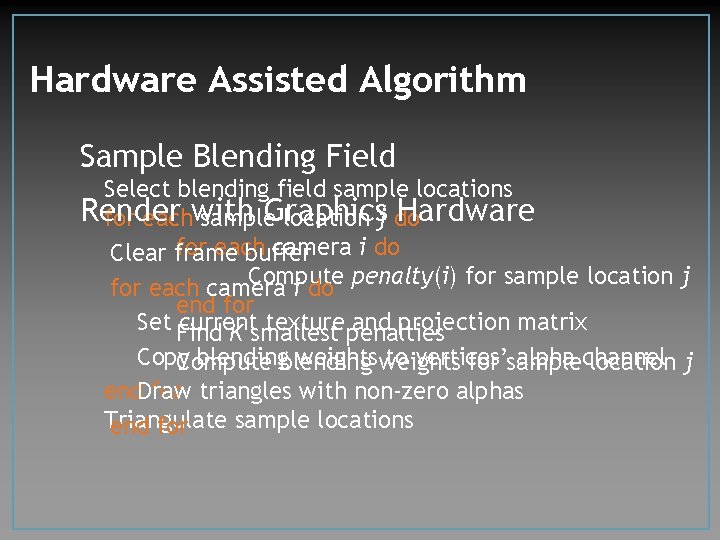

Hardware Assisted Algorithm Sample Blending Field Select blending field sample locations Render Graphics Hardware for eachwith sample location j do for each camera i do Clear frame buffer Compute for each camera i do penalty(i) for sample location j end for Set Find current texture penalties and projection matrix K smallest Copy blending weights to vertices’ alpha channel Compute for sample location j end. Draw for triangles with non-zero alphas Triangulate sample locations end for

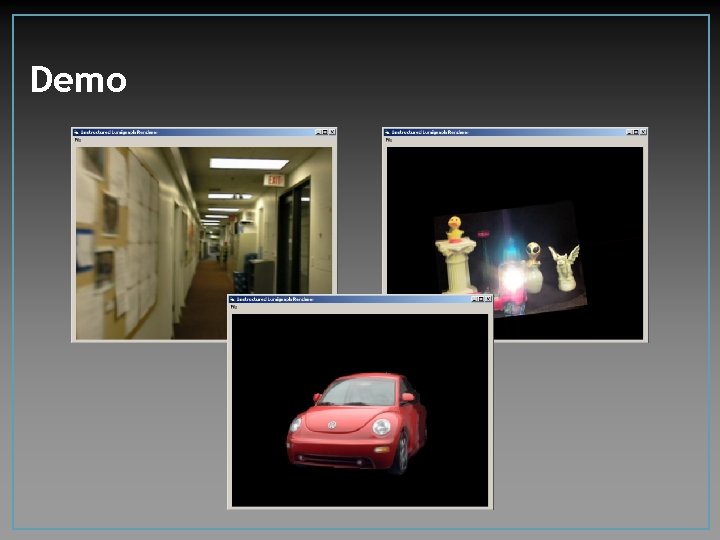

Demo

Future Work • Optimal sampling of the camera blending field • More complete treatment of resolution effects in IBR • View-dependent geometry proxies • Investigation of geometry vs. images tradeoff

Conclusions Unstructured Lumigraph Rendering • unifies view-dependent texture mapping and lumigraph rendering methods • allows rendering from unorganized images • sampled camera blending field

Acknowledgements Thanks to the members of the MIT Computer Graphics Group and Microsoft Research Graphics and Computer Vision Groups • DARPA ITO Grant F 30602 -971 -0283 • NSF CAREER Awards 9875859 & 9703399 • Microsoft Research Graduate Fellowship Program • Donations from Intel Corporation, Nvidia, and Microsoft Corporation

- Slides: 35