Computer Graphics The Rendering Pipeline Review CO 2409

- Slides: 16

Computer Graphics The Rendering Pipeline - Review CO 2409 Computer Graphics Week 15

Lecture Contents 1. The Rendering Pipeline 2. Input-Assembler Stage – Vertex Data & Primitive Data 3. Vertex Shader Stage – – Matrix Transformations Lighting 4. Rasterizer Stage 5. Pixel Shader Stage – Textures 6. Output-Merger Stage

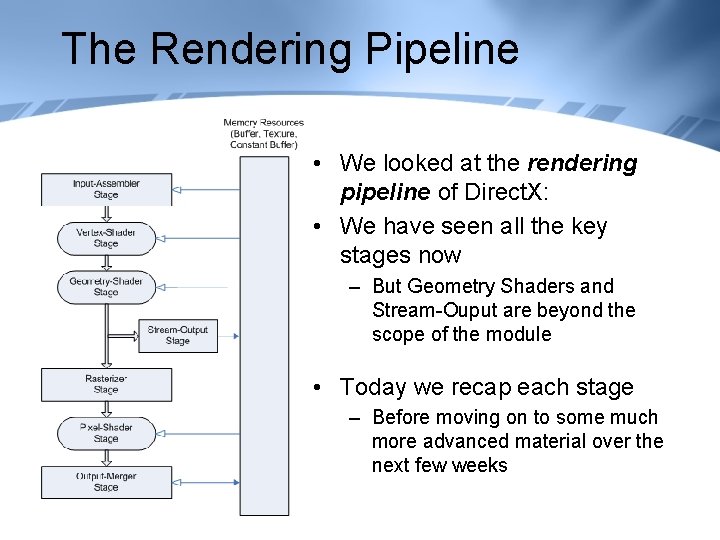

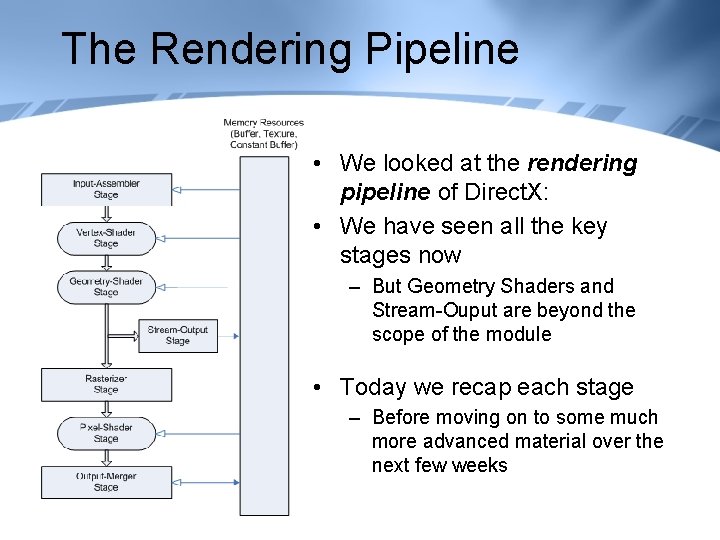

The Rendering Pipeline • We looked at the rendering pipeline of Direct. X: • We have seen all the key stages now – But Geometry Shaders and Stream-Ouput are beyond the scope of the module • Today we recap each stage – Before moving on to some much more advanced material over the next few weeks

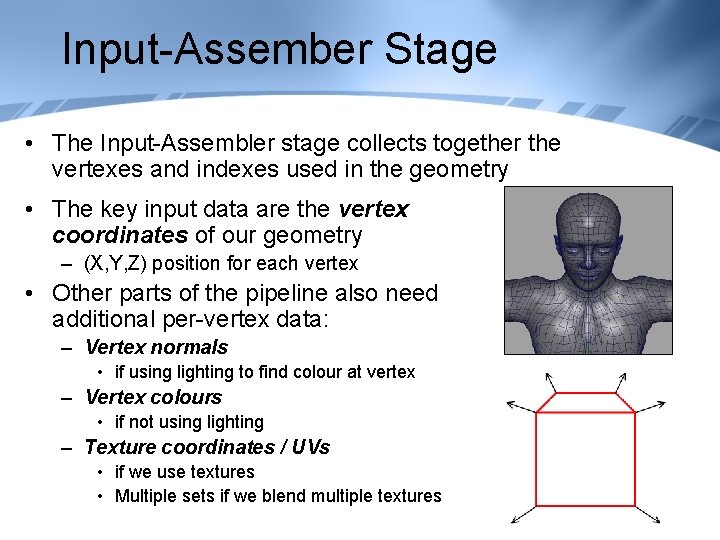

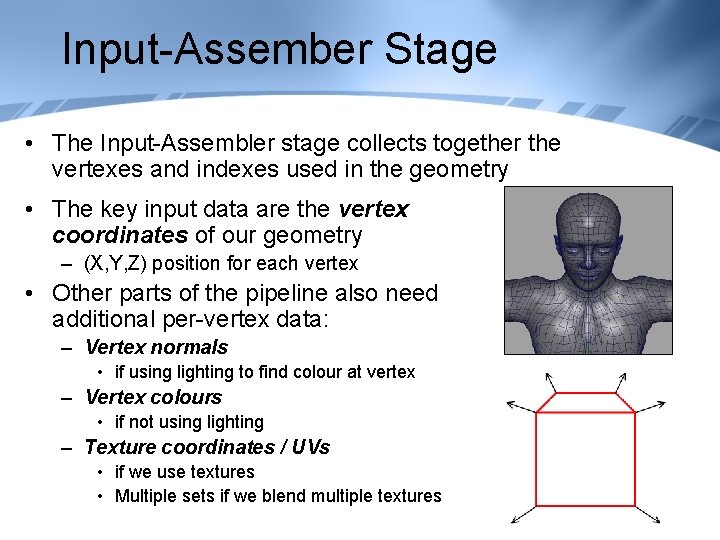

Input-Assember Stage • The Input-Assembler stage collects together the vertexes and indexes used in the geometry • The key input data are the vertex coordinates of our geometry – (X, Y, Z) position for each vertex • Other parts of the pipeline also need additional per-vertex data: – Vertex normals • if using lighting to find colour at vertex – Vertex colours • if not using lighting – Texture coordinates / UVs • if we use textures • Multiple sets if we blend multiple textures

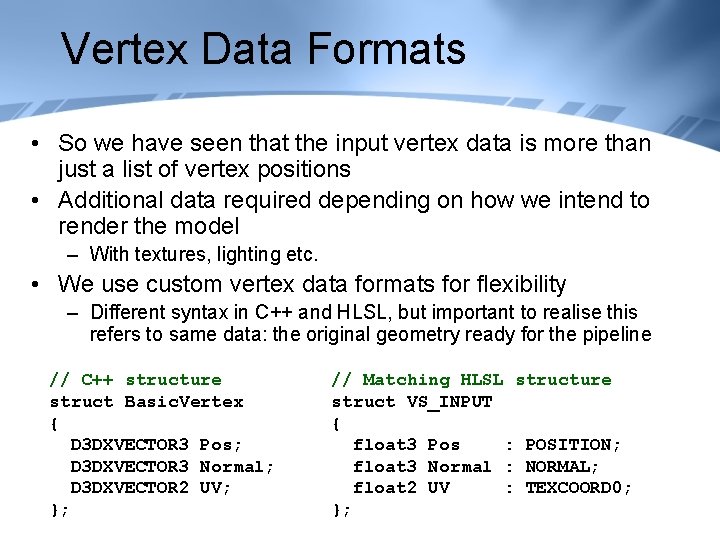

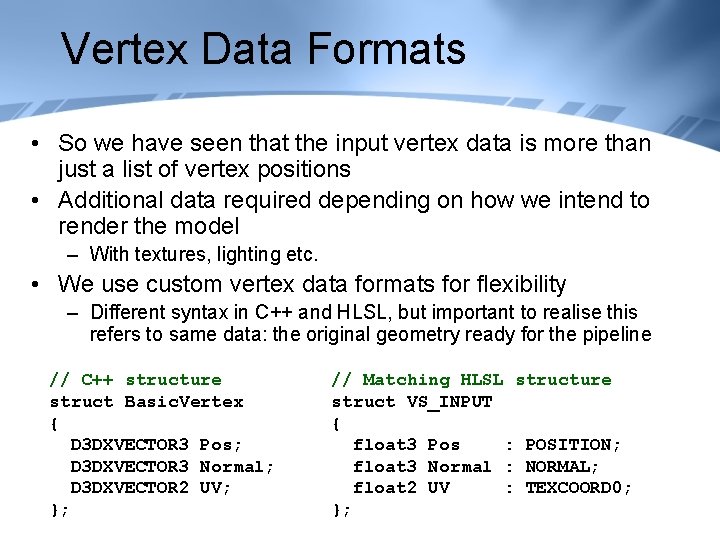

Vertex Data Formats • So we have seen that the input vertex data is more than just a list of vertex positions • Additional data required depending on how we intend to render the model – With textures, lighting etc. • We use custom vertex data formats for flexibility – Different syntax in C++ and HLSL, but important to realise this refers to same data: the original geometry ready for the pipeline // C++ structure struct Basic. Vertex { D 3 DXVECTOR 3 Pos; D 3 DXVECTOR 3 Normal; D 3 DXVECTOR 2 UV; }; // Matching HLSL structure struct VS_INPUT { float 3 Pos : POSITION; float 3 Normal : NORMAL; float 2 UV : TEXCOORD 0; };

Vertex Buffers • A vertex buffer is just an array of vertices – Defining the set of vertices in our geometry – Each vertex in a custom format as above • The buffer is managed by Direct. X – We must ask Direct. X to create and destroy them… – …and to access the data inside • The array is usually created in main memory then copied to video card memory – Need it on the video card for performance reasons • First saw vertex buffers in the week 5 lab

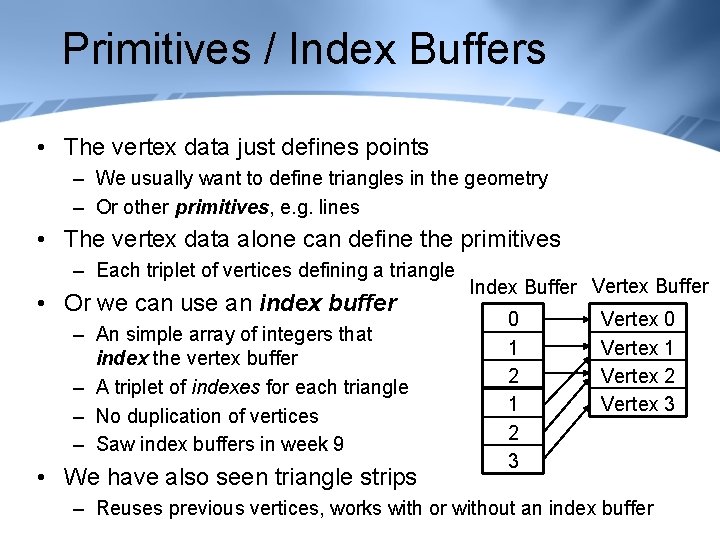

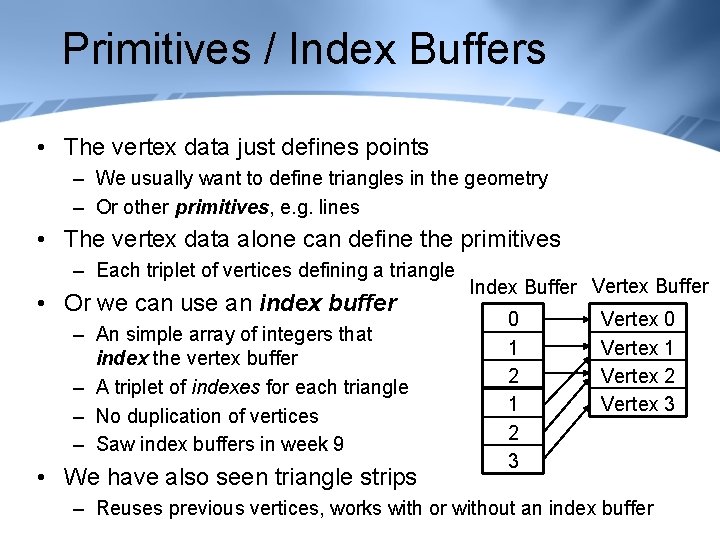

Primitives / Index Buffers • The vertex data just defines points – We usually want to define triangles in the geometry – Or other primitives, e. g. lines • The vertex data alone can define the primitives – Each triplet of vertices defining a triangle • Or we can use an index buffer – An simple array of integers that index the vertex buffer – A triplet of indexes for each triangle – No duplication of vertices – Saw index buffers in week 9 • We have also seen triangle strips Index Buffer Vertex Buffer 0 1 2 3 Vertex 0 Vertex 1 Vertex 2 Vertex 3 – Reuses previous vertices, works with or without an index buffer

Vertex Shader Stage • The vertex / index data defines the 3 D geometry – Next step is to convert this data into 2 D polygons • We use vertex shaders for this vertex processing • The key operation is a sequence of matrix transforms – Transforming vertex coordinates from 3 D model space to 2 D viewport space • The vertex shader can also calculate lighting (using the vertex normals), perform deformation etc. – Although we will shift lighting to the pixel shader next week • Some vertex data (e. g. UVs) is not used at this stage and is simply passed through to later stages

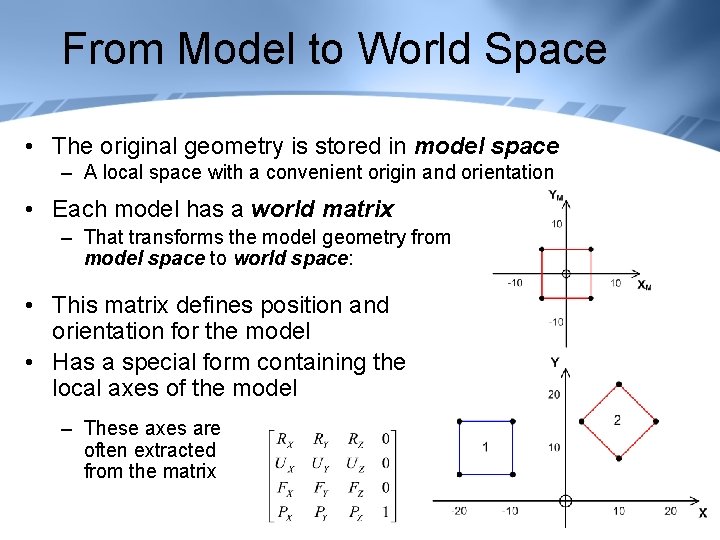

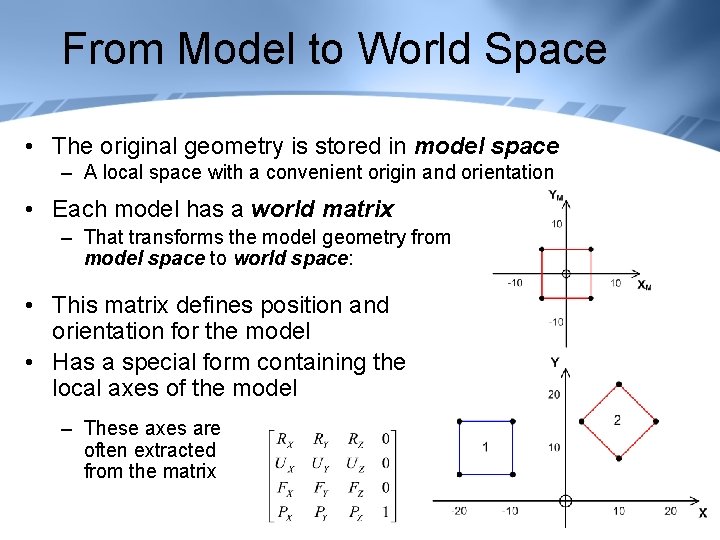

From Model to World Space • The original geometry is stored in model space – A local space with a convenient origin and orientation • Each model has a world matrix – That transforms the model geometry from model space to world space: • This matrix defines position and orientation for the model • Has a special form containing the local axes of the model – These axes are often extracted from the matrix

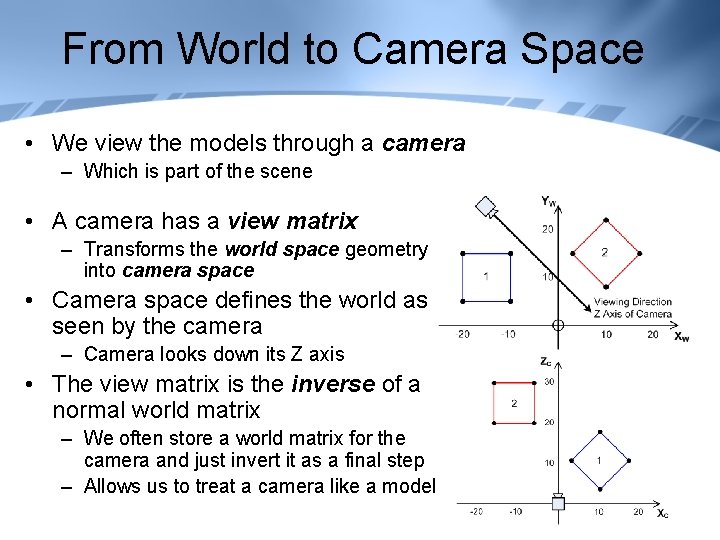

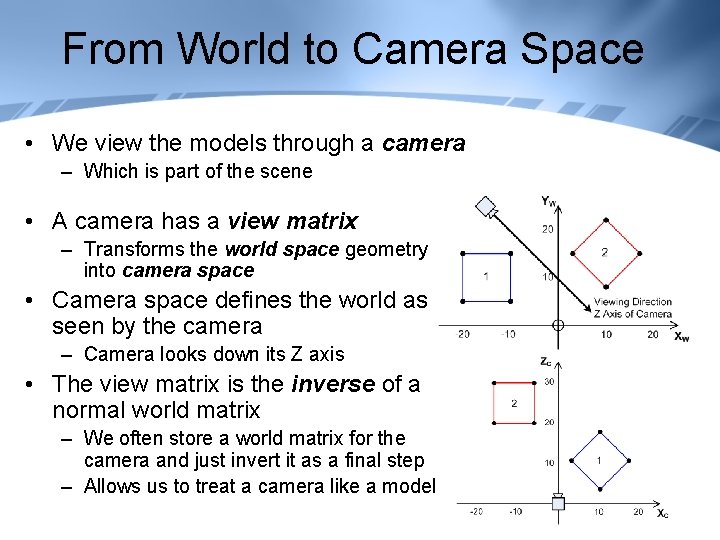

From World to Camera Space • We view the models through a camera – Which is part of the scene • A camera has a view matrix – Transforms the world space geometry into camera space • Camera space defines the world as seen by the camera – Camera looks down its Z axis • The view matrix is the inverse of a normal world matrix – We often store a world matrix for the camera and just invert it as a final step – Allows us to treat a camera like a model

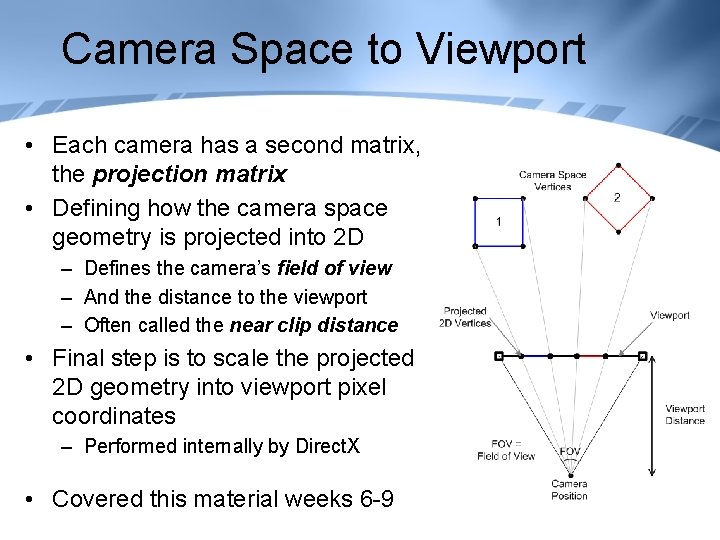

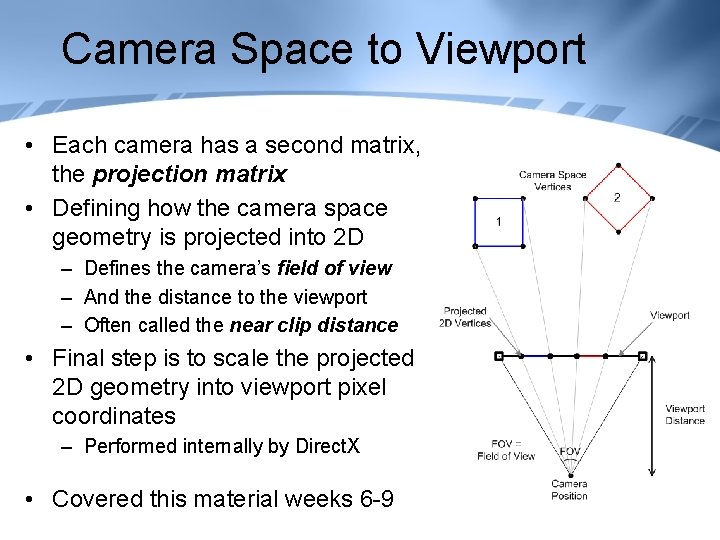

Camera Space to Viewport • Each camera has a second matrix, the projection matrix • Defining how the camera space geometry is projected into 2 D – Defines the camera’s field of view – And the distance to the viewport – Often called the near clip distance • Final step is to scale the projected 2 D geometry into viewport pixel coordinates – Performed internally by Direct. X • Covered this material weeks 6 -9

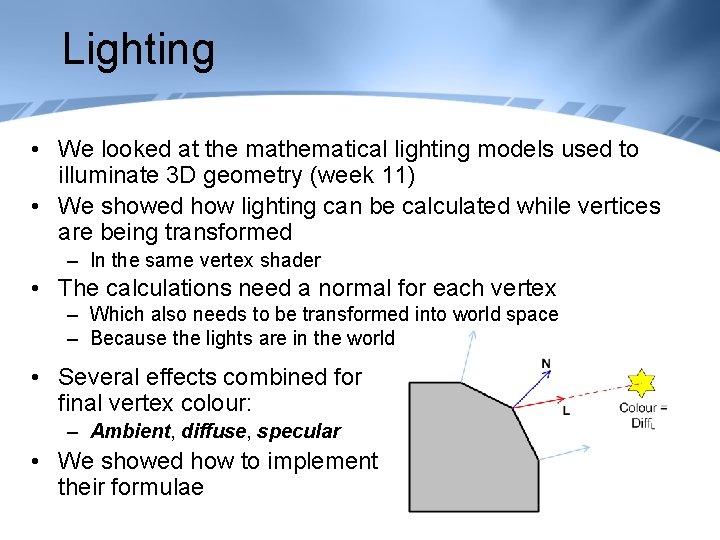

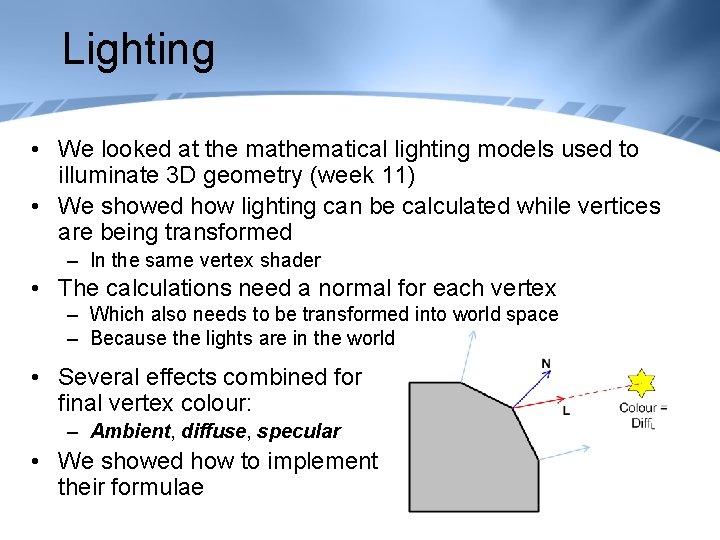

Lighting • We looked at the mathematical lighting models used to illuminate 3 D geometry (week 11) • We showed how lighting can be calculated while vertices are being transformed – In the same vertex shader • The calculations need a normal for each vertex – Which also needs to be transformed into world space – Because the lights are in the world • Several effects combined for final vertex colour: – Ambient, diffuse, specular • We showed how to implement their formulae

Rasterizer Stage • This stage processes 2 D polygons before rendering: – Off-screen polygons are discarded (culled) – Partially off-screen polygons are clipped – Back-facing polygons are culled if required (determined by clockwise/anti-clockwise order of viewport vertices) • These steps occur by setting states – Saw states in week 14 blending lab • This stage also scans through the pixels of the polygons – Called rasterising / rasterizing – Calls the pixel shader for each pixel encountered • Data output from earlier stages is interpolated for each pixel – 2 D coordinates, colours, UVs etc.

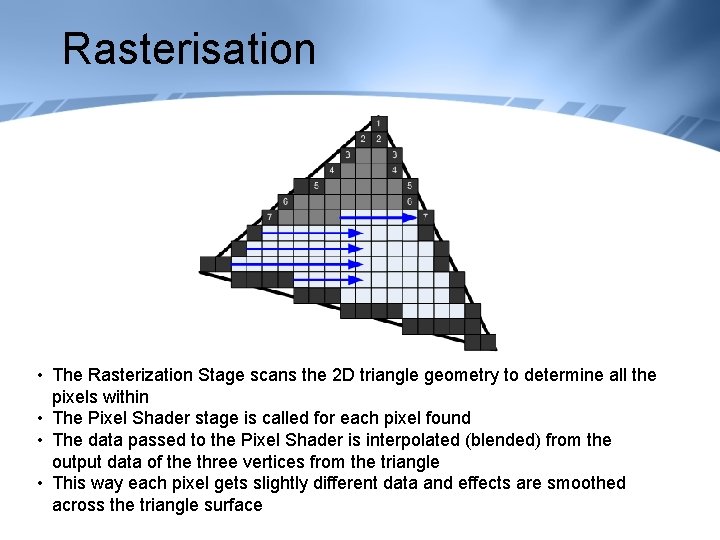

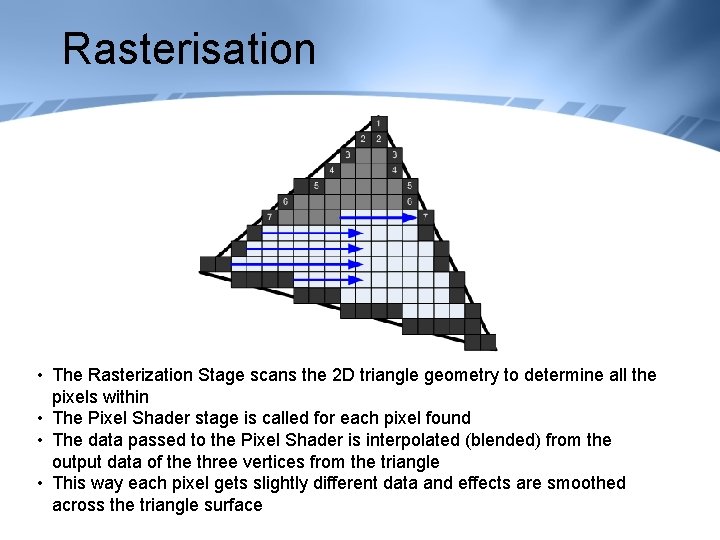

Rasterisation • The Rasterization Stage scans the 2 D triangle geometry to determine all the pixels within • The Pixel Shader stage is called for each pixel found • The data passed to the Pixel Shader is interpolated (blended) from the output data of the three vertices from the triangle • This way each pixel gets slightly different data and effects are smoothed across the triangle surface

Pixel Shader Stage • Next each pixel is worked on to get a final colour – The work is done in a pixel shader • Texture coordinates (UVs) map textures onto polygons – UV data will have been passed through from the previous steps – Textures are provided from the C++ via shader variables • Textures can be filtered (texture sampling) to improve their look – Textures covered in week 12 • Texture colours are combined with lighting or polygon colours to produce final pixel colours

Output-Merger Stage • The final step in the rendering pipeline is the rendering of the pixels to the viewport – Don’t always copy the pixels directly, because the object we’re rendering may be partly transparent • This involves blending the final polygon colour with the existing viewport pixel colours – Saw this in the Week 14 lab – Similar to sprite blending • Also the depth buffer values are tested / written – We saw the depth buffer in the week 8 lab – Will see them in more detail shortly