CS 704 Advanced Computer Architecture Lecture 22 Instruction

![Superblocks A[i]=A[i]+B[i] T F (Superblock exit) n=4 Is A[i]=0 Residual Loop B[i] = …… Superblocks A[i]=A[i]+B[i] T F (Superblock exit) n=4 Is A[i]=0 Residual Loop B[i] = ……](https://slidetodoc.com/presentation_image_h2/b0ffaabc7480cb3c26d9a8d3140d742c/image-59.jpg)

![Example 1 For (i=2; i<100; i= i+1) { a[i] = c[i-1] = a[i-1] = Example 1 For (i=2; i<100; i= i+1) { a[i] = c[i-1] = a[i-1] =](https://slidetodoc.com/presentation_image_h2/b0ffaabc7480cb3c26d9a8d3140d742c/image-65.jpg)

![Numerical problems Foo: L. D F 0, 0(R 1); /load X[i] L. D F Numerical problems Foo: L. D F 0, 0(R 1); /load X[i] L. D F](https://slidetodoc.com/presentation_image_h2/b0ffaabc7480cb3c26d9a8d3140d742c/image-73.jpg)

![Problem # 3 Consider a code For (i=2; i<=100; i+=2) a[i] = a[50*i+1] Using Problem # 3 Consider a code For (i=2; i<=100; i+=2) a[i] = a[50*i+1] Using](https://slidetodoc.com/presentation_image_h2/b0ffaabc7480cb3c26d9a8d3140d742c/image-80.jpg)

- Slides: 85

CS 704 Advanced Computer Architecture Lecture 22 Instruction Level Parallelism (Software pipelining and Trace Scheduling) Prof. Dr. M. Ashraf Chughtai

Today’s Topics Recap: Eliminating Dependent Computations Software pipelining Trace Scheduling Superblocks Summary MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 2

Recap: Lecture 21 Last time we extended our discussion on the Static Scheduling to VLIW processors A VLIW is formatted as one large instruction or a fixed instruction packet with explicit parallelism among instructions in a set MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 3

Recap: Lecture 21 The multiple operations are initiated in a cycle by the compiler which place them in a a packet Wider processors having multiple independent functional units are used to eliminate recompilation; And …………. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 4

Recap: Scheduling in VLIW processor compiler finds dependence and schedule instructions for parallel execution It resulted in the improvement, compared to the superscalar processor in respect of: MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 5

Recap: Scheduling in VLIW processor – average issue rate, i. e. , operations issued per cycle; and – execution speed, i. e. , the time to complete execution of code However, the efficiency of VLIW, measured as the percentage of available slots containing an operation, ranges from 40% to 60% MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 6

Recap: Scheduling in VLIW processor Following this we also distinguished between Instruction Level Parallelism – ILP and Loop Level Parallelism – LLP While talking about LLP we found that loop carried dependence prevents LLP but not ILP MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 7

Recap: Scheduling in VLIW processor At the end we studied the affinebased Greatest Common Divisor (GCD) algorithm to detect dependence in a loop Continuing our discussion we will exploit how a compiler reduces the dependent computations MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 8

Reducing Dependent Computations In order to achieve more ILP, compiler reduces the dependent computation by using Back Substitution technique This results in algebraic simplification and optimization which eliminates operations that copy values to simplify the sequence MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 9

Copy Propagation The approach of simplification and optimization is also referred to as the Copy Propagation For example, in the sequence: DADDUI MAC/VU-Advanced Computer Architecture R 1, R 2, #4 R 1, #4 Lecture 22 – Instruction Level Parallelism-Static (3) 10

Copy Propagation Here, the net use of R 1 is to hold the result of second DADDUI operation, therefore, Substituting the result of first DADDUI operation in to the second results into DADDUI R 1, R 2, #8 Here, we have eliminated the multiple use of the register R 1 during loop unrolling MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 11

Copy Propagation - Conclusion Particularly, in case of memory access, this technique of reducing computations eliminates: the multiple increments of array indices - during the loop un-rolling; and - to move increments across the memory addresses MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 12

Tree-Height Reduction - Optimization The copy-propagation technique reduces the number of operations or code length The Optimization to increase parallelism of the code, however, is possible by restructuring the code that may …… MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 13

Tree-Height Reduction (Restructuring) increase the number of operations while the execution cycles are reduced Such optimization is called treeheight reduction since it reduces the height of tree structure representing a computation, making it wider but shorter MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 14

Optimization - Tree height reduction For example, the code sequence ADD R 1, R 2, R 3 ADD R 4, R 1, R 6 ADD R 8, R 1, R 7 requires three cycles for execution Because, here all the instructions depend on immediate predecessor and cannot be issued in parallel MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 15

Optimization - Tree height reduction Now taking the advantage of the associatively, the code can be transformed and written in the from shown as below, ADD R 1, R 2, R 3 ADD R 4, R 6, R 7 ADD R 8, R 1, R 4 This sequence can be computed in two(2) execution cycles by issuing first two instruction in parallel MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 16

Conclusion: Detecting and Enhancing LLP The analysis of LLP focuses on determining data dependence of: – some later iteration on to an earlier iteration Such dependence is referred to as the Loop-Carried Dependence MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 17

Conclusion: Detecting and Enhancing LLP Greatest Common Divisor test is defined to find the existence of dependence Compiler techniques, such as: – Copy propagation and – Tree-Height Reduction are discussed to eliminate dependent computations MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 18

Uncovering Instruction Level Parallelism ………. Cont’d We have already discussed Loop Unrolling as the basic compiler technique to uncover ILP We have observed that Loop unrolling with compiler scheduling enhances the overall performance of Superscalar and VLIW processors MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 19

Loop Unrolling: Review Loop unrolling generates a sequence of straight line code uncovering parallelism among instructions Here, to avoid a pipeline stall, the dependent instructions are separated from source instructions, by a distance in clock cycles equal to the pipeline latency of that source instruction MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 20

Loop Unrolling: Review To perform this scheduling, complier determines both: – the amount of ILP available in the program; and – the latencies of the functional units in the pipeline. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 21

Advanced Compiler Techniques Today will discuss two new compiler techniques to uncover the ILP Software pipelining Global Code Scheduling – Trace scheduling – Superblock MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 22

Software pipelining is a technique where the loop is reorganized such that the code for each iteration is made by choosing instructions from different iterations of the original loop A software pipelined loop interleaves instructions from different iterations without unrolling the loop MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 23

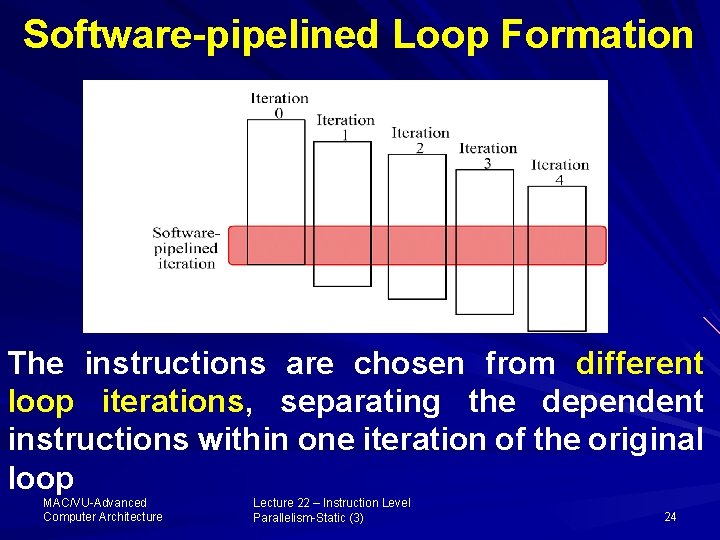

Software-pipelined Loop Formation The instructions are chosen from different loop iterations, separating the dependent instructions within one iteration of the original loop MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 24

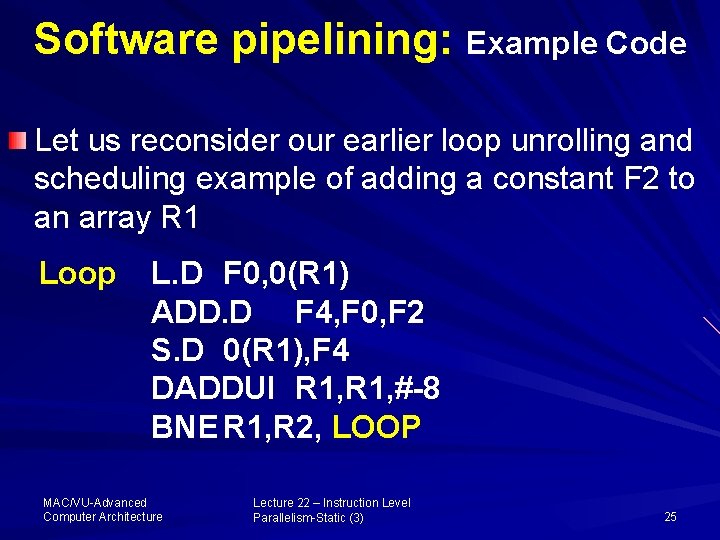

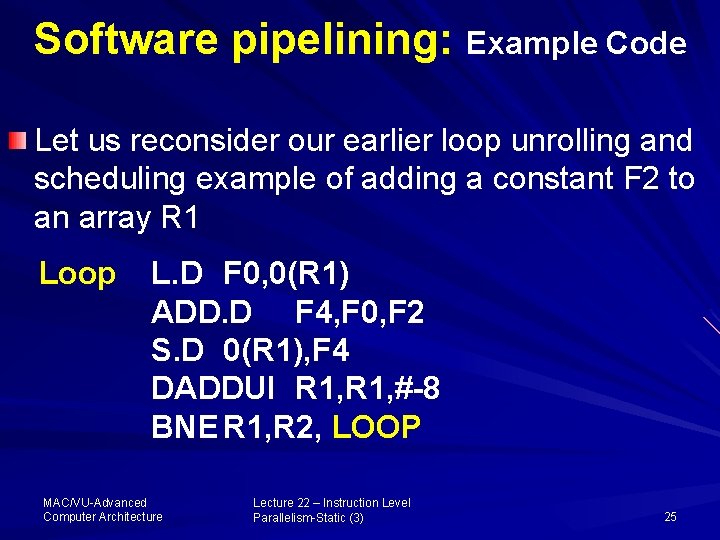

Software pipelining: Example Code Let us reconsider our earlier loop unrolling and scheduling example of adding a constant F 2 to an array R 1 Loop L. D F 0, 0(R 1) ADD. D F 4, F 0, F 2 S. D 0(R 1), F 4 DADDUI R 1, #-8 BNE R 1, R 2, LOOP MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 25

Software pipelining: Explanation For software pipelining, the compiler symbolically unroll the loop and schedule them It selects the instructions from each iteration that do not have dependence among each other The overhead instructions (DADDUI and BNE) are not replicated MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 26

Symbolic Loop Unrolling The body of the symbolically unrolled loop for three iterations is as follows: The instructions selected from each iteration are shown in yellow color Iteration i: L. D ADD. D S. D Iteration i+1: L. D ADD. D S. D Iteration i+2: L. D ADD. D S. D MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) F 0, 0(R 1) F 4, F 0, F 2 F 4, 0(R 1) 27

Software pipeline The selected instructions from different iterations are put together in a loop with the loop control instructions as shown below Loop: S. D F 4, 16(R 1) stores in M[i] ADD. D F 4, F 0, F 2 adds to M[i-1] L. D F 0. 0(R 1) loads M[i-2] DADDUI R 1, # -8 BNE R 1, R 2, Loop MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 28

Software pipeline Here, note that for start-up and finishup we need some code that will not be executed For start-up we need to run L. D and ADD. D instructions for iteration 1 and L. D for iteration 2 Similarly, for finish-up we need to execute the S. D instruction for iteration 2 and ADD. D and S. D for iteration 3 MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 29

Software pipeline This loop takes 5 clock cycles to execute per result. L. D and ADD. D are separated by offset of 16 to run the loop for three iterations (i. e. , two iterations less then simple loop unrolling and scheduling case) Here registers F 4, F 0 and R 1 are reused as there is no data dependence in this case, thus WAR hazard is avoided. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 30

Software pipelining vs. Loop unrolling Software pipelining can be thought of as symbolic loop unrolling because here, some algorithms use loop unrolling techniques to softwarepipeline loops – Software pipelining consumes less space code as compared to loop unrolled MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 31

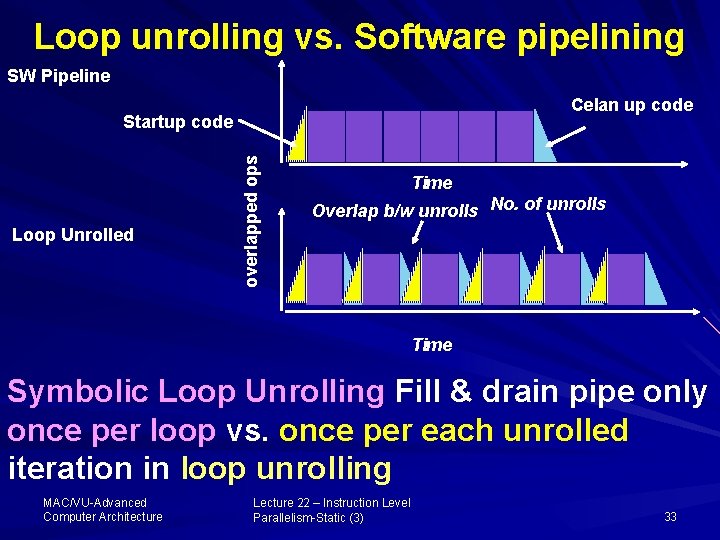

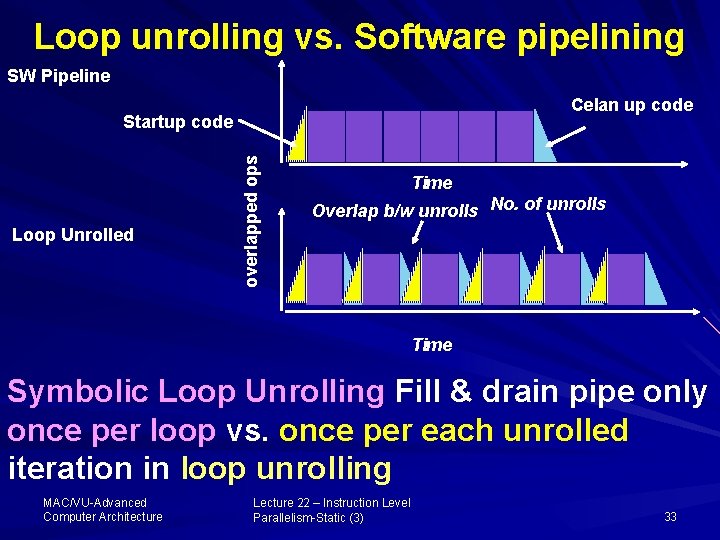

Loop unrolling vs. Software pipelining software unrolling reduces the time, when the loop is not running at peak speed, to once per loop at the beginning and end E. g. ; if a loop is to do 100 iterations with 4 iteration symbolically unrolled; then we pay overhead for 100/4 = 25 times instead of 100 times This is shown in the fig. below MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 32

Loop unrolling vs. Software pipelining SW Pipeline Celan up code Loop Unrolled overlapped ops Startup code Time Overlap b/w unrolls No. of unrolls Time Symbolic Loop Unrolling Fill & drain pipe only once per loop vs. once per each unrolled iteration in loop unrolling MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 33

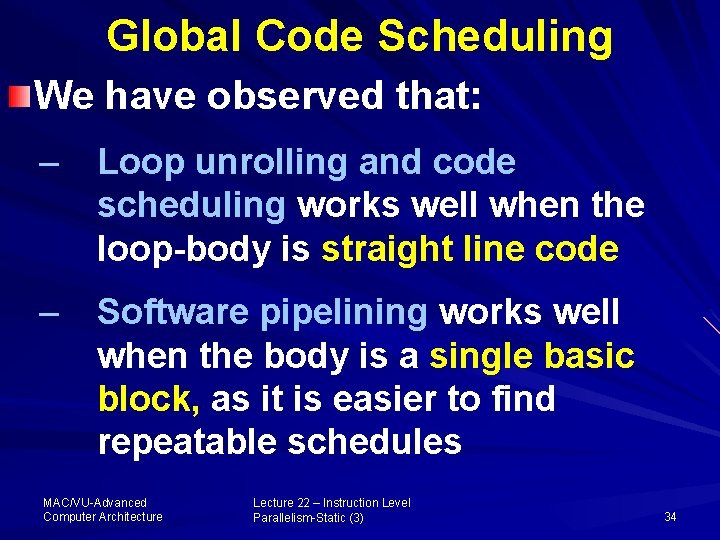

Global Code Scheduling We have observed that: – Loop unrolling and code scheduling works well when the loop-body is straight line code – Software pipelining works well when the body is a single basic block, as it is easier to find repeatable schedules MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 34

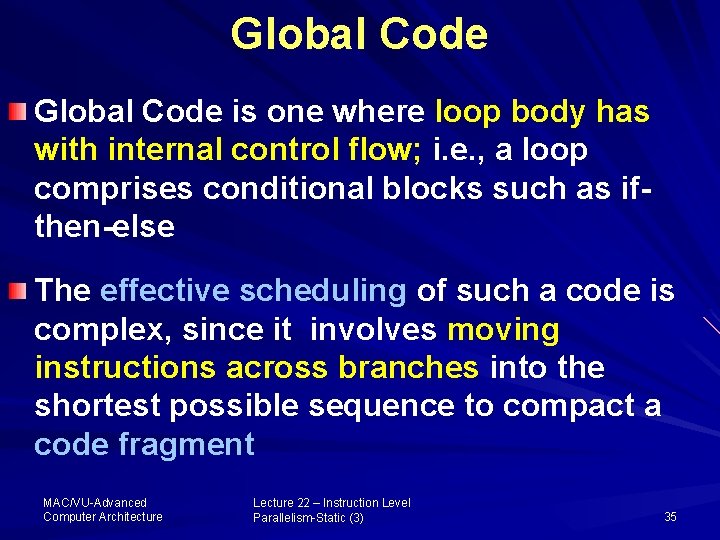

Global Code is one where loop body has with internal control flow; i. e. , a loop comprises conditional blocks such as ifthen-else The effective scheduling of such a code is complex, since it involves moving instructions across branches into the shortest possible sequence to compact a code fragment MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 35

Global code scheduling A global code involves both the data dependence and control dependence In order to enhance the ILP in global code, the loop unrolling and scheduling or software pipeline approaches do not work efficiently MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 36

Global code scheduling These approaches are suitable to enhance ILP in straight-line or single basic block codes where internal code of the loop have data dependence and the control dependence of the loop is overcome by loop unrolling MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 37

Global code scheduling The most commonly used compiler – based approaches, to schedule global code, are: – Trace scheduling or critical path approach – Superblock approach MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 38

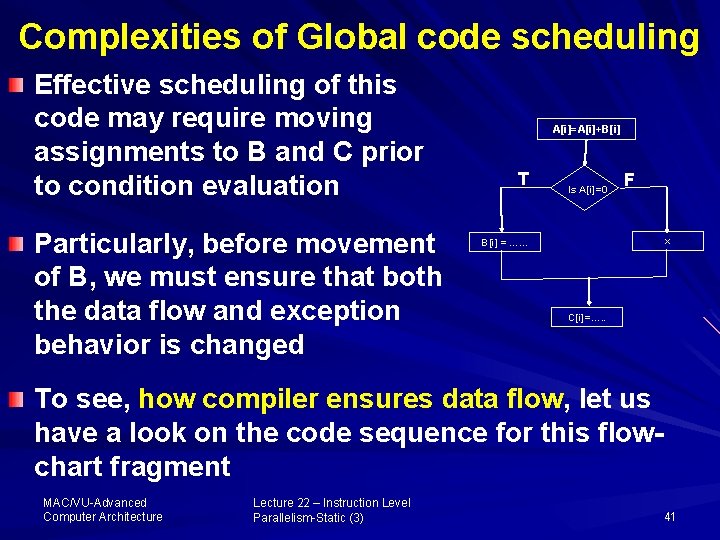

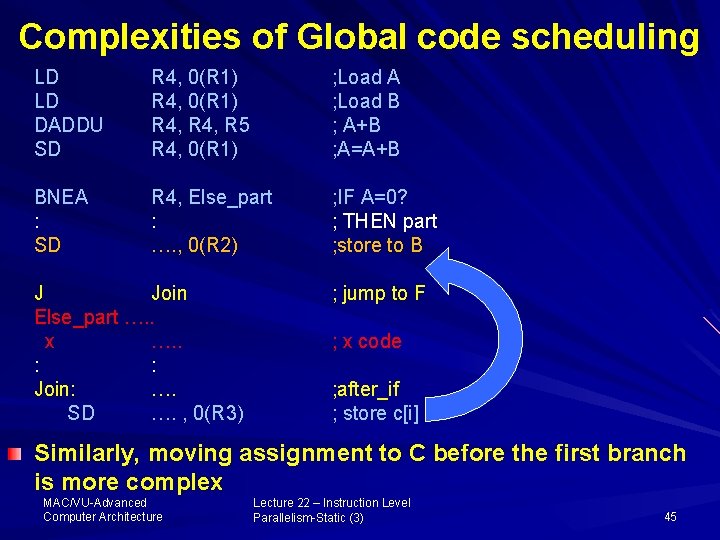

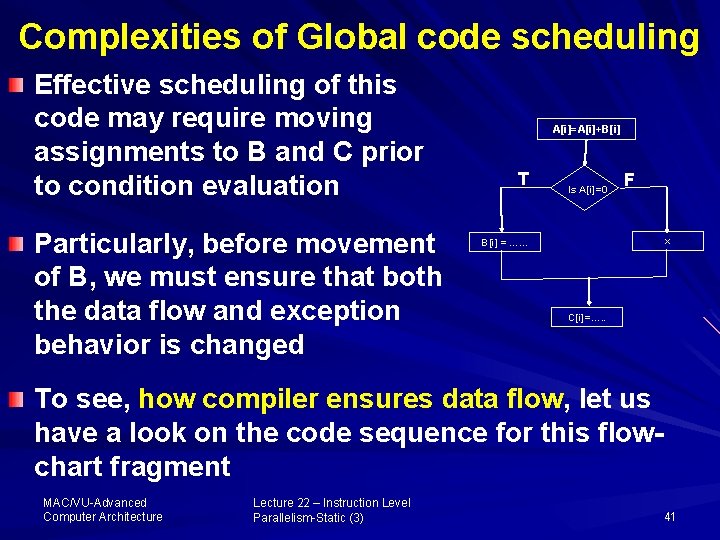

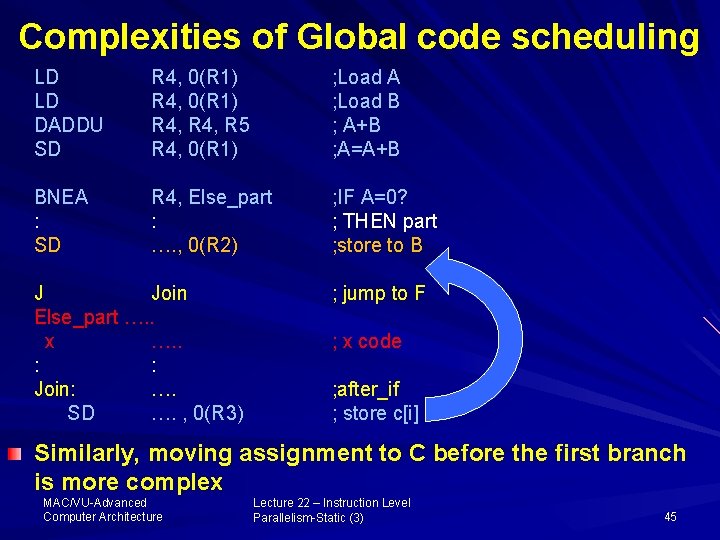

Complexities of Global code scheduling Before discussing the Global Code Scheduling techniques, let us first familiarize: the complexities of scheduling assignment instructions internal to a loop in condition branches of a global code MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 39

Complexities of Global code scheduling Let us consider a typical global - code fragment that represents an iteration of an unrolled inner loop Here, moving (scheduling prior to condition evaluation) B or C requires more complex analysis MAC/VU-Advanced Computer Architecture A[i]=A[i]+B[ i] T F Is A[i]=0 x B[i] = …… Lecture 22 – Instruction Level Parallelism-Static (3) C[i]=…. . 40

Complexities of Global code scheduling Effective scheduling of this code may require moving assignments to B and C prior to condition evaluation Particularly, before movement of B, we must ensure that both the data flow and exception behavior is changed A[i]=A[i]+B[i] T Is A[i]=0 F x B[i] = …… C[i]=…. . To see, how compiler ensures data flow, let us have a look on the code sequence for this flowchart fragment MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 41

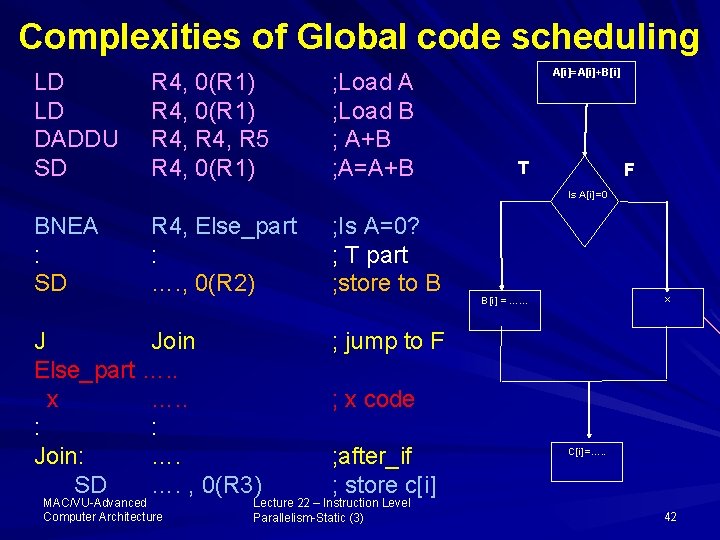

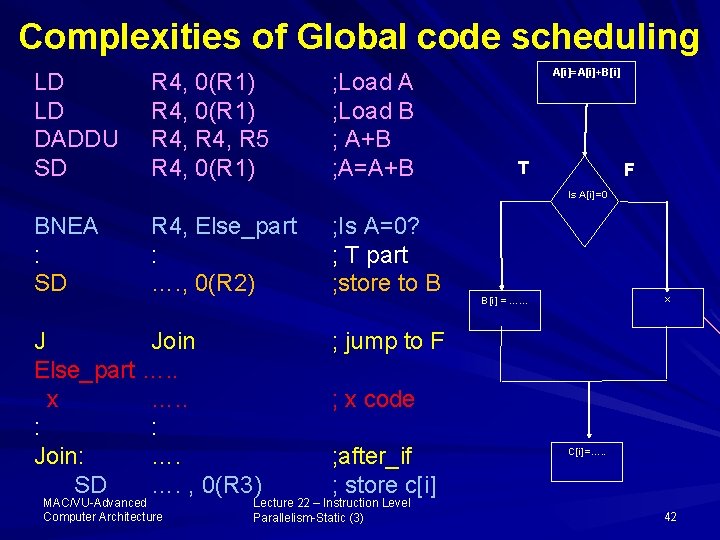

Complexities of Global code scheduling LD LD DADDU SD R 4, 0(R 1) R 4, R 5 R 4, 0(R 1) ; Load A ; Load B ; A+B ; A=A+B A[i]=A[i]+B[i] T F Is A[i]=0 BNEA : SD R 4, Else_part : …. , 0(R 2) J Join Else_part …. . x …. . : : Join: …. SD …. , 0(R 3) MAC/VU-Advanced Computer Architecture ; Is A=0? ; T part ; store to B x B[i] = …… ; jump to F ; x code ; after_if ; store c[i] Lecture 22 – Instruction Level Parallelism-Static (3) C[i]=…. . 42

Complexities of Global code scheduling LD LD DADDU SD R 4, 0(R 1) R 4, R 5 R 4, 0(R 1) ; Load A ; Load B ; A+B ; A=A+B BNEA : SD R 4, Else_part : …. , 0(R 2) ; IF A=0? ; T part ; store to B J Join Else_part …. . x …. . : : Join: …. SD …. , 0(R 3) ; jump to F ; x code ; after_if ; store c[i] Let us see the effect of moving assignment to B before BNEA MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 43

Complexities of Global code scheduling Here, if B is referenced in X or after the IF statement, then moving B before IF will change the data flow This can be overcome, however, by making a shadow copy of B before IF statement and use the shadow copy in x However, such a copy is avoided as it slow down the program MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 44

Complexities of Global code scheduling LD LD DADDU SD R 4, 0(R 1) R 4, R 5 R 4, 0(R 1) ; Load A ; Load B ; A+B ; A=A+B BNEA : SD R 4, Else_part : …. , 0(R 2) ; IF A=0? ; THEN part ; store to B J Join Else_part …. . x …. . : : Join: …. SD …. , 0(R 3) ; jump to F ; x code ; after_if ; store c[i] Similarly, moving assignment to C before the first branch is more complex MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 45

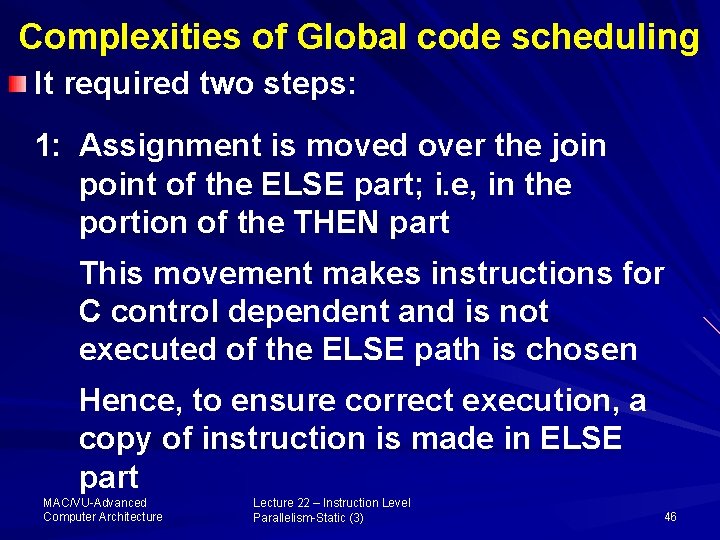

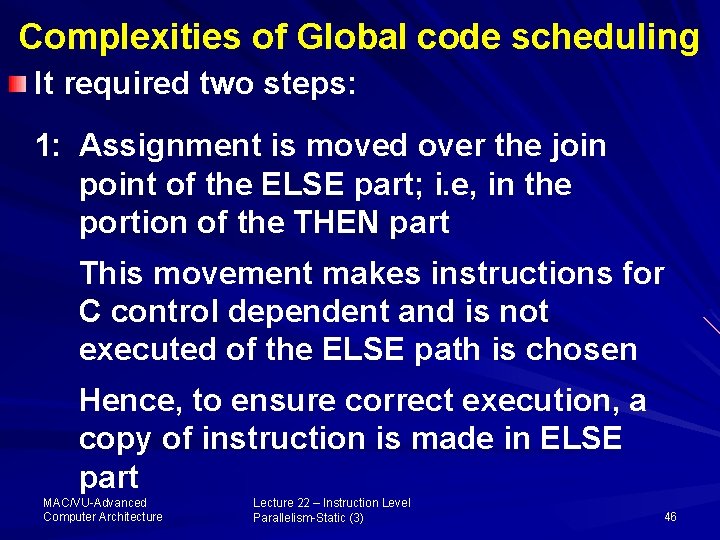

Complexities of Global code scheduling It required two steps: 1: Assignment is moved over the join point of the ELSE part; i. e, in the portion of the THEN part This movement makes instructions for C control dependent and is not executed of the ELSE path is chosen Hence, to ensure correct execution, a copy of instruction is made in ELSE part MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 46

Complexities of Global code scheduling 2: if C assignment is moved to before the IF Test provided it does not affect any data flow In this case the copy of instruction in ELSE part is be avoided Our above discussion reveals that global code scheduling is an extremely complex problem MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 47

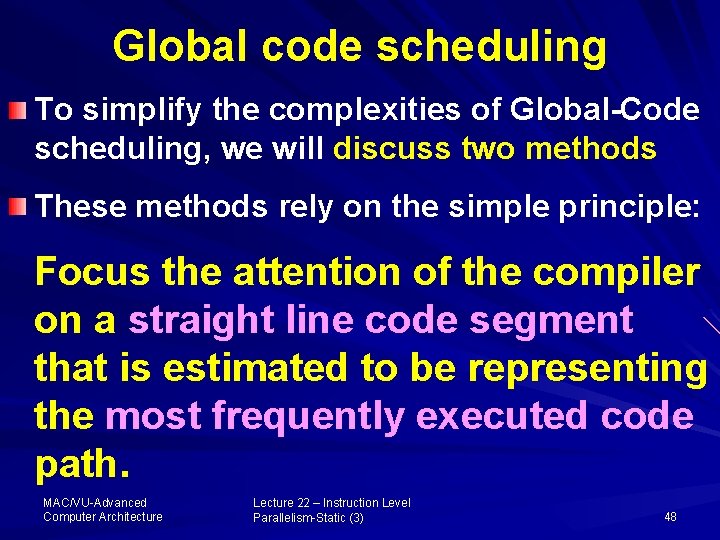

Global code scheduling To simplify the complexities of Global-Code scheduling, we will discuss two methods These methods rely on the simple principle: Focus the attention of the compiler on a straight line code segment that is estimated to be representing the most frequently executed code path. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 48

1: Trace scheduling Trace: is a sequence of basic blocks whose operation could be put together into smaller number of instructions Trace scheduling is a way to organize global code motion process such that the cost of code motion is incurred by the less frequent paths MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 49

1: Trace scheduling It is useful for processors with a large number of issues per clock, where: – conditional or predicted execution is inappropriate or unsupported; and – simple loop unrolling is not sufficient to uncover ILP to keep processor busy MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 50

Trace Scheduling … Cont’d Trace scheduling is carried in two steps: 1: Trace selection - to find likely sequence of basic blocks whose operation could be put together into smaller number of instructions 2: Trace compaction: to squeeze the trace into a small number of wide instructions. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 51

Trace Scheduling … Cont’d Trace Generation: Since the probability of loop branchestaken is usually high, so trace is generated by loop unrolling Additionally static branch prediction is employed as taken or not-taken to obtain straight-line code by concatenating many basic blocks MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 52

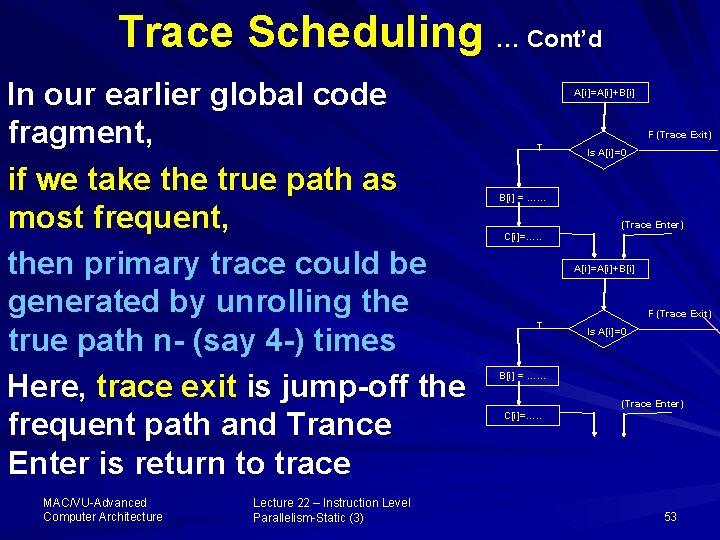

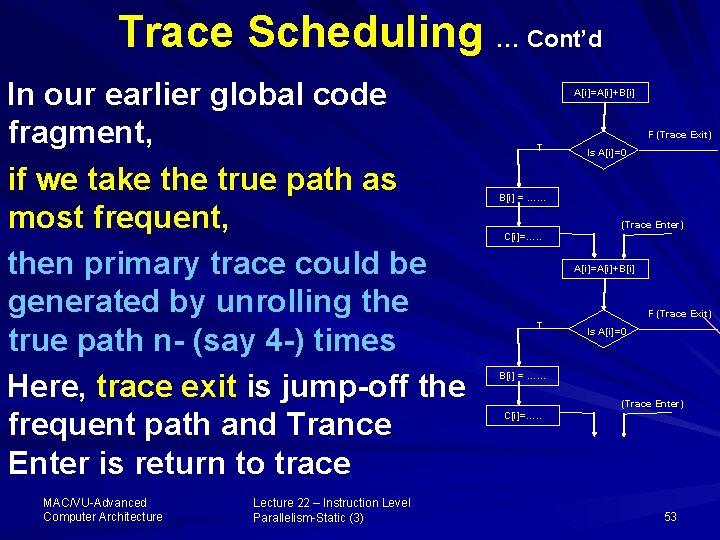

Trace Scheduling … Cont’d In our earlier global code fragment, if we take the true path as most frequent, then primary trace could be generated by unrolling the true path n- (say 4 -) times Here, trace exit is jump-off the frequent path and Trance Enter is return to trace MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) A[i]=A[i]+B[i] F (Trace Exit) T Is A[i]=0 B[i] = …… (Trace Enter) C[i]=…. . 53

Trace scheduling Code Compaction is the code scheduling, hence, compiler attempts to: – move operations as early as it can in a sequence (trace); and – Pack the operations into as few wide instructions (or issue packets) as possible. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 54

Advantages of Trace scheduling simplifies the decision concerning global code motion Branches are viewed as jumps into [Trace Entrance] or jump out of [Trace Exit] selected trace which the most probable path MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 55

Overhead of Trace scheduling Note that when code is moved across trace additional book keeping code is needed on entry or exit point Furthermore, when an entry or exit point is in the middle of a trace, significant overheads of compensation code may make trace scheduling an unattractive approach. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 56

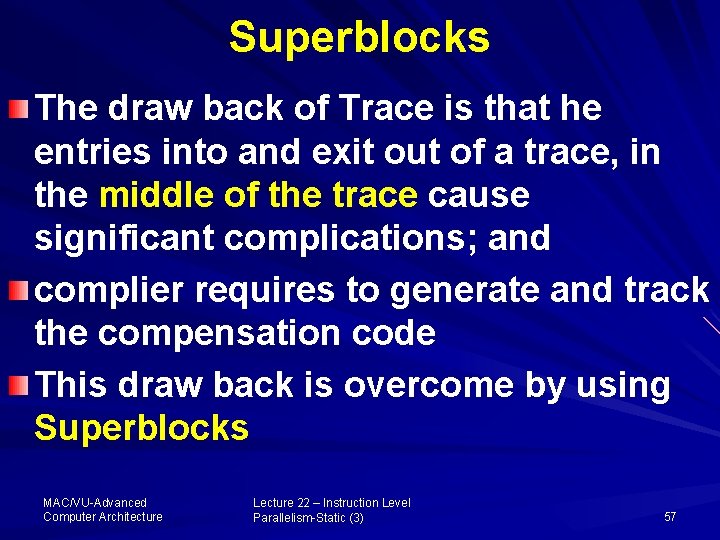

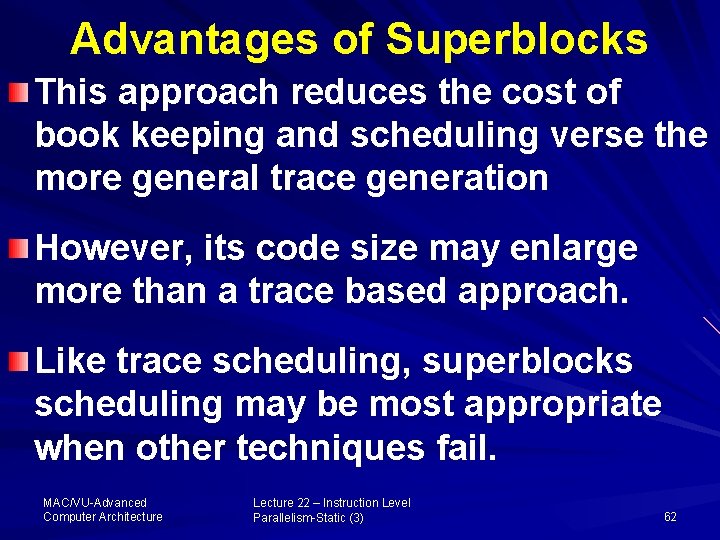

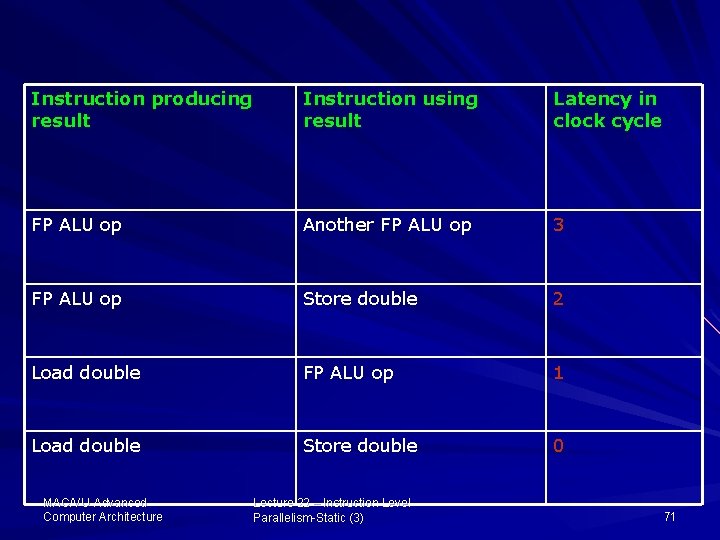

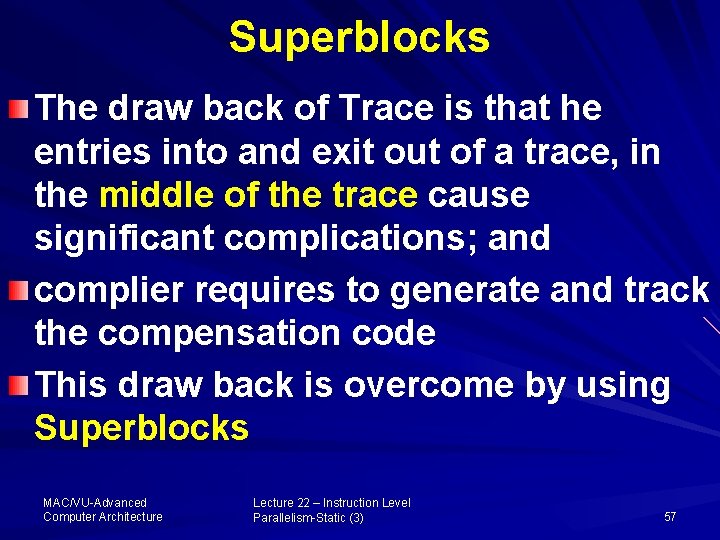

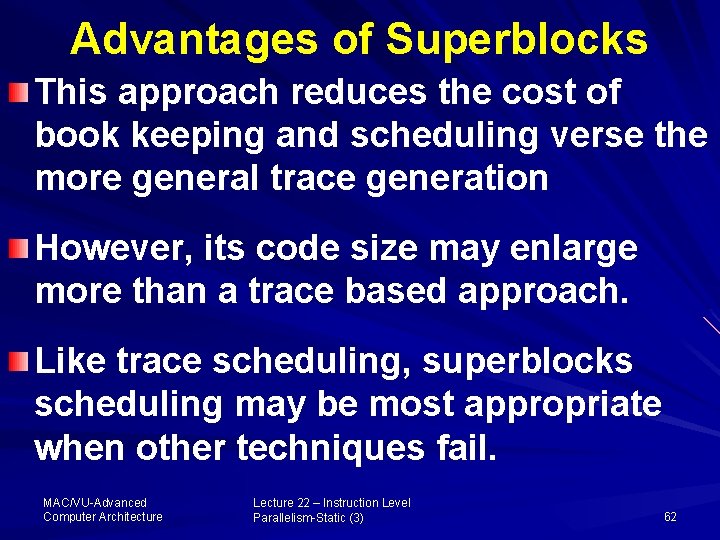

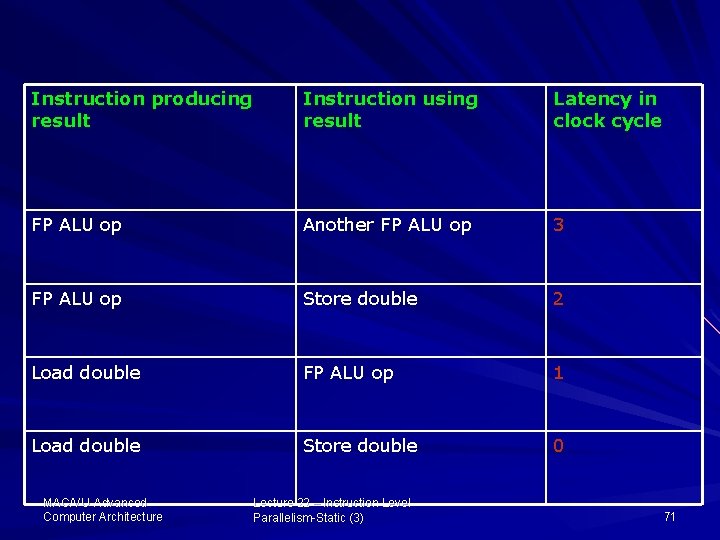

Superblocks The draw back of Trace is that he entries into and exit out of a trace, in the middle of the trace cause significant complications; and complier requires to generate and track the compensation code This draw back is overcome by using Superblocks MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 57

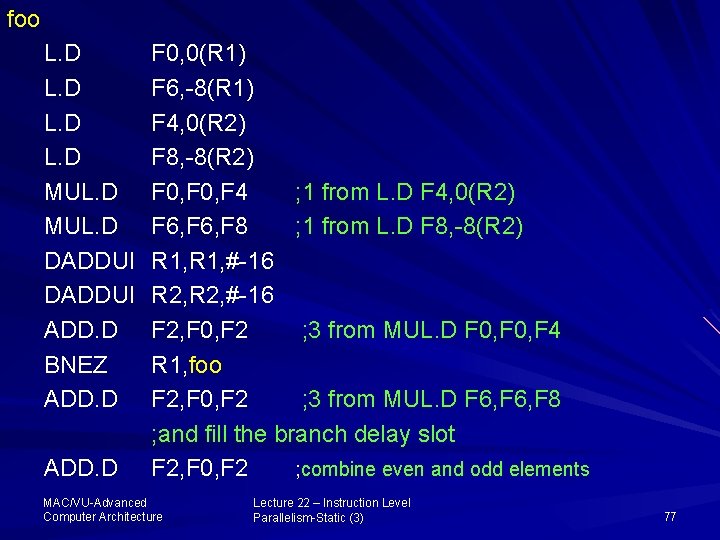

Superblocks are a form of extended basic blocks, which have a single entry point but allow multiple exits Therefore it is easier to compact superblocks as compared to a trace In our earlier example Global Code, superblocks with one entrance can be easily constructed by moving C as shown MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 58

![Superblocks AiAiBi T F Superblock exit n4 Is Ai0 Residual Loop Bi Superblocks A[i]=A[i]+B[i] T F (Superblock exit) n=4 Is A[i]=0 Residual Loop B[i] = ……](https://slidetodoc.com/presentation_image_h2/b0ffaabc7480cb3c26d9a8d3140d742c/image-59.jpg)

Superblocks A[i]=A[i]+B[i] T F (Superblock exit) n=4 Is A[i]=0 Residual Loop B[i] = …… A[i]=A[i]+B[i] C[i]=…. . T A[i]=A[i]+B[i] T F (Superblock Exit) n=3 Is A[i]=0 B[i] = …… Is A[i]=0 F x B[i] = …… F (Superblock exit) n=2 C[i]=…. . Entrance n times F (Superblock exit) n=1 MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 59

Superblocks Here, Tail Duplication is used to create a separate block that corresponds to portion of the trace after the entry Here, each unrolling of the loop creates an exit from the superblock to the residual loop that handles the remaining iterations MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 60

Superblocks The residual loop handles the iterations that occur when the unpredicted path is selected MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 61

Advantages of Superblocks This approach reduces the cost of book keeping and scheduling verse the more general trace generation However, its code size may enlarge more than a trace based approach. Like trace scheduling, superblocks scheduling may be most appropriate when other techniques fail. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 62

Conclusion – Enhancing ILP All the four approaches Loop unrolling, Software pipelining, Trace scheduling and Superblocks aim at trying to increase the amount of ILP, which can be exploited by a processor issuing more than one instruction on every clock cycle. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 63

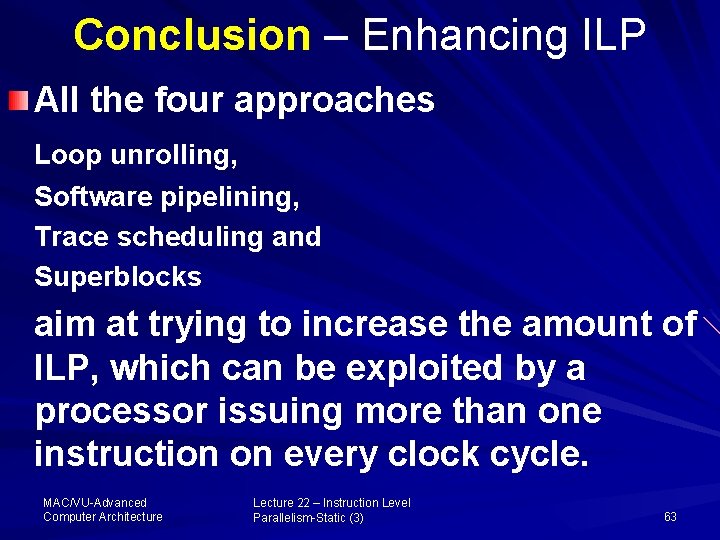

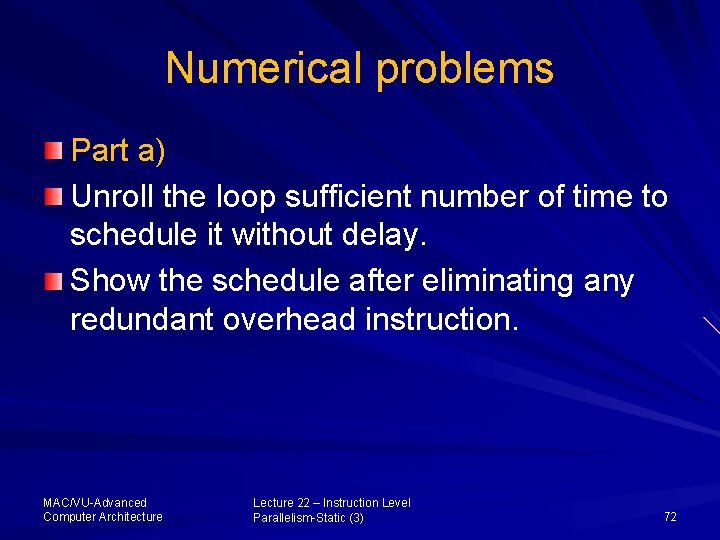

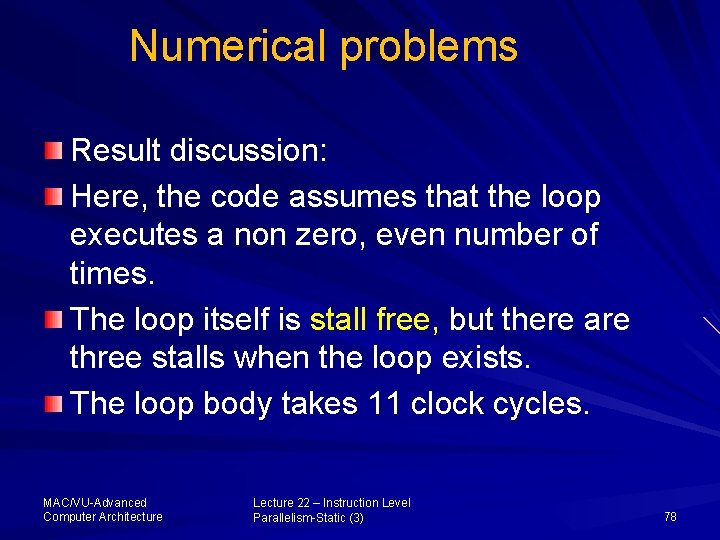

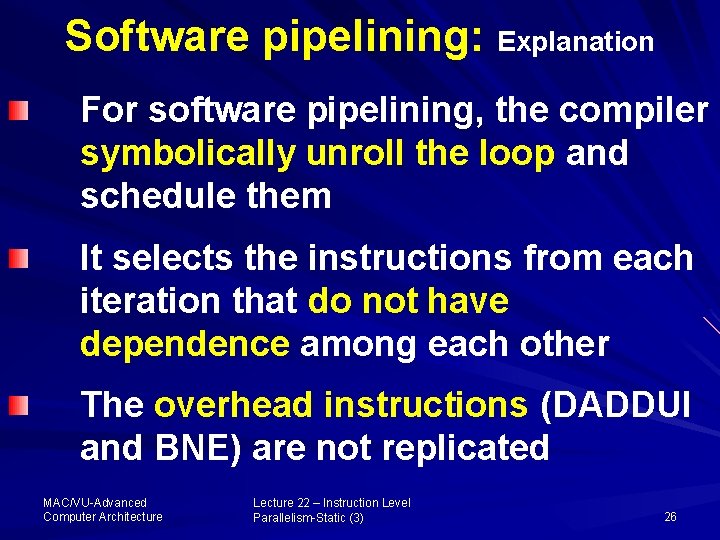

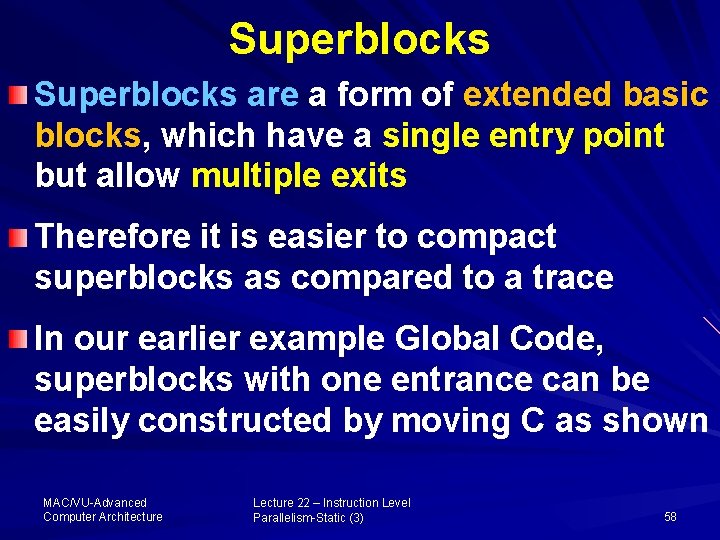

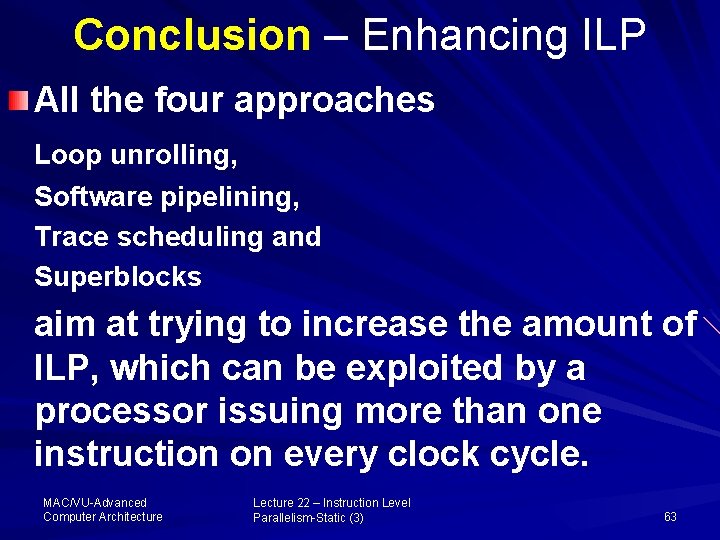

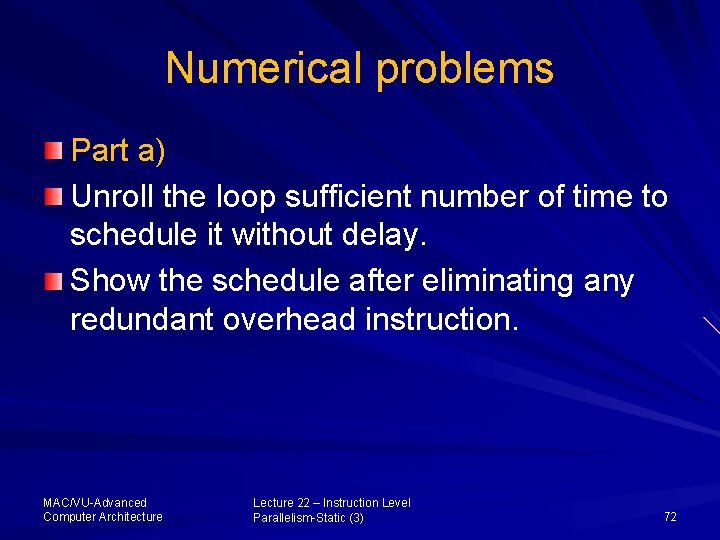

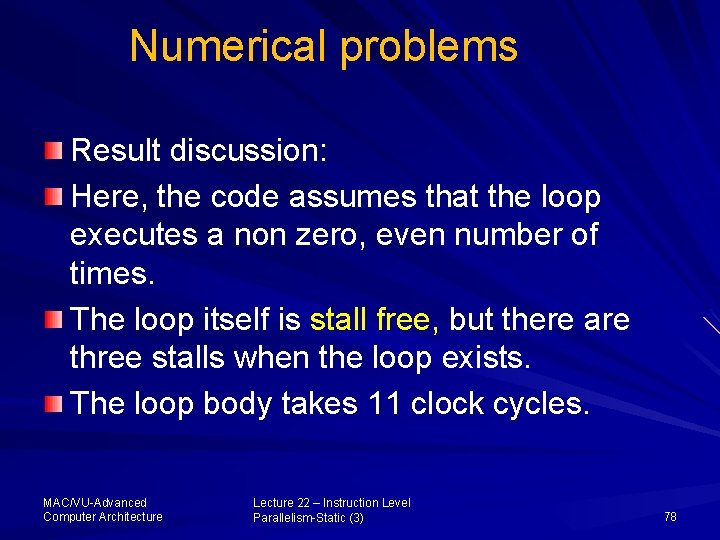

Numerical problems In the following code: – List all dependences (output, anti, and true) in the – Indicate whether the true dependences are loop carried or not? – Why the loop is not parallel? MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 64

![Example 1 For i2 i100 i i1 ai ci1 ai1 Example 1 For (i=2; i<100; i= i+1) { a[i] = c[i-1] = a[i-1] =](https://slidetodoc.com/presentation_image_h2/b0ffaabc7480cb3c26d9a8d3140d742c/image-65.jpg)

Example 1 For (i=2; i<100; i= i+1) { a[i] = c[i-1] = a[i-1] = b[i+1] = b[i] + a[i]; a[i] + d[i]; 2* b[i]; 2*b[i]; /*s 1*/ /*s 2*/ /*s 3*/ /*s 4*/ } MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 65

Example Solution There are six dependences in the loop. 1) There is antidependence from s 1 to s 1 on a. a[i] = b[i] + a[i]; /*s 1*/ 2) There is true dependence from s 2 to s 1. a[i] = b[i] + a[i]; /*s 1*/ c[i-1] = a[i] + d[i]; /*s 2*/ Here, the value of a in s 2 is dependent on the result of a in s 1. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 66

Numerical problems 3) 4) 5) 6) there is loop carried true dependence from s 4 to s 1 on b. there is loop carried true dependence from s 4 to s 3 on b. there is loop carried true dependence from s 3 to s 3 on b. there is loop carried output dependence from s 3 to s 3 on a MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 67

Numerical problems Part b) Indicate whether the true dependences are loop carried or not? We know that for loops to be parallel, each iteration must be independent of all others. Here in this case, as dependences 3, 4, 5 are true dependences They cannot removed by renaming or any such technique These dependence are loop carried as the iterations of the loop are not independent. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 68

Numerical problems These factors imply the loop is not parallel as the loop is written. Loop can be made parallel by rewriting the loop to find a loop that is functionality equivalent to the original loop that can be made parallel MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 69

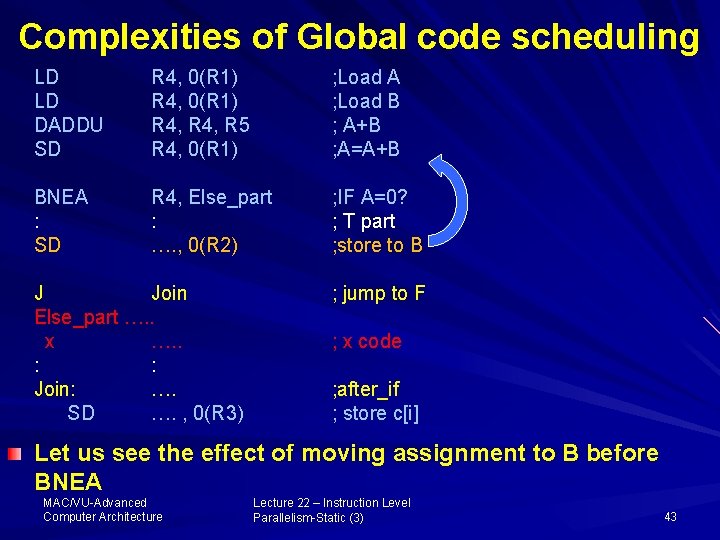

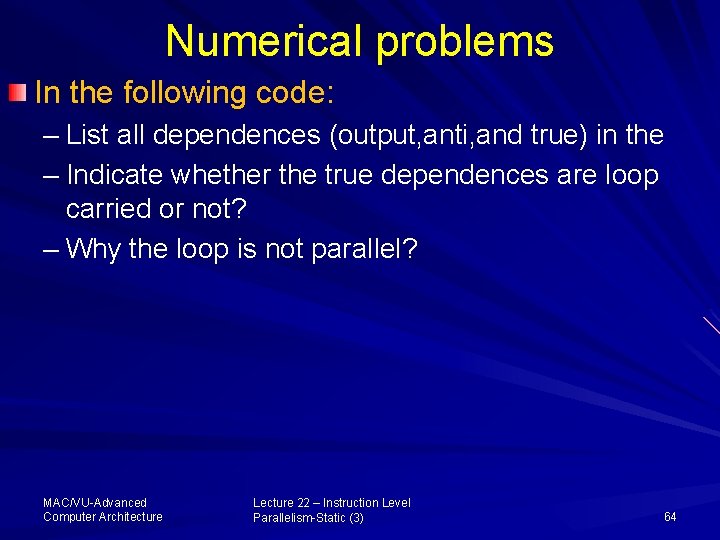

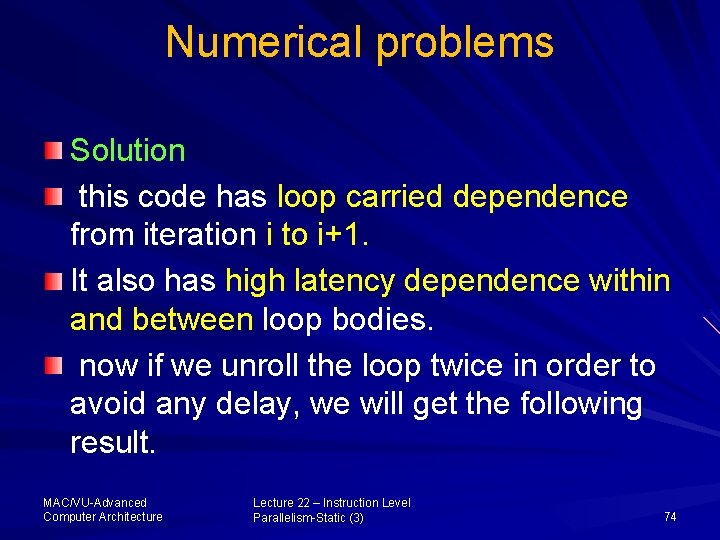

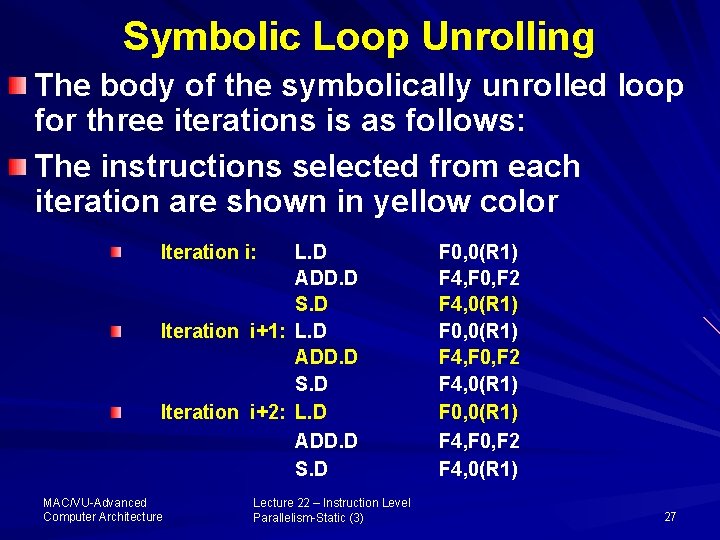

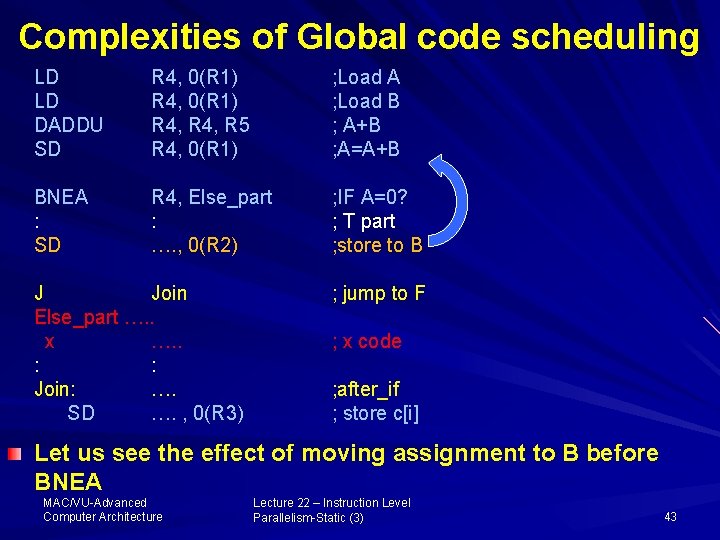

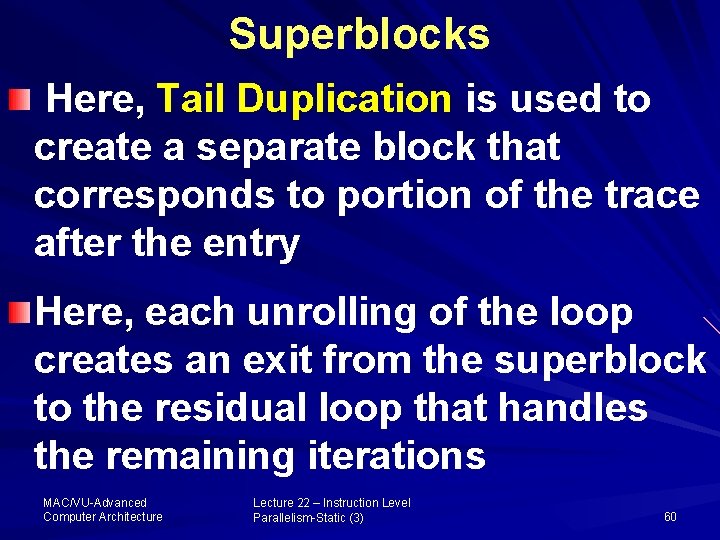

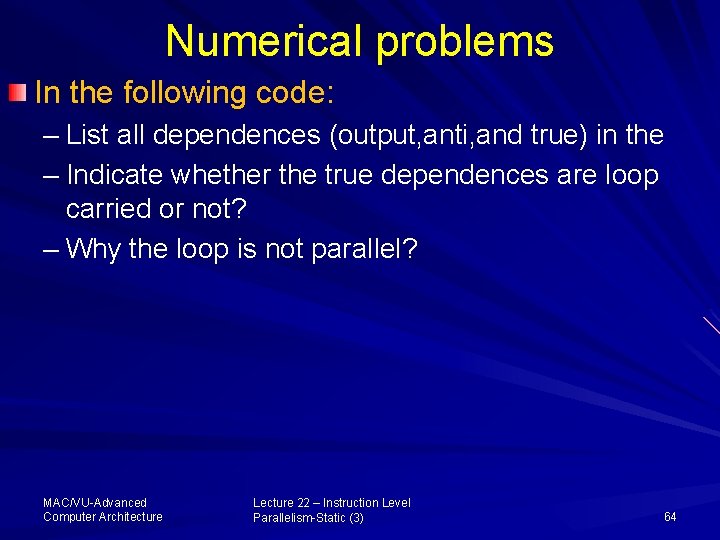

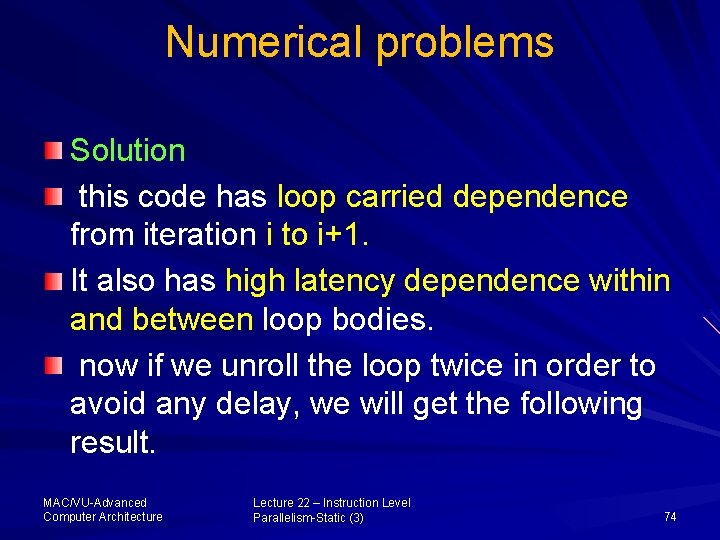

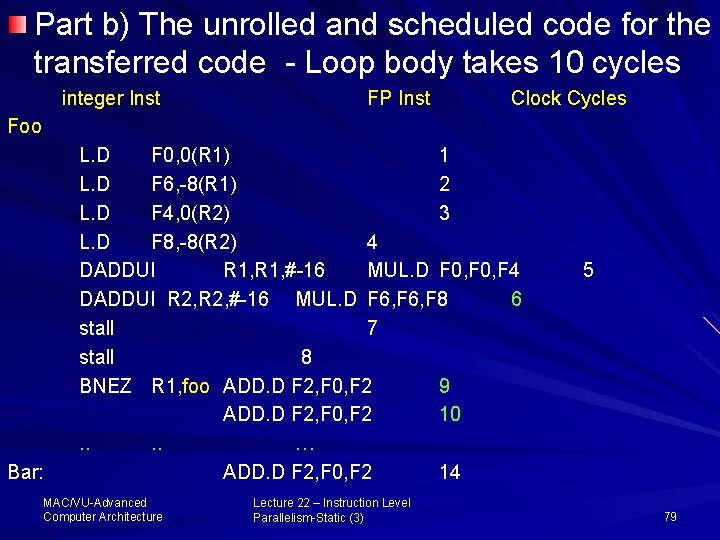

Numerical problems Problem # 2 The loop given below is a dot product (assuming the running sum in F 2 initially 0) and contains a recurrence. Assume the pipeline latencies from the table shown below, and a 1 cycle delayed branch. Considering single issue pipeline. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 70

Instruction producing result Instruction using result Latency in clock cycle FP ALU op Another FP ALU op 3 FP ALU op Store double 2 Load double FP ALU op 1 Load double Store double 0 MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 71

Numerical problems Part a) Unroll the loop sufficient number of time to schedule it without delay. Show the schedule after eliminating any redundant overhead instruction. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 72

![Numerical problems Foo L D F 0 0R 1 load Xi L D F Numerical problems Foo: L. D F 0, 0(R 1); /load X[i] L. D F](https://slidetodoc.com/presentation_image_h2/b0ffaabc7480cb3c26d9a8d3140d742c/image-73.jpg)

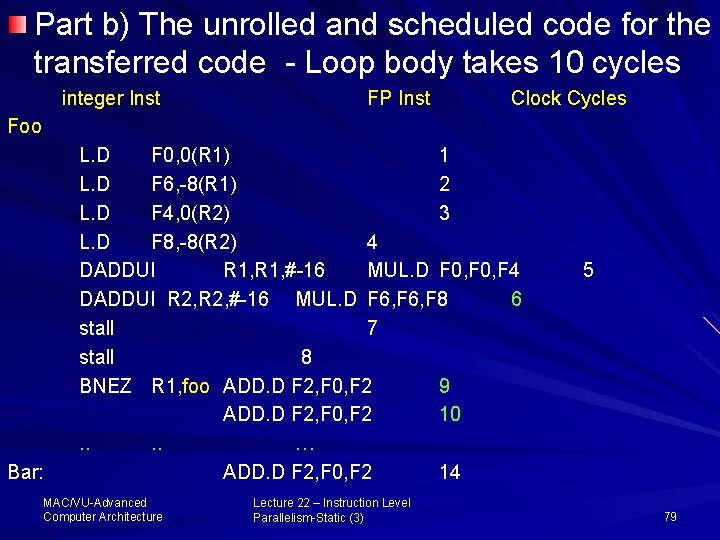

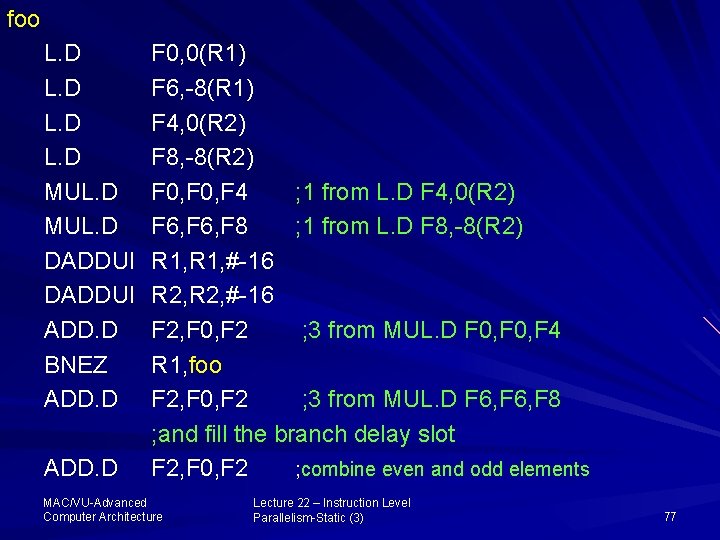

Numerical problems Foo: L. D F 0, 0(R 1); /load X[i] L. D F 4, 0(R 2); /load Y[i] MUL. D F 0, F 4; /multiply X[i]*Y[i] ADD. D F 2, F 0, F 2; /add sum=sum + x[i] * y[i] DADDUI R 1, #-8; /decrement X index i DADDUI R 2, #-8; /decrement Y index i BNEZ R 1, foo ; /loop if not done MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 73

Numerical problems Solution this code has loop carried dependence from iteration i to i+1. It also has high latency dependence within and between loop bodies. now if we unroll the loop twice in order to avoid any delay, we will get the following result. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 74

foo L. D MUL. D DADDUI MUL. D ADD. D DADDUI Stall BNEZ ADD. D MAC/VU-Advanced Computer Architecture F 0, 0(R 1) F 4, 0(R 2) F 6, #-8(R 1) F 0, F 4 F 8, #-8(R 2) R 1, #-16 F 6, F 8 F 2, F 0, F 2 R 2, #-16 R 1, foo F 2, F 6, F 2 Lecture 22 – Instruction Level Parallelism-Static (3) ; 1 from L. D F 4, 0(R 2) ; 1 from L. D F 8, -8(R 2) ; 3 from MUL. D F 0, F 4 ; in slot, and 3 from ADD. D F 2, F 0, F 2 75

Numerical problems Here the dependences chain from one ADD. D to the next ADD. D forces the stall. Next part: In order to unroll further to schedule eliminating the stall (overhead) we take advantage of commutativity and associativity of dot product of two running sums in the loop One for even elements and one for odd elements, and combine the two partial sums outside the loop body: MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 76

foo L. D MUL. D DADDUI ADD. D BNEZ ADD. D F 0, 0(R 1) F 6, -8(R 1) F 4, 0(R 2) F 8, -8(R 2) F 0, F 4 ; 1 from L. D F 4, 0(R 2) F 6, F 8 ; 1 from L. D F 8, -8(R 2) R 1, #-16 R 2, #-16 F 2, F 0, F 2 ; 3 from MUL. D F 0, F 4 R 1, foo F 2, F 0, F 2 ; 3 from MUL. D F 6, F 8 ; and fill the branch delay slot F 2, F 0, F 2 ; combine even and odd elements MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 77

Numerical problems Result discussion: Here, the code assumes that the loop executes a non zero, even number of times. The loop itself is stall free, but there are three stalls when the loop exists. The loop body takes 11 clock cycles. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 78

Part b) The unrolled and scheduled code for the transferred code - Loop body takes 10 cycles integer Inst FP Inst Clock Cycles Foo Bar: L. D F 0, 0(R 1) 1 L. D F 6, -8(R 1) 2 L. D F 4, 0(R 2) 3 L. D F 8, -8(R 2) 4 DADDUI R 1, #-16 MUL. D F 0, F 4 DADDUI R 2, #-16 MUL. D F 6, F 8 6 stall 7 stall 8 BNEZ R 1, foo ADD. D F 2, F 0, F 2 9 ADD. D F 2, F 0, F 2 10. . … ADD. D F 2, F 0, F 2 14 MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 5 79

![Problem 3 Consider a code For i2 i100 i2 ai a50i1 Using Problem # 3 Consider a code For (i=2; i<=100; i+=2) a[i] = a[50*i+1] Using](https://slidetodoc.com/presentation_image_h2/b0ffaabc7480cb3c26d9a8d3140d742c/image-80.jpg)

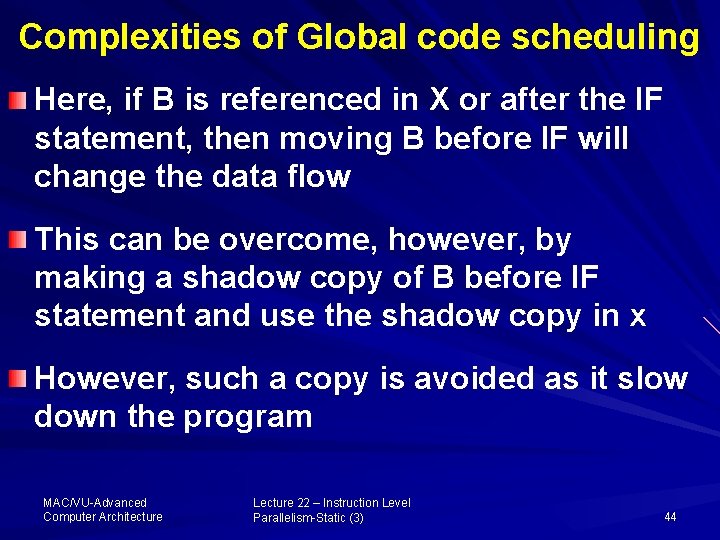

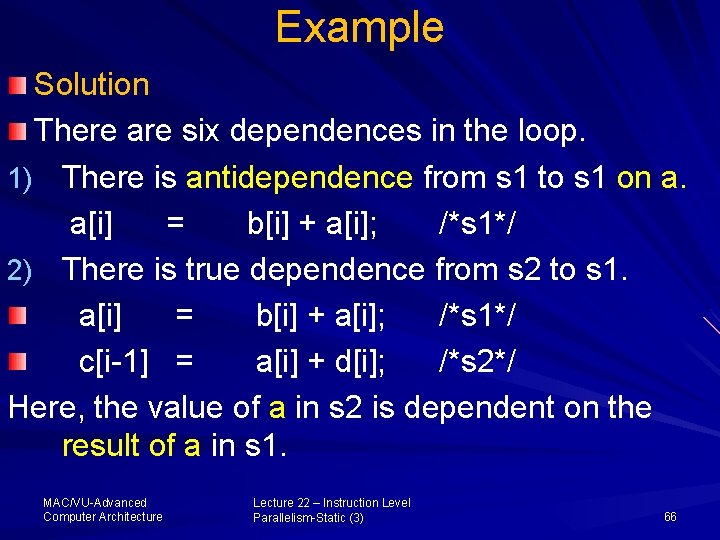

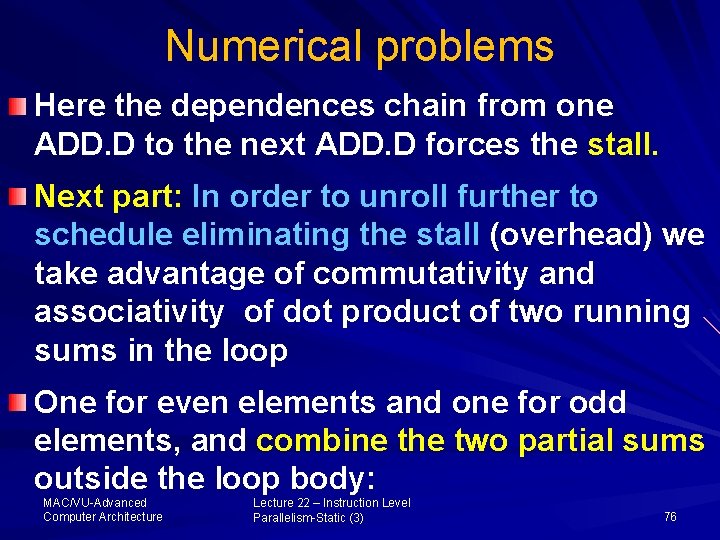

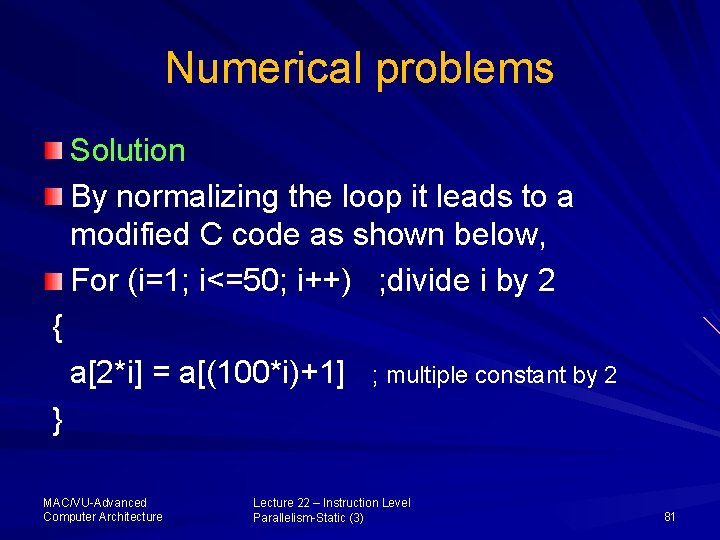

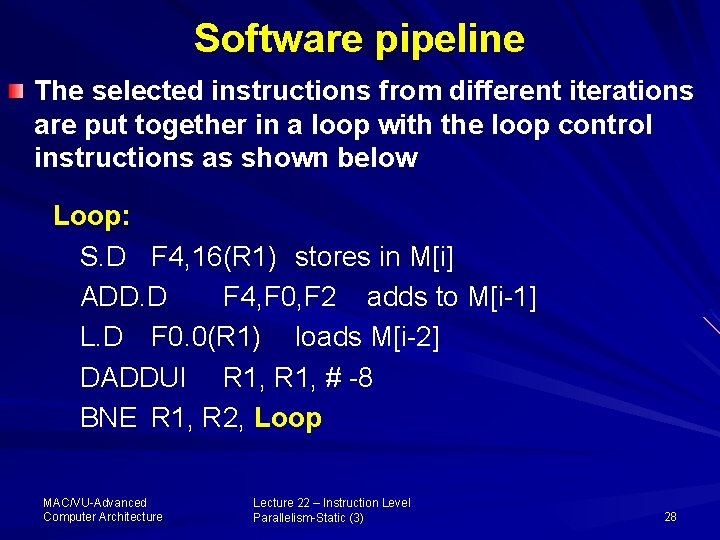

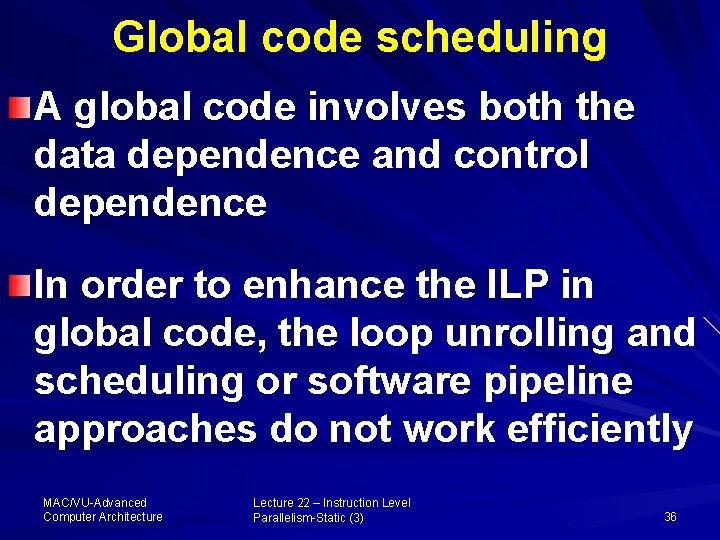

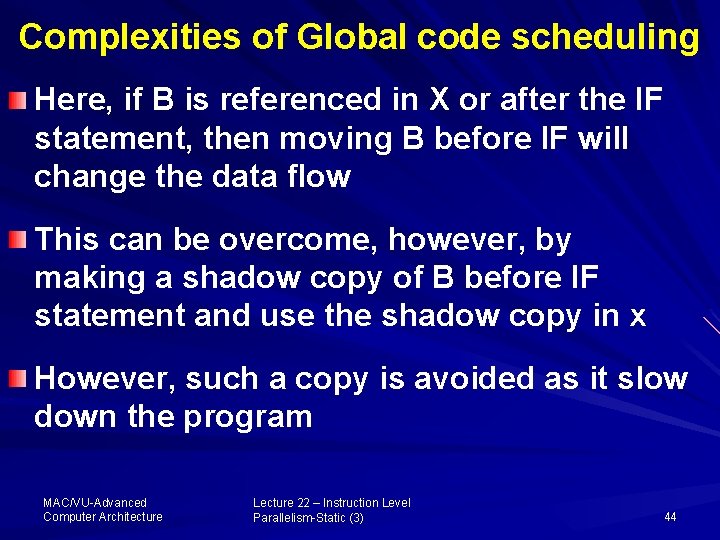

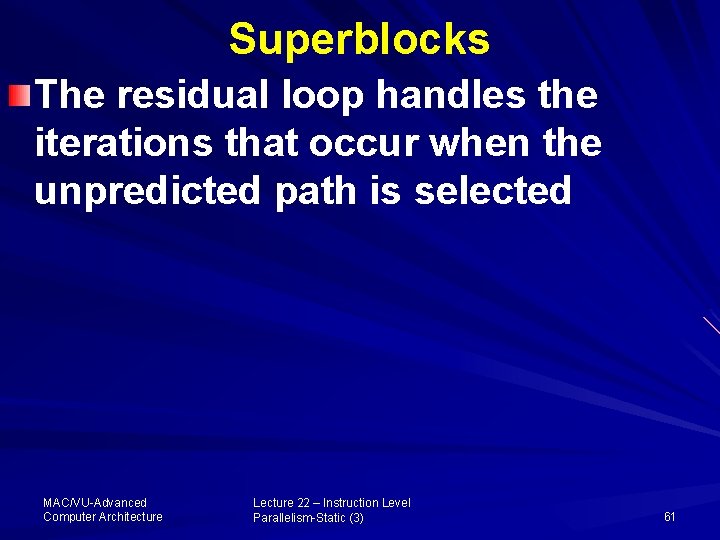

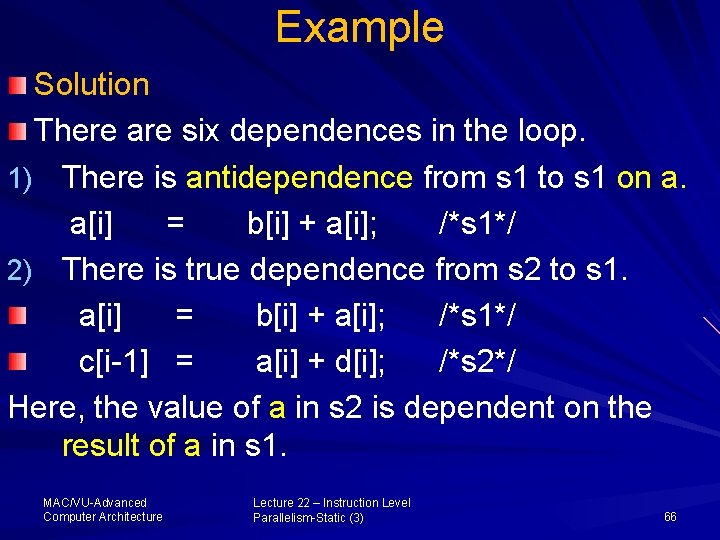

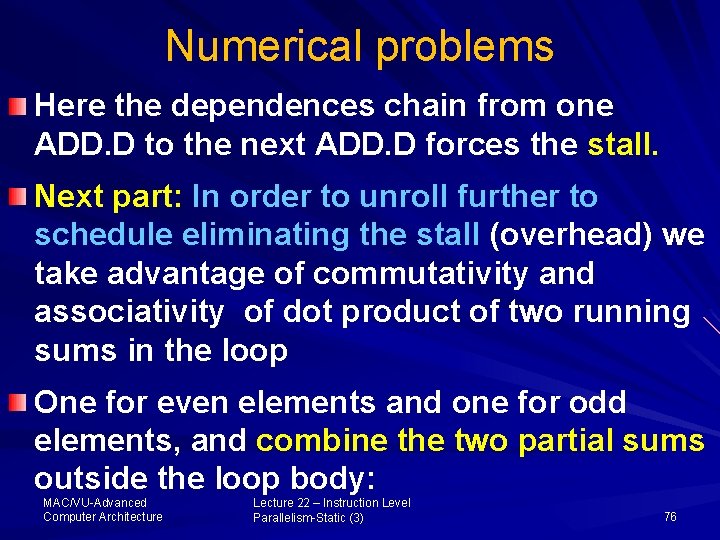

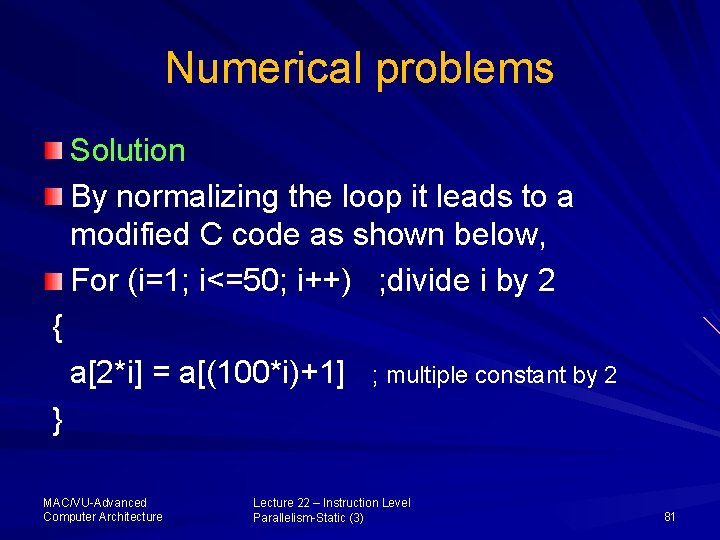

Problem # 3 Consider a code For (i=2; i<=100; i+=2) a[i] = a[50*i+1] Using GCD test, normalize the loop. Start index at 1 and increment it by 1 on every iteration. Write the normalized version of the loop then use GCD test to see if there is dependence. MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 80

Numerical problems Solution By normalizing the loop it leads to a modified C code as shown below, For (i=1; i<=50; i++) ; divide i by 2 { a[2*i] = a[(100*i)+1] ; multiple constant by 2 } MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 81

Numerical problems The GCD test shows the potential for dependences within an array indexed by the function, ai +b and cj + d If the condition (d-b) mod gcd (c, a) = 0 is satisfied MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 82

Numerical problems Applying GCD test, in that case we will get, a = 2, b = 0; c = 100, d =1 allows us to determine dependence in loop. Thus gcd will be, gcd(2, 100) = 2 And d–b=1 MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 83

Numerical problems Here, as 1 is factor of 2. Thus, GCD test indicates that there is a dependence in the code. In reality, there is no dependence in the code. Since the loop load its value from a[101], a[201]……a[5001] and again these values to a[2], a[4], …. . a[100] MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 84

Asslam-u-a. Lacum and ALLAH Hafiz MAC/VU-Advanced Computer Architecture Lecture 22 – Instruction Level Parallelism-Static (3) 85